This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.Este contenido no está disponible en el idioma seleccionado.

Container-Native Storage for OpenShift Container Platform 3.3

Deploying Container-Native Storage for OpenShift Container Platform

Edition 1

Abstract

Chapter 1. Introduction to Containerized Red Hat Gluster Storage

- Dedicated Storage Cluster - this solution addresses the use-case where the applications require both a persistent data store and a shared persistent file system for storing and sharing data across containerized applications.For information on creating OpenShift Container Platform cluster with persistent storage using Red Hat Gluster Storage, see https://access.redhat.com/documentation/en/openshift-enterprise/3.2/installation-and-configuration/chapter-15-configuring-persistent-storage#install-config-persistent-storage-persistent-storage-glusterfs

- Chapter 2, Red Hat Gluster Storage Container Native with OpenShift Container Platform - this solution addresses the use-case where applications require both shared file storage and the flexibility of a converged infrastructure with compute and storage instances being scheduled and run from the same set of hardware.

Note

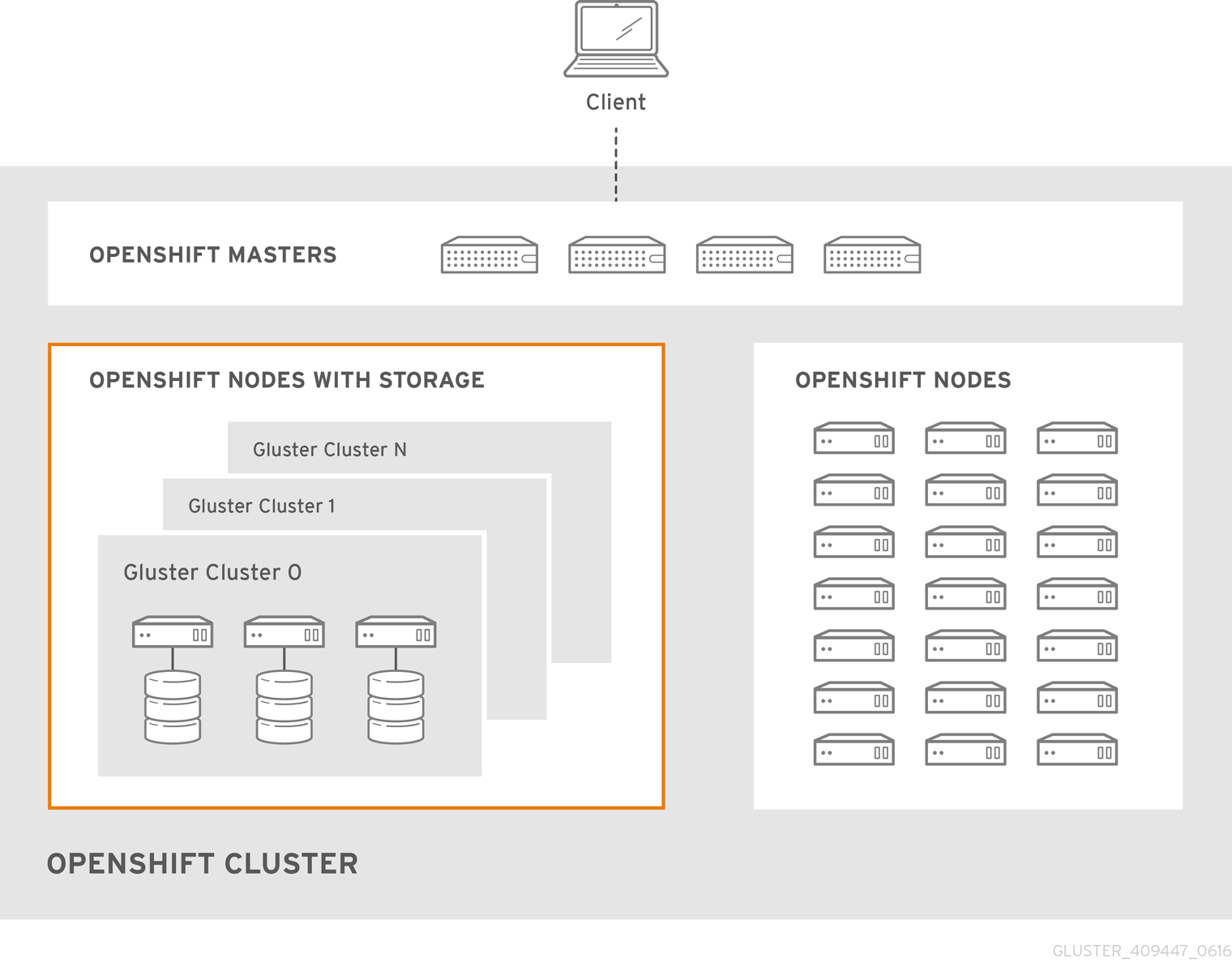

Chapter 2. Red Hat Gluster Storage Container Native with OpenShift Container Platform

- OpenShift provides the platform as a service (PaaS) infrastructure based on Kubernetes container management. Basic OpenShift architecture is built around multiple master systems where each system contains a set of nodes.

- Red Hat Gluster Storage provides the containerized distributed storage based on Red Hat Gluster Storage 3.1.3 container. Each Red Hat Gluster Storage volume is composed of a collection of bricks, where each brick is the combination of a node and an export directory.

- Heketi provides the Red Hat Gluster Storage volume life cycle management. It creates the Red Hat Gluster Storage volumes dynamically and supports multiple Red Hat Gluster Storage clusters.

- Create multiple persistent volumes (PV) and register these volumes with OpenShift.

- Developers then submit a persistent volume claim (PVC).

- A PV is identified and selected from a pool of available PVs and bound to the PVC.

- The OpenShift pod then uses the PV for persistent storage.

Figure 2.1. Architecture - Red Hat Gluster Storage Container Native with OpenShift Container Platform

Chapter 3. Support Requirements

3.1. Supported Versions

| Red Hat Gluster Storage | OpenShift Container Platform |

| 3.1.3 | 3.2 and later |

3.2. Environment Requirements

3.2.1. Installing Red Hat Gluster Storage Container Native with OpenShift Container Platform on Red Hat Enterprise Linux 7 based OpenShift Container Platform Cluster

3.2.1.1. Setting up the Openshift Master as the Client

This enables you to install the heketi client packages which are required to setup the client for Red Hat Gluster Storage Container Native with OpenShift Container Platform.

subscription-manager repos --enable=rh-gluster-3-for-rhel-7-server-rpms

# subscription-manager repos --enable=rh-gluster-3-for-rhel-7-server-rpmsyum install heketi-client heketi-templates

# yum install heketi-client heketi-templates3.2.1.2. Setting up the Red Hat Enterprise Linux 7 Client for Installing Red Hat Gluster Storage Container Native with OpenShift Container Platform

This enables you to install the heketi client packages which are required to setup the client for Red Hat Gluster Storage Container Native with OpenShift Container Platform.

subscription-manager repos --enable=rh-gluster-3-for-rhel-7-server-rpms

# subscription-manager repos --enable=rh-gluster-3-for-rhel-7-server-rpmsyum install heketi-client heketi-templates

# yum install heketi-client heketi-templatesIf you are using OpenShift Container Platform 3.3, subscribe to 3.3 repository to enable you to install the Openshift client packages

subscription-manager repos --enable=rhel-7-server-ose-3.3-rpms --enable=rhel-7-server-rpms

# subscription-manager repos --enable=rhel-7-server-ose-3.3-rpms --enable=rhel-7-server-rpmsyum install atomic-openshift-clients

# yum install atomic-openshift-clientsyum install atomic-openshift

# yum install atomic-openshiftIf you are using OpenShift Enterprise 3.2, subscribe to 3.2 repository to enable you to install the Openshift client packages

subscription-manager repos --enable=rhel-7-server-ose-3.2-rpms --enable=rhel-7-server-rpms

# subscription-manager repos --enable=rhel-7-server-ose-3.2-rpms --enable=rhel-7-server-rpmsyum install atomic-openshift-clients

# yum install atomic-openshift-clientsyum install atomic-openshift

# yum install atomic-openshift3.2.2. Installing Red Hat Gluster Storage Container Native with OpenShift Container Platform on Red Hat Enterprise Linux Atomic Host OpenShift Container Platform Cluster

3.2.3. Red Hat OpenShift Container Platform Requirements

- The OpenShift cluster must be up and running.

- On each of the OpenShift nodes that will host the Red Hat Gluster Storage container, add the following rules to /etc/sysconfig/iptables in order to open the required ports:

-A OS_FIREWALL_ALLOW -p tcp -m state --state NEW -m tcp --dport 24007 -j ACCEPT -A OS_FIREWALL_ALLOW -p tcp -m state --state NEW -m tcp --dport 24008 -j ACCEPT -A OS_FIREWALL_ALLOW -p tcp -m state --state NEW -m tcp --dport 2222 -j ACCEPT -A OS_FIREWALL_ALLOW -p tcp -m state --state NEW -m multiport --dports 49152:49664 -j ACCEPT

-A OS_FIREWALL_ALLOW -p tcp -m state --state NEW -m tcp --dport 24007 -j ACCEPT -A OS_FIREWALL_ALLOW -p tcp -m state --state NEW -m tcp --dport 24008 -j ACCEPT -A OS_FIREWALL_ALLOW -p tcp -m state --state NEW -m tcp --dport 2222 -j ACCEPT -A OS_FIREWALL_ALLOW -p tcp -m state --state NEW -m multiport --dports 49152:49664 -j ACCEPTCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Execute the following command to reload the iptables:

systemctl reload iptables

# systemctl reload iptablesCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- A cluster-admin user must be created. For more information, see Appendix A, Cluster Administrator Setup

- At least three OpenShift nodes must be created as the storage nodes with at least one raw device each.

- All OpenShift nodes on Red Hat Enterprise Linux systems must have glusterfs-client RPM installed.

- Ensure the nodes have the valid ports opened for communicating with Red Hat Gluster Storage. See the iptables configuration task in Step 1 of Section 4.1, “Preparing the Red Hat OpenShift Container Platform Cluster” for more information.

3.2.4. Red Hat Gluster Storage Requirements

- Installation of Heketi packages must have valid subscriptions to Red Hat Gluster Storage Server repositories.

- Red Hat Gluster Storage installations must adhere to the requirements outlined in the Red Hat Gluster Storage Installation Guide.

- The versions of Red Hat Enterprise OpenShift and Red Hat Gluster Storage integrated must be compatible, according to the information in Section 3.1, “Supported Versions” section.

- A fully-qualified domain name must be set for Red Hat Gluster Storage server node. Ensure that the correct DNS records exist, and that the fully-qualified domain name is resolvable via both forward and reverse DNS lookup.

3.2.5. Planning Guidelines

- Ensure that the Trusted Storage Pool is not scaled beyond 100 volumes per 3 nodes per 32G of RAM.

- A trusted storage pool consists of a minimum of 3 nodes/peers.

- Distributed-Three-way replication is the only supported volume type.

- Each physical node that needs to host a Red Hat Gluster Storage peer:

- will need a minimum of 32GB RAM.

- is expected to have the same disk type.

- by default the heketidb utilises 32 GB distributed replica volume.

- Red Hat Gluster Storage Container Native with OpenShift Container Platform supports up to 14 snapshots per volume.

Chapter 4. Setting up the Environment

4.1. Preparing the Red Hat OpenShift Container Platform Cluster

- On the client, execute the following command to login as the cluster admin user:

oc login

# oc loginCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Execute the following command to create a project, which will contain all the containerized Red Hat Gluster Storage services:

oc new-project <project name>

# oc new-project <project name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:oc new-project storage-project

# oc new-project storage-project Now using project "storage-project" on server "https://master.example.com:8443"Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Execute the following steps to set up the router:

Note

If a router already exists, proceed to Step 5.- Execute the following command on the client that is used to deploy Red Hat Gluster Storage Container Native with OpenShift Container Platform:

oadm policy add-scc-to-user privileged -z router oadm policy add-scc-to-user privileged -z default

# oadm policy add-scc-to-user privileged -z router # oadm policy add-scc-to-user privileged -z defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Execute the following command to deploy the router:

oadm router storage-project-router --replicas=1

# oadm router storage-project-router --replicas=1Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Edit the subdomain name in the config.yaml file located at /etc/origin/master/master-config.yaml .For example:

subdomain: "cloudapps.mystorage.com”

subdomain: "cloudapps.mystorage.com”Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Restart the master OpenShift services by executing the following command:

systemctl restart atomic-openshift-master

# systemctl restart atomic-openshift-masterCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note

If the router setup fails, use the port forward method as described in Appendix B, Client Configuration using Port Forwarding.

For more information regarding router setup, see https://access.redhat.com/documentation/en/openshift-enterprise/3.2/single/installation-and-configuration/#install-config-install-deploy-router - After the router is running, the clients have to be setup to access the services in the OpenShift cluster. Execute the following steps to set up the DNS.

- On the client, edit the /etc/dnsmasq.conf file and add the following line to the file:

address=/.cloudapps.mystorage.com/<Router_IP_Address>

address=/.cloudapps.mystorage.com/<Router_IP_Address>Copy to Clipboard Copied! Toggle word wrap Toggle overflow where, Router_IP_Address is the IP address of one of the nodes running the router.Note

Ensure you do not edit the /etc/dnsmasq.conf file until the router has started. - Restart the

dnsmasqservice by executing the following command:systemctl restart dnsmasq

# systemctl restart dnsmasqCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Edit /etc/resolv.conf and add the following line:

nameserver 127.0.0.1

nameserver 127.0.0.1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

For more information regarding setting up the DNS, see https://access.redhat.com/documentation/en/openshift-enterprise/version-3.2/installation-and-configuration/#prereq-dns. - After the project is created, execute the following command on the master node to deploy the privileged containers:

oadm policy add-scc-to-user privileged -z default

# oadm policy add-scc-to-user privileged -z defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note

Red Hat Gluster Storage container can only run in privileged mode.

4.2. Installing the Templates

- Ensure you are in the newly created containerized Red Hat Gluster Storage project:

oc project

# oc project Using project "storage-project" on server "https://master.example.com:8443".Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Execute the following command to install the templates:

oc create -f /usr/share/heketi/templates

# oc create -f /usr/share/heketi/templatesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:template "deploy-heketi" created template "glusterfs" created template "heketi" created

template "deploy-heketi" created template "glusterfs" created template "heketi" createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Execute the following command to verify that the templates are installed:

oc get templates

# oc get templatesCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.3. Deploying the Containers

- List out the hostnames of the nodes on which the Red Hat Gluster Storage container has to be deployed:

oc get nodes

# oc get nodesCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Deploy a Red Hat Gluster Storage container on a node by executing the following command:

oc process glusterfs -v GLUSTERFS_NODE=<node_hostname> | oc create -f -

# oc process glusterfs -v GLUSTERFS_NODE=<node_hostname> | oc create -f -Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:oc process glusterfs -v GLUSTERFS_NODE=node1.example.com | oc create -f -

# oc process glusterfs -v GLUSTERFS_NODE=node1.example.com | oc create -f - deploymentconfig "glusterfs-dc-node1.example.com" createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Repeat the step of deploying the Red Hat Gluster Storage container on each node.Note

This command deploys a single Red Hat Gluster Storage container on the node. This does not initialize the hardware or create trusted storage pools. That aspect will be taken care by Heketi which is explained in the further steps. - Execute the following command to deploy heketi:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Execute the following command to verify that the containers are running:

oc get pods

# oc get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.4. Setting up the Heketi Server

/usr/share/heketi/ folder.

Note

node.hostnames.manage section and node.hostnames.storage section with the IP address. For simplicity, the file only sets up 4 nodes with 8 drives each.

- Execute the following command to check if the bootstrap container is running:

curl http://deploy-heketi-<project_name>.<sub-domain_name>/hello

# curl http://deploy-heketi-<project_name>.<sub-domain_name>/helloCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:curl http://deploy-heketi-storage-project.cloudapps.mystorage.com/hello

# curl http://deploy-heketi-storage-project.cloudapps.mystorage.com/hello Hello from HeketiCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Execute the following command to load the topology file:

export HEKETI_CLI_SERVER=http://deploy-heketi-<project_name>.<sub_domain_name>

# export HEKETI_CLI_SERVER=http://deploy-heketi-<project_name>.<sub_domain_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:export HEKETI_CLI_SERVER=http://deploy-heketi-storage-project.cloudapps.mystorage.com

# export HEKETI_CLI_SERVER=http://deploy-heketi-storage-project.cloudapps.mystorage.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow heketi-cli topology load --json=topology.json

# heketi-cli topology load --json=topology.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Execute the following command to verify that the topology is loaded:

heketi-cli topology info

# heketi-cli topology infoCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Execute the following command to create the Heketi storage volume which will store the database on a reliable Red Hat Gluster Storage volume:

heketi-cli setup-openshift-heketi-storage

# heketi-cli setup-openshift-heketi-storageCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:heketi-cli setup-openshift-heketi-storage

# heketi-cli setup-openshift-heketi-storage Saving heketi-storage.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note

If the Trusted Storage Pool where the heketidbstorage volume is created is down, then the Heketi service will not work. Hence, you must ensure that the Trusted Storage Pool is up before runningheketi-cli. - Execute the following command to create a job which will copy the database from deploy-heketi bootstrap container to the volume.

oc create -f heketi-storage.json

# oc create -f heketi-storage.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Execute the following command to verify that the job has finished successfully:

oc get jobs

# oc get jobsCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:oc get jobs

# oc get jobs NAME DESIRED SUCCESSFUL AGE heketi-storage-copy-job 1 1 2mCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Execute the following command to deploy the Heketi service which will be used to create persistent volumes for OpenShift:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Execute the following command to let the client communicate with the container:

export HEKETI_CLI_SERVER=http://heketi-<project_name>.<sub_domain_name>

# export HEKETI_CLI_SERVER=http://heketi-<project_name>.<sub_domain_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:export HEKETI_CLI_SERVER=http://heketi-storage-project.cloudapps.mystorage.com

# export HEKETI_CLI_SERVER=http://heketi-storage-project.cloudapps.mystorage.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow heketi-cli topology info

# heketi-cli topology infoCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Execute the following command to remove all the bootstrap containers which was used earlier to deploy Heketi

oc delete all,job,template,secret --selector="deploy-heketi"

# oc delete all,job,template,secret --selector="deploy-heketi"Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 5. Creating Persistent Volumes

Labels are an OpenShift Container Platform feature that support user-defined tags (key-value pairs) as part of an object’s specification. Their primary purpose is to enable the arbitrary grouping of objects by defining identical labels among them. These labels can then be targeted by selectors to match all objects with specified label values. It is this functionality we will take advantage of to enable our PVC to bind to our PV.

- To specify the endpoints you want to create, update the

sample-gluster-endpoint.jsonfile with the endpoints to be created based on the environment. Each Red Hat Gluster Storage trusted storage pool requires its own endpoint with the IP of the nodes in the trusted storage pool.Copy to Clipboard Copied! Toggle word wrap Toggle overflow name: is the name of the endpointip: is the ip address of the Red Hat Gluster Storage nodes. - Execute the following command to create the endpoints:

oc create -f <name_of_endpoint_file>

# oc create -f <name_of_endpoint_file>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:oc create -f sample-gluster-endpoint.json

# oc create -f sample-gluster-endpoint.json endpoints "glusterfs-cluster" createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow - To verify that the endpoints are created, execute the following command:

oc get endpoints

# oc get endpointsCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Execute the following command to create a gluster service:

oc create -f <name_of_service_file>

# oc create -f <name_of_service_file>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:oc create -f sample-gluster-service.json

# oc create -f sample-gluster-service.json service "glusterfs-cluster" createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow - To verify that the service is created, execute the following command:

oc get service

# oc get serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note

The endpoints and the services must be created for each project that requires a persistent storage. - Create a 100G persistent volume with Replica 3 from GlusterFS and output a persistent volume specification describing this volume to the file pv001.json:

heketi-cli volume create --size=100 --persistent-volume-file=pv001.json

$ heketi-cli volume create --size=100 --persistent-volume-file=pv001.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow Important

You must manually add the Labels information to the .json file.Following is the example YAML file for reference:Copy to Clipboard Copied! Toggle word wrap Toggle overflow name: The name of the volume.storage: The amount of storage allocated to this volumeglusterfs: The volume type being used, in this case the glusterfs plug-inendpoints: The endpoints name that defines the trusted storage pool createdpath: The Red Hat Gluster Storage volume that will be accessed from the Trusted Storage Pool.accessModes: accessModes are used as labels to match a PV and a PVC. They currently do not define any form of access control.lables: Use labels to identify common attributes or characteristics shared among volumes. In this case, we have defined the gluster volume to have a custom attribute (key) named storage-tier with a value of gold assigned. A claim will be able to select a PV with storage-tier=gold to match this PV.Note

- heketi-cli also accepts the endpoint name on the command line (--persistent-volume-endpoint=”TYPE ENDPOINT HERE”). This can then be piped to

oc create -f -to create the persistent volume immediately. - Creation of more than 100 volumes per 3 nodes per cluster is not supported.

- If there are multiple Red Hat Gluster Storage trusted storage pools in your environment, you can check on which trusted storage pool the volume is created using the

heketi-cli volume listcommand. This command lists the cluster name. You can then update the endpoint information in thepv001.jsonfile accordingly. - When creating a Heketi volume with only two nodes with the replica count set to the default value of three (replica 3), an error "No space" is displayed by Heketi as there is no space to create a replica set of three disks on three different nodes.

- If all the heketi-cli write operations (ex: volume create, cluster create..etc) fails and the read operations ( ex: topology info, volume info ..etc) are successful, then the possibility is that the gluster volume is operating in read-only mode.

- Edit the pv001.json file and enter the name of the endpoint in the endpoints section.

oc create -f pv001.json

# oc create -f pv001.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:oc create -f pv001.json

# oc create -f pv001.json persistentvolume "glusterfs-4fc22ff9" createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow - To verify that the persistent volume is created, execute the following command:

oc get pv

# oc get pvCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:oc get pv

# oc get pv NAME CAPACITY ACCESSMODES STATUS CLAIM REASON AGE glusterfs-4fc22ff9 100Gi RWX Available 4sCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Bind the persistent volume to the persistent volume claim by executing the following command:

oc create -f pvc.json

# oc create -f pvc.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:oc create -f pvc.json

# oc create -f pvc.json persistentvolumeclaim"glusterfs-claim" createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow - To verify that the persistent volume and the persistent volume claim is bound, execute the following commands:

oc get pv oc get pvc

# oc get pv # oc get pvcCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:oc get pv

# oc get pv NAME CAPACITY ACCESSMODES STATUS CLAIM REASON AGE glusterfs-4fc22ff9 100Gi RWX Bound storage-project/glusterfs-claim 1mCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc get pvc

# oc get pvc NAME STATUS VOLUME CAPACITY ACCESSMODES AGE glusterfs-claim Bound glusterfs-4fc22ff9 100Gi RWX 11sCopy to Clipboard Copied! Toggle word wrap Toggle overflow - The claim can now be used in the application:For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc create -f app.yml

# oc create -f app.yml pod "busybox" createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow For more information about using the glusterfs claim in the application see, https://access.redhat.com/documentation/en/openshift-enterprise/version-3.2/installation-and-configuration/#complete-example-using-gusterfs-creating-the-pod. - To verify that the pod is created, execute the following command:

oc get pods

# oc get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - To verify that the persistent volume is mounted inside the container, execute the following command:

oc rsh busybox

# oc rsh busyboxCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Note

Chapter 6. Operations on a Red Hat Gluster Storage Pod in an OpenShift Environment

- To list the pods, execute the following command :

oc get pods

# oc get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Following are the gluster pods from the above example:glusterfs-dc-node1.example.com glusterfs-dc-node2.example.com glusterfs-dc-node3.example.com

glusterfs-dc-node1.example.com glusterfs-dc-node2.example.com glusterfs-dc-node3.example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note

The topology.json file will provide the details of the nodes in a given Trusted Storage Pool (TSP) . In the above example all the 3 Red Hat Gluster Storage nodes are from the same TSP. - To enter the gluster pod shell, execute the following command:

oc rsh <gluster_pod_name>

# oc rsh <gluster_pod_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:oc rsh glusterfs-dc-node1.example.com

# oc rsh glusterfs-dc-node1.example.com sh-4.2#Copy to Clipboard Copied! Toggle word wrap Toggle overflow - To get the peer status, execute the following command:

gluster peer status

# gluster peer statusCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - To list the gluster volumes on the Trusted Storage Pool, execute the following command:

gluster volume info

# gluster volume infoCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - To get the volume status, execute the following command:

gluster volume status <volname>

# gluster volume status <volname>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - To take the snapshot of the gluster volume, execute the following command:

gluster snapshot create <snapname> <volname>

# gluster snapshot create <snapname> <volname>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:gluster snapshot create snap1 vol_9e86c0493f6b1be648c9deee1dc226a6

# gluster snapshot create snap1 vol_9e86c0493f6b1be648c9deee1dc226a6 snapshot create: success: Snap snap1_GMT-2016.07.29-13.05.46 created successfullyCopy to Clipboard Copied! Toggle word wrap Toggle overflow - To list the snapshots, execute the following command:

gluster snapshot list

# gluster snapshot listCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - To delete a snapshot, execute the following command:

gluster snap delete <snapname>

# gluster snap delete <snapname>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:gluster snap delete snap1_GMT-2016.07.29-13.05.46

# gluster snap delete snap1_GMT-2016.07.29-13.05.46 Deleting snap will erase all the information about the snap. Do you still want to continue? (y/n) y snapshot delete: snap1_GMT-2016.07.29-13.05.46: snap removed successfullyCopy to Clipboard Copied! Toggle word wrap Toggle overflow For more information about managing snapshots, refer https://access.redhat.com/documentation/en-US/Red_Hat_Storage/3.1/html-single/Administration_Guide/index.html#chap-Managing_Snapshots.

Chapter 7. Managing Clusters

7.1. Increasing Storage Capacity

- Adding devices

- Increasing cluster size

- Adding an entirely new cluster.

7.1.1. Adding New Devices

7.1.1.1. Using Heketi CLI

/dev/sde to node d6f2c22f2757bf67b1486d868dcb7794:

heketi-cli device add --name=/dev/sde --node=d6f2c22f2757bf67b1486d868dcb7794

# heketi-cli device add --name=/dev/sde --node=d6f2c22f2757bf67b1486d868dcb7794

OUTPUT:

Device added successfully7.1.1.2. Updating Topology File

/dev/sde drive added to the node:

7.1.2. Increasing Cluster Size

7.1.2.1. Using Heketi CLI

zone 1 to 597fceb5d6c876b899e48f599b988f54 cluster using the CLI:

/dev/sdb and /dev/sdc devices for 095d5f26b56dc6c64564a9bc17338cbf node:

7.1.2.2. Updating Topology File

after the existing ones so that the Heketi CLI identifies on which cluster this new node should be part of.

7.1.3. Adding a New Cluster

7.1.3.1. Updating Topology file

7.2. Reducing Storage Capacity

7.2.1. Deleting Devices

/dev/sde drive is deleted from the node:

7.2.2. Deleting Nodes

7.2.3. Deleting Clusters

Chapter 8. Uninstalling Containerized Red Hat Gluster Storage

- Cleanup Red Hat Gluster Storage using Heketi

- Remove any containers using the persistent volume claim from Red Hat Gluster Storage.

- Remove the appropriate persistent volume claim and persistent volume:

oc delete pvc <pvc_name> oc delete pv <pv_name>

# oc delete pvc <pvc_name> # oc delete pv <pv_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- Remove all OpenShift objects

- Delete all project specific pods, services, routes, and deployment configurations:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Wait until all the pods have been terminated. - Check and delete the gluster service and endpoints from the projects that required a persistent storage:

oc get endpoints,service oc delete endpoints <glusterfs-endpoint-name> oc delete service <glusterfs-service-name>

# oc get endpoints,service # oc delete endpoints <glusterfs-endpoint-name> # oc delete service <glusterfs-service-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- Cleanup the persistent directories

- To cleanup the persistent directories execute the following command on each node as a root user:

rm -rf /var/lib/heketi \ /etc/glusterfs \ /var/lib/glusterd \ /var/log/glusterfs

# rm -rf /var/lib/heketi \ /etc/glusterfs \ /var/lib/glusterd \ /var/log/glusterfsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- Force cleanup the disks

- Execute the following command to cleanup the disks:

wipefs -a -f /dev/<disk-id>

# wipefs -a -f /dev/<disk-id>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Appendix A. Cluster Administrator Setup

Set up the authentication using AllowAll Authentication method.

/etc/origin/master/master-config.yaml on the OpenShift master and change the value of DenyAllPasswordIdentityProvider to AllowAllPasswordIdentityProvider. Then restart the OpenShift master.

- Now that the authentication model has been setup, login as a user, for example admin/admin:

oc login openshift master e.g. https://1.1.1.1:8443 --username=admin --password=admin

# oc login openshift master e.g. https://1.1.1.1:8443 --username=admin --password=adminCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Grant the admin user account the

cluster-adminrole.oadm policy add-cluster-role-to-user cluster-admin admin

# oadm policy add-cluster-role-to-user cluster-admin adminCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Appendix B. Client Configuration using Port Forwarding

- Obtain the Heketi service pod name by running the following command:

oc get pods

# oc get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - To forward the port on your local system to the pod, execute the following command on another terminal of your local system:

oc port-forward <heketi pod name> 8080:8080

# oc port-forward <heketi pod name> 8080:8080Copy to Clipboard Copied! Toggle word wrap Toggle overflow - On the original terminal execute the following command to test the communication with the server:

curl http://localhost:8080/hello

# curl http://localhost:8080/helloCopy to Clipboard Copied! Toggle word wrap Toggle overflow This will forward the local port 8080 to the pod port 8080. - Setup the Heketi server environment variable by running the following command:

export HEKETI_CLI_SERVER=http://localhost:8080

# export HEKETI_CLI_SERVER=http://localhost:8080Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Get information from Heketi by running the following command:

heketi-cli topology info

# heketi-cli topology infoCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Appendix C. Heketi CLI Commands

- heketi-cli topology info

This command retreives information about the current Topology.

- heketi-cli cluster list

Lists the clusters managed by Heketi

For example:heketi-cli cluster list

# heketi-cli cluster list Clusters: 9460bbea6f6b1e4d833ae803816122c6Copy to Clipboard Copied! Toggle word wrap Toggle overflow - heketi-cli cluster info <cluster_id>

Retrieves the information about the cluster.

For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - heketi-cli node info <node_id>

Retrieves the information about the node.

For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - heketi-cli volume list

Lists the volumes managed by Heketi

For example:heketi-cli volume list

# heketi-cli volume list Id:142e0ec4a4c1d1cc082071329a0911c6 Cluster:9460bbea6f6b1e4d833ae803816122c6 Name:heketidbstorage Id:638d0dc6b1c85f5eaf13bd5c7ed2ee2a Cluster:9460bbea6f6b1e4d833ae803816122c6 Name:scalevol-1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

heketi-cli --help

# heketi-cli --help

- heketi-cli [flags]

- heketi-cli [command]

export HEKETI_CLI_SERVER=http://localhost:8080

# export HEKETI_CLI_SERVER=http://localhost:8080heketi-cli volume list

# heketi-cli volume list- cluster

Heketi cluster management

- device

Heketi device management

- setup-openshift-heketi-storage

Setup OpenShift/Kubernetes persistent storage for Heketi

- node

Heketi Node Management

- topology

Heketi Topology Management

- volume

Heketi Volume Management

Appendix D. Cleaning up the Heketi Topology

- Delete all the volumes by executing the following command:

heketi-cli volume delete <volume_id>

heketi-cli volume delete <volume_id>Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Delete all the devices by executing the following command:

heketi-cli device delete <device_id>

# heketi-cli device delete <device_id>Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Delete all the nodes by executing the following command:

heketi-cli node delete <node_id>

# heketi-cli node delete <node_id>Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Delete all the clusters by executing the following command:

heketi-cli cluster delete <cluster_id>

# heketi-cli cluster delete <cluster_id>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Note

- The IDs can be retrieved by executing the heketi-cli topology info command.

- The

heketidbstoragevolume cannot be deleted as it contains the heketi database.

Appendix E. Known Issues

glusterd service is responsible for allocating the ports for the brick processes of the volumes. Currently for the newly created volumes, glusterd service does not reutilize the ports which were used for brick processes for the volumes which are now been deleted. Hence, having a number of active volumes versus number of open ports in the system ratio does not work.

Legal Notice

Copyright © 2025 Red Hat

OpenShift documentation is licensed under the Apache License 2.0 (https://www.apache.org/licenses/LICENSE-2.0).

Modified versions must remove all Red Hat trademarks.

Portions adapted from https://github.com/kubernetes-incubator/service-catalog/ with modifications by Red Hat.

Red Hat, Red Hat Enterprise Linux, the Red Hat logo, the Shadowman logo, JBoss, OpenShift, Fedora, the Infinity logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries.

Linux® is the registered trademark of Linus Torvalds in the United States and other countries.

Java® is a registered trademark of Oracle and/or its affiliates.

XFS® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries.

MySQL® is a registered trademark of MySQL AB in the United States, the European Union and other countries.

Node.js® is an official trademark of Joyent. Red Hat Software Collections is not formally related to or endorsed by the official Joyent Node.js open source or commercial project.

The OpenStack® Word Mark and OpenStack logo are either registered trademarks/service marks or trademarks/service marks of the OpenStack Foundation, in the United States and other countries and are used with the OpenStack Foundation’s permission. We are not affiliated with, endorsed or sponsored by the OpenStack Foundation, or the OpenStack community.

All other trademarks are the property of their respective owners.