This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.CLI ツール

OpenShift Container Platform コマンドラインツールの使用方法

概要

第1章 OpenShift Container Platform CLI ツールの概要

OpenShift Container Platform での作業中に、次のようなさまざまな操作を実行します。

- クラスターの管理

- アプリケーションのビルド、デプロイ、および管理

- デプロイメントプロセスの管理

- Operator の開発

- Operator カタログの作成と保守

OpenShift Container Platform には、一連のコマンドラインインターフェイス (CLI) ツールが同梱されており、ユーザーがターミナルからさまざまな管理および開発操作を実行できるようにしてこれらのタスクを簡素化します。これらのツールでは、アプリケーションの管理だけでなく、システムの各コンポーネントを操作する簡単なコマンドを利用できます。

1.1. CLI ツールのリスト

OpenShift Container Platform では、以下の CLI ツールのセットを使用できます。

- OpenShift CLI (oc): これは OpenShift Container Platform ユーザーが最も一般的に使用する CLI ツールです。これは、クラスター管理者と開発者の両方が、ターミナルを使用して OpenShift Container Platform 全体でエンドツーエンドの操作が行えるようにします。Web コンソールとは異なり、ユーザーはコマンドスクリプトを使用してプロジェクトのソースコードを直接操作できます。

-

Developer CLI (odo):

odoCLI ツールは、複雑な Kubernetes および OpenShift Container Platform の概念を取り除くことで、開発者が OpenShift Container Platform でアプリケーションを作成および保守するという主目的に集中できるようにします。これにより、開発者はクラスターを管理する必要なしに、ターミナルからクラスターでのアプリケーション作成、ビルド、およびデバッグを行うことができます。 - Helm CLI: Helm は Kubernetes アプリケーションのパッケージマネージャーで、Helm チャートとしてパッケージ化されたアプリケーションの定義、インストール、およびアップグレードを可能にします。Helm CLI は、ターミナルからの簡単なコマンドを使用して、ユーザーがアプリケーションおよびサービスを OpenShift Container Platform クラスターに簡単にデプロイできるようにします。

-

Knative CLI (kn): (

kn) CLI ツールは、Knative Serving や Eventing などの OpenShift サーバーレスコンポーネントの操作に使用できるシンプルで直感的なターミナルコマンドを提供します。 -

Pipelines CLI (tkn):OpenShift Pipelines は、内部で Tekton を使用する OpenShift Container Platform の継続的インテグレーションおよび継続的デリバリー (CI / CD) ソリューションです。

tknCLI ツールには、シンプルで直感的なコマンドが同梱されており、ターミナルを使用して OpenShift パイプラインを操作できます。 -

opm CLI:

opmCLI ツールは、オペレーター開発者とクラスター管理者がターミナルからオペレーターのカタログを作成および保守するのに役立ちます。 - Operator SDK: Operator Framework のコンポーネントである Operator SDK は、Operator 開発者がターミナルから Operator のビルド、テストおよびデプロイに使用できる CLI ツールを提供します。これにより、Kubernetes ネイティブアプリケーションを構築するプロセスが簡素化されます。これには、アプリケーション固有の深い運用知識が必要になる場合があります。

第2章 OpenShift CLI (oc)

2.1. OpenShift CLI の使用を開始する

2.1.1. OpenShift CLI について

OpenShift のコマンドラインインターフェイス (CLI)、oc を使用すると、ターミナルからアプリケーションを作成し、OpenShift Container Platform プロジェクトを管理できます。OpenShift CLI は以下の状況に適しています。

- プロジェクトソースコードを直接使用している。

- OpenShift Container Platform 操作をスクリプト化する。

- 帯域幅リソースによる制限があり、Web コンソールが利用できない状況でのプロジェクトの管理

2.1.2. OpenShift CLI のインストール。

OpenShift CLI(oc) をインストールするには、バイナリーをダウンロードするか、RPM を使用します。

2.1.2.1. バイナリーのダウンロードによる OpenShift CLI のインストール

コマンドラインインターフェイスを使用して OpenShift Container Platform と対話するために CLI (oc) をインストールすることができます。oc は Linux、Windows、または macOS にインストールできます。

以前のバージョンの oc をインストールしている場合、これを使用して OpenShift Container Platform 4.7 のすべてのコマンドを実行することはできません。新規バージョンの oc をダウンロードし、インストールします。

2.1.2.1.1. Linux への OpenShift CLI のインストール

以下の手順を使用して、OpenShift CLI (oc) バイナリーを Linux にインストールできます。

手順

- Red Hat カスタマーポータルの OpenShift Container Platform ダウンロードページ に移動します。

- Version ドロップダウンメニューで適切なバージョンを選択します。

- OpenShift v4.7 Linux Client エントリーの横にある Download Now をクリックして、ファイルを保存します。

アーカイブを展開します。

tar xvzf <file>

$ tar xvzf <file>Copy to Clipboard Copied! Toggle word wrap Toggle overflow ocバイナリーを、PATHにあるディレクトリーに配置します。PATHを確認するには、以下のコマンドを実行します。echo $PATH

$ echo $PATHCopy to Clipboard Copied! Toggle word wrap Toggle overflow

OpenShift CLI のインストール後に、oc コマンドを使用して利用できます。

oc <command>

$ oc <command>2.1.2.1.2. Windows への OpenShift CLI のインストール

以下の手順を使用して、OpenShift CLI (oc) バイナリーを Windows にインストールできます。

手順

- Red Hat カスタマーポータルの OpenShift Container Platform ダウンロードページ に移動します。

- Version ドロップダウンメニューで適切なバージョンを選択します。

- OpenShift v4.7 Windows Client エントリーの横にある Download Now をクリックして、ファイルを保存します。

- ZIP プログラムでアーカイブを解凍します。

ocバイナリーを、PATHにあるディレクトリーに移動します。PATHを確認するには、コマンドプロンプトを開いて以下のコマンドを実行します。path

C:\> pathCopy to Clipboard Copied! Toggle word wrap Toggle overflow

OpenShift CLI のインストール後に、oc コマンドを使用して利用できます。

oc <command>

C:\> oc <command>2.1.2.1.3. macOC への OpenShift CLI のインストール

以下の手順を使用して、OpenShift CLI (oc) バイナリーを macOS にインストールできます。

手順

- Red Hat カスタマーポータルの OpenShift Container Platform ダウンロードページ に移動します。

- Version ドロップダウンメニューで適切なバージョンを選択します。

- OpenShift v4.7 MacOSX Client エントリーの横にある Download Now をクリックして、ファイルを保存します。

- アーカイブを展開し、解凍します。

ocバイナリーをパスにあるディレクトリーに移動します。PATHを確認するには、ターミナルを開き、以下のコマンドを実行します。echo $PATH

$ echo $PATHCopy to Clipboard Copied! Toggle word wrap Toggle overflow

OpenShift CLI のインストール後に、oc コマンドを使用して利用できます。

oc <command>

$ oc <command>2.1.2.2. Web コンソールを使用した OpenShift CLI のインストール

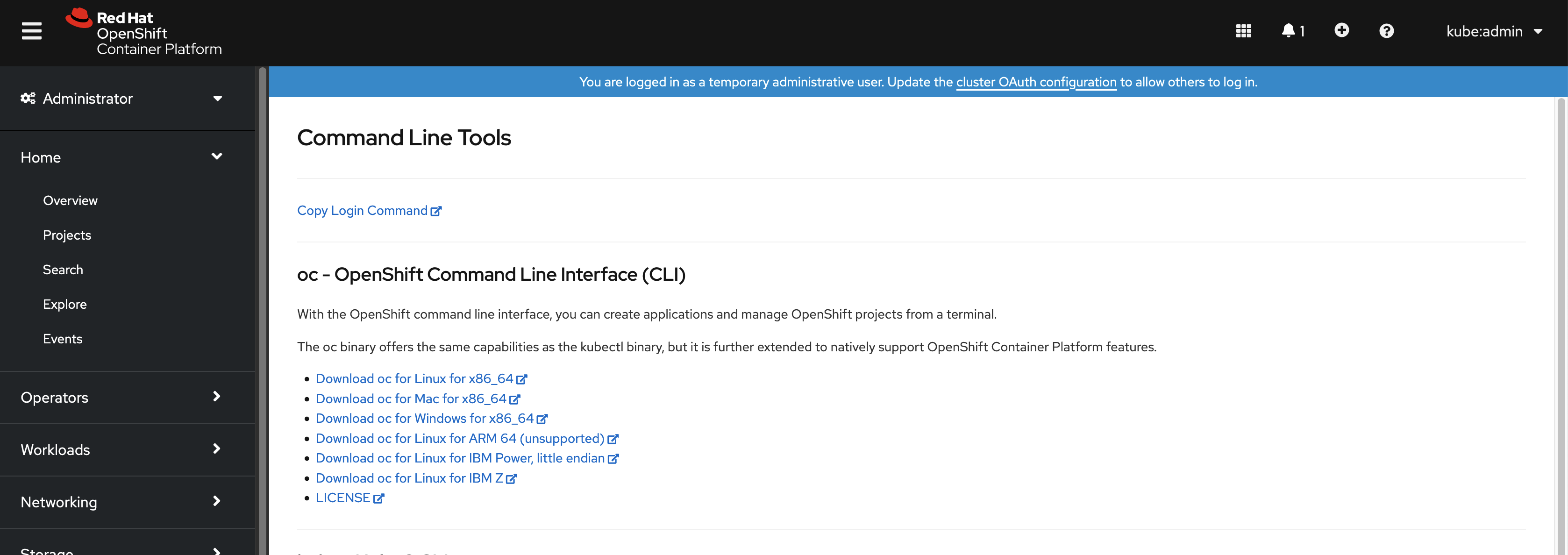

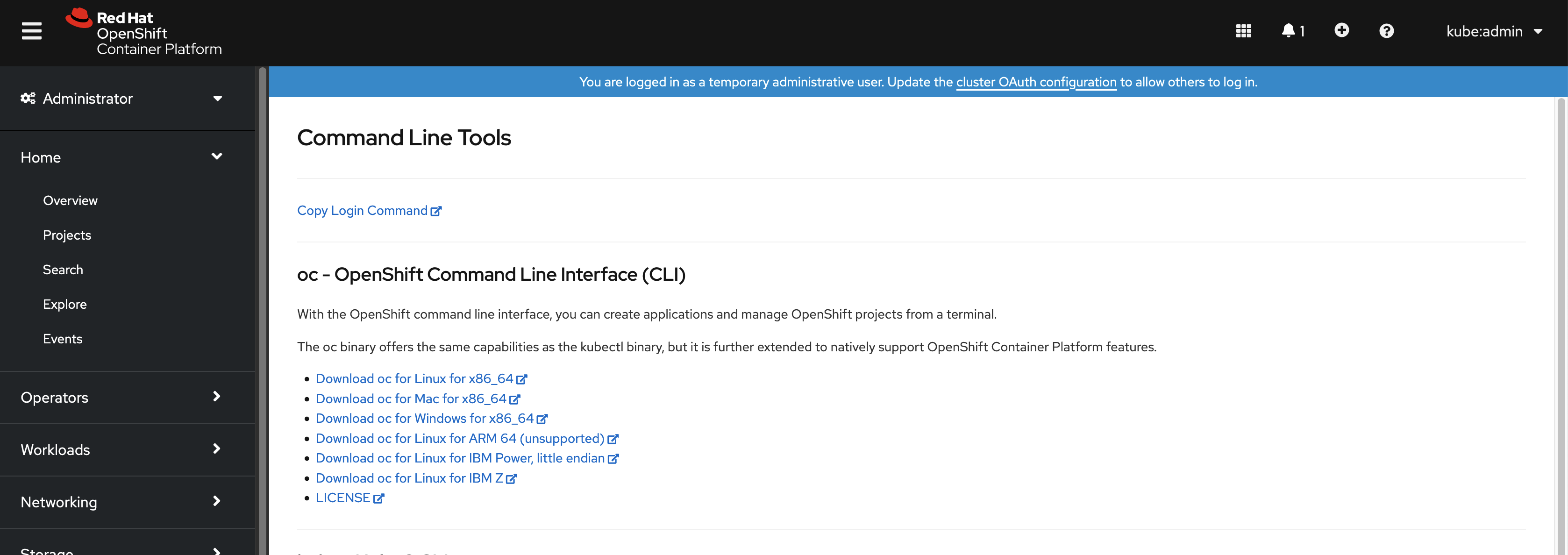

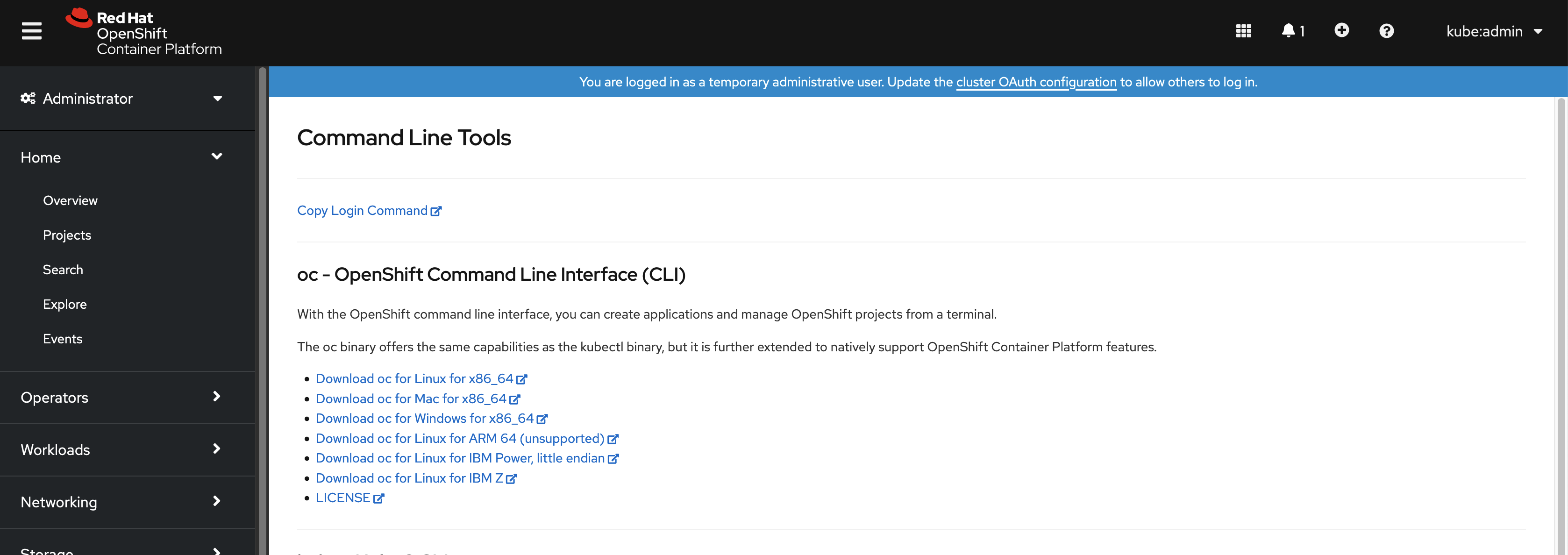

OpenShift CLI(oc) をインストールして、Web コンソールから OpenShift Container Platform と対話できます。oc は Linux、Windows、または macOS にインストールできます。

以前のバージョンの oc をインストールしている場合、これを使用して OpenShift Container Platform 4.7 のすべてのコマンドを実行することはできません。新規バージョンの oc をダウンロードし、インストールします。

2.1.2.2.1. Web コンソールを使用した Linux への OpenShift CLI のインストール

以下の手順を使用して、OpenShift CLI (oc) バイナリーを Linux にインストールできます。

手順

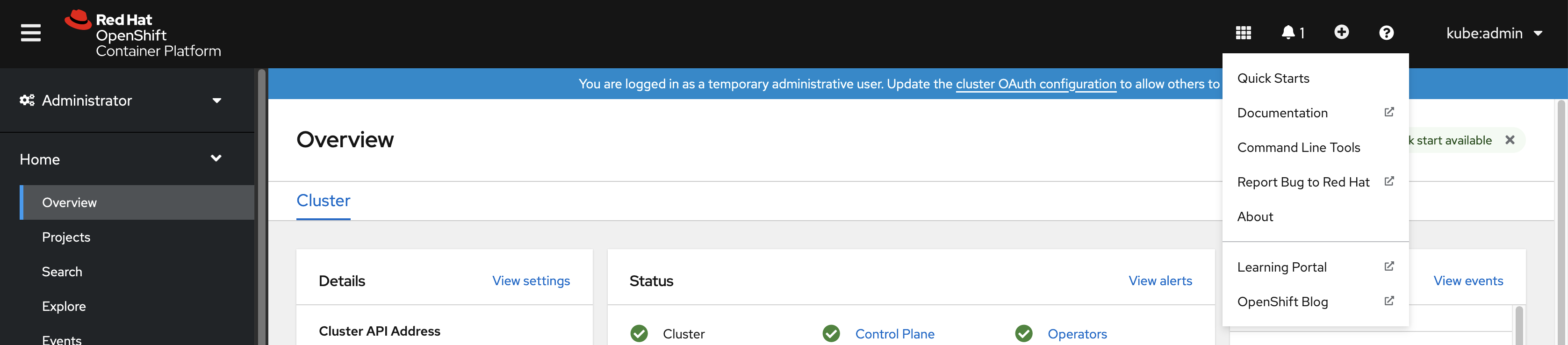

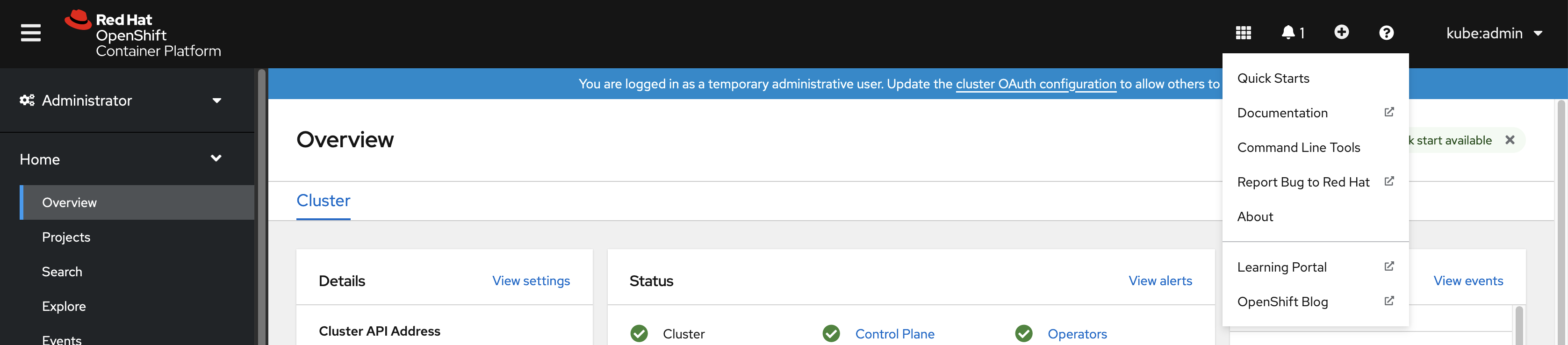

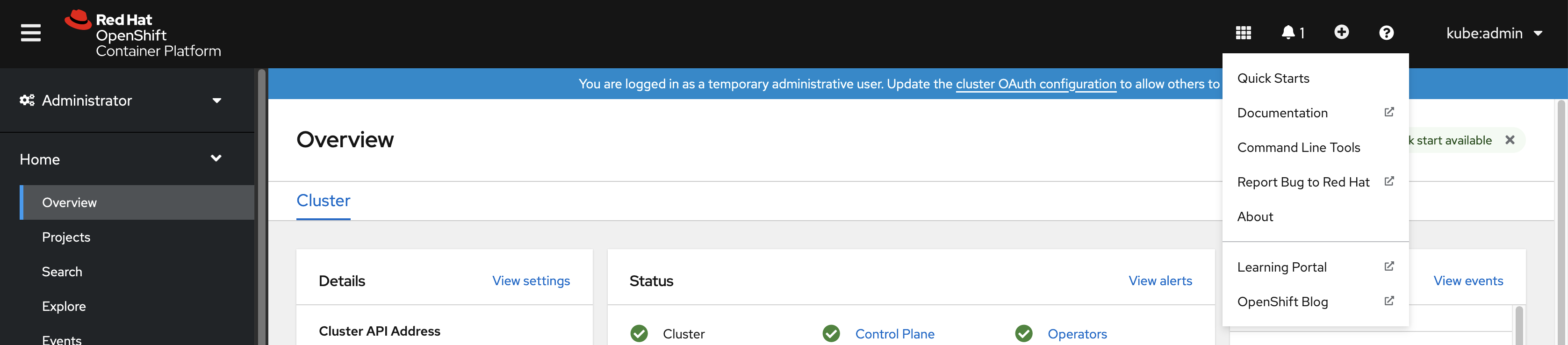

Web コンソールで ? をクリックします。

コマンドラインツール をクリックします。

-

Linux プラットフォームに適した

ocbinary を選択してから、Download oc for Linux をクリックします。 - ファイルを保存します。

アーカイブを展開します。

tar xvzf <file>

$ tar xvzf <file>Copy to Clipboard Copied! Toggle word wrap Toggle overflow ocバイナリーを、PATHにあるディレクトリーに移動します。PATHを確認するには、以下のコマンドを実行します。echo $PATH

$ echo $PATHCopy to Clipboard Copied! Toggle word wrap Toggle overflow

OpenShift CLI のインストール後に、oc コマンドを使用して利用できます。

oc <command>

$ oc <command>2.1.2.2.2. Web コンソールを使用した Windows への OpenShift CLI のインストール

以下の手順を使用して、OpenShift CLI(oc) バイナリーを Windows にインストールできます。

手順

Web コンソールで ? をクリックします。

コマンドラインツール をクリックします。

-

Windows プラットフォームの

ocバイナリーを選択してから、Download oc for Windows for x86_64 をクリックします。 - ファイルを保存します。

- ZIP プログラムでアーカイブを解凍します。

ocバイナリーを、PATHにあるディレクトリーに移動します。PATHを確認するには、コマンドプロンプトを開いて以下のコマンドを実行します。path

C:\> pathCopy to Clipboard Copied! Toggle word wrap Toggle overflow

OpenShift CLI のインストール後に、oc コマンドを使用して利用できます。

oc <command>

C:\> oc <command>2.1.2.2.3. Web コンソールを使用した macOS への OpenShift CLI のインストール

以下の手順を使用して、OpenShift CLI (oc) バイナリーを macOS にインストールできます。

手順

Web コンソールで ? をクリックします。

コマンドラインツール をクリックします。

-

macOS プラットフォームの

ocバイナリーを選択し、Download oc for Mac for x86_64 をクリックします。 - ファイルを保存します。

- アーカイブを展開し、解凍します。

ocバイナリーをパスにあるディレクトリーに移動します。PATHを確認するには、ターミナルを開き、以下のコマンドを実行します。echo $PATH

$ echo $PATHCopy to Clipboard Copied! Toggle word wrap Toggle overflow

OpenShift CLI のインストール後に、oc コマンドを使用して利用できます。

oc <command>

$ oc <command>2.1.2.3. RPM を使用した OpenShift CLI のインストール

Red Hat Enterprise Linux (RHEL) の場合、Red Hat アカウントに有効な OpenShift Container Platform サブスクリプションがある場合は、OpenShift CLI (oc) を RPM としてインストールできます。

前提条件

- root または sudo の権限が必要です。

手順

Red Hat Subscription Manager に登録します。

subscription-manager register

# subscription-manager registerCopy to Clipboard Copied! Toggle word wrap Toggle overflow 最新のサブスクリプションデータをプルします。

subscription-manager refresh

# subscription-manager refreshCopy to Clipboard Copied! Toggle word wrap Toggle overflow 利用可能なサブスクリプションを一覧表示します。

subscription-manager list --available --matches '*OpenShift*'

# subscription-manager list --available --matches '*OpenShift*'Copy to Clipboard Copied! Toggle word wrap Toggle overflow 直前のコマンドの出力で、OpenShift Container Platform サブスクリプションのプール ID を見つけ、これを登録されたシステムにアタッチします。

subscription-manager attach --pool=<pool_id>

# subscription-manager attach --pool=<pool_id>Copy to Clipboard Copied! Toggle word wrap Toggle overflow OpenShift Container Platform 4.7 で必要なリポジトリーを有効にします。

Red Hat Enterprise Linux 8 の場合:

subscription-manager repos --enable="rhocp-4.7-for-rhel-8-x86_64-rpms"

# subscription-manager repos --enable="rhocp-4.7-for-rhel-8-x86_64-rpms"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Red Hat Enterprise Linux 7 の場合:

subscription-manager repos --enable="rhel-7-server-ose-4.7-rpms"

# subscription-manager repos --enable="rhel-7-server-ose-4.7-rpms"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

openshift-clientsパッケージをインストールします。yum install openshift-clients

# yum install openshift-clientsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

CLI のインストール後は、oc コマンドを使用して利用できます。

oc <command>

$ oc <command>2.1.2.4. Homebrew を使用した OpenShift CLI のインストール

macOS の場合、Homebrew パッケージマネージャーを使用して OpenShift CLI (oc) をインストールできます。

前提条件

-

Homebrew (

brew) がインストールされている必要があります。

手順

以下のコマンドを実行して openshift-cli パッケージをインストールします。

brew install openshift-cli

$ brew install openshift-cliCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.1.3. OpenShift CLI へのログイン

OpenShift CLI (oc) にログインしてクラスターにアクセスし、これを管理できます。

前提条件

- OpenShift Container Platform クラスターへのアクセスが必要です。

-

OpenShift CLI (

oc) がインストールされている必要があります。

HTTP プロキシーサーバー上でのみアクセスできるクラスターにアクセスするには、HTTP_PROXY、HTTPS_PROXY および NO_PROXY 変数を設定できます。これらの環境変数は、クラスターとのすべての通信が HTTP プロキシーを経由するように oc CLI で使用されます。

認証ヘッダーは、HTTPS トランスポートを使用する場合にのみ送信されます。

手順

oc loginコマンドを入力し、ユーザー名を渡します。oc login -u user1

$ oc login -u user1Copy to Clipboard Copied! Toggle word wrap Toggle overflow プロンプトが表示されたら、必要な情報を入力します。

出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Web コンソールにログインしている場合には、トークンおよびサーバー情報を含む oc login コマンドを生成できます。このコマンドを使用して、対話プロンプトなしに OpenShift Container Platform CLI にログインできます。コマンドを生成するには、Web コンソールの右上にあるユーザー名のドロップダウンメニューから Copy login command を選択します。

これで、プロジェクトを作成でき、クラスターを管理するための他のコマンドを実行することができます。

2.1.4. OpenShift CLI の使用

以下のセクションで、CLI を使用して一般的なタスクを実行する方法を確認します。

2.1.4.1. プロジェクトの作成

新規プロジェクトを作成するには、oc new-project コマンドを使用します。

oc new-project my-project

$ oc new-project my-project出力例

Now using project "my-project" on server "https://openshift.example.com:6443".

Now using project "my-project" on server "https://openshift.example.com:6443".2.1.4.2. 新しいアプリケーションの作成

新規アプリケーションを作成するには、oc new-app コマンドを使用します。

oc new-app https://github.com/sclorg/cakephp-ex

$ oc new-app https://github.com/sclorg/cakephp-ex出力例

--> Found image 40de956 (9 days old) in imagestream "openshift/php" under tag "7.2" for "php"

...

Run 'oc status' to view your app.

--> Found image 40de956 (9 days old) in imagestream "openshift/php" under tag "7.2" for "php"

...

Run 'oc status' to view your app.2.1.4.3. Pod の表示

現在のプロジェクトの Pod を表示するには、oc get pods コマンドを使用します。

Pod 内で oc を実行し、namespace を指定しない場合、Pod の namespace はデフォルトで使用されます。

oc get pods -o wide

$ oc get pods -o wide出力例

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE cakephp-ex-1-build 0/1 Completed 0 5m45s 10.131.0.10 ip-10-0-141-74.ec2.internal <none> cakephp-ex-1-deploy 0/1 Completed 0 3m44s 10.129.2.9 ip-10-0-147-65.ec2.internal <none> cakephp-ex-1-ktz97 1/1 Running 0 3m33s 10.128.2.11 ip-10-0-168-105.ec2.internal <none>

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

cakephp-ex-1-build 0/1 Completed 0 5m45s 10.131.0.10 ip-10-0-141-74.ec2.internal <none>

cakephp-ex-1-deploy 0/1 Completed 0 3m44s 10.129.2.9 ip-10-0-147-65.ec2.internal <none>

cakephp-ex-1-ktz97 1/1 Running 0 3m33s 10.128.2.11 ip-10-0-168-105.ec2.internal <none>2.1.4.4. Pod ログの表示

特定の Pod のログを表示するには、oc logs コマンドを使用します。

oc logs cakephp-ex-1-deploy

$ oc logs cakephp-ex-1-deploy出力例

--> Scaling cakephp-ex-1 to 1 --> Success

--> Scaling cakephp-ex-1 to 1

--> Success2.1.4.5. 現在のプロジェクトの表示

現在のプロジェクトを表示するには、oc project コマンドを使用します。

oc project

$ oc project出力例

Using project "my-project" on server "https://openshift.example.com:6443".

Using project "my-project" on server "https://openshift.example.com:6443".2.1.4.6. 現在のプロジェクトのステータスの表示

サービス、デプロイメント、およびビルド設定などの現在のプロジェクトについての情報を表示するには、oc status コマンドを使用します。

oc status

$ oc status出力例

2.1.4.7. サポートされる API のリソースの一覧表示

サーバー上でサポートされる API リソースの一覧を表示するには、oc api-resources コマンドを使用します。

oc api-resources

$ oc api-resources出力例

NAME SHORTNAMES APIGROUP NAMESPACED KIND bindings true Binding componentstatuses cs false ComponentStatus configmaps cm true ConfigMap ...

NAME SHORTNAMES APIGROUP NAMESPACED KIND

bindings true Binding

componentstatuses cs false ComponentStatus

configmaps cm true ConfigMap

...2.1.5. ヘルプの表示

CLI コマンドおよび OpenShift Container Platform リソースに関するヘルプを以下の方法で表示することができます。

利用可能なすべての CLI コマンドの一覧および説明を表示するには、

oc helpを使用します。例: CLI についての一般的なヘルプの表示

oc help

$ oc helpCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 特定の CLI コマンドについてのヘルプを表示するには、

--helpフラグを使用します。例:

oc createコマンドについてのヘルプの表示oc create --help

$ oc create --helpCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 特定リソースについての説明およびフィールドを表示するには、

oc explainコマンドを使用します。例:

Podリソースのドキュメントの表示oc explain pods

$ oc explain podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.1.6. OpenShift CLI からのログアウト

OpenShift CLI からログアウトし、現在のセッションを終了することができます。

oc logoutコマンドを使用します。oc logout

$ oc logoutCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

Logged "user1" out on "https://openshift.example.com"

Logged "user1" out on "https://openshift.example.com"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

これにより、サーバーから保存された認証トークンが削除され、設定ファイルから除去されます。

2.2. OpenShift CLI の設定

2.2.1. タブ補完の有効化

Bash または Zsh シェルのタブ補完を有効にできます。

2.2.1.1. Bash のタブ補完を有効にする

OpenShift CLI (oc) ツールをインストールした後に、タブ補完を有効にして oc コマンドの自動補完を実行するか、または Tab キーを押す際にオプションの提案が表示されるようにできます。次の手順では、Bash シェルのタブ補完を有効にします。

前提条件

-

OpenShift CLI (

oc) がインストールされている必要があります。 -

bash-completionパッケージがインストールされている。

手順

Bash 補完コードをファイルに保存します。

oc completion bash > oc_bash_completion

$ oc completion bash > oc_bash_completionCopy to Clipboard Copied! Toggle word wrap Toggle overflow ファイルを

/etc/bash_completion.d/にコピーします。sudo cp oc_bash_completion /etc/bash_completion.d/

$ sudo cp oc_bash_completion /etc/bash_completion.d/Copy to Clipboard Copied! Toggle word wrap Toggle overflow さらにファイルをローカルディレクトリーに保存した後に、これを

.bashrcファイルから取得できるようにすることができます。

タブ補完は、新規ターミナルを開くと有効にされます。

2.2.1.2. Zsh のタブ補完を有効にする

OpenShift CLI (oc) ツールをインストールした後に、タブ補完を有効にして oc コマンドの自動補完を実行するか、または Tab キーを押す際にオプションの提案が表示されるようにできます。次の手順では、Zsh シェルのタブ補完を有効にします。

前提条件

-

OpenShift CLI (

oc) がインストールされている必要があります。

手順

ocのタブ補完を.zshrcファイルに追加するには、次のコマンドを実行します。Copy to Clipboard Copied! Toggle word wrap Toggle overflow

タブ補完は、新規ターミナルを開くと有効にされます。

2.3. Managing CLI Profiles

CLI 設定ファイルでは、CLI ツールの概要 で使用するさまざまなプロファイルまたはコンテキストを設定できます。コンテキストは、ユーザー認証 および ニックネーム と関連付けられた OpenShift Container Platform サーバー情報から設定されます。

2.3.1. CLI プロファイル間のスイッチについて

CLI 操作を使用する場合に、コンテキストを使用すると、複数の OpenShift Container Platform サーバーまたはクラスターにまたがって、複数ユーザー間の切り替えが簡単になります。ニックネームを使用すると、コンテキスト、ユーザーの認証情報およびクラスターの詳細情報の省略された参照を提供することで、CLI 設定の管理が容易になります。CLI を使用して初めてログインした後、OpenShift Container Platform は ~/.kube/config ファイルを作成します (すでに存在しない場合)。oc login 操作中に自動的に、または CLI プロファイルを手動で設定することにより、より多くの認証と接続の詳細が CLI に提供されると、更新された情報が設定ファイルに保存されます。

CLI 設定ファイル

- 1

clustersセクションは、マスターサーバーのアドレスを含む OpenShift Container Platform クラスターの接続の詳細を定義します。この例では、1 つのクラスターのニックネームはopenshift1.example.com:8443で、もう 1 つのクラスターのニックネームはopenshift2.example.com:8443となっています。- 2

- この

contextsセクションでは、2 つのコンテキストを定義します。1 つはalice-project/openshift1.example.com:8443/aliceというニックネームで、alice-projectプロジェクト、openshift1.example.com:8443クラスター、およびaliceユーザーを使用します。もう 1 つはjoe-project/openshift1.example.com:8443/aliceというニックネームで、joe-projectプロジェクト、openshift1.example.com:8443クラスター、およびaliceユーザーを使用します。 - 3

current-contextパラメーターは、joe-project/openshift1.example.com:8443/aliceコンテキストが現在使用中であることを示しています。これにより、aliceユーザーはopenshift1.example.com:8443クラスターのjoe-projectプロジェクトで作業することが可能になります。- 4

usersセクションは、ユーザーの認証情報を定義します。この例では、ユーザーニックネームalice/openshift1.example.com:8443は、アクセストークンを使用します。

CLI は、実行時にロードされ、コマンドラインから指定されたオーバーライドオプションとともにマージされる複数の設定ファイルをサポートできます。ログイン後に、oc status または oc project コマンドを使用して、現在の作業環境を確認できます。

現在の作業環境の確認

oc status

$ oc status出力例

現在のプロジェクトの一覧表示

oc project

$ oc project出力例

Using project "joe-project" from context named "joe-project/openshift1.example.com:8443/alice" on server "https://openshift1.example.com:8443".

Using project "joe-project" from context named "joe-project/openshift1.example.com:8443/alice" on server "https://openshift1.example.com:8443".

oc login コマンドを再度実行し、対話式プロセス中に必要な情報を指定して、ユーザー認証情報およびクラスターの詳細の他の組み合わせを使用してログインできます。コンテキストが存在しない場合は、コンテキストが指定される情報に基づいて作成されます。すでにログインしている場合で、現行ユーザーがアクセス可能な別のプロジェクトに切り替える場合には、oc project コマンドを使用してプロジェクトの名前を入力します。

oc project alice-project

$ oc project alice-project出力例

Now using project "alice-project" on server "https://openshift1.example.com:8443".

Now using project "alice-project" on server "https://openshift1.example.com:8443".

出力に示されるように、いつでも oc config view コマンドを使用して、現在の CLI 設定を表示できます。高度な使用方法で利用できる CLI 設定コマンドが他にもあります。

管理者の認証情報にアクセスできるが、デフォルトのシステムユーザーsystem:adminとしてログインしていない場合は、認証情報が CLI 設定ファイルに残っている限り、いつでもこのユーザーとして再度ログインできます。以下のコマンドはログインを実行し、デフォルトプロジェクトに切り替えます。

oc login -u system:admin -n default

$ oc login -u system:admin -n default2.3.2. CLI プロファイルの手動設定

このセクションでは、CLI 設定の高度な使用方法について説明します。ほとんどの場合、oc login コマンドおよび oc project コマンドを使用してログインし、コンテキスト間とプロジェクト間の切り替えを実行できます。

CLI 設定ファイルを手動で設定する必要がある場合は、ファイルを直接変更せずに oc config コマンドを使用することができます。oc config コマンドには、この目的で役立ついくつかのサブコマンドが含まれています。

| サブコマンド | 使用法 |

|---|---|

|

| CLI 設定ファイルにクラスターエントリーを設定します。参照されるクラスターのニックネームがすでに存在する場合、指定情報はマージされます。 oc config set-cluster <cluster_nickname> [--server=<master_ip_or_fqdn>] [--certificate-authority=<path/to/certificate/authority>] [--api-version=<apiversion>] [--insecure-skip-tls-verify=true] |

|

| CLI 設定ファイルにコンテキストエントリーを設定します。参照されるコンテキストのニックネームがすでに存在する場合、指定情報はマージされます。 oc config set-context <context_nickname> [--cluster=<cluster_nickname>] [--user=<user_nickname>] [--namespace=<namespace>] |

|

| 指定されたコンテキストのニックネームを使用して、現在のコンテキストを設定します。 oc config use-context <context_nickname> |

|

| CLI 設定ファイルに個別の値を設定します。 oc config set <property_name> <property_value>

|

|

| CLI 設定ファイルでの個別の値の設定を解除します。 oc config unset <property_name>

|

|

| 現在使用中のマージされた CLI 設定を表示します。 oc config view 指定された CLI 設定ファイルの結果を表示します。 oc config view --config=<specific_filename> |

使用例

-

アクセストークンを使用するユーザーとしてログインします。このトークンは

aliceユーザーによって使用されます。

oc login https://openshift1.example.com --token=ns7yVhuRNpDM9cgzfhhxQ7bM5s7N2ZVrkZepSRf4LC0

$ oc login https://openshift1.example.com --token=ns7yVhuRNpDM9cgzfhhxQ7bM5s7N2ZVrkZepSRf4LC0- 自動的に作成されたクラスターエントリーを表示します。

oc config view

$ oc config view出力例

- 現在のコンテキストを更新して、ユーザーが必要な namespace にログインできるようにします。

oc config set-context `oc config current-context` --namespace=<project_name>

$ oc config set-context `oc config current-context` --namespace=<project_name>- 現在のコンテキストを調べて、変更が実装されていることを確認します。

oc whoami -c

$ oc whoami -c後続のすべての CLI 操作は、オーバーライドする CLI オプションにより特に指定されていない限り、またはコンテキストが切り替わるまで、新しいコンテキストを使用します。

2.3.3. ルールの読み込みおよびマージ

CLI 設定のロードおよびマージ順序の CLI 操作を実行する際に、以下のルールを実行できます。

CLI 設定ファイルは、以下の階層とマージルールを使用してワークステーションから取得されます。

-

--configオプションが設定されている場合、そのファイルのみが読み込まれます。フラグは一度設定され、マージは実行されません。 -

$KUBECONFIG環境変数が設定されている場合は、これが使用されます。変数はパスの一覧である可能性があり、その場合、パスは 1 つにマージされます。値が変更される場合は、スタンザを定義するファイルで変更されます。値が作成される場合は、存在する最初のファイルで作成されます。ファイルがチェーン内に存在しない場合は、一覧の最後のファイルが作成されます。 -

または、

~/.kube/configファイルが使用され、マージは実行されません。

-

使用するコンテキストは、以下のフローの最初の一致に基づいて決定されます。

-

--contextオプションの値。 -

CLI 設定ファイルの

current-context値。 - この段階では空の値が許可されます。

-

使用するユーザーおよびクラスターが決定されます。この時点では、コンテキストがある場合とない場合があります。コンテキストは、以下のフローの最初の一致に基づいて作成されます。このフローは、ユーザー用に 1 回、クラスター用に 1 回実行されます。

-

ユーザー名の

--userの値、およびクラスター名の--clusterオプション。 -

--contextオプションがある場合は、コンテキストの値を使用します。 - この段階では空の値が許可されます。

-

ユーザー名の

使用する実際のクラスター情報が決定されます。この時点では、クラスター情報がある場合とない場合があります。各クラスター情報は、以下のフローの最初の一致に基づいて構築されます。

以下のコマンドラインオプションのいずれかの値。

-

--server -

--api-version -

--certificate-authority -

--insecure-skip-tls-verify

-

- クラスター情報および属性の値がある場合は、それを使用します。

- サーバーロケーションがない場合は、エラーが生じます。

使用する実際のユーザー情報が決定されます。ユーザーは、クラスターと同じルールを使用して作成されます。ただし、複数の手法が競合することによって操作が失敗することから、ユーザーごとの 1 つの認証手法のみを使用できます。コマンドラインのオプションは、設定ファイルの値よりも優先されます。以下は、有効なコマンドラインのオプションです。

-

--auth-path -

--client-certificate -

--client-key -

--token

-

- 欠落している情報がある場合には、デフォルト値が使用され、追加情報を求めるプロンプトが出されます。

2.4. プラグインによる OpenShift CLI の拡張

デフォルトの oc コマンドを拡張するためにプラグインを作成およびインストールし、これを使用して OpenShift Container Platform CLI で新規および追加の複雑なタスクを実行できます。

2.4.1. CLI プラグインの作成

コマンドラインのコマンドを作成できる任意のプログラミング言語またはスクリプトで、OpenShift Container Platform CLI のプラグインを作成できます。既存の oc コマンドを上書きするプラグインを使用することはできない点に注意してください。

現時点で OpenShift CLI プラグインはテクノロジープレビュー機能です。テクノロジープレビュー機能は、Red Hat の実稼働環境でのサービスレベルアグリーメント (SLA) ではサポートされていないため、Red Hat では実稼働環境での使用を推奨していません。これらの機能は、近々発表予定の製品機能をリリースに先駆けてご提供することにより、お客様は機能性をテストし、開発プロセス中にフィードバックをお寄せいただくことができます。

詳細は、テクノロジープレビュー機能のサポート範囲 を参照してください。

手順

以下の手順では、oc foo コマンドの実行時にターミナルにメッセージを出力する単純な Bash プラグインを作成します。

oc-fooというファイルを作成します。プラグインファイルの名前を付ける際には、以下の点に留意してください。

-

プログインとして認識されるように、ファイルの名前は

oc-またはkubectl-で開始する必要があります。 -

ファイル名は、プラグインを起動するコマンドを判別するものとなります。たとえば、ファイル名が

oc-foo-barのプラグインは、oc foo barのコマンドで起動します。また、コマンドにダッシュを含める必要がある場合には、アンダースコアを使用することもできます。たとえば、ファイル名がoc-foo_barのプラグインはoc foo-barのコマンドで起動できます。

-

プログインとして認識されるように、ファイルの名前は

以下の内容をファイルに追加します。

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

OpenShift Container Platform CLI のこのプラグインをインストールした後に、oc foo コマンドを使用してこれを起動できます。

2.4.2. CLI プラグインのインストールおよび使用

OpenShift Container Platform CLI のカスタムプラグインの作成後に、これが提供する機能を使用できるようインストールする必要があります。

現時点で OpenShift CLI プラグインはテクノロジープレビュー機能です。テクノロジープレビュー機能は、Red Hat の実稼働環境でのサービスレベルアグリーメント (SLA) ではサポートされていないため、Red Hat では実稼働環境での使用を推奨していません。これらの機能は、近々発表予定の製品機能をリリースに先駆けてご提供することにより、お客様は機能性をテストし、開発プロセス中にフィードバックをお寄せいただくことができます。

詳細は、テクノロジープレビュー機能のサポート範囲 を参照してください。

前提条件

-

ocCLI ツールをインストールしていること。 -

oc-またはkubectl-で始まる CLI プラグインファイルがあること。

手順

必要に応じて、プラグインファイルを実行可能な状態になるように更新します。

chmod +x <plugin_file>

$ chmod +x <plugin_file>Copy to Clipboard Copied! Toggle word wrap Toggle overflow ファイルを

PATHの任意の場所に置きます (例:/usr/local/bin/)。sudo mv <plugin_file> /usr/local/bin/.

$ sudo mv <plugin_file> /usr/local/bin/.Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc plugin listを実行し、プラグインが一覧表示されることを確認します。oc plugin list

$ oc plugin listCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

The following compatible plugins are available: /usr/local/bin/<plugin_file>

The following compatible plugins are available: /usr/local/bin/<plugin_file>Copy to Clipboard Copied! Toggle word wrap Toggle overflow プラグインがここに一覧表示されていない場合、ファイルが

oc-またはkubectl-で開始されるものであり、実行可能な状態でPATH上にあることを確認します。プラグインによって導入される新規コマンドまたはオプションを起動します。

たとえば、

kubectl-nsプラグインを サンプルのプラグインリポジトリー からビルドし、インストールしている場合、以下のコマンドを使用して現在の namespace を表示できます。oc ns

$ oc nsCopy to Clipboard Copied! Toggle word wrap Toggle overflow プラグインを起動するためのコマンドはプラグインファイル名によって異なることに注意してください。たとえば、ファイル名が

oc-foo-barのプラグインはoc foo barコマンドによって起動します。

2.5. OpenShift CLI 開発者コマンド

2.5.1. 基本的な CLI コマンド

2.5.1.1. explain

特定リソースのドキュメントを表示します。

例: Pod のドキュメントの表示

oc explain pods

$ oc explain pods2.5.1.2. login

OpenShift Container Platform サーバーにログインし、後続の使用のためにログイン情報を保存します。

例: 対話型ログイン

oc login -u user1

$ oc login -u user12.5.1.3. new-app

ソースコード、テンプレート、またはイメージを指定して新規アプリケーションを作成します。

例: ローカル Git リポジトリーからの新規アプリケーションの作成

oc new-app .

$ oc new-app .例: リモート Git リポジトリーからの新規アプリケーションの作成

oc new-app https://github.com/sclorg/cakephp-ex

$ oc new-app https://github.com/sclorg/cakephp-ex例: プライベートリモートリポジトリーからの新規アプリケーションの作成

oc new-app https://github.com/youruser/yourprivaterepo --source-secret=yoursecret

$ oc new-app https://github.com/youruser/yourprivaterepo --source-secret=yoursecret2.5.1.4. new-project

新規プロジェクトを作成し、設定のデフォルトのプロジェクトとしてこれに切り替えます。

例: 新規プロジェクトの作成

oc new-project myproject

$ oc new-project myproject2.5.1.5. project

別のプロジェクトに切り替えて、これを設定でデフォルトにします。

例: 別のプロジェクトへの切り替え

oc project test-project

$ oc project test-project2.5.1.6. projects

現在のアクティブなプロジェクトおよびサーバー上の既存プロジェクトについての情報を表示します。

例: すべてのプロジェクトの一覧表示

oc projects

$ oc projects2.5.1.7. status

現在のプロジェクトのハイレベルの概要を表示します。

例: 現在のプロジェクトのステータスの表示

oc status

$ oc status2.5.2. CLI コマンドのビルドおよびデプロイ

2.5.2.1. cancel-build

実行中、保留中、または新規のビルドを取り消します。

例: ビルドの取り消し

oc cancel-build python-1

$ oc cancel-build python-1例: python ビルド設定からの保留中のすべてのビルドの取り消し

oc cancel-build buildconfig/python --state=pending

$ oc cancel-build buildconfig/python --state=pending2.5.2.2. import-image

イメージリポジトリーから最新のタグおよびイメージ情報をインポートします。

例: 最新のイメージ情報のインポート

oc import-image my-ruby

$ oc import-image my-ruby2.5.2.3. new-build

ソースコードから新規のビルド設定を作成します。

例: ローカル Git リポジトリーからのビルド設定の作成

oc new-build .

$ oc new-build .例: リモート Git リポジトリーからのビルド設定の作成

oc new-build https://github.com/sclorg/cakephp-ex

$ oc new-build https://github.com/sclorg/cakephp-ex2.5.2.4. rollback

アプリケーションを以前のデプロイメントに戻します。

例: 最後に成功したデプロイメントへのロールバック

oc rollback php

$ oc rollback php例: 特定バージョンへのロールバック

oc rollback php --to-version=3

$ oc rollback php --to-version=32.5.2.5. rollout

新規ロールアウトを開始し、そのステータスまたは履歴を表示するか、またはアプリケーションの以前のバージョンにロールバックします。

例: 最後に成功したデプロイメントへのロールバック

oc rollout undo deploymentconfig/php

$ oc rollout undo deploymentconfig/php例: 最新状態のデプロイメントの新規ロールアウトの開始

oc rollout latest deploymentconfig/php

$ oc rollout latest deploymentconfig/php2.5.2.6. start-build

ビルド設定からビルドを開始するか、または既存ビルドをコピーします。

例: 指定されたビルド設定からのビルドの開始

oc start-build python

$ oc start-build python例: 以前のビルドからのビルドの開始

oc start-build --from-build=python-1

$ oc start-build --from-build=python-1例: 現在のビルドに使用する環境変数の設定

oc start-build python --env=mykey=myvalue

$ oc start-build python --env=mykey=myvalue2.5.2.7. tag

既存のイメージをイメージストリームにタグ付けします。

例: ruby イメージの latest タグを 2.0 タグのイメージを参照するように設定する

oc tag ruby:latest ruby:2.0

$ oc tag ruby:latest ruby:2.02.5.3. アプリケーション管理 CLI コマンド

2.5.3.1. annotate

1 つ以上のリソースでアノテーションを更新します。

例: アノテーションのルートへの追加

oc annotate route/test-route haproxy.router.openshift.io/ip_whitelist="192.168.1.10"

$ oc annotate route/test-route haproxy.router.openshift.io/ip_whitelist="192.168.1.10"例: ルートからのアノテーションの削除

oc annotate route/test-route haproxy.router.openshift.io/ip_whitelist-

$ oc annotate route/test-route haproxy.router.openshift.io/ip_whitelist-2.5.3.2. apply

JSON または YAML 形式のファイル名または標準入力 (stdin) 別に設定をリソースに適用します。

例: pod.json の設定の Pod への適用

oc apply -f pod.json

$ oc apply -f pod.json2.5.3.3. autoscale

デプロイメントまたはレプリケーションコントローラーの自動スケーリングを実行します。

例: 最小の 2 つおよび最大の 5 つの Pod への自動スケーリング

oc autoscale deploymentconfig/parksmap-katacoda --min=2 --max=5

$ oc autoscale deploymentconfig/parksmap-katacoda --min=2 --max=52.5.3.4. create

JSON または YAML 形式のファイル名または標準入力 (stdin) 別にリソースを作成します。

例: pod.json の内容を使用した Pod の作成

oc create -f pod.json

$ oc create -f pod.json2.5.3.5. delete

リソースを削除します。

例: parksmap-katacoda-1-qfqz4 という名前の Pod の削除

oc delete pod/parksmap-katacoda-1-qfqz4

$ oc delete pod/parksmap-katacoda-1-qfqz4例: app=parksmap-katacoda ラベルの付いたすべての Pod の削除

oc delete pods -l app=parksmap-katacoda

$ oc delete pods -l app=parksmap-katacoda2.5.3.6. describe

特定のオブジェクトに関する詳細情報を返します。

例: example という名前のデプロイメントの記述

oc describe deployment/example

$ oc describe deployment/example例: すべての Pod の記述

oc describe pods

$ oc describe pods2.5.3.7. edit

リソースを編集します。

例: デフォルトエディターを使用したデプロイメントの編集

oc edit deploymentconfig/parksmap-katacoda

$ oc edit deploymentconfig/parksmap-katacoda例: 異なるエディターを使用したデプロイメントの編集

OC_EDITOR="nano" oc edit deploymentconfig/parksmap-katacoda

$ OC_EDITOR="nano" oc edit deploymentconfig/parksmap-katacoda例: JSON 形式のデプロイメントの編集

oc edit deploymentconfig/parksmap-katacoda -o json

$ oc edit deploymentconfig/parksmap-katacoda -o json2.5.3.8. expose

ルートとしてサービスを外部に公開します。

例: サービスの公開

oc expose service/parksmap-katacoda

$ oc expose service/parksmap-katacoda例: サービスの公開およびホスト名の指定

oc expose service/parksmap-katacoda --hostname=www.my-host.com

$ oc expose service/parksmap-katacoda --hostname=www.my-host.com2.5.3.9. get

1 つ以上のリソースを表示します。

例: default namespace の Pod の一覧表示

oc get pods -n default

$ oc get pods -n default例: JSON 形式の python デプロイメントについての詳細の取得

oc get deploymentconfig/python -o json

$ oc get deploymentconfig/python -o json2.5.3.10. label

1 つ以上のリソースでラベルを更新します。

例: python-1-mz2rf Pod の unhealthy に設定されたラベル status での更新

oc label pod/python-1-mz2rf status=unhealthy

$ oc label pod/python-1-mz2rf status=unhealthy2.5.3.11. scale

レプリケーションコントローラーまたはデプロイメントの必要なレプリカ数を設定します。

例: ruby-app デプロイメントの 3 つの Pod へのスケーリング

oc scale deploymentconfig/ruby-app --replicas=3

$ oc scale deploymentconfig/ruby-app --replicas=32.5.3.12. secrets

プロジェクトのシークレットを管理します。

例: my-pull-secret の、default サービスアカウントによるイメージプルシークレットとしての使用を許可

oc secrets link default my-pull-secret --for=pull

$ oc secrets link default my-pull-secret --for=pull2.5.3.13. serviceaccounts

サービスアカウントに割り当てられたトークンを取得するか、またはサービスアカウントの新規トークンまたは kubeconfig ファイルを作成します。

例: default サービスアカウントに割り当てられたトークンの取得

oc serviceaccounts get-token default

$ oc serviceaccounts get-token default2.5.3.14. set

既存のアプリケーションリソースを設定します。

例: ビルド設定でのシークレットの名前の設定

oc set build-secret --source buildconfig/mybc mysecret

$ oc set build-secret --source buildconfig/mybc mysecret2.5.4. CLI コマンドのトラブルシューティングおよびデバッグ

2.5.4.1. attach

実行中のコンテナーにシェルを割り当てます。

例: Pod python-1-mz2rf の python コンテナーからの出力の取得

oc attach python-1-mz2rf -c python

$ oc attach python-1-mz2rf -c python2.5.4.2. cp

ファイルおよびディレクトリーのコンテナーへの/からのコピーを実行します。

例: python-1-mz2rf Pod からローカルファイルシステムへのファイルのコピー

oc cp default/python-1-mz2rf:/opt/app-root/src/README.md ~/mydirectory/.

$ oc cp default/python-1-mz2rf:/opt/app-root/src/README.md ~/mydirectory/.2.5.4.3. debug

コマンドシェルを起動して、実行中のアプリケーションをデバッグします。

例: python デプロイメントのデバッグ

oc debug deploymentconfig/python

$ oc debug deploymentconfig/python2.5.4.4. exec

コンテナーでコマンドを実行します。

例: ls コマンドの Pod python-1-mz2rf の python コンテナーでの実行

oc exec python-1-mz2rf -c python ls

$ oc exec python-1-mz2rf -c python ls2.5.4.5. logs

特定のビルド、ビルド設定、デプロイメント、または Pod のログ出力を取得します。

例: python デプロイメントからの最新ログのストリーミング

oc logs -f deploymentconfig/python

$ oc logs -f deploymentconfig/python2.5.4.6. port-forward

1 つ以上のポートを Pod に転送します。

例: ポート 8888 でのローカルのリッスンおよび Pod のポート 5000 への転送

oc port-forward python-1-mz2rf 8888:5000

$ oc port-forward python-1-mz2rf 8888:50002.5.4.7. proxy

Kubernetes API サーバーに対してプロキシーを実行します。

例: ./local/www/ から静的コンテンツを提供するポート 8011 の API サーバーに対するプロキシーの実行

oc proxy --port=8011 --www=./local/www/

$ oc proxy --port=8011 --www=./local/www/2.5.4.8. rsh

コンテナーへのリモートシェルセッションを開きます。

例: python-1-mz2rf Pod の最初のコンテナーでシェルセッションを開く

oc rsh python-1-mz2rf

$ oc rsh python-1-mz2rf2.5.4.9. rsync

ディレクトリーの内容の実行中の Pod コンテナーへの/からのコピーを実行します。変更されたファイルのみが、オペレーティングシステムから rsync コマンドを使用してコピーされます。

例: ローカルディレクトリーのファイルの Pod ディレクトリーとの同期

oc rsync ~/mydirectory/ python-1-mz2rf:/opt/app-root/src/

$ oc rsync ~/mydirectory/ python-1-mz2rf:/opt/app-root/src/2.5.4.10. run

特定のイメージを実行する Pod を作成します。

例: perl イメージを実行する Pod の起動

oc run my-test --image=perl

$ oc run my-test --image=perl2.5.4.11. wait

1 つ以上のリソースの特定の条件を待機します。

このコマンドは実験的なもので、通知なしに変更される可能性があります。

例: python-1-mz2rf Pod の削除の待機

oc wait --for=delete pod/python-1-mz2rf

$ oc wait --for=delete pod/python-1-mz2rf2.5.5. 上級開発者の CLI コマンド

2.5.5.1. api-resources

サーバーがサポートする API リソースの詳細の一覧を表示します。

例: サポートされている API リソースの一覧表示

oc api-resources

$ oc api-resources2.5.5.2. api-versions

サーバーがサポートする API バージョンの詳細の一覧を表示します。

例: サポートされている API バージョンの一覧表示

oc api-versions

$ oc api-versions2.5.5.3. auth

パーミッションを検査し、RBAC ロールを調整します。

例: 現行ユーザーが Pod ログを読み取ることができるかどうかのチェック

oc auth can-i get pods --subresource=log

$ oc auth can-i get pods --subresource=log例: ファイルの RBAC ロールおよびパーミッションの調整

oc auth reconcile -f policy.json

$ oc auth reconcile -f policy.json2.5.5.4. cluster-info

マスターおよびクラスターサービスのアドレスを表示します。

例: クラスター情報の表示

oc cluster-info

$ oc cluster-info2.5.5.5. extract

設定マップまたはシークレットの内容を抽出します。設定マップまたはシークレットのそれぞれのキーがキーの名前を持つ別個のファイルとして作成されます。

例: ruby-1-ca 設定マップの内容の現行ディレクトリーへのダウンロード

oc extract configmap/ruby-1-ca

$ oc extract configmap/ruby-1-ca例: ruby-1-ca 設定マップの内容の標準出力 (stdout) への出力

oc extract configmap/ruby-1-ca --to=-

$ oc extract configmap/ruby-1-ca --to=-2.5.5.6. idle

スケーラブルなリソースをアイドリングします。アイドリングされたサービスは、トラフィックを受信するとアイドリング解除されます。 これは oc scale コマンドを使用して手動でアイドリング解除することもできます。

例: ruby-app サービスのアイドリング

oc idle ruby-app

$ oc idle ruby-app2.5.5.7. image

OpenShift Container Platform クラスターでイメージを管理します。

例: イメージの別のタグへのコピー

oc image mirror myregistry.com/myimage:latest myregistry.com/myimage:stable

$ oc image mirror myregistry.com/myimage:latest myregistry.com/myimage:stable2.5.5.8. observe

リソースの変更を監視し、それらの変更に対するアクションを取ります。

例: サービスへの変更の監視

oc observe services

$ oc observe services2.5.5.9. patch

JSON または YAML 形式のストテラテジーに基づくマージパッチを使用してオブジェクトの 1 つ以上のフィールドを更新します。

例: ノード node1 の spec.unschedulable フィールドの true への更新

oc patch node/node1 -p '{"spec":{"unschedulable":true}}'

$ oc patch node/node1 -p '{"spec":{"unschedulable":true}}'

カスタムリソース定義 (Custom Resource Definition) のパッチを適用する必要がある場合、コマンドに --type merge オプションまたは --type json オプションを含める必要があります。

2.5.5.10. policy

認可ポリシーを管理します。

例: edit ロールの現在のプロジェクトの user1 への追加

oc policy add-role-to-user edit user1

$ oc policy add-role-to-user edit user12.5.5.11. process

リソースの一覧に対してテンプレートを処理します。

例: template.json をリソース一覧に変換し、 oc create に渡す

oc process -f template.json | oc create -f -

$ oc process -f template.json | oc create -f -2.5.5.12. registry

OpenShift Container Platform で統合レジストリーを管理します。

例: 統合レジストリーについての情報の表示

oc registry info

$ oc registry info2.5.5.13. replace

指定された設定ファイルに基づいて既存オブジェクトを変更します。

例: pod.json の内容を使用した Pod の更新

oc replace -f pod.json

$ oc replace -f pod.json2.5.6. CLI コマンドの設定

2.5.6.1. completion

指定されたシェルのシェル補完コードを出力します。

例: Bash の補完コードの表示

oc completion bash

$ oc completion bash2.5.6.2. config

クライアント設定ファイルを管理します。

例: 現在の設定の表示

oc config view

$ oc config view例: 別のコンテキストへの切り替え

oc config use-context test-context

$ oc config use-context test-context2.5.6.3. logout

現行のセッションからログアウトします。

例: 現行セッションの終了

oc logout

$ oc logout2.5.6.4. whoami

現行セッションに関する情報を表示します。

例: 現行の認証ユーザーの表示

oc whoami

$ oc whoami2.5.7. 他の開発者 CLI コマンド

2.5.7.1. help

CLI の一般的なヘルプ情報および利用可能なコマンドの一覧を表示します。

例: 利用可能なコマンドの表示

oc help

$ oc help例: new-project コマンドのヘルプの表示

oc help new-project

$ oc help new-project2.5.7.2. plugin

ユーザーの PATH に利用可能なプラグインを一覧表示します。

例: 利用可能なプラグインの一覧表示

oc plugin list

$ oc plugin list2.5.7.3. version

oc クライアントおよびサーバーのバージョンを表示します。

例: バージョン情報の表示

oc version

$ oc versionクラスター管理者の場合、OpenShift Container Platform サーバーバージョンも表示されます。

2.6. OpenShift CLI 管理者コマンド

これらの管理者コマンドを使用するには、cluster-admin または同等のパーミッションが必要です。

2.6.1. クラスター管理 CLI コマンド

2.6.1.1. inspect

特定のリソースについてのデバッグ情報を収集します。

このコマンドは実験的なもので、通知なしに変更される可能性があります。

例: OpenShift API サーバークラスター Operator のデバッグデータの収集

oc adm inspect clusteroperator/openshift-apiserver

$ oc adm inspect clusteroperator/openshift-apiserver2.6.1.2. must-gather

問題のデバッグに必要なクラスターの現在の状態についてのデータを一括収集します。

このコマンドは実験的なもので、通知なしに変更される可能性があります。

例: デバッグ情報の収集

oc adm must-gather

$ oc adm must-gather2.6.1.3. top

サーバー上のリソースの使用状況についての統計を表示します。

例: Pod の CPU およびメモリーの使用状況の表示

oc adm top pods

$ oc adm top pods例: イメージの使用状況の統計の表示

oc adm top images

$ oc adm top images2.6.2. ノード管理 CLI コマンド

2.6.2.1. cordon

ノードにスケジュール対象外 (unschedulable) のマークを付けます。ノードにスケジュール対象外のマークを手動で付けると、いずれの新規 Pod もノードでスケジュールされなくなりますが、ノード上の既存の Pod にはこれによる影響がありません。

例: node1 にスケジュール対象外のマークを付ける

oc adm cordon node1

$ oc adm cordon node12.6.2.2. drain

メンテナーンスの準備のためにノードをドレイン (解放) します。

例: node1 のドレイン (解放)

oc adm drain node1

$ oc adm drain node12.6.2.3. node-logs

ノードのログを表示し、フィルターします。

例: NetworkManager のログの取得

oc adm node-logs --role master -u NetworkManager.service

$ oc adm node-logs --role master -u NetworkManager.service2.6.2.4. taint

1 つ以上のノードでテイントを更新します。

例: ユーザーのセットに対してノードを専用に割り当てるためのテイントの追加

oc adm taint nodes node1 dedicated=groupName:NoSchedule

$ oc adm taint nodes node1 dedicated=groupName:NoSchedule例: ノード node1 からキー dedicated のあるテイントを削除する

oc adm taint nodes node1 dedicated-

$ oc adm taint nodes node1 dedicated-2.6.2.5. uncordon

ノードにスケジュール対象 (schedulable) のマークを付けます。

例: node1 にスケジュール対象のマークを付ける

oc adm uncordon node1

$ oc adm uncordon node12.6.3. セキュリティーおよびポリシー CLI コマンド

2.6.3.1. certificate

証明書署名要求 (CSR) を承認するか、または拒否します。

例: CSR の承認

oc adm certificate approve csr-sqgzp

$ oc adm certificate approve csr-sqgzp2.6.3.2. groups

クラスター内のグループを管理します。

例: 新規グループの作成

oc adm groups new my-group

$ oc adm groups new my-group2.6.3.3. new-project

新規プロジェクトを作成し、管理オプションを指定します。

例: ノードセレクターを使用した新規プロジェクトの作成

oc adm new-project myproject --node-selector='type=user-node,region=east'

$ oc adm new-project myproject --node-selector='type=user-node,region=east'2.6.3.4. pod-network

クラスター内の Pod ネットワークを管理します。

例: project1 および project2 を他の非グローバルプロジェクトから分離する

oc adm pod-network isolate-projects project1 project2

$ oc adm pod-network isolate-projects project1 project22.6.3.5. policy

クラスター上のロールおよびポリシーを管理します。

例: すべてのプロジェクトについて edit ロールを user1 に追加する

oc adm policy add-cluster-role-to-user edit user1

$ oc adm policy add-cluster-role-to-user edit user1例: privileged SCC (security context constraint) のサービスアカウントへの追加

oc adm policy add-scc-to-user privileged -z myserviceaccount

$ oc adm policy add-scc-to-user privileged -z myserviceaccount2.6.4. メンテナーンス CLI コマンド

2.6.4.1. migrate

使用されるサブコマンドに応じて、クラスターのリソースを新規バージョンまたはフォーマットに移行します。

例: 保存されたすべてのオブジェクトの更新の実行

oc adm migrate storage

$ oc adm migrate storage例: Pod のみの更新の実行

oc adm migrate storage --include=pods

$ oc adm migrate storage --include=pods2.6.4.2. prune

サーバーから古いバージョンのリソースを削除します。

例: ビルド設定がすでに存在しないビルドを含む、古いビルドのプルーニング

oc adm prune builds --orphans

$ oc adm prune builds --orphans2.6.5. 設定 CLI コマンド

2.6.5.1. create-bootstrap-project-template

ブートストラッププロジェクトテンプレートを作成します。

例: YAML 形式でのブートストラッププロジェクトテンプレートの標準出力 (stdout) への出力

oc adm create-bootstrap-project-template -o yaml

$ oc adm create-bootstrap-project-template -o yaml2.6.5.2. create-error-template

エラーページをカスタマイズするためのテンプレートを作成します。

例: エラーページのテンプレートの標準出力 (stdout) への出力

oc adm create-error-template

$ oc adm create-error-template2.6.5.3. create-kubeconfig

クライアント証明書から基本的な .kubeconfig ファイルを作成します。

例: 提供されるクライアント証明書を使用した .kubeconfig ファイルの作成

oc adm create-kubeconfig \ --client-certificate=/path/to/client.crt \ --client-key=/path/to/client.key \ --certificate-authority=/path/to/ca.crt

$ oc adm create-kubeconfig \

--client-certificate=/path/to/client.crt \

--client-key=/path/to/client.key \

--certificate-authority=/path/to/ca.crt2.6.5.4. create-login-template

ログインページをカスタマイズするためのテンプレートを作成します。

例: ログインページのテンプレートの標準出力 (stdout) への出力

oc adm create-login-template

$ oc adm create-login-template2.6.5.5. create-provider-selection-template

プロバイダー選択ページをカスタマイズするためのテンプレートを作成します。

例: プロバイダー選択ページのテンプレートの標準出力 (stdout) への出力

oc adm create-provider-selection-template

$ oc adm create-provider-selection-template2.6.6. 他の管理者 CLI コマンド

2.6.6.1. build-chain

ビルドの入力と依存関係を出力します。

例: perl イメージストリームの依存関係の出力

oc adm build-chain perl

$ oc adm build-chain perl2.6.6.2. completion

指定されたシェルについての oc adm コマンドのシェル補完コードを出力します。

例: Bash の oc adm 補完コードの表示

oc adm completion bash

$ oc adm completion bash2.6.6.3. config

クライアント設定ファイルを管理します。このコマンドは、oc config コマンドと同じ動作を実行します。

例: 現在の設定の表示

oc adm config view

$ oc adm config view例: 別のコンテキストへの切り替え

oc adm config use-context test-context

$ oc adm config use-context test-context2.6.6.4. release

リリースについての情報の表示、またはリリースの内容の検査などの OpenShift Container Platform リリースプロセスの様々な側面を管理します。

例: 2 つのリリース間の変更ログの生成および changelog.md への保存

oc adm release info --changelog=/tmp/git \

quay.io/openshift-release-dev/ocp-release:4.7.0-x86_64 \

quay.io/openshift-release-dev/ocp-release:4.7.1-x86_64 \

> changelog.md

$ oc adm release info --changelog=/tmp/git \

quay.io/openshift-release-dev/ocp-release:4.7.0-x86_64 \

quay.io/openshift-release-dev/ocp-release:4.7.1-x86_64 \

> changelog.md2.6.6.5. verify-image-signature

ローカルのパブリック GPG キーを使用して内部レジストリーにインポートされたイメージのイメージ署名を検証します。

例: nodejs イメージ署名の検証

oc adm verify-image-signature \

sha256:2bba968aedb7dd2aafe5fa8c7453f5ac36a0b9639f1bf5b03f95de325238b288 \

--expected-identity 172.30.1.1:5000/openshift/nodejs:latest \

--public-key /etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release \

--save

$ oc adm verify-image-signature \

sha256:2bba968aedb7dd2aafe5fa8c7453f5ac36a0b9639f1bf5b03f95de325238b288 \

--expected-identity 172.30.1.1:5000/openshift/nodejs:latest \

--public-key /etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release \

--save2.7. oc および kubectl コマンドの使用

Kubernetes のコマンドラインインターフェイス (CLI) kubectl は、Kubernetes クラスターに対してコマンドを実行するために使用されます。OpenShift Container Platform は認定 Kubernetes ディストリビューションであるため、OpenShift Container Platform に同梱されるサポート対象の kubectl バイナリーを使用するか、または oc バイナリーを使用して拡張された機能を取得できます。

2.7.1. oc バイナリー

oc バイナリーは kubectl バイナリーと同じ機能を提供しますが、これは、以下を含む OpenShift Container Platform 機能をネイティブにサポートするように拡張されています。

OpenShift Container Platform リソースの完全サポート

DeploymentConfig、BuildConfig、Route、ImageStream、およびImageStreamTagオブジェクトなどのリソースは OpenShift Container Platform ディストリビューションに固有のリソースであり、標準の Kubernetes プリミティブにビルドされます。認証

ocバイナリーは、認証を可能にするビルトインのloginコマンドを提供し、Kubernetes namespace を認証ユーザーにマップする OpenShift Container Platform プロジェクトを使って作業できるようにします。詳細は、認証について 参照してください。追加コマンド

追加コマンドの

oc new-appなどは、既存のソースコードまたは事前にビルドされたイメージを使用して新規アプリケーションを起動することを容易にします。同様に、追加コマンドのoc new-projectにより、デフォルトとして切り替えることができるプロジェクトを簡単に開始できるようになります。

以前のバージョンの oc バイナリーをインストールしている場合、これを使用して OpenShift Container Platform 4.7 のすべてのコマンドを実行することはできません。最新の機能が必要な場合は、お使いの OpenShift Container Platform サーバーバージョンに対応する最新バージョンの oc バイナリーをダウンロードし、インストールする必要があります。

セキュリティー以外の API の変更は、古い oc バイナリーの更新を可能にするために、2 つ以上のマイナーリリース (例: 4.1 から 4.2、そして 4.3 へ) が必要です。新機能を使用するには新規の oc バイナリーが必要になる場合があります。4.3 サーバーには、4.2 oc バイナリーが使用できない機能が追加されている場合や、4.3 oc バイナリーには 4.2 サーバーでサポートされていない追加機能が含まれる場合があります。

|

X.Y ( |

X.Y+N [a] ( | |

| X.Y (サーバー) |

|

|

| X.Y+N [a] (サーバー) |

|

|

[a]

ここでは、N は、1 以上の数値です。

| ||

完全に互換性がある。

完全に互換性がある。

oc クライアントは、サーバー機能にアクセスできない場合があります。

oc クライアントは、アクセスされるサーバーと互換性のないオプションおよび機能を提供する可能性があります。

2.7.2. kubectl バイナリー

kubectl バイナリーは、標準の Kubernetes 環境を使用する新規 OpenShift Container Platform ユーザー、または kubectl CLI を優先的に使用するユーザーの既存ワークフローおよびスクリプトをサポートする手段として提供されます。kubectl の既存ユーザーはバイナリーを引き続き使用し、OpenShift Container Platform クラスターへの変更なしに Kubernetes のプリミティブと対話できます。

OpenShift CLI のインストール 手順に従って、サポートされている kubectl バイナリーをインストールできます。kubectl バイナリーは、バイナリーをダウンロードする場合にアーカイブに含まれます。または RPM を使用して CLI のインストール時にインストールされます。

詳細は、kubectl のドキュメント を参照してください。

第3章 Developer CLI (odo)

3.1. odo リリースノート

3.1.1. odo version 2.5.0 への主な変更点および改善点

-

adler32ハッシュを使用して各コンポーネントに一意のルートを作成します。 リソースの割り当て用に devfile の追加フィールドをサポートします。

- cpuRequest

- cpuLimit

- memoryRequest

- memoryLimit

--deployフラグをodo deleteコマンドに追加し、odo deployコマンドを使用してデプロイされたコンポーネントを削除します。odo delete --deploy

$ odo delete --deployCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

odo linkコマンドにマッピングサポートを追加します。 -

volumeコンポーネントのephemeralフィールドを使用して一時ボリュームをサポートします。 -

Telemetry オプトインを要求する際に、デフォルトの回答を

yesに設定します。 - 追加の Telemetry データを devfile レジストリーに送信してメトリクスを向上させます。

-

ブートストラップイメージを

registry.access.redhat.com/ocp-tools-4/odo-init-container-rhel8:1.1.11に更新します。 - アップストリームリポジトリーは https://github.com/redhat-developer/odo から入手できます。

3.1.2. バグ修正

-

以前のバージョンでは、

.odo/envファイルが存在しない場合、odo deployは失敗していました。このコマンドは、必要に応じて.odo/envファイルを作成するようになりました。 -

以前のバージョンでは、

odo createコマンドを使用したインタラクティブなコンポーネントの作成は、クラスターからの切断時に失敗しました。この問題は最新リリースで修正されました。

3.1.3. サポート

製品

エラーを見つけた場合や、odo の機能に関するバグが見つかった場合やこれに関する改善案をお寄せいただける場合は、Bugzilla に報告してください。製品タイプとして OpenShift Developer Tools and Services を選択し、odo をコンポーネントとして選択します。

問題の詳細情報をできる限り多く入力します。

ドキュメント

エラーを見つけた場合、またはドキュメントを改善するための提案がある場合は、最も関連性の高いドキュメントコンポーネントの Jira issue を提出してください。

3.2. odo について

Red Hat OpenShift Developer CLI(odo) は、アプリケーションを OpenShift Container Platform および Kubernetes で作成するためのツールです。odo を使用すると、プラットフォームを詳細に理解しなくても、マイクロサービスベースのアプリケーションを Kubernetes クラスターで開発、テスト、デバッグ、デプロイできます。

odo は 作成とプッシュ のワークフローに従います。ユーザーとして 作成 すると、情報 (またはマニフェスト) が設定ファイルに保存されます。プッシュ すると、対応するリソースが Kubernetes クラスターに作成されます。この設定はすべて、シームレスなアクセスと機能のために Kubernetes API に格納されます。

odo は、service および link コマンドを使用して、コンポーネントおよびサービスをリンクします。odo は、クラスターの Kubernetes Operator に基づいてサービスを作成し、デプロイしてこれを実行します。サービスは、Operator Hub で利用可能な任意の Operator を使用して作成できます。サービスをリンクした後に、odo はサービス設定をコンポーネントに挿入します。その後、アプリケーションはこの設定を使用して、Operator がサポートするサービスと通信できます。

3.2.1. odo キー機能

odo は、Kubernetes の開発者フレンドリーなインターフェイスとなるように設計されており、以下を実行できます。

- 新規マニフェストを作成するか、または既存のマニフェストを使用して、Kubernetes クラスターでアプリケーションを迅速にデプロイします。

- Kubernetes 設定ファイルを理解および維持しなくても、コマンドを使用してマニフェストを簡単に作成および更新できます。

- Kubernetes クラスターで実行されるアプリケーションへのセキュアなアクセスを提供します。

- Kubernetes クラスターのアプリケーションの追加ストレージを追加および削除します。

- Operator がサポートするサービスを作成し、アプリケーションをそれらのサービスにリンクします。

-

odoコンポーネントとしてデプロイされる複数のマイクロサービス間のリンクを作成します。 -

IDE で

odoを使用してデプロイしたアプリケーションをリモートでデバッグします。 -

odoを使用して Kubernetes にデプロイされたアプリケーションを簡単にテスト

3.2.2. odo のコアとなる概念

odo は、Kubernetes の概念を開発者に馴染みのある用語に抽象化します。

- アプリケーション

特定のタスクを実行するために使用される、クラウドネイティブなアプローチ で開発された通常のアプリケーション。

アプリケーション の例には、オンラインビデオストリーミング、オンラインショッピング、ホテルの予約システムなどがあります。

- コンポーネント

個別に実行でき、デプロイできる Kubernetes リソースのセット。クラウドネイティブアプリケーションは、小規模で独立した、緩く結合された コンポーネント の集まりです。

コンポーネント の例には、API バックエンド、Web インターフェイス、支払いバックエンドなどがあります。

- プロジェクト

- ソースコード、テスト、ライブラリーを含む単一のユニット。

- コンテキスト

-

単一コンポーネントのソースコード、テスト、ライブラリー、および

odo設定ファイルが含まれるディレクトリー。 - URL

- クラスター外からアクセスするためにコンポーネントを公開するメカニズム。

- ストレージ

- クラスター内の永続ストレージ。これは、再起動およびコンポーネントの再構築後もデータを永続化します。

- サービス

コンポーネントに追加機能を提供する外部アプリケーション。

サービス の例には、PostgreSQL、MySQL、Redis、RabbitMQ などがあります。

odoでは、サービスは OpenShift Service Catalog からプロビジョニングされ、クラスター内で有効にされる必要があります。- devfile

コンテナー化された開発環境を定義するためのオープン標準。これにより、開発者用ツールはワークフローを簡素化し、高速化することができます。詳細は、https://devfile.io のドキュメントを参照してください。

公開されている devfile レジストリーに接続するか、またはセキュアなレジストリーをインストールできます。

3.2.3. odo でのコンポーネントの一覧表示

odo は移植可能な devfile 形式を使用してコンポーネントおよびそれらの関連する URL、ストレージ、およびサービスを記述します。odo はさまざまな devfile レジストリーに接続して、さまざまな言語およびフレームワークの devfile をダウンロードできます。devfile 情報を取得するために odo で使用されるレジストリーを管理する方法についての詳細は、odo registry コマンドのドキュメントを参照してください。

odo catalog list components コマンドを使用して、さまざまなレジストリーで利用可能な devfile をすべて一覧表示できます。

手順

odoでクラスターにログインします。odo login -u developer -p developer

$ odo login -u developer -p developerCopy to Clipboard Copied! Toggle word wrap Toggle overflow 利用可能な

odoコンポーネントを一覧表示します。odo catalog list components

$ odo catalog list componentsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.2.4. odo での Telemetry

odo は、オペレーティングシステムのメトリクス、RAM、CPU、コア数、odo バージョン、エラー、成功/失敗、および odo コマンドの完了までにかかる時間を含む、使用方法に関する情報を収集します。

odo preference コマンドを使用して Telemetry の承諾を変更できます。

-

odo preference set ConsentTelemetry trueは Telemetry を承諾します。 -

odo preference unset ConsentTelemetryは Telemetry を無効化します。 -

odo preference viewは現在の設定を表示します。

3.3. odo のインストール

odo CLI は、バイナリーをダウンロードして、Linux、Windows、または macOS にインストールできます。また、odo と oc の両方のバイナリーを使用して、OpenShift Container Platform クラスターと対話する OpenShift VS Code 拡張機能をインストールすることもできます。Red Hat Enterprise Linux(RHEL) の場合、odo CLI を RPM としてインストールできます。

現時点では、odo はネットワークが制限された環境でのインストールをサポートしていません。

3.3.1. odo の Linux へのインストール

odo CLI はバイナリーとしてダウンロードでき、以下を含む複数のオペレーティングシステムおよびアーキテクチャーの tarball としてダウンロードできます。

| オペレーティングシステム | バイナリー | Tarball |

|---|---|---|

| Linux | ||

| Linux on IBM Power | ||

| Linux on IBM Z および LinuxONE |

手順

コンテンツゲートウェイ に移動し、オペレーティングシステムおよびアーキテクチャーに適したファイルをダウンロードします。

バイナリーをダウンロードする場合は、これを

odoに変更します。curl -L https://developers.redhat.com/content-gateway/rest/mirror/pub/openshift-v4/clients/odo/latest/odo-linux-amd64 -o odo

$ curl -L https://developers.redhat.com/content-gateway/rest/mirror/pub/openshift-v4/clients/odo/latest/odo-linux-amd64 -o odoCopy to Clipboard Copied! Toggle word wrap Toggle overflow tarball をダウンロードする場合は、バイナリーを展開します。

curl -L https://developers.redhat.com/content-gateway/rest/mirror/pub/openshift-v4/clients/odo/latest/odo-linux-amd64.tar.gz -o odo.tar.gz tar xvzf odo.tar.gz

$ curl -L https://developers.redhat.com/content-gateway/rest/mirror/pub/openshift-v4/clients/odo/latest/odo-linux-amd64.tar.gz -o odo.tar.gz $ tar xvzf odo.tar.gzCopy to Clipboard Copied! Toggle word wrap Toggle overflow

バイナリーのパーミッションを変更します。

chmod +x <filename>

$ chmod +x <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow odoバイナリーを、PATHにあるディレクトリーに配置します。PATHを確認するには、以下のコマンドを実行します。echo $PATH

$ echo $PATHCopy to Clipboard Copied! Toggle word wrap Toggle overflow odoがシステムで利用可能になっていることを確認します。odo version

$ odo versionCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.3.2. odo の Windows へのインストール

Windows 用のodo CLI は、バイナリーおよびアーカイブとしてダウンロードできます。

| オペレーティングシステム | バイナリー | Tarball |

|---|---|---|

| Windows |

手順

コンテンツゲートウェイ に移動し、適切なファイルをダウンロードします。

-

バイナリーをダウンロードする場合は、名前を

odo.exeに変更します。 -

アーカイブをダウンロードする場合は、ZIP プログラムでバイナリーを展開し、名前を

odo.exeに変更します。

-

バイナリーをダウンロードする場合は、名前を

odo.exeバイナリーをPATHにあるディレクトリーに移動します。PATHを確認するには、コマンドプロンプトを開いて以下のコマンドを実行します。path

C:\> pathCopy to Clipboard Copied! Toggle word wrap Toggle overflow odoがシステムで利用可能になっていることを確認します。odo version

C:\> odo versionCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.3.3. odo の macOS へのインストール

macOS の odo CLI は、バイナリーおよび tarball としてダウンロードできます。

| オペレーティングシステム | バイナリー | Tarball |

|---|---|---|

| macOS |

手順

コンテンツゲートウェイ に移動し、適切なファイルをダウンロードします。

バイナリーをダウンロードする場合は、これを

odoに変更します。curl -L https://developers.redhat.com/content-gateway/rest/mirror/pub/openshift-v4/clients/odo/latest/odo-darwin-amd64 -o odo

$ curl -L https://developers.redhat.com/content-gateway/rest/mirror/pub/openshift-v4/clients/odo/latest/odo-darwin-amd64 -o odoCopy to Clipboard Copied! Toggle word wrap Toggle overflow tarball をダウンロードする場合は、バイナリーを展開します。

curl -L https://developers.redhat.com/content-gateway/rest/mirror/pub/openshift-v4/clients/odo/latest/odo-darwin-amd64.tar.gz -o odo.tar.gz tar xvzf odo.tar.gz

$ curl -L https://developers.redhat.com/content-gateway/rest/mirror/pub/openshift-v4/clients/odo/latest/odo-darwin-amd64.tar.gz -o odo.tar.gz $ tar xvzf odo.tar.gzCopy to Clipboard Copied! Toggle word wrap Toggle overflow

バイナリーのパーミッションを変更します。

chmod +x odo

# chmod +x odoCopy to Clipboard Copied! Toggle word wrap Toggle overflow odoバイナリーを、PATHにあるディレクトリーに配置します。PATHを確認するには、以下のコマンドを実行します。echo $PATH

$ echo $PATHCopy to Clipboard Copied! Toggle word wrap Toggle overflow odoがシステムで利用可能になっていることを確認します。odo version

$ odo versionCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.3.4. odo の VS Code へのインストール

OpenShift VS Code 拡張 は、odo と oc バイナリーの両方を使用して OpenShift Container Platform クラスターと対話します。これらの機能を使用するには、OpenShift VS Code 拡張を VS Code にインストールします。

前提条件

- VS Code がインストールされていること。

手順

- VS Code を開きます。

-

Ctrl+Pで VS Code Quick Open を起動します。 以下のコマンドを入力します。

ext install redhat.vscode-openshift-connector

$ ext install redhat.vscode-openshift-connectorCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.3.5. RPM を使用した odo の Red Hat Enterprise Linux(RHEL) へのインストール

Red Hat Enterprise Linux(RHEL) の場合、odo CLI を RPM としてインストールできます。

手順

Red Hat Subscription Manager に登録します。

subscription-manager register

# subscription-manager registerCopy to Clipboard Copied! Toggle word wrap Toggle overflow 最新のサブスクリプションデータをプルします。

subscription-manager refresh

# subscription-manager refreshCopy to Clipboard Copied! Toggle word wrap Toggle overflow 利用可能なサブスクリプションを一覧表示します。

subscription-manager list --available --matches '*OpenShift Developer Tools and Services*'

# subscription-manager list --available --matches '*OpenShift Developer Tools and Services*'Copy to Clipboard Copied! Toggle word wrap Toggle overflow 直前のコマンドの出力で、OpenShift Container Platform サブスクリプションの

Pool IDフィールドを見つけ、これを登録されたシステムに割り当てます。subscription-manager attach --pool=<pool_id>

# subscription-manager attach --pool=<pool_id>Copy to Clipboard Copied! Toggle word wrap Toggle overflow odoで必要なリポジトリーを有効にします。subscription-manager repos --enable="ocp-tools-4.9-for-rhel-8-x86_64-rpms"

# subscription-manager repos --enable="ocp-tools-4.9-for-rhel-8-x86_64-rpms"Copy to Clipboard Copied! Toggle word wrap Toggle overflow odoパッケージをインストールします。yum install odo

# yum install odoCopy to Clipboard Copied! Toggle word wrap Toggle overflow odoがシステムで利用可能になっていることを確認します。odo version

$ odo versionCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4. odo CLI の設定

odo のグローバル設定は、デフォルトで $HOME/.odo ディレクトリーにある preference.yaml ファイルにあります。

GLOBALODOCONFIG 変数をエクスポートして、preference.yaml ファイルに別の場所を設定できます。

3.4.1. 現在の設定の表示

以下のコマンドを使用して、現在の odo CLI 設定を表示できます。

odo preference view

$ odo preference view出力例

3.4.2. 値の設定

以下のコマンドを使用して、preference キーの値を設定できます。

odo preference set <key> <value>

$ odo preference set <key> <value>優先キーは大文字と小文字を区別しません。

コマンドの例

odo preference set updatenotification false

$ odo preference set updatenotification false出力例

Global preference was successfully updated

Global preference was successfully updated3.4.3. 値の設定解除

以下のコマンドを使用して、preference キーの値の設定を解除できます。

odo preference unset <key>

$ odo preference unset <key>

-f フラグを使用して確認を省略できます。

コマンドの例

odo preference unset updatenotification ? Do you want to unset updatenotification in the preference (y/N) y

$ odo preference unset updatenotification

? Do you want to unset updatenotification in the preference (y/N) y出力例

Global preference was successfully updated

Global preference was successfully updated3.4.4. preference キーの表

以下の表は、odo CLI の preference キーを設定するために使用できるオプションを示しています。

| preference キー | 説明 | デフォルト値 |

|---|---|---|

|

|

| True |

|

|

| 現在のディレクトリー名 |

|

| Kubernetes サーバー接続チェックのタイムアウト。 | 1 秒 |

|

| git コンポーネントのビルドが完了するまでのタイムアウト。 | 300 秒 |

|

| コンポーネントが起動するまで待機するタイムアウト。 | 240 秒 |

|

|

ソースコードを保存するために | True |

|

|

| False |

3.4.5. ファイルまたはパターンを無視する

アプリケーションのルートディレクトリーにある .odoignore ファイルを変更して、無視するファイルまたはパターンの一覧を設定できます。これは、odo push および odo watch の両方に適用されます。

.odoignore ファイルが存在 しない 場合、特定のファイルおよびフォルダーを無視するように .gitignore ファイルが代わりに使用されます。

.git ファイル、.js 拡張子のあるファイルおよびフォルダー tests を無視するには、以下を .odoignore または .gitignore ファイルのいずれかに追加します。

.git *.js tests/

.git

*.js

tests/

.odoignore ファイルはすべての glob 表現を許可します。

3.5. odo CLI リファレンス

3.5.1. odo build-images

odo は Dockerfile に基づいてコンテナーイメージをビルドし、それらのイメージをレジストリーにプッシュできます。

odo build-images コマンドを実行すると、odo は image タイプで devfile.yaml 内のすべてのコンポーネントを検索します。以下に例を示します。

各イメージコンポーネントについて、odo は podman または docker (この順序で最初に見つかったもの) を実行し、指定された Dockerfile、ビルドコンテキスト、および引数でイメージをビルドします。

--push フラグがコマンドに渡されると、イメージはビルド後にレジストリーにプッシュされます。

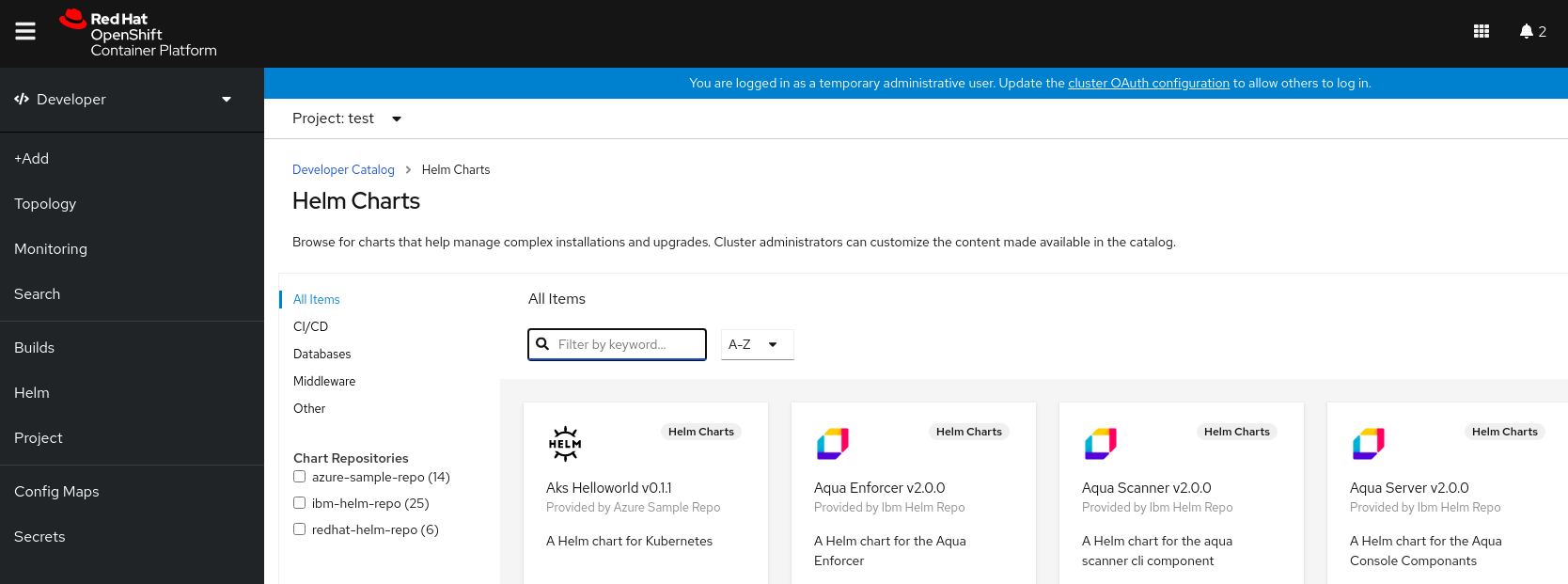

3.5.2. odo catalog

odo は異なる カタログ を使用して コンポーネント および サービス をデプロイします。

3.5.2.1. コンポーネント

odo は移植可能な devfile 形式を使用してコンポーネントを記述します。さまざまな devfile レジストリーに接続して、さまざまな言語およびフレームワークの devfile をダウンロードできます。詳細は、odo registry を参照してください。

3.5.2.1.1. コンポーネントの一覧表示

異なるレジストリーで利用可能な devfile の一覧を表示するには、以下のコマンドを実行します。

odo catalog list components

$ odo catalog list components出力例

3.5.2.1.2. コンポーネントに関する情報の取得

特定のコンポーネントに関する詳細情報を取得するには、以下のコマンドを実行します。

odo catalog describe component

$ odo catalog describe componentたとえば、以下のコマンドを実行します。

odo catalog describe component nodejs

$ odo catalog describe component nodejs出力例

スタータープロジェクトからプロジェクトを作成する方法については、odo create を参照してください。

3.5.2.2. サービス

odo は Operator を利用して サービス をデプロイでき ます。

odo では、Operator Lifecycle Manager を利用してデプロイされた Operator のみがサポートされます。

3.5.2.2.1. サービスの一覧表示

利用可能な Operator およびそれらの関連サービスを一覧表示するには、以下のコマンドを実行します。

odo catalog list services

$ odo catalog list services出力例

Services available through Operators NAME CRDs postgresql-operator.v0.1.1 Backup, Database redis-operator.v0.8.0 RedisCluster, Redis

Services available through Operators

NAME CRDs

postgresql-operator.v0.1.1 Backup, Database

redis-operator.v0.8.0 RedisCluster, Redis

この例では、2 つの Operator がクラスターにインストールされます。postgresql-operator.v0.1.1 Operator は、PostgreSQL に関連するサービス: Backup と Database をデプロイします。redis-operator.v0.8.0 Operator は、RedisCluster および Redis に関連するサービスをデプロイします。

利用可能な Operator の一覧を取得するには、odo は Succeeded フェーズにある現在の namespace の ClusterServiceVersion (CSV) リソースを取得します。クラスター全体のアクセスをサポートする Operator の場合、新規 namespace が作成されると、これらのリソースがこれに自動的に追加されます。ただし、Succeeded フェーズに入るまでに時間がかかる場合がありますが、odo はリソースが準備状態になるまで空の一覧を返す可能性があります。

3.5.2.2.2. サービスの検索

キーワードで特定のサービスを検索するには、以下のコマンドを実行します。

odo catalog search service

$ odo catalog search serviceたとえば、PostgreSQL サービスを取得するには、以下のコマンドを実行します。

odo catalog search service postgres

$ odo catalog search service postgres出力例

Services available through Operators NAME CRDs postgresql-operator.v0.1.1 Backup, Database

Services available through Operators

NAME CRDs

postgresql-operator.v0.1.1 Backup, Database検索されたキーワードを名前に含む Operator の一覧が表示されます。

3.5.2.2.3. サービスに関する情報の取得

特定のサービスに関する詳細情報を取得するには、以下のコマンドを実行します。

odo catalog describe service

$ odo catalog describe service以下に例を示します。

odo catalog describe service postgresql-operator.v0.1.1/Database

$ odo catalog describe service postgresql-operator.v0.1.1/Database出力例

サービスは、CustomResourceDefinition (CRD) リソースによってクラスターに表示されます。前のコマンドは、kind、version、このカスタムリソースのインスタンスを定義するために使用できるフィールドのリストなど、CRD に関する詳細を表示します。

フィールドの一覧は、CRD に含まれる OpenAPI スキーマ から抽出されます。この情報は CRD でオプションであり、存在しない場合は、サービスを表す ClusterServiceVersion (CSV) リソースから抽出されます。

CRD タイプの情報を指定せずに、Operator がサポートするサービスの説明を要求することもできます。CRD のないクラスターで Redis Operator を記述するには、以下のコマンドを実行します。

odo catalog describe service redis-operator.v0.8.0

$ odo catalog describe service redis-operator.v0.8.0出力例

3.5.3. odo create

odo は devfile を使用してコンポーネントの設定を保存し、ストレージやサービスなどのコンポーネントのリソースを記述します。odo create コマンドはこのファイルを生成します。

3.5.3.1. コンポーネントの作成

既存のプロジェクトの devfile を 作成 するには、コンポーネントの名前とタイプ (たとえば、nodejs または go) を指定して odo create コマンドを実行します。

odo create nodejs mynodejs

odo create nodejs mynodejs

この例では、nodejs はコンポーネントのタイプで、mynodejs は odo が作成するコンポーネントの名前です。

サポートされるすべてのコンポーネントタイプの一覧については、コマンド odo catalog list components を実行します。

ソースコードが現在のディレクトリーに存在する場合は、--context フラグを使用してパスを指定できます。たとえば、nodejs コンポーネントのソースが現在の作業ディレクトリーと相対的に node-backend というフォルダーにある場合は、以下のコマンドを実行します。

odo create nodejs mynodejs --context ./node-backend

odo create nodejs mynodejs --context ./node-backend

--context フラグは、相対パスおよび絶対パスをサポートします。

コンポーネントがデプロイされるプロジェクトまたはアプリケーションを指定するには、--project フラグおよび --app フラグを使用します。たとえば、backend プロジェクト内の myapp アプリの一部であるコンポーネントを作成するには、次のコマンドを実行します。

odo create nodejs --app myapp --project backend

odo create nodejs --app myapp --project backendこれらのフラグが指定されていない場合、デフォルトはアクティブなアプリケーションおよびプロジェクトに設定されます。

3.5.3.2. スタータープロジェクト

既存のソースコードがなく、devfile およびコンポーネントを迅速に稼働させる必要がある場合は、スタータープロジェクトを使用します。スタータープロジェクトを使用するには、--starter フラグを odo create コマンドに追加します。

コンポーネントタイプの利用可能なスタータープロジェクトの一覧を表示するには、odo catalog describe component コマンドを実行します。たとえば、nodejs コンポーネントタイプの利用可能なスタータープロジェクトをすべて取得するには、以下のコマンドを実行します。

odo catalog describe component nodejs

odo catalog describe component nodejs

次に、odo create コマンドで --starter フラグを使用して必要なプロジェクトを指定します。

odo create nodejs --starter nodejs-starter

odo create nodejs --starter nodejs-starter

これにより、選択したコンポーネントタイプ (この例では nodejs) に対応するサンプルテンプレートがダウンロードされます。テンプレートは、現在のディレクトリーまたは --context フラグで指定された場所にダウンロードされます。スタータープロジェクトに独自の devfile がある場合、この devfile は保持されます。

3.5.3.3. 既存の devfile の使用

既存の devfile から新規コンポーネントを作成する場合は、--devfile フラグを使用して devfile へのパスを指定して実行できます。たとえば、GitHub の devfile に基づいて mynodejs というコンポーネントを作成するには、以下のコマンドを使用します。

odo create mynodejs --devfile https://raw.githubusercontent.com/odo-devfiles/registry/master/devfiles/nodejs/devfile.yaml

odo create mynodejs --devfile https://raw.githubusercontent.com/odo-devfiles/registry/master/devfiles/nodejs/devfile.yaml3.5.3.4. インタラクティブな作成

odo create コマンドを対話的に実行して、コンポーネントの作成に必要な手順をガイドすることもできます。

コンポーネントのコンポーネントタイプ、名前、およびプロジェクトを選択します。スタータープロジェクトをダウンロードするかどうかを選択することもできます。完了したら、新しい devfile.yaml ファイルが作業ディレクトリーに作成されます。

これらのリソースをクラスターにデプロイするには、odo push コマンドを実行します。

3.5.4. odo delete

odo delete コマンドは、odo によって管理されるリソースを削除するのに役立ちます。

3.5.4.1. コンポーネントの削除

devfile コンポーネントを削除するには、odo delete コマンドを実行します。

odo delete

$ odo deleteコンポーネントがクラスターにプッシュされている場合、コンポーネントは依存するストレージ、URL、シークレット、他のリソースと共にクラスターから削除されます。コンポーネントがプッシュされていない場合、コマンドはクラスターのリソースが検出できなかったことを示すエラーを出して終了します。

確認質問を回避するには、-f フラグまたは --force フラグを使用します。

3.5.4.2. devfile Kubernetes コンポーネントのアンデプロイ

odo deploy でデプロイされた devfile Kubernetes コンポーネントをアンデプロイするには、--deploy フラグを指定して odo delete コマンドを実行します。

odo delete --deploy

$ odo delete --deploy

確認質問を回避するには、-f フラグまたは --force フラグを使用します。

3.5.4.3. すべて削除

以下の項目を含むすべてのアーティファクトを削除するには、--all フラグを指定して odo delete コマンドを実行します。

- devfile コンポーネント

-

odo deployコマンドを使用してデプロイされた devfile Kubernetes コンポーネント - devfile

- ローカル設定

odo delete --all

$ odo delete --all3.5.4.4. 利用可能なフラグ

-f,--force- このフラグを使用して確認質問を回避します。

-w,--wait- このフラグを使用して、コンポーネントおよび依存関係が削除されるのを待機します。このフラグは、アンデプロイ時には機能しません。

Common Flags フラグに関するドキュメントでは、コマンドで利用可能なフラグの詳細情報が提供されています。

3.5.5. odo deploy

odo を使用すると、CI/CD システムを使用してコンポーネントをデプロイする方法と同様に、コンポーネントをデプロイできます。まず、odo はコンテナーイメージをビルドしてから、コンポーネントのデプロイに必要な Kubernetes リソースをデプロイします。

コマンド odo deploy を実行すると、odo は devfile で kind deploy のデフォルトコマンドを検索し、以下のコマンドを実行します。このタイプの deploy は、バージョン 2.2.0 以降の devfile 形式でサポートされます。

deploy コマンドは通常、いくつかの 適用 コマンドで設定される 複合 コマンドです。

-

適用されると、デプロイするコンテナーのイメージを構築し、それをレジストリーにプッシュする

imageコンポーネントを参照するコマンド。 - Kubernetes コンポーネント を参照するコマンドは、適用されるとクラスターに Kubernetes リソースを作成します。

以下の devfile.yaml ファイルのサンプルでは、コンテナーイメージはディレクトリーにある Dockerfile を使用してビルドされます。イメージはレジストリーにプッシュされ、この新規にビルドされたイメージを使用して Kubernetes Deployment リソースがクラスターに作成されます。

3.5.6. odo link

odo link コマンドは、odo コンポーネントを Operator がサポートするサービスまたは別の odo コンポーネントにリンクするのに役立ちます。これは Service Binding Operator を使用して行います。現時点で、odo は必要な機能を実現するために Operator 自体ではなく、Service Binding ライブラリーを使用します。

3.5.6.1. 各種リンクオプション

odo は、コンポーネントを Operator がサポートするサービスまたは別の odo コンポーネントにリンクするための各種のオプションを提供します。これらのオプション (またはフラグ) はすべて、コンポーネントをサービスにリンクする場合でも、別のコンポーネントにリンクする場合でも使用できます。

3.5.6.1.1. デフォルト動作

デフォルトでは、odo link コマンドは、コンポーネントディレクトリーに kubernetes/ という名前のディレクトリーを作成し、そこにサービスとリンクに関する情報 (YAML マニフェスト) を保存します。odo push を使用すると、odo はこれらのマニフェストを Kubernetes クラスター上のリソースの状態と比較し、ユーザーが指定したものと一致するようにリソースを作成、変更、または破棄する必要があるかどうかを判断します。

3.5.6.1.2. --inlined フラグ

odo link コマンドに --inlined フラグを指定すると、odo は、kubernetes/ ディレクトリーの下にファイルを作成する代わりに、リンク情報をコンポーネントディレクトリーの devfile.yaml にインラインで保存します。--inlined フラグの動作は、odo link および odo service create コマンドの両方で似ています。このフラグは、すべてが単一の devfile.yaml に保存されている場合に便利です。コンポーネント用に実行する各 odo link および odo service create コマンドで --inlined フラグを使用するのを覚えておく必要があります。

3.5.6.1.3. --map フラグ

場合によっては、デフォルトで利用できる内容に加えて、コンポーネントにバインディング情報をさらに追加する必要がある場合があります。たとえば、コンポーネントをサービスにリンクしていて、サービスの仕様 (仕様の略) からの情報をバインドしたい場合は、-map フラグを使用できます。odo は、リンクされているサービスまたはコンポーネントの仕様に対して検証を実行しないことに注意してください。このフラグの使用は、Kubernetes YAML マニフェストの使用に慣れる場合にのみ推奨されます。

3.5.6.1.4. --bind-as-files フラグ

これまでに説明したすべてのリンクオプションについて、odo はバインディング情報を環境変数としてコンポーネントに挿入します。この情報をファイルとしてマウントする場合は、--bind-as-files フラグを使用できます。これにより、odo はバインディング情報をファイルとしてコンポーネントの Pod 内の /bindings の場所に挿入します。環境変数のシナリオと比較して、-bind-as-files を使用すると、ファイルはキーにちなんで名前が付けられ、これらのキーの値はこれらのファイルのコンテンツとして保存されます。

3.5.6.2. 例

3.5.6.2.1. デフォルトの odo link

以下の例では、バックエンドコンポーネントはデフォルトの odo link コマンドを使用して PostgreSQL サービスにリンクされています。バックエンドコンポーネントでは、コンポーネントおよびサービスがクラスターにプッシュされていることを確認します。

odo list

$ odo list出力例

APP NAME PROJECT TYPE STATE MANAGED BY ODO app backend myproject spring Pushed Yes

APP NAME PROJECT TYPE STATE MANAGED BY ODO

app backend myproject spring Pushed Yesodo service list

$ odo service list出力例

NAME MANAGED BY ODO STATE AGE PostgresCluster/hippo Yes (backend) Pushed 59m41s

NAME MANAGED BY ODO STATE AGE

PostgresCluster/hippo Yes (backend) Pushed 59m41s

ここで、odo link を実行してバックエンドコンポーネントを PostgreSQL サービスにリンクします。

odo link PostgresCluster/hippo

$ odo link PostgresCluster/hippo出力例

✓ Successfully created link between component "backend" and service "PostgresCluster/hippo" To apply the link, please use `odo push`

✓ Successfully created link between component "backend" and service "PostgresCluster/hippo"

To apply the link, please use `odo push`

次に、odo push を実行して Kubernetes クラスターにリンクを作成します。

odo push に成功すると、以下のような結果が表示されます。

バックエンドコンポーネントによってデプロイされたアプリケーションの URL を開くと、データベース内の

ToDoアイテムのリストが表示されます。たとえば、odo url listコマンドの出力では、todosが記載されているパスが含まれます。odo url list

$ odo url listCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例

Found the following URLs for component backend NAME STATE URL PORT SECURE KIND 8080-tcp Pushed http://8080-tcp.192.168.39.112.nip.io 8080 false ingress

Found the following URLs for component backend NAME STATE URL PORT SECURE KIND 8080-tcp Pushed http://8080-tcp.192.168.39.112.nip.io 8080 false ingressCopy to Clipboard Copied! Toggle word wrap Toggle overflow URL の正しいパスは http://8080-tcp.192.168.39.112.nip.io/api/v1/todos になります。URL は設定によって異なります。また、追加しない限りデータベースには

todoがないため、URL に空の JSON オブジェクトが表示される場合があることにも注意してください。backend コンポーネントにインジェクトされる Postgres サービスに関連するバインディング情報を確認できます。このバインディング情報は、デフォルトで環境変数として挿入されます。バックエンドコンポーネントのディレクトリーから

odo describeコマンドを使用してこれを確認できます。odo describe

$ odo describeCopy to Clipboard Copied! Toggle word wrap Toggle overflow 出力例:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow これらの変数の一部は、バックエンドコンポーネントの

src/main/resources/application.propertiesファイルで使用されるため、JavaSpringBoot アプリケーションは PostgreSQL データベースサービスに接続できます。最後に、

odoはバックエンドコンポーネントのディレクトリーにkubernetes/というディレクトリーを作成しました。このディレクトリーには次のファイルが含まれています。ls kubernetes odo-service-backend-postgrescluster-hippo.yaml odo-service-hippo.yaml

$ ls kubernetes odo-service-backend-postgrescluster-hippo.yaml odo-service-hippo.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow これらのファイルには、次の 2 つのリソースの情報 (YAML マニフェスト) が含まれています。

-

odo-service-hippo.yaml-odo service create:odo service create --from-file ../postgrescluster.yamlコマンドを使用して作成された Postgres サービス。 -

odo-service-backend-PostgresCluster-hippo.yaml-odolink:odo linkコマンドを使用して作成された リンク。

-

3.5.6.2.2. --inlined フラグでの odo link の使用

odo link コマンドで --inlined フラグを使用すると、これがバインディング情報を挿入するというフラグなしに odo link コマンドと同じ効果があります。ただし、上記の場合は、kubernetes/ ディレクトリーに 2 つのマニフェストファイルがあります。1 つは Postgres サービス用で、もう 1 つは backend コンポーネントとこのサービス間のリンク用です。ただし、-inlined フラグを渡すと、odo は kubernetes/ ディレクトリーの下に YAML マニフェストを保存するファイルを作成せず、devfile.yaml ファイルにインラインで保存します。

これを確認するには、最初に PostgreSQL サービスからコンポーネントをリンク解除します。

odo unlink PostgresCluster/hippo

$ odo unlink PostgresCluster/hippo出力例:

✓ Successfully unlinked component "backend" from service "PostgresCluster/hippo" To apply the changes, please use `odo push`

✓ Successfully unlinked component "backend" from service "PostgresCluster/hippo"

To apply the changes, please use `odo push`

クラスターでそれらをリンクするには、odo push を実行します。kubernetes/ ディレクトリーを検査すると、1 つのファイルのみが表示されます。

ls kubernetes odo-service-hippo.yaml

$ ls kubernetes

odo-service-hippo.yaml

次に、--inlined フラグを使用してリンクを作成します。

odo link PostgresCluster/hippo --inlined

$ odo link PostgresCluster/hippo --inlined出力例:

✓ Successfully created link between component "backend" and service "PostgresCluster/hippo" To apply the link, please use `odo push`

✓ Successfully created link between component "backend" and service "PostgresCluster/hippo"

To apply the link, please use `odo push`

--inlined フラグを省略する手順など、クラスターで作成されるリンクを取得するために odo push を実行する必要があります。odo は設定を devfile.yaml に保存します。このファイルに以下のようなエントリーが表示されます。

odo unlink PostgresCluster/hippo を実行する場合に、odo はまず devfile.yaml からリンク情報を削除し、後続の odo push はクラスターからリンクを削除するようになりました。

3.5.6.2.3. カスタムバインディング

odo link は、カスタムバインディング情報をコンポーネントに挿入することのできるフラグ --map を受け入れます。このようなバインディング情報は、コンポーネントにリンクしているリソースのマニフェストから取得されます。たとえば、バックエンドコンポーネントおよび PostgreSQL サービスのコンテキストでは、PostgreSQL サービスのマニフェスト postgrescluster.yaml ファイルからの情報をバックエンドコンポーネントに注入することができます。

PostgresCluster サービスの名前が hippo (または PostgresCluster サービスの名前が異なる場合は odo service list の出力) の場合、その YAML 定義から postgresVersion の値をバックエンドコンポーネントに挿入するときは、次のコマンドを実行します。

odo link PostgresCluster/hippo --map pgVersion='{{ .hippo.spec.postgresVersion }}'

$ odo link PostgresCluster/hippo --map pgVersion='{{ .hippo.spec.postgresVersion }}'

Postgres サービスの名前が hippo と異なる場合は、上記のコマンドで pgVersion の値の .hippo の代わりにそれを指定する必要があることに注意してください。

リンク操作後に、通常どおり odo push を実行します。プッシュ操作が正常に完了すると、バックエンドコンポーネントディレクトリーから次のコマンドを実行して、カスタムマッピングが適切に挿入されたかどうかを検証できます。

odo exec -- env | grep pgVersion

$ odo exec -- env | grep pgVersion出力例:

pgVersion=13

pgVersion=13

カスタムバインディング情報を複数挿入したい可能性があるため、odo link は複数のキーと値のペアを受け入れます。唯一の制約は、これらを --map <key>=<value> として指定する必要があるということです。たとえば、PostgreSQL イメージ情報をバージョンと共に注入する場合には、以下を実行できます。

odo link PostgresCluster/hippo --map pgVersion='{{ .hippo.spec.postgresVersion }}' --map pgImage='{{ .hippo.spec.image }}'

$ odo link PostgresCluster/hippo --map pgVersion='{{ .hippo.spec.postgresVersion }}' --map pgImage='{{ .hippo.spec.image }}'

次に、odo push を実行します。両方のマッピングが正しくインジェクトされたかどうかを確認するには、以下のコマンドを実行します。

odo exec -- env | grep -e "pgVersion\|pgImage"

$ odo exec -- env | grep -e "pgVersion\|pgImage"出力例:

pgVersion=13 pgImage=registry.developers.crunchydata.com/crunchydata/crunchy-postgres-ha:centos8-13.4-0

pgVersion=13

pgImage=registry.developers.crunchydata.com/crunchydata/crunchy-postgres-ha:centos8-13.4-03.5.6.2.3.1. インラインかどうか。

odo link が kubernetes/ ディレクトリー下のリンクのマニフェストファイルを生成するデフォルトの動作を受け入れます。または、すべてを単一の devfile.yaml ファイルに保存する場合は、-inlined フラグを使用できます。

3.5.6.3. ファイルとしてのバインド

odo link が提供するもう 1 つの便利なフラグは、--bind-as-files です。このフラグが渡されると、バインディング情報は環境変数としてコンポーネントの Pod に挿入されませんが、ファイルシステムとしてマウントされます。

バックエンドコンポーネントと PostgreSQL サービスの間に既存のリンクがないことを確認します。これは、バックエンドコンポーネントのディレクトリーで odo describe を実行して、以下のような出力が表示されるかどうかを確認することで実行できます。

Linked Services: · PostgresCluster/hippo

Linked Services:

· PostgresCluster/hippo以下を使用してコンポーネントからサービスをリンクを解除します。

odo unlink PostgresCluster/hippo odo push

$ odo unlink PostgresCluster/hippo

$ odo push3.5.6.4. --bind-as-files の例

3.5.6.4.1. デフォルトの odo link の使用

デフォルトでは、odo はリンク情報を保存するために kubernetes/ ディレクトリーの下にマニフェストファイルを作成します。バックエンドコンポーネントおよび PostgreSQL サービスをリンクします。

odo link PostgresCluster/hippo --bind-as-files odo push

$ odo link PostgresCluster/hippo --bind-as-files

$ odo pushodo describe 出力例:

以前の odo describe 出力で key=value 形式の環境変数であったものはすべて、ファイルとしてマウントされるようになりました。cat コマンドを使用して、これらのファイルの一部を表示します。

コマンドの例:

odo exec -- cat /bindings/backend-postgrescluster-hippo/password

$ odo exec -- cat /bindings/backend-postgrescluster-hippo/password出力例:

q({JC:jn^mm/Bw}eu+j.GX{k

q({JC:jn^mm/Bw}eu+j.GX{kコマンドの例:

odo exec -- cat /bindings/backend-postgrescluster-hippo/user

$ odo exec -- cat /bindings/backend-postgrescluster-hippo/user出力例:

hippo

hippoコマンドの例:

odo exec -- cat /bindings/backend-postgrescluster-hippo/clusterIP

$ odo exec -- cat /bindings/backend-postgrescluster-hippo/clusterIP出力例:

10.101.78.56

10.101.78.563.5.6.4.2. --inlined の使用

--bind-as-files と --inlined を一緒に使用した結果は、odolink--inlined を使用した場合と同様です。リンクのマニフェストは、kubernetes/ ディレクトリーの別のファイルに保存されるのではなく、devfile.yaml に保存されます。これ以外に、odo describe 出力は以前と同じになります。

3.5.6.4.3. カスタムバインディング

バックエンドコンポーネントを PostgreSQL サービスにリンクしているときにカスタムバインディングを渡すと、これらのカスタムバインディングは環境変数としてではなく、ファイルとしてマウントされます。以下に例を示します。

odo link PostgresCluster/hippo --map pgVersion='{{ .hippo.spec.postgresVersion }}' --map pgImage='{{ .hippo.spec.image }}' --bind-as-files

odo push

$ odo link PostgresCluster/hippo --map pgVersion='{{ .hippo.spec.postgresVersion }}' --map pgImage='{{ .hippo.spec.image }}' --bind-as-files

$ odo pushこれらのカスタムバインディングは、環境変数として挿入されるのではなく、ファイルとしてマウントされます。これが機能することを確認するには、以下のコマンドを実行します。

コマンドの例:

odo exec -- cat /bindings/backend-postgrescluster-hippo/pgVersion

$ odo exec -- cat /bindings/backend-postgrescluster-hippo/pgVersion出力例:

13

13コマンドの例:

odo exec -- cat /bindings/backend-postgrescluster-hippo/pgImage

$ odo exec -- cat /bindings/backend-postgrescluster-hippo/pgImage出力例:

registry.developers.crunchydata.com/crunchydata/crunchy-postgres-ha:centos8-13.4-0

registry.developers.crunchydata.com/crunchydata/crunchy-postgres-ha:centos8-13.4-03.5.7. odo registry

odo は移植可能な devfile 形式を使用してコンポーネントを記述します。odo は各種の devfile レジストリーに接続して、さまざまな言語およびフレームワークの devfile をダウンロードできます。

公開されている利用可能な devfile レジストリーに接続するか、または独自の Secure Registry をインストールできます。

odo registry コマンドを使用して、odo によって使用されるレジストリーを管理し、devfile 情報を取得できます。

3.5.7.1. レジストリーの一覧表示

odo で現在接続しているレジストリーを一覧表示するには、以下のコマンドを実行します。

odo registry list

$ odo registry list出力例:

NAME URL SECURE DefaultDevfileRegistry https://registry.devfile.io No

NAME URL SECURE

DefaultDevfileRegistry https://registry.devfile.io No

DefaultDevfileRegistry は odo によって使用されるデフォルトレジストリーです。これは devfile.io プロジェクトによって提供されます。

3.5.7.2. レジストリーの追加

レジストリーを追加するには、以下のコマンドを実行します。

odo registry add

$ odo registry add出力例:

odo registry add StageRegistry https://registry.stage.devfile.io New registry successfully added

$ odo registry add StageRegistry https://registry.stage.devfile.io

New registry successfully added

独自の Secure Registry をデプロイしている場合、--token フラグを使用してセキュアなレジストリーに対して認証するためにパーソナルアクセストークンを指定できます。

odo registry add MyRegistry https://myregistry.example.com --token <access_token> New registry successfully added

$ odo registry add MyRegistry https://myregistry.example.com --token <access_token>

New registry successfully added3.5.7.3. レジストリーの削除

レジストリーを削除するには、以下のコマンドを実行します。

odo registry delete

$ odo registry delete出力例:

odo registry delete StageRegistry ? Are you sure you want to delete registry "StageRegistry" Yes Successfully deleted registry

$ odo registry delete StageRegistry

? Are you sure you want to delete registry "StageRegistry" Yes

Successfully deleted registry

--force (または -f) フラグを使用して、確認なしでレジストリーを強制的に削除します。

3.5.7.4. レジストリーの更新

すでに登録されているレジストリーの URL またはパーソナルアクセストークンを更新するには、以下のコマンドを実行します。

odo registry update

$ odo registry update出力例:

odo registry update MyRegistry https://otherregistry.example.com --token <other_access_token> ? Are you sure you want to update registry "MyRegistry" Yes Successfully updated registry

$ odo registry update MyRegistry https://otherregistry.example.com --token <other_access_token>