This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.확장 및 성능

프로덕션 환경에서 OpenShift Container Platform 클러스터 스케일링 및 성능 튜닝

초록

1장. 대규모 클러스터 설치에 대한 권장 사례

대규모 클러스터를 설치하거나 클러스터 스케일링을 통해 노드 수를 늘리는 경우 다음 사례를 적용하십시오.

1.1. 대규모 클러스터 설치에 대한 권장 사례

대규모 클러스터를 설치하거나 클러스터 스케일링을 통해 노드 수를 늘리는 경우 install-config.yaml 파일에서 클러스터 네트워크 cidr을 적절하게 설정한 후 클러스터를 설치하십시오.

클러스터 크기가 500개 노드를 초과하는 경우 기본 클러스터 네트워크 cidr 10.128.0.0/14를 사용할 수 없습니다. 노드 수가 500개를 초과하게 되면 10.128.0.0/12 또는 10.128.0.0/10으로 설정해야 합니다.

2장. 호스트 관련 권장 사례

이 주제에서는 OpenShift Container Platform의 호스트 관련 권장 사례를 설명합니다.

이러한 지침은 OVN(Open Virtual Network)이 아닌 SDN(소프트웨어 정의 네트워킹)을 사용하는 OpenShift Container Platform에 적용됩니다.

2.1. 노드 호스트 관련 권장 사례

OpenShift Container Platform 노드 구성 파일에는 중요한 옵션이 포함되어 있습니다. 예를 들어 두 개의 매개변수 podsPerCore 및 maxPods는 하나의 노드에 대해 예약할 수 있는 최대 Pod 수를 제어합니다.

옵션을 둘 다 사용하는 경우 한 노드의 Pod 수는 두 값 중 작은 값으로 제한됩니다. 이 값을 초과하면 다음과 같은 결과가 발생할 수 있습니다.

- CPU 사용률 증가

- Pod 예약 속도 저하

- 노드의 메모리 크기에 따라 메모리 부족 시나리오 발생

- IP 주소 모두 소진

- 리소스 초과 커밋으로 인한 사용자 애플리케이션 성능 저하

Kubernetes의 경우 단일 컨테이너를 보유한 하나의 Pod에서 실제로 두 개의 컨테이너가 사용됩니다. 두 번째 컨테이너는 실제 컨테이너 시작 전 네트워킹 설정에 사용됩니다. 따라서 10개의 Pod를 실행하는 시스템에서는 실제로 20개의 컨테이너가 실행됩니다.

클라우드 공급자의 디스크 IOPS 제한이 CRI-O 및 kubelet에 영향을 미칠 수 있습니다. 노드에서 다수의 I/O 집약적 Pod가 실행되고 있는 경우 오버로드될 수 있습니다. 노드에서 디스크 I/O를 모니터링하고 워크로드에 대해 처리량이 충분한 볼륨을 사용하는 것이 좋습니다.

podsPerCore는 노드의 프로세서 코어 수에 따라 노드가 실행할 수 있는 Pod 수를 설정합니다. 예를 들어 프로세서 코어가 4개인 노드에서 podsPerCore가 10으로 설정된 경우 노드에 허용되는 최대 Pod 수는 40이 됩니다.

kubeletConfig: podsPerCore: 10

kubeletConfig:

podsPerCore: 10

podsPerCore를 0으로 설정하면 이 제한이 비활성화됩니다. 기본값은 0입니다. podsPerCore는 maxPods를 초과할 수 없습니다.

maxPods는 노드의 속성에 관계없이 노드가 실행할 수 있는 Pod 수를 고정된 값으로 설정합니다.

kubeletConfig:

maxPods: 250

kubeletConfig:

maxPods: 2502.2. KubeletConfig CRD를 생성하여 kubelet 매개변수 편집

kubelet 구성은 현재 Ignition 구성으로 직렬화되어 있으므로 직접 편집할 수 있습니다. 하지만 MCC(Machine Config Controller)에 새 kubelet-config-controller도 추가되어 있습니다. 이를 통해 KubeletConfig CR(사용자 정의 리소스)을 사용하여 kubelet 매개변수를 편집할 수 있습니다.

kubeletConfig 오브젝트의 필드가 Kubernetes 업스트림에서 kubelet으로 직접 전달되므로 kubelet은 해당 값을 직접 검증합니다. kubeletConfig 오브젝트의 값이 유효하지 않으면 클러스터 노드를 사용할 수 없게 될 수 있습니다. 유효한 값은 Kubernetes 설명서를 참조하십시오.

다음 지침 사항을 고려하십시오.

-

해당 풀에 필요한 모든 구성 변경 사항을 사용하여 각 머신 구성 풀에 대해 하나의

KubeletConfigCR을 생성합니다. 모든 풀에 동일한 콘텐츠를 적용하는 경우 모든 풀에 대해 하나의KubeletConfigCR만 필요합니다. -

기존

KubeletConfigCR을 편집하여 각 변경 사항에 대한 CR을 생성하는 대신 기존 설정을 수정하거나 새 설정을 추가합니다. 변경 사항을 되돌릴 수 있도록 다른 머신 구성 풀을 수정하거나 임시로 변경하려는 변경 사항만 수정하기 위해 CR을 생성하는 것이 좋습니다. -

필요에 따라 클러스터당 10개로 제한되는 여러

KubeletConfigCR을 생성합니다. 첫 번째KubeletConfigCR의 경우 MCO(Machine Config Operator)는kubelet에 추가된 머신 구성을 생성합니다. 이후 각 CR을 통해 컨트롤러는 숫자 접미사가 있는 다른kubelet머신 구성을 생성합니다. 예를 들어,-2접미사가 있는kubelet머신 구성이 있는 경우 다음kubelet머신 구성에-3이 추가됩니다.

머신 구성을 삭제하려면 제한을 초과하지 않도록 해당 구성을 역순으로 삭제합니다. 예를 들어 kubelet-2 머신 구성을 삭제하기 전에 kubelet-3 머신 구성을 삭제합니다.

kubelet-9 접미사가 있는 머신 구성이 있고 다른 KubeletConfig CR을 생성하는 경우 kubelet 머신 구성이 10개 미만인 경우에도 새 머신 구성이 생성되지 않습니다.

KubeletConfig CR 예

oc get kubeletconfig

$ oc get kubeletconfigNAME AGE set-max-pods 15m

NAME AGE

set-max-pods 15mKubeletConfig 머신 구성 표시 예

oc get mc | grep kubelet

$ oc get mc | grep kubelet... 99-worker-generated-kubelet-1 b5c5119de007945b6fe6fb215db3b8e2ceb12511 3.2.0 26m ...

...

99-worker-generated-kubelet-1 b5c5119de007945b6fe6fb215db3b8e2ceb12511 3.2.0 26m

...다음 프로세스는 작업자 노드의 각 노드에 대한 최대 Pod 수를 구성하는 방법을 보여줍니다.

사전 요구 사항

구성하려는 노드 유형의 정적

MachineConfigPoolCR와 연관된 라벨을 가져옵니다. 다음 중 하나를 실행합니다.Machine config pool을 표시합니다.

oc describe machineconfigpool <name>

$ oc describe machineconfigpool <name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 예를 들면 다음과 같습니다.

oc describe machineconfigpool worker

$ oc describe machineconfigpool workerCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 라벨이 추가되면

labels아래에 표시됩니다.

라벨이 없으면 키/값 쌍을 추가합니다.

oc label machineconfigpool worker custom-kubelet=set-max-pods

$ oc label machineconfigpool worker custom-kubelet=set-max-podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

절차

이 명령은 선택할 수 있는 사용 가능한 머신 구성 오브젝트를 표시합니다.

oc get machineconfig

$ oc get machineconfigCopy to Clipboard Copied! Toggle word wrap Toggle overflow 기본적으로 두 개의 kubelet 관련 구성은

01-master-kubelet및01-worker-kubelet입니다.노드당 최대 Pod의 현재 값을 확인하려면 다음을 실행합니다.

oc describe node <node_name>

$ oc describe node <node_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 예를 들면 다음과 같습니다.

oc describe node ci-ln-5grqprb-f76d1-ncnqq-worker-a-mdv94

$ oc describe node ci-ln-5grqprb-f76d1-ncnqq-worker-a-mdv94Copy to Clipboard Copied! Toggle word wrap Toggle overflow Allocatable스탠자에서value: pods: <value>를 찾습니다.출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 작업자 노드에서 노드당 최대 Pod 수를 설정하려면 kubelet 구성이 포함된 사용자 정의 리소스 파일을 생성합니다.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 참고kubelet이 API 서버와 통신하는 속도는 QPS(초당 쿼리) 및 버스트 값에 따라 달라집니다. 노드마다 실행되는 Pod 수가 제한된 경우 기본 값인

50(kubeAPIQPS인 경우) 및100(kubeAPIBurst인 경우)이면 충분합니다. 노드에 CPU 및 메모리 리소스가 충분한 경우 kubelet QPS 및 버스트 속도를 업데이트하는 것이 좋습니다.Copy to Clipboard Copied! Toggle word wrap Toggle overflow 라벨을 사용하여 작업자의 머신 구성 풀을 업데이트합니다.

oc label machineconfigpool worker custom-kubelet=large-pods

$ oc label machineconfigpool worker custom-kubelet=large-podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow KubeletConfig오브젝트를 생성합니다.oc create -f change-maxPods-cr.yaml

$ oc create -f change-maxPods-cr.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow KubeletConfig오브젝트가 생성되었는지 확인합니다.oc get kubeletconfig

$ oc get kubeletconfigCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

NAME AGE set-max-pods 15m

NAME AGE set-max-pods 15mCopy to Clipboard Copied! Toggle word wrap Toggle overflow 클러스터의 작업자 노드 수에 따라 작업자 노드가 하나씩 재부팅될 때까지 기다립니다. 작업자 노드가 3개인 클러스터의 경우 약 10~15분이 걸릴 수 있습니다.

변경 사항이 노드에 적용되었는지 확인합니다.

작업자 노드에서

maxPods값이 변경되었는지 확인합니다.oc describe node <node_name>

$ oc describe node <node_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Allocatable스탠자를 찾습니다.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 이 예에서

pods매개변수는KubeletConfig오브젝트에 설정한 값을 보고해야 합니다.

KubeletConfig오브젝트에서 변경 사항을 확인합니다.oc get kubeletconfigs set-max-pods -o yaml

$ oc get kubeletconfigs set-max-pods -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 상태가 표시되어야 합니다. "true"및type:Success:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4. 컨트롤 플레인 노드 크기 조정

컨트롤 플레인 노드 리소스 요구사항은 클러스터의 노드 수에 따라 달라집니다. 다음 컨트롤 플레인 노드 크기 권장 사항은 컨트롤 플레인 밀도 중심 테스트 결과를 기반으로 합니다. 컨트롤 플레인 테스트에서는 노드 수에 따라 각 네임스페이스의 클러스터에서 다음 오브젝트를 생성합니다.

- 12개의 이미지 스트림

- 3개의 빌드 구성

- 6개의 빌드

- 각각 두 개의 시크릿을 마운트하는 2개의 Pod 복제본이 있는 배포 1개

- 2개의 배포에 1개의 Pod 복제본이 2개의 시크릿을 마운트함

- 이전 배포를 가리키는 서비스 3개

- 이전 배포를 가리키는 경로 3개

- 10개의 시크릿, 이 중 2 개는 이전 배포에 의해 마운트됨

- 10개의 구성 맵, 이 중 두 개는 이전 배포에 의해 마운트됨

| 작업자 노드 수 | 클러스터 로드(네임스페이스) | CPU 코어 수 | 메모리(GB) |

|---|---|---|---|

| 25 | 500 | 4 | 16 |

| 100 | 1000 | 8 | 32 |

| 250 | 4000 | 16 | 96 |

3개의 마스터 또는 컨트롤 플레인 노드가 있는 대규모 및 고밀도 클러스터에서는 노드 중 하나가 중지되거나, 재부팅 또는 실패할 때 CPU 및 메모리 사용량이 증가합니다. 비용 절감을 위해 클러스터를 종료한 후 클러스터를 재시작하는 의도적인 경우 외에도 전원, 네트워크 또는 기본 인프라와 관련된 예기치 않은 문제로 인해 오류가 발생할 수 있습니다. 나머지 두 컨트롤 플레인 노드는 고가용성이 되기 위해 부하를 처리하여 리소스 사용량을 늘려야 합니다. 이는 마스터가 직렬로 연결, 드레이닝, 재부팅되어 운영 체제 업데이트를 적용하고 컨트롤 플레인 Operator 업데이트를 적용하기 때문에 업그레이드 중에도 이 문제가 발생할 수 있습니다. 단계적 오류를 방지하려면 컨트롤 플레인 노드의 전체 CPU 및 메모리 리소스 사용량을 리소스 사용량 급증을 처리하기 위해 사용 가능한 모든 용량의 60%로 유지합니다. 리소스 부족으로 인한 다운타임을 방지하기 위해 컨트롤 플레인 노드에서 CPU 및 메모리를 늘립니다.

노드 크기 조정은 클러스터의 노드 수와 개체 수에 따라 달라집니다. 또한 클러스터에서 개체가 현재 생성되는지에 따라 달라집니다. 개체 생성 중에 컨트롤 플레인은 개체가 running 단계에 있을 때보다 리소스 사용량 측면에서 더 활성화됩니다.

설치 관리자 프로비저닝 인프라 설치 방법을 사용한 경우 실행 중인 OpenShift Container Platform 4.7 클러스터에서 컨트롤 플레인 노드 크기를 수정할 수 없습니다. 대신 총 노드 수를 추정하고 설치하는 동안 권장되는 컨트롤 플레인 노드 크기를 사용해야 합니다.

권장 사항은 OpenShiftSDN이 있는 OpenShift Container Platform 클러스터에서 네트워크 플러그인으로 캡처된 데이터 포인트를 기반으로 합니다.

OpenShift Container Platform 3.11 및 이전 버전과 비교하면, OpenShift Container Platform 4.7에서는 기본적으로 CPU 코어의 절반(500밀리코어)이 시스템에 의해 예약되어 있습니다. 이러한 점을 고려하여 크기가 결정됩니다.

2.4.1. AWS(Amazon Web Services) 마스터 인스턴스의 플레이버 크기 증가

클러스터에 AWS 마스터 노드에 과부하가 발생하고 마스터 노드에 더 많은 리소스가 필요한 경우 마스터 인스턴스의 플레이버 크기를 늘릴 수 있습니다.

AWS 마스터 인스턴스의 플레이버 크기를 늘리기 전에 etcd를 백업하는 것이 좋습니다.

사전 요구 사항

- AWS에 IPI(설치 프로그램 프로비저닝 인프라) 또는 UPI(사용자 프로비저닝 인프라) 클러스터가 있습니다.

절차

- AWS 콘솔을 열고 마스터 인스턴스를 가져옵니다.

- 하나의 마스터 인스턴스를 중지합니다.

- 중지된 인스턴스를 선택하고 작업 → 인스턴스 설정 → 인스턴스 유형 변경을 클릭합니다.

-

인스턴스를 더 큰 유형으로 변경하고 유형이 이전 선택과 동일한 기본인지 확인하고 변경 사항을 적용합니다. 예를 들어

m5.xlarge를 m로 변경할 수 있습니다.5.2xlarge또는m5.4xlarge - 인스턴스를 백업하고 다음 master 인스턴스에 대한 단계를 반복합니다.

2.5. etcd 관련 권장 사례

대규모 및 밀도가 높은 클러스터의 경우 키 공간이 너무 커져서 공간 할당량을 초과하면 etcd 성능이 저하될 수 있습니다. 주기적으로 etcd를 유지 관리하고 조각 모음하여 데이터 저장소에서 공간을 확보합니다. etcd 지표에 대한 Prometheus를 모니터링하고 필요한 경우 조각 모음을 모니터링합니다. 그러지 않으면 etcd에서 키 읽기 및 삭제만 수락하는 유지 관리 모드로 클러스터를 배치하는 클러스터 전체 알람을 생성할 수 있습니다.

다음 주요 메트릭을 모니터링합니다.

-

etcd_server_quota_backend_bytes, 현재 할당량 제한 -

etcd_mvcc_db_total_size_in_use_in_bytes. 이는 기록 압축 후 실제 데이터베이스 사용량을 나타냅니다. -

etcd_debugging_mvcc_db_total_size_in_bytes. 여기에는 조각 모음 대기 중인 여유 공간을 포함하여 데이터베이스 크기가 표시됩니다.

etcd 조각 모음에 대한 자세한 내용은 "Defragmenting etcd data" 섹션을 참조하십시오.

etcd는 디스크에 데이터를 쓰고 디스크에 제안을 유지하므로 디스크 성능에 따라 성능이 달라집니다. 디스크 속도가 느리고 다른 프로세스의 디스크 활동이 길어지면 fsync 대기 시간이 길어질 수 있습니다. 이러한 대기 시간 동안 etcd가 하트비트를 놓치고 새 제안을 제때 디스크에 커밋하지 않고 궁극적으로 요청 시간 초과 및 임시 리더 손실이 발생할 수 있습니다. 대기 시간이 짧고 처리량이 높은 SSD 디스크 또는 NVMe 디스크가 지원하는 머신에서 etcd를 실행합니다. 메모리 셀당 1 비트를 제공하고, 내구성이 있고 신뢰할 수 있으며 쓰기 집약적인 워크로드에 이상적입니다.

배포된 OpenShift Container Platform 클러스터에서 모니터링하기 위한 몇 가지 주요 메트릭은 etcd 디스크 미리 쓰기 시 미리 쓰기 시간 및 etcd 리더 변경 횟수입니다. 이러한 지표를 추적하려면 Prometheus를 사용하십시오.

-

etcd_disk_wal_fsync_duration_seconds_bucket지표는 etcd 디스크 fsync 기간을 보고합니다. -

etcd_server_leader_changes_seen_total지표에서 리더 변경 사항을 보고합니다. -

느린 디스크를 배제하고 디스크 속도가 충분히 빠졌는지 확인하려면

etcd_disk_wal_fsync_duration_seconds_bucket의 99번째 백분위수가 10ms 미만인지 확인합니다.

OpenShift Container Platform 클러스터를 생성하기 전이나 후에 etcd의 하드웨어를 검증하려면 fio라는 I/O 벤치마킹 툴을 사용할 수 있습니다.

사전 요구 사항

- Podman 또는 Docker와 같은 컨테이너 런타임은 테스트 중인 머신에 설치됩니다.

-

데이터는

/var/lib/etcd경로에 작성됩니다.

프로세스

Fio를 실행하고 결과를 분석합니다.

Podman을 사용하는 경우 다음 명령을 실행합니다.

sudo podman run --volume /var/lib/etcd:/var/lib/etcd:Z quay.io/openshift-scale/etcd-perf

$ sudo podman run --volume /var/lib/etcd:/var/lib/etcd:Z quay.io/openshift-scale/etcd-perfCopy to Clipboard Copied! Toggle word wrap Toggle overflow Docker를 사용하는 경우 다음 명령을 실행합니다.

sudo docker run --volume /var/lib/etcd:/var/lib/etcd:Z quay.io/openshift-scale/etcd-perf

$ sudo docker run --volume /var/lib/etcd:/var/lib/etcd:Z quay.io/openshift-scale/etcd-perfCopy to Clipboard Copied! Toggle word wrap Toggle overflow

실행에서 캡처된 fsync 지표의 99번째 백분위수를 비교하여 디스크 속도가 10ms 미만인지 확인하여 디스크 속도가 etcd를 호스트할 수 있는지 여부를 출력에서 확인할 수 있습니다.

etcd는 모든 멤버 간에 요청을 복제하므로 성능은 네트워크 입력/출력(I/O) 대기 시간에 따라 크게 달라집니다. 네트워크 대기 시간이 길면 etcd 하트비트가 선택 시간 초과보다 오래 걸리므로 리더 선택이 발생하여 클러스터가 손상될 수 있습니다. 배포된 OpenShift Container Platform 클러스터에서 모니터링되는 주요 메트릭은 각 etcd 클러스터 멤버에서 etcd 네트워크 피어 대기 시간의 99번째 백분위 수입니다. 이러한 메트릭을 추적하려면 Prometheus를 사용하십시오.

histogram_quantile(0.99, rate(etcd_network_peer_round_trip_time_seconds_bucket[2m]) 지표에서 etcd가 멤버 간 클라이언트 요청을 복제하기 위한 왕복 시간을 보고합니다. 50ms보다 작은지 확인하십시오.

2.6. etcd 데이터 조각 모음

etcd 기록 압축 및 기타 이벤트가 디스크 분할 이후 디스크 공간을 회수하려면 수동 조각 모음을 주기적으로 수행해야 합니다.

기록 압축은 5분마다 자동으로 수행되며 백엔드 데이터베이스에서 공백이 남습니다. 이 분할된 공간은 etcd에서 사용할 수 있지만 호스트 파일 시스템에서 사용할 수 없습니다. 호스트 파일 시스템에서 이 공간을 사용할 수 있도록 etcd 조각을 정리해야 합니다.

etcd는 디스크에 데이터를 쓰기 때문에 etcd 성능은 디스크 성능에 따라 크게 달라집니다. 매달 또는 한달에 한 두 번 또는 클러스터에 필요한 경우 etcd를 조각을 정리하는 것이 좋습니다. etcd_db_total_size_in_bytes 메트릭을 모니터링하여 조각 모음이 필요한지 여부를 결정할 수도 있습니다.

etcd를 분리하는 것은 차단 작업입니다. 조각화 처리가 완료될 때까지 etcd 멤버는 응답하지 않습니다. 따라서 각 pod의 조각 모음 작업 간에 클러스터가 정상 작동을 재개할 수 있도록 1분 이상 대기해야 합니다.

각 etcd 멤버의 etcd 데이터 조각 모음을 수행하려면 다음 절차를 따릅니다.

사전 요구 사항

-

cluster-admin역할의 사용자로 클러스터에 액세스할 수 있어야 합니다.

절차

리더가 최종 조각화 처리를 수행하므로 어떤 etcd 멤버가 리더인지 확인합니다.

etcd pod 목록을 가져옵니다.

oc get pods -n openshift-etcd -o wide | grep -v quorum-guard | grep etcd

$ oc get pods -n openshift-etcd -o wide | grep -v quorum-guard | grep etcdCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

etcd-ip-10-0-159-225.example.redhat.com 3/3 Running 0 175m 10.0.159.225 ip-10-0-159-225.example.redhat.com <none> <none> etcd-ip-10-0-191-37.example.redhat.com 3/3 Running 0 173m 10.0.191.37 ip-10-0-191-37.example.redhat.com <none> <none> etcd-ip-10-0-199-170.example.redhat.com 3/3 Running 0 176m 10.0.199.170 ip-10-0-199-170.example.redhat.com <none> <none>

etcd-ip-10-0-159-225.example.redhat.com 3/3 Running 0 175m 10.0.159.225 ip-10-0-159-225.example.redhat.com <none> <none> etcd-ip-10-0-191-37.example.redhat.com 3/3 Running 0 173m 10.0.191.37 ip-10-0-191-37.example.redhat.com <none> <none> etcd-ip-10-0-199-170.example.redhat.com 3/3 Running 0 176m 10.0.199.170 ip-10-0-199-170.example.redhat.com <none> <none>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Pod를 선택하고 다음 명령을 실행하여 어떤 etcd 멤버가 리더인지 확인합니다.

oc rsh -n openshift-etcd etcd-ip-10-0-159-225.example.redhat.com etcdctl endpoint status --cluster -w table

$ oc rsh -n openshift-etcd etcd-ip-10-0-159-225.example.redhat.com etcdctl endpoint status --cluster -w tableCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 이 출력의

ISLEADER 열에 따르면https://10.0.199.170:2379엔드 포인트가 리더입니다. 이전 단계의 출력과 이 앤드 포인트가 일치하면 리더의 Pod 이름은etcd-ip-10-0199-170.example.redhat.com입니다.

etcd 멤버를 분리합니다.

실행중인 etcd 컨테이너에 연결하고 리더가 아닌 pod 이름을 전달합니다.

oc rsh -n openshift-etcd etcd-ip-10-0-159-225.example.redhat.com

$ oc rsh -n openshift-etcd etcd-ip-10-0-159-225.example.redhat.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow ETCDCTL_ENDPOINTS환경 변수를 설정 해제합니다.unset ETCDCTL_ENDPOINTS

sh-4.4# unset ETCDCTL_ENDPOINTSCopy to Clipboard Copied! Toggle word wrap Toggle overflow etcd 멤버를 분리합니다.

etcdctl --command-timeout=30s --endpoints=https://localhost:2379 defrag

sh-4.4# etcdctl --command-timeout=30s --endpoints=https://localhost:2379 defragCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Finished defragmenting etcd member[https://localhost:2379]

Finished defragmenting etcd member[https://localhost:2379]Copy to Clipboard Copied! Toggle word wrap Toggle overflow 시간 초과 오류가 발생하면 명령이 성공할 때까지

--command-timeout의 값을 늘립니다.데이터베이스 크기가 감소되었는지 확인합니다.

etcdctl endpoint status -w table --cluster

sh-4.4# etcdctl endpoint status -w table --clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 이 예에서는 etcd 멤버의 데이터베이스 크기가 시작 크기인 104MB와 달리 현재 41MB임을 보여줍니다.

다음 단계를 반복하여 다른 etcd 멤버에 연결하고 조각 모음을 수행합니다. 항상 리더의 조각 모음을 마지막으로 수행합니다.

etcd pod가 복구될 수 있도록 조각 모음 작업에서 1분 이상 기다립니다. etcd pod가 복구될 때까지 etcd 멤버는 응답하지 않습니다.

공간 할당량을 초과하여

NOSPACE경고가 발생하는 경우 이를 지우십시오.NOSPACE경고가 있는지 확인합니다.etcdctl alarm list

sh-4.4# etcdctl alarm listCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

memberID:12345678912345678912 alarm:NOSPACE

memberID:12345678912345678912 alarm:NOSPACECopy to Clipboard Copied! Toggle word wrap Toggle overflow 경고를 지웁니다.

etcdctl alarm disarm

sh-4.4# etcdctl alarm disarmCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.7. OpenShift Container Platform 인프라 구성 요소

다음 인프라 워크로드에서는 OpenShift Container Platform 작업자 서브스크립션이 발생하지 않습니다.

- 마스터에서 실행되는 Kubernetes 및 OpenShift Container Platform 컨트롤 플레인 서비스

- 기본 라우터

- 통합된 컨테이너 이미지 레지스트리

- HAProxy 기반 Ingress 컨트롤러

- 사용자 정의 프로젝트를 모니터링하기위한 구성 요소를 포함한 클러스터 메트릭 수집 또는 모니터링 서비스

- 클러스터 집계 로깅

- 서비스 브로커

- Red Hat Quay

- Red Hat OpenShift Container Storage

- Red Hat Advanced Cluster Manager

- Red Hat Advanced Cluster Security for Kubernetes

- Red Hat OpenShift GitOps

- Red Hat OpenShift Pipelines

다른 컨테이너, Pod 또는 구성 요소를 실행하는 모든 노드는 서브스크립션을 적용해야 하는 작업자 노드입니다.

2.8. 모니터링 솔루션 이동

기본적으로 Prometheus, Grafana 및 AlertManager가 포함된 Prometheus Cluster Monitoring 스택은 클러스터 모니터링을 제공하기 위해 배포됩니다. 이는 Cluster Monitoring Operator가 관리합니다. 이러한 구성 요소를 다른 머신으로 이동하려면 사용자 정의 구성 맵을 생성하고 적용해야 합니다.

프로세스

다음

ConfigMap정의를cluster-monitoring-configmap.yaml파일로 저장합니다.Copy to Clipboard Copied! Toggle word wrap Toggle overflow 이 구성 맵을 실행하면 모니터링 스택의 구성 요소가 인프라 노드에 재배포됩니다.

새 구성 맵을 적용합니다.

oc create -f cluster-monitoring-configmap.yaml

$ oc create -f cluster-monitoring-configmap.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 모니터링 pod가 새 머신으로 이동하는 것을 확인합니다.

watch 'oc get pod -n openshift-monitoring -o wide'

$ watch 'oc get pod -n openshift-monitoring -o wide'Copy to Clipboard Copied! Toggle word wrap Toggle overflow 구성 요소가

infra노드로 이동하지 않은 경우 이 구성 요소가 있는 pod를 제거합니다.oc delete pod -n openshift-monitoring <pod>

$ oc delete pod -n openshift-monitoring <pod>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 삭제된 pod의 구성 요소가

infra노드에 다시 생성됩니다.

2.9. 기본 레지스트리 이동

Pod를 다른 노드에 배포하도록 레지스트리 Operator를 구성합니다.

전제 조건

- OpenShift Container Platform 클러스터에서 추가 머신 세트를 구성합니다.

프로세스

config/instance개체를 표시합니다.oc get configs.imageregistry.operator.openshift.io/cluster -o yaml

$ oc get configs.imageregistry.operator.openshift.io/cluster -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow config/instance개체를 편집합니다.oc edit configs.imageregistry.operator.openshift.io/cluster

$ oc edit configs.imageregistry.operator.openshift.io/clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow 다음 YAML과 동일하게 오브젝트의

spec섹션을 수정합니다.Copy to Clipboard Copied! Toggle word wrap Toggle overflow 레지스트리 pod가 인프라 노드로 이동되었는지 검증합니다.

다음 명령을 실행하여 레지스트리 pod가 있는 노드를 식별합니다.

oc get pods -o wide -n openshift-image-registry

$ oc get pods -o wide -n openshift-image-registryCopy to Clipboard Copied! Toggle word wrap Toggle overflow 노드에 지정된 레이블이 있는지 확인합니다.

oc describe node <node_name>

$ oc describe node <node_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 명령 출력을 확인하고

node-role.kubernetes.io/infra가LABELS목록에 있는지 확인합니다.

2.10. 라우터 이동

라우터 Pod를 다른 머신 세트에 배포할 수 있습니다. 기본적으로 Pod는 작업자 노드에 배포됩니다.

전제 조건

- OpenShift Container Platform 클러스터에서 추가 머신 세트를 구성합니다.

프로세스

라우터 Operator의

IngressController사용자 정의 리소스를 표시합니다.oc get ingresscontroller default -n openshift-ingress-operator -o yaml

$ oc get ingresscontroller default -n openshift-ingress-operator -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 명령 출력은 다음 예제와 유사합니다.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow ingresscontroller리소스를 편집하고infra레이블을 사용하도록nodeSelector를 변경합니다.oc edit ingresscontroller default -n openshift-ingress-operator

$ oc edit ingresscontroller default -n openshift-ingress-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow 다음과 같이

infra레이블을 참조하는nodeSelector부분을spec섹션에 추가합니다.spec: nodePlacement: nodeSelector: matchLabels: node-role.kubernetes.io/infra: ""spec: nodePlacement: nodeSelector: matchLabels: node-role.kubernetes.io/infra: ""Copy to Clipboard Copied! Toggle word wrap Toggle overflow 라우터 pod가

infra노드에서 실행되고 있는지 확인합니다.라우터 pod 목록을 표시하고 실행중인 pod의 노드 이름을 기록해 둡니다.

oc get pod -n openshift-ingress -o wide

$ oc get pod -n openshift-ingress -o wideCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES router-default-86798b4b5d-bdlvd 1/1 Running 0 28s 10.130.2.4 ip-10-0-217-226.ec2.internal <none> <none> router-default-955d875f4-255g8 0/1 Terminating 0 19h 10.129.2.4 ip-10-0-148-172.ec2.internal <none> <none>

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES router-default-86798b4b5d-bdlvd 1/1 Running 0 28s 10.130.2.4 ip-10-0-217-226.ec2.internal <none> <none> router-default-955d875f4-255g8 0/1 Terminating 0 19h 10.129.2.4 ip-10-0-148-172.ec2.internal <none> <none>Copy to Clipboard Copied! Toggle word wrap Toggle overflow 이 예에서 실행중인 pod는

ip-10-0-217-226.ec2.internal노드에 있습니다.실행중인 pod의 노드 상태를 표시합니다.

oc get node <node_name>

$ oc get node <node_name>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

NAME STATUS ROLES AGE VERSION ip-10-0-217-226.ec2.internal Ready infra,worker 17h v1.20.0

NAME STATUS ROLES AGE VERSION ip-10-0-217-226.ec2.internal Ready infra,worker 17h v1.20.0Copy to Clipboard Copied! Toggle word wrap Toggle overflow 역할 목록에

infra가 포함되어 있으므로 pod가 올바른 노드에서 실행됩니다.

2.11. 인프라 노드 크기 조정

인프라 노드 리소스 요구사항은 클러스터 사용 기간, 노드, 클러스터의 오브젝트에 따라 달라집니다. 이러한 요인으로 인해 Prometheus의 지표 또는 시계열 수가 증가할 수 있기 때문입니다. 다음 인프라 노드 크기 권장 사항은 클러스터 최대값 및 컨트롤 플레인 밀도 중심 테스트 결과를 기반으로 합니다.

| 작업자 노드 수 | CPU 코어 수 | 메모리(GB) |

|---|---|---|

| 25 | 4 | 16 |

| 100 | 8 | 32 |

| 250 | 16 | 128 |

| 500 | 32 | 128 |

이러한 크기 조정 권장 사항은 클러스터에서 많은 수의 오브젝트를 생성하는 스케일링 테스트를 기반으로 합니다. 이 테스트에는 일부 클러스터 최대값에 도달하는 테스트가 포함되어 있습니다. OpenShift Container Platform 4.7 클러스터에서 노드 수가 250~500개인 경우 최대값은 Pod가 61,000개, 배포가 10,000개, 보안이 181,000개, 구성 맵이 400개 등인 네임스페이스 10,000개입니다. Prometheus는 고도의 메모리 집약적 애플리케이션입니다. 리소스 사용량은 노드 수, 오브젝트, Prometheus 지표 스크래핑 간격, 지표 또는 시계열, 클러스터 사용 기간 등 다양한 요인에 따라 달라집니다. 디스크 크기도 보존 기간에 따라 달라집니다. 이와 같은 요인을 고려하여 적절하게 크기를 조정해야 합니다.

이러한 크기 조정 권장 사항은 클러스터 설치 중에 설치되는 인프라 구성 요소인 Prometheus, 라우터 및 레지스트리에만 적용됩니다. 로깅은 Day 2 작업에서 이러한 권장 사항은 포함되어 있지 않습니다.

OpenShift Container Platform 3.11 및 이전 버전과 비교하면, OpenShift Container Platform 4.7에서는 기본적으로 CPU 코어의 절반(500밀리코어)이 시스템에 의해 예약되어 있습니다. 명시된 크기 조정 권장 사항은 이러한 요인의 영향을 받습니다.

3장. IBM Z 및 LinuxONE 환경에 대한 권장 호스트 사례

이 주제에서는 IBM Z 및 LinuxONE의 OpenShift Container Platform에 대한 호스트 권장 사례를 설명합니다.

s390x 아키텍처는 여러 면에서 고유합니다. 따라서 여기에 제시된 일부 권장 사항은 다른 플랫폼에 적용되지 않을 수 있습니다.

별도로 명시하지 않는 한, 이러한 방법은 IBM Z 및 LinuxONE에 z/VM 및 RHEL(Red Hat Enterprise Linux) KVM 설치에 모두 적용됩니다.

3.1. CPU 과다 할당 관리

고도로 가상화된 IBM Z 환경에서는 인프라 설정 및 크기 조정을 신중하게 계획해야 합니다. 가상화의 가장 중요한 기능 중 하나는 리소스 과다 할당을 수행하여 하이퍼바이저 수준에서 실제로 사용 가능한 것보다 가상 시스템에 더 많은 리소스를 할당하는 기능입니다. 이 방법은 워크로드에 따라 다르며 모든 설정에 적용할 수 있는 결정적인 규칙이 없습니다.

설정에 따라 CPU 과다 할당과 관련된 모범 사례를 고려하십시오.

- LPAR 레벨(PR/SM 하이퍼바이저)에서는 사용 가능한 모든 물리적 코어(IFL)를 각 LPAR에 할당하지 않도록 합니다. 예를 들어, 물리적 IFL 4개를 사용할 수 있는 경우 각각 4개의 논리적 IFL이 있는 3개의 LPAR을 정의해서는 안 됩니다.

- LPAR 공유 및 가중치 확인 및 이해.

- 과도한 가상 CPU 수는 성능에 부정적인 영향을 줄 수 있습니다. 논리 프로세서가 LPAR에 정의된 것보다 게스트에 더 많은 가상 프로세서를 정의하지 마십시오.

- 게스트당 가상 프로세서 수를 최대 업무 부하가 아닌 구성.

- 소규모로 시작하고 워크로드를 모니터링합니다. 필요에 따라 vCPU 수를 점진적으로 늘립니다.

- 모든 워크로드가 높은 과다 할당 비율에 적합한 것은 아닙니다. 워크로드가 CPU를 많이 사용하는 경우 성능 문제 없이 높은 비율을 달성할 수 없을 것입니다. I/O 집약성이 높은 워크로드는 높은 과다 할당 비율을 통해서도 일관된 성능을 유지할 수 있습니다.

3.2. 투명한 대규모 페이지를 비활성화하는 방법

THP(Transparent Huge Pages)는 대규모 페이지를 생성, 관리 및 사용하는 대부분의 측면을 자동화하려고 합니다. THP는 대규모 페이지를 자동으로 관리하므로 모든 유형의 워크로드에 대해 항상 최적으로 처리되지는 않습니다. THP는 많은 애플리케이션에서 자체적으로 대규모 페이지를 처리하므로 성능 저하가 발생할 수 있습니다. 따라서 THP를 비활성화하는 것이 좋습니다. 다음 단계에서는 NTO(Node Tuning Operator) 프로필을 사용하여 THP를 비활성화하는 방법을 설명합니다.

3.2.1. NTO(Node Tuning Operator) 프로필로 THP 비활성화

절차

다음 NTO 샘플 프로필을 YAML 파일에 복사합니다. 예를 들어,

thp-s390-tuned.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow NTO 프로필을 생성합니다.

oc create -f thp-s390-tuned.yaml

$ oc create -f thp-s390-tuned.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 활성 프로파일 목록을 확인합니다.

oc get tuned -n openshift-cluster-node-tuning-operator

$ oc get tuned -n openshift-cluster-node-tuning-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow 프로파일을 제거합니다.

oc delete -f thp-s390-tuned.yaml

$ oc delete -f thp-s390-tuned.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

검증

노드 중 하나에 로그인하고 일반 THP 검사를 수행하여 노드가 프로필을 성공적으로 적용했는지 확인합니다.

cat /sys/kernel/mm/transparent_hugepage/enabled always madvise [never]

$ cat /sys/kernel/mm/transparent_hugepage/enabled always madvise [never]Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.3. 수신 흐름으로 네트워킹 성능 향상

RFS(Receive Packet Buildering)는 네트워크 대기 시간을 추가로 줄임으로써 수신 패킷 제거(RPS)를 확장합니다. ss는 기술적으로 RPS를 기반으로 하며 CPU 캐시 적중 속도를 늘려 패킷 처리 효율성을 향상시킵니다. 이를 통해 이를 달성하고, 캐시 적중이 CPU 내에서 발생할 가능성이 높아지도록 계산에 가장 편리한 CPU를 결정함으로써 대기열 길이를 고려합니다. 따라서 CPU 캐시가 무효화되고 캐시를 다시 빌드하려면 더 적은 사이클이 필요합니다. 이렇게 하면 패킷 처리 실행 시간을 줄일 수 있습니다.

3.3.1. MCO(Machine Config Operator)를 사용하여 HFSS 활성화

절차

다음 MCO 샘플 프로필을 YAML 파일에 복사합니다. 예를 들어

enable-rfs.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow MCO 프로필을 생성합니다.

oc create -f enable-rfs.yaml

$ oc create -f enable-rfs.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 50-enable-rfs라는 항목이 나열되는지확인합니다.oc get mc

$ oc get mcCopy to Clipboard Copied! Toggle word wrap Toggle overflow 비활성화하려면 다음을 입력합니다.

oc delete mc 50-enable-rfs

$ oc delete mc 50-enable-rfsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4. 네트워크 설정을 선택하십시오

네트워킹 스택은 OpenShift Container Platform과 같은 Kubernetes 기반 제품에 가장 중요한 구성 요소 중 하나입니다. IBM Z 설정의 경우 네트워킹 설정은 선택한 하이퍼바이저에 따라 다릅니다. 워크로드 및 애플리케이션에 따라 일반적으로 사용 사례 및 트래픽 패턴에 따라 가장 적합한 것이 좋습니다.

설정에 따라 다음 모범 사례를 고려하십시오.

- 트래픽 패턴을 최적화하려면 네트워킹 장치와 관련된 모든 옵션을 고려하십시오. OSA-Express, RoCE Express, HiperSockets, z/VM VSwitch, Linux Bridge(KVM) 등의 장점을 살펴보고 설치 시 가장 큰 이점을 제공하는 옵션을 결정하십시오.

- 항상 사용 가능한 최신 NIC 버전을 사용합니다. 예를 들어 OSA Express 7S 10 GbE는 모두 10GbE 어댑터이지만 트랜잭션 워크로드 유형의 OSA Express 6S 10GbE에 비해 훨씬 향상되었습니다.

- 각 가상 스위치는 추가 대기 시간 계층을 추가합니다.

- 로드 밸런서는 클러스터 외부의 네트워크 통신에 중요한 역할을 합니다. 애플리케이션이 중요한 경우 프로덕션급 하드웨어 로드 밸런서를 사용하는 것이 좋습니다.

- OpenShift Container Platform SDN에는 네트워킹 성능에 영향을 주는 흐름과 규칙이 도입되었습니다. 통신이 중요한 서비스의 지역으로부터 혜택을 받으려면 포드 선호도 및 배치를 고려해야 합니다.

- 성능과 기능 간의 장단점 균형을 맞추십시오.

3.5. z/VM에서 HyperPAV로 높은 디스크 성능 확인

DASD 및 ECKD 장치는 일반적으로 IBM Z 환경에서 디스크 유형으로 사용됩니다. z/VM 환경의 일반적인 OpenShift Container Platform 설정에서 DASD 디스크는 노드의 로컬 스토리지를 지원하는 데 일반적으로 사용됩니다. z/VM 게스트를 지원하는 DASD 디스크에 대해 더 많은 처리량과 전반적으로 개선된 I/O 성능을 제공하도록 HyperPAV 별칭 장치를 설정할 수 있습니다.

로컬 스토리지 장치에 HyperPAV를 사용하면 성능이 크게 향상됩니다. 그러나 처리량과 CPU 비용 간에 장단점이 있다는 점에 유의해야 합니다.

전체 팩 미니 디스크를 사용하는 z/VM 기반 OpenShift Container Platform 설정의 경우 모든 노드에서 HyperPAV 별칭을 활성화하여 MCO 프로필의 이점을 활용할 수 있습니다. 컨트롤 플레인 및 컴퓨팅 노드 모두에 YAML 구성을 추가해야 합니다.

절차

다음 MCO 샘플 프로필을 컨트롤 플레인 노드의 YAML 파일에 복사합니다. 예를 들면

05-master-kernelarg-hpav.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow 다음 MCO 샘플 프로필을 계산 노드의 YAML 파일에 복사합니다. 예를 들면

05-worker-kernelarg-hpav.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow 참고장치 ID에 맞게

rd.dasd인수를 수정해야 합니다.MCO 프로필을 생성합니다.

oc create -f 05-master-kernelarg-hpav.yaml

$ oc create -f 05-master-kernelarg-hpav.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc create -f 05-worker-kernelarg-hpav.yaml

$ oc create -f 05-worker-kernelarg-hpav.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 비활성화하려면 다음을 입력합니다.

oc delete -f 05-master-kernelarg-hpav.yaml

$ oc delete -f 05-master-kernelarg-hpav.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc delete -f 05-worker-kernelarg-hpav.yaml

$ oc delete -f 05-worker-kernelarg-hpav.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.6. IBM Z 호스트의 RHEL KVM 권장 사항

KVM 가상 서버 환경 최적화는 가상 서버의 워크로드와 사용 가능한 리소스에 따라 크게 달라집니다. 한 환경에서 성능을 개선하는 것과 동일한 조치는 다른 환경에서 부정적인 영향을 미칠 수 있습니다. 특정 설정의 최적의 균형을 찾는 것은 어려운 일이며, 종종 실험이 필요합니다.

다음 섹션에서는 IBM Z 및 LinuxONE 환경에서 RHEL KVM과 함께 OpenShift Container Platform을 사용할 때 몇 가지 모범 사례를 소개합니다.

3.6.1. VirtIO 네트워크 인터페이스에 여러 큐 사용

여러 가상 CPU를 사용하면 들어오고 나가는 패킷에 여러 큐를 제공하는 경우 동시에 패키지를 전송할 수 있습니다. 드라이버 요소의 queues 특성을 사용하여 여러 큐를 구성합니다. 가상 서버의 가상 CPU 수를 초과하지 않는 정수 2를 지정합니다.

다음 예제 사양은 네트워크 인터페이스에 대해 두 개의 입력 및 출력 대기열을 구성합니다.

<interface type="direct">

<source network="net01"/>

<model type="virtio"/>

<driver ... queues="2"/>

</interface>

<interface type="direct">

<source network="net01"/>

<model type="virtio"/>

<driver ... queues="2"/>

</interface>다중 대기열은 네트워크 인터페이스에 향상된 성능을 제공하도록 설계되었지만 메모리 및 CPU 리소스도 사용합니다. 사용 중인 인터페이스에 사용할 두 개의 큐 정의로 시작합니다. 그런 다음 사용 중인 인터페이스에 대해 트래픽이 적거나 대기열이 2개 이상인 인터페이스에 대해 두 개의 대기열을 시도합니다.

3.6.2. 가상 블록 장치에 I/O 스레드 사용

가상 블록 장치가 I/O 스레드를 사용하도록 하려면 이러한 I/O 스레드 중 하나를 사용하도록 가상 서버 및 각 가상 블록 장치에 대해 하나 이상의 I/O 스레드를 구성해야 합니다.

다음 예제에서는 연속 10진수 스레드 ID 1, 2 및 3을 사용하여 3개의 I /O 스레드를 구성하도록 <iothreads>3</iothreads>를 지정합니다. The iothread" 매개 변수는 ID 2와 함께 I/O 스레드를 사용하도록 디스크 장치의 드라이버 요소를 지정합니다.

I/O 스레드 사양 샘플

스레드는 디스크 장치의 I/O 작업의 성능을 향상시킬 수 있지만 메모리 및 CPU 리소스도 사용합니다. 동일한 스레드를 사용하도록 여러 장치를 구성할 수 있습니다. 스레드를 장치에 가장 잘 매핑하는 방법은 사용 가능한 리소스 및 워크로드에 따라 다릅니다.

소수의 I/O 스레드로 시작합니다. 종종 모든 디스크 장치에 대한 단일 I/O 스레드만으로 충분합니다. 가상 CPU 수보다 많은 스레드를 구성하지 말고 유휴 스레드를 구성하지 마십시오.

virsh iothreadadd 명령을 사용하여 실행 중인 가상 서버에 특정 스레드 ID가 있는 I/O 스레드를 추가할 수 있습니다.

3.6.3. 가상 SCSI 장치 방지

SCSI별 인터페이스를 통해 장치를 해결해야 하는 경우에만 가상 SCSI 장치를 구성합니다. 호스트의 백업과 관계없이 디스크 공간을 가상 SCSI 장치가 아닌 가상 블록 장치로 구성합니다.

그러나 다음을 위해 SCSI 관련 인터페이스가 필요할 수 있습니다.

- 호스트에서 SCSI 연결 테이프 드라이브의 LUN입니다.

- 가상 DVD 드라이브에 마운트된 호스트 파일 시스템의 DVD ISO 파일입니다.

3.6.4. 디스크에 게스트 캐싱 설정

호스트가 아닌 게스트가 캐싱을 수행하도록 디스크 장치를 구성합니다.

디스크 장치의 드라이버 요소에 cache="none" 및 매개변수가 포함되어 있는지 확인합니다.

io="native"

<disk type="block" device="disk">

<driver name="qemu" type="raw" cache="none" io="native" iothread="1"/>

...

</disk>

<disk type="block" device="disk">

<driver name="qemu" type="raw" cache="none" io="native" iothread="1"/>

...

</disk>3.6.5. 메모리 증대 장치 제외

동적 메모리 크기가 필요하지 않은 경우 메모리 balloon 장치를 정의하지 말고 libvirt가 사용자를 위해 파일을 생성하지 않도록 합니다. memballoon 매개 변수를 도메인 구성 XML 파일의 devices 요소의 하위 항목으로 포함합니다.

활성 프로파일 목록을 확인합니다.

<memballoon model="none"/>

<memballoon model="none"/>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.6.6. 호스트 스케줄러의 CPU 마이그레이션 알고리즘 조정

결과를 이해하는 전문가인 경우가 아니면 스케줄러 설정을 변경하지 마십시오. 테스트하지 않고 프로덕션 시스템에 변경 사항을 적용하지 않고 변경 사항을 적용하고 의도한 영향을 미치는지 확인하지 마십시오.

kernel.sched_migration_cost_ns 매개변수는 나노초 단위로 시간 간격을 지정합니다. 작업을 마지막으로 실행한 후에는 이 간격이 만료될 때까지 CPU 캐시에 유용한 콘텐츠가 있는 것으로 간주됩니다. 이 간격을 늘리면 작업 마이그레이션이 줄어듭니다. 기본값은 500000 ns입니다.

실행 가능한 프로세스가 있을 때 CPU 유휴 시간이 예상보다 크면 이 간격을 줄이십시오. CPU 또는 노드 간에 너무 자주 바운싱되는 경우 작업을 늘리십시오.

간격을 60000 ns로 동적으로 설정하려면 다음 명령을 입력합니다.

sysctl kernel.sched_migration_cost_ns=60000

# sysctl kernel.sched_migration_cost_ns=60000

값을 60000 ns로 영구적으로 변경하려면 /etc/sysctl.conf에 다음 항목을 추가합니다.

kernel.sched_migration_cost_ns=60000

kernel.sched_migration_cost_ns=600003.6.7. cpuset cgroup 컨트롤러 비활성화

이 설정은 cgroup 버전 1이 있는 KVM 호스트에만 적용됩니다. 호스트에서 CPU 핫플러그를 활성화하려면 cgroup 컨트롤러를 비활성화합니다.

절차

-

선택한 편집기를 사용하여

/etc/libvirt/qemu.conf를 엽니다. -

cgroup_controllers행으로 이동합니다. - 전체 행을 복제하고 복사에서 선행 기호(#)를 제거합니다.

다음과 같이

cpuset항목을 제거합니다.cgroup_controllers = [ "cpu", "devices", "memory", "blkio", "cpuacct" ]

cgroup_controllers = [ "cpu", "devices", "memory", "blkio", "cpuacct" ]Copy to Clipboard Copied! Toggle word wrap Toggle overflow 새 설정을 적용하려면 libvirtd 데몬을 다시 시작해야 합니다.

- 모든 가상 시스템을 중지합니다.

다음 명령을 실행합니다.

systemctl restart libvirtd

# systemctl restart libvirtdCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 가상 시스템을 다시 시작합니다.

이 설정은 호스트 재부팅 시 유지됩니다.

3.6.8. 유휴 가상 CPU의 폴링 기간 조정

가상 CPU가 유휴 상태가 되면 KVM은 호스트 리소스를 할당하기 전에 가상 CPU의 시작 조건을 폴링합니다. /sys/module/kvm/parameters/halt_poll_ns 의 sysfs에서 폴링이 발생하는 시간 간격을 지정할 수 있습니다. 지정된 시간 동안 폴링을 수행하면 리소스 사용량을 희생할 때 가상 CPU의 대기 시간이 줄어듭니다. 워크로드에 따라 폴링 시간이 길거나 짧을 수 있습니다. 시간 간격은 나노초 단위로 지정됩니다. 기본값은 50000 ns입니다.

낮은 CPU 소비를 최적화하려면 작은 값을 입력하거나 0을 작성하여 폴링을 비활성화합니다.

echo 0 > /sys/module/kvm/parameters/halt_poll_ns

# echo 0 > /sys/module/kvm/parameters/halt_poll_nsCopy to Clipboard Copied! Toggle word wrap Toggle overflow 트랜잭션 워크로드의 경우 대기 시간이 짧도록 최적화하려면 큰 값을 입력합니다.

echo 80000 > /sys/module/kvm/parameters/halt_poll_ns

# echo 80000 > /sys/module/kvm/parameters/halt_poll_nsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4장. 클러스터 스케일링 관련 권장 사례

이 섹션의 지침은 클라우드 공급자 통합을 통한 설치에만 관련이 있습니다.

이러한 지침은 OVN(Open Virtual Network)이 아닌 SDN(소프트웨어 정의 네트워킹)을 사용하는 OpenShift Container Platform에 적용됩니다.

다음 모범 사례를 적용하여 OpenShift Container Platform 클러스터의 작업자 머신 수를 스케일링하십시오. 작업자 머신 세트에 정의된 복제본 수를 늘리거나 줄여 작업자 머신을 스케일링합니다.

4.1. 클러스터 스케일링에 대한 권장 사례

노드 수가 많아지도록 클러스터를 확장하는 경우 다음을 수행합니다.

- 고가용성을 위해 모든 사용 가능한 영역으로 노드를 분산합니다.

- 한 번에 확장하는 머신 수가 25~50개를 넘지 않도록 합니다.

- 주기적인 공급자 용량 제약 조건을 완화하는 데 도움이 되도록 유사한 크기의 대체 인스턴스 유형을 사용하여 사용 가능한 각 영역에 새 머신 세트를 생성하는 것을 고려해 봅니다. 예를 들어 AWS에서 m5.large 및 m5d.large를 사용합니다.

클라우드 제공자는 API 서비스 할당량을 구현할 수 있습니다. 따라서 점진적으로 클러스터를 스케일링하십시오.

머신 세트의 복제본이 한 번에 모두 더 높은 숫자로 설정되면 컨트롤러가 머신을 생성하지 못할 수 있습니다. OpenShift Container Platform이 배포된 클라우드 플랫폼에서 처리할 수 있는 요청 수는 프로세스에 영향을 미칩니다. 컨트롤러는 상태를 사용하여 머신을 생성하고, 점검하고, 업데이트하는 동안 더 많이 쿼리하기 시작합니다. OpenShift Container Platform이 배포된 클라우드 플랫폼에는 API 요청 제한이 있으며 과도한 쿼리는 클라우드 플랫폼 제한으로 인한 머신 생성 실패로 이어질 수 있습니다.

노드 수가 많아지도록 스케일링하는 경우 머신 상태 점검을 활성화하십시오. 실패가 발생하면 상태 점검에서 상태를 모니터링하고 비정상 머신을 자동으로 복구합니다.

대규모 및 밀도가 높은 클러스터의 노드 수를 줄이는 경우 이 프로세스가 종료할 노드에서 실행되는 개체의 드레이닝 또는 제거가 동시에 실행되기 때문에 많은 시간이 걸릴 수 있습니다. 또한 제거할 개체가 너무 많으면 클라이언트 요청 처리에 병목 현상이 발생할 수 있습니다. 기본 클라이언트 QPS 및 버스트 비율은 현재 5 및 10으로 각각 설정되어 있으며 OpenShift Container Platform에서 수정할 수 없습니다.

4.2. 머신 세트 수정

머신 세트를 변경하려면 MachineSet YAML을 편집합니다. 다음으로 각 머신을 삭제하거나 복제본 수가 0이 되도록 머신 세트를 축소하여 머신 세트와 연관된 모든 머신을 제거합니다. 복제본을 필요한 수로 다시 조정합니다. 머신 세트를 변경해도 기존 머신에는 영향을 미치지 않습니다.

다른 변경을 수행하지 않고 머신 세트를 스케일링해야 하는 경우 머신을 삭제할 필요가 없습니다.

기본적으로 OpenShift Container Platform 라우터 Pod는 작업자에게 배포됩니다. 라우터는 웹 콘솔을 포함한 일부 클러스터 리소스에 액세스해야 하므로 먼저 라우터 Pod를 재배치하지 않는 한 작업자 머신 세트를 0으로 스케일링하지 마십시오.

전제 조건

-

OpenShift Container Platform 클러스터 및

oc명령행을 설치합니다. -

cluster-admin권한이 있는 사용자로oc에 로그인합니다.

프로세스

머신 세트를 편집합니다.

oc edit machineset <machineset> -n openshift-machine-api

$ oc edit machineset <machineset> -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow 머신 세트를

0으로 축소합니다.oc scale --replicas=0 machineset <machineset> -n openshift-machine-api

$ oc scale --replicas=0 machineset <machineset> -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow 또는 다음을 수행합니다.

oc edit machineset <machineset> -n openshift-machine-api

$ oc edit machineset <machineset> -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow 머신이 제거될 때까지 기다립니다.

필요에 따라 머신 세트를 확장합니다.

oc scale --replicas=2 machineset <machineset> -n openshift-machine-api

$ oc scale --replicas=2 machineset <machineset> -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow 또는 다음을 수행합니다.

oc edit machineset <machineset> -n openshift-machine-api

$ oc edit machineset <machineset> -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow 머신이 시작될 때까지 기다립니다. 새 머신에는 머신 세트에 대한 변경사항이 포함되어 있습니다.

4.3. 머신 상태 점검 정보

머신 상태 점검에서는 특정 머신 풀의 비정상적인 머신을 자동으로 복구합니다.

머신 상태를 모니터링하기 위해 컨트롤러 구성을 정의할 리소스를 만듭니다. NotReady 상태를 5 분 동안 유지하거나 노드 문제 탐지기(node-problem-detector)에 영구적인 조건을 표시하는 등 검사할 조건과 모니터링할 머신 세트의 레이블을 설정합니다.

마스터 역할이 있는 머신에는 머신 상태 점검을 적용할 수 없습니다.

MachineHealthCheck 리소스를 관찰하는 컨트롤러에서 정의된 상태를 확인합니다. 머신이 상태 확인에 실패하면 머신이 자동으로 삭제되고 대체할 머신이 만들어집니다. 머신이 삭제되면 machine deleted 이벤트가 표시됩니다.

머신 삭제로 인한 영향을 제한하기 위해 컨트롤러는 한 번에 하나의 노드 만 드레인하고 삭제합니다. 대상 머신 풀에서 허용된 maxUnhealthy 임계값 보다 많은 비정상적인 머신이 있는 경우 수동 개입이 수행될 수 있도록 복구가 중지됩니다.

워크로드 및 요구 사항을 살펴보고 신중하게 시간 초과를 고려하십시오.

- 시간 제한이 길어지면 비정상 머신의 워크로드에 대한 다운타임이 길어질 수 있습니다.

-

시간 초과가 너무 짧으면 수정 루프가 발생할 수 있습니다. 예를 들어

NotReady상태를 확인하는 시간은 머신이 시작 프로세스를 완료할 수 있을 만큼 충분히 길어야 합니다.

검사를 중지하려면 리소스를 제거합니다.

4.3.1. 머신 상태 검사 배포 시 제한 사항

머신 상태 점검을 배포하기 전에 고려해야 할 제한 사항은 다음과 같습니다.

- 머신 세트가 소유한 머신만 머신 상태 검사를 통해 업데이트를 적용합니다.

- 컨트롤 플레인 시스템은 현재 지원되지 않으며 비정상적인 경우 업데이트 적용되지 않습니다.

- 머신의 노드가 클러스터에서 제거되면 머신 상태 점검에서 이 머신을 비정상적으로 간주하고 즉시 업데이트를 적용합니다.

-

nodeStartupTimeout후 시스템의 해당 노드가 클러스터에 참여하지 않으면 업데이트가 적용됩니다. -

Machine리소스 단계가Failed하면 즉시 머신에 업데이트를 적용합니다.

4.4. MachineHealthCheck 리소스 샘플

베어 메탈 이외의 모든 클라우드 기반 설치 유형에 대한 MachineHealthCheck 리소스는 다음 YAML 파일과 유사합니다.

- 1

- 배포할 머신 상태 점검의 이름을 지정합니다.

- 2 3

- 확인할 머신 풀의 레이블을 지정합니다.

- 4

- 추적할 머신 세트를

<cluster_name>-<label>-<zone>형식으로 지정합니다. 예를 들어prod-node-us-east-1a입니다. - 5 6

- 노드 상태에 대한 시간 제한을 지정합니다. 시간 제한 기간 중 상태가 일치되면 머신이 수정됩니다. 시간 제한이 길어지면 비정상 머신의 워크로드에 대한 다운타임이 길어질 수 있습니다.

- 7

- 대상 풀에서 동시에 복구할 수 있는 시스템 수를 지정합니다. 이는 백분율 또는 정수로 설정할 수 있습니다. 비정상 머신의 수가

maxUnhealthy에서의 설정 제한을 초과하면 복구가 수행되지 않습니다. - 8

- 머신 상태가 비정상으로 확인되기 전에 노드가 클러스터에 참여할 때까지 기다려야 하는 시간 초과 기간을 지정합니다.

matchLabels는 예제일 뿐입니다. 특정 요구에 따라 머신 그룹을 매핑해야 합니다.

4.4.1. 쇼트 서킷 (Short Circuit) 머신 상태 점검 및 수정

쇼트 서킷 (Short Circuit)은 클러스터가 정상일 경우에만 머신 상태 점검을 통해 머신을 조정합니다. 쇼트 서킷은 MachineHealthCheck 리소스의 maxUnhealthy 필드를 통해 구성됩니다.

사용자가 시스템을 조정하기 전에 maxUnhealthy 필드 값을 정의하는 경우 MachineHealthCheck는 비정상적으로 결정된 대상 풀 내의 maxUnhealthy 값과 비교합니다. 비정상 머신의 수가 maxUnhealthy 제한을 초과하면 수정을 위한 업데이트가 수행되지 않습니다.

maxUnhealthy가 설정되지 않은 경우 기본값은 100%로 설정되고 클러스터 상태와 관계없이 머신이 수정됩니다.

적절한 maxUnhealthy 값은 배포하는 클러스터의 규모와 MachineHealthCheck에서 다루는 시스템 수에 따라 달라집니다. 예를 들어 maxUnhealthy 값을 사용하여 여러 가용 영역에서 여러 머신 세트를 처리할 수 있으므로 전체 영역을 손실하면 maxUnhealthy 설정이 클러스터 내에서 추가 수정을 방지 할 수 있습니다.

maxUnhealthy 필드는 정수 또는 백분율로 설정할 수 있습니다. maxUnhealthy 값에 따라 다양한 수정을 적용할 수 있습니다.

4.4.1.1. 절대 값을 사용하여 maxUnhealthy 설정

maxUnhealthy가 2로 설정된 경우

- 2개 이상의 노드가 비정상인 경우 수정을 위한 업데이트가 수행됩니다.

- 3개 이상의 노드가 비정상이면 수정을 위한 업데이트가 수행되지 않습니다

이러한 값은 머신 상태 점검에서 확인할 수 있는 머신 수와 관련이 없습니다.

4.4.1.2. 백분율을 사용하여 maxUnhealthy 설정

maxUnhealthy가 40%로 설정되어 있고 25 대의 시스템이 확인되고 있는 경우 다음을 수행하십시오.

- 10개 이상의 노드가 비정상인 경우 수정을 위한 업데이트가 수행됩니다.

- 11개 이상의 노드가 비정상인 경우 수정을 위한 업데이트가 수행되지 않습니다.

maxUnhealthy가 40%로 설정되어 있고 6 대의 시스템이 확인되고 있는 경우 다음을 수행하십시오.

- 2개 이상의 노드가 비정상인 경우 수정을 위한 업데이트가 수행됩니다.

- 3개 이상의 노드가 비정상이면 수정을 위한 업데이트가 수행되지 않습니다

maxUnhealthy 머신의 백분율이 정수가 아닌 경우 허용되는 머신 수가 반올림됩니다.

4.5. MachineHealthCheck 리소스 만들기

클러스터의 모든 MachineSets에 대해 MachineHealthCheck 리소스를 생성할 수 있습니다. 컨트롤 플레인 시스템을 대상으로 하는 MachineHealthCheck 리소스를 생성해서는 안 됩니다.

사전 요구 사항

-

oc명령행 인터페이스를 설치합니다.

프로세스

-

머신 상태 점검 정의가 포함된

healthcheck.yml파일을 생성합니다. healthcheck.yml파일을 클러스터에 적용합니다.oc apply -f healthcheck.yml

$ oc apply -f healthcheck.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5장. Node Tuning Operator 사용

Node Tuning Operator에 대해 알아보고, Node Tuning Operator를 사용하여 Tuned 데몬을 오케스트레이션하고 노드 수준 튜닝을 관리하는 방법도 알아봅니다.

5.1. Node Tuning Operator 정보

Node Tuning Operator는 Tuned 데몬을 오케스트레이션하여 노드 수준 튜닝을 관리하는 데 도움이 됩니다. 대부분의 고성능 애플리케이션에는 일정 수준의 커널 튜닝이 필요합니다. Node Tuning Operator는 노드 수준 sysctls 사용자에게 통합 관리 인터페이스를 제공하며 사용자의 필요에 따라 지정되는 사용자 정의 튜닝을 추가할 수 있는 유연성을 제공합니다.

Operator는 OpenShift Container Platform의 컨테이너화된 Tuned 데몬을 Kubernetes 데몬 세트로 관리합니다. 클러스터에서 실행되는 모든 컨테이너화된 Tuned 데몬에 사용자 정의 튜닝 사양이 데몬이 이해할 수 있는 형식으로 전달되도록 합니다. 데몬은 클러스터의 모든 노드에서 노드당 하나씩 실행됩니다.

컨테이너화된 Tuned 데몬을 통해 적용되는 노드 수준 설정은 프로필 변경을 트리거하는 이벤트 시 또는 컨테이너화된 Tuned 데몬이 종료 신호를 수신하고 처리하여 정상적으로 종료될 때 롤백됩니다.

버전 4.1 이상에서는 Node Tuning Operator가 표준 OpenShift Container Platform 설치에 포함되어 있습니다.

5.2. Node Tuning Operator 사양 예에 액세스

이 프로세스를 사용하여 Node Tuning Operator 사양 예에 액세스하십시오.

프로세스

다음을 실행합니다.

oc get Tuned/default -o yaml -n openshift-cluster-node-tuning-operator

$ oc get Tuned/default -o yaml -n openshift-cluster-node-tuning-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow

기본 CR은 OpenShift Container Platform 플랫폼의 표준 노드 수준 튜닝을 제공하기 위한 것이며 Operator 관리 상태를 설정하는 경우에만 수정할 수 있습니다. Operator는 기본 CR에 대한 다른 모든 사용자 정의 변경사항을 덮어씁니다. 사용자 정의 튜닝의 경우 고유한 Tuned CR을 생성합니다. 새로 생성된 CR은 노드 또는 Pod 라벨 및 프로필 우선 순위에 따라 OpenShift Container Platform 노드에 적용된 기본 CR 및 사용자 정의 튜닝과 결합됩니다.

특정 상황에서는 Pod 라벨에 대한 지원이 필요한 튜닝을 자동으로 제공하는 편리한 방법일 수 있지만 이러한 방법은 권장되지 않으며 특히 대규모 클러스터에서는 이러한 방법을 사용하지 않는 것이 좋습니다. 기본 Tuned CR은 Pod 라벨이 일치되지 않은 상태로 제공됩니다. Pod 라벨이 일치된 상태로 사용자 정의 프로필이 생성되면 해당 시점에 이 기능이 활성화됩니다. Pod 레이블 기능은 Node Tuning Operator의 향후 버전에서 더 이상 사용되지 않을 수 있습니다.

5.3. 클러스터에 설정된 기본 프로필

다음은 클러스터에 설정된 기본 프로필입니다.

5.4. Tuned 프로필이 적용되었는지 검증

다음 절차를 통해 모든 노드에 Tuned 프로필이 적용되었는지 확인하십시오.

프로세스

각 노드에서 Tuned Pod가 실행되고 있는지 확인합니다.

oc get pods -n openshift-cluster-node-tuning-operator -o wide

$ oc get pods -n openshift-cluster-node-tuning-operator -o wideCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 각 Pod에서 적용된 프로필을 추출하여 이전 목록과 대조합니다.

for p in `oc get pods -n openshift-cluster-node-tuning-operator -l openshift-app=tuned -o=jsonpath='{range .items[*]}{.metadata.name} {end}'`; do printf "\n*** $p ***\n" ; oc logs pod/$p -n openshift-cluster-node-tuning-operator | grep applied; done$ for p in `oc get pods -n openshift-cluster-node-tuning-operator -l openshift-app=tuned -o=jsonpath='{range .items[*]}{.metadata.name} {end}'`; do printf "\n*** $p ***\n" ; oc logs pod/$p -n openshift-cluster-node-tuning-operator | grep applied; doneCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.5. 사용자 정의 튜닝 사양

Operator의 CR(사용자 정의 리소스)에는 두 가지 주요 섹션이 있습니다. 첫 번째 섹션인 profile:은 Tuned 프로필 및 해당 이름의 목록입니다. 두 번째인 recommend:은 프로필 선택 논리를 정의합니다.

여러 사용자 정의 튜닝 사양은 Operator의 네임스페이스에 여러 CR로 존재할 수 있습니다. 새로운 CR의 존재 또는 오래된 CR의 삭제는 Operator에서 탐지됩니다. 기존의 모든 사용자 정의 튜닝 사양이 병합되고 컨테이너화된 Tuned 데몬의 해당 오브젝트가 업데이트됩니다.

관리 상태

Operator 관리 상태는 기본 Tuned CR을 조정하여 설정됩니다. 기본적으로 Operator는 Managed 상태이며 기본 Tuned CR에는 spec.managementState 필드가 없습니다. Operator 관리 상태에 유효한 값은 다음과 같습니다.

- Managed: 구성 리소스가 업데이트되면 Operator가 해당 피연산자를 업데이트합니다.

- Unmanaged: Operator가 구성 리소스에 대한 변경을 무시합니다.

- Removed: Operator가 프로비저닝한 해당 피연산자 및 리소스를 Operator가 제거합니다.

프로필 데이터

profile: 섹션에는 Tuned 프로필 및 해당 이름이 나열됩니다.

권장 프로필

profile: 선택 논리는 CR의 recommend: 섹션에 의해 정의됩니다. recommend: 섹션은 선택 기준에 따라 프로필을 권장하는 항목의 목록입니다.

recommend: <recommend-item-1> # ... <recommend-item-n>

recommend:

<recommend-item-1>

# ...

<recommend-item-n>목록의 개별 항목은 다음과 같습니다.

- 1

- 선택 사항입니다.

- 2

- 키/값

MachineConfig라벨 사전입니다. 키는 고유해야 합니다. - 3

- 생략하면 우선 순위가 높은 프로필이 먼저 일치되거나

machineConfigLabels가 설정되어 있지 않으면 프로필이 일치하는 것으로 가정합니다. - 4

- 선택사항 목록입니다.

- 5

- 프로필 순서 지정 우선 순위입니다. 숫자가 작을수록 우선 순위가 높습니다(

0이 가장 높은 우선 순위임). - 6

- 일치에 적용할 TuneD 프로필입니다. 예를 들어

tuned_profile_1이 있습니다. - 7

- 선택적 피연산자 구성입니다.

- 8

- TuneD 데몬의 디버깅을 켜거나 끕니다. off의 경우 on 또는

false의 경우 옵션이true입니다. 기본값은false입니다.

<match>는 다음과 같이 재귀적으로 정의되는 선택사항 목록입니다.

- label: <label_name>

value: <label_value>

type: <label_type>

<match>

- label: <label_name>

value: <label_value>

type: <label_type>

<match>

<match>를 생략하지 않으면 모든 중첩 <match> 섹션도 true로 평가되어야 합니다. 생략하면 false로 가정하고 해당 <match> 섹션이 있는 프로필을 적용하지 않거나 권장하지 않습니다. 따라서 중첩(하위 <match> 섹션)은 논리 AND 연산자 역할을 합니다. 반대로 <match> 목록의 항목이 일치하면 전체 <match> 목록이 true로 평가됩니다. 따라서 이 목록이 논리 OR 연산자 역할을 합니다.

machineConfigLabels 가 정의되면 지정된 recommend: 목록 항목에 대해 머신 구성 풀 기반 일치가 설정됩니다. <mcLabels> 는 머신 구성의 라벨을 지정합니다. 머신 구성은 <tuned_profile_name> 프로필에 대해 커널 부팅 매개변수와 같은 호스트 설정을 적용하기 위해 자동으로 생성됩니다. 여기에는 <mcLabels>와 일치하는 머신 구성 선택기가 있는 모든 머신 구성 풀을 찾고 머신 구성 풀이 할당된 모든 노드에서 <tuned_profile_name> 프로필을 설정하는 작업이 포함됩니다. 마스터 및 작업자 역할이 모두 있는 노드를 대상으로 하려면 마스터 역할을 사용해야 합니다.

목록 항목 match 및 machineConfigLabels는 논리 OR 연산자로 연결됩니다. match 항목은 단락 방식으로 먼저 평가됩니다. 따라서 true로 평가되면 machineConfigLabels 항목이 고려되지 않습니다.

머신 구성 풀 기반 일치를 사용하는 경우 동일한 하드웨어 구성을 가진 노드를 동일한 머신 구성 풀로 그룹화하는 것이 좋습니다. 이 방법을 따르지 않으면 Tuned 피연산자가 동일한 머신 구성 풀을 공유하는 두 개 이상의 노드에 대해 충돌하는 커널 매개변수를 계산할 수 있습니다.

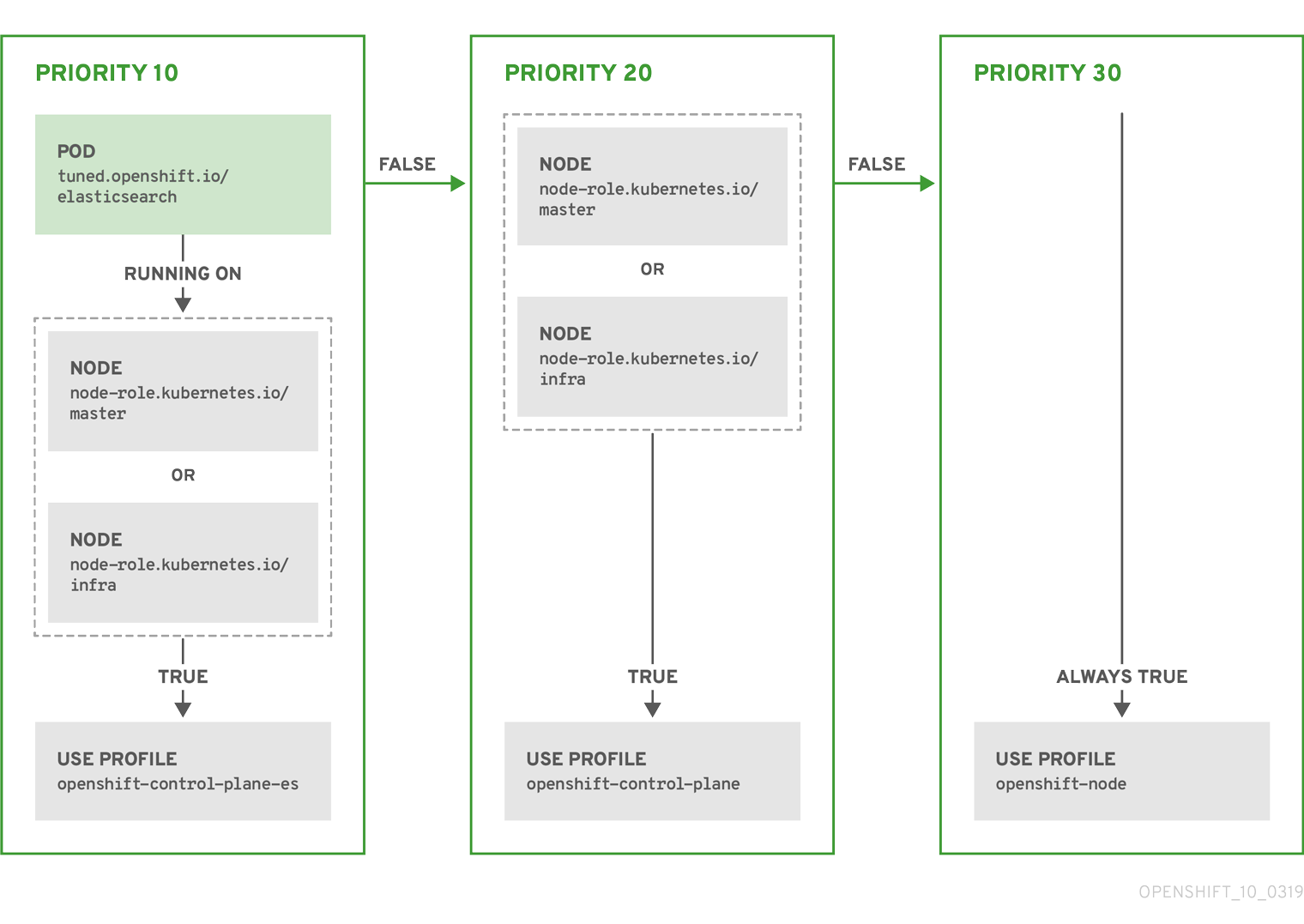

예: 노드 또는 Pod 라벨 기반 일치

위의 CR은 컨테이너화된 Tuned 데몬의 프로필 우선 순위에 따라 recommended.conf 파일로 변환됩니다. 우선 순위가 가장 높은 프로필(10)이 openshift-control-plane-es이므로 이 프로필을 첫 번째로 고려합니다. 지정된 노드에서 실행되는 컨테이너화된 Tuned 데몬은 tuned.openshift.io/elasticsearch 라벨이 설정된 동일한 노드에서 실행되는 Pod가 있는지 확인합니다. 없는 경우 전체 <match> 섹션이 false로 평가됩니다. 라벨이 있는 Pod가 있는 경우 <match> 섹션을 true로 평가하려면 노드 라벨도 node-role.kubernetes.io/master 또는 node-role.kubernetes.io/infra여야 합니다.

우선 순위가 10인 프로필의 라벨이 일치하면 openshift-control-plane-es 프로필이 적용되고 다른 프로필은 고려되지 않습니다. 노드/Pod 라벨 조합이 일치하지 않으면 두 번째로 높은 우선 순위 프로필(openshift-control-plane)이 고려됩니다. 컨테이너화된 Tuned Pod가 node-role.kubernetes.io/master 또는 node-role.kubernetes.io/infra 라벨이 있는 노드에서 실행되는 경우 이 프로필이 적용됩니다.

마지막으로, openshift-node 프로필은 우선 순위가 가장 낮은 30입니다. 이 프로필에는 <match> 섹션이 없으므로 항상 일치합니다. 지정된 노드에서 우선 순위가 더 높은 다른 프로필이 일치하지 않는 경우 openshift-node 프로필을 설정하는 데 catch-all 프로필 역할을 합니다.

예: 머신 구성 풀 기반 일치

노드 재부팅을 최소화하려면 머신 구성 풀의 노드 선택기와 일치하는 라벨로 대상 노드에 라벨을 지정한 후 위의 Tuned CR을 생성하고 마지막으로 사용자 정의 머신 구성 풀을 생성합니다.

5.6. 사용자 정의 튜닝 예

다음 CR에서는 tuned.openshift.io/ingress-node-label 레이블이 임의의 값으로 설정된 OpenShift Container Platform 노드에 대해 사용자 정의 노드 수준 튜닝을 적용합니다. 관리자는 다음 명령을 사용하여 사용자 정의 Tuned CR을 생성합니다.

사용자 정의 튜닝 예

사용자 정의 프로필 작성자는 기본 Tuned CR에 제공된 기본 Tuned 데몬 프로필을 포함하는 것이 좋습니다. 위의 예에서는 기본 openshift-control-plane 프로필을 사용하여 작업을 수행합니다.

5.7. 지원되는 Tuned 데몬 플러그인

Tuned CR의 profile: 섹션에 정의된 사용자 정의 프로필을 사용하는 경우 [main] 섹션을 제외한 다음 Tuned 플러그인이 지원됩니다.

- audio

- cpu

- disk

- eeepc_she

- modules

- mounts

- net

- scheduler

- scsi_host

- selinux

- sysctl

- sysfs

- usb

- video

- vm

일부 플러그인에서 제공하는 동적 튜닝 기능 중에는 지원되지 않는 기능이 일부 있습니다. 다음 Tuned 플러그인은 현재 지원되지 않습니다.

- bootloader

- script

- systemd

자세한 내용은 사용 가능한 Tuned 플러그인 및 Tuned 시작하기를 참조하십시오.

6장. Cluster Loader 사용

Cluster Loader는 많은 수의 다양한 오브젝트를 클러스터에 배포하여 사용자 정의 클러스터 오브젝트를 생성하는 툴입니다. Cluster Loader를 빌드하고, 구성하고, 실행하여 다양한 클러스터 상태에서 OpenShift Container Platform 배포의 성능 지표를 측정하십시오.

6.1. Cluster Loader 설치

프로세스

컨테이너 이미지를 가져오려면 다음을 실행합니다.

podman pull quay.io/openshift/origin-tests:4.7

$ podman pull quay.io/openshift/origin-tests:4.7Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2. Cluster Loader 실행

전제 조건

- 리포지토리에서 인증하라는 메시지를 표시합니다. 레지스트리 자격 증명을 사용하면 공개적으로 제공되지 않는 이미지에 액세스할 수 있습니다. 설치의 기존 인증 자격 증명을 사용하십시오.

프로세스

다섯 개의 템플릿 빌드를 배포하고 완료될 때까지 대기하는 내장 테스트 구성을 사용하여 Cluster Loader를 실행합니다.

podman run -v ${LOCAL_KUBECONFIG}:/root/.kube/config:z -i \ quay.io/openshift/origin-tests:4.7 /bin/bash -c 'export KUBECONFIG=/root/.kube/config && \ openshift-tests run-test "[sig-scalability][Feature:Performance] Load cluster \ should populate the cluster [Slow][Serial] [Suite:openshift]"'$ podman run -v ${LOCAL_KUBECONFIG}:/root/.kube/config:z -i \ quay.io/openshift/origin-tests:4.7 /bin/bash -c 'export KUBECONFIG=/root/.kube/config && \ openshift-tests run-test "[sig-scalability][Feature:Performance] Load cluster \ should populate the cluster [Slow][Serial] [Suite:openshift]"'Copy to Clipboard Copied! Toggle word wrap Toggle overflow 또는

VIPERCONFIG환경 변수를 설정하여 사용자 정의 구성으로 Cluster Loader를 실행합니다.Copy to Clipboard Copied! Toggle word wrap Toggle overflow 이 예에서는

${LOCAL_KUBECONFIG}가 로컬 파일 시스템의kubeconfig경로를 참조합니다.test.yaml구성 파일이 있는 컨테이너에 마운트되는${LOCAL_CONFIG_FILE_PATH}디렉터리도 있습니다.test.yaml이 외부 템플릿 파일 또는 podspec 파일을 참조하는 경우 해당 파일도 컨테이너에 마운트되어야 합니다.

6.3. Cluster Loader 구성

이 툴에서는 여러 템플릿 또는 Pod를 포함하는 여러 네임스페이스(프로젝트)를 생성합니다.

6.3.1. Cluster Loader 구성 파일 예

Cluster Loader 구성 파일은 기본 YAML 파일입니다.

이 예에서는 외부 템플릿 파일 또는 Pod 사양 파일에 대한 참조도 컨테이너에 마운트되었다고 가정합니다.

Microsoft Azure에서 Cluster Loader를 실행하는 경우 AZURE_AUTH_LOCATION 변수를 설치 프로그램 디렉터리에 있는 terraform.azure.auto.tfvars.json 출력이 포함된 파일로 설정해야 합니다.

6.3.2. 구성 필드

| 필드 | 설명 |

|---|---|

|

|

|

|

|

정의가 하나 이상인 하위 오브젝트입니다. |

|

|

구성당 하나의 정의가 있는 하위 오브젝트입니다. |

|

| 구성당 정의가 하나인 선택적 하위 오브젝트입니다. 오브젝트 생성 중 동기화 가능성을 추가합니다. |

| 필드 | 설명 |

|---|---|

|

| 정수입니다. 생성할 프로젝트 수에 대한 하나의 정의입니다. |

|

|

문자열입니다. 프로젝트의 기본 이름에 대한 하나의 정의입니다. 충돌을 방지하도록 동일한 네임스페이스 수가 |

|

| 문자열입니다. 오브젝트에 적용할 튜닝 세트에 대한 하나의 정의로, 이 네임스페이스 내에서 배포합니다. |

|

|

|

|

| 키-값 쌍 목록입니다. 키는 구성 맵 이름이고 값은 구성 맵을 생성하는 파일의 경로입니다. |

|

| 키-값 쌍 목록입니다. 키는 보안 이름이고 값은 보안을 생성하는 파일의 경로입니다. |

|

| 배포할 Pod 정의가 하나 이상인 하위 오브젝트입니다. |

|

| 배포할 템플릿 정의가 하나 이상인 하위 오브젝트입니다. |

| 필드 | 설명 |

|---|---|

|

| 정수입니다. 배포할 Pod 또는 템플릿 수입니다. |

|

| 문자열입니다. docker 이미지를 가져올 수 있는 리포지토리에 대한 docker 이미지 URL입니다. |

|

| 문자열입니다. 생성할 템플릿(또는 Pod)의 기본 이름에 대한 하나의 정의입니다. |

|

| 문자열입니다. 생성할 Pod 사양 또는 템플릿이 있는 로컬 파일에 대한 경로입니다. |

|

|

키-값 쌍입니다. |

| 필드 | 설명 |

|---|---|

|

| 문자열입니다. 프로젝트에서 튜닝을 정의할 때 지정된 이름과 일치하는 튜닝 세트의 이름입니다. |

|

|

Pod에 적용할 |

|

|

템플릿에 적용할 |

| 필드 | 설명 |

|---|---|

|

| 하위 오브젝트입니다. 단계 생성 패턴으로 오브젝트를 생성하려는 경우 사용되는 스테핑 구성입니다. |

|

| 하위 오브젝트입니다. 오브젝트 생성 속도를 제한하는 속도 제한 튜닝 세트 구성입니다. |

| 필드 | 설명 |

|---|---|

|

| 정수입니다. 오브젝트 생성을 정지하기 전 생성할 오브젝트 수입니다. |

|

|

정수입니다. |

|

| 정수입니다. 오브젝트 생성에 성공하지 못하는 경우 실패 전 대기하는 시간(초)입니다. |

|

| 정수입니다. 생성 요청 간에 대기하는 시간(밀리초)입니다. |

| 필드 | 설명 |

|---|---|

|

|

|

|

|

부울입니다. |

|

|

부울입니다. |

|

|

|

|

|

문자열입니다. Pod가 |

6.4. 알려진 문제

- 구성없이 Cluster Loader를 호출하는 경우 실패합니다. (BZ#1761925)

IDENTIFIER매개변수가 사용자 템플릿에 정의되어 있지 않으면 템플릿 생성에 실패하고error: unknown parameter name "IDENTIFIER"가 표시됩니다. 템플릿을 배포하는 경우 다음과 같이 이 매개변수를 템플릿에 추가하여 오류를 방지하십시오.{ "name": "IDENTIFIER", "description": "Number to append to the name of resources", "value": "1" }{ "name": "IDENTIFIER", "description": "Number to append to the name of resources", "value": "1" }Copy to Clipboard Copied! Toggle word wrap Toggle overflow Pod를 배포하는 경우 매개변수를 추가할 필요가 없습니다.

7장. CPU 관리자 사용

CPU 관리자는 CPU 그룹을 관리하고 워크로드를 특정 CPU로 제한합니다.

CPU 관리자는 다음과 같은 속성 중 일부가 포함된 워크로드에 유용합니다.

- 가능한 한 많은 CPU 시간이 필요합니다.

- 프로세서 캐시 누락에 민감합니다.

- 대기 시간이 짧은 네트워크 애플리케이션입니다.

- 다른 프로세스와 조정하고 단일 프로세서 캐시 공유를 통해 얻는 이점이 있습니다.

7.1. CPU 관리자 설정

절차

선택 사항: 노드에 레이블을 지정합니다.

oc label node perf-node.example.com cpumanager=true

# oc label node perf-node.example.com cpumanager=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow CPU 관리자를 활성화해야 하는 노드의

MachineConfigPool을 편집합니다. 이 예에서는 모든 작업자의 CPU 관리자가 활성화됩니다.oc edit machineconfigpool worker

# oc edit machineconfigpool workerCopy to Clipboard Copied! Toggle word wrap Toggle overflow 작업자 머신 구성 풀에 레이블을 추가합니다.

metadata: creationTimestamp: 2020-xx-xxx generation: 3 labels: custom-kubelet: cpumanager-enabledmetadata: creationTimestamp: 2020-xx-xxx generation: 3 labels: custom-kubelet: cpumanager-enabledCopy to Clipboard Copied! Toggle word wrap Toggle overflow KubeletConfig,cpumanager-kubeletconfig.yaml, CR(사용자 정의 리소스)을 생성합니다. 이전 단계에서 생성한 레이블을 참조하여 올바른 노드가 새 kubelet 구성으로 업데이트되도록 합니다.machineConfigPoolSelector섹션을 참조하십시오.Copy to Clipboard Copied! Toggle word wrap Toggle overflow 동적 kubelet 구성을 생성합니다.

oc create -f cpumanager-kubeletconfig.yaml

# oc create -f cpumanager-kubeletconfig.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 그러면 kubelet 구성에 CPU 관리자 기능이 추가되고 필요한 경우 MCO(Machine Config Operator)가 노드를 재부팅합니다. CPU 관리자를 활성화하는 데는 재부팅이 필요하지 않습니다.

병합된 kubelet 구성을 확인합니다.

oc get machineconfig 99-worker-XXXXXX-XXXXX-XXXX-XXXXX-kubelet -o json | grep ownerReference -A7

# oc get machineconfig 99-worker-XXXXXX-XXXXX-XXXX-XXXXX-kubelet -o json | grep ownerReference -A7Copy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 작업자에서 업데이트된

kubelet.conf를 확인합니다.oc debug node/perf-node.example.com sh-4.2# cat /host/etc/kubernetes/kubelet.conf | grep cpuManager

# oc debug node/perf-node.example.com sh-4.2# cat /host/etc/kubernetes/kubelet.conf | grep cpuManagerCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

cpuManagerPolicy: static cpuManagerReconcilePeriod: 5s

cpuManagerPolicy: static1 cpuManagerReconcilePeriod: 5s2 Copy to Clipboard Copied! Toggle word wrap Toggle overflow 코어를 하나 이상 요청하는 Pod를 생성합니다. 제한 및 요청 둘 다 해당 CPU 값이 정수로 설정되어야 합니다. 해당 숫자는 이 Pod 전용으로 사용할 코어 수입니다.

cat cpumanager-pod.yaml

# cat cpumanager-pod.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Pod를 생성합니다.

oc create -f cpumanager-pod.yaml

# oc create -f cpumanager-pod.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow 레이블 지정한 노드에 Pod가 예약되어 있는지 검증합니다.

oc describe pod cpumanager

# oc describe pod cpumanagerCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow cgroups가 올바르게 설정되었는지 검증합니다.pause프로세스의 PID(프로세스 ID)를 가져옵니다.Copy to Clipboard Copied! Toggle word wrap Toggle overflow QoS(Quality of Service) 계층

Guaranteed의 Pod는kubepods.slice에 있습니다. 다른 QoS 계층의 Pod는kubepods의 하위cgroups에 있습니다.cd /sys/fs/cgroup/cpuset/kubepods.slice/kubepods-pod69c01f8e_6b74_11e9_ac0f_0a2b62178a22.slice/crio-b5437308f1ad1a7db0574c542bdf08563b865c0345c86e9585f8c0b0a655612c.scope for i in `ls cpuset.cpus tasks` ; do echo -n "$i "; cat $i ; done

# cd /sys/fs/cgroup/cpuset/kubepods.slice/kubepods-pod69c01f8e_6b74_11e9_ac0f_0a2b62178a22.slice/crio-b5437308f1ad1a7db0574c542bdf08563b865c0345c86e9585f8c0b0a655612c.scope # for i in `ls cpuset.cpus tasks` ; do echo -n "$i "; cat $i ; doneCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

cpuset.cpus 1 tasks 32706

cpuset.cpus 1 tasks 32706Copy to Clipboard Copied! Toggle word wrap Toggle overflow 작업에 허용되는 CPU 목록을 확인합니다.

grep ^Cpus_allowed_list /proc/32706/status

# grep ^Cpus_allowed_list /proc/32706/statusCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Cpus_allowed_list: 1

Cpus_allowed_list: 1Copy to Clipboard Copied! Toggle word wrap Toggle overflow GuaranteedPod용으로 할당된 코어에서는 시스템의 다른 Pod(이 경우burstableQoS 계층의 Pod)를 실행할 수 없는지 검증합니다.cat /sys/fs/cgroup/cpuset/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podc494a073_6b77_11e9_98c0_06bba5c387ea.slice/crio-c56982f57b75a2420947f0afc6cafe7534c5734efc34157525fa9abbf99e3849.scope/cpuset.cpus 0 oc describe node perf-node.example.com

# cat /sys/fs/cgroup/cpuset/kubepods.slice/kubepods-besteffort.slice/kubepods-besteffort-podc494a073_6b77_11e9_98c0_06bba5c387ea.slice/crio-c56982f57b75a2420947f0afc6cafe7534c5734efc34157525fa9abbf99e3849.scope/cpuset.cpus 0 # oc describe node perf-node.example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow 출력 예

Copy to Clipboard Copied! Toggle word wrap Toggle overflow 이 VM에는 두 개의 CPU 코어가 있습니다.

system-reserved설정은 500밀리코어로 설정되었습니다. 즉,Node Allocatable양이 되는 노드의 전체 용량에서 한 코어의 절반이 감산되었습니다.Allocatable CPU는 1500 밀리코어임을 확인할 수 있습니다. 즉, Pod마다 하나의 전체 코어를 사용하므로 CPU 관리자 Pod 중 하나를 실행할 수 있습니다. 전체 코어는 1000밀리코어에 해당합니다. 두 번째 Pod를 예약하려고 하면 시스템에서 해당 Pod를 수락하지만 Pod가 예약되지 않습니다.NAME READY STATUS RESTARTS AGE cpumanager-6cqz7 1/1 Running 0 33m cpumanager-7qc2t 0/1 Pending 0 11s

NAME READY STATUS RESTARTS AGE cpumanager-6cqz7 1/1 Running 0 33m cpumanager-7qc2t 0/1 Pending 0 11sCopy to Clipboard Copied! Toggle word wrap Toggle overflow

8장. 토폴로지 관리자 사용

토폴로지 관리자는 동일한 NUMA(Non-Uniform Memory Access) 노드의 모든 QoS(Quality of Service) 클래스에 대해 CPU 관리자, 장치 관리자, 기타 힌트 공급자로부터 힌트를 수집하여 CPU, SR-IOV VF, 기타 장치 리소스 등의 Pod 리소스를 정렬합니다.

토폴로지 관리자는 토폴로지 관리자 정책 및 요청된 Pod 리소스를 기반으로 수집된 힌트의 토폴로지 정보를 사용하여 노드에서 Pod를 수락하거나 거부할 수 있는지 결정합니다.

토폴로지 관리자는 하드웨어 가속기를 사용하여 대기 시간이 중요한 실행과 처리량이 높은 병렬 계산을 지원하는 워크로드에 유용합니다.

토폴로지 관리자를 사용하려면 정책이 static 상태인 CPU 관리자를 사용해야 합니다. CPU 관리자에 대한 자세한 내용은 CPU 관리자 사용을 참조하십시오.

8.1. 토폴로지 관리자 정책

토폴로지 관리자는 CPU 관리자 및 장치 관리자와 같은 힌트 공급자로부터 토폴로지 힌트를 수집하고 수집된 힌트로 Pod 리소스를 정렬하는 방법으로 모든 QoS(Quality of Service) 클래스의 Pod 리소스를 정렬합니다.

Pod 사양에서 요청된 다른 리소스와 CPU 리소스를 정렬하려면 static CPU 관리자 정책을 사용하여 CPU 관리자를 활성화해야 합니다.

토폴로지 관리자는 cpumanager-enabled CR(사용자 정의 리소스)에서 할당하는 데 다음 4가지 할당 정책을 지원합니다.

none정책- 기본 정책으로, 토폴로지 정렬을 수행하지 않습니다.

best-effort정책-

best-effort토폴로지 관리 정책을 사용하는 Pod의 각 컨테이너에서는 kubelet이 각 힌트 공급자를 호출하여 해당 리소스 가용성을 검색합니다. 토폴로지 관리자는 이 정보를 사용하여 해당 컨테이너의 기본 NUMA 노드 선호도를 저장합니다. 선호도를 기본 설정하지 않으면 토폴로지 관리자가 해당 정보를 저장하고 노드에 대해 Pod를 허용합니다. restricted정책-

restricted토폴로지 관리 정책을 사용하는 Pod의 각 컨테이너에서는 kubelet이 각 힌트 공급자를 호출하여 해당 리소스 가용성을 검색합니다. 토폴로지 관리자는 이 정보를 사용하여 해당 컨테이너의 기본 NUMA 노드 선호도를 저장합니다. 선호도를 기본 설정하지 않으면 토폴로지 관리자가 노드에서 이 Pod를 거부합니다. 그러면 Pod는Terminated상태가 되고 Pod 허용 실패가 발생합니다. single-numa-node정책-

single-numa-node토폴로지 관리 정책을 사용하는 Pod의 각 컨테이너에서는 kubelet이 각 힌트 공급자를 호출하여 해당 리소스 가용성을 검색합니다. 토폴로지 관리자는 이 정보를 사용하여 단일 NUMA 노드 선호도가 가능한지 여부를 결정합니다. 가능한 경우 노드에 대해 Pod가 허용됩니다. 단일 NUMA 노드 선호도가 가능하지 않은 경우 토폴로지 관리자가 노드에서 Pod를 거부합니다. 그러면 Pod는 Terminated 상태가 되고 Pod 허용 실패가 발생합니다.

8.2. 토폴로지 관리자 설정

토폴로지 관리자를 사용하려면 cpumanager-enabled CR(사용자 정의 리소스)에서 할당 정책을 구성해야 합니다. CPU 관리자를 설정한 경우 해당 파일이 존재할 수 있습니다. 파일이 없으면 파일을 생성할 수 있습니다.

전제 조건

-

CPU 관리자 정책을

static으로 구성하십시오. 확장 및 성능 섹션에서 CPU 관리자 사용을 참조하십시오.

프로세스

토폴로지 관리자를 활성화하려면 다음을 수행합니다.

cpumanager-enabledCR(사용자 정의 리소스)에서 토폴로지 관리자 할당 정책을 구성합니다.oc edit KubeletConfig cpumanager-enabled

$ oc edit KubeletConfig cpumanager-enabledCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow

8.3. Pod와 토폴로지 관리자 정책 간의 상호 작용

아래 Pod 사양의 예는 Pod와 토폴로지 관리자 간 상호 작용을 보여주는 데 도움이 됩니다.

다음 Pod는 리소스 요청 또는 제한이 지정되어 있지 않기 때문에 BestEffort QoS 클래스에서 실행됩니다.

spec:

containers:

- name: nginx

image: nginx

spec:

containers:

- name: nginx

image: nginx

다음 Pod는 요청이 제한보다 작기 때문에 Burstable QoS 클래스에서 실행됩니다.

선택한 정책이 none이 아니면 토폴로지 관리자는 이러한 Pod 사양 중 하나를 고려하지 않습니다.

아래 마지막 예의 Pod는 요청이 제한과 동일하기 때문에 Guaranteed QoS 클래스에서 실행됩니다.

토폴로지 관리자는 이러한 Pod를 고려합니다. 토폴로지 관리자는 사용 가능한 CPU의 토폴로지를 반환하는 CPU 관리자 static 정책을 참조합니다. 토폴로지 관리자는 example.com/device에 대해 사용 가능한 장치의 토폴로지를 검색하는 장치 관리자도 참조합니다.

토폴로지 관리자는 이러한 정보를 사용하여 이 컨테이너에 가장 적합한 토폴로지를 저장합니다. 이 Pod의 경우 CPU 관리자와 장치 관리자는 리소스 할당 단계에서 이러한 저장된 정보를 사용합니다.

9장. Cluster Monitoring Operator 스케일링

OpenShift Container Platform에서는 Cluster Monitoring Operator가 수집하여 Prometheus 기반 모니터링 스택에 저장하는 지표를 공개합니다. 관리자는 시스템 리소스, 컨테이너, 구성 요소 지표를 하나의 대시보드 인터페이스인 Grafana에서 볼 수 있습니다.

Prometheus의 PVC로 연결된 클러스터 모니터링을 실행 중인 경우 클러스터 업그레이드 중에 OOM이 종료될 수 있습니다. Prometheus에 영구 스토리지를 사용하는 경우 클러스터 업그레이드 중 그리고 업그레이드가 완료된 후 몇 시간 동안 Prometheus 메모리 사용량이 두 배로 증가합니다. OOM 종료 문제가 발생하지 않도록 하려면 업그레이드 전에 사용 가능한 메모리 크기의 두 배인 작업자 노드를 허용합니다. 예를 들어 최소 권장 노드(8GB RAM이 있는 코어 2개)에서 모니터링을 실행 중인 경우 메모리를 16GB로 늘립니다. 자세한 내용은 BZ#1925061를 참조하십시오.

9.1. Prometheus 데이터베이스 스토리지 요구사항

Red Hat은 여러 스케일링 크기에 대해 다양한 테스트를 수행했습니다.

아래 Prometheus 스토리지 요구 사항은 규정되어 있지 않습니다. 워크로드 활동 및 리소스 사용량에 따라 클러스터에서 리소스 사용량이 높아질 수 있습니다.

| 노드 수 | Pod 수 | Prometheus 스토리지 증가(1일당) | Prometheus 스토리지 증가(15일당) | RAM 공간(스케일링 크기당) | 네트워크(tsdb 청크당) |

|---|---|---|---|---|---|

| 50 | 1800 | 6.3GB | 94GB | 6GB | 16MB |

| 100 | 3600 | 13GB | 195GB | 10GB | 26MB |

| 150 | 5400 | 19GB | 283GB | 12GB | 36MB |

| 200 | 7200 | 25GB | 375GB | 14GB | 46MB |

스토리지 요구사항이 계산된 값을 초과하지 않도록 예상 크기의 약 20%가 오버헤드로 추가되었습니다.

위의 계산은 기본 OpenShift Container Platform Cluster Monitoring Operator용입니다.

CPU 사용률은 약간의 영향을 미칩니다. 50개 노드 및 1,800개 Pod당 비율이 약 40개 중 1개 코어입니다.

OpenShift Container Platform 권장 사항

- 인프라 노드를 3개 이상 사용하십시오.

- NVMe(Non-Volatile Memory Express) 드라이브를 사용하는 경우 openshift-container-storage 노드를 3개 이상 사용하십시오.

9.2. 클러스터 모니터링 구성

클러스터 모니터링 스택에서 Prometheus 구성 요소의 스토리지 용량을 늘릴 수 있습니다.

프로세스

Prometheus의 스토리지 용량을 늘리려면 다음을 수행합니다.

YAML 구성 파일

cluster-monitoring-config.yaml을 생성합니다. 예를 들면 다음과 같습니다.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- 일반적인 값은

PROMETHEUS_RETENTION_PERIOD=15d입니다. 단위는 s, m, h, d 접미사 중 하나를 사용하는 시간으로 측정됩니다. - 2 4

- 클러스터의 스토리지 클래스입니다.

- 3

- 일반적인 값은

PROMETHEUS_STORAGE_SIZE=2000Gi입니다. 스토리지 값은 일반 정수이거나 이러한 접미사 중 하나를 사용하는 고정 포인트 정수일 수 있습니다. E, P, T, G, M, K. 2의 거듭제곱을 사용할 수도 있습니다: EI, Pi, Ti, Gi, Mi, Ki. - 5

- 일반적인 값은

ALERTMANAGER_STORAGE_SIZE=20Gi입니다. 스토리지 값은 일반 정수이거나 이러한 접미사 중 하나를 사용하는 고정 포인트 정수일 수 있습니다. E, P, T, G, M, K. 2의 거듭제곱을 사용할 수도 있습니다: EI, Pi, Ti, Gi, Mi, Ki.

- 보존 기간, 스토리지 클래스 및 스토리지 크기에 대한 값을 추가합니다.

- 파일을 저장합니다.

다음을 실행하여 변경사항을 적용합니다.

oc create -f cluster-monitoring-config.yaml

$ oc create -f cluster-monitoring-config.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

10장. 오브젝트 최대값에 따른 환경 계획

OpenShift Container Platform 클러스터를 계획하는 경우 다음과 같은 테스트된 오브젝트 최대값을 고려하십시오.

이러한 지침은 가능한 가장 큰 클러스터를 기반으로 합니다. 크기가 작은 클러스터의 경우 최대값이 더 낮습니다. etcd 버전 또는 스토리지 데이터 형식을 비롯하여 명시된 임계값에 영향을 주는 요인은 여러 가지가 있습니다.

이러한 지침은 OVN(Open Virtual Network)이 아닌 SDN(소프트웨어 정의 네트워킹)을 사용하는 OpenShift Container Platform에 적용됩니다.

대부분의 경우 이러한 수치를 초과하면 전체 성능이 저하됩니다. 반드시 클러스터가 실패하는 것은 아닙니다.

10.1. OpenShift Container Platform에 대해 테스트된 클러스터 최대값(주요 릴리스)

OpenShift Container Platform 3.x에 대해 테스트된 클라우드 플랫폼은 다음과 같습니다. RHOSP(Red Hat OpenStack Platform), Amazon Web Services 및 Microsoft Azure. OpenShift Container Platform 4.x에 대해 테스트된 클라우드 플랫폼은 다음과 같습니다. Amazon Web Services, Microsoft Azure 및 Google Cloud Platform.

| 최대값 유형 | 3.x 테스트된 최대값 | 4.x 테스트된 최대값 |

|---|---|---|

| 노드 수 | 2,000 | 2,000 |

| Pod 수 [1] | 150,000 | 150,000 |

| 노드당 Pod 수 | 250 | 500 [2] |

| 코어당 Pod 수 | 기본값 없음 | 기본값 없음 |

| 네임스페이스 수 [3] | 10,000 | 10,000 |

| 빌드 수 | 10,000(기본 Pod RAM 512Mi) - Pipeline 전략 | 10,000(기본 Pod RAM 512Mi) - S2I(Source-to-Image) 빌드 전략 |

| 네임스페이스당 Pod 수 [4] | 25,000 | 25,000 |

| Ingress 컨트롤러당 경로 및 백엔드 수 | 라우터당 2,000개 | 라우터당 2,000개 |

| 보안 수 | 80,000 | 80,000 |

| 구성 맵 수 | 90,000 | 90,000 |

| 서비스 수 [5] | 10,000 | 10,000 |

| 네임스페이스당 서비스 수 | 5,000 | 5,000 |

| 서비스당 백엔드 수 | 5,000 | 5,000 |

| 네임스페이스당 배포 수 [4] | 2,000 | 2,000 |

| 빌드 구성 수 | 12,000 | 12,000 |

| 보안 수 | 40,000 | 40,000 |

| CRD(사용자 정의 리소스 정의) 수 | 기본값 없음 | 512 [6] |

- 여기에 표시된 Pod 수는 테스트 Pod 수입니다. 실제 Pod 수는 애플리케이션 메모리, CPU 및 스토리지 요구사항에 따라 달라집니다.

-

이 테스트는 작업자 노드가 100개이며 작업자 노드당 Pod가 500개인 클러스터에서 수행되었습니다. 기본

maxPods는 계속 250입니다.maxPods가 500이 되도록 하려면 사용자 정의 kubelet 구성을 사용하여500으로 설정된maxPods가 포함된 클러스터를 생성해야 합니다. 500개의 사용자 Pod가 필요한 경우 노드에서 이미 실행되고 있는 시스템 Pod가 10~15개가 있으므로hostPrefix22가 필요합니다. 연결된 PVC(영구 볼륨 클레임)가 있는 Pod의 최대 수는 PVC가 할당된 스토리지 백엔드에 따라 달라집니다. 이 테스트에서는 OpenShift Container Storage (OCS v4)만 이 문서에서 설명하는 노드당 Pod 수를 충족할 수 있었습니다. - 활성 프로젝트 수가 많은 경우 키 공간이 지나치게 커져서 공간 할당량을 초과하면 etcd 성능이 저하될 수 있습니다. etcd 스토리지를 확보하기 위해 조각 모음을 포함한 etcd의 유지보수를 정기적으로 사용하는 것이 좋습니다.

- 시스템에는 일부 상태 변경에 대한 대응으로 지정된 네임스페이스의 모든 오브젝트에서 반복해야 하는 컨트롤 루프가 많습니다. 단일 네임스페이스에 지정된 유형의 오브젝트가 많이 있으면 루프 비용이 많이 들고 지정된 상태 변경 처리 속도가 느려질 수 있습니다. 이 제한을 적용하면 애플리케이션 요구사항을 충족하기에 충분한 CPU, 메모리 및 디스크가 시스템에 있다고 가정합니다.

- 각 서비스 포트와 각 서비스 백엔드는 iptables에 해당 항목이 있습니다. 지정된 서비스의 백엔드 수는 끝점 오브젝트의 크기에 영향을 미치므로 시스템 전체에서 전송되는 데이터의 크기에 영향을 미칩니다.

-

OpenShift Container Platform에는 OpenShift Container Platform에서 설치한 제품, OpenShift Container Platform 및 사용자 생성 CRD와 통합된 제품을 포함하여 총 512개의 CRD(사용자 정의 리소스 정의) 제한이 있습니다. 512개 이상의 CRD가 생성되는 경우

oc명령 요청이 제한될 수 있습니다.

Red Hat은 OpenShift Container Platform 클러스터 크기 조정에 대한 직접적인 지침을 제공하지 않습니다. 이는 클러스터가 지원되는 OpenShift Container Platform 범위 내에 있는지 여부를 확인하기 때문에 클러스터 규모를 제한하는 모든 다차원 요소를 고려해야 하기 때문입니다.

10.2. 클러스터 최대값 테스트를 위한 OpenShift Container Platform 환경 및 구성

AWS 클라우드 플랫폼:

| 노드 | 플레이버 | vCPU | RAM(GiB) | 디스크 유형 | 디스크 크기(GiB)/IOS | 수량 | 리전 |

|---|---|---|---|---|---|---|---|

| Master/etcd [1] | r5.4xlarge | 16 | 128 | io1 | 220 / 3000 | 3 | us-west-2 |

| 인프라 [2] | m5.12xlarge | 48 | 192 | gp2 | 100 | 3 | us-west-2 |

| 워크로드 [3] | m5.4xlarge | 16 | 64 | gp2 | 500 [4] | 1 | us-west-2 |

| Worker | m5.2xlarge | 8 | 32 | gp2 | 100 | 3/25/250/500 [5] | us-west-2 |

- etcd는 I/O 집약적이고 지연 시간에 민감하므로 3000 IOPS가 있는 io1 디스크는 master/etcd 노드에 사용됩니다.

- 인프라 노드는 모니터링, Ingress 및 레지스트리 구성 요소를 호스팅하는데 사용되어 대규모로 실행할 수 있는 충분한 리소스가 있는지 확인합니다.

- 워크로드 노드는 성능 및 확장 가능한 워크로드 생성기 실행 전용입니다.

- 성능 및 확장성 테스트 실행 중에 수집되는 대량의 데이터를 저장할 수 있는 충분한 공간을 확보 할 수 있도록 큰 디스크 크기가 사용됩니다.

- 클러스터는 반복적으로 확장되며 성능 및 확장성 테스트는 지정된 노드 수에 따라 실행됩니다.

10.3. 테스트된 클러스터 최대값에 따라 환경을 계획하는 방법

노드에서 물리적 리소스에 대한 서브스크립션을 초과하면 Pod를 배치하는 동안 Kubernetes 스케줄러가 보장하는 리소스에 영향을 미칩니다. 메모리 교체가 발생하지 않도록 하기 위해 수행할 수 있는 조치를 알아보십시오.

테스트된 최대값 중 일부는 단일 차원에서만 확장됩니다. 클러스터에서 실행되는 오브젝트가 많으면 최대값이 달라집니다.

이 문서에 명시된 수치는 Red Hat의 테스트 방법론, 설정, 구성, 튜닝을 기반으로 한 것입니다. 고유한 개별 설정 및 환경에 따라 수치가 달라질 수 있습니다.

환경을 계획하는 동안 노드당 몇 개의 Pod가 적합할 것으로 예상되는지 결정하십시오.

required pods per cluster / pods per node = total number of nodes needed

required pods per cluster / pods per node = total number of nodes needed노드당 최대 Pod 수는 현재 250입니다. 하지만 노드에 적합한 Pod 수는 애플리케이션 자체에 따라 달라집니다. 애플리케이션 요구사항에 따라 환경을 계획하는 방법에 설명된 대로 애플리케이션의 메모리, CPU 및 스토리지 요구사항을 고려하십시오.

시나리오 예

클러스터당 2,200개의 Pod로 클러스터 규모를 지정하려면 노드당 최대 500개의 Pod가 있다고 가정하여 최소 5개의 노드가 있어야 합니다.

2200 / 500 = 4.4

2200 / 500 = 4.4노드 수를 20으로 늘리면 Pod 배포는 노드당 110개 Pod로 변경됩니다.

2200 / 20 = 110

2200 / 20 = 110다음과 같습니다.

required pods per cluster / total number of nodes = expected pods per node

required pods per cluster / total number of nodes = expected pods per node10.4. 애플리케이션 요구사항에 따라 환경을 계획하는 방법

예에 나온 애플리케이션 환경을 고려해 보십시오.

| Pod 유형 | Pod 수량 | 최대 메모리 | CPU 코어 수 | 영구 스토리지 |

|---|---|---|---|---|

| apache | 100 | 500MB | 0.5 | 1GB |

| node.js | 200 | 1GB | 1 | 1GB |

| postgresql | 100 | 1GB | 2 | 10GB |

| JBoss EAP | 100 | 1GB | 1 | 1GB |

예상 요구 사항: 550개의 CPU 코어, 450GB RAM 및 1.4TB 스토리지.

노드의 인스턴스 크기는 기본 설정에 따라 높게 또는 낮게 조정될 수 있습니다. 노드에서는 리소스 초과 커밋이 발생하는 경우가 많습니다. 이 배포 시나리오에서는 동일한 양의 리소스를 제공하는 데 더 작은 노드를 추가로 실행하도록 선택할 수도 있고 더 적은 수의 더 큰 노드를 실행하도록 선택할 수도 있습니다. 운영 민첩성 및 인스턴스당 비용과 같은 요인을 고려해야 합니다.

| 노드 유형 | 수량 | CPU | RAM(GB) |

|---|---|---|---|

| 노드(옵션 1) | 100 | 4 | 16 |

| 노드(옵션 2) | 50 | 8 | 32 |

| 노드(옵션 3) | 25 | 16 | 64 |

어떤 애플리케이션은 초과 커밋된 환경에 적합하지만 어떤 애플리케이션은 그렇지 않습니다. 대부분의 Java 애플리케이션과 대규모 페이지를 사용하는 애플리케이션은 초과 커밋에 적합하지 않은 애플리케이션의 예입니다. 해당 메모리는 다른 애플리케이션에 사용할 수 없습니다. 위의 예에 나온 환경에서는 초과 커밋이 약 30%이며, 이는 일반적으로 나타나는 비율입니다.

애플리케이션 Pod는 환경 변수 또는 DNS를 사용하여 서비스에 액세스할 수 있습니다. 환경 변수를 사용하는 경우 노드에서 Pod가 실행될 때 활성 서비스마다 kubelet을 통해 변수를 삽입합니다. 클러스터 인식 DNS 서버는 새로운 서비스의 Kubernetes API를 확인하고 각각에 대해 DNS 레코드 세트를 생성합니다. 클러스터 전체에서 DNS가 활성화된 경우 모든 Pod가 자동으로 해당 DNS 이름을 통해 서비스를 확인할 수 있어야 합니다. 서비스가 5,000개를 넘어야 하는 경우 DNS를 통한 서비스 검색을 사용할 수 있습니다. 서비스 검색에 환경 변수를 사용하는 경우 네임스페이스에서 서비스가 5,000개를 넘은 후 인수 목록이 허용되는 길이를 초과하면 Pod 및 배포가 실패하기 시작합니다. 이 문제를 해결하려면 배포의 서비스 사양 파일에서 서비스 링크를 비활성화하십시오.

네임스페이스에서 실행할 수 있는 애플리케이션 Pod 수는 서비스 검색에 환경 변수가 사용될 때 서비스 수와 서비스 이름의 길이에 따라 달라집니다. ARG_MAX는 새로운 프로세스의 최대 인수 길이를 정의하고 기본적으로 2097152 KiB로 설정됩니다. Kubelet은 네임스페이스에서 실행되도록 예약된 각 pod에 환경 변수를 삽입합니다. 여기에는 다음이 포함됩니다.

-

<SERVICE_NAME>_SERVICE_HOST=<IP> -

<SERVICE_NAME>_SERVICE_PORT=<PORT> -

<SERVICE_NAME>_PORT=tcp://<IP>:<PORT> -

<SERVICE_NAME>_PORT_<PORT>_TCP=tcp://<IP>:<PORT> -

<SERVICE_NAME>_PORT_<PORT>_TCP_PROTO=tcp -

<SERVICE_NAME>_PORT_<PORT>_TCP_PORT=<PORT> -

<SERVICE_NAME>_PORT_<PORT>_TCP_ADDR=<ADDR>

인수 길이가 허용된 값을 초과하고 서비스 이름의 문자 수에 영향을 미치는 경우 네임스페이스의 Pod가 실패합니다. 예를 들어, 5000개의 서비스가 있는 네임스페이스에서 서비스 이름의 제한은 33자이며, 네임스페이스에서 5000개의 Pod를 실행할 수 있습니다.

11장. 스토리지 최적화

스토리지를 최적화하면 모든 리소스에서 스토리지 사용을 최소화할 수 있습니다. 관리자는 스토리지를 최적화하여 기존 스토리지 리소스가 효율적으로 작동하도록 합니다.

11.1. 사용 가능한 영구 스토리지 옵션

OpenShift Container Platform 환경을 최적화할 수 있도록 영구 스토리지 옵션에 대해 알아보십시오.

| 스토리지 유형 | 설명 | 예 |

|---|---|---|

| 블록 |

| AWS EBS 및 VMware vSphere는 OpenShift Container Platform에서 기본적으로 동적 PV(영구 볼륨) 프로비저닝을 지원합니다. |

| 파일 |

| RHEL NFS, NetApp NFS [1] 및 Vendor NFS |

| 개체 |

| AWS S3 |

- NetApp NFS는 Trident 플러그인을 사용하는 경우 동적 PV 프로비저닝을 지원합니다.

OpenShift Container Platform 4.7에서는 현재 CNS가 지원되지 않습니다.

11.2. 권장되는 구성 가능한 스토리지 기술

다음 표에는 지정된 OpenShift Container Platform 클러스터 애플리케이션에 권장되는 구성 가능한 스토리지 기술이 요약되어 있습니다.

| 스토리지 유형 | ROX1 | RWX2 | Registry | 확장 레지스트리 | Metrics3 | 로깅 | 앱 |

|---|---|---|---|---|---|---|---|

|

1

2 3 Prometheus는 메트릭에 사용되는 기본 기술입니다. 4 물리적 디스크, VM 물리적 디스크, VMDK, NFS를 통한 루프백, AWS EBS 및 Azure Disk에는 적용되지 않습니다.

5 메트릭의 경우 RWX( 6 로깅의 경우 공유 스토리지를 사용하는 것이 패턴 차단입니다. Elasticsearch당 하나의 볼륨이 필요합니다. 7 OpenShift Container Platform의 PV 또는 PVC를 통해서는 오브젝트 스토리지가 사용되지 않습니다. 앱은 오브젝트 스토리지 REST API와 통합해야 합니다. | |||||||

| 블록 | 제공됨4 | 없음 | 구성 가능 | 구성 불가능 | 권장 | 권장 | 권장 |

| 파일 | 제공됨4 | 예 | 구성 가능 | 구성 가능 | 구성 가능5 | 구성 가능6 | 권장 |

| 개체 | 예 | 예 | 권장 | 권장 | 구성 불가능 | 구성 불가능 | 구성 불가능7 |

확장 레지스트리는 두 개 이상의 Pod 복제본이 실행되는 OpenShift Container Platform 레지스트리입니다.

11.2.1. 특정 애플리케이션 스토리지 권장 사항

테스트에서는 RHEL(Red Hat Enterprise Linux)의 NFS 서버를 핵심 서비스용 스토리지 백엔드로 사용하는 데 문제가 있는 것을 보여줍니다. 여기에는 OpenShift Container Registry and Quay, 스토리지 모니터링을 위한 Prometheus, 로깅 스토리지를 위한 Elasticsearch가 포함됩니다. 따라서 RHEL NFS를 사용하여 핵심 서비스에서 사용하는 PV를 백업하는 것은 권장되지 않습니다.

마켓플레이스의 다른 NFS 구현에는 이러한 문제가 나타나지 않을 수 있습니다. 이러한 OpenShift Container Platform 핵심 구성 요소에 대해 완료된 테스트에 대한 자세한 내용은 개별 NFS 구현 공급업체에 문의하십시오.

11.2.1.1. 레지스트리

비확장/HA(고가용성) OpenShift Container Platform 레지스트리 클러스터 배포에서는 다음 사항에 유의합니다.

- 스토리지 기술에서 RWX 액세스 모드를 지원할 필요가 없습니다.

- 스토리지 기술에서 쓰기 후 읽기 일관성을 보장해야 합니다.

- 기본 스토리지 기술은 오브젝트 스토리지, 블록 스토리지 순입니다.

- 프로덕션 워크로드가 있는 OpenShift Container Platform 레지스트리 클러스터 배포에는 파일 스토리지를 사용하지 않는 것이 좋습니다.

11.2.1.2. 확장 레지스트리

확장/HA OpenShift Container Platform 레지스트리 클러스터 배포에서는 다음 사항에 유의합니다.

- 스토리지 기술은 RWX 액세스 모드를 지원해야 합니다.

- 스토리지 기술에서 쓰기 후 읽기 일관성을 보장해야 합니다.

- 기본 스토리지 기술은 오브젝트 스토리지입니다.

- Amazon S3(Amazon Simple Storage Service), GCS(Google Cloud Storage), Microsoft Azure Blob Storage 및 OpenStack Swift가 지원됩니다.

- 오브젝트 스토리지는 S3 또는 Swift와 호환되어야 합니다.

- vSphere, 베어 메탈 설치 등 클라우드 이외의 플랫폼에서는 구성 가능한 유일한 기술이 파일 스토리지입니다.

- 블록 스토리지는 구성 불가능합니다.

11.2.1.3. 지표

OpenShift Container Platform 호스트 지표 클러스터 배포에서는 다음 사항에 유의합니다.

- 기본 스토리지 기술은 블록 스토리지입니다.

- 오브젝트 스토리지는 구성 불가능합니다.

프로덕션 워크로드가 있는 호스트 지표 클러스터 배포에는 파일 스토리지를 사용하지 않는 것이 좋습니다.

11.2.1.4. 로깅

OpenShift Container Platform 호스트 로깅 클러스터 배포에서는 다음 사항에 유의합니다.

- 기본 스토리지 기술은 블록 스토리지입니다.

- 오브젝트 스토리지는 구성 불가능합니다.

11.2.1.5. 애플리케이션

애플리케이션 사용 사례는 다음 예에 설명된 대로 애플리케이션마다 다릅니다.

- 동적 PV 프로비저닝을 지원하는 스토리지 기술은 마운트 대기 시간이 짧고 정상 클러스터를 지원하는 노드와 관련이 없습니다.

- 애플리케이션 개발자는 애플리케이션의 스토리지 요구사항을 잘 알고 있으며 제공된 스토리지로 애플리케이션을 작동시켜 애플리케이션이 스토리지 계층을 스케일링하거나 스토리지 계층과 상호 작용할 때 문제가 발생하지 않도록 하는 방법을 이해하고 있어야 합니다.

11.2.2. 다른 특정 애플리케이션 스토리지 권장 사항

etcd 와 같은 쓰기 집약적 워크로드에서는 RAID 구성을 사용하지 않는 것이 좋습니다. RAID 구성으로 etcd 를 실행하는 경우 워크로드의 성능 문제가 발생할 위험이 있을 수 있습니다.

- RHOSP(Red Hat OpenStack Platform) Cinder: RHOSP Cinder는 ROX 액세스 모드 사용 사례에 적응하는 경향이 있습니다.

- 데이터베이스: 데이터베이스(RDBMS, NoSQL DB 등)는 전용 블록 스토리지에서 최상의 성능을 발휘하는 경향이 있습니다.

- etcd 데이터베이스에는 대규모 클러스터를 활성화하려면 충분한 스토리지와 충분한 성능 용량이 있어야 합니다. 충분한 스토리지 및 고성능 환경을 구축하기 위한 모니터링 및 벤치마킹 툴에 대한 정보는 권장 etcd 관행에 설명되어 있습니다.

11.3. 데이터 스토리지 관리

다음 표에는 OpenShift Container Platform 구성 요소가 데이터를 쓰는 기본 디렉터리가 요약되어 있습니다.

| 디렉터리 | 참고 | 크기 조정 | 예상 증가 |

|---|---|---|---|

| /var/log | 모든 구성 요소의 로그 파일입니다. | 10~30GB입니다. | 로그 파일이 빠르게 증가할 수 있습니다. 크기는 디스크를 늘리거나 로그 회전을 사용하여 관리할 수 있습니다. |

| /var/lib/etcd | 데이터베이스를 저장할 때 etcd 스토리지에 사용됩니다. | 20GB 미만입니다. 데이터베이스는 최대 8GB까지 증가할 수 있습니다. | 환경과 함께 천천히 증가합니다. 메타데이터만 저장합니다. 추가로 메모리가 8GB 증가할 때마다 추가로 20~25GB가 증가합니다. |

| /var/lib/containers | CRI-O 런타임의 마운트 옵션입니다. Pod를 포함한 활성 컨테이너 런타임에 사용되는 스토리지 및 로컬 이미지 스토리지입니다. 레지스트리 스토리지에는 사용되지 않습니다. | 16GB 메모리가 있는 노드의 경우 50GB가 증가합니다. 이 크기 조정은 최소 클러스터 요구사항을 결정하는 데 사용하면 안 됩니다. 추가로 메모리가 8GB 증가할 때마다 추가로 20~25GB가 증가합니다. | 컨테이너 실행 용량에 의해 증가가 제한됩니다. |

| /var/lib/kubelet | Pod용 임시 볼륨 스토리지입니다. 런타임 시 컨테이너로 마운트된 외부 요소가 모두 포함됩니다. 영구 볼륨에서 지원하지 않는 환경 변수, kube 보안 및 데이터 볼륨이 포함됩니다. | 변동 가능 | 스토리지가 필요한 Pod가 영구 볼륨을 사용하는 경우 최소입니다. 임시 스토리지를 사용하는 경우 빠르게 증가할 수 있습니다. |

12장. 라우팅 최적화

OpenShift Container Platform HAProxy 라우터는 스케일링을 통해 성능을 최적화합니다.

12.1. 기본 Ingress 컨트롤러(라우터) 성능

OpenShift Container Platform Ingress 컨트롤러 또는 라우터는 OpenShift Container Platform 서비스를 대상으로 하는 모든 외부 트래픽의 Ingress 지점입니다.

초당 처리된 HTTP 요청 측면에서 단일 HAProxy 라우터 성능을 평가할 때 성능은 여러 요인에 따라 달라집니다. 특히 중요한 요인은 다음과 같습니다.

- HTTP 연결 유지/닫기 모드

- 경로 유형

- TLS 세션 재개 클라이언트 지원

- 대상 경로당 동시 연결 수

- 대상 경로 수

- 백엔드 서버 페이지 크기

- 기본 인프라(네트워크/SDN 솔루션, CPU 등)

특정 환경의 성능은 달라질 수 있으나 Red Hat 랩은 크기가 4 vCPU/16GB RAM인 퍼블릭 클라우드 인스턴스에서 테스트합니다. 1kB 정적 페이지를 제공하는 백엔드에서 종료한 100개의 경로를 처리하는 단일 HAProxy 라우터가 처리할 수 있는 초당 트랜잭션 수는 다음과 같습니다.

HTTP 연결 유지 모드 시나리오에서는 다음과 같습니다.

| Encryption | LoadBalancerService | HostNetwork |

|---|---|---|

| none | 21515 | 29622 |

| edge | 16743 | 22913 |

| passthrough | 36786 | 53295 |

| re-encrypt | 21583 | 25198 |

HTTP 닫기(연결 유지 제외) 시나리오에서는 다음과 같습니다.

| Encryption | LoadBalancerService | HostNetwork |

|---|---|---|

| none | 5719 | 8273 |

| edge | 2729 | 4069 |

| passthrough | 4121 | 5344 |

| re-encrypt | 2320 | 2941 |

ROUTER_THREADS=4로 설정된 기본 Ingress 컨트롤러 구성이 사용되었고 두 개의 서로 다른 끝점 게시 전략(LoadBalancerService/HostNetwork)이 테스트되었습니다. 암호화된 경로에는 TLS 세션 재개가 사용되었습니다. HTTP 연결 유지 기능을 사용하면 단일 HAProxy 라우터가 8kB의 작은 페이지 크기에서 1Gbit NIC를 수용할 수 있습니다.

최신 프로세서가 있는 베어 메탈에서 실행하는 경우 성능이 위 퍼블릭 클라우드 인스턴스의 약 2배가 될 것을 예상할 수 있습니다. 이 오버헤드는 퍼블릭 클라우드에서 가상화 계층에 의해 도입되며 프라이빗 클라우드 기반 가상화에도 적용됩니다. 다음 표는 라우터 뒤에서 사용할 애플리케이션 수에 대한 가이드입니다.

| 애플리케이션 수 | 애플리케이션 유형 |

|---|---|

| 5-10 | 정적 파일/웹 서버 또는 캐싱 프록시 |

| 100-1000 | 동적 콘텐츠를 생성하는 애플리케이션 |

일반적으로 HAProxy는 사용 중인 기술에 따라 5~1,000개의 애플리케이션 경로를 지원할 수 있습니다. Ingress 컨트롤러 성능은 언어 또는 정적 콘텐츠 대비 동적 콘텐츠 등 지원하는 애플리케이션의 기능과 성능에 따라 제한될 수 있습니다.

Ingress 또는 라우터 샤딩을 사용하여 애플리케이션에 대한 경로를 더 많이 제공하면 라우팅 계층을 수평으로 확장하는 데 도움이 됩니다.

Ingress 샤딩에 대한 자세한 내용은 경로 레이블을 사용하여 Ingress 컨트롤러 샤딩 구성 및 네임스페이스 레이블을 사용하여 Ingress 컨트롤러 샤딩 구성을 참조하십시오.

12.2. Ingress 컨트롤러(라우터) 성능 최적화

OpenShift Container Platform에서는 ROUTER_THREADS, ROUTER_DEFAULT_TUNNEL_TIMEOUT, ROUTER_DEFAULT_CLIENT_TIMEOUT, ROUTER_DEFAULT_SERVER_TIMEOUT 및 RELOAD_INTERVAL과 같은 환경 변수를 설정하여 Ingress 컨트롤러 배포를 수정하는 작업을 더 이상 지원하지 않습니다.

Ingress 컨트롤러 배포를 수정할 수 있지만 Ingress Operator가 활성화된 경우 구성을 덮어씁니다.

13장. 네트워킹 최적화

OpenShift SDN은 OpenvSwitch, VXLAN(Virtual Extensible LAN) 터널, OpenFlow 규칙 및 iptables를 사용합니다. 이 네트워크는 점보 프레임, NIC(네트워크 인터페이스 컨트롤러) 오프로드, 멀티 큐 및 ethtool 설정을 사용하여 조정할 수 있습니다.

OVN-Kubernetes는 VXLAN 대신 Geneve(Generic Network Virtualization Encapsulation)를 터널 프로토콜로 사용합니다.

VXLAN은 VLAN에 비해 네트워크 수가 4096개에서 1600만 개 이상으로 증가하고 물리적 네트워크 전반에 걸쳐 계층 2 연결과 같은 이점을 제공합니다. 이를 통해 서비스 뒤에 있는 모든 Pod가 서로 다른 시스템에서 실행되는 경우에도 서로 통신할 수 있습니다.

VXLAN은 사용자 데이터그램 프로토콜(UDP) 패킷의 터널링된 모든 트래픽을 캡슐화합니다. 그러나 이로 인해 CPU 사용량이 증가합니다. 이러한 외부 및 내부 패킷은 전송 중에 데이터가 손상되지 않도록하기 위해 일반 체크섬 규칙을 따릅니다. CPU 성능에 따라 이러한 추가 처리 오버헤드는 처리량이 감소하고 기존 비 오버레이 네트워크에 비해 대기 시간이 증가할 수 있습니다.

클라우드, 가상 머신, 베어 메탈 CPU 성능은 많은 Gbps의 네트워크 처리량을 처리할 수 있습니다. 10 또는 40Gbps와 같은 높은 대역폭 링크를 사용하는 경우 성능이 저하될 수 있습니다. 이 문제는 VXLAN 기반 환경에서 알려진 문제이며 컨테이너 또는 OpenShift Container Platform에만 국한되지 않습니다. VXLAN 터널에 의존하는 네트워크는 VXLAN 구현으로 인해 비슷한 작업을 수행할 수 있습니다.

Gbps을 초과하여 푸시하려는 경우 다음을 수행할 수 있습니다.

- BGP (Border Gateway Protocol)와 같은 다른 라우팅 기술을 구현하는 네트워크 플러그인을 평가합니다.

- VXLAN 오프로드 가능 네트워크 어댑터를 사용합니다. VXLAN 오프로드는 패킷 체크섬 계산 및 관련 CPU 오버헤드를 시스템 CPU에서 네트워크 어댑터의 전용 하드웨어로 이동합니다. 이를 통해 Pod 및 애플리케이션에서 사용할 CPU 사이클을 확보하고 사용자는 네트워크 인프라의 전체 대역폭을 사용할 수 있습니다.

VXLAN 오프로드는 대기 시간을 단축시키지 않습니다. 그러나 대기 시간 테스트에서도 CPU 사용량이 감소합니다.

13.1. 네트워크에 대한 MTU 최적화

중요한 최대 전송 단위(MTU)는 NIC(네트워크 인터페이스 컨트롤러) MTU와 클러스터 네트워크 MTU입니다.

NIC MTU는 OpenShift Container Platform을 설치할 때만 구성됩니다. MTU는 네트워크 NIC에서 지원되는 최대 값과 작거나 같아야 합니다. 처리량을 최적화하려면 가능한 가장 큰 값을 선택합니다. 최소 지연을 최적화하려면 더 낮은 값을 선택합니다.

SDN 오버레이의 MTU는 NIC MTU보다 최소 50바이트 작아야 합니다. 이 계정은 SDN 오버레이 헤더에 대한 계정입니다. 일반적인 이더넷 네트워크에서는 이 값을 1450으로 설정합니다. 점보 프레임 이더넷 네트워크에서는 이 값을 8950으로 설정합니다.

OVN 및 Geneve의 경우 MTU는 NIC MTU보다 최소 100바이트 작아야 합니다.

50바이트 오버레이 헤더는 OpenShift SDN과 관련이 있습니다. 기타 SDN 솔루션에서는 이 값이 더 크거나 작아야 할 수 있습니다.

13.2. 대규모 클러스터 설치에 대한 권장 사례

대규모 클러스터를 설치하거나 클러스터 스케일링을 통해 노드 수를 늘리는 경우 install-config.yaml 파일에서 클러스터 네트워크 cidr을 적절하게 설정한 후 클러스터를 설치하십시오.

클러스터 크기가 500개 노드를 초과하는 경우 기본 클러스터 네트워크 cidr 10.128.0.0/14를 사용할 수 없습니다. 노드 수가 500개를 초과하게 되면 10.128.0.0/12 또는 10.128.0.0/10으로 설정해야 합니다.

13.3. IPsec 영향

노드 호스트의 암호화 및 암호 해독은 CPU를 사용하기 때문에 사용 중인 IP 보안 시스템에 관계없이 암호화를 사용할 때 노드의 처리량과 CPU 사용량 모두에서 성능에 영향을 미칩니다.

IPsec은 NIC에 도달하기 전에 IP 페이로드 수준에서 트래픽을 암호화하여 NIC 오프로드에 사용되는 필드를 보호합니다. 즉, IPSec가 활성화되면 일부 NIC 가속 기능을 사용할 수 없으며 처리량이 감소하고 CPU 사용량이 증가합니다.

14장. 베어 메탈 호스트 관리

베어 메탈 클러스터에 OpenShift Container Platform을 설치할 때 클러스터에 있는 베어 메탈 호스트에 대한 machine 및 machineset CR(사용자 정의 리소스)을 사용하여 베어 메탈 노드를 프로비저닝하고 관리할 수 있습니다.

14.1. 베어 메탈 호스트 및 노드 정보

RHCOS(Red Hat Enterprise Linux CoreOS) 베어 메탈 호스트를 클러스터에서 노드로 프로비저닝하려면 먼저 베어 메탈 호스트 하드웨어에 해당하는 MachineSet CR(사용자 정의 리소스) 오브젝트를 생성합니다. 베어 메탈 호스트 머신 세트는 구성과 관련된 인프라 구성 요소를 설명합니다. 이러한 머신에 특정 Kubernetes 레이블을 적용한 다음 해당 머신 세트에서만 실행되도록 인프라 구성 요소를 업데이트합니다.

machine CR은 metal3.io/autoscale-to-hosts 주석이 포함된 관련 MachineSet을 확장하면 자동으로 생성됩니다. OpenShift Container Platform은 Machine CR을 사용하여 MachineSet CR에 지정된 대로 호스트에 해당하는 베어 메탈 노드를 프로비저닝합니다.

14.2. 베어 메탈 호스트 유지관리