Installing and using the Migration Toolkit for Virtualization

Migrating from VMware vSphere or Red Hat Virtualization to Red Hat OpenShift Virtualization

Abstract

Making open source more inclusive

Red Hat is committed to replacing problematic language in our code, documentation, and web properties. We are beginning with these four terms: master, slave, blacklist, and whitelist. Because of the enormity of this endeavor, these changes will be implemented gradually over several upcoming releases. For more details, see our CTO Chris Wright’s message.

Chapter 1. About the Migration Toolkit for Virtualization

You can use the Migration Toolkit for Virtualization (MTV) to migrate virtual machines from the following source providers to OpenShift Virtualization destination providers:

- VMware vSphere

- Red Hat Virtualization (RHV)

- OpenStack

- Open Virtual Appliances (OVAs) that were created by VMware vSphere

- Remote OpenShift Virtualization clusters

Additional resources

1.1. About cold and warm migration

MTV supports cold migration from:

- VMware vSphere

- Red Hat Virtualization (RHV)

- OpenStack

- Remote OpenShift Virtualization clusters

MTV supports warm migration from VMware vSphere and from RHV.

1.1.1. Cold migration

Cold migration is the default migration type. The source virtual machines are shut down while the data is copied.

1.1.2. Warm migration

Most of the data is copied during the precopy stage while the source virtual machines (VMs) are running.

Then the VMs are shut down and the remaining data is copied during the cutover stage.

Precopy stage

The VMs are not shut down during the precopy stage.

The VM disks are copied incrementally using changed block tracking (CBT) snapshots. The snapshots are created at one-hour intervals by default. You can change the snapshot interval by updating the forklift-controller deployment.

You must enable CBT for each source VM and each VM disk.

A VM can support up to 28 CBT snapshots. If the source VM has too many CBT snapshots and the Migration Controller service is not able to create a new snapshot, warm migration might fail. The Migration Controller service deletes each snapshot when the snapshot is no longer required.

The precopy stage runs until the cutover stage is started manually or is scheduled to start.

Cutover stage

The VMs are shut down during the cutover stage and the remaining data is migrated. Data stored in RAM is not migrated.

You can start the cutover stage manually by using the MTV console or you can schedule a cutover time in the Migration manifest.

Chapter 2. Prerequisites

Review the following prerequisites to ensure that your environment is prepared for migration.

2.1. Software requirements

You must install compatible versions of Red Hat OpenShift and OpenShift Virtualization.

2.2. Storage support and default modes

MTV uses the following default volume and access modes for supported storage.

| Provisioner | Volume mode | Access mode |

|---|---|---|

| kubernetes.io/aws-ebs | Block | ReadWriteOnce |

| kubernetes.io/azure-disk | Block | ReadWriteOnce |

| kubernetes.io/azure-file | Filesystem | ReadWriteMany |

| kubernetes.io/cinder | Block | ReadWriteOnce |

| kubernetes.io/gce-pd | Block | ReadWriteOnce |

| kubernetes.io/hostpath-provisioner | Filesystem | ReadWriteOnce |

| manila.csi.openstack.org | Filesystem | ReadWriteMany |

| openshift-storage.cephfs.csi.ceph.com | Filesystem | ReadWriteMany |

| openshift-storage.rbd.csi.ceph.com | Block | ReadWriteOnce |

| kubernetes.io/rbd | Block | ReadWriteOnce |

| kubernetes.io/vsphere-volume | Block | ReadWriteOnce |

If the OpenShift Virtualization storage does not support dynamic provisioning, you must apply the following settings:

Filesystemvolume modeFilesystemvolume mode is slower thanBlockvolume mode.ReadWriteOnceaccess modeReadWriteOnceaccess mode does not support live virtual machine migration.

See Enabling a statically-provisioned storage class for details on editing the storage profile.

If your migration uses block storage and persistent volumes created with an EXT4 file system, increase the file system overhead in CDI to be more than 10%. The default overhead that is assumed by CDI does not completely include the reserved place for the root partition. If you do not increase the file system overhead in CDI by this amount, your migration might fail.

When migrating from OpenStack or running a cold-migration from RHV to the OCP cluster that MTV is deployed on, the migration allocates persistent volumes without CDI. In these cases, you might need to adjust the file system overhead.

If the configured file system overhead, which has a default value of 10%, is too low, the disk transfer will fail due to lack of space. In such a case, you would want to increase the file system overhead.

In some cases, however, you might want to decrease the file system overhead to reduce storage consumption.

You can change the file system overhead by changing the value of the controller_filesystem_overhead in the spec portion of the forklift-controller CR, as described in Configuring the MTV Operator.

2.3. Network prerequisites

The following prerequisites apply to all migrations:

- IP addresses, VLANs, and other network configuration settings must not be changed before or during migration. The MAC addresses of the virtual machines are preserved during migration.

- The network connections between the source environment, the OpenShift Virtualization cluster, and the replication repository must be reliable and uninterrupted.

- If you are mapping more than one source and destination network, you must create a network attachment definition for each additional destination network.

2.3.1. Ports

The firewalls must enable traffic over the following ports:

| Port | Protocol | Source | Destination | Purpose |

|---|---|---|---|---|

| 443 | TCP | OpenShift nodes | VMware vCenter | VMware provider inventory Disk transfer authentication |

| 443 | TCP | OpenShift nodes | VMware ESXi hosts | Disk transfer authentication |

| 902 | TCP | OpenShift nodes | VMware ESXi hosts | Disk transfer data copy |

| Port | Protocol | Source | Destination | Purpose |

|---|---|---|---|---|

| 443 | TCP | OpenShift nodes | RHV Engine | RHV provider inventory Disk transfer authentication |

| 443 | TCP | OpenShift nodes | RHV hosts | Disk transfer authentication |

| 54322 | TCP | OpenShift nodes | RHV hosts | Disk transfer data copy |

2.4. Source virtual machine prerequisites

The following prerequisites apply to all migrations:

- ISO/CDROM disks must be unmounted.

- Each NIC must contain one IPv4 and/or one IPv6 address.

- The VM operating system must be certified and supported for use as a guest operating system with OpenShift Virtualization.

-

VM names must contain only lowercase letters (

a-z), numbers (0-9), or hyphens (-), up to a maximum of 253 characters. The first and last characters must be alphanumeric. The name must not contain uppercase letters, spaces, periods (.), or special characters. VM names must not duplicate the name of a VM in the OpenShift Virtualization environment.

NoteMigration Toolkit for Virtualization automatically assigns a new name to a VM that does not comply with the rules.

Migration Toolkit for Virtualization makes the following changes when it automatically generates a new VM name:

- Excluded characters are removed.

- Uppercase letters are switched to lowercase letters.

-

Any underscore (

_) is changed to a dash (-).

This feature allows a migration to proceed smoothly even if someone entered a VM name that does not follow the rules.

2.5. Red Hat Virtualization prerequisites

The following prerequisites apply to Red Hat Virtualization migrations:

-

To create a source provider, you must have at least the

UserRoleandReadOnlyAdminroles assigned to you. These are the minimum required permissions, however, any other administrator or superuser permissions will also work.

You must keep the UserRole and ReadOnlyAdmin roles until the virtual machines of the source provider have been migrated. Otherwise, the migration will fail.

To migrate virtual machines:

You must have one of the following:

- RHV admin permissions. These permissions allow you to migrate any virtual machine in the system.

-

DiskCreatorandUserVmManagerpermissions on every virtual machine you want to migrate.

- You must use a compatible version of Red Hat Virtualization.

You must have the Manager CA certificate, unless it was replaced by a third-party certificate, in which case, specify the Manager Apache CA certificate.

You can obtain the Manager CA certificate by navigating to https://<engine_host>/ovirt-engine/services/pki-resource?resource=ca-certificate&format=X509-PEM-CA in a browser.

- If you are migrating a virtual machine with a direct LUN disk, ensure that the nodes in the OpenShift Virtualization destination cluster that the VM is expected to run on can access the backend storage.

- Unlike disk images that are copied from a source provider to a target provider, LUNs are detached, but not removed, from virtual machines in the source provider and then attached to the virtual machines (VMs) that are created in the target provider.

- LUNs are not removed from the source provider during the migration in case fallback to the source provider is required. However, before re-attaching the LUNs to VMs in the source provider, ensure that the LUNs are not used by VMs on the target environment at the same time, which might lead to data corruption.

2.6. OpenStack prerequisites

The following prerequisites apply to OpenStack migrations:

- You must use a compatible version of OpenStack.

2.6.1. Additional authentication methods for migrations with OpenStack source providers

MTV versions 2.6 and later support the following authentication methods for migrations with OpenStack source providers in addition to the standard username and password credential set:

- Token authentication

- Application credential authentication

You can use these methods to migrate virtual machines with OpenStack source providers using the CLI the same way you migrate other virtual machines, except for how you prepare the Secret manifest.

2.6.1.1. Using token authentication with an OpenStack source provider

You can use token authentication, instead of username and password authentication, when you create an OpenStack source provider.

MTV supports both of the following types of token authentication:

- Token with user ID

- Token with user name

For each type of token authentication, you need to use data from OpenStack to create a Secret manifest.

Prerequisites

Have an OpenStack account.

Procedure

- In the dashboard of the OpenStack web console, click Project > API Access.

Expand Download OpenStack RC file and click OpenStack RC file.

The file that is downloaded, referred to here as

<openstack_rc_file>, includes the following fields used for token authentication:OS_AUTH_URL OS_PROJECT_ID OS_PROJECT_NAME OS_DOMAIN_NAME OS_USERNAME

To get the data needed for token authentication, run the following command:

$ openstack token issue

The output, referred to here as

<openstack_token_output>, includes thetoken,userID, andprojectIDthat you need for authentication using a token with user ID.Create a

Secretmanifest similar to the following:For authentication using a token with user ID:

cat << EOF | oc apply -f - apiVersion: v1 kind: Secret metadata: name: openstack-secret-tokenid namespace: openshift-mtv labels: createdForProviderType: openstack type: Opaque stringData: authType: token token: <token_from_openstack_token_output> projectID: <projectID_from_openstack_token_output> userID: <userID_from_openstack_token_output> url: <OS_AUTH_URL_from_openstack_rc_file> EOFFor authentication using a token with user name:

cat << EOF | oc apply -f - apiVersion: v1 kind: Secret metadata: name: openstack-secret-tokenname namespace: openshift-mtv labels: createdForProviderType: openstack type: Opaque stringData: authType: token token: <token_from_openstack_token_output> domainName: <OS_DOMAIN_NAME_from_openstack_rc_file> projectName: <OS_PROJECT_NAME_from_openstack_rc_file> username: <OS_USERNAME_from_openstack_rc_file> url: <OS_AUTH_URL_from_openstack_rc_file> EOF

-

Continue migrating your virtual machine according to the procedure in Migrating virtual machines, starting with step 2, "Create a

Providermanifest for the source provider."

2.6.1.2. Using application credential authentication with an OpenStack source provider

You can use application credential authentication, instead of username and password authentication, when you create an OpenStack source provider.

MTV supports both of the following types of application credential authentication:

- Application credential ID

- Application credential name

For each type of application credential authentication, you need to use data from OpenStack to create a Secret manifest.

Prerequisites

You have an OpenStack account.

Procedure

- In the dashboard of the OpenStack web console, click Project > API Access.

Expand Download OpenStack RC file and click OpenStack RC file.

The file that is downloaded, referred to here as

<openstack_rc_file>, includes the following fields used for application credential authentication:OS_AUTH_URL OS_PROJECT_ID OS_PROJECT_NAME OS_DOMAIN_NAME OS_USERNAME

To get the data needed for application credential authentication, run the following command:

$ openstack application credential create --role member --role reader --secret redhat forklift

The output, referred to here as

<openstack_credential_output>, includes:-

The

idandsecretthat you need for authentication using an application credential ID -

The

nameandsecretthat you need for authentication using an application credential name

-

The

Create a

Secretmanifest similar to the following:For authentication using the application credential ID:

cat << EOF | oc apply -f - apiVersion: v1 kind: Secret metadata: name: openstack-secret-appid namespace: openshift-mtv labels: createdForProviderType: openstack type: Opaque stringData: authType: applicationcredential applicationCredentialID: <id_from_openstack_credential_output> applicationCredentialSecret: <secret_from_openstack_credential_output> url: <OS_AUTH_URL_from_openstack_rc_file> EOF

For authentication using the application credential name:

cat << EOF | oc apply -f - apiVersion: v1 kind: Secret metadata: name: openstack-secret-appname namespace: openshift-mtv labels: createdForProviderType: openstack type: Opaque stringData: authType: applicationcredential applicationCredentialName: <name_from_openstack_credential_output> applicationCredentialSecret: <secret_from_openstack_credential_output> domainName: <OS_DOMAIN_NAME_from_openstack_rc_file> username: <OS_USERNAME_from_openstack_rc_file> url: <OS_AUTH_URL_from_openstack_rc_file> EOF

-

Continue migrating your virtual machine according to the procedure in Migrating virtual machines, starting with step 2, "Create a

Providermanifest for the source provider."

2.7. VMware prerequisites

It is strongly recommended to create a VDDK image to accelerate migrations. For more information, see Creating a VDDK image.

The following prerequisites apply to VMware migrations:

- You must use a compatible version of VMware vSphere.

- You must be logged in as a user with at least the minimal set of VMware privileges.

- To access the virtual machine using a pre-migration hook, VMware Tools must be installed on the source virtual machine.

-

The VM operating system must be certified and supported for use as a guest operating system with OpenShift Virtualization and for conversion to KVM with

virt-v2v. - If you are running a warm migration, you must enable changed block tracking (CBT) on the VMs and on the VM disks.

- If you are migrating more than 10 VMs from an ESXi host in the same migration plan, you must increase the NFC service memory of the host.

- It is strongly recommended to disable hibernation because Migration Toolkit for Virtualization (MTV) does not support migrating hibernated VMs.

In the event of a power outage, data might be lost for a VM with disabled hibernation. However, if hibernation is not disabled, migration will fail

Neither MTV nor OpenShift Virtualization support conversion of Btrfs for migrating VMs from VMWare.

VMware privileges

The following minimal set of VMware privileges is required to migrate virtual machines to OpenShift Virtualization with the Migration Toolkit for Virtualization (MTV).

| Privilege | Description |

|---|---|

|

| |

|

| Allows powering off a powered-on virtual machine. This operation powers down the guest operating system. |

|

| Allows powering on a powered-off virtual machine and resuming a suspended virtual machine. |

|

| Allows managing a virtual machine by the VMware VIX API. |

|

Note

All | |

|

| Allows opening a disk on a virtual machine for random read and write access. Used mostly for remote disk mounting. |

|

| Allows operations on files associated with a virtual machine, including VMX, disks, logs, and NVRAM. |

|

| Allows opening a disk on a virtual machine for random read access. Used mostly for remote disk mounting. |

|

| Allows read operations on files associated with a virtual machine, including VMX, disks, logs, and NVRAM. |

|

| Allows write operations on files associated with a virtual machine, including VMX, disks, logs, and NVRAM. |

|

| Allows cloning of a template. |

|

| Allows cloning of an existing virtual machine and allocation of resources. |

|

| Allows creation of a new template from a virtual machine. |

|

| Allows customization of a virtual machine’s guest operating system without moving the virtual machine. |

|

| Allows deployment of a virtual machine from a template. |

|

| Allows marking an existing powered-off virtual machine as a template. |

|

| Allows marking an existing template as a virtual machine. |

|

| Allows creation, modification, or deletion of customization specifications. |

|

| Allows promote operations on a virtual machine’s disks. |

|

| Allows reading a customization specification. |

|

| |

|

| Allows creation of a snapshot from the virtual machine’s current state. |

|

| Allows removal of a snapshot from the snapshot history. |

|

| |

|

| Allows exploring the contents of a datastore. |

|

| Allows performing low-level file operations - read, write, delete, and rename - in a datastore. |

|

| |

|

| Allows verification of the validity of a session. |

|

| |

|

| Allows decryption of an encrypted virtual machine. |

|

| Allows access to encrypted resources. |

2.7.1. Creating a VDDK image

The Migration Toolkit for Virtualization (MTV) can use the VMware Virtual Disk Development Kit (VDDK) SDK to accelerate transferring virtual disks from VMware vSphere.

Creating a VDDK image, although optional, is highly recommended.

To make use of this feature, you download the VMware Virtual Disk Development Kit (VDDK), build a VDDK image, and push the VDDK image to your image registry.

The VDDK package contains symbolic links, therefore, the procedure of creating a VDDK image must be performed on a file system that preserves symbolic links (symlinks).

Storing the VDDK image in a public registry might violate the VMware license terms.

Prerequisites

- Red Hat OpenShift image registry.

-

podmaninstalled. - You are working on a file system that preserves symbolic links (symlinks).

- If you are using an external registry, OpenShift Virtualization must be able to access it.

Procedure

Create and navigate to a temporary directory:

$ mkdir /tmp/<dir_name> && cd /tmp/<dir_name>

- In a browser, navigate to the VMware VDDK version 8 download page.

- Select version 8.0.1 and click Download.

In order to migrate to OpenShift Virtualization 4.12, download VDDK version 7.0.3.2 from the VMware VDDK version 7 download page.

- Save the VDDK archive file in the temporary directory.

Extract the VDDK archive:

$ tar -xzf VMware-vix-disklib-<version>.x86_64.tar.gz

Create a

Dockerfile:$ cat > Dockerfile <<EOF FROM registry.access.redhat.com/ubi8/ubi-minimal USER 1001 COPY vmware-vix-disklib-distrib /vmware-vix-disklib-distrib RUN mkdir -p /opt ENTRYPOINT ["cp", "-r", "/vmware-vix-disklib-distrib", "/opt"] EOF

Build the VDDK image:

$ podman build . -t <registry_route_or_server_path>/vddk:<tag>

Push the VDDK image to the registry:

$ podman push <registry_route_or_server_path>/vddk:<tag>

- Ensure that the image is accessible to your OpenShift Virtualization environment.

2.7.2. Increasing the NFC service memory of an ESXi host

If you are migrating more than 10 VMs from an ESXi host in the same migration plan, you must increase the NFC service memory of the host. Otherwise, the migration will fail because the NFC service memory is limited to 10 parallel connections.

Procedure

- Log in to the ESXi host as root.

Change the value of

maxMemoryto1000000000in/etc/vmware/hostd/config.xml:... <nfcsvc> <path>libnfcsvc.so</path> <enabled>true</enabled> <maxMemory>1000000000</maxMemory> <maxStreamMemory>10485760</maxStreamMemory> </nfcsvc> ...Restart

hostd:# /etc/init.d/hostd restart

You do not need to reboot the host.

2.8. Open Virtual Appliance (OVA) prerequisites

The following prerequisites apply to Open Virtual Appliance (OVA) file migrations:

- All OVA files are created by VMware vSphere.

Migration of OVA files that were not created by VMware vSphere but are compatible with vSphere might succeed. However, migration of such files is not supported by MTV. MTV supports only OVA files created by VMware vSphere.

The OVA files are in one or more folders under an NFS shared directory in one of the following structures:

In one or more compressed Open Virtualization Format (OVF) packages that hold all the VM information.

The filename of each compressed package must have the

.ovaextension. Several compressed packages can be stored in the same folder.When this structure is used, MTV scans the root folder and the first-level subfolders for compressed packages.

For example, if the NFS share is,

/nfs, then:

The folder/nfsis scanned.

The folder/nfs/subfolder1is scanned.

But,/nfs/subfolder1/subfolder2is not scanned.In extracted OVF packages.

When this structure is used, MTV scans the root folder, first-level subfolders, and second-level subfolders for extracted OVF packages. However, there can be only one

.ovffile in a folder. Otherwise, the migration will fail.For example, if the NFS share is,

/nfs, then:

The OVF file/nfs/vm.ovfis scanned.

The OVF file/nfs/subfolder1/vm.ovfis scanned.

The OVF file/nfs/subfolder1/subfolder2/vm.ovfis scanned.

But, the OVF file/nfs/subfolder1/subfolder2/subfolder3/vm.ovfis not scanned.

2.9. Software compatibility guidelines

You must install compatible software versions.

| Migration Toolkit for Virtualization | Red Hat OpenShift | OpenShift Virtualization | VMware vSphere | Red Hat Virtualization | OpenStack |

|---|---|---|---|---|---|

| 2.6.7 | 4.14 or later | 4.14 or later | 6.5 or later | 4.4 SP1 or later | 16.1 or later |

MTV 2.6 was tested only with Red Hat Virtualization (RHV) 4.4 SP1. Migration from Red Hat Virtualization (RHV) 4.3 has not been tested with MTV 2.6. While not supported, basic migrations from RHV 4.3 are expected to work.

As RHV 4.3 lacks the improvements that were introduced in RHV 4.4 for MTV, and new features were not tested with RHV 4.3, migrations from RHV 4.3 may not function at the same level as migrations from RHV 4.4, with some functionality may be missing.

Therefore, it is recommended to upgrade RHV to the supported version above before the migration to OpenShift Virtualization.

However, migrations from RHV 4.3.11 were tested with MTV 2.3, and may work in practice in many environments using MTV 2.6. In this case, we advise upgrading Red Hat Virtualization Manager (RHVM) to the previously mentioned supported version before the migration to OpenShift Virtualization.

2.9.1. OpenShift Operator Life Cycles

For more information about the software maintenance Life Cycle classifications for Operators shipped by Red Hat for use with OpenShift Container Platform, see OpenShift Operator Life Cycles.

Chapter 3. Installing and configuring the MTV Operator

You can install the MTV Operator by using the Red Hat OpenShift web console or the command line interface (CLI).

In Migration Toolkit for Virtualization (MTV) version 2.4 and later, the MTV Operator includes the MTV plugin for the Red Hat OpenShift web console.

After you install the MTV Operator by using either the Red Hat OpenShift web console or the CLI, you can configure the Operator.

3.1. Installing the MTV Operator by using the Red Hat OpenShift web console

You can install the MTV Operator by using the Red Hat OpenShift web console.

Prerequisites

- Red Hat OpenShift 4.14 or later installed.

- OpenShift Virtualization Operator installed on an OpenShift migration target cluster.

-

You must be logged in as a user with

cluster-adminpermissions.

Procedure

- In the Red Hat OpenShift web console, click Operators → OperatorHub.

- Use the Filter by keyword field to search for mtv-operator.

- Click Migration Toolkit for Virtualization Operator and then click Install.

- Click Create ForkliftController when the button becomes active.

Click Create.

Your ForkliftController appears in the list that is displayed.

- Click Workloads → Pods to verify that the MTV pods are running.

Click Operators → Installed Operators to verify that Migration Toolkit for Virtualization Operator appears in the openshift-mtv project with the status Succeeded.

When the plugin is ready you will be prompted to reload the page. The Migration menu item is automatically added to the navigation bar, displayed on the left of the Red Hat OpenShift web console.

3.2. Installing the MTV Operator from the command line interface

You can install the MTV Operator from the command line interface (CLI).

Prerequisites

- Red Hat OpenShift 4.14 or later installed.

- OpenShift Virtualization Operator installed on an OpenShift migration target cluster.

-

You must be logged in as a user with

cluster-adminpermissions.

Procedure

Create the openshift-mtv project:

$ cat << EOF | oc apply -f - apiVersion: project.openshift.io/v1 kind: Project metadata: name: openshift-mtv EOF

Create an

OperatorGroupCR calledmigration:$ cat << EOF | oc apply -f - apiVersion: operators.coreos.com/v1 kind: OperatorGroup metadata: name: migration namespace: openshift-mtv spec: targetNamespaces: - openshift-mtv EOFCreate a

SubscriptionCR for the Operator:$ cat << EOF | oc apply -f - apiVersion: operators.coreos.com/v1alpha1 kind: Subscription metadata: name: mtv-operator namespace: openshift-mtv spec: channel: release-v2.6 installPlanApproval: Automatic name: mtv-operator source: redhat-operators sourceNamespace: openshift-marketplace startingCSV: "mtv-operator.v2.6.7" EOF

Create a

ForkliftControllerCR:$ cat << EOF | oc apply -f - apiVersion: forklift.konveyor.io/v1beta1 kind: ForkliftController metadata: name: forklift-controller namespace: openshift-mtv spec: olm_managed: true EOF

Verify that the MTV pods are running:

$ oc get pods -n openshift-mtv

Example output

NAME READY STATUS RESTARTS AGE forklift-api-bb45b8db4-cpzlg 1/1 Running 0 6m34s forklift-controller-7649db6845-zd25p 2/2 Running 0 6m38s forklift-must-gather-api-78fb4bcdf6-h2r4m 1/1 Running 0 6m28s forklift-operator-59c87cfbdc-pmkfc 1/1 Running 0 28m forklift-ui-plugin-5c5564f6d6-zpd85 1/1 Running 0 6m24s forklift-validation-7d84c74c6f-fj9xg 1/1 Running 0 6m30s forklift-volume-populator-controller-85d5cb64b6-mrlmc 1/1 Running 0 6m36s

3.3. Configuring the MTV Operator

You can configure all of the following settings of the MTV Operator by modifying the ForkliftController CR, or in the Settings section of the Overview page, unless otherwise indicated.

- Maximum number of virtual machines (VMs) per plan that can be migrated simultaneously.

-

How long

must gatherreports are retained before being automatically deleted. - CPU limit allocated to the main controller container.

- Memory limit allocated to the main controller container.

- Interval at which a new snapshot is requested before initiating a warm migration.

- Frequency with which the system checks the status of snapshot creation or removal during a warm migration.

-

Percentage of space in persistent volumes allocated as file system overhead when the

storageclassisfilesystem(ForkliftControllerCR only). -

Fixed amount of additional space allocated in persistent block volumes. This setting is applicable for any

storageclassthat is block-based (ForkliftControllerCR only). -

Configuration map of operating systems to preferences for vSphere source providers (

ForkliftControllerCR only). -

Configuration map of operating systems to preferences for Red Hat Virtualization (RHV) source providers (

ForkliftControllerCR only).

The procedure for configuring these settings using the user interface is presented in Configuring MTV settings. The procedure for configuring these settings by modifying the ForkliftController CR is presented following.

Procedure

-

Change a parameter’s value in the

specportion of theForkliftControllerCR by adding the label and value as follows:

spec:

label: value 1- 1

- Labels you can configure using the CLI are shown in the table that follows, along with a description of each label and its default value.

| Label | Description | Default value |

|---|---|---|

|

| The maximum number of VMs per plan that can be migrated simultaneously. |

|

|

|

The duration in hours for retaining |

|

|

| The CPU limit allocated to the main controller container. |

|

|

| The memory limit allocated to the main controller container. |

|

|

| The interval in minutes at which a new snapshot is requested before initiating a warm migration. |

|

|

| The frequency in seconds with which the system checks the status of snapshot creation or removal during a warm migration. |

|

|

|

Percentage of space in persistent volumes allocated as file system overhead when the

|

|

|

|

Fixed amount of additional space allocated in persistent block volumes. This setting is applicable for any

|

|

|

| Configuration map for vSphere source providers. This configuration map maps the operating system of the incoming VM to a OpenShift Virtualization preference name. This configuration map needs to be in the namespace where the MTV Operator is deployed. To see the list of preferences in your OpenShift Virtualization environment, open the OpenShift web console and click Virtualization → Preferences.

You can add values to the configuration map when this label has the default value,

|

|

|

| Configuration map for RHV source providers. This configuration map maps the operating system of the incoming VM to a OpenShift Virtualization preference name. This configuration map needs to be in the namespace where the MTV Operator is deployed. To see the list of preferences in your OpenShift Virtualization environment, open the OpenShift web console and click Virtualization → Preferences.

You can add values to the configuration map when this label has the default value,

|

|

Chapter 4. Migrating virtual machines by using the Red Hat OpenShift web console

You can migrate virtual machines (VMs) by using the Red Hat OpenShift web console to:

You must ensure that all prerequisites are met.

VMware only: You must have the minimal set of VMware privileges.

VMware only: Creating a VMware Virtual Disk Development Kit (VDDK) image will increase migration speed.

4.1. The MTV user interface

The Migration Toolkit for Virtualization (MTV) user interface is integrated into the OpenShift web console.

In the left-hand panel, you can choose a page related to a component of the migration progress, for example, Providers for Migration, or, if you are an administrator, you can choose Overview, which contains information about migrations and lets you configure MTV settings.

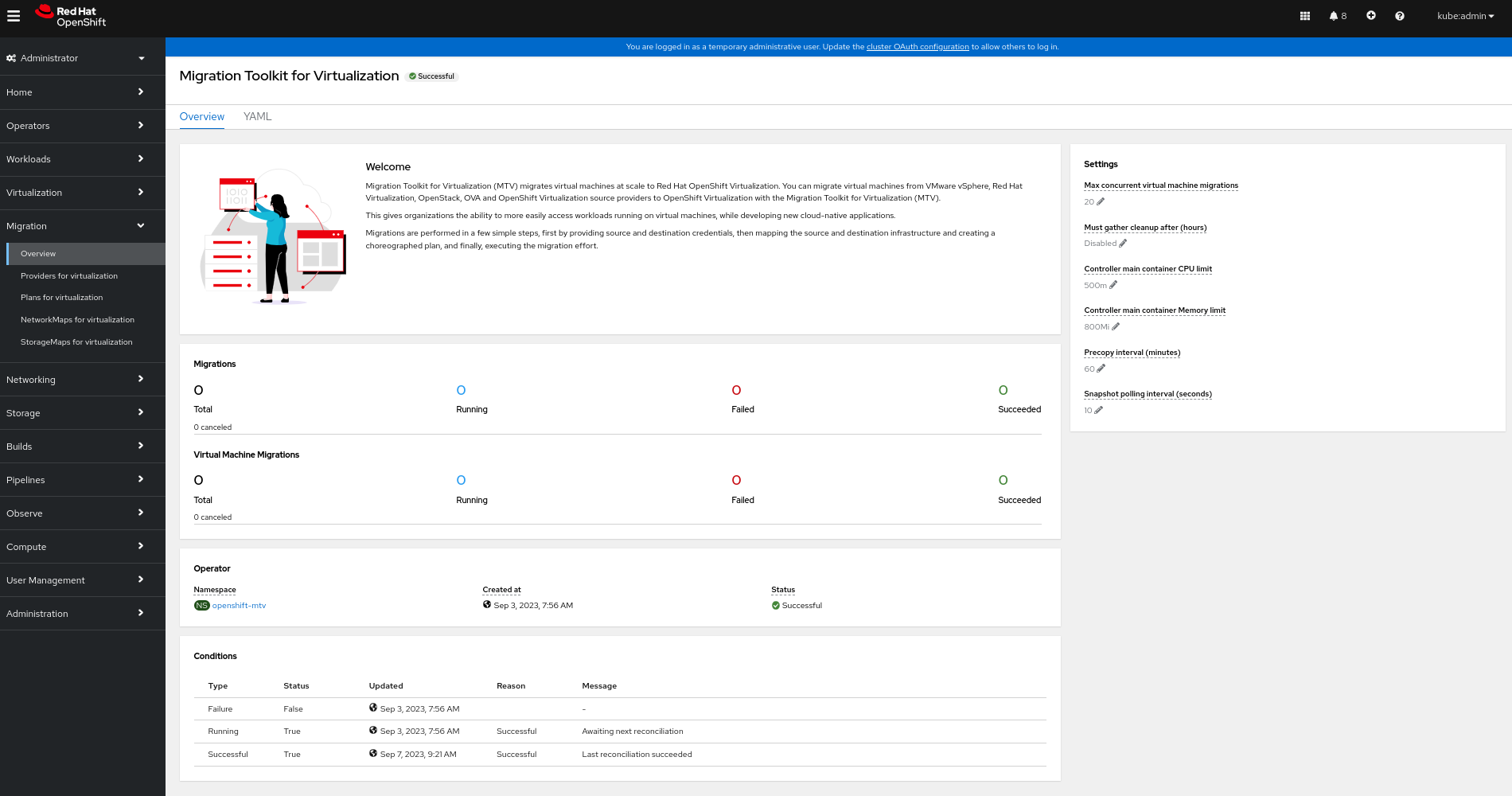

Figure 4.1. MTV extension interface

In pages related to components, you can click on the Projects list, which is in the upper-left portion of the page, and see which projects (namespaces) you are allowed to work with.

- If you are an administrator, you can see all projects.

- If you are a non-administrator, you can see only the projects that you have permissions to work with.

4.2. The MTV Overview page

The Migration Toolkit for Virtualization (MTV) Overview page displays system-wide information about migrations and a list of Settings you can change.

If you have Administrator privileges, you can access the Overview page by clicking Migration → Overview in the Red Hat OpenShift web console.

The Overview page has 3 tabs:

- Overview

- YAML

- Metrics

4.2.1. Overview tab

The Overview tab lets you see:

- Operator: The namespace on which the MTV Operator is deployed and the status of the Operator

- Pods: The name, status, and creation time of each pod that was deployed by the MTV Operator

Conditions: Status of the MTV Operator:

-

Failure: Last failure.

Falseindicates no failure since deployment. - Running: Whether the Operator is currently running and waiting for the next reconciliation.

- Successful: Last successful reconciliation.

-

Failure: Last failure.

4.2.2. YAML tab

The custom resource ForkliftController that defines the operation of the MTV Operator. You can modify the custom resource from this tab.

4.2.3. Metrics tab

The Metrics tab lets you see:

Migrations: The number of migrations performed using MTV:

- Total

- Running

- Failed

- Succeeded

- Canceled

Virtual Machine Migrations: The number of VMs migrated using MTV:

- Total

- Running

- Failed

- Succeeded

- Canceled

Since a single migration might involve many virtual machines, the number of migrations performed using MTV might vary significantly from the number of virtual machines that have been migrated using MTV.

- Chart showing the number of running, failed, and succeeded migrations performed using MTV for each of the last 7 days

- Chart showing the number of running, failed, and succeeded virtual machine migrations performed using MTV for each of the last 7 days

4.3. Configuring MTV settings

If you have Administrator privileges, you can access the Overview page and change the following settings in it:

| Setting | Description | Default value |

|---|---|---|

| Max concurrent virtual machine migrations | The maximum number of VMs per plan that can be migrated simultaneously | 20 |

| Must gather cleanup after (hours) |

The duration for retaining | Disabled |

| Controller main container CPU limit | The CPU limit allocated to the main controller container | 500 m |

| Controller main container Memory limit | The memory limit allocated to the main controller container | 800 Mi |

| Precopy internal (minutes) | The interval at which a new snapshot is requested before initiating a warm migration | 60 |

| Snapshot polling interval (seconds) | The frequency with which the system checks the status of snapshot creation or removal during a warm migration | 10 |

Procedure

- In the Red Hat OpenShift web console, click Migration → Overview. The Settings list is on the right-hand side of the page.

- In the Settings list, click the Edit icon of the setting you want to change.

- Choose a setting from the list.

- Click Save.

4.4. Adding providers

You can add source providers and destination providers for a virtual machine migration by using the Red Hat OpenShift web console.

4.4.1. Adding source providers

You can use MTV to migrate VMs from the following source providers:

- VMware vSphere

- Red Hat Virtualization

- OpenStack

- Open Virtual Appliances (OVAs) that were created by VMware vSphere

- OpenShift Virtualization

You can add a source provider by using the Red Hat OpenShift web console.

4.4.1.1. Adding a VMware vSphere source provider

You can migrate VMware vSphere VMs from VMware vCenter or from a VMWare ESX/ESXi server. In MTV versions 2.6 and later, you can migrate directly from an ESX/ESXi server, without going through vCenter, by specifying the SDK endpoint to that of an ESX/ESXi server.

EMS enforcement is disabled for migrations with VMware vSphere source providers in order to enable migrations from versions of vSphere that are supported by Migration Toolkit for Virtualization but do not comply with the 2023 FIPS requirements. Therefore, users should consider whether migrations from vSphere source providers risk their compliance with FIPS. Supported versions of vSphere are specified in Software compatibility guidelines.

Prerequisites

- It is strongly recommended to create a VMware Virtual Disk Development Kit (VDDK) image in a secure registry that is accessible to all clusters. A VDDK image accelerates migration. For more information, see Creating a VDDK image.

Procedure

- In the Red Hat OpenShift web console, click Migration → Providers for virtualization.

- Click Create Provider.

- Click vSphere.

- Specify the following fields:

Provider Details

- Provider resource name: Name of the source provider.

- Endpoint type: Select the vSphere provider endpoint type. Options: vCenter or ESXi. You can migrate virtual machines from vCenter, an ESX/ESXi server that is not managed by vCenter, or from an ESX/ESXi server that is managed by vCenter but does not go through vCenter.

-

URL: URL of the SDK endpoint of the vCenter on which the source VM is mounted. Ensure that the URL includes the

sdkpath, usually/sdk. For example,https://vCenter-host-example.com/sdk. If a certificate for FQDN is specified, the value of this field needs to match the FQDN in the certificate. -

VDDK init image:

VDDKInitImagepath. It is strongly recommended to create a VDDK init image to accelerate migrations. For more information, see Creating a VDDK image.

Provider details

-

Username: vCenter user or ESXi user. For example,

user@vsphere.local. Password: vCenter user password or ESXi user password.

Choose one of the following options for validating CA certificates:

- Use a custom CA certificate: Migrate after validating a custom CA certificate.

- Use the system CA certificate: Migrate after validating the system CA certificate.

Skip certificate validation : Migrate without validating a CA certificate.

- To use a custom CA certificate, leave the Skip certificate validation switch toggled to left, and either drag the CA certificate to the text box or browse for it and click Select.

- To use the system CA certificate, leave the Skip certificate validation switch toggled to the left, and leave the CA certificate text box empty.

- To skip certificate validation, toggle the Skip certificate validation switch to the right.

Optional: Ask MTV to fetch a custom CA certificate from the provider’s API endpoint URL.

- Click Fetch certificate from URL. The Verify certificate window opens.

If the details are correct, select the I trust the authenticity of this certificate checkbox, and then, click Confirm. If not, click Cancel, and then, enter the correct certificate information manually.

Once confirmed, the CA certificate will be used to validate subsequent communication with the API endpoint.

Click Create provider to add and save the provider.

The provider appears in the list of providers.

NoteIt might take a few minutes for the provider to have the status

Ready.

4.4.1.1.1. Selecting a migration network for a VMware source provider

You can select a migration network in the Red Hat OpenShift web console for a source provider to reduce risk to the source environment and to improve performance.

Using the default network for migration can result in poor performance because the network might not have sufficient bandwidth. This situation can have a negative effect on the source platform because the disk transfer operation might saturate the network.

You can also control the network from which disks are transferred from a host by using the Network File Copy (NFC) service in vSphere.

Prerequisites

- The migration network must have sufficient throughput, minimum speed of 10 Gbps, for disk transfer.

The migration network must be accessible to the OpenShift Virtualization nodes through the default gateway.

NoteThe source virtual disks are copied by a pod that is connected to the pod network of the target namespace.

- The migration network should have jumbo frames enabled.

Procedure

- In the Red Hat OpenShift web console, click Migration → Providers for virtualization.

- Click the host number in the Hosts column beside a provider to view a list of hosts.

- Select one or more hosts and click Select migration network.

Specify the following fields:

- Network: Network name

-

ESXi host admin username: For example,

root - ESXi host admin password: Password

- Click Save.

Verify that the status of each host is Ready.

If a host status is not Ready, the host might be unreachable on the migration network or the credentials might be incorrect. You can modify the host configuration and save the changes.

4.4.1.2. Adding a Red Hat Virtualization source provider

You can add a Red Hat Virtualization source provider by using the Red Hat OpenShift web console.

Prerequisites

- Manager CA certificate, unless it was replaced by a third-party certificate, in which case, specify the Manager Apache CA certificate

Procedure

- In the Red Hat OpenShift web console, click Migration → Providers for virtualization.

- Click Create Provider.

- Click Red Hat Virtualization

Specify the following fields:

- Provider resource name: Name of the source provider.

-

URL: URL of the API endpoint of the Red Hat Virtualization Manager (RHVM) on which the source VM is mounted. Ensure that the URL includes the path leading to the RHVM API server, usually

/ovirt-engine/api. For example,https://rhv-host-example.com/ovirt-engine/api. - Username: Username.

- Password: Password.

Choose one of the following options for validating CA certificates:

- Use a custom CA certificate: Migrate after validating a custom CA certificate.

- Use the system CA certificate: Migrate after validating the system CA certificate.

Skip certificate validation : Migrate without validating a CA certificate.

- To use a custom CA certificate, leave the Skip certificate validation switch toggled to left, and either drag the CA certificate to the text box or browse for it and click Select.

- To use the system CA certificate, leave the Skip certificate validation switch toggled to the left, and leave the CA certificate text box empty.

- To skip certificate validation, toggle the Skip certificate validation switch to the right.

Optional: Ask MTV to fetch a custom CA certificate from the provider’s API endpoint URL.

- Click Fetch certificate from URL. The Verify certificate window opens.

If the details are correct, select the I trust the authenticity of this certificate checkbox, and then, click Confirm. If not, click Cancel, and then, enter the correct certificate information manually.

Once confirmed, the CA certificate will be used to validate subsequent communication with the API endpoint.

Click Create provider to add and save the provider.

The provider appears in the list of providers.

4.4.1.3. Adding an OpenStack source provider

You can add an OpenStack source provider by using the Red Hat OpenShift web console.

When you migrate an image-based VM from an OpenStack provider, a snapshot is created for the image that is attached to the source VM and the data from the snapshot is copied over to the target VM. This means that the target VM will have the same state as that of the source VM at the time the snapshot was created.

Procedure

- In the Red Hat OpenShift web console, click Migration → Providers for virtualization.

- Click Create Provider.

- Click OpenStack.

Specify the following fields:

- Provider resource name: Name of the source provider.

-

URL: URL of the OpenStack Identity (Keystone) endpoint. For example,

http://controller:5000/v3. Authentication type: Choose one of the following methods of authentication and supply the information related to your choice. For example, if you choose Application credential ID as the authentication type, the Application credential ID and the Application credential secret fields become active, and you need to supply the ID and the secret.

Application credential ID

- Application credential ID: OpenStack application credential ID

-

Application credential secret: OpenStack https://github.com/kubev2v/forklift-documentation/pull/402pplication credential

Secret

Application credential name

- Application credential name: OpenStack application credential name

-

Application credential secret: : OpenStack application credential

Secret - Username: OpenStack username

- Domain: OpenStack domain name

Token with user ID

- Token: OpenStack token

- User ID: OpenStack user ID

- Project ID: OpenStack project ID

Token with user Name

- Token: OpenStack token

- Username: OpenStack username

- Project: OpenStack project

- Domain name: OpenStack domain name

Password

- Username: OpenStack username

- Password: OpenStack password

- Project: OpenStack project

- Domain: OpenStack domain name

Choose one of the following options for validating CA certificates:

- Use a custom CA certificate: Migrate after validating a custom CA certificate.

- Use the system CA certificate: Migrate after validating the system CA certificate.

Skip certificate validation : Migrate without validating a CA certificate.

- To use a custom CA certificate, leave the Skip certificate validation switch toggled to left, and either drag the CA certificate to the text box or browse for it and click Select.

- To use the system CA certificate, leave the Skip certificate validation switch toggled to the left, and leave the CA certificate text box empty.

- To skip certificate validation, toggle the Skip certificate validation switch to the right.

Optional: Ask MTV to fetch a custom CA certificate from the provider’s API endpoint URL.

- Click Fetch certificate from URL. The Verify certificate window opens.

If the details are correct, select the I trust the authenticity of this certificate checkbox, and then, click Confirm. If not, click Cancel, and then, enter the correct certificate information manually.

Once confirmed, the CA certificate will be used to validate subsequent communication with the API endpoint.

Click Create provider to add and save the provider.

The provider appears in the list of providers.

4.4.1.4. Adding an Open Virtual Appliance (OVA) source provider

You can add Open Virtual Appliance (OVA) files that were created by VMware vSphere as a source provider by using the Red Hat OpenShift web console.

Procedure

- In the Red Hat OpenShift web console, click Migration → Providers for virtualization.

- Click Create Provider.

- Click Open Virtual Appliance (OVA).

Specify the following fields:

- Provider resource name: Name of the source provider

- URL: URL of the NFS file share that serves the OVA

Click Create provider to add and save the provider.

The provider appears in the list of providers.

NoteAn error message might appear that states that an error has occurred. You can ignore this message.

4.4.1.5. Adding a Red Hat OpenShift Virtualization source provider

You can use a Red Hat OpenShift Virtualization provider as both a source provider and destination provider.

Specifically, the host cluster that is automatically added as a OpenShift Virtualization provider can be used as both a source provider and a destination provider.

You can migrate VMs from the cluster that MTV is deployed on to another cluster, or from a remote cluster to the cluster that MTV is deployed on.

The Red Hat OpenShift cluster version of the source provider must be 4.13 or later.

Procedure

- In the Red Hat OpenShift web console, click Migration → Providers for virtualization.

- Click Create Provider.

- Click OpenShift Virtualization.

Specify the following fields:

- Provider resource name: Name of the source provider

- URL: URL of the endpoint of the API server

Service account bearer token: Token for a service account with

cluster-adminprivilegesIf both URL and Service account bearer token are left blank, the local OpenShift cluster is used.

Choose one of the following options for validating CA certificates:

- Use a custom CA certificate: Migrate after validating a custom CA certificate.

- Use the system CA certificate: Migrate after validating the system CA certificate.

Skip certificate validation : Migrate without validating a CA certificate.

- To use a custom CA certificate, leave the Skip certificate validation switch toggled to left, and either drag the CA certificate to the text box or browse for it and click Select.

- To use the system CA certificate, leave the Skip certificate validation switch toggled to the left, and leave the CA certificate text box empty.

- To skip certificate validation, toggle the Skip certificate validation switch to the right.

Optional: Ask MTV to fetch a custom CA certificate from the provider’s API endpoint URL.

- Click Fetch certificate from URL. The Verify certificate window opens.

If the details are correct, select the I trust the authenticity of this certificate checkbox, and then, click Confirm. If not, click Cancel, and then, enter the correct certificate information manually.

Once confirmed, the CA certificate will be used to validate subsequent communication with the API endpoint.

Click Create provider to add and save the provider.

The provider appears in the list of providers.

4.4.2. Adding destination providers

You can add a OpenShift Virtualization destination provider by using the Red Hat OpenShift web console.

4.4.2.1. Adding an OpenShift Virtualization destination provider

You can use a Red Hat OpenShift Virtualization provider as both a source provider and destination provider.

Specifically, the host cluster that is automatically added as a OpenShift Virtualization provider can be used as both a source provider and a destination provider.

You can also add another OpenShift Virtualization destination provider to the Red Hat OpenShift web console in addition to the default OpenShift Virtualization destination provider, which is the cluster where you installed MTV.

You can migrate VMs from the cluster that MTV is deployed on to another cluster, or from a remote cluster to the cluster that MTV is deployed on.

Prerequisites

-

You must have an OpenShift Virtualization service account token with

cluster-adminprivileges.

Procedure

- In the Red Hat OpenShift web console, click Migration → Providers for virtualization.

- Click Create Provider.

- Click OpenShift Virtualization.

Specify the following fields:

- Provider resource name: Name of the source provider

- URL: URL of the endpoint of the API server

Service account bearer token: Token for a service account with

cluster-adminprivilegesIf both URL and Service account bearer token are left blank, the local OpenShift cluster is used.

Choose one of the following options for validating CA certificates:

- Use a custom CA certificate: Migrate after validating a custom CA certificate.

- Use the system CA certificate: Migrate after validating the system CA certificate.

Skip certificate validation : Migrate without validating a CA certificate.

- To use a custom CA certificate, leave the Skip certificate validation switch toggled to left, and either drag the CA certificate to the text box or browse for it and click Select.

- To use the system CA certificate, leave the Skip certificate validation switch toggled to the left, and leave the CA certificate text box empty.

- To skip certificate validation, toggle the Skip certificate validation switch to the right.

Optional: Ask MTV to fetch a custom CA certificate from the provider’s API endpoint URL.

- Click Fetch certificate from URL. The Verify certificate window opens.

If the details are correct, select the I trust the authenticity of this certificate checkbox, and then, click Confirm. If not, click Cancel, and then, enter the correct certificate information manually.

Once confirmed, the CA certificate will be used to validate subsequent communication with the API endpoint.

Click Create provider to add and save the provider.

The provider appears in the list of providers.

4.4.2.2. Selecting a migration network for an OpenShift Virtualization provider

You can select a default migration network for an OpenShift Virtualization provider in the Red Hat OpenShift web console to improve performance. The default migration network is used to transfer disks to the namespaces in which it is configured.

If you do not select a migration network, the default migration network is the pod network, which might not be optimal for disk transfer.

You can override the default migration network of the provider by selecting a different network when you create a migration plan.

Procedure

- In the Red Hat OpenShift web console, click Migration → Providers for virtualization.

-

On the right side of the provider, select Select migration network from the Options menu

.

.

- Select a network from the list of available networks and click Select.

4.5. Creating migration plans

You can create a migration plan by using the Red Hat OpenShift web console to specify a source provider, the virtual machines (VMs) you want to migrate, and other plan details.

For your convenience, there are two procedures to create migration plans, starting with either a source provider or with specific VMs:

- To start with a source provider, see Creating a migration plan starting with a source provider.

- To start with specific VMs, see Creating a migration plan starting with specific VMs.

Virtual machines with guest initiated storage connections, such as Internet Small Computer Systems Interface (iSCSI) connections or Network File System (NFS) mounts, are not handled by MTV and could require additional planning before or reconfiguration after the migration.

This is to ensure that no issues arise due to the addition or newly migrated VM accessing this storage.

4.5.1. Creating a migration plan starting with a source provider

You can create a migration plan based on a source provider, starting on the Plans for virtualization page. Note the specific options for migrations from VMware or RHV providers.

Procedure

In the Red Hat OpenShift web console, click Plans for virtualization and then click Create Plan.

The Create migration plan wizard opens to the Select source provider interface.

Select the source provider of the VMs you want to migrate.

The Select virtual machines interface opens.

Select the VMs you want to migrate and click Next.

The Create migration plan pane opens. It displays the source provider’s name and suggestions for a target provider and namespace, a network map, and a storage map.

- Enter the Plan name.

- Make any needed changes to the editable items.

- Click Add mapping to edit a suggested network mapping or a storage mapping, or to add one or more additional mappings.

Click Create migration plan.

MTV validates the migration plan and the Plan details page opens, indicating whether the plan is ready for use or contains an error. The details of the plan are listed, and you can edit the items you filled in on the previous page. If you make any changes, MTV validates the plan again.

VMware source providers only (All optional):

Preserving static IPs of VMs: By default, virtual network interface controllers (vNICs) change during the migration process. This results in vNICs that are set with a static IP in vSphere losing their IP. To avoid this by preserving static IPs of VMs, click the Edit icon next to Preserve static IPs and toggle the Whether to preserve the static IPs switch in the window that opens. Then click Save.

MTV then issues a warning message about any VMs with a Windows operating system for which vNIC properties are missing. To retrieve any missing vNIC properties, run those VMs in vSphere in order for the vNIC properties to be reported to MTV.

- Entering a list of decryption passphrases for disks encrypted using Linux Unified Key Setup (LUKS): To enter a list of decryption passphrases for LUKS-encrypted devices, in the Settings section, click the Edit icon next to Disk decryption passphrases, enter the passphrases, and then click Save. You do not need to enter the passphrases in a specific order - for each LUKS-encrypted device, MTV tries each passphrase until one unlocks the device.

Specifying a root device: Applies to multi-boot VM migrations only. By default, MTV uses the first bootable device detected as the root device.

To specify a different root device, in the Settings section, click the Edit icon next to Root device and choose a device from the list of commonly-used options, or enter a device in the text box.

MTV uses the following format for disk location:

/dev/sd<disk_identifier><disk_partition>. For example, if the second disk is the root device and the operating system is on the disk’s second partition, the format would be:/dev/sdb2. After you enter the boot device, click Save.If the conversion fails because the boot device provided is incorrect, it is possible to get the correct information by looking at the conversion pod logs.

RHV source providers only (Optional):

Preserving the CPU model of VMs that are migrated from RHV: Generally, the CPU model (type) for RHV VMs is set at the cluster level, but it can be set at the VM level, which is called a custom CPU model. By default, MTV sets the CPU model on the destination cluster as follows: MTV preserves custom CPU settings for VMs that have them, but, for VMs without custom CPU settings, MTV does not set the CPU model. Instead, the CPU model is later set by OpenShift Virtualization.

To preserve the cluster-level CPU model of your RHV VMs, in the Settings section, click the Edit icon next to Preserve CPU model. Toggle the Whether to preserve the CPU model switch, and then click Save.

If the plan is valid,

- You can run the plan now by clicking Start migration.

- You can run the plan later by selecting it on the Plans for virtualization page and following the procedure in Running a migration plan.

4.5.2. Creating a migration plan starting with specific VMs

You can create a migration plan based on specific VMs, starting on the Providers for virtualization page. Note the specific options for migrations from VMware or RHV providers.

Procedure

- In the Red Hat OpenShift web console, click Providers for virtualization.

In the row of the appropriate source provider, click VMs.

The Virtual Machines tab opens.

Select the VMs you want to migrate and click Create migration plan.

The Create migration plan pane opens. It displays the source provider’s name and suggestions for a target provider and namespace, a network map, and a storage map.

- Enter the Plan name.

- Make any needed changes to the editable items.

- Click Add mapping to edit a suggested network mapping or a storage mapping, or to add one or more additional mappings.

Click Create migration plan.

MTV validates the migration plan and the Plan details page opens, indicating whether the plan is ready for use or contains an error. The details of the plan are listed, and you can edit the items you filled in on the previous page. If you make any changes, MTV validates the plan again.

VMware source providers only (All optional):

Preserving static IPs of VMs: By default, virtual network interface controllers (vNICs) change during the migration process. This results in vNICs that are set with a static IP in vSphere losing their IP. To avoid this by preserving static IPs of VMs, click the Edit icon next to Preserve static IPs and toggle the Whether to preserve the static IPs switch in the window that opens. Then click Save.

MTV then issues a warning message about any VMs with a Windows operating system for which vNIC properties are missing. To retrieve any missing vNIC properties, run those VMs in vSphere in order for the vNIC properties to be reported to MTV.

- Entering a list of decryption passphrases for disks encrypted using Linux Unified Key Setup (LUKS): To enter a list of decryption passphrases for LUKS-encrypted devices, in the Settings section, click the Edit icon next to Disk decryption passphrases, enter the passphrases, and then click Save. You do not need to enter the passphrases in a specific order - for each LUKS-encrypted device, MTV tries each passphrase until one unlocks the device.

Specifying a root device: Applies to multi-boot VM migrations only. By default, MTV uses the first bootable device detected as the root device.

To specify a different root device, in the Settings section, click the Edit icon next to Root device and choose a device from the list of commonly-used options, or enter a device in the text box.

MTV uses the following format for disk location:

/dev/sd<disk_identifier><disk_partition>. For example, if the second disk is the root device and the operating system is on the disk’s second partition, the format would be:/dev/sdb2. After you enter the boot device, click Save.If the conversion fails because the boot device provided is incorrect, it is possible to get the correct information by looking at the conversion pod logs.

RHV source providers only (Optional):

Preserving the CPU model of VMs that are migrated from RHV: Generally, the CPU model (type) for RHV VMs is set at the cluster level, but it can be set at the VM level, which is called a custom CPU model. By default, MTV sets the CPU model on the destination cluster as follows: MTV preserves custom CPU settings for VMs that have them, but, for VMs without custom CPU settings, MTV does not set the CPU model. Instead, the CPU model is later set by OpenShift Virtualization.

To preserve the cluster-level CPU model of your RHV VMs, in the Settings section, click the Edit icon next to Preserve CPU model. Toggle the Whether to preserve the CPU model switch, and then click Save.

If the plan is valid,

- You can run the plan now by clicking Start migration.

- You can run the plan later by selecting it on the Plans for virtualization page and following the procedure in Running a migration plan.

4.6. Running a migration plan

You can run a migration plan and view its progress in the Red Hat OpenShift web console.

Prerequisites

- Valid migration plan.

Procedure

In the Red Hat OpenShift web console, click Migration → Plans for virtualization.

The Plans list displays the source and target providers, the number of virtual machines (VMs) being migrated, the status, and the description of each plan.

- Click Start beside a migration plan to start the migration.

Click Start in the confirmation window that opens.

The Migration details by VM screen opens, displaying the migration’s progress

Warm migration only:

- The precopy stage starts.

- Click Cutover to complete the migration.

If the migration fails:

- Click Get logs to retrieve the migration logs.

- Click Get logs in the confirmation window that opens.

- Wait until Get logs changes to Download logs and then click the button to download the logs.

Click a migration’s Status, whether it failed or succeeded or is still ongoing, to view the details of the migration.

The Migration details by VM screen opens, displaying the start and end times of the migration, the amount of data copied, and a progress pipeline for each VM being migrated.

- Expand an individual VM to view its steps and the elapsed time and state of each step.

4.7. Migration plan options

On the Plans for virtualization page of the Red Hat OpenShift web console, you can click the Options menu

![]() beside a migration plan to access the following options:

beside a migration plan to access the following options:

- Get logs: Retrieves the logs of a migration. When you click Get logs, a confirmation window opens. After you click Get logs in the window, wait until Get logs changes to Download logs and then click the button to download the logs.

- Edit: Edit the details of a migration plan. You cannot edit a migration plan while it is running or after it has completed successfully.

Duplicate: Create a new migration plan with the same virtual machines (VMs), parameters, mappings, and hooks as an existing plan. You can use this feature for the following tasks:

- Migrate VMs to a different namespace.

- Edit an archived migration plan.

- Edit a migration plan with a different status, for example, failed, canceled, running, critical, or ready.

Archive: Delete the logs, history, and metadata of a migration plan. The plan cannot be edited or restarted. It can only be viewed.

NoteThe Archive option is irreversible. However, you can duplicate an archived plan.

Delete: Permanently remove a migration plan. You cannot delete a running migration plan.

NoteThe Delete option is irreversible.

Deleting a migration plan does not remove temporary resources such as

importerpods,conversionpods, config maps, secrets, failed VMs, and data volumes. (BZ#2018974) You must archive a migration plan before deleting it in order to clean up the temporary resources.- View details: Display the details of a migration plan.

- Restart: Restart a failed or canceled migration plan.

- Cancel scheduled cutover: Cancel a scheduled cutover migration for a warm migration plan.

4.8. Canceling a migration

You can cancel the migration of some or all virtual machines (VMs) while a migration plan is in progress by using the Red Hat OpenShift web console.

Procedure

- In the Red Hat OpenShift web console, click Plans for virtualization.

- Click the name of a running migration plan to view the migration details.

- Select one or more VMs and click Cancel.

Click Yes, cancel to confirm the cancellation.

In the Migration details by VM list, the status of the canceled VMs is Canceled. The unmigrated and the migrated virtual machines are not affected.

You can restart a canceled migration by clicking Restart beside the migration plan on the Migration plans page.

Chapter 5. Migrating virtual machines from the command line

You can migrate virtual machines to OpenShift Virtualization from the command line.

You must ensure that all prerequisites are met.

5.1. Permissions needed by non-administrators to work with migration plan components

If you are an administrator, you can work with all components of migration plans (for example, providers, network mappings, and migration plans).

By default, non-administrators have limited ability to work with migration plans and their components. As an administrator, you can modify their roles to allow them full access to all components, or you can give them limited permissions.

For example, administrators can assign non-administrators one or more of the following cluster roles for migration plans:

| Role | Description |

|---|---|

|

| Can view migration plans but not to create, delete or modify them |

|

|

Can create, delete or modify (all parts of |

|

|

All |

Note that pre-defined cluster roles include a resource (for example, plans), an API group (for example, forklift.konveyor.io-v1beta1) and an action (for example, view, edit).

As a more comprehensive example, you can grant non-administrators the following set of permissions per namespace:

- Create and modify storage maps, network maps, and migration plans for the namespaces they have access to

- Attach providers created by administrators to storage maps, network maps, and migration plans

- Not be able to create providers or to change system settings

| Actions | API group | Resource |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| Empty string |

|

Non-administrators need to have the create permissions that are part of edit roles for network maps and for storage maps to create migration plans, even when using a template for a network map or a storage map.

5.2. Retrieving a VMware vSphere moRef

When you migrate VMs with a VMware vSphere source provider using Migration Toolkit for Virtualization (MTV) from the CLI, you need to know the managed object reference (moRef) of certain entities in vSphere, such as datastores, networks, and VMs.

You can retrieve the moRef of one or more vSphere entities from the Inventory service. You can then use each moRef as a reference for retrieving the moRef of another entity.

Procedure

Retrieve the routes for the project:

oc get route -n openshift-mtv

Retrieve the

Inventoryservice route:$ oc get route <inventory_service> -n openshift-mtv

Retrieve the access token:

$ TOKEN=$(oc whoami -t)

Retrieve the moRef of a VMware vSphere provider:

$ curl -H "Authorization: Bearer $TOKEN" https://<inventory_service_route>/providers/vsphere -k

Retrieve the datastores of a VMware vSphere source provider:

$ curl -H "Authorization: Bearer $TOKEN" https://<inventory_service_route>/providers/vsphere/<provider id>/datastores/ -k

Example output

[ { "id": "datastore-11", "parent": { "kind": "Folder", "id": "group-s5" }, "path": "/Datacenter/datastore/v2v_general_porpuse_ISCSI_DC", "revision": 46, "name": "v2v_general_porpuse_ISCSI_DC", "selfLink": "providers/vsphere/01278af6-e1e4-4799-b01b-d5ccc8dd0201/datastores/datastore-11" }, { "id": "datastore-730", "parent": { "kind": "Folder", "id": "group-s5" }, "path": "/Datacenter/datastore/f01-h27-640-SSD_2", "revision": 46, "name": "f01-h27-640-SSD_2", "selfLink": "providers/vsphere/01278af6-e1e4-4799-b01b-d5ccc8dd0201/datastores/datastore-730" }, ...

In this example, the moRef of the datastore v2v_general_porpuse_ISCSI_DC is datastore-11 and the moRef of the datastore f01-h27-640-SSD_2 is datastore-730.

5.3. Migrating virtual machines

You migrate virtual machines (VMs) from the command line (CLI) by creating MTV custom resources (CRs). The CRs and the migration procedure vary by source provider.

You must specify a name for cluster-scoped CRs.

You must specify both a name and a namespace for namespace-scoped CRs.

To migrate to or from an OpenShift cluster that is different from the one the migration plan is defined on, you must have an OpenShift Virtualization service account token with cluster-admin privileges.

5.3.1. Migrating from a VMware vSphere source provider

You can migrate from a VMware vSphere source provider by using the CLI.

Procedure

Create a

Secretmanifest for the source provider credentials:$ cat << EOF | oc apply -f - apiVersion: v1 kind: Secret metadata: name: <secret> namespace: <namespace> ownerReferences: 1 - apiVersion: forklift.konveyor.io/v1beta1 kind: Provider name: <provider_name> uid: <provider_uid> labels: createdForProviderType: vsphere createdForResourceType: providers type: Opaque stringData: user: <user> 2 password: <password> 3 insecureSkipVerify: <"true"/"false"> 4 cacert: | 5 <ca_certificate> url: <api_end_point> 6 EOF

- 1

- The

ownerReferencessection is optional. - 2

- Specify the vCenter user or the ESX/ESXi user.

- 3

- Specify the password of the vCenter user or the ESX/ESXi user.

- 4

- Specify

"true"to skip certificate verification, specify"false"to verify the certificate. Defaults to"false"if not specified. Skipping certificate verification proceeds with an insecure migration and then the certificate is not required. Insecure migration means that the transferred data is sent over an insecure connection and potentially sensitive data could be exposed. - 5

- When this field is not set and skip certificate verification is disabled, MTV attempts to use the system CA.

- 6

- Specify the API endpoint URL of the vCenter or the ESX/ESXi, for example,

https://<vCenter_host>/sdk.

Create a

Providermanifest for the source provider:$ cat << EOF | oc apply -f - apiVersion: forklift.konveyor.io/v1beta1 kind: Provider metadata: name: <source_provider> namespace: <namespace> spec: type: vsphere url: <api_end_point> 1 settings: vddkInitImage: <VDDK_image> 2 sdkEndpoint: vcenter 3 secret: name: <secret> 4 namespace: <namespace> EOF

- 1

- Specify the URL of the API endpoint, for example,

https://<vCenter_host>/sdk. - 2

- Optional, but it is strongly recommended to create a VDDK image to accelerate migrations. Follow OpenShift documentation to specify the VDDK image you created.

- 3

- Options:

vcenteroresxi. - 4

- Specify the name of the provider

SecretCR.

Create a

Hostmanifest:$ cat << EOF | oc apply -f - apiVersion: forklift.konveyor.io/v1beta1 kind: Host metadata: name: <vmware_host> namespace: <namespace> spec: provider: namespace: <namespace> name: <source_provider> 1 id: <source_host_mor> 2 ipAddress: <source_network_ip> 3 EOF- 1

- Specify the name of the VMware vSphere

ProviderCR. - 2

- Specify the Managed Object Reference (moRef) of the VMware vSphere host. To retrieve the moRef, see Retrieving a VMware vSphere moRef.

- 3

- Specify the IP address of the VMware vSphere migration network.

Create a

NetworkMapmanifest to map the source and destination networks:$ cat << EOF | oc apply -f - apiVersion: forklift.konveyor.io/v1beta1 kind: NetworkMap metadata: name: <network_map> namespace: <namespace> spec: map: - destination: name: <network_name> type: pod 1 source: 2 id: <source_network_id> name: <source_network_name> - destination: name: <network_attachment_definition> 3 namespace: <network_attachment_definition_namespace> 4 type: multus source: id: <source_network_id> name: <source_network_name> provider: source: name: <source_provider> namespace: <namespace> destination: name: <destination_provider> namespace: <namespace> EOF- 1

- Allowed values are

podandmultus. - 2

- You can use either the

idor thenameparameter to specify the source network. Forid, specify the VMware vSphere network Managed Object Reference (moRef). To retrieve the moRef, see Retrieving a VMware vSphere moRef. - 3

- Specify a network attachment definition for each additional OpenShift Virtualization network.

- 4

- Required only when

typeismultus. Specify the namespace of the OpenShift Virtualization network attachment definition.

Create a

StorageMapmanifest to map source and destination storage:$ cat << EOF | oc apply -f - apiVersion: forklift.konveyor.io/v1beta1 kind: StorageMap metadata: name: <storage_map> namespace: <namespace> spec: map: - destination: storageClass: <storage_class> accessMode: <access_mode> 1 source: id: <source_datastore> 2 provider: source: name: <source_provider> namespace: <namespace> destination: name: <destination_provider> namespace: <namespace> EOF- 1

- Allowed values are

ReadWriteOnceandReadWriteMany. - 2

- Specify the VMware vSphere datastore moRef. For example,

f2737930-b567-451a-9ceb-2887f6207009. To retrieve the moRef, see Retrieving a VMware vSphere moRef.

Optional: Create a