This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.Configuring Clusters

OpenShift Container Platform 3.10 Installation and Configuration

Abstract

Chapter 1. Overview

This guide covers further configuration options available for your OpenShift Container Platform cluster post-installation.

Chapter 2. Setting up the Registry

2.1. Registry Overview

2.1.1. About the Registry

OpenShift Container Platform can build container images from your source code, deploy them, and manage their lifecycle. To enable this, OpenShift Container Platform provides an internal, integrated Docker registry that can be deployed in your OpenShift Container Platform environment to locally manage images.

2.1.2. Integrated or Stand-alone Registries

During an initial installation of a full OpenShift Container Platform cluster, it is likely that the registry was deployed automatically during the installation process. If it was not, or if you want to further customize the configuration of your registry, see Deploying a Registry on Existing Clusters.

While it can be deployed to run as an integrated part of your full OpenShift Container Platform cluster, the OpenShift Container Platform registry can alternatively be installed separately as a stand-alone container image registry.

To install a stand-alone registry, follow Installing a Stand-alone Registry. This installation path deploys an all-in-one cluster running a registry and specialized web console.

2.1.3. Red Hat Quay Registries

If you need an enterprise-quality container image registry, Red Hat Quay is available both as a hosted service and as software you can install in your own data center or cloud environment. Advanced registry features in Red Hat Quay include geo-replication, image scanning, and the ability to rollback images.

Visit the Quay.io site to set up your own hosted Quay registry account. After that, the Quay Tutorial helps you login to the Quay registry and start managing your images. Alternatively, refer to Getting Started with Red Hat Quay for information on setting up your own Red Hat Quay registry.

At the moment, you access your Red Hat Quay registry from OpenShift as you would any remote container image registry. To learn how to set up credentials to access Red Hat Quay as a secured registry, refer to Allowing Pods to Reference Images from Other Secured Registries.

2.2. Deploying a Registry on Existing Clusters

2.2.1. Overview

If the integrated registry was not previously deployed automatically during the initial installation of your OpenShift Container Platform cluster, or if it is no longer running successfully and you need to redeploy it on your existing cluster, see the following sections for options on deploying a new registry.

This topic is not required if you installed a stand-alone registry.

2.2.2. Deploying the Registry

To deploy the integrated Docker registry, use the oc adm registry command as a user with cluster administrator privileges. For example:

oc adm registry --config=/etc/origin/master/admin.kubeconfig \

--service-account=registry \

--images='registry.access.redhat.com/openshift3/ose-${component}:${version}'

$ oc adm registry --config=/etc/origin/master/admin.kubeconfig \

--service-account=registry \

--images='registry.access.redhat.com/openshift3/ose-${component}:${version}' This creates a service and a deployment configuration, both called docker-registry. Once deployed successfully, a pod is created with a name similar to docker-registry-1-cpty9.

To see a full list of options that you can specify when creating the registry:

oc adm registry --help

$ oc adm registry --help

The value for --fs-group must be permitted by the SCC used by the registry (typically, the restricted SCC).

2.2.3. Deploying the Registry as a DaemonSet

Use the oc adm registry command to deploy the registry as a DaemonSet with the --daemonset option.

Daemonsets ensure that when nodes are created, they contain copies of a specified pod. When the nodes are removed, the pods are garbage collected.

For more information on DaemonSets, see Using Daemonsets.

2.2.4. Registry Compute Resources

By default, the registry is created with no settings for compute resource requests or limits. For production, it is highly recommended that the deployment configuration for the registry be updated to set resource requests and limits for the registry pod. Otherwise, the registry pod will be considered a BestEffort pod.

See Compute Resources for more information on configuring requests and limits.

2.2.5. Storage for the Registry

The registry stores container images and metadata. If you simply deploy a pod with the registry, it uses an ephemeral volume that is destroyed if the pod exits. Any images anyone has built or pushed into the registry would disappear.

This section lists the supported registry storage drivers. See the Docker registry documentation for more information.

The following list includes storage drivers that need to be configured in the registry’s configuration file:

- Filesystem. Filesystem is the default and does not need to be configured.

- S3. See the CloudFront configuration documentation for more information.

- OpenStack Swift

- Google Cloud Storage (GCS)

- Microsoft Azure

- Aliyun OSS

General registry storage configuration options are supported. See the Docker registry documentation for more information.

The following storage options need to be configured through the filesystem driver:

For more information on supported persistent storage drivers, see Configuring Persistent Storage and Persistent Storage Examples.

2.2.5.1. Production Use

For production use, attach a remote volume or define and use the persistent storage method of your choice.

For example, to use an existing persistent volume claim:

oc volume deploymentconfigs/docker-registry --add --name=registry-storage -t pvc \

--claim-name=<pvc_name> --overwrite

$ oc volume deploymentconfigs/docker-registry --add --name=registry-storage -t pvc \

--claim-name=<pvc_name> --overwriteTesting shows issues with using the RHEL NFS server as a storage backend for the container image registry. This includes the OpenShift Container Registry and Quay. Therefore, using the RHEL NFS server to back PVs used by core services is not recommended.

Other NFS implementations on the marketplace might not have these issues. Contact the individual NFS implementation vendor for more information on any testing that was possibly completed against these OpenShift core components.

2.2.5.1.1. Use Amazon S3 as a Storage Back-end

There is also an option to use Amazon Simple Storage Service storage with the internal Docker registry. It is a secure cloud storage manageable through AWS Management Console. To use it, the registry’s configuration file must be manually edited and mounted to the registry pod. However, before you start with the configuration, look at upstream’s recommended steps.

Take a default YAML configuration file as a base and replace the filesystem entry in the storage section with s3 entry such as below. The resulting storage section may look like this:

All of the s3 configuration options are documented in upstream’s driver reference documentation.

Overriding the registry configuration will take you through the additional steps on mounting the configuration file into pod.

When the registry runs on the S3 storage back-end, there are reported issues.

If you want to use a S3 region that is not supported by the integrated registry you are using, see S3 Driver Configuration.

2.2.5.2. Non-Production Use

For non-production use, you can use the --mount-host=<path> option to specify a directory for the registry to use for persistent storage. The registry volume is then created as a host-mount at the specified <path>.

The --mount-host option mounts a directory from the node on which the registry container lives. If you scale up the docker-registry deployment configuration, it is possible that your registry pods and containers will run on different nodes, which can result in two or more registry containers, each with its own local storage. This will lead to unpredictable behavior, as subsequent requests to pull the same image repeatedly may not always succeed, depending on which container the request ultimately goes to.

The --mount-host option requires that the registry container run in privileged mode. This is automatically enabled when you specify --mount-host. However, not all pods are allowed to run privileged containers by default. If you still want to use this option, create the registry and specify that it use the registry service account that was created during installation:

oc adm registry --service-account=registry \

--config=/etc/origin/master/admin.kubeconfig \

--images='registry.access.redhat.com/openshift3/ose-${component}:${version}' \

--mount-host=<path>

$ oc adm registry --service-account=registry \

--config=/etc/origin/master/admin.kubeconfig \

--images='registry.access.redhat.com/openshift3/ose-${component}:${version}' \

--mount-host=<path>The Docker registry pod runs as user 1001. This user must be able to write to the host directory. You may need to change directory ownership to user ID 1001 with this command:

sudo chown 1001:root <path>

$ sudo chown 1001:root <path>2.2.6. Enabling the Registry Console

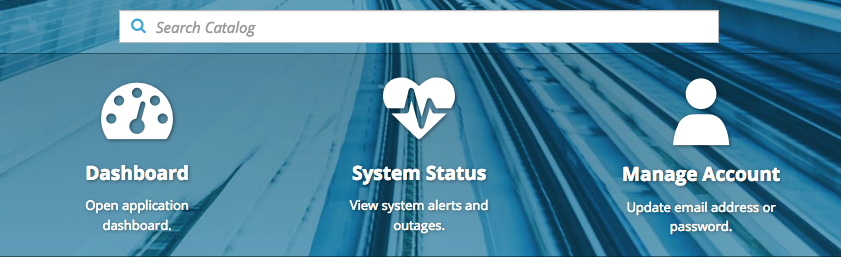

OpenShift Container Platform provides a web-based interface to the integrated registry. This registry console is an optional component for browsing and managing images. It is deployed as a stateless service running as a pod.

If you installed OpenShift Container Platform as a stand-alone registry, the registry console is already deployed and secured automatically during installation.

If Cockpit is already running, you’ll need to shut it down before proceeding in order to avoid a port conflict (9090 by default) with the registry console.

2.2.6.1. Deploying the Registry Console

You must first have exposed the registry.

Create a passthrough route in the default project. You will need this when creating the registry console application in the next step.

oc create route passthrough --service registry-console \ --port registry-console \ -n default$ oc create route passthrough --service registry-console \ --port registry-console \ -n defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Deploy the registry console application. Replace

<openshift_oauth_url>with the URL of the OpenShift Container Platform OAuth provider, which is typically the master.oc new-app -n default --template=registry-console \ -p OPENSHIFT_OAUTH_PROVIDER_URL="https://<openshift_oauth_url>:8443" \ -p REGISTRY_HOST=$(oc get route docker-registry -n default --template='{{ .spec.host }}') \ -p COCKPIT_KUBE_URL=$(oc get route registry-console -n default --template='https://{{ .spec.host }}')$ oc new-app -n default --template=registry-console \ -p OPENSHIFT_OAUTH_PROVIDER_URL="https://<openshift_oauth_url>:8443" \ -p REGISTRY_HOST=$(oc get route docker-registry -n default --template='{{ .spec.host }}') \ -p COCKPIT_KUBE_URL=$(oc get route registry-console -n default --template='https://{{ .spec.host }}')Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf the redirection URL is wrong when you are trying to log in to the registry console, check your OAuth client with

oc get oauthclients.- Finally, use a web browser to view the console using the route URI.

2.2.6.2. Securing the Registry Console

By default, the registry console generates self-signed TLS certificates if deployed manually per the steps in Deploying the Registry Console. See Troubleshooting the Registry Console for more information.

Use the following steps to add your organization’s signed certificates as a secret volume. This assumes your certificates are available on the oc client host.

Create a .cert file containing the certificate and key. Format the file with:

- One or more BEGIN CERTIFICATE blocks for the server certificate and the intermediate certificate authorities

A block containing a BEGIN PRIVATE KEY or similar for the key. The key must not be encrypted

For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The secured registry should contain the following Subject Alternative Names (SAN) list:

Two service hostnames.

For example:

docker-registry.default.svc.cluster.local docker-registry.default.svc

docker-registry.default.svc.cluster.local docker-registry.default.svcCopy to Clipboard Copied! Toggle word wrap Toggle overflow Service IP address.

For example:

172.30.124.220

172.30.124.220Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use the following command to get the Docker registry service IP address:

oc get service docker-registry --template='{{.spec.clusterIP}}'oc get service docker-registry --template='{{.spec.clusterIP}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Public hostname.

For example:

docker-registry-default.apps.example.com

docker-registry-default.apps.example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow Use the following command to get the Docker registry public hostname:

oc get route docker-registry --template '{{.spec.host}}'oc get route docker-registry --template '{{.spec.host}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example, the server certificate should contain SAN details similar to the following:

X509v3 Subject Alternative Name: DNS:docker-registry-public.openshift.com, DNS:docker-registry.default.svc, DNS:docker-registry.default.svc.cluster.local, DNS:172.30.2.98, IP Address:172.30.2.98X509v3 Subject Alternative Name: DNS:docker-registry-public.openshift.com, DNS:docker-registry.default.svc, DNS:docker-registry.default.svc.cluster.local, DNS:172.30.2.98, IP Address:172.30.2.98Copy to Clipboard Copied! Toggle word wrap Toggle overflow The registry console loads a certificate from the /etc/cockpit/ws-certs.d directory. It uses the last file with a .cert extension in alphabetical order. Therefore, the .cert file should contain at least two PEM blocks formatted in the OpenSSL style.

If no certificate is found, a self-signed certificate is created using the

opensslcommand and stored in the 0-self-signed.cert file.

Create the secret:

oc create secret generic console-secret \ --from-file=/path/to/console.cert$ oc create secret generic console-secret \ --from-file=/path/to/console.certCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the secrets to the registry-console deployment configuration:

oc volume dc/registry-console --add --type=secret \ --secret-name=console-secret -m /etc/cockpit/ws-certs.d$ oc volume dc/registry-console --add --type=secret \ --secret-name=console-secret -m /etc/cockpit/ws-certs.dCopy to Clipboard Copied! Toggle word wrap Toggle overflow This triggers a new deployment of the registry console to include your signed certificates.

2.2.6.3. Troubleshooting the Registry Console

2.2.6.3.1. Debug Mode

The registry console debug mode is enabled using an environment variable. The following command redeploys the registry console in debug mode:

oc set env dc registry-console G_MESSAGES_DEBUG=cockpit-ws,cockpit-wrapper

$ oc set env dc registry-console G_MESSAGES_DEBUG=cockpit-ws,cockpit-wrapperEnabling debug mode allows more verbose logging to appear in the registry console’s pod logs.

2.2.6.3.2. Display SSL Certificate Path

To check which certificate the registry console is using, a command can be run from inside the console pod.

List the pods in the default project and find the registry console’s pod name:

oc get pods -n default NAME READY STATUS RESTARTS AGE registry-console-1-rssrw 1/1 Running 0 1d

$ oc get pods -n default NAME READY STATUS RESTARTS AGE registry-console-1-rssrw 1/1 Running 0 1dCopy to Clipboard Copied! Toggle word wrap Toggle overflow Using the pod name from the previous command, get the certificate path that the cockpit-ws process is using. This example shows the console using the auto-generated certificate:

oc exec registry-console-1-rssrw remotectl certificate certificate: /etc/cockpit/ws-certs.d/0-self-signed.cert

$ oc exec registry-console-1-rssrw remotectl certificate certificate: /etc/cockpit/ws-certs.d/0-self-signed.certCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.3. Accessing the Registry

2.3.1. Viewing Logs

To view the logs for the Docker registry, use the oc logs command with the deployment configuration:

2.3.2. File Storage

Tag and image metadata is stored in OpenShift Container Platform, but the registry stores layer and signature data in a volume that is mounted into the registry container at /registry. As oc exec does not work on privileged containers, to view a registry’s contents you must manually SSH into the node housing the registry pod’s container, then run docker exec on the container itself:

List the current pods to find the pod name of your Docker registry:

oc get pods

# oc get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Then, use

oc describeto find the host name for the node running the container:oc describe pod <pod_name>

# oc describe pod <pod_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Log into the desired node:

ssh node.example.com

# ssh node.example.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow List the running containers from the default project on the node host and identify the container ID for the Docker registry:

docker ps --filter=name=registry_docker-registry.*_default_

# docker ps --filter=name=registry_docker-registry.*_default_Copy to Clipboard Copied! Toggle word wrap Toggle overflow List the registry contents using the

oc rshcommand:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- This directory stores all layers and signatures as blobs.

- 2

- This file contains the blob’s contents.

- 3

- This directory stores all the image repositories.

- 4

- This directory is for a single image repository p1/pause.

- 5

- This directory contains signatures for a particular image manifest revision.

- 6

- This file contains a reference back to a blob (which contains the signature data).

- 7

- This directory contains any layers that are currently being uploaded and staged for the given repository.

- 8

- This directory contains links to all the layers this repository references.

- 9

- This file contains a reference to a specific layer that has been linked into this repository via an image.

2.3.3. Accessing the Registry Directly

For advanced usage, you can access the registry directly to invoke docker commands. This allows you to push images to or pull them from the integrated registry directly using operations like docker push or docker pull. To do so, you must be logged in to the registry using the docker login command. The operations you can perform depend on your user permissions, as described in the following sections.

2.3.3.1. User Prerequisites

To access the registry directly, the user that you use must satisfy the following, depending on your intended usage:

For any direct access, you must have a regular user for your preferred identity provider. A regular user can generate an access token required for logging in to the registry. System users, such as system:admin, cannot obtain access tokens and, therefore, cannot access the registry directly.

For example, if you are using

HTPASSWDauthentication, you can create one using the following command:htpasswd /etc/origin/master/htpasswd <user_name>

# htpasswd /etc/origin/master/htpasswd <user_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For pulling images, for example when using the

docker pullcommand, the user must have the registry-viewer role. To add this role:oc policy add-role-to-user registry-viewer <user_name>

$ oc policy add-role-to-user registry-viewer <user_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For writing or pushing images, for example when using the

docker pushcommand, the user must have the registry-editor role. To add this role:oc policy add-role-to-user registry-editor <user_name>

$ oc policy add-role-to-user registry-editor <user_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

For more information on user permissions, see Managing Role Bindings.

2.3.3.2. Logging in to the Registry

Ensure your user satisfies the prerequisites for accessing the registry directly.

To log in to the registry directly:

Ensure you are logged in to OpenShift Container Platform as a regular user:

oc login

$ oc loginCopy to Clipboard Copied! Toggle word wrap Toggle overflow Log in to the Docker registry by using your access token:

docker login -u openshift -p $(oc whoami -t) <registry_ip>:<port>

docker login -u openshift -p $(oc whoami -t) <registry_ip>:<port>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

You can pass any value for the username, the token contains all necessary information. Passing a username that contains colons will result in a login failure.

2.3.3.3. Pushing and Pulling Images

After logging in to the registry, you can perform docker pull and docker push operations against your registry.

You can pull arbitrary images, but if you have the system:registry role added, you can only push images to the registry in your project.

In the following examples, we use:

| Component | Value |

| <registry_ip> |

|

| <port> |

|

| <project> |

|

| <image> |

|

| <tag> |

omitted (defaults to |

Pull an arbitrary image:

docker pull docker.io/busybox

$ docker pull docker.io/busyboxCopy to Clipboard Copied! Toggle word wrap Toggle overflow Tag the new image with the form

<registry_ip>:<port>/<project>/<image>. The project name must appear in this pull specification for OpenShift Container Platform to correctly place and later access the image in the registry.docker tag docker.io/busybox 172.30.124.220:5000/openshift/busybox

$ docker tag docker.io/busybox 172.30.124.220:5000/openshift/busyboxCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteYour regular user must have the system:image-builder role for the specified project, which allows the user to write or push an image. Otherwise, the

docker pushin the next step will fail. To test, you can create a new project to push the busybox image.Push the newly-tagged image to your registry:

docker push 172.30.124.220:5000/openshift/busybox ... cf2616975b4a: Image successfully pushed Digest: sha256:3662dd821983bc4326bee12caec61367e7fb6f6a3ee547cbaff98f77403cab55

$ docker push 172.30.124.220:5000/openshift/busybox ... cf2616975b4a: Image successfully pushed Digest: sha256:3662dd821983bc4326bee12caec61367e7fb6f6a3ee547cbaff98f77403cab55Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.3.4. Accessing Registry Metrics

The OpenShift Container Registry provides an endpoint for Prometheus metrics. Prometheus is a stand-alone, open source systems monitoring and alerting toolkit.

The metrics are exposed at the /extensions/v2/metrics path of the registry endpoint. However, this route must first be enabled; see Extended Registry Configuration for instructions.

The following is a simple example of a metrics query:

Another method to access the metrics is to use a cluster role. You still need to enable the endpoint, but you do not need to specify a <secret>. The part of the configuration file responsible for metrics should look like this:

openshift:

version: 1.0

metrics:

enabled: true

...

openshift:

version: 1.0

metrics:

enabled: true

...You must create a cluster role if you do not already have one to access the metrics:

To add this role to a user, run the following command:

oc adm policy add-cluster-role-to-user prometheus-scraper <username>

$ oc adm policy add-cluster-role-to-user prometheus-scraper <username>See the upstream Prometheus documentation for more advanced queries and recommended visualizers.

2.4. Securing and Exposing the Registry

2.4.1. Overview

By default, the OpenShift Container Platform registry is secured during cluster installation so that it serves traffic via TLS. A passthrough route is also created by default to expose the service externally.

If for any reason your registry has not been secured or exposed, see the following sections for steps on how to manually do so.

2.4.2. Manually Securing the Registry

To manually secure the registry to serve traffic via TLS:

- Deploy the registry.

Fetch the service IP and port of the registry:

oc get svc/docker-registry NAME LABELS SELECTOR IP(S) PORT(S) docker-registry docker-registry=default docker-registry=default 172.30.124.220 5000/TCP

$ oc get svc/docker-registry NAME LABELS SELECTOR IP(S) PORT(S) docker-registry docker-registry=default docker-registry=default 172.30.124.220 5000/TCPCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can use an existing server certificate, or create a key and server certificate valid for specified IPs and host names, signed by a specified CA. To create a server certificate for the registry service IP and the docker-registry.default.svc.cluster.local host name, run the following command from the first master listed in the Ansible host inventory file, by default /etc/ansible/hosts:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow If the router will be exposed externally, add the public route host name in the

--hostnamesflag:--hostnames='mydocker-registry.example.com,docker-registry.default.svc.cluster.local,172.30.124.220 \

--hostnames='mydocker-registry.example.com,docker-registry.default.svc.cluster.local,172.30.124.220 \Copy to Clipboard Copied! Toggle word wrap Toggle overflow See Redeploying Registry and Router Certificates for additional details on updating the default certificate so that the route is externally accessible.

NoteThe

oc adm ca create-server-certcommand generates a certificate that is valid for two years. This can be altered with the--expire-daysoption, but for security reasons, it is recommended to not make it greater than this value.Create the secret for the registry certificates:

oc create secret generic registry-certificates \ --from-file=/etc/secrets/registry.crt \ --from-file=/etc/secrets/registry.key$ oc create secret generic registry-certificates \ --from-file=/etc/secrets/registry.crt \ --from-file=/etc/secrets/registry.keyCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the secret to the registry pod’s service accounts (including the default service account):

oc secrets link registry registry-certificates oc secrets link default registry-certificates

$ oc secrets link registry registry-certificates $ oc secrets link default registry-certificatesCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteLimiting secrets to only the service accounts that reference them is disabled by default. This means that if

serviceAccountConfig.limitSecretReferencesis set tofalse(the default setting) in the master configuration file, linking secrets to a service is not required.Pause the

docker-registryservice:oc rollout pause dc/docker-registry

$ oc rollout pause dc/docker-registryCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the secret volume to the registry deployment configuration:

oc volume dc/docker-registry --add --type=secret \ --secret-name=registry-certificates -m /etc/secrets$ oc volume dc/docker-registry --add --type=secret \ --secret-name=registry-certificates -m /etc/secretsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enable TLS by adding the following environment variables to the registry deployment configuration:

oc set env dc/docker-registry \ REGISTRY_HTTP_TLS_CERTIFICATE=/etc/secrets/registry.crt \ REGISTRY_HTTP_TLS_KEY=/etc/secrets/registry.key$ oc set env dc/docker-registry \ REGISTRY_HTTP_TLS_CERTIFICATE=/etc/secrets/registry.crt \ REGISTRY_HTTP_TLS_KEY=/etc/secrets/registry.keyCopy to Clipboard Copied! Toggle word wrap Toggle overflow See the Configuring a registry section of the Docker documentation for more information.

Update the scheme used for the registry’s liveness probe from HTTP to HTTPS:

oc patch dc/docker-registry -p '{"spec": {"template": {"spec": {"containers":[{ "name":"registry", "livenessProbe": {"httpGet": {"scheme":"HTTPS"}} }]}}}}'$ oc patch dc/docker-registry -p '{"spec": {"template": {"spec": {"containers":[{ "name":"registry", "livenessProbe": {"httpGet": {"scheme":"HTTPS"}} }]}}}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow If your registry was initially deployed on OpenShift Container Platform 3.2 or later, update the scheme used for the registry’s readiness probe from HTTP to HTTPS:

oc patch dc/docker-registry -p '{"spec": {"template": {"spec": {"containers":[{ "name":"registry", "readinessProbe": {"httpGet": {"scheme":"HTTPS"}} }]}}}}'$ oc patch dc/docker-registry -p '{"spec": {"template": {"spec": {"containers":[{ "name":"registry", "readinessProbe": {"httpGet": {"scheme":"HTTPS"}} }]}}}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Resume the

docker-registryservice:oc rollout resume dc/docker-registry

$ oc rollout resume dc/docker-registryCopy to Clipboard Copied! Toggle word wrap Toggle overflow Validate the registry is running in TLS mode. Wait until the latest docker-registry deployment completes and verify the Docker logs for the registry container. You should find an entry for

listening on :5000, tls.oc logs dc/docker-registry | grep tls time="2015-05-27T05:05:53Z" level=info msg="listening on :5000, tls" instance.id=deeba528-c478-41f5-b751-dc48e4935fc2

$ oc logs dc/docker-registry | grep tls time="2015-05-27T05:05:53Z" level=info msg="listening on :5000, tls" instance.id=deeba528-c478-41f5-b751-dc48e4935fc2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the CA certificate to the Docker certificates directory. This must be done on all nodes in the cluster:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The ca.crt file is a copy of /etc/origin/master/ca.crt on the master.

When using authentication, some versions of

dockeralso require you to configure your cluster to trust the certificate at the OS level.Copy the certificate:

cp /etc/origin/master/ca.crt /etc/pki/ca-trust/source/anchors/myregistrydomain.com.crt

$ cp /etc/origin/master/ca.crt /etc/pki/ca-trust/source/anchors/myregistrydomain.com.crtCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run:

update-ca-trust enable

$ update-ca-trust enableCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Remove the

--insecure-registryoption only for this particular registry in the /etc/sysconfig/docker file. Then, reload the daemon and restart the docker service to reflect this configuration change:sudo systemctl daemon-reload sudo systemctl restart docker

$ sudo systemctl daemon-reload $ sudo systemctl restart dockerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Validate the

dockerclient connection. Runningdocker pushto the registry ordocker pullfrom the registry should succeed. Make sure you have logged into the registry.docker tag|push <registry/image> <internal_registry/project/image>

$ docker tag|push <registry/image> <internal_registry/project/image>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4.3. Manually Exposing a Secure Registry

Instead of logging in to the OpenShift Container Platform registry from within the OpenShift Container Platform cluster, you can gain external access to it by first securing the registry and then exposing it with a route. This allows you to log in to the registry from outside the cluster using the route address, and to tag and push images using the route host.

Each of the following prerequisite steps are performed by default during a typical cluster installation. If they have not been, perform them manually:

A passthrough route should have been created by default for the registry during the initial cluster installation:

Verify whether the route exists:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteRe-encrypt routes are also supported for exposing the secure registry.

If it does not exist, create the route via the

oc create route passthroughcommand, specifying the registry as the route’s service. By default, the name of the created route is the same as the service name:Get the docker-registry service details:

oc get svc NAME CLUSTER_IP EXTERNAL_IP PORT(S) SELECTOR AGE docker-registry 172.30.69.167 <none> 5000/TCP docker-registry=default 4h kubernetes 172.30.0.1 <none> 443/TCP,53/UDP,53/TCP <none> 4h router 172.30.172.132 <none> 80/TCP router=router 4h

$ oc get svc NAME CLUSTER_IP EXTERNAL_IP PORT(S) SELECTOR AGE docker-registry 172.30.69.167 <none> 5000/TCP docker-registry=default 4h kubernetes 172.30.0.1 <none> 443/TCP,53/UDP,53/TCP <none> 4h router 172.30.172.132 <none> 80/TCP router=router 4hCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the route:

oc create route passthrough \ --service=docker-registry \ --hostname=<host> route "docker-registry" created$ oc create route passthrough \ --service=docker-registry \1 --hostname=<host> route "docker-registry" created2 Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Next, you must trust the certificates being used for the registry on your host system to allow the host to push and pull images. The certificates referenced were created when you secured your registry.

sudo mkdir -p /etc/docker/certs.d/<host> sudo cp <ca_certificate_file> /etc/docker/certs.d/<host> sudo systemctl restart docker

$ sudo mkdir -p /etc/docker/certs.d/<host> $ sudo cp <ca_certificate_file> /etc/docker/certs.d/<host> $ sudo systemctl restart dockerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Log in to the registry using the information from securing the registry. However, this time point to the host name used in the route rather than your service IP. When logging in to a secured and exposed registry, make sure you specify the registry in the

docker logincommand:docker login -e user@company.com \ -u f83j5h6 \ -p Ju1PeM47R0B92Lk3AZp-bWJSck2F7aGCiZ66aFGZrs2 \ <host># docker login -e user@company.com \ -u f83j5h6 \ -p Ju1PeM47R0B92Lk3AZp-bWJSck2F7aGCiZ66aFGZrs2 \ <host>Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can now tag and push images using the route host. For example, to tag and push a

busyboximage in a project calledtest:Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteYour image streams will have the IP address and port of the registry service, not the route name and port. See

oc get imagestreamsfor details.

2.4.4. Manually Exposing a Non-Secure Registry

Instead of securing the registry in order to expose the registry, you can simply expose a non-secure registry for non-production OpenShift Container Platform environments. This allows you to have an external route to the registry without using SSL certificates.

Only non-production environments should expose a non-secure registry to external access.

To expose a non-secure registry:

Expose the registry:

oc expose service docker-registry --hostname=<hostname> -n default

# oc expose service docker-registry --hostname=<hostname> -n defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow This creates the following JSON file:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the route has been created successfully:

oc get route NAME HOST/PORT PATH SERVICE LABELS INSECURE POLICY TLS TERMINATION docker-registry registry.example.com docker-registry docker-registry=default

# oc get route NAME HOST/PORT PATH SERVICE LABELS INSECURE POLICY TLS TERMINATION docker-registry registry.example.com docker-registry docker-registry=defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the health of the registry:

curl -v http://registry.example.com/healthz

$ curl -v http://registry.example.com/healthzCopy to Clipboard Copied! Toggle word wrap Toggle overflow Expect an HTTP 200/OK message.

After exposing the registry, update your /etc/sysconfig/docker file by adding the port number to the

OPTIONSentry. For example:OPTIONS='--selinux-enabled --insecure-registry=172.30.0.0/16 --insecure-registry registry.example.com:80'

OPTIONS='--selinux-enabled --insecure-registry=172.30.0.0/16 --insecure-registry registry.example.com:80'Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantThe above options should be added on the client from which you are trying to log in.

Also, ensure that Docker is running on the client.

When logging in to the non-secured and exposed registry, make sure you specify the registry in the docker login command. For example:

docker login -e user@company.com \

-u f83j5h6 \

-p Ju1PeM47R0B92Lk3AZp-bWJSck2F7aGCiZ66aFGZrs2 \

<host>

# docker login -e user@company.com \

-u f83j5h6 \

-p Ju1PeM47R0B92Lk3AZp-bWJSck2F7aGCiZ66aFGZrs2 \

<host>2.5. Extended Registry Configuration

2.5.1. Maintaining the Registry IP Address

OpenShift Container Platform refers to the integrated registry by its service IP address, so if you decide to delete and recreate the docker-registry service, you can ensure a completely transparent transition by arranging to re-use the old IP address in the new service. If a new IP address cannot be avoided, you can minimize cluster disruption by rebooting only the masters.

- Re-using the Address

- To re-use the IP address, you must save the IP address of the old docker-registry service prior to deleting it, and arrange to replace the newly assigned IP address with the saved one in the new docker-registry service.

Make a note of the

clusterIPfor the service:oc get svc/docker-registry -o yaml | grep clusterIP:

$ oc get svc/docker-registry -o yaml | grep clusterIP:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the service:

oc delete svc/docker-registry dc/docker-registry

$ oc delete svc/docker-registry dc/docker-registryCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the registry definition in registry.yaml, replacing

<options>with, for example, those used in step 3 of the instructions in the Non-Production Use section:oc adm registry <options> -o yaml > registry.yaml

$ oc adm registry <options> -o yaml > registry.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Edit registry.yaml, find the

Servicethere, and change itsclusterIPto the address noted in step 1. Create the registry using the modified registry.yaml:

oc create -f registry.yaml

$ oc create -f registry.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- Rebooting the Masters

- If you are unable to re-use the IP address, any operation that uses a pull specification that includes the old IP address will fail. To minimize cluster disruption, you must reboot the masters:

master-restart api master-restart controllers

# master-restart api

# master-restart controllersThis ensures that the old registry URL, which includes the old IP address, is cleared from the cache.

We recommend against rebooting the entire cluster because that incurs unnecessary downtime for pods and does not actually clear the cache.

2.5.2. Whitelisting Docker Registries

You can specify a whitelist of docker registries, allowing you to curate a set of images and templates that are available for download by OpenShift Container Platform users. This curated set can be placed in one or more docker registries, and then added to the whitelist. When using a whitelist, only the specified registries are accessible within OpenShift Container Platform, and all other registries are denied access by default.

To configure a whitelist:

Edit the /etc/sysconfig/docker file to block all registries:

BLOCK_REGISTRY='--block-registry=all'

BLOCK_REGISTRY='--block-registry=all'Copy to Clipboard Copied! Toggle word wrap Toggle overflow You may need to uncomment the

BLOCK_REGISTRYline.In the same file, add registries to which you want to allow access:

ADD_REGISTRY='--add-registry=<registry1> --add-registry=<registry2>'

ADD_REGISTRY='--add-registry=<registry1> --add-registry=<registry2>'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Allowing Access to Registries

ADD_REGISTRY='--add-registry=registry.access.redhat.com'

ADD_REGISTRY='--add-registry=registry.access.redhat.com'Copy to Clipboard Copied! Toggle word wrap Toggle overflow This example would restrict access to images available on the Red Hat Customer Portal.

Once the whitelist is configured, if a user tries to pull from a docker registry that is not on the whitelist, they will receive an error message stating that this registry is not allowed.

2.5.3. Setting the Registry Hostname

You can configure the hostname and port the registry is known by for both internal and external references. By doing this, image streams will provide hostname based push and pull specifications for images, allowing consumers of the images to be isolated from changes to the registry service ip and potentially allowing image streams and their references to be portable between clusters.

To set the hostname used to reference the registry from within the cluster, set the internalRegistryHostname in the imagePolicyConfig section of the master configuration file. The external hostname is controlled by setting the externalRegistryHostname value in the same location.

Image Policy Configuration

imagePolicyConfig: internalRegistryHostname: docker-registry.default.svc.cluster.local:5000 externalRegistryHostname: docker-registry.mycompany.com

imagePolicyConfig:

internalRegistryHostname: docker-registry.default.svc.cluster.local:5000

externalRegistryHostname: docker-registry.mycompany.com

The registry itself must be configured with the same internal hostname value. This can be accomplished by setting the REGISTRY_OPENSHIFT_SERVER_ADDR environment variable on the registry deployment configuration, or by setting the value in the OpenShift section of the registry configuration.

If you have enabled TLS for your registry the server certificate must include the hostnames by which you expect the registry to be referenced. See securing the registry for instructions on adding hostnames to the server certificate.

2.5.4. Overriding the Registry Configuration

You can override the integrated registry’s default configuration, found by default at /config.yml in a running registry’s container, with your own custom configuration.

Upstream configuration options in this file may also be overridden using environment variables. The middleware section is an exception as there are just a few options that can be overridden using environment variables. Learn how to override specific configuration options.

To enable management of the registry configuration file directly and deploy an updated configuration using a ConfigMap:

- Deploy the registry.

Edit the registry configuration file locally as needed. The initial YAML file deployed on the registry is provided below. Review supported options.

Registry Configuration File

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a

ConfigMapholding the content of each file in this directory:oc create configmap registry-config \ --from-file=</path/to/custom/registry/config.yml>/$ oc create configmap registry-config \ --from-file=</path/to/custom/registry/config.yml>/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add the registry-config ConfigMap as a volume to the registry’s deployment configuration to mount the custom configuration file at /etc/docker/registry/:

oc volume dc/docker-registry --add --type=configmap \ --configmap-name=registry-config -m /etc/docker/registry/$ oc volume dc/docker-registry --add --type=configmap \ --configmap-name=registry-config -m /etc/docker/registry/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update the registry to reference the configuration path from the previous step by adding the following environment variable to the registry’s deployment configuration:

oc set env dc/docker-registry \ REGISTRY_CONFIGURATION_PATH=/etc/docker/registry/config.yml$ oc set env dc/docker-registry \ REGISTRY_CONFIGURATION_PATH=/etc/docker/registry/config.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

This may be performed as an iterative process to achieve the desired configuration. For example, during troubleshooting, the configuration may be temporarily updated to put it in debug mode.

To update an existing configuration:

This procedure will overwrite the currently deployed registry configuration.

- Edit the local registry configuration file, config.yml.

Delete the registry-config configmap:

oc delete configmap registry-config

$ oc delete configmap registry-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow Recreate the configmap to reference the updated configuration file:

oc create configmap registry-config\ --from-file=</path/to/custom/registry/config.yml>/$ oc create configmap registry-config\ --from-file=</path/to/custom/registry/config.yml>/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Redeploy the registry to read the updated configuration:

oc rollout latest docker-registry

$ oc rollout latest docker-registryCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Maintain configuration files in a source control repository.

2.5.5. Registry Configuration Reference

There are many configuration options available in the upstream docker distribution library. Not all configuration options are supported or enabled. Use this section as a reference when overriding the registry configuration.

Upstream configuration options in this file may also be overridden using environment variables. However, the middleware section may not be overridden using environment variables. Learn how to override specific configuration options.

2.5.5.1. Log

Upstream options are supported.

Example:

2.5.5.2. Hooks

Mail hooks are not supported.

2.5.5.3. Storage

This section lists the supported registry storage drivers. See the Docker registry documentation for more information.

The following list includes storage drivers that need to be configured in the registry’s configuration file:

- Filesystem. Filesystem is the default and does not need to be configured.

- S3. See the CloudFront configuration documentation for more information.

- OpenStack Swift

- Google Cloud Storage (GCS)

- Microsoft Azure

- Aliyun OSS

General registry storage configuration options are supported. See the Docker registry documentation for more information.

The following storage options need to be configured through the filesystem driver:

For more information on supported persistent storage drivers, see Configuring Persistent Storage and Persistent Storage Examples.

General Storage Configuration Options

- 1

- This entry is mandatory for image pruning to work properly.

2.5.5.4. Auth

Auth options should not be altered. The openshift extension is the only supported option.

auth:

openshift:

realm: openshift

auth:

openshift:

realm: openshift2.5.5.5. Middleware

The repository middleware extension allows to configure OpenShift Container Platform middleware responsible for interaction with OpenShift Container Platform and image proxying.

- 1 2 9

- These entries are mandatory. Their presence ensures required components are loaded. These values should not be changed.

- 3

- Allows you to store manifest schema v2 during a push to the registry. See below for more details.

- 4

- Allows the registry to act as a proxy for remote blobs. See below for more details.

- 5

- Allows the registry cache blobs to be served from remote registries for fast access later. The mirroring starts when the blob is accessed for the first time. The option has no effect if the pullthrough is disabled.

- 6

- Prevents blob uploads exceeding the size limit, which are defined in the targeted project.

- 7

- An expiration timeout for limits cached in the registry. The lower the value, the less time it takes for the limit changes to propagate to the registry. However, the registry will query limits from the server more frequently and, as a consequence, pushes will be slower.

- 8

- An expiration timeout for remembered associations between blob and repository. The higher the value, the higher probability of fast lookup and more efficient registry operation. On the other hand, memory usage will raise as well as a risk of serving image layer to user, who is no longer authorized to access it.

2.5.5.5.1. S3 Driver Configuration

If you want to use a S3 region that is not supported by the integrated registry you are using, then you can specify a regionendpoint to avoid the region validation error.

For more information about using Amazon Simple Storage Service storage, see Amazon S3 as a Storage Back-end.

For example:

Verify the region and regionendpoint fields are consistent between themselves. Otherwise the integrated registry will start, but it can not read or write anything to the S3 storage.

The regionendpoint can also be useful if you use a S3 storage different from the Amazon S3.

2.5.5.5.2. CloudFront Middleware

The CloudFront middleware extension can be added to support AWS, CloudFront CDN storage provider. CloudFront middleware speeds up distribution of image content internationally. The blobs are distributed to several edge locations around the world. The client is always directed to the edge with the lowest latency.

The CloudFront middleware extension can be only used with S3 storage. It is utilized only during blob serving. Therefore, only blob downloads can be speeded up, not uploads.

The following is an example of minimal configuration of S3 storage driver with a CloudFront middleware:

- 1

- The S3 storage must be configured the same way regardless of CloudFront middleware.

- 2

- The CloudFront storage middleware needs to be listed before OpenShift middleware.

- 3

- The CloudFront base URL. In the AWS management console, this is listed as Domain Name of CloudFront distribution.

- 4

- The location of your AWS private key on the filesystem. This must be not confused with Amazon EC2 key pair. See the AWS documentation on creating CloudFront key pairs for your trusted signers. The file needs to be mounted as a secret into the registry pod.

- 5

- The ID of your Cloudfront key pair.

2.5.5.5.3. Overriding Middleware Configuration Options

The middleware section cannot be overridden using environment variables. There are a few exceptions, however. For example:

- 1

- A configuration option that can be overridden by the boolean environment variable

REGISTRY_MIDDLEWARE_REPOSITORY_OPENSHIFT_ACCEPTSCHEMA2, which allows for the ability to accept manifest schema v2 on manifest put requests. Recognized values aretrueandfalse(which applies to all the other boolean variables below). - 2

- A configuration option that can be overridden by the boolean environment variable

REGISTRY_MIDDLEWARE_REPOSITORY_OPENSHIFT_PULLTHROUGH, which enables a proxy mode for remote repositories. - 3

- A configuration option that can be overridden by the boolean environment variable

REGISTRY_MIDDLEWARE_REPOSITORY_OPENSHIFT_MIRRORPULLTHROUGH, which instructs registry to mirror blobs locally if serving remote blobs. - 4

- A configuration option that can be overridden by the boolean environment variable

REGISTRY_MIDDLEWARE_REPOSITORY_OPENSHIFT_ENFORCEQUOTA, which allows the ability to turn quota enforcement on or off. By default, quota enforcement is off. - 5

- A configuration option that can be overridden by the environment variable

REGISTRY_MIDDLEWARE_REPOSITORY_OPENSHIFT_PROJECTCACHETTL, specifying an eviction timeout for project quota objects. It takes a valid time duration string (for example,2m). If empty, you get the default timeout. If zero (0m), caching is disabled. - 6

- A configuration option that can be overridden by the environment variable

REGISTRY_MIDDLEWARE_REPOSITORY_OPENSHIFT_BLOBREPOSITORYCACHETTL, specifying an eviction timeout for associations between blob and containing repository. The format of the value is the same as inprojectcachettlcase.

2.5.5.5.4. Image Pullthrough

If enabled, the registry will attempt to fetch requested blob from a remote registry unless the blob exists locally. The remote candidates are calculated from DockerImage entries stored in status of the image stream, a client pulls from. All the unique remote registry references in such entries will be tried in turn until the blob is found.

Pullthrough will only occur if an image stream tag exists for the image being pulled. For example, if the image being pulled is docker-registry.default.svc:5000/yourproject/yourimage:prod then the registry will look for an image stream tag named yourimage:prod in the project yourproject. If it finds one, it will attempt to pull the image using the dockerImageReference associated with that image stream tag.

When performing pullthrough, the registry will use pull credentials found in the project associated with the image stream tag that is being referenced. This capability also makes it possible for you to pull images that reside on a registry they do not have credentials to access, as long as you have access to the image stream tag that references the image.

You must ensure that your registry has appropriate certificates to trust any external registries you do a pullthrough against. The certificates need to be placed in the /etc/pki/tls/certs directory on the pod. You can mount the certificates using a configuration map or secret. Note that the entire /etc/pki/tls/certs directory must be replaced. You must include the new certificates and replace the system certificates in your secret or configuration map that you mount.

Note that by default image stream tags use a reference policy type of Source which means that when the image stream reference is resolved to an image pull specification, the specification used will point to the source of the image. For images hosted on external registries, this will be the external registry and as a result the resource will reference and pull the image by the external registry. For example, registry.access.redhat.com/openshift3/jenkins-2-rhel7 and pullthrough will not apply. To ensure that resources referencing image streams use a pull specification that points to the internal registry, the image stream tag should use a reference policy type of Local. More information is available on Reference Policy.

This feature is on by default. However, it can be disabled using a configuration option.

By default, all the remote blobs served this way are stored locally for subsequent faster access unless mirrorpullthrough is disabled. The downside of this mirroring feature is an increased storage usage.

The mirroring starts when a client tries to fetch at least a single byte of the blob. To pre-fetch a particular image into integrated registry before it is actually needed, you can run the following command:

oc get imagestreamtag/${IS}:${TAG} -o jsonpath='{ .image.dockerImageLayers[*].name }' | \

xargs -n1 -I {} curl -H "Range: bytes=0-1" -u user:${TOKEN} \

http://${REGISTRY_IP}:${PORT}/v2/default/mysql/blobs/{}

$ oc get imagestreamtag/${IS}:${TAG} -o jsonpath='{ .image.dockerImageLayers[*].name }' | \

xargs -n1 -I {} curl -H "Range: bytes=0-1" -u user:${TOKEN} \

http://${REGISTRY_IP}:${PORT}/v2/default/mysql/blobs/{}This OpenShift Container Platform mirroring feature should not be confused with the upstream registry pull through cache feature, which is a similar but distinct capability.

2.5.5.5.5. Manifest Schema v2 Support

Each image has a manifest describing its blobs, instructions for running it and additional metadata. The manifest is versioned, with each version having different structure and fields as it evolves over time. The same image can be represented by multiple manifest versions. Each version will have different digest though.

The registry currently supports manifest v2 schema 1 (schema1) and manifest v2 schema 2 (schema2). The former is being obsoleted but will be supported for an extended amount of time.

You should be wary of compatibility issues with various Docker clients:

- Docker clients of version 1.9 or older support only schema1. Any manifest this client pulls or pushes will be of this legacy schema.

- Docker clients of version 1.10 support both schema1 and schema2. And by default, it will push the latter to the registry if it supports newer schema.

The registry, storing an image with schema1 will always return it unchanged to the client. Schema2 will be transferred unchanged only to newer Docker client. For the older one, it will be converted on-the-fly to schema1.

This has significant consequences. For example an image pushed to the registry by a newer Docker client cannot be pulled by the older Docker by its digest. That’s because the stored image’s manifest is of schema2 and its digest can be used to pull only this version of manifest.

For this reason, the registry is configured by default not to store schema2. This ensures that any docker client will be able to pull from the registry any image pushed there regardless of client’s version.

Once you’re confident that all the registry clients support schema2, you’ll be safe to enable its support in the registry. See the middleware configuration reference above for particular option.

2.5.5.6. OpenShift

This section reviews the configuration of global settings for features specific to OpenShift Container Platform. In a future release, openshift-related settings in the Middleware section will be obsoleted.

Currently, this section allows you to configure registry metrics collection:

- 1

- A mandatory entry specifying configuration version of this section. The only supported value is

1.0. - 2

- The hostname of the registry. Should be set to the same value configured on the master. It can be overridden by the environment variable

REGISTRY_OPENSHIFT_SERVER_ADDR. - 3

- Can be set to

trueto enable metrics collection. It can be overridden by the boolean environment variableREGISTRY_OPENSHIFT_METRICS_ENABLED. - 4

- A secret used to authorize client requests. Metrics clients must use it as a bearer token in

Authorizationheader. It can be overridden by the environment variableREGISTRY_OPENSHIFT_METRICS_SECRET. - 5

- Maximum number of simultaneous pull requests. It can be overridden by the environment variable

REGISTRY_OPENSHIFT_REQUESTS_READ_MAXRUNNING. Zero indicates no limit. - 6

- Maximum number of queued pull requests. It can be overridden by the environment variable

REGISTRY_OPENSHIFT_REQUESTS_READ_MAXINQUEUE. Zero indicates no limit. - 7

- Maximum time a pull request can wait in the queue before being rejected. It can be overridden by the environment variable

REGISTRY_OPENSHIFT_REQUESTS_READ_MAXWAITINQUEUE. Zero indicates no limit. - 8

- Maximum number of simultaneous push requests. It can be overridden by the environment variable

REGISTRY_OPENSHIFT_REQUESTS_WRITE_MAXRUNNING. Zero indicates no limit. - 9

- Maximum number of queued push requests. It can be overridden by the environment variable

REGISTRY_OPENSHIFT_REQUESTS_WRITE_MAXINQUEUE. Zero indicates no limit. - 10

- Maximum time a push request can wait in the queue before being rejected. It can be overridden by the environment variable

REGISTRY_OPENSHIFT_REQUESTS_WRITE_MAXWAITINQUEUE. Zero indicates no limit.

See Accessing Registry Metrics for usage information.

2.5.5.7. Reporting

Reporting is unsupported.

2.5.5.8. HTTP

Upstream options are supported. Learn how to alter these settings via environment variables. Only the tls section should be altered. For example:

http:

addr: :5000

tls:

certificate: /etc/secrets/registry.crt

key: /etc/secrets/registry.key

http:

addr: :5000

tls:

certificate: /etc/secrets/registry.crt

key: /etc/secrets/registry.key2.5.5.9. Notifications

Upstream options are supported. The REST API Reference provides more comprehensive integration options.

Example:

2.5.5.10. Redis

Redis is not supported.

2.5.5.11. Health

Upstream options are supported. The registry deployment configuration provides an integrated health check at /healthz.

2.5.5.12. Proxy

Proxy configuration should not be enabled. This functionality is provided by the OpenShift Container Platform repository middleware extension, pullthrough: true.

2.5.5.13. Cache

The integrated registry actively caches data to reduce the number of calls to slow external resources. There are two caches:

- The storage cache that is used to cache blobs metadata. This cache does not have an expiration time and the data is there until it is explicitly deleted.

- The application cache contains association between blobs and repositories. The data in this cache has an expiration time.

In order to completely turn off the cache, you need to change the configuration:

- 1

- Disables cache of metadata accessed in the storage backend. Without this cache, the registry server will constantly access the backend for metadata.

- 2

- Disables the cache in which contains the blob and repository associations. Without this cache, the registry server will continually re-query the data from the master API and recompute the associations.

2.6. Known Issues

2.6.1. Overview

The following are the known issues when deploying or using the integrated registry.

2.6.2. Concurrent Build with Registry Pull-through

The local docker-registry deployment takes on additional load. By default, it now caches content from registry.access.redhat.com. The images from registry.access.redhat.com for STI builds are now stored in the local registry. Attempts to pull them result in pulls from the local docker-registry. As a result, there are circumstances where extreme numbers of concurrent builds can result in timeouts for the pulls and the build can possibly fail. To alleviate the issue, scale the docker-registry deployment to more than one replica. Check for timeouts in the builder pod’s logs.

2.6.3. Image Push Errors with Scaled Registry Using Shared NFS Volume

When using a scaled registry with a shared NFS volume, you may see one of the following errors during the push of an image:

-

digest invalid: provided digest did not match uploaded content -

blob upload unknown -

blob upload invalid

These errors are returned by an internal registry service when Docker attempts to push the image. Its cause originates in the synchronization of file attributes across nodes. Factors such as NFS client side caching, network latency, and layer size can all contribute to potential errors that might occur when pushing an image using the default round-robin load balancing configuration.

You can perform the following steps to minimize the probability of such a failure:

Ensure that the

sessionAffinityof your docker-registry service is set toClientIP:oc get svc/docker-registry --template='{{.spec.sessionAffinity}}'$ oc get svc/docker-registry --template='{{.spec.sessionAffinity}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow This should return

ClientIP, which is the default in recent OpenShift Container Platform versions. If not, change it:oc patch svc/docker-registry -p '{"spec":{"sessionAffinity": "ClientIP"}}'$ oc patch svc/docker-registry -p '{"spec":{"sessionAffinity": "ClientIP"}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Ensure that the NFS export line of your registry volume on your NFS server has the

no_wdelayoptions listed. Theno_wdelayoption prevents the server from delaying writes, which greatly improves read-after-write consistency, a requirement of the registry.

Testing shows issues with using the RHEL NFS server as a storage backend for the container image registry. This includes the OpenShift Container Registry and Quay. Therefore, using the RHEL NFS server to back PVs used by core services is not recommended.

Other NFS implementations on the marketplace might not have these issues. Contact the individual NFS implementation vendor for more information on any testing that was possibly completed against these OpenShift core components.

2.6.4. Pull of Internally Managed Image Fails with "not found" Error

This error occurs when the pulled image is pushed to an image stream different from the one it is being pulled from. This is caused by re-tagging a built image into an arbitrary image stream:

oc tag srcimagestream:latest anyproject/pullimagestream:latest

$ oc tag srcimagestream:latest anyproject/pullimagestream:latestAnd subsequently pulling from it, using an image reference such as:

internal.registry.url:5000/anyproject/pullimagestream:latest

internal.registry.url:5000/anyproject/pullimagestream:latestDuring a manual Docker pull, this will produce a similar error:

Error: image anyproject/pullimagestream:latest not found

Error: image anyproject/pullimagestream:latest not foundTo prevent this, avoid the tagging of internally managed images completely, or re-push the built image to the desired namespace manually.

2.6.5. Image Push Fails with "500 Internal Server Error" on S3 Storage

There are problems reported happening when the registry runs on S3 storage back-end. Pushing to a Docker registry occasionally fails with the following error:

Received unexpected HTTP status: 500 Internal Server Error

Received unexpected HTTP status: 500 Internal Server ErrorTo debug this, you need to view the registry logs. In there, look for similar error messages occurring at the time of the failed push:

If you see such errors, contact your Amazon S3 support. There may be a problem in your region or with your particular bucket.

2.6.6. Image Pruning Fails

If you encounter the following error when pruning images:

BLOB sha256:49638d540b2b62f3b01c388e9d8134c55493b1fa659ed84e97cb59b87a6b8e6c error deleting blob

BLOB sha256:49638d540b2b62f3b01c388e9d8134c55493b1fa659ed84e97cb59b87a6b8e6c error deleting blobAnd your registry log contains the following information:

error deleting blob \"sha256:49638d540b2b62f3b01c388e9d8134c55493b1fa659ed84e97cb59b87a6b8e6c\": operation unsupported

error deleting blob \"sha256:49638d540b2b62f3b01c388e9d8134c55493b1fa659ed84e97cb59b87a6b8e6c\": operation unsupported

It means that your custom configuration file lacks mandatory entries in the storage section, namely storage:delete:enabled set to true. Add them, re-deploy the registry, and repeat your image pruning operation.

Chapter 3. Setting up a Router

3.1. Router Overview

3.1.1. About Routers

There are many ways to get traffic into the cluster. The most common approach is to use the OpenShift Container Platform router as the ingress point for external traffic destined for services in your OpenShift Container Platform installation.

OpenShift Container Platform provides and supports the following router plug-ins:

- The HAProxy template router is the default plug-in. It uses the openshift3/ose-haproxy-router image to run an HAProxy instance alongside the template router plug-in inside a container on OpenShift Container Platform. It currently supports HTTP(S) traffic and TLS-enabled traffic via SNI. The router’s container listens on the host network interface, unlike most containers that listen only on private IPs. The router proxies external requests for route names to the IPs of actual pods identified by the service associated with the route.

- The F5 router integrates with an existing F5 BIG-IP® system in your environment to synchronize routes. F5 BIG-IP® version 11.4 or newer is required in order to have the F5 iControl REST API.

The F5 router plug-in is available starting in OpenShift Container Platform 3.0.2.

3.1.2. Router Service Account

Before deploying an OpenShift Container Platform cluster, you must have a service account for the router, which is automatically created during cluster installation. This service account has permissions to a security context constraint (SCC) that allows it to specify host ports.

3.1.2.1. Permission to Access Labels

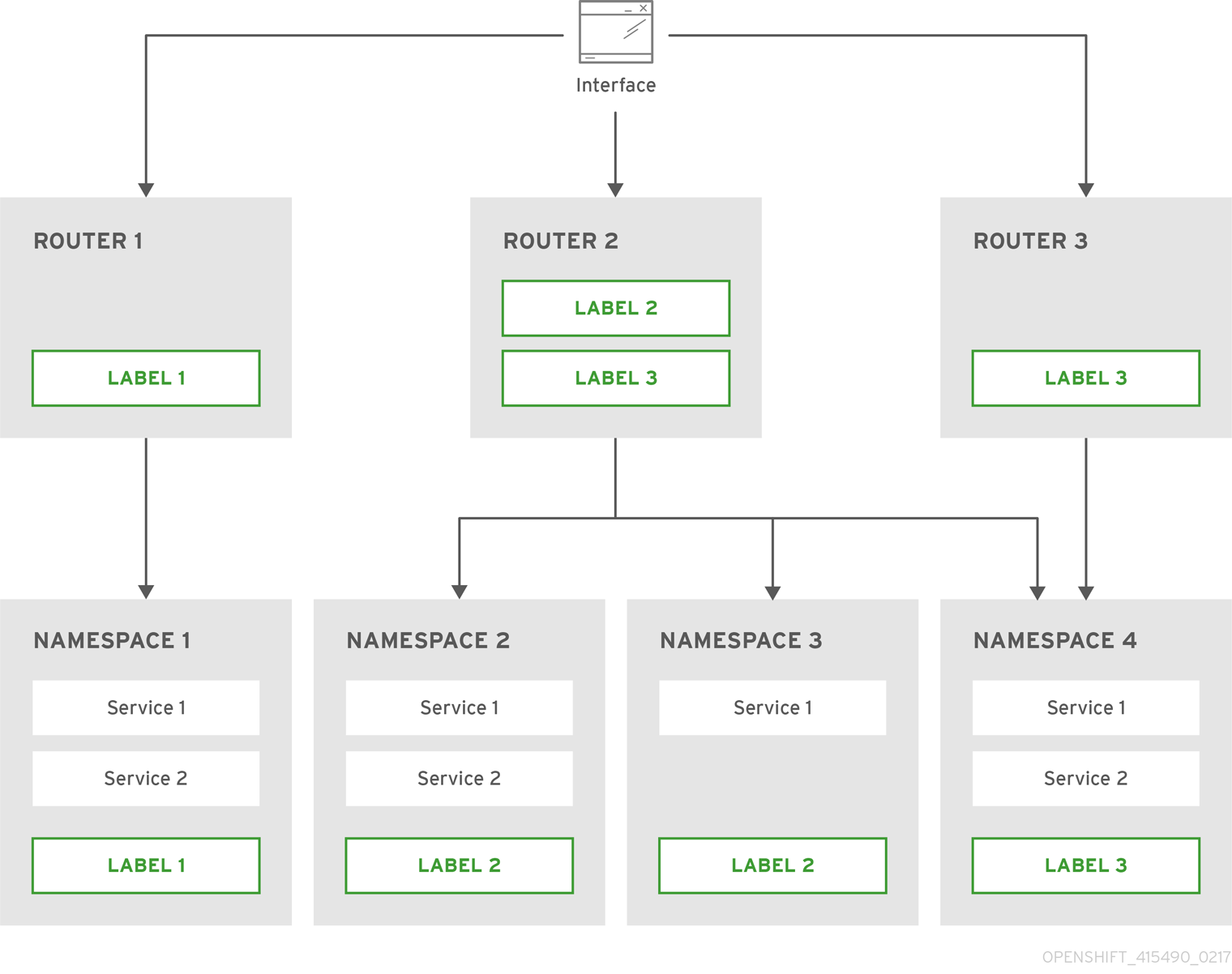

When namespace labels are used, for example in creating router shards, the service account for the router must have cluster-reader permission.

oc adm policy add-cluster-role-to-user \

cluster-reader \

system:serviceaccount:default:router

$ oc adm policy add-cluster-role-to-user \

cluster-reader \

system:serviceaccount:default:routerWith a service account in place, you can proceed to installing a default HAProxy Router, a customized HAProxy Router or F5 Router.

3.2. Using the Default HAProxy Router

3.2.1. Overview

The oc adm router command is provided with the administrator CLI to simplify the tasks of setting up routers in a new installation. The oc adm router command creates the service and deployment configuration objects. Use the --service-account option to specify the service account the router will use to contact the master.

The router service account can be created in advance or created by the oc adm router --service-account command.

Every form of communication between OpenShift Container Platform components is secured by TLS and uses various certificates and authentication methods. The --default-certificate .pem format file can be supplied or one is created by the oc adm router command. When routes are created, the user can provide route certificates that the router will use when handling the route.

When deleting a router, ensure the deployment configuration, service, and secret are deleted as well.

Routers are deployed on specific nodes. This makes it easier for the cluster administrator and external network manager to coordinate which IP address will run a router and which traffic the router will handle. The routers are deployed on specific nodes by using node selectors.

Routers use host networking by default, and they directly attach to port 80 and 443 on all interfaces on a host. Restrict routers to hosts where ports 80/443 are available and not being consumed by another service, and set this using node selectors and the scheduler configuration. As an example, you can achieve this by dedicating infrastructure nodes to run services such as routers.

It is recommended to use separate distinct openshift-router service account with your router. This can be provided using the --service-account flag to the oc adm router command.

oc adm router --dry-run --service-account=router

$ oc adm router --dry-run --service-account=router

Router pods created using oc adm router have default resource requests that a node must satisfy for the router pod to be deployed. In an effort to increase the reliability of infrastructure components, the default resource requests are used to increase the QoS tier of the router pods above pods without resource requests. The default values represent the observed minimum resources required for a basic router to be deployed and can be edited in the routers deployment configuration and you may want to increase them based on the load of the router.

3.2.2. Creating a Router

If the router does not exist, run the following to create a router:

oc adm router <router_name> --replicas=<number> --service-account=router

$ oc adm router <router_name> --replicas=<number> --service-account=router

--replicas is usually 1 unless a high availability configuration is being created.

To find the host IP address of the router:

oc get po <router-pod> --template={{.status.hostIP}}

$ oc get po <router-pod> --template={{.status.hostIP}}You can also use router shards to ensure that the router is filtered to specific namespaces or routes, or set any environment variables after router creation. In this case create a router for each shard.

3.2.3. Other Basic Router Commands

- Checking the Default Router

- The default router service account, named router, is automatically created during cluster installations. To verify that this account already exists:

oc adm router --dry-run --service-account=router

$ oc adm router --dry-run --service-account=router- Viewing the Default Router

- To see what the default router would look like if created:

oc adm router --dry-run -o yaml --service-account=router

$ oc adm router --dry-run -o yaml --service-account=router- Deploying the Router to a Labeled Node

- To deploy the router to any node(s) that match a specified node label:

oc adm router <router_name> --replicas=<number> --selector=<label> \

--service-account=router

$ oc adm router <router_name> --replicas=<number> --selector=<label> \

--service-account=router

For example, if you want to create a router named router and have it placed on a node labeled with node-role.kubernetes.io/infra=true:

oc adm router router --replicas=1 --selector='node-role.kubernetes.io/infra=true' \ --service-account=router

$ oc adm router router --replicas=1 --selector='node-role.kubernetes.io/infra=true' \

--service-account=router

During cluster installation, the openshift_router_selector and openshift_registry_selector Ansible settings are set to node-role.kubernetes.io/infra=true by default. The default router and registry will only be automatically deployed if a node exists that matches the node-role.kubernetes.io/infra=true label.

For information on updating labels, see Updating Labels on Nodes.

Multiple instances are created on different hosts according to the scheduler policy.

- Using a Different Router Image

- To use a different router image and view the router configuration that would be used:

oc adm router <router_name> -o <format> --images=<image> \

--service-account=router

$ oc adm router <router_name> -o <format> --images=<image> \

--service-account=routerFor example:

oc adm router region-west -o yaml --images=myrepo/somerouter:mytag \

--service-account=router

$ oc adm router region-west -o yaml --images=myrepo/somerouter:mytag \

--service-account=router3.2.4. Filtering Routes to Specific Routers

Using the ROUTE_LABELS environment variable, you can filter routes so that they are used only by specific routers.

For example, if you have multiple routers, and 100 routes, you can attach labels to the routes so that a portion of them are handled by one router, whereas the rest are handled by another.

After creating a router, use the

ROUTE_LABELSenvironment variable to tag the router:oc env dc/<router=name> ROUTE_LABELS="key=value"

$ oc env dc/<router=name> ROUTE_LABELS="key=value"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add the label to the desired routes:

oc label route <route=name> key=value

oc label route <route=name> key=valueCopy to Clipboard Copied! Toggle word wrap Toggle overflow To verify that the label has been attached to the route, check the route configuration:

oc describe route/<route_name>

$ oc describe route/<route_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- Setting the Maximum Number of Concurrent Connections

-

The router can handle a maximum number of 20000 connections by default. You can change that limit depending on your needs. Having too few connections prevents the health check from working, which causes unnecessary restarts. You need to configure the system to support the maximum number of connections. The limits shown in

'sysctl fs.nr_open'and'sysctl fs.file-max'must be large enough. Otherwise, HAproxy will not start.

When the router is created, the --max-connections= option sets the desired limit:

oc adm router --max-connections=10000 ....

$ oc adm router --max-connections=10000 ....

Edit the ROUTER_MAX_CONNECTIONS environment variable in the router’s deployment configuration to change the value. The router pods are restarted with the new value. If ROUTER_MAX_CONNECTIONS is not present, the default value of 20000, is used.

A connection includes the frontend and internal backend. This counts as two connections. Be sure to set ROUTER_MAX_CONNECTIONS to double than the number of connections you intend to create.

3.2.5. HAProxy Strict SNI

The HAProxy strict-sni can be controlled through the ROUTER_STRICT_SNI environment variable in the router’s deployment configuration. It can also be set when the router is created by using the --strict-sni command line option.

oc adm router --strict-sni

$ oc adm router --strict-sni3.2.6. TLS Cipher Suites

Set the router cipher suite using the --ciphers option when creating a router:

oc adm router --ciphers=modern ....

$ oc adm router --ciphers=modern ....