Developer Guide

OpenShift Enterprise 3.2 Developer Reference

Abstract

- Monitor and browse projects with the web console

- Configure and utilize the CLI

- Generate configurations using templates

- Manage builds and webhooks

- Define and trigger deployments

- Integrate external services (databases, SaaS endpoints)

Chapter 1. Overview

This guide helps developers set up and configure a workstation to develop and deploy applications in an OpenShift Enterprise cloud environment with a command-line interface (CLI). This guide provides detailed instructions and examples to help developers:

- Monitor and browse projects with the web console.

- Configure and utilize the CLI.

- Generate configurations using templates.

- Manage builds and webhooks.

- Define and trigger deployments.

- Integrate external services (databases, SaaS endpoints).

Chapter 2. Application Life Cycle Examples

2.1. Overview

As a PaaS, OpenShift Enterprise is designed for building and deploying applications. Depending on how much you want to involve OpenShift Enterprise in the development process, you can choose to develop on OpenShift Enterprise and use it to continuously develop an application, or you can deploy a fully developed application onto an OpenShift Enterprise instance.

2.2. Developing on OpenShift Enterprise

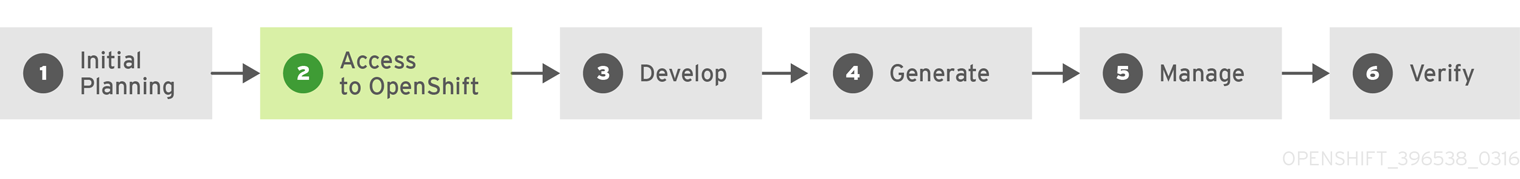

You can develop your application on OpenShift Enterprise directly. Use the following process if you plan to use OpenShift Enterprise as a method to build and deploy your application:

- Initial Planning

- What does your application do?

- What programming language will it be developed in?

- Access to OpenShift Enterprise

- OpenShift Enterprise should be installed by this point, either by yourself or an administrator within your organization.

- Develop

- Using your editor/IDE of choice, create a basic skeleton of an application. It should be developed enough to tell OpenShift Enterprise what kind of application it is.

- Push the code to your Git repository.

- Generate

-

Create a basic application using the

new-appcommand. OpenShift Enterprise generates build and deployment configurations.

-

Create a basic application using the

- Manage

- Start developing your application code.

- Ensure your application builds successfully.

- Continue to locally develop and polish your code.

- Push your code to a Git repository.

- Is any extra configuration needed? Explore the Developer Guide for more options.

- Verify

-

You can verify your application in a number of ways. You can push your changes to your application’s Git repository, and use OpenShift Enterprise to rebuild and redeploy your application. Alternatively, you can hot deploy using

rsyncto synchronize your code changes into a running pod.

-

You can verify your application in a number of ways. You can push your changes to your application’s Git repository, and use OpenShift Enterprise to rebuild and redeploy your application. Alternatively, you can hot deploy using

2.3. Developing Then Deploying on OpenShift Enterprise

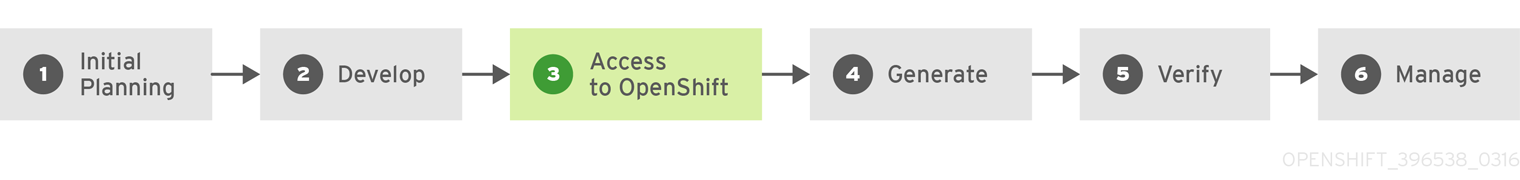

Another possible application life cycle is to develop locally, then use OpenShift Enterprise to deploy your fully developed application. Use the following process if you plan to have application code already, then want to build and deploy onto an OpenShift Enterprise installation when completed:

- Initial Planning

- What does your application do?

- What programming language will it be developed in?

- Develop

- Develop your application code using your editor/IDE of choice.

- Build and test your application code locally.

- Push your code to a Git repository.

- Access to OpenShift Enterprise

- OpenShift Enterprise should be installed by this point, either by yourself or an administrator within your organization.

- Generate

-

Create a basic application using the

new-appcommand. OpenShift Enterprise generates build and deployment configurations.

-

Create a basic application using the

- Verify

- Ensure that the application that you have built and deployed in the above Generate step is successfully running on OpenShift Enterprise.

- Manage

- Continue to develop your application code until you are happy with the results.

- Rebuild your application in OpenShift Enterprise to accept any newly pushed code.

- Is any extra configuration needed? Explore the Developer Guide for more options.

Chapter 3. Authentication

3.1. Web Console Authentication

When accessing the web console from a browser at <master_public_addr>:8443, you are automatically redirected to a login page.

Review the browser versions and operating systems that can be used to access the web console.

You can provide your login credentials on this page to obtain a token to make API calls. After logging in, you can navigate your projects using the web console.

3.2. CLI Authentication

You can authenticate from the command line using the CLI command oc login. You can get started with the CLI by running this command without any options:

$ oc login

The command’s interactive flow helps you establish a session to an OpenShift Enterprise server with the provided credentials. If any information required to successfully log in to an OpenShift Enterprise server is not provided, the command prompts for user input as required. The configuration is automatically saved and is then used for every subsequent command.

All configuration options for the oc login command, listed in the oc login --help command output, are optional. The following example shows usage with some common options:

$ oc login [-u=<username>] \ [-p=<password>] \ [-s=<server>] \ [-n=<project>] \ [--certificate-authority=</path/to/file.crt>|--insecure-skip-tls-verify]

The following table describes these common options:

| Option | Syntax | Description |

|---|---|---|

|

|

$ oc login -s=<server> | Specifies the host name of the OpenShift Enterprise server. If a server is provided through this flag, the command does not ask for it interactively. This flag can also be used if you already have a CLI configuration file and want to log in and switch to another server. |

|

|

$ oc login -u=<username> -p=<password> | Allows you to specify the credentials to log in to the OpenShift Enterprise server. If user name or password are provided through these flags, the command does not ask for it interactively. These flags can also be used if you already have a configuration file with a session token established and want to log in and switch to another user name. |

|

|

$ oc login -u=<username> -p=<password> -n=<project> |

A global CLI option which, when used with |

|

|

$ oc login --certificate-authority=<path/to/file.crt> | Correctly and securely authenticates with an OpenShift Enterprise server that uses HTTPS. The path to a certificate authority file must be provided. |

|

|

$ oc login --insecure-skip-tls-verify |

Allows interaction with an HTTPS server bypassing the server certificate checks; however, note that it is not secure. If you try to |

CLI configuration files allow you to easily manage multiple CLI profiles.

If you have access to administrator credentials but are no longer logged in as the default system user system:admin, you can log back in as this user at any time as long as the credentials are still present in your CLI configuration file. The following command logs in and switches to the default project:

$ oc login -u system:admin -n default

Chapter 4. Projects

4.1. Overview

A project allows a community of users to organize and manage their content in isolation from other communities.

4.2. Creating a Project

If allowed by your cluster administrator, you can create a new project using the CLI or the web console.

To create a new project using the CLI:

$ oc new-project <project_name> \

--description="<description>" --display-name="<display_name>"For example:

$ oc new-project hello-openshift \

--description="This is an example project to demonstrate OpenShift v3" \

--display-name="Hello OpenShift"The number of projects you are allowed to create may be limited by the system administrator. Once your limit is reached, you may need to delete an existing project in order to create a new one.

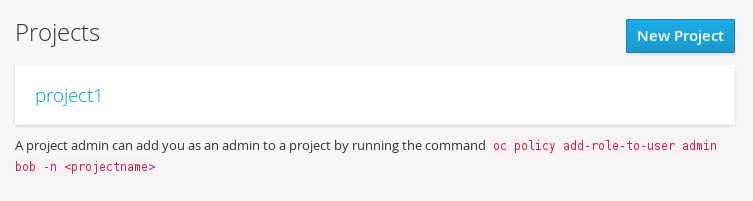

4.3. Viewing Projects

When viewing projects, you are restricted to seeing only the projects you have access to view based on the authorization policy.

To view a list of projects:

$ oc get projects

You can change from the current project to a different project for CLI operations. The specified project is then used in all subsequent operations that manipulate project-scoped content:

$ oc project <project_name>

You can also use the web console to view and change between projects. After authenticating and logging in, you are presented with a list of projects that you have access to:

If you use the CLI to create a new project, you can then refresh the page in the browser to see the new project.

Selecting a project brings you to the project overview for that project.

4.4. Checking Project Status

The oc status command provides a high-level overview of the current project, with its components and their relationships. This command takes no argument:

$ oc status

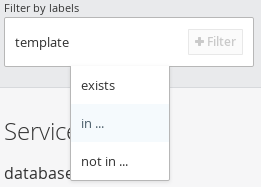

4.5. Filtering by Labels

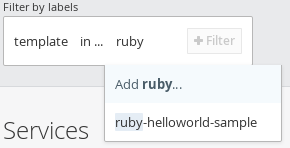

You can filter the contents of a project page in the web console by using the labels of a resource. You can pick from a suggested label name and values, or type in your own. Multiple filters can be added. When multiple filters are applied, resources must match all of the filters to remain visible.

To filter by labels:

Select a label type:

Select one of the following:

exists

Verify that the label name exists, but ignore its value.

in

Verify that the label name exists and is equal to one of the selected values.

not in

Verify that the label name does not exist, or is not equal to any of the selected values.

If you selected in or not in, select a set of values then select Filter:

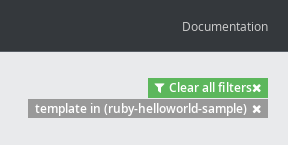

After adding filters, you can stop filtering by selecting Clear all filters or by clicking individual filters to remove them:

4.6. Deleting a Project

When you delete a project, the server updates the project status to Terminating from Active. The server then clears all content from a project that is Terminating before finally removing the project. While a project is in Terminating status, a user cannot add new content to the project. Projects can be deleted from the CLI or the web console.

To delete a project using the CLI:

$ oc delete project <project_name>

Chapter 5. Creating New Applications

5.1. Overview

You can create a new OpenShift Enterprise application from source code, images, or templates by using either the OpenShift CLI or web console.

5.2. Creating an Application Using the CLI

5.2.1. Creating an Application From Source Code

The new-app command allows you to create applications using source code in a local or remote Git repository.

To create an application using a Git repository in a local directory:

$ oc new-app /path/to/source/code

If using a local Git repository, the repository must have an origin remote that points to a URL accessible by the OpenShift Enterprise cluster.

You can use a subdirectory of your source code repository by specifying a --context-dir flag. To create an application using a remote Git repository and a context subdirectory:

$ oc new-app https://github.com/openshift/sti-ruby.git \

--context-dir=2.0/test/puma-test-app

Also, when specifying a remote URL, you can specify a Git branch to use by appending #<branch_name> to the end of the URL:

$ oc new-app https://github.com/openshift/ruby-hello-world.git#beta4

Using new-app results in a build configuration, which creates a new application image from your source code. It also constructs a deployment configuration to deploy the new image, and a service to provide load-balanced access to the deployment running your image.

OpenShift Enterprise automatically detects whether the Docker or Sourcebuild strategy is being used, and in the case of Source builds, detects an appropriate language builder image.

Build Strategy Detection

If a Dockerfile is in the repository when creating a new application, OpenShift Enterprise generates a Docker build strategy. Otherwise, it generates a Source strategy.

You can specify a strategy by setting the --strategy flag to either source or docker.

$ oc new-app /home/user/code/myapp --strategy=docker

Language Detection

If creating a Source build, new-app attempts to determine the language builder to use by the presence of certain files in the root of the repository:

| Language | Files |

|---|---|

|

| Rakefile, Gemfile, config.ru |

|

| pom.xml |

|

| app.json, package.json |

|

| index.php, composer.json |

|

| requirements.txt, setup.py |

|

| index.pl, cpanfile |

After a language is detected, new-app searches the OpenShift Enterprise server for image stream tags that have a supports annotation matching the detected language, or an image stream that matches the name of the detected language. If a match is not found, new-app searches the Docker Hub registry for an image that matches the detected language based on name.

You can override the image the builder uses for a particular source repository by specifying the image (either an image stream or container specification) and the repository, with a ~ as a separator.

For example, to use the myproject/my-ruby image stream with the source in a remote repository:

$ oc new-app myproject/my-ruby~https://github.com/openshift/ruby-hello-world.git

To use the openshift/ruby-20-centos7:latest container image stream with the source in a local repository:

$ oc new-app openshift/ruby-20-centos7:latest~/home/user/code/my-ruby-app

5.2.2. Creating an Application From an Image

You can deploy an application from an existing image. Images can come from image streams in the OpenShift Enterprise server, images in a specific registry or Docker Hub registry, or images in the local Docker server.

The new-app command attempts to determine the type of image specified in the arguments passed to it. However, you can explicitly tell new-app whether the image is a Docker image (using the --docker-image argument) or an image stream (using the -i|--image argument).

If you specify an image from your local Docker repository, you must ensure that the same image is available to the OpenShift Enterprise cluster nodes.

For example, to create an application from the DockerHub MySQL image:

$ oc new-app mysql

To create an application using an image in a private registry, specify the full Docker image specification:

$ oc new-app myregistry:5000/example/myimage

If the registry containing the image is not secured with SSL, cluster administrators must ensure that the Docker daemon on the OpenShift Enterprise node hosts is run with the --insecure-registry flag pointing to that registry. You must also tell new-app that the image comes from an insecure registry with the --insecure-registry=true flag.

You can create an application from an existing image stream and tag (optional) for the image stream:

$ oc new-app my-stream:v1

5.2.3. Creating an Application From a Template

You can create an application from a previously stored template or from a template file, by specifying the name of the template as an argument. For example, you can store a sample application template and use it to create an application.

To create an application from a stored template:

$ oc create -f examples/sample-app/application-template-stibuild.json $ oc new-app ruby-helloworld-sample

To directly use a template in your local file system, without first storing it in OpenShift Enterprise, use the -f|--file argument:

$ oc new-app -f examples/sample-app/application-template-stibuild.json

Template Parameters

When creating an application based on a template, use the -p|--param argument to set parameter values defined by the template:

$ oc new-app ruby-helloworld-sample \

-p ADMIN_USERNAME=admin,ADMIN_PASSWORD=mypassword5.2.4. Further Modifying Application Creation

The new-app command generates OpenShift Enterprise objects that will build, deploy, and run the application being created. Normally, these objects are created in the current project using names derived from the input source repositories or the input images. However, new-app allows you to modify this behavior.

The set of objects created by new-app depends on the artifacts passed as input: source repositories, images, or templates.

| Object | Description |

|---|---|

|

|

A |

|

|

For |

|

|

A |

|

|

The |

| Other | Other objects can be generated when instantiating templates. |

5.2.4.1. Specifying Environment Variables

When generating applications from a source or an image, you can use the -e|--env argument to pass environment variables to the application container at run time:

$ oc new-app openshift/postgresql-92-centos7 \

-e POSTGRESQL_USER=user \

-e POSTGRESQL_DATABASE=db \

-e POSTGRESQL_PASSWORD=password5.2.4.2. Specifying Labels

When generating applications from source, images, or templates, you can use the -l|--label argument to add labels to the created objects. Labels make it easy to collectively select, configure, and delete objects associated with the application.

$ oc new-app https://github.com/openshift/ruby-hello-world -l name=hello-world

5.2.4.3. Viewing the Output Without Creation

To see a dry-run of what new-app will create, you can use the -o|--output argument with a yaml or json value. You can then use the output to preview the objects that will be created, or redirect it to a file that you can edit. Once you are satisfied, you can use oc create to create the OpenShift Enterprise objects.

To output new-app artifacts to a file, edit them, then create them:

$ oc new-app https://github.com/openshift/ruby-hello-world \

-o yaml > myapp.yaml

$ vi myapp.yaml

$ oc create -f myapp.yaml5.2.4.4. Creating Objects With Different Names

Objects created by new-app are normally named after the source repository, or the image used to generate them. You can set the name of the objects produced by adding a --name flag to the command:

$ oc new-app https://github.com/openshift/ruby-hello-world --name=myapp

5.2.4.5. Creating Objects in a Different Project

Normally, new-app creates objects in the current project. However, you can create objects in a different project that you have access to using the -n|--namespace argument:

$ oc new-app https://github.com/openshift/ruby-hello-world -n myproject

5.2.4.6. Creating Multiple Objects

The new-app command allows creating multiple applications specifying multiple parameters to new-app. Labels specified in the command line apply to all objects created by the single command. Environment variables apply to all components created from source or images.

To create an application from a source repository and a Docker Hub image:

$ oc new-app https://github.com/openshift/ruby-hello-world mysql

If a source code repository and a builder image are specified as separate arguments, new-app uses the builder image as the builder for the source code repository. If this is not the intent, simply specify a specific builder image for the source using the ~ separator.

5.2.4.7. Grouping Images and Source in a Single Pod

The new-app command allows deploying multiple images together in a single pod. In order to specify which images to group together, use the + separator. The --group command line argument can also be used to specify the images that should be grouped together. To group the image built from a source repository with other images, specify its builder image in the group:

$ oc new-app ruby+mysql

To deploy an image built from source and an external image together:

$ oc new-app \

ruby~https://github.com/openshift/ruby-hello-world \

mysql \

--group=ruby+mysql5.2.4.8. Useful Edits

Following are some specific examples of useful edits to make in the myapp.yaml file.

These examples presume myapp.yaml was created as a result of the oc new-app … -o yaml command.

Example 5.1. Deploy to Selected Nodes

apiVersion: v1 items: - apiVersion: v1 kind: Project 1 metadata: name: myapp annotations: openshift.io/node-selector: region=west 2 - apiVersion: v1 kind: ImageStream ... kind: List metadata: {}

- 1

- In myapp.yaml, the section that defines the myapp project has both

kind: Projectandmetadata.name: myapp. If this section is missing, you should add it at the second level, as a new item of the listitems, peer to thekind: ImageStreamdefinitions. - 2

- Add this node selector annotation to the myapp project to cause its pods to be deployed only on nodes that have the label

region=west.

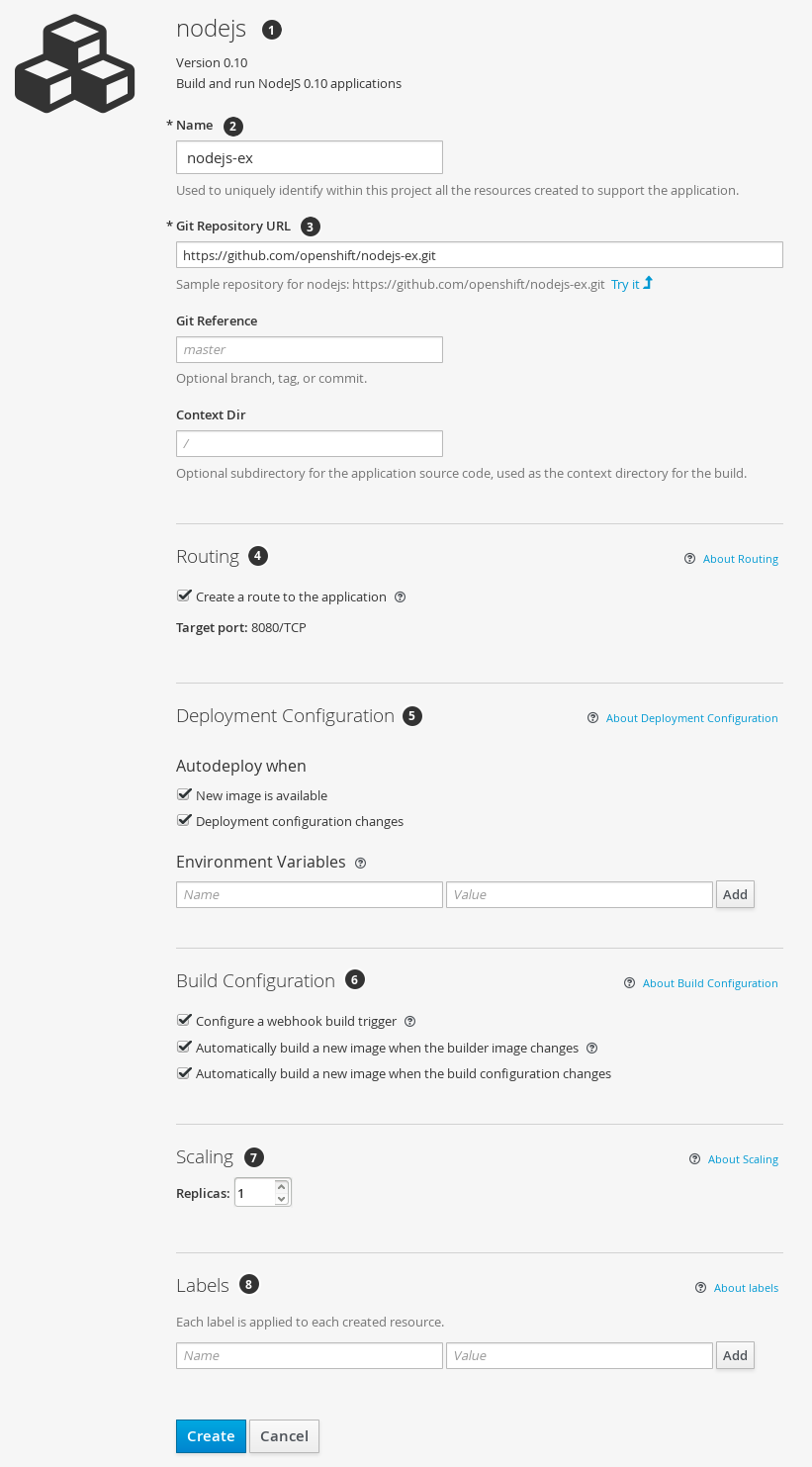

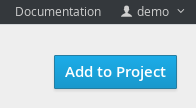

5.3. Creating an Application Using the Web Console

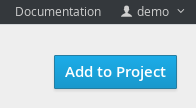

While in the desired project, click Add to Project:

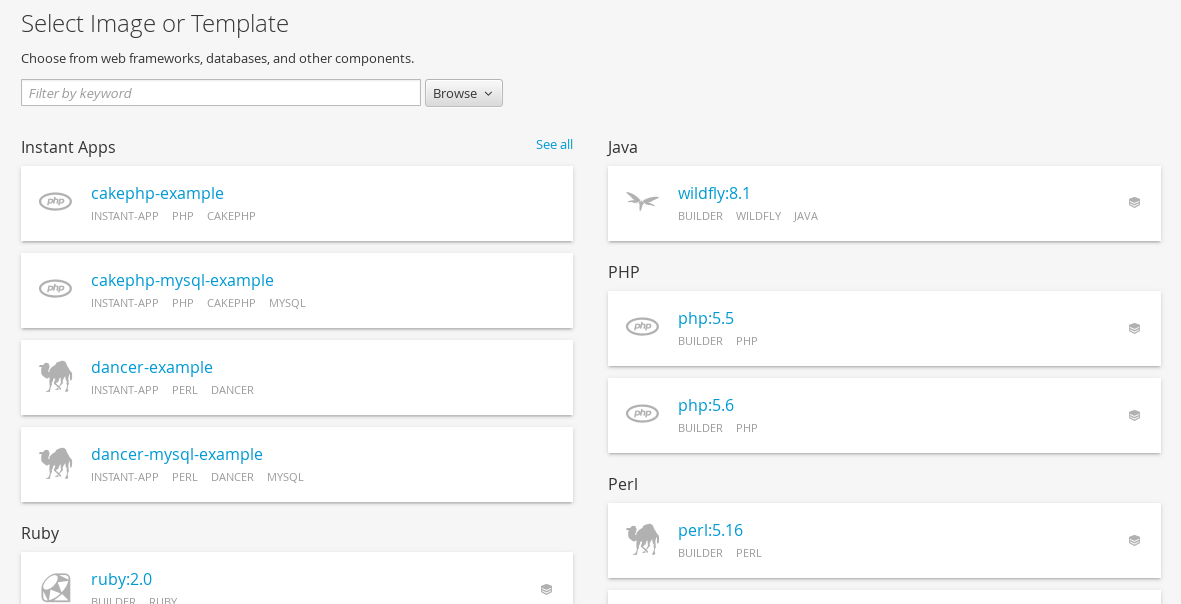

Select either a builder image from the list of images in your project, or from the global library:

Note

NoteOnly image stream tags that have the builder tag listed in their annotations appear in this list, as demonstrated here:

kind: "ImageStream" apiVersion: "v1" metadata: name: "ruby" creationTimestamp: null spec: dockerImageRepository: "registry.access.redhat.com/openshift3/ruby-20-rhel7" tags: - name: "2.0" annotations: description: "Build and run Ruby 2.0 applications" iconClass: "icon-ruby" tags: "builder,ruby" 1 supports: "ruby:2.0,ruby" version: "2.0"- 1

- Including builder here ensures this

ImageStreamTagappears in the web console as a builder.

Modify the settings in the new application screen to configure the objects to support your application:

- The builder image name and description.

- The application name used for the generated OpenShift Enterprise objects.

- The Git repository URL, reference, and context directory for your source code.

- Routing configuration section for making this application publicly accessible.

- Deployment configuration section for customizing deployment triggers and image environment variables.

- Build configuration section for customizing build triggers.

- Replica scaling section for configuring the number of running instances of the application.

- The labels to assign to all items generated for the application. You can add and edit labels for all objects here.

NoteTo see all of the configuration options, click the "Show advanced build and deployment options" link.

Chapter 6. Migrating Applications

6.1. Overview

This topic covers the migration procedure of OpenShift version 2 (v2) applications to OpenShift version 3 (v3).

This topic uses some terminology that is specific to OpenShift v2. Comparing OpenShift Enterprise 2 and OpenShift Enterprise 3 provides insight on the differences between the two versions and the language used.

To migrate OpenShift v2 applications to OpenShift Enterprise v3, all cartridges in the v2 application must be recorded as each v2 cartridge is equivalent with a corresponding image or template in OpenShift Enterprise v3 and they must be migrated individually. For each cartridge, all dependencies or required packages also must be recorded, as they must be included in the v3 images.

The general migration procedure is:

Back up the v2 application.

- Web cartridge: The source code can be backed up to a Git repository such as by pushing to a repository on GitHub.

-

Database cartridge: The database can be backed up using a dump command (

mongodump,mysqldump,pg_dump) to back up the database. Web and database cartridges:

rhcclient tool provides snapshot ability to back up multiple cartridges:$ rhc snapshot save <app_name>

The snapshot is a tar file that can be unzipped, and its content is application source code and the database dump.

- If the application has a database cartridge, create a v3 database application, sync the database dump to the pod of the new v3 database application, then restore the v2 database in the v3 database application with database restore commands.

- For a web framework application, edit the application source code to make it v3 compatible. Then, add any dependencies or packages required in appropriate files in the Git repository. Convert v2 environment variables to corresponding v3 environment variables.

- Create a v3 application from source (your Git repository) or from a quickstart with your Git URL. Also, add the database service parameters to the new application to link the database application to the web application.

- In v2, there is an integrated Git environment and your applications automatically rebuild and restart whenever a change is pushed to your v2 Git repository. In v3, in order to have a build automatically triggered by source code changes pushed to your public Git repository, you must set up a webhook after the initial build in v3 is completed.

6.2. Migrating Database Applications

6.2.1. Overview

This topic reviews how to migrate MySQL, PostgreSQL, and MongoDB database applications from OpenShift version 2 (v2) to OpenShift version 3 (v3).

6.2.2. Supported Databases

| v2 | v3 |

|---|---|

| MongoDB: 2.4 | MongoDB: 2.4, 2.6 |

| MySQL: 5.5 | MySQL: 5.5, 5.6 |

| PostgreSQL: 9.2 | PostgreSQL: 9.2, 9.4 |

6.2.3. MySQL

Export all databases to a dump file and copy it to a local machine (into the current directory):

$ rhc ssh <v2_application_name> $ mysqldump --skip-lock-tables -h $OPENSHIFT_MYSQL_DB_HOST -P ${OPENSHIFT_MYSQL_DB_PORT:-3306} -u ${OPENSHIFT_MYSQL_DB_USERNAME:-'admin'} \ --password="$OPENSHIFT_MYSQL_DB_PASSWORD" --all-databases > ~/app-root/data/all.sql $ exitDownload dbdump to your local machine:

$ mkdir mysqldumpdir $ rhc scp -a <v2_application_name> download mysqldumpdir app-root/data/all.sql

Create a v3 mysql-persistent pod from template:

$ oc new-app mysql-persistent -p \ MYSQL_USER=<your_V2_mysql_username> -p \ MYSQL_PASSWORD=<your_v2_mysql_password> -p MYSQL_DATABASE=<your_v2_database_name>

Check to see if the pod is ready to use:

$ oc get pods

When the pod is up and running, copy database archive files to your v3 MySQL pod:

$ oc rsync /local/mysqldumpdir <mysql_pod_name>:/var/lib/mysql/data

Restore the database in the v3 running pod:

$ oc rsh <mysql_pod> $ cd /var/lib/mysql/data/mysqldumpdir

In v3, to restore databases you need to access MySQL as root user.

In v2, the

$OPENSHIFT_MYSQL_DB_USERNAMEhad full privileges on all databases. In v3, you must grant privileges to$MYSQL_USERfor each database.$ mysql -u root $ source all.sql

Grant all privileges on <dbname> to

<your_v2_username>@localhost, then flush privileges.Remove the dump directory from the pod:

$ cd ../; rm -rf /var/lib/mysql/data/mysqldumpdir

Supported MySQL Environment Variables

| v2 | v3 |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

|

| |

|

| |

|

| |

|

| |

|

| |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

|

| |

|

| |

|

| |

|

| |

|

|

6.2.4. PostgreSQL

Back up the v2 PostgreSQL database from the gear:

$ rhc ssh -a <v2-application_name> $ mkdir ~/app-root/data/tmp $ pg_dump <database_name> | gzip > ~/app-root/data/tmp/<database_name>.gz

Extract the backup file back to your local machine:

$ rhc scp -a <v2_application_name> download <local_dest> app-root/data/tmp/<db-name>.gz $ gzip -d <database-name>.gz

NoteSave the backup file to a separate folder for step 4.

Create the PostgreSQL service using the v2 application database name, user name and password to create the new service:

$ oc new-app postgresql-persistent -p POSTGRESQL_DATABASE=dbname -p POSTGRESQL_PASSWORD=password -p POSTGRESQL_USER=username

Check to see if the pod is ready to use:

$ oc get pods

When the pod is up and running, sync the backup directory to pod:

$ oc rsync /local/path/to/dir <postgresql_pod_name>:/var/lib/pgsql/data

Remotely access the pod:

$ oc rsh <pod_name>

Restore the database:

psql dbname < /var/lib/pgsql/data/<database_backup_file>

Remove all backup files that are no longer needed:

$ rm /var/lib/pgsql/data/<database-backup-file>

Supported PostgreSQL Environment Variables

| v2 | v3 |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

|

| |

|

| |

|

| |

|

| |

|

| |

|

| |

|

| |

|

| |

|

| |

|

| |

|

| |

|

| |

|

|

6.2.5. MongoDB

- For OpenShift v3: MongoDB shell version 3.2.6

- For OpenShift v2: MongoDB shell version 2.4.9

Remotely access the v2 application via the

sshcommand:$ rhc ssh <v2_application_name>

Run mongodump, specifying a single database with

-d <database_name> -c <collections>. Without those options, dump all databases. Each database is dumped in its own directory:$ mongodump -h $OPENSHIFT_MONGODB_DB_HOST -o app-root/repo/mydbdump -u 'admin' -p $OPENSHIFT_MONGODB_DB_PASSWORD $ cd app-root/repo/mydbdump/<database_name>; tar -cvzf dbname.tar.gz $ exit

Download dbdump to a local machine in the mongodump directory:

$ mkdir mongodump $ rhc scp -a <v2 appname> download mongodump \ app-root/repo/mydbdump/<dbname>/dbname.tar.gz

Start a MongoDB pod in v3. Because the latest image (3.2.6) does not include mongo-tools, to use

mongorestoreormongoimportcommands you need to edit the default mongodb-persistent template to specify the image tag that contains themongo-tools, “mongodb:2.4”. For that reason, the followingoc exportcommand and edit are necessary:$ oc export template mongodb-persistent -n openshift -o json > mongodb-24persistent.json

Edit L80 of mongodb-24persistent.json; replace

mongodb:latestwithmongodb:2.4.$ oc new-app --template=mongodb-persistent -n <project-name-that-template-was-created-in> \ MONGODB_USER=user_from_v2_app -p \ MONGODB_PASSWORD=password_from_v2_db -p \ MONGODB_DATABASE=v2_dbname -p \ MONGODB_ADMIN_PASSWORD=password_from_v2_db $ oc get pods

When the mongodb pod is up and running, copy the database archive files to the v3 MongoDB pod:

$ oc rsync local/path/to/mongodump <mongodb_pod_name>:/var/lib/mongodb/data $ oc rsh <mongodb_pod>

In the MongoDB pod, complete the following for each database you want to restore:

$ cd /var/lib/mongodb/data/mongodump $ tar -xzvf dbname.tar.gz $ mongorestore -u $MONGODB_USER -p $MONGODB_PASSWORD -d dbname -v /var/lib/mongodb/data/mongodump

Check if the database is restored:

$ mongo admin -u $MONGODB_USER -p $MONGODB_ADMIN_PASSWORD $ use dbname $ show collections $ exit

Remove the mongodump directory from the pod:

$ rm -rf /var/lib/mongodb/data/mongodump

Supported MongoDB Environment Variables

| v2 | v3 |

|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

| |

|

| |

|

| |

|

| |

|

| |

|

| |

|

| |

|

| |

|

|

6.3. Migrating Web Framework Applications

6.3.1. Overview

This topic reviews how to migrate Python, Ruby, PHP, Perl, Node.js, JBoss EAP, JBoss WS (Tomcat), and Wildfly 10 (JBoss AS) web framework applications from OpenShift version 2 (v2) to OpenShift version 3 (v3).

6.3.2. Python

Set up a new GitHub repository and add it as a remote branch to the current, local v2 Git repository:

$ git remote add <remote-name> https://github.com/<github-id>/<repo-name>.git

Push the local v2 source code to the new repository:

$ git push -u <remote-name> master

Ensure that all important files such as setup.py, wsgi.py, requirements.txt, and etc are pushed to new repository.

- Ensure all required packages for your application are included in requirements.txt.

- S2I-python does not support mod_wsgi anymore. However, gunicorn is supported and it is an alternative for mod_wsgi. So, add gunicorn package to requirements.txt.

Use the

occommand to launch a new Python application from the builder image and source code:$ oc new-app --strategy=source python:3.3~https://github.com/<github-id>/<repo-name> --name=<app-name> -e <ENV_VAR_NAME>=<env_var_value>

Supported Python Versions

| v2 | v3 |

|---|---|

| Python: 2.6, 2.7, 3.3 | Python: 2.7, 3.3, 3.4 |

| Django | Django-psql-example (quickstart) |

6.3.3. Ruby

Set up a new GitHub repository and add it as a remote branch to the current, local v2 Git repository:

$ git remote add <remote-name> https://github.com/<github-id>/<repo-name>.git

Push the local v2 source code to the new repository:

$ git push -u <remote-name> master

If you do not have a Gemfile and are running a simple rack application, copy this Gemfile into the root of your source:

https://github.com/openshift/ruby-ex/blob/master/Gemfile

NoteThe latest version of the rack gem that supports Ruby 2.0 is 1.6.4, so the Gemfile needs to be modified to

gem 'rack', “1.6.4”.For Ruby 2.2 or later, use the rack gem 2.0 or later.

Use the

occommand to launch a new Ruby application from the builder image and source code:$ oc new-app --strategy=source ruby:2.0~https://github.com/<github-id>/<repo-name>.git

Supported Ruby Versions

| v2 | v3 |

|---|---|

| Ruby: 1.8, 1.9, 2.0 | Ruby: 2.0, 2.2 |

| Ruby on Rails: 3, 4 | Rails-postgresql-example (quickstart) |

| Sinatra |

6.3.4. PHP

Set up a new GitHub repository and add it as a remote branch to the current, local v2 Git repository:

$ git remote add <remote-name> https://github.com/<github-id>/<repo-name>

Push the local v2 source code to the new repository:

$ git push -u <remote-name> master

Use the

occommand to launch a new PHP application from the builder image and source code:$ oc new-app https://github.com/<github-id>/<repo-name>.git --name=<app-name> -e <ENV_VAR_NAME>=<env_var_value>

Supported PHP Versions

| v2 | v3 |

|---|---|

| PHP: 5.3, 5.4 | PHP:5.5, 5.6 |

| PHP 5.4 with Zend Server 6.1 | |

| CodeIgniter 2 | |

| HHVM | |

| Laravel 5.0 | |

| cakephp-mysql-example (quickstart) |

6.3.5. Perl

Set up a new GitHub repository and add it as a remote branch to the current, local v2 Git repository:

$ git remote add <remote-name> https://github.com/<github-id>/<repo-name>

Push the local v2 source code to the new repository:

$ git push -u <remote-name> master

Edit the local Git repository and push changes upstream to make it v3 compatible:

In v2, CPAN modules reside in .openshift/cpan.txt. In v3, the s2i builder looks for a file named cpanfile in the root directory of the source.

$ cd <local-git-repository> $ mv .openshift/cpan.txt cpanfile

Edit cpanfile, as it has a slightly different format:

format of cpanfile format of cpan.txt requires ‘cpan::mod’;

cpan::mod

requires ‘Dancer’;

Dancer

requires ‘YAML’;

YAML

Remove .openshift directory

NoteIn v3, action_hooks and cron tasks are not supported in the same way. See Action Hooks for more information.

-

Use the

occommand to launch a new Perl application from the builder image and source code:

$ oc new-app https://github.com/<github-id>/<repo-name>.git

Supported Perl Versions

| v2 | v3 |

|---|---|

| Perl: 5.10 | Perl: 5.16, 5.20 |

| Dancer-mysql-example (quickstart) |

6.3.6. Node.js

Set up a new GitHub repository and add it as a remote branch to the current, local Git repository:

$ git remote add <remote-name> https://github.com/<github-id>/<repo-name>

Push the local v2 source code to the new repository:

$ git push -u <remote-name> master

Edit the local Git repository and push changes upstream to make it v3 compatible:

Remove the .openshift directory.

NoteIn v3, action_hooks and cron tasks are not supported in the same way. See Action Hooks for more information.

Edit server.js.

- L116 server.js: 'self.app = express();'

- L25 server.js: self.ipaddress = '0.0.0.0';

L26 server.js: self.port = 8080;

NoteLines(L) are from the base V2 cartridge server.js.

Use the

occommand to launch a new Node.js application from the builder image and source code:$ oc new-app https://github.com/<github-id>/<repo-name>.git --name=<app-name> -e <ENV_VAR_NAME>=<env_var_value>

Supported Node.js Versions

| v2 | v3 |

|---|---|

| Node.js 0.10 | Nodejs: 0.10 |

| Nodejs-mongodb-example (quickstart) |

6.3.7. JBoss EAP

Set up a new GitHub repository and add it as a remote branch to the current, local Git repository:

$ git remote add <remote-name> https://github.com/<github-id>/<repo-name>

Push the local v2 source code to the new repository:

$ git push -u <remote-name> master

- If the repository includes pre-built .war files, they need to reside in the deployments directory off the root directory of the repository.

Create the new application using the JBoss EAP 6 builder image (jboss-eap64-openshift) and the source code repository from GitHub:

$ oc new-app --strategy=source jboss-eap64-openshift~https://github.com/<github-id>/<repo-name>.git

6.3.8. JBoss WS (Tomcat)

Set up a new GitHub repository and add it as a remote branch to the current, local Git repository:

$ git remote add <remote-name> https://github.com/<github-id>/<repo-name>

Push the local v2 source code to the new repository:

$ git push -u <remote-name> master

- If the repository includes pre-built .war files, they need to reside in the deployments directory off the root directory of the repository.

Create the new application using the JBoss Web Server 3 (Tomcat 7) builder image (jboss-webserver30-tomcat7) and the source code repository from GitHub:

$ oc new-app --strategy=source jboss-webserver30-tomcat7-openshift~https://github.com/<github-id>/<repo-name>.git --name=<app-name> -e <ENV_VAR_NAME>=<env_var_value>

6.3.9. JBoss AS (Wildfly 10)

Set up a new GitHub repository and add it as a remote branch to the current, local Git repository:

$ git remote add <remote-name> https://github.com/<github-id>/<repo-name>

Push the local v2 source code to the new repository:

$ git push -u <remote-name> master

Edit the local Git repository and push the changes upstream to make it v3 compatible:

Remove .openshift directory.

NoteIn v3, action_hooks and cron tasks are not supported in the same way. See Action Hooks for more information.

- Add the deployments directory to the root of the source repository. Move the .war files to ‘deployments’ directory.

Use the the

occommand to launch a new Wildfly application from the builder image and source code:$ oc new-app https://github.com/<github-id>/<repo-name>.git --image-stream=”openshift/wildfly:10.0" --name=<app-name> -e <ENV_VAR_NAME>=<env_var_value>

NoteThe argument

--nameis optional to specify the name of your application. The argument-eis optional to add environment variables that are needed for build and deployment processes, such asOPENSHIFT_PYTHON_DIR.

6.3.10. Supported JBoss/XPaas Versions

| v2 | v3 |

|---|---|

| JBoss App Server 7 | |

| Tomcat 6 (JBoss EWS 1.0) | jboss-webserver30-tomcat7-openshift: 1.1 |

| Tomcat 7 (JBoss EWS 2.0) | |

| Vert.x 2.1 | |

| WildFly App Server 10 | |

| WildFly App Server 8.2.1.Final | |

| WildFly App Server 9 | |

| CapeDwarf | |

| JBoss Data Virtualization 6 | |

| JBoss Enterprise App Platform 6 | jboss-eap64-openshift: 1.2, 1.3 |

| JBoss Unified Push Server 1.0.0.Beta1, Beta2 | |

| JBoss BPM Suite | |

| JBoss BRMS | |

| jboss-eap70-openshift: 1.3-Beta | |

| eap64-https-s2i | |

| eap64-mongodb-persistent-s2i | |

| eap64-mysql-persistent-s2i | |

| eap64-psql-persistent-s2i |

6.4. QuickStart Examples

6.4.1. Overview

Although there is no clear-cut migration path for v2 quickstart to v3 quickstart, the following quickstarts are currently available in v3. If you have an application with a database, rather than using oc new-app to create your application, then oc new-app again to start a separate database service and linking the two with common environment variables, you can use one of the following to instantiate the linked application and database at once, from your GitHub repository containing your source code. You can list all available templates with oc get templates -n openshift:

CakePHP MySQL https://github.com/openshift/cakephp-ex

- template: cakephp-mysql-example

Node.js MongoDB https://github.com/openshift/nodejs-ex

- template: nodejs-mongodb-example

Django PosgreSQL https://github.com/openshift/django-ex

- template: django-psql-example

Dancer MySQL https://github.com/openshift/dancer-ex

- template: dancer-mysql-example

Rails PostgreSQL https://github.com/openshift/rails-ex

- template: rails-postgresql-example

6.4.2. Workflow

Run a git clone of one of the above template URLs locally. Add and commit your application source code and push a GitHub repository, then start a v3 quickstart application from one of the templates listed above:

- Create a GitHub repository for your application.

Clone a quickstart template and add your GitHub repository as a remote:

$ git clone <one-of-the-template-URLs-listed-above> $ cd <your local git repository> $ git remote add upstream <https://github.com/<git-id>/<quickstart-repo>.git> $ git push -u upstream master

Commit and push your source code to GitHub:

$ cd <your local repository> $ git commit -am “added code for my app” $ git push origin master

Create a new application in v3:

$ oc new-app --template=<template> \ -p SOURCE_REPOSITORY_URL=<https://github.com/<git-id>/<quickstart_repo>.git> \ -p DATABASE_USER=<your_db_user> \ -p DATABASE_NAME=<your_db_name> \ -p DATABASE_PASSWORD=<your_db_password> \ -p DATABASE_ADMIN_PASSWORD=<your_db_admin_password> 1- 1

- Only applicable for MongoDB.

You should now have 2 pods running, a web framework pod, and a database pod. The web framework pod environment should match the database pod environment. You can list the environment variables with

oc set env pod/<pod_name> --list:-

DATABASE_NAMEis now<DB_SERVICE>_DATABASE -

DATABASE_USERis now<DB_SERVICE>_USER -

DATABASE_PASSWORDis now<DB_SERVICE>_PASSWORD DATABASE_ADMIN_PASSWORDis nowMONGODB_ADMIN_PASSWORD(only applicable for MongoDB)If no

SOURCE_REPOSITORY_URLis specified, the template will use the template URL (https://github.com/openshift/<quickstart>-ex) listed above as the source repository, and a hello-welcome application will be started.

-

If you are migrating a database, export databases to a dump file and restore the database in the new v3 database pod. Refer to the steps outlined in Database Applications, skipping the

oc new-appstep as the database pod is already up and running.

6.5. Continuous Integration and Deployment (CI/CD)

6.5.1. Overview

This topic reviews the differences in continuous integration and deployment (CI/CD) applications between OpenShift version 2 (v2) and OpenShift version 3 (v3) and how to migrate these applications into the v3 environment.

6.5.2. Jenkins

The Jenkins applications in OpenShift version 2 (v2) and OpenShift version 3 (v3) are configured differently due to fundamental differences in architecture. For example, in v2, the application uses an integrated Git repository that is hosted in the gear to store the source code. In v3, the source code is located in a public or private Git repository that is hosted outside of the pod.

Furthermore, in OpenShift v3, Jenkins jobs can not only be triggered by source code changes, but also by changes in ImageStream, which are changes on the images that are used to build the application along with its source code. As a result, it is highly recommended that you migrate the Jenkins application manually by creating a new Jenkins application in v3, and then re-creating jobs with the configurations that are suitable to OpenShift v3 environment.

Consult these resources for more information on how to create a Jenkins application, configure jobs, and use Jenkins plug-ins properly:

6.6. Webhooks and Action Hooks

6.6.1. Overview

This topic reviews the differences in webhooks and action hooks between OpenShift version 2 (v2) and OpenShift version 3 (v3) and how to migrate these applications into the v3 environment.

6.6.2. Webhooks

After creating a

BuildConfig`from a GitHub repository, run:$ oc describe bc/<name-of-your-BuildConfig>

This will output a webhook GitHub URL that looks like:

<https://api.dev-preview-int.openshift.com:443/oapi/v1/namespaces/nsname/buildconfigs/bcname/webhooks/secret/github>.

- Cut and paste this URL into GitHub, from the GitHub web console.

- In your GitHub repository, select Add Webhook from Settings → Webhooks & Services.

- Paste the URL output (similar to above) into the Payload URL field.

You should see a message from GitHub stating that your webhook was successfully configured.

Now, whenever you push a change to your GitHub repository, a new build will automatically start, and upon a successful build a new deployment will start.

If you delete or recreate your application, you will have to update the Payload URL field in GitHub with the new BuildConfig webhook url.

6.6.3. Action Hooks

In OpenShift version 2 (v2), there are build, deploy, post_deploy, and pre_build scripts or action_hooks that are located in the .openshift/action_hooks directory. While there is no one-to-one mapping of function for these in v3, the S2I tool in v3 does have the option of adding customizable scripts, either in a designated URL or in the .s2i/bin directory of your source repository.

OpenShift version 3 (v3) also offers a post-build hook for running basic testing of an image after it is built and before it is pushed to the registry. Deployment hooks are configured in the deployment configuration.

In v2, action_hooks are commonly used to set up environment variables. In v2, any environment variables should be passed with:

$ oc new-app <source-url> -e ENV_VAR=env_var

or:

$ oc new-app <template-name> -p ENV_VAR=env_var

Also, environment variables can be added or changed using:

$ oc set env dc/<name-of-dc> ENV_VAR1=env_var1 ENV_VAR2=env_var2’

6.7. S2I Tool

6.7.1. Overview

The Source-to-Image (S2I) tool injects application source code into a container image and the final product is a new and ready-to-run container image that incorporates the builder image and built source code. The S2I tool can be installed on your local machine without OpenShift Enterprise from the repository.

The S2I tool is a very powerful tool to test and verify your application and images locally before using them on OpenShift Enterprise.

6.7.2. Creating a Container Image

- Identify the builder image that is needed for the application. Red Hat offers multiple builder images for different languages including Python, Ruby, Perl, PHP, and Node.js. Other images are available from the community space.

S2I can build images from source code in a local file system or from a Git repository. To build a new container image from the builder image and the source code:

$ s2i build <source-location> <builder-image-name> <output-image-name>

Note<source-location>can either be a Git repository URL or a directory to source code in a local file system.Test the built image with the Docker daemon:

$ docker run -d --name <new-name> -p <port-number>:<port-number> <output-image-name> $ curl localhost:<port-number>

- Push the new image to the OpenShift registry.

Create a new application from the image in the OpenShift registry using the

occommand:$ oc new-app <image-name>

6.8. Support Guide

6.8.1. Overview

This topic reviews supported languages, frameworks, databases, and markers for OpenShift version 2 (v2) and OpenShift version 3 (v3).

6.8.2. Supported Databases

See the Supported Databases section of the Database Applications topic.

6.8.3. Supported Languages

6.8.4. Supported Frameworks

| v2 | v3 |

|---|---|

| Jenkins Server | jenkins-persistent |

| Drupal 7 | |

| Ghost 0.7.5 | |

| WordPress 4 | |

| Ceylon | |

| Go | |

| MEAN |

6.8.5. Supported Markers

| v2 | v3 |

|---|---|

| pip_install | If your repository contains requirements.txt, then pip is invoked by default. Otherwise, pip is not used. |

| v2 | v3 |

|---|---|

| disable_asset_compilation |

This can be done by setting |

| v2 | v3 |

|---|---|

| enable_cpan_tests |

This can be done by setting |

| v2 | v3 |

|---|---|

| use_composer | composer is always used if the source repository includes a composer.json in the root directory. |

| v2 | v3 |

|---|---|

| NODEJS_VERSION | N/A |

| use_npm |

npm is always used to start the application, unless |

| v2 | v3 |

|---|---|

| enable_debugging |

This option is controlled via the |

| skip_maven_build | If pom.xml is present, maven will be run. |

| java7 | N/A |

| java8 | JavaEE is using JDK8. |

| v2 | v3 |

|---|---|

| enable_debugging | N/A |

| v2 | v3 |

|---|---|

| force_clean_build | There is a similar concept in v3, as noCache field in buildconfig forces the container build to rerun each layer. In the S2I build, the incremental flag is false by default, which indicates a clean build. |

| hot_deploy | |

| enable_public_server_status | N/A |

| disable_auto_scaling | Autoscaling is off by default and it can be turn on via pod auto-scaling. |

6.8.6. Supported Environment Variables

Chapter 7. Application Tutorials

7.1. Overview

This topic group includes information on how to get your application up and running in OpenShift Enterprise and covers different languages and their frameworks.

7.2. Quickstart Templates

7.2.1. Overview

A quickstart is a basic example of an application running on OpenShift Enterprise. Quickstarts come in a variety of languages and frameworks, and are defined in a template, which is constructed from a set of services, build configurations, and deployment configurations. This template references the necessary images and source repositories to build and deploy the application.

To explore a quickstart, create an application from a template. Your administrator may have already installed these templates in your OpenShift Enterprise cluster, in which case you can simply select it from the web console. See the template documentation for more information on how to upload, create from, and modify a template.

Quickstarts refer to a source repository that contains the application source code. To customize the quickstart, fork the repository and, when creating an application from the template, substitute the default source repository name with your forked repository. This results in builds that are performed using your source code instead of the provided example source. You can then update the code in your source repository and launch a new build to see the changes reflected in the deployed application.

7.2.2. Web Framework Quickstart Templates

These quickstarts provide a basic application of the indicated framework and language:

CakePHP: a PHP web framework (includes a MySQL database)

Dancer: a Perl web framework (includes a MySQL database)

Django: a Python web framework (includes a PostgreSQL database)

NodeJS: a NodeJS web application (includes a MongoDB database)

Rails: a Ruby web framework (includes a PostgreSQL database)

7.3. Ruby on Rails

7.3.1. Overview

Ruby on Rails is a popular web framework written in Ruby. This guide covers using Rails 4 on OpenShift Enterprise.

We strongly advise going through the whole tutorial to have an overview of all the steps necessary to run your application on the OpenShift Enterprise. If you experience a problem try reading through the entire tutorial and then going back to your issue. It can also be useful to review your previous steps to ensure that all the steps were executed correctly.

For this guide you will need:

- Basic Ruby/Rails knowledge

- Locally installed version of Ruby 2.0.0+, Rubygems, Bundler

- Basic Git knowledge

- Running instance of OpenShift Enterprise v3

7.3.2. Local Workstation Setup

First make sure that an instance of OpenShift Enterprise is running and is available. For more info on how to get OpenShift Enterprise up and running check the installation methods. Also make sure that your oc CLI client is installed and the command is accessible from your command shell, so you can use it to log in using your email address and password.

7.3.2.1. Setting Up the Database

Rails applications are almost always used with a database. For the local development we chose the PostgreSQL database. To install it type:

$ sudo yum install -y postgresql postgresql-server postgresql-devel

Next you need to initialize the database with:

$ sudo postgresql-setup initdb

This command will create the /var/lib/pgsql/data directory, in which the data will be stored.

Start the database by typing:

$ sudo systemctl start postgresql.service

When the database is running, create your rails user:

$ sudo -u postgres createuser -s rails

Note that the user we created has no password.

7.3.3. Writing Your Application

If you are starting your Rails application from scratch, you need to install the Rails gem first.

$ gem install rails Successfully installed rails-4.2.0 1 gem installed

After you install the Rails gem create a new application, with PostgreSQL as your database:

$ rails new rails-app --database=postgresql

Then change into your new application directory.

$ cd rails-app

If you already have an application, make sure the pg (postgresql) gem is present in your Gemfile. If not edit your Gemfile by adding the gem:

gem 'pg'

To generate a new Gemfile.lock with all your dependencies run:

$ bundle install

In addition to using the postgresql database with the pg gem, you’ll also need to ensure the config/database.yml is using the postgresql adapter.

Make sure you updated default section in the config/database.yml file, so it looks like this:

default: &default adapter: postgresql encoding: unicode pool: 5 host: localhost username: rails password:

Create your application’s development and test databases by using this rake command:

$ rake db:create

This will create development and test database in your PostgreSQL server.

7.3.3.1. Creating a Welcome Page

Since Rails 4 no longer serves a static public/index.html page in production, we need to create a new root page.

In order to have a custom welcome page we need to do following steps:

- Create a controller with an index action

-

Create a view page for the

welcomecontrollerindexaction - Create a route that will serve applications root page with the created controller and view

Rails offers a generator that will do all this necessary steps for you.

$ rails generate controller welcome index

All the necessary files have been created, now we just need to edit line 2 in config/routes.rb file to look like:

root 'welcome#index'

Run the rails server to verify the page is available.

$ rails server

You should see your page by visiting http://localhost:3000 in your browser. If you don’t see the page, check the logs that are output to your server to debug.

7.3.3.2. Configuring the Application for OpenShift Enterprise

In order to have your application communicating with the PostgreSQL database service that will be running in OpenShift Enterprise, you will need to edit the default section in your config/database.yml to use environment variables, which you will define later, upon the database service creation.

The default section in your edited config/database.yml together with pre-defined variables should look like:

<% user = ENV.key?("POSTGRESQL_ADMIN_PASSWORD") ? "root" : ENV["POSTGRESQL_USER"] %>

<% password = ENV.key?("POSTGRESQL_ADMIN_PASSWORD") ? ENV["POSTGRESQL_ADMIN_PASSWORD"] : ENV["POSTGRESQL_PASSWORD"] %>

<% db_service = ENV.fetch("DATABASE_SERVICE_NAME","").upcase %>

default: &default

adapter: postgresql

encoding: unicode

# For details on connection pooling, see rails configuration guide

# http://guides.rubyonrails.org/configuring.html#database-pooling

pool: <%= ENV["POSTGRESQL_MAX_CONNECTIONS"] || 5 %>

username: <%= user %>

password: <%= password %>

host: <%= ENV["#{db_service}_SERVICE_HOST"] %>

port: <%= ENV["#{db_service}_SERVICE_PORT"] %>

database: <%= ENV["POSTGRESQL_DATABASE"] %>For an example of how the final file should look, see Ruby on Rails example application config/database.yml.

7.3.3.3. Storing Your Application in Git

OpenShift Enterprise requires git, if you don’t have it installed you will need to install it.

Building an application in OpenShift Enterprise usually requires that the source code be stored in a git repository, so you will need to install git if you do not already have it.

Make sure you are in your Rails application directory by running the ls -1 command. The output of the command should look like:

$ ls -1 app bin config config.ru db Gemfile Gemfile.lock lib log public Rakefile README.rdoc test tmp vendor

Now run these commands in your Rails app directory to initialize and commit your code to git:

$ git init $ git add . $ git commit -m "initial commit"

Once your application is committed you need to push it to a remote repository. For this you would need a GitHub account, in which you create a new repository.

Set the remote that points to your git repository:

$ git remote add origin git@github.com:<namespace/repository-name>.git

After that, push your application to your remote git repository.

$ git push

7.3.4. Deploying Your Application to OpenShift Enterprise

To deploy your Ruby on Rails application, create a new Project for the application:

$ oc new-project rails-app --description="My Rails application" --display-name="Rails Application"

After creating the the rails-app project, you will be automatically switched to the new project namespace.

Deploying your application in OpenShift Enterprise involves three steps:

- Creating a database service from OpenShift Enterprise’s PostgreSQL image

- Creating a frontend service from OpenShift Enterprise’s Ruby 2.0 builder image and your Ruby on Rails source code, which we wire with the database service

- Creating a route for your application.

7.3.4.1. Creating the Database Service

Your Rails application expects a running database service. For this service use PostgeSQL database image.

To create the database service you will use the oc new-app command. To this command you will need to pass some necessary environment variables which will be used inside the database container. These environment variables are required to set the username, password, and name of the database. You can change the values of these environment variables to anything you would like. The variables we are going to be setting are as follows:

- POSTGRESQL_DATABASE

- POSTGRESQL_USER

- POSTGRESQL_PASSWORD

Setting these variables ensures:

- A database exists with the specified name

- A user exists with the specified name

- The user can access the specified database with the specified password

For example:

$ oc new-app postgresql -e POSTGRESQL_DATABASE=db_name -e POSTGRESQL_USER=username -e POSTGRESQL_PASSWORD=password

To also set the password for the database administrator, append to the previous command with:

-e POSTGRESQL_ADMIN_PASSWORD=admin_pw

To watch the progress of this command:

$ oc get pods --watch

7.3.4.2. Creating the Frontend Service

To bring your application to OpenShift Enterprise, you need to specify a repository in which your application lives, using once again the oc new-app command, in which you will need to specify database related environment variables we setup in the Creating the Database Service:

$ oc new-app path/to/source/code --name=rails-app -e POSTGRESQL_USER=username -e POSTGRESQL_PASSWORD=password -e POSTGRESQL_DATABASE=db_name -e DATABASE_SERVICE_NAME=postgresql

With this command, OpenShift Enterprise fetches the source code, sets up the Builder image, builds your application image, and deploys the newly created image together with the specified environment variables. The application is named rails-app.

You can verify the environment variables have been added by viewing the JSON document of the rails-app DeploymentConfig:

$ oc get dc rails-app -o json

You should see the following section:

env": [

{

"name": "POSTGRESQL_USER",

"value": "username"

},

{

"name": "POSTGRESQL_PASSWORD",

"value": "password"

},

{

"name": "POSTGRESQL_DATABASE",

"value": "db_name"

},

{

"name": "DATABASE_SERVICE_NAME",

"value": "postgresql"

}

],To check the build process, use the build-logs command:

$ oc logs -f build rails-app-1

Once the build is complete, you can look at the running pods in OpenShift Enterprise:

$ oc get pods

You should see a line starting with myapp-(#number)-(some hash) and that is your application running in OpenShift Enterprise.

Before your application will be functional, you need to initialize the database by running the database migration script. There are two ways you can do this:

- Manually from the running frontend container:

First you need to exec into frontend container with rsh command:

$ oc rsh <FRONTEND_POD_ID>

Run the migration from inside the container:

$ RAILS_ENV=production bundle exec rake db:migrate

If you are running your Rails application in a development or test environment you don’t have to specify the RAILS_ENV environment variable.

- By adding pre-deployment lifecycle hooks in your template. For example check the hooks example in our Rails example application.

7.3.4.3. Creating a Route for Your Application

To expose a service by giving it an externally-reachable hostname like www.example.com use OpenShift Enterprise route. In your case you need to expose the frontend service by typing:

$ oc expose service rails-app --hostname=www.example.com

It’s the user’s responsibility to ensure the hostname they specify resolves into the IP address of the router. For more information, check the OpenShift Enterprise documentation on:

Chapter 8. Opening a Remote Shell to Containers

8.1. Overview

The oc rsh command allows you to locally access and manage tools that are on the system. The secure shell (SSH) is the underlying technology and industry standard that provides a secure connection to the application. Access to applications with the shell environment is protected and restricted with Security-Enhanced Linux (SELinux) policies.

8.2. Start a Secure Shell Session

Open a remote shell session to a container:

$ oc rsh <pod>

While in the remote shell, you can issue commands as if you are inside the container and perform local operations like monitoring, debugging, and using CLI commands specific to what is running in the container.

For example, in a MySQL container, you can count the number of records in the database by invoking the mysql command, then using the the prompt to type in the SELECT command. You can also use use commands like ps(1) and ls(1) for validation.

BuildConfigs and DeployConfigs map out how you want things to look and pods (with containers inside) are created and dismantled as needed. Your changes are not persistent. If you make changes directly within the container and that container is destroyed and rebuilt, your changes will no longer exist.

oc exec can be used to execute a command remotely. However, the oc rsh command provides an easier way to keep a remote shell open persistently.

8.3. Secure Shell Session Help

For help with usage, options, and to see examples:

$ oc rsh -h

Chapter 9. Templates

9.1. Overview

A template describes a set of objects that can be parameterized and processed to produce a list of objects for creation by OpenShift Enterprise. A template can be processed to create anything you have permission to create within a project, for example services, build configurations, and deployment configurations. A template may also define a set of labels to apply to every object defined in the template.

You can create a list of objects from a template using the CLI or, if a template has been uploaded to your project or the global template library, using the web console.

9.2. Uploading a Template

If you have a JSON or YAML file that defines a template, for example as seen in this example, you can upload the template to projects using the CLI. This saves the template to the project for repeated use by any user with appropriate access to that project. Instructions on writing your own templates are provided later in this topic.

To upload a template to your current project’s template library, pass the JSON or YAML file with the following command:

$ oc create -f <filename>

You can upload a template to a different project using the -n option with the name of the project:

$ oc create -f <filename> -n <project>

The template is now available for selection using the web console or the CLI.

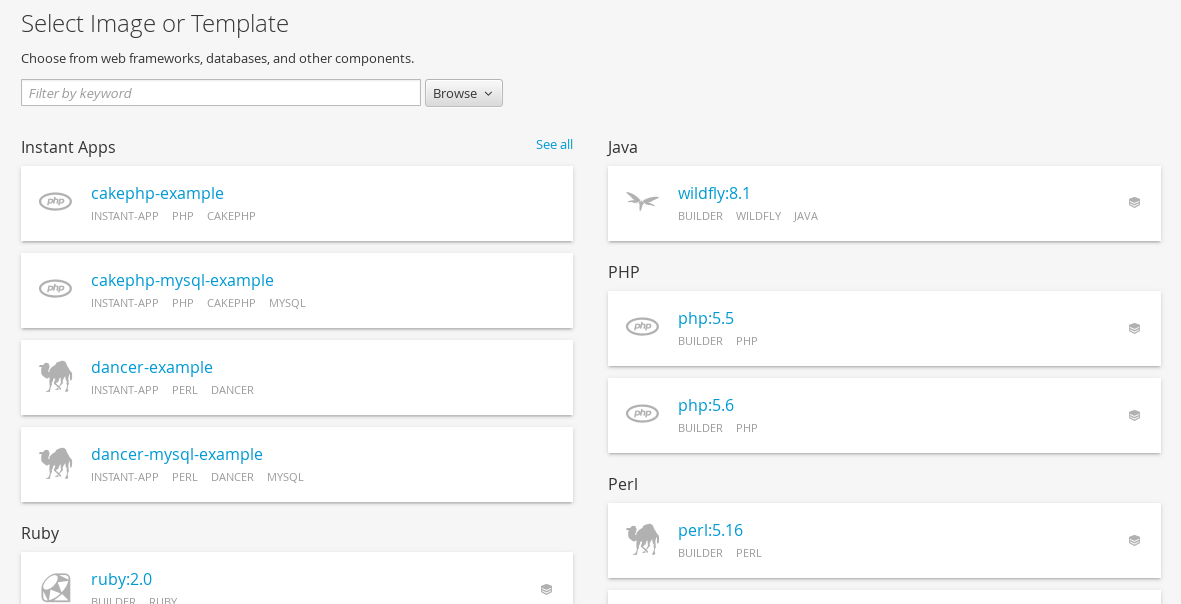

9.3. Creating from Templates Using the Web Console

To create the objects from an uploaded template using the web console:

While in the desired project, click Add to Project:

Select a template from the list of templates in your project, or provided by the global template library:

Modify template parameters in the template creation screen:

- Template name and description.

- Container images included in the template.

- Parameters defined by the template. You can edit values for parameters defined in the template here.

- Labels to assign to all items included in the template. You can add and edit labels for objects.

9.4. Creating from Templates Using the CLI

You can use the CLI to process templates and use the configuration that is generated to create objects.

9.4.1. Labels

Labels are used to manage and organize generated objects, such as pods. The labels specified in the template are applied to every object that is generated from the template.

There is also the ability to add labels in the template from the command line.

$ oc process -f <filename> -l name=otherLabel

9.4.2. Parameters

The list of parameters that you can override are listed in the parameters section of the template. You can list them with the CLI by using the following command and specifying the file to be used:

$ oc process --parameters -f <filename>

Alternatively, if the template is already uploaded:

$ oc process --parameters -n <project> <template_name>

For example, the following shows the output when listing the parameters for one of the Quickstart templates in the default openshift project:

$ oc process --parameters -n openshift rails-postgresql-example

NAME DESCRIPTION GENERATOR VALUE

SOURCE_REPOSITORY_URL The URL of the repository with your application source code https://github.com/openshift/rails-ex.git

SOURCE_REPOSITORY_REF Set this to a branch name, tag or other ref of your repository if you are not using the default branch

CONTEXT_DIR Set this to the relative path to your project if it is not in the root of your repository

APPLICATION_DOMAIN The exposed hostname that will route to the Rails service rails-postgresql-example.openshiftapps.com

GITHUB_WEBHOOK_SECRET A secret string used to configure the GitHub webhook expression [a-zA-Z0-9]{40}

SECRET_KEY_BASE Your secret key for verifying the integrity of signed cookies expression [a-z0-9]{127}

APPLICATION_USER The application user that is used within the sample application to authorize access on pages openshift

APPLICATION_PASSWORD The application password that is used within the sample application to authorize access on pages secret

DATABASE_SERVICE_NAME Database service name postgresql

POSTGRESQL_USER database username expression user[A-Z0-9]{3}

POSTGRESQL_PASSWORD database password expression [a-zA-Z0-9]{8}

POSTGRESQL_DATABASE database name root

POSTGRESQL_MAX_CONNECTIONS database max connections 10

POSTGRESQL_SHARED_BUFFERS database shared buffers 12MBThe output identifies several parameters that are generated with a regular expression-like generator when the template is processed.

9.4.3. Generating a List of Objects

Using the CLI, you can process a file defining a template to return the list of objects to standard output:

$ oc process -f <filename>

Alternatively, if the template has already been uploaded to the current project:

$ oc process <template_name>

The process command also takes a list of templates you can process to a list of objects. In that case, every template will be processed and the resulting list of objects will contain objects from all templates passed to a process command:

$ cat <first_template> <second_template> | oc process -f -

You can create objects from a template by processing the template and piping the output to oc create:

$ oc process -f <filename> | oc create -f -

Alternatively, if the template has already been uploaded to the current project:

$ oc process <template> | oc create -f -

You can override any parameter values defined in the file by adding the -v option followed by a comma-separated list of <name>=<value> pairs. A parameter reference may appear in any text field inside the template items.

For example, in the following the POSTGRESQL_USER and POSTGRESQL_DATABASE parameters of a template are overridden to output a configuration with customized environment variables:

Example 9.1. Creating a List of Objects from a Template

$ oc process -f my-rails-postgresql \

-v POSTGRESQL_USER=bob,POSTGRESQL_DATABASE=mydatabase

The JSON file can either be redirected to a file or applied directly without uploading the template by piping the processed output to the oc create command:

$ oc process -f my-rails-postgresql \

-v POSTGRESQL_USER=bob,POSTGRESQL_DATABASE=mydatabase \

| oc create -f -9.5. Modifying an Uploaded Template

You can edit a template that has already been uploaded to your project by using the following command:

$ oc edit template <template>

9.6. Using the Instant App and Quickstart Templates

OpenShift Enterprise provides a number of default Instant App and Quickstart templates to make it easy to quickly get started creating a new application for different languages. Templates are provided for Rails (Ruby), Django (Python), Node.js, CakePHP (PHP), and Dancer (Perl). Your cluster administrator should have created these templates in the default, global openshift project so you have access to them. You can list the available default Instant App and Quickstart templates with:

$ oc get templates -n openshift

If they are not available, direct your cluster administrator to the Loading the Default Image Streams and Templates topic.

By default, the templates build using a public source repository on GitHub that contains the necessary application code. In order to be able to modify the source and build your own version of the application, you must:

-

Fork the repository referenced by the template’s default

SOURCE_REPOSITORY_URLparameter. -

Override the value of the

SOURCE_REPOSITORY_URLparameter when creating from the template, specifying your fork instead of the default value.

By doing this, the build configuration created by the template will now point to your fork of the application code, and you can modify the code and rebuild the application at will. A walkthrough of this process using the web console is provided in Getting Started for Developers: Web Console.

Some of the Instant App and Quickstart templates define a database deployment configuration. The configuration they define uses ephemeral storage for the database content. These templates should be used for demonstration purposes only as all database data will be lost if the database pod restarts for any reason.

9.7. Writing Templates

You can define new templates to make it easy to recreate all the objects of your application. The template will define the objects it creates along with some metadata to guide the creation of those objects.

9.7.1. Description

The template description covers information that informs users what your template does and helps them find it when searching in the web console. In addition to general descriptive information, it includes a set of tags. Useful tags include the name of the language your template is related to (e.g., java, php, ruby, etc.). In addition, adding the special tag instant-app causes your template to be displayed in the list of Instant Apps on the template selection page of the web console.

kind: "Template" apiVersion: "v1" metadata: name: "cakephp-mysql-example" 1 annotations: description: "An example CakePHP application with a MySQL database" 2 tags: "instant-app,php,cakephp,mysql" 3 iconClass: "icon-php" 4

9.7.2. Labels

Templates can include a set of labels. These labels will be added to each object created when the template is instantiated. Defining a label in this way makes it easy for users to find and manage all the objects created from a particular template.

kind: "Template"

apiVersion: "v1"

...

labels:

template: "cakephp-mysql-example" 1- 1

- A label that will be applied to all objects created from this template.

9.7.3. Parameters

Parameters allow a value to be supplied by the user or generated when the template is instantiated. This is useful for generating random passwords or allowing the user to supply a host name or other user-specific value that is required to customize the template. Parameters can be referenced by placing values in the form "${PARAMETER_NAME}" in place of any string field in the template.

kind: Template

apiVersion: v1

objects:

- kind: BuildConfig

apiVersion: v1

metadata:

name: cakephp-mysql-example

annotations:

description: Defines how to build the application

spec:

source:

type: Git

git:

uri: "${SOURCE_REPOSITORY_URL}" 1

ref: "${SOURCE_REPOSITORY_REF}"

contextDir: "${CONTEXT_DIR}"

parameters:

- name: SOURCE_REPOSITORY_URL 2

description: The URL of the repository with your application source code 3

value: https://github.com/openshift/cakephp-ex.git 4

required: true 5

- name: GITHUB_WEBHOOK_SECRET

description: A secret string used to configure the GitHub webhook

generate: expression 6

from: "[a-zA-Z0-9]{40}" 7- 1

- This value will be replaced with the value of the

SOURCE_REPOSITORY_URLparameter when the template is instantiated. - 2

- The name of the parameter. This value is displayed to users and used to reference the parameter within the template.

- 3

- A description of the parameter.

- 4

- A default value for the parameter which will be used if the user does not override the value when instantiating the template.

- 5

- Indicates this parameter is required, meaning the user cannot override it with an empty value. If the parameter does not provide a default or generated value, the user must supply a value.

- 6

- A parameter which has its value generated via a regular expression-like syntax.

- 7

- The input to the generator. In this case, the generator will produce a 40 character alphanumeric value including upper and lowercase characters.

9.7.4. Object List

The main portion of the template is the list of objects which will be created when the template is instantiated. This can be any valid API object, such as a BuildConfig, DeploymentConfig, Service, etc. The object will be created exactly as defined here, with any parameter values substituted in prior to creation. The definition of these objects can reference parameters defined earlier.

kind: "Template"

apiVersion: "v1"

objects:

- kind: "Service" 1

apiVersion: "v1"

metadata: