This documentation is for a release that is no longer maintained

See documentation for the latest supported version 3 or the latest supported version 4.Networking

Configuring and managing cluster networking

Abstract

Chapter 1. Understanding networking

Cluster Administrators have several options for exposing applications that run inside a cluster to external traffic and securing network connections:

- Service types, such as node ports or load balancers

-

API resources, such as

IngressandRoute

By default, Kubernetes allocates each pod an internal IP address for applications running within the pod. Pods and their containers can network, but clients outside the cluster do not have networking access. When you expose your application to external traffic, giving each pod its own IP address means that pods can be treated like physical hosts or virtual machines in terms of port allocation, networking, naming, service discovery, load balancing, application configuration, and migration.

Some cloud platforms offer metadata APIs that listen on the 169.254.169.254 IP address, a link-local IP address in the IPv4 169.254.0.0/16 CIDR block.

This CIDR block is not reachable from the pod network. Pods that need access to these IP addresses must be given host network access by setting the spec.hostNetwork field in the pod spec to true.

If you allow a pod host network access, you grant the pod privileged access to the underlying network infrastructure.

1.1. OpenShift Container Platform DNS

If you are running multiple services, such as front-end and back-end services for use with multiple pods, environment variables are created for user names, service IPs, and more so the front-end pods can communicate with the back-end services. If the service is deleted and recreated, a new IP address can be assigned to the service, and requires the front-end pods to be recreated to pick up the updated values for the service IP environment variable. Additionally, the back-end service must be created before any of the front-end pods to ensure that the service IP is generated properly, and that it can be provided to the front-end pods as an environment variable.

For this reason, OpenShift Container Platform has a built-in DNS so that the services can be reached by the service DNS as well as the service IP/port.

1.2. OpenShift Container Platform Ingress Operator

When you create your OpenShift Container Platform cluster, pods and services running on the cluster are each allocated their own IP addresses. The IP addresses are accessible to other pods and services running nearby but are not accessible to outside clients. The Ingress Operator implements the IngressController API and is the component responsible for enabling external access to OpenShift Container Platform cluster services.

The Ingress Operator makes it possible for external clients to access your service by deploying and managing one or more HAProxy-based Ingress Controllers to handle routing. You can use the Ingress Operator to route traffic by specifying OpenShift Container Platform Route and Kubernetes Ingress resources. Configurations within the Ingress Controller, such as the ability to define endpointPublishingStrategy type and internal load balancing, provide ways to publish Ingress Controller endpoints.

1.2.1. Comparing routes and Ingress

The Kubernetes Ingress resource in OpenShift Container Platform implements the Ingress Controller with a shared router service that runs as a pod inside the cluster. The most common way to manage Ingress traffic is with the Ingress Controller. You can scale and replicate this pod like any other regular pod. This router service is based on HAProxy, which is an open source load balancer solution.

The OpenShift Container Platform route provides Ingress traffic to services in the cluster. Routes provide advanced features that might not be supported by standard Kubernetes Ingress Controllers, such as TLS re-encryption, TLS passthrough, and split traffic for blue-green deployments.

Ingress traffic accesses services in the cluster through a route. Routes and Ingress are the main resources for handling Ingress traffic. Ingress provides features similar to a route, such as accepting external requests and delegating them based on the route. However, with Ingress you can only allow certain types of connections: HTTP/2, HTTPS and server name identification (SNI), and TLS with certificate. In OpenShift Container Platform, routes are generated to meet the conditions specified by the Ingress resource.

1.3. Glossary of common terms for OpenShift Container Platform networking

This glossary defines common terms that are used in the networking content.

- authentication

- To control access to an OpenShift Container Platform cluster, a cluster administrator can configure user authentication and ensure only approved users access the cluster. To interact with an OpenShift Container Platform cluster, you must authenticate to the OpenShift Container Platform API. You can authenticate by providing an OAuth access token or an X.509 client certificate in your requests to the OpenShift Container Platform API.

- AWS Load Balancer Operator

-

The AWS Load Balancer (ALB) Operator deploys and manages an instance of the

aws-load-balancer-controller. - Cluster Network Operator

- The Cluster Network Operator (CNO) deploys and manages the cluster network components in an OpenShift Container Platform cluster. This includes deployment of the Container Network Interface (CNI) default network provider plug-in selected for the cluster during installation.

- config map

-

A config map provides a way to inject configuration data into pods. You can reference the data stored in a config map in a volume of type

ConfigMap. Applications running in a pod can use this data. - custom resource (CR)

- A CR is extension of the Kubernetes API. You can create custom resources.

- DNS

- Cluster DNS is a DNS server which serves DNS records for Kubernetes services. Containers started by Kubernetes automatically include this DNS server in their DNS searches.

- DNS Operator

- The DNS Operator deploys and manages CoreDNS to provide a name resolution service to pods. This enables DNS-based Kubernetes Service discovery in OpenShift Container Platform.

- deployment

- A Kubernetes resource object that maintains the life cycle of an application.

- domain

- Domain is a DNS name serviced by the Ingress Controller.

- egress

- The process of data sharing externally through a network’s outbound traffic from a pod.

- External DNS Operator

- The External DNS Operator deploys and manages ExternalDNS to provide the name resolution for services and routes from the external DNS provider to OpenShift Container Platform.

- HTTP-based route

- An HTTP-based route is an unsecured route that uses the basic HTTP routing protocol and exposes a service on an unsecured application port.

- Ingress

- The Kubernetes Ingress resource in OpenShift Container Platform implements the Ingress Controller with a shared router service that runs as a pod inside the cluster.

- Ingress Controller

- The Ingress Operator manages Ingress Controllers. Using an Ingress Controller is the most common way to allow external access to an OpenShift Container Platform cluster.

- installer-provisioned infrastructure

- The installation program deploys and configures the infrastructure that the cluster runs on.

- kubelet

- A primary node agent that runs on each node in the cluster to ensure that containers are running in a pod.

- Kubernetes NMState Operator

- The Kubernetes NMState Operator provides a Kubernetes API for performing state-driven network configuration across the OpenShift Container Platform cluster’s nodes with NMState.

- kube-proxy

- Kube-proxy is a proxy service which runs on each node and helps in making services available to the external host. It helps in forwarding the request to correct containers and is capable of performing primitive load balancing.

- load balancers

- OpenShift Container Platform uses load balancers for communicating from outside the cluster with services running in the cluster.

- MetalLB Operator

-

As a cluster administrator, you can add the MetalLB Operator to your cluster so that when a service of type

LoadBalanceris added to the cluster, MetalLB can add an external IP address for the service. - multicast

- With IP multicast, data is broadcast to many IP addresses simultaneously.

- namespaces

- A namespace isolates specific system resources that are visible to all processes. Inside a namespace, only processes that are members of that namespace can see those resources.

- networking

- Network information of a OpenShift Container Platform cluster.

- node

- A worker machine in the OpenShift Container Platform cluster. A node is either a virtual machine (VM) or a physical machine.

- OpenShift Container Platform Ingress Operator

-

The Ingress Operator implements the

IngressControllerAPI and is the component responsible for enabling external access to OpenShift Container Platform services. - pod

- One or more containers with shared resources, such as volume and IP addresses, running in your OpenShift Container Platform cluster. A pod is the smallest compute unit defined, deployed, and managed.

- PTP Operator

-

The PTP Operator creates and manages the

linuxptpservices. - route

- The OpenShift Container Platform route provides Ingress traffic to services in the cluster. Routes provide advanced features that might not be supported by standard Kubernetes Ingress Controllers, such as TLS re-encryption, TLS passthrough, and split traffic for blue-green deployments.

- scaling

- Increasing or decreasing the resource capacity.

- service

- Exposes a running application on a set of pods.

- Single Root I/O Virtualization (SR-IOV) Network Operator

- The Single Root I/O Virtualization (SR-IOV) Network Operator manages the SR-IOV network devices and network attachments in your cluster.

- software-defined networking (SDN)

- OpenShift Container Platform uses a software-defined networking (SDN) approach to provide a unified cluster network that enables communication between pods across the OpenShift Container Platform cluster.

- Stream Control Transmission Protocol (SCTP)

- SCTP is a reliable message based protocol that runs on top of an IP network.

- taint

- Taints and tolerations ensure that pods are scheduled onto appropriate nodes. You can apply one or more taints on a node.

- toleration

- You can apply tolerations to pods. Tolerations allow the scheduler to schedule pods with matching taints.

- web console

- A user interface (UI) to manage OpenShift Container Platform.

Chapter 2. Accessing hosts

Learn how to create a bastion host to access OpenShift Container Platform instances and access the control plane nodes with secure shell (SSH) access.

2.1. Accessing hosts on Amazon Web Services in an installer-provisioned infrastructure cluster

The OpenShift Container Platform installer does not create any public IP addresses for any of the Amazon Elastic Compute Cloud (Amazon EC2) instances that it provisions for your OpenShift Container Platform cluster. To be able to SSH to your OpenShift Container Platform hosts, you must follow this procedure.

Procedure

-

Create a security group that allows SSH access into the virtual private cloud (VPC) created by the

openshift-installcommand. - Create an Amazon EC2 instance on one of the public subnets the installer created.

Associate a public IP address with the Amazon EC2 instance that you created.

Unlike with the OpenShift Container Platform installation, you should associate the Amazon EC2 instance you created with an SSH keypair. It does not matter what operating system you choose for this instance, as it will simply serve as an SSH bastion to bridge the internet into your OpenShift Container Platform cluster’s VPC. The Amazon Machine Image (AMI) you use does matter. With Red Hat Enterprise Linux CoreOS (RHCOS), for example, you can provide keys via Ignition, like the installer does.

After you provisioned your Amazon EC2 instance and can SSH into it, you must add the SSH key that you associated with your OpenShift Container Platform installation. This key can be different from the key for the bastion instance, but does not have to be.

NoteDirect SSH access is only recommended for disaster recovery. When the Kubernetes API is responsive, run privileged pods instead.

-

Run

oc get nodes, inspect the output, and choose one of the nodes that is a master. The hostname looks similar toip-10-0-1-163.ec2.internal. From the bastion SSH host you manually deployed into Amazon EC2, SSH into that control plane host. Ensure that you use the same SSH key you specified during the installation:

ssh -i <ssh-key-path> core@<master-hostname>

$ ssh -i <ssh-key-path> core@<master-hostname>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 3. Networking Operators overview

OpenShift Container Platform supports multiple types of networking Operators. You can manage the cluster networking using these networking Operators.

3.1. Cluster Network Operator

The Cluster Network Operator (CNO) deploys and manages the cluster network components in an OpenShift Container Platform cluster. This includes deployment of the Container Network Interface (CNI) default network provider plugin selected for the cluster during installation. For more information, see Cluster Network Operator in OpenShift Container Platform.

3.2. DNS Operator

The DNS Operator deploys and manages CoreDNS to provide a name resolution service to pods. This enables DNS-based Kubernetes Service discovery in OpenShift Container Platform. For more information, see DNS Operator in OpenShift Container Platform.

3.3. Ingress Operator

When you create your OpenShift Container Platform cluster, pods and services running on the cluster are each allocated IP addresses. The IP addresses are accessible to other pods and services running nearby but are not accessible to external clients. The Ingress Operator implements the Ingress Controller API and is responsible for enabling external access to OpenShift Container Platform cluster services. For more information, see Ingress Operator in OpenShift Container Platform.

3.4. External DNS Operator

The External DNS Operator deploys and manages ExternalDNS to provide the name resolution for services and routes from the external DNS provider to OpenShift Container Platform. For more information, see Understanding the External DNS Operator.

3.5. Network Observability Operator

The Network Observability Operator is an optional Operator that allows cluster administrators to observe the network traffic for OpenShift Container Platform clusters. The Network Observability Operator uses the eBPF technology to create network flows. The network flows are then enriched with OpenShift Container Platform information and stored in Loki. You can view and analyze the stored network flows information in the OpenShift Container Platform console for further insight and troubleshooting. For more information, see About Network Observability Operator.

Chapter 4. Cluster Network Operator in OpenShift Container Platform

The Cluster Network Operator (CNO) deploys and manages the cluster network components on an OpenShift Container Platform cluster, including the Container Network Interface (CNI) default network provider plugin selected for the cluster during installation.

4.1. Cluster Network Operator

The Cluster Network Operator implements the network API from the operator.openshift.io API group. The Operator deploys the OpenShift SDN default Container Network Interface (CNI) network provider plugin, or the default network provider plugin that you selected during cluster installation, by using a daemon set.

Procedure

The Cluster Network Operator is deployed during installation as a Kubernetes Deployment.

Run the following command to view the Deployment status:

oc get -n openshift-network-operator deployment/network-operator

$ oc get -n openshift-network-operator deployment/network-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY UP-TO-DATE AVAILABLE AGE network-operator 1/1 1 1 56m

NAME READY UP-TO-DATE AVAILABLE AGE network-operator 1/1 1 1 56mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to view the state of the Cluster Network Operator:

oc get clusteroperator/network

$ oc get clusteroperator/networkCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE network 4.5.4 True False False 50m

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE network 4.5.4 True False False 50mCopy to Clipboard Copied! Toggle word wrap Toggle overflow The following fields provide information about the status of the operator:

AVAILABLE,PROGRESSING, andDEGRADED. TheAVAILABLEfield isTruewhen the Cluster Network Operator reports an available status condition.

4.2. Viewing the cluster network configuration

Every new OpenShift Container Platform installation has a network.config object named cluster.

Procedure

Use the

oc describecommand to view the cluster network configuration:oc describe network.config/cluster

$ oc describe network.config/clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.3. Viewing Cluster Network Operator status

You can inspect the status and view the details of the Cluster Network Operator using the oc describe command.

Procedure

Run the following command to view the status of the Cluster Network Operator:

oc describe clusteroperators/network

$ oc describe clusteroperators/networkCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.4. Viewing Cluster Network Operator logs

You can view Cluster Network Operator logs by using the oc logs command.

Procedure

Run the following command to view the logs of the Cluster Network Operator:

oc logs --namespace=openshift-network-operator deployment/network-operator

$ oc logs --namespace=openshift-network-operator deployment/network-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.5. Cluster Network Operator configuration

The configuration for the cluster network is specified as part of the Cluster Network Operator (CNO) configuration and stored in a custom resource (CR) object that is named cluster. The CR specifies the fields for the Network API in the operator.openshift.io API group.

The CNO configuration inherits the following fields during cluster installation from the Network API in the Network.config.openshift.io API group and these fields cannot be changed:

clusterNetwork- IP address pools from which pod IP addresses are allocated.

serviceNetwork- IP address pool for services.

defaultNetwork.type- Cluster network provider, such as OpenShift SDN or OVN-Kubernetes.

After cluster installation, you cannot modify the fields listed in the previous section.

You can specify the cluster network provider configuration for your cluster by setting the fields for the defaultNetwork object in the CNO object named cluster.

4.5.1. Cluster Network Operator configuration object

The fields for the Cluster Network Operator (CNO) are described in the following table:

| Field | Type | Description |

|---|---|---|

|

|

|

The name of the CNO object. This name is always |

|

|

| A list specifying the blocks of IP addresses from which pod IP addresses are allocated and the subnet prefix length assigned to each individual node in the cluster. For example:

This value is ready-only and inherited from the |

|

|

| A block of IP addresses for services. The OpenShift SDN and OVN-Kubernetes Container Network Interface (CNI) network providers support only a single IP address block for the service network. For example: spec: serviceNetwork: - 172.30.0.0/14

This value is ready-only and inherited from the |

|

|

| Configures the Container Network Interface (CNI) cluster network provider for the cluster network. |

|

|

| The fields for this object specify the kube-proxy configuration. If you are using the OVN-Kubernetes cluster network provider, the kube-proxy configuration has no effect. |

defaultNetwork object configuration

The values for the defaultNetwork object are defined in the following table:

| Field | Type | Description |

|---|---|---|

|

|

|

Either Note OpenShift Container Platform uses the OpenShift SDN Container Network Interface (CNI) cluster network provider by default. |

|

|

| This object is only valid for the OpenShift SDN cluster network provider. |

|

|

| This object is only valid for the OVN-Kubernetes cluster network provider. |

Configuration for the OpenShift SDN CNI cluster network provider

The following table describes the configuration fields for the OpenShift SDN Container Network Interface (CNI) cluster network provider.

| Field | Type | Description |

|---|---|---|

|

|

| The network isolation mode for OpenShift SDN. |

|

|

| The maximum transmission unit (MTU) for the VXLAN overlay network. This value is normally configured automatically. |

|

|

|

The port to use for all VXLAN packets. The default value is |

You can only change the configuration for your cluster network provider during cluster installation.

Example OpenShift SDN configuration

Configuration for the OVN-Kubernetes CNI cluster network provider

The following table describes the configuration fields for the OVN-Kubernetes CNI cluster network provider.

| Field | Type | Description |

|---|---|---|

|

|

| The maximum transmission unit (MTU) for the Geneve (Generic Network Virtualization Encapsulation) overlay network. This value is normally configured automatically. |

|

|

| The UDP port for the Geneve overlay network. |

|

|

| If the field is present, IPsec is enabled for the cluster. |

|

|

| Specify a configuration object for customizing network policy audit logging. If unset, the defaults audit log settings are used. |

|

|

| Optional: Specify a configuration object for customizing how egress traffic is sent to the node gateway. Note While migrating egress traffic, you can expect some disruption to workloads and service traffic until the Cluster Network Operator (CNO) successfully rolls out the changes. |

| Field | Type | Description |

|---|---|---|

|

| integer |

The maximum number of messages to generate every second per node. The default value is |

|

| integer |

The maximum size for the audit log in bytes. The default value is |

|

| string | One of the following additional audit log targets:

|

|

| string |

The syslog facility, such as |

| Field | Type | Description |

|---|---|---|

|

|

|

Set this field to

This field has an interaction with the Open vSwitch hardware offloading feature. If you set this field to |

You can only change the configuration for your cluster network provider during cluster installation, except for the gatewayConfig field that can be changed at runtime as a post-installation activity.

Example OVN-Kubernetes configuration with IPSec enabled

kubeProxyConfig object configuration

The values for the kubeProxyConfig object are defined in the following table:

| Field | Type | Description |

|---|---|---|

|

|

|

The refresh period for Note

Because of performance improvements introduced in OpenShift Container Platform 4.3 and greater, adjusting the |

|

|

|

The minimum duration before refreshing kubeProxyConfig:

proxyArguments:

iptables-min-sync-period:

- 0s

|

4.5.2. Cluster Network Operator example configuration

A complete CNO configuration is specified in the following example:

Example Cluster Network Operator object

Chapter 5. DNS Operator in OpenShift Container Platform

The DNS Operator deploys and manages CoreDNS to provide a name resolution service to pods, enabling DNS-based Kubernetes Service discovery in OpenShift Container Platform.

5.1. DNS Operator

The DNS Operator implements the dns API from the operator.openshift.io API group. The Operator deploys CoreDNS using a daemon set, creates a service for the daemon set, and configures the kubelet to instruct pods to use the CoreDNS service IP address for name resolution.

Procedure

The DNS Operator is deployed during installation with a Deployment object.

Use the

oc getcommand to view the deployment status:oc get -n openshift-dns-operator deployment/dns-operator

$ oc get -n openshift-dns-operator deployment/dns-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY UP-TO-DATE AVAILABLE AGE dns-operator 1/1 1 1 23h

NAME READY UP-TO-DATE AVAILABLE AGE dns-operator 1/1 1 1 23hCopy to Clipboard Copied! Toggle word wrap Toggle overflow Use the

oc getcommand to view the state of the DNS Operator:oc get clusteroperator/dns

$ oc get clusteroperator/dnsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE dns 4.1.0-0.11 True False False 92m

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE dns 4.1.0-0.11 True False False 92mCopy to Clipboard Copied! Toggle word wrap Toggle overflow AVAILABLE,PROGRESSINGandDEGRADEDprovide information about the status of the operator.AVAILABLEisTruewhen at least 1 pod from the CoreDNS daemon set reports anAvailablestatus condition.

5.2. Changing the DNS Operator managementState

DNS manages the CoreDNS component to provide a name resolution service for pods and services in the cluster. The managementState of the DNS Operator is set to Managed by default, which means that the DNS Operator is actively managing its resources. You can change it to Unmanaged, which means the DNS Operator is not managing its resources.

The following are use cases for changing the DNS Operator managementState:

-

You are a developer and want to test a configuration change to see if it fixes an issue in CoreDNS. You can stop the DNS Operator from overwriting the fix by setting the

managementStatetoUnmanaged. -

You are a cluster administrator and have reported an issue with CoreDNS, but need to apply a workaround until the issue is fixed. You can set the

managementStatefield of the DNS Operator toUnmanagedto apply the workaround.

Procedure

Change

managementStateDNS Operator:oc patch dns.operator.openshift.io default --type merge --patch '{"spec":{"managementState":"Unmanaged"}}'oc patch dns.operator.openshift.io default --type merge --patch '{"spec":{"managementState":"Unmanaged"}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.3. Controlling DNS pod placement

The DNS Operator has two daemon sets: one for CoreDNS and one for managing the /etc/hosts file. The daemon set for /etc/hosts must run on every node host to add an entry for the cluster image registry to support pulling images. Security policies can prohibit communication between pairs of nodes, which prevents the daemon set for CoreDNS from running on every node.

As a cluster administrator, you can use a custom node selector to configure the daemon set for CoreDNS to run or not run on certain nodes.

Prerequisites

-

You installed the

ocCLI. -

You are logged in to the cluster with a user with

cluster-adminprivileges.

Procedure

To prevent communication between certain nodes, configure the

spec.nodePlacement.nodeSelectorAPI field:Modify the DNS Operator object named

default:oc edit dns.operator/default

$ oc edit dns.operator/defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Specify a node selector that includes only control plane nodes in the

spec.nodePlacement.nodeSelectorAPI field:spec: nodePlacement: nodeSelector: node-role.kubernetes.io/worker: ""spec: nodePlacement: nodeSelector: node-role.kubernetes.io/worker: ""Copy to Clipboard Copied! Toggle word wrap Toggle overflow

To allow the daemon set for CoreDNS to run on nodes, configure a taint and toleration:

Modify the DNS Operator object named

default:oc edit dns.operator/default

$ oc edit dns.operator/defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Specify a taint key and a toleration for the taint:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- If the taint is

dns-only, it can be tolerated indefinitely. You can omittolerationSeconds.

5.4. View the default DNS

Every new OpenShift Container Platform installation has a dns.operator named default.

Procedure

Use the

oc describecommand to view the defaultdns:oc describe dns.operator/default

$ oc describe dns.operator/defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow To find the service CIDR of your cluster, use the

oc getcommand:oc get networks.config/cluster -o jsonpath='{$.status.serviceNetwork}'$ oc get networks.config/cluster -o jsonpath='{$.status.serviceNetwork}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Example output

[172.30.0.0/16]

[172.30.0.0/16]5.5. Using DNS forwarding

You can use DNS forwarding to override the default forwarding configuration in the /etc/resolv.conf file in the following ways:

- Specify name servers for every zone. If the forwarded zone is the Ingress domain managed by OpenShift Container Platform, then the upstream name server must be authorized for the domain.

- Provide a list of upstream DNS servers.

- Change the default forwarding policy.

A DNS forwarding configuration for the default domain can have both the default servers specified in the /etc/resolv.conf file and the upstream DNS servers.

Procedure

Modify the DNS Operator object named

default:oc edit dns.operator/default

$ oc edit dns.operator/defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow This allows the Operator to create and update the ConfigMap named

dns-defaultwith additional server configuration blocks based onServer. If none of the servers has a zone that matches the query, then name resolution falls back to the upstream DNS servers.Sample DNS

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Must comply with the

rfc6335service name syntax. - 2

- Must conform to the definition of a

subdomaininrfc1123. The cluster domain,cluster.local, is an invalidsubdomainforzones. - 3

- Defines the policy to select upstream resolvers. Default value is

Random. You can also useRoundRobin, andSequential. - 4

- A maximum of 15

upstreamsis allowed perforwardPlugin. - 5

- Optional. You can use it to override the default policy and forward DNS resolution to the specified DNS resolvers (upstream resolvers) for the default domain. If you do not provide any upstream resolvers, the DNS name queries go to the servers in

/etc/resolv.conf. - 6

- Determines the order in which upstream servers are selected for querying. You can specify one of these values:

Random,RoundRobin, orSequential. The default value isSequential. - 7

- Optional. You can use it to provide upstream resolvers.

- 8

- You can specify two types of

upstreams-SystemResolvConfandNetwork.SystemResolvConfconfigures the upstream to use`/etc/resolv.confandNetworkdefines aNetworkresolver. You can specify one or both. - 9

- If the specified type is

Network, you must provide an IP address.addressmust be a valid IPv4 or IPv6 address. - 10

- If the specified type is

Network, you can optionally provide a port.portmust be between 1 and 65535.

NoteIf

serversis undefined or invalid, the ConfigMap only contains the default server.View the ConfigMap:

oc get configmap/dns-default -n openshift-dns -o yaml

$ oc get configmap/dns-default -n openshift-dns -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Sample DNS ConfigMap based on previous sample DNS

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Changes to the

forwardPlugintriggers a rolling update of the CoreDNS daemon set.

5.6. DNS Operator status

You can inspect the status and view the details of the DNS Operator using the oc describe command.

Procedure

View the status of the DNS Operator:

oc describe clusteroperators/dns

$ oc describe clusteroperators/dns5.7. DNS Operator logs

You can view DNS Operator logs by using the oc logs command.

Procedure

View the logs of the DNS Operator:

oc logs -n openshift-dns-operator deployment/dns-operator -c dns-operator

$ oc logs -n openshift-dns-operator deployment/dns-operator -c dns-operator5.8. Setting the CoreDNS log level

You can configure the CoreDNS log level to determine the amount of detail in logged error messages. The valid values for CoreDNS log level are Normal, Debug, and Trace. The default logLevel is Normal.

The errors plugin is always enabled. The following logLevel settings report different error responses:

-

logLevel:Normalenables the "errors" class:log . { class error }. -

logLevel:Debugenables the "denial" class:log . { class denial error }. -

logLevel:Traceenables the "all" class:log . { class all }.

Procedure

To set

logLeveltoDebug, enter the following command:oc patch dnses.operator.openshift.io/default -p '{"spec":{"logLevel":"Debug"}}' --type=merge$ oc patch dnses.operator.openshift.io/default -p '{"spec":{"logLevel":"Debug"}}' --type=mergeCopy to Clipboard Copied! Toggle word wrap Toggle overflow To set

logLeveltoTrace, enter the following command:oc patch dnses.operator.openshift.io/default -p '{"spec":{"logLevel":"Trace"}}' --type=merge$ oc patch dnses.operator.openshift.io/default -p '{"spec":{"logLevel":"Trace"}}' --type=mergeCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

To ensure the desired log level was set, check the config map:

oc get configmap/dns-default -n openshift-dns -o yaml

$ oc get configmap/dns-default -n openshift-dns -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.9. Setting the CoreDNS Operator log level

Cluster administrators can configure the Operator log level to more quickly track down OpenShift DNS issues. The valid values for operatorLogLevel are Normal, Debug, and Trace. Trace has the most detailed information. The default operatorlogLevel is Normal. There are seven logging levels for issues: Trace, Debug, Info, Warning, Error, Fatal and Panic. After the logging level is set, log entries with that severity or anything above it will be logged.

-

operatorLogLevel: "Normal"setslogrus.SetLogLevel("Info"). -

operatorLogLevel: "Debug"setslogrus.SetLogLevel("Debug"). -

operatorLogLevel: "Trace"setslogrus.SetLogLevel("Trace").

Procedure

To set

operatorLogLeveltoDebug, enter the following command:oc patch dnses.operator.openshift.io/default -p '{"spec":{"operatorLogLevel":"Debug"}}' --type=merge$ oc patch dnses.operator.openshift.io/default -p '{"spec":{"operatorLogLevel":"Debug"}}' --type=mergeCopy to Clipboard Copied! Toggle word wrap Toggle overflow To set

operatorLogLeveltoTrace, enter the following command:oc patch dnses.operator.openshift.io/default -p '{"spec":{"operatorLogLevel":"Trace"}}' --type=merge$ oc patch dnses.operator.openshift.io/default -p '{"spec":{"operatorLogLevel":"Trace"}}' --type=mergeCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 6. Ingress Operator in OpenShift Container Platform

6.1. OpenShift Container Platform Ingress Operator

When you create your OpenShift Container Platform cluster, pods and services running on the cluster are each allocated their own IP addresses. The IP addresses are accessible to other pods and services running nearby but are not accessible to outside clients. The Ingress Operator implements the IngressController API and is the component responsible for enabling external access to OpenShift Container Platform cluster services.

The Ingress Operator makes it possible for external clients to access your service by deploying and managing one or more HAProxy-based Ingress Controllers to handle routing. You can use the Ingress Operator to route traffic by specifying OpenShift Container Platform Route and Kubernetes Ingress resources. Configurations within the Ingress Controller, such as the ability to define endpointPublishingStrategy type and internal load balancing, provide ways to publish Ingress Controller endpoints.

6.2. The Ingress configuration asset

The installation program generates an asset with an Ingress resource in the config.openshift.io API group, cluster-ingress-02-config.yml.

YAML Definition of the Ingress resource

The installation program stores this asset in the cluster-ingress-02-config.yml file in the manifests/ directory. This Ingress resource defines the cluster-wide configuration for Ingress. This Ingress configuration is used as follows:

- The Ingress Operator uses the domain from the cluster Ingress configuration as the domain for the default Ingress Controller.

-

The OpenShift API Server Operator uses the domain from the cluster Ingress configuration. This domain is also used when generating a default host for a

Routeresource that does not specify an explicit host.

6.3. Ingress Controller configuration parameters

The ingresscontrollers.operator.openshift.io resource offers the following configuration parameters.

| Parameter | Description |

|---|---|

|

|

The

If empty, the default value is |

|

|

|

|

|

If not set, the default value is based on

For most platforms, the

|

|

|

The

The secret must contain the following keys and data: *

If not set, a wildcard certificate is automatically generated and used. The certificate is valid for the Ingress Controller The in-use certificate, whether generated or user-specified, is automatically integrated with OpenShift Container Platform built-in OAuth server. |

|

|

|

|

|

|

|

|

If not set, the defaults values are used. Note

The |

|

|

If not set, the default value is based on the

When using the

The minimum TLS version for Ingress Controllers is Note

Ciphers and the minimum TLS version of the configured security profile are reflected in the Important

The Ingress Operator converts the TLS |

|

|

The

The |

|

|

|

|

|

|

|

|

By setting the

By default, the policy is set to

By setting These adjustments are only applied to cleartext, edge-terminated, and re-encrypt routes, and only when using HTTP/1.

For request headers, these adjustments are applied only for routes that have the |

|

|

|

|

|

|

|

|

For any cookie that you want to capture, the following parameters must be in your

For example: httpCaptureCookies:

- matchType: Exact

maxLength: 128

name: MYCOOKIE

|

|

|

|

|

|

|

|

|

The

|

|

|

The

These connections come from load balancer health probes or web browser speculative connections (preconnect) and can be safely ignored. However, these requests can be caused by network errors, so setting this field to |

All parameters are optional.

6.3.1. Ingress Controller TLS security profiles

TLS security profiles provide a way for servers to regulate which ciphers a connecting client can use when connecting to the server.

6.3.1.1. Understanding TLS security profiles

You can use a TLS (Transport Layer Security) security profile to define which TLS ciphers are required by various OpenShift Container Platform components. The OpenShift Container Platform TLS security profiles are based on Mozilla recommended configurations.

You can specify one of the following TLS security profiles for each component:

| Profile | Description |

|---|---|

|

| This profile is intended for use with legacy clients or libraries. The profile is based on the Old backward compatibility recommended configuration.

The Note For the Ingress Controller, the minimum TLS version is converted from 1.0 to 1.1. |

|

| This profile is the recommended configuration for the majority of clients. It is the default TLS security profile for the Ingress Controller, kubelet, and control plane. The profile is based on the Intermediate compatibility recommended configuration.

The |

|

| This profile is intended for use with modern clients that have no need for backwards compatibility. This profile is based on the Modern compatibility recommended configuration.

The |

|

| This profile allows you to define the TLS version and ciphers to use. Warning

Use caution when using a |

When using one of the predefined profile types, the effective profile configuration is subject to change between releases. For example, given a specification to use the Intermediate profile deployed on release X.Y.Z, an upgrade to release X.Y.Z+1 might cause a new profile configuration to be applied, resulting in a rollout.

6.3.1.2. Configuring the TLS security profile for the Ingress Controller

To configure a TLS security profile for an Ingress Controller, edit the IngressController custom resource (CR) to specify a predefined or custom TLS security profile. If a TLS security profile is not configured, the default value is based on the TLS security profile set for the API server.

Sample IngressController CR that configures the Old TLS security profile

The TLS security profile defines the minimum TLS version and the TLS ciphers for TLS connections for Ingress Controllers.

You can see the ciphers and the minimum TLS version of the configured TLS security profile in the IngressController custom resource (CR) under Status.Tls Profile and the configured TLS security profile under Spec.Tls Security Profile. For the Custom TLS security profile, the specific ciphers and minimum TLS version are listed under both parameters.

The HAProxy Ingress Controller image supports TLS 1.3 and the Modern profile.

The Ingress Operator also converts the TLS 1.0 of an Old or Custom profile to 1.1.

Prerequisites

-

You have access to the cluster as a user with the

cluster-adminrole.

Procedure

Edit the

IngressControllerCR in theopenshift-ingress-operatorproject to configure the TLS security profile:oc edit IngressController default -n openshift-ingress-operator

$ oc edit IngressController default -n openshift-ingress-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the

spec.tlsSecurityProfilefield:Sample

IngressControllerCR for aCustomprofileCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Save the file to apply the changes.

Verification

Verify that the profile is set in the

IngressControllerCR:oc describe IngressController default -n openshift-ingress-operator

$ oc describe IngressController default -n openshift-ingress-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.1.3. Configuring mutual TLS authentication

You can configure the Ingress Controller to enable mutual TLS (mTLS) authentication by setting a spec.clientTLS value. The clientTLS value configures the Ingress Controller to verify client certificates. This configuration includes setting a clientCA value, which is a reference to a config map. The config map contains the PEM-encoded CA certificate bundle that is used to verify a client’s certificate. Optionally, you can also configure a list of certificate subject filters.

If the clientCA value specifies an X509v3 certificate revocation list (CRL) distribution point, the Ingress Operator downloads and manages a CRL config map based on the HTTP URI X509v3 CRL Distribution Point specified in each provided certificate. The Ingress Controller uses this config map during mTLS/TLS negotiation. Requests that do not provide valid certificates are rejected.

Prerequisites

-

You have access to the cluster as a user with the

cluster-adminrole. - You have a PEM-encoded CA certificate bundle.

If your CA bundle references a CRL distribution point, you must have also included the end-entity or leaf certificate to the client CA bundle. This certificate must have included an HTTP URI under

CRL Distribution Points, as described in RFC 5280. For example:Issuer: C=US, O=Example Inc, CN=Example Global G2 TLS RSA SHA256 2020 CA1 Subject: SOME SIGNED CERT X509v3 CRL Distribution Points: Full Name: URI:http://crl.example.com/example.crlIssuer: C=US, O=Example Inc, CN=Example Global G2 TLS RSA SHA256 2020 CA1 Subject: SOME SIGNED CERT X509v3 CRL Distribution Points: Full Name: URI:http://crl.example.com/example.crlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

In the

openshift-confignamespace, create a config map from your CA bundle:oc create configmap \ router-ca-certs-default \ --from-file=ca-bundle.pem=client-ca.crt \ -n openshift-config

$ oc create configmap \ router-ca-certs-default \ --from-file=ca-bundle.pem=client-ca.crt \1 -n openshift-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The config map data key must be

ca-bundle.pem, and the data value must be a CA certificate in PEM format.

Edit the

IngressControllerresource in theopenshift-ingress-operatorproject:oc edit IngressController default -n openshift-ingress-operator

$ oc edit IngressController default -n openshift-ingress-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the

spec.clientTLSfield and subfields to configure mutual TLS:Sample

IngressControllerCR for aclientTLSprofile that specifies filtering patternsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.4. View the default Ingress Controller

The Ingress Operator is a core feature of OpenShift Container Platform and is enabled out of the box.

Every new OpenShift Container Platform installation has an ingresscontroller named default. It can be supplemented with additional Ingress Controllers. If the default ingresscontroller is deleted, the Ingress Operator will automatically recreate it within a minute.

Procedure

View the default Ingress Controller:

oc describe --namespace=openshift-ingress-operator ingresscontroller/default

$ oc describe --namespace=openshift-ingress-operator ingresscontroller/defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.5. View Ingress Operator status

You can view and inspect the status of your Ingress Operator.

Procedure

View your Ingress Operator status:

oc describe clusteroperators/ingress

$ oc describe clusteroperators/ingressCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.6. View Ingress Controller logs

You can view your Ingress Controller logs.

Procedure

View your Ingress Controller logs:

oc logs --namespace=openshift-ingress-operator deployments/ingress-operator -c <container_name>

$ oc logs --namespace=openshift-ingress-operator deployments/ingress-operator -c <container_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.7. View Ingress Controller status

Your can view the status of a particular Ingress Controller.

Procedure

View the status of an Ingress Controller:

oc describe --namespace=openshift-ingress-operator ingresscontroller/<name>

$ oc describe --namespace=openshift-ingress-operator ingresscontroller/<name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.8. Configuring the Ingress Controller

6.8.1. Setting a custom default certificate

As an administrator, you can configure an Ingress Controller to use a custom certificate by creating a Secret resource and editing the IngressController custom resource (CR).

Prerequisites

- You must have a certificate/key pair in PEM-encoded files, where the certificate is signed by a trusted certificate authority or by a private trusted certificate authority that you configured in a custom PKI.

Your certificate meets the following requirements:

- The certificate is valid for the ingress domain.

-

The certificate uses the

subjectAltNameextension to specify a wildcard domain, such as*.apps.ocp4.example.com.

You must have an

IngressControllerCR. You may use the default one:oc --namespace openshift-ingress-operator get ingresscontrollers

$ oc --namespace openshift-ingress-operator get ingresscontrollersCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME AGE default 10m

NAME AGE default 10mCopy to Clipboard Copied! Toggle word wrap Toggle overflow

If you have intermediate certificates, they must be included in the tls.crt file of the secret containing a custom default certificate. Order matters when specifying a certificate; list your intermediate certificate(s) after any server certificate(s).

Procedure

The following assumes that the custom certificate and key pair are in the tls.crt and tls.key files in the current working directory. Substitute the actual path names for tls.crt and tls.key. You also may substitute another name for custom-certs-default when creating the Secret resource and referencing it in the IngressController CR.

This action will cause the Ingress Controller to be redeployed, using a rolling deployment strategy.

Create a Secret resource containing the custom certificate in the

openshift-ingressnamespace using thetls.crtandtls.keyfiles.oc --namespace openshift-ingress create secret tls custom-certs-default --cert=tls.crt --key=tls.key

$ oc --namespace openshift-ingress create secret tls custom-certs-default --cert=tls.crt --key=tls.keyCopy to Clipboard Copied! Toggle word wrap Toggle overflow Update the IngressController CR to reference the new certificate secret:

oc patch --type=merge --namespace openshift-ingress-operator ingresscontrollers/default \ --patch '{"spec":{"defaultCertificate":{"name":"custom-certs-default"}}}'$ oc patch --type=merge --namespace openshift-ingress-operator ingresscontrollers/default \ --patch '{"spec":{"defaultCertificate":{"name":"custom-certs-default"}}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify the update was effective:

echo Q |\ openssl s_client -connect console-openshift-console.apps.<domain>:443 -showcerts 2>/dev/null |\ openssl x509 -noout -subject -issuer -enddate

$ echo Q |\ openssl s_client -connect console-openshift-console.apps.<domain>:443 -showcerts 2>/dev/null |\ openssl x509 -noout -subject -issuer -enddateCopy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<domain>- Specifies the base domain name for your cluster.

Example output

subject=C = US, ST = NC, L = Raleigh, O = RH, OU = OCP4, CN = *.apps.example.com issuer=C = US, ST = NC, L = Raleigh, O = RH, OU = OCP4, CN = example.com notAfter=May 10 08:32:45 2022 GM

subject=C = US, ST = NC, L = Raleigh, O = RH, OU = OCP4, CN = *.apps.example.com issuer=C = US, ST = NC, L = Raleigh, O = RH, OU = OCP4, CN = example.com notAfter=May 10 08:32:45 2022 GMCopy to Clipboard Copied! Toggle word wrap Toggle overflow TipYou can alternatively apply the following YAML to set a custom default certificate:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The certificate secret name should match the value used to update the CR.

Once the IngressController CR has been modified, the Ingress Operator updates the Ingress Controller’s deployment to use the custom certificate.

6.8.2. Removing a custom default certificate

As an administrator, you can remove a custom certificate that you configured an Ingress Controller to use.

Prerequisites

-

You have access to the cluster as a user with the

cluster-adminrole. -

You have installed the OpenShift CLI (

oc). - You previously configured a custom default certificate for the Ingress Controller.

Procedure

To remove the custom certificate and restore the certificate that ships with OpenShift Container Platform, enter the following command:

oc patch -n openshift-ingress-operator ingresscontrollers/default \ --type json -p $'- op: remove\n path: /spec/defaultCertificate'

$ oc patch -n openshift-ingress-operator ingresscontrollers/default \ --type json -p $'- op: remove\n path: /spec/defaultCertificate'Copy to Clipboard Copied! Toggle word wrap Toggle overflow There can be a delay while the cluster reconciles the new certificate configuration.

Verification

To confirm that the original cluster certificate is restored, enter the following command:

echo Q | \ openssl s_client -connect console-openshift-console.apps.<domain>:443 -showcerts 2>/dev/null | \ openssl x509 -noout -subject -issuer -enddate

$ echo Q | \ openssl s_client -connect console-openshift-console.apps.<domain>:443 -showcerts 2>/dev/null | \ openssl x509 -noout -subject -issuer -enddateCopy to Clipboard Copied! Toggle word wrap Toggle overflow where:

<domain>- Specifies the base domain name for your cluster.

Example output

subject=CN = *.apps.<domain> issuer=CN = ingress-operator@1620633373 notAfter=May 10 10:44:36 2023 GMT

subject=CN = *.apps.<domain> issuer=CN = ingress-operator@1620633373 notAfter=May 10 10:44:36 2023 GMTCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.8.3. Scaling an Ingress Controller

Manually scale an Ingress Controller to meeting routing performance or availability requirements such as the requirement to increase throughput. oc commands are used to scale the IngressController resource. The following procedure provides an example for scaling up the default IngressController.

Scaling is not an immediate action, as it takes time to create the desired number of replicas.

Procedure

View the current number of available replicas for the default

IngressController:oc get -n openshift-ingress-operator ingresscontrollers/default -o jsonpath='{$.status.availableReplicas}'$ oc get -n openshift-ingress-operator ingresscontrollers/default -o jsonpath='{$.status.availableReplicas}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

2

2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Scale the default

IngressControllerto the desired number of replicas using theoc patchcommand. The following example scales the defaultIngressControllerto 3 replicas:oc patch -n openshift-ingress-operator ingresscontroller/default --patch '{"spec":{"replicas": 3}}' --type=merge$ oc patch -n openshift-ingress-operator ingresscontroller/default --patch '{"spec":{"replicas": 3}}' --type=mergeCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

ingresscontroller.operator.openshift.io/default patched

ingresscontroller.operator.openshift.io/default patchedCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the default

IngressControllerscaled to the number of replicas that you specified:oc get -n openshift-ingress-operator ingresscontrollers/default -o jsonpath='{$.status.availableReplicas}'$ oc get -n openshift-ingress-operator ingresscontrollers/default -o jsonpath='{$.status.availableReplicas}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

3

3Copy to Clipboard Copied! Toggle word wrap Toggle overflow TipYou can alternatively apply the following YAML to scale an Ingress Controller to three replicas:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- If you need a different amount of replicas, change the

replicasvalue.

6.8.4. Configuring Ingress access logging

You can configure the Ingress Controller to enable access logs. If you have clusters that do not receive much traffic, then you can log to a sidecar. If you have high traffic clusters, to avoid exceeding the capacity of the logging stack or to integrate with a logging infrastructure outside of OpenShift Container Platform, you can forward logs to a custom syslog endpoint. You can also specify the format for access logs.

Container logging is useful to enable access logs on low-traffic clusters when there is no existing Syslog logging infrastructure, or for short-term use while diagnosing problems with the Ingress Controller.

Syslog is needed for high-traffic clusters where access logs could exceed the OpenShift Logging stack’s capacity, or for environments where any logging solution needs to integrate with an existing Syslog logging infrastructure. The Syslog use-cases can overlap.

Prerequisites

-

Log in as a user with

cluster-adminprivileges.

Procedure

Configure Ingress access logging to a sidecar.

To configure Ingress access logging, you must specify a destination using

spec.logging.access.destination. To specify logging to a sidecar container, you must specifyContainerspec.logging.access.destination.type. The following example is an Ingress Controller definition that logs to aContainerdestination:Copy to Clipboard Copied! Toggle word wrap Toggle overflow When you configure the Ingress Controller to log to a sidecar, the operator creates a container named

logsinside the Ingress Controller Pod:oc -n openshift-ingress logs deployment.apps/router-default -c logs

$ oc -n openshift-ingress logs deployment.apps/router-default -c logsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

2020-05-11T19:11:50.135710+00:00 router-default-57dfc6cd95-bpmk6 router-default-57dfc6cd95-bpmk6 haproxy[108]: 174.19.21.82:39654 [11/May/2020:19:11:50.133] public be_http:hello-openshift:hello-openshift/pod:hello-openshift:hello-openshift:10.128.2.12:8080 0/0/1/0/1 200 142 - - --NI 1/1/0/0/0 0/0 "GET / HTTP/1.1"

2020-05-11T19:11:50.135710+00:00 router-default-57dfc6cd95-bpmk6 router-default-57dfc6cd95-bpmk6 haproxy[108]: 174.19.21.82:39654 [11/May/2020:19:11:50.133] public be_http:hello-openshift:hello-openshift/pod:hello-openshift:hello-openshift:10.128.2.12:8080 0/0/1/0/1 200 142 - - --NI 1/1/0/0/0 0/0 "GET / HTTP/1.1"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Configure Ingress access logging to a Syslog endpoint.

To configure Ingress access logging, you must specify a destination using

spec.logging.access.destination. To specify logging to a Syslog endpoint destination, you must specifySyslogforspec.logging.access.destination.type. If the destination type isSyslog, you must also specify a destination endpoint usingspec.logging.access.destination.syslog.endpointand you can specify a facility usingspec.logging.access.destination.syslog.facility. The following example is an Ingress Controller definition that logs to aSyslogdestination:Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe

syslogdestination port must be UDP.

Configure Ingress access logging with a specific log format.

You can specify

spec.logging.access.httpLogFormatto customize the log format. The following example is an Ingress Controller definition that logs to asyslogendpoint with IP address 1.2.3.4 and port 10514:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Disable Ingress access logging.

To disable Ingress access logging, leave

spec.loggingorspec.logging.accessempty:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.8.5. Setting Ingress Controller thread count

A cluster administrator can set the thread count to increase the amount of incoming connections a cluster can handle. You can patch an existing Ingress Controller to increase the amount of threads.

Prerequisites

- The following assumes that you already created an Ingress Controller.

Procedure

Update the Ingress Controller to increase the number of threads:

oc -n openshift-ingress-operator patch ingresscontroller/default --type=merge -p '{"spec":{"tuningOptions": {"threadCount": 8}}}'$ oc -n openshift-ingress-operator patch ingresscontroller/default --type=merge -p '{"spec":{"tuningOptions": {"threadCount": 8}}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf you have a node that is capable of running large amounts of resources, you can configure

spec.nodePlacement.nodeSelectorwith labels that match the capacity of the intended node, and configurespec.tuningOptions.threadCountto an appropriately high value.

6.8.6. Ingress Controller sharding

As the primary mechanism for traffic to enter the cluster, the demands on the Ingress Controller, or router, can be significant. As a cluster administrator, you can shard the routes to:

- Balance Ingress Controllers, or routers, with several routes to speed up responses to changes.

- Allocate certain routes to have different reliability guarantees than other routes.

- Allow certain Ingress Controllers to have different policies defined.

- Allow only specific routes to use additional features.

- Expose different routes on different addresses so that internal and external users can see different routes, for example.

Ingress Controller can use either route labels or namespace labels as a sharding method.

6.8.6.1. Configuring Ingress Controller sharding by using route labels

Ingress Controller sharding by using route labels means that the Ingress Controller serves any route in any namespace that is selected by the route selector.

Ingress Controller sharding is useful when balancing incoming traffic load among a set of Ingress Controllers and when isolating traffic to a specific Ingress Controller. For example, company A goes to one Ingress Controller and company B to another.

Procedure

Edit the

router-internal.yamlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specify a domain to be used by the Ingress Controller. This domain must be different from the default Ingress Controller domain.

Apply the Ingress Controller

router-internal.yamlfile:oc apply -f router-internal.yaml

# oc apply -f router-internal.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow The Ingress Controller selects routes in any namespace that have the label

type: sharded.Create a new route using the domain configured in the

router-internal.yaml:oc expose svc <service-name> --hostname <route-name>.apps-sharded.basedomain.example.net

$ oc expose svc <service-name> --hostname <route-name>.apps-sharded.basedomain.example.netCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.8.6.2. Configuring Ingress Controller sharding by using namespace labels

Ingress Controller sharding by using namespace labels means that the Ingress Controller serves any route in any namespace that is selected by the namespace selector.

Ingress Controller sharding is useful when balancing incoming traffic load among a set of Ingress Controllers and when isolating traffic to a specific Ingress Controller. For example, company A goes to one Ingress Controller and company B to another.

If you deploy the Keepalived Ingress VIP, do not deploy a non-default Ingress Controller with value HostNetwork for the endpointPublishingStrategy parameter. Doing so might cause issues. Use value NodePort instead of HostNetwork for endpointPublishingStrategy.

Procedure

Edit the

router-internal.yamlfile:cat router-internal.yaml

# cat router-internal.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specify a domain to be used by the Ingress Controller. This domain must be different from the default Ingress Controller domain.

Apply the Ingress Controller

router-internal.yamlfile:oc apply -f router-internal.yaml

# oc apply -f router-internal.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow The Ingress Controller selects routes in any namespace that is selected by the namespace selector that have the label

type: sharded.Create a new route using the domain configured in the

router-internal.yaml:oc expose svc <service-name> --hostname <route-name>.apps-sharded.basedomain.example.net

$ oc expose svc <service-name> --hostname <route-name>.apps-sharded.basedomain.example.netCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.8.7. Configuring an Ingress Controller to use an internal load balancer

When creating an Ingress Controller on cloud platforms, the Ingress Controller is published by a public cloud load balancer by default. As an administrator, you can create an Ingress Controller that uses an internal cloud load balancer.

If your cloud provider is Microsoft Azure, you must have at least one public load balancer that points to your nodes. If you do not, all of your nodes will lose egress connectivity to the internet.

If you want to change the scope for an IngressController, you can change the .spec.endpointPublishingStrategy.loadBalancer.scope parameter after the custom resource (CR) is created.

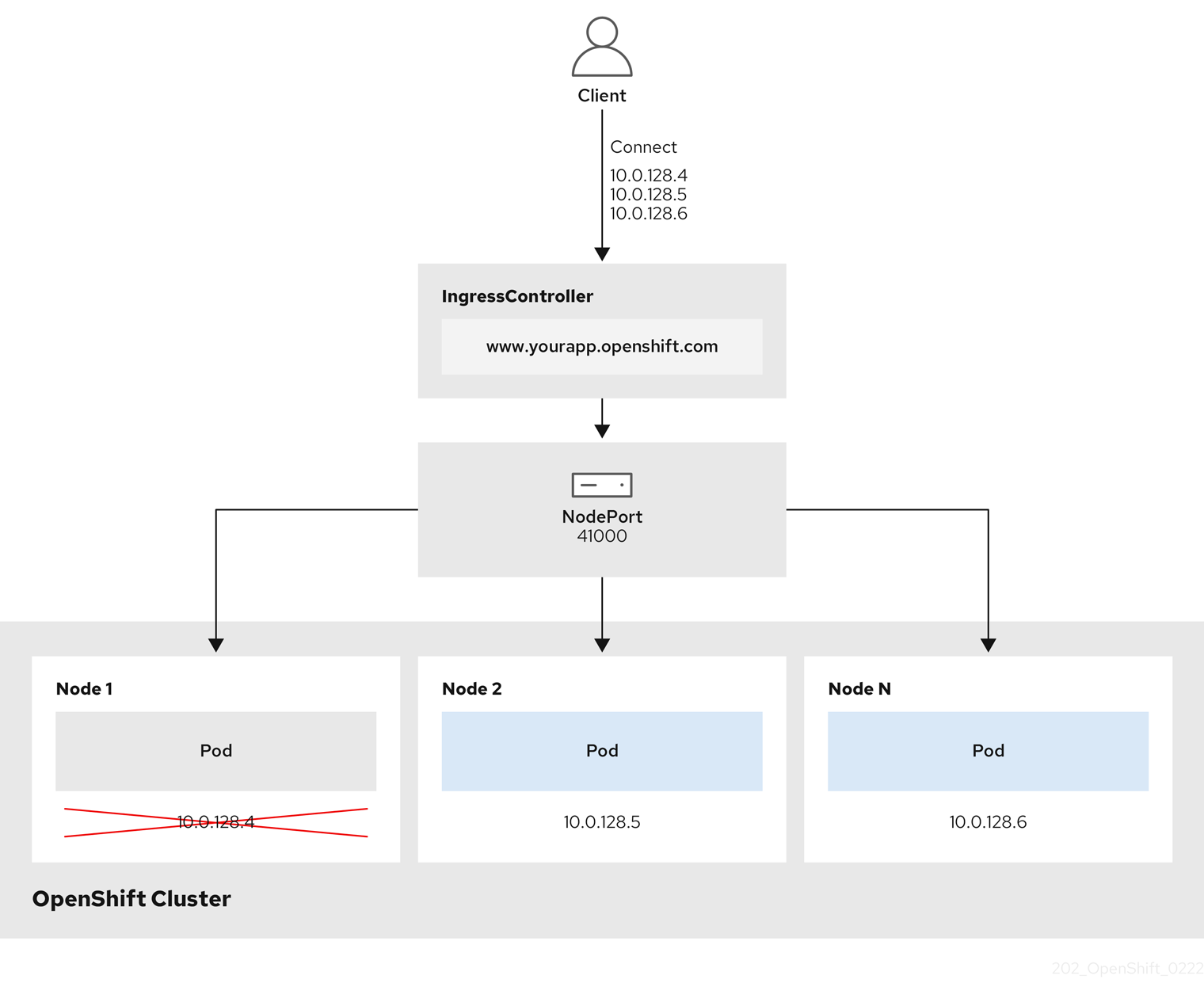

Figure 6.1. Diagram of LoadBalancer

The preceding graphic shows the following concepts pertaining to OpenShift Container Platform Ingress LoadBalancerService endpoint publishing strategy:

- You can load balance externally, using the cloud provider load balancer, or internally, using the OpenShift Ingress Controller Load Balancer.

- You can use the single IP address of the load balancer and more familiar ports, such as 8080 and 4200 as shown on the cluster depicted in the graphic.

- Traffic from the external load balancer is directed at the pods, and managed by the load balancer, as depicted in the instance of a down node. See the Kubernetes Services documentation for implementation details.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in as a user with

cluster-adminprivileges.

Procedure

Create an

IngressControllercustom resource (CR) in a file named<name>-ingress-controller.yaml, such as in the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the Ingress Controller defined in the previous step by running the following command:

oc create -f <name>-ingress-controller.yaml

$ oc create -f <name>-ingress-controller.yaml1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace

<name>with the name of theIngressControllerobject.

Optional: Confirm that the Ingress Controller was created by running the following command:

oc --all-namespaces=true get ingresscontrollers

$ oc --all-namespaces=true get ingresscontrollersCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.8.8. Configuring global access for an Ingress Controller on GCP

An Ingress Controller created on GCP with an internal load balancer generates an internal IP address for the service. A cluster administrator can specify the global access option, which enables clients in any region within the same VPC network and compute region as the load balancer, to reach the workloads running on your cluster.

For more information, see the GCP documentation for global access.

Prerequisites

- You deployed an OpenShift Container Platform cluster on GCP infrastructure.

- You configured an Ingress Controller to use an internal load balancer.

-

You installed the OpenShift CLI (

oc).

Procedure

Configure the Ingress Controller resource to allow global access.

NoteYou can also create an Ingress Controller and specify the global access option.

Configure the Ingress Controller resource:

oc -n openshift-ingress-operator edit ingresscontroller/default

$ oc -n openshift-ingress-operator edit ingresscontroller/defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the YAML file:

Sample

clientAccessconfiguration toGlobalCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Set

gcp.clientAccesstoGlobal.

- Save the file to apply the changes.

Run the following command to verify that the service allows global access:

oc -n openshift-ingress edit svc/router-default -o yaml

$ oc -n openshift-ingress edit svc/router-default -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output shows that global access is enabled for GCP with the annotation,

networking.gke.io/internal-load-balancer-allow-global-access.

6.8.9. Configuring the default Ingress Controller for your cluster to be internal

You can configure the default Ingress Controller for your cluster to be internal by deleting and recreating it.

If your cloud provider is Microsoft Azure, you must have at least one public load balancer that points to your nodes. If you do not, all of your nodes will lose egress connectivity to the internet.

If you want to change the scope for an IngressController, you can change the .spec.endpointPublishingStrategy.loadBalancer.scope parameter after the custom resource (CR) is created.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Log in as a user with

cluster-adminprivileges.

Procedure

Configure the

defaultIngress Controller for your cluster to be internal by deleting and recreating it.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.8.10. Configuring the route admission policy

Administrators and application developers can run applications in multiple namespaces with the same domain name. This is for organizations where multiple teams develop microservices that are exposed on the same hostname.

Allowing claims across namespaces should only be enabled for clusters with trust between namespaces, otherwise a malicious user could take over a hostname. For this reason, the default admission policy disallows hostname claims across namespaces.

Prerequisites

- Cluster administrator privileges.

Procedure

Edit the

.spec.routeAdmissionfield of theingresscontrollerresource variable using the following command:oc -n openshift-ingress-operator patch ingresscontroller/default --patch '{"spec":{"routeAdmission":{"namespaceOwnership":"InterNamespaceAllowed"}}}' --type=merge$ oc -n openshift-ingress-operator patch ingresscontroller/default --patch '{"spec":{"routeAdmission":{"namespaceOwnership":"InterNamespaceAllowed"}}}' --type=mergeCopy to Clipboard Copied! Toggle word wrap Toggle overflow Sample Ingress Controller configuration

spec: routeAdmission: namespaceOwnership: InterNamespaceAllowed ...spec: routeAdmission: namespaceOwnership: InterNamespaceAllowed ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow TipYou can alternatively apply the following YAML to configure the route admission policy:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.8.11. Using wildcard routes

The HAProxy Ingress Controller has support for wildcard routes. The Ingress Operator uses wildcardPolicy to configure the ROUTER_ALLOW_WILDCARD_ROUTES environment variable of the Ingress Controller.

The default behavior of the Ingress Controller is to admit routes with a wildcard policy of None, which is backwards compatible with existing IngressController resources.

Procedure

Configure the wildcard policy.

Use the following command to edit the

IngressControllerresource:oc edit IngressController

$ oc edit IngressControllerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Under

spec, set thewildcardPolicyfield toWildcardsDisallowedorWildcardsAllowed:spec: routeAdmission: wildcardPolicy: WildcardsDisallowed # or WildcardsAllowedspec: routeAdmission: wildcardPolicy: WildcardsDisallowed # or WildcardsAllowedCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.8.12. Using X-Forwarded headers

You configure the HAProxy Ingress Controller to specify a policy for how to handle HTTP headers including Forwarded and X-Forwarded-For. The Ingress Operator uses the HTTPHeaders field to configure the ROUTER_SET_FORWARDED_HEADERS environment variable of the Ingress Controller.

Procedure

Configure the

HTTPHeadersfield for the Ingress Controller.Use the following command to edit the

IngressControllerresource:oc edit IngressController

$ oc edit IngressControllerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Under

spec, set theHTTPHeaderspolicy field toAppend,Replace,IfNone, orNever:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Example use cases

As a cluster administrator, you can:

Configure an external proxy that injects the

X-Forwarded-Forheader into each request before forwarding it to an Ingress Controller.To configure the Ingress Controller to pass the header through unmodified, you specify the

neverpolicy. The Ingress Controller then never sets the headers, and applications receive only the headers that the external proxy provides.Configure the Ingress Controller to pass the

X-Forwarded-Forheader that your external proxy sets on external cluster requests through unmodified.To configure the Ingress Controller to set the

X-Forwarded-Forheader on internal cluster requests, which do not go through the external proxy, specify theif-nonepolicy. If an HTTP request already has the header set through the external proxy, then the Ingress Controller preserves it. If the header is absent because the request did not come through the proxy, then the Ingress Controller adds the header.

As an application developer, you can:

Configure an application-specific external proxy that injects the

X-Forwarded-Forheader.To configure an Ingress Controller to pass the header through unmodified for an application’s Route, without affecting the policy for other Routes, add an annotation

haproxy.router.openshift.io/set-forwarded-headers: if-noneorhaproxy.router.openshift.io/set-forwarded-headers: neveron the Route for the application.NoteYou can set the

haproxy.router.openshift.io/set-forwarded-headersannotation on a per route basis, independent from the globally set value for the Ingress Controller.

6.8.13. Enabling HTTP/2 Ingress connectivity

You can enable transparent end-to-end HTTP/2 connectivity in HAProxy. It allows application owners to make use of HTTP/2 protocol capabilities, including single connection, header compression, binary streams, and more.

You can enable HTTP/2 connectivity for an individual Ingress Controller or for the entire cluster.

To enable the use of HTTP/2 for the connection from the client to HAProxy, a route must specify a custom certificate. A route that uses the default certificate cannot use HTTP/2. This restriction is necessary to avoid problems from connection coalescing, where the client re-uses a connection for different routes that use the same certificate.

The connection from HAProxy to the application pod can use HTTP/2 only for re-encrypt routes and not for edge-terminated or insecure routes. This restriction is because HAProxy uses Application-Level Protocol Negotiation (ALPN), which is a TLS extension, to negotiate the use of HTTP/2 with the back-end. The implication is that end-to-end HTTP/2 is possible with passthrough and re-encrypt and not with insecure or edge-terminated routes.

Using WebSockets with a re-encrypt route and with HTTP/2 enabled on an Ingress Controller requires WebSocket support over HTTP/2. WebSockets over HTTP/2 is a feature of HAProxy 2.4, which is unsupported in OpenShift Container Platform at this time.

For non-passthrough routes, the Ingress Controller negotiates its connection to the application independently of the connection from the client. This means a client may connect to the Ingress Controller and negotiate HTTP/1.1, and the Ingress Controller may then connect to the application, negotiate HTTP/2, and forward the request from the client HTTP/1.1 connection using the HTTP/2 connection to the application. This poses a problem if the client subsequently tries to upgrade its connection from HTTP/1.1 to the WebSocket protocol, because the Ingress Controller cannot forward WebSocket to HTTP/2 and cannot upgrade its HTTP/2 connection to WebSocket. Consequently, if you have an application that is intended to accept WebSocket connections, it must not allow negotiating the HTTP/2 protocol or else clients will fail to upgrade to the WebSocket protocol.

Procedure

Enable HTTP/2 on a single Ingress Controller.

To enable HTTP/2 on an Ingress Controller, enter the

oc annotatecommand:oc -n openshift-ingress-operator annotate ingresscontrollers/<ingresscontroller_name> ingress.operator.openshift.io/default-enable-http2=true

$ oc -n openshift-ingress-operator annotate ingresscontrollers/<ingresscontroller_name> ingress.operator.openshift.io/default-enable-http2=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

<ingresscontroller_name>with the name of the Ingress Controller to annotate.

Enable HTTP/2 on the entire cluster.