Postinstallation configuration

Day 2 operations for OpenShift Container Platform

Abstract

Chapter 1. Postinstallation configuration overview

After installing OpenShift Container Platform, a cluster administrator can configure and customize the following components:

- Machine

- Bare metal

- Cluster

- Node

- Network

- Storage

- Users

- Alerts and notifications

1.1. Post-installation configuration tasks

You can perform the post-installation configuration tasks to configure your environment to meet your need.

The following lists details these configurations:

-

Configure operating system features: The Machine Config Operator (MCO) manages

MachineConfigobjects. By using the MCO, you can configure nodes and custom resources. Configure bare metal nodes: You can use the Bare Metal Operator (BMO) to manage bare metal hosts. The BMO can complete the following operations:

- Inspects hardware details of the host and report them to the bare metal host.

- Inspect firmware and configure BIOS settings.

- Provision hosts with a desired image.

- Clean disk contents for the host before or after provisioning the host.

Configure cluster features. You can modify the following features of an OpenShift Container Platform cluster:

- Image registry

- Networking configuration

- Image build behavior

- Identity provider

- The etcd configuration

- Machine set creation to handle the workloads

- Cloud provider credential management

Configuring a private cluster: By default, the installation program provisions OpenShift Container Platform by using a publicly accessible DNS and endpoints. To make your cluster accessible only from within an internal network, configure the following components to make them private:

- DNS

- Ingress Controller

- API server

Perform node operations: By default, OpenShift Container Platform uses Red Hat Enterprise Linux CoreOS (RHCOS) compute machines. You can perform the following node operations:

- Add and remove compute machines.

- Add and remove taints and tolerations.

- Configure the maximum number of pods per node.

- Enable Device Manager.

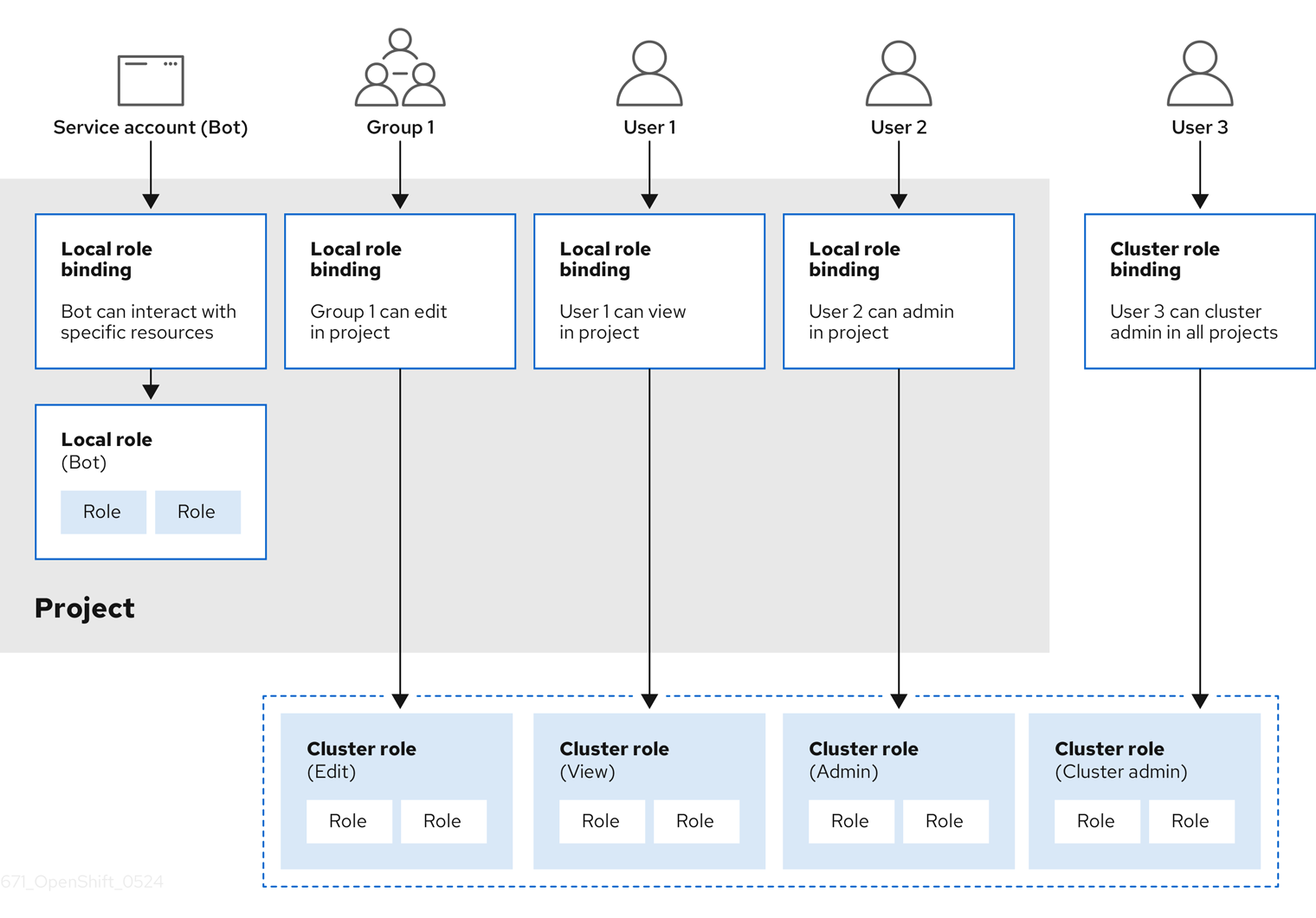

- Configure users: OAuth access tokens allow users to authenticate themselves to the API. You can configure OAuth to perform the following tasks:

- Specify an identity provider

- Use role-based access control to define and supply permissions to users

- Install an Operator from OperatorHub

- Configuring alert notifications: By default, firing alerts are displayed on the Alerting UI of the web console. You can also configure OpenShift Container Platform to send alert notifications to external systems.

Chapter 2. Configuring a private cluster

After you install an OpenShift Container Platform version 4.15 cluster, you can set some of its core components to be private.

2.1. About private clusters

By default, OpenShift Container Platform is provisioned using publicly-accessible DNS and endpoints. You can set the DNS, Ingress Controller, and API server to private after you deploy your private cluster.

If the cluster has any public subnets, load balancer services created by administrators might be publicly accessible. To ensure cluster security, verify that these services are explicitly annotated as private.

2.1.1. DNS

If you install OpenShift Container Platform on installer-provisioned infrastructure, the installation program creates records in a pre-existing public zone and, where possible, creates a private zone for the cluster’s own DNS resolution. In both the public zone and the private zone, the installation program or cluster creates DNS entries for *.apps, for the Ingress object, and api, for the API server.

The *.apps records in the public and private zone are identical, so when you delete the public zone, the private zone seamlessly provides all DNS resolution for the cluster.

2.1.2. Ingress Controller

Because the default Ingress object is created as public, the load balancer is internet-facing and in the public subnets.

The Ingress Operator generates a default certificate for an Ingress Controller to serve as a placeholder until you configure a custom default certificate. Do not use Operator-generated default certificates in production clusters. The Ingress Operator does not rotate its own signing certificate or the default certificates that it generates. Operator-generated default certificates are intended as placeholders for custom default certificates that you configure.

2.1.3. API server

By default, the installation program creates appropriate network load balancers for the API server to use for both internal and external traffic.

On Amazon Web Services (AWS), separate public and private load balancers are created. The load balancers are identical except that an additional port is available on the internal one for use within the cluster. Although the installation program automatically creates or destroys the load balancer based on API server requirements, the cluster does not manage or maintain them. As long as you preserve the cluster’s access to the API server, you can manually modify or move the load balancers. For the public load balancer, port 6443 is open and the health check is configured for HTTPS against the /readyz path.

On Google Cloud, a single load balancer is created to manage both internal and external API traffic, so you do not need to modify the load balancer.

On Microsoft Azure, both public and private load balancers are created. However, because of limitations in current implementation, you just retain both load balancers in a private cluster.

2.2. Configuring DNS records to be published in a private zone

For all OpenShift Container Platform clusters, whether public or private, DNS records are published in a public zone by default.

You can remove the public zone from the cluster DNS configuration to avoid exposing DNS records to the public. You might want to avoid exposing sensitive information, such as internal domain names, internal IP addresses, or the number of clusters at an organization, or you might simply have no need to publish records publicly. If all the clients that should be able to connect to services within the cluster use a private DNS service that has the DNS records from the private zone, then there is no need to have a public DNS record for the cluster.

After you deploy a cluster, you can modify its DNS to use only a private zone by modifying the DNS custom resource (CR). Modifying the DNS CR in this way means that any DNS records that are subsequently created are not published to public DNS servers, which keeps knowledge of the DNS records isolated to internal users. This can be done when you configure the cluster to be private, or if you never want DNS records to be publicly resolvable.

Alternatively, even in a private cluster, you might keep the public zone for DNS records because it allows clients to resolve DNS names for applications running on that cluster. For example, an organization can have machines that connect to the public internet and then establish VPN connections for certain private IP ranges in order to connect to private IP addresses. The DNS lookups from these machines use the public DNS to determine the private addresses of those services, and then connect to the private addresses over the VPN.

Procedure

Review the

DNSCR for your cluster by running the following command and observing the output:oc get dnses.config.openshift.io/cluster -o yaml

$ oc get dnses.config.openshift.io/cluster -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note that the

specsection contains both a private and a public zone.Patch the

DNSCR to remove the public zone by running the following command:oc patch dnses.config.openshift.io/cluster --type=merge --patch='{"spec": {"publicZone": null}}'$ oc patch dnses.config.openshift.io/cluster --type=merge --patch='{"spec": {"publicZone": null}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

dns.config.openshift.io/cluster patched

dns.config.openshift.io/cluster patchedCopy to Clipboard Copied! Toggle word wrap Toggle overflow The Ingress Operator consults the

DNSCR definition when it creates DNS records forIngressControllerobjects. If only private zones are specified, only private records are created.ImportantExisting DNS records are not modified when you remove the public zone. You must manually delete previously published public DNS records if you no longer want them to be published publicly.

Verification

Review the

DNSCR for your cluster and confirm that the public zone was removed, by running the following command and observing the output:oc get dnses.config.openshift.io/cluster -o yaml

$ oc get dnses.config.openshift.io/cluster -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.3. Setting the Ingress Controller to private

After you deploy a cluster, you can modify its Ingress Controller to use only a private zone.

Procedure

Modify the default Ingress Controller to use only an internal endpoint:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

ingresscontroller.operator.openshift.io "default" deleted ingresscontroller.operator.openshift.io/default replaced

ingresscontroller.operator.openshift.io "default" deleted ingresscontroller.operator.openshift.io/default replacedCopy to Clipboard Copied! Toggle word wrap Toggle overflow The public DNS entry is removed, and the private zone entry is updated.

2.4. Restricting the API server to private

After you deploy a cluster to Amazon Web Services (AWS) or Microsoft Azure, you can reconfigure the API server to use only the private zone.

Prerequisites

-

Install the OpenShift CLI (

oc). -

Have access to the web console as a user with

adminprivileges.

Procedure

In the web portal or console for your cloud provider, take the following actions:

Locate and delete the appropriate load balancer component:

- For AWS, delete the external load balancer. The API DNS entry in the private zone already points to the internal load balancer, which uses an identical configuration, so you do not need to modify the internal load balancer.

-

For Azure, delete the

api-internal-v4rule for the public load balancer.

-

For Azure, configure the Ingress Controller endpoint publishing scope to

Internal. For more information, see "Configuring the Ingress Controller endpoint publishing scope to Internal". -

For the Azure public load balancer, if you configure the Ingress Controller endpoint publishing scope to

Internaland there are no existing inbound rules in the public load balancer, you must create an outbound rule explicitly to provide outbound traffic for the backend address pool. For more information, see the Microsoft Azure documentation about adding outbound rules. -

Delete the

api.$clustername.$yourdomainorapi.$clusternameDNS entry in the public zone.

AWS clusters: Remove the external load balancers:

ImportantYou can run the following steps only for an installer-provisioned infrastructure (IPI) cluster. For a user-provisioned infrastructure (UPI) cluster, you must manually remove or disable the external load balancers.

If your cluster uses a control plane machine set, delete the lines in the control plane machine set custom resource that configure your public or external load balancer:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow If your cluster does not use a control plane machine set, you must delete the external load balancers from each control plane machine.

From your terminal, list the cluster machines by running the following command:

oc get machine -n openshift-machine-api

$ oc get machine -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The control plane machines contain

masterin the name.Remove the external load balancer from each control plane machine:

Edit a control plane machine object to by running the following command:

oc edit machines -n openshift-machine-api <control_plane_name>

$ oc edit machines -n openshift-machine-api <control_plane_name>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specify the name of the control plane machine object to modify.

Remove the lines that describe the external load balancer, which are marked in the following example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Save your changes and exit the object specification.

- Repeat this process for each of the control plane machines.

2.5. Configuring a private storage endpoint on Azure

You can leverage the Image Registry Operator to use private endpoints on Azure, which enables seamless configuration of private storage accounts when OpenShift Container Platform is deployed on private Azure clusters. This allows you to deploy the image registry without exposing public-facing storage endpoints.

Do not configure a private storage endpoint on Microsoft Azure Red Hat OpenShift (ARO), because the endpoint can put your Microsoft Azure Red Hat OpenShift cluster in an unrecoverable state.

You can configure the Image Registry Operator to use private storage endpoints on Azure in one of two ways:

- By configuring the Image Registry Operator to discover the VNet and subnet names

- With user-provided Azure Virtual Network (VNet) and subnet names

2.5.1. Limitations for configuring a private storage endpoint on Azure

The following limitations apply when configuring a private storage endpoint on Azure:

-

When configuring the Image Registry Operator to use a private storage endpoint, public network access to the storage account is disabled. Consequently, pulling images from the registry outside of OpenShift Container Platform only works by setting

disableRedirect: truein the registry Operator configuration. With redirect enabled, the registry redirects the client to pull images directly from the storage account, which will no longer work due to disabled public network access. For more information, see "Disabling redirect when using a private storage endpoint on Azure". - This operation cannot be undone by the Image Registry Operator.

2.5.2. Configuring a private storage endpoint on Azure by enabling the Image Registry Operator to discover VNet and subnet names

The following procedure shows you how to set up a private storage endpoint on Azure by configuring the Image Registry Operator to discover VNet and subnet names.

Prerequisites

- You have configured the image registry to run on Azure.

Your network has been set up using the Installer Provisioned Infrastructure installation method.

For users with a custom network setup, see "Configuring a private storage endpoint on Azure with user-provided VNet and subnet names".

Procedure

Edit the Image Registry Operator

configobject and setnetworkAccess.typetoInternal:oc edit configs.imageregistry/cluster

$ oc edit configs.imageregistry/clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Enter the following command to confirm that the Operator has completed provisioning. This might take a few minutes.

oc get configs.imageregistry/cluster -o=jsonpath="{.spec.storage.azure.privateEndpointName}" -w$ oc get configs.imageregistry/cluster -o=jsonpath="{.spec.storage.azure.privateEndpointName}" -wCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: If the registry is exposed by a route, and you are configuring your storage account to be private, you must disable redirect if you want pulls external to the cluster to continue to work. Enter the following command to disable redirect on the Image Operator configuration:

oc patch configs.imageregistry cluster --type=merge -p '{"spec":{"disableRedirect": true}}'$ oc patch configs.imageregistry cluster --type=merge -p '{"spec":{"disableRedirect": true}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteWhen redirect is enabled, pulling images from outside of the cluster will not work.

Verification

Fetch the registry service name by running the following command:

oc registry info --internal=true

$ oc registry info --internal=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

image-registry.openshift-image-registry.svc:5000

image-registry.openshift-image-registry.svc:5000Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enter debug mode by running the following command:

oc debug node/<node_name>

$ oc debug node/<node_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the suggested

chrootcommand. For example:chroot /host

$ chroot /hostCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the following command to log in to your container registry:

podman login --tls-verify=false -u unused -p $(oc whoami -t) image-registry.openshift-image-registry.svc:5000

$ podman login --tls-verify=false -u unused -p $(oc whoami -t) image-registry.openshift-image-registry.svc:5000Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Login Succeeded!

Login Succeeded!Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the following command to verify that you can pull an image from the registry:

podman pull --tls-verify=false image-registry.openshift-image-registry.svc:5000/openshift/tools

$ podman pull --tls-verify=false image-registry.openshift-image-registry.svc:5000/openshift/toolsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5.3. Configuring a private storage endpoint on Azure with user-provided VNet and subnet names

Use the following procedure to configure a storage account that has public network access disabled and is exposed behind a private storage endpoint on Azure.

Prerequisites

- You have configured the image registry to run on Azure.

- You must know the VNet and subnet names used for your Azure environment.

- If your network was configured in a separate resource group in Azure, you must also know its name.

Procedure

Edit the Image Registry Operator

configobject and configure the private endpoint using your VNet and subnet names:oc edit configs.imageregistry/cluster

$ oc edit configs.imageregistry/clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Enter the following command to confirm that the Operator has completed provisioning. This might take a few minutes.

oc get configs.imageregistry/cluster -o=jsonpath="{.spec.storage.azure.privateEndpointName}" -w$ oc get configs.imageregistry/cluster -o=jsonpath="{.spec.storage.azure.privateEndpointName}" -wCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteWhen redirect is enabled, pulling images from outside of the cluster will not work.

Verification

Fetch the registry service name by running the following command:

oc registry info --internal=true

$ oc registry info --internal=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

image-registry.openshift-image-registry.svc:5000

image-registry.openshift-image-registry.svc:5000Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enter debug mode by running the following command:

oc debug node/<node_name>

$ oc debug node/<node_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the suggested

chrootcommand. For example:chroot /host

$ chroot /hostCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the following command to log in to your container registry:

podman login --tls-verify=false -u unused -p $(oc whoami -t) image-registry.openshift-image-registry.svc:5000

$ podman login --tls-verify=false -u unused -p $(oc whoami -t) image-registry.openshift-image-registry.svc:5000Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Login Succeeded!

Login Succeeded!Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the following command to verify that you can pull an image from the registry:

podman pull --tls-verify=false image-registry.openshift-image-registry.svc:5000/openshift/tools

$ podman pull --tls-verify=false image-registry.openshift-image-registry.svc:5000/openshift/toolsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5.4. Optional: Disabling redirect when using a private storage endpoint on Azure

By default, redirect is enabled when using the image registry. Redirect allows off-loading of traffic from the registry pods into the object storage, which makes pull faster. When redirect is enabled and the storage account is private, users from outside of the cluster are unable to pull images from the registry.

In some cases, users might want to disable redirect so that users from outside of the cluster can pull images from the registry.

Use the following procedure to disable redirect.

Prerequisites

- You have configured the image registry to run on Azure.

- You have configured a route.

Procedure

Enter the following command to disable redirect on the image registry configuration:

oc patch configs.imageregistry cluster --type=merge -p '{"spec":{"disableRedirect": true}}'$ oc patch configs.imageregistry cluster --type=merge -p '{"spec":{"disableRedirect": true}}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Fetch the registry service name by running the following command:

oc registry info

$ oc registry infoCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

default-route-openshift-image-registry.<cluster_dns>

default-route-openshift-image-registry.<cluster_dns>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the following command to log in to your container registry:

podman login --tls-verify=false -u unused -p $(oc whoami -t) default-route-openshift-image-registry.<cluster_dns>

$ podman login --tls-verify=false -u unused -p $(oc whoami -t) default-route-openshift-image-registry.<cluster_dns>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Login Succeeded!

Login Succeeded!Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the following command to verify that you can pull an image from the registry:

podman pull --tls-verify=false default-route-openshift-image-registry.<cluster_dns> /openshift/tools

$ podman pull --tls-verify=false default-route-openshift-image-registry.<cluster_dns> /openshift/toolsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 3. Bare metal configuration

When deploying OpenShift Container Platform on bare metal hosts, there are times when you need to make changes to the host either before or after provisioning. This can include inspecting the host’s hardware, firmware, and firmware details. It can also include formatting disks or changing modifiable firmware settings.

3.1. About the Bare Metal Operator

Use the Bare Metal Operator (BMO) to provision, manage, and inspect bare-metal hosts in your cluster.

The BMO uses three resources to complete these tasks:

-

BareMetalHost -

HostFirmwareSettings -

FirmwareSchema

The BMO maintains an inventory of the physical hosts in the cluster by mapping each bare-metal host to an instance of the BareMetalHost custom resource definition. Each BareMetalHost resource features hardware, software, and firmware details. The BMO continually inspects the bare-metal hosts in the cluster to ensure each BareMetalHost resource accurately details the components of the corresponding host.

The BMO also uses the HostFirmwareSettings resource and the FirmwareSchema resource to detail firmware specifications for the bare-metal host.

The BMO interfaces with bare-metal hosts in the cluster by using the Ironic API service. The Ironic service uses the Baseboard Management Controller (BMC) on the host to interface with the machine.

Some common tasks you can complete by using the BMO include the following:

- Provision bare-metal hosts to the cluster with a specific image

- Format a host’s disk contents before provisioning or after deprovisioning

- Turn on or off a host

- Change firmware settings

- View the host’s hardware details

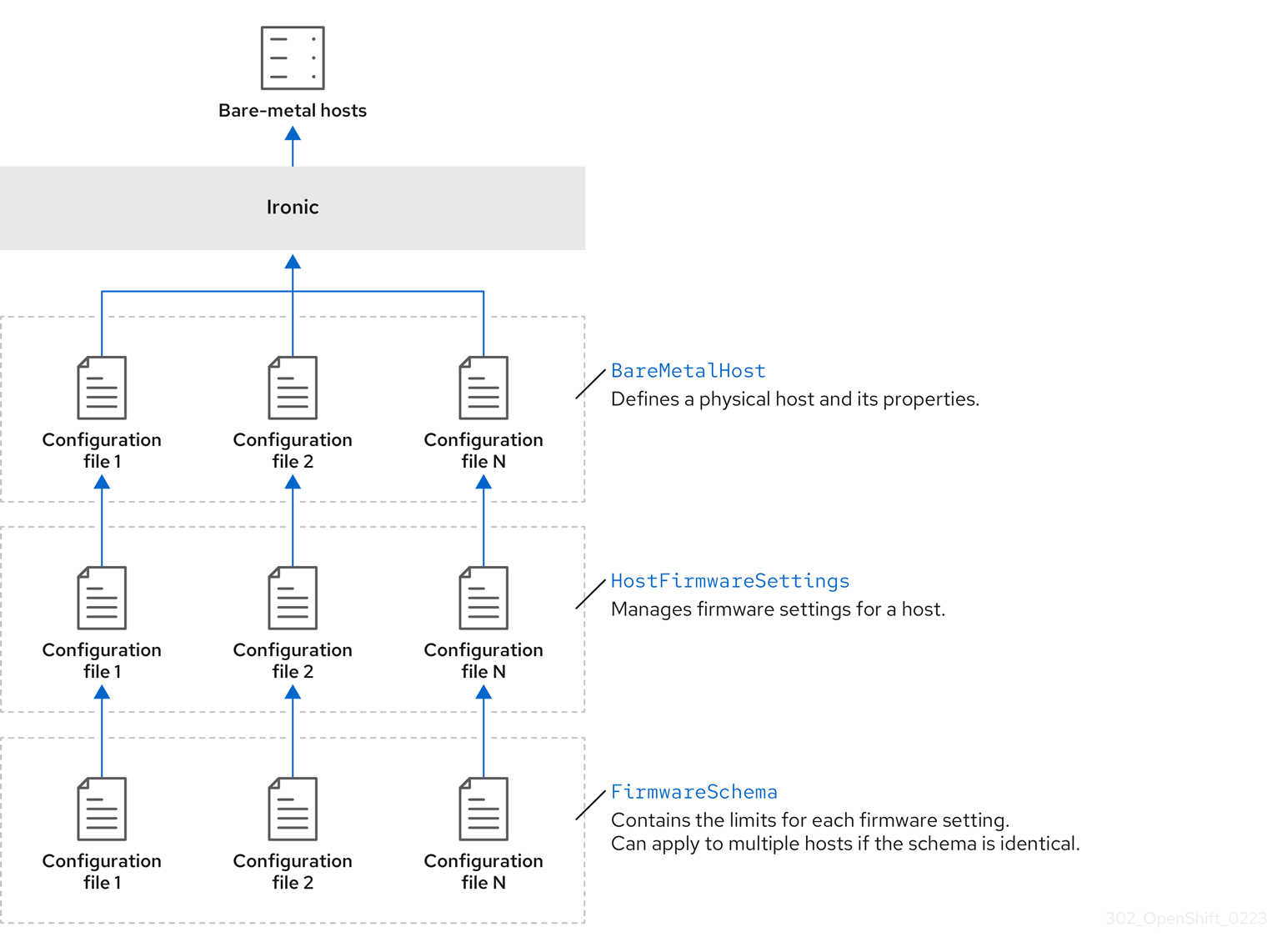

3.1.1. Bare Metal Operator architecture

The Bare Metal Operator (BMO) uses three resources to provision, manage, and inspect bare-metal hosts in your cluster. The following diagram illustrates the architecture of these resources:

BareMetalHost

The BareMetalHost resource defines a physical host and its properties. When you provision a bare-metal host to the cluster, you must define a BareMetalHost resource for that host. For ongoing management of the host, you can inspect the information in the BareMetalHost or update this information.

The BareMetalHost resource features provisioning information such as the following:

- Deployment specifications such as the operating system boot image or the custom RAM disk

- Provisioning state

- Baseboard Management Controller (BMC) address

- Desired power state

The BareMetalHost resource features hardware information such as the following:

- Number of CPUs

- MAC address of a NIC

- Size of the host’s storage device

- Current power state

HostFirmwareSettings

You can use the HostFirmwareSettings resource to retrieve and manage the firmware settings for a host. When a host moves to the Available state, the Ironic service reads the host’s firmware settings and creates the HostFirmwareSettings resource. There is a one-to-one mapping between the BareMetalHost resource and the HostFirmwareSettings resource.

You can use the HostFirmwareSettings resource to inspect the firmware specifications for a host or to update a host’s firmware specifications.

You must adhere to the schema specific to the vendor firmware when you edit the spec field of the HostFirmwareSettings resource. This schema is defined in the read-only FirmwareSchema resource.

FirmwareSchema

Firmware settings vary among hardware vendors and host models. A FirmwareSchema resource is a read-only resource that contains the types and limits for each firmware setting on each host model. The data comes directly from the BMC by using the Ironic service. The FirmwareSchema resource enables you to identify valid values you can specify in the spec field of the HostFirmwareSettings resource.

A FirmwareSchema resource can apply to many BareMetalHost resources if the schema is the same.

3.2. About the BareMetalHost resource

Metal3 introduces the concept of the BareMetalHost resource, which defines a physical host and its properties. The BareMetalHost resource contains two sections:

-

The

BareMetalHostspec -

The

BareMetalHoststatus

3.2.1. The BareMetalHost spec

The spec section of the BareMetalHost resource defines the desired state of the host.

| Parameters | Description |

|---|---|

|

|

An interface to enable or disable automated cleaning during provisioning and de-provisioning. When set to |

bmc: address: credentialsName: disableCertificateVerification: |

The

|

|

| The MAC address of the NIC used for provisioning the host. |

|

|

The boot mode of the host. It defaults to |

|

|

A reference to another resource that is using the host. It could be empty if another resource is not currently using the host. For example, a |

|

| A human-provided string to help identify the host. |

|

| A boolean indicating whether the host provisioning and deprovisioning are managed externally. When set:

|

|

|

Contains information about the BIOS configuration of bare-metal hosts. Currently,

|

image: url: checksum: checksumType: format: |

The

|

|

| A reference to the secret containing the network configuration data and its namespace, so that it can be attached to the host before the host boots to set up the network. |

|

|

A boolean indicating whether the host should be powered on ( |

raid: hardwareRAIDVolumes: softwareRAIDVolumes: | (Optional) Contains the information about the RAID configuration for bare-metal hosts. If not specified, it retains the current configuration. Note OpenShift Container Platform 4.15 supports hardware RAID on the installation drive for BMCs, including:

OpenShift Container Platform 4.15 does not support software RAID on the installation drive. See the following configuration settings:

You can set the spec:

raid:

hardwareRAIDVolume: []

If you receive an error message indicating that the driver does not support RAID, set the |

|

|

The

|

3.2.2. The BareMetalHost status

The BareMetalHost status represents the host’s current state, and includes tested credentials, current hardware details, and other information.

| Parameters | Description |

|---|---|

|

| A reference to the secret and its namespace holding the last set of baseboard management controller (BMC) credentials the system was able to validate as working. |

|

| Details of the last error reported by the provisioning backend, if any. |

|

| Indicates the class of problem that has caused the host to enter an error state. The error types are:

|

|

|

The

|

hardware: firmware: | Contains BIOS firmware information. For example, the hardware vendor and version. |

|

|

The

|

hardware: ramMebibytes: | The host’s amount of memory in Mebibytes (MiB). |

|

|

The

|

hardware:

systemVendor:

manufacturer:

productName:

serialNumber:

|

Contains information about the host’s |

|

| The timestamp of the last time the status of the host was updated. |

|

| The status of the server. The status is one of the following:

|

|

| Boolean indicating whether the host is powered on. |

|

|

The

|

|

| A reference to the secret and its namespace holding the last set of BMC credentials that were sent to the provisioning backend. |

3.3. Getting the BareMetalHost resource

The BareMetalHost resource contains the properties of a physical host. You must get the BareMetalHost resource for a physical host to review its properties.

Procedure

Get the list of

BareMetalHostresources:oc get bmh -n openshift-machine-api -o yaml

$ oc get bmh -n openshift-machine-api -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteYou can use

baremetalhostas the long form ofbmhwithoc getcommand.Get the list of hosts:

oc get bmh -n openshift-machine-api

$ oc get bmh -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow Get the

BareMetalHostresource for a specific host:oc get bmh <host_name> -n openshift-machine-api -o yaml

$ oc get bmh <host_name> -n openshift-machine-api -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Where

<host_name>is the name of the host.Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4. About the HostFirmwareSettings resource

You can use the HostFirmwareSettings resource to retrieve and manage the BIOS settings for a host. When a host moves to the Available state, Ironic reads the host’s BIOS settings and creates the HostFirmwareSettings resource. The resource contains the complete BIOS configuration returned from the baseboard management controller (BMC). Whereas, the firmware field in the BareMetalHost resource returns three vendor-independent fields, the HostFirmwareSettings resource typically comprises many BIOS settings of vendor-specific fields per host.

The HostFirmwareSettings resource contains two sections:

-

The

HostFirmwareSettingsspec. -

The

HostFirmwareSettingsstatus.

3.4.1. The HostFirmwareSettings spec

The spec section of the HostFirmwareSettings resource defines the desired state of the host’s BIOS, and it is empty by default. Ironic uses the settings in the spec.settings section to update the baseboard management controller (BMC) when the host is in the Preparing state. Use the FirmwareSchema resource to ensure that you do not send invalid name/value pairs to hosts. See "About the FirmwareSchema resource" for additional details.

Example

spec:

settings:

ProcTurboMode: Disabled

spec:

settings:

ProcTurboMode: Disabled- 1

- In the foregoing example, the

spec.settingssection contains a name/value pair that will set theProcTurboModeBIOS setting toDisabled.

Integer parameters listed in the status section appear as strings. For example, "1". When setting integers in the spec.settings section, the values should be set as integers without quotes. For example, 1.

3.4.2. The HostFirmwareSettings status

The status represents the current state of the host’s BIOS.

| Parameters | Description |

|---|---|

|

|

The

|

status:

schema:

name:

namespace:

lastUpdated:

|

The

|

status: settings: |

The |

3.5. Getting the HostFirmwareSettings resource

The HostFirmwareSettings resource contains the vendor-specific BIOS properties of a physical host. You must get the HostFirmwareSettings resource for a physical host to review its BIOS properties.

Procedure

Get the detailed list of

HostFirmwareSettingsresources:oc get hfs -n openshift-machine-api -o yaml

$ oc get hfs -n openshift-machine-api -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteYou can use

hostfirmwaresettingsas the long form ofhfswith theoc getcommand.Get the list of

HostFirmwareSettingsresources:oc get hfs -n openshift-machine-api

$ oc get hfs -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow Get the

HostFirmwareSettingsresource for a particular hostoc get hfs <host_name> -n openshift-machine-api -o yaml

$ oc get hfs <host_name> -n openshift-machine-api -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Where

<host_name>is the name of the host.

3.6. Editing the HostFirmwareSettings resource

You can edit the HostFirmwareSettings of provisioned hosts.

You can only edit hosts when they are in the provisioned state, excluding read-only values. You cannot edit hosts in the externally provisioned state.

Procedure

Get the list of

HostFirmwareSettingsresources:oc get hfs -n openshift-machine-api

$ oc get hfs -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit a host’s

HostFirmwareSettingsresource:oc edit hfs <host_name> -n openshift-machine-api

$ oc edit hfs <host_name> -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow Where

<host_name>is the name of a provisioned host. TheHostFirmwareSettingsresource will open in the default editor for your terminal.Add name/value pairs to the

spec.settingssection:Example

spec: settings: name: valuespec: settings: name: value1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Use the

FirmwareSchemaresource to identify the available settings for the host. You cannot set values that are read-only.

- Save the changes and exit the editor.

Get the host’s machine name:

oc get bmh <host_name> -n openshift-machine name

$ oc get bmh <host_name> -n openshift-machine nameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Where

<host_name>is the name of the host. The machine name appears under theCONSUMERfield.Annotate the machine to delete it from the machineset:

oc annotate machine <machine_name> machine.openshift.io/delete-machine=true -n openshift-machine-api

$ oc annotate machine <machine_name> machine.openshift.io/delete-machine=true -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow Where

<machine_name>is the name of the machine to delete.Get a list of nodes and count the number of worker nodes:

oc get nodes

$ oc get nodesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Get the machineset:

oc get machinesets -n openshift-machine-api

$ oc get machinesets -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow Scale the machineset:

oc scale machineset <machineset_name> -n openshift-machine-api --replicas=<n-1>

$ oc scale machineset <machineset_name> -n openshift-machine-api --replicas=<n-1>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Where

<machineset_name>is the name of the machineset and<n-1>is the decremented number of worker nodes.When the host enters the

Availablestate, scale up the machineset to make theHostFirmwareSettingsresource changes take effect:oc scale machineset <machineset_name> -n openshift-machine-api --replicas=<n>

$ oc scale machineset <machineset_name> -n openshift-machine-api --replicas=<n>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Where

<machineset_name>is the name of the machineset and<n>is the number of worker nodes.

3.7. Verifying the HostFirmware Settings resource is valid

When the user edits the spec.settings section to make a change to the HostFirmwareSetting(HFS) resource, the Bare Metal Operator (BMO) validates the change against the FimwareSchema resource, which is a read-only resource. If the setting is invalid, the BMO will set the Type value of the status.Condition setting to False and also generate an event and store it in the HFS resource. Use the following procedure to verify that the resource is valid.

Procedure

Get a list of

HostFirmwareSettingresources:oc get hfs -n openshift-machine-api

$ oc get hfs -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the

HostFirmwareSettingsresource for a particular host is valid:oc describe hfs <host_name> -n openshift-machine-api

$ oc describe hfs <host_name> -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow Where

<host_name>is the name of the host.Example output

Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal ValidationFailed 2m49s metal3-hostfirmwaresettings-controller Invalid BIOS setting: Setting ProcTurboMode is invalid, unknown enumeration value - Foo

Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal ValidationFailed 2m49s metal3-hostfirmwaresettings-controller Invalid BIOS setting: Setting ProcTurboMode is invalid, unknown enumeration value - FooCopy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantIf the response returns

ValidationFailed, there is an error in the resource configuration and you must update the values to conform to theFirmwareSchemaresource.

3.8. About the FirmwareSchema resource

BIOS settings vary among hardware vendors and host models. A FirmwareSchema resource is a read-only resource that contains the types and limits for each BIOS setting on each host model. The data comes directly from the BMC through Ironic. The FirmwareSchema enables you to identify valid values you can specify in the spec field of the HostFirmwareSettings resource. The FirmwareSchema resource has a unique identifier derived from its settings and limits. Identical host models use the same FirmwareSchema identifier. It is likely that multiple instances of HostFirmwareSettings use the same FirmwareSchema.

| Parameters | Description |

|---|---|

|

|

The

|

3.9. Getting the FirmwareSchema resource

Each host model from each vendor has different BIOS settings. When editing the HostFirmwareSettings resource’s spec section, the name/value pairs you set must conform to that host’s firmware schema. To ensure you are setting valid name/value pairs, get the FirmwareSchema for the host and review it.

Procedure

To get a list of

FirmwareSchemaresource instances, execute the following:oc get firmwareschema -n openshift-machine-api

$ oc get firmwareschema -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow To get a particular

FirmwareSchemainstance, execute:oc get firmwareschema <instance_name> -n openshift-machine-api -o yaml

$ oc get firmwareschema <instance_name> -n openshift-machine-api -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Where

<instance_name>is the name of the schema instance stated in theHostFirmwareSettingsresource (see Table 3).

Chapter 4. Configuring multi-architecture compute machines on an OpenShift cluster

4.1. About clusters with multi-architecture compute machines

An OpenShift Container Platform cluster with multi-architecture compute machines is a cluster that supports compute machines with different architectures. Clusters with multi-architecture compute machines are available only on Amazon Web Services (AWS) or Microsoft Azure installer-provisioned infrastructures and bare metal, IBM Power®, and IBM Z® user-provisioned infrastructures with 64-bit x86 control plane machines.

When there are nodes with multiple architectures in your cluster, the architecture of your image must be consistent with the architecture of the node. You need to ensure that the pod is assigned to the node with the appropriate architecture and that it matches the image architecture. For more information on assigning pods to nodes, see Assigning pods to nodes.

The Cluster Samples Operator is not supported on clusters with multi-architecture compute machines. Your cluster can be created without this capability. For more information, see Cluster capabilities.

For information on migrating your single-architecture cluster to a cluster that supports multi-architecture compute machines, see Migrating to a cluster with multi-architecture compute machines.

4.1.1. Configuring your cluster with multi-architecture compute machines

To create a cluster with multi-architecture compute machines with different installation options and platforms, you can use the documentation in the following table:

| Documentation section | Platform | User-provisioned installation | Installer-provisioned installation | Control Plane | Compute node |

|---|---|---|---|---|---|

| Creating a cluster with multi-architecture compute machines on Azure | Microsoft Azure | ✓ |

|

| |

| Creating a cluster with multi-architecture compute machines on AWS | Amazon Web Services (AWS) | ✓ |

|

| |

| Creating a cluster with multi-architecture compute machines on Google Cloud | Google Cloud | ✓ |

|

| |

| Creating a cluster with multi-architecture compute machines on bare metal, IBM Power, or IBM Z | Bare metal | ✓ |

|

| |

| IBM Power | ✓ |

|

| ||

| IBM Z | ✓ |

|

| ||

| Creating a cluster with multi-architecture compute machines on IBM Z® and IBM® LinuxONE with z/VM | IBM Z® and IBM® LinuxONE | ✓ |

|

| |

| IBM Z® and IBM® LinuxONE | ✓ |

|

| ||

| Creating a cluster with multi-architecture compute machines on IBM Power® | IBM Power® | ✓ |

|

|

Autoscaling from zero is currently not supported on Google Cloud.

4.2. Creating a cluster with multi-architecture compute machine on Azure

To deploy an Azure cluster with multi-architecture compute machines, you must first create a single-architecture Azure installer-provisioned cluster that uses the multi-architecture installer binary. For more information on Azure installations, see Installing a cluster on Azure with customizations. You can then add an ARM64 compute machine set to your cluster to create a cluster with multi-architecture compute machines.

The following procedures explain how to generate an ARM64 boot image and create an Azure compute machine set that uses the ARM64 boot image. This adds ARM64 compute nodes to your cluster and deploys the amount of ARM64 virtual machines (VM) that you need.

4.2.1. Verifying cluster compatibility

Before you can start adding compute nodes of different architectures to your cluster, you must verify that your cluster is multi-architecture compatible.

Prerequisites

-

You installed the OpenShift CLI (

oc)

Procedure

You can check that your cluster uses the architecture payload by running the following command:

oc adm release info -o jsonpath="{ .metadata.metadata}"$ oc adm release info -o jsonpath="{ .metadata.metadata}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

If you see the following output, then your cluster is using the multi-architecture payload:

{ "release.openshift.io/architecture": "multi", "url": "https://access.redhat.com/errata/<errata_version>" }{ "release.openshift.io/architecture": "multi", "url": "https://access.redhat.com/errata/<errata_version>" }Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can then begin adding multi-arch compute nodes to your cluster.

If you see the following output, then your cluster is not using the multi-architecture payload:

{ "url": "https://access.redhat.com/errata/<errata_version>" }{ "url": "https://access.redhat.com/errata/<errata_version>" }Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantTo migrate your cluster so the cluster supports multi-architecture compute machines, follow the procedure in Migrating to a cluster with multi-architecture compute machines.

4.2.2. Creating an ARM64 boot image using the Azure image gallery

The following procedure describes how to manually generate an ARM64 boot image.

Prerequisites

-

You installed the Azure CLI (

az). - You created a single-architecture Azure installer-provisioned cluster with the multi-architecture installer binary.

Procedure

Log in to your Azure account:

az login

$ az loginCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a storage account and upload the

arm64virtual hard disk (VHD) to your storage account. The OpenShift Container Platform installation program creates a resource group, however, the boot image can also be uploaded to a custom named resource group:az storage account create -n ${STORAGE_ACCOUNT_NAME} -g ${RESOURCE_GROUP} -l westus --sku Standard_LRS$ az storage account create -n ${STORAGE_ACCOUNT_NAME} -g ${RESOURCE_GROUP} -l westus --sku Standard_LRS1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The

westusobject is an example region.

Create a storage container using the storage account you generated:

az storage container create -n ${CONTAINER_NAME} --account-name ${STORAGE_ACCOUNT_NAME}$ az storage container create -n ${CONTAINER_NAME} --account-name ${STORAGE_ACCOUNT_NAME}Copy to Clipboard Copied! Toggle word wrap Toggle overflow You must use the OpenShift Container Platform installation program JSON file to extract the URL and

aarch64VHD name:Extract the

URLfield and set it toRHCOS_VHD_ORIGIN_URLas the file name by running the following command:RHCOS_VHD_ORIGIN_URL=$(oc -n openshift-machine-config-operator get configmap/coreos-bootimages -o jsonpath='{.data.stream}' | jq -r '.architectures.aarch64."rhel-coreos-extensions"."azure-disk".url')$ RHCOS_VHD_ORIGIN_URL=$(oc -n openshift-machine-config-operator get configmap/coreos-bootimages -o jsonpath='{.data.stream}' | jq -r '.architectures.aarch64."rhel-coreos-extensions"."azure-disk".url')Copy to Clipboard Copied! Toggle word wrap Toggle overflow Extract the

aarch64VHD name and set it toBLOB_NAMEas the file name by running the following command:BLOB_NAME=rhcos-$(oc -n openshift-machine-config-operator get configmap/coreos-bootimages -o jsonpath='{.data.stream}' | jq -r '.architectures.aarch64."rhel-coreos-extensions"."azure-disk".release')-azure.aarch64.vhd$ BLOB_NAME=rhcos-$(oc -n openshift-machine-config-operator get configmap/coreos-bootimages -o jsonpath='{.data.stream}' | jq -r '.architectures.aarch64."rhel-coreos-extensions"."azure-disk".release')-azure.aarch64.vhdCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Generate a shared access signature (SAS) token. Use this token to upload the RHCOS VHD to your storage container with the following commands:

end=`date -u -d "30 minutes" '+%Y-%m-%dT%H:%MZ'`

$ end=`date -u -d "30 minutes" '+%Y-%m-%dT%H:%MZ'`Copy to Clipboard Copied! Toggle word wrap Toggle overflow sas=`az storage container generate-sas -n ${CONTAINER_NAME} --account-name ${STORAGE_ACCOUNT_NAME} --https-only --permissions dlrw --expiry $end -o tsv`$ sas=`az storage container generate-sas -n ${CONTAINER_NAME} --account-name ${STORAGE_ACCOUNT_NAME} --https-only --permissions dlrw --expiry $end -o tsv`Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the RHCOS VHD into the storage container:

az storage blob copy start --account-name ${STORAGE_ACCOUNT_NAME} --sas-token "$sas" \ --source-uri "${RHCOS_VHD_ORIGIN_URL}" \ --destination-blob "${BLOB_NAME}" --destination-container ${CONTAINER_NAME}$ az storage blob copy start --account-name ${STORAGE_ACCOUNT_NAME} --sas-token "$sas" \ --source-uri "${RHCOS_VHD_ORIGIN_URL}" \ --destination-blob "${BLOB_NAME}" --destination-container ${CONTAINER_NAME}Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can check the status of the copying process with the following command:

az storage blob show -c ${CONTAINER_NAME} -n ${BLOB_NAME} --account-name ${STORAGE_ACCOUNT_NAME} | jq .properties.copy$ az storage blob show -c ${CONTAINER_NAME} -n ${BLOB_NAME} --account-name ${STORAGE_ACCOUNT_NAME} | jq .properties.copyCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- If the status parameter displays the

successobject, the copying process is complete.

Create an image gallery using the following command:

az sig create --resource-group ${RESOURCE_GROUP} --gallery-name ${GALLERY_NAME}$ az sig create --resource-group ${RESOURCE_GROUP} --gallery-name ${GALLERY_NAME}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use the image gallery to create an image definition. In the following example command,

rhcos-arm64is the name of the image definition.az sig image-definition create --resource-group ${RESOURCE_GROUP} --gallery-name ${GALLERY_NAME} --gallery-image-definition rhcos-arm64 --publisher RedHat --offer arm --sku arm64 --os-type linux --architecture Arm64 --hyper-v-generation V2$ az sig image-definition create --resource-group ${RESOURCE_GROUP} --gallery-name ${GALLERY_NAME} --gallery-image-definition rhcos-arm64 --publisher RedHat --offer arm --sku arm64 --os-type linux --architecture Arm64 --hyper-v-generation V2Copy to Clipboard Copied! Toggle word wrap Toggle overflow To get the URL of the VHD and set it to

RHCOS_VHD_URLas the file name, run the following command:RHCOS_VHD_URL=$(az storage blob url --account-name ${STORAGE_ACCOUNT_NAME} -c ${CONTAINER_NAME} -n "${BLOB_NAME}" -o tsv)$ RHCOS_VHD_URL=$(az storage blob url --account-name ${STORAGE_ACCOUNT_NAME} -c ${CONTAINER_NAME} -n "${BLOB_NAME}" -o tsv)Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use the

RHCOS_VHD_URLfile, your storage account, resource group, and image gallery to create an image version. In the following example,1.0.0is the image version.az sig image-version create --resource-group ${RESOURCE_GROUP} --gallery-name ${GALLERY_NAME} --gallery-image-definition rhcos-arm64 --gallery-image-version 1.0.0 --os-vhd-storage-account ${STORAGE_ACCOUNT_NAME} --os-vhd-uri ${RHCOS_VHD_URL}$ az sig image-version create --resource-group ${RESOURCE_GROUP} --gallery-name ${GALLERY_NAME} --gallery-image-definition rhcos-arm64 --gallery-image-version 1.0.0 --os-vhd-storage-account ${STORAGE_ACCOUNT_NAME} --os-vhd-uri ${RHCOS_VHD_URL}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Your

arm64boot image is now generated. You can access the ID of your image with the following command:az sig image-version show -r $GALLERY_NAME -g $RESOURCE_GROUP -i rhcos-arm64 -e 1.0.0

$ az sig image-version show -r $GALLERY_NAME -g $RESOURCE_GROUP -i rhcos-arm64 -e 1.0.0Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following example image ID is used in the

recourseIDparameter of the compute machine set:Example

resourceID/resourceGroups/${RESOURCE_GROUP}/providers/Microsoft.Compute/galleries/${GALLERY_NAME}/images/rhcos-arm64/versions/1.0.0/resourceGroups/${RESOURCE_GROUP}/providers/Microsoft.Compute/galleries/${GALLERY_NAME}/images/rhcos-arm64/versions/1.0.0Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2.3. Adding a multi-architecture compute machine set to your cluster

To add ARM64 compute nodes to your cluster, you must create an Azure compute machine set that uses the ARM64 boot image. To create your own custom compute machine set on Azure, see "Creating a compute machine set on Azure".

Prerequisites

-

You installed the OpenShift CLI (

oc).

Procedure

Create a compute machine set and modify the

resourceIDandvmSizeparameters with the following command. This compute machine set will control thearm64worker nodes in your cluster:oc create -f arm64-machine-set-0.yaml

$ oc create -f arm64-machine-set-0.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Sample YAML compute machine set with

arm64boot imageCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Verify that the new ARM64 machines are running by entering the following command:

oc get machineset -n openshift-machine-api

$ oc get machineset -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME DESIRED CURRENT READY AVAILABLE AGE <infrastructure_id>-arm64-machine-set-0 2 2 2 2 10m

NAME DESIRED CURRENT READY AVAILABLE AGE <infrastructure_id>-arm64-machine-set-0 2 2 2 2 10mCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can check that the nodes are ready and scheduable with the following command:

oc get nodes

$ oc get nodesCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.3. Creating a cluster with multi-architecture compute machines on AWS

To create an AWS cluster with multi-architecture compute machines, you must first create a single-architecture AWS installer-provisioned cluster with the multi-architecture installer binary. For more information on AWS installations, refer to Installing a cluster on AWS with customizations. You can then add a ARM64 compute machine set to your AWS cluster.

4.3.1. Verifying cluster compatibility

Before you can start adding compute nodes of different architectures to your cluster, you must verify that your cluster is multi-architecture compatible.

Prerequisites

-

You installed the OpenShift CLI (

oc)

Procedure

You can check that your cluster uses the architecture payload by running the following command:

oc adm release info -o jsonpath="{ .metadata.metadata}"$ oc adm release info -o jsonpath="{ .metadata.metadata}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

If you see the following output, then your cluster is using the multi-architecture payload:

{ "release.openshift.io/architecture": "multi", "url": "https://access.redhat.com/errata/<errata_version>" }{ "release.openshift.io/architecture": "multi", "url": "https://access.redhat.com/errata/<errata_version>" }Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can then begin adding multi-arch compute nodes to your cluster.

If you see the following output, then your cluster is not using the multi-architecture payload:

{ "url": "https://access.redhat.com/errata/<errata_version>" }{ "url": "https://access.redhat.com/errata/<errata_version>" }Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantTo migrate your cluster so the cluster supports multi-architecture compute machines, follow the procedure in Migrating to a cluster with multi-architecture compute machines.

4.3.2. Adding an ARM64 compute machine set to your cluster

To configure a cluster with multi-architecture compute machines, you must create a AWS ARM64 compute machine set. This adds ARM64 compute nodes to your cluster so that your cluster has multi-architecture compute machines.

Prerequisites

-

You installed the OpenShift CLI (

oc). - You used the installation program to create an AMD64 single-architecture AWS cluster with the multi-architecture installer binary.

Procedure

Create and modify a compute machine set, this will control the ARM64 compute nodes in your cluster.

oc create -f aws-arm64-machine-set-0.yaml

$ oc create -f aws-arm64-machine-set-0.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Sample YAML compute machine set to deploy an ARM64 compute node

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1 2 3 9 13 14

- Specify the infrastructure ID that is based on the cluster ID that you set when you provisioned the cluster. If you have the OpenShift CLI installed, you can obtain the infrastructure ID by running the following command:

oc get -o jsonpath=‘{.status.infrastructureName}{“\n”}’ infrastructure cluster$ oc get -o jsonpath=‘{.status.infrastructureName}{“\n”}’ infrastructure clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 4 7

- Specify the infrastructure ID, role node label, and zone.

- 5 6

- Specify the role node label to add.

- 8

- Specify an ARM64 supported Red Hat Enterprise Linux CoreOS (RHCOS) Amazon Machine Image (AMI) for your AWS zone for your OpenShift Container Platform nodes.

oc get configmap/coreos-bootimages \ -n openshift-machine-config-operator \ -o jsonpath='{.data.stream}' | jq \ -r '.architectures.<arch>.images.aws.regions."<region>".image'$ oc get configmap/coreos-bootimages \ -n openshift-machine-config-operator \ -o jsonpath='{.data.stream}' | jq \ -r '.architectures.<arch>.images.aws.regions."<region>".image'Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 10

- Specify an ARM64 supported machine type. For more information, refer to "Tested instance types for AWS 64-bit ARM"

- 11

- Specify the zone, for example

us-east-1a. Ensure that the zone you select offers 64-bit ARM machines. - 12

- Specify the region, for example,

us-east-1. Ensure that the zone you select offers 64-bit ARM machines.

Verification

View the list of compute machine sets by entering the following command:

oc get machineset -n openshift-machine-api

$ oc get machineset -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can then see your created ARM64 machine set.

Example output

NAME DESIRED CURRENT READY AVAILABLE AGE <infrastructure_id>-aws-arm64-machine-set-0 2 2 2 2 10m

NAME DESIRED CURRENT READY AVAILABLE AGE <infrastructure_id>-aws-arm64-machine-set-0 2 2 2 2 10mCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can check that the nodes are ready and scheduable with the following command:

oc get nodes

$ oc get nodesCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.4. Creating a cluster with multi-architecture compute machines on Google Cloud

To create a Google Cloud cluster with multi-architecture compute machines, you must first create a single-architecture Google Cloud installer-provisioned cluster with the multi-architecture installer binary. For more information on AWS installations, refer to Installing a cluster on Google Cloud with customizations. You can then add ARM64 compute machines sets to your Google Cloud cluster.

Secure booting is currently not supported on ARM64 machines for Google Cloud

4.4.1. Verifying cluster compatibility

Before you can start adding compute nodes of different architectures to your cluster, you must verify that your cluster is multi-architecture compatible.

Prerequisites

-

You installed the OpenShift CLI (

oc)

Procedure

You can check that your cluster uses the architecture payload by running the following command:

oc adm release info -o jsonpath="{ .metadata.metadata}"$ oc adm release info -o jsonpath="{ .metadata.metadata}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

If you see the following output, then your cluster is using the multi-architecture payload:

{ "release.openshift.io/architecture": "multi", "url": "https://access.redhat.com/errata/<errata_version>" }{ "release.openshift.io/architecture": "multi", "url": "https://access.redhat.com/errata/<errata_version>" }Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can then begin adding multi-arch compute nodes to your cluster.

If you see the following output, then your cluster is not using the multi-architecture payload:

{ "url": "https://access.redhat.com/errata/<errata_version>" }{ "url": "https://access.redhat.com/errata/<errata_version>" }Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantTo migrate your cluster so the cluster supports multi-architecture compute machines, follow the procedure in Migrating to a cluster with multi-architecture compute machines.

4.4.2. Adding an ARM64 compute machine set to your Google Cloud cluster

To configure a cluster with multi-architecture compute machines, you must create a Google Cloud ARM64 compute machine set. This adds ARM64 compute nodes to your cluster.

Prerequisites

-

You installed the OpenShift CLI (

oc). - You used the installation program to create an AMD64 single-architecture AWS cluster with the multi-architecture installer binary.

Procedure

Create and modify a compute machine set, this controls the ARM64 compute nodes in your cluster:

oc create -f gcp-arm64-machine-set-0.yaml

$ oc create -f gcp-arm64-machine-set-0.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Sample Google Cloud YAML compute machine set to deploy an ARM64 compute node

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specify the infrastructure ID that is based on the cluster ID that you set when you provisioned the cluster. You can obtain the infrastructure ID by running the following command:

oc get -o jsonpath='{.status.infrastructureName}{"\n"}' infrastructure cluster$ oc get -o jsonpath='{.status.infrastructureName}{"\n"}' infrastructure clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 2

- Specify the role node label to add.

- 3

- Specify the path to the image that is used in current compute machine sets. You need the project and image name for your path to image.

To access the project and image name, run the following command:

oc get configmap/coreos-bootimages \ -n openshift-machine-config-operator \ -o jsonpath='{.data.stream}' | jq \ -r '.architectures.aarch64.images.gcp'$ oc get configmap/coreos-bootimages \ -n openshift-machine-config-operator \ -o jsonpath='{.data.stream}' | jq \ -r '.architectures.aarch64.images.gcp'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

"gcp": { "release": "415.92.202309142014-0", "project": "rhcos-cloud", "name": "rhcos-415-92-202309142014-0-gcp-aarch64" }"gcp": { "release": "415.92.202309142014-0", "project": "rhcos-cloud", "name": "rhcos-415-92-202309142014-0-gcp-aarch64" }Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use the

projectandnameparameters from the output to create the path to image field in your machine set. The path to the image should follow the following format:projects/<project>/global/images/<image_name>

$ projects/<project>/global/images/<image_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 4

- Optional: Specify custom metadata in the form of a

key:valuepair. For example use cases, see the Google Cloud documentation for setting custom metadata. - 5

- Specify an ARM64 supported machine type. For more information, refer to Tested instance types for Google Cloud on 64-bit ARM infrastructures in "Additional resources".

- 6

- Specify the name of the Google Cloud project that you use for your cluster.

- 7

- Specify the region, for example,

us-central1. Ensure that the zone you select offers 64-bit ARM machines.

Verification

View the list of compute machine sets by entering the following command:

oc get machineset -n openshift-machine-api

$ oc get machineset -n openshift-machine-apiCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can then see your created ARM64 machine set.

Example output

NAME DESIRED CURRENT READY AVAILABLE AGE <infrastructure_id>-gcp-arm64-machine-set-0 2 2 2 2 10m

NAME DESIRED CURRENT READY AVAILABLE AGE <infrastructure_id>-gcp-arm64-machine-set-0 2 2 2 2 10mCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can check that the nodes are ready and scheduable with the following command:

oc get nodes

$ oc get nodesCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

4.5. Creating a cluster with multi-architecture compute machines on bare metal, IBM Power, or IBM Z

To create a cluster with multi-architecture compute machines on bare metal (x86_64), IBM Power® (ppc64le), or IBM Z® (s390x) you must have an existing single-architecture cluster on one of these platforms. Follow the installations procedures for your platform:

- Installing a user provisioned cluster on bare metal. You can then add 64-bit ARM compute machines to your OpenShift Container Platform cluster on bare metal.

-

Installing a cluster on IBM Power®. You can then add

x86_64compute machines to your OpenShift Container Platform cluster on IBM Power®. -

Installing a cluster on IBM Z® and IBM® LinuxONE. You can then add

x86_64compute machines to your OpenShift Container Platform cluster on IBM Z® and IBM® LinuxONE.

Before you can add additional compute nodes to your cluster, you must upgrade your cluster to one that uses the multi-architecture payload. For more information on migrating to the multi-architecture payload, see Migrating to a cluster with multi-architecture compute machines.

The following procedures explain how to create a RHCOS compute machine using an ISO image or network PXE booting. This will allow you to add additional nodes to your cluster and deploy a cluster with multi-architecture compute machines.

4.5.1. Verifying cluster compatibility

Before you can start adding compute nodes of different architectures to your cluster, you must verify that your cluster is multi-architecture compatible.

Prerequisites

-

You installed the OpenShift CLI (

oc)

Procedure

You can check that your cluster uses the architecture payload by running the following command:

oc adm release info -o jsonpath="{ .metadata.metadata}"$ oc adm release info -o jsonpath="{ .metadata.metadata}"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

If you see the following output, then your cluster is using the multi-architecture payload:

{ "release.openshift.io/architecture": "multi", "url": "https://access.redhat.com/errata/<errata_version>" }{ "release.openshift.io/architecture": "multi", "url": "https://access.redhat.com/errata/<errata_version>" }Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can then begin adding multi-arch compute nodes to your cluster.

If you see the following output, then your cluster is not using the multi-architecture payload:

{ "url": "https://access.redhat.com/errata/<errata_version>" }{ "url": "https://access.redhat.com/errata/<errata_version>" }Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantTo migrate your cluster so the cluster supports multi-architecture compute machines, follow the procedure in Migrating to a cluster with multi-architecture compute machines.

4.5.2. Creating RHCOS machines using an ISO image

You can create more Red Hat Enterprise Linux CoreOS (RHCOS) compute machines for your bare metal cluster by using an ISO image to create the machines.

Prerequisites

- Obtain the URL of the Ignition config file for the compute machines for your cluster. You uploaded this file to your HTTP server during installation.

-

You must have the OpenShift CLI (

oc) installed.

Procedure

Extract the Ignition config file from the cluster by running the following command:

oc extract -n openshift-machine-api secret/worker-user-data-managed --keys=userData --to=- > worker.ign

$ oc extract -n openshift-machine-api secret/worker-user-data-managed --keys=userData --to=- > worker.ignCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Upload the

worker.ignIgnition config file you exported from your cluster to your HTTP server. Note the URLs of these files. You can validate that the ignition files are available on the URLs. The following example gets the Ignition config files for the compute node:

curl -k http://<HTTP_server>/worker.ign

$ curl -k http://<HTTP_server>/worker.ignCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can access the ISO image for booting your new machine by running to following command:

RHCOS_VHD_ORIGIN_URL=$(oc -n openshift-machine-config-operator get configmap/coreos-bootimages -o jsonpath='{.data.stream}' | jq -r '.architectures.<architecture>.artifacts.metal.formats.iso.disk.location')RHCOS_VHD_ORIGIN_URL=$(oc -n openshift-machine-config-operator get configmap/coreos-bootimages -o jsonpath='{.data.stream}' | jq -r '.architectures.<architecture>.artifacts.metal.formats.iso.disk.location')Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use the ISO file to install RHCOS on more compute machines. Use the same method that you used when you created machines before you installed the cluster:

- Burn the ISO image to a disk and boot it directly.

- Use ISO redirection with a LOM interface.

Boot the RHCOS ISO image without specifying any options, or interrupting the live boot sequence. Wait for the installer to boot into a shell prompt in the RHCOS live environment.

NoteYou can interrupt the RHCOS installation boot process to add kernel arguments. However, for this ISO procedure you must use the

coreos-installercommand as outlined in the following steps, instead of adding kernel arguments.Run the

coreos-installercommand and specify the options that meet your installation requirements. At a minimum, you must specify the URL that points to the Ignition config file for the node type, and the device that you are installing to:sudo coreos-installer install --ignition-url=http://<HTTP_server>/<node_type>.ign <device> --ignition-hash=sha512-<digest>

$ sudo coreos-installer install --ignition-url=http://<HTTP_server>/<node_type>.ign <device> --ignition-hash=sha512-<digest>1 2 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- You must run the

coreos-installercommand by usingsudo, because thecoreuser does not have the required root privileges to perform the installation. - 2

- The

--ignition-hashoption is required when the Ignition config file is obtained through an HTTP URL to validate the authenticity of the Ignition config file on the cluster node.<digest>is the Ignition config file SHA512 digest obtained in a preceding step.

NoteIf you want to provide your Ignition config files through an HTTPS server that uses TLS, you can add the internal certificate authority (CA) to the system trust store before running

coreos-installer.The following example initializes a compute node installation to the

/dev/sdadevice. The Ignition config file for the compute node is obtained from an HTTP web server with the IP address 192.168.1.2:sudo coreos-installer install --ignition-url=http://192.168.1.2:80/installation_directory/worker.ign /dev/sda --ignition-hash=sha512-a5a2d43879223273c9b60af66b44202a1d1248fc01cf156c46d4a79f552b6bad47bc8cc78ddf0116e80c59d2ea9e32ba53bc807afbca581aa059311def2c3e3b

$ sudo coreos-installer install --ignition-url=http://192.168.1.2:80/installation_directory/worker.ign /dev/sda --ignition-hash=sha512-a5a2d43879223273c9b60af66b44202a1d1248fc01cf156c46d4a79f552b6bad47bc8cc78ddf0116e80c59d2ea9e32ba53bc807afbca581aa059311def2c3e3bCopy to Clipboard Copied! Toggle word wrap Toggle overflow Monitor the progress of the RHCOS installation on the console of the machine.

ImportantEnsure that the installation is successful on each node before commencing with the OpenShift Container Platform installation. Observing the installation process can also help to determine the cause of RHCOS installation issues that might arise.

- Continue to create more compute machines for your cluster.

4.5.3. Creating RHCOS machines by PXE or iPXE booting

You can create more Red Hat Enterprise Linux CoreOS (RHCOS) compute machines for your bare metal cluster by using PXE or iPXE booting.

Prerequisites

- Obtain the URL of the Ignition config file for the compute machines for your cluster. You uploaded this file to your HTTP server during installation.

-

Obtain the URLs of the RHCOS ISO image, compressed metal BIOS,

kernel, andinitramfsfiles that you uploaded to your HTTP server during cluster installation. - You have access to the PXE booting infrastructure that you used to create the machines for your OpenShift Container Platform cluster during installation. The machines must boot from their local disks after RHCOS is installed on them.

-

If you use UEFI, you have access to the

grub.conffile that you modified during OpenShift Container Platform installation.

Procedure

Confirm that your PXE or iPXE installation for the RHCOS images is correct.

For PXE:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specify the location of the live

kernelfile that you uploaded to your HTTP server. - 2

- Specify locations of the RHCOS files that you uploaded to your HTTP server. The

initrdparameter value is the location of the liveinitramfsfile, thecoreos.inst.ignition_urlparameter value is the location of the worker Ignition config file, and thecoreos.live.rootfs_urlparameter value is the location of the liverootfsfile. Thecoreos.inst.ignition_urlandcoreos.live.rootfs_urlparameters only support HTTP and HTTPS.

NoteThis configuration does not enable serial console access on machines with a graphical console. To configure a different console, add one or more

console=arguments to theAPPENDline. For example, addconsole=tty0 console=ttyS0to set the first PC serial port as the primary console and the graphical console as a secondary console. For more information, see How does one set up a serial terminal and/or console in Red Hat Enterprise Linux?.For iPXE (

x86_64+aarch64):kernel http://<HTTP_server>/rhcos-<version>-live-kernel-<architecture> initrd=main coreos.live.rootfs_url=http://<HTTP_server>/rhcos-<version>-live-rootfs.<architecture>.img coreos.inst.install_dev=/dev/sda coreos.inst.ignition_url=http://<HTTP_server>/worker.ign initrd --name main http://<HTTP_server>/rhcos-<version>-live-initramfs.<architecture>.img boot

kernel http://<HTTP_server>/rhcos-<version>-live-kernel-<architecture> initrd=main coreos.live.rootfs_url=http://<HTTP_server>/rhcos-<version>-live-rootfs.<architecture>.img coreos.inst.install_dev=/dev/sda coreos.inst.ignition_url=http://<HTTP_server>/worker.ign1 2 initrd --name main http://<HTTP_server>/rhcos-<version>-live-initramfs.<architecture>.img3 bootCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specify the locations of the RHCOS files that you uploaded to your HTTP server. The

kernelparameter value is the location of thekernelfile, theinitrd=mainargument is needed for booting on UEFI systems, thecoreos.live.rootfs_urlparameter value is the location of therootfsfile, and thecoreos.inst.ignition_urlparameter value is the location of the worker Ignition config file. - 2

- If you use multiple NICs, specify a single interface in the

ipoption. For example, to use DHCP on a NIC that is namedeno1, setip=eno1:dhcp. - 3

- Specify the location of the

initramfsfile that you uploaded to your HTTP server.

NoteThis configuration does not enable serial console access on machines with a graphical console To configure a different console, add one or more

console=arguments to thekernelline. For example, addconsole=tty0 console=ttyS0to set the first PC serial port as the primary console and the graphical console as a secondary console. For more information, see How does one set up a serial terminal and/or console in Red Hat Enterprise Linux? and "Enabling the serial console for PXE and ISO installation" in the "Advanced RHCOS installation configuration" section.NoteTo network boot the CoreOS

kernelonaarch64architecture, you need to use a version of iPXE build with theIMAGE_GZIPoption enabled. SeeIMAGE_GZIPoption in iPXE.For PXE (with UEFI and GRUB as second stage) on