Authentication and authorization

Configuring user authentication and access controls for users and services

Abstract

Chapter 1. Overview of authentication and authorization

1.1. Glossary of common terms for OpenShift Container Platform authentication and authorization

This glossary defines common terms that are used in OpenShift Container Platform authentication and authorization.

- authentication

- An authentication determines access to an OpenShift Container Platform cluster and ensures only authenticated users access the OpenShift Container Platform cluster.

- authorization

- Authorization determines whether the identified user has permissions to perform the requested action.

- bearer token

-

Bearer token is used to authenticate to API with the header

Authorization: Bearer <token>.

- Cloud Credential Operator

- The Cloud Credential Operator (CCO) manages cloud provider credentials as custom resource definitions (CRDs).

- config map

-

A config map provides a way to inject configuration data into the pods. You can reference the data stored in a config map in a volume of type

ConfigMap. Applications running in a pod can use this data. - containers

- Lightweight and executable images that consist of software and all its dependencies. Because containers virtualize the operating system, you can run containers in a data center, public or private cloud, or your local host.

- Custom Resource (CR)

- A CR is an extension of the Kubernetes API.

- group

- A group is a set of users. A group is useful for granting permissions to multiple users one time.

- HTPasswd

- HTPasswd updates the files that store usernames and password for authentication of HTTP users.

- Keystone

- Keystone is an Red Hat OpenStack Platform (RHOSP) project that provides identity, token, catalog, and policy services.

- Lightweight directory access protocol (LDAP)

- LDAP is a protocol that queries user information.

- manual mode

- In manual mode, a user manages cloud credentials instead of the Cloud Credential Operator (CCO).

- mint mode

In mint mode, the Cloud Credential Operator (CCO) uses the provided administrator-level cloud credential to create new credentials for components in the cluster with only the specific permissions that are required.

NoteMint mode is the default and the preferred setting for the CCO to use on the platforms for which it is supported.

- namespace

- A namespace isolates specific system resources that are visible to all processes. Inside a namespace, only processes that are members of that namespace can see those resources.

- node

- A node is a worker machine in the OpenShift Container Platform cluster. A node is either a virtual machine (VM) or a physical machine.

- OAuth client

- OAuth client is used to get a bearer token.

- OAuth server

- The OpenShift Container Platform control plane includes a built-in OAuth server that determines the user’s identity from the configured identity provider and creates an access token.

- OpenID Connect

- The OpenID Connect is a protocol to authenticate the users to use single sign-on (SSO) to access sites that use OpenID Providers.

- passthrough mode

- In passthrough mode, the Cloud Credential Operator (CCO) passes the provided cloud credential to the components that request cloud credentials.

- pod

- A pod is the smallest logical unit in Kubernetes. A pod is comprised of one or more containers to run in a worker node.

- regular users

- Users that are created automatically in the cluster upon first login or via the API.

- request header

- A request header is an HTTP header that is used to provide information about HTTP request context, so that the server can track the response of the request.

- role-based access control (RBAC)

- A key security control to ensure that cluster users and workloads have access to only the resources required to execute their roles.

- service accounts

- Service accounts are used by the cluster components or applications.

- system users

- Users that are created automatically when the cluster is installed.

- users

- Users is an entity that can make requests to API.

1.2. About authentication in OpenShift Container Platform

To control access to an OpenShift Container Platform cluster, a cluster administrator can configure user authentication and ensure only approved users access the cluster.

To interact with an OpenShift Container Platform cluster, users must first authenticate to the OpenShift Container Platform API in some way. You can authenticate by providing an OAuth access token or an X.509 client certificate in your requests to the OpenShift Container Platform API.

If you do not present a valid access token or certificate, your request is unauthenticated and you receive an HTTP 401 error.

An administrator can configure authentication through the following tasks:

- Configuring an identity provider: You can define any supported identity provider in OpenShift Container Platform and add it to your cluster.

Configuring the internal OAuth server: The OpenShift Container Platform control plane includes a built-in OAuth server that determines the user’s identity from the configured identity provider and creates an access token. You can configure the token duration and inactivity timeout, and customize the internal OAuth server URL.

NoteUsers can view and manage OAuth tokens owned by them.

Registering an OAuth client: OpenShift Container Platform includes several default OAuth clients. You can register and configure additional OAuth clients.

NoteWhen users send a request for an OAuth token, they must specify either a default or custom OAuth client that receives and uses the token.

- Managing cloud provider credentials using the Cloud Credentials Operator: Cluster components use cloud provider credentials to get permissions required to perform cluster-related tasks.

- Impersonating a system admin user: You can grant cluster administrator permissions to a user by impersonating a system admin user.

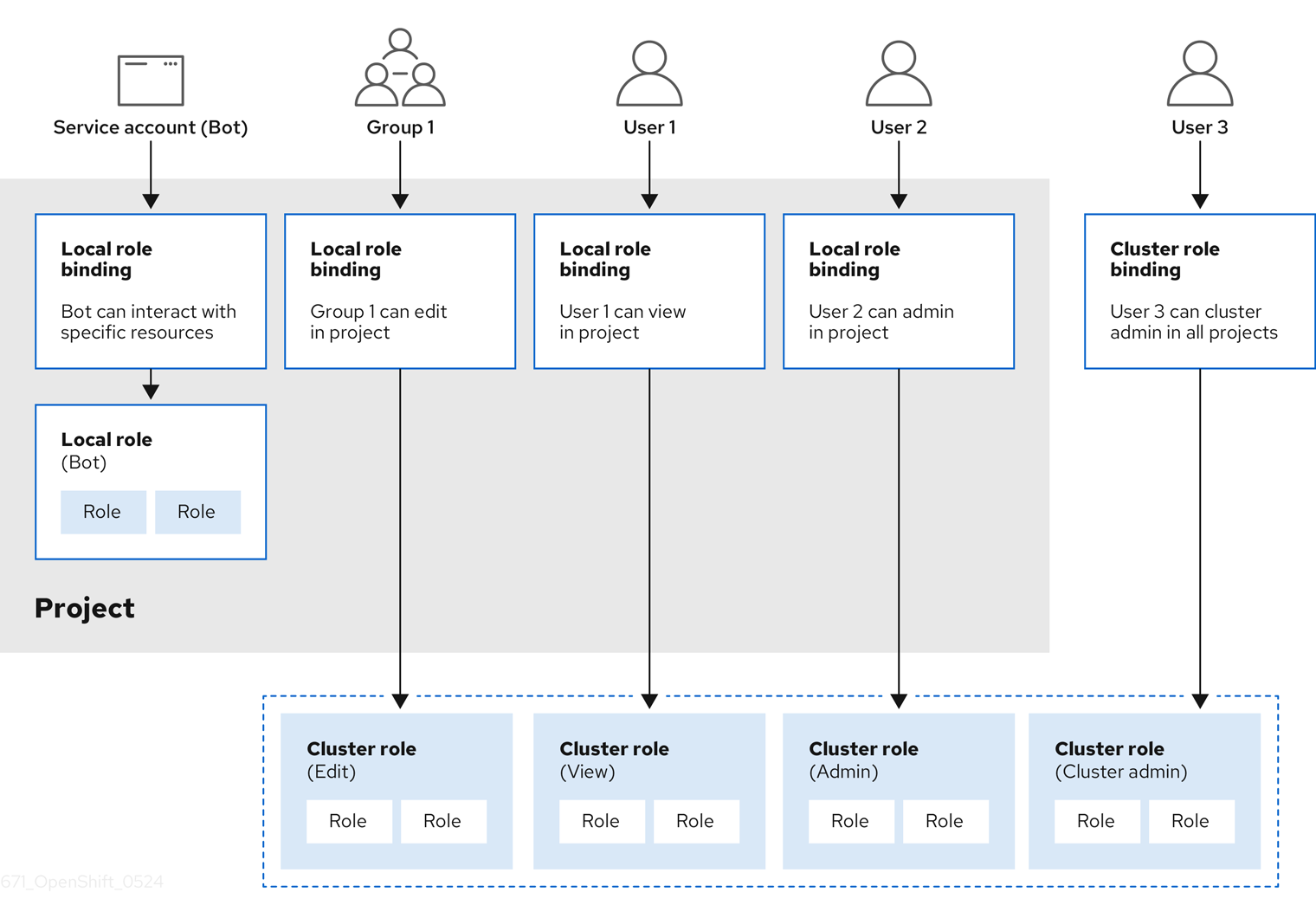

1.3. About authorization in OpenShift Container Platform

Authorization involves determining whether the identified user has permissions to perform the requested action.

Administrators can define permissions and assign them to users using the RBAC objects, such as rules, roles, and bindings. To understand how authorization works in OpenShift Container Platform, see Evaluating authorization.

You can also control access to an OpenShift Container Platform cluster through projects and namespaces.

Along with controlling user access to a cluster, you can also control the actions a pod can perform and the resources it can access using security context constraints (SCCs).

You can manage authorization for OpenShift Container Platform through the following tasks:

- Viewing local and cluster roles and bindings.

- Creating a local role and assigning it to a user or group.

- Creating a cluster role and assigning it to a user or group: OpenShift Container Platform includes a set of default cluster roles. You can create additional cluster roles and add them to a user or group.

Creating a cluster-admin user: By default, your cluster has only one cluster administrator called

kubeadmin. You can create another cluster administrator. Before creating a cluster administrator, ensure that you have configured an identity provider.NoteAfter creating the cluster admin user, delete the existing kubeadmin user to improve cluster security.

- Creating service accounts: Service accounts provide a flexible way to control API access without sharing a regular user’s credentials. A user can create and use a service account in applications and also as an OAuth client.

- Scoping tokens: A scoped token is a token that identifies as a specific user who can perform only specific operations. You can create scoped tokens to delegate some of your permissions to another user or a service account.

- Syncing LDAP groups: You can manage user groups in one place by syncing the groups stored in an LDAP server with the OpenShift Container Platform user groups.

Chapter 2. Understanding authentication

For users to interact with OpenShift Container Platform, they must first authenticate to the cluster. The authentication layer identifies the user associated with requests to the OpenShift Container Platform API. The authorization layer then uses information about the requesting user to determine if the request is allowed.

As an administrator, you can configure authentication for OpenShift Container Platform.

2.1. Users

A user in OpenShift Container Platform is an entity that can make requests to the OpenShift Container Platform API. An OpenShift Container Platform User object represents an actor which can be granted permissions in the system by adding roles to them or to their groups. Typically, this represents the account of a developer or administrator that is interacting with OpenShift Container Platform.

Several types of users can exist:

| User type | Description |

|---|---|

|

|

This is the way most interactive OpenShift Container Platform users are represented. Regular users are created automatically in the system upon first login or can be created via the API. Regular users are represented with the |

|

|

Many of these are created automatically when the infrastructure is defined, mainly for the purpose of enabling the infrastructure to interact with the API securely. They include a cluster administrator (with access to everything), a per-node user, users for use by routers and registries, and various others. Finally, there is an |

|

|

These are special system users associated with projects; some are created automatically when the project is first created, while project administrators can create more for the purpose of defining access to the contents of each project. Service accounts are represented with the |

Each user must authenticate in some way to access OpenShift Container Platform. API requests with no authentication or invalid authentication are authenticated as requests by the anonymous system user. After authentication, policy determines what the user is authorized to do.

2.2. Groups

A user can be assigned to one or more groups, each of which represent a certain set of users. Groups are useful when managing authorization policies to grant permissions to multiple users at once, for example allowing access to objects within a project, versus granting them to users individually.

In addition to explicitly defined groups, there are also system groups, or virtual groups, that are automatically provisioned by the cluster.

The following default virtual groups are most important:

| Virtual group | Description |

|---|---|

|

| Automatically associated with all authenticated users. |

|

| Automatically associated with all users authenticated with an OAuth access token. |

|

| Automatically associated with all unauthenticated users. |

2.3. API authentication

Requests to the OpenShift Container Platform API are authenticated using the following methods:

- OAuth access tokens

-

Obtained from the OpenShift Container Platform OAuth server using the

<namespace_route>/oauth/authorizeand<namespace_route>/oauth/tokenendpoints. -

Sent as an

Authorization: Bearer…header. -

Sent as a websocket subprotocol header in the form

base64url.bearer.authorization.k8s.io.<base64url-encoded-token>for websocket requests.

-

Obtained from the OpenShift Container Platform OAuth server using the

- X.509 client certificates

- Requires an HTTPS connection to the API server.

- Verified by the API server against a trusted certificate authority bundle.

- The API server creates and distributes certificates to controllers to authenticate themselves.

Any request with an invalid access token or an invalid certificate is rejected by the authentication layer with a 401 error.

If no access token or certificate is presented, the authentication layer assigns the system:anonymous virtual user and the system:unauthenticated virtual group to the request. This allows the authorization layer to determine which requests, if any, an anonymous user is allowed to make.

2.3.1. OpenShift Container Platform OAuth server

The OpenShift Container Platform master includes a built-in OAuth server. Users obtain OAuth access tokens to authenticate themselves to the API.

When a person requests a new OAuth token, the OAuth server uses the configured identity provider to determine the identity of the person making the request.

It then determines what user that identity maps to, creates an access token for that user, and returns the token for use.

2.3.1.1. OAuth token requests

Every request for an OAuth token must specify the OAuth client that will receive and use the token. The following OAuth clients are automatically created when starting the OpenShift Container Platform API:

| OAuth client | Usage |

|---|---|

|

|

Requests tokens at |

|

|

Requests tokens with a user-agent that can handle |

<namespace_route>refers to the namespace route. This is found by running the following command:oc get route oauth-openshift -n openshift-authentication -o json | jq .spec.host

$ oc get route oauth-openshift -n openshift-authentication -o json | jq .spec.hostCopy to Clipboard Copied! Toggle word wrap Toggle overflow

All requests for OAuth tokens involve a request to <namespace_route>/oauth/authorize. Most authentication integrations place an authenticating proxy in front of this endpoint, or configure OpenShift Container Platform to validate credentials against a backing identity provider. Requests to <namespace_route>/oauth/authorize can come from user-agents that cannot display interactive login pages, such as the CLI. Therefore, OpenShift Container Platform supports authenticating using a WWW-Authenticate challenge in addition to interactive login flows.

If an authenticating proxy is placed in front of the <namespace_route>/oauth/authorize endpoint, it sends unauthenticated, non-browser user-agents WWW-Authenticate challenges rather than displaying an interactive login page or redirecting to an interactive login flow.

To prevent cross-site request forgery (CSRF) attacks against browser clients, only send Basic authentication challenges with if a X-CSRF-Token header is on the request. Clients that expect to receive Basic WWW-Authenticate challenges must set this header to a non-empty value.

If the authenticating proxy cannot support WWW-Authenticate challenges, or if OpenShift Container Platform is configured to use an identity provider that does not support WWW-Authenticate challenges, you must use a browser to manually obtain a token from <namespace_route>/oauth/token/request.

2.3.1.2. API impersonation

You can configure a request to the OpenShift Container Platform API to act as though it originated from another user. For more information, see User impersonation in the Kubernetes documentation.

2.3.1.3. Authentication metrics for Prometheus

OpenShift Container Platform captures the following Prometheus system metrics during authentication attempts:

-

openshift_auth_basic_password_countcounts the number ofoc loginuser name and password attempts. -

openshift_auth_basic_password_count_resultcounts the number ofoc loginuser name and password attempts by result,successorerror. -

openshift_auth_form_password_countcounts the number of web console login attempts. -

openshift_auth_form_password_count_resultcounts the number of web console login attempts by result,successorerror. -

openshift_auth_password_totalcounts the total number ofoc loginand web console login attempts.

Chapter 3. Configuring the internal OAuth server

3.1. OpenShift Container Platform OAuth server

The OpenShift Container Platform master includes a built-in OAuth server. Users obtain OAuth access tokens to authenticate themselves to the API.

When a person requests a new OAuth token, the OAuth server uses the configured identity provider to determine the identity of the person making the request.

It then determines what user that identity maps to, creates an access token for that user, and returns the token for use.

3.2. OAuth token request flows and responses

The OAuth server supports standard authorization code grant and the implicit grant OAuth authorization flows.

When requesting an OAuth token using the implicit grant flow (response_type=token) with a client_id configured to request WWW-Authenticate challenges (like openshift-challenging-client), these are the possible server responses from /oauth/authorize, and how they should be handled:

| Status | Content | Client response |

|---|---|---|

| 302 |

|

Use the |

| 302 |

|

Fail, optionally surfacing the |

| 302 |

Other | Follow the redirect, and process the result using these rules. |

| 401 |

|

Respond to challenge if type is recognized (e.g. |

| 401 |

| No challenge authentication is possible. Fail and show response body (which might contain links or details on alternate methods to obtain an OAuth token). |

| Other | Other | Fail, optionally surfacing response body to the user. |

3.3. Options for the internal OAuth server

Several configuration options are available for the internal OAuth server.

3.3.1. OAuth token duration options

The internal OAuth server generates two kinds of tokens:

| Token | Description |

|---|---|

| Access tokens | Longer-lived tokens that grant access to the API. |

| Authorize codes | Short-lived tokens whose only use is to be exchanged for an access token. |

You can configure the default duration for both types of token. If necessary, you can override the duration of the access token by using an OAuthClient object definition.

3.3.2. OAuth grant options

When the OAuth server receives token requests for a client to which the user has not previously granted permission, the action that the OAuth server takes is dependent on the OAuth client’s grant strategy.

The OAuth client requesting token must provide its own grant strategy.

You can apply the following default methods:

| Grant option | Description |

|---|---|

|

| Auto-approve the grant and retry the request. |

|

| Prompt the user to approve or deny the grant. |

3.4. Configuring the internal OAuth server’s token duration

You can configure default options for the internal OAuth server’s token duration.

By default, tokens are only valid for 24 hours. Existing sessions expire after this time elapses.

If the default time is insufficient, then this can be modified using the following procedure.

Procedure

Create a configuration file that contains the token duration options. The following file sets this to 48 hours, twice the default.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Set

accessTokenMaxAgeSecondsto control the lifetime of access tokens. The default lifetime is 24 hours, or 86400 seconds. This attribute cannot be negative. If set to zero, the default lifetime is used.

Apply the new configuration file:

NoteBecause you update the existing OAuth server, you must use the

oc applycommand to apply the change.oc apply -f </path/to/file.yaml>

$ oc apply -f </path/to/file.yaml>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Confirm that the changes are in effect:

oc describe oauth.config.openshift.io/cluster

$ oc describe oauth.config.openshift.io/clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

... Spec: Token Config: Access Token Max Age Seconds: 172800 ...... Spec: Token Config: Access Token Max Age Seconds: 172800 ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.5. Configuring token inactivity timeout for the internal OAuth server

You can configure OAuth tokens to expire after a set period of inactivity. By default, no token inactivity timeout is set.

If the token inactivity timeout is also configured in your OAuth client, that value overrides the timeout that is set in the internal OAuth server configuration.

Prerequisites

-

You have access to the cluster as a user with the

cluster-adminrole. - You have configured an identity provider (IDP).

Procedure

Update the

OAuthconfiguration to set a token inactivity timeout.Edit the

OAuthobject:oc edit oauth cluster

$ oc edit oauth clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the

spec.tokenConfig.accessTokenInactivityTimeoutfield and set your timeout value:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Set a value with the appropriate units, for example

400sfor 400 seconds, or30mfor 30 minutes. The minimum allowed timeout value is300s.

- Save the file to apply the changes.

Check that the OAuth server pods have restarted:

oc get clusteroperators authentication

$ oc get clusteroperators authenticationCopy to Clipboard Copied! Toggle word wrap Toggle overflow Do not continue to the next step until

PROGRESSINGis listed asFalse, as shown in the following output:Example output

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE authentication 4.17.0 True False False 145m

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE authentication 4.17.0 True False False 145mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check that a new revision of the Kubernetes API server pods has rolled out. This will take several minutes.

oc get clusteroperators kube-apiserver

$ oc get clusteroperators kube-apiserverCopy to Clipboard Copied! Toggle word wrap Toggle overflow Do not continue to the next step until

PROGRESSINGis listed asFalse, as shown in the following output:Example output

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE kube-apiserver 4.17.0 True False False 145m

NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE kube-apiserver 4.17.0 True False False 145mCopy to Clipboard Copied! Toggle word wrap Toggle overflow If

PROGRESSINGis showingTrue, wait a few minutes and try again.

Verification

- Log in to the cluster with an identity from your IDP.

- Execute a command and verify that it was successful.

- Wait longer than the configured timeout without using the identity. In this procedure’s example, wait longer than 400 seconds.

Try to execute a command from the same identity’s session.

This command should fail because the token should have expired due to inactivity longer than the configured timeout.

Example output

error: You must be logged in to the server (Unauthorized)

error: You must be logged in to the server (Unauthorized)Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.6. Customizing the internal OAuth server URL

You can customize the internal OAuth server URL by setting the custom hostname and TLS certificate in the spec.componentRoutes field of the cluster Ingress configuration.

If you update the internal OAuth server URL, you might break trust from components in the cluster that need to communicate with the OpenShift OAuth server to retrieve OAuth access tokens. Components that need to trust the OAuth server will need to include the proper CA bundle when calling OAuth endpoints. For example:

oc login -u <username> -p <password> --certificate-authority=<path_to_ca.crt>

$ oc login -u <username> -p <password> --certificate-authority=<path_to_ca.crt> - 1

- For self-signed certificates, the

ca.crtfile must contain the custom CA certificate, otherwise the login will not succeed.

The Cluster Authentication Operator publishes the OAuth server’s serving certificate in the oauth-serving-cert config map in the openshift-config-managed namespace. You can find the certificate in the data.ca-bundle.crt key of the config map.

Prerequisites

- You have logged in to the cluster as a user with administrative privileges.

You have created a secret in the

openshift-confignamespace containing the TLS certificate and key. This is required if the domain for the custom hostname suffix does not match the cluster domain suffix. The secret is optional if the suffix matches.TipYou can create a TLS secret by using the

oc create secret tlscommand.

Procedure

Edit the cluster

Ingressconfiguration:oc edit ingress.config.openshift.io cluster

$ oc edit ingress.config.openshift.io clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow Set the custom hostname and optionally the serving certificate and key:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Save the file to apply the changes.

3.7. OAuth server metadata

Applications running in OpenShift Container Platform might have to discover information about the built-in OAuth server. For example, they might have to discover what the address of the <namespace_route> is without manual configuration. To aid in this, OpenShift Container Platform implements the IETF OAuth 2.0 Authorization Server Metadata draft specification.

Thus, any application running inside the cluster can issue a GET request to https://openshift.default.svc/.well-known/oauth-authorization-server to fetch the following information:

- 1

- The authorization server’s issuer identifier, which is a URL that uses the

httpsscheme and has no query or fragment components. This is the location where.well-knownRFC 5785 resources containing information about the authorization server are published. - 2

- URL of the authorization server’s authorization endpoint. See RFC 6749.

- 3

- URL of the authorization server’s token endpoint. See RFC 6749.

- 4

- JSON array containing a list of the OAuth 2.0 RFC 6749 scope values that this authorization server supports. Note that not all supported scope values are advertised.

- 5

- JSON array containing a list of the OAuth 2.0

response_typevalues that this authorization server supports. The array values used are the same as those used with theresponse_typesparameter defined by "OAuth 2.0 Dynamic Client Registration Protocol" in RFC 7591. - 6

- JSON array containing a list of the OAuth 2.0 grant type values that this authorization server supports. The array values used are the same as those used with the

grant_typesparameter defined byOAuth 2.0 Dynamic Client Registration Protocolin RFC 7591. - 7

- JSON array containing a list of PKCE RFC 7636 code challenge methods supported by this authorization server. Code challenge method values are used in the

code_challenge_methodparameter defined in Section 4.3 of RFC 7636. The valid code challenge method values are those registered in the IANAPKCE Code Challenge Methodsregistry. See IANA OAuth Parameters.

3.8. Troubleshooting OAuth API events

In some cases the API server returns an unexpected condition error message that is difficult to debug without direct access to the API master log. The underlying reason for the error is purposely obscured in order to avoid providing an unauthenticated user with information about the server’s state.

A subset of these errors is related to service account OAuth configuration issues. These issues are captured in events that can be viewed by non-administrator users. When encountering an unexpected condition server error during OAuth, run oc get events to view these events under ServiceAccount.

The following example warns of a service account that is missing a proper OAuth redirect URI:

oc get events | grep ServiceAccount

$ oc get events | grep ServiceAccountExample output

1m 1m 1 proxy ServiceAccount Warning NoSAOAuthRedirectURIs service-account-oauth-client-getter system:serviceaccount:myproject:proxy has no redirectURIs; set serviceaccounts.openshift.io/oauth-redirecturi.<some-value>=<redirect> or create a dynamic URI using serviceaccounts.openshift.io/oauth-redirectreference.<some-value>=<reference>

1m 1m 1 proxy ServiceAccount Warning NoSAOAuthRedirectURIs service-account-oauth-client-getter system:serviceaccount:myproject:proxy has no redirectURIs; set serviceaccounts.openshift.io/oauth-redirecturi.<some-value>=<redirect> or create a dynamic URI using serviceaccounts.openshift.io/oauth-redirectreference.<some-value>=<reference>

Running oc describe sa/<service_account_name> reports any OAuth events associated with the given service account name.

oc describe sa/proxy | grep -A5 Events

$ oc describe sa/proxy | grep -A5 EventsExample output

Events: FirstSeen LastSeen Count From SubObjectPath Type Reason Message --------- -------- ----- ---- ------------- -------- ------ ------- 3m 3m 1 service-account-oauth-client-getter Warning NoSAOAuthRedirectURIs system:serviceaccount:myproject:proxy has no redirectURIs; set serviceaccounts.openshift.io/oauth-redirecturi.<some-value>=<redirect> or create a dynamic URI using serviceaccounts.openshift.io/oauth-redirectreference.<some-value>=<reference>

Events:

FirstSeen LastSeen Count From SubObjectPath Type Reason Message

--------- -------- ----- ---- ------------- -------- ------ -------

3m 3m 1 service-account-oauth-client-getter Warning NoSAOAuthRedirectURIs system:serviceaccount:myproject:proxy has no redirectURIs; set serviceaccounts.openshift.io/oauth-redirecturi.<some-value>=<redirect> or create a dynamic URI using serviceaccounts.openshift.io/oauth-redirectreference.<some-value>=<reference>The following is a list of the possible event errors:

No redirect URI annotations or an invalid URI is specified

Reason Message NoSAOAuthRedirectURIs system:serviceaccount:myproject:proxy has no redirectURIs; set serviceaccounts.openshift.io/oauth-redirecturi.<some-value>=<redirect> or create a dynamic URI using serviceaccounts.openshift.io/oauth-redirectreference.<some-value>=<reference>

Reason Message

NoSAOAuthRedirectURIs system:serviceaccount:myproject:proxy has no redirectURIs; set serviceaccounts.openshift.io/oauth-redirecturi.<some-value>=<redirect> or create a dynamic URI using serviceaccounts.openshift.io/oauth-redirectreference.<some-value>=<reference>Invalid route specified

Reason Message NoSAOAuthRedirectURIs [routes.route.openshift.io "<name>" not found, system:serviceaccount:myproject:proxy has no redirectURIs; set serviceaccounts.openshift.io/oauth-redirecturi.<some-value>=<redirect> or create a dynamic URI using serviceaccounts.openshift.io/oauth-redirectreference.<some-value>=<reference>]

Reason Message

NoSAOAuthRedirectURIs [routes.route.openshift.io "<name>" not found, system:serviceaccount:myproject:proxy has no redirectURIs; set serviceaccounts.openshift.io/oauth-redirecturi.<some-value>=<redirect> or create a dynamic URI using serviceaccounts.openshift.io/oauth-redirectreference.<some-value>=<reference>]Invalid reference type specified

Reason Message NoSAOAuthRedirectURIs [no kind "<name>" is registered for version "v1", system:serviceaccount:myproject:proxy has no redirectURIs; set serviceaccounts.openshift.io/oauth-redirecturi.<some-value>=<redirect> or create a dynamic URI using serviceaccounts.openshift.io/oauth-redirectreference.<some-value>=<reference>]

Reason Message

NoSAOAuthRedirectURIs [no kind "<name>" is registered for version "v1", system:serviceaccount:myproject:proxy has no redirectURIs; set serviceaccounts.openshift.io/oauth-redirecturi.<some-value>=<redirect> or create a dynamic URI using serviceaccounts.openshift.io/oauth-redirectreference.<some-value>=<reference>]Missing SA tokens

Reason Message NoSAOAuthTokens system:serviceaccount:myproject:proxy has no tokens

Reason Message

NoSAOAuthTokens system:serviceaccount:myproject:proxy has no tokensChapter 4. Configuring OAuth clients

Several OAuth clients are created by default in OpenShift Container Platform. You can also register and configure additional OAuth clients.

4.1. Default OAuth clients

The following OAuth clients are automatically created when starting the OpenShift Container Platform API:

| OAuth client | Usage |

|---|---|

|

|

Requests tokens at |

|

|

Requests tokens with a user-agent that can handle |

|

| Requests tokens by using a local HTTP server fetching an authorization code grant. |

<namespace_route>refers to the namespace route. This is found by running the following command:oc get route oauth-openshift -n openshift-authentication -o json | jq .spec.host

$ oc get route oauth-openshift -n openshift-authentication -o json | jq .spec.hostCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2. Registering an additional OAuth client

If you need an additional OAuth client to manage authentication for your OpenShift Container Platform cluster, you can register one.

Procedure

To register additional OAuth clients:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The

nameof the OAuth client is used as theclient_idparameter when making requests to<namespace_route>/oauth/authorizeand<namespace_route>/oauth/token. - 2

- The

secretis used as theclient_secretparameter when making requests to<namespace_route>/oauth/token. - 3

- The

redirect_uriparameter specified in requests to<namespace_route>/oauth/authorizeand<namespace_route>/oauth/tokenmust be equal to or prefixed by one of the URIs listed in theredirectURIsparameter value. - 4

- The

grantMethodis used to determine what action to take when this client requests tokens and has not yet been granted access by the user. Specifyautoto automatically approve the grant and retry the request, orpromptto prompt the user to approve or deny the grant.

4.3. Configuring token inactivity timeout for an OAuth client

You can configure OAuth clients to expire OAuth tokens after a set period of inactivity. By default, no token inactivity timeout is set.

If the token inactivity timeout is also configured in the internal OAuth server configuration, the timeout that is set in the OAuth client overrides that value.

Prerequisites

-

You have access to the cluster as a user with the

cluster-adminrole. - You have configured an identity provider (IDP).

Procedure

Update the

OAuthClientconfiguration to set a token inactivity timeout.Edit the

OAuthClientobject:oc edit oauthclient <oauth_client>

$ oc edit oauthclient <oauth_client>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Replace

<oauth_client>with the OAuth client to configure, for example,console.

Add the

accessTokenInactivityTimeoutSecondsfield and set your timeout value:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The minimum allowed timeout value in seconds is

300.

- Save the file to apply the changes.

Verification

- Log in to the cluster with an identity from your IDP. Be sure to use the OAuth client that you just configured.

- Perform an action and verify that it was successful.

- Wait longer than the configured timeout without using the identity. In this procedure’s example, wait longer than 600 seconds.

Try to perform an action from the same identity’s session.

This attempt should fail because the token should have expired due to inactivity longer than the configured timeout.

Chapter 5. Managing user-owned OAuth access tokens

Users can review their own OAuth access tokens and delete any that are no longer needed.

5.1. Listing user-owned OAuth access tokens

You can list your user-owned OAuth access tokens. Token names are not sensitive and cannot be used to log in.

Procedure

List all user-owned OAuth access tokens:

oc get useroauthaccesstokens

$ oc get useroauthaccesstokensCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME CLIENT NAME CREATED EXPIRES REDIRECT URI SCOPES <token1> openshift-challenging-client 2021-01-11T19:25:35Z 2021-01-12 19:25:35 +0000 UTC https://oauth-openshift.apps.example.com/oauth/token/implicit user:full <token2> openshift-browser-client 2021-01-11T19:27:06Z 2021-01-12 19:27:06 +0000 UTC https://oauth-openshift.apps.example.com/oauth/token/display user:full <token3> console 2021-01-11T19:26:29Z 2021-01-12 19:26:29 +0000 UTC https://console-openshift-console.apps.example.com/auth/callback user:full

NAME CLIENT NAME CREATED EXPIRES REDIRECT URI SCOPES <token1> openshift-challenging-client 2021-01-11T19:25:35Z 2021-01-12 19:25:35 +0000 UTC https://oauth-openshift.apps.example.com/oauth/token/implicit user:full <token2> openshift-browser-client 2021-01-11T19:27:06Z 2021-01-12 19:27:06 +0000 UTC https://oauth-openshift.apps.example.com/oauth/token/display user:full <token3> console 2021-01-11T19:26:29Z 2021-01-12 19:26:29 +0000 UTC https://console-openshift-console.apps.example.com/auth/callback user:fullCopy to Clipboard Copied! Toggle word wrap Toggle overflow List user-owned OAuth access tokens for a particular OAuth client:

oc get useroauthaccesstokens --field-selector=clientName="console"

$ oc get useroauthaccesstokens --field-selector=clientName="console"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME CLIENT NAME CREATED EXPIRES REDIRECT URI SCOPES <token3> console 2021-01-11T19:26:29Z 2021-01-12 19:26:29 +0000 UTC https://console-openshift-console.apps.example.com/auth/callback user:full

NAME CLIENT NAME CREATED EXPIRES REDIRECT URI SCOPES <token3> console 2021-01-11T19:26:29Z 2021-01-12 19:26:29 +0000 UTC https://console-openshift-console.apps.example.com/auth/callback user:fullCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.2. Viewing the details of a user-owned OAuth access token

You can view the details of a user-owned OAuth access token.

Procedure

Describe the details of a user-owned OAuth access token:

oc describe useroauthaccesstokens <token_name>

$ oc describe useroauthaccesstokens <token_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The token name, which is the sha256 hash of the token. Token names are not sensitive and cannot be used to log in.

- 2

- The client name, which describes where the token originated from.

- 3

- The value in seconds from the creation time before this token expires.

- 4

- If there is a token inactivity timeout set for the OAuth server, this is the value in seconds from the creation time before this token can no longer be used.

- 5

- The scopes for this token.

- 6

- The user name associated with this token.

5.3. Deleting user-owned OAuth access tokens

The oc logout command only invalidates the OAuth token for the active session. You can use the following procedure to delete any user-owned OAuth tokens that are no longer needed.

Deleting an OAuth access token logs out the user from all sessions that use the token.

Procedure

Delete the user-owned OAuth access token:

oc delete useroauthaccesstokens <token_name>

$ oc delete useroauthaccesstokens <token_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

useroauthaccesstoken.oauth.openshift.io "<token_name>" deleted

useroauthaccesstoken.oauth.openshift.io "<token_name>" deletedCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.4. Adding unauthenticated groups to cluster roles

As a cluster administrator, you can add unauthenticated users to the following cluster roles in OpenShift Container Platform by creating a cluster role binding. Unauthenticated users do not have access to non-public cluster roles. This should only be done in specific use cases when necessary.

You can add unauthenticated users to the following cluster roles:

-

system:scope-impersonation -

system:webhook -

system:oauth-token-deleter -

self-access-reviewer

Always verify compliance with your organization’s security standards when modifying unauthenticated access.

Prerequisites

-

You have access to the cluster as a user with the

cluster-adminrole. -

You have installed the OpenShift CLI (

oc).

Procedure

Create a YAML file named

add-<cluster_role>-unauth.yamland add the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the configuration by running the following command:

oc apply -f add-<cluster_role>.yaml

$ oc apply -f add-<cluster_role>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 6. Understanding identity provider configuration

The OpenShift Container Platform master includes a built-in OAuth server. Developers and administrators obtain OAuth access tokens to authenticate themselves to the API.

As an administrator, you can configure OAuth to specify an identity provider after you install your cluster.

6.1. About identity providers in OpenShift Container Platform

By default, only a kubeadmin user exists on your cluster. To specify an identity provider, you must create a custom resource (CR) that describes that identity provider and add it to the cluster.

OpenShift Container Platform user names containing /, :, and % are not supported.

6.2. Supported identity providers

You can configure the following types of identity providers:

| Identity provider | Description |

|---|---|

|

Configure the | |

|

Configure the | |

|

Configure the | |

|

Configure a | |

|

Configure a | |

|

Configure a | |

|

Configure a | |

|

Configure a | |

|

Configure an |

Once an identity provider has been defined, you can use RBAC to define and apply permissions.

6.3. Removing the kubeadmin user

After you define an identity provider and create a new cluster-admin user, you can remove the kubeadmin to improve cluster security.

If you follow this procedure before another user is a cluster-admin, then OpenShift Container Platform must be reinstalled. It is not possible to undo this command.

Prerequisites

- You must have configured at least one identity provider.

-

You must have added the

cluster-adminrole to a user. - You must be logged in as an administrator.

Procedure

Remove the

kubeadminsecrets:oc delete secrets kubeadmin -n kube-system

$ oc delete secrets kubeadmin -n kube-systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.4. Identity provider parameters

The following parameters are common to all identity providers:

| Parameter | Description |

|---|---|

|

| The provider name is prefixed to provider user names to form an identity name. |

|

| Defines how new identities are mapped to users when they log in. Enter one of the following values:

|

When adding or changing identity providers, you can map identities from the new provider to existing users by setting the mappingMethod parameter to add.

6.5. Sample identity provider CR

The following custom resource (CR) shows the parameters and default values that you use to configure an identity provider. This example uses the htpasswd identity provider.

Sample identity provider CR

6.6. Manually provisioning a user when using the lookup mapping method

Typically, identities are automatically mapped to users during login. The lookup mapping method disables this automatic mapping, which requires you to provision users manually. If you are using the lookup mapping method, use the following procedure for each user after configuring the identity provider.

Prerequisites

-

You have installed the OpenShift CLI (

oc).

Procedure

Create an OpenShift Container Platform user:

oc create user <username>

$ oc create user <username>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create an OpenShift Container Platform identity:

oc create identity <identity_provider>:<identity_provider_user_id>

$ oc create identity <identity_provider>:<identity_provider_user_id>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Where

<identity_provider_user_id>is a name that uniquely represents the user in the identity provider.Create a user identity mapping for the created user and identity:

oc create useridentitymapping <identity_provider>:<identity_provider_user_id> <username>

$ oc create useridentitymapping <identity_provider>:<identity_provider_user_id> <username>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 7. Configuring identity providers

7.1. Configuring an htpasswd identity provider

Configure the htpasswd identity provider to allow users to log in to OpenShift Container Platform with credentials from an htpasswd file.

To define an htpasswd identity provider, perform the following tasks:

-

Create an

htpasswdfile to store the user and password information. -

Create a secret to represent the

htpasswdfile. - Define an htpasswd identity provider resource that references the secret.

- Apply the resource to the default OAuth configuration to add the identity provider.

7.1.1. About identity providers in OpenShift Container Platform

By default, only a kubeadmin user exists on your cluster. To specify an identity provider, you must create a custom resource (CR) that describes that identity provider and add it to the cluster.

OpenShift Container Platform user names containing /, :, and % are not supported.

7.1.2. About htpasswd authentication

Using htpasswd authentication in OpenShift Container Platform allows you to identify users based on an htpasswd file. An htpasswd file is a flat file that contains the user name and hashed password for each user. You can use the htpasswd utility to create this file.

Do not use htpasswd authentication in OpenShift Container Platform for production environments. Use htpasswd authentication only for development environments.

7.1.3. Creating the htpasswd file

See one of the following sections for instructions about how to create the htpasswd file:

7.1.3.1. Creating an htpasswd file using Linux

To use the htpasswd identity provider, you must generate a flat file that contains the user names and passwords for your cluster by using htpasswd.

Prerequisites

-

Have access to the

htpasswdutility. On Red Hat Enterprise Linux this is available by installing thehttpd-toolspackage.

Procedure

Create or update your flat file with a user name and hashed password:

htpasswd -c -B -b </path/to/users.htpasswd> <username> <password>

$ htpasswd -c -B -b </path/to/users.htpasswd> <username> <password>Copy to Clipboard Copied! Toggle word wrap Toggle overflow The command generates a hashed version of the password.

For example:

htpasswd -c -B -b users.htpasswd <username> <password>

$ htpasswd -c -B -b users.htpasswd <username> <password>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Adding password for user user1

Adding password for user user1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Continue to add or update credentials to the file:

htpasswd -B -b </path/to/users.htpasswd> <user_name> <password>

$ htpasswd -B -b </path/to/users.htpasswd> <user_name> <password>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

7.1.3.2. Creating an htpasswd file using Windows

To use the htpasswd identity provider, you must generate a flat file that contains the user names and passwords for your cluster by using htpasswd.

Prerequisites

-

Have access to

htpasswd.exe. This file is included in the\bindirectory of many Apache httpd distributions.

Procedure

Create or update your flat file with a user name and hashed password:

> htpasswd.exe -c -B -b <\path\to\users.htpasswd> <username> <password>

> htpasswd.exe -c -B -b <\path\to\users.htpasswd> <username> <password>Copy to Clipboard Copied! Toggle word wrap Toggle overflow The command generates a hashed version of the password.

For example:

> htpasswd.exe -c -B -b users.htpasswd <username> <password>

> htpasswd.exe -c -B -b users.htpasswd <username> <password>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Adding password for user user1

Adding password for user user1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Continue to add or update credentials to the file:

> htpasswd.exe -b <\path\to\users.htpasswd> <username> <password>

> htpasswd.exe -b <\path\to\users.htpasswd> <username> <password>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

7.1.4. Creating the htpasswd secret

To use the htpasswd identity provider, you must define a secret that contains the htpasswd user file.

Prerequisites

- Create an htpasswd file.

Procedure

Create a

Secretobject that contains the htpasswd users file:oc create secret generic htpass-secret --from-file=htpasswd=<path_to_users.htpasswd> -n openshift-config

$ oc create secret generic htpass-secret --from-file=htpasswd=<path_to_users.htpasswd> -n openshift-config1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The secret key containing the users file for the

--from-fileargument must be namedhtpasswd, as shown in the above command.

TipYou can alternatively apply the following YAML to create the secret:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

7.1.5. Sample htpasswd CR

The following custom resource (CR) shows the parameters and acceptable values for an htpasswd identity provider.

htpasswd CR

7.1.6. Adding an identity provider to your cluster

After you install your cluster, add an identity provider to it so your users can authenticate.

Prerequisites

- Create an OpenShift Container Platform cluster.

- Create the custom resource (CR) for your identity providers.

- You must be logged in as an administrator.

Procedure

Apply the defined CR:

oc apply -f </path/to/CR>

$ oc apply -f </path/to/CR>Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf a CR does not exist,

oc applycreates a new CR and might trigger the following warning:Warning: oc apply should be used on resources created by either oc create --save-config or oc apply. In this case you can safely ignore this warning.Log in to the cluster as a user from your identity provider, entering the password when prompted.

oc login -u <username>

$ oc login -u <username>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Confirm that the user logged in successfully, and display the user name.

oc whoami

$ oc whoamiCopy to Clipboard Copied! Toggle word wrap Toggle overflow

7.1.7. Updating users for an htpasswd identity provider

You can add or remove users from an existing htpasswd identity provider.

Prerequisites

-

You have created a

Secretobject that contains the htpasswd user file. This procedure assumes that it is namedhtpass-secret. -

You have configured an htpasswd identity provider. This procedure assumes that it is named

my_htpasswd_provider. -

You have access to the

htpasswdutility. On Red Hat Enterprise Linux this is available by installing thehttpd-toolspackage. - You have cluster administrator privileges.

Procedure

Retrieve the htpasswd file from the

htpass-secretSecretobject and save the file to your file system:oc get secret htpass-secret -ojsonpath={.data.htpasswd} -n openshift-config | base64 --decode > users.htpasswd$ oc get secret htpass-secret -ojsonpath={.data.htpasswd} -n openshift-config | base64 --decode > users.htpasswdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add or remove users from the

users.htpasswdfile.To add a new user:

htpasswd -bB users.htpasswd <username> <password>

$ htpasswd -bB users.htpasswd <username> <password>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Adding password for user <username>

Adding password for user <username>Copy to Clipboard Copied! Toggle word wrap Toggle overflow To remove an existing user:

htpasswd -D users.htpasswd <username>

$ htpasswd -D users.htpasswd <username>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Deleting password for user <username>

Deleting password for user <username>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Replace the

htpass-secretSecretobject with the updated users in theusers.htpasswdfile:oc create secret generic htpass-secret --from-file=htpasswd=users.htpasswd --dry-run=client -o yaml -n openshift-config | oc replace -f -

$ oc create secret generic htpass-secret --from-file=htpasswd=users.htpasswd --dry-run=client -o yaml -n openshift-config | oc replace -f -Copy to Clipboard Copied! Toggle word wrap Toggle overflow TipYou can alternatively apply the following YAML to replace the secret:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow If you removed one or more users, you must additionally remove existing resources for each user.

Delete the

Userobject:oc delete user <username>

$ oc delete user <username>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

user.user.openshift.io "<username>" deleted

user.user.openshift.io "<username>" deletedCopy to Clipboard Copied! Toggle word wrap Toggle overflow Be sure to remove the user, otherwise the user can continue using their token as long as it has not expired.

Delete the

Identityobject for the user:oc delete identity my_htpasswd_provider:<username>

$ oc delete identity my_htpasswd_provider:<username>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

identity.user.openshift.io "my_htpasswd_provider:<username>" deleted

identity.user.openshift.io "my_htpasswd_provider:<username>" deletedCopy to Clipboard Copied! Toggle word wrap Toggle overflow

7.1.8. Configuring identity providers using the web console

Configure your identity provider (IDP) through the web console instead of the CLI.

Prerequisites

- You must be logged in to the web console as a cluster administrator.

Procedure

- Navigate to Administration → Cluster Settings.

- Under the Configuration tab, click OAuth.

- Under the Identity Providers section, select your identity provider from the Add drop-down menu.

You can specify multiple IDPs through the web console without overwriting existing IDPs.

7.2. Configuring a Keystone identity provider

Configure the keystone identity provider to integrate your OpenShift Container Platform cluster with Keystone to enable shared authentication with an OpenStack Keystone v3 server configured to store users in an internal database. This configuration allows users to log in to OpenShift Container Platform with their Keystone credentials.

7.2.1. About identity providers in OpenShift Container Platform

By default, only a kubeadmin user exists on your cluster. To specify an identity provider, you must create a custom resource (CR) that describes that identity provider and add it to the cluster.

OpenShift Container Platform user names containing /, :, and % are not supported.

7.2.2. About Keystone authentication

Keystone is an OpenStack project that provides identity, token, catalog, and policy services.

You can configure the integration with Keystone so that the new OpenShift Container Platform users are based on either the Keystone user names or unique Keystone IDs. With both methods, users log in by entering their Keystone user name and password. Basing the OpenShift Container Platform users on the Keystone ID is more secure because if you delete a Keystone user and create a new Keystone user with that user name, the new user might have access to the old user’s resources.

7.2.3. Creating the secret

Identity providers use OpenShift Container Platform Secret objects in the openshift-config namespace to contain the client secret, client certificates, and keys.

Procedure

Create a

Secretobject that contains the key and certificate by using the following command:oc create secret tls <secret_name> --key=key.pem --cert=cert.pem -n openshift-config

$ oc create secret tls <secret_name> --key=key.pem --cert=cert.pem -n openshift-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow TipYou can alternatively apply the following YAML to create the secret:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

7.2.4. Creating a config map

Identity providers use OpenShift Container Platform ConfigMap objects in the openshift-config namespace to contain the certificate authority bundle. These are primarily used to contain certificate bundles needed by the identity provider.

Procedure

Define an OpenShift Container Platform

ConfigMapobject containing the certificate authority by using the following command. The certificate authority must be stored in theca.crtkey of theConfigMapobject.oc create configmap ca-config-map --from-file=ca.crt=/path/to/ca -n openshift-config

$ oc create configmap ca-config-map --from-file=ca.crt=/path/to/ca -n openshift-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow TipYou can alternatively apply the following YAML to create the config map:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

7.2.5. Sample Keystone CR

The following custom resource (CR) shows the parameters and acceptable values for a Keystone identity provider.

Keystone CR

- 1

- This provider name is prefixed to provider user names to form an identity name.

- 2

- Controls how mappings are established between this provider’s identities and

Userobjects. - 3

- Keystone domain name. In Keystone, usernames are domain-specific. Only a single domain is supported.

- 4

- The URL to use to connect to the Keystone server (required). This must use https.

- 5

- Optional: Reference to an OpenShift Container Platform

ConfigMapobject containing the PEM-encoded certificate authority bundle to use in validating server certificates for the configured URL. - 6

- Optional: Reference to an OpenShift Container Platform

Secretobject containing the client certificate to present when making requests to the configured URL. - 7

- Reference to an OpenShift Container Platform

Secretobject containing the key for the client certificate. Required iftlsClientCertis specified.

7.2.6. Adding an identity provider to your cluster

After you install your cluster, add an identity provider to it so your users can authenticate.

Prerequisites

- Create an OpenShift Container Platform cluster.

- Create the custom resource (CR) for your identity providers.

- You must be logged in as an administrator.

Procedure

Apply the defined CR:

oc apply -f </path/to/CR>

$ oc apply -f </path/to/CR>Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf a CR does not exist,

oc applycreates a new CR and might trigger the following warning:Warning: oc apply should be used on resources created by either oc create --save-config or oc apply. In this case you can safely ignore this warning.Log in to the cluster as a user from your identity provider, entering the password when prompted.

oc login -u <username>

$ oc login -u <username>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Confirm that the user logged in successfully, and display the user name.

oc whoami

$ oc whoamiCopy to Clipboard Copied! Toggle word wrap Toggle overflow

7.3. Configuring an LDAP identity provider

Configure the ldap identity provider to validate user names and passwords against an LDAPv3 server, using simple bind authentication.

7.3.1. About identity providers in OpenShift Container Platform

By default, only a kubeadmin user exists on your cluster. To specify an identity provider, you must create a custom resource (CR) that describes that identity provider and add it to the cluster.

OpenShift Container Platform user names containing /, :, and % are not supported.

7.3.2. About LDAP authentication

During authentication, the LDAP directory is searched for an entry that matches the provided user name. If a single unique match is found, a simple bind is attempted using the distinguished name (DN) of the entry plus the provided password.

These are the steps taken:

-

Generate a search filter by combining the attribute and filter in the configured

urlwith the user-provided user name. - Search the directory using the generated filter. If the search does not return exactly one entry, deny access.

- Attempt to bind to the LDAP server using the DN of the entry retrieved from the search, and the user-provided password.

- If the bind is unsuccessful, deny access.

- If the bind is successful, build an identity using the configured attributes as the identity, email address, display name, and preferred user name.

The configured url is an RFC 2255 URL, which specifies the LDAP host and search parameters to use. The syntax of the URL is:

ldap://host:port/basedn?attribute?scope?filter

ldap://host:port/basedn?attribute?scope?filterFor this URL:

| URL component | Description |

|---|---|

|

|

For regular LDAP, use the string |

|

|

The name and port of the LDAP server. Defaults to |

|

| The DN of the branch of the directory where all searches should start from. At the very least, this must be the top of your directory tree, but it could also specify a subtree in the directory. |

|

|

The attribute to search for. Although RFC 2255 allows a comma-separated list of attributes, only the first attribute will be used, no matter how many are provided. If no attributes are provided, the default is to use |

|

|

The scope of the search. Can be either |

|

|

A valid LDAP search filter. If not provided, defaults to |

When doing searches, the attribute, filter, and provided user name are combined to create a search filter that looks like:

(&(<filter>)(<attribute>=<username>))

(&(<filter>)(<attribute>=<username>))For example, consider a URL of:

ldap://ldap.example.com/o=Acme?cn?sub?(enabled=true)

ldap://ldap.example.com/o=Acme?cn?sub?(enabled=true)

When a client attempts to connect using a user name of bob, the resulting search filter will be (&(enabled=true)(cn=bob)).

If the LDAP directory requires authentication to search, specify a bindDN and bindPassword to use to perform the entry search.

7.3.3. Creating the LDAP secret

To use the identity provider, you must define an OpenShift Container Platform Secret object that contains the bindPassword field.

Procedure

Create a

Secretobject that contains thebindPasswordfield:oc create secret generic ldap-secret --from-literal=bindPassword=<secret> -n openshift-config

$ oc create secret generic ldap-secret --from-literal=bindPassword=<secret> -n openshift-config1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The secret key containing the bindPassword for the

--from-literalargument must be calledbindPassword.

TipYou can alternatively apply the following YAML to create the secret:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

7.3.4. Creating a config map

Identity providers use OpenShift Container Platform ConfigMap objects in the openshift-config namespace to contain the certificate authority bundle. These are primarily used to contain certificate bundles needed by the identity provider.

Procedure

Define an OpenShift Container Platform

ConfigMapobject containing the certificate authority by using the following command. The certificate authority must be stored in theca.crtkey of theConfigMapobject.oc create configmap ca-config-map --from-file=ca.crt=/path/to/ca -n openshift-config

$ oc create configmap ca-config-map --from-file=ca.crt=/path/to/ca -n openshift-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow TipYou can alternatively apply the following YAML to create the config map:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

7.3.5. Sample LDAP CR

The following custom resource (CR) shows the parameters and acceptable values for an LDAP identity provider.

LDAP CR

- 1

- This provider name is prefixed to the returned user ID to form an identity name.

- 2

- Controls how mappings are established between this provider’s identities and

Userobjects. - 3

- List of attributes to use as the identity. First non-empty attribute is used. At least one attribute is required. If none of the listed attribute have a value, authentication fails. Defined attributes are retrieved as raw, allowing for binary values to be used.

- 4

- List of attributes to use as the email address. First non-empty attribute is used.

- 5

- List of attributes to use as the display name. First non-empty attribute is used.

- 6

- List of attributes to use as the preferred user name when provisioning a user for this identity. First non-empty attribute is used.

- 7

- Optional DN to use to bind during the search phase. Must be set if

bindPasswordis defined. - 8

- Optional reference to an OpenShift Container Platform

Secretobject containing the bind password. Must be set ifbindDNis defined. - 9

- Optional: Reference to an OpenShift Container Platform

ConfigMapobject containing the PEM-encoded certificate authority bundle to use in validating server certificates for the configured URL. Only used wheninsecureisfalse. - 10

- When

true, no TLS connection is made to the server. Whenfalse,ldaps://URLs connect using TLS, andldap://URLs are upgraded to TLS. This must be set tofalsewhenldaps://URLs are in use, as these URLs always attempt to connect using TLS. - 11

- An RFC 2255 URL which specifies the LDAP host and search parameters to use.

To whitelist users for an LDAP integration, use the lookup mapping method. Before a login from LDAP would be allowed, a cluster administrator must create an Identity object and a User object for each LDAP user.

7.3.6. Adding an identity provider to your cluster

After you install your cluster, add an identity provider to it so your users can authenticate.

Prerequisites

- Create an OpenShift Container Platform cluster.

- Create the custom resource (CR) for your identity providers.

- You must be logged in as an administrator.

Procedure

Apply the defined CR:

oc apply -f </path/to/CR>

$ oc apply -f </path/to/CR>Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf a CR does not exist,

oc applycreates a new CR and might trigger the following warning:Warning: oc apply should be used on resources created by either oc create --save-config or oc apply. In this case you can safely ignore this warning.Log in to the cluster as a user from your identity provider, entering the password when prompted.

oc login -u <username>

$ oc login -u <username>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Confirm that the user logged in successfully, and display the user name.

oc whoami

$ oc whoamiCopy to Clipboard Copied! Toggle word wrap Toggle overflow

7.4. Configuring a basic authentication identity provider

Configure the basic-authentication identity provider for users to log in to OpenShift Container Platform with credentials validated against a remote identity provider. Basic authentication is a generic back-end integration mechanism.

7.4.1. About identity providers in OpenShift Container Platform

By default, only a kubeadmin user exists on your cluster. To specify an identity provider, you must create a custom resource (CR) that describes that identity provider and add it to the cluster.

OpenShift Container Platform user names containing /, :, and % are not supported.

7.4.2. About basic authentication

Basic authentication is a generic back-end integration mechanism that allows users to log in to OpenShift Container Platform with credentials validated against a remote identity provider.

Because basic authentication is generic, you can use this identity provider for advanced authentication configurations.

Basic authentication must use an HTTPS connection to the remote server to prevent potential snooping of the user ID and password and man-in-the-middle attacks.

With basic authentication configured, users send their user name and password to OpenShift Container Platform, which then validates those credentials against a remote server by making a server-to-server request, passing the credentials as a basic authentication header. This requires users to send their credentials to OpenShift Container Platform during login.

This only works for user name/password login mechanisms, and OpenShift Container Platform must be able to make network requests to the remote authentication server.

User names and passwords are validated against a remote URL that is protected by basic authentication and returns JSON.

A 401 response indicates failed authentication.

A non-200 status, or the presence of a non-empty "error" key, indicates an error:

{"error":"Error message"}

{"error":"Error message"}

A 200 status with a sub (subject) key indicates success:

{"sub":"userid"}

{"sub":"userid"} - 1

- The subject must be unique to the authenticated user and must not be able to be modified.

A successful response can optionally provide additional data, such as:

A display name using the

namekey. For example:{"sub":"userid", "name": "User Name", ...}{"sub":"userid", "name": "User Name", ...}Copy to Clipboard Copied! Toggle word wrap Toggle overflow An email address using the

emailkey. For example:{"sub":"userid", "email":"user@example.com", ...}{"sub":"userid", "email":"user@example.com", ...}Copy to Clipboard Copied! Toggle word wrap Toggle overflow A preferred user name using the

preferred_usernamekey. This is useful when the unique, unchangeable subject is a database key or UID, and a more human-readable name exists. This is used as a hint when provisioning the OpenShift Container Platform user for the authenticated identity. For example:{"sub":"014fbff9a07c", "preferred_username":"bob", ...}{"sub":"014fbff9a07c", "preferred_username":"bob", ...}Copy to Clipboard Copied! Toggle word wrap Toggle overflow

7.4.3. Creating the secret

Identity providers use OpenShift Container Platform Secret objects in the openshift-config namespace to contain the client secret, client certificates, and keys.

Procedure

Create a

Secretobject that contains the key and certificate by using the following command:oc create secret tls <secret_name> --key=key.pem --cert=cert.pem -n openshift-config

$ oc create secret tls <secret_name> --key=key.pem --cert=cert.pem -n openshift-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow TipYou can alternatively apply the following YAML to create the secret:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

7.4.4. Creating a config map

Identity providers use OpenShift Container Platform ConfigMap objects in the openshift-config namespace to contain the certificate authority bundle. These are primarily used to contain certificate bundles needed by the identity provider.

Procedure

Define an OpenShift Container Platform

ConfigMapobject containing the certificate authority by using the following command. The certificate authority must be stored in theca.crtkey of theConfigMapobject.oc create configmap ca-config-map --from-file=ca.crt=/path/to/ca -n openshift-config

$ oc create configmap ca-config-map --from-file=ca.crt=/path/to/ca -n openshift-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow TipYou can alternatively apply the following YAML to create the config map:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

7.4.5. Sample basic authentication CR

The following custom resource (CR) shows the parameters and acceptable values for a basic authentication identity provider.

Basic authentication CR

- 1

- This provider name is prefixed to the returned user ID to form an identity name.

- 2

- Controls how mappings are established between this provider’s identities and

Userobjects. - 3

- URL accepting credentials in Basic authentication headers.

- 4

- Optional: Reference to an OpenShift Container Platform

ConfigMapobject containing the PEM-encoded certificate authority bundle to use in validating server certificates for the configured URL. - 5

- Optional: Reference to an OpenShift Container Platform

Secretobject containing the client certificate to present when making requests to the configured URL. - 6

- Reference to an OpenShift Container Platform

Secretobject containing the key for the client certificate. Required iftlsClientCertis specified.

7.4.6. Adding an identity provider to your cluster

After you install your cluster, add an identity provider to it so your users can authenticate.

Prerequisites

- Create an OpenShift Container Platform cluster.

- Create the custom resource (CR) for your identity providers.

- You must be logged in as an administrator.

Procedure

Apply the defined CR:

oc apply -f </path/to/CR>

$ oc apply -f </path/to/CR>Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf a CR does not exist,

oc applycreates a new CR and might trigger the following warning:Warning: oc apply should be used on resources created by either oc create --save-config or oc apply. In this case you can safely ignore this warning.Log in to the cluster as a user from your identity provider, entering the password when prompted.

oc login -u <username>

$ oc login -u <username>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Confirm that the user logged in successfully, and display the user name.

oc whoami

$ oc whoamiCopy to Clipboard Copied! Toggle word wrap Toggle overflow

7.4.7. Example Apache HTTPD configuration for basic identity providers

The basic identify provider (IDP) configuration in OpenShift Container Platform 4 requires that the IDP server respond with JSON for success and failures. You can use CGI scripting in Apache HTTPD to accomplish this. This section provides examples.

Example /etc/httpd/conf.d/login.conf

Example /var/www/cgi-bin/login.cgi

#!/bin/bash

echo "Content-Type: application/json"

echo ""

echo '{"sub":"userid", "name":"'$REMOTE_USER'"}'

exit 0

#!/bin/bash

echo "Content-Type: application/json"

echo ""

echo '{"sub":"userid", "name":"'$REMOTE_USER'"}'

exit 0Example /var/www/cgi-bin/fail.cgi

#!/bin/bash

echo "Content-Type: application/json"

echo ""

echo '{"error": "Login failure"}'

exit 0

#!/bin/bash

echo "Content-Type: application/json"

echo ""

echo '{"error": "Login failure"}'

exit 07.4.7.1. File requirements

These are the requirements for the files you create on an Apache HTTPD web server:

-

login.cgiandfail.cgimust be executable (chmod +x). -

login.cgiandfail.cgimust have proper SELinux contexts if SELinux is enabled:restorecon -RFv /var/www/cgi-bin, or ensure that the context ishttpd_sys_script_exec_tusingls -laZ. -

login.cgiis only executed if your user successfully logs in perRequire and Authdirectives. -

fail.cgiis executed if the user fails to log in, resulting in anHTTP 401response.

7.4.8. Basic authentication troubleshooting

The most common issue relates to network connectivity to the backend server. For simple debugging, run curl commands on the master. To test for a successful login, replace the <user> and <password> in the following example command with valid credentials. To test an invalid login, replace them with false credentials.

curl --cacert /path/to/ca.crt --cert /path/to/client.crt --key /path/to/client.key -u <user>:<password> -v https://www.example.com/remote-idp

$ curl --cacert /path/to/ca.crt --cert /path/to/client.crt --key /path/to/client.key -u <user>:<password> -v https://www.example.com/remote-idpSuccessful responses

A 200 status with a sub (subject) key indicates success:

{"sub":"userid"}

{"sub":"userid"}The subject must be unique to the authenticated user, and must not be able to be modified.

A successful response can optionally provide additional data, such as:

A display name using the

namekey:{"sub":"userid", "name": "User Name", ...}{"sub":"userid", "name": "User Name", ...}Copy to Clipboard Copied! Toggle word wrap Toggle overflow An email address using the

emailkey:{"sub":"userid", "email":"user@example.com", ...}{"sub":"userid", "email":"user@example.com", ...}Copy to Clipboard Copied! Toggle word wrap Toggle overflow A preferred user name using the

preferred_usernamekey:{"sub":"014fbff9a07c", "preferred_username":"bob", ...}{"sub":"014fbff9a07c", "preferred_username":"bob", ...}Copy to Clipboard Copied! Toggle word wrap Toggle overflow The