Security

Read more to learn about the governance policy framework, which helps harden cluster security by using policies.

Abstract

Chapter 1. Security

Manage your security and role-based access control (RBAC) of Red Hat Advanced Cluster Management for Kubernetes components. Govern your cluster with defined policies and processes to identify and minimize risks. Use policies to define rules and set controls.

Prerequisite: You must configure authentication service requirements for Red Hat Advanced Cluster Management for Kubernetes to onboard workloads to Identity and Access Management (IAM). For more information see, Understanding authentication in Understanding authentication in the OpenShift Container Platform documentation.

Review the following topics to learn more about securing your cluster:

1.1. Role-based access control

Red Hat Advanced Cluster Management for Kubernetes supports role-based access control (RBAC). Your role determines the actions that you can perform. RBAC is based on the authorization mechanisms in Kubernetes, similar to Red Hat OpenShift Container Platform. For more information about RBAC, see the OpenShift RBAC overview in the OpenShift Container Platform documentation.

Note: Action buttons are disabled from the console if the user-role access is impermissible.

View the following sections for details of supported RBAC by component:

1.1.1. Overview of roles

Some product resources are cluster-wide and some are namespace-scoped. You must apply cluster role bindings and namespace role bindings to your users for consistent access controls. View the table list of the following role definitions that are supported in Red Hat Advanced Cluster Management for Kubernetes:

| Role | Definition |

|---|---|

| cluster-admin |

A user with cluster-wide binding to the |

| open-cluster-management:cluster-manager-admin |

A user with cluster-wide binding to the |

| open-cluster-management:managed-cluster-x (admin) |

A user with cluster binding to the |

| open-cluster-management:managed-cluster-x (viewer) |

A user with cluster-wide binding to the |

| open-cluster-management:subscription-admin |

A user with the |

| admin, edit, view |

Admin, edit, and view are OpenShift Container Platform default roles. A user with a namespace-scoped binding to these roles has access to |

Important:

- Any user can create projects from OpenShift Container Platform, which gives administrator role permissions for the namespace.

-

If a user does not have role access to a cluster, the cluster name is not visible. The cluster name is displayed with the following symbol:

-.

1.1.2. RBAC implementation

RBAC is validated at the console level and at the API level. Actions in the console can be enabled or disabled based on user access role permissions. View the following sections for more information on RBAC for specific lifecycles in the product.

1.1.2.1. Cluster lifecycle RBAC

View the following cluster lifecycle RBAC operations.

To create and administer all managed clusters:

Create a cluster role binding to the cluster role

open-cluster-management:cluster-manager-admin. This role is a super user, which has access to all resources and actions. This role allows you to create cluster-scopedmanagedclusterresources, the namespace for the resources that manage the managed cluster, and the resources in the namespace. This role also allows access to provider connections and to bare metal assets that are used to create managed clusters.oc create clusterrolebinding <role-binding-name> --clusterrole=open-cluster-management:cluster-manager-admin

oc create clusterrolebinding <role-binding-name> --clusterrole=open-cluster-management:cluster-manager-adminCopy to Clipboard Copied! Toggle word wrap Toggle overflow

To administer a managed cluster named cluster-name:

Create a cluster role binding to the cluster role

open-cluster-management:admin:<cluster-name>. This role allows read/write access to the cluster-scopedmanagedclusterresource. This is needed because themanagedclusteris a cluster-scoped resource and not a namespace-scoped resource.oc create clusterrolebinding (role-binding-name) --clusterrole=open-cluster-management:admin:<cluster-name>

oc create clusterrolebinding (role-binding-name) --clusterrole=open-cluster-management:admin:<cluster-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a namespace role binding to the cluster role

admin. This role allows read/write access to the resources in the namespace of the managed cluster.oc create rolebinding <role-binding-name> -n <cluster-name> --clusterrole=admin

oc create rolebinding <role-binding-name> -n <cluster-name> --clusterrole=adminCopy to Clipboard Copied! Toggle word wrap Toggle overflow

To view a managed cluster named cluster-name:

Create a cluster role binding to the cluster role

open-cluster-management:view:<cluster-name>. This role allows read access to the cluster-scopedmanagedclusterresource. This is needed because themanagedclusteris a cluster-scoped resource and not a namespace-scoped resource.oc create clusterrolebinding <role-binding-name> --clusterrole=open-cluster-management:view:<cluster-name>

oc create clusterrolebinding <role-binding-name> --clusterrole=open-cluster-management:view:<cluster-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a namespace role binding to the cluster role

view. This role allows read-only access to the resources in the namespace of the managed cluster.oc create rolebinding <role-binding-name> -n <cluster-name> --clusterrole=view

oc create rolebinding <role-binding-name> -n <cluster-name> --clusterrole=viewCopy to Clipboard Copied! Toggle word wrap Toggle overflow

View the following console and API RBAC tables for cluster lifecycle:

| Action | Admin | Edit | View |

|---|---|---|---|

| Clusters | read, update, delete | read, update | read |

| Provider connections | create, read, update, and delete | create, read, update, and delete | read |

| Bare metal asset | create, read, update, delete | read, update | read |

| API | Admin | Edit | View |

|---|---|---|---|

| managedclusters.cluster.open-cluster-management.io | create, read, update, delete | read, update | read |

| baremetalassets.inventory.open-cluster-management.io | create, read, update, delete | read, update | read |

| klusterletaddonconfigs.agent.open-cluster-management.io | create, read, update, delete | read, update | read |

| managedclusteractions.action.open-cluster-management.io | create, read, update, delete | read, update | read |

| managedclusterviews.view.open-cluster-management.io | create, read, update, delete | read, update | read |

| managedclusterinfos.internal.open-cluster-management.io | create, read, update, delete | read, update | read |

| manifestworks.work.open-cluster-management.io | create, read, update, delete | read, update | read |

1.1.2.2. Application lifecycle RBAC

When you create an application, the subscription namespace is created and the configuration map is created in the subscription namespace. You must also have access to the channel namespace. When you want to apply a subscription, you must be a subscription administrator. For more information on managing applications, see Creating and managing subscriptions.

To perform application lifecycle tasks, users with the admin role must have access to the application namespace where the application is created, and to the managed cluster namespace. For example, the required access to create applications in namespace "N" is a namespace-scoped binding to the admin role for namespace "N".

View the following console and API RBAC tables for Application lifecycle:

| Action | Admin | Edit | View |

|---|---|---|---|

| Application | create, read, update, delete | create, read, update, delete | read |

| Channel | create, read, update, delete | create, read, update, delete | read |

| Subscription | create, read, update, delete | create, read, update, delete | read |

| Placement rule | create, read, update, delete | create, read, update, delete | read |

| API | Admin | Edit | View |

|---|---|---|---|

| applications.app.k8s.io | create, read, update, delete | create, read, update, delete | read |

| channels.apps.open-cluster-management.io | create, read, update, delete | create, read, update, delete | read |

| deployables.apps.open-cluster-management.io | create, read, update, delete | create, read, update, delete | read |

| helmreleases.apps.open-cluster-management.io | create, read, update, delete | create, read, update, delete | read |

| placementrules.apps.open-cluster-management.io | create, read, update, delete | create, read, update, delete | read |

| subscriptions.apps.open-cluster-management.io | create, read, update, delete | create, read, update, delete | read |

| configmaps | create, read, update, delete | create, read, update, delete | read |

| secrets | create, read, update, delete | create, read, update, delete | read |

| namespaces | create, read, update, delete | create, read, update, delete | read |

1.1.2.3. Governance lifecycle RBAC

To perform governance lifecycle operations, users must have access to the namespace where the policy is created, along with access to the managedcluster namespace where the policy is applied.

View the following examples:

To view policies in namespace "N" the following role is required:

-

A namespace-scoped binding to the

viewrole for namespace "N".

-

A namespace-scoped binding to the

To create a policy in namespace "N" and apply it on

managedcluster"X", the following roles are required:-

A namespace-scoped binding to the

adminrole for namespace "N". -

A namespace-scoped binding to the

adminrole for namespace "X".

-

A namespace-scoped binding to the

View the following console and API RBAC tables for Governance lifecycle:

| Action | Admin | Edit | View |

|---|---|---|---|

| Policies | create, read, update, delete | read, update | read |

| PlacementBindings | create, read, update, delete | read, update | read |

| PlacementRules | create, read, update, delete | read, update | read |

| API | Admin | Edit | View |

|---|---|---|---|

| policies.policy.open-cluster-management.io | create, read, update, delete | read, update | read |

| placementbindings.policy.open-cluster-management.io | create, read, update, delete | read, update | read |

1.1.2.4. Observability RBAC

To view the observability metrics for a managed cluster, you must have view access to that managed cluster on the hub cluster. View the following list of observability features:

Access managed cluster metrics.

Users are denied access to managed cluster metrics, if they are not assigned to the

viewrole for the managed cluster on the hub cluster.- Search for resources.

To view observability data in Grafana, you must have a RoleBinding resource in the same namespace of the managed cluster. View the following RoleBinding example:

See Role binding policy for more information. See Customizing observability to configure observability.

- Use the Visual Web Terminal if you have access to the managed cluster.

To create, update, and delete the MultiClusterObservability custom resource. View the following RBAC table:

| API | Admin | Edit | View |

| multiclusterobservabilities.observability.open-cluster-management.io | create, read, update, and delete | - | - |

To continue to learn more about securing your cluster, see Security.

1.2. Credentials

You can rotate your credentials for your Red Hat Advanced Cluster Management for Kubernetes clusters when your cloud provider access credentials have changed. Continue reading for the procedure to manually propagate your updated cloud provider credentials.

Required access: Cluster administrator

1.2.1. Provider credentials

Connection secrets for a cloud provider can be rotated. See the following list of provider credentials:

1.2.1.1. Amazon Web Services

-

aws_access_key_id: Your provisioned cluster access key. aws_secret_access_key: Your provisioned secret access key.- View the resources in the namespace that has the same name as the cluster with the expired credential.

-

Find the secret name

<cluster_name>-<cloud_provider>-creds. For example:my_cluster-aws-creds1. - Edit the secret to replace the existing value with the updated value.

1.2.2. Agents

Agents are responsible for connections. See how you can rotate the following credentials:

-

registration-agent: Connects the registration agent to the hub cluster. work-agent: Connects the work agent to the hub cluster.To rotate credentials, delete the

hub-kubeconfigsecret to restart the registration pods.APIServer: Connects agents and add-ons to the hub cluster.On the hub cluster, display the import command by entering the following command:

oc get secret -n ${CLUSTER_NAME} ${CLUSTER_NAME}-import -ojsonpath='{.data.import\.yaml}' | base64 --decode > import.yamloc get secret -n ${CLUSTER_NAME} ${CLUSTER_NAME}-import -ojsonpath='{.data.import\.yaml}' | base64 --decode > import.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

On the managed cluster, apply the

import.yamlfile. Run the following command:oc apply -f import.yaml.

1.3. Certificates

Various certificates are created and used throughout Red Hat Advanced Cluster Management for Kubernetes.

You can bring your own certificates. You must create a Kubernetes TLS Secret for your certificate. After you create your certificates, you can replace certain certificates that are created by the Red Hat Advanced Cluster Management installer.

Required access: Cluster administrator or team administrator.

Note: Replacing certificates is supported only on native Red Hat Advanced Cluster Management installations.

All certificates required by services that run on Red Hat Advanced Cluster Management are created during the installation of Red Hat Advanced Cluster Management. Certificates are created and managed by the Red Hat Advanced Cluster Management Certificate manager (cert-manager) service. The Red Hat Advanced Cluster Management Root Certificate Authority (CA) certificate is stored within the Kubernetes Secret multicloud-ca-cert in the hub cluster namespace. The certificate can be imported into your client truststores to access Red Hat Advanced Cluster Management Platform APIs.

See the following topics to replace certificates:

1.3.1. List managed certificates

You can view a list of managed certificates that use cert-manager internally by running the following command:

oc get certificates.certmanager.k8s.io -n open-cluster-management

oc get certificates.certmanager.k8s.io -n open-cluster-managementNote: If observability is enabled, there are additional namespaces where certificates are created.

1.3.2. Refresh a managed certificate

You can refresh a managed certificate by running the command in the List managed certificates section. When you identify the certificate that you need to refresh, delete the secret that is associated with the certificate. For example, you can delete a secret by running the following command:

oc delete secret grc-0c925-grc-secrets -n open-cluster-management

oc delete secret grc-0c925-grc-secrets -n open-cluster-management1.3.3. Refresh managed certificates for Red Hat Advanced Cluster Management for Kubernetes

You can refresh all managed certificates that are issued by the Red Hat Advanced Cluster Management CA. During the refresh, the Kubernetes secret that is associated with each cert-manager certificate is deleted. The service restarts automatically to use the certificate. Run the following command:

oc delete secret -n open-cluster-management $(oc get certificates.certmanager.k8s.io -n open-cluster-management -o wide | grep multicloud-ca-issuer | awk '{print $3}')

oc delete secret -n open-cluster-management $(oc get certificates.certmanager.k8s.io -n open-cluster-management -o wide | grep multicloud-ca-issuer | awk '{print $3}')The Red Hat OpenShift Container Platform certificate is not included in the Red Hat Advanced Cluster Management for Kubernetes management ingress. For more information, see the Security known issues.

1.3.4. Refresh internal certificates

You can refresh internal certificates, which are certificates that are used by Red Hat Advanced Cluster Management webhooks and the proxy server.

Complete the following steps to refresh internal certificates:

Delete the secret that is associated with the internal certificate by running the following command:

oc delete secret -n open-cluster-management ocm-webhook-secret

oc delete secret -n open-cluster-management ocm-webhook-secretCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note: Some services might not have a secret that needs to be deleted.

Restart the services that are associated with the internal certificate(s) by running the following command:

oc delete po -n open-cluster-management ocm-webhook-679444669c-5cg76

oc delete po -n open-cluster-management ocm-webhook-679444669c-5cg76Copy to Clipboard Copied! Toggle word wrap Toggle overflow Remember: There are replicas of many services; each service must be restarted.

View the following table for a summarized list of the pods that contain certificates and whether a secret needs to be deleted prior to restarting the pod:

| Service name | Namespace | Sample pod name | Secret name (if applicable) |

|---|---|---|---|

| channels-apps-open-cluster-management-webhook-svc | open-cluster-management | multicluster-operators-application-8c446664c-5lbfk | - |

| multicluster-operators-application-svc | open-cluster-management | multicluster-operators-application-8c446664c-5lbfk | - |

| multiclusterhub-operator-webhook | open-cluster-management | multiclusterhub-operator-bfd948595-mnhjc | - |

| ocm-webhook | open-cluster-management | ocm-webhook-679444669c-5cg76 | ocm-webhook-secret |

| cluster-manager-registration-webhook | open-cluster-management-hub | cluster-manager-registration-webhook-fb7b99c-d8wfc | registration-webhook-serving-cert |

| cluster-manager-work-webhook | open-cluster-management-hub | cluster-manager-work-webhook-89b8d7fc-f4pv8 | work-webhook-serving-cert |

1.3.4.1. Rotating the gatekeeper webhook certificate

Complete the following steps to rotate the gatekeeper webhook certificate:

Edit the secret that contains the certificate with the following command:

oc edit secret -n openshift-gatekeeper-system gatekeeper-webhook-server-cert

oc edit secret -n openshift-gatekeeper-system gatekeeper-webhook-server-certCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Delete the following content in the

datasection:ca.crt,ca.key, tls.crt`, andtls.key. Restart the gatekeeper webhook service by deleting the

gatekeeper-controller-managerpods with the following command:oc delete po -n openshift-gatekeeper-system -l control-plane=controller-manager

oc delete po -n openshift-gatekeeper-system -l control-plane=controller-managerCopy to Clipboard Copied! Toggle word wrap Toggle overflow

The gatekeeper webhook certificate is rotated.

1.3.4.2. Rotating the integrity shield webhook certificate (Technology preview)

Complete the following steps to rotate the integrity shield webhook certificate:

Edit the IntegrityShield custom resource and add the

integrity-shield-operator-systemnamespace to the excluded list of namespaces in theinScopeNamespaceSelectorsetting. Run the following command to edit the resource:oc edit integrityshield integrity-shield-server -n integrity-shield-operator-system

oc edit integrityshield integrity-shield-server -n integrity-shield-operator-systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the secret that contains the integrity shield certificate by running the following command:

oc delete secret -n integrity-shield-operator-system ishield-server-tls

oc delete secret -n integrity-shield-operator-system ishield-server-tlsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the operator so that the secret is recreated. Be sure that the operator pod name matches the pod name on your system. Run the following command:

oc delete po -n integrity-shield-operator-system integrity-shield-operator-controller-manager-64549569f8-v4pz6

oc delete po -n integrity-shield-operator-system integrity-shield-operator-controller-manager-64549569f8-v4pz6Copy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the integrity shield server pod to begin using the new certificate with the following command:

oc delete po -n integrity-shield-operator-system integrity-shield-server-5fbdfbbbd4-bbfbz

oc delete po -n integrity-shield-operator-system integrity-shield-server-5fbdfbbbd4-bbfbzCopy to Clipboard Copied! Toggle word wrap Toggle overflow

1.3.4.3. Observability certificates

When Red Hat Advanced Cluster Management is installed there are additional namespaces where certificates are managed. The open-cluster-management-observability namespace and the managed cluster namespaces contain certificates managed by cert-manager for the observability service.

Observability certificates are automatically refreshed upon expiration. View the following list to understand the effects when certificates are automatically renewed:

- Components on your hub cluster automatically restart to retrieve the refreshed certificate.

- Red Hat Advanced Cluster Management sends the refreshed certificates to managed clusters.

The

metrics-collectorrestarts to mount the renewed certificates.Note:

metrics-collectorcan push metrics to the hub cluster before and after certificates are removed. For more information about refreshing certificates across your clusters, see the Refresh internal certificates section. Be sure to specify the appropriate namespace when you refresh a certificate.

1.3.4.4. Channel certificates

CA certificates can be associated with Git channel that are a part of the Red Hat Advanced Cluster Management application management. See Using custom CA certificates for a secure HTTPS connection for more details.

Helm channels allow you to disable certificate validation. Helm channels where certificate validation is disabled, must be configured in development environments. Disabling certificate validation introduces security risks.

1.3.4.5. Managed cluster certificates

Certificates are used to authenticate managed clusters with the hub. Therefore, it is important to be aware of troubleshooting scenarios associated with these certificates. View Troubleshooting imported clusters offline after certificate change for more details.

The managed cluster certificates are refreshed automatically.

Use the certificate policy controller to create and manage certificate policies on managed clusters. See Policy controllers to learn more about controllers. Return to the Security page for more information.

1.3.5. Replacing the root CA certificate

You can replace the root CA certificate.

1.3.5.1. Prerequisites for root CA certificate

Verify that your Red Hat Advanced Cluster Management for Kubernetes cluster is running.

Back up the existing Red Hat Advanced Cluster Management for Kubernetes certificate resource by running the following command:

oc get cert multicloud-ca-cert -n open-cluster-management -o yaml > multicloud-ca-cert-backup.yaml

oc get cert multicloud-ca-cert -n open-cluster-management -o yaml > multicloud-ca-cert-backup.yaml1.3.5.2. Creating the root CA certificate with OpenSSL

Complete the following steps to create a root CA certificate with OpenSSL:

Generate your certificate authority (CA) RSA private key by running the following command:

openssl genrsa -out ca.key 4096

openssl genrsa -out ca.key 4096Copy to Clipboard Copied! Toggle word wrap Toggle overflow Generate a self-signed CA certificate by using your CA key. Run the following command:

openssl req -x509 -new -nodes -key ca.key -days 400 -out ca.crt -config req.cnf

openssl req -x509 -new -nodes -key ca.key -days 400 -out ca.crt -config req.cnfCopy to Clipboard Copied! Toggle word wrap Toggle overflow Your

req.cnffile might resemble the following file:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

1.3.5.3. Replacing root CA certificates

Create a new secret with the CA certificate by running the following command:

kubectl -n open-cluster-management create secret tls byo-ca-cert --cert ./ca.crt --key ./ca.key

kubectl -n open-cluster-management create secret tls byo-ca-cert --cert ./ca.crt --key ./ca.keyCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the CA issuer to point to the BYO certificate. Run the following commnad:

oc edit issuer -n open-cluster-management multicloud-ca-issuer

oc edit issuer -n open-cluster-management multicloud-ca-issuerCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Replace the string

mulicloud-ca-certwithbyo-ca-cert. Save your deployment and quit the editor. Edit the management ingress deployment to reference the Bring Your Own (BYO) CA certificate. Run the following command:

oc edit deployment management-ingress-435ab

oc edit deployment management-ingress-435abCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Replace the

multicloud-ca-certstring with thebyo-ca-cert. Save your deployment and quit the editor. - Validate the custom CA is in use by logging in to the console and view the details of the certificate being used.

1.3.5.4. Refreshing cert-manager certificates

After the root CA is replaced, all certificates that are signed by the root CA must be refreshed and the services that use those certificates must be restarted. Cert-manager creates the default Issuer from the root CA so all of the certificates issued by cert-manager, and signed by the default ClusterIssuer must also be refreshed.

Delete the Kubernetes secrets associated with each cert-manager certificate to refresh the certificate and restart the services that use the certificate. Run the following command:

oc delete secret -n open-cluster-management $(oc get cert -n open-cluster-management -o wide | grep multicloud-ca-issuer | awk '{print $3}')

oc delete secret -n open-cluster-management $(oc get cert -n open-cluster-management -o wide | grep multicloud-ca-issuer | awk '{print $3}')1.3.5.5. Restoring root CA certificates

To restore the root CA certificate, update the CA issuer by completing the following steps:

Edit the CA issuer. Run the following command:

oc edit issuer -n open-cluster-management multicloud-ca-issuer

oc edit issuer -n open-cluster-management multicloud-ca-issuerCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Replace the

byo-ca-certstring withmulticloud-ca-certin the editor. Save the issuer and quit the editor. Edit the management ingress depolyment to reference the original CA certificate. Run the following command:

oc edit deployment management-ingress-435ab

oc edit deployment management-ingress-435abCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Replace the

byo-ca-certstring with themulticloud-ca-certstring. Save your deployment and quit the editor. Delete the BYO CA certificate. Run the following commnad:

oc delete secret -n open-cluster-management byo-ca-cert

oc delete secret -n open-cluster-management byo-ca-certCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Refresh all cert-manager certificates that use the CA. For more information, see the forementioned section, Refreshing cert-manager certificates.

See Certificates for more information about certificates that are created and managed by Red Hat Advanced Cluster Management for Kubernates.

1.3.6. Replacing the management ingress certificates

You can replace management ingress certificates.

1.3.6.1. Prerequisites to replace management ingress certificate

Prepare and have your management-ingress certificates and private keys ready. If needed, you can generate a TLS certificate by using OpenSSL. Set the common name parameter,CN, on the certificate to manangement-ingress. If you are generating the certificate, include the following settings:

Include the route name for Red Hat Advanced Cluster Management for Kubernetes as the domain name in your certificate Subject Alternative Name (SAN) list.

-

The service name for the management ingress:

management-ingress. Include the route name for Red Hat Advanced Cluster Management for Kubernetes.

Receive the route name by running the following command:

oc get route -n open-cluster-management

oc get route -n open-cluster-managementCopy to Clipboard Copied! Toggle word wrap Toggle overflow You might receive the following response:

multicloud-console.apps.grchub2.dev08.red-chesterfield.com

multicloud-console.apps.grchub2.dev08.red-chesterfield.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Add the localhost IP address:

127.0.0.1. -

Add the localhost entry:

localhost.

-

The service name for the management ingress:

1.3.6.1.1. Example configuration file for generating a certificate

The following example configuration file and OpenSSL commands provide an example for how to generate a TLS certificate by using OpenSSL. View the following csr.cnf configuration file, which defines the configuration settings for generating certificates with OpenSSL.

Note: Be sure to update the SAN labeled, DNS.2 with the correct hostname for your management ingress.

1.3.6.1.2. OpenSSL commands for generating a certificate

The following OpenSSL commands are used with the preceding configuration file to generate the required TLS certificate.

Generate your certificate authority (CA) RSA private key:

openssl genrsa -out ca.key 4096

openssl genrsa -out ca.key 4096Copy to Clipboard Copied! Toggle word wrap Toggle overflow Generate a self-signed CA certificate by using your CA key:

openssl req -x509 -new -nodes -key ca.key -subj "/C=US/ST=North Carolina/L=Raleigh/O=Red Hat OpenShift" -days 400 -out ca.crt

openssl req -x509 -new -nodes -key ca.key -subj "/C=US/ST=North Carolina/L=Raleigh/O=Red Hat OpenShift" -days 400 -out ca.crtCopy to Clipboard Copied! Toggle word wrap Toggle overflow Generate the RSA private key for your certificate:

openssl genrsa -out ingress.key 4096

openssl genrsa -out ingress.key 4096Copy to Clipboard Copied! Toggle word wrap Toggle overflow Generate the Certificate Signing request (CSR) by using the private key:

openssl req -new -key ingress.key -out ingress.csr -config csr.cnf

openssl req -new -key ingress.key -out ingress.csr -config csr.cnfCopy to Clipboard Copied! Toggle word wrap Toggle overflow Generate a signed certificate by using your CA certificate and key and CSR:

openssl x509 -req -in ingress.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out ingress.crt -sha256 -days 300 -extensions v3_ext -extfile csr.cnf

openssl x509 -req -in ingress.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out ingress.crt -sha256 -days 300 -extensions v3_ext -extfile csr.cnfCopy to Clipboard Copied! Toggle word wrap Toggle overflow Examine the certificate contents:

openssl x509 -noout -text -in ./ingress.crt

openssl x509 -noout -text -in ./ingress.crtCopy to Clipboard Copied! Toggle word wrap Toggle overflow

1.3.6.2. Replace the Bring Your Own (BYO) ingress certificate

Complete the following steps to replace your BYO ingress certificate:

Create the

byo-ingress-tlssecret by using your certificate and private key. Run the following command:kubectl -n open-cluster-management create secret tls byo-ingress-tls-secret --cert ./ingress.crt --key ./ingress.key

kubectl -n open-cluster-management create secret tls byo-ingress-tls-secret --cert ./ingress.crt --key ./ingress.keyCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the secret is created in the correct namespace with the following command:

kubectl get secret -n open-cluster-management | grep -e byo-ingress-tls-secret -e byo-ca-cert

kubectl get secret -n open-cluster-management | grep -e byo-ingress-tls-secret -e byo-ca-certCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a secret containing the CA certificate by running the following command:

kubectl -n open-cluster-management create secret tls byo-ca-cert --cert ./ca.crt --key ./ca.key

kubectl -n open-cluster-management create secret tls byo-ca-cert --cert ./ca.crt --key ./ca.keyCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the management ingress deployment and get the name of the deployment with the following commands:

export MANAGEMENT_INGRESS=`oc get deployment -o custom-columns=:.metadata.name | grep management-ingress` oc edit deployment $MANAGEMENT_INGRESS -n open-cluster-management

export MANAGEMENT_INGRESS=`oc get deployment -o custom-columns=:.metadata.name | grep management-ingress` oc edit deployment $MANAGEMENT_INGRESS -n open-cluster-managementCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Replace the

multicloud-ca-certstring withbyo-ca-cert. -

Replace the

$MANAGEMENT_INGRESS-tls-secretstring withbyo-ingress-tls-secret. - Save your deployment and close the editor. + The management ingress automatically restarts.

-

Replace the

- Verify that the current certificate is your certificate, and that all console access and login functionality remain the same.

1.3.6.3. Restore the default self-signed certificate for management ingress

Edit the management ingress deployment. Replace the string

multicloud-ca-certwithbyo-ca-certand get the name of the deployment with the following commands:export MANAGEMENT_INGRESS=`oc get deployment -o custom-columns=:.metadata.name | grep management-ingress` oc edit deployment $MANAGEMENT_INGRESS -n open-cluster-management

export MANAGEMENT_INGRESS=`oc get deployment -o custom-columns=:.metadata.name | grep management-ingress` oc edit deployment $MANAGEMENT_INGRESS -n open-cluster-managementCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Replace the

byo-ca-certstring withmulticloud-ca-cert. -

Replace the

byo-ingress-tls-secretstring with the$MANAGEMENT_INGRESS-tls-secret. - Save your deployment and close the editor. The management ingress automatically restarts.

-

Replace the

- After all pods are restarted, navigate to the Red Hat Advanced Cluster Management for Kubernetes console from your browser.

- Verify that the current certificate is your certificate, and that all console access and login functionality remain the same.

Delete the Bring Your Own (BYO) ingress secret and ingress CA certificate by running the following commands:

oc delete secret -n open-cluster-management byo-ingress-tls-secret oc delete secret -n open-cluster-management byo-ca-cert

oc delete secret -n open-cluster-management byo-ingress-tls-secret oc delete secret -n open-cluster-management byo-ca-certCopy to Clipboard Copied! Toggle word wrap Toggle overflow

See Certificates for more information about certificates that are created and managed by Red Hat Advanced Cluster Management. Return to the Security page for more information on securing your cluster.

Chapter 2. Governance and risk

Enterprises must meet internal standards for software engineering, secure engineering, resiliency, security, and regulatory compliance for workloads hosted on private, multi and hybrid clouds. Red Hat Advanced Cluster Management for Kubernetes governance provides an extensible policy framework for enterprises to introduce their own security policies.

2.1. Governance architecture

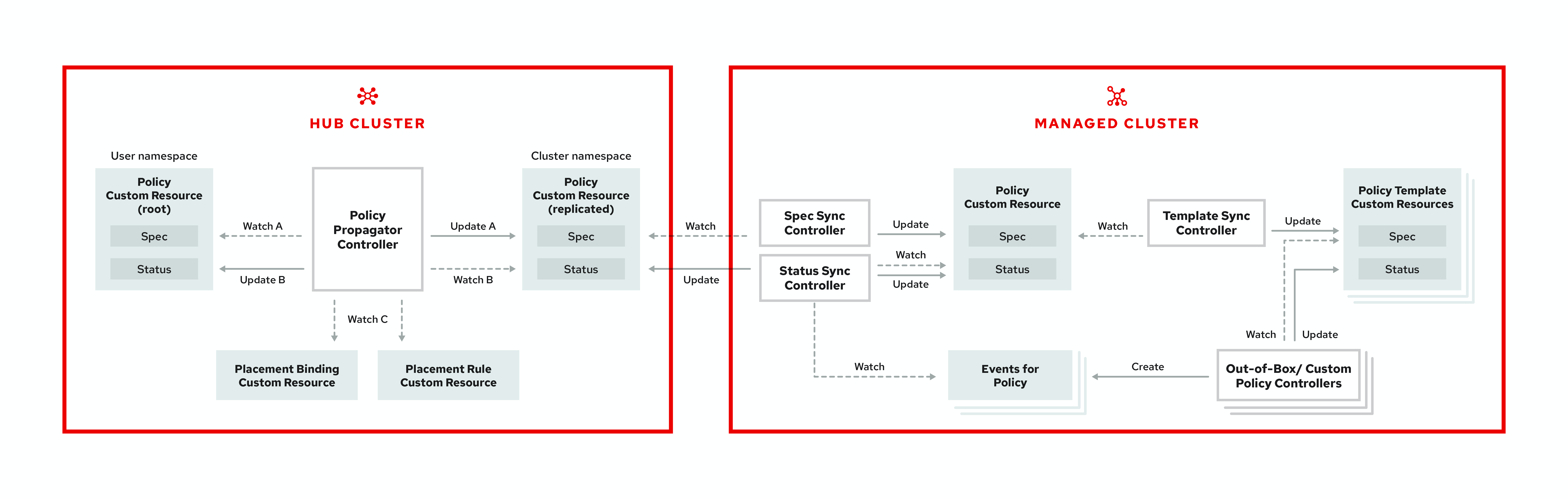

Enhance the security for your cluster with the Red Hat Advanced Cluster Management for Kubernetes governance lifecycle. The product governance lifecycle is based on defined policies, processes, and procedures to manage security and compliance from a central interface page. View the following diagram of the governance architecture:

The governance architecture is composed of the following components:

Governance and risk dashboard: Provides a summary of your cloud governance and risk details, which include policy and cluster violations.

Notes:

-

When a policy is propagated to a managed cluster, the replicated policy is named

namespaceName.policyName. When you create a policy, make sure that the length of thenamespaceName.policyNamemust not exceed 63 characters due to the Kubernetes limit for object names. -

When you search for a policy in the hub cluster, you might also receive the name of the replicated policy on your managed cluster. For example, if you search for

policy-dhaz-cert, the following policy name from the hub cluster might appear:default.policy-dhaz-cert.

-

When a policy is propagated to a managed cluster, the replicated policy is named

-

Policy-based governance framework: Supports policy creation and deployment to various managed clusters based on attributes associated with clusters, such as a geographical region. See the

policy-collectionrepository to view examples of the predefined policies, and instructions on deploying policies to your cluster. You can also contribute custom policy controllers and policies. - Policy controller: Evaluates one or more policies on the managed cluster against your specified control and generates Kubernetes events for violations. Violations are propagated to the hub cluster. Policy controllers that are included in your installation are the following: Kubernetes configuration, Certificate, and IAM. You can also create a custom policy controller.

-

Open source community: Supports community contributions with a foundation of the Red Hat Advanced Cluster Management policy framework. Policy controllers and third-party policies are also a part of the

open-cluster-management/policy-collectionrepository. Learn how to contribute and deploy policies using GitOps. For more information, see Deploy policies using GitOps. Learn how to integrate third-party policies with Red Hat Advanced Cluster Management for Kubernetes. For more information, see Integrate third-party policy controllers.

Learn about the structure of an Red Hat Advanced Cluster Management for Kubernetes policy framework, and how to use the Red Hat Advanced Cluster Management for Kubernetes Governance and risk dashboard.

2.2. Policy overview

Use the Red Hat Advanced Cluster Management for Kubernetes security policy framework to create custom policy controllers and other policies. Kubernetes custom resource definition (CRD) instance are used to create policies. For more information about CRDs, see Extend the Kubernetes API with CustomResourceDefinitions.

Each Red Hat Advanced Cluster Management for Kubernetes policy can have at least one or more templates. For more details about the policy elements, view the following Policy YAML table section on this page.

The policy requires a PlacementRule that defines the clusters that the policy document is applied to, and a PlacementBinding that binds the Red Hat Advanced Cluster Management for Kubernetes policy to the placement rule.

Important:

-

You must create a

PlacementRuleto apply your policies to the managed cluster, and bind thePlacementRulewith aPlacementBinding. - You can create a policy in any namespace on the hub cluster except the cluster namespace. If you create a policy in the cluster namespace, it is deleted by Red Hat Advanced Cluster Management for Kubernetes.

- Each client and provider is responsible for ensuring that their managed cloud environment meets internal enterprise security standards for software engineering, secure engineering, resiliency, security, and regulatory compliance for workloads hosted on Kubernetes clusters. Use the governance and security capability to gain visibility and remediate configurations to meet standards.

2.2.1. Policy YAML structure

When you create a policy, you must include required parameter fields and values. Depending on your policy controller, you might need to include other optional fields and values. View the following YAML structure for the explained parameter fields:

2.2.2. Policy YAML table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name for identifying the policy resource. |

| metadata.annotations | Optional. Used to specify a set of security details that describes the set of standards the policy is trying to validate. All annotations documented here are represented as a string that contains a comma-separated list. Note: You can view policy violations based on the standards and categories that you define for your policy on the Policies page, from the console. |

| annotations.policy.open-cluster-management.io/standards | The name or names of security standards the policy is related to. For example, National Institute of Standards and Technology (NIST) and Payment Card Industry (PCI). |

| annotations.policy.open-cluster-management.io/categories | A security control category represent specific requirements for one or more standards. For example, a System and Information Integrity category might indicate that your policy contains a data transfer protocol to protect personal information, as required by the HIPAA and PCI standards. |

| annotations.policy.open-cluster-management.io/controls | The name of the security control that is being checked. For example, the certificate policy controller. |

| spec.policy-templates | Required. Used to create one or more policies to apply to a managed cluster. |

| spec.disabled |

Required. Set the value to |

| spec.remediationAction |

Optional. Specifies the remediation of your policy. The parameter values are |

2.2.3. Policy sample file

See Managing security policies to create and update a policy. You can also enable and updateRed Hat Advanced Cluster Management policy controllers to validate the compliance of your policies. Refer to Policy controllers. To learn more policy topics, see Governance and risk.

2.3. Policy controllers

Policy controllers monitor and report whether your cluster is compliant with a policy. Use the Red Hat Advanced Cluster Management for Kubernetes policy framework by using the out of the box policy templates to apply predefined policy controllers, and policies. The policy controllers are Kubernetes custom resource definition (CRD) instance. For more information about CRDs, see Extend the Kubernetes API with CustomResourceDefinitions. Policy controllers remediate policy violations to make the cluster status be compliant.

You can create custom policies and policy controllers with the product policy framework. See Creating a custom policy controller for more information.

Important: Only the configuration policy controller supports the enforce feature. You must manually remediate policies, where the policy controller does not support the enforce feature.

View the following topics to learn more about the following Red Hat Advanced Cluster Management for Kubernetes policy controllers:

Refer to Governance and risk for more topics about managing your policies.

2.3.1. Kubernetes configuration policy controller

Configuration policy controller can be used to configure any Kubernetes resource and apply security policies across your clusters.

The configuration policy controller communicates with the local Kubernetes API server to get the list of your configurations that are in your cluster. For more information about CRDs, see Extend the Kubernetes API with CustomResourceDefinitions.

The configuration policy controller is created on the hub cluster during installation. Configuration policy controller supports the enforce feature and monitors the compliance of the following policies:

When the remediationAction for the configuration policy is set to enforce, the controller creates a replicate policy on the target managed clusters.

2.3.1.1. Configuration policy controller YAML structure

2.3.1.2. Configuration policy sample

2.3.1.3. Configuration policy YAML table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name of the policy. |

| spec | Required. Specifications of which configuration policy to monitor and how to remediate them. |

| spec.namespace |

Required for namespaced objects or resources. The namespaces in the hub cluster that the policy is applied to. Enter at least one namespace for the |

| spec.remediationAction |

Required. Specifies the remediation of your policy. Enter |

| spec.remediationAction.severity |

Required. Specifies the severity when the policy is non-compliant. Use the following parameter values: |

| spec.remediationAction.complianceType | Required. Used to list expected behavior for roles and other Kubernetes object that must be evaluated or applied to the managed clusters. You must use the following verbs as parameter values:

|

See the policy samples that use NIST Special Publication 800-53 (Rev. 4), and are supported by Red Hat Advanced Cluster Management from the CM-Configuration-Management folder. Learn about how policies are applied on your hub cluster, see Supported policies for more details.

Learn how to create and customize policies, see Manage security policies. Refer to Policy controllers for more details about controllers.

2.3.2. Certificate policy controller

Certificate policy controller can be used to detect certificates that are close to expiring, and detect time durations (hours) that are too long or contain DNS names that fail to match specified patterns.

Configure and customize the certificate policy controller by updating the following parameters in your controller policy:

-

minimumDuration -

minimumCADuration -

maximumDuration -

maximumCADuration -

allowedSANPattern -

disallowedSANPattern

Your policy might become non-compliant due to either of the following scenarios:

- When a certificate expires in less than the minimum duration of time or exceeds the maximum time.

- When DNS names fail to match the specified pattern.

The certificate policy controller is created on your managed cluster. The controller communicates with the local Kubernetes API server to get the list of secrets that contain certificates and determine all non-compliant certificates. For more information about CRDs, see Extend the Kubernetes API with CustomResourceDefinitions.

Certificate policy controller does not support the enforce feature.

2.3.2.1. Certificate policy controller YAML structure

View the following example of a certificate policy and review the element in the YAML table:

2.3.2.1.1. Certificate policy controller YAML table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name to identify the policy. |

| metadata.namespace | Required. The namespaces within the managed cluster where the policy is created. |

| metadata.labels |

Optional. In a certificate policy, the |

| spec | Required. Specifications of which certificates to monitor and refresh. |

| spec.namespaceSelector |

Required. Managed cluster namespace to which you want to apply the policy. Enter parameter values for

• When you create multiple certificate policies and apply them to the same managed cluster, each policy

• If the |

| spec.remediationAction |

Required. Specifies the remediation of your policy. Set the parameter value to |

| spec.severity |

Optional. Informs the user of the severity when the policy is non-compliant. Use the following parameter values: |

| spec.minimumDuration |

Required. When a value is not specified, the default value is |

| spec.minimumCADuration |

Optional. Set a value to identify signing certificates that might expire soon with a different value from other certificates. If the parameter value is not specified, the CA certificate expiration is the value used for the |

| spec.maximumDuration | Optional. Set a value to identify certificates that have been created with a duration that exceeds your desired limit. The parameter uses the time duration format from Golang. See Golang Parse Duration for more information. |

| spec.maximumCADuration | Optional. Set a value to identify signing certificates that have been created with a duration that exceeds your defined limit. The parameter uses the time duration format from Golang. See Golang Parse Duration for more information. |

| spec.allowedSANPattern | Optional. A regular expression that must match every SAN entry that you have defined in your certificates. This parameter checks DNS names against patterns. See the Golang Regular Expression syntax for more information. |

| spec.disallowedSANPattern |

Optional. A regular expression that must not match any SAN entries you have defined in your certificates. This parameter checks DNS names against patterns. See the Golang Regular Expression syntax for more information. |

2.3.2.2. Certificate policy sample

When your certificate policy controller is created on your hub cluster, a replicated policy is created on your managed cluster. See policy-certificate.yaml to view the certificate policy sample.

Learn how to manage a certificate policy, see Managing certificate policies for more details. Refer to Policy controllers for more topics.

2.3.3. IAM policy controller

Identity and Access Management (IAM) policy controller can be used to receive notifications about IAM policies that are non-compliant. The compliance check is based on the parameters that you configure in the IAM policy.

The IAM policy controller checks for compliance of the number of cluster administrators that you allow in your cluster. IAM policy controller communicates with the local Kubernetes API server. For more information, see Extend the Kubernetes API with CustomResourceDefinitions.

The IAM policy controller runs on your managed cluster.

2.3.3.1. IAM policy YAML structure

View the following example of an IAM policy and review the parameters in the YAML table:

2.3.3.2. IAM policy YAMl table

View the following parameter table for descriptions:

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name for identifying the policy resource. |

| spec | Required. Add configuration details for your policy. |

| spec.severity |

Optional. Informs the user of the severity when the policy is non-compliant. Use the following parameter values: |

| spec.namespaceSelector |

Required. The namespaces within the hub cluster that the policy is applied to. Enter at least one namespace for the |

| spec.remediationAction |

Optional. Specifies the remediation of your policy. Enter |

| spec.maxClusterRoleBindingUsers | Required. Maximum number of IAM role bindings that are available before a policy is considered non-compliant. |

2.3.3.3. IAM policy sample

See policy-limitclusteradmin.yaml to view the IAM policy sample. Learn how to manage an IAM policy, see Managing IAM policies for more details. Refer to Policy controllers for more topics.

2.3.4. Integrate third-party policy controllers

Integrate third-party policies to create custom annotations within the policy templates to specify one or more compliance standards, control categories, and controls.

You can also use the third-party party policies from the policy-collection/community.

Learn to integrate the following third-party policies:

2.3.5. Creating a custom policy controller

Learn to write, apply, view, and update your custom policy controllers. You can create a YAML file for your policy controller to deploy onto your cluster. View the following sections to create a policy controller:

2.3.5.1. Writing a policy controller

Use the policy controller framework that is in the governance-policy-framework repository. Complete the following steps to create a policy controller:

Clone the

governance-policy-frameworkrepository by running the following command:git clone git@github.com:stolostron/governance-policy-framework.git

git clone git@github.com:stolostron/governance-policy-framework.gitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Customize the controller policy by updating the policy schema definition. Your policy might resemble the following content:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update the policy controller to watch for the

SamplePolicykind. Run the following command:for file in $(find . -name "*.go" -type f); do sed -i "" "s/SamplePolicy/g" $file; done for file in $(find . -name "*.go" -type f); do sed -i "" "s/samplepolicy-controller/samplepolicy-controller/g" $file; done

for file in $(find . -name "*.go" -type f); do sed -i "" "s/SamplePolicy/g" $file; done for file in $(find . -name "*.go" -type f); do sed -i "" "s/samplepolicy-controller/samplepolicy-controller/g" $file; doneCopy to Clipboard Copied! Toggle word wrap Toggle overflow Recompile and run the policy controller by completing the following steps:

- Log in to your cluster.

- Select the user icon, then click Configure client.

- Copy and paste the configuration information into your command line, and press Enter.

Run the following commands to apply your policy CRD and start the controller:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow You might receive the following output that indicates that your controller runs:

{“level”:”info”,”ts”:1578503280.511274,”logger”:”controller-runtime.manager”,”msg”:”starting metrics server”,”path”:”/metrics”} {“level”:”info”,”ts”:1578503281.215883,”logger”:”controller-runtime.controller”,”msg”:”Starting Controller”,”controller”:”samplepolicy-controller”} {“level”:”info”,”ts”:1578503281.3203468,”logger”:”controller-runtime.controller”,”msg”:”Starting workers”,”controller”:”samplepolicy-controller”,”worker count”:1} Waiting for policies to be available for processing…{“level”:”info”,”ts”:1578503280.511274,”logger”:”controller-runtime.manager”,”msg”:”starting metrics server”,”path”:”/metrics”} {“level”:”info”,”ts”:1578503281.215883,”logger”:”controller-runtime.controller”,”msg”:”Starting Controller”,”controller”:”samplepolicy-controller”} {“level”:”info”,”ts”:1578503281.3203468,”logger”:”controller-runtime.controller”,”msg”:”Starting workers”,”controller”:”samplepolicy-controller”,”worker count”:1} Waiting for policies to be available for processing…Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a policy and verify that the controller retrieves it and applies the policy onto your cluster. Run the following command:

kubectl apply -f deploy/crds/policy.open-cluster-management.io_samplepolicies_crd.yaml

kubectl apply -f deploy/crds/policy.open-cluster-management.io_samplepolicies_crd.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow When the policy is applied, a message appears to indicate that policy is monitored and detected by your custom controller. The mesasge might resemble the following contents:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Check the

statusfield for compliance details by running the following command:kubectl describe SamplePolicy example-samplepolicy -n default

kubectl describe SamplePolicy example-samplepolicy -n defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Your output might resemble the following contents:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Change the policy rules and policy logic to introduce new rules for your policy controller. Complete the following steps:

Add new fields in your YAML file by updating the

SamplePolicySpec. Your specification might resemble the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Update the

SamplePolicySpecstructure in the samplepolicy_controller.go with new fields. -

Update the

PeriodicallyExecSamplePoliciesfunction in thesamplepolicy_controller.gofile with new logic to run the policy controller. View an example of thePeriodicallyExecSamplePoliciesfield, see stolostron/multicloud-operators-policy-controller. - Recompile and run the policy controller. See Writing a policy controller

Your policy controller is functional.

2.3.5.2. Deploying your controller to the cluster

Deploy your custom policy controller to your cluster and integrate the policy controller with the Governance and risk dashboard. Complete the following steps:

Build the policy controller image by running the following command:

make build docker build . -f build/Dockerfile -t <username>/multicloud-operators-policy-controller:latest

make build docker build . -f build/Dockerfile -t <username>/multicloud-operators-policy-controller:latestCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to push the image to a repository of your choice. For example, run the following commands to push the image to Docker Hub:

docker login docker push <username>/multicloud-operators-policy-controller

docker login docker push <username>/multicloud-operators-policy-controllerCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Configure

kubectlto point to a cluster managed by Red Hat Advanced Cluster Management for Kubernetes. Replace the operator manifest to use the built-in image name and update the namespace to watch for policies. The namespace must be the cluster namespace. Your manifest might resemble the following contents:

sed -i "" 's|open-cluster-management/multicloud-operators-policy-controller|ycao/multicloud-operators-policy-controller|g' deploy/operator.yaml sed -i "" 's|value: default|value: <namespace>|g' deploy/operator.yaml

sed -i "" 's|open-cluster-management/multicloud-operators-policy-controller|ycao/multicloud-operators-policy-controller|g' deploy/operator.yaml sed -i "" 's|value: default|value: <namespace>|g' deploy/operator.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Update the RBAC role by running the following commands:

sed -i "" 's|samplepolicies|testpolicies|g' deploy/cluster_role.yaml sed -i "" 's|namespace: default|namespace: <namespace>|g' deploy/cluster_role_binding.yaml

sed -i "" 's|samplepolicies|testpolicies|g' deploy/cluster_role.yaml sed -i "" 's|namespace: default|namespace: <namespace>|g' deploy/cluster_role_binding.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Deploy your policy controller to your cluster:

Set up a service account for cluster by runnng the following command:

kubectl apply -f deploy/service_account.yaml -n <namespace>

kubectl apply -f deploy/service_account.yaml -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set up RBAC for the operator by running the following commands:

kubectl apply -f deploy/role.yaml -n <namespace> kubectl apply -f deploy/role_binding.yaml -n <namespace>

kubectl apply -f deploy/role.yaml -n <namespace> kubectl apply -f deploy/role_binding.yaml -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set up RBAC for your policy controller. Run the following commands:

kubectl apply -f deploy/cluster_role.yaml kubectl apply -f deploy/cluster_role_binding.yaml

kubectl apply -f deploy/cluster_role.yaml kubectl apply -f deploy/cluster_role_binding.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Set up a custom resource definition (CRD) by running the following command:

kubectl apply -f deploy/crds/policies.open-cluster-management.io_samplepolicies_crd.yaml

kubectl apply -f deploy/crds/policies.open-cluster-management.io_samplepolicies_crd.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Deploy the

multicloud-operator-policy-controllerby running the following command:kubectl apply -f deploy/operator.yaml -n <namespace>

kubectl apply -f deploy/operator.yaml -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the controller is functional by running the following command:

kubectl get pod -n <namespace>

kubectl get pod -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

-

You must integrate your policy controller by creating a

policy-templatefor the controller to monitor. For more information, see Creating a cluster security policy from the console.

2.3.5.2.1. Scaling your controller deployment

Policy controller deployments do not support deletetion or removal. You can scale your deployment to update which pods the deployment is applied to. Complete the following steps:

- Log in to your managed cluster.

- Navigate to the deployment for your custom policy controller.

- Scale the deployment. When you scale your deployment to zero pods, the policy controler deployment is disabled.

For more information on deployments, see OpenShift Container Platform Deployments.

Your policy controller is deployed and integrated on your cluster. View the product policy controllers, see Policy controllers for more information.

2.4. Supported policies

View the supported policies to learn how to define rules, processes, and controls on the hub cluster when you create and manage policies in Red Hat Advanced Cluster Management for Kubernetes.

Note: You can copy and paste an existing policy in to the Policy YAML. The values for the parameter fields are automatically entered when you paste your existing policy. You can also search the contents in your policy YAML file with the search feature.

View the following policy samples to view how specific policies are applied:

Refer to Governance and risk for more topics.

2.4.1. Memory usage policy

Kubernetes configuration policy controller monitors the status of the memory usage policy. Use the memory usage policy to limit or restrict your memory and compute usage. For more information, see Limit Ranges in the Kubernetes documentation. Learn more details about the memory usage policy structure in the following sections.

2.4.1.1. Memory usage policy YAML structure

Your memory usage policy might resemble the following YAML file:

2.4.1.2. Memory usage policy table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name for identifying the policy resource. |

| metadata.namespaces | Optional. |

| spec.namespace |

Required. The namespaces within the hub cluster that the policy is applied to. Enter parameter values for |

| remediationAction |

Optional. Specifies the remediation of your policy. The parameter values are |

| disabled |

Required. Set the value to |

| spec.complianceType |

Required. Set the value to |

| spec.object-template | Optional. Used to list any other Kubernetes object that must be evaluated or applied to the managed clusters. |

2.4.1.3. Memory usage policy sample

See the policy-limitmemory.yaml to view a sample of the policy. View Managing memory usage policies for more information. Refer to Kubernetes configuration policy controller to view other configuration policies that are monitored by the controller.

2.4.2. Namespace policy

Kubernetes configuration policy controller monitors the status of your namespace policy. Apply the namespace policy to define specific rules for your namespace. Learn more details about the namespace policy structure in the following sections.

2.4.2.1. Namespace policy YAML structure

2.4.2.2. Namespace policy YAML table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name for identifying the policy resource. |

| metadata.namespaces | Optional. |

| spec.namespace |

Required. The namespaces within the hub cluster that the policy is applied to. Enter parameter values for |

| remediationAction |

Optional. Specifies the remediation of your policy. The parameter values are |

| disabled |

Required. Set the value to |

| spec.complianceType |

Required. Set the value to |

| spec.object-template | Optional. Used to list any other Kubernetes object that must be evaluated or applied to the managed clusters. |

2.4.2.3. Namespace policy sample

See policy-namespace.yaml to view the policy sample.

View Managing namespace policies for more information. Refer to Kubernetes configuration policy controller to learn about other configuration policies.

2.4.3. Image vulnerability policy

Apply the image vulnerability policy to detect if container images have vulnerabilities by leveraging the Container Security Operator. The policy installs the Container Security Operator on your managed cluster if it is not installed.

The image vulnerability policy is checked by the Kubernetes configuration policy controller. For more information about the Security Operator, see the Container Security Operator from the Quay repository.

Note: Image vulnerability policy is not functional during a disconnected installation.

2.4.3.1. Image vulnerability policy YAML structure

2.4.3.2. Image vulnerability policy YAML table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name for identifying the policy resource. |

| metadata.namespaces | Optional. |

| spec.namespace |

Required. The namespaces within the hub cluster that the policy is applied to. Enter parameter values for |

| remediationAction |

Optional. Specifies the remediation of your policy. The parameter values are |

| disabled |

Required. Set the value to |

| spec.complianceType |

Required. Set the value to |

| spec.object-template | Optional. Used to list any other Kubernetes object that must be evaluated or applied to the managed clusters. |

2.4.3.3. Image vulnerability policy sample

See policy-imagemanifestvuln.yaml. View Managing image vulnerability policies for more information. Refer to Kubernetes configuration policy controller to view other configuration policies that are monitored by the configuration controller.

2.4.4. Pod policy

Kubernetes configuration policy controller monitors the status of you pod policies. Apply the pod policy to define the container rules for your pods. A pod must exist in your cluster to use this information.

2.4.4.1. Pod policy YAML structure

2.4.4.2. Pod policy table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name for identifying the policy resource. |

| metadata.namespaces | Optional. |

| spec.namespace |

Required. The namespaces within the hub cluster that the policy is applied to. Enter parameter values for |

| remediationAction |

Optional. Specifies the remediation of your policy. The parameter values are |

| disabled |

Required. Set the value to |

| spec.complianceType |

Required. Set the value to |

| spec.object-template | Optional. Used to list any other Kubernetes object that must be evaluated or applied to the managed clusters. |

2.4.4.3. Pod policy sample

See policy-pod.yaml to view the policy sample. Learn how to manage a pod policy, see Managing pod policies for more details.

Refer to Kubernetes configuration policy controller to view other configuration policies that are monitored by the configuration controller. See Manage security policies to manage other policies.

2.4.5. Pod security policy

Kubernetes configuration policy controller monitors the status of the pod security policy. Apply a pod security policy to secure pods and containers. For more information, see Pod Security Policies in the Kubernetes documentation. Learn more details about the pod security policy structure in the following sections.

2.4.5.1. Pod security policy YAML structure

2.4.5.2. Pod security policy table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name for identifying the policy resource. |

| metadata.namespaces | Optional. |

| spec.namespace |

Required. The namespaces within the hub cluster that the policy is applied to. Enter parameter values for |

| remediationAction |

Optional. Specifies the remediation of your policy. The parameter values are |

| disabled |

Required. Set the value to |

| spec.complianceType |

Required. Set the value to |

| spec.object-template | Optional. Used to list any other Kubernetes object that must be evaluated or applied to the managed clusters. |

2.4.5.3. Pod security policy sample

See policy-psp.yaml to view the sample policy. View Managing pod security policies for more information. Refer to Kubernetes configuration policy controller to view other configuration policies that are monitored by the controller.

2.4.6. Role policy

Kubernetes configuration policy controller monitors the status of role policies. Define roles in the object-template to set rules and permissions for specific roles in your cluster. Learn more details about the role policy structure in the following sections.

2.4.6.1. Role policy YAML structure

2.4.6.2. Role policy table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name for identifying the policy resource. |

| metadata.namespaces | Optional. |

| spec.namespace |

Required. The namespaces within the hub cluster that the policy is applied to. Enter parameter values for |

| remediationAction |

Optional. Specifies the remediation of your policy. The parameter values are |

| disabled |

Required. Set the value to |

| spec.complianceType |

Required. Set the value to |

| spec.object-template | Optional. Used to list any other Kubernetes object that must be evaluated or applied to the managed clusters. |

2.4.6.3. Role policy sample

Apply a role policy to set rules and permissions for specific roles in your cluster. For more information on roles, see Role-based access control. View a sample of a role policy, see policy-role.yaml.

To learn how to manage role policies, refer to Managing role policies for more information. See the Kubernetes configuration policy controller to view other configuration policies that are monitored the controller.

2.4.7. Role binding policy

Kubernetes configuration policy controller monitors the status of your role binding policy. Apply a role binding policy to bind a policy to a namespace in your managed cluster. Learn more details about the namespace policy structure in the following sections.

2.4.7.1. Role binding policy YAML structure

2.4.7.2. Role binding policy table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name to identify the policy resource. |

| metadata.namespaces | Required. The namespace within the managed cluster where the policy is created. |

| spec | Required. Specifications of how compliance violations are identified and fixed. |

| metadata.name | Required. The name for identifying the policy resource. |

| metadata.namespaces | Optional. |

| spec.complianceType |

Required. Set the value to |

| spec.namespace |

Required. Managed cluster namespace to which you want to apply the policy. Enter parameter values for |

| spec.remediationAction |

Required. Specifies the remediation of your policy. The parameter values are |

| spec.object-template | Required. Used to list any other Kubernetes object that must be evaluated or applied to the managed clusters. |

2.4.7.3. Role binding policy sample

See policy-rolebinding.yaml to view the policy sample. Learn how to manage a role binding policy, see Managing role binding policies for more details. Refer to Kubernetes configuration policy controller to learn about other configuration policies. See Manage security policies to manage other policies.

2.4.8. Security Context Constraints policy

Kubernetes configuration policy controller monitors the status of your Security Context Constraints (SCC) policy. Apply an Security Context Constraints (SCC) policy to control permissions for pods by defining conditions in the policy. Learn more details about SCC policies in the following sections.

2.4.8.1. SCC policy YAML structure

2.4.8.2. SCC policy table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name to identify the policy resource. |

| metadata.namespace | Required. The namespace within the managed cluster where the policy is created. |

| spec.complianceType |

Required. Set the value to |

| spec.remediationAction |

Required. Specifies the remediation of your policy. The parameter values are |

| spec.namespace |

Required. Managed cluster namespace to which you want to apply the policy. Enter parameter values for |

| spec.object-template | Required. Used to list any other Kubernetes object that must be evaluated or applied to the managed clusters. |

For explanations on the contents of a SCC policy, see Managing Security Context Constraints from the OpenShift Container Platform documentation.

2.4.8.3. SCC policy sample

Apply a Security context constraints (SCC) policy to control permissions for pods by defining conditions in the policy. For more information see, Managing Security Context Constraints (SCC).

See policy-scc.yaml to view the policy sample. To learn how to manage an SCC policy, see Managing Security Context Constraints policies for more details.

Refer to Kubernetes configuration policy controller to learn about other configuration policies. See Manage security policies to manage other policies.

2.4.9. ETCD encryption policy

Apply the etcd-encryption policy to detect, or enable encryption of sensitive data in the ETCD data-store. Kubernetes configuration policy controller monitors the status of the etcd-encryption policy. For more information, see Encrypting etcd data in the OpenShift Container Platform documentation. Note: The ETCD encryption policy only supports Red Hat OpenShift Container Platform 4 and later.

Learn more details about the etcd-encryption policy structure in the following sections:

2.4.9.1. ETCD encryption policy YAML structure

Your etcd-encryption policy might resemble the following YAML file:

2.4.9.2. ETCD encryption policy table

| Field | Description |

|---|---|

| apiVersion |

Required. Set the value to |

| kind |

Required. Set the value to |

| metadata.name | Required. The name for identifying the policy resource. |

| metadata.namespaces | Optional. |

| spec.namespace |

Required. The namespaces within the hub cluster that the policy is applied to. Enter parameter values for |

| remediationAction |

Optional. Specifies the remediation of your policy. The parameter values are |

| disabled |

Required. Set the value to |

| spec.complianceType |

Required. Set the value to |

| spec.object-template | Optional. Used to list any other Kubernetes object that must be evaluated or applied to the managed clusters. See Encrypting etcd data in the OpenShift Container Platform documentation. |

2.4.9.3. Etcd encryption policy sample

See policy-etcdencryption.yaml for the policy sample. View Managing ETCD encryption policies for more information. Refer to Kubernetes configuration policy controller to view other configuration policies that are monitored by the controller.

2.4.10. Integrating gatekeeper constraints and constraint templates

Gatekeeper is a validating webhook that enforces custom resource definition (CRD) based policies that are run with the Open Policy Agent (OPA). You can install gatekeeper on your cluster by using the gatekeeper operator policy. Gatekeeper policy can be used to evaluate Kubernetes resource compliance. You can leverage a OPA as the policy engine, and use Rego as the policy language.

The gatekeeper policy is created as a Kubernetes configuration policy in Red Hat Advanced Cluster Management. Gatekeeper policies include constraint templates (ConstraintTemplates) and Constraints, audit templates, and admission templates. For more information, see the Gatekeeper upstream repository.

Red Hat Advanced Cluster Management applies the following constraint templates in your Red Hat Advanced Cluster Management gatekeeper policy: