Clusters

Read more to learn how to create, import, and manage clusters across cloud providers.

Abstract

Chapter 1. Managing your clusters

Learn how to create, import, and manage clusters across cloud providers by using the Red Hat Advanced Cluster Management for Kubernetes console. Learn how to manage clusters across providers in the following topics:

- Supported providers

- Scaling managed clusters

- Release images

- Creating and modifying bare metal assets

- Creating an infrastructure environment

- Managing credentials overview

- Creating a cluster

- Importing a target managed cluster to the hub cluster

- Creating a cluster in a proxy environment

- Enabling cluster proxy add-ons

- Configuring a specific cluster management role

- Managing cluster labels

- Creating and managing ManagedClusterSets (Technology Preview)

- Using ManagedClusterSets with Placement

- Managing cluster pools (Technology Preview)

- Configuring Ansible Tower tasks to run on managed clusters

- Claiming clusters from cluster pools

- Using hosted control plane clusters (Technology Preview)

- Discovery introduction

- Upgrading your cluster

- Removing a cluster from management

- Cluster backup and restore operator

1.1. Cluster lifecycle architecture

Red Hat Advanced Cluster Management for Kubernetes has two main types of clusters: hub clusters and managed clusters.

The hub cluster is the main cluster with Red Hat Advanced Cluster Management for Kubernetes installed on it. You can create, manage and monitor other Kubernetes clusters with the hub cluster.

The managed clusters are Kubernetes clusters that are managed by the hub cluster. You can create some clusters by using the Red Hat Advanced Cluster Management hub cluster, while you can also import existing clusters to be managed by the hub cluster.

When you create a managed cluster using Red Hat Advanced Cluster Management, the cluster is created using the Red Hat OpenShift Container Platform cluster installer with the Hive resource. You can find more information about the process of installing clusters with the OpenShift Container Platform installer by reading OpenShift Container Platform installation overview in the OpenShift Container Platform documentation.

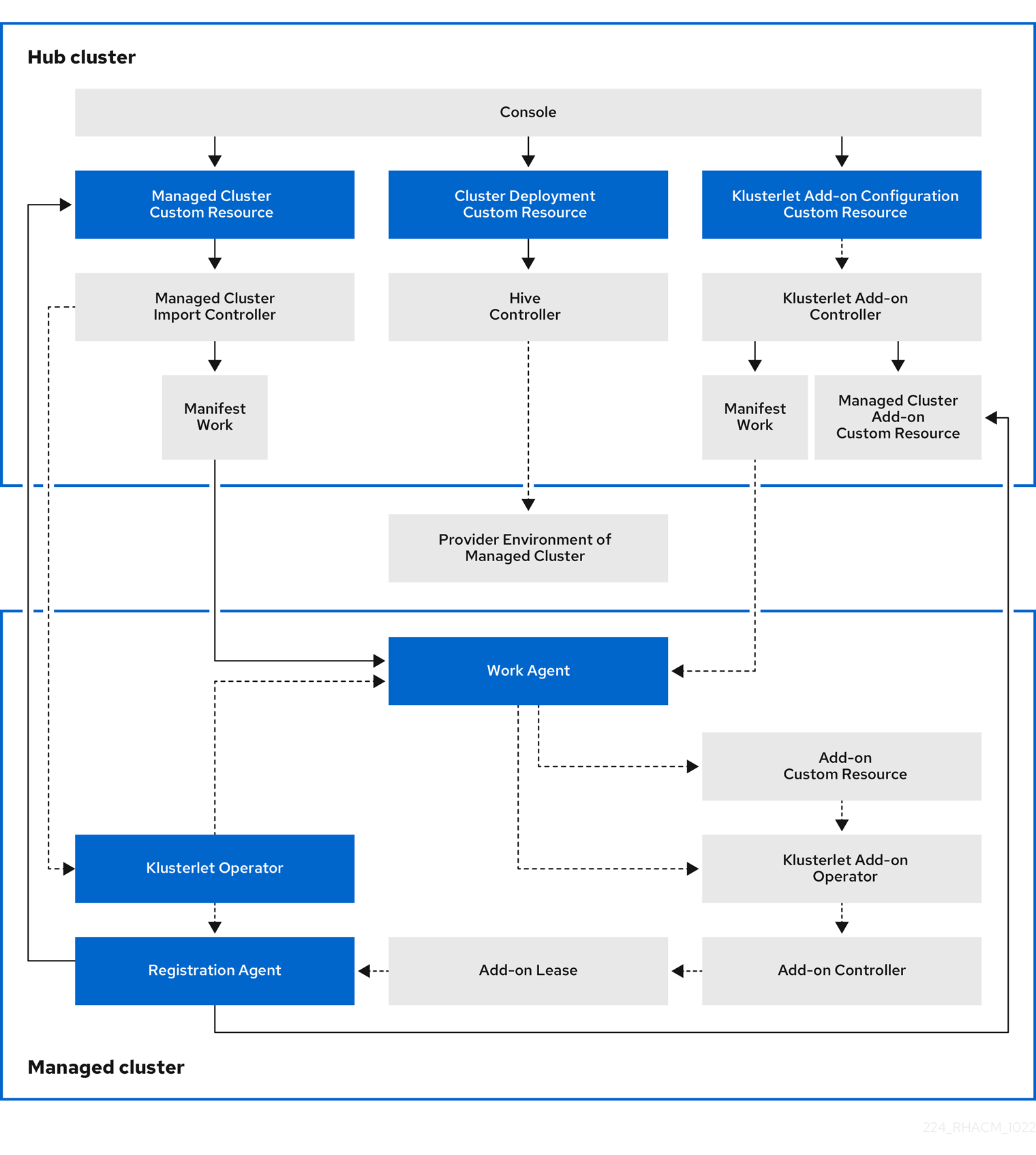

The following diagram shows the components that are installed with Red Hat Advanced Cluster Management for cluster management:

The components of the cluster lifecycle management architecture include the following items:

Components on the hub cluster:

- Console: Provides a web-based interface to manage the cluster lifecycle of the Red Hat Advanced Cluster Management managed clusters.

- Hive Controller: Provisions the clusters that you create with Red Hat Advanced Cluster Management. The Hive Controller also detaches and destroys managed clusters that were created by Red Hat Advanced Cluster Management.

- Managed Cluster Import Controller: Deploys the klusterlet operator to the managed clusters.

- Klusterlet Add-on Controller: Deploys the klusterlet add-on operator to managed clusters.

Components on the managed cluster:

- Klusterlet Operator: Deploys the registration and work controllers on the managed cluster.

Registration Agent: Registers the managed cluster with the hub cluster. The following permissions are automatically created to allow the managed cluster to access the hub cluster:

Clusterrole

- Allows the agent to rotate its certificate

-

Allows the agent to

get/list/update/watchthe clusters that the hub cluster manages - Allows the agent to update the status of the clusters that the hub cluster manages

Role created in the hub cluster namespace of the hub cluster

-

Allows the managed cluster Registration Agent to

getorupdatethecoordination.k8s.iolease -

Allows the agent to

get/list/watchthe managed cluster add-on - Allows the agent to update the status of managed cluster add-ons

-

Allows the managed cluster Registration Agent to

Work Agent: Applies the manifest work to the managed cluster. The following permission is automatically created to allow the managed cluster to access the hub cluster:

Role created in the hub cluster namespace of the hub cluster

- Allows the Work Agent to send events to the hub cluster

-

Allows the agent to

get/list/watch/updatethemanifestworksresource -

Allows the agent to update the status of

manifestworksresource

1.2. Scaling managed clusters (Technology Preview)

For clusters that were created by Red Hat Advanced Cluster Management, you can customize and resize your managed cluster specifications, such as virtual machine sizes and number of nodes. To scale managed clusters that were imported from other providers, see Provider managed clusters scaling.

Technology Preview: Many clusters that are managed by Red Hat Advanced Cluster Management for Kubernetes can be scaled by using Red Hat Advanced Cluster Management console or command line, and the MachinePool resource.

-

Using the

MachinePoolresource is a feature that is not supported for bare metal clusters that were created by Red Hat Advanced Cluster Management. -

A

MachinePoolresource is a Kubernetes resource on the hub cluster that groups theMachineSetresources together on the managed cluster. -

The

MachinePoolresource uniformly configures a set of machine resources, including zone configurations, instance type, and root storage. -

With

MachinePool, you can manually configure the desired number of nodes or configure auto-scaling of nodes on the managed cluster.

1.2.1. Autoscaling

Configuring autoscaling provides the flexibility of your cluster to scale as needed to lower your cost of resources by scaling down when traffic is low, and by scaling up to ensure that there are enough resources when there is a higher demand for resources.

1.2.1.1. Enabling autoscaling

To enable autoscaling on your

MachinePoolresources using the Red Hat Advanced Cluster Management console, complete the following steps:- In the Red Hat Advanced Cluster Management navigation, select Infrastructure > Clusters.

- Click the name of your target cluster and select the Machine pools tab.

- From the machine pools page, select Enable autoscale from the Options menu for the target machine pool.

Select the minimum and maximum number of machine set replicas. A machine set replica maps directly to a node on the cluster.

The changes might take several minutes to reflect on the console after you click Scale. You can view the status of the scaling operation by clicking View machines if the notification of the Machine pools tab.

To enable autoscaling on your

MachinePoolresources using the command line, complete the following steps:Enter the following command to view your list of machine pools:

oc get machinepools -n <managed-cluster-namespace>

oc get machinepools -n <managed-cluster-namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

managed-cluster-namespacewith the namespace of your target managed cluster.Enter the following command to edit the YAML file for the machine pool:

oc edit machinepool <name-of-MachinePool-resource> -n <namespace-of-managed-cluster>

oc edit machinepool <name-of-MachinePool-resource> -n <namespace-of-managed-cluster>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

name-of-MachinePool-resourcewith the name of yourMachinePoolresource.Replace

namespace-of-managed-clusterwith the name of the namespace of your managed cluster.-

Delete the

spec.replicasfield from the YAML file. -

Add the

spec.autoscaling.minReplicassetting andspec.autoscaling.maxReplicasfields to the resource YAML. -

Add the minimum number of replicas to the

minReplicassetting. -

Add the maximum number of replicas into the

maxReplicassetting. - Save the file to submit the changes.

Autoscaling is enabled for the machine pool.

1.2.1.2. Disabling autoscaling

You can disable autoscaling by using the console or the command line.

To disable autoscaling by using the Red Hat Advanced Cluster Management console, complete the following steps:

- In the Red Hat Advanced Cluster Management navigation, select Infrastructure > Clusters.

- Click the name of your target cluster and select the Machine pools tab.

- From the machine pools page, select Disable autoscale from the Options menu for the target machine pool.

Select the number of machine set replicas that you want. A machine set replica maps directly with a node on the cluster.

It might take several minutes to display in the console after you click Scale. You can view the status of the scaling by clicking View machines in the notification on the Machine pools tab.

To disable autoscaling by using the command line, complete the following steps:

Enter the following command to view your list of machine pools:

oc get machinepools -n <managed-cluster-namespace>

oc get machinepools -n <managed-cluster-namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

managed-cluster-namespacewith the namespace of your target managed cluster.Enter the following command to edit the YAML file for the machine pool:

oc edit machinepool <name-of-MachinePool-resource> -n <namespace-of-managed-cluster>

oc edit machinepool <name-of-MachinePool-resource> -n <namespace-of-managed-cluster>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

name-of-MachinePool-resourcewith the name of yourMachinePoolresource.Replace

namespace-of-managed-clusterwith the name of the namespace of your managed cluster.-

Delete the

spec.autoscalingfield from the YAML file. -

Add the

spec.replicasfield to the resource YAML. -

Add the number of replicas to the

replicassetting. - Save the file to submit the changes.

Autoscaling is disabled.

1.2.2. Manually scaling your cluster

If you do not want to enable autoscaling of your cluster, you can use the Red Hat Advanced Cluster Management console or the command line to change the static number of replicas that you want your cluster to maintain. This can help to increase or decrease the size, as needed.

To scale your

MachinePoolresources manually using the Red Hat Advanced Cluster Management console, complete the following steps:- In the Red Hat Advanced Cluster Management navigation, select Infrastructure > Clusters.

Click the name of your target cluster and select the Machine pools tab.

Note: If the value in the Autoscale field is

Enabledyou must first disable the autoscaling feature by completing the steps in Disabling autoscaling before continuing.- From the Options menu for the machine pool, select Scale machine pool.

- Adjust the number of machine set replicas to scale the machine pool.

To scale your

MachinePoolresources using the command line, complete the following steps:Enter the following command to view your list of machine pools:

oc get machinepools -n <managed-cluster-namespace>

oc get machinepools -n <managed-cluster-namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

managed-cluster-namespacewith the namespace of your target managed cluster.Enter the following command to edit the YAML file for the machine pool:

oc edit machinepool <name-of-MachinePool-resource> -n <namespace-of-managed-cluster>

oc edit machinepool <name-of-MachinePool-resource> -n <namespace-of-managed-cluster>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

name-of-MachinePool-resourcewith the name of yourMachinePoolresource.Replace

namespace-of-managed-clusterwith the name of the namespace of your managed cluster.-

Update the

spec.replicasconfiguration in the YAML to the number of replicas. - Save the file to submit the changes.

Note: Imported managed clusters do not have the same resources as clusters that were created by Red Hat Advanced Cluster Management. For that reason, the procedures for scaling the clusters is different. See the product documentation for your provider, which contains information about how to scale the clusters for imported clusters.

For example, you can see Recommended cluster scaling practices and Manually scaling a MachineSet in the OpenShift Container Platform documentation that applies to the version that you are using.

1.3. Release images

When you create a cluster on a provider by using Red Hat Advanced Cluster Management for Kubernetes, you must specify a release image to use for the new cluster. The release image specifies which version of Red Hat OpenShift Container Platform is used to build the cluster.

The files that reference the release images are YAML files that are maintained in the acm-hive-openshift-releases GitHub repository. Red Hat Advanced Cluster Management uses those files to create the list of the available release images in the console. This includes the latest fast channel images from OpenShift Container Platform. The console only displays the latest release images for the three latest versions of OpenShift Container Platform. For example, you might see the following release images displayed in the console options:

- quay.io/openshift-release-dev/ocp-release:4.6.23-x86_64

- quay.io/openshift-release-dev/ocp-release:4.10.1-x86_64

Note: Only release images with the label of: visible: 'true' are available to select when creating clusters in the console. An example of this label in a ClusterImageSet resource is provided in the following content:

Additional release images are stored, but are not visible in the console. To view all of the available release images, run kubectl get clusterimageset in your CLI. Only the latest versions are in the console to encourage the creation of clusters with the latest release images. In some cases, you might need to create a cluster that is a specific version, which is why the older versions are available. Red Hat Advanced Cluster Management uses those files to create the list of the available release images in the console. This includes the latest fast channel images from OpenShift Container Platform.

The repository contains the clusterImageSets directory and the subscription directory, which are the directories that you use when working with the release images.

The clusterImageSets directory contains the following directories:

- Fast: Contains files that reference the latest versions of the release images for each OpenShift Container Platform version that is supported. The release images in this folder are tested, verified, and supported.

- Releases: Contains files that reference all of the release images for each OpenShift Container Platform version (stable, fast, and candidate channels) Note: These releases have not all been tested and determined to be stable.

- Stable: Contains files that reference the latest two stable versions of the release images for each OpenShift Container Platform version that is supported.

Note: By default, the current list of release images is updated one time an hour. After upgrading the product, it may take up to an hour for the list to reflect the recommended release image versions for the new version of the product.

You can curate your own ClusterImageSets in three ways:

The first step for any of the three ways is to disable the included subscription that automatically updates the latest fast channel images. The automatic curation of the latest fast ClusterImageSets can be disabled by using an installer parameter on the multiclusterhub resource. By toggling the spec.disableUpdateClusterImageSets parameter between true and false, the subscription installed with Red Hat Advanced Cluster Management is disabled or enabled, respectively. If you want to curate your own images, set the spec.disableUpdateClusterImageSets to true to disable the subscription.

Option 1: Specify the image reference for the specific ClusterImageSet that you want to use in the console when creating a cluster. Each new entry you specify persists and is available for all future cluster provisions. An example of an entry is: quay.io/openshift-release-dev/ocp-release:4.6.8-x86_64.

Option 2: Manually create and apply a ClusterImageSets YAML file from the acm-hive-openshift-releases GitHub repository.

Option 3: Follow the README.md in the acm-hive-openshift-releases GitHub repository to enable automatic updates of ClusterImageSets from a forked GitHub repository.

The subscription directory contains files that specify where the list of release images is pulled from.

The default release images for Red Hat Advanced Cluster Management are provided in a Quay.io directory.

The images are referenced by the files in the acm-hive-openshift-releases GitHub repository for release 2.5.

1.3.1. Creating a release image to deploy a cluster on a different architecture

You can create a cluster on an architecture that is different from the architecture of the hub cluster by manually creating a release image that contains the files for both architectures.

For example, you might need to create an x86_64 cluster from a hub cluster that is running on the ppc64le, aarch64, or s390x architecture. If you create the release image with both sets of files, the cluster creation succeeds because the new release image enables the OpenShift Container Platform release registry to provide a multi-architecture image manifest.

To create the release image, complete steps similar to the following example for your architecture type:

From the OpenShift Container Platform release registry, create a manifest list that includes

x86_64,s390x,aarch64, andppc64lerelease images.Pull the manifest lists for both architectures in your environment from the Quay repository using the following example commands:

podman pull quay.io/openshift-release-dev/ocp-release:4.10.1-x86_64 podman pull quay.io/openshift-release-dev/ocp-release:4.10.1-ppc64le podman pull quay.io/openshift-release-dev/ocp-release:4.10.1-s390x podman pull quay.io/openshift-release-dev/ocp-release:4.10.1-aarch64

podman pull quay.io/openshift-release-dev/ocp-release:4.10.1-x86_64 podman pull quay.io/openshift-release-dev/ocp-release:4.10.1-ppc64le podman pull quay.io/openshift-release-dev/ocp-release:4.10.1-s390x podman pull quay.io/openshift-release-dev/ocp-release:4.10.1-aarch64Copy to Clipboard Copied! Toggle word wrap Toggle overflow Log in to your private repository where you maintain your images:

podman login <private-repo>

podman login <private-repo>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

private-repowith the path to your repository.Add the release image manifest to your private repository by running the following commands that apply to your environment:

podman push quay.io/openshift-release-dev/ocp-release:4.10.1-x86_64 <private-repo>/ocp-release:4.10.1-x86_64 podman push quay.io/openshift-release-dev/ocp-release:4.10.1-ppc64le <private-repo>/ocp-release:4.10.1-ppc64le podman push quay.io/openshift-release-dev/ocp-release:4.10.1-s390x <private-repo>/ocp-release:4.10.1-s390x podman push quay.io/openshift-release-dev/ocp-release:4.10.1-aarch64 <private-repo>/ocp-release:4.10.1-aarch64

podman push quay.io/openshift-release-dev/ocp-release:4.10.1-x86_64 <private-repo>/ocp-release:4.10.1-x86_64 podman push quay.io/openshift-release-dev/ocp-release:4.10.1-ppc64le <private-repo>/ocp-release:4.10.1-ppc64le podman push quay.io/openshift-release-dev/ocp-release:4.10.1-s390x <private-repo>/ocp-release:4.10.1-s390x podman push quay.io/openshift-release-dev/ocp-release:4.10.1-aarch64 <private-repo>/ocp-release:4.10.1-aarch64Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

private-repowith the path to your repository.Create a manifest for the new information:

podman manifest create mymanifest

podman manifest create mymanifestCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add references to both release images to the manifest list:

podman manifest add mymanifest <private-repo>/ocp-release:4.10.1-x86_64 podman manifest add mymanifest <private-repo>/ocp-release:4.10.1-ppc64le podman manifest add mymanifest <private-repo>/ocp-release:4.10.1-s390x podman manifest add mymanifest <private-repo>/ocp-release:4.10.1-aarch64

podman manifest add mymanifest <private-repo>/ocp-release:4.10.1-x86_64 podman manifest add mymanifest <private-repo>/ocp-release:4.10.1-ppc64le podman manifest add mymanifest <private-repo>/ocp-release:4.10.1-s390x podman manifest add mymanifest <private-repo>/ocp-release:4.10.1-aarch64Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

private-repowith the path to your repository.Merge the list in your manifest list with the existing manifest:

podman manifest push mymanifest docker://<private-repo>/ocp-release:4.10.1

podman manifest push mymanifest docker://<private-repo>/ocp-release:4.10.1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

private-repowith the path to your repository.

On the hub cluster, create a release image that references the manifest in your repository.

Create a YAML file that contains information that is similar to the following example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

private-repowith the path to your repository.Run the following command on your hub cluster to apply the changes:

oc apply -f <file-name>.yaml

oc apply -f <file-name>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

file-namewith the name of the YAML file that you just created.

- Select the new release image when you create your OpenShift Container Platform cluster.

- If you deploy the managed cluster using the Red Hat Advanced Cluster Management console, specify the architecture for the managed cluster in the Architecture field during the cluster creation process.

The creation process uses the merged release images to create the cluster.

1.3.2. Synchronizing available release images

The release images are updated frequently, so you might want to synchronize the list of release images to ensure that you can select the latest available versions. The release images are available in the acm-hive-openshift-releases GitHub repository for release 2.5.

There are three levels of stability of the release images:

| Category | Description |

| stable | Fully tested images that are confirmed to install and build clusters correctly. |

| fast | Partially tested, but likely less stable than a stable version. |

| candidate | Not tested, but the most current image. Might have some bugs. |

Complete the following steps to refresh the list:

-

If the installer-managed

acm-hive-openshift-releasessubscription is enabled, disable the subscription by setting the value ofdisableUpdateClusterImageSetstotruein themulticlusterhubresource. - Clone the acm-hive-openshift-releases GitHub repository for release 2.5.

Remove the subscription by entering a command that is similar to the following command:

oc delete -f subscribe/subscription-fast

oc delete -f subscribe/subscription-fastCopy to Clipboard Copied! Toggle word wrap Toggle overflow Connect to the stable release images and synchronize your Red Hat Advanced Cluster Management for Kubernetes hub cluster by entering the following command:

make subscribe-stable

make subscribe-stableCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note: You can only run this

makecommand when you are using the Linux or MacOS operating system.After about one minute, the latest list of

stablerelease images is available.To synchronize and display the fast release images, enter the following command:

make subscribe-fast

make subscribe-fastCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note: You can only run this

makecommand when you are using the Linux or MacOS operating system.About one minute after running the command, the list of available

stableandfastrelease images updates with the currently available images.To synchronize and display the

candidaterelease images, enter the following command:make subscribe-candidate

make subscribe-candidateCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note: You can only run this

makecommand when you are using the Linux or MacOS operating system.About one minute after running the command, the list of available

stable,fast, andcandidaterelease images updates with the currently available images.

- View the list of currently available release images in the Red Hat Advanced Cluster Management console when you are creating a cluster.

You can unsubscribe from any of these channels to stop viewing the updates by entering a command in the following format:

oc delete -f subscribe/subscription-fast

oc delete -f subscribe/subscription-fastCopy to Clipboard Copied! Toggle word wrap Toggle overflow

1.3.3. Maintaining a custom list of release images when connected

You might want to ensure that you use the same release image for all of your clusters. To simplify, you can create your own custom list of release images that are available when creating a cluster. Complete the following steps to manage your available release images:

-

If the installer-managed

acm-hive-openshift-releasessubscription is enabled, disable it by setting the value ofdisableUpdateClusterImageSetstotruein themulticlusterhubresource. - Fork the acm-hive-openshift-releases GitHub repository 2.5 branch.

Update the

./subscribe/channel.yamlfile by changing thespec: pathnameto access your the GitHub name for your forked repository, instead ofstolostron. This step specifies where the hub cluster retrieves the release images. Your updated content should look similar to the following example:spec: type: Git pathname: https://github.com/<forked_content>/acm-hive-openshift-releases.git

spec: type: Git pathname: https://github.com/<forked_content>/acm-hive-openshift-releases.gitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

forked_contentwith the path to your forked repository.Add the YAML files for the images that you want available when you create a cluster by using the Red Hat Advanced Cluster Management for Kubernetes console to the ./clusterImageSets/stable/* or ./clusterImageSets/fast/* directory.

Tip: You can retrieve the available YAML files from the main repository by merging changes into your forked repository.

- Commit and merge your changes to your forked repository.

To synchronize your list of fast release images after you have cloned the

acm-hive-openshift-releasesrepository, enter the following command to update the fast images:make subscribe-fast

make subscribe-fastCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note: You can only run this

makecommand when you are using the Linux or MacOS operating system.After running this command, the list of available fast release images updates with the currently available images in about one minute.

By default, only the fast images are listed. To synchronize and display the stable release images, enter the following command:

make subscribe-stable

make subscribe-stableCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note: You can only run this

makecommand when you are using the Linux or MacOS operating system.After running this command, the list of available stable release images updates with the currently available images in about 1 minute.

By default, Red Hat Advanced Cluster Management pre-loads a few ClusterImageSets. You can use the following commands to list what is available and remove the defaults.

oc get clusterImageSets oc delete clusterImageSet <clusterImageSet_NAME>

oc get clusterImageSets oc delete clusterImageSet <clusterImageSet_NAME>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note: If you have not disabled the installer-managed automatic updates of the

ClusterImageSetsby setting the value ofdisableUpdateClusterImageSetstotruein themulticlusterhubresource, any images that you delete are recreated automatically.- View the list of currently available release images in the Red Hat Advanced Cluster Management console when you are creating a cluster.

1.3.4. Maintaining a custom list of release images while disconnected

In some cases, you need to maintain a custom list of release images when the hub cluster has no Internet connection. You can create your own custom list of release images that are available when creating a cluster. Complete the following steps to manage your available release images while disconnected:

- While you are on a connected system, navigate to the acm-hive-openshift-releases GitHub repository to access the cluster image sets that are available for version 2.5.

-

Copy the

clusterImageSetsdirectory to a system that can access the disconnected Red Hat Advanced Cluster Management for Kubernetes hub cluster. Add the mapping between the managed cluster and the disconnected repository with your cluster image sets by completing the following steps that fits your managed cluster:

-

For an OpenShift Container Platform managed cluster, see Configuring image registry repository mirroring for information about using your

ImageContentSourcePolicyobject to complete the mapping. -

For a managed cluster that is not an OpenShift Container Platform cluster, use the

ManageClusterImageRegistryCRD to override the location of the image sets. See Importing a cluster with a custom ManagedClusterImageRegistry CRD for information about how to override the cluster for the mapping.

-

For an OpenShift Container Platform managed cluster, see Configuring image registry repository mirroring for information about using your

-

Add the YAML files for the images that you want available when you create a cluster by using the Red Hat Advanced Cluster Management console by manually adding the

clusterImageSetYAML content. Modify the

clusterImageSetYAML files for the remaining OpenShift Container Platform release images to reference the correct offline repository where you store the images. Your updates should resemble the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Ensure that the images are loaded in the offline image registry that is referenced in the YAML file.

Create each of the

clusterImageSetsby entering the following command for each YAML file:oc create -f <clusterImageSet_FILE>

oc create -f <clusterImageSet_FILE>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

clusterImageSet_FILEwith the name of the cluster image set file. For example:oc create -f img4.9.9-x86_64.yaml

oc create -f img4.9.9-x86_64.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow After running this command for each resource you want to add, the list of available release images will be available.

- Alternately you can paste the image URL directly in the create cluster console in Red Hat Advanced Cluster Management. Adding the image URL creates new clusterImageSets if they do not exist.

- View the list of currently available release images in the Red Hat Advanced Cluster Management console when you are creating a cluster.

1.4. Creating and modifying bare metal assets

Deprecation notice: The procedure for creating bare metal clusters using bare metal assets is deprecated. See Creating a cluster in an on-premises environment for the recommended process.

Bare metal assets are virtual or physical servers that you configure to run your OpenShift Container Platform clusters. Red Hat Advanced Cluster Management for Kubernetes connects to a bare metal asset that your administrator creates. Then you can deploy the bare metal asset in a managed cluster.

The hub cluster inventory controller defines a custom resource definition (CRD) called BareMetalAsset, that holds the bare metal asset inventory record. When provisioning a managed cluster, the inventory controller reconciles BareMetalAsset inventory records with corresponding BareMetalHost resources in the managed cluster.

Red Hat Advanced Cluster Management uses BareMetalAsset CRs to provision cluster hardware based on records entered in a configuration management database (CMDB), or similar system. An external tool or automation polls the CMDB and uses the Red Hat Advanced Cluster Management API to create corresponding BareMetalAsset and corresponding Secret resources in a hub cluster for subsequent deployment in a managed cluster.

Use the following procedures to create and manage bare metal assets for clusters managed by Red Hat Advanced Cluster Management.

1.4.1. Prerequisites

You need the following prerequisites before creating a bare metal asset:

- A deployed Red Hat Advanced Cluster Management hub cluster on OpenShift Container Platform version 4.6, or later.

- Access for your Red Hat Advanced Cluster Management hub cluster to connect to the bare metal asset.

A configured bare metal asset, and log in credentials with the required permissions to log in and manage it.

Note: Credentials for your bare metal asset include the following items for the asset that are provided by your administrator: user name password Baseboard Management Controller (BMC) address boot NIC MAC address

1.4.2. Creating a bare metal asset with the console

To create a bare metal asset using the Red Hat Advanced Cluster Management for Kubernetes console, navigate to Infrastructure > Bare metal assets. Select Create bare metal asset and complete the procedure in the console.

The name for your bare metal asset identifies it when you create a cluster.

The bare metal asset, managed bare metal cluster, and its related secret must be in the same namespace.

+ Users who have access to this namespace can associate this asset to the cluster when creating a cluster.

The Baseboard Management Controller address is the controller that enables communication with the host. The following protocols are supported:

- IPMI, see IPMI 2.0 Specification for more information.

- iDRAC, see Support for Integrated Dell Remote Access Controller 9 (iDRAC9) for more information.

- iRMC, see Data Sheet: FUJITSU Software ServerView Suite integrated Remote Management Controller - iRMC S5 for more information.

- Redfish, see Redfish specification for more information.

The boot NIC MAC address is the MAC address of the network-connected NIC of the host, which is used to provision the host on the bare metal asset.

You can continue with Creating a cluster on bare metal.

1.4.3. Creating a bare metal asset using the CLI

Use a BareMetalAsset CR to create a bare metal asset for a particular namespace in the cluster. Each BareMetalAsset also has a corresponding Secret that contains the Baseboard Management Controller (BMC) credentials and the secret name in the same namespace.

1.4.3.1. Prerequisites

- Install Red Hat Advanced Cluster Management for Kubernetes on a hub cluster.

- Install the Red Hat OpenShift CLI (oc).

-

Log in as a user with

cluster-adminprivileges.

1.4.3.2. Create the bare metal asset

- Install and provision a bare metal asset in your environment.

- Power on the BMC, and note the IPMI or Redfish BMC address and MAC address for the hardware.

Create the following

BareMetalAssetandSecretCR, and save the file asbaremetalasset-cr.yaml:Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Replace

baremetalasset-machinewith the name of the machine where the bare metal asset is located. When created, theBareMetalHoston the managed cluster gets the same name as the correspondingBareMetalAsseton the hub cluster. TheBareMetalHostname should always match the correspondingBareMetalAssetname. -

Replace

baremetalasset-namespacewith the cluster namespace where the bare metal asset is created. -

Replace

out_of_band_ipandportwith the address and port for your bare metal asset. For Redfish addressing, use the following address format:redfish://<out-of-band-ip>/redfish/v1/Systems/1. -

Replace

rolewithworker,master, or leave it empty, depending on the machine role type. Therolesetting is used to match a bare metal asset to specific machine role types in the cluster.BareMetalAssetresources with a specified machine role type should not be used to fill another role. Therolevalue is used as the value for a label with keyinventory.open-cluster-management.io/role. This enables a cluster management application or user to query for inventory that is intended for a particular role. -

Replace

cluster_namewith the name of your cluster, which is used by the cluster management application or user to query for inventory that is associated with a particular cluster. Leave this value empty to create the bare metal asset without adding it to a cluster deployment. -

Replace

usernamewith the username for your secret. -

Replace

passwordwith the password for your secret.

-

Replace

Run the following command to create the

BareMetalAssetCR:oc create -f baremetalasset-cr.yaml

oc create -f baremetalasset-cr.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check that the

BareMetalAssetis created successfully:oc get baremetalassets -A

oc get baremetalassets -ACopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

NAMESPACE NAME AGE ocp-example-bm baremetalasset-machine 2m ocp-example-bm csv-f24-h27-000-r630-master-1-1 4d21h

NAMESPACE NAME AGE ocp-example-bm baremetalasset-machine 2m ocp-example-bm csv-f24-h27-000-r630-master-1-1 4d21hCopy to Clipboard Copied! Toggle word wrap Toggle overflow

1.4.4. Bulk importing bare metal assets with the console

You can import bare metal assets in bulk using the Red Hat Advanced Cluster Management for Kubernetes console using a CSV formatted list.

1.4.4.1. Prerequisites

- Install Red Hat Advanced Cluster Management on a hub cluster that manages one or more spoke clusters.

- Install the OpenShift Container Platform CLI (oc).

-

Log in as a user with

cluster-adminprivileges.

1.4.4.2. Import the assets

To import a set of bare metal assets, complete the following steps:

- From the Red Hat Advanced Cluster Management console, select Cluster management > Bare metal assets in the navigation menu.

Select Import assets, and import the CSV file that contains the bare metal assets data. The CSV file must have the following header columns:

hostName, hostNamespace, bmcAddress, macAddress, role (optional), username, password

hostName, hostNamespace, bmcAddress, macAddress, role (optional), username, passwordCopy to Clipboard Copied! Toggle word wrap Toggle overflow

1.4.5. Modifying a bare metal asset

If you need to modify the settings for a bare metal asset, complete the following steps:

- In the Red Hat Advanced Cluster Management for Kubernetes console navigation, select: Infrastructure > Bare metal assets.

- Select the options menu for the asset that you want to modify in the table.

- Select Edit asset.

1.4.6. Removing a bare metal asset

When a bare metal asset is no longer used for any of the clusters, you can remove it from the list of available bare metal assets. Removing unused assets both simplifies your list of available assets, and prevents the accidental selection of that asset.

To remove a bare metal asset in the console, complete the following steps:

- In the Red Hat Advanced Cluster Management for Kubernetes console navigation, select: Infrastructure > Bare metal assets.

- Select the options menu for the asset that you want to remove in the table.

- Select Delete asset.

1.4.7. Using the REST API to create a bare metal asset

You can use the OpenShift Container Platform REST API to manage bare metal assets for use in your Red Hat Advanced Cluster Management cluster. This is useful when you have a separate CMDB application or database to manage the bare metal assets in your environment.

1.4.7.1. Prerequisites

- Install Red Hat Advanced Cluster Management for Kubernetes on a hub cluster.

- Install the OpenShift Container Platform CLI (oc).

-

Log in as a user with

cluster-adminprivileges.

1.4.7.2. Create the bare metal asset

To use the REST API to create a bare metal asset, do the following:

Obtain a login token for your hub cluster, and login to the cluster at the command line. For example:

oc login --token=<login_token> --server=https://<hub_cluster_api_url>:6443

oc login --token=<login_token> --server=https://<hub_cluster_api_url>:6443Copy to Clipboard Copied! Toggle word wrap Toggle overflow Modify the following curl command with the details of the bare metal asset that you want to add to the cluster, and run the command.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Replace

baremetalasset-namewith the name of the bare metal asset. When created, theBareMetalHoston the managed cluster gets the same name as the correspondingBareMetalAsseton the hub cluster. TheBareMetalHostname should always match the correspondingBareMetalAssetname. -

Replace

baremetalasset-namespacewith the cluster namespace where the bare metal asset is created. -

Replace

out_of_band_ipandportwith the address and port for your bare metal asset. For Redfish addressing, use the following address format:redfish://<out-of-band-ip>/redfish/v1/Systems/1. -

Replace

rolewithworker,master, or leave it empty, depending on the machine role type. Therolesetting is used to match a bare metal asset to specific machine role types in the cluster.BareMetalAssetresources with a specified machine role type should not be used to fill another role. Therolevalue is used as the value for a label with keyinventory.open-cluster-management.io/role. This enables a cluster management application or user to query for inventory that is intended for a particular role. Replace

cluster_namewith the name of your cluster, which is used by the cluster management application or user to query for inventory that is associated with a particular cluster. Leave this value empty to create the bare metal asset without adding it to a cluster deployment.Note: For the previous curl command, it is assumed that the API server is served over HTTPS and is accessed securely. In a development or test environment, you can pass the

--insecureparameter.

-

Replace

Tip: You can append --v=9 to an oc command to see the raw output of the resulting action. This can be useful for ascertaining the REST API route for an oc command.

1.5. Creating an infrastructure environment

You can use the Red Hat Advanced Cluster Management for Kubernetes console to create an infrastructure environment to manage your hosts and create clusters on those hosts.

Infrastructure environments support the following features:

- Zero-touch provisioning of clusters: Deploy clusters using a script. See Deploying distributed units at scale in a disconnected environment in the Red Hat OpenShift Container Platform documentation for more information.

- Late binding: Enable the host to be booted by an infrastructure administrator, and the creator of a cluster can bind a cluster to that host at a later time. The cluster creator does not have to have administrator privileges to the infrastructure when using late binding.

-

Dual stack: Deploy clusters that have both IPv4 and IPv6 addresses. Dual stack uses the

OVN-Kubernetesnetworking implementation to support multiple subnets. - Add remote worker nodes: Add remote worker nodes to your clusters after they are created and running, which provides flexibility of adding nodes in other locations for backup purposes.

- Static IP using NMState: Use the NMState API to define static IP addresses for your environment.

1.5.1. Prerequisites

See the following prerequisites before creating an infrastructure environment:

- You must have OpenShift Container Platform deployed on your hub cluster.

- You need Internet access for your Red Hat Advanced Cluster Management hub cluster (connected), or a connection to an internal or mirror registry that has a connection to the Internet (disconnected) to retrieve the required images for creating the environment.

- You need a configured instance of the Central Infrastructure Management (CIM) feature on your hub cluster. See Enabling the Central Infrastructure Management service for the procedure.

- You need an OpenShift Container Platform pull secret. See Using image pull secrets for more information.

-

You need your SSH key that is in your

~/.ssh/id_rsa.pubfile, by default. - You need a configured storage class.

- Disconnected environment only: Complete the procedure for Preparing the disconnected environment in the OpenShift Container Platform documentation.

1.5.2. Enabling the Central Infrastructure Management service

The Central Infrastructure Management service is provided with the {mce-short} and deploys OpenShift Container Platform clusters. CIM is deployed when you enable the MultiClusterHub Operator on the hub cluster, but must be enabled.

To enable the CIM service, complete the following steps:

Important: Only if your hub cluster is installed on one of the following platforms: bare metal, Red Hat OpenStack Platform, VMware vSphere, or was installed by using the user-provisioned infrastructure (UPI) method and the platform is None, complete the following step. Skip this step if your hub cluster is on any other platform.

Modify the

Provisioningresource to allow the Bare Metal Operator to watch all namespaces by running the following command:oc patch provisioning provisioning-configuration --type merge -p '{"spec":{"watchAllNamespaces": true }}'oc patch provisioning provisioning-configuration --type merge -p '{"spec":{"watchAllNamespaces": true }}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow For disconnected environments: Create a

ConfigMapin the same namespace as your infrastructure operator to specify the values forca-bundle.crtandregistries.conffor your mirror registry. Your fileConfigMapshould resemble the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

1.5.2.1. Creating the AgentServiceConfig custom resource

Create the AgentServiceConfig custom resource by completing the following steps:

For disconnected environments only: Save the following

YAMLcontent in theagent_service_config.yamlfile and replace the values as needed:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

mirror_configwith the name of theConfigMapthat contains your mirror registry configuration details.Include the optional

unauthenticated_registryparameter if you are using a mirror registry that does not require authentication. Entries on this list are not validated or required to have an entry in the pull secret.For connected environments only: Save the following

YAMLcontent in theagent_service_config.yamlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

db_volume_sizewith the volume size for thedatabaseStoragefield, for example10G. This value specifies how much storage is is allocated for storing files such as database tables and database views for the clusters. You might need to use a higher value if there are many clusters.Replace

fs_volume_sizewith the size of the volume for thefilesystemStoragefield, for example200Mper cluster and2-3Gper supported OpenShift Container Platform version. The minimum value that is required is100G. This value specifies how much storage is allocated for storing logs, manifests, andkubeconfigfiles for the clusters. You might need to use a higher value if there are many clusters.Replace

img_volume_sizewith the size of the volume for theimageStoragefield, for example2Gper operating system image. The minimum size is50G. This value specifies how much storage is allocated for the images of the clusters. You need to allow 1 GB of image storage for each instance of Red Hat Enterprise Linux CoreOS that is running. You might need to use a higher value if there are many clusters and instances of Red Hat Enterprise Linux CoreOS.Replace

ocp_versionwith the OpenShift Container Platform version to install, for example,4.9.Replace

ocp_release_versionwith the specific install version, for example,49.83.202103251640-0.Replace

iso_urlwith the ISO url, for example,https://mirror.openshift.com/pub/openshift-v4/x86_64/dependencies/rhcos/4.10/4.10.3/rhcos-4.10.3-x86_64-live.x86_64.iso. You can find other values at: https://mirror.openshift.com/pub/openshift-v4/x86_64/dependencies/rhcos/4.10/4.10.3/.Replace

root_fs_urlwith the root FS image URL, for example,https://mirror.openshift.com/pub/openshift-v4/x86_64/dependencies/rhcos/4.10/4.10.3/rhcos-4.10.3-x86_64-live-rootfs.x86_64.img. You can find other values at: https://mirror.openshift.com/pub/openshift-v4/x86_64/dependencies/rhcos/4.10/4.10.3/.Create the

AgentServiceConfigcustom resource by running the following command:oc create -f agent_service_config.yaml

oc create -f agent_service_config.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output might resemble the following example:

agentserviceconfig.agent-install.openshift.io/agent created

agentserviceconfig.agent-install.openshift.io/agent createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow

You can verify that it is healthy by checking the assisted-service and assisted-image-service deployments and ensuring that their pods are ready and running. Continue with Creating your infrastructure environment with the console.

1.5.2.2. Manually create the Provisioning custom resource (CR)

Manually create a Provisioning CR to enable services for automated provisioning by using the following command:

oc create -f provisioning-configuration.yaml

oc create -f provisioning-configuration.yamlYour CR might resemble the following sample:

1.5.2.3. Enabling Central Infrastructure Management on Amazon Web Services

If you are running your hub cluster on Amazon Web Services and want to enable the CIM service, complete the following additional steps after Enabling CIM:

Make sure you are logged in at the hub and find the unique domain configured on the

assisted-image-serviceby running the following command:oc get routes --all-namespaces | grep assisted-image-service

oc get routes --all-namespaces | grep assisted-image-serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Your domain might resemble the following example:

assisted-image-service-multicluster-engine.apps.<yourdomain>.comMake sure you are logged in at the hub and create a new

IngressControllerwith a unique domain using theNLBtypeparameter. See the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Add

<yourdomain>to thedomainparameter inIngressControllerby replacing<domain>innlb-apps.<domain>.comwith<yourdomain>. Apply the new

IngressControllerby using the following command:oc apply -f ingresscontroller.yaml

oc apply -f ingresscontroller.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to edit the

assisted-image-serviceroute to use thenlb-appslocation:oc edit route assisted-image-service -n <namespace>

oc edit route assisted-image-service -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Tip: The default namespace is where you installed the :mce:.

Add the following lines to the

assisted-image-serviceroute:metadata: labels: router-type: nlb name: assisted-image-servicemetadata: labels: router-type: nlb name: assisted-image-serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow In the

assisted-image-serviceroute, find the URL value ofspec.host. The URL might resemble the following example:assisted-image-service-multicluster-engine.apps.<yourdomain>.com-

Replace

appsin the URL withnlb-appsto match the domain configured in the newIngressController.

To verify that the CIM service is enabled on Amazon Web Services, complete the following steps:

Run the following command to verify that the pods are healthy:

oc get pods -n multicluster-engine | grep assist

oc get pods -n multicluster-engine | grep assistCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Create a new infrastructure environment and ensure that the download URL uses the new

nlb-appsURL.

1.5.3. Creating your infrastructure environment with the console

To create an infrastructure environment from the Red Hat Advanced Cluster Management console, complete the following steps:

- From the navigation menu, navigate to Infrastructure > Infrastructure environments and click Create infrastructure environment.

Add the following information to your infrastructure environment settings:

- Name: A unique name for your environment.

- Network type: Specifies which types of hosts can be added to your environment. You can only use the static IP option when you are using bare metal hosts.

- Location: Specifies the geographic location of the host. The geographic location can be used to easily determine where your data on a cluster is stored when you are creating the cluster.

- Labels: Optional field where you can add labels to the infrastructure environment so you can more easily find and group the environment with other environments that share a characteristic. The selections that you made for the network type and location are automatically added to the list of labels.

- Pull secret: Your OpenShift Container Platform pull secret that enables you to access the OpenShift Container Platform resources.

-

SSH public key: The SSH key that enables the secure communication with the hosts. This is generally in your

~/.ssh/id_rsa.pubfile, by default. If you want to enable proxy settings across all of your clusters, select the setting to enable it. This requires that you enter the following information:

- HTTP Proxy URL: The URL that should be used when accessing the discovery service.

-

HTTPS Proxy URL: The secure proxy URL that should be used when accessing the discovery service. Note that the format must be

http, ashttpsis not yet supported. -

No Proxy domains: A comma-separated list of domains that should bypass the proxy. Begin a domain name with a period

.to include all of the subdomains that are in that domain. Add and asterisk*to bypass the proxy for all destinations.

You can now continue by adding hosts to your infrastructure environment.

To access an infrastructure environment, select Infrastructure > Host inventory in the console. Select your infrastructure environment from the list to view the details and hosts for that infrastructure environment.

1.5.4. Adding hosts to an infrastructure environment

You can use the Red Hat Advanced Cluster Management for Kubernetes console to add hosts to an infrastructure environment. Adding the hosts makes it easier to select the already-configured hosts when you are creating a cluster.

Complete the following steps to add a host:

- From the Red Hat Advanced Cluster Management navigation, select Infrastructure > Infrastructure environments.

- Select the infrastructure environment where you want to add the host to view its settings.

- Select the Hosts tab to view the hosts that are already added to that environment and to add a host. Available hosts might take a few minutes to appear in the table.

- Select Discovery ISO or Baseboard Management Controller (BMC) to enter the information for your host.

If you select the Discovery ISO option, complete the following steps:

- Copy the command that is provided in the console to download the ISO or select Download Discovery ISO.

- Run the command on a bootable device to start each host.

- For added security, select Approve host for each of the discovered hosts. This additional step offers some protection in case your ISO file is changed and run by an unauthorized person.

-

Rename the hosts that are named,

localhostto unique names.

If you select Baseboard Management Controller (BMC) option, complete the following steps:

Note: The BMC option for adding hosts can only be used when the platform of your Red Hat Advanced Cluster Management hub cluster is bare metal, Red Hat OpenStack Platform, VMware vSphere, or was installed using the user-provisioned infrastructure (UPI) method and the platform is

None.- Add the connection details for the BMC of your host.

Select Add host to start the boot process. The host is automatically booted by using the discovery ISO image, and is added to the list of hosts when it is started.

When you add a host by using the BMC option, the host is automatically approved.

You can now create an on-premises cluster on this infrastructure environment. See Creating a cluster in an on-premises environment for more information about creating a cluster.

1.6. Creating a cluster

Learn how to create Red Hat OpenShift Container Platform clusters across cloud providers with Red Hat Advanced Cluster Management for Kubernetes.

Multicluster-engine uses the Hive operator that is provided with OpenShift Container Platform to provision clusters for all providers except the on-premises clusters and hosted control planes. When provisioning the on-premises clusters, multicluster-engine uses the Central Infrastructure Management (CIM) and Assisted Installer function that are provided with OpenShift Container Platform. The hosted clusters for hosted control planes are provisioned by using the HyperShift operator.

- Configuring additional manifests during cluster creation

- Creating a cluster on Amazon Web Services

- Creating a cluster on Microsoft Azure

- Creating a cluster on Google Cloud Platform

- Creating a cluster on VMware vSphere

- Creating a cluster on Red Hat OpenStack Platform

- Creating a cluster on Red Hat Virtualization

- Creating a cluster on bare metal

- Creating a cluster in an on-premises environment

1.6.1. Configuring additional manifests during cluster creation

You can configure additional Kubernetes resource manifests during the installation process of creating your cluster. This can help if you need to configure additional manifests for scenarios such as configuring networking or setting up a load balancer.

Before you create your cluster, you need to add a reference to the ClusterDeployment resource that specifies a ConfigMap that contains the additional resource manifests.

Note: The ClusterDeployment resource and the ConfigMap must be in the same namespace. The following examples show how your content might look.

ConfigMap with resource manifests

ConfigMapthat contains a manifest with anotherConfigMapresource. The resource manifestConfigMapcan contain multiple keys with resource configurations added in adata.<resource_name>\.yamlpattern.Copy to Clipboard Copied! Toggle word wrap Toggle overflow ClusterDeployment with resource manifest

ConfigMapreferencedThe resource manifest

ConfigMapis referenced underspec.provisioning.manifestsConfigMapRef.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

1.6.2. Creating a cluster on Amazon Web Services

You can use the Red Hat Advanced Cluster Management for Kubernetes console to create a Red Hat OpenShift Container Platform cluster on Amazon Web Services (AWS).

When you create a cluster, the creation process uses the OpenShift Container Platform installer with the Hive resource. If you have questions about cluster creation after completing this procedure, see Installing on AWS in the OpenShift Container Platform documentation for more information about the process.

1.6.2.1. Prerequisites

See the following prerequisites before creating a cluster on AWS:

- You must have a deployed Red Hat Advanced Cluster Management for Kubernetes hub cluster.

- You need Internet access for your Red Hat Advanced Cluster Management for Kubernetes hub cluster so it can create the Kubernetes cluster on Amazon Web Services.

- You need an AWS credential. See Creating a credential for Amazon Web Services for more information.

- You need a configured domain in AWS. See Configuring an AWS account for instructions on how to configure a domain.

- You must have Amazon Web Services (AWS) login credentials, which include user name, password, access key ID, and secret access key. See Understanding and Getting Your Security Credentials.

- You must have an OpenShift Container Platform image pull secret. See Using image pull secrets.

Note: If you change your cloud provider access key, you must manually update the provisioned cluster access key. For more information, see the known issue, Automatic secret updates for provisioned clusters is not supported.

1.6.2.2. Creating your cluster with the console

To create a cluster from the Red Hat Advanced Cluster Management console, navigate to Infrastructure > Clusters. On the Clusters page, click Create cluster and complete the steps in the console.

Note: This procedure is for creating a cluster. If you have an existing cluster that you want to import, see Importing a target managed cluster to the hub cluster for those steps.

If you need to create a credential, see Creating a credential for Amazon Web Services for more information.

The name of the cluster is used in the hostname of the cluster.

Important: When you create a cluster, the Red Hat Advanced Cluster Management controller creates a namespace for the cluster and its resources. Ensure that you include only resources for that cluster instance in that namespace. Destroying the cluster deletes the namespace and all of the resources in it.

Tip: Select YAML: On to view content updates as you enter the information in the console.

1.6.2.3. Adding your cluster to an existing cluster set

If you want to add your cluster to an existing cluster set, you must have the correct permissions on the cluster set to add it. If you do not have cluster-admin privileges when you are creating the cluster, you must select a cluster set on which you have clusterset-admin permissions. If you do not have the correct permissions on the specified cluster set, the cluster creation fails. Contact your cluster administrator to provide you with clusterset-admin permissions to a cluster set if you do not have any cluster set options to select.

Every managed cluster must be associated with a managed cluster set. If you do not assign the managed cluster to a ManagedClusterSet, it is automatically added to the default managed cluster set.

If there is already a base DNS domain that is associated with the selected credential that you configured with your AWS account, that value is populated in the field. You can change the value by overwriting it. This name is used in the hostname of the cluster. See Configuring an AWS account for more information.

The release image identifies the version of the OpenShift Container Platform image that is used to create the cluster. If the version that you want to use is available, you can select the image from the list of images. If the image that you want to use is not a standard image, you can enter the URL to the image that you want to use. See Release images for more information about release images.

The node pools include the control plane pool and the worker pools. The control plane nodes share the management of the cluster activity. The information includes the following fields:

- Architecture: If the architecture type of the managed cluster is not the same as the architecture of your hub cluster, enter a value for the instruction set architecture of the machines in the pool. Valid values are amd64, ppc64le, s390x, and arm64.

- Zones: Specify where you want to run your control plane pools. You can select multiple zones within the region for a more distributed group of control plane nodes. A closer zone might provide faster performance, but a more distant zone might be more distributed.

- Instance type: Specify the instance type for your control plane node. You can change the type and size of your instance after it is created.

- Root storage: Specify the amount of root storage to allocate for the cluster.

You can create zero or more worker nodes in a worker pool to run the container workloads for the cluster. They can be in a single worker pool, or distributed across multiple worker pools. If zero worker nodes are specified, the control plane nodes also function as worker nodes. The optional information includes the following fields:

- Zones: Specify where you want to run your worker pools. You can select multiple zones within the region for a more distributed group of nodes. A closer zone might provide faster performance, but a more distant zone might be more distributed.

- Instance type: Specify the instance type of your worker pools. You can change the type and size of your instance after it is created.

- Node count: Specify the node count of your worker pool. This setting is required when you define a worker pool.

- Root storage: Specify the amount of root storage allocated for your worker pool. This setting is required when you define a worker pool.

Networking details are required for your cluster, and multiple networks are required for using IPv6. You can add an additional network by clicking Add network.

Proxy information that is provided in the credential is automatically added to the proxy fields. You can use the information as it is, overwrite it, or add the information if you want to enable a proxy. The following list contains the required information for creating a proxy:

-

HTTP proxy URL: Specify the URL that should be used as a proxy for

HTTPtraffic. -

HTTPS proxy URL: Specify the secure proxy URL that should be used for

HTTPStraffic. If no value is provided, the same value as theHTTP Proxy URLis used for bothHTTPandHTTPS. -

No proxy domains: A comma-separated list of domains that should bypass the proxy. Begin a domain name with a period

.to include all of the subdomains that are in that domain. Add an asterisk*to bypass the proxy for all destinations. - Additional trust bundle: Specify the contents of the certificate file that is required to access the mirror registry.

When you review your information and optionally customize it before creating the cluster, you can select YAML: On to view the install-config.yaml file content in the panel. You can edit the YAML file with your custom settings, if you have any updates.

Note: You do not have to run the kubectl command that is provided with the cluster details to import the cluster. When you create the cluster, it is automatically configured under the management of Red Hat Advanced Cluster Management.

Continue with Accessing your cluster for instructions for accessing your cluster.

1.6.3. Creating a cluster on Microsoft Azure

You can use the Red Hat Advanced Cluster Management for Kubernetes console to deploy a Red Hat OpenShift Container Platform cluster on Microsoft Azure or on Microsoft Azure Government.

When you create a cluster, the creation process uses the OpenShift Container Platform installer with the Hive resource. If you have questions about cluster creation after completing this procedure, see Installing on Azure in the OpenShift Container Platform documentation for more information about the process.

1.6.3.1. Prerequisites

See the following prerequisites before creating a cluster on Azure:

- You must have a deployed Red Hat Advanced Cluster Management for Kubernetes hub cluster.

- You need Internet access for your Red Hat Advanced Cluster Management for Kubernetes hub cluster so it can create the Kubernetes cluster on Azure or Azure Government

- You need an Azure credential. See Creating a credential for Microsoft Azure for more information.

- You need a configured domain in Azure or Azure Government. See Configuring a custom domain name for an Azure cloud service for instructions on how to configure a domain.

- You need Azure login credentials, which include user name and password. See the Microsoft Azure Portal.

-

You need Azure service principals, which include

clientId,clientSecret, andtenantId. See azure.microsoft.com. - You need an OpenShift Container Platform image pull secret. See Using image pull secrets.

Note: If you change your cloud provider access key, you must manually update the provisioned cluster access key. For more information, see the known issue, Automatic secret updates for provisioned clusters is not supported.

1.6.3.2. Creating your cluster with the console

To create a cluster from the Red Hat Advanced Cluster Management for Kubernetes console, navigate to Infrastructure > Clusters. On the Clusters page, click Create cluster and complete the steps in the console.

Note: This procedure is for creating a cluster. If you have an existing cluster that you want to import, see Importing a target managed cluster to the hub cluster for those steps.

If you need to create a credential, see Creating a credential for Microsoft Azure for more information.

The name of the cluster is used in the hostname of the cluster.

Important: When you create a cluster, the Red Hat Advanced Cluster Management controller creates a namespace for the cluster and its resources. Ensure that you include only resources for that cluster instance in that namespace. Destroying the cluster deletes the namespace and all of the resources in it.

Tip: Select YAML: On to view content updates as you enter the information in the console.

1.6.3.3. Adding your cluster to an existing cluster set

If you want to add your cluster to an existing cluster set, you must have the correct permissions on the cluster set to add it. If you do not have cluster-admin privileges when you are creating the cluster, you must select a cluster set on which you have clusterset-admin permissions. If you do not have the correct permissions on the specified cluster set, the cluster creation fails. Contact your cluster administrator to provide you with clusterset-admin permissions to a cluster set if you do not have any cluster set options to select.

Every managed cluster must be associated with a managed cluster set. If you do not assign the managed cluster to a ManagedClusterSet, it is automatically added to the default managed cluster set.

If there is already a base DNS domain that is associated with the selected credential that you configured for your Azure account, that value is populated in that field. You can change the value by overwriting it. See Configuring a custom domain name for an Azure cloud service for more information. This name is used in the hostname of the cluster.

The release image identifies the version of the OpenShift Container Platform image that is used to create the cluster. If the version that you want to use is available, you can select the image from the list of images. If the image that you want to use is not a standard image, you can enter the URL to the image that you want to use. See Release images for more information about release images.

The Node pools include the control plane pool and the worker pools. The control plane nodes share the management of the cluster activity. The information includes the following optional fields:

- Region: Specify a region where you want to run your node pools. You can select multiple zones within the region for a more distributed group of control plane nodes. A closer zone might provide faster performance, but a more distant zone might be more distributed.

- Architecture: If the architecture type of the managed cluster is not the same as the architecture of your hub cluster, enter a value for the instruction set architecture of the machines in the pool. Valid values are amd64, ppc64le, s390x, and arm64.

- Instance type and Root storage allocation (required) for your control plane pool. You can change the type and size of your instance after it is created.

You can create one or more worker nodes in a worker pool to run the container workloads for the cluster. They can be in a single worker pool, or distributed across multiple worker pools. If zero worker nodes are specified, the control plane nodes also function as worker nodes. The information includes the following fields:

- Zones: Specifies here you want to run your worker pools. You can select multiple zones within the region for a more distributed group of nodes. A closer zone might provide faster performance, but a more distant zone might be more distributed.

- Instance type: You can change the type and size of your instance after it is created.

You can add an additional network by clicking Add network. You must have more than one network if you are using IPv6 addresses.

Proxy information that is provided in the credential is automatically added to the proxy fields. You can use the information as it is, overwrite it, or add the information if you want to enable a proxy. The following list contains the required information for creating a proxy:

-

HTTP proxy URL: The URL that should be used as a proxy for

HTTPtraffic. -

HTTPS proxy URL: The secure proxy URL that should be used for

HTTPStraffic. If no value is provided, the same value as theHTTP Proxy URLis used for bothHTTPandHTTPS. -

No proxy domains: A comma-separated list of domains that should bypass the proxy. Begin a domain name with a period

.to include all of the subdomains that are in that domain. Add an asterisk*to bypass the proxy for all destinations. - Additional trust bundle: The contents of the certificate file that is required to access the mirror registry.

When you review your information and optionally customize it before creating the cluster, you can click the YAML switch On to view the install-config.yaml file content in the panel. You can edit the YAML file with your custom settings, if you have any updates.

Note: You do not have to run the kubectl command that is provided with the cluster details to import the cluster. When you create the cluster, it is automatically configured under the management of Red Hat Advanced Cluster Management.

Continue with Accessing your cluster for instructions for accessing your cluster.

1.6.4. Creating a cluster on Google Cloud Platform

Follow the procedure to create a Red Hat OpenShift Container Platform cluster on Google Cloud Platform (GCP). For more information about GCP, see Google Cloud Platform.

When you create a cluster, the creation process uses the OpenShift Container Platform installer with the Hive resource. If you have questions about cluster creation after completing this procedure, see Installing on GCP in the OpenShift Container Platform documentation for more information about the process.

1.6.4.1. Prerequisites

See the following prerequisites before creating a cluster on GCP:

- You must have a deployed Red Hat Advanced Cluster Management for Kubernetes hub cluster.

- You need Internet access for your Red Hat Advanced Cluster Management for Kubernetes hub cluster so it can create the Kubernetes cluster on GCP.

- You must have a GCP credential. See Creating a credential for Google Cloud Platform for more information.

- You must have a configured domain in GCP. See Setting up a custom domain for instructions on how to configure a domain.

- You need your GCP login credentials, which include user name and password.

- You must have an OpenShift Container Platform image pull secret. See Using image pull secrets.

Note: If you change your cloud provider access key, you must manually update the provisioned cluster access key. For more information, see the known issue, Automatic secret updates for provisioned clusters is not supported.

1.6.4.2. Creating your cluster with the console

To create clusters from the Red Hat Advanced Cluster Management for Kubernetes console, navigate to Infrastructure > Clusters. On the Clusters page, click Create cluster and complete the steps in the console.

Note: This procedure is for creating a cluster. If you have an existing cluster that you want to import, see Importing a target managed cluster to the hub cluster for those steps.

If you need to create a credential, see Creating a credential for Google Cloud Platform for more information.

The name of your cluster is used in the hostname of the cluster. There are some restrictions that apply to naming your GCP cluster. These restrictions include not beginning the name with goog or containing a group of letters and numbers that resemble google anywhere in the name. See Bucket naming guidelines for the complete list of restrictions.

Important: When you create a cluster, the Red Hat Advanced Cluster Management controller creates a namespace for the cluster and its resources. Ensure that you include only resources for that cluster instance in that namespace. Destroying the cluster deletes the namespace and all of the resources in it.

Tip: Select YAML: On to view content updates as you enter the information in the console.

1.6.4.3. Adding your cluster to an existing cluster set