Governance

Read more to learn about the governance policy framework, which helps harden cluster security by using policies.

Abstract

Chapter 1. Risk and compliance

Manage your security of Red Hat Advanced Cluster Management for Kubernetes components. Govern your cluster with defined policies and processes to identify and minimize risks. Use policies to define rules and set controls.

Prerequisite: You must configure authentication service requirements for Red Hat Advanced Cluster Management for Kubernetes. See Access control for more information.

Review the following topics to learn more about securing your cluster:

1.1. Certificates

Various certificates are created and used throughout Red Hat Advanced Cluster Management for Kubernetes.

You can bring your own certificates. You must create a Kubernetes TLS Secret for your certificate. After you create your certificates, you can replace certain certificates that are created by the Red Hat Advanced Cluster Management installer.

Required access: Cluster administrator

All certificates required by services that run on Red Hat Advanced Cluster Management are created during the installation of Red Hat Advanced Cluster Management. Certificates are created and managed by the following components:

- OpenShift Service Serving Certificates

- Red Hat Advanced Cluster Management webhook controllers

- Kubernetes Certificates API

- OpenShift default ingress

Continue reading to learn more about certificate management:

Red Hat Advanced Cluster Management hub cluster certificates

Red Hat Advanced Cluster Management component certificates

Red Hat Advanced Cluster Management managed certificates

Note: Users are responsible for certificate rotations and updates.

1.1.1. Red Hat Advanced Cluster Management hub cluster certificates

1.1.1.1. Observability certificates

After Red Hat Advanced Cluster Management is installed, observability certificates are created and used by the observability components, to provide mutual TLS on the traffic between the hub cluster and managed cluster. The Kubernetes secrets that are associated with the observability certificates.

The open-cluster-management-observability namespace contain the following certificates:

-

observability-server-ca-certs: Has the CA certificate to sign server-side certificates -

observability-client-ca-certs: Has the CA certificate to sign client-side certificates -

observability-server-certs: Has the server certificate used by theobservability-observatorium-apideployment -

observability-grafana-certs: Has the client certificate used by theobservability-rbac-query-proxydeployment

The open-cluster-management-addon-observability namespace contain the following certificates on managed clusters:

-

observability-managed-cluster-certs: Has the same server CA certificate asobservability-server-ca-certsin the hub server -

observability-controller-open-cluster-management.io-observability-signer-client-cert: Has the client certificate used by themetrics-collector-deployment

The CA certificates are valid for five years and other certificates are valid for one year. All observability certificates are automatically refreshed upon expiration.

View the following list to understand the effects when certificates are automatically renewed:

- Non-CA certificates are renewed automatically when the remaining valid time is no more than 73 days. After the certificate is renewed, the pods in the related deployments restart automatically to use the renewed certificates.

- CA certificates are renewed automatically when the remaining valid time is no more than one year. After the certificate is renewed, the old CA is not deleted but co-exist with the renewed ones. Both old and renewed certificates are used by related deployments, and continue to work. The old CA certificates are deleted when they expire.

- When a certificate is renewed, the traffic between the hub cluster and managed cluster is not interrupted.

1.1.1.2. Bring Your Own (BYO) observability certificate authority (CA) certificates

If you do not want to use the default observability CA certificates generated by Red Hat Advanced Cluster Management, you can choose to use the BYO observability CA certificates before you enable observability.

1.1.1.2.1. OpenSSL commands to generate CA certificate

Observability requires two CA certificates; one is for the server-side and the other is for the client-side.

Generate your CA RSA private keys with the following commands:

openssl genrsa -out serverCAKey.pem 2048 openssl genrsa -out clientCAKey.pem 2048

openssl genrsa -out serverCAKey.pem 2048 openssl genrsa -out clientCAKey.pem 2048Copy to Clipboard Copied! Toggle word wrap Toggle overflow Generate the self-signed CA certificates using the private keys. Run the following commands:

openssl req -x509 -sha256 -new -nodes -key serverCAKey.pem -days 1825 -out serverCACert.pem openssl req -x509 -sha256 -new -nodes -key clientCAKey.pem -days 1825 -out clientCACert.pem

openssl req -x509 -sha256 -new -nodes -key serverCAKey.pem -days 1825 -out serverCACert.pem openssl req -x509 -sha256 -new -nodes -key clientCAKey.pem -days 1825 -out clientCACert.pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow

1.1.1.2.2. Create the secrets associated with the BYO observability CA certificates

Complete the following steps to create the secrets:

Create the

observability-server-ca-certssecret by using your certificate and private key. Run the following command:oc -n open-cluster-management-observability create secret tls observability-server-ca-certs --cert ./serverCACert.pem --key ./serverCAKey.pem

oc -n open-cluster-management-observability create secret tls observability-server-ca-certs --cert ./serverCACert.pem --key ./serverCAKey.pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

observability-client-ca-certssecret by using your certificate and private key. Run the following command:oc -n open-cluster-management-observability create secret tls observability-client-ca-certs --cert ./clientCACert.pem --key ./clientCAKey.pem

oc -n open-cluster-management-observability create secret tls observability-client-ca-certs --cert ./clientCACert.pem --key ./clientCAKey.pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow

1.1.1.2.3. Replacing certificates for alertmanager route

You can replace alertmanager certificates by updating the alertmanager route, if you do not want to use the OpenShift default ingress certificate. Complete the following steps:

Examine the observability certificate with the following command:

openssl x509 -noout -text -in ./observability.crt

openssl x509 -noout -text -in ./observability.crtCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Change the common name (

CN) on the certificate toalertmanager. -

Change the SAN in the

csr.cnfconfiguration file with the hostname for your alertmanager route. Create the two following secrets in the

open-cluster-management-observabilitynamespace. Run the following command:oc -n open-cluster-management-observability create secret tls alertmanager-byo-ca --cert ./ca.crt --key ./ca.key oc -n open-cluster-management-observability create secret tls alertmanager-byo-cert --cert ./ingress.crt --key ./ingress.key

oc -n open-cluster-management-observability create secret tls alertmanager-byo-ca --cert ./ca.crt --key ./ca.key oc -n open-cluster-management-observability create secret tls alertmanager-byo-cert --cert ./ingress.crt --key ./ingress.keyCopy to Clipboard Copied! Toggle word wrap Toggle overflow

1.1.2. Red Hat Advanced Cluster Management component certificates

1.1.2.1. List hub cluster managed certificates

You can view a list of hub cluster managed certificates that use OpenShift Service Serving Certificates service internally. Run the following command to list the certificates:

for ns in multicluster-engine open-cluster-management ; do echo "$ns:" ; oc get secret -n $ns -o custom-columns=Name:.metadata.name,Expiration:.metadata.annotations.service\\.beta\\.openshift\\.io/expiry | grep -v '<none>' ; echo ""; done

for ns in multicluster-engine open-cluster-management ; do echo "$ns:" ; oc get secret -n $ns -o custom-columns=Name:.metadata.name,Expiration:.metadata.annotations.service\\.beta\\.openshift\\.io/expiry | grep -v '<none>' ; echo ""; doneNote: If observability is enabled, there are additional namespaces where certificates are created.

1.1.2.2. Refresh hub cluster managed certificates

You can refresh a hub cluster managed certificate by running the delete secret command in the List hub cluster managed certificates section. When you identify the certificate that you need to refresh, delete the secret that is associated with the certificate. For example, you can delete a secret by running the following command:

oc delete secret grc-0c925-grc-secrets -n open-cluster-management

oc delete secret grc-0c925-grc-secrets -n open-cluster-managementNote: After you delete the secret, a new one is created. However, you must restart pods that use the secret manually so they can begin to use the new certificate.

1.1.2.3. Refresh a Red Hat Advanced Cluster Management webhook certificate

You can refresh OpenShift Container Platform managed certificates, which are certificates that are used by Red Hat Advanced Cluster Management webhooks.

Complete the following steps to refresh Red Hat Advanced Cluster Management webhook certificate:

Delete the secret that is associated with the OpenShift Container Platform managed certificate by running the following command:

oc delete secret -n open-cluster-management ocm-webhook-secret

oc delete secret -n open-cluster-management ocm-webhook-secretCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note: Some services might not have a secret that needs to be deleted.

Restart the services that are associated with the OpenShift Container Platform managed certificate(s) by running the following command:

oc delete po -n open-cluster-management ocm-webhook-679444669c-5cg76

oc delete po -n open-cluster-management ocm-webhook-679444669c-5cg76Copy to Clipboard Copied! Toggle word wrap Toggle overflow Important: There are replicas of many services; each service must be restarted.

View the following table for a summarized list of the pods that contain certificates and whether a secret needs to be deleted prior to restarting the pod:

| Service name | Namespace | Sample pod name | Secret name (if applicable) |

|---|---|---|---|

| channels-apps-open-cluster-management-webhook-svc | open-cluster-management | multicluster-operators-application-8c446664c-5lbfk | channels-apps-open-cluster-management-webhook-svc-ca |

| multicluster-operators-application-svc | open-cluster-management | multicluster-operators-application-8c446664c-5lbfk | multicluster-operators-application-svc-ca |

| cluster-manager-registration-webhook | open-cluster-management-hub | cluster-manager-registration-webhook-fb7b99c-d8wfc | registration-webhook-serving-cert |

| cluster-manager-work-webhook | open-cluster-management-hub | cluster-manager-work-webhook-89b8d7fc-f4pv8 | work-webhook-serving-cert |

1.1.3. Red Hat Advanced Cluster Management managed certificates

1.1.3.1. Channel certificates

CA certificates can be associated with Git channel that are a part of the Red Hat Advanced Cluster Management application management. See Using custom CA certificates for a secure HTTPS connection for more details.

Helm channels allow you to disable certificate validation. Helm channels where certificate validation is disabled, must be configured in development environments. Disabling certificate validation introduces security risks.

1.1.3.2. Managed cluster certificates

Certificates are used to authenticate managed clusters with the hub. Therefore, it is important to be aware of troubleshooting scenarios associated with these certificates. View Troubleshooting imported clusters offline after certificate change for more details.

The managed cluster certificates are refreshed automatically.

1.1.4. Third-party certificates

1.1.4.1. Rotating the gatekeeper webhook certificate

Complete the following steps to rotate the gatekeeper webhook certificate:

Edit the secret that contains the certificate with the following command:

oc edit secret -n openshift-gatekeeper-system gatekeeper-webhook-server-cert

oc edit secret -n openshift-gatekeeper-system gatekeeper-webhook-server-certCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Delete the following content in the

datasection:ca.crt,ca.key, tls.crt`, andtls.key. Restart the gatekeeper webhook service by deleting the

gatekeeper-controller-managerpods with the following command:oc delete po -n openshift-gatekeeper-system -l control-plane=controller-manager

oc delete po -n openshift-gatekeeper-system -l control-plane=controller-managerCopy to Clipboard Copied! Toggle word wrap Toggle overflow

The gatekeeper webhook certificate is rotated.

Use the certificate policy controller to create and manage certificate policies on managed clusters. See Policy controllers to learn more about controllers. Return to the Risk and compliance page for more information.

Chapter 2. Governance

Enterprises must meet internal standards for software engineering, secure engineering, resiliency, security, and regulatory compliance for workloads hosted on private, multi and hybrid clouds. Red Hat Advanced Cluster Management for Kubernetes governance provides an extensible policy framework for enterprises to introduce their own security policies.

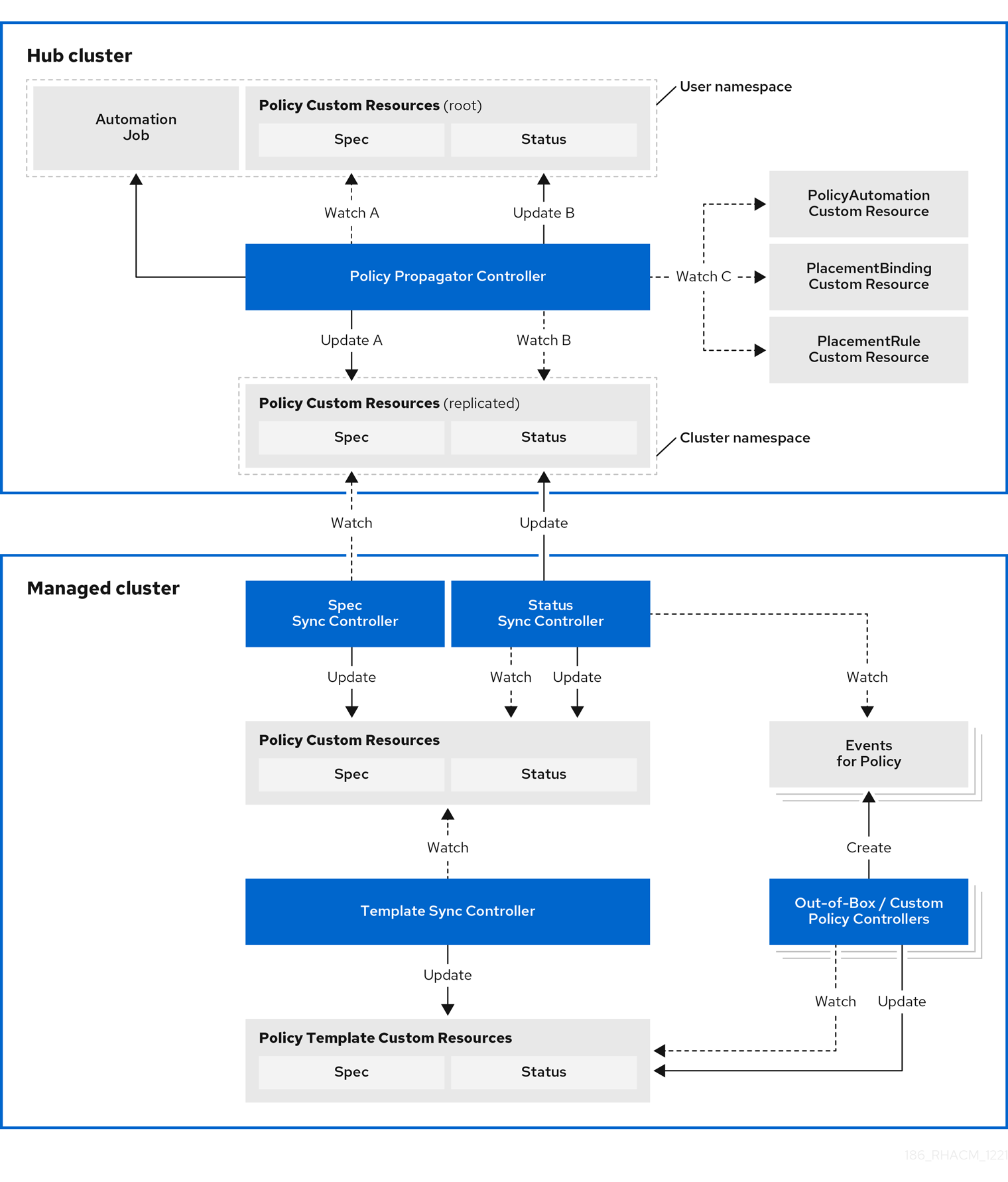

2.1. Governance architecture

Enhance the security for your cluster with the Red Hat Advanced Cluster Management for Kubernetes governance lifecycle. The product governance lifecycle is based on defined policies, processes, and procedures to manage security and compliance from a central interface page. View the following diagram of the governance architecture:

The governance architecture is composed of the following components:

Governance dashboard: Provides a summary of your cloud governance and risk details, which include policy and cluster violations. Refer to the Manage security policies section to learn about the structure of an Red Hat Advanced Cluster Management for Kubernetes policy framework, and how to use the Red Hat Advanced Cluster Management for Kubernetes Governance dashboard.

Notes:

-

When a policy is propagated to a managed cluster, it is first replicated to the cluster namespace on the hub cluster, and is named and labeled using

namespaceName.policyName. When you create a policy, make sure that the length of thenamespaceName.policyNamedoes not exceed 63 characters due to the Kubernetes length limit for label values. -

When you search for a policy in the hub cluster, you might also receive the name of the replicated policy in the managed cluster namespace. For example, if you search for

policy-dhaz-certin thedefaultnamespace, the following policy name from the hub cluster might also appear in the managed cluster namespace:default.policy-dhaz-cert.

-

When a policy is propagated to a managed cluster, it is first replicated to the cluster namespace on the hub cluster, and is named and labeled using

- Policy-based governance framework: Supports policy creation and deployment to various managed clusters based on attributes associated with clusters, such as a geographical region. There are examples of the predefined policies and instructions on deploying policies to your cluster in the open source community. Additionally, when policies are violated, automations can be configured to run and take any action that the user chooses.

- Policy controller: Evaluates one or more policies on the managed cluster against your specified control and generates Kubernetes events for violations. Violations are propagated to the hub cluster. Policy controllers that are included in your installation are the following: Kubernetes configuration, Certificate, and IAM. Customize the your policy controllers using advanced configurations.

-

Open source community: Supports community contributions with a foundation of the Red Hat Advanced Cluster Management policy framework. Policy controllers and third-party policies are also a part of the

stolostron/policy-collectionrepository. You can contribute and deploy policies using GitOps. For more information, see Deploying policies using GitOps in the Manage security policies section. Learn how to integrate third-party policies with Red Hat Advanced Cluster Management for Kubernetes.

Continue reading the related topics:

2.2. Policy overview

Use the Red Hat Advanced Cluster Management for Kubernetes security policy framework to create and manage policies. Kubernetes custom resource definition instances are used to create policies.

Each Red Hat Advanced Cluster Management policy can have at least one or more templates. For more details about the policy elements, view the Policy YAML table section on this page.

The policy requires a PlacementRule or Placement that defines the clusters that the policy document is applied to, and a PlacementBinding that binds the Red Hat Advanced Cluster Management for Kubernetes policy to the placement rule. For more on how to define a PlacementRule, see Placement rules in the Application lifecycle documentation. For more on how to define a Placement see Placement overview in the Cluster lifecycle documentation.

Important:

You must create the

PlacementBindingto bind your policy with either aPlacementRuleor aPlacementin order to propagate the policy to the managed clusters.Best practice: Use the command line interface (CLI) to make updates to the policies when you use the

Placementresource.-

You can create a policy in any namespace on the hub cluster except the managed cluster namespaces. Every managed cluster has a namespace on the hub cluster that matches the name of the managed cluster. If you create a policy in a managed cluster namespace, it is deleted by Red Hat Advanced Cluster Management for Kubernetes. Instead, use a namespace such as

policy. - Each client and provider is responsible for ensuring that their managed cloud environment meets internal enterprise security standards for software engineering, secure engineering, resiliency, security, and regulatory compliance for workloads hosted on Kubernetes clusters. Use the governance and security capability to gain visibility and remediate configurations to meet standards.

Learn more details about the policy components in the following sections:

2.2.1. Policy YAML structure

When you create a policy, you must include required parameter fields and values. Depending on your policy controller, you might need to include other optional fields and values. View the following YAML structure for the explained parameter fields:

2.2.2. Policy YAML table

| Field | Optional or required | Description |

|---|---|---|

|

| Required |

Set the value to |

|

| Required |

Set the value to |

|

| Required | The name for identifying the policy resource. |

|

| Optional | Used to specify a set of security details that describes the set of standards the policy is trying to validate. All annotations documented here are represented as a string that contains a comma-separated list. Note: You can view policy violations based on the standards and categories that you define for your policy on the Policies page, from the console. |

|

| Optional | The name or names of security standards the policy is related to. For example, National Institute of Standards and Technology (NIST) and Payment Card Industry (PCI). |

|

| Optional | A security control category represent specific requirements for one or more standards. For example, a System and Information Integrity category might indicate that your policy contains a data transfer protocol to protect personal information, as required by the HIPAA and PCI standards. |

|

| Optional | The name of the security control that is being checked. For example, Access Control or System and Information Integrity. |

|

| Optional | Used to create a list of dependency objects detailed with extra considerations for compliance. |

|

| Required | Used to create one or more policies to apply to a managed cluster. |

|

| Optional | For policy templates, this is used to create a list of dependency objects detailed with extra considerations for compliance. |

|

| Optional | Used to mark a policy template as compliant until the dependency criteria is verified. |

|

| Required |

Set the value to |

|

| Optional. |

Specifies the remediation of your policy. The parameter values are Important: Some policy kinds might not support the enforce feature. |

2.2.3. Policy sample file

2.2.4. Placement YAML sample file

The PlacementBinding and Placement resources can be combined with the previous policy example to deploy the policy using the cluster Placement API instead of the PlacementRule API.

- Refer to Policy controllers.

- See Managing security policies to create and update a policy. You can also enable and update Red Hat Advanced Cluster Management policy controllers to validate the compliance of your policies.

- Return to the Governance documentation.

2.3. Policy controllers

Policy controllers monitor and report whether your cluster is compliant with a policy. Use the Red Hat Advanced Cluster Management for Kubernetes policy framework by using the out-of-the-box policy templates to apply policies managed by these controllers. The policy controllers manage Kubernetes custom resource definition (CRD) instances.

Policy controllers monitor for policy violations, and can make the cluster status compliant if the controller supports the enforcement feature. View the following topics to learn more about the following Red Hat Advanced Cluster Management for Kubernetes policy controllers:

Important: Only the configuration policy controller policies support the enforce feature. You must manually remediate policies, where the policy controller does not support the enforce feature.

Refer to Governance for more topics about managing your policies.

2.3.1. Kubernetes configuration policy controller

The configuration policy controller can be used to configure any Kubernetes resource and apply security policies across your clusters. The configuration policy is provided in the policy-templates field of the policy on the hub cluster, and is propagated to the selected managed clusters by the governance framework. See the Policy overview for more details on the hub cluster policy.

A Kubernetes object is defined (in whole or in part) in the object-templates array in the configuration policy, indicating to the configuration policy controller of the fields to compare with objects on the managed cluster. The configuration policy controller communicates with the local Kubernetes API server to get the list of your configurations that are in your cluster.

The configuration policy controller is created on the managed cluster during installation. The configuration policy controller supports the enforce feature to remediate when the configuration policy is non-compliant. When the remediationAction for the configuration policy is set to enforce, the controller applies the specified configuration to the target managed cluster. Note: Configuration policies that specify an object without a name can only be inform.

You can also use templated values within configuration policies. For more information, see Support for templates in configuration policies.

If you have existing Kubernetes manifests that you want to put in a policy, the Policy Generator is a useful tool to accomplish this.

Continue reading to learn more about the configuration policy controller:

2.3.1.1. Configuration policy sample

2.3.1.2. Configuration policy YAML table

| Field | Optional or required | Description |

|---|---|---|

|

| Required |

Set the value to |

|

| Required |

Set the value to |

|

| Required | The name of the policy. |

|

| Required for namespaced objects that do not have a namespace specified |

Determines namespaces in the managed cluster that the object is applied to. The |

|

| Required |

Specifies the action to take when the policy is non-compliant. Use the following parameter values: |

|

| Required |

Specifies the severity when the policy is non-compliant. Use the following parameter values: |

|

| Optional |

Used to define how often the policy is evaluated when it is in the compliant state. The values must be in the format of a duration which is a sequence of numbers with time unit suffixes. For example,

By default, the minimum time between evaluations for configuration policies is approximately 10 seconds when the |

|

| Optional |

Used to define how often the policy is evaluated when it is in the non-compliant state. Similar to the |

|

| Optional |

The array of Kubernetes objects (either fully defined or containing a subset of fields) for the controller to compare with objects on the managed cluster. Note: While |

|

| Optional |

Used to set object templates with a raw YAML string. Note: While |

|

| Optional |

Used to specify conditions for the object templates. If else statements are supported values. For example, add the following value to avoid duplication in your

|

|

| Required | Used to define the desired state of the Kubernetes object on the managed clusters. You must use one of the following verbs as the parameter value:

|

|

| Optional |

Overrides |

|

| Required | A Kubernetes object (either fully defined or containing a subset of fields) for the controller to compare with objects on the managed cluster. |

|

| Optional | Determines whether to clean up resources related to the policy when the policy is removed from a managed cluster. |

2.3.1.3. Additional resources

See the following topics for more information:

- See the Policy overview for more details on the hub cluster policy.

-

See the policy samples that use NIST Special Publication 800-53 (Rev. 4), and are supported by Red Hat Advanced Cluster Management from the

CM-Configuration-Managementfolder. - Learn about how policies are applied on your hub cluster, see Supported policies for more details.

- Refer to Policy controllers for more details about controllers.

- Customize your policy controller configuration. See Policy controller advanced configuration.

- Learn about cleaning up resources and other topics in the Cleaning up resources that are created by policies documentation.

- Refer to Policy Generator.

- Learn about how to create and customize policies, see Manage security policies.

- See Support for templates in configuration policies.

2.3.2. Certificate policy controller

Certificate policy controller can be used to detect certificates that are close to expiring, time durations (hours) that are too long, or contain DNS names that fail to match specified patterns. The certificate policy is provided in the policy-templates field of the policy on the hub cluster and is propagated to the selected managed clusters by the governance framework. See the Policy overview documentation for more details on the hub cluster policy.

Configure and customize the certificate policy controller by updating the following parameters in your controller policy:

-

minimumDuration -

minimumCADuration -

maximumDuration -

maximumCADuration -

allowedSANPattern -

disallowedSANPattern

Your policy might become non-compliant due to either of the following scenarios:

- When a certificate expires in less than the minimum duration of time or exceeds the maximum time.

- When DNS names fail to match the specified pattern.

The certificate policy controller is created on your managed cluster. The controller communicates with the local Kubernetes API server to get the list of secrets that contain certificates and determine all non-compliant certificates.

Certificate policy controller does not support the enforce feature.

Note: The certificate policy controller automatically looks for a certificate in a secret in only the tls.crt key. If a secret is stored under a different key, add a label named certificate_key_name with a value set to the key to let the certificate policy controller know to look in a different key. For example, if a secret contains a certificate stored in the key named sensor-cert.pem, add the following label to the secret: certificate_key_name: sensor-cert.pem.

2.3.2.1. Certificate policy controller YAML structure

View the following example of a certificate policy and review the element in the YAML table:

2.3.2.1.1. Certificate policy controller YAML table

| Field | Optional or required | Description |

|---|---|---|

|

| Required |

Set the value to |

|

| Required |

Set the value to |

|

| Required | The name to identify the policy. |

|

| Optional |

In a certificate policy, the |

|

| Required |

Determines namespaces in the managed cluster where secrets are monitored. The

Note: If the |

|

| Optional | Specifies identifying attributes of objects. See the Kubernetes labels and selectors documentation. |

|

| Required |

Specifies the remediation of your policy. Set the parameter value to |

|

| Optional |

Informs the user of the severity when the policy is non-compliant. Use the following parameter values: |

|

| Required |

When a value is not specified, the default value is |

|

| Optional |

Set a value to identify signing certificates that might expire soon with a different value from other certificates. If the parameter value is not specified, the CA certificate expiration is the value used for the |

|

| Optional | Set a value to identify certificates that have been created with a duration that exceeds your desired limit. The parameter uses the time duration format from Golang. See Golang Parse Duration for more information. |

|

| Optional | Set a value to identify signing certificates that have been created with a duration that exceeds your defined limit. The parameter uses the time duration format from Golang. See Golang Parse Duration for more information. |

|

| Optional | A regular expression that must match every SAN entry that you have defined in your certificates. This parameter checks DNS names against patterns. See the Golang Regular Expression syntax for more information. |

|

| Optional | A regular expression that must not match any SAN entries you have defined in your certificates. This parameter checks DNS names against patterns.

Note: To detect wild-card certificate, use the following SAN pattern: See the Golang Regular Expression syntax for more information. |

2.3.2.2. Certificate policy sample

When your certificate policy controller is created on your hub cluster, a replicated policy is created on your managed cluster. See policy-certificate.yaml to view the certificate policy sample.

Learn how to manage a certificate policy, see Managing security policies for more details. Refer to Policy controllers for more topics.

2.3.3. IAM policy controller

The Identity and Access Management (IAM) policy controller can be used to receive notifications about IAM policies that are non-compliant. The compliance check is based on the parameters that you configure in the IAM policy. The IAM policy is provided in the policy-templates field of the policy on the hub cluster and is propagated to the selected managed clusters by the governance framework. See the Policy YAML structure documentation for more details on the hub cluster policy.

The IAM policy controller monitors for the desired maximum number of users with a particular cluster role (i.e. ClusterRole) in your cluster. The default cluster role to monitor is cluster-admin. The IAM policy controller communicates with the local Kubernetes API server.

The IAM policy controller runs on your managed cluster. View the following sections to learn more:

2.3.3.1. IAM policy YAML structure

View the following example of an IAM policy and review the parameters in the YAML table:

2.3.3.2. IAM policy YAML table

View the following parameter table for descriptions:

| Field | Optional or required | Description |

|---|---|---|

|

| Required |

Set the value to |

|

| Required |

Set the value to |

|

| Required | The name for identifying the policy resource. |

|

| Optional |

The cluster role (i.e. |

|

| Optional |

Informs the user of the severity when the policy is non-compliant. Use the following parameter values: |

|

| Optional |

Specifies the remediation of your policy. Enter |

|

| Optional |

A list of regular expression (regex) values that indicate which cluster role binding names to ignore. These regular expression values must follow Go regexp syntax. By default, all cluster role bindings that have a name that starts with |

|

| Required | Maximum number of IAM role bindings that are available before a policy is considered non-compliant. |

2.3.3.3. IAM policy sample

See policy-limitclusteradmin.yaml to view the IAM policy sample. See Managing security policies for more information. Refer to Policy controllers for more topics.

2.3.4. Policy set controller

The policy set controller aggregates the policy status scoped to policies that are defined in the same namespace. Create a policy set (PolicySet) to group policies that are in the same namespace. All policies in the PolicySet are placed together in a selected cluster by creating a PlacementBinding to bind the PolicySet and Placement. The policy set is deployed to the hub cluster.

Additionally, when a policy is a part of multiple policy sets, existing and new Placement resources remain in the policy. When a user removes a policy from the policy set, the policy is not applied to the cluster that is selected in the policy set, but the placements remain. The policy set controller only checks for violations in clusters that include the policy set placement.

Note: The Red Hat Advanced Cluster Management hardening sample policy set uses cluster placement. If you use cluster placement, bind the namespace containing the policy to the managed cluster set. See Deploying policies to your cluster for more details on using cluster placement.

Learn more details about the policy set structure in the following sections:

2.3.4.1. Policy set YAML structure

Your policy set might resemble the following YAML file:

2.3.4.2. Policy set table

View the following parameter table for descriptions:

| Field | Optional or required | Description |

|---|---|---|

|

| Required |

Set the value to |

|

| Required |

Set the value to |

|

| Required | The name for identifying the policy resource. |

|

| Required | Add configuration details for your policy. |

|

| Optional | The list of policies that you want to group together in the policy set. |

2.3.4.3. Policy set sample

See the Creating policy sets section in the Managing security policies topic. Also view the stable PolicySets, which require the Policy Generator for deployment, PolicySets-- Stable. See the Policy Generator documentation.

2.4. Policy controller advanced configuration

You can customize policy controller configurations on your managed clusters using the ManagedClusterAddOn custom resources. The following ManagedClusterAddOns configure the policy framework, governance-policy-framework, config-policy-controller, cert-policy-controller, and iam-policy-controller.

Required access: Cluster administrator

2.4.1. Configure the concurrency

You can configure the concurrency of the configuration policy controller for each managed cluster to change how many configuration policies it can evaluate at the same time. To change the default value of 2, set the policy-evaluation-concurrency annotation with a non-zero integer within quotes. You can set the value on the ManagedClusterAddOn object called config-policy-controller in the managed cluster namespace of the hub cluster.

Note: Higher concurrency values increase CPU and memory utilization on the config-policy-controller pod, Kubernetes API server, and OpenShift API server.

In the following YAML example, concurrency is set to 5 on the managed cluster called cluster1:

2.4.2. Configure debug log

Configure debug logs for each policy controller. To receive the second level of debugging information for the Kubernetes configuration controller, add the log-level annotation with the value of 2 to the ManagedClusterAddOn custom resource. By default, the log-level is set to 0, which means you receive informative messages. View the following example:

2.4.3. Governance metric

The policy framework exposes metrics that show policy distribution and compliance. Use the policy_governance_info metric on the hub cluster to view trends and analyze any policy failures. See the following topics for an overview of metrics:

2.4.3.1. Metric: policy_governance_info

The policy_governance_info is collected by OpenShift Container Platform monitoring, and some aggregate data is collected by Red Hat Advanced Cluster Management observability, if it is enabled.

Note: If observability is enabled, you can enter a query for the metric from the Grafana Explore page.

When you create a policy, you are creating a root policy. The framework watches for root policies as well as PlacementRules and PlacementBindings, to determine where to create propagated policies in order to distribute the policy to managed clusters. For both root and propagated policies, a metric of 0 is recorded if the policy is compliant, and 1 if it is non-compliant.

The policy_governance_info metric uses the following labels:

-

type: The label values arerootorpropagated. -

policy: The name of the associated root policy. -

policy_namespace: The namespace on the hub cluster where the root policy was defined. -

cluster_namespace: The namespace for the cluster where the policy is distributed.

These labels and values enable queries that can show us many things happening in the cluster that might be difficult to track.

Note: If the metrics are not needed, and there are any concerns about performance or security, this feature can be disabled. Set the DISABLE_REPORT_METRICS environment variable to true in the propagator deployment. You can also add policy_governance_info metric to the observability allowlist as a custom metric. See Adding custom metrics for more details.

2.4.3.2. Metric: config_policies_evaluation_duration_seconds

The config_policies_evaluation_duration_seconds histogram tracks the number of seconds it takes to process all configuration policies that are ready to be evaluated on the cluster. Use the following metrics to query the histogram:

-

config_policies_evaluation_duration_seconds_bucket: The buckets are cumulative and represent seconds with the following possible entries: 1, 3, 9, 10.5, 15, 30, 60, 90, 120, 180, 300, 450, 600, and greater. -

config_policies_evaluation_duration_seconds_count: The count of all events. -

config_policies_evaluation_duration_seconds_sum: The sum of all values.

Use the config_policies_evaluation_duration_seconds metric to determine if the ConfigurationPolicy evaluationInterval setting needs to be changed for resource intensive policies that do not need frequent evaluation. You can also increase the concurrency at the cost of higher resource utilization on the Kubernetes API server. See Configure the concurrency section for more details.

To receive information about the time used to evaluate configuration policies, perform a Prometheus query that resembles the following expression:

rate(config_policies_evaluation_duration_seconds_sum[10m])/rate (config_policies_evaluation_duration_seconds_count[10m]

The config-policy-controller pod running on managed clusters in the open-cluster-management-agent-addon namespace calculates the metric. The config-policy-controller does not send the metric to observability by default.

2.4.4. Verify configuration changes

When the new configuration is applied by the controller, the ManifestApplied parameter is updated in the ManagedClusterAddOn. That condition timestamp can be used to verify the configuration correctly. For example, this command can verify when the cert-policy-controller on the local-cluster was updated:

oc get -n local-cluster managedclusteraddon cert-policy-controller | grep -B4 'type: ManifestApplied'

oc get -n local-cluster managedclusteraddon cert-policy-controller | grep -B4 'type: ManifestApplied'You might receive the following output:

- lastTransitionTime: "2023-01-26T15:42:22Z"

message: manifests of addon are applied successfully

reason: AddonManifestApplied

status: "True"

type: ManifestApplied

- lastTransitionTime: "2023-01-26T15:42:22Z"

message: manifests of addon are applied successfully

reason: AddonManifestApplied

status: "True"

type: ManifestAppliedReturn to the Governance page for more topics.

2.5. Supported policies

View the supported policies to learn how to define rules, processes, and controls on the hub cluster when you create and manage policies in Red Hat Advanced Cluster Management for Kubernetes.

2.5.1. Table of sample configuration policies

View the following sample configuration policies:

| Policy sample | Description |

|---|---|

| Ensure consistent environment isolation and naming with Namespaces. See the Kubernetes Namespace documentation. | |

| Ensure cluster workload configuration. See the Kubernetes Pod documentation. | |

| Limit workload resource usage by using Limit Ranges. See the Limit Range documentation. | |

| Pod security policy (Deprecated) | Ensure consistent workload security. See the Kubernetes Pod security policy documentation. |

| Manage role permissions and bindings by using Roles and Role Bindings. See the Kubernetes RBAC documentation. | |

| Manage workload permissions with Security Context Constraints. See the Openshift Security Context Constraints documentation. | |

| Ensure data security with etcd encryption. See the Openshift etcd encryption documentation. | |

| Deploy the Compliance Operator to scan and enforce the compliance state of clusters leveraging OpenSCAP. See the Openshift Compliance Operator documentation. | |

| After applying the Compliance operator policy, deploy an Essential 8 (E8) scan to check for compliance with E8 security profiles. See the Openshift Compliance Operator documentation. | |

| After applying the Compliance operator policy, deploy a Center for Internet Security (CIS) scan to check for compliance with CIS security profiles. See the Openshift Compliance Operator documentation. | |

| Deploy the Container Security Operator and detect known image vulnerabilities in pods running on the cluster. See the Container Security Operator GitHub. | |

| Gatekeeper is an admission webhook that enforces custom resource definition-based policies that are run by the Open Policy Agent (OPA) policy engine. See the Gatekeeper documentation. | |

| After deploying Gatekeeper to the clusters, deploy this sample Gatekeeper policy that ensures namespaces that are created on the cluster are labeled as specified. |

2.5.2. Support matrix for out-of-box policies

| Policy | Red Hat OpenShift Container Platform 3.11 | Red Hat OpenShift Container Platform 4 |

|---|---|---|

| Memory usage policy | x | x |

| Namespace policy | x | x |

| Image vulnerability policy | x | x |

| Pod policy | x | x |

| Pod security policy (deprecated) | ||

| Role policy | x | x |

| Role binding policy | x | x |

| Security Context Constraints policy (SCC) | x | x |

| ETCD encryption policy | x | |

| Gatekeeper policy | x | |

| Compliance operator policy | x | |

| E8 scan policy | x | |

| OpenShift CIS scan policy | x | |

| Policy set | x |

View the following policy samples to view how specific policies are applied:

Refer to Governance for more topics.

2.5.3. Memory usage policy

The Kubernetes configuration policy controller monitors the status of the memory usage policy. Use the memory usage policy to limit or restrict your memory and compute usage. For more information, see Limit Ranges in the Kubernetes documentation.

Learn more details about the memory usage policy structure in the following sections:

2.5.3.1. Memory usage policy YAML structure

Your memory usage policy might resemble the following YAML file:

2.5.3.2. Memory usage policy table

| Field | Optional or required | Description |

|---|---|---|

|

| Required |

Set the value to |

|

| Required |

Set the value to |

|

| Required | The name for identifying the policy resource. |

|

| Required | The namespace of the policy. |

|

| Optional |

Specifies the remediation of your policy. The parameter values are |

|

| Required |

Set the value to |

|

| Required | Used to list configuration policies containing Kubernetes objects that must be evaluated or applied to the managed clusters. |

2.5.3.3. Memory usage policy sample

See the policy-limitmemory.yaml to view a sample of the policy. See Managing security policies for more details. Refer to the Policy overview documentation, and to Kubernetes configuration policy controller to view other configuration policies that are monitored by the controller.

2.5.4. Namespace policy

The Kubernetes configuration policy controller monitors the status of your namespace policy. Apply the namespace policy to define specific rules for your namespace.

Learn more details about the namespace policy structure in the following sections:

2.5.4.1. Namespace policy YAML structure

2.5.4.2. Namespace policy YAML table

| Field | Optional or required | Description |

|---|---|---|

|

| Required |

Set the value to |

|

| Required |

Set the value to |

|

| Required | The name for identifying the policy resource. |

|

| Required | The namespace of the policy. |

|

| Optional |

Specifies the remediation of your policy. The parameter values are |

|

| Required |

Set the value to |

|

| Required | Used to list configuration policies containing Kubernetes objects that must be evaluated or applied to the managed clusters. |

2.5.4.3. Namespace policy sample

See policy-namespace.yaml to view the policy sample.

See Managing security policies for more details. Refer to Policy overview documentation, and to the Kubernetes configuration policy controller to learn about other configuration policies.

2.5.5. Image vulnerability policy

Apply the image vulnerability policy to detect if container images have vulnerabilities by leveraging the Container Security Operator. The policy installs the Container Security Operator on your managed cluster if it is not installed.

The image vulnerability policy is checked by the Kubernetes configuration policy controller. For more information about the Security Operator, see the Container Security Operator from the Quay repository.

Notes:

- Image vulnerability policy is not functional during a disconnected installation.

-

The Image vulnerability policy is not supported on the IBM Power and IBM Z architectures. It relies on the Quay Container Security Operator. There are no

ppc64leors390ximages in the container-security-operator registry.

View the following sections to learn more:

2.5.5.1. Image vulnerability policy YAML structure

When you create the container security operator policy, it involves the following policies:

A policy that creates the subscription (

container-security-operator) to reference the name and channel. This configuration policy must havespec.remediationActionset toenforceto create the resources. The subscription pulls the profile, as a container, that the subscription supports. View the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow An

informconfiguration policy to audit theClusterServiceVersionto ensure that the container security operator installation succeeded. View the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow An

informconfiguration policy to audit whether anyImageManifestVulnobjects were created by the image vulnerability scans. View the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5.5.2. Image vulnerability policy sample

See policy-imagemanifestvuln.yaml. See Managing security policies for more information. Refer to Kubernetes configuration policy controller to view other configuration policies that are monitored by the configuration controller.

2.5.6. Pod policy

The Kubernetes configuration policy controller monitors the status of your pod policies. Apply the pod policy to define the container rules for your pods. A pod must exist in your cluster to use this information.

Learn more details about the pod policy structure in the following sections:

2.5.6.1. Pod policy YAML structure

2.5.6.2. Pod policy table

| Field | Optional or required | Description |

|---|---|---|

|

| Required |

Set the value to |

|

| Required |

Set the value to |

|

| Required | The name for identifying the policy resource. |

|

| Required | The namespace of the policy. |

|

| Optional |

Specifies the remediation of your policy. The parameter values are |

|

| Required |

Set the value to |

|

| Required | Used to list configuration policies containing Kubernetes objects that must be evaluated or applied to the managed clusters. |

2.5.6.3. Pod policy sample

See policy-pod.yaml to view the policy sample.

Refer to Kubernetes configuration policy controller to view other configuration policies that are monitored by the configuration controller, and see the Policy overview documentation to see a full description of the policy YAML structure and additional fields. Return to Managing configuration policies documentation to manage other policies.

2.5.7. Pod security policy (Deprecated)

The Kubernetes configuration policy controller monitors the status of the pod security policy. Apply a pod security policy to secure pods and containers.

Learn more details about the pod security policy structure in the following sections:

2.5.7.1. Pod security policy YAML structure

2.5.7.2. Pod security policy table

| Field | Optional or required | Description |

|---|---|---|

|

| Required |

Set the value to |

|

| Required |

Set the value to |

|

| Required | The name for identifying the policy resource. |

|

| Required | The namespace of the policy. |

|

| Optional |

Specifies the remediation of your policy. The parameter values are |

|

| Required |

Set the value to |

|

| Required | Used to list configuration policies containing Kubernetes objects that must be evaluated or applied to the managed clusters. |

2.5.7.3. Pod security policy sample

The support of pod security policies is removed from OpenShift Container Platform 4.12 and later, and from Kubernetes v1.25 and later. If you apply a PodSecurityPolicy resource, you might receive the following non-compliant message:

violation - couldn't find mapping resource with kind PodSecurityPolicy, please check if you have CRD deployed

violation - couldn't find mapping resource with kind PodSecurityPolicy, please check if you have CRD deployed- For more information including the deprecation notice, see Pod Security Policies in the Kubernetes documentation.

-

See

policy-psp.yamlto view the sample policy. View Managing configuration policies for more information. - Refer to the Policy overview documentation for a full description of the policy YAML structure, and Kubernetes configuration policy controller to view other configuration policies that are monitored by the controller.

2.5.8. Role policy

The Kubernetes configuration policy controller monitors the status of role policies. Define roles in the object-template to set rules and permissions for specific roles in your cluster.

Learn more details about the role policy structure in the following sections:

2.5.8.1. Role policy YAML structure

2.5.8.2. Role policy table

| Field | Optional or required | Description |

|---|---|---|

|

| Required |

Set the value to |

|

| Required |

Set the value to |

|

| Required | The name for identifying the policy resource. |

|

| Required | The namespace of the policy. |

|

| Optional |

Specifies the remediation of your policy. The parameter values are |

|

| Required |

Set the value to |

|

| Required | Used to list configuration policies containing Kubernetes objects that must be evaluated or applied to the managed clusters. |

2.5.8.3. Role policy sample

Apply a role policy to set rules and permissions for specific roles in your cluster. For more information on roles, see Role-based access control. View a sample of a role policy, see policy-role.yaml.

To learn how to manage role policies, refer to Managing configuration policies for more information. See the Kubernetes configuration policy controller to view other configuration policies that are monitored the controller.

2.5.9. Role binding policy

The Kubernetes configuration policy controller monitors the status of your role binding policy. Apply a role binding policy to bind a policy to a namespace in your managed cluster.

Learn more details about the namespace policy structure in the following sections:

2.5.9.1. Role binding policy YAML structure

2.5.9.2. Role binding policy table

| Field | Optional or required | Description |

|---|---|---|

|

| Required |

Set the value to |

|

| Required |

Set the value to |

|

| Required | The name for identifying the policy resource. |

|

| Required | The namespace of the policy. |

|

| Optional |

Specifies the remediation of your policy. The parameter values are |

|

| Required |

Set the value to |

|

| Required | Used to list configuration policies containing Kubernetes objects that must be evaluated or applied to the managed clusters. |

2.5.9.3. Role binding policy sample

See policy-rolebinding.yaml to view the policy sample. For a full description of the policy YAML structure and additional fields, see the Policy overview documentation. Refer to Kubernetes configuration policy controller documentation to learn about other configuration policies.

2.5.10. Security Context Constraints policy

The Kubernetes configuration policy controller monitors the status of your Security Context Constraints (SCC) policy. Apply an Security Context Constraints (SCC) policy to control permissions for pods by defining conditions in the policy.

Learn more details about SCC policies in the following sections:

2.5.10.1. SCC policy YAML structure

2.5.10.2. SCC policy table

| Field | Optional or required | Description |

|---|---|---|

|

| Required |

Set the value to |

|

| Required |

Set the value to |

|

| Required | The name for identifying the policy resource. |

|

| Required | The namespace of the policy. |

|

| Optional |

Specifies the remediation of your policy. The parameter values are |

|

| Required |

Set the value to |

|

| Required | Used to list configuration policies containing Kubernetes objects that must be evaluated or applied to the managed clusters. |

For explanations on the contents of a SCC policy, see Managing Security Context Constraints from the OpenShift Container Platform documentation.

2.5.10.3. SCC policy sample

Apply a Security context constraints (SCC) policy to control permissions for pods by defining conditions in the policy. For more information see, Managing Security Context Constraints (SCC).

See policy-scc.yaml to view the policy sample. For a full description of the policy YAML structure and additional fields, see the Policy overview documentation. Refer to Kubernetes configuration policy controller documentation to learn about other configuration policies.

2.5.11. ETCD encryption policy

Apply the etcd-encryption policy to detect, or enable encryption of sensitive data in the ETCD data-store. The Kubernetes configuration policy controller monitors the status of the etcd-encryption policy. For more information, see Encrypting etcd data in the OpenShift Container Platform documentation. Note: The ETCD encryption policy only supports Red Hat OpenShift Container Platform 4 and later.

Learn more details about the etcd-encryption policy structure in the following sections:

2.5.11.1. ETCD encryption policy YAML structure

Your etcd-encryption policy might resemble the following YAML file:

2.5.11.2. ETCD encryption policy table

| Field | Optional or required | Description |

|---|---|---|

|

| Required |

Set the value to |

|

| Required |

Set the value to |

|

| Required | The name for identifying the policy resource. |

|

| Required | The namespace of the policy. |

|

| Optional |

Specifies the remediation of your policy. The parameter values are |

|

| Required |

Set the value to |

|

| Required | Used to list configuration policies containing Kubernetes objects that must be evaluated or applied to the managed clusters. |

2.5.11.3. ETCD encryption policy sample

See policy-etcdencryption.yaml for the policy sample. See the Policy overview documentation and the Kubernetes configuration policy controller to view additional details on policy and configuration policy fields.

2.5.12. Compliance Operator policy

You can use the Compliance Operator to automate the inspection of numerous technical implementations and compare those against certain aspects of industry standards, benchmarks, and baselines. The Compliance Operator is not an auditor. To be compliant or certified with these various standards, you need to engage an authorized auditor such as a Qualified Security Assessor (QSA), Joint Authorization Board (JAB), or other industry recognized regulatory authority to assess your environment.

Recommendations that are generated from the Compliance Operator are based on generally available information and practices regarding such standards, and might assist you with remediations, but actual compliance is your responsibility. Work with an authorized auditor to achieve compliance with a standard.

For the latest updates, see the Compliance Operator release notes.

2.5.12.1. Compliance Operator policy overview

You can install the Compliance Operator on your managed cluster by using the Compliance Operator policy. The Compliance Operator policy is created as a Kubernetes configuration policy in Red Hat Advanced Cluster Management. OpenShift Container Platform supports the Compliance Operator policy.

Note: The Compliance operator policy relies on the OpenShift Container Platform Compliance Operator, which is not supported on the IBM Power or IBM Z architectures. See Understanding the Compliance Operator in the OpenShift Container Platform documentation for more information about the Compliance Operator.

2.5.12.2. Compliance operator resources

When you create a Compliance Operator policy, the following resources are created:

-

A Compliance Operator namespace (

openshift-compliance) for the operator installation:

-

An operator group (

compliance-operator) to specify the target namespace:

-

A subscription (

comp-operator-subscription) to reference the name and channel. The subscription pulls the profile, as a container, that it supports:

2.5.12.3. Additional resources

- For more information, see Managing the Compliance Operator in the OpenShift Container Platform documentation for more details.

-

After you install the Compliance Operator policy, the following pods are created:

compliance-operator,ocp4, andrhcos4. See a sample of thepolicy-compliance-operator-install.yaml. - You can also create and apply the E8 scan policy and OpenShift CIS scan policy, after you have installed the Compliance Operator. For more information, see E8 scan policy and OpenShift CIS scan policy.

- To learn about managing Compliance Operator policies, see Managing security policies for more details. Refer to Kubernetes configuration policy controller for more topics about configuration policies.

2.5.13. E8 scan policy

An Essential 8 (E8) scan policy deploys a scan that checks the master and worker nodes for compliance with the E8 security profiles. You must install the compliance operator to apply the E8 scan policy.

The E8 scan policy is created as a Kubernetes configuration policy in Red Hat Advanced Cluster Management. OpenShift Container Platform 4.7 and 4.6, support the E8 scan policy. For more information, see Understanding the Compliance Operator in the OpenShift Container Platform documentation for more details.

2.5.13.1. E8 scan policy resources

When you create an E8 scan policy the following resources are created:

A

ScanSettingBindingresource (e8) to identify which profiles to scan:Copy to Clipboard Copied! Toggle word wrap Toggle overflow A

ComplianceSuiteresource (compliance-suite-e8) to verify if the scan is complete by checking thestatusfield:Copy to Clipboard Copied! Toggle word wrap Toggle overflow A

ComplianceCheckResultresource (compliance-suite-e8-results) which reports the results of the scan suite by checking theComplianceCheckResultcustom resources (CR):Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Note: Automatic remediation is supported. Set the remediation action to enforce to create ScanSettingBinding resource.

See a sample of the policy-compliance-operator-e8-scan.yaml. See Managing security policies for more information. Note: After your E8 policy is deleted, it is removed from your target cluster or clusters.

2.5.14. OpenShift CIS scan policy

An OpenShift CIS scan policy deploys a scan that checks the master and worker nodes for compliance with the OpenShift CIS security benchmark. You must install the compliance operator to apply the OpenShift CIS policy.

The OpenShift CIS scan policy is created as a Kubernetes configuration policy in Red Hat Advanced Cluster Management. OpenShift Container Platform 4.9, 4.7, and 4.6, support the OpenShift CIS scan policy. For more information, see Understanding the Compliance Operator in the OpenShift Container Platform documentation for more details.

2.5.14.1. OpenShift CIS resources

When you create an OpenShift CIS scan policy the following resources are created:

A

ScanSettingBindingresource (cis) to identify which profiles to scan:Copy to Clipboard Copied! Toggle word wrap Toggle overflow A

ComplianceSuiteresource (compliance-suite-cis) to verify if the scan is complete by checking thestatusfield:Copy to Clipboard Copied! Toggle word wrap Toggle overflow A

ComplianceCheckResultresource (compliance-suite-cis-results) which reports the results of the scan suite by checking theComplianceCheckResultcustom resources (CR):Copy to Clipboard Copied! Toggle word wrap Toggle overflow

See a sample of the policy-compliance-operator-cis-scan.yaml file. For more information on creating policies, see Managing security policies.

2.6. Manage security policies

Use the Governance dashboard to create, view, and manage your security policies and policy violations. You can create YAML files for your policies from the CLI and console.

2.6.1. Governance page

The following tabs are displayed on the Governance page:

Overview

View the following summary cards from the Overview tab: Policy set violations, Policy violations, Clusters, Categories, Controls, and Standards.

Policy sets

Create and manage hub cluster policy sets.

Policies

Create and manage security policies. The table of policies lists the following details of a policy: Name, Namespace, Status, Remediation, Policy set, Cluster violations, Source, Automation and Created.

You can edit, enable or disable, set remediation to inform or enforce, or remove a policy by selecting the Actions icon. You can view the categories and standards of a specific policy by selecting the drop-down arrow to expand the row.

Complete bulk actions by selecting multiple policies and clicking the Actions button. You can also customize your policy table by clicking the Filter button.

When you select a policy in the table list, the following tabs of information are displayed from the console:

- Details: Select the Details tab to view policy details and placement details. In the Placement table, the Compliance column provides links to view the compliance of the clusters that are displayed.

Results: Select the Results tab to view a table list of all clusters that are associated to the policy.

From the Message column, click the View details link to view the template details, template YAML, and related resources. You can also view related resources. Click the View history link to view the violation message and a time of the last report.

2.6.2. Governance automation configuration

If there is a configured automation for a specific policy, you can select the automation to view more details. View the following descriptions of the schedule frequency options for your automation:

-

Manual run: Manually set this automation to run once. After the automation runs, it is set to

disabled. Note: You can only select Manual run mode when the schedule frequency is disabled. -

Run once mode: When a policy is violated, the automation runs one time. After the automation runs, it is set to

disabled. After the automation is set todisabled, you must continue to run the automation manually. When you run once mode, the extra variable oftarget_clustersis automatically supplied with the list of clusters that violated the policy. The Ansible Automation Platform Job template must havePROMPT ON LAUNCHenabled for theEXTRA VARIABLESsection (also known asextra_vars). -

Run everyEvent mode: When a policy is violated, the automation runs every time for each unique policy violation per managed cluster. Use the

DelayAfterRunSecondsparameter to set the minimum seconds before an automation can be restarted on the same cluster. If the policy is violated multiple times during the delay period and kept in the violated state, the automation runs one time after the delay period. The default is 0 seconds and is only applicable for theeveryEventmode. When you runeveryEventmode, the extra variable oftarget_clustersand Ansible Automation Platform Job template is the same as once mode. -

Disable automation: When the scheduled automation is set to

disabled, the automation does not run until the setting is updated.

The following variables are automatically provided in the extra_vars of the Ansible Automation Platform Job:

-

policy_name: The name of the non-compliant root policy that initiates the Ansible Automation Platform job on the hub cluster. -

policy_namespace: The namespace of the root policy. -

hub_cluster: The name of the hub cluster, which is determined by the value in theclustersDNSobject. -

policy_sets: This parameter contains all associated policy set names of the root policy. If the policy is not within a policy set, thepolicy_setparameter is empty. -

policy_violations: This parameter contains a list of non-compliant cluster names, and the value is the policystatusfield for each non-compliant cluster.

Review the following topics to learn more about creating and updating your security policies:

2.6.3. Configuring Ansible Automation Platform for governance

Red Hat Advanced Cluster Management for Kubernetes governance can be integrated with Red Hat Ansible Automation Platform to create policy violation automations. You can configure the automation from the Red Hat Advanced Cluster Management console.

2.6.3.1. Prerequisites

- Red Hat OpenShift Container Platform 4.5 or later

- You must have Ansible Automation Platform version 3.7.3 or a later version installed. It is best practice to install the latest supported version of Ansible Automation Platform. See Red Hat Ansible Automation Platform documentation for more details.

Install the Ansible Automation Platform Resource Operator from the Operator Lifecycle Manager. In the Update Channel section, select

stable-2.x-cluster-scoped. Select the All namespaces on the cluster (default) installation mode.Note: Ensure that the Ansible Automation Platform job template is idempotent when you run it. If you do not have Ansible Automation Platform Resource Operator, you can find it from the Red Hat OpenShift Container Platform OperatorHub page.

For more information about installing and configuring Red Hat Ansible Automation Platform, see Setting up Ansible tasks.

2.6.3.2. Creating a policy violation automation from the console

After you log into your Red Hat Advanced Cluster Management hub cluster, select Governance from the navigation menu, and then click on the Policies tab to view the policy tables.

Configure an automation for a specific policy by clicking Configure in the Automation column. You can create automation when the policy automation panel appears. From the Ansible credential section, click the drop-down menu to select an Ansible credential. If you need to add a credential, see Managing credentials overview.

Note: This credential is copied to the same namespace as the policy. The credential is used by the AnsibleJob resource that is created to initiate the automation. Changes to the Ansible credential in the Credentials section of the console is automatically updated.

After a credential is selected, click the Ansible job drop-down list to select a job template. In the Extra variables section, add the parameter values from the extra_vars section of the PolicyAutomation. Select the frequency of the automation. You can select Run once mode, Run everyEvent mode, or Disable automation.

Save your policy violation automation by selecting Submit. When you select the View Job link from the Ansible job details side panel, the link directs you to the job template on the Search page. After you successfully create the automation, it is displayed in the Automation column.

Note: When you delete a policy that has an associated policy automation, the policy automation is automatically deleted as part of clean up.

Your policy violation automation is created from the console.

2.6.3.3. Creating a policy violation automation from the CLI

Complete the following steps to configure a policy violation automation from the CLI:

-

From your terminal, log in to your Red Hat Advanced Cluster Management hub cluster using the

oc logincommand. - Find or create a policy that you want to add an automation to. Note the policy name and namespace.

Create a

PolicyAutomationresource using the following sample as a guide:Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

The Automation template name in the previous sample is

Policy Compliance Template. Change that value to match your job template name. -

In the

extra_varssection, add any parameters you need to pass to the Automation template. -

Set the mode to either

once,everyEvent, ordisabled. -

Set the

policyRefto the name of your policy. -

Create a secret in the same namespace as this

PolicyAutomationresource that contains the Ansible Automation Platform credential. In the previous example, the secret name isansible-tower. Use the sample from application lifecycle to see how to create the secret. Create the

PolicyAutomationresource.Notes:

An immediate run of the policy automation can be initiated by adding the following annotation to the

PolicyAutomationresource:metadata: annotations: policy.open-cluster-management.io/rerun: "true"metadata: annotations: policy.open-cluster-management.io/rerun: "true"Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

When the policy is in

oncemode, the automation runs when the policy is non-compliant. Theextra_varsvariable, namedtarget_clustersis added and the value is an array of each managed cluster name where the policy is non-compliant. -

When the policy is in

everyEventmode and theDelayAfterRunSecondsexceeds the defined time value, the policy is non-compliant and the automation runs for every policy violation.

2.6.4. Deploying policies using GitOps

You can deploy a set of policies across a fleet of managed clusters with the governance framework. You can add to the open source community, policy-collection by contributing to and using the policies in the repository. For more information, see Contributing a custom policy. Policies in each of the stable and community folders from the open source community are further organized according to NIST Special Publication 800-53.

Continue reading to learn best practices to use GitOps to automate and track policy updates and creation through a Git repository.

Prerequisite: Before you begin, be sure to fork the policy-collection repository.

2.6.4.1. Customizing your local repository

Customize your local repository by consolidating the stable and community policies into a single folder. Remove the policies you do not want to use. Complete the following steps to customize your local repository:

Create a new directory in the repository to hold the policies that you want to deploy. Be sure that you are in your local

policy-collectionrepository on your main default branch for GitOps. Run the following command:mkdir my-policies

mkdir my-policiesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy all of the

stableandcommunitypolicies into yourmy-policiesdirectory. Start with thecommunitypolicies first, in case thestablefolder contains duplicates of what is available in the community. Run the following commands:cp -R community/* my-policies/ cp -R stable/* my-policies/

cp -R community/* my-policies/ cp -R stable/* my-policies/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Now that you have all of the policies in a single parent directory structure, you can edit the policies in your fork.

Tips:

- It is best practice to remove the policies you are not planning to use.

Learn about policies and the definition of the policies from the following list:

- Purpose: Understand what the policy does.

Remediation Action: Does the policy only inform you of compliance, or enforce the policy and make changes? See the

spec.remediationActionparameter. If changes are enforced, make sure you understand the functional expectation. Remember to check which policies support enforcement. For more information, view the Validate section.Note: The

spec.remediationActionset for the policy overrides any remediation action that is set in the individualspec.policy-templates.-

Placement: What clusters is the policy deployed to? By default, most policies target the clusters with the

environment: devlabel. Some policies may target OpenShift Container Platform clusters or another label. You can update or add additional labels to include other clusters. When there is no specific value, the policy is applied to all of your clusters. You can also create multiple copies of a policy and customize each one if you want to use a policy that is configured one way for one set of clusters and configured another way for another set of clusters.

2.6.4.2. Committing to your local repository

After you are satisfied with the changes you have made to your directory, commit and push your changes to Git so that they can be accessed by your cluster.

Note: This example is used to show the basics of how to use policies with GitOps, so you might have a different workflow to get changes to your branch.

Complete the following steps:

From your terminal, run

git statusto view your recent changes in your directory that you previously created. Add your new directory to the list of changes to be committed with the following command:git add my-policies/

git add my-policies/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Commit the changes and customize your message. Run the following command:

git commit -m “Policies to deploy to the hub cluster”

git commit -m “Policies to deploy to the hub cluster”Copy to Clipboard Copied! Toggle word wrap Toggle overflow Push the changes to the branch of your forked repository that is used for GitOps. Run the following command: