Clusters

Cluster management

Abstract

Chapter 1. Cluster lifecycle with multicluster engine operator overview

The multicluster engine operator is the cluster lifecycle operator that provides cluster management capabilities for OpenShift Container Platform and Red Hat Advanced Cluster Management hub clusters. From the hub cluster, you can create and manage clusters, as well as destroy any clusters that you created. You can also hibernate, resume, and detach clusters. Learn more about the cluster lifecycle capabilities from the following documentation.

Access the Support matrix to learn about hub cluster and managed cluster requirements and support.

Information:

- Your cluster is created by using the OpenShift Container Platform cluster installer with the Hive resource. You can find more information about the process of installing OpenShift Container Platform clusters at Installing and configuring OpenShift Container Platform clusters in the OpenShift Container Platform documentation.

- With your OpenShift Container Platform cluster, you can use multicluster engine operator as a standalone cluster manager for cluster lifecycle function, or you can use it as part of a Red Hat Advanced Cluster Management hub cluster.

- If you are using OpenShift Container Platform only, the operator is included with subscription. Visit About multicluster engine for Kubernetes operator from the OpenShift Container Platform documentation.

- If you subscribe to Red Hat Advanced Cluster Management, you also receive the operator with installation. You can create, manage, and monitor other Kubernetes clusters with the Red Hat Advanced Cluster Management hub cluster. See the Red Hat Advanced Cluster Management Installing and upgrading documentation.

Release images are the version of OpenShift Container Platform that you use when you create a cluster. For clusters that are created using Red Hat Advanced Cluster Management, you can enable automatic upgrading of your release images. For more information about release images in Red Hat Advanced Cluster Management, see Release images.

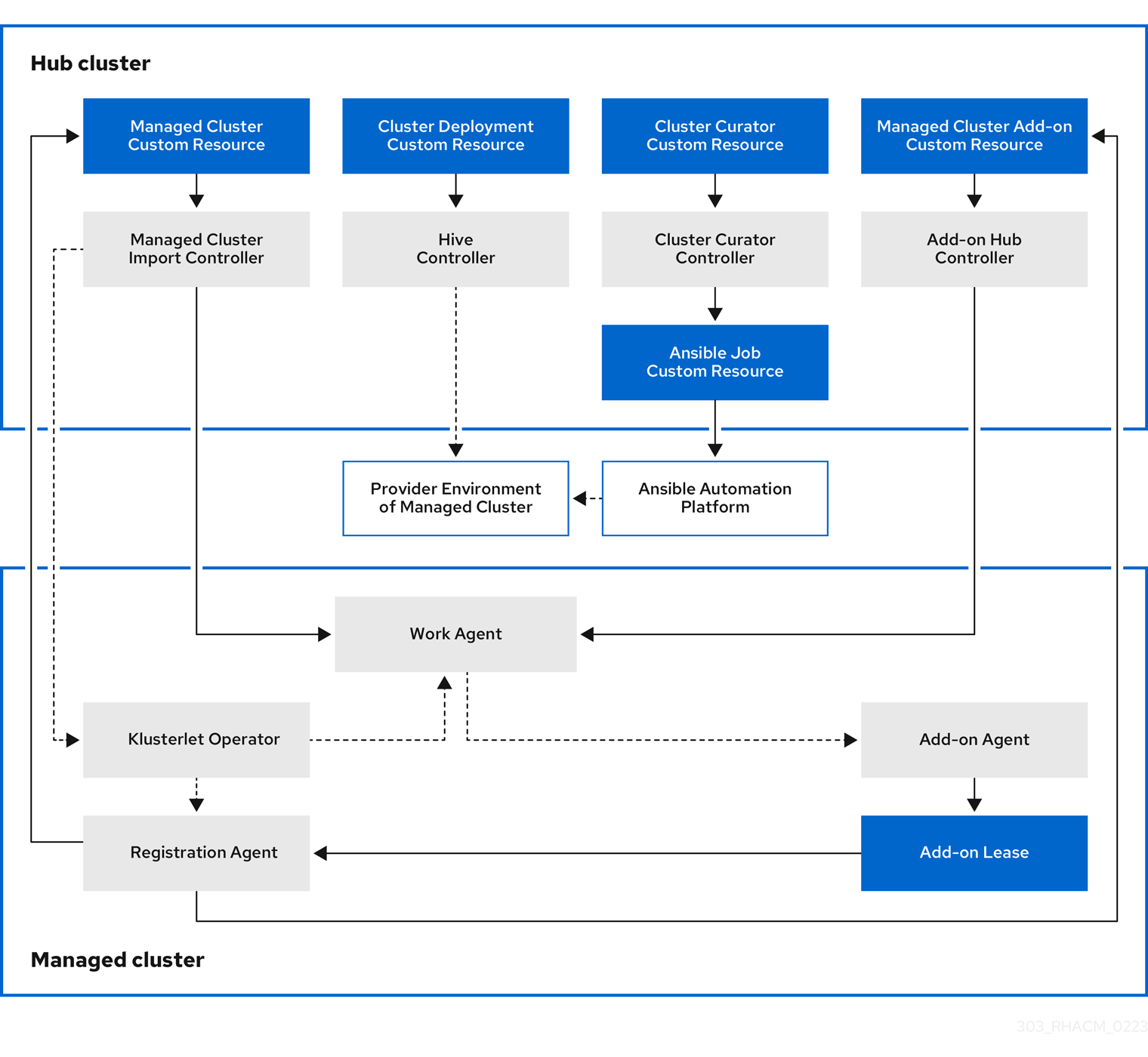

The components of the cluster lifecycle management architecture are included in the Cluster lifecycle architecture.

1.1. Release notes

Learn about the current release.

Deprecated: multicluster engine operator 2.3 and earlier versions are no longer supported. The documentation might remain available, but without any Errata or other updates.

Best practice: Upgrade to the most recent version.

If you experience issues with one of the currently supported releases, or the product documentation, go to Red Hat Support where you can troubleshoot, view Knowledgebase articles, connect with the Support Team, or open a case. You must log in with your credentials.

You can also learn more about the Customer Portal documentation at Red Hat Customer Portal FAQ.

The documentation references the earliest supported OpenShift Container Platform version, unless a specific component or function is introduced and tested only on a more recent version of OpenShift Container Platform.

For full support information, see the Support matrix. For lifecycle information, see Red Hat OpenShift Container Platform Life Cycle policy.

1.1.1. What’s new in cluster lifecycle with the multicluster engine operator

Important: Some features and components are identified and released as Technology Preview.

Learn more about what is new this release:

- The open source Open Cluster Management repository is ready for interaction, growth, and contributions from the open community. To get involved, see open-cluster-management.io. You can access the GitHub repository for more information, as well.

- Cluster lifecycle

- Hosted control planes

1.1.1.1. Cluster lifecycle

Learn about what’s new relating to Cluster lifecycle with multicluster engine operator.

Use the integrated console to create a credential and cluster that runs in the Nutanix environment. See Managing credentials and Cluster creation introduction for more information.

1.1.1.2. Hosted control planes

Hosted control planes for OpenShift Container Platform is now generally available on bare metal and OpenShift Virtualization. For more information, see the following topics:

Technology Preview: As a Technology Preview feature, you can provision a hosted control plane cluster on the following platforms:

- Amazon Web Services: For more information, see Configuring hosted control plane clusters on AWS (Technology Preview).

-

IBM Z: For more information, see Configuring the hosting cluster on

x86bare metal for IBM Z compute nodes (Technology Preview). - IBM Power: For more information, see Configuring the hosting cluster on a 64-bit x86 OpenShift Container Platform cluster to create hosted control planes for IBM Power compute nodes (Technology Preview).

- The hosted control planes feature is now enabled by default. If you want to disable the hosted control planes feature, or if you disabled it and want to manually enable it, see Enabling or disabling the hosted control planes feature.

- Hosted clusters are now automatically imported to your multicluster engine operator cluster. If you want to disable this feature, see Disabling the automatic import of hosted clusters into multicluster engine operator.

1.1.2. Cluster lifecycle known issues

Review the known issues for cluster lifecycle with multicluster engine operator. The following list contains known issues for this release, or known issues that continued from the previous release. For your OpenShift Container Platform cluster, see OpenShift Container Platform release notes.

1.1.2.1. Cluster management

Cluster lifecycle known issues and limitations are part of the Cluster lifecycle with multicluster engine operator documentation.

1.1.2.1.1. Limitation with nmstate

Develop quicker by configuring copy and paste features. To configure the copy-from-mac feature in the assisted-installer, you must add the mac-address to the nmstate definition interface and the mac-mapping interface. The mac-mapping interface is provided outside the nmstate definition interface. As a result, you must provide the same mac-address twice.

1.1.2.1.2. A StorageVersionMigration error

When you upgrade from multicluster engine operator from 2.4.0 to 2.4.1, you might find a StorageVersionMigration error in the log of the cluster-manager pods that run in the multicluster-engine namespace. These error messages have no impact on multicluster engine operator. Therefore, you do not need to take any action to resolve these errors.

See the following sample of the error message:

"CRDMigrationController" controller failed to sync "cluster-manager", err: ClusterManager.operator.open-cluster-management.io "cluster-manager" is invalid: status.conditions[5].reason: Invalid value: "StorageVersionMigration Failed. ": status.conditions[5].reason in body should match '^[A-Za-z]([A-Za-z0-9_,:]*[A-Za-z0-9_])?$'

"CRDMigrationController" controller failed to sync "cluster-manager", err: ClusterManager.operator.open-cluster-management.io "cluster-manager" is invalid: status.conditions[5].reason: Invalid value: "StorageVersionMigration Failed. ": status.conditions[5].reason in body should match '^[A-Za-z]([A-Za-z0-9_,:]*[A-Za-z0-9_])?$'

You might also find an error in the condition in the status of StorageVersionMigration managedclustersetbindings.v1beta1.cluster.open-cluster-management.io and managedclustersets.v1beta1.cluster.open-cluster-management.io. See the following sample of the error message:

1.1.2.1.3. Lack of support for cluster proxy add-on

The cluster proxy add-on does not support proxy settings between the multicluster engine operator hub cluster and the managed cluster. If there is a proxy server between the multicluster engine operator hub cluster and the managed cluster, you can disable the cluster proxy add-on.

1.1.2.1.4. Prehook failure does not fail the hosted cluster creation

If you use the automation template for the hosted cluster creation and the prehook job fails, then it looks like the hosted cluster creation is still progressing. This is normal because the hosted cluster was designed with no complete failure state, and therefore, it keeps trying to create the cluster.

1.1.2.1.5. Manual removal of the VolSync CSV required on managed cluster when removing the add-on

When you remove the VolSync ManagedClusterAddOn from the hub cluster, it removes the VolSync operator subscription on the managed cluster but does not remove the cluster service version (CSV). To remove the CSV from the managed clusters, run the following command on each managed cluster from which you are removing VolSync:

oc delete csv -n openshift-operators volsync-product.v0.6.0

oc delete csv -n openshift-operators volsync-product.v0.6.0

If you have a different version of VolSync installed, replace v0.6.0 with your installed version.

1.1.2.1.6. Deleting a managed cluster set does not automatically remove its label

After you delete a ManagedClusterSet, the label that is added to each managed cluster that associates the cluster to the cluster set is not automatically removed. Manually remove the label from each of the managed clusters that were included in the deleted managed cluster set. The label resembles the following example: cluster.open-cluster-management.io/clusterset:<ManagedClusterSet Name>.

1.1.2.1.7. ClusterClaim error

If you create a Hive ClusterClaim against a ClusterPool and manually set the ClusterClaimspec lifetime field to an invalid golang time value, the product stops fulfilling and reconciling all ClusterClaims, not just the malformed claim.

If this error occurs. you see the following content in the clusterclaim-controller pod logs, which is a specific example with the pool name and invalid lifetime included:

E0203 07:10:38.266841 1 reflector.go:138] sigs.k8s.io/controller-runtime/pkg/cache/internal/informers_map.go:224: Failed to watch *v1.ClusterClaim: failed to list *v1.ClusterClaim: v1.ClusterClaimList.Items: []v1.ClusterClaim: v1.ClusterClaim.v1.ClusterClaim.Spec: v1.ClusterClaimSpec.Lifetime: unmarshalerDecoder: time: unknown unit "w" in duration "1w", error found in #10 byte of ...|time":"1w"}},{"apiVe|..., bigger context ...|clusterPoolName":"policy-aas-hubs","lifetime":"1w"}},{"apiVersion":"hive.openshift.io/v1","kind":"Cl|...

E0203 07:10:38.266841 1 reflector.go:138] sigs.k8s.io/controller-runtime/pkg/cache/internal/informers_map.go:224: Failed to watch *v1.ClusterClaim: failed to list *v1.ClusterClaim: v1.ClusterClaimList.Items: []v1.ClusterClaim: v1.ClusterClaim.v1.ClusterClaim.Spec: v1.ClusterClaimSpec.Lifetime: unmarshalerDecoder: time: unknown unit "w" in duration "1w", error found in #10 byte of ...|time":"1w"}},{"apiVe|..., bigger context ...|clusterPoolName":"policy-aas-hubs","lifetime":"1w"}},{"apiVersion":"hive.openshift.io/v1","kind":"Cl|...You can delete the invalid claim.

If the malformed claim is deleted, claims begin successfully reconciling again without any further interaction.

1.1.2.1.8. The product channel out of sync with provisioned cluster

The clusterimageset is in fast channel, but the provisioned cluster is in stable channel. Currently the product does not sync the channel to the provisioned OpenShift Container Platform cluster.

Change to the right channel in the OpenShift Container Platform console. Click Administration > Cluster Settings > Details Channel.

1.1.2.1.9. Restoring the connection of a managed cluster with custom CA certificates to its restored hub cluster might fail

After you restore the backup of a hub cluster that managed a cluster with custom CA certificates, the connection between the managed cluster and the hub cluster might fail. This is because the CA certificate was not backed up on the restored hub cluster. To restore the connection, copy the custom CA certificate information that is in the namespace of your managed cluster to the <managed_cluster>-admin-kubeconfig secret on the restored hub cluster.

Tip: If you copy this CA certificate to the hub cluster before creating the backup copy, the backup copy includes the secret information. When the backup copy is used to restore in the future, the connection between the hub and managed clusters will automatically complete.

1.1.2.1.10. The local-cluster might not be automatically recreated

If the local-cluster is deleted while disableHubSelfManagement is set to false, the local-cluster is recreated by the MulticlusterHub operator. After you detach a local-cluster, the local-cluster might not be automatically recreated.

To resolve this issue, modify a resource that is watched by the

MulticlusterHuboperator. See the following example:oc delete deployment multiclusterhub-repo -n <namespace>

oc delete deployment multiclusterhub-repo -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

To properly detach the local-cluster, set the

disableHubSelfManagementto true in theMultiClusterHub.

1.1.2.1.11. Selecting a subnet is required when creating an on-premises cluster

When you create an on-premises cluster using the console, you must select an available subnet for your cluster. It is not marked as a required field.

1.1.2.1.12. Cluster provisioning with Infrastructure Operator fails

When creating OpenShift Container Platform clusters using the Infrastructure Operator, the file name of the ISO image might be too long. The long image name causes the image provisioning and the cluster provisioning to fail. To determine if this is the problem, complete the following steps:

View the bare metal host information for the cluster that you are provisioning by running the following command:

oc get bmh -n <cluster_provisioning_namespace>

oc get bmh -n <cluster_provisioning_namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the

describecommand to view the error information:oc describe bmh -n <cluster_provisioning_namespace> <bmh_name>

oc describe bmh -n <cluster_provisioning_namespace> <bmh_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow An error similar to the following example indicates that the length of the filename is the problem:

Status: Error Count: 1 Error Message: Image provisioning failed: ... [Errno 36] File name too long ...

Status: Error Count: 1 Error Message: Image provisioning failed: ... [Errno 36] File name too long ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow

If this problem occurs, it is typically on the following versions of OpenShift Container Platform, because the infrastructure operator was not using image service:

- 4.8.17 and earlier

- 4.9.6 and earlier

To avoid this error, upgrade your OpenShift Container Platform to version 4.8.18 or later, or 4.9.7 or later.

1.1.2.1.13. Cannot use host inventory to boot with the discovery image and add hosts automatically

You cannot use a host inventory, or InfraEnv custom resource, to both boot with the discovery image and add hosts automatically. If you used your previous InfraEnv resource for the BareMetalHost resource, and you want to boot the image yourself, you can work around the issue by creating a new InfraEnv resource.

1.1.2.1.14. Local-cluster status offline after reimporting with a different name

When you accidentally try to reimport the cluster named local-cluster as a cluster with a different name, the status for local-cluster and for the reimported cluster display offline.

To recover from this case, complete the following steps:

Run the following command on the hub cluster to edit the setting for self-management of the hub cluster temporarily:

oc edit mch -n open-cluster-management multiclusterhub

oc edit mch -n open-cluster-management multiclusterhubCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Add the setting

spec.disableSelfManagement=true. Run the following command on the hub cluster to delete and redeploy the local-cluster:

oc delete managedcluster local-cluster

oc delete managedcluster local-clusterCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the following command to remove the

local-clustermanagement setting:oc edit mch -n open-cluster-management multiclusterhub

oc edit mch -n open-cluster-management multiclusterhubCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Remove

spec.disableSelfManagement=truethat you previously added.

1.1.2.1.15. Cluster provision with Ansible automation fails in proxy environment

An Automation template that is configured to automatically provision a managed cluster might fail when both of the following conditions are met:

- The hub cluster has cluster-wide proxy enabled.

- The Ansible Automation Platform can only be reached through the proxy.

1.1.2.1.16. Version of the klusterlet operator must be the same as the hub cluster

If you import a managed cluster by installing the klusterlet operator, the version of the klusterlet operator must be the same as the version of the hub cluster or the klusterlet operator will not work.

1.1.2.1.17. Cannot delete managed cluster namespace manually

You cannot delete the namespace of a managed cluster manually. The managed cluster namespace is automatically deleted after the managed cluster is detached. If you delete the managed cluster namespace manually before the managed cluster is detached, the managed cluster shows a continuous terminating status after you delete the managed cluster. To delete this terminating managed cluster, manually remove the finalizers from the managed cluster that you detached.

1.1.2.1.18. Hub cluster and managed clusters clock not synced

Hub cluster and manage cluster time might become out-of-sync, displaying in the console unknown and eventually available within a few minutes. Ensure that the OpenShift Container Platform hub cluster time is configured correctly. See Customizing nodes.

1.1.2.1.19. Importing certain versions of IBM OpenShift Container Platform Kubernetes Service clusters is not supported

You cannot import IBM OpenShift Container Platform Kubernetes Service version 3.11 clusters. Later versions of IBM OpenShift Kubernetes Service are supported.

1.1.2.1.20. Automatic secret updates for provisioned clusters is not supported

When you change your cloud provider access key on the cloud provider side, you also need to update the corresponding credential for this cloud provider on the console of multicluster engine operator. This is required when your credentials expire on the cloud provider where the managed cluster is hosted and you try to delete the managed cluster.

1.1.2.1.21. Node information from the managed cluster cannot be viewed in search

Search maps RBAC for resources in the hub cluster. Depending on user RBAC settings, users might not see node data from the managed cluster. Results from search might be different from what is displayed on the Nodes page for a cluster.

1.1.2.1.22. Process to destroy a cluster does not complete

When you destroy a managed cluster, the status continues to display Destroying after one hour, and the cluster is not destroyed. To resolve this issue complete the following steps:

- Manually ensure that there are no orphaned resources on your cloud, and that all of the provider resources that are associated with the managed cluster are cleaned up.

Open the

ClusterDeploymentinformation for the managed cluster that is being removed by entering the following command:oc edit clusterdeployment/<mycluster> -n <namespace>

oc edit clusterdeployment/<mycluster> -n <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

myclusterwith the name of the managed cluster that you are destroying.Replace

namespacewith the namespace of the managed cluster.-

Remove the

hive.openshift.io/deprovisionfinalizer to forcefully stop the process that is trying to clean up the cluster resources in the cloud. -

Save your changes and verify that

ClusterDeploymentis gone. Manually remove the namespace of the managed cluster by running the following command:

oc delete ns <namespace>

oc delete ns <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

namespacewith the namespace of the managed cluster.

1.1.2.1.23. Cannot upgrade OpenShift Container Platform managed clusters on OpenShift Container Platform Dedicated with the console

You cannot use the Red Hat Advanced Cluster Management console to upgrade OpenShift Container Platform managed clusters that are in the OpenShift Container Platform Dedicated environment.

1.1.2.1.24. Work manager add-on search details

The search details page for a certain resource on a certain managed cluster might fail. You must ensure that the work-manager add-on in the managed cluster is in Available status before you can search.

1.1.2.1.25. Non-Red Hat OpenShift Container Platform managed clusters must have LoadBalancer enabled

Both Red Hat OpenShift Container Platform and non-OpenShift Container Platform clusters support the pod log feature, however non-OpenShift Container Platform clusters require LoadBalancer to be enabled to use the feature. Complete the following steps to enable LoadBalancer:

-

Cloud providers have different

LoadBalancerconfigurations. Visit your cloud provider documentation for more information. -

Verify if

LoadBalanceris enabled on your Red Hat Advanced Cluster Management by checking theloggingEndpointin the status ofmanagedClusterInfo. Run the following command to check if the

loggingEndpoint.IPorloggingEndpoint.Hosthas a valid IP address or host name:oc get managedclusterinfo <clusterName> -n <clusterNamespace> -o json | jq -r '.status.loggingEndpoint'

oc get managedclusterinfo <clusterName> -n <clusterNamespace> -o json | jq -r '.status.loggingEndpoint'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

For more information about the LoadBalancer types, see the Service page in the Kubernetes documentation.

1.1.2.1.26. OpenShift Container Platform 4.10.z does not support hosted control plane clusters with proxy configuration

When you create a hosting service cluster with a cluster-wide proxy configuration on OpenShift Container Platform 4.10.z, the nodeip-configuration.service service does not start on the worker nodes.

1.1.2.1.27. Cannot provision OpenShift Container Platform 4.11 cluster on Azure

Provisioning an OpenShift Container Platform 4.11 cluster on Azure fails due to an authentication operator timeout error. To work around the issue, use a different worker node type in the install-config.yaml file or set the vmNetworkingType parameter to Basic. See the following install-config.yaml example:

1.1.2.1.28. Client cannot reach iPXE script

iPXE is an open source network boot firmware. See iPXE for more details.

When booting a node, the URL length limitation in some DHCP servers cuts off the ipxeScript URL in the InfraEnv custom resource definition, resulting in the following error message in the console:

no bootable devices

To work around the issue, complete the following steps:

Apply the

InfraEnvcustom resource definition when using an assisted installation to expose thebootArtifacts, which might resemble the following file:Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Create a proxy server to expose the

bootArtifactswith short URLs. Copy the

bootArtifactsand add them them to the proxy by running the following commands:for artifact in oc get infraenv qe2 -ojsonpath="{.status.bootArtifacts}" | jq ". | keys[]" | sed "s/\"//g" do curl -k oc get infraenv qe2 -ojsonpath="{.status.bootArtifacts.${artifact}}"` -o $artifactfor artifact in oc get infraenv qe2 -ojsonpath="{.status.bootArtifacts}" | jq ". | keys[]" | sed "s/\"//g" do curl -k oc get infraenv qe2 -ojsonpath="{.status.bootArtifacts.${artifact}}"` -o $artifactCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Add the

ipxeScriptartifact proxy URL to thebootpparameter inlibvirt.xml.

1.1.2.1.29. Cannot delete ClusterDeployment after upgrading Red Hat Advanced Cluster Management

If you are using the removed BareMetalAssets API in Red Hat Advanced Cluster Management 2.6, the ClusterDeployment cannot be deleted after upgrading to Red Hat Advanced Cluster Management 2.7 because the BareMetalAssets API is bound to the ClusterDeployment.

To work around the issue, run the following command to remove the finalizers before upgrading to Red Hat Advanced Cluster Management 2.7:

oc patch clusterdeployment <clusterdeployment-name> -p '{"metadata":{"finalizers":null}}' --type=merge

oc patch clusterdeployment <clusterdeployment-name> -p '{"metadata":{"finalizers":null}}' --type=merge1.1.2.1.30. A cluster deployed in a disconnected environment by using the central infrastructure management service might not install

When you deploy a cluster in a disconnected environment by using the central infrastructure management service, the cluster nodes might not start installing.

This issue occurs because the cluster uses a discovery ISO image that is created from the Red Hat Enterprise Linux CoreOS live ISO image that is shipped with OpenShift Container Platform versions 4.12.0 through 4.12.2. The image contains a restrictive /etc/containers/policy.json file that requires signatures for images sourcing from registry.redhat.io and registry.access.redhat.com. In a disconnected environment, the images that are mirrored might not have the signatures mirrored, which results in the image pull failing for cluster nodes at discovery. The Agent image fails to connect with the cluster nodes, which causes communication with the assisted service to fail.

To work around this issue, apply an ignition override to the cluster that sets the /etc/containers/policy.json file to unrestrictive. The ignition override can be set in the InfraEnv custom resource definition. The following example shows an InfraEnv custom resource definition with the override:

The following example shows the unrestrictive file that is created:

After this setting is changed, the clusters install.

1.1.2.1.31. Managed cluster stuck in Pending status after deployment

If the Assisted Installer agent starts slowly and you deploy a managed cluster, the managed cluster might become stuck in the Pending status and not have any agent resources. You can work around the issue by disabling converged flow. Complete the following steps:

Create the following ConfigMap on the hub cluster:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the ConfigMap by running the following command:

oc annotate --overwrite AgentServiceConfig agent unsupported.agent-install.openshift.io/assisted-service-configmap=my-assisted-service-config

oc annotate --overwrite AgentServiceConfig agent unsupported.agent-install.openshift.io/assisted-service-configmap=my-assisted-service-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow

1.1.2.1.32. ManagedClusterSet API specification limitation

The selectorType: LaberSelector setting is not supported when using the Clustersets API. The selectorType: ExclusiveClusterSetLabel setting is supported.

1.1.2.1.33. Hub cluster communication limitations

The following limitations occur if the hub cluster is not able to reach or communicate with the managed cluster:

- You cannot create a new managed cluster by using the console. You are still able to import a managed cluster manually by using the command line interface or by using the Run import commands manually option in the console.

- If you deploy an Application or ApplicationSet by using the console, or if you import a managed cluster into Argo CD, the hub cluster Argo CD controller calls the managed cluster API server. You can use AppSub or the Argo CD pull model to work around the issue.

The console page for pod logs does not work, and an error message that resembles the following appears:

Error querying resource logs: Service unavailable

Error querying resource logs: Service unavailableCopy to Clipboard Copied! Toggle word wrap Toggle overflow

1.1.2.1.34. Managed Service Account add-on limitations

The following are known issues and limitations for the managed-serviceaccount add-on:

1.1.2.1.34.1. The managed-serviceaccount does not support OpenShift Container Platform 3.11

The managed-serviceaccount add-on crashes if you run it on OpenShift Container Platform 3.11.

1.1.2.1.34.2. installNamespace field can only have one value

When enabling the managed-serviceaccount add-on, the installNamespace field in the ManagedClusterAddOn resource must have open-cluster-management-agent-addon as the value. Other values are ignored. The managed-serviceaccount add-on agent is always deployed in the open-cluster-management-agent-addon namespace on the managed cluster.

1.1.2.1.34.3. tolerations and nodeSelector settings do not affect the managed-serviceaccount agent

The tolerations and nodeSelector settings configured on the MultiClusterEngine and MultiClusterHub resources do not affect the managed-serviceaccount agent deployed on the local cluster. The managed-serviceaccount add-on is not always required on the local cluster.

If the managed-serviceaccount add-on is required, you can work around the issue by completing the following steps:

-

Create the

addonDeploymentConfigcustom resource. -

Set the

tolerationsandnodeSelectorvalues for the local cluster andmanaged-serviceaccountagent. -

Update the

managed-serviceaccountManagedClusterAddonin the local cluster namespace to use theaddonDeploymentConfigcustom resource you created.

See Configuring nodeSelectors and tolerations for klusterlet add-ons to learn more about how to use the addonDeploymentConfig custom resource to configure tolerations and nodeSelector for add-ons.

1.1.2.1.35. Cluster proxy add-on limitations

The cluster-proxy-addon does not work when there is another proxy configuration on your hub cluster or managed clusters. The following scenarios causes this issue:

- There is a cluster-wide-proxy configuration on your hub cluster and managed cluster.

-

There is a proxy configuration in the agent

AddOnDeploymentConfigresource.

1.1.2.1.36. Custom ingress domain is not applied correctly

You can specify a custom ingress domain by using the ClusterDeployment resource while installing a managed cluster, but the change is only applied after the installation by using the SyncSet resource. As a result, the spec field in the clusterdeployment.yaml file displays the custom ingress domain you specified, but the status still displays the default domain.

1.1.2.2. Hosted control planes

1.1.2.2.1. Console displays hosted cluster as Pending import

If the annotation and ManagedCluster name do not match, the console displays the cluster as Pending import. The cluster cannot be used by the multicluster engine operator. The same issue happens when there is no annotation and the ManagedCluster name does not match the Infra-ID value of the HostedCluster resource."

1.1.2.2.2. Console might list the same version multiple times when adding a node pool to a hosted cluster

When you use the console to add a new node pool to an existing hosted cluster, the same version of OpenShift Container Platform might appear more than once in the list of options. You can select any instance in the list for the version that you want.

1.1.2.2.3. The web console lists nodes even after they are removed from the cluster and returned to the infrastructure environment

When a node pool is scaled down to 0 workers, the list of hosts in the console still shows nodes in a Ready state. You can verify the number of nodes in two ways:

- In the console, go to the node pool and verify that it has 0 nodes.

On the command line interface, run the following commands:

Verify that 0 nodes are in the node pool by running the following command:

oc get nodepool -A

oc get nodepool -ACopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that 0 nodes are in the cluster by running the following command:

oc get nodes --kubeconfig

oc get nodes --kubeconfigCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Verify that 0 agents are reported as bound to the cluster by running the following command:

oc get agents -A

oc get agents -ACopy to Clipboard Copied! Toggle word wrap Toggle overflow

1.1.2.2.4. Potential DNS issues in hosted clusters configured for a dual-stack network

When you create a hosted cluster in an environment that uses the dual-stack network, you might encounter the following DNS-related issues:

-

CrashLoopBackOffstate in theservice-ca-operatorpod: When the pod tries to reach the Kubernetes API server through the hosted control plane, the pod cannot reach the server because the data plane proxy in thekube-systemnamespace cannot resolve the request. This issue occurs because in the HAProxy setup, the front end uses an IP address and the back end uses a DNS name that the pod cannot resolve. -

Pods stuck in

ContainerCreatingstate: This issue occurs because theopenshift-service-ca-operatorcannot generate themetrics-tlssecret that the DNS pods need for DNS resolution. As a result, the pods cannot resolve the Kubernetes API server.

To resolve those issues, configure the DNS server settings by following the guidelines in Configuring DNS for a dual stack network.

1.1.2.2.5. On bare metal platforms, Agent resources might fail to pull ignition

On the bare metal (Agent) platform, the hosted control planes feature periodically rotates the token that the Agent uses to pull ignition. A bug causes the new token to not be propagated. As a result, if you have an Agent resource that was created some time ago, it might fail to pull ignition.

As a workaround, in the Agent specification, delete the secret that the IgnitionEndpointTokenReference property refers to, and then add or modify any label on the Agent resource. The system can then detect that the Agent resource was modified and re-create the secret with the new token.

1.1.3. Errata updates

For multicluster engine operator, the Errata updates are automatically applied when released.

Important: For reference, Errata links and GitHub numbers might be added to the content and used internally. Links that require access might not be available for the user.

1.1.3.1. Errata 2.4.9

- Delivers updates to one or more product container images.

1.1.3.2. Errata 2.4.8

- Delivers updates to one or more product container images.

1.1.3.3. Errata 2.4.7

- Delivers updates to one or more product container images.

1.1.3.4. Errata 2.4.6

- Delivers updates to one or more of the product container images.

1.1.3.5. Errata 2.4.5

-

Fixes the CA bundle comparison logic of the import controller that resolves an issue with the controller refreshing the bootstrap hub cluster

kubeconfigwhen a custom API server certificate is used with a blank line between certificates. (ACM-10999) -

Fixes agent components that were using

open-cluster-management-image-pull-credentialsas the default image pull secret without checking whether the secret exists. Without a secret, the pods reported a warning message. This update delivers an empty secret whenopen-cluster-management-image-pull-credentialsis not set. (ACM-9982)

1.1.3.6. Errata 2.4.4

- Delivers updates to one or more of the product container images.

1.1.3.7. Errata 2.4.3

- Delivers updates to one or more of the product container images.

-

Fixes an issue where the klusterlet agent did not update when the

external-managed-kubeconfigsecret changed in the hosted mode. (ACM-8799) - Fixes an issue where the node regions and zones were incorrectly displayed from the Topology view. (ACM-8996)

- Fixes an issue where deprecated node labels were used. (ACM-9002)

1.1.3.8. Errata 2.4.2

- Delivers updates to one or more of the product container images.

-

Fixes an issue where the

AppliedManifestWorkresource was deleted. (ACM-8926)

1.1.3.9. Errata 2.4.1

- Delivers updates to one or more of the product container images.

1.1.4. Deprecations and removals Cluster lifecycle

Learn when parts of the product are deprecated or removed from multicluster engine operator. Consider the alternative actions in the Recommended action and details, which display in the tables for the current release and for two prior releases.

Deprecated: multicluster engine operator 2.3 and earlier versions are no longer supported. The documentation might remain available, but without any Errata or other updates.

1.1.4.1. API deprecations and removals

multicluster engine operator follows the Kubernetes deprecation guidelines for APIs. See the Kubernetes Deprecation Policy for more details about that policy. multicluster engine operator APIs are only deprecated or removed outside of the following timelines:

-

All

V1APIs are generally available and supported for 12 months or three releases, whichever is greater. V1 APIs are not removed, but can be deprecated outside of that time limit. -

All

betaAPIs are generally available for nine months or three releases, whichever is greater. Beta APIs are not removed outside of that time limit. -

All

alphaAPIs are not required to be supported, but might be listed as deprecated or removed if it benefits users.

1.1.4.1.1. API deprecations

| Product or category | Affected item | Version | Recommended action | More details and links |

|---|---|---|---|---|

| ManagedServiceAccount |

The | 2.9 |

Use | None |

1.1.4.1.2. API removals

| Product or category | Affected item | Version | Recommended action | More details and links |

1.1.4.2. Deprecations

A deprecated component, feature, or service is supported, but no longer recommended for use and might become obsolete in future releases. Consider the alternative actions in the Recommended action and details that are provided in the following table:

| Product or category | Affected item | Version | Recommended action | More details and links |

|---|---|---|---|---|

| Cluster lifecycle | Create cluster on Red Hat Virtualization | 2.9 | None | None |

| Cluster lifecycle | Klusterlet OLM Operator | 2.4 | None | None |

1.1.4.3. Removals

A removed item is typically function that was deprecated in previous releases and is no longer available in the product. You must use alternatives for the removed function. Consider the alternative actions in the Recommended action and details that are provided in the following table:

| Product or category | Affected item | Version | Recommended action | More details and links |

1.2. About cluster lifecycle with multicluster engine operator

The multicluster engine for Kubernetes operator is the cluster lifecycle operator that provides cluster management capabilities for Red Hat OpenShift Container Platform and Red Hat Advanced Cluster Management hub clusters. If you installed Red Hat Advanced Cluster Management, you do not need to install multicluster engine operator, as it is automatically installed.

See the Support matrix to learn about hub cluster and managed cluster requirements and support. for support information, as well as the following documentation:

To continue, see the remaining cluster lifecyle documentation at Cluster lifecycle with multicluster engine operator overview.

1.2.1. Console overview

OpenShift Container Platform console plug-ins are available with the OpenShift Container Platform web console and can be integrated. To use this feature, the console plug-ins must remain enabled. The multicluster engine operator displays certain console features from Infrastructure and Credentials navigation items. If you install Red Hat Advanced Cluster Management, you see more console capability.

Note: With the plug-ins enabled, you can access Red Hat Advanced Cluster Management within the OpenShift Container Platform console from the cluster switcher by selecting All Clusters from the drop-down menu.

- To disable the plug-in, be sure you are in the Administrator perspective in the OpenShift Container Platform console.

- Find Administration in the navigation and click Cluster Settings, then click Configuration tab.

-

From the list of Configuration resources, click the Console resource with the

operator.openshift.ioAPI group, which contains cluster-wide configuration for the web console. -

Click on the Console plug-ins tab. The

mceplug-in is listed. Note: If Red Hat Advanced Cluster Management is installed, it is also listed asacm. - Modify plug-in status from the table. In a few moments, you are prompted to refresh the console.

1.2.2. multicluster engine operator role-based access control

RBAC is validated at the console level and at the API level. Actions in the console can be enabled or disabled based on user access role permissions. View the following sections for more information on RBAC for specific lifecycles in the product:

1.2.2.1. Overview of roles

Some product resources are cluster-wide and some are namespace-scoped. You must apply cluster role bindings and namespace role bindings to your users for consistent access controls. View the table list of the following role definitions that are supported:

1.2.2.1.1. Table of role definition

| Role | Definition |

|---|---|

|

|

This is an OpenShift Container Platform default role. A user with cluster binding to the |

|

|

A user with cluster binding to the |

|

|

A user with cluster binding to the |

|

|

A user with cluster binding to the |

|

|

A user with cluster binding to the |

|

|

A user with cluster binding to the |

|

|

Admin, edit, and view are OpenShift Container Platform default roles. A user with a namespace-scoped binding to these roles has access to |

Important:

- Any user can create projects from OpenShift Container Platform, which gives administrator role permissions for the namespace.

-

If a user does not have role access to a cluster, the cluster name is not visible. The cluster name is displayed with the following symbol:

-.

RBAC is validated at the console level and at the API level. Actions in the console can be enabled or disabled based on user access role permissions. View the following sections for more information on RBAC for specific lifecycles in the product.

1.2.2.2. Cluster lifecycle RBAC

View the following cluster lifecycle RBAC operations:

Create and administer cluster role bindings for all managed clusters. For example, create a cluster role binding to the cluster role

open-cluster-management:cluster-manager-adminby entering the following command:oc create clusterrolebinding <role-binding-name> --clusterrole=open-cluster-management:cluster-manager-admin --user=<username>

oc create clusterrolebinding <role-binding-name> --clusterrole=open-cluster-management:cluster-manager-admin --user=<username>Copy to Clipboard Copied! Toggle word wrap Toggle overflow This role is a super user, which has access to all resources and actions. You can create cluster-scoped

managedclusterresources, the namespace for the resources that manage the managed cluster, and the resources in the namespace with this role. You might need to add theusernameof the ID that requires the role association to avoid permission errors.Run the following command to administer a cluster role binding for a managed cluster named

cluster-name:oc create clusterrolebinding (role-binding-name) --clusterrole=open-cluster-management:admin:<cluster-name> --user=<username>

oc create clusterrolebinding (role-binding-name) --clusterrole=open-cluster-management:admin:<cluster-name> --user=<username>Copy to Clipboard Copied! Toggle word wrap Toggle overflow This role has read and write access to the cluster-scoped

managedclusterresource. This is needed because themanagedclusteris a cluster-scoped resource and not a namespace-scoped resource.Create a namespace role binding to the cluster role

adminby entering the following command:oc create rolebinding <role-binding-name> -n <cluster-name> --clusterrole=admin --user=<username>

oc create rolebinding <role-binding-name> -n <cluster-name> --clusterrole=admin --user=<username>Copy to Clipboard Copied! Toggle word wrap Toggle overflow This role has read and write access to the resources in the namespace of the managed cluster.

Create a cluster role binding for the

open-cluster-management:view:<cluster-name>cluster role to view a managed cluster namedcluster-nameEnter the following command:oc create clusterrolebinding <role-binding-name> --clusterrole=open-cluster-management:view:<cluster-name> --user=<username>

oc create clusterrolebinding <role-binding-name> --clusterrole=open-cluster-management:view:<cluster-name> --user=<username>Copy to Clipboard Copied! Toggle word wrap Toggle overflow This role has read access to the cluster-scoped

managedclusterresource. This is needed because themanagedclusteris a cluster-scoped resource.Create a namespace role binding to the cluster role

viewby entering the following command:oc create rolebinding <role-binding-name> -n <cluster-name> --clusterrole=view --user=<username>

oc create rolebinding <role-binding-name> -n <cluster-name> --clusterrole=view --user=<username>Copy to Clipboard Copied! Toggle word wrap Toggle overflow This role has read-only access to the resources in the namespace of the managed cluster.

View a list of the managed clusters that you can access by entering the following command:

oc get managedclusters.clusterview.open-cluster-management.io

oc get managedclusters.clusterview.open-cluster-management.ioCopy to Clipboard Copied! Toggle word wrap Toggle overflow This command is used by administrators and users without cluster administrator privileges.

View a list of the managed cluster sets that you can access by entering the following command:

oc get managedclustersets.clusterview.open-cluster-management.io

oc get managedclustersets.clusterview.open-cluster-management.ioCopy to Clipboard Copied! Toggle word wrap Toggle overflow This command is used by administrators and users without cluster administrator privileges.

1.2.2.2.1. Cluster pools RBAC

View the following cluster pool RBAC operations:

As a cluster administrator, use cluster pool provision clusters by creating a managed cluster set and grant administrator permission to roles by adding the role to the group. View the following examples:

Grant

adminpermission to theserver-foundation-clustersetmanaged cluster set with the following command:oc adm policy add-cluster-role-to-group open-cluster-management:clusterset-admin:server-foundation-clusterset server-foundation-team-admin

oc adm policy add-cluster-role-to-group open-cluster-management:clusterset-admin:server-foundation-clusterset server-foundation-team-adminCopy to Clipboard Copied! Toggle word wrap Toggle overflow Grant

viewpermission to theserver-foundation-clustersetmanaged cluster set with the following command:oc adm policy add-cluster-role-to-group open-cluster-management:clusterset-view:server-foundation-clusterset server-foundation-team-user

oc adm policy add-cluster-role-to-group open-cluster-management:clusterset-view:server-foundation-clusterset server-foundation-team-userCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Create a namespace for the cluster pool,

server-foundation-clusterpool. View the following examples to grant role permissions:Grant

adminpermission toserver-foundation-clusterpoolfor theserver-foundation-team-adminby running the following commands:oc adm new-project server-foundation-clusterpool oc adm policy add-role-to-group admin server-foundation-team-admin --namespace server-foundation-clusterpool

oc adm new-project server-foundation-clusterpool oc adm policy add-role-to-group admin server-foundation-team-admin --namespace server-foundation-clusterpoolCopy to Clipboard Copied! Toggle word wrap Toggle overflow

As a team administrator, create a cluster pool named

ocp46-aws-clusterpoolwith a cluster set label,cluster.open-cluster-management.io/clusterset=server-foundation-clustersetin the cluster pool namespace:-

The

server-foundation-webhookchecks if the cluster pool has the cluster set label, and if the user has permission to create cluster pools in the cluster set. -

The

server-foundation-controllergrantsviewpermission to theserver-foundation-clusterpoolnamespace forserver-foundation-team-user.

-

The

When a cluster pool is created, the cluster pool creates a

clusterdeployment. Continue reading for more details:-

The

server-foundation-controllergrantsadminpermission to theclusterdeploymentnamespace forserver-foundation-team-admin. The

server-foundation-controllergrantsviewpermissionclusterdeploymentnamespace forserver-foundation-team-user.Note: As a

team-adminandteam-user, you haveadminpermission to theclusterpool,clusterdeployment, andclusterclaim.

-

The

1.2.2.2.2. Console and API RBAC table for cluster lifecycle

View the following console and API RBAC tables for cluster lifecycle:

| Resource | Admin | Edit | View |

|---|---|---|---|

| Clusters | read, update, delete | - | read |

| Cluster sets | get, update, bind, join | edit role not mentioned | get |

| Managed clusters | read, update, delete | no edit role mentioned | get |

| Provider connections | create, read, update, and delete | - | read |

| API | Admin | Edit | View |

|---|---|---|---|

|

You can use | create, read, update, delete | read, update | read |

|

You can use | read | read | read |

|

| update | update | |

|

You can use | create, read, update, delete | read, update | read |

|

| read | read | read |

|

You can use | create, read, update, delete | read, update | read |

|

| create, read, update, delete | read, update | read |

|

| create, read, update, delete | read, update | read |

|

| create, read, update, delete | read, update | read |

|

| create, read, update, delete | read, update | read |

|

| create, read, update, delete | read, update | read |

|

| create, read, update, delete | read, update | read |

|

| create, read, update, delete | read, update | read |

1.2.2.2.3. Credentials role-based access control

The access to credentials is controlled by Kubernetes. Credentials are stored and secured as Kubernetes secrets. The following permissions apply to accessing secrets in Red Hat Advanced Cluster Management for Kubernetes:

- Users with access to create secrets in a namespace can create credentials.

- Users with access to read secrets in a namespace can also view credentials.

-

Users with the Kubernetes cluster roles of

adminandeditcan create and edit secrets. -

Users with the Kubernetes cluster role of

viewcannot view secrets because reading the contents of secrets enables access to service account credentials.

1.2.3. Network configuration

Configure your network settings to allow the connections.

Important: The trusted CA bundle is available in the multicluster engine operator namespace, but that enhancement requires changes to your network. The trusted CA bundle ConfigMap uses the default name of trusted-ca-bundle. You can change this name by providing it to the operator in an environment variable named TRUSTED_CA_BUNDLE. See Configuring the cluster-wide proxy in the Networking section of Red Hat OpenShift Container Platform for more information.

Note: Registration Agent and Work Agent on the managed cluster do not support proxy settings because they communicate with apiserver on the hub cluster by establishing an mTLS connection, which cannot pass through the proxy.

For the multicluster engine operator cluster networking requirements, see the following table:

| Direction | Protocol | Connection | Port (if specified) |

|---|---|---|---|

| Outbound | Kubernetes API server of the provisioned managed cluster | 6443 | |

| Outbound from the OpenShift Container Platform managed cluster to the hub cluster | TCP | Communication between the ironic agent and the bare metal operator on the hub cluster | 6180, 6183, 6385, and 5050 |

| Outbound from the hub cluster to the Ironic Python Agent (IPA) on the managed cluster | TCP | Communication between the bare metal node where IPA is running and the Ironic conductor service | 9999 |

| Outbound and inbound |

The | 443 | |

| Inbound | The Kubernetes API server of the multicluster engine for Kubernetes operator cluster from the managed cluster | 6443 |

Note: The managed cluster must be able to reach the hub cluster control plane node IP addresses.

1.3. Installing and upgrading multicluster engine operator

The multicluster engine operator is a software operator that enhances cluster fleet management. The multicluster engine operator supportsRed Hat OpenShift Container Platform and Kubernetes cluster lifecycle management across clouds and data centers.

The documentation references the earliest supported OpenShift Container Platform version, unless a specific component or function is introduced and tested only on a more recent version of OpenShift Container Platform.

For full support information, see the Support matrix. For life cycle information, see Red Hat OpenShift Container Platform Life Cycle policy.

Important: If you are using Red Hat Advanced Cluster Management version 2.5 or later, then multicluster engine for Kubernetes operator is already installed on the cluster.

See the following documentation:

1.3.1. Installing while connected online

The multicluster engine operator is installed with Operator Lifecycle Manager, which manages the installation, upgrade, and removal of the components that encompass the multicluster engine operator.

Required access: Cluster administrator

Deprecated: multicluster engine operator 2.3 and earlier versions are no longer supported. The documentation might remain available, but without any Errata or other updates.

Best practice: Upgrade to the most recent version.

Important:

-

For OpenShift Container Platform Dedicated environment, you must have

cluster-adminpermissions. By defaultdedicated-adminrole does not have the required permissions to create namespaces in the OpenShift Container Platform Dedicated environment. - By default, the multicluster engine operator components are installed on worker nodes of your OpenShift Container Platform cluster without any additional configuration. You can install multicluster engine operator onto worker nodes by using the OpenShift Container Platform OperatorHub web console interface, or by using the OpenShift Container Platform CLI.

- If you have configured your OpenShift Container Platform cluster with infrastructure nodes, you can install multicluster engine operator onto those infrastructure nodes by using the OpenShift Container Platform CLI with additional resource parameters. See the Installing multicluster engine on infrastructure nodes section for those details.

If you plan to import Kubernetes clusters that were not created by OpenShift Container Platform or multicluster engine for Kubernetes operator, you will need to configure an image pull secret. For information on how to configure an image pull secret and other advanced configurations, see options in the Advanced configuration section of this documentation.

1.3.1.1. Prerequisites

Before you install multicluster engine for Kubernetes operator, see the following requirements:

- Your Red Hat OpenShift Container Platform cluster must have access to the multicluster engine operator in the OperatorHub catalog from the OpenShift Container Platform console.

- You need access to the catalog.redhat.com.

OpenShift Container Platform 4.12 or later, must be deployed in your environment, and you must be logged into with the OpenShift Container Platform CLI. See the following install documentation for OpenShift Container Platform:

-

Your OpenShift Container Platform command line interface (CLI) must be configured to run

occommands. See Getting started with the CLI for information about installing and configuring the OpenShift Container Platform CLI. - Your OpenShift Container Platform permissions must allow you to create a namespace.

- You must have an Internet connection to access the dependencies for the operator.

To install in a OpenShift Container Platform Dedicated environment, see the following:

- You must have the OpenShift Container Platform Dedicated environment configured and running.

-

You must have

cluster-adminauthority to the OpenShift Container Platform Dedicated environment where you are installing the engine.

- If you plan to create managed clusters by using the Assisted Installer that is provided with Red Hat OpenShift Container Platform, see Preparing to install with the Assisted Installer topic in the OpenShift Container Platform documentation for the requirements.

1.3.1.2. Confirm your OpenShift Container Platform installation

You must have a supported OpenShift Container Platform version, including the registry and storage services, installed and working. For more information about installing OpenShift Container Platform, see the OpenShift Container Platform documentation.

- Verify that multicluster engine operator is not already installed on your OpenShift Container Platform cluster. The multicluster engine operator allows only one single installation on each OpenShift Container Platform cluster. Continue with the following steps if there is no installation.

To ensure that the OpenShift Container Platform cluster is set up correctly, access the OpenShift Container Platform web console with the following command:

kubectl -n openshift-console get route console

kubectl -n openshift-console get route consoleCopy to Clipboard Copied! Toggle word wrap Toggle overflow See the following example output:

console console-openshift-console.apps.new-coral.purple-chesterfield.com console https reencrypt/Redirect None

console console-openshift-console.apps.new-coral.purple-chesterfield.com console https reencrypt/Redirect NoneCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Open the URL in your browser and check the result. If the console URL displays

console-openshift-console.router.default.svc.cluster.local, set the value foropenshift_master_default_subdomainwhen you install OpenShift Container Platform. See the following example of a URL:https://console-openshift-console.apps.new-coral.purple-chesterfield.com.

You can proceed to install multicluster engine operator.

1.3.1.3. Installing from the OperatorHub web console interface

Best practice: From the Administrator view in your OpenShift Container Platform navigation, install the OperatorHub web console interface that is provided with OpenShift Container Platform.

- Select Operators > OperatorHub to access the list of available operators, and select multicluster engine for Kubernetes operator.

-

Click

Install. On the Operator Installation page, select the options for your installation:

Namespace:

- The multicluster engine operator engine must be installed in its own namespace, or project.

-

By default, the OperatorHub console installation process creates a namespace titled

multicluster-engine. Best practice: Continue to use themulticluster-enginenamespace if it is available. -

If there is already a namespace named

multicluster-engine, select a different namespace.

- Channel: The channel that you select corresponds to the release that you are installing. When you select the channel, it installs the identified release, and establishes that the future errata updates within that release are obtained.

Approval strategy: The approval strategy identifies the human interaction that is required for applying updates to the channel or release to which you subscribed.

- Select Automatic, which is selected by default, to ensure any updates within that release are automatically applied.

- Select Manual to receive a notification when an update is available. If you have concerns about when the updates are applied, this might be best practice for you.

Note: To upgrade to the next minor release, you must return to the OperatorHub page and select a new channel for the more current release.

- Select Install to apply your changes and create the operator.

See the following process to create the MultiClusterEngine custom resource.

- In the OpenShift Container Platform console navigation, select Installed Operators > multicluster engine for Kubernetes.

- Select the MultiCluster Engine tab.

- Select Create MultiClusterEngine.

Update the default values in the YAML file. See options in the MultiClusterEngine advanced configuration section of the documentation.

- The following example shows the default template that you can copy into the editor:

apiVersion: multicluster.openshift.io/v1 kind: MultiClusterEngine metadata: name: multiclusterengine spec: {}apiVersion: multicluster.openshift.io/v1 kind: MultiClusterEngine metadata: name: multiclusterengine spec: {}Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Select Create to initialize the custom resource. It can take up to 10 minutes for the multicluster engine operator engine to build and start.

After the MultiClusterEngine resource is created, the status for the resource is

Availableon the MultiCluster Engine tab.

1.3.1.4. Installing from the OpenShift Container Platform CLI

Create a multicluster engine operator engine namespace where the operator requirements are contained. Run the following command, where

namespaceis the name for your multicluster engine for Kubernetes operator namespace. The value fornamespacemight be referred to as Project in the OpenShift Container Platform environment:oc create namespace <namespace>

oc create namespace <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Switch your project namespace to the one that you created. Replace

namespacewith the name of the multicluster engine for Kubernetes operator namespace that you created in step 1.oc project <namespace>

oc project <namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a YAML file to configure an

OperatorGroupresource. Each namespace can have only one operator group. Replacedefaultwith the name of your operator group. Replacenamespacewith the name of your project namespace. See the following example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to create the

OperatorGroupresource. Replaceoperator-groupwith the name of the operator group YAML file that you created:oc apply -f <path-to-file>/<operator-group>.yaml

oc apply -f <path-to-file>/<operator-group>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a YAML file to configure an OpenShift Container Platform Subscription. Your file should look similar to the following example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note: For installing the multicluster engine for Kubernetes operator on infrastructure nodes, the see Operator Lifecycle Manager Subscription additional configuration section.

Run the following command to create the OpenShift Container Platform Subscription. Replace

subscriptionwith the name of the subscription file that you created:oc apply -f <path-to-file>/<subscription>.yaml

oc apply -f <path-to-file>/<subscription>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a YAML file to configure the

MultiClusterEnginecustom resource. Your default template should look similar to the following example:apiVersion: multicluster.openshift.io/v1 kind: MultiClusterEngine metadata: name: multiclusterengine spec: {}apiVersion: multicluster.openshift.io/v1 kind: MultiClusterEngine metadata: name: multiclusterengine spec: {}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note: For installing the multicluster engine operator on infrastructure nodes, see the MultiClusterEngine custom resource additional configuration section:

Run the following command to create the

MultiClusterEnginecustom resource. Replacecustom-resourcewith the name of your custom resource file:oc apply -f <path-to-file>/<custom-resource>.yaml

oc apply -f <path-to-file>/<custom-resource>.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow If this step fails with the following error, the resources are still being created and applied. Run the command again in a few minutes when the resources are created:

error: unable to recognize "./mce.yaml": no matches for kind "MultiClusterEngine" in version "operator.multicluster-engine.io/v1"

error: unable to recognize "./mce.yaml": no matches for kind "MultiClusterEngine" in version "operator.multicluster-engine.io/v1"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to get the custom resource. It can take up to 10 minutes for the

MultiClusterEnginecustom resource status to display asAvailablein thestatus.phasefield after you run the following command:oc get mce -o=jsonpath='{.items[0].status.phase}'oc get mce -o=jsonpath='{.items[0].status.phase}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

If you are reinstalling the multicluster engine operator and the pods do not start, see Troubleshooting reinstallation failure for steps to work around this problem.

Notes:

-

A

ServiceAccountwith aClusterRoleBindingautomatically gives cluster administrator privileges to multicluster engine operator and to any user credentials with access to the namespace where you install multicluster engine operator.

1.3.1.5. Installing on infrastructure nodes

An OpenShift Container Platform cluster can be configured to contain infrastructure nodes for running approved management components. Running components on infrastructure nodes avoids allocating OpenShift Container Platform subscription quota for the nodes that are running those management components.

After adding infrastructure nodes to your OpenShift Container Platform cluster, follow the Installing from the OpenShift Container Platform CLI instructions and add the following configurations to the Operator Lifecycle Manager Subscription and MultiClusterEngine custom resource.

1.3.1.5.1. Add infrastructure nodes to the OpenShift Container Platform cluster

Follow the procedures that are described in Creating infrastructure machine sets in the OpenShift Container Platform documentation. Infrastructure nodes are configured with a Kubernetes taint and label to keep non-management workloads from running on them.

To be compatible with the infrastructure node enablement provided by multicluster engine operator, ensure your infrastructure nodes have the following taint and label applied:

1.3.1.5.2. Operator Lifecycle Manager Subscription additional configuration

Add the following additional configuration before applying the Operator Lifecycle Manager Subscription:

1.3.1.5.3. MultiClusterEngine custom resource additional configuration

Add the following additional configuration before applying the MultiClusterEngine custom resource:

spec:

nodeSelector:

node-role.kubernetes.io/infra: ""

spec:

nodeSelector:

node-role.kubernetes.io/infra: ""1.3.2. Install on disconnected networks

You might need to install the multicluster engine operator on Red Hat OpenShift Container Platform clusters that are not connected to the Internet. The procedure to install on a disconnected engine requires some of the same steps as the connected installation.

Important: You must install multicluster engine operator on a cluster that does not have Red Hat Advanced Cluster Management for Kubernetes earlier than 2.5 installed. The multicluster engine operator cannot co-exist with Red Hat Advanced Cluster Management for Kubernetes on versions earlier than 2.5 because they provide some of the same management components. It is recommended that you install multicluster engine operator on a cluster that has never previously installed Red Hat Advanced Cluster Management. If you are using Red Hat Advanced Cluster Management for Kubernetes at version 2.5.0 or later then multicluster engine operator is already installed on the cluster with it.

You must download copies of the packages to access them during the installation, rather than accessing them directly from the network during the installation.

1.3.2.1. Prerequisites

You must meet the following requirements before you install The multicluster engine operator:

- Red Hat OpenShift Container Platform version 4.12 or later must be deployed in your environment, and you must be logged in with the command line interface (CLI).

You need access to catalog.redhat.com.

Note: For managing bare metal clusters, you must have OpenShift Container Platform version 4.12 or later.

-

Your Red Hat OpenShift Container Platform CLI must be version 4.12 or later, and configured to run

occommands. - Your Red Hat OpenShift Container Platform permissions must allow you to create a namespace.

- You must have a workstation with Internet connection to download the dependencies for the operator.

1.3.2.2. Confirm your OpenShift Container Platform installation

- You must have a supported OpenShift Container Platform version, including the registry and storage services, installed and working in your cluster. For information about OpenShift Container Platform version 4.12, see OpenShift Container Platform documentation.

When and if you are connected, you can ensure that the OpenShift Container Platform cluster is set up correctly by accessing the OpenShift Container Platform web console with the following command:

kubectl -n openshift-console get route console

kubectl -n openshift-console get route consoleCopy to Clipboard Copied! Toggle word wrap Toggle overflow See the following example output:

console console-openshift-console.apps.new-coral.purple-chesterfield.com console https reencrypt/Redirect None

console console-openshift-console.apps.new-coral.purple-chesterfield.com console https reencrypt/Redirect NoneCopy to Clipboard Copied! Toggle word wrap Toggle overflow The console URL in this example is:

https:// console-openshift-console.apps.new-coral.purple-chesterfield.com. Open the URL in your browser and check the result.If the console URL displays

console-openshift-console.router.default.svc.cluster.local, set the value foropenshift_master_default_subdomainwhen you install OpenShift Container Platform.

1.3.2.3. Installing in a disconnected environment

Important: You need to download the required images to a mirroring registry to install the operators in a disconnected environment. Without the download, you might receive ImagePullBackOff errors during your deployment.

Follow these steps to install the multicluster engine operator in a disconnected environment:

Create a mirror registry. If you do not already have a mirror registry, create one by completing the procedure in the Disconnected installation mirroring topic of the Red Hat OpenShift Container Platform documentation.

If you already have a mirror registry, you can configure and use your existing one.

Note: For bare metal only, you need to provide the certificate information for the disconnected registry in your

install-config.yamlfile. To access the image in a protected disconnected registry, you must provide the certificate information so the multicluster engine operator can access the registry.- Copy the certificate information from the registry.

-

Open the

install-config.yamlfile in an editor. -

Find the entry for

additionalTrustBundle: |. Add the certificate information after the

additionalTrustBundleline. The resulting content should look similar to the following example:additionalTrustBundle: | -----BEGIN CERTIFICATE----- certificate_content -----END CERTIFICATE----- sshKey: >-

additionalTrustBundle: | -----BEGIN CERTIFICATE----- certificate_content -----END CERTIFICATE----- sshKey: >-Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Important: Additional mirrors for disconnected image registries are needed if the following Governance policies are required:

-

Container Security Operator policy: Locate the images in the

registry.redhat.io/quaysource. -

Compliance Operator policy: Locate the images in the

registry.redhat.io/compliancesource. Gatekeeper Operator policy: Locate the images in the

registry.redhat.io/gatekeepersource.See the following example of mirrors lists for all three operators:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Container Security Operator policy: Locate the images in the

-

Save the

install-config.yamlfile. Create a YAML file that contains the

ImageContentSourcePolicywith the namemce-policy.yaml. Note: If you modify this on a running cluster, it causes a rolling restart of all nodes.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the ImageContentSourcePolicy file by entering the following command:

oc apply -f mce-policy.yaml

oc apply -f mce-policy.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enable the disconnected Operator Lifecycle Manager Red Hat Operators and Community Operators.

the multicluster engine operator is included in the Operator Lifecycle Manager Red Hat Operator catalog.

- Configure the disconnected Operator Lifecycle Manager for the Red Hat Operator catalog. Follow the steps in the Using Operator Lifecycle Manager on restricted networks topic of theRed Hat OpenShift Container Platform documentation.

- Now that you have the image in the disconnected Operator Lifecycle Manager, continue to install the multicluster engine operator for Kubernetes from the Operator Lifecycle Manager catalog.

See Installing while connected online for the required steps.

1.3.3. Advanced configuration

The multicluster engine operator is installed using an operator that deploys all of the required components. The multicluster engine operator can be further configured during or after installation. Learn more about the advanced configuration options.

1.3.3.1. Deployed components

Add one or more of the following attributes to the MultiClusterEngine custom resource:

| Name | Description | Enabled |

| assisted-service | Installs OpenShift Container Platform with minimal infrastructure prerequisites and comprehensive pre-flight validations | True |

| cluster-lifecycle | Provides cluster management capabilities for OpenShift Container Platform and Kubernetes hub clusters | True |

| cluster-manager | Manages various cluster-related operations within the cluster environment | True |

| cluster-proxy-addon |

Automates the installation of | True |

| console-mce | Enables the multicluster engine operator console plug-in | True |

| discovery | Discovers and identifies new clusters within the OpenShift Cluster Manager | True |

| hive | Provisions and performs initial configuration of OpenShift Container Platform clusters | True |

| hypershift | Hosts OpenShift Container Platform control planes at scale with cost and time efficiency, and cross-cloud portability | True |

| hypershift-local-hosting | Enables local hosting capabilities for within the local cluster environment | True |

| local-cluster | Enables the import and self-management of the local hub cluster where the multicluster engine operator is deployed | True |

| managedserviceacccount | Syncronizes service accounts to managed clusters and collects tokens as secret resources back to the hub cluster | False |

| server-foundation | Provides foundational services for server-side operations within the multicluster environment | True |

When you install multicluster engine operator on to the cluster, not all of the listed components are enabled by default.

You can further configure multicluster engine operator during or after installation by adding one or more attributes to the MultiClusterEngine custom resource. Continue reading for information about the attributes that you can add.

1.3.3.2. Console and component configuration

The following example displays the spec.overrides default template that you can use to enable or disable the component:

- 1

- Replace

namewith the name of the component.

Alternatively, you can run the following command. Replace namespace with the name of your project and name with the name of the component:

oc patch MultiClusterEngine <multiclusterengine-name> --type=json -p='[{"op": "add", "path": "/spec/overrides/components/-","value":{"name":"<name>","enabled":true}}]'

oc patch MultiClusterEngine <multiclusterengine-name> --type=json -p='[{"op": "add", "path": "/spec/overrides/components/-","value":{"name":"<name>","enabled":true}}]'1.3.3.3. Local-cluster enablement

By default, the cluster that is running multicluster engine operator manages itself. To install multicluster engine operator without the cluster managing itself, specify the following values in the spec.overrides.components settings in the MultiClusterEngine section:

-

The

namevalue identifies the hub cluster as alocal-cluster. -

The