Deploying AMQ Interconnect on OpenShift

For Use with AMQ Interconnect 1.10

Abstract

Chapter 1. Getting started with AMQ Interconnect on OpenShift Container Platform

AMQ Interconnect is a lightweight AMQP 1.0 message router for building large, highly resilient messaging networks for hybrid cloud and IoT/edge deployments. AMQ Interconnect automatically learns the addresses of messaging endpoints (such as clients, servers, and message brokers) and flexibly routes messages between them.

This document describes how to deploy AMQ Interconnect on OpenShift Container Platform by using the AMQ Interconnect Operator and the Interconnect Custom Resource Definition (CRD) that it provides. The CRD defines an AMQ Interconnect deployment, and the Operator creates and manages the deployment in OpenShift Container Platform.

1.1. What Operators are

Operators are a method of packaging, deploying, and managing a Kubernetes application. They take human operational knowledge and encode it into software that is more easily shared with consumers to automate common or complex tasks.

In OpenShift Container Platform 4.0, the Operator Lifecycle Manager (OLM) helps users install, update, and generally manage the life cycle of all Operators and their associated services running across their clusters. It is part of the Operator Framework, an open source toolkit designed to manage Kubernetes native applications (Operators) in an effective, automated, and scalable way.

The OLM runs by default in OpenShift Container Platform 4.0, which aids cluster administrators in installing, upgrading, and granting access to Operators running on their cluster. The OpenShift Container Platform web console provides management screens for cluster administrators to install Operators, as well as grant specific projects access to use the catalog of Operators available on the cluster.

OperatorHub is the graphical interface that OpenShift Container Platform cluster administrators use to discover, install, and upgrade Operators. With one click, these Operators can be pulled from OperatorHub, installed on the cluster, and managed by the OLM, ready for engineering teams to self-service manage the software in development, test, and production environments.

Additional resources

- For more information about Operators, see the OpenShift documentation.

1.2. Provided Custom Resources

The AMQ Interconnect Operator provides the Interconnect Custom Resource Definition (CRD), which allows you to interact with an AMQ Interconnect deployment running on OpenShift Container Platform just like other OpenShift Container Platform API objects.

The Interconnect CRD represents a deployment of AMQ Interconnect routers. The CRD provides elements for defining many different aspects of a router deployment in OpenShift Container Platform such as:

- Number of AMQ Interconnect routers

- Deployment topology

- Connectivity

- Address semantics

Chapter 2. Preparing to deploy AMQ Interconnect on OpenShift Container Platform

Before deploying AMQ Interconnect on OpenShift Container Platform, perform one of the following procedures:

If you are evaluating AMQ Interconnect, you can skip these steps however Red Hat recommends always securing AMQ Interconnect communication.

2.1. Creating secrets for SSL/TLS authentication

If you installed the Red Hat Integration - AMQ Certificate Manager Operator you can skip this procedure, instructions for securing your network with AMQ Certificate Manager are included in the associated procedures. OpenShift uses objects called Secrets to hold sensitive information such as SSL/TLS certificates. If you want to secure inter-router traffic, client traffic, or both, then you must create the SSL/TLS certificates and private keys and provide them to OpenShift as secrets.

Procedure

If you do not have an existing certificate authority (CA) certificate for inter-router connections, create one.

These commands create a self-signed CA certificate for inter-router connections:

# Create a new directory for the inter-router certificates. $ mkdir internal-certs # Create a private key for the CA. $ openssl genrsa -out internal-certs/ca-key.pem 2048 # Create a certificate signing request for the CA. $ openssl req -new -batch -key internal-certs/ca-key.pem -out internal-certs/ca-csr.pem # Self sign the CA certificate. $ openssl x509 -req -in internal-certs/ca-csr.pem -signkey internal-certs/ca-key.pem -out internal-certs/ca.crt

Create a certificate for the router signed by the CA.

These commands create a private key and a certificate, and sign the certificate using the CA created in the previous step:

# Create a private key. $ openssl genrsa -out internal-certs/tls.key 2048 # Create a certificate signing request for the router. $ openssl req -new -batch -subj "/CN=amq-interconnect.<project_name>.svc.cluster.local" -key internal-certs/tls.key -out internal-certs/server-csr.pem # Sign the certificate using the CA. $ openssl x509 -req -in internal-certs/server-csr.pem -CA internal-certs/ca.crt -CAkey internal-certs/ca-key.pem -out internal-certs/tls.crt -CAcreateserial

where

<project_name>is the name of the current OpenShift project.Create a secret containing the private key, router certificate, and CA certificate.

This command creates the secret using the key and certificates that were created in the previous steps:

$ oc create secret generic inter-router-certs-secret --from-file=tls.crt=internal-certs/tls.crt --from-file=tls.key=internal-certs/tls.key --from-file=ca.crt=internal-certs/ca.crt

If you want to use SSL/TLS to authenticate client connections (as opposed to authenticating clients using SASL), create a CA certificate for client connections.

These commands create a self-signed CA certificate for client connections:

# Create a new directory for the client certificates. $ mkdir client-certs # Create a private key for the CA. $ openssl genrsa -out client-certs/ca-key.pem 2048 # Create a certificate signing request for the CA. $ openssl req -new -batch -key client-certs/ca-key.pem -out client-certs/ca-csr.pem # Self sign the certificate. $ openssl x509 -req -in client-certs/ca-csr.pem -signkey client-certs/ca-key.pem -out client-certs/ca.crt

Create a certificate for client connections signed by the CA.

These commands create a private key and a certificate, and then sign the certificate using the CA created in the previous step:

# Create a private key. $ openssl genrsa -out client-certs/tls.key 2048 # Create a certificate signing request for the client connections $ openssl req -new -batch -subj "/CN=<client_name>" -key client-certs/tls.key -out client-certs/client-csr.pem # Sign the certificate using the CA. $ openssl x509 -req -in client-certs/client-csr.pem -CA client-certs/ca.crt -CAkey client-certs/ca-key.pem -out client-certs/tls.crt -CAcreateserial

where

<client_name>is unique for each router client.Create a secret containing the CA certificate used to sign client certificates using the certificate that was created in the previous steps:

$ oc create secret generic client-ca-secret --from-file=ca.crt=client-certs/ca.crt --from-file=tls.crt=client-certs/ca.crt --from-file=tls.key=client-certs/ca-key.pem

2.2. Adding the Red Hat Integration - AMQ Certificate Manager Operator

The Red Hat Integration - AMQ Certificate Manager Operator (cert-manager) is an optional Kubernetes add-on that issues and manages TLS certificates. The Red Hat Integration - AMQ Interconnect Operator uses it to automatically create the TLS certificates needed to secure the router network.

You use OperatorHub to add the Operator to your OpenShift Container Platform cluster.

Installing an Operator requires administrator-level privileges for your OpenShift cluster.

The Red Hat Integration - AMQ Certificate Manager Operator is not supported on OpenShift Container Platform 4.9 or later. As an alternative, you can create and manage TLS certificates as described in Section 2.1, “Creating secrets for SSL/TLS authentication”.

When installed, the operator is available to all users and projects in the cluster.

Prerequisites

-

Access to an OpenShift Container Platform 4.6, 4.7, 4.8, 4.9 or 4.10 cluster using a

cluster-adminaccount.

Procedure

- In the OpenShift Container Platform web console, navigate to → .

-

Choose

Red Hat Integration - AMQ Certificate Manager Operatorfrom the list of available Operators, and then click . On the Operator Installation page, select All namespaces on the cluster (default), and then click .

The Installed Operators page appears displaying the status of the Operator installation.

- Verify that the Red Hat Integration - AMQ Certificate Manager Operator is displayed and wait until the Status changes to Succeeded.

If the installation is not successful, troubleshoot the error:

-

Click

Red Hat Integration - AMQ Certificate Manager Operatoron the Installed Operators page. - Select the Subscription tab and view any failures or errors.

-

Click

Additional resources

-

For more information about

cert-manager, see the cert-manager documentation.

Chapter 3. Adding the Red Hat Integration - AMQ Interconnect Operator

The Red Hat Integration - AMQ Interconnect Operator creates and manages AMQ Interconnect router networks in OpenShift Container Platform. This Operator must be installed separately for each project that uses it.

The options for installing the Operator are:

Installing an Operator requires administrator-level privileges for your OpenShift cluster.

3.1. Installing the Operator using the CLI

The procedures in this section show how to use the OpenShift command-line interface (CLI) to install and deploy the latest version of the Red Hat Integration - AMQ Interconnect Operator in a given OpenShift project.

3.1.1. Getting the Operator code

This procedure shows how to access and prepare the code you need to install the latest version of the Operator for AMQ Interconnect 1.10.

Procedure

- In your web browser, navigate to the Software Downloads page for AMQ Interconnect releases.

-

Ensure that the value of the Version drop-down list is set to

1.10.7and the Releases tab is selected. Next to AMQ Interconnect 1.10.7 Operator Installation and Example Files, click Download.

Download of the

amq-interconnect-operator-1.10.7-ocp-install-examples.zipcompressed archive automatically begins.When the download has completed, move the archive to your chosen installation directory. The following example moves the archive to a directory called

~/router/operator.$ mkdir ~/router $ mv amq-interconnect-operator-1.10.7-ocp-install-examples.zip ~/router

In your chosen installation directory, extract the contents of the archive. For example:

$ cd ~/router $ unzip amq-interconnect-operator-1.10.7-ocp-install-examples.zip

Switch to the directory that was created when you extracted the archive. For example:

$ cd operator

Log in to OpenShift Container Platform as a cluster administrator. For example:

$ oc login -u system:admin

Specify the project in which you want to install the Operator. You can create a new project or switch to an existing one.

Create a new project:

$ oc new-project <project-name>Or, switch to an existing project:

$ oc project <project-name>

Create a service account to use with the Operator.

$ oc create -f deploy/service_account.yaml

Create a role for the Operator.

$ oc create -f deploy/role.yaml

Create a role binding for the Operator. The role binding binds the previously-created service account to the Operator role, based on the names you specified.

$ oc create -f deploy/role_binding.yaml

In the procedure that follows, you deploy the Operator in your project.

3.1.2. Deploying the Operator using the CLI

The procedure in this section shows how to use the OpenShift command-line interface (CLI) to deploy the latest version of the Operator for AMQ Interconnect 1.10 in your OpenShift project.

Prerequisites

- You must have already prepared your OpenShift project for the Operator deployment. See Section 3.1.1, “Getting the Operator code”.

- Before you can follow the procedure in this section, you must first complete the steps described in Red Hat Container Registry Authentication.

Procedure

In the OpenShift command-line interface (CLI), log in to OpenShift Container Platform as a cluster administrator. For example:

$ oc login -u system:admin

Switch to the project that you previously prepared for the Operator deployment. For example:

$ oc project <project-name>Switch to the directory that was created when you previously extracted the Operator installation archive. For example:

$ cd ~/router/operator/qdr-operator-1.10-ocp-install-examples

Deploy the CRD that is included with the Operator. You must install the CRD in your OpenShift cluster before deploying and starting the Operator.

$ oc create -f deploy/crds/interconnectedcloud_v1alpha1_interconnect_crd.yaml

Link the pull secret associated with the account used for authentication in the Red Hat Ecosystem Catalog with the

default,deployer, andbuilderservice accounts for your OpenShift project.$ oc secrets link --for=pull default <secret-name> $ oc secrets link --for=pull deployer <secret-name> $ oc secrets link --for=pull builder <secret-name>

NoteIn OpenShift Container Platform 4.1 or later, you can also use the web console to associate a pull secret with a project in which you want to deploy container images such as the AMQ Interconnect Operator. To do this, click → . Specify the pull secret associated with the account that you use for authentication in the Red Hat Container Registry.

Deploy the Operator.

$ oc create -f deploy/operator.yaml

Verify that the Operator is running:

$ oc get pods -l name=qdr-operator

If the output does not report the pod is running, use the following command to determine the issue that prevented it from running:

$ oc describe pod -l name=qdr-operator

Verify that the CRD is registered in the cluster and review the CRD details:

$ oc get crd $ oc describe crd interconnects.interconnectedcloud.github.io

It is recommended that you deploy only a single instance of the AMQ Interconnect Operator in a given OpenShift project. Setting the replicas element of your Operator deployment to a value greater than 1, or deploying the Operator more than once in the same project is not recommended.

Additional resources

- For an alternative method of installing the AMQ Interconnect Operator that uses the OperatorHub graphical interface, see Section 3.2, “Installing the Operator using the Operator Lifecycle Manager”.

3.2. Installing the Operator using the Operator Lifecycle Manager

The procedures in this section show how to use the OperatorHub to install and deploy the latest version of the Red Hat Integration - AMQ Interconnect Operator in a given OpenShift project.

In OpenShift Container Platform 4.1 and later, the Operator Lifecycle Manager (OLM) helps users install, update, and generally manage the lifecycle of all Operators and their associated services running across their clusters. It is part of the Operator Framework, an open source toolkit designed to manage Kubernetes native applications (Operators) in an effective, automated, and scalable way.

Prerequisites

-

Access to an OpenShift Container Platform 4.6, 4.7, 4.8, 4.9 or 4.10 cluster using a

cluster-adminaccount. - Red Hat Integration - AMQ Certificate Manager Operator is installed in the OpenShift Container Platform cluster if required.

Procedure

- In the OpenShift Container Platform web console, navigate to → .

-

Choose

Red Hat Integration - AMQ Interconnect Operatorfrom the list of available Operators, and then click . On the Operator Installation page, select the namespace into which you want to install the Operator, and then click .

The Installed Operators page appears displaying the status of the Operator installation.

- Verify that the AMQ Interconnect Operator is displayed and wait until the Status changes to Succeeded.

If the installation is not successful, troubleshoot the error:

-

Click

Red Hat Integration - AMQ Interconnect Operatoron the Installed Operators page. - Select the Subscription tab and view any failures or errors.

-

Click

Chapter 4. Installing the Red Hat Integration - AMQ Interconnect Operator in a restricted environment

In a production environment which has no or limited internet access, installing the Red Hat Integration - AMQ Interconnect Operator as described in Chapter 3, Adding the Red Hat Integration - AMQ Interconnect Operator is not possible. This section explains how to install Red Hat Integration - AMQ Interconnect Operator in a restricted environment by mirroring the required components to the cluster.

Prerequisites

- A OpenShift Container Platform cluster version 4.6, 4.7, 4.8, 4.9 or 4.10

A RHEL machine with:

-

podmanversion 1.9.3 or later -

The

opmCLI as described in the OpenShift documentation -

The

ocCLI version 4.9.9 or later

-

Network access

- network access to the Red Hat Container Registry

- network access to the OpenShift Container Platform cluster

You only need access to the Red Hat Container Registry while mirroring. You do not need simultaneous access to the Red Hat Container Registry and the OpenShift Container Platform cluster.

The steps required are described in the following sections:

4.1. Setting up the OpenShift Container Platform cluster

Complete the following steps on the OpenShift Container Platform cluster to prepare for the mirroring process:

-

Log into the cluster as

cluster-admin. Disable the sources for the default catalogs using either the CLI or the OpenShift console:

For the CLI, set

disableAllDefaultSources: truefor OperatorHub:$ oc patch OperatorHub cluster --type json \ -p '[{"op": "add", "path": "/spec/disableAllDefaultSources", "value": true}]'For the OpenShift console, navigate to Administration → Cluster Settings → Configuration → OperatorHub.

On the OperatorHub page, click the Sources tab, and disable the sources.

4.2. Creating the AMQ Interconnect images on a RHEL machine

Complete the following steps on the RHEL machine to prepare for the mirroring process:

Prerequisites

Access to

registry.redhat.ioLogin to

registry.redhat.iofrom the RHEL machine.$ podman login -u USERNAME -p PASSWORD registry.redhat.io

Keep only the Interconnect Operator in the list of operators:

$ opm index prune -f registry.redhat.io/redhat/redhat-operator-index:v<openshift-version> -p amq7-interconnect-operator -t <cluster-domain>:<registry-port>/iib:my-operator-iib

where

-

<openshift-version> is the version of OpenShift Container Platform, for example,

4.9. -

<cluster-domain> is the domain name for the OpenShift Container Platform cluster, for example,

mycluster.example.com. -

<registry-port> is the port number used by the registry in the OpenShift Container Platform cluster, the default being

5000.

Verify that you have only created a podman image of the Interconnect Operator:

$ podman images | grep my-operator-iib <cluster-domain>:<registry-port>/iib my-operator-iib 39b6148e6981 3 days ago 138 MB

-

<openshift-version> is the version of OpenShift Container Platform, for example,

4.3. Pushing images to the OpenShift Container Platform cluster

Prerequisites

Access from the RHEL machine to the OpenShift Container Platform cluster.

From the RHEL machine, push the image to the cluster registry :

$ podman push <cluster-domain>:<registry-port>/iib:my-operator-iib

Create the three files required for the mirroring process :

$ /usr/local/bin/oc adm catalog mirror \ <cluster-domain>:<registry-port>/iib:my-operator-iib \ <cluster-domain>:<registry-port> \ -a /home/customer-user/.docker/config.json \ --insecure=true \ --registry-config /home/customer-user/.docker/config.json \ --index-filter-by-os=linux/amd64 \ --manifests-only

Make sure that the following files exist:

-

catalogSource.yaml- A YAML file describing the catalogSource. -

imageContentSourcePolicy.yaml- A YAML file that maps the images in the internal registry with the addresses from RedHat registries. -

mapping.txt- A text file that drives the mirroring process of the images to the internal registry.

-

Edit

mapping.txtto list only the images you want to mirror.The file has the following format:

[ Operator address on RedHat registry : Operator SHA ] = [ Operator address on internal mirror registry : tag ]

Example of a

mapping.txtfile :registry.redhat.io/amq7/amq-interconnect@sha256:6101cc735e4d19cd67c6d80895c425ecf6f1d2604d88f999fa0cae57a7d6abaf=<cluster-domain>:<registry-port>/amq7-amq-interconnect:f793b0cc registry.redhat.io/amq7/amq-interconnect-operator@sha256:8dd53290c909589590b88a1544d854b4ad9f8b4a639189597c0a59579bc60c40=<cluster-domain>:<registry-port>/amq7-amq-interconnect-operator:73c142ff registry.redhat.io/amq7/amq-interconnect-operator-metadata@sha256:799ce48905d5d2a91b42e2a7943ce9b756aa9da80f6924be06b2a6275ac90214=<cluster-domain>:<registry-port>/amq7-amq-interconnect-operator-metadata:14cc4a4e

Mirror the required images

$ /usr/local/bin/oc image mirror \ -f mapping-ic.yaml \ -a /home/customer-user/.docker/config.json \ --insecure=true \ --registry-config /home/customer-user/.docker/config.json \ --keep-manifest-list=true

Configure the

ImageContentSourcePolicy(ICSP) name:Set the field 'name' in the file imageContentSourcePolicy.yaml, for example,

my-operator-icspExample of a ICSP snippet :

--- apiVersion: operator.openshift.io/v1alpha1 kind: ImageContentSourcePolicy metadata: labels: operators.openshift.org/catalog: "true" name: my-operator-icsp spec: repositoryDigestMirrors: - mirrors: - <cluster-domain>:<registry-port>/amq7-amq-interconnect-operator source: registry.redhat.io/amq7/amq-interconnect-operatorApply the policy (ICSP) file :

$ /usr/local/bin/oc create -f imageContentSourcePolicy.yaml

After you apply this file, all cluster nodes are reset automatically. You can check the nodes status using

oc get nodesor in the OpenShift console by navigating to Compute → Nodes.NoteMake sure all nodes are in Ready state before you continue.

Configure the catalogSource name :

Set the field

namein thecatalogSource.yamlfile, for example,my-operator-catalogExample of a

catalogSource.yamlfile:apiVersion: operators.coreos.com/v1alpha1 kind: CatalogSource metadata: name: iib namespace: openshift-marketplace spec: image: <cluster-domain>:<registry-port>/iib:my-operator-iib sourceType: grpc

Apply the catalog source configuration to complete the installation of the Red Hat Integration - AMQ Interconnect Operator:

$ /usr/local/bin/oc apply -f catalogSource.yaml

- Make sure the installation is working by deploying a router as described in Section 5.1, “Creating an interior router deployment”

Chapter 5. Creating a router network

To create a network of AMQ Interconnect routers, you define a deployment in an Interconnect Custom Resource, and then apply it. The AMQ Interconnect Operator creates the deployment by scheduling the necessary Pods and creating any needed Resources.

The procedures in this section demonstrate the following router network topologies:

- Interior router mesh

- Interior router mesh with edge routers for scalability

- Inter-cluster router network that connects two OpenShift clusters

Prerequisites

- The AMQ Interconnect Operator is installed in your OpenShift Container Platform project.

5.1. Creating an interior router deployment

Interior routers establish connections with each other and automatically compute the lowest cost paths across the network.

Procedure

This procedure creates an interior router network of three routers. The routers automatically connect to each other in a mesh topology, and their connections are secured with mutual SSL/TLS authentication.

Create an

InterconnectCustom Resource YAML file that describes the interior router deployment.Sample

router-mesh.yamlfileapiVersion: interconnectedcloud.github.io/v1alpha1 kind: Interconnect metadata: name: router-mesh spec: deploymentPlan: role: interior 1 size: 3 2 placement: Any 3- 1

- The operating mode of the routers in the deployment. The Operator will automatically connect interior routers in a mesh topology.

- 2

- The number of routers to create.

- 3

- Each router runs in a separate Pod. The placement defines where in the cluster the Operator should schedule and place the Pods. You can choose the following placement options:

Any- The Pods can run on any node in the OpenShift Container Platform cluster.

Every-

The Operator places a router Pod on each node in the cluster. If you choose this option, the

Sizeproperty is not needed - the number of routers corresponds to the number of nodes in the cluster. AntiAffinity-

The Operator ensures that multiple router Pods do not run on the same node in the cluster. If the size is greater than the number of nodes in the cluster, the extra Pods that cannot be scheduled will remain in a

Pendingstate.

Create the router deployment described in the YAML file.

$ oc apply -f router-mesh.yaml

The Operator creates a deployment of interior routers in a mesh topology that uses default address semantics. It also creates a Service through which the routers can be accessed, and a Route through which you can access the web console.

Verify that the router mesh was created and the Pods are running.

Each router runs in a separate Pod. They connect to each other automatically using the Service that the Operator created.

$ oc get pods NAME READY STATUS RESTARTS AGE interconnect-operator-587f94784b-4bzdx 1/1 Running 0 52m router-mesh-6b48f89bd-588r5 1/1 Running 0 40m router-mesh-6b48f89bd-bdjc4 1/1 Running 0 40m router-mesh-6b48f89bd-h6d5r 1/1 Running 0 40m

Review the router deployment.

$ oc get interconnect/router-mesh -o yaml apiVersion: interconnectedcloud.github.io/v1alpha1 kind: Interconnect ... spec: addresses: 1 - distribution: closest prefix: closest - distribution: multicast prefix: multicast - distribution: closest prefix: unicast - distribution: closest prefix: exclusive - distribution: multicast prefix: broadcast deploymentPlan: 2 livenessPort: 8888 placement: Any resources: {} role: interior size: 3 edgeListeners: 3 - port: 45672 interRouterListeners: 4 - authenticatePeer: true expose: true port: 55671 saslMechanisms: EXTERNAL sslProfile: inter-router listeners: 5 - port: 5672 - authenticatePeer: true expose: true http: true port: 8080 - port: 5671 sslProfile: default sslProfiles: 6 - credentials: router-mesh-default-tls name: default - caCert: router-mesh-inter-router-tls credentials: router-mesh-inter-router-tls mutualAuth: true name: inter-router users: router-mesh-users 7

- 1

- The default address configuration. All messages sent to an address that does not match any of these prefixes are distributed in a balanced anycast pattern.

- 2

- A router mesh of three interior routers was deployed.

- 3

- Each interior router listens on port

45672for connections from edge routers. - 4

- The interior routers connect to each other on port

55671. These inter-router connections are secured with SSL/TLS mutual authentication. Theinter-routerSSL Profile contains the details of the certificates that the Operator generated. - 5

- Each interior router listens for connections from external clients on the following ports:

-

5672- Unsecure connections from messaging applications. -

5671- Secure connections from messaging applications. -

8080- AMQ Interconnect web console access. Default user name/password security is applied.

-

- 6

- Using the Red Hat Integration - AMQ Certificate Manager Operator, the Red Hat Integration - AMQ Interconnect Operator automatically creates two SSL profiles:

-

inter-router- The Operator secures the inter-router network with mutual TLS authentication by creating a Certificate Authority (CA) and generating certificates signed by the CA for each interior router. -

default- The Operator creates TLS certificates for messaging applications to connect to the interior routers on port5671.

-

- 7

- The AMQ Interconnect web console is secured with user name/password authentication. The Operator automatically generates the credentials and stores them in the

router-mesh-usersSecret.

5.2. Creating an edge router deployment

You can efficiently scale your router network by adding an edge router deployment. Edge routers act as connection concentrators for messaging applications. Each edge router maintains a single uplink connection to an interior router, and messaging applications connect to the edge routers to send and receive messages.

Prerequisites

- The interior router mesh is deployed. For more information, see Section 5.1, “Creating an interior router deployment”.

Procedure

This procedure creates an edge router on each node of the OpenShift Container Platform cluster and connects them to the previously created interior router mesh.

Create an

InterconnectCustom Resource YAML file that describes the edge router deployment.Sample

edge-routers.yamlfileapiVersion: interconnectedcloud.github.io/v1alpha1 kind: Interconnect metadata: name: edge-routers spec: deploymentPlan: role: edge placement: Every 1 edgeConnectors: 2 - host: router-mesh 3 port: 45672 4- 1

- An edge router Pod will be deployed on each node in the OpenShift Container Platform cluster. This placement helps to balance messaging application traffic across the cluster. The Operator will create a DaemonSet to ensure that the number of Pods scheduled always corresponds to the number of nodes in the cluster.

- 2

- Edge connectors define the connections from the edge routers to the interior routers.

- 3

- The name of the Service that was created for the interior routers.

- 4

- The port on which the interior routers listen for edge connections. The default is

45672.

Create the edge routers described in the YAML file:

$ oc apply -f edge-routers.yaml

The Operator deploys an edge router on each node of the OpenShift Container Platform cluster, and connects them to the interior routers.

Verify that the edge routers were created and the Pods are running.

Each router runs in a separate Pod. Each edge router connects to any of the previously created interior routers.

$ oc get pods NAME READY STATUS RESTARTS AGE edge-routers-2jz5j 1/1 Running 0 33s edge-routers-fhlxv 1/1 Running 0 33s edge-routers-gg2qb 1/1 Running 0 33s edge-routers-hj72t 1/1 Running 0 33s interconnect-operator-587f94784b-4bzdx 1/1 Running 0 54m router-mesh-6b48f89bd-588r5 1/1 Running 0 42m router-mesh-6b48f89bd-bdjc4 1/1 Running 0 42m router-mesh-6b48f89bd-h6d5r 1/1 Running 0 42m

5.3. Creating an inter-cluster router network

Depending on whether you are using AMQ Certificate Manager, there are different procedures for creating an inter-cluster router network.

5.3.1. Creating an inter-cluster router network using a Certificate Authority

You can create a router network from routers running in different OpenShift Container Platform clusters. This enables you to connect applications running in separate clusters.

Prerequisites

- You have already created secrets defining an existing certificate for each router.

Procedure

This procedure creates router deployments in two different OpenShift Container Platform clusters (cluster1 and cluster2) and connects them together to form an inter-cluster router network. The connection between the router deployments is secured with SSL/TLS mutual authentication.

In the first OpenShift Container Platform cluster (

cluster1), create anInterconnectCustom Resource YAML file that describes the interior router deployment.This example creates a single interior router with a default configuration.

Sample

cluster1-router-mesh.yamlfileapiVersion: interconnectedcloud.github.io/v1alpha1 kind: Interconnect metadata: name: cluster1-router-mesh spec: interRouterListeners: - authenticatePeer: true 1 host: 0.0.0.0 2 port: 55672 3 saslMechanisms: EXTERNAL 4 sslProfile: inter-router-profile 5 expose: true 6 sslProfiles: - caCert: inter-router-certs-secret 7 credentials: inter-router-certs-secret 8 name: inter-router-profile 9- 1

authenticatePeermust be set totrueto authenticate using TLS certificates- 2

- listener host

- 3

- listener port

- 4

- SASL mechanism to authenticate, use EXTERNAL for TLS certificates

- 5

- ssl-profile name to use for authenticating clients

- 6

- exposes a route so that the port is accessible from outside the cluster

- 7

- name of cluster secret or your CA containing a

ca.crtname (in case you’re using the same secret used in credentials, otherwise it must have a tls.crt) - 8

- name of cluster secret with the CA certificate containing

tls.crtandtls.keyfiles - 9

- ssl-profile name to use for the interRouterListener

Create the router deployment described in the YAML file.

$ oc apply -f cluster1-router-mesh.yaml

The Red Hat Integration - AMQ Interconnect Operator creates an interior router with a default configuration and a listener to authenticate other routers.

-

Log in to the second OpenShift Container Platform cluster (

cluster2), and switch to the project where you want to create the second router deployment. In

cluster2, create anInterconnectCustom Resource YAML file to describe the router deployment.apiVersion: interconnectedcloud.github.io/v1alpha1 kind: Interconnect metadata: name: cluster2-router-mesh spec: sslProfiles: - name: inter-router-profile 1 credentials: inter-router-certs-secret caCert: inter-router-certs-secret interRouterConnectors: - host: cluster1-router-mesh-port-55672-myproject.cluster1.openshift.com 2 port: 443 verifyHostname: false sslProfile: inter-router-profile name: cluster1

Create the router deployment described in the YAML file.

$ oc apply -f cluster2-router-mesh.yaml

Verify that the routers are connected.

This example displays the connections from the router in

cluster2to the router incluster1.$ oc exec cluster2-fb6bc5797-crvb6 -it -- qdstat -c Connections id host container role dir security authentication tenant ==================================================================================================================================================================================================== 1 cluster1-router-mesh-port-55672-myproject.cluster1.openshift.com:443 cluster1-router-mesh-54cffd9967-9h4vq inter-router out TLSv1/SSLv3(DHE-RSA-AES256-GCM-SHA384) x.509

5.3.2. Creating an inter-cluster router network using AMQ Certificate Manager

You can create a router network from routers running in different OpenShift Container Platform clusters. This enables you to connect applications running in separate clusters.

Procedure

This procedure creates router deployments in two different OpenShift Container Platform clusters (cluster1 and cluster2) and connects them together to form an inter-cluster router network. The connection between the router deployments is secured with SSL/TLS mutual authentication.

In the first OpenShift Container Platform cluster (

cluster1), create anInterconnectCustom Resource YAML file that describes the interior router deployment.This example creates a single interior router with a default configuration.

Sample

cluster1-router-mesh.yamlfileapiVersion: interconnectedcloud.github.io/v1alpha1 kind: Interconnect metadata: name: cluster1-router-mesh spec: {}Create the router deployment described in the YAML file.

$ oc apply -f cluster1-router-mesh.yaml

The Red Hat Integration - AMQ Interconnect Operator creates an interior router with a default configuration. It uses the Red Hat Integration - AMQ Certificate Manager Operator to create a Certificate Authority (CA) and generate a certificate signed by the CA.

Generate an additional certificate for the router deployment in the second OpenShift Container Platform cluster (

cluster2).The router deployment in

cluster2requires a certificate issued by the CA ofcluster1.Create a

CertificateCustom Resource YAML file to request a certificate.Sample

certificate-request.yamlfileapiVersion: certmanager.k8s.io/v1alpha1 kind: Certificate metadata: name: cluster2-inter-router-tls spec: commonName: cluster1-router-mesh-myproject.cluster2.openshift.com issuerRef: name: cluster1-router-mesh-inter-router-ca 1 secretName: cluster2-inter-router-tls-secret ---- 1

- The name of the Issuer that created the inter-router CA for

cluster1. By default, the name of the Issuer is<application-name>-inter-router-ca.

Create the certificate described in the YAML file.

$ oc apply -f certificate-request.yaml

Extract the certificate that you generated.

$ mkdir /tmp/cluster2-inter-router-tls $ oc extract secret/cluster2-inter-router-tls-secret --to=/tmp/cluster2-inter-router-tls

-

Log in to the second OpenShift Container Platform cluster (

cluster2), and switch to the project where you want to create the second router deployment. In

cluster2, create a Secret containing the certificate that you generated.$ oc create secret generic cluster2-inter-router-tls-secret --from-file=/tmp/cluster2-inter-router-tls

In

cluster2, create anInterconnectCustom Resource YAML file to describe the router deployment.apiVersion: interconnectedcloud.github.io/v1alpha1 kind: Interconnect metadata: name: cluster2-router-mesh spec: sslProfiles: - name: inter-cluster-tls 1 credentials: cluster2-inter-router-tls-secret caCert: cluster2-inter-router-tls-secret interRouterConnectors: - host: cluster1-router-mesh-port-55671-myproject.cluster1.openshift.com 2 port: 443 verifyHostname: false sslProfile: inter-cluster-tls

Create the router deployment described in the YAML file.

$ oc apply -f cluster2-router-mesh.yaml

Verify that the routers are connected.

This example displays the connections from the router in

cluster2to the router incluster1.$ oc exec cluster2-fb6bc5797-crvb6 -it -- qdstat -c Connections id host container role dir security authentication tenant ==================================================================================================================================================================================================== 1 cluster1-router-mesh-port-55671-myproject.cluster1.openshift.com:443 cluster1-router-mesh-54cffd9967-9h4vq inter-router out TLSv1/SSLv3(DHE-RSA-AES256-GCM-SHA384) x.509

Chapter 6. Connecting clients to the router network

After creating a router network, you can connect clients (messaging applications) to it so that they can begin sending and receiving messages.

By default, the Red Hat Integration - AMQ Interconnect Operator creates a Service for the router deployment and configures the following ports for client access:

-

5672for plain AMQP traffic without authentication -

5671for AMQP traffic secured with TLS authentication

To connect clients to the router network, you can do the following:

- If any clients are outside of the OpenShift cluster, expose the ports so that they can connect to the router network.

- Configure your clients to connect to the router network.

6.1. Exposing ports for clients outside of OpenShift Container Platform

You expose ports to enable clients outside of the OpenShift Container Platform cluster to connect to the router network.

Procedure

Start editing the

InterconnectCustom Resource YAML file that describes the router deployment for which you want to expose ports.$ oc edit -f router-mesh.yaml

In the

spec.listenerssection, expose each port that you want clients outside of the cluster to be able to access.In this example, port

5671is exposed. This enables clients outside of the cluster to authenticate with and connect to the router network.Sample

router-mesh.yamlfileapiVersion: interconnectedcloud.github.io/v1alpha1 kind: Interconnect metadata: name: router-mesh spec: ... listeners: - port: 5672 - authenticatePeer: true expose: true http: true port: 8080 - port: 5671 sslProfile: default expose: true ...The Red Hat Integration - AMQ Interconnect Operator creates a Route, which clients from outside the cluster can use to connect to the router network.

6.2. Authentication for client connections

When you create a router deployment, the Red Hat Integration - AMQ Interconnect Operator uses the Red Hat Integration - AMQ Certificate Manager Operator to create default SSL/TLS certificates for client authentication, and configures port 5671 for SSL encryption.

6.3. Configuring clients to connect to the router network

You can connect messaging clients running in the same OpenShift cluster as the router network, a different cluster, or outside of OpenShift altogether so that they can exchange messages.

Prerequisites

- If the client is outside of the OpenShift Container Platform cluster, a connecting port must be exposed. For more information, see Section 6.1, “Exposing ports for clients outside of OpenShift Container Platform”.

Procedure

To connect a client to the router network, use the following connection URL format:

<scheme>://[<username>@]<host>[:<port>]

- <scheme>

Use one of the following:

-

amqp- unencrypted TCP from within the same OpenShift cluster -

amqps- for secure connections using SSL/TLS authentication -

amqpws- AMQP over WebSockets for unencrypted connections from outside the OpenShift cluster

-

- <username>

- If you deployed the router mesh with user name/password authentication, provide the client’s user name.

- <host>

- If the client is in the same OpenShift cluster as the router network, use the OpenShift Service host name. Otherwise, use the host name of the Route.

- <port>

If you are connecting to a Route, you must specify the port. To connect on an unsecured connection, use port

80. Otherwise, to connect on a secured connection, use port443.NoteTo connect on an unsecured connection (port

80), the client must use AMQP over WebSockets (amqpws).

The following table shows some example connection URLs.

| URL | Description |

|---|---|

|

|

The client and router network are both in the same OpenShift cluster, so the Service host name is used for the connection URL. In this case, user name/password authentication is implemented, which requires the user name ( |

|

|

The client is outside of OpenShift, so the Route host name is used for the connection URL. In this case, SSL/TLS authentication is implemented, which requires the |

|

|

The client is outside of OpenShift, so the Route host name is used for the connection URL. In this case, no authentication is implemented, which means the client must use the |

Chapter 7. Connecting to external services

You can connect a router to an external service such as a message broker. The services may be running in the same OpenShift cluster as the router network, or running outside of OpenShift.

Prerequisites

- You must have access to a message broker.

Procedure

This procedure describes how to connect a router to a broker and configure a link route to connect messaging clients to it.

Start editing the

InterconnectCustom Resource YAML file that describes the router deployment that you want to connect to a broker.$ oc edit -f router-mesh.yaml

In the

specsection, configure the connection and link route.Sample

router-mesh.yamlfileapiVersion: interconnectedcloud.github.io/v1alpha1 kind: Interconnect metadata: name: router-mesh spec: ... connectors: 1 - name: my-broker host: broker port: 5672 routeContainer: true linkRoutes: 2 - prefix: q1 direction: in connection: my-broker - prefix: q1 direction: out connection: my-broker

- 1

- The connection to be used to connect this router to the message broker. The Operator will configure this connection from every router defined in this router deployment to the broker. If you only want a single connection between the router network and the broker, then configure a

listenerinstead of a connector and have the broker establish the connection. - 2

- The link route configuration. It defines the incoming and outgoing links and connection to be used to connect messaging applications to the message broker.

Verify that the router has established the link route to the message broker.

$ oc exec router-mesh-fb6bc5797-crvb6 -it -- qdstat --linkroutes Link Routes address dir distrib status ==================================== q1 in linkBalanced active q1 out linkBalanced active

Additional resources

- For more information about link routes, see Creating link routes.

Chapter 8. Configuring the address space for message routing

AMQ Interconnect provides flexible application-layer addressing and delivery semantics. By configuring addresses, you can route messages in anycast (closest or balanced) or multicast patterns.

8.1. Routing messages between clients

By default, AMQ Interconnect distributes messages in a balanced anycast pattern (each message is delivered to a single consumer, and AMQ Interconnect attempts to balance the traffic load across the network). This means you only need to change the address configuration if you want to apply non-default semantics to an address or range of addresses.

Procedure

This procedure configures an address to use multicast distribution. The router network will distribute a copy of each message sent to this address to every consumer that is subscribed to the address.

Start editing the

InterconnectCustom Resource YAML file that describes the router deployment.$ oc edit -f router-mesh.yaml

In the

specsection, define the semantics to be applied to addresses.Sample

router-mesh.yamlfileapiVersion: interconnectedcloud.github.io/v1alpha1 kind: Interconnect metadata: name: router-mesh spec: ... addresses: - pattern: */orders 1 distribution: multicast- 1

- Messages sent to any address that ends with “

orders” will be distributed in a multicast pattern.

The Operator applies the changes to the router network and restarts each Pod.

If you have additional router deployment Custom Resources that define routers in the router network, repeat this procedure for each CR.

Each router in the router network must have the same address configuration.

Additional resources

- For more information about address semantics that you can configure, see Configuring message routing.

8.2. Routing messages through brokers

If you need to store and forward messages, you can route them through a queue on a message broker. In this scenario, message producers send messages to a router, and the router sends the messages to a broker queue. When a consumer connects to the router to receive the messages, the router retrieves them from the broker queue.

You can route messages to brokers running in the same OpenShift cluster as the router network, or to brokers that are running outside of the cluster.

Prerequisites

- You must have access to a message broker.

Procedure

Start editing the Interconnect Custom Resource YAML file that describes the router deployment.

$ oc edit -f router-mesh.yaml

In the

specsection, add a connector to connect to the broker, a waypoint address to point to the broker queue, and autolinks to create the links to the queue.Sample

router-mesh.yamlfileapiVersion: interconnectedcloud.github.io/v1alpha1 kind: Interconnect metadata: name: router-mesh spec: ... addresses: - prefix: my-queue 1 waypoint: true autoLinks: 2 - address: my-queue direction: in connection: my-broker - address: my-queue direction: out connection: my-broker connectors: 3 - name: my-broker host: broker port: 5672 routeContainer: true

- 1

- The address (or set of addresses) for which messages should be stored on a broker queue.

- 2

- The autolink configuration. It defines the incoming and outgoing links and connection to be used to send and receive the messages on the broker.

- 3

- The connection to be used to connect the routers to the message broker.

The Operator applies the changes to the router network and restarts each Pod.

Verify that the router has established the autolinks to the message broker.

$ oc exec router-mesh-6d6dccb57f-x5cqf -it -- qdstat --autolinks AutoLinks addr dir phs extAddr link status lastErr ==================================================== my-queue in 1 26 active my-queue out 0 27 active

If you have additional router deployment Custom Resources that define routers in the router network, repeat this procedure for each CR.

Each router in the router network must have the same address configuration.

Additional resources

- For more information about routing messages to and from broker queues, see Routing Messages through broker queues.

Chapter 9. Using Prometheus and Grafana to monitor the router network

Prometheus is container-native software built for storing historical data and for monitoring large, scalable systems such as AMQ Interconnect. It gathers data over an extended time, rather than just for the currently running session.

You use Prometheus and Alertmanager to monitor and store AMQ Interconnect data so that you can use a graphical tool, such as Grafana, to visualize and run queries on the data.

9.1. Setting up Prometheus and Grafana

Before you can view AMQ Interconnect dashboards, you must deploy and configure Prometheus, Alertmanager, and Grafana in the OpenShift project in which AMQ Interconnect is deployed. All of the required configuration files are provided in a GitHub repository.

Procedure

Clone the

qdr-monitoringGitHub repository.This repository contains example configuration files needed to set up Prometheus and Grafana to monitor AMQ Interconnect.

$ git clone https://github.com/interconnectedcloud/qdr-monitoring

Set the

NAMESPACEenvironment variable to the name of the project where you deployed AMQ Interconnect.For example, if you deployed AMQ Interconnect in the

exampleproject, set theNAMESPACEenvironment variable as follows:$ export NAMESPACE=example

Run the

deploy-monitoring.shscript.This script creates and configures the OpenShift resources needed to deploy Prometheus, Alertmanager, and Grafana in your OpenShift project. It also configures two dashboards that provide metrics for the router network.

$ ./deploy-monitoring.sh

An alternative method of running this script is to to specify the target project as a parameter. For example:

$ ./deploy-monitoring.sh example

Additional resources

- For more information about Prometheus, see the Prometheus documentation.

- For more information about Grafana, see the Grafana documentation.

9.2. Viewing AMQ Interconnect dashboards in Grafana

After setting up Prometheus and Grafana, you can visualize the AMQ Interconnect data on the following Grafana dashboards:

- Qpid Dispatch Router

- Shows metrics for:

- Qpid Dispatch Router

Shows metrics for:

-

Deliveries ingress -

Deliveries egress -

Deliveries ingress route container -

Deliveries egress route container -

Deliveries redirected to fallback destination -

Dropped presettled deliveries -

Presettled deliveries -

Auto links -

Link routes -

Address count -

Connection count -

Link count

-

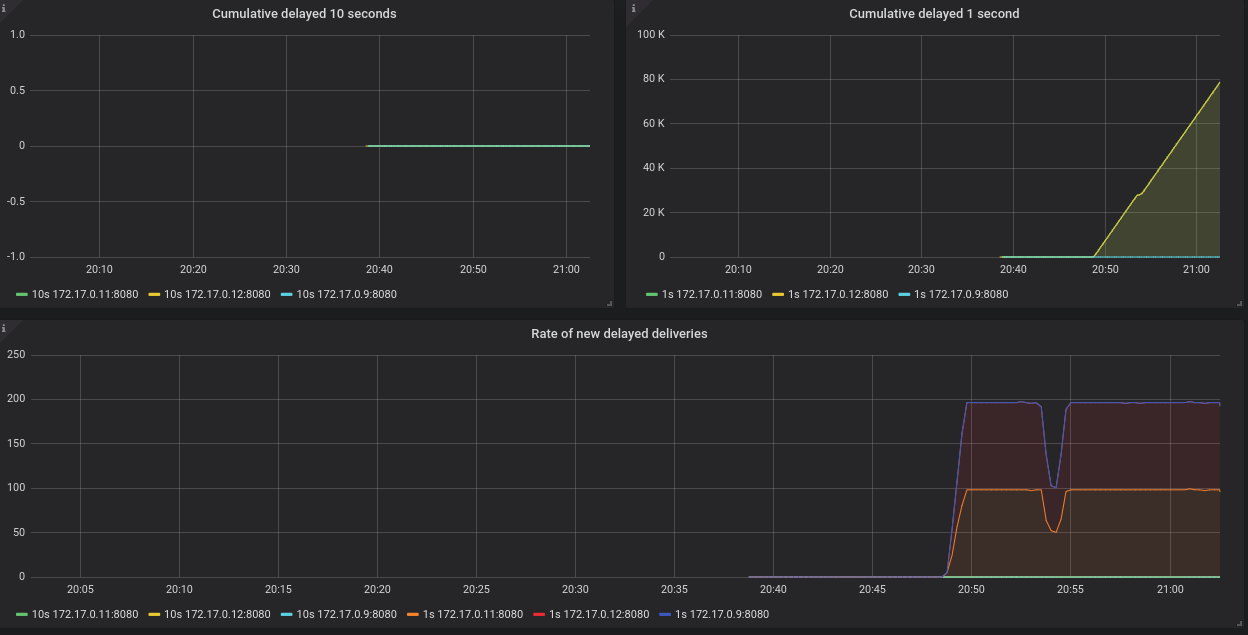

- Qpid Dispatch Router - Delayed Deliveries

Shows metrics for:

-

Cumulative delayed 10 seconds -

Cumulative delayed 1 second -

Rate of new delayed deliveries

-

For more information about these metrics, see Section 9.3, “Router metrics”.

Procedure

In the OpenShift web console, switch to → , and click the URL for the

grafanaRoute.The Grafana Log In page appears.

Enter your user name and password, and then click .

The default Grafana user name and password are both

admin. After logging in for the first time, you can change the password.On the top header, click the dashboard drop-down menu, and then select the

Qpid Dispatch RouterorQpid Dispatch Router - Delayed Deliveriesdashboard.Figure 9.1. Delayed Deliveries dashboard

9.3. Router metrics

The following metrics are available in Prometheus:

qdr_connections_total- The total number of network connections to the router. This includes connections from and to any AMQP route container.

qdr_links_total- The total number of incoming and outgoing links attached to the router.

qdr_addresses_total- The total number of addresses known to the router.

qdr_routers_total- The total number of routers known to the router.

qdr_link_routes_total- The total number of active and inactive link routes configured for the router. See Understanding link routing for more details.

qdr_auto_links_total- The total number of incoming and outgoing auto links configured for the router. See Configuring brokered messaging for more details about autolinks.

qdr_presettled_deliveries_total- The total number of presettled deliveries arriving at the router. The router settles the incoming deliveries and propagates the settlement to the message destination, also known as fire and forget.

qdr_dropped_presettled_deliveries_total- The total number of presettled deliveries that the router dropped due to congestion. The router settles the incoming deliveries and propagates the settlement to the message destination, also known as fire and forget.

qdr_accepted_deliveries_total- The total number of deliveries accepted at the router. See Understanding message routing for more information on accepted deliveries.

qdr_released_deliveries_total- The total number of deliveries released at the router. See Understanding message routing for more information on released deliveries.

qdr_rejected_deliveries_total- The total number of deliveries rejected at the router. See Understanding message routing for more information on rejected deliveries.

qdr_modified_deliveries_total- The total number of deliveries modified at the router. See Understanding message routing for more information on modified deliveries.

qdr_deliveries_ingress_total- The total number of messages delivered to the router from clients. This includes management messages, but not route control messages.

qdr_deliveries_egress_total- The total number of messages sent from the router to clients. This includes management messages, but not route control messages.

qdr_deliveries_transit_total,qdr_deliveries_ingress_route_container_total- The total number of messages passing through the router for delivery to a different router.

qdr_deliveries_egress_route_container_total- The total number of deliveries sent to AMQP route containers from the router This includes messages to an AMQ Broker instance and management messages, but not route control messages.

qdr_deliveries_delayed_1sec_total- The total number of deliveries forwarded by the router that were unsettled for more than one second.

qdr_deliveries_delayed_10sec_total- The total number of deliveries forwarded by the router that were unsettled for more than ten seconds.

qdr_deliveries_stuck_total- The total number of deliveries that cannot be delivered. Typically, deliveries cannot be delivered due to lack of credit as described in Message routing flow control

qdr_links_blocked_total- The total number of links that are blocked.

qdr_deliveries_redirected_to_fallback_total- The total number of deliveries that were forwarded to a fallback destination. See Handling undeliverable messages for more information.

Additional information

See Section 9.2, “Viewing AMQ Interconnect dashboards in Grafana”.

Chapter 10. Using the AMQ Interconnect web console to monitor the router network

You can use the AMQ Interconnect web console to monitor the status and performance of your router network. By default, when you create a router deployment, the AMQ Interconnect Operator generates the credentials to access the console and stores them in a Secret.

Procedure

In OpenShift, switch to → , and click the console Route.

The web console opens in a new tab.

To connect to the web console, complete the following fields:

Port-

Enter

443. User nameEnter the user name.

To find the user name and password for accessing the web console, navigate to → . The Secret containing the web console credentials is called

<application-name>-users(for example,router-mesh-users).The syntax for the user name is <user>@<domain> (the domain is the OpenShift application name, which is the name of the Custom Resource that describes the router deployment). For example,

guest@router-mesh.Password-

Enter the password defined in the

<application-name>-usersSecret.

Click .

The

Routerspage is displayed showing all of the routers in the router network.Use the web console tabs to monitor the router network.

This tab… Provides… OverviewAggregated information about routers, addresses, links, connections, and logs.

EntitiesDetailed information about each AMQP management entity for each router in the router network. Some of the attributes have charts that you can add to the

Chartstab.TopologyA graphical view of the router network, including routers, clients, and brokers. The topology shows how the routers are connected, and how messages are flowing through the network.

ChartsGraphs of the information selected on the

Entitiestab.Message FlowA chord diagram showing the real-time message flow by address.

SchemaThe management schema that controls each of the routers in the router network.

Chapter 11. Migrating from Red Hat Integration - AMQ Certificate Manager Operator

Red Hat Integration - AMQ Certificate Manager Operator is not required to secure your connections, and will not be supported with OpenShift Container Platform 4.9. To secure connections on OpenShift Container Platform 4.9, you can remove the Red Hat Integration - AMQ Certificate Manager Operator as described below.

After removing the Red Hat Integration - AMQ Certificate Manager Operator, the certificates that were previously issued continue to be valid and your connections are still secured.

Prerequisites

-

You are logged into the cluster using a

cluster-adminaccount.

Procedure

- Make sure you have upgraded the Red Hat Integration - AMQ Interconnect Operator to the latest version.

Remove the Red Hat Integration - AMQ Certificate Manager Operator:

- In the OpenShift Container Platform web console, navigate to → .

-

Choose

Red Hat Integration - AMQ Certificate Manager Operatorfrom the list of installed Operators, and then click . Click when the following message is displayed:

Operator Red Hat Integration - AMQ Certificate Manager will be removed from all-namespaces, but any of its custom resource definitions or managed resources will remain. If your Operator deployed applications on the cluster or configured off-cluster resources, these will continue to run and require manual cleanup.

Remove the Red Hat Integration - AMQ Certificate Manager Operator CRD

$ oc delete crd issuers.certmanager.k8s.io customresourcedefinition.apiextensions.k8s.io "issuers.certmanager.k8s.io" deleted

- Verify that all current connections are working.

If you create new connections after removing the Red Hat Integration - AMQ Certificate Manager Operator, you must follow the procedure described in Section 2.1, “Creating secrets for SSL/TLS authentication” to secure the new connections.

Revised on 2023-01-04 15:56:30 UTC