Using AMQ Streams on RHEL

For use with AMQ Streams 1.8 on Red Hat Enterprise Linux

Abstract

Making open source more inclusive

Red Hat is committed to replacing problematic language in our code, documentation, and web properties. We are beginning with these four terms: master, slave, blacklist, and whitelist. Because of the enormity of this endeavor, these changes will be implemented gradually over several upcoming releases. For more details, see our CTO Chris Wright’s message.

Chapter 1. Overview of AMQ Streams

Red Hat AMQ Streams is a massively-scalable, distributed, and high-performance data streaming platform based on the Apache ZooKeeper and Apache Kafka projects.

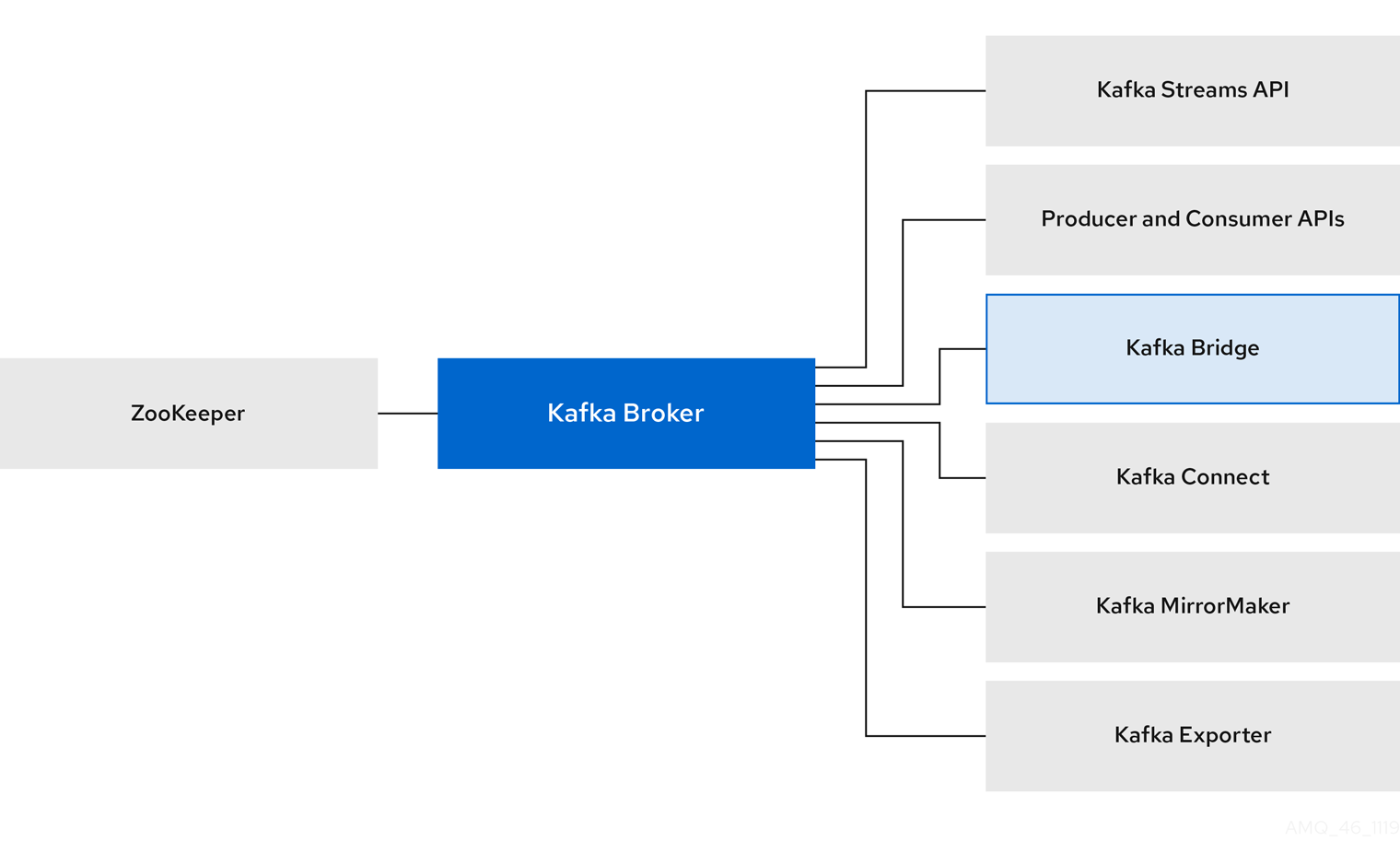

The main components comprise:

- Kafka Broker

Messaging broker responsible for delivering records from producing clients to consuming clients.

Apache ZooKeeper is a core dependency for Kafka, providing a cluster coordination service for highly reliable distributed coordination.

- Kafka Streams API

- API for writing stream processor applications.

- Producer and Consumer APIs

- Java-based APIs for producing and consuming messages to and from Kafka brokers.

- Kafka Bridge

- AMQ Streams Kafka Bridge provides a RESTful interface that allows HTTP-based clients to interact with a Kafka cluster.

- Kafka Connect

- A toolkit for streaming data between Kafka brokers and other systems using Connector plugins.

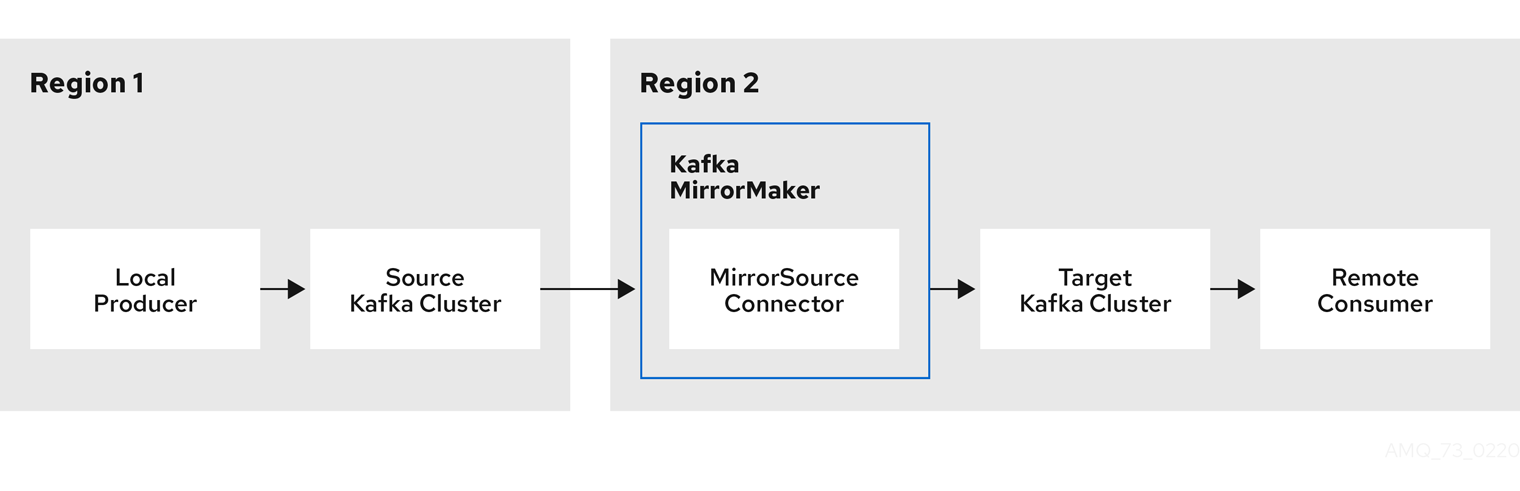

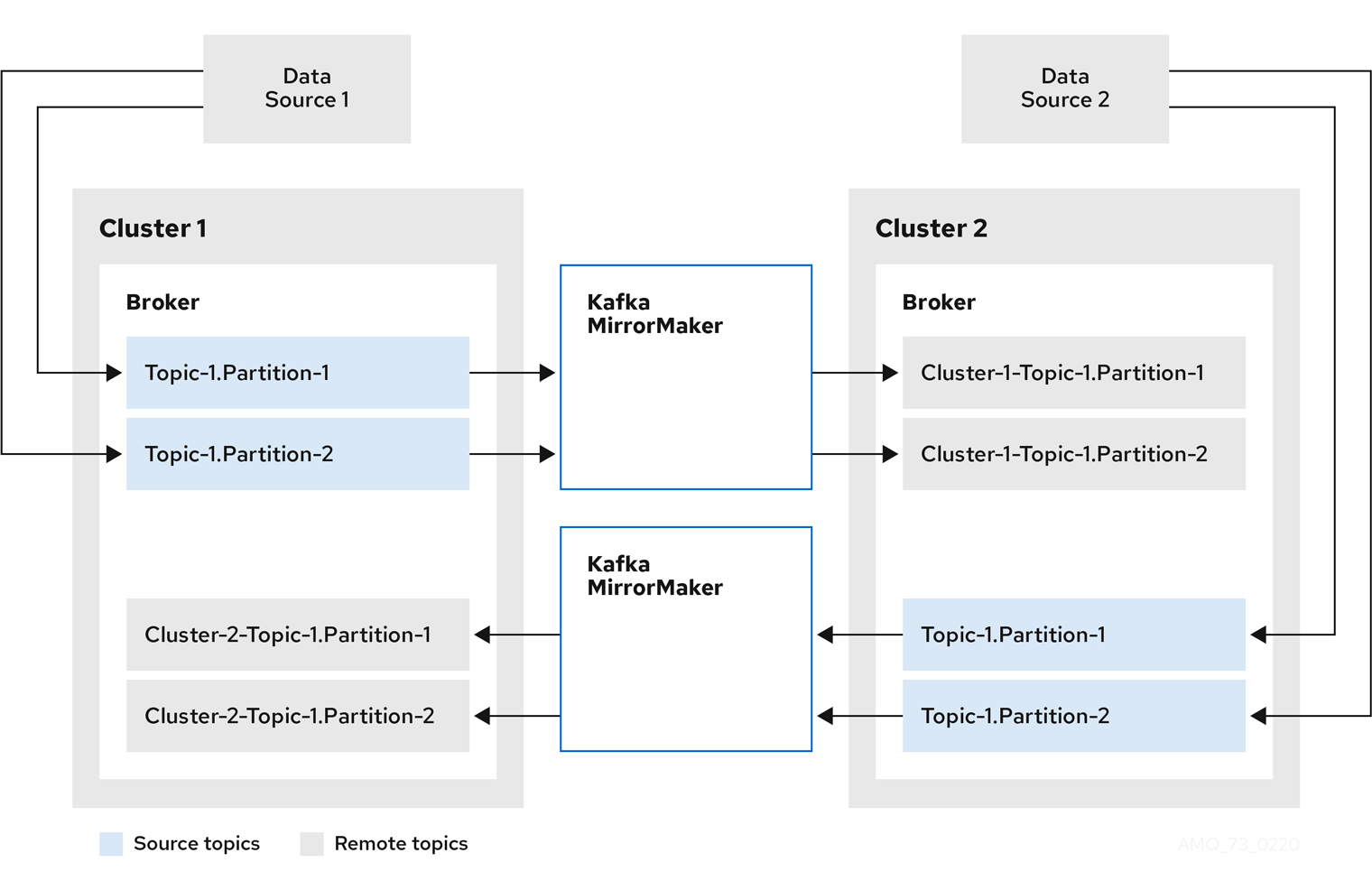

- Kafka MirrorMaker

- Replicates data between two Kafka clusters, within or across data centers.

- Kafka Exporter

- An exporter used in the extraction of Kafka metrics data for monitoring.

A cluster of Kafka brokers is the hub connecting all these components. The broker uses Apache ZooKeeper for storing configuration data and for cluster coordination. Before running Apache Kafka, an Apache ZooKeeper cluster has to be ready.

Figure 1.1. AMQ Streams architecture

1.1. Kafka capabilities

The underlying data stream-processing capabilities and component architecture of Kafka can deliver:

- Microservices and other applications to share data with extremely high throughput and low latency

- Message ordering guarantees

- Message rewind/replay from data storage to reconstruct an application state

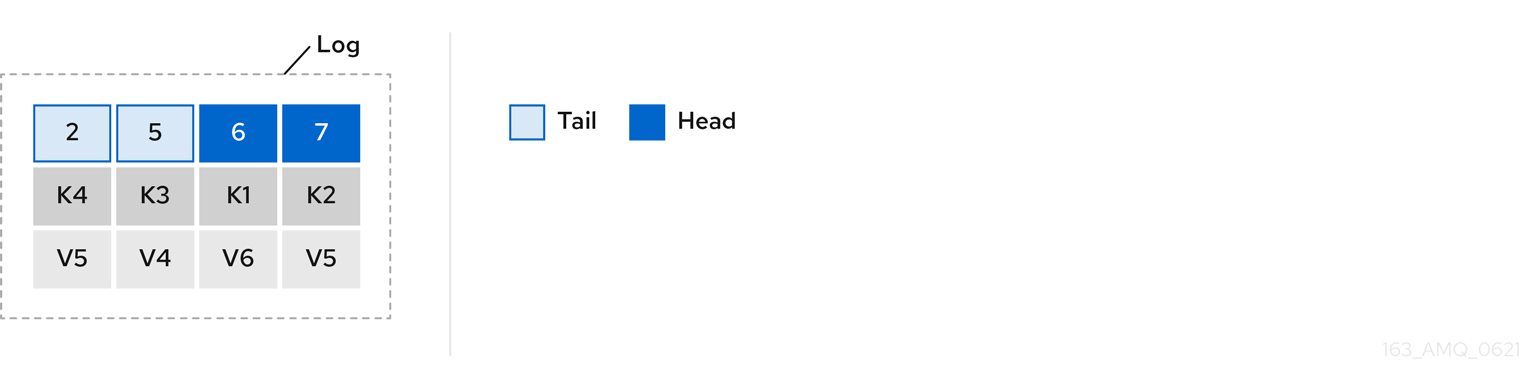

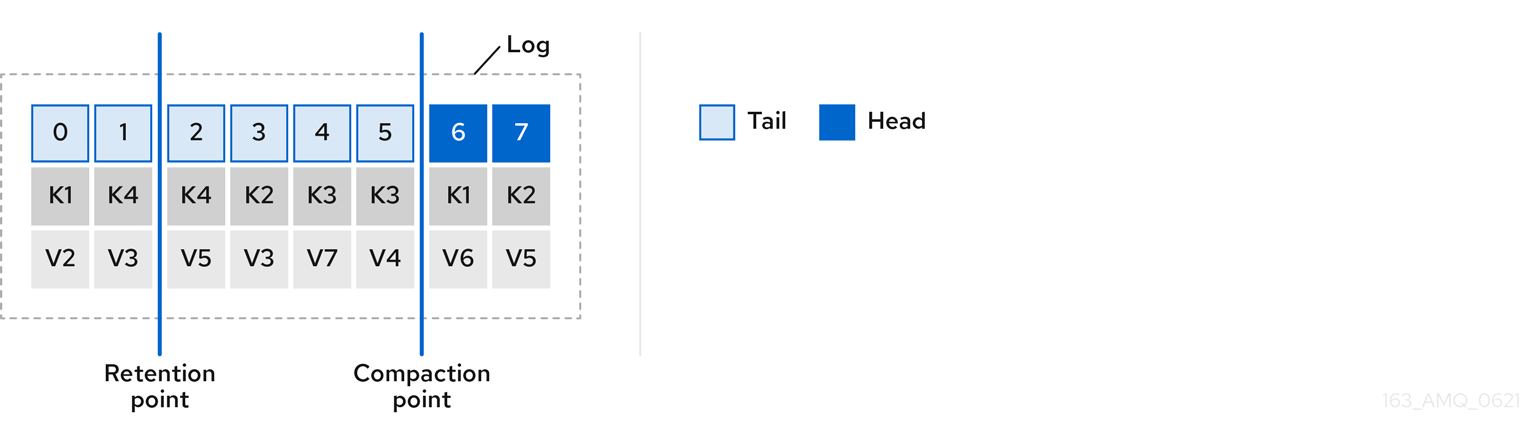

- Message compaction to remove old records when using a key-value log

- Horizontal scalability in a cluster configuration

- Replication of data to control fault tolerance

- Retention of high volumes of data for immediate access

1.2. Kafka use cases

Kafka’s capabilities make it suitable for:

- Event-driven architectures

- Event sourcing to capture changes to the state of an application as a log of events

- Message brokering

- Website activity tracking

- Operational monitoring through metrics

- Log collection and aggregation

- Commit logs for distributed systems

- Stream processing so that applications can respond to data in real time

1.3. Supported Configurations

In order to be running in a supported configuration, AMQ Streams must be running in one of the following JVM versions and on one of the supported operating systems.

| Java Virtual Machine | Version |

|---|---|

| OpenJDK | 1.8, 11 |

| OracleJDK | 1.8, 11 |

| IBM JDK | 1.8 |

| Operating System | Architecture | Version |

|---|---|---|

| Red Hat Enterprise Linux | x86_64 | 7.x, 8.x |

1.4. Document conventions

Replaceables

In this document, replaceable text is styled in monospace, with italics, uppercase, and hyphens.

For example, in the following code, you will want to replace BOOTSTRAP-ADDRESS and TOPIC-NAME with your own address and topic name:

bin/kafka-console-consumer.sh --bootstrap-server BOOTSTRAP-ADDRESS --topic TOPIC-NAME --from-beginning

bin/kafka-console-consumer.sh --bootstrap-server BOOTSTRAP-ADDRESS --topic TOPIC-NAME --from-beginningChapter 2. Getting started

2.1. AMQ Streams distribution

AMQ Streams is distributed as single ZIP file. This ZIP file contains AMQ Streams components:

- Apache ZooKeeper

- Apache Kafka

- Apache Kafka Connect

- Apache Kafka MirrorMaker

- Kafka Bridge

- Kafka Exporter

2.2. Downloading an AMQ Streams Archive

An archived distribution of AMQ Streams is available for download from the Red Hat website. You can download a copy of the distribution by following the steps below.

Procedure

- Download the latest version of the Red Hat AMQ Streams archive from the Customer Portal.

2.3. Installing AMQ Streams

Follow this procedure to install the latest version of AMQ Streams on Red Hat Enterprise Linux.

For instructions on upgrading an existing cluster to AMQ Streams 1.8, see AMQ Streams and Kafka upgrades.

Prerequisites

- Download the installation archive.

- Review the Section 1.3, “Supported Configurations”

Procedure

Add new

kafkauser and group.sudo groupadd kafka sudo useradd -g kafka kafka sudo passwd kafka

sudo groupadd kafka sudo useradd -g kafka kafka sudo passwd kafkaCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create directory

/opt/kafka.sudo mkdir /opt/kafka

sudo mkdir /opt/kafkaCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a temporary directory and extract the contents of the AMQ Streams ZIP file.

mkdir /tmp/kafka unzip amq-streams_y.y-x.x.x.zip -d /tmp/kafka

mkdir /tmp/kafka unzip amq-streams_y.y-x.x.x.zip -d /tmp/kafkaCopy to Clipboard Copied! Toggle word wrap Toggle overflow Move the extracted contents into

/opt/kafkadirectory and delete the temporary directory.sudo mv /tmp/kafka/kafka_y.y-x.x.x/* /opt/kafka/ rm -r /tmp/kafka

sudo mv /tmp/kafka/kafka_y.y-x.x.x/* /opt/kafka/ rm -r /tmp/kafkaCopy to Clipboard Copied! Toggle word wrap Toggle overflow Change the ownership of the

/opt/kafkadirectory to thekafkauser.sudo chown -R kafka:kafka /opt/kafka

sudo chown -R kafka:kafka /opt/kafkaCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create directory

/var/lib/zookeeperfor storing ZooKeeper data and set its ownership to thekafkauser.sudo mkdir /var/lib/zookeeper sudo chown -R kafka:kafka /var/lib/zookeeper

sudo mkdir /var/lib/zookeeper sudo chown -R kafka:kafka /var/lib/zookeeperCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create directory

/var/lib/kafkafor storing Kafka data and set its ownership to thekafkauser.sudo mkdir /var/lib/kafka sudo chown -R kafka:kafka /var/lib/kafka

sudo mkdir /var/lib/kafka sudo chown -R kafka:kafka /var/lib/kafkaCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4. Data storage considerations

An efficient data storage infrastructure is essential to the optimal performance of AMQ Streams.

AMQ Streams requires block storage and works well with cloud-based block storage solutions, such as Amazon Elastic Block Store (EBS). The use of file storage is not recommended.

Choose local storage when possible. If local storage is not available, you can use a Storage Area Network (SAN) accessed by a protocol such as Fibre Channel or iSCSI.

2.4.1. Apache Kafka and ZooKeeper storage support

Use separate disks for Apache Kafka and ZooKeeper.

Kafka supports JBOD (Just a Bunch of Disks) storage, a data storage configuration of multiple disks or volumes. JBOD provides increased data storage for Kafka brokers. It can also improve performance.

Solid-state drives (SSDs), though not essential, can improve the performance of Kafka in large clusters where data is sent to and received from multiple topics asynchronously. SSDs are particularly effective with ZooKeeper, which requires fast, low latency data access.

You do not need to provision replicated storage because Kafka and ZooKeeper both have built-in data replication.

2.4.2. File systems

It is recommended that you configure your storage system to use the XFS file system. AMQ Streams is also compatible with the ext4 file system, but this might require additional configuration for best results.

Additional resources

- For more information about XFS, see The XFS File System.

2.5. Running a single node AMQ Streams cluster

This procedure shows how to run a basic AMQ Streams cluster consisting of a single Apache ZooKeeper node and a single Apache Kafka node, both running on the same host. The default configuration files are used for ZooKeeper and Kafka.

A single node AMQ Streams cluster does not provide reliability and high availability and is suitable only for development purposes.

Prerequisites

- AMQ Streams is installed on the host

Running the cluster

Edit the ZooKeeper configuration file

/opt/kafka/config/zookeeper.properties. Set thedataDiroption to/var/lib/zookeeper/:dataDir=/var/lib/zookeeper/

dataDir=/var/lib/zookeeper/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the Kafka configuration file

/opt/kafka/config/server.properties. Set thelog.dirsoption to/var/lib/kafka/:log.dirs=/var/lib/kafka/

log.dirs=/var/lib/kafka/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Switch to the

kafkauser:su - kafka

su - kafkaCopy to Clipboard Copied! Toggle word wrap Toggle overflow Start ZooKeeper:

/opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties

/opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.propertiesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check that ZooKeeper is running:

jcmd | grep zookeeper

jcmd | grep zookeeperCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns:

number org.apache.zookeeper.server.quorum.QuorumPeerMain /opt/kafka/config/zookeeper.properties

number org.apache.zookeeper.server.quorum.QuorumPeerMain /opt/kafka/config/zookeeper.propertiesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Start Kafka:

/opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties

/opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.propertiesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check that Kafka is running:

jcmd | grep kafka

jcmd | grep kafkaCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns:

number kafka.Kafka /opt/kafka/config/server.properties

number kafka.Kafka /opt/kafka/config/server.propertiesCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

- For more information about installing AMQ Streams, see Section 2.3, “Installing AMQ Streams”.

- For more information about configuring AMQ Streams, see Section 2.8, “Configuring AMQ Streams”.

2.6. Using the cluster

This procedure describes how to start the Kafka console producer and consumer clients and use them to send and receive several messages.

A new topic is automatically created in step one. Topic auto-creation is controlled using the auto.create.topics.enable configuration property (set to true by default). Alternatively, you can configure and create topics before using the cluster. For more information, see Topics.

Procedure

Start the Kafka console producer and configure it to send messages to a new topic:

/opt/kafka/bin/kafka-console-producer.sh --broker-list <bootstrap-address> --topic <topic-name>

/opt/kafka/bin/kafka-console-producer.sh --broker-list <bootstrap-address> --topic <topic-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

/opt/kafka/bin/kafka-console-producer.sh --broker-list localhost:9092 --topic my-topic

/opt/kafka/bin/kafka-console-producer.sh --broker-list localhost:9092 --topic my-topicCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enter several messages into the console. Press Enter to send each individual message to your new topic:

>message 1 >message 2 >message 3 >message 4

>message 1 >message 2 >message 3 >message 4Copy to Clipboard Copied! Toggle word wrap Toggle overflow When Kafka creates a new topic automatically, you might receive a warning that the topic does not exist:

WARN Error while fetching metadata with correlation id 39 : {4-3-16-topic1=LEADER_NOT_AVAILABLE} (org.apache.kafka.clients.NetworkClient)WARN Error while fetching metadata with correlation id 39 : {4-3-16-topic1=LEADER_NOT_AVAILABLE} (org.apache.kafka.clients.NetworkClient)Copy to Clipboard Copied! Toggle word wrap Toggle overflow The warning should not reappear after you send further messages.

In a new terminal window, start the Kafka console consumer and configure it to read messages from the beginning of your new topic.

/opt/kafka/bin/kafka-console-consumer.sh --bootstrap-server <bootstrap-address> --topic <topic-name> --from-beginning

/opt/kafka/bin/kafka-console-consumer.sh --bootstrap-server <bootstrap-address> --topic <topic-name> --from-beginningCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

/opt/kafka/bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic my-topic --from-beginning

/opt/kafka/bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic my-topic --from-beginningCopy to Clipboard Copied! Toggle word wrap Toggle overflow The incoming messages display in the consumer console.

- Switch to the producer console and send additional messages. Check that they display in the consumer console.

- Stop the Kafka console producer and then the consumer by pressing Ctrl+C.

2.7. Stopping the AMQ Streams services

You can stop the Kafka and ZooKeeper services by running a script. All connections to the Kafka and ZooKeeper services will be terminated.

Prerequisites

- AMQ Streams is installed on the host

- ZooKeeper and Kafka are up and running

Procedure

Stop the Kafka broker.

su - kafka /opt/kafka/bin/kafka-server-stop.sh

su - kafka /opt/kafka/bin/kafka-server-stop.shCopy to Clipboard Copied! Toggle word wrap Toggle overflow Confirm that the Kafka broker is stopped.

jcmd | grep kafka

jcmd | grep kafkaCopy to Clipboard Copied! Toggle word wrap Toggle overflow Stop ZooKeeper.

su - kafka /opt/kafka/bin/zookeeper-server-stop.sh

su - kafka /opt/kafka/bin/zookeeper-server-stop.shCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.8. Configuring AMQ Streams

Prerequisites

- AMQ Streams is downloaded and installed on the host

Procedure

Open ZooKeeper and Kafka broker configuration files in a text editor. The configuration files are located at :

- ZooKeeper

-

/opt/kafka/config/zookeeper.properties - Kafka

-

/opt/kafka/config/server.properties

Edit the configuration options. The configuration files are in the Java properties format. Every configuration option should be on separate line in the following format:

<option> = <value>

<option> = <value>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Lines starting with

#or!will be treated as comments and will be ignored by AMQ Streams components.This is a comment

# This is a commentCopy to Clipboard Copied! Toggle word wrap Toggle overflow Values can be split into multiple lines by using

\directly before the newline / carriage return.sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required \ username="bob" \ password="bobs-password";sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required \ username="bob" \ password="bobs-password";Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Save the changes

- Restart the ZooKeeper or Kafka broker

- Repeat this procedure on all the nodes of the cluster.

Chapter 3. Configuring ZooKeeper

Kafka uses ZooKeeper to store configuration data and for cluster coordination. It is strongly recommended to run a cluster of replicated ZooKeeper instances.

3.1. Basic configuration

The most important ZooKeeper configuration options are:

tickTime- ZooKeeper’s basic time unit in milliseconds. It is used for heartbeats and session timeouts. For example, minimum session timeout will be two ticks.

dataDir-

The directory where ZooKeeper stores its transaction logs and snapshots of its in-memory database. This should be set to the

/var/lib/zookeeper/directory that was created during installation. clientPort-

Port number where clients can connect. Defaults to

2181.

An example ZooKeeper configuration file named config/zookeeper.properties is located in the AMQ Streams installation directory. It is recommended to place the dataDir directory on a separate disk device to minimize the latency in ZooKeeper.

ZooKeeper configuration file should be located in /opt/kafka/config/zookeeper.properties. A basic example of the configuration file can be found below. The configuration file has to be readable by the kafka user.

tickTime=2000 dataDir=/var/lib/zookeeper/ clientPort=2181

tickTime=2000

dataDir=/var/lib/zookeeper/

clientPort=21813.2. ZooKeeper cluster configuration

In most production environments, we recommend you deploy a cluster of replicated ZooKeeper instances. A stable and highly available ZooKeeper cluster is important for running for a reliable ZooKeeper service. ZooKeeper clusters are also referred to as ensembles.

ZooKeeper clusters usually consist of an odd number of nodes. ZooKeeper requires that a majority of the nodes in the cluster are up and running. For example:

- In a cluster with three nodes, at least two of the nodes must be up and running. This means it can tolerate one node being down.

- In a cluster consisting of five nodes, at least three nodes must be available. This means it can tolerate two nodes being down.

- In a cluster consisting of seven nodes, at least four nodes must be available. This means it can tolerate three nodes being down.

Having more nodes in the ZooKeeper cluster delivers better resiliency and reliability of the whole cluster.

ZooKeeper can run in clusters with an even number of nodes. The additional node, however, does not increase the resiliency of the cluster. A cluster with four nodes requires at least three nodes to be available and can tolerate only one node being down. Therefore it has exactly the same resiliency as a cluster with only three nodes.

Ideally, the different ZooKeeper nodes should be located in different data centers or network segments. Increasing the number of ZooKeeper nodes increases the workload spent on cluster synchronization. For most Kafka use cases, a ZooKeeper cluster with 3, 5 or 7 nodes should be sufficient.

A ZooKeeper cluster with 3 nodes can tolerate only 1 unavailable node. This means that if a cluster node crashes while you are doing maintenance on another node your ZooKeeper cluster will be unavailable.

Replicated ZooKeeper configuration supports all configuration options supported by the standalone configuration. Additional options are added for the clustering configuration:

initLimit-

Amount of time to allow followers to connect and sync to the cluster leader. The time is specified as a number of ticks (see the

timeTickoption for more details). syncLimit-

Amount of time for which followers can be behind the leader. The time is specified as a number of ticks (see the

timeTickoption for more details). reconfigEnabled- Enables or disables dynamic reconfiguration. Must be enabled in order to add or remove servers to a ZooKeeper cluster.

standaloneEnabled- Enables or disables standalone mode, where ZooKeeper runs with only one server.

In addition to the options above, every configuration file should contain a list of servers which should be members of the ZooKeeper cluster. The server records should be specified in the format server.id=hostname:port1:port2, where:

id- The ID of the ZooKeeper cluster node.

hostname- The hostname or IP address where the node listens for connections.

port1- The port number used for intra-cluster communication.

port2- The port number used for leader election.

The following is an example configuration file of a ZooKeeper cluster with three nodes:

In ZooKeeper 3.5.7, the four letter word commands must be added to the allow list before they can be used. For more information, see the ZooKeeper documentation.

myid files

Each node in the ZooKeeper cluster must be assigned a unique ID. Each node’s ID must be configured in a myid file and stored in the dataDir folder, like /var/lib/zookeeper/. The myid files should contain only a single line with the written ID as text. The ID can be any integer from 1 to 255. You must manually create this file on each cluster node. Using this file, each ZooKeeper instance will use the configuration from the corresponding server. line in the configuration file to configure its listeners. It will also use all other server. lines to identify other cluster members.

In the above example, there are three nodes, so each one will have a different myid with values 1, 2, and 3 respectively.

3.3. Running multi-node ZooKeeper cluster

This procedure will show you how to configure and run ZooKeeper as a multi-node cluster.

In ZooKeeper 3.5.7, the four letter word commands must be added to the allow list before they can be used. For more information, see the ZooKeeper documentation.

Prerequisites

- AMQ Streams is installed on all hosts which will be used as ZooKeeper cluster nodes.

Running the cluster

Create the

myidfile in/var/lib/zookeeper/. Enter ID1for the first ZooKeeper node,2for the second ZooKeeper node, and so on.su - kafka echo "<NodeID>" > /var/lib/zookeeper/myid

su - kafka echo "<NodeID>" > /var/lib/zookeeper/myidCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

su - kafka echo "1" > /var/lib/zookeeper/myid

su - kafka echo "1" > /var/lib/zookeeper/myidCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the ZooKeeper

/opt/kafka/config/zookeeper.propertiesconfiguration file for the following:-

Set the option

dataDirto/var/lib/zookeeper/. -

Configure the

initLimitandsyncLimitoptions. -

Configure the

reconfigEnabledandstandaloneEnabledoptions. Add a list of all ZooKeeper nodes. The list should include also the current node.

Example configuration for a node of ZooKeeper cluster with five members

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

-

Set the option

Start ZooKeeper with the default configuration file.

su - kafka /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties

su - kafka /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.propertiesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that ZooKeeper is running.

jcmd | grep zookeeper

jcmd | grep zookeeperCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Repeat this procedure on all the nodes of the cluster.

Once all nodes of the clusters are up and running, verify that all nodes are members of the cluster by sending a

statcommand to each of the nodes usingncatutility.Use ncat stat to check the node status

echo stat | ncat localhost 2181

echo stat | ncat localhost 2181Copy to Clipboard Copied! Toggle word wrap Toggle overflow In the output you should see information that the node is either

leaderorfollower.Example output from the

ncatcommandCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

- For more information about installing AMQ Streams, see Section 2.3, “Installing AMQ Streams”.

- For more information about configuring AMQ Streams, see Section 2.8, “Configuring AMQ Streams”.

3.4. Authentication

By default, ZooKeeper does not use any form of authentication and allows anonymous connections. However, it supports Java Authentication and Authorization Service (JAAS) which can be used to set up authentication using Simple Authentication and Security Layer (SASL). ZooKeeper supports authentication using the DIGEST-MD5 SASL mechanism with locally stored credentials.

3.4.1. Authentication with SASL

JAAS is configured using a separate configuration file. It is recommended to place the JAAS configuration file in the same directory as the ZooKeeper configuration (/opt/kafka/config/). The recommended file name is zookeeper-jaas.conf. When using a ZooKeeper cluster with multiple nodes, the JAAS configuration file has to be created on all cluster nodes.

JAAS is configured using contexts. Separate parts such as the server and client are always configured with a separate context. The context is a configuration option and has the following format:

ContextName {

param1

param2;

};

ContextName {

param1

param2;

};SASL Authentication is configured separately for server-to-server communication (communication between ZooKeeper instances) and client-to-server communication (communication between Kafka and ZooKeeper). Server-to-server authentication is relevant only for ZooKeeper clusters with multiple nodes.

Server-to-Server authentication

For server-to-server authentication, the JAAS configuration file contains two parts:

- The server configuration

- The client configuration

When using DIGEST-MD5 SASL mechanism, the QuorumServer context is used to configure the authentication server. It must contain all the usernames to be allowed to connect together with their passwords in an unencrypted form. The second context, QuorumLearner, has to be configured for the client which is built into ZooKeeper. It also contains the password in an unencrypted form. An example of the JAAS configuration file for DIGEST-MD5 mechanism can be found below:

In addition to the JAAS configuration file, you must enable the server-to-server authentication in the regular ZooKeeper configuration file by specifying the following options:

Use the KAFKA_OPTS environment variable to pass the JAAS configuration file to the ZooKeeper server as a Java property:

su - kafka export KAFKA_OPTS="-Djava.security.auth.login.config=/opt/kafka/config/zookeeper-jaas.conf"; /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties

su - kafka

export KAFKA_OPTS="-Djava.security.auth.login.config=/opt/kafka/config/zookeeper-jaas.conf"; /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.propertiesFor more information about server-to-server authentication, see ZooKeeper wiki.

Client-to-Server authentication

Client-to-server authentication is configured in the same JAAS file as the server-to-server authentication. However, unlike the server-to-server authentication, it contains only the server configuration. The client part of the configuration has to be done in the client. For information on how to configure a Kafka broker to connect to ZooKeeper using authentication, see the Kafka installation section.

Add the Server context to the JAAS configuration file to configure client-to-server authentication. For DIGEST-MD5 mechanism it configures all usernames and passwords:

After configuring the JAAS context, enable the client-to-server authentication in the ZooKeeper configuration file by adding the following line:

requireClientAuthScheme=sasl authProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProvider authProvider.2=org.apache.zookeeper.server.auth.SASLAuthenticationProvider authProvider.3=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

requireClientAuthScheme=sasl

authProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

authProvider.2=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

authProvider.3=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

You must add the authProvider.<ID> property for every server that is part of the ZooKeeper cluster.

Use the KAFKA_OPTS environment variable to pass the JAAS configuration file to the ZooKeeper server as a Java property:

su - kafka export KAFKA_OPTS="-Djava.security.auth.login.config=/opt/kafka/config/zookeeper-jaas.conf"; /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties

su - kafka

export KAFKA_OPTS="-Djava.security.auth.login.config=/opt/kafka/config/zookeeper-jaas.conf"; /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.propertiesFor more information about configuring ZooKeeper authentication in Kafka brokers, see Section 4.6, “ZooKeeper authentication”.

3.4.2. Enabling Server-to-server authentication using DIGEST-MD5

This procedure describes how to enable authentication using the SASL DIGEST-MD5 mechanism between the nodes of the ZooKeeper cluster.

Prerequisites

- AMQ Streams is installed on the host

- ZooKeeper cluster is configured with multiple nodes.

Enabling SASL DIGEST-MD5 authentication

On all ZooKeeper nodes, create or edit the

/opt/kafka/config/zookeeper-jaas.confJAAS configuration file and add the following contexts:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The username and password must be the same in both JAAS contexts. For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow On all ZooKeeper nodes, edit the

/opt/kafka/config/zookeeper.propertiesZooKeeper configuration file and set the following options:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Restart all ZooKeeper nodes one by one. To pass the JAAS configuration to ZooKeeper, use the

KAFKA_OPTSenvironment variable.su - kafka export KAFKA_OPTS="-Djava.security.auth.login.config=/opt/kafka/config/zookeeper-jaas.conf"; /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties

su - kafka export KAFKA_OPTS="-Djava.security.auth.login.config=/opt/kafka/config/zookeeper-jaas.conf"; /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.propertiesCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

- For more information about installing AMQ Streams, see Section 2.3, “Installing AMQ Streams”.

- For more information about configuring AMQ Streams, see Section 2.8, “Configuring AMQ Streams”.

- For more information about running a ZooKeeper cluster, see Section 3.3, “Running multi-node ZooKeeper cluster”.

3.4.3. Enabling Client-to-server authentication using DIGEST-MD5

This procedure describes how to enable authentication using the SASL DIGEST-MD5 mechanism between ZooKeeper clients and ZooKeeper.

Prerequisites

- AMQ Streams is installed on the host

- ZooKeeper cluster is configured and running.

Enabling SASL DIGEST-MD5 authentication

On all ZooKeeper nodes, create or edit the

/opt/kafka/config/zookeeper-jaas.confJAAS configuration file and add the following context:Server { org.apache.zookeeper.server.auth.DigestLoginModule required user_super="<SuperUserPassword>" user<Username1>_="<Password1>" user<USername2>_="<Password2>"; };Server { org.apache.zookeeper.server.auth.DigestLoginModule required user_super="<SuperUserPassword>" user<Username1>_="<Password1>" user<USername2>_="<Password2>"; };Copy to Clipboard Copied! Toggle word wrap Toggle overflow The

superautomatically has administrator priviledges. The file can contain multiple users, but only one additional user is required by the Kafka brokers. The recommended name for the Kafka user iskafka.The following example shows the

Servercontext for client-to-server authentication:Server { org.apache.zookeeper.server.auth.DigestLoginModule required user_super="123456" user_kafka="123456"; };Server { org.apache.zookeeper.server.auth.DigestLoginModule required user_super="123456" user_kafka="123456"; };Copy to Clipboard Copied! Toggle word wrap Toggle overflow On all ZooKeeper nodes, edit the

/opt/kafka/config/zookeeper.propertiesZooKeeper configuration file and set the following options:requireClientAuthScheme=sasl authProvider.<IdOfBroker1>=org.apache.zookeeper.server.auth.SASLAuthenticationProvider authProvider.<IdOfBroker2>=org.apache.zookeeper.server.auth.SASLAuthenticationProvider authProvider.<IdOfBroker3>=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

requireClientAuthScheme=sasl authProvider.<IdOfBroker1>=org.apache.zookeeper.server.auth.SASLAuthenticationProvider authProvider.<IdOfBroker2>=org.apache.zookeeper.server.auth.SASLAuthenticationProvider authProvider.<IdOfBroker3>=org.apache.zookeeper.server.auth.SASLAuthenticationProviderCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

authProvider.<ID>property has to be added for every node which is part of the ZooKeeper cluster. An example three-node ZooKeeper cluster configuration must look like the following:requireClientAuthScheme=sasl authProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProvider authProvider.2=org.apache.zookeeper.server.auth.SASLAuthenticationProvider authProvider.3=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

requireClientAuthScheme=sasl authProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProvider authProvider.2=org.apache.zookeeper.server.auth.SASLAuthenticationProvider authProvider.3=org.apache.zookeeper.server.auth.SASLAuthenticationProviderCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart all ZooKeeper nodes one by one. To pass the JAAS configuration to ZooKeeper, use the

KAFKA_OPTSenvironment variable.su - kafka export KAFKA_OPTS="-Djava.security.auth.login.config=/opt/kafka/config/zookeeper-jaas.conf"; /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties

su - kafka export KAFKA_OPTS="-Djava.security.auth.login.config=/opt/kafka/config/zookeeper-jaas.conf"; /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.propertiesCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

- For more information about installing AMQ Streams, see Section 2.3, “Installing AMQ Streams”.

- For more information about configuring AMQ Streams, see Section 2.8, “Configuring AMQ Streams”.

- For more information about running a ZooKeeper cluster, see Section 3.3, “Running multi-node ZooKeeper cluster”.

3.5. Authorization

ZooKeeper supports access control lists (ACLs) to protect data stored inside it. Kafka brokers can automatically configure the ACL rights for all ZooKeeper records they create so no other ZooKeeper user can modify them.

For more information about enabling ZooKeeper ACLs in Kafka brokers, see Section 4.8, “ZooKeeper authorization”.

3.6. TLS

ZooKeeper supports TLS for encryption or authentication.

3.7. Additional configuration options

You can set the following additional ZooKeeper configuration options based on your use case:

maxClientCnxns- The maximum number of concurrent client connections to a single member of the ZooKeeper cluster.

autopurge.snapRetainCount-

Number of snapshots of ZooKeeper’s in-memory database which will be retained. Default value is

3. autopurge.purgeInterval-

The time interval in hours for purging snapshots. The default value is

0and this option is disabled.

All available configuration options can be found in the ZooKeeper documentation.

3.8. Logging

ZooKeeper is using log4j as their logging infrastructure. Logging configuration is by default read from the log4j.properties configuration file which should be placed either in the /opt/kafka/config/ directory or in the classpath. The location and name of the configuration file can be changed using the Java property log4j.configuration which can be passed to ZooKeeper using the KAFKA_LOG4J_OPTS environment variable:

su - kafka export KAFKA_LOG4J_OPTS="-Dlog4j.configuration=file:/my/path/to/log4j.properties"; /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.properties

su - kafka

export KAFKA_LOG4J_OPTS="-Dlog4j.configuration=file:/my/path/to/log4j.properties"; /opt/kafka/bin/zookeeper-server-start.sh -daemon /opt/kafka/config/zookeeper.propertiesFor more information about Log4j configurations, see Log4j documentation.

Chapter 4. Configuring Kafka

Kafka uses a properties file to store static configuration. The recommended location for the configuration file is /opt/kafka/config/server.properties. The configuration file has to be readable by the kafka user.

AMQ Streams ships an example configuration file that highlights various basic and advanced features of the product. It can be found under config/server.properties in the AMQ Streams installation directory.

This chapter explains the most important configuration options. For a complete list of supported Kafka broker configuration options, see Appendix A, Broker configuration parameters.

4.1. ZooKeeper

Kafka brokers need ZooKeeper to store some parts of their configuration as well as to coordinate the cluster (for example to decide which node is a leader for which partition). Connection details for the ZooKeeper cluster are stored in the configuration file. The field zookeeper.connect contains a comma-separated list of hostnames and ports of members of the zookeeper cluster.

For example:

zookeeper.connect=zoo1.my-domain.com:2181,zoo2.my-domain.com:2181,zoo3.my-domain.com:2181

zookeeper.connect=zoo1.my-domain.com:2181,zoo2.my-domain.com:2181,zoo3.my-domain.com:2181

Kafka will use these addresses to connect to the ZooKeeper cluster. With this configuration, all Kafka znodes will be created directly in the root of ZooKeeper database. Therefore, such a ZooKeeper cluster could be used only for a single Kafka cluster. To configure multiple Kafka clusters to use single ZooKeeper cluster, specify a base (prefix) path at the end of the ZooKeeper connection string in the Kafka configuration file:

zookeeper.connect=zoo1.my-domain.com:2181,zoo2.my-domain.com:2181,zoo3.my-domain.com:2181/my-cluster-1

zookeeper.connect=zoo1.my-domain.com:2181,zoo2.my-domain.com:2181,zoo3.my-domain.com:2181/my-cluster-14.2. Listeners

Listeners are used to connect to Kafka brokers. Each Kafka broker can be configured to use multiple listeners. Each listener requires a different configuration so it can listen on a different port or network interface.

To configure listeners, edit the listeners property in the configuration file (/opt/kafka/config/server.properties). Add listeners to the listeners property as a comma-separated list. Configure each property as follows:

<listenerName>://<hostname>:<port>

<listenerName>://<hostname>:<port>

If <hostname> is empty, Kafka uses the java.net.InetAddress.getCanonicalHostName() class as the hostname.

Example configuration for multiple listeners

listeners=internal-1://:9092,internal-2://:9093,replication://:9094

listeners=internal-1://:9092,internal-2://:9093,replication://:9094

When a Kafka client wants to connect to a Kafka cluster, it first connects to the bootstrap server, which is one of the cluster nodes. The bootstrap server provides the client with a list of all the brokers in the cluster, and the client connects to each one individually. The list of brokers is based on the configured listeners.

Advertised listeners

Optionally, you can use the advertised.listeners property to provide the client with a different set of listener addresses than those given in the listeners property. This is useful if additional network infrastructure, such as a proxy, is between the client and the broker, or an external DNS name is being used instead of an IP address.

The advertised.listeners property is formatted in the same way as the listeners property.

Example configuration for advertised listeners

listeners=internal-1://:9092,internal-2://:9093 advertised.listeners=internal-1://my-broker-1.my-domain.com:1234,internal-2://my-broker-1.my-domain.com:1235

listeners=internal-1://:9092,internal-2://:9093

advertised.listeners=internal-1://my-broker-1.my-domain.com:1234,internal-2://my-broker-1.my-domain.com:1235

The names of the advertised listeners must match those listed in the listeners property.

Inter-broker listeners

Inter-broker listeners are used for communication between Kafka brokers. Inter-broker communication is required for:

- Coordinating workloads between different brokers

- Replicating messages between partitions stored on different brokers

- Handling administrative tasks from the controller, such as partition leadership changes

The inter-broker listener can be assigned to a port of your choice. When multiple listeners are configured, you can define the name of the inter-broker listener in the inter.broker.listener.name property.

Here, the inter-broker listener is named as REPLICATION:

listeners=REPLICATION://0.0.0.0:9091 inter.broker.listener.name=REPLICATION

listeners=REPLICATION://0.0.0.0:9091

inter.broker.listener.name=REPLICATIONControl plane listeners

By default, communication between the controller and other brokers uses the inter-broker listener. The controller is responsible for coordinating administrative tasks, such as partition leadership changes.

You can enable a dedicated control plane listener for controller connections. The control plane listener can be assigned to a port of your choice.

To enable the control plane listener, configure the control.plane.listener.name property with a listener name:

listeners=CONTROLLER://0.0.0.0:9090,REPLICATION://0.0.0.0:9091 ... control.plane.listener.name=CONTROLLER

listeners=CONTROLLER://0.0.0.0:9090,REPLICATION://0.0.0.0:9091

...

control.plane.listener.name=CONTROLLEREnabling the control plane listener might improve cluster performance because controller communications are not delayed by data replication across brokers. Data replication continues through the inter-broker listener.

If control.plane.listener is not configured, controller connections use the inter-broker listener.

For more information, see Appendix A, Broker configuration parameters.

4.3. Commit logs

Apache Kafka stores all records it receives from producers in commit logs. The commit logs contain the actual data, in the form of records, that Kafka needs to deliver. These are not the application log files which record what the broker is doing.

Log directories

You can configure log directories using the log.dirs property file to store commit logs in one or multiple log directories. It should be set to /var/lib/kafka directory created during installation:

log.dirs=/var/lib/kafka

log.dirs=/var/lib/kafkaFor performance reasons, you can configure log.dirs to multiple directories and place each of them on a different physical device to improve disk I/O performance. For example:

log.dirs=/var/lib/kafka1,/var/lib/kafka2,/var/lib/kafka3

log.dirs=/var/lib/kafka1,/var/lib/kafka2,/var/lib/kafka34.4. Broker ID

Broker ID is a unique identifier for each broker in the cluster. You can assign an integer greater than or equal to 0 as broker ID. The broker ID is used to identify the brokers after restarts or crashes and it is therefore important that the id is stable and does not change over time. The broker ID is configured in the broker properties file:

broker.id=1

broker.id=14.5. Running a multi-node Kafka cluster

This procedure describes how to configure and run Kafka as a multi-node cluster.

Prerequisites

- AMQ Streams is installed on all hosts which will be used as Kafka brokers.

- A ZooKeeper cluster is configured and running.

Running the cluster

For each Kafka broker in your AMQ Streams cluster:

Edit the

/opt/kafka/config/server.propertiesKafka configuration file as follows:-

Set the

broker.idfield to0for the first broker,1for the second broker, and so on. -

Configure the details for connecting to ZooKeeper in the

zookeeper.connectoption. - Configure the Kafka listeners.

Set the directories where the commit logs should be stored in the

logs.dirdirectory.Here we see an example configuration for a Kafka broker:

broker.id=0 zookeeper.connect=zoo1.my-domain.com:2181,zoo2.my-domain.com:2181,zoo3.my-domain.com:2181 listeners=REPLICATION://:9091,PLAINTEXT://:9092 inter.broker.listener.name=REPLICATION log.dirs=/var/lib/kafka

broker.id=0 zookeeper.connect=zoo1.my-domain.com:2181,zoo2.my-domain.com:2181,zoo3.my-domain.com:2181 listeners=REPLICATION://:9091,PLAINTEXT://:9092 inter.broker.listener.name=REPLICATION log.dirs=/var/lib/kafkaCopy to Clipboard Copied! Toggle word wrap Toggle overflow In a typical installation where each Kafka broker is running on identical hardware, only the

broker.idconfiguration property will differ between each broker config.

-

Set the

Start the Kafka broker with the default configuration file.

su - kafka /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties

su - kafka /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.propertiesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the Kafka broker is running.

jcmd | grep Kafka

jcmd | grep KafkaCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verifying the brokers

Once all nodes of the clusters are up and running, verify that all nodes are members of the Kafka cluster by sending a dump command to one of the ZooKeeper nodes using the ncat utility. The command prints all Kafka brokers registered in ZooKeeper.

Use ncat stat to check the node status.

echo dump | ncat zoo1.my-domain.com 2181

echo dump | ncat zoo1.my-domain.com 2181Copy to Clipboard Copied! Toggle word wrap Toggle overflow The output should contain all Kafka brokers you just configured and started.

Example output from the

ncatcommand for Kafka cluster with 3 nodes:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

- For more information about installing AMQ Streams, see Section 2.3, “Installing AMQ Streams”.

- For more information about configuring AMQ Streams, see Section 2.8, “Configuring AMQ Streams”.

- For more information about running a ZooKeeper cluster, see Section 3.3, “Running multi-node ZooKeeper cluster”.

- For a complete list of supported Kafka broker configuration options, see Appendix A, Broker configuration parameters.

4.6. ZooKeeper authentication

By default, connections between ZooKeeper and Kafka are not authenticated. However, Kafka and ZooKeeper support Java Authentication and Authorization Service (JAAS) which can be used to set up authentication using Simple Authentication and Security Layer (SASL). ZooKeeper supports authentication using the DIGEST-MD5 SASL mechanism with locally stored credentials.

4.6.1. JAAS Configuration

SASL authentication for ZooKeeper connections has to be configured in the JAAS configuration file. By default, Kafka will use the JAAS context named Client for connecting to ZooKeeper. The Client context should be configured in the /opt/kafka/config/jass.conf file. The context has to enable the PLAIN SASL authentication, as in the following example:

Client {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="kafka"

password="123456";

};

Client {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="kafka"

password="123456";

};4.6.2. Enabling ZooKeeper authentication

This procedure describes how to enable authentication using the SASL DIGEST-MD5 mechanism when connecting to ZooKeeper.

Prerequisites

- Client-to-server authentication is enabled in ZooKeeper

Enabling SASL DIGEST-MD5 authentication

On all Kafka broker nodes, create or edit the

/opt/kafka/config/jaas.confJAAS configuration file and add the following context:Client { org.apache.kafka.common.security.plain.PlainLoginModule required username="<Username>" password="<Password>"; };Client { org.apache.kafka.common.security.plain.PlainLoginModule required username="<Username>" password="<Password>"; };Copy to Clipboard Copied! Toggle word wrap Toggle overflow The username and password should be the same as configured in ZooKeeper.

Following example shows the

Clientcontext:Client { org.apache.kafka.common.security.plain.PlainLoginModule required username="kafka" password="123456"; };Client { org.apache.kafka.common.security.plain.PlainLoginModule required username="kafka" password="123456"; };Copy to Clipboard Copied! Toggle word wrap Toggle overflow Restart all Kafka broker nodes one by one. To pass the JAAS configuration to Kafka brokers, use the

KAFKA_OPTSenvironment variable.su - kafka export KAFKA_OPTS="-Djava.security.auth.login.config=/opt/kafka/config/jaas.conf"; /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.properties

su - kafka export KAFKA_OPTS="-Djava.security.auth.login.config=/opt/kafka/config/jaas.conf"; /opt/kafka/bin/kafka-server-start.sh -daemon /opt/kafka/config/server.propertiesCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

- For more information about configuring client-to-server authentication in ZooKeeper, see Section 3.4, “Authentication”.

4.7. Authorization

Authorization in Kafka brokers is implemented using authorizer plugins.

In this section we describe how to use the AclAuthorizer plugin provided with Kafka.

Alternatively, you can use your own authorization plugins. For example, if you are using OAuth 2.0 token-based authentication, you can use OAuth 2.0 authorization.

4.7.1. Simple ACL authorizer

Authorizer plugins, including AclAuthorizer, are enabled through the authorizer.class.name property:

authorizer.class.name=kafka.security.auth.SimpleAclAuthorizer

authorizer.class.name=kafka.security.auth.SimpleAclAuthorizer

A fully-qualified name is required for the chosen authorizer. For AclAuthorizer, the fully-qualified name is kafka.security.auth.SimpleAclAuthorizer.

4.7.1.1. ACL rules

AclAuthorizer uses ACL rules to manage access to Kafka brokers.

ACL rules are defined in the format:

Principal P is allowed / denied operation O on Kafka resource R from host H

For example, a rule might be set so that user:

John can view the topic comments from host 127.0.0.1

Host is the IP address of the machine that John is connecting from.

In most cases, the user is a producer or consumer application:

Consumer01 can write to the consumer group accounts from host 127.0.0.1

If ACL rules are not present

If ACL rules are not present for a given resource, all actions are denied. This behavior can be changed by setting the property allow.everyone.if.no.acl.found to true in the Kafka configuration file /opt/kafka/config/server.properties.

4.7.1.2. Principals

A principal represents the identity of a user. The format of the ID depends on the authentication mechanism used by clients to connect to Kafka:

-

User:ANONYMOUSwhen connected without authentication. User:<username>when connected using simple authentication mechanisms, such as PLAIN or SCRAM.For example

User:adminorUser:user1.User:<DistinguishedName>when connected using TLS client authentication.For example

User:CN=user1,O=MyCompany,L=Prague,C=CZ.-

User:<Kerberos username>when connected using Kerberos.

The DistinguishedName is the distinguished name from the client certificate.

The Kerberos username is the primary part of the Kerberos principal, which is used by default when connecting using Kerberos. You can use the sasl.kerberos.principal.to.local.rules property to configure how the Kafka principal is built from the Kerberos principal.

4.7.1.3. Authentication of users

To use authorization, you need to have authentication enabled and used by your clients. Otherwise, all connections will have the principal User:ANONYMOUS.

For more information on methods of authentication, see Encryption and authentication.

4.7.1.4. Super users

Super users are allowed to take all actions regardless of the ACL rules.

Super users are defined in the Kafka configuration file using the property super.users.

For example:

super.users=User:admin,User:operator

super.users=User:admin,User:operator4.7.1.5. Replica broker authentication

When authorization is enabled, it is applied to all listeners and all connections. This includes the inter-broker connections used for replication of data between brokers. If enabling authorization, therefore, ensure that you use authentication for inter-broker connections and give the users used by the brokers sufficient rights. For example, if authentication between brokers uses the kafka-broker user, then super user configuration must include the username super.users=User:kafka-broker.

4.7.1.6. Supported resources

You can apply Kafka ACLs to these types of resource:

- Topics

- Consumer groups

- The cluster

- TransactionId

- DelegationToken

4.7.1.7. Supported operations

AclAuthorizer authorizes operations on resources.

Fields with X in the following table mark the supported operations for each resource.

| Topics | Consumer Groups | Cluster | |

|---|---|---|---|

| Read | X | X | |

| Write | X | ||

| Create | X | ||

| Delete | X | ||

| Alter | X | ||

| Describe | X | X | X |

| ClusterAction | X | ||

| All | X | X | X |

4.7.1.8. ACL management options

ACL rules are managed using the bin/kafka-acls.sh utility, which is provided as part of the Kafka distribution package.

Use kafka-acls.sh parameter options to add, list and remove ACL rules, and perform other functions.

The parameters require a double-hyphen convention, such as --add.

| Option | Type | Description | Default |

|---|---|---|---|

|

| Action | Add ACL rule. | |

|

| Action | Remove ACL rule. | |

|

| Action | List ACL rules. | |

|

| Action | Fully-qualified class name of the authorizer. |

|

|

| Configuration | Key/value pairs passed to the authorizer for initialization.

For | |

|

| Resource | Host/port pairs to connect to the Kafka cluster. |

Use this option or the |

|

| Resource |

Configuration property file to pass to the Admin Client, which is used in conjunction with the | |

|

| Resource | Specifies a cluster as an ACL resource. | |

|

| Resource | Specifies a topic name as an ACL resource.

An asterisk (

A single command can specify multiple | |

|

| Resource | Specifies a consumer group name as an ACL resource.

A single command can specify multiple | |

|

| Resource | Specifies a transactional ID as an ACL resource. Transactional delivery means that all messages sent by a producer to multiple partitions must be successfully delivered or none of them.

An asterisk ( | |

|

| Resource | Specifies a delegation token as an ACL resource.

An asterisk ( | |

|

| Configuration |

Specifies a type of resource pattern for the

Use

Use |

|

|

| Principal | Principal added to an allow ACL rule.

A single command can specify multiple | |

|

| Principal | Principal added to a deny ACL rule.

A single command can specify multiple | |

|

| Principal |

Principal name used with the

A single command can specify multiple | |

|

| Host |

IP address that allows access to the principals listed in Hostnames or CIDR ranges are not supported. |

If |

|

| Host |

IP address that denies access to the principals listed in Hostnames or CIDR ranges are not supported. |

if |

|

| Operation | Allows or denies an operation.

A single command can specify multipleMultiple | All |

|

| Shortcut | A shortcut to allow or deny all operations needed by a message producer (WRITE and DESCRIBE on topic, CREATE on cluster). | |

|

| Shortcut | A shortcut to allow or deny all operations needed by a message consumer (READ and DESCRIBE on topic, READ on consumer group). | |

|

| Shortcut |

A shortcut to enable idempotence when used with the Idepmotence is enabled automatically if the producer is authorized to send messages based on a specific transactional ID. | |

|

| Shortcut | A shortcut to accept all queries and do not prompt. |

4.7.2. Enabling authorization

This procedure describes how to enable the AclAuthorizer plugin for authorization in Kafka brokers.

Prerequisites

- AMQ Streams is installed on all hosts used as Kafka brokers.

Procedure

Edit the

/opt/kafka/config/server.propertiesKafka configuration file to use theAclAuthorizer.authorizer.class.name=kafka.security.auth.SimpleAclAuthorizer

authorizer.class.name=kafka.security.auth.SimpleAclAuthorizerCopy to Clipboard Copied! Toggle word wrap Toggle overflow - (Re)start the Kafka brokers.

Additional resources

- For more information about configuring AMQ Streams, see Section 2.8, “Configuring AMQ Streams”.

- For more information about running a Kafka cluster, see Section 4.5, “Running a multi-node Kafka cluster”.

4.7.3. Adding ACL rules

AclAuthorizer uses Access Control Lists (ACLs), which define a set of rules describing what users can and cannot do.

This procedure describes how to add ACL rules when using the AclAuthorizer plugin in Kafka brokers.

Rules are added using the kafka-acls.sh utility and stored in ZooKeeper.

Prerequisites

- AMQ Streams is installed on all hosts used as Kafka brokers.

- Authorization is enabled in Kafka brokers.

Procedure

Run

kafka-acls.shwith the--addoption.Examples:

Allow

user1anduser2access to read frommyTopicusing theMyConsumerGroupconsumer group.bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --add --operation Read --topic myTopic --allow-principal User:user1 --allow-principal User:user2 bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --add --operation Describe --topic myTopic --allow-principal User:user1 --allow-principal User:user2 bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --add --operation Read --operation Describe --group MyConsumerGroup --allow-principal User:user1 --allow-principal User:user2

bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --add --operation Read --topic myTopic --allow-principal User:user1 --allow-principal User:user2 bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --add --operation Describe --topic myTopic --allow-principal User:user1 --allow-principal User:user2 bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --add --operation Read --operation Describe --group MyConsumerGroup --allow-principal User:user1 --allow-principal User:user2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Deny

user1access to readmyTopicfrom IP address host127.0.0.1.bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --add --operation Describe --operation Read --topic myTopic --group MyConsumerGroup --deny-principal User:user1 --deny-host 127.0.0.1

bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --add --operation Describe --operation Read --topic myTopic --group MyConsumerGroup --deny-principal User:user1 --deny-host 127.0.0.1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add

user1as the consumer ofmyTopicwithMyConsumerGroup.bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --add --consumer --topic myTopic --group MyConsumerGroup --allow-principal User:user1

bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --add --consumer --topic myTopic --group MyConsumerGroup --allow-principal User:user1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

-

For a list of all

kafka-acls.shoptions, see Section 4.7.1, “Simple ACL authorizer”.

4.7.4. Listing ACL rules

This procedure describes how to list existing ACL rules when using the AclAuthorizer plugin in Kafka brokers.

Rules are listed using the kafka-acls.sh utility.

Prerequisites

- AMQ Streams is installed on all hosts used as Kafka brokers.

- Authorization is enabled in Kafka brokers

- ACLs have been added.

Procedure

Run

kafka-acls.shwith the--listoption.For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

-

For a list of all

kafka-acls.shoptions, see Section 4.7.1, “Simple ACL authorizer”.

4.7.5. Removing ACL rules

This procedure describes how to remove ACL rules when using the AclAuthorizer plugin in Kafka brokers.

Rules are removed using the kafka-acls.sh utility.

Prerequisites

- AMQ Streams is installed on all hosts used as Kafka brokers.

- Authorization is enabled in Kafka brokers.

- ACLs have been added.

Procedure

Run

kafka-acls.shwith the--removeoption.Examples:

Remove the ACL allowing Allow

user1anduser2access to read frommyTopicusing theMyConsumerGroupconsumer group.bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --remove --operation Read --topic myTopic --allow-principal User:user1 --allow-principal User:user2 bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --remove --operation Describe --topic myTopic --allow-principal User:user1 --allow-principal User:user2 bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --remove --operation Read --operation Describe --group MyConsumerGroup --allow-principal User:user1 --allow-principal User:user2

bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --remove --operation Read --topic myTopic --allow-principal User:user1 --allow-principal User:user2 bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --remove --operation Describe --topic myTopic --allow-principal User:user1 --allow-principal User:user2 bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --remove --operation Read --operation Describe --group MyConsumerGroup --allow-principal User:user1 --allow-principal User:user2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Remove the ACL adding

user1as the consumer ofmyTopicwithMyConsumerGroup.bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --remove --consumer --topic myTopic --group MyConsumerGroup --allow-principal User:user1

bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --remove --consumer --topic myTopic --group MyConsumerGroup --allow-principal User:user1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Remove the ACL denying

user1access to readmyTopicfrom IP address host127.0.0.1.bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --remove --operation Describe --operation Read --topic myTopic --group MyConsumerGroup --deny-principal User:user1 --deny-host 127.0.0.1

bin/kafka-acls.sh --authorizer-properties zookeeper.connect=zoo1.my-domain.com:2181 --remove --operation Describe --operation Read --topic myTopic --group MyConsumerGroup --deny-principal User:user1 --deny-host 127.0.0.1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

-

For a list of all

kafka-acls.shoptions, see Section 4.7.1, “Simple ACL authorizer”. - For more information about enabling authorization, see Section 4.7.2, “Enabling authorization”.

4.8. ZooKeeper authorization

When authentication is enabled between Kafka and ZooKeeper, you can use ZooKeeper Access Control List (ACL) rules to automatically control access to Kafka’s metadata stored in ZooKeeper.

4.8.1. ACL Configuration

Enforcement of ZooKeeper ACL rules is controlled by the zookeeper.set.acl property in the config/server.properties Kafka configuration file.

The property is disabled by default and enabled by setting to true:

zookeeper.set.acl=true

zookeeper.set.acl=true

If ACL rules are enabled, when a znode is created in ZooKeeper only the Kafka user who created it can modify or delete it. All other users have read-only access.

Kafka sets ACL rules only for newly created ZooKeeper znodes. If the ACLs are only enabled after the first start of the cluster, the zookeeper-security-migration.sh tool can set ACLs on all existing znodes.

Confidentiality of data in ZooKeeper

Data stored in ZooKeeper includes:

- Topic names and their configuration

- Salted and hashed user credentials when SASL SCRAM authentication is used.

But ZooKeeper does not store any records sent and received using Kafka. The data stored in ZooKeeper is assumed to be non-confidential.

If the data is to be regarded as confidential (for example because topic names contain customer IDs), the only option available for protection is isolating ZooKeeper on the network level and allowing access only to Kafka brokers.

4.8.2. Enabling ZooKeeper ACLs for a new Kafka cluster

This procedure describes how to enable ZooKeeper ACLs in Kafka configuration for a new Kafka cluster. Use this procedure only before the first start of the Kafka cluster. For enabling ZooKeeper ACLs in a cluster that is already running, see Section 4.8.3, “Enabling ZooKeeper ACLs in an existing Kafka cluster”.

Prerequisites

- AMQ Streams is installed on all hosts which will be used as Kafka brokers.

- ZooKeeper cluster is configured and running.

- Client-to-server authentication is enabled in ZooKeeper.

- ZooKeeper authentication is enabled in the Kafka brokers.

- Kafka brokers have not yet been started.

Procedure

Edit the

/opt/kafka/config/server.propertiesKafka configuration file to set thezookeeper.set.aclfield totrueon all cluster nodes.zookeeper.set.acl=true

zookeeper.set.acl=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Start the Kafka brokers.

4.8.3. Enabling ZooKeeper ACLs in an existing Kafka cluster

This procedure describes how to enable ZooKeeper ACLs in Kafka configuration for a Kafka cluster that is running. Use the zookeeper-security-migration.sh tool to set ZooKeeper ACLs on all existing znodes. The zookeeper-security-migration.sh is available as part of AMQ Streams, and can be found in the bin directory.

Prerequisites

- Kafka cluster is configured and running.

Enabling the ZooKeeper ACLs

Edit the

/opt/kafka/config/server.propertiesKafka configuration file to set thezookeeper.set.aclfield totrueon all cluster nodes.zookeeper.set.acl=true

zookeeper.set.acl=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Restart all Kafka brokers one by one.

Set the ACLs on all existing ZooKeeper

znodesusing thezookeeper-security-migration.shtool.su - kafka cd /opt/kafka KAFKA_OPTS="-Djava.security.auth.login.config=./config/jaas.conf"; ./bin/zookeeper-security-migration.sh --zookeeper.acl=secure --zookeeper.connect=<ZooKeeperURL> exit

su - kafka cd /opt/kafka KAFKA_OPTS="-Djava.security.auth.login.config=./config/jaas.conf"; ./bin/zookeeper-security-migration.sh --zookeeper.acl=secure --zookeeper.connect=<ZooKeeperURL> exitCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

su - kafka cd /opt/kafka KAFKA_OPTS="-Djava.security.auth.login.config=./config/jaas.conf"; ./bin/zookeeper-security-migration.sh --zookeeper.acl=secure --zookeeper.connect=zoo1.my-domain.com:2181 exit

su - kafka cd /opt/kafka KAFKA_OPTS="-Djava.security.auth.login.config=./config/jaas.conf"; ./bin/zookeeper-security-migration.sh --zookeeper.acl=secure --zookeeper.connect=zoo1.my-domain.com:2181 exitCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.9. Encryption and authentication

AMQ Streams supports encryption and authentication, which is configured as part of the listener configuration.

4.9.1. Listener configuration

Encryption and authentication in Kafka brokers is configured per listener. For more information about Kafka listener configuration, see Section 4.2, “Listeners”.

Each listener in the Kafka broker is configured with its own security protocol. The configuration property listener.security.protocol.map defines which listener uses which security protocol. It maps each listener name to its security protocol. Supported security protocols are:

PLAINTEXT- Listener without any encryption or authentication.

SSL- Listener using TLS encryption and, optionally, authentication using TLS client certificates.

SASL_PLAINTEXT- Listener without encryption but with SASL-based authentication.

SASL_SSL- Listener with TLS-based encryption and SASL-based authentication.

Given the following listeners configuration:

listeners=INT1://:9092,INT2://:9093,REPLICATION://:9094

listeners=INT1://:9092,INT2://:9093,REPLICATION://:9094

the listener.security.protocol.map might look like this:

listener.security.protocol.map=INT1:SASL_PLAINTEXT,INT2:SASL_SSL,REPLICATION:SSL

listener.security.protocol.map=INT1:SASL_PLAINTEXT,INT2:SASL_SSL,REPLICATION:SSL

This would configure the listener INT1 to use unencrypted connections with SASL authentication, the listener INT2 to use encrypted connections with SASL authentication and the REPLICATION interface to use TLS encryption (possibly with TLS client authentication). The same security protocol can be used multiple times. The following example is also a valid configuration:

listener.security.protocol.map=INT1:SSL,INT2:SSL,REPLICATION:SSL

listener.security.protocol.map=INT1:SSL,INT2:SSL,REPLICATION:SSLSuch a configuration would use TLS encryption and TLS authentication for all interfaces. The following chapters will explain in more detail how to configure TLS and SASL.

4.9.2. TLS Encryption

Kafka supports TLS for encrypting communication with Kafka clients.

In order to use TLS encryption and server authentication, a keystore containing private and public keys has to be provided. This is usually done using a file in the Java Keystore (JKS) format. A path to this file is set in the ssl.keystore.location property. The ssl.keystore.password property should be used to set the password protecting the keystore. For example:

ssl.keystore.location=/path/to/keystore/server-1.jks ssl.keystore.password=123456

ssl.keystore.location=/path/to/keystore/server-1.jks

ssl.keystore.password=123456

In some cases, an additional password is used to protect the private key. Any such password can be set using the ssl.key.password property.

Kafka is able to use keys signed by certification authorities as well as self-signed keys. Using keys signed by certification authorities should always be the preferred method. In order to allow clients to verify the identity of the Kafka broker they are connecting to, the certificate should always contain the advertised hostname(s) as its Common Name (CN) or in the Subject Alternative Names (SAN).

It is possible to use different SSL configurations for different listeners. All options starting with ssl. can be prefixed with listener.name.<NameOfTheListener>., where the name of the listener has to be always in lower case. This will override the default SSL configuration for that specific listener. The following example shows how to use different SSL configurations for different listeners:

Additional TLS configuration options

In addition to the main TLS configuration options described above, Kafka supports many options for fine-tuning the TLS configuration. For example, to enable or disable TLS / SSL protocols or cipher suites:

ssl.cipher.suites- List of enabled cipher suites. Each cipher suite is a combination of authentication, encryption, MAC and key exchange algorithms used for the TLS connection. By default, all available cipher suites are enabled.

ssl.enabled.protocols-

List of enabled TLS / SSL protocols. Defaults to

TLSv1.2,TLSv1.1,TLSv1.

For a complete list of supported Kafka broker configuration options, see Appendix A, Broker configuration parameters.

4.9.3. Enabling TLS encryption

This procedure describes how to enable encryption in Kafka brokers.

Prerequisites

- AMQ Streams is installed on all hosts which will be used as Kafka brokers.

Procedure

- Generate TLS certificates for all Kafka brokers in your cluster. The certificates should have their advertised and bootstrap addresses in their Common Name or Subject Alternative Name.

Edit the

/opt/kafka/config/server.propertiesKafka configuration file on all cluster nodes for the following:-

Change the

listener.security.protocol.mapfield to specify theSSLprotocol for the listener where you want to use TLS encryption. -

Set the

ssl.keystore.locationoption to the path to the JKS keystore with the broker certificate. Set the

ssl.keystore.passwordoption to the password you used to protect the keystore.For example:

listeners=UNENCRYPTED://:9092,ENCRYPTED://:9093,REPLICATION://:9094 listener.security.protocol.map=UNENCRYPTED:PLAINTEXT,ENCRYPTED:SSL,REPLICATION:PLAINTEXT ssl.keystore.location=/path/to/keystore/server-1.jks ssl.keystore.password=123456

listeners=UNENCRYPTED://:9092,ENCRYPTED://:9093,REPLICATION://:9094 listener.security.protocol.map=UNENCRYPTED:PLAINTEXT,ENCRYPTED:SSL,REPLICATION:PLAINTEXT ssl.keystore.location=/path/to/keystore/server-1.jks ssl.keystore.password=123456Copy to Clipboard Copied! Toggle word wrap Toggle overflow

-

Change the

- (Re)start the Kafka brokers

Additional resources

- For more information about configuring AMQ Streams, see Section 2.8, “Configuring AMQ Streams”.

- For more information about running a Kafka cluster, see Section 4.5, “Running a multi-node Kafka cluster”.

For more information about configuring TLS encryption in clients, see:

4.9.4. Authentication

For authentication, you can use:

- TLS client authentication based on X.509 certificates on encrypted connections

- A supported Kafka SASL (Simple Authentication and Security Layer) mechanism

- OAuth 2.0 token based authentication

4.9.4.1. TLS client authentication

TLS client authentication can be used only on connections which are already using TLS encryption. To use TLS client authentication, a truststore with public keys can be provided to the broker. These keys can be used to authenticate clients connecting to the broker. The truststore should be provided in Java Keystore (JKS) format and should contain public keys of the certification authorities. All clients with public and private keys signed by one of the certification authorities included in the truststore will be authenticated. The location of the truststore is set using field ssl.truststore.location. In case the truststore is password protected, the password should be set in the ssl.truststore.password property. For example:

ssl.truststore.location=/path/to/keystore/server-1.jks ssl.truststore.password=123456

ssl.truststore.location=/path/to/keystore/server-1.jks

ssl.truststore.password=123456

Once the truststore is configured, TLS client authentication has to be enabled using the ssl.client.auth property. This property can be set to one of three different values:

none- TLS client authentication is switched off. (Default value)

requested- TLS client authentication is optional. Clients will be asked to authenticate using TLS client certificate but they can choose not to.

required- Clients are required to authenticate using TLS client certificate.

When a client authenticates using TLS client authentication, the authenticated principal name is the distinguished name from the authenticated client certificate. For example, a user with a certificate which has a distinguished name CN=someuser will be authenticated with the following principal CN=someuser,OU=Unknown,O=Unknown,L=Unknown,ST=Unknown,C=Unknown. When TLS client authentication is not used and SASL is disabled, the principal name will be ANONYMOUS.

4.9.4.2. SASL authentication

SASL authentication is configured using Java Authentication and Authorization Service (JAAS). JAAS is also used for authentication of connections between Kafka and ZooKeeper. JAAS uses its own configuration file. The recommended location for this file is /opt/kafka/config/jaas.conf. The file has to be readable by the kafka user. When running Kafka, the location of this file is specified using Java system property java.security.auth.login.config. This property has to be passed to Kafka when starting the broker nodes:

KAFKA_OPTS="-Djava.security.auth.login.config=/path/to/my/jaas.config"; bin/kafka-server-start.sh

KAFKA_OPTS="-Djava.security.auth.login.config=/path/to/my/jaas.config"; bin/kafka-server-start.sh

SASL authentication is supported both through plain unencrypted connections as well as through TLS connections. SASL can be enabled individually for each listener. To enable it, the security protocol in listener.security.protocol.map has to be either SASL_PLAINTEXT or SASL_SSL.

SASL authentication in Kafka supports several different mechanisms:

PLAIN- Implements authentication based on username and passwords. Usernames and passwords are stored locally in Kafka configuration.

SCRAM-SHA-256andSCRAM-SHA-512- Implements authentication using Salted Challenge Response Authentication Mechanism (SCRAM). SCRAM credentials are stored centrally in ZooKeeper. SCRAM can be used in situations where ZooKeeper cluster nodes are running isolated in a private network.

GSSAPI- Implements authentication against a Kerberos server.