Using AMQ Streams on OpenShift

For use with AMQ Streams 1.4 on OpenShift Container Platform

Abstract

Chapter 1. Overview of AMQ Streams

AMQ Streams simplifies the process of running Apache Kafka in an OpenShift cluster.

1.1. Kafka capabilities

The underlying data stream-processing capabilities and component architecture of Kafka can deliver:

- Microservices and other applications to share data with extremely high throughput and low latency

- Message ordering guarantees

- Message rewind/replay from data storage to reconstruct an application state

- Message compaction to remove old records when using a key-value log

- Horizontal scalability in a cluster configuration

- Replication of data to control fault tolerance

- Retention of high volumes of data for immediate access

1.2. Kafka use cases

Kafka’s capabilities make it suitable for:

- Event-driven architectures

- Event sourcing to capture changes to the state of an application as a log of events

- Message brokering

- Website activity tracking

- Operational monitoring through metrics

- Log collection and aggregation

- Commit logs for distributed systems

- Stream processing so that applications can respond to data in real time

1.3. How AMQ Streams supports Kafka

AMQ Streams provides container images and Operators for running Kafka on OpenShift. AMQ Streams Operators are fundamental to the running of AMQ Streams. The Operators provided with AMQ Streams are purpose-built with specialist operational knowledge to effectively manage Kafka.

Operators simplify the process of:

- Deploying and running Kafka clusters

- Deploying and running Kafka components

- Configuring access to Kafka

- Securing access to Kafka

- Upgrading Kafka

- Managing brokers

- Creating and managing topics

- Creating and managing users

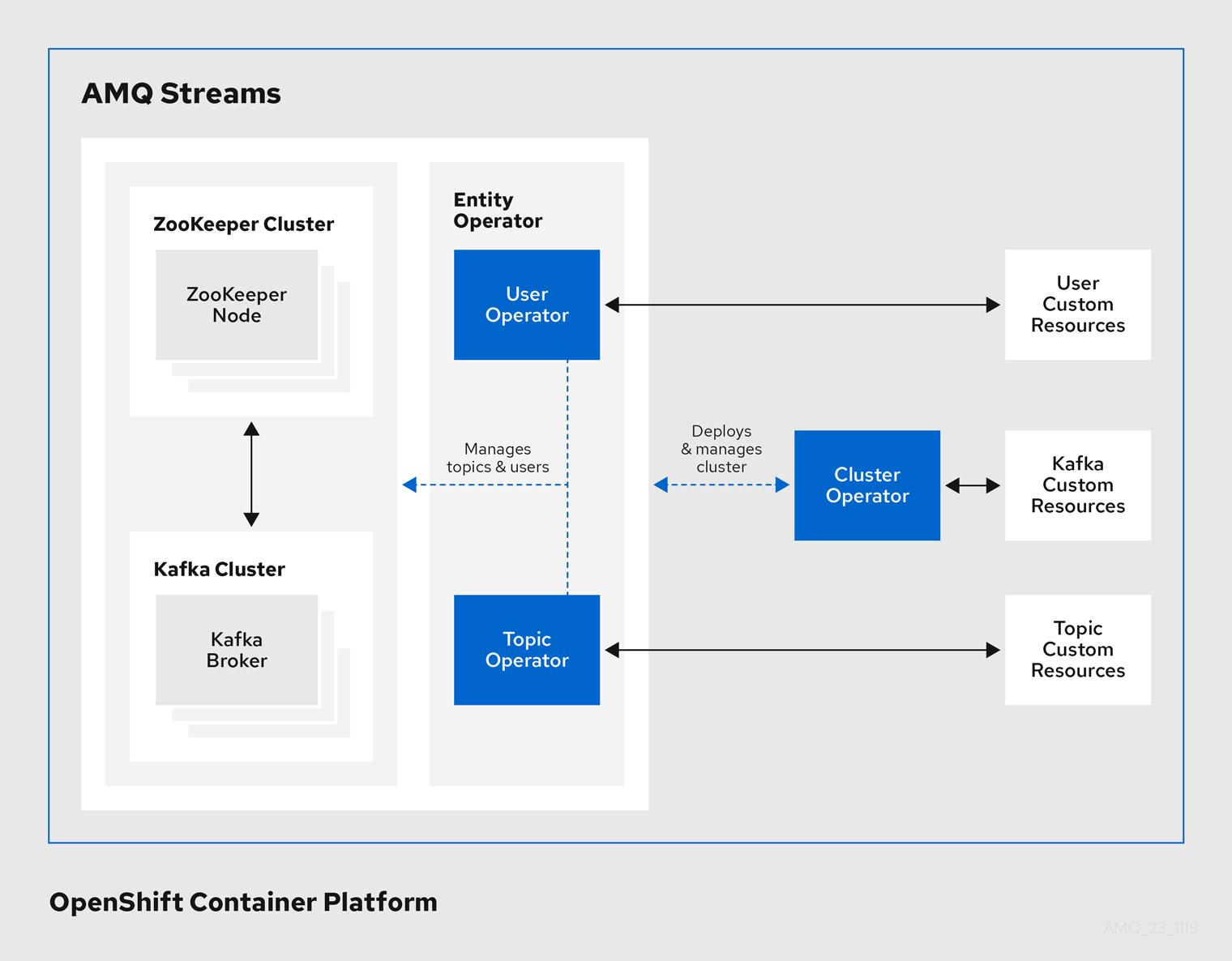

1.4. Operators

AMQ Streams provides Operators for managing a Kafka cluster running within an OpenShift cluster.

- Cluster Operator

- Deploys and manages Apache Kafka clusters, Kafka Connect, Kafka MirrorMaker, Kafka Bridge, Kafka Exporter, and the Entity Operator

- Entity Operator

- Comprises the Topic Operator and User Operator

- Topic Operator

- Manages Kafka topics

- User Operator

- Manages Kafka users

The Cluster Operator can deploy the Topic Operator and User Operator as part of an Entity Operator configuration at the same time as a Kafka cluster.

Operators within the AMQ Streams architecture

1.5. AMQ Streams installation methods

There are two ways to install AMQ Streams on OpenShift.

| Installation method | Description | Supported versions |

|---|---|---|

| Installation artifacts (YAML files) |

Download the | OpenShift 3.11 and later |

| OperatorHub | Use the AMQ Streams Operator in the OperatorHub to deploy the Cluster Operator to a single namespace or all namespaces. | OpenShift 4.x only |

For the greatest flexibility, choose the installation artifacts method. Choose the OperatorHub method if you want to install AMQ Streams to OpenShift 4 in a standard configuration using the OpenShift 4 web console. The OperatorHub also allows you to take advantage of automatic updates.

In the case of both methods, the Cluster Operator is deployed to your OpenShift cluster, ready for you to deploy the other components of AMQ Streams, starting with a Kafka cluster, using the YAML example files provided.

AMQ Streams installation artifacts

The AMQ Streams installation artifacts contain various YAML files that can be deployed to OpenShift, using oc, to create custom resources, including:

- Deployments

- Custom resource definitions (CRDs)

- Roles and role bindings

- Service accounts

YAML installation files are provided for the Cluster Operator, Topic Operator, User Operator, and the Strimzi Admin role.

OperatorHub

In OpenShift 4, the Operator Lifecycle Manager (OLM) helps cluster administrators to install, update, and manage the lifecycle of all Operators and their associated services running across their clusters. The OLM is part of the Operator Framework, an open source toolkit designed to manage Kubernetes-native applications (Operators) in an effective, automated, and scalable way.

The OperatorHub is part of the OpenShift 4 web console. Cluster administrators can use it to discover, install, and upgrade Operators. Operators can be pulled from the OperatorHub, installed on the OpenShift cluster to a single (project) namespace or all (projects) namespaces, and managed by the OLM. Engineering teams can then independently manage the software in development, test, and production environments using the OLM.

The OperatorHub is not available in versions of OpenShift earlier than version 4.

AMQ Streams Operator

The AMQ Streams Operator is available to install from the OperatorHub. Once installed, the AMQ Streams Operator deploys the Cluster Operator to your OpenShift cluster, along with the necessary CRDs and role-based access control (RBAC) resources.

Additional resources

Installing AMQ Streams using the installation artifacts:

Installing AMQ Streams from the OperatorHub:

- Section 2.3.6, “Deploying the Cluster Operator from the OperatorHub”

- Operators guide in the OpenShift documentation.

1.6. Document Conventions

Replaceables

In this document, replaceable text is styled in monospace and italics.

For example, in the following code, you will want to replace my-namespace with the name of your namespace:

sed -i 's/namespace: .*/namespace: my-namespace/' install/cluster-operator/*RoleBinding*.yaml

sed -i 's/namespace: .*/namespace: my-namespace/' install/cluster-operator/*RoleBinding*.yamlChapter 2. Getting started with AMQ Streams

AMQ Streams is designed to work on all types of OpenShift cluster regardless of distribution, from public and private clouds to local deployments intended for development. AMQ Streams supports a few features which are specific to OpenShift, where such integration benefits OpenShift users and cannot be implemented equivalently using standard OpenShift.

This guide assumes that an OpenShift cluster is available and the oc command-line tool is installed and configured to connect to the running cluster.

AMQ Streams is based on Strimzi 0.17.x. This chapter describes the procedures to deploy AMQ Streams on OpenShift 3.11 and later.

To run the commands in this guide, your cluster user must have the rights to manage role-based access control (RBAC) and CRDs.

2.1. Installing AMQ Streams and deploying components

To install AMQ Streams, download and extract the amq-streams-x.y.z-ocp-install-examples.zip file from the AMQ Streams download site.

The folder contains several YAML files to help you deploy the components of AMQ Streams to OpenShift, perform common operations, and configure your Kafka cluster. The YAML files are referenced throughout this documentation.

The remainder of this chapter provides an overview of each component and instructions for deploying the components to OpenShift using the YAML files provided.

Although container images for AMQ Streams are available in the Red Hat Container Catalog, we recommend that you use the YAML files provided instead.

2.2. Custom resources

Custom resources allow you to configure and introduce changes to a default AMQ Streams deployment. In order to use custom resources, custom resource definitions must first be defined.

Custom resource definitions (CRDs) extend the Kubernetes API, providing definitions to add custom resources to an OpenShift cluster. Custom resources are created as instances of the APIs added by CRDs.

In AMQ Streams, CRDs introduce custom resources specific to AMQ Streams to an OpenShift cluster, such as Kafka, Kafka Connect, Kafka MirrorMaker, and users and topics custom resources. CRDs provide configuration instructions, defining the schemas used to instantiate and manage the AMQ Streams-specific resources. CRDs also allow AMQ Streams resources to benefit from native OpenShift features like CLI accessibility and configuration validation.

CRDs require a one-time installation in a cluster. Depending on the cluster setup, installation typically requires cluster admin privileges.

Access to manage custom resources is limited to AMQ Streams administrators.

CRDs and custom resources are defined as YAML files.

A CRD defines a new kind of resource, such as kind:Kafka, within an OpenShift cluster.

The Kubernetes API server allows custom resources to be created based on the kind and understands from the CRD how to validate and store the custom resource when it is added to the OpenShift cluster.

When CRDs are deleted, custom resources of that type are also deleted. Additionally, the resources created by the custom resource, such as pods and statefulsets are also deleted.

Additional resources

2.2.1. AMQ Streams custom resource example

Each AMQ Streams-specific custom resource conforms to the schema defined by the CRD for the resource’s kind.

To understand the relationship between a CRD and a custom resource, let’s look at a sample of the CRD for a Kafka topic.

Kafka topic CRD

- 1

- The metadata for the topic CRD, its name and a label to identify the CRD.

- 2

- The specification for this CRD, including the group (domain) name, the plural name and the supported schema version, which are used in the URL to access the API of the topic. The other names are used to identify instance resources in the CLI. For example,

oc get kafkatopic my-topicoroc get kafkatopics. - 3

- The shortname can be used in CLI commands. For example,

oc get ktcan be used as an abbreviation instead ofoc get kafkatopic. - 4

- The information presented when using a

getcommand on the custom resource. - 5

- The current status of the CRD as described in the schema reference for the resource.

- 6

- openAPIV3Schema validation provides validation for the creation of topic custom resources. For example, a topic requires at least one partition and one replica.

You can identify the CRD YAML files supplied with the AMQ Streams installation files, because the file names contain an index number followed by ‘Crd’.

Here is a corresponding example of a KafkaTopic custom resource.

Kafka topic custom resource

- 1

- The

kindandapiVersionidentify the CRD of which the custom resource is an instance. - 2

- A label, applicable only to

KafkaTopicandKafkaUserresources, that defines the name of the Kafka cluster (which is same as the name of theKafkaresource) to which a topic or user belongs.The name is used by the Topic Operator and User Operator to identify the Kafka cluster when creating a topic or user.

- 3

- The spec shows the number of partitions and replicas for the topic as well as the configuration parameters for the topic itself. In this example, the retention period for a message to remain in the topic and the segment file size for the log are specified.

- 4

- Status conditions for the

KafkaTopicresource. Thetypecondition changed toReadyat thelastTransitionTime.

Custom resources can be applied to a cluster through the platform CLI. When the custom resource is created, it uses the same validation as the built-in resources of the Kubernetes API.

After a KafkaTopic custom resource is created, the Topic Operator is notified and corresponding Kafka topics are created in AMQ Streams.

2.2.2. AMQ Streams custom resource status

The status property of a AMQ Streams custom resource publishes information about the resource to users and tools that need it.

Several resources have a status property, as described in the following table.

| AMQ Streams resource | Schema reference | Publishes status information on… |

|---|---|---|

|

| The Kafka cluster. | |

|

| The Kafka Connect cluster, if deployed. | |

|

| The Kafka Connect cluster with Source-to-Image support, if deployed. | |

|

|

| |

|

| The Kafka MirrorMaker tool, if deployed. | |

|

| Kafka topics in your Kafka cluster. | |

|

| Kafka users in your Kafka cluster. | |

|

| The AMQ Streams Kafka Bridge, if deployed. |

The status property of a resource provides information on the resource’s:

-

Current state, in the

status.conditionsproperty -

Last observed generation, in the

status.observedGenerationproperty

The status property also provides resource-specific information. For example:

-

KafkaConnectStatusprovides the REST API endpoint for Kafka Connect connectors. -

KafkaUserStatusprovides the user name of the Kafka user and theSecretin which their credentials are stored. -

KafkaBridgeStatusprovides the HTTP address at which external client applications can access the Bridge service.

A resource’s current state is useful for tracking progress related to the resource achieving its desired state, as defined by the spec property. The status conditions provide the time and reason the state of the resource changed and details of events preventing or delaying the operator from realizing the resource’s desired state.

The last observed generation is the generation of the resource that was last reconciled by the Cluster Operator. If the value of observedGeneration is different from the value of metadata.generation, the operator has not yet processed the latest update to the resource. If these values are the same, the status information reflects the most recent changes to the resource.

AMQ Streams creates and maintains the status of custom resources, periodically evaluating the current state of the custom resource and updating its status accordingly. When performing an update on a custom resource using oc edit, for example, its status is not editable. Moreover, changing the status would not affect the configuration of the Kafka cluster.

Here we see the status property specified for a Kafka custom resource.

Kafka custom resource with status

- 1

- Status

conditionsdescribe criteria related to the status that cannot be deduced from the existing resource information, or are specific to the instance of a resource. - 2

- The

Readycondition indicates whether the Cluster Operator currently considers the Kafka cluster able to handle traffic. - 3

- The

observedGenerationindicates the generation of theKafkacustom resource that was last reconciled by the Cluster Operator. - 4

- The

listenersdescribe the current Kafka bootstrap addresses by type.ImportantThe address in the custom resource status for external listeners with type

nodeportis currently not supported.

The Kafka bootstrap addresses listed in the status do not signify that those endpoints or the Kafka cluster is in a ready state.

Accessing status information

You can access status information for a resource from the command line. For more information, see Section 16.1, “Checking the status of a custom resource”.

2.3. Cluster Operator

The Cluster Operator is responsible for deploying and managing Apache Kafka clusters within an OpenShift cluster.

2.3.1. Cluster Operator

AMQ Streams uses the Cluster Operator to deploy and manage clusters for:

- Kafka (including ZooKeeper, Entity Operator and Kafka Exporter)

- Kafka Connect

- Kafka MirrorMaker

- Kafka Bridge

Custom resources are used to deploy the clusters.

For example, to deploy a Kafka cluster:

-

A

Kafkaresource with the cluster configuration is created within the OpenShift cluster. -

The Cluster Operator deploys a corresponding Kafka cluster, based on what is declared in the

Kafkaresource.

The Cluster Operator can also deploy (through configuration of the Kafka resource):

-

A Topic Operator to provide operator-style topic management through

KafkaTopiccustom resources -

A User Operator to provide operator-style user management through

KafkaUsercustom resources

The Topic Operator and User Operator function within the Entity Operator on deployment.

Example architecture for the Cluster Operator

2.3.2. Watch options for a Cluster Operator deployment

When the Cluster Operator is running, it starts to watch for updates of Kafka resources.

Depending on the deployment, the Cluster Operator can watch Kafka resources from:

AMQ Streams provides example YAML files to make the deployment process easier.

The Cluster Operator watches for changes to the following resources:

-

Kafkafor the Kafka cluster. -

KafkaConnectfor the Kafka Connect cluster. -

KafkaConnectS2Ifor the Kafka Connect cluster with Source2Image support. -

KafkaConnectorfor creating and managing connectors in a Kafka Connect cluster. -

KafkaMirrorMakerfor the Kafka MirrorMaker instance. -

KafkaBridgefor the Kafka Bridge instance

When one of these resources is created in the OpenShift cluster, the operator gets the cluster description from the resource and starts creating a new cluster for the resource by creating the necessary OpenShift resources, such as StatefulSets, Services and ConfigMaps.

Each time a Kafka resource is updated, the operator performs corresponding updates on the OpenShift resources that make up the cluster for the resource.

Resources are either patched or deleted, and then recreated in order to make the cluster for the resource reflect the desired state of the cluster. This operation might cause a rolling update that might lead to service disruption.

When a resource is deleted, the operator undeploys the cluster and deletes all related OpenShift resources.

2.3.3. Deploying the Cluster Operator to watch a single namespace

Prerequisites

-

This procedure requires use of an OpenShift user account which is able to create

CustomResourceDefinitions,ClusterRolesandClusterRoleBindings. Use of Role Base Access Control (RBAC) in the OpenShift cluster usually means that permission to create, edit, and delete these resources is limited to OpenShift cluster administrators, such assystem:admin. Modify the installation files according to the namespace the Cluster Operator is going to be installed in.

On Linux, use:

sed -i 's/namespace: .*/namespace: my-namespace/' install/cluster-operator/*RoleBinding*.yaml

sed -i 's/namespace: .*/namespace: my-namespace/' install/cluster-operator/*RoleBinding*.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow On MacOS, use:

sed -i '' 's/namespace: .*/namespace: my-namespace/' install/cluster-operator/*RoleBinding*.yaml

sed -i '' 's/namespace: .*/namespace: my-namespace/' install/cluster-operator/*RoleBinding*.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

Deploy the Cluster Operator:

oc apply -f install/cluster-operator -n my-namespace

oc apply -f install/cluster-operator -n my-namespaceCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.3.4. Deploying the Cluster Operator to watch multiple namespaces

Prerequisites

-

This procedure requires use of an OpenShift user account which is able to create

CustomResourceDefinitions,ClusterRolesandClusterRoleBindings. Use of Role Base Access Control (RBAC) in the OpenShift cluster usually means that permission to create, edit, and delete these resources is limited to OpenShift cluster administrators, such assystem:admin. Edit the installation files according to the namespace the Cluster Operator is going to be installed in.

On Linux, use:

sed -i 's/namespace: .*/namespace: my-namespace/' install/cluster-operator/*RoleBinding*.yaml

sed -i 's/namespace: .*/namespace: my-namespace/' install/cluster-operator/*RoleBinding*.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow On MacOS, use:

sed -i '' 's/namespace: .*/namespace: my-namespace/' install/cluster-operator/*RoleBinding*.yaml

sed -i '' 's/namespace: .*/namespace: my-namespace/' install/cluster-operator/*RoleBinding*.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

Edit the file

install/cluster-operator/050-Deployment-strimzi-cluster-operator.yamland in the environment variableSTRIMZI_NAMESPACElist all the namespaces where Cluster Operator should watch for resources. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow For all namespaces which should be watched by the Cluster Operator (

watched-namespace-1,watched-namespace-2,watched-namespace-3in the above example), install theRoleBindings. Replace thewatched-namespacewith the namespace used in the previous step.This can be done using

oc apply:oc apply -f install/cluster-operator/020-RoleBinding-strimzi-cluster-operator.yaml -n watched-namespace oc apply -f install/cluster-operator/031-RoleBinding-strimzi-cluster-operator-entity-operator-delegation.yaml -n watched-namespace oc apply -f install/cluster-operator/032-RoleBinding-strimzi-cluster-operator-topic-operator-delegation.yaml -n watched-namespace

oc apply -f install/cluster-operator/020-RoleBinding-strimzi-cluster-operator.yaml -n watched-namespace oc apply -f install/cluster-operator/031-RoleBinding-strimzi-cluster-operator-entity-operator-delegation.yaml -n watched-namespace oc apply -f install/cluster-operator/032-RoleBinding-strimzi-cluster-operator-topic-operator-delegation.yaml -n watched-namespaceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Deploy the Cluster Operator

This can be done using

oc apply:oc apply -f install/cluster-operator -n my-namespace

oc apply -f install/cluster-operator -n my-namespaceCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.3.5. Deploying the Cluster Operator to watch all namespaces

You can configure the Cluster Operator to watch AMQ Streams resources across all namespaces in your OpenShift cluster. When running in this mode, the Cluster Operator automatically manages clusters in any new namespaces that are created.

Prerequisites

-

This procedure requires use of an OpenShift user account which is able to create

CustomResourceDefinitions,ClusterRolesandClusterRoleBindings. Use of Role Base Access Control (RBAC) in the OpenShift cluster usually means that permission to create, edit, and delete these resources is limited to OpenShift cluster administrators, such assystem:admin. - Your OpenShift cluster is running.

Procedure

Configure the Cluster Operator to watch all namespaces:

-

Edit the

050-Deployment-strimzi-cluster-operator.yamlfile. Set the value of the

STRIMZI_NAMESPACEenvironment variable to*.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

-

Edit the

Create

ClusterRoleBindingsthat grant cluster-wide access to all namespaces to the Cluster Operator.Use the

oc create clusterrolebindingcommand:oc create clusterrolebinding strimzi-cluster-operator-namespaced --clusterrole=strimzi-cluster-operator-namespaced --serviceaccount my-namespace:strimzi-cluster-operator oc create clusterrolebinding strimzi-cluster-operator-entity-operator-delegation --clusterrole=strimzi-entity-operator --serviceaccount my-namespace:strimzi-cluster-operator oc create clusterrolebinding strimzi-cluster-operator-topic-operator-delegation --clusterrole=strimzi-topic-operator --serviceaccount my-namespace:strimzi-cluster-operator

oc create clusterrolebinding strimzi-cluster-operator-namespaced --clusterrole=strimzi-cluster-operator-namespaced --serviceaccount my-namespace:strimzi-cluster-operator oc create clusterrolebinding strimzi-cluster-operator-entity-operator-delegation --clusterrole=strimzi-entity-operator --serviceaccount my-namespace:strimzi-cluster-operator oc create clusterrolebinding strimzi-cluster-operator-topic-operator-delegation --clusterrole=strimzi-topic-operator --serviceaccount my-namespace:strimzi-cluster-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

my-namespacewith the namespace in which you want to install the Cluster Operator.Deploy the Cluster Operator to your OpenShift cluster.

Use the

oc applycommand:oc apply -f install/cluster-operator -n my-namespace

oc apply -f install/cluster-operator -n my-namespaceCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.3.6. Deploying the Cluster Operator from the OperatorHub

You can deploy the Cluster Operator to your OpenShift cluster by installing the AMQ Streams Operator from the OperatorHub. The OperatorHub is available in OpenShift 4 only.

Prerequisites

-

The Red Hat Operators

OperatorSourceis enabled in your OpenShift cluster. If you can see Red Hat Operators in the OperatorHub, the correctOperatorSourceis enabled. For more information, see the Operators guide. - Installation requires a user with sufficient privileges to install Operators from the OperatorHub.

Procedure

- In the OpenShift 4 web console, click Operators > OperatorHub.

Search or browse for the AMQ Streams Operator, in the Streaming & Messaging category.

- Click the AMQ Streams tile and then, in the sidebar on the right, click Install.

On the Create Operator Subscription screen, choose from the following installation and update options:

- Installation Mode: Choose to install the AMQ Streams Operator to all (projects) namespaces in the cluster (the default option) or a specific (project) namespace. It is good practice to use namespaces to separate functions. We recommend that you install the Operator to its own namespace, separate from the namespace that will contain the Kafka cluster and other AMQ Streams components.

- Approval Strategy: By default, the AMQ Streams Operator is automatically upgraded to the latest AMQ Streams version by the Operator Lifecycle Manager (OLM). Optionally, select Manual if you want to manually approve future upgrades. For more information, see the Operators guide in the OpenShift documentation.

Click Subscribe; the AMQ Streams Operator is installed to your OpenShift cluster.

The AMQ Streams Operator deploys the Cluster Operator, CRDs, and role-based access control (RBAC) resources to the selected namespace, or to all namespaces.

On the Installed Operators screen, check the progress of the installation. The AMQ Streams Operator is ready to use when its status changes to InstallSucceeded.

Next, you can deploy the other components of AMQ Streams, starting with a Kafka cluster, using the YAML example files.

2.4. Kafka cluster

You can use AMQ Streams to deploy an ephemeral or persistent Kafka cluster to OpenShift. When installing Kafka, AMQ Streams also installs a ZooKeeper cluster and adds the necessary configuration to connect Kafka with ZooKeeper.

You can also use it to deploy Kafka Exporter.

- Ephemeral cluster

-

In general, an ephemeral (that is, temporary) Kafka cluster is suitable for development and testing purposes, not for production. This deployment uses

emptyDirvolumes for storing broker information (for ZooKeeper) and topics or partitions (for Kafka). Using anemptyDirvolume means that its content is strictly related to the pod life cycle and is deleted when the pod goes down. - Persistent cluster

-

A persistent Kafka cluster uses

PersistentVolumesto store ZooKeeper and Kafka data. ThePersistentVolumeis acquired using aPersistentVolumeClaimto make it independent of the actual type of thePersistentVolume. For example, it can use Amazon EBS volumes in Amazon AWS deployments without any changes in the YAML files. ThePersistentVolumeClaimcan use aStorageClassto trigger automatic volume provisioning.

AMQ Streams includes several examples for deploying a Kafka cluster.

-

kafka-persistent.yamldeploys a persistent cluster with three ZooKeeper and three Kafka nodes. -

kafka-jbod.yamldeploys a persistent cluster with three ZooKeeper and three Kafka nodes (each using multiple persistent volumes). -

kafka-persistent-single.yamldeploys a persistent cluster with a single ZooKeeper node and a single Kafka node. -

kafka-ephemeral.yamldeploys an ephemeral cluster with three ZooKeeper and three Kafka nodes. -

kafka-ephemeral-single.yamldeploys an ephemeral cluster with three ZooKeeper nodes and a single Kafka node.

The example clusters are named my-cluster by default. The cluster name is defined by the name of the resource and cannot be changed after the cluster has been deployed. To change the cluster name before you deploy the cluster, edit the Kafka.metadata.name property of the resource in the relevant YAML file.

apiVersion: kafka.strimzi.io/v1beta1 kind: Kafka metadata: name: my-cluster # ...

apiVersion: kafka.strimzi.io/v1beta1

kind: Kafka

metadata:

name: my-cluster

# ...2.4.1. Deploying the Kafka cluster

You can deploy an ephemeral or persistent Kafka cluster to OpenShift on the command line.

Prerequisites

- The Cluster Operator is deployed.

Procedure

If you plan to use the cluster for development or testing purposes, you can create and deploy an ephemeral cluster using

oc apply.oc apply -f examples/kafka/kafka-ephemeral.yaml

oc apply -f examples/kafka/kafka-ephemeral.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you plan to use the cluster in production, create and deploy a persistent cluster using

oc apply.oc apply -f examples/kafka/kafka-persistent.yaml

oc apply -f examples/kafka/kafka-persistent.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

- For more information on deploying the Cluster Operator, see Section 2.3, “Cluster Operator”.

-

For more information on the different configuration options supported by the

Kafkaresource, see Section 3.1, “Kafka cluster configuration”.

2.5. Kafka Connect

Kafka Connect is a tool for streaming data between Apache Kafka and external systems. It provides a framework for moving large amounts of data into and out of your Kafka cluster while maintaining scalability and reliability. Kafka Connect is typically used to integrate Kafka with external databases and storage and messaging systems.

In Kafka Connect, a source connector is a runtime entity that fetches data from an external system and feeds it to Kafka as messages. A sink connector is a runtime entity that fetches messages from Kafka topics and feeds them to an external system. The workload of connectors is divided into tasks. Tasks are distributed among nodes (also called workers), which form a Connect cluster. This allows the message flow to be highly scalable and reliable.

Each connector is an instance of a particular connector class that knows how to communicate with the relevant external system in terms of messages. Connectors are available for many external systems, or you can develop your own.

The term connector is used interchangably to mean a connector instance running within a Kafka Connect cluster, or a connector class. This guide uses the term connector when the meaning is clear from the context.

AMQ Streams allows you to:

- Create a Kafka Connect image containing the connectors you want

-

Deploy and manage a Kafka Connect cluster running within OpenShift using a

KafkaConnectresource -

Run connectors within your Kafka Connect cluster, optionally managed using

KafkaConnectorresources

Kafka Connect includes the following built-in connectors for moving file-based data into and out of your Kafka cluster.

| File Connector | Description |

|---|---|

|

| Transfers data to your Kafka cluster from a file (the source). |

|

| Transfers data from your Kafka cluster to a file (the sink). |

To use other connector classes, you need to prepare connector images by following one of these procedures:

The Cluster Operator can use images that you create to deploy a Kafka Connect cluster to your OpenShift cluster.

A Kafka Connect cluster is implemented as a Deployment with a configurable number of workers.

You can create and manage connectors using KafkaConnector resources or manually using the Kafka Connect REST API, which is available on port 8083 as the <connect-cluster-name>-connect-api service. The operations supported by the REST API are described in the Apache Kafka documentation.

2.5.1. Deploying Kafka Connect to your cluster

You can deploy a Kafka Connect cluster to your OpenShift cluster by using the Cluster Operator.

Prerequisites

Procedure

Use the

oc applycommand to create aKafkaConnectresource based on thekafka-connect.yamlfile:oc apply -f examples/kafka-connect/kafka-connect.yaml

oc apply -f examples/kafka-connect/kafka-connect.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

2.5.2. Extending Kafka Connect with connector plug-ins

The AMQ Streams container images for Kafka Connect include the two built-in file connectors: FileStreamSourceConnector and FileStreamSinkConnector. You can add your own connectors by:

- Creating a container image from the Kafka Connect base image (manually or using your CI (continuous integration), for example).

- Creating a container image using OpenShift builds and Source-to-Image (S2I) - available only on OpenShift.

2.5.2.1. Creating a Docker image from the Kafka Connect base image

You can use the Kafka container image on Red Hat Container Catalog as a base image for creating your own custom image with additional connector plug-ins.

The following procedure explains how to create your custom image and add it to the /opt/kafka/plugins directory. At startup, the AMQ Streams version of Kafka Connect loads any third-party connector plug-ins contained in the /opt/kafka/plugins directory.

Prerequisites

Procedure

Create a new

Dockerfileusingregistry.redhat.io/amq7/amq-streams-kafka-24-rhel7:1.4.0as the base image:FROM registry.redhat.io/amq7/amq-streams-kafka-24-rhel7:1.4.0 USER root:root COPY ./my-plugins/ /opt/kafka/plugins/ USER 1001

FROM registry.redhat.io/amq7/amq-streams-kafka-24-rhel7:1.4.0 USER root:root COPY ./my-plugins/ /opt/kafka/plugins/ USER 1001Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Build the container image.

- Push your custom image to your container registry.

Point to the new container image.

You can either:

Edit the

KafkaConnect.spec.imageproperty of theKafkaConnectcustom resource.If set, this property overrides the

STRIMZI_KAFKA_CONNECT_IMAGESvariable in the Cluster Operator.Copy to Clipboard Copied! Toggle word wrap Toggle overflow or

-

In the

install/cluster-operator/050-Deployment-strimzi-cluster-operator.yamlfile, edit theSTRIMZI_KAFKA_CONNECT_IMAGESvariable to point to the new container image, and then reinstall the Cluster Operator.

Additional resources

-

For more information on the

KafkaConnect.spec.image property, see Section 3.2.11, “Container images”. -

For more information on the

STRIMZI_KAFKA_CONNECT_IMAGESvariable, see Section 4.1.7, “Cluster Operator Configuration”.

2.5.2.2. Creating a container image using OpenShift builds and Source-to-Image

You can use OpenShift builds and the Source-to-Image (S2I) framework to create new container images. An OpenShift build takes a builder image with S2I support, together with source code and binaries provided by the user, and uses them to build a new container image. Once built, container images are stored in OpenShift’s local container image repository and are available for use in deployments.

A Kafka Connect builder image with S2I support is provided on the Red Hat Container Catalog as part of the registry.redhat.io/amq7/amq-streams-kafka-24-rhel7:1.4.0 image. This S2I image takes your binaries (with plug-ins and connectors) and stores them in the /tmp/kafka-plugins/s2i directory. It creates a new Kafka Connect image from this directory, which can then be used with the Kafka Connect deployment. When started using the enhanced image, Kafka Connect loads any third-party plug-ins from the /tmp/kafka-plugins/s2i directory.

Procedure

On the command line, use the

oc applycommand to create and deploy a Kafka Connect S2I cluster:oc apply -f examples/kafka-connect/kafka-connect-s2i.yaml

oc apply -f examples/kafka-connect/kafka-connect-s2i.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a directory with Kafka Connect plug-ins:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use the

oc start-buildcommand to start a new build of the image using the prepared directory:oc start-build my-connect-cluster-connect --from-dir ./my-plugins/

oc start-build my-connect-cluster-connect --from-dir ./my-plugins/Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe name of the build is the same as the name of the deployed Kafka Connect cluster.

- Once the build has finished, the new image is used automatically by the Kafka Connect deployment.

2.5.3. Creating and managing connectors

When you have created a container image for your connector plug-in, you need to create a connector instance in your Kafka Connect cluster. You can then configure, monitor, and manage a running connector instance.

AMQ Streams provides two APIs for creating and managing connectors:

-

KafkaConnectorresources (referred to asKafkaConnectors) - Kafka Connect REST API

Using the APIs, you can:

- Check the status of a connector instance

- Reconfigure a running connector

- Increase or decrease the number of tasks for a connector instance

-

Restart failed tasks (not supported by

KafkaConnectorresource) - Pause a connector instance

- Resume a previously paused connector instance

- Delete a connector instance

2.5.3.1. KafkaConnector resources

KafkaConnectors allow you to create and manage connector instances for Kafka Connect in an OpenShift-native way, so an HTTP client such as cURL is not required. Like other Kafka resources, you declare a connector’s desired state in a KafkaConnector YAML file that is deployed to your OpenShift cluster to create the connector instance.

You manage a running connector instance by updating its corresponding KafkaConnector, and then applying the updates. You remove a connector by deleting its corresponding KafkaConnector.

To ensure compatibility with earlier versions of AMQ Streams, KafkaConnectors are disabled by default. To enable them for a Kafka Connect cluster, you must use annotations on the KafkaConnect resource. For instructions, see Section 3.2.14, “Enabling KafkaConnector resources”.

When KafkaConnectors are enabled, the Cluster Operator begins to watch for them. It updates the configurations of running connector instances to match the configurations defined in their KafkaConnectors.

AMQ Streams includes an example KafkaConnector, named examples/connector/source-connector.yaml. You can use this example to create and manage a FileStreamSourceConnector.

2.5.3.2. Availability of the Kafka Connect REST API

The Kafka Connect REST API is available on port 8083 as the <connect-cluster-name>-connect-api service.

If KafkaConnectors are enabled, manual changes made directly using the Kafka Connect REST API are reverted by the Cluster Operator.

2.5.4. Deploying a KafkaConnector resource to Kafka Connect

Deploy the example KafkaConnector to a Kafka Connect cluster. The example YAML will create a FileStreamSourceConnector to send each line of the license file to Kafka as a message in a topic named my-topic.

Prerequisites

-

A Kafka Connect deployment in which

KafkaConnectorsare enabled - A running Cluster Operator

Procedure

Edit the

examples/connector/source-connector.yamlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Enter a name for the

KafkaConnectorresource. This will be used as the name of the connector within Kafka Connect. You can choose any name that is valid for an OpenShift resource. - 2

- Enter the name of the Kafka Connect cluster in which to create the connector.

- 3

- The name or alias of the connector class. This should be present in the image being used by the Kafka Connect cluster.

- 4

- The maximum number of tasks that the connector can create.

- 5

- Configuration settings for the connector. Available configuration options depend on the connector class.

Create the

KafkaConnectorin your OpenShift cluster:oc apply -f examples/connector/source-connector.yaml

oc apply -f examples/connector/source-connector.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check that the resource was created:

oc get kctr --selector strimzi.io/cluster=my-connect-cluster -o name

oc get kctr --selector strimzi.io/cluster=my-connect-cluster -o nameCopy to Clipboard Copied! Toggle word wrap Toggle overflow

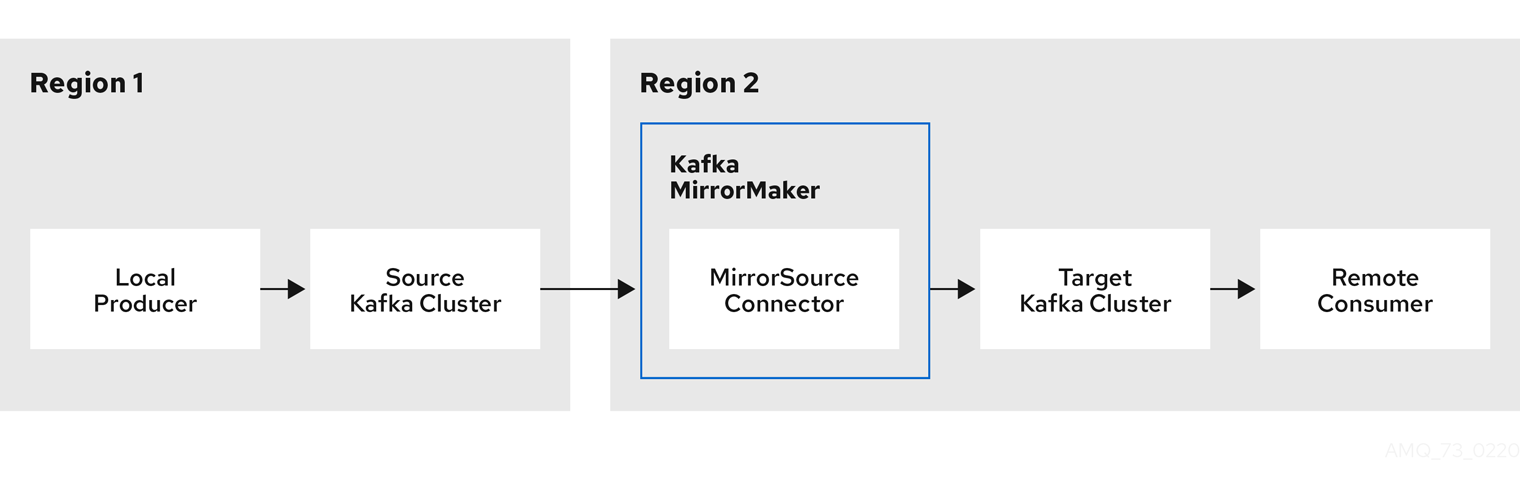

2.6. Kafka MirrorMaker

The Cluster Operator deploys one or more Kafka MirrorMaker replicas to replicate data between Kafka clusters. This process is called mirroring to avoid confusion with the Kafka partitions replication concept. The MirrorMaker consumes messages from the source cluster and republishes those messages to the target cluster.

For information about example resources and the format for deploying Kafka MirrorMaker, see Kafka MirrorMaker configuration.

2.6.1. Deploying Kafka MirrorMaker

Prerequisites

- Before deploying Kafka MirrorMaker, the Cluster Operator must be deployed.

Procedure

Create a Kafka MirrorMaker cluster from the command-line:

oc apply -f examples/kafka-mirror-maker/kafka-mirror-maker.yaml

oc apply -f examples/kafka-mirror-maker/kafka-mirror-maker.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

- For more information about deploying the Cluster Operator, see Section 2.3, “Cluster Operator”

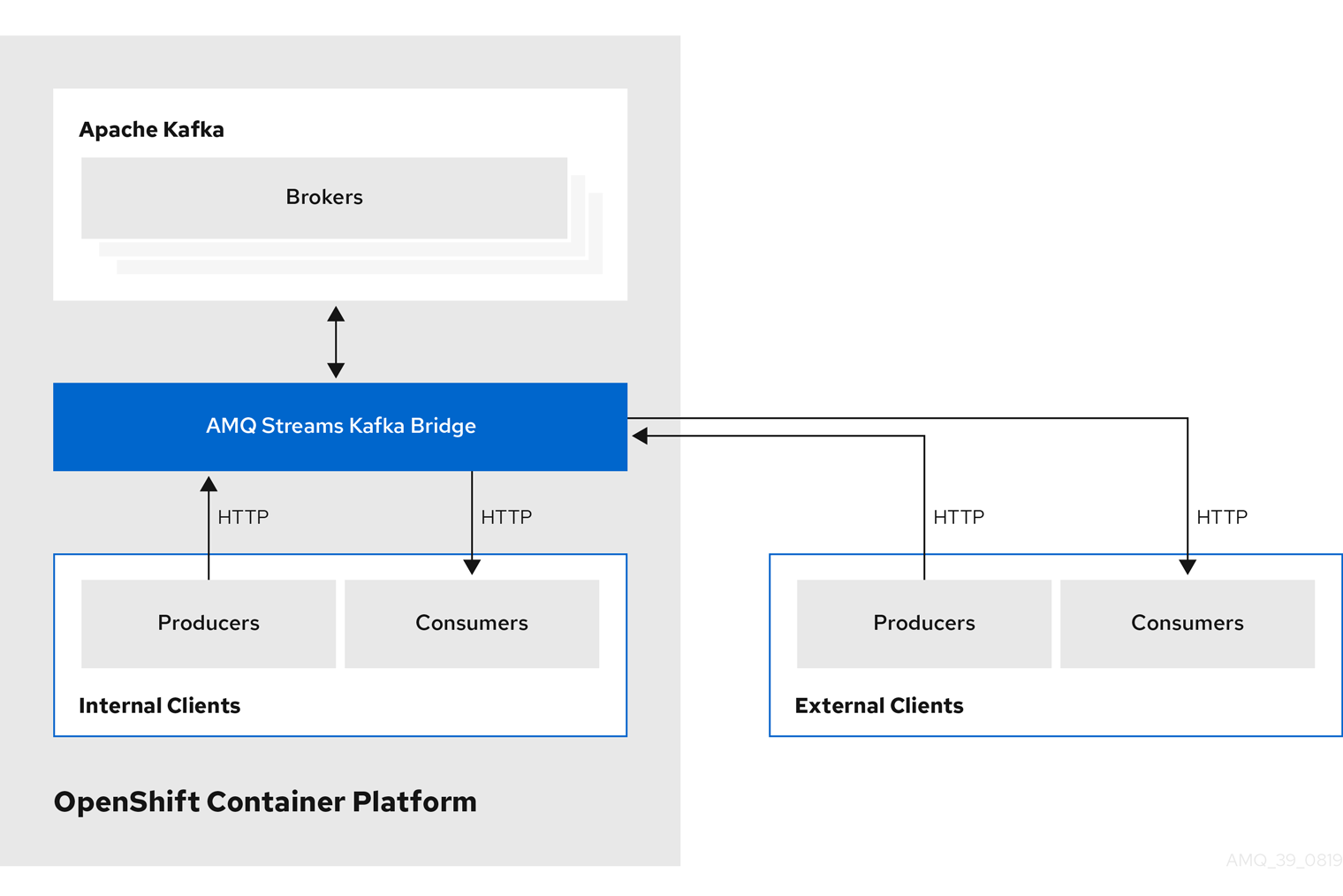

2.7. Kafka Bridge

The Cluster Operator deploys one or more Kafka bridge replicas to send data between Kafka clusters and clients via HTTP API.

For information about example resources and the format for deploying Kafka Bridge, see Kafka Bridge configuration.

2.7.1. Deploying Kafka Bridge to your OpenShift cluster

You can deploy a Kafka Bridge cluster to your OpenShift cluster by using the Cluster Operator.

Prerequisites

Procedure

Use the

oc applycommand to create aKafkaBridgeresource based on thekafka-bridge.yamlfile:oc apply -f examples/kafka-bridge/kafka-bridge.yaml

oc apply -f examples/kafka-bridge/kafka-bridge.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

2.8. Deploying example clients

Prerequisites

- An existing Kafka cluster for the client to connect to.

Procedure

Deploy the producer.

Use

oc run:oc run kafka-producer -ti --image=registry.redhat.io/amq7/amq-streams-kafka-24-rhel7:1.4.0 --rm=true --restart=Never -- bin/kafka-console-producer.sh --broker-list cluster-name-kafka-bootstrap:9092 --topic my-topic

oc run kafka-producer -ti --image=registry.redhat.io/amq7/amq-streams-kafka-24-rhel7:1.4.0 --rm=true --restart=Never -- bin/kafka-console-producer.sh --broker-list cluster-name-kafka-bootstrap:9092 --topic my-topicCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Type your message into the console where the producer is running.

- Press Enter to send the message.

Deploy the consumer.

Use

oc run:oc run kafka-consumer -ti --image=registry.redhat.io/amq7/amq-streams-kafka-24-rhel7:1.4.0 --rm=true --restart=Never -- bin/kafka-console-consumer.sh --bootstrap-server cluster-name-kafka-bootstrap:9092 --topic my-topic --from-beginning

oc run kafka-consumer -ti --image=registry.redhat.io/amq7/amq-streams-kafka-24-rhel7:1.4.0 --rm=true --restart=Never -- bin/kafka-console-consumer.sh --bootstrap-server cluster-name-kafka-bootstrap:9092 --topic my-topic --from-beginningCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Confirm that you see the incoming messages in the consumer console.

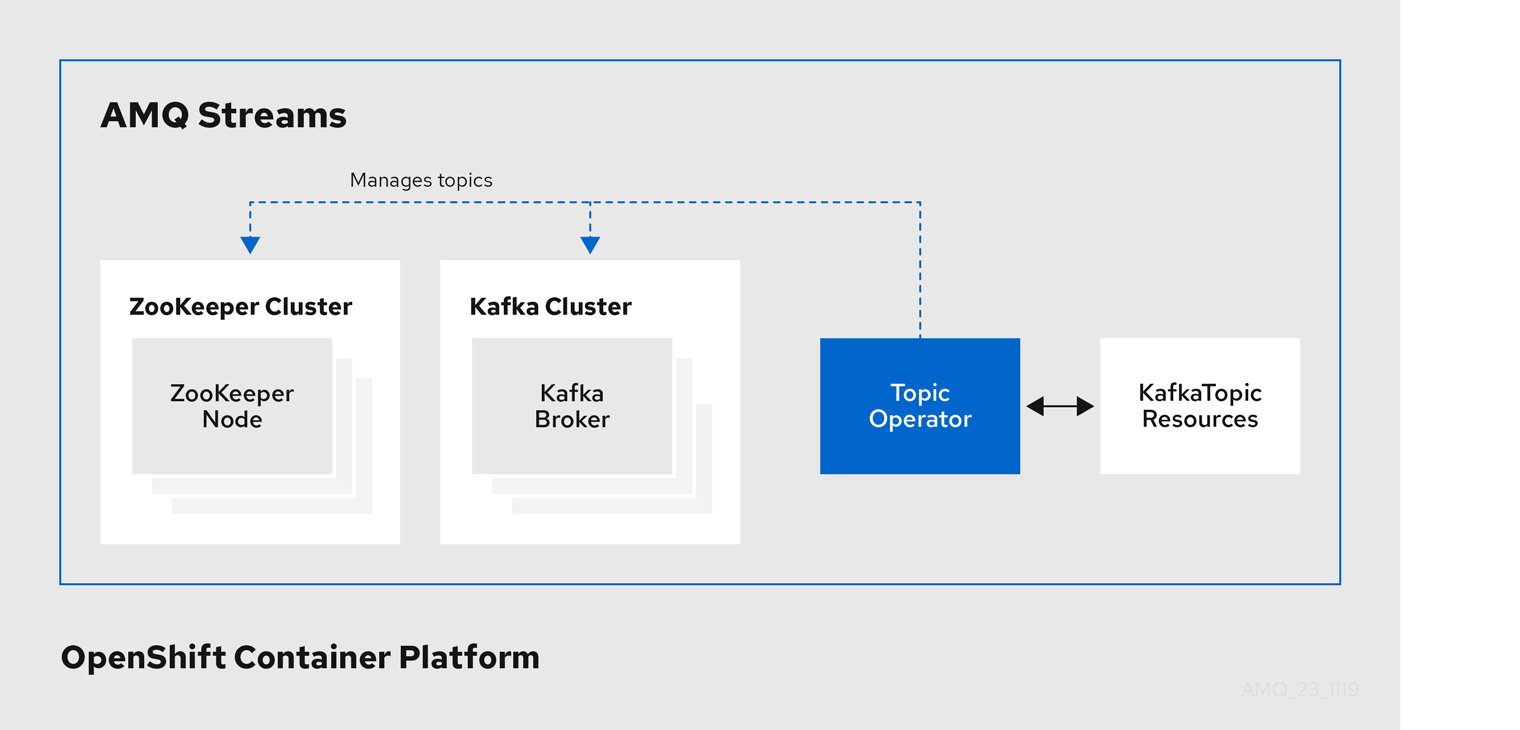

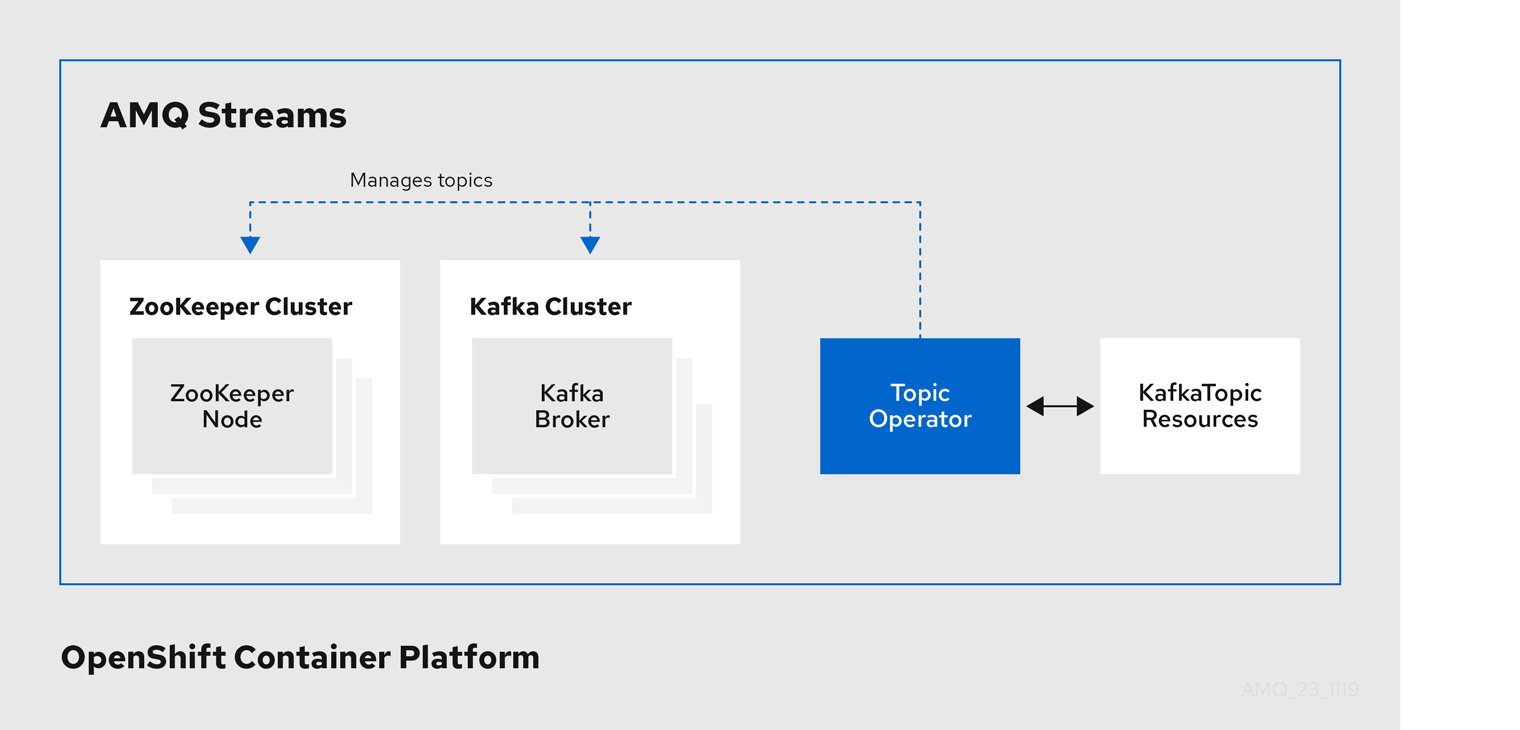

2.9. Topic Operator

The Topic Operator is responsible for managing Kafka topics within a Kafka cluster running within an OpenShift cluster.

2.9.1. Topic Operator

The Topic Operator provides a way of managing topics in a Kafka cluster through OpenShift resources.

Example architecture for the Topic Operator

The role of the Topic Operator is to keep a set of KafkaTopic OpenShift resources describing Kafka topics in-sync with corresponding Kafka topics.

Specifically, if a KafkaTopic is:

- Created, the Topic Operator creates the topic

- Deleted, the Topic Operator deletes the topic

- Changed, the Topic Operator updates the topic

Working in the other direction, if a topic is:

-

Created within the Kafka cluster, the Operator creates a

KafkaTopic -

Deleted from the Kafka cluster, the Operator deletes the

KafkaTopic -

Changed in the Kafka cluster, the Operator updates the

KafkaTopic

This allows you to declare a KafkaTopic as part of your application’s deployment and the Topic Operator will take care of creating the topic for you. Your application just needs to deal with producing or consuming from the necessary topics.

If the topic is reconfigured or reassigned to different Kafka nodes, the KafkaTopic will always be up to date.

2.9.2. Deploying the Topic Operator using the Cluster Operator

This procedure describes how to deploy the Topic Operator using the Cluster Operator. If you want to use the Topic Operator with a Kafka cluster that is not managed by AMQ Streams, you must deploy the Topic Operator as a standalone component. For more information, see Section 4.2.6, “Deploying the standalone Topic Operator”.

Prerequisites

- A running Cluster Operator

-

A

Kafkaresource to be created or updated

Procedure

Ensure that the

Kafka.spec.entityOperatorobject exists in theKafkaresource. This configures the Entity Operator.Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Configure the Topic Operator using the properties described in Section B.62, “

EntityTopicOperatorSpecschema reference”. Create or update the Kafka resource in OpenShift.

Use

oc apply:oc apply -f your-file

oc apply -f your-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

- For more information about deploying the Cluster Operator, see Section 2.3, “Cluster Operator”.

- For more information about deploying the Entity Operator, see Section 3.1.11, “Entity Operator”.

-

For more information about the

Kafka.spec.entityOperatorobject used to configure the Topic Operator when deployed by the Cluster Operator, see Section B.61, “EntityOperatorSpecschema reference”.

2.10. User Operator

The User Operator is responsible for managing Kafka users within a Kafka cluster running within an OpenShift cluster.

2.10.1. User Operator

The User Operator manages Kafka users for a Kafka cluster by watching for KafkaUser resources that describe Kafka users, and ensuring that they are configured properly in the Kafka cluster.

For example, if a KafkaUser is:

- Created, the User Operator creates the user it describes

- Deleted, the User Operator deletes the user it describes

- Changed, the User Operator updates the user it describes

Unlike the Topic Operator, the User Operator does not sync any changes from the Kafka cluster with the OpenShift resources. Kafka topics can be created by applications directly in Kafka, but it is not expected that the users will be managed directly in the Kafka cluster in parallel with the User Operator.

The User Operator allows you to declare a KafkaUser resource as part of your application’s deployment. You can specify the authentication and authorization mechanism for the user. You can also configure user quotas that control usage of Kafka resources to ensure, for example, that a user does not monopolize access to a broker.

When the user is created, the user credentials are created in a Secret. Your application needs to use the user and its credentials for authentication and to produce or consume messages.

In addition to managing credentials for authentication, the User Operator also manages authorization rules by including a description of the user’s access rights in the KafkaUser declaration.

2.10.2. Deploying the User Operator using the Cluster Operator

Prerequisites

- A running Cluster Operator

-

A

Kafkaresource to be created or updated.

Procedure

-

Edit the

Kafkaresource ensuring it has aKafka.spec.entityOperator.userOperatorobject that configures the User Operator how you want. Create or update the Kafka resource in OpenShift.

This can be done using

oc apply:oc apply -f your-file

oc apply -f your-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

- For more information about deploying the Cluster Operator, see Section 2.3, “Cluster Operator”.

-

For more information about the

Kafka.spec.entityOperatorobject used to configure the User Operator when deployed by the Cluster Operator, seeEntityOperatorSpecschema reference.

2.11. Strimzi Administrators

AMQ Streams includes several custom resources. By default, permission to create, edit, and delete these resources is limited to OpenShift cluster administrators. If you want to allow non-cluster administators to manage AMQ Streams resources, you must assign them the Strimzi Administrator role.

2.11.1. Designating Strimzi Administrators

Prerequisites

-

AMQ Streams

CustomResourceDefinitionsare installed.

Procedure

Create the

strimzi-admincluster role in OpenShift.Use

oc apply:oc apply -f install/strimzi-admin

oc apply -f install/strimzi-adminCopy to Clipboard Copied! Toggle word wrap Toggle overflow Assign the

strimzi-adminClusterRoleto one or more existing users in the OpenShift cluster.Use

oc create:oc create clusterrolebinding strimzi-admin --clusterrole=strimzi-admin --user=user1 --user=user2

oc create clusterrolebinding strimzi-admin --clusterrole=strimzi-admin --user=user1 --user=user2Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.12. Container images

Container images for AMQ Streams are available in the Red Hat Container Catalog. The installation YAML files provided by AMQ Streams will pull the images directly from the Red Hat Container Catalog.

If you do not have access to the Red Hat Container Catalog or want to use your own container repository:

- Pull all container images listed here

- Push them into your own registry

- Update the image names in the installation YAML files

Each Kafka version supported for the release has a separate image.

| Container image | Namespace/Repository | Description |

|---|---|---|

| Kafka |

| AMQ Streams image for running Kafka, including:

|

| Operator |

| AMQ Streams image for running the operators:

|

| Kafka Bridge |

| AMQ Streams image for running the AMQ Streams kafka Bridge |

Chapter 3. Deployment configuration

This chapter describes how to configure different aspects of the supported deployments:

- Kafka clusters

- Kafka Connect clusters

- Kafka Connect clusters with Source2Image support

- Kafka Mirror Maker

- Kafka Bridge

- OAuth 2.0 token-based authentication

- OAuth 2.0 token-based authorization

3.1. Kafka cluster configuration

The full schema of the Kafka resource is described in the Section B.1, “Kafka schema reference”. All labels that are applied to the desired Kafka resource will also be applied to the OpenShift resources making up the Kafka cluster. This provides a convenient mechanism for resources to be labeled as required.

3.1.1. Sample Kafka YAML configuration

For help in understanding the configuration options available for your Kafka deployment, refer to sample YAML file provided here.

The sample shows only some of the possible configuration options, but those that are particularly important include:

- Resource requests (CPU / Memory)

- JVM options for maximum and minimum memory allocation

- Listeners (and authentication)

- Authentication

- Storage

- Rack awareness

- Metrics

- 1

- Replicas specifies the number of broker nodes.

- 2

- Kafka version, which can be changed by following the upgrade procedure.

- 3

- Resource requests specify the resources to reserve for a given container.

- 4

- Resource limits specify the maximum resources that can be consumed by a container.

- 5

- 6

- Listeners configure how clients connect to the Kafka cluster via bootstrap addresses. Listeners are configured as

plain(without encryption),tlsorexternal. - 7

- Listener authentication mechanisms may be configured for each listener, and specified as mutual TLS or SCRAM-SHA.

- 8

- External listener configuration specifies how the Kafka cluster is exposed outside OpenShift, such as through a

route,loadbalancerornodeport. - 9

- Optional configuration for a Kafka listener certificate managed by an external Certificate Authority. The

brokerCertChainAndKeyproperty specifies aSecretthat holds a server certificate and a private key. Kafka listener certificates can also be configured for TLS listeners. - 10

- 11

- Config specifies the broker configuration. Standard Apache Kafka configuration may be provided, restricted to those properties not managed directly by AMQ Streams.

- 12

- 13

- Storage size for persistent volumes may be increased and additional volumes may be added to JBOD storage.

- 14

- Persistent storage has additional configuration options, such as a storage

idandclassfor dynamic volume provisioning. - 15

- Rack awareness is configured to spread replicas across different racks. A

topologykey must match the label of a cluster node. - 16

- 17

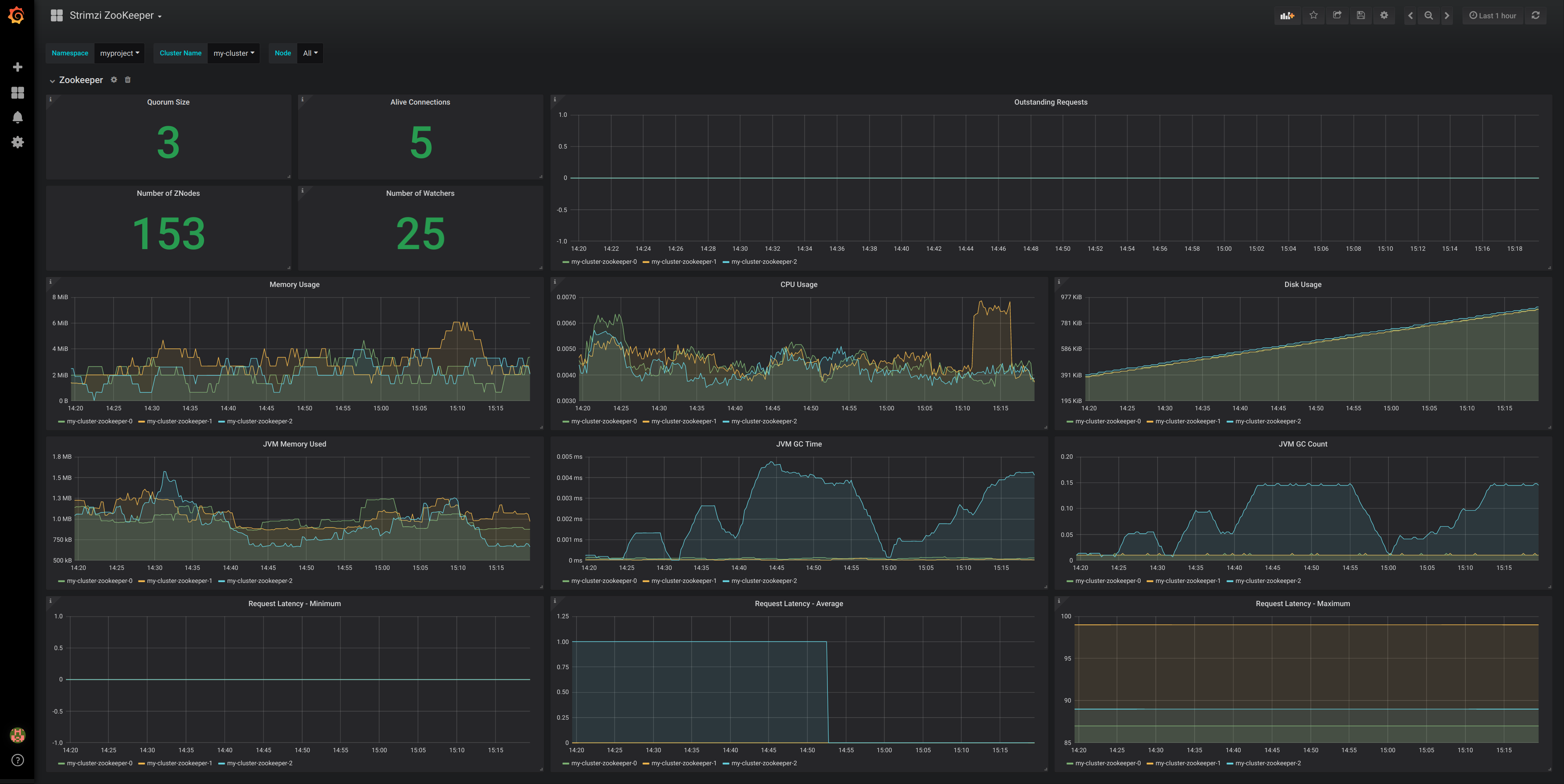

- Kafka rules for exporting metrics to a Grafana dashboard through the JMX Exporter. A set of rules provided with AMQ Streams may be copied to your Kafka resource configuration.

- 18

- ZooKeeper-specific configuration, which contains properties similar to the Kafka configuration.

- 19

- Entity Operator configuration, which specifies the configuration for the Topic Operator and User Operator.

- 20

- Kafka Exporter configuration, which is used to expose data as Prometheus metrics.

3.1.2. Data storage considerations

An efficient data storage infrastructure is essential to the optimal performance of AMQ Streams.

Block storage is required. File storage, such as NFS, does not work with Kafka.

For your block storage, you can choose, for example:

- Cloud-based block storage solutions, such as Amazon Elastic Block Store (EBS)

- Local persistent volumes

- Storage Area Network (SAN) volumes accessed by a protocol such as Fibre Channel or iSCSI

Strimzi does not require OpenShift raw block volumes.

3.1.2.1. File systems

It is recommended that you configure your storage system to use the XFS file system. AMQ Streams is also compatible with the ext4 file system, but this might require additional configuration for best results.

3.1.2.2. Apache Kafka and ZooKeeper storage

Use separate disks for Apache Kafka and ZooKeeper.

Three types of data storage are supported:

- Ephemeral (Recommended for development only)

- Persistent

- JBOD (Just a Bunch of Disks, suitable for Kafka only)

For more information, see Kafka and ZooKeeper storage.

Solid-state drives (SSDs), though not essential, can improve the performance of Kafka in large clusters where data is sent to and received from multiple topics asynchronously. SSDs are particularly effective with ZooKeeper, which requires fast, low latency data access.

You do not need to provision replicated storage because Kafka and ZooKeeper both have built-in data replication.

3.1.3. Kafka and ZooKeeper storage types

As stateful applications, Kafka and ZooKeeper need to store data on disk. AMQ Streams supports three storage types for this data:

- Ephemeral

- Persistent

- JBOD storage

JBOD storage is supported only for Kafka, not for ZooKeeper.

When configuring a Kafka resource, you can specify the type of storage used by the Kafka broker and its corresponding ZooKeeper node. You configure the storage type using the storage property in the following resources:

-

Kafka.spec.kafka -

Kafka.spec.zookeeper

The storage type is configured in the type field.

The storage type cannot be changed after a Kafka cluster is deployed.

Additional resources

- For more information about ephemeral storage, see ephemeral storage schema reference.

- For more information about persistent storage, see persistent storage schema reference.

- For more information about JBOD storage, see JBOD schema reference.

-

For more information about the schema for

Kafka, seeKafkaschema reference.

3.1.3.1. Ephemeral storage

Ephemeral storage uses the `emptyDir` volumes volumes to store data. To use ephemeral storage, the type field should be set to ephemeral.

emptyDir volumes are not persistent and the data stored in them will be lost when the Pod is restarted. After the new pod is started, it has to recover all data from other nodes of the cluster. Ephemeral storage is not suitable for use with single node ZooKeeper clusters and for Kafka topics with replication factor 1, because it will lead to data loss.

An example of Ephemeral storage

3.1.3.1.1. Log directories

The ephemeral volume will be used by the Kafka brokers as log directories mounted into the following path:

/var/lib/kafka/data/kafka-log_idx_-

Where

idxis the Kafka broker pod index. For example/var/lib/kafka/data/kafka-log0.

3.1.3.2. Persistent storage

Persistent storage uses Persistent Volume Claims to provision persistent volumes for storing data. Persistent Volume Claims can be used to provision volumes of many different types, depending on the Storage Class which will provision the volume. The data types which can be used with persistent volume claims include many types of SAN storage as well as Local persistent volumes.

To use persistent storage, the type has to be set to persistent-claim. Persistent storage supports additional configuration options:

id(optional)-

Storage identification number. This option is mandatory for storage volumes defined in a JBOD storage declaration. Default is

0. size(required)- Defines the size of the persistent volume claim, for example, "1000Gi".

class(optional)- The OpenShift Storage Class to use for dynamic volume provisioning.

selector(optional)- Allows selecting a specific persistent volume to use. It contains key:value pairs representing labels for selecting such a volume.

deleteClaim(optional)-

Boolean value which specifies if the Persistent Volume Claim has to be deleted when the cluster is undeployed. Default is

false.

Increasing the size of persistent volumes in an existing AMQ Streams cluster is only supported in OpenShift versions that support persistent volume resizing. The persistent volume to be resized must use a storage class that supports volume expansion. For other versions of OpenShift and storage classes which do not support volume expansion, you must decide the necessary storage size before deploying the cluster. Decreasing the size of existing persistent volumes is not possible.

Example fragment of persistent storage configuration with 1000Gi size

# ... storage: type: persistent-claim size: 1000Gi # ...

# ...

storage:

type: persistent-claim

size: 1000Gi

# ...The following example demonstrates the use of a storage class.

Example fragment of persistent storage configuration with specific Storage Class

Finally, a selector can be used to select a specific labeled persistent volume to provide needed features such as an SSD.

Example fragment of persistent storage configuration with selector

3.1.3.2.1. Storage class overrides

You can specify a different storage class for one or more Kafka brokers, instead of using the default storage class. This is useful if, for example, storage classes are restricted to different availability zones or data centers. You can use the overrides field for this purpose.

In this example, the default storage class is named my-storage-class:

Example AMQ Streams cluster using storage class overrides

As a result of the configured overrides property, the broker volumes use the following storage classes:

-

The persistent volumes of broker 0 will use

my-storage-class-zone-1a. -

The persistent volumes of broker 1 will use

my-storage-class-zone-1b. -

The persistent volumes of broker 2 will use

my-storage-class-zone-1c.

The overrides property is currently used only to override storage class configurations. Overriding other storage configuration fields is not currently supported. Other fields from the storage configuration are currently not supported.

3.1.3.2.2. Persistent Volume Claim naming

When persistent storage is used, it creates Persistent Volume Claims with the following names:

data-cluster-name-kafka-idx-

Persistent Volume Claim for the volume used for storing data for the Kafka broker pod

idx. data-cluster-name-zookeeper-idx-

Persistent Volume Claim for the volume used for storing data for the ZooKeeper node pod

idx.

3.1.3.2.3. Log directories

The persistent volume will be used by the Kafka brokers as log directories mounted into the following path:

/var/lib/kafka/data/kafka-log_idx_-

Where

idxis the Kafka broker pod index. For example/var/lib/kafka/data/kafka-log0.

3.1.3.3. Resizing persistent volumes

You can provision increased storage capacity by increasing the size of the persistent volumes used by an existing AMQ Streams cluster. Resizing persistent volumes is supported in clusters that use either a single persistent volume or multiple persistent volumes in a JBOD storage configuration.

You can increase but not decrease the size of persistent volumes. Decreasing the size of persistent volumes is not currently supported in OpenShift.

Prerequisites

- An OpenShift cluster with support for volume resizing.

- The Cluster Operator is running.

- A Kafka cluster using persistent volumes created using a storage class that supports volume expansion.

Procedure

In a

Kafkaresource, increase the size of the persistent volume allocated to the Kafka cluster, the ZooKeeper cluster, or both.-

To increase the volume size allocated to the Kafka cluster, edit the

spec.kafka.storageproperty. To increase the volume size allocated to the ZooKeeper cluster, edit the

spec.zookeeper.storageproperty.For example, to increase the volume size from

1000Gito2000Gi:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

-

To increase the volume size allocated to the Kafka cluster, edit the

Create or update the resource.

Use

oc apply:oc apply -f your-file

oc apply -f your-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow OpenShift increases the capacity of the selected persistent volumes in response to a request from the Cluster Operator. When the resizing is complete, the Cluster Operator restarts all pods that use the resized persistent volumes. This happens automatically.

Additional resources

For more information about resizing persistent volumes in OpenShift, see Resizing Persistent Volumes using Kubernetes.

3.1.3.4. JBOD storage overview

You can configure AMQ Streams to use JBOD, a data storage configuration of multiple disks or volumes. JBOD is one approach to providing increased data storage for Kafka brokers. It can also improve performance.

A JBOD configuration is described by one or more volumes, each of which can be either ephemeral or persistent. The rules and constraints for JBOD volume declarations are the same as those for ephemeral and persistent storage. For example, you cannot change the size of a persistent storage volume after it has been provisioned.

3.1.3.4.1. JBOD configuration

To use JBOD with AMQ Streams, the storage type must be set to jbod. The volumes property allows you to describe the disks that make up your JBOD storage array or configuration. The following fragment shows an example JBOD configuration:

The ids cannot be changed once the JBOD volumes are created.

Users can add or remove volumes from the JBOD configuration.

3.1.3.4.2. JBOD and Persistent Volume Claims

When persistent storage is used to declare JBOD volumes, the naming scheme of the resulting Persistent Volume Claims is as follows:

data-id-cluster-name-kafka-idx-

Where

idis the ID of the volume used for storing data for Kafka broker podidx.

3.1.3.4.3. Log directories

The JBOD volumes will be used by the Kafka brokers as log directories mounted into the following path:

/var/lib/kafka/data-id/kafka-log_idx_-

Where

idis the ID of the volume used for storing data for Kafka broker podidx. For example/var/lib/kafka/data-0/kafka-log0.

3.1.3.5. Adding volumes to JBOD storage

This procedure describes how to add volumes to a Kafka cluster configured to use JBOD storage. It cannot be applied to Kafka clusters configured to use any other storage type.

When adding a new volume under an id which was already used in the past and removed, you have to make sure that the previously used PersistentVolumeClaims have been deleted.

Prerequisites

- An OpenShift cluster

- A running Cluster Operator

- A Kafka cluster with JBOD storage

Procedure

Edit the

spec.kafka.storage.volumesproperty in theKafkaresource. Add the new volumes to thevolumesarray. For example, add the new volume with id2:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create or update the resource.

This can be done using

oc apply:oc apply -f your-file

oc apply -f your-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Create new topics or reassign existing partitions to the new disks.

Additional resources

For more information about reassigning topics, see Section 3.1.25.2, “Partition reassignment”.

3.1.3.6. Removing volumes from JBOD storage

This procedure describes how to remove volumes from Kafka cluster configured to use JBOD storage. It cannot be applied to Kafka clusters configured to use any other storage type. The JBOD storage always has to contain at least one volume.

To avoid data loss, you have to move all partitions before removing the volumes.

Prerequisites

- An OpenShift cluster

- A running Cluster Operator

- A Kafka cluster with JBOD storage with two or more volumes

Procedure

- Reassign all partitions from the disks which are you going to remove. Any data in partitions still assigned to the disks which are going to be removed might be lost.

Edit the

spec.kafka.storage.volumesproperty in theKafkaresource. Remove one or more volumes from thevolumesarray. For example, remove the volumes with ids1and2:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create or update the resource.

This can be done using

oc apply:oc apply -f your-file

oc apply -f your-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

For more information about reassigning topics, see Section 3.1.25.2, “Partition reassignment”.

3.1.4. Kafka broker replicas

A Kafka cluster can run with many brokers. You can configure the number of brokers used for the Kafka cluster in Kafka.spec.kafka.replicas. The best number of brokers for your cluster has to be determined based on your specific use case.

3.1.4.1. Configuring the number of broker nodes

This procedure describes how to configure the number of Kafka broker nodes in a new cluster. It only applies to new clusters with no partitions. If your cluster already has topics defined, see Section 3.1.25, “Scaling clusters”.

Prerequisites

- An OpenShift cluster

- A running Cluster Operator

- A Kafka cluster with no topics defined yet

Procedure

Edit the

replicasproperty in theKafkaresource. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create or update the resource.

This can be done using

oc apply:oc apply -f your-file

oc apply -f your-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

If your cluster already has topics defined, see Section 3.1.25, “Scaling clusters”.

3.1.5. Kafka broker configuration

AMQ Streams allows you to customize the configuration of the Kafka brokers in your Kafka cluster. You can specify and configure most of the options listed in the "Broker Configs" section of the Apache Kafka documentation. You cannot configure options that are related to the following areas:

- Security (Encryption, Authentication, and Authorization)

- Listener configuration

- Broker ID configuration

- Configuration of log data directories

- Inter-broker communication

- ZooKeeper connectivity

These options are automatically configured by AMQ Streams.

3.1.5.1. Kafka broker configuration

The config property in Kafka.spec.kafka contains Kafka broker configuration options as keys with values in one of the following JSON types:

- String

- Number

- Boolean

You can specify and configure all of the options in the "Broker Configs" section of the Apache Kafka documentation apart from those managed directly by AMQ Streams. Specifically, you are prevented from modifying all configuration options with keys equal to or starting with one of the following strings:

-

listeners -

advertised. -

broker. -

listener. -

host.name -

port -

inter.broker.listener.name -

sasl. -

ssl. -

security. -

password. -

principal.builder.class -

log.dir -

zookeeper.connect -

zookeeper.set.acl -

authorizer. -

super.user

If the config property specifies a restricted option, it is ignored and a warning message is printed to the Cluster Operator log file. All other supported options are passed to Kafka.

An example Kafka broker configuration

3.1.5.2. Configuring Kafka brokers

You can configure an existing Kafka broker, or create a new Kafka broker with a specified configuration.

Prerequisites

- An OpenShift cluster is available.

- The Cluster Operator is running.

Procedure

-

Open the YAML configuration file that contains the

Kafkaresource specifying the cluster deployment. In the

spec.kafka.configproperty in theKafkaresource, enter one or more Kafka configuration settings. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the new configuration to create or update the resource.

Use

oc apply:oc apply -f kafka.yaml

oc apply -f kafka.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow where

kafka.yamlis the YAML configuration file for the resource that you want to configure; for example,kafka-persistent.yaml.

3.1.6. Kafka broker listeners

You can configure the listeners enabled in Kafka brokers. The following types of listeners are supported:

- Plain listener on port 9092 (without TLS encryption)

- TLS listener on port 9093 (with TLS encryption)

- External listener on port 9094 for access from outside of OpenShift

OAuth 2.0

If you are using OAuth 2.0 token-based authentication, you can configure the listeners to connect to your authorization server. For more information, see Using OAuth 2.0 token-based authentication.

Listener certificates

You can provide your own server certificates, called Kafka listener certificates, for TLS listeners or external listeners which have TLS encryption enabled. For more information, see Section 13.8, “Kafka listener certificates”.

3.1.6.1. Kafka listeners

You can configure Kafka broker listeners using the listeners property in the Kafka.spec.kafka resource. The listeners property contains three sub-properties:

-

plain -

tls -

external

Each listener will only be defined when the listeners object has the given property.

An example of listeners property with all listeners enabled

An example of listeners property with only the plain listener enabled

# ...

listeners:

plain: {}

# ...

# ...

listeners:

plain: {}

# ...3.1.6.2. Configuring Kafka listeners

Prerequisites

- An OpenShift cluster

- A running Cluster Operator

Procedure

Edit the

listenersproperty in theKafka.spec.kafkaresource.An example configuration of the plain (unencrypted) listener without authentication:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create or update the resource.

This can be done using

oc apply:oc apply -f your-file

oc apply -f your-fileCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

-

For more information about the schema, see

KafkaListenersschema reference.

3.1.6.3. Listener authentication

The listener authentication property is used to specify an authentication mechanism specific to that listener:

- Mutual TLS authentication (only on the listeners with TLS encryption)

- SCRAM-SHA authentication

If no authentication property is specified then the listener does not authenticate clients which connect through that listener.

Authentication must be configured when using the User Operator to manage KafkaUsers.

3.1.6.3.1. Authentication configuration for a listener

The following example shows:

-

A

plainlistener configured for SCRAM-SHA authentication -

A

tlslistener with mutual TLS authentication -

An

externallistener with mutual TLS authentication

An example showing listener authentication configuration

3.1.6.3.2. Mutual TLS authentication

Mutual TLS authentication is always used for the communication between Kafka brokers and ZooKeeper pods.

Mutual authentication or two-way authentication is when both the server and the client present certificates. AMQ Streams can configure Kafka to use TLS (Transport Layer Security) to provide encrypted communication between Kafka brokers and clients either with or without mutual authentication. When you configure mutual authentication, the broker authenticates the client and the client authenticates the broker.

TLS authentication is more commonly one-way, with one party authenticating the identity of another. For example, when HTTPS is used between a web browser and a web server, the server obtains proof of the identity of the browser.

3.1.6.3.2.1. When to use mutual TLS authentication for clients

Mutual TLS authentication is recommended for authenticating Kafka clients when:

- The client supports authentication using mutual TLS authentication

- It is necessary to use the TLS certificates rather than passwords

- You can reconfigure and restart client applications periodically so that they do not use expired certificates.

3.1.6.3.3. SCRAM-SHA authentication

SCRAM (Salted Challenge Response Authentication Mechanism) is an authentication protocol that can establish mutual authentication using passwords. AMQ Streams can configure Kafka to use SASL (Simple Authentication and Security Layer) SCRAM-SHA-512 to provide authentication on both unencrypted and TLS-encrypted client connections. TLS authentication is always used internally between Kafka brokers and ZooKeeper nodes. When used with a TLS client connection, the TLS protocol provides encryption, but is not used for authentication.

The following properties of SCRAM make it safe to use SCRAM-SHA even on unencrypted connections:

- The passwords are not sent in the clear over the communication channel. Instead the client and the server are each challenged by the other to offer proof that they know the password of the authenticating user.

- The server and client each generate a new challenge for each authentication exchange. This means that the exchange is resilient against replay attacks.

3.1.6.3.3.1. Supported SCRAM credentials

AMQ Streams supports SCRAM-SHA-512 only. When a KafkaUser.spec.authentication.type is configured with scram-sha-512 the User Operator will generate a random 12 character password consisting of upper and lowercase ASCII letters and numbers.

3.1.6.3.3.2. When to use SCRAM-SHA authentication for clients

SCRAM-SHA is recommended for authenticating Kafka clients when:

- The client supports authentication using SCRAM-SHA-512

- It is necessary to use passwords rather than the TLS certificates

- Authentication for unencrypted communication is required

3.1.6.4. External listeners

Use an external listener to expose your AMQ Streams Kafka cluster to a client outside an OpenShift environment.

Additional resources

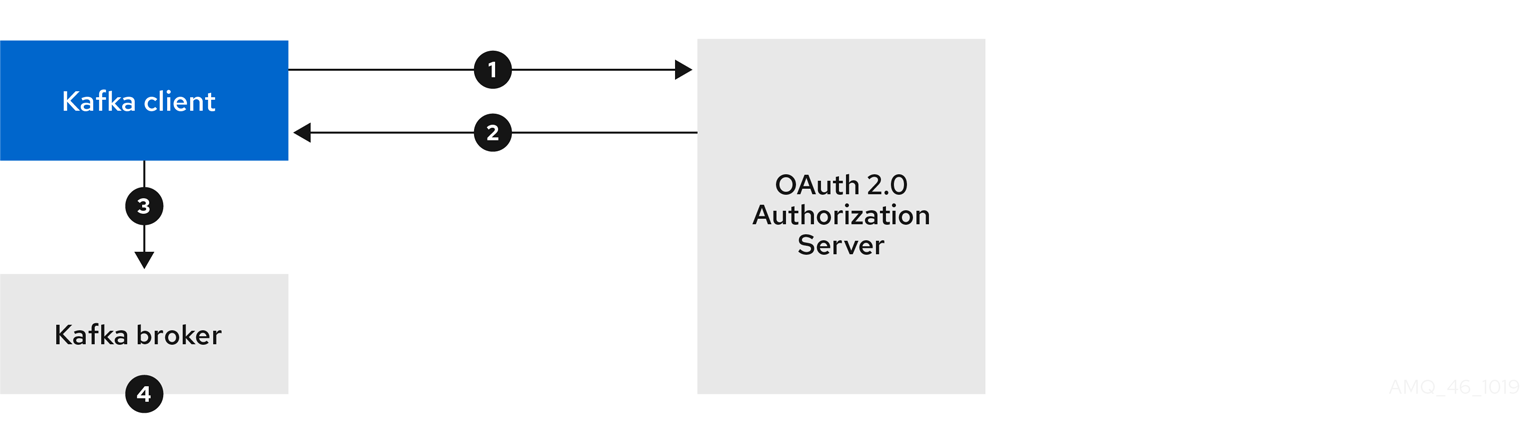

3.1.6.4.1. Customizing advertised addresses on external listeners