Configuring automation execution

Learn how to manage, monitor, and use automation controller

Abstract

Preface

This guide describes the administration of automation controller through custom scripts, management jobs, and more. Written for DevOps engineers and administrators, the Configuring automation execution guide assumes a basic understanding of the systems requiring management with automation controllers easy-to-use graphical interface.

Providing feedback on Red Hat documentation

If you have a suggestion to improve this documentation, or find an error, you can contact technical support at https://access.redhat.com to open a request.

Chapter 1. Start, stop, and restart automation controller

Automation controller includes an administrator utility script, automation-controller-service. The script can start, stop, and restart all automation controller services running on the current single automation controller node. The script includes the message queue components and the database if it is an integrated installation.

External databases must be explicitly managed by the administrator. You can find the services script in /usr/bin/automation-controller-service, which can be invoked with the following command:

root@localhost:~$ automation-controller-service restart

root@localhost:~$ automation-controller-service restart

In clustered installs, the automation-controller-service restart does not include PostgreSQL as part of the services that are restarted. This is because it exists external to automation controller, and PostgreSQL does not always need to be restarted. Use systemctl restart automation-controller to restart services on clustered environments instead.

You must also restart each cluster node for certain changes to persist as opposed to a single node for a localhost install.

For more information on clustered environments, see the Clustering section.

You can also invoke the services script using distribution-specific service management commands. Distribution packages often provide a similar script, sometimes as an init script, to manage services. For more information, see your distribution-specific service management system.

When running automation controller in a container, do not use the automation-controller-service script. Restart the pod using the container environment instead.

Chapter 2. Automation controller configuration

You can configure some automation controller options by using the Settings menu of the User Interface.

Save applies the changes you make, but it does not exit the edit dialog.

To return to the Settings page, from the navigation panel select Settings or use the breadcrumbs at the top of the current view.

2.1. Configuring system settings

You can use the System menu to define automation controller system settings.

Procedure

- From the navigation panel, select → → . The System Settings page is displayed.

- Click .

You can configure the following options:

- Base URL of the service: This setting is used by services such as notifications to render a valid URL to the service.

Proxy IP allowed list: If the service is behind a reverse proxy or load balancer, use this setting to configure the proxy IP addresses from which the service should trust custom

REMOTE_HOST_HEADERSheader values.If this setting is an empty list (the default), the headers specified by

REMOTE_HOST_HEADERSare trusted unconditionally.-

CSRF Trusted Origins List: If the service is behind a reverse proxy or load balancer, use this setting to configure the

schema://addressesfrom which the service should trust Origin header values. - Red Hat customer username: This username is used to send data to Automation Analytics.

- Red Hat customer password: This password is used to send data to Automation Analytics.

- Red Hat or Satellite username: This username is used to send data to Automation Analytics.

- Red Hat or Satellite password: This password is used to send data to Automation Analytics.

- Global default execution environment: The execution environment to be used when one has not been configured for a job template.

Custom virtual environment paths: Paths where automation controller looks for custom virtual environments.

Enter one path per line.

- Last gather date for Automation Analytics: Set the date and time.

Automation Analytics Gather Interval: Interval (in seconds) between data gathering.

If Gather data for Automation Analytics is set to false, this value is ignored.

-

Remote Host Headers: HTTP headers and meta keys to search to decide remote hostname or IP. Add additional items to this list, such as

HTTP_X_FORWARDED_FOR, if behind a reverse proxy. For more information, see Configuring proxy support for Red Hat Ansible Automation Platform. - Automation Analytics upload URL: This value has been set manually in a settings file. This setting is used to configure the upload URL for data collection for Automation Analytics.

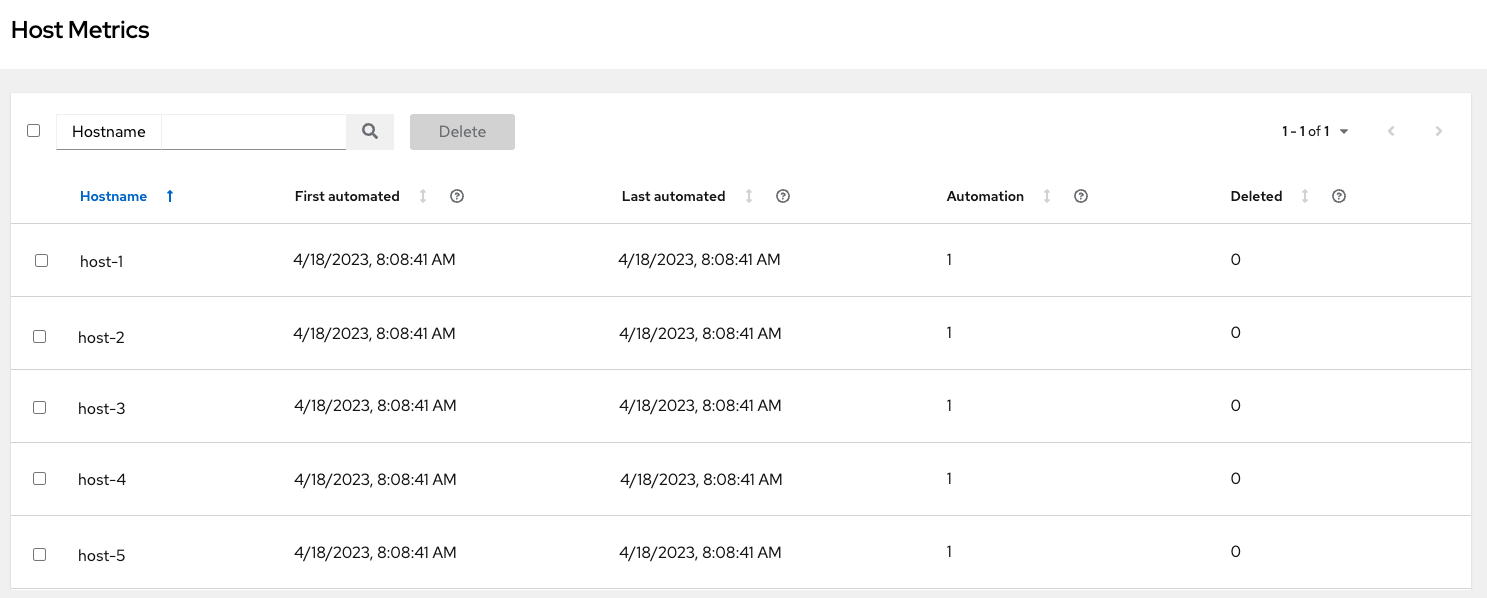

Defines subscription usage model and shows Host Metrics:

You can set the following options:

- No Subcriptions. Deletion of host_metrics will not be considered for purposes of management host counting

- Usage based on unique managed nodes in a large historical time frame and delete functionality for no longer used managed nodes

You can also select the following options:

- Enable Activity Stream: Set to enable capturing activity for the activity stream.

- Enable Activity Stream for Inventory Sync: Set to enable capturing activity for the activity stream when running inventory sync.

- All Users Visible to Organization Admins: Set to control whether any organization administrator can view all users and teams, even those not associated with their organization.

Organization Admins Can Manage Users and Teams: Set to control whether any organization administrator has the privileges to create and manage users and teams.

You might want to disable this ability if you are using an LDAP or SAML integration.

- Gather data for Automation Analytics: Set to enable the service to gather data on automation and send it to Automation Analytics.

- Click

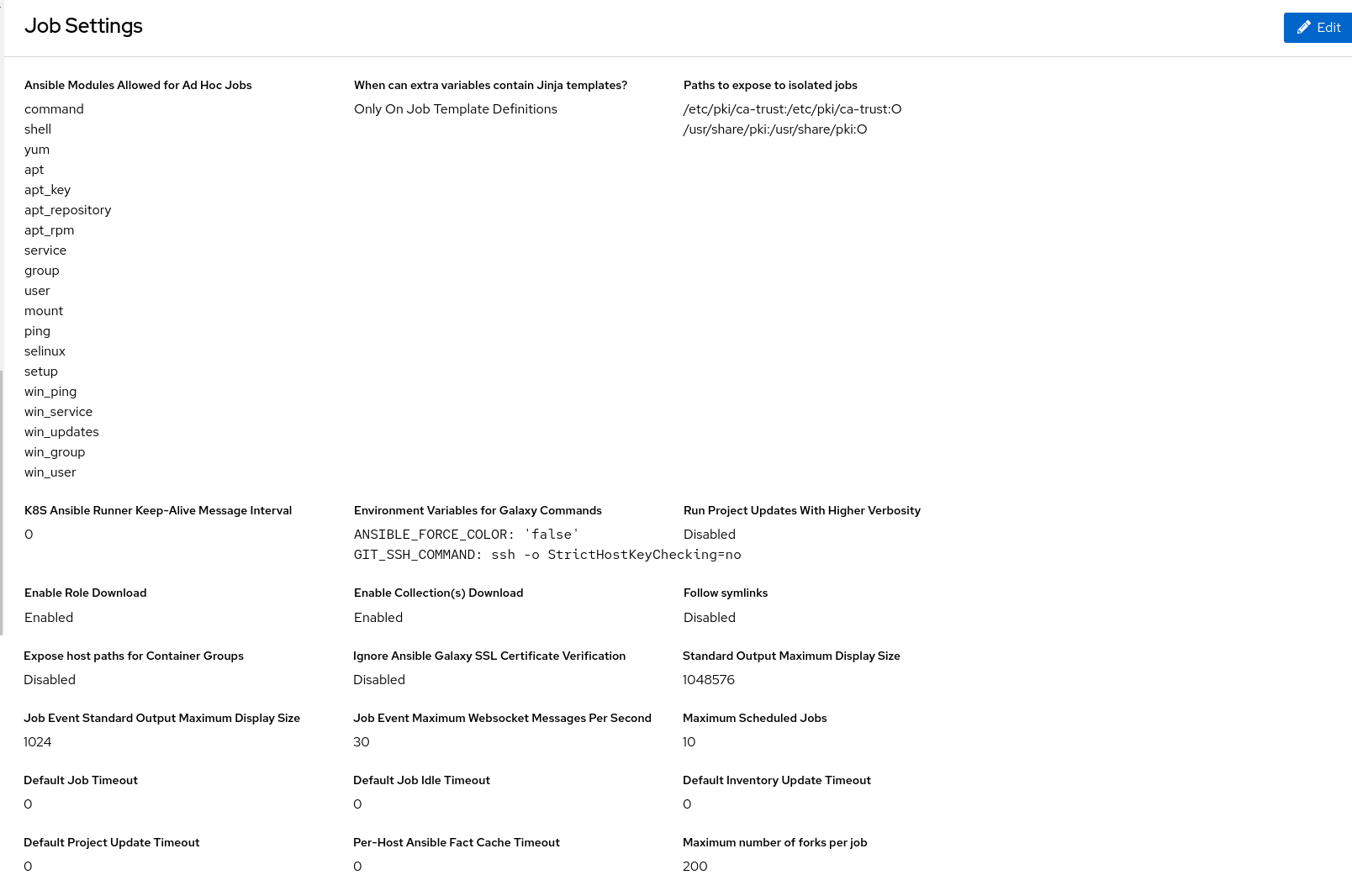

2.2. Configuring jobs

You can use the Job option to define the operation of Jobs in automation controller.

Procedure

- From the navigation panel, select → → .

On the Job Settings page, click .

You can configure the following options:

Ansible Modules Allowed For Ad Hoc Jobs: List of modules allowed to be used by ad hoc jobs.

The directory in which the service creates new temporary directories for job execution and isolation (such as credential files).

When can extra variables contain Jinja templates?: Ansible allows variable substitution through the Jinja2 templating language for

--extra-vars.This poses a potential security risk where users with the ability to specify extra vars at job launch time can use Jinja2 templates to run arbitrary Python.

Set this value to either

templateornever.Paths to expose to isolated jobs: List of paths that would otherwise be hidden to expose to isolated jobs.

Enter one path per line. If a path to a specific file is entered, then the entire directory containing that file will be mounted inside the execution environment.

Volumes are mounted from the execution node to the container.

The supported format is

HOST-DIR[:CONTAINER-DIR[:OPTIONS]].- Extra Environment Variables: Additional environment variables set for playbook runs, inventory updates, project updates, and notification sending.

K8S Ansible Runner Keep-Alive Message Interval: Only applies to jobs running in a Container Group.

If not 0, send a message every specified number of seconds to keep the connection open.

- Environment Variables for Galaxy Commands: Additional environment variables set for invocations of ansible-galaxy within project updates. Useful if you must use a proxy server for ansible-galaxy but not git.

- Standard Output Maximum Display Size: Maximum Size of Standard Output in bytes to display before requiring the output be downloaded.

-

Job Event Standard Output Maximum Display Size: Maximum Size of Standard Output in bytes to display for a single job or ad hoc command event. stdout ends with

…when truncated. Job Event Maximum Websocket Messages Per Second: The maximum number of messages to update the UI live job output with per second.

A value of 0 means no limit.

- Maximum Scheduled Jobs: Maximum number of the same job template that can be waiting to run when launching from a schedule before no more are created.

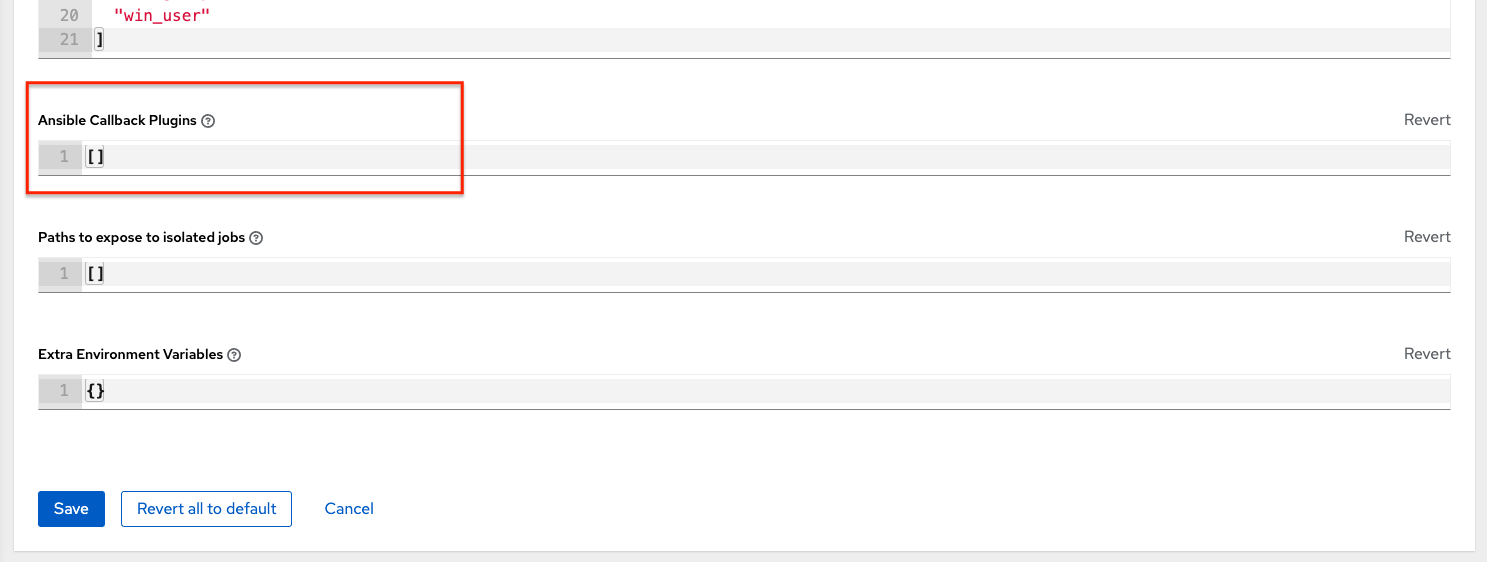

- Ansible Callback Plugins: List of paths to search for extra callback plugins to be used when running jobs.

Default Job Timeout: If no output is detected from ansible in this number of seconds the execution will be terminated.

Use a value of 0 to indicate that no idle timeout should be imposed.

Enter one path per line.

Default Job Idle Timeout: If no output is detected from ansible in this number of seconds the execution will be terminated.

Use a value of 0 to indicate that no idle timeout should be imposed.

Default Inventory Update Timeout: Maximum time in seconds to allow inventory updates to run.

Use a value of 0 to indicate that no timeout should be imposed.

A timeout set on an individual inventory source will override this.

Default Project Update Timeout: Maximum time in seconds to allow project updates to run.

Use a value of 0 to indicate that no timeout should be imposed.

A timeout set on an individual project will override this.

Per-Host Ansible Fact Cache Timeout: Maximum time, in seconds, that stored Ansible facts are considered valid since the last time they were modified.

Only valid, non-stale, facts are accessible by a playbook.

This does not influence the deletion of

ansible_factsfrom the database.Use a value of 0 to indicate that no timeout should be imposed.

Maximum number of forks per job: Saving a Job Template with more than this number of forks results in an error.

When set to 0, no limit is applied.

- Job execution path: Only available in operator-based installations.

Container Run Options: Only available in operator-based installations.

List of options to pass to Podman run example:

['--network', 'slirp4netns:enable_ipv6=true', '--log-level', 'debug'].You can set the following options:

-

Run Project Updates With Higher Verbosity: Select to add the CLI

-vvvflag to playbook runs ofproject_update.ymlused for project updates -

Enable Role Download: Select to allow roles to be dynamically downloaded from a

requirements.ymlfile for SCM projects. -

Enable Collection(s) Download: Select to allow collections to be dynamically downloaded from a

requirements.ymlfile for SCM projects. Follow symlinks: Select to follow symbolic links when scanning for playbooks.

Be aware that setting this to

Truecan lead to infinite recursion if a link points to a parent directory of itself.Expose host paths for Container Groups: Select to expose paths through hostPath for the Pods created by a Container Group.

HostPath volumes present many security risks, and it is best practice to avoid the use of HostPaths when possible.

Ignore Ansible Galaxy SSL Certificate Verification: If set to

true, certificate validation is not done when installing content from any Galaxy server.Click the tooltip

icon next to the field that you need additional information about.

icon next to the field that you need additional information about.

For more information about configuring Galaxy settings, see the Ansible Galaxy Support section of Using automation execution.

NoteThe values for all timeouts are in seconds.

- Click to apply the settings.

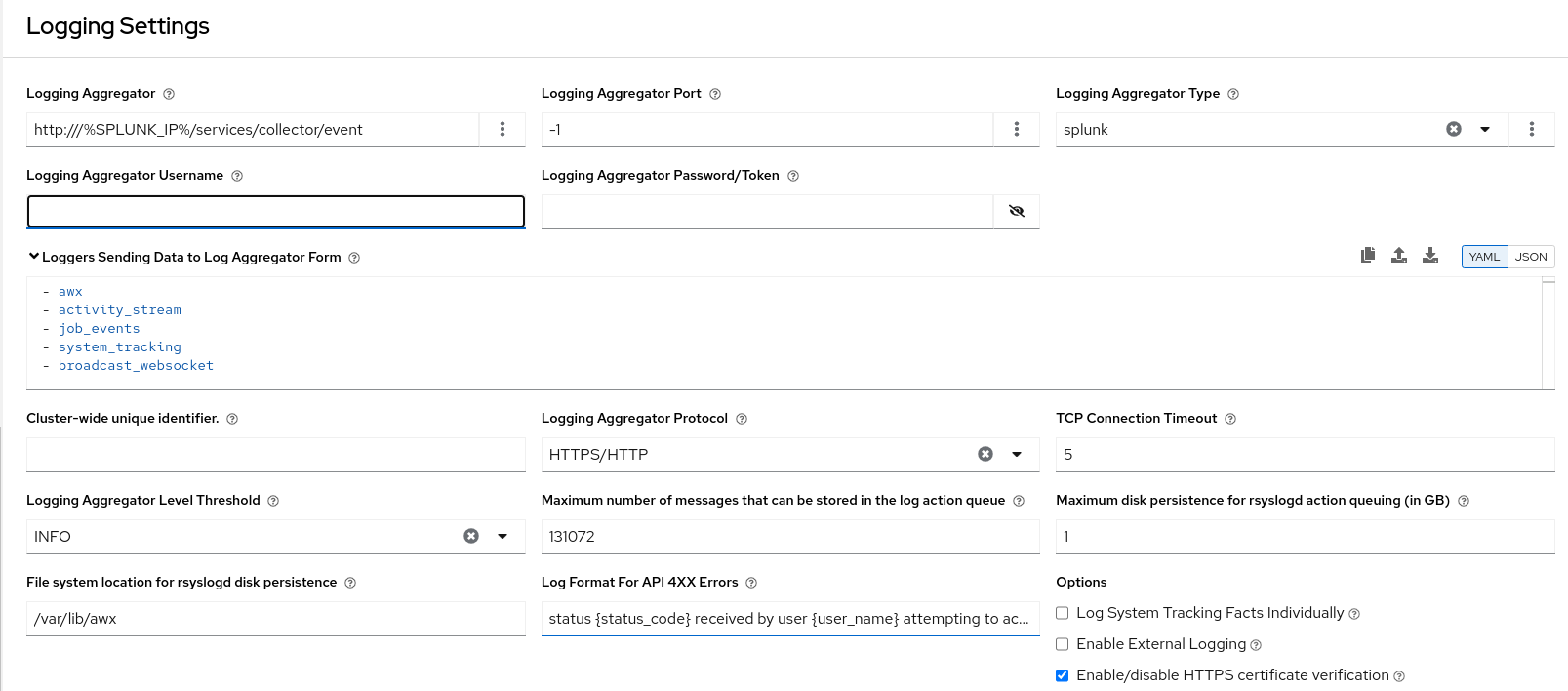

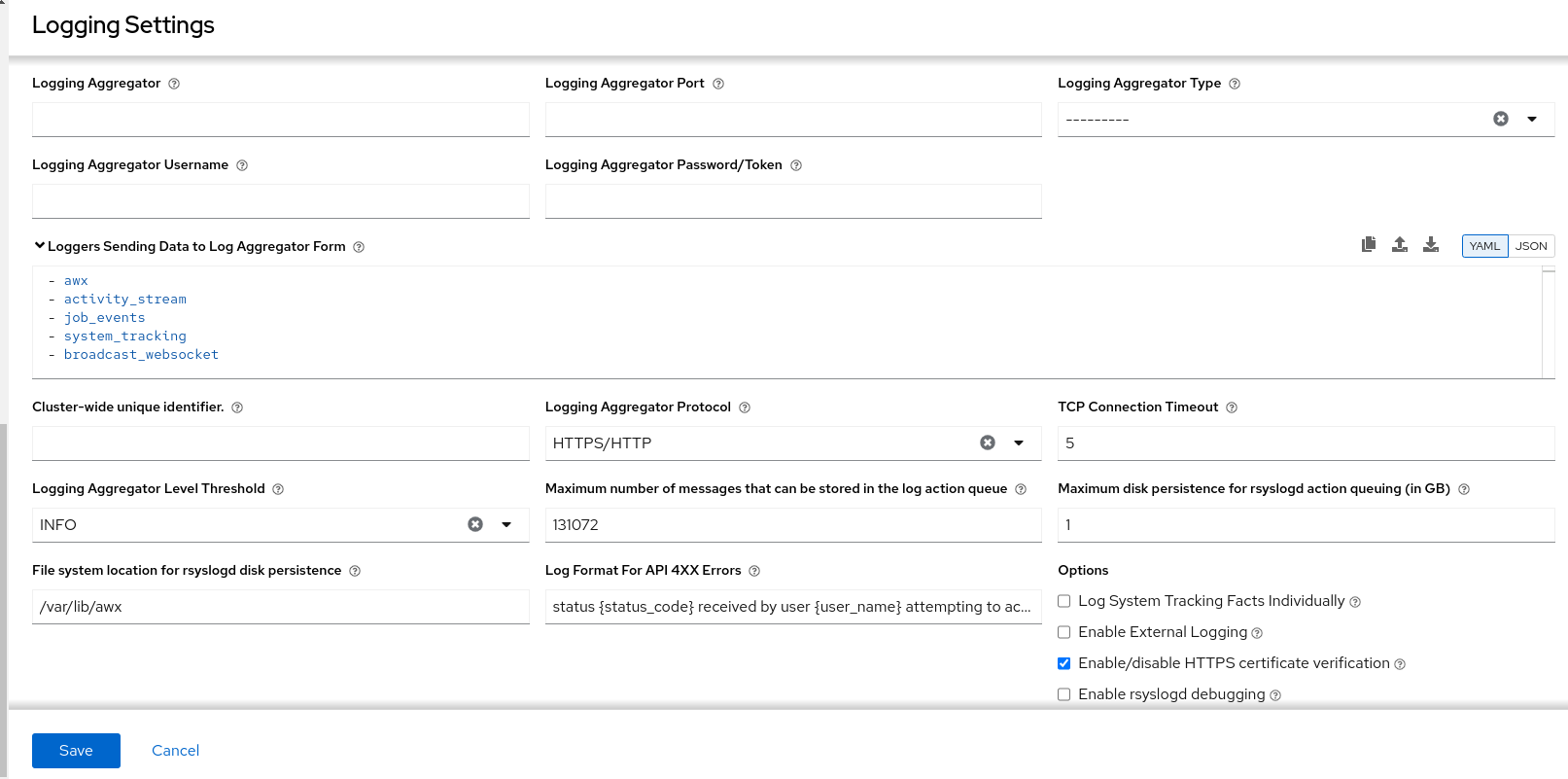

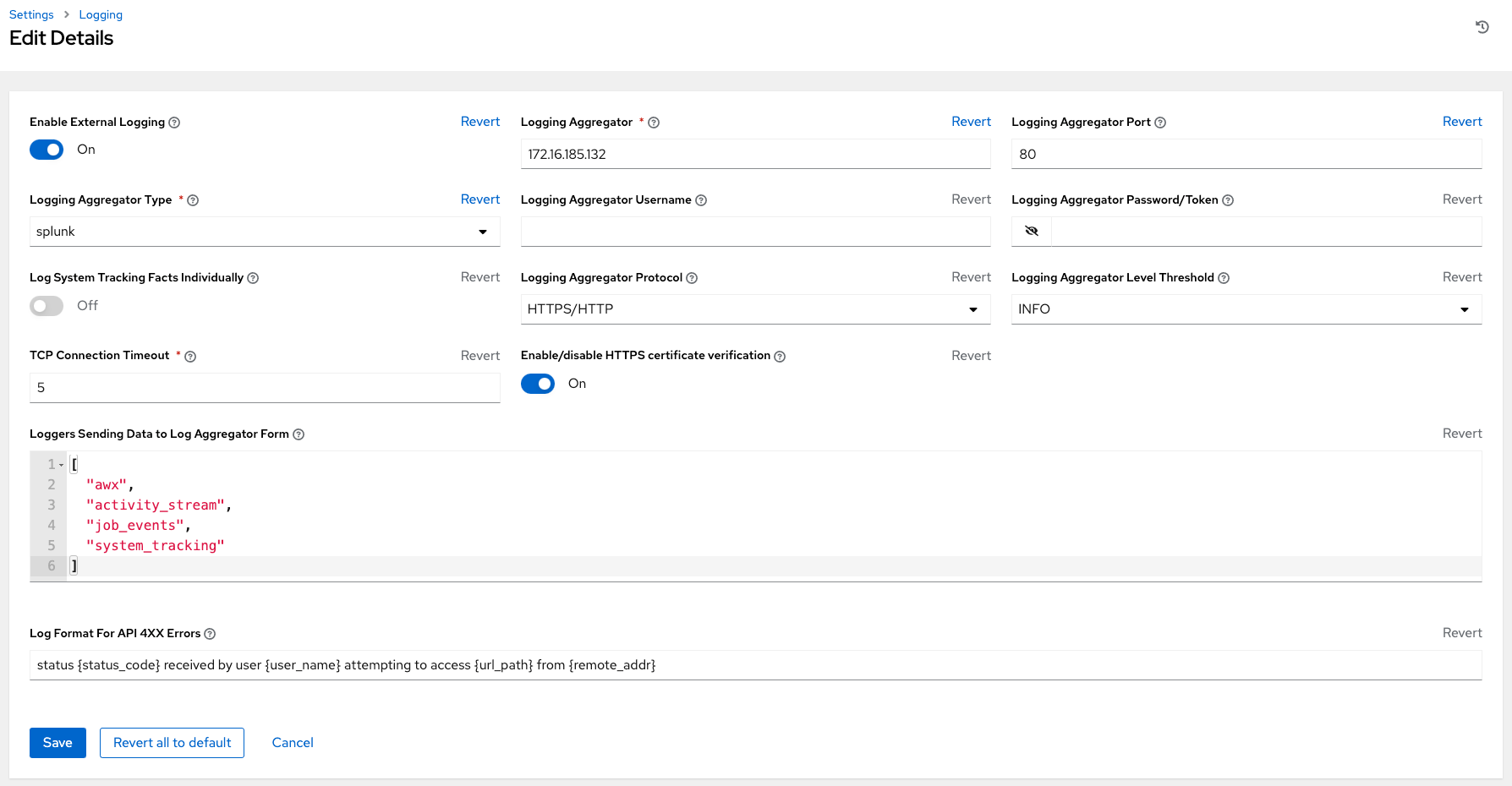

2.3. Logging and aggregation settings

For information about these settings, see Setting up logging.

2.4. Configuring Automation Analytics

When you imported your license for the first time, you were automatically opted in for the collection of data that powers Automation Analytics, a cloud service that is part of the Ansible Automation Platform subscription.

Prerequisites

- A service account created with the Automation Analytics Viewer role in console.redhat.com. For more information, see Creating a service account.

Procedure

- From the navigation panel, select → → .

- Click .

- In the field labeled Red Hat Client ID for Analytics, enter the client ID you received when you created your service account to retrieve subscription and content information.

- In the field labeled Red Hat Client Secret for Analytics, enter the client secret you received when you created your service account to send data to Automation Analytics.

- In the Options list select the checkbox to Gather data for Automation Analytics.

- Click .

Verification

After configuring the service account, run a test job to ensure everything is set up correctly.

- From the navigation panel, select → to launch a job.

- Check analytics at console.redhat.com to confirm that the data is being posted.

2.5. Additional settings for automation controller

There are additional advanced settings that can affect automation controller behavior that are not available in the automation controller UI.

For traditional virtual machine based deployments, these settings can be provided to automation controller by creating a file in /etc/tower/conf.d/custom.py. When settings are provided to automation controller through file-based settings, the settings file must be present on all control plane nodes. These include all of the hybrid or control type nodes in the automationcontroller group in the installer inventory.

For these settings to be effective, restart the service with automation-controller-service restart on each node with the settings file. If the settings provided in this file are also visible in the automation controller UI, then they are marked as "Read only" in the UI.

For container-based installations, use controller_extra_settings in the Automation controller variables. The containerized version does not support custom.py.

Chapter 3. Performance tuning for automation controller

Tune your automation controller to optimize performance and scalability. When planning your workload, ensure that you identify your performance and scaling needs, adjust for any limitations, and monitor your deployment.

Automation controller is a distributed system with many components that you can tune, including the following:

- Task system in charge of scheduling jobs

- Control Plane in charge of controlling jobs and processing output

- Execution plane where jobs run

- Web server in charge of serving the API

- WebSocket system that serve and broadcast WebSocket connections and data

- Database used by many components

3.1. Websocket configuration for automation controller

You can configure automation controller to align the websocket configuration with your nginx or load balancer configuration.

Automation controller nodes are interconnected through websockets to distribute all websocket-emitted messages throughout your system. This configuration setup enables any browser client websocket to subscribe to any job that might be running on any automation controller node. Websocket clients are not routed to specific automation controller nodes. Instead, any automation controller node can handle any websocket request and each automation controller node must know about all websocket messages destined for all clients.

You can configure websockets at /etc/tower/conf.d/websocket_config.py in all of your automation controller nodes and the changes become effective after the service restarts.

Automation controller automatically handles discovery of other automation controller nodes through the Instance record in the database.

Your automation controller nodes are designed to broadcast websocket traffic across a private, trusted subnet (and not the open Internet). Therefore, if you turn off HTTPS for websocket broadcasting, the websocket traffic, composed mostly of Ansible Playbook stdout, is sent unencrypted between automation controller nodes.

3.1.1. Configuring automatic discovery of other automation controller nodes

You can configure websocket connections to enable automation controller to automatically handle discovery of other automation controller nodes through the Instance record in the database.

Edit automation controller websocket information for port and protocol, and confirm whether to verify certificates with

TrueorFalsewhen establishing the websocket connections:BROADCAST_WEBSOCKET_PROTOCOL = 'http' BROADCAST_WEBSOCKET_PORT = 80 BROADCAST_WEBSOCKET_VERIFY_CERT = False

BROADCAST_WEBSOCKET_PROTOCOL = 'http' BROADCAST_WEBSOCKET_PORT = 80 BROADCAST_WEBSOCKET_VERIFY_CERT = FalseCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart automation controller with the following command:

automation-controller-service restart

$ automation-controller-service restartCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.2. Capacity planning for deploying automation controller

Capacity planning for automation controller is planning the scale and characteristics of your deployment so that it has the capacity to run the planned workload. Capacity planning includes the following phases:

- Characterizing your workload

- Reviewing the capabilities of different node types

- Planning the deployment based on the requirements of your workload

3.2.1. Characteristics of your workload

Before planning your deployment, establish the workload that you want to support. Consider the following factors to characterize an automation controller workload:

- Managed hosts

- Tasks per hour per host

- Maximum number of concurrent jobs that you want to support

- Maximum number of forks set on jobs. Forks determine the number of hosts that a job acts on concurrently.

- Maximum API requests per second

- Node size that you prefer to deploy (CPU/Memory/Disk)

3.2.2. Types of nodes in automation controller

You can configure four types of nodes in an automation controller deployment:

- Control nodes

- Hybrid nodes

- Execution nodes

- Hop nodes

However, for an operator-based environment, there are no hybrid or control nodes. There are container groups, which make up containers running on the Kubernetes cluster. That comprises the control plane. That control plane is local to the Kubernetes cluster in which Red Hat Ansible Automation Platform is deployed.

3.2.2.1. Benefits of scaling control nodes

Control and hybrid nodes provide control capacity. They provide the ability to start jobs and process their output into the database. Every job is assigned a control node. In the default configuration, each job requires one capacity unit to control. For example, a control node with 100 capacity units can control a maximum of 100 jobs.

Vertically scaling a control node by deploying a larger virtual machine with more resources increases the following capabilities of the control plane:

- The number of jobs that a control node can perform control tasks for, which requires both more CPU and memory.

- The number of job events a control node can process concurrently.

Scaling CPU and memory in the same proportion is recommended, for example, 1 CPU: 4 GB RAM. Even when memory consumption is high, increasing the CPU of an instance can often relieve pressure. The majority of the memory that control nodes consume is from unprocessed events that are stored in a memory-based queue.

Vertically scaling a control node does not automatically increase the number of workers that handle web requests.

An alternative to vertically scaling is horizontally scaling by deploying more control nodes. This allows spreading control tasks across more nodes and allowing web traffic to be spread over more nodes, given that you provision a load balancer to spread requests across nodes. Horizontally scaling by deploying more control nodes in many ways can be preferable as it additionally provides for more redundancy and workload isolation when a control node goes down or experiences higher than normal load.

3.2.2.2. Benefits of scaling execution nodes

Execution and hybrid nodes provide execution capacity. The capacity consumed by a job is equal to the number of forks set on the job template or the number of hosts in the inventory, whichever is less, plus one additional capacity unit to account for the main ansible process. For example, a job template with the default forks value of 5 acting on an inventory with 50 hosts consumes 6 capacity units from the execution node it is assigned to.

Vertically scaling an execution node by deploying a larger virtual machine with more resources provides more forks for job execution. This increases the number of concurrent jobs that an instance can run.

In general, scaling CPU alongside memory in the same proportion is recommended. Like control and hybrid nodes, there is a capacity adjustment on each execution node that you can use to align actual use with the estimation of capacity consumption that the automation controller makes. By default, all nodes are set to the top of that range. If actual monitoring data reveals the node to be over-used, decreasing the capacity adjustment can help bring this in line with actual usage.

An alternative to vertically scaling execution nodes is horizontally scaling the execution plane by deploying more virtual machines to be execution nodes. Because horizontally scaling can provide additional isolation of workloads, you can assign different instances to different instance groups. You can then assign these instance groups to organizations, inventories, or job templates. For example, you can configure an instance group that can only be used for running jobs against a certain Inventory. In this scenario, by horizontally scaling the execution plane, you can ensure that lower-priority jobs do not block higher-priority jobs

3.2.2.3. Benefits of scaling hop nodes

Because hop nodes use very low memory and CPU, vertically scaling these nodes does not impact capacity. Monitor the network bandwidth of any hop node that serves as the sole connection between many execution nodes and the control plane. If bandwidth use is saturated, consider changing the network.

Horizontally scaling by adding more hop nodes could provide redundancy when one hop node goes down, which can allow traffic to continue to flow between the control plane and the execution nodes.

3.2.2.4. Ratio of control to execution capacity

Assuming default configuration, the maximum recommended ratio of control capacity to execution capacity is 1:5 in traditional VM deployments. This ensures that there is enough control capacity to run jobs on all the execution capacity available and process the output. Any less control capacity in relation to the execution capacity, and it would not be able to launch enough jobs to use the execution capacity.

There are cases in which you might want to modify this ratio closer to 1:1. For example, in cases where a job produces a high level of job events, reducing the amount of execution capacity in relation to the control capacity helps relieve pressure on the control nodes to process that output.

3.3. Example capacity planning exercise

After you have determined the workload capacity that you want to support, you must plan your deployment based on the requirements of the workload. To help you with your deployment, review the following planning exercise.

For this example, the cluster must support the following capacity:

- 300 managed hosts

- 1,000 tasks per hour per host or 16 tasks per minute per host

- 10 concurrent jobs

- Forks set to 5 on playbooks. This is the default.

- Average event size is 1 Mb

The virtual machines have 4 CPU and 16 GB RAM, and disks that have 3000 IOPS.

3.3.1. Example workload requirements

For this example capacity planning exercise, use the following workload requirements:

Execution capacity

To run the 10 concurrent jobs requires at least 60 units of execution capacity.

- You calculate this by using the following equation: (10 jobs * 5 forks) + (10 jobs * 1 base task impact of a job) = 60 execution capacity

Control capacity

- To control 10 concurrent jobs requires at least 10 units of control capacity.

To calculate the number of events per hour that you need to support 300 managed hosts and 1,000 tasks per hour per host, use the following equation:

- 1000 tasks * 300 managed hosts per hour = 300,000 events per hour at minimum.

- You must run the job to see exactly how many events it produces, because this is dependent on the specific task and verbosity. For example, a debug task printing “Hello World” produces 6 job events with the verbosity of 1 on one host. With a verbosity of 3, it produces 34 job events on one host. Therefore, you must estimate that the task produces at least 6 events. This would produce closer to 3,000,000 events per hour, or approximately 833 events per second.

Determining quantity of execution and control nodes needed

To determine how many execution and control nodes you need, reference the experimental results in the following table that shows the observed event processing rate of a single control node with 5 execution nodes of equal size (API Capacity column). The default “forks” setting of job templates is 5, so using this default, the maximum number of jobs a control node can dispatch to execution nodes makes 5 execution nodes of equal CPU/RAM use 100% of their capacity, arriving to the previously mentioned 1:5 ratio of control to execution capacity.

| Node | API capacity | Default execution capacity | Default control capacity | Mean event processing rate at 100% capacity usage | Mean events processing rate at 50% capacity usage | Mean event processing rate at 40% capacity usage |

|---|---|---|---|---|---|---|

| 4 CPU at 2.5Ghz, 16 GB RAM control node, a maximum of 3000 IOPS disk | about 10 requests per second | n/a | 137 jobs | 1100 per second | 1400 per second | 1630 per second |

| 4 CPU at 2.5Ghz, 16 GB RAM execution node, a maximum of 3000 IOPS disk | n/a | 137 | n/a | n/a | n/a | n/a |

| 4 CPU at 2.5Ghz, 16 GB RAM database node, a maximum of 3000 IOPS disk | n/a | n/a | n/a | n/a | n/a | n/a |

Because controlling jobs competes with job event processing on the control node, over-provisioning control capacity can reduce processing times. When processing times are high, you can experience a delay between when the job runs and when you can view the output in the API or UI.

For this example, for a workload on 300 managed hosts, executing 1000 tasks per hour per host, 10 concurrent jobs with forks set to 5 on playbooks, and an average event size 1 Mb, use the following procedure:

- Deploy 1 execution node, 1 control node, 1 database node of 4 CPU at 2.5Ghz, 16 GB RAM, and disks that have about 3000 IOPS.

- Keep the default fork setting of 5 on job templates.

- Use the capacity change feature in the instance view of the UI on the control node to reduce the capacity down to 16, the lowest value, to reserve more of the control node’s capacity for processing events.

For more information about workloads with high levels of API interaction, see Scaling Automation Controller for API Driven Workloads. For more information about managing capacity with instances, see Managing capacity with Instances. For more information about operator-based deployments, see Red Hat Ansible Automation Platform considerations for operator environments.

3.4. Performance troubleshooting for automation controller

Users experience many request timeouts (504 or 503 errors), or in general high API latency. In the UI, clients face slow login and long wait times for pages to load. What system is the likely culprit?

- If these issues occur only on login, and you use external authentication, the problem is likely with the integration of your external authentication provider and you should seek Red Hat support.

- For other issues with timeouts or high API latency, see Web server tuning.

Long wait times for job output to load.

- Job output streams from the execution node where the ansible-playbook is actually run to the associated control node. Then the callback receiver serializes this data and writes it to the database. Relevant settings to observe and tune can be found in Settings for managing job event processing and PostgreSQL database configuration and maintenance for automation controller.

- In general, to resolve this symptom it is important to observe the CPU and memory use of the control nodes. If CPU or memory use is very high, you can either horizontally scale the control plane by deploying more virtual machines to be control nodes that naturally spreads out work more, or to modify the number of jobs a control node will manage at a time. For more information, see Capacity settings for control and execution nodes for more information.

- Job output delay can occur on initial job runs that use execution environments that have not been pulled into the platform. The output becomes visible after the job run completes.

What can you do to increase the number of jobs that automation controller can run concurrently?

Factors that cause jobs to remain in “pending” state are:

- Waiting for “dependencies” to finish: this includes project updates and inventory updates when “update on launch” behavior is enabled.

- The “allow_simultaneous” setting of the job template: if multiple jobs of the same job template are in “pending” status, check the “allow_simultaneous” setting of the job template (“Concurrent Jobs” checkbox in the UI). If this is not enabled, only one job from a job template can run at a time.

- The “forks” value of your job template: the default value is 5. The amount of capacity required to run the job is roughly the forks value (some small overhead is accounted for). If the forks value is set to a very large number, this will limit what nodes will be able to run it.

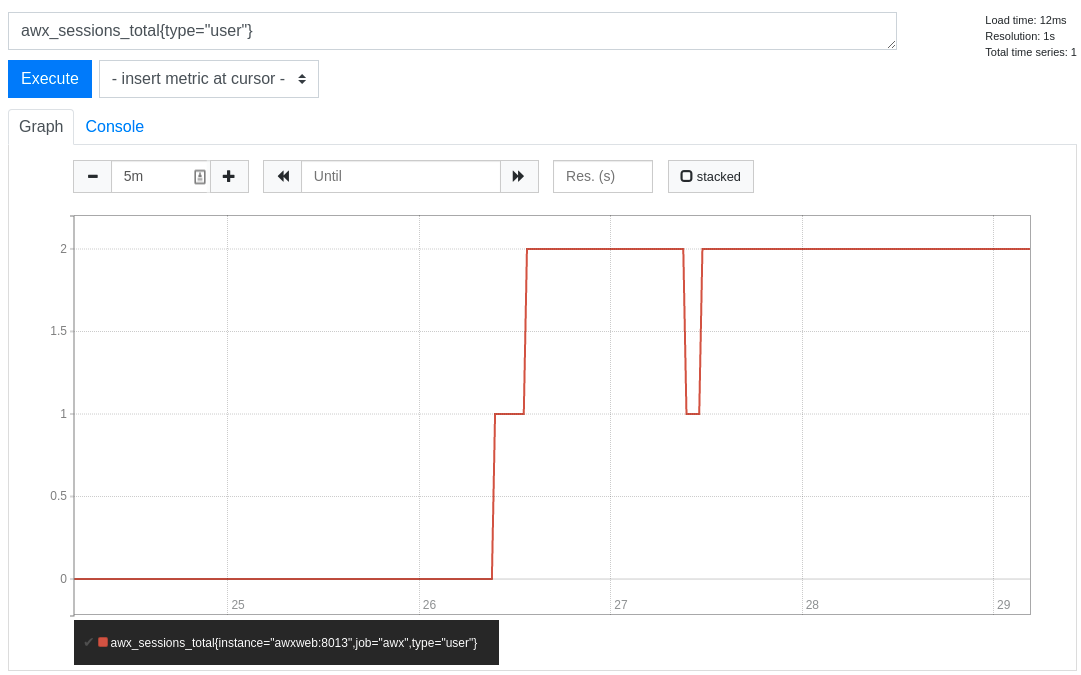

- Lack of either control or execution capacity: see “awx_instance_remaining_capacity” metric from the application metrics available on /api/v2/metrics. See Metrics for monitoring automation controller application for more information about how to check metrics. See Capacity planning for deploying automation controller for information about how to plan your deployment to handle the number of jobs you are interested in.

Jobs run more slowly on automation controller than on a local machine.

- Some additional overhead is expected, because automation controller might be dispatching your job to a separate node. In this case, automation controller is starting a container and running ansible-playbook there, serializing all output and writing it to a database.

- Project update on launch and inventory update on launch behavior can cause additional delays at job start time.

- Size of projects can impact how long it takes to start the job, as the project is updated on the control node and transferred to the execution node. Internal cluster routing can impact network performance. For more information, see Internal cluster routing.

- Container pull settings can impact job start time. The execution environment is a container that is used to run jobs within it. Container pull settings can be set to “Always”, “Never” or “If not present”. If the container is always pulled, this can cause delays.

- Ensure that all cluster nodes, including execution, control, and the database, have been deployed in instances with storage rated to the minimum required IOPS, because the manner in which automation controller runs ansible and caches event data implicates significant disk I/O. For more information, see System requirements.

Database storage does not stop growing.

- Automation controller has a management job titled “Cleanup Job Details”. By default, it is set to keep 120 days of data and to run once a week. To reduce the amount of data in the database, you can shorten the retention time. For more information, see Removing old activity stream data.

- Running the cleanup job deletes the data in the database. However, the database must at some point perform its vacuuming operation which reclaims storage. See PostgreSQL database configuration and maintenance for automation controller for more information about database vacuuming.

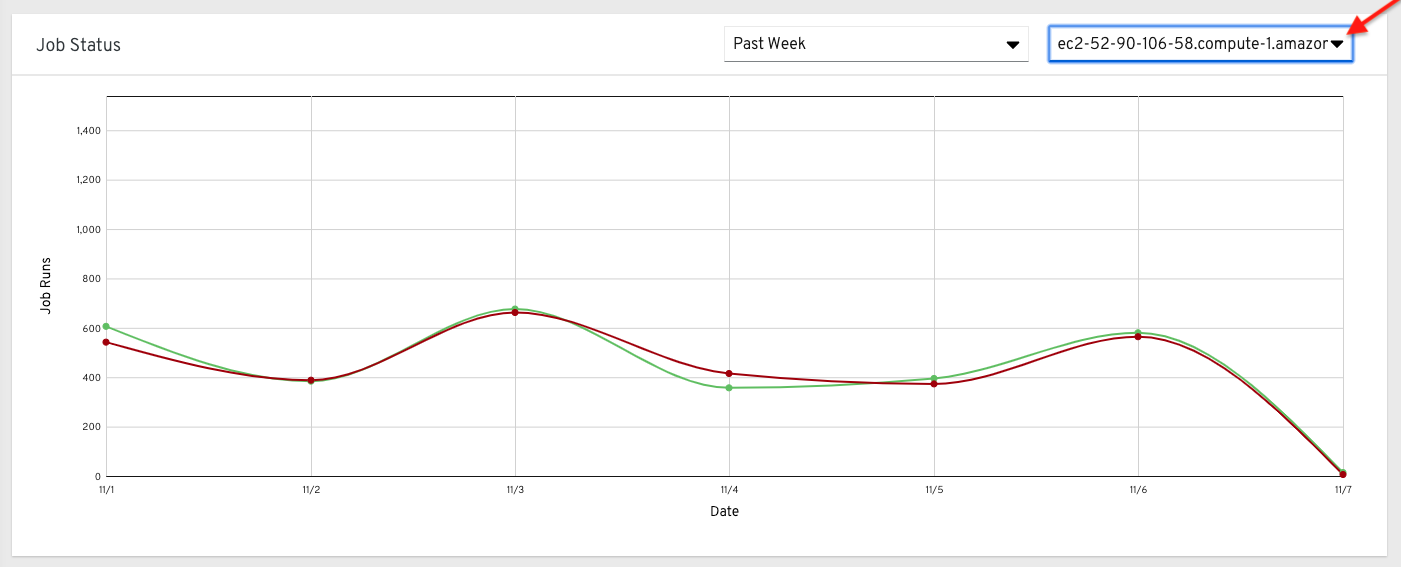

3.5. Metrics to monitor automation controller

Monitor your automation controller hosts at the system and application levels.

System level monitoring includes the following information:

- Disk I/O

- RAM use

- CPU use

- Network traffic

Application level metrics provide data that the application knows about the system. This data includes the following information:

- How many jobs are running in a given instance

- Capacity information about instances in the cluster

- How many inventories are present

- How many hosts are in those inventories

Using system and application metrics can help you identify what was happening in the application when a service degradation occurred. Information about automation controller’s performance over time helps when diagnosing problems or doing capacity planning for future growth.

3.5.1. Metrics for monitoring automation controller application

For application level monitoring, automation controller provides Prometheus-style metrics on an API endpoint /api/v2/metrics. Use these metrics to monitor aggregate data about job status and subsystem performance, such as for job output processing or job scheduling.

The metrics endpoint includes descriptions of each metric. Metrics of particular interest for performance include:

awx_status_total- Current total of jobs in each status. Helps correlate other events to activity in system.

- Can monitor upticks in errored or failed jobs.

awx_instance_remaining_capacity

- Amount of capacity remaining for running additional jobs.

callback_receiver_event_processing_avg_seconds- colloquially called “job events lag”.

- Running average of the lag time between when a task occurred in ansible and when the user is able to see it. This indicates how far behind the callback receiver is in processing events. When this number is very high, users can consider scaling up the control plane or using the capacity adjustment feature to reduce the number of jobs a control node controls.

callback_receiver_events_insert_db- Counter of events that have been inserted by a node. Can be used to calculate the job event insertion rate over a given time period.

callback_receiver_events_queue_size_redis- Indicator of how far behind callback receiver is in processing events. If too high, Redis can cause the control node to run out of memory (OOM).

3.5.2. System level monitoring

Monitoring the CPU and memory use of your cluster hosts is important because capacity management for instances does not introspect into the actual resource usage of hosts. The resource impact of automation jobs depends on what the playbooks are doing. For example, many cloud or networking modules do most of the processing on the execution node, which runs the Ansible Playbook. The impact on the automation controller is very different than if you were running a native module like “yum” where the work is performed on the target hosts where the execution node spends much of the time during this task waiting on results.

If CPU or memory usage is very high, consider lowering the capacity adjustment (available on the instance detail page) on affected instances in the automation controller. This limits how many jobs are run on or controlled by this instance.

Monitor the disk I/O and use of your system. The manner in which an automation controller node runs Ansible and caches output on the file system, and eventually saves it in the database, creates high levels of disk reads and writes. Identifying poor disk performance early can help prevent poor user experience and system degradation.

Additional resources

3.6. PostgreSQL database configuration and maintenance for automation controller

To improve the performance of automation controller, you can configure the following configuration parameters in the database:

Maintenance

The VACUUM and ANALYZE tasks are important maintenance activities that can impact performance. In normal PostgreSQL operation, tuples that are deleted or obsoleted by an update are not physically removed from their table; they remain present until a VACUUM is done. Therefore it’s necessary to do VACUUM periodically, especially on frequently-updated tables. ANALYZE collects statistics about the contents of tables in the database, and stores the results in the pg_statistic system catalog. Subsequently, the query planner uses these statistics to help determine the most efficient execution plans for queries. The autovacuuming PostgreSQL configuration parameter automates the execution of VACUUM and ANALYZE commands. Setting autovacuuming to true is a good practice. However, autovacuuming will not occur if there is never any idle time on the database. If it is observed that autovacuuming is not sufficiently cleaning up space on the database disk, then scheduling specific vacuum tasks during specific maintenance windows can be a solution.

Configuration parameters

To improve the performance of the PostgreSQL server, configure the following Grand Unified Configuration (GUC) parameters that manage database memory. You can find these parameters inside the $PDATA directory in the postgresql.conf file, which manages the configurations of the database server.

-

shared_buffers: determines how much memory is dedicated to the server for caching data. The default value for this parameter is 128 MB. When you modify this value, you must set it between 15% and 25% of the machine’s total RAM.

If you are compiling Postgres against OpenSSL 3.2, your system regresses to remove the parameter for User during startup. You can rectify this by using the BIO_get_app_data call instead of open_get_data. Only an administrator can make these changes, but it impacts all users connected to the PostgreSQL database. f you update your systems without the OpenSSL patch, you are not impacted, and you do not need to take action.

You must restart the database server after changing the value for shared_buffers.

If you are compiling Postgres against OpenSSL 3.2, your system regresses to remove the parameter for User during startup. You can rectify this by using the BIO_get_app_data call instead of open_get_data. Only an administrator can make these changes, but it impacts all users connected to the PostgreSQL database.

If you update your systems without the OpenSSL patch, you are not impacted, and you do not need to take action.

work_mem: provides the amount of memory to be used by internal sort operations and hash tables before disk-swapping. Sort operations are used for order by, distinct, and merge join operations. Hash tables are used in hash joins and hash-based aggregation. The default value for this parameter is 4 MB. Setting the correct value of thework_memparameter improves the speed of a search by reducing disk-swapping.-

Use the following formula to calculate the optimal value of the

work_memparameter for the database server:

-

Use the following formula to calculate the optimal value of the

Total RAM * 0.25 / max_connections

Total RAM * 0.25 / max_connections

Setting a large work_mem can cause the PostgreSQL server to go out of memory (OOM) if there are too many open connections to the database.

-

max_connections: specifies the maximum number of concurrent connections to the database server. -

maintenance_work_mem: provides the maximum amount of memory to be used by maintenance operations, such as vacuum, create index, and alter table add foreign key operations. The default value for this parameter is 64 MB. Use the following equation to calculate a value for this parameter:

Total RAM * 0.05

Total RAM * 0.05

Set maintenance_work_mem higher than work_mem to improve performance for vacuuming.

Additional resources

3.6.1. Encrypting plain text passwords in automation controller configuration files

Passwords stored in automation controller configuration files are stored in plain text. A user with access to the /etc/tower/conf.d/ directory can view the passwords used to access the database. Access to the directories is controlled with permissions, so they are protected, but some security findings deem this protection to be inadequate. The solution is to encrypt the passwords individually.

3.6.1.1. Creating PostgreSQL password hashes

Supply the hash values that replace the plain text passwords within the automation controller configuration files.

Procedure

On your automation controller node, run the following:

awx-manage shell_plus

# awx-manage shell_plusCopy to Clipboard Copied! Toggle word wrap Toggle overflow Then run the following from the python prompt:

>>> from awx.main.utils import encrypt_value, get_encryption_key \ >>> postgres_secret = encrypt_value('$POSTGRES_PASS') \ >>> print(postgres_secret)>>> from awx.main.utils import encrypt_value, get_encryption_key \ >>> postgres_secret = encrypt_value('$POSTGRES_PASS') \ >>> print(postgres_secret)Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteReplace the

$POSTGRES_PASSvariable with the actual plain text password you want to encrypt.The output should resemble the following:

$encrypted$UTF8$AESCBC$Z0FBQUFBQmtLdGNRWXFjZGtkV1ZBR3hkNGVVbFFIU3hhY21UT081eXFkR09aUWZLcG9TSmpndmZYQXFyRHVFQ3ZYSE15OUFuM1RHZHBqTFU3S0MyNEo2Y2JWUURSYktsdmc9PQ==

$encrypted$UTF8$AESCBC$Z0FBQUFBQmtLdGNRWXFjZGtkV1ZBR3hkNGVVbFFIU3hhY21UT081eXFkR09aUWZLcG9TSmpndmZYQXFyRHVFQ3ZYSE15OUFuM1RHZHBqTFU3S0MyNEo2Y2JWUURSYktsdmc9PQ==Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the full values of these hashes and save them.

The hash value begins with

$encrypted$, and is not just the string of characters, as shown in the following example:$encrypted$AESCBC$Z0FBQUFBQmNONU9BbGQ1VjJyNDJRVTRKaFRIR09Ib2U5TGdaYVRfcXFXRjlmdmpZNjdoZVpEZ21QRWViMmNDOGJaM0dPeHN2b194NUxvQ1M5X3dSc1gxQ29TdDBKRkljWHc9PQ==

$encrypted$AESCBC$Z0FBQUFBQmNONU9BbGQ1VjJyNDJRVTRKaFRIR09Ib2U5TGdaYVRfcXFXRjlmdmpZNjdoZVpEZ21QRWViMmNDOGJaM0dPeHN2b194NUxvQ1M5X3dSc1gxQ29TdDBKRkljWHc9PQ==Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note that the

$*_PASSvalues are already in plain text in your inventory file.

3.6.1.2. Encrypting the Postgres password

The following procedure replaces the plain text passwords with encrypted values. Perform the following steps on each node in the cluster:

Procedure

Edit

/etc/tower/conf.d/postgres.pyusing:vim /etc/tower/conf.d/postgres.py

$ vim /etc/tower/conf.d/postgres.pyCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the following line to the top of the file.

from awx.main.utils import decrypt_value, get_encryption_key

from awx.main.utils import decrypt_value, get_encryption_keyCopy to Clipboard Copied! Toggle word wrap Toggle overflow Remove the password value listed after 'PASSWORD': and replace it with the following line, replacing the supplied value of

$encrytpted..with your own hash value:decrypt_value(get_encryption_key('value'),'$encrypted$AESCBC$Z0FBQUFBQmNONU9BbGQ1VjJyNDJRVTRKaFRIR09Ib2U5TGdaYVRfcXFXRjlmdmpZNjdoZVpEZ21QRWViMmNDOGJaM0dPeHN2b194NUxvQ1M5X3dSc1gxQ29TdDBKRkljWHc9PQ=='),decrypt_value(get_encryption_key('value'),'$encrypted$AESCBC$Z0FBQUFBQmNONU9BbGQ1VjJyNDJRVTRKaFRIR09Ib2U5TGdaYVRfcXFXRjlmdmpZNjdoZVpEZ21QRWViMmNDOGJaM0dPeHN2b194NUxvQ1M5X3dSc1gxQ29TdDBKRkljWHc9PQ=='),Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe hash value in this step is the output value of

postgres_secret.The full

postgres.pyresembles the following:Ansible Automation platform controller database settings. from awx.main.utils import decrypt_value, get_encryption_key DATABASES = { 'default': { 'ATOMIC_REQUESTS': True, 'ENGINE': 'django.db.backends.postgresql', 'NAME': 'awx', 'USER': 'awx', 'PASSWORD': decrypt_value(get_encryption_key('value'),'$encrypted$AESCBC$Z0FBQUFBQmNONU9BbGQ1VjJyNDJRVTRKaFRIR09Ib2U5TGdaYVRfcXFXRjlmdmpZNjdoZVpEZ21QRWViMmNDOGJaM0dPeHN2b194NUxvQ1M5X3dSc1gxQ29TdDBKRkljWHc9PQ=='), 'HOST': '127.0.0.1', 'PORT': 5432, } }# Ansible Automation platform controller database settings. from awx.main.utils import decrypt_value, get_encryption_key DATABASES = { 'default': { 'ATOMIC_REQUESTS': True, 'ENGINE': 'django.db.backends.postgresql', 'NAME': 'awx', 'USER': 'awx', 'PASSWORD': decrypt_value(get_encryption_key('value'),'$encrypted$AESCBC$Z0FBQUFBQmNONU9BbGQ1VjJyNDJRVTRKaFRIR09Ib2U5TGdaYVRfcXFXRjlmdmpZNjdoZVpEZ21QRWViMmNDOGJaM0dPeHN2b194NUxvQ1M5X3dSc1gxQ29TdDBKRkljWHc9PQ=='), 'HOST': '127.0.0.1', 'PORT': 5432, } }Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.7. Automation controller tuning

You can configure many automation controller settings by using the UI, API, and file settings including:

- Live events in the automation controller UI

- Job event processing and scheduling

- Control and execution node capacity

- Instance and container groups capacity

- Internal cluster routing

- Web server tuning

3.7.1. Managing live events in the automation controller UI

Events are sent to any node where there is a UI client subscribed to a job. This task is expensive, and becomes more expensive as the number of events that the cluster is producing increases and the number of control nodes increases, because all events are broadcast to all nodes regardless of how many clients are subscribed to particular jobs.

To reduce the overhead of displaying live events in the UI, administrators can choose to either:

- Disable live streaming events.

- Reduce the number of events shown per second or before truncating or hiding events in the UI.

When you disable live streaming of events, they are only loaded on hard refresh to a job’s output detail page. When you reduce the number of events shown per second, this limits the overhead of showing live events, but still provides live updates in the UI without a hard refresh.

3.7.1.1. Disabling live streaming events

Procedure

Disable live streaming events by using one of the following methods:

-

In the API, set

UI_LIVE_UPDATES_ENABLEDto False. - Go to your automation controller. Open the Miscellaneous System Settings window. Set the Enable Activity Stream toggle to Off.

-

In the API, set

3.7.1.2. Settings to modify rate and size of events

If you cannot disable live streaming of events because of their size, reduce the number of events that are displayed in the UI. You can use the following settings to manage how many events are displayed:

Settings available for editing in the UI or API:

-

EVENT_STDOUT_MAX_BYTES_DISPLAY: Maximum amount ofstdoutto display (as measured in bytes). This truncates the size displayed in the UI. -

MAX_WEBSOCKET_EVENT_RATE: Number of events to send to clients per second.

Settings available by using file based settings:

-

MAX_UI_JOB_EVENTS: Number of events to display. This setting hides the rest of the events in the list. -

MAX_EVENT_RES_DATA: The maximum size of the ansible callback event’s "res" data structure. The "res" is the full "result" of the module. When the maximum size of ansible callback events is reached, then the remaining output will be truncated. Default value is 700000 bytes. -

LOCAL_STDOUT_EXPIRE_TIME: The amount of time before astdoutfile is expired and removed locally.

3.7.2. Settings for managing job event processing

The callback receiver processes all the output of jobs and writes this output as job events to the automation controller database. The callback receiver has a pool of workers that processes events in batches. The number of workers automatically increases with the number of CPU available on an instance.

Administrators can override the number of callback receiver workers with the setting JOB_EVENT_WORKERS. Do not set more than 1 worker per CPU, and there must be at least 1 worker. Greater values have more workers available to clear the Redis queue as events stream to the automation controller, but can compete with other processes such as the web server for CPU seconds, uses more database connections (1 per worker), and can reduce the batch size of events each worker commits.

Each worker builds up a buffer of events to write in a batch. The default amount of time to wait before writing a batch is 1 second. This is controlled by the JOB_EVENT_BUFFER_SECONDS setting. Increasing the amount of time the worker waits between batches can result in larger batch sizes.

3.7.3. Capacity settings for control and execution nodes

The following settings impact capacity calculations on the cluster. Set them to the same value on all control nodes by using the following file-based settings.

-

AWX_CONTROL_NODE_TASK_IMPACT: Sets the impact of controlling jobs. You can use it when your control plane exceeds desired CPU or memory usage to control the number of jobs that your control plane can run at the same time. -

SYSTEM_TASK_FORKS_CPUandSYSTEM_TASK_FORKS_MEM: Influence how many resources are estimated to be consumed by each fork of Ansible. By default, 1 fork of Ansible is estimated to use 0.25 of a CPU and 100 Mb of memory.

3.7.4. Capacity settings for instance group and container group

Use the max_concurrent_jobs and max_forks settings available on instance groups to limit how many jobs and forks can be consumed across an instance group or container group.

-

To calculate the

max_concurrent_jobsyou need on a container group consider thepod_specsetting for that container group. In thepod_spec, you can see the resource requests and limits for the automation job pod. Use the following equation to calculate the maximum concurrent jobs that you need:

((number of worker nodes in kubernetes cluster) * (CPU available on each worker)) / (CPU request on pod_spec) = maximum number of concurrent jobs

((number of worker nodes in kubernetes cluster) * (CPU available on each worker)) / (CPU request on pod_spec) = maximum number of concurrent jobsFor example, if your

pod_specindicates that a pod will request 250 mcpu Kubernetes cluster has 1 worker node with 2 CPU, the maximum number of jobs that you need to start with is 8.-

You can also consider the memory consumption of the forks in the jobs. Calculate the appropriate setting of

max_forkswith the following equation:

-

You can also consider the memory consumption of the forks in the jobs. Calculate the appropriate setting of

((number of worker nodes in kubernetes cluster) * (memory available on each worker)) / (memory request on pod_spec) = maximum number of forks

((number of worker nodes in kubernetes cluster) * (memory available on each worker)) / (memory request on pod_spec) = maximum number of forksFor example, given a single worker node with 8 GB of Memory, we determine that the

max forkswe want to run is 81. This way, either 39 jobs with 1 fork can run (task impact is always forks + 1), or 2 jobs with forks set to 39 can run.-

You might have other business requirements that motivate using

max_forksormax_concurrent_jobsto limit the number of jobs launched in a container group.

-

You might have other business requirements that motivate using

3.7.5. Settings for scheduling jobs

The task manager periodically collects tasks that need to be scheduled and determines what instances have capacity and are eligible for running them. The task manager has the following workflow:

- Find and assign the control and execution instances.

- Update the job’s status to waiting.

-

Message the control node through

pg_notifyfor the dispatcher to pick up the task and start running it.

If the scheduling task is not completed within TASK_MANAGER_TIMEOUT seconds (default 300 seconds), the task is terminated early. Timeout issues generally arise when there are thousands of pending jobs.

One way the task manager limits how much work it can do in a single run is the START_TASK_LIMIT setting. This limits how many jobs it can start in a single run. The default is 100 jobs. If more jobs are pending, a new scheduler task is scheduled to run immediately after. Users who are willing to have potentially longer latency between when a job is launched and when it starts, to have greater overall throughput, can consider increasing the START_TASK_LIMIT. To see how long individual runs of the task manager take, use the Prometheus metric task_manager__schedule_seconds, available in /api/v2/metrics.

Jobs elected to begin running by the task manager do not do so until the task manager process exits and commits its changes. The TASK_MANAGER_TIMEOUT setting determines how long a single run of the task manager will run for before committing its changes. When the task manager reaches its timeout, it attempts to commit any progress it made. The task is not actually forced to exit until after a grace period (determined by TASK_MANAGER_TIMEOUT_GRACE_PERIOD) has passed.

3.7.6. Internal Cluster Routing

Automation controller cluster hosts communicate across the network within the cluster. In the inventory file for the traditional VM installer, you can indicate multiple routes to the cluster nodes that are used in different ways:

Example:

[automationcontroller] controller1 ansible_user=ec2-user ansible_host=10.10.12.11 node_type=hybrid routable_hostname=somehost.somecompany.org

[automationcontroller]

controller1 ansible_user=ec2-user ansible_host=10.10.12.11 node_type=hybrid routable_hostname=somehost.somecompany.org-

controller1is the inventory hostname for the automation controller host. The inventory hostname is what is shown as the instance hostname in the application. This can be useful when preparing for disaster recovery scenarios where you want to use the backup/restore method to restore the cluster to a new set of hosts that have different IP addresses. In this case you can have entries in/etc/hoststhat map these inventory hostnames to IP addresses, and you can use internal IP addresses to mitigate any DNS issues when it comes to resolving public DNS names. -

ansible_host=10.10.12.11indicates how the installer reaches the host, which in this case is an internal IP address. This is not used outside of the installer. -

routable_hostname=somehost.somecompany.orgindicates the hostname that is resolvable for the peers that connect to this node on the receptor mesh. Since it may cross multiple networks, we are using a hostname that will map to an IP address resolvable for the receptor peers.

3.7.7. Web server tuning

Control and Hybrid nodes each serve the UI and API of automation controller. WSGI traffic is served by the uwsgi web server on a local socket. ASGI traffic is served by Daphne. NGINX listens on port 443 and proxies traffic as needed.

To scale automation controller’s web service, follow these best practices:

- Deploy multiple control nodes and use a load balancer to spread web requests over multiple servers.

- Set max connections per automation controller to 100.

To optimize automation controller’s web service on the client side, follow these guidelines:

- Direct user to use dynamic inventory sources instead of individually creating inventory hosts by using the API.

- Use webhook notifications instead of polling for job status.

- Use the bulk APIs for host creation and job launching to batch requests.

- Use token authentication. For automation clients that must make many requests very quickly, using tokens is a best practice, because depending on the type of user, there might be additional overhead when using Basic authentication.

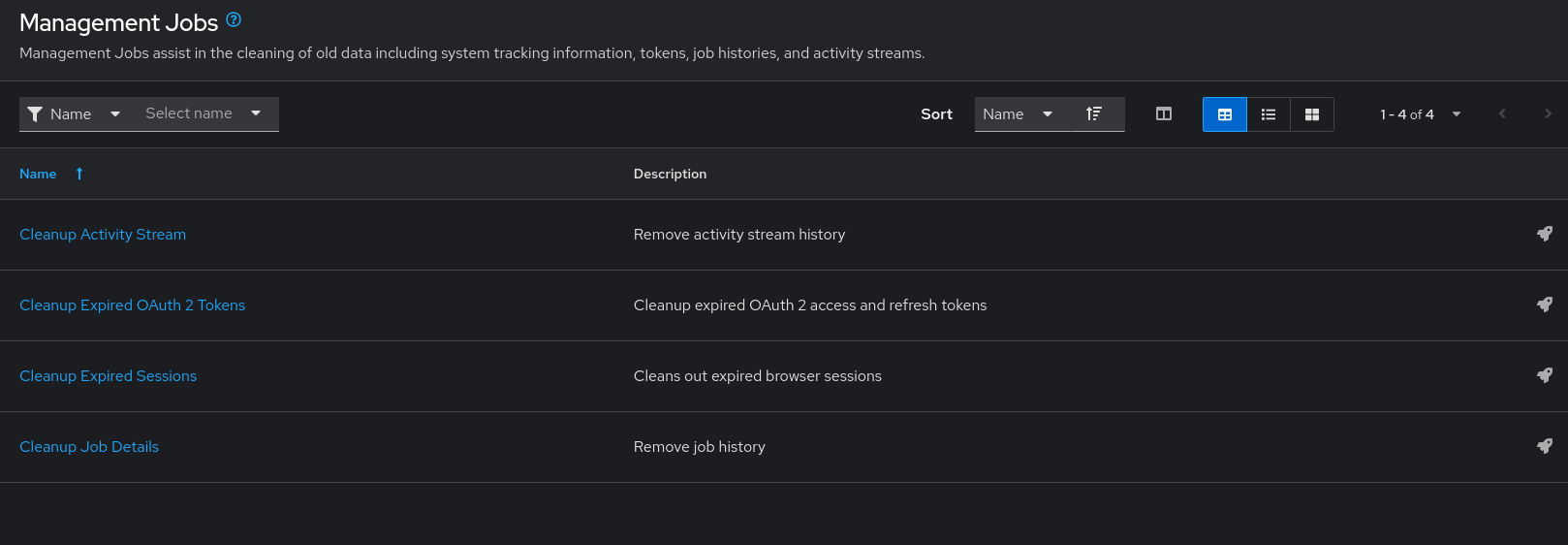

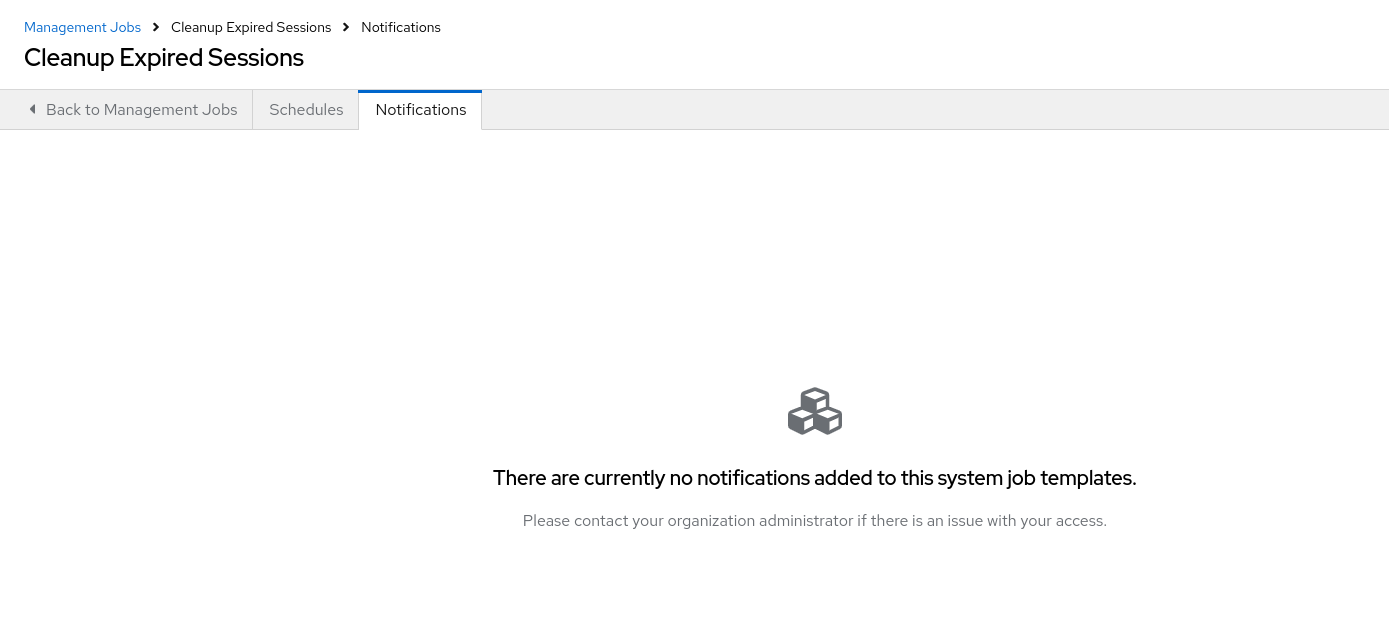

Chapter 4. Management Jobs

Management Jobs assist in the cleaning of old data from automation controller, including system tracking information, tokens, job histories, and activity streams.

You can use this if you have specific retention policies or need to decrease the storage used by your automation controller database.

From the navigation panel, select → → .

The following job types are available for you to schedule and launch:

- Cleanup Activity Stream: Remove activity stream history older than a specified number of days

- Cleanup Expired Sessions: Remove expired browser sessions from the database

- Cleanup Job Details: Remove job history older than a specified number of days

4.1. Removing old activity stream data

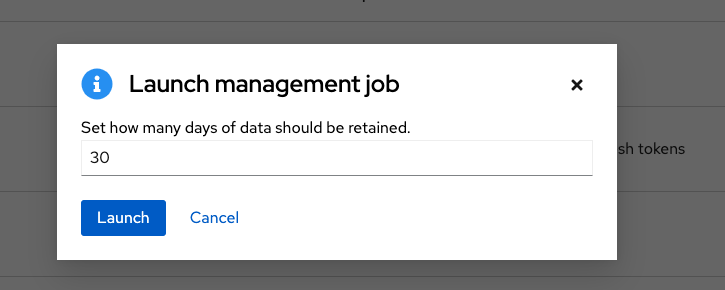

To remove older activity stream data, click the launch

![]() icon beside Cleanup Activity Stream.

icon beside Cleanup Activity Stream.

Enter the number of days of data you want to save and click .

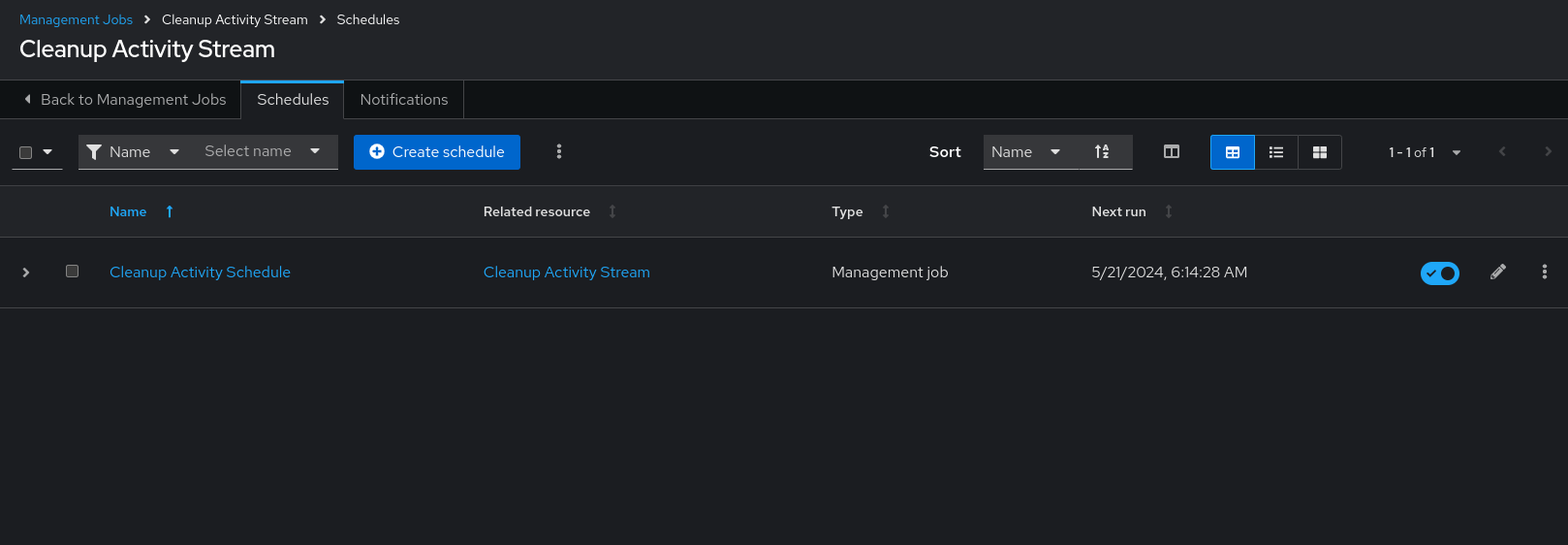

4.1.1. Scheduling deletion

Use the following procedure to review or set a schedule for purging data marked for deletion:

Procedure

For a particular cleanup job, click the Schedules tab.

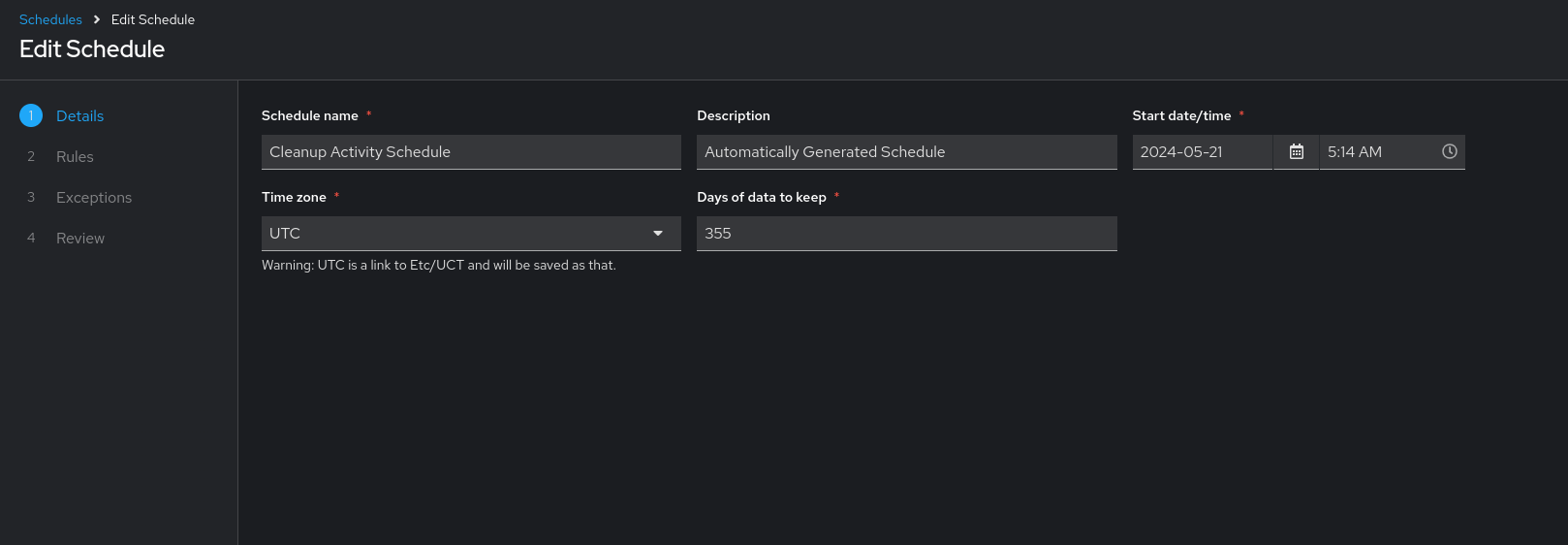

- Click the name of the job, Cleanup Activity Schedule in this example, to review the schedule settings.

Click to change them. You can also click to create a new schedule for this management job.

Enter the appropriate details into the following fields and click Next:

- Schedule name required

- Start date/time required

- Time zone the entered Start Time should be in this time zone.

- Repeat frequency the appropriate options display as the update frequency is modified including data you do not want to include by specifying exceptions.

- Days of data to keep required - specify how much data you want to retain.

The Details tab displays a description of the schedule and a list of the scheduled occurrences in the selected Local Time Zone.

Jobs are scheduled in UTC. Repeating jobs that run at a specific time of day can move relative to a local time zone when Daylight Saving Time shifts occur.

4.1.2. Setting notifications

Use the following procedure to review or set notifications associated with a management job:

Procedure

- For a particular cleanup job, select the Notifications tab.

If none exist, see Creating a notification template in Using automation execution.

4.1.3. Cleanup Expired Sessions

To remove expired sessions, click the launch

![]() icon beside Cleanup Expired Sessions.

icon beside Cleanup Expired Sessions.

You can review or set a schedule for cleaning up expired sessions by performing the same procedure described for activity stream management jobs. For more information, see Scheduling deletion.

You can also set or review notifications associated with this management job the same way as described in Notifications for activity stream management jobs.

For more information, see Notifiers in Using automation execution.

4.1.4. Removing Old Job History

To remove job history older than a specified number of days, click the launch

![]() icon beside Cleanup Job Details.

icon beside Cleanup Job Details.

Enter the number of days of data you want to save and click .

The initial job run for an automation controller resource, such as Projects, or Job Templates, are excluded from Cleanup Job Details, regardless of retention value.

You can review or set a schedule for cleaning up old job history by performing the same procedure described for activity stream management jobs.

For more information, see Scheduling deletion.

You can also set or review notifications associated with this management job in the same way as described in Setting notifications for activity stream management jobs, or for more information, see link:https://docs.redhat.com/en/documentation/red_hat_ansible_automation_platform/2.5/html/using_automation_execution/controller-notificationsNotifiers].

Chapter 5. Inventory File Importing

With automation controller you can select an inventory file from source control, rather than creating one from scratch. The files are non-editable, and as inventories are updated at the source, the inventories within the projects are also updated accordingly, including the group_vars and host_vars files or directory associated with them. SCM types can consume both inventory files and scripts. Both inventory files and custom inventory types use scripts.

Imported hosts have a description of imported by default. This can be overridden by setting the _awx_description variable on a given host. For example, if importing from a sourced .ini file, you can add the following host variables:

[main] 127.0.0.1 _awx_description="my host 1" 127.0.0.2 _awx_description="my host 2"

[main]

127.0.0.1 _awx_description="my host 1"

127.0.0.2 _awx_description="my host 2"

Similarly, group descriptions also default to imported, but can also be overridden by _awx_description.

To use old inventory scripts in source control, see Export old inventory scripts in Using automation execution.

5.1. Source control management Inventory Source Fields

The source fields used are:

-

source_project: the project to use. -

source_path: the relative path inside the project indicating a directory or a file. If left blank, "" is still a relative path indicating the root directory of the project. -

source_vars: if set on a "file" type inventory source then they are passed to the environment variables when running.

Additionally:

- An update of the project automatically triggers an inventory update where it is used.

- An update of the project is scheduled immediately after creation of the inventory source.

- Neither inventory nor project updates are blocked while a related job is running.

-

In cases where you have a large project (around 10 GB), disk space on

/tmpcan be an issue.

You can specify a location manually in the automation controller UI from the Add source page of an inventory. Refer to Adding a source for instructions on creating an inventory source.

When you update a project, refresh the listing to use the latest source control management (SCM) information. If no inventory sources use a project as an SCM inventory source, then the inventory listing might not be refreshed on update.

For inventories with SCM sources, the job Details page for inventory updates displays a status indicator for the project update and the name of the project.

The status indicator links to the project update job.

The project name links to the project.

You can perform an inventory update while a related job is running.

5.1.1. Supported File Syntax

Automation controller uses the ansible-inventory module from Ansible to process inventory files, and supports all valid inventory syntax that automation controller requires.

You do not need to write inventory scripts in Python. You can enter any executable file in the source field and must run chmod +x for that file and check it into Git.

The following is a working example of JSON output that automation controller can read for the import:

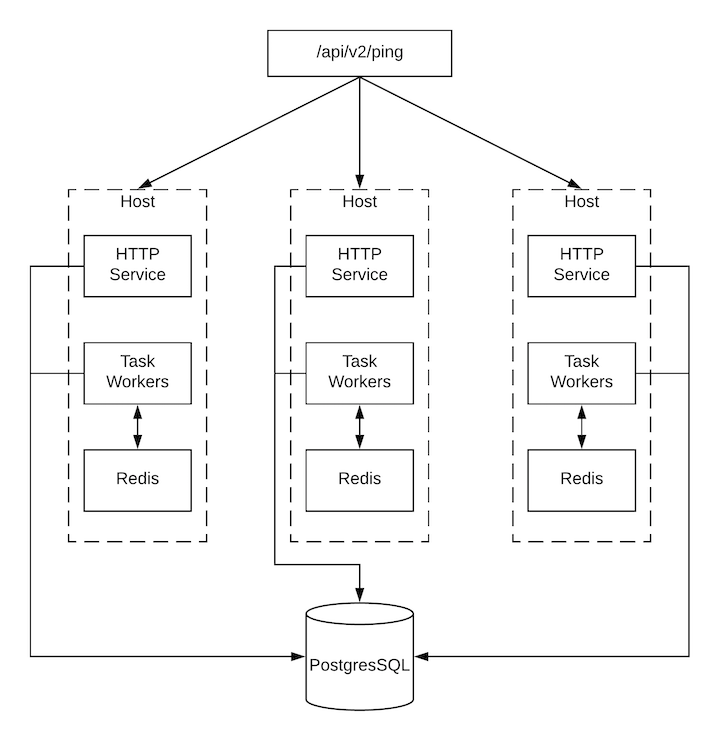

Chapter 6. Clustering

Clustering is sharing load between hosts. Each instance must be able to act as an entry point for UI and API access. This must enable the automation controller administrators to use load balancers in front of as many instances as they want and keep good data visibility.

Load balancing is optional, and it is entirely possible to have ingress on one or all instances as needed.

Each instance must be able to join the automation controller cluster and expand its ability to run jobs. This is a simple system where jobs can run anywhere rather than be directed on where to run. Also, you can group clustered instances into different pools or queues, called Instance groups as described in Using automation execution.

Ansible Automation Platform supports container-based clusters by using Kubernetes, meaning you can install new automation controller instances on this platform without any variation or diversion in functionality. You can create instance groups to point to a Kubernetes container. For more information, see the Instance and container groups section in Using automation execution.

Supported operating systems

The following operating systems are supported for establishing a clustered environment:

- Red Hat Enterprise Linux 8 or later

Isolated instances are not supported in conjunction with running automation controller in OpenShift.

6.1. Setup considerations

Learn about the initial setup of clusters. To upgrade an existing cluster, see Upgrade Planning in the Ansible Automation Platform Upgrade and Migration Guide.

Note the following important considerations in the new clustering environment:

- PostgreSQL is a standalone instance and is not clustered. Automation controller does not manage replica configuration or database failover (if the user configures standby replicas).

- When you start a cluster, the database node must be a standalone server, and PostgreSQL must not be installed on one of the automation controller nodes.

-

PgBouncer is not recommended for connection pooling with automation controller. Automation controller relies on

pg_notifyfor sending messages across various components, and therefore,PgBouncercannot readily be used in transaction pooling mode. - All instances must be reachable from all other instances and they must be able to reach the database. It is also important for the hosts to have a stable address or hostname (depending on how the automation controller host is configured).

- All instances must be geographically collocated, with reliable low-latency connections between instances.

-

To upgrade to a clustered environment, your primary instance must be part of the

defaultgroup in the inventory and it needs to be the first host listed in thedefaultgroup. - Manual projects must be manually synced to all instances by the customer, and updated on all instances at once.

-

The

inventoryfile for platform deployments should be saved or persisted. If new instances are to be provisioned, the passwords, configuration options, and host names, must be made available to installation program.

6.2. Install and configure

Provisioning new instances for a VM-based install involves updating the inventory file and re-running the setup playbook. It is important that the inventory file has all passwords and information used when installing the cluster or other instances might be reconfigured. The inventory file has a single inventory group, automationcontroller.

All instances are responsible for various housekeeping tasks related to task scheduling, such as determining where jobs are supposed to be launched and processing playbook events, as well as periodic cleanup.

If no groups are selected for a resource, then the automationcontroller group is used, but if any other group is selected, then the automationcontroller group is not used in any way.

The database group remains for specifying an external PostgreSQL. If the database host is provisioned separately, this group must be empty:

When a playbook runs on an individual controller instance in a cluster, the output of that playbook is broadcast to all of the other nodes as part of automation controller’s WebSocket-based streaming output functionality. You must handle this data broadcast by using internal addressing by specifying a private routable address for each node in your inventory:

[automationcontroller] hostA routable_hostname=10.1.0.2 hostB routable_hostname=10.1.0.3 hostC routable_hostname=10.1.0.4 routable_hostname

[automationcontroller]

hostA routable_hostname=10.1.0.2

hostB routable_hostname=10.1.0.3

hostC routable_hostname=10.1.0.4

routable_hostname

For more information about routable_hostname, see General variables in the RPM installation.

Earlier versions of automation controller used the variable name rabbitmq_host. If you are upgrading from an earlier version of the platform, and you previously specified rabbitmq_host in your inventory, rename rabbitmq_host to routable_hostname before upgrading.

6.2.1. Instances and ports used by automation controller and automation hub

Ports and instances used by automation controller and also required by the on-premise automation hub node are as follows:

- Port 80, 443 (normal automation controller and automation hub ports)

- Port 22 (SSH - ingress only required)

- Port 5432 (database instance - if the database is installed on an external instance, it must be opened to automation controller instances)

6.3. Status and monitoring by browser API

Automation controller reports as much status as it can using the browser API at /api/v2/ping to validate the health of the cluster. This includes the following:

- The instance servicing the HTTP request

- The timestamps of the last heartbeat of all other instances in the cluster

- Instance Groups and Instance membership in those groups

View more details about Instances and Instance Groups, including running jobs and membership information at /api/v2/instances/ and /api/v2/instance_groups/.

6.4. Instance services and failure behavior

Each automation controller instance is made up of the following different services working collaboratively:

- HTTP services

- This includes the automation controller application itself and external web services.

- Callback receiver

- Receives job events from running Ansible jobs.

- Dispatcher

- The worker queue that processes and runs all jobs.

- Redis

- This key value store is used as a queue for event data propagated from ansible-playbook to the application.

- Rsyslog

- The log processing service used to deliver logs to various external logging services.

Automation controller is configured so that if any of these services or their components fail, then all services are restarted. If these fail often in a short span of time, then the entire instance is placed offline in an automated fashion to allow remediation without causing unexpected behavior.

For backing up and restoring a clustered environment, see the Backup and restore clustered environments section.

6.5. Job runtime behavior

The way jobs are run and reported to a normal user of automation controller does not change. On the system side, note the following differences:

When a job is submitted from the API interface it is pushed into the dispatcher queue. Each automation controller instance connects to and receives jobs from that queue using a scheduling algorithm. Any instance in the cluster is just as likely to receive the work and run the task. If an instance fails while executing jobs, then the work is marked as permanently failed.

- Project updates run successfully on any instance that could potentially run a job. Projects synchronize themselves to the correct version on the instance immediately before running the job. If the required revision is already locally checked out and Galaxy or Collections updates are not required, then a sync cannot be performed.

-

When the synchronization happens, it is recorded in the database as a project update with a

launch_type = syncandjob_type = run. Project syncs do not change the status or version of the project; instead, they update the source tree only on the instance where they run. -

If updates are required from Galaxy or Collections, a sync is performed that downloads the required roles, consuming more space in your

/tmp file. In cases where you have a large project (around 10 GB), disk space on/tmpcan be an issue.

6.5.1. Job runs

By default, when a job is submitted to the automation controller queue, it can be picked up by any of the workers. However, you can control where a particular job runs, such as restricting the instances from which a job runs on.

To support taking an instance offline temporarily, there is a property enabled defined on each instance. When this property is disabled, no jobs are assigned to that instance. Existing jobs finish, but no new work is assigned.

Troubleshooting

When you issue a cancel request on a running automation controller job, automation controller issues a SIGINT to the ansible-playbook process. While this causes Ansible to stop dispatching new tasks and exit, in many cases, module tasks that were already dispatched to remote hosts will run to completion. This behavior is similar to pressing Ctrl-c during a command-line Ansible run.

With respect to software dependencies, if a running job is canceled, the job is removed but the dependencies remain.

6.6. Deprovisioning instances

Re-running the setup playbook does not automatically deprovision instances since clusters do not currently distinguish between an instance that was taken offline intentionally or due to failure. Instead, shut down all services on the automation controller instance and then run the deprovisioning tool from any other instance.

Procedure

-

Shut down the instance or stop the service with the command:

automation-controller-service stop. Run the following deprovision command from another instance to remove it from the automation controller cluster:

awx-manage deprovision_instance --hostname=<name used in inventory file>

$ awx-manage deprovision_instance --hostname=<name used in inventory file>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

The following is an example deprovision command:

awx-manage deprovision_instance --hostname=hostB

$ awx-manage deprovision_instance --hostname=hostBDeprovisioning instance groups in automation controller does not automatically deprovision or remove instance groups. For more information, see the Deprovisioning instance groups section in Using automation execution.

Chapter 7. Implementing policy enforcement

Policy enforcement at automation runtime is a feature that uses encoded rules to define, manage, and enforce policies that govern how your users interact with your Ansible Automation Platform instance. Policy enforcement automates policy management, improving security, compliance, and efficiency.

OPA, or Open Policy Agent, is a policy engine that offloads policy decisions from your Ansible instance. When it is triggered, the policy enforcement feature connects to OPA to retrieve policies specified in your configuration, and applies policy rules to your automation content. If OPA detects a policy violation, it will stop the action and give your user information about the policy violation.

Prerequisites

Before you can implement policy enforcement in your Ansible Automation Platform instance, you must have:

- Access to an OPA server that is reachable from your Ansible Automation Platform deployment.

- Configured Ansible Automation Platform with settings required for authenticating to your OPA server.

- Some familiarity with OPA and the Rego language, which is the language policies are written in.

For policy enforcement to work correctly, you must both configure the OPA server in your policy settings, and associate a specific policy with a particular resource. For example, a particular organization, inventory, or job template.

OPA API V1 is the only version currently supported in Ansible Automation Platform.

7.1. Configuring policy enforcement settings

You can specify how your Ansible Automation Platform instance interacts with OPA by modifying your global settings.

Prerequisites

- To configure policy enforcement, you must have administrative privileges.

If you do not configure the OPA server in your policy settings, policy evaluation will not occur when you run the job.

Procedure

- From the navigation panel, select → → .

- Click Edit policy settings.

On the Policy Settings page, fill out the following fields:

- OPA Server hostname

- Enter the name of the host that connects to the OPA service.

- OPA server port

- Enter the port that connects to the OPA service.

- OPA authentication type

- Select the OPA authentication type.

- OPA custom authentication header

- Enter a custom header to append to request headers for OPA authentication.

- OPA request timeout

- Enter the number of seconds until the connection times out.

- OPA request retry count

- Enter a figure for the number of times a request can attempt to connect to the OPA service before failing.

Depending on your authentication type, you might need to fill out the following fields.

If you selected Token as your authentication type:

- OPA authentication token

- Enter the OPA authentication token.

If you selected Certificate as your authentication type:

- OPA client certificate content

- Enter content of the CA certificate for mTLS authentication.

- OPA client key content

- Enter the client key for mTLS authentication.

- OPA CA certificate content

- Enter the content of the CA certificate for mTLS authentication.

Beneath the heading labeled Options:

- Use SSL for OPA connection

- Check this box to enable an SSL connection to the OPA service.

- Click .

7.2. Understanding OPA packages and rules

An OPA policy is organized in packages, which are namespaced collections of rules. The basic structure of an OPA policy looks like this:

The key components of the rule’s structure are:

- Package declaration

- This defines the namespace for your policy.

- Rules

- This defines the policy’s logic and the decision that it returns.