Node.js Runtime Guide

Use Node.js 10 to develop scalable network applications that run on OpenShift and on stand-alone RHEL

Abstract

Preface

This guide covers concepts as well as practical details needed by developers to use the Node.js runtime.

Chapter 1. What is Node.js

Node.js is based on the V8 JavaScript engine from Google and allows you to write server-side JavaScript applications. It provides an I/O model based on events and non-blocking operations that enables you to write efficient applications. Node.js also provides a large module ecosystem called npm. Check out Additional Resources for further reading on Node.js.

The Node.js runtime enables you to run Node.js applications and services on OpenShift while providing all the advantages and conveniences of the OpenShift platform such as rolling updates, continuous delivery pipelines, service discovery, and canary deployments. OpenShift also makes it easier for your applications to implement common microservice patterns such as externalized configuration, health check, circuit breaker, and failover.

Red Hat provides different supported releases of Node.js. For more information how to get support, see Getting Node.js and support from Red Hat.

Chapter 2. Supported Architectures by Node.js

Node.js supports the following architectures:

- x86_64 (AMD64)

- IBM Z (s390x) in the OpenShift environment

Different images are supported for different architectures. The example codes in this guide demonstrate the commands for x86_64 architecture. If you are using other architectures, specify the relevant image name in the commands.

Chapter 3. Introduction to example applications

Examples are working applications that demonstrate how to build cloud native applications and services. They demonstrate prescriptive architectures, design patterns, tools, and best practices that should be used when you develop your applications. The example applications can be used as templates to create your cloud-native microservices. You can update and redeploy these examples using the deployment process explained in this guide.

The examples implement Microservice patterns such as:

- Creating REST APIs

- Interoperating with a database

- Implementing the health check pattern

- Externalizing the configuration of your applications to make them more secure and easier to scale

You can use the examples applications as:

- Working demonstration of the technology

- Learning tool or a sandbox to understand how to develop applications for your project

- Starting point for updating or extending your own use case

Each example application is implemented in one or more runtimes. For example, the REST API Level 0 example is available for the following runtimes:

The subsequent sections explain the example applications implemented for the Node.js runtime.

You can download and deploy all the example applications on:

- x86_64 architecture - The example applications in this guide demonstrate how to build and deploy example applications on x86_64 architecture.

s390x architecture - To deploy the example applications on OpenShift environments provisioned on IBM Z infrastructure, specify the relevant IBM Z image name in the commands.

Some of the example applications also require other products, such as Red Hat Data Grid to demonstrate the workflows. In this case, you must also change the image names of these products to their relevant IBM Z image names in the YAML file of the example applications.

Chapter 4. Available examples for Node.js

The Node.js runtime provides example applications. When you start developing applications on OpenShift, you can use the example applications as templates.

You can access these example applications on Developer Launcher.

4.1. REST API Level 0 example for Node.js

The following example is not meant to be run in a production environment.

Example proficiency level: Foundational.

What the REST API Level 0 example does

The REST API Level 0 example shows how to map business operations to a remote procedure call endpoint over HTTP using a REST framework. This corresponds to Level 0 in the Richardson Maturity Model. Creating an HTTP endpoint using REST and its underlying principles to define your API lets you quickly prototype and design the API flexibly.

This example introduces the mechanics of interacting with a remote service using the HTTP protocol. It allows you to:

-

Execute an HTTP

GETrequest on theapi/greetingendpoint. -

Receive a response in JSON format with a payload consisting of the

Hello, World!String. -

Execute an HTTP

GETrequest on theapi/greetingendpoint while passing in a String argument. This uses thenamerequest parameter in the query string. -

Receive a response in JSON format with a payload of

Hello, $name!with$namereplaced by the value of thenameparameter passed into the request.

4.1.1. REST API Level 0 design tradeoffs

| Pros | Cons |

|---|---|

|

|

4.1.2. Deploying the REST API Level 0 example application to OpenShift Online

Use one of the following options to execute the REST API Level 0 example application on OpenShift Online.

Although each method uses the same oc commands to deploy your application, using developers.redhat.com/launch provides an automated deployment workflow that executes the oc commands for you.

4.1.2.1. Deploying the example application using developers.redhat.com/launch

Prerequisites

- An account at OpenShift Online.

Procedure

- Navigate to the developers.redhat.com/launch URL in a browser and log in.

- Follow on-screen instructions to create and launch your example application in Node.js.

4.1.2.2. Authenticating the oc CLI client

To work with example applications on OpenShift Online using the oc command-line client, you must authenticate the client using the token provided by the OpenShift Online web interface.

Prerequisites

- An account at OpenShift Online.

Procedure

- Navigate to the OpenShift Online URL in a browser.

- Click on the question mark icon in the top right-hand corner of the Web console, next to your user name.

- Select Command Line Tools in the drop-down menu.

-

Find the text box that contains the

oc login …command with the hidden token, and click the button next to it to copy its content to your clipboard. Paste the command into a terminal application. The command uses your authentication token to authenticate your

ocCLI client with your OpenShift Online account.oc login OPENSHIFT_URL --token=MYTOKEN

$ oc login OPENSHIFT_URL --token=MYTOKENCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.1.2.3. Deploying the REST API Level 0 example application using the oc CLI client

Prerequisites

- The example application created using developers.redhat.com/launch. For more information, see Section 4.1.2.1, “Deploying the example application using developers.redhat.com/launch”.

-

The

occlient authenticated. For more information, see Section 4.1.2.2, “Authenticating theocCLI client”.

Procedure

Clone your project from GitHub.

git clone git@github.com:USERNAME/MY_PROJECT_NAME.git

$ git clone git@github.com:USERNAME/MY_PROJECT_NAME.gitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, if you downloaded a ZIP file of your project, extract it.

unzip MY_PROJECT_NAME.zip

$ unzip MY_PROJECT_NAME.zipCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new project in OpenShift.

oc new-project MY_PROJECT_NAME

$ oc new-project MY_PROJECT_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow - Navigate to the root directory of your application.

Use

npmto start the deployment to OpenShift.npm install && npm run openshift

$ npm install && npm run openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow These commands install any missing module dependencies, then using the Nodeshift module, deploy the example application on OpenShift.

Check the status of your application and ensure your pod is running.

oc get pods -w NAME READY STATUS RESTARTS AGE MY_APP_NAME-1-aaaaa 1/1 Running 0 58s MY_APP_NAME-s2i-1-build 0/1 Completed 0 2m

$ oc get pods -w NAME READY STATUS RESTARTS AGE MY_APP_NAME-1-aaaaa 1/1 Running 0 58s MY_APP_NAME-s2i-1-build 0/1 Completed 0 2mCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

MY_APP_NAME-1-aaaaapod should have a status ofRunningonce it is fully deployed and started. Your specific pod name will vary. The number in the middle will increase with each new build. The letters at the end are generated when the pod is created.After your example application is deployed and started, determine its route.

Example Route Information

oc get routes NAME HOST/PORT PATH SERVICES PORT TERMINATION MY_APP_NAME MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME MY_APP_NAME 8080

$ oc get routes NAME HOST/PORT PATH SERVICES PORT TERMINATION MY_APP_NAME MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME MY_APP_NAME 8080Copy to Clipboard Copied! Toggle word wrap Toggle overflow The route information of a pod gives you the base URL which you use to access it. In the example above, you would use

http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAMEas the base URL to access the application.

4.1.3. Deploying the REST API Level 0 example application to Minishift or CDK

Use one of the following options to execute the REST API Level 0 example application locally on Minishift or CDK:

Although each method uses the same oc commands to deploy your application, using Fabric8 Launcher provides an automated deployment workflow that executes the oc commands for you.

4.1.3.1. Getting the Fabric8 Launcher tool URL and credentials

You need the Fabric8 Launcher tool URL and user credentials to create and deploy example applications on Minishift or CDK. This information is provided when the Minishift or CDK is started.

Prerequisites

- The Fabric8 Launcher tool installed, configured, and running.

Procedure

- Navigate to the console where you started Minishift or CDK.

Check the console output for the URL and user credentials you can use to access the running Fabric8 Launcher:

Example Console Output from a Minishift or CDK Startup

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.1.3.2. Deploying the example application using the Fabric8 Launcher tool

Prerequisites

- The URL of your running Fabric8 Launcher instance and the user credentials of your Minishift or CDK. For more information, see Section 4.1.3.1, “Getting the Fabric8 Launcher tool URL and credentials”.

Procedure

- Navigate to the Fabric8 Launcher URL in a browser.

- Follow the on-screen instructions to create and launch your example application in Node.js.

4.1.3.3. Authenticating the oc CLI client

To work with example applications on Minishift or CDK using the oc command-line client, you must authenticate the client using the token provided by the Minishift or CDK web interface.

Prerequisites

- The URL of your running Fabric8 Launcher instance and the user credentials of your Minishift or CDK. For more information, see Section 4.1.3.1, “Getting the Fabric8 Launcher tool URL and credentials”.

Procedure

- Navigate to the Minishift or CDK URL in a browser.

- Click on the question mark icon in the top right-hand corner of the Web console, next to your user name.

- Select Command Line Tools in the drop-down menu.

-

Find the text box that contains the

oc login …command with the hidden token, and click the button next to it to copy its content to your clipboard. Paste the command into a terminal application. The command uses your authentication token to authenticate your

ocCLI client with your Minishift or CDK account.oc login OPENSHIFT_URL --token=MYTOKEN

$ oc login OPENSHIFT_URL --token=MYTOKENCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.1.3.4. Deploying the REST API Level 0 example application using the oc CLI client

Prerequisites

- The example application created using Fabric8 Launcher tool on a Minishift or CDK. For more information, see Section 4.1.3.2, “Deploying the example application using the Fabric8 Launcher tool”.

- Your Fabric8 Launcher tool URL.

-

The

occlient authenticated. For more information, see Section 4.1.3.3, “Authenticating theocCLI client”.

Procedure

Clone your project from GitHub.

git clone git@github.com:USERNAME/MY_PROJECT_NAME.git

$ git clone git@github.com:USERNAME/MY_PROJECT_NAME.gitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, if you downloaded a ZIP file of your project, extract it.

unzip MY_PROJECT_NAME.zip

$ unzip MY_PROJECT_NAME.zipCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new project in OpenShift.

oc new-project MY_PROJECT_NAME

$ oc new-project MY_PROJECT_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow - Navigate to the root directory of your application.

Use

npmto start the deployment to OpenShift.npm install && npm run openshift

$ npm install && npm run openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow These commands install any missing module dependencies, then using the Nodeshift module, deploy the example application on OpenShift.

Check the status of your application and ensure your pod is running.

oc get pods -w NAME READY STATUS RESTARTS AGE MY_APP_NAME-1-aaaaa 1/1 Running 0 58s MY_APP_NAME-s2i-1-build 0/1 Completed 0 2m

$ oc get pods -w NAME READY STATUS RESTARTS AGE MY_APP_NAME-1-aaaaa 1/1 Running 0 58s MY_APP_NAME-s2i-1-build 0/1 Completed 0 2mCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

MY_APP_NAME-1-aaaaapod should have a status ofRunningonce it is fully deployed and started. Your specific pod name will vary. The number in the middle will increase with each new build. The letters at the end are generated when the pod is created.After your example application is deployed and started, determine its route.

Example Route Information

oc get routes NAME HOST/PORT PATH SERVICES PORT TERMINATION MY_APP_NAME MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME MY_APP_NAME 8080

$ oc get routes NAME HOST/PORT PATH SERVICES PORT TERMINATION MY_APP_NAME MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME MY_APP_NAME 8080Copy to Clipboard Copied! Toggle word wrap Toggle overflow The route information of a pod gives you the base URL which you use to access it. In the example above, you would use

http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAMEas the base URL to access the application.

4.1.4. Deploying the REST API Level 0 example application to OpenShift Container Platform

The process of creating and deploying example applications to OpenShift Container Platform is similar to OpenShift Online:

Prerequisites

- The example application created using developers.redhat.com/launch.

Procedure

- Follow the instructions in Section 4.1.2, “Deploying the REST API Level 0 example application to OpenShift Online”, only use the URL and user credentials from the OpenShift Container Platform Web Console.

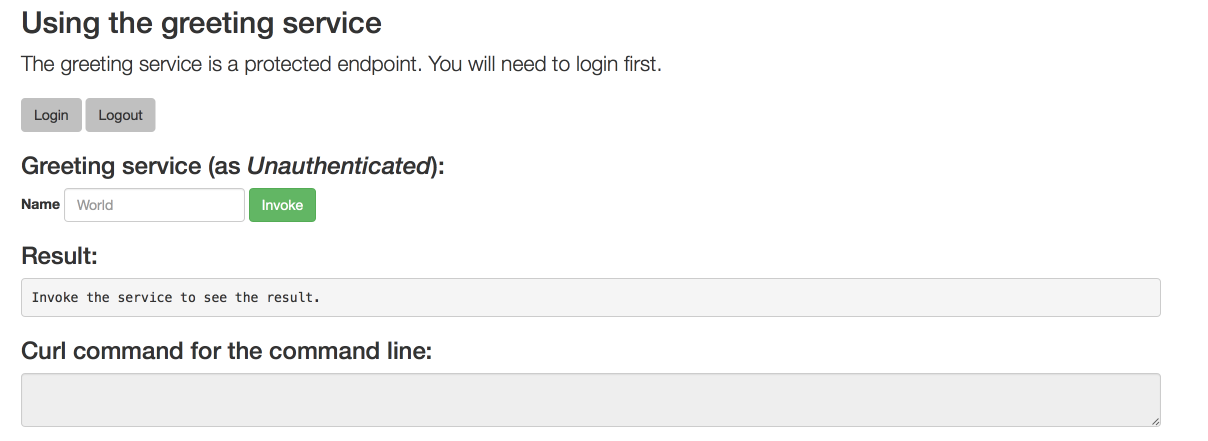

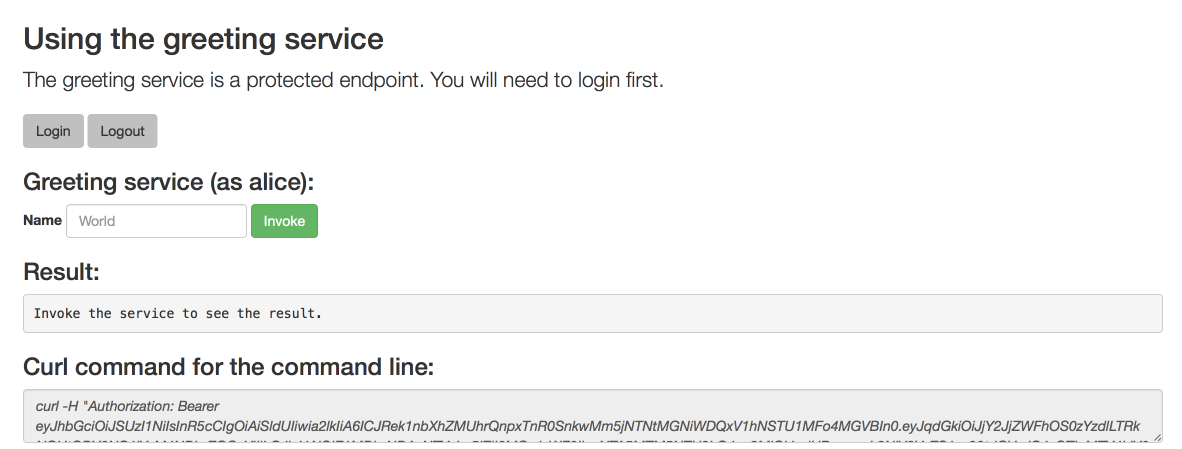

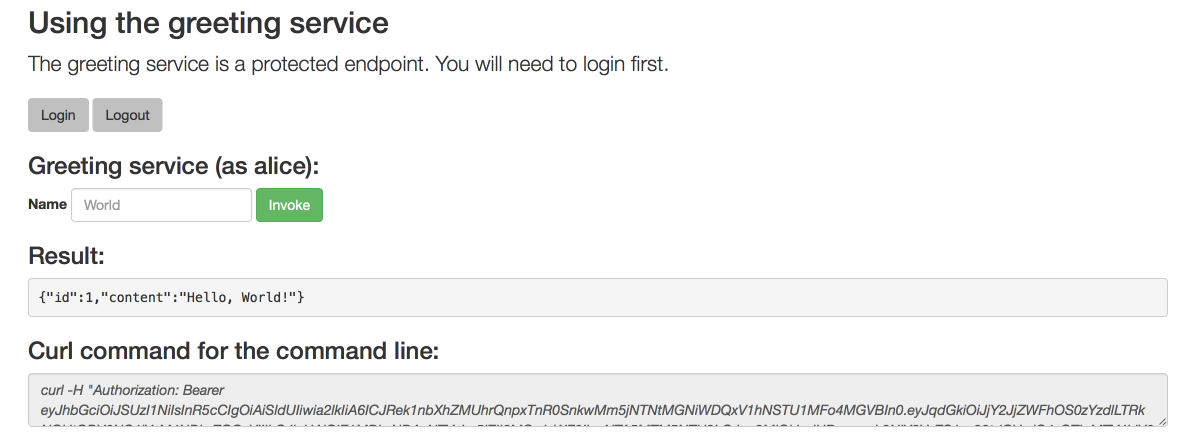

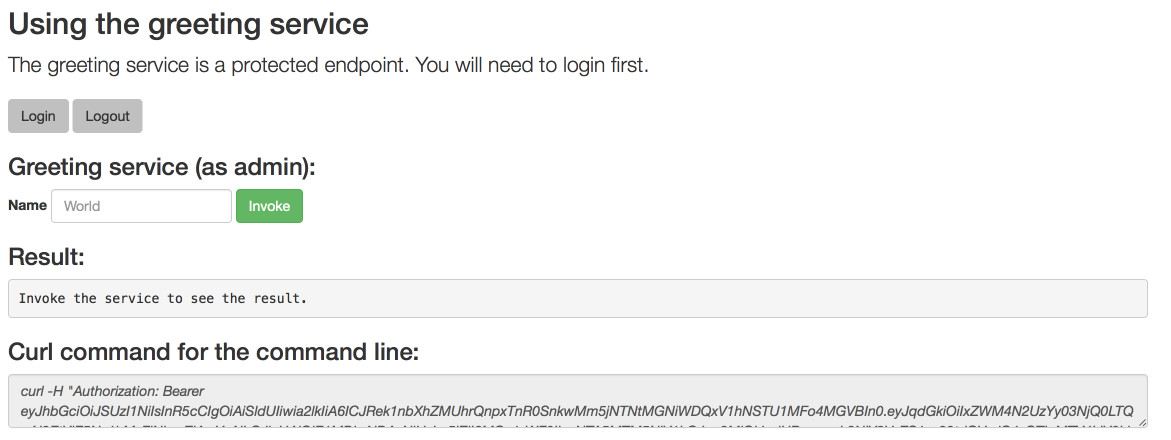

4.1.5. Interacting with the unmodified REST API Level 0 example application for Node.js

The example provides a default HTTP endpoint that accepts GET requests.

Prerequisites

- Your application running

-

The

curlbinary or a web browser

Procedure

Use

curlto execute aGETrequest against the example. You can also use a browser to do this.curl http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/greeting {"content":"Hello, World!"}$ curl http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/greeting {"content":"Hello, World!"}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use

curlto execute aGETrequest with thenameURL parameter against the example. You can also use a browser to do this.curl http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/greeting?name=Sarah {"content":"Hello, Sarah!"}$ curl http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/greeting?name=Sarah {"content":"Hello, Sarah!"}Copy to Clipboard Copied! Toggle word wrap Toggle overflow

From a browser, you can also use a form provided by the example to perform these same interactions. The form is located at the root of the project http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME.

4.1.6. REST resources

More background and related information on REST can be found here:

4.2. Externalized Configuration example for Node.js

The following example is not meant to be run in a production environment.

Example proficiency level: Foundational.

Externalized Configuration provides a basic example of using a ConfigMap to externalize configuration. ConfigMap is an object used by OpenShift to inject configuration data as simple key and value pairs into one or more Linux containers while keeping the containers independent of OpenShift.

This example shows you how to:

-

Set up and configure a

ConfigMap. -

Use the configuration provided by the

ConfigMapwithin an application. -

Deploy changes to the

ConfigMapconfiguration of running applications.

4.2.1. The externalized configuration design pattern

Whenever possible, externalize the application configuration and separate it from the application code. This allows the application configuration to change as it moves through different environments, but leaves the code unchanged. Externalizing the configuration also keeps sensitive or internal information out of your code base and version control. Many languages and application servers provide environment variables to support externalizing an application’s configuration.

Microservices architectures and multi-language (polyglot) environments add a layer of complexity to managing an application’s configuration. Applications consist of independent, distributed services, and each can have its own configuration. Keeping all configuration data synchronized and accessible creates a maintenance challenge.

ConfigMaps enable the application configuration to be externalized and used in individual Linux containers and pods on OpenShift. You can create a ConfigMap object in a variety of ways, including using a YAML file, and inject it into the Linux container. ConfigMaps also allow you to group and scale sets of configuration data. This lets you configure a large number of environments beyond the basic Development, Stage, and Production. You can find more information about ConfigMaps in the OpenShift documentation.

4.2.2. Externalized Configuration design tradeoffs

| Pros | Cons |

|---|---|

|

|

4.2.3. Deploying the Externalized Configuration example application to OpenShift Online

Use one of the following options to execute the Externalized Configuration example application on OpenShift Online.

Although each method uses the same oc commands to deploy your application, using developers.redhat.com/launch provides an automated deployment workflow that executes the oc commands for you.

4.2.3.1. Deploying the example application using developers.redhat.com/launch

Prerequisites

- An account at OpenShift Online.

Procedure

- Navigate to the developers.redhat.com/launch URL in a browser and log in.

- Follow on-screen instructions to create and launch your example application in Node.js.

4.2.3.2. Authenticating the oc CLI client

To work with example applications on OpenShift Online using the oc command-line client, you must authenticate the client using the token provided by the OpenShift Online web interface.

Prerequisites

- An account at OpenShift Online.

Procedure

- Navigate to the OpenShift Online URL in a browser.

- Click on the question mark icon in the top right-hand corner of the Web console, next to your user name.

- Select Command Line Tools in the drop-down menu.

-

Find the text box that contains the

oc login …command with the hidden token, and click the button next to it to copy its content to your clipboard. Paste the command into a terminal application. The command uses your authentication token to authenticate your

ocCLI client with your OpenShift Online account.oc login OPENSHIFT_URL --token=MYTOKEN

$ oc login OPENSHIFT_URL --token=MYTOKENCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2.3.3. Deploying the Externalized Configuration example application using the oc CLI client

Prerequisites

- The example application created using developers.redhat.com/launch. For more information, see Section 4.2.3.1, “Deploying the example application using developers.redhat.com/launch”.

-

The

occlient authenticated. For more information, see Section 4.2.3.2, “Authenticating theocCLI client”.

Procedure

Clone your project from GitHub.

git clone git@github.com:USERNAME/MY_PROJECT_NAME.git

$ git clone git@github.com:USERNAME/MY_PROJECT_NAME.gitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, if you downloaded a ZIP file of your project, extract it.

unzip MY_PROJECT_NAME.zip

$ unzip MY_PROJECT_NAME.zipCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new OpenShift project.

oc new-project MY_PROJECT_NAME

$ oc new-project MY_PROJECT_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Assign view access rights to the service account before deploying your example application, so that the application can access the OpenShift API in order to read the contents of the ConfigMap.

oc policy add-role-to-user view -n $(oc project -q) -z default

$ oc policy add-role-to-user view -n $(oc project -q) -z defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Navigate to the root directory of your application.

Deploy your ConfigMap configuration to OpenShift using

app-config.yml.oc create configmap app-config --from-file=app-config.yml

$ oc create configmap app-config --from-file=app-config.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify your ConfigMap configuration has been deployed.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use

npmto start the deployment to OpenShift.npm install && npm run openshift

$ npm install && npm run openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow These commands install any missing module dependencies, then using the Nodeshift module, deploy the example application on OpenShift.

Check the status of your application and ensure your pod is running.

oc get pods -w NAME READY STATUS RESTARTS AGE MY_APP_NAME-1-aaaaa 1/1 Running 0 58s MY_APP_NAME-s2i-1-build 0/1 Completed 0 2m

$ oc get pods -w NAME READY STATUS RESTARTS AGE MY_APP_NAME-1-aaaaa 1/1 Running 0 58s MY_APP_NAME-s2i-1-build 0/1 Completed 0 2mCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

MY_APP_NAME-1-aaaaapod should have a status ofRunningonce its fully deployed and started. Your specific pod name will vary. The number in the middle will increase with each new build. The letters at the end are generated when the pod is created.After your example application is deployed and started, determine its route.

Example Route Information

oc get routes NAME HOST/PORT PATH SERVICES PORT TERMINATION MY_APP_NAME MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME MY_APP_NAME 8080

$ oc get routes NAME HOST/PORT PATH SERVICES PORT TERMINATION MY_APP_NAME MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME MY_APP_NAME 8080Copy to Clipboard Copied! Toggle word wrap Toggle overflow The route information of a pod gives you the base URL which you use to access it. In the example above, you would use

http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAMEas the base URL to access the application.

4.2.4. Deploying the Externalized Configuration example application to Minishift or CDK

Use one of the following options to execute the Externalized Configuration example application locally on Minishift or CDK:

Although each method uses the same oc commands to deploy your application, using Fabric8 Launcher provides an automated deployment workflow that executes the oc commands for you.

4.2.4.1. Getting the Fabric8 Launcher tool URL and credentials

You need the Fabric8 Launcher tool URL and user credentials to create and deploy example applications on Minishift or CDK. This information is provided when the Minishift or CDK is started.

Prerequisites

- The Fabric8 Launcher tool installed, configured, and running.

Procedure

- Navigate to the console where you started Minishift or CDK.

Check the console output for the URL and user credentials you can use to access the running Fabric8 Launcher:

Example Console Output from a Minishift or CDK Startup

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2.4.2. Deploying the example application using the Fabric8 Launcher tool

Prerequisites

- The URL of your running Fabric8 Launcher instance and the user credentials of your Minishift or CDK. For more information, see Section 4.2.4.1, “Getting the Fabric8 Launcher tool URL and credentials”.

Procedure

- Navigate to the Fabric8 Launcher URL in a browser.

- Follow the on-screen instructions to create and launch your example application in Node.js.

4.2.4.3. Authenticating the oc CLI client

To work with example applications on Minishift or CDK using the oc command-line client, you must authenticate the client using the token provided by the Minishift or CDK web interface.

Prerequisites

- The URL of your running Fabric8 Launcher instance and the user credentials of your Minishift or CDK. For more information, see Section 4.2.4.1, “Getting the Fabric8 Launcher tool URL and credentials”.

Procedure

- Navigate to the Minishift or CDK URL in a browser.

- Click on the question mark icon in the top right-hand corner of the Web console, next to your user name.

- Select Command Line Tools in the drop-down menu.

-

Find the text box that contains the

oc login …command with the hidden token, and click the button next to it to copy its content to your clipboard. Paste the command into a terminal application. The command uses your authentication token to authenticate your

ocCLI client with your Minishift or CDK account.oc login OPENSHIFT_URL --token=MYTOKEN

$ oc login OPENSHIFT_URL --token=MYTOKENCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2.4.4. Deploying the Externalized Configuration example application using the oc CLI client

Prerequisites

- The example application created using Fabric8 Launcher tool on a Minishift or CDK. For more information, see Section 4.2.4.2, “Deploying the example application using the Fabric8 Launcher tool”.

- Your Fabric8 Launcher tool URL.

-

The

occlient authenticated. For more information, see Section 4.2.4.3, “Authenticating theocCLI client”.

Procedure

Clone your project from GitHub.

git clone git@github.com:USERNAME/MY_PROJECT_NAME.git

$ git clone git@github.com:USERNAME/MY_PROJECT_NAME.gitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, if you downloaded a ZIP file of your project, extract it.

unzip MY_PROJECT_NAME.zip

$ unzip MY_PROJECT_NAME.zipCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new OpenShift project.

oc new-project MY_PROJECT_NAME

$ oc new-project MY_PROJECT_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Assign view access rights to the service account before deploying your example application, so that the application can access the OpenShift API in order to read the contents of the ConfigMap.

oc policy add-role-to-user view -n $(oc project -q) -z default

$ oc policy add-role-to-user view -n $(oc project -q) -z defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Navigate to the root directory of your application.

Deploy your ConfigMap configuration to OpenShift using

app-config.yml.oc create configmap app-config --from-file=app-config.yml

$ oc create configmap app-config --from-file=app-config.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify your ConfigMap configuration has been deployed.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use

npmto start the deployment to OpenShift.npm install && npm run openshift

$ npm install && npm run openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow These commands install any missing module dependencies, then using the Nodeshift module, deploy the example application on OpenShift.

Check the status of your application and ensure your pod is running.

oc get pods -w NAME READY STATUS RESTARTS AGE MY_APP_NAME-1-aaaaa 1/1 Running 0 58s MY_APP_NAME-s2i-1-build 0/1 Completed 0 2m

$ oc get pods -w NAME READY STATUS RESTARTS AGE MY_APP_NAME-1-aaaaa 1/1 Running 0 58s MY_APP_NAME-s2i-1-build 0/1 Completed 0 2mCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

MY_APP_NAME-1-aaaaapod should have a status ofRunningonce its fully deployed and started. Your specific pod name will vary. The number in the middle will increase with each new build. The letters at the end are generated when the pod is created.After your example application is deployed and started, determine its route.

Example Route Information

oc get routes NAME HOST/PORT PATH SERVICES PORT TERMINATION MY_APP_NAME MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME MY_APP_NAME 8080

$ oc get routes NAME HOST/PORT PATH SERVICES PORT TERMINATION MY_APP_NAME MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME MY_APP_NAME 8080Copy to Clipboard Copied! Toggle word wrap Toggle overflow The route information of a pod gives you the base URL which you use to access it. In the example above, you would use

http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAMEas the base URL to access the application.

4.2.5. Deploying the Externalized Configuration example application to OpenShift Container Platform

The process of creating and deploying example applications to OpenShift Container Platform is similar to OpenShift Online:

Prerequisites

- The example application created using developers.redhat.com/launch.

Procedure

- Follow the instructions in Section 4.2.3, “Deploying the Externalized Configuration example application to OpenShift Online”, only use the URL and user credentials from the OpenShift Container Platform Web Console.

4.2.6. Interacting with the unmodified Externalized Configuration example application for Node.js

The example provides a default HTTP endpoint that accepts GET requests.

Prerequisites

- Your application running

-

The

curlbinary or a web browser

Procedure

Use

curlto execute aGETrequest against the example. You can also use a browser to do this.curl http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/greeting {"content":"Hello, World from a ConfigMap !"}$ curl http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/greeting {"content":"Hello, World from a ConfigMap !"}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update the deployed ConfigMap configuration.

oc edit configmap app-config

$ oc edit configmap app-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow Change the value for the

messagekey toBonjour, %s from a ConfigMap !and save the file.- Update of the ConfigMap should be read by the application within an acceptable time (a few seconds) without requiring a restart of the application.

Execute a

GETrequest usingcurlagainst the example with the updated ConfigMap configuration to see your updated greeting. You can also do this from your browser using the web form provided by the application.curl http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/greeting {"content":"Bonjour, World from a ConfigMap !"}$ curl http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/greeting {"content":"Bonjour, World from a ConfigMap !"}Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2.7. Externalized Configuration resources

More background and related information on Externalized Configuration and ConfigMap can be found here:

4.3. Relational Database Backend example for Node.js

The following example is not meant to be run in a production environment.

Limitation: Run this example application on a Minishift or CDK. You can also use a manual workflow to deploy this example to OpenShift Online Pro and OpenShift Container Platform. This example is not currently available on OpenShift Online Starter.

Example proficiency level: Foundational.

What the Relational Database Backend example does

The Relational Database Backend example expands on the REST API Level 0 application to provide a basic example of performing create, read, update and delete (CRUD) operations on a PostgreSQL database using a simple HTTP API. CRUD operations are the four basic functions of persistent storage, widely used when developing an HTTP API dealing with a database.

The example also demonstrates the ability of the HTTP application to locate and connect to a database in OpenShift. Each runtime shows how to implement the connectivity solution best suited in the given case. The runtime can choose between options such as using JDBC, JPA, or accessing ORM APIs directly.

The example application exposes an HTTP API, which provides endpoints that allow you to manipulate data by performing CRUD operations over HTTP. The CRUD operations are mapped to HTTP Verbs. The API uses JSON formatting to receive requests and return responses to the user. The user can also use a user interface provided by the example to use the application. Specifically, this example provides an application that allows you to:

-

Navigate to the application web interface in your browser. This exposes a simple website allowing you to perform CRUD operations on the data in the

my_datadatabase. -

Execute an HTTP

GETrequest on theapi/fruitsendpoint. - Receive a response formatted as a JSON array containing the list of all fruits in the database.

-

Execute an HTTP

GETrequest on theapi/fruits/*endpoint while passing in a valid item ID as an argument. - Receive a response in JSON format containing the name of the fruit with the given ID. If no item matches the specified ID, the call results in an HTTP error 404.

-

Execute an HTTP

POSTrequest on theapi/fruitsendpoint passing in a validnamevalue to create a new entry in the database. -

Execute an HTTP

PUTrequest on theapi/fruits/*endpoint passing in a valid ID and a name as an argument. This updates the name of the item with the given ID to match the name specified in your request. -

Execute an HTTP

DELETErequest on theapi/fruits/*endpoint, passing in a valid ID as an argument. This removes the item with the specified ID from the database and returns an HTTP code204(No Content) as a response. If you pass in an invalid ID, the call results in an HTTP error404.

This example does not showcase a fully matured RESTful model (level 3), but it does use compatible HTTP verbs and status, following the recommended HTTP API practices.

4.3.1. Relational Database Backend design tradeoffs

| Pros | Cons |

|---|---|

|

|

4.3.2. Deploying the Relational Database Backend example application to OpenShift Online

Use one of the following options to execute the Relational Database Backend example application on OpenShift Online.

Although each method uses the same oc commands to deploy your application, using developers.redhat.com/launch provides an automated deployment workflow that executes the oc commands for you.

4.3.2.1. Deploying the example application using developers.redhat.com/launch

Prerequisites

- An account at OpenShift Online.

Procedure

- Navigate to the developers.redhat.com/launch URL in a browser and log in.

- Follow on-screen instructions to create and launch your example application in Node.js.

4.3.2.2. Authenticating the oc CLI client

To work with example applications on OpenShift Online using the oc command-line client, you must authenticate the client using the token provided by the OpenShift Online web interface.

Prerequisites

- An account at OpenShift Online.

Procedure

- Navigate to the OpenShift Online URL in a browser.

- Click on the question mark icon in the top right-hand corner of the Web console, next to your user name.

- Select Command Line Tools in the drop-down menu.

-

Find the text box that contains the

oc login …command with the hidden token, and click the button next to it to copy its content to your clipboard. Paste the command into a terminal application. The command uses your authentication token to authenticate your

ocCLI client with your OpenShift Online account.oc login OPENSHIFT_URL --token=MYTOKEN

$ oc login OPENSHIFT_URL --token=MYTOKENCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.3.2.3. Deploying the Relational Database Backend example application using the oc CLI client

Prerequisites

- The example application created using developers.redhat.com/launch. For more information, see Section 4.3.2.1, “Deploying the example application using developers.redhat.com/launch”.

-

The

occlient authenticated. For more information, see Section 4.3.2.2, “Authenticating theocCLI client”.

Procedure

Clone your project from GitHub.

git clone git@github.com:USERNAME/MY_PROJECT_NAME.git

$ git clone git@github.com:USERNAME/MY_PROJECT_NAME.gitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, if you downloaded a ZIP file of your project, extract it.

unzip MY_PROJECT_NAME.zip

$ unzip MY_PROJECT_NAME.zipCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new OpenShift project.

oc new-project MY_PROJECT_NAME

$ oc new-project MY_PROJECT_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow - Navigate to the root directory of your application.

Deploy the PostgreSQL database to OpenShift. Ensure that you use the following values for user name, password, and database name when creating your database application. The example application is pre-configured to use these values. Using different values prevents your application from integrating with the database.

oc new-app -e POSTGRESQL_USER=luke -ePOSTGRESQL_PASSWORD=secret -ePOSTGRESQL_DATABASE=my_data registry.access.redhat.com/rhscl/postgresql-10-rhel7 --name=my-database

$ oc new-app -e POSTGRESQL_USER=luke -ePOSTGRESQL_PASSWORD=secret -ePOSTGRESQL_DATABASE=my_data registry.access.redhat.com/rhscl/postgresql-10-rhel7 --name=my-databaseCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the status of your database and ensure the pod is running.

oc get pods -w my-database-1-aaaaa 1/1 Running 0 45s my-database-1-deploy 0/1 Completed 0 53s

$ oc get pods -w my-database-1-aaaaa 1/1 Running 0 45s my-database-1-deploy 0/1 Completed 0 53sCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

my-database-1-aaaaapod should have a status ofRunningand should be indicated as ready once it is fully deployed and started. Your specific pod name will vary. The number in the middle will increase with each new build. The letters at the end are generated when the pod is created.Use

npmto start the deployment to OpenShift.npm install && npm run openshift

$ npm install && npm run openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow These commands install any missing module dependencies, then using the Nodeshift module, deploy the example application on OpenShift.

Check the status of your application and ensure your pod is running.

oc get pods -w NAME READY STATUS RESTARTS AGE MY_APP_NAME-1-aaaaa 1/1 Running 0 58s MY_APP_NAME-s2i-1-build 0/1 Completed 0 2m

$ oc get pods -w NAME READY STATUS RESTARTS AGE MY_APP_NAME-1-aaaaa 1/1 Running 0 58s MY_APP_NAME-s2i-1-build 0/1 Completed 0 2mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Your

MY_APP_NAME-1-aaaaapod should have a status ofRunningand should be indicated as ready once it is fully deployed and started.After your example application is deployed and started, determine its route.

Example Route Information

oc get routes NAME HOST/PORT PATH SERVICES PORT TERMINATION MY_APP_NAME MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME MY_APP_NAME 8080

$ oc get routes NAME HOST/PORT PATH SERVICES PORT TERMINATION MY_APP_NAME MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME MY_APP_NAME 8080Copy to Clipboard Copied! Toggle word wrap Toggle overflow The route information of a pod gives you the base URL which you use to access it. In the example above, you would use

http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAMEas the base URL to access the application.

4.3.3. Deploying the Relational Database Backend example application to Minishift or CDK

Use one of the following options to execute the Relational Database Backend example application locally on Minishift or CDK:

Although each method uses the same oc commands to deploy your application, using Fabric8 Launcher provides an automated deployment workflow that executes the oc commands for you.

4.3.3.1. Getting the Fabric8 Launcher tool URL and credentials

You need the Fabric8 Launcher tool URL and user credentials to create and deploy example applications on Minishift or CDK. This information is provided when the Minishift or CDK is started.

Prerequisites

- The Fabric8 Launcher tool installed, configured, and running.

Procedure

- Navigate to the console where you started Minishift or CDK.

Check the console output for the URL and user credentials you can use to access the running Fabric8 Launcher:

Example Console Output from a Minishift or CDK Startup

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.3.3.2. Deploying the example application using the Fabric8 Launcher tool

Prerequisites

- The URL of your running Fabric8 Launcher instance and the user credentials of your Minishift or CDK. For more information, see Section 4.3.3.1, “Getting the Fabric8 Launcher tool URL and credentials”.

Procedure

- Navigate to the Fabric8 Launcher URL in a browser.

- Follow the on-screen instructions to create and launch your example application in Node.js.

4.3.3.3. Authenticating the oc CLI client

To work with example applications on Minishift or CDK using the oc command-line client, you must authenticate the client using the token provided by the Minishift or CDK web interface.

Prerequisites

- The URL of your running Fabric8 Launcher instance and the user credentials of your Minishift or CDK. For more information, see Section 4.3.3.1, “Getting the Fabric8 Launcher tool URL and credentials”.

Procedure

- Navigate to the Minishift or CDK URL in a browser.

- Click on the question mark icon in the top right-hand corner of the Web console, next to your user name.

- Select Command Line Tools in the drop-down menu.

-

Find the text box that contains the

oc login …command with the hidden token, and click the button next to it to copy its content to your clipboard. Paste the command into a terminal application. The command uses your authentication token to authenticate your

ocCLI client with your Minishift or CDK account.oc login OPENSHIFT_URL --token=MYTOKEN

$ oc login OPENSHIFT_URL --token=MYTOKENCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.3.3.4. Deploying the Relational Database Backend example application using the oc CLI client

Prerequisites

- The example application created using Fabric8 Launcher tool on a Minishift or CDK. For more information, see Section 4.3.3.2, “Deploying the example application using the Fabric8 Launcher tool”.

- Your Fabric8 Launcher tool URL.

-

The

occlient authenticated. For more information, see Section 4.3.3.3, “Authenticating theocCLI client”.

Procedure

Clone your project from GitHub.

git clone git@github.com:USERNAME/MY_PROJECT_NAME.git

$ git clone git@github.com:USERNAME/MY_PROJECT_NAME.gitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, if you downloaded a ZIP file of your project, extract it.

unzip MY_PROJECT_NAME.zip

$ unzip MY_PROJECT_NAME.zipCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new OpenShift project.

oc new-project MY_PROJECT_NAME

$ oc new-project MY_PROJECT_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow - Navigate to the root directory of your application.

Deploy the PostgreSQL database to OpenShift. Ensure that you use the following values for user name, password, and database name when creating your database application. The example application is pre-configured to use these values. Using different values prevents your application from integrating with the database.

oc new-app -e POSTGRESQL_USER=luke -ePOSTGRESQL_PASSWORD=secret -ePOSTGRESQL_DATABASE=my_data registry.access.redhat.com/rhscl/postgresql-10-rhel7 --name=my-database

$ oc new-app -e POSTGRESQL_USER=luke -ePOSTGRESQL_PASSWORD=secret -ePOSTGRESQL_DATABASE=my_data registry.access.redhat.com/rhscl/postgresql-10-rhel7 --name=my-databaseCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the status of your database and ensure the pod is running.

oc get pods -w my-database-1-aaaaa 1/1 Running 0 45s my-database-1-deploy 0/1 Completed 0 53s

$ oc get pods -w my-database-1-aaaaa 1/1 Running 0 45s my-database-1-deploy 0/1 Completed 0 53sCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

my-database-1-aaaaapod should have a status ofRunningand should be indicated as ready once it is fully deployed and started. Your specific pod name will vary. The number in the middle will increase with each new build. The letters at the end are generated when the pod is created.Use

npmto start the deployment to OpenShift.npm install && npm run openshift

$ npm install && npm run openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow These commands install any missing module dependencies, then using the Nodeshift module, deploy the example application on OpenShift.

Check the status of your application and ensure your pod is running.

oc get pods -w NAME READY STATUS RESTARTS AGE MY_APP_NAME-1-aaaaa 1/1 Running 0 58s MY_APP_NAME-s2i-1-build 0/1 Completed 0 2m

$ oc get pods -w NAME READY STATUS RESTARTS AGE MY_APP_NAME-1-aaaaa 1/1 Running 0 58s MY_APP_NAME-s2i-1-build 0/1 Completed 0 2mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Your

MY_APP_NAME-1-aaaaapod should have a status ofRunningand should be indicated as ready once it is fully deployed and started.After your example application is deployed and started, determine its route.

Example Route Information

oc get routes NAME HOST/PORT PATH SERVICES PORT TERMINATION MY_APP_NAME MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME MY_APP_NAME 8080

$ oc get routes NAME HOST/PORT PATH SERVICES PORT TERMINATION MY_APP_NAME MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME MY_APP_NAME 8080Copy to Clipboard Copied! Toggle word wrap Toggle overflow The route information of a pod gives you the base URL which you use to access it. In the example above, you would use

http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAMEas the base URL to access the application.

4.3.4. Deploying the Relational Database Backend example application to OpenShift Container Platform

The process of creating and deploying example applications to OpenShift Container Platform is similar to OpenShift Online:

Prerequisites

- The example application created using developers.redhat.com/launch.

Procedure

- Follow the instructions in Section 4.3.2, “Deploying the Relational Database Backend example application to OpenShift Online”, only use the URL and user credentials from the OpenShift Container Platform Web Console.

4.3.5. Interacting with the Relational Database Backend API on Node.js

When you have finished creating your example application, you can interact with it the following way:

Prerequisites

- Your application running

-

The

curlbinary or a web browser

Procedure

Obtain the URL of your application by executing the following command:

oc get route MY_APP_NAME

$ oc get route MY_APP_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow NAME HOST/PORT PATH SERVICES PORT TERMINATION MY_APP_NAME MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME MY_APP_NAME 8080

NAME HOST/PORT PATH SERVICES PORT TERMINATION MY_APP_NAME MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME MY_APP_NAME 8080Copy to Clipboard Copied! Toggle word wrap Toggle overflow To access the web interface of the database application, navigate to the application URL in your browser:

http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME

http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, you can make requests directly on the

api/fruits/*endpoint usingcurl:List all entries in the database:

curl http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/fruits

$ curl http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/fruitsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow Retrieve an entry with a specific ID

curl http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/fruits/3

$ curl http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/fruits/3Copy to Clipboard Copied! Toggle word wrap Toggle overflow { "id" : 3, "name" : "Pear", "stock" : 10 }{ "id" : 3, "name" : "Pear", "stock" : 10 }Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new entry:

curl -H "Content-Type: application/json" -X POST -d '{"name":"Peach","stock":1}' http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/fruits$ curl -H "Content-Type: application/json" -X POST -d '{"name":"Peach","stock":1}' http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/fruitsCopy to Clipboard Copied! Toggle word wrap Toggle overflow { "id" : 4, "name" : "Peach", "stock" : 1 }{ "id" : 4, "name" : "Peach", "stock" : 1 }Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update an Entry

curl -H "Content-Type: application/json" -X PUT -d '{"name":"Apple","stock":100}' http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/fruits/1$ curl -H "Content-Type: application/json" -X PUT -d '{"name":"Apple","stock":100}' http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/fruits/1Copy to Clipboard Copied! Toggle word wrap Toggle overflow { "id" : 1, "name" : "Apple", "stock" : 100 }{ "id" : 1, "name" : "Apple", "stock" : 100 }Copy to Clipboard Copied! Toggle word wrap Toggle overflow Delete an Entry:

curl -X DELETE http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/fruits/1

$ curl -X DELETE http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/fruits/1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Troubleshooting

-

If you receive an HTTP Error code

503as a response after executing these commands, it means that the application is not ready yet.

4.3.6. Relational database resources

More background and related information on running relational databases in OpenShift, CRUD, HTTP API and REST can be found here:

- HTTP Verbs

- Architectural Styles and the Design of Network-based Software Architectures - Representational State Transfer (REST)

- The never ending REST API design debase

- REST APIs must be Hypertext driven

- Richardson Maturity Model

- Express Web Framework

- Relational Database Backend for Spring Boot

- Relational Database Backend for Eclipse Vert.x

- Relational Database Backend for Thorntail

4.4. Health Check example for Node.js

The following example is not meant to be run in a production environment.

Example proficiency level: Foundational.

When you deploy an application, it is important to know if it is available and if it can start handling incoming requests. Implementing the health check pattern allows you to monitor the health of an application, which includes if an application is available and whether it is able to service requests.

If you are not familiar with the health check terminology, see the Section 4.4.1, “Health check concepts” section first.

The purpose of this use case is to demonstrate the health check pattern through the use of probing. Probing is used to report the liveness and readiness of an application. In this use case, you configure an application which exposes an HTTP health endpoint to issue HTTP requests. If the container is alive, according to the liveness probe on the health HTTP endpoint, the management platform receives 200 as return code and no further action is required. If the health HTTP endpoint does not return a response, for example if the thread is blocked, then the application is not considered alive according to the liveness probe. In that case, the platform kills the pod corresponding to that application and recreates a new pod to restart the application.

This use case also allows you to demonstrate and use a readiness probe. In cases where the application is running but is unable to handle requests, such as when the application returns an HTTP 503 response code during restart, this application is not considered ready according to the readiness probe. If the application is not considered ready by the readiness probe, requests are not routed to that application until it is considered ready according to the readiness probe.

4.4.1. Health check concepts

In order to understand the health check pattern, you need to first understand the following concepts:

- Liveness

- Liveness defines whether an application is running or not. Sometimes a running application moves into an unresponsive or stopped state and needs to be restarted. Checking for liveness helps determine whether or not an application needs to be restarted.

- Readiness

- Readiness defines whether a running application can service requests. Sometimes a running application moves into an error or broken state where it can no longer service requests. Checking readiness helps determine whether or not requests should continue to be routed to that application.

- Fail-over

- Fail-over enables failures in servicing requests to be handled gracefully. If an application fails to service a request, that request and future requests can then fail-over or be routed to another application, which is usually a redundant copy of that same application.

- Resilience and Stability

- Resilience and Stability enable failures in servicing requests to be handled gracefully. If an application fails to service a request due to connection loss, in a resilient system that request can be retried after the connection is re-established.

- Probe

- A probe is a Kubernetes action that periodically performs diagnostics on a running container.

4.4.2. Deploying the Health Check example application to OpenShift Online

Use one of the following options to execute the Health Check example application on OpenShift Online.

Although each method uses the same oc commands to deploy your application, using developers.redhat.com/launch provides an automated deployment workflow that executes the oc commands for you.

4.4.2.1. Deploying the example application using developers.redhat.com/launch

Prerequisites

- An account at OpenShift Online.

Procedure

- Navigate to the developers.redhat.com/launch URL in a browser and log in.

- Follow on-screen instructions to create and launch your example application in Node.js.

4.4.2.2. Authenticating the oc CLI client

To work with example applications on OpenShift Online using the oc command-line client, you must authenticate the client using the token provided by the OpenShift Online web interface.

Prerequisites

- An account at OpenShift Online.

Procedure

- Navigate to the OpenShift Online URL in a browser.

- Click on the question mark icon in the top right-hand corner of the Web console, next to your user name.

- Select Command Line Tools in the drop-down menu.

-

Find the text box that contains the

oc login …command with the hidden token, and click the button next to it to copy its content to your clipboard. Paste the command into a terminal application. The command uses your authentication token to authenticate your

ocCLI client with your OpenShift Online account.oc login OPENSHIFT_URL --token=MYTOKEN

$ oc login OPENSHIFT_URL --token=MYTOKENCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.4.2.3. Deploying the Health Check example application using the oc CLI client

Prerequisites

- The example application created using developers.redhat.com/launch. For more information, see Section 4.4.2.1, “Deploying the example application using developers.redhat.com/launch”.

-

The

occlient authenticated. For more information, see Section 4.4.2.2, “Authenticating theocCLI client”.

Procedure

Clone your project from GitHub.

git clone git@github.com:USERNAME/MY_PROJECT_NAME.git

$ git clone git@github.com:USERNAME/MY_PROJECT_NAME.gitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, if you downloaded a ZIP file of your project, extract it.

unzip MY_PROJECT_NAME.zip

$ unzip MY_PROJECT_NAME.zipCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new OpenShift project.

oc new-project MY_PROJECT_NAME

$ oc new-project MY_PROJECT_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow - Navigate to the root directory of your application.

Use

npmto start the deployment to OpenShift.npm install && npm run openshift

$ npm install && npm run openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow These commands install any missing module dependencies, then using the Nodeshift module, deploy the example application on OpenShift.

Check the status of your application and ensure your pod is running.

oc get pods -w NAME READY STATUS RESTARTS AGE MY_APP_NAME-1-aaaaa 1/1 Running 0 58s MY_APP_NAME-s2i-1-build 0/1 Completed 0 2m

$ oc get pods -w NAME READY STATUS RESTARTS AGE MY_APP_NAME-1-aaaaa 1/1 Running 0 58s MY_APP_NAME-s2i-1-build 0/1 Completed 0 2mCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

MY_APP_NAME-1-aaaaapod should have a status ofRunningonce its fully deployed and started. You should also wait for your pod to be ready before proceeding, which is shown in theREADYcolumn. For example,MY_APP_NAME-1-aaaaais ready when theREADYcolumn is1/1. Your specific pod name will vary. The number in the middle will increase with each new build. The letters at the end are generated when the pod is created.After your example application is deployed and started, determine its route.

Example Route Information

oc get routes NAME HOST/PORT PATH SERVICES PORT TERMINATION MY_APP_NAME MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME MY_APP_NAME 8080

$ oc get routes NAME HOST/PORT PATH SERVICES PORT TERMINATION MY_APP_NAME MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME MY_APP_NAME 8080Copy to Clipboard Copied! Toggle word wrap Toggle overflow The route information of a pod gives you the base URL which you use to access it. In the example above, you would use

http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAMEas the base URL to access the application.

4.4.3. Deploying the Health Check example application to Minishift or CDK

Use one of the following options to execute the Health Check example application locally on Minishift or CDK:

Although each method uses the same oc commands to deploy your application, using Fabric8 Launcher provides an automated deployment workflow that executes the oc commands for you.

4.4.3.1. Getting the Fabric8 Launcher tool URL and credentials

You need the Fabric8 Launcher tool URL and user credentials to create and deploy example applications on Minishift or CDK. This information is provided when the Minishift or CDK is started.

Prerequisites

- The Fabric8 Launcher tool installed, configured, and running.

Procedure

- Navigate to the console where you started Minishift or CDK.

Check the console output for the URL and user credentials you can use to access the running Fabric8 Launcher:

Example Console Output from a Minishift or CDK Startup

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.4.3.2. Deploying the example application using the Fabric8 Launcher tool

Prerequisites

- The URL of your running Fabric8 Launcher instance and the user credentials of your Minishift or CDK. For more information, see Section 4.4.3.1, “Getting the Fabric8 Launcher tool URL and credentials”.

Procedure

- Navigate to the Fabric8 Launcher URL in a browser.

- Follow the on-screen instructions to create and launch your example application in Node.js.

4.4.3.3. Authenticating the oc CLI client

To work with example applications on Minishift or CDK using the oc command-line client, you must authenticate the client using the token provided by the Minishift or CDK web interface.

Prerequisites

- The URL of your running Fabric8 Launcher instance and the user credentials of your Minishift or CDK. For more information, see Section 4.4.3.1, “Getting the Fabric8 Launcher tool URL and credentials”.

Procedure

- Navigate to the Minishift or CDK URL in a browser.

- Click on the question mark icon in the top right-hand corner of the Web console, next to your user name.

- Select Command Line Tools in the drop-down menu.

-

Find the text box that contains the

oc login …command with the hidden token, and click the button next to it to copy its content to your clipboard. Paste the command into a terminal application. The command uses your authentication token to authenticate your

ocCLI client with your Minishift or CDK account.oc login OPENSHIFT_URL --token=MYTOKEN

$ oc login OPENSHIFT_URL --token=MYTOKENCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.4.3.4. Deploying the Health Check example application using the oc CLI client

Prerequisites

- The example application created using Fabric8 Launcher tool on a Minishift or CDK. For more information, see Section 4.4.3.2, “Deploying the example application using the Fabric8 Launcher tool”.

- Your Fabric8 Launcher tool URL.

-

The

occlient authenticated. For more information, see Section 4.4.3.3, “Authenticating theocCLI client”.

Procedure

Clone your project from GitHub.

git clone git@github.com:USERNAME/MY_PROJECT_NAME.git

$ git clone git@github.com:USERNAME/MY_PROJECT_NAME.gitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, if you downloaded a ZIP file of your project, extract it.

unzip MY_PROJECT_NAME.zip

$ unzip MY_PROJECT_NAME.zipCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new OpenShift project.

oc new-project MY_PROJECT_NAME

$ oc new-project MY_PROJECT_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow - Navigate to the root directory of your application.

Use

npmto start the deployment to OpenShift.npm install && npm run openshift

$ npm install && npm run openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow These commands install any missing module dependencies, then using the Nodeshift module, deploy the example application on OpenShift.

Check the status of your application and ensure your pod is running.

oc get pods -w NAME READY STATUS RESTARTS AGE MY_APP_NAME-1-aaaaa 1/1 Running 0 58s MY_APP_NAME-s2i-1-build 0/1 Completed 0 2m

$ oc get pods -w NAME READY STATUS RESTARTS AGE MY_APP_NAME-1-aaaaa 1/1 Running 0 58s MY_APP_NAME-s2i-1-build 0/1 Completed 0 2mCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

MY_APP_NAME-1-aaaaapod should have a status ofRunningonce its fully deployed and started. You should also wait for your pod to be ready before proceeding, which is shown in theREADYcolumn. For example,MY_APP_NAME-1-aaaaais ready when theREADYcolumn is1/1. Your specific pod name will vary. The number in the middle will increase with each new build. The letters at the end are generated when the pod is created.After your example application is deployed and started, determine its route.

Example Route Information

oc get routes NAME HOST/PORT PATH SERVICES PORT TERMINATION MY_APP_NAME MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME MY_APP_NAME 8080

$ oc get routes NAME HOST/PORT PATH SERVICES PORT TERMINATION MY_APP_NAME MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME MY_APP_NAME 8080Copy to Clipboard Copied! Toggle word wrap Toggle overflow The route information of a pod gives you the base URL which you use to access it. In the example above, you would use

http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAMEas the base URL to access the application.

4.4.4. Deploying the Health Check example application to OpenShift Container Platform

The process of creating and deploying example applications to OpenShift Container Platform is similar to OpenShift Online:

Prerequisites

- The example application created using developers.redhat.com/launch.

Procedure

- Follow the instructions in Section 4.4.2, “Deploying the Health Check example application to OpenShift Online”, only use the URL and user credentials from the OpenShift Container Platform Web Console.

4.4.5. Interacting with the unmodified Health Check example application

After you deploy the example application, you will have the MY_APP_NAME service running. The MY_APP_NAME service exposes the following REST endpoints:

- /api/greeting

-

Returns a JSON containing greeting of

nameparameter (or World as default value). - /api/stop

- Forces the service to become unresponsive as means to simulate a failure.

The following steps demonstrate how to verify the service availability and simulate a failure. This failure of an available service causes the OpenShift self-healing capabilities to be trigger on the service.

Alternatively, you can use the web interface to perform these steps.

Use

curlto execute aGETrequest against theMY_APP_NAMEservice. You can also use a browser to do this.curl http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/greeting

$ curl http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/greetingCopy to Clipboard Copied! Toggle word wrap Toggle overflow {"content":"Hello, World!"}{"content":"Hello, World!"}Copy to Clipboard Copied! Toggle word wrap Toggle overflow Invoke the

/api/stopendpoint and verify the availability of the/api/greetingendpoint shortly after that.Invoking the

/api/stopendpoint simulates an internal service failure and triggers the OpenShift self-healing capabilities. When invoking/api/greetingafter simulating the failure, the service should return a HTTP status503.curl http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/stop

$ curl http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/stopCopy to Clipboard Copied! Toggle word wrap Toggle overflow Stopping HTTP server, Bye bye world !

Stopping HTTP server, Bye bye world !Copy to Clipboard Copied! Toggle word wrap Toggle overflow (followed by)

curl http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/greeting

$ curl http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME/api/greetingCopy to Clipboard Copied! Toggle word wrap Toggle overflow Not online

Not onlineCopy to Clipboard Copied! Toggle word wrap Toggle overflow Use

oc get pods -wto continuously watch the self-healing capabilities in action.While invoking the service failure, you can watch the self-healing capabilities in action on OpenShift console, or with the

occlient tools. You should see the number of pods in theREADYstate move to zero (0/1) and after a short period (less than one minute) move back up to one (1/1). In addition to that, theRESTARTScount increases every time you you invoke the service failure.oc get pods -w NAME READY STATUS RESTARTS AGE MY_APP_NAME-1-26iy7 0/1 Running 5 18m MY_APP_NAME-1-26iy7 1/1 Running 5 19m

$ oc get pods -w NAME READY STATUS RESTARTS AGE MY_APP_NAME-1-26iy7 0/1 Running 5 18m MY_APP_NAME-1-26iy7 1/1 Running 5 19mCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Use the web interface to invoke the service.

Alternatively to the interaction using the terminal window, you can use the web interface provided by the service to invoke the different methods and watch the service move through the life cycle phases.

http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME

http://MY_APP_NAME-MY_PROJECT_NAME.OPENSHIFT_HOSTNAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Use the web console to view the log output generated by the application at each stage of the self-healing process.

- Navigate to your project.

- On the sidebar, click on Monitoring.

- In the upper right-hand corner of the screen, click on Events to display the log messages.

- Optional: Click View Details to display a detailed view of the Event log.

The health check application generates the following messages:

Expand Message Status Unhealthy

Readiness probe failed. This message is expected and indicates that the simulated failure of the

/api/greetingendpoint has been detected and the self-healing process starts.Killing

The unavailable Docker container running the service is being killed before being re-created.

Pulling

Downloading the latest version of docker image to re-create the container.

Pulled

Docker image downloaded successfully.

Created

Docker container has been successfully created

Started

Docker container is ready to handle requests

4.4.6. Health check resources

More background and related information on health checking can be found here:

4.5. Circuit Breaker example for Node.js

The following example is not meant to be run in a production environment.

Limitation: Run this example application on a Minishift or CDK. You can also use a manual workflow to deploy this example to OpenShift Online Pro and OpenShift Container Platform. This example is not currently available on OpenShift Online Starter.

Example proficiency level: Foundational.

The Circuit Breaker example demonstrates a generic pattern for reporting the failure of a service and then limiting access to the failed service until it becomes available to handle requests. This helps prevent cascading failure in other services that depend on the failed services for functionality.

This example shows you how to implement a Circuit Breaker and Fallback pattern in your services.

4.5.1. The circuit breaker design pattern

The Circuit Breaker is a pattern intended to:

Reduce the impact of network failure and high latency on service architectures where services synchronously invoke other services.

If one of the services:

- becomes unavailable due to network failure, or

- incurs unusually high latency values due to overwhelming traffic,

other services attempting to call its endpoint may end up exhausting critical resources in an attempt to reach it, rendering themselves unusable.

- Prevent the condition also known as cascading failure, which can render the entire microservice architecture unusable.

- Act as a proxy between a protected function and a remote function, which monitors for failures.

- Trip once the failures reach a certain threshold, and all further calls to the circuit breaker return an error or a predefined fallback response, without the protected call being made at all.

The Circuit Breaker usually also contain an error reporting mechanism that notifies you when the Circuit Breaker trips.

Circuit breaker implementation

- With the Circuit Breaker pattern implemented, a service client invokes a remote service endpoint via a proxy at regular intervals.

- If the calls to the remote service endpoint fail repeatedly and consistently, the Circuit Breaker trips, making all calls to the service fail immediately over a set timeout period and returns a predefined fallback response.

When the timeout period expires, a limited number of test calls are allowed to pass through to the remote service to determine whether it has healed, or remains unavailable.

- If the test calls fail, the Circuit Breaker keeps the service unavailable and keeps returning the fallback responses to incoming calls.

- If the test calls succeed, the Circuit Breaker closes, fully enabling traffic to reach the remote service again.

4.5.2. Circuit Breaker design tradeoffs

| Pros | Cons |

|---|---|

|

|

4.5.3. Deploying the Circuit Breaker example application to OpenShift Online

Use one of the following options to execute the Circuit Breaker example application on OpenShift Online.

Although each method uses the same oc commands to deploy your application, using developers.redhat.com/launch provides an automated deployment workflow that executes the oc commands for you.

4.5.3.1. Deploying the example application using developers.redhat.com/launch

Prerequisites

- An account at OpenShift Online.

Procedure

- Navigate to the developers.redhat.com/launch URL in a browser and log in.

- Follow on-screen instructions to create and launch your example application in Node.js.

4.5.3.2. Authenticating the oc CLI client

To work with example applications on OpenShift Online using the oc command-line client, you must authenticate the client using the token provided by the OpenShift Online web interface.

Prerequisites

- An account at OpenShift Online.

Procedure

- Navigate to the OpenShift Online URL in a browser.

- Click on the question mark icon in the top right-hand corner of the Web console, next to your user name.

- Select Command Line Tools in the drop-down menu.

-

Find the text box that contains the

oc login …command with the hidden token, and click the button next to it to copy its content to your clipboard. Paste the command into a terminal application. The command uses your authentication token to authenticate your

ocCLI client with your OpenShift Online account.oc login OPENSHIFT_URL --token=MYTOKEN

$ oc login OPENSHIFT_URL --token=MYTOKENCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.5.3.3. Deploying the Circuit Breaker example application using the oc CLI client

Prerequisites

- The example application created using developers.redhat.com/launch. For more information, see Section 4.5.3.1, “Deploying the example application using developers.redhat.com/launch”.

-

The

occlient authenticated. For more information, see Section 4.5.3.2, “Authenticating theocCLI client”.

Procedure

Clone your project from GitHub.

git clone git@github.com:USERNAME/MY_PROJECT_NAME.git

$ git clone git@github.com:USERNAME/MY_PROJECT_NAME.gitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, if you downloaded a ZIP file of your project, extract it.

unzip MY_PROJECT_NAME.zip

$ unzip MY_PROJECT_NAME.zipCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new OpenShift project.

oc new-project MY_PROJECT_NAME

$ oc new-project MY_PROJECT_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow - Navigate to the root directory of your application.

Use the provided

start-openshift.shscript to start the deployment to OpenShift.chmod +x start-openshift.sh ./start-openshift.sh

$ chmod +x start-openshift.sh $ ./start-openshift.shCopy to Clipboard Copied! Toggle word wrap Toggle overflow These commands use the Nodeshift

npmmodule to install your dependencies, launch the S2I build process on OpenShift, and start the services.Check the status of your application and ensure your pod is running.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Both the

MY_APP_NAME-greeting-1-aaaaaandMY_APP_NAME-name-1-aaaaapods should have a status ofRunningonce they are fully deployed and started. You should also wait for your pods to be ready before proceeding, which is shown in theREADYcolumn. For example,MY_APP_NAME-greeting-1-aaaaais ready when theREADYcolumn is1/1. Your specific pod names will vary. The number in the middle will increase with each new build. The letters at the end are generated when the pod is created.After your example application is deployed and started, determine its route.

Example Route Information

oc get routes NAME HOST/PORT PATH SERVICES PORT TERMINATION MY_APP_NAME-greeting MY_APP_NAME-greeting-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME MY_APP_NAME-greeting 8080 None MY_APP_NAME-name MY_APP_NAME-name-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME MY_APP_NAME-name 8080 None

$ oc get routes NAME HOST/PORT PATH SERVICES PORT TERMINATION MY_APP_NAME-greeting MY_APP_NAME-greeting-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME MY_APP_NAME-greeting 8080 None MY_APP_NAME-name MY_APP_NAME-name-MY_PROJECT_NAME.OPENSHIFT_HOSTNAME MY_APP_NAME-name 8080 NoneCopy to Clipboard Copied! Toggle word wrap Toggle overflow The route information of a pod gives you the base URL which you use to access it. In the example above, you would use

http://MY_APP_NAME-greeting-MY_PROJECT_NAME.OPENSHIFT_HOSTNAMEas the base URL to access the application.

4.5.4. Deploying the Circuit Breaker example application to Minishift or CDK