Block Device Guide

Managing, creating, configuring, and using Red Hat Ceph Storage Block Devices

Abstract

Chapter 1. Overview

A block is a sequence of bytes, for example, a 512-byte block of data. Block-based storage interfaces are the most common way to store data with rotating media such as:

- hard disks,

- CDs,

- floppy disks,

- and even traditional 9-track tape.

The ubiquity of block device interfaces makes a virtual block device an ideal candidate to interact with a mass data storage system like Red Hat Ceph Storage.

Ceph Block Devices, also known as Reliable Autonomic Distributed Object Store (RADOS) Block Devices (RBDs), are thin-provisioned, resizable and store data striped over multiple Object Storage Devices (OSD) in a Ceph Storage Cluster. Ceph Block Devices leverage RADOS capabilities such as:

- creating snapshots,

- replication,

- and consistency.

Ceph Block Devices interact with OSDs by using the librbd library.

Ceph Block Devices deliver high performance with infinite scalability to Kernel Virtual Machines (KVMs) such as Quick Emulator (QEMU), and cloud-based computing systems like OpenStack and CloudStack that rely on the libvirt and QEMU utilities to integrate with Ceph Block Devices. You can use the same cluster to operate the Ceph Object Gateway and Ceph Block Devices simultaneously.

To use Ceph Block Devices, you must have access to a running Ceph Storage Cluster. For details on installing the Red Hat Ceph Storage, see the Installation Guide for Red Hat Enterprise Linux or Installation Guide for Ubuntu.

Chapter 2. Block Device Commands

The rbd command enables you to create, list, introspect, and remove block device images. You can also use it to clone images, create snapshots, rollback an image to a snapshot, view a snapshot, and so on.

2.1. Prerequisites

There are two prerequisites that you must meet before you can use the Ceph Block Devices and the rbd command:

- You must have access to a running Ceph Storage Cluster. For details, see the Red Hat Ceph Storage 3 Installation Guide for Red Hat Enterprise Linux or Installation Guide for Ubuntu.

- You must install the Ceph Block Device client. For details, see the Red Hat Ceph Storage 3 Installation Guide for Red Hat Enterprise Linux or Installation Guide for Ubuntu.

The Manually Installing Ceph Block Device chapter also provides information on mounting and using Ceph Block Devices on client nodes. Execute these steps on client nodes only after creating an image for the Block Device in the Ceph Storage Cluster. See Section 2.4, “Creating Block Device Images” for details.

2.2. Displaying Help

Use the rbd help command to display help for a particular rbd command and its subcommand:

rbd help <command> <subcommand>

[root@rbd-client ~]# rbd help <command> <subcommand>Example

To display help for the snap list command:

rbd help snap list

[root@rbd-client ~]# rbd help snap list

The -h option still displays help for all available commands.

2.3. Creating Block Device Pools

Before using the block device client, ensure a pool for rbd exists and is enabled and initialized. To create an rbd pool, execute the following:

ceph osd pool create {pool-name} {pg-num} {pgp-num}

ceph osd pool application enable {pool-name} rbd

rbd pool init -p {pool-name}

[root@rbd-client ~]# ceph osd pool create {pool-name} {pg-num} {pgp-num}

[root@rbd-client ~]# ceph osd pool application enable {pool-name} rbd

[root@rbd-client ~]# rbd pool init -p {pool-name}You MUST create a pool first before you can specify it as a source. See the Pools chapter in the Storage Strategies guide for Red Hat Ceph Storage 3 for additional details.

2.4. Creating Block Device Images

Before adding a block device to a node, create an image for it in the Ceph storage cluster. To create a block device image, execute the following command:

rbd create <image-name> --size <megabytes> --pool <pool-name>

[root@rbd-client ~]# rbd create <image-name> --size <megabytes> --pool <pool-name>

For example, to create a 1GB image named data that stores information in a pool named stack, run:

rbd create data --size 1024 --pool stack

[root@rbd-client ~]# rbd create data --size 1024 --pool stack- NOTE

-

Ensure a pool for

rbdexists before creating an image. See Creating Block Device Pools for additional details.

2.5. Listing Block Device Images

To list block devices in the rbd pool, execute the following (rbd is the default pool name):

rbd ls

[root@rbd-client ~]# rbd ls

To list block devices in a particular pool, execute the following, but replace {poolname} with the name of the pool:

rbd ls {poolname}

[root@rbd-client ~]# rbd ls {poolname}For example:

rbd ls swimmingpool

[root@rbd-client ~]# rbd ls swimmingpool2.6. Retrieving Image Information

To retrieve information from a particular image, execute the following, but replace {image-name} with the name for the image:

rbd --image {image-name} info

[root@rbd-client ~]# rbd --image {image-name} infoFor example:

rbd --image foo info

[root@rbd-client ~]# rbd --image foo info

To retrieve information from an image within a pool, execute the following, but replace {image-name} with the name of the image and replace {pool-name} with the name of the pool:

rbd --image {image-name} -p {pool-name} info

[root@rbd-client ~]# rbd --image {image-name} -p {pool-name} infoFor example:

rbd --image bar -p swimmingpool info

[root@rbd-client ~]# rbd --image bar -p swimmingpool info2.7. Resizing Block Device Images

Ceph block device images are thin provisioned. They do not actually use any physical storage until you begin saving data to them. However, they do have a maximum capacity that you set with the --size option.

To increase or decrease the maximum size of a Ceph block device image:

rbd resize --image <image-name> --size <size>

[root@rbd-client ~]# rbd resize --image <image-name> --size <size>2.8. Removing Block Device Images

To remove a block device, execute the following, but replace {image-name} with the name of the image you want to remove:

rbd rm {image-name}

[root@rbd-client ~]# rbd rm {image-name}For example:

rbd rm foo

[root@rbd-client ~]# rbd rm foo

To remove a block device from a pool, execute the following, but replace {image-name} with the name of the image to remove and replace {pool-name} with the name of the pool:

rbd rm {image-name} -p {pool-name}

[root@rbd-client ~]# rbd rm {image-name} -p {pool-name}For example:

rbd rm bar -p swimmingpool

[root@rbd-client ~]# rbd rm bar -p swimmingpool2.9. Moving Block Device Images to the Trash

RADOS Block Device (RBD) images can be moved to the trash using the rbd trash command. This command provides more options than the rbd rm command.

Once an image is moved to the trash, it can be removed from the trash at a later time. This helps to avoid accidental deletion.

To move an image to the trash execute the following:

rbd trash move {image-spec}

[root@rbd-client ~]# rbd trash move {image-spec}

Once an image is in the trash, it is assigned a unique image ID. You will need this image ID to specify the image later if you need to use any of the trash options. Execute the rbd trash list for a list of IDs of the images in the trash. This command also returns the image’s pre-deletion name.

In addition, there is an optional --image-id argument that can be used with rbd info and rbd snap commands. Use --image-id with the rbd info command to see the properties of an image in the trash, and with rbd snap to remove an image’s snapshots from the trash.

Remove an Image from the Trash

To remove an image from the trash execute the following:

rbd trash remove [{pool-name}/] {image-id}

[root@rbd-client ~]# rbd trash remove [{pool-name}/] {image-id}Once an image is removed from the trash, it cannot be restored.

Delay Trash Removal

Use the --delay option to set an amount of time before an image can be removed from the trash. Execute the following, except replace {time} with the number of seconds to wait before the image can be removed (defaults to 0):

rbd trash move [--delay {time}] {image-spec}

[root@rbd-client ~]# rbd trash move [--delay {time}] {image-spec}

Once the --delay option is enabled, an image cannot be removed from the trash within the specified timeframe unless forced.

Restore an Image from the Trash

As long as an image has not been removed from the trash, it can be restored using the rbd trash restore command.

Execute the rbd trash restore command to restore the image:

rbd trash restore [{pool-name}/] {image-id}

[root@rbd-client ~]# rbd trash restore [{pool-name}/] {image-id}2.10. Enabling and Disabling Image Features

You can enable or disable image features, such as fast-diff, exclusive-lock, object-map, or journaling, on already existing images.

To enable a feature:

rbd feature enable <pool-name>/<image-name> <feature-name>

[root@rbd-client ~]# rbd feature enable <pool-name>/<image-name> <feature-name>To disable a feature:

rbd feature disable <pool-name>/<image-name> <feature-name>

[root@rbd-client ~]# rbd feature disable <pool-name>/<image-name> <feature-name>Examples

To enable the

exclusive-lockfeature on theimage1image in thedatapool:rbd feature enable data/image1 exclusive-lock

[root@rbd-client ~]# rbd feature enable data/image1 exclusive-lockCopy to Clipboard Copied! Toggle word wrap Toggle overflow To disable the

fast-difffeature on theimage2image in thedatapool:rbd feature disable data/image2 fast-diff

[root@rbd-client ~]# rbd feature disable data/image2 fast-diffCopy to Clipboard Copied! Toggle word wrap Toggle overflow

After enabling the fast-diff and object-map features, rebuild the object map:

rbd object-map rebuild <pool-name>/<image-name>

[root@rbd-client ~]# rbd object-map rebuild <pool-name>/<image-name>

The deep flatten feature can be only disabled on already existing images but not enabled. To use deep flatten, enable it when creating images.

2.11. Working with Image Metadata

Ceph supports adding custom image metadata as key-value pairs. The pairs do not have any strict format.

Also, by using metadata, you can set the RBD configuration parameters for particular images. See Overriding the Default Configuration for Particular Images for details.

Use the rbd image-meta commands to work with metadata.

Setting Image Metadata

To set a new metadata key-value pair:

rbd image-meta set <pool-name>/<image-name> <key> <value>

[root@rbd-client ~]# rbd image-meta set <pool-name>/<image-name> <key> <value>Example

To set the

last_updatekey to the2016-06-06value on thedatasetimage in thedatapool:rbd image-meta set data/dataset last_update 2016-06-06

[root@rbd-client ~]# rbd image-meta set data/dataset last_update 2016-06-06Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Removing Image Metadata

To remove a metadata key-value pair:

rbd image-meta remove <pool-name>/<image-name> <key>

[root@rbd-client ~]# rbd image-meta remove <pool-name>/<image-name> <key>Example

To remove the

last_updatekey-value pair from thedatasetimage in thedatapool:rbd image-meta remove data/dataset last_update

[root@rbd-client ~]# rbd image-meta remove data/dataset last_updateCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Getting a Value for a Key

To view a value of a key:

rbd image-meta get <pool-name>/<image-name> <key>

[root@rbd-client ~]# rbd image-meta get <pool-name>/<image-name> <key>Example

To view the value of the

last_updatekey:rbd image-meta get data/dataset last_update

[root@rbd-client ~]# rbd image-meta get data/dataset last_updateCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Listing Image Metadata

To show all metadata on an image:

rbd image-meta list <pool-name>/<image-name>

[root@rbd-client ~]# rbd image-meta list <pool-name>/<image-name>Example

To list metadata set on the

datasetimage in thedatapool:rbd data/dataset image-meta list

[root@rbd-client ~]# rbd data/dataset image-meta listCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Overriding the Default Configuration for Particular Images

To override the RBD image configuration settings set in the Ceph configuration file for a particular image, set the configuration parameters with the conf_ prefix as image metadata:

rbd image-meta set <pool-name>/<image-name> conf_<parameter> <value>

[root@rbd-client ~]# rbd image-meta set <pool-name>/<image-name> conf_<parameter> <value>Example

To disable the RBD cache for the

datasetimage in thedatapool:rbd image-meta set data/dataset conf_rbd_cache false

[root@rbd-client ~]# rbd image-meta set data/dataset conf_rbd_cache falseCopy to Clipboard Copied! Toggle word wrap Toggle overflow

See Block Device Configuration Reference for a list of possible configuration options.

Chapter 3. Snapshots

A snapshot is a read-only copy of the state of an image at a particular point in time. One of the advanced features of Ceph block devices is that you can create snapshots of the images to retain a history of an image’s state. Ceph also supports snapshot layering, which allows you to clone images (for example a VM image) quickly and easily. Ceph supports block device snapshots using the rbd command and many higher level interfaces, including QEMU, libvirt,OpenStack and CloudStack.

To use RBD snapshots, you must have a running Ceph cluster.

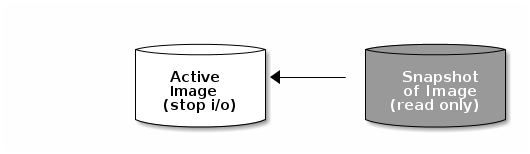

If a snapshot is taken while I/O is still in progress in a image, the snapshot might not get the exact or latest data of the image and the snapshot may have to be cloned to a new image to be mountable. So, we recommend to stop I/O before taking a snapshot of an image. If the image contains a filesystem, the filesystem must be in a consistent state before taking a snapshot. To stop I/O you can use fsfreeze command. See fsfreeze(8) man page for more details. For virtual machines, qemu-guest-agent can be used to automatically freeze filesystems when creating a snapshot.

3.1. Cephx Notes

When cephx is enabled (it is by default), you must specify a user name or ID and a path to the keyring containing the corresponding key for the user. You may also add the CEPH_ARGS environment variable to avoid re-entry of the following parameters:

rbd --id {user-ID} --keyring=/path/to/secret [commands]

rbd --name {username} --keyring=/path/to/secret [commands]

[root@rbd-client ~]# rbd --id {user-ID} --keyring=/path/to/secret [commands]

[root@rbd-client ~]# rbd --name {username} --keyring=/path/to/secret [commands]For example:

rbd --id admin --keyring=/etc/ceph/ceph.keyring [commands] rbd --name client.admin --keyring=/etc/ceph/ceph.keyring [commands]

[root@rbd-client ~]# rbd --id admin --keyring=/etc/ceph/ceph.keyring [commands]

[root@rbd-client ~]# rbd --name client.admin --keyring=/etc/ceph/ceph.keyring [commands]

Add the user and secret to the CEPH_ARGS environment variable so that you don’t need to enter them each time.

3.2. Snapshot Basics

The following procedures demonstrate how to create, list, and remove snapshots using the rbd command on the command line.

3.2.1. Creating Snapshots

To create a snapshot with rbd, specify the snap create option, the pool name and the image name:

rbd --pool {pool-name} snap create --snap {snap-name} {image-name}

rbd snap create {pool-name}/{image-name}@{snap-name}

[root@rbd-client ~]# rbd --pool {pool-name} snap create --snap {snap-name} {image-name}

[root@rbd-client ~]# rbd snap create {pool-name}/{image-name}@{snap-name}For example:

rbd --pool rbd snap create --snap snapname foo rbd snap create rbd/foo@snapname

[root@rbd-client ~]# rbd --pool rbd snap create --snap snapname foo

[root@rbd-client ~]# rbd snap create rbd/foo@snapname3.2.2. Listing Snapshots

To list snapshots of an image, specify the pool name and the image name:

rbd --pool {pool-name} snap ls {image-name}

rbd snap ls {pool-name}/{image-name}

[root@rbd-client ~]# rbd --pool {pool-name} snap ls {image-name}

[root@rbd-client ~]# rbd snap ls {pool-name}/{image-name}For example:

rbd --pool rbd snap ls foo rbd snap ls rbd/foo

[root@rbd-client ~]# rbd --pool rbd snap ls foo

[root@rbd-client ~]# rbd snap ls rbd/foo3.2.3. Rollbacking Snapshots

To rollback to a snapshot with rbd, specify the snap rollback option, the pool name, the image name and the snap name:

rbd --pool {pool-name} snap rollback --snap {snap-name} {image-name}

rbd snap rollback {pool-name}/{image-name}@{snap-name}

rbd --pool {pool-name} snap rollback --snap {snap-name} {image-name}

rbd snap rollback {pool-name}/{image-name}@{snap-name}For example:

rbd --pool rbd snap rollback --snap snapname foo rbd snap rollback rbd/foo@snapname

rbd --pool rbd snap rollback --snap snapname foo

rbd snap rollback rbd/foo@snapnameRolling back an image to a snapshot means overwriting the current version of the image with data from a snapshot. The time it takes to execute a rollback increases with the size of the image. It is faster to clone from a snapshot than to rollback an image to a snapshot, and it is the preferred method of returning to a pre-existing state.

3.2.4. Deleting Snapshots

To delete a snapshot with rbd, specify the snap rm option, the pool name, the image name and the snapshot name:

rbd --pool <pool-name> snap rm --snap <snap-name> <image-name> rbd snap rm <pool-name-/<image-name>@<snap-name>

[root@rbd-client ~]# rbd --pool <pool-name> snap rm --snap <snap-name> <image-name>

[root@rbd-client ~]# rbd snap rm <pool-name-/<image-name>@<snap-name>For example:

rbd --pool rbd snap rm --snap snapname foo rbd snap rm rbd/foo@snapname

[root@rbd-client ~]# rbd --pool rbd snap rm --snap snapname foo

[root@rbd-client ~]# rbd snap rm rbd/foo@snapnameIf an image has any clones, the cloned images retain reference to the parent image snapshot. To delete the parent image snapshot, you must flatten the child images first. See Flattening a Cloned Image for details.

Ceph OSD daemons delete data asynchronously, so deleting a snapshot does not free up the disk space immediately.

3.2.5. Purging Snapshots

To delete all snapshots for an image with rbd, specify the snap purge option and the image name:

rbd --pool {pool-name} snap purge {image-name}

rbd snap purge {pool-name}/{image-name}

[root@rbd-client ~]# rbd --pool {pool-name} snap purge {image-name}

[root@rbd-client ~]# rbd snap purge {pool-name}/{image-name}For example:

rbd --pool rbd snap purge foo rbd snap purge rbd/foo

[root@rbd-client ~]# rbd --pool rbd snap purge foo

[root@rbd-client ~]# rbd snap purge rbd/foo3.2.6. Renaming Snapshots

To rename a snapshot:

rbd snap rename <pool-name>/<image-name>@<original-snapshot-name> <pool-name>/<image-name>@<new-snapshot-name>

[root@rbd-client ~]# rbd snap rename <pool-name>/<image-name>@<original-snapshot-name> <pool-name>/<image-name>@<new-snapshot-name>Example

To rename the snap1 snapshot of the dataset image on the data pool to snap2:

rbd snap rename data/dataset@snap1 data/dataset@snap2

[root@rbd-client ~]# rbd snap rename data/dataset@snap1 data/dataset@snap2

Execute the rbd help snap rename command to display additional details on renaming snapshots.

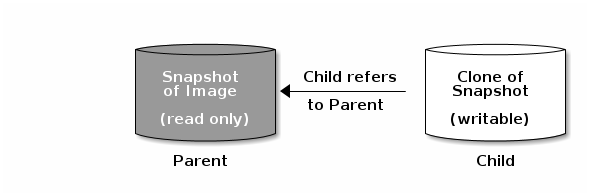

3.3. Layering

Ceph supports the ability to create many copy-on-write (COW) or copy-on-read (COR) clones of a block device snapshot. Snapshot layering enables Ceph block device clients to create images very quickly. For example, you might create a block device image with a Linux VM written to it; then, snapshot the image, protect the snapshot, and create as many clones as you like. A snapshot is read-only, so cloning a snapshot simplifies semantics—making it possible to create clones rapidly.

The terms parent and child mean a Ceph block device snapshot (parent), and the corresponding image cloned from the snapshot (child). These terms are important for the command line usage below.

Each cloned image (child) stores a reference to its parent image, which enables the cloned image to open the parent snapshot and read it. This reference is removed when the clone is flattened that is, when information from the snapshot is completely copied to the clone. For more information on flattening see Section 3.3.6, “Flattening Cloned Images”.

A clone of a snapshot behaves exactly like any other Ceph block device image. You can read to, write from, clone, and resize cloned images. There are no special restrictions with cloned images. However, the clone of a snapshot refers to the snapshot, so you MUST protect the snapshot before you clone it.

A clone of a snapshot can be a copy-on-write (COW) or copy-on-read (COR) clone. Copy-on-write (COW) is always enabled for clones while copy-on-read (COR) has to be enabled explicitly. Copy-on-write (COW) copies data from the parent to the clone when it writes to an unallocated object within the clone. Copy-on-read (COR) copies data from the parent to the clone when it reads from an unallocated object within the clone. Reading data from a clone will only read data from the parent if the object does not yet exist in the clone. Rados block device breaks up large images into multiple objects (defaults to 4 MB) and all copy-on-write (COW) and copy-on-read (COR) operations occur on a full object (that is writing 1 byte to a clone will result in a 4 MB object being read from the parent and written to the clone if the destination object does not already exist in the clone from a previous COW/COR operation).

Whether or not copy-on-read (COR) is enabled, any reads that cannot be satisfied by reading an underlying object from the clone will be rerouted to the parent. Since there is practically no limit to the number of parents (meaning that you can clone a clone), this reroute continues until an object is found or you hit the base parent image. If copy-on-read (COR) is enabled, any reads that fail to be satisfied directly from the clone result in a full object read from the parent and writing that data to the clone so that future reads of the same extent can be satisfied from the clone itself without the need of reading from the parent.

This is essentially an on-demand, object-by-object flatten operation. This is specially useful when the clone is in a high-latency connection away from it’s parent (parent in a different pool in another geographical location). Copy-on-read (COR) reduces the amortized latency of reads. The first few reads will have high latency because it will result in extra data being read from the parent (for example, you read 1 byte from the clone but now 4 MB has to be read from the parent and written to the clone), but all future reads will be served from the clone itself.

To create copy-on-read (COR) clones from snapshot you have to explicitly enable this feature by adding rbd_clone_copy_on_read = true under [global] or [client] section in your ceph.conf file.

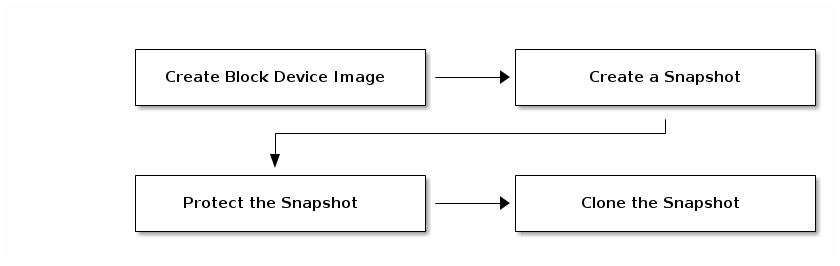

3.3.1. Getting Started with Layering

Ceph block device layering is a simple process. You must have an image. You must create a snapshot of the image. You must protect the snapshot. Once you have performed these steps, you can begin cloning the snapshot.

The cloned image has a reference to the parent snapshot, and includes the pool ID, image ID and snapshot ID. The inclusion of the pool ID means that you may clone snapshots from one pool to images in another pool.

-

Image Template: A common use case for block device layering is to create a master image and a snapshot that serves as a template for clones. For example, a user may create an image for a RHEL7 distribution and create a snapshot for it. Periodically, the user may update the image and create a new snapshot (for example

yum update,yum upgrade, followed byrbd snap create). As the image matures, the user can clone any one of the snapshots. - Extended Template: A more advanced use case includes extending a template image that provides more information than a base image. For example, a user may clone an image (for example, a VM template) and install other software (for example, a database, a content management system, an analytics system, and so on) and then snapshot the extended image, which itself may be updated just like the base image.

- Template Pool: One way to use block device layering is to create a pool that contains master images that act as templates, and snapshots of those templates. You may then extend read-only privileges to users so that they may clone the snapshots without the ability to write or execute within the pool.

- Image Migration/Recovery: One way to use block device layering is to migrate or recover data from one pool into another pool.

3.3.2. Protecting Snapshots

Clones access the parent snapshots. All clones would break if a user inadvertently deleted the parent snapshot. To prevent data loss, you MUST protect the snapshot before you can clone it. To do so, run the following commands:

rbd --pool {pool-name} snap protect --image {image-name} --snap {snapshot-name}

rbd snap protect {pool-name}/{image-name}@{snapshot-name}

[root@rbd-client ~]# rbd --pool {pool-name} snap protect --image {image-name} --snap {snapshot-name}

[root@rbd-client ~]# rbd snap protect {pool-name}/{image-name}@{snapshot-name}For example:

rbd --pool rbd snap protect --image my-image --snap my-snapshot rbd snap protect rbd/my-image@my-snapshot

[root@rbd-client ~]# rbd --pool rbd snap protect --image my-image --snap my-snapshot

[root@rbd-client ~]# rbd snap protect rbd/my-image@my-snapshotYou cannot delete a protected snapshot.

3.3.3. Cloning Snapshots

To clone a snapshot, you need to specify the parent pool, image and snapshot; and the child pool and image name. You must protect the snapshot before you can clone it. To do so, run the following commands:

rbd --pool {pool-name} --image {parent-image} --snap {snap-name} --dest-pool {pool-name} --dest {child-image}

rbd clone {pool-name}/{parent-image}@{snap-name} {pool-name}/{child-image-name}

[root@rbd-client ~]# rbd --pool {pool-name} --image {parent-image} --snap {snap-name} --dest-pool {pool-name} --dest {child-image}

[root@rbd-client ~]# rbd clone {pool-name}/{parent-image}@{snap-name} {pool-name}/{child-image-name}For example:

rbd clone rbd/my-image@my-snapshot rbd/new-image

[root@rbd-client ~]# rbd clone rbd/my-image@my-snapshot rbd/new-imageYou may clone a snapshot from one pool to an image in another pool. For example, you may maintain read-only images and snapshots as templates in one pool, and writable clones in another pool.

3.3.4. Unprotecting Snapshots

Before you can delete a snapshot, you must unprotect it first. Additionally, you may NOT delete snapshots that have references from clones. You must flatten each clone of a snapshot, before you can delete the snapshot. To do so, run the following commands:

rbd --pool {pool-name} snap unprotect --image {image-name} --snap {snapshot-name}

rbd snap unprotect {pool-name}/{image-name}@{snapshot-name}

[root@rbd-client ~]#rbd --pool {pool-name} snap unprotect --image {image-name} --snap {snapshot-name}

[root@rbd-client ~]# rbd snap unprotect {pool-name}/{image-name}@{snapshot-name}For example:

rbd --pool rbd snap unprotect --image my-image --snap my-snapshot rbd snap unprotect rbd/my-image@my-snapshot

[root@rbd-client ~]# rbd --pool rbd snap unprotect --image my-image --snap my-snapshot

[root@rbd-client ~]# rbd snap unprotect rbd/my-image@my-snapshot3.3.5. Listing Children of a Snapshot

To list the children of a snapshot, execute the following:

rbd --pool {pool-name} children --image {image-name} --snap {snap-name}

rbd children {pool-name}/{image-name}@{snapshot-name}

rbd --pool {pool-name} children --image {image-name} --snap {snap-name}

rbd children {pool-name}/{image-name}@{snapshot-name}For example:

rbd --pool rbd children --image my-image --snap my-snapshot rbd children rbd/my-image@my-snapshot

rbd --pool rbd children --image my-image --snap my-snapshot

rbd children rbd/my-image@my-snapshot3.3.6. Flattening Cloned Images

Cloned images retain a reference to the parent snapshot. When you remove the reference from the child clone to the parent snapshot, you effectively "flatten" the image by copying the information from the snapshot to the clone.The time it takes to flatten a clone increases with the size of the snapshot.

To delete a parent image snapshot associated with child images, you must flatten the child images first:

rbd --pool <pool-name> flatten --image <image-name> rbd flatten <pool-name>/<image-name>

[root@rbd-client ~]# rbd --pool <pool-name> flatten --image <image-name>

[root@rbd-client ~]# rbd flatten <pool-name>/<image-name>For example:

rbd --pool rbd flatten --image my-image rbd flatten rbd/my-image

[root@rbd-client ~]# rbd --pool rbd flatten --image my-image

[root@rbd-client ~]# rbd flatten rbd/my-imageBecause a flattened image contains all the information from the snapshot, a flattened image will use more storage space than a layered clone.

If the deep flatten feature is enabled on an image, the image clone is dissociated from its parent by default.

Chapter 4. Block Device Mirroring

RADOS Block Device (RBD) mirroring is a process of asynchronous replication of Ceph block device images between two or more Ceph clusters. Mirroring ensures point-in-time consistent replicas of all changes to an image, including reads and writes, block device resizing, snapshots, clones and flattening.

Mirroring uses mandatory exclusive locks and the RBD journaling feature to record all modifications to an image in the order in which they occur. This ensures that a crash-consistent mirror of an image is available. Before an image can be mirrored to a peer cluster, you must enable journaling. See Section 4.1, “Enabling Journaling” for details.

Since it is the images stored in the primary and secondary pools associated to the block device that get mirrored, the CRUSH hierarchy for the primary and secondary pools should have the same storage capacity and performance characteristics. Additionally, the network connection between the primary and secondary sites should have sufficient bandwidth to ensure mirroring happens without too much latency.

The CRUSH hierarchies supporting primary and secondary pools that mirror block device images must have the same capacity and performance characteristics, and must have adequate bandwidth to ensure mirroring without excess latency. For example, if you have X MiB/s average write throughput to images in the primary cluster, the network must support N * X throughput in the network connection to the secondary site plus a safety factor of Y% to mirror N images.

Mirroring serves primarily for recovery from a disaster. Depending on which type of mirroring you use, see either Recovering from a disaster with one-way mirroring or Recovering from a disaster with two-way mirroring, for details.

The rbd-mirror Daemon

The rbd-mirror daemon is responsible for synchronizing images from one Ceph cluster to another.

Depending on the type of replication, rbd-mirror runs either on a single cluster or on all clusters that participate in mirroring:

One-way Replication

-

When data is mirrored from a primary cluster to a secondary cluster that serves as a backup,

rbd-mirrorruns ONLY on the secondary cluster. RBD mirroring may have multiple secondary sites.

-

When data is mirrored from a primary cluster to a secondary cluster that serves as a backup,

Two-way Replication

-

Two-way replication adds an

rbd-mirrordaemon on the primary cluster so images can be demoted on it and promoted on the secondary cluster. Changes can then be made to the images on the secondary cluster and they will be replicated in the reverse direction, from secondary to primary. Both clusters must haverbd-mirrorrunning to allow promoting and demoting images on either cluster. Currently, two-way replication is only supported between two sites.

-

Two-way replication adds an

The rbd-mirror package provides rbd-mirror.

In two-way replication, each instance of rbd-mirror must be able to connect to the other Ceph cluster simultaneously. Additionally, the network must have sufficient bandwidth between the two data center sites to handle mirroring.

Only run a single rbd-mirror daemon per a Ceph cluster.

Mirroring Modes

Mirroring is configured on a per-pool basis within peer clusters. Ceph supports two modes, depending on what images in a pool are mirrored:

- Pool Mode

- All images in a pool with the journaling feature enabled are mirrored. See Configuring Pool Mirroring for details.

- Image Mode

- Only a specific subset of images within a pool is mirrored and you must enable mirroring for each image separately. See Configuring Image Mirroring for details.

Image States

Whether or not an image can be modified depends on its state:

- Images in the primary state can be modified

- Images in the non-primary state cannot be modified

Images are automatically promoted to primary when mirroring is first enabled on an image. The promotion can happen:

- implicitly by enabling mirroring in pool mode (see Section 4.2, “Pool Configuration”)

- explicitly by enabling mirroring of a specific image (see Section 4.3, “Image Configuration”)

It is possible to demote primary images and promote non-primary images. See Section 4.3, “Image Configuration” for details.

4.1. Enabling Journaling

You can enable the RBD journaling feature:

- when an image is created

- dynamically on already existing images

Journaling depends on the exclusive-lock feature which must be enabled too. See the following steps.

To enable journaling when creating an image, use the --image-feature option:

rbd create <image-name> --size <megabytes> --pool <pool-name> --image-feature <feature>

rbd create <image-name> --size <megabytes> --pool <pool-name> --image-feature <feature>For example:

rbd create image1 --size 1024 --pool data --image-feature exclusive-lock,journaling

# rbd create image1 --size 1024 --pool data --image-feature exclusive-lock,journaling

To enable journaling on previously created images, use the rbd feature enable command:

rbd feature enable <pool-name>/<image-name> <feature-name>

rbd feature enable <pool-name>/<image-name> <feature-name>For example:

rbd feature enable data/image1 exclusive-lock rbd feature enable data/image1 journaling

# rbd feature enable data/image1 exclusive-lock

# rbd feature enable data/image1 journalingTo enable journaling on all new images by default, add the following setting to the Ceph configuration file:

rbd default features = 125

rbd default features = 1254.2. Pool Configuration

This chapter shows how to do the following tasks:

Execute the following commands on both peer clusters.

Enabling Mirroring on a Pool

To enable mirroring on a pool:

rbd mirror pool enable <pool-name> <mode>

rbd mirror pool enable <pool-name> <mode>Examples

To enable mirroring of the whole pool named data:

rbd mirror pool enable data pool

# rbd mirror pool enable data pool

To enable image mode mirroring on the pool named data:

rbd mirror pool enable data image

# rbd mirror pool enable data imageSee Mirroring Modes for details.

Disabling Mirroring on a Pool

To disable mirroring on a pool:

rbd mirror pool disable <pool-name>

rbd mirror pool disable <pool-name>Example

To disable mirroring of a pool named data:

rbd mirror pool disable data

# rbd mirror pool disable dataBefore disabling mirroring, remove the peer clusters. See Section 4.2, “Pool Configuration” for details.

When you disable mirroring on a pool, you also disable it on any images within the pool for which mirroring was enabled separately in image mode. See Image Configuration for details.

Adding a Cluster Peer

In order for the rbd-mirror daemon to discover its peer cluster, you must register the peer to the pool:

rbd --cluster <cluster-name> mirror pool peer add <pool-name> <peer-client-name>@<peer-cluster-name> -n <client-name>

rbd --cluster <cluster-name> mirror pool peer add <pool-name> <peer-client-name>@<peer-cluster-name> -n <client-name>Example

To add the site-a cluster as a peer to the site-b cluster run the following command from the client node in the site-b cluster:

rbd --cluster site-b mirror pool peer add data client.site-a@site-a -n client.site-b

# rbd --cluster site-b mirror pool peer add data client.site-a@site-a -n client.site-bViewing Information about Peers

To view information about the peers:

rbd mirror pool info <pool-name>

rbd mirror pool info <pool-name>Example

rbd mirror pool info data Mode: pool Peers: UUID NAME CLIENT 7e90b4ce-e36d-4f07-8cbc-42050896825d site-a client.site-a

# rbd mirror pool info data

Mode: pool

Peers:

UUID NAME CLIENT

7e90b4ce-e36d-4f07-8cbc-42050896825d site-a client.site-aRemoving a Cluster Peer

To remove a mirroring peer cluster:

rbd mirror pool peer remove <pool-name> <peer-uuid>

rbd mirror pool peer remove <pool-name> <peer-uuid>

Specify the pool name and the peer Universally Unique Identifier (UUID). To view the peer UUID, use the rbd mirror pool info command.

Example

rbd mirror pool peer remove data 7e90b4ce-e36d-4f07-8cbc-42050896825d

# rbd mirror pool peer remove data 7e90b4ce-e36d-4f07-8cbc-42050896825dGetting Mirroring Status for a Pool

To get the mirroring pool summary:

rbd mirror pool status <pool-name>

rbd mirror pool status <pool-name>Example

To get the status of the data pool:

rbd mirror pool status data health: OK images: 1 total

# rbd mirror pool status data

health: OK

images: 1 total

To output status details for every mirroring image in a pool, use the --verbose option.

4.3. Image Configuration

This chapter shows how to do the following tasks:

Execute the following commands on a single cluster only.

Enabling Image Mirroring

To enable mirroring of a specific image:

- Enable mirroring of the whole pool in image mode on both peer clusters. See Section 4.2, “Pool Configuration” for details.

Then explicitly enable mirroring for a specific image within the pool:

rbd mirror image enable <pool-name>/<image-name>

rbd mirror image enable <pool-name>/<image-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Example

To enable mirroring for the image2 image in the data pool:

rbd mirror image enable data/image2

# rbd mirror image enable data/image2Disabling Image Mirroring

To disable mirroring for a specific image:

rbd mirror image disable <pool-name>/<image-name>

rbd mirror image disable <pool-name>/<image-name>Example

To disable mirroring of the image2 image in the data pool:

rbd mirror image disable data/image2

# rbd mirror image disable data/image2Image Promotion and Demotion

To demote an image to non-primary:

rbd mirror image demote <pool-name>/<image-name>

rbd mirror image demote <pool-name>/<image-name>Example

To demote the image2 image in the data pool:

rbd mirror image demote data/image2

# rbd mirror image demote data/image2To promote an image to primary:

rbd mirror image promote <pool-name>/<image-name>

rbd mirror image promote <pool-name>/<image-name>Example

To promote the image2 image in the data pool:

rbd mirror image promote data/image2

# rbd mirror image promote data/image2Depending on which type of mirroring you use, see either Recovering from a disaster with one-way mirroring or Recovering from a disaster with two-way mirroring, for details.

Use the --force option to force promote a non-primary image:

rbd mirror image promote --force data/image2

# rbd mirror image promote --force data/image2Use forced promotion when the demotion cannot be propagated to the peer Ceph cluster, for example because of cluster failure or communication outage. See Failover After a Non-Orderly Shutdown for details.

Do not force promote non-primary images that are still syncing, because the images will not be valid after the promotion.

Image Resynchronization

To request a resynchronization to the primary image:

rbd mirror image resync <pool-name>/<image-name>

rbd mirror image resync <pool-name>/<image-name>Example

To request resynchronization of the image2 image in the data pool:

rbd mirror image resync data/image2

# rbd mirror image resync data/image2

In case of an inconsistent state between the two peer clusters, the rbd-mirror daemon does not attempt to mirror the image that is causing the inconsistency. For details on fixing this issue, see the section on recovering from a disaster. Depending on which type of mirroring you use, see either Recovering from a disaster with one-way mirroring or Recovering from a disaster with two-way mirroring, for details.

Getting Mirroring Status for a Single Image

To get the status of a mirrored image:

rbd mirror image status <pool-name>/<image-name>

rbd mirror image status <pool-name>/<image-name>Example

To get the status of the image2 image in the data pool:

4.4. Configuring One-Way Mirroring

One-way mirroring implies that a primary image in one cluster gets replicated in a secondary cluster. In the secondary cluster, the replicated image is non-primary; that is, block device clients cannot write to the image.

One-way mirroring supports multiple secondary sites. To configure one-way mirroring on multiple secondary sites, repeat the following procedures on each secondary cluster.

One-way mirroring is appropriate for maintaining a crash-consistent copy of an image. One-way mirroring may not be appropriate for all situations, such as using the secondary image for automatic failover and failback with OpenStack, since the cluster cannot failback when using one-way mirroring. In those scenarios, use two-way mirroring. See Section 4.5, “Configuring Two-Way Mirroring” for details.

The following procedures assume:

-

You have two clusters and you want to replicate images from a primary cluster to a secondary cluster. For the purposes of this procedure, we will distinguish the two clusters by referring to the cluster with the primary images as the

site-acluster and the cluster you want to replicate the images to as thesite-bcluster. For information on installing a Ceph Storage Cluster see the Installation Guide for Red Hat Enterprise Linux or the Installation Guide for Ubuntu. -

The

site-bcluster has a client node attached to it where therbd-mirrordaemon will run. This daemon will connect to thesite-acluster to sync images to thesite-bcluster. For information on installing Ceph clients, see the Installation Guide for Red Hat Enterprise Linux or the Installation Guide for Ubuntu -

A pool with the same name is created on both clusters. In the examples below the pool is named

data. See the Pools chapter in the Storage Strategies Guide or Red Hat Ceph Storage 3 for details. -

The pool contains images you want to mirror and journaling is enabled on them. In the examples below, the images are named

image1andimage2. See Enabling Journaling for details.

There are two ways to configure block device mirroring:

- Pool Mirroring: To mirror all images within a pool, use the Configuring Pool Mirroring procedure.

- Image Mirroring: To mirror select images within a pool, use the Configuring Image Mirroring procedure.

Configuring Pool Mirroring

-

Ensure that all images within the

datapool have exclusive lock and journaling enabled. See Section 4.1, “Enabling Journaling” for details. On the client node of the

site-bcluster, install therbd-mirrorpackage. The package is provided by the Red Hat Ceph Storage 3 Tools repository.Red Hat Enterprise Linux

yum install rbd-mirror

# yum install rbd-mirrorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Ubuntu

sudo apt-get install rbd-mirror

$ sudo apt-get install rbd-mirrorCopy to Clipboard Copied! Toggle word wrap Toggle overflow On the client node of the

site-bcluster, specify the cluster name by adding theCLUSTERoption to the appropriate file. On Red Hat Enterprise Linux, update the/etc/sysconfig/cephfile, and on Ubuntu, update the/etc/default/cephfile accordingly:CLUSTER=site-b

CLUSTER=site-bCopy to Clipboard Copied! Toggle word wrap Toggle overflow On both clusters, create users with permissions to access the

datapool and output their keyrings to a<cluster-name>.client.<user-name>.keyringfile.On the monitor host in the

site-acluster, create theclient.site-auser and output the keyring to thesite-a.client.site-a.keyringfile:ceph auth get-or-create client.site-a mon 'profile rbd' osd 'profile rbd pool=data' -o /etc/ceph/site-a.client.site-a.keyring

# ceph auth get-or-create client.site-a mon 'profile rbd' osd 'profile rbd pool=data' -o /etc/ceph/site-a.client.site-a.keyringCopy to Clipboard Copied! Toggle word wrap Toggle overflow On the monitor host in the

site-bcluster, create theclient.site-buser and output the keyring to thesite-b.client.site-b.keyringfile:ceph auth get-or-create client.site-b mon 'profile rbd' osd 'profile rbd pool=data' -o /etc/ceph/site-b.client.site-b.keyring

# ceph auth get-or-create client.site-b mon 'profile rbd' osd 'profile rbd pool=data' -o /etc/ceph/site-b.client.site-b.keyringCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Copy the Ceph configuration file and the newly created RBD keyring file from the

site-amonitor node to thesite-bmonitor and client nodes:scp /etc/ceph/ceph.conf <user>@<site-b_mon-host-name>:/etc/ceph/site-a.conf scp /etc/ceph/site-a.client.site-a.keyring <user>@<site-b_mon-host-name>:/etc/ceph/ scp /etc/ceph/ceph.conf <user>@<site-b_client-host-name>:/etc/ceph/site-a.conf scp /etc/ceph/site-a.client.site-a.keyring <user>@<site-b_client-host-name>:/etc/ceph/

# scp /etc/ceph/ceph.conf <user>@<site-b_mon-host-name>:/etc/ceph/site-a.conf # scp /etc/ceph/site-a.client.site-a.keyring <user>@<site-b_mon-host-name>:/etc/ceph/ # scp /etc/ceph/ceph.conf <user>@<site-b_client-host-name>:/etc/ceph/site-a.conf # scp /etc/ceph/site-a.client.site-a.keyring <user>@<site-b_client-host-name>:/etc/ceph/Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe

scpcommands that transfer the Ceph configuration file from thesite-amonitor node to thesite-bmonitor and client nodes rename the file tosite-a.conf. The keyring file name stays the same.Create a symbolic link named

site-b.confpointing toceph.confon thesite-bcluster client node:cd /etc/ceph ln -s ceph.conf site-b.conf

# cd /etc/ceph # ln -s ceph.conf site-b.confCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enable and start the

rbd-mirrordaemon on thesite-bclient node:systemctl enable ceph-rbd-mirror.target systemctl enable ceph-rbd-mirror@<client-id> systemctl start ceph-rbd-mirror@<client-id>

systemctl enable ceph-rbd-mirror.target systemctl enable ceph-rbd-mirror@<client-id> systemctl start ceph-rbd-mirror@<client-id>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Change

<client-id>to the Ceph Storage cluster user that therbd-mirrordaemon will use. The user must have the appropriatecephxaccess to the cluster. For detailed information, see the User Management chapter in the Administration Guide for Red Hat Ceph Storage 3.Based on the preceeding examples using

site-b, run the following commands:systemctl enable ceph-rbd-mirror.target systemctl enable ceph-rbd-mirror@site-b systemctl start ceph-rbd-mirror@site-b

# systemctl enable ceph-rbd-mirror.target # systemctl enable ceph-rbd-mirror@site-b # systemctl start ceph-rbd-mirror@site-bCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enable pool mirroring of the

datapool residing on thesite-acluster by running the following command on a monitor node in thesite-acluster:rbd mirror pool enable data pool

# rbd mirror pool enable data poolCopy to Clipboard Copied! Toggle word wrap Toggle overflow And ensure that mirroring has been successfully enabled:

rbd mirror pool info data Mode: pool Peers: none

# rbd mirror pool info data Mode: pool Peers: noneCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the

site-acluster as a peer of thesite-bcluster by running the following command from the client node in thesite-bcluster:rbd --cluster site-b mirror pool peer add data client.site-a@site-a -n client.site-b

# rbd --cluster site-b mirror pool peer add data client.site-a@site-a -n client.site-bCopy to Clipboard Copied! Toggle word wrap Toggle overflow And ensure that the peer was successfully added:

rbd mirror pool info data Mode: pool Peers: UUID NAME CLIENT 7e90b4ce-e36d-4f07-8cbc-42050896825d site-a client.site-a

# rbd mirror pool info data Mode: pool Peers: UUID NAME CLIENT 7e90b4ce-e36d-4f07-8cbc-42050896825d site-a client.site-aCopy to Clipboard Copied! Toggle word wrap Toggle overflow After some time, check the status of the

image1andimage2images. If they are in stateup+replaying, mirroring is functioning properly. Run the following commands from a monitor node in thesite-bcluster:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Configuring Image Mirroring

-

Ensure the selected images to be mirrored within the

datapool have exclusive lock and journaling enabled. See Section 4.1, “Enabling Journaling” for details. - Follow steps 2 - 7 in the Configuring Pool Mirroring procedure.

From a monitor node on the

site-acluster, enable image mirroring of thedatapool:rbd mirror pool enable data image

# rbd mirror pool enable data imageCopy to Clipboard Copied! Toggle word wrap Toggle overflow And ensure that mirroring has been successfully enabled:

rbd mirror pool info data Mode: image Peers: none

# rbd mirror pool info data Mode: image Peers: noneCopy to Clipboard Copied! Toggle word wrap Toggle overflow From the client node on the

site-bcluster, add thesite-acluster as a peer:rbd --cluster site-b mirror pool peer add data client.site-a@site-a -n client.site-b

# rbd --cluster site-b mirror pool peer add data client.site-a@site-a -n client.site-bCopy to Clipboard Copied! Toggle word wrap Toggle overflow And ensure that the peer was successfully added:

rbd mirror pool info data Mode: image Peers: UUID NAME CLIENT 9c1da891-b9f4-4644-adee-6268fe398bf1 site-a client.site-a

# rbd mirror pool info data Mode: image Peers: UUID NAME CLIENT 9c1da891-b9f4-4644-adee-6268fe398bf1 site-a client.site-aCopy to Clipboard Copied! Toggle word wrap Toggle overflow From a monitor node on the

site-acluster, explicitly enable image mirroring of theimage1andimage2images:rbd mirror image enable data/image1 Mirroring enabled rbd mirror image enable data/image2 Mirroring enabled

# rbd mirror image enable data/image1 Mirroring enabled # rbd mirror image enable data/image2 Mirroring enabledCopy to Clipboard Copied! Toggle word wrap Toggle overflow After some time, check the status of the

image1andimage2images. If they are in stateup+replaying, mirroring is functioning properly. Run the following commands from a monitor node in thesite-bcluster:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.5. Configuring Two-Way Mirroring

Two-way mirroring allows you to replicate images in either direction between two clusters. It does not allow you to write changes to the same image from either cluster and have the changes propogate back and forth. An image is promoted or demoted from a cluster to change where it is writable from, and where it syncs to.

The following procedures assume that:

-

You have two clusters and you want to be able to replicate images between them in either direction. In the examples below, the clusters are referred to as the

site-aandsite-bclusters. For information on installing a Ceph Storage Cluster see the Installation Guide for Red Hat Enterprise Linux or the Installation Guide for Ubuntu. -

Both clusters have a client node attached to them where the

rbd-mirrordaemon will run. The daemon on thesite-bcluster will connect to thesite-acluster to sync images tosite-b, and the daemon on thesite-acluster will connect to thesite-bcluster to sync images tosite-a. For information on installing Ceph clients, see the Installation Guide for Red Hat Enterprise Linux or Installation Guide for Ubuntu. -

A pool with the same name is created on both clusters. In the examples below the pool is named

data. See the Pools chapter in the Storage Strategies Guide or Red Hat Ceph Storage 3 for details. -

The pool contains images you want to mirror and journaling is enabled on them. In the examples below the images are named

image1andimage2. See Enabling Journaling for details.

There are two ways to configure block device mirroring:

- Pool Mirroring: To mirror all images within a pool, follow Configuring Pool Mirroring immediately below.

- Image Mirroring: To mirror select images within a pool, follow Configuring Image Mirroring below.

Configuring Pool Mirroring

-

Ensure that all images within the

datapool have exclusive lock and journaling enabled. See Section 4.1, “Enabling Journaling” for details. - Set up one way mirroring by following steps 2 - 7 in the equivalent Configuring Pool Mirroring section of Configuring One-Way Mirroring

On the client node of the

site-acluster, install therbd-mirrorpackage. The package is provided by the Red Hat Ceph Storage 3 Tools repository.Red Hat Enterprise Linux

yum install rbd-mirror

# yum install rbd-mirrorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Ubuntu

sudo apt-get install rbd-mirror

$ sudo apt-get install rbd-mirrorCopy to Clipboard Copied! Toggle word wrap Toggle overflow On the client node of the

site-acluster specify the cluster name by adding theCLUSTERoption to the appropriate file. On Red Hat Enterprise Linux, update the/etc/sysconfig/cephfile, and on Ubuntu, update the/etc/default/cephfile accordingly:CLUSTER=site-a

CLUSTER=site-aCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the

site-bCeph configuration file and RBD keyring file from thesite-bmonitor to thesite-amonitor and client nodes:scp /etc/ceph/ceph.conf <user>@<site-a_mon-host-name>:/etc/ceph/site-b.conf scp /etc/ceph/site-b.client.site-b.keyring root@<site-a_mon-host-name>:/etc/ceph/ scp /etc/ceph/ceph.conf user@<site-a_client-host-name>:/etc/ceph/site-b.conf scp /etc/ceph/site-b.client.site-b.keyring user@<site-a_client-host-name>:/etc/ceph/

# scp /etc/ceph/ceph.conf <user>@<site-a_mon-host-name>:/etc/ceph/site-b.conf # scp /etc/ceph/site-b.client.site-b.keyring root@<site-a_mon-host-name>:/etc/ceph/ # scp /etc/ceph/ceph.conf user@<site-a_client-host-name>:/etc/ceph/site-b.conf # scp /etc/ceph/site-b.client.site-b.keyring user@<site-a_client-host-name>:/etc/ceph/Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe

scpcommands that transfer the Ceph configuration file from thesite-bmonitor node to thesite-amonitor and client nodes rename the file tosite-b.conf. The keyring file name stays the same.Copy the

site-aRBD keyring file from thesite-amonitor node to thesite-aclient node:scp /etc/ceph/site-a.client.site-a.keyring <user>@<site-a_client-host-name>:/etc/ceph/

# scp /etc/ceph/site-a.client.site-a.keyring <user>@<site-a_client-host-name>:/etc/ceph/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a symbolic link named

site-a.confpointing toceph.confon thesite-acluster client node:cd /etc/ceph ln -s ceph.conf site-a.conf

# cd /etc/ceph # ln -s ceph.conf site-a.confCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enable and start the

rbd-mirrordaemon on thesite-aclient node:systemctl enable ceph-rbd-mirror.target systemctl enable ceph-rbd-mirror@<client-id> systemctl start ceph-rbd-mirror@<client-id>

systemctl enable ceph-rbd-mirror.target systemctl enable ceph-rbd-mirror@<client-id> systemctl start ceph-rbd-mirror@<client-id>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Where

<client-id>is the Ceph Storage cluster user that therbd-mirrordaemon will use. The user must have the appropriatecephxaccess to the cluster. For detailed information, see the User Management chapter in the Administration Guide for Red Hat Ceph Storage 3.Based on the preceeding examples using

site-a, run the following commands:systemctl enable ceph-rbd-mirror.target systemctl enable ceph-rbd-mirror@site-a systemctl start ceph-rbd-mirror@site-a

# systemctl enable ceph-rbd-mirror.target # systemctl enable ceph-rbd-mirror@site-a # systemctl start ceph-rbd-mirror@site-aCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enable pool mirroring of the

datapool residing on thesite-bcluster by running the following command on a monitor node in thesite-bcluster:rbd mirror pool enable data pool

# rbd mirror pool enable data poolCopy to Clipboard Copied! Toggle word wrap Toggle overflow And ensure that mirroring has been successfully enabled:

rbd mirror pool info data Mode: pool Peers: none

# rbd mirror pool info data Mode: pool Peers: noneCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the

site-bcluster as a peer of thesite-acluster by running the following command from the client node in thesite-acluster:rbd --cluster site-a mirror pool peer add data client.site-b@site-b -n client.site-a

# rbd --cluster site-a mirror pool peer add data client.site-b@site-b -n client.site-aCopy to Clipboard Copied! Toggle word wrap Toggle overflow And ensure that the peer was successfully added:

rbd mirror pool info data Mode: pool Peers: UUID NAME CLIENT dc97bd3f-869f-48a5-9f21-ff31aafba733 site-b client.site-b

# rbd mirror pool info data Mode: pool Peers: UUID NAME CLIENT dc97bd3f-869f-48a5-9f21-ff31aafba733 site-b client.site-bCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the mirroring status from the client node on the

site-acluster.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow The images should be in state

up+stopped. Here,upmeans therbd-mirrordaemon is running andstoppedmeans the image is not a target for replication from another cluster. This is because the images are primary on this cluster.NotePreviously, when setting up one-way mirroring the images were configured to replicate to

site-b. That was achieved by installingrbd-mirroron thesite-bclient node so it could "pull" updates fromsite-atosite-b. At this point thesite-acluster is ready to be mirrored to but the images are not in a state that requires it. Mirroring in the other direction will start if the images onsite-aare demoted and the images on onsite-bare promoted. For information on how to promote and demote images, see Image Configuration.

Configuring Image Mirroring

Set up one way mirroring if it is not already set up.

- Follow steps 2 - 7 in the Configuring Pool Mirroring section of Configuring One-Way Mirroring

- Follow steps 3 - 5 in the Configuring Image Mirroring section of Configuring One-Way Mirroring

- Follow steps 3 - 7 in the Configuring Pool Mirroring section of Configuring Two-Way Mirroring. This section is immediately above.

Add the

site-bcluster as a peer of thesite-acluster by running the following command from the client node in thesite-acluster:rbd --cluster site-a mirror pool peer add data client.site-b@site-b -n client.site-a

# rbd --cluster site-a mirror pool peer add data client.site-b@site-b -n client.site-aCopy to Clipboard Copied! Toggle word wrap Toggle overflow And ensure that the peer was successfully added:

rbd mirror pool info data Mode: pool Peers: UUID NAME CLIENT dc97bd3f-869f-48a5-9f21-ff31aafba733 site-b client.site-b

# rbd mirror pool info data Mode: pool Peers: UUID NAME CLIENT dc97bd3f-869f-48a5-9f21-ff31aafba733 site-b client.site-bCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the mirroring status from the client node on the

site-acluster.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow The images should be in state

up+stopped. Here,upmeans therbd-mirrordaemon is running andstoppedmeans the image is not a target for replication from another cluster. This is because the images are primary on this cluster.NotePreviously, when setting up one-way mirroring the images were configured to replicate to

site-b. That was achieved by installingrbd-mirroron thesite-bclient node so it could "pull" updates fromsite-atosite-b. At this point thesite-acluster is ready to be mirrored to but the images are not in a state that requires it. Mirroring in the other direction will start if the images onsite-aare demoted and the images on onsite-bare promoted. For information on how to promote and demote images, see Image Configuration.

4.6. Delayed Replication

Whether you are using one- or two-way replication, you can delay replication between RADOS Block Device (RBD) mirroring images. You may want to implement delayed replication if you want a window of cushion time in case an unwanted change to the primary image needs to be reverted before being replicated to the secondary image.

To implement delayed replication, the rbd-mirror daemon within the destination cluster should set the rbd mirroring replay delay = <minimum delay in seconds> configuration setting. This setting can either be applied globally within the ceph.conf file utilized by the rbd-mirror daemons, or on an individual image basis.

To utilize delayed replication for a specific image, on the primary image, run the following rbd CLI command:

rbd image-meta set <image-spec> conf_rbd_mirroring_replay_delay <minimum delay in seconds>

rbd image-meta set <image-spec> conf_rbd_mirroring_replay_delay <minimum delay in seconds>For example, to set a 10 minute minimum replication delay on image vm-1 in the pool vms:

rbd image-meta set vms/vm-1 conf_rbd_mirroring_replay_delay 600

rbd image-meta set vms/vm-1 conf_rbd_mirroring_replay_delay 6004.7. Recovering from a disaster with one-way mirroring

To recover from a disaster when using one-way mirroring use the following procedures. They show how to fail over to the secondary cluster after the primary cluster terminates, and how to failback. The shutdown can be orderly or non-orderly.

In the below examples, the primary cluster is known as the site-a cluster, and the secondary cluster is known as the site-b cluster. Additionally, the clusters both have a data pool with two images, image1 and image2.

One-way mirroring supports multiple secondary sites. If you are using additional secondary clusters, choose one of the secondary clusters to fail over to. Synchronize from the same cluster during failback.

Prerequisites

- At least two running clusters.

- Pool mirroring or image mirroring configured with one way mirroring.

Failover After an Orderly Shutdown

- Stop all clients that use the primary image. This step depends on which clients use the image. For example, detach volumes from any OpenStack instances that use the image. See the Block Storage and Volumes chapter in the Storage Guide for Red Hat OpenStack Platform 13.

Demote the primary images located on the

site-acluster by running the following commands on a monitor node in thesite-acluster:rbd mirror image demote data/image1 rbd mirror image demote data/image2

# rbd mirror image demote data/image1 # rbd mirror image demote data/image2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Promote the non-primary images located on the

site-bcluster by running the following commands on a monitor node in thesite-bcluster:rbd mirror image promote data/image1 rbd mirror image promote data/image2

# rbd mirror image promote data/image1 # rbd mirror image promote data/image2Copy to Clipboard Copied! Toggle word wrap Toggle overflow After some time, check the status of the images from a monitor node in the

site-bcluster. They should show a state ofup+stoppedand the description should sayprimary:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Failover After a Non-Orderly Shutdown

- Verify that the primary cluster is down.

- Stop all clients that use the primary image. This step depends on which clients use the image. For example, detach volumes from any OpenStack instances that use the image. See the Block Storage and Volumes chapter in the Storage Guide for Red Hat OpenStack Platform 10.

Promote the non-primary images from a monitor node in the

site-bcluster. Use the--forceoption, because the demotion cannot be propagated to thesite-acluster:rbd mirror image promote --force data/image1 rbd mirror image promote --force data/image2

# rbd mirror image promote --force data/image1 # rbd mirror image promote --force data/image2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Check the status of the images from a monitor node in the

site-bcluster. They should show a state ofup+stopping_replayand the description should sayforce promoted:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Prepare for failback

When the formerly primary cluster recovers, failback to it.

If two clusters were originally configured only for one-way mirroring, in order to failback, the primary cluster must be configured for mirroring as well in order to replicate the images in the opposite direction.

On the client node of the

site-acluster, install therbd-mirrorpackage. The package is provided by the Red Hat Ceph Storage 3 Tools repository.Red Hat Enterprise Linux

yum install rbd-mirror

# yum install rbd-mirrorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Ubuntu

sudo apt-get install rbd-mirror

$ sudo apt-get install rbd-mirrorCopy to Clipboard Copied! Toggle word wrap Toggle overflow On the client node of the

site-acluster, specify the cluster name by adding theCLUSTERoption to the appropriate file. On Red Hat Enterprise Linux, update the/etc/sysconfig/cephfile, and on Ubuntu, update the/etc/default/cephfile accordingly:CLUSTER=site-b

CLUSTER=site-bCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the

site-bCeph configuration file and RBD keyring file from thesite-bmonitor to thesite-amonitor and client nodes:scp /etc/ceph/ceph.conf <user>@<site-a_mon-host-name>:/etc/ceph/site-b.conf scp /etc/ceph/site-b.client.site-b.keyring root@<site-a_mon-host-name>:/etc/ceph/ scp /etc/ceph/ceph.conf user@<site-a_client-host-name>:/etc/ceph/site-b.conf scp /etc/ceph/site-b.client.site-b.keyring user@<site-a_client-host-name>:/etc/ceph/

# scp /etc/ceph/ceph.conf <user>@<site-a_mon-host-name>:/etc/ceph/site-b.conf # scp /etc/ceph/site-b.client.site-b.keyring root@<site-a_mon-host-name>:/etc/ceph/ # scp /etc/ceph/ceph.conf user@<site-a_client-host-name>:/etc/ceph/site-b.conf # scp /etc/ceph/site-b.client.site-b.keyring user@<site-a_client-host-name>:/etc/ceph/Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe

scpcommands that transfer the Ceph configuration file from thesite-bmonitor node to thesite-amonitor and client nodes rename the file tosite-a.conf. The keyring file name stays the same.Copy the

site-aRBD keyring file from thesite-amonitor node to thesite-aclient node:scp /etc/ceph/site-a.client.site-a.keyring <user>@<site-a_client-host-name>:/etc/ceph/

# scp /etc/ceph/site-a.client.site-a.keyring <user>@<site-a_client-host-name>:/etc/ceph/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enable and start the

rbd-mirrordaemon on thesite-aclient node:systemctl enable ceph-rbd-mirror.target systemctl enable ceph-rbd-mirror@<client-id> systemctl start ceph-rbd-mirror@<client-id>

systemctl enable ceph-rbd-mirror.target systemctl enable ceph-rbd-mirror@<client-id> systemctl start ceph-rbd-mirror@<client-id>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Change

<client-id>to the Ceph Storage cluster user that therbd-mirrordaemon will use. The user must have the appropriatecephxaccess to the cluster. For detailed information, see the User Management chapter in the Administration Guide for Red Hat Ceph Storage 3.Based on the preceeding examples using

site-a, the commands would be:systemctl enable ceph-rbd-mirror.target systemctl enable ceph-rbd-mirror@site-a systemctl start ceph-rbd-mirror@site-a

# systemctl enable ceph-rbd-mirror.target # systemctl enable ceph-rbd-mirror@site-a # systemctl start ceph-rbd-mirror@site-aCopy to Clipboard Copied! Toggle word wrap Toggle overflow From the client node on the

site-acluster, add thesite-bcluster as a peer:rbd --cluster site-a mirror pool peer add data client.site-b@site-b -n client.site-a

# rbd --cluster site-a mirror pool peer add data client.site-b@site-b -n client.site-aCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you are using multiple secondary clusters, only the secondary cluster chosen to fail over to, and failback from, must be added.

From a monitor node in the

site-acluster, verify thesite-bcluster was successfully added as a peer:rbd mirror pool info -p data Mode: image Peers: UUID NAME CLIENT d2ae0594-a43b-4c67-a167-a36c646e8643 site-b client.site-b

# rbd mirror pool info -p data Mode: image Peers: UUID NAME CLIENT d2ae0594-a43b-4c67-a167-a36c646e8643 site-b client.site-bCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Failback

When the formerly primary cluster recovers, failback to it.

From a monitor node on the

site-acluster determine if the images are still primary:rbd info data/image1 rbd info data/image2

# rbd info data/image1 # rbd info data/image2Copy to Clipboard Copied! Toggle word wrap Toggle overflow In the output from the commands, look for

mirroring primary: trueormirroring primary: false, to determine the state.Demote any images that are listed as primary by running a command like the following from a monitor node in the

site-acluster:rbd mirror image demote data/image1

# rbd mirror image demote data/image1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Resynchronize the images ONLY if there was a non-orderly shutdown. Run the following commands on a monitor node in the

site-acluster to resynchronize the images fromsite-btosite-a:rbd mirror image resync data/image1 Flagged image for resync from primary rbd mirror image resync data/image2 Flagged image for resync from primary

# rbd mirror image resync data/image1 Flagged image for resync from primary # rbd mirror image resync data/image2 Flagged image for resync from primaryCopy to Clipboard Copied! Toggle word wrap Toggle overflow After some time, ensure resynchronization of the images is complete by verifying they are in the

up+replayingstate. Check their state by running the following commands on a monitor node in thesite-acluster:rbd mirror image status data/image1 rbd mirror image status data/image2

# rbd mirror image status data/image1 # rbd mirror image status data/image2Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Demote the images on the

site-bcluster by running the following commands on a monitor node in thesite-bcluster:rbd mirror image demote data/image1 rbd mirror image demote data/image2

# rbd mirror image demote data/image1 # rbd mirror image demote data/image2Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf there are multiple secondary clusters, this only needs to be done from the secondary cluster where it was promoted.

Promote the formerly primary images located on the

site-acluster by running the following commands on a monitor node in thesite-acluster:rbd mirror image promote data/image1 rbd mirror image promote data/image2

# rbd mirror image promote data/image1 # rbd mirror image promote data/image2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Check the status of the images from a monitor node in the

site-acluster. They should show a status ofup+stoppedand the description should saylocal image is primary:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Remove two-way mirroring

In the Prepare for failback section above, functions for two-way mirroring were configured to enable synchronization from the site-b cluster to the site-a cluster. After failback is complete these functions can be disabled.

Remove the

site-bcluster as a peer from thesite-acluster:rbd mirror pool peer remove data client.remote@remote --cluster local rbd --cluster site-a mirror pool peer remove data client.site-b@site-b -n client.site-a

$ rbd mirror pool peer remove data client.remote@remote --cluster local # rbd --cluster site-a mirror pool peer remove data client.site-b@site-b -n client.site-aCopy to Clipboard Copied! Toggle word wrap Toggle overflow Stop and disable the

rbd-mirrordaemon on thesite-aclient:systemctl stop ceph-rbd-mirror@<client-id> systemctl disable ceph-rbd-mirror@<client-id> systemctl disable ceph-rbd-mirror.target

systemctl stop ceph-rbd-mirror@<client-id> systemctl disable ceph-rbd-mirror@<client-id> systemctl disable ceph-rbd-mirror.targetCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

systemctl stop ceph-rbd-mirror@site-a systemctl disable ceph-rbd-mirror@site-a systemctl disable ceph-rbd-mirror.target

# systemctl stop ceph-rbd-mirror@site-a # systemctl disable ceph-rbd-mirror@site-a # systemctl disable ceph-rbd-mirror.targetCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional Resources

- For details on demoting, promoting, and resyncing images, see Image configuration in the Block device guide.

4.8. Recovering from a disaster with two-way mirroring

To recover from a disaster when using two-way mirroring use the following procedures. They show how to fail over to the mirrored data on the secondary cluster after the primary cluster terminates, and how to failback. The shutdown can be orderly or non-orderly.

In the below examples, the primary cluster is known as the site-a cluster, and the secondary cluster is known as the site-b cluster. Additionally, the clusters both have a data pool with two images, image1 and image2.

Prerequisites

- At least two running clusters.

- Pool mirroring or image mirroring configured with one way mirroring.

Failover After an Orderly Shutdown

- Stop all clients that use the primary image. This step depends on which clients use the image. For example, detach volumes from any OpenStack instances that use the image. See the Block Storage and Volumes chapter in the Storage Guide for Red Hat OpenStack Platform 10.

Demote the primary images located on the

site-acluster by running the following commands on a monitor node in thesite-acluster:rbd mirror image demote data/image1 rbd mirror image demote data/image2

# rbd mirror image demote data/image1 # rbd mirror image demote data/image2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Promote the non-primary images located on the

site-bcluster by running the following commands on a monitor node in thesite-bcluster:rbd mirror image promote data/image1 rbd mirror image promote data/image2

# rbd mirror image promote data/image1 # rbd mirror image promote data/image2Copy to Clipboard Copied! Toggle word wrap Toggle overflow After some time, check the status of the images from a monitor node in the

site-bcluster. They should show a state ofup+stoppedand be listed as primary:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Resume the access to the images. This step depends on which clients use the image.

Failover After a Non-Orderly Shutdown

- Verify that the primary cluster is down.

- Stop all clients that use the primary image. This step depends on which clients use the image. For example, detach volumes from any OpenStack instances that use the image. See the Block Storage and Volumes chapter in the Storage Guide for Red Hat OpenStack Platform 10.

Promote the non-primary images from a monitor node in the

site-bcluster. Use the--forceoption, because the demotion cannot be propagated to thesite-acluster:rbd mirror image promote --force data/image1 rbd mirror image promote --force data/image2

# rbd mirror image promote --force data/image1 # rbd mirror image promote --force data/image2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Check the status of the images from a monitor node in the

site-bcluster. They should show a state ofup+stopping_replayand the description should sayforce promoted:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Failback

When the formerly primary cluster recovers, failback to it.

Check the status of the images from a monitor node in the

site-bcluster again. They should show a state ofup-stoppedand the description should saylocal image is primary:Copy to Clipboard Copied! Toggle word wrap Toggle overflow From a monitor node on the

site-acluster determine if the images are still primary:rbd info data/image1 rbd info data/image2

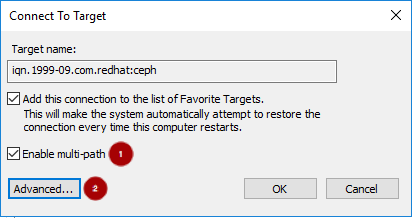

# rbd info data/image1 # rbd info data/image2Copy to Clipboard Copied! Toggle word wrap Toggle overflow In the output from the commands, look for