Configuration Guide

Configuration settings for Red Hat Ceph Storage

Abstract

Chapter 1. Configuration Reference

All Ceph clusters have a configuration, which defines:

- Cluster identity

- Authentication settings

- Ceph daemon membership in the cluster

- Network configuration

- Host names and addresses

- Paths to keyrings

- Paths to data (including journals)

- Other runtime options

A deployment tool such as Red Hat Storage Console or Ansible will typically create an initial Ceph configuration file for you. However, you can create one yourself if you prefer to bootstrap a cluster without using a deployment tool.

For your convenience, each daemon has a series of default values, that is, many are set by the ceph/src/common/config_opts.h script. You can override these settings with a Ceph configuration file or at runtime by using the monitor tell command or connecting directly to a daemon socket on a Ceph node.

1.1. General Recommendations

You may maintain a Ceph configuration file anywhere you like, but Red Hat recommends having an administration node where you maintain a master copy of the Ceph configuration file.

When you make changes to the Ceph configuration file, it is a good practice to push the updated configuration file to your Ceph nodes to maintain consistency.

1.2. Configuration File Structure

The Ceph configuration file configures Ceph daemons at start time—overriding default values. Ceph configuration files use an ini style syntax. You can add comments by preceding comments with a pound sign (#) or a semi-colon (;). For example:

# <--A number (#) sign precedes a comment. ; A comment may be anything. # Comments always follow a semi-colon (;) or a pound (#) on each line. # The end of the line terminates a comment. # We recommend that you provide comments in your configuration file(s).

# <--A number (#) sign precedes a comment.

; A comment may be anything.

# Comments always follow a semi-colon (;) or a pound (#) on each line.

# The end of the line terminates a comment.

# We recommend that you provide comments in your configuration file(s).The configuration file can configure all Ceph daemons in a Ceph storage cluster or all Ceph daemons of a particular type at start time. To configure a series of daemons, the settings must be included under the processes that will receive the configuration as follows:

- [global]

- Description

-

Settings under

[global]affect all daemons in a Ceph Storage Cluster. - Example

-

auth supported = cephx

- [osd]

- Description

-

Settings under

[osd]affect allceph-osddaemons in the Ceph storage cluster, and override the same setting in[global]. - Example

-

osd journal size = 1000

- [mon]

- Description

-

Settings under

[mon]affect allceph-mondaemons in the Ceph storage cluster, and override the same setting in[global]. - Example

-

mon host = hostname1,hostname2,hostname3mon addr = 10.0.0.101:6789

- [client]

- Description

-

Settings under

[client]affect all Ceph clients (for example, mounted Ceph block devices, Ceph object gateways, and so on). - Example

-

log file = /var/log/ceph/radosgw.log

Global settings affect all instances of all daemon in the Ceph storage cluster. Use the [global] setting for values that are common for all daemons in the Ceph storage cluster. You can override each [global] setting by:

-

Changing the setting in a particular process type (for example,

[osd],[mon]). -

Changing the setting in a particular process (for example,

[osd.1]).

Overriding a global setting affects all child processes, except those that you specifically override in a particular daemon.

A typical global setting involves activating authentication. For example:

[global] #Enable authentication between hosts within the cluster. auth_cluster_required = cephx auth_service_required = cephx auth_client_required = cephx

[global]

#Enable authentication between hosts within the cluster.

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

You can specify settings that apply to a particular type of daemon. When you specify settings under [osd] or [mon] without specifying a particular instance, the setting will apply to all OSD or monitor daemons respectively.

A typical daemon-wide setting involves setting journal sizes, filestore settings, and so on For example:

[osd] osd_journal_size = 1000

[osd]

osd_journal_size = 1000You can specify settings for particular instances of a daemon. You may specify an instance by entering its type, delimited by a period (.) and by the instance ID. The instance ID for a Ceph OSD daemons is always numeric, but it may be alphanumeric for Ceph monitors.

[osd.1] # settings affect osd.1 only. [mon.a] # settings affect mon.a only.

[osd.1]

# settings affect osd.1 only.

[mon.a]

# settings affect mon.a only.The default Ceph configuration file locations in sequential order include:

-

$CEPH_CONF(the path following the$CEPH_CONFenvironment variable) -

-c path/path(the-ccommand line argument) -

/etc/ceph/ceph.conf -

~/.ceph/config -

./ceph.conf(in the current working directory)

A typical Ceph configuration file has at least the following settings:

1.3. Metavariables

Metavariables simplify Ceph storage cluster configuration dramatically. When a metavariable is set in a configuration value, Ceph expands the metavariable into a concrete value.

Metavariables are very powerful when used within the [global], [osd], [mon], or [client] sections of the Ceph configuration file. However, you can also use them with the administration socket. Ceph metavariables are similar to Bash shell expansion.

Ceph supports the following metavariables:

- $cluster

- Description

- Expands to the Ceph storage cluster name. Useful when running multiple Ceph storage clusters on the same hardware.

- Example

-

/etc/ceph/$cluster.keyring - Default

-

ceph

- $type

- Description

-

Expands to one of

osdormon, depending on the type of the instant daemon. - Example

-

/var/lib/ceph/$type

- $id

- Description

-

Expands to the daemon identifier. For

osd.0, this would be0. - Example

-

/var/lib/ceph/$type/$cluster-$id

- $host

- Description

- Expands to the host name of the instant daemon.

- $name

- Description

-

Expands to

$type.$id. - Example

-

/var/run/ceph/$cluster-$name.asok

1.4. Viewing the Ceph Runtime Configuration

To view a runtime configuration, log in to a Ceph node and execute:

ceph daemon {daemon-type}.{id} config show

ceph daemon {daemon-type}.{id} config show

For example, if you want to see the configuration for osd.0, log into the node containing osd.0 and execute:

ceph daemon osd.0 config show

ceph daemon osd.0 config show

For additional options, specify a daemon and help. For example:

ceph daemon osd.0 help

ceph daemon osd.0 help1.5. Getting a Specific Configuration Setting at Runtime

To get a specific configuration setting at runtime, log in to a Ceph node and execute:

ceph daemon {daemon-type}.{id} config get {parameter}

ceph daemon {daemon-type}.{id} config get {parameter}

For example to retrieve the public address of osd.0, execute:

ceph daemon osd.0 config get public_addr

ceph daemon osd.0 config get public_addr1.6. Setting a Specific Configuration Setting at Runtime

There are two general ways to set a runtime configuration:

- by using the Ceph monitor

- by using the administration socket

You can set a Ceph runtime configuration setting by contacting the monitor using the tell and injectargs command. To use this approach, the monitors and the daemon you are trying to modify must be running:

ceph tell {daemon-type}.{daemon id or *} injectargs --{name} {value} [--{name} {value}]

ceph tell {daemon-type}.{daemon id or *} injectargs --{name} {value} [--{name} {value}]

Replace {daemon-type} with one of osd or mon. You can apply the runtime setting to all daemons of a particular type with *, or specify a specific daemon’s ID (that is, its number or name). For example, to change the debug logging for a ceph-osd daemon named osd.0 to 0/5, execute the following command:

ceph tell osd.0 injectargs '--debug-osd 0/5'

ceph tell osd.0 injectargs '--debug-osd 0/5'

The tell command takes multiple arguments, so each argument for tell must be within single quotes, and the configuration prepended with two dashes ('--{config_opt} {opt-val}' ['-{config_opt} {opt-val}']). Quotes are not necessary for the daemon command, because it only takes one argument.

The ceph tell command goes through the monitors. If you cannot bind to the monitor, you can still make the change by logging into the host of the daemon whose configuration you want to change using ceph daemon. For example:

sudo ceph osd.0 config set debug_osd 0/5

sudo ceph osd.0 config set debug_osd 0/51.7. General Configuration Reference

General settings typically get set automatically by deployment tools.

- fsid

- Description

- The file system ID. One per cluster.

- Type

- UUID

- Required

- No.

- Default

- N/A. Usually generated by deployment tools.

- admin_socket

- Description

- The socket for executing administrative commands on a daemon, irrespective of whether Ceph monitors have established a quorum.

- Type

- String

- Required

- No

- Default

-

/var/run/ceph/$cluster-$name.asok

- pid_file

- Description

-

The file in which the monitor or OSD will write its PID. For instance,

/var/run/$cluster/$type.$id.pidwill create /var/run/ceph/mon.a.pid for themonwith idarunning in thecephcluster. Thepid fileis removed when the daemon stops gracefully. If the process is not daemonized (meaning it runs with the-for-doption), thepid fileis not created. - Type

- String

- Required

- No

- Default

- No

- chdir

- Description

-

The directory Ceph daemons change to once they are up and running. Default

/directory recommended. - Type

- String

- Required

- No

- Default

-

/

- max_open_files

- Description

-

If set, when the Red Hat Ceph Storage cluster starts, Ceph sets the

max_open_fdsat the OS level (that is, the max # of file descriptors). It helps prevents Ceph OSDs from running out of file descriptors. - Type

- 64-bit Integer

- Required

- No

- Default

-

0

- fatal_signal_handlers

- Description

- If set, we will install signal handlers for SEGV, ABRT, BUS, ILL, FPE, XCPU, XFSZ, SYS signals to generate a useful log message.

- Type

- Boolean

- Default

-

true

1.8. OSD Memory Target

BlueStore keeps OSD heap memory usage under a designated target size with the osd_memory_target configuration option.

The option osd_memory_target sets OSD memory based upon the available RAM in the system. By default, Anisble sets the value to 4 GB. You can change the value, expressed in bytes, in the /usr/share/ceph-ansible/group_vars/all.yml file when deploying the daemon.

Example: Set the osd_memory_target to 6000000000 bytes

ceph_conf_overrides:

osd:

osd_memory_target=6000000000

ceph_conf_overrides:

osd:

osd_memory_target=6000000000Ceph OSD memory caching is more important when the block device is slow, for example, traditional hard drives, because the benefit of a cache hit is much higher than it would be with a solid state drive. However, this has to be weighed-in to co-locate OSDs with other services, such as in a hyper-converged infrastructure (HCI), or other applications.

The value of osd_memory_target is one OSD per device for traditional hard drive device, and two OSDs per device for NVMe SSD devices. The osds_per_device is defined in group_vars/osds.yml file.

Additional Resources

-

Setting

osd_memory_targetSetting OSD Memory Target

1.9. MDS Cache Memory Limit

MDS servers keep their metadata in a separate storage pool, named cephfs_metadata, and are the users of Ceph OSDs. For Ceph File Systems, MDS servers have to support an entire Red Hat Ceph Storage cluster, not just a single storage device within the storage cluster, so their memory requirements can be significant, particularly if the workload consists of small-to-medium-size files, where the ratio of metadata to data is much higher.

Example:Set the mds_cache_memory_limit to 2000000000 bytes

ceph_conf_overrides:

osd:

mds_cache_memory_limit=2000000000

ceph_conf_overrides:

osd:

mds_cache_memory_limit=2000000000For a large Red Hat Ceph Storage cluster with a metadata-intensive workload, do not put an MDS server on the same node as other memory-intensive services, doing so gives you the option to allocate more memory to MDS, for example, sizes greater than 100 GB.

Additional Resources

Chapter 2. Network Configuration Reference

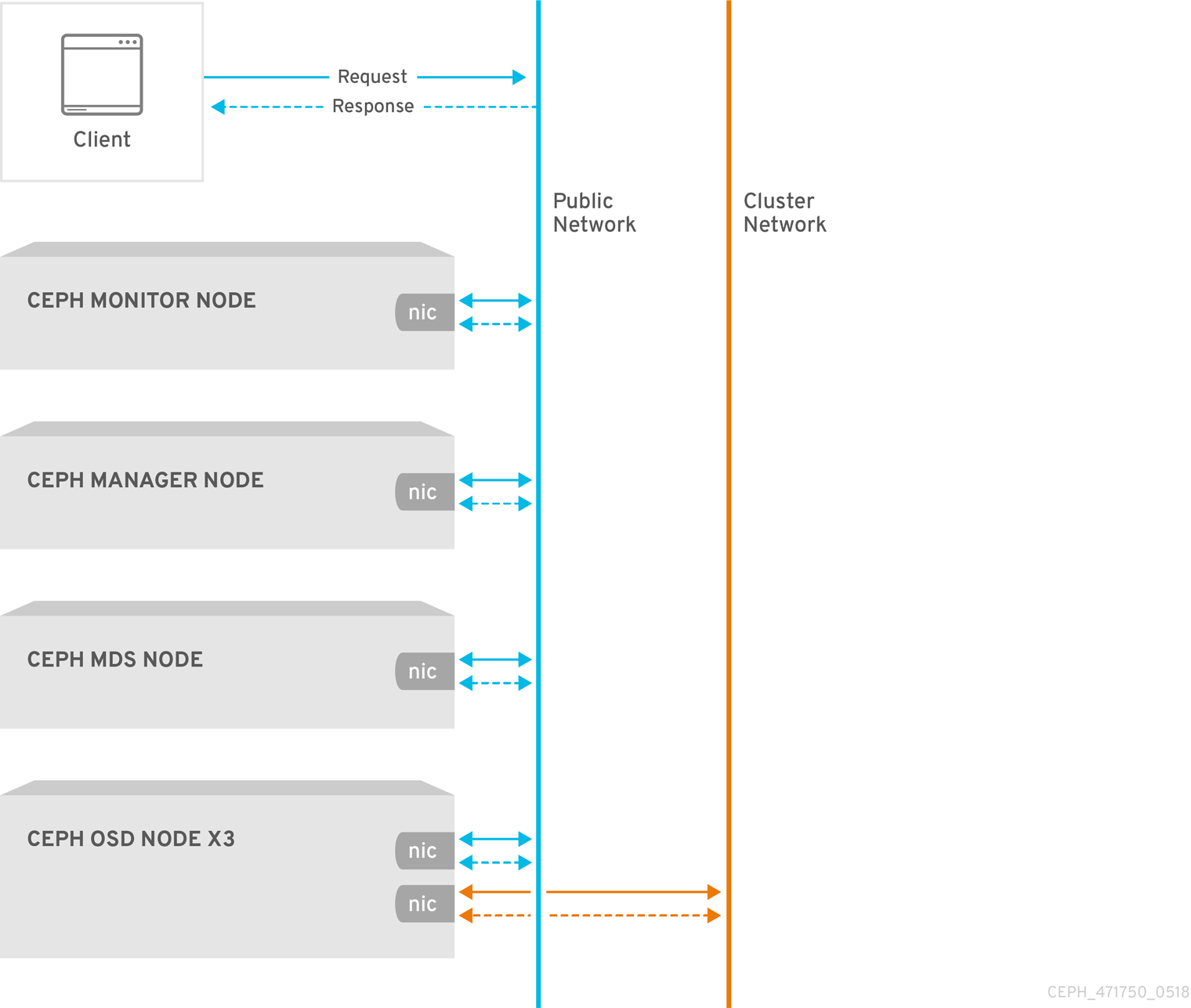

Network configuration is critical for building a high performance Red Hat Ceph Storage cluster. The Ceph storage cluster does not perform request routing or dispatching on behalf of the Ceph client. Instead, Ceph clients make requests directly to Ceph OSD daemons. Ceph OSDs perform data replication on behalf of Ceph clients, which means replication and other factors impose additional loads on the networks of Ceph storage clusters.

All Ceph clusters must use a public network. However, unless you specify a cluster (internal) network, Ceph assumes a single public network. Ceph can function with a public network only, but you will see significant performance improvement with a second "cluster" network in a large cluster.

Red Hat recommends running a Ceph storage cluster with two networks:

- a public network

- and a cluster network.

To support two networks, each Ceph Node will need to have more than one network interface card (NIC).

There are several reasons to consider operating two separate networks:

- Performance: Ceph OSDs handle data replication for the Ceph clients. When Ceph OSDs replicate data more than once, the network load between Ceph OSDs easily dwarfs the network load between Ceph clients and the Ceph storage cluster. This can introduce latency and create a performance problem. Recovery and rebalancing can also introduce significant latency on the public network.

-

Security: While most people are generally civil, some actors will engage in what is known as a Denial of Service (DoS) attack. When traffic between Ceph OSDs gets disrupted, peering may fail and placement groups may no longer reflect an

active + cleanstate, which may prevent users from reading and writing data. A great way to defeat this type of attack is to maintain a completely separate cluster network that does not connect directly to the internet.

2.1. Network Configuration Settings

Network configuration settings are not required. Ceph can function with a public network only, assuming a public network is configured on all hosts running a Ceph daemon. However, Ceph allows you to establish much more specific criteria, including multiple IP networks and subnet masks for your public network. You can also establish a separate cluster network to handle OSD heartbeat, object replication, and recovery traffic.

Do not confuse the IP addresses you set in the configuration with the public-facing IP addresses network clients might use to access your service. Typical internal IP networks are often 192.168.0.0 or 10.0.0.0.

If you specify more than one IP address and subnet mask for either the public or the cluster network, the subnets within the network must be capable of routing to each other. Additionally, make sure you include each IP address/subnet in your IP tables and open ports for them as necessary.

Ceph uses CIDR notation for subnets (for example, 10.0.0.0/24).

When you configured the networks, you can restart the cluster or restart each daemon. Ceph daemons bind dynamically, so you do not have to restart the entire cluster at once if you change the network configuration.

2.1.1. Public Network

To configure a public network, add the following option to the [global] section of the Ceph configuration file.

[global]

...

public_network = <public-network/netmask>

[global]

...

public_network = <public-network/netmask>

The public network configuration allows you specifically define IP addresses and subnets for the public network. You may specifically assign static IP addresses or override public network settings using the public addr setting for a specific daemon.

- public_network

- Description

-

The IP address and netmask of the public (front-side) network (for example,

192.168.0.0/24). Set in[global]. You can specify comma-delimited subnets. - Type

-

<ip-address>/<netmask> [, <ip-address>/<netmask>] - Required

- No

- Default

- N/A

- public_addr

- Description

- The IP address for the public (front-side) network. Set for each daemon.

- Type

- IP Address

- Required

- No

- Default

- N/A

2.1.2. Cluster Network

If you declare a cluster network, OSDs will route heartbeat, object replication, and recovery traffic over the cluster network. This can improve performance compared to using a single network. To configure a cluster network, add the following option to the [global] section of the Ceph configuration file.

[global]

...

cluster_network = <cluster-network/netmask>

[global]

...

cluster_network = <cluster-network/netmask>It is preferable, that the cluster network is not reachable from the public network or the Internet for added security.

The cluster network configuration allows you to declare a cluster network, and specifically define IP addresses and subnets for the cluster network. You can specifically assign static IP addresses or override cluster network settings using the cluster addr setting for specific OSD daemons.

- cluster_network

- Description

-

The IP address and netmask of the cluster network (for example,

10.0.0.0/24). Set in[global]. You can specify comma-delimited subnets. - Type

-

<ip-address>/<netmask> [, <ip-address>/<netmask>] - Required

- No

- Default

- N/A

- cluster_addr

- Description

- The IP address for the cluster network. Set for each daemon.

- Type

- Address

- Required

- No

- Default

- N/A

2.1.3. Verifying and configuring the MTU value

The maximum transmission unit (MTU) value is the size, in bytes, of the largest packet sent on the link layer. The default MTU value is 1500 bytes. Red Hat recommends using jumbo frames, a MTU value of 9000 bytes, for a Red Hat Ceph Storage cluster.

Red Hat Ceph Storage requires the same MTU value throughout all networking devices in the communication path, end-to-end for both public and cluster networks. Verify that the MTU value is the same on all nodes and networking equipment in the environment before using a Red Hat Ceph Storage cluster in production.

When bonding network interfaces together, the MTU value only needs to be set on the bonded interface. The new MTU value propagates from the bonding device to the underlying network devices.

Prerequisites

- Root-level access to the node.

Procedure

Verify the current MTU value:

Example

ip link list 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 2: enp22s0f0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP mode DEFAULT group default qlen 1000[root@mon ~]# ip link list 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 2: enp22s0f0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP mode DEFAULT group default qlen 1000Copy to Clipboard Copied! Toggle word wrap Toggle overflow For this example, the network interface is

enp22s0f0and it has a MTU value of1500.To temporarily change the MTU value online:

Syntax

ip link set dev NET_INTERFACE mtu NEW_MTU_VALUE

ip link set dev NET_INTERFACE mtu NEW_MTU_VALUECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

ip link set dev enp22s0f0 mtu 9000

[root@mon ~]# ip link set dev enp22s0f0 mtu 9000Copy to Clipboard Copied! Toggle word wrap Toggle overflow To permanently change the MTU value.

Open for editing the network configuration file for that particular network interface:

Syntax

vim /etc/sysconfig/network-scripts/ifcfg-NET_INTERFACE

vim /etc/sysconfig/network-scripts/ifcfg-NET_INTERFACECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

vim /etc/sysconfig/network-scripts/ifcfg-enp22s0f0

[root@mon ~]# vim /etc/sysconfig/network-scripts/ifcfg-enp22s0f0Copy to Clipboard Copied! Toggle word wrap Toggle overflow On a new line, add the

MTU=9000option:Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the network service:

Example

systemctl restart network

[root@mon ~]# systemctl restart networkCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional Resources

- For more details, see the Networking Guide for Red Hat Enterprise Linux 7.

2.1.4. Messaging

Messenger is the Ceph network layer implementation. Red Hat supports two messenger types:

-

simple -

async

In RHCS 2 and earlier releases, simple is the default messenger type. In RHCS 3, async is the default messenger type. To change the messenger type, specify the ms_type configuration setting in the [global] section of the Ceph configuration file.

For the async messenger, Red Hat supports the posix transport type, but does not currently support rdma or dpdk. By default, the ms_type setting in RHCS 3 should reflect async+posix, where async is the messenger type and posix is the transport type.

About SimpleMessenger

The SimpleMessenger implementation uses TCP sockets with two threads per socket. Ceph associates each logical session with a connection. A pipe handles the connection, including the input and output of each message. While SimpleMessenger is effective for the posix transport type, it is not effective for other transport types such as rdma or dpdk. Consequently, AsyncMessenger is the default messenger type for RHCS 3 and later releases.

About AsyncMessenger

For RHCS 3, the AsyncMessenger implementation uses TCP sockets with a fixed-size thread pool for connections, which should be equal to the highest number of replicas or erasure-code chunks. The thread count can be set to a lower value if performance degrades due to a low CPU count or a high number of OSDs per server.

Red Hat does not support other transport types such as rdma or dpdk at this time.

Messenger Type Settings

- ms_type

- Description

-

The messenger type for the network transport layer. Red Hat supports the

simpleand theasyncmessenger type usingposixsemantics. - Type

- String.

- Required

- No.

- Default

-

async+posix

- ms_public_type

- Description

-

The messenger type for the network transport layer of the public network. It operates identically to

ms_type, but is applicable only to the public or front-side network. This setting enables Ceph to use a different messenger type for the public or front-side and cluster or back-side networks. - Type

- String.

- Required

- No.

- Default

- None.

- ms_cluster_type

- Description

-

The messenger type for the network transport layer of the cluster network. It operates identically to

ms_type, but is applicable only to the cluster or back-side network. This setting enables Ceph to use a different messenger type for the public or front-side and cluster or back-side networks. - Type

- String.

- Required

- No.

- Default

- None.

2.1.5. AsyncMessenger Settings

- ms_async_transport_type

- Description

-

Transport type used by the

AsyncMessenger. Red Hat supports theposixsetting, but does not support thedpdkorrdmasettings at this time. POSIX uses standard TCP/IP networking and is the default value. Other transport types are experimental and are NOT supported. - Type

- String

- Required

- No

- Default

-

posix

- ms_async_op_threads

- Description

-

Initial number of worker threads used by each

AsyncMessengerinstance. This configuration setting SHOULD equal the number of replicas or erasure code chunks. but it may be set lower if the CPU core count is low or the number of OSDs on a single server is high. - Type

- 64-bit Unsigned Integer

- Required

- No

- Default

-

3

- ms_async_max_op_threads

- Description

-

The maximum number of worker threads used by each

AsyncMessengerinstance. Set to lower values if the OSD host has limited CPU count, and increase if Ceph is underutilizing CPUs are underutilized. - Type

- 64-bit Unsigned Integer

- Required

- No

- Default

-

5

- ms_async_set_affinity

- Description

-

Set to

trueto bindAsyncMessengerworkers to particular CPU cores. - Type

- Boolean

- Required

- No

- Default

-

true

- ms_async_affinity_cores

- Description

-

When

ms_async_set_affinityistrue, this string specifies howAsyncMessengerworkers are bound to CPU cores. For example,0,2will bind workers #1 and #2 to CPU cores #0 and #2, respectively. NOTE: When manually setting affinity, make sure to not assign workers to virtual CPUs created as an effect of hyper threading or similar technology, because they are slower than physical CPU cores. - Type

- String

- Required

- No

- Default

-

(empty)

- ms_async_send_inline

- Description

-

Send messages directly from the thread that generated them instead of queuing and sending from the

AsyncMessengerthread. This option is known to decrease performance on systems with a lot of CPU cores, so it’s disabled by default. - Type

- Boolean

- Required

- No

- Default

-

false

2.1.6. Bind

Bind settings set the default port ranges Ceph OSD daemons use. The default range is 6800:7100. Ensure that the firewall configuration allows you to use the configured port range.

You can also enable Ceph daemons to bind to IPv6 addresses.

- ms_bind_port_min

- Description

- The minimum port number to which an OSD daemon will bind.

- Type

- 32-bit Integer

- Default

-

6800 - Required

- No

- ms_bind_port_max

- Description

- The maximum port number to which an OSD daemon will bind.

- Type

- 32-bit Integer

- Default

-

7300 - Required

- No.

- ms_bind_ipv6

- Description

- Enables Ceph daemons to bind to IPv6 addresses.

- Type

- Boolean

- Default

-

false - Required

- No

2.1.7. Hosts

Ceph expects at least one monitor declared in the Ceph configuration file, with a mon addr setting under each declared monitor. Ceph expects a host setting under each declared monitor, metadata server and OSD in the Ceph configuration file.

- mon_addr

- Description

-

A list of

<hostname>:<port>entries that clients can use to connect to a Ceph monitor. If not set, Ceph searches[mon.*]sections. - Type

- String

- Required

- No

- Default

- N/A

- host

- Description

-

The host name. Use this setting for specific daemon instances (for example,

[osd.0]). - Type

- String

- Required

- Yes, for daemon instances.

- Default

-

localhost

Do not use localhost. To get your host name, execute the hostname -s command and use the name of your host to the first period, not the fully-qualified domain name.

Do not specify any value for host when using a third party deployment system that retrieves the host name for you.

2.1.8. TCP

Ceph disables TCP buffering by default.

- ms_tcp_nodelay

- Description

-

Ceph enables

ms_tcp_nodelayso that each request is sent immediately (no buffering). Disabling Nagle’s algorithm increases network traffic, which can introduce congestion. If you experience large numbers of small packets, you may try disablingms_tcp_nodelay, but be aware that disabling it will generally increase latency. - Type

- Boolean

- Required

- No

- Default

-

true

- ms_tcp_rcvbuf

- Description

- The size of the socket buffer on the receiving end of a network connection. Disable by default.

- Type

- 32-bit Integer

- Required

- No

- Default

-

0

- ms_tcp_read_timeout

- Description

-

If a client or daemon makes a request to another Ceph daemon and does not drop an unused connection, the

tcp read timeoutdefines the connection as idle after the specified number of seconds. - Type

- Unsigned 64-bit Integer

- Required

- No

- Default

-

90015 minutes.

2.1.9. Firewall

By default, daemons bind to ports within the 6800:7100 range. You can configure this range at your discretion. Before configuring the firewall, check the default firewall configuration. You can configure this range at your discretion.

sudo iptables -L

sudo iptables -L

For the firewalld daemon, execute the following command as root:

firewall-cmd --list-all-zones

# firewall-cmd --list-all-zonesSome Linux distributions include rules that reject all inbound requests except SSH from all network interfaces. For example:

REJECT all -- anywhere anywhere reject-with icmp-host-prohibited

REJECT all -- anywhere anywhere reject-with icmp-host-prohibited2.1.9.1. Monitor Firewall

Ceph monitors listen on port 6789 by default. Additionally, Ceph monitors always operate on the public network. When you add the rule using the example below, make sure you replace <iface> with the public network interface (for example, eth0, eth1, and so on), <ip-address> with the IP address of the public network and <netmask> with the netmask for the public network.

sudo iptables -A INPUT -i <iface> -p tcp -s <ip-address>/<netmask> --dport 6789 -j ACCEPT

sudo iptables -A INPUT -i <iface> -p tcp -s <ip-address>/<netmask> --dport 6789 -j ACCEPT

For the firewalld daemon, execute the following commands as root:

firewall-cmd --zone=public --add-port=6789/tcp firewall-cmd --zone=public --add-port=6789/tcp --permanent

# firewall-cmd --zone=public --add-port=6789/tcp

# firewall-cmd --zone=public --add-port=6789/tcp --permanent2.1.9.2. OSD Firewall

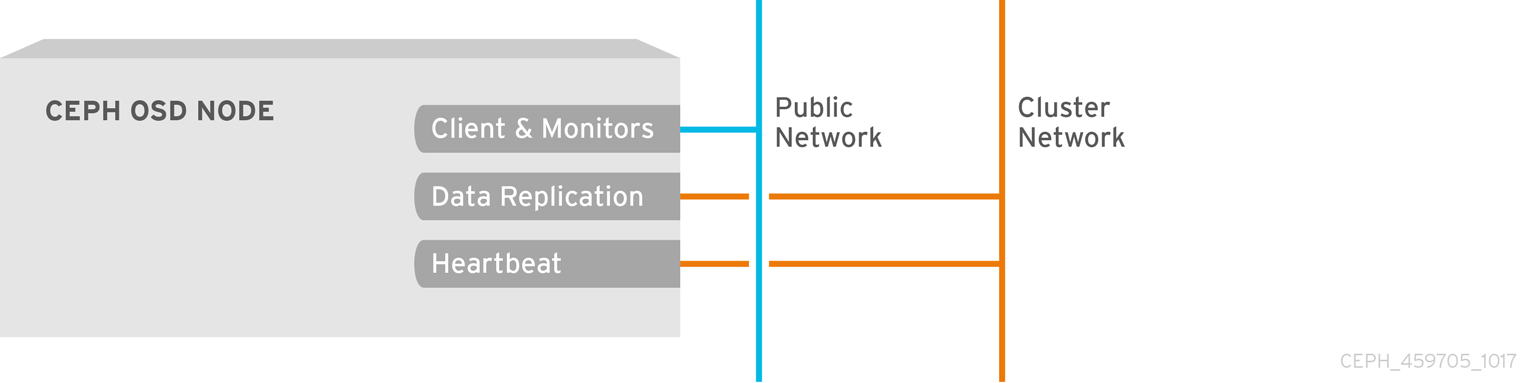

By default, Ceph OSDs bind to the first available ports on a Ceph node beginning at port 6800. Ensure to open at least three ports beginning at port 6800 for each OSD that runs on the host:

- One for talking to clients and monitors (public network).

- One for sending data to other OSDs (cluster network).

- One for sending heartbeat packets (cluster network).

Ports are node-specific. However, you might need to open more ports than the number of ports needed by Ceph daemons running on that Ceph node in the event that processes get restarted and the bound ports do not get released. Consider to open a few additional ports in case a daemon fails and restarts without releasing the port such that the restarted daemon binds to a new port. Also, consider opening the port range of 6800:7300 on each OSD host.

If you set separate public and cluster networks, you must add rules for both the public network and the cluster network, because clients will connect using the public network and other Ceph OSD Daemons will connect using the cluster network.

When you add the rule using the example below, make sure you replace <iface> with the network interface (for example, eth0 or eth1), `<ip-address> with the IP address and <netmask> with the netmask of the public or cluster network. For example:

sudo iptables -A INPUT -i <iface> -m multiport -p tcp -s <ip-address>/<netmask> --dports 6800:6810 -j ACCEPT

sudo iptables -A INPUT -i <iface> -m multiport -p tcp -s <ip-address>/<netmask> --dports 6800:6810 -j ACCEPT

For the firewalld daemon, execute the following commands as root:

firewall-cmd --zone=public --add-port=6800-6810/tcp firewall-cmd --zone=public --add-port=6800-6810/tcp --permanent

# firewall-cmd --zone=public --add-port=6800-6810/tcp

# firewall-cmd --zone=public --add-port=6800-6810/tcp --permanentIf you put the cluster network into another zone, open the ports within that zone as appropriate.

2.2. Ceph Daemons

Ceph has one network configuration requirement that applies to all daemons. The Ceph configuration file must specify the host for each daemon. Ceph no longer requires that a Ceph configuration file specify the monitor IP address and its port.

Some deployment utilities might create a configuration file for you. Do not set these values if the deployment utility does it for you.

The host setting is the short name of the host (that is, not an FQDN). It is not an IP address either. Use the hostname -s command to retrieve the name of the host.

You do not have to set the host IP address for a daemon. If you have a static IP configuration and both public and cluster networks running, the Ceph configuration file might specify the IP address of the host for each daemon. To set a static IP address for a daemon, the following option(s) should appear in the daemon instance sections of the Ceph configuration file.

[osd.0]

public_addr = <host-public-ip-address>

cluster_addr = <host-cluster-ip-address>

[osd.0]

public_addr = <host-public-ip-address>

cluster_addr = <host-cluster-ip-address>One NIC OSD in a Two Network Cluster

Generally, Red Hat does not recommend deploying an OSD host with a single NIC in a cluster with two networks. However, you cam accomplish this by forcing the OSD host to operate on the public network by adding a public addr entry to the [osd.n] section of the Ceph configuration file, where n refers to the number of the OSD with one NIC. Additionally, the public network and cluster network must be able to route traffic to each other, which Red Hat does not recommend for security reasons.

Chapter 3. Monitor Configuration Reference

Understanding how to configure a Ceph monitor is an important part of building a reliable Red Hat Ceph Storage cluster. All clusters have at least one monitor. A monitor configuration usually remains fairly consistent, but you can add, remove or replace a monitor in a cluster.

3.1. Background

Ceph monitors maintain a "master copy" of the cluster map. That means a Ceph client can determine the location of all Ceph monitors and Ceph OSDs just by connecting to one Ceph monitor and retrieving a current cluster map.

Before Ceph clients can read from or write to Ceph OSDs, they must connect to a Ceph monitor first. With a current copy of the cluster map and the CRUSH algorithm, a Ceph client can compute the location for any object. The ability to compute object locations allows a Ceph client to talk directly to Ceph OSDs, which is a very important aspect of Ceph high scalability and performance.

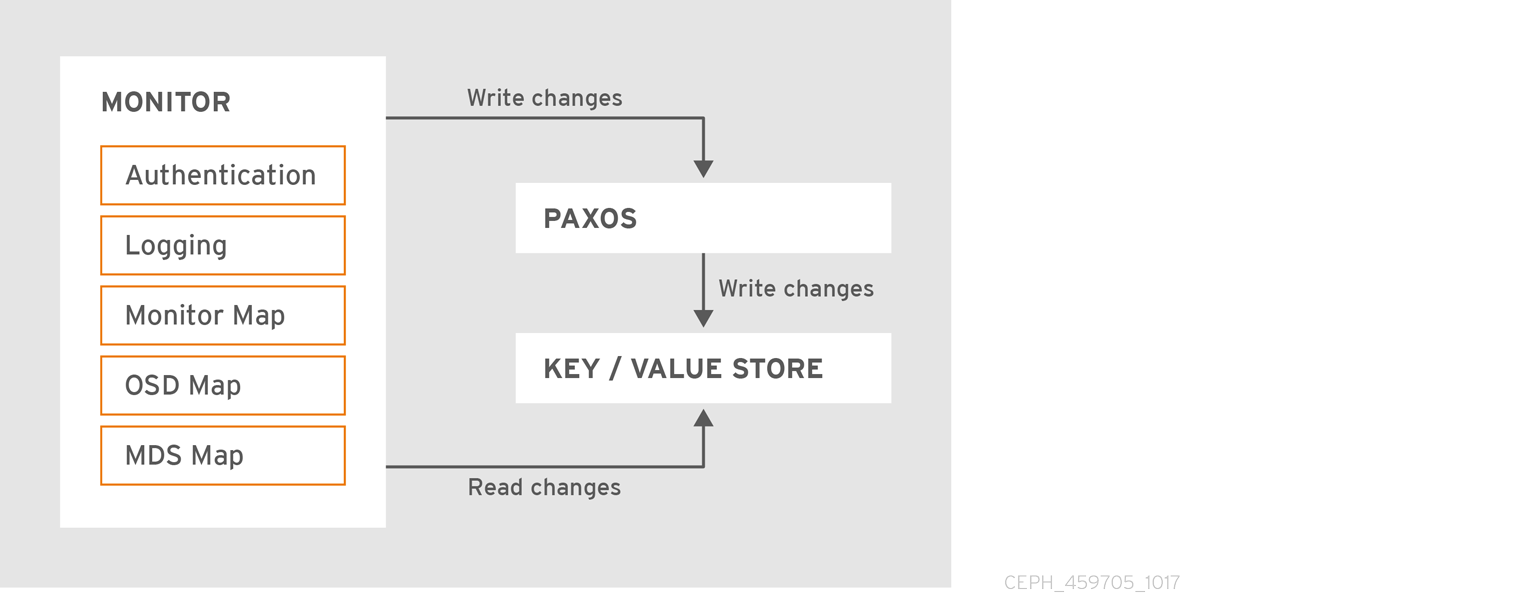

The primary role of the Ceph monitor is to maintain a master copy of the cluster map. Ceph monitors also provide authentication and logging services. Ceph monitors write all changes in the monitor services to a single Paxos instance, and Paxos writes the changes to a key-value store for strong consistency. Ceph monitors can query the most recent version of the cluster map during synchronization operations. Ceph monitors leverage the key-value store’s snapshots and iterators (using the leveldb database) to perform store-wide synchronization.

3.1.1. Cluster Maps

The cluster map is a composite of maps, including the monitor map, the OSD map, and the placement group map. The cluster map tracks a number of important events:

-

Which processes are

inthe Red Hat Ceph Storage cluster -

Which processes that are

inthe Red Hat Ceph Storage cluster areupand running ordown. -

Whether, the placement groups are

activeorinactive, andcleanor in some other state. other details that reflect the current state of the cluster such as:

- the total amount of storage space or

- the amount of storage used.

When there is a significant change in the state of the cluster for example, a Ceph OSD goes down, a placement group falls into a degraded state, and so on, the cluster map gets updated to reflect the current state of the cluster. Additionally, the Ceph monitor also maintains a history of the prior states of the cluster. The monitor map, OSD map, and placement group map each maintain a history of their map versions. Each version is called an epoch.

When operating the Red Hat Ceph Storage cluster, keeping track of these states is an important part of the cluster administration.

3.1.2. Monitor Quorum

A cluster will run sufficiently with a single monitor. However, a single monitor is a single-point-of-failure. To ensure high availability in a production Ceph storage cluster, run Ceph with multiple monitors so that the failure of a single monitor will not cause a failure of the entire cluster.

When a Ceph storage cluster runs multiple Ceph monitors for high availability, Ceph monitors use the Paxos algorithm to establish consensus about the master cluster map. A consensus requires a majority of monitors running to establish a quorum for consensus about the cluster map (for example, 1; 2 out of 3; 3 out of 5; 4 out of 6; and so on).

mon_force_quorum_join

- Description

- Force monitor to join quorum even if it has been previously removed from the map

- Type

- Boolean

- Default

-

False

3.1.3. Consistency

When you add monitor settings to the Ceph configuration file, you need to be aware of some of the architectural aspects of Ceph monitors. Ceph imposes strict consistency requirements for a Ceph monitor when discovering another Ceph monitor within the cluster. Whereas, Ceph clients and other Ceph daemons use the Ceph configuration file to discover monitors, monitors discover each other using the monitor map (monmap), not the Ceph configuration file.

A Ceph monitor always refers to the local copy of the monitor map when discovering other Ceph monitors in the Red Hat Ceph Storage cluster. Using the monitor map instead of the Ceph configuration file avoids errors that could break the cluster, for example, typos in the Ceph configuration file when specifying a monitor address or port). Since monitors use monitor maps for discovery and they share monitor maps with clients and other Ceph daemons, the monitor map provides monitors with a strict guarantee that their consensus is valid.

Strict consistency also applies to updates to the monitor map. As with any other updates on the Ceph monitor, changes to the monitor map always run through a distributed consensus algorithm called Paxos. The Ceph monitors must agree on each update to the monitor map, such as adding or removing a Ceph monitor, to ensure that each monitor in the quorum has the same version of the monitor map. Updates to the monitor map are incremental so that Ceph monitors have the latest agreed upon version, and a set of previous versions. Maintaining a history enables a Ceph monitor that has an older version of the monitor map to catch up with the current state of the Red Hat Ceph Storage cluster.

If Ceph monitors discovered each other through the Ceph configuration file instead of through the monitor map, it would introduce additional risks because the Ceph configuration files are not updated and distributed automatically. Ceph monitors might inadvertently use an older Ceph configuration file, fail to recognize a Ceph monitor, fall out of a quorum, or develop a situation where Paxos is not able to determine the current state of the system accurately.

3.1.4. Bootstrapping Monitors

In most configuration and deployment cases, tools that deploy Ceph might help bootstrap the Ceph monitors by generating a monitor map for you, for example, Red Hat Storage Console or Ansible. A Ceph monitor requires a few explicit settings:

-

File System ID: The

fsidis the unique identifier for your object store. Since you can run multiple clusters on the same hardware, you must specify the unique ID of the object store when bootstrapping a monitor. Using deployment tools for example, Red Hat Storage Console or Ansible will generate a file system identifier, but you can specify thefsidmanually too. -

Monitor ID: A monitor ID is a unique ID assigned to each monitor within the cluster. It is an alphanumeric value, and by convention the identifier usually follows an alphabetical increment (for example,

a,b, and so on). This can be set in the Ceph configuration file (for example,[mon.a],[mon.b], and so on), by a deployment tool, or using thecephcommand. - Keys: The monitor must have secret keys.

3.2. Configuring Monitors

To apply configuration settings to the entire cluster, enter the configuration settings under the [global] section. To apply configuration settings to all monitors in the cluster, enter the configuration settings under the [mon] section. To apply configuration settings to specific monitors, specify the monitor instance (for example, [mon.a]). By convention, monitor instance names use alpha notation.

3.2.1. Minimum Configuration

The bare minimum monitor settings for a Ceph monitor in the Ceph configuration file includes a host name for each monitor if it is not configured for DNS and the monitor address. You can configure these under [mon] or under the entry for a specific monitor.

[mon] mon_host = hostname1,hostname2,hostname3 mon_addr = 10.0.0.10:6789,10.0.0.11:6789,10.0.0.12:6789

[mon]

mon_host = hostname1,hostname2,hostname3

mon_addr = 10.0.0.10:6789,10.0.0.11:6789,10.0.0.12:6789Or

[mon.a] host = hostname1 mon_addr = 10.0.0.10:6789

[mon.a]

host = hostname1

mon_addr = 10.0.0.10:6789

This minimum configuration for monitors assumes that a deployment tool generates the fsid and the mon. key for you.

Once you deploy a Ceph cluster, do not change the IP address of the monitors.

As of RHCS 2.4, Ceph does not require the mon_host when the cluster is configured to look up a monitor via the DNS server. To configure the Ceph cluster for DNS lookup, set the mon_dns_srv_name setting in the Ceph configuration file.

- mon_dns_srv_name

- Description

- The service name used for querying the DNS for the monitor hosts/addresses.

- Type

- String

- Default

-

ceph-mon

Once set, configure the DNS. Create records either IPv4 (A) or IPv6 (AAAA) for the monitors in the DNS zone. For example:

Where: example.com is the DNS search domain.

Then, create the SRV TCP records with the name mon_dns_srv_name configuration setting pointing to the three Monitors. The following example uses the default ceph-mon value.

_ceph-mon._tcp.example.com. 60 IN SRV 10 60 6789 mon1.example.com. _ceph-mon._tcp.example.com. 60 IN SRV 10 60 6789 mon2.example.com. _ceph-mon._tcp.example.com. 60 IN SRV 10 60 6789 mon3.example.com.

_ceph-mon._tcp.example.com. 60 IN SRV 10 60 6789 mon1.example.com.

_ceph-mon._tcp.example.com. 60 IN SRV 10 60 6789 mon2.example.com.

_ceph-mon._tcp.example.com. 60 IN SRV 10 60 6789 mon3.example.com.

Monitors run on port 6789 by default, and their priority and weight are all set to 10 and 60 respectively in the foregoing example.

3.2.2. Cluster ID

Each Red Hat Ceph Storage cluster has a unique identifier (fsid). If specified, it usually appears under the [global] section of the configuration file. Deployment tools usually generate the fsid and store it in the monitor map, so the value may not appear in a configuration file. The fsid makes it possible to run daemons for multiple clusters on the same hardware.

- fsid

- Description

- The cluster ID. One per cluster.

- Type

- UUID

- Required

- Yes.

- Default

- N/A. May be generated by a deployment tool if not specified.

Do not set this value if you use a deployment tool that does it for you.

3.2.3. Initial Members

Red Hat recommends running a production Red Hat Ceph Storage cluster with at least three Ceph monitors to ensure high availability. When you run multiple monitors, you can specify the initial monitors that must be members of the cluster in order to establish a quorum. This may reduce the time it takes for the cluster to come online.

[mon] mon_initial_members = a,b,c

[mon]

mon_initial_members = a,b,c- mon_initial_members

- Description

- The IDs of initial monitors in a cluster during startup. If specified, Ceph requires an odd number of monitors to form an initial quorum (for example, 3).

- Type

- String

- Default

- None

A majority of monitors in your cluster must be able to reach each other in order to establish a quorum. You can decrease the initial number of monitors to establish a quorum with this setting.

3.2.4. Data

Ceph provides a default path where Ceph monitors store data. For optimal performance in a production Red Hat Ceph Storage cluster, Red Hat recommends running Ceph monitors on separate hosts and drives from Ceph OSDs. Ceph monitors call the fsync() function often, which can interfere with Ceph OSD workloads.

Ceph monitors store their data as key-value pairs. Using a data store prevents recovering Ceph monitors from running corrupted versions through Paxos, and it enables multiple modification operations in one single atomic batch, among other advantages.

Red Hat does not recommend changing the default data location. If you modify the default location, make it uniform across Ceph monitors by setting it in the [mon] section of the configuration file.

- mon_data

- Description

- The monitor’s data location.

- Type

- String

- Default

-

/var/lib/ceph/mon/$cluster-$id

- mon_data_size_warn

- Description

-

Ceph issues a

HEALTH_WARNstatus in the cluster log when the monitor’s data store reaches this threshold. The default value is 15GB. - Type

- Integer

- Default

-

15*1024*1024*1024*`

- mon_data_avail_warn

- Description

-

Ceph issues a

HEALTH_WARNstatus in cluster log when the available disk space of the monitor’s data store is lower than or equal to this percentage. - Type

- Integer

- Default

-

30

- mon_data_avail_crit

- Description

-

Ceph issues a

HEALTH_ERRstatus in cluster log when the available disk space of the monitor’s data store is lower or equal to this percentage. - Type

- Integer

- Default

-

5

- mon_warn_on_cache_pools_without_hit_sets

- Description

-

Ceph issues a

HEALTH_WARNstatus in cluster log if a cache pool does not have thehit_set_typeparamater set. See Pool Values for more details. - Type

- Boolean

- Default

- True

- mon_warn_on_crush_straw_calc_version_zero

- Description

-

Ceph issues a

HEALTH_WARNstatus in the cluster log if the CRUSH’sstraw_calc_versionis zero. See CRUSH tunables for details. - Type

- Boolean

- Default

- True

- mon_warn_on_legacy_crush_tunables

- Description

-

Ceph issues a

HEALTH_WARNstatus in the cluster log if CRUSH tunables are too old (older thanmon_min_crush_required_version). - Type

- Boolean

- Default

- True

- mon_crush_min_required_version

- Description

- This setting defines the minimum tunable profile version required by the cluster. See CRUSH tunables for details.

- Type

- String

- Default

-

firefly

- mon_warn_on_osd_down_out_interval_zero

- Description

-

Ceph issues a

HEALTH_WARNstatus in the cluster log if themon_osd_down_out_intervalsetting is zero, because the Leader behaves in a similar manner when thenooutflag is set. Administrators find it easier to troubleshoot a cluster by setting thenooutflag. Ceph issues the warning to ensure administrators know that the setting is zero. - Type

- Boolean

- Default

- True

- mon_cache_target_full_warn_ratio

- Description

-

Ceph issues a warning when between the ratio of

cache_target_fullandtarget_max_object. - Type

- Float

- Default

-

0.66

- mon_health_data_update_interval

- Description

- How often (in seconds) a monitor in the quorum shares its health status with its peers. A negative number disables health updates.

- Type

- Float

- Default

-

60

- mon_health_to_clog

- Description

- This setting enable Ceph to send a health summary to the cluster log periodically.

- Type

- Boolean

- Default

- True

- mon_health_to_clog_tick_interval

- Description

- How often (in seconds) the monitor sends a health summary to the cluster log. A non-positive number disables it. If the current health summary is empty or identical to the last time, the monitor will not send the status to the cluster log.

- Type

- Integer

- Default

- 3600

- mon_health_to_clog_interval

- Description

- How often (in seconds) the monitor sends a health summary to the cluster log. A non-positive number disables it. The monitor will always send the summary to cluster log.

- Type

- Integer

- Default

- 60

3.2.5. Storage Capacity

When a Red Hat Ceph Storage cluster gets close to its maximum capacity (specifies by the mon_osd_full_ratio parameter), Ceph prevents you from writing to or reading from Ceph OSDs as a safety measure to prevent data loss. Therefore, letting a production Red Hat Ceph Storage cluster approach its full ratio is not a good practice, because it sacrifices high availability. The default full ratio is .95, or 95% of capacity. This a very aggressive setting for a test cluster with a small number of OSDs.

When monitoring a cluster, be alert to warnings related to the nearfull ratio. This means that a failure of some OSDs could result in a temporary service disruption if one or more OSDs fails. Consider adding more OSDs to increase storage capacity.

A common scenario for test clusters involves a system administrator removing a Ceph OSD from the Red Hat Ceph Storage cluster to watch the cluster re-balance. Then, removing another Ceph OSD, and so on until the Red Hat Ceph Storage cluster eventually reaches the full ratio and locks up.

Red Hat recommends a bit of capacity planning even with a test cluster. Planning enables you to gauge how much spare capacity you will need in order to maintain high availability. Ideally, you want to plan for a series of Ceph OSD failures where the cluster can recover to an active + clean state without replacing those Ceph OSDs immediately. You can run a cluster in an active + degraded state, but this is not ideal for normal operating conditions.

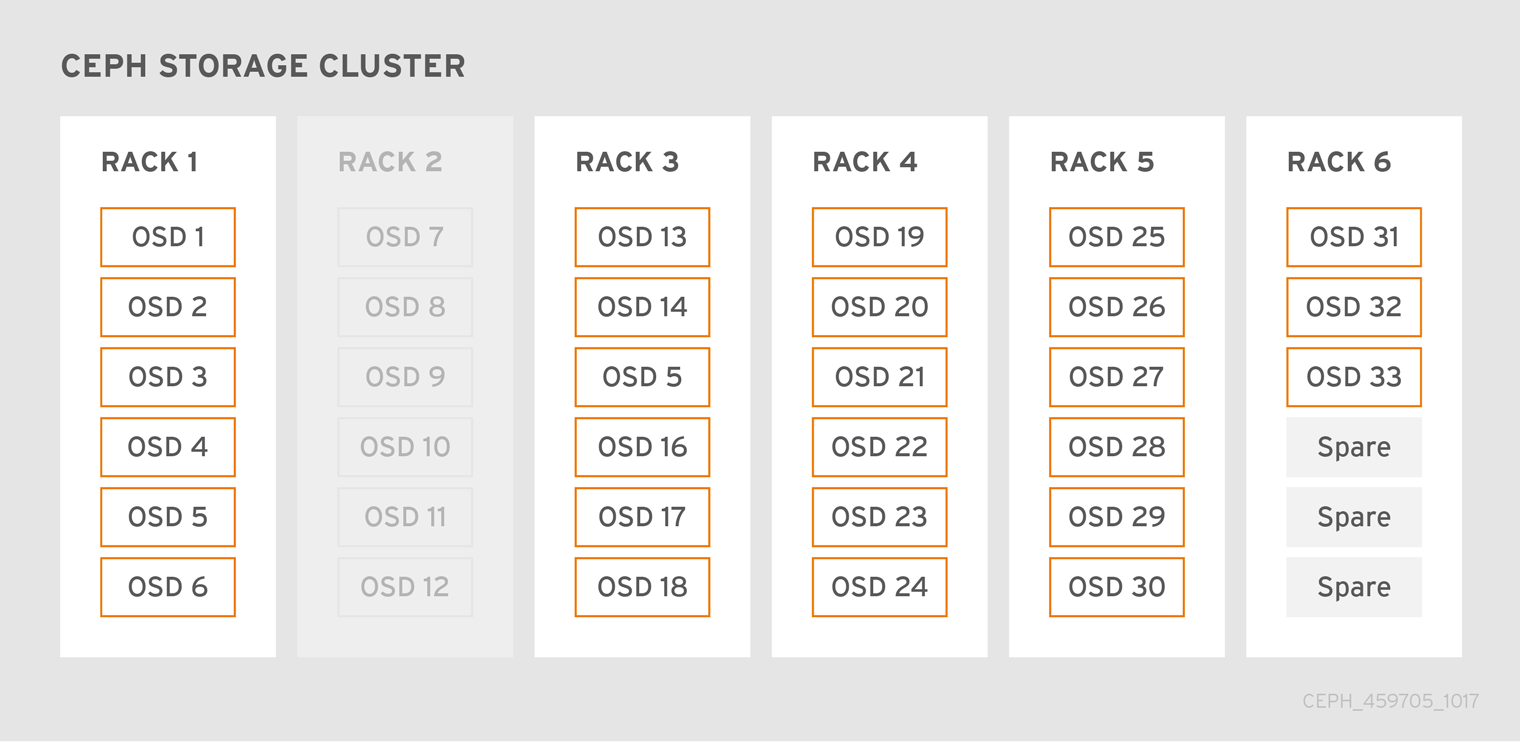

The following diagram depicts a simplistic Red Hat Ceph Storage cluster containing 33 Ceph Nodes with one Ceph OSD per host, each Ceph OSD Daemon reading from and writing to a 3TB drive. So this exemplary Red Hat Ceph Storage cluster has a maximum actual capacity of 99TB. With a mon osd full ratio of 0.95, if the Red Hat Ceph Storage cluster falls to 5 TB of remaining capacity, the cluster will not allow Ceph clients to read and write data. So the Red Hat Ceph Storage cluster’s operating capacity is 95 TB, not 99 TB.

It is normal in such a cluster for one or two OSDs to fail. A less frequent but reasonable scenario involves a rack’s router or power supply failing, which brings down multiple OSDs simultaneously (for example, OSDs 7-12). In such a scenario, you should still strive for a cluster that can remain operational and achieve an active + clean state, even if that means adding a few hosts with additional OSDs in short order. If your capacity utilization is too high, you might not lose data, but you could still sacrifice data availability while resolving an outage within a failure domain if capacity utilization of the cluster exceeds the full ratio. For this reason, Red Hat recommends at least some rough capacity planning.

Identify two numbers for your cluster:

- the number of OSDs

- the total capacity of the cluster

To determine the mean average capacity of an OSD within a cluster, divide the total capacity of the cluster by the number of OSDs in the cluster. Consider multiplying that number by the number of OSDs you expect to fail simultaneously during normal operations (a relatively small number). Finally, multiply the capacity of the cluster by the full ratio to arrive at a maximum operating capacity. Then, subtract the number of amount of data from the OSDs you expect to fail to arrive at a reasonable full ratio. Repeat the foregoing process with a higher number of OSD failures (for example, a rack of OSDs) to arrive at a reasonable number for a near full ratio.

[global] ... mon_osd_full_ratio = .80 mon_osd_nearfull_ratio = .70

[global]

...

mon_osd_full_ratio = .80

mon_osd_nearfull_ratio = .70- mon_osd_full_ratio

- Description

-

The percentage of disk space used before an OSD is considered

full. - Type

- Float:

- Default

-

.95

- mon_osd_nearfull_ratio

- Description

-

The percentage of disk space used before an OSD is considered

nearfull. - Type

- Float

- Default

-

.85

If some OSDs are nearfull, but others have plenty of capacity, you might have a problem with the CRUSH weight for the nearfull OSDs.

3.2.6. Heartbeat

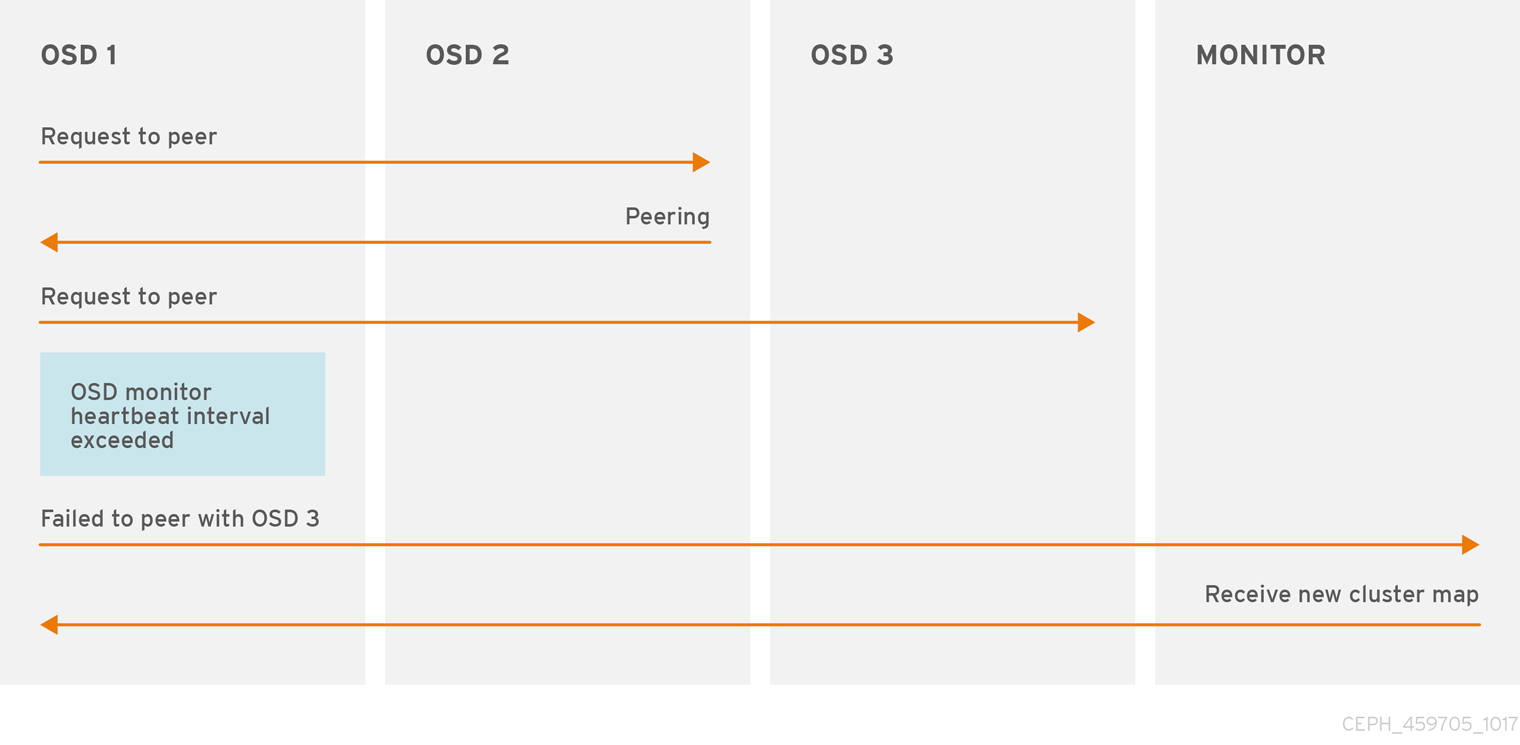

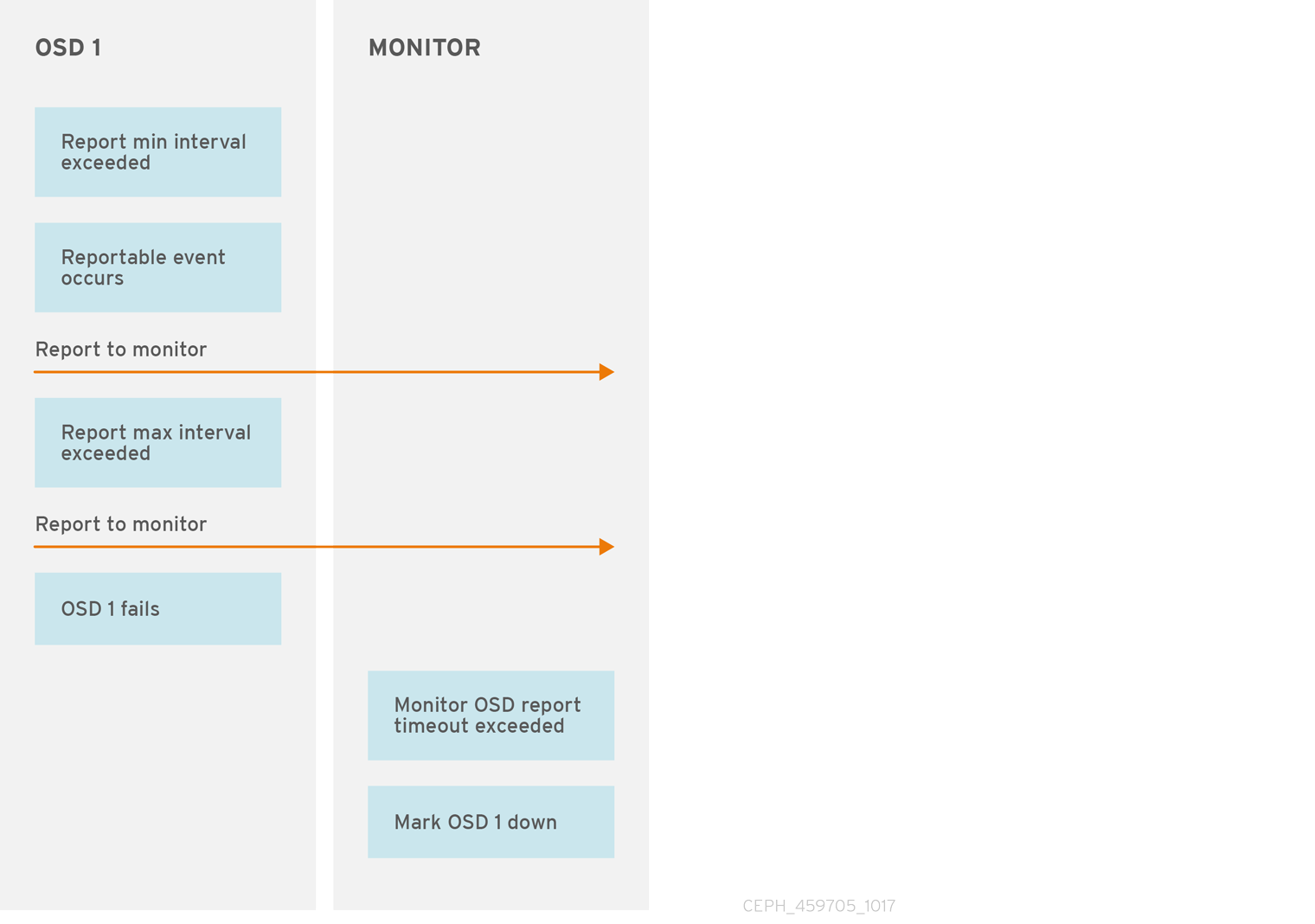

Ceph monitors know about the cluster by requiring reports from each OSD, and by receiving reports from OSDs about the status of their neighboring OSDs. Ceph provides reasonable default settings for interaction between monitor and OSD, however, you can modify them as needed.

3.2.7. Monitor Store Synchronization

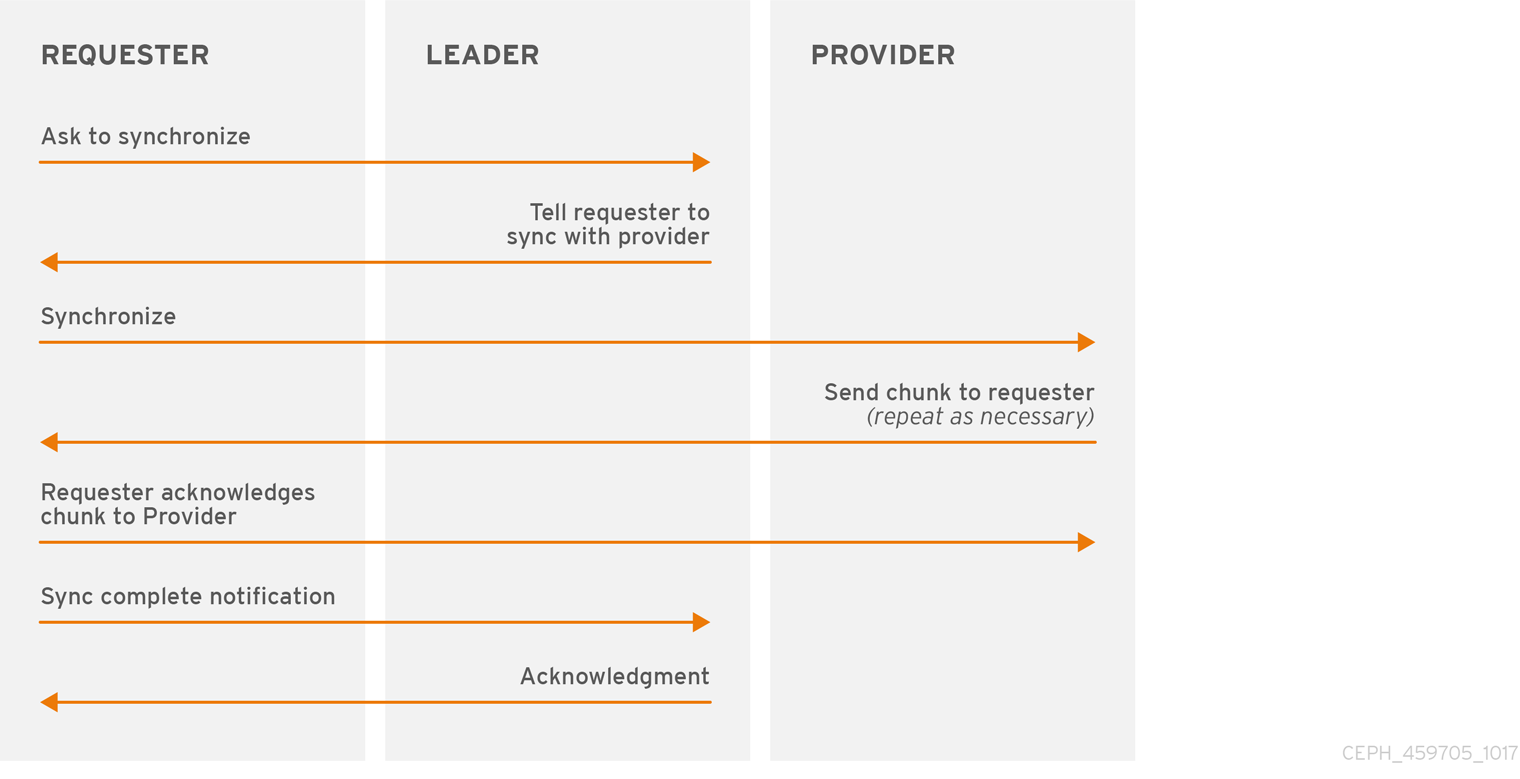

When you run a production cluster with multiple monitors which is recommended, each monitor checks to see if a neighboring monitor has a more recent version of the cluster map. For example, a map in a neighboring monitor with one or more epoch numbers higher than the most current epoch in the map of the instant monitor. Periodically, one monitor in the cluster might fall behind the other monitors to the point where it must leave the quorum, synchronize to retrieve the most current information about the cluster, and then rejoin the quorum. For the purposes of synchronization, monitors can assume one of three roles:

- Leader: The Leader is the first monitor to achieve the most recent Paxos version of the cluster map.

- Provider: The Provider is a monitor that has the most recent version of the cluster map, but was not the first to achieve the most recent version.

- Requester: The Requester is a monitor that has fallen behind the leader and must synchronize in order to retrieve the most recent information about the cluster before it can rejoin the quorum.

These roles enable a leader to delegate synchronization duties to a provider, which prevents synchronization requests from overloading the leader and improving performance. In the following diagram, the requester has learned that it has fallen behind the other monitors. The requester asks the leader to synchronize, and the leader tells the requester to synchronize with a provider.

Synchronization always occurs when a new monitor joins the cluster. During runtime operations, monitors can receive updates to the cluster map at different times. This means the leader and provider roles may migrate from one monitor to another. If this happens while synchronizing (for example, a provider falls behind the leader), the provider can terminate synchronization with a requester.

Once synchronization is complete, Ceph requires trimming across the cluster. Trimming requires that the placement groups are active + clean.

- mon_sync_trim_timeout

- Description, Type

- Double

- Default

-

30.0

- mon_sync_heartbeat_timeout

- Description, Type

- Double

- Default

-

30.0

- mon_sync_heartbeat_interval

- Description, Type

- Double

- Default

-

5.0

- mon_sync_backoff_timeout

- Description, Type

- Double

- Default

-

30.0

- mon_sync_timeout

- Description

- Number of seconds the monitor will wait for the next update message from its sync provider before it gives up and bootstraps again.

- Type

- Double

- Default

-

30.0

- mon_sync_max_retries

- Description, Type

- Integer

- Default

-

5

- mon_sync_max_payload_size

- Description

- The maximum size for a sync payload (in bytes).

- Type

- 32-bit Integer

- Default

-

1045676

- paxos_max_join_drift

- Description

- The maximum Paxos iterations before we must first sync the monitor data stores. When a monitor finds that its peer is too far ahead of it, it will first sync with data stores before moving on.

- Type

- Integer

- Default

-

10

- paxos_stash_full_interval

- Description

-

How often (in commits) to stash a full copy of the PaxosService state. Current this setting only affects

mds,mon,authandmgrPaxosServices. - Type

- Integer

- Default

- 25

- paxos_propose_interval

- Description

- Gather updates for this time interval before proposing a map update.

- Type

- Double

- Default

-

1.0

- paxos_min

- Description

- The minimum number of paxos states to keep around

- Type

- Integer

- Default

- 500

- paxos_min_wait

- Description

- The minimum amount of time to gather updates after a period of inactivity.

- Type

- Double

- Default

-

0.05

- paxos_trim_min

- Description

- Number of extra proposals tolerated before trimming

- Type

- Integer

- Default

- 250

- paxos_trim_max

- Description

- The maximum number of extra proposals to trim at a time

- Type

- Integer

- Default

- 500

- paxos_service_trim_min

- Description

- The minimum amount of versions to trigger a trim (0 disables it)

- Type

- Integer

- Default

- 250

- paxos_service_trim_max

- Description

- The maximum amount of versions to trim during a single proposal (0 disables it)

- Type

- Integer

- Default

- 500

- mon_max_log_epochs

- Description

- The maximum amount of log epochs to trim during a single proposal

- Type

- Integer

- Default

- 500

- mon_max_pgmap_epochs

- Description

- The maximum amount of pgmap epochs to trim during a single proposal

- Type

- Integer

- Default

- 500

- mon_mds_force_trim_to

- Description

- Force monitor to trim mdsmaps to this point (0 disables it. dangerous, use with care)

- Type

- Integer

- Default

- 0

- mon_osd_force_trim_to

- Description

- Force monitor to trim osdmaps to this point, even if there is PGs not clean at the specified epoch (0 disables it. dangerous, use with care)

- Type

- Integer

- Default

- 0

- mon_osd_cache_size

- Description

- The size of osdmaps cache, not to rely on underlying store’s cache

- Type

- Integer

- Default

- 10

- mon_election_timeout

- Description

- On election proposer, maximum waiting time for all ACKs in seconds.

- Type

- Float

- Default

-

5

- mon_lease

- Description

- The length (in seconds) of the lease on the monitor’s versions.

- Type

- Float

- Default

-

5

- mon_lease_renew_interval_factor

- Description

-

mon lease*mon lease renew interval factorwill be the interval for the Leader to renew the other monitor’s leases. The factor should be less than1.0. - Type

- Float

- Default

-

0.6

- mon_lease_ack_timeout_factor

- Description

-

The Leader will wait

mon lease*mon lease ack timeout factorfor the Providers to acknowledge the lease extension. - Type

- Float

- Default

-

2.0

- mon_accept_timeout_factor

- Description

-

The Leader will wait

mon lease*mon accept timeout factorfor the Requester(s) to accept a Paxos update. It is also used during the Paxos recovery phase for similar purposes. - Type

- Float

- Default

-

2.0

- mon_min_osdmap_epochs

- Description

- Minimum number of OSD map epochs to keep at all times.

- Type

- 32-bit Integer

- Default

-

500

- mon_max_pgmap_epochs

- Description

- Maximum number of PG map epochs the monitor should keep.

- Type

- 32-bit Integer

- Default

-

500

- mon_max_log_epochs

- Description

- Maximum number of Log epochs the monitor should keep.

- Type

- 32-bit Integer

- Default

-

500

3.2.8. Clock

Ceph daemons pass critical messages to each other, which must be processed before daemons reach a timeout threshold. If the clocks in Ceph monitors are not synchronized, it can lead to a number of anomalies. For example:

- Daemons ignoring received messages (for example, timestamps outdated).

- Timeouts triggered too soon or late when a message was not received in time.

See Monitor Store Synchronization for details.

Install NTP on the Ceph monitor hosts to ensure that the monitor cluster operates with synchronized clocks.

Clock drift may still be noticeable with NTP even though the discrepancy is not yet harmful. Ceph clock drift and clock skew warnings can get triggered even though NTP maintains a reasonable level of synchronization. Increasing your clock drift may be tolerable under such circumstances. However, a number of factors such as workload, network latency, configuring overrides to default timeouts and the Monitor Store Synchronization settings may influence the level of acceptable clock drift without compromising Paxos guarantees.

Ceph provides the following tunable options to allow you to find acceptable values.

- clock_offset

- Description

-

How much to offset the system clock. See

Clock.ccfor details. - Type

- Double

- Default

-

0

- mon_tick_interval

- Description

- A monitor’s tick interval in seconds.

- Type

- 32-bit Integer

- Default

-

5

- mon_clock_drift_allowed

- Description

- The clock drift in seconds allowed between monitors.

- Type

- Float

- Default

-

.050

- mon_clock_drift_warn_backoff

- Description

- Exponential backoff for clock drift warnings.

- Type

- Float

- Default

-

5

- mon_timecheck_interval

- Description

- The time check interval (clock drift check) in seconds for the leader.

- Type

- Float

- Default

-

300.0

- mon_timecheck_skew_interval

- Description

- The time check interval (clock drift check) in seconds when in the presence of a skew in seconds for the Leader.

- Type

- Float

- Default

-

30.0

3.2.9. Client

- mon_client_hunt_interval

- Description

-

The client will try a new monitor every

Nseconds until it establishes a connection. - Type

- Double

- Default

-

3.0

- mon_client_ping_interval

- Description

-

The client will ping the monitor every

Nseconds. - Type

- Double

- Default

-

10.0

- mon_client_max_log_entries_per_message

- Description

- The maximum number of log entries a monitor will generate per client message.

- Type

- Integer

- Default

-

1000

- mon_client_bytes

- Description

- The amount of client message data allowed in memory (in bytes).

- Type

- 64-bit Integer Unsigned

- Default

-

100ul << 20

3.3. Miscellaneous

- mon_max_osd

- Description

- The maximum number of OSDs allowed in the cluster.

- Type

- 32-bit Integer

- Default

-

10000

- mon_globalid_prealloc

- Description

- The number of global IDs to pre-allocate for clients and daemons in the cluster.

- Type

- 32-bit Integer

- Default

-

100

- mon_sync_fs_threshold

- Description

-

Synchronize with the filesystem when writing the specified number of objects. Set it to

0to disable it. - Type

- 32-bit Integer

- Default

-

5

- mon_subscribe_interval

- Description

- The refresh interval (in seconds) for subscriptions. The subscription mechanism enables obtaining the cluster maps and log information.

- Type

- Double

- Default

-

300

- mon_stat_smooth_intervals

- Description

-

Ceph will smooth statistics over the last

NPG maps. - Type

- Integer

- Default

-

2

- mon_probe_timeout

- Description

- Number of seconds the monitor will wait to find peers before bootstrapping.

- Type

- Double

- Default

-

2.0

- mon_daemon_bytes

- Description

- The message memory cap for metadata server and OSD messages (in bytes).

- Type

- 64-bit Integer Unsigned

- Default

-

400ul << 20

- mon_max_log_entries_per_event

- Description

- The maximum number of log entries per event.

- Type

- Integer

- Default

-

4096

- mon_osd_prime_pg_temp

- Description

-

Enables or disable priming the PGMap with the previous OSDs when an out OSD comes back into the cluster. With the

truesetting the clients will continue to use the previous OSDs until the newly in OSDs as that PG peered. - Type

- Boolean

- Default

-

true

- mon_osd_prime_pg_temp_max_time

- Description

- How much time in seconds the monitor should spend trying to prime the PGMap when an out OSD comes back into the cluster.

- Type

- Float

- Default

-

0.5

- mon_osd_prime_pg_temp_max_time_estimate

- Description

- Maximum estimate of time spent on each PG before we prime all PGs in parallel.

- Type

- Float

- Default

-

0.25

- mon_osd_allow_primary_affinity

- Description

-

allow

primary_affinityto be set in the osdmap. - Type

- Boolean

- Default

- False

- mon_osd_pool_ec_fast_read

- Description

-

Whether turn on fast read on the pool or not. It will be used as the default setting of newly created erasure pools if

fast_readis not specified at create time. - Type

- Boolean

- Default

- False

- mon_mds_skip_sanity

- Description

- Skip safety assertions on FSMap (in case of bugs where we want to continue anyway). Monitor terminates if the FSMap sanity check fails, but we can disable it by enabling this option.

- Type

- Boolean

- Default

- False

- mon_max_mdsmap_epochs

- Description

- The maximum amount of mdsmap epochs to trim during a single proposal.

- Type

- Integer

- Default

- 500

- mon_config_key_max_entry_size

- Description

- The maximum size of config-key entry (in bytes)

- Type

- Integer

- Default

- 4096

- mon_scrub_interval

- Description

- How often (in seconds) the monitor scrub its store by comparing the stored checksums with the computed ones of all the stored keys.

- Type

- Integer

- Default

- 3600*24

- mon_scrub_max_keys

- Description

- The maximum number of keys to scrub each time.

- Type

- Integer

- Default

- 100

- mon_compact_on_start

- Description

-

Compact the database used as Ceph Monitor store on

ceph-monstart. A manual compaction helps to shrink the monitor database and improve the performance of it if the regular compaction fails to work. - Type

- Boolean

- Default

- False

- mon_compact_on_bootstrap

- Description

- Compact the database used as Ceph Monitor store on on bootstrap. Monitor starts probing each other for creating a quorum after bootstrap. If it times out before joining the quorum, it will start over and bootstrap itself again.

- Type

- Boolean

- Default

- False

- mon_compact_on_trim

- Description

- Compact a certain prefix (including paxos) when we trim its old states.

- Type

- Boolean

- Default

- True

- mon_cpu_threads

- Description

- Number of threads for performing CPU intensive work on monitor.

- Type

- Boolean

- Default

- True

- mon_osd_mapping_pgs_per_chunk

- Description

- We calculate the mapping from placement group to OSDs in chunks. This option specifies the number of placement groups per chunk.

- Type

- Integer

- Default

- 4096

- mon_osd_max_split_count

- Description

-

Largest number of PGs per "involved" OSD to let split create. When we increase the

pg_numof a pool, the placement groups will be splitted on all OSDs serving that pool. We want to avoid extreme multipliers on PG splits. - Type

- Integer

- Default

- 300

- mon_session_timeout

- Description

- Monitor will terminate inactive sessions stay idle over this time limit.

- Type

- Integer

- Default

- 300

- rados_mon_op_timeout

- Description

- Number of seconds that RADOS waits for a response from the Ceph Monitor before returning an error from a RADOS operation. A value of 0 means no limit.

- Type

- Double

- Default

- 0

Chapter 4. Cephx Configuration Reference

The cephx protocol is enabled by default. Cryptographic authentication has some computational costs, though they are generally quite low. If the network environment connecting a client and server hosts is very safe and you cannot afford authentication, you can disable it. However, Red Hat recommends using authentication.

If you disable authentication, you are at risk of a man-in-the-middle attack altering client and server messages, which could lead to significant security issues.

4.1. Manual

When you deploy a cluster manually, you have to bootstrap the monitor manually and create the client.admin user and keyring. To deploy Ceph manually, see our Knowledgebase article. The steps for monitor bootstrapping are the logical steps you must perform when using third party deployment tools like Chef, Puppet, Juju, and so on.

4.2. Enabling and Disabling Cephx

Enabling Cephx requires that you have deployed keys for your monitors and OSDs. If you are simply toggling Cephx on / off, you do not have to repeat the bootstrapping procedures.

4.2.1. Enabling Cephx

When cephx is enabled, Ceph will look for the keyring in the default search path, which includes /etc/ceph/$cluster.$name.keyring. You can override this location by adding a keyring option in the [global] section of the Ceph configuration file, but this is not recommended.

Execute the following procedures to enable cephx on a cluster with authentication disabled. If you or your deployment utility have already generated the keys, you may skip the steps related to generating keys.

Create a

client.adminkey, and save a copy of the key for your client host:ceph auth get-or-create client.admin mon 'allow *' osd 'allow *' -o /etc/ceph/ceph.client.admin.keyring

ceph auth get-or-create client.admin mon 'allow *' osd 'allow *' -o /etc/ceph/ceph.client.admin.keyringCopy to Clipboard Copied! Toggle word wrap Toggle overflow WarningThis will erase the contents of any existing

/etc/ceph/client.admin.keyringfile. Do not perform this step if a deployment tool has already done it for you.Create a keyring for the monitor cluster and generate a monitor secret key:

ceph-authtool --create-keyring /tmp/ceph.mon.keyring --gen-key -n mon. --cap mon 'allow *'

ceph-authtool --create-keyring /tmp/ceph.mon.keyring --gen-key -n mon. --cap mon 'allow *'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the monitor keyring into a

ceph.mon.keyringfile in every monitormon datadirectory. For example, to copy it tomon.ain clusterceph, use the following:cp /tmp/ceph.mon.keyring /var/lib/ceph/mon/ceph-a/keyring

cp /tmp/ceph.mon.keyring /var/lib/ceph/mon/ceph-a/keyringCopy to Clipboard Copied! Toggle word wrap Toggle overflow Generate a secret key for every OSD, where

{$id}is the OSD number:ceph auth get-or-create osd.{$id} mon 'allow rwx' osd 'allow *' -o /var/lib/ceph/osd/ceph-{$id}/keyringceph auth get-or-create osd.{$id} mon 'allow rwx' osd 'allow *' -o /var/lib/ceph/osd/ceph-{$id}/keyringCopy to Clipboard Copied! Toggle word wrap Toggle overflow By default the

cephxauthentication protocol is enabled.NoteIf the

cephxauthentication protocol was disabled previously by setting the authentication options tonone, then by removing the following lines under the[global]section in the Ceph configuration file (/etc/ceph/ceph.conf) will reenable thecephxauthentication protocol:auth_cluster_required = none auth_service_required = none auth_client_required = none

auth_cluster_required = none auth_service_required = none auth_client_required = noneCopy to Clipboard Copied! Toggle word wrap Toggle overflow Start or restart the Ceph cluster.

ImportantEnabling

cephxrequires downtime because the cluster needs to be completely restarted, or it needs to be shut down and then started while client I/O is disabled.These flags need to be set before restarting or shutting down the storage cluster:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Once

cephxis enabled and all PGs are active and clean, unset the flags:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2.2. Disabling Cephx

The following procedure describes how to disable Cephx. If your cluster environment is relatively safe, you can offset the computation expense of running authentication. Red Hat recommends enabling authentication. However, it may be easier during setup or troubleshooting to temporarily disable authentication.

Disable

cephxauthentication by setting the following options in the[global]section of the Ceph configuration file:auth_cluster_required = none auth_service_required = none auth_client_required = none

auth_cluster_required = none auth_service_required = none auth_client_required = noneCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Start or restart the Ceph cluster.

4.3. Configuration Settings

4.3.1. Enablement

- auth_cluster_required

- Description

-

If enabled, the Red Hat Ceph Storage cluster daemons (that is,

ceph-monandceph-osd) must authenticate with each other. Valid settings arecephxornone. - Type

- String

- Required

- No

- Default

-

cephx.

- auth_service_required

- Description

-

If enabled, the Red Hat Ceph Storage cluster daemons require Ceph clients to authenticate with the Red Hat Ceph Storage cluster in order to access Ceph services. Valid settings are

cephxornone. - Type

- String

- Required

- No

- Default

-

cephx.

- auth_client_required

- Description

-

If enabled, the Ceph client requires the Red Hat Ceph Storage cluster to authenticate with the Ceph client. Valid settings are

cephxornone. - Type

- String

- Required

- No

- Default

-

cephx.

4.3.2. Keys

When you run Ceph with authentication enabled, the ceph administrative commands and Ceph clients require authentication keys to access the Ceph storage cluster.