Installation Guide

Installing Red Hat Ceph Storage on Red Hat Enterprise Linux

Abstract

Chapter 1. What is Red Hat Ceph Storage?

Red Hat Ceph Storage is a scalable, open, software-defined storage platform that combines an enterprise-hardened version of the Ceph storage system with a Ceph management platform, deployment utilities, and support services. Red Hat Ceph Storage is designed for cloud infrastructure and web-scale object storage. Red Hat Ceph Storage clusters consist of the following types of nodes:

Red Hat Ceph Storage Ansible administration

The Ansible administration node replaces the traditional Ceph administration node used in previous versions of Red Hat Ceph Storage. The Ansible administration node provides the following functions:

- Centralized storage cluster management.

- The Ceph configuration files and keys.

- Optionally, local repositories for installing Ceph on nodes that cannot access the Internet for security reasons.

Ceph Monitor

Each Ceph Monitor node runs the ceph-mon daemon, which maintains a master copy of the storage cluster map. The storage cluster map includes the storage cluster topology. A client connecting to the Ceph storage cluster retrieves the current copy of the storage cluster map from the Ceph Monitor, which enables the client to read from and write data to the storage cluster.

The storage cluster can run with only one Ceph Monitor; however, to ensure high availability in a production storage cluster, Red Hat will only support deployments with at least three Ceph Monitor nodes. Red Hat recommends deploying a total of 5 Ceph Monitors for storage clusters exceeding 750 Ceph OSDs.

Ceph OSD

Each Ceph Object Storage Device (OSD) node runs the ceph-osd daemon, which interacts with logical disks attached to the node. The storage cluster stores data on these Ceph OSD nodes.

Ceph can run with very few OSD nodes, which the default is three, but production storage clusters realize better performance beginning at modest scales. For example, 50 Ceph OSDs in a storage cluster. Ideally, a Ceph storage cluster has multiple OSD nodes, allowing for the possibility to isolate failure domains by configuring the CRUSH map accordingly.

Ceph MDS

Each Ceph Metadata Server (MDS) node runs the ceph-mds daemon, which manages metadata related to files stored on the Ceph File System (CephFS). The Ceph MDS daemon also coordinates access to the shared storage cluster.

Ceph Object Gateway

Ceph Object Gateway node runs the ceph-radosgw daemon, and is an object storage interface built on top of librados to provide applications with a RESTful access point to the Ceph storage cluster. The Ceph Object Gateway supports two interfaces:

S3

Provides object storage functionality with an interface that is compatible with a large subset of the Amazon S3 RESTful API.

Swift

Provides object storage functionality with an interface that is compatible with a large subset of the OpenStack Swift API.

Additional Resources

- For details on the Ceph architecture, see the Red Hat Ceph Storage Architecture Guide.

- For the minimum hardware recommendations, see the Red Hat Ceph Storage Hardware Selection Guide.

Chapter 2. Red Hat Ceph Storage considerations and recommendations

As a storage administrator, you can have a basic understanding about what things to consider before running a Red Hat Ceph Storage cluster. Understanding such things as, the hardware and network requirements, understanding what type of workloads work well with a Red Hat Ceph Storage cluster, along with Red Hat’s recommendations. Red Hat Ceph Storage can be used for different workloads based on a particular business need or set of requirements. Doing the necessary planning before installing a Red Hat Ceph Storage is critical to the success of running a Ceph storage cluster efficiently, achieving the business requirements.

Want help with planning a Red Hat Ceph Storage cluster for a specific use case? Please contact your Red Hat representative for assistance.

2.1. Prerequisites

- Time to understand, consider, and plan a storage solution.

2.2. Basic Red Hat Ceph Storage considerations

The first consideration for using Red Hat Ceph Storage is developing a storage strategy for the data. A storage strategy is a method of storing data that serves a particular use case. If you need to store volumes and images for a cloud platform like OpenStack, you can choose to store data on faster Serial Attached SCSI (SAS) drives with Solid State Drives (SSD) for journals. By contrast, if you need to store object data for an S3- or Swift-compliant gateway, you can choose to use something more economical, like traditional Serial Advanced Technology Attachment (SATA) drives. Red Hat Ceph Storage can accommodate both scenarios in the same storage cluster, but you need a means of providing the fast storage strategy to the cloud platform, and a means of providing more traditional storage for your object store.

One of the most important steps in a successful Ceph deployment is identifying a price-to-performance profile suitable for the storage cluster’s use case and workload. It is important to choose the right hardware for the use case. For example, choosing IOPS-optimized hardware for a cold storage application increases hardware costs unnecessarily. Whereas, choosing capacity-optimized hardware for its more attractive price point in an IOPS-intensive workload will likely lead to unhappy users complaining about slow performance.

Red Hat Ceph Storage can support multiple storage strategies. Use cases, cost versus benefit performance tradeoffs, and data durability are the primary considerations that help develop a sound storage strategy.

Use Cases

Ceph provides massive storage capacity, and it supports numerous use cases, such as:

- The Ceph Block Device client is a leading storage backend for cloud platforms that provides limitless storage for volumes and images with high performance features like copy-on-write cloning.

- The Ceph Object Gateway client is a leading storage backend for cloud platforms that provides a RESTful S3-compliant and Swift-compliant object storage for objects like audio, bitmap, video and other data.

- The Ceph File System for traditional file storage.

Cost vs. Benefit of Performance

Faster is better. Bigger is better. High durability is better. However, there is a price for each superlative quality, and a corresponding cost versus benefit trade off. Consider the following use cases from a performance perspective: SSDs can provide very fast storage for relatively small amounts of data and journaling. Storing a database or object index can benefit from a pool of very fast SSDs, but proves too expensive for other data. SAS drives with SSD journaling provide fast performance at an economical price for volumes and images. SATA drives without SSD journaling provide cheap storage with lower overall performance. When you create a CRUSH hierarchy of OSDs, you need to consider the use case and an acceptable cost versus performance trade off.

Data Durability

In large scale storage clusters, hardware failure is an expectation, not an exception. However, data loss and service interruption remain unacceptable. For this reason, data durability is very important. Ceph addresses data durability with multiple replica copies of an object or with erasure coding and multiple coding chunks. Multiple copies or multiple coding chunks present an additional cost versus benefit tradeoff: it is cheaper to store fewer copies or coding chunks, but it can lead to the inability to service write requests in a degraded state. Generally, one object with two additional copies, or two coding chunks can allow a storage cluster to service writes in a degraded state while the storage cluster recovers.

Replication stores one or more redundant copies of the data across failure domains in case of a hardware failure. However, redundant copies of data can become expensive at scale. For example, to store 1 petabyte of data with triple replication would require a cluster with at least 3 petabytes of storage capacity.

Erasure coding stores data as data chunks and coding chunks. In the event of a lost data chunk, erasure coding can recover the lost data chunk with the remaining data chunks and coding chunks. Erasure coding is substantially more economical than replication. For example, using erasure coding with 8 data chunks and 3 coding chunks provides the same redundancy as 3 copies of the data. However, such an encoding scheme uses approximately 1.5x of the initial data stored compared to 3x with replication.

The CRUSH algorithm aids this process by ensuring that Ceph stores additional copies or coding chunks in different locations within the storage cluster. This ensures that the failure of a single storage device or node does not lead to a loss of all of the copies or coding chunks necessary to preclude data loss. You can plan a storage strategy with cost versus benefit tradeoffs, and data durability in mind, then present it to a Ceph client as a storage pool.

ONLY the data storage pool can use erasure coding. Pools storing service data and bucket indexes use replication.

Ceph’s object copies or coding chunks make RAID solutions obsolete. Do not use RAID, because Ceph already handles data durability, a degraded RAID has a negative impact on performance, and recovering data using RAID is substantially slower than using deep copies or erasure coding chunks.

2.3. Red Hat Ceph Storage workload considerations

One of the key benefits of a Ceph storage cluster is the ability to support different types of workloads within the same storage cluster using performance domains. Different hardware configurations can be associated with each performance domain. Storage administrators can deploy storage pools on the appropriate performance domain, providing applications with storage tailored to specific performance and cost profiles. Selecting appropriately sized and optimized servers for these performance domains is an essential aspect of designing a Red Hat Ceph Storage cluster.

To the Ceph client interface that reads and writes data, a Ceph storage cluster appears as a simple pool where the client stores data. However, the storage cluster performs many complex operations in a manner that is completely transparent to the client interface. Ceph clients and Ceph object storage daemons, referred to as Ceph OSDs, or simply OSDs, both use the Controlled Replication Under Scalable Hashing (CRUSH) algorithm for storage and retrieval of objects. Ceph OSDs can run on bare-metal servers or virtual machines within the storage cluster, using containers or RPM based deployments.

A CRUSH map describes a topography of cluster resources, and the map exists both on client nodes as well as Ceph Monitor nodes within the cluster. Ceph clients and Ceph OSDs both use the CRUSH map and the CRUSH algorithm. Ceph clients communicate directly with OSDs, eliminating a centralized object lookup and a potential performance bottleneck. With awareness of the CRUSH map and communication with their peers, OSDs can handle replication, backfilling, and recovery—allowing for dynamic failure recovery.

Ceph uses the CRUSH map to implement failure domains. Ceph also uses the CRUSH map to implement performance domains, which simply take the performance profile of the underlying hardware into consideration. The CRUSH map describes how Ceph stores data, and it is implemented as a simple hierarchy, specifically a acyclic graph, and a ruleset. The CRUSH map can support multiple hierarchies to separate one type of hardware performance profile from another. Ceph implements performance domains with device "classes".

For example, you can have these performance domains coexisting in the same Red Hat Ceph Storage cluster:

- Hard disk drives (HDDs) are typically appropriate for cost- and capacity-focused workloads.

- Throughput-sensitive workloads typically use HDDs with Ceph write journals on solid state drives (SSDs).

- IOPS-intensive workloads such as MySQL and MariaDB often use SSDs.

Workloads

Red Hat Ceph Storage is optimized for three primary workloads:

IOPS optimized: Input, output per second (IOPS) optimization deployments are suitable for cloud computing operations, such as running MYSQL or MariaDB instances as virtual machines on OpenStack. IOPS optimized deployments require higher performance storage such as 15k RPM SAS drives and separate SSD journals to handle frequent write operations. Some high IOPS scenarios use all flash storage to improve IOPS and total throughput.

An IOPS-optimized storage cluster has the following properties:

- Lowest cost per IOPS.

- Highest IOPS per GB.

- 99th percentile latency consistency.

Uses for an IOPS-optimized storage cluster are:

- Typically block storage.

- 3x replication for hard disk drives (HDDs) or 2x replication for solid state drives (SSDs).

- MySQL on OpenStack clouds.

Throughput optimized: Throughput-optimized deployments are suitable for serving up significant amounts of data, such as graphic, audio and video content. Throughput-optimized deployments require high bandwidth networking hardware, controllers and hard disk drives with fast sequential read and write characteristics. If fast data access is a requirement, then use a throughput-optimized storage strategy. Also, if fast write performance is a requirement, using Solid State Disks (SSD) for journals will substantially improve write performance.

A throughput-optimized storage cluster has the following properties:

- Lowest cost per MBps (throughput).

- Highest MBps per TB.

- Highest MBps per BTU.

- Highest MBps per Watt.

- 97th percentile latency consistency.

Uses for an throughput-optimized storage cluster are:

- Block or object storage.

- 3x replication.

- Active performance storage for video, audio, and images.

- Streaming media, such as 4k video.

Capacity optimized: Capacity-optimized deployments are suitable for storing significant amounts of data as inexpensively as possible. Capacity-optimized deployments typically trade performance for a more attractive price point. For example, capacity-optimized deployments often use slower and less expensive SATA drives and co-locate journals rather than using SSDs for journaling.

A cost- and capacity-optimized storage cluster has the following properties:

- Lowest cost per TB.

- Lowest BTU per TB.

- Lowest Watts required per TB.

Uses for an cost- and capacity-optimized storage cluster are:

- Typically object storage.

- Erasure coding for maximizing usable capacity

- Object archive.

- Video, audio, and image object repositories.

Carefully consider the workload being ran by a Red Hat Ceph Storage clusters BEFORE considering what hardware to purchase, because it can significantly impact the price and performance of the storage cluster. For example, if the workload is capacity-optimized and the hardware is better suited to a throughput-optimized workload, then hardware will be more expensive than necessary. Conversely, if the workload is throughput-optimized and the hardware is better suited to a capacity-optimized workload, then storage cluster can suffer from poor performance.

2.4. Network considerations for Red Hat Ceph Storage

An important aspect of a cloud storage solution is that storage clusters can run out of IOPS due to network latency, and other factors. Also, the storage cluster can run out of throughput due to bandwidth constraints long before the storage clusters run out of storage capacity. This means that the network hardware configuration must support the chosen workloads in order to meet price versus performance requirements.

Storage administrators prefer that a storage cluster recovers as quickly as possible. Carefully consider bandwidth requirements for the storage cluster network, be mindful of network link oversubscription, and segregate the intra-cluster traffic from the client-to-cluster traffic. Also consider that network performance is increasingly important when considering the use of Solid State Disks (SSD), flash, NVMe, and other high performing storage devices.

Ceph supports a public network and a storage cluster network. The public network handles client traffic and communication with Ceph Monitors. The storage cluster network handles Ceph OSD heartbeats, replication, backfilling and recovery traffic. At a minimum, a single 10 GB Ethernet link should be used for storage hardware, and you can add additional 10 GB Ethernet links for connectivity and throughput.

Red Hat recommends allocating bandwidth to the storage cluster network, such that, it is a multiple of the public network using osd_pool_default_size as the basis for the multiple on replicated pools. Red Hat also recommends running the public and storage cluster networks on separate network cards.

Red Hat recommends using 10 GB Ethernet for Red Hat Ceph Storage deployments in production. A 1 GB Ethernet network is not suitable for production storage clusters.

In the case of a drive failure, replicating 1 TB of data across a 1 GB Ethernet network takes 3 hours, and 3 TB takes 9 hours. Using 3 TB is the typical drive configuration. By contrast, with a 10 GB Ethernet network, the replication times would be 20 minutes and 1 hour respectively. Remember that when a Ceph OSD fails, the storage cluster will recover by replicating the data it contained to other Ceph OSDs within the pool.

The failure of a larger domain such as a rack means that the storage cluster will utilize considerably more bandwidth. When building a storage cluster consisting of multiple racks, which is common for large storage implementations, consider utilizing as much network bandwidth between switches in a "fat tree" design for optimal performance. A typical 10 GB Ethernet switch has 48 10 GB ports and four 40 GB ports. Use the 40 GB ports on the spine for maximum throughput. Alternatively, consider aggregating unused 10 GB ports with QSFP+ and SFP+ cables into more 40 GB ports to connect to other rack and spine routers. Also, consider using LACP mode 4 to bond network interfaces. Additionally, use jumbo frames, maximum transmission unit (MTU) of 9000, especially on the backend or cluster network.

Before installing and testing a Red Hat Ceph Storage cluster, verify the network throughput. Most performance-related problems in Ceph usually begin with a networking issue. Simple network issues like a kinked or bent Cat-6 cable could result in degraded bandwidth. Use a minimum of 10 GB ethernet for the front side network. For large clusters, consider using 40 GB ethernet for the backend or cluster network.

For network optimization, Red Hat recommends using jumbo frames for a better CPU per bandwidth ratio, and a non-blocking network switch back-plane. Red Hat Ceph Storage requires the same MTU value throughout all networking devices in the communication path, end-to-end for both public and cluster networks. Verify that the MTU value is the same on all nodes and networking equipment in the environment before using a Red Hat Ceph Storage cluster in production.

2.5. Tuning considerations for the Linux kernel when running Ceph

Production Red Hat Ceph Storage clusters generally benefit from tuning the operating system, specifically around limits and memory allocation. Ensure that adjustments are set for all nodes within the storage cluster. You can also open a case with Red Hat support asking for additional guidance.

Reserving Free Memory for Ceph OSDs

To help prevent insufficient memory-related errors during Ceph OSD memory allocation requests, set the specific amount of physical memory to keep in reserve. Red Hat recommends the following settings based on the amount of system RAM.

For 64 GB, reserve 1 GB:

vm.min_free_kbytes = 1048576

vm.min_free_kbytes = 1048576Copy to Clipboard Copied! Toggle word wrap Toggle overflow For 128 GB, reserve 2 GB:

vm.min_free_kbytes = 2097152

vm.min_free_kbytes = 2097152Copy to Clipboard Copied! Toggle word wrap Toggle overflow For 256 GB, reserve 3 GB:

vm.min_free_kbytes = 3145728

vm.min_free_kbytes = 3145728Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Increase the File Descriptors

The Ceph Object Gateway can hang if it runs out of file descriptors. You can modify the /etc/security/limits.conf file on Ceph Object Gateway nodes to increase the file descriptors for the Ceph Object Gateway.

ceph soft nofile unlimited

ceph soft nofile unlimitedAdjusting the ulimit value for Large Storage Clusters

When running Ceph administrative commands on large storage clusters, for example, with 1024 Ceph OSDs or more, create an /etc/security/limits.d/50-ceph.conf file on each node that runs administrative commands with the following contents:

USER_NAME soft nproc unlimited

USER_NAME soft nproc unlimitedReplace USER_NAME with the name of the non-root user account that runs the Ceph administrative commands.

The root user’s ulimit value is already set to unlimited by default on Red Hat Enterprise Linux.

2.6. Considerations for using a RAID controller with OSD nodes

Optionally, you can consider using a RAID controller on the OSD nodes. Here are some things to consider:

- If an OSD node has a RAID controller with 1-2GB of cache installed, enabling the write-back cache might result in increased small I/O write throughput. However, the cache must be non-volatile.

- Most modern RAID controllers have super capacitors that provide enough power to drain volatile memory to non-volatile NAND memory during a power-loss event. It is important to understand how a particular controller and its firmware behave after power is restored.

- Some RAID controllers require manual intervention. Hard drives typically advertise to the operating system whether their disk caches should be enabled or disabled by default. However, certain RAID controllers and some firmware do not provide such information. Verify that disk level caches are disabled to avoid file system corruption.

- Create a single RAID 0 volume with write-back for each Ceph OSD data drive with write-back cache enabled.

- If Serial Attached SCSI (SAS) or SATA connected Solid-state Drive (SSD) disks are also present on the RAID controller, then investigate whether the controller and firmware support pass-through mode. Enabling pass-through mode helps avoid caching logic, and generally results in much lower latency for fast media.

2.7. Considerations for using NVMe with Object Gateway

Optionally, you can consider using NVMe for the Ceph Object Gateway.

If you plan to use the object gateway feature of Red Hat Ceph Storage and the OSD nodes are using NVMe-based SSDs, then consider following the procedures found in the Using NVMe with LVM optimally section of the Ceph Object Gateway for Production Guide. These procedures explain how to use specially designed Ansible playbooks which will place journals and bucket indexes together on SSDs, which can increase performance compared to having all journals on one device.

2.8. Minimum hardware considerations for Red Hat Ceph Storage

Red Hat Ceph Storage can run on non-proprietary commodity hardware. Small production clusters and development clusters can run without performance optimization with modest hardware.

Red Hat Ceph Storage has slightly different requirements depending on a bare-metal or containerized deployment.

Disk space requirements are based on the Ceph daemons' default path under /var/lib/ceph/ directory.

| Process | Criteria | Minimum Recommended |

|---|---|---|

|

| Processor | 1x AMD64 or Intel 64 |

| RAM |

For | |

| OS Disk | 1x OS disk per host | |

| Volume Storage | 1x storage drive per daemon | |

|

|

Optional, but Red Hat recommended, 1x SSD or NVMe or Optane partition or logical volume per daemon. Sizing is 4% of | |

|

|

Optional, 1x SSD or NVMe or Optane partition or logical volume per daemon. Use a small size, for example 10 GB, and only if it’s faster than the | |

| Network | 2x 10 GB Ethernet NICs | |

|

| Processor | 1x AMD64 or Intel 64 |

| RAM | 1 GB per daemon | |

| Disk Space | 15 GB per daemon | |

| Monitor Disk |

Optionally,1x SSD disk for | |

| Network | 2x 1 GB Ethernet NICs | |

|

| Processor | 1x AMD64 or Intel 64 |

| RAM | 1 GB per daemon | |

| Network | 2x 1 GB Ethernet NICs | |

|

| Processor | 1x AMD64 or Intel 64 |

| RAM | 1 GB per daemon | |

| Disk Space | 5 GB per daemon | |

| Network | 1x 1 GB Ethernet NICs | |

|

| Processor | 1x AMD64 or Intel 64 |

| RAM | 2 GB per daemon

This number is highly dependent on the configurable MDS cache size. The RAM requirement is typically twice as much as the amount set in the | |

| Disk Space | 2 MB per daemon, plus any space required for logging, which might vary depending on the configured log levels. | |

| Network | 2x 1 GB Ethernet NICs Note that this is the same network as the OSDs. If you have a 10 GB network on your OSDs you should use the same on your MDS so that the MDS is not disadvantaged when it comes to latency. |

| Process | Criteria | Minimum Recommended |

|---|---|---|

|

| Processor | 1x AMD64 or Intel 64 CPU CORE per OSD container |

| RAM | Minimum of 5 GB of RAM per OSD container | |

| OS Disk | 1x OS disk per host | |

| OSD Storage | 1x storage drive per OSD container. Cannot be shared with OS Disk. | |

|

|

Optional, but Red Hat recommended, 1x SSD or NVMe or Optane partition or lvm per daemon. Sizing is 4% of | |

|

|

Optionally, 1x SSD or NVMe or Optane partition or logical volume per daemon. Use a small size, for example 10 GB, and only if it’s faster than the | |

| Network | 2x 10 GB Ethernet NICs, 10 GB Recommended | |

|

| Processor | 1x AMD64 or Intel 64 CPU CORE per mon-container |

| RAM |

3 GB per | |

| Disk Space |

10 GB per | |

| Monitor Disk |

Optionally, 1x SSD disk for | |

| Network | 2x 1 GB Ethernet NICs, 10 GB Recommended | |

|

| Processor |

1x AMD64 or Intel 64 CPU CORE per |

| RAM |

3 GB per | |

| Network | 2x 1 GB Ethernet NICs, 10 GB Recommended | |

|

| Processor | 1x AMD64 or Intel 64 CPU CORE per radosgw-container |

| RAM | 1 GB per daemon | |

| Disk Space | 5 GB per daemon | |

| Network | 1x 1 GB Ethernet NICs | |

|

| Processor | 1x AMD64 or Intel 64 CPU CORE per mds-container |

| RAM |

3 GB per

This number is highly dependent on the configurable MDS cache size. The RAM requirement is typically twice as much as the amount set in the | |

| Disk Space |

2 GB per | |

| Network | 2x 1 GB Ethernet NICs, 10 GB Recommended Note that this is the same network as the OSD containers. If you have a 10 GB network on your OSDs you should use the same on your MDS so that the MDS is not disadvantaged when it comes to latency. |

Chapter 3. Requirements for Installing Red Hat Ceph Storage

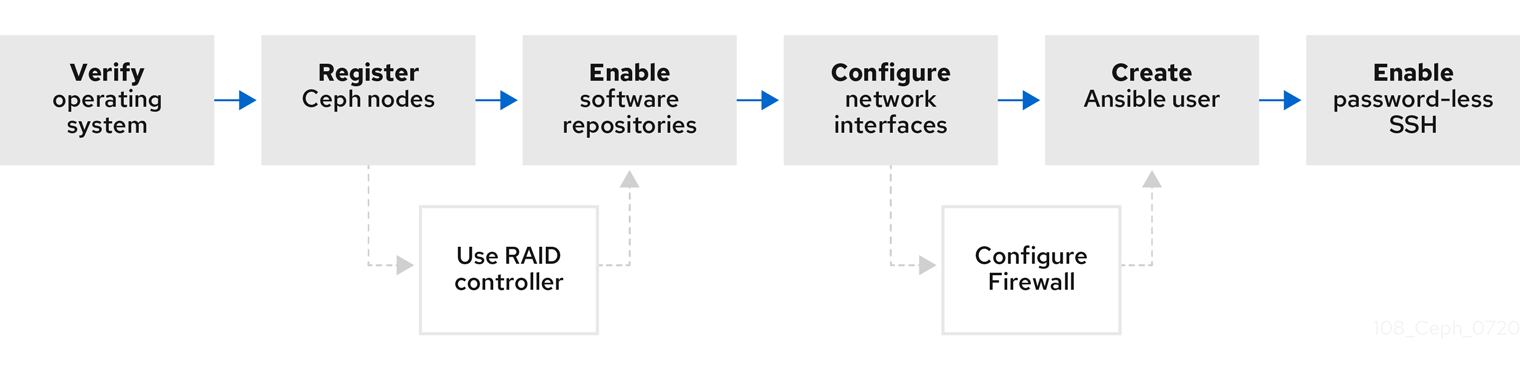

Figure 3.1. Prerequisite Workflow

Before installing Red Hat Ceph Storage, review the following requirements and prepare each Monitor, OSD, Metadata Server, and client nodes accordingly.

To know about Red Hat Ceph Storage releases and corresponding Red Hat Ceph Storage package versions, see What are the Red Hat Ceph Storage releases and corresponding Ceph package versions article on the Red Hat Customer Portal.

3.1. Prerequisites

- Verify the hardware meets the minimum requirements for Red Hat Ceph Storage 4.

3.2. Requirements checklist for installing Red Hat Ceph Storage

| Task | Required | Section | Recommendation |

|---|---|---|---|

| Verifying the operating system version | Yes | Section 3.3, “Operating system requirements for Red Hat Ceph Storage” | |

| Registering Ceph nodes | Yes | Section 3.4, “Registering Red Hat Ceph Storage nodes to the CDN and attaching subscriptions” | |

| Enabling Ceph software repositories | Yes | Section 3.5, “Enabling the Red Hat Ceph Storage repositories” | |

| Using a RAID controller with OSD nodes | No | Section 2.6, “Considerations for using a RAID controller with OSD nodes” | Enabling write-back caches on a RAID controller might result in increased small I/O write throughput for OSD nodes. |

| Configuring the network | Yes | Section 3.6, “Verifying the network configuration for Red Hat Ceph Storage” | At minimum, a public network is required. However, a private network for cluster communication is recommended. |

| Configuring a firewall | No | Section 3.7, “Configuring a firewall for Red Hat Ceph Storage” | A firewall can increase the level of trust for a network. |

| Creating an Ansible user | Yes | Creating the Ansible user is required on all Ceph nodes. | |

| Enabling password-less SSH | Yes | Required for Ansible. |

By default, ceph-ansible installs NTP/chronyd as a requirement. If NTP/chronyd is customized, refer to Configuring the Network Time Protocol for Red Hat Ceph Storage in Manually Installing Red Hat Ceph Storage section to understand how NTP/chronyd must be configured to function properly with Ceph.

3.3. Operating system requirements for Red Hat Ceph Storage

Red Hat Enterprise Linux entitlements are included in the Red Hat Ceph Storage subscription.

The initial release of Red Hat Ceph Storage 4 is supported on Red Hat Enterprise Linux 7.7 or Red Hat Enterprise Linux 8.1. The current version of Red Hat Ceph Storage 4.3 is supported on Red Hat Enterprise Linux 7.9, 8.2 EUS, 8.4 EUS, 8.5, 8.6, 8.7, 8.8.

Red Hat Ceph Storage 4 is supported on RPM-based deployments or container-based deployments.

Deploying Red Hat Ceph Storage 4 in containers running on Red Hat Enterprise Linux 7, deploys Red Hat Ceph Storage 4 running on Red Hat Enterprise Linux 8 container image.

Use the same operating system version, architecture, and deployment type across all nodes. For example, do not use a mixture of nodes with both AMD64 and Intel 64 architectures, a mixture of nodes with both Red Hat Enterprise Linux 7 and Red Hat Enterprise Linux 8 operating systems, or a mixture of nodes with both RPM-based deployments and container-based deployments.

Red Hat does not support clusters with heterogeneous architectures, operating system versions, or deployment types.

SELinux

By default, SELinux is set to Enforcing mode and the ceph-selinux packages are installed. For additional information on SELinux please see the Data Security and Hardening Guide, Red Hat Enterprise Linux 7 SELinux User’s and Administrator’s Guide, and Red Hat Enterprise Linux 8 Using SELinux Guide.

Additional Resources

- The documentation set for Red Hat Enterprise Linux 8 is available at https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/8/

- The documentation set for Red Hat Enterprise Linux 7 is available at https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/7/.

3.4. Registering Red Hat Ceph Storage nodes to the CDN and attaching subscriptions

Register each Red Hat Ceph Storage node to the Content Delivery Network (CDN) and attach the appropriate subscription so that the node has access to software repositories. Each Red Hat Ceph Storage node must be able to access the full Red Hat Enterprise Linux 8 base content and the extras repository content. Perform the following steps on all bare-metal and container nodes in the storage cluster, unless otherwise noted.

For bare-metal Red Hat Ceph Storage nodes that cannot access the Internet during the installation, provide the software content by using the Red Hat Satellite server. Alternatively, mount a local Red Hat Enterprise Linux 8 Server ISO image and point the Red Hat Ceph Storage nodes to the ISO image. For additional details, contact Red Hat Support.

For more information on registering Ceph nodes with the Red Hat Satellite server, see the How to Register Ceph with Satellite 6 and How to Register Ceph with Satellite 5 articles on the Red Hat Customer Portal.

Prerequisites

- A valid Red Hat subscription.

- Red Hat Ceph Storage nodes must be able to connect to the Internet.

- Root-level access to the Red Hat Ceph Storage nodes.

Procedure

For container deployments only, when the Red Hat Ceph Storage nodes do NOT have access to the Internet during deployment. You must follow these steps first on a node with Internet access:

Start a local container registry:

Red Hat Enterprise Linux 7

docker run -d -p 5000:5000 --restart=always --name registry registry:2

# docker run -d -p 5000:5000 --restart=always --name registry registry:2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Red Hat Enterprise Linux 8

podman run -d -p 5000:5000 --restart=always --name registry registry:2

# podman run -d -p 5000:5000 --restart=always --name registry registry:2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify

registry.redhat.iois in the container registry search path.Open for editing the

/etc/containers/registries.conffile:[registries.search] registries = [ 'registry.access.redhat.com', 'registry.fedoraproject.org', 'registry.centos.org', 'docker.io']

[registries.search] registries = [ 'registry.access.redhat.com', 'registry.fedoraproject.org', 'registry.centos.org', 'docker.io']Copy to Clipboard Copied! Toggle word wrap Toggle overflow If

registry.redhat.iois not included in the file, add it:[registries.search] registries = ['registry.redhat.io', 'registry.access.redhat.com', 'registry.fedoraproject.org', 'registry.centos.org', 'docker.io']

[registries.search] registries = ['registry.redhat.io', 'registry.access.redhat.com', 'registry.fedoraproject.org', 'registry.centos.org', 'docker.io']Copy to Clipboard Copied! Toggle word wrap Toggle overflow Pull the Red Hat Ceph Storage 4 image, Prometheus image, and Dashboard image from the Red Hat Customer Portal:

Red Hat Enterprise Linux 7

docker pull registry.redhat.io/rhceph/rhceph-4-rhel8:latest docker pull registry.redhat.io/openshift4/ose-prometheus-node-exporter:v4.6 docker pull registry.redhat.io/rhceph/rhceph-4-dashboard-rhel8:latest docker pull registry.redhat.io/openshift4/ose-prometheus:v4.6 docker pull registry.redhat.io/openshift4/ose-prometheus-alertmanager:v4.6

# docker pull registry.redhat.io/rhceph/rhceph-4-rhel8:latest # docker pull registry.redhat.io/openshift4/ose-prometheus-node-exporter:v4.6 # docker pull registry.redhat.io/rhceph/rhceph-4-dashboard-rhel8:latest # docker pull registry.redhat.io/openshift4/ose-prometheus:v4.6 # docker pull registry.redhat.io/openshift4/ose-prometheus-alertmanager:v4.6Copy to Clipboard Copied! Toggle word wrap Toggle overflow Red Hat Enterprise Linux 8

podman pull registry.redhat.io/rhceph/rhceph-4-rhel8:latest podman pull registry.redhat.io/openshift4/ose-prometheus-node-exporter:v4.6 podman pull registry.redhat.io/rhceph/rhceph-4-dashboard-rhel8:latest podman pull registry.redhat.io/openshift4/ose-prometheus:v4.6 podman pull registry.redhat.io/openshift4/ose-prometheus-alertmanager:v4.6

# podman pull registry.redhat.io/rhceph/rhceph-4-rhel8:latest # podman pull registry.redhat.io/openshift4/ose-prometheus-node-exporter:v4.6 # podman pull registry.redhat.io/rhceph/rhceph-4-dashboard-rhel8:latest # podman pull registry.redhat.io/openshift4/ose-prometheus:v4.6 # podman pull registry.redhat.io/openshift4/ose-prometheus-alertmanager:v4.6Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteRed Hat Enterprise Linux 7 and 8 both use the same container image, based on Red Hat Enterprise Linux 8.

Tag the image:

The Prometheus image tag version is v4.6 for Red Hat Ceph Storage 4.2.

Red Hat Enterprise Linux 7

docker tag registry.redhat.io/rhceph/rhceph-4-rhel8:latest LOCAL_NODE_FQDN:5000/rhceph/rhceph-4-rhel8:latest # docker tag registry.redhat.io/openshift4/ose-prometheus-node-exporter:v4.6 LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus-node-exporter:v4.6 # docker tag registry.redhat.io/rhceph/rhceph-4-dashboard-rhel8:latest LOCAL_NODE_FQDN:5000/rhceph/rhceph-4-dashboard-rhel8:latest # docker tag registry.redhat.io/openshift4/ose-prometheus-alertmanager:v4.6 LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus-alertmanager:v4.6 # docker tag registry.redhat.io/openshift4/ose-prometheus:v4.6 LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus:v4.6

# docker tag registry.redhat.io/rhceph/rhceph-4-rhel8:latest LOCAL_NODE_FQDN:5000/rhceph/rhceph-4-rhel8:latest # docker tag registry.redhat.io/openshift4/ose-prometheus-node-exporter:v4.6 LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus-node-exporter:v4.6 # docker tag registry.redhat.io/rhceph/rhceph-4-dashboard-rhel8:latest LOCAL_NODE_FQDN:5000/rhceph/rhceph-4-dashboard-rhel8:latest # docker tag registry.redhat.io/openshift4/ose-prometheus-alertmanager:v4.6 LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus-alertmanager:v4.6 # docker tag registry.redhat.io/openshift4/ose-prometheus:v4.6 LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus:v4.6Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace

- LOCAL_NODE_FQDN with your local host FQDN.

Red Hat Enterprise Linux 8

podman tag registry.redhat.io/rhceph/rhceph-4-rhel8:latest LOCAL_NODE_FQDN:5000/rhceph/rhceph-4-rhel8:latest # podman tag registry.redhat.io/openshift4/ose-prometheus-node-exporter:v4.6 LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus-node-exporter:v4.6 # podman tag registry.redhat.io/rhceph/rhceph-4-dashboard-rhel8:latest LOCAL_NODE_FQDN:5000/rhceph/rhceph-4-dashboard-rhel8:latest # podman tag registry.redhat.io/openshift4/ose-prometheus-alertmanager:v4.6 LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus-alertmanager:v4.6 # podman tag registry.redhat.io/openshift4/ose-prometheus:v4.6 LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus:v4.6

# podman tag registry.redhat.io/rhceph/rhceph-4-rhel8:latest LOCAL_NODE_FQDN:5000/rhceph/rhceph-4-rhel8:latest # podman tag registry.redhat.io/openshift4/ose-prometheus-node-exporter:v4.6 LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus-node-exporter:v4.6 # podman tag registry.redhat.io/rhceph/rhceph-4-dashboard-rhel8:latest LOCAL_NODE_FQDN:5000/rhceph/rhceph-4-dashboard-rhel8:latest # podman tag registry.redhat.io/openshift4/ose-prometheus-alertmanager:v4.6 LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus-alertmanager:v4.6 # podman tag registry.redhat.io/openshift4/ose-prometheus:v4.6 LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus:v4.6Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace

- LOCAL_NODE_FQDN with your local host FQDN.

Edit the

/etc/containers/registries.conffile and add the node’s FQDN with the port in the file, and save:[registries.insecure] registries = ['LOCAL_NODE_FQDN:5000']

[registries.insecure] registries = ['LOCAL_NODE_FQDN:5000']Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThis step must be done on all storage cluster nodes that access the local Docker registry.

Push the image to the local Docker registry you started:

Red Hat Enterprise Linux 7

docker push --remove-signatures LOCAL_NODE_FQDN:5000/rhceph/rhceph-4-rhel8 # docker push --remove-signatures LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus-node-exporter:v4.6 # docker push --remove-signatures LOCAL_NODE_FQDN:5000/rhceph/rhceph-4-dashboard-rhel8 # docker push --remove-signatures LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus-alertmanager:v4.6 # docker push --remove-signatures LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus:v4.6

# docker push --remove-signatures LOCAL_NODE_FQDN:5000/rhceph/rhceph-4-rhel8 # docker push --remove-signatures LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus-node-exporter:v4.6 # docker push --remove-signatures LOCAL_NODE_FQDN:5000/rhceph/rhceph-4-dashboard-rhel8 # docker push --remove-signatures LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus-alertmanager:v4.6 # docker push --remove-signatures LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus:v4.6Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace

- LOCAL_NODE_FQDN with your local host FQDN.

Red Hat Enterprise Linux 8

podman push --remove-signatures LOCAL_NODE_FQDN:5000/rhceph/rhceph-4-rhel8 # podman push --remove-signatures LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus-node-exporter:v4.6 # podman push --remove-signatures LOCAL_NODE_FQDN:5000/rhceph/rhceph-4-dashboard-rhel8 # podman push --remove-signatures LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus-alertmanager:v4.6 # podman push --remove-signatures LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus:v4.6

# podman push --remove-signatures LOCAL_NODE_FQDN:5000/rhceph/rhceph-4-rhel8 # podman push --remove-signatures LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus-node-exporter:v4.6 # podman push --remove-signatures LOCAL_NODE_FQDN:5000/rhceph/rhceph-4-dashboard-rhel8 # podman push --remove-signatures LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus-alertmanager:v4.6 # podman push --remove-signatures LOCAL_NODE_FQDN:5000/openshift4/ose-prometheus:v4.6Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace

- LOCAL_NODE_FQDN with your local host FQDN.

For Red Hat Enterprise Linux 7, restart the

dockerservice:systemctl restart docker

# systemctl restart dockerCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteSee the Installing a Red Hat Ceph Storage cluster for an example of the

all.ymlfile when the Red Hat Ceph Storage nodes do NOT have access to the Internet during deployment.

For all deployments, bare-metal or in containers:

Register the node, and when prompted, enter the appropriate Red Hat Customer Portal credentials:

subscription-manager register

# subscription-manager registerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Pull the latest subscription data from the CDN:

subscription-manager refresh

# subscription-manager refreshCopy to Clipboard Copied! Toggle word wrap Toggle overflow List all available subscriptions for Red Hat Ceph Storage:

subscription-manager list --available --all --matches="*Ceph*"

# subscription-manager list --available --all --matches="*Ceph*"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the Pool ID from the list of available subscriptions for Red Hat Ceph Storage.

Attach the subscription:

subscription-manager attach --pool=POOL_ID

# subscription-manager attach --pool=POOL_IDCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace

- POOL_ID with the Pool ID identified in the previous step.

Disable the default software repositories, and enable the server and the extras repositories on the respective version of Red Hat Enterprise Linux:

Red Hat Enterprise Linux 7

subscription-manager repos --disable=* subscription-manager repos --enable=rhel-7-server-rpms subscription-manager repos --enable=rhel-7-server-extras-rpms

# subscription-manager repos --disable=* # subscription-manager repos --enable=rhel-7-server-rpms # subscription-manager repos --enable=rhel-7-server-extras-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Red Hat Enterprise Linux 8

subscription-manager repos --disable=* subscription-manager repos --enable=rhel-8-for-x86_64-baseos-rpms subscription-manager repos --enable=rhel-8-for-x86_64-appstream-rpms

# subscription-manager repos --disable=* # subscription-manager repos --enable=rhel-8-for-x86_64-baseos-rpms # subscription-manager repos --enable=rhel-8-for-x86_64-appstream-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Update the system to receive the latest packages.

For Red Hat Enterprise Linux 7:

yum update

# yum updateCopy to Clipboard Copied! Toggle word wrap Toggle overflow For Red Hat Enterprise Linux 8:

dnf update

# dnf updateCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional Resources

- See the Using and Configuring Red Hat Subscription Manager guide for Red Hat Subscription Management.

- See the Enabling the Red Hat Ceph Storage repositories.

3.5. Enabling the Red Hat Ceph Storage repositories

Before you can install Red Hat Ceph Storage, you must choose an installation method. Red Hat Ceph Storage supports two installation methods:

Content Delivery Network (CDN)

For Ceph Storage clusters with Ceph nodes that can connect directly to the internet, use Red Hat Subscription Manager to enable the required Ceph repository.

Local Repository

For Ceph Storage clusters where security measures preclude nodes from accessing the internet, install Red Hat Ceph Storage 4 from a single software build delivered as an ISO image, which will allow you to install local repositories.

Prerequisites

- Valid customer subscription.

For CDN installations:

- Red Hat Ceph Storage nodes must be able to connect to the internet.

- Register the cluster nodes with CDN.

If enabled, then disable the Extra Packages for Enterprise Linux (EPEL) software repository:

yum install yum-utils vim -y yum-config-manager --disable epel

[root@monitor ~]# yum install yum-utils vim -y [root@monitor ~]# yum-config-manager --disable epelCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

For CDN installations:

On the Ansible administration node, enable the Red Hat Ceph Storage 4 Tools repository and Ansible repository:

Red Hat Enterprise Linux 7

subscription-manager repos --enable=rhel-7-server-rhceph-4-tools-rpms --enable=rhel-7-server-ansible-2.9-rpms

[root@admin ~]# subscription-manager repos --enable=rhel-7-server-rhceph-4-tools-rpms --enable=rhel-7-server-ansible-2.9-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Red Hat Enterprise Linux 8

subscription-manager repos --enable=rhceph-4-tools-for-rhel-8-x86_64-rpms --enable=ansible-2.9-for-rhel-8-x86_64-rpms

[root@admin ~]# subscription-manager repos --enable=rhceph-4-tools-for-rhel-8-x86_64-rpms --enable=ansible-2.9-for-rhel-8-x86_64-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow By default, Red Hat Ceph Storage repositories are enabled by

ceph-ansibleon the respective nodes. To manually enable the repositories:NoteDo not enable these repositories on containerized deployments as they are not needed.

On the Ceph Monitor nodes, enable the Red Hat Ceph Storage 4 Monitor repository:

Red Hat Enterprise Linux 7

subscription-manager repos --enable=rhel-7-server-rhceph-4-mon-rpms

[root@monitor ~]# subscription-manager repos --enable=rhel-7-server-rhceph-4-mon-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Red Hat Enterprise Linux 8

subscription-manager repos --enable=rhceph-4-mon-for-rhel-8-x86_64-rpms

[root@monitor ~]# subscription-manager repos --enable=rhceph-4-mon-for-rhel-8-x86_64-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow On the Ceph OSD nodes, enable the Red Hat Ceph Storage 4 OSD repository:

Red Hat Enterprise Linux 7

subscription-manager repos --enable=rhel-7-server-rhceph-4-osd-rpms

[root@osd ~]# subscription-manager repos --enable=rhel-7-server-rhceph-4-osd-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Red Hat Enterprise Linux 8

subscription-manager repos --enable=rhceph-4-osd-for-rhel-8-x86_64-rpms

[root@osd ~]# subscription-manager repos --enable=rhceph-4-osd-for-rhel-8-x86_64-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enable the Red Hat Ceph Storage 4 Tools repository on the following node types: RBD mirroring, Ceph clients, Ceph Object Gateways, Metadata Servers, NFS, iSCSI gateways, and Dashboard servers.

Red Hat Enterprise Linux 7

subscription-manager repos --enable=rhel-7-server-rhceph-4-tools-rpms

[root@client ~]# subscription-manager repos --enable=rhel-7-server-rhceph-4-tools-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Red Hat Enterprise Linux 8

subscription-manager repos --enable=rhceph-4-tools-for-rhel-8-x86_64-rpms

[root@client ~]# subscription-manager repos --enable=rhceph-4-tools-for-rhel-8-x86_64-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow For ISO installations:

- Log in to the Red Hat Customer Portal.

- Click Downloads to visit the Software & Download center.

- In the Red Hat Ceph Storage area, click Download Software to download the latest version of the software.

Additional Resources

- The Using and Configuring Red Hat Subscription Manager guide for Red Hat Subscription Management 1

3.6. Verifying the network configuration for Red Hat Ceph Storage

All Red Hat Ceph Storage nodes require a public network. You must have a network interface card configured to a public network where Ceph clients can reach Ceph monitors and Ceph OSD nodes.

You might have a network interface card for a cluster network so that Ceph can conduct heart-beating, peering, replication, and recovery on a network separate from the public network.

Configure the network interface settings and ensure to make the changes persistent.

Red Hat does not recommend using a single network interface card for both a public and private network.

Prerequisites

- Network interface card connected to the network.

Procedure

Do the following steps on all Red Hat Ceph Storage nodes in the storage cluster, as the root user.

Verify the following settings are in the

/etc/sysconfig/network-scripts/ifcfg-*file corresponding the public-facing network interface card:-

The

BOOTPROTOparameter is set tononefor static IP addresses. The

ONBOOTparameter must be set toyes.If it is set to

no, the Ceph storage cluster might fail to peer on reboot.If you intend to use IPv6 addressing, you must set the IPv6 parameters such as

IPV6INITtoyes, except theIPV6_FAILURE_FATALparameter.Also, edit the Ceph configuration file,

/etc/ceph/ceph.conf, to instruct Ceph to use IPv6, otherwise, Ceph uses IPv4.

-

The

Additional Resources

- For details on configuring network interface scripts for Red Hat Enterprise Linux 8, see the Configuring ip networking with ifcfg files chapter in the Configuring and managing networking guide for Red Hat Enterprise Linux 8.

- For more information on network configuration see the Ceph network configuration section in the Configuration Guide for Red Hat Ceph Storage 4.

3.7. Configuring a firewall for Red Hat Ceph Storage

Red Hat Ceph Storage uses the firewalld service. The firewalld service contains the list of ports for each daemon.

The Ceph Monitor daemons use ports 3300 and 6789 for communication within the Ceph storage cluster.

On each Ceph OSD node, the OSD daemons use several ports in the range 6800-7300:

- One for communicating with clients and monitors over the public network

- One for sending data to other OSDs over a cluster network, if available; otherwise, over the public network

- One for exchanging heartbeat packets over a cluster network, if available; otherwise, over the public network

The Ceph Manager (ceph-mgr) daemons use ports in range 6800-7300. Consider colocating the ceph-mgr daemons with Ceph Monitors on same nodes.

The Ceph Metadata Server nodes (ceph-mds) use port range 6800-7300.

The Ceph Object Gateway nodes are configured by Ansible to use port 8080 by default. However, you can change the default port, for example to port 80.

To use the SSL/TLS service, open port 443.

The following steps are optional if firewalld is enabled. By default, ceph-ansible includes the below setting in group_vars/all.yml, which automatically opens the appropriate ports:

configure_firewall: True

configure_firewall: TruePrerequisite

- Network hardware is connected.

-

Having

rootorsudoaccess to all nodes in the storage cluster.

Procedure

On all nodes in the storage cluster, start the

firewalldservice. Enable it to run on boot, and ensure that it is running:systemctl enable firewalld systemctl start firewalld systemctl status firewalld

# systemctl enable firewalld # systemctl start firewalld # systemctl status firewalldCopy to Clipboard Copied! Toggle word wrap Toggle overflow On all monitor nodes, open port

3300and6789on the public network:Copy to Clipboard Copied! Toggle word wrap Toggle overflow To limit access based on the source address:

firewall-cmd --zone=public --add-rich-rule='rule family=ipv4 \ source address=IP_ADDRESS/NETMASK_PREFIX port protocol=tcp \ port=6789 accept' --permanent

firewall-cmd --zone=public --add-rich-rule='rule family=ipv4 \ source address=IP_ADDRESS/NETMASK_PREFIX port protocol=tcp \ port=6789 accept' --permanentCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace

- IP_ADDRESS with the network address of the Monitor node.

NETMASK_PREFIX with the netmask in CIDR notation.

Example

firewall-cmd --zone=public --add-rich-rule='rule family=ipv4 \ source address=192.168.0.11/24 port protocol=tcp \ port=6789 accept' --permanent

[root@monitor ~]# firewall-cmd --zone=public --add-rich-rule='rule family=ipv4 \ source address=192.168.0.11/24 port protocol=tcp \ port=6789 accept' --permanentCopy to Clipboard Copied! Toggle word wrap Toggle overflow

On all OSD nodes, open ports

6800-7300on the public network:firewall-cmd --zone=public --add-port=6800-7300/tcp firewall-cmd --zone=public --add-port=6800-7300/tcp --permanent firewall-cmd --permanent --add-service=ceph firewall-cmd --add-service=ceph

[root@osd ~]# firewall-cmd --zone=public --add-port=6800-7300/tcp [root@osd ~]# firewall-cmd --zone=public --add-port=6800-7300/tcp --permanent [root@osd ~]# firewall-cmd --permanent --add-service=ceph [root@osd ~]# firewall-cmd --add-service=cephCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you have a separate cluster network, repeat the commands with the appropriate zone.

On all Ceph Manager (

ceph-mgr) nodes, open ports6800-7300on the public network:firewall-cmd --zone=public --add-port=6800-7300/tcp firewall-cmd --zone=public --add-port=6800-7300/tcp --permanent

[root@monitor ~]# firewall-cmd --zone=public --add-port=6800-7300/tcp [root@monitor ~]# firewall-cmd --zone=public --add-port=6800-7300/tcp --permanentCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you have a separate cluster network, repeat the commands with the appropriate zone.

On all Ceph Metadata Server (

ceph-mds) nodes, open ports6800-7300on the public network:firewall-cmd --zone=public --add-port=6800-7300/tcp firewall-cmd --zone=public --add-port=6800-7300/tcp --permanent

[root@monitor ~]# firewall-cmd --zone=public --add-port=6800-7300/tcp [root@monitor ~]# firewall-cmd --zone=public --add-port=6800-7300/tcp --permanentCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you have a separate cluster network, repeat the commands with the appropriate zone.

On all Ceph Object Gateway nodes, open the relevant port or ports on the public network.

To open the default Ansible configured port of

8080:firewall-cmd --zone=public --add-port=8080/tcp firewall-cmd --zone=public --add-port=8080/tcp --permanent

[root@gateway ~]# firewall-cmd --zone=public --add-port=8080/tcp [root@gateway ~]# firewall-cmd --zone=public --add-port=8080/tcp --permanentCopy to Clipboard Copied! Toggle word wrap Toggle overflow To limit access based on the source address:

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" \ source address="IP_ADDRESS/NETMASK_PREFIX" port protocol="tcp" \ port="8080" accept"

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" \ source address="IP_ADDRESS/NETMASK_PREFIX" port protocol="tcp" \ port="8080" accept"Copy to Clipboard Copied! Toggle word wrap Toggle overflow firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" \ source address="IP_ADDRESS/NETMASK_PREFIX" port protocol="tcp" \ port="8080" accept" --permanent

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" \ source address="IP_ADDRESS/NETMASK_PREFIX" port protocol="tcp" \ port="8080" accept" --permanentCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace

- IP_ADDRESS with the network address of the Monitor node.

NETMASK_PREFIX with the netmask in CIDR notation.

Example

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" \ source address="192.168.0.31/24" port protocol="tcp" \ port="8080" accept"

[root@gateway ~]# firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" \ source address="192.168.0.31/24" port protocol="tcp" \ port="8080" accept"Copy to Clipboard Copied! Toggle word wrap Toggle overflow firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" \ source address="192.168.0.31/24" port protocol="tcp" \ port="8080" accept" --permanent

[root@gateway ~]# firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" \ source address="192.168.0.31/24" port protocol="tcp" \ port="8080" accept" --permanentCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Optionally, if you installed Ceph Object Gateway using Ansible and changed the default port that Ansible configures the Ceph Object Gateway to use from

8080, for example, to port80, then open this port:firewall-cmd --zone=public --add-port=80/tcp firewall-cmd --zone=public --add-port=80/tcp --permanent

[root@gateway ~]# firewall-cmd --zone=public --add-port=80/tcp [root@gateway ~]# firewall-cmd --zone=public --add-port=80/tcp --permanentCopy to Clipboard Copied! Toggle word wrap Toggle overflow To limit access based on the source address, run the following commands:

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" \ source address="IP_ADDRESS/NETMASK_PREFIX" port protocol="tcp" \ port="80" accept"

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" \ source address="IP_ADDRESS/NETMASK_PREFIX" port protocol="tcp" \ port="80" accept"Copy to Clipboard Copied! Toggle word wrap Toggle overflow firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" \ source address="IP_ADDRESS/NETMASK_PREFIX" port protocol="tcp" \ port="80" accept" --permanent

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" \ source address="IP_ADDRESS/NETMASK_PREFIX" port protocol="tcp" \ port="80" accept" --permanentCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace

- IP_ADDRESS with the network address of the Monitor node.

- NETMASK_PREFIX with the netmask in CIDR notation.

Example

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" \ source address="192.168.0.31/24" port protocol="tcp" \ port="80" accept"

[root@gateway ~]# firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" \ source address="192.168.0.31/24" port protocol="tcp" \ port="80" accept"Copy to Clipboard Copied! Toggle word wrap Toggle overflow firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" \ source address="192.168.0.31/24" port protocol="tcp" \ port="80" accept" --permanent

[root@gateway ~]# firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" \ source address="192.168.0.31/24" port protocol="tcp" \ port="80" accept" --permanentCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional. To use SSL/TLS, open port

443:firewall-cmd --zone=public --add-port=443/tcp firewall-cmd --zone=public --add-port=443/tcp --permanent

[root@gateway ~]# firewall-cmd --zone=public --add-port=443/tcp [root@gateway ~]# firewall-cmd --zone=public --add-port=443/tcp --permanentCopy to Clipboard Copied! Toggle word wrap Toggle overflow To limit access based on the source address, run the following commands:

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" \ source address="IP_ADDRESS/NETMASK_PREFIX" port protocol="tcp" \ port="443" accept"

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" \ source address="IP_ADDRESS/NETMASK_PREFIX" port protocol="tcp" \ port="443" accept"Copy to Clipboard Copied! Toggle word wrap Toggle overflow firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" \ source address="IP_ADDRESS/NETMASK_PREFIX" port protocol="tcp" \ port="443" accept" --permanent

firewall-cmd --zone=public --add-rich-rule="rule family="ipv4" \ source address="IP_ADDRESS/NETMASK_PREFIX" port protocol="tcp" \ port="443" accept" --permanentCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace

- IP_ADDRESS with the network address of the Monitor node.

- NETMASK_PREFIX with the netmask in CIDR notation.

Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional Resources

- For more information about public and cluster network, see Verifying the Network Configuration for Red Hat Ceph Storage.

-

For additional details on

firewalld, see the Using and configuring firewalls chapter in the Securing networks guide for Red Hat Enterprise Linux 8.

3.8. Creating an Ansible user with sudo access

Ansible must be able to log into all the Red Hat Ceph Storage (RHCS) nodes as a user that has root privileges to install software and create configuration files without prompting for a password. You must create an Ansible user with password-less root access on all nodes in the storage cluster when deploying and configuring a Red Hat Ceph Storage cluster with Ansible.

Prerequisite

-

Having

rootorsudoaccess to all nodes in the storage cluster.

Procedure

Log into the node as the

rootuser:ssh root@HOST_NAME

ssh root@HOST_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace

HOST_NAME with the host name of the Ceph node.

Example

ssh root@mon01

# ssh root@mon01Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the

rootpassword when prompted.

Create a new Ansible user:

adduser USER_NAME

adduser USER_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace

USER_NAME with the new user name for the Ansible user.

Example

adduser admin

# adduser adminCopy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantDo not use

cephas the user name. Thecephuser name is reserved for the Ceph daemons. A uniform user name across the cluster can improve ease of use, but avoid using obvious user names, because intruders typically use them for brute-force attacks.

Set a new password for this user:

passwd USER_NAME

# passwd USER_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace

USER_NAME with the new user name for the Ansible user.

Example

passwd admin

# passwd adminCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the new password twice when prompted.

Configure

sudoaccess for the newly created user:cat << EOF >/etc/sudoers.d/USER_NAME $USER_NAME ALL = (root) NOPASSWD:ALL EOF

cat << EOF >/etc/sudoers.d/USER_NAME $USER_NAME ALL = (root) NOPASSWD:ALL EOFCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace

USER_NAME with the new user name for the Ansible user.

Example

cat << EOF >/etc/sudoers.d/admin admin ALL = (root) NOPASSWD:ALL EOF

# cat << EOF >/etc/sudoers.d/admin admin ALL = (root) NOPASSWD:ALL EOFCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Assign the correct file permissions to the new file:

chmod 0440 /etc/sudoers.d/USER_NAME

chmod 0440 /etc/sudoers.d/USER_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace

USER_NAME with the new user name for the Ansible user.

Example

chmod 0440 /etc/sudoers.d/admin

# chmod 0440 /etc/sudoers.d/adminCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional Resources

- The Managing user accounts section in the Configuring basic system settings guide Red Hat Enterprise Linux 8

3.9. Enabling password-less SSH for Ansible

Generate an SSH key pair on the Ansible administration node and distribute the public key to each node in the storage cluster so that Ansible can access the nodes without being prompted for a password.

This procedure is not required if installing Red Hat Ceph Storage using the Cockpit web-based interface. This is because the Cockpit Ceph Installer generates its own SSH key. Instructions for copying the Cockpit SSH key to all nodes in the cluster are in the chapter Installing Red Hat Ceph Storage using the Cockpit web interface.

Prerequisites

- Access to the Ansible administration node.

-

Creating an Ansible user with

sudoaccess.

Procedure

Generate the SSH key pair, accept the default file name and leave the passphrase empty:

ssh-keygen

[ansible@admin ~]$ ssh-keygenCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the public key to all nodes in the storage cluster:

ssh-copy-id USER_NAME@HOST_NAME

ssh-copy-id USER_NAME@HOST_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace

- USER_NAME with the new user name for the Ansible user.

HOST_NAME with the host name of the Ceph node.

Example

ssh-copy-id ceph-admin@ceph-mon01

[ansible@admin ~]$ ssh-copy-id ceph-admin@ceph-mon01Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Create the user’s SSH

configfile:touch ~/.ssh/config

[ansible@admin ~]$ touch ~/.ssh/configCopy to Clipboard Copied! Toggle word wrap Toggle overflow Open for editing the

configfile. Set values for theHostnameandUseroptions for each node in the storage cluster:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace

- HOST_NAME with the host name of the Ceph node.

USER_NAME with the new user name for the Ansible user.

Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantBy configuring the

~/.ssh/configfile you do not have to specify the-u USER_NAMEoption each time you execute theansible-playbookcommand.

Set the correct file permissions for the

~/.ssh/configfile:chmod 600 ~/.ssh/config

[admin@admin ~]$ chmod 600 ~/.ssh/configCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional Resources

-

The

ssh_config(5)manual page. - See the Using secure communications between two systems with OpenSSH chapter in the Securing networks for Red Hat Enterprise Linux 8.

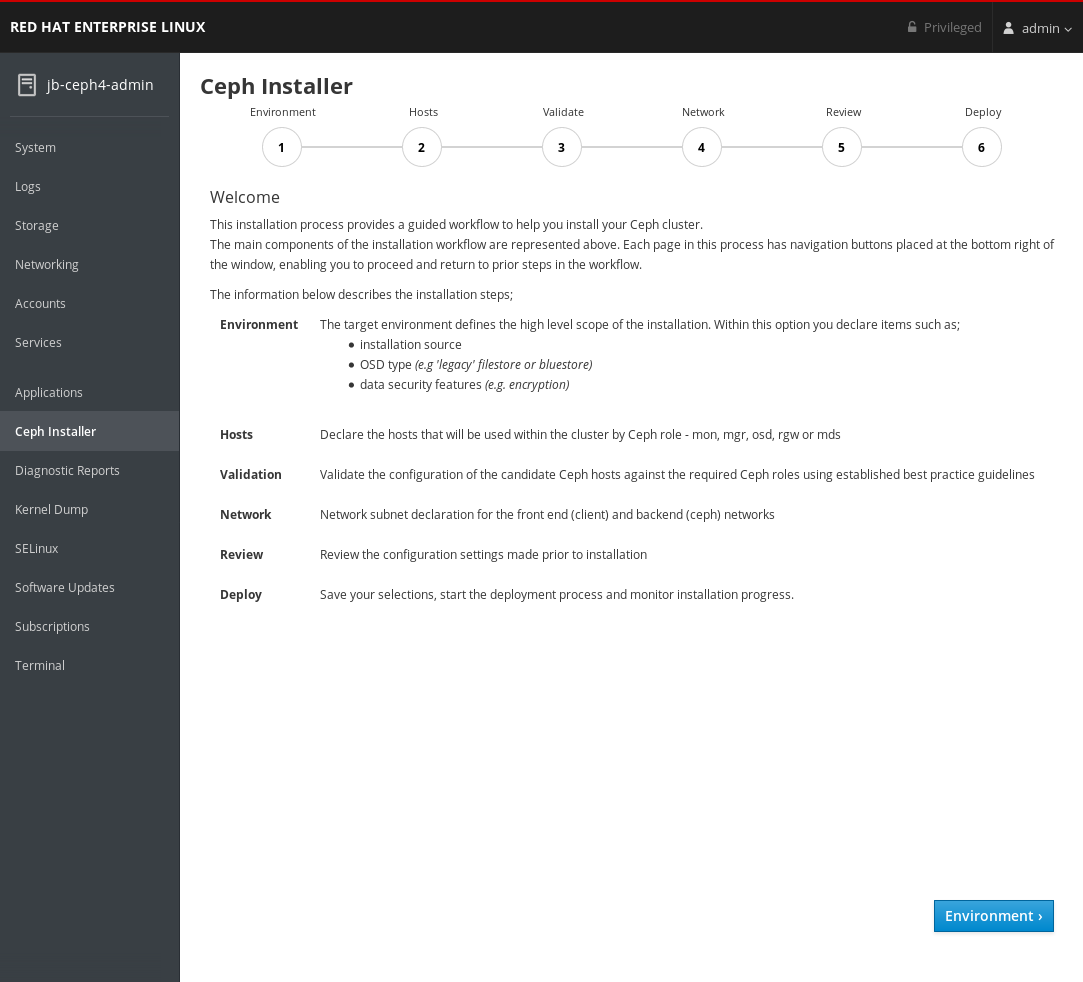

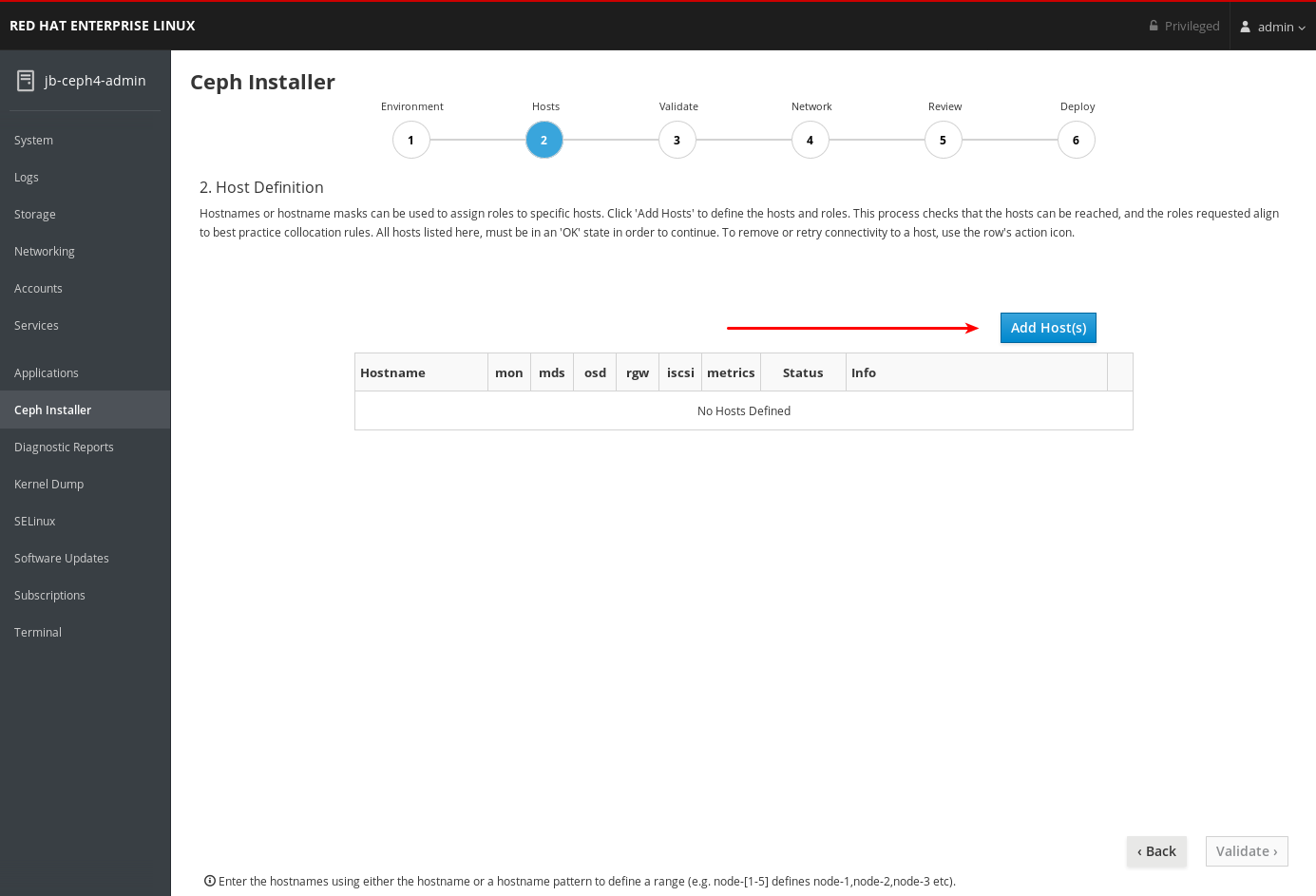

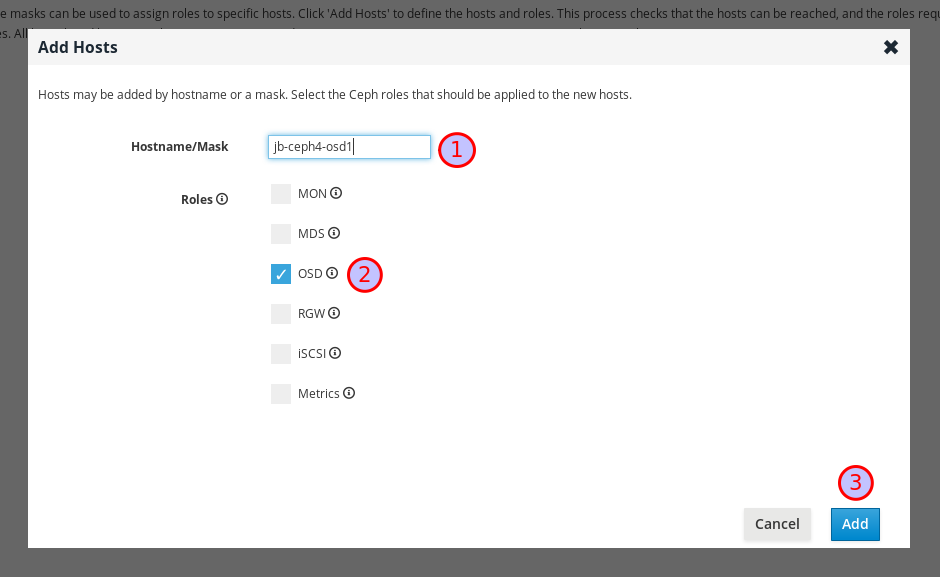

Chapter 4. Installing Red Hat Ceph Storage using the Cockpit web interface

This chapter describes how to use the Cockpit web-based interface to install a Red Hat Ceph Storage cluster and other components, such as Metadata Servers, the Ceph client, or the Ceph Object Gateway.

The process consists of installing the Cockpit Ceph Installer, logging into Cockpit, and configuring and starting the cluster install using different pages within the installer.

The Cockpit Ceph Installer uses Ansible and the Ansible playbooks provided by the ceph-ansible RPM to perform the actual install. It is still possible to use these playbooks to install Ceph without Cockpit. That process is relevant to this chapter and is referred to as a direct Ansible install, or using the Ansible playbooks directly.

The Cockpit Ceph installer does not currently support IPv6 networking. If you require IPv6 networking, install Ceph using the Ansible playbooks directly.

The dashboard web interface, used for administration and monitoring of Ceph, is installed by default by the Ansible playbooks in the ceph-ansible RPM, which Cockpit uses on the back-end. Therefore, whether you use Ansible playbooks directly, or use Cockpit to install Ceph, the dashboard web interface will be installed as well.

4.1. Prerequisites

- Complete the general prerequisites required for direct Ansible Red Hat Ceph Storage installs.

- A recent version of Firefox or Chrome.

- If using multiple networks to segment intra-cluster traffic, client-to-cluster traffic, RADOS Gateway traffic, or iSCSI traffic, ensure the relevant networks are already configured on the hosts. For more information, see network considerations in the Hardware Guide and the section in this chapter on completing the Network page of the Cockpit Ceph Installer

-

Ensure the default port for Cockpit web-based interface,

9090, is accessible.

4.2. Installation requirements

- One node to act as the Ansible administration node.

- One node to provide the performance metrics and alerting platform. This may be colocated with the Ansible administration node.

- One or more nodes to form the Ceph cluster. The installer supports an all-in-one installation called Development/POC. In this mode all Ceph services can run from the same node, and data replication defaults to disk rather than host level protection.

4.3. Install and configure the Cockpit Ceph Installer

Before you can use the Cockpit Ceph Installer to install a Red Hat Ceph Storage cluster, you must install the Cockpit Ceph Installer on the Ansible administration node.

Prerequisites

- Root-level access to the Ansible administration node.

-

The

ansibleuser account for use with the Ansible application.

Procedure

Verify Cockpit is installed.

rpm -q cockpit

$ rpm -q cockpitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example:

rpm -q cockpit cockpit-196.3-1.el8.x86_64

[admin@jb-ceph4-admin ~]$ rpm -q cockpit cockpit-196.3-1.el8.x86_64Copy to Clipboard Copied! Toggle word wrap Toggle overflow If you see similar output to the example above, skip to the step Verify Cockpit is running. If the output is

package cockpit is not installed, continue to the step Install Cockpit.Optional: Install Cockpit.

For Red Hat Enterprise Linux 8:

dnf install cockpit

# dnf install cockpitCopy to Clipboard Copied! Toggle word wrap Toggle overflow For Red Hat Enterprise Linux 7:

yum install cockpit

# yum install cockpitCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verify Cockpit is running.

systemctl status cockpit.socket

# systemctl status cockpit.socketCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you see

Active: active (listening)in the output, skip to the step Install the Cockpit plugin for Red Hat Ceph Storage. If instead you seeActive: inactive (dead), continue to the step Enable Cockpit.Optional: Enable Cockpit.

Use the

systemctlcommand to enable Cockpit:systemctl enable --now cockpit.socket

# systemctl enable --now cockpit.socketCopy to Clipboard Copied! Toggle word wrap Toggle overflow You will see a line like the following:

Created symlink /etc/systemd/system/sockets.target.wants/cockpit.socket → /usr/lib/systemd/system/cockpit.socket.

Created symlink /etc/systemd/system/sockets.target.wants/cockpit.socket → /usr/lib/systemd/system/cockpit.socket.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify Cockpit is running:

systemctl status cockpit.socket

# systemctl status cockpit.socketCopy to Clipboard Copied! Toggle word wrap Toggle overflow You will see a line like the following:

Active: active (listening) since Tue 2020-01-07 18:49:07 EST; 7min ago

Active: active (listening) since Tue 2020-01-07 18:49:07 EST; 7min agoCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Install the Cockpit Ceph Installer for Red Hat Ceph Storage.

For Red Hat Enterprise Linux 8:

dnf install cockpit-ceph-installer

# dnf install cockpit-ceph-installerCopy to Clipboard Copied! Toggle word wrap Toggle overflow For Red Hat Enterprise Linux 7:

yum install cockpit-ceph-installer

# yum install cockpit-ceph-installerCopy to Clipboard Copied! Toggle word wrap Toggle overflow

As the Ansible user, log in to the container catalog using sudo:

NoteBy default, the Cockpit Ceph Installer uses the

rootuser to install Ceph. To use the Ansible user created as a part of the prerequisites to install Ceph, run the rest of the commands in this procedure withsudoas the Ansible user.Red Hat Enterprise Linux 7

sudo docker login -u CUSTOMER_PORTAL_USERNAME https://registry.redhat.io

$ sudo docker login -u CUSTOMER_PORTAL_USERNAME https://registry.redhat.ioCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

sudo docker login -u myusername https://registry.redhat.io Password: Login Succeeded!

[admin@jb-ceph4-admin ~]$ sudo docker login -u myusername https://registry.redhat.io Password: Login Succeeded!Copy to Clipboard Copied! Toggle word wrap Toggle overflow Red Hat Enterprise Linux 8

sudo podman login -u CUSTOMER_PORTAL_USERNAME https://registry.redhat.io

$ sudo podman login -u CUSTOMER_PORTAL_USERNAME https://registry.redhat.ioCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

sudo podman login -u myusername https://registry.redhat.io Password: Login Succeeded!

[admin@jb-ceph4-admin ~]$ sudo podman login -u myusername https://registry.redhat.io Password: Login Succeeded!Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify

registry.redhat.iois in the container registry search path.Open for editing the

/etc/containers/registries.conffile:[registries.search] registries = [ 'registry.access.redhat.com', 'registry.fedoraproject.org', 'registry.centos.org', 'docker.io']

[registries.search] registries = [ 'registry.access.redhat.com', 'registry.fedoraproject.org', 'registry.centos.org', 'docker.io']Copy to Clipboard Copied! Toggle word wrap Toggle overflow If

registry.redhat.iois not included in the file, add it:[registries.search] registries = ['registry.redhat.io', 'registry.access.redhat.com', 'registry.fedoraproject.org', 'registry.centos.org', 'docker.io']

[registries.search] registries = ['registry.redhat.io', 'registry.access.redhat.com', 'registry.fedoraproject.org', 'registry.centos.org', 'docker.io']Copy to Clipboard Copied! Toggle word wrap Toggle overflow

As the Ansible user, start the

ansible-runner-serviceusing sudo.sudo ansible-runner-service.sh -s

$ sudo ansible-runner-service.sh -sCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The last line of output includes the URL to the Cockpit Ceph Installer. In the example above the URL is

https://jb-ceph4-admin:9090/cockpit-ceph-installer. Take note of the URL printed in your environment.

4.4. Copy the Cockpit Ceph Installer SSH key to all nodes in the cluster

The Cockpit Ceph Installer uses SSH to connect to and configure the nodes in the cluster. In order for it to do this automatically the installer generates an SSH key pair so it can access the nodes without being prompted for a password. The SSH public key must be transferred to all nodes in the cluster.

Prerequisites

- An Ansible user with sudo access has been created.

- The Cockpit Ceph Installer is installed and configured.

Procedure

Log in to the Ansible administration node as the Ansible user.

ssh ANSIBLE_USER@HOST_NAME

ssh ANSIBLE_USER@HOST_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example:

ssh admin@jb-ceph4-admin

$ ssh admin@jb-ceph4-adminCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the SSH public key to the first node:

sudo ssh-copy-id -f -i /usr/share/ansible-runner-service/env/ssh_key.pub _ANSIBLE_USER_@_HOST_NAME_

sudo ssh-copy-id -f -i /usr/share/ansible-runner-service/env/ssh_key.pub _ANSIBLE_USER_@_HOST_NAME_Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Repeat this step for all nodes in the cluster

4.5. Log in to Cockpit

You can view the Cockpit Ceph Installer web interface by logging into Cockpit.

Prerequisites

- The Cockpit Ceph Installer is installed and configured.

- You have the URL printed as a part of configuring the Cockpit Ceph Installer

Procedure

Open the URL in a web browser.

Enter the Ansible user name and its password.

Click the radio button for Reuse my password for privileged tasks.

Click Log In.

Review the welcome page to understand how the installer works and the overall flow of the installation process.

Click the Environment button at the bottom right corner of the web page after you have reviewed the information in the welcome page.

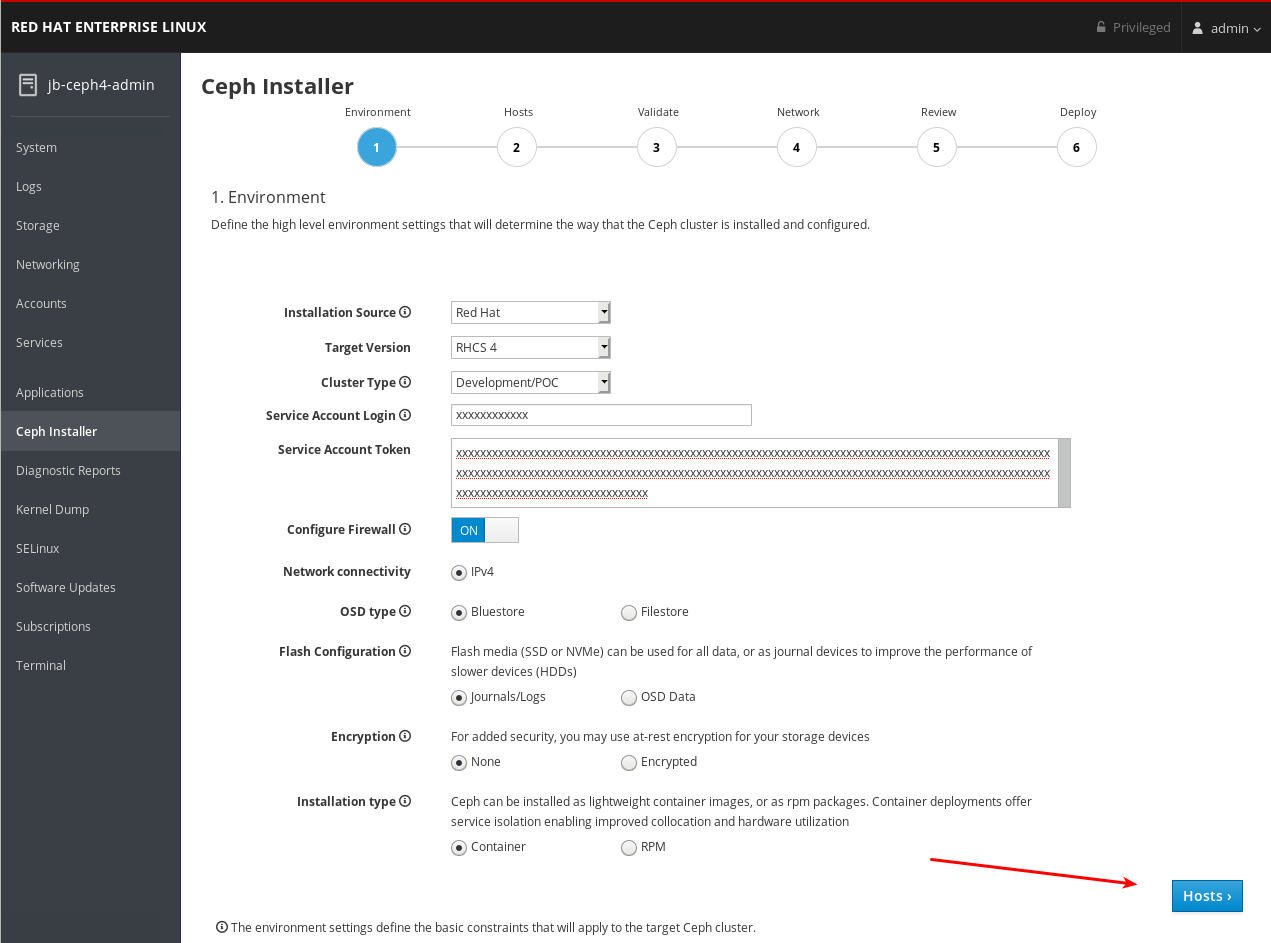

4.6. Complete the Environment page of the Cockpit Ceph Installer

The Environment page allows you to configure overall aspects of the cluster, like what installation source to use and how to use Hard Disk Drives (HDDs) and Solid State Drives (SSDs) for storage.

Prerequisites

- The Cockpit Ceph Installer is installed and configured.

- You have the URL printed as a part of configuring the Cockpit Ceph Installer.

- You have created a registry service account.

In the dialogs to follow, there are tooltips to the right of some of the settings. To view them, hover the mouse cursor over the icon that looks like an i with a circle around it.

Procedure

Select the Installation Source. Choose Red Hat to use repositories from Red Hat Subscription Manager, or ISO to use a CD image downloaded from the Red Hat Customer Portal.

If you choose Red Hat, Target Version will be set to RHCS 4 without any other options. If you choose ISO, Target Version will be set to the ISO image file.

ImportantIf you choose ISO, the image file must be in the

/usr/share/ansible-runner-service/isodirectory and its SELinux context must be set tocontainer_file_t.ImportantThe Community and Distribution options for Installation Source are not supported.

Select the Cluster Type. The Production selection prohibits the install from proceeding if certain resource requirements like CPU number and memory size are not met. To allow the cluster installation to proceed even if the resource requirements are not met, select Development/POC.

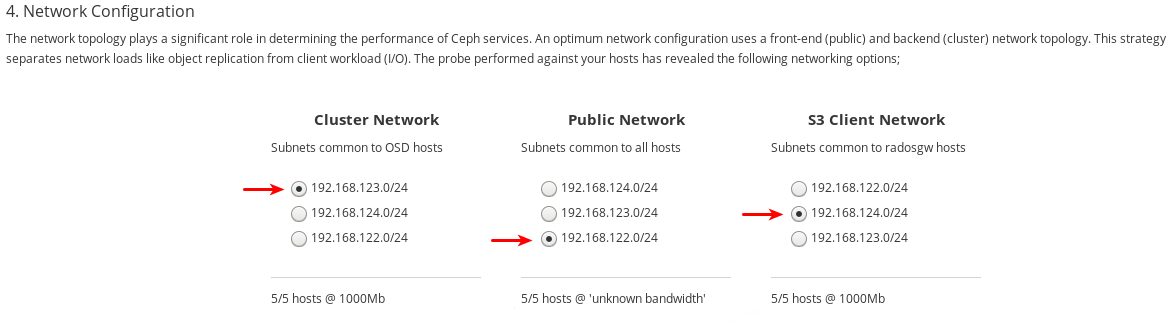

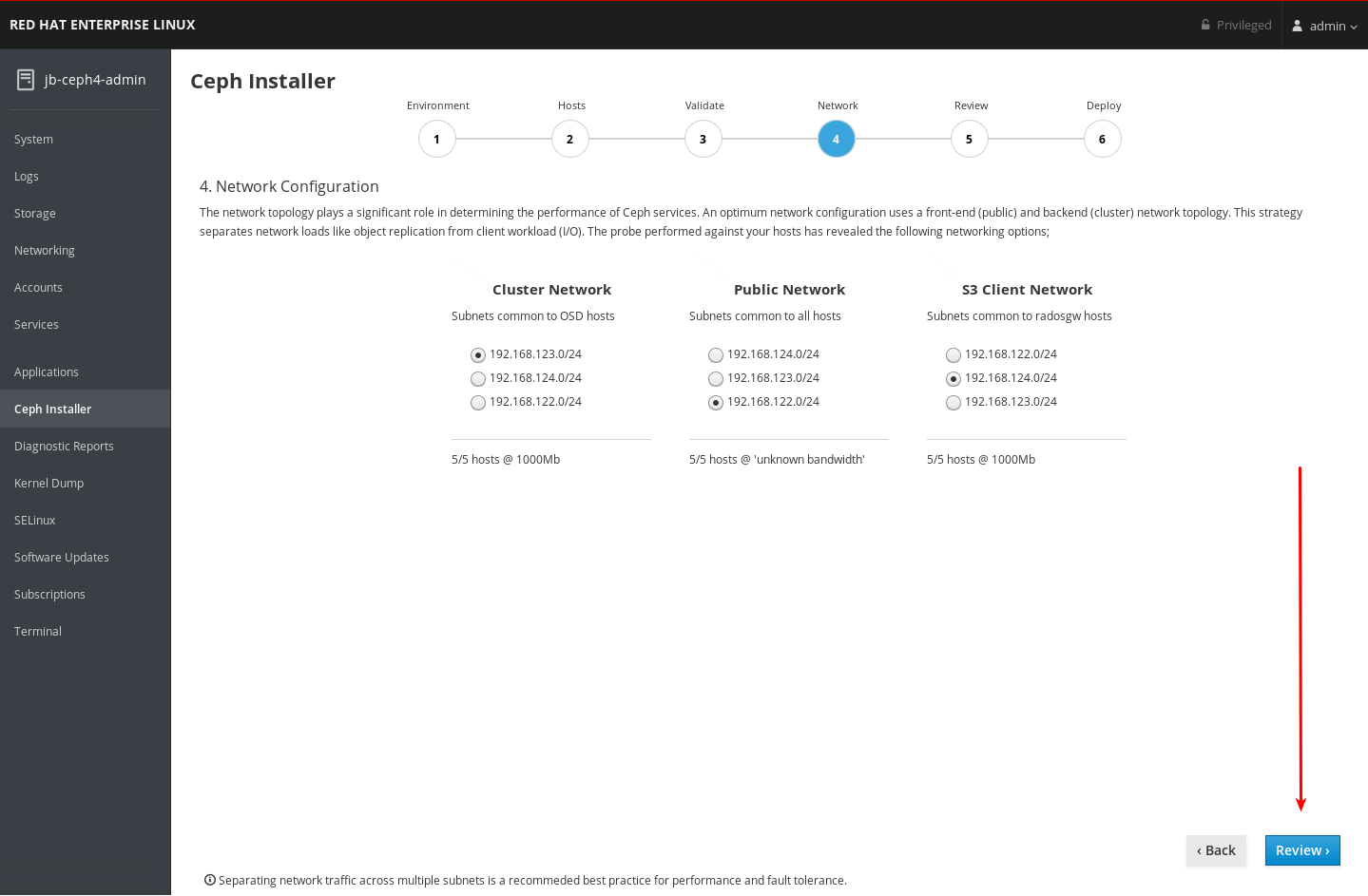

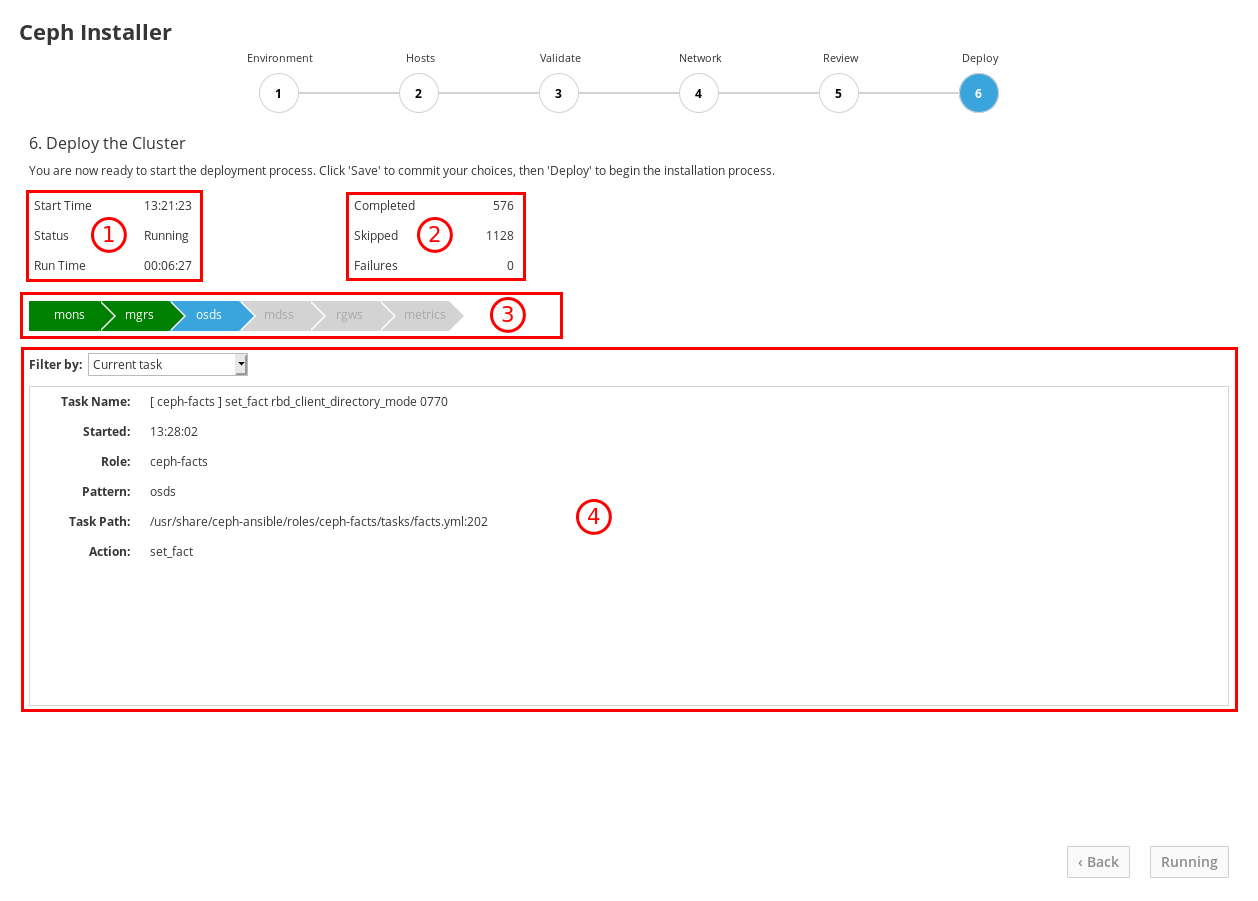

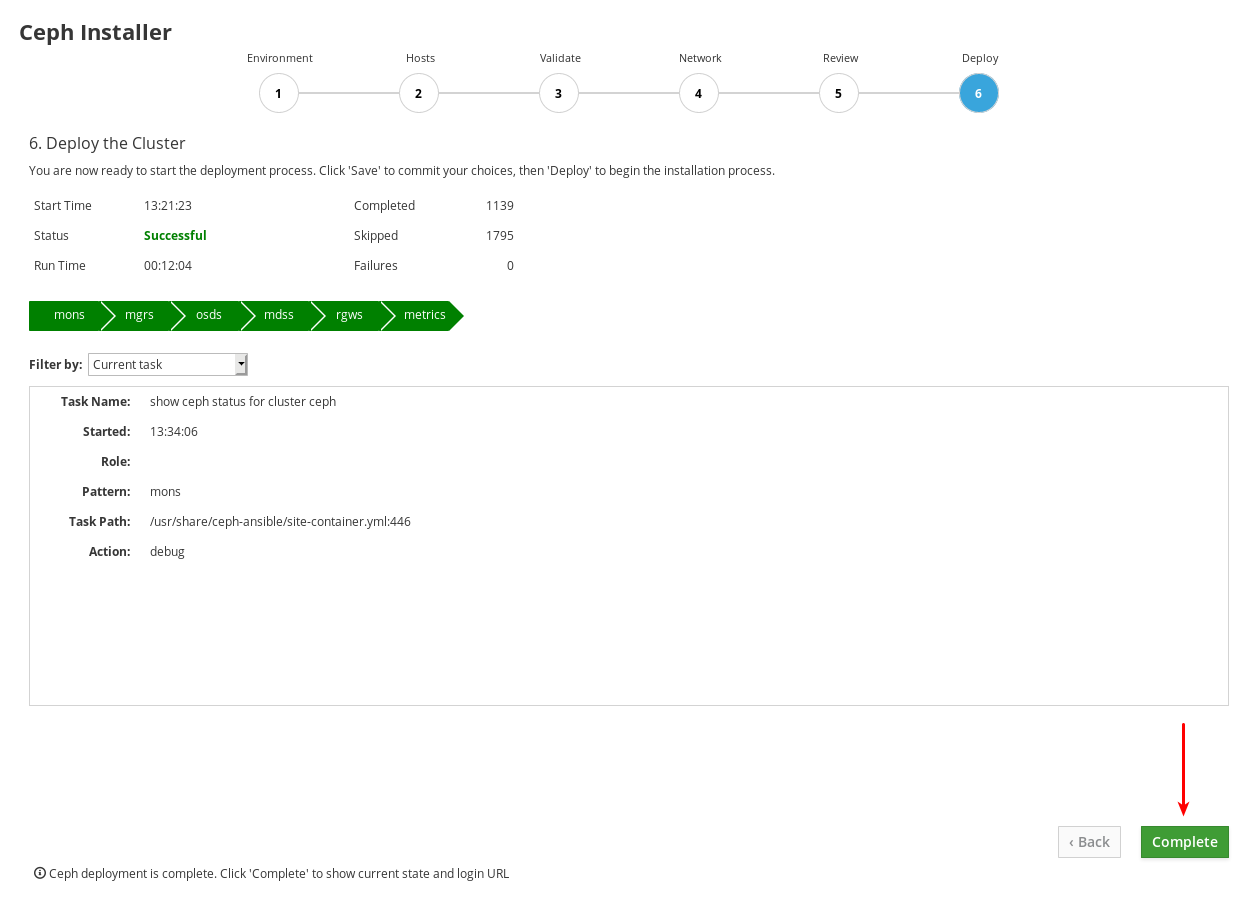

ImportantDo not use Development/POC mode to install a Ceph cluster that will be used in production.