Installation Guide

Installing Red Hat Ceph Storage on Red Hat Enterprise Linux

Abstract

Chapter 1. Red Hat Ceph Storage

Red Hat Ceph Storage is a scalable, open, software-defined storage platform that combines an enterprise-hardened version of the Ceph storage system, with a Ceph management platform, deployment utilities, and support services.

Red Hat Ceph Storage is designed for cloud infrastructure and web-scale object storage. Red Hat Ceph Storage clusters consist of the following types of nodes:

Ceph Monitor

Each Ceph Monitor node runs the ceph-mon daemon, which maintains a master copy of the storage cluster map. The storage cluster map includes the storage cluster topology. A client connecting to the Ceph storage cluster retrieves the current copy of the storage cluster map from the Ceph Monitor, which enables the client to read from and write data to the storage cluster.

The storage cluster can run with only one Ceph Monitor; however, to ensure high availability in a production storage cluster, Red Hat will only support deployments with at least three Ceph Monitor nodes. Red Hat recommends deploying a total of 5 Ceph Monitors for storage clusters exceeding 750 Ceph OSDs.

Ceph Manager

The Ceph Manager daemon, ceph-mgr, co-exists with the Ceph Monitor daemons running on Ceph Monitor nodes to provide additional services. The Ceph Manager provides an interface for other monitoring and management systems using Ceph Manager modules. Running the Ceph Manager daemons is a requirement for normal storage cluster operations.

Ceph OSD

Each Ceph Object Storage Device (OSD) node runs the ceph-osd daemon, which interacts with logical disks attached to the node. The storage cluster stores data on these Ceph OSD nodes.

Ceph can run with very few OSD nodes, of which the default is three, but production storage clusters realize better performance beginning at modest scales. For example, 50 Ceph OSDs in a storage cluster. Ideally, a Ceph storage cluster has multiple OSD nodes, allowing for the possibility to isolate failure domains by configuring the CRUSH map accordingly.

Ceph MDS

Each Ceph Metadata Server (MDS) node runs the ceph-mds daemon, which manages metadata related to files stored on the Ceph File System (CephFS). The Ceph MDS daemon also coordinates access to the shared storage cluster.

Ceph Object Gateway

Ceph Object Gateway node runs the ceph-radosgw daemon, and is an object storage interface built on top of librados to provide applications with a RESTful access point to the Ceph storage cluster. The Ceph Object Gateway supports two interfaces:

S3

Provides object storage functionality with an interface that is compatible with a large subset of the Amazon S3 RESTful API.

Swift

Provides object storage functionality with an interface that is compatible with a large subset of the OpenStack Swift API.

Additional Resources

- For details on the Ceph architecture, see the Red Hat Ceph Storage Architecture Guide.

- For the minimum hardware recommendations, see the Red Hat Ceph Storage Hardware Selection Guide.

Chapter 2. Red Hat Ceph Storage considerations and recommendations

As a storage administrator, you can have a basic understanding about what things to consider before running a Red Hat Ceph Storage cluster. Understanding such things as, the hardware and network requirements, understanding what type of workloads work well with a Red Hat Ceph Storage cluster, along with Red Hat’s recommendations. Red Hat Ceph Storage can be used for different workloads based on a particular business need or set of requirements. Doing the necessary planning before installing a Red Hat Ceph Storage is critical to the success of running a Ceph storage cluster efficiently and achieving the business requirements.

Want help with planning a Red Hat Ceph Storage cluster for a specific use case? Contact your Red Hat representative for assistance.

2.1. Basic Red Hat Ceph Storage considerations

The first consideration for using Red Hat Ceph Storage is developing a storage strategy for the data. A storage strategy is a method of storing data that serves a particular use case. If you need to store volumes and images for a cloud platform like OpenStack, you can choose to store data on faster Serial Attached SCSI (SAS) drives with Solid State Drives (SSD) for journals. By contrast, if you need to store object data for an S3- or Swift-compliant gateway, you can choose to use something more economical, like traditional Serial Advanced Technology Attachment (SATA) drives. Red Hat Ceph Storage can accommodate both scenarios in the same storage cluster, but you need a means of providing the fast storage strategy to the cloud platform, and a means of providing more traditional storage for your object store.

One of the most important steps in a successful Ceph deployment is identifying a price-to-performance profile suitable for the storage cluster’s use case and workload. It is important to choose the right hardware for the use case. For example, choosing IOPS-optimized hardware for a cold storage application increases hardware costs unnecessarily. Whereas, choosing capacity-optimized hardware for its more attractive price point in an IOPS-intensive workload will likely lead to unhappy users complaining about slow performance.

Red Hat Ceph Storage can support multiple storage strategies. Use cases, cost versus benefit performance tradeoffs, and data durability are the primary considerations that help develop a sound storage strategy.

Use Cases

Ceph provides massive storage capacity, and it supports numerous use cases, such as:

- The Ceph Block Device client is a leading storage backend for cloud platforms that provides limitless storage for volumes and images with high performance features like copy-on-write cloning.

- The Ceph Object Gateway client is a leading storage backend for cloud platforms that provides a RESTful S3-compliant and Swift-compliant object storage for objects like audio, bitmap, video, and other data.

- The Ceph File System for traditional file storage.

Cost vs. Benefit of Performance

Faster is better. Bigger is better. High durability is better. However, there is a price for each superlative quality, and a corresponding cost versus benefit tradeoff. Consider the following use cases from a performance perspective: SSDs can provide very fast storage for relatively small amounts of data and journaling. Storing a database or object index can benefit from a pool of very fast SSDs, but proves too expensive for other data. SAS drives with SSD journaling provide fast performance at an economical price for volumes and images. SATA drives without SSD journaling provide cheap storage with lower overall performance. When you create a CRUSH hierarchy of OSDs, you need to consider the use case and an acceptable cost versus performance tradeoff.

Data Durability

In large scale storage clusters, hardware failure is an expectation, not an exception. However, data loss and service interruption remain unacceptable. For this reason, data durability is very important. Ceph addresses data durability with multiple replica copies of an object or with erasure coding and multiple coding chunks. Multiple copies or multiple coding chunks present an additional cost versus benefit tradeoff: it is cheaper to store fewer copies or coding chunks, but it can lead to the inability to service write requests in a degraded state. Generally, one object with two additional copies, or two coding chunks can allow a storage cluster to service writes in a degraded state while the storage cluster recovers.

Replication stores one or more redundant copies of the data across failure domains in case of a hardware failure. However, redundant copies of data can become expensive at scale. For example, to store 1 petabyte of data with triple replication would require a cluster with at least 3 petabytes of storage capacity.

Erasure coding stores data as data chunks and coding chunks. In the event of a lost data chunk, erasure coding can recover the lost data chunk with the remaining data chunks and coding chunks. Erasure coding is substantially more economical than replication. For example, using erasure coding with 8 data chunks and 3 coding chunks provides the same redundancy as 3 copies of the data. However, such an encoding scheme uses approximately 1.5x the initial data stored compared to 3x with replication.

The CRUSH algorithm aids this process by ensuring that Ceph stores additional copies or coding chunks in different locations within the storage cluster. This ensures that the failure of a single storage device or host does not lead to a loss of all of the copies or coding chunks necessary to preclude data loss. You can plan a storage strategy with cost versus benefit tradeoffs, and data durability in mind, then present it to a Ceph client as a storage pool.

ONLY the data storage pool can use erasure coding. Pools storing service data and bucket indexes use replication.

Ceph’s object copies or coding chunks make RAID solutions obsolete. Do not use RAID, because Ceph already handles data durability, a degraded RAID has a negative impact on performance, and recovering data using RAID is substantially slower than using deep copies or erasure coding chunks.

2.2. Red Hat Ceph Storage workload considerations

One of the key benefits of a Ceph storage cluster is the ability to support different types of workloads within the same storage cluster using performance domains. Different hardware configurations can be associated with each performance domain. Storage administrators can deploy storage pools on the appropriate performance domain, providing applications with storage tailored to specific performance and cost profiles. Selecting appropriately sized and optimized servers for these performance domains is an essential aspect of designing a Red Hat Ceph Storage cluster.

To the Ceph client interface that reads and writes data, a Ceph storage cluster appears as a simple pool where the client stores data. However, the storage cluster performs many complex operations in a manner that is completely transparent to the client interface. Ceph clients and Ceph object storage daemons, referred to as Ceph OSDs, or simply OSDs, both use the Controlled Replication Under Scalable Hashing (CRUSH) algorithm for the storage and retrieval of objects. Ceph OSDs can run in containers within the storage cluster.

A CRUSH map describes a topography of cluster resources, and the map exists both on client hosts as well as Ceph Monitor hosts within the cluster. Ceph clients and Ceph OSDs both use the CRUSH map and the CRUSH algorithm. Ceph clients communicate directly with OSDs, eliminating a centralized object lookup and a potential performance bottleneck. With awareness of the CRUSH map and communication with their peers, OSDs can handle replication, backfilling, and recovery—allowing for dynamic failure recovery.

Ceph uses the CRUSH map to implement failure domains. Ceph also uses the CRUSH map to implement performance domains, which simply take the performance profile of the underlying hardware into consideration. The CRUSH map describes how Ceph stores data, and it is implemented as a simple hierarchy, specifically an acyclic graph, and a ruleset. The CRUSH map can support multiple hierarchies to separate one type of hardware performance profile from another. Ceph implements performance domains with device "classes".

For example, you can have these performance domains coexisting in the same Red Hat Ceph Storage cluster:

- Hard disk drives (HDDs) are typically appropriate for cost and capacity-focused workloads.

- Throughput-sensitive workloads typically use HDDs with Ceph write journals on solid state drives (SSDs).

- IOPS-intensive workloads, such as MySQL and MariaDB, often use SSDs.

Figure 2.1. Performance and Failure Domains

Workloads

Red Hat Ceph Storage is optimized for three primary workloads.

Carefully consider the workload being run by Red Hat Ceph Storage clusters BEFORE considering what hardware to purchase, because it can significantly impact the price and performance of the storage cluster. For example, if the workload is capacity-optimized and the hardware is better suited to a throughput-optimized workload, then hardware will be more expensive than necessary. Conversely, if the workload is throughput-optimized and the hardware is better suited to a capacity-optimized workload, then the storage cluster can suffer from poor performance.

IOPS optimized: Input, output per second (IOPS) optimization deployments are suitable for cloud computing operations, such as running MYSQL or MariaDB instances as virtual machines on OpenStack. IOPS optimized deployments require higher performance storage such as 15k RPM SAS drives and separate SSD journals to handle frequent write operations. Some high IOPS scenarios use all flash storage to improve IOPS and total throughput.

An IOPS-optimized storage cluster has the following properties:

- Lowest cost per IOPS.

- Highest IOPS per GB.

- 99th percentile latency consistency.

Uses for an IOPS-optimized storage cluster are:

- Typically block storage.

- 3x replication for hard disk drives (HDDs) or 2x replication for solid state drives (SSDs).

- MySQL on OpenStack clouds.

Throughput optimized: Throughput-optimized deployments are suitable for serving up significant amounts of data, such as graphic, audio, and video content. Throughput-optimized deployments require high bandwidth networking hardware, controllers, and hard disk drives with fast sequential read and write characteristics. If fast data access is a requirement, then use a throughput-optimized storage strategy. Also, if fast write performance is a requirement, using Solid State Disks (SSD) for journals will substantially improve write performance.

A throughput-optimized storage cluster has the following properties:

- Lowest cost per MBps (throughput).

- Highest MBps per TB.

- Highest MBps per BTU.

- Highest MBps per Watt.

- 97th percentile latency consistency.

Uses for a throughput-optimized storage cluster are:

- Block or object storage.

- 3x replication.

- Active performance storage for video, audio, and images.

- Streaming media, such as 4k video.

Capacity optimized: Capacity-optimized deployments are suitable for storing significant amounts of data as inexpensively as possible. Capacity-optimized deployments typically trade performance for a more attractive price point. For example, capacity-optimized deployments often use slower and less expensive SATA drives and co-locate journals rather than using SSDs for journaling.

A cost and capacity-optimized storage cluster has the following properties:

- Lowest cost per TB.

- Lowest BTU per TB.

- Lowest Watts required per TB.

Uses for a cost and capacity-optimized storage cluster are:

- Typically object storage.

- Erasure coding for maximizing usable capacity

- Object archive.

- Video, audio, and image object repositories.

2.3. Network considerations for Red Hat Ceph Storage

An important aspect of a cloud storage solution is that storage clusters can run out of IOPS due to network latency, and other factors. Also, the storage cluster can run out of throughput due to bandwidth constraints long before the storage clusters run out of storage capacity. This means that the network hardware configuration must support the chosen workloads to meet price versus performance requirements.

Storage administrators prefer that a storage cluster recovers as quickly as possible. Carefully consider bandwidth requirements for the storage cluster network, be mindful of network link oversubscription, and segregate the intra-cluster traffic from the client-to-cluster traffic. Also consider that network performance is increasingly important when considering the use of Solid State Disks (SSD), flash, NVMe, and other high performing storage devices.

Ceph supports a public network and a storage cluster network. The public network handles client traffic and communication with Ceph Monitors. The storage cluster network handles Ceph OSD heartbeats, replication, backfilling, and recovery traffic. At a minimum, a single 10 Gb/s Ethernet link should be used for storage hardware, and you can add additional 10 Gb/s Ethernet links for connectivity and throughput.

Red Hat recommends allocating bandwidth to the storage cluster network, such that it is a multiple of the public network using the osd_pool_default_size as the basis for the multiple on replicated pools. Red Hat also recommends running the public and storage cluster networks on separate network cards.

Red Hat recommends using 10 Gb/s Ethernet for Red Hat Ceph Storage deployments in production. A 1 Gb/s Ethernet network is not suitable for production storage clusters.

In the case of a drive failure, replicating 1 TB of data across a 1 Gb/s network takes 3 hours and replicating 10 TB across a 1 Gb/s network takes 30 hours. Using 10 TB is the typical drive configuration. By contrast, with a 10 Gb/s Ethernet network, the replication times would be 20 minutes for 1 TB and 1 hour for 10 TB. Remember that when a Ceph OSD fails, the storage cluster will recover by replicating the data it contained to other Ceph OSDs within the pool.

The failure of a larger domain such as a rack means that the storage cluster utilizes considerably more bandwidth. When building a storage cluster consisting of multiple racks, which is common for large storage implementations, consider utilizing as much network bandwidth between switches in a "fat tree" design for optimal performance. A typical 10 Gb/s Ethernet switch has 48 10 Gb/s ports and four 40 Gb/s ports. Use the 40 Gb/s ports on the spine for maximum throughput. Alternatively, consider aggregating unused 10 Gb/s ports with QSFP+ and SFP+ cables into more 40 Gb/s ports to connect to other rack and spine routers. Also, consider using LACP mode 4 to bond network interfaces. Additionally, use jumbo frames, with a maximum transmission unit (MTU) of 9000, especially on the backend or cluster network.

Before installing and testing a Red Hat Ceph Storage cluster, verify the network throughput. Most performance-related problems in Ceph usually begin with a networking issue. Simple network issues like a kinked or bent Cat-6 cable could result in degraded bandwidth. Use a minimum of 10 Gb/s ethernet for the front side network. For large clusters, consider using 40 Gb/s ethernet for the backend or cluster network.

For network optimization, Red Hat recommends using jumbo frames for a better CPU per bandwidth ratio, and a non-blocking network switch back-plane. Red Hat Ceph Storage requires the same MTU value throughout all networking devices in the communication path, end-to-end for both public and cluster networks. Verify that the MTU value is the same on all hosts and networking equipment in the environment before using a Red Hat Ceph Storage cluster in production.

2.4. Considerations for using a RAID controller with OSD hosts

Optionally, you can consider using a RAID controller on the OSD hosts. Here are some things to consider:

- If an OSD host has a RAID controller with 1-2 Gb of cache installed, enabling the write-back cache might result in increased small I/O write throughput. However, the cache must be non-volatile.

- Most modern RAID controllers have super capacitors that provide enough power to drain volatile memory to non-volatile NAND memory during a power-loss event. It is important to understand how a particular controller and its firmware behave after power is restored.

- Some RAID controllers require manual intervention. Hard drives typically advertise to the operating system whether their disk caches should be enabled or disabled by default. However, certain RAID controllers and some firmware do not provide such information. Verify that disk level caches are disabled to avoid file system corruption.

- Create a single RAID 0 volume with write-back for each Ceph OSD data drive with write-back cache enabled.

- If Serial Attached SCSI (SAS) or SATA connected Solid-state Drive (SSD) disks are also present on the RAID controller, then investigate whether the controller and firmware support pass-through mode. Enabling pass-through mode helps avoid caching logic, and generally results in much lower latency for fast media.

2.5. Tuning considerations for the Linux kernel when running Ceph

Production Red Hat Ceph Storage clusters generally benefit from tuning the operating system, specifically around limits and memory allocation. Ensure that adjustments are set for all hosts within the storage cluster. You can also open a case with Red Hat support asking for additional guidance.

Increase the File Descriptors

The Ceph Object Gateway can hang if it runs out of file descriptors. You can modify the /etc/security/limits.conf file on Ceph Object Gateway hosts to increase the file descriptors for the Ceph Object Gateway.

ceph soft nofile unlimited

ceph soft nofile unlimitedAdjusting the ulimit value for Large Storage Clusters

When running Ceph administrative commands on large storage clusters, for example, with 1024 Ceph OSDs or more, create an /etc/security/limits.d/50-ceph.conf file on each host that runs administrative commands with the following contents:

USER_NAME soft nproc unlimited

USER_NAME soft nproc unlimitedReplace USER_NAME with the name of the non-root user account that runs the Ceph administrative commands.

The root user’s ulimit value is already set to unlimited by default on Red Hat Enterprise Linux.

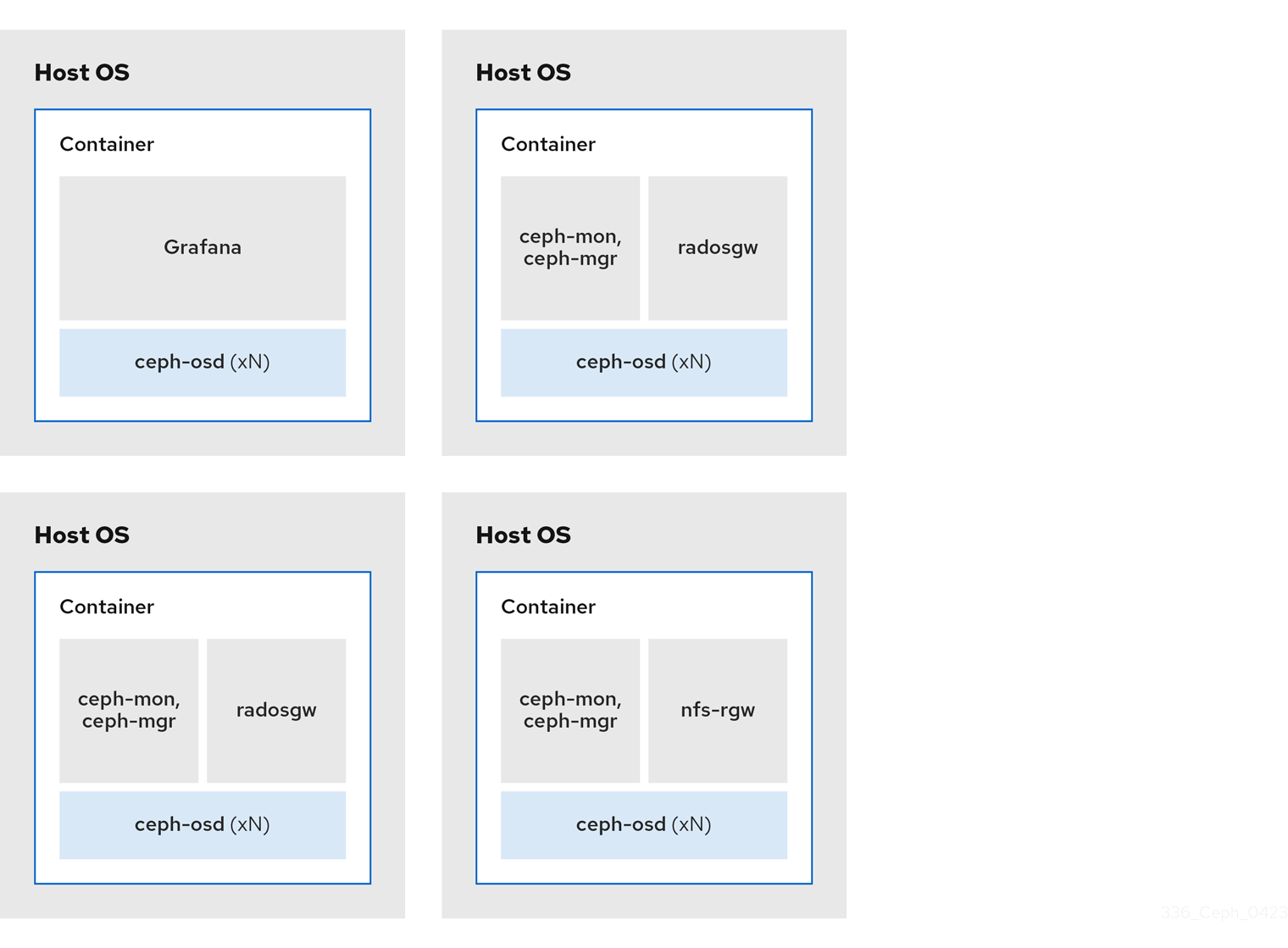

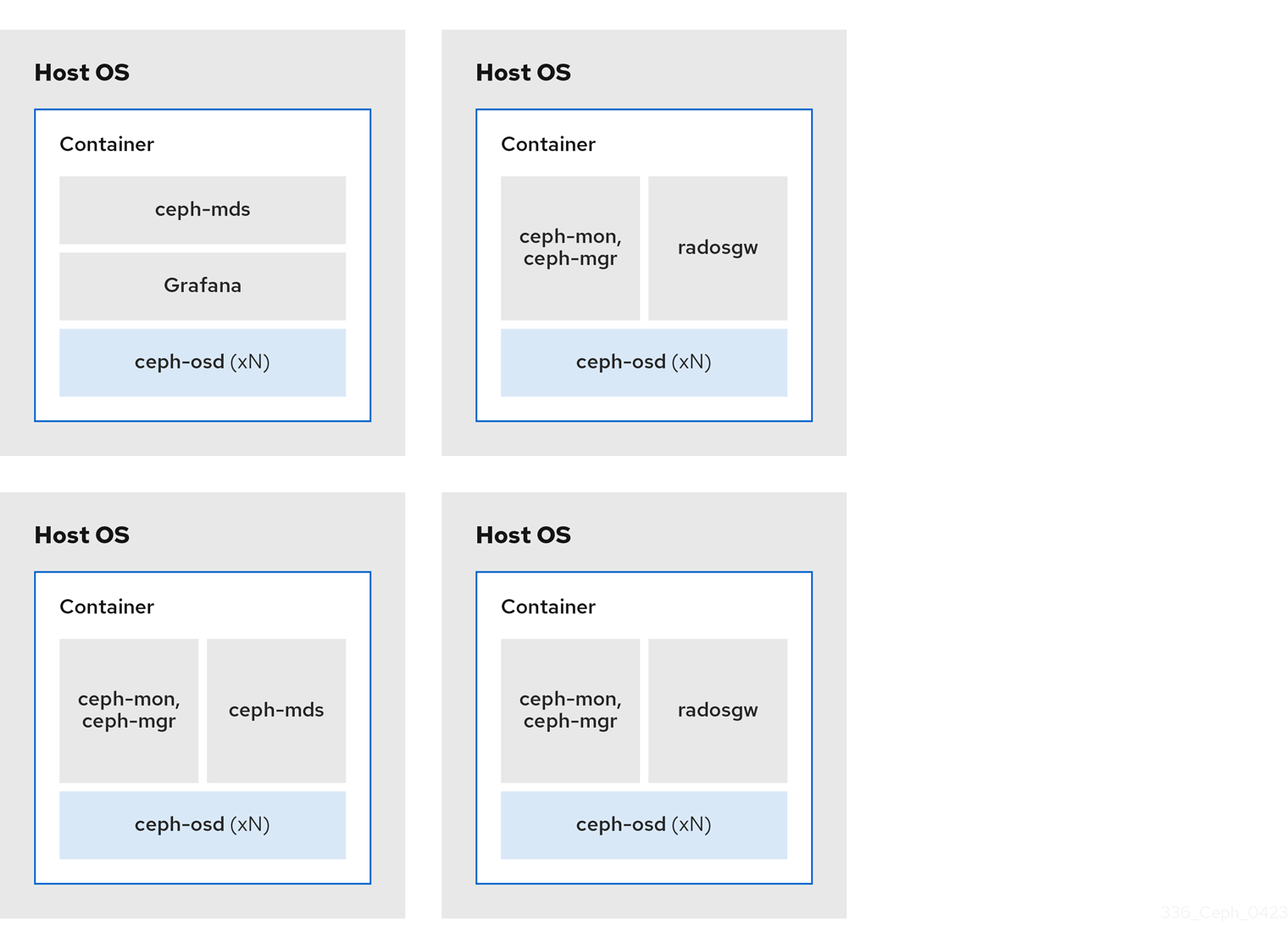

2.6. How colocation works and its advantages

You can colocate containerized Ceph daemons on the same host. Here are the advantages of colocating some of Ceph’s services:

- Significant improvement in total cost of ownership (TCO) at small scale

- Reduction from six hosts to three for the minimum configuration

- Easier upgrade

- Better resource isolation

How Colocation Works

With the help of the Cephadm orchestrator, you can colocate one daemon from the following list with one or more OSD daemons (ceph-osd):

-

Ceph Monitor (

ceph-mon) and Ceph Manager (ceph-mgr) daemons -

NFS Ganesha (

nfs-ganesha) for Ceph Object Gateway (nfs-ganesha) -

RBD Mirror (

rbd-mirror) - Observability Stack (Grafana)

Additionally, for Ceph Object Gateway (radosgw) (RGW) and Ceph File System (ceph-mds), you can colocate either with an OSD daemon plus a daemon from the above list, excluding RBD mirror.

Collocating two of the same kind of daemons on a given node is not supported.

Because ceph-mon and ceph-mgr work together closely they do not count as two separate daemons for the purposes of colocation.

Red Hat recommends colocating the Ceph Object Gateway with Ceph OSD containers to increase performance.

With the colocation rules shared above, we have the following minimum clusters sizes that comply with these rules:

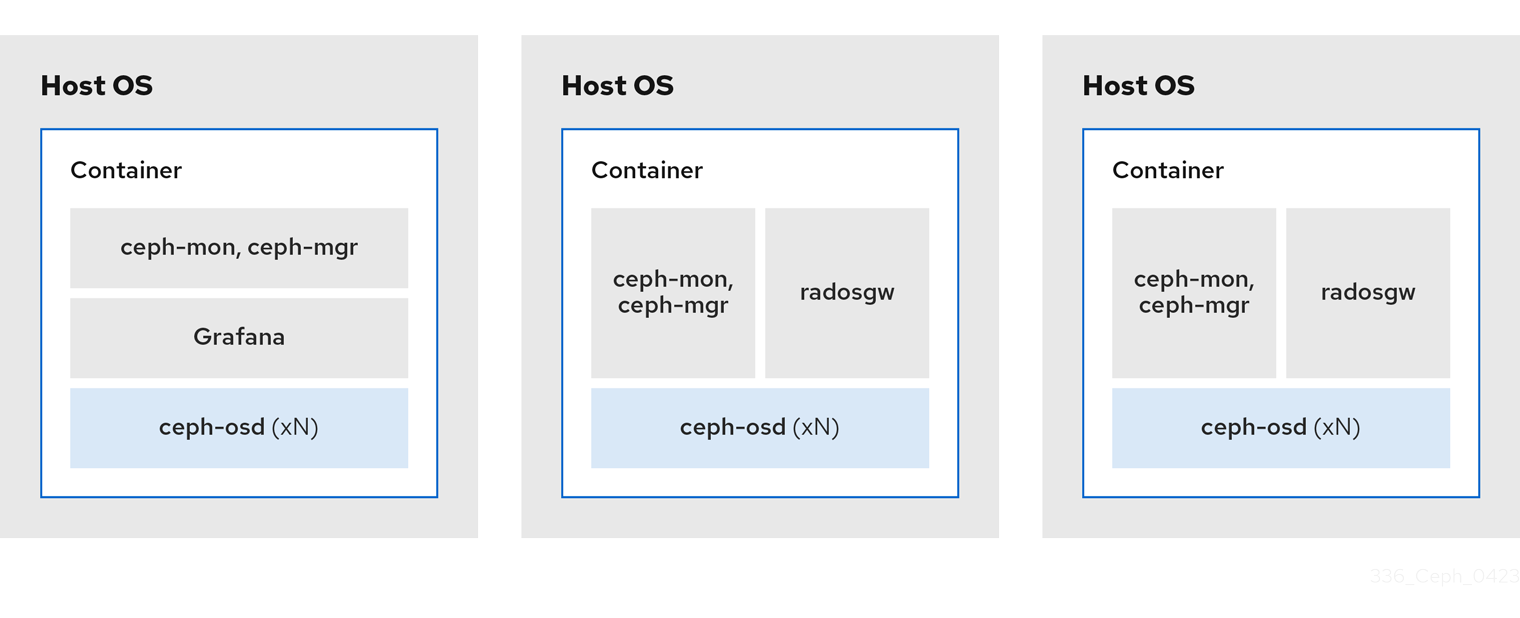

Example 1

- Media: Full flash systems (SSDs)

- Use case: Block (RBD) and File (CephFS), or Object (Ceph Object Gateway)

- Number of nodes: 3

- Replication scheme: 2

| Host | Daemon | Daemon | Daemon |

|---|---|---|---|

| host1 | OSD | Monitor/Manager | Grafana |

| host2 | OSD | Monitor/Manager | RGW or CephFS |

| host3 | OSD | Monitor/Manager | RGW or CephFS |

The minimum size for a storage cluster with three replicas is four nodes. Similarly, the size of a storage cluster with two replicas is a three node cluster. It is a requirement to have a certain number of nodes for the replication factor with an extra node in the cluster to avoid extended periods with the cluster in a degraded state.

Figure 2.2. Colocated Daemons Example 1

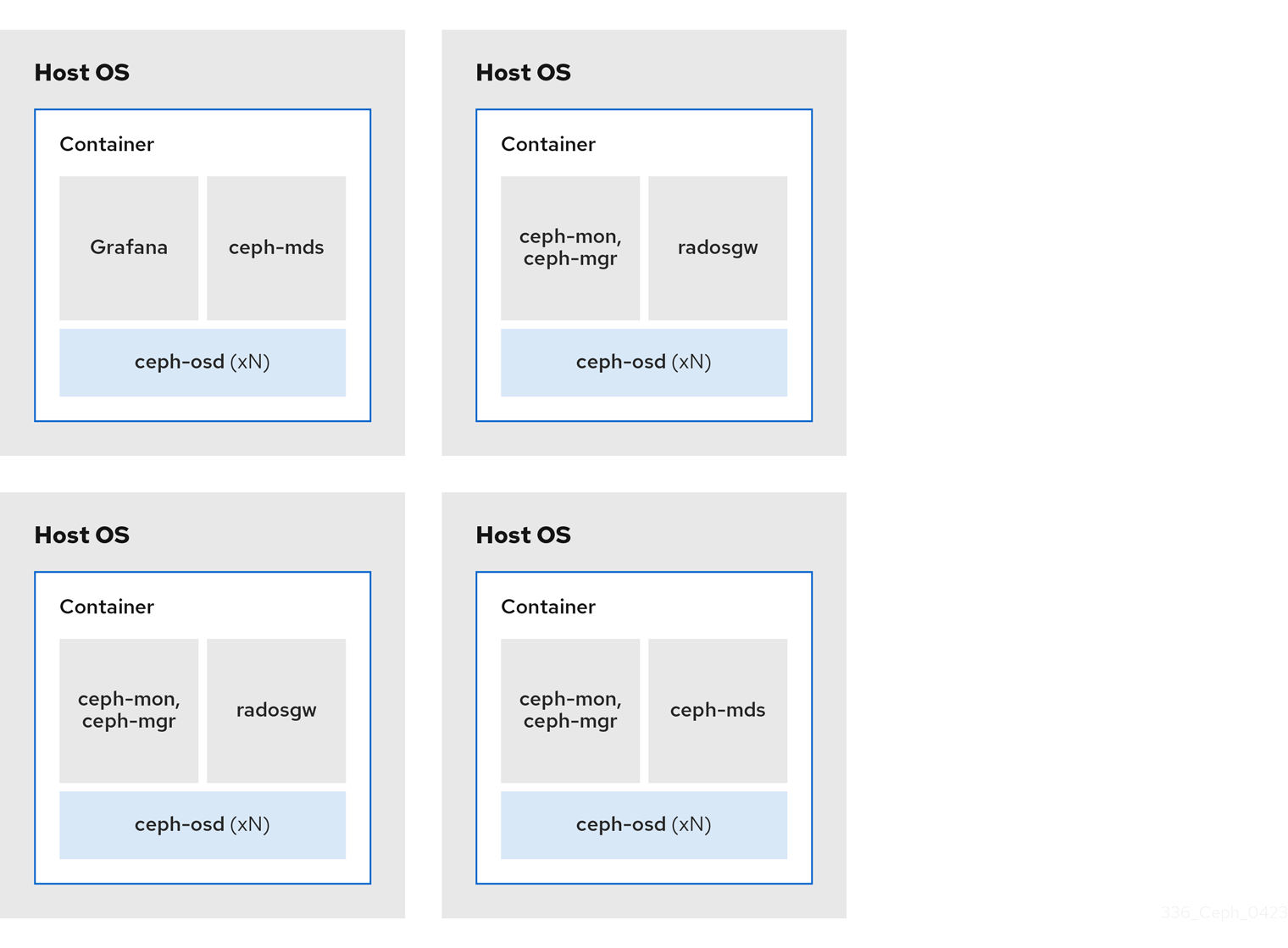

Example 2

- Media: Full flash systems (SSDs) or spinning devices (HDDs)

- Use case: Block (RBD), File (CephFS), and Object (Ceph Object Gateway)

- Number of nodes: 4

- Replication scheme: 3

| Host | Daemon | Daemon | Daemon |

|---|---|---|---|

| host1 | OSD | Grafana | CephFS |

| host2 | OSD | Monitor/Manager | RGW |

| host3 | OSD | Monitor/Manager | RGW |

| host4 | OSD | Monitor/Manager | CephFS |

Figure 2.3. Colocated Daemons Example 2

Example 3

- Media: Full flash systems (SSDs) or spinning devices (HDDs)

- Use case: Block (RBD), Object (Ceph Object Gateway), and NFS for Ceph Object Gateway

- Number of nodes: 4

- Replication scheme: 3

| Host | Daemon | Daemon | Daemon |

|---|---|---|---|

| host1 | OSD | Grafana | |

| host2 | OSD | Monitor/Manager | RGW |

| host3 | OSD | Monitor/Manager | RGW |

| host4 | OSD | Monitor/Manager | NFS (RGW) |

Figure 2.4. Colocated Daemons Example 3

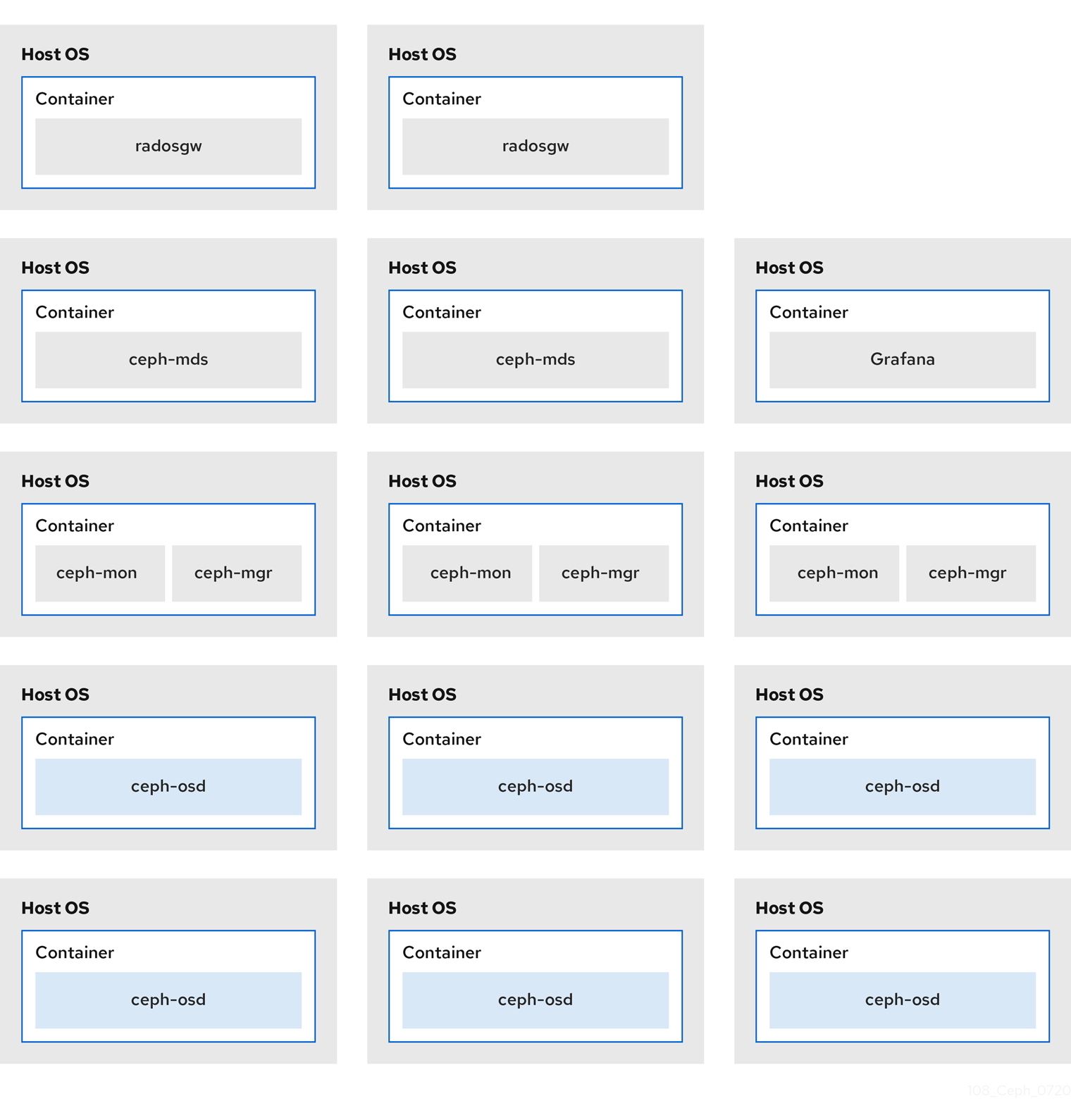

The diagrams below shows the differences between storage clusters with colocated and non-colocated daemons.

Figure 2.5. Colocated Daemons

Figure 2.6. Non-colocated Daemons

2.7. Operating system requirements for Red Hat Ceph Storage

Red Hat Enterprise Linux entitlements are included in the Red Hat Ceph Storage subscription.

For full compatibility information, see Compatibility Guide.

Red Hat Ceph Storage 7 is supported on container-based deployments only.

Use the same architecture and deployment type across all nodes. For example, do not use a mixture of nodes with both AMD64 and Intel 64 architectures, or a mixture of nodes with container-based deployments.

Red Hat does not support clusters with heterogeneous architectures or deployment types.

SELinux

By default, SELinux is set to Enforcing mode and the ceph-selinux packages are installed. For additional information on SELinux, see the Data Security and Hardening Guide, and Red Hat Enterprise Linux 9 Using SELinux Guide.

2.8. Minimum hardware considerations for Red Hat Ceph Storage

Red Hat Ceph Storage can run on non-proprietary commodity hardware. Small production clusters and development clusters can run without performance optimization with modest hardware.

Disk space requirements are based on the Ceph daemons' default path under /var/lib/ceph/ directory.

| Process | Criteria | Minimum Recommended |

|---|---|---|

|

| Processor | 1x AMD64 or Intel 64 CPU CORE per OSD container. |

| RAM | Minimum of 5 GB of RAM per OSD container. | |

| Number of nodes | Minimum of 3 nodes required. | |

| OS Disk | 1x OS disk per host. | |

| OSD Storage | 1x storage drive per OSD container. Cannot be shared with OS Disk. | |

|

|

Optional, but Red Hat recommended, 1x SSD or NVMe or Optane partition or lvm per daemon. Sizing is 4% of | |

|

|

Optionally, 1x SSD or NVMe or Optane partition or logical volume per daemon. Use a small size, for example 10 GB, and only if it’s faster than the | |

| Network | 2x 10 GB Ethernet NICs |

|

| Processor | 1x AMD64 or Intel 64 CPU CORE per mon-container | |

| RAM |

3 GB per | |

| Disk Space | 10 GB per mon-container, 50 GB Recommended | |

| Monitor Disk |

Optionally, 1x SSD disk for | |

| Network | 2x 1 GB Ethernet NICs, 10 GB Recommended | |

| Prometheus |

20 GB to 50 GB under |

|

| Processor |

1x AMD64 or Intel 64 CPU CORE per | |

| RAM |

3 GB per | |

| Network | 2x 1 GB Ethernet NICs, 10 GB Recommended |

|

| Processor | 1x AMD64 or Intel 64 CPU CORE per radosgw-container | |

| RAM | 1 GB per daemon | |

| Disk Space | 5 GB per daemon | |

| Network | 1x 1 GB Ethernet NICs |

|

| Processor | 1x AMD64 or Intel 64 CPU CORE per mds-container | |

| RAM |

3 GB per

This number is highly dependent on the configurable MDS cache size. The RAM requirement is typically twice as much as the amount set in the | |

| Disk Space | 2 GB per 'mds-container', plus taking into consideration any additional space required for possible debug logging, 20GB is a good start. |

Chapter 3. Red Hat Ceph Storage installation

As a storage administrator, you can use the cephadm utility to deploy new Red Hat Ceph Storage clusters.

The cephadm utility manages the entire life cycle of a Ceph cluster. Installation and management tasks comprise two types of operations:

- Day One operations involve installing and bootstrapping a bare-minimum, containerized Ceph storage cluster, running on a single node. Day One also includes deploying the Monitor and Manager daemons and adding Ceph OSDs.

-

Day Two operations use the Ceph orchestration interface,

cephadm orch, or the Red Hat Ceph Storage Dashboard to expand the storage cluster by adding other Ceph services to the storage cluster.

Prerequisites

- At least one running virtual machine (VM) or bare-metal server with an active internet connection.

-

Red Hat Enterprise Linux 9.2 with

ansible-corebundled into AppStream. - A valid Red Hat subscription with the appropriate entitlements.

- Root-level access to all nodes.

- An active Red Hat Network (RHN) or service account to access the Red Hat Registry.

- Remove troubling configurations in iptables so that refresh of iptables services does not cause issues to the cluster. For an example, refer to the Verifying firewall rules are configured for default Ceph ports section of the Red Hat Ceph Storage Configuration Guide.

3.1. The cephadm utility

The cephadm utility deploys and manages a Ceph storage cluster. It is tightly integrated with both the command-line interface (CLI) and the Red Hat Ceph Storage Dashboard web interface so that you can manage storage clusters from either environment. cephadm uses SSH to connect to hosts from the manager daemon to add, remove, or update Ceph daemon containers. It does not rely on external configuration or orchestration tools such as Ansible or Rook.

The cephadm utility is available after running the preflight playbook on a host.

The cephadm utility consists of two main components:

-

The

cephadmshell. -

The

cephadmorchestrator.

The cephadm shell

The cephadm shell starts a bash shell within a container. Use the shell to complete “Day One” cluster setup tasks, such as installation and bootstrapping, and to use ceph commands.

For more information about how to start the cephadm shell, see Starting the cephadm shell.

The cephadm orchestrator

Use the cephadm orchestrator to perform “Day Two” Ceph functions, such as expanding the storage cluster and provisioning Ceph daemons and services. You can use the cephadm orchestrator through either the command-line interface (CLI) or the web-based Red Hat Ceph Storage Dashboard. Orchestrator commands take the form ceph orch.

The cephadm script interacts with the Ceph orchestration module used by the Ceph Manager.

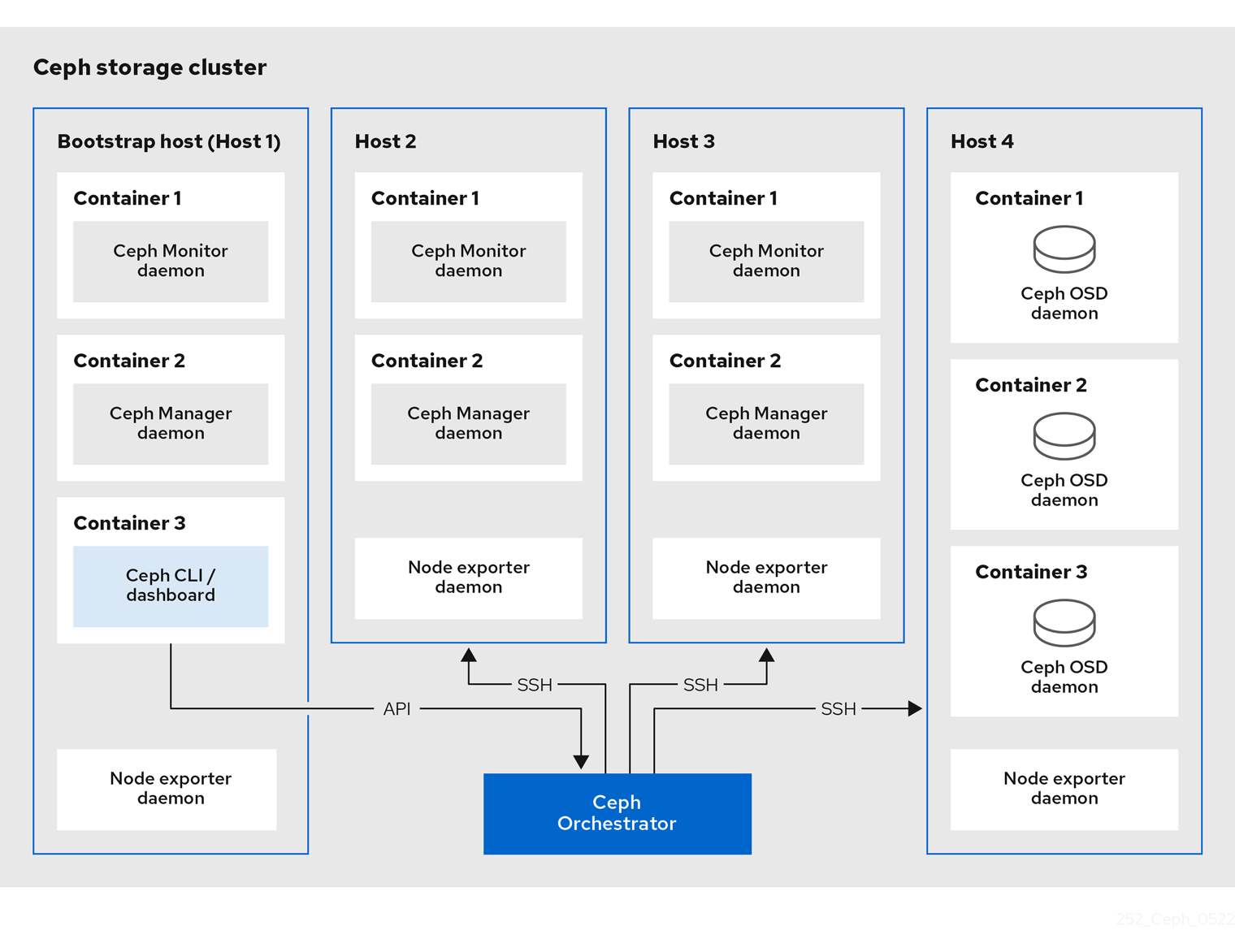

3.2. How cephadm works

The cephadm command manages the full lifecycle of a Red Hat Ceph Storage cluster. The cephadm command can perform the following operations:

- Bootstrap a new Red Hat Ceph Storage cluster.

- Launch a containerized shell that works with the Red Hat Ceph Storage command-line interface (CLI).

- Aid in debugging containerized daemons.

The cephadm command uses ssh to communicate with the nodes in the storage cluster. This allows you to add, remove, or update Red Hat Ceph Storage containers without using external tools. Generate the ssh key pair during the bootstrapping process, or use your own ssh key.

The cephadm bootstrapping process creates a small storage cluster on a single node, consisting of one Ceph Monitor and one Ceph Manager, as well as any required dependencies. You then use the orchestrator CLI or the Red Hat Ceph Storage Dashboard to expand the storage cluster to include nodes, and to provision all of the Red Hat Ceph Storage daemons and services. You can perform management functions through the CLI or from the Red Hat Ceph Storage Dashboard web interface.

3.3. The cephadm-ansible playbooks

The cephadm-ansible package is a collection of Ansible playbooks to simplify workflows that are not covered by cephadm. After installation, the playbooks are located in /usr/share/cephadm-ansible/.

The cephadm-ansible package includes the following playbooks:

-

cephadm-preflight.yml -

cephadm-clients.yml -

cephadm-purge-cluster.yml

The cephadm-preflight playbook

Use the cephadm-preflight playbook to initially setup hosts before bootstrapping the storage cluster and before adding new nodes or clients to your storage cluster. This playbook configures the Ceph repository and installs some prerequisites such as podman, lvm2, chrony, and cephadm.

The cephadm-clients playbook

Use the cephadm-clients playbook to set up client hosts. This playbook handles the distribution of configuration and keyring files to a group of Ceph clients.

The cephadm-purge-cluster playbook

Use the cephadm-purge-cluster playbook to remove a Ceph cluster. This playbook purges a Ceph cluster managed with cephadm.

3.4. Registering the Red Hat Ceph Storage nodes to the CDN and attaching subscriptions

When using Red Hat Enterprise Linux 8.x, the Admin node must be running a supported Red Hat Enterprise Linux 9.x version for your Red Hat Ceph Storage.

For full compatibility information, see Compatibility Guide.

Prerequisites

- At least one running virtual machine (VM) or bare-metal server with an active internet connection.

- For Red Hat Ceph Storage 7.0, Red Hat Enterprise Linux 9.2 with ansible-core bundled into AppStream. For Red Hat Ceph Storage 7.1, Red Hat Enterprise Linux 8.10, 9.4, 9.5, or 9.6 with ansible-core bundled into AppStream.

- A valid Red Hat subscription with the appropriate entitlements.

- Root-level access to all nodes.

Procedure

Register the node, and when prompted, enter your Red Hat Customer Portal credentials:

Syntax

subscription-manager register

subscription-manager registerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Pull the latest subscription data from the CDN:

Syntax

subscription-manager refresh

subscription-manager refreshCopy to Clipboard Copied! Toggle word wrap Toggle overflow List all available subscriptions for Red Hat Ceph Storage:

Syntax

subscription-manager list --available --matches 'Red Hat Ceph Storage'

subscription-manager list --available --matches 'Red Hat Ceph Storage'Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Identify the appropriate subscription and retrieve its Pool ID.

Attach a pool ID to gain access to the software entitlements. Use the Pool ID you identified in the previous step.

Syntax

subscription-manager attach --pool=POOL_ID

subscription-manager attach --pool=POOL_IDCopy to Clipboard Copied! Toggle word wrap Toggle overflow Disable the default software repositories, and then enable the server and the extras repositories on the respective version of Red Hat Enterprise Linux:

Red Hat Enterprise Linux 9

subscription-manager repos --disable=* subscription-manager repos --enable=rhel-9-for-x86_64-baseos-rpms subscription-manager repos --enable=rhel-9-for-x86_64-appstream-rpms

subscription-manager repos --disable=* subscription-manager repos --enable=rhel-9-for-x86_64-baseos-rpms subscription-manager repos --enable=rhel-9-for-x86_64-appstream-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Update the system to receive the latest packages for Red Hat Enterprise Linux:

Syntax

dnf update

# dnf updateCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Subscribe to Red Hat Ceph Storage 7 content. Follow the instructions in How to Register Ceph with Red Hat Satellite 6.

Enable the

ceph-toolsrepository:Red Hat Enterprise Linux 9

subscription-manager repos --enable=rhceph-7-tools-for-rhel-9-x86_64-rpms

subscription-manager repos --enable=rhceph-7-tools-for-rhel-9-x86_64-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Repeat the above steps on all nodes you are adding to the cluster.

Install

cephadm-ansible:Syntax

dnf install cephadm-ansible

dnf install cephadm-ansibleCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.5. Configuring Ansible inventory location

You can configure inventory location files for the cephadm-ansible staging and production environments. The Ansible inventory hosts file contains all the hosts that are part of the storage cluster. You can list nodes individually in the inventory hosts file or you can create groups such as [mons],[osds], and [rgws] to provide clarity to your inventory and ease the usage of the --limit option to target a group or node when running a playbook.

If deploying clients, client nodes must be defined in a dedicated [clients] group.

Prerequisites

- An Ansible administration node.

- Root-level access to the Ansible administration node.

-

The

cephadm-ansiblepackage is installed on the node.

Procedure

Navigate to the

/usr/share/cephadm-ansible/directory:cd /usr/share/cephadm-ansible

[root@admin ~]# cd /usr/share/cephadm-ansibleCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Create subdirectories for staging and production:

mkdir -p inventory/staging inventory/production

[root@admin cephadm-ansible]# mkdir -p inventory/staging inventory/productionCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Edit the

ansible.cfgfile and add the following line to assign a default inventory location:[defaults] inventory = ./inventory/staging

[defaults] inventory = ./inventory/stagingCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Create an inventory

hostsfile for each environment:touch inventory/staging/hosts touch inventory/production/hosts

[root@admin cephadm-ansible]# touch inventory/staging/hosts [root@admin cephadm-ansible]# touch inventory/production/hostsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Open and edit each

hostsfile and add the nodes and[admin]group:NODE_NAME_1 NODE_NAME_2 [admin] ADMIN_NODE_NAME_1

NODE_NAME_1 NODE_NAME_2 [admin] ADMIN_NODE_NAME_1Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace NODE_NAME_1 and NODE_NAME_2 with the Ceph nodes such as monitors, OSDs, MDSs, and gateway nodes.

Replace ADMIN_NODE_NAME_1 with the name of the node where the admin keyring is stored.

Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf you set the inventory location in the

ansible.cfgfile to staging, you need to run the playbooks in the staging environment as follows:Syntax

ansible-playbook -i inventory/staging/hosts PLAYBOOK.yml

ansible-playbook -i inventory/staging/hosts PLAYBOOK.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow To run the playbooks in the production environment:

Syntax

ansible-playbook -i inventory/production/hosts PLAYBOOK.yml

ansible-playbook -i inventory/production/hosts PLAYBOOK.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.6. Enabling SSH login as root user on Red Hat Enterprise Linux 9

Red Hat Enterprise Linux 9 does not support SSH login as a root user even if PermitRootLogin parameter is set to yes in the /etc/ssh/sshd_config file. You get the following error:

Example

ssh root@myhostname root@myhostname password: Permission denied, please try again.

[root@host01 ~]# ssh root@myhostname

root@myhostname password:

Permission denied, please try again.You can run one of the following methods to enable login as a root user:

- Use "Allow root SSH login with password" flag while setting the root password during installation of Red Hat Enterprise Linux 9.

-

Manually set the

PermitRootLoginparameter after Red Hat Enterprise Linux 9 installation.

This section describes manual setting of the PermitRootLogin parameter.

Prerequisites

- Root-level access to all nodes.

Procedure

Open the

etc/ssh/sshd_configfile and set thePermitRootLogintoyes:Example

echo 'PermitRootLogin yes' >> /etc/ssh/sshd_config.d/01-permitrootlogin.conf

[root@admin ~]# echo 'PermitRootLogin yes' >> /etc/ssh/sshd_config.d/01-permitrootlogin.confCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the

SSHservice:Example

systemctl restart sshd.service

[root@admin ~]# systemctl restart sshd.serviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Login to the node as the

rootuser:Syntax

ssh root@HOST_NAME

ssh root@HOST_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace HOST_NAME with the host name of the Ceph node.

Example

ssh root@host01

[root@admin ~]# ssh root@host01Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the

rootpassword when prompted.

3.7. Creating an Ansible user with sudo access

You can create an Ansible user with password-less root access on all nodes in the storage cluster to run the cephadm-ansible playbooks. The Ansible user must be able to log into all the Red Hat Ceph Storage nodes as a user that has root privileges to install software and create configuration files without prompting for a password.

Prerequisites

- Root-level access to all nodes.

- For Red Hat Enterprise Linux 9, to log in as a root user, see Enabling SSH log in as root user on Red Hat Enterprise Linux 9

Procedure

Log in to the node as the

rootuser:Syntax

ssh root@HOST_NAME

ssh root@HOST_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace HOST_NAME with the host name of the Ceph node.

Example

ssh root@host01

[root@admin ~]# ssh root@host01Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the

rootpassword when prompted.Create a new Ansible user:

Syntax

adduser USER_NAME

adduser USER_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace USER_NAME with the new user name for the Ansible user.

Example

adduser ceph-admin

[root@host01 ~]# adduser ceph-adminCopy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantDo not use

cephas the user name. Thecephuser name is reserved for the Ceph daemons. A uniform user name across the cluster can improve ease of use, but avoid using obvious user names, because intruders typically use them for brute-force attacks.Set a new password for this user:

Syntax

passwd USER_NAME

passwd USER_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace USER_NAME with the new user name for the Ansible user.

Example

passwd ceph-admin

[root@host01 ~]# passwd ceph-adminCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the new password twice when prompted.

Configure

sudoaccess for the newly created user:Syntax

cat << EOF >/etc/sudoers.d/USER_NAME $USER_NAME ALL = (root) NOPASSWD:ALL EOF

cat << EOF >/etc/sudoers.d/USER_NAME $USER_NAME ALL = (root) NOPASSWD:ALL EOFCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace USER_NAME with the new user name for the Ansible user.

Example

[root@host01 ~]# cat << EOF >/etc/sudoers.d/ceph-admin ceph-admin ALL = (root) NOPASSWD:ALL EOF

[root@host01 ~]# cat << EOF >/etc/sudoers.d/ceph-admin ceph-admin ALL = (root) NOPASSWD:ALL EOFCopy to Clipboard Copied! Toggle word wrap Toggle overflow Assign the correct file permissions to the new file:

Syntax

chmod 0440 /etc/sudoers.d/USER_NAME

chmod 0440 /etc/sudoers.d/USER_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace USER_NAME with the new user name for the Ansible user.

Example

chmod 0440 /etc/sudoers.d/ceph-admin

[root@host01 ~]# chmod 0440 /etc/sudoers.d/ceph-adminCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Repeat the above steps on all nodes in the storage cluster.

3.8. Enabling password-less SSH for Ansible

Generate an SSH key pair on the Ansible administration node and distribute the public key to each node in the storage cluster so that Ansible can access the nodes without being prompted for a password.

Prerequisites

- Access to the Ansible administration node.

- Ansible user with sudo access to all nodes in the storage cluster.

- For Red Hat Enterprise Linux 9, to log in as a root user, see Enabling SSH log in as root user on Red Hat Enterprise Linux 9

Procedure

Generate the SSH key pair, accept the default file name and leave the passphrase empty:

ssh-keygen

[ceph-admin@admin ~]$ ssh-keygenCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the public key to all nodes in the storage cluster:

ssh-copy-id USER_NAME@HOST_NAME

ssh-copy-id USER_NAME@HOST_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace USER_NAME with the new user name for the Ansible user. Replace HOST_NAME with the host name of the Ceph node.

Example

ssh-copy-id ceph-admin@host01

[ceph-admin@admin ~]$ ssh-copy-id ceph-admin@host01Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the user’s SSH

configfile:touch ~/.ssh/config

[ceph-admin@admin ~]$ touch ~/.ssh/configCopy to Clipboard Copied! Toggle word wrap Toggle overflow Open for editing the

configfile. Set values for theHostnameandUseroptions for each node in the storage cluster:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace HOST_NAME with the host name of the Ceph node. Replace USER_NAME with the new user name for the Ansible user.

Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantBy configuring the

~/.ssh/configfile you do not have to specify the-u USER_NAMEoption each time you execute theansible-playbookcommand.Set the correct file permissions for the

~/.ssh/configfile:chmod 600 ~/.ssh/config

[ceph-admin@admin ~]$ chmod 600 ~/.ssh/configCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.9. Running the preflight playbook

This Ansible playbook configures the Ceph repository and prepares the storage cluster for bootstrapping. It also installs some prerequisites, such as podman, lvm2, chrony, and cephadm. The default location for cephadm-ansible and cephadm-preflight.yml is /usr/share/cephadm-ansible.

The preflight playbook uses the cephadm-ansible inventory file to identify all the admin and nodes in the storage cluster.

The default location for the inventory file is /usr/share/cephadm-ansible/hosts. The following example shows the structure of a typical inventory file:

Example

The [admin] group in the inventory file contains the name of the node where the admin keyring is stored. On a new storage cluster, the node in the [admin] group will be the bootstrap node. To add additional admin hosts after bootstrapping the cluster see Setting up the admin node in the Installation Guide for more information.

Run the preflight playbook before you bootstrap the initial host.

If you are performing a disconnected installation, see Running the preflight playbook for a disconnected installation.

Prerequisites

- Root-level access to the Ansible administration node.

Ansible user with sudo and passwordless

sshaccess to all nodes in the storage cluster.NoteIn the below example, host01 is the bootstrap node.

Procedure

-

Navigate to the the

/usr/share/cephadm-ansibledirectory. Open and edit the

hostsfile and add your nodes:Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the preflight playbook:

Syntax

ansible-playbook -i INVENTORY_FILE cephadm-preflight.yml --extra-vars "ceph_origin=rhcs"

ansible-playbook -i INVENTORY_FILE cephadm-preflight.yml --extra-vars "ceph_origin=rhcs"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

ansible-playbook -i hosts cephadm-preflight.yml --extra-vars "ceph_origin=rhcs"

[ceph-admin@admin cephadm-ansible]$ ansible-playbook -i hosts cephadm-preflight.yml --extra-vars "ceph_origin=rhcs"Copy to Clipboard Copied! Toggle word wrap Toggle overflow After installation is complete,

cephadmresides in the/usr/sbin/directory.Use the

--limitoption to run the preflight playbook on a selected set of hosts in the storage cluster:Syntax

ansible-playbook -i INVENTORY_FILE cephadm-preflight.yml --extra-vars "ceph_origin=rhcs" --limit GROUP_NAME|NODE_NAME

ansible-playbook -i INVENTORY_FILE cephadm-preflight.yml --extra-vars "ceph_origin=rhcs" --limit GROUP_NAME|NODE_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace GROUP_NAME with a group name from your inventory file. Replace NODE_NAME with a specific node name from your inventory file.

NoteOptionally, you can group your nodes in your inventory file by group name such as

[mons],[osds], and[mgrs]. However, admin nodes must be added to the[admin]group and clients must be added to the[clients]group.Example

ansible-playbook -i hosts cephadm-preflight.yml --extra-vars "ceph_origin=rhcs" --limit clients ansible-playbook -i hosts cephadm-preflight.yml --extra-vars "ceph_origin=rhcs" --limit host01

[ceph-admin@admin cephadm-ansible]$ ansible-playbook -i hosts cephadm-preflight.yml --extra-vars "ceph_origin=rhcs" --limit clients [ceph-admin@admin cephadm-ansible]$ ansible-playbook -i hosts cephadm-preflight.yml --extra-vars "ceph_origin=rhcs" --limit host01Copy to Clipboard Copied! Toggle word wrap Toggle overflow When you run the preflight playbook,

cephadm-ansibleautomatically installschronyandceph-commonon the client nodes.The preflight playbook installs

chronybut configures it for a single NTP source. If you want to configure multiple sources or if you have a disconnected environment, see the following documentation for more information:

3.10. Bootstrapping a new storage cluster

The cephadm utility performs the following tasks during the bootstrap process:

- Installs and starts a Ceph Monitor daemon and a Ceph Manager daemon for a new Red Hat Ceph Storage cluster on the local node as containers.

-

Creates the

/etc/cephdirectory. -

Writes a copy of the public key to

/etc/ceph/ceph.pubfor the Red Hat Ceph Storage cluster and adds the SSH key to the root user’s/root/.ssh/authorized_keysfile. -

Applies the

_adminlabel to the bootstrap node. -

Writes a minimal configuration file needed to communicate with the new cluster to

/etc/ceph/ceph.conf. -

Writes a copy of the

client.adminadministrative secret key to/etc/ceph/ceph.client.admin.keyring. -

Deploys a basic monitoring stack with Prometheus, Grafana, and other tools including

node-exporterandAlertmanager.

If you are performing a disconnected installation, see Performing a disconnected installation.

If you have existing Prometheus services that you want to run with the new storage cluster, or if you are deploying Ceph with Rook, pass the --skip-monitoring-stack option to the cephadm bootstrap command. This option bypasses the basic monitoring stack so that you can manually configure it later.

If you are deploying the monitoring stack, see Deploying the monitoring stack using the Ceph Orchestrator in the Red Hat Ceph Storage Operations Guide.

Bootstrapping provides the default user name and password for the initial login to the Dashboard. Bootstrap requires you to change the password after you log in.

Before you begin the bootstrapping process, make sure that the container image that you want to use has the same version of Red Hat Ceph Storage as cephadm. If the two versions do not match, bootstrapping fails at the Creating initial admin user stage.

Before you begin the bootstrapping process, you must create a username and password for the registry.redhat.io container registry. For more information about Red Hat container registry authentication, see the knowledge base article Red Hat Container Registry Authentication

Prerequisites

- An IP address for the first Ceph Monitor container, which is also the IP address for the first node in the storage cluster.

-

Login access to

registry.redhat.io. -

A minimum of 10 GB of free space for

/var/lib/containers/. - Root-level access to all nodes.

If the storage cluster includes multiple networks and interfaces, be sure to choose a network that is accessible by any node that uses the storage cluster.

If the local node uses fully-qualified domain names (FQDN), then add the --allow-fqdn-hostname option to cephadm bootstrap on the command line.

Run cephadm bootstrap on the node that you want to be the initial Monitor node in the cluster. The IP_ADDRESS option should be the IP address of the node you are using to run cephadm bootstrap.

If you want to deploy a storage cluster using IPV6 addresses, then use the IPV6 address format for the --mon-ip IP_ADDRESS option. For example: cephadm bootstrap --mon-ip 2620:52:0:880:225:90ff:fefc:2536 --registry-json /etc/mylogin.json

Procedure

Bootstrap a storage cluster:

Syntax

cephadm bootstrap --cluster-network NETWORK_CIDR --mon-ip IP_ADDRESS --registry-url registry.redhat.io --registry-username USER_NAME --registry-password PASSWORD --yes-i-know

cephadm bootstrap --cluster-network NETWORK_CIDR --mon-ip IP_ADDRESS --registry-url registry.redhat.io --registry-username USER_NAME --registry-password PASSWORD --yes-i-knowCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

cephadm bootstrap --cluster-network 10.10.128.0/24 --mon-ip 10.10.128.68 --registry-url registry.redhat.io --registry-username myuser1 --registry-password mypassword1 --yes-i-know

[root@host01 ~]# cephadm bootstrap --cluster-network 10.10.128.0/24 --mon-ip 10.10.128.68 --registry-url registry.redhat.io --registry-username myuser1 --registry-password mypassword1 --yes-i-knowCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteIf you want internal cluster traffic routed over the public network, you can omit the

--cluster-network NETWORK_CIDRoption.The script takes a few minutes to complete. Once the script completes, it provides the credentials to the Red Hat Ceph Storage Dashboard URL, a command to access the Ceph command-line interface (CLI), and a request to enable telemetry.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional Resources

- For more information about the recommended bootstrap command options, see Recommended cephadm bootstrap command options.

- For more information about the options available for the bootstrap command, see Bootstrap command options.

- For information about using a JSON file to contain login credentials for the bootstrap process, see Using a JSON file to protect login information.

3.10.1. Recommended cephadm bootstrap command options

The cephadm bootstrap command has multiple options that allow you to specify file locations, configure ssh settings, set passwords, and perform other initial configuration tasks.

Red Hat recommends that you use a basic set of command options for cephadm bootstrap. You can configure additional options after your initial cluster is up and running.

The following examples show how to specify the recommended options.

Syntax

cephadm bootstrap --ssh-user USER_NAME --mon-ip IP_ADDRESS --allow-fqdn-hostname --registry-json REGISTRY_JSON

cephadm bootstrap --ssh-user USER_NAME --mon-ip IP_ADDRESS --allow-fqdn-hostname --registry-json REGISTRY_JSONExample

cephadm bootstrap --ssh-user ceph --mon-ip 10.10.128.68 --allow-fqdn-hostname --registry-json /etc/mylogin.json

[root@host01 ~]# cephadm bootstrap --ssh-user ceph --mon-ip 10.10.128.68 --allow-fqdn-hostname --registry-json /etc/mylogin.json3.10.2. Using a JSON file to protect login information

As a storage administrator, you might choose to add login and password information to a JSON file, and then refer to the JSON file for bootstrapping. This protects the login credentials from exposure.

You can also use a JSON file with the cephadm --registry-login command.

Prerequisites

- An IP address for the first Ceph Monitor container, which is also the IP address for the first node in the storage cluster.

-

Login access to

registry.redhat.io. -

A minimum of 10 GB of free space for

/var/lib/containers/. - Root-level access to all nodes.

Procedure

Create the JSON file. In this example, the file is named

mylogin.json.Syntax

{ "url":"REGISTRY_URL", "username":"USER_NAME", "password":"PASSWORD" }{ "url":"REGISTRY_URL", "username":"USER_NAME", "password":"PASSWORD" }Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

{ "url":"registry.redhat.io", "username":"myuser1", "password":"mypassword1" }{ "url":"registry.redhat.io", "username":"myuser1", "password":"mypassword1" }Copy to Clipboard Copied! Toggle word wrap Toggle overflow Bootstrap a storage cluster:

Syntax

cephadm bootstrap --mon-ip IP_ADDRESS --registry-json /etc/mylogin.json

cephadm bootstrap --mon-ip IP_ADDRESS --registry-json /etc/mylogin.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

cephadm bootstrap --mon-ip 10.10.128.68 --registry-json /etc/mylogin.json

[root@host01 ~]# cephadm bootstrap --mon-ip 10.10.128.68 --registry-json /etc/mylogin.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.10.3. Bootstrapping a storage cluster using a service configuration file

To bootstrap the storage cluster and configure additional hosts and daemons using a service configuration file, use the --apply-spec option with the cephadm bootstrap command. The configuration file is a .yaml file that contains the service type, placement, and designated nodes for services that you want to deploy.

If you want to use a non-default realm or zone for applications such as multi-site, configure your Ceph Object Gateway daemons after you bootstrap the storage cluster, instead of adding them to the configuration file and using the --apply-spec option. This gives you the opportunity to create the realm or zone you need for the Ceph Object Gateway daemons before deploying them. See the Red Hat Ceph Storage Operations Guide for more information.

If deploying a NFS-Ganesha gateway, or Metadata Server (MDS) service, configure them after bootstrapping the storage cluster.

- To deploy a Ceph NFS-Ganesha gateway, you must create a RADOS pool first.

- To deploy the MDS service, you must create a CephFS volume first.

See the Red Hat Ceph Storage Operations Guide for more information.

Prerequisites

- At least one running virtual machine (VM) or server.

- For Red Hat Ceph Storage 7.0, Red Hat Enterprise Linux 9.2 with ansible-core bundled into AppStream. For Red Hat Ceph Storage 7.1, Red Hat Enterprise Linux 8.10, 9.4, 9.5, or 9.6 with ansible-core bundled into AppStream.

- Root-level access to all nodes.

-

Login access to

registry.redhat.io. -

Passwordless

sshis set up on all hosts in the storage cluster. -

cephadmis installed on the node that you want to be the initial Monitor node in the storage cluster.

Procedure

- Log in to the bootstrap host.

Create the service configuration

.yamlfile for your storage cluster. The example file directscephadm bootstrapto configure the initial host and two additional hosts, and it specifies that OSDs be created on all available disks.Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Bootstrap the storage cluster with the

--apply-specoption:Syntax

cephadm bootstrap --apply-spec CONFIGURATION_FILE_NAME --mon-ip MONITOR_IP_ADDRESS --registry-url registry.redhat.io --registry-username USER_NAME --registry-password PASSWORD

cephadm bootstrap --apply-spec CONFIGURATION_FILE_NAME --mon-ip MONITOR_IP_ADDRESS --registry-url registry.redhat.io --registry-username USER_NAME --registry-password PASSWORDCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

cephadm bootstrap --apply-spec initial-config.yaml --mon-ip 10.10.128.68 --registry-url registry.redhat.io --registry-username myuser1 --registry-password mypassword1

[root@host01 ~]# cephadm bootstrap --apply-spec initial-config.yaml --mon-ip 10.10.128.68 --registry-url registry.redhat.io --registry-username myuser1 --registry-password mypassword1Copy to Clipboard Copied! Toggle word wrap Toggle overflow The script takes a few minutes to complete. Once the script completes, it provides the credentials to the Red Hat Ceph Storage Dashboard URL, a command to access the Ceph command-line interface (CLI), and a request to enable telemetry.

- Once your storage cluster is up and running, see the Red Hat Ceph Storage Operations Guide for more information about configuring additional daemons and services.

3.10.4. Bootstrapping the storage cluster as a non-root user

You can bootstrap the storage cluster as a non-root user if you have passwordless sudo privileges.

To bootstrap the Red Hat Ceph Storage cluster as a non-root user on the bootstrap node, use the --ssh-user option with the cephadm bootstrap command. --ssh-user specifies a user for SSH connections to cluster nodes.

Non-root users must have passwordless sudo access.

Prerequisites

- An IP address for the first Ceph Monitor container, which is also the IP address for the initial Monitor node in the storage cluster.

-

Login access to

registry.redhat.io. -

A minimum of 10 GB of free space for

/var/lib/containers/. - Optional: SSH public and private keys.

-

Passwordless

sudoaccess to the bootstrap node. -

Non-root users have passwordless

sudoaccess on all nodes intended to be part of the cluster. -

cephadminstalled on the node that you want to be the initial Monitor node in the storage cluster.

Procedure

Change to

sudoon the bootstrap node:Syntax

su - SSH_USER_NAME

su - SSH_USER_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

su - ceph Last login: Tue Sep 14 12:00:29 EST 2021 on pts/0

[root@host01 ~]# su - ceph Last login: Tue Sep 14 12:00:29 EST 2021 on pts/0Copy to Clipboard Copied! Toggle word wrap Toggle overflow Check the SSH connection to the bootstrap node:

Example

ssh host01 Last login: Tue Sep 14 12:03:29 EST 2021 on pts/0

[ceph@host01 ~]$ ssh host01 Last login: Tue Sep 14 12:03:29 EST 2021 on pts/0Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Invoke the

cephadm bootstrapcommand.NoteUsing private and public keys is optional. If SSH keys have not previously been created, these can be created during this step.

Include the

--ssh-private-keyand--ssh-public-keyoptions:Syntax

sudo cephadm bootstrap --ssh-user USER_NAME --mon-ip IP_ADDRESS --ssh-private-key PRIVATE_KEY --ssh-public-key PUBLIC_KEY --registry-url registry.redhat.io --registry-username USER_NAME --registry-password PASSWORD

sudo cephadm bootstrap --ssh-user USER_NAME --mon-ip IP_ADDRESS --ssh-private-key PRIVATE_KEY --ssh-public-key PUBLIC_KEY --registry-url registry.redhat.io --registry-username USER_NAME --registry-password PASSWORDCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

sudo cephadm bootstrap --ssh-user ceph --mon-ip 10.10.128.68 --ssh-private-key /home/ceph/.ssh/id_rsa --ssh-public-key /home/ceph/.ssh/id_rsa.pub --registry-url registry.redhat.io --registry-username myuser1 --registry-password mypassword1

sudo cephadm bootstrap --ssh-user ceph --mon-ip 10.10.128.68 --ssh-private-key /home/ceph/.ssh/id_rsa --ssh-public-key /home/ceph/.ssh/id_rsa.pub --registry-url registry.redhat.io --registry-username myuser1 --registry-password mypassword1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.10.5. Bootstrap command options

The cephadm bootstrap command bootstraps a Ceph storage cluster on the local host. It deploys a MON daemon and a MGR daemon on the bootstrap node, automatically deploys the monitoring stack on the local host, and calls ceph orch host add HOSTNAME.

The following table lists the available options for cephadm bootstrap.

cephadm bootstrap option | Description |

|---|---|

| --config CONFIG_FILE, -c CONFIG_FILE |

CONFIG_FILE is the |

| --cluster-network NETWORK_CIDR |

Use the subnet defined by NETWORK_CIDR for internal cluster traffic. This is specified in CIDR notation. For example: |

| --mon-id MON_ID | Bootstraps on the host named MON_ID. Default value is the local host. |

| --mon-addrv MON_ADDRV | mon IPs (e.g., [v2:localipaddr:3300,v1:localipaddr:6789]) |

| --mon-ip IP_ADDRESS |

IP address of the node you are using to run |

| --mgr-id MGR_ID | Host ID where a MGR node should be installed. Default: randomly generated. |

| --fsid FSID | Cluster FSID. |

| --output-dir OUTPUT_DIR | Use this directory to write config, keyring, and pub key files. |

| --output-keyring OUTPUT_KEYRING | Use this location to write the keyring file with the new cluster admin and mon keys. |

| --output-config OUTPUT_CONFIG | Use this location to write the configuration file to connect to the new cluster. |

| --output-pub-ssh-key OUTPUT_PUB_SSH_KEY | Use this location to write the public SSH key for the cluster. |

| --skip-ssh | Skip the setup of the ssh key on the local host. |

| --initial-dashboard-user INITIAL_DASHBOARD_USER | Initial user for the dashboard. |

| --initial-dashboard-password INITIAL_DASHBOARD_PASSWORD | Initial password for the initial dashboard user. |

| --ssl-dashboard-port SSL_DASHBOARD_PORT | Port number used to connect with the dashboard using SSL. |

| --dashboard-key DASHBOARD_KEY | Dashboard key. |

| --dashboard-crt DASHBOARD_CRT | Dashboard certificate. |

| --ssh-config SSH_CONFIG | SSH config. |

| --ssh-private-key SSH_PRIVATE_KEY | SSH private key. |

| --ssh-public-key SSH_PUBLIC_KEY | SSH public key. |

| --ssh-user SSH_USER | Sets the user for SSH connections to cluster hosts. Passwordless sudo is needed for non-root users. |

| --skip-mon-network | Sets mon public_network based on the bootstrap mon ip. |

| --skip-dashboard | Do not enable the Ceph Dashboard. |

| --dashboard-password-noupdate | Disable forced dashboard password change. |

| --no-minimize-config | Do not assimilate and minimize the configuration file. |

| --skip-ping-check | Do not verify that the mon IP is pingable. |

| --skip-pull | Do not pull the latest image before bootstrapping. |

| --skip-firewalld | Do not configure firewalld. |

| --allow-overwrite | Allow the overwrite of existing –output-* config/keyring/ssh files. |

| --allow-fqdn-hostname | Allow fully qualified host name. |

| --skip-prepare-host | Do not prepare host. |

| --orphan-initial-daemons | Do not create initial mon, mgr, and crash service specs. |

| --skip-monitoring-stack | Do not automatically provision the monitoring stack] (prometheus, grafana, alertmanager, node-exporter). |

| --apply-spec APPLY_SPEC | Apply cluster spec file after bootstrap (copy ssh key, add hosts and apply services). |

| --registry-url REGISTRY_URL |

Specifies the URL of the custom registry to log into. For example: |

| --registry-username REGISTRY_USERNAME | User name of the login account to the custom registry. |

| --registry-password REGISTRY_PASSWORD | Password of the login account to the custom registry. |

| --registry-json REGISTRY_JSON | JSON file containing registry login information. |

3.10.6. Configuring a private registry for a disconnected installation

You can use a disconnected installation procedure to install cephadm and bootstrap your storage cluster on a private network. A disconnected installation uses a private registry for installation. Use this procedure when the Red Hat Ceph Storage nodes do NOT have access to the Internet during deployment.

Follow this procedure to set up a secure private registry using authentication and a self-signed certificate. Perform these steps on a node that has both Internet access and access to the local cluster.

Using an insecure registry for production is not recommended.

Prerequisites

- At least one running virtual machine (VM) or server with an active internet connection.

- For Red Hat Ceph Storage 7.0, Red Hat Enterprise Linux 9.2 with ansible-core bundled into AppStream. For Red Hat Ceph Storage 7.1, Red Hat Enterprise Linux 8.10, 9.4, 9.5, or 9.6 with ansible-core bundled into AppStream.

-

Login access to

registry.redhat.io. - Root-level access to all nodes.

Procedure

- Log in to the node that has access to both the public network and the cluster nodes.

Register the node, and when prompted, enter the appropriate Red Hat Customer Portal credentials:

Example

subscription-manager register

[root@admin ~]# subscription-manager registerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Pull the latest subscription data:

Example

subscription-manager refresh

[root@admin ~]# subscription-manager refreshCopy to Clipboard Copied! Toggle word wrap Toggle overflow List all available subscriptions for Red Hat Ceph Storage:

Example

subscription-manager list --available --all --matches="*Ceph*"

[root@admin ~]# subscription-manager list --available --all --matches="*Ceph*"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the Pool ID from the list of available subscriptions for Red Hat Ceph Storage.

Attach the subscription to get access to the software entitlements:

Syntax

subscription-manager attach --pool=POOL_ID

subscription-manager attach --pool=POOL_IDCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace POOL_ID with the Pool ID identified in the previous step.

Disable the default software repositories, and enable the server and the extras repositories:

Red Hat Enterprise Linux 9

subscription-manager repos --disable=* subscription-manager repos --enable=rhel-9-for-x86_64-baseos-rpms subscription-manager repos --enable=rhel-9-for-x86_64-appstream-rpms

[root@admin ~]# subscription-manager repos --disable=* [root@admin ~]# subscription-manager repos --enable=rhel-9-for-x86_64-baseos-rpms [root@admin ~]# subscription-manager repos --enable=rhel-9-for-x86_64-appstream-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Install the

podmanandhttpd-toolspackages:Example

dnf install -y podman httpd-tools

[root@admin ~]# dnf install -y podman httpd-toolsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create folders for the private registry:

Example

mkdir -p /opt/registry/{auth,certs,data}[root@admin ~]# mkdir -p /opt/registry/{auth,certs,data}Copy to Clipboard Copied! Toggle word wrap Toggle overflow The registry will be stored in

/opt/registryand the directories are mounted in the container running the registry.-

The

authdirectory stores thehtpasswdfile the registry uses for authentication. -

The

certsdirectory stores the certificates the registry uses for authentication. -

The

datadirectory stores the registry images.

-

The

Create credentials for accessing the private registry:

Syntax

htpasswd -bBc /opt/registry/auth/htpasswd PRIVATE_REGISTRY_USERNAME PRIVATE_REGISTRY_PASSWORD

htpasswd -bBc /opt/registry/auth/htpasswd PRIVATE_REGISTRY_USERNAME PRIVATE_REGISTRY_PASSWORDCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

The

boption provides the password from the command line. -

The

Boption stores the password usingBcryptencryption. -

The

coption creates thehtpasswdfile. - Replace PRIVATE_REGISTRY_USERNAME with the username to create for the private registry.

Replace PRIVATE_REGISTRY_PASSWORD with the password to create for the private registry username.

Example

htpasswd -bBc /opt/registry/auth/htpasswd myregistryusername myregistrypassword1

[root@admin ~]# htpasswd -bBc /opt/registry/auth/htpasswd myregistryusername myregistrypassword1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

-

The

Create a self-signed certificate:

Syntax

openssl req -newkey rsa:4096 -nodes -sha256 -keyout /opt/registry/certs/domain.key -x509 -days 365 -out /opt/registry/certs/domain.crt -addext "subjectAltName = DNS:LOCAL_NODE_FQDN"

openssl req -newkey rsa:4096 -nodes -sha256 -keyout /opt/registry/certs/domain.key -x509 -days 365 -out /opt/registry/certs/domain.crt -addext "subjectAltName = DNS:LOCAL_NODE_FQDN"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace LOCAL_NODE_FQDN with the fully qualified host name of the private registry node.

NoteYou will be prompted for the respective options for your certificate. The

CN=value is the host name of your node and should be resolvable by DNS or the/etc/hostsfile.Example

openssl req -newkey rsa:4096 -nodes -sha256 -keyout /opt/registry/certs/domain.key -x509 -days 365 -out /opt/registry/certs/domain.crt -addext "subjectAltName = DNS:admin.lab.redhat.com"

[root@admin ~]# openssl req -newkey rsa:4096 -nodes -sha256 -keyout /opt/registry/certs/domain.key -x509 -days 365 -out /opt/registry/certs/domain.crt -addext "subjectAltName = DNS:admin.lab.redhat.com"Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteWhen creating a self-signed certificate, be sure to create a certificate with a proper Subject Alternative Name (SAN). Podman commands that require TLS verification for certificates that do not include a proper SAN, return the following error: x509: certificate relies on legacy Common Name field, use SANs or temporarily enable Common Name matching with GODEBUG=x509ignoreCN=0

Create a symbolic link to

domain.certto allowskopeoto locate the certificate with the file extension.cert:Example

ln -s /opt/registry/certs/domain.crt /opt/registry/certs/domain.cert

[root@admin ~]# ln -s /opt/registry/certs/domain.crt /opt/registry/certs/domain.certCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the certificate to the trusted list on the private registry node:

Syntax

cp /opt/registry/certs/domain.crt /etc/pki/ca-trust/source/anchors/ update-ca-trust trust list | grep -i "LOCAL_NODE_FQDN"

cp /opt/registry/certs/domain.crt /etc/pki/ca-trust/source/anchors/ update-ca-trust trust list | grep -i "LOCAL_NODE_FQDN"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace LOCAL_NODE_FQDN with the FQDN of the private registry node.

Example

cp /opt/registry/certs/domain.crt /etc/pki/ca-trust/source/anchors/ update-ca-trust trust list | grep -i "admin.lab.redhat.com" label: admin.lab.redhat.com[root@admin ~]# cp /opt/registry/certs/domain.crt /etc/pki/ca-trust/source/anchors/ [root@admin ~]# update-ca-trust [root@admin ~]# trust list | grep -i "admin.lab.redhat.com" label: admin.lab.redhat.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the certificate to any nodes that will access the private registry for installation and update the trusted list:

Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Start the local secure private registry:

Syntax

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace NAME_OF_CONTAINER with a name to assign to the container.

Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow This starts the private registry on port 5000 and mounts the volumes of the registry directories in the container running the registry.

On the local registry node, verify that

registry.redhat.iois in the container registry search path.Open for editing the

/etc/containers/registries.conffile, and addregistry.redhat.ioto theunqualified-search-registrieslist, if it does not exist:Example

unqualified-search-registries = ["registry.redhat.io", "registry.access.redhat.com", "registry.fedoraproject.org", "registry.centos.org", "docker.io"]

unqualified-search-registries = ["registry.redhat.io", "registry.access.redhat.com", "registry.fedoraproject.org", "registry.centos.org", "docker.io"]Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Login to

registry.redhat.iowith your Red Hat Customer Portal credentials:Syntax

podman login registry.redhat.io

podman login registry.redhat.ioCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the following Red Hat Ceph Storage 7 image, Prometheus images, and Dashboard image from the Red Hat Customer Portal to the private registry:

NoteFind the latest available supported container images on the Red Hat Ecosystem Catalog.

Expand Table 3.1. Custom image details for monitoring stack Monitoring stack component Image details for Red Hat Ceph Storage 7.0 Image details for Red Hat Ceph Storage 7.1 Prometheus

registry.redhat.io/openshift4/ose-prometheus:v4.12

registry.redhat.io/openshift4/ose-prometheus:v4.15

Grafana

registry.redhat.io/rhceph/grafana-rhel9:latest

registry.redhat.io/rhceph/grafana-rhel9:latest

Node-exporter

registry.redhat.io/openshift4/ose-prometheus-node-exporter:v4.12

registry.redhat.io/openshift4/ose-prometheus-node-exporter:v4.15

AlertManager

registry.redhat.io/openshift4/ose-prometheus-alertmanager:v4.12

registry.redhat.io/openshift4/ose-prometheus-alertmanager:v4.15

HAProxy

registry.redhat.io/rhceph/rhceph-haproxy-rhel9:latest

registry.redhat.io/rhceph/rhceph-haproxy-rhel9:latest

Keepalived

registry.redhat.io/rhceph/keepalived-rhel9:latest

registry.redhat.io/rhceph/keepalived-rhel9:latest

SNMP Gateway

registry.redhat.io/rhceph/snmp-notifier-rhel9:latest

registry.redhat.io/rhceph/snmp-notifier-rhel9:latest

Loki

registry.redhat.io/openshift-logging/logging-loki-rhel8:v2.6.1

registry.redhat.io/openshift-logging/logging-loki-rhel8:v2.6.1

Promtail