Dashboard Guide

Monitoring Ceph Cluster with Ceph Dashboard

Abstract

Chapter 1. Ceph dashboard overview

As a storage administrator, the Red Hat Ceph Storage Dashboard provides management and monitoring capabilities, allowing you to administer and configure the cluster, as well as visualize information and performance statistics related to it. The dashboard uses a web server hosted by the ceph-mgr daemon.

The dashboard is accessible from a web browser and includes many useful management and monitoring features, for example, to configure manager modules and monitor the state of OSDs.

The Ceph dashboard provides the following features:

- Multi-user and role management

The dashboard supports multiple user accounts with different permissions and roles. User accounts and roles can be managed using both, the command line and the web user interface. The dashboard supports various methods to enhance password security. Password complexity rules may be configured, requiring users to change their password after the first login or after a configurable time period.

For more information, see Managing roles on the Ceph Dashboard and Managing users on the Ceph dashboard.

- Single Sign-On (SSO)

The dashboard supports authentication with an external identity provider using the SAML 2.0 protocol.

For more information, see Enabling single sign-on for the Ceph dashboard.

- Auditing

The dashboard backend can be configured to log all PUT, POST and DELETE API requests in the Ceph manager log.

For more information about using the manager modules with the dashboard, see Viewing and editing the manager modules of the Ceph cluster on the dashboard.

Management features

The Red Hat Ceph Storage Dashboard includes various management features.

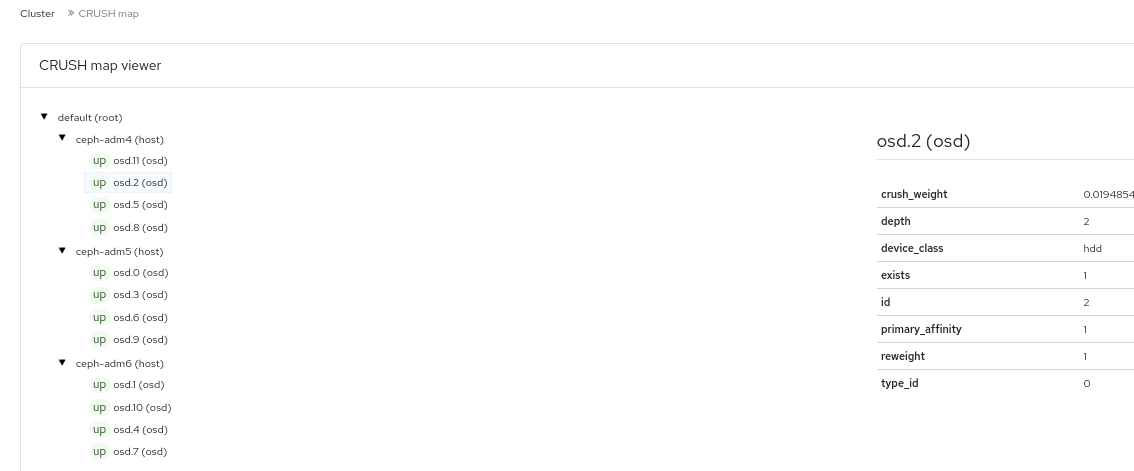

- Viewing cluster hierarchy

You can view the CRUSH map, for example, to determine which host a specific OSD ID is running on. This is helpful if an issue with an OSD occurs.

For more information, see Viewing the CRUSH map of the Ceph cluster on the dashboard.

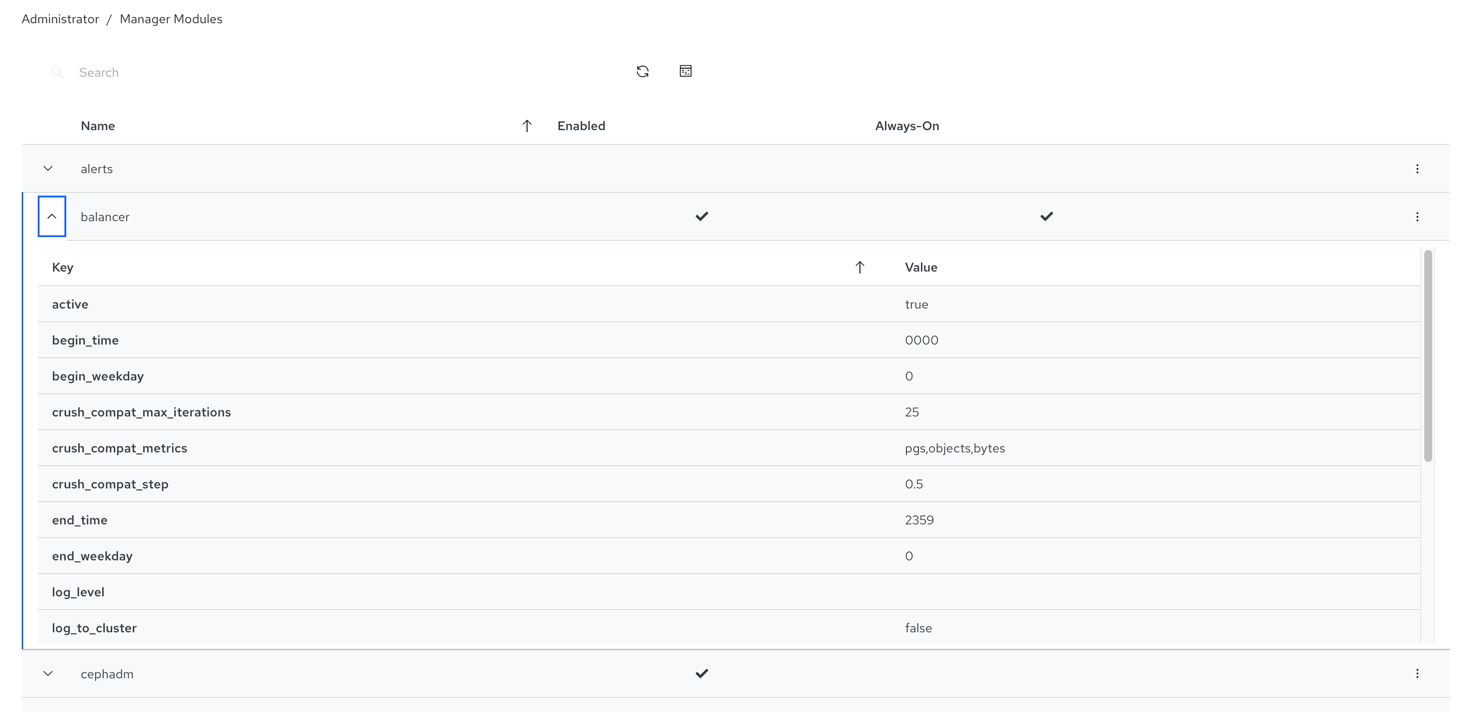

- Configuring manager modules

You can view and change parameters for Ceph manager modules.

For more information, see Viewing and editing the manager modules of the Ceph cluster on the dashboard.

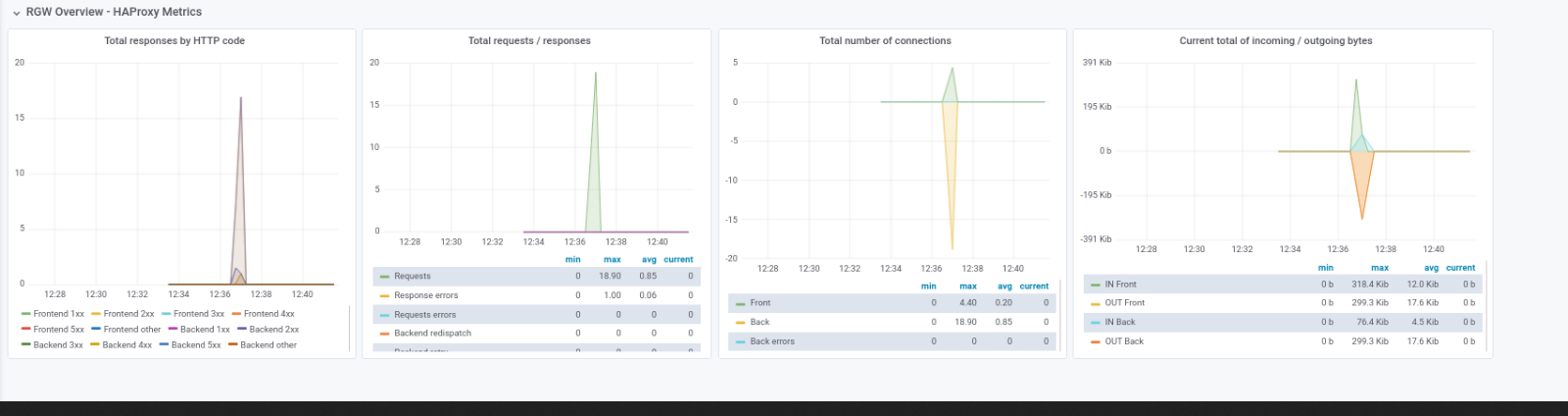

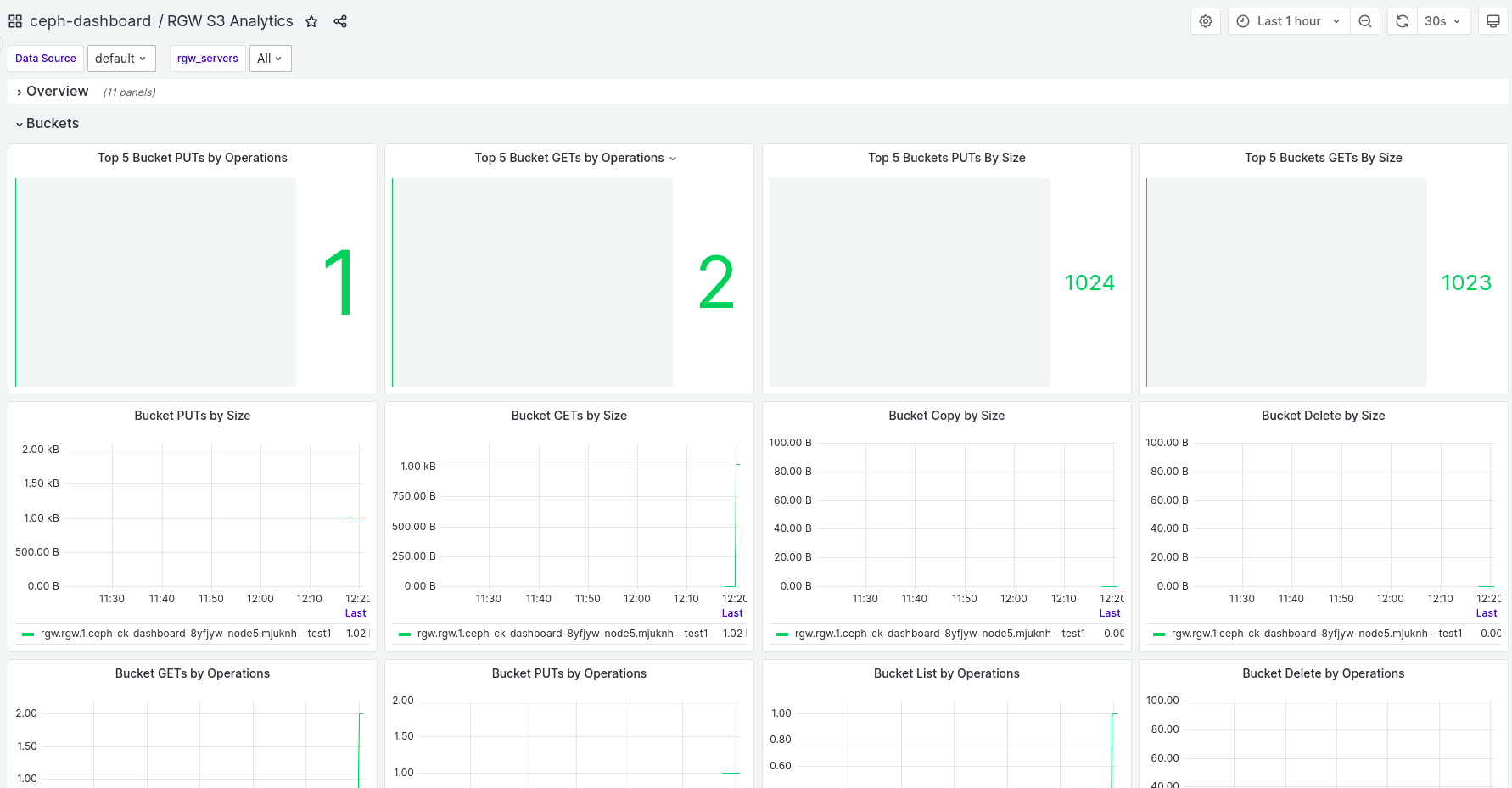

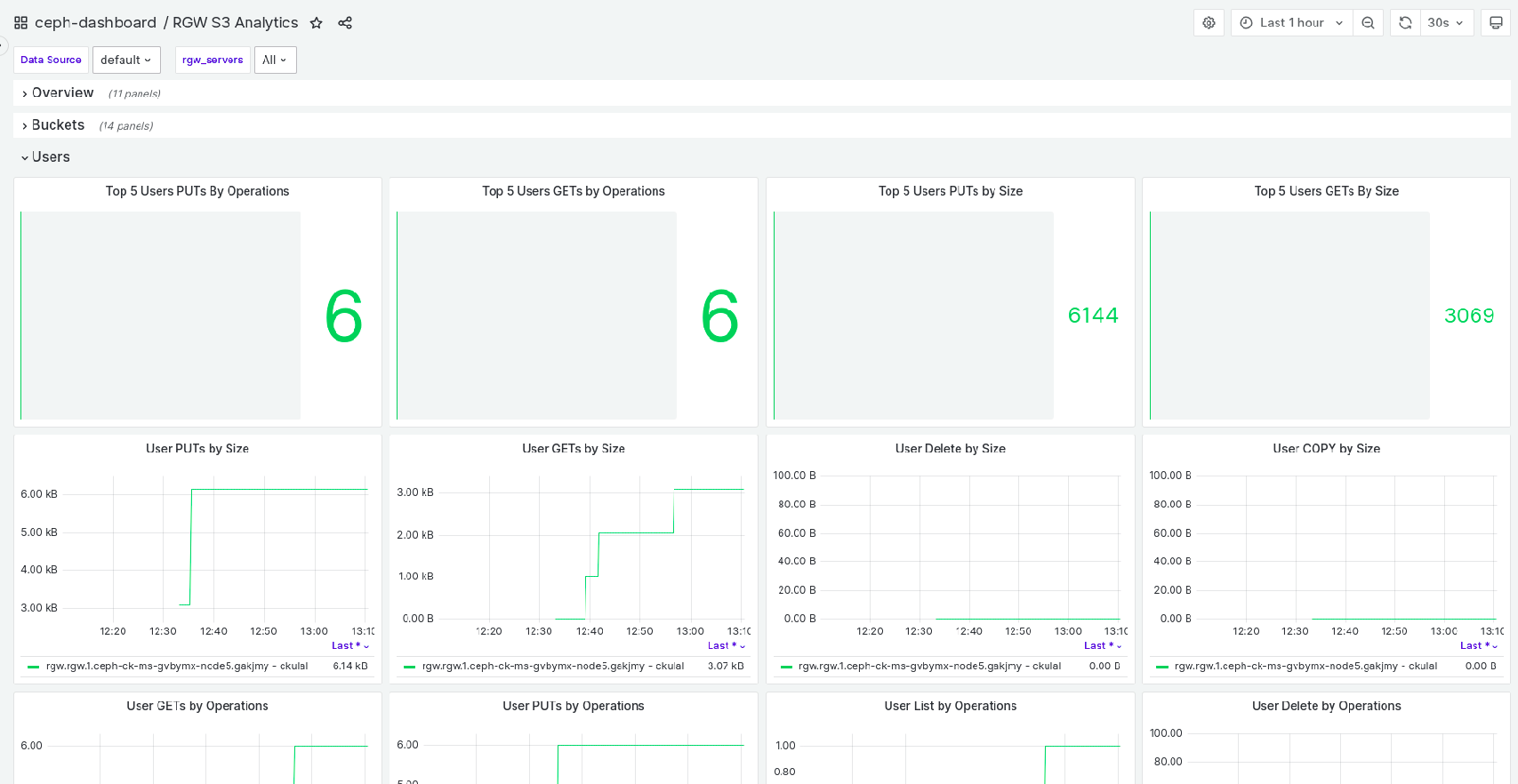

- Embedded Grafana dashboards

Ceph Dashboard Grafana dashboards might be embedded in external applications and web pages to surface information with Prometheus modules gathering the performance metrics.

For more information, see Ceph Dashboard components.

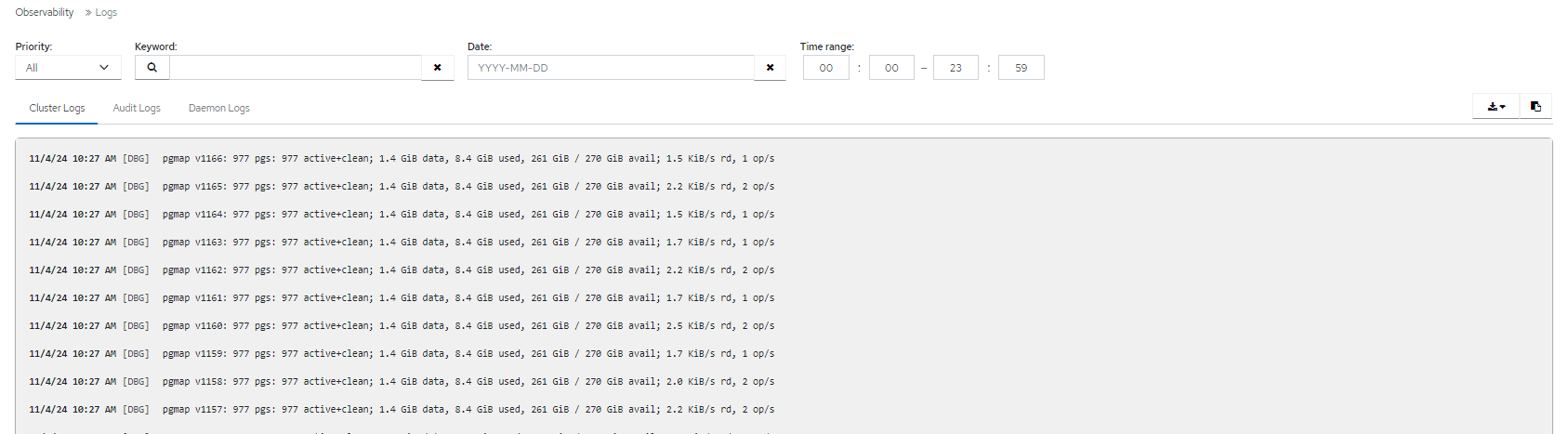

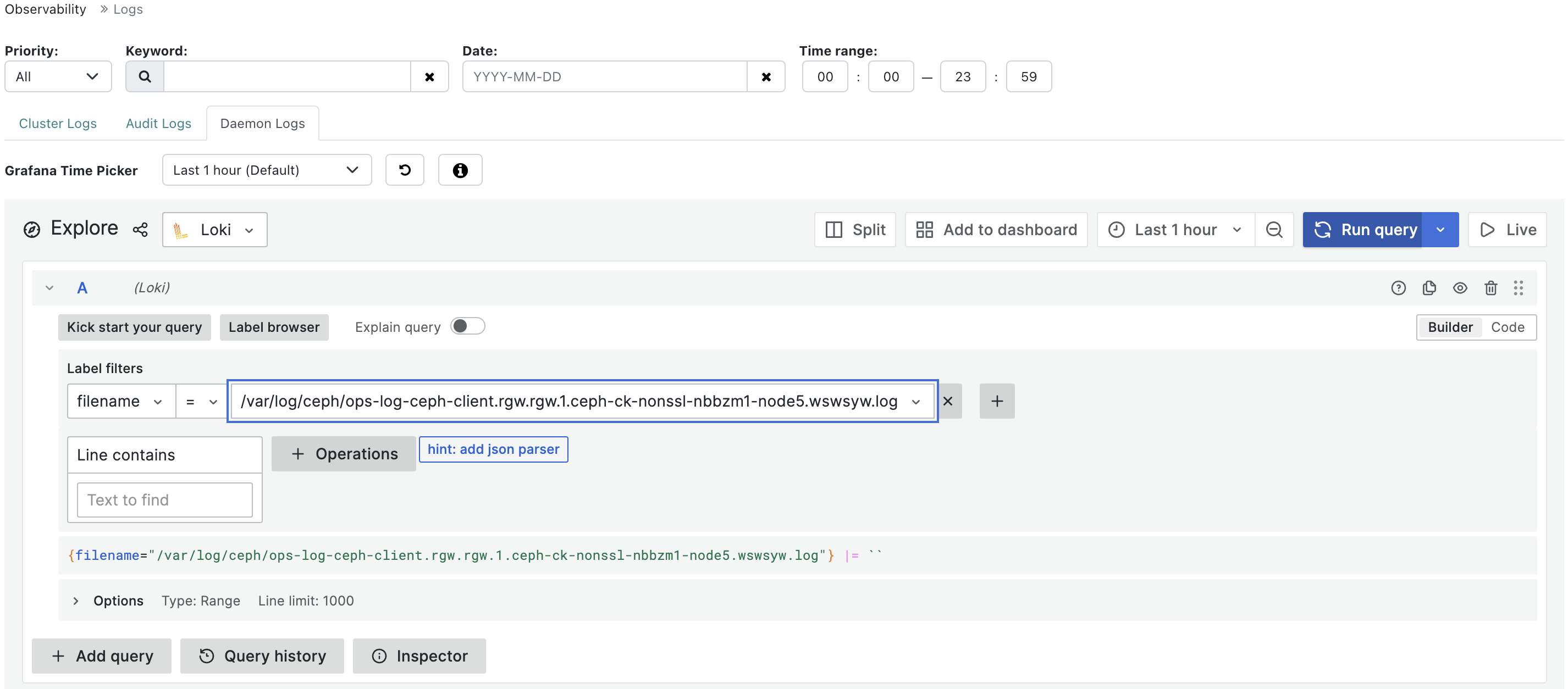

- Viewing and filtering logs

You can view event and audit cluster logs and filter them based on priority, keyword, date, or time range.

For more information, see Filtering logs of the Ceph cluster on the dashboard.

- Toggling dashboard components

You can enable and disable dashboard components so only the features you need are available.

For more information, see Toggling Ceph dashboard features.

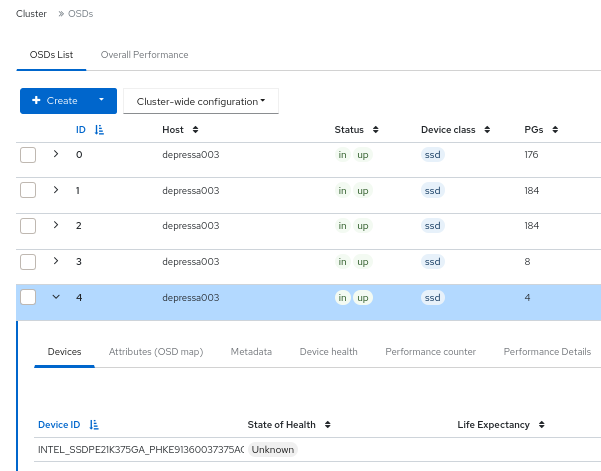

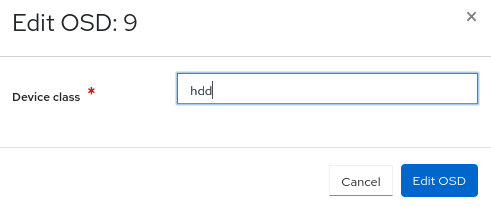

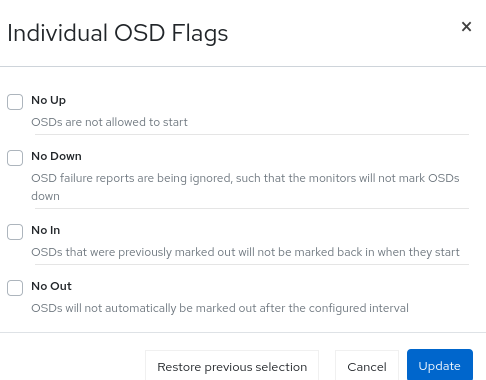

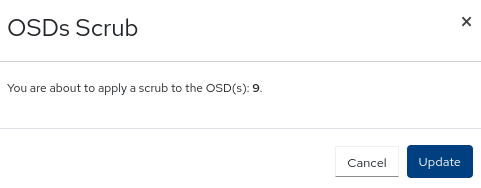

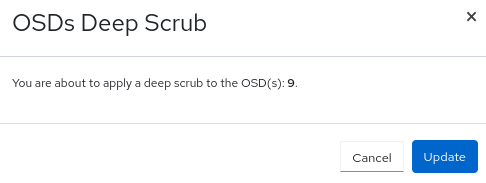

- Managing OSD settings

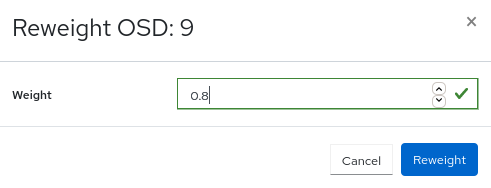

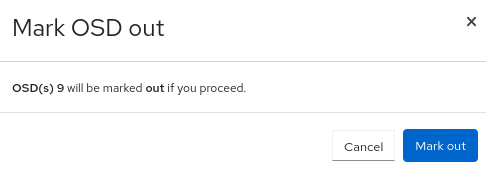

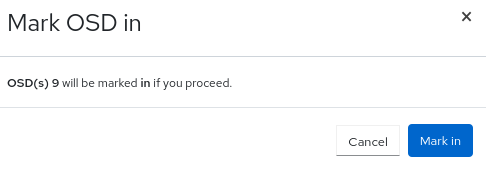

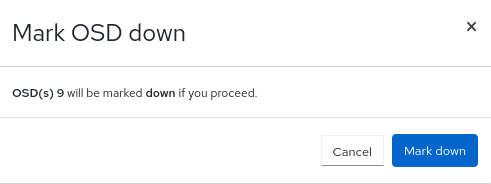

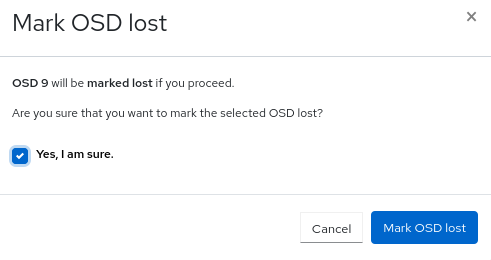

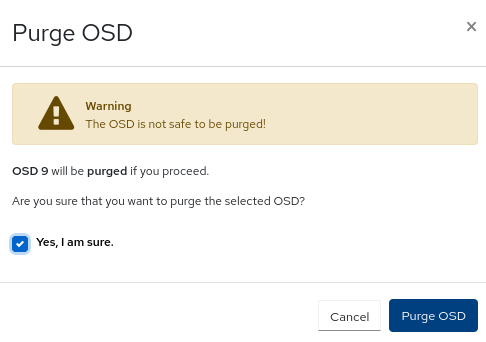

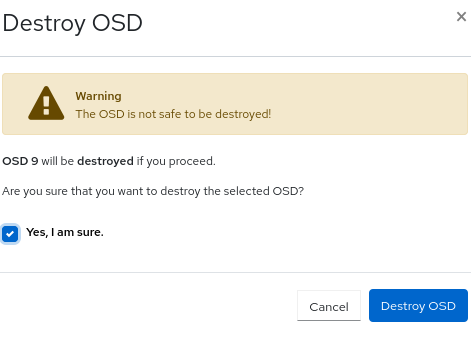

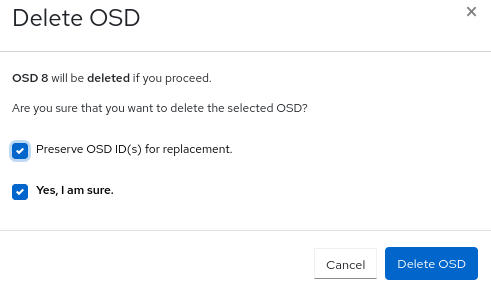

You can set cluster-wide OSD flags using the dashboard. You can also Mark OSDs up, down or out, purge and reweight OSDs, perform scrub operations, modify various scrub-related configuration options, select profiles to adjust the level of backfilling activity. You can set and change the device class of an OSD, display and sort OSDs by device class. You can deploy OSDs on new drives and hosts.

For more information, see Managing Ceph OSDs on the dashboard.

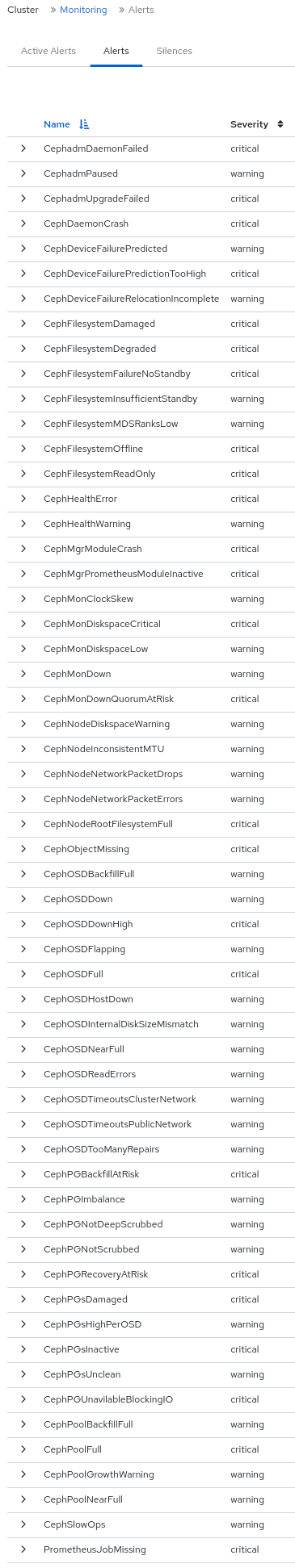

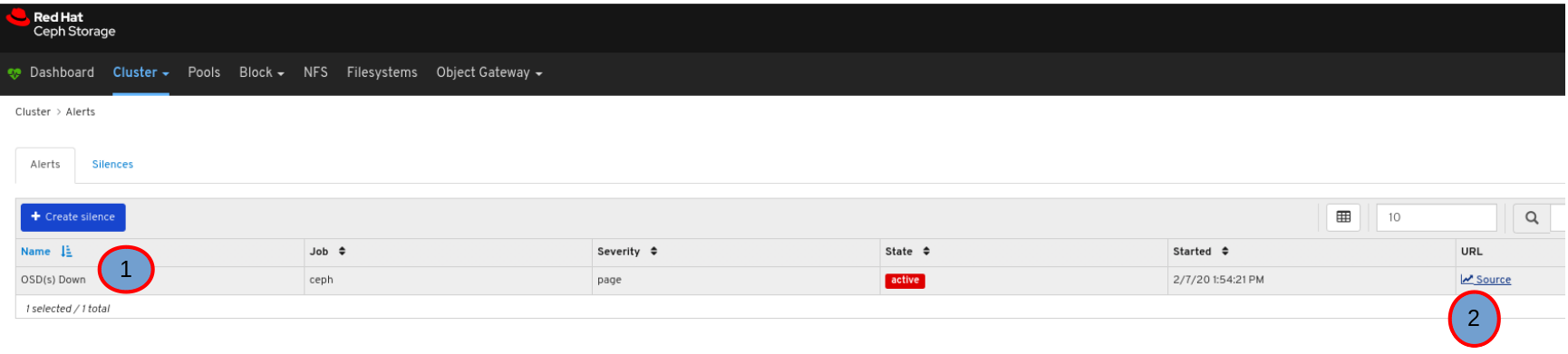

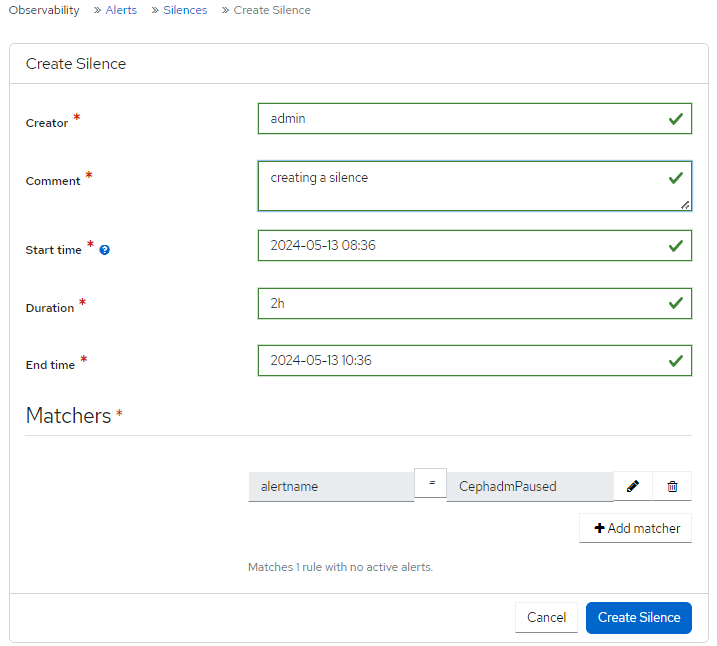

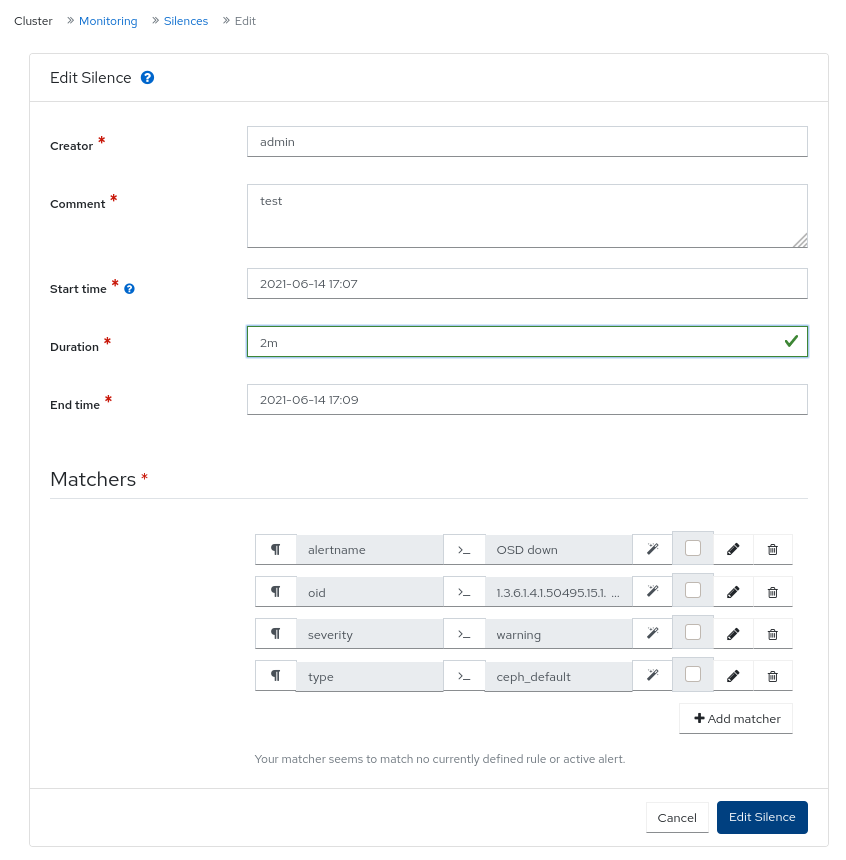

- Viewing alerts

The alerts page allows you to see details of current alerts.

For more information, see Viewing alerts on the Ceph dashboard.

- Upgrading

You can upgrade the Ceph cluster version using the dashboard.

For more information, see Upgrading a cluster.

- Quality of service for images

You can set performance limits on images, for example limiting IOPS or read BPS burst rates.

For more information, see Managing block device images on the Ceph dashboard.

Monitoring features

Monitor different features from within the Red Hat Ceph Storage Dashboard.

- Username and password protection

You can access the dashboard only by providing a configurable username and password.

For more information, see Managing users on the Ceph dashboard.

- Overall cluster health

Displays performance and capacity metrics. This also displays the overall cluster status, storage utilization, for example, number of objects, raw capacity, usage per pool, a list of pools and their status and usage statistics.

For more information, see Viewing and editing the configuration of the Ceph cluster on the dashboard.

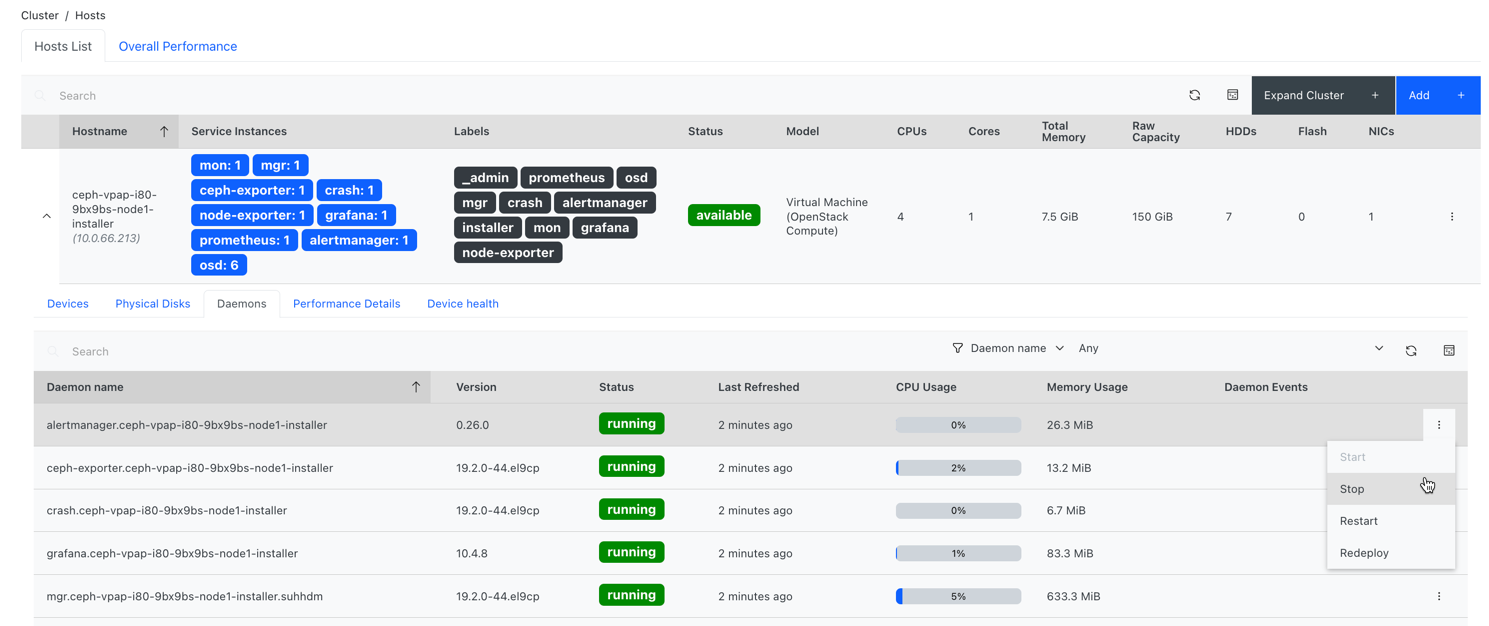

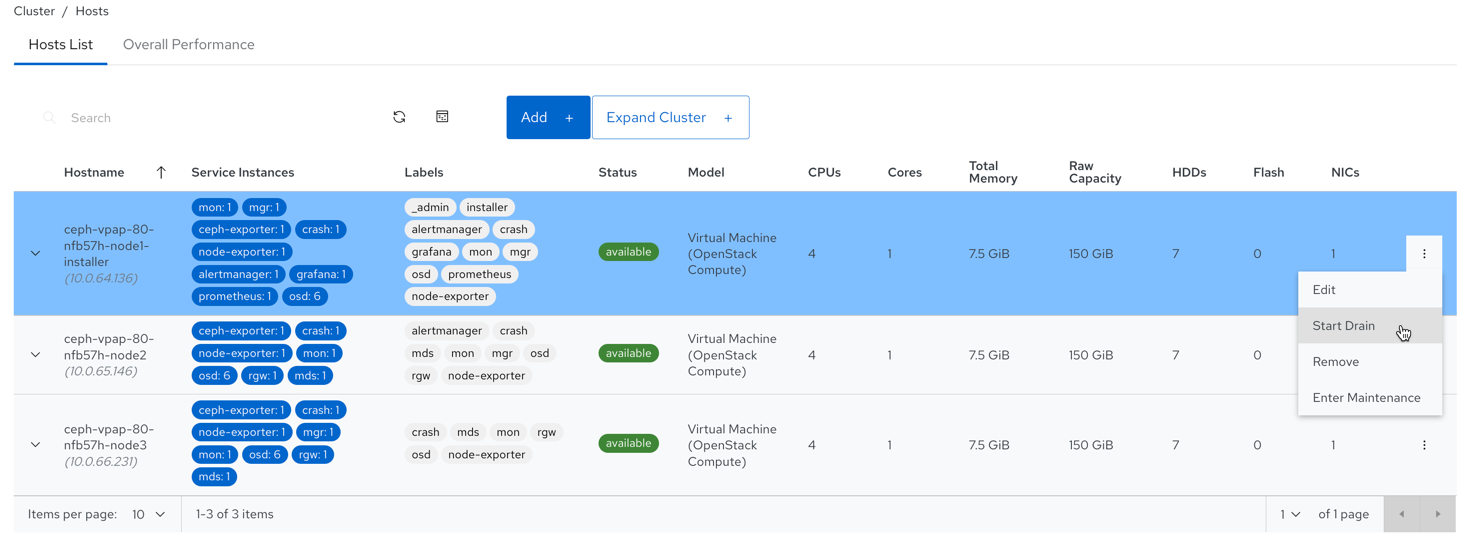

- Hosts

Provides a list of all hosts associated with the cluster along with the running services and the installed Ceph version.

For more information, see Monitoring hosts of the Ceph cluster on the dashboard.

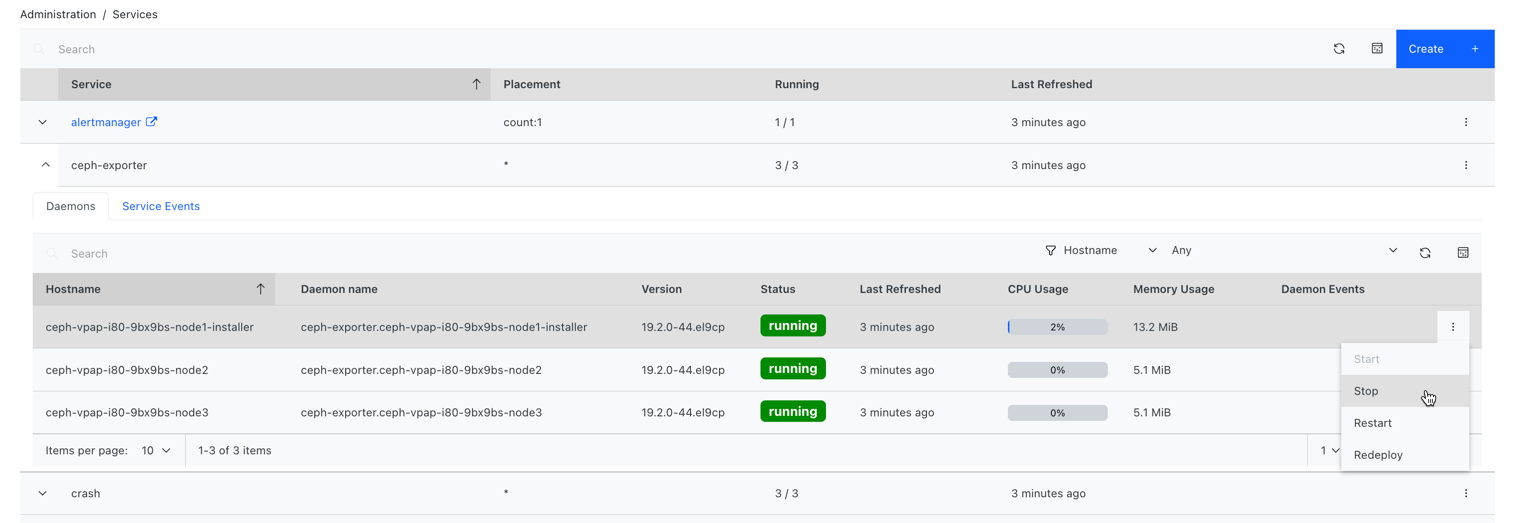

- Performance counters

Displays detailed statistics for each running service.

For more information, see Monitoring services of the Ceph cluster on the dashboard.

- Monitors

Lists all Monitors, their quorum status and open sessions.

For more information, see Monitoring monitors of the Ceph cluster on the dashboard.

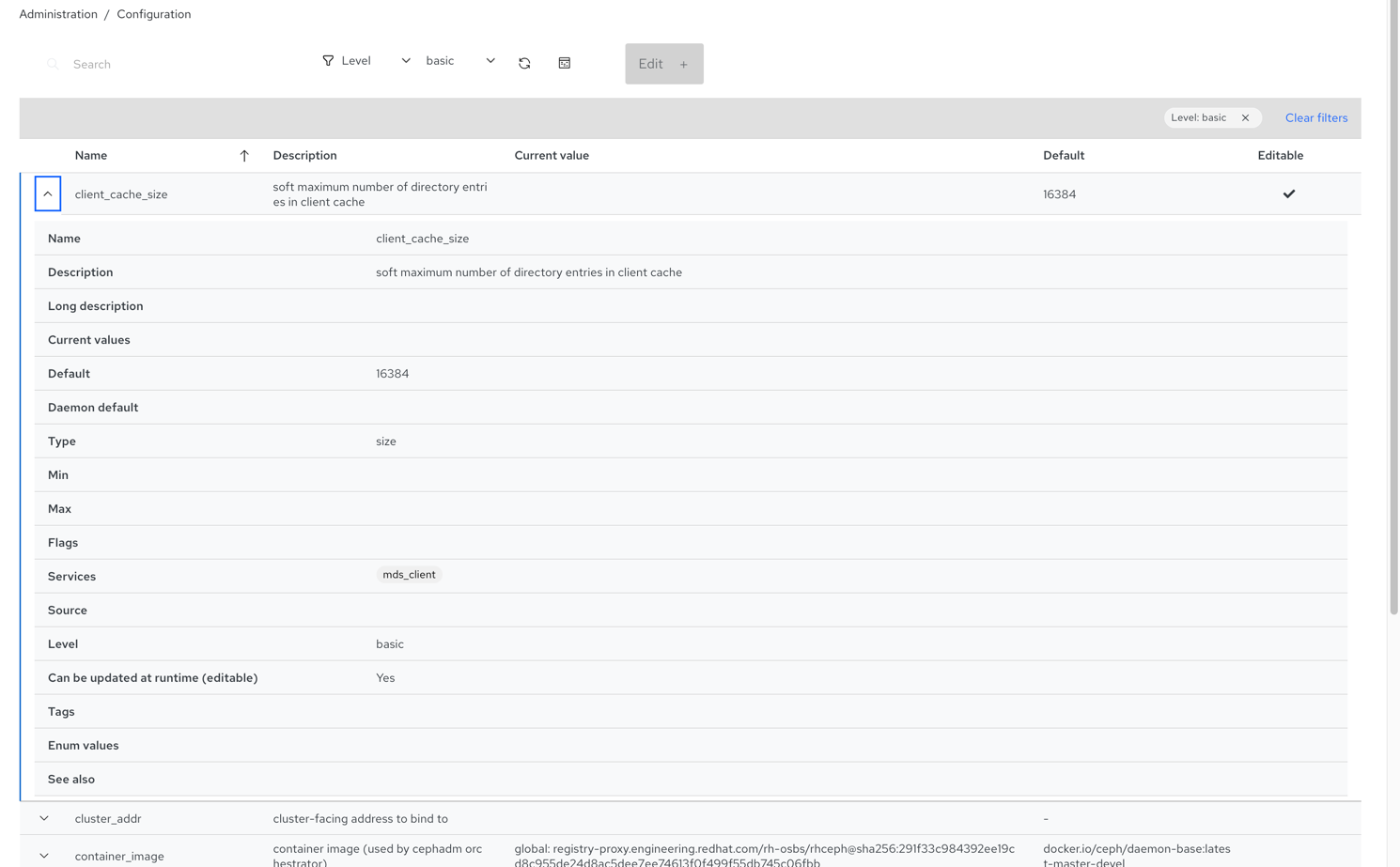

- Configuration editor

Displays all the available configuration options, their descriptions, types, default, and currently set values. These values are editable.

For more information, see Viewing and editing the configuration of the Ceph cluster on the dashboard.

- Cluster logs

Displays and filters the latest updates to the cluster’s event and audit log files by priority, date, or keyword.

For more information, see Filtering logs of the Ceph cluster on the dashboard.

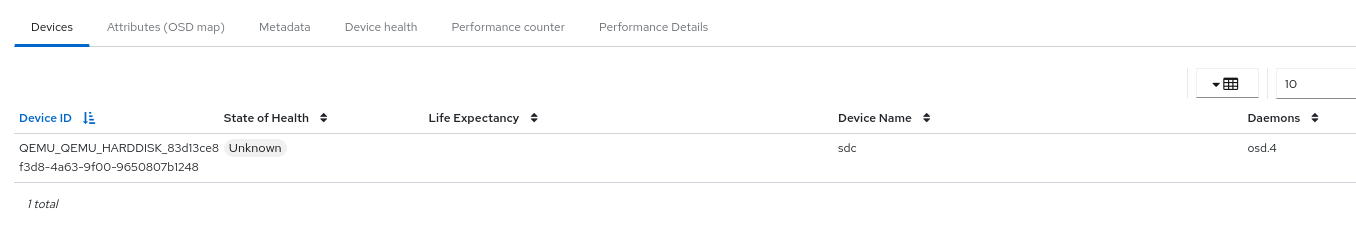

- Device management

Lists all hosts known by the Orchestrator. Lists all drives attached to a host and their properties. Displays drive health predictions, SMART data, and blink enclosure LEDs.

For more information, see Monitoring hosts of the Ceph cluster on the dashboard.

- View storage cluster capacity

You can view raw storage capacity of the Red Hat Ceph Storage cluster in the Capacity pages of the Ceph dashboard.

For more information, see Understanding the landing page of the Ceph dashboard.

- Pools

Lists and manages all Ceph pools and their details. For example: applications, placement groups, replication size, EC profile, quotas, and CRUSH ruleset.

For more information, see Understanding the landing page of the Ceph dashboard and Monitoring pools of the Ceph cluster on the dashboard.

- OSDs

Lists and manages all OSDs, their status, and usage statistics. OSDs also lists detailed information, for example, attributes, OSD map, metadata, and performance counters for read and write operations. OSDs also lists all drives that are associated with an OSD.

For more information, see Monitoring Ceph OSDs on the dashboard.

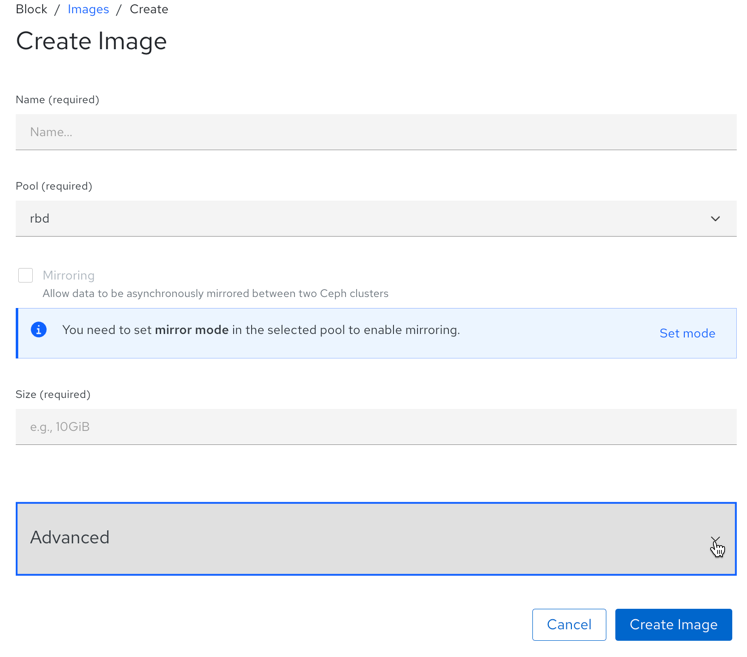

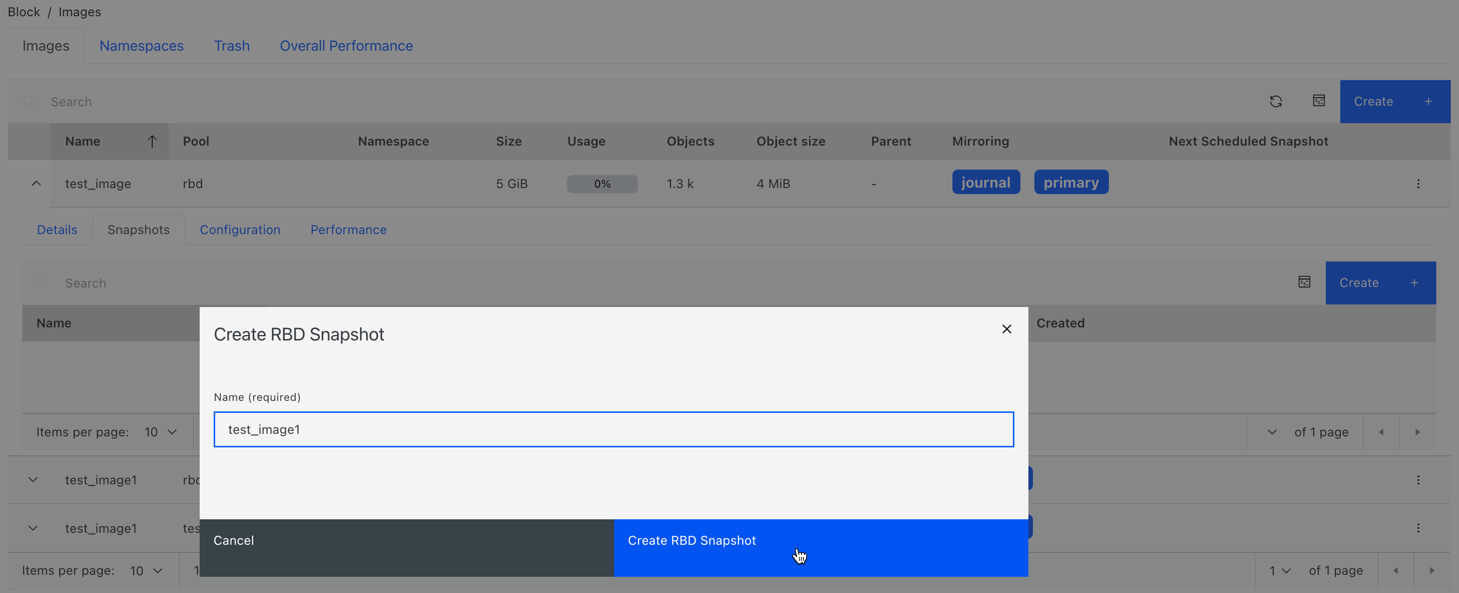

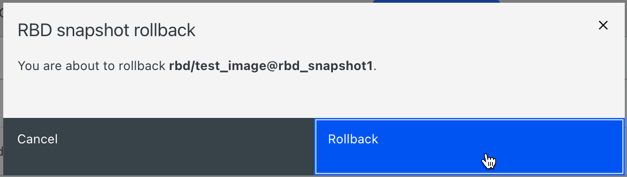

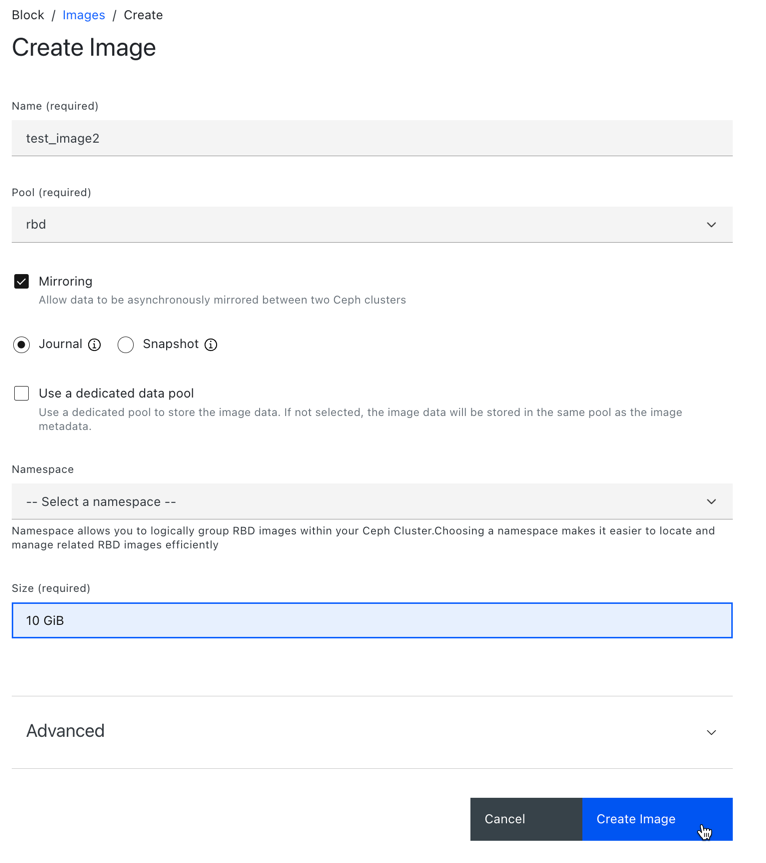

- Images

Lists all Ceph Block Device (RBD) images and their properties such as size, objects, and features. Create, copy, modify and delete RBD images. Create, delete, and rollback snapshots of selected images, protect or unprotect these snapshots against modification. Copy or clone snapshots, flatten cloned images.

NoteThe performance graph for I/O changes in the Overall Performance tab for a specific image shows values only after specifying the pool that includes that image by setting the

rbd_stats_poolparameter in Cluster→Manager modules→Prometheus.For more information, see Monitoring block device images on the Ceph dashboard.

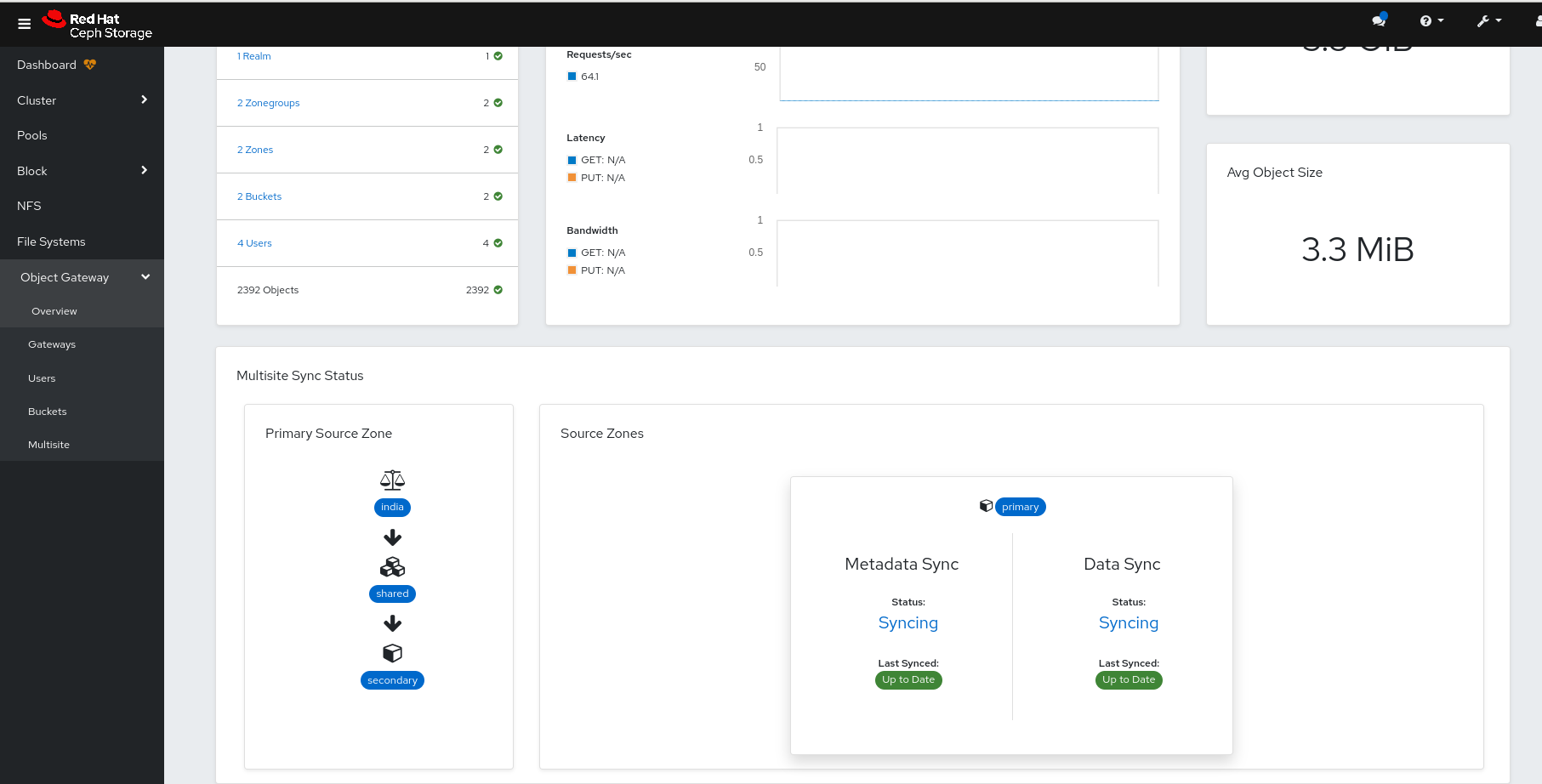

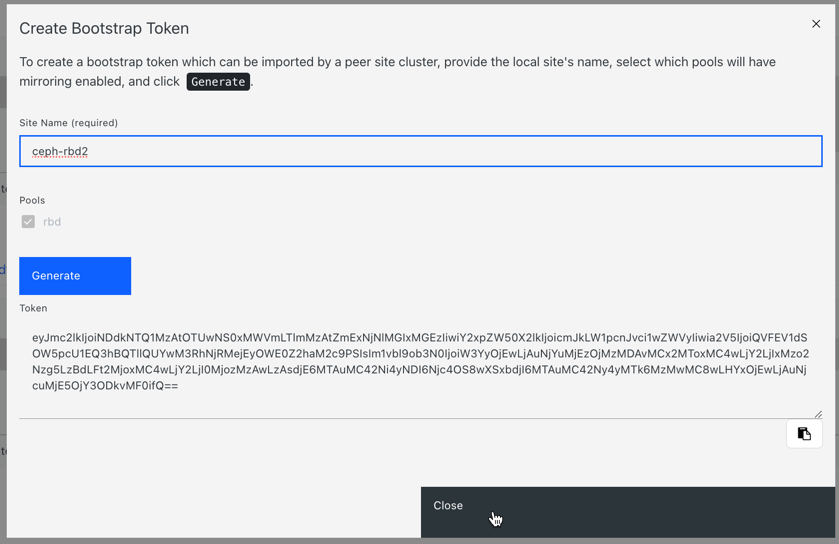

- Block device mirroring

Enables and configures Ceph Block Device (RBD) mirroring to a remote Ceph server. Lists all active sync daemons and their status, pools and RBD images including their synchronization state.

For more information, see Mirroring view on the Ceph dashboard.

- Ceph File Systems

Lists all active Ceph File System (CephFS) clients and associated pools, including their usage statistics. Evict active CephFS clients, manage CephFS quotas and snapshots, and browse a CephFS directory structure.

For more information, see Monitoring Ceph file systems on the dashboard.

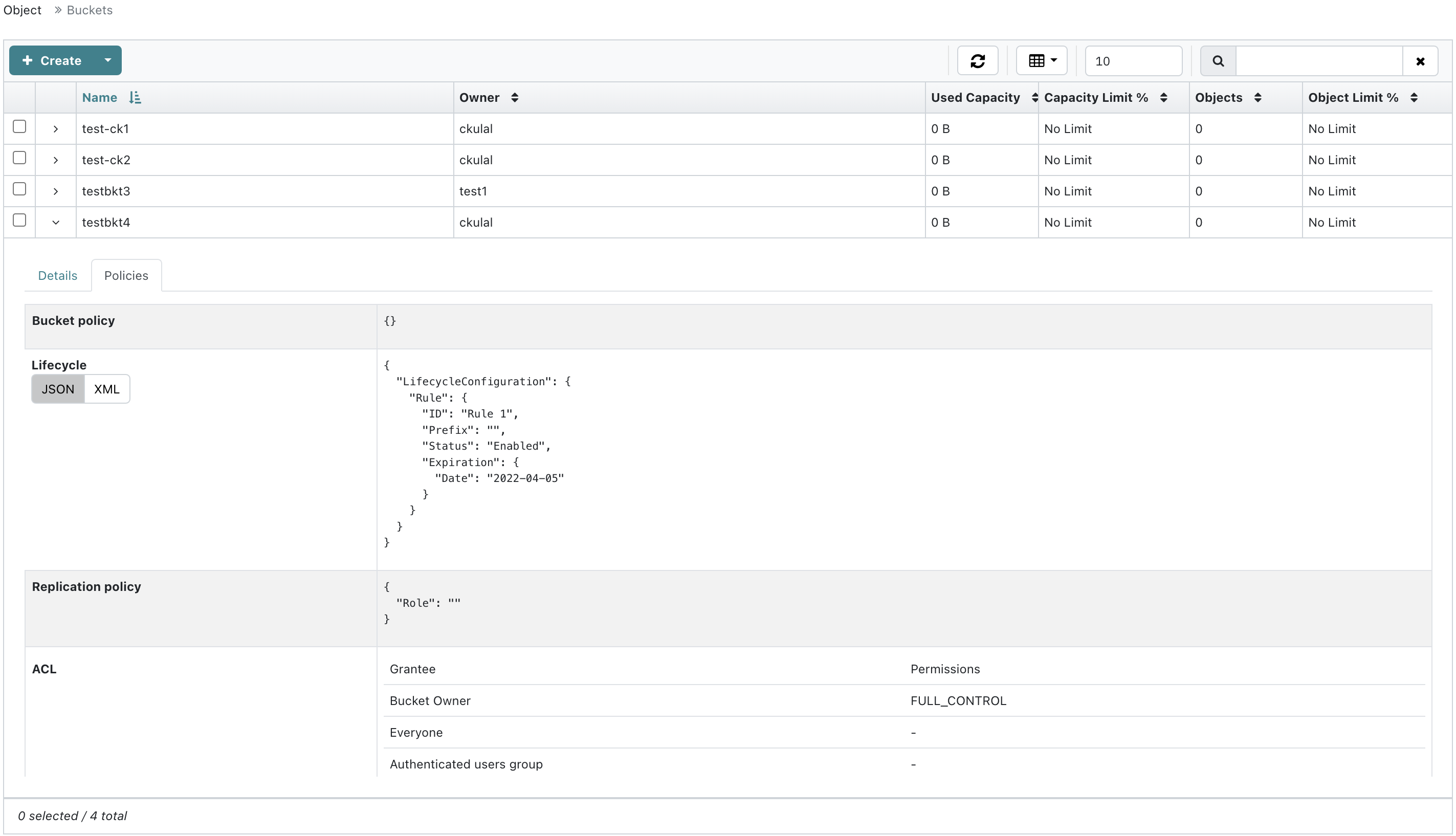

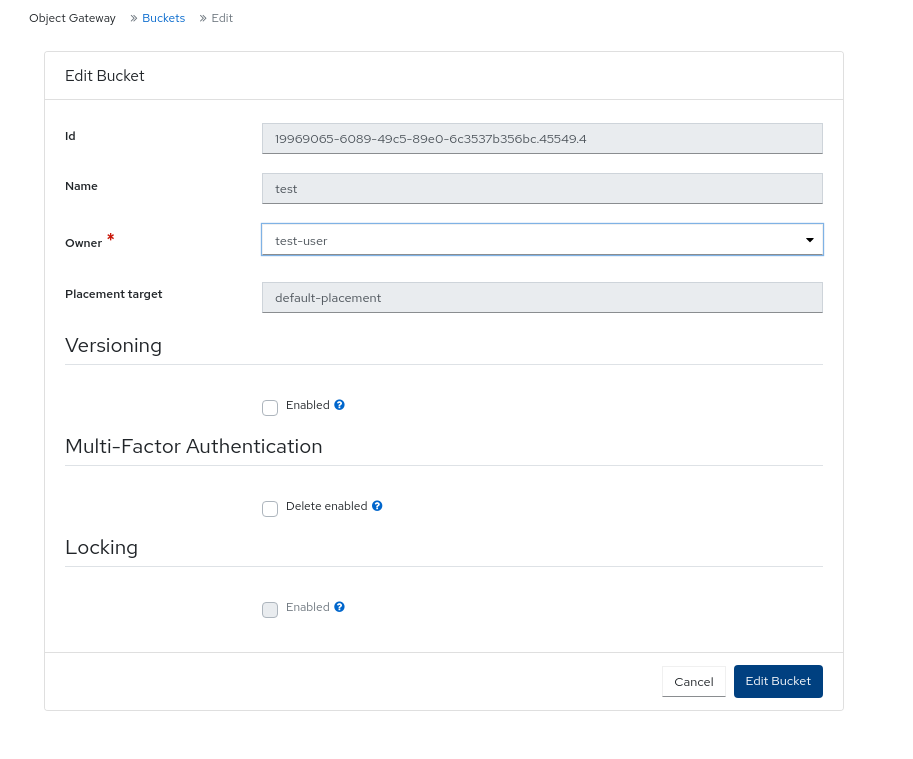

- Object Gateway (RGW)

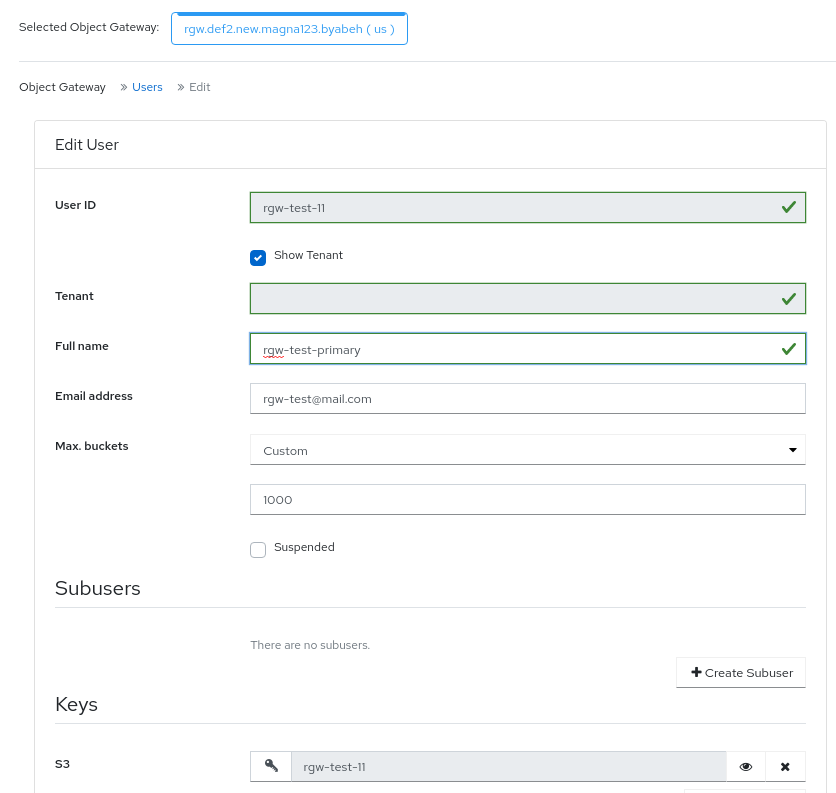

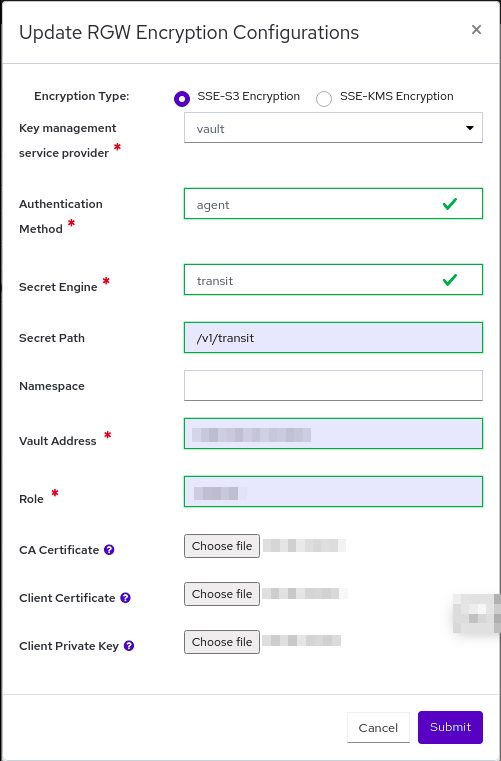

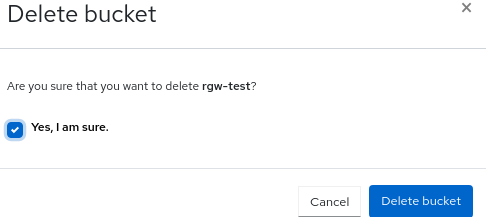

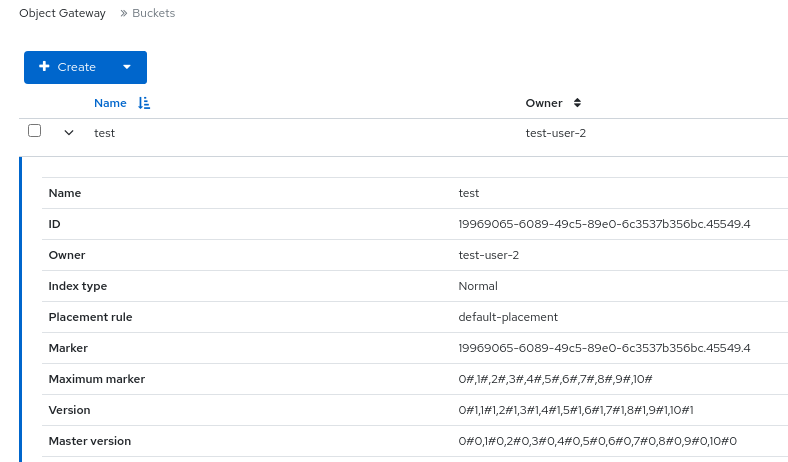

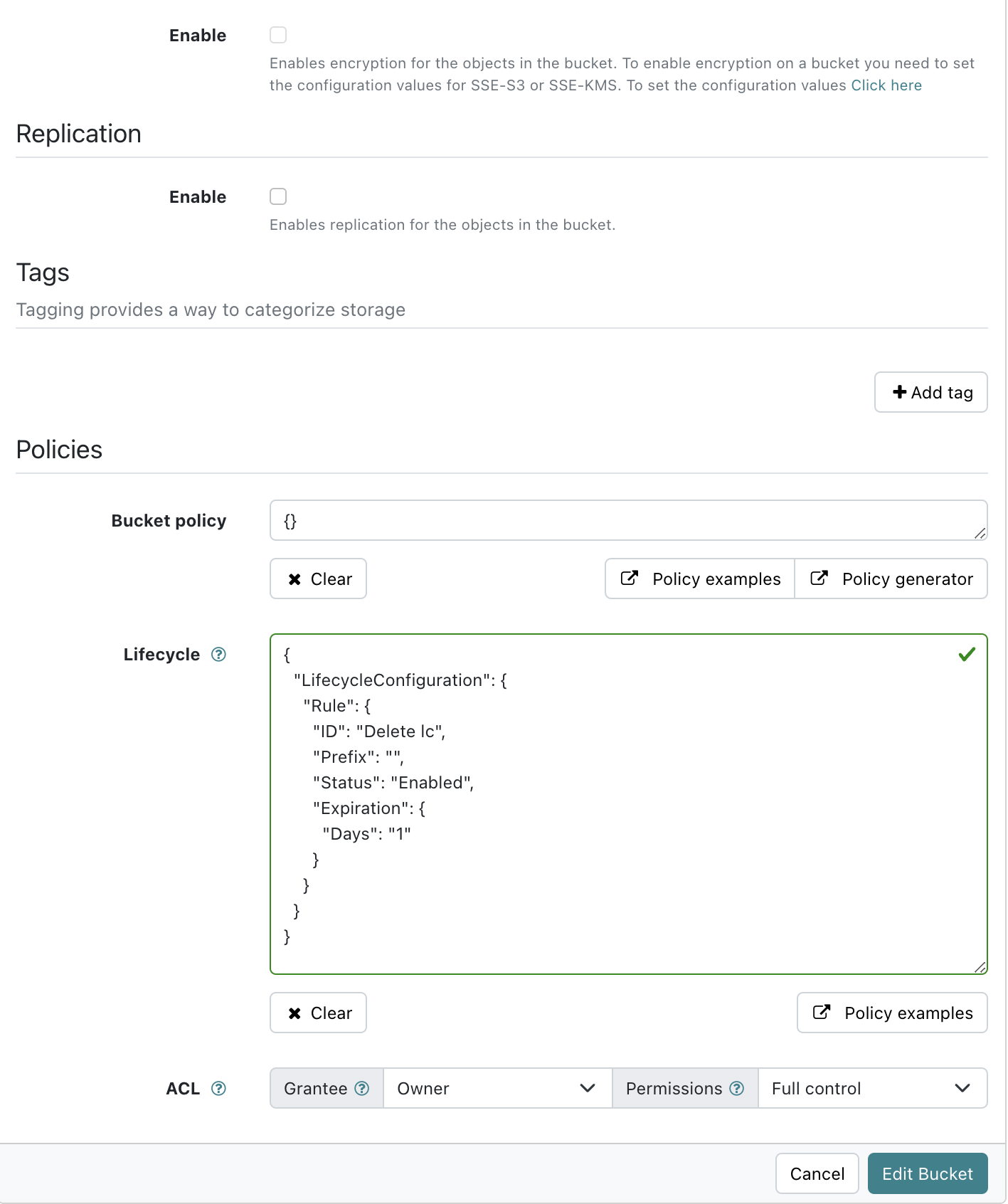

Lists all active object gateways and their performance counters. Displays and manages, including add, edit, and delete, Ceph Object Gateway users and their details, for example quotas, as well as the users’ buckets and their details, for example, owner or quotas.

For more information, see Monitoring Ceph Object Gateway daemons on the dashboard.

Security features

The dashboard provides the following security features.

- SSL and TLS support

All HTTP communication between the web browser and the dashboard is secured via SSL. A self-signed certificate can be created with a built-in command, but it is also possible to import custom certificates signed and issued by a Certificate Authority (CA).

For more information, see Ceph Dashboard installation and access.

Prerequisites

- System administrator level experience.

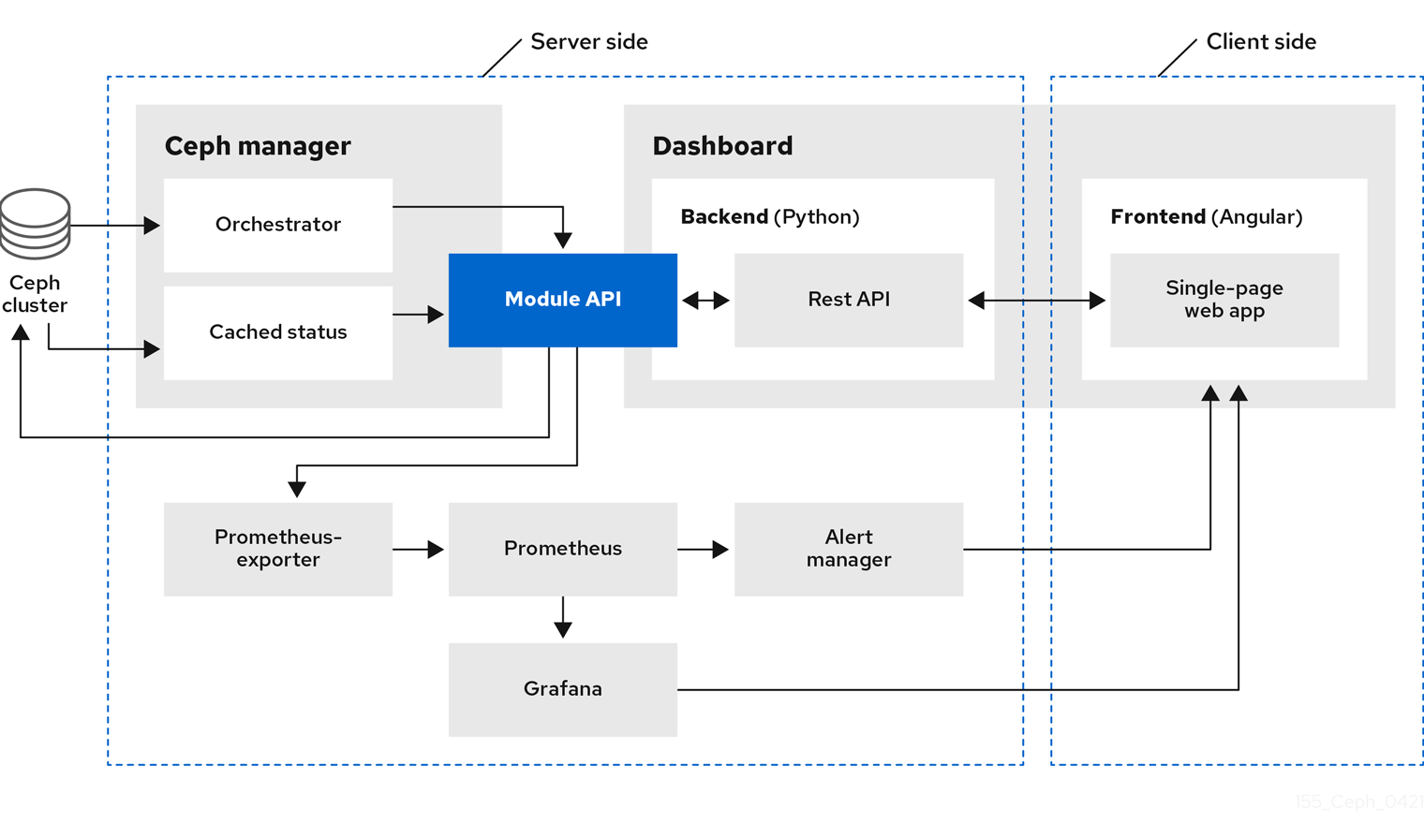

1.1. Ceph Dashboard components

The functionality of the dashboard is provided by multiple components.

- The Cephadm application for deployment.

-

The embedded dashboard

ceph-mgrmodule. -

The embedded Prometheus

ceph-mgrmodule. - The Prometheus time-series database.

- The Prometheus node-exporter daemon, running on each host of the storage cluster.

- The Grafana platform to provide monitoring user interface and alerting.

1.2. Red Hat Ceph Storage Dashboard architecture

The Dashboard architecture depends on the Ceph manager dashboard plugin and other components. See the following diagram to understand how the Ceph manager and dashboard work together.

Chapter 2. Ceph Dashboard installation and access

As a system administrator, you can access the dashboard with the credentials provided on bootstrapping the cluster.

Cephadm installs the dashboard by default. Following is an example of the dashboard URL:

URL: https://host01:8443/ User: admin Password: zbiql951ar

URL: https://host01:8443/

User: admin

Password: zbiql951arUpdate the browser and clear the cookies prior to accessing the dashboard URL.

The following are the Cephadm bootstrap options that are available for the Ceph dashboard configurations:

- [–initial-dashboard-user INITIAL_DASHBOARD_USER] - Use this option while bootstrapping to set initial-dashboard-user.

- [–initial-dashboard-password INITIAL_DASHBOARD_PASSWORD] - Use this option while bootstrapping to set initial-dashboard-password.

- [–ssl-dashboard-port SSL_DASHBOARD_PORT] - Use this option while bootstrapping to set custom dashboard port other than default 8443.

- [–dashboard-key DASHBOARD_KEY] - Use this option while bootstrapping to set Custom key for SSL.

- [–dashboard-crt DASHBOARD_CRT] - Use this option while bootstrapping to set Custom certificate for SSL.

- [–skip-dashboard] - Use this option while bootstrapping to deploy Ceph without dashboard.

- [–dashboard-password-noupdate] - Use this option while bootstrapping if you used above two options and don’t want to reset password at the first time login.

- [–allow-fqdn-hostname] - Use this option while bootstrapping to allow hostname that is fully-qualified.

- [–skip-prepare-host] - Use this option while bootstrapping to skip preparing the host.

To avoid connectivity issues with dashboard related external URL, use the fully qualified domain names (FQDN) for hostnames, for example, host01.ceph.redhat.com.

Open the Grafana URL directly in the client internet browser and accept the security exception to see the graphs on the Ceph dashboard. Reload the browser to view the changes.

Example

cephadm bootstrap --mon-ip 127.0.0.1 --registry-json cephadm.txt --initial-dashboard-user admin --initial-dashboard-password zbiql951ar --dashboard-password-noupdate --allow-fqdn-hostname

[root@host01 ~]# cephadm bootstrap --mon-ip 127.0.0.1 --registry-json cephadm.txt --initial-dashboard-user admin --initial-dashboard-password zbiql951ar --dashboard-password-noupdate --allow-fqdn-hostname

While boostrapping the storage cluster using cephadm, you can use the --image option for either custom container images or local container images.

You have to change the password the first time you log into the dashboard with the credentials provided on bootstrapping only if --dashboard-password-noupdate option is not used while bootstrapping. You can find the Ceph dashboard credentials in the var/log/ceph/cephadm.log file. Search with the "Ceph Dashboard is now available at" string.

This section covers the following tasks:

- Network port requirements for Ceph dashboard.

- Accessing the Ceph dashboard.

- Expanding the cluster on the Ceph dashboard.

- Upgrading a cluster.

- Toggling Ceph dashboard features.

- Understanding the landing page of the Ceph dashboard.

- Enabling Red Hat Ceph Storage Dashboard manually.

- Changing the dashboard password using the Ceph dashboard.

- Changing the Ceph dashboard password using the command line interface.

-

Setting

adminuser password for Grafana. - Creating an admin account for syncing users to the Ceph dashboard.

- Syncing users to the Ceph dashboard using the Red Hat Single Sign-On. *Enabling single sign-on for the Ceph dashboard.

- Disabling single sign-on for the Ceph dashboard.

2.1. Network port requirements for Ceph Dashboard

The Ceph dashboard components use certain TCP network ports which must be accessible. By default, the network ports are automatically opened in firewalld during installation of Red Hat Ceph Storage.

| Port | Use | Originating Host | Destination Host |

|---|---|---|---|

| 8443 | The dashboard web interface | IP addresses that need access to Ceph Dashboard UI and the host under Grafana server, since the AlertManager service can also initiate connections to the Dashboard for reporting alerts. | The Ceph Manager hosts. |

| 3000 | Grafana | IP addresses that need access to Grafana Dashboard UI and all Ceph Manager hosts and Grafana server. | The host or hosts running Grafana server. |

| 2049 | NFS-Ganesha | IP addresses that need access to NFS. | The IP addresses that provide NFS services. |

| 9095 | Default Prometheus server for basic Prometheus graphs | IP addresses that need access to Prometheus UI and all Ceph Manager hosts and Grafana server or Hosts running Prometheus. | The host or hosts running Prometheus. |

| 9093 | Prometheus Alertmanager | IP addresses that need access to Alertmanager Web UI and all Ceph Manager hosts and Grafana server or Hosts running Prometheus. | All Ceph Manager hosts and the host under Grafana server. |

| 9094 | Prometheus Alertmanager for configuring a highly available cluster made from multiple instances | All Ceph Manager hosts and the host under Grafana server. |

Prometheus Alertmanager High Availability (peer daemon sync), so both |

| 9100 |

The Prometheus | Hosts running Prometheus that need to view Node Exporter metrics Web UI and All Ceph Manager hosts and Grafana server or Hosts running Prometheus. | All storage cluster hosts, including MONs, OSDS, Grafana server host. |

| 9283 | Ceph Manager Prometheus exporter module | Hosts running Prometheus that need access to Ceph Exporter metrics Web UI and Grafana server. | All Ceph Manager hosts. |

2.2. Accessing the Ceph dashboard

You can access the Ceph dashboard to administer and monitor your Red Hat Ceph Storage cluster.

Prerequisites

- Successful installation of Red Hat Ceph Storage Dashboard.

- NTP is synchronizing clocks properly.

Procedure

Enter the following URL in a web browser:

Syntax

https://HOST_NAME:PORT

https://HOST_NAME:PORTCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace:

- HOST_NAME with the fully qualified domain name (FQDN) of the active manager host.

PORT with port

8443Example

https://host01:8443

https://host01:8443Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can also get the URL of the dashboard by running the following command in the Cephadm shell:

Example

[ceph: root@host01 /]# ceph mgr services

[ceph: root@host01 /]# ceph mgr servicesCopy to Clipboard Copied! Toggle word wrap Toggle overflow This command will show you all endpoints that are currently configured. Look for the

dashboardkey to obtain the URL for accessing the dashboard.

-

On the login page, enter the username

adminand the default password provided during bootstrapping. - You have to change the password the first time you log in to the Red Hat Ceph Storage dashboard.

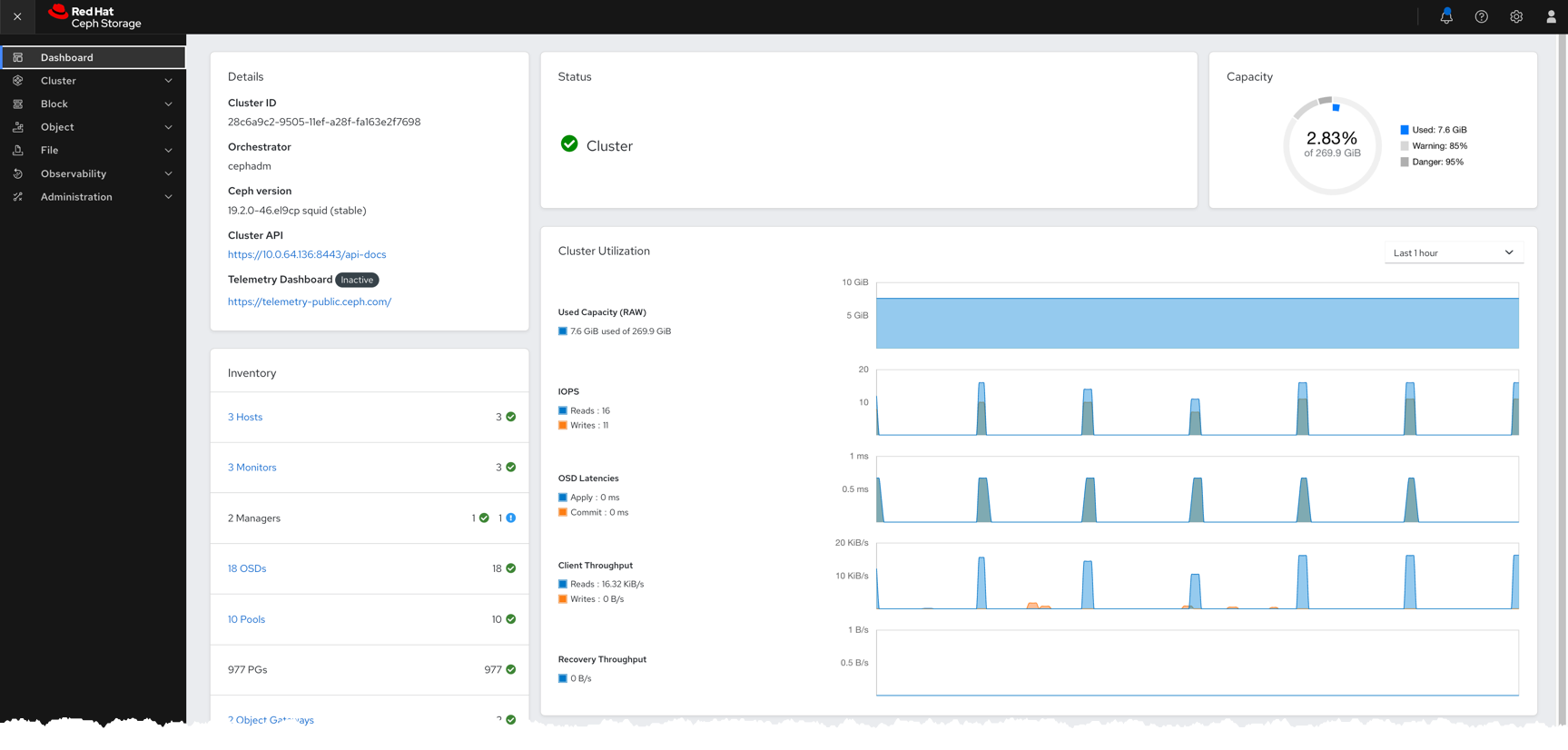

After logging in, the dashboard default landing page is displayed, which provides details, a high-level overview of status, performance, inventory, and capacity metrics of the Red Hat Ceph Storage cluster.

Figure 2.1. Ceph dashboard landing page

-

Click the menu icon (

) on the dashboard landing page to collapse or display the options in the vertical menu.

) on the dashboard landing page to collapse or display the options in the vertical menu.

2.3. Expanding the cluster on the Ceph dashboard

You can use the dashboard to expand the Red Hat Ceph Storage cluster for adding hosts, adding OSDs, and creating services such as Alertmanager, Cephadm-exporter, CephFS-mirror, Grafana, ingress, MDS, NFS, node-exporter, Prometheus, RBD-mirror, and Ceph Object Gateway.

Once you bootstrap a new storage cluster, the Ceph Monitor and Ceph Manager daemons are created and the cluster is in HEALTH_WARN state. After creating all the services for the cluster on the dashboard, the health of the cluster changes from HEALTH_WARN to HEALTH_OK status.

Prerequisites

- Bootstrapped storage cluster. See Bootstrapping a new storage cluster section in the Red Hat Ceph Storage Installation Guide for more details.

-

At least

cluster-managerrole for the user on the Red Hat Ceph Storage Dashboard. See the User roles and permissions on the Ceph dashboard section in the Red Hat Ceph Storage Dashboard Guide for more details.

Procedure

Copy the admin key from the bootstrapped host to other hosts:

Syntax

ssh-copy-id -f -i /etc/ceph/ceph.pub root@HOST_NAME

ssh-copy-id -f -i /etc/ceph/ceph.pub root@HOST_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# ssh-copy-id -f -i /etc/ceph/ceph.pub root@host02 [ceph: root@host01 /]# ssh-copy-id -f -i /etc/ceph/ceph.pub root@host03

[ceph: root@host01 /]# ssh-copy-id -f -i /etc/ceph/ceph.pub root@host02 [ceph: root@host01 /]# ssh-copy-id -f -i /etc/ceph/ceph.pub root@host03Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Log in to the dashboard with the default credentials provided during bootstrap.

- Change the password and log in to the dashboard with the new password .

On the landing page, click Expand Cluster.

NoteClicking Expand Cluster opens a wizard taking you through the expansion steps. To skip and add hosts and services separately, click Skip.

Figure 2.2. Expand cluster

Add hosts. This needs to be done for each host in the storage cluster.

- In the Add Hosts step, click Add.

Provide the hostname. This is same as the hostname that was provided while copying the key from the bootstrapped host.

NoteAdd multiple hosts by using a comma-separated list of host names, a range expression, or a comma separated range expression.

- Optional: Provide the respective IP address of the host.

- Optional: Select the labels for the hosts on which the services are going to be created. Click the pencil icon to select or add new labels.

Click Add Host.

The new host is displayed in the Add Hosts pane.

- Click Next.

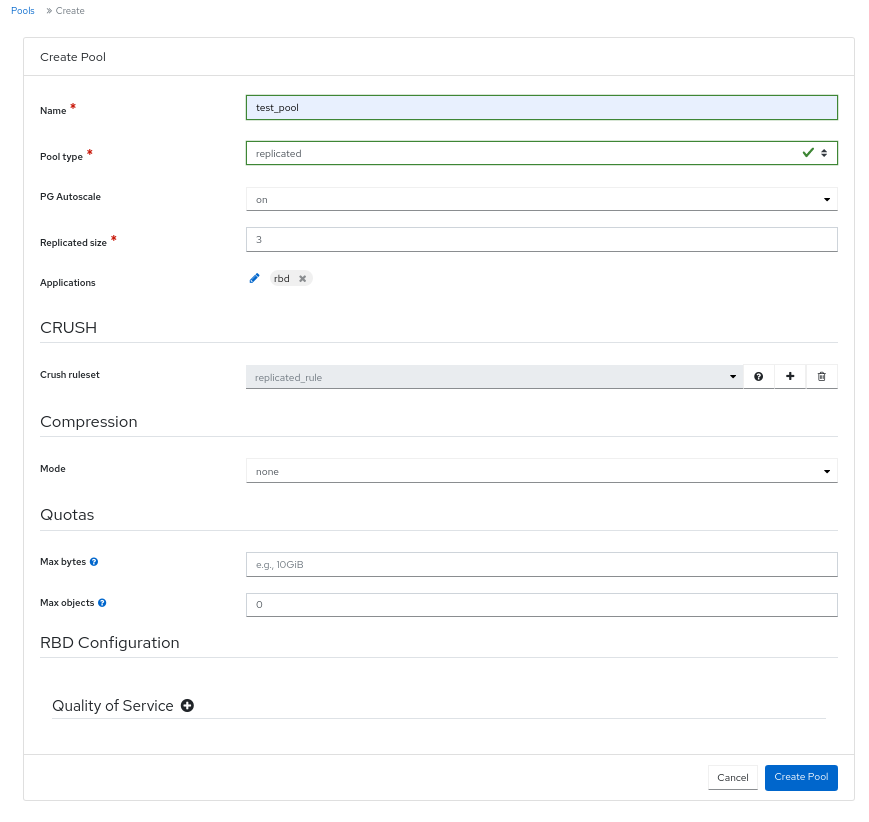

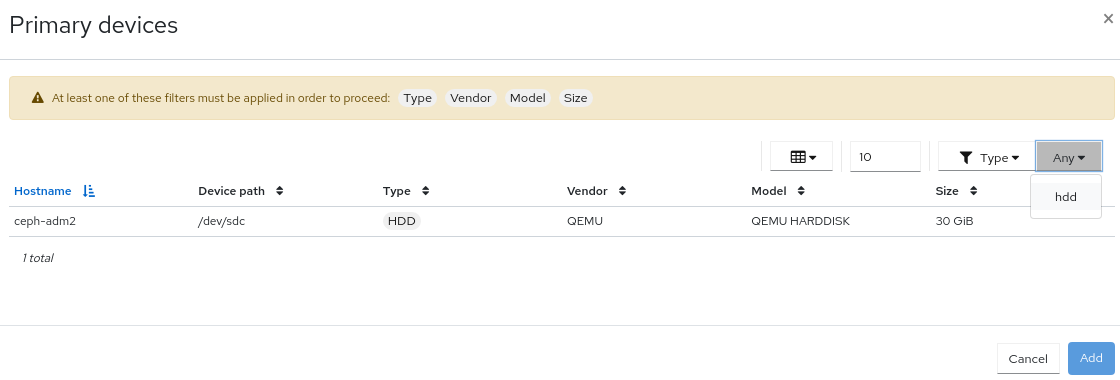

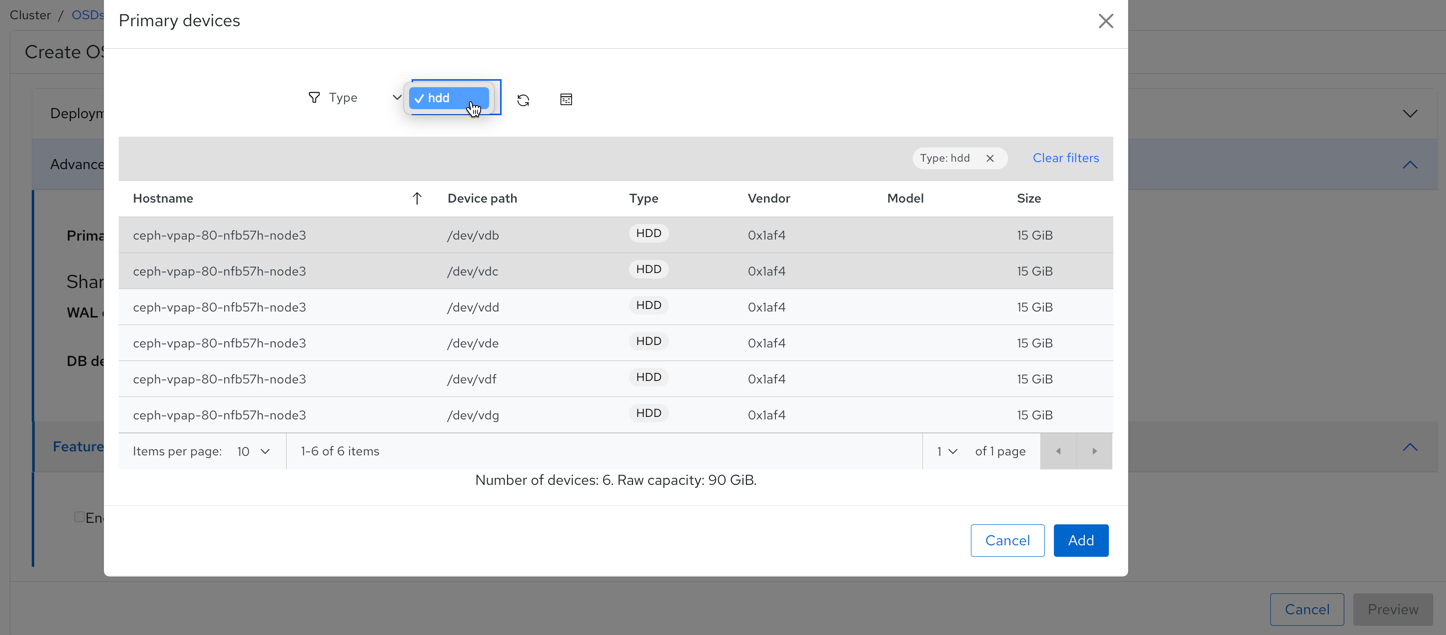

Create OSDs:

- In the Create OSDs step, for Primary devices, Click Add.

- In the Primary Devices window, filter for the device and select the device.

- Click Add.

- Optional: In the Create OSDs window, if you have any shared devices such as WAL or DB devices, then add the devices.

- Optional: In the Features section, select Encryption to encrypt the features.

- Click Next.

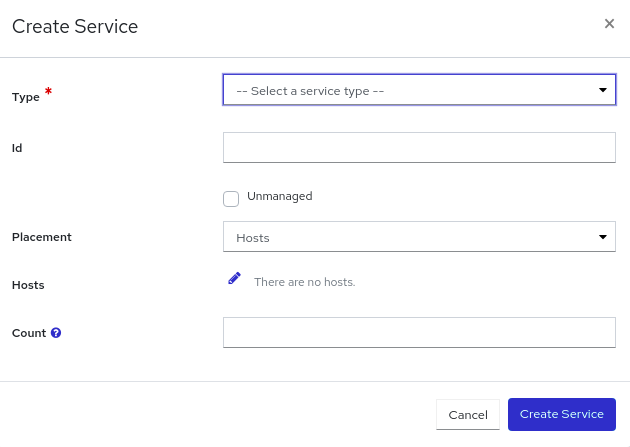

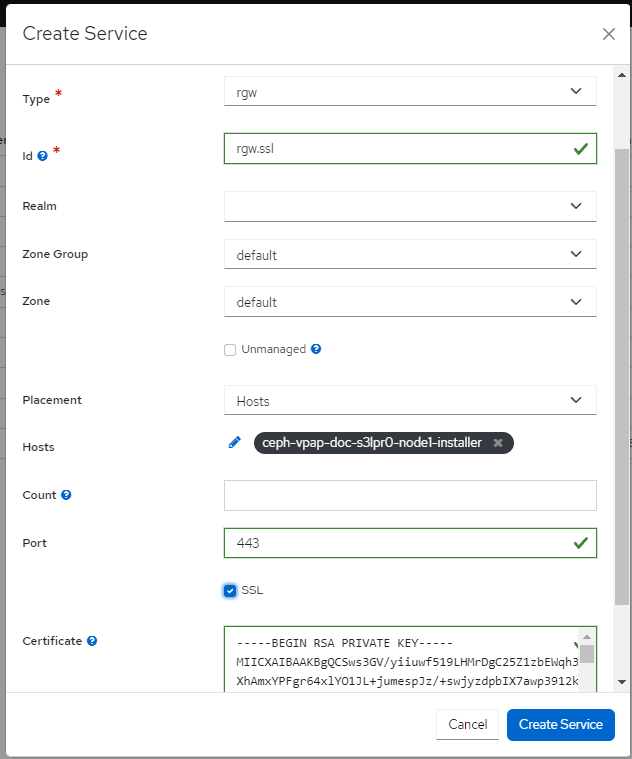

Create services:

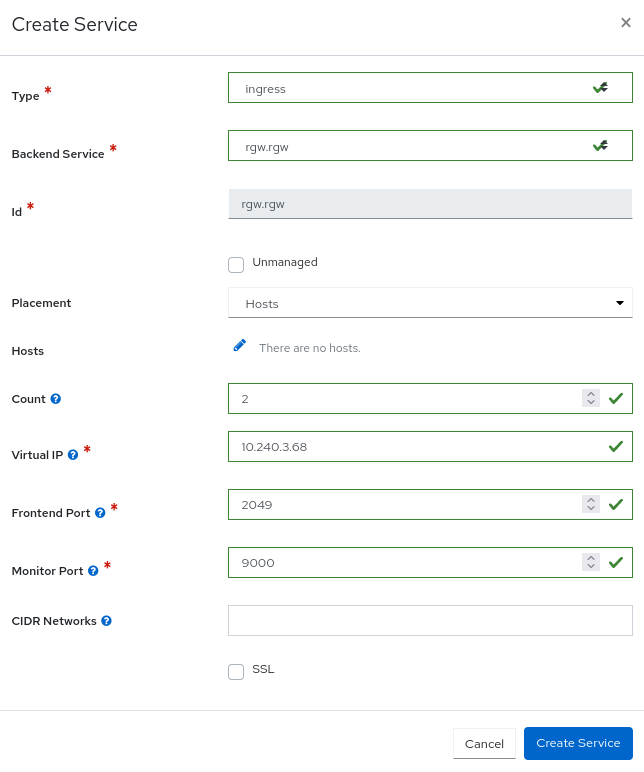

- In the Create Services step, click Create.

In the Create Service form:

- Select a service type.

-

Provide the service ID. The ID is a unique name for the service. This ID is used in the service name, which is

service_type.service_id.

…Optional: Select if the service is Unmanaged.

+ When Unmanaged services is selected, the orchestrator will not start or stop any daemon associated with this service. Placement and all other properties are ignored.

- Select if the placement is by hosts or label.

- Select the hosts.

In the Count field, provide the number of daemons or services that need to be deployed.

Click Create Service.

The new service is displayed in the Create Services pane.

- In the Create Service window, Click Next.

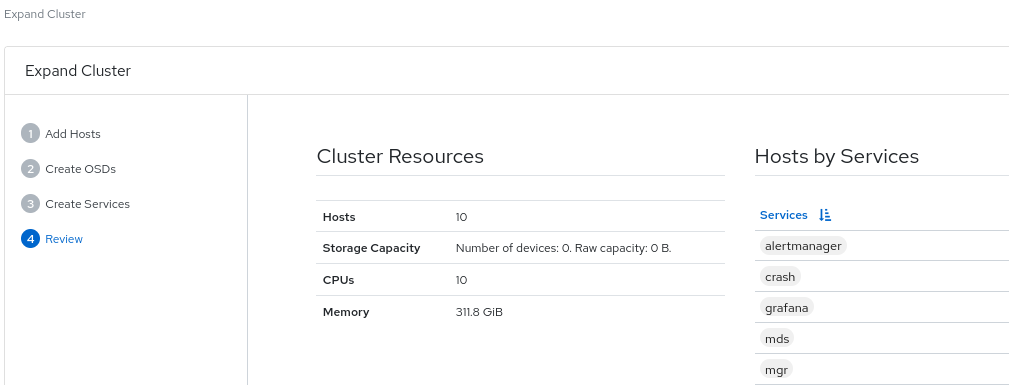

Review the cluster expansion details.

Review the Cluster Resources, Hosts by Services, Host Details. To edit any parameters, click Back and follow the previous steps.

Figure 2.3. Review cluster

Click Expand Cluster.

The

Cluster expansion displayednotification is displayed and the cluster status changes to HEALTH_OK on the dashboard.

Verification

Log in to the

cephadmshell:Example

cephadm shell

[root@host01 ~]# cephadm shellCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the

ceph -scommand.Example

[ceph: root@host01 /]# ceph -s

[ceph: root@host01 /]# ceph -sCopy to Clipboard Copied! Toggle word wrap Toggle overflow The health of the cluster is HEALTH_OK.

2.4. Upgrading a cluster

Upgrade Ceph clusters using the dashboard.

Cluster images are pulled automatically from registry.redhat.io. Optionally, use custom images for upgrade.

Prerequisites

Verify that your upgrade version path and operating system is supported before starting the upgrade process. For more information, see Compatibility Matrix for Red Hat Ceph Storage 8.0.

Before you begin, make sure that you have the following prerequisites in place:

These items cannot be done through the dashboard and must be completed manually, through the command-line interface, before continuing to upgrade the cluster from the dashboard.

For detailed information, see Upgrading the Red Hat Ceph Storage cluster in a disconnected environment and complete steps 1 through 7.

The latest

cephadm.Syntax

dnf udpate cephadm

dnf udpate cephadmCopy to Clipboard Copied! Toggle word wrap Toggle overflow The latest

cephadm-ansible.Syntax

dnf udpate cephadm-ansible

dnf udpate cephadm-ansibleCopy to Clipboard Copied! Toggle word wrap Toggle overflow The latest

cephadm pre-flight playbook.Syntax

ansible-playbook -i INVENTORY_FILE cephadm-preflight.yml --extra-vars "ceph_origin=custom upgrade_ceph_packages=true"

ansible-playbook -i INVENTORY_FILE cephadm-preflight.yml --extra-vars "ceph_origin=custom upgrade_ceph_packages=true"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following Ceph commands to avoid alerts and rebalancing of the data during the cluster upgrade:

Syntax

ceph health mute DAEMON_OLD_VERSION --sticky ceph osd set noout ceph osd set noscrub ceph osd set nodeep-scrub

ceph health mute DAEMON_OLD_VERSION --sticky ceph osd set noout ceph osd set noscrub ceph osd set nodeep-scrubCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

View if cluster upgrades are available and upgrade as needed from Administration > Upgrade on the dashboard.

NoteIf the dashboard displays the

Not retrieving upgradesmessage, check if the registries were added to the container configuration files with the appropriate log in credentials to Podman or docker.Click Pause or Stop during the upgrade process, if needed. The upgrade progress is shown in the progress bar along with information messages during the upgrade.

NoteWhen stopping the upgrade, the upgrade is first paused and then prompts you to stop the upgrade.

- Optional. View cluster logs during the upgrade process from the Cluster logs section of the Upgrade page.

- Verify that the upgrade is completed successfully by confirming that the cluster status displays OK state.

After verifying that the upgrade is complete, unset the

noout,noscrub, andnodeep-scrubflags.Example

[ceph: root@host01 /]# ceph osd unset noout [ceph: root@host01 /]# ceph osd unset noscrub [ceph: root@host01 /]# ceph osd unset nodeep-scrub

[ceph: root@host01 /]# ceph osd unset noout [ceph: root@host01 /]# ceph osd unset noscrub [ceph: root@host01 /]# ceph osd unset nodeep-scrubCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5. Toggling Ceph dashboard features

You can customize the Red Hat Ceph Storage dashboard components by enabling or disabling features on demand. All features are enabled by default. When disabling a feature, the web-interface elements become hidden and the associated REST API end-points reject any further requests for that feature. Enabling and disabling dashboard features can be done from the command-line interface or the web interface.

Available features:

Ceph Block Devices:

-

Image management,

rbd -

Mirroring,

mirroring

-

Image management,

-

Ceph File System,

cephfs -

Ceph Object Gateway,

rgw -

NFS Ganesha gateway,

nfs

By default, the Ceph Manager is collocated with the Ceph Monitor.

You can disable multiple features at once.

Once a feature is disabled, it can take up to 20 seconds to reflect the change in the web interface.

Prerequisites

- Installation and configuration of the Red Hat Ceph Storage dashboard software.

- User access to the Ceph Manager host or the dashboard web interface.

- Root level access to the Ceph Manager host.

Procedure

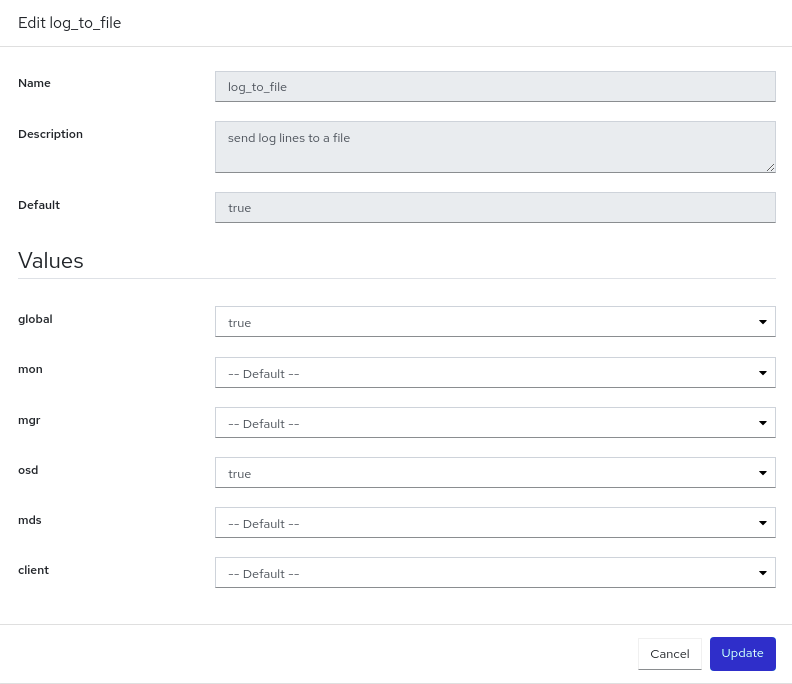

To toggle the dashboard features from the dashboard web interface:

- On the dashboard landing page, go to Administration→Manager Modules and select the dashboard module.

- Click Edit.

- In the Edit Manager module form, you can enable or disable the dashboard features by selecting or clearing the check boxes next to the different feature names.

- After the selections are made, click Update.

To toggle the dashboard features from the command-line interface:

Log in to the Cephadm shell:

Example

cephadm shell

[root@host01 ~]# cephadm shellCopy to Clipboard Copied! Toggle word wrap Toggle overflow List the feature status:

Example

[ceph: root@host01 /]# ceph dashboard feature status

[ceph: root@host01 /]# ceph dashboard feature statusCopy to Clipboard Copied! Toggle word wrap Toggle overflow Disable a feature:

[ceph: root@host01 /]# ceph dashboard feature disable rgw

[ceph: root@host01 /]# ceph dashboard feature disable rgwCopy to Clipboard Copied! Toggle word wrap Toggle overflow This example disables the Ceph Object Gateway feature.

Enable a feature:

[ceph: root@host01 /]# ceph dashboard feature enable cephfs

[ceph: root@host01 /]# ceph dashboard feature enable cephfsCopy to Clipboard Copied! Toggle word wrap Toggle overflow This example enables the Ceph Filesystem feature.

2.6. Understanding the landing page of the Ceph dashboard

The landing page displays an overview of the entire Ceph cluster using navigation bars and individual panels.

The menu bar provides the following options:

- Tasks and Notifications

- Provides task and notification messages.

- Help

- Provides links to the product and REST API documentation, details about the Red Hat Ceph Storage Dashboard, and a form to report an issue.

- Dashboard Settings

- Gives access to user management and telemetry configuration.

- User

- Use this menu to see log in status, to change a password, and to sign out of the dashboard.

Figure 2.4. Menu bar

The navigation menu can be opened or hidden by clicking the navigation menu icon

.

.

Dashboard

The main dashboard displays specific information about the state of the cluster.

The main dashboard can be accessed at any time by clicking Dashboard from the navigation menu.

The dashboard landing page organizes the panes into different categories.

Figure 2.5. Ceph dashboard landing page

- Details

- Displays specific cluster information and if telemetry is active or inactive.

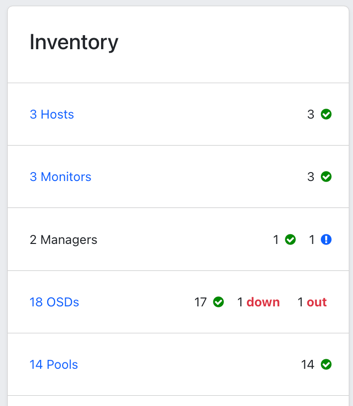

- Inventory

Displays the different parts of the cluster, how many are available, and their status.

Link directly from Inventory to specific inventory items, where available.

- Hosts

- Displays the total number of hosts in the Ceph storage cluster.

- Monitors

- Displays the number of Ceph Monitors and the quorum status.

- Managers

- Displays the number and status of the Manager Daemons.

- OSDs

- Displays the total number of OSDs in the Ceph Storage cluster and the number that are up, and in.

- Pools

- Displays the number of storage pools in the Ceph cluster.

- PGs

Displays the total number of placement groups (PGs). The PG states are divided into Working and Warning to simplify the display. Each one encompasses multiple states. + The Working state includes PGs with any of the following states:

- activating

- backfill_wait

- backfilling

- creating

- deep

- degraded

- forced_backfill

- forced_recovery

- peering

- peered

- recovering

- recovery_wait

- repair

- scrubbing

- snaptrim

- snaptrim_wait + The Warning state includes PGs with any of the following states:

- backfill_toofull

- backfill_unfound

- down

- incomplete

- inconsistent

- recovery_toofull

- recovery_unfound

- remapped

- snaptrim_error

- stale

- undersized

- Object Gateways

- Displays the number of Object Gateways in the Ceph storage cluster.

- Metadata Servers

- Displays the number and status of metadata servers for Ceph File Systems (CephFS).

- Status

- Displays the health of the cluster and host and daemon states. The current health status of the Ceph storage cluster is displayed. Danger and warning alerts are displayed directly on the landing page. Click View alerts for a full list of alerts.

- Capacity

- Displays storage usage metrics. This is displayed as a graph of used, warning, and danger. The numbers are in percentages and in GiB.

- Cluster Utilization

- The Cluster Utilization pane displays information related to data transfer speeds. Select the time range for the data output from the list. Select a range between the last 5 minutes to the last 24 hours.

- Used Capacity (RAW)

- Displays usage in GiB.

- IOPS

- Displays total I/O read and write operations per second.

- OSD Latencies

- Displays total applies and commits per millisecond.

- Client Throughput

- Displays total client read and write throughput in KiB per second.

- Recovery Throughput

- Displays the rate of cluster healing and balancing operations. For example, the status of any background data that may be moving due to a loss of disk is displayed. The information is displayed in bytes per second.

2.7. Changing the dashboard password using the Ceph dashboard

By default, the password for accessing dashboard is randomly generated by the system while bootstrapping the cluster. You have to change the password the first time you log in to the Red Hat Ceph Storage dashboard. You can change the password for the admin user using the dashboard.

Prerequisites

- A running Red Hat Ceph Storage cluster.

Procedure

Log in to the dashboard:

Syntax

https://HOST_NAME:8443

https://HOST_NAME:8443Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Go to User→Change password on the menu bar.

- Enter the old password, for verification.

- In the New password field enter a new password. Passwords must contain a minimum of 8 characters and cannot be the same as the last one.

- In the Confirm password field, enter the new password again to confirm.

Click Change Password.

You will be logged out and redirected to the login screen. A notification appears confirming the password is changed.

2.8. Changing the Ceph dashboard password using the command line interface

If you have forgotten your Ceph dashboard password, you can change the password using the command line interface.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Root-level access to the host on which the dashboard is installed.

Procedure

Log into the Cephadm shell:

Example

cephadm shell

[root@host01 ~]# cephadm shellCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

dashboard_password.ymlfile:Example

[ceph: root@host01 /]# touch dashboard_password.yml

[ceph: root@host01 /]# touch dashboard_password.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the file and add the new dashboard password:

Example

[ceph: root@host01 /]# vi dashboard_password.yml

[ceph: root@host01 /]# vi dashboard_password.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Reset the dashboard password:

Syntax

ceph dashboard ac-user-set-password DASHBOARD_USERNAME -i PASSWORD_FILE

ceph dashboard ac-user-set-password DASHBOARD_USERNAME -i PASSWORD_FILECopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# ceph dashboard ac-user-set-password admin -i dashboard_password.yml {"username": "admin", "password": "$2b$12$i5RmvN1PolR61Fay0mPgt.GDpcga1QpYsaHUbJfoqaHd1rfFFx7XS", "roles": ["administrator"], "name": null, "email": null, "lastUpdate": , "enabled": true, "pwdExpirationDate": null, "pwdUpdateRequired": false}[ceph: root@host01 /]# ceph dashboard ac-user-set-password admin -i dashboard_password.yml {"username": "admin", "password": "$2b$12$i5RmvN1PolR61Fay0mPgt.GDpcga1QpYsaHUbJfoqaHd1rfFFx7XS", "roles": ["administrator"], "name": null, "email": null, "lastUpdate": , "enabled": true, "pwdExpirationDate": null, "pwdUpdateRequired": false}Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

- Log in to the dashboard with your new password.

2.9. Setting admin user password for Grafana

By default, cephadm does not create an admin user for Grafana. With the Ceph Orchestrator, you can create an admin user and set the password.

With these credentials, you can log in to the storage cluster’s Grafana URL with the given password for the admin user.

Prerequisites

- A running Red Hat Ceph Storage cluster with the monitoring stack installed.

-

Root-level access to the

cephadmhost. -

The

dashboardmodule enabled.

Procedure

As a root user, create a

grafana.ymlfile and provide the following details:Syntax

service_type: grafana spec: initial_admin_password: PASSWORD

service_type: grafana spec: initial_admin_password: PASSWORDCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

service_type: grafana spec: initial_admin_password: mypassword

service_type: grafana spec: initial_admin_password: mypasswordCopy to Clipboard Copied! Toggle word wrap Toggle overflow Mount the

grafana.ymlfile under a directory in the container:Example

cephadm shell --mount grafana.yml:/var/lib/ceph/grafana.yml

[root@host01 ~]# cephadm shell --mount grafana.yml:/var/lib/ceph/grafana.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteEvery time you exit the shell, you have to mount the file in the container before deploying the daemon.

Optional: Check if the

dashboardCeph Manager module is enabled:Example

[ceph: root@host01 /]# ceph mgr module ls

[ceph: root@host01 /]# ceph mgr module lsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Enable the

dashboardCeph Manager module:Example

[ceph: root@host01 /]# ceph mgr module enable dashboard

[ceph: root@host01 /]# ceph mgr module enable dashboardCopy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the specification using the

orchcommand:Syntax

ceph orch apply -i FILE_NAME.yml

ceph orch apply -i FILE_NAME.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# ceph orch apply -i /var/lib/ceph/grafana.yml

[ceph: root@host01 /]# ceph orch apply -i /var/lib/ceph/grafana.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Redeploy

grafanaservice:Example

[ceph: root@host01 /]# ceph orch redeploy grafana

[ceph: root@host01 /]# ceph orch redeploy grafanaCopy to Clipboard Copied! Toggle word wrap Toggle overflow This creates an admin user called

adminwith the given password and the user can log in to the Grafana URL with these credentials.

Verification:

Log in to Grafana with the credentials:

Syntax

https://HOST_NAME:PORT

https://HOST_NAME:PORTCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

https://host01:3000/

https://host01:3000/Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.10. Enabling Red Hat Ceph Storage Dashboard manually

If you have installed a Red Hat Ceph Storage cluster by using --skip-dashboard option during bootstrap, you can see that the dashboard URL and credentials are not available in the bootstrap output. You can enable the dashboard manually using the command-line interface. Although the monitoring stack components such as Prometheus, Grafana, Alertmanager, and node-exporter are deployed, they are disabled and you have to enable them manually.

Prerequisite

-

A running Red Hat Ceph Storage cluster installed with

--skip-dashboardoption during bootstrap. - Root-level access to the host on which the dashboard needs to be enabled.

Procedure

Log into the Cephadm shell:

Example

cephadm shell

[root@host01 ~]# cephadm shellCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the Ceph Manager services:

Example

[ceph: root@host01 /]# ceph mgr services { "prometheus": "http://10.8.0.101:9283/" }[ceph: root@host01 /]# ceph mgr services { "prometheus": "http://10.8.0.101:9283/" }Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can see that the Dashboard URL is not configured.

Enable the dashboard module:

Example

[ceph: root@host01 /]# ceph mgr module enable dashboard

[ceph: root@host01 /]# ceph mgr module enable dashboardCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the self-signed certificate for the dashboard access:

Example

[ceph: root@host01 /]# ceph dashboard create-self-signed-cert

[ceph: root@host01 /]# ceph dashboard create-self-signed-certCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteYou can disable the certificate verification to avoid certification errors.

Check the Ceph Manager services:

Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the admin user and password to access the Red Hat Ceph Storage dashboard:

Syntax

echo -n "PASSWORD" > PASSWORD_FILE ceph dashboard ac-user-create admin -i PASSWORD_FILE administrator

echo -n "PASSWORD" > PASSWORD_FILE ceph dashboard ac-user-create admin -i PASSWORD_FILE administratorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# echo -n "p@ssw0rd" > password.txt [ceph: root@host01 /]# ceph dashboard ac-user-create admin -i password.txt administrator

[ceph: root@host01 /]# echo -n "p@ssw0rd" > password.txt [ceph: root@host01 /]# ceph dashboard ac-user-create admin -i password.txt administratorCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Enable the monitoring stack. See the Enabling monitoring stack section in the Red Hat Ceph Storage Dashboard Guide for details.

2.11. Using single sign-on with the dashboard

The Ceph Dashboard supports external authentication of users with the choice of either the Security Assertion Markup Language (SAML) 2.0 protocol or with the OAuth2 Proxy (oauth2-proxy). Before using single sign-on (SSO) with the Ceph Dashboard, create the dashboard user accounts and assign any required roles. The Ceph Dashboard completes user authorization and then the existing Identity Provider (IdP) completes the authentication process. You can enable single sign-on using the SAML protocol or oauth2-proxy.

Red Hat Ceph Storage supports dashboard SSO and Multi-Factor Authentication with RHSSO (Keycloak).

To connect your SAML IdP with the Ceph Dashboard, use the following Service Provider (SP) configuration details. In each of the following configuration URLs, replace DASHBOARD_HOST with the fully qualified domain name (FQDN) and port of your dashboard. For example, ceph-dashboard.example.com:8443.

- Entity ID (Audience URI)

- The unique identifier for the Ceph Dashboard service provider.

https://DASHBOARD_HOST/auth/saml2/metadata

- Assertion Consumer Service (ACS)

- The endpoint where the IdP sends the SAML assertion (using POST binding).

https://DASHBOARD_HOST/auth/saml2/

- Default Relay State (Start URL)

- The page that users are directed to after a successful login.

https://DASHBOARD_HOST/#/dashboard

- Target URL

-

https://DASHBOARD_HOST/#/dashboard

Some IdPs can automatically configure these fields from the service provider metadata. To provide the metadata, give your IdP the following URL:

OAuth2 SSO uses the oauth2-proxy service to work with the Ceph Management gateway (mgmt-gateway), providing unified access and improved user experience.

The OAuth2 SSO, mgmt-gateway, and oauth2-proxy services are Technology Preview.

For more information about the Ceph Management gateway and the OAuth2 Proxy service, see Using the Ceph Management gateway (mgmt-gateway) and Using the OAuth2 Proxy (oauth2-proxy) service.

For more information about Red Hat build of Keycloack, see Red Hat build of Keycloak on the Red Hat Customer Portal.

2.11.1. Creating an admin account for syncing users to the Ceph dashboard

You have to create an admin account to synchronize users to the Ceph dashboard.

After creating the account, use Red Hat Single Sign-on (SSO) to synchronize users to the Ceph dashboard. See the Syncing users to the Ceph dashboard using Red Hat Single Sign-On section in the Red Hat Ceph Storage Dashboard Guide.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Dashboard is installed.

- Admin level access to the dashboard.

- Users are added to the dashboard.

- Root-level access on all the hosts.

- Java OpenJDK installed. For more information, see the Installing a JRE on RHEL by using yum section of the Installing and using OpenJDK 8 for RHEL guide for OpenJDK on the Red Hat Customer Portal.

- Red hat Single Sign-On installed from a ZIP file. See the Installing RH-SSO from a ZIP File section of the Server Installation and Configuration Guide for Red Hat Single Sign-On on the Red Hat Customer Portal.

Procedure

- Download the Red Hat Single Sign-On 7.4.0 Server on the system where Red Hat Ceph Storage is installed.

Unzip the folder:

unzip rhsso-7.4.0.zip

[root@host01 ~]# unzip rhsso-7.4.0.zipCopy to Clipboard Copied! Toggle word wrap Toggle overflow Navigate to the

standalone/configurationdirectory and open thestandalone.xmlfor editing:cd standalone/configuration vi standalone.xml

[root@host01 ~]# cd standalone/configuration [root@host01 configuration]# vi standalone.xmlCopy to Clipboard Copied! Toggle word wrap Toggle overflow From the

bindirectory of the newly createdrhsso-7.4.0folder, run theadd-user-keycloakscript to add the initial administrator user:./add-user-keycloak.sh -u admin

[root@host01 bin]# ./add-user-keycloak.sh -u adminCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Replace all instances of

localhostand two instances of127.0.0.1with the IP address of the machine where Red Hat SSO is installed. Start the server. From the

bindirectory ofrh-sso-7.4folder, run thestandaloneboot script:./standalone.sh

[root@host01 bin]# ./standalone.shCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the admin account in https: IP_ADDRESS :8080/auth with a username and password:

NoteYou have to create an admin account only the first time that you log into the console.

- Log into the admin console with the credentials created.

2.11.2. Syncing users to the Ceph dashboard using Red Hat Single Sign-On

You can use Red Hat Single Sign-on (SSO) with Lightweight Directory Access Protocol (LDAP) integration to synchronize users to the Red Hat Ceph Storage Dashboard.

The users are added to specific realms in which they can access the dashboard through SSO without any additional requirements of a password.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Dashboard is installed.

- Admin level access to the dashboard.

- Users are added to the dashboard. See the Creating users on Ceph dashboard section in the Red Hat Ceph Storage Dashboard Guide.

- Root-level access on all the hosts.

- Admin account created for syncing users. See the Creating an admin account for syncing users to the Ceph dashboard section in the Red Hat Ceph Storage Dashboard Guide.

Procedure

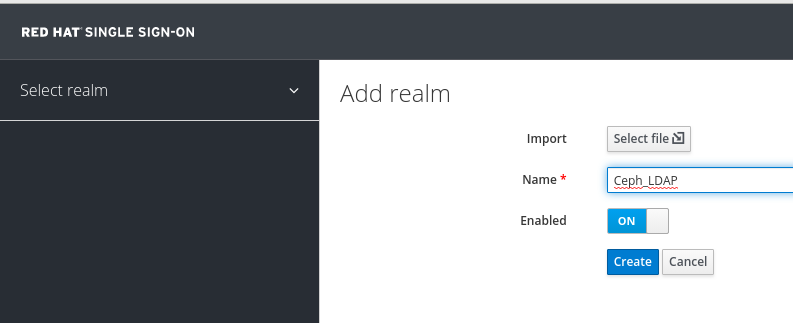

- To create a realm, click the Master drop-down menu. In this realm, you can provide access to users and applications.

In the Add Realm window, enter a case-sensitive realm name and set the parameter Enabled to ON and click Create:

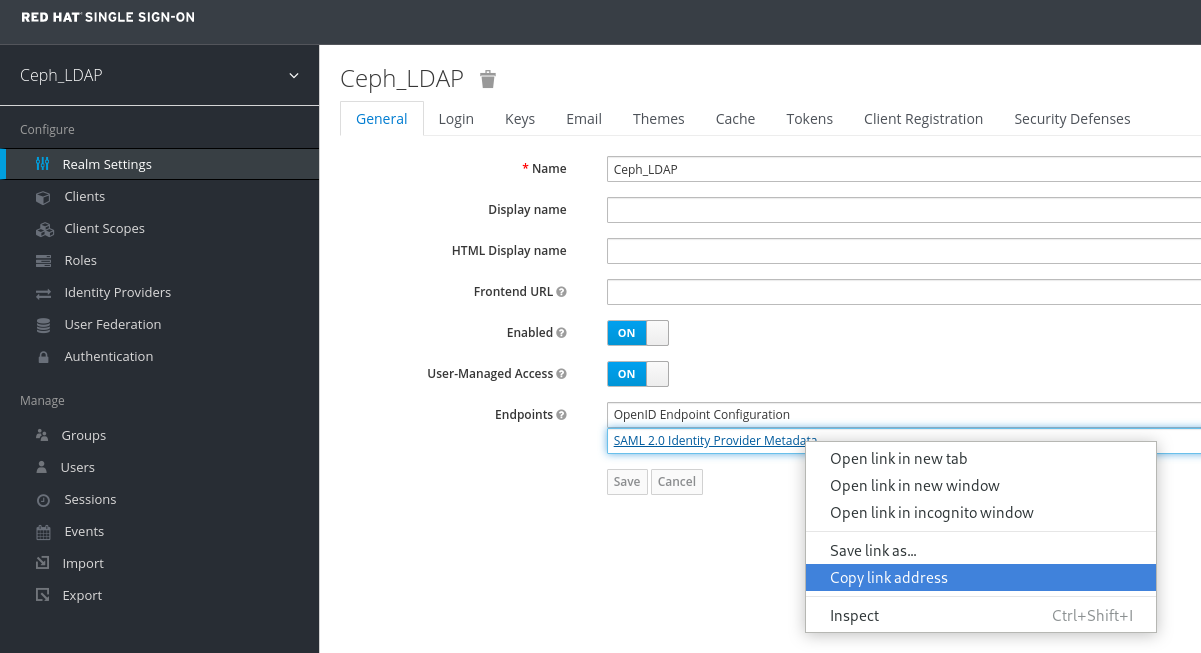

In the Realm Settings tab, set the following parameters and click Save:

- Enabled - ON

- User-Managed Access - ON

Make a note of the link address of SAML 2.0 Identity Provider Metadata to paste in Client Settings.

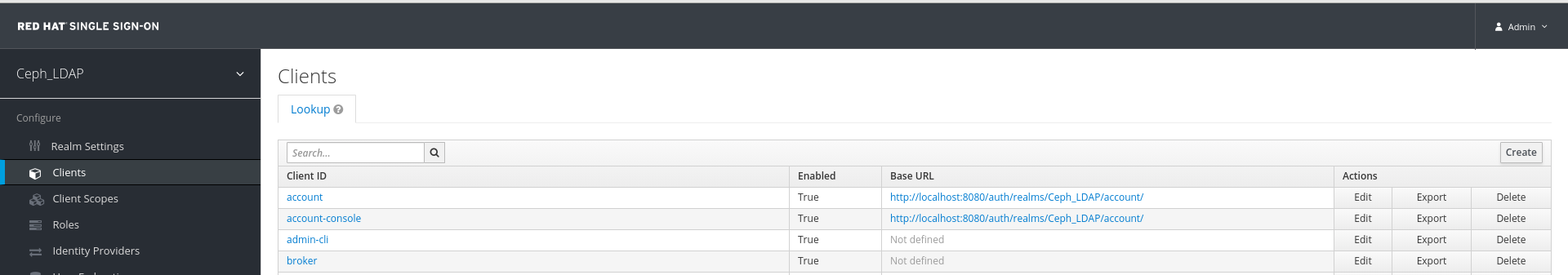

In the Clients tab, click Create:

In the Add Client window, set the following parameters and click Save:

Client ID - BASE_URL:8443/auth/saml2/metadata

Example

https://example.ceph.redhat.com:8443/auth/saml2/metadata

- Client Protocol - saml

In the Client window, under Settings tab, set the following parameters:

Expand Table 2.2. Client Settings tab Name of the parameter Syntax Example Client IDBASE_URL:8443/auth/saml2/metadata

https://example.ceph.redhat.com:8443/auth/saml2/metadata

EnabledON

ON

Client Protocolsaml

saml

Include AuthnStatementON

ON

Sign DocumentsON

ON

Signature AlgorithmRSA_SHA1

RSA_SHA1

SAML Signature Key NameKEY_ID

KEY_ID

Valid Redirect URLsBASE_URL:8443/*

https://example.ceph.redhat.com:8443/*

Base URLBASE_URL:8443

https://example.ceph.redhat.com:8443/

Master SAML Processing URLhttps://localhost:8080/auth/realms/REALM_NAME/protocol/saml/descriptor

https://localhost:8080/auth/realms/Ceph_LDAP/protocol/saml/descriptor

NotePaste the link of SAML 2.0 Identity Provider Metadata from Realm Settings tab.

Under Fine Grain SAML Endpoint Configuration, set the following parameters and click Save:

Expand Table 2.3. Fine Grain SAML configuration Name of the parameter Syntax Example Assertion Consumer Service POST Binding URL

BASE_URL:8443/#/dashboard

https://example.ceph.redhat.com:8443/#/dashboard

Assertion Consumer Service Redirect Binding URL

BASE_URL:8443/#/dashboard

https://example.ceph.redhat.com:8443/#/dashboard

Logout Service Redirect Binding URL

BASE_URL:8443/

https://example.ceph.redhat.com:8443/

In the Clients window, Mappers tab, set the following parameters and click Save:

Expand Table 2.4. Client Mappers tab Name of the parameter Value Protocolsaml

Nameusername

Mapper PropertyUser Property

Propertyusername

SAML Attribute nameusername

In the Clients Scope tab, select role_list:

- In Mappers tab, select role list, set the Single Role Attribute to ON.

Select User_Federation tab:

- In User Federation window, select ldap from the drop-down menu:

In User_Federation window, Settings tab, set the following parameters and click Save:

Expand Table 2.5. User Federation Settings tab Name of the parameter Value Console Display Namerh-ldap

Import UsersON

Edit_ModeREAD_ONLY

Username LDAP attributeusername

RDN LDAP attributeusername

UUID LDAP attributensuniqueid

User Object ClassesinetOrgPerson, organizationalPerson, rhatPerson

Connection URLExample: ldap://ldap.corp.redhat.com Click Test Connection. You will get a notification that the LDAP connection is successful.

Users DNou=users, dc=example, dc=com

Bind Typesimple

Click Test authentication. You will get a notification that the LDAP authentication is successful.

In Mappers tab, select first name row and edit the following parameter and Click Save:

- LDAP Attribute - givenName

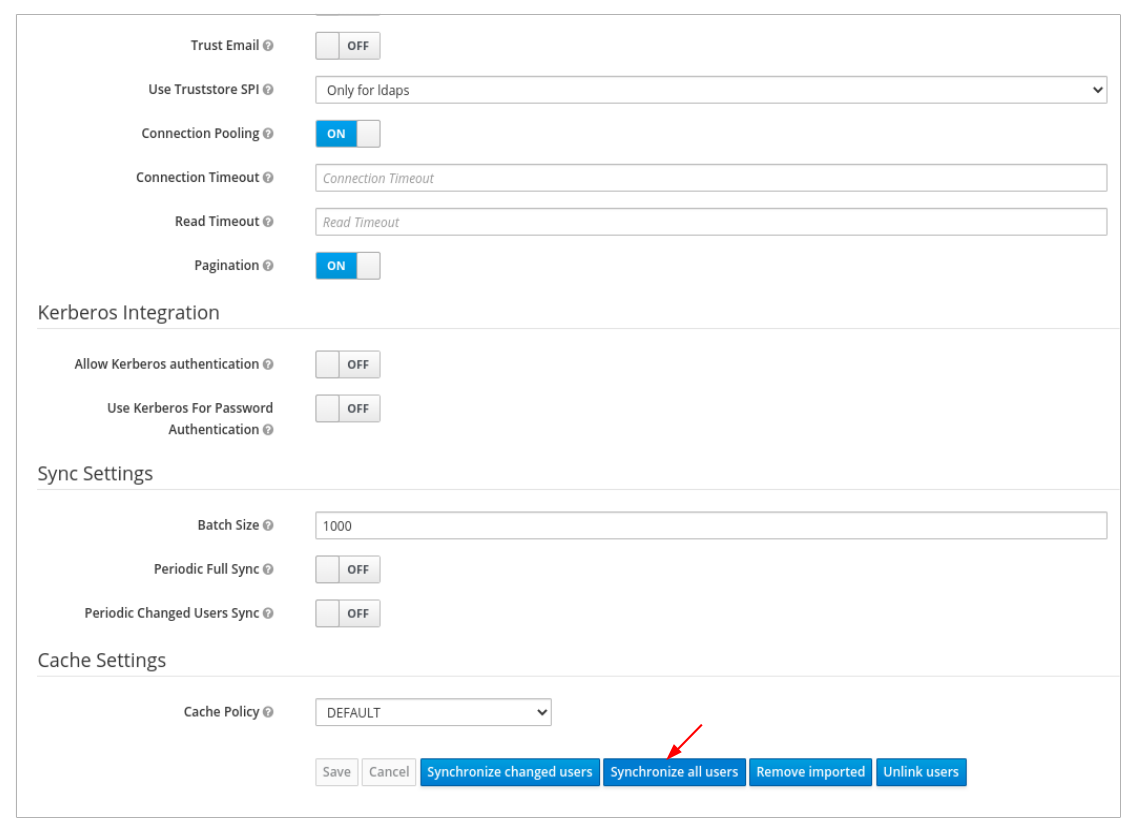

In User_Federation tab, Settings tab, Click Synchronize all users:

You will get a notification that the sync of users is finished successfully.

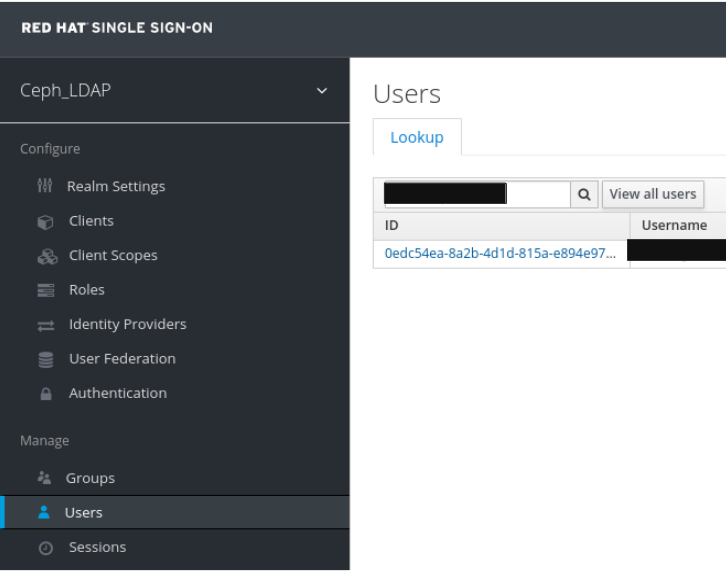

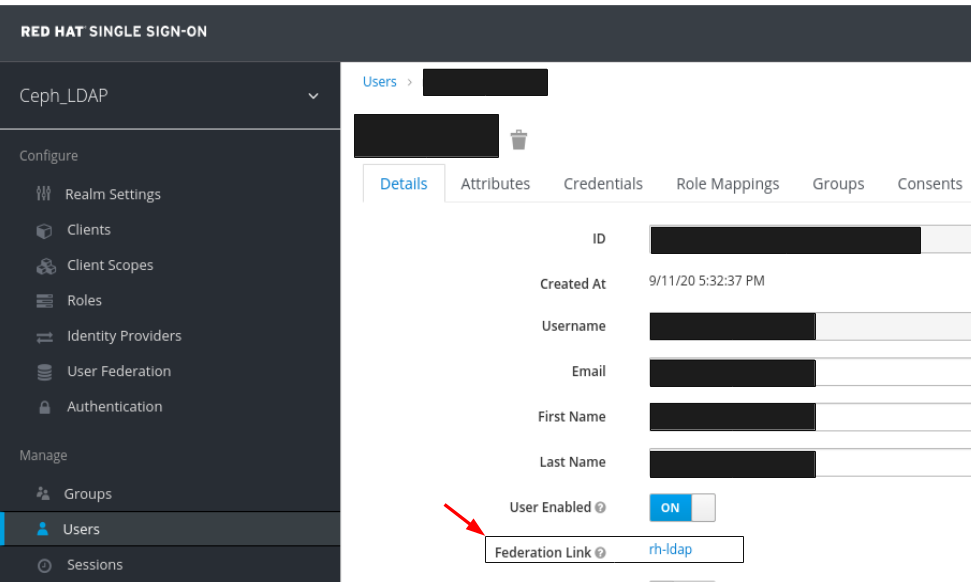

In the Users tab, search for the user added to the dashboard and click the Search icon:

To view the user , click the specific row. You should see the federation link as the name provided for the User Federation.

ImportantDo not add users manually as the users will not be synchronized by LDAP. If added manually, delete the user by clicking Delete.

NoteIf Red Hat SSO is currently being used within your work environment, be sure to first enable SSO. For more information, see the Enabling Single Sign-On with SAMLE 2.0 for the Ceph Dashboard section in the Red Hat Ceph Storage Dashboard Guide.

Verification

Users added to the realm and the dashboard can access the Ceph dashboard with their mail address and password.

Example

https://example.ceph.redhat.com:8443

2.11.3. Enabling Single Sign-On with SAML 2.0 for the Ceph Dashboard

The Ceph Dashboard supports external authentication of users with the Security Assertion Markup Language (SAML) 2.0 protocol. Before using single sign-On (SSO) with the Ceph dashboard, create the dashboard user accounts and assign the desired roles. The Ceph Dashboard performs authorization of the users and the authentication process is performed by an existing Identity Provider (IdP). You can enable single sign-on using the SAML protocol.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Installation of the Ceph Dashboard.

- Root-level access to The Ceph Manager hosts.

Procedure

To configure SSO on Ceph Dashboard, run the following command:

Syntax

cephadm shell CEPH_MGR_HOST ceph dashboard sso setup saml2 CEPH_DASHBOARD_BASE_URL IDP_METADATA IDP_USERNAME_ATTRIBUTE IDP_ENTITY_ID SP_X_509_CERT SP_PRIVATE_KEY

cephadm shell CEPH_MGR_HOST ceph dashboard sso setup saml2 CEPH_DASHBOARD_BASE_URL IDP_METADATA IDP_USERNAME_ATTRIBUTE IDP_ENTITY_ID SP_X_509_CERT SP_PRIVATE_KEYCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

cephadm shell host01 ceph dashboard sso setup saml2 https://dashboard_hostname.ceph.redhat.com:8443 idp-metadata.xml username https://10.70.59.125:8080/auth/realms/realm_name /home/certificate.txt /home/private-key.txt

[root@host01 ~]# cephadm shell host01 ceph dashboard sso setup saml2 https://dashboard_hostname.ceph.redhat.com:8443 idp-metadata.xml username https://10.70.59.125:8080/auth/realms/realm_name /home/certificate.txt /home/private-key.txtCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

-

CEPH_MGR_HOST with Ceph

mgrhost. For example,host01 - CEPH_DASHBOARD_BASE_URL with the base URL where Ceph Dashboard is accessible.

- IDP_METADATA with the URL to remote or local path or content of the IdP metadata XML. The supported URL types are http, https, and file.

- Optional: IDP_USERNAME_ATTRIBUTE with the attribute used to get the username from the authentication response. Defaults to uid.

- Optional: IDP_ENTITY_ID with the IdP entity ID when more than one entity ID exists on the IdP metadata.

- Optional: SP_X_509_CERT with the file path of the certificate used by Ceph Dashboard for signing and encryption.

- Optional: SP_PRIVATE_KEY with the file path of the private key used by Ceph Dashboard for signing and encryption.

-

CEPH_MGR_HOST with Ceph

Verify the current SAML 2.0 configuration:

Syntax

cephadm shell CEPH_MGR_HOST ceph dashboard sso show saml2

cephadm shell CEPH_MGR_HOST ceph dashboard sso show saml2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

cephadm shell host01 ceph dashboard sso show saml2

[root@host01 ~]# cephadm shell host01 ceph dashboard sso show saml2Copy to Clipboard Copied! Toggle word wrap Toggle overflow To enable SSO, run the following command:

Syntax

cephadm shell CEPH_MGR_HOST ceph dashboard sso enable saml2 SSO is "enabled" with "SAML2" protocol.

cephadm shell CEPH_MGR_HOST ceph dashboard sso enable saml2 SSO is "enabled" with "SAML2" protocol.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

cephadm shell host01 ceph dashboard sso enable saml2

[root@host01 ~]# cephadm shell host01 ceph dashboard sso enable saml2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Open your dashboard URL.

Example

https://dashboard_hostname.ceph.redhat.com:8443

https://dashboard_hostname.ceph.redhat.com:8443Copy to Clipboard Copied! Toggle word wrap Toggle overflow - On the SSO page, enter the login credentials. SSO redirects to the dashboard web interface.

2.11.4. Configuring Red Hat SSO as the identity provider using OAuth2 Protocol

You can enable single sign-on (SSO) for the IBM Storage Ceph Dashboard by configuring Red Hat SSO as the Identity Provider (IdP).

Prerequisites

- A running Red Hat Ceph Storage cluster.

- A subvolume group with corresponding subvolumes.

-

Enable the Ceph Management gateway (

mgmt-gateway) service. For more information, see Enabling the Ceph Management gateway. -

Enable the OAuth2 Proxy service (

oauth2-proxy). For more information, see Enabling the OAuth2 Proxy service. - Ensure that oauth2 proxy and identity provider are configured before you can configure the Identity Provider.

Procedure

Create a realm in Red Hat SSO.

- Click the Master drop-down menu and select Add Realm.

- Enter a case-sensitive name for the realm.

- Set Enabled to ON and click Create.

Configure realm settings.

- Go to the Realm Settings tab.

- Set Enabled to ON and User-Managed Access to ON.

- Click* Save*.

- Copy the OpenID Endpoint Configuration URL. Use the issuer endpoint from this configuration for OAuth2 Proxy setup.

Example

Issuer: http://<your-ip>:<port>/realms/<realm-name>

Issuer: http://<your-ip>:<port>/realms/<realm-name>Create and configure the OIDC client.

- Create a client of type OpenID Connect.

- Enable client authentication and authorization.

Set redirect URLs.

- Valid redirect URL: https://<host_name|IP_address>/oauth2/callback

- Valid logout redirect URL: https://<host_name|IP_address>/oauth2/sign_out

- Go to the Credentials tab and note the values for client_id and client_secret.These credentials are required to configure the oauth2-proxy service.

Create a role for dashboard access.

- Navigate to the Roles tab.

- Create a role and assign it to the OIDC client.

- Use a role name supported by the IBM Storage Ceph dashboard.

Create a user and assign roles.

- Create a new user.

- Define user credentials.

- Open the Role Mappings tab and assign the previously created role to the user.

Enable SSO for the dashboard.

Syntax

ceph dashboard sso enable

ceph dashboard sso enableCopy to Clipboard Copied! Toggle word wrap Toggle overflow

SSO is now enabled for the Ceph management stack. When users access the dashboard, they are redirected to the Red Hat SSO login page. After successful authentication, users are granted access across all monitoring stacks, including dashboard, Grafana, and Prometheus, based on the roles assigned in Red Hat SSO.

2.11.5. Configuring IBM Security Verify as the identity provider using OAuth2 protocol

You can enable single sign-on (SSO) for the IBM Storage Ceph Dashboard by configuring IBM Security Verify as the Identity Provider (IdP).

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Installation of the Ceph Dashboard.

-

Enable the Ceph Management gateway (

mgmt-gateway) service. For more information, see Enabling the Ceph Management gateway. -

Enable the OAuth2 Proxy service (

oauth2-proxy). For more information, see Enabling the OAuth2 Proxy service.

Procedure

- From the IBM Security Verify application, navigate to the Applications > Application and and click Add An Application.

- Under Application Type , select Custom Application and click Add Application.

- Add the company name under the General tab.

- Navigate to Sign-on tab and select Open ID Connect 1.0 from the Sign-on Method drop-down list.

Enter the mgmt-gateway URL under the Application URL section.

Example

https://<HOSTNAME/IP_OF_THE_MGMT-GATEWAY_HOST>

https://<HOSTNAME/IP_OF_THE_MGMT-GATEWAY_HOST>Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Clear the PKCE verification checkbox.

From the Redirect URIs section, fill in the redirect the URIs. Use the following redirect URL format.

From the Token settings, select JWT as the access token format. For each of the following attributes, enter the details in the respective fields and then select the corresponding attribute source from the drop-down list:

- name | preferred_username

- email | email

- From the Consent Settings, select Do not ask for consent from the drop-down list.

- From the Custom Scopes, clear the Restrict Custom Scopes checkbox and click Save.

Add a user.

- Navigate to Directory > Users & Groups and click Add User.

- Provide the desired username and a valid email address.The initial user credentials are sent to the email address provided.

- Click Save.

Navigate to the Directory > Attributes and click Add Attribute.

- Select Custom Attribute, and then select the Single Sign On (SSO) checkbox. Click Next.

- In the Attribute Name field, enter roles.

- Under Data Type, select Multivalue String from the drop-down list.

- Click Additional settings, select Lowercase from the Apply Transformation drop-down list and click Next.

- Click Add Attribute.

Navigate to Directory > Users & Groups and edit the newly added user.

- Select View Extended Profile.

- From Custom User Attributes, add your desired dashboard role. For example, administrator, read-only, block-manager, and so on. This user inherit the dashboard roles automatically.

- Click Save settings.

Go to Applications > Application and click the Settings icon for the custom application created in step 2.

- From the Sign-on tab, go to *Token setting*s.

- For the roles attribute, enter the detail in the respective fields and then select the corresponding attribute source from the drop-down list: roles | roles

- From the Entitlements tab add the newly created user and click Save.

2.11.6. Enabling OAuth2 single sign-on

Enable OAuth2 single sign-on (SSO) for the Ceph Dashboard. OAuth2 SSO uses the oauth2-proxy service.

Enabling OAuth2 single sign-on is Technology Preview for Red Hat Ceph Storage 8.0.

Technology Preview features are not supported with Red Hat production service level agreements (SLAs), might not be functionally complete, and Red Hat does not recommend using them for production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process. See the support scope for Red Hat Technology Preview features for more details.

Prerequisites

Before you begin, make sure that you have the following prerequisites in place:

- A running Red Hat Ceph Storage cluster.

- Installation of the Ceph Dashboard.

- Root-level access to the Ceph Manager hosts.

- An admin account with Red Hat Single-Sign on 7.6.0. For more information, see Creating an admin account with Red Hat Single Sign-On 7.6.0.

-

Enable the Ceph Management gateway (

mgmt-gateway) service. For more information, see Enabling the Ceph Management gateway. -

Enable the OAuth2 Proxy service (

oauth2-proxy). For more information, see Enabling the OAuth2 Proxy service. - You should either have an admin account with Red Hat Single-Sign-On 7.6.0 with OAuth2 protocol running or IBM Security Verify with OAuth2 protocol running. For more information, see Configuring IBM Security Verify as the identity provider using OAuth2 protocol.

Procedure

Enable Ceph Dashboard OAuth2 SSO access.

Syntax

ceph dashboard sso enable oauth2

ceph dashboard sso enable oauth2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

[ceph: root@host01 /]# ceph dashboard sso enable oauth2 SSO is "enabled" with "oauth2" protocol.

[ceph: root@host01 /]# ceph dashboard sso enable oauth2 SSO is "enabled" with "oauth2" protocol.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set the valid redirect URL.

Syntax

https://HOST_NAME|IP_ADDRESS/oauth2/callback

https://HOST_NAME|IP_ADDRESS/oauth2/callbackCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThis URL must be the same redirect URL as configured in the OAuth2 Proxy service.

Configure a valid user role.

NoteFor the Administrator role, configure the IDP user with an administrator or read-only access.

Open your dashboard URL.

Example

https://<hostname/ip address of mgmt-gateway host>

https://<hostname/ip address of mgmt-gateway host>Copy to Clipboard Copied! Toggle word wrap Toggle overflow - On the SSO page, enter the Identity Provider (IdP) credentials. The SSO redirects to the dashboard web interface.

Verification

Check the SSO status at any time with the cephadm shell ceph dashboard sso status command.

Example

cephadm shell ceph dashboard sso status SSO is "enabled" with "oauth2" protocol.

[root@host01 ~]# cephadm shell ceph dashboard sso status

SSO is "enabled" with "oauth2" protocol.2.11.7. Disabling Single Sign-On for the Ceph Dashboard

You can disable SAML 2.0 and OAuth2 SSO for the Ceph Dashboard at any time.

Prerequisites

Before you begin, make sure that you have the following prerequisites in place:

- A running Red Hat Ceph Storage cluster.

- Installation of the Ceph Dashboard.

- Root-level access to The Ceph Manager hosts.

- Single sign-on enabled for Ceph Dashboard

Procedure

To view status of SSO, run the following command:

Syntax

cephadm shell CEPH_MGR_HOST ceph dashboard sso status

cephadm shell CEPH_MGR_HOST ceph dashboard sso statusCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

cephadm shell host01 ceph dashboard sso status SSO is "enabled" with "SAML2" protocol.

[root@host01 ~]# cephadm shell host01 ceph dashboard sso status SSO is "enabled" with "SAML2" protocol.Copy to Clipboard Copied! Toggle word wrap Toggle overflow To disable SSO, run the following command:

Syntax

cephadm shell CEPH_MGR_HOST ceph dashboard sso disable SSO is "disabled".

cephadm shell CEPH_MGR_HOST ceph dashboard sso disable SSO is "disabled".Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

cephadm shell host01 ceph dashboard sso disable

[root@host01 ~]# cephadm shell host01 ceph dashboard sso disableCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 3. Managing roles on the Ceph dashboard

As a storage administrator, you can create, edit, clone, and delete roles on the dashboard.

By default, there are eight system roles. You can create custom roles and give permissions to those roles. These roles can be assigned to users based on the requirements.

This section covers the following administrative tasks:

3.1. User roles and permissions on the Ceph dashboard

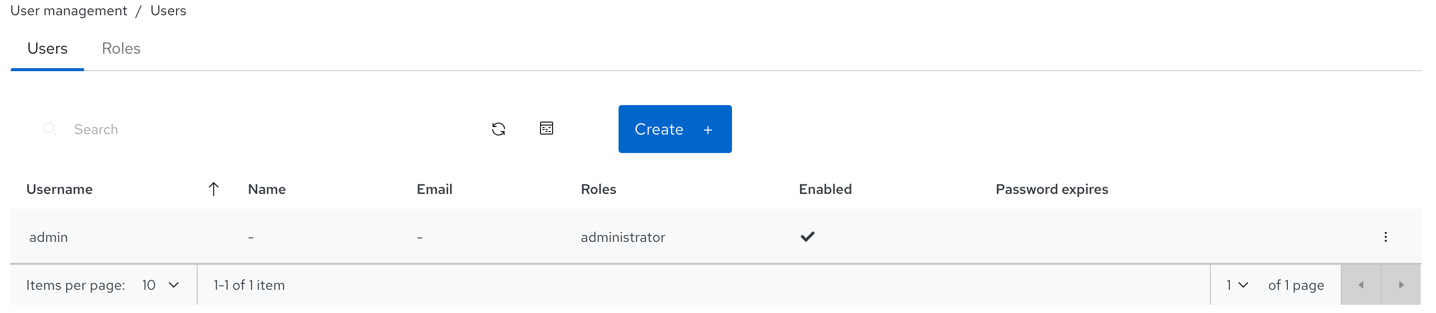

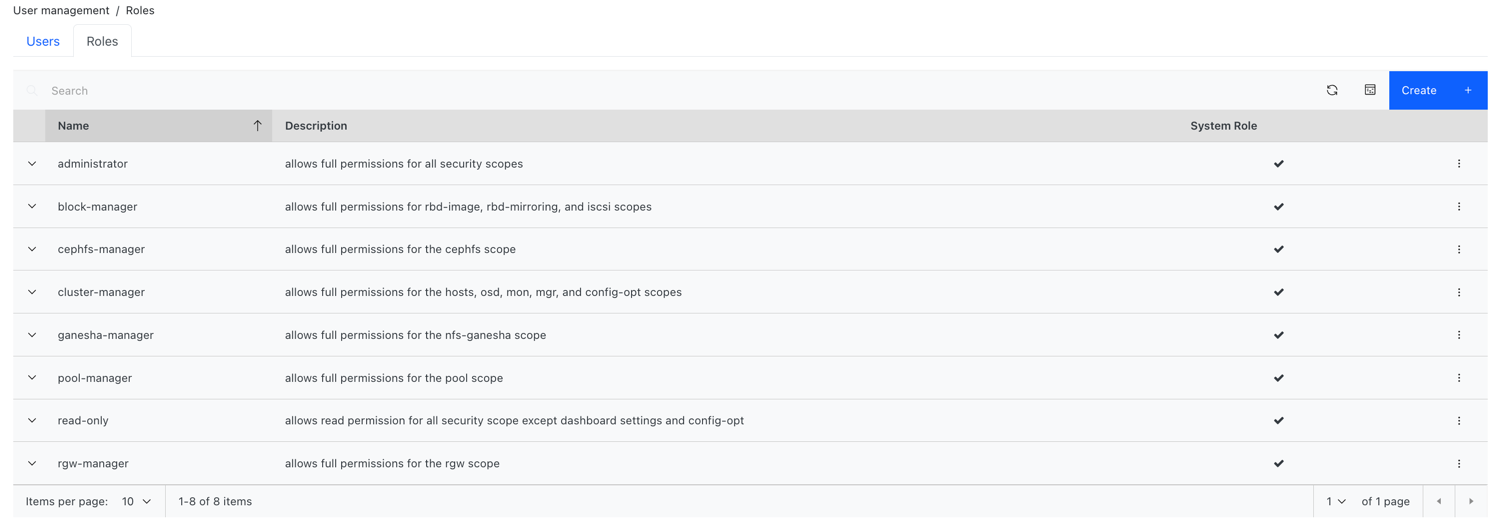

User accounts are associated with a set of roles that define the specific dashboard functionality which can be accessed. View user roles and permissions by going to Dashboard settings→User management.

The Red Hat Ceph Storage dashboard functionality or modules are grouped within a security scope. Security scopes are predefined and static. The current available security scopes on the Red Hat Ceph Storage dashboard are:

- cephfs: Includes all features related to CephFS management.

- config-opt: Includes all features related to management of Ceph configuration options.

- dashboard-settings: Allows to edit the dashboard settings.

- grafana: Include all features related to Grafana proxy.

- hosts: Includes all features related to the Hosts menu entry.

- log: Includes all features related to Ceph logs management.

- manager: Includes all features related to Ceph manager management.

- monitor: Includes all features related to Ceph monitor management.

- nfs-ganesha: Includes all features related to NFS-Ganesha management.

- osd: Includes all features related to OSD management.

- pool: Includes all features related to pool management.

- prometheus: Include all features related to Prometheus alert management.

- rbd-image: Includes all features related to RBD image management.

- rbd-mirroring: Includes all features related to RBD mirroring management.

- rgw: Includes all features related to Ceph object gateway (RGW) management.

A role specifies a set of mappings between a security scope and a set of permissions. There are four types of permissions:

- Read

- Create

- Update

- Delete

The list of system roles are:

- administrator: Allows full permissions for all security scopes.

- block-manager: Allows full permissions for RBD-image and RBD-mirroring scopes.

- cephfs-manager: Allows full permissions for the Ceph file system scope.

- cluster-manager: Allows full permissions for the hosts, OSDs, monitor, manager, and config-opt scopes.

- ganesha-manager: Allows full permissions for the NFS-Ganesha scope.

- pool-manager: Allows full permissions for the pool scope.

- read-only: Allows read permission for all security scopes except the dashboard settings and config-opt scopes.

- rgw-manager: Allows full permissions for the Ceph object gateway scope.

For example, you need to provide rgw-manager access to the users for all Ceph object gateway operations.

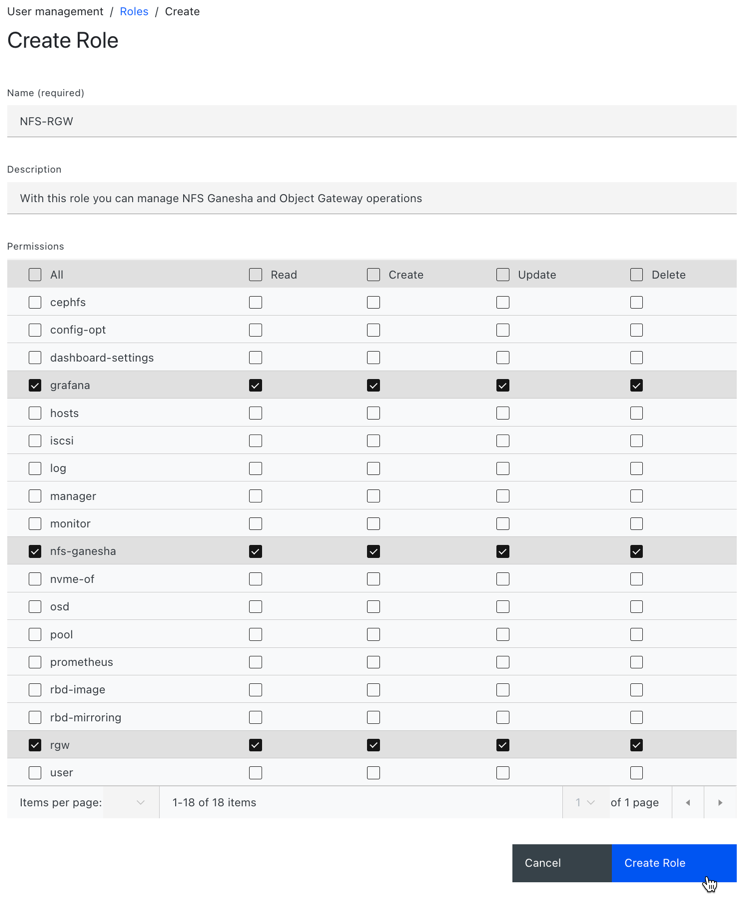

3.2. Creating roles on the Ceph dashboard

You can create custom roles on the dashboard and these roles can be assigned to users based on their roles.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Dashboard is installed.

- Admin-level access to the dashboard.

Procedure

- Log in to the Dashboard.

Click the Dashboard Settings icon and then click User management.

- On Roles tab, click Create.

In the Create Role window, set the Name, Description, and select the Permissions for this role, and then click the Create Role button.

In this example, the user assigned with

ganesha-managerandrgw-managerroles can manage all NFS-Ganesha gateway and Ceph object gateway operations.- You get a notification that the role was created successfully.

- Click on the Expand/Collapse icon of the row to view the details and permissions given to the roles.

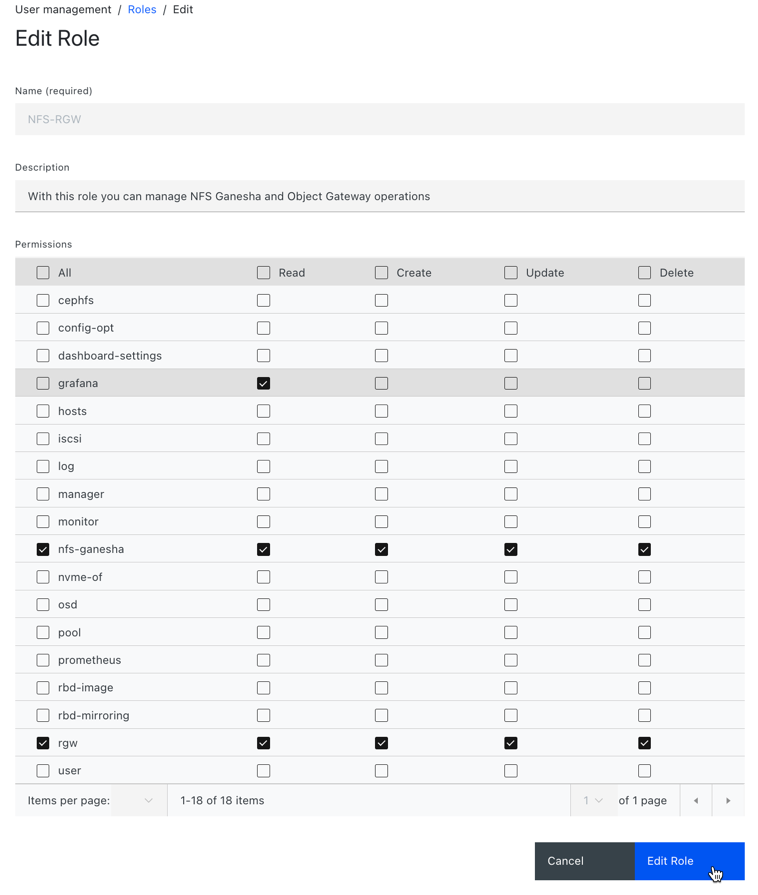

3.3. Editing roles on the Ceph dashboard

The dashboard allows you to edit roles on the dashboard.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Dashboard is installed.

- Admin-level access to the dashboard.

- A role is created on the dashboard.

Procedure

- Log in to the Dashboard.

Click the Dashboard Settings icon and then click User management.

- On Roles tab, click the role you want to edit.

In the Edit Role window, edit the parameters, and then click Edit Role.

- You get a notification that the role was updated successfully.

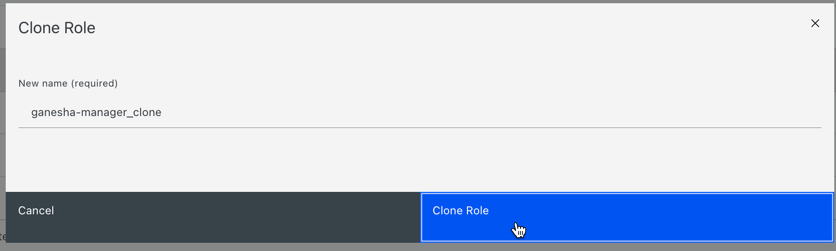

3.4. Cloning roles on the Ceph dashboard

When you want to assign additional permissions to existing roles, you can clone the system roles and edit it on the Red Hat Ceph Storage Dashboard.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Dashboard is installed.

- Admin-level access to the dashboard.

- Roles are created on the dashboard.

Procedure

- Log in to the Dashboard.

Click the Dashboard Settings icon and then click User management.

- On Roles tab, click the role you want to clone.

- Select Clone from the Edit drop-down menu.

In the Clone Role dialog box, enter the details for the role, and then click Clone Role.

- Once you clone the role, you can customize the permissions as per the requirements.

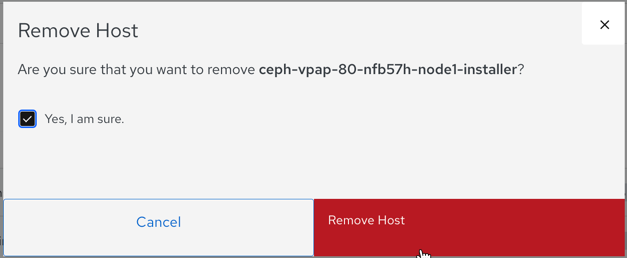

3.5. Deleting roles on the Ceph dashboard

You can delete the custom roles that you have created on the Red Hat Ceph Storage dashboard.

You cannot delete the system roles of the Ceph Dashboard.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Dashboard is installed.

- Admin-level access to the dashboard.

- A custom role is created on the dashboard.

Procedure

- Log in to the Dashboard.

Click the Dashboard Settings icon and then select User management.

- On the Roles tab, click the role you want to delete and select Delete from the action drop-down.

- In the Delete Role notification, select Yes, I am sure and click Delete Role.

Chapter 4. Managing users on the Ceph dashboard

As a storage administrator, you can create, edit, and delete users with specific roles on the Red Hat Ceph Storage dashboard. Role-based access control is given to each user based on their roles and the requirements.

You can also create, edit, import, export, and delete Ceph client authentication keys on the dashboard. Once you create the authentication keys, you can rotate keys using command-line interface (CLI). Key rotation meets the current industry and security compliance requirements.

This section covers the following administrative tasks:

4.1. Creating users on the Ceph dashboard

You can create users on the Red Hat Ceph Storage dashboard with adequate roles and permissions based on their roles. For example, if you want the user to manage Ceph object gateway operations, then you can give rgw-manager role to the user.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Dashboard is installed.

- Admin-level access to the dashboard.

The Red Hat Ceph Storage Dashboard does not support any email verification when changing a users password. This behavior is intentional, because the Dashboard supports Single Sign-On (SSO) and this feature can be delegated to the SSO provider.

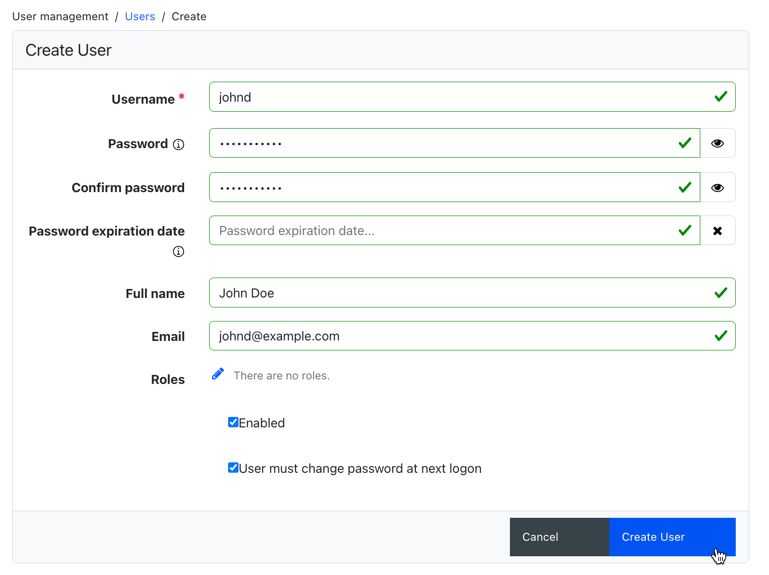

Procedure

- Log in to the Dashboard.

Click the Dashboard Settings icon and then click User management.

- On Users tab, click Create.

In the Create User window, set the Username and other parameters including the roles, and then click Create User.

- You get a notification that the user was created successfully.

4.2. Editing users on the Ceph dashboard

You can edit the users on the Red Hat Ceph Storage dashboard. You can modify the user’s password and roles based on the requirements.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Dashboard is installed.

- Admin-level access to the dashboard.

- User created on the dashboard.

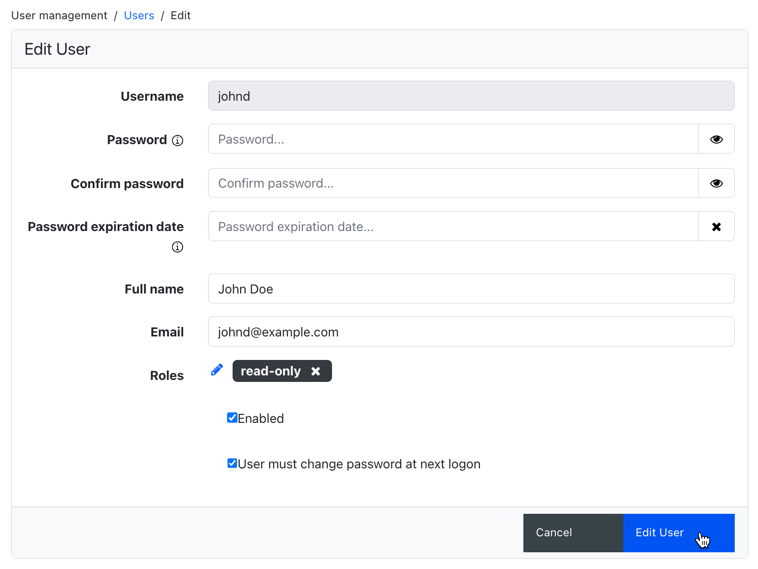

Procedure

- Log in to the Dashboard.

Click the Dashboard Settings icon and then click User management.

- To edit the user, click the row.

- On Users tab, select Edit from the Edit drop-down menu.

In the Edit User window, edit parameters like password and roles, and then click Edit User.

NoteIf you want to disable any user’s access to the Ceph dashboard, you can uncheck Enabled option in the Edit User window.

- You get a notification that the user was created successfully.

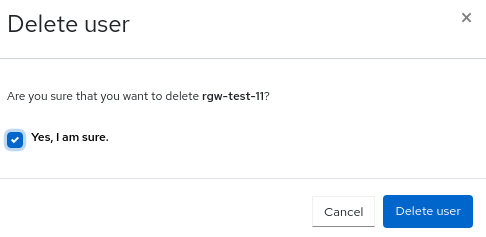

4.3. Deleting users on the Ceph dashboard

You can delete users on the Ceph dashboard. Some users might be removed from the system. The access to such users can be deleted from the Ceph dashboard.

Prerequisites

- A running Red Hat Ceph Storage cluster.

- Dashboard is installed.

- Admin-level access to the dashboard.

- User created on the dashboard.

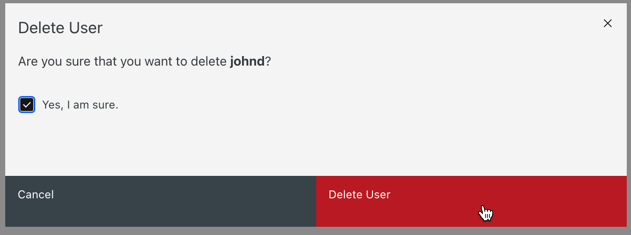

Procedure

- Log in to the Dashboard.

Click the Dashboard Settings icon and then click User management.

- On Users tab, click the user you want to delete.

- select Delete from the Edit drop-down menu.

In the Delete User notification, select Yes, I am sure and click Delete User.

4.4. User capabilities

Ceph stores data RADOS objects within pools irrespective of the Ceph client used. Ceph users must have access to a given pool to read and write data, and must have executable permissions to use Ceph administrative’s commands. Creating users allows you to control their access to your Red Hat Ceph Storage cluster, its pools, and the data within the pools.

Ceph has a concept of type of user which is always client. You need to define the user with the TYPE.ID where ID is the user ID, for example, client.admin. This user typing is because the Cephx protocol is used not only by clients but also non-clients, such as Ceph Monitors, OSDs, and Metadata Servers. Distinguishing the user type helps to distinguish between client users and other users. This distinction streamlines access control, user monitoring, and traceability.

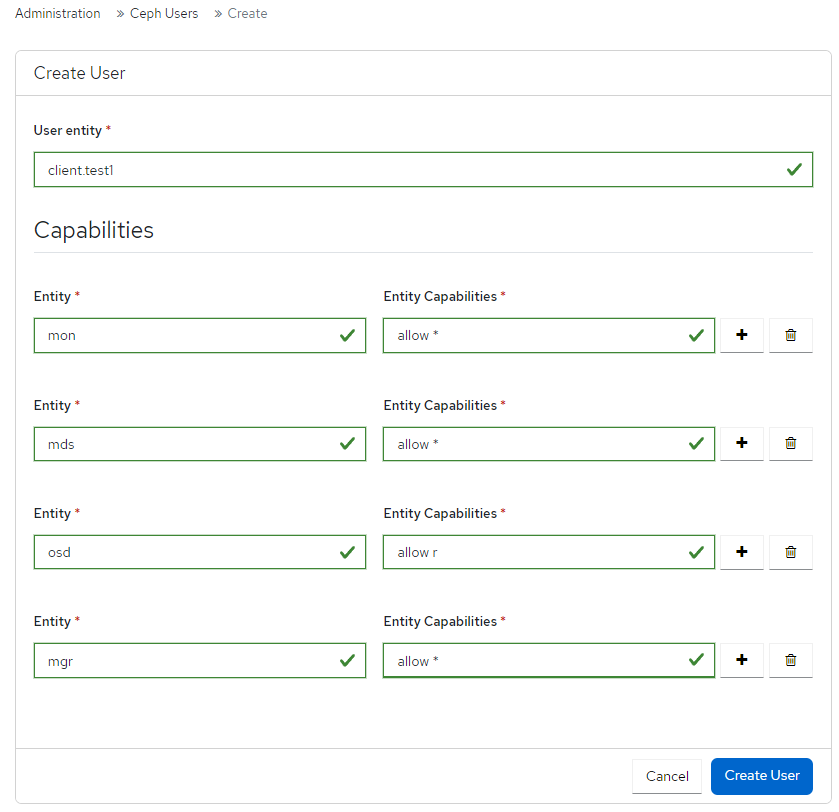

4.4.1. Capabilities

Ceph uses capabilities (caps) to describe the permissions granted to an authenticated user to exercise the functionality of the monitors, OSDs, and metadata servers. The capabilities restrict access to data within a pool, a namespace within a pool, or a set of pools based on their applications tags. A Ceph administrative user specifies the capabilities of a user when creating or updating the user.

You can set the capabilities to monitors, managers, OSDs, and metadata servers.

-

The Ceph Monitor capabilities include

r,w, andxaccess settings. These can be applied in aggregate from pre-defined profiles withprofile NAME. -

The OSD capabilities include

r,w,x,class-read, andclass-writeaccess settings. These can be applied in aggregate from pre-defined profiles withprofile NAME. -

The Ceph Manager capabilities include

r,w, andxaccess settings. These can be applied in aggregate from pre-defined profiles withprofile NAME. -

For administrators, the metadata server (MDS) capabilities include

allow *.

The Ceph Object Gateway daemon (radosgw) is a client of the Red Hat Ceph Storage cluster and is not represented as a Ceph storage cluster daemon type.

4.5. Access capabilities

This section describes the different access or entity capabilities that can be given to a Ceph user or a Ceph client such as Block Device, Object Storage, File System, and native API.

Additionally, you can describe the capability profiles while assigning roles to clients.

allow, Description-

Precedes access settings for a daemon. Implies

rwfor MDS only r, Description- Gives the user read access. Required with monitors to retrieve the CRUSH map.

w, Description- Gives the user write access to objects.

x, Description-

Gives the user the capability to call class methods, that is, both read and write, and to conduct

authoperations on monitors. class-read, Description-

Gives the user the capability to call class read methods. Subset of

x. class-write, Description-

Gives the user the capability to call class write methods. Subset of

x. - *,

all, Description - Gives the user read, write, and execute permissions for a particular daemon or a pool, as well as the ability to execute admin commands.

The following entries describe valid capability profile:

profile osd, Description- This is applicable to Ceph Monitor only. Gives a user permissions to connect as an OSD to other OSDs or monitors. Conferred on OSDs to enable OSDs to handle replication heartbeat traffic and status reporting.

profile mds, Description- This is applicable to Ceph Monitor only. Gives a user permissions to connect as an MDS to other MDSs or monitors.

profile bootstrap-osd, Description-

This is applicable to Ceph Monitor only. Gives a user permissions to bootstrap an OSD. Conferred on deployment tools, such as

ceph-volumeandcephadm, so that they have permissions to add keys when bootstrapping an OSD. profile bootstrap-mds, Description-

This is applicable to Ceph Monitor only. Gives a user permissions to bootstrap a metadata server. Conferred on deployment tools, such as

cephadm, so that they have permissions to add keys when bootstrapping a metadata server. profile bootstrap-rbd, Description-

This is applicable to Ceph Monitor only. Gives a user permissions to bootstrap an RBD user. Conferred on deployment tools, such as

cephadm, so that they have permissions to add keys when bootstrapping an RBD user. profile bootstrap-rbd-mirror, Description-

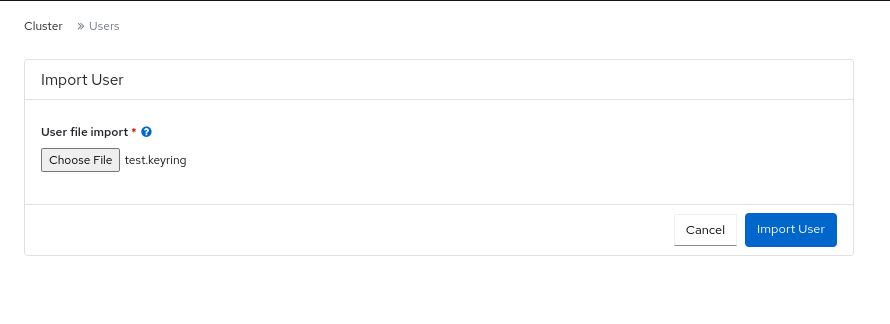

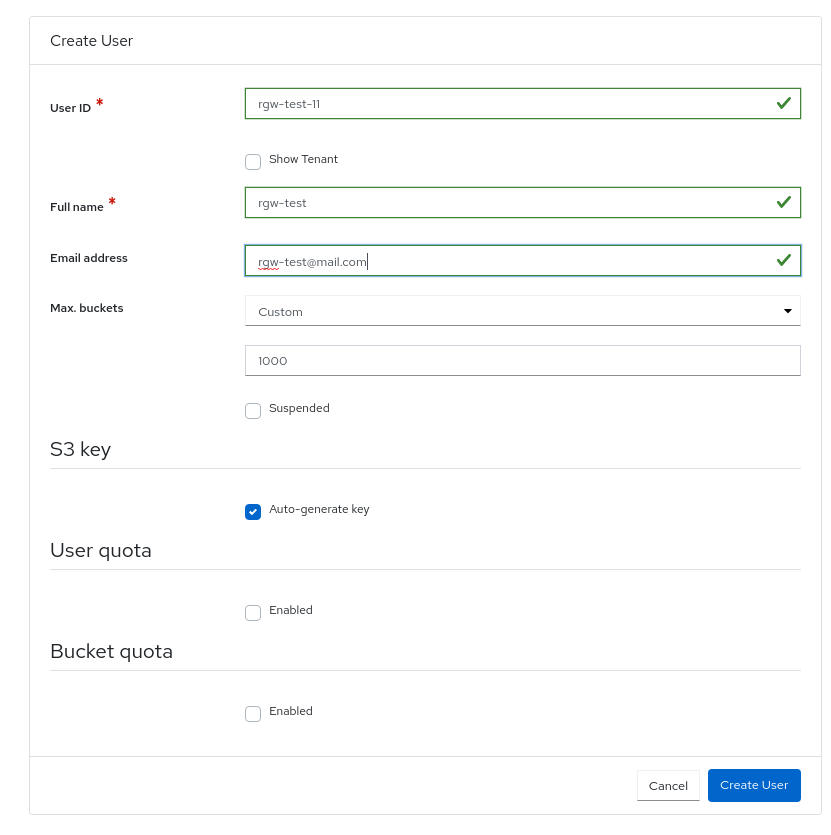

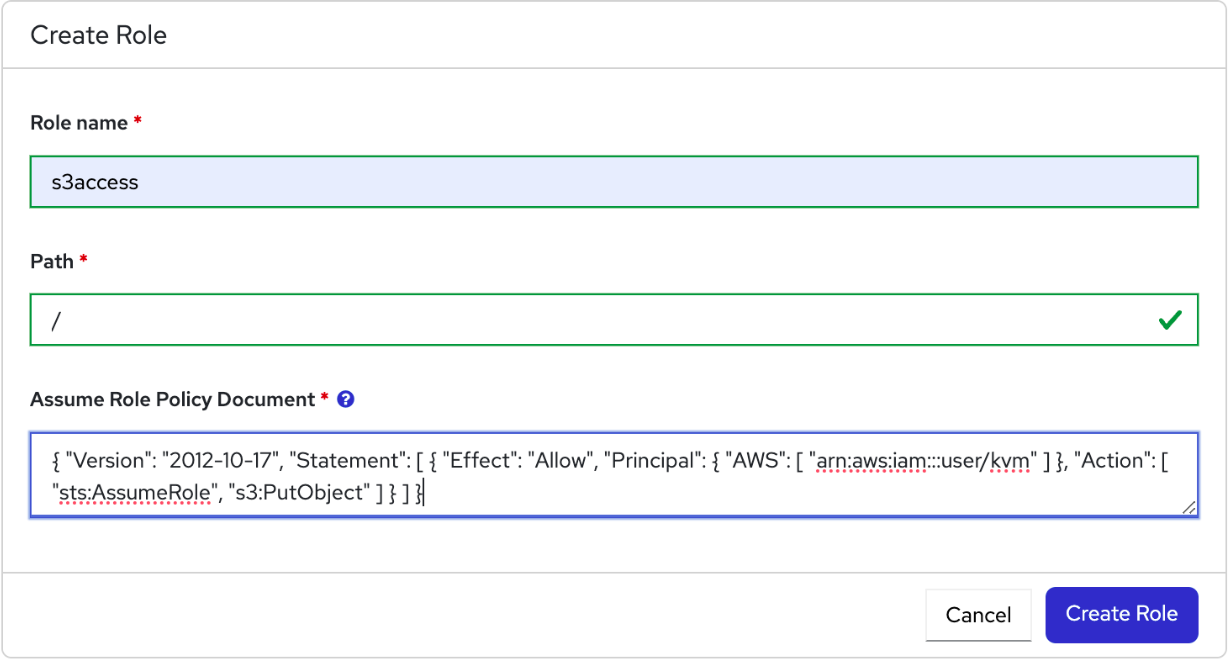

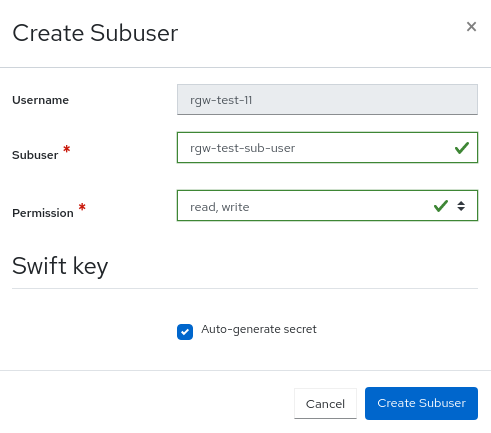

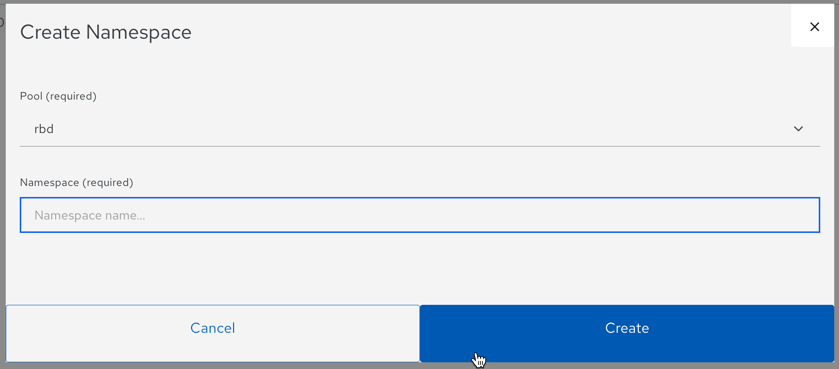

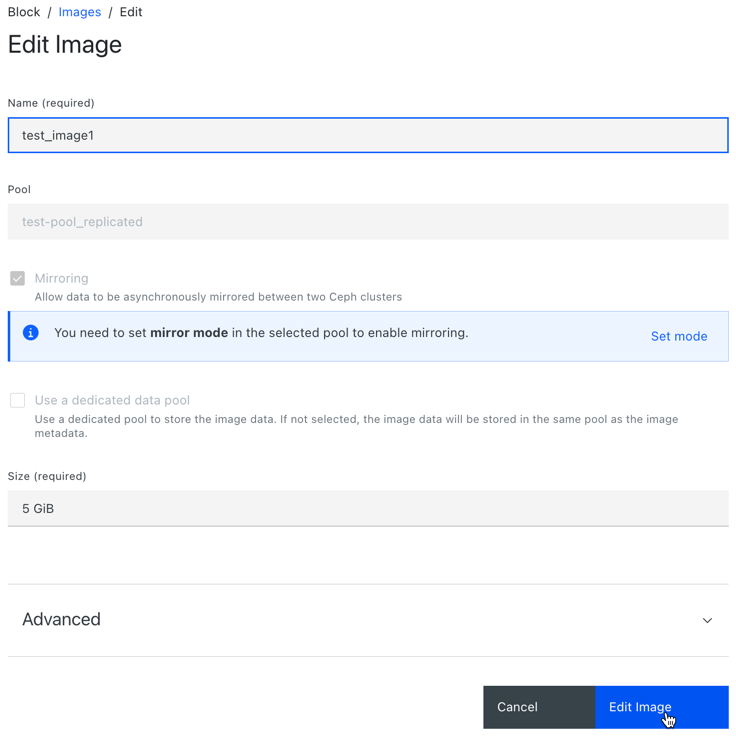

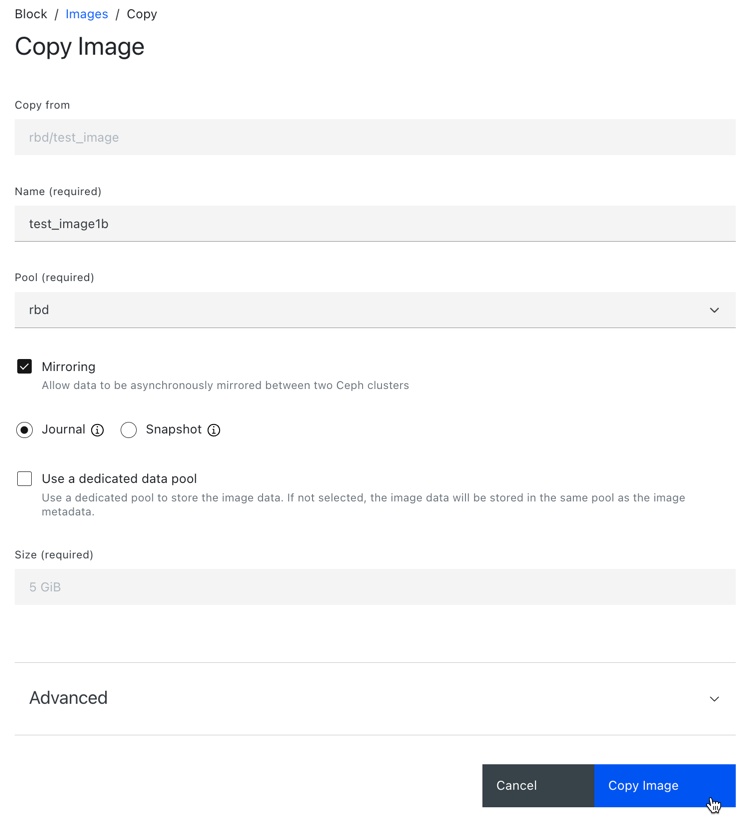

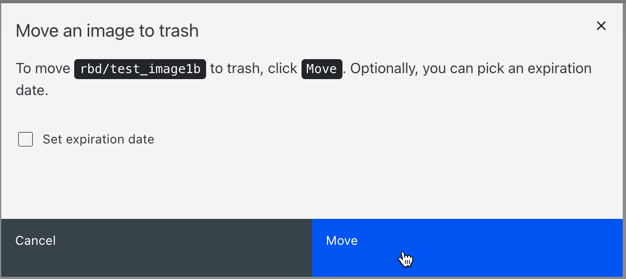

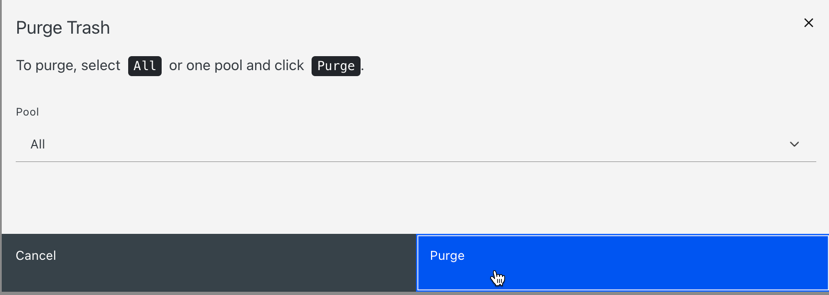

This is applicable to Ceph Monitor only. Gives a user permissions to bootstrap an