Administration Guide

Installing and administering Red Hat CodeReady Workspaces 1.2

Abstract

Chapter 1. Understanding Red Hat CodeReady Workspaces

Red Hat CodeReady Workspaces is a developer workspace server and cloud IDE. Workspaces are defined as project code files and all of their dependencies neccessary to edit, build, run, and debug them. Each workspace has its own private IDE hosted within it. The IDE is accessible through a browser. The browser downloads the IDE as a single-page web application.

Red Hat CodeReady Workspaces provides:

- Workspaces that include runtimes and IDEs

- RESTful workspace server

- A browser-based IDE

- Plugins for languages, framework, and tools

- An SDK for creating plugins and assemblies

Additional resources

See Red Hat CodeReady Workspaces CodeReady Workspaces 1.2 Release Notes and Known Issues for more details about the current version.

Chapter 2. Installing CodeReady Workspaces on OpenShift v3

This section describes how to obtain installation files for Red Hat CodeReady Workspaces and how to use them to deploy the product on an instance of OpenShift (such as Red Hat OpenShift Container Platform) v3.

Prerequisites

Minimum hardware requirements

Minimum 5 GB RAM to run CodeReady Workspaces. The Red Hat Single Sign-On (Red Hat SSO) authorization server and the PostgreSQL database require extra RAM. CodeReady Workspaces uses RAM in the following distribution:

- The CodeReady Workspaces server: Approximately 750 MB

- Red Hat SSO: Approximately 1 GB

- PostgreSQL: Approximately 515 MB

- Workspaces: 2 GB of RAM per workspace. The total workspace RAM depends on the size of the workspace runtime(s) and the number of concurrent workspace pods.

Software requirements

- CodeReady Workspaces deployment script and configuration file

Container images required for deployment:

Important- The container images are now published on the registry.redhat.io registry and will only be available from registry.redhat.io. For details, see Red Hat Container Registry Authentication.

To use this registry you must be logged into it.

- To authorize from the local Docker daemon, see Using Authentication.

- To authorize from an OpenShift cluster, see Allowing Pods to Reference Images from Other Secured Registries.

It is not necessary to download any of the referenced images manually.

- All container images required for deployment are automatically downloaded by the CodeReady Workspaces deployment script.

- Stack images are automatically downloaded by CodeReady Workspaces when new workspaces are created.

-

registry.redhat.io/codeready-workspaces/server-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/server-operator-rhel8:1.2 -

registry.redhat.io/rhscl/postgresql-96-rhel7:1-40 -

registry.redhat.io/redhat-sso-7/sso73-openshift:1.0-11 -

registry.redhat.io/ubi8-minimal:8.0-127

Container images with preconfigured stacks for creating workspaces:

-

registry.redhat.io/codeready-workspaces/stacks-java-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/stacks-node-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/stacks-php-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/stacks-python-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/stacks-dotnet-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/stacks-golang-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/stacks-java-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/stacks-cpp-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/stacks-node:1.2

-

Other

To be able to download the CodeReady Workspaces deployment script, Red Hat asks that you register for the free Red Hat Developer Program. This allows you to agree to license conditions of the product. For instructions on how to obtain the deployment script, see Section 2.2, “Downloading the CodeReady Workspaces deployment script”.

2.1. Downloading the Red Hat OpenShift Origin Client Tools

This procedure describes steps to obtain and unpack the archive with the Red Hat OpenShift Origin Client Tools.

The CodeReady Workspaces deployment and migration scripts require the use of OpenShift Origin Client Tools 3.11. Later versions may be supported in future but as deployment of CodeReady Workspaces to OpenShift Container Platform and OpenShift Dedicated 4 can be done via the embedded OperatorHub, using a deployment script is no longer necessary.

Procedure

Change to a temporary directory. Create it if necessary. For example:

mkdir ~/tmp cd ~/tmp

$ mkdir ~/tmp $ cd ~/tmpCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Download the archive with the

ocfile from: oc.tar.gz. Unpack the downloaded archive. The

ocexecutable file is unpacked in your current directory:tar xf oc.tar.gz && ./oc version

$ tar xf oc.tar.gz && ./oc versionCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Add the

ocfile to your path.

2.2. Downloading the CodeReady Workspaces deployment script

This procedure describes how to obtain and unpack the archive with the CodeReady Workspaces deployment shell script.

The CodeReady Workspaces deployment script uses the OpenShift Operator to deploy Red Hat Single Sign-On, the PostgreSQL database, and the CodeReady Workspaces server container images on an instance of Red Hat OpenShift Container Platform. The images are available in the Red Hat Container Catalog.

Procedure

Change to a temporary directory. Create it if necessary. For example:

mkdir ~/tmp cd ~/tmp

$ mkdir ~/tmp $ cd ~/tmpCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Download the archive with the deployment script and the

custom-resource.yamlfile using the browser with which you logged into the Red Hat Developer Portal: codeready-workspaces-1.2.2.GA-operator-installer.tar.gz. Unpack the downloaded archive and change to the created directory:

tar xvf codeready-workspaces-1.2.2.GA-operator-installer.tar.gz \ && cd codeready-workspaces-operator-installer/

$ tar xvf codeready-workspaces-1.2.2.GA-operator-installer.tar.gz \ && cd codeready-workspaces-operator-installer/Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Next steps

Continue by configuring and running the deployment script. See Section 2.3, “Running the CodeReady Workspaces deployment script”.

2.3. Running the CodeReady Workspaces deployment script

The CodeReady Workspaces deployment script uses command-line arguments and the custom-resource.yaml file to populate a set of configuration environment variables for the OpenShift Operator used for the actual deployment.

Prerequisites

- Downloaded and unpacked deployment script and the configuration file. See Section 2.2, “Downloading the CodeReady Workspaces deployment script”.

- A running instance of Red Hat OpenShift Container Platform 3.11 or OpenShift Dedicated 3.11. To install OpenShift Container Platform, see the Getting Started with OpenShift Container Platform guide.

-

The OpenShift Origin Client Tools 3.11,

oc, is in the path. See Section 2.1, “Downloading the Red Hat OpenShift Origin Client Tools”. -

The user is logged in to the OpenShift instance (using, for example,

oc login). - CodeReady Workspaces is supported for use with Google Chrome 70.0.3538.110 (Official Build) (64bit).

cluster-adminrights to successfully deploy CodeReady Workspaces using the deploy script. The following table lists the objects and the required permissions:Expand Type of object Name of the object that the installer creates Description Permission required CRD

-

Custom Resource Definition - CheCluster

cluster-adminCR

codereadyCustom Resource of the CheCluster type of object

cluster-admin. Alternatively, you can create aclusterrole.ServiceAccount

codeready-operatorOperator uses this service account to reconcile CodeReady Workspaces objects

The edit role in a target namespace.

Role

codeready-operatorScope of permissions for the operator-service account

cluster-adminRoleBinding

codeready-operatorAssignment of a role to the service account

The edit role in a target namespace.

Deployment

codeready-operatorDeployment with operator image in the template specification

The edit role in a target namespace.

ClusterRole

codeready-operatorClusterRoleallows you to create, update, delete oAuthClientscluster-adminClusterRoleBinding

${NAMESPACE}-codeready-operatorClusterRoleBindingallows you to create, update, delete oAuthClientscluster-adminRole

secret-readerRoleallows you to read secrets in the router namespacecluster-adminRoleBinding

${NAMESPACE}-codeready-operatorRoleBindingallows you to read secrets in router namespacecluster-admin

By default, the operator-service account gets privileges to list, get, watch, create, update, and delete ingresses, routes, service accounts, roles, rolebindings, PVCs, deployments, configMaps, secrets. It also has privileges to run execs into pods, watch events, and read pod logs in a target namespace.

With self-signed certificates support enabled, the operator-service account gets privileges to read secrets in an OpenShift router namespace.

With OpenShift OAuth enabled, the operator-service account gets privileges to get, list, create, update, and delete oAuthclients at a cluster scope.

2.3.1. Deploying CodeReady Workspaces with default settings

Run the following command:

./deploy.sh --deploy

$ ./deploy.sh --deployCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteRun the

./deploy.sh --helpcommand to get a list of all available arguments. For a description of all the options, see Section 2.6, “CodeReady Workspaces deployment script parameters”.The following messages indicates that CodeReady Workspaces is getting installed:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The CodeReady Workspaces successfully deployed and available at <URL> message confirms that the deployment is successful.

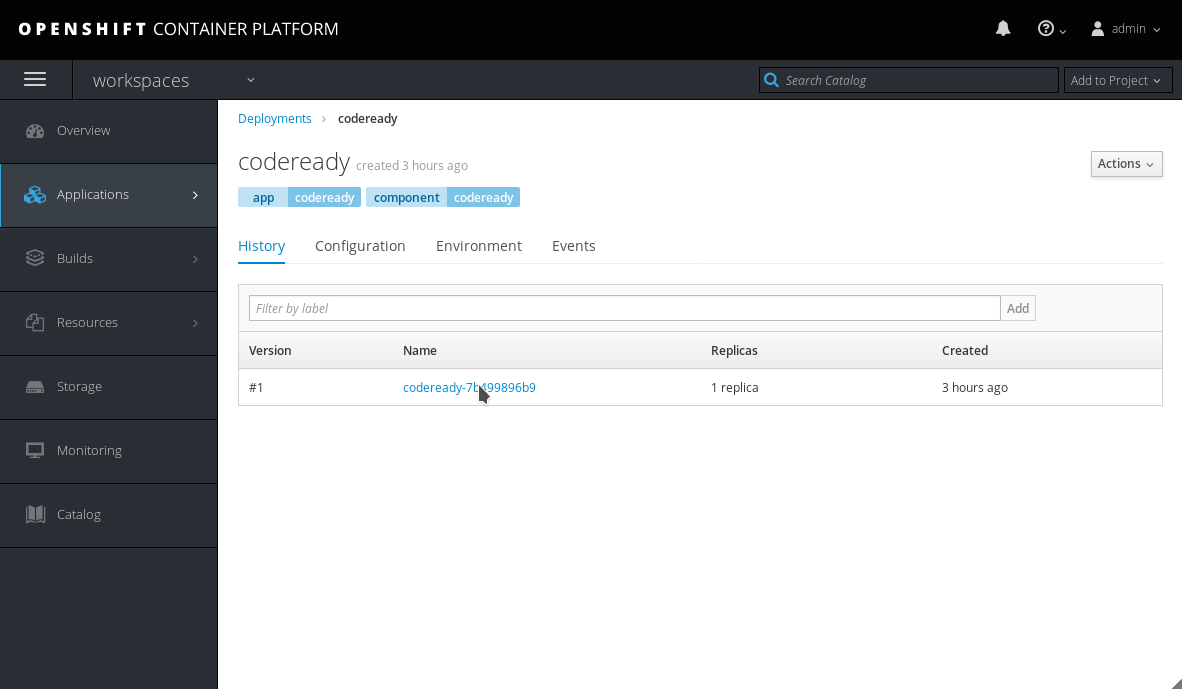

- Open the OpenShift web console.

- In the My Projects pane, click workspaces.

Click Applications > Pods. The pods are shown running.

Figure 2.1. Pods for codeready shown running

2.3.2. Deploying CodeReady Workspaces with a self-signed certificate and OpenShift OAuth

To deploy CodeReady Workspaces with a self-signed certificate, run the following command:

./deploy.sh --deploy --oauth

$ ./deploy.sh --deploy --oauthIf you use the TLS mode with a self-signed certificate, ensure that your browser trusts the certificate. If it does not trust the certificate, the Authorization token is missed error is displayed on the login page and the running workspace may not work as intended.

2.3.3. Deploying CodeReady Workspaces with a public certificate

To deploy CodeReady Workspaces to a cluster configured with public certificates, run the following command:

./deploy.sh --deploy --public-certs

$ ./deploy.sh --deploy --public-certs2.3.4. Deploying CodeReady Workspaces with external Red Hat Single Sign-On

To deploy with an external Red Hat Single Sign-On (Red Hat SSO) and enable a Red Hat SSO instance, take the following steps:

Update the following values in the

custom-resource.yamlfile:auth: externalIdentityProvider: 'true' identityProviderURL: 'https://my-red-hat-sso.com' identityProviderRealm: 'myrealm' identityProviderClientId: 'myClient'

auth: externalIdentityProvider: 'true'1 identityProviderURL: 'https://my-red-hat-sso.com'2 identityProviderRealm: 'myrealm'3 identityProviderClientId: 'myClient'4 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Instructs the operator on whether or not to deploy the Red Hat SSO instance. When set to

true, provisions the connection details. - 2

- Retrieved from the respective route or ingress unless explicitly specified in CR (when the

externalIdentityProvidervariable istrue). - 3

- Name of a Red Hat SSO realm. This realm is created when the

externalIdentityProvidervariable istrue. Otherwise, it is passed to the CodeReady Workspaces server. - 4

- The ID of a Red Hat SSO client. This client is created when the

externalIdentityProvidervariable isfalse. Otherwise, it is passed to the CodeReady Workspaces server.

Run the deploy script:

./deploy.sh --deploy

$ ./deploy.sh --deployCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.3.5. Deploying CodeReady Workspaces with external Red Hat SSO and PostgreSQL

The deploy script supports the following combinations of external Red Hat SSO and PostgreSQL:

- PostgreSQL and Red Hat SSO

- Red Hat SSO only

The deploy script does not support the external database and bundled Red Hat SSO combination currently. Provisioning of the database and the Red Hat SSO realm with the client happens only with bundled resources. If you are connecting your own database or Red Hat SSO, you should pre-create resources.

To deploy with the external PostgreSQL database and Red Hat SSO, take the following steps:

Update the following PostgreSQL database-related values in the

custom-resource.yamlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- When set to

truethe operator skips deploying PostgreSQL and passes the connection details of the existing database to the CodeReady Workspaces server. Otherwise, a PostgreSQL deployment is created. - 2

- The PostgreSQL database hostname that the CodeReady Workspaces server uses to connect to. Defaults to

postgres. - 3

- The PostgreSQL database port that the CodeReady Workspaces server uses to connect to. Defaults to

5432. - 4

- The PostgreSQL user that the CodeReady Workspaces server when making a database connection. Defaults to

pgche. - 5

- The password of a PostgreSQL user. Auto-generated when left blank.

- 6

- The PostgreSQL database name that the CodeReady Workspaces server uses to connect to. Defaults to

dbche.

Update the following Red Hat SSO-related values in the

custom-resource.yamlfile:auth: externalIdentityProvider: 'true' identityProviderURL: 'https://my-red-hat-sso.com' identityProviderRealm: 'myrealm' identityProviderClientId: 'myClient'

auth: externalIdentityProvider: 'true'1 identityProviderURL: 'https://my-red-hat-sso.com'2 identityProviderRealm: 'myrealm'3 identityProviderClientId: 'myClient'4 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Instructs the operator on whether or not to deploy Red Hat SSO instance. When set to

true, provisions the connection details. - 2

- Retrieved from the respective route or ingress unless explicitly specified in CodeReady Workspaces (when

externalIdentityProvideristrue). - 3

- Name of a Red Hat SSO realm. This realm is created when

externalIdentityProvideristrue. Otherwise, passed to the CodeReady Workspaces server. - 4

- ID of a Red Hat SSO client. This client is created when

externalIdentityProviderisfalse. Otherwise, passed to the CodeReady Workspaces server.

Run the deploy script:

./deploy.sh --deploy

$ ./deploy.sh --deployCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

- See Section 2.6, “CodeReady Workspaces deployment script parameters” for definitions of the deployment script parameters.

2.4. Viewing CodeReady Workspaces installation logs

You can view the installation logs in the terminal or from the OpenShift console.

2.4.1. Viewing CodeReady Workspaces installation logs in the terminal

To view the installation logs on the terminal, take the following steps:

To obtain the names of the pods you must switch to project where CodeReady Workspaces is installed:

oc get pods -n=<OpenShift-project-name>

$ oc get pods -n=<OpenShift-project-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Following is an example output.

NAME READY STATUS RESTARTS AGE codeready-76d985c5d8-4sqmm 1/1 Running 2 1d codeready-operator-54b58f8ff7-fc88p 1/1 Running 3 1d keycloak-7668cdb5f5-ss29s 1/1 Running 2 1d postgres-7d94b544dc-nmhwp 1/1 Running 1 1d

NAME READY STATUS RESTARTS AGE codeready-76d985c5d8-4sqmm 1/1 Running 2 1d codeready-operator-54b58f8ff7-fc88p 1/1 Running 3 1d keycloak-7668cdb5f5-ss29s 1/1 Running 2 1d postgres-7d94b544dc-nmhwp 1/1 Running 1 1dCopy to Clipboard Copied! Toggle word wrap Toggle overflow To view the logs for the pod, run:

oc logs <log-name>

$ oc logs <log-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following is an example output:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4.2. Viewing CodeReady Workspaces installation logs in the OpenShift console

To view installation logs in OpenShift console, take the following steps:

- Navigate to the OpenShift web console`.

- In the My Projects pane, click workspaces.

- Click Applications > Pods. Click the name of the pod for which you want to view the logs.

Click Logs and click Follow.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5. Configuring CodeReady Workspaces to work behind a proxy server

This procedure describes how to configure CodeReady Workspaces for use in a deployment behind a proxy server. To access external resources (for example, to download Maven artifacts to build Java projects), change the workspace configuration.

Prerequisites

-

OpenShift with a logged in

occlient. - Deployment script. See Section 2.2, “Downloading the CodeReady Workspaces deployment script”.

Procedure

Update the following values in the

custom-resource.yamlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Substitute

http://172.19.20.128for the protocol and hostname of your proxy server. - 2

- Substitute

3128for the port of your proxy server. - 3

- Substitute

172.30.0.1for the value of the$KUBERNETES_SERVICE_HOSTenvironment variable (runecho $KUBERNETES_SERVICE_HOSTin any container running in the cluster to obtain this value).

You may also have to add a customnonProxyHostsvalue as required by your network. In this example, this value is*.172.19.20.240.nip.io(the routing suffix of the OpenShift Container Platform installation).

Important- Use correct indentation as shown above.

-

Use the bar sign (

|) as the delimiter for multiplenonProxyHostsvalues. You may need to list the same wildcard and abbreviated

nonProxyHostsvalues more than once. For example:nonProxyHosts: 'localhost | 127.0.0.1 | *.nip.io | .nip.io | *.example.com | .example.com'

nonProxyHosts: 'localhost | 127.0.0.1 | *.nip.io | .nip.io | *.example.com | .example.com'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Run the following command:

./deploy.sh --deploy

$ ./deploy.sh --deployCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

2.6. CodeReady Workspaces deployment script parameters

The custom-resource.yaml file contains default values for the installation parameters. Those parameters that take environment variables as values can be overridden from a command line. Not all installation parameters are available as flags.

Before running the deployment script in a fast mode, review the custom-resource.yaml file. Run the ./deploy.sh --help command to get a list of all available arguments.

The following is an annotated example of the custom-resource.yaml file with all available parameters:

Server settings:

- 1

- Defaults to

che. When set tocodeready, CodeReady Workspaces is deployed. The difference is in images, labels, and in exec commands. - 2

- The server image used in the Che deployment.

- 3

- The tag of an image used in the Che deployment.

- 4

- TLS mode for Che. Ensure that you either have public certificate or set the

selfSignedCertenvironment variable totrue. If you use the TLS mode with a self-signed certificate, ensure that your browser trusts the certificate. If it does not trust the certificate, the Authorization token is missed error is displayed on the login page and the running workspace may not work as intended. - 5

- When set to

true, the operator attempts to get a secret in the OpenShift router namespace to add it to the ava trust store of the CodeReady Workspaces server. Requires cluster-administrator privileges for the operator service account. - 6

- The protocol and hostname of a proxy server. Automatically added as

JAVA_OPTSvariable andhttps(s)_proxyto the CodeReady Workspaces server and workspaces containers. - 7

- The port of a proxy server.

- 8

- A list of non-proxy hosts. Use | as a delimiter. Example:

localhost|my.host.com|123.42.12.32. - 9

- The username for a proxy server.

- 10

- The password for a proxy user.

Storage settings:

storage:

pvcStrategy: 'common'

pvcClaimSize: '1Gi'

storage:

pvcStrategy: 'common'

pvcClaimSize: '1Gi' - 1

- The persistent volume claim strategy for the CodeReady Workspaces server. Can be common (all workspaces PVCs in one volume), per-workspace (one PVC per workspace for all the declared volumes), or unique (one PVC per declared volume). Defaults to

common. - 2

- The size of a persistent volume claim for workspaces. Defaults to

1Gi.

Database settings:

- 1

- When set to

true, the operator skips deploying PostgreSQL and passes the connection details of the existing database to the CodeReady Workspaces server. Otherwise, a PostgreSQL deployment is created. - 2

- The PostgreSQL database hostname that the CodeReady Workspaces server uses to connect to. Defaults to

postgres. - 3

- The PostgreSQL database port that the CodeReady Workspaces server uses to connect to. Defaults to

5432. - 4

- The Postgres user that the CodeReady Workspaces server when making a databse connection. Defaults to

pgche. - 5

- The password of a PostgreSQL user. Auto-generated when left blank.

- 6

- The PostgreSQL database name that the CodeReady Workspaces server uses to connect to. Defaults to

dbche.

auth settings:

- 1

- Instructs an operator to enable the OpenShift v3 identity provider in Red Hat SSO and create the respective oAuthClient and configure the Che configMap accordingly.

- 2

- Instructs the operator on whether or not to deploy the RH SSO instance. When set to

true, it provisions the connection details. - 3

- The desired administrator username of the Red Hat SSO administrator (applicable only when the

externalIdentityProvidervariable isfalse). - 4

- The desired password of the Red Hat SSO administrator (applicable only when the

externalIdentityProvidervariable isfalse). - 5

- Retrieved from the respective route or ingress unless explicitly specified in CR (when the

externalIdentityProvidervariable istrue). - 6

- The name of a Red Hat SSO realm. This realm is created when the

externalIdentityProvidervariable istrue. Otherwise, it is passed to the CodeReady Workspaces server. - 7

- The ID of a Red Hat SSO client. This client is created when the

externalIdentityProvidervariable isfalse. Otherwise, it is passed to the CodeReady Workspaces server.

Chapter 3. Installing CodeReady Workspaces on OpenShift 4 from OperatorHub

CodeReady Workspaces is now compatible with OpenShift 4.1 and has a dedicated operator compatible with the Operator Lifecycle Manager (OLM), which allows for easy installation and automated updates.

OLM is a management framework for extending Kubernetes with Operators. The OLM project is a component of the Operator Framework, which is an open-source toolkit to manage Kubernetes-native applications, called operators, in an effective, automated, and scalable way.

When using CodeReady Workspaces on OpenShift 4, updates are performed explicitly via OperatorHub. This is different from CodeReady Workspaces on OpenShift 3.11, where updates are performed using the migrate*.sh script or manual steps.

Procedure

To install CodeReady Workspaces 1.2 from OperatorHub:

- Launch the OpenShift Web Console.

In the console, click CodeReady Workspaces in the OperatorHub tab. The CodeReady Workspaces 1.2 window is shown.

Figure 3.1. CodeReady Workspaces 1.2 listed on OperatorHub

Click Install.

Figure 3.2. Install button on the CodeReady Workspaces 1.2 window

In the Create Operator Subscription window:

- From the A specific namespace in the cluster drop-down list, select the openshift-codeready-workspaces namespace to install the Operator to.

- Choose the appropriate approval strategy in the Approval Strategy field.

Click Subscribe.

Figure 3.3. Selections in the Create Operator Subscription window

A subscription is created in the Operator Lifecycle Manager (OLM), and the operator is installed in the chosen namespace. Successful installation implies that the following requirements in the Cluster Service Version (CSV) are created:

- Service account (SA)

- Role-based access control (RBAC)

Deployment

By default, a deployment creates only one instance of an application, scaled to 1. After the operator installation completes, the operator is marked as installed in the OperatorHub window.

Navigate to Catalog > Installed Operators. The CodeReady Workspaces operator with an InstallSucceded message is displayed.

Figure 3.4. Installed Operators on OperatorHub

To deploy CodeReady Workspaces 1.2 on the operator:

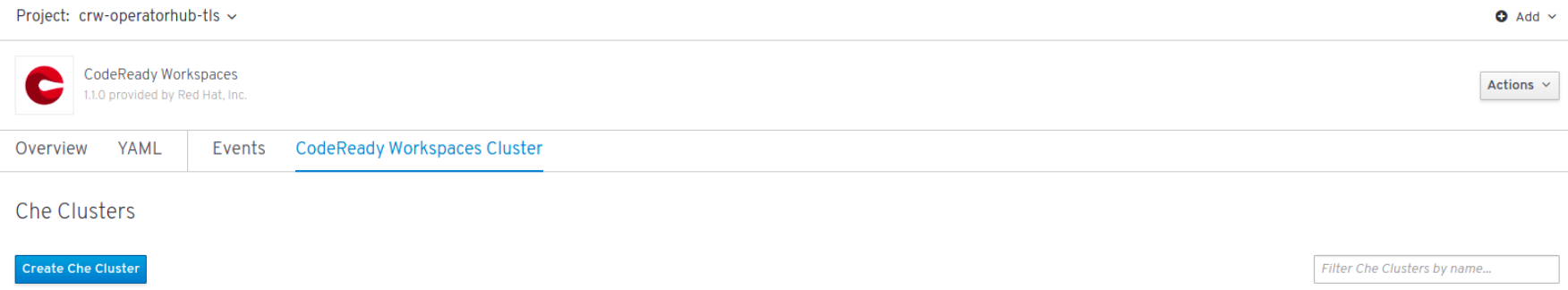

- In the selected namespace, create a custom resource Che Cluster that the operator will watch. To do so, click Operator > CodeReady Workspaces Cluster.

Click Create Che Cluster.

Figure 3.5. Clicking Create Che Cluster

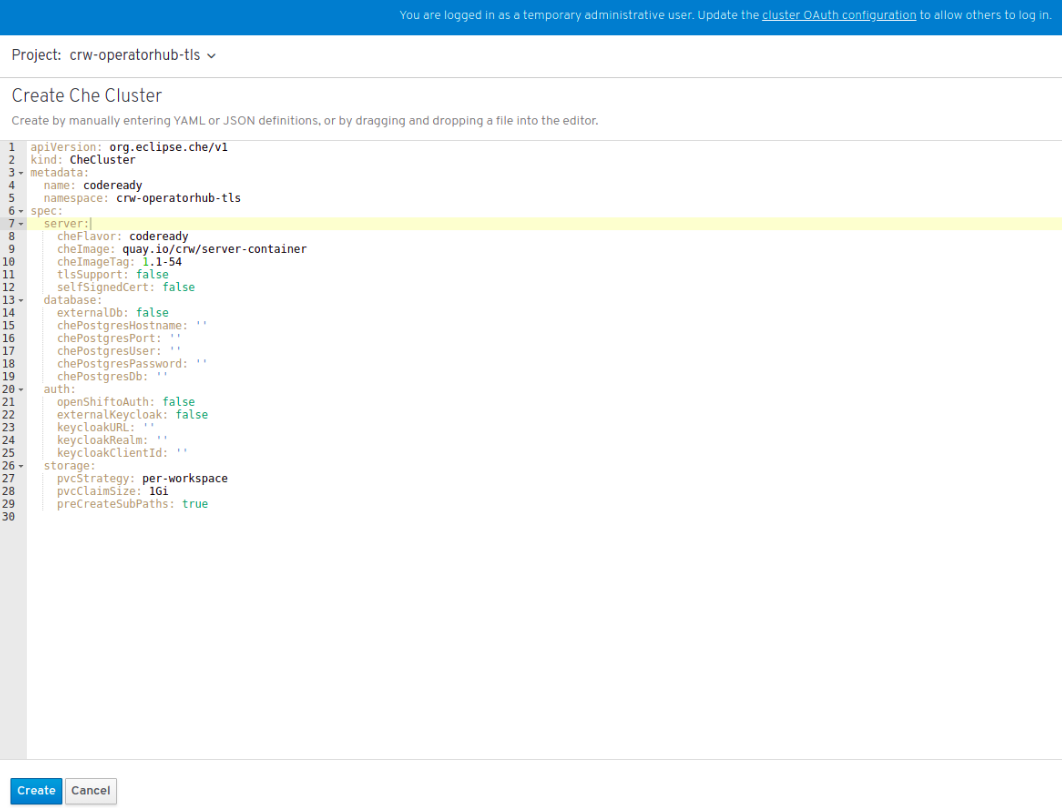

A template for creating a Che-cluster type custom resource is available. When using OpenShift OAuth or a self-signed certificate, grant cluster administrator privileges to the codeready-operator service account. For instructions, see the operator description when you initially install the operator.

Click Create.

Figure 3.6. Clicking Create to create the Che cluster

After the custom resource is created, the operator starts executing the following controller business logic:

- Creates the Kubernetes and OpenShift resources

- Provisions the database and Red Hat SSO resources

- Updates the resource status while the installation is in progress

To track the progress in the OLM UI, navigate to the resource details window.

Figure 3.7. Display of route for CodeReady Workspaces

- Wait for the status to become Available. The CodeReady Workspaces route is displayed.

To track the installation progress, follow the operator logs.

Notice that the coderedy-operator pod is the same namespace. Follow the logs and wait until the operator updates the resource status to Available and sets the URLs.

Figure 3.8. CodeReady Operator pod shown running

Additional resources

Chapter 4. Installing CodeReady Workspaces in restricted environments

To install CodeReady Workspaces in a restricted environment that has no direct connection to the Internet available, all requisite container images must be downloaded prior to the deployment of the application and then used from a local container registry.

The installation of CodeReady Workspaces consists of the following three deployments:

- PostgreSQL (database)

- Red Hat Single Sign-On (Red Hat SSO)

- CodeReady Workspaces

Each of these deployments uses a container image in the container specification. Normally, the images are pulled from the Red Hat Container Catalog at Red Hat CodeReady Workspaces. When the OpenShift Container Platform cluster used for the CodeReady Workspaces deployment does not have access to the Internet, the installation fails. To allow the installation to proceed, override the default image specification to point to your local registry.

Prerequisites

- The CodeReady Workspaces deployment script. See Section 2.2, “Downloading the CodeReady Workspaces deployment script” for detailed instructions on how to obtain the script.

- A local (internal) container registry that can be accessed by the OpenShift instance where CodeReady Workspaces is to be deployed.

- Container images required for CodeReady Workspaces deployment imported to the local registry.

- The following images downloaded from registry.redhat.io:

See Section 4.1, “Preparing CodeReady Workspaces deployment from a local registry” for detailed instructions.

4.1. Preparing CodeReady Workspaces deployment from a local registry

To install CodeReady Workspaces in a restricted environment without access to the Internet, the product deployment container images need to be imported from an external container registry into a local (internal) registry.

CodeReady Workspaces deployment requires the following images from the Red Hat Container Catalog at registry.redhat.io:

-

codeready-workspaces/server-rhel8:1.2: CodeReady Workspaces server -

codeready-workspaces/server-operator-rhel8:1.2: operator that installs and manages CodeReady Workspaces -

rhscl/postgresql-96-rhel7:1-40: PostgreSQL database for persisting data -

redhat-sso-7/sso73-openshift:1.0-11: Red Hat SSO for authentication -

ubi8-minimal:8.0-127: utility image used in preparing the PVCs (Persistant Volume Claims)

Prerequisites

To import container images (create image streams) in your OpenShift Container Platform cluster, you need:

-

cluster-adminrights

Procedure

- Import the required images from an external registry to a local registry that your OpenShift Container Platform cluster can reach. See Section 4.4, “Making CodeReady Workspaces images available from a local registry” for example instructions on how to do this.

Edit the

custom-resource.yamlconfiguration file to specify that the CodeReady Workspaces deployment script should use images from your internal registry. Add the following specification fields to the respective blocks. Use the address of your internal registry and the name of the OpenShift project into which you imported the images. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Important- Make sure to use correct indentation as shown above.

-

Substitute

172.0.0.30:5000for the actual address of your local registry. -

Substitute

openshiftfor the name of the OpenShift project into which you imported the images.

Additional resources

- See Section 4.4, “Making CodeReady Workspaces images available from a local registry” for example instructions on how to transfer container images required for CodeReady Workspaces deployment to a restricted environment.

-

See Section 2.6, “CodeReady Workspaces deployment script parameters” for an overview of all available configuration options in the

custom-resource.yamlconfiguration file.

4.2. Running the CodeReady Workspaces deployment script in a restricted environment

To deploy CodeReady Workspaces in a restricted environment with access to the Internet, it is necessary to use container images from a local (internal) registry. The deployment script (deploy.sh) allows to specify a custom image to be used for installation.

Specification fields from the custom-resource.yaml configuration file and arguments supplied to the deploy.sh script are passed to the operator. The operator then constructs the deployment of CodeReady Workspaces with the images in the container specification.

Prerequisites

- Imported container images required by CodeReady Workspaces to a local registry.

-

Local addresses of imported images specified in the

custom-resource.yamlfile.

See Section 4.1, “Preparing CodeReady Workspaces deployment from a local registry” for detailed instructions.

Procedure

To deploy CodeReady Workspaces from an internal registry, run the ./deploy.sh --deploy command and specify custom (locally available) server and operator images.

Use the

--server-imageand--versionparameters to specify the server image and the--operator-imageparameter to specify the operator image. For example:./deploy.sh --deploy \ --server-image=172.0.0.30:5000/openshift/codeready-workspaces/server-rhel8 \ --version=1.2 \ --operator-image=172.0.0.30:5000/openshift/codeready-workspaces/server-operator-rhel8:1.2

$ ./deploy.sh --deploy \ --server-image=172.0.0.30:5000/openshift/codeready-workspaces/server-rhel8 \ --version=1.2 \ --operator-image=172.0.0.30:5000/openshift/codeready-workspaces/server-operator-rhel8:1.2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Important-

Substitute

172.0.0.30:5000for the actual address of your local registry. -

Substitute

openshiftfor the name of the OpenShift project into which you imported the images.

-

Substitute

Additional resources

-

See Section 2.3, “Running the CodeReady Workspaces deployment script” for instructions on how to run the

deploy.shscript in other situations.

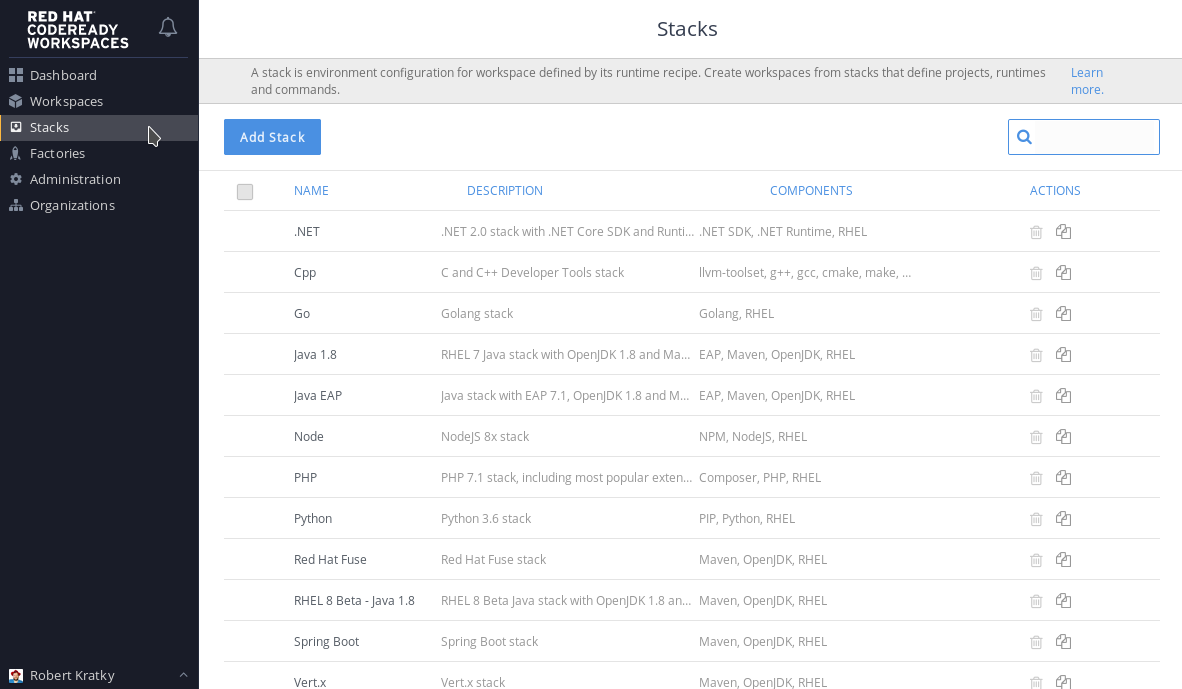

4.3. Starting workspaces in restricted environments

Starting a workspace in CodeReady Workspaces implies creating a new deployment. Different stacks use different images. All of these stacks are from the Red Hat Container Catalog at Red Hat CodeReady Workspaces. For more information on stacks, see the Stacks chapter in this guide.

It is not possible to override stack images during the installation of CodeReady Workspaces. You need to manually edit preconfigured stacks. See Creating stacks.

Procedure

To start a workspace in a restricted environment:

Import all of the following images you need to an internal registry:

-

registry.redhat.io/codeready-workspaces/stacks-java-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/stacks-node-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/stacks-node:1.2 -

registry.redhat.io/codeready-workspaces/stacks-php-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/stacks-python-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/stacks-dotnet-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/stacks-golang-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/stacks-java-rhel8:1.2 registry.redhat.io/codeready-workspaces/stacks-cpp-rhel8:1.2See Section 4.4, “Making CodeReady Workspaces images available from a local registry” for example instructions on how to transfer container images required for CodeReady Workspaces deployment to a restricted environment.

-

Modify the preconfigured stacks:

-

Log in to CodeReady Workspaces as an administrator. (The default login credentials are username:

adminand password:admin.) - In the left pane, click Stacks.

From the list of stacks, select a stack to edit.

Figure 4.1. Selecting a stack to edit

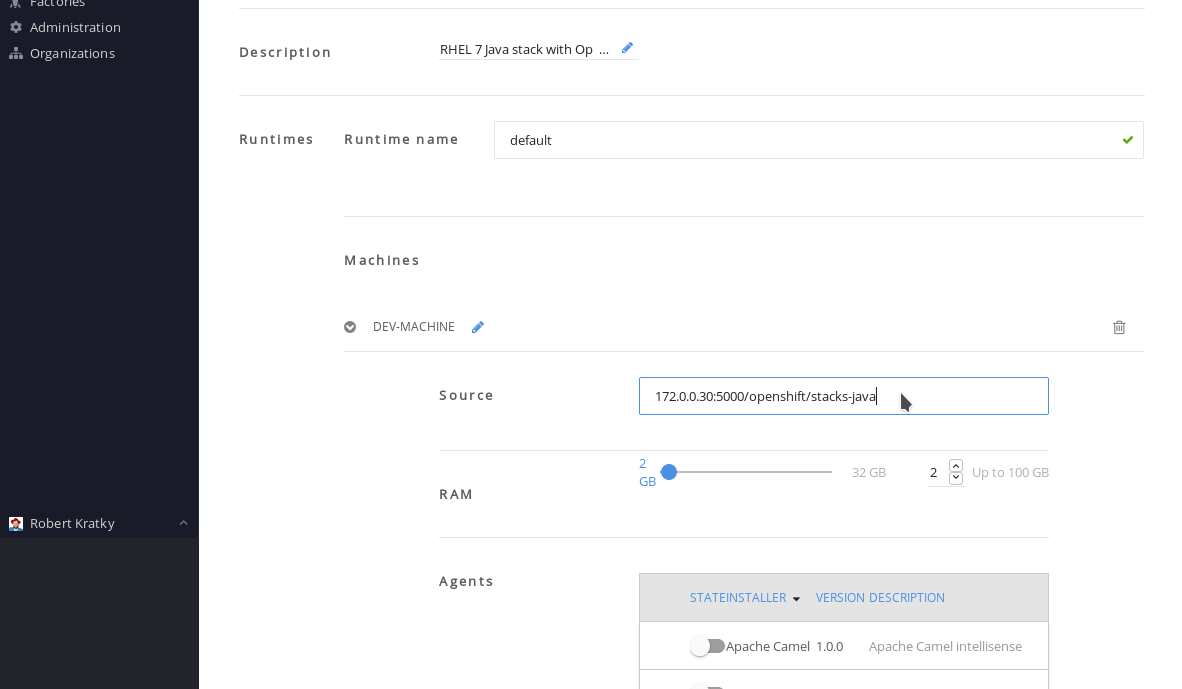

- Click and expand DEV-MACHINE.

In the Source field, replace the default Red Hat Container Catalog image with a local image that you pushed to an internal registry.

Figure 4.2. Editing a stack

Click Save.

Repeat step 2 for all the stacks that you want to use.

-

Log in to CodeReady Workspaces as an administrator. (The default login credentials are username:

By default, the CodeReady Workspaces server is configured to overwrite default stacks on application start. To ensure that the changes persist between restarts, edit the custom config map:

In the OpenShist Web Console, navigate to the Resources > Config Maps tab, and select the custom config map:

Figure 4.3. Navigate to the custom Config Map

- From the Actions drop-down menu in the top-right corner, select Edit.

Scroll to the

CHE_PREDEFINED_STACKS_RELOAD__ON__STARTkey, and enterfalse:Figure 4.4. Set the

CHE_PREDEFINED_STACKS_RELOAD__ON__STARTkey tofalse- Save the config map.

Forcibly start a new CodeReady Workspaces deployment, either by scaling the current deployment to

0and then back to1, or deleting the CodeReady Workspaces server pod:In the OpenShist Web Console, navigate to the Applications > Deployments tab, and select the codeready deployment:

Figure 4.5. Select the codeready deployment

- Click the name of the running codeready pod.

Click the down arrow next to the number of pods, and confirm by clicking the Scale Down button:

Figure 4.6. Scale down the codeready deployment

- Scale the deployment back up by clicking the up arrow.

4.4. Making CodeReady Workspaces images available from a local registry

This procedure is one of the possible ways for making CodeReady Workspaces container images that are required for deployment available from a local (internal) registry. First download the images using a machine connected to the Internet, then use some other method than network connection to transfer them to the restricted environment where you intend to deploy the application. Finally, upload (push) the images to the local registry that will be accessible during the deployment phase.

Prerequisites

- Machine with access to the Internet that can be used to pull and save container images from registry.redhat.io.

- Means of transferring the downloaded images to the restricted environment where you intend to deploy CodeReady Workspaces.

- An account with the rights to push to a local registry within the restricted environment. The local registry must be accessible for the deployment.

At minimum, the following images need to be made locally available:

-

registry.redhat.io/codeready-workspaces/server-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/server-operator-rhel8:1.2 -

registry.redhat.io/rhscl/postgresql-96-rhel7:1-40 -

registry.redhat.io/redhat-sso-7/sso73-openshift:1.0-11 -

registry.redhat.io/ubi8-minimal:8.0-127

You also need to follow the steps for image import for all stack images that you will want to use within CodeReady Workspaces.

Procedure

Use a tool for container management, such as Podman, to both download the container images and subsequently push them to a local registry within the restricted environment.

The podman tool is available from the podman package in Red Hat Enterprise Linux starting with version 7.6. On earlier versions of Red Hat Enterprise Linux, the docker tool can be used with the same command syntax as suggested below for podman.

Steps to perform on a machine with connection to the Internet

Pull the required images from the registry.redhat.io. For example, for the

codeready-workspaces/server-rhel8image, run:podman pull registry.redhat.io/codeready-workspaces/server-rhel8:1.2

# podman pull registry.redhat.io/codeready-workspaces/server-rhel8:1.2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Repeat the command for all the images you need.

Save all the pulled images to a

tarfile in your current directory on the disk. For example, for thecodeready-workspaces/server-rhel8image, run:podman save --output codeready-server.tar \ registry.redhat.io/codeready-workspaces/server-rhel8:1.2

# podman save --output codeready-server.tar \ registry.redhat.io/codeready-workspaces/server-rhel8:1.2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Repeat the command for all the images you need.

Transfer the saved tar image files to a machine connected to the restricted environment.

Steps to perform on a machine within the restricted environment

Load all the required images to the local container repository from which they can uploaded to OpenShift. For example, for the

codeready-workspaces/server-rhel8image, run:podman load --input codeready-server.tar

# podman load --input codeready-server.tarCopy to Clipboard Copied! Toggle word wrap Toggle overflow Repeat the command for all the images you need.

Optionally, check that the images have been successfully loaded to your local container repository. For example, to check for the

codereadyimages, run:podman images */codeready/* REPOSITORY TAG registry.redhat.io/codeready-workspaces/server-rhel8 1.2 registry.redhat.io/codeready-workspaces/server-operator-rhel8 1.2

# podman images */codeready/* REPOSITORY TAG registry.redhat.io/codeready-workspaces/server-rhel8 1.2 registry.redhat.io/codeready-workspaces/server-operator-rhel8 1.2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Log in to the instance of OpenShift Container Platform where you intend to deploy CodeReady Workspaces as a user with the

cluster-adminrole. For example, to log in as the user admin, run:oc login --username <admin> --password <password>

$ oc login --username <admin> --password <password>Copy to Clipboard Copied! Toggle word wrap Toggle overflow In the above command, substitute

<admin>for the username of the account you intend to use to deploy CodeReady Workspaces and<password>for the associated password.Log in with

podmanto the local OpenShift registry that CodeReady Workspaces will be deployed from:podman login --username <admin> \ --password $(oc whoami --show-token) 172.0.0.30:5000

# podman login --username <admin> \ --password $(oc whoami --show-token) 172.0.0.30:5000Copy to Clipboard Copied! Toggle word wrap Toggle overflow In the above command:

-

Substitute

<admin>for the username of the account you used to log in to OpenShift. -

Substitute

172.0.0.30:5000for the URL of the local OpenShift registry to which you are logging in.

-

Substitute

Tag the required images to prepare them to be pushed to the local OpenShift registry. For example, for the

codeready-workspaces/server-rhel8image, run:podman tag registry.redhat.io/codeready-workspaces/server-rhel8:1.2 \ 172.0.0.30:5000/openshift/codeready-server:1.2

# podman tag registry.redhat.io/codeready-workspaces/server-rhel8:1.2 \ 172.0.0.30:5000/openshift/codeready-server:1.2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Repeat the command for all the images you need.

In the above command, substitute

172.0.0.30:5000for the URL of the local OpenShift registry to which you will be pushing the images.Optionally, check that the images have been successfully tagged for pushing to the local OpenShift registry. For example, to check for the

codereadyimages, run:podman images */openshift/codeready-* REPOSITORY TAG 172.0.0.30:5000/openshift/codeready-operator 1.2 172.0.0.30:5000/openshift/codeready-server 1.2

# podman images */openshift/codeready-* REPOSITORY TAG 172.0.0.30:5000/openshift/codeready-operator 1.2 172.0.0.30:5000/openshift/codeready-server 1.2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Push the required images to the local OpenShift registry. For example, for the

codeready-workspaces/server-rhel8image, run:podman push 172.0.0.30:5000/openshift/codeready-server:1.2

# podman push 172.0.0.30:5000/openshift/codeready-server:1.2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Repeat the command for all the images you need.

In the above command, substitute

172.0.0.30:5000for the URL of the local OpenShift registry to which you will be pushing the images.Switch to the

openshiftproject:oc project openshift

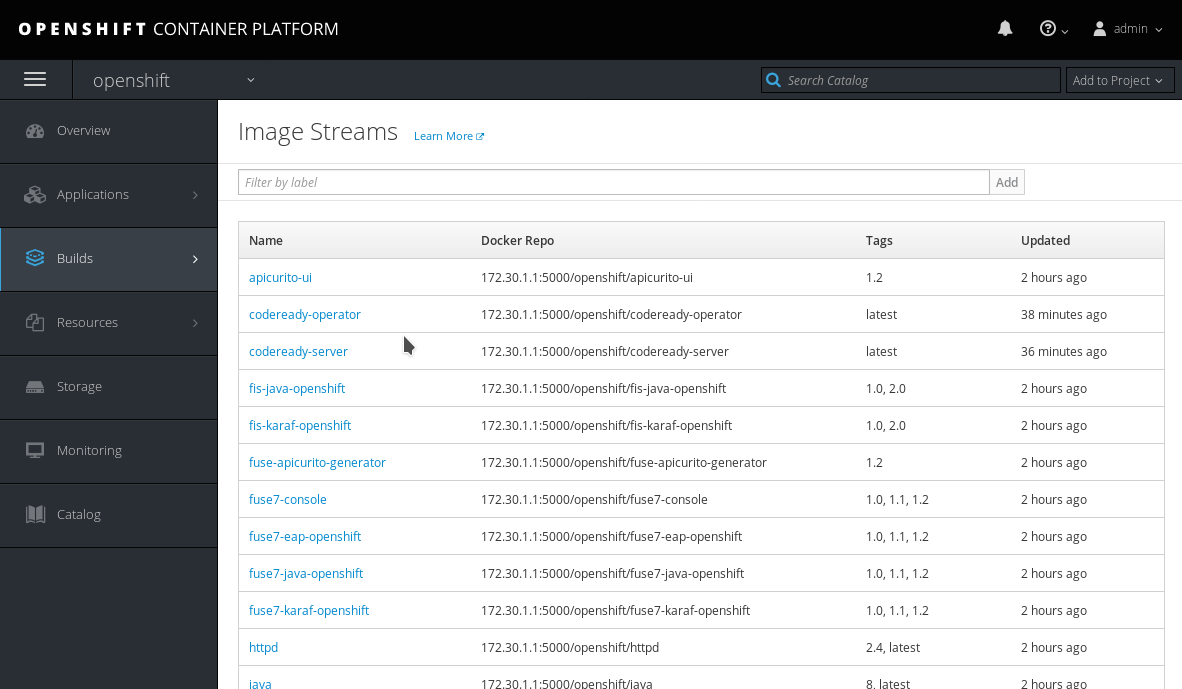

$ oc project openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optionally, check that the images have been successfully pushed to the local OpenShift registry. For example, to check for the

codereadyimages, run:oc get imagestream codeready-* NAME DOCKER REPO TAGS codeready-operator 172.0.0.30:5000/openshift/codeready-operator 1.2 codeready-server 172.0.0.30:5000/openshift/codeready-server 1.2

$ oc get imagestream codeready-* NAME DOCKER REPO TAGS codeready-operator 172.0.0.30:5000/openshift/codeready-operator 1.2 codeready-server 172.0.0.30:5000/openshift/codeready-server 1.2Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can also verify that the images have been successfully pushed in the OpenShift Console. Navigate to the Builds > Images tab, and look for image streams available in the

openshiftproject:Figure 4.7. Confirming images have been pushed to the

openshiftproject

The required CodeReady Workspaces container images are now available for use in your restricted environment.

Additional resources

- Continue by Section 4.2, “Running the CodeReady Workspaces deployment script in a restricted environment”

- See Importing Tag and Image Metadata in the OpenShift Container Platform Developer Guide for detailed information about importing images.

Chapter 5. Installing CodeReady Workspaces on OpenShift Dedicated

CodeReady Workspaces deployment requires cluster-admin OpenShift privileges. To install CodeReady Workspaces on OpenShift Dedicated cluster, a special procedure needs to be followed.

- It is not possible to use OpenShift OAuth for CodeReady Workspaces authentication when deployed on OpenShift Dedicated using the method described in this section.

-

If your OpenShift Dedicated cluster is compatible with 4.1, the installation of CodeReady Workspaces 1.2 will be handled from the Operator Hub. This installation will not require the

cluster-adminrights. For detailed steps to install CodeReady Workspaces from the Operator Hub, see Chapter 3, Installing CodeReady Workspaces on OpenShift 4 from OperatorHub.

Prerequisites

- OpenShift Dedicated cluster with recommended resources. See Chapter 2, Installing CodeReady Workspaces on OpenShift v3 for detailed information about cluster sizing.

Procedure

To install CodeReady Workspaces on OpenShift Dedicated, you need to use the deployment script distributed with CodeReady Workspaces 1.0.2 and manually specify CodeReady Workspaces container images from version 1.2.

-

Download and unpack the deployment script from CodeReady Workspaces 1.0.2 from developers.redhat.com. See Section 2.2, “Downloading the CodeReady Workspaces deployment script” for detailed instructions. Ensure that you are downloading the 1.0.2 version (direct link:

codeready-workspaces-1.0.2.GA-operator-installer.tar.gz). Run the deployment script and specify:

-

1.2

codeready-workspaces/server-rhel8image 1.0

codeready-workspaces/server-operatorimage./deploy.sh --deploy \ --operator-image=registry.redhat.io/codeready-workspaces/server-operator:1.0 \ --server-image=registry.redhat.io/codeready-workspaces/server-rhel8:1.2

$ ./deploy.sh --deploy \ --operator-image=registry.redhat.io/codeready-workspaces/server-operator:1.0 \ --server-image=registry.redhat.io/codeready-workspaces/server-rhel8:1.2Copy to Clipboard Copied! Toggle word wrap Toggle overflow See Running the CodeReady Workspaces deployment script for a description of other available parameters that can be used with the deployment script to customize the CodeReady Workspaces deployment.

-

1.2

Chapter 6. Upgrading CodeReady Workspaces

CodeReady Workspaces 1.2 introduces an operator that uses a controller to watch custom resources. This chapter lists steps to upgrade CodeReady Workspaces 1.1 to CodeReady Workspaces 1.2. For steps to upgrade from CodeReady Workspaces 1.0 to CodeReady Workspaces 1.1, see https://access.redhat.com/documentation/en-us/red_hat_codeready_workspaces/1.1/html/administration_guide/upgrading-codeready-workspaces.

6.1. Upgrading CodeReady Workspaces using a migration script

The upgrade process using the migrate_*.sh scripts is an automation of the manual steps listed in the following manual section. The downloaded codeready-workspaces-operator-installer archive contains the following two migrate_*.sh scripts:

-

migrate_1.0_to_1.1.sh: Use to upgrade from CodeReady Workspaces 1.0 to CodeReady Workspaces 1.1 -

migrate_1.1_to_1.2.sh: Use to upgrade from CodeReady Workspaces 1.1 to CodeReady Workspaces 1.2

Prerequisites

- You must have a running instance of Red Hat OpenShift Container Platform 3.11 or OpenShift Dedicated 3.11.

-

The OpenShift Origin Client Tools 3.11,

oc, is in the path. See Section 2.1, “Downloading the Red Hat OpenShift Origin Client Tools”. You must be logged in to the OpenShift instance using the following command:

oc login https://<your-IP-address>:8443 --username <your-username> --password <your-password>

$ oc login https://<your-IP-address>:8443 --username <your-username> --password <your-password>Copy to Clipboard Copied! Toggle word wrap Toggle overflow - You must have obtained the Red Hat login credentials. For details, see https://access.redhat.com/RegistryAuthentication#getting-a-red-hat-login-2.

Procedure

To upgrade from CodeReady Workspaces 1.1 to CodeReady Workspaces 1.2, run the following command (the default project namespace is workspaces):

./migrate_1.1_to_1.2.sh -n=<OpenShift-project-name>

$ ./migrate_1.1_to_1.2.sh -n=<OpenShift-project-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantSubstitute <OpenShift-project-name> for the project namespace that you are using.

6.2. Running the CodeReady Workspaces migration script in restricted environments

The upgrade process using the migrate_*.sh scripts is an automation of the manual steps listed in the following manual section. The downloaded codeready-workspaces-operator-installer archive contains the following two migrate_*.sh scripts:

-

migrate_1.0_to_1.1.sh: Use to upgrade from CodeReady Workspaces 1.0 to CodeReady Workspaces 1.1 -

migrate_1.1_to_1.2.sh: Use to upgrade from CodeReady Workspaces 1.1 to CodeReady Workspaces 1.2

Prerequisites

- A local (internal) container registry that can be accessed by the OpenShift instance where CodeReady Workspaces is to be deployed.

The following images downloaded from registry.redhat.io:

-

registry.redhat.io/codeready-workspaces/server-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/server-operator-rhel8:1.2 -

registry.redhat.io/rhscl/postgresql-96-rhel7:1-40 -

registry.redhat.io/redhat-sso-7/sso73-openshift:1.0-11 registry.redhat.io/ubi8-minimal:8.0-127Download any of the following container images, with pre-configured stacks for creating workspaces, as required. Note that for most of the images the container name now contains a

-rhel8suffix, but the Node.js 8 container continues to be based on RHEL 7.-

registry.redhat.io/codeready-workspaces/stacks-java-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/stacks-node-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/stacks-php-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/stacks-python-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/stacks-dotnet-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/stacks-golang-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/stacks-java-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/stacks-cpp-rhel8:1.2 -

registry.redhat.io/codeready-workspaces/stacks-node:1.2

-

- To obtain the above images, you must have Red Hat login credentials. For details, see https://access.redhat.com/RegistryAuthentication#getting-a-red-hat-login-2.

-

The OpenShift Origin Client Tools 3.11,

oc, is in the path. See Section 2.1, “Downloading the Red Hat OpenShift Origin Client Tools”. To peform the upgrade procedure, you must have a running instance of Red Hat OpenShift Container Platform 3.11 or OpenShift Dedicated 3.11, and must be logged in to the OpenShift instance using the following command:

oc login https://<your-IP-address>:8443 --username <your-username> --password <your-password>

$ oc login https://<your-IP-address>:8443 --username <your-username> --password <your-password>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure

Use a tool for container management, such as Podman, to push the images to a local registry within the restricted environment.

The podman tool is available from the podman package in Red Hat Enterprise Linux starting with version 7.6. On earlier versions of Red Hat Enterprise Linux, the docker tool can be used with the same command syntax as suggested below for podman.

Pull the required images from registry.redhat.io. For example, to pull the

codeready-workspaces/server-rhel8image, run:podman pull registry.redhat.io/codeready-workspaces/server-rhel8:1.2

# podman pull registry.redhat.io/codeready-workspaces/server-rhel8:1.2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Repeat the command for all the images that you want to pull.

After pulling images, it may be necessary to re-name and re-tag them so they are accessible from your local registry. For example:

podman tag registry.redhat.io/codeready-workspaces/server-rhel8:1.2 registry.yourcompany.com:5000/crw-server-rhel8:1.2

# podman tag registry.redhat.io/codeready-workspaces/server-rhel8:1.2 registry.yourcompany.com:5000/crw-server-rhel8:1.2To upgrade from CodeReady Workspaces 1.1 to CodeReady Workspaces 1.2, run a command like the following, using the correct registry and image names used in the previous steps (The default project namespace is workspaces. Your registry, container names, and tag versions may vary.)

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3. Upgrading CodeReady Workspaces manually

This section walks you through the manual upgrade steps from CodeReady Workspaces 1.1 to CodeReady Workspaces 1.2. For steps to manually upgrade from CodeReady Workspaces 1.0 to CodeReady Workspaces 1.1, see https://access.redhat.com/documentation/en-us/red_hat_codeready_workspaces/1.1/html/administration_guide/upgrading-codeready-workspaces#upgrading-codeready-workspaces-manually.

Prerequisites

- You must have a running instance of Red Hat OpenShift Container Platform 3.11 or OpenShift Dedicated 3.11.

-

The OpenShift Origin Client Tools 3.11,

oc, is in the path. See Section 2.1, “Downloading the Red Hat OpenShift Origin Client Tools”. You must be logged in to the OpenShift instance using the following command:

oc login https://<your-IP-address>:8443 --username <your-username> --password <your-password>

$ oc login https://<your-IP-address>:8443 --username <your-username> --password <your-password>Copy to Clipboard Copied! Toggle word wrap Toggle overflow - You must have obtained the Red Hat login credentials. For details, see https://access.redhat.com/RegistryAuthentication#getting-a-red-hat-login-2.

Procedure

- Log in to the registry.redhat.io registry. For details, see https://access.redhat.com/RegistryAuthentication#using-authentication-3.

To keep your login secret for the registry.redhat.io registry in a separate file, run the following command:

docker --config /tmp/CRW.docker.config.json login https://registry.redhat.io

$ docker --config /tmp/CRW.docker.config.json login https://registry.redhat.ioCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you do not keep the secret in a seperate file, the secret is stored in the

~/.docker/config.jsonfile and all your secrets are imported to OpenShift in the following step.To add your secret to OpenShift, run the following commands:

oc create secret generic registryredhatio --type=kubernetes.io/dockerconfigjson --from-file=.dockerconfigjson=/tmp/CRW.docker.config.json && \ oc secrets link default registryredhatio --for=pull && \ oc secrets link builder registryredhatio

$ oc create secret generic registryredhatio --type=kubernetes.io/dockerconfigjson --from-file=.dockerconfigjson=/tmp/CRW.docker.config.json && \ oc secrets link default registryredhatio --for=pull && \ oc secrets link builder registryredhatioCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the secret is added successfully, the following query shows your new secret:

oc get secret registryredhatio

$ oc get secret registryredhatioCopy to Clipboard Copied! Toggle word wrap Toggle overflow If unsuccessful, see https://access.redhat.com/RegistryAuthentication#allowing-pods-to-reference-images-from-other-secured-registries-9.

Select your CodeReady Workspaces deployment (the default project namespace is workspaces):

oc project -n=<OpenShift-project-name>

$ oc project -n=<OpenShift-project-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantSubstitute <OpenShift-project-name> for the project namespace that you are using.

Obtain the

DB_PASSWORDfrom the running CodeReady Workspaces instance:DB_PASSWORD=$(oc get deployment keycloak -o=jsonpath={'.spec.template.spec.containers[0].env[?(@.name=="DB_PASSWORD")].value'})$ DB_PASSWORD=$(oc get deployment keycloak -o=jsonpath={'.spec.template.spec.containers[0].env[?(@.name=="DB_PASSWORD")].value'})Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the JSON patch:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Patch the running cluster:

oc patch checluster codeready -p "${PATCH_JSON}" --type merge$ oc patch checluster codeready -p "${PATCH_JSON}" --type mergeCopy to Clipboard Copied! Toggle word wrap Toggle overflow Scale down the running deployments:

oc scale deployment/codeready --replicas=0 && \ oc scale deployment/keycloak --replicas=0

$ oc scale deployment/codeready --replicas=0 && \ oc scale deployment/keycloak --replicas=0Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update the operator image and wait until the update is complete:

oc set image deployment/codeready-operator *=registry.redhat.io/codeready-workspaces/server-operator-rhel8:1.2

$ oc set image deployment/codeready-operator *=registry.redhat.io/codeready-workspaces/server-operator-rhel8:1.2Copy to Clipboard Copied! Toggle word wrap Toggle overflow After the operator is redeployed, scale up the keycloak deployment (RH SSO) and wait until the update is complete:

oc scale deployment/keycloak --replicas=1

$ oc scale deployment/keycloak --replicas=1Copy to Clipboard Copied! Toggle word wrap Toggle overflow After keycloak is redeployed, scale up the CodeReady Workspaces deployment (Che Server) and wait until the update is complete:

oc scale deployment/codeready --replicas=1

$ oc scale deployment/codeready --replicas=1Copy to Clipboard Copied! Toggle word wrap Toggle overflow After CodeReady Workspaces is redeployed, set the new image for PostgreSQL:

oc set image deployment/postgres "*=registry.redhat.io/rhscl/postgresql-96-rhel7:1-40"

$ oc set image deployment/postgres "*=registry.redhat.io/rhscl/postgresql-96-rhel7:1-40"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Scale down the PostgreSQL deployment and then scale it back up. Wait until the update is complete:

oc scale deployment/postgres --replicas=0 && \ oc scale deployment/postgres --replicas=1

$ oc scale deployment/postgres --replicas=0 && \ oc scale deployment/postgres --replicas=1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 7. Uninstalling CodeReady Workspaces

There is no dedicated function in the deploy.sh script to uninstall CodeReady Workspaces.

However, you can delete a custom resource, which deletes all the associated objects.

Note that in all the commands in this section, <OpenShift-project-name> is an OpenShift project with deployed CodeReady Workspaces (workspaces is the OpenShift project by default).

Procedure

To delete CodeReady Workspaces and its components:

oc delete checluster/codeready -n=<OpenShift-project-name> oc delete namespace -n=<OpenShift-project-name>

$ oc delete checluster/codeready -n=<OpenShift-project-name> $ oc delete namespace -n=<OpenShift-project-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use selectors to delete particular deployments and associated objects.

To remove all CodeReady Workspaces server-related objects:

oc delete all -l=app=che -n=<OpenShift-project-name>

$ oc delete all -l=app=che -n=<OpenShift-project-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow To remove all Keycloak-related objects:

oc delete all -l=app=keycloak -n=<OpenShift-project-name>

$ oc delete all -l=app=keycloak -n=<OpenShift-project-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow To remove all PostgreSQL-related objects:

oc delete all -l=app=postgres -n=<OpenShift-project-name>

$ oc delete all -l=app=postgres -n=<OpenShift-project-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow PVCs, service accounts, and role bindings should be deleted separately because the

oc delete allcommand does not delete them.To delete CodeReady Workspaces server PVC, ServiceAccount, and RoleBinding:

oc delete sa -l=app=che -n=<OpenShift-project-name> oc delete rolebinding -l=app=che -n=<OpenShift-project-name> oc delete pvc -l=app=che -n=<OpenShift-project-name>

$ oc delete sa -l=app=che -n=<OpenShift-project-name> $ oc delete rolebinding -l=app=che -n=<OpenShift-project-name> $ oc delete pvc -l=app=che -n=<OpenShift-project-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow To delete Keycloak and PostgreSQL PVCs:

oc delete pvc -l=app=keycloak -n=<OpenShift-project-name> oc delete pvc -l=app=postgres -n=<OpenShift-project-name>

$ oc delete pvc -l=app=keycloak -n=<OpenShift-project-name> $ oc delete pvc -l=app=postgres -n=<OpenShift-project-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 8. Viewing CodeReady Workspaces operation logs

After the CodeReady Workspaces pods are created, you can view the operation logs of the application in the terminal or through the OpenShift console.

8.1. Viewing CodeReady Workspaces operation logs in the terminal

To view the operation logs on the terminal, run the following commands:

To obtain the names of the pods you must switch to project where CodeReady Workspaces is installed:

oc get pods -n=<OpenShift-project-name>

$ oc get pods -n=<OpenShift-project-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow This command shows the pods that have been created:

NAME READY STATUS RESTARTS AGE codeready-6b4876f56c-qdlll 1/1 Running 0 24m codeready-operator-56bc9599cc-pkqkn 1/1 Running 0 25m keycloak-666c5f9f4b-zz88z 1/1 Running 0 24m postgres-96875bcbd-tfxr4 1/1 Running 0 25m

NAME READY STATUS RESTARTS AGE codeready-6b4876f56c-qdlll 1/1 Running 0 24m codeready-operator-56bc9599cc-pkqkn 1/1 Running 0 25m keycloak-666c5f9f4b-zz88z 1/1 Running 0 24m postgres-96875bcbd-tfxr4 1/1 Running 0 25mCopy to Clipboard Copied! Toggle word wrap Toggle overflow To view the operation log for a specific pod, run:

oc logs <log-name>

$ oc logs <log-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow The output of this command for the codeready-6b4876f56c-qdll pod (as an example) is as follows:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow For operation logs of the other pods, run:

-

For the codeready-operator-56bc9599cc-pkqkn pod:

oc logs codeready-operator-56bc9599cc-pkqkn -

For the keycloak-666c5f9f4b-zz88z pod:

oc logs keycloak-666c5f9f4b-zz88z -

For the postgres-96875bcbd-tfxr4 pod:

oc logs postgres-96875bcbd-tfxr4

-

For the codeready-operator-56bc9599cc-pkqkn pod:

8.2. Viewing CodeReady Workspaces operation logs in the OpenShift console

To view the operation logs in the OpenShift console, take the following steps:

- Navigate to the OpenShift web console.

- In the My Projects pane, click workspaces.

- In the Overview tab, click the application that you want to view the logs for (example: codeready-operator, #1).

- In the Deployments > <application-name> window, click the name of the pod.

- Scroll down to the Pods section and click the <pod-name>.

Click Logs

Figure 8.1. Clicking View Log

Click Follow to follow the log.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 9. Using the Che 7 IDE in CodeReady Workspaces

CodeReady Workspaces 1.2 is based on upstream Che 6. The next version of Che, Che 7, is being worked on. To try this upcoming version of Che 7 in CodeReady Workspaces, follow the instructions in this section.

Che 7-based stacks are not included by default in CodeReady Workspaces. However, you can use a Che 7-based workspace configuration in CodeReady Workspaces. To use the Che 7-based stacks in CodeReady Workspaces, take the following steps:

- In the Dashboard, click Workspaces and then click Add Workspace.

- Select any stack from the list and click the dropdown icon next to CREATE & OPEN.

- Click Create & Proceed Editing.

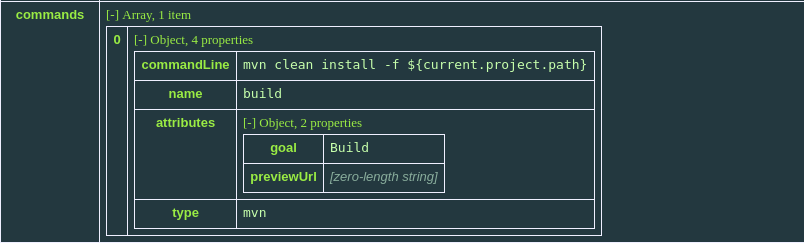

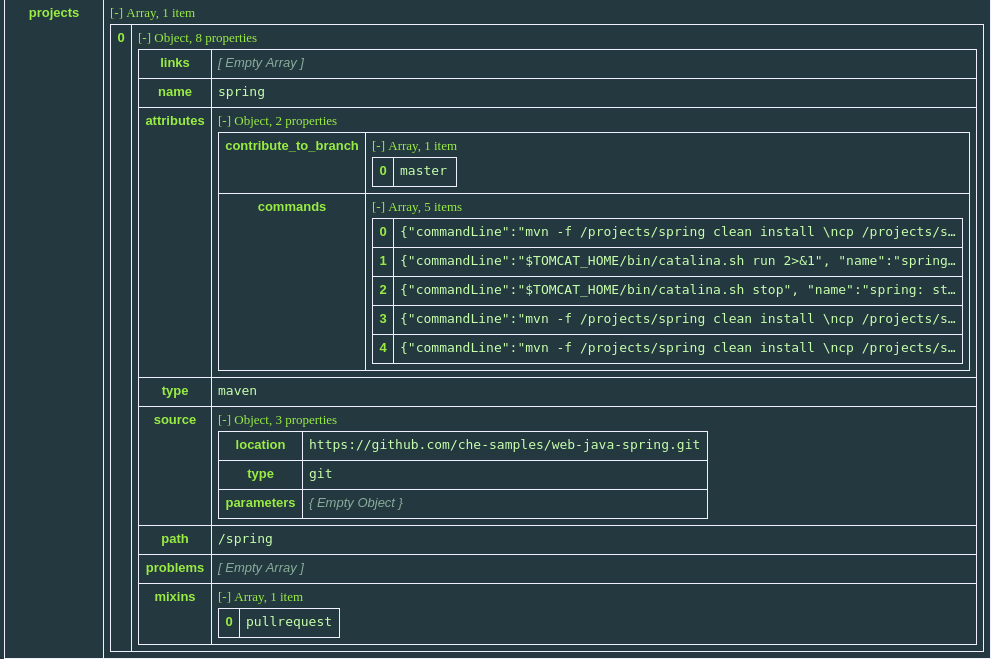

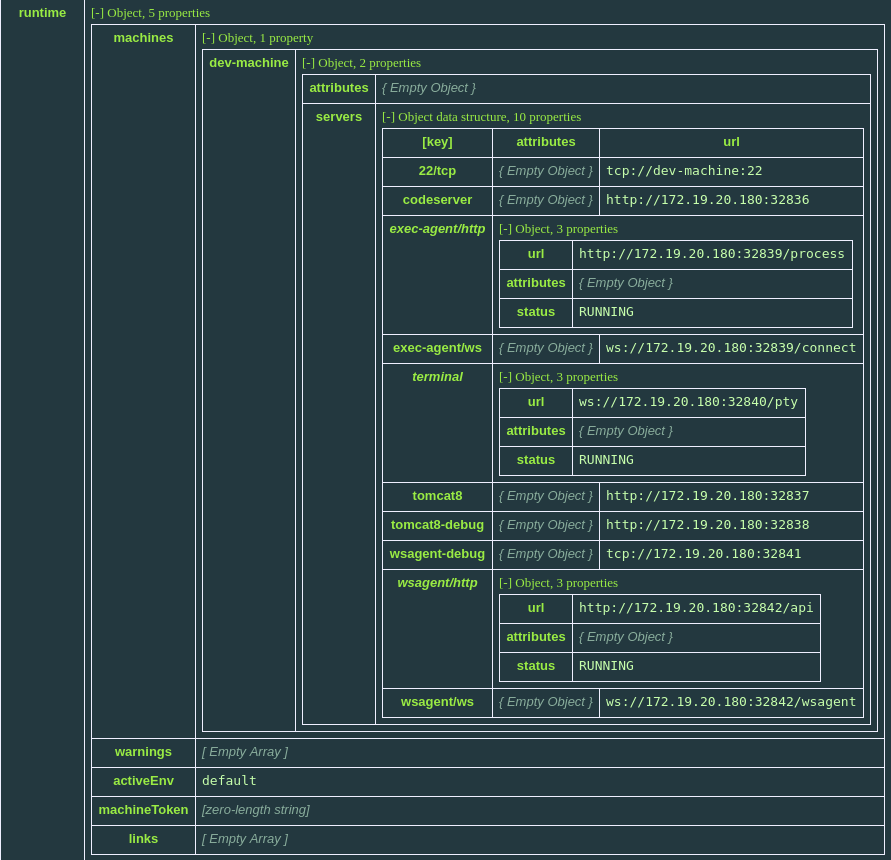

Click the Config tab and replace the default configuration content with the following content:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - To add additional Che plugins (for example to add support for a particular language), add them in the workspace configuration attributes#plugins list. For a list of available plugins, see https://github.com/eclipse/che-plugin-registry/.

To add a runtime environment (for example Java runtime environment), add the following content in the Config tab:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow To define it as the default environment, add the following content:

"defaultEnv": "default" "environments": { "default": {...} }"defaultEnv": "default" "environments": { "default": {...} }Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Click the Open button and run the Che 7-based workspace that you just created.

Chapter 10. Using the Analytics plug-in in CodeReady Workspaces

The Analytics plug-in provides insights about application dependencies: security vulnerabilities, license compatibility, and AI-based guidance for additional, alternative dependencies.

The Analytics plug-in is enabled by default in the Java and Node.js stacks in CodeReady Workspaces. When a user opens the pom.xml or the package.json files, the dependencies are analyzed. The editor shows warnings for available CVEs or issues with any dependency.

Chapter 11. Using version control

CodeReady Workspaces natively supports the Git version control system (VCS), which is provided by the JGit library. Versioning functionality is available in the IDE and in the terminal.

The following sections describe how to connect and authenticate users to a remote Git repository. Any operations that involve authentication on the remote repository need to be done via the IDE interface unless authentication is configured separately for the workspace machine (terminal, commands).

Private repositories require a secure SSH connection. In order to connect to Git repositories over SSH, an SSH key pair needs to be generated. SSH keys are saved in user preferences, so the same key can be used in all workspaces.

HTTPS Git URLs can only be used to push changes when OAuth authentication is enabled. See Enabling authentication with social accounts and brokering.

11.1. Generating and uploading SSH keys

SSH keys can be generated in the CodeReady Workspaces user interface.

- Go to Profile > Preferences > SSH > VCS, and click the button.

-

When prompted to provide the host name for your repository, use the bare host name (do not include

wwworhttps) as in the example below. - Save the resulting key to your Git-hosting provider account.

The host name is an actual host name of a VCS provider. Examples: github.com, bitbucket.org.

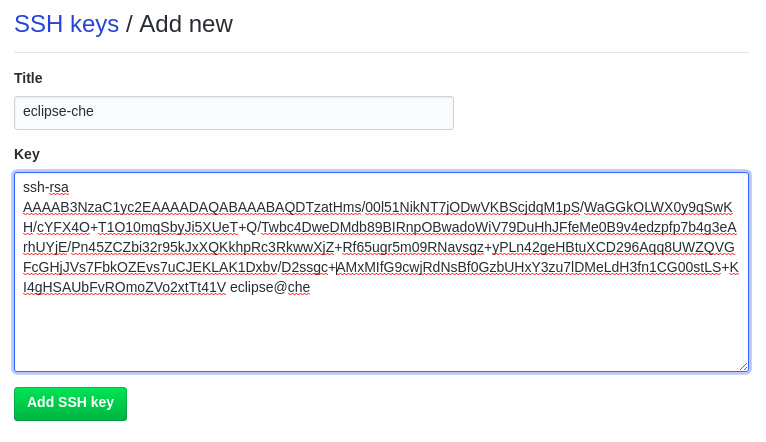

11.1.1. Using existing SSH keys

You can upload an existing public key instead of creating a new SSH key. When uploading a key, add the host name (using no www or https - as in the example below). Note that the Public key > View button is not be available when using this option because the public file should be generated already.

The following examples are specific to GitHub and GitLab, but a similar procedure can be used with all Git or SVN repository providers that use SSH authentication. See documentation provided by other providers for additional assistance.

The default type of SSH key does not work in CodeReady Workspaces. The git pull command fails on authorization. To work around the issue, you need a different type of an SSH key.

To convert an existing SSH key to the PEM format, run the following command and substitute <your_key_file> for the path to your key file.

ssh-keygen -p -f <your_key_file> -m PEM

$ ssh-keygen -p -f <your_key_file> -m PEM

Or, to generate a new PEM SSH key instead of the default RFC 4716/SSH2 key, omit the -p flag. Substitute <new_key_file> for the path to the new file.

ssh-keygen -f <new_key_file> -m PEM

$ ssh-keygen -f <new_key_file> -m PEMExample 11.1. GitHub example

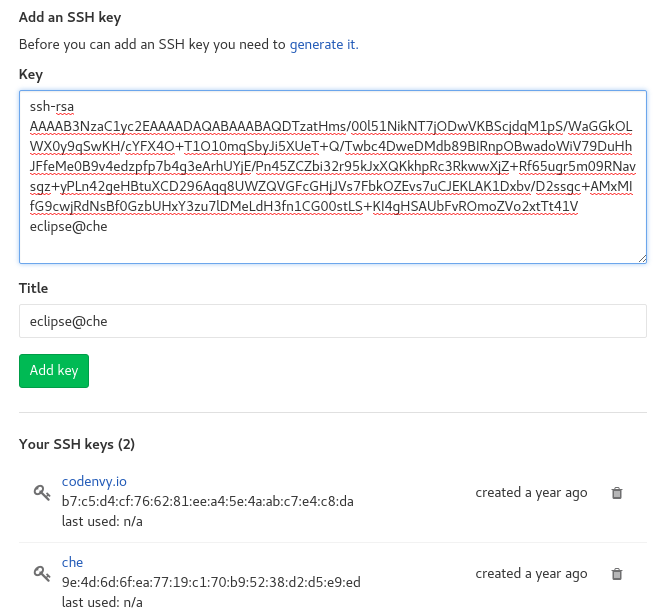

When not using GitHub OAuth, you can manually upload a key. To add the associated public key to a repository or account on github.com:

- Click your user icon (top right).

- Go to Settings > SSH and GPG keys and click the New SSH key button.

- Enter a title and paste the public key copied from CodeReady Workspaces to the Key text field.

Example 11.2. GitLab example

To add the associated public key to a Git repository or account on gitlab.com:

- Click your user icon (top right).

- Go to Settings > SSH Keys.

- Enter a title and paste the public key copied from CodeReady Workspaces to the Key text field.

11.2. Configuring GitHub OAuth

OAuth for Github allows users to import projects using SSH addresses (git@), push to repositories, and use the pull request panel. To enable automatic SSH key upload to GitHub for users:

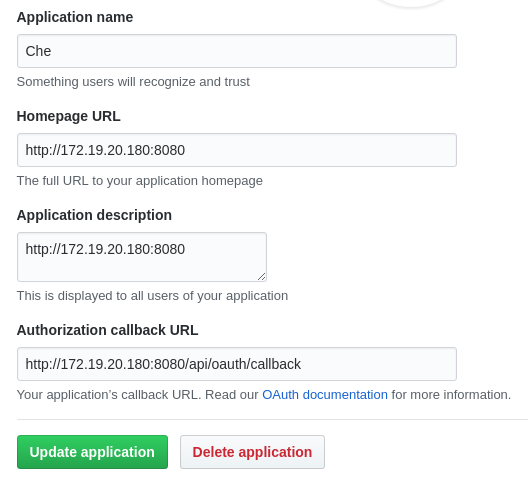

- On github.com, click your user icon (top right).

- Go to Settings > Developer settings > OAuth Apps.

- Click the button.

-

In the Application name field, enter, for example,

CodeReady Workspaces. -

In the Homepage URL field, enter

http://${CHE_HOST}:${CHE_PORT}. In the Authorization callback URL field, enter

http://${CHE_HOST}:${CHE_PORT}/api/oauth/callback.On OpenShift, update the deployment configuration.

CHE_OAUTH_GITHUB_CLIENTID=<your-github-client-id> CHE_OAUTH_GITHUB_CLIENTSECRET=<your-github-secret> CHE_OAUTH_GITHUB_AUTHURI=https://github.com/login/oauth/authorize CHE_OAUTH_GITHUB_TOKENURI=https://github.com/login/oauth/access_token CHE_OAUTH_GITHUB_REDIRECTURIS=http://${CHE_HOST}:${CHE_PORT}/api/oauth/callbackCHE_OAUTH_GITHUB_CLIENTID=<your-github-client-id> CHE_OAUTH_GITHUB_CLIENTSECRET=<your-github-secret> CHE_OAUTH_GITHUB_AUTHURI=https://github.com/login/oauth/authorize CHE_OAUTH_GITHUB_TOKENURI=https://github.com/login/oauth/access_token CHE_OAUTH_GITHUB_REDIRECTURIS=http://${CHE_HOST}:${CHE_PORT}/api/oauth/callbackCopy to Clipboard Copied! Toggle word wrap Toggle overflow

-

Substitute all occurrences of

${CHE_HOST}and${CHE_PORT}with the URL and port of your CodeReady Workspaces installation. -

Substitute

<your-github-client-id>and<your-github-secret>with your GitHub client ID and secret.

Once OAuth is configured, SSH keys are generated and uploaded automatically to GitHub by a user in the IDE in Profile > Preferences > SSH > VCS by clicking the Octocat icon. You can connect to your GitHub account and choose repositories to clone, rather than having to manually type (or paste) GitHub project URLs.

11.3. Configuring GitLab OAuth

OAuth integration with GitLab is not supported. Although GitLab supports OAuth for clone operations, pushes are not supported. A feature request to add support exists in the GitLab issue management system: Allow runners to push via their CI token.

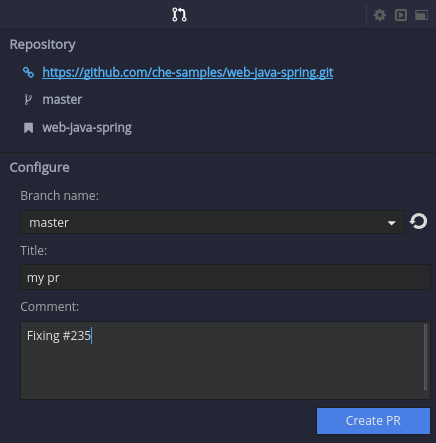

11.4. Submitting pull requests using the built-in Pull Request panel

CodeReady Workspaces provides a Pull Request panel to simplify the creation of pull requests for GitHub, BitBucket, and Microsoft VSTS (with Git) repositories.

11.5. Saving committer name and email

Committer name and email are set in Profile > Preferences > Git > Committer. Once set, every commit will include this information.

11.6. Interacting with Git from a workspace

After importing a repository, you can perform the most common Git operations using interactive menus or as terminal commands.

Terminal Git commands require their own authentication setup. This means that keys generated in the IDE work only when Git is used through the IDE menus. Git installed in a terminal is a different Git system. You can generate keys in ~/.ssh there as well.

Use keyboard shortcuts to access the most frequently used Git functionality faster:

| Commit | Alt+C |

| Push to remote | Alt+Shift+C |

| Pull from remote | Alt+P |

| Work with branches | Ctrl+B |

| Compare current changes with the latest repository version | Ctrl+Alt+D |

11.7. Git status highlighting in the project tree and editor

Files in project explorer and editor tabs can be colored according to their Git status:

- Green: new files that are staged in index

- Blue: files that contain changes

- Yellow: files that are not staged in index

The editor displays change markers according to file edits:

- Yellow marker: modified line(s)

- Green marker: new line(s)

- White triangle: removed line(s)

11.8. Performing Git operations

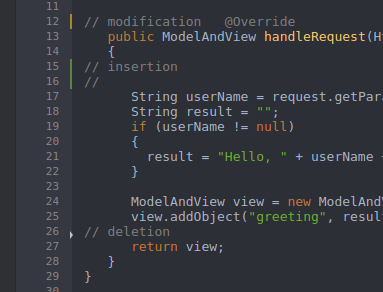

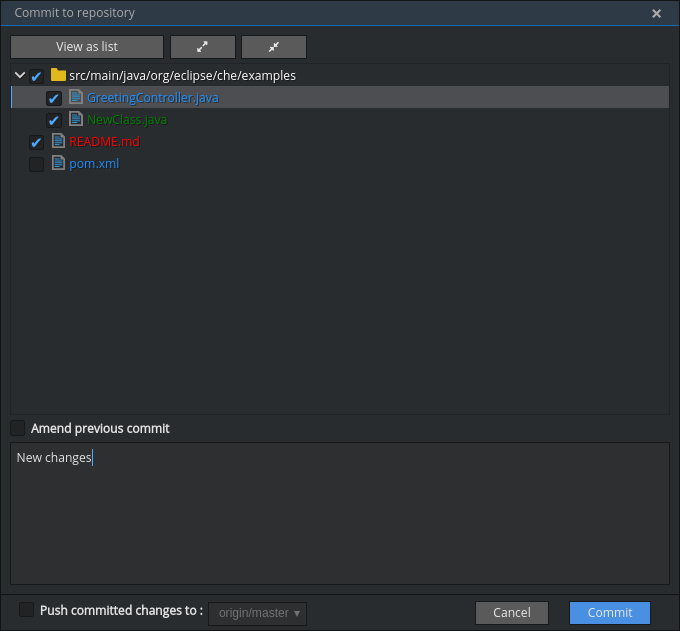

11.8.1. Commiting

Commit your changes by navigating to Git > Commit… in the main menu, or use the Alt+C shortcut.

- Select files that will be added to index and committed. All files in the selected package or folder in the project explorer are checked by default.

- Type your commit message. Optionally, you can select Amend previous commit to modify the previous commit (for more details, see Git commit documentation).

- To push your commit to a remote repository by checking the Push committed changes to check-box and select a remote branch.

- Click to proceed (the button is active when at least one file is selected and a commit message is present, or Amend previous commit is checked).

Behavior for files in the list view is the same as in the Compare window (see Reviewing changed files section). Double-clicking a file opens diff window with it.

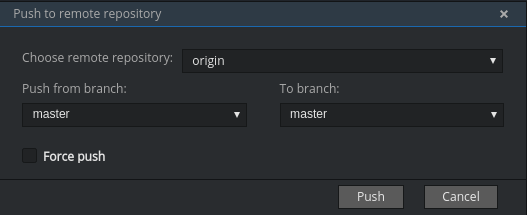

11.8.2. Pushing and pulling

Push your commits by navigating to Git > Remotes… > Push in the main menu, or use the Alt+Shift+C shortcut.

- Choose the remote repository.

- Choose the local and remote branch.

- Optionally, you can force select Force push.

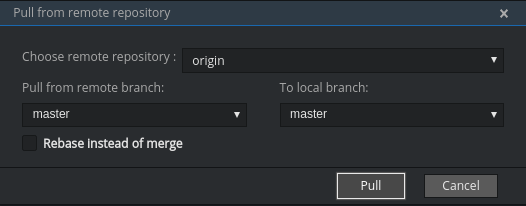

Get changes from a remote repository by navigating to Git > Remotes… > Pull in the main menu, or use the Alt+P shortcut.

You can use Rebase instead of merge to keep your local commits on top (for more information, see Git pull documentation).

11.8.3. Managing branches

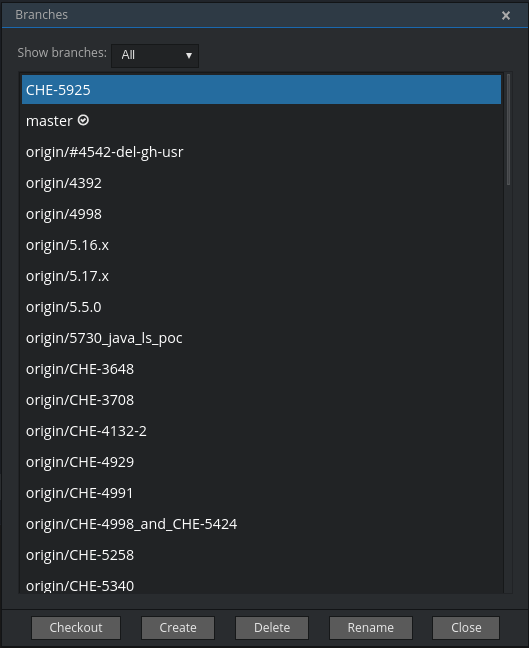

Manage your git branches by navigating to Git > Branches… in the main menu, or use the Ctrl+B shortcut.

You can filter the branches view by choosing to see only local or remote branches.

11.9. Reviewing changed files

The Git Compare window is used to show files that have changed.

To compare the current state of code to the latest local commit, navigate to Git > Compare > Select-to-what in the main menu, or use the Ctrl+Alt+D shortcut. Another way is to select an object in the project tree and choose Git > Select-to-what from the context menu of an item.

Listing changed files

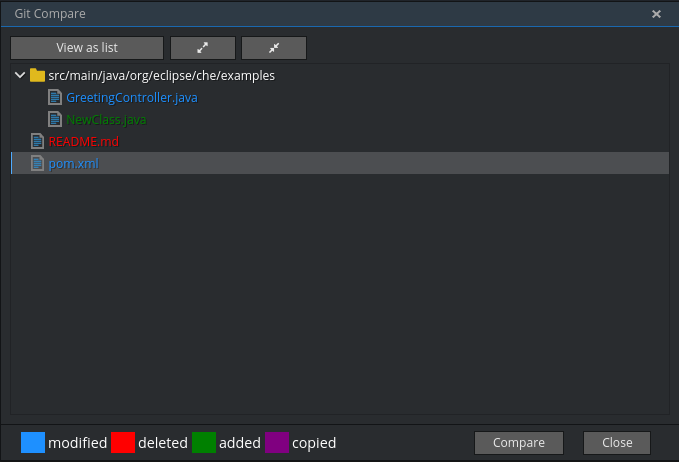

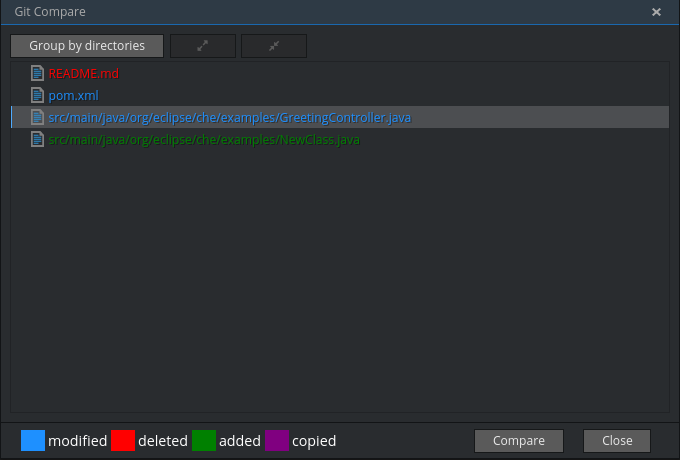

The Git Compare window shows changed files in the selected object in the project explorer. To see all changes, select a project folder. If only one file has changed, a diff window is shown instead of the compare window.

By default, affected files are listed as a tree.

The Expand all directories and Collapse all directories options help to get a better view. The button switches the view of changed files to a list, where each file is shown with its full path. To return to the tree view, click .

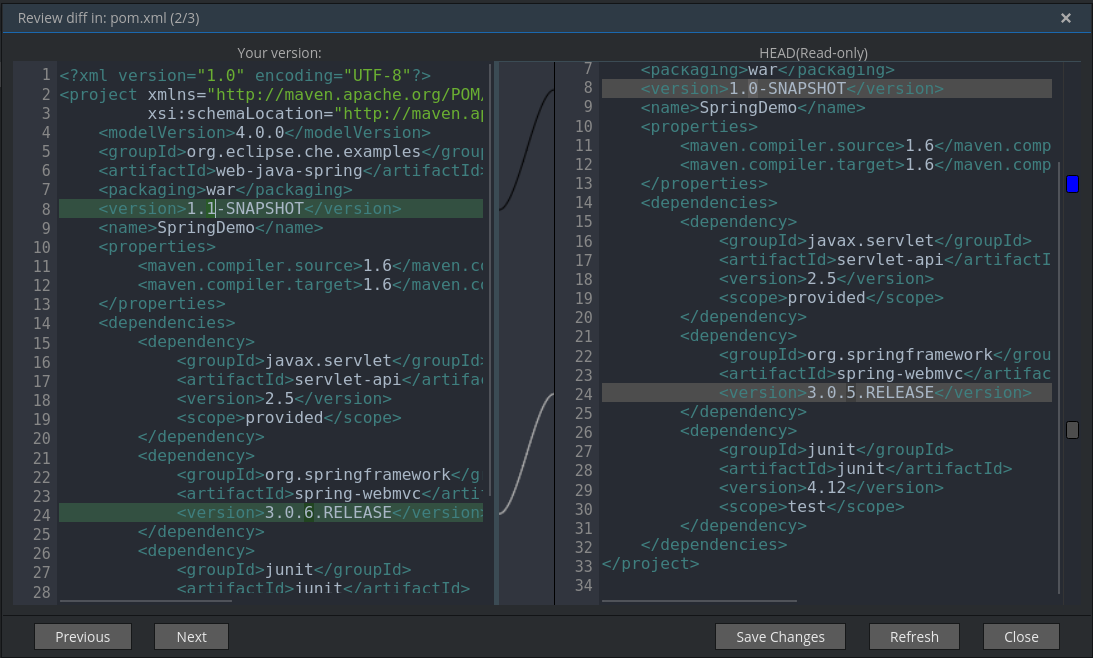

Viewing diffs

To view a diff for a file, select the file and click Compare, or double-click the file name.

You can review changes between two states of code. To view the diff, go to Git > Compare > Select-to-what in main menu. If more than one file has changed, a list of the changed files is opened first. To select a file to compare, double-click it, or select a file, and then click Compare. Another way to open a diff is to select a file in the Projects Explorer and choose Git > Select-to-what from its context menu or directly from the context menu in the editor.

Your changes are shown on the left, and the file being compared to is on the right. The left pane can be used for editing and fixing your changes.

To review multiple files, you can navigate between them using the (or Alt+.) and (or Alt+,) buttons. The number of files for review is displayed in the title of the diff window.

The button updates the difference links between the two panes.

Chapter 12. CodeReady Workspaces Administration Guide

12.1. RAM prerequisites

You must have at least 5 GB of RAM to run CodeReady Workspaces. The Keycloak authorization server and PostgreSQL database require the extra RAM. CodeReady Workspaces uses RAM in this distribution:

- CodeReady Workspaces server: approximately 750 MB

- Keycloak: approximately 1 GB

- PostgreSQL: approximately 515 MB

- Workspaces: 2 GB of RAM per workspace. The total workspace RAM depends on the size of the workspace runtime(s) and the number of concurrent workspace pods.

12.1.1. Setting default workspace RAM limits

The default workspace RAM limit and the RAM allocation request can be configured by passing the CHE_WORKSPACE_DEFAULT__MEMORY__LIMIT__MB and CHE_WORKSPACE_DEFAULT__MEMORY__REQUEST__MB parameters to a CodeReady Workspaces deployment.

For example, use the following configuration to limit the amount of RAM used by workspaces to 2048 MB and to request the allocation of 1024 MB of RAM:

oc set env dc/che CHE_WORKSPACE_DEFAULT__MEMORY__LIMIT__MB=2048 \

CHE_WORKSPACE_DEFAULT__MEMORY__REQUEST__MB=1024

$ oc set env dc/che CHE_WORKSPACE_DEFAULT__MEMORY__LIMIT__MB=2048 \

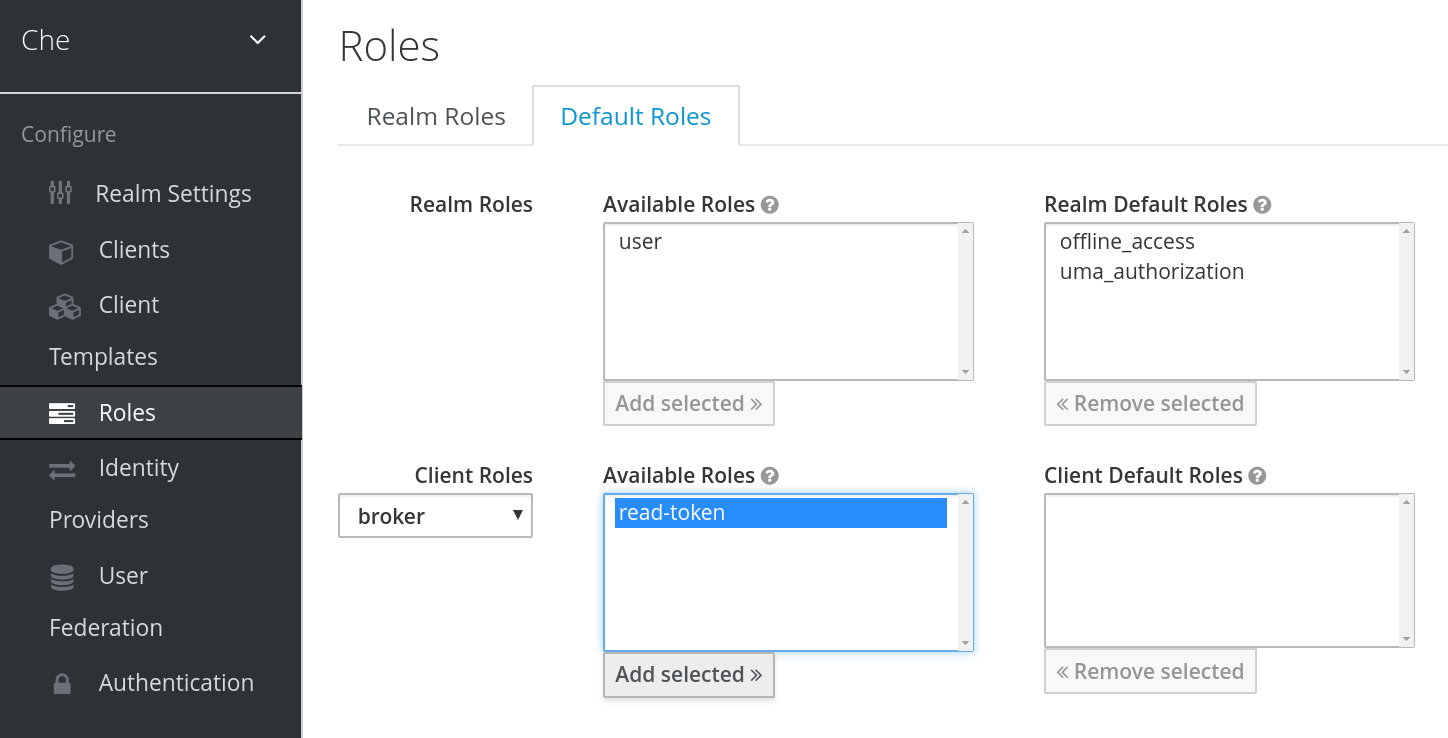

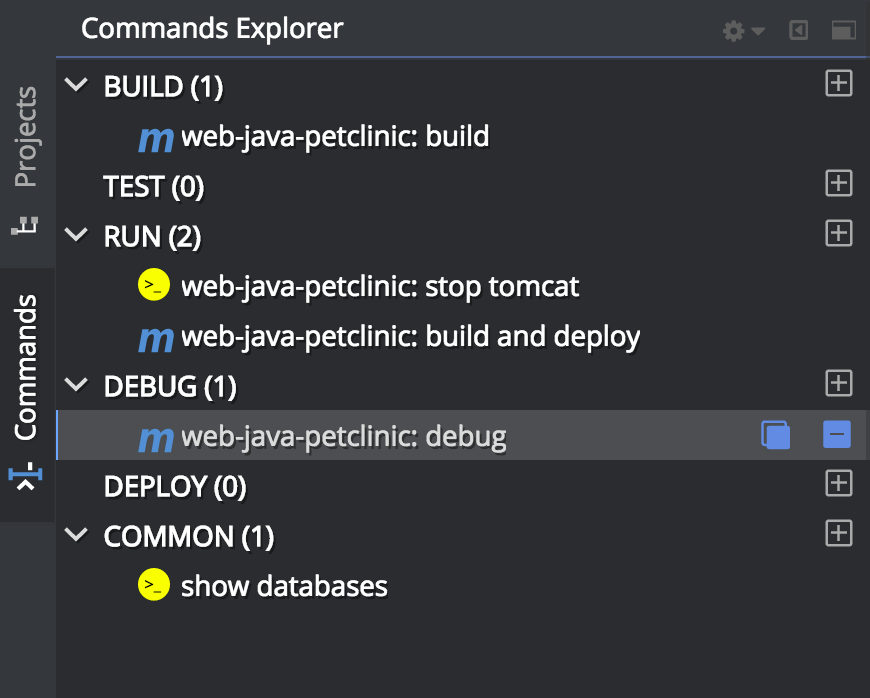

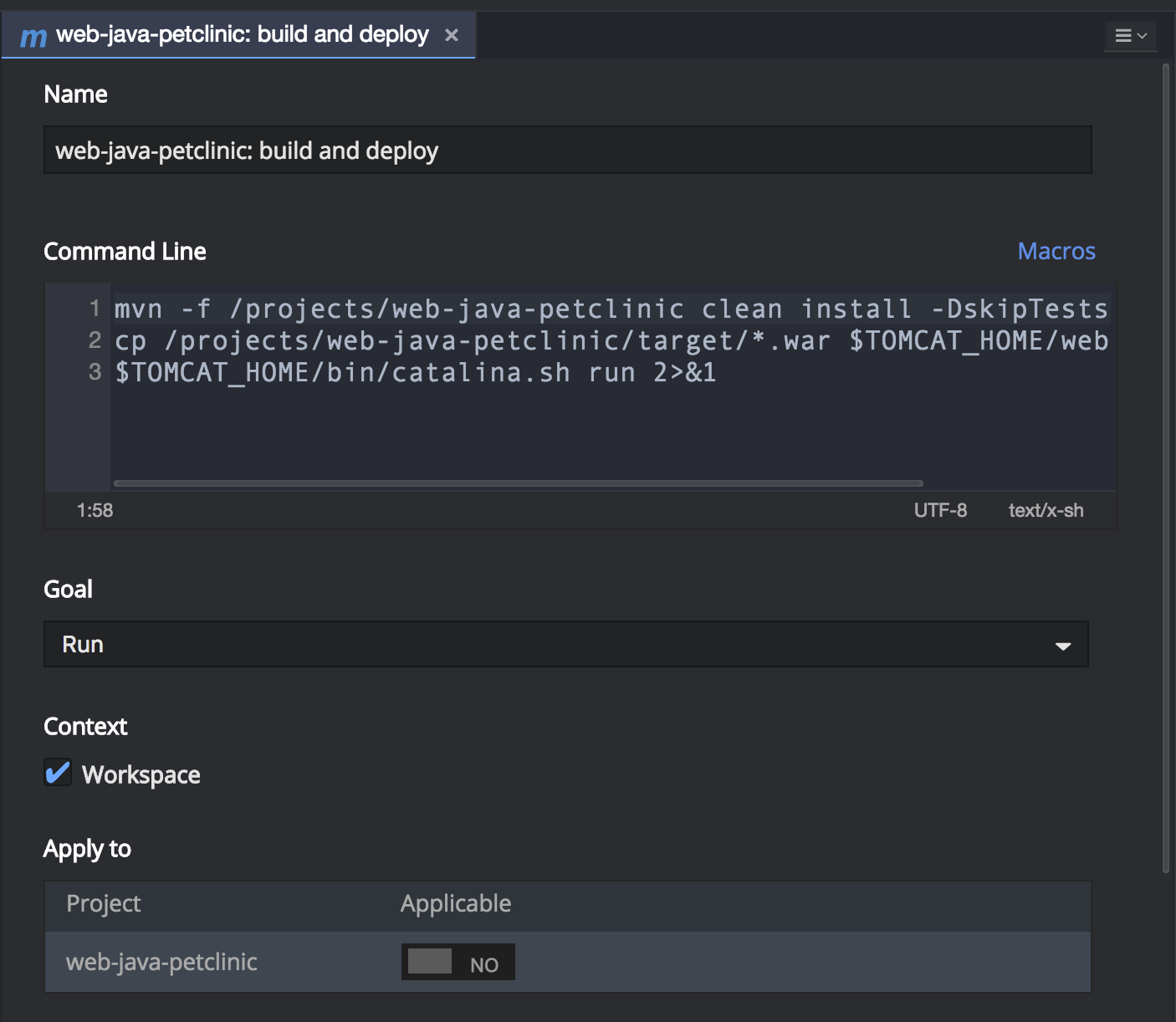

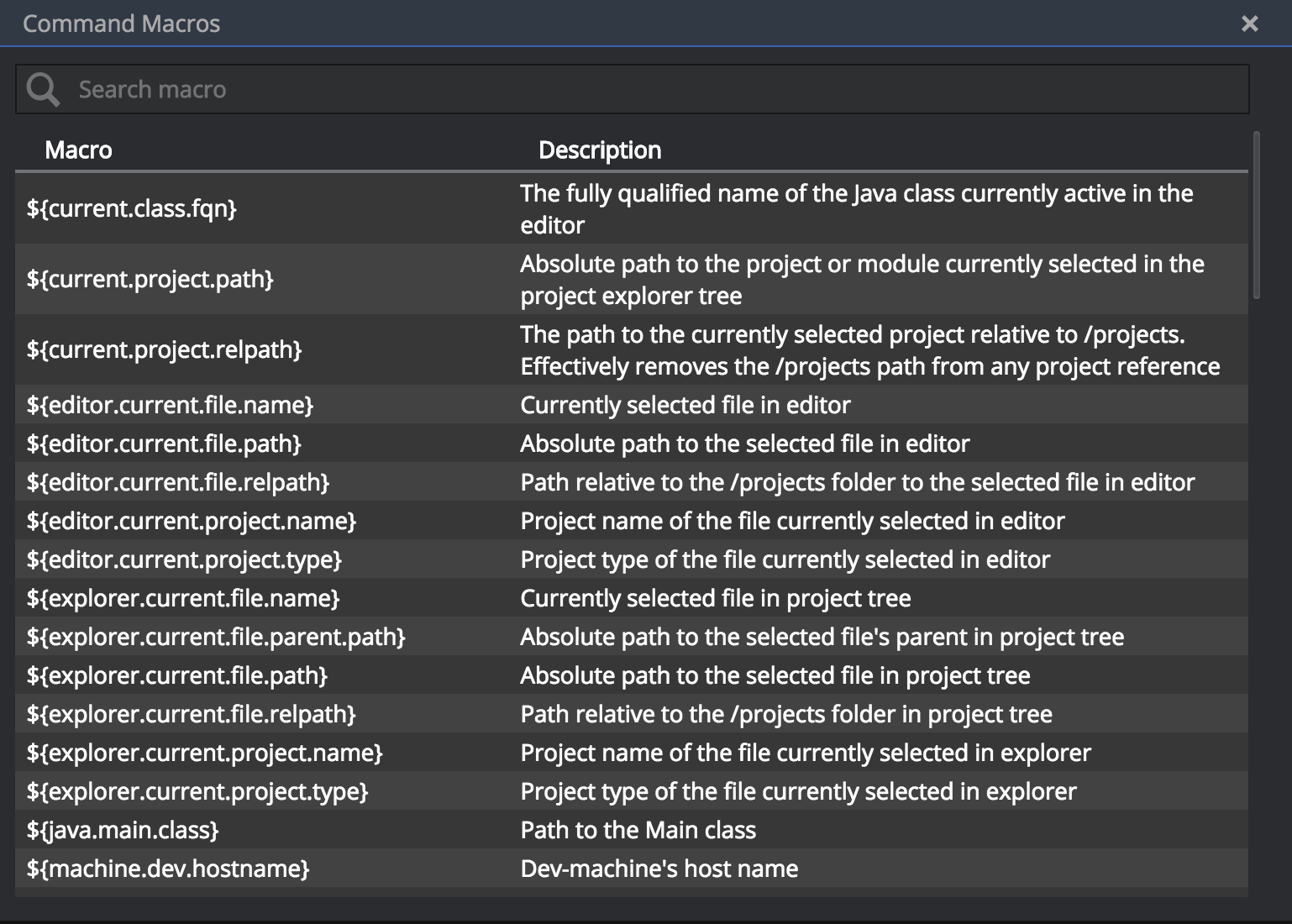

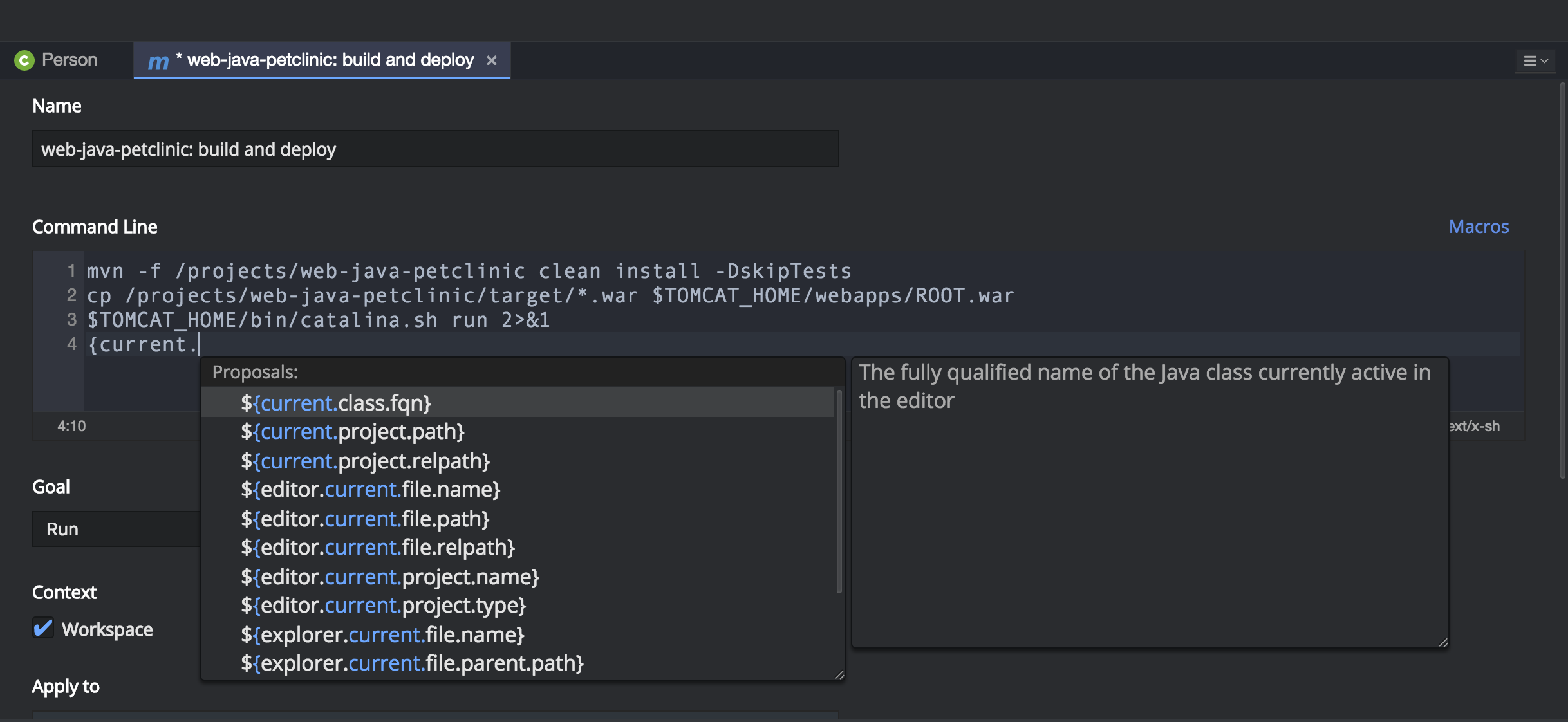

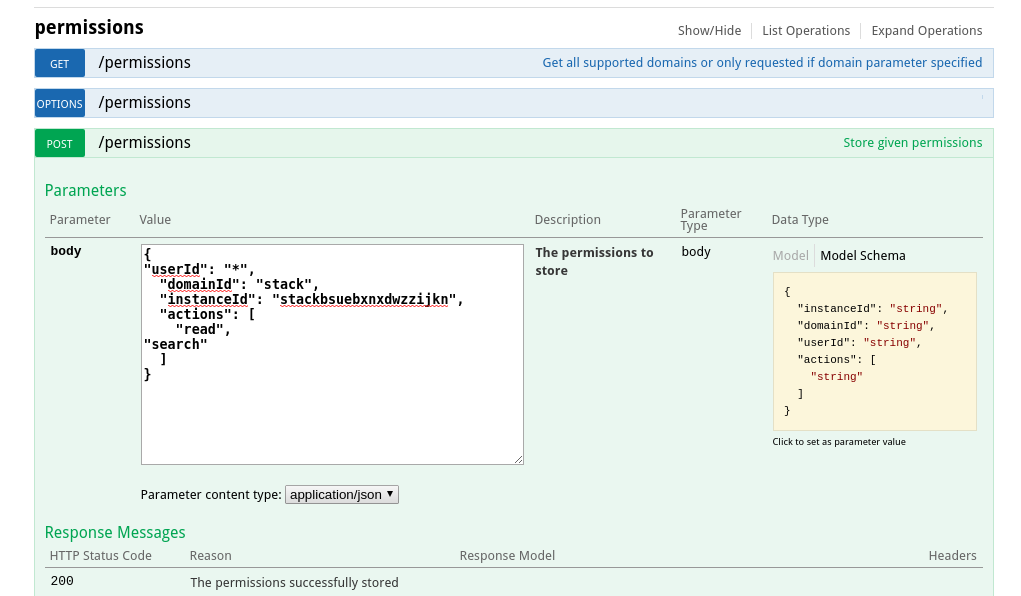

CHE_WORKSPACE_DEFAULT__MEMORY__REQUEST__MB=1024- The user can override the default values when creating a workspace.