Administration Guide

Administering Red Hat CodeReady Workspaces 2.15

Abstract

Making open source more inclusive

Red Hat is committed to replacing problematic language in our code, documentation, and web properties. We are beginning with these four terms: master, slave, blacklist, and whitelist. Because of the enormity of this endeavor, these changes will be implemented gradually over several upcoming releases. For more details, see our CTO Chris Wright’s message.

Chapter 1. Architecture overview

CodeReady Workspaces needs a workspace engine to manage the lifecycle of the workspaces. Two workspace engines are available. The choice of a workspace engine defines the architecture.

- Section 1.1, “CodeReady Workspaces architecture with CodeReady Workspaces server”

CodeReady Workspaces server is the default workspace engine.

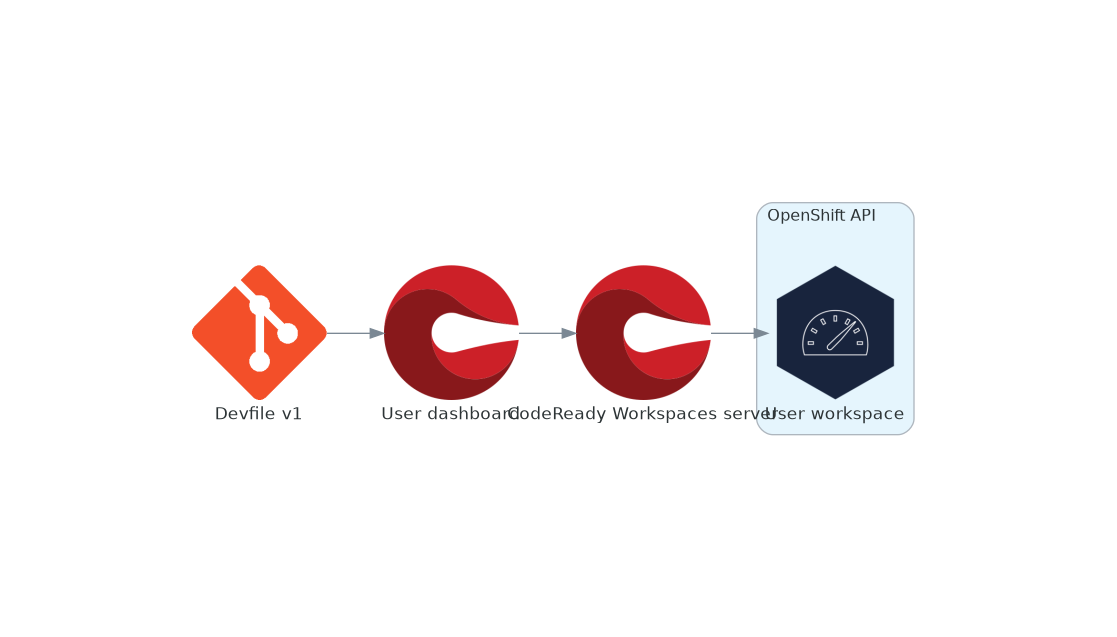

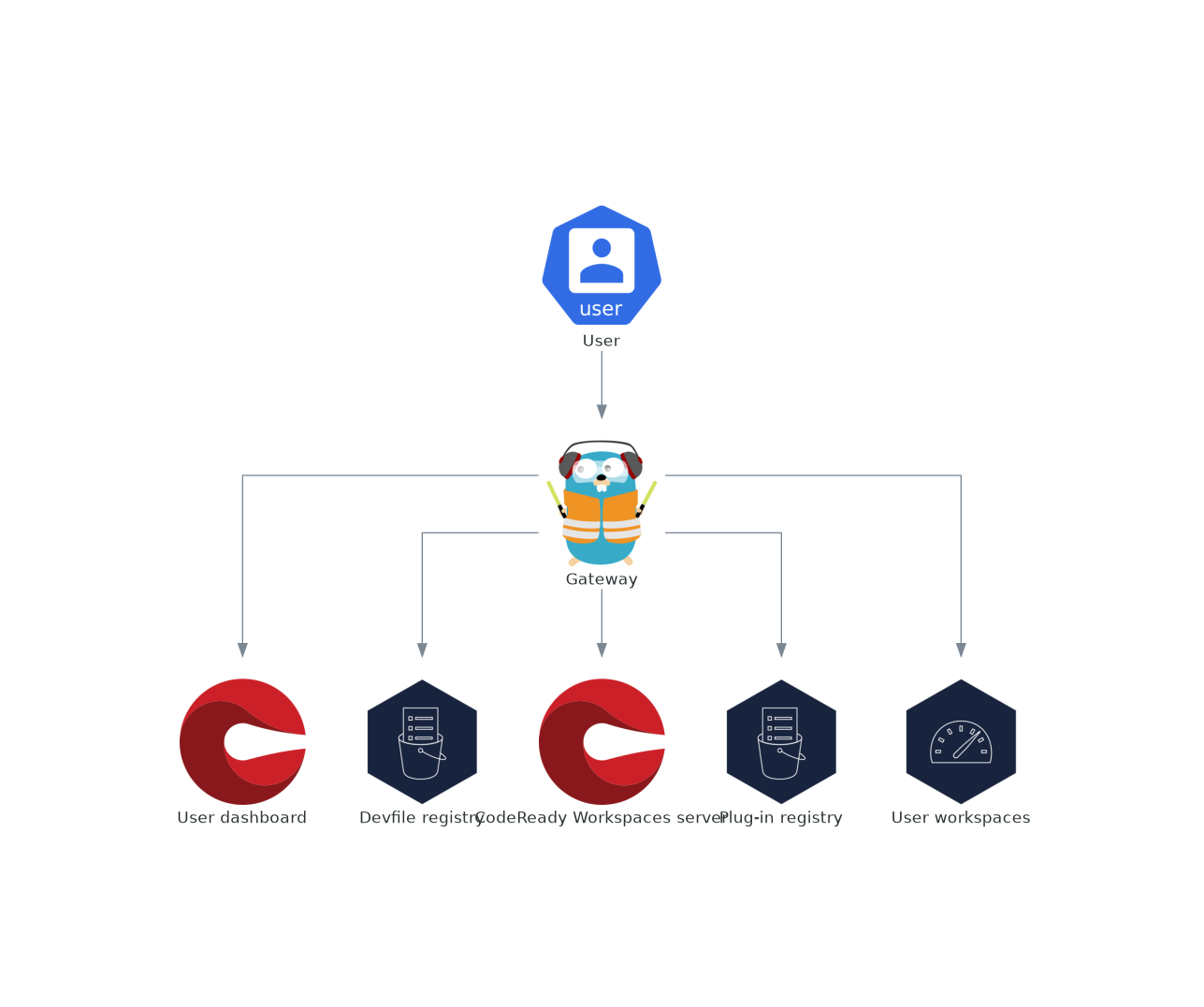

Figure 1.1. High-level CodeReady Workspaces architecture with the CodeReady Workspaces server engine

- Section 1.4, “CodeReady Workspaces architecture with Dev Workspace”

The Dev Workspace Operator is a new workspace engine.

Technology preview featureManaging workspaces with the Dev Workspace engine is an experimental feature. Don’t use this workspace engine in production.

Known limitations

Workspaces are not secured. Whoever knows the URL of a workspace can have access to it and leak the user credentials.

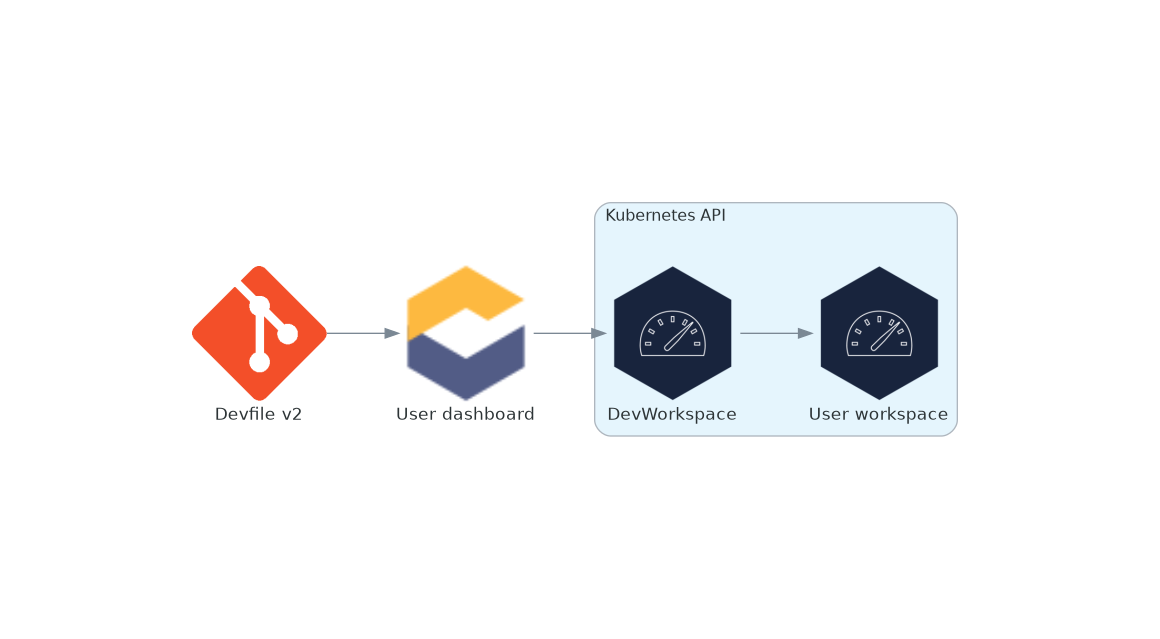

Figure 1.2. High-level CodeReady Workspaces architecture with the Dev Workspace operator

Additional resources

- Section 1.1, “CodeReady Workspaces architecture with CodeReady Workspaces server”

- Section 1.4, “CodeReady Workspaces architecture with Dev Workspace”

- https://access.redhat.com/documentation/en-us/red_hat_codeready_workspaces/2.15/html-single/installation_guide/index#enabling-dev-workspace-operator.adoc

- Dev Workspace Operator GitHub repository

1.1. CodeReady Workspaces architecture with CodeReady Workspaces server

CodeReady Workspaces server is the default workspace engine.

Figure 1.3. High-level CodeReady Workspaces architecture with the CodeReady Workspaces server engine

Red Hat CodeReady Workspaces components are:

- CodeReady Workspaces server

- An always-running service that manages user workspaces with the OpenShift API.

- User workspaces

- Container-based IDEs running on user requests.

1.2. Understanding CodeReady Workspaces server

This chapter describes the CodeReady Workspaces controller and the services that are a part of the controller.

1.2.1. CodeReady Workspaces server

The workspaces controller manages the container-based development environments: CodeReady Workspaces workspaces. To secure the development environments with authentication, the deployment is always multiuser and multitenant.

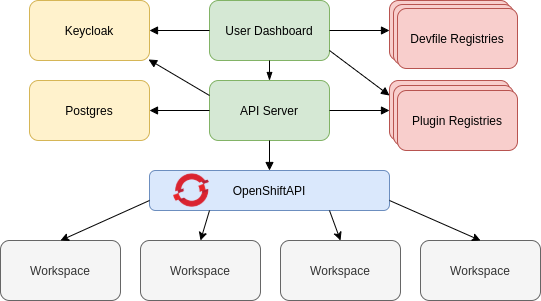

The following diagram shows the different services that are a part of the CodeReady Workspaces workspaces controller.

Figure 1.4. CodeReady Workspaces workspaces controller

Additional resources

1.2.2. CodeReady Workspaces server

The CodeReady Workspaces server is the central service of CodeReady Workspaces server-side components. It is a Java web service exposing an HTTP REST API to manage CodeReady Workspaces workspaces and users. It is the default workspace engine.

1.2.3. CodeReady Workspaces user dashboard

The user dashboard is the landing page of Red Hat CodeReady Workspaces. It is a React application. CodeReady Workspaces users navigate the user dashboard from their browsers to create, start, and manage CodeReady Workspaces workspaces.

1.2.4. CodeReady Workspaces devfile registry

The CodeReady Workspaces devfile registry is a service that provides a list of CodeReady Workspaces samples to create ready-to-use workspaces. This list of samples is used in the Dashboard → Create Workspace window. The devfile registry runs in a container and can be deployed wherever the user dashboard can connect.

1.2.5. CodeReady Workspaces plug-in registry

The CodeReady Workspaces plug-in registry is a service that provides the list of plug-ins and editors for CodeReady Workspaces workspaces. A devfile only references a plug-in that is published in a CodeReady Workspaces plug-in registry. It runs in a container and can be deployed wherever CodeReady Workspaces server connects.

1.2.6. CodeReady Workspaces and PostgreSQL

The PostgreSQL database is a prerequisite for CodeReady Workspaces server and RH-SSO.

The CodeReady Workspaces administrator can choose to:

- Connect CodeReady Workspaces to an existing PostgreSQL instance.

- Let the CodeReady Workspaces deployment start a new dedicated PostgreSQL instance.

Services use the database for the following purposes:

- CodeReady Workspaces server

- Persist user configurations such as workspaces metadata and Git credentials.

- RH-SSO

- Persist user information.

1.2.7. CodeReady Workspaces and RH-SSO

RH-SSO is a prerequisite to configure CodeReady Workspaces. The CodeReady Workspaces administrator can choose to connect CodeReady Workspaces to an existing RH-SSO instance or let the CodeReady Workspaces deployment start a new dedicated RH-SSO instance.

The CodeReady Workspaces server uses RH-SSO as an OpenID Connect (OIDC) provider to authenticate CodeReady Workspaces users and secure access to CodeReady Workspaces resources.

Additional resources

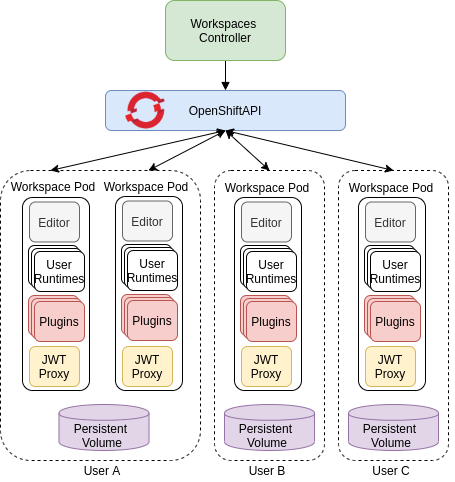

1.3. Understanding CodeReady Workspaces workspaces architecture

This chapter describes the architecture and components of CodeReady Workspaces.

1.3.1. CodeReady Workspaces workspaces architecture

A CodeReady Workspaces deployment on the cluster consists of the CodeReady Workspaces server component, a database for storing user profile and preferences, and several additional deployments hosting workspaces. The CodeReady Workspaces server orchestrates the creation of workspaces, which consist of a deployment containing the workspace containers and enabled plug-ins, plus the related components, such as:

- ConfigMaps

- services

- endpoints

- ingresses or routes

- secrets

- persistent volumes (PVs)

The CodeReady Workspaces workspace is a web application. It is composed of microservices running in containers that provide all the services of a modern IDE such as an editor, language auto-completion, and debugging tools. The IDE services are deployed with the development tools, packaged in containers and user runtime applications, which are defined as OpenShift resources.

The source code of the projects of a CodeReady Workspaces workspace is persisted in a OpenShift PersistentVolume. Microservices run in containers that have read-write access to the source code (IDE services, development tools), and runtime applications have read-write access to this shared directory.

The following diagram shows the detailed components of a CodeReady Workspaces workspace.

Figure 1.5. CodeReady Workspaces workspace components

In the diagram, there are four running workspaces: two belonging to User A, one to User B and one to User C.

Use the devfile format to specify the tools and runtime applications of a CodeReady Workspaces workspace.

1.3.2. CodeReady Workspaces workspace components

This section describes the components of a CodeReady Workspaces workspace.

1.3.2.1. Che Editor plug-in

A Che Editor plug-in is a CodeReady Workspaces workspace plug-in. It defines the web application that is used as an editor in a workspace. The default CodeReady Workspaces workspace editor is Che-Theia. It is a web-based source-code editor similar to Visual Studio Code (Visual Studio Code). It has a plug-in system that supports Visual Studio Code extensions.

| Source code | |

| Container image |

|

| Endpoints |

|

Additional resources

1.3.2.2. CodeReady Workspaces user runtimes

Use any non-terminating user container as a user runtime. An application that can be defined as a container image or as a set of OpenShift resources can be included in a CodeReady Workspaces workspace. This makes it easy to test applications in the CodeReady Workspaces workspace.

To test an application in the CodeReady Workspaces workspace, include the application YAML definition used in stage or production in the workspace specification. It is a 12-factor application development / production parity.

Examples of user runtimes are Node.js, SpringBoot or MongoDB, and MySQL.

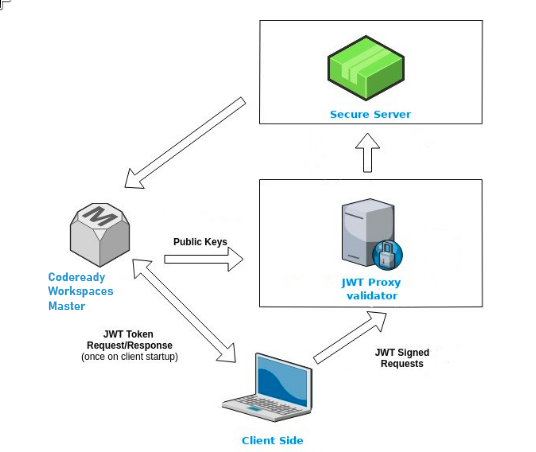

1.3.2.3. CodeReady Workspaces workspace JWT proxy

The JWT proxy is responsible for securing the communication of the CodeReady Workspaces workspace services.

An HTTP proxy is used to sign outgoing requests from a workspace service to the CodeReady Workspaces server and to authenticate incoming requests from the IDE client running on a browser.

| Source code | |

| Container image |

|

1.3.2.4. CodeReady Workspaces plug-ins broker

Plug-in brokers are special services that, given a plug-in meta.yaml file:

- Gather all the information to provide a plug-in definition that the CodeReady Workspaces server knows.

- Perform preparation actions in the workspace project (download, unpack files, process configuration).

The main goal of the plug-in broker is to decouple the CodeReady Workspaces plug-ins definitions from the actual plug-ins that CodeReady Workspaces can support. With brokers, CodeReady Workspaces can support different plug-ins without updating the CodeReady Workspaces server.

The CodeReady Workspaces server starts the plug-in broker. The plug-in broker runs in the same OpenShift project as the workspace. It has access to the plug-ins and project persistent volumes.

A plug-ins broker is defined as a container image (for example, eclipse/che-plugin-broker). The plug-in type determines the type of the broker that is started. Two types of plug-ins are supported: Che Plugin and Che Editor.

| Source code | |

| Container image |

|

1.3.3. CodeReady Workspaces workspace creation flow

The following is a CodeReady Workspaces workspace creation flow:

A user starts a CodeReady Workspaces workspace defined by:

- An editor (the default is Che-Theia)

- A list of plug-ins (for example, Java and OpenShift tools)

- A list of runtime applications

- CodeReady Workspaces server retrieves the editor and plug-in metadata from the plug-in registry.

- For every plug-in type, CodeReady Workspaces server starts a specific plug-in broker.

The CodeReady Workspaces plug-ins broker transforms the plug-in metadata into a

Che Plugindefinition. It executes the following steps:- Downloads a plug-in and extracts its content.

-

Processes the plug-in

meta.yamlfile and sends it back to CodeReady Workspaces server in the format of aChe Plugin.

- CodeReady Workspaces server starts the editor and the plug-in sidecars.

- The editor loads the plug-ins from the plug-in persistent volume.

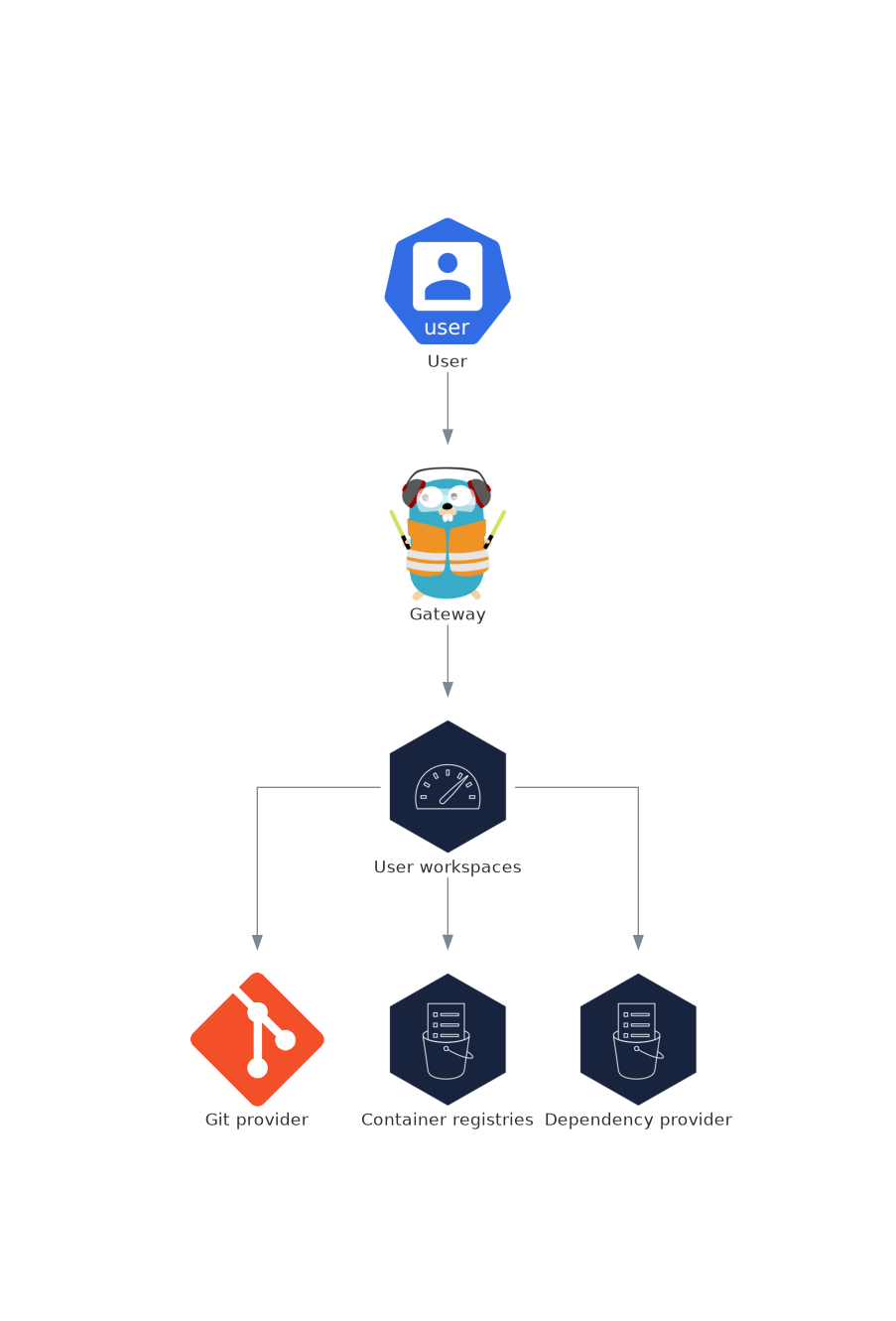

1.4. CodeReady Workspaces architecture with Dev Workspace

Managing workspaces with the Dev Workspace engine is an experimental feature. Don’t use this workspace engine in production.

Known limitations

Workspaces are not secured. Whoever knows the URL of a workspace can have access to it and leak the user credentials.

Figure 1.6. High-level CodeReady Workspaces architecture with the Dev Workspace operator

When CodeReady Workspaces is running with the Dev Workspace operator, it runs on three groups of components:

- CodeReady Workspaces server components

- Manage User project and workspaces. The main component is the User dashboard, from which users control their workspaces.

- Dev Workspace operator

-

Creates and controls the necessary OpenShift objects to run User workspaces. Including

Pods,Services, andPeristentVolumes. - User workspaces

- Container-based development environments, the IDE included.

The role of these OpenShift features is central:

- Dev Workspace Custom Resources

- Valid OpenShift objects representing the User workspaces and manipulated by CodeReady Workspaces. It is the communication channel for the three groups of components.

- OpenShift role-based access control (RBAC)

- Controls access to all resources.

Additional resources

- Section 1.5, “CodeReady Workspaces server components”

- Section 1.5.2, “Dev Workspace operator”

- Section 1.6, “User workspaces”

- https://access.redhat.com/documentation/en-us/red_hat_codeready_workspaces/2.15/html-single/installation_guide/index#enabling-dev-workspace-operator.adoc

- Dev Workspace Operator repository

- Kubernetes documentation - Custom Resources

1.5. CodeReady Workspaces server components

Managing workspaces with the Dev Workspace engine is an experimental feature. Don’t use this workspace engine in production.

Known limitations

Workspaces are not secured. Whoever knows the URL of a workspace can have access to it and leak the user credentials.

The CodeReady Workspaces server components ensure multi-tenancy and workspaces management.

Figure 1.7. CodeReady Workspaces server components interacting with the Dev Workspace operator

Additional resources

1.5.1. CodeReady Workspaces operator

The CodeReady Workspaces operator ensure full lifecycle management of the CodeReady Workspaces server components. It introduces:

CheClustercustom resource definition (CRD)-

Defines the

CheClusterOpenShift object. - CodeReady Workspaces controller

- Creates and controls the necessary OpenShift objects to run a CodeReady Workspaces instance, such as pods, services, and persistent volumes.

CheClustercustom resource (CR)On a cluster with the CodeReady Workspaces operator, it is possible to create a

CheClustercustom resource (CR). The CodeReady Workspaces operator ensures the full lifecycle management of the CodeReady Workspaces server components on this CodeReady Workspaces instance:

Additional resources

1.5.2. Dev Workspace operator

Managing workspaces with the Dev Workspace engine is an experimental feature. Don’t use this workspace engine in production.

Known limitations

Workspaces are not secured. Whoever knows the URL of a workspace can have access to it and leak the user credentials.

The Dev Workspace operator extends OpenShift to provide Dev Workspace support. It introduces:

- Dev Workspace custom resource definition

- Defines the Dev Workspace OpenShift object from the Devfile v2 specification.

- Dev Workspace controller

- Creates and controls the necessary OpenShift objects to run a Dev Workspace, such as pods, services, and persistent volumes.

- Dev Workspace custom resource

- On a cluster with the Dev Workspace operator, it is possible to create Dev Workspace custom resources (CR). A Dev Workspace CR is a OpenShift representation of a Devfile. It defines a User workspaces in a OpenShift cluster.

1.5.3. Gateway

The CodeReady Workspaces gateway has following roles:

- Routing requests. It uses Traefik.

- Authenticating users with OpenID Connect (OIDC). It uses OpenShift OAuth2 proxy.

- Applying OpenShift Role based access control (RBAC) policies to control access to any CodeReady Workspaces resource. It uses `kube-rbac-proxy`.

The CodeReady Workspaces operator manages it as the che-gateway Deployment.

It controls access to:

Figure 1.8. CodeReady Workspaces gateway interactions with other components

Additional resources

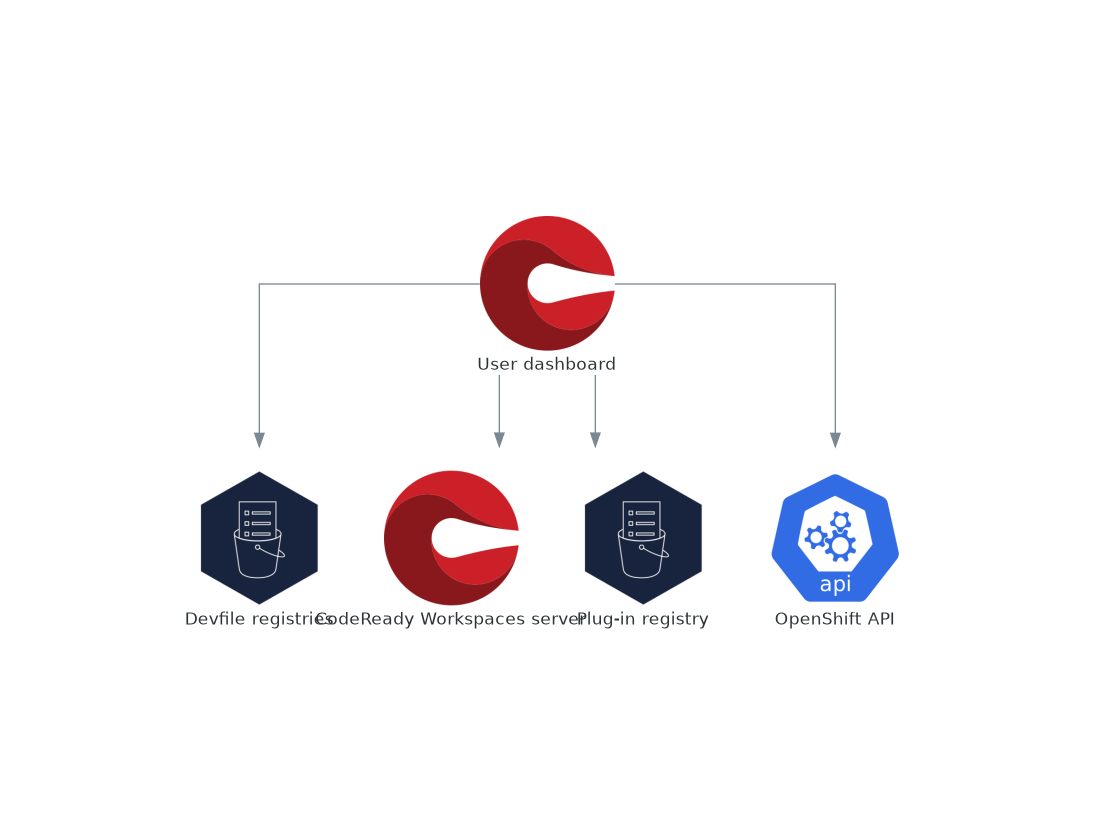

1.5.4. User dashboard

The user dashboard is the landing page of Red Hat CodeReady Workspaces. CodeReady Workspaces end-users browse the user dashboard to access and manage their workspaces. It is a React application. The CodeReady Workspaces deployment starts it in the codeready-dashboard Deployment.

It need access to:

Figure 1.9. User dashboard interactions with other components

When the user requests the user dashboard to start a workspace, the user dashboard executes this sequence of actions:

- Collects the devfile from the Section 1.5.5, “Devfile registries”, when the user is Creating a workspace from a code sample.

- Sends the repository URL to Section 1.5.6, “CodeReady Workspaces server” and expects a devfile in return, when the user is Creating a workspace from remote devfile.

- Reads the devfile describing the workspace.

- Collects the additional metadata from the Section 1.5.8, “Plug-in registry”.

- Converts the information into a Dev Workspace Custom Resource.

- Creates the Dev Workspace Custom Resource in the user project using the OpenShift API.

- Watches the Dev Workspace Custom Resource status.

- Redirects the user to the running workspace IDE.

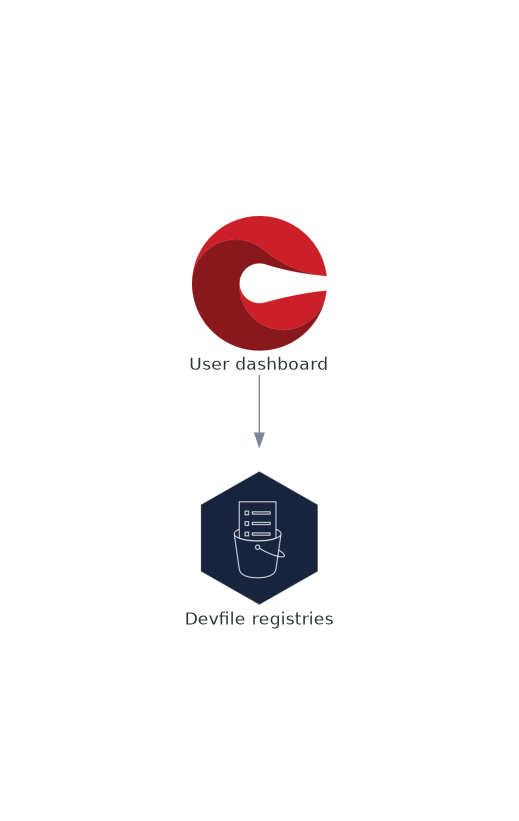

1.5.5. Devfile registries

The CodeReady Workspaces devfile registries are services providing a list of sample devfiles to create ready-to-use workspaces. The Section 1.5.4, “User dashboard” displays the samples list on the Dashboard → Create Workspace page. Each sample includes a Devfile v2. The CodeReady Workspaces deployment starts one devfile registry instance in the devfile-registry deployment.

Figure 1.10. Devfile registries interactions with other components

Additional resources

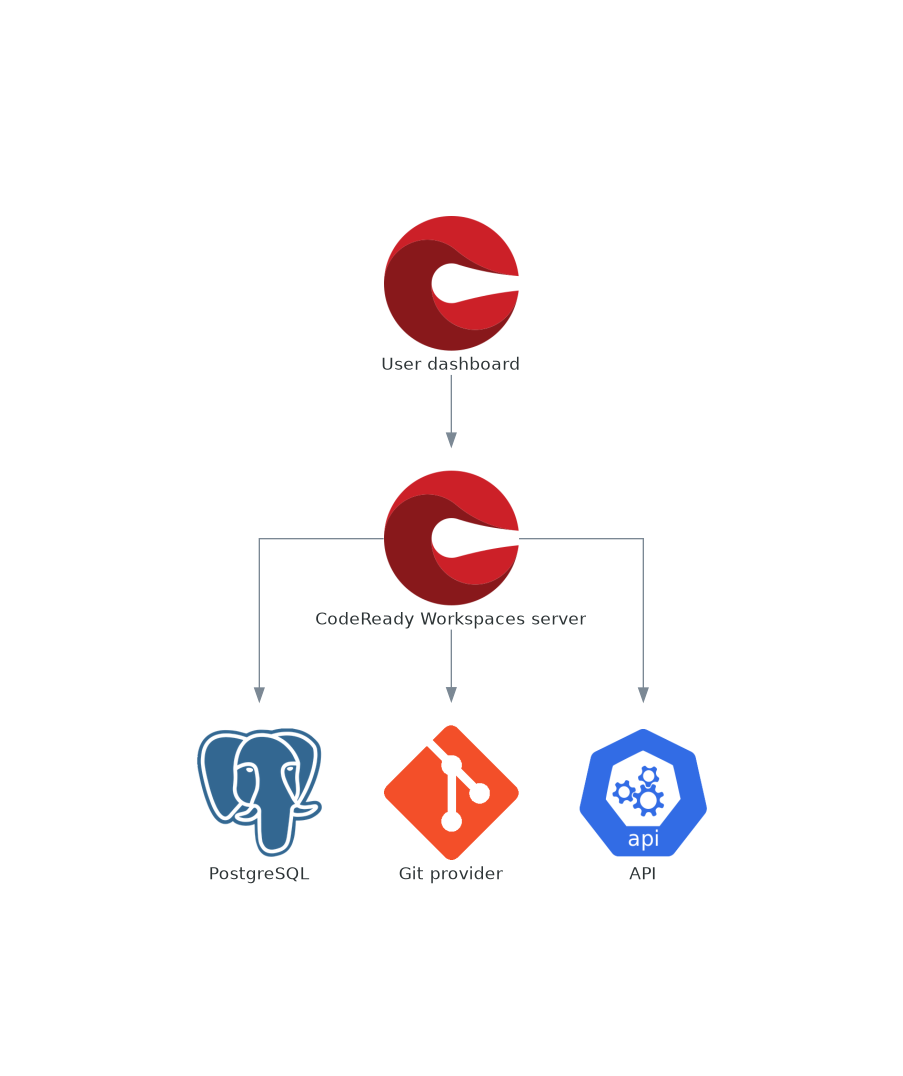

1.5.6. CodeReady Workspaces server

The CodeReady Workspaces server main functions are:

- Creating user namespaces.

- Provisioning user namespaces with required secrets and config maps.

- Integrating with Git services providers, to fetch and validate devfiles and authentication.

The CodeReady Workspaces server is a Java web service exposing an HTTP REST API and needs access to:

- Section 1.5.7, “PostgreSQL”

- Git service providers

- OpenShift API

Figure 1.11. CodeReady Workspaces server interactions with other components

1.5.7. PostgreSQL

CodeReady Workspaces server uses the PostgreSQL database to persist user configurations such as workspaces metadata.

The CodeReady Workspaces deployment starts a dedicated PostgreSQL instance in the postgres Deployment. You can use an external database instead.

Figure 1.12. PostgreSQL interactions with other components

1.5.8. Plug-in registry

Each CodeReady Workspaces workspace starts with a specific editor and set of associated extensions. The CodeReady Workspaces plug-in registry provides the list of available editors and editor extensions. A Devfile v2 describes each editor or extension.

The Section 1.5.4, “User dashboard” is reading the content of the registry.

Figure 1.13. Plug-in registries interactions with other components

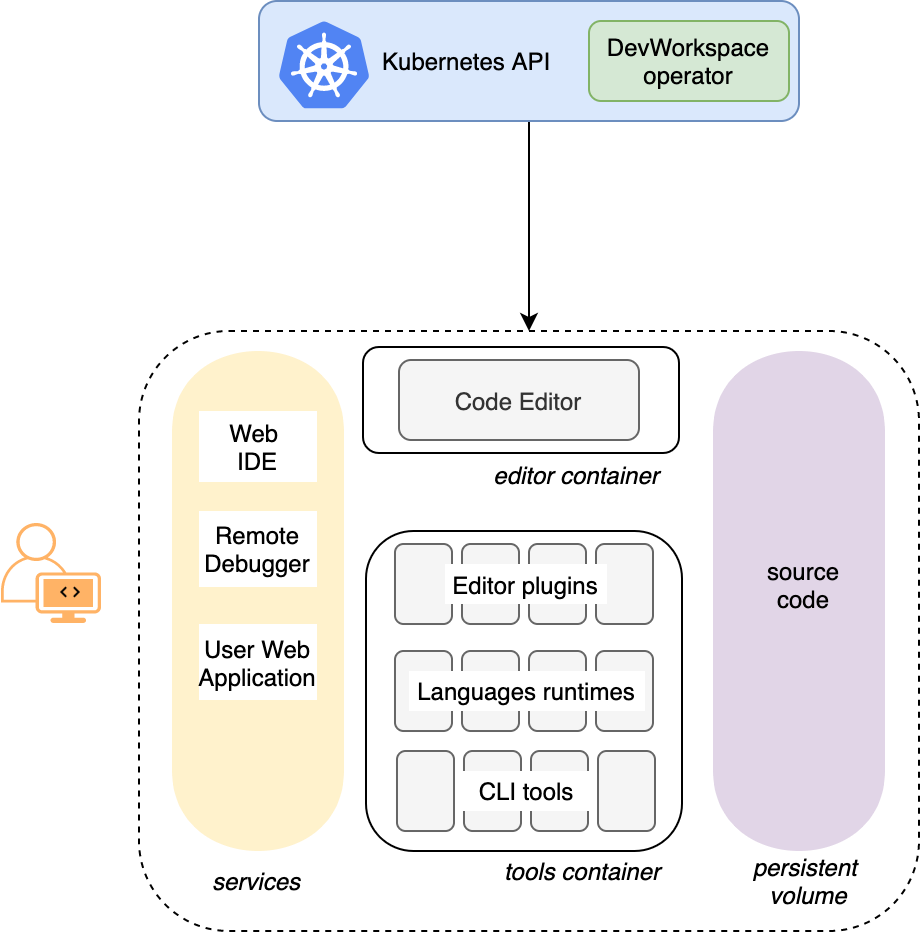

1.6. User workspaces

Figure 1.14. User workspaces interactions with other components

User workspaces are web IDEs running in containers.

A User workspace is a web application. It consists of microservices running in containers providing all the services of a modern IDE running in your browser:

- Editor

- Language auto-completion

- Language server

- Debugging tools

- Plug-ins

- Application runtimes

A workspace is one OpenShift Deployment containing the workspace containers and enabled plug-ins, plus related OpenShift components:

- Containers

- ConfigMaps

- Services

- Endpoints

- Ingresses or Routes

- Secrets

- Persistent Volumes (PVs)

A CodeReady Workspaces workspace contains the source code of the projects, persisted in a OpenShift Persistent Volume (PV). Microservices have read-write access to this shared directory.

Use the devfile v2 format to specify the tools and runtime applications of a CodeReady Workspaces workspace.

The following diagram shows one running CodeReady Workspaces workspace and its components.

Figure 1.15. CodeReady Workspaces workspace components

In the diagram, there is one running workspaces.

Chapter 2. Calculating CodeReady Workspaces resource requirements

Additional resources

This section describes how to calculate resources, such as memory and CPU, required to run Red Hat CodeReady Workspaces.

Both the CodeReady Workspaces central controller and user workspaces consist of a set of containers. Those containers contribute to the resources consumption in terms of CPU and RAM limits and requests.

2.1. Controller requirements

The Workspace Controller consists of a set of five services running in five distinct containers. The following table presents the default resource requirements of each of these services.

| Pod | Container name | Default memory limit | Default memory request |

|---|---|---|---|

| CodeReady Workspaces Server and Dashboard | che | 1 GiB | 512 MiB |

| PostgreSQL |

| 1 GiB | 512 MiB |

| RH-SSO |

| 2 GiB | 512 MiB |

| Devfile registry |

| 256 MiB | 16 MiB |

| Plug-in registry |

| 256 MiB | 16 MiB |

These default values are sufficient when the CodeReady Workspaces Workspace Controller manages a small amount of CodeReady Workspaces workspaces. For larger deployments, increase the memory limit. See the https://access.redhat.com/documentation/en-us/red_hat_codeready_workspaces/2.15/html-single/installation_guide/index#advanced-configuration-options-for-the-che-server-component.adoc article for instructions on how to override the default requests and limits. For example, the Eclipse Che hosted by Red Hat that runs on https://workspaces.openshift.com uses 1 GB of memory.

Additional resources

2.2. Workspaces requirements

This section describes how to calculate the resources required for a workspace. It is the sum of the resources required for each component of this workspace.

These examples demonstrate the necessity of a proper calculation:

- A workspace with ten active plug-ins requires more resources than the same workspace with fewer plug-ins.

- A standard Java workspace requires more resources than a standard Node.js workspace because running builds, tests, and application debugging requires more resources.

Procedure

-

Identify the workspace components explicitly specified in the

componentssection of the https://access.redhat.com/documentation/en-us/red_hat_codeready_workspaces/2.15/html-single/end-user_guide/index#authoring-devfiles-version-2.adoc. Identify the implicit workspace components:

-

CodeReady Workspaces implicitly loads the default

cheEditor:che-theia, and thechePluginthat allows commands execution:che-machine-exec-plugin. To change the default editor, add acheEditorcomponent section in the devfile. - The JWT Proxy component is responsible for the authentication and authorization of the external communications of the workspace components.

-

CodeReady Workspaces implicitly loads the default

Calculate the requirements for each component:

Default values:

The following table displays the default requirements for all workspace components, and the corresponding CodeReady Workspaces server properties. Use the CodeReady Workspaces server properties to modify the defaults cluster-wide.

Expand Table 2.2. Default requirements of workspace components by type Component types CodeReady Workspaces server property Default memory limit Default memory request chePluginche.workspace.sidecar.default_memory_limit_mb128 MiB

64 MiB

cheEditorche.workspace.sidecar.default_memory_limit_mb128 MiB

64 MiB

kubernetes,openshift,dockerimageche.workspace.default_memory_limit_mb,che.workspace.default_memory_request_mb1 Gi

200 MiB

JWT Proxyche.server.secure_exposer.jwtproxy.memory_limit,che.server.secure_exposer.jwtproxy.memory_request128 MiB

15 MiB

Custom requirements for

chePluginsandcheEditorscomponents:Custom memory limit and request:

Define the

memoryLimitandmemoryRequestattributes of thecontainerssection of themeta.yamlfile to configure the memory limit of thechePluginsorcheEditorscomponents. CodeReady Workspaces automatically sets the memory request to match the memory limit if it is not specified explicitly.Example 2.1. The

chePluginche-incubator/typescript/latestmeta.yamlspec section:Copy to Clipboard Copied! Toggle word wrap Toggle overflow This results in a container with the following memory limit and request:

Expand Memory limit

512 MiB

Memory request

256 MiB

NoteFor IBM Power (ppc64le), the memory limit for some plugins has been increased by up to 1.5G to allow pods sufficient RAM to run. For example, on IBM Power (ppc64le), the Theia editor pod requires 2G; the OpenShift connector pod requires 2.5G. For AMD64 and Intel 64 (x86_64) and IBM Z (s390x), memory requirements remain lower at 512M and 1500M respectively. However, some devfiles may still be configured to set the lower limit valid for AMD64 and Intel 64 (x86_64) and IBM Z (s390x), so to work around this, edit devfiles for workspaces that are crashing to increase the default memoryLimit by at least 1 - 1.5 GB.

NoteHow to find the

meta.yamlfile ofchePluginCommunity plug-ins are available in the CodeReady Workspaces plug-ins registry repository in folder

v3/plugins/${organization}/${name}/${version}/.For non-community or customized plug-ins, the

meta.yamlfiles are available on the local OpenShift cluster at${pluginRegistryEndpoint}/v3/plugins/${organization}/${name}/${version}/meta.yaml.Custom CPU limit and request:

CodeReady Workspaces does not set CPU limits and requests by default. However, it is possible to configure CPU limits for the

chePluginandcheEditortypes in themeta.yamlfile or in the devfile in the same way as it done for memory limits.Example 2.2. The

chePluginche-incubator/typescript/latestmeta.yamlspec section:Copy to Clipboard Copied! Toggle word wrap Toggle overflow It results in a container with the following CPU limit and request:

Expand CPU limit

2 cores

CPU request

0.5 cores

To set CPU limits and requests globally, use the following dedicated environment variables:

|

|

|

|

|

|

Note that the LimitRange object of the OpenShift project may specify defaults for CPU limits and requests set by cluster administrators. To prevent start errors due to resources overrun, limits on application or workspace levels must comply with those settings.

Custom requirements for

dockerimagecomponentsDefine the

memoryLimitandmemoryRequestattributes of the devfile to configure the memory limit of adockerimagecontainer. CodeReady Workspaces automatically sets the memory request to match the memory limit if it is not specified explicitly.- alias: maven type: dockerimage image: eclipse/maven-jdk8:latest memoryLimit: 1536M- alias: maven type: dockerimage image: eclipse/maven-jdk8:latest memoryLimit: 1536MCopy to Clipboard Copied! Toggle word wrap Toggle overflow Custom requirements for

kubernetesoropenshiftcomponents:The referenced manifest may define the memory requirements and limits.

- Add all previously calculated requirements.

Additional resources

2.3. A workspace example

This section describes a CodeReady Workspaces workspace example.

The following devfile defines the CodeReady Workspaces workspace:

This table provides the memory requirements for each workspace component:

| Pod | Container name | Default memory limit | Default memory request |

|---|---|---|---|

| Workspace |

| 512 MiB | 512 MiB |

| Workspace |

| 128 MiB | 32 MiB |

| Workspace |

| 512 MiB | 512 MiB |

| Workspace |

| 1 GiB | 512 MiB |

| JWT Proxy | verifier | 128 MiB | 128 MiB |

| Total | 2.25 GiB | 1.38 GiB | |

-

The

theia-ideandmachine-execcomponents are implicitly added to the workspace, even when not included in the devfile. -

The resources required by

machine-execare the default forchePlugin. -

The resources for

theia-ideare specifically set in thecheEditormeta.yamlto 512 MiB asmemoryLimit. -

The Typescript Visual Studio Code extension has also overridden the default memory limits. In its

meta.yamlfile, the limits are explicitly specified to 512 MiB. -

CodeReady Workspaces is applying the defaults for the

dockerimagecomponent type: a memory limit of 1 GiB and a memory request of 512 MiB. - The JWT container requires 128 MiB of memory.

Adding all together results in 1.38 GiB of memory requests with a 2.25 GiB limit.

Additional resources

- Chapter 1, Architecture overview

- https://access.redhat.com/documentation/en-us/red_hat_codeready_workspaces/2.15/html-single/installation_guide/index#configuring-the-che-installation.adoc

- https://access.redhat.com/documentation/en-us/red_hat_codeready_workspaces/2.15/html-single/installation_guide/index#advanced-configuration-options-for-the-che-server-component.adoc

- https://access.redhat.com/documentation/en-us/red_hat_codeready_workspaces/2.15/html-single/end-user_guide/index#authoring-devfiles-version-2.adoc

- Section 12.1, “Authenticating users”

- CodeReady Workspaces plug-ins registry repository

Chapter 3. Customizing the registries

This chapter describes how to build and run custom registries for CodeReady Workspaces.

3.1. Understanding the CodeReady Workspaces registries

CodeReady Workspaces uses two registries: the plug-ins registry and the devfile registry. They are static websites publishing the metadata of CodeReady Workspaces plug-ins and devfiles. When built-in offline mode they also include artifacts.

The devfile and plug-in registries run in two separate Pods. Their deployment is part of the CodeReady Workspaces installation.

The devfile and plug-in registries

- The devfile registry

-

The devfile registry holds the definitions of the CodeReady Workspaces stacks. Stacks are available on the CodeReady Workspaces user dashboard when selecting Create Workspace. It contains the list of CodeReady Workspaces technological stack samples with example projects. When built-in offline mode it also contains all sample projects referenced in devfiles as

zipfiles. - The plug-in registry

- The plug-in registry makes it possible to share a plug-in definition across all the users of the same instance of CodeReady Workspaces. When built-in offline mode it also contains all plug-in or extension artifacts.

Additional resources

3.2. Building custom registry images

3.2.1. Building a custom devfile registry image

This section describes how to build a custom devfile registry image. The procedure explains how to add a devfile. The image contains all sample projects referenced in devfiles.

Prerequisites

- A running installation of podman or docker.

- Valid content for the devfile to add. See: https://access.redhat.com/documentation/en-us/red_hat_codeready_workspaces/2.15/html-single/end-user_guide/index#authoring-devfiles-version-2.adoc.

Procedure

Clone the devfile registry repository and check out the version to deploy:

git clone git@github.com:redhat-developer/codeready-workspaces.git cd codeready-workspaces git checkout crw-2.15-rhel-8

$ git clone git@github.com:redhat-developer/codeready-workspaces.git $ cd codeready-workspaces $ git checkout crw-2.15-rhel-8Copy to Clipboard Copied! Toggle word wrap Toggle overflow In the

./dependencies/che-devfile-registry/devfiles/directory, create a subdirectory<devfile-name>/and add thedevfile.yamlandmeta.yamlfiles.Example 3.1. File organization for a devfile

./dependencies/che-devfile-registry/devfiles/ └── <devfile-name> ├── devfile.yaml └── meta.yaml./dependencies/che-devfile-registry/devfiles/ └── <devfile-name> ├── devfile.yaml └── meta.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Add valid content in the

devfile.yamlfile. For a detailed description of the devfile format, see https://access.redhat.com/documentation/en-us/red_hat_codeready_workspaces/2.15/html-single/end-user_guide/index#authoring-devfiles-version-2.adoc. Ensure that the

meta.yamlfile conforms to the following structure:Expand Table 3.1. Parameters for a devfile meta.yaml Attribute Description descriptionDescription as it appears on the user dashboard.

displayNameName as it appears on the user dashboard.

iconLink to an

.svgfile that is displayed on the user dashboard.tagsList of tags. Tags typically include the tools included in the stack.

globalMemoryLimitOptional parameter: the sum of the expected memory consumed by all the components launched by the devfile. This number will be visible on the user dashboard. It is informative and is not taken into account by the CodeReady Workspaces server.

Example 3.2. Example devfile

meta.yamldisplayName: Rust description: Rust Stack with Rust 1.39 tags: ["Rust"] icon: https://www.eclipse.org/che/images/logo-eclipseche.svg globalMemoryLimit: 1686Mi

displayName: Rust description: Rust Stack with Rust 1.39 tags: ["Rust"] icon: https://www.eclipse.org/che/images/logo-eclipseche.svg globalMemoryLimit: 1686MiCopy to Clipboard Copied! Toggle word wrap Toggle overflow Build a custom devfile registry image:

cd dependencies/che-devfile-registry ./build.sh --organization <my-org> \ --registry <my-registry> \ --tag <my-tag>$ cd dependencies/che-devfile-registry $ ./build.sh --organization <my-org> \ --registry <my-registry> \ --tag <my-tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteTo display full options for the

build.shscript, use the--helpparameter.

3.2.2. Building a custom plug-ins registry image

This section describes how to build a custom plug-ins registry image. The procedure explains how to add a plug-in. The image contains plug-ins or extensions metadata.

Prerequisites

- Node.js 12.x

- A running version of yarn. See: Installing Yarn.

-

./node_modules/.binis in thePATHenvironment variable. - A running installation of podman or docker.

Procedure

Clone the plug-ins registry repository and check out the version to deploy:

git clone git@github.com:redhat-developer/codeready-workspaces.git cd codeready-workspaces git checkout crw-2.15-rhel-8

$ git clone git@github.com:redhat-developer/codeready-workspaces.git $ cd codeready-workspaces $ git checkout crw-2.15-rhel-8Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

In the

./dependencies/che-plugin-registry/directory, edit theche-theia-plugins.yamlfile. -

Add valid content to the

che-theia-plugins.yamlfile, for detailed information see: https://access.redhat.com/documentation/en-us/red_hat_codeready_workspaces/2.15/html-single/end-user_guide/index#adding-a-vs-code-extension-to-the-che-plugin-registry.adoc. Build a custom plug-ins registry image:

cd dependencies/che-plugin-registry ./build.sh --organization <my-org> \ --registry <my-registry> \ --tag <my-tag>$ cd dependencies/che-plugin-registry $ ./build.sh --organization <my-org> \ --registry <my-registry> \ --tag <my-tag>Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteTo display full options for the

build.shscript, use the--helpparameter. To include the plug-in binaries in the registry image, add the--offlineparameter.Observe the contents of

./dependencies/che-plugin-registry/v3/plugins/present in the container after building the registry. Allmeta.yamlfiles resulting from a successful plug-ins registry build will be located here.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Additional resources

3.3. Running custom registries

Prerequisites

The my-plug-in-registry and my-devfile-registry images used in this section are built using the docker command. This section assumes that these images are available on the OpenShift cluster where CodeReady Workspaces is deployed.

These images can be then pushed to:

-

A public container registry such as

quay.io, or the DockerHub. - A private registry.

3.3.1. Deploying registries in OpenShift

Procedure

An OpenShift template to deploy the plug-in registry is available in the deploy/openshift/ directory of the GitHub repository.

To deploy the plug-in registry using the OpenShift template, run the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- If installed using crwctl, the default CodeReady Workspaces project is

openshift-workspaces. The OperatorHub installation method deploys CodeReady Workspaces to the users current project.

The devfile registry has an OpenShift template in the

deploy/openshift/directory of the GitHub repository. To deploy it, run the command:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- If installed using crwctl, the default CodeReady Workspaces project is

openshift-workspaces. The OperatorHub installation method deploys CodeReady Workspaces to the users current project.

Verification steps

The <plug-in> plug-in is available in the plug-in registry.

Example 3.3. Find <plug-in> requesting the plug-in registry API.

URL=$(oc get route -l app=che,component=plugin-registry \ -o 'custom-columns=URL:.spec.host' --no-headers) INDEX_JSON=$(curl -sSL http://${URL}/v3/plugins/index.json) echo ${INDEX_JSON} | jq '.[] | select(.name == "<plug-in>")'$ URL=$(oc get route -l app=che,component=plugin-registry \ -o 'custom-columns=URL:.spec.host' --no-headers) $ INDEX_JSON=$(curl -sSL http://${URL}/v3/plugins/index.json) $ echo ${INDEX_JSON} | jq '.[] | select(.name == "<plug-in>")'Copy to Clipboard Copied! Toggle word wrap Toggle overflow The <devfile> devfile is available in the devfile registry.

Example 3.4. Find <devfile> requesting the devfile registry API.

URL=$(oc get route -l app=che,component=devfile-registry \ -o 'custom-columns=URL:.spec.host' --no-headers) INDEX_JSON=$(curl -sSL http://${URL}/v3/plugins/index.json) echo ${INDEX_JSON} | jq '.[] | select(.name == "<devfile>")'$ URL=$(oc get route -l app=che,component=devfile-registry \ -o 'custom-columns=URL:.spec.host' --no-headers) $ INDEX_JSON=$(curl -sSL http://${URL}/v3/plugins/index.json) $ echo ${INDEX_JSON} | jq '.[] | select(.name == "<devfile>")'Copy to Clipboard Copied! Toggle word wrap Toggle overflow CodeReady Workspaces server points to the URL of the plug-in registry.

Example 3.5. Compare the value of the

CHE_WORKSPACE_PLUGIN__REGISTRY__URLparameter in thecheConfigMap with the URL of the plug-in registry route.Get the value of the

CHE_WORKSPACE_PLUGIN__REGISTRY__URLparameter in thecheConfigMap.oc get cm/che \ -o "custom-columns=URL:.data['CHE_WORKSPACE_PLUGIN__REGISTRY__URL']" \ --no-headers

$ oc get cm/che \ -o "custom-columns=URL:.data['CHE_WORKSPACE_PLUGIN__REGISTRY__URL']" \ --no-headersCopy to Clipboard Copied! Toggle word wrap Toggle overflow Get the URL of the plug-in registry route.

oc get route -l app=che,component=plugin-registry \ -o 'custom-columns=URL: .spec.host' --no-headers

$ oc get route -l app=che,component=plugin-registry \ -o 'custom-columns=URL: .spec.host' --no-headersCopy to Clipboard Copied! Toggle word wrap Toggle overflow CodeReady Workspaces server points to the URL of the devfile registry.

Example 3.6. Compare the value of the

CHE_WORKSPACE_DEVFILE__REGISTRY__URLparameter in thecheConfigMap with the URL of the devfile registry route.Get the value of the

CHE_WORKSPACE_DEVFILE__REGISTRY__URLparameter in thecheConfigMap.oc get cm/che \ -o "custom-columns=URL:.data['CHE_WORKSPACE_DEVFILE__REGISTRY__URL']" \ --no-headers

$ oc get cm/che \ -o "custom-columns=URL:.data['CHE_WORKSPACE_DEVFILE__REGISTRY__URL']" \ --no-headersCopy to Clipboard Copied! Toggle word wrap Toggle overflow Get the URL of the devfile registry route.

oc get route -l app=che,component=devfile-registry \ -o 'custom-columns=URL: .spec.host' --no-headers

$ oc get route -l app=che,component=devfile-registry \ -o 'custom-columns=URL: .spec.host' --no-headersCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the values do not match, update the ConfigMap and restart the CodeReady Workspaces server.

oc edit cm/codeready (...) oc scale --replicas=0 deployment/codeready oc scale --replicas=1 deployment/codeready

$ oc edit cm/codeready (...) $ oc scale --replicas=0 deployment/codeready $ oc scale --replicas=1 deployment/codereadyCopy to Clipboard Copied! Toggle word wrap Toggle overflow The plug-ins are available in the:

- Completion to chePlugin components in the Devfile tab of a workspace details

- Plugin Che-Theia view of a workspace

- The devfiles are available in the Quick Add and Custom Workspace tab of the Create Workspace page on the user dashboard.

3.3.2. Adding a custom plug-in registry in an existing CodeReady Workspaces workspace

The following section describes two methods of adding a custom plug-in registry in an existing CodeReady Workspaces workspace:

- Adding a custom plug-in registry using Command palette - For adding a new custom plug-in registry quickly, with a use of text inputs from Command palette command. This method does not allow a user to edit already existing information, such as plug-in registry URL or name.

-

Adding a custom plug-in registry using the

settings.jsonfile - For adding a new custom plug-in registry and editing of the already existing entries.

3.3.2.1. Adding a custom plug-in registry using Command Palette

Prerequisites

- An instance of CodeReady Workspaces

Procedure

In the CodeReady Workspaces IDE, press F1 to open the Command Palette, or navigate to View → Find Command in the top menu.

The command palette can be also activated by pressing Ctrl+Shift+p (or Cmd+Shift+p on macOS).

-

Enter the

Add Registrycommand into the search box and pres Enter once filled. Enter the registry name and registry URL in next two command prompts.

- After adding a new plug-in registry, the list of plug-ins in the Plug-ins view is refreshed, and if the new plug-in registry is not valid, a user is notified by a warning message.

3.3.2.2. Adding a custom plug-in registry using the settings.json file

The following section describes the use of the main CodeReady Workspaces Settings menu to edit and add a new plug-in registry using the settings.json file.

Prerequisites

- An instance of CodeReady Workspaces

Procedure

- From the main CodeReady Workspaces screen, select Open Preferences by pressing Ctrl+, or using the gear wheel icon on the left bar.

Select Che Plug-ins and continue by Edit in

setting.jsonlink.The

setting.jsonfile is displayed.Add a new plug-in registry using the

chePlugins.repositoriesattribute as shown below:{ “application.confirmExit”: “never”, “chePlugins.repositories”: {“test”: “https://test.com”} }{ “application.confirmExit”: “never”, “chePlugins.repositories”: {“test”: “https://test.com”} }Copy to Clipboard Copied! Toggle word wrap Toggle overflow Save the changes to add a custom plug-in registry in an existing CodeReady Workspaces workspace.

-

A newly added plug-in validation tool checks the correctness of URL values set in the

chePlugins.repositoriesfield of thesettings.jsonfile. -

After adding a new plug-in registry, the list of plug-ins in the Plug-ins view is refreshed, and if the new plug-in registry is not valid, a user is notified by a warning message. This check is also functional for plug-ins added using the Command palette command

Add plugin registry.

-

A newly added plug-in validation tool checks the correctness of URL values set in the

Chapter 4. Retrieving CodeReady Workspaces logs

For information about obtaining various types of logs in CodeReady Workspaces, see the following sections:

4.1. Configuring server logging

It is possible to fine-tune the log levels of individual loggers available in the CodeReady Workspaces server.

The log level of the whole CodeReady Workspaces server is configured globally using the cheLogLevel configuration property of the Operator. See https://access.redhat.com/documentation/en-us/red_hat_codeready_workspaces/2.15/html-single/installation_guide/index#checluster-custom-resource-fields-reference.adoc. To set the global log level in installations not managed by the Operator, specify the CHE_LOG_LEVEL environment variable in the che ConfigMap.

It is possible to configure the log levels of the individual loggers in the CodeReady Workspaces server using the CHE_LOGGER_CONFIG environment variable.

4.1.1. Configuring log levels

The format of the value of the CHE_LOGGER_CONFIG property is a list of comma-separated key-value pairs, where keys are the names of the loggers as seen in the CodeReady Workspaces server log output and values are the required log levels.

In Operator-based deployments, the CHE_LOGGER_CONFIG variable is specified under the customCheProperties of the custom resource.

For example, the following snippet would make the WorkspaceManager produce the DEBUG log messages.

...

server:

customCheProperties:

CHE_LOGGER_CONFIG: "org.eclipse.che.api.workspace.server.WorkspaceManager=DEBUG"

...

server:

customCheProperties:

CHE_LOGGER_CONFIG: "org.eclipse.che.api.workspace.server.WorkspaceManager=DEBUG"4.1.2. Logger naming

The names of the loggers follow the class names of the internal server classes that use those loggers.

4.1.3. Logging HTTP traffic

It is possible to log the HTTP traffic between the CodeReady Workspaces server and the API server of the Kubernetes or OpenShift cluster. To do that, one has to set the che.infra.request-logging logger to the TRACE level.

...

server:

customCheProperties:

CHE_LOGGER_CONFIG: "che.infra.request-logging=TRACE"

...

server:

customCheProperties:

CHE_LOGGER_CONFIG: "che.infra.request-logging=TRACE"4.2. Accessing OpenShift events on OpenShift

For high-level monitoring of OpenShift projects, view the OpenShift events that the project performs.

This section describes how to access these events in the OpenShift web console.

Prerequisites

- A running OpenShift web console.

Procedure

- In the left panel of the OpenShift web console, click the Home → Events.

- To view the list of all events for a particular project, select the project from the list.

- The details of the events for the current project are displayed.

Additional resources

- For a list of OpenShift events, see Comprehensive List of Events in OpenShift documentation.

4.3. Viewing the state of the CodeReady Workspaces cluster deployment using OpenShift 4 CLI tools

This section describes how to view the state of the CodeReady Workspaces cluster deployment using OpenShift 4 CLI tools.

Prerequisites

- An instance of Red Hat CodeReady Workspaces running on OpenShift.

-

An installation of the OpenShift command-line tool,

oc.

Procedure

Run the following commands to select the

crwproject:oc project <project_name>

$ oc project <project_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following commands to get the name and status of the Pods running in the selected project:

oc get pods

$ oc get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check that the status of all the Pods is

Running.Example 4.1. Pods with status

RunningNAME READY STATUS RESTARTS AGE codeready-8495f4946b-jrzdc 0/1 Running 0 86s codeready-operator-578765d954-99szc 1/1 Running 0 42m keycloak-74fbfb9654-g9vp5 1/1 Running 0 4m32s postgres-5d579c6847-w6wx5 1/1 Running 0 5m14s

NAME READY STATUS RESTARTS AGE codeready-8495f4946b-jrzdc 0/1 Running 0 86s codeready-operator-578765d954-99szc 1/1 Running 0 42m keycloak-74fbfb9654-g9vp5 1/1 Running 0 4m32s postgres-5d579c6847-w6wx5 1/1 Running 0 5m14sCopy to Clipboard Copied! Toggle word wrap Toggle overflow To see the state of the CodeReady Workspaces cluster deployment, run:

oc logs --tail=10 -f `(oc get pods -o name | grep operator)`

$ oc logs --tail=10 -f `(oc get pods -o name | grep operator)`Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example 4.2. Logs of the Operator:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.4. Viewing CodeReady Workspaces server logs

This section describes how to view the CodeReady Workspaces server logs using the command line.

4.4.1. Viewing the CodeReady Workspaces server logs using the OpenShift CLI

This section describes how to view the CodeReady Workspaces server logs using the OpenShift CLI (command line interface).

Procedure

In the terminal, run the following command to get the Pods:

oc get pods

$ oc get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

oc get pods NAME READY STATUS RESTARTS AGE codeready-11-j4w2b 1/1 Running 0 3m

$ oc get pods NAME READY STATUS RESTARTS AGE codeready-11-j4w2b 1/1 Running 0 3mCopy to Clipboard Copied! Toggle word wrap Toggle overflow To get the logs for a deployment, run the following command:

oc logs <name-of-pod>

$ oc logs <name-of-pod>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example

oc logs codeready-11-j4w2b

$ oc logs codeready-11-j4w2bCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.5. Viewing external service logs

This section describes how the view the logs from external services related to CodeReady Workspaces server.

4.5.1. Viewing RH-SSO logs

The RH-SSO OpenID provider consists of two parts: Server and IDE. It writes its diagnostics or error information to several logs.

4.5.1.1. Viewing the RH-SSO server logs

This section describes how to view the RH-SSO OpenID provider server logs.

Procedure

- In the OpenShift Web Console, click Deployments.

-

In the Filter by label search field, type

keycloakto see the RH-SSO logs.

. In the Deployment Configs section, click the keycloak link to open it.

- In the History tab, click the View log link for the active RH-SSO deployment.

- The RH-SSO logs are displayed.

Additional resources

- See the Section 4.4, “Viewing CodeReady Workspaces server logs” for diagnostics and error messages related to the RH-SSO IDE Server.

4.5.1.2. Viewing the RH-SSO client logs on Mozilla Firefox

This section describes how to view the RH-SSO IDE client diagnostics or error information in the Mozilla Firefox WebConsole.

Procedure

- Click Menu > WebDeveloper > WebConsole.

4.5.1.3. Viewing the RH-SSO client logs on Google Chrome

This section describes how to view the RH-SSO IDE client diagnostics or error information in the Google Chrome Console tab.

Procedure

- Click Menu > More Tools > Developer Tools.

- Click the Console tab.

4.5.2. Viewing the CodeReady Workspaces database logs

This section describes how to view the database logs in CodeReady Workspaces, such as PostgreSQL server logs.

Procedure

- In the OpenShift Web Console, click Deployments.

In the Find by label search field, type:

-

app=cheand press Enter component=postgresand press EnterThe OpenShift Web Console is searching base on those two keys and displays PostgreSQL logs.

-

-

Click

postgresdeployment to open it. Click the View log link for the active PostgreSQL deployment.

The OpenShift Web Console displays the database logs.

Additional resources

- Some diagnostics or error messages related to the PostgreSQL server can be found in the active CodeReady Workspaces deployment log. For details to access the active CodeReady Workspaces deployments logs, see the Section 4.4, “Viewing CodeReady Workspaces server logs” section.

4.6. Viewing the plug-in broker logs

This section describes how to view the plug-in broker logs.

The che-plugin-broker Pod itself is deleted when its work is complete. Therefore, its event logs are only available while the workspace is starting.

Procedure

To see logged events from temporary Pods:

- Start a CodeReady Workspaces workspace.

- From the main OpenShift Container Platform screen, go to Workload → Pods.

- Use the OpenShift terminal console located in the Pod’s Terminal tab

Verification step

- OpenShift terminal console displays the plug-in broker logs while the workspace is starting

4.7. Collecting logs using crwctl

It is possible to get all Red Hat CodeReady Workspaces logs from a OpenShift cluster using the crwctl tool.

-

crwctl server:deployautomatically starts collecting Red Hat CodeReady Workspaces servers logs during installation of Red Hat CodeReady Workspaces -

crwctl server:logscollects existing Red Hat CodeReady Workspaces server logs -

crwctl workspace:logscollects workspace logs

Chapter 5. Monitoring CodeReady Workspaces

This chapter describes how to configure CodeReady Workspaces to expose metrics and how to build an example monitoring stack with external tools to process data exposed as metrics by CodeReady Workspaces.

5.1. Enabling and exposing CodeReady Workspaces metrics

This section describes how to enable and expose CodeReady Workspaces metrics.

Procedure

-

Set the

CHE_METRICS_ENABLED=trueenvironment variable, which will expose the8087port as a service on theche-masterhost.

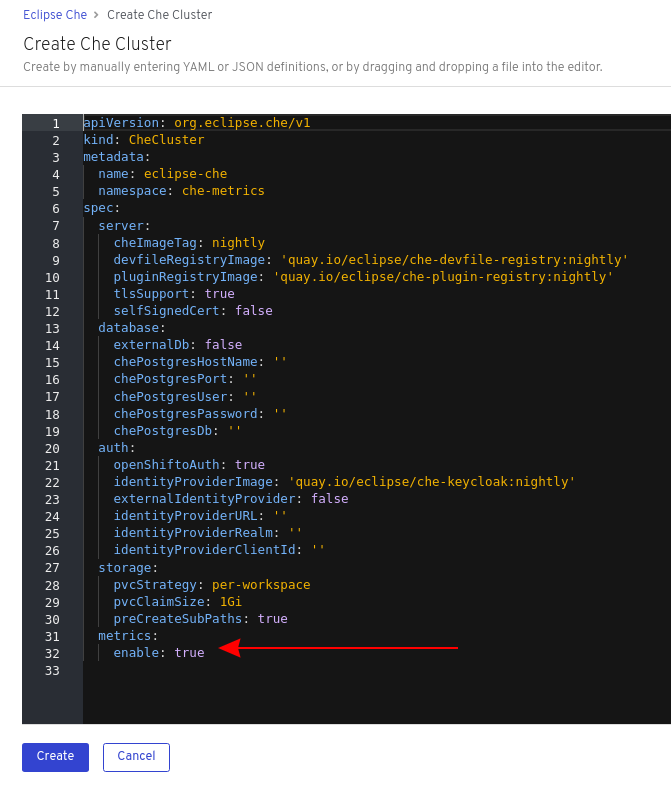

When Red Hat CodeReady Workspaces is installed from the OperatorHub, the environment variable is set automatically if the default CheCluster CR is used:

spec:

metrics:

enable: true

spec:

metrics:

enable: true5.2. Collecting CodeReady Workspaces metrics with Prometheus

This section describes how to use the Prometheus monitoring system to collect, store, and query metrics about CodeReady Workspaces.

Prerequisites

-

CodeReady Workspaces is exposing metrics on port

8087. See Enabling and exposing CodeReady Workspaces metrics. -

Prometheus 2.9.1 or later is running. The Prometheus console is running on port

9090with a corresponding service and route. See First steps with Prometheus.

Procedure

Configure Prometheus to scrape metrics from the

8087port:Example 5.1. Prometheus configuration example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Rate, at which a target is scraped.

- 2

- Rate, at which recording and alerting rules are re-checked (not used in the system at the moment).

- 3

- Resources Prometheus monitors. In the default configuration, a single job called

che, scrapes the time series data exposed by the CodeReady Workspaces server. - 4

- Scrape metrics from the

8087port.

Verification steps

Use the Prometheus console to query and view metrics.

Metrics are available at:

http://<che-server-url>:9090/metrics.For more information, see Using the expression browser.

Additional resources

Chapter 6. Monitoring the Dev Workspace operator

This chapter describes how to configure an example monitoring stack to process metrics exposed by the Dev Workspace operator. You must enable the Dev Workspace operator to follow the instructions in this chapter. See https://access.redhat.com/documentation/en-us/red_hat_codeready_workspaces/2.15/html-single/installation_guide/index#enabling-dev-workspace-operator.adoc.

6.1. Collecting Dev Workspace operator metrics with Prometheus

This section describes how to use the Prometheus to collect, store, and query metrics about the Dev Workspace operator.

Prerequisites

-

The

devworkspace-controller-metricsservice is exposing metrics on port8443. -

The

devworkspace-webhookserverservice is exposing metrics on port9443. By default, the service exposes metrics on port9443. -

Prometheus 2.26.0 or later is running. The Prometheus console is running on port

9090with a corresponding service and route. See First steps with Prometheus.

Procedure

Create a

ClusterRoleBindingto bind theServiceAccountassociated with Prometheus to the devworkspace-controller-metrics-readerClusterRole. Without theClusterRoleBinding, you cannot access Dev Workspace metrics because they are protected with role-based access control (RBAC).Example 6.1. ClusterRole example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example 6.2. ClusterRoleBinding example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Configure Prometheus to scrape metrics from the

8443port exposed by thedevworkspace-controller-metricsservice, and9443port exposed by thedevworkspace-webhookserverservice.Example 6.3. Prometheus configuration example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- 1

- Rate at which a target is scraped.

- 2

- Rate at which recording and alerting rules are re-checked.

- 3

- Resources that Prometheus monitors. In the default configuration, two jobs (

DevWorkspaceandDevWorkspace webhooks), scrape the time series data exposed by thedevworkspace-controller-metricsanddevworkspace-webhookserverservices. - 4

- Scrape metrics from the

8443port. - 5

- Scrape metrics from the

9443port.

Verification steps

Use the Prometheus console to view targets and metrics.

For more information, see Using the expression browser.

6.2. Dev Workspace-specific metrics

This section describes the Dev Workspace-specific metrics exposed by the devworkspace-controller-metrics service.

| Name | Type | Description | Labels |

|---|---|---|---|

|

| Counter | Number of Dev Workspace starting events. |

|

|

| Counter |

Number of Dev Workspaces successfully entering the |

|

|

| Counter | Number of failed Dev Workspaces. |

|

|

| Histogram | Total time taken to start a Dev Workspace, in seconds. |

|

| Name | Description | Values |

|---|---|---|

|

|

The |

|

|

|

The |

|

|

| The workspace startup failure reason. |

|

| Name | Description |

|---|---|

|

| Startup failure due to an invalid devfile used to create a Dev Workspace. |

|

|

Startup failure due to the following errors: |

|

| Unknown failure reason. |

Chapter 7. Tracing CodeReady Workspaces

Tracing helps gather timing data to troubleshoot latency problems in microservice architectures and helps to understand a complete transaction or workflow as it propagates through a distributed system. Every transaction may reflect performance anomalies in an early phase when new services are being introduced by independent teams.

Tracing the CodeReady Workspaces application may help analyze the execution of various operations, such as workspace creations, workspace startup, breaking down the duration of sub-operations executions, helping finding bottlenecks and improve the overall state of the platform.

Tracers live in applications. They record timing and metadata about operations that take place. They often instrument libraries, so that their use is indiscernible to users. For example, an instrumented web server records when it received a request and when it sent a response. The trace data collected is called a span. A span has a context that contains information such as trace and span identifiers and other kinds of data that can be propagated down the line.

7.1. Tracing API

CodeReady Workspaces utilizes OpenTracing API - a vendor-neutral framework for instrumentation. This means that if a developer wants to try a different tracing back end, then rather than repeating the whole instrumentation process for the new distributed tracing system, the developer can simply change the configuration of the tracer back end.

7.2. Tracing back end

By default, CodeReady Workspaces uses Jaeger as the tracing back end. Jaeger was inspired by Dapper and OpenZipkin, and it is a distributed tracing system released as open source by Uber Technologies. Jaeger extends a more complex architecture for a larger scale of requests and performance.

7.3. Installing the Jaeger tracing tool

The following sections describe the installation methods for the Jaeger tracing tool. Jaeger can then be used for gathering metrics in CodeReady Workspaces.

Installation methods available:

For tracing a CodeReady Workspaces instance using Jaeger, version 1.12.0 or above is required. For additional information about Jaeger, see the Jaeger website.

7.3.1. Installing Jaeger using OperatorHub on OpenShift 4

This section provide information about using Jaeger tracing tool for testing an evaluation purposes in production.

To install the Jaeger tracing tool from the OperatorHub interface in OpenShift Container Platform, follow the instructions below.

Prerequisites

- The user is logged in to the OpenShift Container Platform Web Console.

- A CodeReady Workspaces instance is available in a project.

Procedure

- Open the OpenShift Container Platform console.

- From the left menu of the main OpenShift Container Platform screen, navigate to Operators → OperatorHub.

-

In the Search by keyword search bar, type

Jaeger Operator. -

Click the

Jaeger Operatortile. -

Click the button in the

Jaeger Operatorpop-up window. -

Select the installation method:

A specific project on the clusterwhere the CodeReady Workspaces is deployed and leave the rest in its default values. - Click the Subscribe button.

- From the left menu of the main OpenShift Container Platform screen, navigate to the Operators → Installed Operators section.

- Red Hat CodeReady Workspaces is displayed as an Installed Operator, as indicated by the InstallSucceeded status.

- Click the Jaeger Operator name in the list of installed Operators.

- Navigate to the Overview tab.

-

In the Conditions sections at the bottom of the page, wait for this message:

install strategy completed with no errors. -

Jaeger Operatorand additionalElasticsearch Operatoris installed. - Navigate to the Operators → Installed Operators section.

- Click Jaeger Operator in the list of installed Operators.

- The Jaeger Cluster page is displayed.

- In the lower left corner of the window, click Create Instance

- Click Save.

-

OpenShift creates the Jaeger cluster

jaeger-all-in-one-inmemory. - Follow the steps in Enabling metrics collection to finish the procedure.

7.3.2. Installing Jaeger using CLI on OpenShift 4

This section provide information about using Jaeger tracing tool for testing an evaluation purposes.

To install the Jaeger tracing tool from a CodeReady Workspaces project in OpenShift Container Platform, follow the instructions in this section.

Prerequisites

- The user is logged in to the OpenShift Container Platform web console.

- A instance of CodeReady Workspaces in an OpenShift Container Platform cluster.

Procedure

In the CodeReady Workspaces installation project of the OpenShift Container Platform cluster, use the

occlient to create a new application for the Jaeger deployment.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Using the Workloads → Deployments from the left menu of main OpenShift Container Platform screen, monitor the Jaeger deployment until it finishes successfully.

- Select Networking → Routes from the left menu of the main OpenShift Container Platform screen, and click the URL link to access the Jaeger dashboard.

- Follow the steps in Enabling metrics collection to finish the procedure.

7.4. Enabling metrics collection

Prerequisites

- Installed Jaeger v1.12.0 or above. See instructions at Section 7.3, “Installing the Jaeger tracing tool”

Procedure

For Jaeger tracing to work, enable the following environment variables in your CodeReady Workspaces deployment:

To enable the following environment variables:

In the

yamlsource code of the CodeReady Workspaces deployment, add the following configuration variables underspec.server.customCheProperties.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the

JAEGER_ENDPOINTvalue to match the name of the Jaeger collector service in your deployment.From the left menu of the main OpenShift Container Platform screen, obtain the value of JAEGER_ENDPOINT by navigation to Networking → Services. Alternatively, execute the following

occommand:oc get services

$ oc get servicesCopy to Clipboard Copied! Toggle word wrap Toggle overflow The requested value is included in the service name that contains the

collectorstring.

Additional resources

- For additional information about custom environment properties and how to define them in CheCluster Custom Resource, see https://access.redhat.com/documentation/en-us/red_hat_codeready_workspaces/2.15/html-single/installation_guide/index#advanced-configuration-options-for-the-che-server-component.adoc.

- For custom configuration of Jaeger, see the list of Jaeger client environment variables.

7.5. Viewing CodeReady Workspaces traces in Jaeger UI

This section demonstrates how to use the Jaeger UI to overview traces of CodeReady Workspaces operations.

Procedure

In this example, the CodeReady Workspaces instance has been running for some time and one workspace start has occurred.

To inspect the trace of the workspace start:

In the Search panel on the left, filter spans by the operation name (span name), tags, or time and duration.

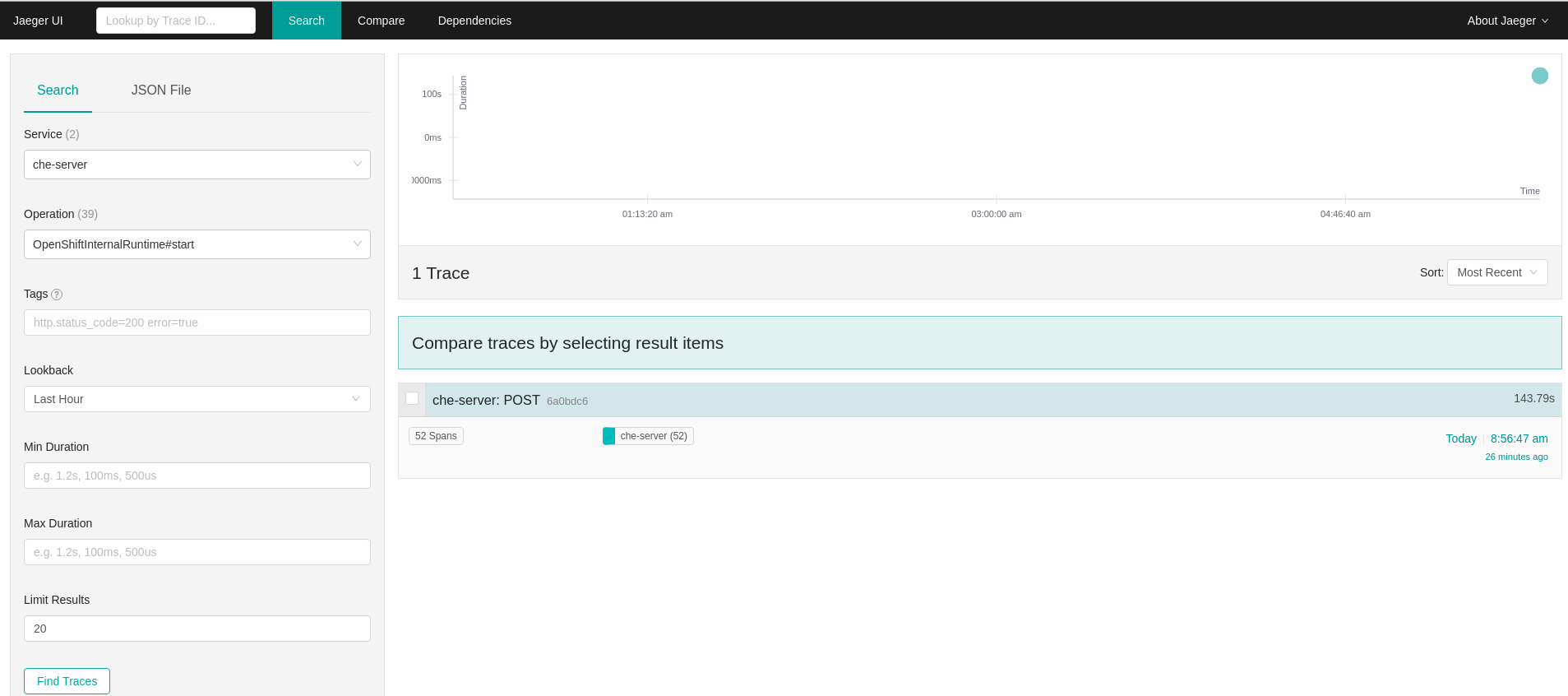

Figure 7.1. Using Jaeger UI to trace CodeReady Workspaces

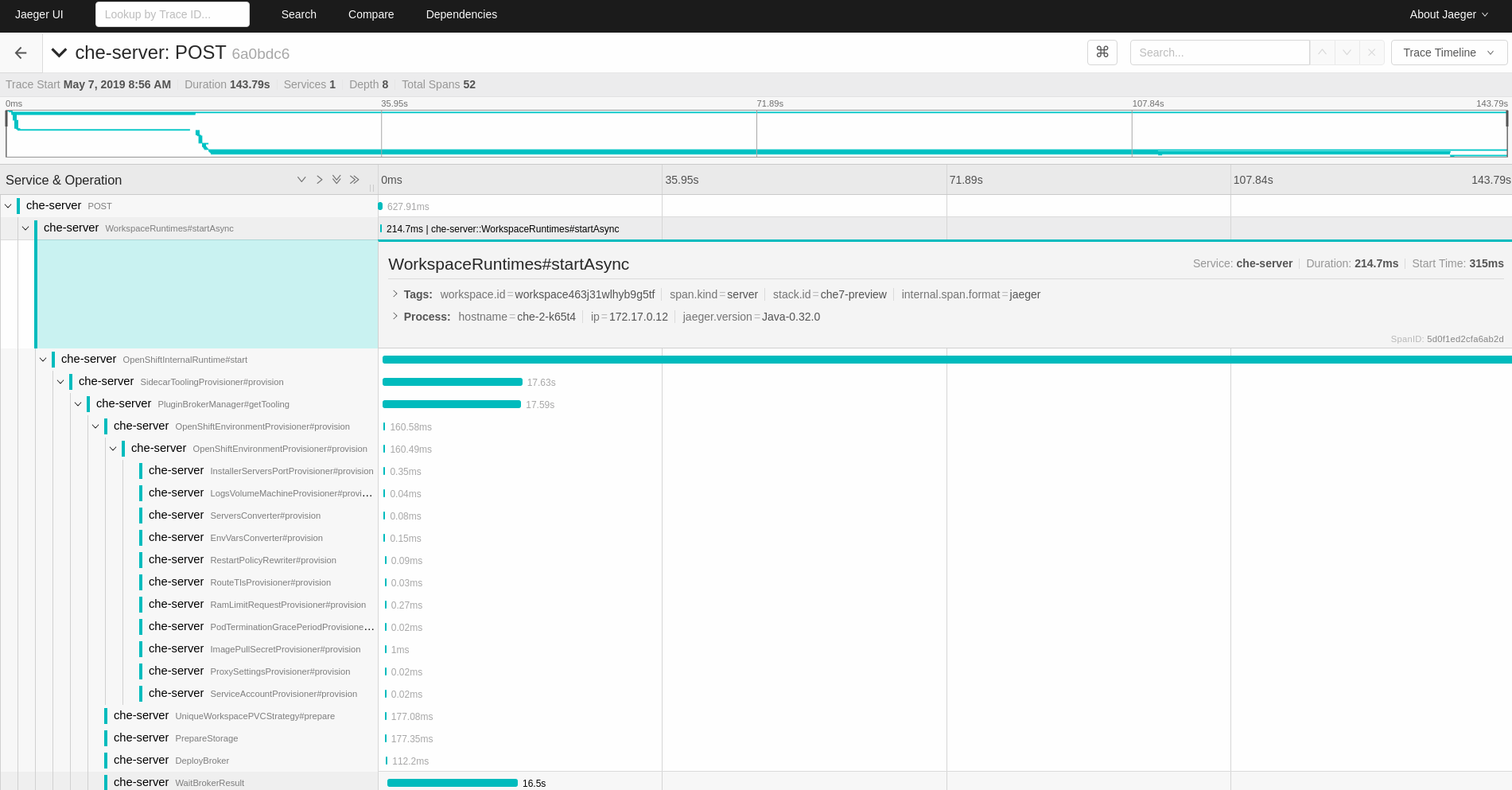

Select the trace to expand it and show the tree of nested spans and additional information about the highlighted span, such as tags or durations.

Figure 7.2. Expanded tracing tree

7.6. CodeReady Workspaces tracing codebase overview and extension guide

The core of the tracing implementation for CodeReady Workspaces is in the che-core-tracing-core and che-core-tracing-web modules.

All HTTP requests to the tracing API have their own trace. This is done by TracingFilter from the OpenTracing library, which is bound for the whole server application. Adding a @Traced annotation to methods causes the TracingInterceptor to add tracing spans for them.

7.6.1. Tagging

Spans may contain standard tags, such as operation name, span origin, error, and other tags that may help users with querying and filtering spans. Workspace-related operations (such as starting or stopping workspaces) have additional tags, including userId, workspaceID, and stackId. Spans created by TracingFilter also have an HTTP status code tag.

Declaring tags in a traced method is done statically by setting fields from the TracingTags class:

TracingTags.WORKSPACE_ID.set(workspace.getId());

TracingTags.WORKSPACE_ID.set(workspace.getId());

TracingTags is a class where all commonly used tags are declared, as respective AnnotationAware tag implementations.

Additional resources

For more information about how to use Jaeger UI, visit Jaeger documentation: Jaeger Getting Started Guide.

Chapter 8. Backup and recovery

Backing up CodeReady Workspaces involves a combination of the following processes that back up different data:

Use the CodeReady Workspaces Operator and a crwctl.

NoteUse the internal backup server to test this process.

Use backups of persistent volumes to back up and restore the source code stored in users' workspaces.

TipWhether or not you implement backups of persistent volumes, advise users to commit and push their changes to avoid losing their work.

- Use backups of the external PostgreSQL database to back up and restore persisting data about the state of CodeReady Workspaces.

8.1. Supported Restic-compatible backup servers

CodeReady Workspaces uses the CodeReady Workspaces Operator and integrated Restic to back up and restore CodeReady Workspaces instances from backup snapshots on a configured backup server. The CodeReady Workspaces Operator automates the creation of a Restic backup repository on the backup server. To back up data, the CodeReady Workspaces Operator gathers the data required for a backup snapshot and uses Restic to create and manage the snapshot. To restore data, the CodeReady Workspaces Operator uses Restic to retrieve and decrypt the snapshot, and then the CodeReady Workspaces Operator applies the retrieved data to perform the recovery.

CodeReady Workspaces can use the following backup servers that are compatible with the integrated Restic:

- SFTP

- See the documentation for the SFTP server solution you plan to use (OpenSSH or a derived commercial product) and the Restic Docs on SFTP.

- Amazon S3

- See the documentation for Amazon S3 (or the chosen S3 API compatible storage) and the Restic Docs on Amazon S3.

- REST

- See the README for Rest Server and the Restic Docs on Rest Server.

For testing the backing up and restoring of CodeReady Workspaces instances, CodeReady Workspaces offers the internal backup server.

8.2. Backing up of CodeReady Workspaces instances to an SFTP backup server

You can send backups of CodeReady Workspaces instances to an SFTP backup server with custom resources or crwctl:

8.2.1. Backing up a CodeReady Workspaces instance to an SFTP backup server by using custom resources

Backing up a CodeReady Workspaces instance to an SFTP backup server by using custom resources requires two custom objects:

- First you create a custom object to configure CodeReady Workspaces to use an SFTP backup server.

- Then you create a custom object to make and send a backup snapshot of a CodeReady Workspaces instance to the configured SFTP backup server.

8.2.1.1. Configuring CodeReady Workspaces with custom resources to use an SFTP backup server

To configure CodeReady Workspaces to use an SFTP backup server:

Prerequisites

- Configured SFTP backup server. See Section 8.1, “Supported Restic-compatible backup servers”.

Procedure

Create a Secret containing the

repo-passwordkey with a password:Copy to Clipboard Copied! Toggle word wrap Toggle overflow (The CodeReady Workspaces Operator will set up this password for the backup repository that the CodeReady Workspaces Operator will create from this custom object on the backup server.)

WarningThe backup repository password is used to encrypt the backup data. If you lose this password, you will lose the backup data.

-

Create a Secret (for example,

name: ssh-key-secret) containing thessh-privatekeykey with a private SSH key for logging in to the SFTP server without a password. Create the

CheBackupServerConfigurationcustom object:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Must only contain one section (such as

sftp). - 2

- User name on the remote server to log in using the SSH protocol.

- 3

- Remote server hostname.

- 4

- Optional property that specifies the port on which an SFTP server is running. The default value is

22. - 5

- Absolute or relative path on the server where backup snapshots are stored.

- 6

- Secret created in step 1.

- 7

- Secret created in step 2.

-

Optional: To configure multiple backup servers, create a separate

CheBackupServerConfigurationcustom object for each backup server.

The CodeReady Workspaces Operator automatically backs up the CodeReady Workspaces instance before every CodeReady Workspaces update, permitting rollback to previous CodeReady Workspaces version if needed. If you configure only one backup server, that backup server is automatically used for pre-update backups by default. If you configure multiple backup servers, you must add the che.eclipse.org/backup-before-update: true annotation to the custom object of only one of them to specify it as the default backup server for pre-update backups. (If you don’t add this annotation for one of multiple backup servers, or if you add this annotation for multiple backup servers, then the CodeReady Workspaces Operator defaults to using the internal backup server for pre-update backups.)

8.2.1.2. Backing up a CodeReady Workspaces instance to an SFTP backup server by using the CheClusterBackup custom object

You can use a CheClusterBackup custom object to make a backup snapshot of a CodeReady Workspaces instance and to send the snapshot to the configured backup server. To create each and every backup snapshot requires a new CheClusterBackup custom object; that is, editing an already consumed CheClusterBackup custom object, during or after backing up, has no effect.

This procedure does not back up the source code stored in users' workspaces. To back up the source code stored in users' workspaces, see Backups of persistent volumes.

Prerequisites

- Configured backup server. See Section 8.1, “Supported Restic-compatible backup servers”.

-

Created

CheBackupServerConfigurationcustom object. See the previous section of this guide.

Procedure

Create the

CheClusterBackupcustom object, which creates a backup snapshot:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Name of the

CheBackupServerConfigurationcustom object defining what backup server to use. - 2

- Configures the Operator through this custom resource to use the CodeReady Workspaces-managed internal backup server or an administrator-managed external backup server (SFTP, Amazon S3 or S3 API compatible storage, or REST).

TipIf you intend to reuse a

nameforCheClusterBackupcustom objects, first delete any existing custom object with the samename. To delete it on the command line, use oc:oc delete CheClusterBackup <name> -n openshift-workspaces

$ oc delete CheClusterBackup <name> -n openshift-workspacesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Read the

statussection of theCheClusterBackupcustom object to verify the backup process, for example:status: message: 'Backup is in progress. Start time: <timestamp>' stage: Collecting CodeReady Workspaces installation data state: InProgress

status: message: 'Backup is in progress. Start time: <timestamp>'1 stage: Collecting CodeReady Workspaces installation data2 state: InProgress3 Copy to Clipboard Copied! Toggle word wrap Toggle overflow The CodeReady Workspaces instance is backed up in a snapshot when

stateisSucceeded:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

8.2.2. Backing up a CodeReady Workspaces instance to an SFTP backup server by using crwctl

You can use crwctl to make a backup snapshot of a CodeReady Workspaces instance and send the snapshot to a configured SFTP backup server. To do so, enter crwctl with the command-line options or set the environment variables.

8.2.2.1. Backing up a CodeReady Workspaces instance to an SFTP backup server by using crwctl with command-line options

To make a backup snapshot of a CodeReady Workspaces instance and send the snapshot to a configured SFTP backup server, run crwctl with the command-line options.

This procedure does not back up the source code stored in users' workspaces. To back up the source code stored in users' workspaces, see Backups of persistent volumes.

Prerequisites

-

Installed

crwctl. - Configured SFTP backup server. See Section 8.1, “Supported Restic-compatible backup servers”.

Procedure

Enter the

crwctl server:backupcommand with the following arguments:crwctl server:backup \ --repository-url=<repository_url> \ --repository-password=<repository_password> \ --ssh-key-file=<ssh_key_file>

$ crwctl server:backup \ --repository-url=<repository_url> \1 --repository-password=<repository_password> \2 --ssh-key-file=<ssh_key_file>3 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Backup repository URL as an argument using the

--repository-urlor-roption. Syntax for the backup repository URL:sftp:<user>@<host>:/<repository_path_on_sftp_server>. An example of a repository path on the SFTP server:/srv/restic-repo. For more details about repository URL syntax, see Restic Documentation. - 2

- Backup repository password as an argument using the

--repository-passwordor-poption. - 3

- Path to a private SSH key file for authenticating on the SFTP server.

TipLast used backup server information is stored in a Secret inside the CodeReady Workspaces cluster. When consistently using the same backup server, you can enter the

--repository-urland--repository-passwordoptions with thecrwctl server:backupcommand just once and omit them onward when enteringcrwctl server:backuporcrwctl server:restore.Verify the output of the entered command. For example:

... ✔ Scheduling backup...OK ✔ Waiting until backup process finishes...OK Backup snapshot ID: 9f0adce2 Command server:backup has completed successfully in 00:10.

... ✔ Scheduling backup...OK ✔ Waiting until backup process finishes...OK Backup snapshot ID: 9f0adce2 Command server:backup has completed successfully in 00:10.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

You can back up a CodeReady Workspaces instance by entering the name of a CheBackupServerConfiguration custom object as an argument with the crwctl server:backup command.

Prerequisites

-

Installed

crwctl. - Configured backup server. See Section 8.1, “Supported Restic-compatible backup servers”.

-

Created

CheBackupServerConfigurationcustom object.

Procedure

Run the following command on a command line:

crwctl server:backup \ --backup-server-config-name=<name_of_CheBackupServerConfiguration>

$ crwctl server:backup \ --backup-server-config-name=<name_of_CheBackupServerConfiguration>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- This option points crwctl to a

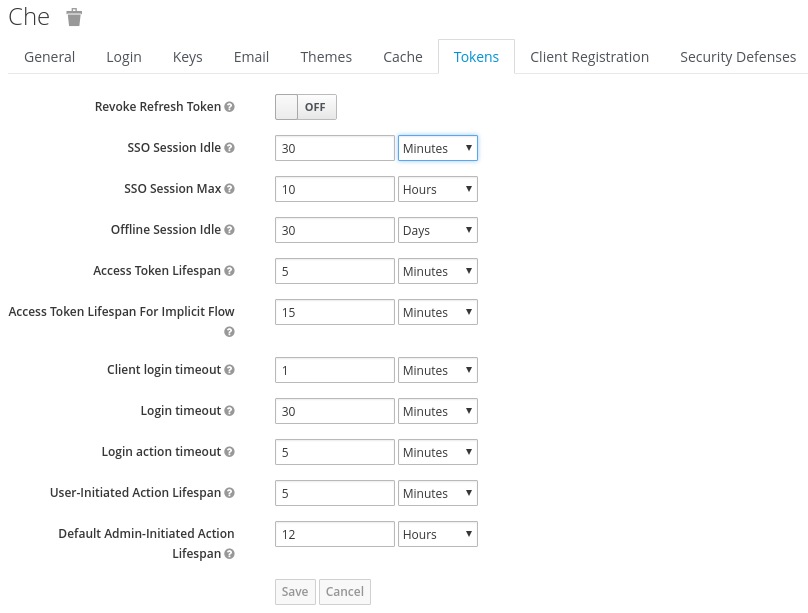

CheBackupServerConfigurationcustom object. You can find thenameof theCheBackupServerConfigurationcustom object undermetadatain the custom object.