Administration and Configuration Guide

For use with Red Hat JBoss Data Grid 7.2

Abstract

Part I. Introduction

Chapter 1. Setting up Red Hat JBoss Data Grid

1.1. Prerequisites

The only prerequisites to set up Red Hat JBoss Data Grid is a Java Virtual Machine and that the most recent supported version of the product is installed on your system.

1.2. Steps to Set up Red Hat JBoss Data Grid

The following steps outline the necessary (and optional, where stated) steps for a first time basic configuration of Red Hat JBoss Data Grid. It is recommended that the steps are followed in the order specified and not skipped unless they are identified as optional steps.

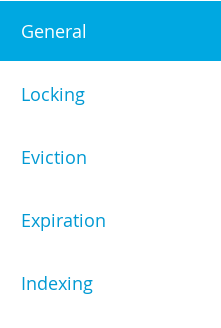

Set Up JBoss Data Grid

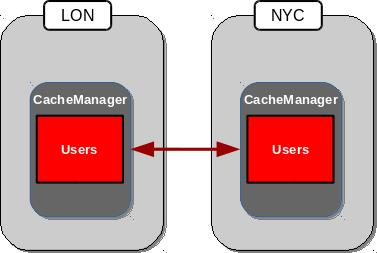

Set Up the Cache Manager

The foundation of a JBoss Data Grid configuration is a cache manager. Cache managers can retrieve cache instances and create cache instances quickly and easily using previously specified configuration templates. For details about setting up a cache manager, refer to the

Cache Managersection in the JBoss Data Grid Getting Started Guide .Set Up JVM Memory Management

An important step in configuring your JBoss Data Grid is to set up memory management for your Java Virtual Machine (JVM). JBoss Data Grid offers features such as eviction and expiration to help manage the JVM memory.

Set Up Eviction

Use eviction to specify the logic used to remove entries from the in-memory cache implementation based on how often they are used. JBoss Data Grid offers different eviction strategies for finer control over entry eviction in your data grid. Eviction strategies and instructions to configure them are available in Configuring Eviction.

Set Up Expiration

To set upper limits to an entry’s time in the cache, attach expiration information to each entry. Use expiration to set up the maximum period an entry is allowed to remain in the cache and how long the retrieved entry can remain idle before being removed from the cache. For details, see Configuring Expiration.

Monitor Your Cache

JBoss Data Grid uses logging via JBossLogging to help users monitor their caches.

Set Up Logging

It is not mandatory to set up logging for your JBoss Data Grid, but it is highly recommended. JBoss Data Grid uses JBossLogging, which allows the user to easily set up automated logging for operations in the data grid. Logs can subsequently be used to troubleshoot errors and identify the cause of an unexpected failure. For details, see Set Up Logging.

Set Up Cache Modes

Cache modes are used to specify whether a cache is local (simple, in-memory cache) or a clustered cache (replicates state changes over a small subset of nodes). Additionally, if a cache is clustered, either replication, distribution or invalidation mode must be applied to determine how the changes propagate across the subset of nodes. For details, see Set Up Cache Modes.

Set Up Locking for the Cache

When replication or distribution is in effect, copies of entries are accessible across multiple nodes. As a result, copies of the data can be accessed or modified concurrently by different threads. To maintain consistency for all copies across nodes, configure locking. For details, see Set Up Locking for the Cache and Set Up Isolation Levels.

Set Up and Configure a Cache Store

JBoss Data Grid offers the passivation feature (or cache writing strategies if passivation is turned off) to temporarily store entries removed from memory in a persistent, external cache store. To set up passivation or a cache writing strategy, you must first set up a cache store.

Set Up a Cache Store

The cache store serves as a connection to the persistent store. Cache stores are primarily used to fetch entries from the persistent store and to push changes back to the persistent store. For details, see Set Up and Configure a Cache Store.

Set Up Passivation

Passivation stores entries evicted from memory in a cache store. This feature allows entries to remain available despite not being present in memory and prevents potentially expensive write operations to the persistent cache. For details, see Set Up Passivation.

Set Up a Cache Writing Strategy

If passivation is disabled, every attempt to write to the cache results in writing to the cache store. This is the default Write-Through cache writing strategy. Set the cache writing strategy to determine whether these cache store writes occur synchronously or asynchronously. For details, see Set Up Cache Writing.

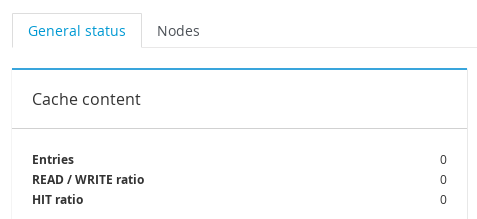

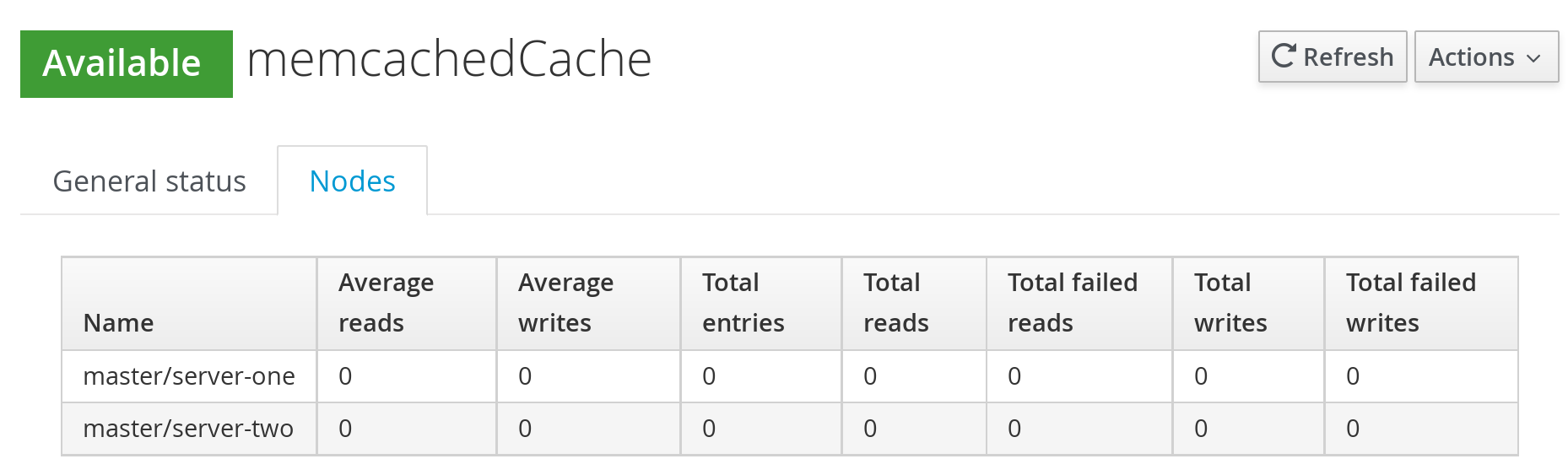

Monitor Caches and Cache Managers

JBoss Data Grid includes three primary tools to monitor the cache and cache managers once the data grid is up and running.

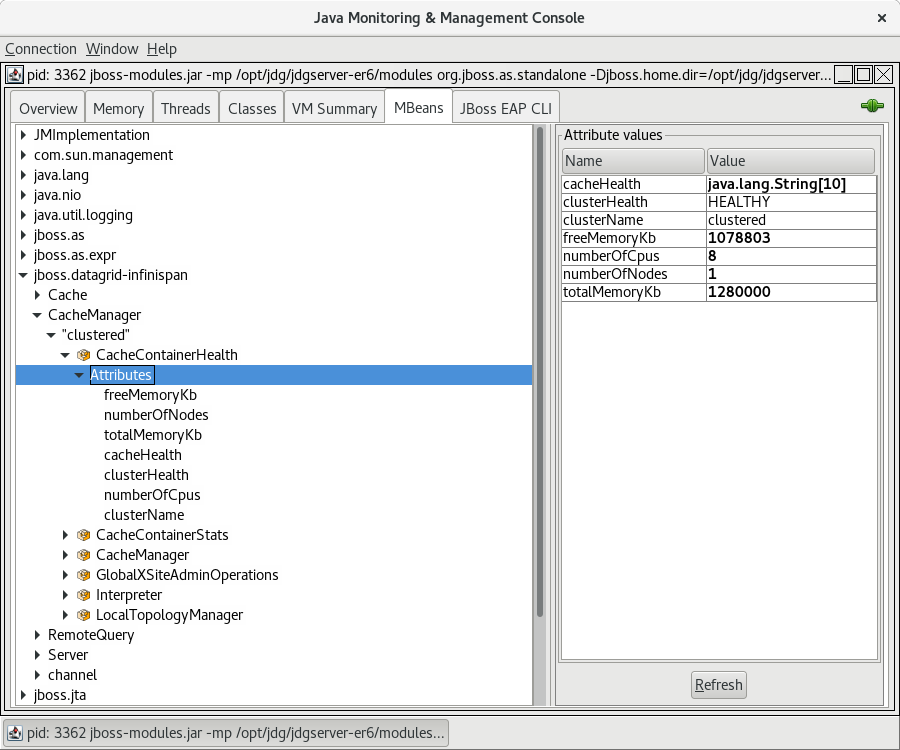

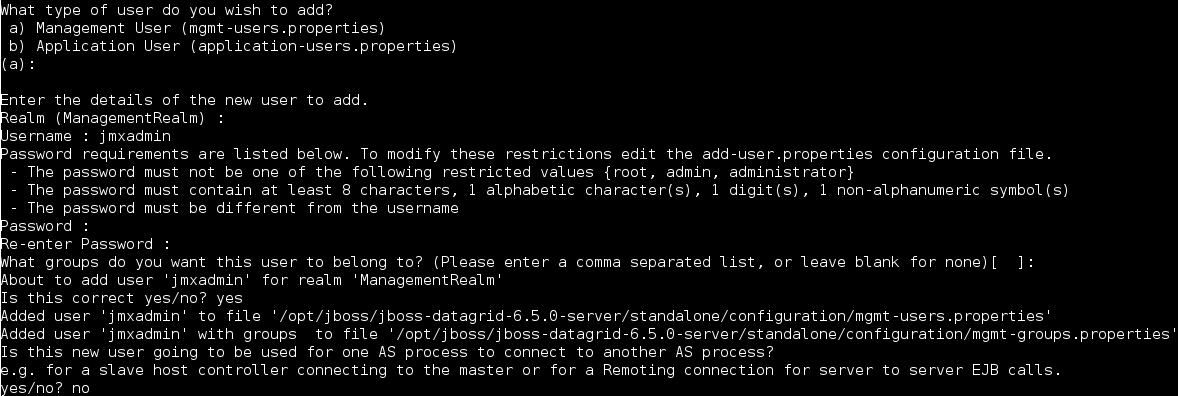

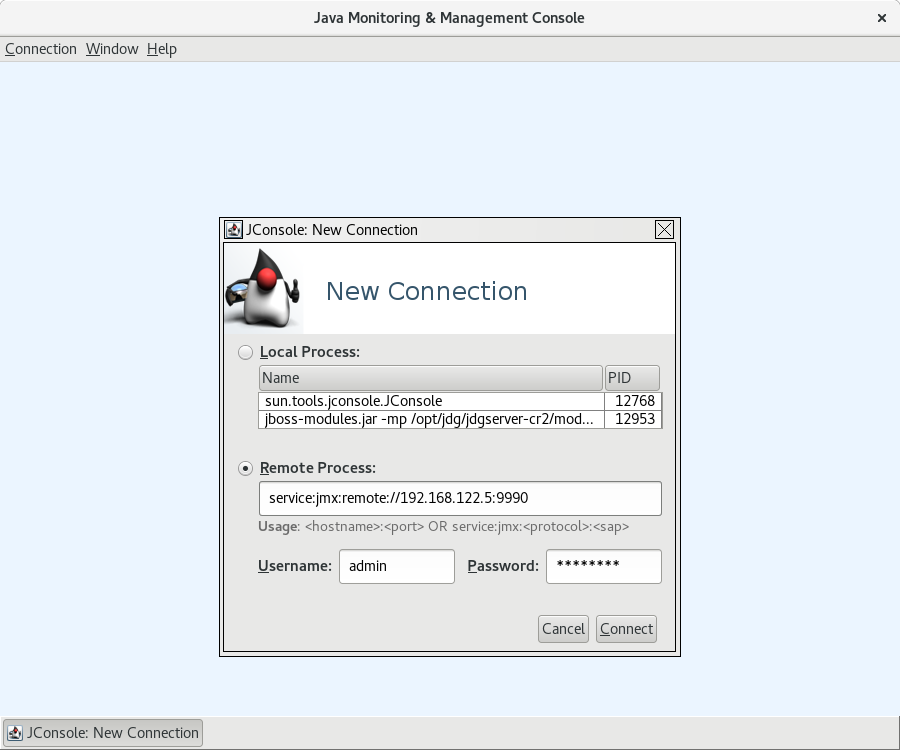

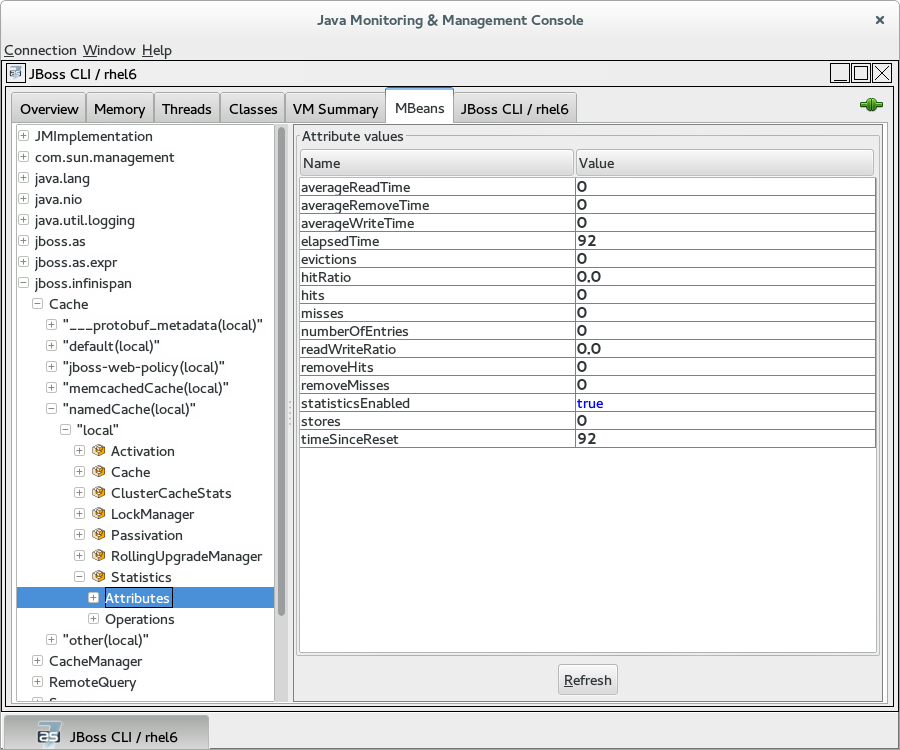

Set Up JMX

JMX is the standard statistics and management tool used for JBoss Data Grid. Depending on the use case, JMX can be configured at a cache level or a cache manager level or both. For details, see Set Up Java Management Extensions (JMX).

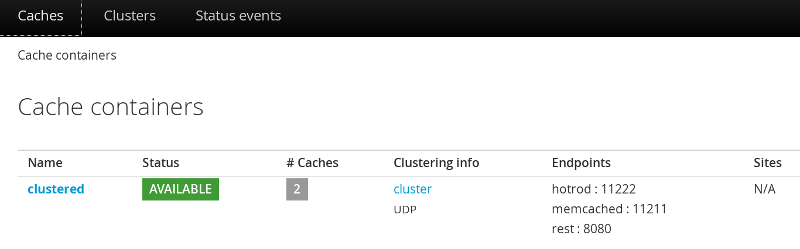

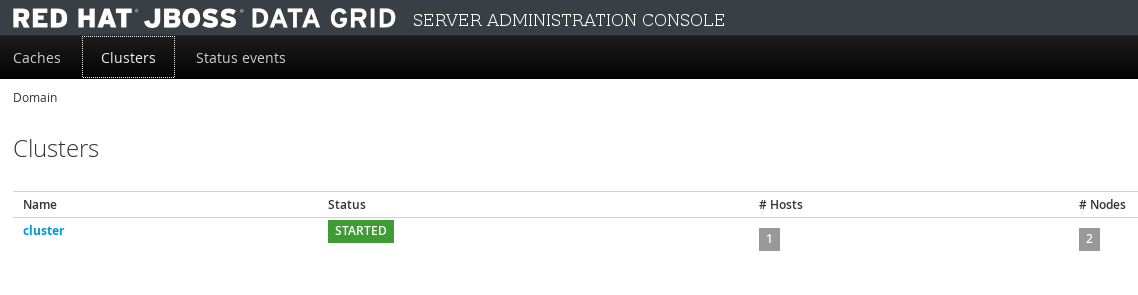

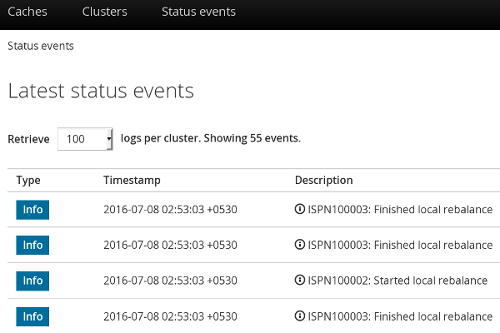

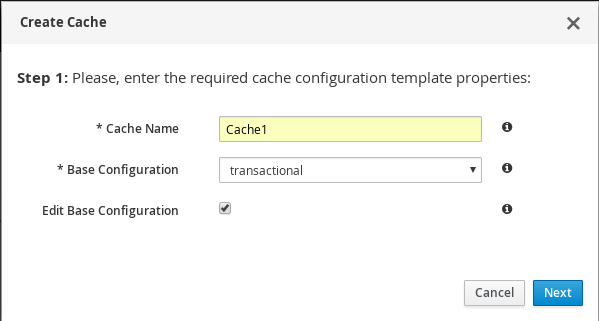

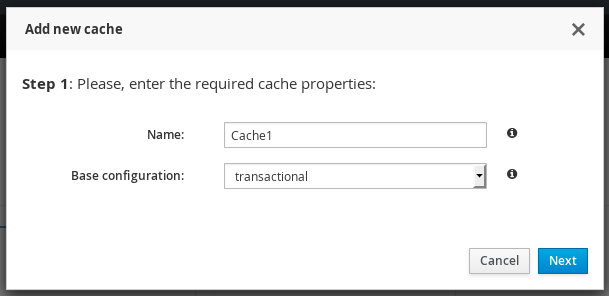

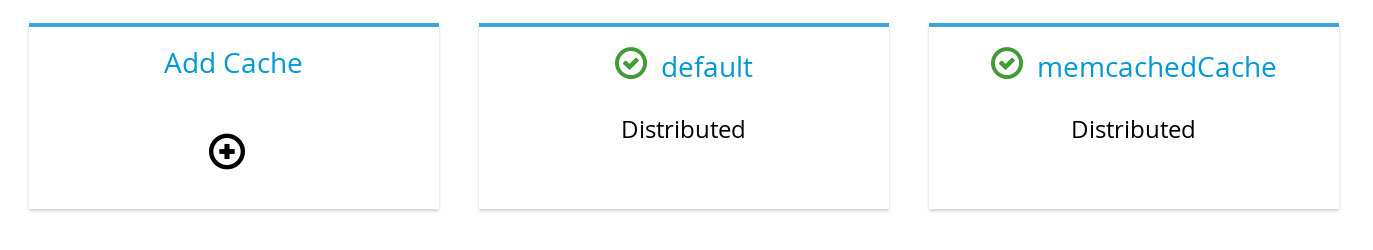

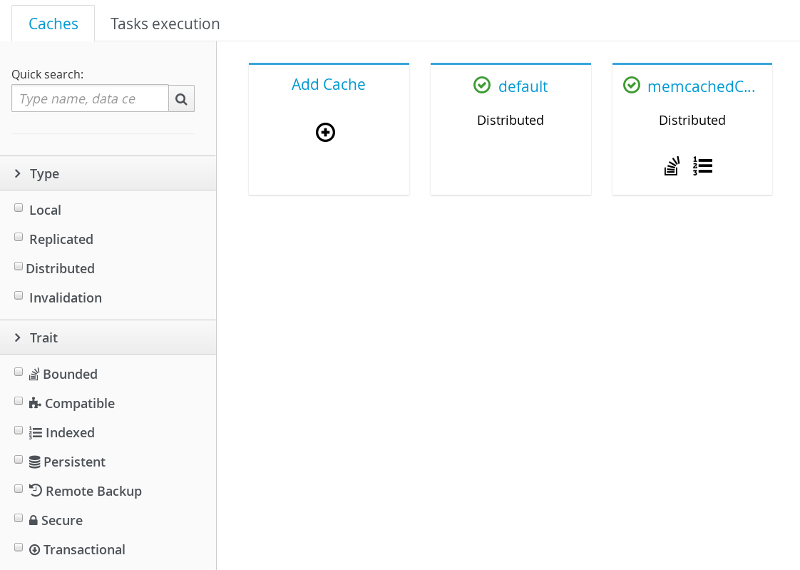

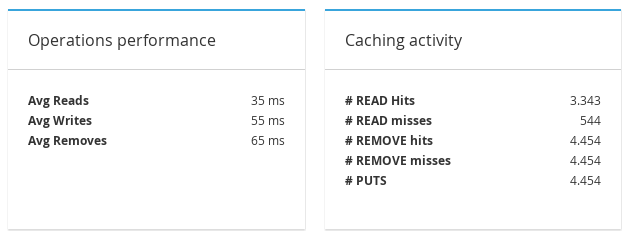

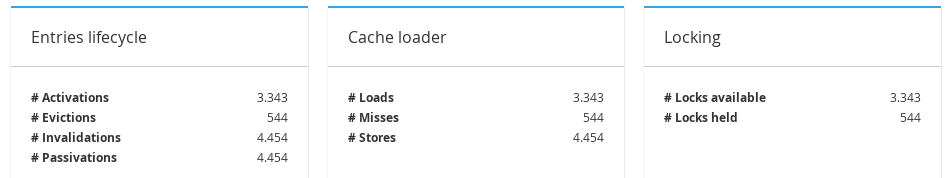

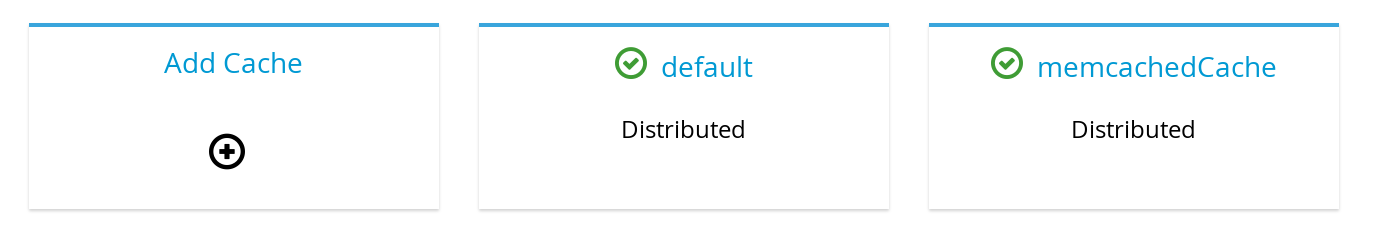

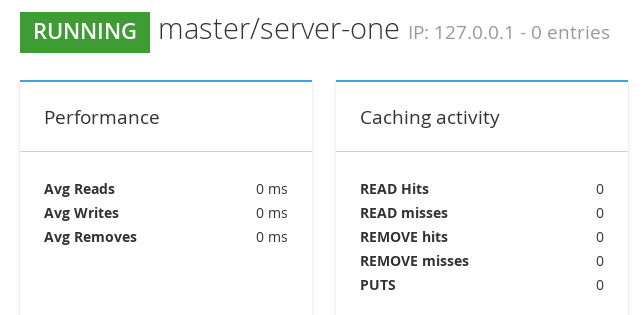

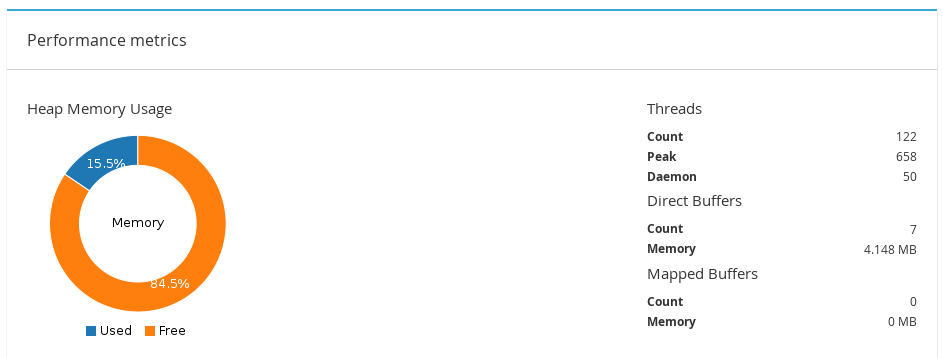

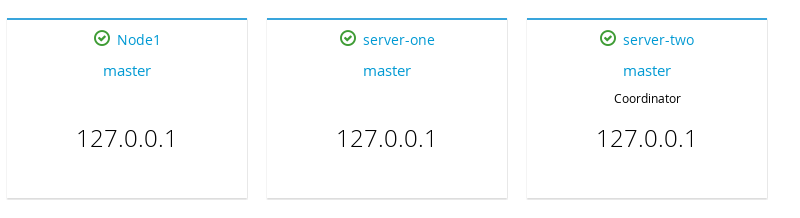

Access the Administration Console

Red Hat JBoss Data Grid 7.2.1 introduces an Administration Console, allowing for web-based monitoring and management of caches and cache managers. For usage details refer to Red Hat JBoss Data Grid Administration Console Getting Started.

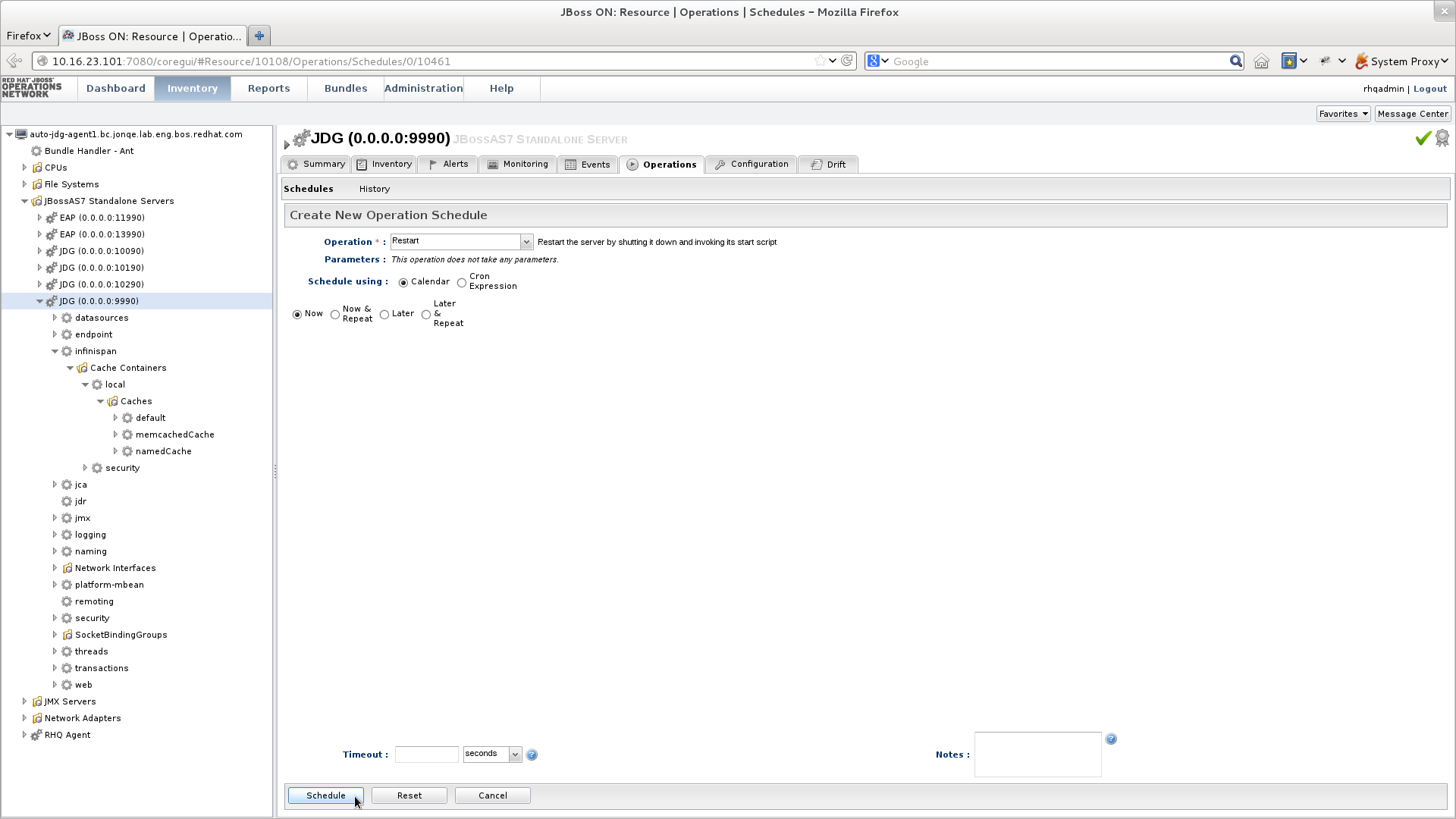

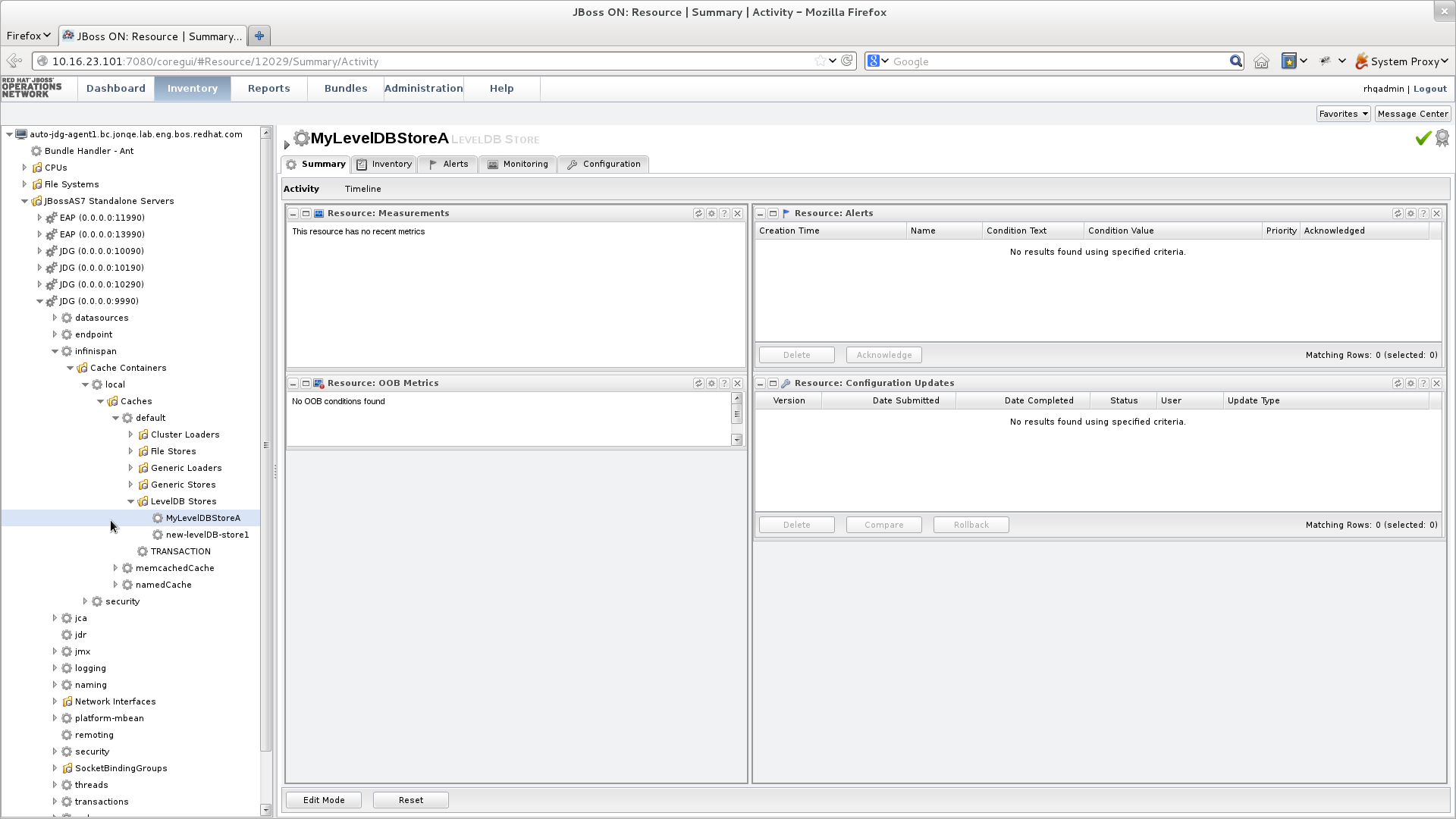

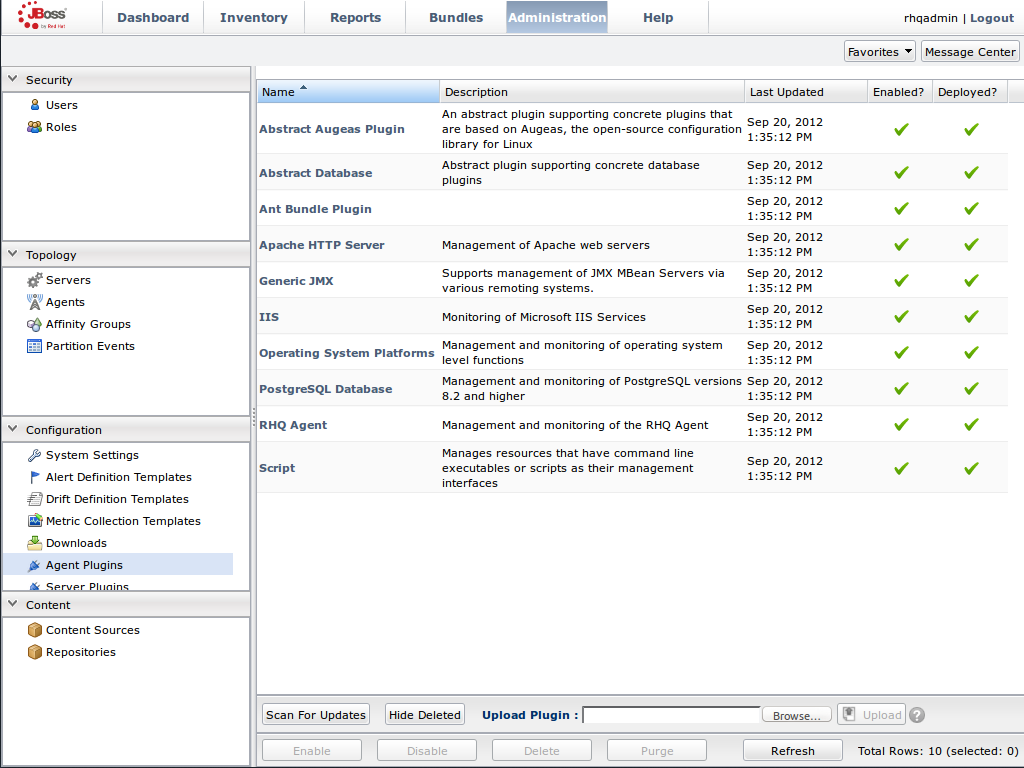

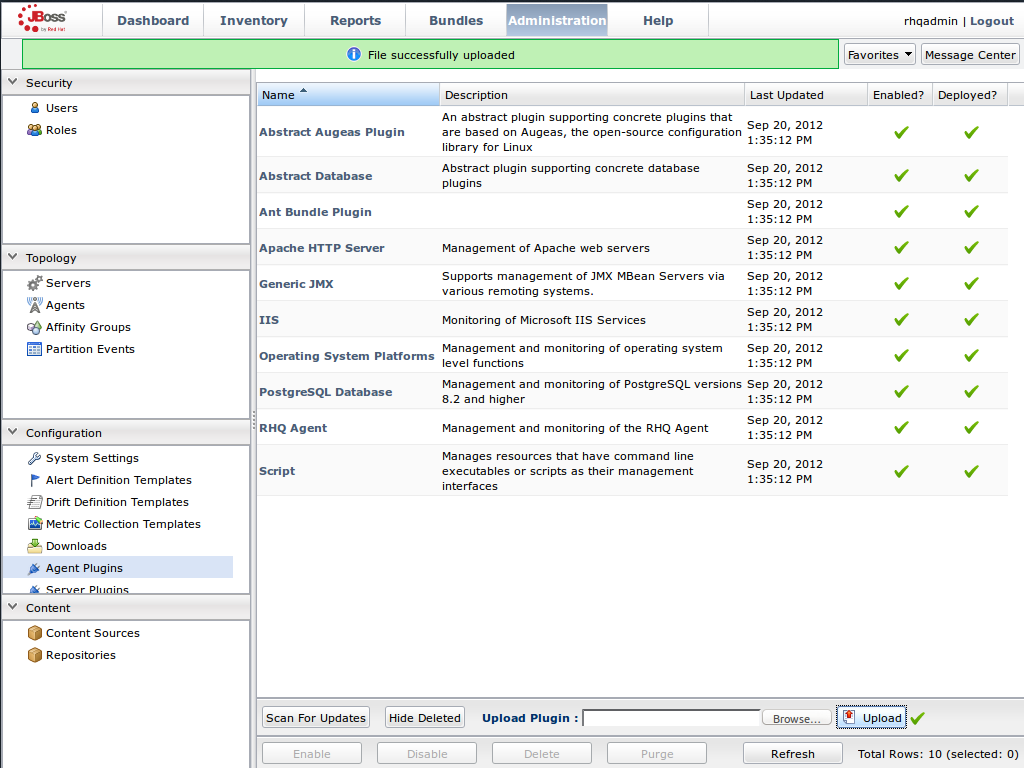

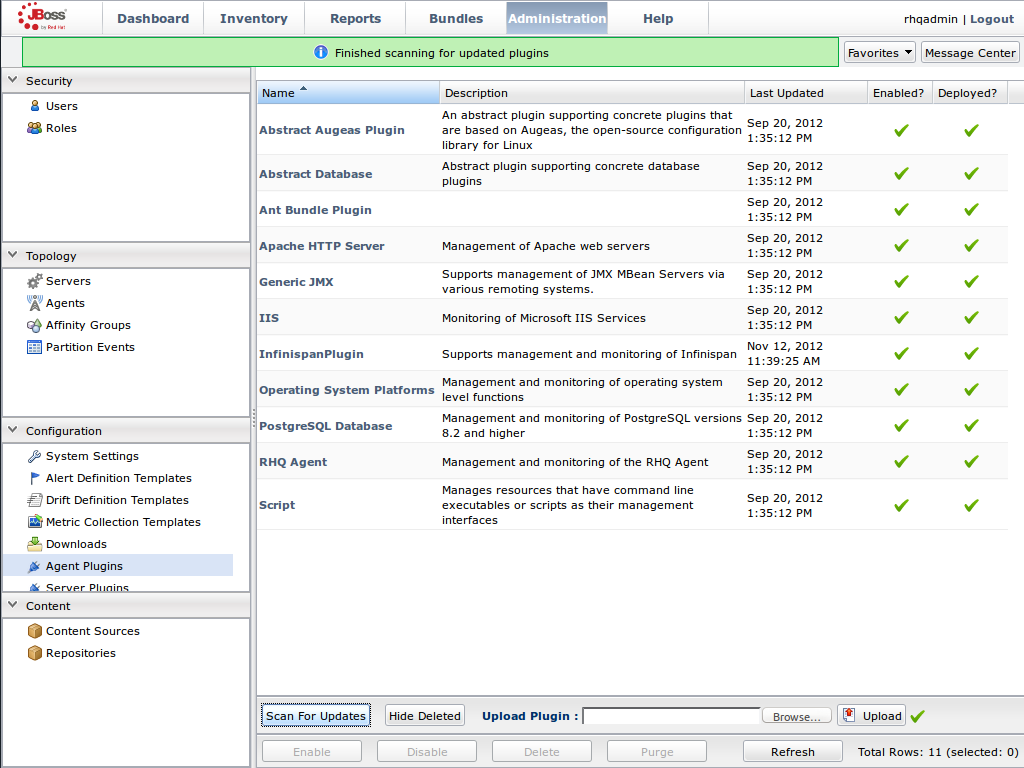

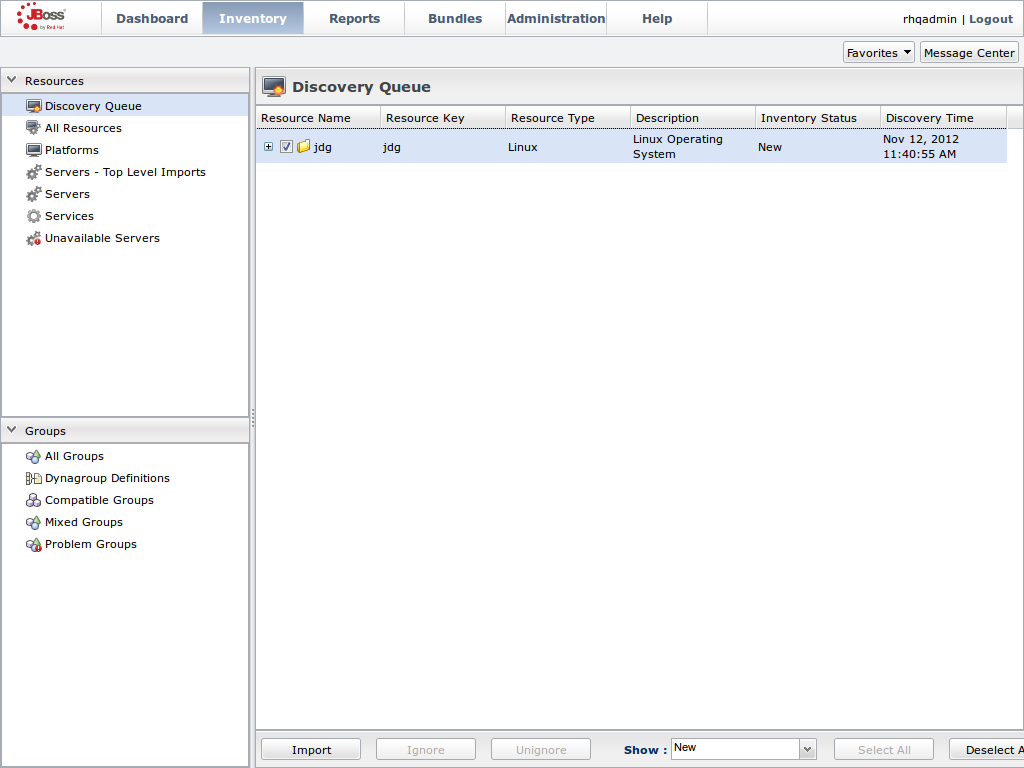

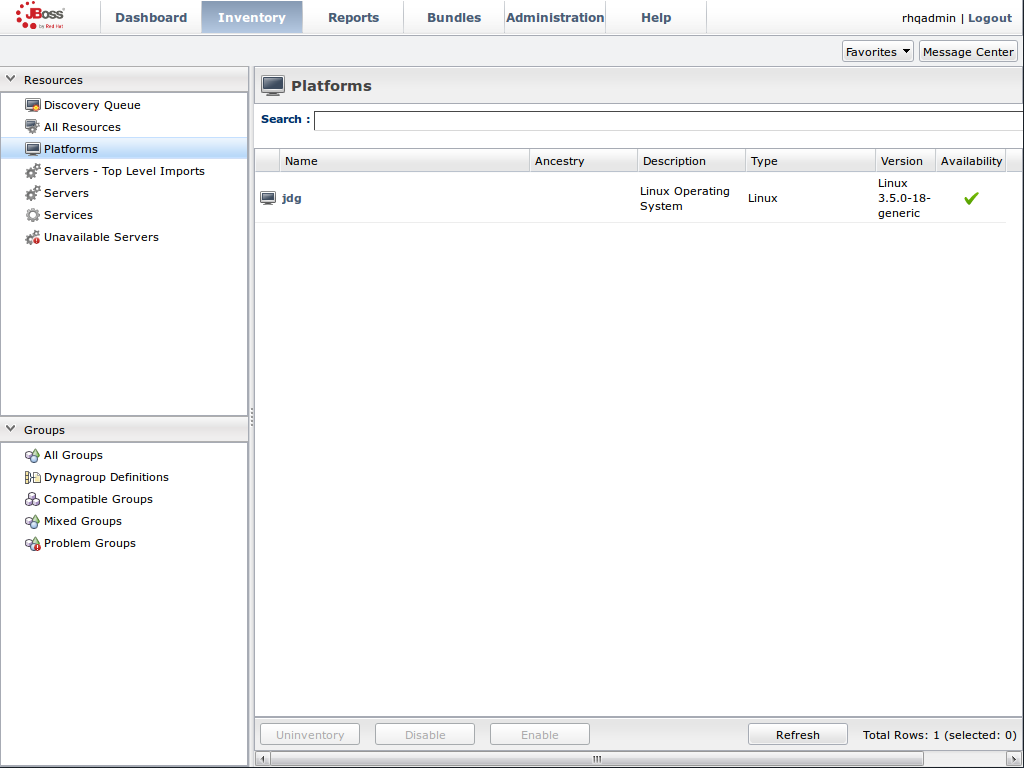

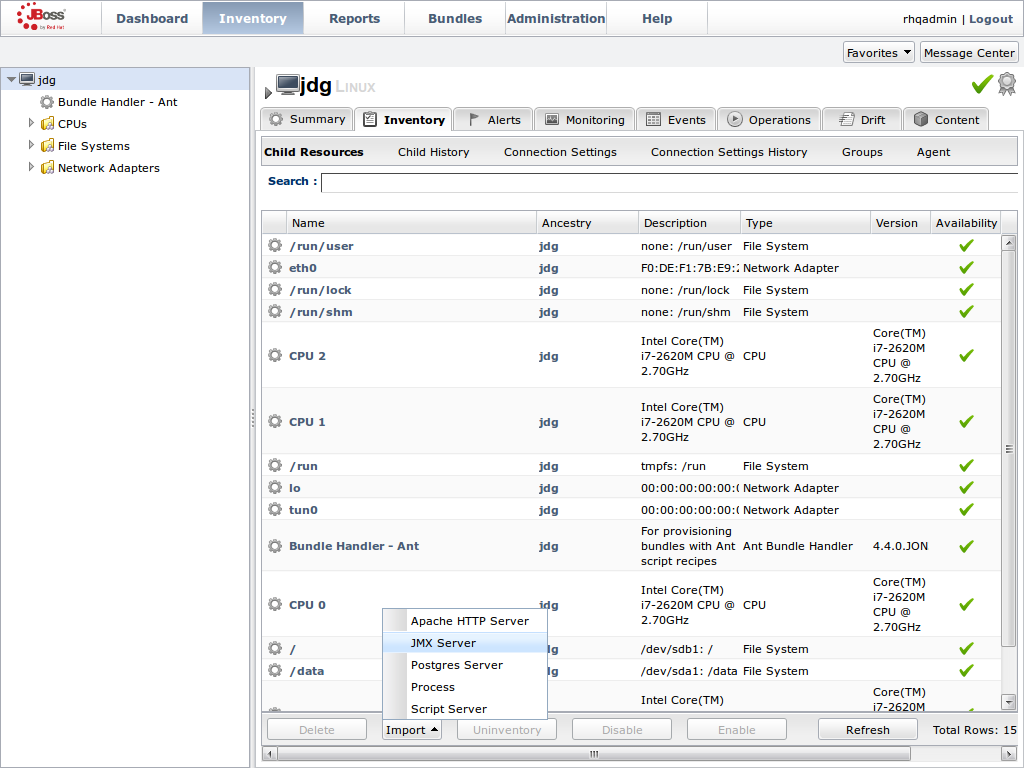

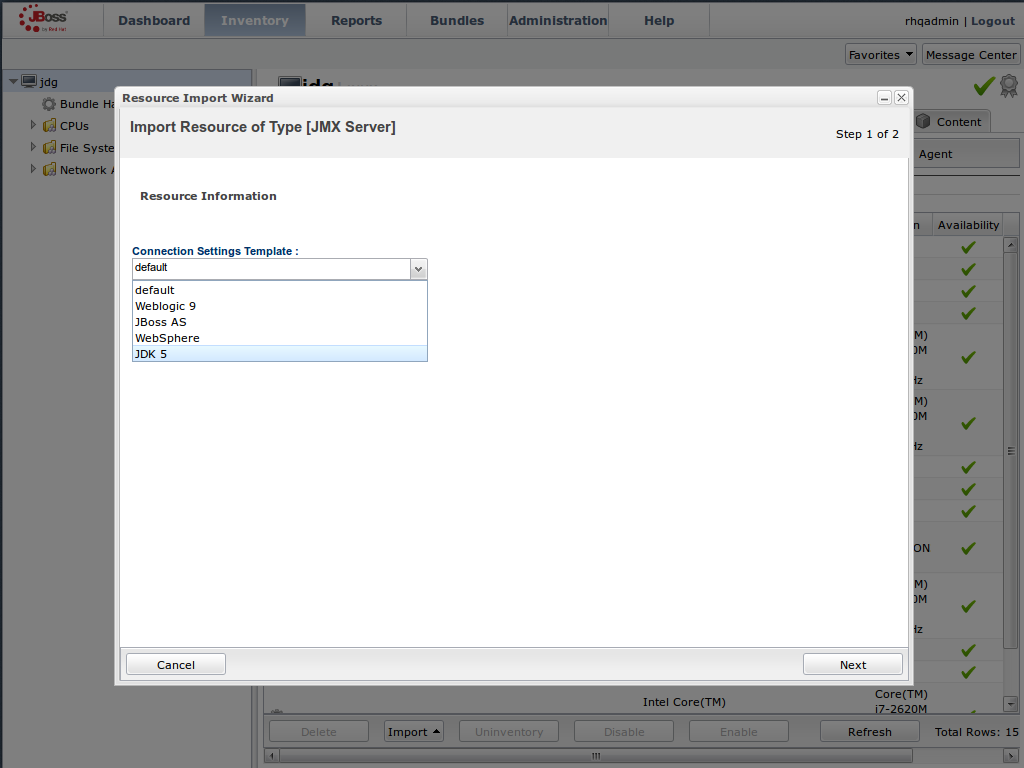

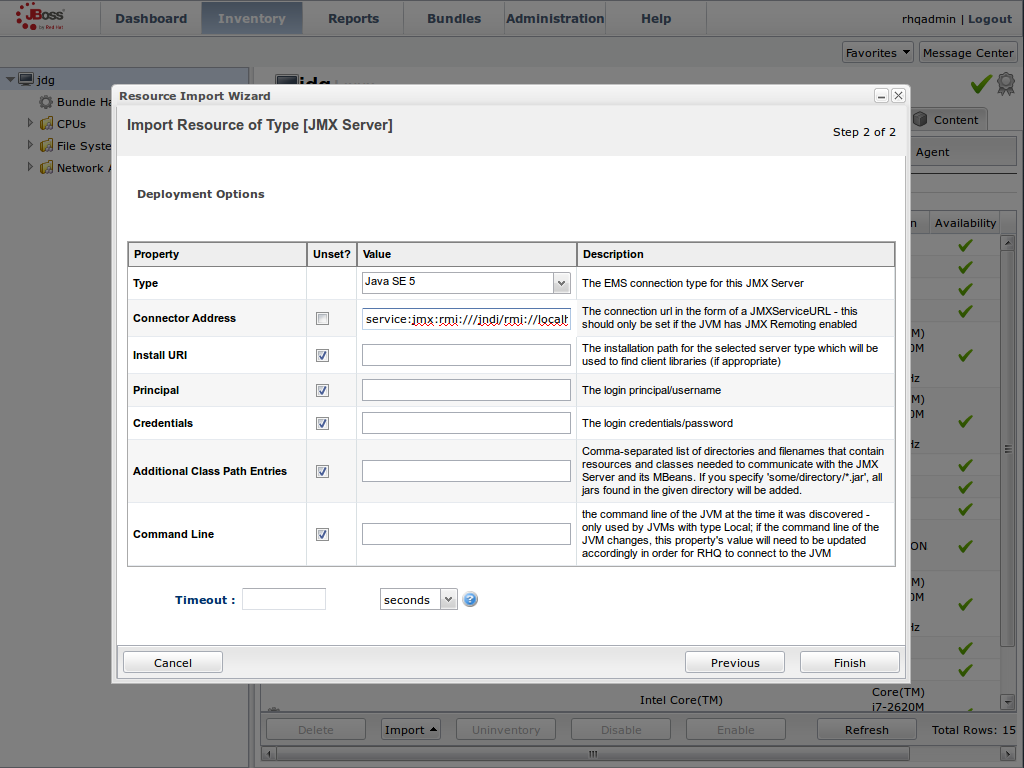

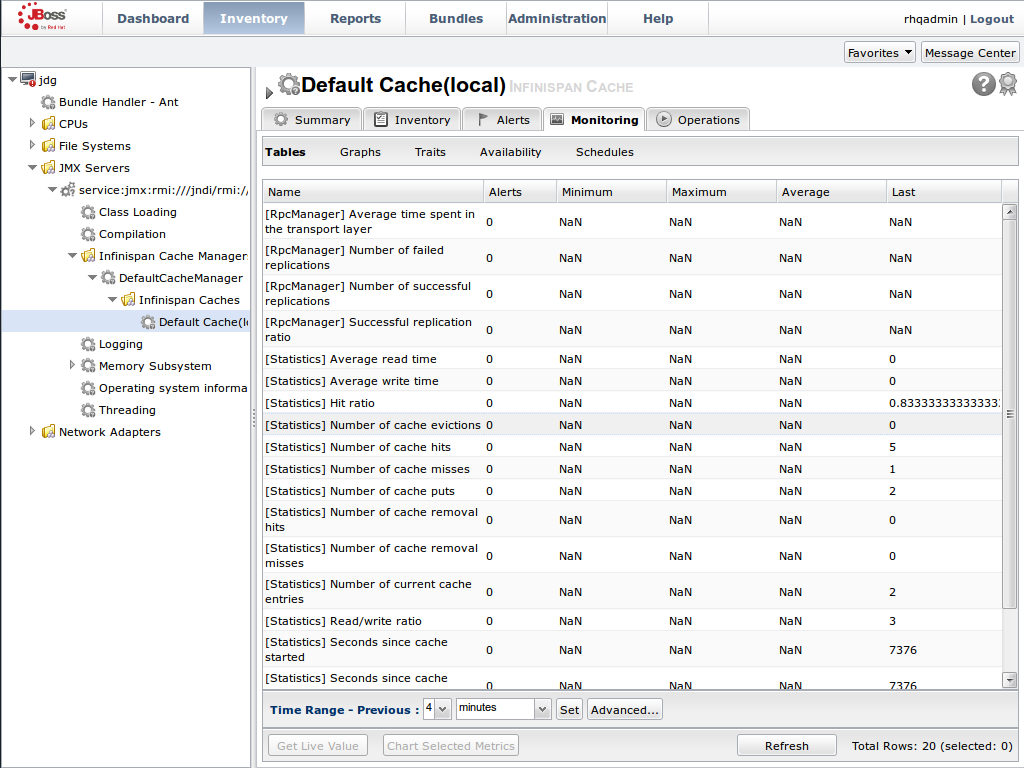

Set Up Red Hat JBoss Operations Network (JON)

Red Hat JBoss Operations Network (JON) is the second monitoring solution available for JBoss Data Grid. JBoss Operations Network (JON) offers a graphical interface to monitor runtime parameters and statistics for caches and cache managers. For details, see Set Up Jboss Operations Network(JON).

NoteThe JON plugin has been deprecated in JBoss Data Grid 7.2 and is expected to be removed in a subsequent version.

Introduce Topology Information

Optionally, introduce topology information to your data grid to specify where specific types of information or objects in your data grid are located. Server hinting is one of the ways to introduce topology information in JBoss Data Grid.

Set Up Server Hinting

When set up, server hinting provides high availability by ensuring that the original and backup copies of data are not stored on the same physical server, rack or data center. This is optional in cases such as a replicated cache, where all data is backed up on all servers, racks and data centers. For details, see High Availability Using Server Hinting.

The subsequent chapters detail each of these steps towards setting up a standard JBoss Data Grid configuration.

Part II. Managing JVM Memory

Chapter 2. Eviction and Expiration

Eviction and expiration are strategies for preventing OutOfMemoryError exceptions in the Java heap space. In other words, eviction and expiration ensure that Red Hat JBoss Data Grid does not run out of memory.

2.1. Overview of Eviction and Expiration

- Eviction

- Removes unused entries from memory after the number of entries in the cache reaches a maximum limit.

- The operation is local to a single cache instance. It removes entries from memory only.

- Executes each time an entry is added or updated in the cache.

- Expiration

- Removes entries from memory after a certain amount of time.

- The operation is cluster-wide. It removes entries from memory across all cache instances and also removes entries from the cache store.

-

Expiration operations are processed by threads that you can configure with the

ExpirationManagerinterface.

2.2. Configuring Eviction

You configure Red Hat JBoss Data Grid to perform eviction with the <memory /> element in your cache configuration. Alternatively, you can use the MemoryConfigurationBuilder class to configure eviction programmatically.

2.2.1. Eviction Types

Eviction types define the maximum limit for entries in the cache.

COUNT- Measures the number of entries in the cache. When the count exceeds the maximum, JBoss Data Grid evicts unused entries.

MEMORY- Measures the amount of memory that all entries in the cache take up. When the total amount of memory exceeds the maximum, JBoss Data Grid evicts unused entries.

2.2.2. Storage Types

Storage types define how JBoss Data Grid stores entries in the cache.

| Storage Type | Description | Eviction Type | Policy |

|---|---|---|---|

|

| Stores entries as objects in the Java heap. This is the default storage type. |

| TinyLFU |

|

|

Stores entries as |

| TinyLFU |

|

|

Stores entries as |

| LRU |

The BINARY and OFF-HEAP storage types both violate object equality. This occurs because equality is determined by the equivalence of the resulting bytes[] that the storage types generate instead of the object instances.

Red Hat JBoss Data Grid includes the Caffeine caching library that implements a variation of the Least Frequently Used (LFU) cache replacement algorithm known as TinyLFU. For OFFHEAP JBoss Data Grid uses a custom implementation of the Least Recently Used (LRU) algorithm.

2.2.3. Adding the Memory Element

The <memory> element controls how Red Hat JBoss Data Grid stores entries in memory.

For example, as a starting point to configure eviction for a standalone cache, add the <memory> element as follows:

<local-cache ...> <memory> </memory> </local-cache>

<local-cache ...>

<memory>

</memory>

</local-cache>2.2.4. Specifying the Storage Type

Define the storage type as a child element under <memory>, as follows:

OBJECT<memory> <object/> </memory>

<memory> <object/> </memory>Copy to Clipboard Copied! Toggle word wrap Toggle overflow BINARY<memory> <binary/> </memory>

<memory> <binary/> </memory>Copy to Clipboard Copied! Toggle word wrap Toggle overflow OFFHEAP<memory> <offheap/> </memory>

<memory> <offheap/> </memory>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.2.5. Specifying the Eviction Type

Include the eviction attribute with the value set to COUNT or MEMORY.

OBJECT<memory> <object/> </memory>

<memory> <object/> </memory>Copy to Clipboard Copied! Toggle word wrap Toggle overflow TipThe

OBJECTstorage type supportsCOUNTonly so you do not need to explicitly set the eviction type.BINARY<memory> <binary eviction="COUNT"/> </memory>

<memory> <binary eviction="COUNT"/> </memory>Copy to Clipboard Copied! Toggle word wrap Toggle overflow OFFHEAP<memory> <offheap eviction="MEMORY"/> </memory>

<memory> <offheap eviction="MEMORY"/> </memory>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.2.6. Setting the Cache Size

Include the size attribute with a value set to a number greater than zero.

-

For

COUNT, thesizeattribute sets the maximum number of entries the cache can hold before eviction starts. -

For

MEMORY, thesizeattribute sets the maximum number of bytes the cache can take from memory before eviction starts. For example, a value of10000000000is 10 GB.

Try different cache sizes to determine the optimal setting. A cache size that is too large can cause Red Hat JBoss Data Grid to run out of memory. At the same time, a cache size that is too small wastes available memory.

OBJECT<memory> <object size="100000"/> </memory>

<memory> <object size="100000"/> </memory>Copy to Clipboard Copied! Toggle word wrap Toggle overflow BINARY<memory> <binary eviction="COUNT" size="100000"/> </memory>

<memory> <binary eviction="COUNT" size="100000"/> </memory>Copy to Clipboard Copied! Toggle word wrap Toggle overflow OFFHEAP<memory> <offheap eviction="MEMORY" size="10000000000"/> </memory>

<memory> <offheap eviction="MEMORY" size="10000000000"/> </memory>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.2.7. Tuning the Off Heap Configuration

Include the address-count attribute when using OFFHEAP storage to prevent collisions that might decrease performance. This attribute specifies the number of pointers that are available in the hash map.

Set the value of the address-count attribute to a number that is greater than the number of cache entries. By default address-count is 2^20, or 1048576. The parameter is always rounded up to a power of 2.

<memory> <offheap eviction="MEMORY" size="10000000000" address-count="1048576"/> </memory>

<memory>

<offheap eviction="MEMORY" size="10000000000" address-count="1048576"/>

</memory>2.2.8. Setting the Eviction Strategy

Eviction strategies control how Red Hat JBoss Data Grid performs eviction. You set eviction strategies with the strategy attribute.

The default strategy is NONE, which disables eviction unless you explicitly configure it. For example, here are two configurations that have the same effect:

<memory/>

<memory/><memory> <object strategy="NONE"/> </memory>

<memory>

<object strategy="NONE"/>

</memory>

When you configure eviction, you implicitly use the REMOVE strategy. For example, the following two configurations have the same effect:

<memory> <object/> </memory>

<memory>

<object/>

</memory><memory> <object strategy="REMOVE"/> </memory>

<memory>

<object strategy="REMOVE"/>

</memory>2.2.8.1. Eviction Strategies

| Strategy | Description |

|---|---|

|

| JBoss Data Grid does not evict entries. This is the default setting unless you configure eviction. |

|

| JBoss Data Grid removes entries from memory so that the cache does not exceed the configured size. This is the default setting when you configure eviction. |

|

|

JBoss Data Grid does not perform eviction. Eviction takes place manually by invoking the |

|

|

JBoss Data Grid does not write new entries to the cache if doing so would exceed the configured size. Instead of writing new entries to the cache, JBoss Data Grid throws a |

2.2.9. Configuring Passivation

Passivation configures Red Hat JBoss Data Grid to write entries to a persistent cache store when it removes those entries from memory. In this way, passivation ensures that only a single copy of an entry is maintained, either in-memory or in a cache store but not both.

For more information, see Setting Up Passivation.

2.3. Configuring Expiration

You configure Red Hat JBoss Data Grid to perform expiration at either the entry or cache level.

If you configure expiration for the cache, all entries in that cache inherit that configuration. However, configuring expiration for specific entries takes precedence over configuration for the cache.

You configure expiration for a cache with the <expiration /> element. Alternatively, you can use the ExpirationConfigurationBuilder class to programmatically configure expiration for a cache.

You configure expiration for specific entries with the Cache API.

2.3.1. Expiration Parameters

Expiration parameters configure the amount of time entries can remain in the cache.

lifespan-

Specifies how long entries can remain in the cache before they expire. The default value is

-1, which is unlimited time. max-idle-

Specifies how long entries can remain idle before they expire. An entry in the cache is idle when no operation is performed with the key. The default value is

-1, which is unlimited time. interval-

Specifies the amount of time between expiration operations. The default value is

60000.

While expiration parameters, lifespan and max-idle, are replicated across the cluster, only the value of the lifespan parameter is replicated along with cache entries. For this reason, you should not use the max-idle parameter with clustered caches. For more information on the limitations of using max-idle in clusters, see Red Hat knowledgebase workaround.

2.3.2. Configuring Expiration

Configure Red Hat JBoss Data Grid to perform expiration for a cache as follows:

Add the

<expiration />element<expiration />

<expiration />Copy to Clipboard Copied! Toggle word wrap Toggle overflow Configure the

lifespanattribute.Specify the amount of time, in milliseconds, that an entry can remain in memory as the value, for example:

<expiration lifespan="1000" />

<expiration lifespan="1000" />Copy to Clipboard Copied! Toggle word wrap Toggle overflow Configure the

max-idleattribute.Specify the amount of time, in milliseconds, that an entry can remain idle as the value, for example:

<expiration lifespan="1000" max-idle="1000" />

<expiration lifespan="1000" max-idle="1000" />Copy to Clipboard Copied! Toggle word wrap Toggle overflow Configure the

intervalattribute.Specify the amount of time, in milliseconds, that Red Hat JBoss Data Grid waits between expiration operations, for example:

<expiration lifespan="1000" max-idle="1000" interval="120000" />

<expiration lifespan="1000" max-idle="1000" interval="120000" />Copy to Clipboard Copied! Toggle word wrap Toggle overflow TipSet a value of

-1to disable periodic expiration.

2.3.3. Expiration Behavior

Red Hat JBoss Data Grid cannot always expire entries immediately when they reach the time limit. Instead, JBoss Data Grid marks entries as expired and removes them when:

- Writing entries to the cache store.

- The maintenance thread that processes expiration identifies entries as expired.

This behavior might indicate that JBoss Data Grid is not performing expiration as expected. However it is the case that the entries are marked as expired but not yet removed from either the memory or the cache store.

To ensure that users cannot receive expired entries, JBoss Data Grid returns null values for entries that are marked as expired but not yet removed.

Part III. Monitoring Your Cache

Chapter 3. Set Up Logging

3.1. About Logging

Red Hat JBoss Data Grid provides highly configurable logging facilities for both its own internal use and for use by deployed applications. The logging subsystem is based on JBossLogManager and it supports several third party application logging frameworks in addition to JBossLogging.

The logging subsystem is configured using a system of log categories and log handlers. Log categories define what messages to capture, and log handlers define how to deal with those messages (write to disk, send to console, etc).

After a JBoss Data Grid cache is configured with operations such as eviction and expiration, logging tracks relevant activity (including errors or failures).

When set up correctly, logging provides a detailed account of what occurred in the environment and when. Logging also helps track activity that occurred just before a crash or problem in the environment. This information is useful when troubleshooting or when attempting to identify the source of a crash or error.

3.2. Supported Application Logging Frameworks

3.2.1. Supported Application Logging Frameworks

Red Hat JBoss LogManager supports the following logging frameworks:

- JBoss Logging, which is included with Red Hat JBoss Data Grid 7.

- Apache Commons Logging

- Simple Logging Facade for Java (SLF4J)

- Apache log4j

- Java SE Logging (java.util.logging)

3.2.2. About JBoss Logging

JBoss Logging is the application logging framework that is included in JBoss Enterprise Application Platform 7. As a result of this inclusion, Red Hat JBoss Data Grid 7 also uses JBoss Logging.

JBoss Logging provides an easy way to add logging to an application. Add code to the application that uses the framework to send log messages in a defined format. When the application is deployed to an application server, these messages can be captured by the server and displayed and/or written to file according to the server’s configuration.

3.2.3. JBoss Logging Features

JBossLogging includes the following features:

- Provides an innovative, easy to use typed logger.

- Full support for internationalization and localization. Translators work with message bundles in properties files while developers can work with interfaces and annotations.

- Build-time tooling to generate typed loggers for production, and runtime generation of typed loggers for development.

3.3. Boot Logging

3.3.1. Boot Logging

The boot log is the record of events that occur while the server is starting up (or booting). Red Hat JBoss Data Grid also includes a server log, which includes log entries generated after the server concludes the boot process.

3.3.2. Configure Boot Logging

Edit the logging.properties file to configure the boot log. This file is a standard Java properties file and can be edited in a text editor. Each line in the file has the format of property=value.

In Red Hat JBoss Data Grid, the logging.properties file is available in the $JDG_HOME/standalone/configuration folder.

3.3.3. Default Log File Locations

The following table provides a list of log files in Red Hat JBoss Data Grid and their locations:

| Log File | Location | Description |

|---|---|---|

| boot.log | $JDG_HOME/standalone/log/ | The Server Boot Log. Contains log messages related to the start up of the server.

By default this file is prepended to the server.log . This file may be created independently of the server.log by defining the |

| server.log | $JDG_HOME/standalone/log/ | The Server Log. Contains all log messages once the server has launched. |

3.4. Logging Attributes

3.4.1. About Log Levels

Log levels are an ordered set of enumerated values that indicate the nature and severity of a log message. The level of a given log message is specified by the developer using the appropriate methods of their chosen logging framework to send the message.

Red Hat JBoss Data Grid supports all the log levels used by the supported application logging frameworks. The six most commonly used log levels are (ordered by lowest to highest severity):

-

TRACE -

DEBUG -

INFO -

WARN -

ERROR -

FATAL

Log levels are used by log categories and handlers to limit the messages they are responsible for. Each log level has an assigned numeric value which indicates its order relative to other log levels. Log categories and handlers are assigned a log level and they only process log messages of that numeric value or higher. For example a log handler with the level of WARN will only record messages of the levels WARN, ERROR and FATAL.

3.4.2. Supported Log Levels

The following table lists log levels that are supported in Red Hat JBoss Data Grid. Each entry includes the log level, its value and description. The log level values indicate each log level’s relative value to other log levels. Additionally, log levels in different frameworks may be named differently, but have a log value consistent to the provided list.

| Log Level | Value | Description |

|---|---|---|

| FINEST | 300 | - |

| FINER | 400 | - |

| TRACE | 400 |

Used for messages that provide detailed information about the running state of an application. |

| DEBUG | 500 |

Used for messages that indicate the progress of individual requests or activities of an application. |

| FINE | 500 | - |

| CONFIG | 700 | - |

| INFO | 800 | Used for messages that indicate the overall progress of the application. Used for application start up, shut down and other major lifecycle events. |

| WARN | 900 | Used to indicate a situation that is not in error but is not considered ideal. Indicates circumstances that can lead to errors in the future. |

| WARNING | 900 | - |

| ERROR | 1000 | Used to indicate an error that has occurred that could prevent the current activity or request from completing but will not prevent the application from running. |

| SEVERE | 1000 | - |

| FATAL | 1100 | Used to indicate events that could cause critical service failure and application shutdown and possibly cause JBoss Data Grid to shut down. |

3.4.3. About Log Categories

Log categories define a set of log messages to capture and one or more log handlers which will process the messages.

The log messages to capture are defined by their Java package of origin and log level. Messages from classes in that package and of that log level or higher (with greater or equal numeric value) are captured by the log category and sent to the specified log handlers. As an example, the WARNING log level results in log values of 900, 1000 and 1100 are captured.

Log categories can optionally use the log handlers of the root logger instead of their own handlers.

3.4.4. About the Root Logger

The root logger captures all log messages sent to the server (of a specified level) that are not captured by a log category. These messages are then sent to one or more log handlers.

By default the root logger is configured to use a console and a periodic log handler. The periodic log handler is configured to write to the file server.log . This file is sometimes referred to as the server log.

3.4.5. About Log Handlers

Log handlers define how captured log messages are recorded by Red Hat JBoss Data Grid. The six types of log handlers configurable in JBoss Data Grid are:

-

Console -

File -

Periodic -

Size -

Async -

Custom

Log handlers direct specified log objects to a variety of outputs (including the console or specified log files). Some log handlers used in JBoss Data Grid are wrapper log handlers, used to direct other log handlers' behavior.

Log handlers are used to direct log outputs to specific files for easier sorting or to write logs for specific intervals of time. They are primarily useful to specify the kind of logs required and where they are stored or displayed or the logging behavior in JBoss Data Grid.

3.4.6. Log Handler Types

The following table lists the different types of log handlers available in Red Hat JBoss Data Grid:

| Log Handler Type | Description | Use Case |

|---|---|---|

| Console |

Console log handlers write log messages to either the host operating system’s standard out ( | The Console log handler is preferred when JBoss Data Grid is administered using the command line. In such a case, the messages from a Console log handler are not saved unless the operating system is configured to capture the standard out or standard error stream. |

| File | File log handlers are the simplest log handlers. Their primary use is to write log messages to a specified file. | File log handlers are most useful if the requirement is to store all log entries according to the time in one place. |

| Periodic | Periodic file handlers write log messages to a named file until a specified period of time has elapsed. Once the time period has elapsed, the specified time stamp is appended to the file name. The handler then continues to write into the newly created log file with the original name. | The Periodic file handler can be used to accumulate log messages on a weekly, daily, hourly or other basis depending on the requirements of the environment. |

| Size | Size log handlers write log messages to a named file until the file reaches a specified size. When the file reaches a specified size, it is renamed with a numeric prefix and the handler continues to write into a newly created log file with the original name. Each size log handler must specify the maximum number of files to be kept in this fashion. | The Size handler is best suited to an environment where the log file size must be consistent. |

| Async | Async log handlers are wrapper log handlers that provide asynchronous behavior for one or more other log handlers. These are useful for log handlers that have high latency or other performance problems such as writing a log file to a network file system. | The Async log handlers are best suited to an environment where high latency is a problem or when writing to a network file system. |

| Custom |

Custom log handlers enable to you to configure new types of log handlers that have been implemented. A custom handler must be implemented as a Java class that extends | Custom log handlers create customized log handler types and are recommended for advanced users. |

3.4.7. Selecting Log Handlers

The following are the most common uses for each of the log handler types available for Red Hat JBoss Data Grid:

-

The

Consolelog handler is preferred when JBoss Data Grid is administered using the command line. In such a case, errors and log messages appear on the console window and are not saved unless separately configured to do so. -

The

Filelog handler is used to direct log entries into a specified file. This simplicity is useful if the requirement is to store all log entries according to the time in one place. -

The

Periodiclog handler is similar to theFilehandler but creates files according to the specified period. As an example, this handler can be used to accumulate log messages on a weekly, daily, hourly or other basis depending on the requirements of the environment. -

The

Sizelog handler also writes log messages to a specified file, but only while the log file size is within a specified limit. Once the file size reaches the specified limit, log files are written to a new log file. This handler is best suited to an environment where the log file size must be consistent. -

The

Asynclog handler is a wrapper that forces other log handlers to operate asynchronously. This is best suited to an environment where high latency is a problem or when writing to a network file system. -

The

Customlog handler creates new, customized types of log handlers. This is an advanced log handler.

3.4.8. About Log Formatters

A log formatter is the configuration property of a log handler. The log formatter defines the appearance of log messages that originate from the relevant log handler. The log formatter is a string that uses the same syntax as the java.util.Formatter class.

See http://docs.oracle.com/javase/6/docs/api/java/util/Formatter.html for more information.

3.5. Logging Sample Configurations

3.5.1. Logging Sample Configuration Location

All of the sample configurations presented in this section should be placed inside the server’s configuration file, typically either standalone.xml or clustered.xml for standalone instances, or domain.xml for managed domain instances.

3.5.2. Sample XML Configuration for the Root Logger

The following procedure demonstrates a sample configuration for the root logger.

Procedure: Configure the Root Logger

The

levelproperty sets the maximum level of log message that the root logger records.<subsystem xmlns="urn:jboss:domain:logging:3.0"> <root-logger> <level name="INFO"/><subsystem xmlns="urn:jboss:domain:logging:3.0"> <root-logger> <level name="INFO"/>Copy to Clipboard Copied! Toggle word wrap Toggle overflow handlersis a list of log handlers that are used by the root logger.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.5.3. Sample XML Configuration for a Log Category

The following procedure demonstrates a sample configuration for a log category.

Configure a Log Category

Use the

categoryproperty to specify the log category from which log messages will be captured.The

use-parent-handlersis set to"true"by default. When set to"true", this category will use the log handlers of the root logger in addition to any other assigned handlers.-

Use the

levelproperty to set the maximum level of log message that the log category records. -

The

handlerselement contains a list of log handlers.

3.5.4. Sample XML Configuration for a Console Log Handler

The following procedure demonstrates a sample configuration for a console log handler.

Configure the Console Log Handler

Add the Log Handler Identifier Information

The

nameproperty sets the unique identifier for this log handler.When

autoflushis set to"true"the log messages will be sent to the handler’s target immediately upon request.Set the

levelPropertyThe

levelproperty sets the maximum level of log messages recorded.Set the

encodingOutputUse

encodingto set the character encoding scheme to be used for the output.Define the

targetValueThe

targetproperty defines the system output stream where the output of the log handler goes. This can beSystem.errfor the system error stream, orSystem.outfor the standard out stream.Define the

filter-specPropertyThe

filter-specproperty is an expression value that defines a filter. The example provided defines a filter that does not match a pattern:not(match("JBAS.*")).Specify the

formatterUse

formatterto list the log formatter used by the log handler.

3.5.5. Sample XML Configuration for a File Log Handler

The following procedure demonstrates a sample configuration for a file log handler.

Configure the File Log Handler

Add the File Log Handler Identifier Information

The

nameproperty sets the unique identifier for this log handler.When

autoflushis set to"true"the log messages will be sent to the handler’s target immediately upon request.Set the

levelPropertyThe

levelproperty sets the maximum level of log message that the root logger records.Set the

encodingOutputUse

encodingto set the character encoding scheme to be used for the output.Set the

fileObjectThe

fileobject represents the file where the output of this log handler is written to. It has two configuration properties:relative-toandpath.The

relative-toproperty is the directory where the log file is written to. JBoss Enterprise Application Platform 6 file path variables can be specified here. Thejboss.server.log.dirvariable points to the log/ directory of the server.The

pathproperty is the name of the file where the log messages will be written. It is a relative path name that is appended to the value of therelative-toproperty to determine the complete path.Specify the

formatterUse

formatterto list the log formatter used by the log handler.Set the

appendPropertyWhen the

appendproperty is set to"true", all messages written by this handler will be appended to an existing file. If set to"false"a new file will be created each time the application server launches. Changes toappendrequire a server reboot to take effect.

3.5.6. Sample XML Configuration for a Periodic Log Handler

The following procedure demonstrates a sample configuration for a periodic log handler.

Configure the Periodic Log Handler

Add the Periodic Log Handler Identifier Information

The

nameproperty sets the unique identifier for this log handler.When

autoflushis set to"true"the log messages will be sent to the handler’s target immediately upon request.Set the

levelPropertyThe

levelproperty sets the maximum level of log message that the root logger records.Set the

encodingOutputUse

encodingto set the character encoding scheme to be used for the output.Specify the

formatterUse

formatterto list the log formatter used by the log handler.Set the

fileObjectThe

fileobject represents the file where the output of this log handler is written to. It has two configuration properties:relative-toandpath.The

relative-toproperty is the directory where the log file is written to. JBoss Enterprise Application Platform 6 file path variables can be specified here. Thejboss.server.log.dirvariable points to the log/ directory of the server.The

pathproperty is the name of the file where the log messages will be written. It is a relative path name that is appended to the value of therelative-toproperty to determine the complete path.Set the

suffixValueThe

suffixis appended to the filename of the rotated logs and is used to determine the frequency of rotation. The format of thesuffixis a dot (.) followed by a date string, which is parsable by thejava.text.SimpleDateFormatclass. The log is rotated on the basis of the smallest time unit defined by thesuffix. For example,yyyy-MM-ddwill result in daily log rotation. See http://docs.oracle.com/javase/6/docs/api/index.html?java/text/SimpleDateFormat.htmlSet the

appendPropertyWhen the

appendproperty is set to"true", all messages written by this handler will be appended to an existing file. If set to"false"a new file will be created each time the application server launches. Changes toappendrequire a server reboot to take effect.

3.5.7. Sample XML Configuration for a Size Log Handler

The following procedure demonstrates a sample configuration for a size log handler.

Configure the Size Log Handler

Add the Size Log Handler Identifier Information

The

nameproperty sets the unique identifier for this log handler.When

autoflushis set to"true"the log messages will be sent to the handler’s target immediately upon request.Set the

levelPropertyThe

levelproperty sets the maximum level of log message that the root logger records.Set the

encodingObjectUse

encodingto set the character encoding scheme to be used for the output.Set the

fileObjectThe

fileobject represents the file where the output of this log handler is written to. It has two configuration properties:relative-toandpath.The

relative-toproperty is the directory where the log file is written to. JBoss Enterprise Application Platform 6 file path variables can be specified here. Thejboss.server.log.dirvariable points to the log/ directory of the server.The

pathproperty is the name of the file where the log messages will be written. It is a relative path name that is appended to the value of therelative-toproperty to determine the complete path.Specify the

rotate-sizeValueThe maximum size that the log file can reach before it is rotated. A single character appended to the number indicates the size units:

bfor bytes,kfor kilobytes,mfor megabytes,gfor gigabytes. For example:50mfor 50 megabytes.Set the

max-backup-indexNumberThe maximum number of rotated logs that are kept. When this number is reached, the oldest log is reused.

Specify the

formatterUse

formatterto list the log formatter used by the log handler.Set the

appendPropertyWhen the

appendproperty is set to"true", all messages written by this handler will be appended to an existing file. If set to"false"a new file will be created each time the application server launches. Changes toappendrequire a server reboot to take effect.

3.5.8. Sample XML Configuration for a Async Log Handler

The following procedure demonstrates a sample configuration for an async log handler

Configure the Async Log Handler

-

The

nameproperty sets the unique identifier for this log handler. -

The

levelproperty sets the maximum level of log message that the root logger records. -

The

queue-lengthdefines the maximum number of log messages that will be held by this handler while waiting for sub-handlers to respond. -

The

overflow-actiondefines how this handler responds when its queue length is exceeded. This can be set toBLOCKorDISCARD.BLOCKmakes the logging application wait until there is available space in the queue. This is the same behavior as an non-async log handler.DISCARDallows the logging application to continue but the log message is deleted. -

The

subhandlerslist is the list of log handlers to which this async handler passes its log messages.

Part IV. Set Up Cache Modes

Chapter 4. Cache Modes

4.1. Cache Modes

Red Hat JBoss Data Grid provides two modes:

- Local mode is the only non-clustered cache mode offered in JBoss Data Grid. In local mode, JBoss Data Grid operates as a simple single-node in-memory data cache. Local mode is most effective when scalability and failover are not required and provides high performance in comparison with clustered modes.

- Clustered mode replicates state changes to a subset of nodes. The subset size should be sufficient for fault tolerance purposes, but not large enough to hinder scalability. Before attempting to use clustered mode, it is important to first configure JGroups for a clustered configuration. For details about configuring JGroups, see Configure JGroups (Library Mode)

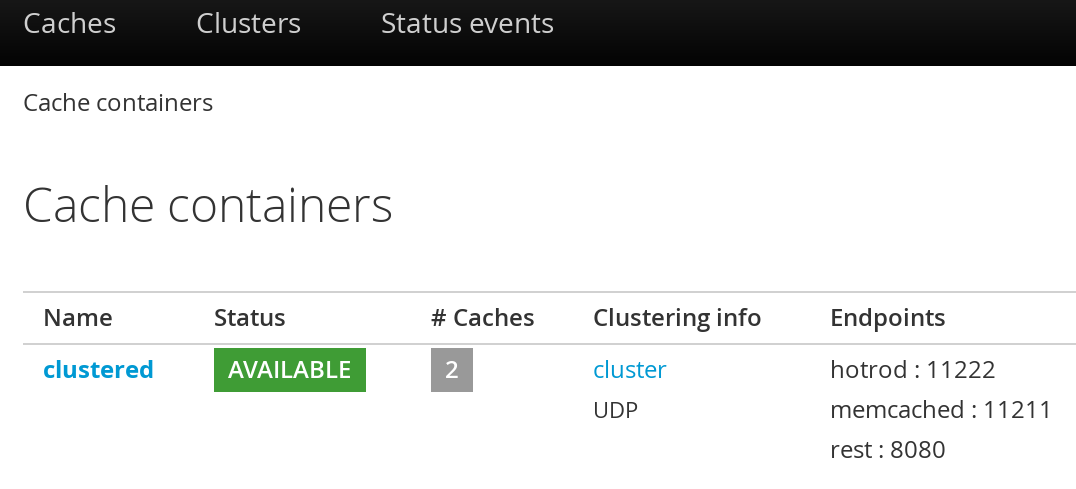

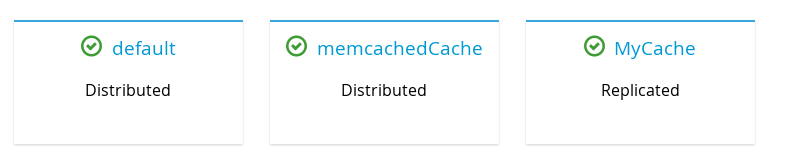

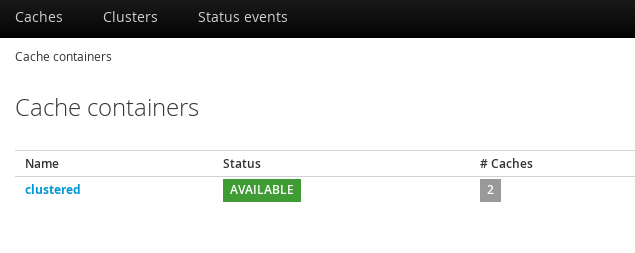

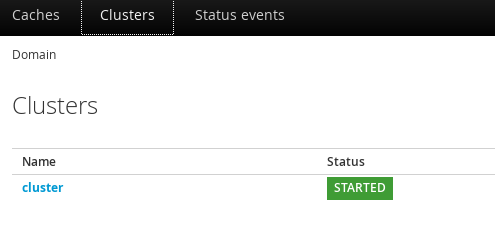

4.2. About Cache Containers

Cache containers are used in Red Hat JBoss Data Grid’s Remote Client-Server mode as a starting point for a cache. The cache-container element acts as a parent of one or more (local or clustered) caches. To add clustered caches to the container, transport must be defined.

The following procedure demonstrates a sample cache container configuration:

How to Configure the Cache Container

Configure the Cache Container

The

cache-containerelement specifies information about the cache container using the following parameters:-

The

nameparameter defines the name of the cache container. -

The

default-cacheparameter defines the name of the default cache used with the cache container. -

The

statisticsattribute is optional and istrueby default. Statistics are useful in monitoring JBoss Data Grid via JMX or JBoss Operations Network, however they adversely affect performance. Disable this attribute by setting it tofalseif it is not required. -

The

startparameter indicates when the cache container starts, i.e. whether it will start lazily when requested or "eagerly" when the server starts up. Valid values for this parameter areEAGERandLAZY.

-

The

Configure Per-cache Statistics

If

statisticsare enabled at the container level, per-cache statistics can be selectively disabled for caches that do not require monitoring by setting thestatisticsattribute tofalse.

4.3. Local Mode

4.3.1. Local Mode

Using Red Hat JBoss Data Grid’s local mode instead of a map provides a number of benefits.

Caches offer features that are unmatched by simple maps, such as:

- Write-through and write-behind caching to persist data.

- Entry eviction to prevent the Java Virtual Machine (JVM) running out of memory.

- Support for entries that expire after a defined period.

JBoss Data Grid is built around a high performance, read-based data container that uses techniques such as optimistic and pessimistic locking to manage lock acquisitions.

JBoss Data Grid also uses compare-and-swap and other lock-free algorithms, resulting in high throughput multi-CPU or multi-core environments. Additionally, JBoss Data Grid’s Cache API extends the JDK's ConcurrentMap, resulting in a simple migration process from a map to JBoss Data Grid.

4.3.2. Configure Local Mode

A local cache can be added to any cache container in both Library Mode and Remote Client-Server Mode. The following example demonstrates how to add the local-cache element.

The local-cache Element

The local-cache element specifies information about the local cache used with the cache container using the following parameters: . The name parameter specifies the name of the local cache to use. . If statistics are enabled at the container level, per-cache statistics can be selectively disabled for caches that do not require monitoring by setting the statistics attribute to false.

Local and clustered caches are able to coexist in the same cache container, however where the container is without a <transport/> it can only contain local caches. The container used in the example can only contain local caches as it does not have a <transport/>.

The cache interface extends the ConcurrentMap and is compatible with multiple cache systems.

4.4. Clustered Modes

4.4.1. Clustered Modes

Red Hat JBoss Data Grid offers the following clustered modes:

- Replication Mode replicates any entry that is added across all cache instances in the cluster.

- Invalidation Mode does not share any data, but signals remote caches to initiate the removal of invalid entries.

- Distribution Mode stores each entry on a subset of nodes instead of on all nodes in the cluster.

The clustered modes can be further configured to use synchronous or asynchronous transport for network communications.

4.4.2. Asynchronous and Synchronous Operations

When a clustered mode (such as invalidation, replication or distribution) is used, data is propagated to other nodes in either a synchronous or asynchronous manner.

If synchronous mode is used, the sender waits for responses from receivers before allowing the thread to continue, whereas asynchronous mode transmits data but does not wait for responses from other nodes in the cluster to continue operations.

JBoss Data Grid clusters are configured to use synchronous operations by default.

4.4.3. About Asynchronous Communications

Asynchronous mode prioritizes speed over consistency, which is ideal for use cases such as HTTP session replications with sticky sessions enabled. Such a session (or data for other use cases) is always accessed on the same cluster node, unless this node fails. If data consistency is required for your use case, you should use synchronous operations.

Additionally, it is not possible for JBoss Data Grid nodes to determine if asynchronous operations succeed because receiving nodes do not send status for operations back to the originating nodes.

In JBoss Data Grid, distributed and replicated caches are represented by the distributed-cache and replicated-cache elements.

Each of these elements contains a mode property, the value of which can be set to SYNC for synchronous, which is the default, or ASYNC for asynchronous communications.

Asynchronous Communications Example Configuration

<replicated-cache name="default"

statistics="true"

mode="ASYNC">

<!-- Additional configuration information here -->

</replicated-cache>

<replicated-cache name="default"

statistics="true"

mode="ASYNC">

<!-- Additional configuration information here -->

</replicated-cache>

The preceding configuration is valid for both JBoss Data Grid usage modes (Library mode and Remote Client-Server mode). However, this configuration does not apply to local caches local-cache because they are not clustered and do not communicate with other nodes.

4.4.4. Cache Mode Troubleshooting

4.4.4.1. Invalid Data in ReadExternal

If invalid data is passed to readExternal, it can be because when using Cache.putAsync(), starting serialization can cause your object to be modified, causing the datastream passed to readExternal to be corrupted. This can be resolved if access to the object is synchronized.

4.4.4.2. Cluster Physical Address Retrieval

How can the physical addresses of the cluster be retrieved?

The physical address can be retrieved using an instance method call. For example: AdvancedCache.getRpcManager().getTransport().getPhysicalAddresses() .

Chapter 5. Set Up Distribution Mode

5.1. About Distribution Mode

In distribution mode, Red Hat JBoss Data Grid stores cache entries across a subset of nodes in the cluster instead of replicating entries on each node. This improves JBoss Data Grid scalability.

5.2. Consistent Hashing in Distribution Mode

Red Hat JBoss Data Grid uses an algorithm based on consistent hashing to distribute cache entries on nodes across clusters. JBoss Data Grid splits keys in distributed caches into fixed numbers of hash space segments, using MurmurHash3 by default.

Segments are distributed across the cluster to nodes that act as primary and backup owners. Primary owners coordinate locking and write operations for the keys in each segment. Backup owners provide redundancy in the event the primary owner becomes unavailable.

You configure the number of owners with the owners attribute. This attribute defines how many copies of each entry are available across the cluster. The default value is 2, a primary owner and one backup owner.

You can configure the number of hash space segments with the segments attribute. This attribute defines the hash space segments for the named cache across the cluster. The cache always has the configured number of hash segments available across the JBoss Data Grid cluster, no matter how many nodes join or leave.

Additionally, the key-to-segment mapping is fixed. In other words, keys always map to the same segments, regardless of changes to the cluster topology.

The default number of segments is 256, which is suitable for JBoss Data Grid clusters of 25 nodes or less. The recommended value is 20 * the number of nodes for each cluster, which allows you to add nodes and still have capacity.

However, any value within the range of 10 * the number of nodes and 100 * the number of nodes per cluster is fine.

With a perfect segment-to-node mapping, nodes are:

-

primary owner for segments calculated as

number of segments / number of nodes -

any kind of owner for segments calculated as

number of owners * number of segments / number of nodes

However, JBoss Data Grid does not always distribute segments evenly and can map more segments to some nodes than others.

Consider a scenario where a cluster has 10 nodes and there are 20 segments per node. If segments are distributed evenly across the cluster, each node is the primary owner for 2 segments. If segments are not distributed evenly, some nodes are primary owners for 3 segments, which represents an increase of 50% for the planned capacity.

Likewise, if the number of owners is 2, each node should own 4 segments. However it could be the case that some nodes are owners for 5 segments, which represents a 25% increase for the planned capacity.

You must restart the JBoss Data Grid cluster for changes to the number of segments to take effect.

5.3. Locating Entries in Distribution Mode

The consistent hash algorithm used in Red Hat JBoss Data Grid’s distribution mode can locate entries deterministically, without multicasting a request or maintaining expensive metadata.

A PUT operation can result in as many remote calls as specified by the owners parameter, while a GET operation executed on any node in the cluster results in a single remote call. In the background, the GET operation results in the same number of remote calls as a PUT operation (specifically the value of the owners parameter), but these occur in parallel and the returned entry is passed to the caller as soon as one returns.

5.4. Return Values in Distribution Mode

In Red Hat JBoss Data Grid’s distribution mode, a synchronous request is used to retrieve the previous return value if it cannot be found locally. A synchronous request is used for this task irrespective of whether distribution mode is using asynchronous or synchronous processes.

5.5. Configure Distribution Mode

Distribution mode is a clustered mode in Red Hat JBoss Data Grid. Distribution mode can be added to any cache container, in both Library Mode and Remote Client-Server Mode, using the following procedure:

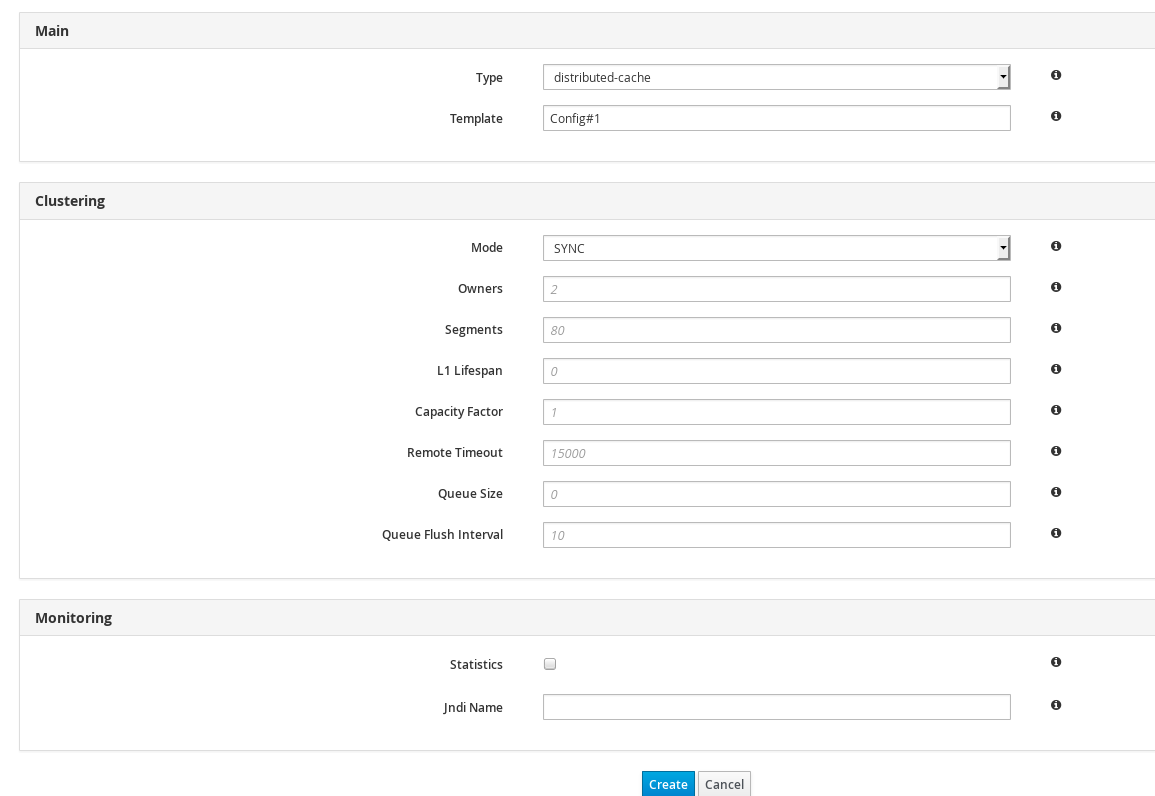

The distributed-cache Element

The distributed-cache element configures settings for the distributed cache using the following parameters:

-

The

nameparameter provides a unique identifier for the cache. -

If

statisticsare enabled at the container level, per-cache statistics can be selectively disabled for caches that do not require monitoring by setting thestatisticsattribute tofalse.

JGroups must be appropriately configured for clustered mode before attempting to load this configuration.

5.6. Synchronous and Asynchronous Distribution

To elicit meaningful return values from certain public API methods, it is essential to use synchronized communication when using distribution mode.

Communication Mode example

For example, with three nodes in a cluster, node A, B and C, and a key K that maps nodes A and B. Perform an operation on node C that requires a return value, for example Cache.remove(K). To execute successfully, the operation must first synchronously forward the call to both node A and B, and then wait for a result returned from either node A or B. If asynchronous communication was used, the usefulness of the returned values cannot be guaranteed, despite the operation behaving as expected.

Chapter 6. Set Up Replication Mode

6.1. About Replication Mode

Red Hat JBoss Data Grid’s replication mode is a simple clustered mode. Cache instances automatically discover neighboring instances on other Java Virtual Machines (JVM) on the same network and subsequently form a cluster with the discovered instances. Any entry added to a cache instance is replicated across all cache instances in the cluster and can be retrieved locally from any cluster cache instance.

In JBoss Data Grid’s replication mode, return values are locally available before the replication occurs.

6.2. Optimized Replication Mode Usage

Replication mode is used for state sharing across a cluster; however, if you have a replicated cache and a large number of nodes are in use then there will be many writes to the replicated cache to keep all of the nodes synchronized. The amount of work performed will depend on many factors and on the specific use case, and for this reason it is recommended to ensure that each workload is tested thoroughly to determine if replication mode will be beneficial with the number of planned nodes. For many situations replication mode is not recommended once there are ten servers; however, in some workloads, such as if load read is important, this mode may be beneficial.

Red Hat JBoss Data Grid can be configured to use UDP multicast, which improves performance to a limited degree for larger clusters.

6.3. Configure Replication Mode

Replication mode is a clustered cache mode in Red Hat JBoss Data Grid. Replication mode can be added to any cache container, in both Library Mode and Remote Client-Server Mode, using the following procedure.

The replicated-cache Element

JGroups must be appropriately configured for clustered mode before attempting to load this configuration.

The replicated-cache element configures settings for the distributed cache using the following parameters:

-

The

nameparameter provides a unique identifier for the cache. -

If

statisticsare enabled at the container level, per-cache statistics can be selectively disabled for caches that do not require monitoring by setting thestatisticsattribute tofalse.

For details about the cache-container and locking, see the appropriate chapter.

6.4. Synchronous and Asynchronous Replication

6.4.1. Synchronous and Asynchronous Replication

Replication mode can be synchronous or asynchronous depending on the problem being addressed.

-

Synchronous replication blocks a thread or caller (for example on a

put()operation) until the modifications are replicated across all nodes in the cluster. By waiting for acknowledgments, synchronous replication ensures that all replications are successfully applied before the operation is concluded. - Asynchronous replication operates significantly faster than synchronous replication because it does not need to wait for responses from nodes. Asynchronous replication performs the replication in the background and the call returns immediately. Errors that occur during asynchronous replication are written to a log. As a result, a transaction can be successfully completed despite the fact that replication of the transaction may not have succeeded on all the cache instances in the cluster.

6.4.2. Troubleshooting Asynchronous Replication Behavior

In some instances, a cache configured for asynchronous replication or distribution may wait for responses, which is synchronous behavior. This occurs because caches behave synchronously when both state transfers and asynchronous modes are configured. This synchronous behavior is a prerequisite for state transfer to operate as expected.

Use one of the following to remedy this problem:

-

Disable state transfer and use a

ClusteredCacheLoaderto lazily look up remote state as and when needed. -

Enable state transfer and

REPL_SYNC. Use the Asynchronous API (for example, thecache.putAsync(k, v)) to activate 'fire-and-forget' capabilities. -

Enable state transfer and

REPL_ASYNC. All RPCs end up becoming synchronous, but client threads will not be held up if a replication queue is enabled (which is recommended for asynchronous mode).

6.5. The Replication Queue

6.5.1. The Replication Queue

In replication mode, Red Hat JBoss Data Grid uses a replication queue to replicate changes across nodes based on the following:

- Previously set intervals.

- The queue size exceeding the number of elements.

- A combination of previously set intervals and the queue size exceeding the number of elements.

The replication queue ensures that during replication, cache operations are transmitted in batches instead of individually. As a result, a lower number of replication messages are transmitted and fewer envelopes are used, resulting in improved JBoss Data Grid performance.

A disadvantage of using the replication queue is that the queue is periodically flushed based on the time or the queue size. Such flushing operations delay the realization of replication, distribution, or invalidation operations across cluster nodes. When the replication queue is disabled, the data is directly transmitted and therefore the data arrives at the cluster nodes faster.

A replication queue is used in conjunction with asynchronous mode.

6.5.2. Replication Queue Usage

When using the replication queue, do one of the following:

- Disable asynchronous marshalling.

Set the

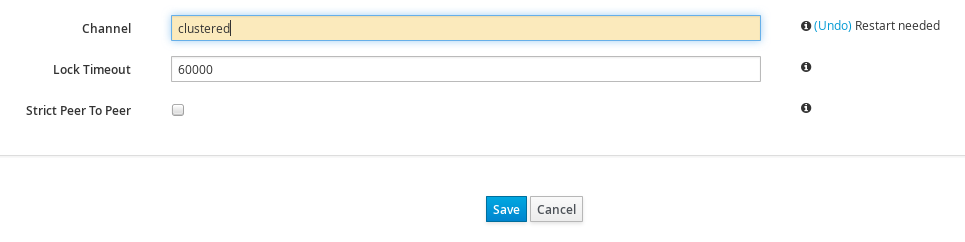

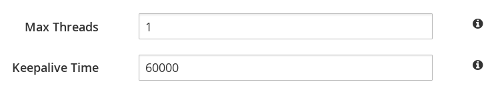

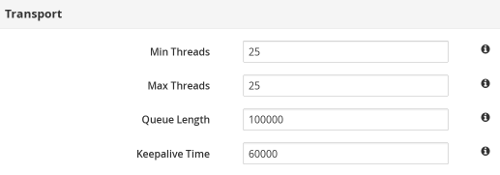

max-threadscount value to1for theexecutorattribute of thetransportelement. Theexecutoris only available in Library Mode, and is therefore defined in its configuration file as follows:<transport executor="infinispan-transport"/>

<transport executor="infinispan-transport"/>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

To implement either of these solutions, the replication queue must be in use in asynchronous mode. Asynchronous mode can be set by defining mode="ASYNC", as seen in the following example:

Replication Queue in Asynchronous Mode

<replicated-cache name="asyncCache"

mode="ASYNC"

statistics="true"

<!-- Additional configuration information here -->

</replicated-cache>

<replicated-cache name="asyncCache"

mode="ASYNC"

statistics="true"

<!-- Additional configuration information here -->

</replicated-cache>The replication queue allows requests to return to the client faster, therefore using the replication queue together with asynchronous marshalling does not present any significant advantages.

6.6. About Replication Guarantees

In a clustered cache, the user can receive synchronous replication guarantees as well as the parallelism associated with asynchronous replication. Red Hat JBoss Data Grid provides an asynchronous API for this purpose.

The asynchronous methods used in the API return Futures, which can be queried. The queries block the thread until a confirmation is received about the success of any network calls used.

6.7. Replication Traffic on Internal Networks

Some cloud providers charge less for traffic over internal IP addresses than for traffic over public IP addresses, or do not charge at all for internal network traffic (for example, ). To take advantage of lower rates, you can configure Red Hat JBoss Data Grid to transfer replication traffic using the internal network. With such a configuration, it is difficult to know the internal IP address you are assigned. JBoss Data Grid uses JGroups interfaces to solve this problem.

Chapter 7. Set Up Invalidation Mode

7.1. About Invalidation Mode

Invalidation is a clustered mode that does not share any data, but instead removes potentially obsolete data from remote caches. Using this cache mode requires another, more permanent store for the data such as a database.

Red Hat JBoss Data Grid, in such a situation, is used as an optimization for a system that performs many read operations and prevents database usage each time a state is needed.

When invalidation mode is in use, data changes in a cache prompts other caches in the cluster to evict their outdated data from memory.

7.2. Configure Invalidation Mode

Invalidation mode is a clustered mode in Red Hat JBoss Data Grid. Invalidation mode can be added to any cache container, in both Library Mode and Remote Client-Server Mode, using the following procedure:

The invalidation-cache Element

The invalidation-cache element configures settings for the distributed cache using the following parameters:

-

The

nameparameter provides a unique identifier for the cache. -

If

statisticsare enabled at the container level, per-cache statistics can be selectively disabled for caches that do not require monitoring by setting thestatisticsattribute tofalse.

JGroups must be appropriately configured for clustered mode before attempting to load this configuration.

For details about the cache-container see the appropriate chapter.

7.3. Synchronous/Asynchronous Invalidation

In Red Hat JBoss Data Grid’s Library mode, invalidation operates either asynchronously or synchronously.

- Synchronous invalidation blocks the thread until all caches in the cluster have received invalidation messages and evicted the obsolete data.

- Asynchronous invalidation operates in a fire-and-forget mode that allows invalidation messages to be broadcast without blocking a thread to wait for responses.

7.4. The L1 Cache and Invalidation

An invalidation message is generated each time a key is updated. This message is multicast to each node that contains data that corresponds to current L1 cache entries. The invalidation message ensures that each of these nodes marks the relevant entry as invalidated.

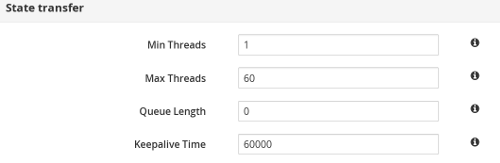

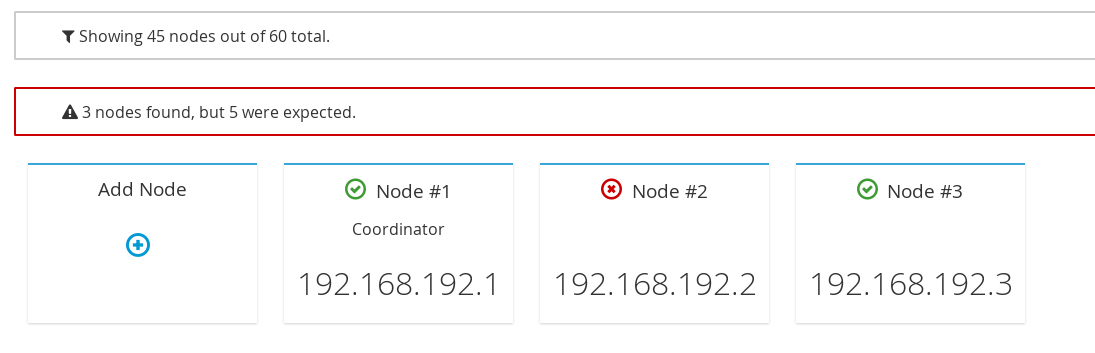

Chapter 8. State Transfer

8.1. State Transfer

State transfer is a basic data grid or clustered cache functionality. Without state transfer, data would be lost as nodes are added to or removed from the cluster.

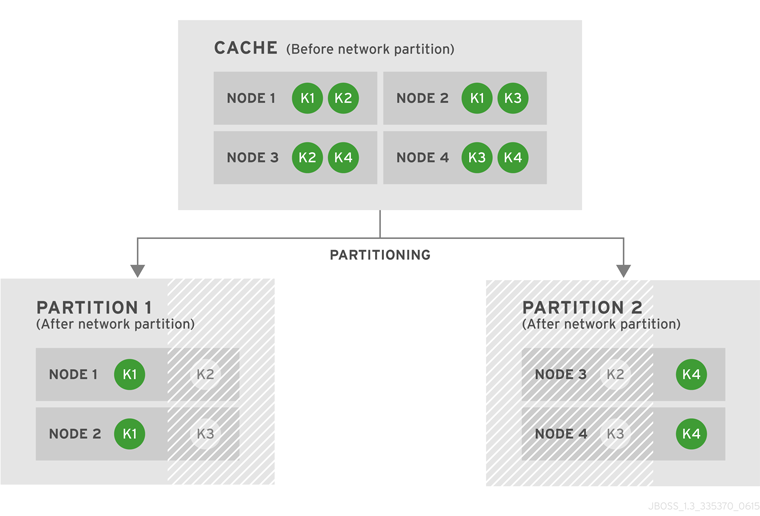

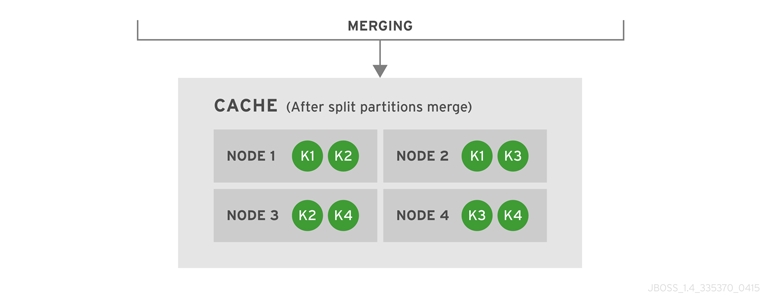

State transfer adjusts the cache’s internal state in response to a change in a cache membership. The change can be when a node joins or leaves, when two or more cluster partitions merge, or a combination of joins, leaves, and merges. State transfer occurs automatically in Red Hat JBoss Data Grid whenever a node joins or leaves the cluster.

In Red Hat JBoss Data Grid’s replication mode, a new node joining the cache receives the entire cache state from the existing nodes. In distribution mode, the new node receives only a part of the state from the existing nodes, and the existing nodes remove some of their state in order to keep owners copies of each key in the cache (as determined through consistent hashing). In invalidation mode the initial state transfer is similar to replication mode, the only difference being that the nodes are not guaranteed to have the same state. When a node leaves, a replicated mode or invalidation mode cache does not perform any state transfer. A distributed cache needs to make additional copies of the keys that were stored on the leaving nodes, again to keep owners copies of each key.

A State Transfer transfers both in-memory and persistent state by default, but both can be disabled in the configuration. When State Transfer is disabled a ClusterLoader must be configured, otherwise a node will become the owner or backup owner of a key without the data being loaded into its cache. In addition, if State Transfer is disabled in distributed mode then a key will occasionally have less than owners owners.

8.2. Non-Blocking State Transfer

Non-Blocking State Transfer in Red Hat JBoss Data Grid minimizes the time in which a cluster or node is unable to respond due to a state transfer in progress. Non-blocking state transfer is a core architectural improvement with the following goals:

- Minimize the interval(s) where the entire cluster cannot respond to requests because of a state transfer in progress.

- Minimize the interval(s) where an existing member stops responding to requests because of a state transfer in progress.

- Allow state transfer to occur with a drop in the performance of the cluster. However, the drop in the performance during the state transfer does not throw any exception, and allows processes to continue.

-

Allows a

GEToperation to successfully retrieve a key from another node without returning a null value during a progressive state transfer.

For simplicity, the total order-based commit protocol uses a blocking version of the currently implemented state transfer mechanism. The main differences between the regular state transfer and the total order state transfer are:

- The blocking protocol queues the transaction delivery during the state transfer.

- State transfer control messages (such as CacheTopologyControlCommand) are sent according to the total order information.

The total order-based commit protocol works with the assumption that all the transactions are delivered in the same order and they see the same data set. So, no transactions are validated during the state transfer because all the nodes must have the most recent key or values in memory.

Using the state transfer and blocking protocol in this manner allows the state transfer and transaction delivery on all on the nodes to be synchronized. However, transactions that are already involved in a state transfer (sent before the state transfer began and delivered after it concludes) must be resent. When resent, these transactions are treated as new joiners and assigned a new total order value.

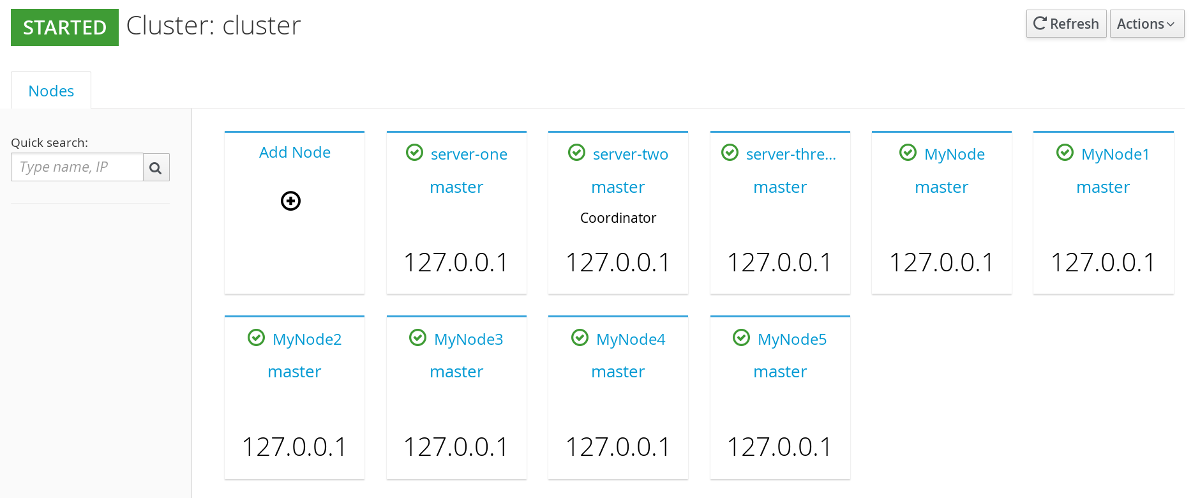

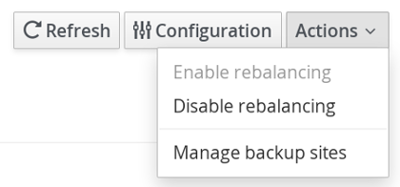

8.3. Suppress State Transfer via JMX

State transfer can be suppressed using JMX in order to bring down and relaunch a cluster for maintenance. This operation permits a more efficient cluster shutdown and startup, and removes the risk of Out Of Memory errors when bringing down a grid.

When a new node joins the cluster and rebalancing is suspended, the getCache() call will timeout after stateTransfer.timeout expires unless rebalancing is re-enabled or stateTransfer.awaitInitialTransferis set to false.

Disabling state transfer and rebalancing can be used for partial cluster shutdown or restart, however there is the possibility that data may be lost in a partial cluster shutdown due to state transfer being disabled.

8.4. The rebalancingEnabled Attribute

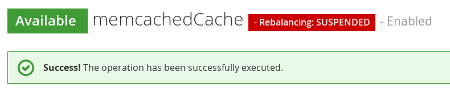

Suppressing rebalancing can only be triggered via the rebalancingEnabled JMX attribute, and requires no specific configuration.

The rebalancingEnabled attribute can be modified for the entire cluster from the LocalTopologyManager JMX Mbean on any node. This attribute is true by default, and is configurable programmatically.

Servers such as Hot Rod attempt to start all caches declared in the configuration during startup. If rebalancing is disabled, the cache will fail to start. Therefore, it is mandatory to use the following setting in a server environment:

<state-transfer enabled="true" await-initial-transfer="false"/>

<state-transfer enabled="true" await-initial-transfer="false"/>Part V. Enabling APIs

Chapter 9. Enabling APIs Declaratively

9.1. Enabling APIs Declaratively

The various APIs that JBoss Data Grid provides are fully documented in the JBoss Data Grid Developer Guide ; however, Administrators can enable these declaratively by adding elements to the configuration file. The following sections discuss methods on implementing the various APIs.

9.2. Batching API

Batching allows atomicity and some characteristics of a transaction, but does not allow full-blown JTA or XA capabilities. Batching is typically lighter and cheaper than a full-blown transaction, and should be used whenever the only participant in the transaction is the JBoss Data Grid cluster. If the transaction involves multiple systems then JTA Transactions should be used. For example, consider a transaction which transfers money from one bank account to another. If both accounts are stored within the JBoss Data Grid cluster then batching could be used; however, if only one account is inside the cluster, with the second being in an external database, then distributed transactions are required.

Transaction batching is only available in JBoss Data Grid’s Library Mode.

Enabling the Batching API

Batching may be enabled on a per-cache basis by defining a transaction mode of BATCH. The following example demonstrates this:

<local-cache name="batchingCache"> <transaction mode="BATCH"/> </local-cache>

<local-cache name="batchingCache">

<transaction mode="BATCH"/>

</local-cache>By default invocation batching is disabled; in addition, a transaction manager is not required to use batching.

9.3. Grouping API

The grouping API allows a group of entries to be co-located on the same node, instead of the default behavior of having each entry being stored on a node corresponding to a calculated hash code of the entry. By default JBoss Data Grid will take a hash code of each key when it is stored and map that key to a hash segment; this allows an algorithm to be used to determine the node that contains the key, allowing each node in the cluster to know which node contains the key without distributing ownership information. This behavior reduces overhead and improves redundancy as the ownership information does not need to be replicated should a node fail.

By enabling the grouping API the hash of the key is ignored when deciding which node to store the entry on. Instead, a hash of the group is obtained and used in its place, while the hash of the key is used internally to prevent performance degradation. When the group API is in use every node can still determine the owners of the key, and due to this reason the group may not be manually specified. A group may either be intrinsic to the entry, generated by the key class, or extrinsic to the entry, generated by an external function.

Enabling the Grouping API

The grouping API may be enabled on a per-cache basis by adding the groups element as seen in the following example:

<distributed-cache name="groupingCache">

<groups enabled="true"/>

</distributed-cache>

<distributed-cache name="groupingCache">

<groups enabled="true"/>

</distributed-cache>Defining an Extrinsic Group

Assuming a custom Grouper exists it may be defined by passing in the classname as seen below:

<distributed-cache name="groupingCache">

<groups enabled="true">

<grouper class="com.acme.KXGrouper" />

</groups>

</distributed-cache>

<distributed-cache name="groupingCache">

<groups enabled="true">

<grouper class="com.acme.KXGrouper" />

</groups>

</distributed-cache>9.4. Externalizable API

9.4.1. The Externalizable API

An Externalizer is a class that can:

- Marshall a given object type to a byte array.

- Unmarshall the contents of a byte array into an instance of the object type.

Externalizers are used by Red Hat JBoss Data Grid and allow users to specify how their object types are serialized. The marshalling infrastructure used in Red Hat JBoss Data Grid builds upon JBoss Marshalling and provides efficient payload delivery and allows the stream to be cached. The stream caching allows data to be accessed multiple times, whereas normally a stream can only be read once.

The Externalizable interface uses and extends serialization. This interface is used to control serialization and deserialization in Red Hat JBoss Data Grid.

9.4.2. Register the Advanced Externalizer (Declaratively)

After the advanced externalizer is set up, register it for use with Red Hat JBoss Data Grid. This registration is done declaratively (via XML) as follows:

Register the Advanced Externalizer

-

Add the

serializationelement to thecache-containerelement. -

Add the

advanced-externalizerelement, defining the custom Externalizer with theclassattribute. Replace theBook$BookExternalizervalues as required.

9.4.3. Configuring the Deserialization Whitelist

For security reasons, the Red Hat JBoss Data Grid server does not deserialize objects of an arbitrary class. JBoss Data Grid allows deserialization only for strings and primitives. If you want JBoss Data Grid to deserialize objects for other Java class instances, you must configure a deserialization whitelist.

Add the following system properties to the JVM at start up:

-

-Dinfinispan.deserialization.whitelist.classesSpecifies the fully qualified names of one or more Java classes. JBoss Data Grid deserializes objects that belong to those classes. -

-Dinfinispan.deserialization.whitelist.regexpsSpecifies one or more regular expressions. JBoss Data Grid deserializes objects that belong to any class that matches those expressions.

Both system properties are optional. You can specify a combination of both properties or specify either property by itself.

For example, the following system properties enable deserialization for the com.foo.bar.spotprice.Price and com.foo.bar.spotprice.Currency classes as well as for any classes that match the .*SpotPrice.* expression:

-Dinfinispan.deserialization.whitelist.classes=com.foo.bar.spotprice.Price,com.foo.bar.spotprice.Currency -Dinfinispan.deserialization.whitelist.regexps=.*SpotPrice.*

-Dinfinispan.deserialization.whitelist.classes=com.foo.bar.spotprice.Price,com.foo.bar.spotprice.Currency

-Dinfinispan.deserialization.whitelist.regexps=.*SpotPrice.*If you want to configure the whitelist so that JBoss Data Grid allows deserialization for any Java class, specify the following:

-Dinfinispan.deserialization.whitelist.regexps=.*

-Dinfinispan.deserialization.whitelist.regexps=.*For information on configuring clients to restrict deserialization to specific Java classes, see Restricting Deserialization to Specific Java Classes in the Developer Guide.

9.4.4. Custom Externalizer ID Values

9.4.4.1. Custom Externalizer ID Values

Advanced externalizers can be assigned custom IDs if desired. Some ID ranges are reserved for other modules or frameworks and must be avoided:

| ID Range | Reserved For |

|---|---|

| 1000-1099 | The Infinispan Tree Module |

| 1100-1199 | Red Hat JBoss Data Grid Server modules |

| 1200-1299 | Hibernate Infinispan Second Level Cache |

| 1300-1399 | JBoss Data Grid Lucene Directory |

| 1400-1499 | Hibernate OGM |

| 1500-1599 | Hibernate Search |

| 1600-1699 | Infinispan Query Module |

| 1700-1799 | Infinispan Remote Query Module |

| 1800-1849 | JBoss Data Grid Scripting Module |

| 1850-1899 | JBoss Data Grid Server Event Logger Module |

| 1900-1999 | JBoss Data Grid Remote Store |

9.4.4.2. Customize the Externalizer ID (Declaratively)

Customize the advanced externalizer ID declaratively (via XML) as follows:

Customizing the Externalizer ID (Declaratively)

-

Add the

serializationelement to thecache-containerelement. -

Add the

advanced-externalizerelement to add information about the new advanced externalizer. -

Define the externalizer ID using the

idattribute. Ensure that the selected ID is not from the range of IDs reserved for other modules. -

Define the externalizer class using the

classattribute. Replace theBook$BookExternalizervalues as required.

Chapter 10. Set Up and Configure the Infinispan Query API

10.1. Set Up Infinispan Query

10.1.1. Infinispan Query Dependencies in Library Mode

To use the JBoss Data Grid Infinispan Query via Maven, add the following dependencies:

<dependency>

<groupId>org.infinispan</groupId>

<artifactId>infinispan-embedded-query</artifactId>

<version>${infinispan.version}</version>

</dependency>

<dependency>

<groupId>org.infinispan</groupId>

<artifactId>infinispan-embedded-query</artifactId>

<version>${infinispan.version}</version>

</dependency>Non-Maven users must install all of the infinispan-embedded-query.jar and infinispan-embedded.jar files from the JBoss Data Grid distribution.

The Infinispan query API directly exposes the Hibernate Search and the Lucene APIs and cannot be embedded within the infinispan-embedded-query.jar file. Do not include other versions of Hibernate Search and Lucene in the same deployment as infinispan-embedded-query . This action will cause classpath conflicts and result in unexpected behavior.

10.2. Directory Providers

10.2.1. Directory Providers

The following directory providers are supported in Infinispan Query:

- RAM Directory Provider

- Filesystem Directory Provider

- Infinispan Directory Provider

10.2.2. RAM Directory Provider

Storing the global index locally in Red Hat JBoss Data Grid’s Query Module allows each node to

- maintain its own index.

-

use

Lucene's in-memory or filesystem-based index directory.

The following example demonstrates an in-memory, RAM-based index store:

<local-cache name="indexesInMemory">

<indexing index="LOCAL">

<property name="default.directory_provider">ram</property>

</indexing>

</local-cache>

<local-cache name="indexesInMemory">

<indexing index="LOCAL">

<property name="default.directory_provider">ram</property>

</indexing>

</local-cache>10.2.3. Filesystem Directory Provider

To configure the storage of indexes, set the appropriate properties when enabling indexing in the JBoss Data Grid configuration.

This example shows a disk-based index store:

Disk-based Index Store

10.2.4. Infinispan Directory Provider

In addition to the Lucene directory implementations, Red Hat JBoss Data Grid also ships with an infinispan-directory module.

Red Hat JBoss Data Grid only supports infinispan-directory in the context of the Querying feature, not as a standalone feature.