Configuring Data Grid Caches

Configure Data Grid caches to customize your deployment

Abstract

Red Hat Data Grid

Data Grid is a high-performance, distributed in-memory data store.

- Schemaless data structure

- Flexibility to store different objects as key-value pairs.

- Grid-based data storage

- Designed to distribute and replicate data across clusters.

- Elastic scaling

- Dynamically adjust the number of nodes to meet demand without service disruption.

- Data interoperability

- Store, retrieve, and query data in the grid from different endpoints.

Data Grid documentation

Documentation for Data Grid is available on the Red Hat customer portal.

Data Grid downloads

Access the Data Grid Software Downloads on the Red Hat customer portal.

You must have a Red Hat account to access and download Data Grid software.

Making open source more inclusive

Red Hat is committed to replacing problematic language in our code, documentation, and web properties. We are beginning with these four terms: master, slave, blacklist, and whitelist. Because of the enormity of this endeavor, these changes will be implemented gradually over several upcoming releases. For more details, see our CTO Chris Wright’s message.

Chapter 1. Data Grid caches

Data Grid caches provide flexible, in-memory data stores that you can configure to suit use cases such as:

- Boosting application performance with high-speed local caches.

- Optimizing databases by decreasing the volume of write operations.

- Providing resiliency and durability for consistent data across clusters.

1.1. Cache API

Cache<K,V> is the central interface for Data Grid and extends java.util.concurrent.ConcurrentMap.

Cache entries are highly concurrent data structures in key:value format that support a wide and configurable range of data types, from simple strings to much more complex objects.

1.2. Cache managers

The CacheManager API is the starting point for interacting with Data Grid caches. Cache managers control cache lifecycle; creating, modifying, and deleting cache instances.

Data Grid provides two CacheManager implementations:

EmbeddedCacheManager- Entry point for caches when running Data Grid inside the same Java Virtual Machine (JVM) as the client application.

RemoteCacheManager-

Entry point for caches when running Data Grid Server in its own JVM. When you instantiate a

RemoteCacheManagerit establishes a persistent TCP connection to Data Grid Server through the Hot Rod endpoint.

Both embedded and remote CacheManager implementations share some methods and properties. However, semantic differences do exist between EmbeddedCacheManager and RemoteCacheManager.

1.3. Cache modes

Data Grid cache managers can create and control multiple caches that use different modes. For example, you can use the same cache manager for local caches, distributed caches, and caches with invalidation mode.

- Local

- Data Grid runs as a single node and never replicates read or write operations on cache entries.

- Replicated

- Data Grid replicates all cache entries on all nodes in a cluster and performs local read operations only.

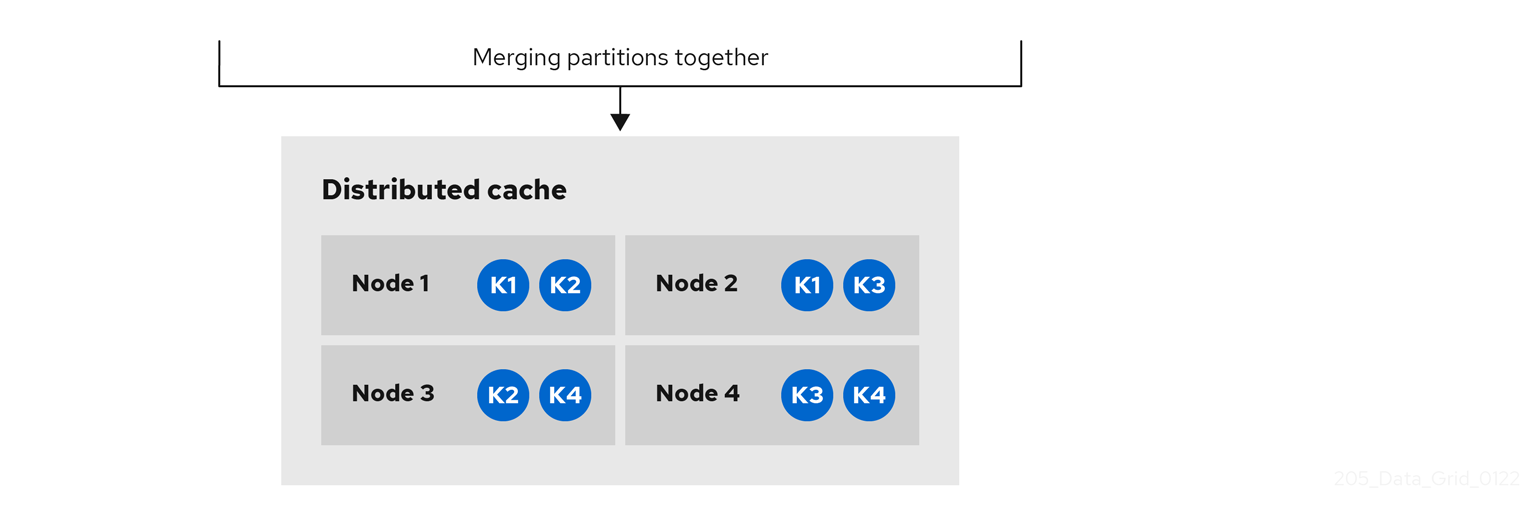

- Distributed

-

Data Grid replicates cache entries on a subset of nodes in a cluster and assigns entries to fixed owner nodes.

Data Grid requests read operations from owner nodes to ensure it returns the correct value. - Invalidation

- Data Grid evicts stale data from all nodes whenever operations modify entries in the cache. Data Grid performs local read operations only.

- Scattered

-

Data Grid stores cache entries across a subset of nodes.

By default Data Grid assigns a primary owner and a backup owner to each cache entry in scattered caches.

Data Grid assigns primary owners in the same way as with distributed caches, while backup owners are always the nodes that initiate the write operations.

Data Grid requests read operations from at least one owner node to ensure it returns the correct value.

1.3.1. Comparison of cache modes

The cache mode that you should choose depends on the qualities and guarantees you need for your data.

The following table summarizes the primary differences between cache modes:

| Cache mode | Clustered? | Read performance | Write performance | Capacity | Availability | Capabilities |

|---|---|---|---|---|---|---|

| Local | No | High (local) | High (local) | Single node | Single node | Complete |

| Simple | No | Highest (local) | Highest (local) | Single node | Single node | Partial: no transactions, persistence, or indexing. |

| Invalidation | Yes | High (local) | Low (all nodes, no data) | Single node | Single node | Partial: no indexing. |

| Replicated | Yes | High (local) | Lowest (all nodes) | Smallest node | All nodes | Complete |

| Distributed | Yes | Medium (owners) | Medium (owner nodes) | Sum of all nodes capacity divided by the number of owners. | Owner nodes | Complete |

| Scattered | Yes | Medium (primary) | Higher (single RPC) | Sum of all nodes capacity divided by 2. | Owner nodes | Partial: no transactions. |

1.4. Local caches

Data Grid offers a local cache mode that is similar to a ConcurrentHashMap.

Caches offer more capabilities than simple maps, including write-through and write-behind to persistent storage as well as management capabilities such as eviction and expiration.

The Data Grid Cache API extends the ConcurrentMap API in Java, making it easy to migrate from a map to a Data Grid cache.

Local cache configuration

XML

<local-cache name="mycache"

statistics="true">

<encoding media-type="application/x-protostream"/>

</local-cache>

<local-cache name="mycache"

statistics="true">

<encoding media-type="application/x-protostream"/>

</local-cache>JSON

YAML

localCache:

name: "mycache"

statistics: "true"

encoding:

mediaType: "application/x-protostream"

localCache:

name: "mycache"

statistics: "true"

encoding:

mediaType: "application/x-protostream"1.4.1. Simple caches

A simple cache is a type of local cache that disables support for the following capabilities:

- Transactions and invocation batching

- Persistent storage

- Custom interceptors

- Indexing

- Transcoding

However, you can use other Data Grid capabilities with simple caches such as expiration, eviction, statistics, and security features. If you configure a capability that is not compatible with a simple cache, Data Grid throws an exception.

Simple cache configuration

XML

<local-cache simple-cache="true" />

<local-cache simple-cache="true" />JSON

{

"local-cache" : {

"simple-cache" : "true"

}

}

{

"local-cache" : {

"simple-cache" : "true"

}

}YAML

localCache: simpleCache: "true"

localCache:

simpleCache: "true"Chapter 2. Clustered caches

You can create embedded and remote caches on Data Grid clusters that replicate data across nodes.

2.1. Replicated caches

Data Grid replicates all entries in the cache to all nodes in the cluster. Each node can perform read operations locally.

Replicated caches provide a quick and easy way to share state across a cluster, but is suitable for clusters of less than ten nodes. Because the number of replication requests scales linearly with the number of nodes in the cluster, using replicated caches with larger clusters reduces performance. However you can use UDP multicasting for replication requests to improve performance.

Each key has a primary owner, which serializes data container updates in order to provide consistency.

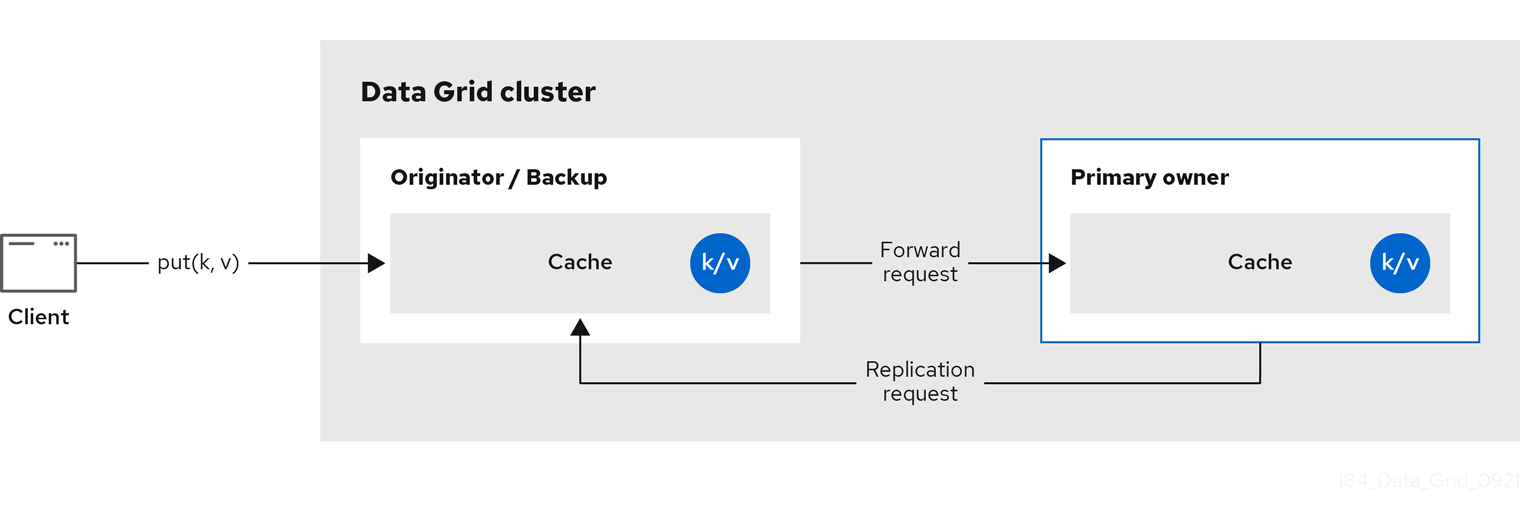

Figure 2.1. Replicated cache

Synchronous or asynchronous replication

-

Synchronous replication blocks the caller (e.g. on a

cache.put(key, value)) until the modifications have been replicated successfully to all the nodes in the cluster. - Asynchronous replication performs replication in the background, and write operations return immediately. Asynchronous replication is not recommended, because communication errors, or errors that happen on remote nodes are not reported to the caller.

Transactions

If transactions are enabled, write operations are not replicated through the primary owner.

With pessimistic locking, each write triggers a lock message, which is broadcast to all the nodes. During transaction commit, the originator broadcasts a one-phase prepare message and an unlock message (optional). Either the one-phase prepare or the unlock message is fire-and-forget.

With optimistic locking, the originator broadcasts a prepare message, a commit message, and an unlock message (optional). Again, either the one-phase prepare or the unlock message is fire-and-forget.

2.2. Distributed caches

Data Grid attempts to keep a fixed number of copies of any entry in the cache, configured as numOwners. This allows distributed caches to scale linearly, storing more data as nodes are added to the cluster.

As nodes join and leave the cluster, there will be times when a key has more or less than numOwners copies. In particular, if numOwners nodes leave in quick succession, some entries will be lost, so we say that a distributed cache tolerates numOwners - 1 node failures.

The number of copies represents a trade-off between performance and durability of data. The more copies you maintain, the lower performance will be, but also the lower the risk of losing data due to server or network failures.

Data Grid splits the owners of a key into one primary owner, which coordinates writes to the key, and zero or more backup owners.

The following diagram shows a write operation that a client sends to a backup owner. In this case the backup node forwards the write to the primary owner, which then replicates the write to the backup.

Figure 2.2. Cluster replication

Figure 2.3. Distributed cache

Read operations

Read operations request the value from the primary owner. If the primary owner does not respond in a reasonable amount of time, Data Grid requests the value from the backup owners as well.

A read operation may require 0 messages if the key is present in the local cache, or up to 2 * numOwners messages if all the owners are slow.

Write operations

Write operations result in at most 2 * numOwners messages. One message from the originator to the primary owner and numOwners - 1 messages from the primary to the backup nodes along with the corresponding acknowledgment messages.

Cache topology changes may cause retries and additional messages for both read and write operations.

Synchronous or asynchronous replication

Asynchronous replication is not recommended because it can lose updates. In addition to losing updates, asynchronous distributed caches can also see a stale value when a thread writes to a key and then immediately reads the same key.

Transactions

Transactional distributed caches send lock/prepare/commit/unlock messages to the affected nodes only, meaning all nodes that own at least one key affected by the transaction. As an optimization, if the transaction writes to a single key and the originator is the primary owner of the key, lock messages are not replicated.

2.2.1. Read consistency

Even with synchronous replication, distributed caches are not linearizable. For transactional caches, they do not support serialization/snapshot isolation.

For example, a thread is carrying out a single put request:

cache.get(k) -> v1 cache.put(k, v2) cache.get(k) -> v2

cache.get(k) -> v1

cache.put(k, v2)

cache.get(k) -> v2But another thread might see the values in a different order:

cache.get(k) -> v2 cache.get(k) -> v1

cache.get(k) -> v2

cache.get(k) -> v1The reason is that read can return the value from any owner, depending on how fast the primary owner replies. The write is not atomic across all the owners. In fact, the primary commits the update only after it receives a confirmation from the backup. While the primary is waiting for the confirmation message from the backup, reads from the backup will see the new value, but reads from the primary will see the old one.

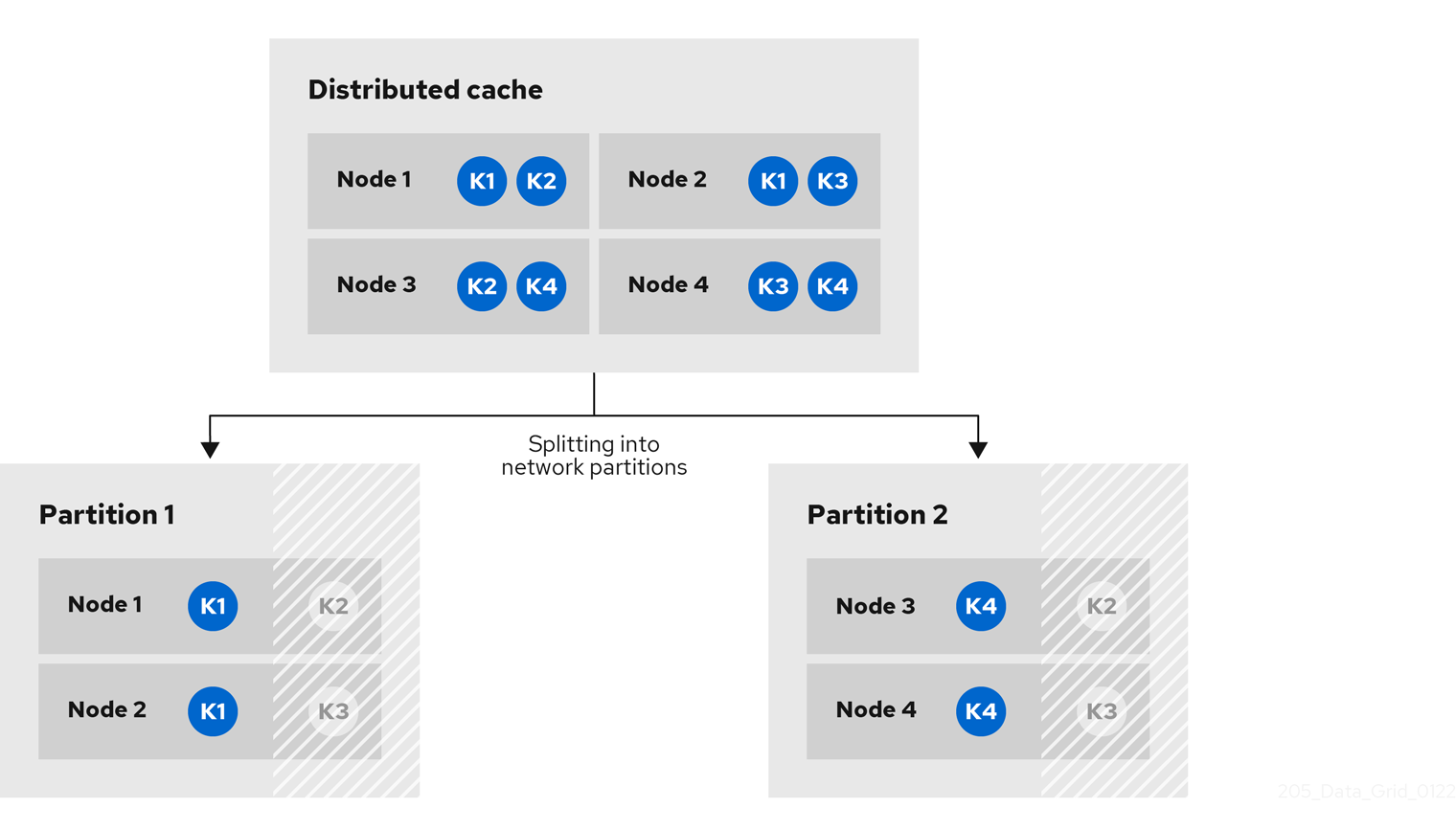

2.2.2. Key ownership

Distributed caches split entries into a fixed number of segments and assign each segment to a list of owner nodes. Replicated caches do the same, with the exception that every node is an owner.

The first node in the list of owners is the primary owner. The other nodes in the list are backup owners. When the cache topology changes, because a node joins or leaves the cluster, the segment ownership table is broadcast to every node. This allows nodes to locate keys without making multicast requests or maintaining metadata for each key.

The numSegments property configures the number of segments available. However, the number of segments cannot change unless the cluster is restarted.

Likewise the key-to-segment mapping cannot change. Keys must always map to the same segments regardless of cluster topology changes. It is important that the key-to-segment mapping evenly distributes the number of segments allocated to each node while minimizing the number of segments that must move when the cluster topology changes.

| Consistent hash factory implementation | Description |

|---|---|

|

| Uses an algorithm based on consistent hashing. Selected by default when server hinting is disabled. This implementation always assigns keys to the same nodes in every cache as long as the cluster is symmetric. In other words, all caches run on all nodes. This implementation does have some negative points in that the load distribution is slightly uneven. It also moves more segments than strictly necessary on a join or leave. |

|

|

Equivalent to |

|

|

Achieves a more even distribution than |

|

|

Equivalent to |

|

| Used internally to implement replicated caches. You should never explicitly select this algorithm in a distributed cache. |

Hashing configuration

You can configure ConsistentHashFactory implementations, including custom ones, with embedded caches only.

XML

<distributed-cache name="distributedCache"

owners="2"

segments="100"

capacity-factor="2" />

<distributed-cache name="distributedCache"

owners="2"

segments="100"

capacity-factor="2" />ConfigurationBuilder

2.2.3. Capacity factors

Capacity factors allocate the number of segments based on resources available to each node in the cluster.

The capacity factor for a node applies to segments for which that node is both the primary owner and backup owner. In other words, the capacity factor specifies is the total capacity that a node has in comparison to other nodes in the cluster.

The default value is 1 which means that all nodes in the cluster have an equal capacity and Data Grid allocates the same number of segments to all nodes in the cluster.

However, if nodes have different amounts of memory available to them, you can configure the capacity factor so that the Data Grid hashing algorithm assigns each node a number of segments weighted by its capacity.

The value for the capacity factor configuration must be a positive number and can be a fraction such as 1.5. You can also configure a capacity factor of 0 but is recommended only for nodes that join the cluster temporarily and should use the zero capacity configuration instead.

2.2.3.1. Zero capacity nodes

You can configure nodes where the capacity factor is 0 for every cache, user defined caches, and internal caches. When defining a zero capacity node, the node does not hold any data.

Zero capacity node configuration

XML

<infinispan> <cache-container zero-capacity-node="true" /> </infinispan>

<infinispan>

<cache-container zero-capacity-node="true" />

</infinispan>JSON

YAML

infinispan:

cacheContainer:

zeroCapacityNode: "true"

infinispan:

cacheContainer:

zeroCapacityNode: "true"ConfigurationBuilder

new GlobalConfigurationBuilder().zeroCapacityNode(true);

new GlobalConfigurationBuilder().zeroCapacityNode(true);2.2.4. Level one (L1) caches

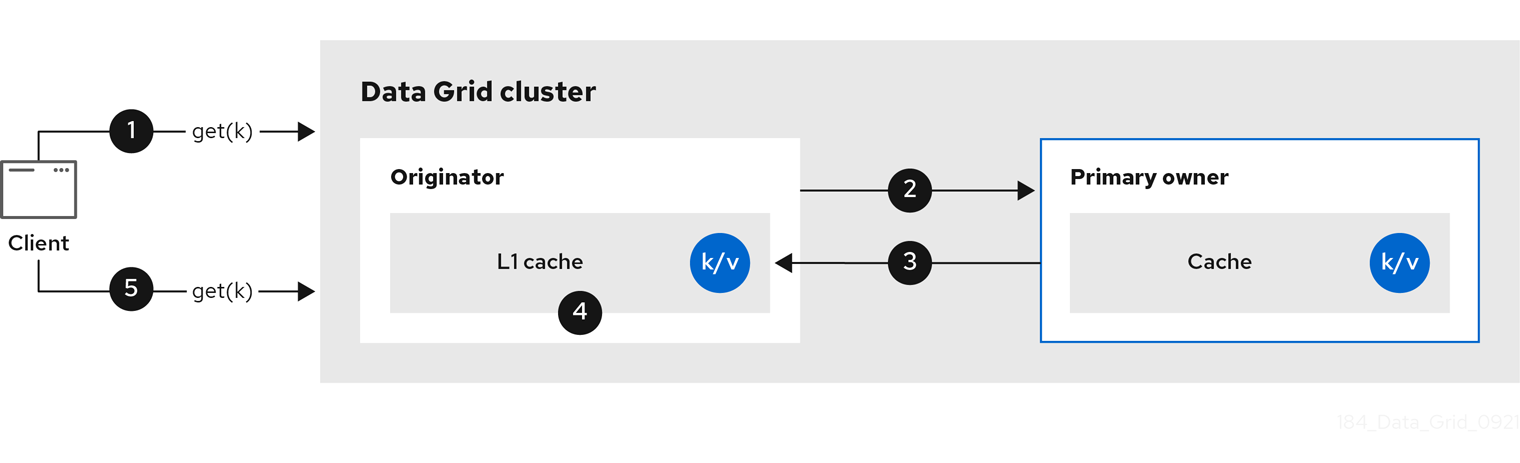

Data Grid nodes create local replicas when they retrieve entries from another node in the cluster. L1 caches avoid repeatedly looking up entries on primary owner nodes and adds performance.

The following diagram illustrates how L1 caches work:

Figure 2.4. L1 cache

In the "L1 cache" diagram:

-

A client invokes

cache.get()to read an entry for which another node in the cluster is the primary owner. - The originator node forwards the read operation to the primary owner.

- The primary owner returns the key/value entry.

- The originator node creates a local copy.

-

Subsequent

cache.get()invocations return the local entry instead of forwarding to the primary owner.

L1 caching performance

Enabling L1 improves performance for read operations but requires primary owner nodes to broadcast invalidation messages when entries are modified. This ensures that Data Grid removes any out of date replicas across the cluster. However this also decreases performance of write operations and increases memory usage, reducing overall capacity of caches.

Data Grid evicts and expires local replicas, or L1 entries, like any other cache entry.

L1 cache configuration

XML

<distributed-cache l1-lifespan="5000"

l1-cleanup-interval="60000">

</distributed-cache>

<distributed-cache l1-lifespan="5000"

l1-cleanup-interval="60000">

</distributed-cache>JSON

YAML

distributedCache: l1Lifespan: "5000" l1-cleanup-interval: "60000"

distributedCache:

l1Lifespan: "5000"

l1-cleanup-interval: "60000"ConfigurationBuilder

ConfigurationBuilder builder = new ConfigurationBuilder();

builder.clustering().cacheMode(CacheMode.DIST_SYNC)

.l1()

.lifespan(5000, TimeUnit.MILLISECONDS)

.cleanupTaskFrequency(60000, TimeUnit.MILLISECONDS);

ConfigurationBuilder builder = new ConfigurationBuilder();

builder.clustering().cacheMode(CacheMode.DIST_SYNC)

.l1()

.lifespan(5000, TimeUnit.MILLISECONDS)

.cleanupTaskFrequency(60000, TimeUnit.MILLISECONDS);2.2.5. Server hinting

Server hinting increases availability of data in distributed caches by replicating entries across as many servers, racks, and data centers as possible.

Server hinting applies only to distributed caches.

When Data Grid distributes the copies of your data, it follows the order of precedence: site, rack, machine, and node. All of the configuration attributes are optional. For example, when you specify only the rack IDs, then Data Grid distributes the copies across different racks and nodes.

Server hinting can impact cluster rebalancing operations by moving more segments than necessary if the number of segments for the cache is too low.

An alternative for clusters in multiple data centers is cross-site replication.

Server hinting configuration

XML

JSON

YAML

GlobalConfigurationBuilder

2.2.6. Key affinity service

In a distributed cache, a key is allocated to a list of nodes with an opaque algorithm. There is no easy way to reverse the computation and generate a key that maps to a particular node. However, Data Grid can generate a sequence of (pseudo-)random keys, see what their primary owner is, and hand them out to the application when it needs a key mapping to a particular node.

Following code snippet depicts how a reference to this service can be obtained and used.

The service is started at step 2: after this point it uses the supplied Executor to generate and queue keys. At step 3, we obtain a key from the service, and at step 4 we use it.

Lifecycle

KeyAffinityService extends Lifecycle, which allows stopping and (re)starting it:

public interface Lifecycle {

void start();

void stop();

}

public interface Lifecycle {

void start();

void stop();

}

The service is instantiated through KeyAffinityServiceFactory. All the factory methods have an Executor parameter, that is used for asynchronous key generation (so that it won’t happen in the caller’s thread). It is the user’s responsibility to handle the shutdown of this Executor.

The KeyAffinityService, once started, needs to be explicitly stopped. This stops the background key generation and releases other held resources.

The only situation in which KeyAffinityService stops by itself is when the cache manager with which it was registered is shutdown.

Topology changes

When the cache topology changes, the ownership of the keys generated by the KeyAffinityService might change. The key affinity service keep tracks of these topology changes and doesn’t return keys that would currently map to a different node, but it won’t do anything about keys generated earlier.

As such, applications should treat KeyAffinityService purely as an optimization, and they should not rely on the location of a generated key for correctness.

In particular, applications should not rely on keys generated by KeyAffinityService for the same address to always be located together. Collocation of keys is only provided by the Grouping API.

2.2.7. Grouping API

Complementary to the Key affinity service, the Grouping API allows you to co-locate a group of entries on the same nodes, but without being able to select the actual nodes.

By default, the segment of a key is computed using the key’s hashCode(). If you use the Grouping API, Data Grid will compute the segment of the group and use that as the segment of the key.

When the Grouping API is in use, it is important that every node can still compute the owners of every key without contacting other nodes. For this reason, the group cannot be specified manually. The group can either be intrinsic to the entry (generated by the key class) or extrinsic (generated by an external function).

To use the Grouping API, you must enable groups.

Configuration c = new ConfigurationBuilder() .clustering().hash().groups().enabled() .build();

Configuration c = new ConfigurationBuilder()

.clustering().hash().groups().enabled()

.build();<distributed-cache> <groups enabled="true"/> </distributed-cache>

<distributed-cache>

<groups enabled="true"/>

</distributed-cache>

If you have control of the key class (you can alter the class definition, it’s not part of an unmodifiable library), then we recommend using an intrinsic group. The intrinsic group is specified by adding the @Group annotation to a method, for example:

The group method must return a String

If you don’t have control over the key class, or the determination of the group is an orthogonal concern to the key class, we recommend using an extrinsic group. An extrinsic group is specified by implementing the Grouper interface.

public interface Grouper<T> {

String computeGroup(T key, String group);

Class<T> getKeyType();

}

public interface Grouper<T> {

String computeGroup(T key, String group);

Class<T> getKeyType();

}

If multiple Grouper classes are configured for the same key type, all of them will be called, receiving the value computed by the previous one. If the key class also has a @Group annotation, the first Grouper will receive the group computed by the annotated method. This allows you even greater control over the group when using an intrinsic group.

Example Grouper implementation

Grouper implementations must be registered explicitly in the cache configuration. If you are configuring Data Grid programmatically:

Configuration c = new ConfigurationBuilder() .clustering().hash().groups().enabled().addGrouper(new KXGrouper()) .build();

Configuration c = new ConfigurationBuilder()

.clustering().hash().groups().enabled().addGrouper(new KXGrouper())

.build();Or, if you are using XML:

<distributed-cache>

<groups enabled="true">

<grouper class="com.example.KXGrouper" />

</groups>

</distributed-cache>

<distributed-cache>

<groups enabled="true">

<grouper class="com.example.KXGrouper" />

</groups>

</distributed-cache>Advanced API

AdvancedCache has two group-specific methods:

-

getGroup(groupName)retrieves all keys in the cache that belong to a group. -

removeGroup(groupName)removes all the keys in the cache that belong to a group.

Both methods iterate over the entire data container and store (if present), so they can be slow when a cache contains lots of small groups.

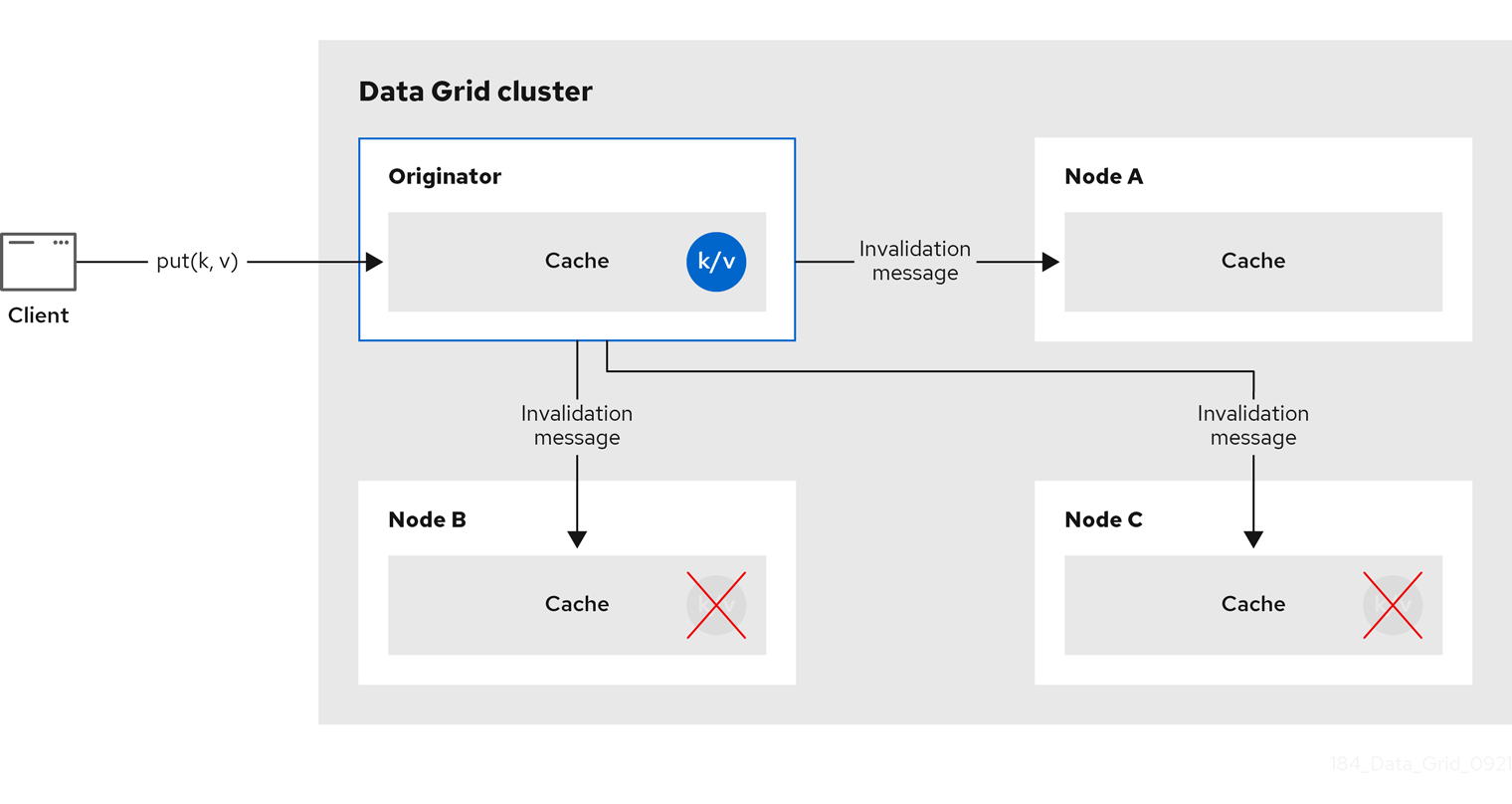

2.3. Invalidation caches

You can use Data Grid in invalidation mode to optimize systems that perform high volumes of read operations. A good example is to use invalidation to prevent lots of database writes when state changes occur.

This cache mode only makes sense if you have another, permanent store for your data such as a database and are only using Data Grid as an optimization in a read-heavy system, to prevent hitting the database for every read. If a cache is configured for invalidation, every time data is changed in a cache, other caches in the cluster receive a message informing them that their data is now stale and should be removed from memory and from any local store.

Figure 2.5. Invalidation cache

Sometimes the application reads a value from the external store and wants to write it to the local cache, without removing it from the other nodes. To do this, it must call Cache.putForExternalRead(key, value) instead of Cache.put(key, value).

Invalidation mode can be used with a shared cache store. A write operation will both update the shared store, and it would remove the stale values from the other nodes' memory. The benefit of this is twofold: network traffic is minimized as invalidation messages are very small compared to replicating the entire value, and also other caches in the cluster look up modified data in a lazy manner, only when needed.

Never use invalidation mode with a local, non-shared, cache store. The invalidation message will not remove entries in the local store, and some nodes will keep seeing the stale value.

An invalidation cache can also be configured with a special cache loader, ClusterLoader. When ClusterLoader is enabled, read operations that do not find the key on the local node will request it from all the other nodes first, and store it in memory locally. In certain situation it will store stale values, so only use it if you have a high tolerance for stale values.

Synchronous or asynchronous replication

When synchronous, a write blocks until all nodes in the cluster have evicted the stale value. When asynchronous, the originator broadcasts invalidation messages but does not wait for responses. That means other nodes still see the stale value for a while after the write completed on the originator.

Transactions

Transactions can be used to batch the invalidation messages. Transactions acquire the key lock on the primary owner.

With pessimistic locking, each write triggers a lock message, which is broadcast to all the nodes. During transaction commit, the originator broadcasts a one-phase prepare message (optionally fire-and-forget) which invalidates all affected keys and releases the locks.

With optimistic locking, the originator broadcasts a prepare message, a commit message, and an unlock message (optional). Either the one-phase prepare or the unlock message is fire-and-forget, and the last message always releases the locks.

2.4. Scattered caches

Scattered caches are very similar to distributed caches as they allow linear scaling of the cluster. Scattered caches allow single node failure by maintaining two copies of the data (numOwners=2). Unlike distributed caches, the location of data is not fixed; while we use the same Consistent Hash algorithm to locate the primary owner, the backup copy is stored on the node that wrote the data last time. When the write originates on the primary owner, backup copy is stored on any other node (the exact location of this copy is not important).

This has the advantage of single Remote Procedure Call (RPC) for any write (distributed caches require one or two RPCs), but reads have to always target the primary owner. That results in faster writes but possibly slower reads, and therefore this mode is more suitable for write-intensive applications.

Storing multiple backup copies also results in slightly higher memory consumption. In order to remove out-of-date backup copies, invalidation messages are broadcast in the cluster, which generates some overhead. This lowers the performance of scattered caches in clusters with a large number of nodes.

When a node crashes, the primary copy may be lost. Therefore, the cluster has to reconcile the backups and find out the last written backup copy. This process results in more network traffic during state transfer.

Since the writer of data is also a backup, even if we specify machine/rack/site IDs on the transport level the cluster cannot be resilient to more than one failure on the same machine/rack/site.

You cannot use scattered caches with transactions or asynchronous replication.

The cache is configured in a similar way as the other cache modes, here is an example of declarative configuration:

<scattered-cache name="scatteredCache" />

<scattered-cache name="scatteredCache" />Configuration c = new ConfigurationBuilder() .clustering().cacheMode(CacheMode.SCATTERED_SYNC) .build();

Configuration c = new ConfigurationBuilder()

.clustering().cacheMode(CacheMode.SCATTERED_SYNC)

.build();Scattered mode is not exposed in the server configuration as the server is usually accessed through the Hot Rod protocol. The protocol automatically selects primary owner for the writes and therefore the write (in distributed mode with two owner) requires single RPC inside the cluster, too. Therefore, scattered cache would not bring the performance benefit.

2.5. Asynchronous replication

All clustered cache modes can be configured to use asynchronous communications with the mode="ASYNC" attribute on the <replicated-cache/>, <distributed-cache>, or <invalidation-cache/> element.

With asynchronous communications, the originator node does not receive any acknowledgement from the other nodes about the status of the operation, so there is no way to check if it succeeded on other nodes.

We do not recommend asynchronous communications in general, as they can cause inconsistencies in the data, and the results are hard to reason about. Nevertheless, sometimes speed is more important than consistency, and the option is available for those cases.

Asynchronous API

The Asynchronous API allows you to use synchronous communications, but without blocking the user thread.

There is one caveat: The asynchronous operations do NOT preserve the program order. If a thread calls cache.putAsync(k, v1); cache.putAsync(k, v2), the final value of k may be either v1 or v2. The advantage over using asynchronous communications is that the final value can’t be v1 on one node and v2 on another.

2.5.1. Return values with asynchronous replication

Because the Cache interface extends java.util.Map, write methods like put(key, value) and remove(key) return the previous value by default.

In some cases, the return value may not be correct:

-

When using

AdvancedCache.withFlags()withFlag.IGNORE_RETURN_VALUE,Flag.SKIP_REMOTE_LOOKUP, orFlag.SKIP_CACHE_LOAD. -

When the cache is configured with

unreliable-return-values="true". - When using asynchronous communications.

- When there are multiple concurrent writes to the same key, and the cache topology changes. The topology change will make Data Grid retry the write operations, and a retried operation’s return value is not reliable.

Transactional caches return the correct previous value in cases 3 and 4. However, transactional caches also have a gotcha: in distributed mode, the read-committed isolation level is implemented as repeatable-read. That means this example of "double-checked locking" won’t work:

The correct way to implement this is to use cache.getAdvancedCache().withFlags(Flag.FORCE_WRITE_LOCK).get(k).

In caches with optimistic locking, writes can also return stale previous values. Write skew checks can avoid stale previous values.

2.6. Configuring initial cluster size

Data Grid handles cluster topology changes dynamically. This means that nodes do not need to wait for other nodes to join the cluster before Data Grid initializes the caches.

If your applications require a specific number of nodes in the cluster before caches start, you can configure the initial cluster size as part of the transport.

Procedure

- Open your Data Grid configuration for editing.

-

Set the minimum number of nodes required before caches start with the

initial-cluster-sizeattribute orinitialClusterSize()method. -

Set the timeout, in milliseconds, after which the cache manager does not start with the

initial-cluster-timeoutattribute orinitialClusterTimeout()method. - Save and close your Data Grid configuration.

Initial cluster size configuration

XML

JSON

YAML

infinispan:

cacheContainer:

transport:

initialClusterSize: "4"

initialClusterTimeout: "30000"

infinispan:

cacheContainer:

transport:

initialClusterSize: "4"

initialClusterTimeout: "30000"ConfigurationBuilder

GlobalConfiguration global = GlobalConfigurationBuilder.defaultClusteredBuilder() .transport() .initialClusterSize(4) .initialClusterTimeout(30000, TimeUnit.MILLISECONDS);

GlobalConfiguration global = GlobalConfigurationBuilder.defaultClusteredBuilder()

.transport()

.initialClusterSize(4)

.initialClusterTimeout(30000, TimeUnit.MILLISECONDS);Chapter 3. Data Grid cache configuration

Cache configuration controls how Data Grid stores your data.

As part of your cache configuration, you declare the cache mode you want to use. For instance, you can configure Data Grid clusters to use replicated caches or distributed caches.

Your configuration also defines the characteristics of your caches and enables the Data Grid capabilities that you want to use when handling data. For instance, you can configure how Data Grid encodes entries in your caches, whether replication requests happen synchronously or asynchronously between nodes, if entries are mortal or immortal, and so on.

3.1. Declarative cache configuration

You can configure caches declaratively, in XML or JSON format, according to the Data Grid schema.

Declarative cache configuration has the following advantages over programmatic configuration:

- Portability

-

Define each configuration in a standalone file that you can use to create embedded and remote caches.

You can also use declarative configuration to create caches with Data Grid Operator for clusters running on OpenShift. - Simplicity

-

Keep markup languages separate to programming languages.

For example, to create remote caches it is generally better to not add complex XML directly to Java code.

Data Grid Server configuration extends infinispan.xml to include cluster transport mechanisms, security realms, and endpoint configuration. If you declare caches as part of your Data Grid Server configuration you should use management tooling, such as Ansible or Chef, to keep it synchronized across the cluster.

To dynamically synchronize remote caches across Data Grid clusters, create them at runtime.

3.1.1. Cache configuration

You can create declarative cache configuration in XML, JSON, and YAML format.

All declarative caches must conform to the Data Grid schema. Configuration in JSON format must follow the structure of an XML configuration, elements correspond to objects and attributes correspond to fields.

Data Grid restricts characters to a maximum of 255 for a cache name or a cache template name. If you exceed this character limit, the Data Grid server might abruptly stop without issuing an exception message. Write succinct cache names and cache template names.

A file system might set a limitation for the length of a file name, so ensure that a cache’s name does not exceed this limitation. If a cache name exceeds a file system’s naming limitation, general operations or initialing operations towards that cache might fail. Write succinct cache names and cache template names.

Distributed caches

XML

JSON

YAML

Replicated caches

XML

JSON

YAML

Multiple caches

XML

YAML

JSON

3.2. Adding cache templates

The Data Grid schema includes *-cache-configuration elements that you can use to create templates. You can then create caches on demand, using the same configuration multiple times.

Procedure

- Open your Data Grid configuration for editing.

-

Add the cache configuration with the appropriate

*-cache-configurationelement or object to the cache manager. - Save and close your Data Grid configuration.

Cache template example

XML

JSON

YAML

3.2.1. Creating caches from templates

Create caches from configuration templates.

Templates for remote caches are available from the Cache templates menu in Data Grid Console.

Prerequisites

- Add at least one cache template to the cache manager.

Procedure

- Open your Data Grid configuration for editing.

-

Specify the template from which the cache inherits with the

configurationattribute or field. - Save and close your Data Grid configuration.

Cache configuration inherited from a template

XML

<distributed-cache configuration="my-dist-template" />

<distributed-cache configuration="my-dist-template" />JSON

{

"distributed-cache": {

"configuration": "my-dist-template"

}

}

{

"distributed-cache": {

"configuration": "my-dist-template"

}

}YAML

distributedCache: configuration: "my-dist-template"

distributedCache:

configuration: "my-dist-template"3.2.2. Cache template inheritance

Cache configuration templates can inherit from other templates to extend and override settings.

Cache template inheritance is hierarchical. For a child configuration template to inherit from a parent, you must include it after the parent template.

Additionally, template inheritance is additive for elements that have multiple values. A cache that inherits from another template merges the values from that template, which can override properties.

Template inheritance example

XML

JSON

YAML

3.2.3. Cache template wildcards

You can add wildcards to cache configuration template names. If you then create caches where the name matches the wildcard, Data Grid applies the configuration template.

Data Grid throws exceptions if cache names match more than one wildcard.

Template wildcard example

XML

JSON

YAML

Using the preceding example, if you create a cache named "async-dist-cache-prod" then Data Grid uses the configuration from the async-dist-cache-* template.

3.2.4. Cache templates from multiple XML files

Split cache configuration templates into multiple XML files for granular flexibility and reference them with XML inclusions (XInclude).

Data Grid provides minimal support for the XInclude specification. This means you cannot use the xpointer attribute, the xi:fallback element, text processing, or content negotiation.

You must also add the xmlns:xi="http://www.w3.org/2001/XInclude" namespace to infinispan.xml to use XInclude.

Xinclude cache template

Data Grid also provides an infinispan-config-fragment-13.0.xsd schema that you can use with configuration fragments.

Configuration fragment schema

<local-cache xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="urn:infinispan:config:13.0 https://infinispan.org/schemas/infinispan-config-fragment-13.0.xsd"

xmlns="urn:infinispan:config:13.0"

name="mycache"/>

<local-cache xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="urn:infinispan:config:13.0 https://infinispan.org/schemas/infinispan-config-fragment-13.0.xsd"

xmlns="urn:infinispan:config:13.0"

name="mycache"/>3.3. Creating remote caches

When you create remote caches at runtime, Data Grid Server synchronizes your configuration across the cluster so that all nodes have a copy. For this reason you should always create remote caches dynamically with the following mechanisms:

- Data Grid Console

- Data Grid Command Line Interface (CLI)

- Hot Rod or HTTP clients

3.3.1. Default Cache Manager

Data Grid Server provides a default Cache Manager that controls the lifecycle of remote caches. Starting Data Grid Server automatically instantiates the Cache Manager so you can create and delete remote caches and other resources like Protobuf schema.

After you start Data Grid Server and add user credentials, you can view details about the Cache Manager and get cluster information from Data Grid Console.

-

Open

127.0.0.1:11222in any browser.

You can also get information about the Cache Manager through the Command Line Interface (CLI) or REST API:

- CLI

Run the

describecommand in the default container.[//containers/default]> describe

[//containers/default]> describeCopy to Clipboard Copied! Toggle word wrap Toggle overflow - REST

-

Open

127.0.0.1:11222/rest/v2/cache-managers/default/in any browser.

Default Cache Manager configuration

XML

JSON

YAML

3.3.2. Creating caches with Data Grid Console

Use Data Grid Console to create remote caches in an intuitive visual interface from any web browser.

Prerequisites

-

Create a Data Grid user with

adminpermissions. - Start at least one Data Grid Server instance.

- Have a Data Grid cache configuration.

Procedure

-

Open

127.0.0.1:11222/console/in any browser. - Select Create Cache and follow the steps as Data Grid Console guides you through the process.

3.3.3. Creating remote caches with the Data Grid CLI

Use the Data Grid Command Line Interface (CLI) to add remote caches on Data Grid Server.

Prerequisites

-

Create a Data Grid user with

adminpermissions. - Start at least one Data Grid Server instance.

- Have a Data Grid cache configuration.

Procedure

Start the CLI and enter your credentials when prompted.

bin/cli.sh

bin/cli.shCopy to Clipboard Copied! Toggle word wrap Toggle overflow Use the

create cachecommand to create remote caches.For example, create a cache named "mycache" from a file named

mycache.xmlas follows:create cache --file=mycache.xml mycache

create cache --file=mycache.xml mycacheCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

List all remote caches with the

lscommand.ls caches mycache

ls caches mycacheCopy to Clipboard Copied! Toggle word wrap Toggle overflow View cache configuration with the

describecommand.describe caches/mycache

describe caches/mycacheCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.3.4. Creating remote caches from Hot Rod clients

Use the Data Grid Hot Rod API to create remote caches on Data Grid Server from Java, C++, .NET/C#, JS clients and more.

This procedure shows you how to use Hot Rod Java clients that create remote caches on first access. You can find code examples for other Hot Rod clients in the Data Grid Tutorials.

Prerequisites

-

Create a Data Grid user with

adminpermissions. - Start at least one Data Grid Server instance.

- Have a Data Grid cache configuration.

Procedure

-

Invoke the

remoteCache()method as part of your theConfigurationBuilder. -

Set the

configurationorconfiguration_uriproperties in thehotrod-client.propertiesfile on your classpath.

ConfigurationBuilder

hotrod-client.properties

infinispan.client.hotrod.cache.another-cache.configuration=<distributed-cache name=\"another-cache\"/> infinispan.client.hotrod.cache.[my.other.cache].configuration_uri=file:///path/to/infinispan.xml

infinispan.client.hotrod.cache.another-cache.configuration=<distributed-cache name=\"another-cache\"/>

infinispan.client.hotrod.cache.[my.other.cache].configuration_uri=file:///path/to/infinispan.xml

If the name of your remote cache contains the . character, you must enclose it in square brackets when using hotrod-client.properties files.

3.3.5. Creating remote caches with the REST API

Use the Data Grid REST API to create remote caches on Data Grid Server from any suitable HTTP client.

Prerequisites

-

Create a Data Grid user with

adminpermissions. - Start at least one Data Grid Server instance.

- Have a Data Grid cache configuration.

Procedure

-

Invoke

POSTrequests to/rest/v2/caches/<cache_name>with cache configuration in the payload.

3.4. Creating embedded caches

Data Grid provides an EmbeddedCacheManager API that lets you control both the Cache Manager and embedded cache lifecycles programmatically.

3.4.1. Adding Data Grid to your project

Add Data Grid to your project to create embedded caches in your applications.

Prerequisites

- Configure your project to get Data Grid artifacts from the Maven repository.

Procedure

-

Add the

infinispan-coreartifact as a dependency in yourpom.xmlas follows:

3.4.2. Configuring embedded caches

Data Grid provides a GlobalConfigurationBuilder API that controls the cache manager and a ConfigurationBuilder API that configures embedded caches.

Prerequisites

-

Add the

infinispan-coreartifact as a dependency in yourpom.xml.

Procedure

- Initialize the default cache manager so you can add embedded caches.

-

Add at least one embedded cache with the

ConfigurationBuilderAPI. -

Invoke the

getOrCreateCache()method that either creates embedded caches on all nodes in the cluster or returns caches that already exist.

Chapter 4. Enabling and configuring Data Grid statistics and JMX monitoring

Data Grid can provide Cache Manager and cache statistics as well as export JMX MBeans.

4.1. Configuring Data Grid metrics

Data Grid generates metrics that are compatible with the MicroProfile Metrics API.

- Gauges provide values such as the average number of nanoseconds for write operations or JVM uptime.

- Histograms provide details about operation execution times such as read, write, and remove times.

By default, Data Grid generates gauges when you enable statistics but you can also configure it to generate histograms.

Procedure

- Open your Data Grid configuration for editing.

-

Add the

metricselement or object to the cache container. -

Enable or disable gauges with the

gaugesattribute or field. -

Enable or disable histograms with the

histogramsattribute or field. - Save and close your client configuration.

Metrics configuration

XML

JSON

YAML

4.2. Registering JMX MBeans

Data Grid can register JMX MBeans that you can use to collect statistics and perform administrative operations. You must also enable statistics otherwise Data Grid provides 0 values for all statistic attributes in JMX MBeans.

Procedure

- Open your Data Grid configuration for editing.

-

Add the

jmxelement or object to the cache container and specifytrueas the value for theenabledattribute or field. -

Add the

domainattribute or field and specify the domain where JMX MBeans are exposed, if required. - Save and close your client configuration.

JMX configuration

XML

JSON

YAML

4.2.1. Enabling JMX remote ports

Provide unique remote JMX ports to expose Data Grid MBeans through connections in JMXServiceURL format.

You can enable remote JMX ports using one of the following approaches:

- Enable remote JMX ports that require authentication to one of the Data Grid Server security realms.

- Enable remote JMX ports manually using the standard Java management configuration options.

Prerequisites

-

For remote JMX with authentication, define user roles using the default security realm. Users must have

controlRolewith read/write access or themonitorRolewith read-only access to access any JMX resources.

Procedure

Start Data Grid Server with a remote JMX port enabled using one of the following ways:

Enable remote JMX through port

9999.bin/server.sh --jmx 9999

bin/server.sh --jmx 9999Copy to Clipboard Copied! Toggle word wrap Toggle overflow WarningUsing remote JMX with SSL disabled is not intended for production environments.

Pass the following system properties to Data Grid Server at startup.

bin/server.sh -Dcom.sun.management.jmxremote.port=9999 -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=false

bin/server.sh -Dcom.sun.management.jmxremote.port=9999 -Dcom.sun.management.jmxremote.authenticate=false -Dcom.sun.management.jmxremote.ssl=falseCopy to Clipboard Copied! Toggle word wrap Toggle overflow WarningEnabling remote JMX with no authentication or SSL is not secure and not recommended in any environment. Disabling authentication and SSL allows unauthorized users to connect to your server and access the data hosted there.

4.2.2. Data Grid MBeans

Data Grid exposes JMX MBeans that represent manageable resources.

org.infinispan:type=Cache- Attributes and operations available for cache instances.

org.infinispan:type=CacheManager- Attributes and operations available for cache managers, including Data Grid cache and cluster health statistics.

For a complete list of available JMX MBeans along with descriptions and available operations and attributes, see the Data Grid JMX Components documentation.

4.2.3. Registering MBeans in custom MBean servers

Data Grid includes an MBeanServerLookup interface that you can use to register MBeans in custom MBeanServer instances.

Prerequisites

-

Create an implementation of

MBeanServerLookupso that thegetMBeanServer()method returns the custom MBeanServer instance. - Configure Data Grid to register JMX MBeans.

Procedure

- Open your Data Grid configuration for editing.

-

Add the

mbean-server-lookupattribute or field to the JMX configuration for the cache manager. -

Specify fully qualified name (FQN) of your

MBeanServerLookupimplementation. - Save and close your client configuration.

JMX MBean server lookup configuration

XML

JSON

YAML

Chapter 5. Configuring JVM memory usage

Control how Data Grid stores data in JVM memory by:

- Managing JVM memory usage with eviction that automatically removes data from caches.

- Adding lifespan and maximum idle times to expire entries and prevent stale data.

- Configuring Data Grid to store data in off-heap, native memory.

5.1. Default memory configuration

By default Data Grid stores cache entries as objects in the JVM heap. Over time, as applications add entries, the size of caches can exceed the amount of memory that is available to the JVM. Likewise, if Data Grid is not the primary data store, then entries become out of date which means your caches contain stale data.

XML

<distributed-cache> <memory storage="HEAP"/> </distributed-cache>

<distributed-cache>

<memory storage="HEAP"/>

</distributed-cache>JSON

YAML

distributedCache:

memory:

storage: "HEAP"

distributedCache:

memory:

storage: "HEAP"5.2. Eviction and expiration

Eviction and expiration are two strategies for cleaning the data container by removing old, unused entries. Although eviction and expiration are similar, they have some important differences.

- ✓ Eviction lets Data Grid control the size of the data container by removing entries when the container becomes larger than a configured threshold.

-

✓ Expiration limits the amount of time entries can exist. Data Grid uses a scheduler to periodically remove expired entries. Entries that are expired but not yet removed are immediately removed on access; in this case

get()calls for expired entries return "null" values. - ✓ Eviction is local to Data Grid nodes.

- ✓ Expiration takes place across Data Grid clusters.

- ✓ You can use eviction and expiration together or independently of each other.

-

✓ You can configure eviction and expiration declaratively in

infinispan.xmlto apply cache-wide defaults for entries. - ✓ You can explicitly define expiration settings for specific entries but you cannot define eviction on a per-entry basis.

- ✓ You can manually evict entries and manually trigger expiration.

5.3. Eviction with Data Grid caches

Eviction lets you control the size of the data container by removing entries from memory in one of two ways:

-

Total number of entries (

max-count). -

Maximum amount of memory (

max-size).

Eviction drops one entry from the data container at a time and is local to the node on which it occurs.

Eviction removes entries from memory but not from persistent cache stores. To ensure that entries remain available after Data Grid evicts them, and to prevent inconsistencies with your data, you should configure persistent storage.

When you configure memory, Data Grid approximates the current memory usage of the data container. When entries are added or modified, Data Grid compares the current memory usage of the data container to the maximum size. If the size exceeds the maximum, Data Grid performs eviction.

Eviction happens immediately in the thread that adds an entry that exceeds the maximum size.

5.3.1. Eviction strategies

When you configure Data Grid eviction you specify:

- The maximum size of the data container.

- A strategy for removing entries when the cache reaches the threshold.

You can either perform eviction manually or configure Data Grid to do one of the following:

- Remove old entries to make space for new ones.

Throw

ContainerFullExceptionand prevent new entries from being created.The exception eviction strategy works only with transactional caches that use 2 phase commits; not with 1 phase commits or synchronization optimizations.

Refer to the schema reference for more details about the eviction strategies.

Data Grid includes the Caffeine caching library that implements a variation of the Least Frequently Used (LFU) cache replacement algorithm known as TinyLFU. For off-heap storage, Data Grid uses a custom implementation of the Least Recently Used (LRU) algorithm.

5.3.2. Configuring maximum count eviction

Limit the size of Data Grid caches to a total number of entries.

Procedure

- Open your Data Grid configuration for editing.

-

Specify the total number of entries that caches can contain before Data Grid performs eviction with either the

max-countattribute ormaxCount()method. Set one of the following as the eviction strategy to control how Data Grid removes entries with the

when-fullattribute orwhenFull()method.-

REMOVEData Grid performs eviction. This is the default strategy. -

MANUALYou perform eviction manually for embedded caches. -

EXCEPTIONData Grid throws an exception instead of evicting entries.

-

- Save and close your Data Grid configuration.

Maximum count eviction

In the following example, Data Grid removes an entry when the cache contains a total of 500 entries and a new entry is created:

XML

<distributed-cache> <memory max-count="500" when-full="REMOVE"/> </distributed-cache>

<distributed-cache>

<memory max-count="500" when-full="REMOVE"/>

</distributed-cache>JSON

YAML

distributedCache:

memory:

maxCount: "500"

whenFull: "REMOVE"

distributedCache:

memory:

maxCount: "500"

whenFull: "REMOVE"ConfigurationBuilder

ConfigurationBuilder builder = new ConfigurationBuilder(); builder.memory().maxCount(500).whenFull(EvictionStrategy.REMOVE);

ConfigurationBuilder builder = new ConfigurationBuilder();

builder.memory().maxCount(500).whenFull(EvictionStrategy.REMOVE);5.3.3. Configuring maximum size eviction

Limit the size of Data Grid caches to a maximum amount of memory.

Procedure

- Open your Data Grid configuration for editing.

Specify

application/x-protostreamas the media type for cache encoding.You must specify a binary media type to use maximum size eviction.

-

Configure the maximum amount of memory, in bytes, that caches can use before Data Grid performs eviction with the

max-sizeattribute ormaxSize()method. Optionally specify a byte unit of measurement.

The default is B (bytes). Refer to the configuration schema for supported units.

Set one of the following as the eviction strategy to control how Data Grid removes entries with either the

when-fullattribute orwhenFull()method.-

REMOVEData Grid performs eviction. This is the default strategy. -

MANUALYou perform eviction manually for embedded caches. -

EXCEPTIONData Grid throws an exception instead of evicting entries.

-

- Save and close your Data Grid configuration.

Maximum size eviction

In the following example, Data Grid removes an entry when the size of the cache reaches 1.5 GB (gigabytes) and a new entry is created:

XML

<distributed-cache> <encoding media-type="application/x-protostream"/> <memory max-size="1.5GB" when-full="REMOVE"/> </distributed-cache>

<distributed-cache>

<encoding media-type="application/x-protostream"/>

<memory max-size="1.5GB" when-full="REMOVE"/>

</distributed-cache>JSON

YAML

ConfigurationBuilder

ConfigurationBuilder builder = new ConfigurationBuilder();

builder.encoding().mediaType("application/x-protostream")

.memory()

.maxSize("1.5GB")

.whenFull(EvictionStrategy.REMOVE);

ConfigurationBuilder builder = new ConfigurationBuilder();

builder.encoding().mediaType("application/x-protostream")

.memory()

.maxSize("1.5GB")

.whenFull(EvictionStrategy.REMOVE);5.3.4. Manual eviction

If you choose the manual eviction strategy, Data Grid does not perform eviction. You must do so manually with the evict() method.

You should use manual eviction with embedded caches only. For remote caches, you should always configure Data Grid with the REMOVE or EXCEPTION eviction strategy.

This configuration prevents a warning message when you enable passivation but do not configure eviction.

XML

<distributed-cache> <memory max-count="500" when-full="MANUAL"/> </distributed-cache>

<distributed-cache>

<memory max-count="500" when-full="MANUAL"/>

</distributed-cache>JSON

YAML

distributedCache:

memory:

maxCount: "500"

whenFull: "MANUAL"

distributedCache:

memory:

maxCount: "500"

whenFull: "MANUAL"ConfigurationBuilder

ConfigurationBuilder builder = new ConfigurationBuilder();

builder.encoding().mediaType("application/x-protostream")

.memory()

.maxSize("1.5GB")

.whenFull(EvictionStrategy.REMOVE);

ConfigurationBuilder builder = new ConfigurationBuilder();

builder.encoding().mediaType("application/x-protostream")

.memory()

.maxSize("1.5GB")

.whenFull(EvictionStrategy.REMOVE);5.3.5. Passivation with eviction

Passivation persists data to cache stores when Data Grid evicts entries. You should always enable eviction if you enable passivation, as in the following examples:

XML

JSON

YAML

distributedCache:

memory:

maxCount: "100"

persistence:

passivation: "true"

distributedCache:

memory:

maxCount: "100"

persistence:

passivation: "true"ConfigurationBuilder

ConfigurationBuilder builder = new ConfigurationBuilder(); builder.memory().maxCount(100); builder.persistence().passivation(true); //Persistent storage configuration

ConfigurationBuilder builder = new ConfigurationBuilder();

builder.memory().maxCount(100);

builder.persistence().passivation(true); //Persistent storage configuration5.4. Expiration with lifespan and maximum idle

Expiration configures Data Grid to remove entries from caches when they reach one of the following time limits:

- Lifespan

- Sets the maximum amount of time that entries can exist.

- Maximum idle

- Specifies how long entries can remain idle. If operations do not occur for entries, they become idle.

Maximum idle expiration does not currently support caches with persistent storage.

If you use expiration and eviction with the EXCEPTION eviction strategy, entries that are expired, but not yet removed from the cache, count towards the size of the data container.

5.4.1. How expiration works

When you configure expiration, Data Grid stores keys with metadata that determines when entries expire.

-

Lifespan uses a

creationtimestamp and the value for thelifespanconfiguration property. -

Maximum idle uses a

last usedtimestamp and the value for themax-idleconfiguration property.

Data Grid checks if lifespan or maximum idle metadata is set and then compares the values with the current time.

If (creation + lifespan < currentTime) or (lastUsed + maxIdle < currentTime) then Data Grid detects that the entry is expired.

Expiration occurs whenever entries are accessed or found by the expiration reaper.

For example, k1 reaches the maximum idle time and a client makes a Cache.get(k1) request. In this case, Data Grid detects that the entry is expired and removes it from the data container. The Cache.get(k1) request returns null.

Data Grid also expires entries from cache stores, but only with lifespan expiration. Maximum idle expiration does not work with cache stores. In the case of cache loaders, Data Grid cannot expire entries because loaders can only read from external storage.

Data Grid adds expiration metadata as long primitive data types to cache entries. This can increase the size of keys by as much as 32 bytes.

5.4.2. Expiration reaper

Data Grid uses a reaper thread that runs periodically to detect and remove expired entries. The expiration reaper ensures that expired entries that are no longer accessed are removed.

The Data Grid ExpirationManager interface handles the expiration reaper and exposes the processExpiration() method.

In some cases, you can disable the expiration reaper and manually expire entries by calling processExpiration(); for instance, if you are using local cache mode with a custom application where a maintenance thread runs periodically.

If you use clustered cache modes, you should never disable the expiration reaper.

Data Grid always uses the expiration reaper when using cache stores. In this case you cannot disable it.

5.4.3. Maximum idle and clustered caches

Because maximum idle expiration relies on the last access time for cache entries, it has some limitations with clustered cache modes.

With lifespan expiration, the creation time for cache entries provides a value that is consistent across clustered caches. For example, the creation time for k1 is always the same on all nodes.

For maximum idle expiration with clustered caches, last access time for entries is not always the same on all nodes. To ensure that entries have the same relative access times across clusters, Data Grid sends touch commands to all owners when keys are accessed.

The touch commands that Data Grid send have the following considerations:

-

Cache.get()requests do not return until all touch commands complete. This synchronous behavior increases latency of client requests. - The touch command also updates the "recently accessed" metadata for cache entries on all owners, which Data Grid uses for eviction.

- With scattered cache mode, Data Grid sends touch commands to all nodes, not just primary and backup owners.

Additional information

- Maximum idle expiration does not work with invalidation mode.

- Iteration across a clustered cache can return expired entries that have exceeded the maximum idle time limit. This behavior ensures performance because no remote invocations are performed during the iteration. Also note that iteration does not refresh any expired entries.

5.4.4. Configuring lifespan and maximum idle times for caches

Set lifespan and maximum idle times for all entries in a cache.

Procedure

- Open your Data Grid configuration for editing.

-

Specify the amount of time, in milliseconds, that entries can stay in the cache with the

lifespanattribute orlifespan()method. -

Specify the amount of time, in milliseconds, that entries can remain idle after last access with the

max-idleattribute ormaxIdle()method. - Save and close your Data Grid configuration.

Expiration for Data Grid caches

In the following example, Data Grid expires all cache entries after 5 seconds or 1 second after the last access time, whichever happens first:

XML

<replicated-cache> <expiration lifespan="5000" max-idle="1000" /> </replicated-cache>

<replicated-cache>

<expiration lifespan="5000" max-idle="1000" />

</replicated-cache>JSON

YAML

replicatedCache:

expiration:

lifespan: "5000"

maxIdle: "1000"

replicatedCache:

expiration:

lifespan: "5000"

maxIdle: "1000"ConfigurationBuilder

ConfigurationBuilder builder = new ConfigurationBuilder();

builder.expiration().lifespan(5000, TimeUnit.MILLISECONDS)

.maxIdle(1000, TimeUnit.MILLISECONDS);

ConfigurationBuilder builder = new ConfigurationBuilder();

builder.expiration().lifespan(5000, TimeUnit.MILLISECONDS)

.maxIdle(1000, TimeUnit.MILLISECONDS);5.4.5. Configuring lifespan and maximum idle times per entry

Specify lifespan and maximum idle times for individual entries. When you add lifespan and maximum idle times to entries, those values take priority over expiration configuration for caches.

When you explicitly define lifespan and maximum idle time values for cache entries, Data Grid replicates those values across the cluster along with the cache entries. Likewise, Data Grid writes expiration values along with the entries to persistent storage.

Procedure

For remote caches, you can add lifespan and maximum idle times to entries interactively with the Data Grid Console.

With the Data Grid Command Line Interface (CLI), use the

--max-idle=and--ttl=arguments with theputcommand.For both remote and embedded caches, you can add lifespan and maximum idle times with

cache.put()invocations.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.5. JVM heap and off-heap memory

Data Grid stores cache entries in JVM heap memory by default. You can configure Data Grid to use off-heap storage, which means that your data occupies native memory outside the managed JVM memory space.

The following diagram is a simplified illustration of the memory space for a JVM process where Data Grid is running:

Figure 5.1. JVM memory space

JVM heap memory

The heap is divided into young and old generations that help keep referenced Java objects and other application data in memory. The GC process reclaims space from unreachable objects, running more frequently on the young generation memory pool.

When Data Grid stores cache entries in JVM heap memory, GC runs can take longer to complete as you start adding data to your caches. Because GC is an intensive process, longer and more frequent runs can degrade application performance.

Off-heap memory

Off-heap memory is native available system memory outside JVM memory management. The JVM memory space diagram shows the Metaspace memory pool that holds class metadata and is allocated from native memory. The diagram also represents a section of native memory that holds Data Grid cache entries.

Off-heap memory:

- Uses less memory per entry.

- Improves overall JVM performance by avoiding Garbage Collector (GC) runs.

One disadvantage, however, is that JVM heap dumps do not show entries stored in off-heap memory.

5.5.1. Off-heap data storage

When you add entries to off-heap caches, Data Grid dynamically allocates native memory to your data.

Data Grid hashes the serialized byte[] for each key into buckets that are similar to a standard Java HashMap. Buckets include address pointers that Data Grid uses to locate entries that you store in off-heap memory.

Even though Data Grid stores cache entries in native memory, run-time operations require JVM heap representations of those objects. For instance, cache.get() operations read objects into heap memory before returning. Likewise, state transfer operations hold subsets of objects in heap memory while they take place.

Object equality

Data Grid determines equality of Java objects in off-heap storage using the serialized byte[] representation of each object instead of the object instance.

Data consistency

Data Grid uses an array of locks to protect off-heap address spaces. The number of locks is twice the number of cores and then rounded to the nearest power of two. This ensures that there is an even distribution of ReadWriteLock instances to prevent write operations from blocking read operations.

5.5.2. Configuring off-heap memory

Configure Data Grid to store cache entries in native memory outside the JVM heap space.

Procedure

- Open your Data Grid configuration for editing.

-

Set

OFF_HEAPas the value for thestorageattribute orstorage()method. - Set a boundary for the size of the cache by configuring eviction.

- Save and close your Data Grid configuration.

Off-heap storage

Data Grid stores cache entries as bytes in native memory. Eviction happens when there are 100 entries in the data container and Data Grid gets a request to create a new entry:

XML

<replicated-cache> <memory storage="OFF_HEAP" max-count="500"/> </replicated-cache>

<replicated-cache>

<memory storage="OFF_HEAP" max-count="500"/>

</replicated-cache>JSON

YAML

replicatedCache:

memory:

storage: "OFF_HEAP"

maxCount: "500"

replicatedCache:

memory:

storage: "OFF_HEAP"

maxCount: "500"ConfigurationBuilder

ConfigurationBuilder builder = new ConfigurationBuilder(); builder.memory().storage(StorageType.OFF_HEAP).maxCount(500);

ConfigurationBuilder builder = new ConfigurationBuilder();

builder.memory().storage(StorageType.OFF_HEAP).maxCount(500);Chapter 6. Configuring persistent storage

Data Grid uses cache stores and loaders to interact with persistent storage.

- Durability

- Adding cache stores allows you to persist data to non-volatile storage so it survives restarts.

- Write-through caching

- Configuring Data Grid as a caching layer in front of persistent storage simplifies data access for applications because Data Grid handles all interactions with the external storage.

- Data overflow

- Using eviction and passivation techniques ensures that Data Grid keeps only frequently used data in-memory and writes older entries to persistent storage.

6.1. Passivation

Passivation configures Data Grid to write entries to cache stores when it evicts those entries from memory. In this way, passivation ensures that only a single copy of an entry is maintained, either in-memory or in a cache store, which prevents unnecessary and potentially expensive writes to persistent storage.

Activation is the process of restoring entries to memory from the cache store when there is an attempt to access passivated entries. For this reason, when you enable passivation, you must configure cache stores that implement both CacheWriter and CacheLoader interfaces so they can write and load entries from persistent storage.

When Data Grid evicts an entry from the cache, it notifies cache listeners that the entry is passivated then stores the entry in the cache store. When Data Grid gets an access request for an evicted entry, it lazily loads the entry from the cache store into memory and then notifies cache listeners that the entry is activated.

- Passivation uses the first cache loader in the Data Grid configuration and ignores all others.

Passivation is not supported with:

- Transactional stores. Passivation writes and removes entries from the store outside the scope of the actual Data Grid commit boundaries.

- Shared stores. Shared cache stores require entries to always exist in the store for other owners. For this reason, passivation is not supported because entries cannot be removed.

If you enable passivation with transactional stores or shared stores, Data Grid throws an exception.

6.1.1. How passivation works

Passivation disabled

Writes to data in memory result in writes to persistent storage.

If Data Grid evicts data from memory, then data in persistent storage includes entries that are evicted from memory. In this way persistent storage is a superset of the in-memory cache.

If you do not configure eviction, then data in persistent storage provides a copy of data in memory.

Passivation enabled

Data Grid adds data to persistent storage only when it evicts data from memory.

When Data Grid activates entries, it restores data in memory and deletes data from persistent storage. In this way, data in memory and data in persistent storage form separate subsets of the entire data set, with no intersection between the two.

Entries in persistent storage can become stale when using shared cache stores. This occurs because Data Grid does not delete passivated entries from shared cache stores when they are activated.

Values are updated in memory but previously passivated entries remain in persistent storage with out of date values.

The following table shows data in memory and in persistent storage after a series of operations:

| Operation | Passivation disabled | Passivation enabled | Passivation enabled with shared cache store |

|---|---|---|---|

| Insert k1. |

Memory: k1 |

Memory: k1 |

Memory: k1 |

| Insert k2. |

Memory: k1, k2 |

Memory: k1, k2 |

Memory: k1, k2 |

| Eviction thread runs and evicts k1. |

Memory: k2 |

Memory: k2 |

Memory: k2 |

| Read k1. |

Memory: k1, k2 |

Memory: k1, k2 |

Memory: k1, k2 |

| Eviction thread runs and evicts k2. |

Memory: k1 |

Memory: k1 |

Memory: k1 |

| Remove k2. |

Memory: k1 |

Memory: k1 |

Memory: k1 |

6.2. Write-through cache stores

Write-through is a cache writing mode where writes to memory and writes to cache stores are synchronous. When a client application updates a cache entry, in most cases by invoking Cache.put(), Data Grid does not return the call until it updates the cache store. This cache writing mode results in updates to the cache store concluding within the boundaries of the client thread.

The primary advantage of write-through mode is that the cache and cache store are updated simultaneously, which ensures that the cache store is always consistent with the cache.

However, write-through mode can potentially decrease performance because the need to access and update cache stores directly adds latency to cache operations.

Write-through configuration

Data Grid uses write-through mode unless you explicitly add write-behind configuration to your caches. There is no separate element or method for configuring write-through mode.

For example, the following configuration adds a file-based store to the cache that implicitly uses write-through mode:

6.3. Write-behind cache stores

Write-behind is a cache writing mode where writes to memory are synchronous and writes to cache stores are asynchronous.

When clients send write requests, Data Grid adds those operations to a modification queue. Data Grid processes operations as they join the queue so that the calling thread is not blocked and the operation completes immediately.

If the number of write operations in the modification queue increases beyond the size of the queue, Data Grid adds those additional operations to the queue. However, those operations do not complete until Data Grid processes operations that are already in the queue.

For example, calling Cache.putAsync returns immediately and the Stage also completes immediately if the modification queue is not full. If the modification queue is full, or if Data Grid is currently processing a batch of write operations, then Cache.putAsync returns immediately and the Stage completes later.

Write-behind mode provides a performance advantage over write-through mode because cache operations do not need to wait for updates to the underlying cache store to complete. However, data in the cache store remains inconsistent with data in the cache until the modification queue is processed. For this reason, write-behind mode is suitable for cache stores with low latency, such as unshared and local file-based cache stores, where the time between the write to the cache and the write to the cache store is as small as possible.

Write-behind configuration

XML

JSON

YAML

ConfigurationBuilder

ConfigurationBuilder builder = new ConfigurationBuilder();

builder.persistence()

.async()