Deployment Guide

Deployment, configuration and administration of Red Hat Enterprise Linux 5

Edition 11

Abstract

Introduction

- Setting up a network interface card (NIC)

- Configuring a Virtual Private Network (VPN)

- Configuring Samba shares

- Managing your software with RPM

- Determining information about your system

- Upgrading your kernel

- File systems

- Package management

- Network-related configuration

- System configuration

- System monitoring

- Kernel and Driver Configuration

- Security and Authentication

- Red Hat Training and Certification

1. Document Conventions

command- Linux commands (and other operating system commands, when used) are represented this way. This style should indicate to you that you can type the word or phrase on the command line and press Enter to invoke a command. Sometimes a command contains words that would be displayed in a different style on their own (such as file names). In these cases, they are considered to be part of the command, so the entire phrase is displayed as a command. For example:Use the

cat testfilecommand to view the contents of a file, namedtestfile, in the current working directory. file name- File names, directory names, paths, and RPM package names are represented this way. This style indicates that a particular file or directory exists with that name on your system. Examples:The

.bashrcfile in your home directory contains bash shell definitions and aliases for your own use.The/etc/fstabfile contains information about different system devices and file systems.Install thewebalizerRPM if you want to use a Web server log file analysis program. - application

- This style indicates that the program is an end-user application (as opposed to system software). For example:Use Mozilla to browse the Web.

- key

- A key on the keyboard is shown in this style. For example:To use Tab completion to list particular files in a directory, type

ls, then a character, and finally the Tab key. Your terminal displays the list of files in the working directory that begin with that character. - key+combination

- A combination of keystrokes is represented in this way. For example:The Ctrl+Alt+Backspace key combination exits your graphical session and returns you to the graphical login screen or the console.

- text found on a GUI interface

- A title, word, or phrase found on a GUI interface screen or window is shown in this style. Text shown in this style indicates a particular GUI screen or an element on a GUI screen (such as text associated with a checkbox or field). Example:Select the Require Password checkbox if you would like your screensaver to require a password before stopping.

- A word in this style indicates that the word is the top level of a pulldown menu. If you click on the word on the GUI screen, the rest of the menu should appear. For example:Under on a GNOME terminal, the option allows you to open multiple shell prompts in the same window.Instructions to type in a sequence of commands from a GUI menu look like the following example:Go to (the main menu on the panel) > > to start the Emacs text editor.

- This style indicates that the text can be found on a clickable button on a GUI screen. For example:Click on the button to return to the webpage you last viewed.

computer output- Text in this style indicates text displayed to a shell prompt such as error messages and responses to commands. For example:The

lscommand displays the contents of a directory. For example:Desktop about.html logs paulwesterberg.png Mail backupfiles mail reports

Desktop about.html logs paulwesterberg.png Mail backupfiles mail reportsCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output returned in response to the command (in this case, the contents of the directory) is shown in this style. prompt- A prompt, which is a computer's way of signifying that it is ready for you to input something, is shown in this style. Examples:

$#[stephen@maturin stephen]$leopard login: user input- Text that the user types, either on the command line or into a text box on a GUI screen, is displayed in this style. In the following example,

textis displayed in this style:To boot your system into the text based installation program, you must type in thetextcommand at theboot:prompt. - <replaceable>

- Text used in examples that is meant to be replaced with data provided by the user is displayed in this style. In the following example, <version-number> is displayed in this style:The directory for the kernel source is

/usr/src/kernels/<version-number>/, where <version-number> is the version and type of kernel installed on this system.

Note

Note

/usr/share/doc/ contains additional documentation for packages installed on your system.

Important

Warning

Warning

2. Send in Your Feedback

http://bugzilla.redhat.com/bugzilla/) against the component Deployment_Guide.

Part I. File Systems

parted utility to manage partitions and access control lists (ACLs) to customize file permissions.

Chapter 1. File System Structure

1.1. Why Share a Common Structure?

- Shareable vs. unshareable files

- Variable vs. static files

1.2. Overview of File System Hierarchy Standard (FHS)

/usr/ partition as read-only. This second point is important because the directory contains common executables and should not be changed by users. Also, since the /usr/ directory is mounted as read-only, it can be mounted from the CD-ROM or from another machine via a read-only NFS mount.

1.2.1. FHS Organization

1.2.1.1. The /boot/ Directory

/boot/ directory contains static files required to boot the system, such as the Linux kernel. These files are essential for the system to boot properly.

Warning

/boot/ directory. Doing so renders the system unbootable.

1.2.1.2. The /dev/ Directory

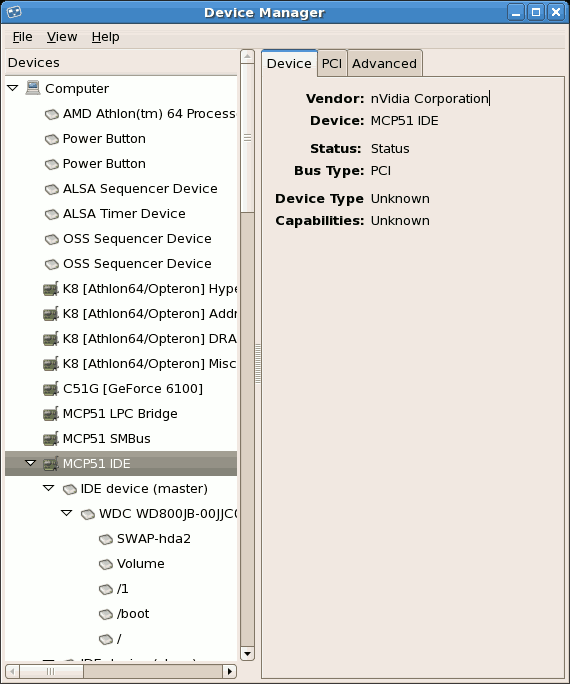

/dev/ directory contains device nodes that either represent devices that are attached to the system or virtual devices that are provided by the kernel. These device nodes are essential for the system to function properly. The udev daemon takes care of creating and removing all these device nodes in /dev/.

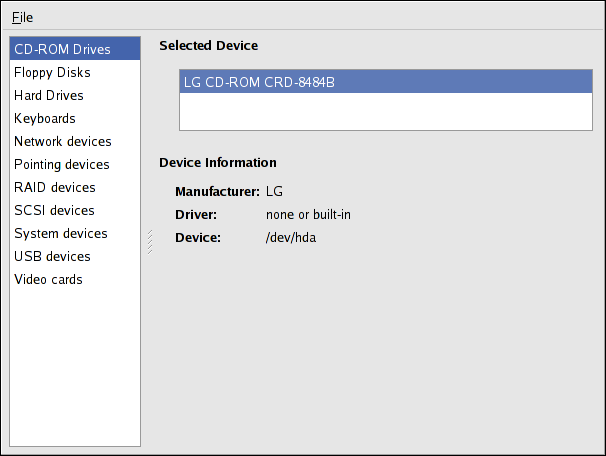

/dev directory and subdirectories are either character (providing only a serial stream of input/output) or block (accessible randomly). Character devices include mouse, keyboard, modem while block devices include hard disk, floppy drive etc. If you have GNOME or KDE installed in your system, devices such as external drives or cds are automatically detected when connected (e.g via usb) or inserted (e.g via CD or DVD drive) and a popup window displaying the contents is automatically displayed. Files in the /dev directory are essential for the system to function properly.

| File | Description |

|---|---|

| /dev/hda | The master device on primary IDE channel. |

| /dev/hdb | The slave device on primary IDE channel. |

| /dev/tty0 | The first virtual console. |

| /dev/tty1 | The second virtual console. |

| /dev/sda | The first device on primary SCSI or SATA channel. |

| /dev/lp0 | The first parallel port. |

1.2.1.3. The /etc/ Directory

/etc/ directory is reserved for configuration files that are local to the machine. No binaries are to be placed in /etc/. Any binaries that were once located in /etc/ should be placed into /sbin/ or /bin/.

/etc are the X11/ and skel/:

/etc |- X11/ |- skel/

/etc

|- X11/

|- skel//etc/X11/ directory is for X Window System configuration files, such as xorg.conf. The /etc/skel/ directory is for "skeleton" user files, which are used to populate a home directory when a user is first created. Applications also store their configuration files in this directory and may reference them when they are executed.

1.2.1.4. The /lib/ Directory

/lib/ directory should contain only those libraries needed to execute the binaries in /bin/ and /sbin/. These shared library images are particularly important for booting the system and executing commands within the root file system.

1.2.1.5. The /media/ Directory

/media/ directory contains subdirectories used as mount points for removable media such as usb storage media, DVDs, CD-ROMs, and Zip disks.

1.2.1.6. The /mnt/ Directory

/mnt/ directory is reserved for temporarily mounted file systems, such as NFS file system mounts. For all removable media, please use the /media/ directory. Automatically detected removable media will be mounted in the /media directory.

Note

/mnt directory must not be used by installation programs.

1.2.1.7. The /opt/ Directory

/opt/ directory provides storage for most application software packages.

/opt/ directory creates a directory bearing the same name as the package. This directory, in turn, holds files that otherwise would be scattered throughout the file system, giving the system administrator an easy way to determine the role of each file within a particular package.

sample is the name of a particular software package located within the /opt/ directory, then all of its files are placed in directories inside the /opt/sample/ directory, such as /opt/sample/bin/ for binaries and /opt/sample/man/ for manual pages.

/opt/ directory, giving that large package a way to organize itself. In this way, our sample package may have different tools that each go in their own sub-directories, such as /opt/sample/tool1/ and /opt/sample/tool2/, each of which can have their own bin/, man/, and other similar directories.

1.2.1.8. The /proc/ Directory

/proc/ directory contains special files that either extract information from or send information to the kernel. Examples include system memory, cpu information, hardware configuration etc.

/proc/ and the many ways this directory can be used to communicate with the kernel, an entire chapter has been devoted to the subject. For more information, refer to Chapter 5, The proc File System.

1.2.1.9. The /sbin/ Directory

/sbin/ directory stores executables used by the root user. The executables in /sbin/ are used at boot time, for system administration and to perform system recovery operations. Of this directory, the FHS says:

/sbincontains binaries essential for booting, restoring, recovering, and/or repairing the system in addition to the binaries in/bin. Programs executed after/usr/is known to be mounted (when there are no problems) are generally placed into/usr/sbin. Locally-installed system administration programs should be placed into/usr/local/sbin.

/sbin/:

1.2.1.10. The /srv/ Directory

/srv/ directory contains site-specific data served by your system running Red Hat Enterprise Linux. This directory gives users the location of data files for a particular service, such as FTP, WWW, or CVS. Data that only pertains to a specific user should go in the /home/ directory.

1.2.1.11. The /sys/ Directory

/sys/ directory utilizes the new sysfs virtual file system specific to the 2.6 kernel. With the increased support for hot plug hardware devices in the 2.6 kernel, the /sys/ directory contains information similarly held in /proc/, but displays a hierarchical view of specific device information in regards to hot plug devices.

1.2.1.12. The /usr/ Directory

/usr/ directory is for files that can be shared across multiple machines. The /usr/ directory is often on its own partition and is mounted read-only. At a minimum, the following directories should be subdirectories of /usr/:

/usr/ directory, the bin/ subdirectory contains executables, etc/ contains system-wide configuration files, games is for games, include/ contains C header files, kerberos/ contains binaries and other Kerberos-related files, and lib/ contains object files and libraries that are not designed to be directly utilized by users or shell scripts. The libexec/ directory contains small helper programs called by other programs, sbin/ is for system administration binaries (those that do not belong in the /sbin/ directory), share/ contains files that are not architecture-specific, src/ is for source code.

1.2.1.13. The /usr/local/ Directory

The/usr/localhierarchy is for use by the system administrator when installing software locally. It needs to be safe from being overwritten when the system software is updated. It may be used for programs and data that are shareable among a group of hosts, but not found in/usr.

/usr/local/ directory is similar in structure to the /usr/ directory. It has the following subdirectories, which are similar in purpose to those in the /usr/ directory:

/usr/local/ directory is slightly different from that specified by the FHS. The FHS says that /usr/local/ should be where software that is to remain safe from system software upgrades is stored. Since software upgrades can be performed safely with RPM Package Manager (RPM), it is not necessary to protect files by putting them in /usr/local/. Instead, the /usr/local/ directory is used for software that is local to the machine.

/usr/ directory is mounted as a read-only NFS share from a remote host, it is still possible to install a package or program under the /usr/local/ directory.

1.2.1.14. The /var/ Directory

/usr/ as read-only, any programs that write log files or need spool/ or lock/ directories should write them to the /var/ directory. The FHS states /var/ is for:

...variable data files. This includes spool directories and files, administrative and logging data, and transient and temporary files.

/var/ directory:

messages and lastlog, go in the /var/log/ directory. The /var/lib/rpm/ directory contains RPM system databases. Lock files go in the /var/lock/ directory, usually in directories for the program using the file. The /var/spool/ directory has subdirectories for programs in which data files are stored.

1.3. Special File Locations Under Red Hat Enterprise Linux

/var/lib/rpm/ directory. For more information on RPM, refer to the chapter Chapter 12, Package Management with RPM.

/var/cache/yum/ directory contains files used by the Package Updater, including RPM header information for the system. This location may also be used to temporarily store RPMs downloaded while updating the system. For more information about Red Hat Network, refer to Chapter 15, Registering a System and Managing Subscriptions.

/etc/sysconfig/ directory. This directory stores a variety of configuration information. Many scripts that run at boot time use the files in this directory. Refer to Chapter 32, The sysconfig Directory for more information about what is within this directory and the role these files play in the boot process.

Chapter 2. Using the mount Command

mount or umount command respectively. This chapter describes the basic usage of these commands, and covers some advanced topics such as moving a mount point or creating shared subtrees.

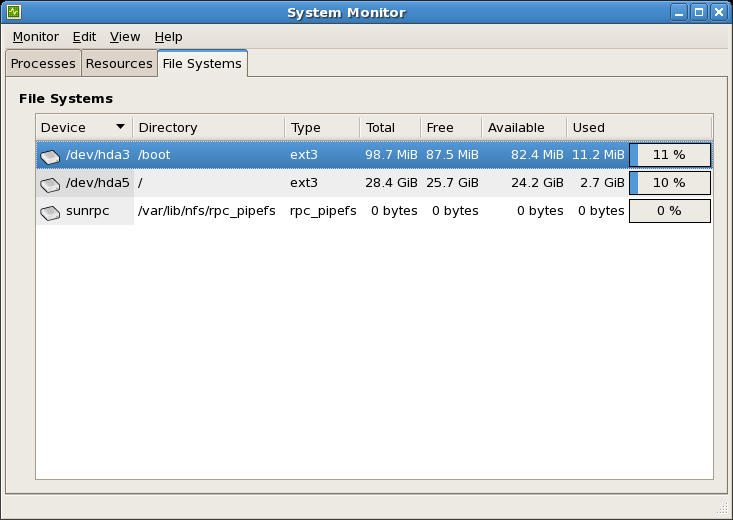

2.1. Listing Currently Mounted File Systems

mount command with no additional arguments:

mount

mountdevice on directory type type (options)

sysfs, tmpfs, and others. To display only the devices with a certain file system type, supply the -t option on the command line:

mount -t type

mount -t typemount command to list the mounted file systems, see Example 2.1, “Listing Currently Mounted ext3 File Systems”.

Example 2.1. Listing Currently Mounted ext3 File Systems

/ and /boot partitions are formatted to use ext3. To display only the mount points that use this file system, type the following at a shell prompt:

mount -t ext3 /dev/mapper/VolGroup00-LogVol00 on / type ext3 (rw) /dev/vda1 on /boot type ext3 (rw)

~]$ mount -t ext3

/dev/mapper/VolGroup00-LogVol00 on / type ext3 (rw)

/dev/vda1 on /boot type ext3 (rw)2.2. Mounting a File System

mount command in the following form:

mount [option…] device directory

mount [option…] device directorymount command is run, it reads the content of the /etc/fstab configuration file to see if the given file system is listed. This file contains a list of device names and the directory in which the selected file systems should be mounted, as well as the file system type and mount options. Because of this, when you are mounting a file system that is specified in this file, you can use one of the following variants of the command:

mount [option…] directory mount [option…] device

mount [option…] directory

mount [option…] deviceroot, you must have permissions to mount the file system (see Section 2.2.2, “Specifying the Mount Options”).

2.2.1. Specifying the File System Type

mount detects the file system automatically. However, there are certain file systems, such as NFS (Network File System) or CIFS (Common Internet File System), that are not recognized, and need to be specified manually. To specify the file system type, use the mount command in the following form:

mount -t type device directory

mount -t type device directorymount command. For a complete list of all available file system types, consult the relevant manual page as referred to in Section 2.4.1, “Installed Documentation”.

| Type | Description |

|---|---|

ext2 | The ext2 file system. |

ext3 | The ext3 file system. |

ext4 | The ext4 file system. |

iso9660 | The ISO 9660 file system. It is commonly used by optical media, typically CDs. |

jfs | The JFS file system created by IBM. |

nfs | The NFS file system. It is commonly used to access files over the network. |

nfs4 | The NFSv4 file system. It is commonly used to access files over the network. |

ntfs | The NTFS file system. It is commonly used on machines that are running the Windows operating system. |

udf | The UDF file system. It is commonly used by optical media, typically DVDs. |

vfat | The FAT file system. It is commonly used on machines that are running the Windows operating system, and on certain digital media such as USB flash drives or floppy disks. |

Example 2.2. Mounting a USB Flash Drive

/dev/sdc1 device and that the /media/flashdisk/ directory exists, you can mount it to this directory by typing the following at a shell prompt as root:

mount -t vfat /dev/sdc1 /media/flashdisk

~]# mount -t vfat /dev/sdc1 /media/flashdisk2.2.2. Specifying the Mount Options

mount -o options

mount -o optionsmount will incorrectly interpret the values following spaces as additional parameters.

| Option | Description |

|---|---|

async | Allows the asynchronous input/output operations on the file system. |

auto | Allows the file system to be mounted automatically using the mount -a command. |

defaults | Provides an alias for async,auto,dev,exec,nouser,rw,suid. |

exec | Allows the execution of binary files on the particular file system. |

loop | Mounts an image as a loop device. |

noauto | Disallows the automatic mount of the file system using the mount -a command. |

noexec | Disallows the execution of binary files on the particular file system. |

nouser | Disallows an ordinary user (that is, other than root) to mount and unmount the file system. |

remount | Remounts the file system in case it is already mounted. |

ro | Mounts the file system for reading only. |

rw | Mounts the file system for both reading and writing. |

user | Allows an ordinary user (that is, other than root) to mount and unmount the file system. |

Example 2.3. Mounting an ISO Image

/media/cdrom/ directory exists, you can mount the image to this directory by running the following command as root:

mount -o ro,loop Fedora-14-x86_64-Live-Desktop.iso /media/cdrom

~]# mount -o ro,loop Fedora-14-x86_64-Live-Desktop.iso /media/cdrom2.2.3. Sharing Mounts

mount command implements the --bind option that provides a means for duplicating certain mounts. Its usage is as follows:

mount --bind old_directory new_directory

mount --bind old_directory new_directorymount --rbind old_directory new_directory

mount --rbind old_directory new_directory- Shared Mount

- A shared mount allows you to create an exact replica of a given mount point. When a shared mount is created, any mount within the original mount point is reflected in it, and vice versa. To create a shared mount, type the following at a shell prompt:

mount --make-shared mount_point

mount --make-shared mount_pointCopy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, you can change the mount type for the selected mount point and all mount points under it:mount --make-rshared mount_point

mount --make-rshared mount_pointCopy to Clipboard Copied! Toggle word wrap Toggle overflow See Example 2.4, “Creating a Shared Mount Point” for an example usage. - Slave Mount

- A slave mount allows you to create a limited duplicate of a given mount point. When a slave mount is created, any mount within the original mount point is reflected in it, but no mount within a slave mount is reflected in its original. To create a slave mount, type the following at a shell prompt:

mount --make-slave mount_point

mount --make-slave mount_pointCopy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, you can change the mount type for the selected mount point and all mount points under it:mount --make-rslave mount_point

mount --make-rslave mount_pointCopy to Clipboard Copied! Toggle word wrap Toggle overflow See Example 2.5, “Creating a Slave Mount Point” for an example usage.Example 2.5. Creating a Slave Mount Point

Imagine you want the content of the/mediadirectory to appear in/mntas well, but you do not want any mounts in the/mntdirectory to be reflected in/media. To do so, asroot, first mark the/mediadirectory as “shared”:mount --bind /media /media mount --make-shared /media

~]# mount --bind /media /media ~]# mount --make-shared /mediaCopy to Clipboard Copied! Toggle word wrap Toggle overflow Then create its duplicate in/mnt, but mark it as “slave”:mount --bind /media /mnt mount --make-slave /mnt

~]# mount --bind /media /mnt ~]# mount --make-slave /mntCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can now verify that a mount within/mediaalso appears in/mnt. For example, if you have non-empty media in your CD-ROM drive and the/media/cdrom/directory exists, run the following commands:mount /dev/cdrom /media/cdrom ls /media/cdrom EFI GPL isolinux LiveOS ls /mnt/cdrom EFI GPL isolinux LiveOS

~]# mount /dev/cdrom /media/cdrom ~]# ls /media/cdrom EFI GPL isolinux LiveOS ~]# ls /mnt/cdrom EFI GPL isolinux LiveOSCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can also verify that file systems mounted in the/mntdirectory are not reflected in/media. For instance, if you have a non-empty USB flash drive that uses the/dev/sdc1device plugged in and the/mnt/flashdisk/directory is present, type: :mount /dev/sdc1 /mnt/flashdisk ls /media/flashdisk ls /mnt/flashdisk en-US publican.cfg

~]# mount /dev/sdc1 /mnt/flashdisk ~]# ls /media/flashdisk ~]# ls /mnt/flashdisk en-US publican.cfgCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Private Mount

- A private mount allows you to create an ordinary mount. When a private mount is created, no subsequent mounts within the original mount point are reflected in it, and no mount within a private mount is reflected in its original. To create a private mount, type the following at a shell prompt:

mount --make-private mount_point

mount --make-private mount_pointCopy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, you can change the mount type for the selected mount point and all mount points under it:mount --make-rprivate mount_point

mount --make-rprivate mount_pointCopy to Clipboard Copied! Toggle word wrap Toggle overflow See Example 2.6, “Creating a Private Mount Point” for an example usage.Example 2.6. Creating a Private Mount Point

Taking into account the scenario in Example 2.4, “Creating a Shared Mount Point”, assume that you have previously created a shared mount point by using the following commands asroot:mount --bind /media /media mount --make-shared /media mount --bind /media /mnt

~]# mount --bind /media /media ~]# mount --make-shared /media ~]# mount --bind /media /mntCopy to Clipboard Copied! Toggle word wrap Toggle overflow To mark the/mntdirectory as “private”, type:mount --make-private /mnt

~]# mount --make-private /mntCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can now verify that none of the mounts within/mediaappears in/mnt. For example, if you have non-empty media in your CD-ROM drive and the/media/cdrom/directory exists, run the following commands:mount /dev/cdrom /media/cdrom ls /media/cdrom EFI GPL isolinux LiveOS ls /mnt/cdrom ~]#

~]# mount /dev/cdrom /media/cdrom ~]# ls /media/cdrom EFI GPL isolinux LiveOS ~]# ls /mnt/cdrom ~]#Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can also verify that file systems mounted in the/mntdirectory are not reflected in/media. For instance, if you have a non-empty USB flash drive that uses the/dev/sdc1device plugged in and the/mnt/flashdisk/directory is present, type:mount /dev/sdc1 /mnt/flashdisk ls /media/flashdisk ls /mnt/flashdisk en-US publican.cfg

~]# mount /dev/sdc1 /mnt/flashdisk ~]# ls /media/flashdisk ~]# ls /mnt/flashdisk en-US publican.cfgCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Unbindable Mount

- An unbindable mount allows you to prevent a given mount point from being duplicated whatsoever. To create an unbindable mount, type the following at a shell prompt:

mount --make-unbindable mount_point

mount --make-unbindable mount_pointCopy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, you can change the mount type for the selected mount point and all mount points under it:mount --make-runbindable mount_point

mount --make-runbindable mount_pointCopy to Clipboard Copied! Toggle word wrap Toggle overflow See Example 2.7, “Creating an Unbindable Mount Point” for an example usage.Example 2.7. Creating an Unbindable Mount Point

To prevent the/mediadirectory from being shared, asroot, type the following at a shell prompt:mount --bind /media /media mount --make-unbindable /media

~]# mount --bind /media /media ~]# mount --make-unbindable /mediaCopy to Clipboard Copied! Toggle word wrap Toggle overflow This way, any subsequent attempt to make a duplicate of this mount will fail with an error:mount --bind /media /mnt mount: wrong fs type, bad option, bad superblock on /media/, missing code page or other error In some cases useful info is found in syslog - try dmesg | tail or so~]# mount --bind /media /mnt mount: wrong fs type, bad option, bad superblock on /media/, missing code page or other error In some cases useful info is found in syslog - try dmesg | tail or soCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.2.4. Moving a Mount Point

mount --move old_directory new_directory

mount --move old_directory new_directoryExample 2.8. Moving an Existing NFS Mount Point

/mnt/userdirs/, as root, you can move this mount point to /home by using the following command:

mount --move /mnt/userdirs /home

~]# mount --move /mnt/userdirs /homels /mnt/userdirs ls /home jill joe

~]# ls /mnt/userdirs

~]# ls /home

jill joe2.3. Unmounting a File System

umount command:

umount directory umount device

umount directory

umount deviceroot, you must have permissions to unmount the file system (see Section 2.2.2, “Specifying the Mount Options”). See Example 2.9, “Unmounting a CD” for an example usage.

Important

umount command will fail with an error. To determine which processes are accessing the file system, use the fuser command in the following form:

fuser -m directory

fuser -m directory/media/cdrom/ directory, type:

fuser -m /media/cdrom /media/cdrom: 1793 2013 2022 2435 10532c 10672c

~]$ fuser -m /media/cdrom

/media/cdrom: 1793 2013 2022 2435 10532c 10672cExample 2.9. Unmounting a CD

/media/cdrom/ directory, type the following at a shell prompt:

umount /media/cdrom

~]$ umount /media/cdrom2.4. Additional Resources

2.4.1. Installed Documentation

man 8 mount— The manual page for themountcommand that provides a full documentation on its usage.man 8 umount— The manual page for theumountcommand that provides a full documentation on its usage.man 5 fstab— The manual page providing a thorough description of the/etc/fstabfile format.

2.4.2. Useful Websites

- Shared subtrees — An LWN article covering the concept of shared subtrees.

- sharedsubtree.txt — Extensive documentation that is shipped with the shared subtrees patches.

Chapter 3. The ext3 File System

3.1. Features of ext3

- Availability

- After an unexpected power failure or system crash (also called an unclean system shutdown), each mounted ext2 file system on the machine must be checked for consistency by the

e2fsckprogram. This is a time-consuming process that can delay system boot time significantly, especially with large volumes containing a large number of files. During this time, any data on the volumes is unreachable.The journaling provided by the ext3 file system means that this sort of file system check is no longer necessary after an unclean system shutdown. The only time a consistency check occurs using ext3 is in certain rare hardware failure cases, such as hard drive failures. The time to recover an ext3 file system after an unclean system shutdown does not depend on the size of the file system or the number of files; rather, it depends on the size of the journal used to maintain consistency. The default journal size takes about a second to recover, depending on the speed of the hardware. - Data Integrity

- The ext3 file system prevents loss of data integrity in the event that an unclean system shutdown occurs. The ext3 file system allows you to choose the type and level of protection that your data receives. By default, the ext3 volumes are configured to keep a high level of data consistency with regard to the state of the file system.

- Speed

- Despite writing some data more than once, ext3 has a higher throughput in most cases than ext2 because ext3's journaling optimizes hard drive head motion. You can choose from three journaling modes to optimize speed, but doing so means trade-offs in regards to data integrity if the system was to fail.

- Easy Transition

- It is easy to migrate from ext2 to ext3 and gain the benefits of a robust journaling file system without reformatting. Refer to Section 3.3, “Converting to an ext3 File System” for more on how to perform this task.

3.2. Creating an ext3 File System

- Format the partition with the ext3 file system using

mkfs. - Label the partition using

e2label.

3.3. Converting to an ext3 File System

tune2fs allows you to convert an ext2 filesystem to ext3.

Note

e2fsck utility to check your filesystem before and after using tune2fs. A default installation of Red Hat Enterprise Linux uses ext3 for all file systems.

ext2 filesystem to ext3, log in as root and type the following command in a terminal:

tune2fs -j <block_device>

tune2fs -j <block_device>- A mapped device — A logical volume in a volume group, for example,

/dev/mapper/VolGroup00-LogVol02. - A static device — A traditional storage volume, for example,

/dev/hdbX, where hdb is a storage device name and X is the partition number.

df command to display mounted file systems.

/dev/mapper/VolGroup00-LogVol02

/dev/mapper/VolGroup00-LogVol02mkinitrd program. For information on using the mkinitrd command, type man mkinitrd. Also, make sure your GRUB configuration loads the initrd.

3.4. Reverting to an ext2 File System

umount /dev/mapper/VolGroup00-LogVol02

umount /dev/mapper/VolGroup00-LogVol02tune2fs -O ^has_journal /dev/mapper/VolGroup00-LogVol02

tune2fs -O ^has_journal /dev/mapper/VolGroup00-LogVol02e2fsck -y /dev/mapper/VolGroup00-LogVol02

e2fsck -y /dev/mapper/VolGroup00-LogVol02mount -t ext2 /dev/mapper/VolGroup00-LogVol02 /mount/point

mount -t ext2 /dev/mapper/VolGroup00-LogVol02 /mount/point.journal file at the root level of the partition by changing to the directory where it is mounted and typing:

rm -f .journal

rm -f .journal/etc/fstab file.

Chapter 4. The ext4 File System

4.1. Features of ext4

- Main Features

- The ext4 file system uses extents (as opposed to the traditional block mapping scheme used by ext2 and ext3), which improves performance when using large files and reduces metadata overhead for large files. In addition, ext4 also labels unallocated block groups and inode table sections accordingly, which allows them to be skipped during a file system check. This makes for quicker file system checks, which becomes more beneficial as the file system grows in size.

- Allocation Features

- The ext4 file system features the following allocation schemes:

- Persistent pre-allocation

- Delayed allocation

- Multi-block allocation

- Stripe-aware allocation

Because of delayed allocation and other performance optimizations, ext4's behavior of writing files to disk is different from ext3. In ext4, a program's writes to the file system are not guaranteed to be on-disk unless the program issues anfsync()call afterwards.By default, ext3 automatically forces newly created files to disk almost immediately even withoutfsync(). This behavior hid bugs in programs that did not usefsync()to ensure that written data was on-disk. The ext4 file system, on the other hand, often waits several seconds to write out changes to disk, allowing it to combine and reorder writes for better disk performance than ext3.Warning

Unlike ext3, the ext4 file system does not force data to disk on transaction commit. As such, it takes longer for buffered writes to be flushed to disk. As with any file system, use data integrity calls such asfsync()to ensure that data is written to permanent storage. - Other ext4 Features

- The ext4 file system also supports the following:

- Extended attributes (

xattr), which allows the system to associate several additional name/value pairs per file. - Quota journaling, which avoids the need for lengthy quota consistency checks after a crash.

Note

The only supported journaling mode in ext4 isdata=ordered(default). - Subsecond timestamps, which allow to specify inode timestamp fields in nanosecond resolution.

4.2. Managing an ext4 File System

yum install e4fsprogs

~]# yum install e4fsprogsmke4fs— A utility used to create an ext4 file system.mkfs.ext4— Another command used to create an ext4 file system.e4fsck— A utility used to repair inconsistencies of an ext4 file system.tune4fs— A utility used to modify ext4 file system attributes.resize4fs— A utility used to resize an ext4 file system.e4label— A utility used to display or modify the label of the ext4 file system.dumpe4fs— A utility used to display the super block and blocks group information for the ext4 file system.debuge4fs— An interactive file system debugger, used to examine ext4 file systems, manually repair corrupted file systems and create test cases fore4fsck.

4.3. Creating an ext4 File System

mke4fs and mkfs.ext4 commands for available options. Also, you may want to examine and modify the configuration file of mke4fs, /etc/mke4fs.conf, if you plan to create ext4 file systems more often.

- Format the partition with the ext4 file system using the

mkfs.ext4ormke4fscommand:mkfs.ext4 block_device

~]# mkfs.ext4 block_deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow mke4fs -t ext4 block_device

~]# mke4fs -t ext4 block_deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow where block_device is a partition which will contain the ext4 filesystem you wish to create. - Label the partition using the

e4labelcommand.e4label <block_device> new-label

~]# e4label <block_device> new-labelCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Create a mount point and mount the new file system to that mount point:

mkdir /mount/point mount block_device /mount/point

~]# mkdir /mount/point ~]# mount block_device /mount/pointCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- A mapped device — A logical volume in a volume group, for example,

/dev/mapper/VolGroup00-LogVol02. - A static device — A traditional storage volume, for example,

/dev/hdbX, where hdb is a storage device name and X is the partition number.

mkfs.ext4 chooses an optimal geometry. This may also be true on some hardware RAIDs which export geometry information to the operating system.

-E option of mkfs.ext4 (that is, extended file system options) with the following sub-options:

- stride=value

- Specifies the RAID chunk size.

- stripe-width=value

- Specifies the number of data disks in a RAID device, or the number of stripe units in the stripe.

value must be specified in file system block units. For example, to create a file system with a 64k stride (that is, 16 x 4096) on a 4k-block file system, use the following command:

mkfs.ext4 -E stride=16,stripe-width=64 block_device

~]# mkfs.ext4 -E stride=16,stripe-width=64 block_deviceman mkfs.ext4.

4.4. Mounting an ext4 File System

mount block_device /mount/point

~]# mount block_device /mount/pointacl, noacl, data, quota, noquota, user_xattr, nouser_xattr, and many others that were already used with the ext2 and ext3 file systems, are backward compatible and have the same usage and functionality. Also, with the ext4 file system, several new ext4-specific mount options have been added, for example:

- barrier / nobarrier

- By default, ext4 uses write barriers to ensure file system integrity even when power is lost to a device with write caches enabled. For devices without write caches, or with battery-backed write caches, you disable barriers using the

nobarrieroption:mount -o nobarrier block_device /mount/point

~]# mount -o nobarrier block_device /mount/pointCopy to Clipboard Copied! Toggle word wrap Toggle overflow - stripe=value

- This option allows you to specify the number of file system blocks allocated for a single file operation. For RAID5 this number should be equal the RAID chunk size multiplied by the number of disks.

- journal_ioprio=value

- This option allows you to set priority of I/O operations submitted during a commit operation. The option can have a value from 7 to 0 (0 is the highest priority), and is set to 3 by default, which is slightly higher priority than the default I/O priority.

tune4fs utility. For example, the following command sets the file system on the /dev/mapper/VolGroup00-LogVol02 device to be mounted by default with debugging disabled and user-specified extended attributes and Posix access control lists enabled:

tune4fs -o ^debug,user_xattr,acl /dev/mapper/VolGroup00-LogVol02

~]# tune4fs -o ^debug,user_xattr,acl /dev/mapper/VolGroup00-LogVol02tune4fs(8) manual page.

mount -t ext4 block_device /mount/point

~]# mount -t ext4 block_device /mount/pointdelayed allocation and multi-block allocation, and exclude features such as extent mapping.

Warning

mount(8) manual page.

Note

/etc/fstab file accordingly. For example:

/dev/mapper/VolGroup00-LogVol02 /test ext4 defaults 0 0

/dev/mapper/VolGroup00-LogVol02 /test ext4 defaults 0 04.5. Resizing an ext4 File System

resize4fs command:

resize4fs block_devicenew_size

~]# resize4fs block_devicenew_sizeresize2fs utility reads the size in units of file system block size, unless a suffix indicating a specific unit is used. The following suffixes indicate specific units:

s— 512 byte sectorsK— kilobytesM— megabytesG— gigabytes

size parameter is optional (and often redundant) when expanding. The resize4fs automatically expands to fill all available space of the container, usually a logical volume or partition. For more information about resizing an ext4 file system, refer to the resize4fs(8) manual page.

Chapter 5. The proc File System

/proc/ directory — also called the proc file system — contains a hierarchy of special files which represent the current state of the kernel — allowing applications and users to peer into the kernel's view of the system.

/proc/ directory, one can find a wealth of information detailing the system hardware and any processes currently running. In addition, some of the files within the /proc/ directory tree can be manipulated by users and applications to communicate configuration changes to the kernel.

5.1. A Virtual File System

/proc/ directory contains another type of file called a virtual file. It is for this reason that /proc/ is often referred to as a virtual file system.

/proc/interrupts, /proc/meminfo, /proc/mounts, and /proc/partitions provide an up-to-the-moment glimpse of the system's hardware. Others, like the /proc/filesystems file and the /proc/sys/ directory provide system configuration information and interfaces.

/proc/ide/ contains information for all physical IDE devices. Likewise, process directories contain information about each running process on the system.

5.1.1. Viewing Virtual Files

cat, more, or less commands on files within the /proc/ directory, users can immediately access enormous amounts of information about the system. For example, to display the type of CPU a computer has, type cat /proc/cpuinfo to receive output similar to the following:

/proc/ file system, some of the information is easily understandable while some is not human-readable. This is in part why utilities exist to pull data from virtual files and display it in a useful way. Examples of these utilities include lspci, apm, free, and top.

Note

/proc/ directory are readable only by the root user.

5.1.2. Changing Virtual Files

/proc/ directory are read-only. However, some can be used to adjust settings in the kernel. This is especially true for files in the /proc/sys/ subdirectory.

echo command and a greater than symbol (>) to redirect the new value to the file. For example, to change the hostname on the fly, type:

echo www.example.com > /proc/sys/kernel/hostname

echo www.example.com > /proc/sys/kernel/hostname cat /proc/sys/net/ipv4/ip_forward returns either a 0 or a 1. A 0 indicates that the kernel is not forwarding network packets. Using the echo command to change the value of the ip_forward file to 1 immediately turns packet forwarding on.

Note

/proc/sys/ subdirectory is /sbin/sysctl. For more information on this command, refer to Section 5.4, “Using the sysctl Command”

/proc/sys/ subdirectory, refer to Section 5.3.9, “ /proc/sys/ ”.

5.1.3. Restricting Access to Process Directories

/proc/ so that they can be viewed only by the root user. You can restrict the access to these directories with the use of the hidepid option.

mount command with the -o remount option. As root, type:

mount -o remount,hidepid=value /proc

mount -o remount,hidepid=value /prochidepid is one of:

0(default) — every user can read all world-readable files stored in a process directory.1— users can access only their own process directories. This protects the sensitive files likecmdline,sched, orstatusfrom access by non-root users. This setting does not affect the actual file permissions.2— process files are invisible to non-root users. The existence of a process can be learned by other means, but its effective UID and GID is hidden. Hiding these IDs complicates an intruder's task of gathering information about running processes.

Example 5.1. Restricting access to process directories

root user, type:

mount -o remount,hidepid=1 /proc

~]# mount -o remount,hidepid=1 /prochidepid=1, a non-root user cannot access the contents of process directories. An attempt to do so fails with the following message:

ls /proc/1/ ls: /proc/1/: Operation not permitted

~]$ ls /proc/1/

ls: /proc/1/: Operation not permitted

hidepid=2 enabled, process directories are made invisible to non-root users:

ls /proc/1/ ls: /proc/1/: No such file or directory

~]$ ls /proc/1/

ls: /proc/1/: No such file or directory

hidepid is set to 1 or 2. To do this, use the gid option. As root, type:

mount -o remount,hidepid=value,gid=gid /proc

mount -o remount,hidepid=value,gid=gid /prochidepid was set to 0. However, users which are not supposed to monitor the tasks in the whole system should not be added to the group. For more information on managing users and groups see Chapter 37, Users and Groups.

5.2. Top-level Files within the proc File System

/proc/ directory.

Note

5.2.1. /proc/apm

apm command. If a system with no battery is connected to an AC power source, this virtual file would look similar to the following:

1.16 1.2 0x07 0x01 0xff 0x80 -1% -1 ?

1.16 1.2 0x07 0x01 0xff 0x80 -1% -1 ?apm -v command on such a system results in output similar to the following:

APM BIOS 1.2 (kernel driver 1.16ac) AC on-line, no system battery

APM BIOS 1.2 (kernel driver 1.16ac) AC on-line, no system batteryapm is able do little more than put the machine in standby mode. The apm command is much more useful on laptops. For example, the following output is from the command cat /proc/apm on a laptop while plugged into a power outlet:

1.16 1.2 0x03 0x01 0x03 0x09 100% -1 ?

1.16 1.2 0x03 0x01 0x03 0x09 100% -1 ?apm file changes to something like the following:

1.16 1.2 0x03 0x00 0x00 0x01 99% 1792 min

1.16 1.2 0x03 0x00 0x00 0x01 99% 1792 minapm -v command now yields more useful data, such as the following:

APM BIOS 1.2 (kernel driver 1.16) AC off-line, battery status high: 99% (1 day, 5:52)

APM BIOS 1.2 (kernel driver 1.16) AC off-line, battery status high: 99% (1 day, 5:52)5.2.2. /proc/buddyinfo

DMA row references the first 16 MB on a system, the HighMem row references all memory greater than 4 GB on a system, and the Normal row references all memory in between.

/proc/buddyinfo:

Node 0, zone DMA 90 6 2 1 1 ... Node 0, zone Normal 1650 310 5 0 0 ... Node 0, zone HighMem 2 0 0 1 1 ...

Node 0, zone DMA 90 6 2 1 1 ...

Node 0, zone Normal 1650 310 5 0 0 ...

Node 0, zone HighMem 2 0 0 1 1 ...5.2.3. /proc/cmdline

/proc/cmdline file looks like the following:

ro root=/dev/VolGroup00/LogVol00 rhgb quiet 3

ro root=/dev/VolGroup00/LogVol00 rhgb quiet 3- ro

- The root device is mounted read-only at boot time. The presence of

roon the kernel boot line overrides any instances ofrw. - root=/dev/VolGroup00/LogVol00

- This tells us on which disk device or, in this case, on which logical volume, the root filesystem image is located. With our sample

/proc/cmdlineoutput, the root filesystem image is located on the first logical volume (LogVol00) of the first LVM volume group (VolGroup00). On a system not using Logical Volume Management, the root file system might be located on/dev/sda1or/dev/sda2, meaning on either the first or second partition of the first SCSI or SATA disk drive, depending on whether we have a separate (preceding) boot or swap partition on that drive.For more information on LVM used in Red Hat Enterprise Linux, refer to http://www.tldp.org/HOWTO/LVM-HOWTO/index.html. - rhgb

- A short lowercase acronym that stands for Red Hat Graphical Boot, providing "rhgb" on the kernel command line signals that graphical booting is supported, assuming that

/etc/inittabshows that the default runlevel is set to 5 with a line like this:id:5:initdefault:

id:5:initdefault:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - quiet

- Indicates that all verbose kernel messages except those which are extremely serious should be suppressed at boot time.

5.2.4. /proc/cpuinfo

/proc/cpuinfo:

processor— Provides each processor with an identifying number. On systems that have one processor, only a0is present.cpu family— Authoritatively identifies the type of processor in the system. For an Intel-based system, place the number in front of "86" to determine the value. This is particularly helpful for those attempting to identify the architecture of an older system such as a 586, 486, or 386. Because some RPM packages are compiled for each of these particular architectures, this value also helps users determine which packages to install.model name— Displays the common name of the processor, including its project name.cpu MHz— Shows the precise speed in megahertz for the processor to the thousandths decimal place.cache size— Displays the amount of level 2 memory cache available to the processor.siblings— Displays the number of sibling CPUs on the same physical CPU for architectures which use hyper-threading.flags— Defines a number of different qualities about the processor, such as the presence of a floating point unit (FPU) and the ability to process MMX instructions.

5.2.5. /proc/crypto

/proc/crypto file looks like the following:

5.2.6. /proc/devices

/proc/devices includes the major number and name of the device, and is broken into two major sections: Character devices and Block devices.

- Character devices do not require buffering. Block devices have a buffer available, allowing them to order requests before addressing them. This is important for devices designed to store information — such as hard drives — because the ability to order the information before writing it to the device allows it to be placed in a more efficient order.

- Character devices send data with no preconfigured size. Block devices can send and receive information in blocks of a size configured per device.

/usr/share/doc/kernel-doc-<version>/Documentation/devices.txt

/usr/share/doc/kernel-doc-<version>/Documentation/devices.txt5.2.7. /proc/dma

/proc/dma files looks like the following:

4: cascade

4: cascade5.2.8. /proc/execdomains

0-0 Linux [kernel]

0-0 Linux [kernel]PER_LINUX execution domain, different personalities can be implemented as dynamically loadable modules.

5.2.9. /proc/fb

/proc/fb for systems which contain frame buffer devices looks similar to the following:

0 VESA VGA

0 VESA VGA5.2.10. /proc/filesystems

/proc/filesystems file looks similar to the following:

nodev are not mounted on a device. The second column lists the names of the file systems supported.

mount command cycles through the file systems listed here when one is not specified as an argument.

5.2.11. /proc/interrupts

/proc/interrupts looks similar to the following:

XT-PIC— This is the old AT computer interrupts.IO-APIC-edge— The voltage signal on this interrupt transitions from low to high, creating an edge, where the interrupt occurs and is only signaled once. This kind of interrupt, as well as theIO-APIC-levelinterrupt, are only seen on systems with processors from the 586 family and higher.IO-APIC-level— Generates interrupts when its voltage signal is high until the signal is low again.

5.2.12. /proc/iomem

5.2.13. /proc/ioports

/proc/ioports provides a list of currently registered port regions used for input or output communication with a device. This file can be quite long. The following is a partial listing:

5.2.14. /proc/kcore

/proc/ files, kcore displays a size. This value is given in bytes and is equal to the size of the physical memory (RAM) used plus 4 KB.

gdb, and is not human readable.

Warning

/proc/kcore virtual file. The contents of the file scramble text output on the terminal. If this file is accidentally viewed, press Ctrl+C to stop the process and then type reset to bring back the command line prompt.

5.2.15. /proc/kmsg

/sbin/klogd or /bin/dmesg.

5.2.16. /proc/loadavg

uptime and other commands. A sample /proc/loadavg file looks similar to the following:

0.20 0.18 0.12 1/80 11206

0.20 0.18 0.12 1/80 112065.2.17. /proc/locks

/proc/locks file for a lightly loaded system looks similar to the following:

FLOCK signifying the older-style UNIX file locks from a flock system call and POSIX representing the newer POSIX locks from the lockf system call.

ADVISORY or MANDATORY. ADVISORY means that the lock does not prevent other people from accessing the data; it only prevents other attempts to lock it. MANDATORY means that no other access to the data is permitted while the lock is held. The fourth column reveals whether the lock is allowing the holder READ or WRITE access to the file. The fifth column shows the ID of the process holding the lock. The sixth column shows the ID of the file being locked, in the format of MAJOR-DEVICE:MINOR-DEVICE:INODE-NUMBER . The seventh and eighth column shows the start and end of the file's locked region.

5.2.18. /proc/mdstat

/proc/mdstat looks similar to the following:

Personalities : read_ahead not set unused devices: <none>

Personalities : read_ahead not set unused devices: <none>md device is present. In that case, view /proc/mdstat to find the current status of mdX RAID devices.

/proc/mdstat file below shows a system with its md0 configured as a RAID 1 device, while it is currently re-syncing the disks:

Personalities : [linear] [raid1] read_ahead 1024 sectors md0: active raid1 sda2[1] sdb2[0] 9940 blocks [2/2] [UU] resync=1% finish=12.3min algorithm 2 [3/3] [UUU] unused devices: <none>

Personalities : [linear] [raid1] read_ahead 1024 sectors

md0: active raid1 sda2[1] sdb2[0] 9940 blocks [2/2] [UU] resync=1% finish=12.3min algorithm 2 [3/3] [UUU]

unused devices: <none>5.2.19. /proc/meminfo

/proc/ directory, as it reports a large amount of valuable information about the systems RAM usage.

/proc/meminfo virtual file is from a system with 256 MB of RAM and 512 MB of swap space:

free, top, and ps commands. In fact, the output of the free command is similar in appearance to the contents and structure of /proc/meminfo. But by looking directly at /proc/meminfo, more details are revealed:

MemTotal— Total amount of physical RAM, in kilobytes.MemFree— The amount of physical RAM, in kilobytes, left unused by the system.Buffers— The amount of physical RAM, in kilobytes, used for file buffers.Cached— The amount of physical RAM, in kilobytes, used as cache memory.SwapCached— The amount of swap, in kilobytes, used as cache memory.Active— The total amount of buffer or page cache memory, in kilobytes, that is in active use. This is memory that has been recently used and is usually not reclaimed for other purposes.Inactive— The total amount of buffer or page cache memory, in kilobytes, that are free and available. This is memory that has not been recently used and can be reclaimed for other purposes.HighTotalandHighFree— The total and free amount of memory, in kilobytes, that is not directly mapped into kernel space. TheHighTotalvalue can vary based on the type of kernel used.LowTotalandLowFree— The total and free amount of memory, in kilobytes, that is directly mapped into kernel space. TheLowTotalvalue can vary based on the type of kernel used.SwapTotal— The total amount of swap available, in kilobytes.SwapFree— The total amount of swap free, in kilobytes.Dirty— The total amount of memory, in kilobytes, waiting to be written back to the disk.Writeback— The total amount of memory, in kilobytes, actively being written back to the disk.Mapped— The total amount of memory, in kilobytes, which have been used to map devices, files, or libraries using themmapcommand.Slab— The total amount of memory, in kilobytes, used by the kernel to cache data structures for its own use.Committed_AS— The total amount of memory, in kilobytes, estimated to complete the workload. This value represents the worst case scenario value, and also includes swap memory.PageTables— The total amount of memory, in kilobytes, dedicated to the lowest page table level.VMallocTotal— The total amount of memory, in kilobytes, of total allocated virtual address space.VMallocUsed— The total amount of memory, in kilobytes, of used virtual address space.VMallocChunk— The largest contiguous block of memory, in kilobytes, of available virtual address space.HugePages_Total— The total number of hugepages for the system. The number is derived by dividingHugepagesizeby the megabytes set aside for hugepages specified in/proc/sys/vm/hugetlb_pool. This statistic only appears on the x86, Itanium, and AMD64 architectures.HugePages_Free— The total number of hugepages available for the system. This statistic only appears on the x86, Itanium, and AMD64 architectures.Hugepagesize— The size for each hugepages unit in kilobytes. By default, the value is 4096 KB on uniprocessor kernels for 32 bit architectures. For SMP, hugemem kernels, and AMD64, the default is 2048 KB. For Itanium architectures, the default is 262144 KB. This statistic only appears on the x86, Itanium, and AMD64 architectures.

5.2.20. /proc/misc

63 device-mapper 175 agpgart 135 rtc 134 apm_bios

63 device-mapper 175 agpgart 135 rtc 134 apm_bios5.2.21. /proc/modules

/proc/modules file output:

Note

/sbin/lsmod command.

Live, Loading, or Unloading are the only possible values.

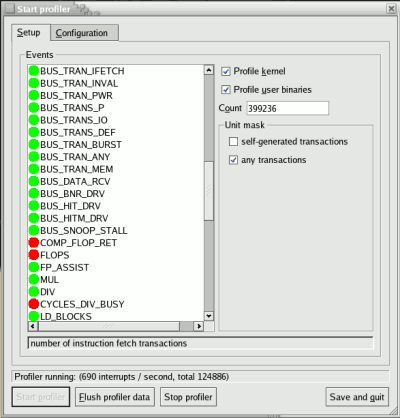

oprofile.

5.2.22. /proc/mounts

/etc/mtab, except that /proc/mount is more up-to-date.

ro) or read-write (rw). The fifth and sixth columns are dummy values designed to match the format used in /etc/mtab.

5.2.23. /proc/mtrr

/proc/mtrr file may look similar to the following:

reg00: base=0x00000000 ( 0MB), size= 256MB: write-back, count=1 reg01: base=0xe8000000 (3712MB), size= 32MB: write-combining, count=1

reg00: base=0x00000000 ( 0MB), size= 256MB: write-back, count=1

reg01: base=0xe8000000 (3712MB), size= 32MB: write-combining, count=1/proc/mtrr file can increase performance more than 150%.

/usr/share/doc/kernel-doc-<version>/Documentation/mtrr.txt

/usr/share/doc/kernel-doc-<version>/Documentation/mtrr.txt5.2.24. /proc/partitions

major— The major number of the device with this partition. The major number in the/proc/partitions, (3), corresponds with the block deviceide0, in/proc/devices.minor— The minor number of the device with this partition. This serves to separate the partitions into different physical devices and relates to the number at the end of the name of the partition.#blocks— Lists the number of physical disk blocks contained in a particular partition.name— The name of the partition.

5.2.25. /proc/pci

/proc/pci can be rather long. A sampling of this file from a basic system looks similar to the following:

Note

lspci -vb

lspci -vb5.2.26. /proc/slabinfo

/proc/slabinfo file manually, the /usr/bin/slabtop program displays kernel slab cache information in real time. This program allows for custom configurations, including column sorting and screen refreshing.

/usr/bin/slabtop usually looks like the following example:

/proc/slabinfo that are included into /usr/bin/slabtop include:

OBJS— The total number of objects (memory blocks), including those in use (allocated), and some spares not in use.ACTIVE— The number of objects (memory blocks) that are in use (allocated).USE— Percentage of total objects that are active. ((ACTIVE/OBJS)(100))OBJ SIZE— The size of the objects.SLABS— The total number of slabs.OBJ/SLAB— The number of objects that fit into a slab.CACHE SIZE— The cache size of the slab.NAME— The name of the slab.

/usr/bin/slabtop program, refer to the slabtop man page.

5.2.27. /proc/stat

/proc/stat, which can be quite long, usually begins like the following example:

cpu— Measures the number of jiffies (1/100 of a second for x86 systems) that the system has been in user mode, user mode with low priority (nice), system mode, idle task, I/O wait, IRQ (hardirq), and softirq respectively. The IRQ (hardirq) is the direct response to a hardware event. The IRQ takes minimal work for queuing the "heavy" work up for the softirq to execute. The softirq runs at a lower priority than the IRQ and therefore may be interrupted more frequently. The total for all CPUs is given at the top, while each individual CPU is listed below with its own statistics. The following example is a 4-way Intel Pentium Xeon configuration with multi-threading enabled, therefore showing four physical processors and four virtual processors totaling eight processors.page— The number of memory pages the system has written in and out to disk.swap— The number of swap pages the system has brought in and out.intr— The number of interrupts the system has experienced.btime— The boot time, measured in the number of seconds since January 1, 1970, otherwise known as the epoch.

5.2.28. /proc/swaps

/proc/swaps may look similar to the following:

Filename Type Size Used Priority /dev/mapper/VolGroup00-LogVol01 partition 524280 0 -1

Filename Type Size Used Priority

/dev/mapper/VolGroup00-LogVol01 partition 524280 0 -1/proc/ directory, /proc/swaps provides a snapshot of every swap file name, the type of swap space, the total size, and the amount of space in use (in kilobytes). The priority column is useful when multiple swap files are in use. The lower the priority, the more likely the swap file is to be used.

5.2.29. /proc/sysrq-trigger

echo command to write to this file, a remote root user can execute most System Request Key commands remotely as if at the local terminal. To echo values to this file, the /proc/sys/kernel/sysrq must be set to a value other than 0. For more information about the System Request Key, refer to Section 5.3.9.3, “ /proc/sys/kernel/ ”.

5.2.30. /proc/uptime

/proc/uptime is quite minimal:

350735.47 234388.90

350735.47 234388.905.2.31. /proc/version

gcc in use, as well as the version of Red Hat Enterprise Linux installed on the system:

Linux version 2.6.8-1.523 (user@foo.redhat.com) (gcc version 3.4.1 20040714 \ (Red Hat Enterprise Linux 3.4.1-7)) #1 Mon Aug 16 13:27:03 EDT 2004

Linux version 2.6.8-1.523 (user@foo.redhat.com) (gcc version 3.4.1 20040714 \ (Red Hat Enterprise Linux 3.4.1-7)) #1 Mon Aug 16 13:27:03 EDT 20045.3. Directories within /proc/

/proc/ directory.

5.3.1. Process Directories

/proc/ directory contains a number of directories with numerical names. A listing of them may be similar to the following:

/proc/ process directory vanishes.

cmdline— Contains the command issued when starting the process.cwd— A symbolic link to the current working directory for the process.environ— A list of the environment variables for the process. The environment variable is given in all upper-case characters, and the value is in lower-case characters.exe— A symbolic link to the executable of this process.fd— A directory containing all of the file descriptors for a particular process. These are given in numbered links:Copy to Clipboard Copied! Toggle word wrap Toggle overflow maps— A list of memory maps to the various executables and library files associated with this process. This file can be rather long, depending upon the complexity of the process, but sample output from thesshdprocess begins like the following:Copy to Clipboard Copied! Toggle word wrap Toggle overflow mem— The memory held by the process. This file cannot be read by the user.root— A link to the root directory of the process.stat— The status of the process.statm— The status of the memory in use by the process. Below is a sample/proc/statmfile:263 210 210 5 0 205 0

263 210 210 5 0 205 0Copy to Clipboard Copied! Toggle word wrap Toggle overflow The seven columns relate to different memory statistics for the process. From left to right, they report the following aspects of the memory used:- Total program size, in kilobytes.

- Size of memory portions, in kilobytes.

- Number of pages that are shared.

- Number of pages that are code.

- Number of pages of data/stack.

- Number of library pages.

- Number of dirty pages.

status— The status of the process in a more readable form thanstatorstatm. Sample output forsshdlooks similar to the following:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The information in this output includes the process name and ID, the state (such asS (sleeping)orR (running)), user/group ID running the process, and detailed data regarding memory usage.

5.3.1.1. /proc/self/

/proc/self/ directory is a link to the currently running process. This allows a process to look at itself without having to know its process ID.

/proc/self/ directory produces the same contents as listing the process directory for that process.

5.3.2. /proc/bus/

/proc/bus/ by the same name, such as /proc/bus/pci/.

/proc/bus/ vary depending on the devices connected to the system. However, each bus type has at least one directory. Within these bus directories are normally at least one subdirectory with a numerical name, such as 001, which contain binary files.

/proc/bus/usb/ subdirectory contains files that track the various devices on any USB buses, as well as the drivers required for them. The following is a sample listing of a /proc/bus/usb/ directory:

total 0 dr-xr-xr-x 1 root root 0 May 3 16:25 001 -r--r--r-- 1 root root 0 May 3 16:25 devices -r--r--r-- 1 root root 0 May 3 16:25 drivers

total 0 dr-xr-xr-x 1 root root 0 May 3 16:25 001

-r--r--r-- 1 root root 0 May 3 16:25 devices

-r--r--r-- 1 root root 0 May 3 16:25 drivers/proc/bus/usb/001/ directory contains all devices on the first USB bus and the devices file identifies the USB root hub on the motherboard.

/proc/bus/usb/devices file:

5.3.3. /proc/driver/

rtc which provides output from the driver for the system's Real Time Clock (RTC), the device that keeps the time while the system is switched off. Sample output from /proc/driver/rtc looks like the following:

/usr/share/doc/kernel-doc-<version>/Documentation/rtc.txt.

5.3.4. /proc/fs

cat /proc/fs/nfsd/exports displays the file systems being shared and the permissions granted for those file systems. For more on file system sharing with NFS, refer to Chapter 21, Network File System (NFS).

5.3.5. /proc/ide/

/proc/ide/ide0 and /proc/ide/ide1. In addition, a drivers file is available, providing the version number of the various drivers used on the IDE channels:

ide-floppy version 0.99. newide ide-cdrom version 4.61 ide-disk version 1.18

ide-floppy version 0.99.

newide ide-cdrom version 4.61

ide-disk version 1.18/proc/ide/piix file which reveals whether DMA or UDMA is enabled for the devices on the IDE channels:

ide0, provides additional information. The channel file provides the channel number, while the model identifies the bus type for the channel (such as pci).

5.3.5.1. Device Directories

/dev/ directory. For instance, the first IDE drive on ide0 would be hda.

Note

/proc/ide/ directory.

cache— The device cache.capacity— The capacity of the device, in 512 byte blocks.driver— The driver and version used to control the device.geometry— The physical and logical geometry of the device.media— The type of device, such as adisk.model— The model name or number of the device.settings— A collection of current device parameters. This file usually contains quite a bit of useful, technical information. A samplesettingsfile for a standard IDE hard disk looks similar to the following:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.3.6. /proc/irq/

/proc/irq/prof_cpu_mask file is a bitmask that contains the default values for the smp_affinity file in the IRQ directory. The values in smp_affinity specify which CPUs handle that particular IRQ.

/proc/irq/ directory, refer to the following installed documentation:

/usr/share/doc/kernel-doc-<version>/Documentation/filesystems/proc.txt

/usr/share/doc/kernel-doc-<version>/Documentation/filesystems/proc.txt5.3.7. /proc/net/

/proc/net/ directory:

arp— Lists the kernel's ARP table. This file is particularly useful for connecting a hardware address to an IP address on a system.atm/directory — The files within this directory contain Asynchronous Transfer Mode (ATM) settings and statistics. This directory is primarily used with ATM networking and ADSL cards.dev— Lists the various network devices configured on the system, complete with transmit and receive statistics. This file displays the number of bytes each interface has sent and received, the number of packets inbound and outbound, the number of errors seen, the number of packets dropped, and more.dev_mcast— Lists Layer2 multicast groups on which each device is listening.igmp— Lists the IP multicast addresses which this system joined.ip_conntrack— Lists tracked network connections for machines that are forwarding IP connections.ip_tables_names— Lists the types ofiptablesin use. This file is only present ifiptablesis active on the system and contains one or more of the following values:filter,mangle, ornat.ip_mr_cache— Lists the multicast routing cache.ip_mr_vif— Lists multicast virtual interfaces.netstat— Contains a broad yet detailed collection of networking statistics, including TCP timeouts, SYN cookies sent and received, and much more.psched— Lists global packet scheduler parameters.raw— Lists raw device statistics.route— Lists the kernel's routing table.rt_cache— Contains the current routing cache.snmp— List of Simple Network Management Protocol (SNMP) data for various networking protocols in use.sockstat— Provides socket statistics.tcp— Contains detailed TCP socket information.tr_rif— Lists the token ring RIF routing table.udp— Contains detailed UDP socket information.unix— Lists UNIX domain sockets currently in use.wireless— Lists wireless interface data.

5.3.8. /proc/scsi/

/proc/ide/ directory, but it is for connected SCSI devices.

/proc/scsi/scsi, which contains a list of every recognized SCSI device. From this listing, the type of device, as well as the model name, vendor, SCSI channel and ID data is available.

/proc/scsi/, which contains files specific to each SCSI controller using that driver. From the previous example, aic7xxx/ and megaraid/ directories are present, since two drivers are in use. The files in each of the directories typically contain an I/O address range, IRQ information, and statistics for the SCSI controller using that driver. Each controller can report a different type and amount of information. The Adaptec AIC-7880 Ultra SCSI host adapter's file in this example system produces the following output:

5.3.9. /proc/sys/

/proc/sys/ directory is different from others in /proc/ because it not only provides information about the system but also allows the system administrator to immediately enable and disable kernel features.

Warning

/proc/sys/ directory. Changing the wrong setting may render the kernel unstable, requiring a system reboot.

/proc/sys/.

-l option at the shell prompt. If the file is writable, it may be used to configure the kernel. For example, a partial listing of /proc/sys/fs looks like the following:

-r--r--r-- 1 root root 0 May 10 16:14 dentry-state -rw-r--r-- 1 root root 0 May 10 16:14 dir-notify-enable -r--r--r-- 1 root root 0 May 10 16:14 dquot-nr -rw-r--r-- 1 root root 0 May 10 16:14 file-max -r--r--r-- 1 root root 0 May 10 16:14 file-nr

-r--r--r-- 1 root root 0 May 10 16:14 dentry-state

-rw-r--r-- 1 root root 0 May 10 16:14 dir-notify-enable

-r--r--r-- 1 root root 0 May 10 16:14 dquot-nr

-rw-r--r-- 1 root root 0 May 10 16:14 file-max

-r--r--r-- 1 root root 0 May 10 16:14 file-nrdir-notify-enable and file-max can be written to and, therefore, can be used to configure the kernel. The other files only provide feedback on current settings.

/proc/sys/ file is done by echoing the new value into the file. For example, to enable the System Request Key on a running kernel, type the command:

echo 1 > /proc/sys/kernel/sysrq

echo 1 > /proc/sys/kernel/sysrqsysrq from 0 (off) to 1 (on).

/proc/sys/ configuration files contain more than one value. To correctly send new values to them, place a space character between each value passed with the echo command, such as is done in this example:

echo 4 2 45 > /proc/sys/kernel/acct

echo 4 2 45 > /proc/sys/kernel/acctNote

echo command disappear when the system is restarted. To make configuration changes take effect after the system is rebooted, refer to Section 5.4, “Using the sysctl Command”.

/proc/sys/ directory contains several subdirectories controlling different aspects of a running kernel.

5.3.9.1. /proc/sys/dev/

cdrom/ and raid/. Customized kernels can have other directories, such as parport/, which provides the ability to share one parallel port between multiple device drivers.

cdrom/ directory contains a file called info, which reveals a number of important CD-ROM parameters:

/proc/sys/dev/cdrom, such as autoclose and checkmedia, can be used to control the system's CD-ROM. Use the echo command to enable or disable these features.

/proc/sys/dev/raid/ directory becomes available with at least two files in it: speed_limit_min and speed_limit_max. These settings determine the acceleration of RAID devices for I/O intensive tasks, such as resyncing the disks.

5.3.9.2. /proc/sys/fs/

binfmt_misc/ directory is used to provide kernel support for miscellaneous binary formats.

/proc/sys/fs/ include:

dentry-state— Provides the status of the directory cache. The file looks similar to the following:57411 52939 45 0 0 0

57411 52939 45 0 0 0Copy to Clipboard Copied! Toggle word wrap Toggle overflow The first number reveals the total number of directory cache entries, while the second number displays the number of unused entries. The third number tells the number of seconds between when a directory has been freed and when it can be reclaimed, and the fourth measures the pages currently requested by the system. The last two numbers are not used and display only zeros.dquot-nr— Lists the maximum number of cached disk quota entries.file-max— Lists the maximum number of file handles that the kernel allocates. Raising the value in this file can resolve errors caused by a lack of available file handles.file-nr— Lists the number of allocated file handles, used file handles, and the maximum number of file handles.overflowgidandoverflowuid— Defines the fixed group ID and user ID, respectively, for use with file systems that only support 16-bit group and user IDs.super-max— Controls the maximum number of superblocks available.super-nr— Displays the current number of superblocks in use.

5.3.9.3. /proc/sys/kernel/

acct— Controls the suspension of process accounting based on the percentage of free space available on the file system containing the log. By default, the file looks like the following:4 2 30

4 2 30Copy to Clipboard Copied! Toggle word wrap Toggle overflow The first value dictates the percentage of free space required for logging to resume, while the second value sets the threshold percentage of free space when logging is suspended. The third value sets the interval, in seconds, that the kernel polls the file system to see if logging should be suspended or resumed.cap-bound— Controls the capability bounding settings, which provides a list of capabilities for any process on the system. If a capability is not listed here, then no process, no matter how privileged, can do it. The idea is to make the system more secure by ensuring that certain things cannot happen, at least beyond a certain point in the boot process.For a valid list of values for this virtual file, refer to the following installed documentation:/lib/modules/<kernel-version>/build/include/linux/capability.h.ctrl-alt-del— Controls whether Ctrl+Alt+Delete gracefully restarts the computer usinginit(0) or forces an immediate reboot without syncing the dirty buffers to disk (1).domainname— Configures the system domain name, such asexample.com.exec-shield— Configures the Exec Shield feature of the kernel. Exec Shield provides protection against certain types of buffer overflow attacks.There are two possible values for this virtual file:0— Disables Exec Shield.1— Enables Exec Shield. This is the default value.

Important

If a system is running security-sensitive applications that were started while Exec Shield was disabled, these applications must be restarted when Exec Shield is enabled in order for Exec Shield to take effect.exec-shield-randomize— Enables location randomization of various items in memory. This helps deter potential attackers from locating programs and daemons in memory. Each time a program or daemon starts, it is put into a different memory location each time, never in a static or absolute memory address.There are two possible values for this virtual file:0— Disables randomization of Exec Shield. This may be useful for application debugging purposes.1— Enables randomization of Exec Shield. This is the default value. Note: Theexec-shieldfile must also be set to1forexec-shield-randomizeto be effective.