Virtualization Guide

Virtualization Documentation

Abstract

Preface

1. About this book

1.1. Overview

- Requirements and Limitations

- Installation

- Configuration

- Administration

- Storage

- Reference

- Tips and Tricks

- Troubleshooting

2. What is Virtualization?

3. Types of Virtualization

3.1. Full Virtualization

3.2. Para-Virtualization

3.3. Para-virtualized drivers

4. How CIOs should think about virtualization

In essence, virtualization increases flexibility by decoupling an operating system and the services and applications supported by that system from a specific physical hardware platform. It allows the establishment of multiple virtual environments on a shared hardware platform.

Virtualization can also be used to lower costs. One obvious benefit comes from the consolidation of servers into a smaller set of more powerful hardware platforms running a collection of virtual environments. Not only can costs be reduced by reducing the amount of hardware and reducing the amount of unused capacity, but application performance can actually be improved since the virtual guests execute on more powerful hardware.

Regardless of the specific needs of your enterprise, you should be investigating virtualization as part of your system and application portfolio as the technology is likely to become pervasive. We expect operating system vendors to include virtualization as a standard component, hardware vendors to build virtual capabilities into their platforms, and virtualization vendors to expand the scope of their offerings.

Part I. Requirements and Limitations for Virtualization with Red Hat Enterprise Linux

System requirements, support restrictions and limitations

Chapter 1. System requirements

Minimum system requirements

- 6GB free disk space.

- 2GB of RAM.

Recommended system requirements

- 6GB plus the required disk space recommended by the guest operating system per guest. For most operating systems more than 6GB of disk space is recommended.

- One processor core or thread for each virtualized CPU and one for the hypervisor.

- 2GB of RAM plus additional RAM for guests.

Note

Para-virtualized guests require a Red Hat Enterprise Linux 5 installation tree available over the network using the NFS, FTP or HTTP protocols.

Full virtualization with the Xen Hypervisor requires:

- an Intel processor with the Intel VT extensions, or

- an AMD processor with the AMD-V extensions, or

- an Intel Itanium processor.

The KVM hypervisor requires:

- an Intel processor with the Intel VT and the Intel 64 extensions, or

- an AMD processor with the AMD-V and the AMD64 extensions.

The supported guest storage methods are:

- Files on local storage

- Physical disk partitions

- Locally connected physical LUNs

- LVM partitions

- iSCSI and Fibre Channel based LUNs

Important

/var/lib/libvirt/images/ directory by default. If you choose to use a different directory you must label the new directory according to SELinux policy. See Section 19.2, “SELinux and virtualization” for details.

Chapter 2. Xen restrictions and support

- For host systems: http://www.redhat.com/products/enterprise-linux/server/compare.html

Note

Note

yum for more information.

Chapter 3. KVM restrictions and support

- For host systems: http://www.redhat.com/products/enterprise-linux/server/compare.html

Chapter 4. Hyper-V restrictions and support

4.1. Hyper-V drivers

- Hyper-V vmbus driver (hv_vmbus) - Provides the infrastructure for other Hyper-V drivers to communicate with the hypervisor.

- Utility driver (hv_utils) - Provides Hyper-V integration services such as shutdown, time synchronization, heartbeat and Key-Value Pair Exchange.

- Network driver (hv_netvsc) - Provides network performance improvements.

- Storage driver (hv_storvsc) - Increases performance when accessing storage (IDE and SCSI) devices.

- Mouse driver (hid_hyperv) - Improves user experience by allowing mouse focus changes for a virtualized guest.

- Clocksource driver - This driver provides a stable clock source for Red Hat Enterprise Linux 5.11 running within the Hyper-V platform.

Note

Chapter 5. Virtualization limitations

5.1. General limitations for virtualization

There is no support for converting Xen-based guests to KVM or KVM-based guests to Xen.

See the Red Hat Enterprise Linux Release Notes at https://access.redhat.com/site/documentation/ for your version. The Release Notes cover the present new features, known issues and limitations as they are updated or discovered.

You should test for the maximum anticipated system and network load before deploying heavy I/O applications such as database servers. Load testing and planning are important as virtualization performance can suffer under high I/O.

5.2. KVM limitations

- Constant TSC bit

- Systems without a Constant Time Stamp Counter require additional configuration. See Chapter 17, KVM guest timing management to determine whether you have a Constant Time Stamp Counter and what additional configuration may be required.

- Memory overcommit

- KVM supports memory overcommit and can store the memory of guests in swap space. A guest will run slower if it is swapped frequently. When Kernel SamePage Merging (KSM) is used, make sure that the swap size is equivalent to the size of the overcommit ratio.

- CPU overcommit

- No support exists for having more than 10 virtual CPUs per physical processor core. A CPU overcommit configuration exceeding this limitation is unsupported and can cause problems with some guests.Overcommitting CPUs has some risk and can lead to instability. See Section 33.4, “Overcommitting Resources” for tips and recommendations on overcommitting CPUs.

- Virtualized SCSI devices

- SCSI emulation is presently not supported. Virtualized SCSI devices are disabled in KVM.

- Virtualized IDE devices

- KVM is limited to a maximum of four virtualized (emulated) IDE devices per guest.

- Para-virtualized devices

- Para-virtualized devices, which use the

virtiodrivers, are PCI devices. Presently, guests are limited to a maximum of 32 PCI devices. Some PCI devices are critical for the guest to run and these devices cannot be removed. The default, required devices are:- the host bridge,

- the ISA bridge and usb bridge (the usb and ISA bridges are the same device),

- the graphics card (using either the Cirrus or qxl driver), and

- the memory balloon device.

Hence, of the 32 available PCI devices for a guest, 4 are not removable. This means there are 28 PCI slots available for additional devices per guest. Every para-virtualized network or block device uses one slot. Each guest can use up to 28 additional devices made up of any combination of para-virtualized network, para-virtualized disk devices, or other PCI devices using VT-d. - Migration limitations

- Live migration is only possible with CPUs from the same vendor (that is, Intel to Intel or AMD to AMD only).The No eXecution (NX) bit must be set to on or off for both CPUs for live migration.See Chapter 21, Xen live migration and Chapter 22, KVM live migration for more details on live migration.

- Storage limitations

- The host should not use disk labels to identify file systems in the

/etc/fstabfile, theinitrdfile or in the kernel command line. A security weakness exists if less privileged users or guests have write access to entire partitions or LVM volumes.Guests should not be given write access to whole disks or block devices (for example,/dev/sdb). Guests with access to block devices may be able to access other block devices on the system or modify volume labels which can be used to compromise the host system. Instead, you should use partitions (for example,/dev/sdb1) or LVM volumes.

5.3. Xen limitations

Note

Xen host (dom0) limitations

- A limit of 254 para-virtualized block devices per host exists. The total number of block devices attached to guests cannot exceed 254.

Note

phy devices it can have if it has sufficient resources.

Xen Para-virtualization limitations

- For x86 guests, a maximum of 16GB memory per guest.

- For x86_64 guests, a maximum of 168GB memory per guest.

- A maximum of 254 devices per guest.

- A maximum of 15 network devices per guest.

Xen full virtualization limitations

- For x86 guests, a maximum of 16GB memory per guest.

- A maximum of four virtualized (emulated) IDE devices per guest.Devices using the para-virtualized drivers for fully-virtualized guests do not have this limitation.

- Virtualized (emulated) IDE devices are limited by the total number of loopback devices supported by the system. The default number of available loopback devices on Red Hat Enterprise Linux 5.11 is 8. That is, by default, all guests on the system can each have no more than 8 virtualized (emulated) IDE devices.For more information on loopback devices, their creation and use, see the Red Hat Knowledge Solution 1721.

Note

The number of available loopback devices can be raised by modifying the kernel limit.In the/etc/modprobe.conffile, add the following line:options loop max_loop=64

options loop max_loop=64Copy to Clipboard Copied! Toggle word wrap Toggle overflow Reboot the machine or run the following commands to update the kernel with this new limit:rmmod loop modprobe loop

# rmmod loop # modprobe loopCopy to Clipboard Copied! Toggle word wrap Toggle overflow - A limit of 254 para-virtualized block devices per host. The total number of block devices (using the tap:aio driver) attached to guests cannot exceed 254 devices.

- A maximum of 254 block devices using the para-virtualized drivers per guest.

- A maximum of 15 network devices per guest.

- A maximum of 15 virtualized SCSI devices per guest.

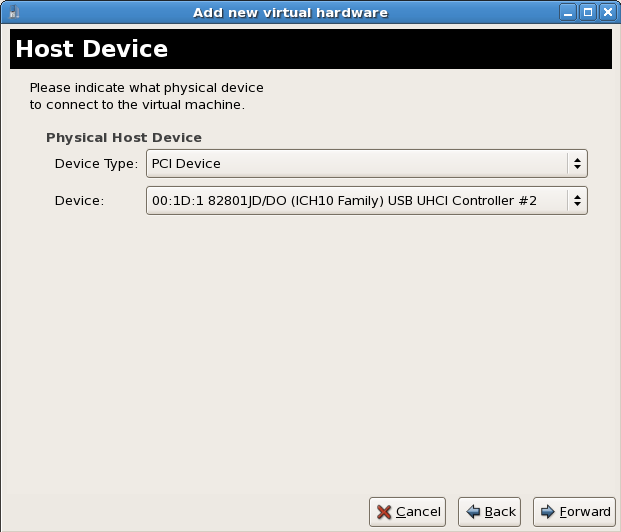

PCI passthrough limitations

- PCI passthrough (attaching PCI devices to guests) is presently only supported on the following architectures:

- 32 bit (x86) systems.

- Intel 64 systems.

- Intel Itanium 2 systems.

5.4. Application limitations

- kdump server

- netdump server

Part II. Installation

Virtualization installation topics

Chapter 6. Installing the virtualization packages

yum command.

6.1. Installing Xen with a new Red Hat Enterprise Linux installation

Note

- Start an interactive Red Hat Enterprise Linux installation from the Red Hat Enterprise Linux Installation CD-ROM, DVD or PXE.

- You must enter a valid installation number when prompted to receive access to the virtualization packages. Installation numbers can be obtained from Red Hat Customer Service.

- Complete all steps until you see the package selection step.

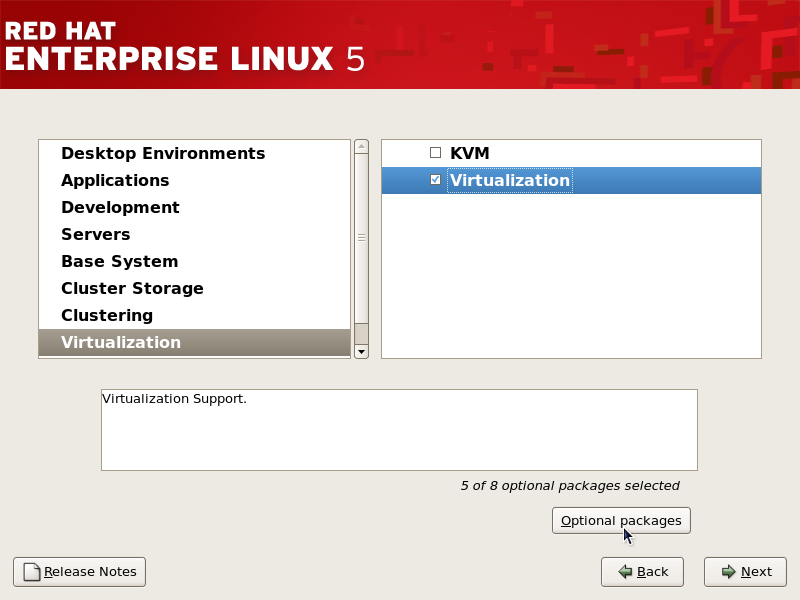

Select the Virtualization package group and the Customize Now radio button.

Select the Virtualization package group and the Customize Now radio button. - Select the Virtualization package group. The Virtualization package group selects the Xen hypervisor,

virt-manager,libvirtandvirt-viewerand all dependencies for installation.

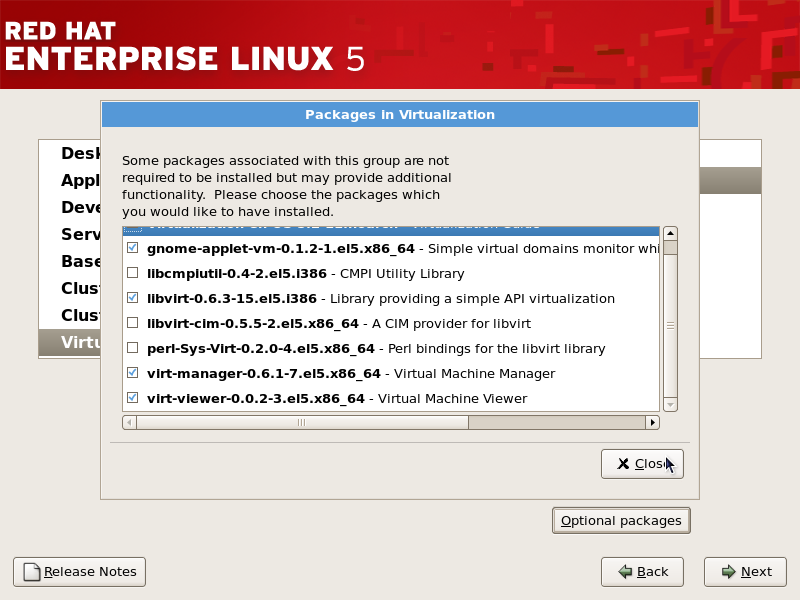

Customize the packages (if required)

Customize the Virtualization group if you require other virtualization packages. Press the Close button then the Forward button to continue the installation.

Press the Close button then the Forward button to continue the installation.

Important

This section describes how to use a Kickstart file to install Red Hat Enterprise Linux with the Xen hypervisor packages. Kickstart files allow for large, automated installations without a user manually installing each individual system. The steps in this section will assist you in creating and using a Kickstart file to install Red Hat Enterprise Linux with the virtualization packages.

%packages section of your Kickstart file, append the following package group:

%packages @xen

%packages

@xen

Note

xen-ia64-guest-firmware

xen-ia64-guest-firmware

6.2. Installing Xen packages on an existing Red Hat Enterprise Linux system

Your machines must be registered on your Red Hat account to receive packages and updates. To register an unregistered installation of Red Hat Enterprise Linux, run the subscription-manager register command and follow the prompts.

yum

To use virtualization on Red Hat Enterprise Linux you need the xen and kernel-xen packages. The xen package contains the hypervisor and basic virtualization tools. The kernel-xen package contains a modified Linux kernel which runs as a virtual machine guest on the hypervisor.

xen and kernel-xen packages, run:

yum install xen kernel-xen

# yum install xen kernel-xen

Recommended virtualization packages:

python-virtinst- Provides the

virt-installcommand for creating virtual machines. libvirtlibvirtis an API library for interacting with hypervisors.libvirtuses thexmvirtualization framework and thevirshcommand line tool to manage and control virtual machines.libvirt-python- The libvirt-python package contains a module that permits applications written in the Python programming language to use the interface supplied by the

libvirtAPI. virt-managervirt-manager, also known as Virtual Machine Manager, provides a graphical tool for administering virtual machines. It useslibvirtlibrary as the management API.

yum install virt-manager libvirt libvirt-python python-virtinst

# yum install virt-manager libvirt libvirt-python python-virtinst

6.3. Installing KVM with a new Red Hat Enterprise Linux installation

Note

Important

- Start an interactive Red Hat Enterprise Linux installation from the Red Hat Enterprise Linux Installation CD-ROM, DVD or PXE.

- You must enter a valid installation number when prompted to receive access to the virtualization and other Advanced Platform packages.

- Complete all steps up to the package selection step.

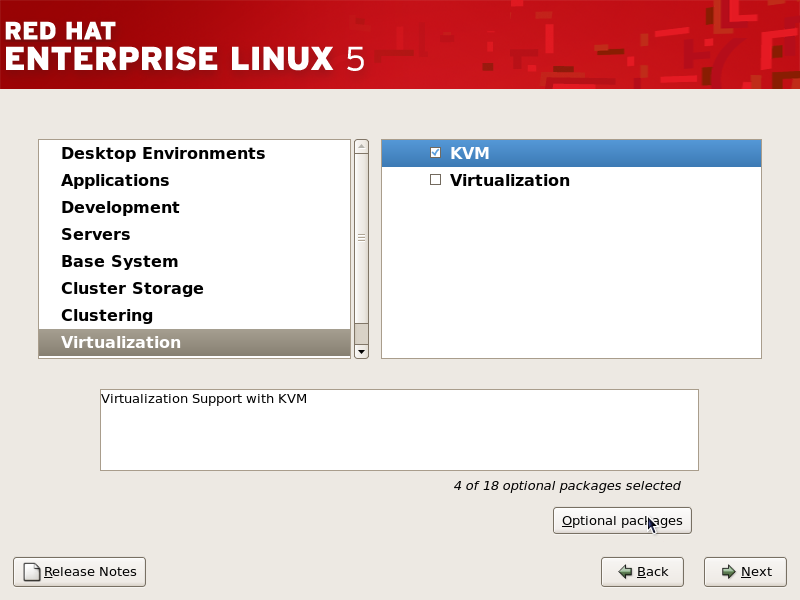

Select the Virtualization package group and the Customize Now radio button.

Select the Virtualization package group and the Customize Now radio button. - Select the KVM package group. Deselect the Virtualization package group. This selects the KVM hypervisor,

virt-manager,libvirtandvirt-viewerfor installation.

Customize the packages (if required)

Customize the Virtualization group if you require other virtualization packages. Press the Close button then the Forward button to continue the installation.

Press the Close button then the Forward button to continue the installation.

Important

This section describes how to use a Kickstart file to install Red Hat Enterprise Linux with the KVM hypervisor packages. Kickstart files allow for large, automated installations without a user manually installing each individual system. The steps in this section will assist you in creating and using a Kickstart file to install Red Hat Enterprise Linux with the virtualization packages.

%packages section of your Kickstart file, append the following package group:

%packages @kvm

%packages

@kvm

6.4. Installing KVM packages on an existing Red Hat Enterprise Linux system

This section describes how to enable entitlements in your Red Hat account for the virtualization packages. You need these entitlements enabled to install and update the virtualization packages on Red Hat Enterprise Linux. You require a valid Red Hat account in order to install virtualization packages on Red Hat Enterprise Linux.

yum

To use virtualization on Red Hat Enterprise Linux you require the kvm package. The kvm package contains the KVM kernel module providing the KVM hypervisor on the default Red Hat Enterprise Linux kernel.

kvm package, run:

yum install kvm

# yum install kvm

Recommended virtualization packages:

python-virtinst- Provides the

virt-installcommand for creating virtual machines. libvirtlibvirtis an API library for interacting with hypervisors.libvirtuses thexmvirtualization framework and thevirshcommand line tool to manage and control virtual machines.libvirt-python- The libvirt-python package contains a module that permits applications written in the Python programming language to use the interface supplied by the

libvirtAPI. virt-managervirt-manager, also known as Virtual Machine Manager, provides a graphical tool for administering virtual machines. It useslibvirtlibrary as the management API.

yum install virt-manager libvirt libvirt-python python-virtinst

# yum install virt-manager libvirt libvirt-python python-virtinst

Chapter 7. Guest installation overview

virt-install. Both methods are covered by this chapter.

7.1. Creating guests with virt-install

virt-install command to create guests from the command line. virt-install is used either interactively or as part of a script to automate the creation of virtual machines. Using virt-install with Kickstart files allows for unattended installation of virtual machines.

virt-install tool provides a number of options one can pass on the command line. To see a complete list of options run:

virt-install --help

$ virt-install --help

virt-install man page also documents each command option and important variables.

qemu-img is a related command which may be used before virt-install to configure storage options.

--vnc option which opens a graphical window for the guest's installation.

Example 7.1. Using virt-install with KVM to create a Red Hat Enterprise Linux 3 guest

rhel3support, from a CD-ROM, with virtual networking and with a 5 GB file-based block device image. This example uses the KVM hypervisor.

Example 7.2. Using virt-install to create a fedora 11 guest

virt-install --name fedora11 --ram 512 --file=/var/lib/libvirt/images/fedora11.img \ --file-size=3 --vnc --cdrom=/var/lib/libvirt/images/fedora11.iso

# virt-install --name fedora11 --ram 512 --file=/var/lib/libvirt/images/fedora11.img \

--file-size=3 --vnc --cdrom=/var/lib/libvirt/images/fedora11.iso

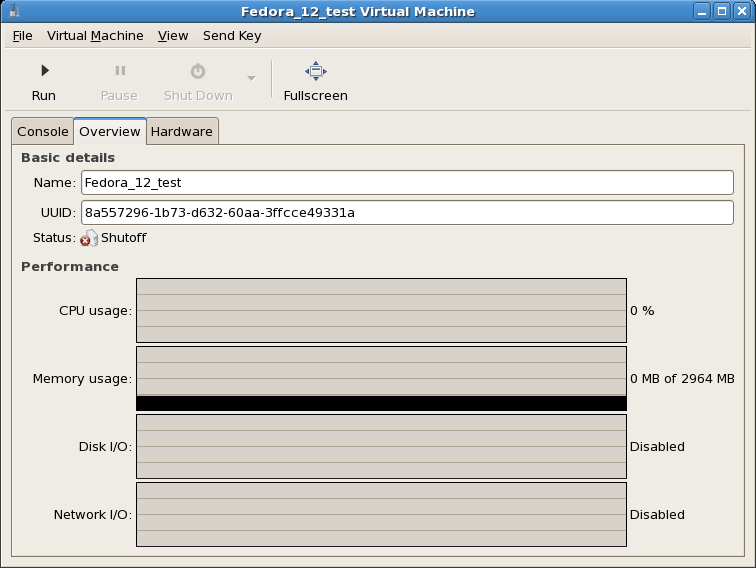

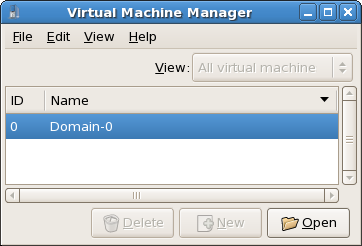

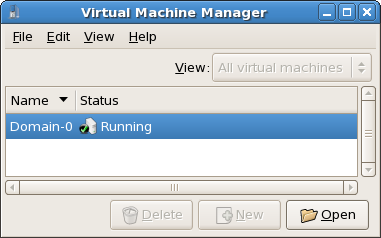

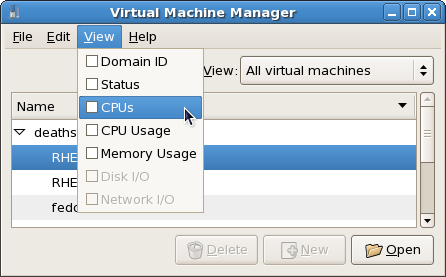

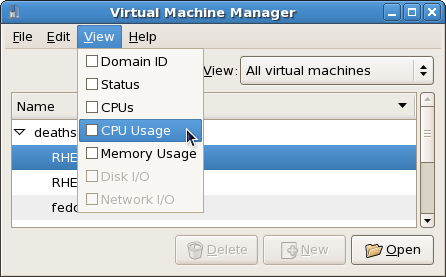

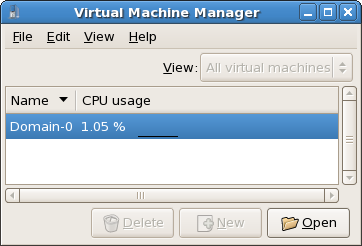

7.2. Creating guests with virt-manager

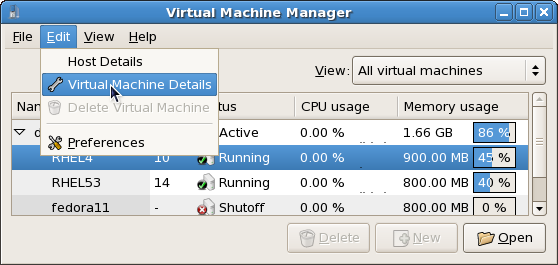

virt-manager, also known as Virtual Machine Manager, is a graphical tool for creating and managing guests.

Procedure 7.1. Creating a guest with virt-manager

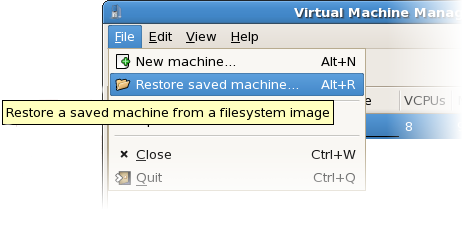

Open virt-manager

Startvirt-manager. Launch the application from the menu and submenu. Alternatively, run thevirt-managercommand as root.Optional: Open a remote hypervisor

Open the File -> Add Connection. The dialog box below appears. Select a hypervisor and click the button:

Create a new guest

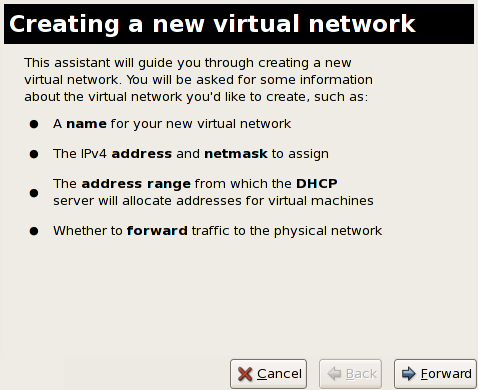

The virt-manager window allows you to create a new virtual machine. Click the button to create a new guest. This opens the wizard shown in the screenshot.

New guest wizard

The Create a new virtual machine window provides a summary of the information you must provide in order to create a virtual machine: Review the information for your installation and click the button.

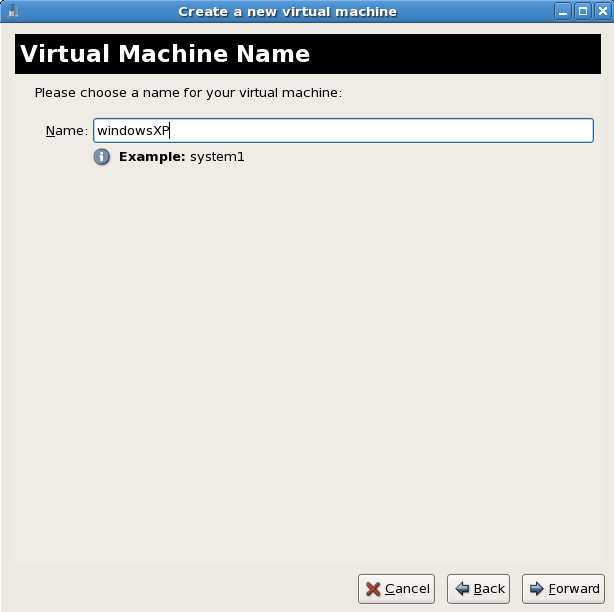

Review the information for your installation and click the button.Name the virtual machine

Provide a name for your guest. Punctuation and whitespace characters are not permitted in versions before Red Hat Enterprise Linux 5.5. Red Hat Enterprise Linux 5.5 adds support for '_', '.' and '-' characters. Press to continue.

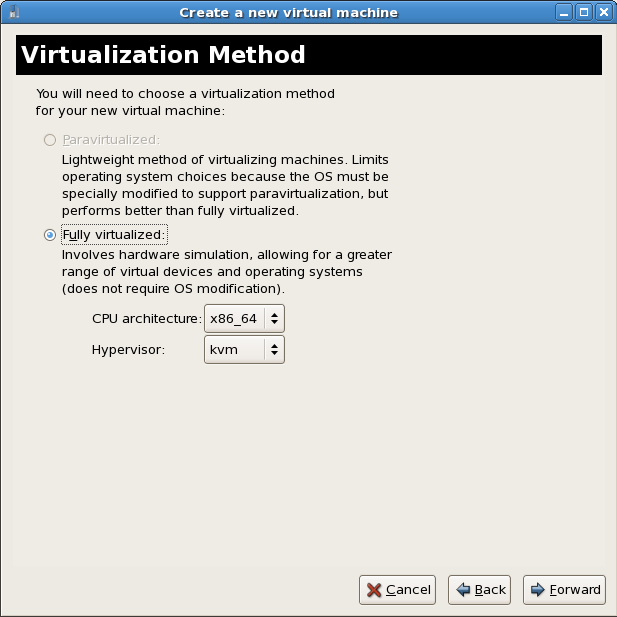

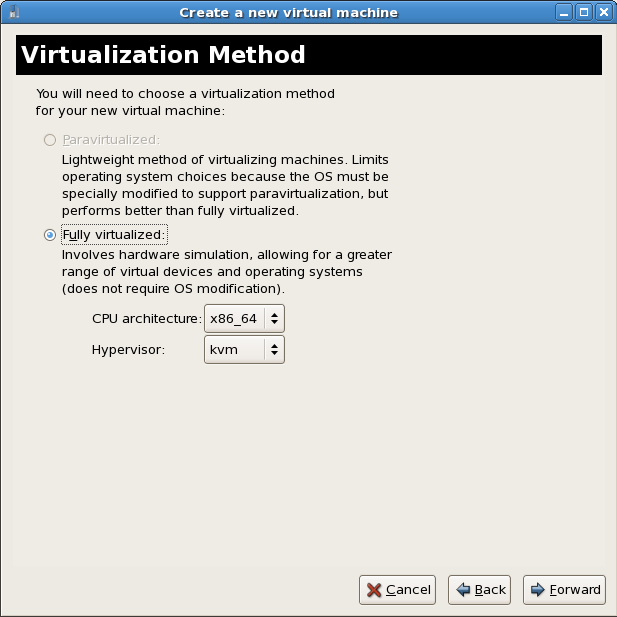

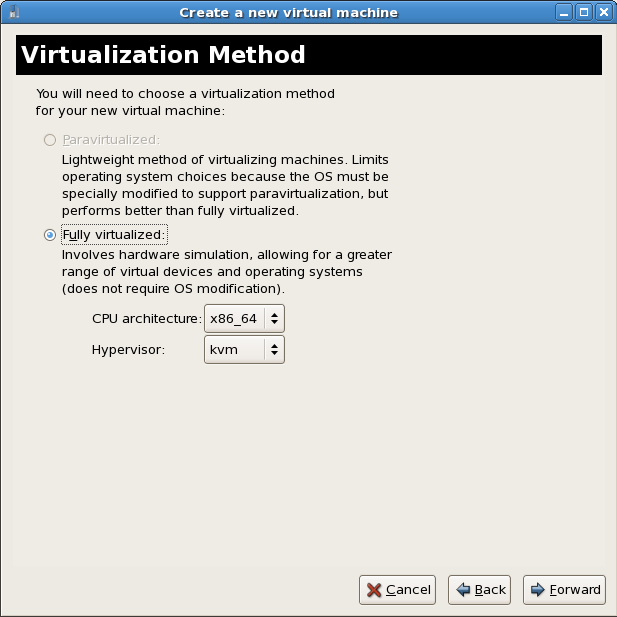

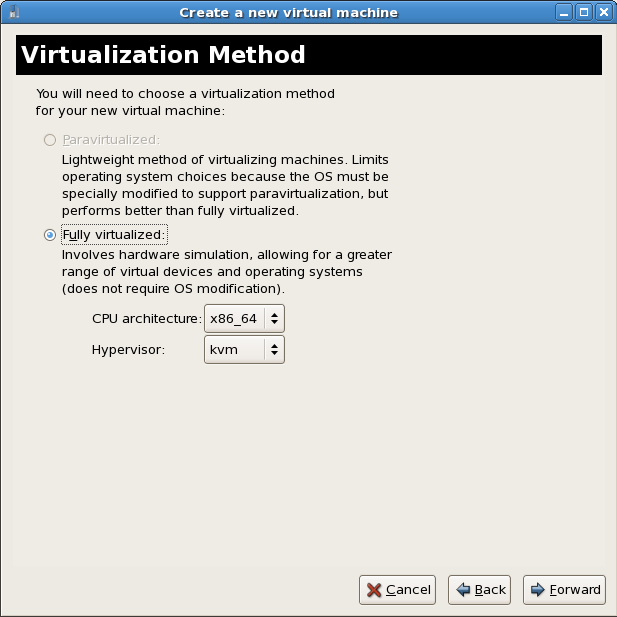

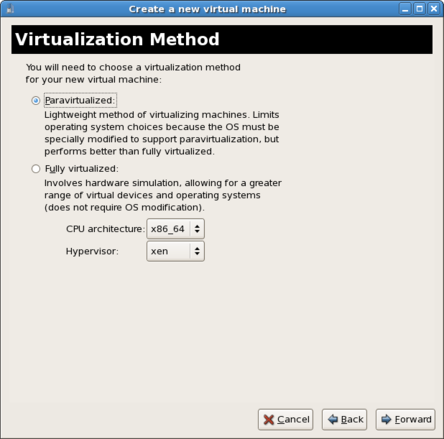

Press to continue.Choose virtualization method

The Choosing a virtualization method window appears. Choose between Para-virtualized or Fully virtualized.Full virtualization requires a system with Intel® VT or AMD-V processor. If the virtualization extensions are not present the fully virtualized radio button or the Enable kernel/hardware acceleration will not be selectable. The Para-virtualized option will be grayed out ifkernel-xenis not the kernel running presently.If you connected to a KVM hypervisor, only full virtualization is available. Choose the virtualization type and click the button.

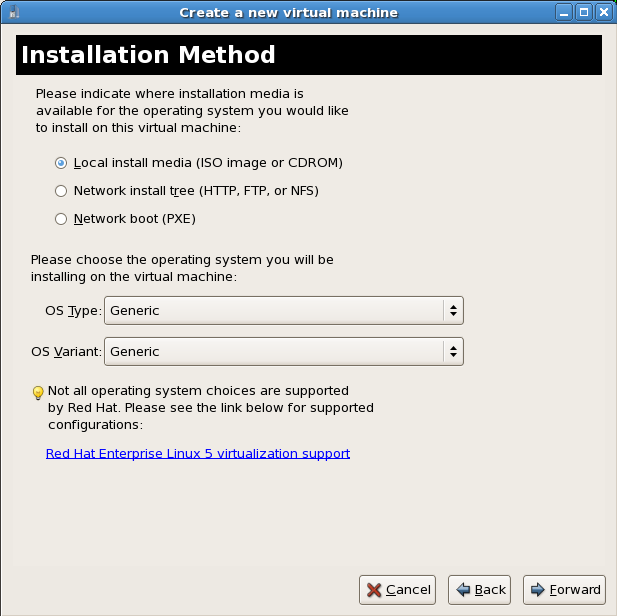

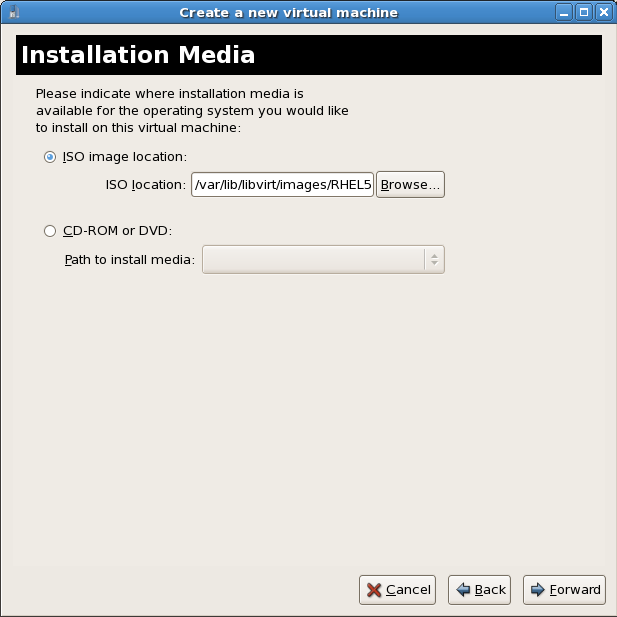

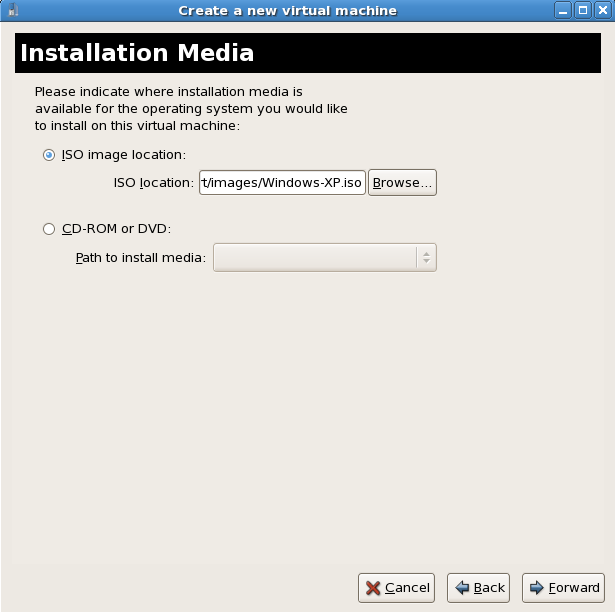

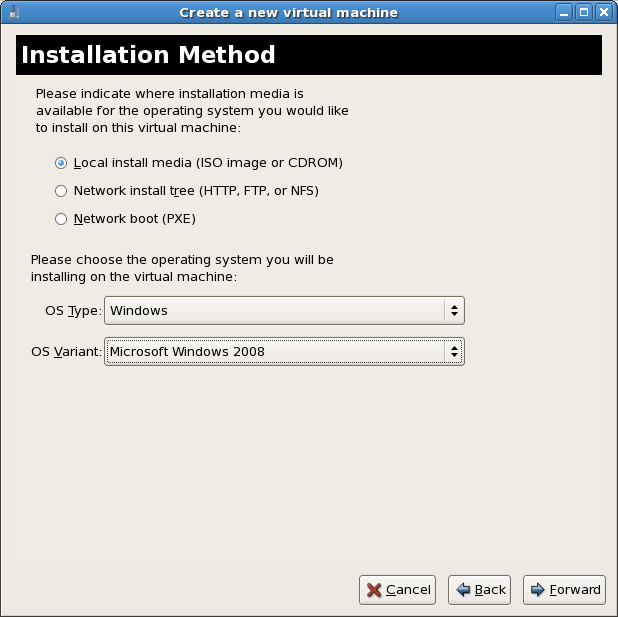

Choose the virtualization type and click the button.Select the installation method

The Installation Method window asks for the type of installation you selected.Guests can be installed using one of the following methods:- Local media installation

- This method uses a CD-ROM or DVD or an image of an installation CD-ROM or DVD (an

.isofile). - Network installation tree

- This method uses a mirrored Red Hat Enterprise Linux installation tree to install guests. The installation tree must be accessible using one of the following network protocols:

HTTP,FTPorNFS.The network services and files can be hosted using network services on the host or another mirror. - Network boot

- This method uses a Preboot eXecution Environment (PXE) server to install the guest. Setting up a PXE server is covered in the Red Hat Enterprise Linux Deployment Guide. Using this method requires a guest with a routable IP address or shared network device. See Chapter 10, Network Configuration for information on the required networking configuration for PXE installation.

Set the OS type and OS variant.Choose the installation method and click Forward to proceed.

Set the OS type and OS variant.Choose the installation method and click Forward to proceed.Important

Para-virtualized installation must be installed with a network installation tree. The installation tree must be accessible using one of the following network protocols:HTTP,FTPorNFS. The installation media URL must contain a Red Hat Enterprise Linux installation tree. This tree is hosted usingNFS,FTPorHTTP.Installation media selection

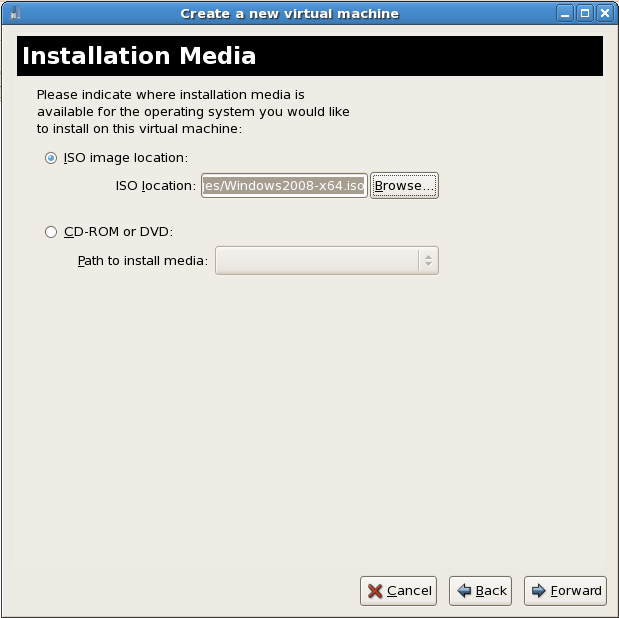

This window is dependent on what was selected in the previous step.ISO image or physical media installation

If Local install media was selected in the previous step this screen is called Install Media.Select the location of an ISO image or select a DVD or CD-ROM from the dropdown list. Click the button to proceed.

Click the button to proceed.Network install tree installation

If Network install tree was selected in the previous step this screen is called Installation Source.Network installation requires the address of a mirror of a Linux installation tree usingNFS,FTPorHTTP. Optionally, a kickstart file can be specified to automated the installation. Kernel parameters can also be specified if required. Click the button to proceed.

Click the button to proceed.Network boot (PXE)

PXE installation does not have an additional step.

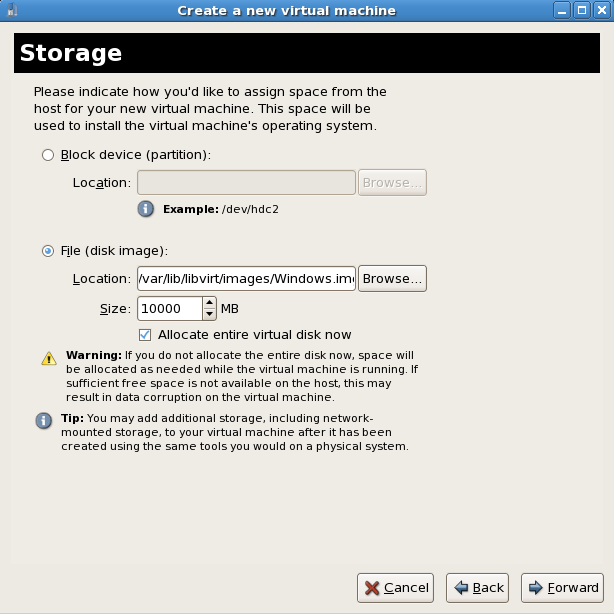

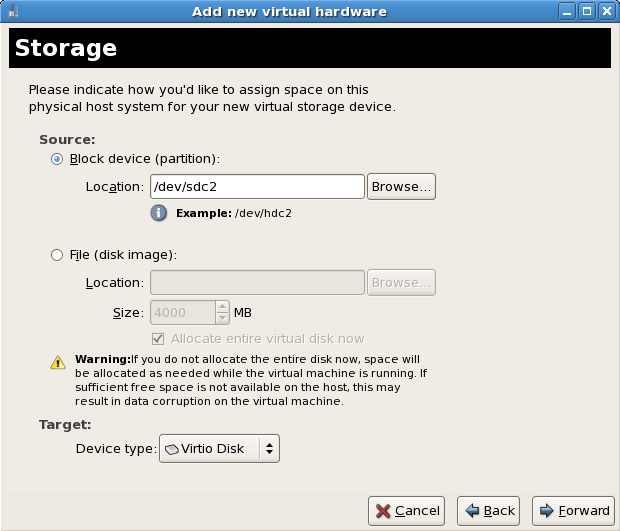

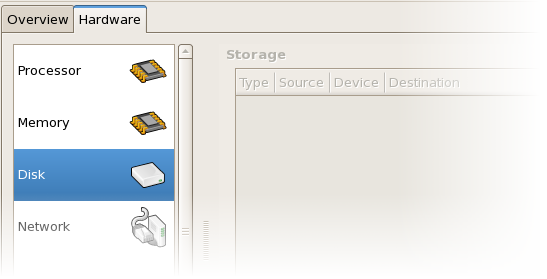

Storage setup

The Storage window displays. Choose a disk partition, LUN or create a file-based image for the guest storage.All image files are stored in the/var/lib/libvirt/images/directory by default. In the default configuration, other directory locations for file-based images are prohibited by SELinux. If you use a different directory you must label the new directory according to SELinux policy. See Section 19.2, “SELinux and virtualization” for details.Your guest storage image should be larger than the size of the installation, any additional packages and applications, and the size of the guests swap file. The installation process will choose the size of the guest's swap based on size of the RAM allocated to the guest.Allocate extra space if the guest needs additional space for applications or other data. For example, web servers require additional space for log files. Choose the appropriate size for the guest on your selected storage type and click the button.

Choose the appropriate size for the guest on your selected storage type and click the button.Note

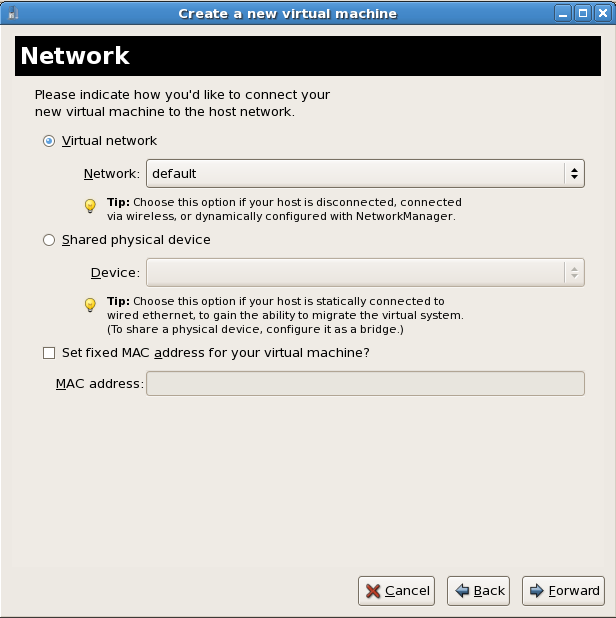

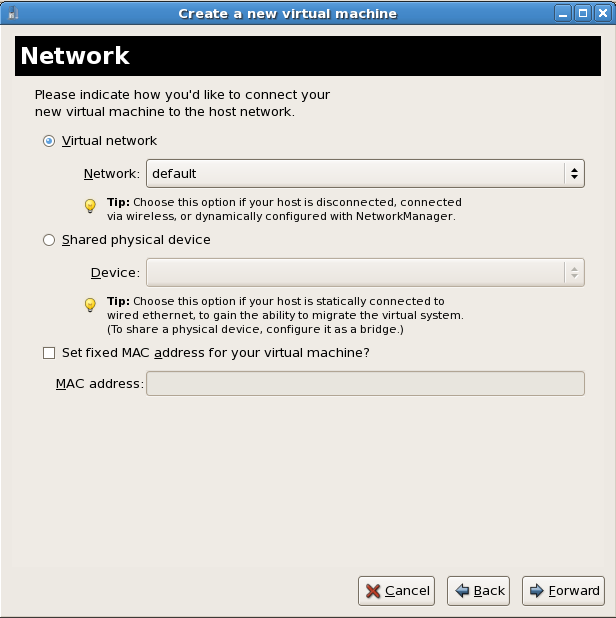

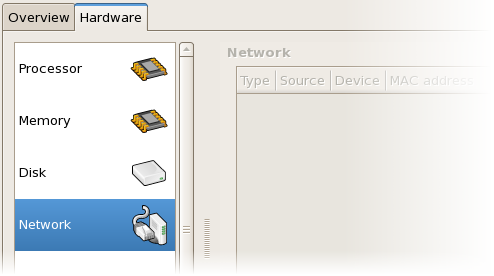

It is recommend that you use the default directory for virtual machine images,/var/lib/libvirt/images/. If you are using a different location (such as/images/in this example) make sure it is labeled according to SELinux policy before you continue with the installation. See Section 19.2, “SELinux and virtualization” for details.Network setup

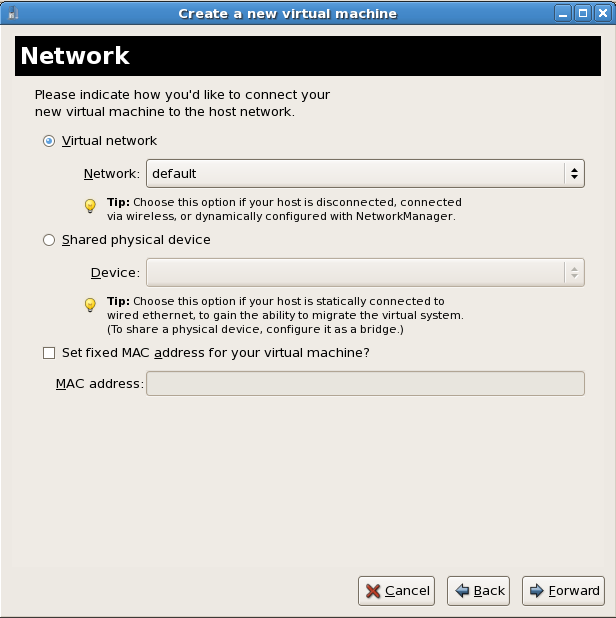

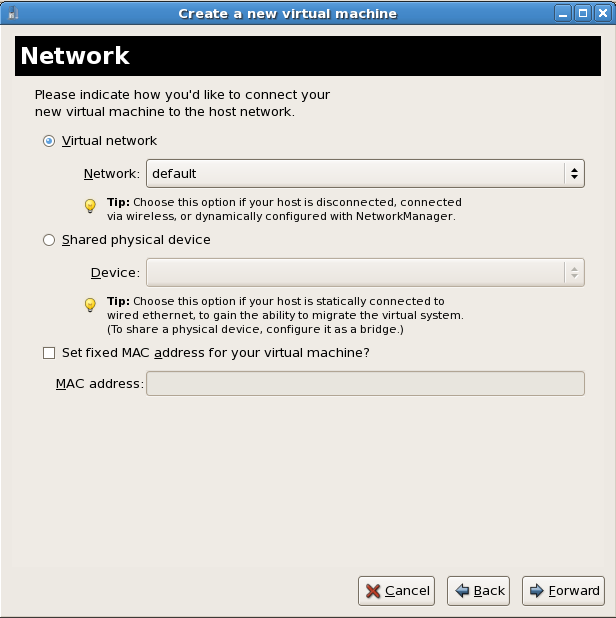

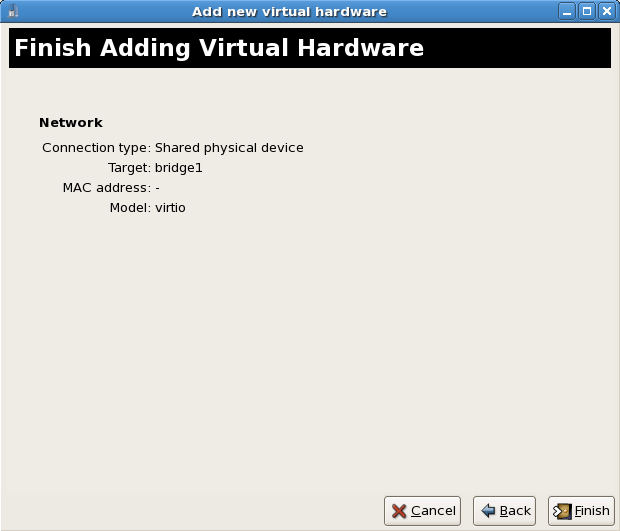

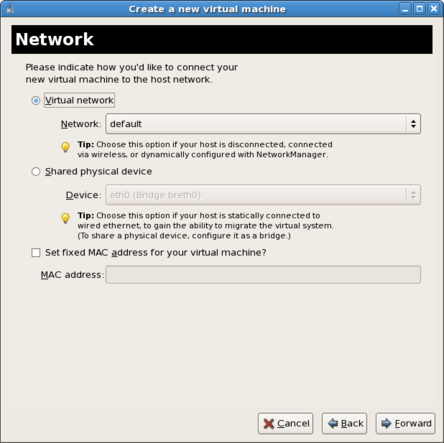

Select either Virtual network or Shared physical device.The virtual network option uses Network Address Translation (NAT) to share the default network device with the guest. Use the virtual network option for wireless networks.The shared physical device option uses a network bond to give the guest full access to a network device.Press to continue.

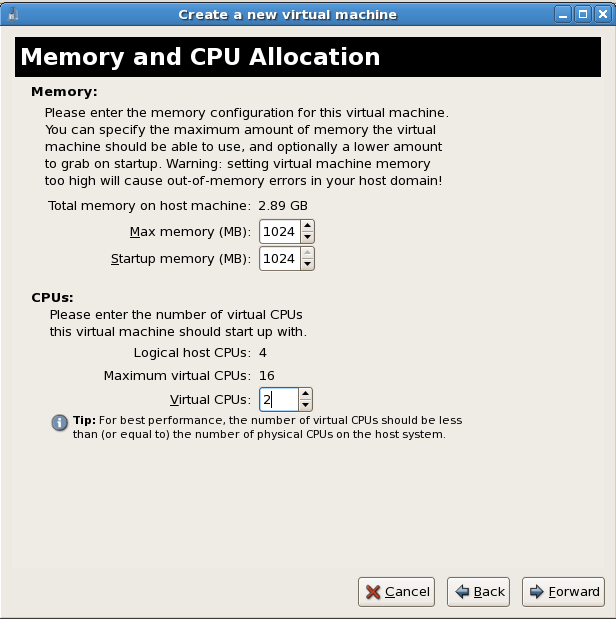

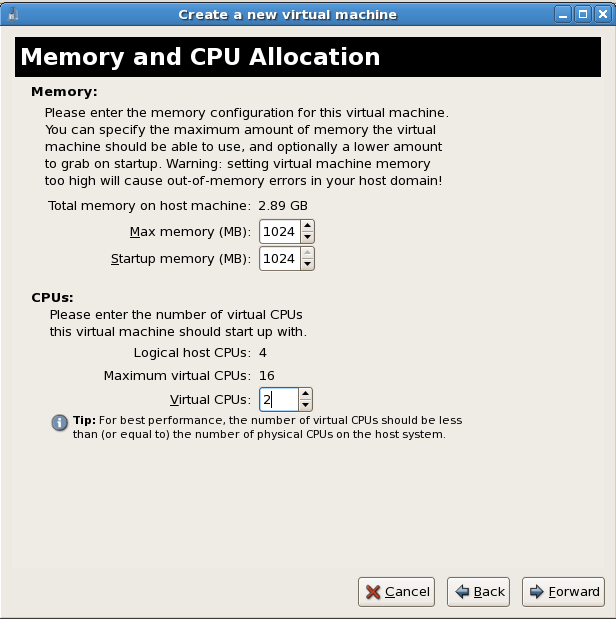

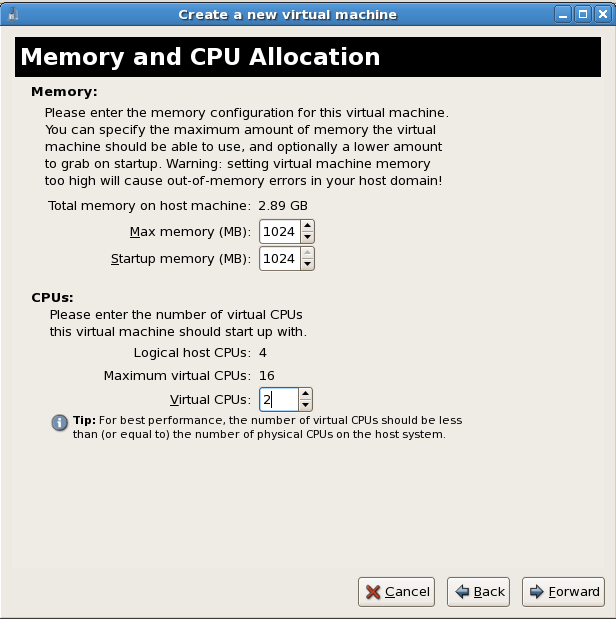

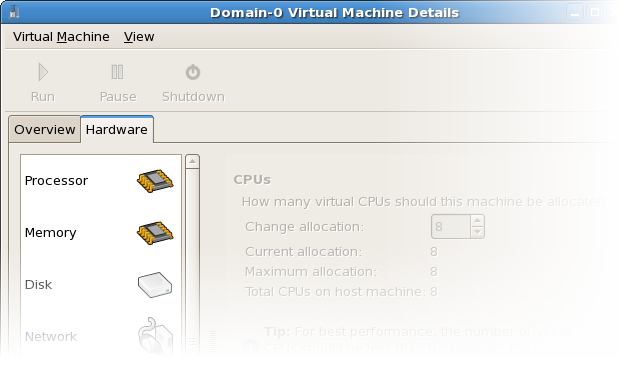

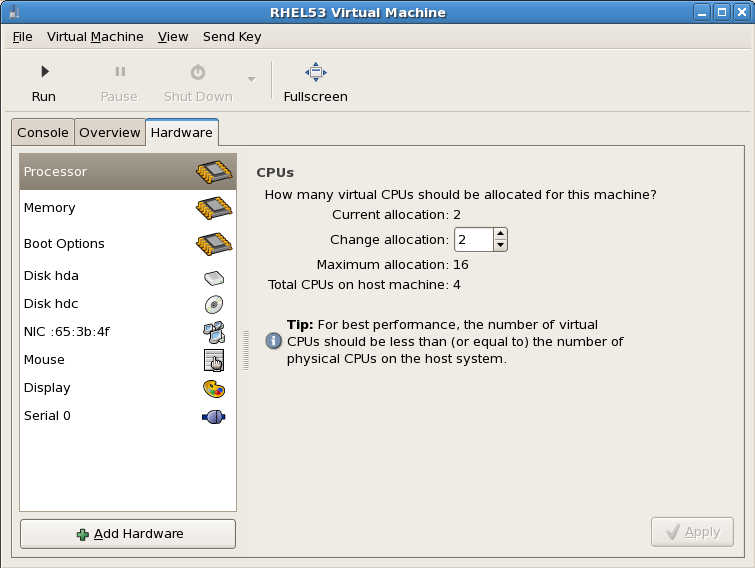

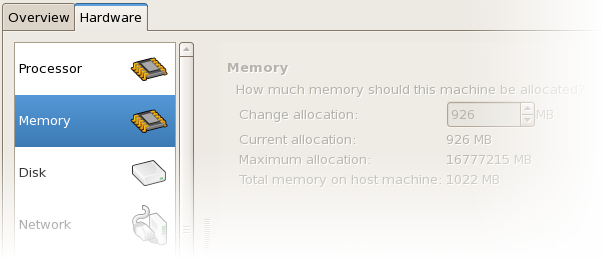

Memory and CPU allocation

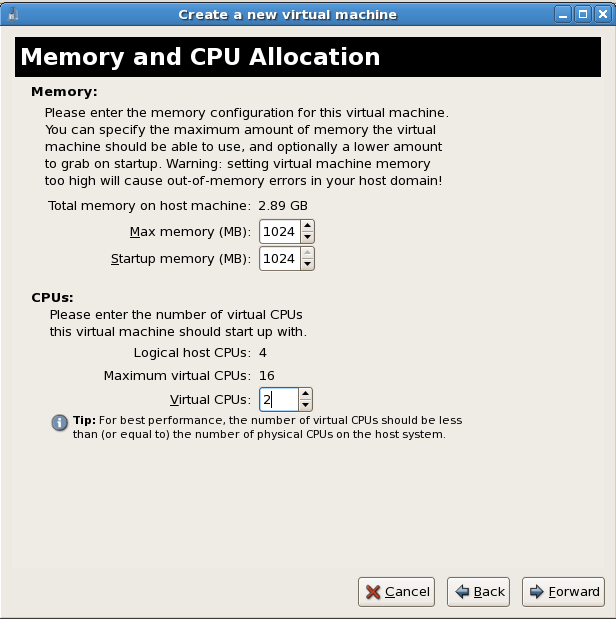

The Memory and CPU Allocation window displays. Choose appropriate values for the virtualized CPUs and RAM allocation. These values affect the host's and guest's performance.Guests require sufficient physical memory (RAM) to run efficiently and effectively. Choose a memory value which suits your guest operating system and application requirements. Most operating system require at least 512MB of RAM to work responsively. Remember, guests use physical RAM. Running too many guests or leaving insufficient memory for the host system results in significant usage of virtual memory. Virtual memory is significantly slower causing degraded system performance and responsiveness. Ensure to allocate sufficient memory for all guests and the host to operate effectively.Assign sufficient virtual CPUs for the guest. If the guest runs a multithreaded application, assign the number of virtualized CPUs the guest will require to run efficiently. Do not assign more virtual CPUs than there are physical processors or threads available on the host system. It is possible to over allocate virtual processors, however, over allocating has a significant, negative effect on guest and host performance due to processor context switching overheads. Press to continue.

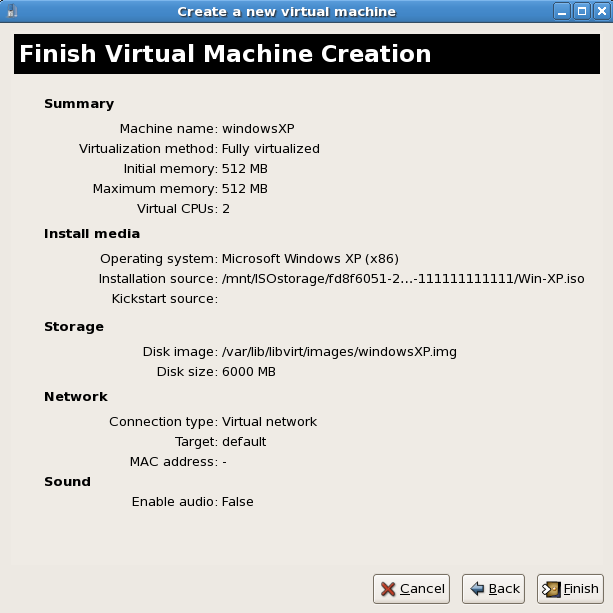

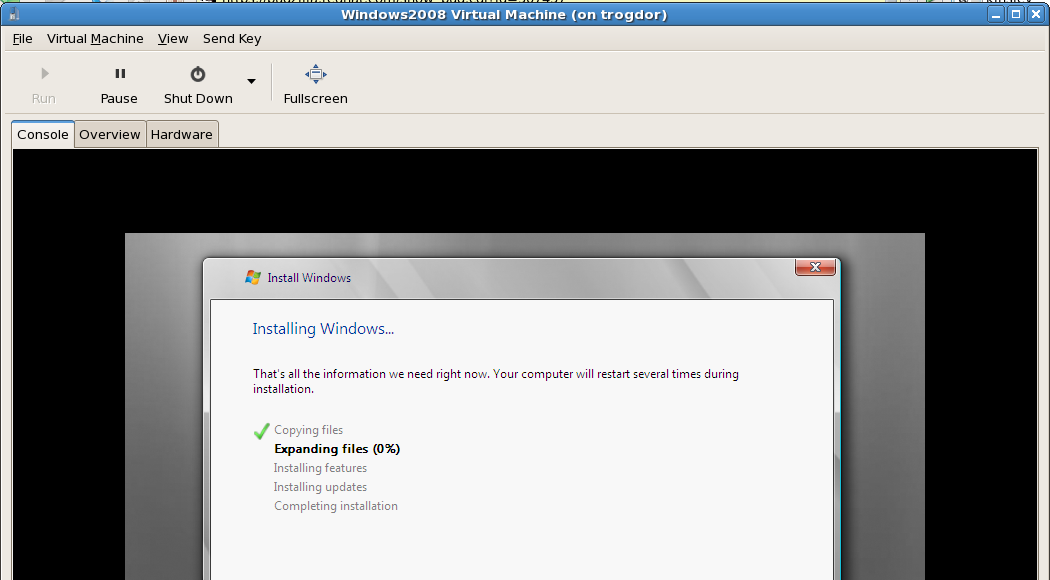

Press to continue.Verify and start guest installation

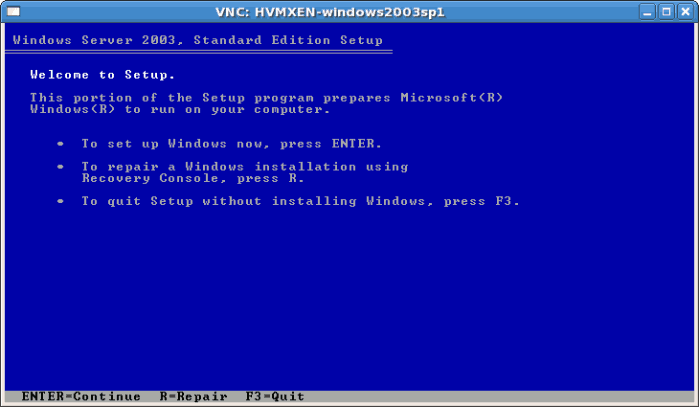

The Finish Virtual Machine Creation window presents a summary of all configuration information you entered. Review the information presented and use the button to make changes, if necessary. Once you are satisfied click the button and to start the installation process. A VNC window opens showing the start of the guest operating system installation process.

A VNC window opens showing the start of the guest operating system installation process.

virt-manager. Chapter 8, Guest operating system installation procedures contains step-by-step instructions to installing a variety of common operating systems.

7.3. Installing guests with PXE

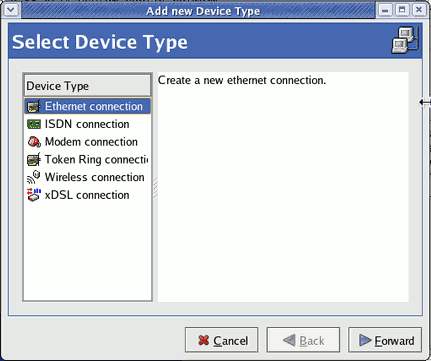

Create a new bridge

- Create a new network script file in the

/etc/sysconfig/network-scripts/directory. This example creates a file namedifcfg-installationwhich makes a bridge namedinstallation.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Warning

The line,TYPE=Bridge, is case-sensitive. It must have uppercase 'B' and lower case 'ridge'.Important

Prior to the release of Red Hat Enterprise Linux 5.9, a segmentation fault can occur when the bridge name contains only uppercase characters. Please upgrade to 5.9 or newer if uppercase names are required. - Start the new bridge by restarting the network service. The

ifup installationcommand can start the individual bridge but it is safer to test the entire network restarts properly.service network restart

# service network restartCopy to Clipboard Copied! Toggle word wrap Toggle overflow - There are no interfaces added to the new bridge yet. Use the

brctl showcommand to view details about network bridges on the system.brctl show bridge name bridge id STP enabled interfaces installation 8000.000000000000 no virbr0 8000.000000000000 yes

# brctl show bridge name bridge id STP enabled interfaces installation 8000.000000000000 no virbr0 8000.000000000000 yesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Thevirbr0bridge is the default bridge used bylibvirtfor Network Address Translation (NAT) on the default Ethernet device.

Add an interface to the new bridge

Edit the configuration file for the interface. Add theBRIDGEparameter to the configuration file with the name of the bridge created in the previous steps.Copy to Clipboard Copied! Toggle word wrap Toggle overflow After editing the configuration file, restart networking or reboot.service network restart

# service network restartCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify the interface is attached with thebrctl showcommand:brctl show bridge name bridge id STP enabled interfaces installation 8000.001320f76e8e no eth1 virbr0 8000.000000000000 yes

# brctl show bridge name bridge id STP enabled interfaces installation 8000.001320f76e8e no eth1 virbr0 8000.000000000000 yesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Security configuration

Configureiptablesto allow all traffic to be forwarded across the bridge.iptables -I FORWARD -m physdev --physdev-is-bridged -j ACCEPT service iptables save service iptables restart

# iptables -I FORWARD -m physdev --physdev-is-bridged -j ACCEPT # service iptables save # service iptables restartCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note

Alternatively, prevent bridged traffic from being processed byiptablesrules. In/etc/sysctl.confappend the following lines:net.bridge.bridge-nf-call-ip6tables = 0 net.bridge.bridge-nf-call-iptables = 0 net.bridge.bridge-nf-call-arptables = 0

net.bridge.bridge-nf-call-ip6tables = 0 net.bridge.bridge-nf-call-iptables = 0 net.bridge.bridge-nf-call-arptables = 0Copy to Clipboard Copied! Toggle word wrap Toggle overflow Reload the kernel parameters configured withsysctl.sysctl -p /etc/sysctl.conf

# sysctl -p /etc/sysctl.confCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart libvirt before the installation

Restart thelibvirtdaemon.service libvirtd restart

# service libvirtd restartCopy to Clipboard Copied! Toggle word wrap Toggle overflow

For virt-install append the --network=bridge:installation installation parameter where installation is the name of your bridge. For PXE installations use the --pxe parameter.

Example 7.3. PXE installation with virt-install

The steps below are the steps that vary from the standard virt-manager installation procedures. For the standard installations rsee Chapter 8, Guest operating system installation procedures.

Select PXE

Select PXE as the installation method.

Select the bridge

Select Shared physical device and select the bridge created in the previous procedure.

Start the installation

The installation is ready to start.

Chapter 8. Guest operating system installation procedures

Important

virsh update-device Guest1 ~/Guest1.xml (substituting your guest's name and XML file), and select OK to continue past this step.

8.1. Installing Red Hat Enterprise Linux 5 as a para-virtualized guest

kernel-xen kernel.

Important

virt-manager, see Section 7.2, “Creating guests with virt-manager”.

virt-install tool. The --vnc option shows the graphical installation. The name of the guest in the example is rhel5PV, the disk image file is rhel5PV.dsk and a local mirror of the Red Hat Enterprise Linux 5 installation tree is ftp://10.1.1.1/trees/RHEL5-B2-Server-i386/. Replace those values with values accurate for your system and network.

virt-install -n rhel5PV -r 500 \ -f /var/lib/libvirt/images/rhel5PV.dsk -s 3 --vnc -p \ -l ftp://10.1.1.1/trees/RHEL5-B2-Server-i386/

# virt-install -n rhel5PV -r 500 \

-f /var/lib/libvirt/images/rhel5PV.dsk -s 3 --vnc -p \

-l ftp://10.1.1.1/trees/RHEL5-B2-Server-i386/Note

Procedure 8.1. Para-virtualized Red Hat Enterprise Linux guest installation procedure

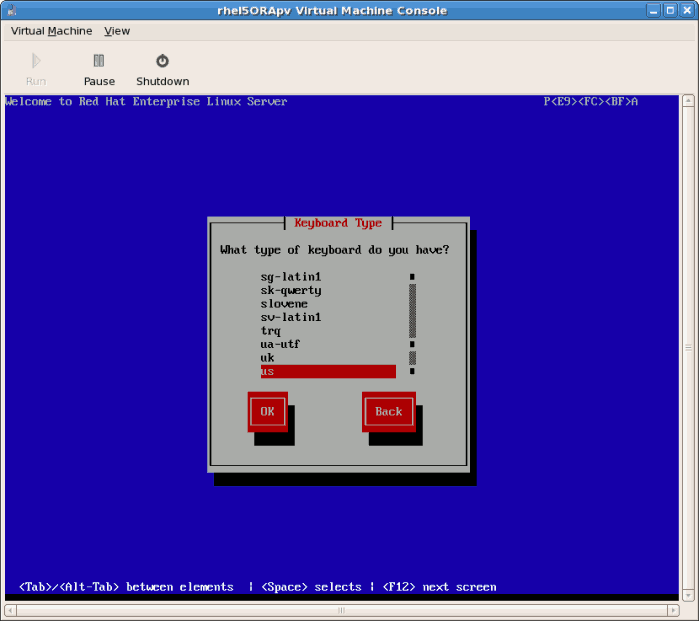

- Select the language and click .

- Select the keyboard layout and click .

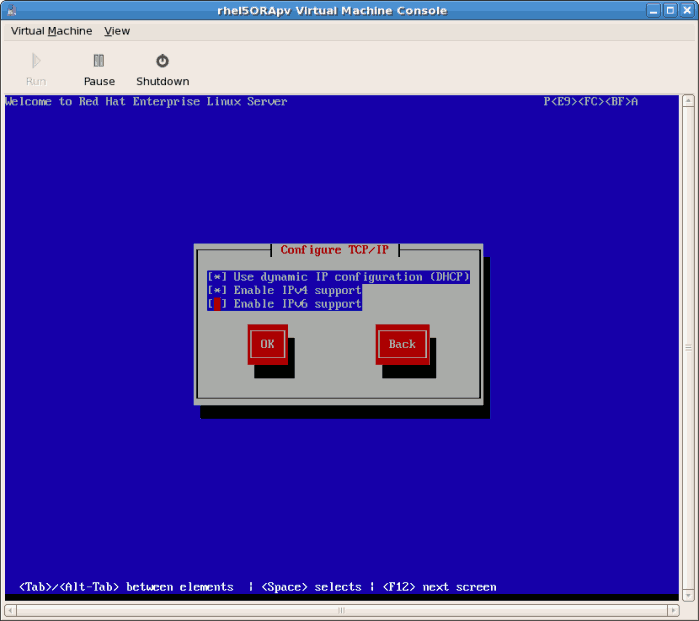

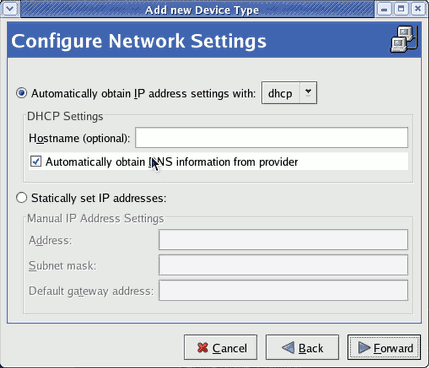

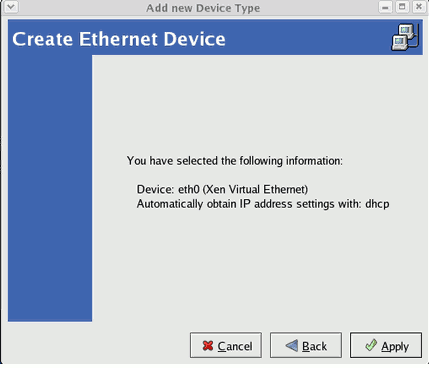

- Assign the guest's network address. Choose to use

DHCP(as shown below) or a static IP address:

- If you select DHCP the installation process will now attempt to acquire an IP address:

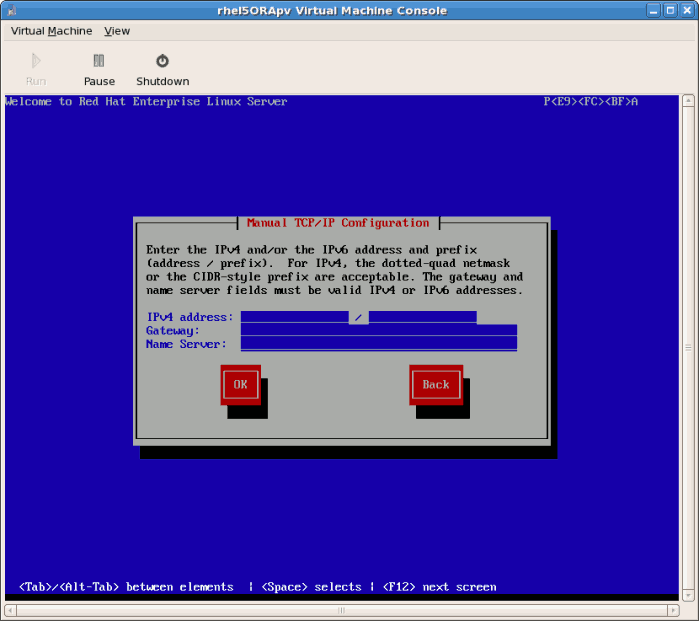

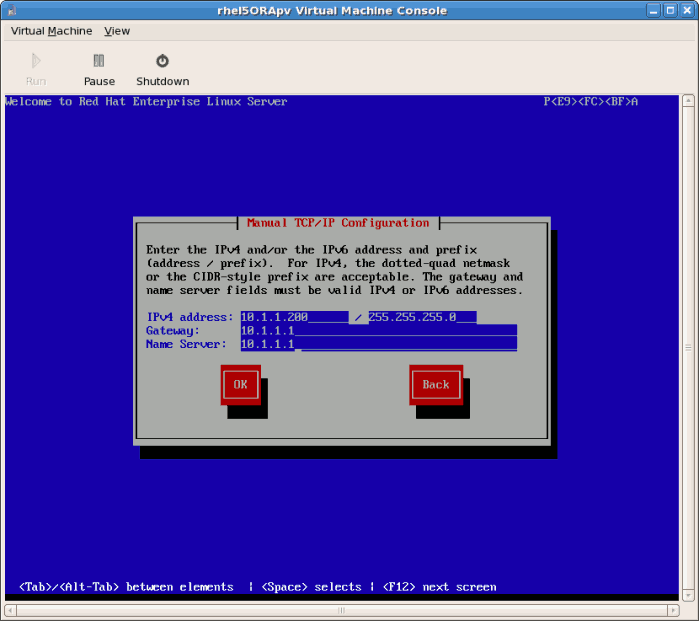

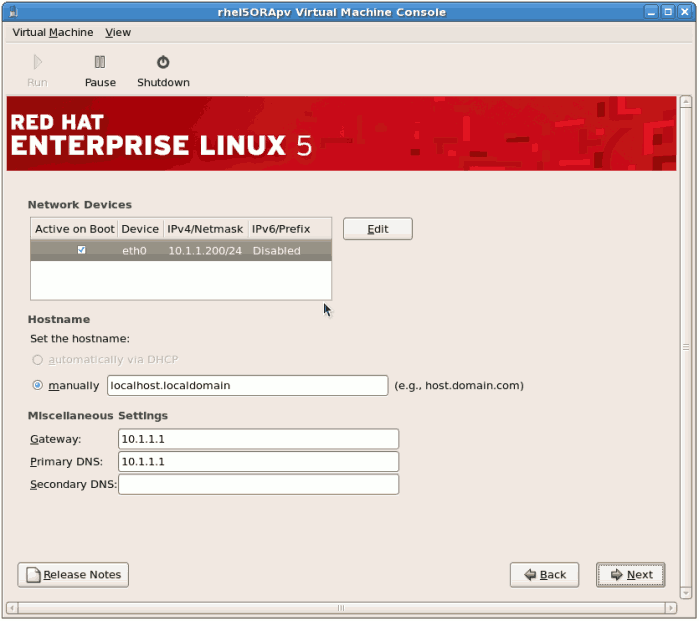

- If you chose a static IP address for your guest this prompt appears. Enter the details on the guest's networking configuration:

- Enter a valid IP address. Ensure the IP address you enter can reach the server with the installation tree.

- Enter a valid Subnet mask, default gateway and name server address.

Select the language and click .

- This is an example of a static IP address configuration:

- The installation process now retrieves the files it needs from the server:

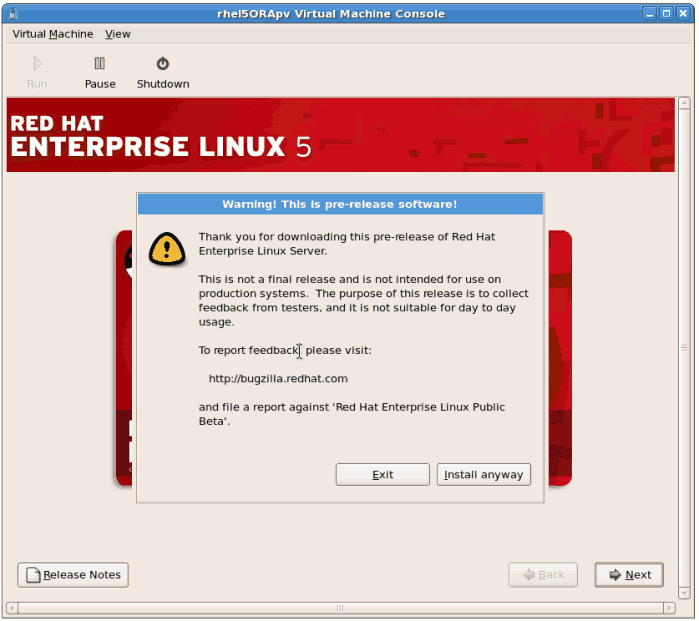

Procedure 8.2. The graphical installation process

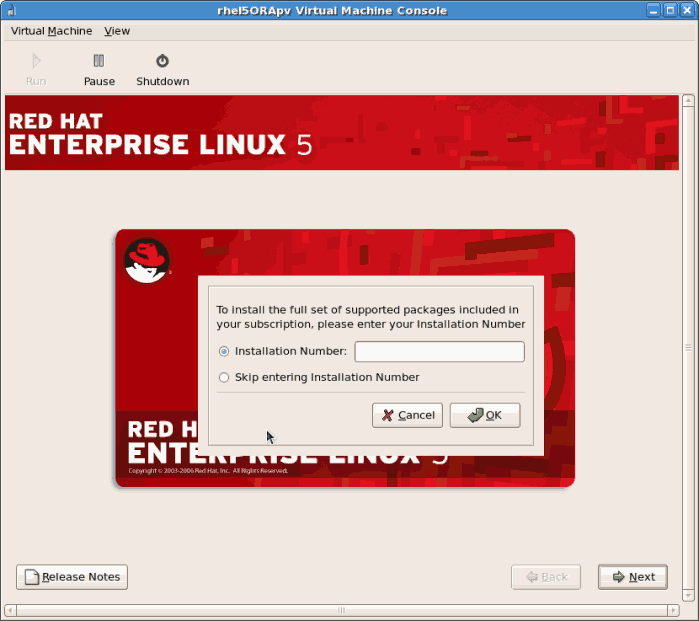

- Enter a valid registration code. If you have a valid Red Hat subscription key please enter in the

Installation Numberfield:

Note

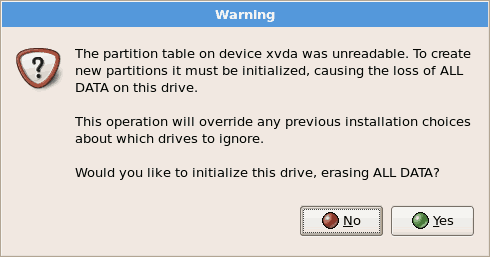

If you skip the registration step, confirm your Red Hat account details after the installation with therhn_registercommand. Therhn_registercommand requires root access. - The installation prompts you to confirm erasure of all data on the storage you selected for the installation:

Click to continue.

Click to continue. - Review the storage configuration and partition layout. You can chose to select the advanced storage configuration if you want to use iSCSI for the guest's storage.

Make your selections then click .

Make your selections then click . - Confirm the selected storage for the installation.

Click to continue.

Click to continue. - Configure networking and hostname settings. These settings are populated with the data entered earlier in the installation process. Change these settings if necessary.

Click to continue.

Click to continue. - Select the appropriate time zone for your environment.

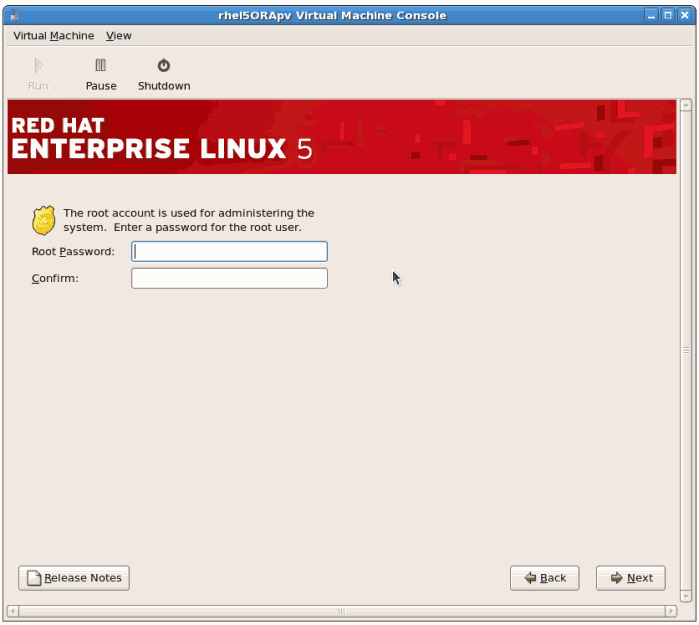

- Enter the root password for the guest.

Click to continue.

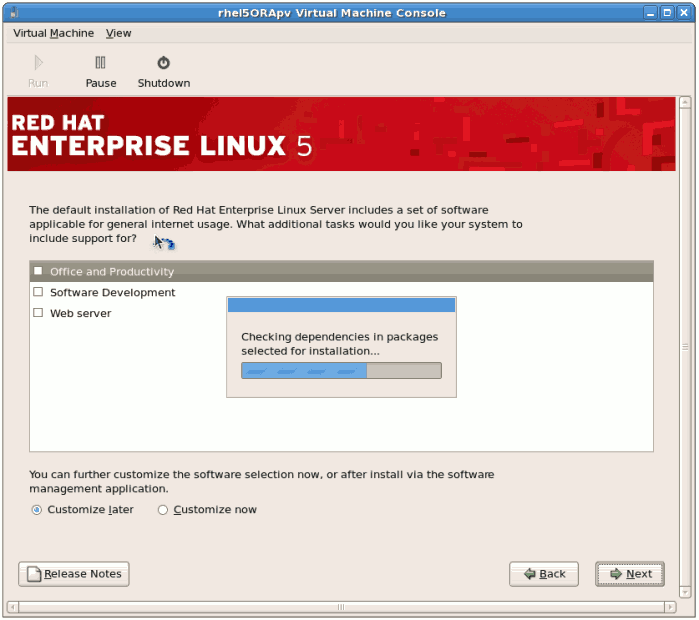

Click to continue. - Select the software packages to install. Select the button. You must install the kernel-xen package in the System directory. The kernel-xen package is required for para-virtualization.

Click .

Click . - Dependencies and space requirements are calculated.

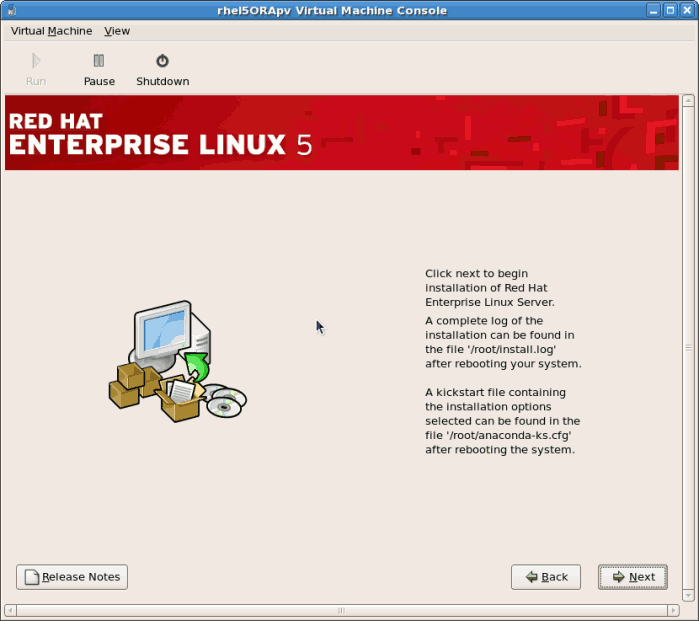

- After the installation dependencies and space requirements have been verified click to start the actual installation.

- All of the selected software packages are installed automatically.

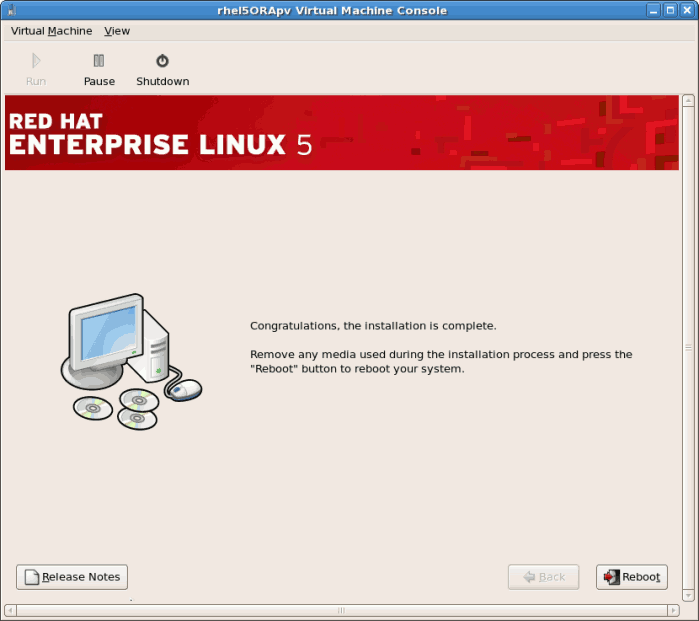

- After the installation has finished reboot your guest:

- The guest will not reboot, instead it will shutdown..

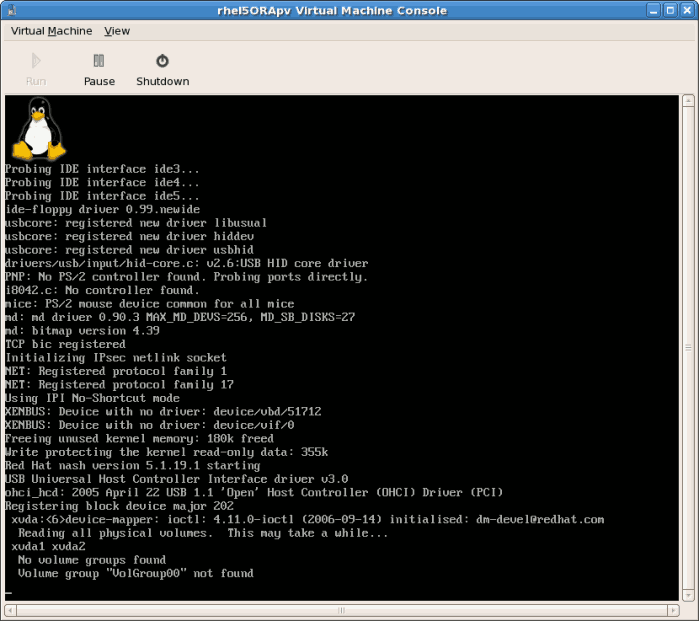

- Boot the guest. The guest's name was chosen when you used the

virt-installin Section 8.1, “Installing Red Hat Enterprise Linux 5 as a para-virtualized guest”. If you used the default example the name is rhel5PV.Usevirshto reboot the guest:virsh reboot rhel5PV

# virsh reboot rhel5PVCopy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, openvirt-manager, select the name of your guest, click , then click .A VNC window displaying the guest's boot processes now opens.

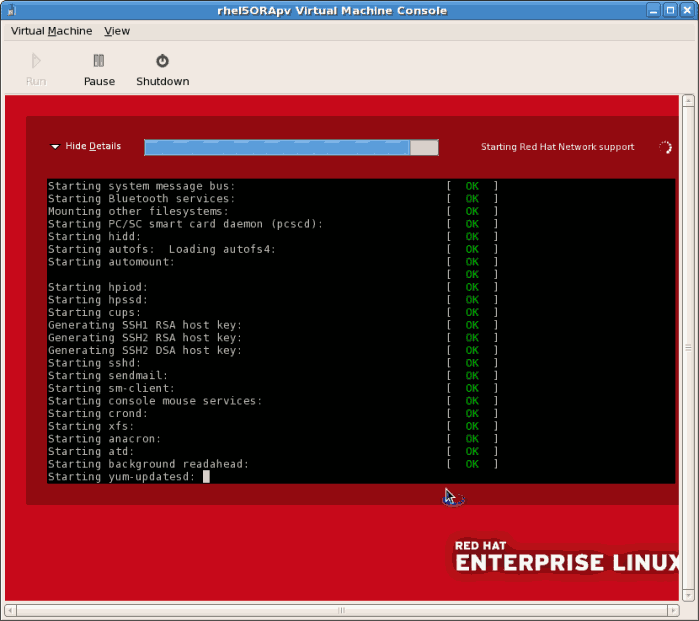

- Booting the guest starts the First Boot configuration screen. This wizard prompts you for some basic configuration choices for your guest.

- Read and agree to the license agreement.

Click on the license agreement windows.

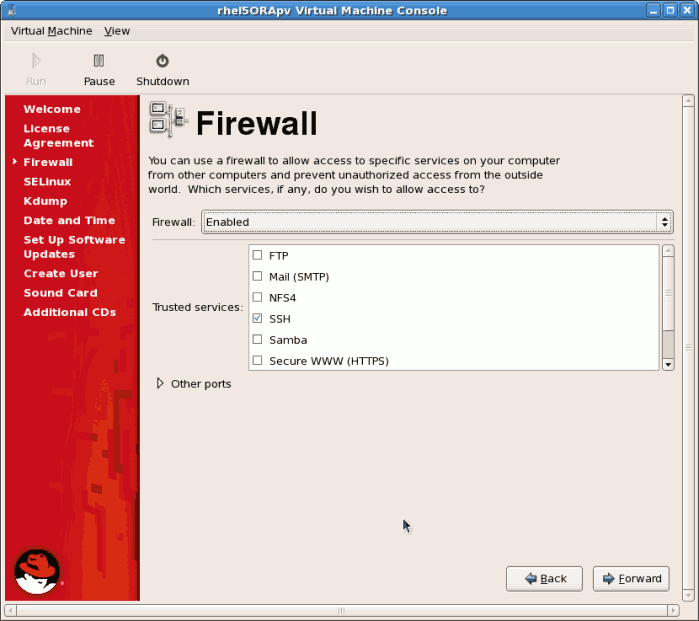

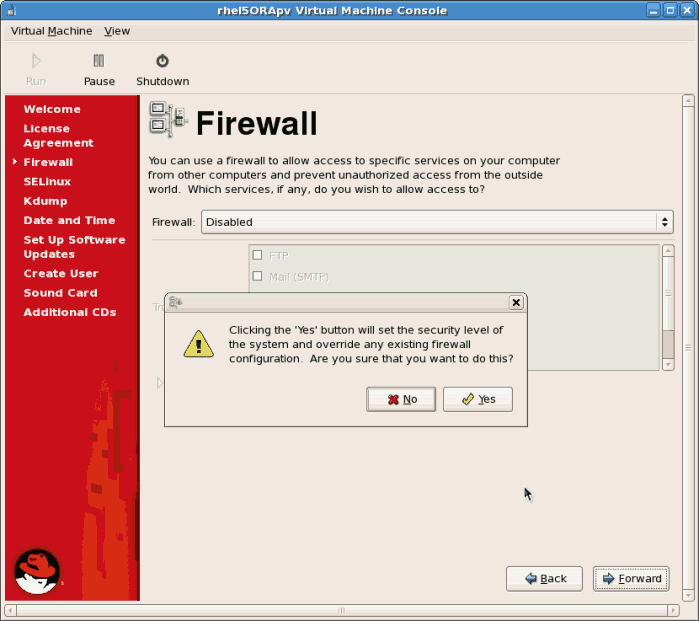

Click on the license agreement windows. - Configure the firewall.

Click to continue.

Click to continue.- If you disable the firewall you will be prompted to confirm your choice. Click to confirm and continue. It is not recommended to disable your firewall.

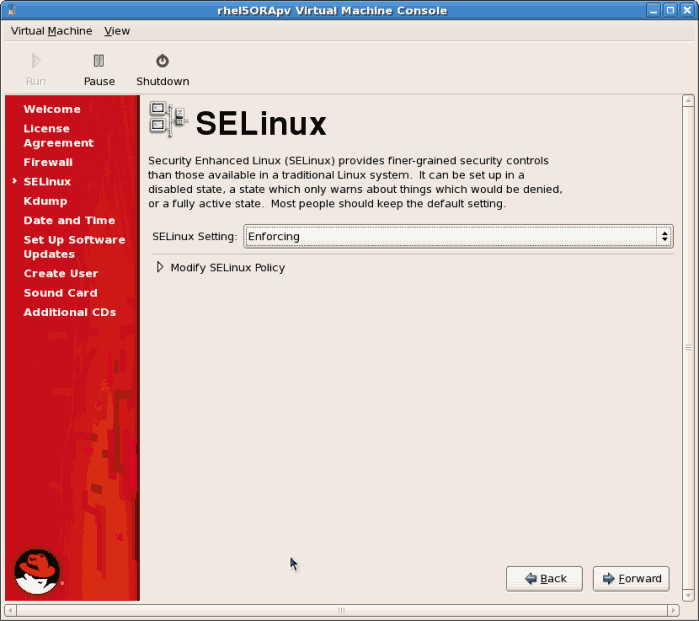

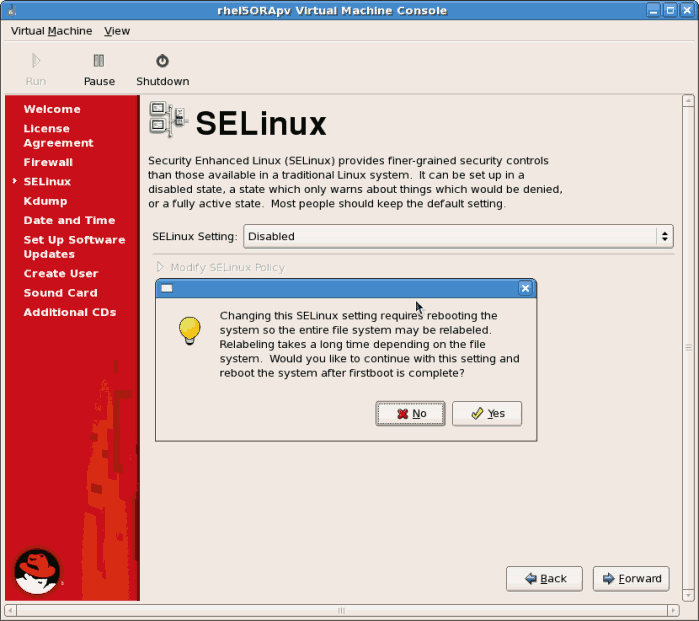

- Configure SELinux. It is strongly recommended you run SELinux in enforcing mode. You can choose to either run SELinux in permissive mode or completely disable it.

Click to continue.

Click to continue.- If you choose to disable SELinux this warning displays. Click to disable SELinux.

- Disable

kdump. The use ofkdumpis unsupported on para-virtualized guests. Click to continue.

Click to continue. - Confirm time and date are set correctly for your guest. If you install a para-virtualized guest time and date should synchronize with the hypervisor.If the users sets the time or date during the installation it is ignored and the hypervisor's time is used.

Click to continue.

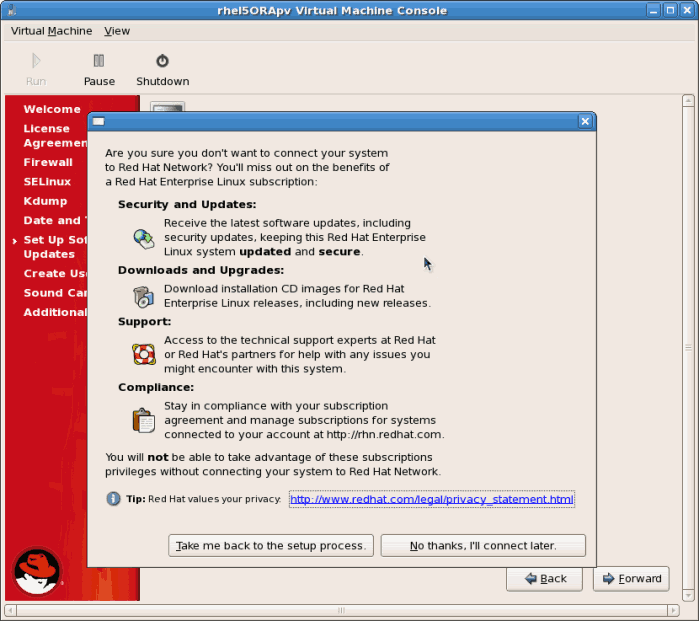

Click to continue. - Set up software updates. If you have a Red Hat account or want to trial one use the screen below to register your newly installed guest.

Click to continue.

Click to continue.- Confirm your choices for RHN.

- You may see an additional screen if you did not configure RHN access. If RHN access is not enabled, you will not receive software updates.

Click the button.

Click the button.

- Create a non root user account. It is advised to create a non root user for normal usage and enhanced security. Enter the Username, Name and password.

Click the button.

Click the button. - If a sound device is detected and you require sound, calibrate it. Complete the process and click .

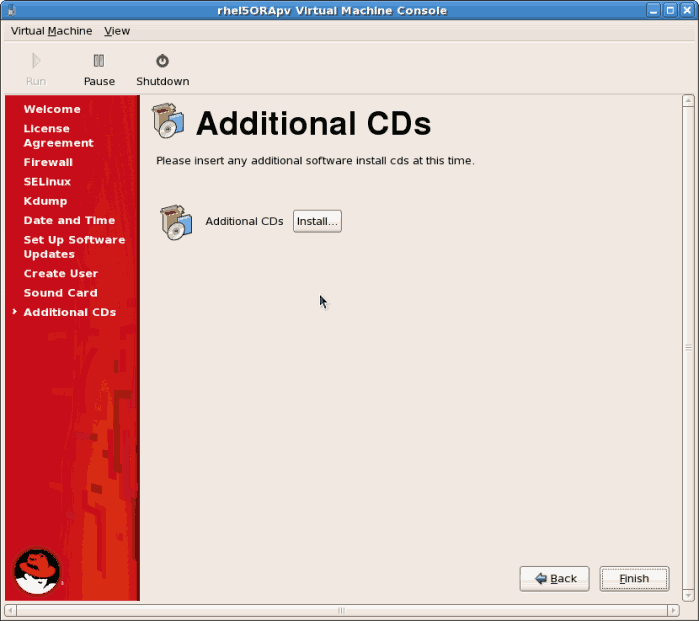

- You can install additional packages from a CD or another repository using this screen. It is often more efficient to not install any additional software at this point but add packages later using the

yumcommand or RHN. Click .

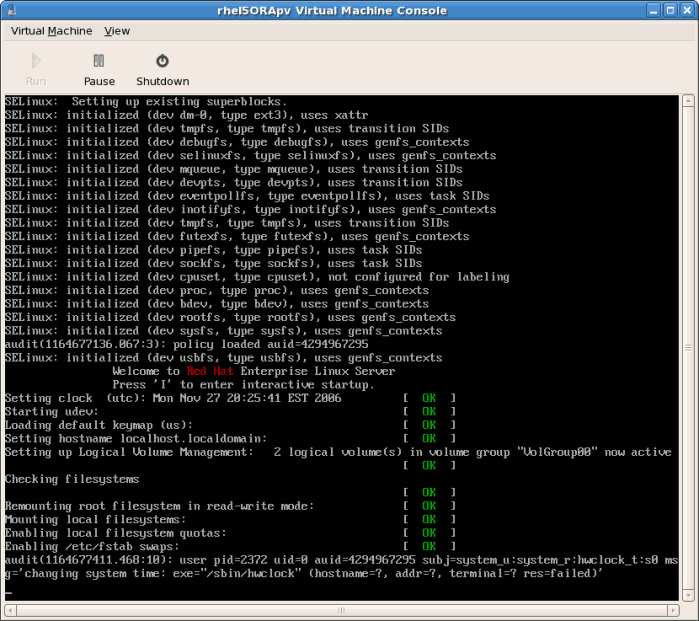

- The guest now configure any settings you changed and continues the boot process.

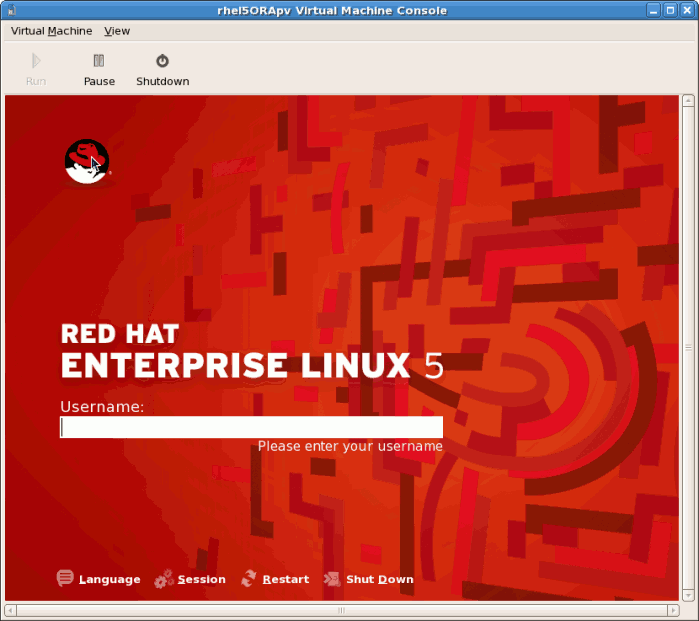

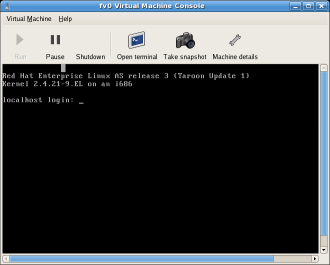

- The Red Hat Enterprise Linux 5 login screen displays. Log in using the username created in the previous steps.

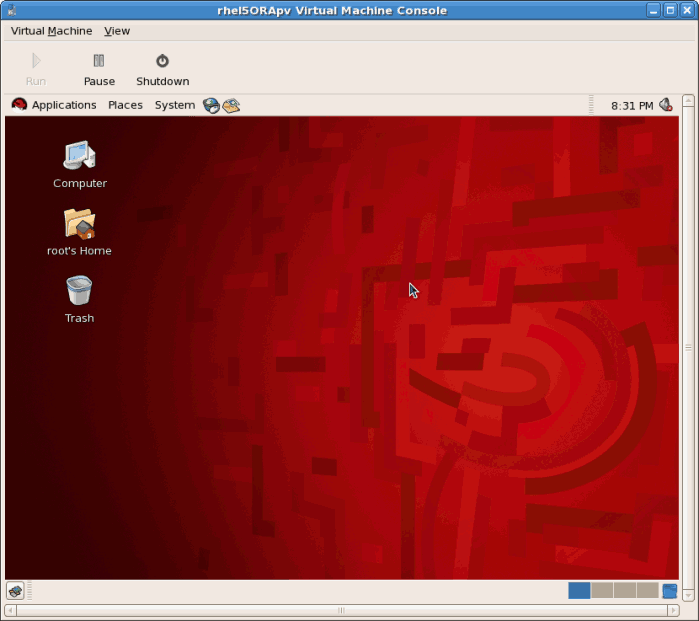

- You have now successfully installed a para-virtualized Red Hat Enterprise Linux guest.

8.2. Installing Red Hat Enterprise Linux as a fully virtualized guest

Procedure 8.3. Creating a fully virtualized Red Hat Enterprise Linux 5 guest with virt-manager

Open virt-manager

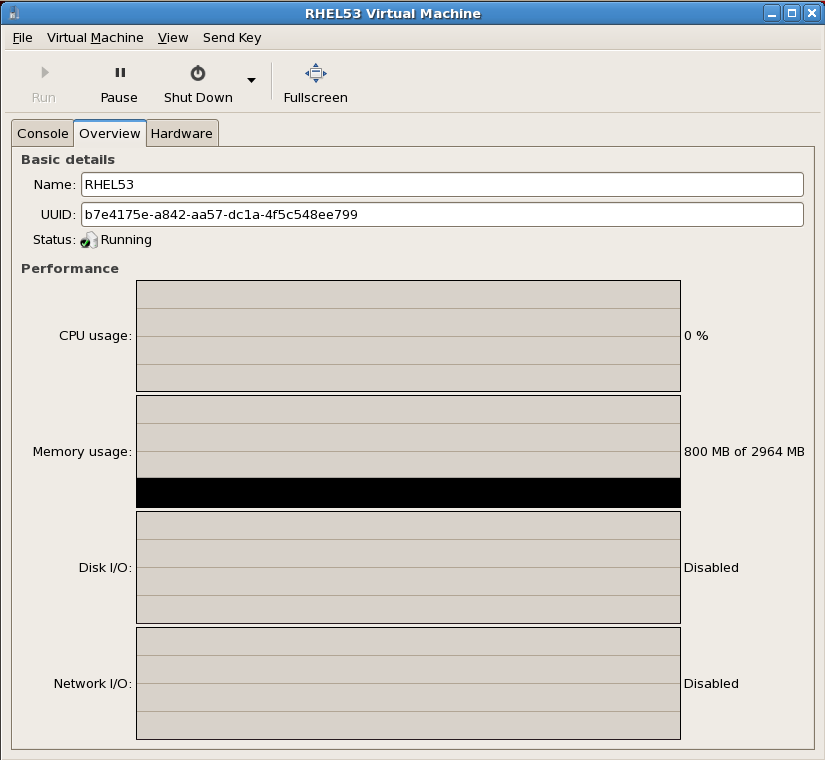

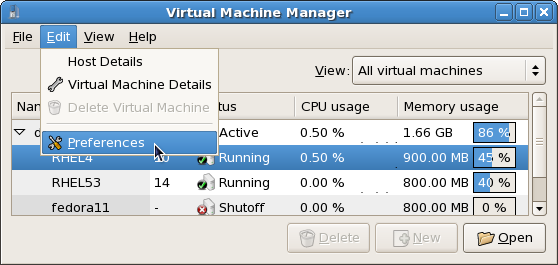

Startvirt-manager. Launch the application from the menu and submenu. Alternatively, run thevirt-managercommand as root.Select the hypervisor

Select the hypervisor. If installed, select Xen or KVM. For this example, select KVM. Note that presently KVM is namedqemu.Connect to a hypervisor if you have not already done so. Open the File menu and select the Add Connection... option. See Section 27.1, “The Add Connection window”.Once a hypervisor connection is selected the New button becomes available. Press the New button.Start the new virtual machine wizard

Pressing the New button starts the virtual machine creation wizard.Press to continue.

Name the virtual machine

Provide a name for your guest. Punctuation and whitespace characters are not permitted in versions before Red Hat Enterprise Linux 5.5. Red Hat Enterprise Linux 5.5 adds support for '_', '.' and '-' characters.Press to continue.

Choose a virtualization method

Choose the virtualization method for the guest. Note you can only select an installed virtualization method. If you selected KVM or Xen earlier (Step 4) you must use the hypervisor you selected. This example uses the KVM hypervisor.Press to continue.

Select the installation method

Red Hat Enterprise Linux can be installed using one of the following methods:- local install media, either an ISO image or physical optical media.

- Select Network install tree if you have the installation tree for Red Hat Enterprise Linux hosted somewhere on your network via HTTP, FTP or NFS.

- PXE can be used if you have a PXE server configured for booting Red Hat Enterprise Linux installation media. Configuring a sever to PXE boot a Red Hat Enterprise Linux installation is not covered by this guide. However, most of the installation steps are the same after the media boots.

Set OS Type to Linux and OS Variant to Red Hat Enterprise Linux 5 as shown in the screenshot.Press to continue.

Locate installation media

Select ISO image location or CD-ROM or DVD device. This example uses an ISO file image of the Red Hat Enterprise Linux installation DVD.- Press the button.

- Search to the location of the ISO file and select the ISO image. Press to confirm your selection.

- The file is selected and ready to install.Press to continue.

Warning

For ISO image files and guest storage images the recommended directory is/var/lib/libvirt/images/. Any other location may require additional configuration for SELinux, see Section 19.2, “SELinux and virtualization” for details.Storage setup

Assign a physical storage device (Block device) or a file-based image (File). File-based images must be stored in the/var/lib/libvirt/images/directory. Assign sufficient space for your guest and any applications the guest requires.Press to continue.

Note

Live and offline migrations require guests to be installed on shared network storage. For information on setting up shared storage for guests see Part V, “Virtualization Storage Topics”.Network setup

Select either Virtual network or Shared physical device.The virtual network option uses Network Address Translation (NAT) to share the default network device with the guest. Use the virtual network option for wireless networks.The shared physical device option uses a network bond to give the guest full access to a network device.Press to continue.

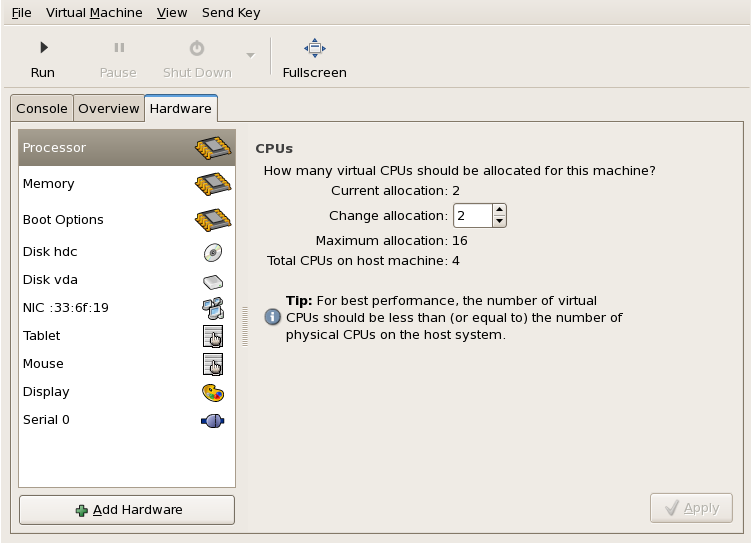

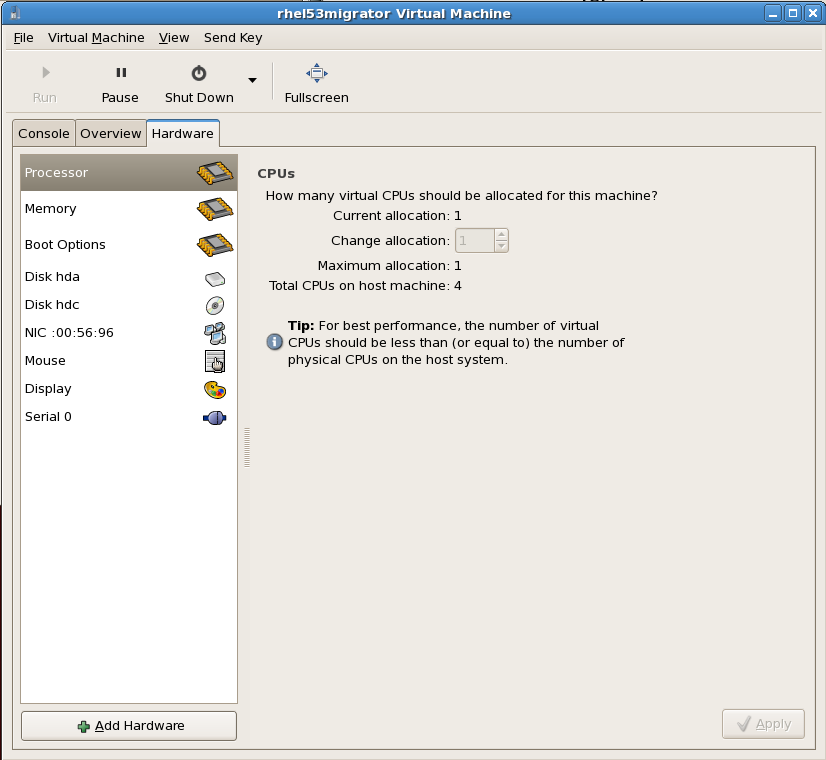

Memory and CPU allocation

The Memory and CPU Allocation window displays. Choose appropriate values for the virtualized CPUs and RAM allocation. These values affect the host's and guest's performance.Guests require sufficient physical memory (RAM) to run efficiently and effectively. Choose a memory value which suits your guest operating system and application requirements. Remember, guests use physical RAM. Running too many guests or leaving insufficient memory for the host system results in significant usage of virtual memory and swapping. Virtual memory is significantly slower which causes degraded system performance and responsiveness. Ensure you allocate sufficient memory for all guests and the host to operate effectively.Assign sufficient virtual CPUs for the guest. If the guest runs a multithreaded application, assign the number of virtualized CPUs the guest will require to run efficiently. Do not assign more virtual CPUs than there are physical processors (or hyper-threads) available on the host system. It is possible to over allocate virtual processors, however, over allocating has a significant, negative effect on guest and host performance due to processor context switching overheads.Press to continue.

Verify and start guest installation

Verify the configuration.Press to start the guest installation procedure.

Installing Red Hat Enterprise Linux

Complete the Red Hat Enterprise Linux 5 installation sequence. The installation sequence is covered by the Installation Guide, see Red Hat Documentation for the Red Hat Enterprise Linux Installation Guide.

8.3. Installing Windows XP as a fully virtualized guest

Important

Starting virt-manager

Open . Open a connection to a host (click ). Click the button to create a new virtual machine.Naming your virtual system

Enter the System Name and click the button.

Choosing a virtualization method

If you selected KVM or Xen earlier (step Step 1 ) you must use the hypervisor you selected. This example uses the KVM hypervisor.Windows can only be installed using full virtualization.

Choosing an installation method

This screen enables you to specify the installation method and the type of operating system.Select Windows from the OS Type list and Microsoft Windows XP from the OS Variant list.Installing guests with PXE is supported in Red Hat Enterprise Linux 5.2. PXE installation is not covered by this chapter.

Warning

For ISO image files and guest storage images it is recommended to use the/var/lib/libvirt/images/directory. Any other location will require additional configuration for SELinux, see Section 19.2, “SELinux and virtualization” for details.Press to continue.Choose installation image

Choose the installation image or CD-ROM. For CD-ROM or DVD installation select the device with the Windows installation disc in it. If you chose ISO Image Location enter the path to a Windows installation .iso image. Press to continue.

Press to continue.- The Storage window displays. Choose a disk partition, LUN or create a file-based image for the guest's storage.All image files are stored in the

/var/lib/libvirt/images/directory by default. In the default configuration, other directory locations for file-based images are prohibited by SELinux. If you use a different directory you must label the new directory according to SELinux policy. See Section 19.2, “SELinux and virtualization” for details.Allocate extra space if the guest needs additional space for applications or other data. For example, web servers require additional space for log files. Choose the appropriate size for the guest on your selected storage type and click the button.

Choose the appropriate size for the guest on your selected storage type and click the button.Note

It is recommend that you use the default directory for virtual machine images,/var/lib/libvirt/images/. If you are using a different location (such as/images/in this example) make sure it is added to your SELinux policy and relabeled before you continue with the installation (later in the document you will find information on how to modify your SELinux policy) Network setup

Select either Virtual network or Shared physical device.The virtual network option uses Network Address Translation (NAT) to share the default network device with the guest. Use the virtual network option for wireless networks.The shared physical device option uses a network bond to give the guest full access to a network device.Press to continue.

- The Memory and CPU Allocation window displays. Choose appropriate values for the virtualized CPUs and RAM allocation. These values affect the host's and guest's performance.Guests require sufficient physical memory (RAM) to run efficiently and effectively. Choose a memory value which suits your guest operating system and application requirements. Most operating system require at least 512MB of RAM to work responsively. Remember, guests use physical RAM. Running too many guests or leaving insufficient memory for the host system results in significant usage of virtual memory and swapping. Virtual memory is significantly slower causing degraded system performance and responsiveness. Ensure to allocate sufficient memory for all guests and the host to operate effectively.Assign sufficient virtual CPUs for the guest. If the guest runs a multithreaded application, assign the number of virtualized CPUs the guest will require to run efficiently. Do not assign more virtual CPUs than there are physical processors (or hyper-threads) available on the host system. It is possible to over allocate virtual processors, however, over allocating has a significant, negative effect on guest and host performance due to processor context switching overheads.

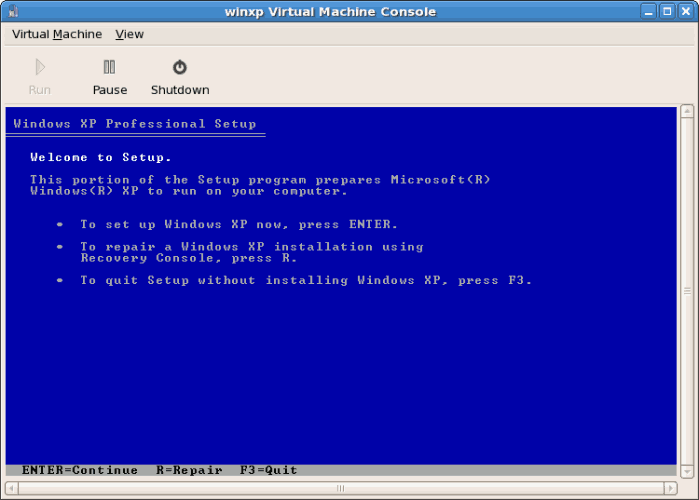

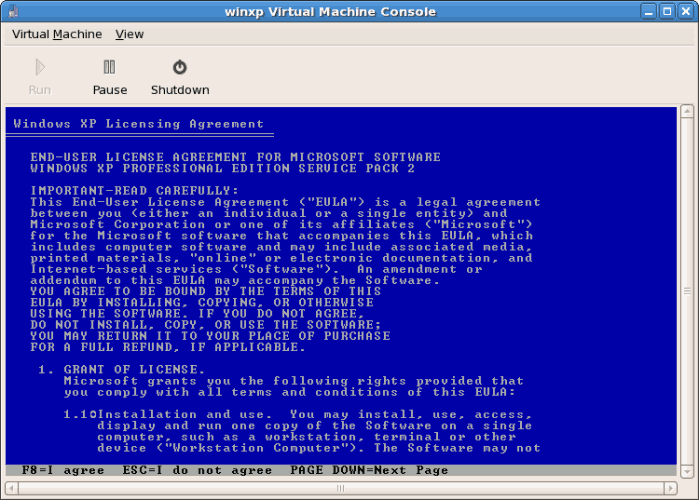

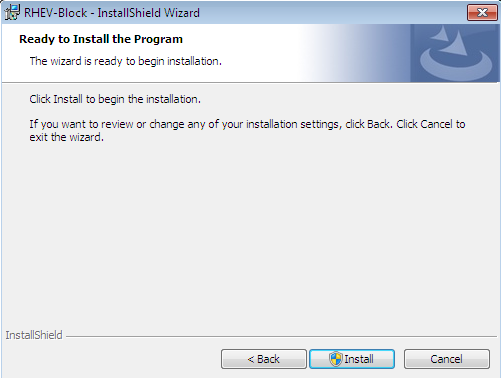

- Before the installation continues you will see the summary screen. Press to proceed to the guest installation:

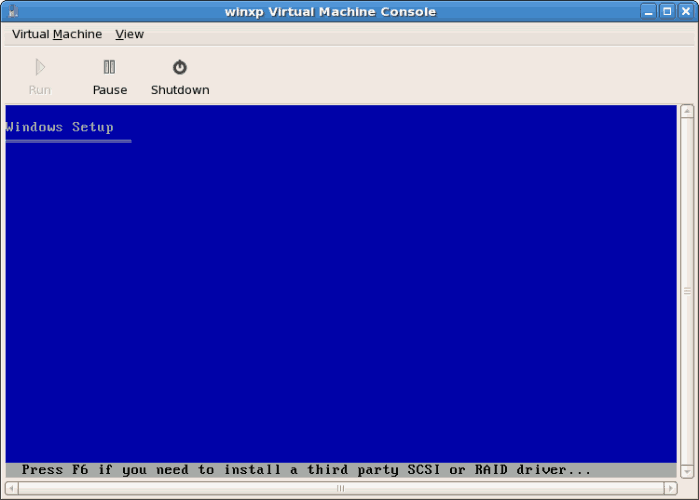

- You must make a hardware selection so open a console window quickly after the installation starts. Click then switch to the virt-manager summary window and select your newly started Windows guest. Double click on the system name and the console window opens. Quickly and repeatedly press F5 to select a new

HAL, once you get the dialog box in the Windows install select the 'Generic i486 Platform' tab. Scroll through selections with the Up and Down arrows.

- The installation continues with the standard Windows installation.

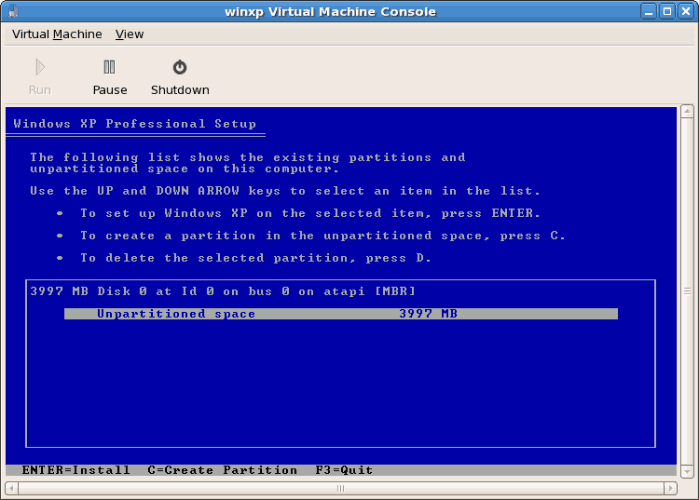

- Partition the hard drive when prompted.

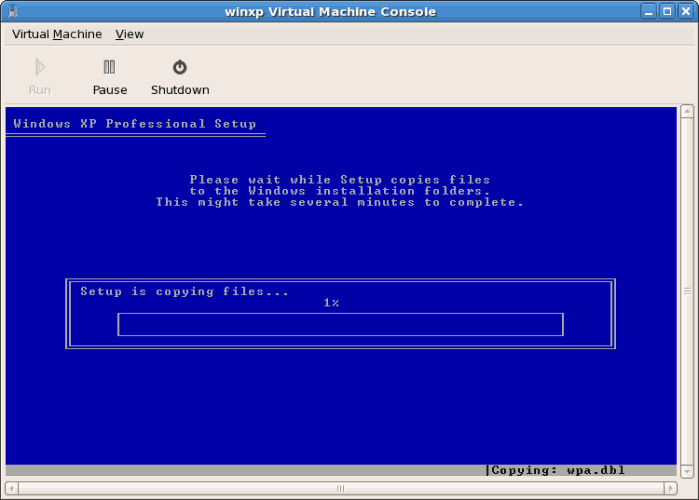

- After the drive is formatted, Windows starts copying the files to the hard drive.

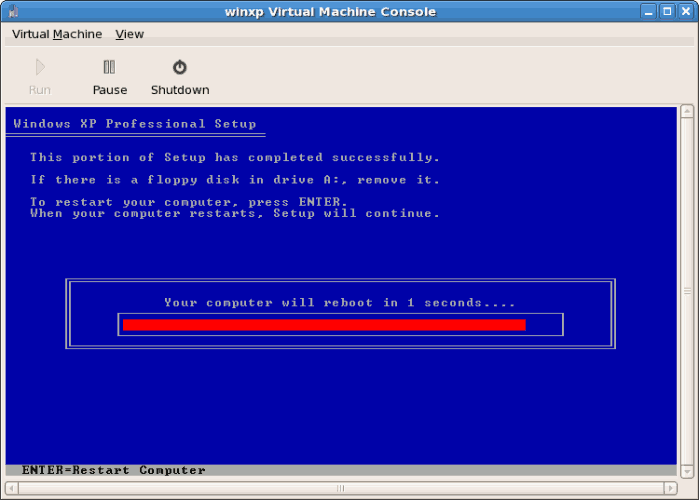

- The files are copied to the storage device, Windows now reboots.

- Restart your Windows guest:

virsh start WindowsGuest

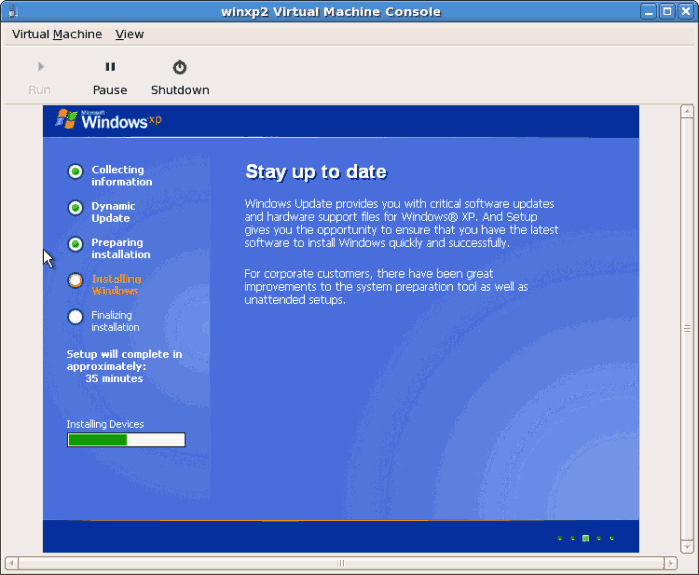

# virsh start WindowsGuestCopy to Clipboard Copied! Toggle word wrap Toggle overflow Where WindowsGuest is the name of your virtual machine. - When the console window opens, you will see the setup phase of the Windows installation.

- If your installation seems to get stuck during the setup phase, restart the guest with

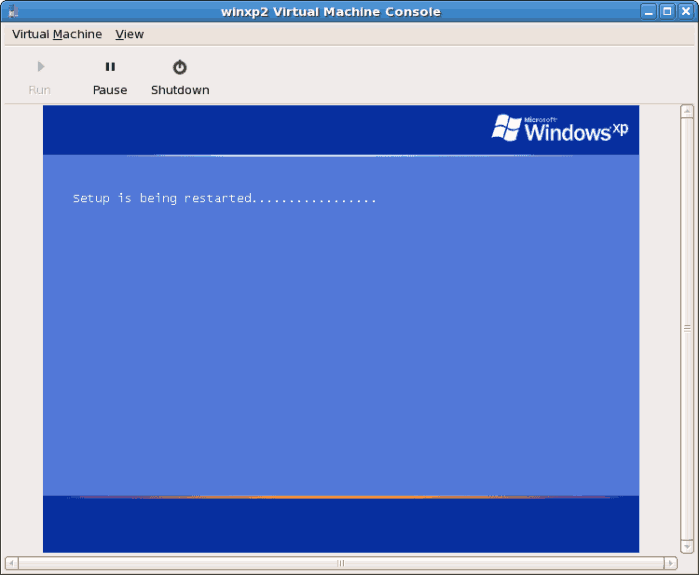

virsh reboot WindowsGuestName. When you restart the virtual machine, theSetup is being restartedmessage displays:

- After setup has finished you will see the Windows boot screen:

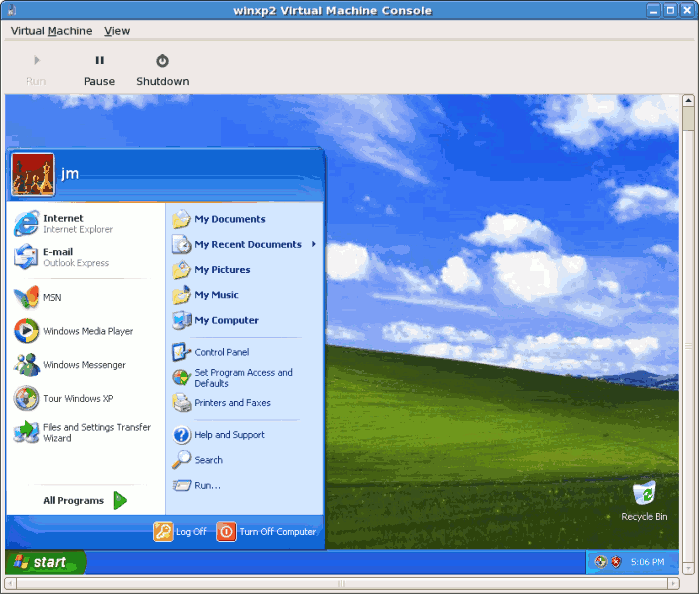

- Now you can continue with the standard setup of your Windows installation:

- The setup process is complete.

8.4. Installing Windows Server 2003 as a fully virtualized guest

virt-install command. virt-install can be used instead of virt-manager This process is similar to the Windows XP installation covered in Section 8.3, “Installing Windows XP as a fully virtualized guest”.

Note

- Using

virt-installfor installing Windows Server 2003 as the console for the Windows guest opens thevirt-viewerwindow promptly. The examples below installs a Windows Server 2003 guest with thevirt-installcommand.Xen virt-install

Copy to Clipboard Copied! Toggle word wrap Toggle overflow KVM virt-install

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- Once the guest boots into the installation you must quickly press F5. If you do not press F5 at the right time you will need to restart the installation. Pressing F5 allows you to select different HAL or Computer Type. Choose

Standard PCas the Computer Type. Changing the Computer Type is required for Windows Server 2003 guests.

- Complete the rest of the installation.

- Windows Server 2003 is now installed as a fully guest.

8.5. Installing Windows Server 2008 as a fully virtualized guest

Procedure 8.4. Installing Windows Server 2008 with virt-manager

Open virt-manager

Startvirt-manager. Launch the application from the menu and submenu. Alternatively, run thevirt-managercommand as root.Select the hypervisor

Select the hypervisor. If installed, select Xen or KVM. For this example, select KVM. Note that presently KVM is namedqemu.Once the option is selected the New button becomes available. Press the New button.Start the new virtual machine wizard

Pressing the New button starts the virtual machine creation wizard.Press to continue.

Name the virtual machine

Provide a name for your guest. Punctuation and whitespace characters are not permitted in versions before Red Hat Enterprise Linux 5.5. Red Hat Enterprise Linux 5.5 adds support for '_', '.' and '-' characters.Press to continue.

Choose a virtualization method

Choose the virtualization method for the guest. Note you can only select an installed virtualization method. If you selected KVM or Xen earlier (step 2) you must use the hypervisor you selected. This example uses the KVM hypervisor.Press to continue.

Select the installation method

For all versions of Windows you must use local install media, either an ISO image or physical optical media.PXE may be used if you have a PXE server configured for Windows network installation. PXE Windows installation is not covered by this guide.Set OS Type to Windows and OS Variant to Microsoft Windows 2008 as shown in the screenshot.Press to continue.

Locate installation media

Select ISO image location or CD-ROM or DVD device. This example uses an ISO file image of the Windows Server 2008 installation CD.- Press the button.

- Search to the location of the ISO file and select it.Press to confirm your selection.

- The file is selected and ready to install.Press to continue.

Warning

For ISO image files and guest storage images, the recommended directory to use is the/var/lib/libvirt/images/directory. Any other location may require additional configuration for SELinux, see Section 19.2, “SELinux and virtualization” for details.Storage setup

Assign a physical storage device (Block device) or a file-based image (File). File-based images must be stored in the/var/lib/libvirt/images/directory. Assign sufficient space for your guest and any applications the guest requires.Press to continue.

Network setup

Select either Virtual network or Shared physical device.The virtual network option uses Network Address Translation (NAT) to share the default network device with the guest. Use the virtual network option for wireless networks.The shared physical device option uses a network bond to give the guest full access to a network device.Press to continue.

Memory and CPU allocation

The Memory and CPU Allocation window displays. Choose appropriate values for the virtualized CPUs and RAM allocation. These values affect the host's and guest's performance.Guests require sufficient physical memory (RAM) to run efficiently and effectively. Choose a memory value which suits your guest operating system and application requirements. Remember, guests use physical RAM. Running too many guests or leaving insufficient memory for the host system results in significant usage of virtual memory and swapping. Virtual memory is significantly slower which causes degraded system performance and responsiveness. Ensure you allocate sufficient memory for all guests and the host to operate effectively.Assign sufficient virtual CPUs for the guest. If the guest runs a multithreaded application, assign the number of virtualized CPUs the guest will require to run efficiently. Do not assign more virtual CPUs than there are physical processors (or hyper-threads) available on the host system. It is possible to over allocate virtual processors, however, over allocating has a significant, negative effect on guest and host performance due to processor context switching overheads.Press to continue.

Verify and start guest installation

Verify the configuration.Press to start the guest installation procedure.

Installing Windows

Complete the Windows Server 2008 installation sequence. The installation sequence is not covered by this guide, see Microsoft's documentation for information on installing Windows.

Part III. Configuration

Configuring Virtualization in Red Hat Enterprise Linux

Chapter 9. Virtualized storage devices

Important

/dev/xvd[a to z][1 to 15]Example:/dev/xvdb13/dev/xvd[a to i][a to z][1 to 15]Example:/dev/xvdbz13/dev/sd[a to p][1 to 15]Example:/dev/sda1/dev/hd[a to t][1 to 63]Example:/dev/hdd3

9.1. Creating a virtualized floppy disk controller

dd command. Replace /dev/fd0 with the name of a floppy device and name the disk image appropriately.

dd if=/dev/fd0 of=/tmp/legacydrivers.img

# dd if=/dev/fd0 of=/tmp/legacydrivers.img

Note

virt-manager running a fully virtualized Red Hat Enterprise Linux installation with an image located in /var/lib/libvirt/images/rhel5FV.img. The Xen hypervisor is used in the example.

- Create the XML configuration file for your guest image using the

virshcommand on a running guest.virsh dumpxml rhel5FV > rhel5FV.xml

# virsh dumpxml rhel5FV > rhel5FV.xmlCopy to Clipboard Copied! Toggle word wrap Toggle overflow This saves the configuration settings as an XML file which can be edited to customize the operations and devices used by the guest. For more information on using the virsh XML configuration files, see Chapter 34, Creating custom libvirt scripts. - Create a floppy disk image for the guest.

dd if=/dev/zero of=/var/lib/libvirt/images/rhel5FV-floppy.img bs=512 count=2880

# dd if=/dev/zero of=/var/lib/libvirt/images/rhel5FV-floppy.img bs=512 count=2880Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Add the content below, changing where appropriate, to your guest's configuration XML file. This example is an emulated floppy device using a file-based image.

<disk type='file' device='floppy'> <source file='/var/lib/libvirt/images/rhel5FV-floppy.img'/> <target dev='fda'/> </disk>

<disk type='file' device='floppy'> <source file='/var/lib/libvirt/images/rhel5FV-floppy.img'/> <target dev='fda'/> </disk>Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Force the guest to stop. To shut down the guest gracefully, use the

virsh shutdowncommand instead.virsh destroy rhel5FV

# virsh destroy rhel5FVCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Restart the guest using the XML configuration file.

virsh create rhel5FV.xml

# virsh create rhel5FV.xmlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

9.2. Adding storage devices to guests

- local hard drive partitions,

- logical volumes,

- Fibre Channel or iSCSI directly connected to the host.

- File containers residing in a file system on the host.

- NFS file systems mounted directly by the virtual machine.

- iSCSI storage directly accessed by the guest.

- Cluster File Systems (GFS).

File-based storage or file-based containers are files on the hosts file system which act as virtualized hard drives for virtual machines. To add a file-based container perform the following steps:

- Create an empty container file or using an existing file container (such as an ISO file).

- Create a sparse file using the

ddcommand. Sparse files are not recommended due to data integrity and performance issues. Sparse files are created much faster and can used for testing but should not be used in production environments.dd if=/dev/zero of=/var/lib/libvirt/images/FileName.img bs=1M seek=4096 count=0

# dd if=/dev/zero of=/var/lib/libvirt/images/FileName.img bs=1M seek=4096 count=0Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Non-sparse, pre-allocated files are recommended for file-based storage images. Create a non-sparse file, execute:

dd if=/dev/zero of=/var/lib/libvirt/images/FileName.img bs=1M count=4096

# dd if=/dev/zero of=/var/lib/libvirt/images/FileName.img bs=1M count=4096Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Both commands create a 4GB file which can be used as additional storage for a virtual machine. - Dump the configuration for the guest. In this example the guest is called Guest1 and the file is saved in the users home directory.

virsh dumpxml Guest1 > ~/Guest1.xml

# virsh dumpxml Guest1 > ~/Guest1.xmlCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Open the configuration file (Guest1.xml in this example) in a text editor. Find the

<disk>elements, these elements describe storage devices. The following is an example disk element:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Add the additional storage by duplicating or writing a new

<disk>element. Ensure you specify a device name for the virtual block device attributes. These attributes must be unique for each guest configuration file. The following example is a configuration file section which contains an additional file-based storage container namedFileName.img.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Restart the guest from the updated configuration file.

virsh create Guest1.xml

# virsh create Guest1.xmlCopy to Clipboard Copied! Toggle word wrap Toggle overflow - The following steps are Linux guest specific. Other operating systems handle new storage devices in different ways. For other systems, see your operating system's documentation.The guest now uses the file

FileName.imgas the device called/dev/sdb. This device requires formatting from the guest. On the guest, partition the device into one primary partition for the entire device then format the device.- Press

nfor a new partition.fdisk /dev/sdb Command (m for help):

# fdisk /dev/sdb Command (m for help):Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Press

pfor a primary partition.Command action e extended p primary partition (1-4)

Command action e extended p primary partition (1-4)Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Choose an available partition number. In this example the first partition is chosen by entering

1.Partition number (1-4): 1

Partition number (1-4): 1Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Enter the default first cylinder by pressing

Enter.First cylinder (1-400, default 1):

First cylinder (1-400, default 1):Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Select the size of the partition. In this example the entire disk is allocated by pressing

Enter.Last cylinder or +size or +sizeM or +sizeK (2-400, default 400):

Last cylinder or +size or +sizeM or +sizeK (2-400, default 400):Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Set the type of partition by pressing

t.Command (m for help): t

Command (m for help): tCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Choose the partition you created in the previous steps. In this example, the partition number is

1.Partition number (1-4): 1

Partition number (1-4): 1Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Enter

83for a linux partition.Hex code (type L to list codes): 83

Hex code (type L to list codes): 83Copy to Clipboard Copied! Toggle word wrap Toggle overflow - write changes to disk and quit.

Command (m for help): w Command (m for help): q

Command (m for help): w Command (m for help): qCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Format the new partition with the

ext3file system.mke2fs -j /dev/sdb1

# mke2fs -j /dev/sdb1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- Mount the disk on the guest.

mount /dev/sdb1 /myfiles

# mount /dev/sdb1 /myfilesCopy to Clipboard Copied! Toggle word wrap Toggle overflow

System administrators use additional hard drives for to provide more storage space or to separate system data from user data. This procedure, Procedure 9.1, “Adding physical block devices to virtual machines”, describes how to add a hard drive on the host to a guest.

Warning

fstab file, the initrd file or used by the kernel command line. If less privileged users, especially virtual machines, have write access to whole partitions or LVM volumes the host system could be compromised.

/dev/sdb). Virtual machines with access to block devices may be able to access other block devices on the system or modify volume labels which can be used to compromise the host system. Use partitions (for example, /dev/sdb1) or LVM volumes to prevent this issue.

Procedure 9.1. Adding physical block devices to virtual machines

- Physically attach the hard disk device to the host. Configure the host if the drive is not accessible by default.

- Configure the device with

multipathand persistence on the host if required. - Use the

virsh attachcommand. Replace: myguest with your guest's name,/dev/sdb1with the device to add, and sdc with the location for the device on the guest. The sdc must be an unused device name. Use the sd* notation for Windows guests as well, the guest will recognize the device correctly.Append the--type cdromparameter to the command for CD-ROM or DVD devices.Append the--type floppyparameter to the command for floppy devices.virsh attach-disk myguest /dev/sdb1 sdc --driver tap --mode readonly

# virsh attach-disk myguest /dev/sdb1 sdc --driver tap --mode readonlyCopy to Clipboard Copied! Toggle word wrap Toggle overflow - The guest now has a new hard disk device called

/dev/sdbon Linux orD: drive, or similar, on Windows. This device may require formatting.

9.3. Configuring persistent storage in Red Hat Enterprise Linux 5

multipath must use Single path configuration. Systems running multipath can use Multiple path configuration.

This procedure implements LUN device persistence using udev. Only use this procedure for hosts which are not using multipath.

- Edit the

/etc/scsi_id.configfile.- Ensure the

options=-bis line commented out.options=-b

# options=-bCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Add the following line:

options=-g

options=-gCopy to Clipboard Copied! Toggle word wrap Toggle overflow This option configuresudevto assume all attached SCSI devices return a UUID.

- To display the UUID for a given device run the

scsi_id -g -s /block/sd*command. For example:scsi_id -g -s /block/sd* 3600a0b800013275100000015427b625e

# scsi_id -g -s /block/sd* 3600a0b800013275100000015427b625eCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output may vary from the example above. The output displays the UUID of the device/dev/sdc. - Verify the UUID output by the

scsi_id -g -s /block/sd*command is identical from computer which accesses the device. - Create a rule to name the device. Create a file named

20-names.rulesin the/etc/udev/rules.ddirectory. Add new rules to this file. All rules are added to the same file using the same format. Rules follow this format:KERNEL=="sd[a-z]", BUS=="scsi", PROGRAM="/sbin/scsi_id -g -s /block/%k", RESULT="UUID", NAME="devicename"

KERNEL=="sd[a-z]", BUS=="scsi", PROGRAM="/sbin/scsi_id -g -s /block/%k", RESULT="UUID", NAME="devicename"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace UUID and devicename with the UUID retrieved above, and a name for the device. This is a rule for the example above:KERNEL="sd*", BUS="scsi", PROGRAM="/sbin/scsi_id -g -s", RESULT="3600a0b800013275100000015427b625e", NAME="rack4row16"

KERNEL="sd*", BUS="scsi", PROGRAM="/sbin/scsi_id -g -s", RESULT="3600a0b800013275100000015427b625e", NAME="rack4row16"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Theudevdaemon now searches all devices named/dev/sd*for the UUID in the rule. Once a matching device is connected to the system the device is assigned the name from the rule. In the a device with a UUID of 3600a0b800013275100000015427b625e would appear as/dev/rack4row16. - Append this line to

/etc/rc.local:/sbin/start_udev

/sbin/start_udevCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Copy the changes in the

/etc/scsi_id.config,/etc/udev/rules.d/20-names.rules, and/etc/rc.localfiles to all relevant hosts./sbin/start_udev

/sbin/start_udevCopy to Clipboard Copied! Toggle word wrap Toggle overflow

The multipath package is used for systems with more than one physical path from the computer to storage devices. multipath provides fault tolerance, fail-over and enhanced performance for network storage devices attached to Red Hat Enterprise Linux systems.

multipath environment requires defined alias names for your multipath devices. Each storage device has a UUID which acts as a key for the aliased names. Identify a device's UUID using the scsi_id command.

scsi_id -g -s /block/sdc

# scsi_id -g -s /block/sdc

/dev/mpath directory. In the example below 4 devices are defined in /etc/multipath.conf:

/dev/mpath/oramp1, /dev/mpath/oramp2, /dev/mpath/oramp3 and /dev/mpath/oramp4. Once entered, the mapping of the devices' WWID to their new names are now persistent after rebooting.

9.4. Add a virtualized CD-ROM or DVD device to a guest

virsh with the attach-disk parameter.

virsh attach-disk [domain-id] [source] [target] --driver file --type cdrom --mode readonly

# virsh attach-disk [domain-id] [source] [target] --driver file --type cdrom --mode readonly/dev directory.

Chapter 10. Network Configuration

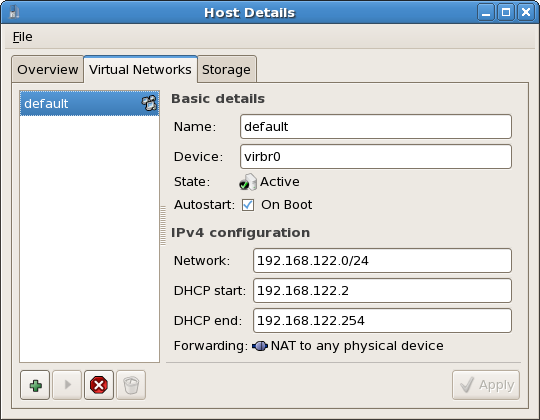

10.1. Network Address Translation (NAT) with libvirt

Every standard libvirt installation provides NAT based connectivity to virtual machines out of the box. This is the so called 'default virtual network'. Verify that it is available with the virsh net-list --all command.

virsh net-list --all Name State Autostart ----------------------------------------- default active yes

# virsh net-list --all

Name State Autostart

-----------------------------------------

default active yes

virsh net-define /usr/share/libvirt/networks/default.xml

# virsh net-define /usr/share/libvirt/networks/default.xml

/usr/share/libvirt/networks/default.xml

virsh net-autostart default Network default marked as autostarted

# virsh net-autostart default

Network default marked as autostarted

virsh net-start default Network default started

# virsh net-start default

Network default started

libvirt default network is running, you will see an isolated bridge device. This device does not have any physical interfaces added, since it uses NAT and IP forwarding to connect to outside world. Do not add new interfaces.

brctl show bridge name bridge id STP enabled interfaces virbr0 8000.000000000000 yes

# brctl show

bridge name bridge id STP enabled interfaces

virbr0 8000.000000000000 yes

libvirt adds iptables rules which allow traffic to and from guests attached to the virbr0 device in the INPUT, FORWARD, OUTPUT and POSTROUTING chains. libvirt then attempts to enable the ip_forward parameter. Some other applications may disable ip_forward, so the best option is to add the following to /etc/sysctl.conf:

net.ipv4.ip_forward = 1

net.ipv4.ip_forward = 1

Once the host configuration is complete, a guest can be connected to the virtual network based on its name. To connect a guest to the 'default' virtual network, the following XML can be used in the guest:

<interface type='network'> <source network='default'/> </interface>

<interface type='network'>

<source network='default'/>

</interface>

Note

<interface type='network'> <source network='default'/> <mac address='00:16:3e:1a:b3:4a'/> </interface>

<interface type='network'>

<source network='default'/>

<mac address='00:16:3e:1a:b3:4a'/>

</interface>

10.2. Bridged networking with libvirt

If your system was using a Xen bridge, it is recommended to disable the default Xen network bridge by editing /etc/xen/xend-config.sxp and changing the line:

(network-script network-bridge)

(network-script network-bridge)

(network-script /bin/true)

(network-script /bin/true)

NetworkManager does not support bridging. Running NetworkManager will overwrite any manual bridge configuration. Because of this, NetworkManager should be disabled in order to use networking via the network scripts (located in the /etc/sysconfig/network-scripts/ directory):

chkconfig NetworkManager off chkconfig network on service NetworkManager stop service network start

# chkconfig NetworkManager off

# chkconfig network on

# service NetworkManager stop

# service network start

Note

NM_CONTROLLED=no" to the ifcfg-* scripts used in the examples. If you do not either set this parameter or disable NetworkManager entirely, any bridge configuration will be overwritten and lost when NetworkManager next starts.

Create or edit the following two network configuration files. This step can be repeated (with different names) for additional network bridges.

/etc/sysconfig/network-scripts directory:

cd /etc/sysconfig/network-scripts

# cd /etc/sysconfig/network-scripts

ifcfg-eth0 defines the physical network interface which is set as part of a bridge:

Note

MTU variable to the end of the configuration file.

MTU=9000

MTU=9000

/etc/sysconfig/network-scripts directory called ifcfg-br0 or similar. The br0 is the name of the bridge; this name can be anything as long as the name of the file is the same as the DEVICE parameter.

Note

ifcfg-br0 file). Network access will not function as expected if IP address details are configured on the physical interface that twehe bridge is connected to.

Warning

TYPE=Bridge, is case-sensitive. It must have uppercase 'B' and lower case 'ridge'.

service network restart

# service network restart

iptables to allow all traffic to be forwarded across the bridge.

iptables -I FORWARD -m physdev --physdev-is-bridged -j ACCEPT service iptables save service iptables restart

# iptables -I FORWARD -m physdev --physdev-is-bridged -j ACCEPT

# service iptables save

# service iptables restart

Note

iptables rules. In /etc/sysctl.conf append the following lines:

net.bridge.bridge-nf-call-ip6tables = 0 net.bridge.bridge-nf-call-iptables = 0 net.bridge.bridge-nf-call-arptables = 0

net.bridge.bridge-nf-call-ip6tables = 0

net.bridge.bridge-nf-call-iptables = 0

net.bridge.bridge-nf-call-arptables = 0

sysctl.

sysctl -p /etc/sysctl.conf

# sysctl -p /etc/sysctl.conf

libvirt daemon.

service libvirtd reload

# service libvirtd reload

brctl show bridge name bridge id STP enabled interfaces virbr0 8000.000000000000 yes br0 8000.000e0cb30550 no eth0

# brctl show

bridge name bridge id STP enabled interfaces

virbr0 8000.000000000000 yes

br0 8000.000e0cb30550 no eth0

virbr0 bridge. Do not attempt to attach a physical device to virbr0. The virbr0 bridge is only for Network Address Translation (NAT) connectivity.

Chapter 11. Pre-Red Hat Enterprise Linux 5.4 Xen networking

virsh (Chapter 26, Managing guests with virsh) and virt-manager (Chapter 27, Managing guests with the Virtual Machine Manager (virt-manager)). Those chapters provide a detailed description of the networking configuration tasks using both tools.

Note

11.1. Configuring multiple guest network bridges to use multiple Ethernet cards

- Configure another network interface using either the

system-config-networkapplication. Alternatively, create a new configuration file namedifcfg-ethXin the/etc/sysconfig/network-scripts/directory whereXis any number not already in use. Below is an example configuration file for a second network interface calledeth1:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Copy the file

/etc/xen/scripts/network-bridgeto/etc/xen/scripts/network-bridge.xen. - Comment out any existing network scripts in

/etc/xen/xend-config.sxpand add the line(network-xen-multi-bridge).A typicalxend-config.sxpfile should have the following line. Comment this line out. Use the # symbol to comment out lines.network-script network-bridge

network-script network-bridgeCopy to Clipboard Copied! Toggle word wrap Toggle overflow Below is the commented out line and the new line, containing thenetwork-xen-multi-bridgeparameter to enable multiple network bridges:#network-script network-bridge network-script network-xen-multi-bridge

#network-script network-bridge network-script network-xen-multi-bridgeCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Create a script to create multiple network bridges. This example creates a script called

network-xen-multi-bridge.shin the/etc/xen/scripts/directory. The following example script will create two Xen network bridges (xenbr0andxenbr1); one will be attached toeth1and the other one toeth0. To create additional bridges, follow the example in the script and copy and paste the lines as required:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Make the script executable.

chmod +x /etc/xen/scripts/network-xen-multi-bridge.sh

# chmod +x /etc/xen/scripts/network-xen-multi-bridge.shCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Restart networking or restart the system to activate the bridges.

service network restart

# service network restartCopy to Clipboard Copied! Toggle word wrap Toggle overflow

11.2. Red Hat Enterprise Linux 5.0 laptop network configuration

Important

virt-manager. NetworkManager works with virtual network devices by default in Red Hat Enterprise Linux 5.1 and newer.

xm configuration files, virtual network devices are labeled "vif".

ifup or ifdown calls to the network interface it is using. In addition wireless network cards do not work well in a virtualization environment due to Xen's (default) bridged network usage.

- You will be configuring a 'dummy' network interface which will be used by Xen. In this example the interface is called

dummy0. This will also allow you to use a hidden IP address space for your guests. - You will need to use static IP address as DHCP will not listen on the dummy interface for DHCP requests. You can compile your own version of DHCP to listen on dummy interfaces, however you may want to look into using dnsmasq for DNS, DHCP and tftpboot services in a Xen environment. Setup and configuration are explained further down in this section/chapter.

- You can also configure NAT and IP masquerading in order to enable access to the network from your guests.

Perform the following configuration steps on your host:

- Create a

dummy0network interface and assign it a static IP address. In our example I selected 10.1.1.1 to avoid routing problems in our environment. To enable dummy device support add the following lines to/etc/modprobe.conf:alias dummy0 dummy options dummy numdummies=1

alias dummy0 dummy options dummy numdummies=1Copy to Clipboard Copied! Toggle word wrap Toggle overflow - To configure networking for

dummy0, edit or create/etc/sysconfig/network-scripts/ifcfg-dummy0:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Bind

xenbr0todummy0, so you can use networking even when not connected to a physical network. Edit/etc/xen/xend-config.sxpto include thenetdev=dummy0entry:(network-script 'network-bridge bridge=xenbr0 netdev=dummy0')

(network-script 'network-bridge bridge=xenbr0 netdev=dummy0')Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Open

/etc/sysconfig/networkin the guest and modify the default gateway to point todummy0. If you are using a static IP, set the guest's IP address to exist on the same subnet asdummy0.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Setting up NAT in the host will allow the guests access Internet, including with wireless, solving the Xen and wireless card issues. The script below will enable NAT based on the interface currently used for your network connection.

Network address translation (NAT) allows multiple network address to connect through a single IP address by intercepting packets and passing them to the private IP addresses. You can copy the following script to /etc/init.d/xenLaptopNAT and create a soft link to /etc/rc3.d/S99xenLaptopNAT. This automatically starts NAT at boot time.

Note

One of the challenges in running virtualization on a laptop (or any other computer which is not connected by a single or stable network connection) is the change in network interfaces and availability. Using a dummy network interface helps to build a more stable environment but it also brings up new challenges in providing DHCP, DNS and tftpboot services to your guest virtual machines. The default DHCP daemon shipped with Red Hat Enterprise Linux and Fedora Core will not listen on dummy interfaces, and your DNS forwarded information may change as you connect to different networks and VPNs.

dnsmasq, which can provide all of the above services in a single package, and also allows you to configure services to be available only to requests from your dummy interface. Below is a short write up on how to configure dnsmasq on a laptop running virtualization: