Cluster Administration

Configuring and Managing the High Availability Add-On

Abstract

Introduction

- Red Hat Enterprise Linux Installation Guide — Provides information regarding installation of Red Hat Enterprise Linux 6.

- Red Hat Enterprise Linux Deployment Guide — Provides information regarding the deployment, configuration and administration of Red Hat Enterprise Linux 6.

- High Availability Add-On Overview — Provides a high-level overview of the Red Hat High Availability Add-On.

- Logical Volume Manager Administration — Provides a description of the Logical Volume Manager (LVM), including information on running LVM in a clustered environment.

- Global File System 2: Configuration and Administration — Provides information about installing, configuring, and maintaining Red Hat GFS2 (Red Hat Global File System 2), which is included in the Resilient Storage Add-On.

- DM Multipath — Provides information about using the Device-Mapper Multipath feature of Red Hat Enterprise Linux 6.

- Load Balancer Administration — Provides information on configuring high-performance systems and services with the Load Balancer Add-On, a set of integrated software components that provide Linux Virtual Servers (LVS) for balancing IP load across a set of real servers.

- Release Notes — Provides information about the current release of Red Hat products.

1. Feedback

Cluster_Administration(EN)-6 (2017-3-07T16:26)

Cluster_Administration(EN)-6 (2017-3-07T16:26)

Chapter 1. Red Hat High Availability Add-On Configuration and Management Overview

Note

1.1. New and Changed Features

1.1.1. New and Changed Features for Red Hat Enterprise Linux 6.1

- As of the Red Hat Enterprise Linux 6.1 release and later, the Red Hat High Availability Add-On provides support for SNMP traps. For information on configuring SNMP traps with the Red Hat High Availability Add-On, see Chapter 11, SNMP Configuration with the Red Hat High Availability Add-On.

- As of the Red Hat Enterprise Linux 6.1 release and later, the Red Hat High Availability Add-On provides support for the

ccscluster configuration command. For information on theccscommand, see Chapter 6, Configuring Red Hat High Availability Add-On With the ccs Command and Chapter 7, Managing Red Hat High Availability Add-On With ccs. - The documentation for configuring and managing Red Hat High Availability Add-On software using Conga has been updated to reflect updated Conga screens and feature support.

- For the Red Hat Enterprise Linux 6.1 release and later, using

riccirequires a password the first time you propagate updated cluster configuration from any particular node. For information onriccisee Section 3.13, “Considerations forricci”. - You can now specify a Restart-Disable failure policy for a service, indicating that the system should attempt to restart the service in place if it fails, but if restarting the service fails the service will be disabled instead of being moved to another host in the cluster. This feature is documented in Section 4.10, “Adding a Cluster Service to the Cluster” and Appendix B, HA Resource Parameters.

- You can now configure an independent subtree as non-critical, indicating that if the resource fails then only that resource is disabled. For information on this feature see Section 4.10, “Adding a Cluster Service to the Cluster” and Section C.4, “Failure Recovery and Independent Subtrees”.

- This document now includes the new chapter Chapter 10, Diagnosing and Correcting Problems in a Cluster.

1.1.2. New and Changed Features for Red Hat Enterprise Linux 6.2

- Red Hat Enterprise Linux now provides support for running Clustered Samba in an active/active configuration. For information on clustered Samba configuration, see Chapter 12, Clustered Samba Configuration.

- Any user able to authenticate on the system that is hosting luci can log in to luci. As of Red Hat Enterprise Linux 6.2, only the root user on the system that is running luci can access any of the luci components until an administrator (the root user or a user with administrator permission) sets permissions for that user. For information on setting luci permissions for users, see Section 4.3, “Controlling Access to luci”.

- The nodes in a cluster can communicate with each other using the UDP unicast transport mechanism. For information on configuring UDP unicast, see Section 3.12, “UDP Unicast Traffic”.

- You can now configure some aspects of luci's behavior by means of the

/etc/sysconfig/lucifile. For example, you can specifically configure the only IP address luci is being served at. For information on configuring the only IP address luci is being served at, see Table 3.2, “Enabled IP Port on a Computer That Runs luci”. For information on the/etc/sysconfig/lucifile in general, see Section 3.4, “Configuring luci with/etc/sysconfig/luci”. - The

ccscommand now includes the--lsfenceoptsoption, which prints a list of available fence devices, and the--lsfenceoptsfence_type option, which prints each available fence type. For information on these options, see Section 6.6, “Listing Fence Devices and Fence Device Options”. - The

ccscommand now includes the--lsserviceoptsoption, which prints a list of cluster services currently available for your cluster, and the--lsserviceoptsservice_type option, which prints a list of the options you can specify for a particular service type. For information on these options, see Section 6.11, “Listing Available Cluster Services and Resources”. - The Red Hat Enterprise Linux 6.2 release provides support for the VMware (SOAP Interface) fence agent. For information on fence device parameters, see Appendix A, Fence Device Parameters.

- The Red Hat Enterprise Linux 6.2 release provides support for the RHEV-M REST API fence agent, against RHEV 3.0 and later. For information on fence device parameters, see Appendix A, Fence Device Parameters.

- As of the Red Hat Enterprise Linux 6.2 release, when you configure a virtual machine in a cluster with the

ccscommand you can use the--addvmoption (rather than theaddserviceoption). This ensures that thevmresource is defined directly under thermconfiguration node in the cluster configuration file. For information on configuring virtual machine resources with theccscommand, see Section 6.12, “Virtual Machine Resources”. - This document includes a new appendix, Appendix D, Modifying and Enforcing Cluster Service Resource Actions. This appendix describes how

rgmanagermonitors the status of cluster resources, and how to modify the status check interval. The appendix also describes the__enforce_timeoutsservice parameter, which indicates that a timeout for an operation should cause a service to fail. - This document includes a new section, Section 3.3.3, “Configuring the iptables Firewall to Allow Cluster Components”. This section shows the filtering you can use to allow multicast traffic through the

iptablesfirewall for the various cluster components.

1.1.3. New and Changed Features for Red Hat Enterprise Linux 6.3

- The Red Hat Enterprise Linux 6.3 release provides support for the

condorresource agent. For information on HA resource parameters, see Appendix B, HA Resource Parameters. - This document includes a new appendix, Appendix F, High Availability LVM (HA-LVM).

- Information throughout this document clarifies which configuration changes require a cluster restart. For a summary of these changes, see Section 10.1, “Configuration Changes Do Not Take Effect”.

- The documentation now notes that there is an idle timeout for luci that logs you out after 15 minutes of inactivity. For information on starting luci, see Section 4.2, “Starting luci”.

- The

fence_ipmilanfence device supports a privilege level parameter. For information on fence device parameters, see Appendix A, Fence Device Parameters. - This document includes a new section, Section 3.14, “Configuring Virtual Machines in a Clustered Environment”.

- This document includes a new section, Section 5.6, “Backing Up and Restoring the luci Configuration”.

- This document includes a new section, Section 10.4, “Cluster Daemon crashes”.

- This document provides information on setting debug options in Section 6.14.4, “Logging”, Section 8.7, “Configuring Debug Options”, and Section 10.13, “Debug Logging for Distributed Lock Manager (DLM) Needs to be Enabled”.

- As of Red Hat Enterprise Linux 6.3, the root user or a user who has been granted luci administrator permissions can also use the luci interface to add users to the system, as described in Section 4.3, “Controlling Access to luci”.

- As of the Red Hat Enterprise Linux 6.3 release, the

ccscommand validates the configuration according to the cluster schema at/usr/share/cluster/cluster.rngon the node that you specify with the-hoption. Previously theccscommand always used the cluster schema that was packaged with theccscommand itself,/usr/share/ccs/cluster.rngon the local system. For information on configuration validation, see Section 6.1.6, “Configuration Validation”. - The tables describing the fence device parameters in Appendix A, Fence Device Parameters and the tables describing the HA resource parameters in Appendix B, HA Resource Parameters now include the names of those parameters as they appear in the

cluster.conffile.

1.1.4. New and Changed Features for Red Hat Enterprise Linux 6.4

- The Red Hat Enterprise Linux 6.4 release provides support for the Eaton Network Power Controller (SNMP Interface) fence agent, the HP BladeSystem fence agent, and the IBM iPDU fence agent. For information on fence device parameters, see Appendix A, Fence Device Parameters.

- Appendix B, HA Resource Parameters now provides a description of the NFS Server resource agent.

- As of Red Hat Enterprise Linux 6.4, the root user or a user who has been granted luci administrator permissions can also use the luci interface to delete users from the system. This is documented in Section 4.3, “Controlling Access to luci”.

- Appendix B, HA Resource Parameters provides a description of the new

nfsrestartparameter for the Filesystem and GFS2 HA resources. - This document includes a new section, Section 6.1.5, “Commands that Overwrite Previous Settings”.

- Section 3.3, “Enabling IP Ports” now includes information on filtering the

iptablesfirewall forigmp. - The IPMI LAN fence agent now supports a parameter to configure the privilege level on the IPMI device, as documented in Appendix A, Fence Device Parameters.

- In addition to Ethernet bonding mode 1, bonding modes 0 and 2 are now supported for inter-node communication in a cluster. Troubleshooting advice in this document that suggests you ensure that you are using only supported bonding modes now notes this.

- VLAN-tagged network devices are now supported for cluster heartbeat communication. Troubleshooting advice indicating that this is not supported has been removed from this document.

- The Red Hat High Availability Add-On now supports the configuration of redundant ring protocol. For general information on using this feature and configuring the

cluster.confconfiguration file, see Section 8.6, “Configuring Redundant Ring Protocol”. For information on configuring redundant ring protocol with luci, see Section 4.5.4, “Configuring Redundant Ring Protocol”. For information on configuring redundant ring protocol with theccscommand, see Section 6.14.5, “Configuring Redundant Ring Protocol”.

1.1.5. New and Changed Features for Red Hat Enterprise Linux 6.5

- This document includes a new section, Section 8.8, “Configuring nfsexport and nfsserver Resources”.

- The tables of fence device parameters in Appendix A, Fence Device Parameters have been updated to reflect small updates to the luci interface.

1.1.6. New and Changed Features for Red Hat Enterprise Linux 6.6

- The tables of fence device parameters in Appendix A, Fence Device Parameters have been updated to reflect small updates to the luci interface.

- The tables of resource agent parameters in Appendix B, HA Resource Parameters have been updated to reflect small updates to the luci interface.

- Table B.3, “Bind Mount (

bind-mountResource) (Red Hat Enterprise Linux 6.6 and later)” documents the parameters for the Bind Mount resource agent. - As of Red Hat Enterprise Linux 6.6 release, you can use the

--noenableoption of theccs --startallcommand to prevent cluster services from being enabled, as documented in Section 7.2, “Starting and Stopping a Cluster” - Table A.26, “Fence kdump” documents the parameters for the kdump fence agent.

- As of the Red Hat Enterprise Linux 6.6 release, you can sort the columns in a resource list on the luci display by clicking on the header for the sort category, as described in Section 4.9, “Configuring Global Cluster Resources”.

1.1.7. New and Changed Features for Red Hat Enterprise Linux 6.7

- This document now includes a new chapter, Chapter 2, Getting Started: Overview, which provides a summary procedure for setting up a basic Red Hat High Availability cluster.

- Appendix A, Fence Device Parameters now includes a table listing the parameters for the Emerson Network Power Switch (SNMP interface).

- Appendix A, Fence Device Parameters now includes a table listing the parameters for the

fence_xvmfence agent, titled as "Fence virt (Multicast Mode"). The table listing the parameters for thefence_virtfence agent is now titled "Fence virt ((Serial/VMChannel Mode)". Both tables have been updated to reflect the luci display. - Appendix A, Fence Device Parameters now includes a table listing the parameters for the

fence_xvmfence agent, titled as "Fence virt (Multicast Mode"). The table listing the parameters for thefence_virtfence agent is now titled "Fence virt ((Serial/VMChannel Mode)". Both tables have been updated to reflect the luci display. - The troubleshooting procedure described in Section 10.10, “Quorum Disk Does Not Appear as Cluster Member” has been updated.

1.1.8. New and Changed Features for Red Hat Enterprise Linux 6.8

- Appendix A, Fence Device Parameters now includes a table listing the parameters for the

fence_mpathfence agent, titled as "Multipath Persistent Reservation Fencing". The table listing the parameters for thefence_ipmilan,fence_idrac,fence_imm,fence_ilo3, andfence_ilo4fence agents has been updated to reflect the luci display. - Section F.3, “Creating New Logical Volumes for an Existing Cluster” now provides a procedure for creating new logical volumes in an existing cluster when using HA-LVM.

1.1.9. New and Changed Features for Red Hat Enterprise Linux 6.9

- As of Red Hat Enterprise Linux 6.9, after you have entered a node name on the luci dialog box or the screen, the fingerprint of the certificate of the ricci host is displayed for confirmation, as described in Section 4.4, “Creating a Cluster” and Section 5.1, “Adding an Existing Cluster to the luci Interface”.Similarly, the fingerprint of the certificate of the ricci host is displayed for confirmation when you add a new node to a running cluster, as described in Section 5.3.3, “Adding a Member to a Running Cluster”.

- The luci display for a selected service group now includes a table showing the actions that have been configured for each resource in that service group. For information on resource actions, see Appendix D, Modifying and Enforcing Cluster Service Resource Actions.

1.2. Configuration Basics

- Setting up hardware. Refer to Section 1.3, “Setting Up Hardware”.

- Installing Red Hat High Availability Add-On software. Refer to Section 1.4, “Installing Red Hat High Availability Add-On software”.

- Configuring Red Hat High Availability Add-On Software. Refer to Section 1.5, “Configuring Red Hat High Availability Add-On Software”.

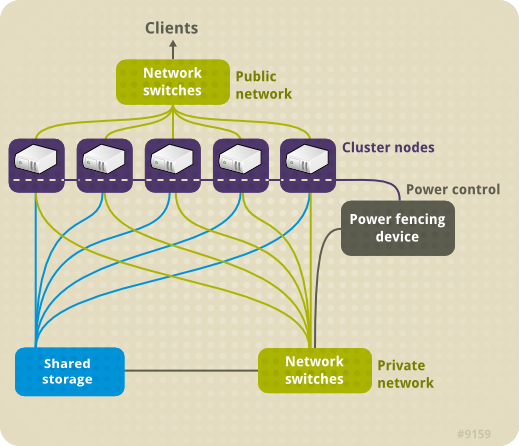

1.3. Setting Up Hardware

- Cluster nodes — Computers that are capable of running Red Hat Enterprise Linux 6 software, with at least 1GB of RAM.

- Network switches for public network — This is required for client access to the cluster.

- Network switches for private network — This is required for communication among the cluster nodes and other cluster hardware such as network power switches and Fibre Channel switches.

- Fencing device — A fencing device is required. A network power switch is recommended to perform fencing in an enterprise-level cluster. For information about supported fencing devices, see Appendix A, Fence Device Parameters.

- Storage — Some type of storage is required for a cluster. Figure 1.1, “Red Hat High Availability Add-On Hardware Overview” shows shared storage, but shared storage may not be required for your specific use.

Figure 1.1. Red Hat High Availability Add-On Hardware Overview

1.4. Installing Red Hat High Availability Add-On software

yum install command to install the Red Hat High Availability Add-On software packages:

yum install rgmanager lvm2-cluster gfs2-utils

# yum install rgmanager lvm2-cluster gfs2-utilsrgmanager will pull in all necessary dependencies to create an HA cluster from the HighAvailability channel. The lvm2-cluster and gfs2-utils packages are part of ResilientStorage channel and may not be needed by your site.

Warning

Upgrading Red Hat High Availability Add-On Software

- Shut down all cluster services on a single cluster node. For instructions on stopping cluster software on a node, see Section 9.1.2, “Stopping Cluster Software”. It may be desirable to manually relocate cluster-managed services and virtual machines off of the host prior to stopping

rgmanager. - Execute the

yum updatecommand to update installed packages. - Reboot the cluster node or restart the cluster services manually. For instructions on starting cluster software on a node, see Section 9.1.1, “Starting Cluster Software”.

1.5. Configuring Red Hat High Availability Add-On Software

- Conga — This is a comprehensive user interface for installing, configuring, and managing Red Hat High Availability Add-On. Refer to Chapter 4, Configuring Red Hat High Availability Add-On With Conga and Chapter 5, Managing Red Hat High Availability Add-On With Conga for information about configuring and managing High Availability Add-On with Conga.

- The

ccscommand — This command configures and manages Red Hat High Availability Add-On. Refer to Chapter 6, Configuring Red Hat High Availability Add-On With the ccs Command and Chapter 7, Managing Red Hat High Availability Add-On With ccs for information about configuring and managing High Availability Add-On with theccscommand. - Command-line tools — This is a set of command-line tools for configuring and managing Red Hat High Availability Add-On. Refer to Chapter 8, Configuring Red Hat High Availability Manually and Chapter 9, Managing Red Hat High Availability Add-On With Command Line Tools for information about configuring and managing a cluster with command-line tools. Refer to Appendix E, Command Line Tools Summary for a summary of preferred command-line tools.

Note

system-config-cluster is not available in Red Hat Enterprise Linux 6.

Chapter 2. Getting Started: Overview

2.1. Installation and System Setup

- Ensure that your Red Hat account includes the following support entitlements:

- RHEL: Server

- Red Hat Applications: High availability

- Red Hat Applications: Resilient Storage, if using the Clustered Logical Volume Manager (CLVM) and GFS2 file systems.

- Register the cluster systems for software updates, using either Red Hat Subscriptions Manager (RHSM) or RHN Classic.

- On each node in the cluster, configure the iptables firewall. The iptables firewall can be disabled, or it can be configured to allow cluster traffic to pass through.To disable the iptables system firewall, execute the following commands.

service iptables stop chkconfig iptables off

# service iptables stop # chkconfig iptables offCopy to Clipboard Copied! Toggle word wrap Toggle overflow For information on configuring the iptables firewall to allow cluster traffic to pass through, see Section 3.3, “Enabling IP Ports”. - On each node in the cluster, configure SELinux. SELinux is supported on Red Hat Enterprise Linux 6 cluster nodes in Enforcing or Permissive mode with a targeted policy, or it can be disabled. To check the current SELinux state, run the

getenforce:getenforce Permissive

# getenforce PermissiveCopy to Clipboard Copied! Toggle word wrap Toggle overflow For information on enabling and disabling SELinux, see the Security-Enhanced Linux user guide. - Install the cluster packages and package groups.

- On each node in the cluster, install the

High AvailabilityandResiliant Storagepackage groups.yum groupinstall 'High Availability' 'Resilient Storage'

# yum groupinstall 'High Availability' 'Resilient Storage'Copy to Clipboard Copied! Toggle word wrap Toggle overflow - On the node that will be hosting the web management interface, install the luci package.

yum install luci

# yum install luciCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.2. Starting Cluster Services

- On both nodes in the cluster, start the

ricciservice and set a password for userricci.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - On the node that will be hosting the web management interface, start the luci service. This will provide the link from which to access luci on this node.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

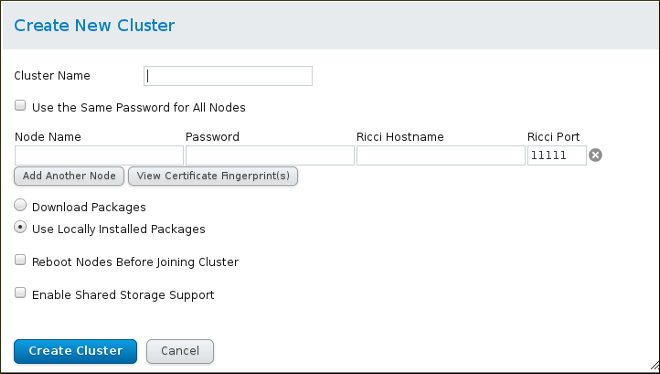

2.3. Creating the Cluster

- To access the High Availability management web interface, point your browser to the link provided by the luci service and log in using the root account on the node hosting luci. Logging in to luci displays the luci page.

- To create a cluster, click on from the menu on the left navigation pane of the page. This displays the page.

- From the page, click the button. This displays the screen.

Figure 2.1. Clusters menu

- On the screen, enter the parameters for the cluster you are creating. The field will be the

riccipassword you defined for the indicated node. For more detailed information about the parameters on this screen and information about verifying the certificate fingerprint of thericciserver, see Section 4.4, “Creating a Cluster”.Figure 2.2. Create New Cluster screen

- After you have completed entering the parameters for the cluster, click the button. A progress bar is displayed with the cluster is formed. Once cluster creation has completed, luci displays the cluster general properties.

- Verify the cluster status by running the

clustatcommand on either node of the cluster.Copy to Clipboard Copied! Toggle word wrap Toggle overflow If you cannot create the cluster, double check the firewall settings, as described in Section 3.3.3, “Configuring the iptables Firewall to Allow Cluster Components”.If you can create the cluster (there is an/etc/cluster/cluster.confon each node) but the cluster will not form, you may have multicast issues. To test this, change the transport mode from UDP multicast to UDP unicast, as described in Section 3.12, “UDP Unicast Traffic”. Note, however, that in unicast mode there is a traffic increase compared to multicast mode, which adds to the processing load of the node.

2.4. Configuring Fencing

2.5. Configuring a High Availability Application

- Configure shared storage and file systems required by your application. For information on high availability logical volumes, see Appendix F, High Availability LVM (HA-LVM). For information on the GFS2 clustered file system, see the Global File System 2 manual.

- Optionally, you can customize your cluster's behavior by configuring a failover domain. A failover domain determines which cluster nodes an application will run on in what circumstances, determined by a set of failover domain configuration options. For information on failover domain options and how they determine a cluster's behavior, see the High Availability Add-On Overview. For information on configuring failover domains, see Section 4.8, “Configuring a Failover Domain”.

- Configure cluster resources for your system. Cluster resources are the individual components of the applications running on a cluster node. For information on configuring cluster resources, see Section 4.9, “Configuring Global Cluster Resources”.

- Configure the cluster services for your cluster. A cluster service is the collection of cluster resources required by an application running on a cluster node that can fail over to another node in a high availability cluster. You can configure the startup and recovery policies for a cluster service, and you can configure resource trees for the resources that constitute the service, which determine startup and shutdown order for the resources as well as the relationships between the resources. For information on service policies, resource trees, service operations, and resource actions, see the High Availability Add-On Overview. For information on configuring cluster services, see Section 4.10, “Adding a Cluster Service to the Cluster”.

2.6. Testing the Configuration

- Verify that the service you created is running with the

clustatcommand, which you can run on either cluster node. In this example, the serviceexample_apacheis running onnode1.example.com.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Check whether the service is operational. How you do this depends on the application. For example, if you are running an Apache web server, point a browser to the URL you defined for the server to see if the display is correct.

- Shut down the cluster software on the node on which the service is running, which you can determine from the

clustatdisplay.- Click on the cluster name beneath from the menu on the left side of the luci page. This displays the nodes that constitute the cluster.

- Select the node you want to leave the cluster by clicking the check box for that node.

- Select the function from the menu at the top of the page. This causes a message to appear at the top of the page indicating that the node is being stopped.

- Refresh the page to see the updated status of the node.

- Run the

clustatcommand again to see if the service is now running on the other cluster node. If it is, check again to see if the service is still operational. For example, if you are running an apache web server, check whether you can still display the website. - Make sure you have the node you disabled rejoin the cluster by returning to the cluster node display, selecting the node, and selecting .

Chapter 3. Before Configuring the Red Hat High Availability Add-On

Important

3.1. General Configuration Considerations

- Number of cluster nodes supported

- The maximum number of cluster nodes supported by the High Availability Add-On is 16.

- Single site clusters

- Only single site clusters are fully supported at this time. Clusters spread across multiple physical locations are not formally supported. For more details and to discuss multi-site clusters, speak to your Red Hat sales or support representative.

- GFS2

- Although a GFS2 file system can be implemented in a standalone system or as part of a cluster configuration, Red Hat does not support the use of GFS2 as a single-node file system. Red Hat does support a number of high-performance single-node file systems that are optimized for single node, which have generally lower overhead than a cluster file system. Red Hat recommends using those file systems in preference to GFS2 in cases where only a single node needs to mount the file system. Red Hat will continue to support single-node GFS2 file systems for existing customers.When you configure a GFS2 file system as a cluster file system, you must ensure that all nodes in the cluster have access to the shared file system. Asymmetric cluster configurations in which some nodes have access to the file system and others do not are not supported.This does not require that all nodes actually mount the GFS2 file system itself.

- No-single-point-of-failure hardware configuration

- Clusters can include a dual-controller RAID array, multiple bonded network channels, multiple paths between cluster members and storage, and redundant un-interruptible power supply (UPS) systems to ensure that no single failure results in application down time or loss of data.Alternatively, a low-cost cluster can be set up to provide less availability than a no-single-point-of-failure cluster. For example, you can set up a cluster with a single-controller RAID array and only a single Ethernet channel.Certain low-cost alternatives, such as host RAID controllers, software RAID without cluster support, and multi-initiator parallel SCSI configurations are not compatible or appropriate for use as shared cluster storage.

- Data integrity assurance

- To ensure data integrity, only one node can run a cluster service and access cluster-service data at a time. The use of power switches in the cluster hardware configuration enables a node to power-cycle another node before restarting that node's HA services during a failover process. This prevents two nodes from simultaneously accessing the same data and corrupting it. Fence devices (hardware or software solutions that remotely power, shutdown, and reboot cluster nodes) are used to guarantee data integrity under all failure conditions.

- Ethernet channel bonding

- Cluster quorum and node health is determined by communication of messages among cluster nodes by means of Ethernet. In addition, cluster nodes use Ethernet for a variety of other critical cluster functions (for example, fencing). With Ethernet channel bonding, multiple Ethernet interfaces are configured to behave as one, reducing the risk of a single-point-of-failure in the typical switched Ethernet connection among cluster nodes and other cluster hardware.As of Red Hat Enterprise Linux 6.4, bonding modes 0, 1, and 2 are supported.

- IPv4 and IPv6

- The High Availability Add-On supports both IPv4 and IPv6 Internet Protocols. Support of IPv6 in the High Availability Add-On is new for Red Hat Enterprise Linux 6.

3.2. Compatible Hardware

3.3. Enabling IP Ports

iptables rules for enabling IP ports needed by the Red Hat High Availability Add-On:

3.3.1. Enabling IP Ports on Cluster Nodes

system-config-firewall to enable the IP ports.

| IP Port Number | Protocol | Component |

|---|---|---|

| 5404, 5405 | UDP | corosync/cman (Cluster Manager) |

| 11111 | TCP | ricci (propagates updated cluster information) |

| 21064 | TCP | dlm (Distributed Lock Manager) |

| 16851 | TCP | modclusterd |

3.3.2. Enabling the IP Port for luci

Note

| IP Port Number | Protocol | Component |

|---|---|---|

| 8084 | TCP | luci (Conga user interface server) |

/etc/sysconfig/luci file, you can specifically configure the only IP address luci is being served at. You can use this capability if your server infrastructure incorporates more than one network and you want to access luci from the internal network only. To do this, uncomment and edit the line in the file that specifies host. For example, to change the host setting in the file to 10.10.10.10, edit the host line as follows:

host = 10.10.10.10

host = 10.10.10.10

/etc/sysconfig/luci file, see Section 3.4, “Configuring luci with /etc/sysconfig/luci”.

3.3.3. Configuring the iptables Firewall to Allow Cluster Components

cman (Cluster Manager), use the following filtering.

iptables -I INPUT -m state --state NEW -m multiport -p udp -s 192.168.1.0/24 -d 192.168.1.0/24 --dports 5404,5405 -j ACCEPT iptables -I INPUT -m addrtype --dst-type MULTICAST -m state --state NEW -m multiport -p udp -s 192.168.1.0/24 --dports 5404,5405 -j ACCEPT

$ iptables -I INPUT -m state --state NEW -m multiport -p udp -s 192.168.1.0/24 -d 192.168.1.0/24 --dports 5404,5405 -j ACCEPT

$ iptables -I INPUT -m addrtype --dst-type MULTICAST -m state --state NEW -m multiport -p udp -s 192.168.1.0/24 --dports 5404,5405 -j ACCEPTdlm (Distributed Lock Manager):

iptables -I INPUT -m state --state NEW -p tcp -s 192.168.1.0/24 -d 192.168.1.0/24 --dport 21064 -j ACCEPT

$ iptables -I INPUT -m state --state NEW -p tcp -s 192.168.1.0/24 -d 192.168.1.0/24 --dport 21064 -j ACCEPT ricci (part of Conga remote agent):

iptables -I INPUT -m state --state NEW -p tcp -s 192.168.1.0/24 -d 192.168.1.0/24 --dport 11111 -j ACCEPT

$ iptables -I INPUT -m state --state NEW -p tcp -s 192.168.1.0/24 -d 192.168.1.0/24 --dport 11111 -j ACCEPTmodclusterd (part of Conga remote agent):

iptables -I INPUT -m state --state NEW -p tcp -s 192.168.1.0/24 -d 192.168.1.0/24 --dport 16851 -j ACCEPT

$ iptables -I INPUT -m state --state NEW -p tcp -s 192.168.1.0/24 -d 192.168.1.0/24 --dport 16851 -j ACCEPTluci (Conga User Interface server):

iptables -I INPUT -m state --state NEW -p tcp -s 192.168.1.0/24 -d 192.168.1.0/24 --dport 8084 -j ACCEPT

$ iptables -I INPUT -m state --state NEW -p tcp -s 192.168.1.0/24 -d 192.168.1.0/24 --dport 8084 -j ACCEPTigmp (Internet Group Management Protocol):

iptables -I INPUT -p igmp -j ACCEPT

$ iptables -I INPUT -p igmp -j ACCEPTservice iptables save ; service iptables restart

$ service iptables save ; service iptables restart3.4. Configuring luci with /etc/sysconfig/luci

/etc/sysconfig/luci file. The parameters you can change with this file include auxiliary settings of the running environment used by the init script as well as server configuration. In addition, you can edit this file to modify some application configuration parameters. There are instructions within the file itself describing which configuration parameters you can change by editing this file.

/etc/sysconfig/luci file when you edit the file. Additionally, you should take care to follow the required syntax for this file, particularly for the INITSCRIPT section which does not allow for white spaces around the equal sign and requires that you use quotation marks to enclose strings containing white spaces.

/etc/sysconfig/luci file.

- Uncomment the following line in the

/etc/sysconfig/lucifile:#port = 4443

#port = 4443Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace 4443 with the desired port number, which must be higher than or equal to 1024 (not a privileged port). For example, you can edit that line of the file as follows to set the port at which luci is being served to 8084 (commenting the line out again would have the same affect, as this is the default value).

port = 8084

port = 8084Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Restart the luci service for the changes to take effect.

ssl_cipher_list configuration parameter in /etc/sysconfig/luci. This parameter can be used to impose restrictions as expressed with OpenSSL cipher notation.

Important

/etc/sysconfig/luci file to redefine a default value, you should take care to use the new value in place of the documented default value. For example, when you modify the port at which luci is being served, make sure that you specify the modified value when you enable an IP port for luci, as described in Section 3.3.2, “Enabling the IP Port for luci”.

/etc/sysconfig/luci file, refer to the documentation within the file itself.

3.5. Configuring ACPI For Use with Integrated Fence Devices

shutdown -h now). Otherwise, if ACPI Soft-Off is enabled, an integrated fence device can take four or more seconds to turn off a node (refer to note that follows). In addition, if ACPI Soft-Off is enabled and a node panics or freezes during shutdown, an integrated fence device may not be able to turn off the node. Under those circumstances, fencing is delayed or unsuccessful. Consequently, when a node is fenced with an integrated fence device and ACPI Soft-Off is enabled, a cluster recovers slowly or requires administrative intervention to recover.

Note

- Use

chkconfigmanagement and verify that the node turns off immediately when fenced, as described in Section 3.5.2, “Disabling ACPI Soft-Off withchkconfigManagement”. This is the first alternate method. - Appending

acpi=offto the kernel boot command line of the/boot/grub/grub.conffile, as described in Section 3.5.3, “Disabling ACPI Completely in thegrub.confFile”. This is the second alternate method.Important

This method completely disables ACPI; some computers do not boot correctly if ACPI is completely disabled. Use this method only if the other methods are not effective for your cluster.

3.5.1. Disabling ACPI Soft-Off with the BIOS

Note

- Reboot the node and start the

BIOS CMOS Setup Utilityprogram. - Navigate to the menu (or equivalent power management menu).

- At the menu, set the function (or equivalent) to (or the equivalent setting that turns off the node by means of the power button without delay). Example 3.1, “

BIOS CMOS Setup Utility: set to ” shows a menu with set to and set to .Note

The equivalents to , , and may vary among computers. However, the objective of this procedure is to configure the BIOS so that the computer is turned off by means of the power button without delay. - Exit the

BIOS CMOS Setup Utilityprogram, saving the BIOS configuration. - When the cluster is configured and running, verify that the node turns off immediately when fenced.

Note

You can fence the node with thefence_nodecommand or Conga.

Example 3.1. BIOS CMOS Setup Utility: set to

3.5.2. Disabling ACPI Soft-Off with chkconfig Management

chkconfig management to disable ACPI Soft-Off either by removing the ACPI daemon (acpid) from chkconfig management or by turning off acpid.

Note

chkconfig management at each cluster node as follows:

- Run either of the following commands:

chkconfig --del acpid— This command removesacpidfromchkconfigmanagement.— OR —chkconfig --level 345 acpid off— This command turns offacpid.

- Reboot the node.

- When the cluster is configured and running, verify that the node turns off immediately when fenced.

Note

You can fence the node with thefence_nodecommand or Conga.

3.5.3. Disabling ACPI Completely in the grub.conf File

chkconfig management (Section 3.5.2, “Disabling ACPI Soft-Off with chkconfig Management”). If the preferred method is not effective for your cluster, you can disable ACPI Soft-Off with the BIOS power management (Section 3.5.1, “Disabling ACPI Soft-Off with the BIOS”). If neither of those methods is effective for your cluster, you can disable ACPI completely by appending acpi=off to the kernel boot command line in the grub.conf file.

Important

grub.conf file of each cluster node as follows:

- Open

/boot/grub/grub.confwith a text editor. - Append

acpi=offto the kernel boot command line in/boot/grub/grub.conf(see Example 3.2, “Kernel Boot Command Line withacpi=offAppended to It”). - Reboot the node.

- When the cluster is configured and running, verify that the node turns off immediately when fenced.

Note

You can fence the node with thefence_nodecommand or Conga.

Example 3.2. Kernel Boot Command Line with acpi=off Appended to It

acpi=off has been appended to the kernel boot command line — the line starting with "kernel /vmlinuz-2.6.32-193.el6.x86_64.img".

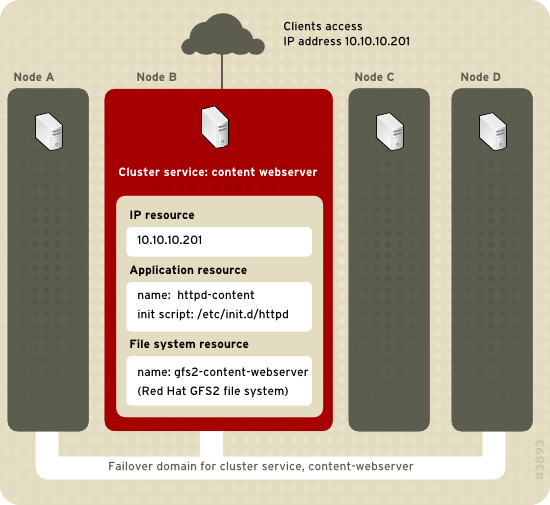

3.6. Considerations for Configuring HA Services

rgmanager, implements cold failover for off-the-shelf applications. In the Red Hat High Availability Add-On, an application is configured with other cluster resources to form an HA service that can fail over from one cluster node to another with no apparent interruption to cluster clients. HA-service failover can occur if a cluster node fails or if a cluster system administrator moves the service from one cluster node to another (for example, for a planned outage of a cluster node).

- IP address resource — IP address 10.10.10.201.

- An application resource named "httpd-content" — a web server application init script

/etc/init.d/httpd(specifyinghttpd). - A file system resource — Red Hat GFS2 named "gfs2-content-webserver".

Figure 3.1. Web Server Cluster Service Example

Note

/etc/cluster/cluster.conf (in each cluster node). In the cluster configuration file, each resource tree is an XML representation that specifies each resource, its attributes, and its relationship among other resources in the resource tree (parent, child, and sibling relationships).

Note

- The types of resources needed to create a service

- Parent, child, and sibling relationships among resources

3.7. Configuration Validation

/usr/share/cluster/cluster.rng during startup time and when a configuration is reloaded. Also, you can validate a cluster configuration any time by using the ccs_config_validate command. For information on configuration validation when using the ccs command, see Section 6.1.6, “Configuration Validation”.

/usr/share/doc/cman-X.Y.ZZ/cluster_conf.html (for example /usr/share/doc/cman-3.0.12/cluster_conf.html).

- XML validity — Checks that the configuration file is a valid XML file.

- Configuration options — Checks to make sure that options (XML elements and attributes) are valid.

- Option values — Checks that the options contain valid data (limited).

- Valid configuration — Example 3.3, “

cluster.confSample Configuration: Valid File” - Invalid option — Example 3.5, “

cluster.confSample Configuration: Invalid Option” - Invalid option value — Example 3.6, “

cluster.confSample Configuration: Invalid Option Value”

Example 3.3. cluster.conf Sample Configuration: Valid File

Example 3.4. cluster.conf Sample Configuration: Invalid XML

<cluster> instead of </cluster>.

Example 3.5. cluster.conf Sample Configuration: Invalid Option

loging instead of logging.

Example 3.6. cluster.conf Sample Configuration: Invalid Option Value

nodeid in the clusternode line for node-01.example.com. The value is a negative value ("-1") instead of a positive value ("1"). For the nodeid attribute, the value must be a positive value.

3.8. Considerations for NetworkManager

Note

cman service will not start if NetworkManager is either running or has been configured to run with the chkconfig command.

3.9. Considerations for Using Quorum Disk

qdiskd, that provides supplemental heuristics to determine node fitness. With heuristics you can determine factors that are important to the operation of the node in the event of a network partition. For example, in a four-node cluster with a 3:1 split, ordinarily, the three nodes automatically "win" because of the three-to-one majority. Under those circumstances, the one node is fenced. With qdiskd however, you can set up heuristics that allow the one node to win based on access to a critical resource (for example, a critical network path). If your cluster requires additional methods of determining node health, then you should configure qdiskd to meet those needs.

Note

qdiskd is not required unless you have special requirements for node health. An example of a special requirement is an "all-but-one" configuration. In an all-but-one configuration, qdiskd is configured to provide enough quorum votes to maintain quorum even though only one node is working.

Important

qdiskd parameters for your deployment depend on the site environment and special requirements needed. To understand the use of heuristics and other qdiskd parameters, see the qdisk(5) man page. If you require assistance understanding and using qdiskd for your site, contact an authorized Red Hat support representative.

qdiskd, you should take into account the following considerations:

- Cluster node votes

- When using Quorum Disk, each cluster node must have one vote.

- CMAN membership timeout value

- The

qdiskdmembership timeout value is automatically configured based on the CMAN membership timeout value (the time a node needs to be unresponsive before CMAN considers that node to be dead, and not a member).qdiskdalso performs extra sanity checks to guarantee that it can operate within the CMAN timeout. If you find that you need to reset this value, you must take the following into account:The CMAN membership timeout value should be at least two times that of theqdiskdmembership timeout value. The reason is because the quorum daemon must detect failed nodes on its own, and can take much longer to do so than CMAN. Other site-specific conditions may affect the relationship between the membership timeout values of CMAN andqdiskd. For assistance with adjusting the CMAN membership timeout value, contact an authorized Red Hat support representative. - Fencing

- To ensure reliable fencing when using

qdiskd, use power fencing. While other types of fencing can be reliable for clusters not configured withqdiskd, they are not reliable for a cluster configured withqdiskd. - Maximum nodes

- A cluster configured with

qdiskdsupports a maximum of 16 nodes. The reason for the limit is because of scalability; increasing the node count increases the amount of synchronous I/O contention on the shared quorum disk device. - Quorum disk device

- A quorum disk device should be a shared block device with concurrent read/write access by all nodes in a cluster. The minimum size of the block device is 10 Megabytes. Examples of shared block devices that can be used by

qdiskdare a multi-port SCSI RAID array, a Fibre Channel RAID SAN, or a RAID-configured iSCSI target. You can create a quorum disk device withmkqdisk, the Cluster Quorum Disk Utility. For information about using the utility see the mkqdisk(8) man page.Note

Using JBOD as a quorum disk is not recommended. A JBOD cannot provide dependable performance and therefore may not allow a node to write to it quickly enough. If a node is unable to write to a quorum disk device quickly enough, the node is falsely evicted from a cluster.

3.10. Red Hat High Availability Add-On and SELinux

enforcing state with the SELinux policy type set to targeted.

Note

fenced_can_network_connect is persistently set to on. This allows the fence_xvm fencing agent to work properly, enabling the system to fence virtual machines.

3.11. Multicast Addresses

Note

3.12. UDP Unicast Traffic

cman transport="udpu" parameter in the cluster.conf configuration file. You can also specify Unicast from the page of the Conga user interface, as described in Section 4.5.3, “Network Configuration”.

3.13. Considerations for ricci

ricci replaces ccsd. Therefore, it is necessary that ricci is running in each cluster node to be able to propagate updated cluster configuration whether it is by means of the cman_tool version -r command, the ccs command, or the luci user interface server. You can start ricci by using service ricci start or by enabling it to start at boot time by means of chkconfig. For information on enabling IP ports for ricci, see Section 3.3.1, “Enabling IP Ports on Cluster Nodes”.

ricci requires a password the first time you propagate updated cluster configuration from any particular node. You set the ricci password as root after you install ricci on your system. To set this password, execute the passwd ricci command, for user ricci.

3.14. Configuring Virtual Machines in a Clustered Environment

rgmanager tools to start and stop the virtual machines. Using virsh to start the machine can result in the virtual machine running in more than one place, which can cause data corruption in the virtual machine.

virsh, as the configuration file will be unknown out of the box to virsh.

path attribute of a virtual machine resource. Note that the path attribute is a directory or set of directories separated by the colon ':' character, not a path to a specific file.

Warning

libvirt-guests service should be disabled on all the nodes that are running rgmanager. If a virtual machine autostarts or resumes, this can result in the virtual machine running in more than one place, which can cause data corruption in the virtual machine.

vm Resource)”.

Chapter 4. Configuring Red Hat High Availability Add-On With Conga

Note

4.1. Configuration Tasks

- Configuring and running the Conga configuration user interface — the luci server. Refer to Section 4.2, “Starting luci”.

- Creating a cluster. Refer to Section 4.4, “Creating a Cluster”.

- Configuring global cluster properties. Refer to Section 4.5, “Global Cluster Properties”.

- Configuring fence devices. Refer to Section 4.6, “Configuring Fence Devices”.

- Configuring fencing for cluster members. Refer to Section 4.7, “Configuring Fencing for Cluster Members”.

- Creating failover domains. Refer to Section 4.8, “Configuring a Failover Domain”.

- Creating resources. Refer to Section 4.9, “Configuring Global Cluster Resources”.

- Creating cluster services. Refer to Section 4.10, “Adding a Cluster Service to the Cluster”.

4.2. Starting luci

Note

luci to configure a cluster requires that ricci be installed and running on the cluster nodes, as described in Section 3.13, “Considerations for ricci”. As noted in that section, using ricci requires a password which luci requires you to enter for each cluster node when you create a cluster, as described in Section 4.4, “Creating a Cluster”.

- Select a computer to host luci and install the luci software on that computer. For example:

yum install luci

# yum install luciCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note

Typically, a computer in a server cage or a data center hosts luci; however, a cluster computer can host luci. - Start luci using

service luci start. For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note

As of Red Hat Enterprise Linux release 6.1, you can configure some aspects of luci's behavior by means of the/etc/sysconfig/lucifile, including the port and host parameters, as described in Section 3.4, “Configuring luci with/etc/sysconfig/luci”. Modified port and host parameters will automatically be reflected in the URL displayed when the luci service starts. - At a Web browser, place the URL of the luci server into the URL address box and click

Go(or the equivalent). The URL syntax for the luci server ishttps://luci_server_hostname:luci_server_port. The default value of luci_server_port is8084.The first time you access luci, a web browser specific prompt regarding the self-signed SSL certificate (of the luci server) is displayed. Upon acknowledging the dialog box or boxes, your Web browser displays the luci login page. - Any user able to authenticate on the system that is hosting luci can log in to luci. As of Red Hat Enterprise Linux 6.2 only the root user on the system that is running luci can access any of the luci components until an administrator (the root user or a user with administrator permission) sets permissions for that user. For information on setting luci permissions for users, see Section 4.3, “Controlling Access to luci”.

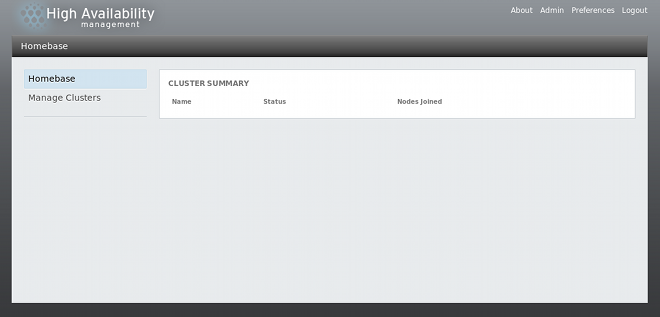

Figure 4.1. luci Homebase page

Note

4.3. Controlling Access to luci

- As of Red Hat Enterprise Linux 6.2, the root user or a user who has been granted luci administrator permissions on a system running luci can control access to the various luci components by setting permissions for the individual users on a system.

- As of Red Hat Enterprise Linux 6.3, the root user or a user who has been granted luci administrator permissions can add users to the luci interface and then set the user permissions for that user. You will still need to add that user to the system and set up a password for that user, but this feature allows you to configure permissions for the user before the user has logged in to luci for the first time.

- As of Red Hat Enterprise Linux 6.4, the root user or a user who has been granted luci administrator permissions can also use the luci interface to delete users from the luci interface, which resets any permissions you have configured for that user.

Note

/etc/pam.d/luci file on the system. For information on using Linux-PAM, see the pam(8) man page.

root or as a user who has previously been granted administrator permissions and click the selection in the upper right corner of the luci screen. This brings up the page, which displays the existing users.

- Grants the user the same permissions as the root user, with full permissions on all clusters and the ability to set or remove permissions on all other users except root, whose permissions cannot be restricted.

- Allows the user to create new clusters, as described in Section 4.4, “Creating a Cluster”.

- Allows the user to add an existing cluster to the luci interface, as described in Section 5.1, “Adding an Existing Cluster to the luci Interface”.

- Allows the user to view the specified cluster.

- Allows the user to modify the configuration for the specified cluster, with the exception of adding and removing cluster nodes.

- Allows the user to manage high-availability services, as described in Section 5.5, “Managing High-Availability Services”.

- Allows the user to manage the individual nodes of a cluster, as described in Section 5.3, “Managing Cluster Nodes”.

- Allows the user to add and delete nodes from a cluster, as described in Section 4.4, “Creating a Cluster”.

- Allows the user to remove a cluster from the luci interface, as described in Section 5.4, “Starting, Stopping, Restarting, and Deleting Clusters”.

4.4. Creating a Cluster

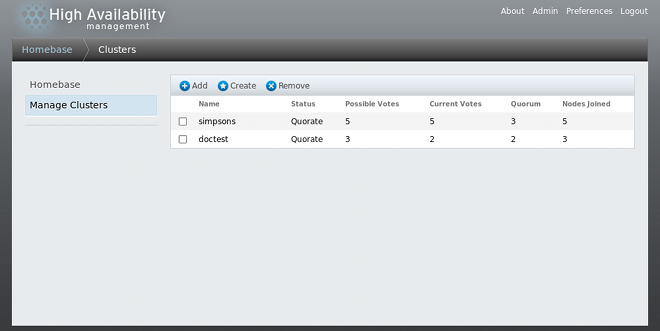

- Click from the menu on the left side of the luci page. The screen appears, as shown in Figure 4.2, “luci cluster management page”.

Figure 4.2. luci cluster management page

- Click . The dialog box appears, as shown in Figure 4.3, “luci cluster creation dialog box”.

Figure 4.3. luci cluster creation dialog box

- Enter the following parameters on the dialog box, as necessary:

- At the text box, enter a cluster name. The cluster name cannot exceed 15 characters.

- If each node in the cluster has the same ricci password, you can check to autofill the field as you add nodes.

- Enter the node name for a node in the cluster in the column. A node name can be up to 255 bytes in length.

- After you have entered the node name, the node name is reused as the ricci host name. If your system is configured with a dedicated private network that is used only for cluster traffic, you may want to configure luci to communicate with ricci on an address that is different from the address to which the cluster node name resolves. You can do this by entering that address as the .

- As of Red Hat Enterprise Linux 6.9, after you have entered the node name and, if necessary, adjusted the ricci host name, the fingerprint of the certificate of the ricci host is displayed for confirmation. You can verify whether this matches the expected fingerprint. If it is legitimate, enter the ricci password and add the next node. You can remove the fingerprint display by clicking on the display window, and you can restore this display (or enforce it at any time) by clicking the button.

Important

It is strongly advised that you verify the certificate fingerprint of the ricci server you are going to authenticate against. Providing an unverified entity on the network with the ricci password may constitute a confidentiality breach, and communication with an unverified entity may cause an integrity breach. - If you are using a different port for the ricci agent than the default of 11111, you can change that parameter.

- Click and enter the node name and ricci password for each additional node in the cluster.Figure 4.4, “luci cluster creation with certificate fingerprint display”. shows the dialog box after two nodes have been entered, showing the certificate fingerprints of the ricci hosts (Red Hat Enterprise Linux 6.9 and later).

Figure 4.4. luci cluster creation with certificate fingerprint display

- If you do not want to upgrade the cluster software packages that are already installed on the nodes when you create the cluster, leave the option selected. If you want to upgrade all cluster software packages, select the option.

Note

Whether you select the or the option, if any of the base cluster components are missing (cman,rgmanager,modclusterand all their dependencies), they will be installed. If they cannot be installed, the node creation will fail. - Check if desired.

- Select if clustered storage is required; this downloads the packages that support clustered storage and enables clustered LVM. You should select this only when you have access to the Resilient Storage Add-On or the Scalable File System Add-On.

- Click . Clicking causes the following actions:

- If you have selected , the cluster software packages are downloaded onto the nodes.

- Cluster software is installed onto the nodes (or it is verified that the appropriate software packages are installed).

- The cluster configuration file is updated and propagated to each node in the cluster.

- The added nodes join the cluster.

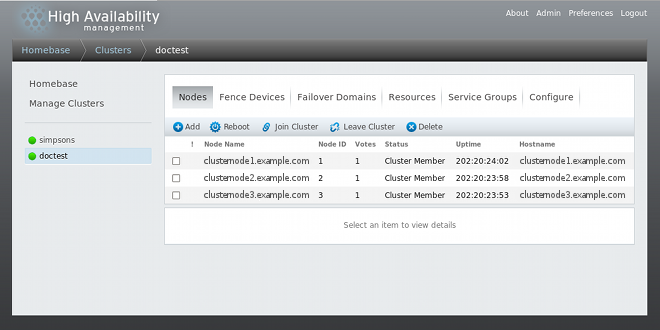

A message is displayed indicating that the cluster is being created. When the cluster is ready, the display shows the status of the newly created cluster, as shown in Figure 4.5, “Cluster node display”. Note that if ricci is not running on any of the nodes, the cluster creation will fail.Figure 4.5. Cluster node display

- After clicking , you can add or delete nodes from the cluster by clicking the or function from the menu at the top of the cluster node display page. Unless you are deleting an entire cluster, nodes must be stopped before being deleted. For information on deleting a node from an existing cluster that is currently in operation, see Section 5.3.4, “Deleting a Member from a Cluster”.

Warning

Removing a cluster node from the cluster is a destructive operation that cannot be undone.

4.5. Global Cluster Properties

4.5.1. Configuring General Properties

- The text box displays the cluster name; it does not accept a cluster name change. The only way to change the name of a cluster is to create a new cluster configuration with the new name.

- The value is set to

1at the time of cluster creation and is automatically incremented each time you modify your cluster configuration. However, if you need to set it to another value, you can specify it at the text box.

4.5.2. Configuring Fence Daemon Properties

- The parameter is the number of seconds the fence daemon (

fenced) waits before fencing a node (a member of the fence domain) after the node has failed. The default value is0. Its value may be varied to suit cluster and network performance. - The parameter is the number of seconds the fence daemon (

fenced) waits before fencing a node after the node joins the fence domain. luci sets the value to6. A typical setting for is between 20 and 30 seconds, but can vary according to cluster and network performance.

Note

4.5.3. Network Configuration

- This is the default setting. With this option selected, the Red Hat High Availability Add-On software creates a multicast address based on the cluster ID. It generates the lower 16 bits of the address and appends them to the upper portion of the address according to whether the IP protocol is IPv4 or IPv6:

- For IPv4 — The address formed is 239.192. plus the lower 16 bits generated by Red Hat High Availability Add-On software.

- For IPv6 — The address formed is FF15:: plus the lower 16 bits generated by Red Hat High Availability Add-On software.

Note

The cluster ID is a unique identifier thatcmangenerates for each cluster. To view the cluster ID, run thecman_tool statuscommand on a cluster node. - If you need to use a specific multicast address, select this option enter a multicast address into the text box.If you do specify a multicast address, you should use the 239.192.x.x series (or FF15:: for IPv6) that

cmanuses. Otherwise, using a multicast address outside that range may cause unpredictable results. For example, using 224.0.0.x (which is "All hosts on the network") may not be routed correctly, or even routed at all by some hardware.If you specify or modify a multicast address, you must restart the cluster for this to take effect. For information on starting and stopping a cluster with Conga, see Section 5.4, “Starting, Stopping, Restarting, and Deleting Clusters”.Note

If you specify a multicast address, make sure that you check the configuration of routers that cluster packets pass through. Some routers may take a long time to learn addresses, seriously impacting cluster performance. - As of the Red Hat Enterprise Linux 6.2 release, the nodes in a cluster can communicate with each other using the UDP Unicast transport mechanism. It is recommended, however, that you use IP multicasting for the cluster network. UDP Unicast is an alternative that can be used when IP multicasting is not available. For GFS2 deployments using UDP Unicast is not recommended.

4.5.4. Configuring Redundant Ring Protocol

4.5.5. Quorum Disk Configuration

Note

| Parameter | Description | ||||

|---|---|---|---|---|---|

Specifies the quorum disk label created by the mkqdisk utility. If this field is used, the quorum daemon reads the /proc/partitions file and checks for qdisk signatures on every block device found, comparing the label against the specified label. This is useful in configurations where the quorum device name differs among nodes. | |||||

| |||||

The minimum score for a node to be considered "alive". If omitted or set to 0, the default function, floor((n+1)/2), is used, where n is the sum of the heuristics scores. The value must never exceed the sum of the heuristic scores; otherwise, the quorum disk cannot be available. |

Note

/etc/cluster/cluster.conf) in each cluster node. However, for the quorum disk to operate or for any modifications you have made to the quorum disk parameters to take effect, you must restart the cluster (see Section 5.4, “Starting, Stopping, Restarting, and Deleting Clusters”), ensuring that you have restarted the qdiskd daemon on each node.

4.5.6. Logging Configuration

- Checking enables debugging messages in the log file.

- Checking enables messages to

syslog. You can select the and the . The setting indicates that messages at the selected level and higher are sent tosyslog. - Checking enables messages to the log file. You can specify the name. The setting indicates that messages at the selected level and higher are written to the log file.

syslog and log file settings for that daemon.

4.6. Configuring Fence Devices

Note

- Creating fence devices — Refer to Section 4.6.1, “Creating a Fence Device”. Once you have created and named a fence device, you can configure the fence devices for each node in the cluster, as described in Section 4.7, “Configuring Fencing for Cluster Members”.

- Updating fence devices — Refer to Section 4.6.2, “Modifying a Fence Device”.

- Deleting fence devices — Refer to Section 4.6.3, “Deleting a Fence Device”.

Note

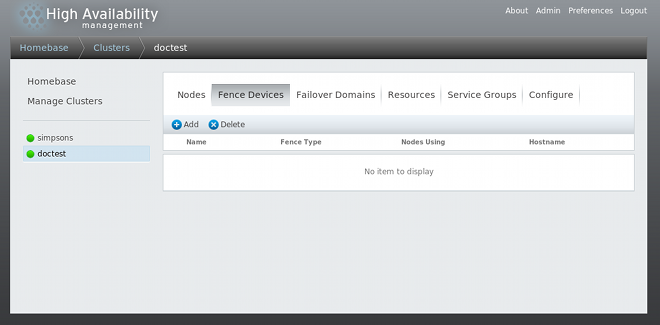

Figure 4.6. luci fence devices configuration page

4.6.1. Creating a Fence Device

- From the configuration page, click . Clicking displays the Add Fence Device (Instance) dialog box. From this dialog box, select the type of fence device to configure.

- Specify the information in the Add Fence Device (Instance) dialog box according to the type of fence device. Refer to Appendix A, Fence Device Parameters for more information about fence device parameters. In some cases you will need to specify additional node-specific parameters for the fence device when you configure fencing for the individual nodes, as described in Section 4.7, “Configuring Fencing for Cluster Members”.

- Click .

4.6.2. Modifying a Fence Device

- From the configuration page, click on the name of the fence device to modify. This displays the dialog box for that fence device, with the values that have been configured for the device.

- To modify the fence device, enter changes to the parameters displayed. Refer to Appendix A, Fence Device Parameters for more information.

- Click and wait for the configuration to be updated.

4.6.3. Deleting a Fence Device

Note

- From the configuration page, check the box to the left of the fence device or devices to select the devices to delete.

- Click and wait for the configuration to be updated. A message appears indicating which devices are being deleted.

4.7. Configuring Fencing for Cluster Members

4.7.1. Configuring a Single Fence Device for a Node

- From the cluster-specific page, you can configure fencing for the nodes in the cluster by clicking on along the top of the cluster display. This displays the nodes that constitute the cluster. This is also the default page that appears when you click on the cluster name beneath from the menu on the left side of the luci page.

- Click on a node name. Clicking a link for a node causes a page to be displayed for that link showing how that node is configured.The node-specific page displays any services that are currently running on the node, as well as any failover domains of which this node is a member. You can modify an existing failover domain by clicking on its name. For information on configuring failover domains, see Section 4.8, “Configuring a Failover Domain”.

- On the node-specific page, under , click . This displays the dialog box.

- Enter a for the fencing method that you are configuring for this node. This is an arbitrary name that will be used by Red Hat High Availability Add-On; it is not the same as the DNS name for the device.

- Click . This displays the node-specific screen that now displays the method you have just added under .

- Configure a fence instance for this method by clicking the button that appears beneath the fence method. This displays the drop-down menu from which you can select a fence device you have previously configured, as described in Section 4.6.1, “Creating a Fence Device”.

- Select a fence device for this method. If this fence device requires that you configure node-specific parameters, the display shows the parameters to configure. For information on fencing parameters, see Appendix A, Fence Device Parameters.

Note

For non-power fence methods (that is, SAN/storage fencing), is selected by default on the node-specific parameters display. This ensures that a fenced node's access to storage is not re-enabled until the node has been rebooted. When you configure a device that requires unfencing, the cluster must first be stopped and the full configuration including devices and unfencing must be added before the cluster is started. For information on unfencing a node, see thefence_node(8) man page. - Click . This returns you to the node-specific screen with the fence method and fence instance displayed.

4.7.2. Configuring a Backup Fence Device

- Use the procedure provided in Section 4.7.1, “Configuring a Single Fence Device for a Node” to configure the primary fencing method for a node.

- Beneath the display of the primary method you defined, click .

- Enter a name for the backup fencing method that you are configuring for this node and click . This displays the node-specific screen that now displays the method you have just added, below the primary fence method.

- Configure a fence instance for this method by clicking . This displays a drop-down menu from which you can select a fence device you have previously configured, as described in Section 4.6.1, “Creating a Fence Device”.

- Select a fence device for this method. If this fence device requires that you configure node-specific parameters, the display shows the parameters to configure. For information on fencing parameters, see Appendix A, Fence Device Parameters.

- Click . This returns you to the node-specific screen with the fence method and fence instance displayed.

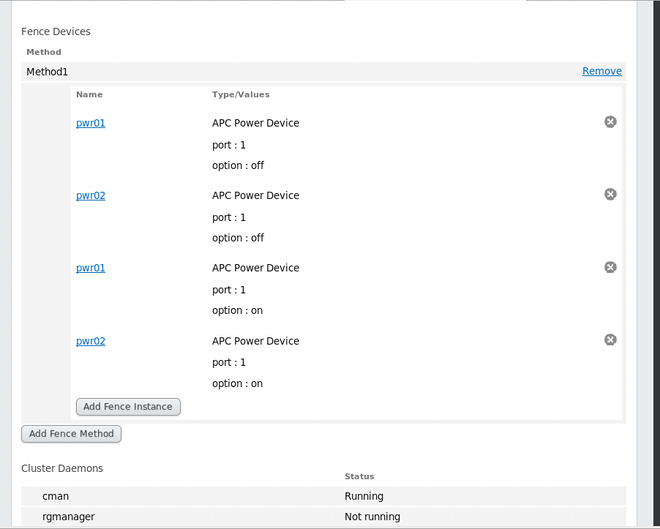

4.7.3. Configuring a Node with Redundant Power

- Before you can configure fencing for a node with redundant power, you must configure each of the power switches as a fence device for the cluster. For information on configuring fence devices, see Section 4.6, “Configuring Fence Devices”.

- From the cluster-specific page, click on along the top of the cluster display. This displays the nodes that constitute the cluster. This is also the default page that appears when you click on the cluster name beneath from the menu on the left side of the luci page.

- Click on a node name. Clicking a link for a node causes a page to be displayed for that link showing how that node is configured.

- On the node-specific page, click .

- Enter a name for the fencing method that you are configuring for this node.

- Click . This displays the node-specific screen that now displays the method you have just added under .

- Configure the first power supply as a fence instance for this method by clicking . This displays a drop-down menu from which you can select one of the power fencing devices you have previously configured, as described in Section 4.6.1, “Creating a Fence Device”.

- Select one of the power fence devices for this method and enter the appropriate parameters for this device.

- Click . This returns you to the node-specific screen with the fence method and fence instance displayed.

- Under the same fence method for which you have configured the first power fencing device, click . This displays a drop-down menu from which you can select the second power fencing devices you have previously configured, as described in Section 4.6.1, “Creating a Fence Device”.

- Select the second of the power fence devices for this method and enter the appropriate parameters for this device.

- Click . This returns you to the node-specific screen with the fence methods and fence instances displayed, showing that each device will power the system off in sequence and power the system on in sequence. This is shown in Figure 4.7, “Dual-Power Fencing Configuration”.

Figure 4.7. Dual-Power Fencing Configuration

4.7.4. Testing the Fence Configuration

fence_check utility.

fence_check(8) man page.

4.8. Configuring a Failover Domain

- Unrestricted — Allows you to specify that a subset of members are preferred, but that a cluster service assigned to this domain can run on any available member.

- Restricted — Allows you to restrict the members that can run a particular cluster service. If none of the members in a restricted failover domain are available, the cluster service cannot be started (either manually or by the cluster software).

- Unordered — When a cluster service is assigned to an unordered failover domain, the member on which the cluster service runs is chosen from the available failover domain members with no priority ordering.

- Ordered — Allows you to specify a preference order among the members of a failover domain. The member at the top of the list is the most preferred, followed by the second member in the list, and so on.

- Failback — Allows you to specify whether a service in the failover domain should fail back to the node that it was originally running on before that node failed. Configuring this characteristic is useful in circumstances where a node repeatedly fails and is part of an ordered failover domain. In that circumstance, if a node is the preferred node in a failover domain, it is possible for a service to fail over and fail back repeatedly between the preferred node and another node, causing severe impact on performance.

Note

The failback characteristic is applicable only if ordered failover is configured.

Note

Note

httpd), which requires you to set up the configuration identically on all members that run the cluster service. Instead of setting up the entire cluster to run the cluster service, you can set up only the members in the restricted failover domain that you associate with the cluster service.

Note

4.8.1. Adding a Failover Domain

- From the cluster-specific page, you can configure failover domains for that cluster by clicking on along the top of the cluster display. This displays the failover domains that have been configured for this cluster.

- Click . Clicking causes the display of the Add Failover Domain to Cluster dialog box, as shown in Figure 4.8, “luci failover domain configuration dialog box”.

Figure 4.8. luci failover domain configuration dialog box

- In the Add Failover Domain to Cluster dialog box, specify a failover domain name at the text box.

Note

The name should be descriptive enough to distinguish its purpose relative to other names used in your cluster. - To enable setting failover priority of the members in the failover domain, click the check box. With checked, you can set the priority value, , for each node selected as members of the failover domain.

Note

The priority value is applicable only if ordered failover is configured. - To restrict failover to members in this failover domain, click the check box. With checked, services assigned to this failover domain fail over only to nodes in this failover domain.

- To specify that a node does not fail back in this failover domain, click the check box. With checked, if a service fails over from a preferred node, the service does not fail back to the original node once it has recovered.

- Configure members for this failover domain. Click the check box for each node that is to be a member of the failover domain. If is checked, set the priority in the text box for each member of the failover domain.

- Click . This displays the Failover Domains page with the newly-created failover domain displayed. A message indicates that the new domain is being created. Refresh the page for an updated status.

4.8.2. Modifying a Failover Domain

- From the cluster-specific page, you can configure Failover Domains for that cluster by clicking on along the top of the cluster display. This displays the failover domains that have been configured for this cluster.

- Click on the name of a failover domain. This displays the configuration page for that failover domain.

- To modify the , , or properties for the failover domain, click or unclick the check box next to the property and click .

- To modify the failover domain membership, click or unclick the check box next to the cluster member. If the failover domain is prioritized, you can also modify the priority setting for the cluster member. Click .

4.8.3. Deleting a Failover Domain

- From the cluster-specific page, you can configure Failover Domains for that cluster by clicking on along the top of the cluster display. This displays the failover domains that have been configured for this cluster.

- Select the check box for the failover domain to delete.

- Click .

4.9. Configuring Global Cluster Resources

- From the cluster-specific page, you can add resources to that cluster by clicking on along the top of the cluster display. This displays the resources that have been configured for that cluster.

- Click . This displays the drop-down menu.

- Click the drop-down box under and select the type of resource to configure.

- Enter the resource parameters for the resource you are adding. Appendix B, HA Resource Parameters describes resource parameters.

- Click . Clicking returns to the resources page that displays the display of Resources, which displays the added resource (and other resources).

- From the luci page, click on the name of the resource to modify. This displays the parameters for that resource.

- Edit the resource parameters.

- Click .

- From the luci page, click the check box for any resources to delete.

- Click .

- Clicking on the header once sorts the resources alphabetically, according to resource name. Clicking on the header a second time sourts the resources in reverse alphabetic order, according to resource name.

- Clicking on the header once sorts the resources alphabetically, according to resource type. Clicking on the header a second time sourts the resources in reverse alphabetic order, according to resource type.

- Clicking on the header once sorts the resources so that they are grouped according to whether they are in use or not.

4.10. Adding a Cluster Service to the Cluster

- From the cluster-specific page, you can add services to that cluster by clicking on along the top of the cluster display. This displays the services that have been configured for that cluster. (From the page, you can also start, restart, and disable a service, as described in Section 5.5, “Managing High-Availability Services”.)

- Click . This displays the dialog box.

- On the Add Service Group to Cluster dialog box, at the text box, type the name of the service.

Note

Use a descriptive name that clearly distinguishes the service from other services in the cluster. - Check the check box if you want the service to start automatically when a cluster is started and running. If the check box is not checked, the service must be started manually any time the cluster comes up from the stopped state.

- Check the check box to set a policy wherein the service only runs on nodes that have no other services running on them.

- If you have configured failover domains for the cluster, you can use the drop-down menu of the parameter to select a failover domain for this service. For information on configuring failover domains, see Section 4.8, “Configuring a Failover Domain”.