Performance Tuning Guide

Optimizing subsystem throughput in Red Hat Enterprise Linux 6

Abstract

Chapter 1. Overview

1.1. How to read this book

- Features

- Each subsystem chapter describes performance features unique to (or implemented differently in) Red Hat Enterprise Linux 6. These chapters also discuss Red Hat Enterprise Linux 6 updates that significantly improved the performance of specific subsystems over Red Hat Enterprise Linux 5.

- Analysis

- The book also enumerates performance indicators for each specific subsystem. Typical values for these indicators are described in the context of specific services, helping you understand their significance in real-world, production systems.In addition, the Performance Tuning Guide also shows different ways of retrieving performance data (that is, profiling) for a subsystem. Note that some of the profiling tools showcased here are documented elsewhere with more detail.

- Configuration

- Perhaps the most important information in this book are instructions on how to adjust the performance of a specific subsystem in Red Hat Enterprise Linux 6. The Performance Tuning Guide explains how to fine-tune a Red Hat Enterprise Linux 6 subsystem for specific services.

1.1.1. Audience

- System/Business Analyst

- This book enumerates and explains Red Hat Enterprise Linux 6 performance features at a high level, providing enough information on how subsystems perform for specific workloads (both by default and when optimized). The level of detail used in describing Red Hat Enterprise Linux 6 performance features helps potential customers and sales engineers understand the suitability of this platform in providing resource-intensive services at an acceptable level.The Performance Tuning Guide also provides links to more detailed documentation on each feature whenever possible. At that detail level, readers can understand these performance features enough to form a high-level strategy in deploying and optimizing Red Hat Enterprise Linux 6. This allows readers to both develop and evaluate infrastructure proposals.This feature-focused level of documentation is suitable for readers with a high-level understanding of Linux subsystems and enterprise-level networks.

- System Administrator

- The procedures enumerated in this book are suitable for system administrators with RHCE [1] skill level (or its equivalent, that is, 3-5 years experience in deploying and managing Linux). The Performance Tuning Guide aims to provide as much detail as possible about the effects of each configuration; this means describing any performance trade-offs that may occur.The underlying skill in performance tuning lies not in knowing how to analyze and tune a subsystem. Rather, a system administrator adept at performance tuning knows how to balance and optimize a Red Hat Enterprise Linux 6 system for a specific purpose. This means also knowing which trade-offs and performance penalties are acceptable when attempting to implement a configuration designed to boost a specific subsystem's performance.

1.2. Release overview

1.2.1. New features in Red Hat Enterprise Linux 6

1.2.2. Horizontal Scalability

1.2.2.1. Parallel Computing

1.2.3. Distributed Systems

- Communication

- Horizontal scalability requires many tasks to be performed simultaneously (in parallel). As such, these tasks must have interprocess communication to coordinate their work. Further, a platform with horizontal scalability should be able to share tasks across multiple systems.

- Storage

- Storage via local disks is not sufficient in addressing the requirements of horizontal scalability. Some form of distributed or shared storage is needed, one with a layer of abstraction that allows a single storage volume's capacity to grow seamlessly with the addition of new storage hardware.

- Management

- The most important duty in distributed computing is the management layer. This management layer coordinates all software and hardware components, efficiently managing communication, storage, and the usage of shared resources.

1.2.3.1. Communication

- Hardware

- Software

The most common way of communicating between computers is over Ethernet. Today, Gigabit Ethernet (GbE) is provided by default on systems, and most servers include 2-4 ports of Gigabit Ethernet. GbE provides good bandwidth and latency. This is the foundation of most distributed systems in use today. Even when systems include faster network hardware, it is still common to use GbE for a dedicated management interface.

Ten Gigabit Ethernet (10GbE) is rapidly growing in acceptance for high end and even mid-range servers. 10GbE provides ten times the bandwidth of GbE. One of its major advantages is with modern multi-core processors, where it restores the balance between communication and computing. You can compare a single core system using GbE to an eight core system using 10GbE. Used in this way, 10GbE is especially valuable for maintaining overall system performance and avoiding communication bottlenecks.

Infiniband offers even higher performance than 10GbE. In addition to TCP/IP and UDP network connections used with Ethernet, Infiniband also supports shared memory communication. This allows Infiniband to work between systems via remote direct memory access (RDMA).

RDMA over Converged Ethernet (RoCE) implements Infiniband-style communications (including RDMA) over a 10GbE infrastructure. Given the cost improvements associated with the growing volume of 10GbE products, it is reasonable to expect wider usage of RDMA and RoCE in a wide range of systems and applications.

1.2.3.2. Storage

- Multiple systems storing data in a single location

- A storage unit (e.g. a volume) composed of multiple storage appliances

Network File System (NFS) allows multiple servers or users to mount and use the same instance of remote storage via TCP or UDP. NFS is commonly used to hold data shared by multiple applications. It is also convenient for bulk storage of large amounts of data.

Storage Area Networks (SANs) use either Fibre Channel or iSCSI protocol to provide remote access to storage. Fibre Channel infrastructure (such as Fibre Channel host bus adapters, switches, and storage arrays) combines high performance, high bandwidth, and massive storage. SANs separate storage from processing, providing considerable flexibility in system design.

- Controlling access to storage

- Managing large amounts of data

- Provisioning systems

- Backing up and replicating data

- Taking snapshots

- Supporting system failover

- Ensuring data integrity

- Migrating data

The Red Hat Global File System 2 (GFS2) file system provides several specialized capabilities. The basic function of GFS2 is to provide a single file system, including concurrent read/write access, shared across multiple members of a cluster. This means that each member of the cluster sees exactly the same data "on disk" in the GFS2 filesystem.

1.2.3.3. Converged Networks

With FCoE, standard fibre channel commands and data packets are transported over a 10GbE physical infrastructure via a single converged network adapter (CNA). Standard TCP/IP ethernet traffic and fibre channel storage operations can be transported via the same link. FCoE uses one physical network interface card (and one cable) for multiple logical network/storage connections.

- Reduced number of connections

- FCoE reduces the number of network connections to a server by half. You can still choose to have multiple connections for performance or availability; however, a single connection provides both storage and network connectivity. This is especially helpful for pizza box servers and blade servers, since they both have very limited space for components.

- Lower cost

- Reduced number of connections immediately means reduced number of cables, switches, and other networking equipment. Ethernet's history also features great economies of scale; the cost of networks drops dramatically as the number of devices in the market goes from millions to billions, as was seen in the decline in the price of 100Mb Ethernet and gigabit Ethernet devices.Similarly, 10GbE will also become cheaper as more businesses adapt to its use. Also, as CNA hardware is integrated into a single chip, widespread use will also increase its volume in the market, which will result in a significant price drop over time.

Internet SCSI (iSCSI) is another type of converged network protocol; it is an alternative to FCoE. Like fibre channel, iSCSI provides block-level storage over a network. However, iSCSI does not provide a complete management environment. The main advantage of iSCSI over FCoE is that iSCSI provides much of the capability and flexibility of fibre channel, but at a lower cost.

Chapter 2. Red Hat Enterprise Linux 6 Performance Features

2.1. 64-Bit Support

- Huge pages and transparent huge pages

- Non-Uniform Memory Access improvements

The implementation of huge pages in Red Hat Enterprise Linux 6 allows the system to manage memory use efficiently across different memory workloads. Huge pages dynamically utilize 2 MB pages compared to the standard 4 KB page size, allowing applications to scale well while processing gigabytes and even terabytes of memory.

Many new systems now support Non-Uniform Memory Access (NUMA). NUMA simplifies the design and creation of hardware for large systems; however, it also adds a layer of complexity to application development. For example, NUMA implements both local and remote memory, where remote memory can take several times longer to access than local memory. This feature has performance implications for operating systems and applications, and should be configured carefully.

2.2. Ticket Spinlocks

2.3. Dynamic List Structure

2.4. Tickless Kernel

2.5. Control Groups

- A list of tasks assigned to the cgroup

- Resources allocated to those tasks

- CPUsets

- Memory

- I/O

- Network (bandwidth)

2.6. Storage and File System Improvements

Ext4 is the default file system for Red Hat Enterprise Linux 6. It is the fourth generation version of the EXT file system family, supporting a theoretical maximum file system size of 1 exabyte, and single file maximum size of 16TB. Red Hat Enterprise Linux 6 supports a maximum file system size of 16TB, and a single file maximum size of 16TB. Other than a much larger storage capacity, ext4 also includes several new features, such as:

- Extent-based metadata

- Delayed allocation

- Journal check-summing

XFS is a robust and mature 64-bit journaling file system that supports very large files and file systems on a single host. This file system was originally developed by SGI, and has a long history of running on extremely large servers and storage arrays. XFS features include:

- Delayed allocation

- Dynamically-allocated inodes

- B-tree indexing for scalability of free space management

- Online defragmentation and file system growing

- Sophisticated metadata read-ahead algorithms

Traditional BIOS supports a maximum disk size of 2.2TB. Red Hat Enterprise Linux 6 systems using BIOS can support disks larger than 2.2TB by using a new disk structure called Global Partition Table (GPT). GPT can only be used for data disks; it cannot be used for boot drives with BIOS; therefore, boot drives can only be a maximum of 2.2TB in size. The BIOS was originally created for the IBM PC; while BIOS has evolved considerably to adapt to modern hardware, Unified Extensible Firmware Interface (UEFI) is designed to support new and emerging hardware.

Important

Important

Chapter 3. Monitoring and Analyzing System Performance

3.1. The proc File System

proc "file system" is a directory that contains a hierarchy of files that represent the current state of the Linux kernel. It allows applications and users to see the kernel's view of the system.

proc directory also contains information about the hardware of the system, and any currently running processes. Most of these files are read-only, but some files (primarily those in /proc/sys) can be manipulated by users and applications to communicate configuration changes to the kernel.

proc directory, see the Deployment Guide, available at http://access.redhat.com/site/documentation/Red_Hat_Enterprise_Linux/.

3.2. GNOME and KDE System Monitors

The GNOME System Monitor displays basic system information and allows you to monitor system processes, and resource or file system usage. Open it with the gnome-system-monitor command in the Terminal, or click on the menu, and select > .

- Displays basic information about the computer's hardware and software.

- Shows active processes, and the relationships between those processes, as well as detailed information about each process. It also lets you filter the processes displayed, and perform certain actions on those processes (start, stop, kill, change priority, etc.).

- Displays the current CPU time usage, memory and swap space usage, and network usage.

- Lists all mounted file systems alongside some basic information about each, such as the file system type, mount point, and memory usage.

The KDE System Guard allows you to monitor current system load and processes that are running. It also lets you perform actions on processes. Open it with the ksysguard command in the Terminal, or click on the and select > > .

- Displays a list of all running processes, alphabetically by default. You can also sort processes by a number of other properties, including total CPU usage, physical or shared memory usage, owner, and priority. You can also filter the visible results, search for specific processes, or perform certain actions on a process.

- Displays historical graphs of CPU usage, memory and swap space usage, and network usage. Hover over the graphs for detailed analysis and graph keys.

3.3. Performance Co-Pilot (PCP)

pmcd) is responsible for collecting performance data on the host system, and various client tools, such as pminfo or pmstat, can be used to retrieve, display, archive, and process this data on the same host or over the network. The pcp package provides the command-line tools and underlying functionality. The graphical tool also requires the pcp-gui package.

Resources

- For information on PCP, see the Index of Performance Co-Pilot (PCP) articles, solutions, tutorials and white papers on the Red Hat Customer Portal.

- The manual page named PCPIntro serves as an introduction to Performance Co-Pilot. It provides a list of available tools as well as a description of available configuration options and a list of related manual pages. By default, comprehensive documentation is installed in the

/usr/share/doc/pcp-doc/directory, notably the Performance Co-Pilot User's and Administrator's Guide and Performance Co-Pilot Programmer's Guide. - If you need to determine what PCP tool has the functionality of an older tool you are already familiar with, see the Side-by-side comparison of PCP tools with legacy tools Red Hat Knowledgebase article.

- See the official PCP documentation for an in-depth description of the Performance Co-Pilot and its usage. If you want to start using PCP on Red Hat Enterprise Linux quickly, see the PCP Quick Reference Guide. The official PCP website also contains a list of frequently asked questions.

Overview of System Services and Tools Provided by PCP

3.4. irqbalance

--oneshot option.

- --powerthresh

- Sets the number of CPUs that can idle before a CPU is placed into powersave mode. If more CPUs than the threshold are more than 1 standard deviation below the average softirq workload and no CPUs are more than one standard deviation above the average, and have more than one irq assigned to them, a CPU is placed into powersave mode. In powersave mode, a CPU is not part of irq balancing so that it is not woken unnecessarily.

- --hintpolicy

- Determines how irq kernel affinity hinting is handled. Valid values are

exact(irq affinity hint is always applied),subset(irq is balanced, but the assigned object is a subset of the affinity hint), orignore(irq affinity hint is ignored completely). - --policyscript

- Defines the location of a script to execute for each interrupt request, with the device path and irq number passed as arguments, and a zero exit code expected by irqbalance. The script defined can specify zero or more key value pairs to guide irqbalance in managing the passed irq.The following are recognized as valid key value pairs.

- ban

- Valid values are

true(exclude the passed irq from balancing) orfalse(perform balancing on this irq). - balance_level

- Allows user override of the balance level of the passed irq. By default the balance level is based on the PCI device class of the device that owns the irq. Valid values are

none,package,cache, orcore. - numa_node

- Allows user override of the NUMA node that is considered local to the passed irq. If information about the local node is not specified in ACPI, devices are considered equidistant from all nodes. Valid values are integers (starting from 0) that identify a specific NUMA node, and

-1, which specifies that an irq should be considered equidistant from all nodes.

- --banirq

- The interrupt with the specified interrupt request number is added to the list of banned interrupts.

IRQBALANCE_BANNED_CPUS environment variable to specify a mask of CPUs that are ignored by irqbalance.

man irqbalance

$ man irqbalance3.5. Built-in Command-line Monitoring Tools

top

man top.

ps

man ps.

vmstat

man vmstat.

sar

man sar.

3.5.1. Getting Information about Pages paged in and Pages paged out

vmstat utility and the sar utility to get the information about Pages paged in (PGPGIN) and Pages paged out (PGPGOUT). Pages paged in are the blocks of data recorded to the memory. Pages paged out are the blocks of data recorded from the memory.

vmstat, use the -s option:

vmstat -s

~]$ vmstat -s/proc/vmstat file on the lines beginning with pgpgin and pgpgout.

- PGPGIN are the blocks of data recorded from any location to the memory

- PGPGOUT are the blocks of data recorded from the memory to any location

sar, use the -B option:

sar -B

~]$ sar -B- PGPGIN are the blocks of data recorded from disks to the memory

- PGPGOUT are the blocks of data recorded from the memory to disks

/var/log/sa/sadd file, where dd is the day in the month. For example, you can choose to get the average values for all 10-minute periods from the system start to the current moment. The resulting values are in kilobytes per second.

3.6. Tuned and ktune

default- The default power-saving profile. This is the most basic power-saving profile. It enables only the disk and CPU plug-ins. Note that this is not the same as turning tuned-adm off, where both tuned and ktune are disabled.

latency-performance- A server profile for typical latency performance tuning. This profile disables dynamic tuning mechanisms and transparent hugepages. It uses the

performancegoverner for p-states throughcpuspeed, and sets the I/O scheduler todeadline. Additionally, in Red Hat Enterprise Linux 6.5 and later, the profile requests acpu_dma_latencyvalue of1. In Red Hat Enterprise Linux 6.4 and earlier,cpu_dma_latencyrequested a value of0. throughput-performance- A server profile for typical throughput performance tuning. This profile is recommended if the system does not have enterprise-class storage. throughput-performance disables power saving mechanisms and enables the

deadlineI/O scheduler. The CPU governor is set toperformance.kernel.sched_min_granularity_ns(scheduler minimal preemption granularity) is set to10milliseconds,kernel.sched_wakeup_granularity_ns(scheduler wake-up granularity) is set to15milliseconds,vm.dirty_ratio(virtual memory dirty ratio) is set to 40%, and transparent huge pages are enabled. enterprise-storage- This profile is recommended for enterprise-sized server configurations with enterprise-class storage, including battery-backed controller cache protection and management of on-disk cache. It is the same as the

throughput-performanceprofile, with one addition: file systems are re-mounted withbarrier=0. virtual-guest- This profile is optimized for virtual machines. It is based on the

enterprise-storageprofile, but also decreases the swappiness of virtual memory. This profile is available in Red Hat Enterprise Linux 6.3 and later. virtual-host- Based on the

enterprise-storageprofile,virtual-hostdecreases the swappiness of virtual memory and enables more aggressive writeback of dirty pages. Non-root and non-boot file systems are mounted withbarrier=0. Additionally, as of Red Hat Enterprise Linux 6.5, thekernel.sched_migration_costparameter is set to5milliseconds. Prior to Red Hat Enterprise Linux 6.5,kernel.sched_migration_costused the default value of0.5milliseconds. This profile is available in Red Hat Enterprise Linux 6.3 and later.

3.7. Application Profilers

3.7.1. SystemTap

/usr/share/doc/systemtap-client-version/examples directory.

Network monitoring scripts (in examples/network)

nettop.stp- Every 5 seconds, prints a list of processes (process identifier and command) with the number of packets sent and received and the amount of data sent and received by the process during that interval.

socket-trace.stp- Instruments each of the functions in the Linux kernel's

net/socket.cfile, and prints trace data. tcp_connections.stp- Prints information for each new incoming TCP connection accepted by the system. The information includes the UID, the command accepting the connection, the process identifier of the command, the port the connection is on, and the IP address of the originator of the request.

dropwatch.stp- Every 5 seconds, prints the number of socket buffers freed at locations in the kernel. Use the

--all-modulesoption to see symbolic names.

Storage monitoring scripts (in examples/io)

disktop.stp- Checks the status of reading/writing disk every 5 seconds and outputs the top ten entries during that period.

iotime.stp- Prints the amount of time spent on read and write operations, and the number of bytes read and written.

traceio.stp- Prints the top ten executables based on cumulative I/O traffic observed, every second.

traceio2.stp- Prints the executable name and process identifier as reads and writes to the specified device occur.

inodewatch.stp- Prints the executable name and process identifier each time a read or write occurs to the specified inode on the specified major/minor device.

inodewatch2.stp- Prints the executable name, process identifier, and attributes each time the attributes are changed on the specified inode on the specified major/minor device.

latencytap.stp script records the effect that different types of latency have on one or more processes. It prints a list of latency types every 30 seconds, sorted in descending order by the total time the process or processes spent waiting. This can be useful for identifying the cause of both storage and network latency. Red Hat recommends using the --all-modules option with this script to better enable the mapping of latency events. By default, this script is installed to the /usr/share/doc/systemtap-client-version/examples/profiling directory.

3.7.2. OProfile

- Performance monitoring samples may not be precise - because the processor may execute instructions out of order, a sample may be recorded from a nearby instruction, instead of the instruction that triggered the interrupt.

- Because OProfile is system-wide and expects processes to start and stop multiple times, samples from multiple runs are allowed to accumulate. This means you may need to clear sample data from previous runs.

- It focuses on identifying problems with CPU-limited processes, and therefore does not identify processes that are sleeping while they wait on locks for other events.

/usr/share/doc/oprofile-<version>.

3.7.3. Valgrind

man valgrind command when the valgrind package is installed. Accompanying documentation can also be found in:

/usr/share/doc/valgrind-<version>/valgrind_manual.pdf/usr/share/doc/valgrind-<version>/html/index.html

3.7.4. Perf

perf stat- This command provides overall statistics for common performance events, including instructions executed and clock cycles consumed. You can use the option flags to gather statistics on events other than the default measurement events. As of Red Hat Enterprise Linux 6.4, it is possible to use

perf statto filter monitoring based on one or more specified control groups (cgroups). For further information, read the man page:man perf-stat. perf record- This command records performance data into a file which can be later analyzed using

perf report. For further details, read the man page:man perf-record.As of Red Hat Enterprise Linux 6.6, the-band-joptions are provided to allow statistical sampling of taken branches. The-boption samples any branches taken, while the-joption can be adjusted to sample branches of different types, such as user level or kernel level branches. perf report- This command reads the performance data from a file and analyzes the recorded data. For further details, read the man page:

man perf-report. perf list- This command lists the events available on a particular machine. These events will vary based on the performance monitoring hardware and the software configuration of the system. For further information, read the man page:

man perf-list. perf mem- Available as of Red Hat Enterprise Linux 6.6. This command profiles the frequency of each type of memory access operation performed by a specified application or command. This allows a user to see the frequency of load and store operations performed by the profiled application. For further information, read the man page:

man perf-mem. perf top- This command performs a similar function to the top tool. It generates and displays a performance counter profile in realtime. For further information, read the man page:

man perf-top. perf trace- This command performs a similar function to the strace tool. It monitors the system calls used by a specified thread or process and all signals received by that application. Additional trace targets are available; refer to the man page for a full list.

3.8. Red Hat Enterprise MRG

- BIOS parameters related to power management, error detection, and system management interrupts;

- network settings, such as interrupt coalescing, and the use of TCP;

- journaling activity in journaling file systems;

- system logging;

- whether interrupts and user processes are handled by a specific CPU or range of CPUs;

- whether swap space is used; and

- how to deal with out-of-memory exceptions.

Chapter 4. CPU

Topology

Threads

Interrupts

4.1. CPU Topology

4.1.1. CPU and NUMA Topology

- Serial buses

- NUMA topologies

- What is the topology of the system?

- Where is the application currently executing?

- Where is the closest memory bank?

4.1.2. Tuning CPU Performance

- A CPU (any of 0-3) presents the memory address to the local memory controller.

- The memory controller sets up access to the memory address.

- The CPU performs read or write operations on that memory address.

Figure 4.1. Local and Remote Memory Access in NUMA Topology

- A CPU (any of 0-3) presents the remote memory address to the local memory controller.

- The CPU's request for that remote memory address is passed to a remote memory controller, local to the node containing that memory address.

- The remote memory controller sets up access to the remote memory address.

- The CPU performs read or write operations on that remote memory address.

- the topology of the system (how its components are connected),

- the core on which the application executes, and

- the location of the closest memory bank.

4.1.2.1. Setting CPU Affinity with taskset

0x00000001 represents processor 0, and 0x00000003 represents processors 0 and 1.

taskset -p mask pid

# taskset -p mask pidtaskset mask -- program

# taskset mask -- program-c option to provide a comma-delimited list of separate processors, or a range of processors, like so:

taskset -c 0,5,7-9 -- myprogram

# taskset -c 0,5,7-9 -- myprogramman taskset.

4.1.2.2. Controlling NUMA Policy with numactl

numactl runs processes with a specified scheduling or memory placement policy. The selected policy is set for that process and all of its children. numactl can also set a persistent policy for shared memory segments or files, and set the CPU affinity and memory affinity of a process. It uses the /sys file system to determine system topology.

/sys file system contains information about how CPUs, memory, and peripheral devices are connected via NUMA interconnects. Specifically, the /sys/devices/system/cpu directory contains information about how a system's CPUs are connected to one another. The /sys/devices/system/node directory contains information about the NUMA nodes in the system, and the relative distances between those nodes.

--show- Display the NUMA policy settings of the current process. This parameter does not require further parameters, and can be used like so:

numactl --show. --hardware- Displays an inventory of the available nodes on the system.

--membind- Only allocate memory from the specified nodes. When this is in use, allocation will fail if memory on these nodes is insufficient. Usage for this parameter is

numactl --membind=nodes program, where nodes is the list of nodes you want to allocate memory from, and program is the program whose memory requirements should be allocated from that node. Node numbers can be given as a comma-delimited list, a range, or a combination of the two. Further details are available on the numactl man page:man numactl. --cpunodebind- Only execute a command (and its child processes) on CPUs belonging to the specified node(s). Usage for this parameter is

numactl --cpunodebind=nodes program, where nodes is the list of nodes to whose CPUs the specified program (program) should be bound. Node numbers can be given as a comma-delimited list, a range, or a combination of the two. Further details are available on the numactl man page:man numactl. --physcpubind- Only execute a command (and its child processes) on the specified CPUs. Usage for this parameter is

numactl --physcpubind=cpu program, where cpu is a comma-delimited list of physical CPU numbers as displayed in the processor fields of/proc/cpuinfo, and program is the program that should execute only on those CPUs. CPUs can also be specified relative to the currentcpuset. Refer to the numactl man page for further information:man numactl. --localalloc- Specifies that memory should always be allocated on the current node.

--preferred- Where possible, memory is allocated on the specified node. If memory cannot be allocated on the node specified, fall back to other nodes. This option takes only a single node number, like so:

numactl --preferred=node. Refer to the numactl man page for further information:man numactl.

man numa(7).

4.1.3. Hardware performance policy (x86_energy_perf_policy)

CPUID.06H.ECX.bit3.

x86_energy_perf_policy requires root privileges, and operates on all CPUs by default.

x86_energy_perf_policy -r

# x86_energy_perf_policy -rx86_energy_perf_policy profile_name

# x86_energy_perf_policy profile_nameperformance- The processor is unwilling to sacrifice any performance for the sake of saving energy. This is the default value.

normal- The processor tolerates minor performance compromises for potentially significant energy savings. This is a reasonable setting for most desktops and servers.

powersave- The processor accepts potentially significant hits to performance in order to maximise energy efficiency.

man x86_energy_perf_policy.

4.1.4. turbostat

root privileges to run. It also requires processor support for invariant time stamp counters, and APERF and MPERF model-specific registers.

- pkg

- The processor package number.

- core

- The processor core number.

- CPU

- The Linux CPU (logical processor) number.

- %c0

- The percentage of the interval for which the CPU retired instructions.

- GHz

- The average clock speed while the CPU was in the c0 state. When this number is higher than the value in TSC, the CPU is in turbo mode.

- TSC

- The average clock speed over the course of the entire interval. When this number is lower than the value in TSC, the CPU is in turbo mode.

- %c1, %c3, and %c6

- The percentage of the interval for which the processor was in the c1, c3, or c6 state, respectively.

- %pc3 or %pc6

- The percentage of the interval for which the processor was in the pc3 or pc6 state, respectively.

-i option, for example, run turbostat -i 10 to print results every 10 seconds instead.

Note

man turbostat.

4.1.5. numastat

Important

numastat, with no options or parameters) maintains strict compatibility with the previous version of the tool, note that supplying options or parameters to this command significantly changes both the output content and its format.

numastat displays how many pages of memory are occupied by the following event categories for each node.

numa_miss and numa_foreign values.

Default Tracking Categories

- numa_hit

- The number of attempted allocations to this node that were successful.

- numa_miss

- The number of attempted allocations to another node that were allocated on this node because of low memory on the intended node. Each

numa_missevent has a correspondingnuma_foreignevent on another node. - numa_foreign

- The number of allocations initially intended for this node that were allocated to another node instead. Each

numa_foreignevent has a correspondingnuma_missevent on another node. - interleave_hit

- The number of attempted interleave policy allocations to this node that were successful.

- local_node

- The number of times a process on this node successfully allocated memory on this node.

- other_node

- The number of times a process on another node allocated memory on this node.

-c- Horizontally condenses the displayed table of information. This is useful on systems with a large number of NUMA nodes, but column width and inter-column spacing are somewhat unpredictable. When this option is used, the amount of memory is rounded to the nearest megabyte.

-m- Displays system-wide memory usage information on a per-node basis, similar to the information found in

/proc/meminfo. -n- Displays the same information as the original

numastatcommand (numa_hit, numa_miss, numa_foreign, interleave_hit, local_node, and other_node), with an updated format, using megabytes as the unit of measurement. -p pattern- Displays per-node memory information for the specified pattern. If the value for pattern is comprised of digits, numastat assumes that it is a numerical process identifier. Otherwise, numastat searches process command lines for the specified pattern.Command line arguments entered after the value of the

-poption are assumed to be additional patterns for which to filter. Additional patterns expand, rather than narrow, the filter. -s- Sorts the displayed data in descending order so that the biggest memory consumers (according to the

totalcolumn) are listed first.Optionally, you can specify a node, and the table will be sorted according to the node column. When using this option, the node value must follow the-soption immediately, as shown here:numastat -s2

numastat -s2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Do not include white space between the option and its value. -v- Displays more verbose information. Namely, process information for multiple processes will display detailed information for each process.

-V- Displays numastat version information.

-z- Omits table rows and columns with only zero values from the displayed information. Note that some near-zero values that are rounded to zero for display purposes will not be omitted from the displayed output.

4.1.6. NUMA Affinity Management Daemon (numad)

/proc file system to monitor available system resources on a per-node basis. The daemon then attempts to place significant processes on NUMA nodes that have sufficient aligned memory and CPU resources for optimum NUMA performance. Current thresholds for process management are at least 50% of one CPU and at least 300 MB of memory. numad attempts to maintain a resource utilization level, and rebalances allocations when necessary by moving processes between NUMA nodes.

-w option for pre-placement advice: man numad.

4.1.6.1. Benefits of numad

4.1.6.2. Modes of operation

Note

/sys/kernel/mm/ksm/merge_nodes tunable to 0 to avoid merging pages across NUMA nodes. Kernel memory accounting statistics can eventually contradict each other after large amounts of cross-node merging. As such, numad can become confused after the KSM daemon merges large amounts of memory. If your system has a large amount of free memory, you may achieve higher performance by turning off and disabling the KSM daemon.

- as a service

- as an executable

4.1.6.2.1. Using numad as a service

service numad start

# service numad startchkconfig numad on

# chkconfig numad on4.1.6.2.2. Using numad as an executable

numad

# numad/var/log/numad.log.

numad -S 0 -p pid

# numad -S 0 -p pid-p pid- Adds the specified pid to an explicit inclusion list. The process specified will not be managed until it meets the numad process significance threshold.

-S mode- The

-Sparameter specifies the type of process scanning. Setting it to0as shown limits numad management to explicitly included processes.

numad -i 0

# numad -i 0man numad.

4.1.7. Dynamic Resource Affinity on Power Architecture

powerpc-utils and ppc64-diag packages process the event and update the system with the new CPU or memory affinity information.

4.2. CPU Scheduling

- Realtime policies

- SCHED_FIFO

- SCHED_RR

- Normal policies

- SCHED_OTHER

- SCHED_BATCH

- SCHED_IDLE

4.2.1. Realtime scheduling policies

SCHED_FIFO- This policy is also referred to as static priority scheduling, because it defines a fixed priority (between 1 and 99) for each thread. The scheduler scans a list of SCHED_FIFO threads in priority order and schedules the highest priority thread that is ready to run. This thread runs until it blocks, exits, or is preempted by a higher priority thread that is ready to run.Even the lowest priority realtime thread will be scheduled ahead of any thread with a non-realtime policy; if only one realtime thread exists, the

SCHED_FIFOpriority value does not matter. SCHED_RR- A round-robin variant of the

SCHED_FIFOpolicy.SCHED_RRthreads are also given a fixed priority between 1 and 99. However, threads with the same priority are scheduled round-robin style within a certain quantum, or time slice. Thesched_rr_get_interval(2)system call returns the value of the time slice, but the duration of the time slice cannot be set by a user. This policy is useful if you need multiple thread to run at the same priority.

SCHED_FIFO threads run until they block, exit, or are pre-empted by a thread with a higher priority. Setting a priority of 99 is therefore not recommended, as this places your process at the same priority level as migration and watchdog threads. If these threads are blocked because your thread goes into a computational loop, they will not be able to run. Uniprocessor systems will eventually lock up in this situation.

SCHED_FIFO policy includes a bandwidth cap mechanism. This protects realtime application programmers from realtime tasks that might monopolize the CPU. This mechanism can be adjusted through the following /proc file system parameters:

/proc/sys/kernel/sched_rt_period_us- Defines the time period to be considered one hundred percent of CPU bandwidth, in microseconds ('us' being the closest equivalent to 'µs' in plain text). The default value is 1000000µs, or 1 second.

/proc/sys/kernel/sched_rt_runtime_us- Defines the time period to be devoted to running realtime threads, in microseconds ('us' being the closest equivalent to 'µs' in plain text). The default value is 950000µs, or 0.95 seconds.

4.2.2. Normal scheduling policies

SCHED_OTHER, SCHED_BATCH and SCHED_IDLE. However, the SCHED_BATCH and SCHED_IDLE policies are intended for very low priority jobs, and as such are of limited interest in a performance tuning guide.

SCHED_OTHER, orSCHED_NORMAL- The default scheduling policy. This policy uses the Completely Fair Scheduler (CFS) to provide fair access periods for all threads using this policy. CFS establishes a dynamic priority list partly based on the

nicenessvalue of each process thread. (Refer to the Deployment Guide for more details about this parameter and the/procfile system.) This gives users some indirect level of control over process priority, but the dynamic priority list can only be directly changed by the CFS.

4.2.3. Policy Selection

SCHED_OTHER and let the system manage CPU utilization for you.

SCHED_FIFO. If you have a small number of threads, consider isolating a CPU socket and moving your threads onto that socket's cores so that there are no other threads competing for time on the cores.

4.3. Interrupts and IRQ Tuning

/proc/interrupts file lists the number of interrupts per CPU per I/O device. It displays the IRQ number, the number of that interrupt handled by each CPU core, the interrupt type, and a comma-delimited list of drivers that are registered to receive that interrupt. (Refer to the proc(5) man page for further details: man 5 proc)

smp_affinity, which defines the CPU cores that are allowed to execute the ISR for that IRQ. This property can be used to improve application performance by assigning both interrupt affinity and the application's thread affinity to one or more specific CPU cores. This allows cache line sharing between the specified interrupt and application threads.

/proc/irq/IRQ_NUMBER/smp_affinity file, which can be viewed and modified by the root user. The value stored in this file is a hexadecimal bit-mask representing all CPU cores in the system.

grep eth0 /proc/interrupts 32: 0 140 45 850264 PCI-MSI-edge eth0

# grep eth0 /proc/interrupts

32: 0 140 45 850264 PCI-MSI-edge eth0smp_affinity file:

cat /proc/irq/32/smp_affinity f

# cat /proc/irq/32/smp_affinity

ff, meaning that the IRQ can be serviced on any of the CPUs in the system. Setting this value to 1, as follows, means that only CPU 0 can service this interrupt:

echo 1 >/proc/irq/32/smp_affinity cat /proc/irq/32/smp_affinity 1

# echo 1 >/proc/irq/32/smp_affinity

# cat /proc/irq/32/smp_affinity

1smp_affinity values for discrete 32-bit groups. This is required on systems with more than 32 cores. For example, the following example shows that IRQ 40 is serviced on all cores of a 64-core system:

cat /proc/irq/40/smp_affinity ffffffff,ffffffff

# cat /proc/irq/40/smp_affinity

ffffffff,ffffffffecho 0xffffffff,00000000 > /proc/irq/40/smp_affinity cat /proc/irq/40/smp_affinity ffffffff,00000000

# echo 0xffffffff,00000000 > /proc/irq/40/smp_affinity

# cat /proc/irq/40/smp_affinity

ffffffff,00000000Note

smp_affinity of an IRQ sets up the hardware so that the decision to service an interrupt with a particular CPU is made at the hardware level, with no intervention from the kernel.

4.4. CPU Frequency Governors

cpufreq governors determine the frequency of a CPU at any given time based on a set of rules about the kind of behavior that prompts a change to a higher or lower frequency.

cpufreq_ondemand governor for most situations, as this governor provides better performance through a higher CPU frequency when the system is under load, and power savings through a lower CPU frequency when the system is not being heavily used.

Note

sysfs parameter io_is_busy. If this is set to 1, Input/Output (I/O) activity is included in CPU activity calculations, and if set to 0 it is excluded. The default setting is 1. If I/O activity is excluded, the ondemand governor may reduce the CPU frequency to reduce power usage, resulting in slower I/O operations.

io_is_busy parameter is /sys/devices/system/cpu/cpufreq/ondemand/io_is_busy.

cpufreq_performance governor. This governor uses the highest possible CPU frequency to ensure that tasks execute as quickly as possible. This governor does not make use of power saving mechanisms such as sleep or idle, and is therefore not recommended for data centers or similar large deployments.

Procedure 4.1. Enabling and configuring a governor

- Ensure that cpupowerutils is installed:

yum install cpupowerutils

# yum install cpupowerutilsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Check that the driver you want to use is available.

cpupower frequency-info --governors

# cpupower frequency-info --governorsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If the driver you want is not available, use the

modprobecommand to add it to your system. For example, to add theondemandgovernor, run:modprobe cpufreq_ondemand

# modprobe cpufreq_ondemandCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Set the governor temporarily by using the cpupower command line tool. For example, to set the

ondemandgovernor, run:cpupower frequency-set --governor ondemand

# cpupower frequency-set --governor ondemandCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.5. Enhancements to NUMA in Red Hat Enterprise Linux 6

4.5.1. Bare-metal and Scalability Optimizations

4.5.1.1. Enhancements in topology-awareness

- enhanced topology detection

- This allows the operating system to detect low-level hardware details (such as logical CPUs, hyper threads, cores, sockets, NUMA nodes and access times between nodes) at boot time, and optimize processing on your system.

- completely fair scheduler

- This new scheduling mode ensures that runtime is shared evenly between eligible processes. Combining this with topology detection allows processes to be scheduled onto CPUs within the same socket to avoid the need for expensive remote memory access, and ensure that cache content is preserved wherever possible.

mallocmallocis now optimized to ensure that the regions of memory that are allocated to a process are as physically close as possible to the core on which the process is executing. This increases memory access speeds.- skbuff I/O buffer allocation

- Similarly to

malloc, this is now optimized to use memory that is physically close to the CPU handling I/O operations such as device interrupts. - device interrupt affinity

- Information recorded by device drivers about which CPU handles which interrupts can be used to restrict interrupt handling to CPUs within the same physical socket, preserving cache affinity and limiting high-volume cross-socket communication.

4.5.1.2. Enhancements in Multi-processor Synchronization

- Read-Copy-Update (RCU) locks

- Typically, 90% of locks are acquired for read-only purposes. RCU locking removes the need to obtain an exclusive-access lock when the data being accessed is not being modified. This locking mode is now used in page cache memory allocation: locking is now used only for allocation or deallocation operations.

- per-CPU and per-socket algorithms

- Many algorithms have been updated to perform lock coordination among cooperating CPUs on the same socket to allow for more fine-grained locking. Numerous global spinlocks have been replaced with per-socket locking methods, and updated memory allocator zones and related memory page lists allow memory allocation logic to traverse a more efficient subset of the memory mapping data structures when performing allocation or deallocation operations.

4.5.2. Virtualization Optimizations

- CPU pinning

- Virtual guests can be bound to run on a specific socket in order to optimize local cache use and remove the need for expensive inter-socket communications and remote memory access.

- transparent hugepages (THP)

- With THP enabled, the system automatically performs NUMA-aware memory allocation requests for large contiguous amounts of memory, reducing both lock contention and the number of translation lookaside buffer (TLB) memory management operations required and yielding a performance increase of up to 20% in virtual guests.

- kernel-based I/O implementation

- The virtual guest I/O subsystem is now implemented in the kernel, greatly reducing the expense of inter-node communication and memory access by avoiding a significant amount of context switching, and synchronization and communication overhead.

Chapter 5. Memory

5.1. Huge Translation Lookaside Buffer (HugeTLB)

/usr/share/doc/kernel-doc-version/Documentation/vm/hugetlbpage.txt

5.2. Huge Pages and Transparent Huge Pages

- Increase the number of page table entries in the hardware memory management unit

- Increase the page size

5.2.1. Configure Huge Pages

Huge pages kernel options

- hugepages

- Defines the number of persistent huge pages configured in the kernel at boot time. The default value is

0. It is only possible to allocate (or deallocate) huge pages if there are sufficient physically contiguous free pages in the system. Pages reserved by this parameter cannot be used for other purposes.Default size huge pages can be dynamically allocated or deallocated by changing the value of the/proc/sys/vm/nr_hugepagesfile.In a NUMA system, huge pages assigned with this parameter are divided equally between nodes. You can assign huge pages to specific nodes at runtime by changing the value of the node's/sys/devices/system/node/node_id/hugepages/hugepages-1048576kB/nr_hugepagesfile.For more information, read the relevant kernel documentation, which is installed in/usr/share/doc/kernel-doc-kernel_version/Documentation/vm/hugetlbpage.txtby default. This documentation is available only if the kernel-doc package is installed. - hugepagesz

- Defines the size of persistent huge pages configured in the kernel at boot time. Valid values are 2 MB and 1 GB. The default value is 2 MB.

- default_hugepagesz

- Defines the default size of persistent huge pages configured in the kernel at boot time. Valid values are 2 MB and 1 GB. The default value is 2 MB.

5.3. Using Valgrind to Profile Memory Usage

valgrind --tool=toolname program

valgrind --tool=toolname programmemcheck, massif, or cachegrind), and program with the program you wish to profile with Valgrind. Be aware that Valgrind's instrumentation will cause your program to run more slowly than it would normally.

man valgrind command when the valgrind package is installed, or found in the following locations:

/usr/share/doc/valgrind-version/valgrind_manual.pdf, and/usr/share/doc/valgrind-version/html/index.html.

5.3.1. Profiling Memory Usage with Memcheck

valgrind program, without specifying --tool=memcheck. It detects and reports on a number of memory errors that can be difficult to detect and diagnose, such as memory access that should not occur, the use of undefined or uninitialized values, incorrectly freed heap memory, overlapping pointers, and memory leaks. Programs run ten to thirty times more slowly with Memcheck than when run normally.

/usr/share/doc/valgrind-version/valgrind_manual.pdf.

--leak-check- When enabled, Memcheck searches for memory leaks when the client program finishes. The default value is

summary, which outputs the number of leaks found. Other possible values areyesandfull, both of which give details of each individual leak, andno, which disables memory leak checking. --undef-value-errors- When enabled (set to

yes), Memcheck reports errors when undefined values are used. When disabled (set tono), undefined value errors are not reported. This is enabled by default. Disabling it speeds up Memcheck slightly. --ignore-ranges- Allows the user to specify one or more ranges that Memcheck should ignore when checking for addressability. Multiple ranges are delimited by commas, for example,

--ignore-ranges=0xPP-0xQQ,0xRR-0xSS.

/usr/share/doc/valgrind-version/valgrind_manual.pdf.

5.3.2. Profiling Cache Usage with Cachegrind

valgrind --tool=cachegrind program

# valgrind --tool=cachegrind program- first-level instruction cache reads (or instructions executed) and read misses, and last-level cache instruction read misses;

- data cache reads (or memory reads), read misses, and last-level cache data read misses;

- data cache writes (or memory writes), write misses, and last-level cache write misses;

- conditional branches executed and mispredicted; and

- indirect branches executed and mispredicted.

cachegrind.out.pid by default, where pid is the process ID of the program on which you ran Cachegrind). This file can be further processed by the accompanying cg_annotate tool, like so:

cg_annotate cachegrind.out.pid

# cg_annotate cachegrind.out.pidNote

cg_diff first second

# cg_diff first second--I1- Specifies the size, associativity, and line size of the first-level instruction cache, separated by commas:

--I1=size,associativity,line size. --D1- Specifies the size, associativity, and line size of the first-level data cache, separated by commas:

--D1=size,associativity,line size. --LL- Specifies the size, associativity, and line size of the last-level cache, separated by commas:

--LL=size,associativity,line size. --cache-sim- Enables or disables the collection of cache access and miss counts. The default value is

yes(enabled).Note that disabling both this and--branch-simleaves Cachegrind with no information to collect. --branch-sim- Enables or disables the collection of branch instruction and misprediction counts. This is set to

no(disabled) by default, since it slows Cachegrind by approximately 25 per-cent.Note that disabling both this and--cache-simleaves Cachegrind with no information to collect.

/usr/share/doc/valgrind-version/valgrind_manual.pdf.

5.3.3. Profiling Heap and Stack Space with Massif

massif as the Valgrind tool you wish to use:

valgrind --tool=massif program

# valgrind --tool=massif programmassif.out.pid, where pid is the process ID of the specified program.

ms_print command, like so:

ms_print massif.out.pid

# ms_print massif.out.pid--heap- Specifies whether to perform heap profiling. The default value is

yes. Heap profiling can be disabled by setting this option tono. --heap-admin- Specifies the number of bytes per block to use for administration when heap profiling is enabled. The default value is

8bytes per block. --stacks- Specifies whether to perform stack profiling. The default value is

no(disabled). To enable stack profiling, set this option toyes, but be aware that doing so will greatly slow Massif. Also note that Massif assumes that the main stack has size zero at start-up in order to better indicate the size of the stack portion over which the program being profiled has control. --time-unit- Specifies the unit of time used for the profiling. There are three valid values for this option: instructions executed (

i), the default value, which is useful in most cases; real time (ms, in milliseconds), which can be useful in certain instances; and bytes allocated/deallocated on the heap and/or stack (B), which is useful for very short-run programs, and for testing purposes, because it is the most reproducible across different machines. This option is useful when graphing Massif output withms_print.

/usr/share/doc/valgrind-version/valgrind_manual.pdf.

5.4. Capacity Tuning

overcommit_memory temporarily to 1, run:

echo 1 > /proc/sys/vm/overcommit_memory

# echo 1 > /proc/sys/vm/overcommit_memorysysctl command. For information on how to use sysctl, see E.4. Using the sysctl Command in the Red Hat Enterprise Linux 6 Deployment Guide.

/proc/meminfo file provides the MemAvailable field. To determine how much memory is available, run:

cat /proc/meminfo | grep MemAvailable

# cat /proc/meminfo | grep MemAvailable

Capacity-related Memory Tunables

/proc/sys/vm/ in the proc file system.

overcommit_memory- Defines the conditions that determine whether a large memory request is accepted or denied. There are three possible values for this parameter:

0— The default setting. The kernel performs heuristic memory overcommit handling by estimating the amount of memory available and failing requests that are blatantly invalid. Unfortunately, since memory is allocated using a heuristic rather than a precise algorithm, this setting can sometimes allow available memory on the system to be overloaded.1— The kernel performs no memory overcommit handling. Under this setting, the potential for memory overload is increased, but so is performance for memory-intensive tasks.2— The kernel denies requests for memory equal to or larger than the sum of total available swap and the percentage of physical RAM specified inovercommit_ratio. This setting is best if you want a lesser risk of memory overcommitment.Note

This setting is only recommended for systems with swap areas larger than their physical memory.

overcommit_ratio- Specifies the percentage of physical RAM considered when

overcommit_memoryis set to2. The default value is50. max_map_count- Defines the maximum number of memory map areas that a process may use. In most cases, the default value of

65530is appropriate. Increase this value if your application needs to map more than this number of files. nr_hugepages- Defines the number of hugepages configured in the kernel. The default value is 0. It is only possible to allocate (or deallocate) hugepages if there are sufficient physically contiguous free pages in the system. Pages reserved by this parameter cannot be used for other purposes. Further information is available from the installed documentation:

/usr/share/doc/kernel-doc-kernel_version/Documentation/vm/hugetlbpage.txt.For an Oracle database workload, Red Hat recommends configuring a number of hugepages equivalent to slightly more than the total size of the system global area of all databases running on the system. 5 additional hugepages per database instance is sufficient.

Capacity-related Kernel Tunables

/proc/sys/kernel/ directory, can be calculated by the kernel at boot time depending on available system resources.

getconf PAGE_SIZE

# getconf PAGE_SIZEgrep Hugepagesize /proc/meminfo

# grep Hugepagesize /proc/meminfomsgmax- Defines the maximum allowable size in bytes of any single message in a message queue. This value must not exceed the size of the queue (

msgmnb). To determine the currentmsgmaxvalue on your system, enter:sysctl kernel.msgmax

# sysctl kernel.msgmaxCopy to Clipboard Copied! Toggle word wrap Toggle overflow msgmnb- Defines the maximum size in bytes of a single message queue. To determine the current

msgmnbvalue on your system, enter:sysctl kernel.msgmnb

# sysctl kernel.msgmnbCopy to Clipboard Copied! Toggle word wrap Toggle overflow msgmni- Defines the maximum number of message queue identifiers (and therefore the maximum number of queues). To determine the current

msgmnivalue on your system, enter:sysctl kernel.msgmni

# sysctl kernel.msgmniCopy to Clipboard Copied! Toggle word wrap Toggle overflow - sem

- Semaphores, counters that help synchronize processes and threads, are generally configured to assist with database workloads. Recommended values vary between databases. See your database documentation for details about semaphore values.This parameter takes four values, separated by spaces, that represent SEMMSL, SEMMNS, SEMOPM, and SEMMNI respectively.

shmall- Defines the total number of shared memory pages that can be used on the system at one time. For database workloads, Red Hat recommends that this value is set to the result of

shmmaxdivided by the hugepage size. However, Red Hat recommends checking your vendor documentation for recommended values. To determine the currentshmallvalue on your system, enter:sysctl kernel.shmall

sysctl kernel.shmallCopy to Clipboard Copied! Toggle word wrap Toggle overflow shmmax- Defines the maximum shared memory segment allowed by the kernel, in bytes. For database workloads, Red Hat recommends a value no larger than 75% of the total memory on the system. However, Red Hat recommends checking your vendor documentation for recommended values. To determine the current

shmmaxvalue on your system, enter:sysctl kernel.shmmax

# sysctl kernel.shmmaxCopy to Clipboard Copied! Toggle word wrap Toggle overflow shmmni- Defines the system-wide maximum number of shared memory segments. The default value is

4096on all systems. threads-max- Defines the system-wide maximum number of threads (tasks) to be used by the kernel at one time. To determine the current

threads-maxvalue on your system, enter:sysctl kernel.threads-max

# sysctl kernel.threads-maxCopy to Clipboard Copied! Toggle word wrap Toggle overflow The default value is the result of:mempages / (8 * THREAD_SIZE / PAGE_SIZE )

mempages / (8 * THREAD_SIZE / PAGE_SIZE )Copy to Clipboard Copied! Toggle word wrap Toggle overflow The minimum value ofthreads-maxis20.

Capacity-related File System Tunables

/proc/sys/fs/ in the proc file system.

aio-max-nr- Defines the maximum allowed number of events in all active asynchronous I/O contexts. The default value is

65536. Note that changing this value does not pre-allocate or resize any kernel data structures. file-max- Lists the maximum number of file handles that the kernel allocates. The default value matches the value of

files_stat.max_filesin the kernel, which is set to the largest value out of either(mempages * (PAGE_SIZE / 1024)) / 10, orNR_FILE(8192 in Red Hat Enterprise Linux). Raising this value can resolve errors caused by a lack of available file handles.

Out-of-Memory Kill Tunables

/proc/sys/vm/panic_on_oom parameter to 0 instructs the kernel to call the oom_killer function when OOM occurs. Usually, oom_killer can kill rogue processes and the system survives.

oom_killer function. It is located under /proc/pid/ in the proc file system, where pid is the process ID number.

oom_adj- Defines a value from

-16to15that helps determine theoom_scoreof a process. The higher theoom_scorevalue, the more likely the process will be killed by theoom_killer. Setting aoom_adjvalue of-17disables theoom_killerfor that process.Important

Any processes spawned by an adjusted process will inherit that process'soom_score. For example, if ansshdprocess is protected from theoom_killerfunction, all processes initiated by that SSH session will also be protected. This can affect theoom_killerfunction's ability to salvage the system if OOM occurs.

5.5. Tuning Virtual Memory

swappiness- A value from 0 to 100 which controls the degree to which the system favors anonymous memory or the page cache. A high value improves file-system performance, while aggressively swapping less active processes out of physical memory. A low value avoids swapping processes out of memory, which usually decreases latency, at the cost of I/O performance. The default value is 60.

Warning

Since RHEL 6.4, settingswappiness=0more aggressively avoids swapping out, which increases the risk of OOM killing under strong memory and I/O pressure.A lowswappinessvalue is recommended for database workloads. For example, for Oracle databases, Red Hat recommends aswappinessvalue of10.vm.swappiness=10

vm.swappiness=10Copy to Clipboard Copied! Toggle word wrap Toggle overflow min_free_kbytes- The minimum number of kilobytes to keep free across the system. This value is used to compute a watermark value for each low memory zone, which are then assigned a number of reserved free pages proportional to their size.

Warning

Be cautious when setting this parameter, as both too-low and too-high values can be damaging and break your system.Settingmin_free_kbytestoo low prevents the system from reclaiming memory. This can result in system hangs and OOM-killing multiple processes.However, setting this parameter to a value that is too high (5-10% of total system memory) will cause your system to become out-of-memory immediately. Linux is designed to use all available RAM to cache file system data. Setting a highmin_free_kbytesvalue results in the system spending too much time reclaiming memory. dirty_ratio- Defines a percentage value. Writeout of dirty data begins (via pdflush) when dirty data comprises this percentage of total system memory. The default value is

20.Red Hat recommends a slightly lower value of15for database workloads. dirty_background_ratio- Defines a percentage value. Writeout of dirty data begins in the background (via pdflush) when dirty data comprises this percentage of total memory. The default value is

10. For database workloads, Red Hat recommends a lower value of3. dirty_expire_centisecs- Specifies the number of centiseconds (hundredths of a second) dirty data remains in the page cache before it is eligible to be written back to disk. Red Hat does not recommend tuning this parameter.

dirty_writeback_centisecs- Specifies the length of the interval between kernel flusher threads waking and writing eligible data to disk, in centiseconds (hundredths of a second). Setting this to

0disables periodic write behavior. Red Hat does not recommend tuning this parameter. drop_caches- Setting this value to

1,2, or3causes the kernel to drop various combinations of page cache and slab cache.- 1

- The system invalidates and frees all page cache memory.

- 2

- The system frees all unused slab cache memory.

- 3

- The system frees all page cache and slab cache memory.

This is a non-destructive operation. Since dirty objects cannot be freed, runningsyncbefore setting this parameter's value is recommended.Important

Using thedrop_cachesto free memory is not recommended in a production environment.

swappiness temporarily to 50, run:

echo 50 > /proc/sys/vm/swappiness

# echo 50 > /proc/sys/vm/swappinesssysctl command. For further information, refer to the Deployment Guide, available from http://access.redhat.com/site/documentation/Red_Hat_Enterprise_Linux/.

5.6. KSM

ksm service starts and stops the KSM thread, and the ksmtuned service controls and tunes the ksm service. For information on KSM and its tuning, see the KSM chapter of the Red Hat Enterprise Linux 6 Virtualization Administration Guide.

Chapter 6. Input/Output

6.1. Features

- Solid state disks (SSDs) are now recognized automatically, and the performance of the I/O scheduler is tuned to take advantage of the high I/Os per second (IOPS) that these devices can perform. For information on tuning SSDs, see the Solid State Disk Deployment Guidelines chapter in the Red Hat Enterprise Linux 6 Storage Administration Guide.

- Discard support has been added to the kernel to report unused block ranges to the underlying storage. This helps SSDs with their wear-leveling algorithms. It also helps storage that supports logical block provisioning (a sort of virtual address space for storage) by keeping closer tabs on the actual amount of storage in-use.

- The file system barrier implementation was overhauled in Red Hat Enterprise Linux 6.1 to make it more performant.

pdflushhas been replaced by per-backing-device flusher threads, which greatly improves system scalability on configurations with large LUN counts.

6.2. Analysis

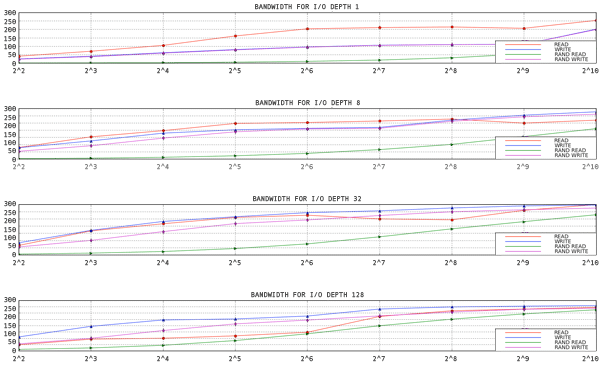

Figure 6.1. aio-stress output for 1 thread, 1 file

- aio-stress

- iozone

- fio

6.3. Tools

si (swap in), so (swap out), bi (block in), bo (block out), and wa (I/O wait time). si and so are useful when your swap space is on the same device as your data partition, and as an indicator of overall memory pressure. si and bi are read operations, while so and bo are write operations. Each of these categories is reported in kilobytes. wa is idle time; it indicates what portion of the run queue is blocked waiting for I/O complete.

free, buff, and cache columns are also worth noting. The cache value increasing alongside the bo value, followed by a drop in cache and an increase in free indicates that the system is performing write-back and invalidation of the page cache.

avgqu-sz), you can make some estimations about how the storage should perform using the graphs you generated when characterizing the performance of your storage. Some generalizations apply: for example, if the average request size is 4KB and the average queue size is 1, throughput is unlikely to be extremely performant.

- Q — A block I/O is Queued

- G — Get RequestA newly queued block I/O was not a candidate for merging with any existing request, so a new block layer request is allocated.

- M — A block I/O is Merged with an existing request.

- I — A request is Inserted into the device's queue.

- D — A request is issued to the Device.

- C — A request is Completed by the driver.

- P — The block device queue is Plugged, to allow the aggregation of requests.

- U — The device queue is Unplugged, allowing the aggregated requests to be issued to the device.

- Q2Q — time between requests sent to the block layer

- Q2G — how long it takes from the time a block I/O is queued to the time it gets a request allocated for it

- G2I — how long it takes from the time a request is allocated to the time it is Inserted into the device's queue

- Q2M — how long it takes from the time a block I/O is queued to the time it gets merged with an existing request

- I2D — how long it takes from the time a request is inserted into the device's queue to the time it is actually issued to the device

- M2D — how long it takes from the time a block I/O is merged with an exiting request until the request is issued to the device

- D2C — service time of the request by the device

- Q2C — total time spent in the block layer for a request

Figure 6.2. Example seekwatcher output

6.4. Configuration

6.4.1. Completely Fair Queuing (CFQ)

ionice command, or programmatically assigned via the ioprio_set system call. By default, processes are placed in the best-effort scheduling class. The real-time and best-effort scheduling classes are further subdivided into eight I/O priorities within each class, priority 0 being the highest and 7 the lowest. Processes in the real-time scheduling class are scheduled much more aggressively than processes in either best-effort or idle, so any scheduled real-time I/O is always performed before best-effort or idle I/O. This means that real-time priority I/O can starve out both the best-effort and idle classes. Best effort scheduling is the default scheduling class, and 4 is the default priority within this class. Processes in the idle scheduling class are only serviced when there is no other I/O pending in the system. Thus, it is very important to only set the I/O scheduling class of a process to idle if I/O from the process is not at all required for making forward progress.

/sys/block/device/queue/iosched/:

slice_idle = 0 quantum = 64 group_idle = 1

slice_idle = 0

quantum = 64

group_idle = 1group_idle is set to 1, there is still the potential for I/O stalls (whereby the back-end storage is not busy due to idling). However, these stalls will be less frequent than idling on every queue in the system.

Tunables

back_seek_max- Backward seeks are typically bad for performance, as they can incur greater delays in repositioning the heads than forward seeks do. However, CFQ will still perform them, if they are small enough. This tunable controls the maximum distance in KB the I/O scheduler will allow backward seeks. The default is

16KB. back_seek_penalty- Because of the inefficiency of backward seeks, a penalty is associated with each one. The penalty is a multiplier; for example, consider a disk head position at 1024KB. Assume there are two requests in the queue, one at 1008KB and another at 1040KB. The two requests are equidistant from the current head position. However, after applying the back seek penalty (default: 2), the request at the later position on disk is now twice as close as the earlier request. Thus, the head will move forward.

fifo_expire_async- This tunable controls how long an async (buffered write) request can go unserviced. After the expiration time (in milliseconds), a single starved async request will be moved to the dispatch list. The default is

250ms. fifo_expire_sync- This is the same as the fifo_expire_async tunable, for for synchronous (read and O_DIRECT write) requests. The default is

125ms. group_idle- When set, CFQ will idle on the last process issuing I/O in a cgroup. This should be set to

1when using proportional weight I/O cgroups and settingslice_idleto0(typically done on fast storage). group_isolation- If group isolation is enabled (set to

1), it provides a stronger isolation between groups at the expense of throughput. Generally speaking, if group isolation is disabled, fairness is provided for sequential workloads only. Enabling group isolation provides fairness for both sequential and random workloads. The default value is0(disabled). Refer toDocumentation/cgroups/blkio-controller.txtfor further information. low_latency- When low latency is enabled (set to

1), CFQ attempts to provide a maximum wait time of 300 ms for each process issuing I/O on a device. This favors fairness over throughput. Disabling low latency (setting it to0) ignores target latency, allowing each process in the system to get a full time slice. Low latency is enabled by default. quantum- The quantum controls the number of I/Os that CFQ will send to the storage at a time, essentially limiting the device queue depth. By default, this is set to

8. The storage may support much deeper queue depths, but increasingquantumwill also have a negative impact on latency, especially in the presence of large sequential write workloads. slice_async- This tunable controls the time slice allotted to each process issuing asynchronous (buffered write) I/O. By default it is set to

40ms. slice_idle- This specifies how long CFQ should idle while waiting for further requests. The default value in Red Hat Enterprise Linux 6.1 and earlier is

8ms. In Red Hat Enterprise Linux 6.2 and later, the default value is0. The zero value improves the throughput of external RAID storage by removing all idling at the queue and service tree level. However, a zero value can degrade throughput on internal non-RAID storage, because it increases the overall number of seeks. For non-RAID storage, we recommend aslice_idlevalue that is greater than 0. slice_sync- This tunable dictates the time slice allotted to a process issuing synchronous (read or direct write) I/O. The default is

100ms.

6.4.2. Deadline I/O Scheduler

Tunables

fifo_batch- This determines the number of reads or writes to issue in a single batch. The default is

16. Setting this to a higher value may result in better throughput, but will also increase latency. front_merges- You can set this tunable to

0if you know your workload will never generate front merges. Unless you have measured the overhead of this check, it is advisable to leave it at its default setting (1). read_expire- This tunable allows you to set the number of milliseconds in which a read request should be serviced. By default, this is set to

500ms (half a second). write_expire- This tunable allows you to set the number of milliseconds in which a write request should be serviced. By default, this is set to

5000ms (five seconds). writes_starved- This tunable controls how many read batches can be processed before processing a single write batch. The higher this is set, the more preference is given to reads.

6.4.3. Noop

/sys/block/sdX/queue tunables

- add_random

- In some cases, the overhead of I/O events contributing to the entropy pool for

/dev/randomis measurable. In such cases, it may be desirable to set this value to 0. max_sectors_kb- By default, the maximum request size sent to disk is

512KB. This tunable can be used to either raise or lower that value. The minimum value is limited by the logical block size; the maximum value is limited bymax_hw_sectors_kb. There are some SSDs which perform worse when I/O sizes exceed the internal erase block size. In such cases, it is recommended to tunemax_hw_sectors_kbdown to the erase block size. You can test for this using an I/O generator such as iozone or aio-stress, varying the record size from, for example,512bytes to1MB. nomerges- This tunable is primarily a debugging aid. Most workloads benefit from request merging (even on faster storage such as SSDs). In some cases, however, it is desirable to disable merging, such as when you want to see how many IOPS a storage back-end can process without disabling read-ahead or performing random I/O.

nr_requests- Each request queue has a limit on the total number of request descriptors that can be allocated for each of read and write I/Os. By default, the number is