Virtualization Administration Guide

Managing your virtual environment

Abstract

Note

Chapter 1. Server Best Practices

- Run SELinux in enforcing mode. Set SELinux to run in enforcing mode with the

setenforcecommand.setenforce 1

# setenforce 1Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Remove or disable any unnecessary services such as

AutoFS,NFS,FTP,HTTP,NIS,telnetd,sendmailand so on. - Only add the minimum number of user accounts needed for platform management on the server and remove unnecessary user accounts.

- Avoid running any unessential applications on your host. Running applications on the host may impact virtual machine performance and can affect server stability. Any application which may crash the server will also cause all virtual machines on the server to go down.

- Use a central location for virtual machine installations and images. Virtual machine images should be stored under

/var/lib/libvirt/images/. If you are using a different directory for your virtual machine images make sure you add the directory to your SELinux policy and relabel it before starting the installation. Use of shareable, network storage in a central location is highly recommended.

Chapter 2. sVirt

In a non-virtualized environment, host physical machines are separated from each other physically and each host physical machine has a self-contained environment, consisting of services such as a web server, or a DNS server. These services communicate directly to their own user space, host physical machine's kernel and physical hardware, offering their services directly to the network. The following image represents a non-virtualized environment:

|

User Space - memory area where all user mode applications and some drivers execute.

|

|

Web App (web application server) - delivers web content that can be accessed through the a browser.

|

|

Host Kernel - is strictly reserved for running the host physical machine's privileged kernel, kernel extensions, and most device drivers.

|

|

DNS Server - stores DNS records allowing users to access web pages using logical names instead of IP addresses.

|

In a virtualized environment, several virtual operating systems can run on a single kernel residing on a host physical machine. The following image represents a virtualized environment:

2.1. Security and Virtualization

2.2. sVirt Labeling

ps -eZ | grep qemu system_u:system_r:svirt_t:s0:c87,c520 27950 ? 00:00:17 qemu-kvm

# ps -eZ | grep qemu

system_u:system_r:svirt_t:s0:c87,c520 27950 ? 00:00:17 qemu-kvm

ls -lZ /var/lib/libvirt/images/* system_u:object_r:svirt_image_t:s0:c87,c520 image1

# ls -lZ /var/lib/libvirt/images/*

system_u:object_r:svirt_image_t:s0:c87,c520 image1

| SELinux Context | Type / Description |

|---|---|

| system_u:system_r:svirt_t:MCS1 | Guest virtual machine processes. MCS1 is a random MCS field. Approximately 500,000 labels are supported. |

| system_u:object_r:svirt_image_t:MCS1 | Guest virtual machine images. Only svirt_t processes with the same MCS fields can read/write these images. |

| system_u:object_r:svirt_image_t:s0 | Guest virtual machine shared read/write content. All svirt_t processes can write to the svirt_image_t:s0 files. |

Chapter 3. Cloning Virtual Machines

- Clones are instances of a single virtual machine. Clones can be used to set up a network of identical virtual machines, and they can also be distributed to other destinations.

- Templates are instances of a virtual machine that are designed to be used as a source for cloning. You can create multiple clones from a template and make minor modifications to each clone. This is useful in seeing the effects of these changes on the system.

- Platform level information and configurations include anything assigned to the virtual machine by the virtualization solution. Examples include the number of Network Interface Cards (NICs) and their MAC addresses.

- Guest operating system level information and configurations include anything configured within the virtual machine. Examples include SSH keys.

- Application level information and configurations include anything configured by an application installed on the virtual machine. Examples include activation codes and registration information.

Note

This chapter does not include information about removing the application level, because the information and approach is specific to each application.

3.1. Preparing Virtual Machines for Cloning

Procedure 3.1. Preparing a virtual machine for cloning

Setup the virtual machine

- Build the virtual machine that is to be used for the clone or template.

- Install any software needed on the clone.

- Configure any non-unique settings for the operating system.

- Configure any non-unique application settings.

Remove the network configuration

- Remove any persistent udev rules using the following command:

rm -f /etc/udev/rules.d/70-persistent-net.rules

# rm -f /etc/udev/rules.d/70-persistent-net.rulesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note

If udev rules are not removed, the name of the first NIC may be eth1 instead of eth0. - Remove unique network details from ifcfg scripts by making the following edits to

/etc/sysconfig/network-scripts/ifcfg-eth[x]:- Remove the HWADDR and Static lines

Note

If the HWADDR does not match the new guest's MAC address, the ifcfg will be ignored. Therefore, it is important to remove the HWADDR from the file.Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Ensure that a DHCP configuration remains that does not include a HWADDR or any unique information.

DEVICE=eth[x] BOOTPROTO=dhcp ONBOOT=yes

DEVICE=eth[x] BOOTPROTO=dhcp ONBOOT=yesCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Ensure that the file includes the following lines:

DEVICE=eth[x] ONBOOT=yes

DEVICE=eth[x] ONBOOT=yesCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- If the following files exist, ensure that they contain the same content:

/etc/sysconfig/networking/devices/ifcfg-eth[x]/etc/sysconfig/networking/profiles/default/ifcfg-eth[x]

Note

If NetworkManager or any special settings were used with the virtual machine, ensure that any additional unique information is removed from the ifcfg scripts.

Remove registration details

- Remove registration details using one of the following:

- For Red Hat Network (RHN) registered guest virtual machines, run the following command:

rm /etc/sysconfig/rhn/systemid

# rm /etc/sysconfig/rhn/systemidCopy to Clipboard Copied! Toggle word wrap Toggle overflow - For Red Hat Subscription Manager (RHSM) registered guest virtual machines:

- If the original virtual machine will not be used, run the following commands:

subscription-manager unsubscribe --all subscription-manager unregister subscription-manager clean

# subscription-manager unsubscribe --all # subscription-manager unregister # subscription-manager cleanCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If the original virtual machine will be used, run only the following command:

subscription-manager clean

# subscription-manager cleanCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note

The original RHSM profile remains in the portal.

Removing other unique details

- Remove any sshd public/private key pairs using the following command:

rm -rf /etc/ssh/ssh_host_*

# rm -rf /etc/ssh/ssh_host_*Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note

Removing ssh keys prevents problems with ssh clients not trusting these hosts. - Remove any other application-specific identifiers or configurations that may cause conflicts if running on multiple machines.

Configure the virtual machine to run configuration wizards on the next boot

- Configure the virtual machine to run the relevant configuration wizards the next time it is booted by doing one of the following:

- For Red Hat Enterprise Linux 6 and below, create an empty file on the root file system called .unconfigured using the following command:

touch /.unconfigured

# touch /.unconfiguredCopy to Clipboard Copied! Toggle word wrap Toggle overflow - For Red Hat Enterprise Linux 7, enable the first boot and initial-setup wizards by running the following commands:

sed -ie 's/RUN_FIRSTBOOT=NO/RUN_FIRSTBOOT=YES/' /etc/sysconfig/firstboot systemctl enable firstboot-graphical systemctl enable initial-setup-graphical

# sed -ie 's/RUN_FIRSTBOOT=NO/RUN_FIRSTBOOT=YES/' /etc/sysconfig/firstboot # systemctl enable firstboot-graphical # systemctl enable initial-setup-graphicalCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Note

The wizards that run on the next boot depend on the configurations that have been removed from the virtual machine. In addition, on the first boot of the clone, it is recommended that you change the host name.

3.2. Cloning a Virtual Machine

virt-clone or virt-manager.

3.2.1. Cloning Guests with virt-clone

virt-clone to clone virtual machines from the command line.

virt-clone to complete successfully.

virt-clone command provides a number of options that can be passed on the command line. These include general options, storage configuration options, networking configuration options, and miscellaneous options. Only the --original is required. To see a complete list of options, enter the following command:

virt-clone --help

# virt-clone --helpvirt-clone man page also documents each command option, important variables, and examples.

Example 3.1. Using virt-clone to clone a guest

virt-clone --original demo --auto-clone

# virt-clone --original demo --auto-cloneExample 3.2. Using virt-clone to clone a guest

virt-clone --connect qemu:///system --original demo --name newdemo --file /var/lib/xen/images/newdemo.img --file /var/lib/xen/images/newdata.img

# virt-clone --connect qemu:///system --original demo --name newdemo --file /var/lib/xen/images/newdemo.img --file /var/lib/xen/images/newdata.img3.2.2. Cloning Guests with virt-manager

Procedure 3.2. Cloning a Virtual Machine with virt-manager

Open virt-manager

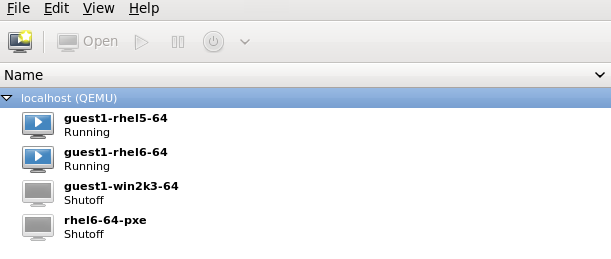

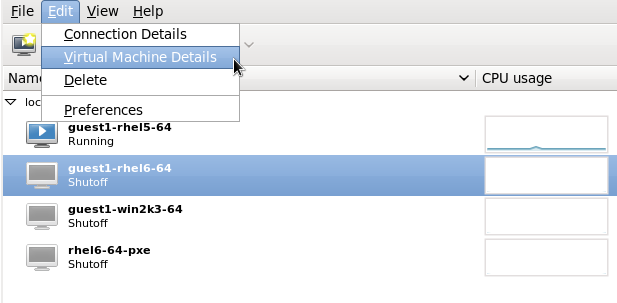

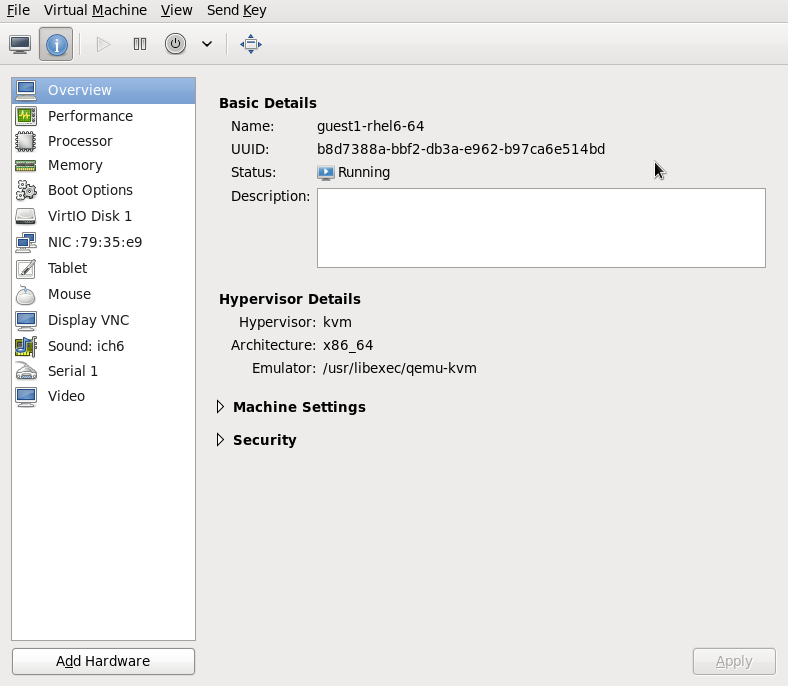

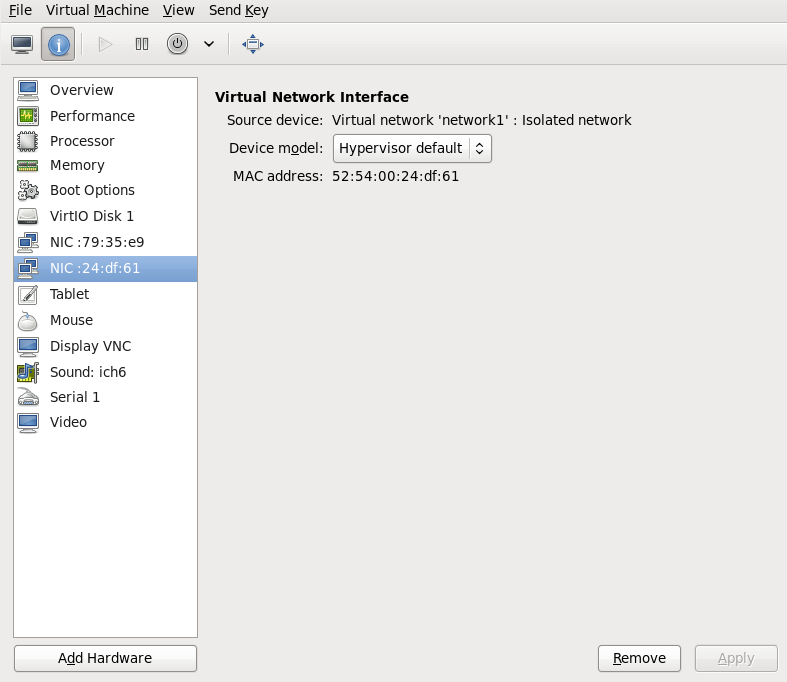

Start virt-manager. Launch the application from the menu and submenu. Alternatively, run thevirt-managercommand as root.Select the guest virtual machine you want to clone from the list of guest virtual machines in Virtual Machine Manager.Right-click the guest virtual machine you want to clone and select . The Clone Virtual Machine window opens.

Figure 3.1. Clone Virtual Machine window

Configure the clone

- To change the name of the clone, enter a new name for the clone.

- To change the networking configuration, click Details.Enter a new MAC address for the clone.Click OK.

Figure 3.2. Change MAC Address window

- For each disk in the cloned guest virtual machine, select one of the following options:

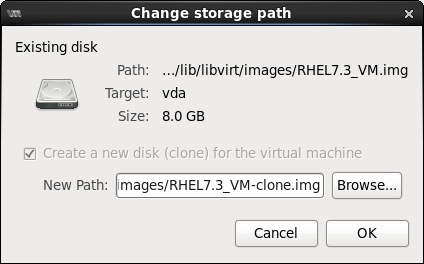

Clone this disk- The disk will be cloned for the cloned guest virtual machineShare disk with guest virtual machine name- The disk will be shared by the guest virtual machine that will be cloned and its cloneDetails- Opens the Change storage path window, which enables selecting a new path for the disk

Figure 3.3. Change

storage pathwindow

Clone the guest virtual machine

Click Clone.

Chapter 4. KVM Live Migration

- Load balancing - guest virtual machines can be moved to host physical machines with lower usage when their host physical machine becomes overloaded, or another host physical machine is under-utilized.

- Hardware independence - when we need to upgrade, add, or remove hardware devices on the host physical machine, we can safely relocate guest virtual machines to other host physical machines. This means that guest virtual machines do not experience any downtime for hardware improvements.

- Energy saving - guest virtual machines can be redistributed to other host physical machines and can thus be powered off to save energy and cut costs in low usage periods.

- Geographic migration - guest virtual machines can be moved to another location for lower latency or in serious circumstances.

4.1. Live Migration Requirements

Migration requirements

- A guest virtual machine installed on shared storage using one of the following protocols:

- Fibre Channel-based LUNs

- iSCSI

- FCoE

- NFS

- GFS2

- SCSI RDMA protocols (SCSI RCP): the block export protocol used in Infiniband and 10GbE iWARP adapters

- The migration platforms and versions should be checked against table Table 4.1, “Live Migration Compatibility”. It should also be noted that Red Hat Enterprise Linux 6 supports live migration of guest virtual machines using raw and qcow2 images on shared storage.

- Both systems must have the appropriate TCP/IP ports open. In cases where a firewall is used, refer to the Red Hat Enterprise Linux Virtualization Security Guide which can be found at https://access.redhat.com/site/documentation/ for detailed port information.

- A separate system exporting the shared storage medium. Storage should not reside on either of the two host physical machines being used for migration.

- Shared storage must mount at the same location on source and destination systems. The mounted directory names must be identical. Although it is possible to keep the images using different paths, it is not recommended. Note that, if you are intending to use virt-manager to perform the migration, the path names must be identical. If however you intend to use virsh to perform the migration, different network configurations and mount directories can be used with the help of

--xmloption or pre-hooks when doing migrations. Even without shared storage, migration can still succeed with the option--copy-storage-all(deprecated). For more information onprehooks, refer to libvirt.org, and for more information on the XML option, refer to Chapter 20, Manipulating the Domain XML. - When migration is attempted on an existing guest virtual machine in a public bridge+tap network, the source and destination host physical machines must be located in the same network. Otherwise, the guest virtual machine network will not operate after migration.

- In Red Hat Enterprise Linux 5 and 6, the default cache mode of KVM guest virtual machines is set to

none, which prevents inconsistent disk states. Setting the cache option tonone(usingvirsh attach-disk cache none, for example), causes all of the guest virtual machine's files to be opened using theO_DIRECTflag (when calling theopensyscall), thus bypassing the host physical machine's cache, and only providing caching on the guest virtual machine. Setting the cache mode tononeprevents any potential inconsistency problems, and when used makes it possible to live-migrate virtual machines. For information on setting cache tonone, refer to Section 13.3, “Adding Storage Devices to Guests”.

libvirtd service is enabled (# chkconfig libvirtd on) and running (# service libvirtd start). It is also important to note that the ability to migrate effectively is dependent on the parameter settings in the /etc/libvirt/libvirtd.conf configuration file.

Procedure 4.1. Configuring libvirtd.conf

- Opening the

libvirtd.confrequires running the command as root:vim /etc/libvirt/libvirtd.conf

# vim /etc/libvirt/libvirtd.confCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Change the parameters as needed and save the file.

- Restart the

libvirtdservice:service libvirtd restart

# service libvirtd restartCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2. Live Migration and Red Hat Enterprise Linux Version Compatibility

| Migration Method | Release Type | Example | Live Migration Support | Notes |

|---|---|---|---|---|

| Forward | Major release | 5.x → 6.y | Not supported | |

| Forward | Minor release | 5.x → 5.y (y>x, x>=4) | Fully supported | Any issues should be reported |

| Forward | Minor release | 6.x → 6.y (y>x, x>=0) | Fully supported | Any issues should be reported |

| Backward | Major release | 6.x → 5.y | Not supported | |

| Backward | Minor release | 5.x → 5.y (x>y,y>=4) | Supported | Refer to Troubleshooting problems with migration for known issues |

| Backward | Minor release | 6.x → 6.y (x>y, y>=0) | Supported | Refer to Troubleshooting problems with migration for known issues |

Troubleshooting problems with migration

- Issues with SPICE — It has been found that SPICE has an incompatible change when migrating from Red Hat Enterprise Linux 6.0 → 6.1. In such cases, the client may disconnect and then reconnect, causing a temporary loss of audio and video. This is only temporary and all services will resume.

- Issues with USB — Red Hat Enterprise Linux 6.2 added USB functionality which included migration support, but not without certain caveats which reset USB devices and caused any application running over the device to abort. This problem was fixed in Red Hat Enterprise Linux 6.4, and should not occur in future versions. To prevent this from happening in a version prior to 6.4, abstain from migrating while USB devices are in use.

- Issues with the migration protocol — If backward migration ends with "unknown section error", repeating the migration process can repair the issue as it may be a transient error. If not, please report the problem.

Configure shared storage and install a guest virtual machine on the shared storage.

4.4. Live KVM Migration with virsh

virsh command. The migrate command accepts parameters in the following format:

virsh migrate --live GuestName DestinationURL

# virsh migrate --live GuestName DestinationURL--live option may be eliminated when live migration is not desired. Additional options are listed in Section 4.4.2, “Additional Options for the virsh migrate Command”.

GuestName parameter represents the name of the guest virtual machine which you want to migrate.

DestinationURL parameter is the connection URL of the destination host physical machine. The destination system must run the same version of Red Hat Enterprise Linux, be using the same hypervisor and have libvirt running.

Note

DestinationURL parameter for normal migration and peer-to-peer migration has different semantics:

- normal migration: the

DestinationURLis the URL of the target host physical machine as seen from the source guest virtual machine. - peer-to-peer migration:

DestinationURLis the URL of the target host physical machine as seen from the source host physical machine.

Important

/etc/hosts file on the source server is required for migration to succeed. Enter the IP address and host name for the destination host physical machine in this file as shown in the following example, substituting your destination host physical machine's IP address and host name:

10.0.0.20 host2.example.com

10.0.0.20 host2.example.com

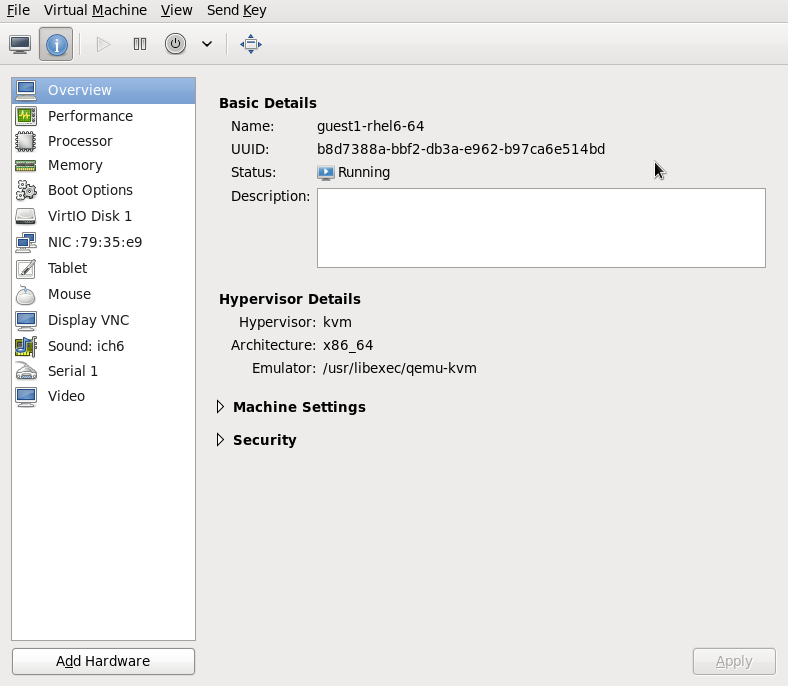

This example migrates from host1.example.com to host2.example.com. Change the host physical machine names for your environment. This example migrates a virtual machine named guest1-rhel6-64.

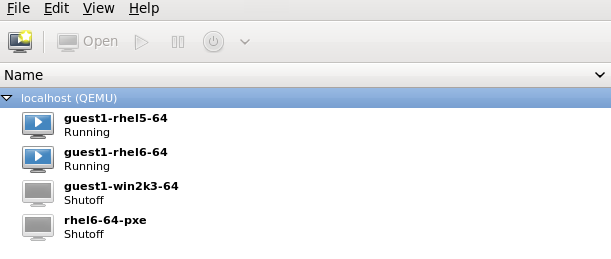

Verify the guest virtual machine is running

From the source system,host1.example.com, verifyguest1-rhel6-64is running:virsh list Id Name State ---------------------------------- 10 guest1-rhel6-64 running

[root@host1 ~]# virsh list Id Name State ---------------------------------- 10 guest1-rhel6-64 runningCopy to Clipboard Copied! Toggle word wrap Toggle overflow Migrate the guest virtual machine

Execute the following command to live migrate the guest virtual machine to the destination,host2.example.com. Append/systemto the end of the destination URL to tell libvirt that you need full access.virsh migrate --live guest1-rhel6-64 qemu+ssh://host2.example.com/system

# virsh migrate --live guest1-rhel6-64 qemu+ssh://host2.example.com/systemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Once the command is entered you will be prompted for the root password of the destination system.Wait

The migration may take some time depending on load and the size of the guest virtual machine.virshonly reports errors. The guest virtual machine continues to run on the source host physical machine until fully migrated.Note

During the migration, the completion percentage indicator number is likely to decrease multiple times before the process finishes. This is caused by a recalculation of the overall progress, as source memory pages that are changed after the migration starts need to be be copied again. Therefore, this behavior is expected and does not indicate any problems with the migration.Verify the guest virtual machine has arrived at the destination host

From the destination system,host2.example.com, verifyguest1-rhel6-64is running:virsh list Id Name State ---------------------------------- 10 guest1-rhel6-64 running

[root@host2 ~]# virsh list Id Name State ---------------------------------- 10 guest1-rhel6-64 runningCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Note

Note

virsh migrate command. To migrate a non-running guest virtual machine, the following script should be used:

virsh dumpxml Guest1 > Guest1.xml virsh -c qemu+ssh://<target-system-FQDN> define Guest1.xml virsh undefine Guest1

virsh dumpxml Guest1 > Guest1.xml

virsh -c qemu+ssh://<target-system-FQDN> define Guest1.xml

virsh undefine Guest1

4.4.1. Additional Tips for Migration with virsh

- Open the libvirtd.conf file as described in Procedure 4.1, “Configuring libvirtd.conf”.

- Look for the Processing controls section.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Change the

max_clientsandmax_workersparameters settings. It is recommended that the number be the same in both parameters. Themax_clientswill use 2 clients per migration (one per side) andmax_workerswill use 1 worker on the source and 0 workers on the destination during the perform phase and 1 worker on the destination during the finish phase.Important

Themax_clientsandmax_workersparameters settings are effected by all guest virtual machine connections to the libvirtd service. This means that any user that is using the same guest virtual machine and is performing a migration at the same time will also beholden to the limits set in themax_clientsandmax_workersparameters settings. This is why the maximum value needs to be considered carefully before performing a concurrent live migration. - Save the file and restart the service.

Note

There may be cases where a migration connection drops because there are too many ssh sessions that have been started, but not yet authenticated. By default,sshdallows only 10 sessions to be in a "pre-authenticated state" at any time. This setting is controlled by theMaxStartupsparameter in the sshd configuration file (located here:/etc/ssh/sshd_config), which may require some adjustment. Adjusting this parameter should be done with caution as the limitation is put in place to prevent DoS attacks (and over-use of resources in general). Setting this value too high will negate its purpose. To change this parameter, edit the file/etc/ssh/sshd_config, remove the#from the beginning of theMaxStartupsline, and change the10(default value) to a higher number. Remember to save the file and restart thesshdservice. For more information, refer to thesshd_configman page.

4.4.2. Additional Options for the virsh migrate Command

--live, virsh migrate accepts the following options:

--direct- used for direct migration--p2p- used for peer-to-peer migration--tunnelled- used for tunneled migration--persistent- leaves the domain in a persistent state on the destination host physical machine--undefinesource- removes the guest virtual machine on the source host physical machine--suspend- leaves the domain in a paused state on the destination host physical machine--change-protection- enforces that no incompatible configuration changes will be made to the domain while the migration is underway; this option is implicitly enabled when supported by the hypervisor, but can be explicitly used to reject the migration if the hypervisor lacks change protection support.--unsafe- forces the migration to occur, ignoring all safety procedures.--verbose- displays the progress of migration as it is occurring--abort-on-error- cancels the migration if a soft error (such as an I/O error) happens during the migration process.--migrateuri- the migration URI which is usually omitted.--domain[string]- domain name, id or uuid--desturi[string]- connection URI of the destination host physical machine as seen from the client(normal migration) or source(p2p migration)--migrateuri- migration URI, usually can be omitted--timeout[seconds]- forces a guest virtual machine to suspend when the live migration counter exceeds N seconds. It can only be used with a live migration. Once the timeout is initiated, the migration continues on the suspended guest virtual machine.--dname[string] - changes the name of the guest virtual machine to a new name during migration (if supported)--xml- the filename indicated can be used to supply an alternative XML file for use on the destination to supply a larger set of changes to any host-specific portions of the domain XML, such as accounting for naming differences between source and destination in accessing underlying storage. This option is usually omitted.

4.5. Migrating with virt-manager

virt-manager from one host physical machine to another.

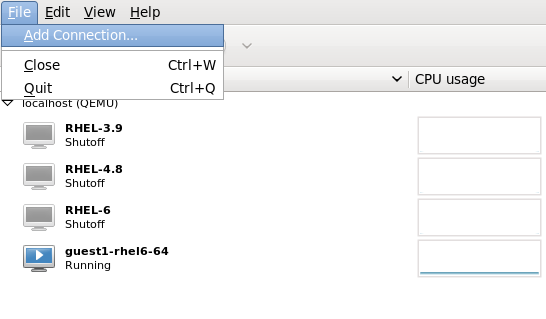

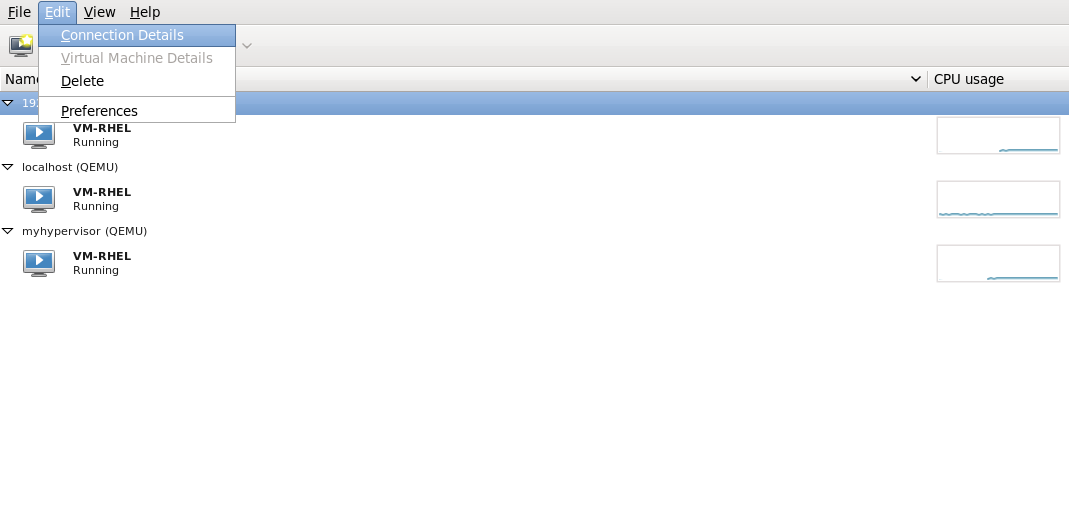

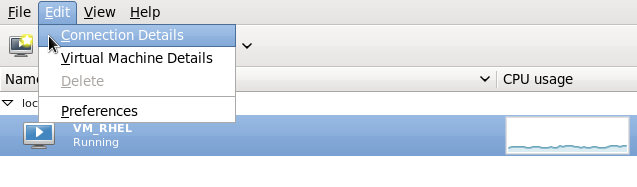

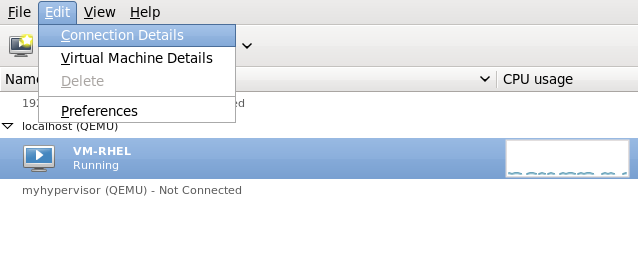

Open virt-manager

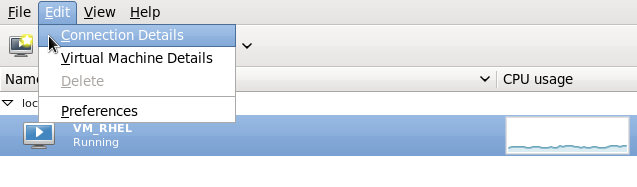

Openvirt-manager. Choose → → from the main menu bar to launchvirt-manager.

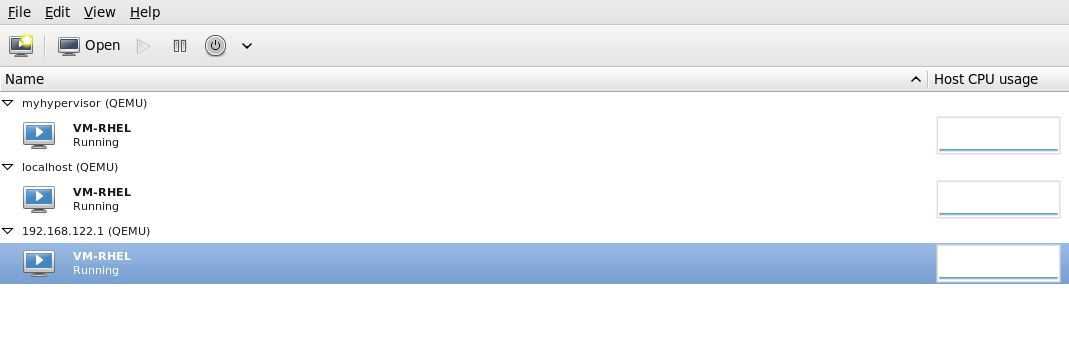

Figure 4.1. Virt-Manager main menu

Connect to the target host physical machine

Connect to the target host physical machine by clicking on the menu, then click .

Figure 4.2. Open Add Connection window

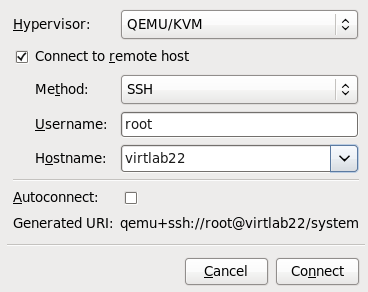

Add connection

The Add Connection window appears.

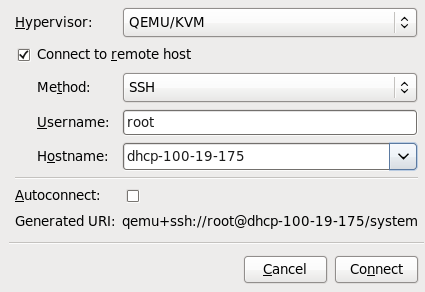

Figure 4.3. Adding a connection to the target host physical machine

Enter the following details:- Hypervisor: Select .

- Method: Select the connection method.

- Username: Enter the user name for the remote host physical machine.

- Hostname: Enter the host name for the remote host physical machine.

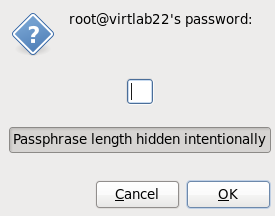

Click the button. An SSH connection is used in this example, so the specified user's password must be entered in the next step.

Figure 4.4. Enter password

Migrate guest virtual machines

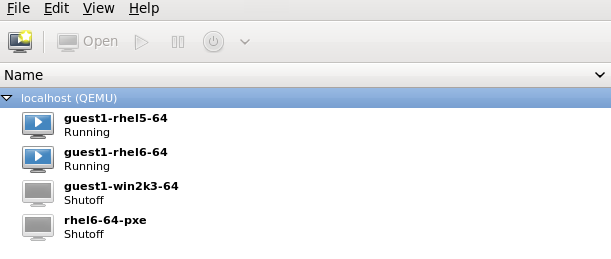

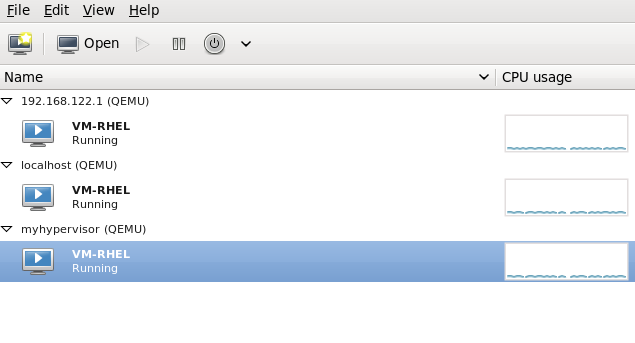

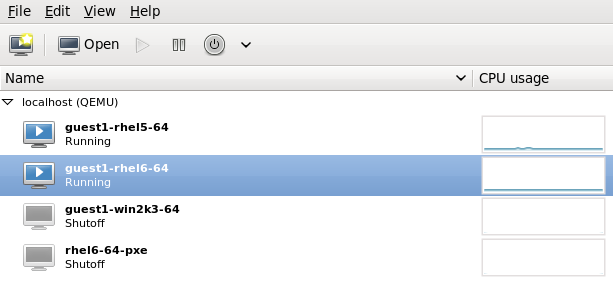

Open the list of guests inside the source host physical machine (click the small triangle on the left of the host name) and right click on the guest that is to be migrated (guest1-rhel6-64 in this example) and click .

Figure 4.5. Choosing the guest to be migrated

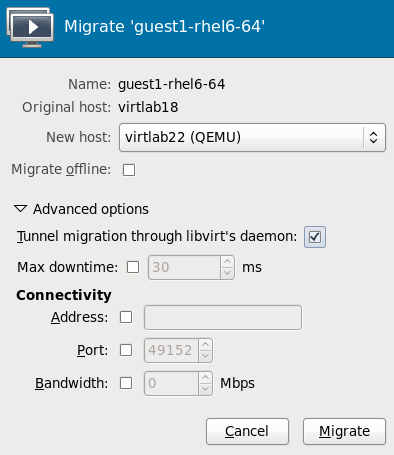

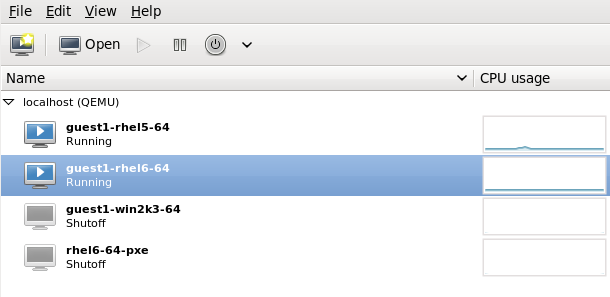

In the field, use the drop-down list to select the host physical machine you wish to migrate the guest virtual machine to and click .

Figure 4.6. Choosing the destination host physical machine and starting the migration process

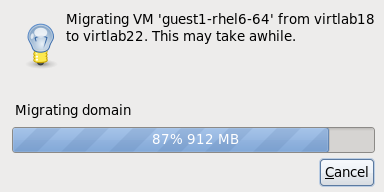

A progress window will appear.

Figure 4.7. Progress window

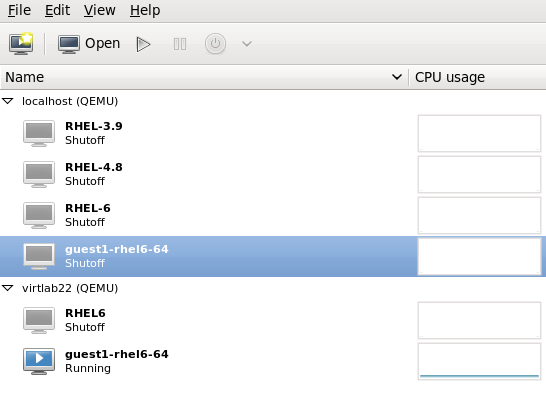

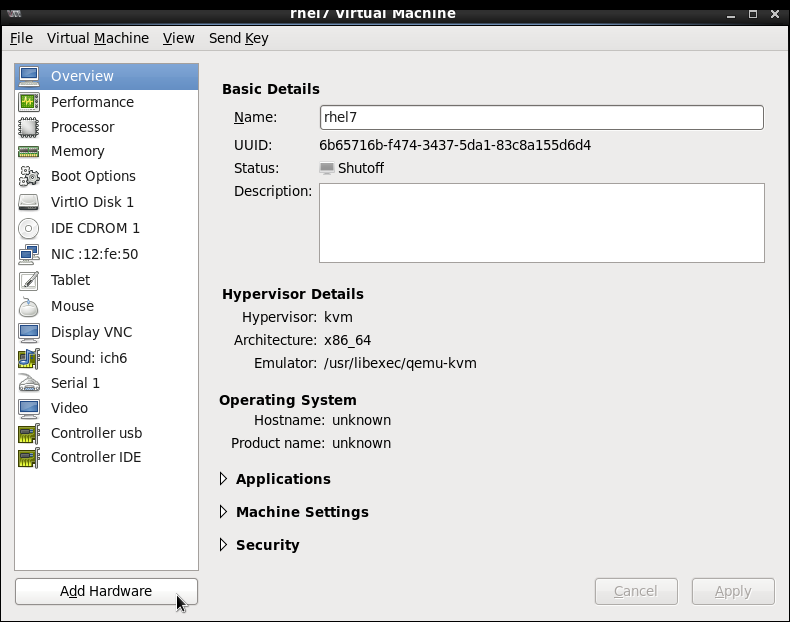

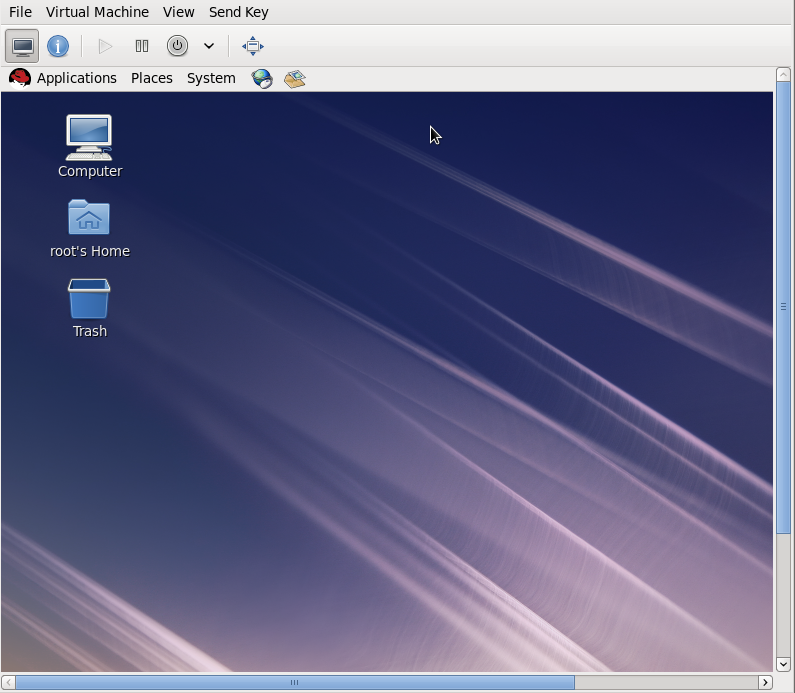

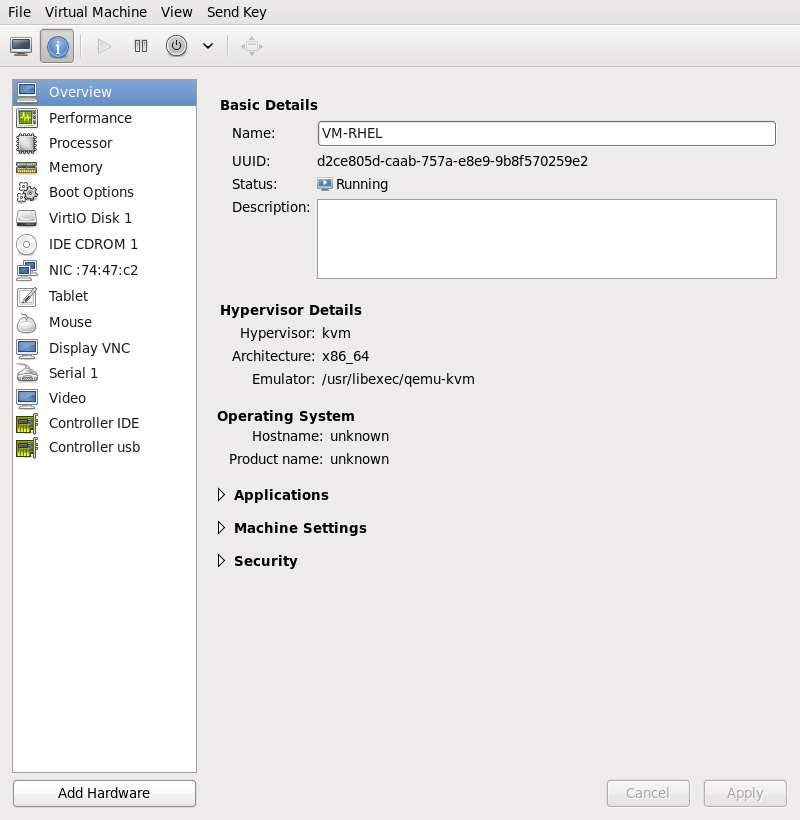

virt-manager now displays the newly migrated guest virtual machine running in the destination host. The guest virtual machine that was running in the source host physical machine is now listed inthe Shutoff state.

Figure 4.8. Migrated guest virtual machine running in the destination host physical machine

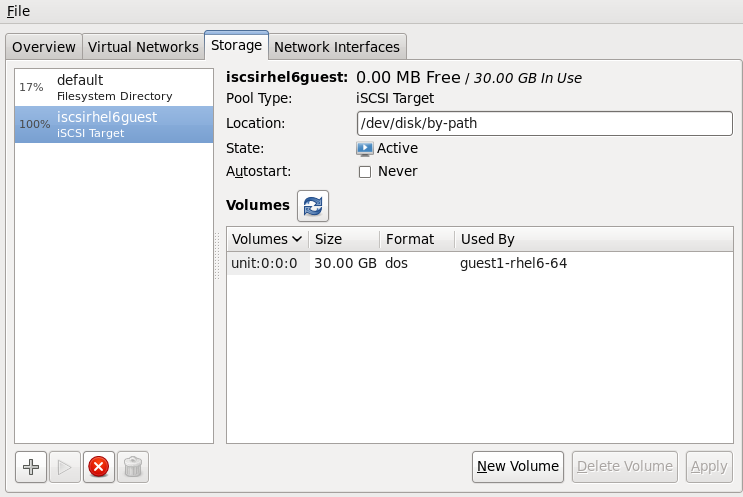

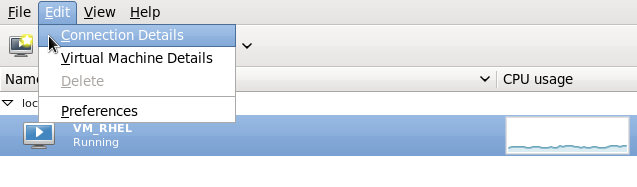

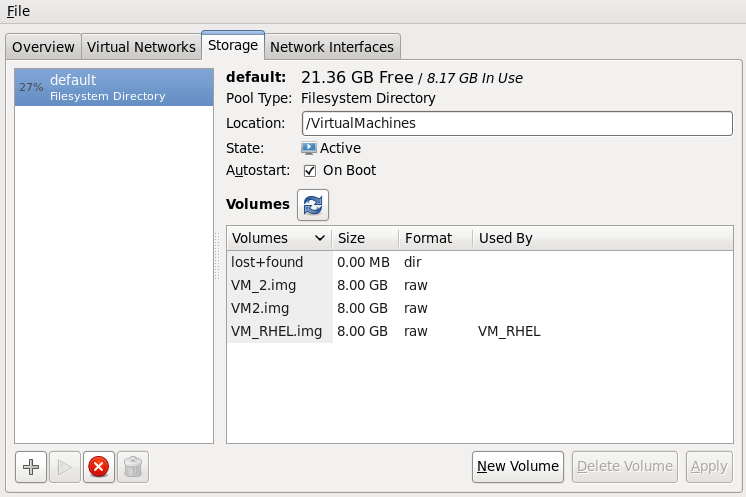

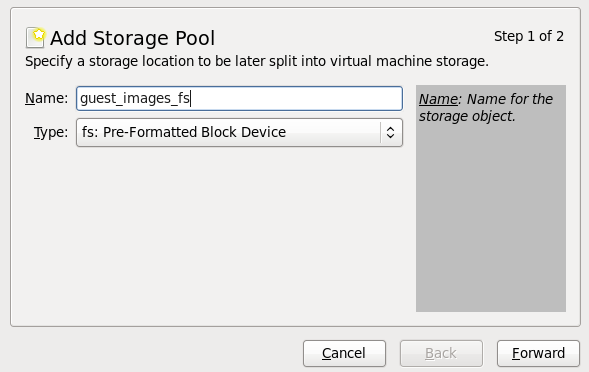

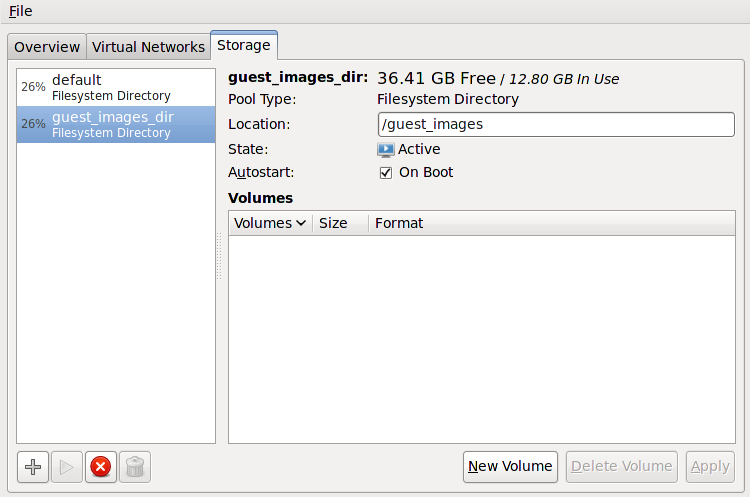

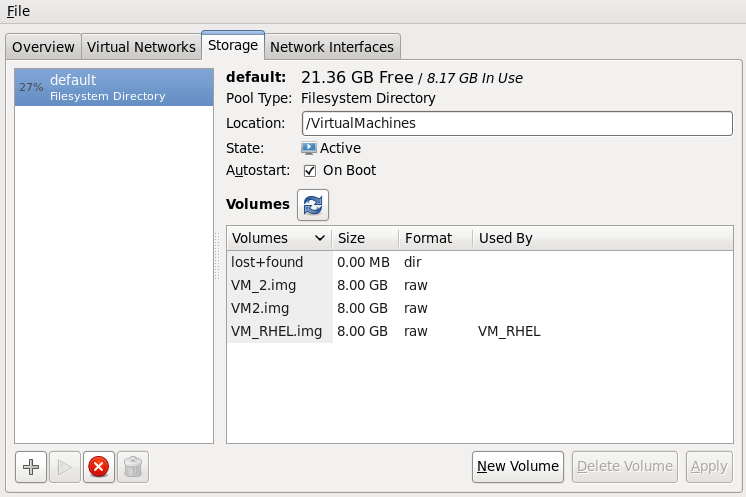

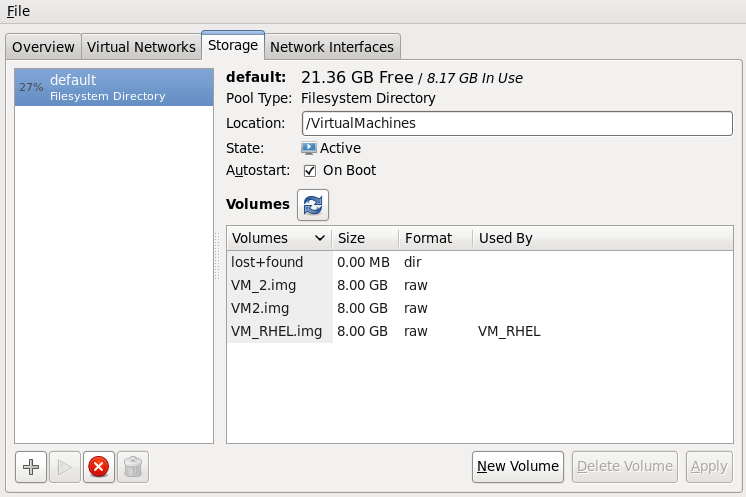

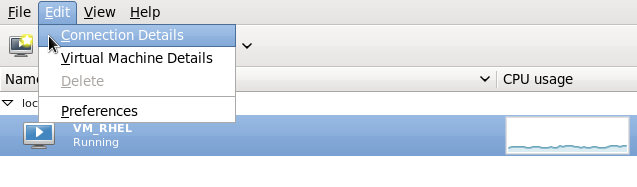

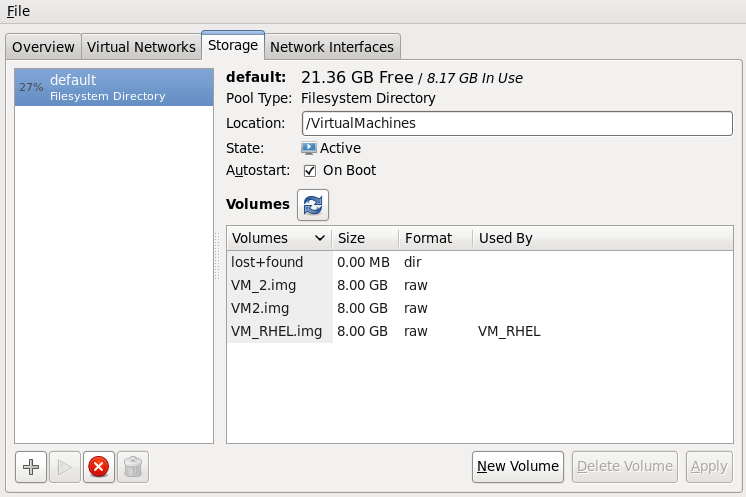

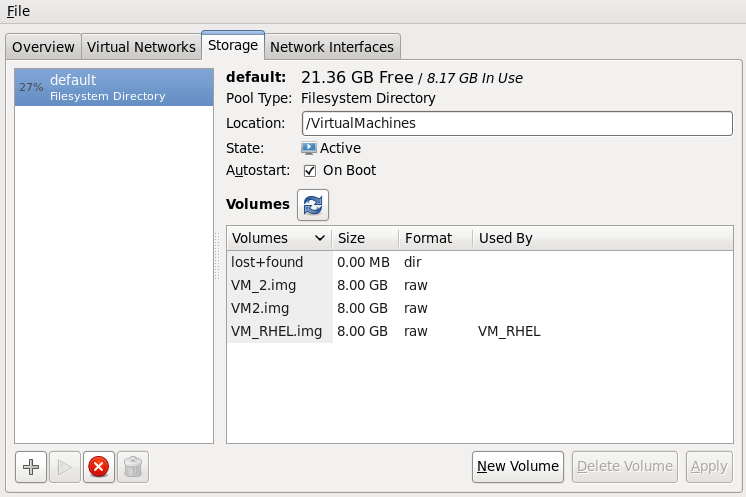

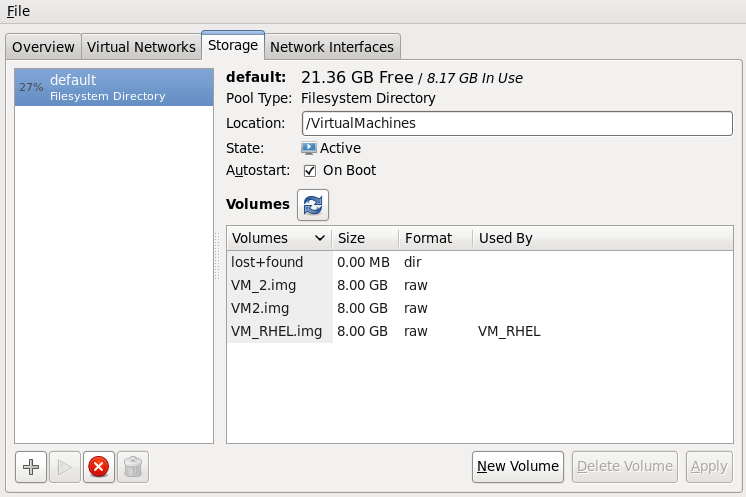

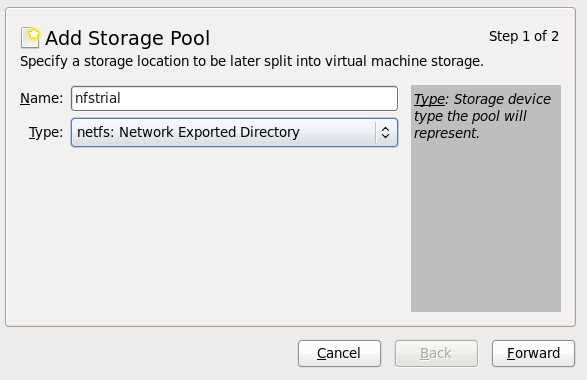

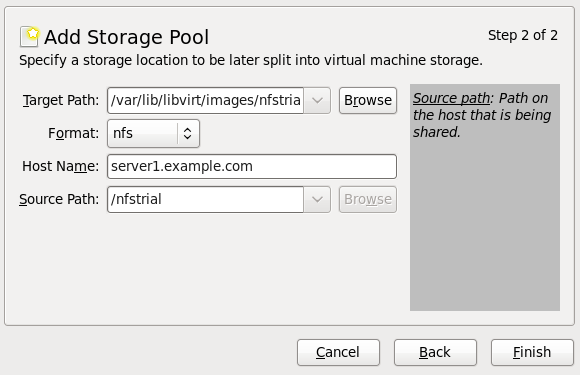

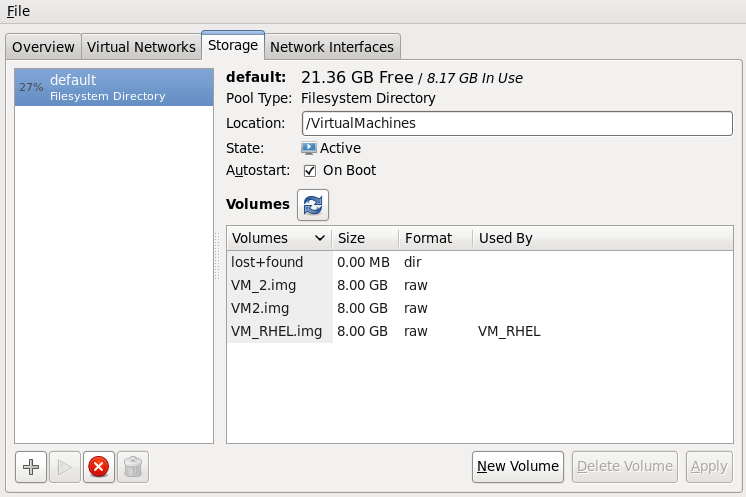

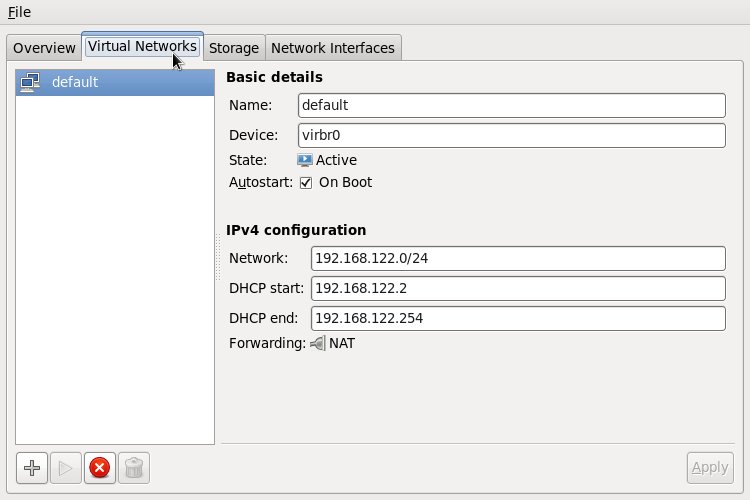

Optional - View the storage details for the host physical machine

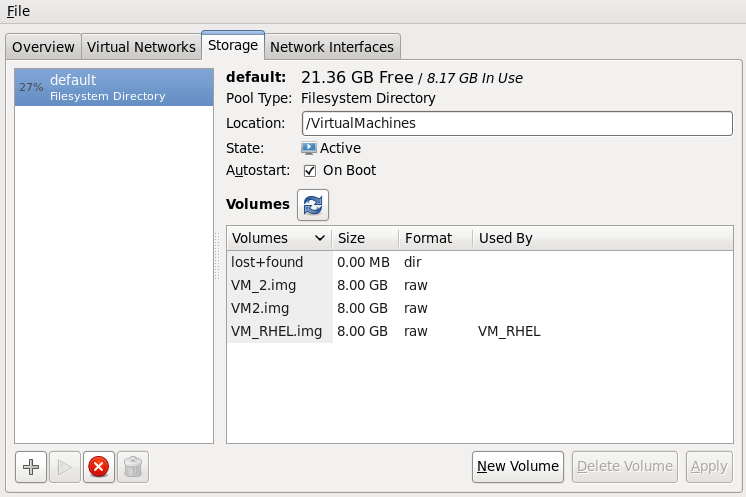

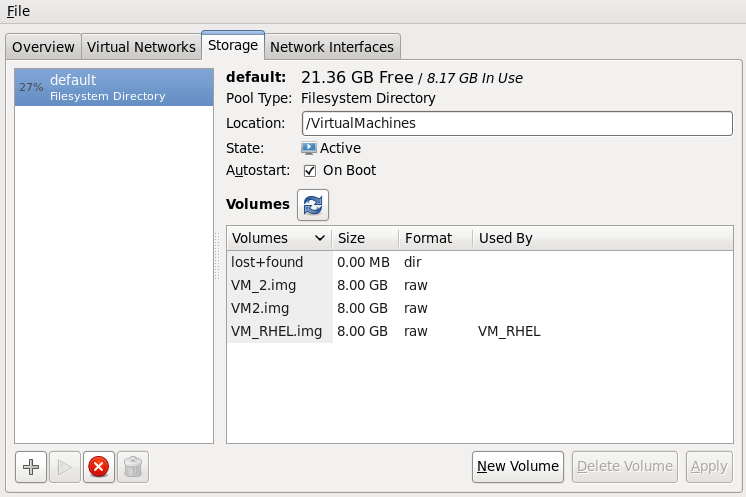

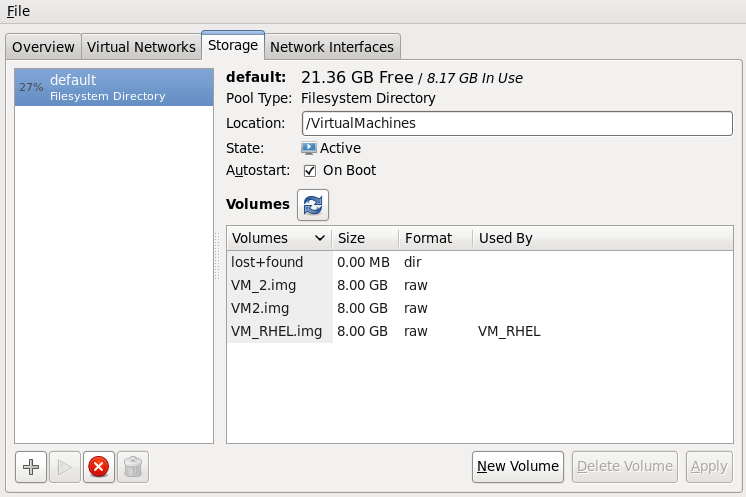

In the menu, click , the Connection Details window appears.Click the tab. The iSCSI target details for the destination host physical machine is shown. Note that the migrated guest virtual machine is listed as using the storage

Figure 4.9. Storage details

This host was defined by the following XML configuration:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Figure 4.10. XML configuration for the destination host physical machine

Chapter 5. Remote Management of Guests

ssh or TLS and SSL. More information on SSH can be found in the Red Hat Enterprise Linux Deployment Guide.

5.1. Remote Management with SSH

libvirt management connection securely tunneled over an SSH connection to manage the remote machines. All the authentication is done using SSH public key cryptography and passwords or passphrases gathered by your local SSH agent. In addition the VNC console for each guest is tunneled over SSH.

- you require root log in access to the remote machine for managing virtual machines,

- the initial connection setup process may be slow,

- there is no standard or trivial way to revoke a user's key on all hosts or guests, and

- ssh does not scale well with larger numbers of remote machines.

Note

- openssh

- openssh-askpass

- openssh-clients

- openssh-server

virt-manager The following instructions assume you are starting from scratch and do not already have SSH keys set up. If you have SSH keys set up and copied to the other systems you can skip this procedure.

Important

virt-manager must be run by the user who owns the keys to connect to the remote host. That means, if the remote systems are managed by a non-root user virt-manager must be run in unprivileged mode. If the remote systems are managed by the local root user then the SSH keys must be owned and created by root.

virt-manager.

Optional: Changing user

Change user, if required. This example uses the local root user for remotely managing the other hosts and the local host.su -

$ su -Copy to Clipboard Copied! Toggle word wrap Toggle overflow Generating the SSH key pair

Generate a public key pair on the machinevirt-manageris used. This example uses the default key location, in the~/.ssh/directory.ssh-keygen -t rsa

# ssh-keygen -t rsaCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copying the keys to the remote hosts

Remote login without a password, or with a passphrase, requires an SSH key to be distributed to the systems being managed. Use thessh-copy-idcommand to copy the key to root user at the system address provided (in the example,root@host2.example.com).ssh-copy-id -i ~/.ssh/id_rsa.pub root@host2.example.com root@host2.example.com's password:

# ssh-copy-id -i ~/.ssh/id_rsa.pub root@host2.example.com root@host2.example.com's password:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Now try logging into the machine, with thessh root@host2.example.comcommand and check in the.ssh/authorized_keysfile to make sure unexpected keys have not been added.Repeat for other systems, as required.Optional: Add the passphrase to the ssh-agent

The instructions below describe how to add a passphrase to an existing ssh-agent. It will fail to run if the ssh-agent is not running. To avoid errors or conflicts make sure that your SSH parameters are set correctly. Refer to the Red Hat Enterprise Linux Deployment Guide for more information.Add the passphrase for the SSH key to thessh-agent, if required. On the local host, use the following command to add the passphrase (if there was one) to enable password-less login.ssh-add ~/.ssh/id_rsa

# ssh-add ~/.ssh/id_rsaCopy to Clipboard Copied! Toggle word wrap Toggle overflow The SSH key is added to the remote system.

libvirt Daemon (libvirtd)

The libvirt daemon provides an interface for managing virtual machines. You must have the libvirtd daemon installed and running on every remote host that needs managing.

ssh root@somehost chkconfig libvirtd on service libvirtd start

$ ssh root@somehost

# chkconfig libvirtd on

# service libvirtd startlibvirtd and SSH are configured you should be able to remotely access and manage your virtual machines. You should also be able to access your guests with VNC at this point.

Remote hosts can be managed with the virt-manager GUI tool. SSH keys must belong to the user executing virt-manager for password-less login to work.

- Start virt-manager.

- Open the -> menu.

Figure 5.1. Add connection menu

- Use the drop down menu to select hypervisor type, and click the check box to open the Connection (in this case Remote tunnel over SSH), and enter the desired and , then click .

5.2. Remote Management Over TLS and SSL

libvirt management connection opens a TCP port for incoming connections, which is securely encrypted and authenticated based on x509 certificates. The procedures that follow provide instructions on creating and deploying authentication certificates for TLS and SSL management.

Procedure 5.1. Creating a certificate authority (CA) key for TLS management

- Before you begin, confirm that the

certtoolutility is installed. If not:yum install gnutls-utils

# yum install gnutls-utilsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Generate a private key, using the following command:

certtool --generate-privkey > cakey.pem

# certtool --generate-privkey > cakey.pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Once the key generates, the next step is to create a signature file so the key can be self-signed. To do this, create a file with signature details and name it

ca.info. This file should contain the following:vim ca.info

# vim ca.infoCopy to Clipboard Copied! Toggle word wrap Toggle overflow cn = Name of your organization ca cert_signing_key

cn = Name of your organization ca cert_signing_keyCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Generate the self-signed key with the following command:

certtool --generate-self-signed --load-privkey cakey.pem --template ca.info --outfile cacert.pem

# certtool --generate-self-signed --load-privkey cakey.pem --template ca.info --outfile cacert.pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow Once the file generates, the ca.info file may be deleted using thermcommand. The file that results from the generation process is namedcacert.pem. This file is the public key (certificate). The loaded filecakey.pemis the private key. This file should not be kept in a shared space. Keep this key private. - Install the

cacert.pemCertificate Authority Certificate file on all clients and servers in the/etc/pki/CA/cacert.pemdirectory to let them know that the certificate issued by your CA can be trusted. To view the contents of this file, run:certtool -i --infile cacert.pem

# certtool -i --infile cacert.pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow This is all that is required to set up your CA. Keep the CA's private key safe as you will need it in order to issue certificates for your clients and servers.

Procedure 5.2. Issuing a server certificate

qemu://mycommonname/system, so the CN field should be identical, ie mycommoname.

- Create a private key for the server.

certtool --generate-privkey > serverkey.pem

# certtool --generate-privkey > serverkey.pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Generate a signature for the CA's private key by first creating a template file called

server.info. Make sure that the CN is set to be the same as the server's host name:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Create the certificate with the following command:

certtool --generate-certificate --load-privkey serverkey.pem --load-ca-certificate cacert.pem --load-ca-privkey cakey.pem \ --template server.info --outfile servercert.pem

# certtool --generate-certificate --load-privkey serverkey.pem --load-ca-certificate cacert.pem --load-ca-privkey cakey.pem \ --template server.info --outfile servercert.pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow - This results in two files being generated:

- serverkey.pem - The server's private key

- servercert.pem - The server's public key

Make sure to keep the location of the private key secret. To view the contents of the file, perform the following command:certtool -i --inifile servercert.pem

# certtool -i --inifile servercert.pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow When opening this file theCN=parameter should be the same as the CN that you set earlier. For example,mycommonname. - Install the two files in the following locations:

serverkey.pem- the server's private key. Place this file in the following location:/etc/pki/libvirt/private/serverkey.pemservercert.pem- the server's certificate. Install it in the following location on the server:/etc/pki/libvirt/servercert.pem

Procedure 5.3. Issuing a client certificate

- For every client (ie. any program linked with libvirt, such as virt-manager), you need to issue a certificate with the X.509 Distinguished Name (DN) set to a suitable name. This needs to be decided on a corporate level.For example purposes the following information will be used:

C=USA,ST=North Carolina,L=Raleigh,O=Red Hat,CN=name_of_client

C=USA,ST=North Carolina,L=Raleigh,O=Red Hat,CN=name_of_clientCopy to Clipboard Copied! Toggle word wrap Toggle overflow This process is quite similar to Procedure 5.2, “Issuing a server certificate”, with the following exceptions noted. - Make a private key with the following command:

certtool --generate-privkey > clientkey.pem

# certtool --generate-privkey > clientkey.pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Generate a signature for the CA's private key by first creating a template file called

client.info. The file should contain the following (fields should be customized to reflect your region/location):Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Sign the certificate with the following command:

certtool --generate-certificate --load-privkey clientkey.pem --load-ca-certificate cacert.pem \ --load-ca-privkey cakey.pem --template client.info --outfile clientcert.pem

# certtool --generate-certificate --load-privkey clientkey.pem --load-ca-certificate cacert.pem \ --load-ca-privkey cakey.pem --template client.info --outfile clientcert.pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Install the certificates on the client machine:

cp clientkey.pem /etc/pki/libvirt/private/clientkey.pem cp clientcert.pem /etc/pki/libvirt/clientcert.pem

# cp clientkey.pem /etc/pki/libvirt/private/clientkey.pem # cp clientcert.pem /etc/pki/libvirt/clientcert.pemCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.3. Transport Modes

libvirt supports the following transport modes:

Transport Layer Security TLS 1.0 (SSL 3.1) authenticated and encrypted TCP/IP socket, usually listening on a public port number. To use this you will need to generate client and server certificates. The standard port is 16514.

UNIX domain sockets are only accessible on the local machine. Sockets are not encrypted, and use UNIX permissions or SELinux for authentication. The standard socket names are /var/run/libvirt/libvirt-sock and /var/run/libvirt/libvirt-sock-ro (for read-only connections).

Transported over a Secure Shell protocol (SSH) connection. Requires Netcat (the nc package) installed. The libvirt daemon (libvirtd) must be running on the remote machine. Port 22 must be open for SSH access. You should use some sort of SSH key management (for example, the ssh-agent utility) or you will be prompted for a password.

The ext parameter is used for any external program which can make a connection to the remote machine by means outside the scope of libvirt. This parameter is unsupported.

Unencrypted TCP/IP socket. Not recommended for production use, this is normally disabled, but an administrator can enable it for testing or use over a trusted network. The default port is 16509.

A Uniform Resource Identifier (URI) is used by virsh and libvirt to connect to a remote host. URIs can also be used with the --connect parameter for the virsh command to execute single commands or migrations on remote hosts. Remote URIs are formed by taking ordinary local URIs and adding a host name or transport name. As a special case, using a URI scheme of 'remote', will tell the remote libvirtd server to probe for the optimal hypervisor driver. This is equivalent to passing a NULL URI for a local connection

driver[+transport]://[username@][hostname][:port]/path[?extraparameters]

driver[+transport]://[username@][hostname][:port]/path[?extraparameters]- qemu://hostname/

- xen://hostname/

- xen+ssh://hostname/

Examples of remote management parameters

- Connect to a remote KVM host named

host2, using SSH transport and the SSH user namevirtuser.The connect command for each isconnect [<name>] [--readonly], where<name>is a valid URI as explained here. For more information about thevirsh connectcommand refer to Section 14.1.5, “connect”qemu+ssh://virtuser@hot2/

qemu+ssh://virtuser@hot2/Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Connect to a remote KVM hypervisor on the host named

host2using TLS.qemu://host2/

qemu://host2/Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Testing examples

- Connect to the local KVM hypervisor with a non-standard UNIX socket. The full path to the UNIX socket is supplied explicitly in this case.

qemu+unix:///system?socket=/opt/libvirt/run/libvirt/libvirt-sock

qemu+unix:///system?socket=/opt/libvirt/run/libvirt/libvirt-sockCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Connect to the libvirt daemon with an unencrypted TCP/IP connection to the server with the IP address 10.1.1.10 on port 5000. This uses the test driver with default settings.

test+tcp://10.1.1.10:5000/default

test+tcp://10.1.1.10:5000/defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Extra parameters can be appended to remote URIs. The table below Table 5.1, “Extra URI parameters” covers the recognized parameters. All other parameters are ignored. Note that parameter values must be URI-escaped (that is, a question mark (?) is appended before the parameter and special characters are converted into the URI format).

| Name | Transport mode | Description | Example usage |

|---|---|---|---|

| name | all modes | The name passed to the remote virConnectOpen function. The name is normally formed by removing transport, host name, port number, user name and extra parameters from the remote URI, but in certain very complex cases it may be better to supply the name explicitly. | name=qemu:///system |

| command | ssh and ext | The external command. For ext transport this is required. For ssh the default is ssh. The PATH is searched for the command. | command=/opt/openssh/bin/ssh |

| socket | unix and ssh | The path to the UNIX domain socket, which overrides the default. For ssh transport, this is passed to the remote netcat command (see netcat). | socket=/opt/libvirt/run/libvirt/libvirt-sock |

| netcat | ssh |

The

netcat command can be used to connect to remote systems. The default netcat parameter uses the nc command. For SSH transport, libvirt constructs an SSH command using the form below:

command -p port [-l username] hostname

netcat -U socket

The

port, username and hostname parameters can be specified as part of the remote URI. The command, netcat and socket come from other extra parameters.

| netcat=/opt/netcat/bin/nc |

| no_verify | tls | If set to a non-zero value, this disables client checks of the server's certificate. Note that to disable server checks of the client's certificate or IP address you must change the libvirtd configuration. | no_verify=1 |

| no_tty | ssh | If set to a non-zero value, this stops ssh from asking for a password if it cannot log in to the remote machine automatically . Use this when you do not have access to a terminal . | no_tty=1 |

Chapter 6. Overcommitting with KVM

6.1. Overcommitting Memory

Important

Important

6.2. Overcommitting Virtualized CPUs

Important

Chapter 7. KSM

qemu-kvm process. Once the guest virtual machine is running, the contents of the guest virtual machine operating system image can be shared when guests are running the same operating system or applications.

Note

Note

/sys/kernel/mm/ksm/merge_across_nodes tunable to 0 to avoid merging pages across NUMA nodes. Kernel memory accounting statistics can eventually contradict each other after large amounts of cross-node merging. As such, numad can become confused after the KSM daemon merges large amounts of memory. If your system has a large amount of free memory, you may achieve higher performance by turning off and disabling the KSM daemon. Refer to the Red Hat Enterprise Linux Performance Tuning Guide for more information on NUMA.

- The

ksmservice starts and stops the KSM kernel thread. - The

ksmtunedservice controls and tunes theksm, dynamically managing same-page merging. Theksmtunedservice startsksmand stops theksmservice if memory sharing is not necessary. Theksmtunedservice must be told with theretuneparameter to run when new guests are created or destroyed.

The ksm service is included in the qemu-kvm package. KSM is off by default on Red Hat Enterprise Linux 6. When using Red Hat Enterprise Linux 6 as a KVM host physical machine, however, it is likely turned on by the ksm/ksmtuned services.

ksm service is not started, KSM shares only 2000 pages. This default is low and provides limited memory saving benefits.

ksm service is started, KSM will share up to half of the host physical machine system's main memory. Start the ksm service to enable KSM to share more memory.

service ksm start Starting ksm: [ OK ]

# service ksm start

Starting ksm: [ OK ]ksm service can be added to the default startup sequence. Make the ksm service persistent with the chkconfig command.

chkconfig ksm on

# chkconfig ksm on

The ksmtuned service does not have any options. The ksmtuned service loops and adjusts ksm. The ksmtuned service is notified by libvirt when a guest virtual machine is created or destroyed.

service ksmtuned start Starting ksmtuned: [ OK ]

# service ksmtuned start

Starting ksmtuned: [ OK ]ksmtuned service can be tuned with the retune parameter. The retune parameter instructs ksmtuned to run tuning functions manually.

thres- Activation threshold, in kbytes. A KSM cycle is triggered when thethresvalue added to the sum of allqemu-kvmprocesses RSZ exceeds total system memory. This parameter is the equivalent in kbytes of the percentage defined inKSM_THRES_COEF.

/etc/ksmtuned.conf file is the configuration file for the ksmtuned service. The file output below is the default ksmtuned.conf file.

KSM stores monitoring data in the /sys/kernel/mm/ksm/ directory. Files in this directory are updated by the kernel and are an accurate record of KSM usage and statistics.

/etc/ksmtuned.conf file as noted below.

The /sys/kernel/mm/ksm/ files

- full_scans

- Full scans run.

- pages_shared

- Total pages shared.

- pages_sharing

- Pages presently shared.

- pages_to_scan

- Pages not scanned.

- pages_unshared

- Pages no longer shared.

- pages_volatile

- Number of volatile pages.

- run

- Whether the KSM process is running.

- sleep_millisecs

- Sleep milliseconds.

/var/log/ksmtuned log file if the DEBUG=1 line is added to the /etc/ksmtuned.conf file. The log file location can be changed with the LOGFILE parameter. Changing the log file location is not advised and may require special configuration of SELinux settings.

KSM has a performance overhead which may be too large for certain environments or host physical machine systems.

ksmtuned and the ksm service. Stopping the services deactivates KSM but does not persist after restarting.

chkconfig command. To turn off the services, run the following commands:

chkconfig ksm off chkconfig ksmtuned off

# chkconfig ksm off

# chkconfig ksmtuned offImportant

Chapter 8. Advanced Guest Virtual Machine Administration

8.1. Control Groups (cgroups)

8.2. Huge Page Support

x86 CPUs usually address memory in 4kB pages, but they are capable of using larger pages known as huge pages. KVM guests can be deployed with huge page memory support in order to improve performance by increasing CPU cache hits against the Transaction Lookaside Buffer (TLB). Huge pages can significantly increase performance, particularly for large memory and memory-intensive workloads. Red Hat Enterprise Linux 6 is able to more effectively manage large amounts of memory by increasing the page size through the use of huge pages.

Transparent huge pages (THP) is a kernel feature that reduces TLB entries needed for an application. By also allowing all free memory to be used as cache, performance is increased.

qemu.conf file is required. Huge pages are used by default if /sys/kernel/mm/redhat_transparent_hugepage/enabled is set to always.

hugetlbfs feature. However, when hugetlbfs is not used, KVM will use transparent huge pages instead of the regular 4kB page size.

Note

8.3. Running Red Hat Enterprise Linux as a Guest Virtual Machine on a Hyper-V Hypervisor

- Upgraded VMBUS protocols - VMBUS protocols have been upgraded to Windows 8 level. As part of this work, now VMBUS interrupts can be processed on all available virtual CPUs in the guest. Furthermore, the signaling protocol between the Red Hat Enterprise Linux guest virtual machine and the Windows host physical machine has been optimized.

- Synthetic frame buffer driver - Provides enhanced graphics performance and superior resolution for Red Hat Enterprise Linux desktop users.

- Live Virtual Machine Backup support - Provisions uninterrupted backup support for live Red Hat Enterprise Linux guest virtual machines.

- Dynamic expansion of fixed size Linux VHDs - Allows expansion of live mounted fixed size Red Hat Enterprise Linux VHDs.

Note

parted). This is a known limit of Hyper-V. As a workaround, it is possible to manually restore the secondary GPT header after shrinking the GPT disk by using the expert menu in gdisk and the e command. Furthermore, using the "expand" option in the Hyper-V manager also places the GPT secondary header in a location other than at the end of disk, but this can be moved with parted. See the gdisk and parted man pages for more information on these commands.

8.4. Guest Virtual Machine Memory Allocation

borbytesfor bytesKBfor kilobytes (103 or blocks of 1,000 bytes)korKiBfor kibibytes (210 or blocks of 1024 bytes)MBfor megabytes (106 or blocks of 1,000,000 bytes)MorMiBfor mebibytes (220 or blocks of 1,048,576 bytes)GBfor gigabytes (109 or blocks of 1,000,000,000 bytes)GorGiBfor gibibytes (230 or blocks of 1,073,741,824 bytes)TBfor terabytes (1012 or blocks of 1,000,000,000,000 bytes)TorTiBfor tebibytes (240 or blocks of 1,099,511,627,776 bytes)

memory unit, which defaults to the kibibytes (KiB) as a unit of measure where the value given is multiplied by 210 or blocks of 1024 bytes.

dumpCore can be used to control whether the guest virtual machine's memory should be included in the generated coredump (dumpCore='on') or not included (dumpCore='off'). Note that the default setting is on so if the parameter is not set to off, the guest virtual machine memory will be included in the coredump file.

currentMemory attribute determines the actual memory allocation for a guest virtual machine. This value can be less than the maximum allocation, to allow for ballooning up the guest virtual machines memory on the fly. If this is omitted, it defaults to the same value as the memory element. The unit attribute behaves the same as for memory.

8.5. Automatically Starting Guest Virtual Machines

virsh to set a guest virtual machine, TestServer, to automatically start when the host physical machine boots.

virsh autostart TestServer Domain TestServer marked as autostarted

# virsh autostart TestServer

Domain TestServer marked as autostarted

--disable parameter

virsh autostart --disable TestServer Domain TestServer unmarked as autostarted

# virsh autostart --disable TestServer

Domain TestServer unmarked as autostarted

8.6. Disable SMART Disk Monitoring for Guest Virtual Machines

service smartd stop chkconfig --del smartd

# service smartd stop

# chkconfig --del smartd

8.7. Configuring a VNC Server

vino-preferences command.

~/.vnc/xstartup file to start a GNOME session whenever vncserver is started. The first time you run the vncserver script it will ask you for a password you want to use for your VNC session. For more information on vnc server files refer to the Red Hat Enterprise Linux Installation Guide.

8.8. Generating a New Unique MAC Address

macgen.py. Now from that directory you can run the script using ./macgen.py and it will generate a new MAC address. A sample output would look like the following:

./macgen.py 00:16:3e:20:b0:11

$ ./macgen.py

00:16:3e:20:b0:118.8.1. Another Method to Generate a New MAC for Your Guest Virtual Machine

python-virtinst to generate a new MAC address and UUID for use in a guest virtual machine configuration file:

echo 'import virtinst.util ; print\ virtinst.util.uuidToString(virtinst.util.randomUUID())' | python echo 'import virtinst.util ; print virtinst.util.randomMAC()' | python

# echo 'import virtinst.util ; print\

virtinst.util.uuidToString(virtinst.util.randomUUID())' | python

# echo 'import virtinst.util ; print virtinst.util.randomMAC()' | python

8.9. Improving Guest Virtual Machine Response Time

- Severely overcommitted memory.

- Overcommitted memory with high processor usage

- Other (not

qemu-kvmprocesses) busy or stalled processes on the host physical machine.

Warning

8.10. Virtual Machine Timer Management with libvirt

virsh edit command. See Section 14.6, “Editing a Guest Virtual Machine's configuration file” for details.

<clock> element is used to determine how the guest virtual machine clock is synchronized with the host physical machine clock. The clock element has the following attributes:

offsetdetermines how the guest virtual machine clock is offset from the host physical machine clock. The offset attribute has the following possible values:Expand Table 8.1. Offset attribute values Value Description utc The guest virtual machine clock will be synchronized to UTC when booted. localtime The guest virtual machine clock will be synchronized to the host physical machine's configured timezone when booted, if any. timezone The guest virtual machine clock will be synchronized to a given timezone, specified by the timezoneattribute.variable The guest virtual machine clock will be synchronized to an arbitrary offset from UTC. The delta relative to UTC is specified in seconds, using the adjustmentattribute. The guest virtual machine is free to adjust the Real Time Clock (RTC) over time and expect that it will be honored following the next reboot. This is in contrast toutcmode, where any RTC adjustments are lost at each reboot.Note

The value utc is set as the clock offset in a virtual machine by default. However, if the guest virtual machine clock is run with the localtime value, the clock offset needs to be changed to a different value in order to have the guest virtual machine clock synchronized with the host physical machine clock.- The

timezoneattribute determines which timezone is used for the guest virtual machine clock. - The

adjustmentattribute provides the delta for guest virtual machine clock synchronization. In seconds, relative to UTC.

Example 8.1. Always synchronize to UTC

<clock offset="utc" />

<clock offset="utc" />Example 8.2. Always synchronize to the host physical machine timezone

<clock offset="localtime" />

<clock offset="localtime" />Example 8.3. Synchronize to an arbitrary timezone

<clock offset="timezone" timezone="Europe/Paris" />

<clock offset="timezone" timezone="Europe/Paris" />Example 8.4. Synchronize to UTC + arbitrary offset

<clock offset="variable" adjustment="123456" />

<clock offset="variable" adjustment="123456" />8.10.1. timer Child Element for clock

name is required, all other attributes are optional.

name attribute dictates the type of the time source to use, and can be one of the following:

| Value | Description |

|---|---|

| pit | Programmable Interval Timer - a timer with periodic interrupts. |

| rtc | Real Time Clock - a continuously running timer with periodic interrupts. |

| tsc | Time Stamp Counter - counts the number of ticks since reset, no interrupts. |

| kvmclock | KVM clock - recommended clock source for KVM guest virtual machines. KVM pvclock, or kvm-clock lets guest virtual machines read the host physical machine’s wall clock time. |

8.10.2. track

track attribute specifies what is tracked by the timer. Only valid for a name value of rtc.

| Value | Description |

|---|---|

| boot | Corresponds to old host physical machine option, this is an unsupported tracking option. |

| guest | RTC always tracks guest virtual machine time. |

| wall | RTC always tracks host time. |

8.10.3. tickpolicy

tickpolicy attribute assigns the policy used to pass ticks on to the guest virtual machine. The following values are accepted:

| Value | Description |

|---|---|

| delay | Continue to deliver at normal rate (so ticks are delayed). |

| catchup | Deliver at a higher rate to catch up. |

| merge | Ticks merged into one single tick. |

| discard | All missed ticks are discarded. |

8.10.4. frequency, mode, and present

frequency attribute is used to set a fixed frequency, and is measured in Hz. This attribute is only relevant when the name element has a value of tsc. All other timers operate at a fixed frequency (pit, rtc).

mode determines how the time source is exposed to the guest virtual machine. This attribute is only relevant for a name value of tsc. All other timers are always emulated. Command is as follows: <timer name='tsc' frequency='NNN' mode='auto|native|emulate|smpsafe'/>. Mode definitions are given in the table.

| Value | Description |

|---|---|

| auto | Native if TSC is unstable, otherwise allow native TSC access. |

| native | Always allow native TSC access. |

| emulate | Always emulate TSC. |

| smpsafe | Always emulate TSC and interlock SMP |

present is used to override the default set of timers visible to the guest virtual machine..

| Value | Description |

|---|---|

| yes | Force this timer to the visible to the guest virtual machine. |

| no | Force this timer to not be visible to the guest virtual machine. |

8.10.5. Examples Using Clock Synchronization

Example 8.5. Clock synchronizing to local time with RTC and PIT timers

<clock offset="localtime"> <timer name="rtc" tickpolicy="catchup" track="guest virtual machine" /> <timer name="pit" tickpolicy="delay" /> </clock>

<clock offset="localtime">

<timer name="rtc" tickpolicy="catchup" track="guest virtual machine" />

<timer name="pit" tickpolicy="delay" />

</clock>Note

- Guest virtual machine may have only one CPU

- APIC timer must be disabled (use the "noapictimer" command line option)

- NoHZ mode must be disabled in the guest (use the "nohz=off" command line option)

- High resolution timer mode must be disabled in the guest (use the "highres=off" command line option)

- The PIT clocksource is not compatible with either high resolution timer mode, or NoHz mode.

8.11. Using PMU to Monitor Guest Virtual Machine Performance

-cpu host flag must be set.

yum install perf.

# yum install perf.perf for more information on the perf commands.

8.12. Guest Virtual Machine Power Management

pm enables ('yes') or disables ('no') BIOS support for S3 (suspend-to-disk) and S4 (suspend-to-mem) ACPI sleep states. If nothing is specified, then the hypervisor will be left with its default value.

Chapter 9. Guest virtual machine device configuration

- Emulated devices are purely virtual devices that mimic real hardware, allowing unmodified guest operating systems to work with them using their standard in-box drivers. Red Hat Enterprise Linux 6 supports up to 216 virtio devices.

- Virtio devices are purely virtual devices designed to work optimally in a virtual machine. Virtio devices are similar to emulated devices, however, non-Linux virtual machines do not include the drivers they require by default. Virtualization management software like the Virtual Machine Manager (virt-manager) and the Red Hat Virtualization Hypervisor (RHV-H) install these drivers automatically for supported non-Linux guest operating systems. Red Hat Enterprise Linux 6 supports up to 700 scsi disks.

- Assigned devices are physical devices that are exposed to the virtual machine. This method is also known as 'passthrough'. Device assignment allows virtual machines exclusive access to PCI devices for a range of tasks, and allows PCI devices to appear and behave as if they were physically attached to the guest operating system. Red Hat Enterprise Linux 6 supports up to 32 assigned devices per virtual machine.

Note

/etc/security/limits.conf, which can be overridden by /etc/libvirt/qemu.conf). Other limitation factors include the number of slots available on the virtual bus, as well as the system-wide limit on open files set by sysctl.

Note

allow_unsafe_interrupts option to the vfio_iommu_type1 module. This may either be done persistently by adding a .conf file (for example local.conf) to /etc/modprobe.d containing the following:

options vfio_iommu_type1 allow_unsafe_interrupts=1

options vfio_iommu_type1 allow_unsafe_interrupts=1echo 1 > /sys/module/vfio_iommu_type1/parameters/allow_unsafe_interrupts

# echo 1 > /sys/module/vfio_iommu_type1/parameters/allow_unsafe_interrupts9.1. PCI Devices

Procedure 9.1. Preparing an Intel system for PCI device assignment

Enable the Intel VT-d specifications

The Intel VT-d specifications provide hardware support for directly assigning a physical device to a virtual machine. These specifications are required to use PCI device assignment with Red Hat Enterprise Linux.The Intel VT-d specifications must be enabled in the BIOS. Some system manufacturers disable these specifications by default. The terms used to refer to these specifications can differ between manufacturers; consult your system manufacturer's documentation for the appropriate terms.Activate Intel VT-d in the kernel

Activate Intel VT-d in the kernel by adding theintel_iommu=onparameter to the end of the GRUB_CMDLINX_LINUX line, within the quotes, in the/etc/sysconfig/grubfile.The example below is a modifiedgrubfile with Intel VT-d activated.GRUB_CMDLINE_LINUX="rd.lvm.lv=vg_VolGroup00/LogVol01 vconsole.font=latarcyrheb-sun16 rd.lvm.lv=vg_VolGroup_1/root vconsole.keymap=us $([ -x /usr/sbin/rhcrashkernel-param ] && /usr/sbin/ rhcrashkernel-param || :) rhgb quiet intel_iommu=on"

GRUB_CMDLINE_LINUX="rd.lvm.lv=vg_VolGroup00/LogVol01 vconsole.font=latarcyrheb-sun16 rd.lvm.lv=vg_VolGroup_1/root vconsole.keymap=us $([ -x /usr/sbin/rhcrashkernel-param ] && /usr/sbin/ rhcrashkernel-param || :) rhgb quiet intel_iommu=on"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Regenerate config file

Regenerate /etc/grub2.cfg by running:grub2-mkconfig -o /etc/grub2.cfg

grub2-mkconfig -o /etc/grub2.cfgCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that if you are using a UEFI-based host, the target file should be/etc/grub2-efi.cfg.Ready to use

Reboot the system to enable the changes. Your system is now capable of PCI device assignment.

Procedure 9.2. Preparing an AMD system for PCI device assignment

Enable the AMD IOMMU specifications

The AMD IOMMU specifications are required to use PCI device assignment in Red Hat Enterprise Linux. These specifications must be enabled in the BIOS. Some system manufacturers disable these specifications by default.Enable IOMMU kernel support

Appendamd_iommu=onto the end of the GRUB_CMDLINX_LINUX line, within the quotes, in/etc/sysconfig/grubso that AMD IOMMU specifications are enabled at boot.Regenerate config file

Regenerate /etc/grub2.cfg by running:grub2-mkconfig -o /etc/grub2.cfg

grub2-mkconfig -o /etc/grub2.cfgCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that if you are using a UEFI-based host, the target file should be/etc/grub2-efi.cfg.Ready to use

Reboot the system to enable the changes. Your system is now capable of PCI device assignment.

9.1.1. Assigning a PCI Device with virsh

pci_0000_01_00_0, and a fully virtualized guest machine named guest1-rhel6-64.

Procedure 9.3. Assigning a PCI device to a guest virtual machine with virsh

Identify the device

First, identify the PCI device designated for device assignment to the virtual machine. Use thelspcicommand to list the available PCI devices. You can refine the output oflspciwithgrep.This example uses the Ethernet controller highlighted in the following output:lspci | grep Ethernet 00:19.0 Ethernet controller: Intel Corporation 82567LM-2 Gigabit Network Connection 01:00.0 Ethernet controller: Intel Corporation 82576 Gigabit Network Connection (rev 01) 01:00.1 Ethernet controller: Intel Corporation 82576 Gigabit Network Connection (rev 01)

# lspci | grep Ethernet 00:19.0 Ethernet controller: Intel Corporation 82567LM-2 Gigabit Network Connection 01:00.0 Ethernet controller: Intel Corporation 82576 Gigabit Network Connection (rev 01) 01:00.1 Ethernet controller: Intel Corporation 82576 Gigabit Network Connection (rev 01)Copy to Clipboard Copied! Toggle word wrap Toggle overflow This Ethernet controller is shown with the short identifier00:19.0. We need to find out the full identifier used byvirshin order to assign this PCI device to a virtual machine.To do so, use thevirsh nodedev-listcommand to list all devices of a particular type (pci) that are attached to the host machine. Then look at the output for the string that maps to the short identifier of the device you wish to use.This example highlights the string that maps to the Ethernet controller with the short identifier00:19.0. In this example, the:and.characters are replaced with underscores in the full identifier.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Record the PCI device number that maps to the device you want to use; this is required in other steps.Review device information

Information on the domain, bus, and function are available from output of thevirsh nodedev-dumpxmlcommand:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note

An IOMMU group is determined based on the visibility and isolation of devices from the perspective of the IOMMU. Each IOMMU group may contain one or more devices. When multiple devices are present, all endpoints within the IOMMU group must be claimed for any device within the group to be assigned to a guest. This can be accomplished either by also assigning the extra endpoints to the guest or by detaching them from the host driver usingvirsh nodedev-detach. Devices contained within a single group may not be split between multiple guests or split between host and guest. Non-endpoint devices such as PCIe root ports, switch ports, and bridges should not be detached from the host drivers and will not interfere with assignment of endpoints.Devices within an IOMMU group can be determined using the iommuGroup section of thevirsh nodedev-dumpxmloutput. Each member of the group is provided via a separate "address" field. This information may also be found in sysfs using the following:An example of the output from this would be:ls /sys/bus/pci/devices/0000:01:00.0/iommu_group/devices/

$ ls /sys/bus/pci/devices/0000:01:00.0/iommu_group/devices/Copy to Clipboard Copied! Toggle word wrap Toggle overflow To assign only 0000.01.00.0 to the guest, the unused endpoint should be detached from the host before starting the guest:0000:01:00.0 0000:01:00.1

0000:01:00.0 0000:01:00.1Copy to Clipboard Copied! Toggle word wrap Toggle overflow virsh nodedev-detach pci_0000_01_00_1

$ virsh nodedev-detach pci_0000_01_00_1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Determine required configuration details

Refer to the output from thevirsh nodedev-dumpxml pci_0000_00_19_0command for the values required for the configuration file.The example device has the following values: bus = 0, slot = 25 and function = 0. The decimal configuration uses those three values:bus='0' slot='25' function='0'

bus='0' slot='25' function='0'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add configuration details

Runvirsh edit, specifying the virtual machine name, and add a device entry in the<source>section to assign the PCI device to the guest virtual machine.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Alternately, runvirsh attach-device, specifying the virtual machine name and the guest's XML file:virsh attach-device guest1-rhel6-64 file.xml

virsh attach-device guest1-rhel6-64 file.xmlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Start the virtual machine

virsh start guest1-rhel6-64

# virsh start guest1-rhel6-64Copy to Clipboard Copied! Toggle word wrap Toggle overflow

9.1.2. Assigning a PCI Device with virt-manager

virt-manager tool. The following procedure adds a Gigabit Ethernet controller to a guest virtual machine.

Procedure 9.4. Assigning a PCI device to a guest virtual machine using virt-manager

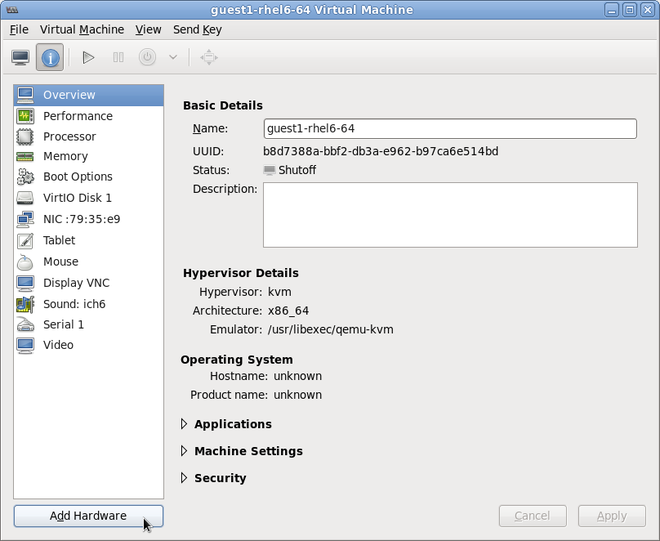

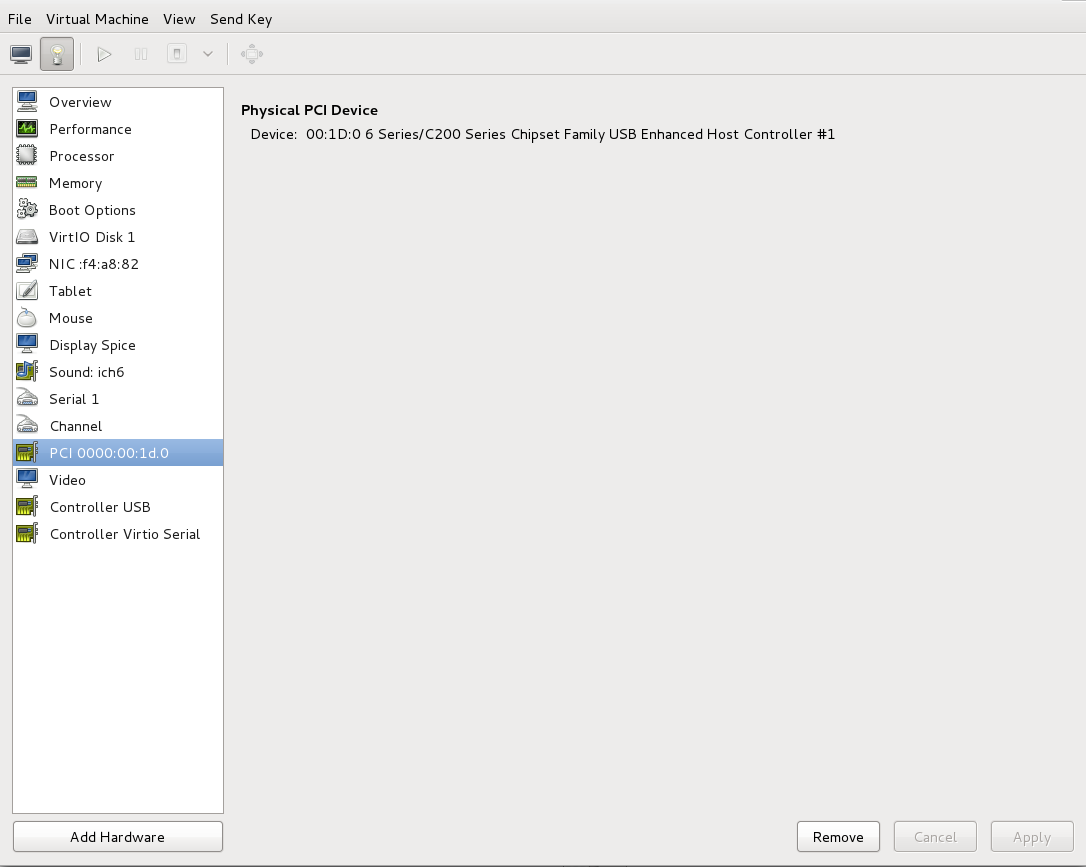

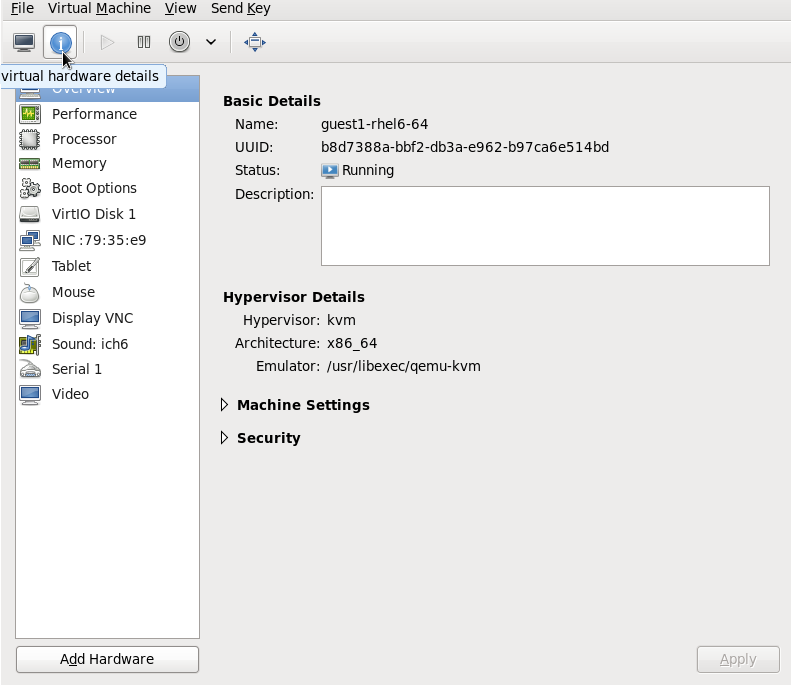

Open the hardware settings

Open the guest virtual machine and click the button to add a new device to the virtual machine.

Figure 9.1. The virtual machine hardware information window

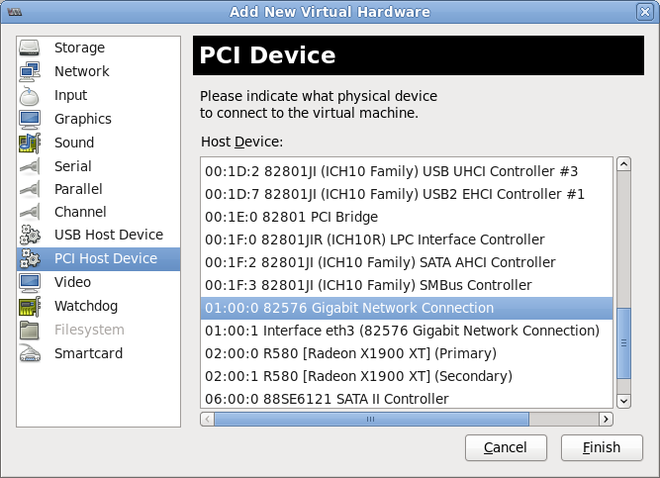

Select a PCI device

Select PCI Host Device from the Hardware list on the left.Select an unused PCI device. If you select a PCI device that is in use by another guest an error may result. In this example, a spare 82576 network device is used. Click Finish to complete setup.

Figure 9.2. The Add new virtual hardware wizard

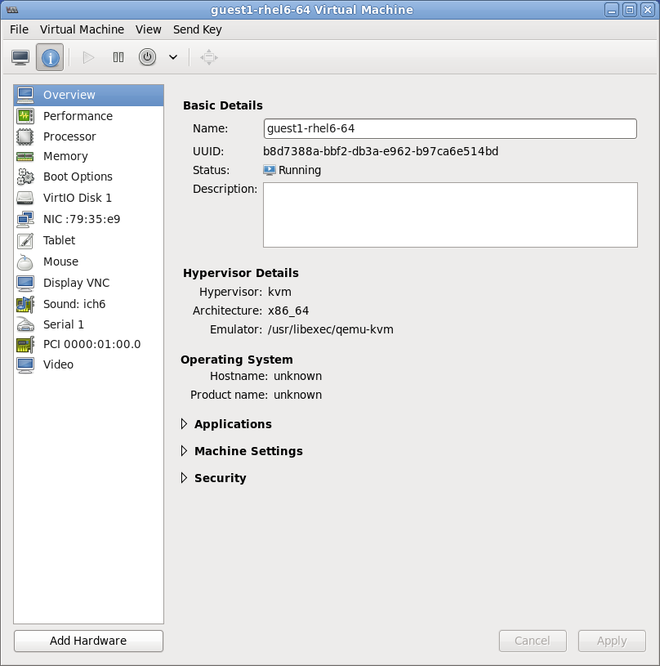

Add the new device

The setup is complete and the guest virtual machine now has direct access to the PCI device.

Figure 9.3. The virtual machine hardware information window

Note

9.1.3. PCI Device Assignment with virt-install

--host-device parameter.

Procedure 9.5. Assigning a PCI device to a virtual machine with virt-install

Identify the device

Identify the PCI device designated for device assignment to the guest virtual machine.lspci | grep Ethernet 00:19.0 Ethernet controller: Intel Corporation 82567LM-2 Gigabit Network Connection 01:00.0 Ethernet controller: Intel Corporation 82576 Gigabit Network Connection (rev 01) 01:00.1 Ethernet controller: Intel Corporation 82576 Gigabit Network Connection (rev 01)

# lspci | grep Ethernet 00:19.0 Ethernet controller: Intel Corporation 82567LM-2 Gigabit Network Connection 01:00.0 Ethernet controller: Intel Corporation 82576 Gigabit Network Connection (rev 01) 01:00.1 Ethernet controller: Intel Corporation 82576 Gigabit Network Connection (rev 01)Copy to Clipboard Copied! Toggle word wrap Toggle overflow Thevirsh nodedev-listcommand lists all devices attached to the system, and identifies each PCI device with a string. To limit output to only PCI devices, run the following command:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Record the PCI device number; the number is needed in other steps.Information on the domain, bus and function are available from output of thevirsh nodedev-dumpxmlcommand:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note

If there are multiple endpoints in the IOMMU group and not all of them are assigned to the guest, you will need to manually detach the other endpoint(s) from the host by running the following command before you start the guest:virsh nodedev-detach pci_0000_00_19_1

$ virsh nodedev-detach pci_0000_00_19_1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Refer to the Note in Section 9.1.1, “Assigning a PCI Device with virsh” for more information on IOMMU groups.Add the device

Use the PCI identifier output from thevirsh nodedevcommand as the value for the--host-deviceparameter.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Complete the installation

Complete the guest installation. The PCI device should be attached to the guest.

9.1.4. Detaching an Assigned PCI Device

virsh or virt-manager so it is available for host use.

Procedure 9.6. Detaching a PCI device from a guest with virsh

Detach the device

Use the following command to detach the PCI device from the guest by removing it in the guest's XML file:virsh detach-device name_of_guest file.xml

# virsh detach-device name_of_guest file.xmlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Re-attach the device to the host (optional)

If the device is inmanagedmode, skip this step. The device will be returned to the host automatically.If the device is not usingmanagedmode, use the following command to re-attach the PCI device to the host machine:virsh nodedev-reattach device

# virsh nodedev-reattach deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example, to re-attach thepci_0000_01_00_0device to the host:virsh nodedev-reattach pci_0000_01_00_0

virsh nodedev-reattach pci_0000_01_00_0Copy to Clipboard Copied! Toggle word wrap Toggle overflow The device is now available for host use.

Procedure 9.7. Detaching a PCI Device from a guest with virt-manager

Open the virtual hardware details screen

In virt-manager, double-click on the virtual machine that contains the device. Select the Show virtual hardware details button to display a list of virtual hardware.

Figure 9.4. The virtual hardware details button

Select and remove the device

Select the PCI device to be detached from the list of virtual devices in the left panel.

Figure 9.5. Selecting the PCI device to be detached

Click the button to confirm. The device is now available for host use.

9.1.5. Creating PCI Bridges

Note

9.1.6. PCI Passthrough

<source> element) is directly assigned to the guest using generic device passthrough, after first optionally setting the device's MAC address to the configured value, and associating the device with an 802.1Qbh capable switch using an optionally specified <virtualport> element (see the examples of virtualport given above for type='direct' network devices). Due to limitations in standard single-port PCI ethernet card driver design - only SR-IOV (Single Root I/O Virtualization) virtual function (VF) devices can be assigned in this manner; to assign a standard single-port PCI or PCIe Ethernet card to a guest, use the traditional <hostdev> device definition.

<type='hostdev'> interface can have an optional driver sub-element with a name attribute set to "vfio". To use legacy KVM device assignment you can set name to "kvm" (or simply omit the <driver> element, since <driver='kvm'> is currently the default).

Note

<hostdev> device, the difference being that this method allows specifying a MAC address and <virtualport> for the passed-through device. If these capabilities are not required, if you have a standard single-port PCI, PCIe, or USB network card that does not support SR-IOV (and hence would anyway lose the configured MAC address during reset after being assigned to the guest domain), or if you are using a version of libvirt older than 0.9.11, you should use standard <hostdev> to assign the device to the guest instead of <interface type='hostdev'/>.

Figure 9.6. XML example for PCI device assignment

9.1.7. Configuring PCI Assignment (Passthrough) with SR-IOV Devices

<hostdev>, but as SR-IOV VF network devices do not have permanent unique MAC addresses, it causes issues where the guest virtual machine's network settings would have to be re-configured each time the host physical machine is rebooted. To remedy this, you would need to set the MAC address prior to assigning the VF to the host physical machine and you would need to set this each and every time the guest virtual machine boots. In order to assign this MAC address as well as other options, refer to the procedure described in Procedure 9.8, “Configuring MAC addresses, vLAN, and virtual ports for assigning PCI devices on SR-IOV”.

Procedure 9.8. Configuring MAC addresses, vLAN, and virtual ports for assigning PCI devices on SR-IOV

<hostdev> element cannot be used for function-specific items like MAC address assignment, vLAN tag ID assignment, or virtual port assignment because the <mac>, <vlan>, and <virtualport> elements are not valid children for <hostdev>. As they are valid for <interface>, support for a new interface type was added (<interface type='hostdev'>). This new interface device type behaves as a hybrid of an <interface> and <hostdev>. Thus, before assigning the PCI device to the guest virtual machine, libvirt initializes the network-specific hardware/switch that is indicated (such as setting the MAC address, setting a vLAN tag, or associating with an 802.1Qbh switch) in the guest virtual machine's XML configuration file. For information on setting the vLAN tag, refer to Section 18.14, “Setting vLAN Tags”.

Shutdown the guest virtual machine

Usingvirsh shutdowncommand (refer to Section 14.9.1, “Shutting Down a Guest Virtual Machine”), shutdown the guest virtual machine named guestVM.virsh shutdown guestVM

# virsh shutdown guestVMCopy to Clipboard Copied! Toggle word wrap Toggle overflow Gather information

In order to use<interface type='hostdev'>, you must have an SR-IOV-capable network card, host physical machine hardware that supports either the Intel VT-d or AMD IOMMU extensions, and you must know the PCI address of the VF that you wish to assign.Open the XML file for editing