Kernel Administration Guide

Using modules, kpatch, or kdump to manage Linux kernel in RHEL

Abstract

Preface

The Kernel Administration Guide describes working with the kernel and shows several practical tasks. Beginning with information on using kernel modules, the guide then covers interaction with the sysfs facility, manual upgrade of the kernel and using kpatch. The guide also introduces the crash dump mechanism, which steps through the process of setting up and testing vmcore collection in the event of a kernel failure.

The Kernel Administration Guide also covers selected use cases of managing the kernel and includes reference material about command line options, kernel tunables (also known as switches), and a brief discussion of kernel features.

Chapter 1. Working with kernel modules

This Chapter explains:

- What is a kernel module.

- How to use the kmod utilities to manage modules and their dependencies.

- How to configure module parameters to control behavior of the kernel modules.

- How to load modules at boot time.

In order to use the kernel module utilities described in this chapter, first ensure the kmod package is installed on your system by running, as root:

yum install kmod

# yum install kmod1.1. What is a kernel module?

The Linux kernel is monolithic by design. However, it is compiled with optional or additional modules as required by each use case. This means that you can extend the kernel’s capabilities through the use of dynamically-loaded kernel modules. A kernel module can provide:

- A device driver which adds support for new hardware.

-

Support for a file system such as

GFS2orNFS.

Like the kernel itself, modules can take parameters that customize their behavior. Though the default parameters work well in most cases. In relation to kernel modules, user-space tools can do the following operations:

- Listing modules currently loaded into a running kernel.

- Querying all available modules for available parameters and module-specific information.

- Loading or unloading (removing) modules dynamically into or from a running kernel.

Many of these utilities, which are provided by the kmod package, take module dependencies into account when performing operations. As a result, manual dependency-tracking is rarely necessary.

On modern systems, kernel modules are automatically loaded by various mechanisms when needed. However, there are occasions when it is necessary to load or unload modules manually. For example, when one module is preferred over another although either is able to provide basic functionality, or when a module performs unexpectedly.

1.2. Kernel module dependencies

Certain kernel modules sometimes depend on one or more other kernel modules. The /lib/modules/<KERNEL_VERSION>/modules.dep file contains a complete list of kernel module dependencies for the respective kernel version.

The dependency file is generated by the depmod program, which is a part of the kmod package. Many of the utilities provided by kmod take module dependencies into account when performing operations so that manual dependency-tracking is rarely necessary.

The code of kernel modules is executed in kernel-space in the unrestricted mode. Because of this, you should be mindful of what modules you are loading.

1.3. Listing currently-loaded modules

You can list all kernel modules that are currently loaded into the kernel by running the lsmod command, for example:

The lsmod output specifies three columns:

Module

- The name of a kernel module currently loaded in memory.

Size

- The amount of memory the kernel module uses in kilobytes.

Used by

- A decimal number representing how many dependencies there are on the Module field.

- A comma separated string of dependent Module names. Using this list, you can first unload all the modules depending on the module you want to unload.

Finally, note that lsmod output is less verbose and considerably easier to read than the content of the /proc/modules pseudo-file.

1.4. Displaying information about a module

You can display detailed information about a kernel module using the modinfo <MODULE_NAME> command.

When entering the name of a kernel module as an argument to one of the kmod utilities, do not append a .ko extension to the end of the name. Kernel module names do not have extensions; their corresponding files do.

Example 1.1. Listing information about a kernel module with lsmod

To display information about the e1000e module, which is the Intel PRO/1000 network driver, enter the following command as root:

1.5. Loading kernel modules at system runtime

The optimal way to expand the functionality of the Linux kernel is by loading kernel modules. The following procedure describes how to use the modprobe command to find and load a kernel module into the currently running kernel.

Prerequisites

- Root permissions.

-

The

kmodpackage is installed. - The respective kernel module is not loaded. To ensure this is the case, see Listing Currently Loaded Modules.

Procedure

Select a kernel module you want to load.

The modules are located in the

/lib/modules/$(uname -r)/kernel/<SUBSYSTEM>/directory.Load the relevant kernel module:

modprobe <MODULE_NAME>

# modprobe <MODULE_NAME>Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteWhen entering the name of a kernel module, do not append the

.ko.xzextension to the end of the name. Kernel module names do not have extensions; their corresponding files do.Optionally, verify the relevant module was loaded:

lsmod | grep <MODULE_NAME>

$ lsmod | grep <MODULE_NAME>Copy to Clipboard Copied! Toggle word wrap Toggle overflow If the module was loaded correctly, this command displays the relevant kernel module. For example:

lsmod | grep serio_raw serio_raw 16384 0

$ lsmod | grep serio_raw serio_raw 16384 0Copy to Clipboard Copied! Toggle word wrap Toggle overflow

The changes described in this procedure will not persist after rebooting the system. For information on how to load kernel modules to persist across system reboots, see Loading kernel modules automatically at system boot time.

Additional resources

-

For further details about

modprobe, see themodprobe(8)manual page.

1.6. Unloading kernel modules at system runtime

At times, you find that you need to unload certain kernel modules from the running kernel. The following procedure describes how to use the modprobe command to find and unload a kernel module at system runtime from the currently loaded kernel.

Prerequisites

- Root permissions.

-

The

kmodpackage is installed.

Procedure

Execute the

lsmodcommand and select a kernel module you want to unload.If a kernel module has dependencies, unload those prior to unloading the kernel module. For details on identifying modules with dependencies, see Listing Currently Loaded Modules and Kernel module dependencies.

Unload the relevant kernel module:

modprobe -r <MODULE_NAME>

# modprobe -r <MODULE_NAME>Copy to Clipboard Copied! Toggle word wrap Toggle overflow When entering the name of a kernel module, do not append the

.ko.xzextension to the end of the name. Kernel module names do not have extensions; their corresponding files do.WarningDo not unload kernel modules when they are used by the running system. Doing so can lead to an unstable or non-operational system.

Optionally, verify the relevant module was unloaded:

lsmod | grep <MODULE_NAME>

$ lsmod | grep <MODULE_NAME>Copy to Clipboard Copied! Toggle word wrap Toggle overflow If the module was unloaded successfully, this command does not display any output.

After finishing this procedure, the kernel modules that are defined to be automatically loaded on boot, will not stay unloaded after rebooting the system. For information on how to counter this outcome, see Preventing kernel modules from being automatically loaded at system boot time.

Additional resources

-

For further details about

modprobe, see themodprobe(8)manual page.

1.7. Loading kernel modules automatically at system boot time

The following procedure describes how to configure a kernel module so that it is loaded automatically during the boot process.

Prerequisites

- Root permissions.

-

The

kmodpackage is installed.

Procedure

Select a kernel module you want to load during the boot process.

The modules are located in the

/lib/modules/$(uname -r)/kernel/<SUBSYSTEM>/directory.Create a configuration file for the module:

echo <MODULE_NAME> > /etc/modules-load.d/<MODULE_NAME>.conf

# echo <MODULE_NAME> > /etc/modules-load.d/<MODULE_NAME>.confCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteWhen entering the name of a kernel module, do not append the

.ko.xzextension to the end of the name. Kernel module names do not have extensions; their corresponding files do.Optionally, after reboot, verify the relevant module was loaded:

lsmod | grep <MODULE_NAME>

$ lsmod | grep <MODULE_NAME>Copy to Clipboard Copied! Toggle word wrap Toggle overflow The example command above should succeed and display the relevant kernel module.

The changes described in this procedure will persist after rebooting the system.

Additional resources

-

For further details about loading kernel modules during the boot process, see the

modules-load.d(5)manual page.

1.8. Preventing kernel modules from being automatically loaded at system boot time

The following procedure describes how to add a kernel module to a denylist so that it will not be automatically loaded during the boot process.

Prerequisites

- Root permissions.

-

The

kmodpackage is installed. - Ensure that a kernel module in a denylist is not vital for your current system configuration.

Procedure

Select a kernel module that you want to put in a denylist:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The

lsmodcommand displays a list of modules loaded to the currently running kernel.Alternatively, identify an unloaded kernel module you want to prevent from potentially loading.

All kernel modules are located in the

/lib/modules/<KERNEL_VERSION>/kernel/<SUBSYSTEM>/directory.

Create a configuration file for a denylist:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The example shows the contents of the

blacklist.conffile, edited by thevimeditor. Theblacklistline ensures that the relevant kernel module will not be automatically loaded during the boot process. Theblacklistcommand, however, does not prevent the module from being loaded as a dependency for another kernel module that is not in a denylist. Therefore theinstallline causes the/bin/falseto run instead of installing a module.The lines starting with a hash sign are comments to make the file more readable.

NoteWhen entering the name of a kernel module, do not append the

.ko.xzextension to the end of the name. Kernel module names do not have extensions; their corresponding files do.Create a backup copy of the current initial ramdisk image before rebuilding:

cp /boot/initramfs-$(uname -r).img /boot/initramfs-$(uname -r).bak.$(date +%m-%d-%H%M%S).img

# cp /boot/initramfs-$(uname -r).img /boot/initramfs-$(uname -r).bak.$(date +%m-%d-%H%M%S).imgCopy to Clipboard Copied! Toggle word wrap Toggle overflow The command above creates a backup

initramfsimage in case the new version has an unexpected problem.Alternatively, create a backup copy of other initial ramdisk image which corresponds to the kernel version for which you want to put kernel modules in a denylist:

cp /boot/initramfs-<SOME_VERSION>.img /boot/initramfs-<SOME_VERSION>.img.bak.$(date +%m-%d-%H%M%S)

# cp /boot/initramfs-<SOME_VERSION>.img /boot/initramfs-<SOME_VERSION>.img.bak.$(date +%m-%d-%H%M%S)Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Generate a new initial ramdisk image to reflect the changes:

dracut -f -v

# dracut -f -vCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you are building an initial ramdisk image for a different kernel version than you are currently booted into, specify both target

initramfsand kernel version:dracut -f -v /boot/initramfs-<TARGET_VERSION>.img <CORRESPONDING_TARGET_KERNEL_VERSION>

# dracut -f -v /boot/initramfs-<TARGET_VERSION>.img <CORRESPONDING_TARGET_KERNEL_VERSION>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Reboot the system:

reboot

$ rebootCopy to Clipboard Copied! Toggle word wrap Toggle overflow

The changes described in this procedure will take effect and persist after rebooting the system. If you improperly put a key kernel module in a denylist, you can face an unstable or non-operational system.

Additional resources

-

For further details concerning the

dracututility, refer to thedracut(8)manual page. - For more information on preventing automatic loading of kernel modules at system boot time on Red Hat Enterprise Linux 8 and earlier versions, see How do I prevent a kernel module from loading automatically?

1.9. Signing kernel modules for secure boot

Red Hat Enterprise Linux 7 includes support for the UEFI Secure Boot feature, which means that Red Hat Enterprise Linux 7 can be installed and run on systems where UEFI Secure Boot is enabled. Note that Red Hat Enterprise Linux 7 does not require the use of Secure Boot on UEFI systems.

If Secure Boot is enabled, the UEFI operating system boot loaders, the Red Hat Enterprise Linux kernel, and all kernel modules must be signed with a private key and authenticated with the corresponding public key. If they are not signed and authenticated, the system will not be allowed to finish the booting process.

The Red Hat Enterprise Linux 7 distribution includes:

- Signed boot loaders

- Signed kernels

- Signed kernel modules

In addition, the signed first-stage boot loader and the signed kernel include embedded Red Hat public keys. These signed executable binaries and embedded keys enable Red Hat Enterprise Linux 7 to install, boot, and run with the Microsoft UEFI Secure Boot Certification Authority keys that are provided by the UEFI firmware on systems that support UEFI Secure Boot.

Not all UEFI-based systems include support for Secure Boot.

The information provided in the following sections describes the steps to self-sign privately built kernel modules for use with Red Hat Enterprise Linux 7 on UEFI-based build systems where Secure Boot is enabled. These sections also provide an overview of available options for importing your public key into a target system where you want to deploy your kernel modules.

To sign and load kernel modules, you need to:

1.9.1. Prerequisites

To be able to sign externally built kernel modules, install the utilities listed in the following table on the build system.

| Utility | Provided by package | Used on | Purpose |

|---|---|---|---|

|

|

| Build system | Generates public and private X.509 key pair |

|

|

| Build system | Perl script used to sign kernel modules |

|

|

| Build system | Perl interpreter used to run the signing script |

|

|

| Target system | Optional utility used to manually enroll the public key |

|

|

| Target system | Optional utility used to display public keys in the system key ring |

The build system, where you build and sign your kernel module, does not need to have UEFI Secure Boot enabled and does not even need to be a UEFI-based system.

1.9.2. Kernel module authentication

In Red Hat Enterprise Linux 7, when a kernel module is loaded, the module’s signature is checked using the public X.509 keys on the kernel’s system key ring, excluding keys on the kernel’s system black-list key ring. The following sections provide an overview of sources of keys/keyrings, examples of loaded keys from different sources in the system. Also, the user can see what it takes to authenticate a kernel module.

1.9.2.1. Sources for public keys used to authenticate kernel modules

During boot, the kernel loads X.509 keys into the system key ring or the system black-list key ring from a set of persistent key stores as shown in the table below.

| Source of X.509 keys | User ability to add keys | UEFI Secure Boot state | Keys loaded during boot |

|---|---|---|---|

| Embedded in kernel | No | - |

|

| UEFI Secure Boot "db" | Limited | Not enabled | No |

| Enabled |

| ||

| UEFI Secure Boot "dbx" | Limited | Not enabled | No |

| Enabled |

| ||

|

Embedded in | No | Not enabled | No |

| Enabled |

| ||

| Machine Owner Key (MOK) list | Yes | Not enabled | No |

| Enabled |

|

If the system is not UEFI-based or if UEFI Secure Boot is not enabled, then only the keys that are embedded in the kernel are loaded onto the system key ring. In that case you have no ability to augment that set of keys without rebuilding the kernel.

The system black list key ring is a list of X.509 keys which have been revoked. If your module is signed by a key on the black list then it will fail authentication even if your public key is in the system key ring.

You can display information about the keys on the system key rings using the keyctl utility. The following is a shortened example output from a Red Hat Enterprise Linux 7 system where UEFI Secure Boot is not enabled.

keyctl list %:.system_keyring 3 keys in keyring: ...asymmetric: Red Hat Enterprise Linux Driver Update Program (key 3): bf57f3e87... ...asymmetric: Red Hat Enterprise Linux kernel signing key: 4249689eefc77e95880b... ...asymmetric: Red Hat Enterprise Linux kpatch signing key: 4d38fd864ebe18c5f0b7...

# keyctl list %:.system_keyring

3 keys in keyring:

...asymmetric: Red Hat Enterprise Linux Driver Update Program (key 3): bf57f3e87...

...asymmetric: Red Hat Enterprise Linux kernel signing key: 4249689eefc77e95880b...

...asymmetric: Red Hat Enterprise Linux kpatch signing key: 4d38fd864ebe18c5f0b7...The following is a shortened example output from a Red Hat Enterprise Linux 7 system where UEFI Secure Boot is enabled.

The above output shows the addition of two keys from the UEFI Secure Boot "db" keys as well as the Red Hat Secure Boot (CA key 1), which is embedded in the shim.efi boot loader. You can also look for the kernel console messages that identify the keys with an UEFI Secure Boot related source. These include UEFI Secure Boot db, embedded shim, and MOK list.

dmesg | grep 'EFI: Loaded cert' [5.160660] EFI: Loaded cert 'Microsoft Windows Production PCA 2011: a9290239... [5.160674] EFI: Loaded cert 'Microsoft Corporation UEFI CA 2011: 13adbf4309b... [5.165794] EFI: Loaded cert 'Red Hat Secure Boot (CA key 1): 4016841644ce3a8...

# dmesg | grep 'EFI: Loaded cert'

[5.160660] EFI: Loaded cert 'Microsoft Windows Production PCA 2011: a9290239...

[5.160674] EFI: Loaded cert 'Microsoft Corporation UEFI CA 2011: 13adbf4309b...

[5.165794] EFI: Loaded cert 'Red Hat Secure Boot (CA key 1): 4016841644ce3a8...1.9.2.2. Kernel module authentication requirements

This section explains what conditions have to be met for loading kernel modules on systems with enabled UEFI Secure Boot functionality.

If UEFI Secure Boot is enabled or if the module.sig_enforce kernel parameter has been specified, you can only load signed kernel modules that are authenticated using a key on the system key ring. In addition, the public key must not be on the system black list key ring.

If UEFI Secure Boot is disabled and if the module.sig_enforce kernel parameter has not been specified, you can load unsigned kernel modules and signed kernel modules without a public key. This is summarized in the table below.

| Module signed | Public key found and signature valid | UEFI Secure Boot state | sig_enforce | Module load | Kernel tainted |

|---|---|---|---|---|---|

| Unsigned | - | Not enabled | Not enabled | Succeeds | Yes |

| Not enabled | Enabled | Fails | - | ||

| Enabled | - | Fails | - | ||

| Signed | No | Not enabled | Not enabled | Succeeds | Yes |

| Not enabled | Enabled | Fails | - | ||

| Enabled | - | Fails | - | ||

| Signed | Yes | Not enabled | Not enabled | Succeeds | No |

| Not enabled | Enabled | Succeeds | No | ||

| Enabled | - | Succeeds | No |

1.9.3. Generating a public and private X.509 key pair

You need to generate a public and private X.509 key pair to succeed in your efforts of using kernel modules on a Secure Boot-enabled system. You will later use the private key to sign the kernel module. You will also have to add the corresponding public key to the Machine Owner Key (MOK) for Secure Boot to validate the signed module. For instructions to do so, see Section 1.9.4.2, “System administrator manually adding public key to the MOK list”.

Some of the parameters for this key pair generation are best specified with a configuration file.

Create a configuration file with parameters for the key pair generation:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create an X.509 public and private key pair as shown in the following example:

openssl req -x509 -new -nodes -utf8 -sha256 -days 36500 \ -batch -config configuration_file.config -outform DER \ -out my_signing_key_pub.der \ -keyout my_signing_key.priv

# openssl req -x509 -new -nodes -utf8 -sha256 -days 36500 \ -batch -config configuration_file.config -outform DER \ -out my_signing_key_pub.der \ -keyout my_signing_key.privCopy to Clipboard Copied! Toggle word wrap Toggle overflow The public key will be written to the

my_signing_key_pub.derfile and the private key will be written to themy_signing_key.privfile.Enroll your public key on all systems where you want to authenticate and load your kernel module.

For details, see Section 1.9.4, “Enrolling public key on target system”.

Apply strong security measures and access policies to guard the contents of your private key. In the wrong hands, the key could be used to compromise any system which is authenticated by the corresponding public key.

1.9.4. Enrolling public key on target system

When Red Hat Enterprise Linux 7 boots on a UEFI-based system with Secure Boot enabled, the kernel loads onto the system key ring all public keys that are in the Secure Boot db key database, but not in the dbx database of revoked keys. The sections below describe different ways of importing a public key on a target system so that the system key ring is able to use the public key to authenticate a kernel module.

1.9.4.1. Factory firmware image including public key

To facilitate authentication of your kernel module on your systems, consider requesting your system vendor to incorporate your public key into the UEFI Secure Boot key database in their factory firmware image.

1.9.4.2. System administrator manually adding public key to the MOK list

The Machine Owner Key (MOK) facility feature can be used to expand the UEFI Secure Boot key database. When Red Hat Enterprise Linux 7 boots on a UEFI-enabled system with Secure Boot enabled, the keys on the MOK list are also added to the system key ring in addition to the keys from the key database. The MOK list keys are also stored persistently and securely in the same fashion as the Secure Boot database keys, but these are two separate facilities. The MOK facility is supported by shim.efi, MokManager.efi, grubx64.efi, and the Red Hat Enterprise Linux 7 mokutil utility.

Enrolling a MOK key requires manual interaction by a user at the UEFI system console on each target system. Nevertheless, the MOK facility provides a convenient method for testing newly generated key pairs and testing kernel modules signed with them.

To add your public key to the MOK list:

Request the addition of your public key to the MOK list:

mokutil --import my_signing_key_pub.der

# mokutil --import my_signing_key_pub.derCopy to Clipboard Copied! Toggle word wrap Toggle overflow You will be asked to enter and confirm a password for this MOK enrollment request.

Reboot the machine.

The pending MOK key enrollment request will be noticed by

shim.efiand it will launchMokManager.efito allow you to complete the enrollment from the UEFI console.Enter the password you previously associated with this request and confirm the enrollment.

Your public key is added to the MOK list, which is persistent.

Once a key is on the MOK list, it will be automatically propagated to the system key ring on this and subsequent boots when UEFI Secure Boot is enabled.

1.9.5. Signing kernel module with the private key

Assuming you have your kernel module ready:

Use a Perl script to sign your kernel module with your private key:

perl /usr/src/kernels/$(uname -r)/scripts/sign-file \ sha256 \ my_signing_key.priv\ my_signing_key_pub.der\ my_module.ko

# perl /usr/src/kernels/$(uname -r)/scripts/sign-file \ sha256 \ my_signing_key.priv\ my_signing_key_pub.der\ my_module.koCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe Perl script requires that you provide both the files that contain your private and the public key as well as the kernel module file that you want to sign.

Your kernel module is in ELF image format and the Perl script computes and appends the signature directly to the ELF image in your kernel module file. The

modinfoutility can be used to display information about the kernel module’s signature, if it is present. For information on usingmodinfo, see Section 1.4, “Displaying information about a module”.The appended signature is not contained in an ELF image section and is not a formal part of the ELF image. Therefore, utilities such as

readelfwill not be able to display the signature on your kernel module.Your kernel module is now ready for loading. Note that your signed kernel module is also loadable on systems where UEFI Secure Boot is disabled or on a non-UEFI system. That means you do not need to provide both a signed and unsigned version of your kernel module.

1.9.6. Loading signed kernel module

Once your public key is enrolled and is in the system key ring, use mokutil to add your public key to the MOK list. Then manually load your kernel module with the modprobe command.

Optionally, verify that your kernel module will not load before you have enrolled your public key.

For details on how to list currently loaded kernel modules, see Section 1.3, “Listing currently-loaded modules”.

Verify what keys have been added to the system key ring on the current boot:

keyctl list %:.system_keyring

# keyctl list %:.system_keyringCopy to Clipboard Copied! Toggle word wrap Toggle overflow Since your public key has not been enrolled yet, it should not be displayed in the output of the command.

Request enrollment of your public key:

mokutil --import my_signing_key_pub.der

# mokutil --import my_signing_key_pub.derCopy to Clipboard Copied! Toggle word wrap Toggle overflow Reboot, and complete the enrollment at the UEFI console:

reboot

# rebootCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify the keys on the system key ring again:

keyctl list %:.system_keyring

# keyctl list %:.system_keyringCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the module into the

/extra/directory of the kernel you want:cp my_module.ko /lib/modules/$(uname -r)/extra/

# cp my_module.ko /lib/modules/$(uname -r)/extra/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update the modular dependency list:

depmod -a

# depmod -aCopy to Clipboard Copied! Toggle word wrap Toggle overflow Load the kernel module and verify that it was successfully loaded:

modprobe -v my_module lsmod | grep my_module

# modprobe -v my_module # lsmod | grep my_moduleCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optionally, to load the module on boot, add it to the

/etc/modules-loaded.d/my_module.conffile:echo "my_module" > /etc/modules-load.d/my_module.conf

# echo "my_module" > /etc/modules-load.d/my_module.confCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 2. Working with sysctl and kernel tunables

2.1. What is a kernel tunable?

Kernel tunables are used to customize the behavior of Red Hat Enterprise Linux at boot, or on demand while the system is running. Some hardware parameters are specified at boot time only and cannot be altered once the system is running, most however, can be altered as required and set permanent for the next boot.

2.2. How to work with kernel tunables

There are three ways to modify kernel tunables.

-

Using the

sysctlcommand -

By manually modifying configuration files in the

/etc/sysctl.d/directory -

Through a shell, interacting with the virtual file system mounted at

/proc/sys

Not all boot time parameters are under control of the sysfs subsystem, some hardware specific option must be set on the kernel command line, the Kernel Parameters section of this guide addresses those options

2.2.1. Using the sysctl command

The sysctl command is used to list, read, and set kernel tunables. It can filter tunables when listing or reading and set tunables temporarily or permanently.

Listing variables

sysctl -a

# sysctl -aCopy to Clipboard Copied! Toggle word wrap Toggle overflow Reading variables

sysctl kernel.version kernel.version = #1 SMP Fri Jan 19 13:19:54 UTC 2018

# sysctl kernel.version kernel.version = #1 SMP Fri Jan 19 13:19:54 UTC 2018Copy to Clipboard Copied! Toggle word wrap Toggle overflow Writing variables temporarily

sysctl <tunable class>.<tunable>=<value>

# sysctl <tunable class>.<tunable>=<value>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Writing variables permanently

sysctl -w <tunable class>.<tunable>=<value> >> /etc/sysctl.conf

# sysctl -w <tunable class>.<tunable>=<value> >> /etc/sysctl.confCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.2.2. Modifying files in /etc/sysctl.d

To override a default at boot, you can also manually populate files in /etc/sysctl.d.

Create a new file in

/etc/sysctl.dvim /etc/sysctl.d/99-custom.conf

# vim /etc/sysctl.d/99-custom.confCopy to Clipboard Copied! Toggle word wrap Toggle overflow Include the variables you wish to set, one per line, in the following form

<tunable class>.<tunable> = <value> + <tunable class>.<tunable> = <value>

<tunable class>.<tunable> = <value> + <tunable class>.<tunable> = <value>Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Save the file

-

Either reboot the machine to make the changes take effect

or

Executesysctl -p /etc/sysctl.d/99-custom.confto apply the changes without rebooting

2.3. What tunables can be controlled?

Tunables are divided into groups by kernel sybsystem. A Red Hat Enterprise Linux system has the following classes of tunables:

| Class | Subsystem |

|---|---|

| abi | Execution domains and personalities |

| crypto | Cryptographic interfaces |

| debug | Kernel debugging interfaces |

| dev | Device specific information |

| fs | Global and specific filesystem tunables |

| kernel | Global kernel tunables |

| net | Network tunables |

| sunrpc | Sun Remote Procedure Call (NFS) |

| user | User Namespace limits |

| vm | Tuning and management of memory, buffer, and cache |

2.3.1. Network interface tunables

System administrators are able to adjust the network configuration on a running system through the networking tunables.

Networking tunables are included in the /proc/sys/net directory, which contains multiple subdirectories for various networking topics. To adjust the network configuration, system administrators need to modify the files within such subdirectories.

The most frequently used directories are:

-

/proc/sys/net/core/ -

/proc/sys/net/ipv4/

The /proc/sys/net/core/ directory contains a variety of settings that control the interaction between the kernel and networking layers. By adjusting some of those tunables, you can improve performance of a system, for example by increasing the size of a receive queue, increasing the maximum connections or the memory dedicated to network interfaces. Note that the performance of a system depends on different aspects according to the individual issues.

The /proc/sys/net/ipv4/ directory contains additional networking settings, which are useful when preventing attacks on the system or when using the system to act as a router. The directory contains both IP and TCP variables. For detailed explaination of those variables, see /usr/share/doc/kernel-doc-<version>/Documentation/networking/ip-sysctl.txt.

Other directories within the /proc/sys/net/ipv4/ directory cover different aspects of the network stack:

-

/proc/sys/net/ipv4/conf/- allows you to configure each system interface in different ways, including the use of default settings for unconfigured devices and settings that override all special configurations -

/proc/sys/net/ipv4/neigh/- contains settings for communicating with a host directly connected to the system and also contains different settings for systems more than one step away -

/proc/sys/net/ipv4/route/- contains specifications that apply to routing with any interfaces on the system

This list of network tunables is relevant to IPv4 interfaces and are accessible from the /proc/sys/net/ipv4/{all,<interface_name>}/ directory.

Description of the following parameters have been adopted from the kernel documentation sites.[1]

- log_martians

Log packets with impossible addresses to kernel log.

Expand Type Default Boolean

0

Enabled if one or more of

conf/{all,interface}/log_martiansis set to TRUEFurther Resources

- accept_redirects

Accept ICMP redirect messages.

Expand Type Default Boolean

1

accept_redirects for the interface is enabled under the following conditions:

-

Both

conf/{all,interface}/accept_redirectsare TRUE (when forwarding for the interface is enabled) -

At least one of

conf/{all,interface}/accept_redirectsis TRUE (forwarding for the interface is disabled)

For more information refer to How to enable or disable ICMP redirects

-

Both

- forwarding

Enable IP forwarding on an interface.

Expand Type Default Boolean

0

Further Resources

- mc_forwarding

Do multicast routing.

Expand Type Default Boolean

0

- Read only value

- A multicast routing daemon is required.

-

conf/all/mc_forwardingmust also be set to TRUE to enable multicast routing for the interface

Further Resources

- For an explanation of the read only behavior, see Why system reports "permission denied on key" while setting the kernel parameter "net.ipv4.conf.all.mc_forwarding"?

- medium_id

Arbitrary value used to differentiate the devices by the medium they are attached to.

Expand Type Default Integer

0

Notes

- Two devices on the same medium can have different id values when the broadcast packets are received only on one of them.

- The default value 0 means that the device is the only interface to its medium

- value of -1 means that medium is not known.

- Currently, it is used to change the proxy_arp behavior:

- the proxy_arp feature is enabled for packets forwarded between two devices attached to different media.

Further Resources - For examples, see Using the "medium_id" feature in Linux 2.2 and 2.4

- proxy_arp

Do proxy arp.

Expand Type Default Boolean

0

proxy_arp for the interface is enabled if at least one of

conf/{all,interface}/proxy_arpis set to TRUE, otherwise it is disabled

- proxy_arp_pvlan

Private VLAN proxy arp.

Expand Type Default Boolean

0

Allow proxy arp replies back to the same interface, to support features like RFC 3069

- secure_redirects

Accept ICMP redirect messages only to gateways listed in the interface’s current gateway list.

Expand Type Default Boolean

1

Notes

- Even if disabled, RFC1122 redirect rules still apply.

- Overridden by shared_media.

-

secure_redirects for the interface is enabled if at least one of

conf/{all,interface}/secure_redirectsis set to TRUE

- send_redirects

Send redirects, if router.

Expand Type Default Boolean

1

Notes

send_redirects for the interface is enabled if at least one ofconf/{all,interface}/send_redirectsis set to TRUE

- bootp_relay

Accept packets with source address 0.b.c.d destined not to this host as local ones.

Expand Type Default Boolean

0

Notes

- A BOOTP daemon must be enabled to manage these packets

-

conf/all/bootp_relaymust also be set to TRUE to enable BOOTP relay for the interface - Not implemented, see DHCP Relay Agent in the Red Hat Enterprise Linux Networking Guide

- accept_source_route

Accept packets with SRR option.

Expand Type Default Boolean

1

Notes

-

conf/all/accept_source_routemust also be set to TRUE to accept packets with SRR option on the interface

-

- accept_local

Accept packets with local source addresses.

Expand Type Default Boolean

0

Notes

- In combination with suitable routing, this can be used to direct packets between two local interfaces over the wire and have them accepted properly.

-

rp_filtermust be set to a non-zero value in order for accept_local to have an effect.

- route_localnet

Do not consider loopback addresses as martian source or destination while routing.

Expand Type Default Boolean

0

Notes

- This enables the use of 127/8 for local routing purposes.

- rp_filter

Enable source Validation

Expand Type Default Integer

0

Expand Value Effect 0No source validation.

1Strict mode as defined in RFC3704 Strict Reverse Path

2Loose mode as defined in RFC3704 Loose Reverse Path

Notes

- Current recommended practice in RFC3704 is to enable strict mode to prevent IP spoofing from DDos attacks.

- If using asymmetric routing or other complicated routing, then loose mode is recommended.

-

The highest value from

conf/{all,interface}/rp_filteris used when doing source validation on the {interface}

- arp_filter

Expand Type Default Boolean

0

Expand Value Effect 0(default) The kernel can respond to arp requests with addresses from other interfaces. It usually makes sense, because it increases the chance of successful communication.

1Allows you to have multiple network interfaces on the samesubnet, and have the ARPs for each interface be answered based on whether or not the kernel would route a packet from the ARP’d IP out that interface (therefore you must use source based routing for this to work). In other words it allows control of cards (usually 1) that respond to an arp request.

Note

- IP addresses are owned by the complete host on Linux, not by particular interfaces. Only for more complex setups like load-balancing, does this behavior cause problems.

-

arp_filterfor the interface is enabled if at least one ofconf/{all,interface}/arp_filteris set to TRUE

- arp_announce

Define different restriction levels for announcing the local source IP address from IP packets in ARP requests sent on interface

Expand Type Default Integer

0

Expand Value Effect 0(default) Use any local address, configured on any interface

1Try to avoid local addresses that are not in the target’s subnet for this interface. This mode is useful when target hosts reachable via this interface require the source IP address in ARP requests to be part of their logical network configured on the receiving interface. When we generate the request we check all our subnets that include the target IP and preserve the source address if it is from such subnet. If there is no such subnet we select source address according to the rules for level 2.

2Always use the best local address for this target. In this mode we ignore the source address in the IP packet and try to select local address that we prefer for talks with the target host. Such local address is selected by looking for primary IP addresses on all our subnets on the outgoing interface that include the target IP address. If no suitable local address is found we select the first local address we have on the outgoing interface or on all other interfaces, with the hope we receive reply for our request and even sometimes no matter the source IP address we announce.

Notes

-

The highest value from

conf/{all,interface}/arp_announceis used. - Increasing the restriction level gives more chance for receiving answer from the resolved target while decreasing the level announces more valid sender’s information.

-

The highest value from

- arp_ignore

Define different modes for sending replies in response to received ARP requests that resolve local target IP addresses

Expand Type Default Integer

0

Expand Value Effect 0(default): reply for any local target IP address, configured on any interface

1reply only if the target IP address is local address configured on the incoming interface

2reply only if the target IP address is local address configured on the incoming interface and both with the sender’s IP address are part from same subnet on this interface

3do not reply for local addresses configured with scope host, only resolutions for global and link addresses are replied

4-7reserved

8do not reply for all local addresses The max value from conf/{all,interface}/arp_ignore is used when ARP request is received on the {interface}

Notes

- arp_notify

Define mode for notification of address and device changes.

Expand Type Default Boolean

0

Expand Value Effect 0

do nothing

1

Generate gratuitous arp requests when device is brought up or hardware address changes.

Notes

- arp_accept

Define behavior for gratuitous ARP frames who’s IP is not already present in the ARP table

Expand Type Default Boolean

0

Expand Value Effect 0

do not create new entries in the ARP table

1

create new entries in the ARP table.

Notes

Both replies and requests type gratuitous arp trigger the ARP table to be updated, if this setting is on. If the ARP table already contains the IP address of the gratuitous arp frame, the arp table is updated regardless if this setting is on or off.

- app_solicit

The maximum number of probes to send to the user space ARP daemon via netlink before dropping back to multicast probes (see mcast_solicit).

Expand Type Default Integer

0

Notes

See mcast_solicit

- disable_policy

Disable IPSEC policy (SPD) for this interface

Expand Type Default Boolean

0

needinfo

- disable_xfrm

Disable IPSEC encryption on this interface, whatever the policy

Expand Type Default Boolean

0

needinfo

- igmpv2_unsolicited_report_interval

The interval in milliseconds in which the next unsolicited IGMPv1 or IGMPv2 report retransmit takes place.

Expand Type Default Integer

10000

Notes

Milliseconds

- igmpv3_unsolicited_report_interval

The interval in milliseconds in which the next unsolicited IGMPv3 report retransmit takes place.

Expand Type Default Integer

1000

Notes

Milliseconds

- tag

Allows you to write a number, which can be used as required.

Expand Type Default Integer

0

- xfrm4_gc_thresh

The threshold at which we start garbage collecting for IPv4 destination cache entries.

Expand Type Default Integer

1

Notes

At twice this value the system refuses new allocations.

2.3.2. Global kernel tunables

System administrators are able to configure and monitor general settings on a running system through the global kernel tunables.

Global kernel tunables are included in the /proc/sys/kernel/ directory either directly as named control files or grouped in further subdirectories for various configuration topics. To adjust the global kernel tunables, system administrators need to modify the control files.

Descriptions of the following parameters have been adopted from the kernel documentation sites.[2]

- dmesg_restrict

Indicates whether unprivileged users are prevented from using the

dmesgcommand to view messages from the kernel’s log buffer.For further information, see Kernel sysctl documentation.

- core_pattern

Specifies a core dumpfile pattern name.

Expand Max length Default 128 characters

"core"

For further information, see Kernel sysctl documentation.

- hardlockup_panic

Controls the kernel panic when a hard lockup is detected.

Expand Type Value Effect Integer

0

kernel does not panic on hard lockup

Integer

1

kernel panics on hard lockup

In order to panic, the system needs to detect a hard lockup first. The detection is controlled by the nmi_watchdog parameter.

Further Resources

- softlockup_panic

Controls the kernel panic when a soft lockup is detected.

Expand Type Value Effect Integer

0

kernel does not panic on soft lockup

Integer

1

kernel panics on soft lockup

By default, on RHEL7 this value is 0.

For more information about

softlockup_panic, see kernel_parameters.

- kptr_restrict

Indicates whether restrictions are placed on exposing kernel addresses via

/procand other interfaces.Expand Type Default Integer

0

Expand Value Effect 0

hashes the kernel address before printing

1

replaces printed kernel pointers with 0’s under certain conditions

2

replaces printed kernel pointers with 0’s unconditionally

To learn more, see Kernel sysctl documentation.

- nmi_watchdog

Controls the hard lockup detector on x86 systems.

Expand Type Default Integer

0

Expand Value Effect 0

disables the lockup detector

1

enables the lockup detector

The hard lockup detector monitors each CPU for its ability to respond to interrupts.

For more details, see Kernel sysctl documentation.

- watchdog_thresh

Controls frequency of watchdog

hrtimer, NMI events, and soft/hard lockup thresholds.Expand Default threshold Soft lockup threshold 10 seconds

2 *

watchdog_threshSetting this tunable to zero disables lockup detection altogether.

For more info, consult Kernel sysctl documentation.

- panic, panic_on_oops, panic_on_stackoverflow, panic_on_unrecovered_nmi, panic_on_warn, panic_on_rcu_stall, hung_task_panic

These tunables specify under what circumstances the kernel should panic.

To see more details about a group of

panicparameters, see Kernel sysctl documentation.

- printk, printk_delay, printk_ratelimit, printk_ratelimit_burst, printk_devkmsg

These tunables control logging or printing of kernel error messages.

For more details about a group of

printkparameters, see Kernel sysctl documentation.

- shmall, shmmax, shm_rmid_forced

These tunables control limits for shared memory.

For more information about a group of

shmparameters, see Kernel sysctl documentation.

- threads-max

Controls the maximum number of threads created by the

fork()system call.Expand Min value Max value 20

Given by FUTEX_TID_MASK (0x3fffffff)

The

threads-maxvalue is checked against the available RAM pages. If the thread structures occupy too much of the available RAM pages,threads-maxis reduced accordingly.For more details, see Kernel sysctl documentation.

- pid_max

PID allocation wrap value.

To see more information, refer to Kernel sysctl documentation.

- numa_balancing

This parameter enables or disables automatic NUMA memory balancing. On NUMA machines, there is a performance penalty if remote memory is accessed by a CPU.

For more details, see Kernel sysctl documentation.

- numa_balancing_scan_period_min_ms, numa_balancing_scan_delay_ms, numa_balancing_scan_period_max_ms, numa_balancing_scan_size_mb

These tunables detect if pages are properly placed of if the data should be migrated to a memory node local to where the task is running.

For more details about a group of

numa_balancing_scanparameters, see Kernel sysctl documentation.

Chapter 3. Listing of kernel parameters and values

3.1. Kernel command-line parameters

Kernel command-line parameters, also known as kernel arguments, are used to customize the behavior of Red Hat Enterprise Linux at boot time only.

3.1.1. Setting kernel command-line parameters

This section explains how to change a kernel command-line parameter on AMD64 and Intel 64 systems and IBM Power Systems servers using the GRUB2 boot loader, and on IBM Z using zipl.

Kernel command-line parameters are saved in the boot/grub/grub.cfg configuration file, which is generated by the GRUB2 boot loader. Do not edit this configuration file. Changes to this file are only made by configuration scripts.

Changing kernel command-line parameters in GRUB2 for AMD64 and Intel 64 systems and IBM Power Systems Hardware.

-

Open the

/etc/default/grubconfiguration file asrootusing a plain text editor such as vim or Gedit. In this file, locate the line beginning with

GRUB_CMDLINE_LINUXsimilar to the following:GRUB_CMDLINE_LINUX="rd.lvm.lv=rhel/swap crashkernel=auto rd.lvm.lv=rhel/root rhgb quiet"

GRUB_CMDLINE_LINUX="rd.lvm.lv=rhel/swap crashkernel=auto rd.lvm.lv=rhel/root rhgb quiet"Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Change the value of the required kernel command-line parameter. Then, save the file and exit the editor.

Regenerate the GRUB2 configuration using the edited

defaultfile. If your system uses BIOS firmware, execute the following command:grub2-mkconfig -o /boot/grub2/grub.cfg

# grub2-mkconfig -o /boot/grub2/grub.cfgCopy to Clipboard Copied! Toggle word wrap Toggle overflow On a system with UEFI firmware, execute the following instead:

grub2-mkconfig -o /boot/efi/EFI/redhat/grub.cfg

# grub2-mkconfig -o /boot/efi/EFI/redhat/grub.cfgCopy to Clipboard Copied! Toggle word wrap Toggle overflow

After finishing the procedure above, the boot loader is reconfigured, and the kernel command-line parameter that you have specified in its configuration file is applied after the next reboot.

Changing kernel command-line parameters in zipl for IBM Z Hardware

-

Open the

/etc/zipl.confconfiguration file asrootusing a plain text editor such as vim or Gedit. -

In this file, locate the

parameters=section, and edit the requiremed parameter, or add it if not present. Then, save the file and exit the editor. Regenerate the zipl configuration:

zipl

# ziplCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteExecuting only the

ziplcommand with no additional options uses default values. See thezipl(8)man page for information about available options.

After finishing the procedure above, the boot loader is reconfigured, and the kernel command-line parameter that you have specified in its configuration file is applied after the next reboot.

3.1.2. What kernel command-line parameters can be controlled

For complete list of kernel command-line parameters, see https://www.kernel.org/doc/Documentation/admin-guide/kernel-parameters.txt.

3.1.2.1. Hardware specific kernel command-line parameters

- pci=option[,option…]

Specify behavior of the PCI hardware subsystem

Expand Setting Effect earlydump

[X86] Dump the PCI configuration space before the kernel changes anything

off

[X86] Do not probe for the PCI bus

noaer

[PCIE] If the PCIEAER kernel parameter is enabled, this kernel boot option can be used to disable the use of PCIE advanced error reporting.

noacpi

[X86] Do not use the Advanced Configuration and Power Interface (ACPI) for Interrupt Request (IRQ) routing or for PCI scanning.

bfsort

Sort PCI devices into breadth-first order. This sorting is done to get a device order compatible with older (⇐ 2.4) kernels.

nobfsort

Do not sort PCI devices into breadth-first order.

Additional PCI options are documented in the on disk documentation found in the

kernel-doc-<version>.noarchpackage. Where '<version>' needs to be replaced with the corresponding kernel version.- acpi=option

Specify behavior of the Advanced Configuration and Power Interface

Expand Setting Effect acpi=off

Disable ACPI

acpi=ht

Use ACPI boot table parsing, but do not enable ACPI interpreter

This disables any ACPI functionality that is not required for Hyper Threading.acpi=force

Require the ACPI subsystem to be enabled

acpi=strict

Make the ACPI layer be less tolerant of platforms that are not fully compliant with the ACPI specification.

acpi_sci=<value>

Set up ACPI SCI interrupt, where <value> is one of edge,level,high,low.

acpi=noirq

Do not use ACPI for IRQ routing

acpi=nocmcff

Disable firmware first (FF) mode for corrected errors. This disables parsing the HEST CMC error source to check if firmware has set the FF flag. This can result in duplicate corrected error reports.

Chapter 4. Kernel features

This chapter explains the purpose and use of kernel features that enable many user space tools and includes resources for further investigation of those tools.

4.1. Control groups

4.1.1. What is a control group?

Control Group Namespaces are a Technology Preview in Red Hat Enterprise Linux 7.5

Linux Control Groups (cgroups) enable limits on the use of system hardware, ensuring that an individual process running inside a cgroup only utilizes as much as has been allowed in the cgroups configuration.

Control Groups restrict the volume of usage on a resource that has been enabled by a namespace. For example, the network namespace allows a process to access a particular network card, the cgroup ensures that the process does not exceed 50% usage of that card, ensuring bandwidth is available for other processes.

Control Group Namespaces provide a virtualized view of individual cgroups through the /proc/self/ns/cgroup interface.

The purpose is to prevent leakage of privileged data from the global namespaces to the cgroup and to enable other features, such as container migration.

Because it is now much easier to associate a container with a single cgroup, containers have a much more coherent cgroup view, it also enables tasks inside the container to have a virtualized view of the cgroup it belongs to.

4.1.2. What is a namespace?

Namespaces are a kernel feature that allow a virtual view of isolated system resources. By isolating a process from system resources, you can specify and control what a process is able to interact with. Namespaces are an essential part of Control Groups.

4.1.3. Supported namespaces

The following namespaces are supported from Red Hat Enterprise Linux 7.5 and later

Mount

- The mount namespace isolates file system mount points, enabling each process to have a distinct filesystem space within wich to operate.

UTS

- Hostname and NIS domain name

IPC

- System V IPC, POSIX message queues

PID

- Process IDs

Network

- Network devices, stacks, ports, etc.

User

- User and group IDs

Control Groups

- Isolates cgroups

Usage of Control Groups is documented in the Resource Management Guide

4.2. Kernel source checker

The Linux Kernel Module Source Checker (ksc) is a tool to check for non whitelist symbols in a given kernel module. Red Hat Partners can also use the tool to request review of a symbol for whitelist inclusion, by filing a bug in Red Hat bugzilla database.

4.2.1. Usage

The tool accepts the path to a module with the "-k" option

ksc -k e1000e.ko Checking against architecture x86_64 Total symbol usage: 165 Total Non white list symbol usage: 74 ksc -k /path/to/module

# ksc -k e1000e.ko

Checking against architecture x86_64

Total symbol usage: 165 Total Non white list symbol usage: 74

# ksc -k /path/to/module

Output is saved in $HOME/ksc-result.txt. If review of the symbols for whitelist addition is requested, then the usage description for each non-whitelisted symbol must be added to the ksc-result.txt file. The request bug can then be filed by running ksc with the "-p" option.

KSC currently does not support xz compression The ksc tool is unable to process the xz compression method and reports the following error:

Invalid architecture, (Only kernel object files are supported)

Invalid architecture, (Only kernel object files are supported)Until this limitation is resolved, system administrators need to manually uncompress any third party modules using xz compression, before running the ksc tool.

4.3. Direct access for files (DAX)

Direct Access for files, known as 'file system dax', or 'fs dax', enables applications to read and write data on a dax-capable storage device without using the page cache to buffer access to the device.

This functionality is available when using the 'ext4' or 'xfs' file system, and is enabled either by mounting the file system with -o dax or by adding dax to the options section for the mount entry in /etc/fstab.

Further information, including code examples can be found in the kernel-doc package and is stored at /usr/share/doc/kernel-doc-<version>/Documentation/filesystems/dax.txt where '<version>' is the corresponding kernel version number.

4.4. Memory protection keys for userspace (also known as PKU, or PKEYS)

Memory Protection Keys provide a mechanism for enforcing page-based protections, but without requiring modification of the page tables when an application changes protection domains. It works by dedicating 4 previously ignored bits in each page table entry to a "protection key", giving 16 possible keys.

Memory Protection Keys are hardware feature of some Intel CPU chipsets. To determine if your processor supports this feature, check for the presence of pku in /proc/cpuinfo

grep pku /proc/cpuinfo

$ grep pku /proc/cpuinfoTo support this feature, the CPUs provide a new user-accessible register (PKRU) with two separate bits (Access Disable and Write Disable) for each key. Two new instructions (RDPKRU and WRPKRU) exist for reading and writing to the new register.

Further documentation, including programming examples can be found in /usr/share/doc/kernel-doc-*/Documentation/x86/protection-keys.txt which is provided by the kernel-doc package.

4.5. Kernel adress space layout randomization

Kernel Adress Space Layout Randomization (KASLR) consists of two parts which work together to enhance the security of the Linux kernel:

- kernel text KASLR

- memory management KASLR

The physical address and virtual address of kernel text itself are randomized to a different position separately. The physical address of the kernel can be anywhere under 64TB, while the virtual address of the kernel is restricted between [0xffffffff80000000, 0xffffffffc0000000], the 1GB space.

Memory management KASLR has three sections whose starting address is randomized in a specific area. KASLR can thus prevent inserting and redirecting the execution of the kernel to a malicious code if this code relies on knowing where symbols of interest are located in the kernel address space.

Memory management KASLR sections are:

- direct mapping section

- vmalloc section

- vmemmap section

KASLR code is now compiled into the Linux kernel, and it is enabled by default. To disable it explicitly, add the nokaslr kernel option to the kernel command line.

4.6. Advanced Error Reporting (AER)

4.6.1. What is AER

Advanced Error Reporting (AER) is a kernel feature that provides enhanced error reporting for Peripheral Component Interconnect Express (PCIe) devices. The AER kernel driver attaches root ports which support PCIe AER capability in order to:

- Gather the comprehensive error information if errors occurred

- Report error to the users

- Perform error recovery actions

Example 4.1. Example AER output

When AER captures an error, it sends an error message to the console. If the error is repairable, the console output is a warning.

4.6.2. Collecting and displaying AER messages

In order to collect and display AER messages, use the rasdaemon program.

Procedure

Install the

rasdaemonpackage.yum install rasdaemon

~]# yum install rasdaemonCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enable and start the

rasdaemonservice.systemctl enable --now rasdaemon

~]# systemctl enable --now rasdaemonCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the

ras-mc-ctlcommand that displays a summary of the logged errors (the--summaryoption) or displays the errors stored at the error database (the--errorsoption).ras-mc-ctl --summary ras-mc-ctl --errors

~]# ras-mc-ctl --summary ~]# ras-mc-ctl --errorsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 5. Manually upgrading the kernel

The Red Hat Enterprise Linux kernel is custom-built by the Red Hat Enterprise Linux kernel team to ensure its integrity and compatibility with supported hardware. Before Red Hat releases a kernel, it must first pass a rigorous set of quality assurance tests.

Red Hat Enterprise Linux kernels are packaged in the RPM format so that they are easy to upgrade and verify using the Yum or PackageKit package managers. PackageKit automatically queries the Red Hat Content Delivery Network servers and informs you of packages with available updates, including kernel packages.

This chapter is therefore only useful for users who need to manually update a kernel package using the rpm command instead of yum.

Whenever possible, use either the Yum or PackageKit package manager to install a new kernel because they always install a new kernel instead of replacing the current one, which could potentially leave your system unable to boot.

Custom kernels are not supported by Red Hat. However, guidance can be obtained from the solution article.

For more information on installing kernel packages with yum, see the relevant section in the System Administrator’s Guide.

For information on Red Hat Content Delivery Network, see the relevant section in the System Administrator’s Guide.

5.1. Overview of kernel packages

Red Hat Enterprise Linux contains the following kernel packages:

- kernel — Contains the kernel for single-core, multi-core, and multi-processor systems.

- kernel-debug — Contains a kernel with numerous debugging options enabled for kernel diagnosis, at the expense of reduced performance.

- kernel-devel — Contains the kernel headers and makefiles sufficient to build modules against the kernel package.

- kernel-debug-devel — Contains the development version of the kernel with numerous debugging options enabled for kernel diagnosis, at the expense of reduced performance.

kernel-doc — Documentation files from the kernel source. Various portions of the Linux kernel and the device drivers shipped with it are documented in these files. Installation of this package provides a reference to the options that can be passed to Linux kernel modules at load time.

By default, these files are placed in the

/usr/share/doc/kernel-doc-kernel_version/directory.- kernel-headers — Includes the C header files that specify the interface between the Linux kernel and user-space libraries and programs. The header files define structures and constants that are needed for building most standard programs.

- linux-firmware — Contains all of the firmware files that are required by various devices to operate.

- perf — This package contains the perf tool, which enables performance monitoring of the Linux kernel.

- kernel-abi-whitelists — Contains information pertaining to the Red Hat Enterprise Linux kernel ABI, including a lists of kernel symbols that are needed by external Linux kernel modules and a yum plug-in to aid enforcement.

- kernel-tools — Contains tools for manipulating the Linux kernel and supporting documentation.

5.2. Preparing to upgrade

Before upgrading the kernel, it is recommended that you take some precautionary steps.

First, ensure that working boot media exists for the system in case a problem occurs. If the boot loader is not configured properly to boot the new kernel, you can use this media to boot into Red Hat Enterprise Linux

USB media often comes in the form of flash devices sometimes called pen drives, thumb disks, or keys, or as an externally-connected hard disk device. Almost all media of this type is formatted as a VFAT file system. You can create bootable USB media on media formatted as ext2, ext3, ext4, or VFAT.

You can transfer a distribution image file or a minimal boot media image file to USB media. Make sure that sufficient free space is available on the device. Around 4 GB is required for a distribution DVD image, around 700 MB for a distribution CD image, or around 10 MB for a minimal boot media image.

You must have a copy of the boot.iso file from a Red Hat Enterprise Linux installation DVD, or installation CD-ROM #1, and you need a USB storage device formatted with the VFAT file system and around 16 MB of free space.

For more information on using USB storage devices, review How to format a USB key and How to manually mount a USB flash drive in a non-graphical environment solution articles.

The following procedure does not affect existing files on the USB storage device unless they have the same path names as the files that you copy onto it. To create USB boot media, perform the following commands as the root user:

-

Install the syslinux package if it is not installed on your system. To do so, as root, run the

yum install syslinuxcommand. Install the SYSLINUX bootloader on the USB storage device:

syslinux /dev/sdX1

# syslinux /dev/sdX1Copy to Clipboard Copied! Toggle word wrap Toggle overflow …where sdX is the device name.

Create mount points for

boot.isoand the USB storage device:mkdir /mnt/isoboot /mnt/diskboot

# mkdir /mnt/isoboot /mnt/diskbootCopy to Clipboard Copied! Toggle word wrap Toggle overflow Mount

boot.iso:mount -o loop boot.iso /mnt/isoboot

# mount -o loop boot.iso /mnt/isobootCopy to Clipboard Copied! Toggle word wrap Toggle overflow Mount the USB storage device:

mount /dev/sdX1 /mnt/diskboot

# mount /dev/sdX1 /mnt/diskbootCopy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the ISOLINUX files from the

boot.isoto the USB storage device:cp /mnt/isoboot/isolinux/* /mnt/diskboot

# cp /mnt/isoboot/isolinux/* /mnt/diskbootCopy to Clipboard Copied! Toggle word wrap Toggle overflow Use the

isolinux.cfgfile fromboot.isoas thesyslinux.cfgfile for the USB device:grep -v local /mnt/isoboot/isolinux/isolinux.cfg > /mnt/diskboot/syslinux.cfg

# grep -v local /mnt/isoboot/isolinux/isolinux.cfg > /mnt/diskboot/syslinux.cfgCopy to Clipboard Copied! Toggle word wrap Toggle overflow Unmount

boot.isoand the USB storage device:umount /mnt/isoboot /mnt/diskboot

# umount /mnt/isoboot /mnt/diskbootCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Reboot the machine with the boot media and verify that you are able to boot with it before continuing.

Alternatively, on systems with a floppy drive, you can create a boot diskette by installing the mkbootdisk package and running the mkbootdisk command as root. See man mkbootdisk man page after installing the package for usage information.

To determine which kernel packages are installed, execute the command yum list installed "kernel-*" at a shell prompt. The output comprises some or all of the following packages, depending on the system’s architecture, and the version numbers might differ:

yum list installed "kernel-*" kernel.x86_64 3.10.0-54.0.1.el7 @rhel7/7.0 kernel-devel.x86_64 3.10.0-54.0.1.el7 @rhel7 kernel-headers.x86_64 3.10.0-54.0.1.el7 @rhel7/7.0

# yum list installed "kernel-*"

kernel.x86_64 3.10.0-54.0.1.el7 @rhel7/7.0

kernel-devel.x86_64 3.10.0-54.0.1.el7 @rhel7

kernel-headers.x86_64 3.10.0-54.0.1.el7 @rhel7/7.0From the output, determine which packages need to be downloaded for the kernel upgrade. For a single processor system, the only required package is the kernel package. See Section 5.1, “Overview of kernel packages” for descriptions of the different packages.

5.3. Downloading the upgraded kernel

There are several ways to determine if an updated kernel is available for the system.

- Security Errata — See Security Advisories in Red Hat Customer Portal for information on security errata, including kernel upgrades that fix security issues.

- The Red Hat Content Delivery Network — For a system subscribed to the Red Hat Content Delivery Network, the yum package manager can download the latest kernel and upgrade the kernel on the system. The Dracut utility creates an initial RAM file system image if needed, and configure the boot loader to boot the new kernel. For more information on installing packages from the Red Hat Content Delivery Network, see the relevant section of the System Administrator’s Guide. For more information on subscribing a system to the Red Hat Content Delivery Network, see the relevant section of the System Administrator’s Guide.

If yum was used to download and install the updated kernel from the Red Hat Network, follow the instructions in Section 5.5, “Verifying the initial RAM file system image” and Section 5.6, “Verifying the boot loader” only, do not change the kernel to boot by default. Red Hat Network automatically changes the default kernel to the latest version. To install the kernel manually, continue to Section 5.4, “Performing the upgrade”.

5.4. Performing the upgrade

After retrieving all of the necessary packages, it is time to upgrade the existing kernel.

It is strongly recommended that you keep the old kernel in case there are problems with the new kernel.

At a shell prompt, change to the directory that contains the kernel RPM packages. Use -i argument with the rpm command to keep the old kernel. Do not use the -U option, since it overwrites the currently installed kernel, which creates boot loader problems. For example:

rpm -ivh kernel-kernel_version.arch.rpm

# rpm -ivh kernel-kernel_version.arch.rpmThe next step is to verify that the initial RAM file system image has been created. See Section 5.5, “Verifying the initial RAM file system image” for details.

5.5. Verifying the initial RAM file system image

The job of the initial RAM file system image is to preload the block device modules, such as for IDE, SCSI or RAID, so that the root file system, on which those modules normally reside, can then be accessed and mounted. On Red Hat Enterprise Linux 7 systems, whenever a new kernel is installed using either the Yum, PackageKit, or RPM package manager, the Dracut utility is always called by the installation scripts to create an initramfs (initial RAM file system image).

If you make changes to the kernel attributes by modifying the /etc/sysctl.conf file or another sysctl configuration file, and if the changed settings are used early in the boot process, then rebuilding the Initial RAM File System Image by running the dracut -f command might be necessary. An example is if you have made changes related to networking and are booting from network-attached storage.

On all architectures other than IBM eServer System i (see the section called “Verifying the initial RAM file system image and kernel on IBM eServer System i”), you can create an initramfs by running the dracut command. However, you usually do not need to create an initramfs manually: this step is automatically performed if the kernel and its associated packages are installed or upgraded from RPM packages distributed by Red Hat.

You can verify that an initramfs corresponding to your current kernel version exists and is specified correctly in the grub.cfg configuration file by following this procedure:

Verifying the initial RAM file system image

As

root, list the contents in the/bootdirectory and find the kernel (vmlinuz-kernel_version) andinitramfs-kernel_versionwith the latest (most recent) version number:Example 5.1. Ensuring that the kernel and initramfs versions match

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example 5.1, “Ensuring that the kernel and initramfs versions match” shows that:

-

we have three kernels installed (or, more correctly, three kernel files are present in the

/bootdirectory), -

the latest kernel is

vmlinuz-3.10.0-78.el7.x86_64, and an

initramfsfile matching our kernel version,initramfs-3.10.0-78.el7.x86_64kdump.img, also exists.ImportantIn the

/bootdirectory you might find severalinitramfs-kernel_versionkdump.imgfiles. These are special files created by the Kdump mechanism for kernel debugging purposes, are not used to boot the system, and can safely be ignored. For more information onkdump, see the Red Hat Enterprise Linux 7 Kernel Crash Dump Guide.

-

we have three kernels installed (or, more correctly, three kernel files are present in the

If your

initramfs-kernel_versionfile does not match the version of the latest kernel in the/bootdirectory, or, in certain other situations, you might need to generate aninitramfsfile with the Dracut utility. Simply invokingdracutasrootwithout options causes it to generate aninitramfsfile in/bootfor the latest kernel present in that directory:dracut

# dracutCopy to Clipboard Copied! Toggle word wrap Toggle overflow You must use the

-f,--forceoption if you wantdracutto overwrite an existinginitramfs(for example, if yourinitramfshas become corrupt). Otherwisedracutrefuses to overwrite the existinginitramfsfile:dracut Does not override existing initramfs (/boot/initramfs-3.10.0-78.el7.x86_64.img) without --force# dracut Does not override existing initramfs (/boot/initramfs-3.10.0-78.el7.x86_64.img) without --forceCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can create an initramfs in the current directory by calling

dracut initramfs_name kernel_version:dracut "initramfs-$(uname -r).img" $(uname -r)

# dracut "initramfs-$(uname -r).img" $(uname -r)Copy to Clipboard Copied! Toggle word wrap Toggle overflow If you need to specify specific kernel modules to be preloaded, add the names of those modules (minus any file name suffixes such as

.ko) inside the parentheses of theadd_dracutmodules+="module more_modules "directive of the/etc/dracut.confconfiguration file. You can list the file contents of aninitramfsimage file created by dracut by using thelsinitrd initramfs_filecommand:Copy to Clipboard Copied! Toggle word wrap Toggle overflow See

man dracutandman dracut.conffor more information on options and usage.Examine the

/boot/grub2/grub.cfgconfiguration file to ensure that aninitramfs-kernel_version.imgfile exists for the kernel version you are booting. For example:grep initramfs /boot/grub2/grub.cfg initrd16 /initramfs-3.10.0-123.el7.x86_64.img initrd16 /initramfs-0-rescue-6d547dbfd01c46f6a4c1baa8c4743f57.img

# grep initramfs /boot/grub2/grub.cfg initrd16 /initramfs-3.10.0-123.el7.x86_64.img initrd16 /initramfs-0-rescue-6d547dbfd01c46f6a4c1baa8c4743f57.imgCopy to Clipboard Copied! Toggle word wrap Toggle overflow See Section 5.6, “Verifying the boot loader” for more information.

Verifying the initial RAM file system image and kernel on IBM eServer System i

On IBM eServer System i machines, the initial RAM file system and kernel files are combined into a single file, which is created with the addRamDisk command. This step is performed automatically if the kernel and its associated packages are installed or upgraded from the RPM packages distributed by Red Hat thus, it does not need to be executed manually. To verify that it was created, run the following command as root to make sure the /boot/vmlinitrd-kernel_version file already exists:

ls -l /boot/

# ls -l /boot/The kernel_version needs to match the version of the kernel just installed.

Reversing the changes made to the initial RAM file system image

In some cases, for example, if you misconfigure the system and it no longer boots, you need to reverse the changes made to the Initial RAM File System Image by following this procedure:

Reversing Changes Made to the Initial RAM File System Image

- Reboot the system choosing the rescue kernel in the GRUB menu.

-

Change the incorrect setting that caused the

initramfsto malfunction. Recreate the

initramfswith the correct settings by running the following command as root:dracut --kver kernel_version --force

# dracut --kver kernel_version --forceCopy to Clipboard Copied! Toggle word wrap Toggle overflow

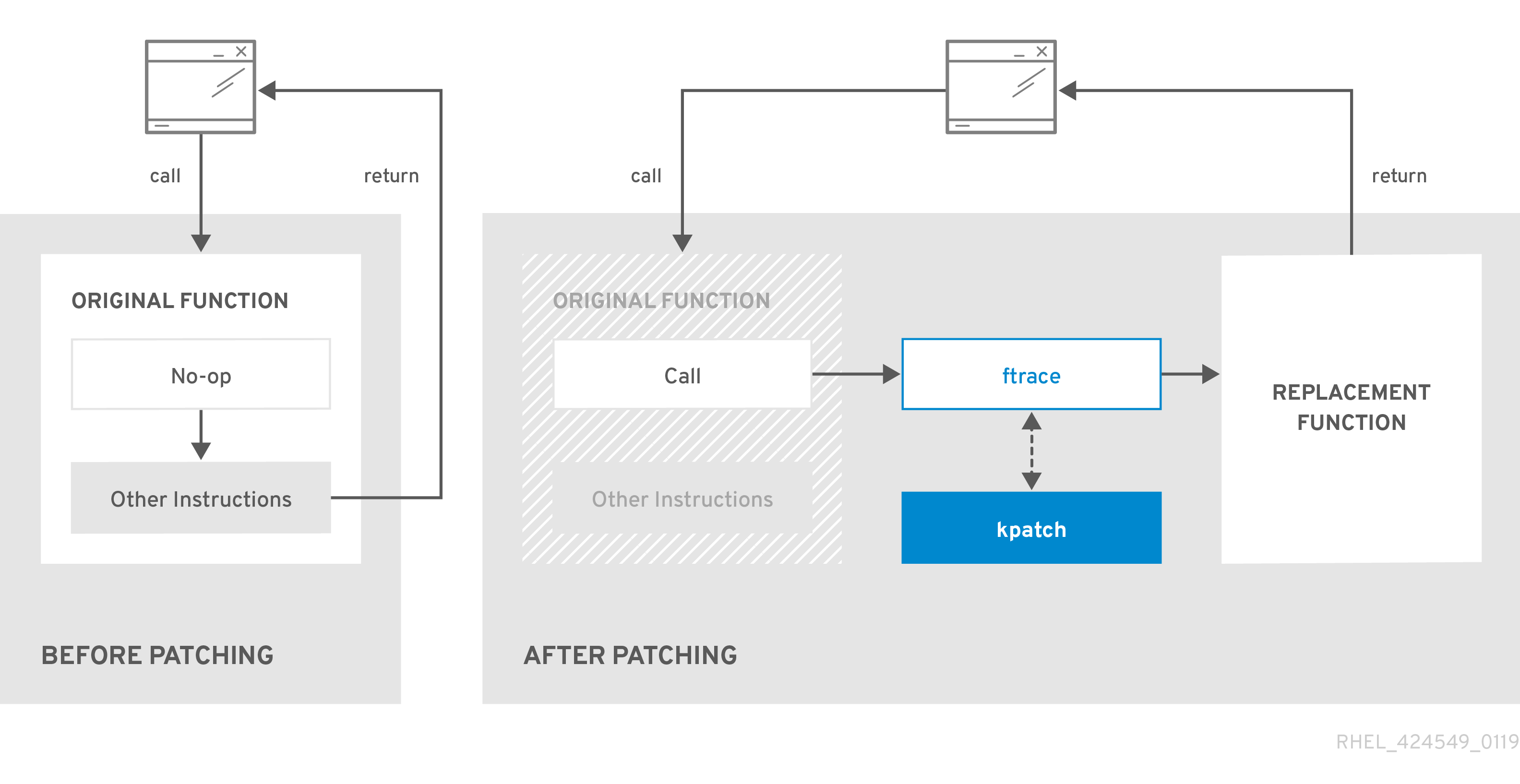

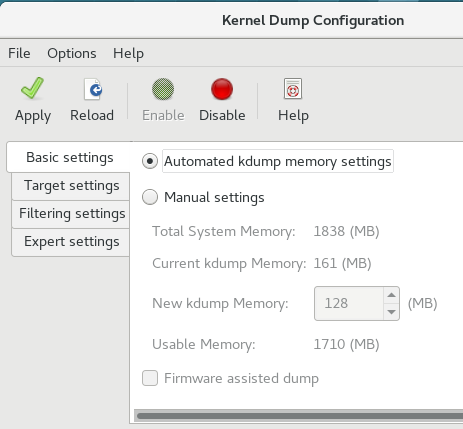

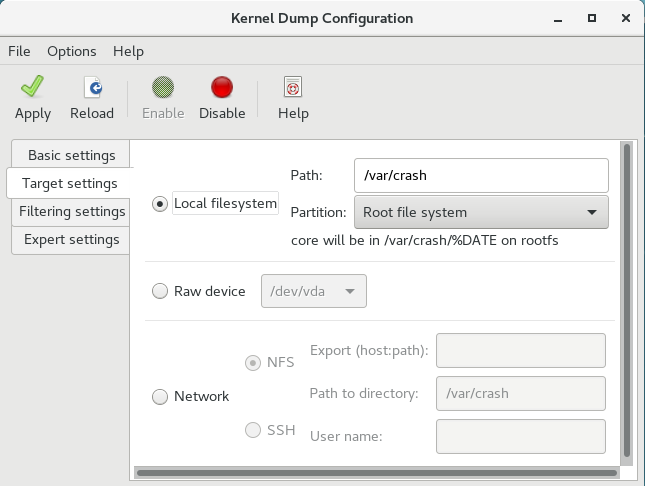

The above procedure might be useful if, for example, you incorrectly set the vm.nr_hugepages in the sysctl.conf file. Because the sysctl.conf file is included in initramfs, the new vm.nr_hugepages setting gets applied in initramfs and causes rebuilding of the initramfs. However, because the setting is incorrect, the new initramfs is broken and the newly built kernel does not boot, which necessitates correcting the setting using the above procedure.