Managing file systems

Creating, modifying, and administering file systems in Red Hat Enterprise Linux 8

Abstract

Providing feedback on Red Hat documentation

We appreciate your feedback on our documentation. Let us know how we can improve it.

Submitting feedback through Jira (account required)

- Log in to the Jira website.

- Click Create in the top navigation bar.

- Enter a descriptive title in the Summary field.

- Enter your suggestion for improvement in the Description field. Include links to the relevant parts of the documentation.

- Click Create at the bottom of the dialogue.

Chapter 1. Overview of available file systems

Choosing the file system that is appropriate for your application is an important decision due to the large number of options available and the trade-offs involved.

The following sections describe the file systems that Red Hat Enterprise Linux 8 includes by default, and recommendations on the most suitable file system for your application.

1.1. Types of file systems

Red Hat Enterprise Linux 8 supports a variety of file systems (FS). Different types of file systems solve different kinds of problems, and their usage is application specific. At the most general level, available file systems can be grouped into the following major types:

| Type | File system | Attributes and use cases |

|---|---|---|

| Disk or local FS | XFS | XFS is the default file system in RHEL. Red Hat recommends deploying XFS as your local file system unless there are specific reasons to do otherwise: for example, compatibility or corner cases around performance. |

| ext4 | ext4 has the benefit of familiarity in Linux, having evolved from the older ext2 and ext3 file systems. In many cases, it rivals XFS on performance. Support limits for ext4 filesystem and file sizes are lower than those on XFS. | |

| Network or client-and-server FS | NFS | Use NFS to share files between multiple systems on the same network. |

| SMB | Use SMB for file sharing with Microsoft Windows systems. | |

| Shared storage or shared disk FS | GFS2 | GFS2 provides shared write access to members of a compute cluster. The emphasis is on stability and reliability, with the functional experience of a local file system as possible. SAS Grid, Tibco MQ, IBM Websphere MQ, and Red Hat Active MQ have been deployed successfully on GFS2. |

| Volume-managing FS | Stratis (Technology Preview) | Stratis is a volume manager built on a combination of XFS and LVM. The purpose of Stratis is to emulate capabilities offered by volume-managing file systems like Btrfs and ZFS. It is possible to build this stack manually, but Stratis reduces configuration complexity, implements best practices, and consolidates error information. |

1.2. Local file systems

Local file systems are file systems that run on a single, local server and are directly attached to storage.

For example, a local file system is the only choice for internal SATA or SAS disks, and is used when your server has internal hardware RAID controllers with local drives. Local file systems are also the most common file systems used on SAN attached storage when the device exported on the SAN is not shared.

All local file systems are POSIX-compliant and are fully compatible with all supported Red Hat Enterprise Linux releases. POSIX-compliant file systems provide support for a well-defined set of system calls, such as read(), write(), and seek().

When considering a file system choice, choose a file system based on how large the file system needs to be, what unique features it must have, and how it performs under your workload.

- Available local file systems

- XFS

- ext4

1.3. The XFS file system

XFS is a highly scalable, high-performance, robust, and mature 64-bit journaling file system that supports very large files and file systems on a single host. It is the default file system in Red Hat Enterprise Linux 8. XFS was originally developed in the early 1990s by SGI and has a long history of running on extremely large servers and storage arrays.

The features of XFS include:

- Reliability

- Metadata journaling, which ensures file system integrity after a system crash by keeping a record of file system operations that can be replayed when the system is restarted and the file system remounted

- Extensive run-time metadata consistency checking

- Scalable and fast repair utilities

- Quota journaling. This avoids the need for lengthy quota consistency checks after a crash.

- Scalability and performance

- Supported file system size up to 1024 TiB

- Ability to support a large number of concurrent operations

- B-tree indexing for scalability of free space management

- Sophisticated metadata read-ahead algorithms

- Optimizations for streaming video workloads

- Allocation schemes

- Extent-based allocation

- Stripe-aware allocation policies

- Delayed allocation

- Space pre-allocation

- Dynamically allocated inodes

- Other features

- Reflink-based file copies

- Tightly integrated backup and restore utilities

- Online defragmentation

- Online file system growing

- Comprehensive diagnostics capabilities

-

Extended attributes (

xattr). This allows the system to associate several additional name/value pairs per file. - Project or directory quotas. This allows quota restrictions over a directory tree.

- Subsecond timestamps

Performance characteristics

XFS has a high performance on large systems with enterprise workloads. A large system is one with a relatively high number of CPUs, multiple HBAs, and connections to external disk arrays. XFS also performs well on smaller systems that have a multi-threaded, parallel I/O workload.

XFS has a relatively low performance for single threaded, metadata-intensive workloads: for example, a workload that creates or deletes large numbers of small files in a single thread.

1.4. The ext4 file system

The ext4 file system is the fourth generation of the ext file system family. It was the default file system in Red Hat Enterprise Linux 6.

The ext4 driver can read and write to ext2 and ext3 file systems, but the ext4 file system format is not compatible with ext2 and ext3 drivers.

ext4 adds several new and improved features, such as:

- Supported file system size up to 50 TiB

- Extent-based metadata

- Delayed allocation

- Journal checksumming

- Large storage support

The extent-based metadata and the delayed allocation features provide a more compact and efficient way to track utilized space in a file system. These features improve file system performance and reduce the space consumed by metadata. Delayed allocation allows the file system to postpone selection of the permanent location for newly written user data until the data is flushed to disk. This enables higher performance since it can allow for larger, more contiguous allocations, allowing the file system to make decisions with much better information.

File system repair time using the fsck utility in ext4 is much faster than in ext2 and ext3. Some file system repairs have demonstrated up to a six-fold increase in performance.

1.5. Comparison of XFS and ext4

XFS is the default file system in RHEL. This section compares the usage and features of XFS and ext4.

- Metadata error behavior

-

In ext4, you can configure the behavior when the file system encounters metadata errors. The default behavior is to simply continue the operation. When XFS encounters an unrecoverable metadata error, it shuts down the file system and returns the

EFSCORRUPTEDerror. - Quotas

In ext4, you can enable quotas when creating the file system or later on an existing file system. You can then configure the quota enforcement using a mount option.

XFS quotas are not a remountable option. You must activate quotas on the initial mount.

Running the

quotacheckcommand on an XFS file system has no effect. The first time you turn on quota accounting, XFS checks quotas automatically.- File system resize

- XFS has no utility to reduce the size of a file system. You can only increase the size of an XFS file system. In comparison, ext4 supports both extending and reducing the size of a file system.

- Inode numbers

The ext4 file system does not support more than 232 inodes.

XFS supports dynamic inode allocation. The amount of space inodes can consume on an XFS filesystem is calculated as a percentage of the total filesystem space. To prevent the system from running out of inodes, an administrator can tune this percentage after the filesystem has been created, given there is free space left on the file system.

Certain applications cannot properly handle inode numbers larger than 232 on an XFS file system. These applications might cause the failure of 32-bit stat calls with the

EOVERFLOWreturn value. Inode number exceed 232 under the following conditions:- The file system is larger than 1 TiB with 256-byte inodes.

- The file system is larger than 2 TiB with 512-byte inodes.

If your application fails with large inode numbers, mount the XFS file system with the

-o inode32option to enforce inode numbers below 232. Note that usinginode32does not affect inodes that are already allocated with 64-bit numbers.ImportantDo not use the

inode32option unless a specific environment requires it. Theinode32option changes allocation behavior. As a consequence, theENOSPCerror might occur if no space is available to allocate inodes in the lower disk blocks.

1.6. Choosing a local file system

To choose a file system that meets your application requirements, you must understand the target system on which you will deploy the file system. In general, use XFS unless you have a specific use case for ext4.

- XFS

- For large-scale deployments, use XFS, particularly when handling large files (hundreds of megabytes) and high I/O concurrency. XFS performs optimally in environments with high bandwidth (greater than 200MB/s) and more than 1000 IOPS. However, it consumes more CPU resources for metadata operations compared to ext4 and does not support file system shrinking.

- ext4

- For smaller systems or environments with limited I/O bandwidth, ext4 might be a better fit. It performs better in single-threaded, lower I/O workloads and environments with lower throughput requirements. ext4 also supports offline shrinking, which can be beneficial if resizing the file system is a requirement.

Benchmark your application’s performance on your target server and storage system to ensure the selected file system meets your performance and scalability requirements.

| Scenario | Recommended file system |

|---|---|

| No special use case | XFS |

| Large server | XFS |

| Large storage devices | XFS |

| Large files | XFS |

| Multi-threaded I/O | XFS |

| Single-threaded I/O | ext4 |

| Limited I/O capability (under 1000 IOPS) | ext4 |

| Limited bandwidth (under 200MB/s) | ext4 |

| CPU-bound workload | ext4 |

| Support for offline shrinking | ext4 |

1.7. Network file systems

Network file systems, also referred to as client/server file systems, enable client systems to access files that are stored on a shared server. This makes it possible for multiple users on multiple systems to share files and storage resources.

Such file systems are built from one or more servers that export a set of file systems to one or more clients. The client nodes do not have access to the underlying block storage, but rather interact with the storage using a protocol that allows for better access control.

- Available network file systems

- The most common client/server file system for RHEL customers is the NFS file system. RHEL provides both an NFS server component to export a local file system over the network and an NFS client to import these file systems.

- RHEL also includes a CIFS client that supports the popular Microsoft SMB file servers for Windows interoperability. The userspace Samba server provides Windows clients with a Microsoft SMB service from a RHEL server.

1.10. Volume-managing file systems

Volume-managing file systems integrate the entire storage stack for the purposes of simplicity and in-stack optimization.

- Available volume-managing file systems

- Red Hat Enterprise Linux 8 provides the Stratis volume manager as a Technology Preview. Stratis uses XFS for the file system layer and integrates it with LVM, Device Mapper, and other components.

Stratis was first released in Red Hat Enterprise Linux 8.0. It is conceived to fill the gap created when Red Hat deprecated Btrfs. Stratis 1.0 is an intuitive, command line-based volume manager that can perform significant storage management operations while hiding the complexity from the user:

- Volume management

- Pool creation

- Thin storage pools

- Snapshots

- Automated read cache

Stratis offers powerful features, but currently lacks certain capabilities of other offerings that it might be compared to, such as Btrfs or ZFS. Most notably, it does not support CRCs with self healing.

Chapter 2. Managing local storage by using RHEL system roles

To manage LVM and local file systems (FS) by using Ansible, you can use the storage role. Using the storage role enables you to automate administration of file systems on disks and logical volumes on multiple machines.

For more information about RHEL system roles and how to apply them, see Introduction to RHEL system roles.

2.1. Creating an XFS file system on a block device by using the storage RHEL system role

You can use the storage RHEL system role to automate the creation of an XFS file system on block devices.

The storage role can create a file system only on an unpartitioned, whole disk or a logical volume (LV). It cannot create the file system on a partition.

Prerequisites

- You have prepared the control node and the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions for these nodes.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The settings specified in the example playbook include the following:

name: barefs-

The volume name (

barefsin the example) is currently arbitrary. Thestoragerole identifies the volume by the disk device listed under thedisksattribute. fs_type: <file_system>-

You can omit the

fs_typeparameter if you want to use the default file system XFS. disks: <list_of_disks_and_volumes>A YAML list of disk and LV names. To create the file system on an LV, provide the LVM setup under the

disksattribute, including the enclosing volume group. For details, see Creating or resizing a logical volume by using the storage RHEL system role.Do not provide the path to the LV device.

For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.storage/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.2. Persistently mounting a file system by using the storage RHEL system role

You can use the storage RHEL system role to persistently mount file systems to ensure they remain available across system reboots and are automatically mounted on startup. If the file system on the device you specified in the playbook does not exist, the role creates it.

Prerequisites

- You have prepared the control node and the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions for these nodes.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.storage/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.3. Creating or resizing a logical volume by using the storage RHEL system role

You can use the storage RHEL system role to create and resize LVM logical volumes. The role automatically creates volume groups if they do not exist.

Use the storage role to perform the following tasks:

- To create an LVM logical volume in a volume group consisting of many disks

- To resize an existing file system on LVM

- To express an LVM volume size in percentage of the pool’s total size

If the volume group does not exist, the role creates it. If a logical volume exists in the volume group, it is resized if the size does not match what is specified in the playbook.

If you are reducing a logical volume, to prevent data loss you must ensure that the file system on that logical volume is not using the space in the logical volume that is being reduced.

Prerequisites

- You have prepared the control node and the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions for these nodes.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The settings specified in the example playbook include the following:

size: <size>- You must specify the size by using units (for example, GiB) or percentage (for example, 60%).

For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.storage/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Verify that specified volume has been created or resized to the requested size:

ansible managed-node-01.example.com -m command -a 'lvs myvg'

# ansible managed-node-01.example.com -m command -a 'lvs myvg'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4. Enabling online block discard by using the storage RHEL system role

You can mount an XFS file system with the online block discard option to automatically discard unused blocks.

Prerequisites

- You have prepared the control node and the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions for these nodes.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.storage/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Verify that online block discard option is enabled:

ansible managed-node-01.example.com -m command -a 'findmnt /mnt/data'

# ansible managed-node-01.example.com -m command -a 'findmnt /mnt/data'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5. Creating and mounting a file system by using the storage RHEL system role

You can use the storage RHEL system role to create and mount file systems that persist across reboots. The role automatically adds entries to /etc/fstab to ensure persistent mounting.

Prerequisites

- You have prepared the control node and the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions for these nodes.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The settings specified in the example playbook include the following:

disks: <list_of_devices>- A YAML list of device names that the role uses when it creates the volume.

fs_type: <file_system>-

Specifies the file system the role should set on the volume. You can select

xfs,ext3,ext4,swap, orunformatted. label-name: <file_system_label>- Optional: sets the label of the file system.

mount_point: <directory>-

Optional: if the volume should be automatically mounted, set the

mount_pointvariable to the directory to which the volume should be mounted.

For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.storage/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.6. Configuring a RAID volume by using the storage RHEL system role

With the storage system role, you can configure a RAID volume on RHEL by using Red Hat Ansible Automation Platform and Ansible-Core. Create an Ansible playbook with the parameters to configure a RAID volume to suit your requirements.

Device names might change in certain circumstances, for example, when you add a new disk to a system. Therefore, to prevent data loss, use persistent naming attributes in the playbook. For more information about persistent naming attributes, see Overview of persistent naming attributes.

Prerequisites

- You have prepared the control node and the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions for these nodes.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.storage/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Verify that the array was correctly created:

ansible managed-node-01.example.com -m command -a 'mdadm --detail /dev/md/data'

# ansible managed-node-01.example.com -m command -a 'mdadm --detail /dev/md/data'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.7. Configuring an LVM pool with RAID by using the storage RHEL system role

With the storage system role, you can configure an LVM pool with RAID on RHEL by using Red Hat Ansible Automation Platform. You can set up an Ansible playbook with the available parameters to configure an LVM pool with RAID.

Prerequisites

- You have prepared the control node and the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions for these nodes.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.storage/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Verify that your pool is on RAID:

ansible managed-node-01.example.com -m command -a 'lsblk'

# ansible managed-node-01.example.com -m command -a 'lsblk'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.8. Configuring a stripe size for RAID LVM volumes by using the storage RHEL system role

You can use the storage RHEL system role to configure stripe sizes for RAID LVM volumes.

Prerequisites

- You have prepared the control node and the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions for these nodes.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.storage/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Verify that stripe size is set to the required size:

ansible managed-node-01.example.com -m command -a 'lvs -o+stripesize /dev/my_pool/my_volume'

# ansible managed-node-01.example.com -m command -a 'lvs -o+stripesize /dev/my_pool/my_volume'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.9. Configuring an LVM-VDO volume by using the storage RHEL system role

You can use the storage RHEL system role to create a VDO volume on LVM (LVM-VDO) with enabled compression and deduplication.

Because of the storage system role use of LVM-VDO, only one volume can be created per pool.

Prerequisites

- You have prepared the control node and the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions for these nodes.

Procedure

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The settings specified in the example playbook include the following:

vdo_pool_size: <size>- The actual size that the volume takes on the device. You can specify the size in human-readable format, such as 10 GiB. If you do not specify a unit, it defaults to bytes.

size: <size>- The virtual size of VDO volume.

For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.storage/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --syntax-check ~/playbook.yml

$ ansible-playbook --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook ~/playbook.yml

$ ansible-playbook ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

View the current status of compression and deduplication:

ansible managed-node-01.example.com -m command -a 'lvs -o+vdo_compression,vdo_compression_state,vdo_deduplication,vdo_index_state' LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert VDOCompression VDOCompressionState VDODeduplication VDOIndexState mylv1 myvg vwi-a-v--- 3.00t vpool0 enabled online enabled online

$ ansible managed-node-01.example.com -m command -a 'lvs -o+vdo_compression,vdo_compression_state,vdo_deduplication,vdo_index_state' LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert VDOCompression VDOCompressionState VDODeduplication VDOIndexState mylv1 myvg vwi-a-v--- 3.00t vpool0 enabled online enabled onlineCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.10. Creating a LUKS2 encrypted volume by using the storage RHEL system role

You can use the storage role to create and configure a volume encrypted with LUKS by running an Ansible playbook.

Prerequisites

- You have prepared the control node and the managed nodes.

-

The account you use to connect to the managed nodes has

sudopermissions for these nodes.

Procedure

Store your sensitive variables in an encrypted file:

Create the vault:

ansible-vault create ~/vault.yml New Vault password: <vault_password> Confirm New Vault password: <vault_password>

$ ansible-vault create ~/vault.yml New Vault password: <vault_password> Confirm New Vault password: <vault_password>Copy to Clipboard Copied! Toggle word wrap Toggle overflow After the

ansible-vault createcommand opens an editor, enter the sensitive data in the<key>: <value>format:luks_password: <password>

luks_password: <password>Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Save the changes, and close the editor. Ansible encrypts the data in the vault.

Create a playbook file, for example,

~/playbook.yml, with the following content:Copy to Clipboard Copied! Toggle word wrap Toggle overflow For details about all variables used in the playbook, see the

/usr/share/ansible/roles/rhel-system-roles.storage/README.mdfile on the control node.Validate the playbook syntax:

ansible-playbook --ask-vault-pass --syntax-check ~/playbook.yml

$ ansible-playbook --ask-vault-pass --syntax-check ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note that this command only validates the syntax and does not protect against a wrong but valid configuration.

Run the playbook:

ansible-playbook --ask-vault-pass ~/playbook.yml

$ ansible-playbook --ask-vault-pass ~/playbook.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Find the

luksUUIDvalue of the LUKS encrypted volume:ansible managed-node-01.example.com -m command -a 'cryptsetup luksUUID /dev/sdb' 4e4e7970-1822-470e-b55a-e91efe5d0f5c

# ansible managed-node-01.example.com -m command -a 'cryptsetup luksUUID /dev/sdb' 4e4e7970-1822-470e-b55a-e91efe5d0f5cCopy to Clipboard Copied! Toggle word wrap Toggle overflow View the encryption status of the volume:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify the created LUKS encrypted volume:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 3. Managing partitions using the web console

Learn how to manage file systems on RHEL 8 using the web console.

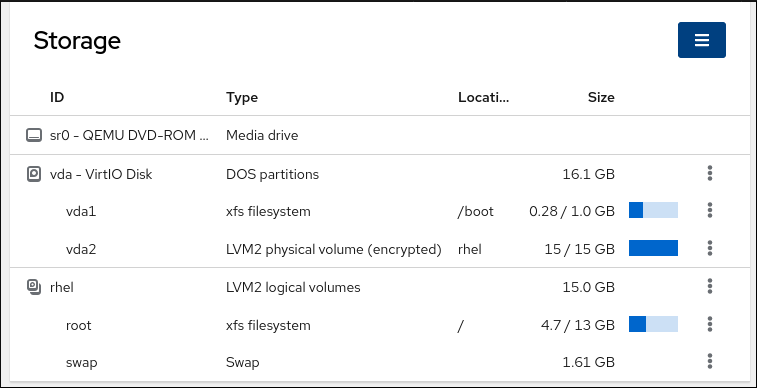

3.1. Displaying partitions formatted with file systems in the web console

The Storage section in the web console displays all available file systems in the Filesystems table. In addition to the list of partitions formatted with file systems, you can also use the page for creating a new storage.

Prerequisites

-

The

cockpit-storagedpackage is installed on your system.

- You have installed the RHEL 8 web console.

- You have enabled the cockpit service.

Your user account is allowed to log in to the web console.

For instructions, see Installing and enabling the web console.

Procedure

Log in to the RHEL 8 web console.

For details, see Logging in to the web console.

Click the Storage tab.

In the Storage table, you can see all available partitions formatted with file systems, their ID, types, locations, sizes, and how much space is available on each partition.

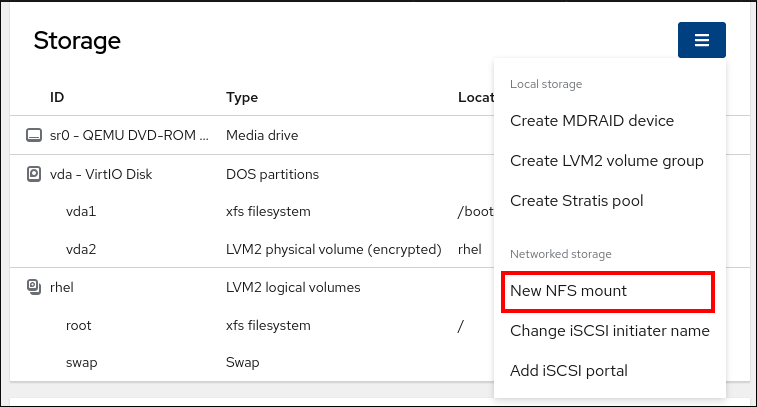

You can also use the drop-down menu in the upper-right corner to create new local or networked storage.

3.2. Creating partitions in the web console

To create a new partition:

- Use an existing partition table

- Create a partition

Prerequisites

-

The

cockpit-storagedpackage is installed on your system. - The web console must be installed and accessible. For details, see Installing the web console.

- An unformatted volume connected to the system is visible in the Storage table of the Storage tab.

Procedure

Log in to the RHEL 8 web console.

For details, see Logging in to the web console.

- Click the Storage tab.

- In the Storage table, click the device which you want to partition to open the page and options for that device.

- On the device page, click the menu button, , and select Create partition table.

In the Initialize disk dialog box, select the following:

Partitioning:

- Compatible with all systems and devices (MBR)

- Compatible with modern system and hard disks > 2TB (GPT)

- No partitioning

Overwrite:

Select the Overwrite existing data with zeros checkbox if you want the RHEL web console to rewrite the whole disk with zeros. This option is slower because the program has to go through the whole disk, but it is more secure. Use this option if the disk includes any data and you need to overwrite it.

If you do not select the Overwrite existing data with zeros checkbox, the RHEL web console rewrites only the disk header. This increases the speed of formatting.

- Click .

- Click the menu button, , next to the partition table you created. It is named Free space by default.

- Click .

- In the Create partition dialog box, enter a Name for the file system.

- Add a Mount point.

In the Type drop-down menu, select a file system:

- XFS file system supports large logical volumes, switching physical drives online without outage, and growing an existing file system. Leave this file system selected if you do not have a different strong preference.

ext4 file system supports:

- Logical volumes

- Switching physical drives online without outage

- Growing a file system

- Shrinking a file system

Additional option is to enable encryption of partition done by LUKS (Linux Unified Key Setup), which allows you to encrypt the volume with a passphrase.

- Enter the Size of the volume you want to create.

Select the Overwrite existing data with zeros checkbox if you want the RHEL web console to rewrite the whole disk with zeros. This option is slower because the program has to go through the whole disk, but it is more secure. Use this option if the disk includes any data and you need to overwrite it.

If you do not select the Overwrite existing data with zeros checkbox, the RHEL web console rewrites only the disk header. This increases the speed of formatting.

If you want to encrypt the volume, select the type of encryption in the Encryption drop-down menu.

If you do not want to encrypt the volume, select No encryption.

- In the At boot drop-down menu, select when you want to mount the volume.

In Mount options section:

- Select the Mount read only checkbox if you want the to mount the volume as a read-only logical volume.

- Select the Custom mount options checkbox and add the mount options if you want to change the default mount option.

Create the partition:

- If you want to create and mount the partition, click the button.

If you want to only create the partition, click the button.

Formatting can take several minutes depending on the volume size and which formatting options are selected.

Verification

- To verify that the partition has been successfully added, switch to the Storage tab and check the Storage table and verify whether the new partition is listed.

3.3. Deleting partitions in the web console

You can remove partitions in the web console interface.

Prerequisites

-

The

cockpit-storagedpackage is installed on your system.

- You have installed the RHEL 8 web console.

- You have enabled the cockpit service.

Your user account is allowed to log in to the web console.

For instructions, see Installing and enabling the web console.

Procedure

Log in to the RHEL 8 web console.

For details, see Logging in to the web console.

- Click the Storage tab.

- Click the device from which you want to delete a partition.

- On the device page and in the GPT partitions section, click the menu button, next to the partition you want to delete.

From the drop-down menu, select .

The RHEL web console terminates all processes that are currently using the partition and unmount the partition before deleting it.

Verification

- To verify that the partition has been successfully removed, switch to the Storage tab and check the Storage table.

Chapter 6. Overview of persistent naming attributes

As a system administrator, you need to refer to storage volumes using persistent naming attributes to build storage setups that are reliable over multiple system boots.

6.1. Disadvantages of non-persistent naming attributes

Red Hat Enterprise Linux provides a number of ways to identify storage devices. It is important to use the correct option to identify each device when used in order to avoid inadvertently accessing the wrong device, particularly when installing to or reformatting drives.

Traditionally, non-persistent names in the form of /dev/sd(major number)(minor number) are used on Linux to refer to storage devices. The major and minor number range and associated sd names are allocated for each device when it is detected. This means that the association between the major and minor number range and associated sd names can change if the order of device detection changes.

Such a change in the ordering might occur in the following situations:

- The parallelization of the system boot process detects storage devices in a different order with each system boot.

-

A disk fails to power up or respond to the SCSI controller. This results in it not being detected by the normal device probe. The disk is not accessible to the system and subsequent devices will have their major and minor number range, including the associated

sdnames shifted down. For example, if a disk normally referred to assdbis not detected, a disk that is normally referred to assdcwould instead appear assdb. -

A SCSI controller (host bus adapter, or HBA) fails to initialize, causing all disks connected to that HBA to not be detected. Any disks connected to subsequently probed HBAs are assigned different major and minor number ranges, and different associated

sdnames. - The order of driver initialization changes if different types of HBAs are present in the system. This causes the disks connected to those HBAs to be detected in a different order. This might also occur if HBAs are moved to different PCI slots on the system.

-

Disks connected to the system with Fibre Channel, iSCSI, or FCoE adapters might be inaccessible at the time the storage devices are probed, due to a storage array or intervening switch being powered off, for example. This might occur when a system reboots after a power failure, if the storage array takes longer to come online than the system take to boot. Although some Fibre Channel drivers support a mechanism to specify a persistent SCSI target ID to WWPN mapping, this does not cause the major and minor number ranges, and the associated

sdnames to be reserved; it only provides consistent SCSI target ID numbers.

These reasons make it undesirable to use the major and minor number range or the associated sd names when referring to devices, such as in the /etc/fstab file. There is the possibility that the wrong device will be mounted and data corruption might result.

Occasionally, however, it is still necessary to refer to the sd names even when another mechanism is used, such as when errors are reported by a device. This is because the Linux kernel uses sd names (and also SCSI host/channel/target/LUN tuples) in kernel messages regarding the device.

6.2. File system and device identifiers

File system identifiers are tied to the file system itself, while device identifiers are linked to the physical block device. Understanding the difference is important for proper storage management.

File system identifiers

File system identifiers are tied to a particular file system created on a block device. The identifier is also stored as part of the file system. If you copy the file system to a different device, it still carries the same file system identifier. However, if you rewrite the device, such as by formatting it with the mkfs utility, the device loses the attribute.

File system identifiers include:

- Unique identifier (UUID)

- Label

Device identifiers

Device identifiers are tied to a block device: for example, a disk or a partition. If you rewrite the device, such as by formatting it with the mkfs utility, the device keeps the attribute, because it is not stored in the file system.

Device identifiers include:

- World Wide Identifier (WWID)

- Partition UUID

- Serial number

Recommendations

- Some file systems, such as logical volumes, span multiple devices. Red Hat recommends accessing these file systems using file system identifiers rather than device identifiers.

6.3. Device names managed by the udev mechanism in /dev/disk/

The udev mechanism is used for all types of devices in Linux, and is not limited only for storage devices. It provides different kinds of persistent naming attributes in the /dev/disk/ directory. In the case of storage devices, Red Hat Enterprise Linux contains udev rules that create symbolic links in the /dev/disk/ directory. This enables you to refer to storage devices by:

- Their content

- A unique identifier

- Their serial number.

Although udev naming attributes are persistent, in that they do not change on their own across system reboots, some are also configurable.

6.3.1. File system identifiers

The UUID attribute in /dev/disk/by-uuid/

Entries in this directory provide a symbolic name that refers to the storage device by a unique identifier (UUID) in the content (that is, the data) stored on the device. For example:

/dev/disk/by-uuid/3e6be9de-8139-11d1-9106-a43f08d823a6

/dev/disk/by-uuid/3e6be9de-8139-11d1-9106-a43f08d823a6

You can use the UUID to refer to the device in the /etc/fstab file using the following syntax:

UUID=3e6be9de-8139-11d1-9106-a43f08d823a6

UUID=3e6be9de-8139-11d1-9106-a43f08d823a6You can configure the UUID attribute when creating a file system, and you can also change it later on.

The Label attribute in /dev/disk/by-label/

Entries in this directory provide a symbolic name that refers to the storage device by a label in the content (that is, the data) stored on the device.

For example:

/dev/disk/by-label/Boot

/dev/disk/by-label/Boot

You can use the label to refer to the device in the /etc/fstab file using the following syntax:

LABEL=Boot

LABEL=BootYou can configure the Label attribute when creating a file system, and you can also change it later on.

6.3.2. Device identifiers

The WWID attribute in /dev/disk/by-id/

The World Wide Identifier (WWID) is a persistent, system-independent identifier that the SCSI Standard requires from all SCSI devices. The WWID identifier is guaranteed to be unique for every storage device, and independent of the path that is used to access the device. The identifier is a property of the device but is not stored in the content (that is, the data) on the devices.

This identifier can be obtained by issuing a SCSI Inquiry to retrieve the Device Identification Vital Product Data (page 0x83) or Unit Serial Number (page 0x80).

Red Hat Enterprise Linux automatically maintains the proper mapping from the WWID-based device name to a current /dev/sd name on that system. Applications can use the /dev/disk/by-id/ name to reference the data on the disk, even if the path to the device changes, and even when accessing the device from different systems.

Example 6.1. WWID mappings

| WWID symlink | Non-persistent device | Note |

|---|---|---|

|

|

|

A device with a page |

|

|

|

A device with a page |

|

|

| A disk partition |

In addition to these persistent names provided by the system, you can also use udev rules to implement persistent names of your own, mapped to the WWID of the storage.

The Partition UUID attribute in /dev/disk/by-partuuid

The Partition UUID (PARTUUID) attribute identifies partitions as defined by GPT partition table.

Example 6.2. Partition UUID mappings

| PARTUUID symlink | Non-persistent device |

|---|---|

|

|

|

|

|

|

|

|

|

The Path attribute in /dev/disk/by-path/

This attribute provides a symbolic name that refers to the storage device by the hardware path used to access the device.

The Path attribute fails if any part of the hardware path (for example, the PCI ID, target port, or LUN number) changes. The Path attribute is therefore unreliable. However, the Path attribute may be useful in one of the following scenarios:

- You need to identify a disk that you are planning to replace later.

- You plan to install a storage service on a disk in a specific location.

6.4. The World Wide Identifier with DM Multipath

You can configure Device Mapper (DM) Multipath to map between the World Wide Identifier (WWID) and non-persistent device names.

If there are multiple paths from a system to a device, DM Multipath uses the WWID to detect this. DM Multipath then presents a single "pseudo-device" in the /dev/mapper/wwid directory, such as /dev/mapper/3600508b400105df70000e00000ac0000.

The command multipath -l shows the mapping to the non-persistent identifiers:

-

Host:Channel:Target:LUN -

/dev/sdname -

major:minornumber

Example 6.3. WWID mappings in a multipath configuration

An example output of the multipath -l command:

DM Multipath automatically maintains the proper mapping of each WWID-based device name to its corresponding /dev/sd name on the system. These names are persistent across path changes, and they are consistent when accessing the device from different systems.

When the user_friendly_names feature of DM Multipath is used, the WWID is mapped to a name of the form /dev/mapper/mpathN. By default, this mapping is maintained in the file /etc/multipath/bindings. These mpathN names are persistent as long as that file is maintained.

If you use user_friendly_names, then additional steps are required to obtain consistent names in a cluster.

6.5. Limitations of the udev device naming convention

The following are some limitations of the udev naming convention:

-

It is possible that the device might not be accessible at the time the query is performed because the

udevmechanism might rely on the ability to query the storage device when theudevrules are processed for audevevent. This is more likely to occur with Fibre Channel, iSCSI or FCoE storage devices when the device is not located in the server chassis. -

The kernel might send

udevevents at any time, causing the rules to be processed and possibly causing the/dev/disk/by-*/links to be removed if the device is not accessible. -

There might be a delay between when the

udevevent is generated and when it is processed, such as when a large number of devices are detected and the user-spaceudevdservice takes some amount of time to process the rules for each one. This might cause a delay between when the kernel detects the device and when the/dev/disk/by-*/names are available. -

External programs such as

blkidinvoked by the rules might open the device for a brief period of time, making the device inaccessible for other uses. -

The device names managed by the

udevmechanism in /dev/disk/ may change between major releases, requiring you to update the links.

6.6. Listing persistent naming attributes

You can find out the persistent naming attributes of non-persistent storage devices.

Procedure

To list the UUID and Label attributes, use the

lsblkutility:lsblk --fs storage-device

$ lsblk --fs storage-deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

Example 6.4. Viewing the UUID and Label of a file system

lsblk --fs /dev/sda1 NAME FSTYPE LABEL UUID MOUNTPOINT sda1 xfs Boot afa5d5e3-9050-48c3-acc1-bb30095f3dc4 /boot

$ lsblk --fs /dev/sda1 NAME FSTYPE LABEL UUID MOUNTPOINT sda1 xfs Boot afa5d5e3-9050-48c3-acc1-bb30095f3dc4 /bootCopy to Clipboard Copied! Toggle word wrap Toggle overflow To list the PARTUUID attribute, use the

lsblkutility with the--output +PARTUUIDoption:lsblk --output +PARTUUID

$ lsblk --output +PARTUUIDCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

Example 6.5. Viewing the PARTUUID attribute of a partition

lsblk --output +PARTUUID /dev/sda1 NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT PARTUUID sda1 8:1 0 512M 0 part /boot 4cd1448a-01

$ lsblk --output +PARTUUID /dev/sda1 NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT PARTUUID sda1 8:1 0 512M 0 part /boot 4cd1448a-01Copy to Clipboard Copied! Toggle word wrap Toggle overflow To list the WWID attribute, examine the targets of symbolic links in the

/dev/disk/by-id/directory. For example:Example 6.6. Viewing the WWID of all storage devices on the system

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.7. Modifying persistent naming attributes

You can change the UUID or Label persistent naming attribute of a file system.

Changing udev attributes happens in the background and might take a long time. The udevadm settle command waits until the change is fully registered, which ensures that your next command will be able to use the new attribute correctly.

In the following commands:

-

Replace new-uuid with the UUID you want to set; for example,

1cdfbc07-1c90-4984-b5ec-f61943f5ea50. You can generate a UUID using theuuidgencommand. -

Replace new-label with a label; for example,

backup_data.

Prerequisites

- If you are modifying the attributes of an XFS file system, unmount it first.

Procedure

To change the UUID or Label attributes of an XFS file system, use the

xfs_adminutility:xfs_admin -U new-uuid -L new-label storage-device udevadm settle

# xfs_admin -U new-uuid -L new-label storage-device # udevadm settleCopy to Clipboard Copied! Toggle word wrap Toggle overflow To change the UUID or Label attributes of an ext4, ext3, or ext2 file system, use the

tune2fsutility:tune2fs -U new-uuid -L new-label storage-device udevadm settle

# tune2fs -U new-uuid -L new-label storage-device # udevadm settleCopy to Clipboard Copied! Toggle word wrap Toggle overflow To change the UUID or Label attributes of a swap volume, use the

swaplabelutility:swaplabel --uuid new-uuid --label new-label swap-device udevadm settle

# swaplabel --uuid new-uuid --label new-label swap-device # udevadm settleCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 7. Partition operations with parted

parted is a program to manipulate disk partitions. It supports multiple partition table formats, including MS-DOS and GPT. It is useful for creating space for new operating systems, reorganizing disk usage, and copying data to new hard disks.

7.1. Viewing the partition table with parted

Display the partition table of a block device to see the partition layout and details about individual partitions. You can view the partition table on a block device using the parted utility.

Procedure

Start the

partedutility. For example, the following output lists the device/dev/sda:parted /dev/sda

# parted /dev/sdaCopy to Clipboard Copied! Toggle word wrap Toggle overflow View the partition table:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Switch to the device you want to examine next:

(parted) select block-device

(parted) select block-deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow

For a detailed description of the print command output, see the following:

Model: ATA SAMSUNG MZNLN256 (scsi)- The disk type, manufacturer, model number, and interface.

Disk /dev/sda: 256GB- The file path to the block device and the storage capacity.

Partition Table: msdos- The disk label type.

Number-

The partition number. For example, the partition with minor number 1 corresponds to

/dev/sda1. StartandEnd- The location on the device where the partition starts and ends.

Type- Valid types are metadata, free, primary, extended, or logical.

File system-

The file system type. If the

File systemfield of a device shows no value, this means that its file system type is unknown. Thepartedutility cannot recognize the file system on encrypted devices. Flags-

Lists the flags set for the partition. Available flags are

boot,root,swap,hidden,raid,lvm, orlba.

7.2. Creating a partition table on a disk with parted

Use the parted utility to format a block device with a partition table more easily.

Formatting a block device with a partition table deletes all data stored on the device.

Procedure

Start the interactive

partedshell:parted block-device

# parted block-deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Determine if there already is a partition table on the device:

(parted) print

(parted) printCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the device already contains partitions, they will be deleted in the following steps.

Create the new partition table:

(parted) mklabel table-type

(parted) mklabel table-typeCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace table-type with with the intended partition table type:

-

msdosfor MBR -

gptfor GPT

-

Example 7.1. Creating a GUID Partition Table (GPT) table

To create a GPT table on the disk, use:

(parted) mklabel gpt

(parted) mklabel gptCopy to Clipboard Copied! Toggle word wrap Toggle overflow The changes start applying after you enter this command.

View the partition table to confirm that it is created:

(parted) print

(parted) printCopy to Clipboard Copied! Toggle word wrap Toggle overflow Exit the

partedshell:(parted) quit

(parted) quitCopy to Clipboard Copied! Toggle word wrap Toggle overflow

7.3. Creating a partition with parted

As a system administrator, you can create new partitions on a disk by using the parted utility.

The required partitions are swap, /boot/, and / (root).

Prerequisites

- A partition table on the disk.

- If the partition you want to create is larger than 2TiB, format the disk with the GUID Partition Table (GPT).

Procedure

Start the

partedutility:parted block-device

# parted block-deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow View the current partition table to determine if there is enough free space:

(parted) print

(parted) printCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Resize the partition in case there is not enough free space.

From the partition table, determine:

- The start and end points of the new partition.

- On MBR, what partition type it should be.

Create the new partition:

(parted) mkpart part-type name fs-type start end

(parted) mkpart part-type name fs-type start endCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Replace part-type with with

primary,logical, orextended. This applies only to the MBR partition table. - Replace name with an arbitrary partition name. This is required for GPT partition tables.

-

Replace fs-type with

xfs,ext2,ext3,ext4,fat16,fat32,hfs,hfs+,linux-swap,ntfs, orreiserfs. The fs-type parameter is optional. Note that thepartedutility does not create the file system on the partition. -

Replace start and end with the sizes that determine the starting and ending points of the partition, counting from the beginning of the disk. You can use size suffixes, such as

512MiB,20GiB, or1.5TiB. The default size is in megabytes.

Example 7.2. Creating a small primary partition

To create a primary partition from 1024MiB until 2048MiB on an MBR table, use:

(parted) mkpart primary 1024MiB 2048MiB

(parted) mkpart primary 1024MiB 2048MiBCopy to Clipboard Copied! Toggle word wrap Toggle overflow The changes start applying after you enter the command.

-

Replace part-type with with

View the partition table to confirm that the created partition is in the partition table with the correct partition type, file system type, and size:

(parted) print

(parted) printCopy to Clipboard Copied! Toggle word wrap Toggle overflow Exit the

partedshell:(parted) quit

(parted) quitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Register the new device node:

udevadm settle

# udevadm settleCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the kernel recognizes the new partition:

cat /proc/partitions

# cat /proc/partitionsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

7.4. Removing a partition with parted

Using the parted utility, you can remove a disk partition to free up disk space.

Procedure

Start the interactive

partedshell:parted block-device

# parted block-deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Replace block-device with the path to the device where you want to remove a partition: for example,

/dev/sda.

-

Replace block-device with the path to the device where you want to remove a partition: for example,

View the current partition table to determine the minor number of the partition to remove:

(parted) print

(parted) printCopy to Clipboard Copied! Toggle word wrap Toggle overflow Remove the partition:

(parted) rm minor-number

(parted) rm minor-numberCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace minor-number with the minor number of the partition you want to remove.

The changes start applying as soon as you enter this command.

Verify that you have removed the partition from the partition table:

(parted) print

(parted) printCopy to Clipboard Copied! Toggle word wrap Toggle overflow Exit the

partedshell:(parted) quit

(parted) quitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the kernel registers that the partition is removed:

cat /proc/partitions

# cat /proc/partitionsCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Remove the partition from the

/etc/fstabfile, if it is present. Find the line that declares the removed partition, and remove it from the file. Regenerate mount units so that your system registers the new

/etc/fstabconfiguration:systemctl daemon-reload

# systemctl daemon-reloadCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you have deleted a swap partition or removed pieces of LVM, remove all references to the partition from the kernel command line:

List active kernel options and see if any option references the removed partition:

grubby --info=ALL

# grubby --info=ALLCopy to Clipboard Copied! Toggle word wrap Toggle overflow Remove the kernel options that reference the removed partition:

grubby --update-kernel=ALL --remove-args="option"

# grubby --update-kernel=ALL --remove-args="option"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

To register the changes in the early boot system, rebuild the

initramfsfile system:dracut --force --verbose

# dracut --force --verboseCopy to Clipboard Copied! Toggle word wrap Toggle overflow

7.5. Resizing a partition with parted

Using the parted utility, extend a partition to use unused disk space, or shrink a partition to use its capacity for different purposes.

Prerequisites

- Back up the data before shrinking a partition.

- If the partition you want to create is larger than 2TiB, format the disk with the GUID Partition Table (GPT).

- If you want to shrink the partition, first shrink the file system so that it is not larger than the resized partition.

XFS does not support shrinking.

Procedure

Start the

partedutility:parted block-device

# parted block-deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow View the current partition table:

(parted) print

(parted) printCopy to Clipboard Copied! Toggle word wrap Toggle overflow From the partition table, determine:

- The minor number of the partition.

- The location of the existing partition and its new ending point after resizing.

Resize the partition:

(parted) resizepart 1 2GiB

(parted) resizepart 1 2GiBCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace 1 with the minor number of the partition that you are resizing.

-

Replace 2 with the size that determines the new ending point of the resized partition, counting from the beginning of the disk. You can use size suffixes, such as

512MiB,20GiB, or1.5TiB. The default size is in megabytes.

View the partition table to confirm that the resized partition is in the partition table with the correct size:

(parted) print

(parted) printCopy to Clipboard Copied! Toggle word wrap Toggle overflow Exit the

partedshell:(parted) quit

(parted) quitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the kernel registers the new partition:

cat /proc/partitions

# cat /proc/partitionsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Optional: If you extended the partition, extend the file system on it as well.

Chapter 8. Strategies for repartitioning a disk

There are different approaches to repartitioning a disk. These include:

- Unpartitioned free space is available.

- An unused partition is available.

- Free space in an actively used partition is available.

The following examples are simplified for clarity and do not reflect the exact partition layout when actually installing Red Hat Enterprise Linux.

8.1. Using unpartitioned free space

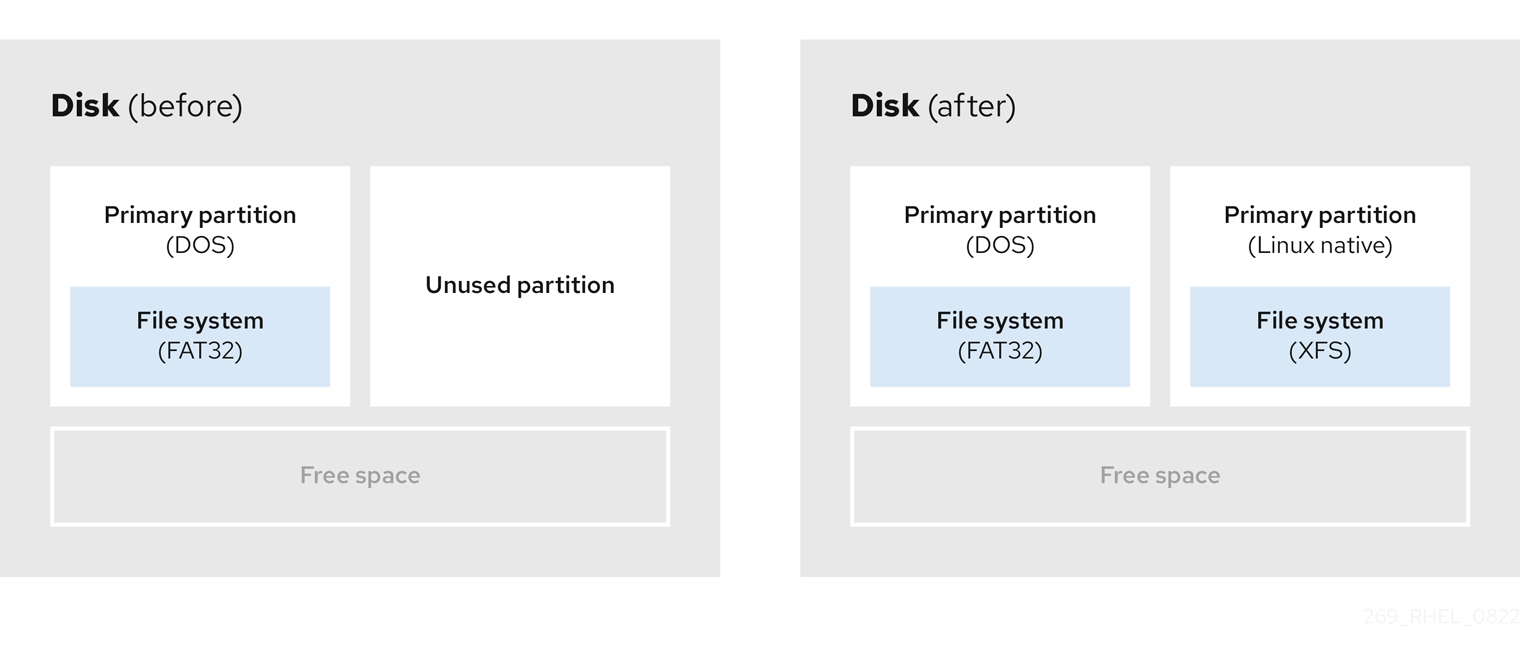

Partitions that are already defined and do not span the entire hard disk, leave unallocated space that is not part of any defined partition. The following diagram shows what this might look like.

Figure 8.1. Disk with unpartitioned free space

The first diagram represents a disk with one primary partition and an undefined partition with unallocated space. The second diagram represents a disk with two defined partitions with allocated space.

An unused hard disk also falls into this category. The only difference is that all the space is not part of any defined partition.

On a new disk, you can create the necessary partitions from the unused space. Most preinstalled operating systems are configured to take up all available space on a disk drive.

8.2. Using space from an unused partition

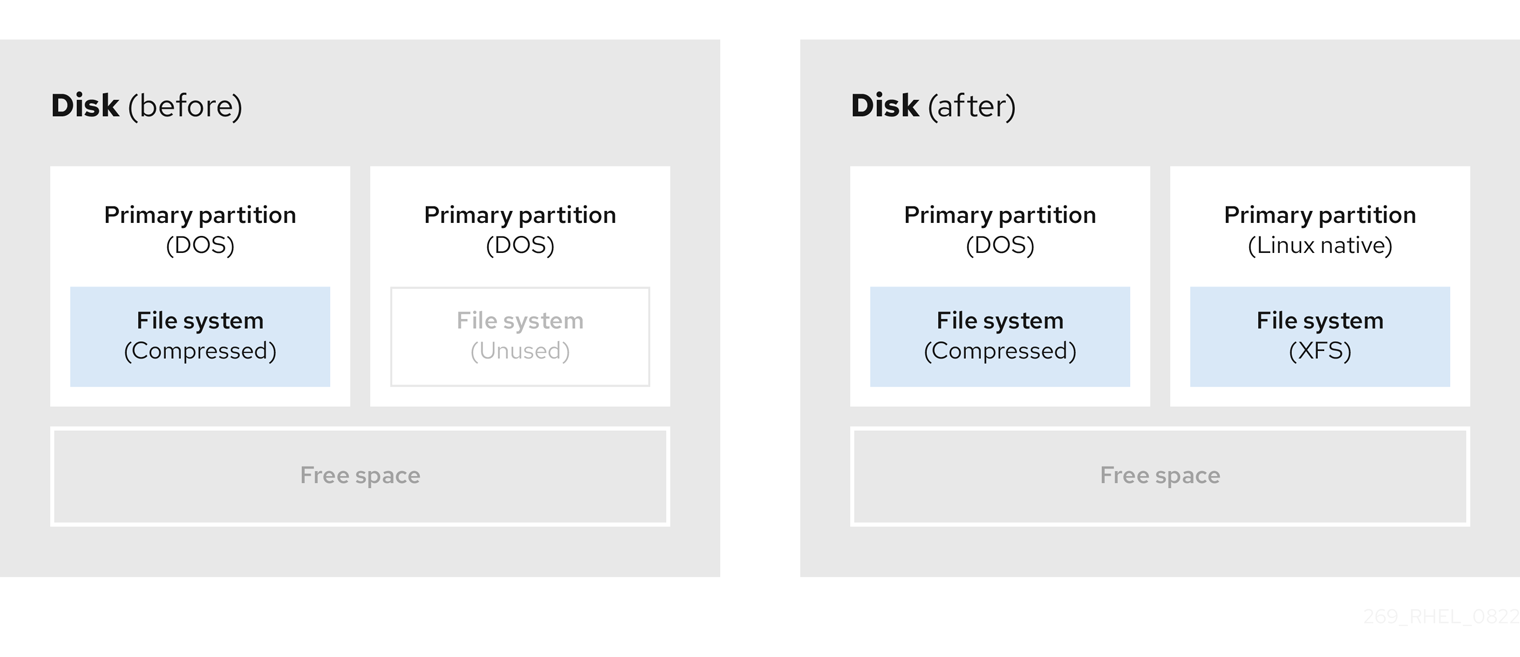

In the following example, the first diagram represents a disk with an unused partition. The second diagram represents reallocating an unused partition for Linux.

Figure 8.2. Disk with an unused partition

To use the space allocated to the unused partition, delete the partition and then create the appropriate Linux partition instead. Alternatively, during the installation process, delete the unused partition and manually create new partitions.

8.3. Using free space from an active partition

This process can be difficult to manage because an active partition, that is already in use, contains the required free space. In most cases, hard disks of computers with preinstalled software contain one larger partition holding the operating system and data.

If you want to use an operating system (OS) on an active partition, you must reinstall the OS. Be aware that some computers, which include pre-installed software, do not include installation media to reinstall the original OS. Check whether this applies to your OS before you destroy an original partition and the OS installation.

To optimise the use of available free space, you can use the methods of destructive or non-destructive repartitioning.

8.3.1. Destructive repartitioning

Destructive repartitioning destroys the partition on your hard drive and creates several smaller partitions instead. Backup any needed data from the original partition as this method deletes the complete contents.

After creating a smaller partition for your existing operating system, you can:

- Reinstall software.

- Restore your data.

- Start your Red Hat Enterprise Linux installation.

The following diagram is a simplified representation of using the destructive repartitioning method.

Figure 8.3. Destructive repartitioning action on disk

This method deletes all data previously stored in the original partition.

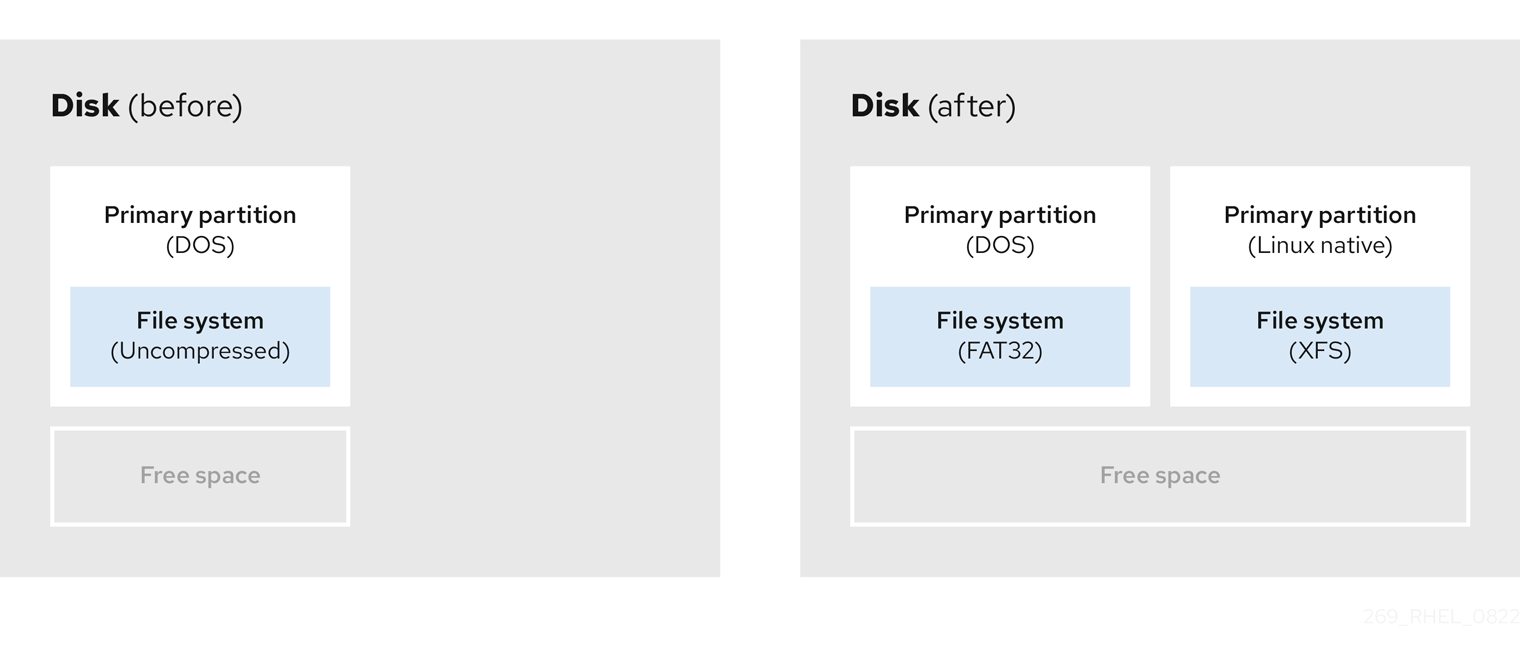

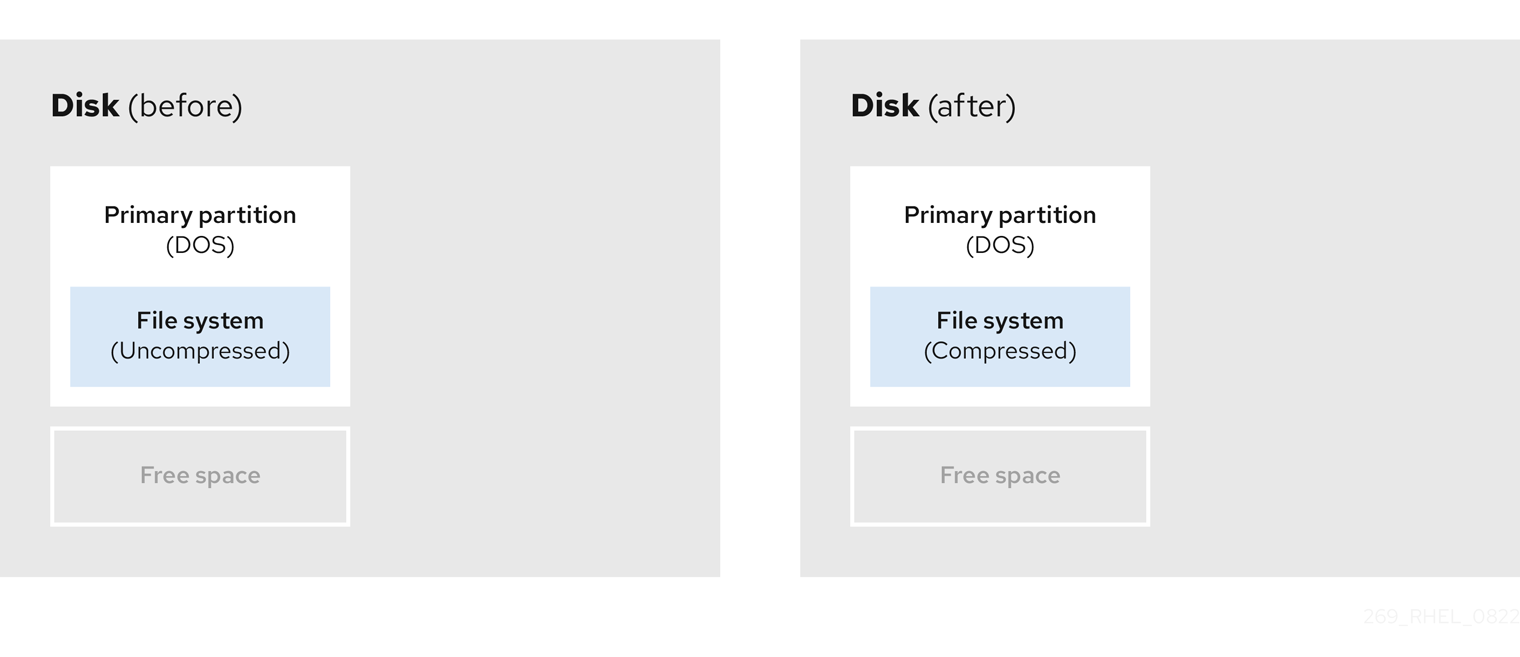

8.3.2. Non-destructive repartitioning

Non-destructive repartitioning resizes partitions, without any data loss. This method is reliable, however it takes longer processing time on large drives.

The following is a list of methods, which can help initiate non-destructive repartitioning.

- Compress existing data

The storage location of some data cannot be changed. This can prevent the resizing of a partition to the required size, and ultimately lead to a destructive repartition process. Compressing data in an already existing partition can help you resize your partitions as needed. It can also help to maximize the free space available.

The following diagram is a simplified representation of this process.

Figure 8.4. Data compression on a disk

To avoid any possible data loss, create a backup before continuing with the compression process.

- Resize the existing partition

By resizing an already existing partition, you can free up more space. Depending on your resizing software, the results may vary. In the majority of cases, you can create a new unformatted partition of the same type, as the original partition.

The steps you take after resizing can depend on the software you use. In the following example, the best practice is to delete the new DOS (Disk Operating System) partition, and create a Linux partition instead. Verify what is most suitable for your disk before initiating the resizing process.

Figure 8.5. Partition resizing on a disk

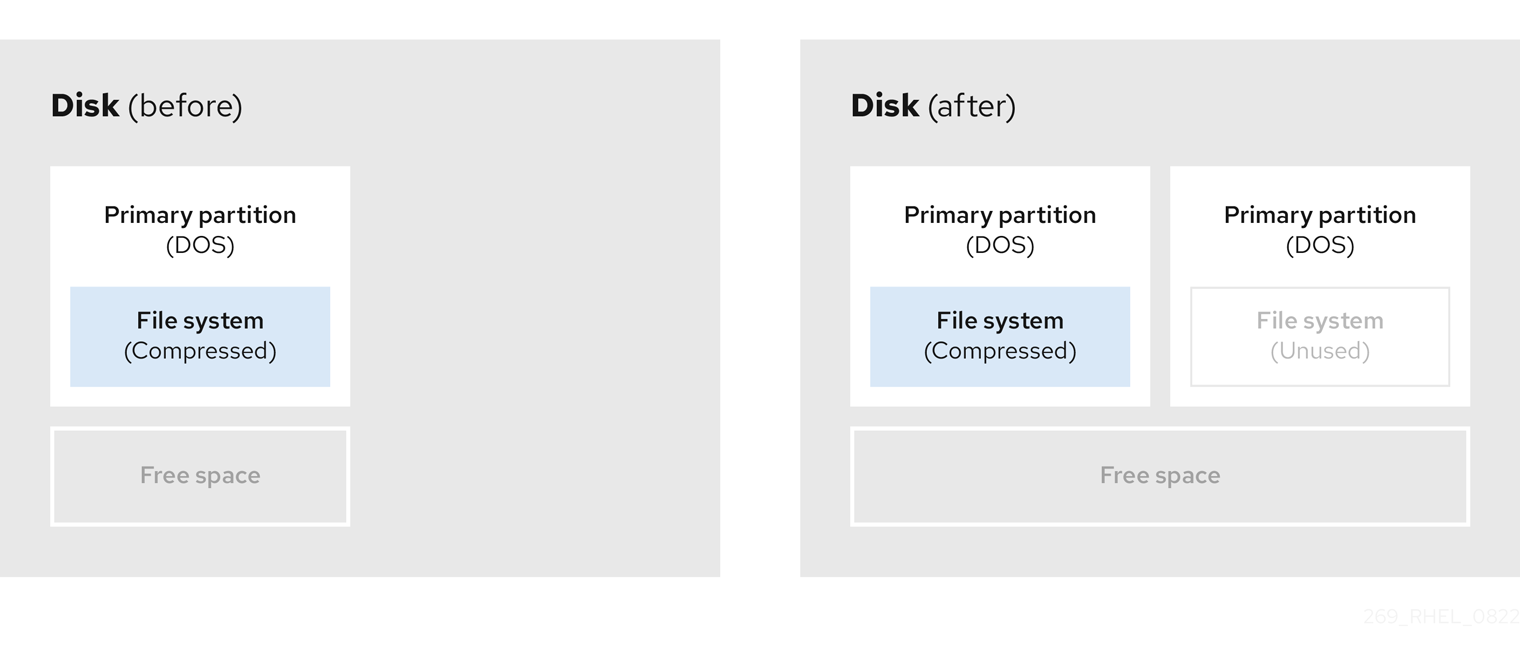

- Optional: Create new partitions

Some pieces of resizing software support Linux based systems. In such cases, there is no need to delete the newly created partition after resizing. Creating a new partition afterwards depends on the software you use.

The following diagram represents the disk state, before and after creating a new partition.

Figure 8.6. Disk with final partition configuration

Chapter 9. Getting started with XFS

This is an overview of how to create and maintain XFS file systems.

9.1. The XFS file system

XFS is a highly scalable, high-performance, robust, and mature 64-bit journaling file system that supports very large files and file systems on a single host. It is the default file system in Red Hat Enterprise Linux 8. XFS was originally developed in the early 1990s by SGI and has a long history of running on extremely large servers and storage arrays.

The features of XFS include:

- Reliability

- Metadata journaling, which ensures file system integrity after a system crash by keeping a record of file system operations that can be replayed when the system is restarted and the file system remounted

- Extensive run-time metadata consistency checking

- Scalable and fast repair utilities

- Quota journaling. This avoids the need for lengthy quota consistency checks after a crash.

- Scalability and performance

- Supported file system size up to 1024 TiB

- Ability to support a large number of concurrent operations

- B-tree indexing for scalability of free space management

- Sophisticated metadata read-ahead algorithms

- Optimizations for streaming video workloads

- Allocation schemes

- Extent-based allocation

- Stripe-aware allocation policies

- Delayed allocation

- Space pre-allocation

- Dynamically allocated inodes

- Other features

- Reflink-based file copies

- Tightly integrated backup and restore utilities

- Online defragmentation

- Online file system growing

- Comprehensive diagnostics capabilities

-

Extended attributes (

xattr). This allows the system to associate several additional name/value pairs per file. - Project or directory quotas. This allows quota restrictions over a directory tree.

- Subsecond timestamps

Performance characteristics

XFS has a high performance on large systems with enterprise workloads. A large system is one with a relatively high number of CPUs, multiple HBAs, and connections to external disk arrays. XFS also performs well on smaller systems that have a multi-threaded, parallel I/O workload.

XFS has a relatively low performance for single threaded, metadata-intensive workloads: for example, a workload that creates or deletes large numbers of small files in a single thread.

9.2. Comparison of tools used with ext4 and XFS

This section compares which tools to use to accomplish common tasks on the ext4 and XFS file systems.

| Task | ext4 | XFS |

|---|---|---|

| Create a file system |

|

|

| File system check |

|

|

| Resize a file system |

|

|

| Save an image of a file system |

|

|

| Label or tune a file system |

|

|

| Back up a file system |

|

|

| Quota management |

|

|

| File mapping |

|

|

Chapter 10. Creating an XFS file system

As a system administrator, you can create an XFS file system on a block device to enable it to store files and directories.

10.1. Creating an XFS file system with mkfs.xfs

This procedure describes how to create an XFS file system on a block device.

Procedure

To create the file system:

If the device is a regular partition, an LVM volume, an MD volume, a disk, or a similar device, use the following command:

mkfs.xfs block-device

# mkfs.xfs block-deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Replace block-device with the path to the block device. For example,

/dev/sdb1,/dev/disk/by-uuid/05e99ec8-def1-4a5e-8a9d-5945339ceb2a, or/dev/my-volgroup/my-lv. - In general, the default options are optimal for common use.

-

When using

mkfs.xfson a block device containing an existing file system, add the-foption to overwrite that file system.

-

Replace block-device with the path to the block device. For example,

To create the file system on a hardware RAID device, check if the system correctly detects the stripe geometry of the device:

If the stripe geometry information is correct, no additional options are needed. Create the file system:

mkfs.xfs block-device

# mkfs.xfs block-deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the information is incorrect, specify stripe geometry manually with the

suandswparameters of the-doption. Thesuparameter specifies the RAID chunk size, and theswparameter specifies the number of data disks in the RAID device.For example:

mkfs.xfs -d su=64k,sw=4 /dev/sda3

# mkfs.xfs -d su=64k,sw=4 /dev/sda3Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Use the following command to wait for the system to register the new device node:

udevadm settle

# udevadm settleCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 11. Backing up an XFS file system

As a system administrator, you can use the xfsdump to back up an XFS file system into a file or on a tape. This provides a simple backup mechanism.

11.1. Features of XFS backup

This section describes key concepts and features of backing up an XFS file system with the xfsdump utility.

You can use the xfsdump utility to:

Perform backups to regular file images.

Only one backup can be written to a regular file.

Perform backups to tape drives.

The

xfsdumputility also enables you to write multiple backups to the same tape. A backup can span multiple tapes.To back up multiple file systems to a single tape device, simply write the backup to a tape that already contains an XFS backup. This appends the new backup to the previous one. By default,

xfsdumpnever overwrites existing backups.Create incremental backups.

The

xfsdumputility uses dump levels to determine a base backup to which other backups are relative. Numbers from 0 to 9 refer to increasing dump levels. An incremental backup only backs up files that have changed since the last dump of a lower level:- To perform a full backup, perform a level 0 dump on the file system.

- A level 1 dump is the first incremental backup after a full backup. The next incremental backup would be level 2, which only backs up files that have changed since the last level 1 dump; and so on, to a maximum of level 9.

- Exclude files from a backup using size, subtree, or inode flags to filter them.

11.2. Backing up an XFS file system with xfsdump

This procedure describes how to back up the content of an XFS file system into a file or a tape.

Prerequisites

- An XFS file system that you can back up.

- Another file system or a tape drive where you can store the backup.

Procedure

Use the following command to back up an XFS file system:

xfsdump -l level [-L label] \ -f backup-destination path-to-xfs-filesystem

# xfsdump -l level [-L label] \ -f backup-destination path-to-xfs-filesystemCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Replace level with the dump level of your backup. Use

0to perform a full backup or1to9to perform consequent incremental backups. -

Replace backup-destination with the path where you want to store your backup. The destination can be a regular file, a tape drive, or a remote tape device. For example,

/backup-files/Data.xfsdumpfor a file or/dev/st0for a tape drive. -

Replace path-to-xfs-filesystem with the mount point of the XFS file system you want to back up. For example,

/mnt/data/. The file system must be mounted. -

When backing up multiple file systems and saving them on a single tape device, add a session label to each backup using the

-L labeloption so that it is easier to identify them when restoring. Replace label with any name for your backup: for example,backup_data.

-

Replace level with the dump level of your backup. Use

Example 11.1. Backing up multiple XFS file systems

To back up the content of XFS file systems mounted on the

/boot/and/data/directories and save them as files in the/backup-files/directory:xfsdump -l 0 -f /backup-files/boot.xfsdump /boot xfsdump -l 0 -f /backup-files/data.xfsdump /data

# xfsdump -l 0 -f /backup-files/boot.xfsdump /boot # xfsdump -l 0 -f /backup-files/data.xfsdump /dataCopy to Clipboard Copied! Toggle word wrap Toggle overflow To back up multiple file systems on a single tape device, add a session label to each backup using the

-L labeloption:xfsdump -l 0 -L "backup_boot" -f /dev/st0 /boot xfsdump -l 0 -L "backup_data" -f /dev/st0 /data

# xfsdump -l 0 -L "backup_boot" -f /dev/st0 /boot # xfsdump -l 0 -L "backup_data" -f /dev/st0 /dataCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 12. Restoring an XFS file system from backup

As a system administrator, you can use the xfsrestore utility to restore XFS backup created with the xfsdump utility and stored in a file or on a tape.

12.1. Features of restoring XFS from backup

The xfsrestore utility restores file systems from backups produced by xfsdump. The xfsrestore utility has two modes:

- The simple mode enables users to restore an entire file system from a level 0 dump. This is the default mode.

- The cumulative mode enables file system restoration from an incremental backup: that is, level 1 to level 9.