Managing storage devices

Configuring and managing local and remote storage devices

Abstract

- Create disk partitions according to your requirements. Use disk encryption to protect the data on a block device.

- Create a Redundant Array of Independent Disks (RAID) to store data across multiple drives and avoid data loss.

- Use iSCSI and NVMe over Fabrics to access storage over a network.

- Set up Stratis to manage pools of physical storage devices.

Providing feedback on Red Hat documentation

We appreciate your feedback on our documentation. Let us know how we can improve it.

Submitting feedback through Jira (account required)

- Log in to the Jira website.

- Click Create in the top navigation bar

- Enter a descriptive title in the Summary field.

- Enter your suggestion for improvement in the Description field. Include links to the relevant parts of the documentation.

- Click Create at the bottom of the dialogue.

Chapter 1. Overview of available storage options

There are several local, remote, and cluster-based storage options available on RHEL 9.

Local storage implies that the storage devices are either installed on the system or directly attached to the system.

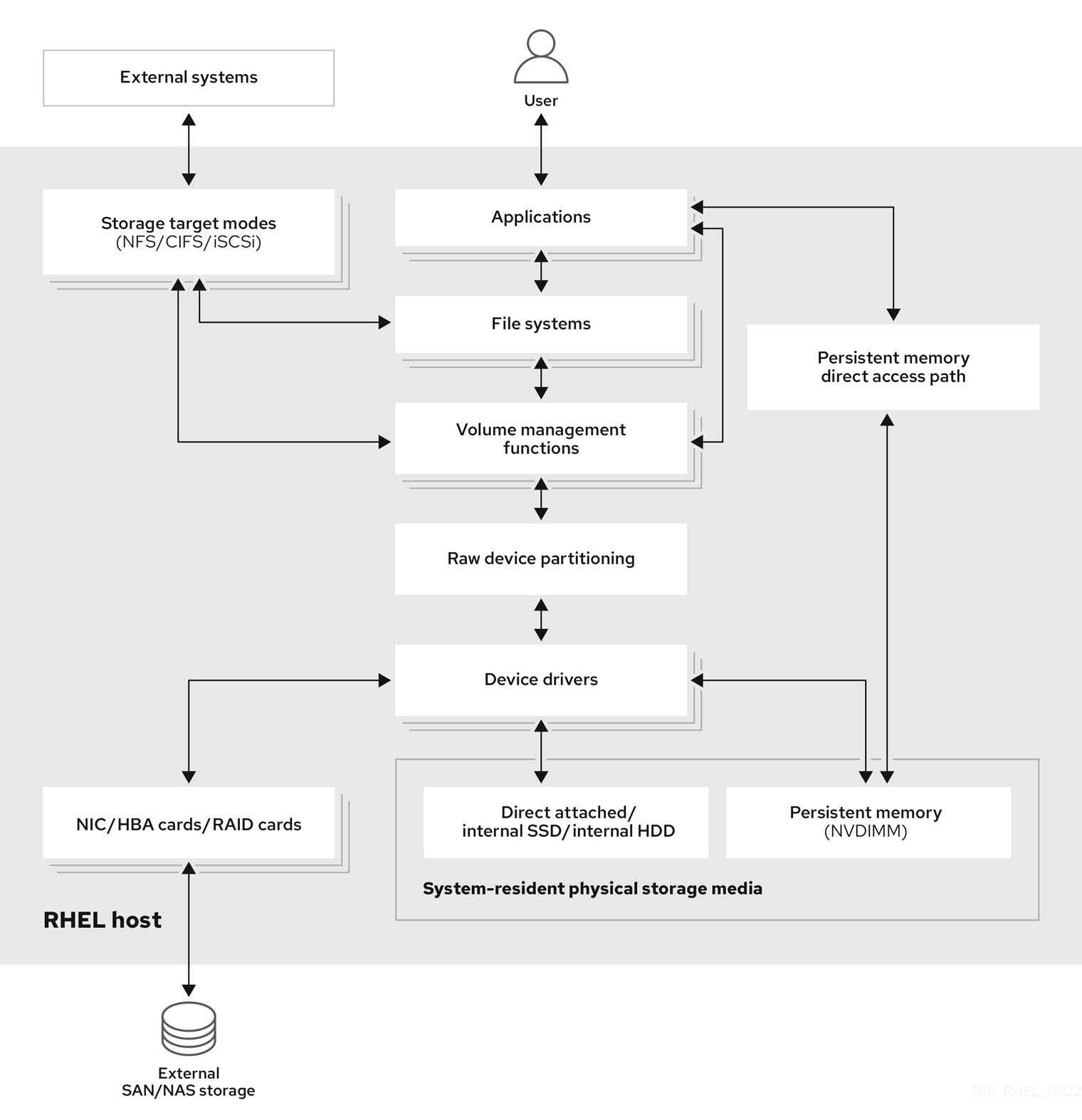

With remote storage, devices are accessed over LAN, the internet, or using a Fibre channel network. The following high level Red Hat Enterprise Linux storage diagram describes the different storage options.

Figure 1.1. High level Red Hat Enterprise Linux storage diagram

1.1. Local storage overview

Red Hat Enterprise Linux 9 offers several local storage options.

- Basic disk administration

Using

partedandfdisk, you can create, modify, delete, and view disk partitions. The following are the partitioning layout standards:- Master Boot Record (MBR)

- It is used with BIOS-based computers. You can create primary, extended, and logical partitions.

- GUID Partition Table (GPT)

- It uses globally unique identifier (GUID) and provides unique disk and partition GUID.

- Storage consumption options

- Non-Volatile Dual In-line Memory Modules (NVDIMM) Management

- It is a combination of memory and storage. You can enable and manage various types of storage on NVDIMM devices connected to your system.

- Block Storage Management

- Data is stored in the form of blocks where each block has a unique identifier.

- File Storage

- Data is stored at file level on the local system. These data can be accessed locally using XFS (default) or ext4, and over a network by using NFS and SMB.

- Logical volumes

- Logical Volume Manager (LVM)

- It creates logical devices from physical devices. Logical volume (LV) is a combination of the physical volumes (PV) and volume groups (VG).

- Virtual Data Optimizer (VDO)

It is used for data reduction by using deduplication, compression, and thin provisioning. Using LV below VDO helps in:

- Extending of VDO volume

- Spanning VDO volume over multiple devices

- Local file systems

- XFS

- The default RHEL file system.

- Ext4

- A legacy file system.

- Stratis

- Stratis is a hybrid user-and-kernel local storage management system that supports advanced storage features.

1.2. Remote storage overview

The following are the remote storage options available in RHEL 9:

- Storage connectivity options

- iSCSI

- RHEL 9 uses the targetcli tool to add, remove, view, and monitor iSCSI storage interconnects.

- Fibre Channel (FC)

RHEL 9 provides the following native Fibre Channel drivers:

-

lpfc -

qla2xxx -

Zfcp

-

- Non-volatile Memory Express (NVMe)

An interface which allows host software utility to communicate with solid state drives. Use the following types of fabric transport to configure NVMe over fabrics:

- NVMe over fabrics using Remote Direct Memory Access (NVMe/RDMA)

- NVMe over fabrics using Fibre Channel (NVMe/FC)

- NVMe over fabrics using TCP (NVMe/TCP)

- Device Mapper multipathing (DM Multipath)

- Allows you to configure multiple I/O paths between server nodes and storage arrays into a single device. These I/O paths are physical SAN connections that can include separate cables, switches, and controllers.

- Network file system

- NFS

- SMB

1.3. GFS2 file system overview

The Red Hat Global File System 2 (GFS2) file system is a 64-bit symmetric cluster file system which provides a shared name space and manages coherency between multiple nodes sharing a common block device. A GFS2 file system is intended to provide a feature set which is as close as possible to a local file system, while at the same time enforcing full cluster coherency between nodes. To achieve this, the nodes employ a cluster-wide locking scheme for file system resources. This locking scheme uses communication protocols such as TCP/IP to exchange locking information.

In a few cases, the Linux file system API does not allow the clustered nature of GFS2 to be totally transparent; for example, programs using POSIX locks in GFS2 should avoid using the GETLK function since, in a clustered environment, the process ID may be for a different node in the cluster. In most cases however, the functionality of a GFS2 file system is identical to that of a local file system.

The Red Hat Enterprise Linux Resilient Storage Add-On provides GFS2, and it depends on the Red Hat Enterprise Linux High Availability Add-On to provide the cluster management required by GFS2.

The gfs2.ko kernel module implements the GFS2 file system and is loaded on GFS2 cluster nodes.

To get the best performance from GFS2, it is important to take into account the performance considerations which stem from the underlying design. Just like a local file system, GFS2 relies on the page cache in order to improve performance by local caching of frequently used data. In order to maintain coherency across the nodes in the cluster, cache control is provided by the glock state machine.

Chapter 2. Persistent naming attributes

The way you identify and manage storage devices ensures the stability and predictability of the system. RHEL 9 uses two primary naming schemes for this purpose: traditional device names and persistent naming attributes.

Traditional device names

The Linux kernel assigns traditional device names based on the order in which they appear in the system or their enumeration. For example, the first SATA drive is usually labeled as /dev/sda, the second as /dev/sdb, and so on. While these names are straightforward, they are subject to change when devices are added or removed, the hardware configuration is modified, or the system is rebooted. This can pose challenges for scripting and configuration files. Furthermore, traditional names lack descriptive information about the purpose or characteristics of the device.

Persistent naming attributes

Persistent naming attributes (PNAs) are based on unique characteristics of the storage devices, making them more stable and predictable when presented to the system, even across system reboots. One of the key benefits of PNAs is their resilience to changes in hardware configurations, making them ideal for maintaining consistent naming conventions. When using PNAs, you can reference storage devices within scripts, configuration files, and management tools without concerns about unexpected name changes. Additionally, PNAs often include valuable metadata, such as device type or manufacturer information like combination of vendor, model name, and serial number, enhancing their descriptiveness for effective device identification and management. PNAs are eventually used to create device links in /dev/disk directory to access individual devices. The way the device link names are constructed and managed is driven by udev rules.

The following is a list of directories we can find in /dev/disk/:

Directories with content that remain unique even after system restart:

-

by-id: based on hardware attributes, with thevendor/model/serial_stringcombination. -

by-path*: Based on physical hardware placement. For devices or disks physically attached to a machine, this is the slot or port where they are connected physically to the host bus on the motherboard. However, for devices or disks attached over a network, this contains the network address specification. -

by-partlabel: Based on a label assigned to a device partition. These labels are assigned by the user. -

by-partuuid: Based on a unique number in the form ofUUIDthat is auto-generated. -

by-uuid: Based on a unique number in the form of UUID that is autogenerated.

-

Directory with content that remain unique during the current system run, but not after system restart:

-

by-diskseq:diskseq`is 'disk sequence number' that starts at 1 when the system boots. It assigns this number to a newly attached disk and each one after that gets the next number in sequence. When the system reboots, the counter restarts at 1.

-

Directories with content that are used specifically for loop devices:

-

by-loop-ref -

by-loop-inode

-

2.1. Persistent attributes for identifying file systems and block devices

In RHEL 9 storage, persistent naming attributes (PNAs) are mechanisms that provide components for consistent and reliable naming for storage devices across system reboots, hardware changes, or other events. These attributes are used to identify storage devices consistently, even if the storage devices are added, removed, or reconfigured.

PNAs are used to identify both file systems and block devices, but they serve different purposes:

- Persistent attributes for identifying file systems

Universally unique identifier (UUID)

UUIDs are primarily used to uniquely identify file systems on storage devices. Each file system instance has its own UUID assigned automatically, and this identifier remains constant even if the file system is unmounted, remounted, or the device is detached and reattached.

Label

Labels are user-assigned names for file systems. While they can be used to identify and reference file systems, they are not as standardized as UUIDs. Since a user assigns the file system label, its uniqueness depends on their choice. Labels are often used as alternatives to UUIDs to specify file systems in configuration files.

When you assign a label to a file system, it becomes part of the file system metadata. This label remains associated with the file system even if it is unmounted, remounted, or the device is detached and reattached.

- Persistent attributes for identifying block devices

Universally unique identifier (UUID)

UUIDs can be used to identify storage block devices. When a storage device is formatted, a UUID is often assigned to the device itself. Such UUIDs are usually generated and assigned to virtual block devices layered on top of other block devices, where the real devices are at the bottom level. For example, device-mapper (DM) based devices and their related subsystems, such as Logical Volume Manager (LVM) and crypt, use UUIDs for device identification, such as Logical Volume UUID (LVUUID) and crypt UUID.

Also, multiple-device (MD) based devices have UUIDs assigned. The virtual devices usually also mark the underlying devices with component UUIDs. For example, LVM marks its underlying devices with a Physical Volume UUID (PVUUID) and MD marks its underlying devices with an MD component UUID.

These UUIDs are embedded within the virtual block device metadata and they are used as persistent naming attributes. It allows you to uniquely identify the block device, even if you change the file system that resides on it. UUIDs are also assigned for device partitions.

Such UUIDs can coexist with other device IDs. For example, an sda device at the bottom of the device stack which is identified by its

vendor/model/serial_numbercombination or WWID, can also have a PVUUID assigned by LVM. This is then recognized by LVM itself to build up the Volume Group (VG) or Logical Volume (LV) in a layer above it.- Label or Name Labels or names can be assigned to certain block devices too. This applies to partitions which can have user-assigned labels. Some of the virtual block devices, like device-mapper (DM) based devices and multiple-device (MD) based devices also use names to identify devices.

World Wide Identifier (WWID)

WWID encompasses a family of identifiers which are globally unique and they are associated with storage block devices or storage components in general. They are commonly used in enterprise-level Storage Area Networks (SANs), like Fibre Channel (FC), to identify a storage node - World Wide Node Name (WWNN) or actual port/connection to a storage device on the node - World Wide Port Name (WWPN). WWIDs ensure consistent communication between servers and SAN storage devices and help manage redundant paths to storage devices.

Other types of devices may use a form of WWIDs too, like NVME devices. These devices do not necessarily need to be accessed over a network or SAN, and they do not have to be enterprise-level devices either.

The WWID format does not follow a single standard. For example, SCSI uses formats such as NAA, T10, and EUI. The NVME uses formats such as EUI-64, NGUUID, and UUID.

Serial string

The serial string is a unique string identifier assigned to each storage block device by the manufacturer. It can be used to differentiate among storage devices and may be used in combination with other attributes like UUIDs or WWIDs for device management.

Within

udev rules, and consequently in the/dev/disk/by-idcontent, the 'short serial string' typically represents the actual serial string as reported by the device itself. Whereas, 'serial string' is composed of several components, usually<bus_type>-<vendor_name>_<model_name>_<short_serial_string>.WWIDs and serial strings are preferred for real devices. For virtual devices, UUIDs or names is preferred.

2.2. udev device naming rules

Userspace device manager (udev) subsystem allows you to define rules for assigning persistent names to devices. These rules are stored in a file with a .rules extension. There are two primary locations for storing the udev rules:

-

/usr/lib/udev/rules.d/directory contains default rules that come with an installed package. -

/etc/udev/rules.ddirectory is intended for customudevrules.

If a rule from /usr/lib/udev/rules.d/ is modified, it will be overwritten by the rules file of the package during an update. Hence, any manual or custom rule should be added in /etc/udev/rules.d where it is retained until removed explicitly. Before use, udev rules from both directories are merged. If a rule in /etc/udev/rules.d has the same name as one in /usr/lib/udev/rules.d/ the one in the former takes precedence.

The purpose of these rules is to ensure that storage devices are consistently and predictably identified, even across system reboots and configuration changes.

udev rules define actions to execute based on incoming events that notify about adding, changing or removing a device. This also helps to collect values for the persistent storage attributes and direct udev to create the /dev content based on the collected information. The udev rules are written in human-readable format using key-value pairs.

In the case of storage devices, udev rules control creation of symbolic links in the /dev/disk/ directory. These symbolic links provide user-friendly aliases for storage devices, making it more convenient to refer to and manage these devices.

You can create custom udev rules to specify how devices should be named based on various attributes such as serial numbers, World Wide Name (WWN) identifiers, or other device-specific characteristics. By defining specific naming rules, you have precise control over how devices are identified within the system. To create a specific custom symbolic link in /dev for a device see the udev(7) man page on your system.

While udev rules are very flexible, it is important to be aware of udev limitations:

-

Accessibility Timing: Some storage devices might not be accessible at the time of a

udevquery. -

Event-Based Processing: The kernel can send

udevevents at any time, potentially triggering rule processing and link removal if a device is inaccessible. - Processing Delay: There might be a delay between event generation and processing, especially with numerous devices, causing a lag between kernel detection and link availability.

-

Device Accessibility: External programs invoked by

udevrules, likeblkid, might briefly open the device, making it temporarily inaccessible for other tasks. -

Link Updates: Device names managed by

udevin/dev/disk/can change between major releases, requiring link updates.

The following table lists the symlinks available in /dev/disk.

| Device type | Nonpersistent Name (Kernel Name) | Persistent Symlink Names |

|---|---|---|

| Real Devices | ||

| nvme (Non-Volatile Memory Express) | /dev/nvme* |

/dev/disk/by-id/nvme-<wwid> |

| scsi (Small Computer System Interface) | /dev/sd*, /dev/sr* |

/dev/disk/by-id/scsi-<model>_<serial> |

| ata (Advanced Technology Attachment)/atapi (ATA Packet Interface) | /dev/sd*, /dev/sr* |

/dev/disk/by-id/ata-<model>_<serial> |

| cciss (Compaq Command Interface for SCSI-3 Support) | /dev/cciss* |

/dev/disk/by-id/cciss-<model>_<serial> |

| virtio (Virtual Input Output) | /dev/vd* | /dev/disk/by-id/virtio-<serial> |

| pmem (Persistent Memory) | /dev/pmem* | /dev/disk/by-id/pmem-<uuid> |

| mmc (MultiMedia Card) | /dev/mmcblk* | /dev/disk/by-id/mmc-<name>_<serial> |

| memstick (Memory Stick) | /dev/msblk* | /dev/disk/by-id/memstick-<name>_<serial> |

| Virtual devices | ||

| loop | /dev/loop* |

/dev/disk/by-loop-inode/<id_loop_backing_device>-<id_loop_backing_inode> |

| dm (device-mapper) | /dev/dm-* |

/dev/mapper/<name> |

| md (multiple device) | /dev/md* |

/dev/md/<devname> |

| Partitions (either on top of a real or a virtual device) | ||

| (any) | (any) |

/dev/disk/by-partuuid/<uuid> |

| LVM PVs (Logical Volume Manager Physical Volumes; either on top of a real or a virtual device) | ||

| (any) | (any) | /dev/disk/by-id/lvm-pv-uuid-<pvuuid> |

2.2.1. Obtaining the device links value for an existing device

You can obtain the value of the device links for an existing device from the current udev database.

Prerequisites

- The device is present and connected to the system.

Procedure

List all the assigned device symbolic links (

DEVLINKS) to the base kernel device node (DEVNAME) under/devfor an existing device:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace nvme0n1 with your device name.

You can also obtain the base kernel name that all the devlinks point to by using the following command:

udevadm info --name /dev/nvme0n1 --query property --property DEVNAME --value /dev/nvme0n1

# udevadm info --name /dev/nvme0n1 --query property --property DEVNAME --value /dev/nvme0n1Copy to Clipboard Copied! Toggle word wrap Toggle overflow The kernel name and any of its devlinks can be used interchangeably.

You can use one of the devlinks to get the full list of devlinks by using the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 3. Disk partitions

To divide a disk into one or more logical areas, use the disk partitioning utility. It enables separate management of each partition.

3.1. Overview of partitions

The hard disk stores information about the location and size of each disk partition in the partition table. Using information from the partition table, the operating system treats each partition as a logical disk. Some of the advantages of disk partitioning include:

- Reduce the likelihood of administrative oversights of Physical Volumes

- Ensure sufficient backup

- Provide efficient disk management

3.2. Comparison of partition table types

To enable partitions on a device, format a block device with different types of partition tables. The following table compares the properties of different types of partition tables that you can create on a block device.

This section does not cover the DASD partition table, which is specific to the IBM Z architecture.

| Partition table | Maximum number of partitions | Maximum partition size |

|---|---|---|

| Master Boot Record (MBR) | 4 primary, or 3 primary and 1 extended partition with 12 logical partitions |

2 TiB if using 512 b sector drives |

| GUID Partition Table (GPT) | 128 |

8 ZiB if using 512 b sector drives |

3.3. MBR disk partitions

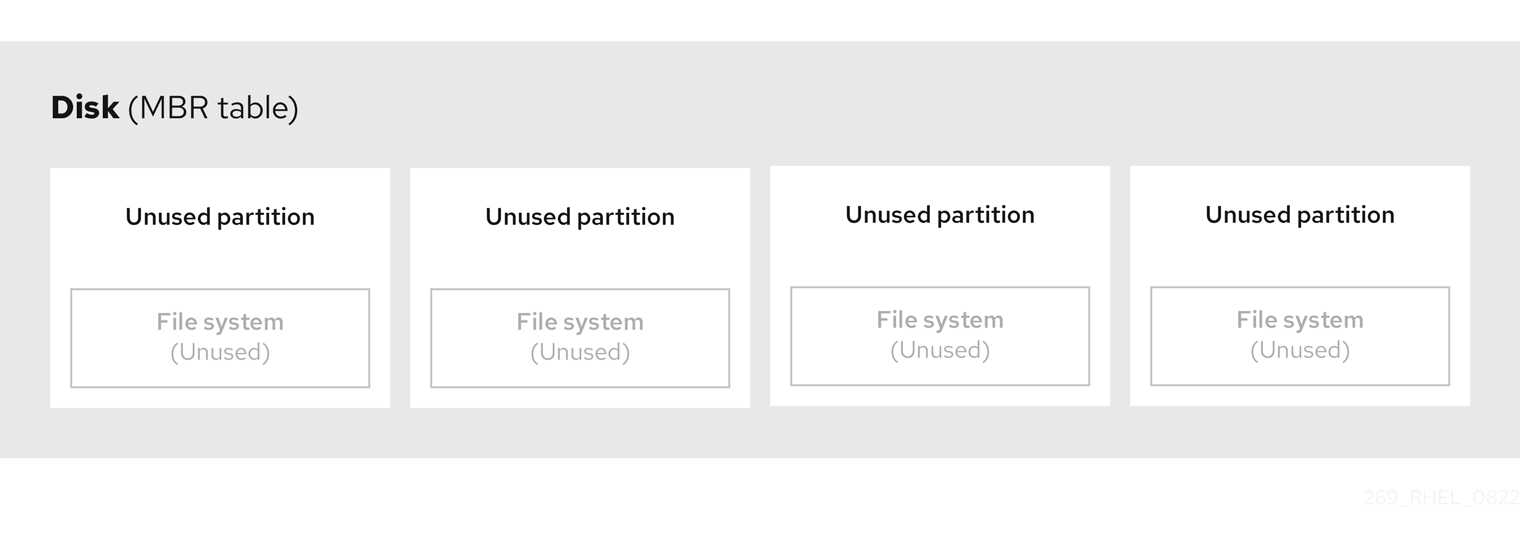

The partition table is stored at the very start of the disk, before any file system or user data. For a more clear example, the partition table is shown as being separate in the following diagrams.

Figure 3.1. Disk with MBR partition table

As the previous diagram shows, the partition table is divided into four sections of four unused primary partitions. A primary partition is a partition on a hard disk drive that contains only one logical drive (or section). Each logical drive holds the information necessary to define a single partition, meaning that the partition table can define no more than four primary partitions.

Each partition table entry contains important characteristics of the partition:

- The points on the disk where the partition starts and ends

-

The state of the partition, as only one partition can be flagged as

active - The type of partition

The starting and ending points define the size and location of the partition on the disk. Some of the operating systems boot loaders use the active flag. That means that the operating system in the partition that is marked "active" is booted.

The type is a number that identifies the anticipated usage of a partition. Some operating systems use the partition type to:

- Denote a specific file system type

- Flag the partition as being associated with a particular operating system

- Indicate that the partition contains a bootable operating system

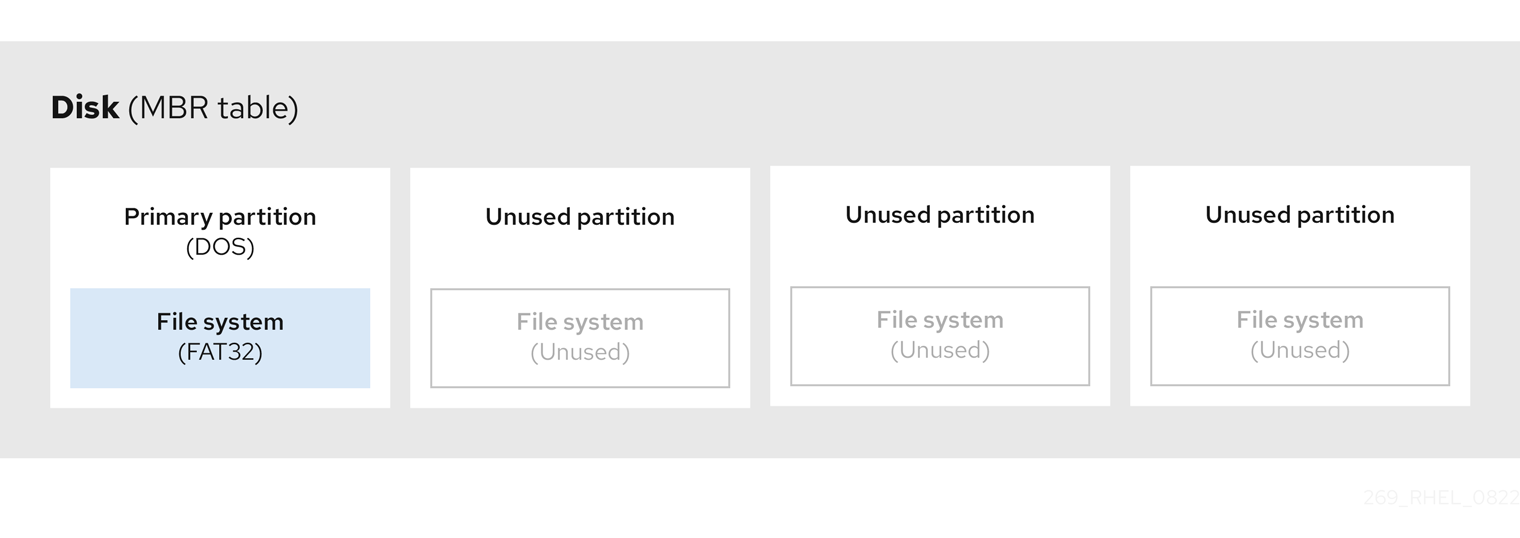

The following diagram shows an example of a drive with a single partition. In this example, the first partition is labeled as DOS partition type:

Figure 3.2. Disk with a single partition

3.4. Extended MBR partitions

To create additional partitions, if needed, set the type to extended.

An extended partition is similar to a disk drive. It has its own partition table, which points to one or more logical partitions, contained entirely within the extended partition. The following diagram shows a disk drive with two primary partitions, and one extended partition containing two logical partitions, along with some unpartitioned free space.

Figure 3.3. Disk with both two primary and an extended MBR partitions

You can have only up to four primary and extended partitions, but there is no fixed limit to the number of logical partitions. As a limit in Linux to access partitions, a single disk drive allows maximum 15 partitions.

3.5. MBR partition types

The table below shows a list of some of the most commonly used MBR partition types and hexadecimal numbers to represent them.

| MBR partition type | Value | MBR partition type | Value |

| Empty | 00 | Novell Netware 386 | 65 |

| DOS 12-bit FAT | 01 | PIC/IX | 75 |

| XENIX root | O2 | Old MINIX | 80 |

| XENIX usr | O3 | Linux/MINUX | 81 |

| DOS 16-bit ⇐32M | 04 | Linux swap | 82 |

| Extended | 05 | Linux native | 83 |

| DOS 16-bit >=32 | 06 | Linux extended | 85 |

| OS/2 HPFS | 07 | Amoeba | 93 |

| AIX | 08 | Amoeba BBT | 94 |

| AIX bootable | 09 | BSD/386 | a5 |

| OS/2 Boot Manager | 0a | OpenBSD | a6 |

| Win95 FAT32 | 0b | NEXTSTEP | a7 |

| Win95 FAT32 (LBA) | 0c | BSDI fs | b7 |

| Win95 FAT16 (LBA) | 0e | BSDI swap | b8 |

| Win95 Extended (LBA) | 0f | Syrinx | c7 |

| Venix 80286 | 40 | CP/M | db |

| Novell | 51 | DOS access | e1 |

| PRep Boot | 41 | DOS R/O | e3 |

| GNU HURD | 63 | DOS secondary | f2 |

| Novell Netware 286 | 64 | BBT | ff |

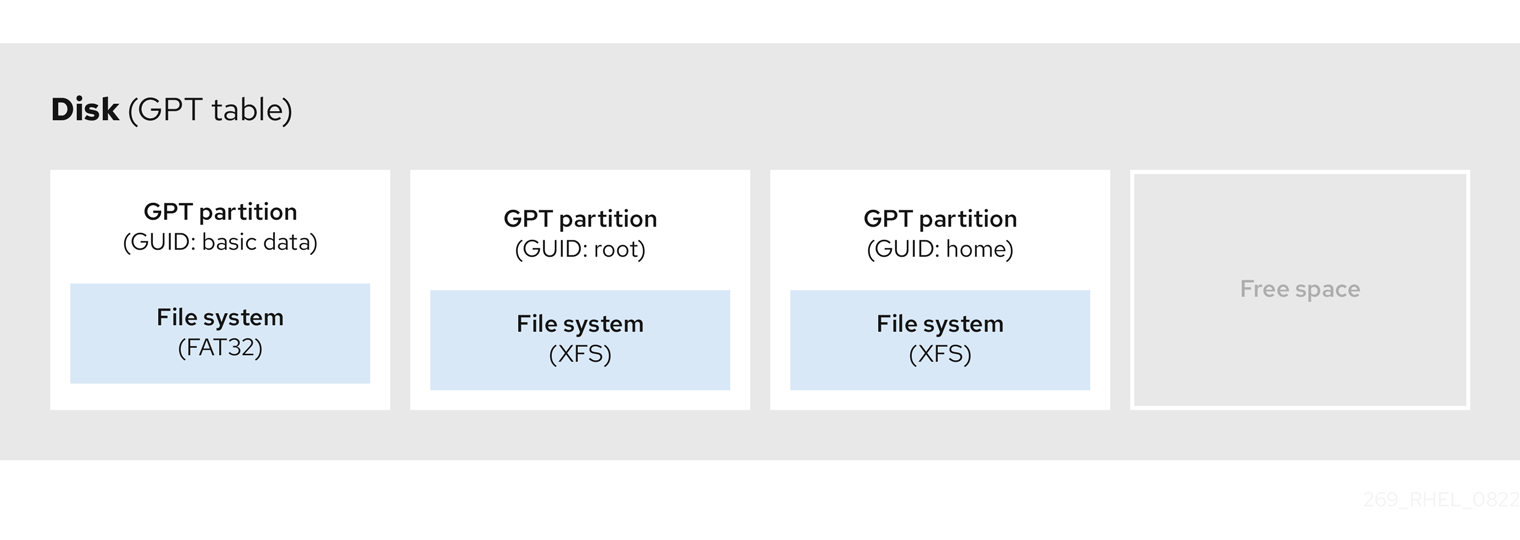

3.6. GUID partition table

The GUID partition table (GPT) is a partitioning scheme based on the Globally Unique Identifier (GUID).

GPT deals with the limitations of the Mater Boot Record (MBR) partition table. The MBR partition table cannot address storage larger than 2 TiB, equal to approximately 2.2 TB. Instead, GPT supports hard disks with larger capacity. The maximum addressable disk size is 8 ZiB, when using 512b sector drives, and 64 ZiB, when using 4096b sector drives. In addition, by default, GPT supports creation of up to 128 primary partitions. Extend the maximum amount of primary partitions by allocating more space to the partition table.

A GPT has partition types based on GUIDs. Certain partitions require a specific GUID. For example, the system partition for Extensible Firmware Interface (EFI) boot loaders require GUID C12A7328-F81F-11D2-BA4B-00A0C93EC93B.

GPT disks use logical block addressing (LBA) and a partition layout as follows:

- For backward compatibility with MBR disks, the system reserves the first sector (LBA 0) of GPT for MBR data, and applies the name "protective MBR".

Primary GPT

- The header begins on the second logical block (LBA 1) of the device. The header contains the disk GUID, the location of the primary partition table, the location of the secondary GPT header, and CRC32 checksums of itself, and the primary partition table. It also specifies the number of partition entries on the table.

- By default, the primary GPT includes 128 partition entries. Each partition has an entry size of 128 bytes, a partition type GUID and a unique partition GUID.

Secondary GPT

- For recovery, it is useful as a backup table in case the primary partition table is corrupted.

- The last logical sector of the disk contains the secondary GPT header and recovers GPT information, in case the primary header is corrupted.

It contains:

- The disk GUID

- The location of the secondary partition table and the primary GPT header

- CRC32 checksums of itself

- The secondary partition table

- The number of possible partition entries

Figure 3.4. Disk with a GUID Partition Table

For a successful installation of the boot loader onto a GPT disk a BIOS boot partition must be present. Reuse is possible only if the disk already contains a BIOS boot partition. This includes disks initialized by the Anaconda installation program.

3.7. Partition types

There are multiple ways to manage partition types:

-

The

fdiskutility supports the full range of partition types by specifying hexadecimal codes. -

The

systemd-gpt-auto-generator, a unit generator utility, uses the partition type to automatically identify and mount devices. The

partedutility maps out the partition type with flags. Thepartedutility handles only certain partition types, for example LVM, swap or RAID.The

partedutility supports setting the following flags:-

boot -

root -

swap -

hidden -

raid -

lvm -

lba -

legacy_boot -

irst -

esp -

palo

-

On Red Hat Enterprise Linux 9 with parted 3.5, you can use the additional flags chromeos_kernel and bls_boot.

The parted utility optionally accepts a file system type argument while creating a partition. For a list of the required conditions, see Creating a partition with parted. Use the value to:

- Set the partition flags on MBR.

-

Set the partition UUID type on GPT. For example, the

swap,fat, orhfsfile system types set different GUIDs. The default value is the Linux Data GUID.

The argument does not modify the file system on the partition. It only differentiates between the supported flags and GUIDs.

The following file system types are supported:

-

xfs -

ext2 -

ext3 -

ext4 -

fat16 -

fat32 -

hfs -

hfs+ -

linux-swap -

ntfs -

reiserfs

3.8. Partition naming scheme

Red Hat Enterprise Linux uses a file-based naming scheme, with file names in the form of /dev/xxyN.

Device and partition names consist of the following structure:

/dev/-

Name of the directory that contains all device files. Hard disks contain partitions, thus the files representing all possible partitions are located in

/dev. xx- The first two letters of the partition name indicate the type of device that contains the partition.

y-

This letter indicates the specific device containing the partition. For example,

/dev/sdafor the first hard disk and/dev/sdbfor the second. You can use more letters in systems with more than 26 drives, for example,/dev/sdaa1. N-

The final letter indicates the number to represent the partition. The first four (primary or extended) partitions are numbered

1through4. Logical partitions start at5. For example,/dev/sda3is the third primary or extended partition on the first hard disk, and/dev/sdb6is the second logical partition on the second hard disk. Drive partition numbering applies only to MBR partition tables. Note that N does not always mean partition.

Even if Red Hat Enterprise Linux can identify and refer to all types of disk partitions, it might not be able to read the file system and therefore access stored data on every partition type. However, in many cases, it is possible to successfully access data on a partition dedicated to another operating system.

3.9. Mount points and disk partitions

In Red Hat Enterprise Linux, each partition forms a part of the storage, necessary to support a single set of files and directories. Mounting a partition makes the storage of that partition available, starting at the specified directory known as a mount point.

For example, if partition /dev/sda5 is mounted on /usr/, it means that all files and directories under /usr/ physically reside on /dev/sda5. The file /usr/share/doc/FAQ/txt/Linux-FAQ resides on /dev/sda5, while the file /etc/gdm/custom.conf does not.

Continuing the example, it is also possible that one or more directories below /usr/ would be mount points for other partitions. For example, /usr/local/man/whatis resides on /dev/sda7, rather than on /dev/sda5, if /usr/local includes a mounted /dev/sda7 partition.

Chapter 4. Getting started with partitions

Use disk partitioning to divide a disk into one or more logical areas which enables work on each partition separately. The hard disk stores information about the location and size of each disk partition in the partition table. Using the table, each partition then appears as a logical disk to the operating system. You can then read and write on those individual disks.

For an overview of the advantages and disadvantages to using partitions on block devices, see the Red Hat Knowledgebase solution What are the advantages and disadvantages to using partitioning on LUNs, either directly or with LVM in between?.

4.1. Creating a partition table on a disk with parted

Use the parted utility to format a block device with a partition table more easily.

Formatting a block device with a partition table deletes all data stored on the device.

Procedure

Start the interactive

partedshell:parted block-device

# parted block-deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Determine if there already is a partition table on the device:

(parted) print

(parted) printCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the device already contains partitions, they will be deleted in the following steps.

Create the new partition table:

(parted) mklabel table-type

(parted) mklabel table-typeCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace table-type with with the intended partition table type:

-

msdosfor MBR -

gptfor GPT

-

Example 4.1. Creating a GUID Partition Table (GPT) table

To create a GPT table on the disk, use:

(parted) mklabel gpt

(parted) mklabel gptCopy to Clipboard Copied! Toggle word wrap Toggle overflow The changes start applying after you enter this command.

View the partition table to confirm that it is created:

(parted) print

(parted) printCopy to Clipboard Copied! Toggle word wrap Toggle overflow Exit the

partedshell:(parted) quit

(parted) quitCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2. Viewing the partition table with parted

Display the partition table of a block device to see the partition layout and details about individual partitions. You can view the partition table on a block device using the parted utility.

Procedure

Start the

partedutility. For example, the following output lists the device/dev/sda:parted /dev/sda

# parted /dev/sdaCopy to Clipboard Copied! Toggle word wrap Toggle overflow View the partition table:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Switch to the device you want to examine next:

(parted) select block-device

(parted) select block-deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow

For a detailed description of the print command output, see the following:

Model: ATA SAMSUNG MZNLN256 (scsi)- The disk type, manufacturer, model number, and interface.

Disk /dev/sda: 256GB- The file path to the block device and the storage capacity.

Partition Table: msdos- The disk label type.

Number-

The partition number. For example, the partition with minor number 1 corresponds to

/dev/sda1. StartandEnd- The location on the device where the partition starts and ends.

Type- Valid types are metadata, free, primary, extended, or logical.

File system-

The file system type. If the

File systemfield of a device shows no value, this means that its file system type is unknown. Thepartedutility cannot recognize the file system on encrypted devices. Flags-

Lists the flags set for the partition. Available flags are

boot,root,swap,hidden,raid,lvm, orlba.

4.3. Creating a partition with parted

As a system administrator, you can create new partitions on a disk by using the parted utility.

The required partitions are swap, /boot/, and / (root).

Prerequisites

- A partition table on the disk.

- If the partition you want to create is larger than 2TiB, format the disk with the GUID Partition Table (GPT).

Procedure

Start the

partedutility:parted block-device

# parted block-deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow View the current partition table to determine if there is enough free space:

(parted) print

(parted) printCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Resize the partition in case there is not enough free space.

From the partition table, determine:

- The start and end points of the new partition.

- On MBR, what partition type it should be.

Create the new partition:

(parted) mkpart part-type name fs-type start end

(parted) mkpart part-type name fs-type start endCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Replace part-type with with

primary,logical, orextended. This applies only to the MBR partition table. - Replace name with an arbitrary partition name. This is required for GPT partition tables.

-

Replace fs-type with

xfs,ext2,ext3,ext4,fat16,fat32,hfs,hfs+,linux-swap,ntfs, orreiserfs. The fs-type parameter is optional. Note that thepartedutility does not create the file system on the partition. -

Replace start and end with the sizes that determine the starting and ending points of the partition, counting from the beginning of the disk. You can use size suffixes, such as

512MiB,20GiB, or1.5TiB. The default size is in megabytes.

Example 4.2. Creating a small primary partition

To create a primary partition from 1024MiB until 2048MiB on an MBR table, use:

(parted) mkpart primary 1024MiB 2048MiB

(parted) mkpart primary 1024MiB 2048MiBCopy to Clipboard Copied! Toggle word wrap Toggle overflow The changes start applying after you enter the command.

-

Replace part-type with with

View the partition table to confirm that the created partition is in the partition table with the correct partition type, file system type, and size:

(parted) print

(parted) printCopy to Clipboard Copied! Toggle word wrap Toggle overflow Exit the

partedshell:(parted) quit

(parted) quitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Register the new device node:

udevadm settle

# udevadm settleCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the kernel recognizes the new partition:

cat /proc/partitions

# cat /proc/partitionsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.4. Setting a partition type with fdisk

You can set a partition type or flag, using the fdisk utility.

Prerequisites

- A partition on the disk.

Procedure

Start the interactive

fdiskshell:fdisk block-device

# fdisk block-deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow View the current partition table to determine the minor partition number:

Command (m for help): print

Command (m for help): printCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can see the current partition type in the

Typecolumn and its corresponding type ID in theIdcolumn.Enter the partition type command and select a partition using its minor number:

Command (m for help): type Partition number (1,2,3 default 3): 2

Command (m for help): type Partition number (1,2,3 default 3): 2Copy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: View the list in hexadecimal codes:

Hex code (type L to list all codes): L

Hex code (type L to list all codes): LCopy to Clipboard Copied! Toggle word wrap Toggle overflow Set the partition type:

Hex code (type L to list all codes): 8e

Hex code (type L to list all codes): 8eCopy to Clipboard Copied! Toggle word wrap Toggle overflow Write your changes and exit the

fdiskshell:Command (m for help): write The partition table has been altered. Syncing disks.

Command (m for help): write The partition table has been altered. Syncing disks.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify your changes:

fdisk --list block-device

# fdisk --list block-deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.5. Resizing a partition with parted

Using the parted utility, extend a partition to use unused disk space, or shrink a partition to use its capacity for different purposes.

Prerequisites

- Back up the data before shrinking a partition.

- If the partition you want to create is larger than 2TiB, format the disk with the GUID Partition Table (GPT).

- If you want to shrink the partition, first shrink the file system so that it is not larger than the resized partition.

XFS does not support shrinking.

Procedure

Start the

partedutility:parted block-device

# parted block-deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow View the current partition table:

(parted) print

(parted) printCopy to Clipboard Copied! Toggle word wrap Toggle overflow From the partition table, determine:

- The minor number of the partition.

- The location of the existing partition and its new ending point after resizing.

Resize the partition:

(parted) resizepart 1 2GiB

(parted) resizepart 1 2GiBCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace 1 with the minor number of the partition that you are resizing.

-

Replace 2 with the size that determines the new ending point of the resized partition, counting from the beginning of the disk. You can use size suffixes, such as

512MiB,20GiB, or1.5TiB. The default size is in megabytes.

View the partition table to confirm that the resized partition is in the partition table with the correct size:

(parted) print

(parted) printCopy to Clipboard Copied! Toggle word wrap Toggle overflow Exit the

partedshell:(parted) quit

(parted) quitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the kernel registers the new partition:

cat /proc/partitions

# cat /proc/partitionsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Optional: If you extended the partition, extend the file system on it as well.

4.6. Removing a partition with parted

Using the parted utility, you can remove a disk partition to free up disk space.

Procedure

Start the interactive

partedshell:parted block-device

# parted block-deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Replace block-device with the path to the device where you want to remove a partition: for example,

/dev/sda.

-

Replace block-device with the path to the device where you want to remove a partition: for example,

View the current partition table to determine the minor number of the partition to remove:

(parted) print

(parted) printCopy to Clipboard Copied! Toggle word wrap Toggle overflow Remove the partition:

(parted) rm minor-number

(parted) rm minor-numberCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Replace minor-number with the minor number of the partition you want to remove.

The changes start applying as soon as you enter this command.

Verify that you have removed the partition from the partition table:

(parted) print

(parted) printCopy to Clipboard Copied! Toggle word wrap Toggle overflow Exit the

partedshell:(parted) quit

(parted) quitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that the kernel registers that the partition is removed:

cat /proc/partitions

# cat /proc/partitionsCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Remove the partition from the

/etc/fstabfile, if it is present. Find the line that declares the removed partition, and remove it from the file. Regenerate mount units so that your system registers the new

/etc/fstabconfiguration:systemctl daemon-reload

# systemctl daemon-reloadCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you have deleted a swap partition or removed pieces of LVM, remove all references to the partition from the kernel command line:

List active kernel options and see if any option references the removed partition:

grubby --info=ALL

# grubby --info=ALLCopy to Clipboard Copied! Toggle word wrap Toggle overflow Remove the kernel options that reference the removed partition:

grubby --update-kernel=ALL --remove-args="option"

# grubby --update-kernel=ALL --remove-args="option"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

To register the changes in the early boot system, rebuild the

initramfsfile system:dracut --force --verbose

# dracut --force --verboseCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 5. Strategies for repartitioning a disk

There are different approaches to repartitioning a disk. These include:

- Unpartitioned free space is available.

- An unused partition is available.

- Free space in an actively used partition is available.

The following examples are simplified for clarity and do not reflect the exact partition layout when actually installing Red Hat Enterprise Linux.

5.1. Using unpartitioned free space

Partitions that are already defined and do not span the entire hard disk, leave unallocated space that is not part of any defined partition. The following diagram shows what this might look like.

Figure 5.1. Disk with unpartitioned free space

The first diagram represents a disk with one primary partition and an undefined partition with unallocated space. The second diagram represents a disk with two defined partitions with allocated space.

An unused hard disk also falls into this category. The only difference is that all the space is not part of any defined partition.

On a new disk, you can create the necessary partitions from the unused space. Most preinstalled operating systems are configured to take up all available space on a disk drive.

5.2. Using space from an unused partition

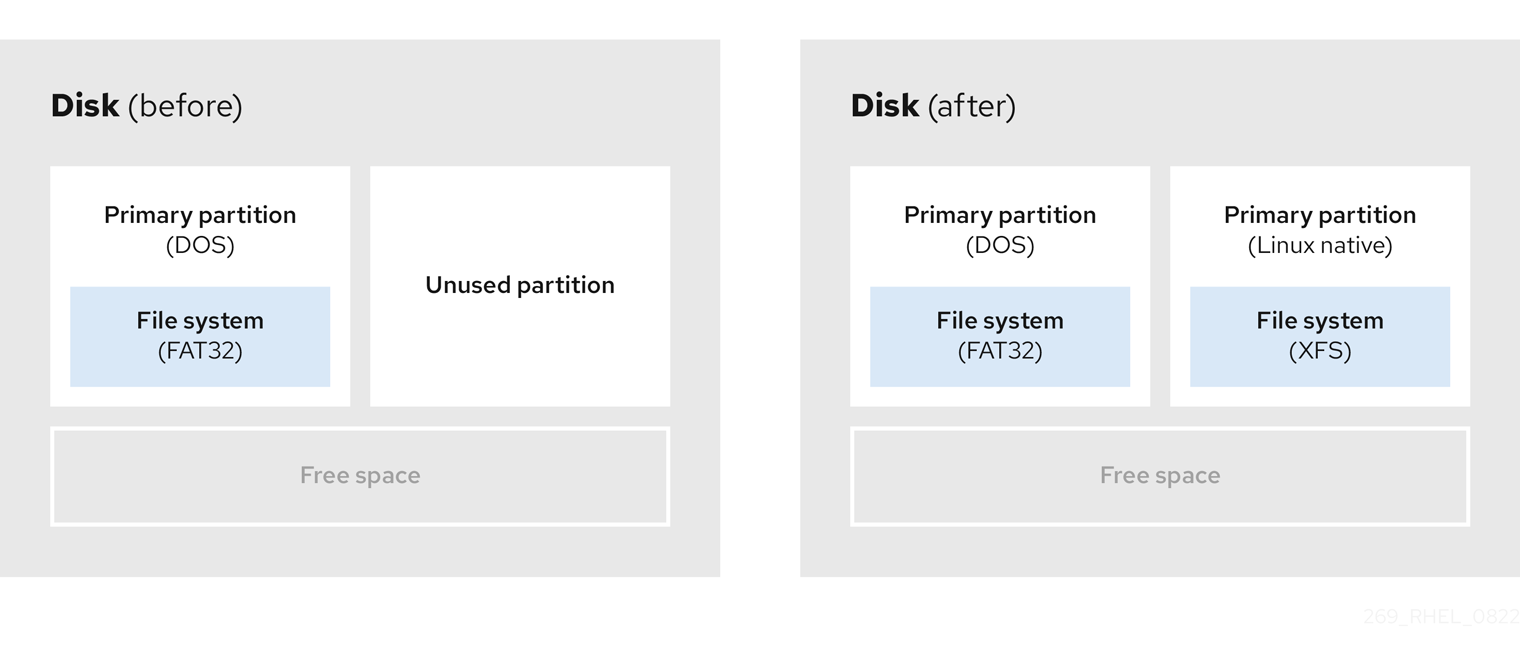

In the following example, the first diagram represents a disk with an unused partition. The second diagram represents reallocating an unused partition for Linux.

Figure 5.2. Disk with an unused partition

To use the space allocated to the unused partition, delete the partition and then create the appropriate Linux partition instead. Alternatively, during the installation process, delete the unused partition and manually create new partitions.

5.3. Using free space from an active partition

This process can be difficult to manage because an active partition, that is already in use, contains the required free space. In most cases, hard disks of computers with preinstalled software contain one larger partition holding the operating system and data.

If you want to use an operating system (OS) on an active partition, you must reinstall the OS. Be aware that some computers, which include pre-installed software, do not include installation media to reinstall the original OS. Check whether this applies to your OS before you destroy an original partition and the OS installation.

To optimise the use of available free space, you can use the methods of destructive or non-destructive repartitioning.

5.3.1. Destructive repartitioning

Destructive repartitioning destroys the partition on your hard drive and creates several smaller partitions instead. Backup any needed data from the original partition as this method deletes the complete contents.

After creating a smaller partition for your existing operating system, you can:

- Reinstall software.

- Restore your data.

- Start your Red Hat Enterprise Linux installation.

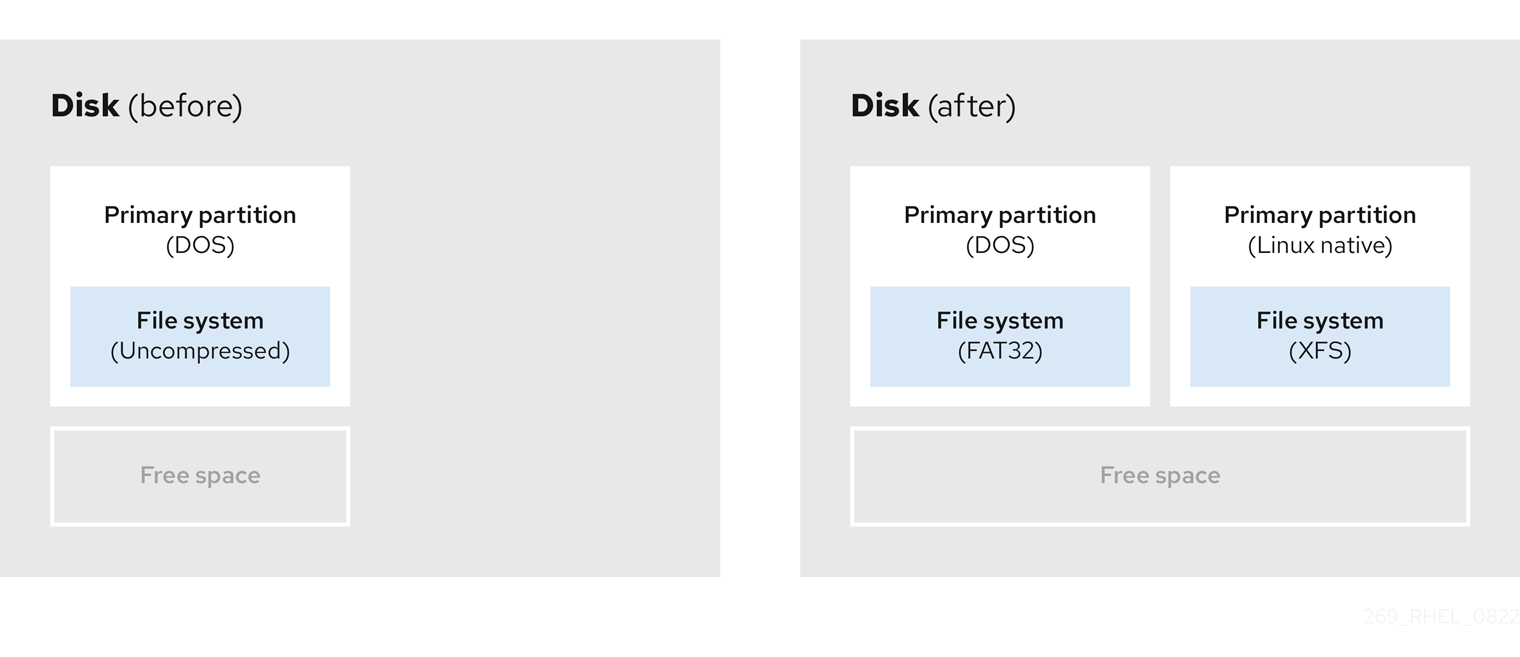

The following diagram is a simplified representation of using the destructive repartitioning method.

Figure 5.3. Destructive repartitioning action on disk

This method deletes all data previously stored in the original partition.

5.3.2. Non-destructive repartitioning

Non-destructive repartitioning resizes partitions, without any data loss. This method is reliable, however it takes longer processing time on large drives.

The following is a list of methods, which can help initiate non-destructive repartitioning.

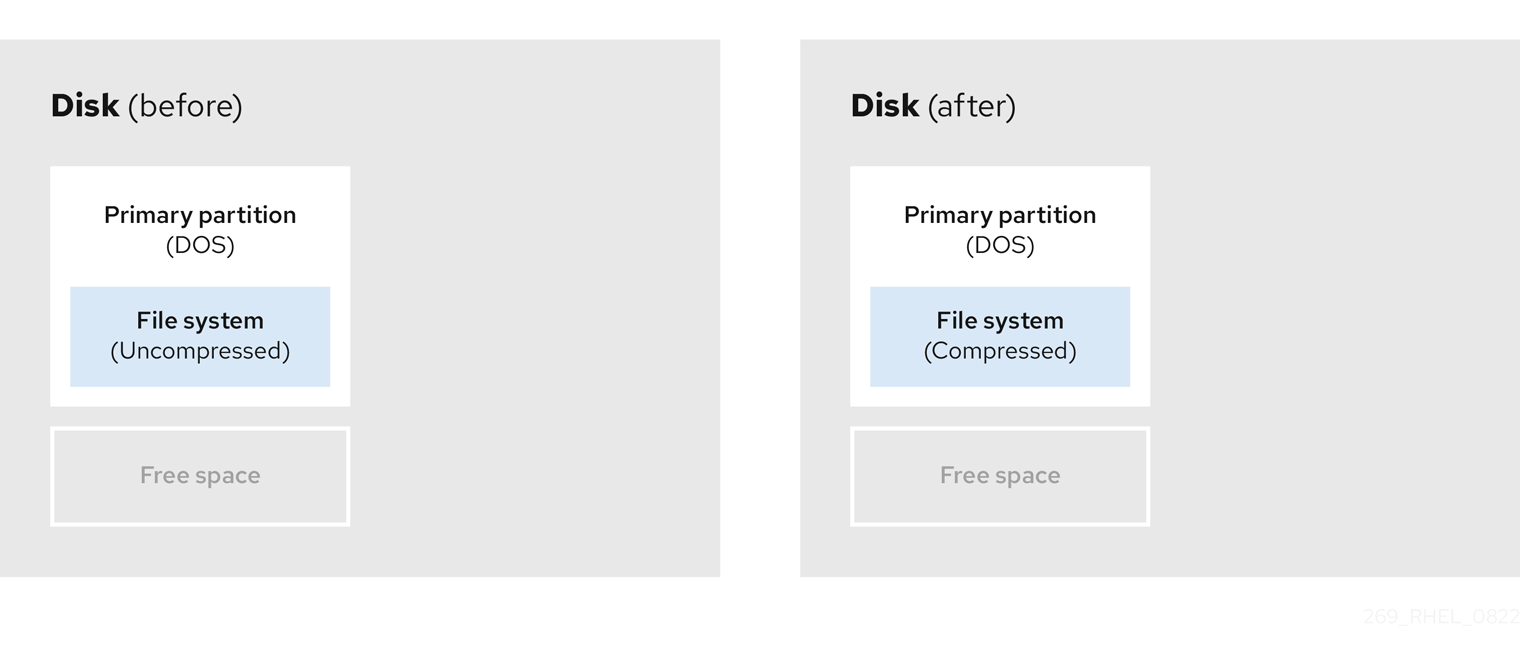

- Compress existing data

The storage location of some data cannot be changed. This can prevent the resizing of a partition to the required size, and ultimately lead to a destructive repartition process. Compressing data in an already existing partition can help you resize your partitions as needed. It can also help to maximize the free space available.

The following diagram is a simplified representation of this process.

Figure 5.4. Data compression on a disk

To avoid any possible data loss, create a backup before continuing with the compression process.

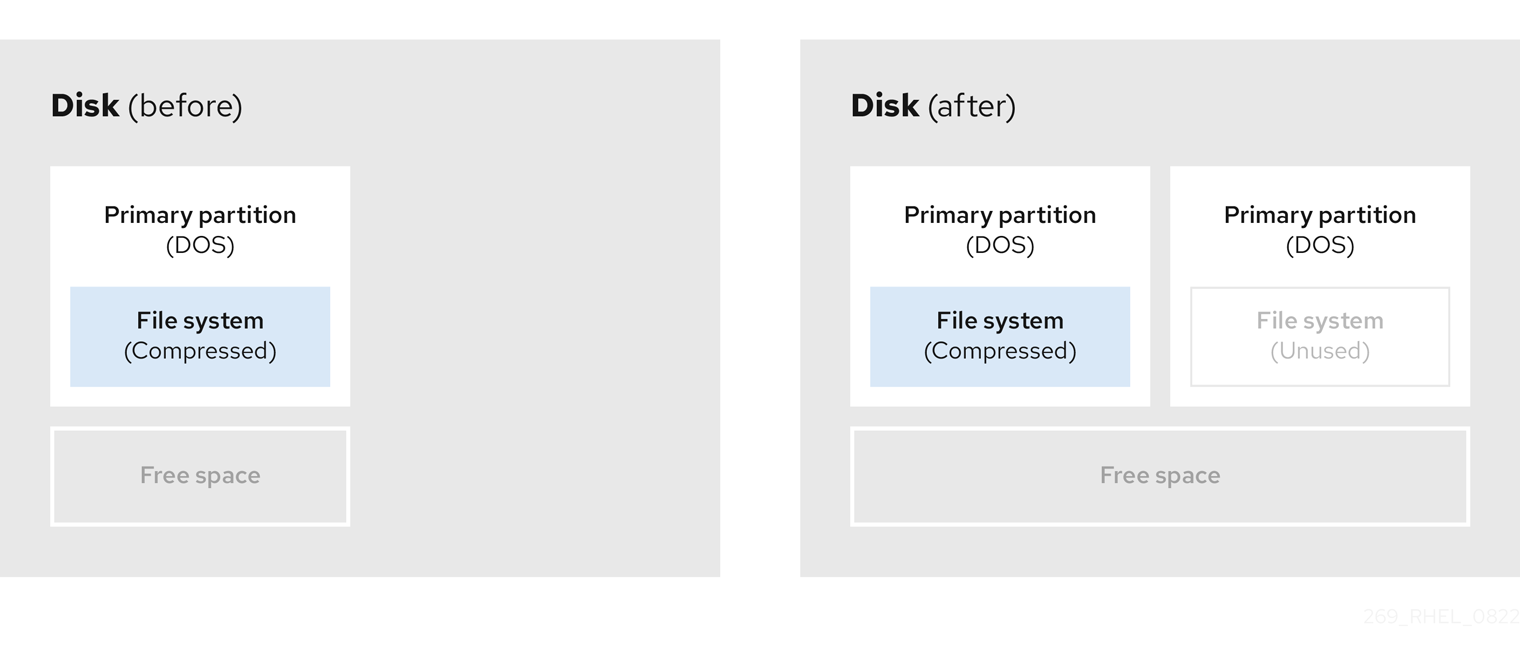

- Resize the existing partition

By resizing an already existing partition, you can free up more space. Depending on your resizing software, the results may vary. In the majority of cases, you can create a new unformatted partition of the same type, as the original partition.

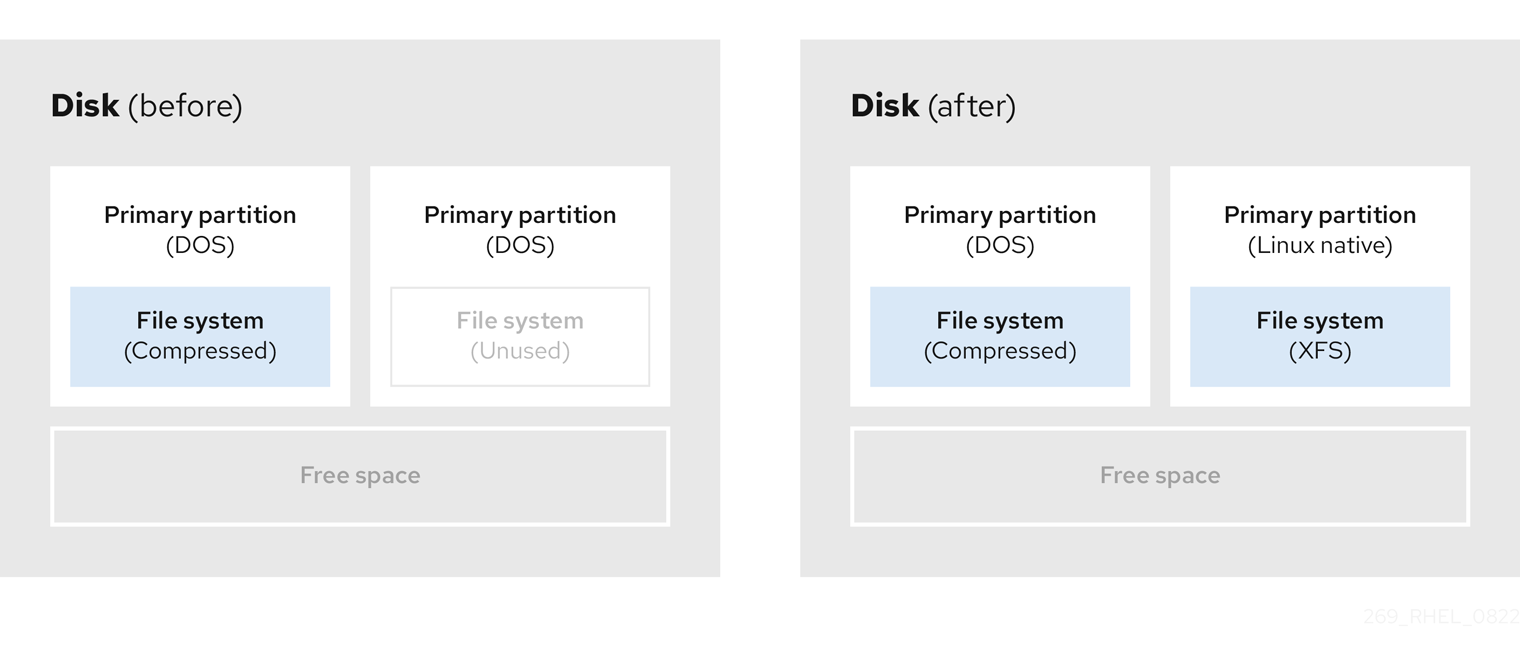

The steps you take after resizing can depend on the software you use. In the following example, the best practice is to delete the new DOS (Disk Operating System) partition, and create a Linux partition instead. Verify what is most suitable for your disk before initiating the resizing process.

Figure 5.5. Partition resizing on a disk

- Optional: Create new partitions

Some pieces of resizing software support Linux based systems. In such cases, there is no need to delete the newly created partition after resizing. Creating a new partition afterwards depends on the software you use.

The following diagram represents the disk state, before and after creating a new partition.

Figure 5.6. Disk with final partition configuration

Chapter 6. Configuring an iSCSI target

Red Hat Enterprise Linux uses the targetcli shell as a command-line interface to perform the following operations:

- Add, remove, view, and monitor iSCSI storage interconnects to utilize iSCSI hardware.

- Export local storage resources that are backed by either files, volumes, local SCSI devices, or by RAM disks to remote systems.

The targetcli tool has a tree-based layout including built-in tab completion, auto-complete support, and inline documentation.

6.1. Installing targetcli

Install the targetcli tool to add, monitor, and remove iSCSI storage interconnects .

Procedure

Install the

targetclitool:dnf install targetcli

# dnf install targetcliCopy to Clipboard Copied! Toggle word wrap Toggle overflow Start the target service:

systemctl start target

# systemctl start targetCopy to Clipboard Copied! Toggle word wrap Toggle overflow Configure target to start at boot time:

systemctl enable target

# systemctl enable targetCopy to Clipboard Copied! Toggle word wrap Toggle overflow Open port

3260in the firewall and reload the firewall configuration:firewall-cmd --permanent --add-port=3260/tcp Success

# firewall-cmd --permanent --add-port=3260/tcp SuccessCopy to Clipboard Copied! Toggle word wrap Toggle overflow firewall-cmd --reload Success

# firewall-cmd --reload SuccessCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

View the

targetclilayout:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2. Creating an iSCSI target

You can create an iSCSI target to let the iSCSI initiator of the client to access the storage devices on the server. Both targets and initiators have unique identifying names.

Prerequisites

-

Installed and running

targetcli. For more information, see Installing targetcli.

Procedure

Navigate to the iSCSI directory. You can also use the

cdcommand to navigate to the iSCSI directory./> iscsi/

/> iscsi/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use one of the following options to create an iSCSI target:

Creating an iSCSI target using a default target name:

/iscsi> create Created target iqn.2003-01.org.linux-iscsi.hostname.x8664:sn.78b473f296ff Created TPG1

/iscsi> create Created target iqn.2003-01.org.linux-iscsi.hostname.x8664:sn.78b473f296ff Created TPG1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Creating an iSCSI target using a specific name:

/iscsi> create iqn.2006-04.com.example:444 Created target iqn.2006-04.com.example:444 Created TPG1 Here iqn.2006-04.com.example:444 is target_iqn_name

/iscsi> create iqn.2006-04.com.example:444 Created target iqn.2006-04.com.example:444 Created TPG1 Here iqn.2006-04.com.example:444 is target_iqn_nameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace iqn.2006-04.com.example:444 with the specific target name.

Verify the newly created target:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3. iSCSI Backstore

An iSCSI backstore enables support for different methods of storing an exported LUN’s data on the local machine. Creating a storage object defines the resources that the backstore uses.

An administrator can choose any of the following backstore devices that Linux-IO (LIO) supports:

fileiobackstore-

Create a

fileiostorage object if you are using regular files on the local file system as disk images. For creating afileiobackstore, see Creating a fileio storage object. blockbackstore-

Create a

blockstorage object if you are using any local block device and logical device. For creating ablockbackstore, see Creating a block storage object. pscsibackstore-

Create a

pscsistorage object if your storage object supports direct pass-through of SCSI commands. For creating apscsibackstore, see Creating a pscsi storage object. ramdiskbackstore-

Create a

ramdiskstorage object if you want to create a temporary RAM backed device. For creating aramdiskbackstore, see Creating a Memory Copy RAM disk storage object.

6.4. Creating a fileio storage object

fileio storage objects can support either the write_back or write_thru operations. The write_back operation enables the local file system cache. This improves performance but increases the risk of data loss.

It is recommended to use write_back=false to disable the write_back operation in favor of the write_thru operation.

Prerequisites

-

Installed and running

targetcli. For more information, see Installing targetcli.

Procedure

Navigate to the

fileio/from thebackstores/directory:/> backstores/fileio

/> backstores/fileioCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a

fileiostorage object:/backstores/fileio> create file1 /tmp/disk1.img 200M write_back=false Created fileio file1 with size 209715200

/backstores/fileio> create file1 /tmp/disk1.img 200M write_back=false Created fileio file1 with size 209715200Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Verify the created

fileiostorage object:/backstores/fileio> ls

/backstores/fileio> lsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.5. Creating a block storage object

The block driver allows the use of any block device that appears in the /sys/block/ directory to be used with Linux-IO (LIO). This includes physical devices, such as HDDs, SSDs, CDs, and DVDs, and logical devices, such as software or hardware RAID volumes, or LVM volumes.

Prerequisites

-

Installed and running

targetcli. For more information, see Installing targetcli.

Procedure

Navigate to the

block/from thebackstores/directory:/> backstores/block/

/> backstores/block/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a

blockbackstore:/backstores/block> create name=block_backend dev=/dev/sdb Generating a wwn serial. Created block storage object block_backend using /dev/sdb.

/backstores/block> create name=block_backend dev=/dev/sdb Generating a wwn serial. Created block storage object block_backend using /dev/sdb.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Verify the created

blockstorage object:/backstores/block> ls

/backstores/block> lsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.6. Creating a pscsi storage object

You can configure as a backstore any storage object that supports direct pass-through of SCSI commands without SCSI emulation and with an underlying SCSI device that appears with lsscsi in the /proc/scsi/scsi, such as a SAS hard drive. SCSI-3 and higher is supported with this subsystem.

pscsi should only be used by advanced users. Advanced SCSI commands such as for Asymmetric Logical Unit Assignment (ALUAs) or Persistent Reservations (for example, those used by VMware ESX, and vSphere) are usually not implemented in the device firmware and can cause malfunctions or crashes. When in doubt, use block backstore for production setups instead.

Prerequisites

-

Installed and running

targetcli. For more information, see Installing targetcli.

Procedure

Navigate to the

pscsi/from thebackstores/directory:/> backstores/pscsi/

/> backstores/pscsi/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a

pscsibackstore for a physical SCSI device, a TYPE_ROM device using/dev/sr0in this example:/backstores/pscsi> create name=pscsi_backend dev=/dev/sr0 Generating a wwn serial. Created pscsi storage object pscsi_backend using /dev/sr0

/backstores/pscsi> create name=pscsi_backend dev=/dev/sr0 Generating a wwn serial. Created pscsi storage object pscsi_backend using /dev/sr0Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Verify the created

pscsistorage object:/backstores/pscsi> ls

/backstores/pscsi> lsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.7. Creating a Memory Copy RAM disk storage object

Memory Copy RAM disks (ramdisk) provide RAM disks with full SCSI emulation and separate memory mappings using memory copy for initiators. This provides capability for multi-sessions and is particularly useful for fast and volatile mass storage for production purposes.

Prerequisites

-

Installed and running

targetcli. For more information, see Installing targetcli.

Procedure

Navigate to the

ramdisk/from thebackstores/directory:/> backstores/ramdisk/

/> backstores/ramdisk/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a 1GB RAM disk backstore:

/backstores/ramdisk> create name=rd_backend size=1GB Generating a wwn serial. Created rd_mcp ramdisk rd_backend with size 1GB.

/backstores/ramdisk> create name=rd_backend size=1GB Generating a wwn serial. Created rd_mcp ramdisk rd_backend with size 1GB.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Verify the created

ramdiskstorage object:/backstores/ramdisk> ls

/backstores/ramdisk> lsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.8. Creating an iSCSI portal

You can create an iSCSI portal. This adds an IP address and a port to the target that keeps the target enabled.

Prerequisites

-

Installed and running

targetcli. For more information, see Installing targetcli. - An iSCSI target associated with a Target Portal Groups (TPG). For more information, see Creating an iSCSI target.

Procedure

Navigate to the TPG directory:

/iscsi> iqn.2006-04.com.example:444/tpg1/

/iscsi> iqn.2006-04.com.example:444/tpg1/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use one of the following options to create an iSCSI portal:

Creating a default portal uses the default iSCSI port

3260and allows the target to listen to all IP addresses on that port:/iscsi/iqn.20...mple:444/tpg1> portals/ create Using default IP port 3260 Binding to INADDR_Any (0.0.0.0) Created network portal 0.0.0.0:3260

/iscsi/iqn.20...mple:444/tpg1> portals/ create Using default IP port 3260 Binding to INADDR_Any (0.0.0.0) Created network portal 0.0.0.0:3260Copy to Clipboard Copied! Toggle word wrap Toggle overflow Creating a portal using a specific IP address:

/iscsi/iqn.20...mple:444/tpg1> portals/ create 192.168.122.137 Using default IP port 3260 Created network portal 192.168.122.137:3260

/iscsi/iqn.20...mple:444/tpg1> portals/ create 192.168.122.137 Using default IP port 3260 Created network portal 192.168.122.137:3260Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Verify the newly created portal:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.9. Creating an iSCSI LUN

Logical unit number (LUN) is a physical device that is backed by the iSCSI backstore. Each LUN has a unique number.

Prerequisites

-

Installed and running

targetcli. For more information, see Installing targetcli. - An iSCSI target associated with a Target Portal Groups (TPG). For more information, see Creating an iSCSI target.

- Created storage objects. For more information, see iSCSI Backstore.

Procedure

Create LUNs of already created storage objects:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify the created LUNs:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Default LUN name starts at

0.ImportantBy default, LUNs are created with read-write permissions. If a new LUN is added after ACLs are created, LUN automatically maps to all available ACLs and can cause a security risk. To create a LUN with read-only permissions, see Creating a read-only iSCSI LUN.

- Configure ACLs. For more information, see Creating an iSCSI ACL.

6.10. Creating a read-only iSCSI LUN

By default, LUNs are created with read-write permissions. You can create a read-only LUN.

Prerequisites

-

Installed and running

targetcli. For more information, see Installing targetcli. - An iSCSI target associated with a Target Portal Groups (TPG). For more information, see Creating an iSCSI target.

- Created storage objects. For more information, see iSCSI Backstore.

Procedure

Set read-only permissions:

/> set global auto_add_mapped_luns=false Parameter auto_add_mapped_luns is now 'false'.

/> set global auto_add_mapped_luns=false Parameter auto_add_mapped_luns is now 'false'.Copy to Clipboard Copied! Toggle word wrap Toggle overflow This prevents the auto mapping of LUNs to existing ACLs allowing the manual mapping of LUNs.

Navigate to the initiator_iqn_name directory:

/> iscsi/target_iqn_name/tpg1/acls/initiator_iqn_name/

/> iscsi/target_iqn_name/tpg1/acls/initiator_iqn_name/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the LUN:

/iscsi/target_iqn_name/tpg1/acls/initiator_iqn_name> create mapped_lun=next_sequential_LUN_number tpg_lun_or_backstore=backstore write_protect=1

/iscsi/target_iqn_name/tpg1/acls/initiator_iqn_name> create mapped_lun=next_sequential_LUN_number tpg_lun_or_backstore=backstore write_protect=1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example:

/iscsi/target_iqn_name/tpg1/acls/2006-04.com.example:888> create mapped_lun=1 tpg_lun_or_backstore=/backstores/block/block2 write_protect=1 Created LUN 1. Created Mapped LUN 1.

/iscsi/target_iqn_name/tpg1/acls/2006-04.com.example:888> create mapped_lun=1 tpg_lun_or_backstore=/backstores/block/block2 write_protect=1 Created LUN 1. Created Mapped LUN 1.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify the created LUN:

/iscsi/target_iqn_name/tpg1/acls/2006-04.com.example:888> ls o- 2006-04.com.example:888 .. [Mapped LUNs: 2] | o- mapped_lun0 .............. [lun0 block/disk1 (rw)] | o- mapped_lun1 .............. [lun1 block/disk2 (ro)]

/iscsi/target_iqn_name/tpg1/acls/2006-04.com.example:888> ls o- 2006-04.com.example:888 .. [Mapped LUNs: 2] | o- mapped_lun0 .............. [lun0 block/disk1 (rw)] | o- mapped_lun1 .............. [lun1 block/disk2 (ro)]Copy to Clipboard Copied! Toggle word wrap Toggle overflow The mapped_lun1 line now has (

ro) at the end (unlike mapped_lun0’s (rw)) stating that it is read-only.- Configure ACLs. For more information, see Creating an iSCSI ACL.

6.11. Creating an iSCSI ACL

The targetcli service uses Access Control Lists (ACLs) to define access rules and grant each initiator access to a Logical Unit Number (LUN).

Both targets and initiators have unique identifying names. You must know the unique name of the initiator to configure ACLs. The /etc/iscsi/initiatorname.iscsi file, provided by the iscsi-initiator-utils package, contains the iSCSI initiator names.

Prerequisites

-

The

targetcliservice is installed and running. - An iSCSI target associated with a Target Portal Groups (TPG).

Procedure

- Optional: To disable auto mapping of LUNs to ACLs see Creating a read-only iSCSI LUN.

Navigate to the acls directory:

/> iscsi/target_iqn_name/tpg_name/acls/

/> iscsi/target_iqn_name/tpg_name/acls/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use one of the following options to create an ACL:

Use the initiator_iqn_name from the

/etc/iscsi/initiatorname.iscsifile on the initiator:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Use a custom_name and update the initiator to match it:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow For information about updating the initiator name, see Creating an iSCSI intiator.

Verification

Verify the created ACL:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.12. Setting up the Challenge-Handshake Authentication Protocol for the target

By using the Challenge-Handshake Authentication Protocol (CHAP), users can protect the target with a password. The initiator must be aware of this password to be able to connect to the target.

Prerequisites

- Created iSCSI ACL. For more information, see Creating an iSCSI ACL.

Procedure

Set attribute authentication:

/iscsi/iqn.20...mple:444/tpg1> set attribute authentication=1 Parameter authentication is now '1'.

/iscsi/iqn.20...mple:444/tpg1> set attribute authentication=1 Parameter authentication is now '1'.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set

useridandpassword:/tpg1> set auth userid=redhat Parameter userid is now 'redhat'. /iscsi/iqn.20...689dcbb3/tpg1> set auth password=redhat_passwd Parameter password is now 'redhat_passwd'.

/tpg1> set auth userid=redhat Parameter userid is now 'redhat'. /iscsi/iqn.20...689dcbb3/tpg1> set auth password=redhat_passwd Parameter password is now 'redhat_passwd'.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Navigate to the

aclsdirectory:/> iscsi/target_iqn_name/tpg1/acls/initiator_iqn_name/

/> iscsi/target_iqn_name/tpg1/acls/initiator_iqn_name/Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set attribute authentication:

/iscsi/iqn.20...:605fcc6a48be> set attribute authentication=1 Parameter authentication is now '1'.

/iscsi/iqn.20...:605fcc6a48be> set attribute authentication=1 Parameter authentication is now '1'.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Set

useridandpassword:/iscsi/iqn.20...:605fcc6a48be> set auth userid=redhat Parameter userid is now 'redhat'. /iscsi/iqn.20...:605fcc6a48be> set auth password=redhat_passwd Parameter password is now 'redhat_passwd'.

/iscsi/iqn.20...:605fcc6a48be> set auth userid=redhat Parameter userid is now 'redhat'. /iscsi/iqn.20...:605fcc6a48be> set auth password=redhat_passwd Parameter password is now 'redhat_passwd'.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.13. Removing an iSCSI object using targetcli tool

You can remove the iSCSI objects by using the targetcli tool.

Procedure

Log off from the target:

iscsiadm -m node -T iqn.2006-04.com.example:444 -u

# iscsiadm -m node -T iqn.2006-04.com.example:444 -uCopy to Clipboard Copied! Toggle word wrap Toggle overflow For more information about how to log in to the target, see Creating an iSCSI initiator.

Remove the entire target, including all ACLs, LUNs, and portals:

/> iscsi/ delete iqn.2006-04.com.example:444

/> iscsi/ delete iqn.2006-04.com.example:444Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace iqn.2006-04.com.example:444 with the target_iqn_name.

To remove an iSCSI backstore:

/> backstores/backstore-type/ delete block_backend

/> backstores/backstore-type/ delete block_backendCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace backstore-type with either

fileio,block,pscsi, orramdisk.Replace block_backend with the backstore-name you want to delete.

To remove parts of an iSCSI target, such as an ACL:

/> /iscsi/iqn-name/tpg/acls/ delete iqn.2006-04.com.example:444

/> /iscsi/iqn-name/tpg/acls/ delete iqn.2006-04.com.example:444Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

View the changes:

/> iscsi/ ls

/> iscsi/ lsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 7. Configuring an iSCSI initiator

An iSCSI initiator forms a session to connect to the iSCSI target. By default, an iSCSI service is lazily started and the service starts after running the iscsiadm command. If root is not on an iSCSI device or there are no nodes marked with node.startup = automatic then the iSCSI service will not start until an iscsiadm command is executed that requires iscsid or the iscsi kernel modules to be started.

Execute the systemctl start iscsid command as root to force the iscsid service to run and iSCSI kernel modules to load.

7.1. Creating an iSCSI initiator

Create an iSCSI initiator to connect to the iSCSI target to access the storage devices on the server.

Prerequisites

You have an iSCSI target’s hostname and IP address:

- If you are connecting to a storage target that the external software created, find the target’s hostname and IP address from the storage administrator.

- If you are creating an iSCSI target, see Creating an iSCSI target.

Procedure

Install

iscsi-initiator-utilson client machine:dnf install iscsi-initiator-utils

# dnf install iscsi-initiator-utilsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Start the

iscsidservice:systemctl start iscsid

# systemctl start iscsidCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the initiator name:

cat /etc/iscsi/initiatorname.iscsi InitiatorName=iqn.2006-04.com.example:888

# cat /etc/iscsi/initiatorname.iscsi InitiatorName=iqn.2006-04.com.example:888Copy to Clipboard Copied! Toggle word wrap Toggle overflow If the ACL was given a custom name in Creating an iSCI ACL, update the initiator name to match the ACL:

Open the

/etc/iscsi/initiatorname.iscsifile and modify the initiator name:vi /etc/iscsi/initiatorname.iscsi InitiatorName=custom-name

# vi /etc/iscsi/initiatorname.iscsi InitiatorName=custom-nameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the

iscsidservice:systemctl restart iscsid

# systemctl restart iscsidCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Discover the target and log in to the target with the displayed target IQN:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace 10.64.24.179 with the target-ip-address.

You can use this procedure for any number of initiators connected to the same target if their respective initiator names are added to the ACL as described in the Creating an iSCSI ACL.

Find the iSCSI disk name and create a file system on this iSCSI disk:

grep "Attached SCSI" /var/log/messages mkfs.ext4 /dev/disk_name

# grep "Attached SCSI" /var/log/messages # mkfs.ext4 /dev/disk_nameCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace disk_name with the iSCSI disk name displayed in the

/var/log/messagesfile.Mount the file system:

mkdir /mount/point mount /dev/disk_name /mount/point

# mkdir /mount/point # mount /dev/disk_name /mount/pointCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace /mount/point with the mount point of the partition.

Edit the

/etc/fstabfile to mount the file system automatically when the system boots:vi /etc/fstab /dev/disk_name /mount/point ext4 _netdev 0 0

# vi /etc/fstab /dev/disk_name /mount/point ext4 _netdev 0 0Copy to Clipboard Copied! Toggle word wrap Toggle overflow Replace disk_name with the iSCSI disk name and /mount/point with the mount point of the partition.

7.2. Setting up the Challenge-Handshake Authentication Protocol for the initiator

By using the Challenge-Handshake Authentication Protocol (CHAP), users can protect the target with a password. The initiator must be aware of this password to be able to connect to the target.

Prerequisites

- Created iSCSI initiator. For more information, see Creating an iSCSI initiator.

-

Set the

CHAPfor the target. For more information, see Setting up the Challenge-Handshake Authentication Protocol for the target.

Procedure

Enable CHAP authentication in the

iscsid.conffile:vi /etc/iscsi/iscsid.conf node.session.auth.authmethod = CHAP

# vi /etc/iscsi/iscsid.conf node.session.auth.authmethod = CHAPCopy to Clipboard Copied! Toggle word wrap Toggle overflow By default, the

node.session.auth.authmethodis set toNoneAdd target

usernameandpasswordin theiscsid.conffile:node.session.auth.username = redhat node.session.auth.password = redhat_passwd

node.session.auth.username = redhat node.session.auth.password = redhat_passwdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the

iscsidservice:systemctl restart iscsid

# systemctl restart iscsidCopy to Clipboard Copied! Toggle word wrap Toggle overflow

7.3. Monitoring an iSCSI session by using the iscsiadm utility

You can monitor the iscsi session by using the iscsiadm utility.

By default, an iSCSI service is lazily started and the service starts after running the iscsiadm command. If root is not on an iSCSI device or there are no nodes marked with node.startup = automatic then the iSCSI service will not start until an iscsiadm command is executed that requires iscsid or the iscsi kernel modules to be started.

Use the systemctl start iscsid command as root to force the iscsid service to run and iSCSI kernel modules to load.

Procedure

Install the

iscsi-initiator-utilson client machine:dnf install iscsi-initiator-utils

# dnf install iscsi-initiator-utilsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Find information about the running sessions:

iscsiadm -m session -P 3

# iscsiadm -m session -P 3Copy to Clipboard Copied! Toggle word wrap Toggle overflow This command displays the session or device state, session ID (sid), some negotiated parameters, and the SCSI devices accessible through the session.

For shorter output, for example, to display only the

sid-to-nodemapping, run:Copy to Clipboard Copied! Toggle word wrap Toggle overflow These commands print the list of running sessions in the following format:

driver [sid] target_ip:port,target_portal_group_tag proper_target_name.

7.4. DM Multipath overrides of the device timeout

The recovery_tmo sysfs option controls the timeout for a particular iSCSI device. The following options globally override the recovery_tmo values:

-

The

replacement_timeoutconfiguration option globally overrides therecovery_tmovalue for all iSCSI devices. For all iSCSI devices that are managed by DM Multipath, the

fast_io_fail_tmooption in DM Multipath globally overrides therecovery_tmovalue.The

fast_io_fail_tmooption in DM Multipath also overrides thefast_io_fail_tmooption in Fibre Channel devices.

The DM Multipath fast_io_fail_tmo option takes precedence over replacement_timeout. Every time the multipathd service is reloaded, it resets recovery_tmo to the value of the fast_io_fail_tmo configuration option. Use the DM multipath fast_io_fail_tmo configuration option to override recovery_tmo in devices managed by DM Multipath.

Chapter 8. Using Fibre Channel devices

Red Hat Enterprise Linux 9 provides the following native Fibre Channel drivers:

-

lpfc -

qla2xxx -

zfcp

8.1. Re-scanning Fibre Channel logical units after resizing a LUN

If you changed the logical unit number (LUN) size on the external storage, use the echo command to update the kernel’s view of the size.

Procedure

Determine which devices are paths for a

multipathlogical unit:multipath -ll

# multipath -llCopy to Clipboard Copied! Toggle word wrap Toggle overflow Re-scan Fibre Channel logical units on a system that uses multipathing:

echo 1 > /sys/block/<device_ID>/device/rescan

$ echo 1 > /sys/block/<device_ID>/device/rescanCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

<device_ID>with the ID of your device, for examplesda.

8.2. Determining the link loss behavior of device using Fibre Channel

If a driver implements the Transport dev_loss_tmo callback, access attempts to a device through a link will be blocked when a transport problem is detected.

Procedure

Determine the state of a remote port:

cat /sys/class/fc_remote_ports/rport-host:bus:remote-port/port_state

$ cat /sys/class/fc_remote_ports/rport-host:bus:remote-port/port_stateCopy to Clipboard Copied! Toggle word wrap Toggle overflow This command returns one of the following output:

-

Blockedwhen the remote port along with devices accessed through it are blocked. Onlineif the remote port is operating normallyIf the problem is not resolved within

dev_loss_tmoseconds, therportand devices will be unblocked. All I/O running on that device along with any new I/O sent to that device will fail.

-

When a link loss exceeds dev_loss_tmo, the scsi_device and sd_N_ devices are removed. Typically, the Fibre Channel class does not alter the device, for example, /dev/sda remains /dev/sda. This is because the target binding is saved by the Fibre Channel driver and when the target port returns, the SCSI addresses are recreated faithfully. However, this cannot be guaranteed, the device will be restored only if no additional change on in-storage box configuration of LUNs is made.

8.3. Fibre Channel configuration files

The following is the list of configuration files in the /sys/class/ directory that provide the user-space API to Fibre Channel.

The items use the following variables:

H- Host number

B- Bus number

T- Target

L- Logical unit (LUNs)

R- Remote port number

Consult your hardware vendor before changing any of the values described in this section, if your system is using multipath software.

Transport configuration in /sys/class/fc_transport/targetH:B:T/

port_id- 24-bit port ID/address

node_name- 64-bit node name

port_name- 64-bit port name

Remote port configuration in /sys/class/fc_remote_ports/rport-H:B-R/

-

port_id -

node_name -

port_name dev_loss_tmoControls when the scsi device gets removed from the system. After

dev_loss_tmotriggers, the scsi device is removed. In themultipath.conffile , you can setdev_loss_tmotoinfinity.In Red Hat Enterprise Linux 9, if you do not set the