Fuse on OpenShift Guide

Installing and developing with Red Hat Fuse on OpenShift

Abstract

Preface

Red Hat Fuse on OpenShift enables you to deploy Fuse applications on OpenShift Container Platform.

Chapter 1. Before You Begin

Release Notes

See the Release Notes for important information about this release.

Version Compatibility and Support

See the Red Hat JBoss Fuse Supported Configurations page for details of version compatibility and support.

Support for Windows O/S

The developer tooling (oc client and Container Development Kit) for Fuse on OpenShift is fully supported on the Windows O/S. The examples shown in Linux command-line syntax can also work on the Windows O/S, provided they are modified appropriately to obey Windows command-line syntax.

1.1. Comparison: Fuse Standalone and Fuse on OpenShift

There are several major functionality differences:

- An application deployment with Fuse on OpenShift consists of an application and all required runtime components packaged inside a Docker image. Applications are not deployed to a runtime as with Fuse Standalone, the application image itself is a complete runtime environment deployed and managed through OpenShift.

- Patching in an OpenShift environment is different from Fuse Standalone, as each application image is a complete runtime environment. To apply a patch, the application image is rebuilt and redeployed within OpenShift. Core OpenShift management capabilities allow for rolling upgrades and side-by-side deployment to maintain availability of your application during upgrade.

- Provisioning and clustering capabilities provided by Fabric in Fuse have been replaced with equivalent functionality in Kubernetes and OpenShift. There is no need to create or configure individual child containers as OpenShift automatically does this for you as part of deploying and scaling your application.

- Fabric endpoints are not used within an OpenShift environment. Kubernetes services must be used instead.

- Messaging services are created and managed using the A-MQ for OpenShift image and not included directly within a Karaf container. Fuse on OpenShift provides an enhanced version of the camel-amq component to allow for seamless connectivity to messaging services in OpenShift through Kubernetes.

- Live updates to running Karaf instances using the Karaf shell is strongly discouraged as updates will not be preserved if an application container is restarted or scaled up. This is a fundamental tenet of immutable architecture and essential to achieving scalability and flexibility within OpenShift.

- Maven dependencies directly linked to Red Hat Fuse components are supported by Red Hat. Third-party Maven dependencies introduced by users are not supported.

- The SSH Agent is not included in the Apache Karaf micro-container, so you cannot connect to it using the bin/client console client.

- Protocol compatibility and Camel components within a Fuse on OpenShift application: non-HTTP based communications must use TLS and SNI to be routable from outside OpenShift into a Fuse service (Camel consumer endpoint).

Chapter 2. Getting Started for Administrators

If you are an OpenShift administrator, you can prepare an OpenShift cluster for Fuse on OpenShift deployments by:

- Configuring authentication to the Red Hat Container Registry.

- Installing the Fuse on OpenShift images and templates.

2.1. Configuring Red Hat Container Registry Authentication

You must configure authentication to Red Hat container registry before you can import and use the Red Hat Fuse on OpenShift image streams and templates.

Procedure

Log in to the OpenShift Server as an administrator:

oc login -u system:admin

oc login -u system:adminCopy to Clipboard Copied! Toggle word wrap Toggle overflow Log in to the OpenShift project where you want to install the image streams. We recommend that you use the

openshiftproject for the Fuse on OpenShift image streams.oc project openshift

oc project openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a docker-registry secret using either your Red Hat Customer Portal account or your Red Hat Developer Program account credentials. Replace

<pull_secret_name>with the name of the secret that you wish to create.oc create secret docker-registry <pull_secret_name> \ --docker-server=registry.redhat.io \ --docker-username=CUSTOMER_PORTAL_USERNAME \ --docker-password=CUSTOMER_PORTAL_PASSWORD \ --docker-email=EMAIL_ADDRESS

oc create secret docker-registry <pull_secret_name> \ --docker-server=registry.redhat.io \ --docker-username=CUSTOMER_PORTAL_USERNAME \ --docker-password=CUSTOMER_PORTAL_PASSWORD \ --docker-email=EMAIL_ADDRESSCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteYou need to create a docker-registry secret in every new namespace where the image streams reside and in every namespace that uses registry.redhat.io.

To use the secret for pulling images for pods, add the secret to your service account. The name of the service account must match the name of the service account pod uses. Following example uses

defaultwhich is the default service account.oc secrets link default <pull_secret_name> --for=pull

oc secrets link default <pull_secret_name> --for=pullCopy to Clipboard Copied! Toggle word wrap Toggle overflow To use the secret for pushing and pulling build images, the secret must be mountable inside of a pod. To mount the secret, use following command:

oc secrets link builder <pull_secret_name>

oc secrets link builder <pull_secret_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

If you do not want to use your Red Hat account username and password to create the secret, you should create an authentication token by using a registry service account.

For more information see:

2.2. Installing Fuse Imagestreams and Templates on the OpenShift 4.x Server

Openshift Container Platform 4.1 uses the Samples Operator, which operates in the OpenShift namespace, installs and updates the Red Hat Enterprise Linux (RHEL)-based OpenShift Container Platform imagestreams and templates. To install the Fuse on OpenShift imagestreams and templates:

- Reconfigure the Samples Operator

Add Fuse imagestreams and templates to

Skipped Imagestreams and Skipped Templatesfields.- Skipped Imagestreams: Imagestreams that are in the Samples Operator’s inventory, but that the cluster administrator wants the Operator to ignore or not manage.

- Skipped Templates: Templates that are in the Samples Operator’s inventory, but that the cluster administrator wants the Operator to ignore or not manage.

Prerequisites

- You have access to OpenShift Server.

- You have configured authentication to the Red Hat Container Registry.

- Optionally, if you want the Fuse templates to be visible in the OpenShift dashboard after you install them, you must first install the service catalog and the template service broker as described in the OpenShift documentation (https://docs.openshift.com/container-platform/4.1/applications/service_brokers/installing-service-catalog.html).

Procedure

- Start the OpenShift 4 Server.

Log in to the OpenShift Server as an administrator.

oc login -u system:admin

oc login -u system:adminCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that you are using the project for which you created a docker-registry secret.

oc project openshift

oc project openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow View the current configuration of Samples operator.

oc get configs.samples.operator.openshift.io -n openshift-cluster-samples-operator -o yaml

oc get configs.samples.operator.openshift.io -n openshift-cluster-samples-operator -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Configure Samples operator to ignore the fuse templates and image streams that are added.

oc edit configs.samples.operator.openshift.io -n openshift-cluster-samples-operator

oc edit configs.samples.operator.openshift.io -n openshift-cluster-samples-operatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add the Fuse imagestreams and templates to Skipped Imagestreams and Skipped Templates section respectively.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Install Fuse on OpenShift image streams.

BASEURL=https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003 oc create -n openshift -f ${BASEURL}/fis-image-streams.jsonBASEURL=https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003 oc create -n openshift -f ${BASEURL}/fis-image-streams.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow Install the quickstart templates:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Install the templates for the Fuse Console.

oc create -n openshift -f https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003/fis-console-cluster-template.json oc create -n openshift -f https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003/fis-console-namespace-template.json

oc create -n openshift -f https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003/fis-console-cluster-template.json oc create -n openshift -f https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003/fis-console-namespace-template.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteFor information on deploying the Fuse Console, see Set up Fuse Console on OpenShift.

(Optional) View the installed Fuse on OpenShift images and templates:

oc get template -n openshift

oc get template -n openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.3. Installing Apicurito Operator on the OpenShift 4.x Server

Red Hat Fuse on OpenShift provides Apicurito, a web-based API designer, that you can use to design REST APIs. The new Apicurito Operator simplifies the installing and upgrading on Openshift Container Platform 4.1. For Fuse 7.4, the Apicurito Operator is available in the OperatorHub. Follow the steps below to install the operator.

Prerequisites

- You have access to OpenShift Server.

- You have configured authentication to the Red Hat Container Registry.

Procedure

- Start the OpenShift 4.x Server.

- Navigate to the OpenShift console in your browser. Log in to the console with your credentials.

- Click Catalog and then Click OperatorHub.

-

Enter Apicurito in the search field window and press

Enterkey. You can see the Apicurito Operator in the right hand side panel. - Click Apicurito Operator. The Apicurito Operator install window is displayed.

Click Install. The Create Operator Subscription form is displayed. The form has options for:

- Installation mode

- Update Channel

Approval Strategy.

Select as per you preferences or you can keep the default values.

- Click Subscribe. The Apicurito Operator is now installed in the selected namespace.

- To verify, click Catalog and then click Installed Operator. You can see the Apicurito Operator in the list.

Click Apicurito Operator in the Name column. Click Create New under Provided APIs. A new Custom Resource Definition (CRD) is created with the default values. Following fields are supported as part of the CR:

- size: how many pods your the apicurito operator will have.

- image: the apicurito image. Changing this image in an existing installation will trigger an upgrade of the operator.

- Click Create. This will create a new apicurito-service.

2.4. Installing Fuse Imagestreams and Templates on the OpenShift 3.x Server

After you configure authentication to the Red Hat container registry, import and use the Red Hat Fuse on OpenShift image streams and templates.

Procedure

- Start the OpenShift Server.

Log in to the OpenShift Server as an administrator.

oc login -u system:admin

oc login -u system:adminCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify that you are using the project for which you created a docker-registry secret.

oc project openshift

oc project openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow Install the Fuse on OpenShift image streams.

BASEURL=https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003 oc create -n openshift -f ${BASEURL}/fis-image-streams.jsonBASEURL=https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003 oc create -n openshift -f ${BASEURL}/fis-image-streams.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow Install the quickstart templates:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Install the templates for the Fuse Console.

oc create -n openshift -f https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003/fis-console-cluster-template.json oc create -n openshift -f https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003/fis-console-namespace-template.json

oc create -n openshift -f https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003/fis-console-cluster-template.json oc create -n openshift -f https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003/fis-console-namespace-template.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteFor information on deploying the Fuse Console, see Set up Fuse Console on OpenShift.

Install the Apicurito template:

oc create -n openshift -f ${BASEURL}/fuse-apicurito.ymloc create -n openshift -f ${BASEURL}/fuse-apicurito.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow (Optional) View the installed Fuse on OpenShift images and templates:

oc get template -n openshift

oc get template -n openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 3. Installing Fuse on OpenShift as a Non-admin User

You can start using Fuse on OpenShift by creating an application and deploying it to OpenShift. First you need to install Fuse on OpenShift images and templates.

3.1. Installing Fuse on OpenShift Images and Templates as a Non-admin User

Prerequisites

- You have access to OpenShift server. It can be either virtual OpenShift server by CDK or remote OpenShift server.

- You have configured authentication to the Red Hat Container Registry.

For more information see:

Procedure

In preparation for building and deploying the Fuse on OpenShift project, log in to the OpenShift Server as follows.

oc login -u developer -p developer https://OPENSHIFT_IP_ADDR:8443

oc login -u developer -p developer https://OPENSHIFT_IP_ADDR:8443Copy to Clipboard Copied! Toggle word wrap Toggle overflow Where,

OPENSHIFT_IP_ADDRis a placeholder for the OpenShift server’s IP address as this IP address is not always the same.NoteThe developer user (with developer password) is a standard account that is automatically created on the virtual OpenShift Server by CDK. If you are accessing a remote server, use the URL and credentials provided by your OpenShift administrator.

Create a new project namespace called test (assuming it does not already exist).

oc new-project test

oc new-project testCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the test project namespace already exists, switch to it.

oc project test

oc project testCopy to Clipboard Copied! Toggle word wrap Toggle overflow Install the Fuse on OpenShift image streams:

BASEURL=https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003 oc create -n test -f ${BASEURL}/fis-image-streams.jsonBASEURL=https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003 oc create -n test -f ${BASEURL}/fis-image-streams.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow The command output displays the Fuse image streams that are now available in your Fuse on OpenShift project.

Install the quickstart templates.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Install the templates for the Fuse Console.

oc create -n test -f ${BASEURL}/fis-console-cluster-template.json oc create -n test -f ${BASEURL}/fis-console-namespace-template.jsonoc create -n test -f ${BASEURL}/fis-console-cluster-template.json oc create -n test -f ${BASEURL}/fis-console-namespace-template.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteFor information on deploying the Fuse Console, see Set up Fuse Console on OpenShift.

(Optional) View the installed Fuse on OpenShift images and templates.

oc get template -n test

oc get template -n testCopy to Clipboard Copied! Toggle word wrap Toggle overflow In your browser, navigate to the OpenShift console:

-

Use https://OPENSHIFT_IP_ADDR:8443 and replace

OPENSHIFT_IP_ADDRwith your OpenShift server’s IP address. - Log in to the OpenShift console with your credentials (for example, with username developer and password developer).

-

Use https://OPENSHIFT_IP_ADDR:8443 and replace

Chapter 4. Getting Started for Developers

4.1. Preparing Development Environment

The fundamental requirement for developing and testing Fuse on OpenShift projects is having access to an OpenShift Server. You have the following basic alternatives:

4.1.1. Installing Container Development Kit (CDK) on Your Local Machine

As a developer, if you want to get started quickly, the most practical alternative is to install Red Hat CDK on your local machine. Using CDK, you can boot a virtual machine (VM) instance that runs an image of OpenShift on Red Hat Enterprise Linux (RHEL) 7. An installation of CDK consists of the following key components:

- A virtual machine (libvirt, VirtualBox, or Hyper-V)

- Minishift to start and manage the Container Development Environment

Red Hat CDK is intended for development purposes only. It is not intended for other purposes, such as production environments, and may not address known security vulnerabilities. For full support of running mission-critical applications inside of docker-formatted containers, you need an active RHEL 7 or RHEL Atomic subscription. For more details, see Support for Red Hat Container Development Kit (CDK).

Prerequisites

Java Version

On your developer machine, make sure you have installed a Java version that is supported by Fuse 7.4. For details of the supported Java versions, see Supported Configurations.

Procedure

To install the CDK on your local machine:

- For Fuse on OpenShift, we recommend that you install version 3.9 of CDK. Detailed instructions for installing and using CDK 3.9 are provided in the Red Hat CDK 3.9 Getting Started Guide.

- Configure your OpenShift credentials to gain access to the Red Hat container registry by following the instructions in Configuring Red Hat Container Registry authentication.

Install the Fuse on OpenShift images and templates manually as described in Chapter 2, Getting Started for Administrators.

NoteYour version of CDK might have Fuse on OpenShift images and templates pre-installed. However, you must install (or update) the Fuse on OpenShift images and templates after you configure your OpenShift credentials.

- Before you proceed with the examples in this chapter, you should read and thoroughly understand the contents of the Red Hat CDK 3.9 Getting Started Guide.

4.1.2. Getting Remote Access to an Existing OpenShift Server

Your IT department might already have set up an OpenShift cluster on some server machines. In this case, the following requirements must be satisfied for getting started with Fuse on OpenShift:

- The server machines must be running a supported version of OpenShift Container Platform (as documented in the Supported Configurations page). The examples in this guide have been tested against version 3.11.

- Ask the OpenShift administrator to install the latest Fuse on OpenShift container base images and the Fuse on OpenShift templates on the OpenShift servers.

- Ask the OpenShift administrator to create a user account for you, having the usual developer permissions (enabling you to create, deploy, and run OpenShift projects).

-

Ask the administrator for the URL of the OpenShift Server (which you can use either to browse to the OpenShift console or connect to OpenShift using the

occommand-line client) and the login credentials for your account.

4.1.3. Installing Client-Side Tools

We recommend that you have the following tools installed on your developer machine:

- Apache Maven 3.6.x: Required for local builds of OpenShift projects. Download the appropriate package from the Apache Maven download page. Make sure that you have at least version 3.6.x (or later) installed, otherwise Maven might have problems resolving dependencies when you build your project.

- Git: Required for the OpenShift S2I source workflow and generally recommended for source control of your Fuse on OpenShift projects. Download the appropriate package from the Git Downloads page.

OpenShift client: If you are using CDK, you can add the

ocbinary to your PATH usingminishift oc-envwhich displays the command you need to type into your shell (the output ofoc-envwill differ depending on OS and shell type):minishift oc-env export PATH="/Users/john/.minishift/cache/oc/v1.5.0:$PATH" # Run this command to configure your shell: eval $(minishift oc-env)

$ minishift oc-env export PATH="/Users/john/.minishift/cache/oc/v1.5.0:$PATH" # Run this command to configure your shell: # eval $(minishift oc-env)Copy to Clipboard Copied! Toggle word wrap Toggle overflow For more details, see Using the OpenShift Client Binary in CDK 3.9 Getting Started Guide.

If you are not using CDK, follow the instructions in the CLI Reference to install the

occlient tool.(Optional) Docker client: Advanced users might find it convenient to have the Docker client tool installed (to communicate with the docker daemon running on an OpenShift server). For information about specific binary installations for your operating system, see the Docker installation site.

For more details, see Reusing the docker Daemon in CDK 3.9 Getting Started Guide.

ImportantMake sure that you install versions of the

octool and thedockertool that are compatible with the version of OpenShift running on the OpenShift Server.

Additional Resources

(Optional) Red Hat JBoss CodeReady Studio: Red Hat JBoss CodeReady Studio is an Eclipse-based development environment that includes support for developing Fuse on OpenShift applications. For details about how to install this development environment, see Install Red Hat JBoss CodeReady Studio.

4.1.4. Configuring Maven Repositories

Configure the Maven repositories, which hold the archetypes and artifacts that you will need for building an Fuse on OpenShift project on your local machine.

Procedure

-

Open your Maven

settings.xmlfile, which is usually located in~/.m2/settings.xml(on Linux or macOS) orDocuments and Settings\<USER_NAME>\.m2\settings.xml(on Windows). Add the following Maven repositories.

-

Maven central:

https://repo1.maven.org/maven2 -

Red Hat GA repository:

https://maven.repository.redhat.com/ga Red Hat EA repository:

https://maven.repository.redhat.com/earlyaccess/allYou must add the preceding repositories both to the dependency repositories section as well as the plug-in repositories section of your

settings.xmlfile.

-

Maven central:

4.2. Creating and Deploying Applications on Fuse on OpenShift

You can start using Fuse on OpenShift by creating an application and deploying it to OpenShift using one of the following OpenShift Source-to-Image (S2I) application development workflows:

- S2I binary workflow

- S2I with build input from a binary source. This workflow is characterized by the fact that the build is partly executed on the developer’s own machine. After building a binary package locally, this workflow hands off the binary package to OpenShift. For more details, see Binary Source from the OpenShift 3.5 Developer Guide.

- S2I source workflow

- S2I with build input from a Git source. This workflow is characterized by the fact that the build is executed entirely on the OpenShift server. For more details, see Git Source from the OpenShift 3.5 Developer Guide.

4.2.1. Creating and Deploying a Project Using the S2I Binary Workflow

In this section, you will use the OpenShift S2I binary workflow to create, build, and deploy an Fuse on OpenShift project.

Procedure

Create a new Fuse on OpenShift project using a Maven archetype. For this example, we use an archetype that creates a sample Spring Boot Camel project. Open a new shell prompt and enter the following Maven command:

mvn org.apache.maven.plugins:maven-archetype-plugin:2.4:generate \ -DarchetypeCatalog=https://maven.repository.redhat.com/ga/io/fabric8/archetypes/archetypes-catalog/2.2.0.fuse-740017-redhat-00003/archetypes-catalog-2.2.0.fuse-740017-redhat-00003-archetype-catalog.xml \ -DarchetypeGroupId=org.jboss.fuse.fis.archetypes \ -DarchetypeArtifactId=spring-boot-camel-xml-archetype \ -DarchetypeVersion=2.2.0.fuse-740017-redhat-00003

mvn org.apache.maven.plugins:maven-archetype-plugin:2.4:generate \ -DarchetypeCatalog=https://maven.repository.redhat.com/ga/io/fabric8/archetypes/archetypes-catalog/2.2.0.fuse-740017-redhat-00003/archetypes-catalog-2.2.0.fuse-740017-redhat-00003-archetype-catalog.xml \ -DarchetypeGroupId=org.jboss.fuse.fis.archetypes \ -DarchetypeArtifactId=spring-boot-camel-xml-archetype \ -DarchetypeVersion=2.2.0.fuse-740017-redhat-00003Copy to Clipboard Copied! Toggle word wrap Toggle overflow The archetype plug-in switches to interactive mode to prompt you for the remaining fields.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow When prompted, enter

org.example.fisfor thegroupIdvalue andfuse74-spring-bootfor theartifactIdvalue. Accept the defaults for the remaining fields.-

If the previous command exited with the

BUILD SUCCESSstatus, you should now have a new Fuse on OpenShift project under thefuse74-spring-bootsubdirectory. You can inspect the XML DSL code in thefuse74-spring-boot/src/main/resources/spring/camel-context.xmlfile. The demonstration code defines a simple Camel route that continuously sends message containing a random number to the log. In preparation for building and deploying the Fuse on OpenShift project, log in to the OpenShift Server as follows.

oc login -u developer -p developer https://OPENSHIFT_IP_ADDR:8443

oc login -u developer -p developer https://OPENSHIFT_IP_ADDR:8443Copy to Clipboard Copied! Toggle word wrap Toggle overflow Where,

OPENSHIFT_IP_ADDRis a placeholder for the OpenShift server’s IP address as this IP address is not always the same.NoteThe

developeruser (withdeveloperpassword) is a standard account that is automatically created on the virtual OpenShift Server by CDK. If you are accessing a remote server, use the URL and credentials provided by your OpenShift administrator.Run the following command to ensure that Fuse on OpenShift images and templates are already installed and you can access them.

oc get template -n openshift

oc get template -n openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the images and templates are not pre-installed, or if the provided versions are out of date, install (or update) the Fuse on OpenShift images and templates manually. For more information on how to install Fuse on OpenShift images see Chapter 2, Getting Started for Administrators.

Create a new project namespace called

test(assuming it does not already exist), as follows.oc new-project test

oc new-project testCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the

testproject namespace already exists, you can switch to it using the following command.oc project test

oc project testCopy to Clipboard Copied! Toggle word wrap Toggle overflow You are now ready to build and deploy the

fuse74-spring-bootproject. Assuming you are still logged into OpenShift, change to the directory of thefuse74-spring-bootproject, and then build and deploy the project, as follows.cd fuse74-spring-boot mvn fabric8:deploy -Popenshift

cd fuse74-spring-boot mvn fabric8:deploy -PopenshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow At the end of a successful build, you should see some output like the following.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe first time you run this command, Maven has to download a lot of dependencies, which takes several minutes. Subsequent builds will be faster.

-

Navigate to the OpenShift console in your browser and log in to the console with your credentials (for example, with username

developerand password,developer). - In the OpenShift console, scroll down to find the test project namespace. Click the test project to open the test project namespace. The Overview tab of the test project opens, showing the fuse74-spring-boot application.

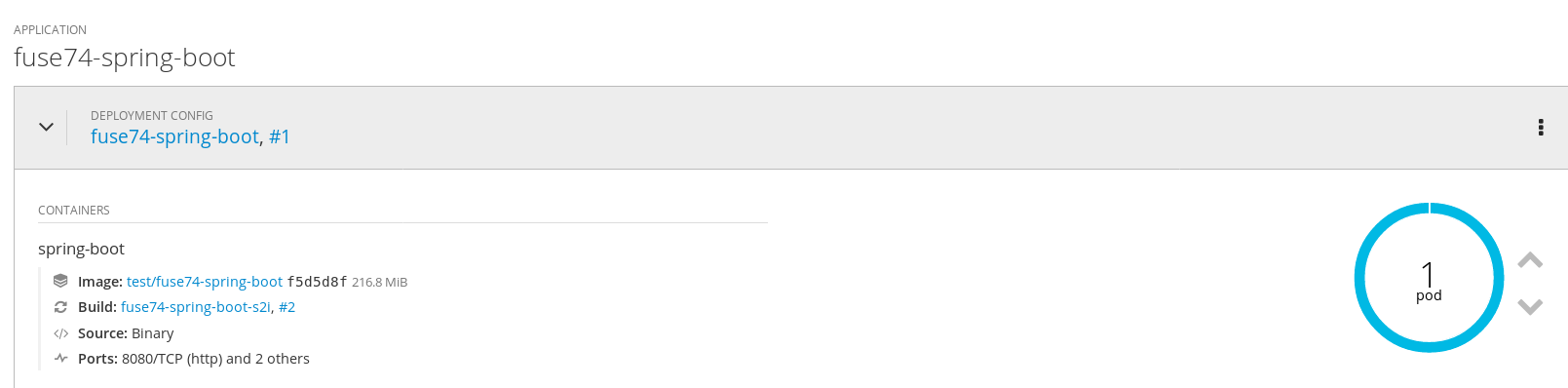

Click the arrow on the left of the fuse74-spring-boot deployment to expand and view the details of this deployment, as shown.

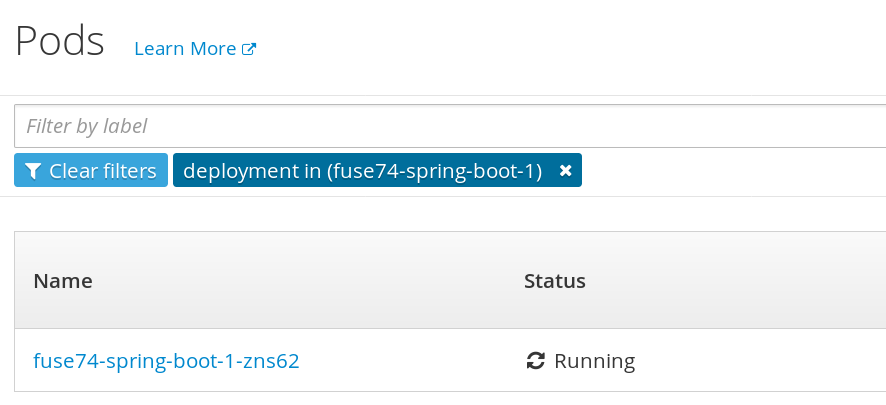

Click in the center of the pod icon (blue circle) to view the list of pods for fuse74-spring-boot.

Click on the pod Name (in this example,

fuse74-spring-boot-1-kxdjm) to view the details of the running pod.

Click on the Logs tab to view the application log and scroll down the log to find the random number log messages generated by the Camel application.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Click Overview on the left-hand navigation bar to return to the applications overview in the

testnamespace. To shut down the running pod, click the down arrow beside the pod icon. When a dialog prompts you with the question Scale down deployment fuse74-spring-boot-1?, click Scale Down.

beside the pod icon. When a dialog prompts you with the question Scale down deployment fuse74-spring-boot-1?, click Scale Down.

(Optional) If you are using CDK, you can shut down the virtual OpenShift Server completely by returning to the shell prompt and entering the following command:

minishift stop

minishift stopCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2.2. Undeploying and Redeploying the Project

You can undeploy or redeploy your projects, as follows:

Procedure

To undeploy the project, enter the command:

mvn fabric8:undeploy

mvn fabric8:undeployCopy to Clipboard Copied! Toggle word wrap Toggle overflow To redeploy the project, enter the commands:

mvn fabric8:undeploy mvn fabric8:deploy -Popenshift

mvn fabric8:undeploy mvn fabric8:deploy -PopenshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2.3. Set up Fuse Console on OpenShift

In OpenShift, you can access the Fuse Console in two ways:

- From a specific pod so that you can monitor that single running Fuse container.

- By adding the centralized Fuse Console catalog item to your project so that you can monitor all the running Fuse containers in your project.

You can deploy the Fuse Console either from the OpenShift Console or from the command line.

- On OpenShift 4, if you want to manage Fuse 7.4 services with the Fuse Console, you must install the community version (Hawtio) as described in the Fuse 7.4 release notes.

- Security and user management for the Fuse Console is handled by OpenShift.

- The Fuse Console templates configure end-to-end encryption by default so that your Fuse Console requests are secured end-to-end, from the browser to the in-cluster services.

- Role-based access control (for users accessing the Fuse Console after it is deployed) is not yet available for Fuse on OpenShift.

Prerequisites

- Install the Fuse on OpenShift image streams and the templates for the Fuse Console as described in Fuse on OpenShift Guide.

- If you want to deploy the Fuse Console in cluster mode on the OpenShift Container Platform environment, you need the cluster admin role and the cluster mode template. Run the following command:

oc adm policy add-cluster-role-to-user cluster-admin system:serviceaccount:openshift-infra:template-instance-controller

oc adm policy add-cluster-role-to-user cluster-admin system:serviceaccount:openshift-infra:template-instance-controllerThe cluster mode template is only available, by default, on the latest version of the OpenShift Container Platform. It is not provided with the OpenShift Online default catalog.

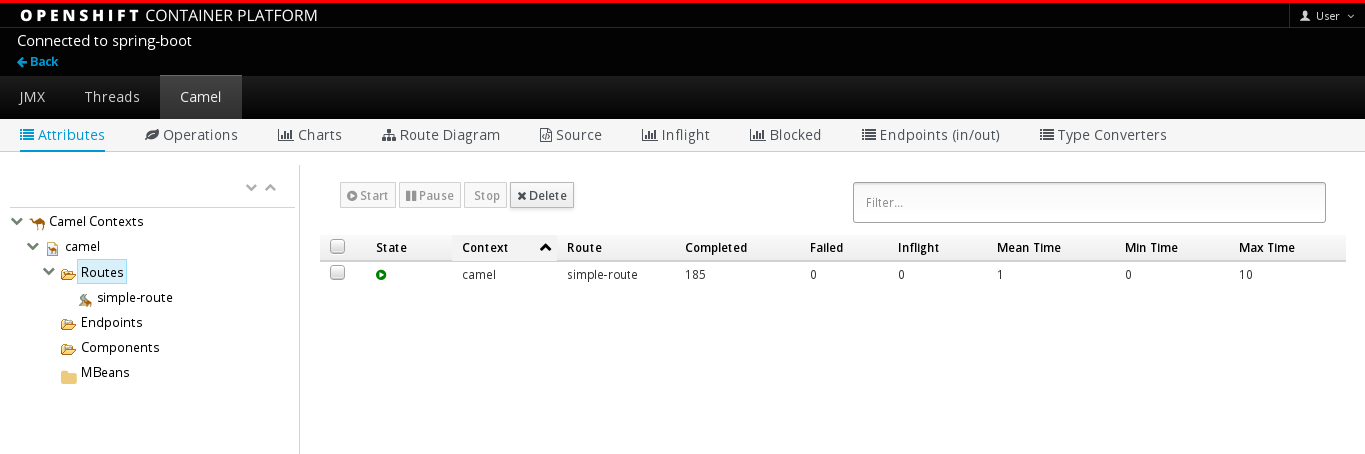

4.2.3.1. Monitoring a single Fuse pod from the Fuse Console

You can open the Fuse Console for a Fuse pod running on OpenShift:

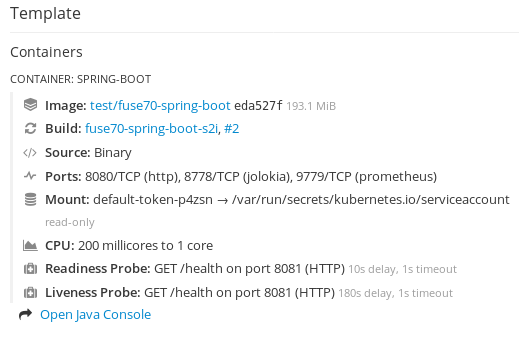

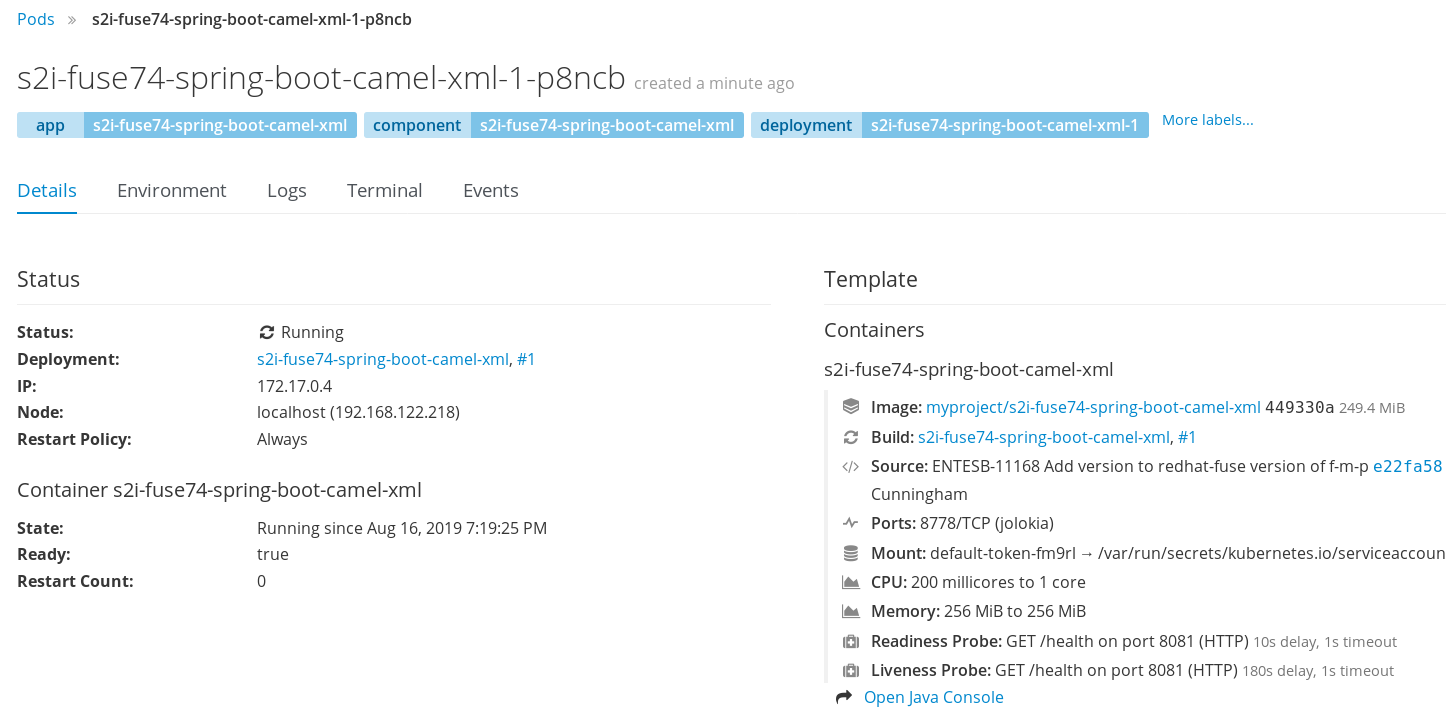

From the Applications → Pods view in your OpenShift project, click on the pod name to view the details of the running Fuse pod. On the right-hand side of this page, you see a summary of the container template:

From this view, click on the Open Java Console link to open the Fuse Console.

Note

NoteIn order to configure OpenShift to display a link to Fuse Console in the pod view, the pod running a Fuse on OpenShift image must declare a TCP port within a name attribute set to

jolokia:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2.3.2. Deploying the Fuse Console from the OpenShift Console

To deploy the Fuse Console on your OpenShift cluster from the OpenShift Console, follow these steps.

Procedure

- In the OpenShift console, open an existing project or create a new project.

Add the Fuse Console to your OpenShift project:

Select Add to Project → Browse Catalog.

The Select an item to add to the current project page opens.

In the Search field, type Fuse Console.

The Red Hat Fuse 7.x Console and Red Hat Fuse 7.x Console (cluster) items should appear as the search result.

If the Red Hat Fuse Console items do not appear as the search result, or if the items that appear are not the latest version, you can install the Fuse Console templates manually as described in the "Prepare the OpenShift server" section of the Fuse on OpenShift Guide.

Click one of the Red Hat Fuse Console items:

- Red Hat Fuse 7.x Console - This version of the Fuse Console discovers and connects to Fuse applications deployed in the current OpenShift project.

- Red Hat Fuse 7.x Console (cluster) - This version of the Fuse Console can discover and connect to Fuse applications deployed across multiple projects on the OpenShift cluster.

In the Red Hat Fuse Console wizard, click Next. The Configuration page of the wizard opens.

Optionally, you can change the default values of the configuration parameters.

Click Create.

The Results page of the wizard indicates that the Red Hat Fuse Console has been created.

- Click the Continue to the project overview link to verify that the Fuse Console application is added to the project.

To open the Fuse Console, click the provided URL link and then log in.

An Authorize Access page opens in the browser listing the required permissions.

Click Allow selected permissions.

The Fuse Console opens in the browser and shows the Fuse pods running in the project.

Click Connect for the application that you want to view.

A new browser window opens showing the application in the Fuse Console.

4.2.3.3. Deploying the Fuse Console from the command line

Table 4.1, “Fuse Console templates” describes the two OpenShift templates that you can use to access the Fuse Console from the command line, depending on the type of Fuse application deployment.

| Type | Description |

|---|---|

| cluster | Use an OAuth client that requires the cluster-admin role to be created. The Fuse Console can discover and connect to Fuse applications deployed across multiple namespaces or projects. |

| namespace | Use a service account as OAuth client, which only requires the admin role in a project to be created. This restricts the Fuse Console access to this single project, and as such acts as a single tenant deployment. |

Optionally, you can view a list of the template parameters by running the following command:

oc process --parameters -f https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003/fis-console-namespace-template.json

oc process --parameters -f https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003/fis-console-namespace-template.jsonProcedure

To deploy the Fuse Console from the command line:

Create a new application based on a Fuse Console template by running one of the following commands (where myproject is the name of your project):

For the Fuse Console cluster template, where

myhostis the hostname to access the Fuse Console:oc new-app -n myproject -f https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003/fis-console-cluster-template.json -p ROUTE_HOSTNAME=myhost

oc new-app -n myproject -f https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003/fis-console-cluster-template.json -p ROUTE_HOSTNAME=myhostCopy to Clipboard Copied! Toggle word wrap Toggle overflow For the Fuse Console namespace template:

oc new-app -n myproject -f https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003/fis-console-namespace-template.json

oc new-app -n myproject -f https://raw.githubusercontent.com/jboss-fuse/application-templates/application-templates-2.1.fuse-740025-redhat-00003/fis-console-namespace-template.jsonCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteYou can omit the route_hostname parameter for the namespace template because OpenShift automatically generates one.

Obtain the status and the URL of your Fuse Console deployment by running this command:

oc status

oc statusCopy to Clipboard Copied! Toggle word wrap Toggle overflow - To access the Fuse Console from a browser, use the provided URL (for example, https://fuse-console.192.168.64.12.nip.io).

4.2.3.4. Ensuring that data displays correctly in the Fuse Console

If the display of the queues and connections in the Fuse Console is missing queues, missing connections, or displaying inconsistent icons, adjust the Jolokia collection size parameter that specifies the maximum number of elements in an array that Jolokia marshals in a response.

Procedure

In the upper right corner of the Fuse Console, click the user icon and then click Preferences.

- Increase the value of the Maximum collection size option (the default is 50,000).

- Click Close.

4.2.4. Creating and Deploying a Project Using the S2I Source Workflow

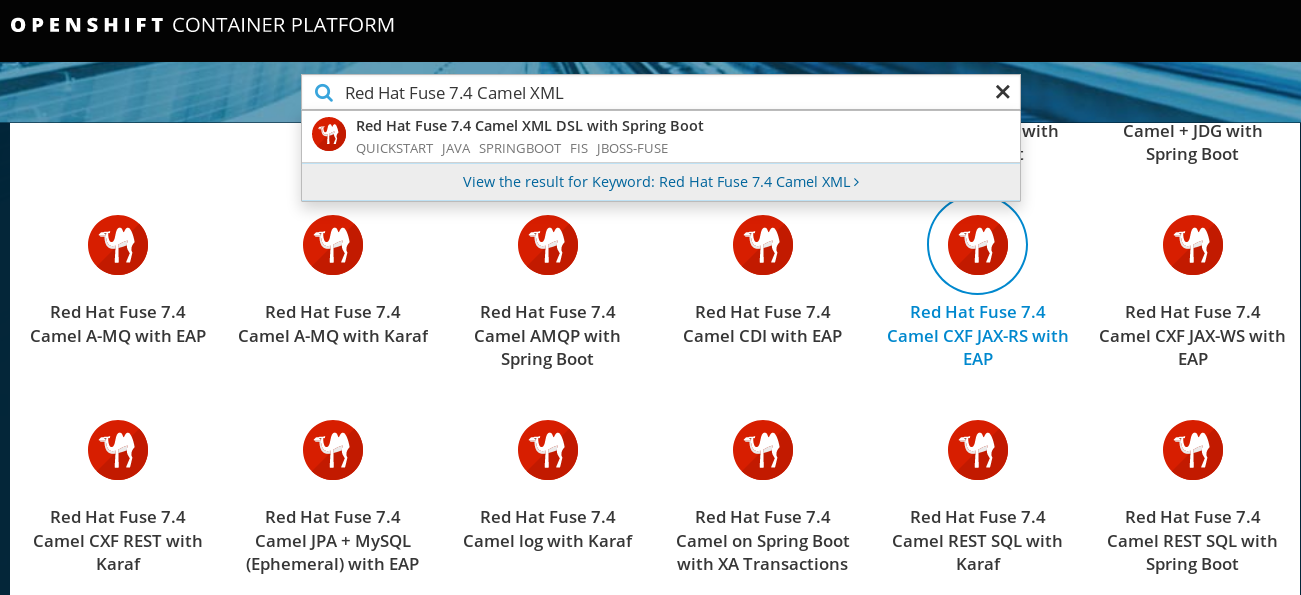

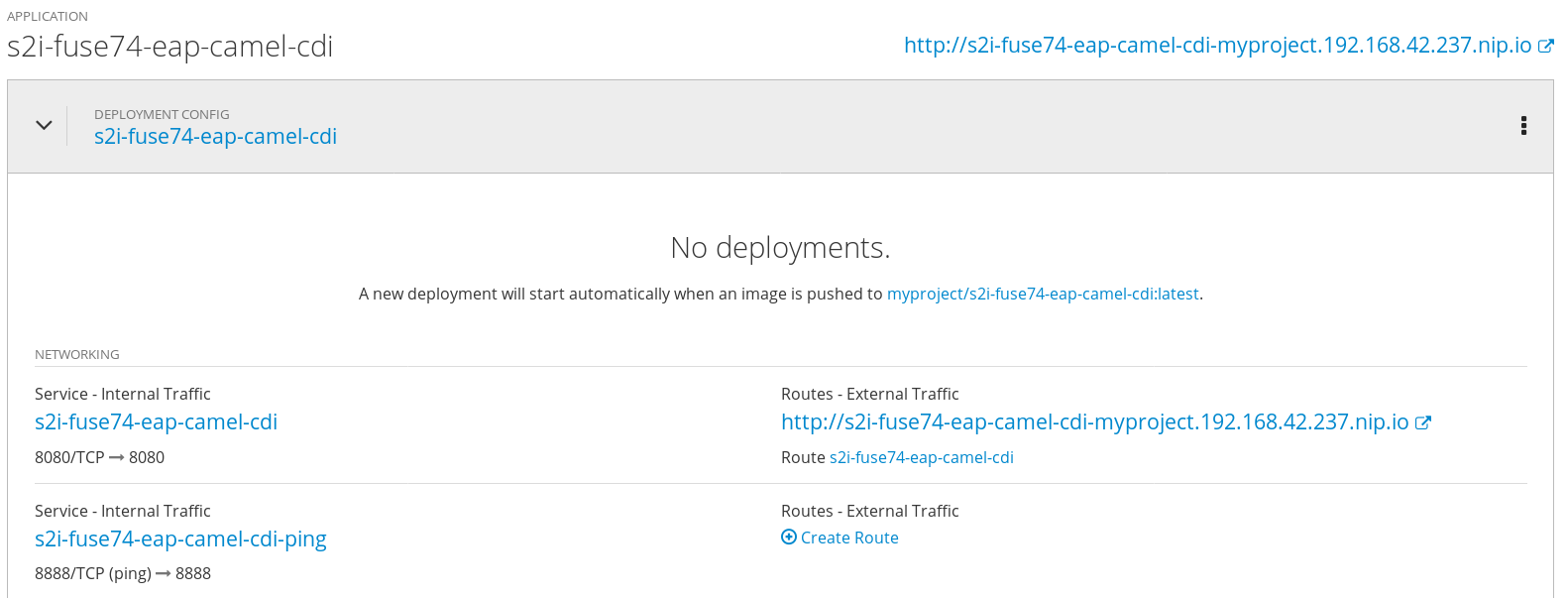

In this section, you will use the OpenShift S2I source workflow to build and deploy a Fuse on OpenShift project based on a template. The starting point for this demonstration is a quickstart project stored in a remote Git repository. Using the OpenShift console, you will download, build, and deploy this quickstart project in the OpenShift server.

Procedure

-

Navigate to the OpenShift console in your browser (https://OPENSHIFT_IP_ADDR:8443, replace

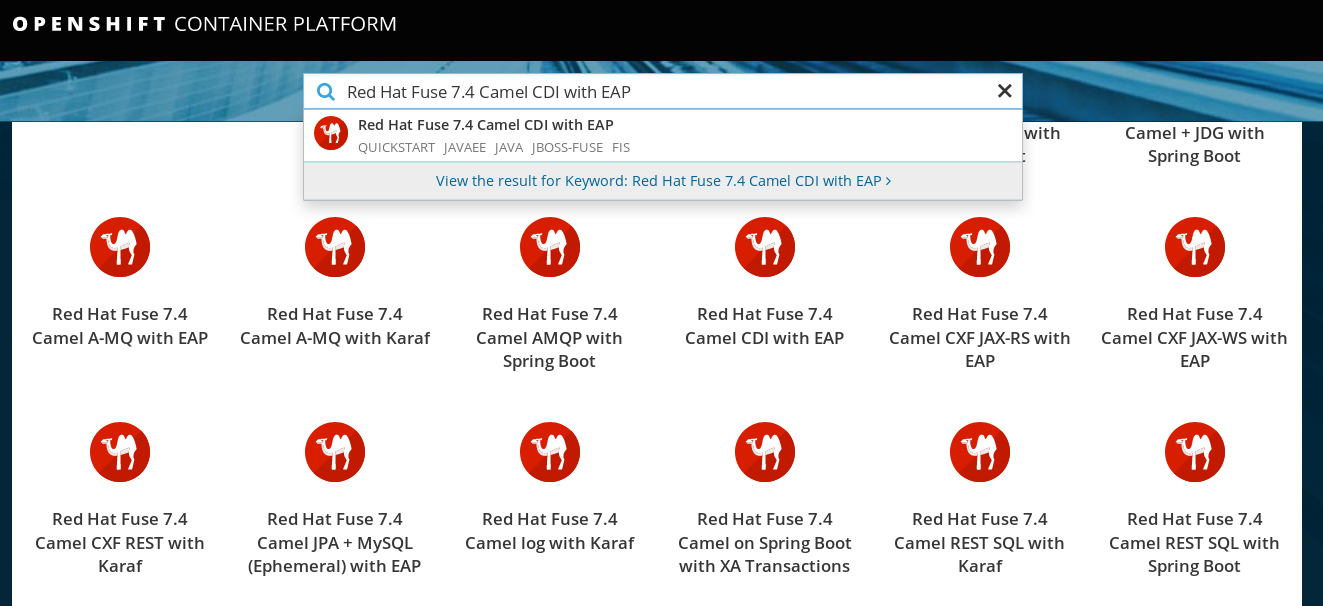

OPENSHIFT_IP_ADDRwith the IP address that was displayed in the case of CDK) and log in to the console with your credentials (for example, with usernamedeveloperand password,developer). In the Catalog search field, enter

Red Hat Fuse 7.4 Camel XMLas the search string and select the Red Hat Fuse 7.4 Camel XML DSL with Spring Boot template.

- The Information step of the template wizard opens. Click Next.

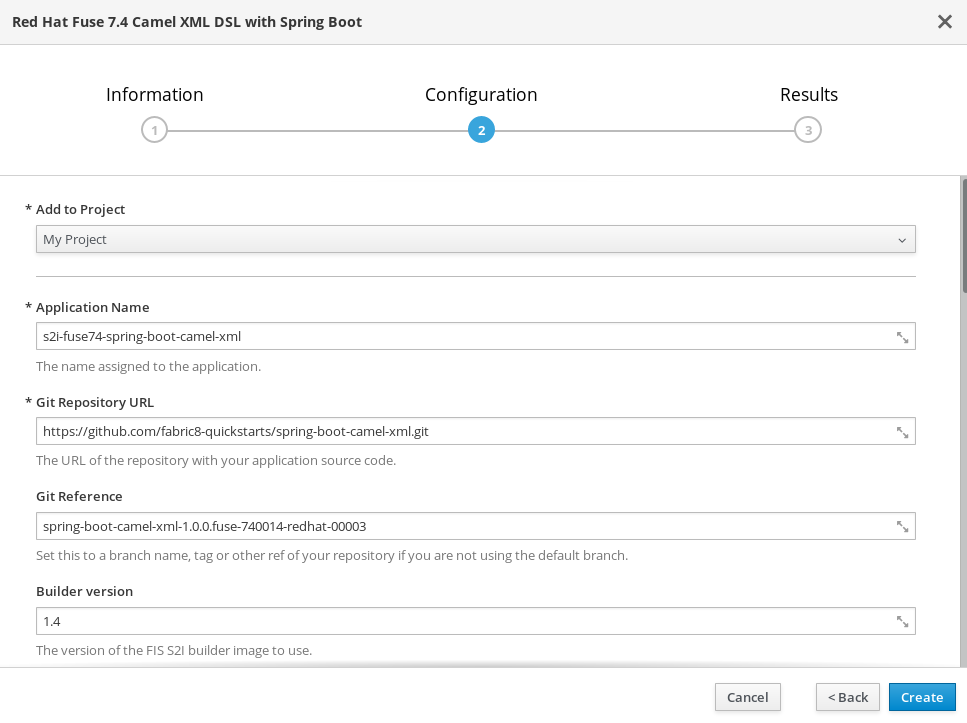

The Configuration step of the template wizard opens, as shown. From the Add to Project dropdown, select My Project.

NoteAlternatively, if you prefer to create a new project for this example, select Create Project from the Add to Project dropdown. A Project Name field then appears for you to fill in the name of the new project.

You can accept the default values for the rest of the settings in the Configuration step. Click Create.

Note

NoteIf you want to modify the application code (instead of just running the quickstart as is), you would need to fork the original quickstart Git repository and fill in the appropriate values in the Git Repository URL and Git Reference fields.

- The Results step of the template wizard opens. Click Close.

- In the right-hand My Projects pane, click My Project. The Overview tab of the My Project project opens, showing the s2i-fuse74-spring-boot-camel-xml application.

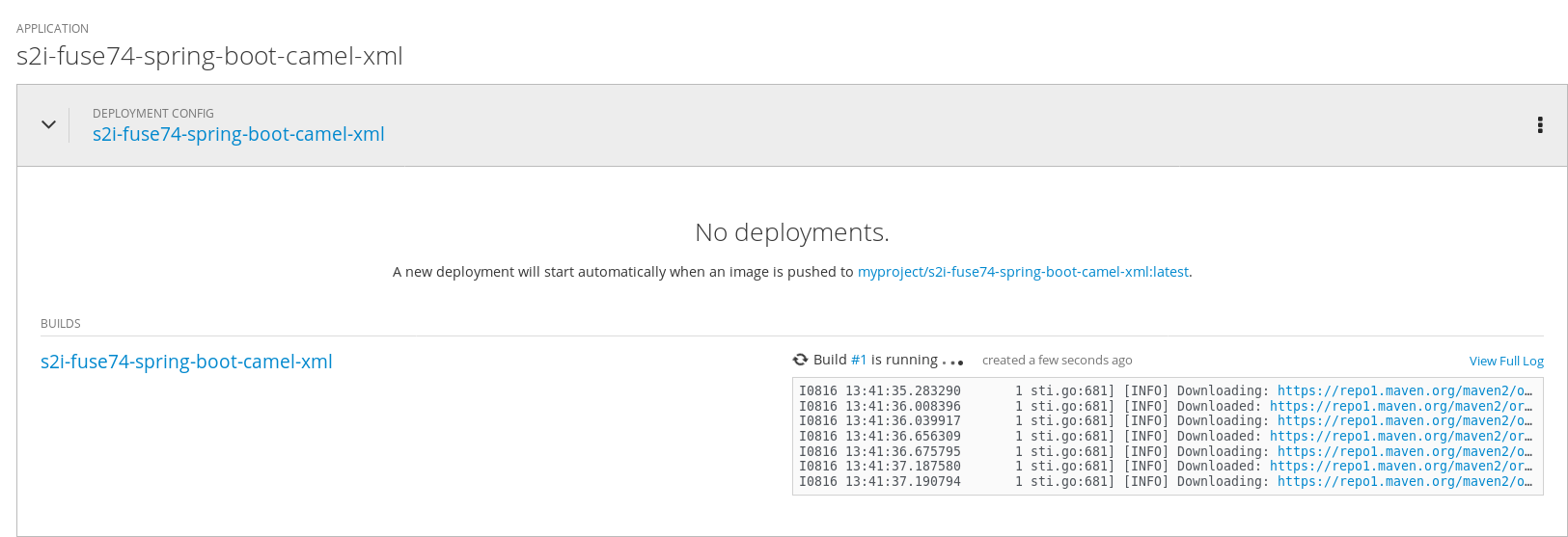

Click the arrow on the left of the s2i-fuse74-spring-boot-camel-xml deployment to expand and view the details of this deployment, as shown.

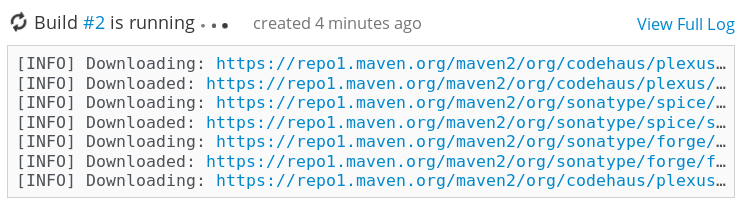

In this view, you can see the build log. If the build should fail for any reason, the build log can help you to diagnose the problem.

NoteThe build can take several minutes to complete, because a lot of dependencies must be downloaded from remote Maven repositories. To speed up build times, we recommend you deploy a Nexus server on your local network.

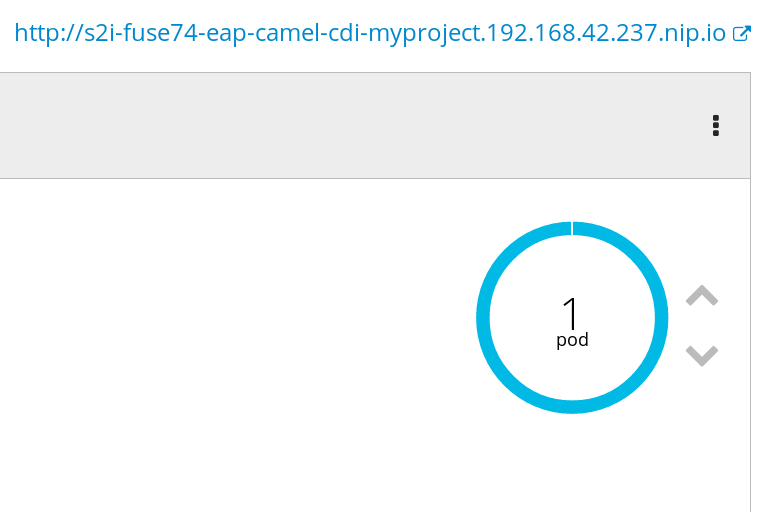

If the build completes successfully, the pod icon shows as a blue circle with 1 pod running. Click in the centre of the pod icon (blue circle) to view the list of pods for s2i-fuse74-spring-boot-camel-xml.

NoteIf multiple pods are running, you would see a list of running pods at this point. Otherwise (if there is just one pod), you get straight through to the details view of the running pod.

The pod details view opens. Click on the Logs tab to view the application log and scroll down the log to find the log messages generated by the Camel application.

-

Click Overview on the left-hand navigation bar to return to the overview of the applications in the

My Projectnamespace. To shut down the running pod, click the down arrow beside the pod icon. When a dialog prompts you with the question Scale down deployment s2i-fuse74-spring-boot-camel-xml-1?, click Scale Down.

beside the pod icon. When a dialog prompts you with the question Scale down deployment s2i-fuse74-spring-boot-camel-xml-1?, click Scale Down.

(Optional) If you are using CDK, you can shut down the virtual OpenShift Server completely by returning to the shell prompt and entering the following command:

minishift stop

minishift stopCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 5. Developing an Application for the Spring Boot Image

This chapter explains how to develop applications for the Spring Boot image.

5.1. Creating a Spring Boot Project using Maven Archetype

To create a Spring Boot project using Maven archetypes follow these steps.

Procedure

- Go to the appropriate directory on your system.

In a shell prompt, enter the following the

mvncommand to create a Spring Boot project.mvn org.apache.maven.plugins:maven-archetype-plugin:2.4:generate \ -DarchetypeCatalog=https://maven.repository.redhat.com/ga/io/fabric8/archetypes/archetypes-catalog/2.2.0.fuse-740017-redhat-00003/archetypes-catalog-2.2.0.fuse-740017-redhat-00003-archetype-catalog.xml \ -DarchetypeGroupId=org.jboss.fuse.fis.archetypes \ -DarchetypeArtifactId=spring-boot-camel-xml-archetype \ -DarchetypeVersion=2.2.0.fuse-740017-redhat-00003

mvn org.apache.maven.plugins:maven-archetype-plugin:2.4:generate \ -DarchetypeCatalog=https://maven.repository.redhat.com/ga/io/fabric8/archetypes/archetypes-catalog/2.2.0.fuse-740017-redhat-00003/archetypes-catalog-2.2.0.fuse-740017-redhat-00003-archetype-catalog.xml \ -DarchetypeGroupId=org.jboss.fuse.fis.archetypes \ -DarchetypeArtifactId=spring-boot-camel-xml-archetype \ -DarchetypeVersion=2.2.0.fuse-740017-redhat-00003Copy to Clipboard Copied! Toggle word wrap Toggle overflow The archetype plug-in switches to interactive mode to prompt you for the remaining fields.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow When prompted, enter

org.example.fisfor thegroupIdvalue andfuse74-spring-bootfor theartifactIdvalue. Accept the defaults for the remaining fields.- Follow the instructions in the quickstart on how to build and deploy the example.

For the full list of available Spring Boot archetypes, see Spring Boot Archetype Catalog.

5.2. Structure of the Camel Spring Boot Application

The directory structure of a Camel Spring Boot application is as follows:

Where the following files are important for developing an application:

- pom.xml

-

Includes additional dependencies. Camel components that are compatible with Spring Boot are available in the starter version, for example

camel-jdbc-starterorcamel-infinispan-starter. Once the starters are included in thepom.xmlthey are automatically configured and registered with the Camel content at boot time. Users can configure the properties of the components using theapplication.propertiesfile. - application.properties

Allows you to externalize your configuration and work with the same application code in different environments. For details, see Externalized Configuration

For example, in this Camel application you can configure certain properties such as name of the application or the IP addresses, and so on.

application.properties

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Application.java

It is an important file to run your application. As a user you will import here a file

camel-context.xmlto configure routes using the Spring DSL.The

Application.java filespecifies the@SpringBootApplicationannotation, which is equivalent to@Configuration,@EnableAutoConfigurationand@ComponentScanwith their default attributes.Application.java

@SpringBootApplication // load regular Blueprint file from the classpath that contains the Camel XML DSL @ImportResource({"classpath:blueprint/camel-context.xml"})@SpringBootApplication // load regular Blueprint file from the classpath that contains the Camel XML DSL @ImportResource({"classpath:blueprint/camel-context.xml"})Copy to Clipboard Copied! Toggle word wrap Toggle overflow It must have a

mainmethod to run the Spring Boot application.Application.java

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - camel-context.xml

The

src/main/resources/spring/camel-context.xmlis an important file for developing application as it contains the Camel routes.NoteYou can find more information on developing Spring-Boot applications at Developing your first Spring Boot Application

- src/main/fabric8/deployment.yml

Provides additional configuration that is merged with the default OpenShift configuration file generated by the fabric8-maven-plugin.

NoteThis file is not used part of Spring Boot application but it is used in all quickstarts to limit the resources such as CPU and memory usage.

5.3. Spring Boot Archetype Catalog

The Spring Boot Archetype catalog includes the following examples.

| Name | Description |

|---|---|

|

| Demonstrates how to use Apache Camel with Spring Boot based on a fabric8 Java base image. |

|

| Demonstrates how to connect a Spring Boot application to an ActiveMQ broker and use JMS messaging between two Camel routes using Kubernetes or OpenShift. |

|

| Demonstrates how to configure a Spring Boot application using Kubernetes ConfigMaps and Secrets. |

|

| Demonstrates how to use Apache Camel to integrate a Spring Boot application running on Kubernetes or OpenShift with a remote Kie Server. |

|

| Demonstrates how to connect a Spring Boot application to a JBoss Data Grid or Infinispan server using the Hot Rod protocol. |

|

| Demonstrates how to use SQL via JDBC along with Camel’s REST DSL to expose a RESTful API. |

|

| Spring Boot, Camel and XA Transactions. This example demonstrates how to run a Camel Service on Spring Boot that supports XA transactions on two external transactional resources: a JMS resource (A-MQ) and a database (PostgreSQL). This quickstart requires the PostgreSQL database and the A-MQ broker have been deployed and running first, one simple way to run them is to use the templates provided in the Openshift service catalog |

|

| Demonstrates how to configure Camel routes in Spring Boot via a Blueprint configuration file. |

|

| Demonstrates how to use Apache CXF with Spring Boot based on a fabric8 Java base image. The quickstart uses Spring Boot to configure an application that includes a CXF JAXRS endpoint with Swagger enabled. |

|

| Demonstrates how to use Apache CXF with Spring Boot based on a fabric8 Java base image. The quickstart uses Spring Boot to configure an application that includes a CXF JAXWS endpoint. |

A Technology Preview quickstart is also available. The Spring Boot Camel XA Transactions quickstart demonstrates how to use Spring Boot to run a Camel service that supports XA transactions. This quickstart shows the use of two external transactional resources: a JMS (AMQ) broker and a database (PostgreSQL). You can find this quickstart here: https://github.com/jboss-fuse/spring-boot-camel-xa.

Technology Preview features are not supported with Red Hat production service level agreements (SLAs), might not be functionally complete, and Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process. For more information, see Red Hat Technology Preview features support scope.

5.4. BOM file for Spring Boot

The purpose of a Maven Bill of Materials (BOM) file is to provide a curated set of Maven dependency versions that work well together, preventing you from having to define versions individually for every Maven artifact.

Ensure you are using the correct Fuse BOM based on the version of Spring Boot you are using (Spring Boot 1 or Spring Boot 2).

The Fuse BOM for Spring Boot offers the following advantages:

- Defines versions for Maven dependencies, so that you do not need to specify the version when you add a dependency to your POM.

- Defines a set of curated dependencies that are fully tested and supported for a specific version of Fuse.

- Simplifies upgrades of Fuse.

Only the set of dependencies defined by a Fuse BOM are supported by Red Hat.

5.4.1. Incorporate the BOM file

To incorporate a BOM file into your Maven project, specify a dependencyManagement element in your project’s pom.xml file (or, possibly, in a parent POM file), as shown in the examples for both Spring Boot 2 and Spring Boot 1 below:

After specifying the BOM using the dependency management mechanism, it becomes possible to add Maven dependencies to your POM without specifying the version of the artifact. For example, to add a dependency for the camel-hystrix component, you would add the following XML fragment to the dependencies element in your POM:

<dependency> <groupId>org.apache.camel</groupId> <artifactId>camel-hystrix-starter</artifactId> </dependency>

<dependency>

<groupId>org.apache.camel</groupId>

<artifactId>camel-hystrix-starter</artifactId>

</dependency>

Note how the Camel artifact ID is specified with the -starter suffix — that is, you specify the Camel Hystrix component as camel-hystrix-starter, not as camel-hystrix. The Camel starter components are packaged in a way that is optimized for the Spring Boot environment.

5.5. Spring Boot Maven plugin

The Spring Boot Maven plugin is provided by Spring Boot and it is a developer utility for building and running a Spring Boot project:

-

Building — create an executable Jar package for your Spring Boot application by entering the command

mvn packagein the project directory. The output of the build is placed in thetarget/subdirectory of your Maven project. -

Running — for convenience, you can run the newly-built application with the command,

mvn spring-boot:start.

To incorporate the Spring Boot Maven plugin into your project POM file, add the plugin configuration to the project/build/plugins section of your pom.xml file, as shown in the following example:

Chapter 6. Apache Camel in Spring Boot

6.1. Introduction to Camel Spring Boot

The Camel Spring Boot component provides auto configuration for Apache Camel. Auto-configuration of the Camel context auto-detects Camel routes available in the Spring context and registers the key Camel utilities such as producer template, consumer template, and the type converter as beans.

Every Camel Spring Boot application should use dependencyManagement with productized versions, see quickstart pom. Versions that are tagged later can be omitted to not override the versions from BOM.

camel-spring-boot jar comes with the spring.factories file which allows you to add that dependency into your classpath and hence Spring Boot will automatically auto-configure Camel.

6.2. Introduction to Camel Spring Boot Starter

Apache Camel includes a Spring Boot starter module that allows you to develop Spring Boot applications using starters.

For more details, see sample application in the source code.

To use the starter, add the following snippet to your Spring Boot pom.xml file:

<dependency>

<groupId>org.apache.camel</groupId>

<artifactId>camel-spring-boot-starter</artifactId>

</dependency>

<dependency>

<groupId>org.apache.camel</groupId>

<artifactId>camel-spring-boot-starter</artifactId>

</dependency>The starter allows you to add classes with your Camel routes, as shown in the snippet below. Once these routes are added to the class path the routes are started automatically.

You can customize the Camel application in the application.properties or application.yml file.

Camel Spring Boot now supports referring to bean by the id name in the configuration files (application.properties or yaml file) when you configure any of the Camel starter components. In the src/main/resources/application.properties (or yaml) file you can now easily configure the options on the Camel that refers to other beans by referring to the beans ID name. For example, the xslt component can refer to a custom bean using the bean ID as follows:

Refer to a custom bean by the id myExtensionFactory as follows:

camel.component.xslt.saxon-extension-functions=myExtensionFactory

camel.component.xslt.saxon-extension-functions=myExtensionFactoryWhich you can then create using Spring Boot @Bean annotation as follows:

@Bean(name = "myExtensionFactory")

public ExtensionFunctionDefinition myExtensionFactory() {

}

@Bean(name = "myExtensionFactory")

public ExtensionFunctionDefinition myExtensionFactory() {

}

Or, in case of a Jackson ObjectMapper in the camel-jackson data-format:

camel.dataformat.json-jackson.object-mapper=myJacksonMapper

camel.dataformat.json-jackson.object-mapper=myJacksonMapper6.3. Auto-configured Camel context

Camel auto-configuration provides a CamelContext instance and creates a SpringCamelContext. It also initializes and performs shutdown of that context. This Camel context is registered in the Spring application context under camelContext bean name and you can access it like other Spring bean.

For example, you can access the camelContext as shown below:

6.4. Auto-detecting Camel routes

Camel auto configuration collects all the RouteBuilder instances from the Spring context and automatically injects them into the CamelContext. It simplifies the process of creating new Camel route with the Spring Boot starter. You can create the routes by adding the @Component annotated class to your classpath.

To create a new route RouteBuilder bean in your @Configuration class, see below:

6.5. Camel properties

Spring Boot auto configuration automatically connects to Spring Boot external configuration such as properties placeholders, OS environment variables, or system properties with Camel properties support.

These properties are defined in application.properties file:

route.from = jms:invoices

route.from = jms:invoicesUse as system property

java -Droute.to=jms:processed.invoices -jar mySpringApp.jar

java -Droute.to=jms:processed.invoices -jar mySpringApp.jarUse as placeholders in Camel route:

6.6. Custom Camel context configuration

To perform operations on CamelContext bean created by Camel auto configuration, you need to register CamelContextConfiguration instance in your Spring context as shown below:

The method CamelContextConfiguration and beforeApplicationStart(CamelContext) will be called before the Spring context is started, so the CamelContext instance passed to this callback is fully auto-configured. You can add many instances of CamelContextConfiguration into your Spring context and all of them will be executed.

6.7. Disabling JMX

To disable JMX of the auto-configured CamelContext use camel.springboot.jmxEnabled property as JMX is enabled by default.

For example, you could add the following property to your application.properties file:

camel.springboot.jmxEnabled = false

camel.springboot.jmxEnabled = false6.8. Auto-configured consumer and producer templates

Camel auto configuration provides pre-configured ConsumerTemplate and ProducerTemplate instances. You can inject them into your Spring-managed beans:

By default consumer templates and producer templates come with the endpoint cache sizes set to 1000. You can change those values using the following Spring properties:

camel.springboot.consumerTemplateCacheSize = 100 camel.springboot.producerTemplateCacheSize = 200

camel.springboot.consumerTemplateCacheSize = 100

camel.springboot.producerTemplateCacheSize = 2006.9. Auto-configured TypeConverter

Camel auto configuration registers a TypeConverter instance named typeConverter in the Spring context.

6.10. Spring type conversion API bridge

Spring consist of type conversion API. Spring API is similar to the Camel type converter API. Due to the similarities between the two APIs Camel Spring Boot automatically registers a bridge converter (SpringTypeConverter) that delegates to the Spring conversion API. That means that out-of-the-box Camel will treat Spring Converters similar to Camel.

This allows you to access both Camel and Spring converters using the Camel TypeConverter API, as shown below:

Here, Spring Boot delegates conversion to the Spring’s ConversionService instances available in the application context. If no ConversionService instance is available, Camel Spring Boot auto configuration creates an instance of ConversionService.

6.11. Disabling type conversions features

To disable registering type conversion features of Camel Spring Boot such as TypeConverter instance or Spring bridge, set the camel.springboot.typeConversion property to false as shown below:

camel.springboot.typeConversion = false

camel.springboot.typeConversion = false6.12. Adding XML routes

By default, you can put Camel XML routes in the classpath under the directory camel, which camel-spring-boot will auto detect and include. From Camel version 2.17 onwards you can configure the directory name or disable this feature using the configuration option, as shown below:

// turn off camel.springboot.xmlRoutes = false // scan in the com/foo/routes classpath camel.springboot.xmlRoutes = classpath:com/foo/routes/*.xml

// turn off

camel.springboot.xmlRoutes = false

// scan in the com/foo/routes classpath

camel.springboot.xmlRoutes = classpath:com/foo/routes/*.xml

The XML files should be Camel XML routes and not CamelContext such as:

When using Spring XML files with <camelContext>, you can configure Camel in the Spring XML file as well as in the application.properties file. For example, to set a name on Camel and turn On the stream caching, add:

camel.springboot.name = MyCamel camel.springboot.stream-caching-enabled=true

camel.springboot.name = MyCamel

camel.springboot.stream-caching-enabled=true6.13. Adding XML Rest-DSL

By default, you can put Camel Rest-DSL XML routes in the classpath under the directory camel-rest, which camel-spring-boot will auto detect and include. You can configure the directory name or disable this feature using the configuration option, as shown below:

// turn off camel.springboot.xmlRests = false // scan in the com/foo/routes classpath camel.springboot.xmlRests = classpath:com/foo/rests/*.xml

// turn off

camel.springboot.xmlRests = false

// scan in the com/foo/routes classpath

camel.springboot.xmlRests = classpath:com/foo/rests/*.xml

The Rest-DSL XML files should be Camel XML rests and not CamelContext such as:

6.14. Testing with Camel Spring Boot

In case on Camel running on Spring Boot, Spring Boot automatically embeds Camel and all its routes, which are annotated with @Component. When testing with Spring boot you use @SpringBootTest instead of @ContextConfiguration to specify which configuration class to use.

When you have multiple Camel routes in different RouteBuilder classes, Camel Spring Boot will include all these routes. Hence, when you wish to test routes from only one RouteBuilder class you can use the following patterns to include or exclude which RouteBuilders to enable:

- java-routes-include-pattern: Used for including RouteBuilder classes that match the pattern.

- java-routes-exclude-pattern: Used for excluding RouteBuilder classes that match the pattern. Exclude takes precedence over include.

You can specify these patterns in your unit test classes as properties to @SpringBootTest annotation, as shown below:

@RunWith(CamelSpringBootRunner.class)

@SpringBootTest(classes = {MyApplication.class);

properties = {"camel.springboot.java-routes-include-pattern=**/Foo*"})

public class FooTest {

@RunWith(CamelSpringBootRunner.class)

@SpringBootTest(classes = {MyApplication.class);

properties = {"camel.springboot.java-routes-include-pattern=**/Foo*"})

public class FooTest {

In the FooTest class, the include pattern is **/Foo*, which represents an Ant style pattern. Here, the pattern starts with double asterisk, which matches with any leading package name. /Foo* means the class name must start with Foo, for example, FooRoute. You can run a test using following maven command:

mvn test -Dtest=FooTest

mvn test -Dtest=FooTest6.15. See Also

Chapter 7. Running a Camel Service on Spring Boot with XA Transactions

The Spring Boot Camel XA transactions quickstart demonstrates how to run a Camel Service on Spring-Boot that supports XA transactions on two external transactional resources, a JMS resource (A-MQ) and a database (PostgreSQL). These external resources are provided by OpenShift which must be started before running this quickstart.

7.1. StatefulSet resources

This quickstart uses OpenShift StatefulSet resources to guarantee uniqueness of transaction managers and require a PersistentVolume to store transaction logs. The application supports scaling on the StatefulSet resource. Each instance will have its own in-process recovery manager. A special controller guarantees that when the application is scaled down, all instances, that are terminated, complete all their work correctly without leaving pending transactions. The scale-down operation is rolled back by the controller if the recovery manager is not been able to flush all pending work before terminating. This quickstart uses Spring Boot Narayana recovery controller.

7.2. Spring Boot Narayana Recovery Controller

The Spring Boot Narayana recovery controller allows to gracefully handle the scaling down phase of a StatefulSet by cleaning pending transactions before termination. If a scaling down operation is executed and the pod is not clean after termination, the previous number of replicas is restored, hence effectively canceling the scaling down operation.

All pods of the StatefulSet require access to a shared volume that is used to store the termination status of each pod belonging to the StatefulSet. The pod-0 of the StatefulSet periodically checks the status and scale the StatefulSet to the right size if there’s a mismatch.

In order for the recovery controller to work, edit permissions on the current namespace are required (role binding is included in the set of resources published to OpenShift). The recovery controller can be disabled using the CLUSTER_RECOVERY_ENABLED environment variable. In this case, no special permissions are required on the service account but any scale down operation may leave pending transactions on the terminated pod without notice.

7.3. Configuring Spring Boot Narayana Recovery Controller

Following example shows how to configure Narayana to work on OpenShift with the recovery controller.

Procedure

This is a sample

application.propertiesfile. Replace the following options in the Kubernetes yaml descriptor.Copy to Clipboard Copied! Toggle word wrap Toggle overflow You need a shared volume to store both transactions and information related to termination. It can be mounted in the StatefulSet yaml descriptor as follows.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Camel Extension for Spring Boot Narayana Recovery Controller

If Camel is found in the Spring Boot application context, the Camel context is automatically stopped before flushing all pending transactions.

7.4. Running Camel Spring Boot XA Quickstart on OpenShift

This procedure shows how to run the quickstart on a running single node OpenShift cluster.

Procedure

Download Camel Spring Boot XA project.

git clone https://github.com/jboss-fuse/spring-boot-camel-xa

git clone https://github.com/jboss-fuse/spring-boot-camel-xaCopy to Clipboard Copied! Toggle word wrap Toggle overflow Navigate to

spring-boot-camel-xadirectory and run following command.mvn clean install

mvn clean installCopy to Clipboard Copied! Toggle word wrap Toggle overflow Log in to the OpenShift Server.

oc login -u developer -p developer

oc login -u developer -p developerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new project namespace called

test(assuming it does not already exist).oc new-project test

oc new-project testCopy to Clipboard Copied! Toggle word wrap Toggle overflow If the

testproject namespace already exists, switch to it.oc project test

oc project testCopy to Clipboard Copied! Toggle word wrap Toggle overflow Install dependencies.

-

From the OpenShift catalog, install

postgresqlusing username astheuserand password asThepassword1!. -

From the OpenShift catalog, install the

A-MQbroker using username astheuserand password asThepassword1!.

-

From the OpenShift catalog, install

Change the

Postgresqldatabase to accept prepared statements.oc env dc/postgresql POSTGRESQL_MAX_PREPARED_TRANSACTIONS=100

oc env dc/postgresql POSTGRESQL_MAX_PREPARED_TRANSACTIONS=100Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a persistent volume claim for the transaction log.

oc create -f persistent-volume-claim.yml

oc create -f persistent-volume-claim.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Build and deploy your quickstart.

mvn fabric8:deploy -P openshift

mvn fabric8:deploy -P openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow Scale it up to the desired number of replicas.

oc scale statefulset spring-boot-camel-xa --replicas 3

oc scale statefulset spring-boot-camel-xa --replicas 3Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note: The pod name is used as transaction manager id (spring.jta.transaction-manager-id property). The current implementation also limits the length of transaction manager ids. So please note that:

- The name of the StatefulSet is an identifier for the transaction system, so it must not be changed.

- You should name the StatefulSet so that all of its pod names have length lower than or equal to 23 characters. Pod names are created by OpenShift using the convention: <statefulset-name>-0, <statefulset-name>-1 and so on. Narayana does its best to avoid having multiple recovery managers with the same id, so when the pod name is longer than the limit, the last 23 bytes are taken as transaction manager id (after stripping some characters like -).

Once the quickstart is running, get the base service URL using the following command.

NARAYANA_HOST=$(oc get route spring-boot-camel-xa -o jsonpath={.spec.host})NARAYANA_HOST=$(oc get route spring-boot-camel-xa -o jsonpath={.spec.host})Copy to Clipboard Copied! Toggle word wrap Toggle overflow

7.5. Testing Successful XA Transactions

Following workflow shows how to test the successful XA transactions.

Procedure

Get the list of messages in the audit_log table.

curl -w "\n" http://$NARAYANA_HOST/api/

curl -w "\n" http://$NARAYANA_HOST/api/Copy to Clipboard Copied! Toggle word wrap Toggle overflow The list is empty at the beginning. Now you can put the first element.

curl -w "\n" -X POST http://$NARAYANA_HOST/api/?entry=hello

curl -w "\n" -X POST http://$NARAYANA_HOST/api/?entry=helloCopy to Clipboard Copied! Toggle word wrap Toggle overflow After waiting for some time get the new list.

curl -w "\n" http://$NARAYANA_HOST/api/

curl -w "\n" http://$NARAYANA_HOST/api/Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

The new list contains two messages,

helloandhello-ok. Thehello-okconfirms that the message has been sent to a outgoing queue and then logged. You can add multiple messages and see the logs.

7.6. Testing Failed XA Transactions

Following workflow shows how to test the failed XA transactions.

Procedure

Send a message named

fail.curl -w "\n" -X POST http://$NARAYANA_HOST/api/?entry=fail

curl -w "\n" -X POST http://$NARAYANA_HOST/api/?entry=failCopy to Clipboard Copied! Toggle word wrap Toggle overflow After waiting for some time get the new list.

curl -w "\n" http://$NARAYANA_HOST/api/

curl -w "\n" http://$NARAYANA_HOST/api/Copy to Clipboard Copied! Toggle word wrap Toggle overflow - This message produces an exception at the end of the route, so that the transaction is always rolled back. You should not find any trace of the message in the audit_log table.

Chapter 8. Integrating a Camel Application with the A-MQ Broker

This tutorial shows how to deploy a quickstart using the A-MQ image.

8.1. Building and Deploying a Spring Boot Camel A-MQ Quickstart

This example requires a JBoss A-MQ 6 image and deployment template. If you are using CDK 3.1.1+, JBoss A-MQ 6 images and templates should be already installed in the openshift namespace by default.

Prerequisites

- Ensure that OpenShift is running correctly and the Fuse image streams are already installed in OpenShift. See Getting Started for Administrators.

- Ensure that Maven Repositories are configured for fuse, see Configuring Maven Repositories.

Procedure

Get ready to build and deploy the quickstart:

Log in to OpenShift as a developer.

oc login -u developer -p developer

oc login -u developer -p developerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new project amq-quickstart.

oc new-project amq-quickstart

oc new-project amq-quickstartCopy to Clipboard Copied! Toggle word wrap Toggle overflow Determine the version of the A-MQ 6 images and templates installed.

oc get template -n openshift

$ oc get template -n openshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow You should be able to find a template named

amqXX-basic, whereXXis the version of A-MQ installed in Openshift.

Deploy the A-MQ 6 image in the

amq-quickstartnamespace (replaceXXwith the actual version of A-MQ found in previous step).oc process openshift//amqXX-basic -p APPLICATION_NAME=broker -p MQ_USERNAME=admin -p MQ_PASSWORD=admin -p MQ_QUEUES=test -p MQ_PROTOCOL=amqp -n amq-quickstart | oc create -f -

$ oc process openshift//amqXX-basic -p APPLICATION_NAME=broker -p MQ_USERNAME=admin -p MQ_PASSWORD=admin -p MQ_QUEUES=test -p MQ_PROTOCOL=amqp -n amq-quickstart | oc create -f -Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThis

occommand could fail, if you use an older version ofoc. This syntax works withocversions 3.5.x (based on Kubernetes 1.5.x).Add a user role that is needed for discovery of mesh endpoints (through Kubernetes REST API agent).

oc policy add-role-to-user view system:serviceaccount:amq-quickstart:default

$ oc policy add-role-to-user view system:serviceaccount:amq-quickstart:defaultCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the quickstart project using the Maven workflow.

mvn org.apache.maven.plugins:maven-archetype-plugin:2.4:generate \ -DarchetypeCatalog=https://maven.repository.redhat.com/ga/io/fabric8/archetypes/archetypes-catalog/2.2.0.fuse-740017-redhat-00003/archetypes-catalog-2.2.0.fuse-740017-redhat-00003-archetype-catalog.xml \ -DarchetypeGroupId=org.jboss.fuse.fis.archetypes \ -DarchetypeArtifactId=spring-boot-camel-amq-archetype \ -DarchetypeVersion=2.2.0.fuse-740017-redhat-00003

$ mvn org.apache.maven.plugins:maven-archetype-plugin:2.4:generate \ -DarchetypeCatalog=https://maven.repository.redhat.com/ga/io/fabric8/archetypes/archetypes-catalog/2.2.0.fuse-740017-redhat-00003/archetypes-catalog-2.2.0.fuse-740017-redhat-00003-archetype-catalog.xml \ -DarchetypeGroupId=org.jboss.fuse.fis.archetypes \ -DarchetypeArtifactId=spring-boot-camel-amq-archetype \ -DarchetypeVersion=2.2.0.fuse-740017-redhat-00003Copy to Clipboard Copied! Toggle word wrap Toggle overflow The archetype plug-in switches to interactive mode to prompt you for the remaining fields.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow When prompted, enter

org.example.fisfor thegroupIdvalue andfuse74-spring-boot-camel-amqfor theartifactIdvalue. Accept the defaults for the remaining fields.Navigate to the quickstart directory

fuse74-spring-boot-camel-amq.cd fuse74-spring-boot-camel-amq

$ cd fuse74-spring-boot-camel-amqCopy to Clipboard Copied! Toggle word wrap Toggle overflow Customize the client credentials for logging on to the broker, by setting the

ACTIVEMQ_BROKER_USERNAMEandACTIVEMQ_BROKER_PASSWORDenvironment variables. In thefuse74-spring-boot-camel-amqproject, edit thesrc/main/fabric8/deployment.ymlfile, as follows:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Run the

mvncommand to deploy the quickstart to OpenShift server.mvn fabric8:deploy -Popenshift

mvn fabric8:deploy -PopenshiftCopy to Clipboard Copied! Toggle word wrap Toggle overflow To verify that the quickstart is running successfully:

- Navigate to the OpenShift console.

- Select the project amq-quickstart.

- Click Applications.

- Select Pods.

- Click fis-spring-boot-camel-am-1-xxxxx.

Click Logs.

The output shows the messages are sent successfully.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- To view the routes on the web interface, click Open Java Console and check the messages in the A-MQ queue.

Chapter 9. Integrating Spring Boot with Kubernetes

The Spring Cloud Kubernetes plug-in currently enables you to integrate the following features of Spring Boot and Kubernetes:

9.1. Spring Boot Externalized Configuration

In Spring Boot, externalized configuration is the mechanism that enables you to inject configuration values from external sources into Java code. In your Java code, injection is typically enabled by annotating with the @Value annotation (to inject into a single field) or the @ConfigurationProperties annotation (to inject into multiple properties on a Java bean class).

The configuration data can come from a wide variety of different sources (or property sources). In particular, configuration properties are often set in a project’s application.properties file (or application.yaml file, if you prefer).

9.1.1. Kubernetes ConfigMap

A Kubernetes ConfigMap is a mechanism that can provide configuration data to a deployed application. A ConfigMap object is typically defined in a YAML file, which is then uploaded to the Kubernetes cluster, making the configuration data available to deployed applications.

9.1.2. Kubernetes Secrets

A Kubernetes Secrets is a mechanism for providing sensitive data (such as passwords, certificates, and so on) to deployed applications.

9.1.3. Spring Cloud Kubernetes Plug-In

The Spring Cloud Kubernetes plug-in implements the integration between Kubernetes and Spring Boot. In principle, you could access the configuration data from a ConfigMap using the Kubernetes API. It is much more convenient, however, to integrate Kubernetes ConfigMap directly with the Spring Boot externalized configuration mechanism, so that Kubernetes ConfigMaps behave as an alternative property source for Spring Boot configuration. This is essentially what the Spring Cloud Kubernetes plug-in provides.

9.1.4. Enabling Spring Boot with Kubernetes Integration

You can enable Kubernetes integration by adding it as a Maven dependency to pom.xml file.

Procedure

Enable the Kubernetes integration by adding the following Maven dependency to the pom.xml file of your Spring Boot Maven project.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow To complete the integration,