Administration Guide

Configuring and Managing Red Hat Gluster Storage

Abstract

Part I. Preface

Chapter 1. Preface

1.1. About Red Hat Gluster Storage

1.2. About glusterFS

1.3. About On-premises Installation

Part II. Overview

Chapter 2. Architecture and Concepts

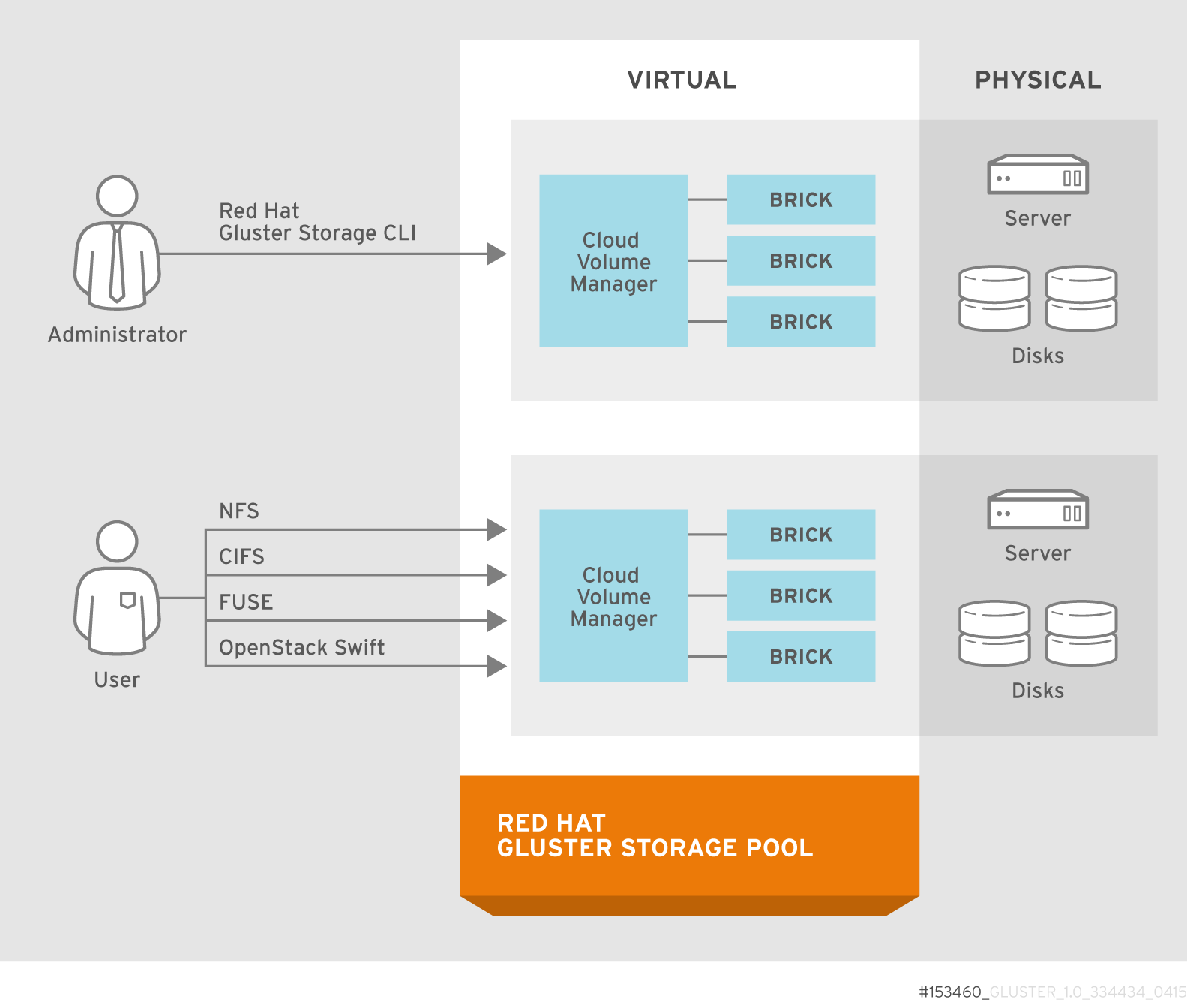

2.1. Architecture

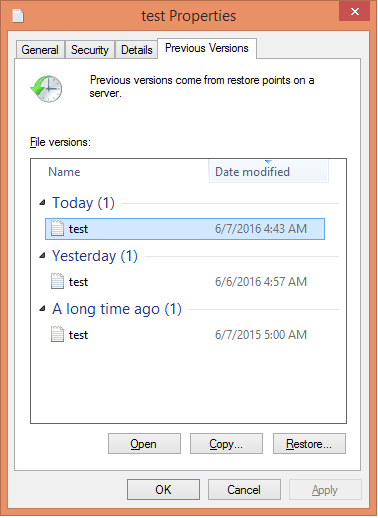

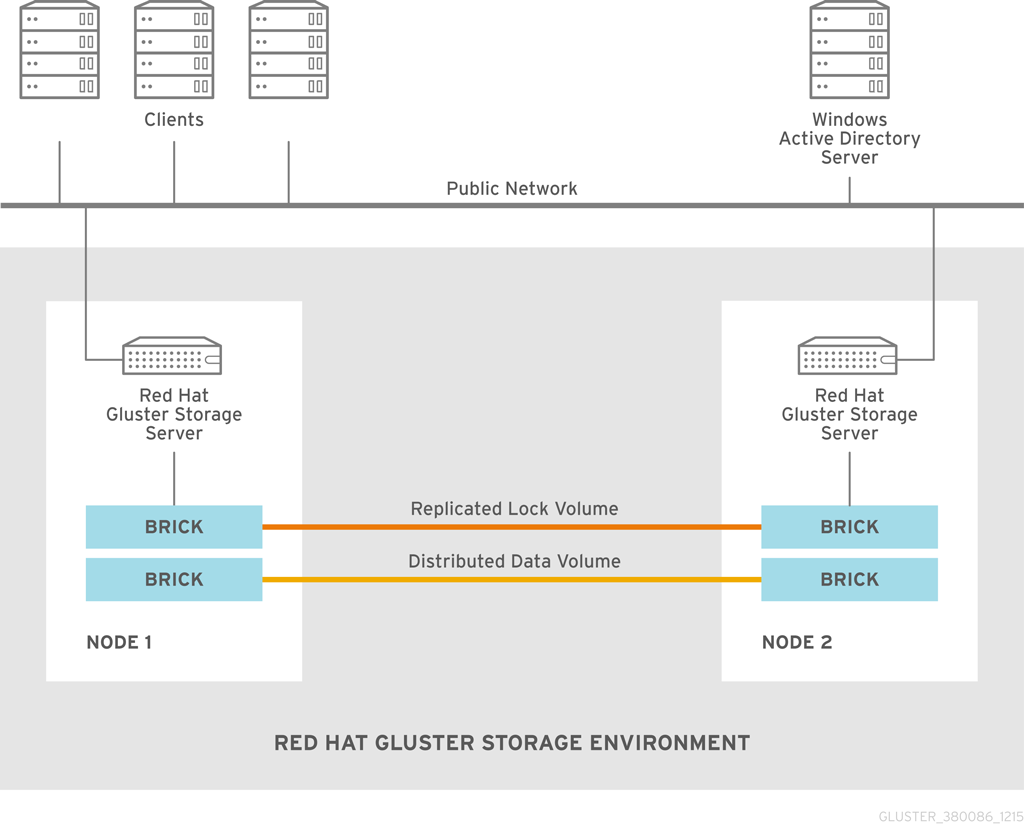

Figure 2.1. Red Hat Gluster Storage Architecture

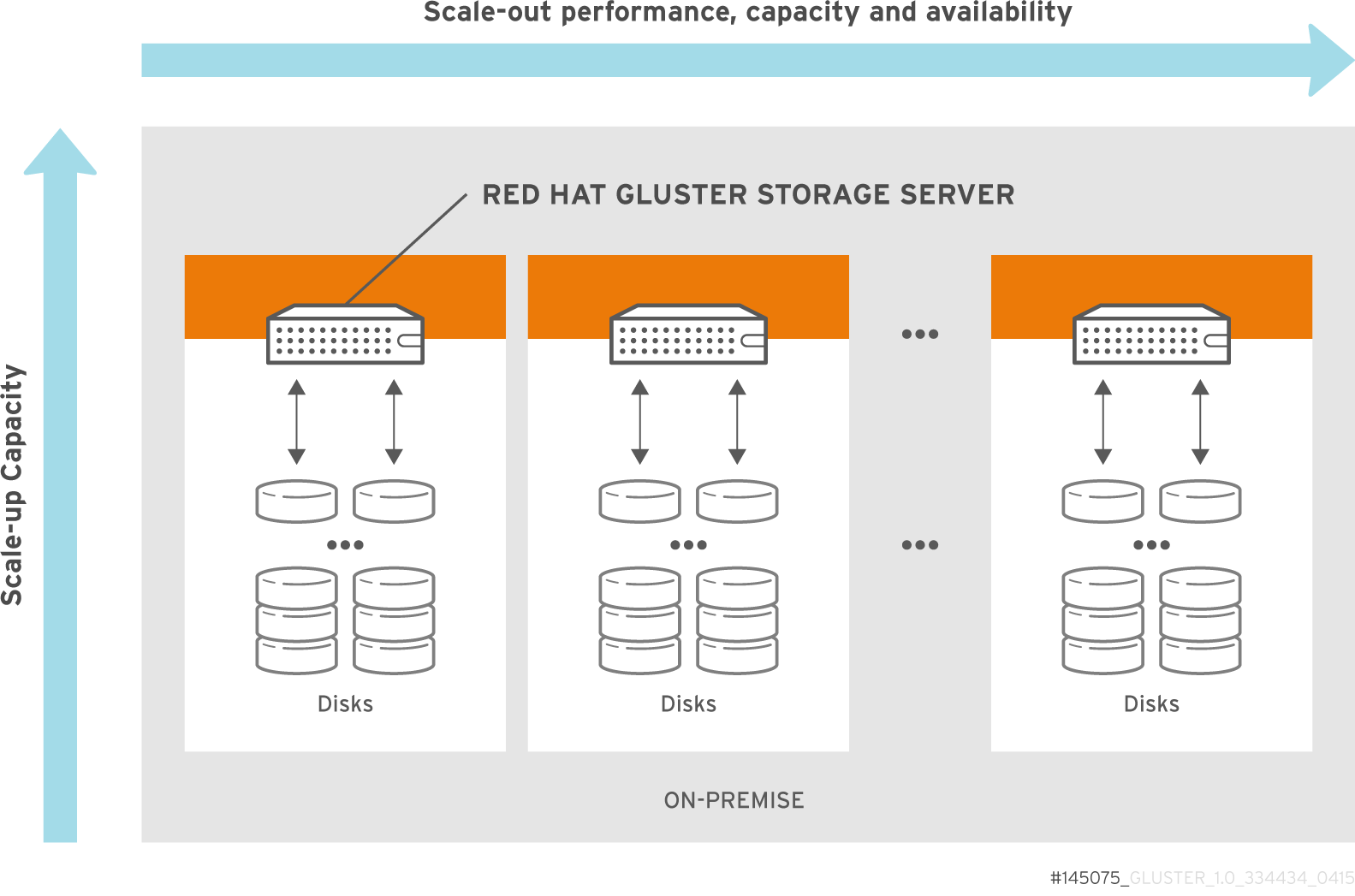

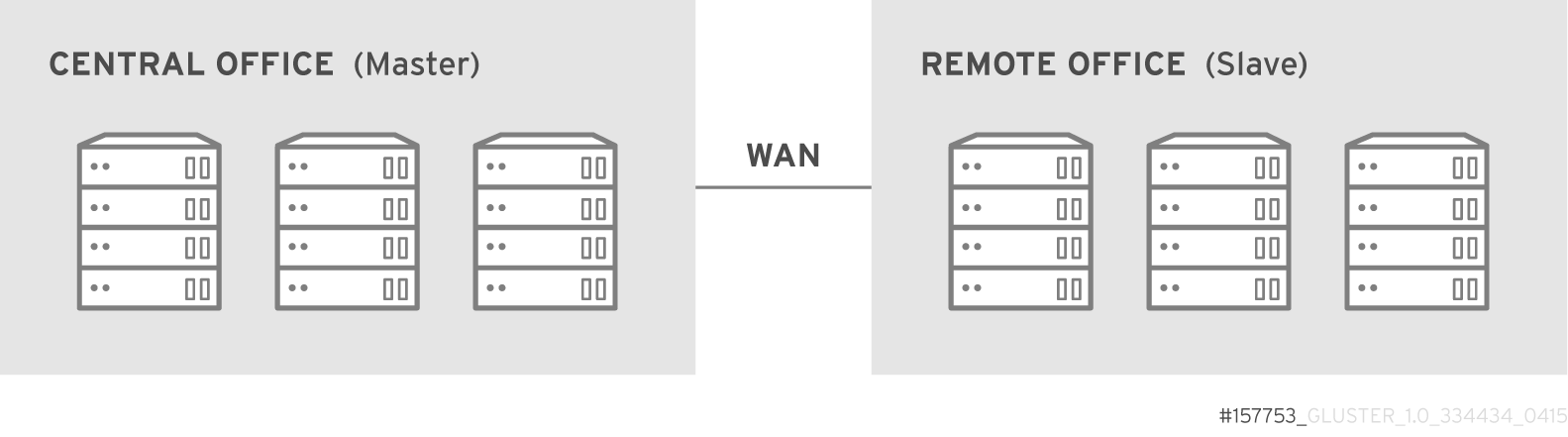

2.2. On-premises Architecture

Figure 2.2. Red Hat Gluster Storage for On-premises Architecture

2.3. Storage Concepts

- Brick

- The glusterFS basic unit of storage, represented by an export directory on a server in the trusted storage pool. A brick is expressed by combining a server with an export directory in the following format:

SERVER:EXPORTFor example:myhostname:/exports/myexportdir/ - Volume

- A volume is a logical collection of bricks. Most of the Red Hat Gluster Storage management operations happen on the volume.

- Translator

- A translator connects to one or more subvolumes, does something with them, and offers a subvolume connection.

- Subvolume

- A brick after being processed by at least one translator.

- Volfile

- Volume (vol) files are configuration files that determine the behavior of your Red Hat Gluster Storage trusted storage pool. At a high level, GlusterFS has three entities, that is, Server, Client and Management daemon. Each of these entities have their own volume files. Volume files for servers and clients are generated by the management daemon upon creation of a volume.Server and Client Vol files are located in

/var/lib/glusterd/vols/VOLNAMEdirectory. The management daemon vol file is named asglusterd.voland is located in/etc/glusterfs/directory.Warning

You must not modify any vol file in/var/lib/glusterdmanually as Red Hat does not support vol files that are not generated by the management daemon. - glusterd

- glusterd is the glusterFS Management Service that must run on all servers in the trusted storage pool.

- Cluster

- A trusted pool of linked computers working together, resembling a single computing resource. In Red Hat Gluster Storage, a cluster is also referred to as a trusted storage pool.

- Client

- The machine that mounts a volume (this may also be a server).

- File System

- A method of storing and organizing computer files. A file system organizes files into a database for the storage, manipulation, and retrieval by the computer's operating system.Source: Wikipedia

- Distributed File System

- A file system that allows multiple clients to concurrently access data which is spread across servers/bricks in a trusted storage pool. Data sharing among multiple locations is fundamental to all distributed file systems.

- Virtual File System (VFS)

- VFS is a kernel software layer that handles all system calls related to the standard Linux file system. It provides a common interface to several kinds of file systems.

- POSIX

- Portable Operating System Interface (for Unix) (POSIX) is the name of a family of related standards specified by the IEEE to define the application programming interface (API), as well as shell and utilities interfaces, for software that is compatible with variants of the UNIX operating system. Red Hat Gluster Storage exports a fully POSIX compatible file system.

- Metadata

- Metadata is data providing information about other pieces of data.

- FUSE

- Filesystem in User space (FUSE) is a loadable kernel module for Unix-like operating systems that lets non-privileged users create their own file systems without editing kernel code. This is achieved by running file system code in user space while the FUSE module provides only a "bridge" to the kernel interfaces.Source: Wikipedia

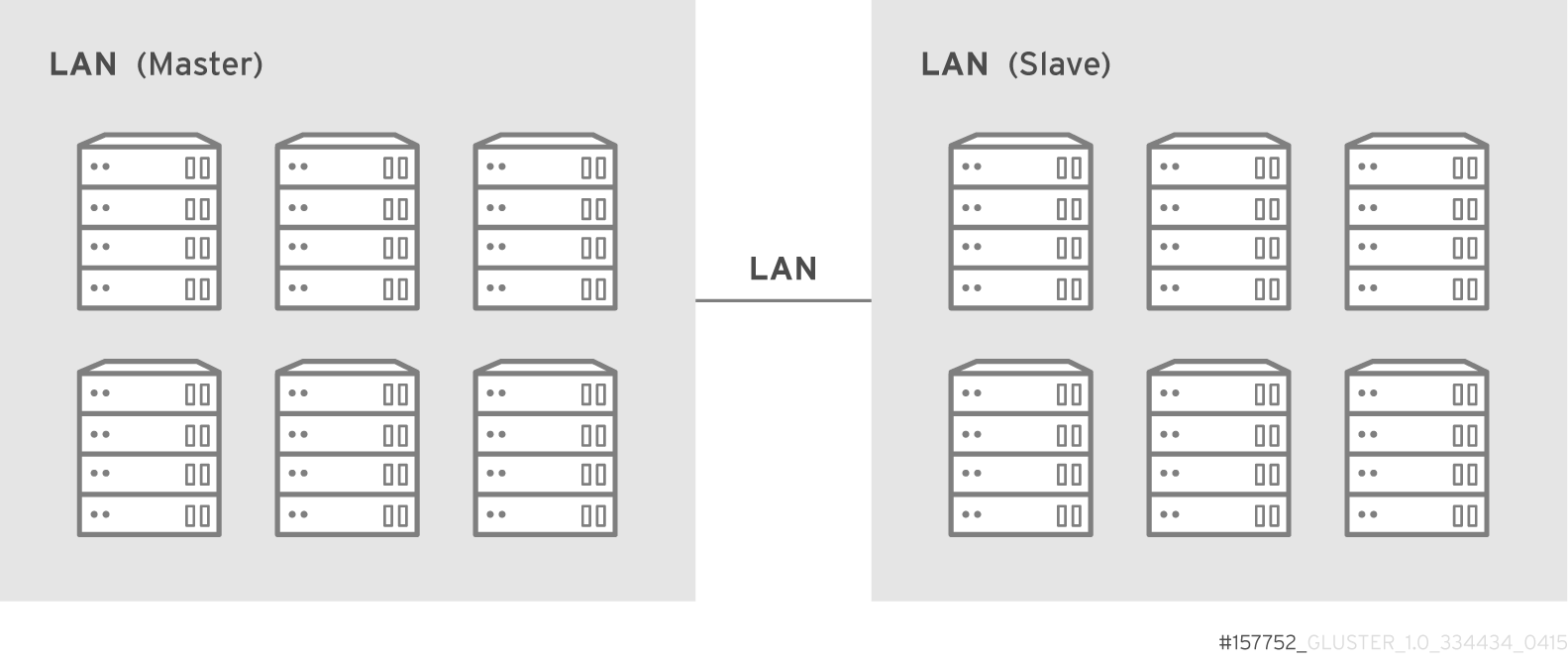

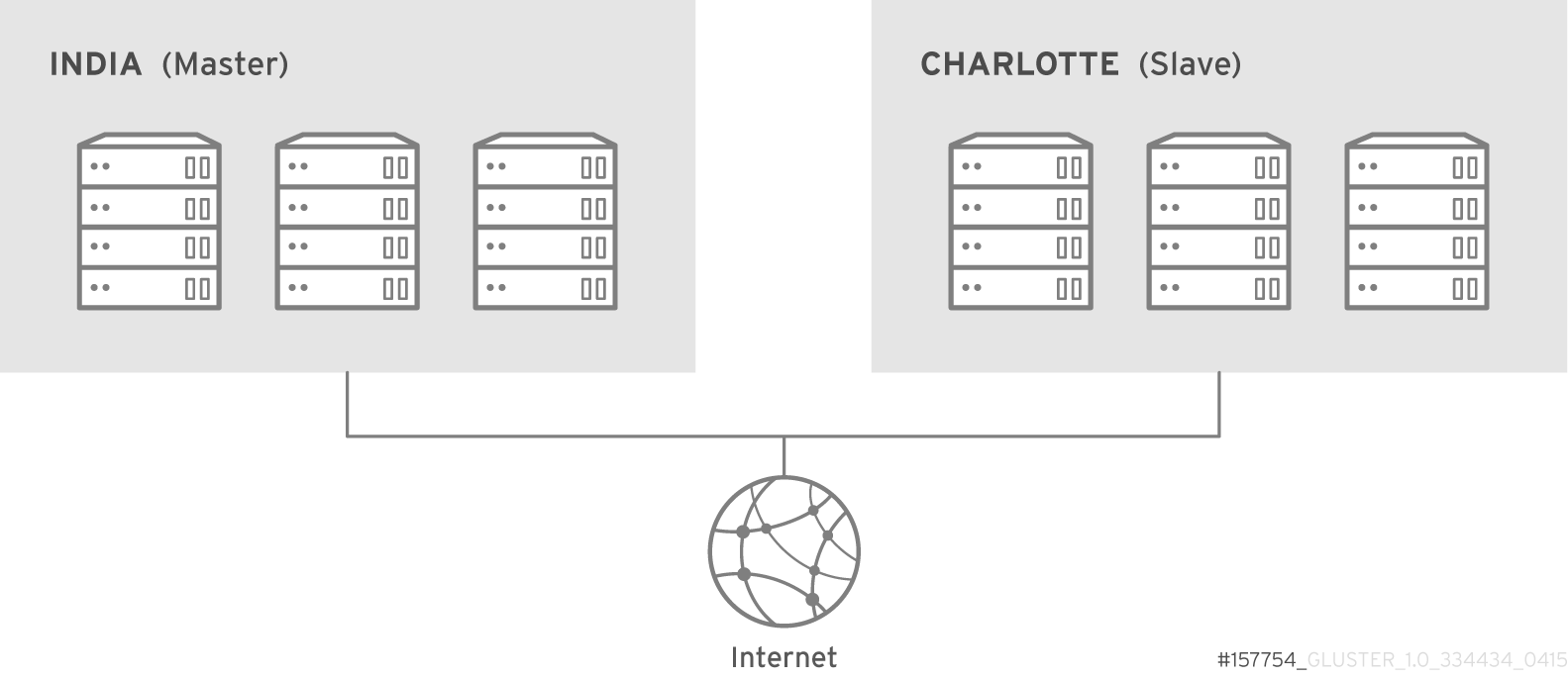

- Geo-Replication

- Geo-replication provides a continuous, asynchronous, and incremental replication service from one site to another over Local Area Networks (LAN), Wide Area Networks (WAN), and the Internet.

- N-way Replication

- Local synchronous data replication that is typically deployed across campus or Amazon Web Services Availability Zones.

- Petabyte

- A petabyte is a unit of information equal to one quadrillion bytes, or 1000 terabytes. The unit symbol for the petabyte is PB. The prefix peta- (P) indicates a power of 1000:1 PB = 1,000,000,000,000,000 B = 1000^5 B = 10^15 B.The term "pebibyte" (PiB), using a binary prefix, is used for the corresponding power of 1024.Source: Wikipedia

- RAID

- Redundant Array of Independent Disks (RAID) is a technology that provides increased storage reliability through redundancy. It combines multiple low-cost, less-reliable disk drives components into a logical unit where all drives in the array are interdependent.

- RRDNS

- Round Robin Domain Name Service (RRDNS) is a method to distribute load across application servers. RRDNS is implemented by creating multiple records with the same name and different IP addresses in the zone file of a DNS server.

- Server

- The machine (virtual or bare metal) that hosts the file system in which data is stored.

- Block Storage

- Block special files, or block devices, correspond to devices through which the system moves data in the form of blocks. These device nodes often represent addressable devices such as hard disks, CD-ROM drives, or memory regions. As of Red Hat Gluster Storage 3.4 and later, block storage supports only OpenShift Container Storage converged and independent mode use cases. Block storage can be created and configured for this use case by using the

gluster-blockcommand line tool. For more information, see Container-Native Storage for OpenShift Container Platform. - Scale-Up Storage

- Increases the capacity of the storage device in a single dimension. For example, adding additional disk capacity in a trusted storage pool.

- Scale-Out Storage

- Increases the capability of a storage device in single dimension. For example, adding more systems of the same size, or adding servers to a trusted storage pool that increases CPU, disk capacity, and throughput for the trusted storage pool.

- Trusted Storage Pool

- A storage pool is a trusted network of storage servers. When you start the first server, the storage pool consists of only that server.

- Namespace

- An abstract container or environment that is created to hold a logical grouping of unique identifiers or symbols. Each Red Hat Gluster Storage trusted storage pool exposes a single namespace as a POSIX mount point which contains every file in the trusted storage pool.

- User Space

- Applications running in user space do not directly interact with hardware, instead using the kernel to moderate access. User space applications are generally more portable than applications in kernel space. glusterFS is a user space application.

- Hashed subvolume

- A Distributed Hash Table Translator subvolume to which the file or directory name is hashed to.

- Cached subvolume

- A Distributed Hash Table Translator subvolume where the file content is actually present. For directories, the concept of cached-subvolume is not relevant. It is loosely used to mean subvolumes which are not hashed-subvolume.

- Linkto-file

- For a newly created file, the hashed and cached subvolumes are the same. When directory entry operations like rename (which can change the name and hence hashed subvolume of the file) are performed on the file, instead of moving the entire data in the file to a new hashed subvolume, a file is created with the same name on the newly hashed subvolume. The purpose of this file is only to act as a pointer to the node where the data is present. In the extended attributes of this file, the name of the cached subvolume is stored. This file on the newly hashed-subvolume is called a linkto-file. The linkto file is relevant only for non-directory entities.

- Directory Layout

- The directory layout helps determine where files in a gluster volume are stored.When a client creates or requests a file, the DHT translator hashes the file's path to create an integer. Each directory in a gluster subvolume holds files that have integers in a specific range, so the hash of any given file maps to a specific subvolume in the gluster volume. The directory layout determines which integer ranges are assigned to a given directory across all subvolumes.Directory layouts are assigned when a directory is first created, and can be reassigned by running a rebalance operation on the volume. If a brick or subvolume is offline when a directory is created, it will not be part of the layout until after a rebalance is run.You should rebalance a volume to recalculate its directory layout after bricks are added to the volume. See Section 11.11, “Rebalancing Volumes” for more information.

- Fix Layout

- A command that is executed during the rebalance process.The rebalance process itself comprises of two stages:

- Fixes the layouts of directories to accommodate any subvolumes that are added or removed. It also heals the directories, checks whether the layout is non-contiguous, and persists the layout in extended attributes, if needed. It also ensures that the directories have the same attributes across all the subvolumes.

- Migrates the data from the cached-subvolume to the hashed-subvolume.

Part III. Configure and Verify

Chapter 3. Considerations for Red Hat Gluster Storage

3.1. Firewall and Port Access

3.1.1. Configuring the Firewall

iptables command to open a port:

iptables -A INPUT -m state --state NEW -m tcp -p tcp --dport 5667 -j ACCEPT service iptables save

# iptables -A INPUT -m state --state NEW -m tcp -p tcp --dport 5667 -j ACCEPT

# service iptables saveImportant

firewall-cmd --zone=zone_name --add-service=glusterfs firewall-cmd --zone=zone_name --add-service=glusterfs --permanent

# firewall-cmd --zone=zone_name --add-service=glusterfs

# firewall-cmd --zone=zone_name --add-service=glusterfs --permanentfirewall-cmd --zone=zone_name --add-port=port/protocol firewall-cmd --zone=zone_name --add-port=port/protocol --permanent

# firewall-cmd --zone=zone_name --add-port=port/protocol

# firewall-cmd --zone=zone_name --add-port=port/protocol --permanentfirewall-cmd --zone=public --add-port=5667/tcp firewall-cmd --zone=public --add-port=5667/tcp --permanent

# firewall-cmd --zone=public --add-port=5667/tcp

# firewall-cmd --zone=public --add-port=5667/tcp --permanent3.1.2. Port Access Requirements

| Connection source | TCP Ports | UDP Ports | Recommended for | Used for |

|---|---|---|---|---|

| Any authorized network entity with a valid SSH key | 22 | - | All configurations | Remote backup using geo-replication |

| Any authorized network entity; be cautious not to clash with other RPC services. | 111 | 111 | All configurations | RPC port mapper and RPC bind |

| Any authorized SMB/CIFS client | 139 and 445 | 137 and 138 | Sharing storage using SMB/CIFS | SMB/CIFS protocol |

| Any authorized NFS clients | 2049 | 2049 | Sharing storage using Gluster NFS or NFS-Ganesha | Exports using NFS protocol |

| All servers in the Samba-CTDB cluster | 4379 | - | Sharing storage using SMB and Gluster NFS | CTDB |

| Any authorized network entity | 24007 | - | All configurations | Management processes using glusterd |

| Any authorized network entity | 55555 | - | All configurations |

Gluster events daemon

If you are upgrading from a previous version of Red Hat Gluster Storage to the latest version 3.5.4, the port used for glusterevents daemon should be modified to be in the ephemral range.

|

| NFSv3 clients | 662 | 662 | Sharing storage using NFS-Ganesha and Gluster NFS | statd |

| NFSv3 clients | 32803 | 32803 | Sharing storage using NFS-Ganesha and Gluster NFS | NLM protocol |

| NFSv3 clients sending mount requests | - | 32769 | Sharing storage using Gluster NFS | Gluster NFS MOUNT protocol |

| NFSv3 clients sending mount requests | 20048 | 20048 | Sharing storage using NFS-Ganesha | NFS-Ganesha MOUNT protocol |

| NFS clients | 875 | 875 | Sharing storage using NFS-Ganesha | NFS-Ganesha RQUOTA protocol (fetching quota information) |

| Servers in pacemaker/corosync cluster | 2224 | - | Sharing storage using NFS-Ganesha | pcsd |

| Servers in pacemaker/corosync cluster | 3121 | - | Sharing storage using NFS-Ganesha | pacemaker_remote |

| Servers in pacemaker/corosync cluster | - | 5404 and 5405 | Sharing storage using NFS-Ganesha | corosync |

| Servers in pacemaker/corosync cluster | 21064 | - | Sharing storage using NFS-Ganesha | dlm |

| Any authorized network entity | 49152 - 49664 | - | All configurations | Brick communication ports. The total number of ports required depends on the number of bricks on the node. One port is required for each brick on the machine. |

| Gluster Clients | 1023 or 49152 | - | Applicable when system ports are already being used in the machines. | Communication between brick and client processes. |

| Connection source | TCP Ports | UDP Ports | Recommended for | Used for |

|---|---|---|---|---|

| NFSv3 servers | 662 | 662 | Sharing storage using NFS-Ganesha and Gluster NFS | statd |

| NFSv3 servers | 32803 | 32803 | Sharing storage using NFS-Ganesha and Gluster NFS | NLM protocol |

3.2. Feature Compatibility Support

Note

| Feature | Version |

|---|---|

| Arbiter bricks | 3.2 |

| Bitrot detection | 3.1 |

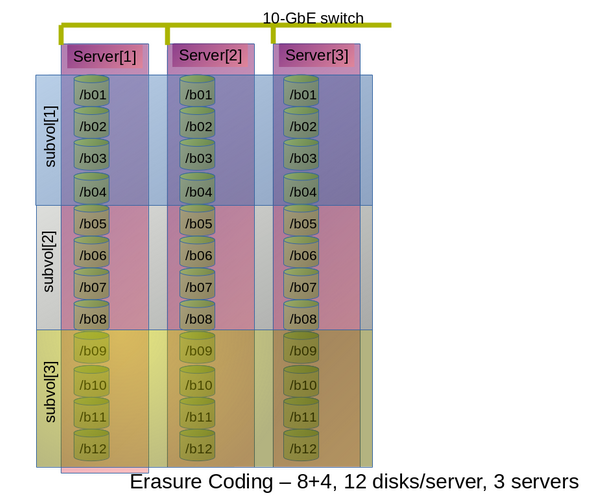

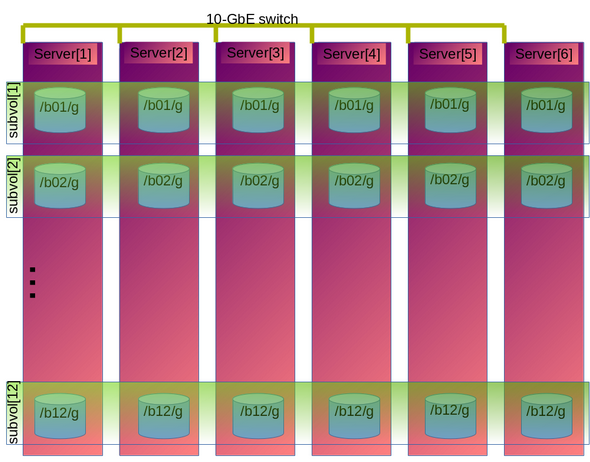

| Erasure coding | 3.1 |

| Google Compute Engine | 3.1.3 |

| Metadata caching | 3.2 |

| Microsoft Azure | 3.1.3 |

| NFS version 4 | 3.1 |

| SELinux | 3.1 |

| Sharding | 3.2.0 |

| Snapshots | 3.0 |

| Snapshots, cloning | 3.1.3 |

| Snapshots, user-serviceable | 3.0.3 |

| Tiering (Deprecated) | 3.1.2 |

| Volume Shadow Copy (VSS) | 3.1.3 |

| Volume Type | Sharding | Tiering (Deprecated) | Quota | Snapshots | Geo-Rep | Bitrot |

|---|---|---|---|---|---|---|

| Arbitrated-Replicated | Yes | No | Yes | Yes | Yes | Yes |

| Distributed | No | Yes | Yes | Yes | Yes | Yes |

| Distributed-Dispersed | No | Yes | Yes | Yes | Yes | Yes |

| Distributed-Replicated | Yes | Yes | Yes | Yes | Yes | Yes |

| Replicated | Yes | Yes | Yes | Yes | Yes | Yes |

| Sharded | N/A | No | No | No | Yes | No |

| Tiered (Deprecated) | No | N/A | Limited[a] | Limited[a] | Limited[a] | Limited[a] |

| Feature | FUSE | Gluster-NFS | NFS-Ganesha | SMB |

|---|---|---|---|---|

| Arbiter | Yes | Yes | Yes | Yes |

| Bitrot detection | Yes | Yes | No | Yes |

| dm-cache | Yes | Yes | Yes | Yes |

| Encryption (TLS-SSL) | Yes | Yes | Yes | Yes |

| Erasure coding | Yes | Yes | Yes | Yes |

| Export subdirectory | Yes | Yes | Yes | N/A |

| Geo-replication | Yes | Yes | Yes | Yes |

| Quota (Deprecated)

Warning

Using QUOTA feature is considered to be deprecated in Red Hat Gluster Storage 3.5.3. Red Hat no longer recommends to use this feature and does not support it on new deployments and existing deployments that upgrade to Red Hat Gluster Storage 3.5.3.

See Chapter 9, Managing Directory Quotas for more details.

| Yes | Yes | Yes | Yes |

| RDMA (Deprecated)

Warning

Using RDMA as a transport protocol is considered deprecated in Red Hat Gluster Storage 3.5. Red Hat no longer recommends its use, and does not support it on new deployments and existing deployments that upgrade to Red Hat Gluster Storage 3.5.3.

| Yes | No | No | No |

| Snapshots | Yes | Yes | Yes | Yes |

| Snapshot cloning | Yes | Yes | Yes | Yes |

| Tiering (Deprecated)

Warning

Tiering is considered deprecated as of Red Hat Gluster Storage 3.5. Red Hat no longer recommends its use, and does not support tiering in new deployments and existing deployments that upgrade to Red Hat Gluster Storage 3.5.3.

| Yes | Yes | N/A | N/A |

Chapter 4. Adding Servers to the Trusted Storage Pool

Important

firewall-cmd --get-active-zones

# firewall-cmd --get-active-zonesfirewall-cmd --zone=zone_name --add-service=glusterfs firewall-cmd --zone=zone_name --add-service=glusterfs --permanent

# firewall-cmd --zone=zone_name --add-service=glusterfs

# firewall-cmd --zone=zone_name --add-service=glusterfs --permanentNote

gluster volume status VOLNAME command is executed from two of the nodes simultaneously.

4.1. Adding Servers to the Trusted Storage Pool

gluster peer probe [server] command is used to add servers to the trusted server pool.

Note

Adding Three Servers to a Trusted Storage Pool

Prerequisites

- The

glusterdservice must be running on all storage servers requiring addition to the trusted storage pool. See Chapter 22, Starting and Stopping the glusterd service for service start and stop commands. Server1, the trusted storage server, is started.- The host names of the target servers must be resolvable by DNS.

- Run

gluster peer probe [server]from Server 1 to add additional servers to the trusted storage pool.Note

- Self-probing

Server1will result in an error because it is part of the trusted storage pool by default. - All the servers in the Trusted Storage Pool must have RDMA devices if either RDMA or RDMA,TCP volumes are created in the storage pool. The peer probe must be performed using IP/hostname assigned to the RDMA device.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Verify the peer status from all servers using the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Important

Note

for peer in `gluster peer status | grep Hostname | awk -F':' '{print $2}' | awk '{print $1}'`; do clockdiff $peer; done

# for peer in `gluster peer status | grep Hostname | awk -F':' '{print $2}' | awk '{print $1}'`; do clockdiff $peer; done4.2. Removing Servers from the Trusted Storage Pool

Warning

gluster peer detach server to remove a server from the storage pool.

Removing One Server from the Trusted Storage Pool

Prerequisites

- The

glusterdservice must be running on the server targeted for removal from the storage pool. See Chapter 22, Starting and Stopping the glusterd service for service start and stop commands. - The host names of the target servers must be resolvable by DNS.

- Run

gluster peer detach [server]to remove the server from the trusted storage pool.gluster peer detach (server) All clients mounted through the peer which is getting detached needs to be remounted, using one of the other active peers in the trusted storage pool, this ensures that the client gets notification on any changes done on the gluster configuration and if the same has been done do you want to proceed? (y/n) y peer detach: success

# gluster peer detach (server) All clients mounted through the peer which is getting detached needs to be remounted, using one of the other active peers in the trusted storage pool, this ensures that the client gets notification on any changes done on the gluster configuration and if the same has been done do you want to proceed? (y/n) y peer detach: successCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Verify the peer status from all servers using the following command:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 5. Setting Up Storage Volumes

Warning

Note

yum groupinstall "Infiniband Support" to install Infiniband packages.

Volume Types

- Distributed

- Distributes files across bricks in the volume.Use this volume type where scaling and redundancy requirements are not important, or provided by other hardware or software layers.See Section 5.4, “Creating Distributed Volumes” for additional information about this volume type.

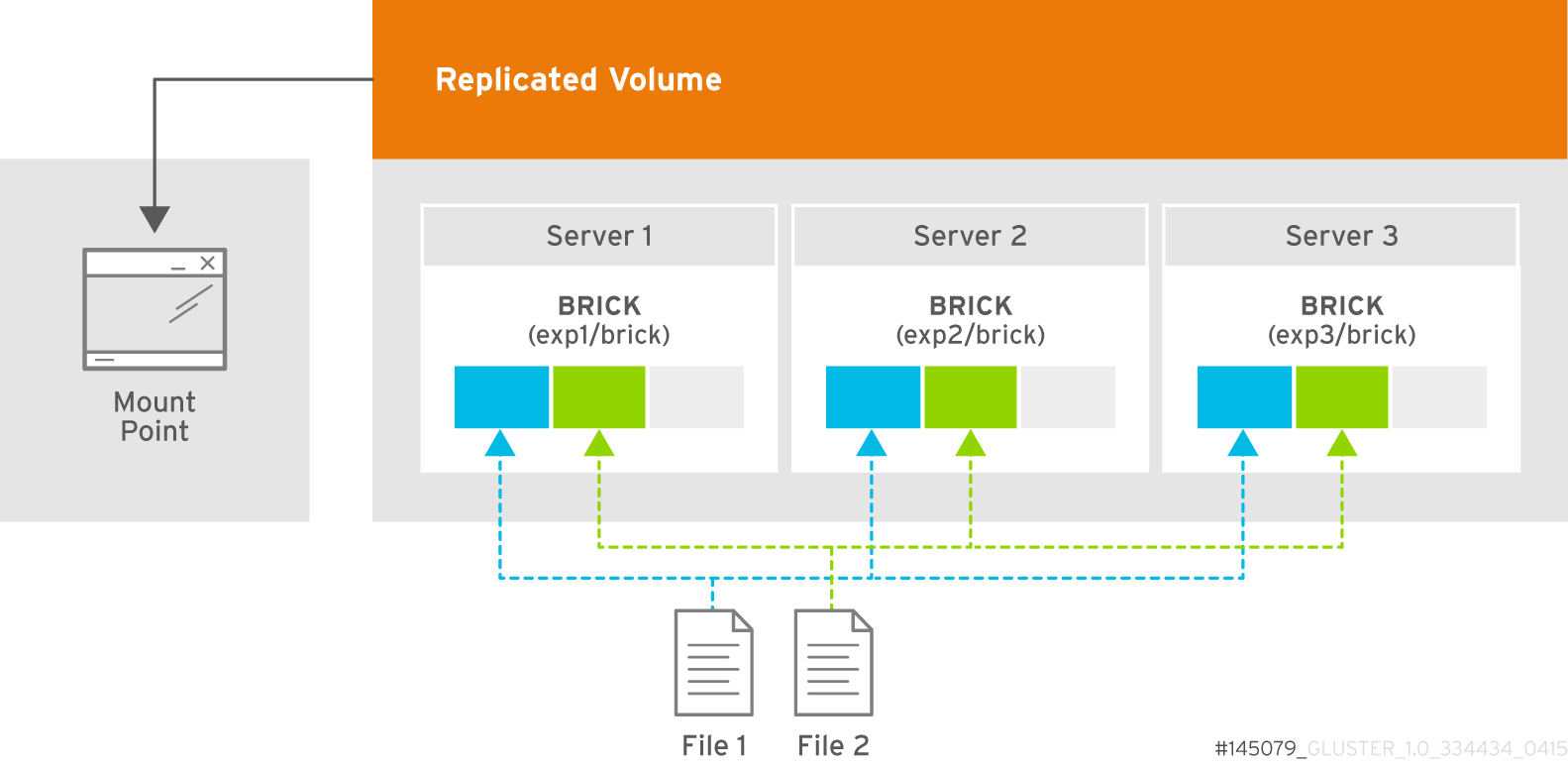

- Replicated

- Replicates files across bricks in the volume.Use this volume type in environments where high-availability and high-reliability are critical.See Section 5.5, “Creating Replicated Volumes” for additional information about this volume type.

- Distributed Replicated

- Distributes files across replicated bricks in the volume.Use this volume type in environments where high-reliability and scalability are critical. This volume type offers improved read performance in most environments.See Section 5.6, “Creating Distributed Replicated Volumes” for additional information about this volume type.

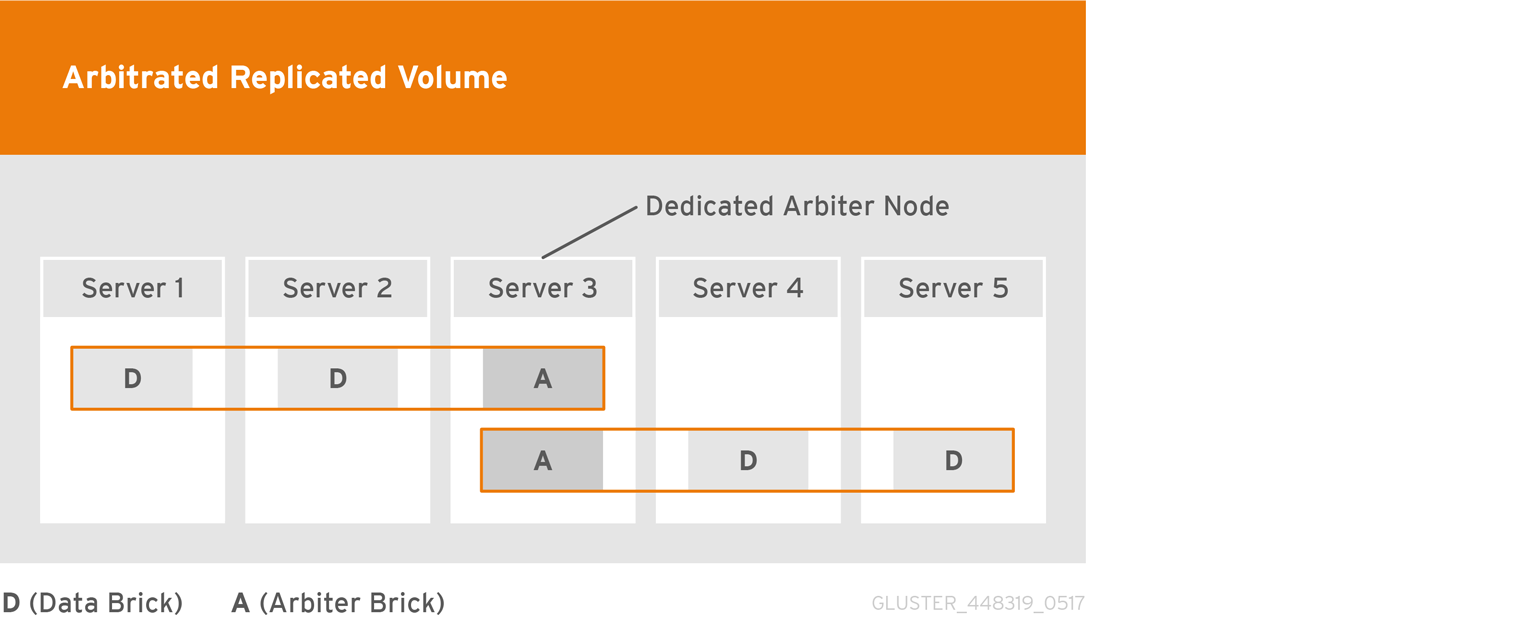

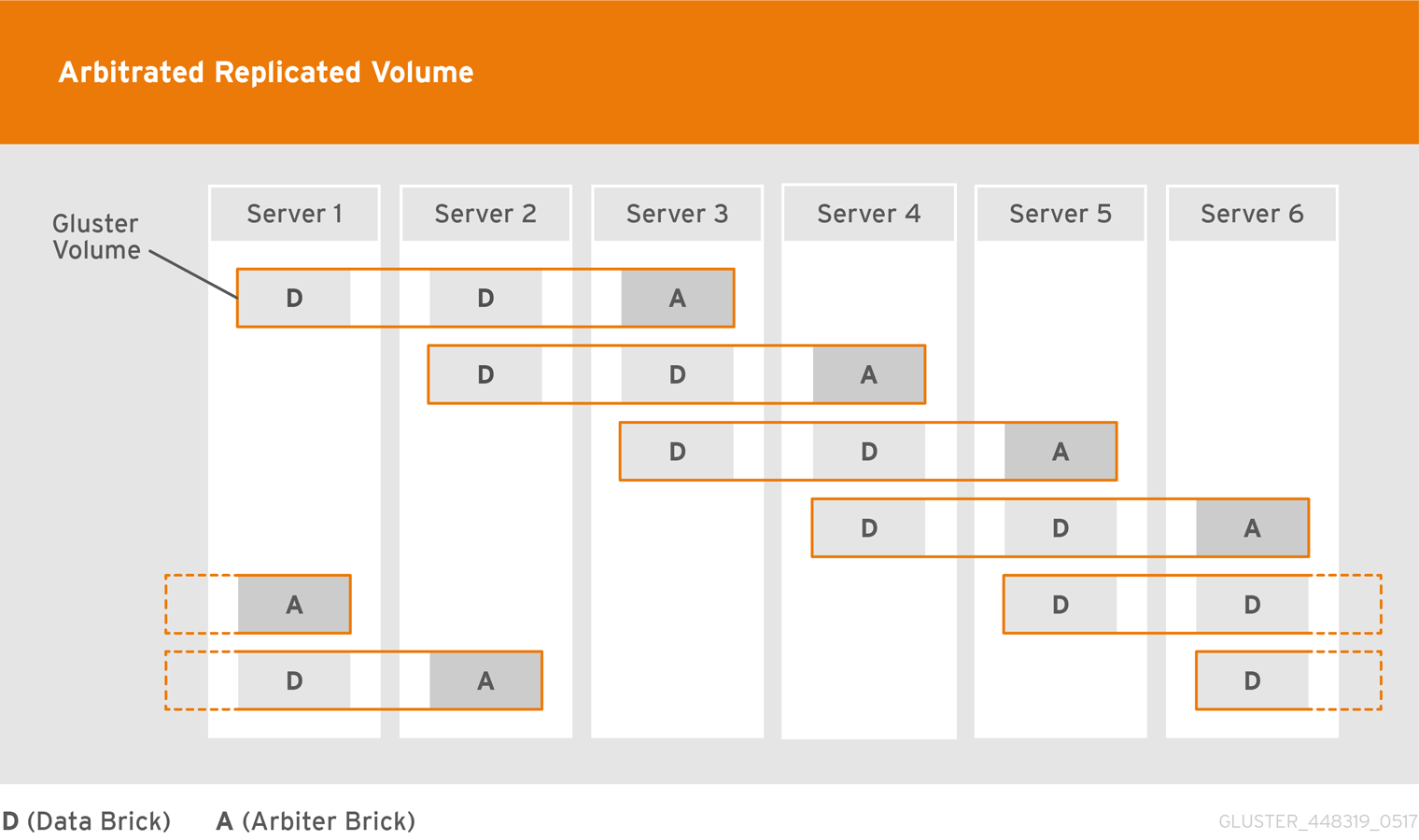

- Arbitrated Replicated

- Replicates files across two bricks in a replica set, and replicates only metadata to the third brick.Use this volume type in environments where consistency is critical, but underlying storage space is at a premium.See Section 5.7, “Creating Arbitrated Replicated Volumes” for additional information about this volume type.

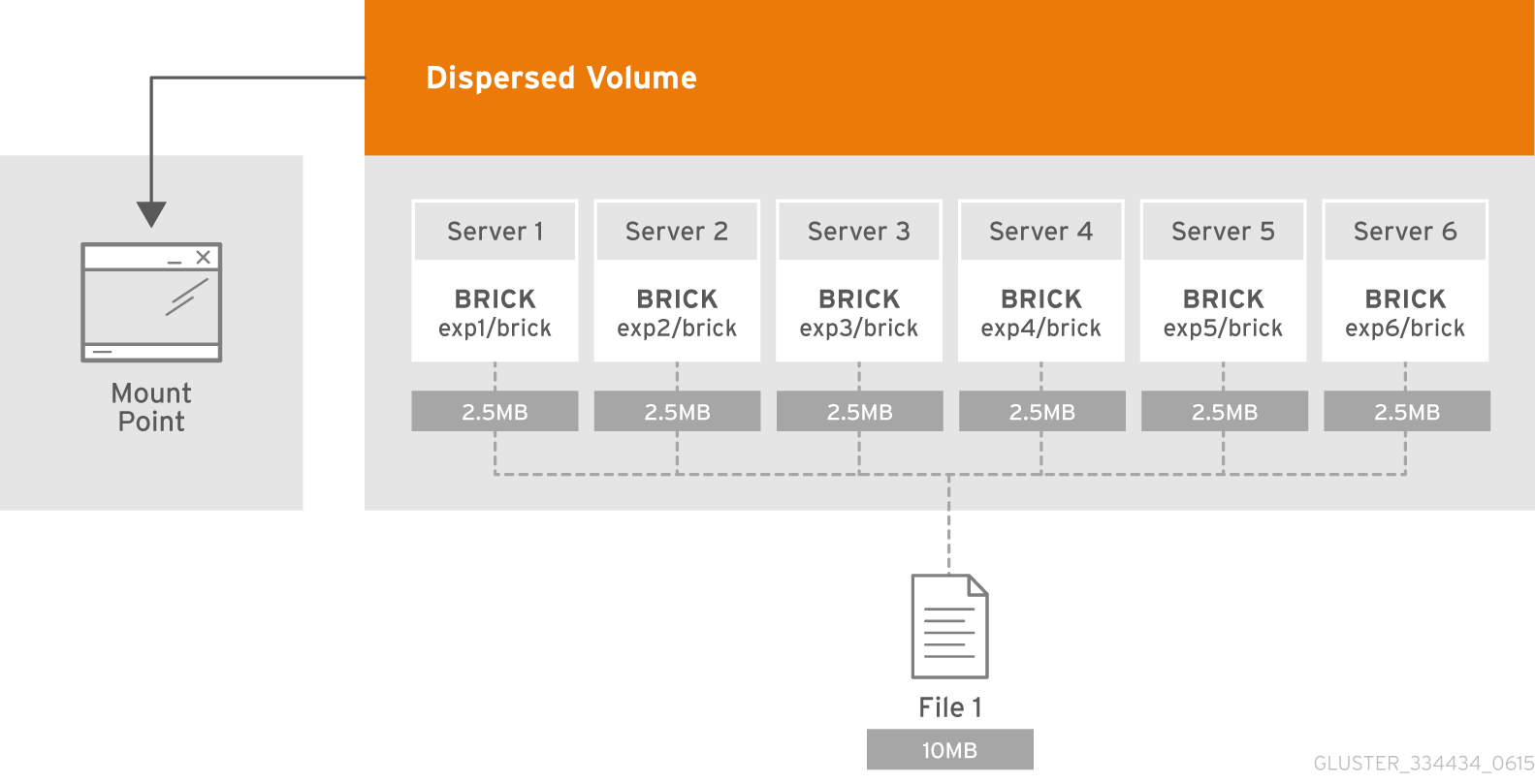

- Dispersed

- Disperses the file's data across the bricks in the volume.Use this volume type where you need a configurable level of reliability with a minimum space waste.See Section 5.8, “Creating Dispersed Volumes” for additional information about this volume type.

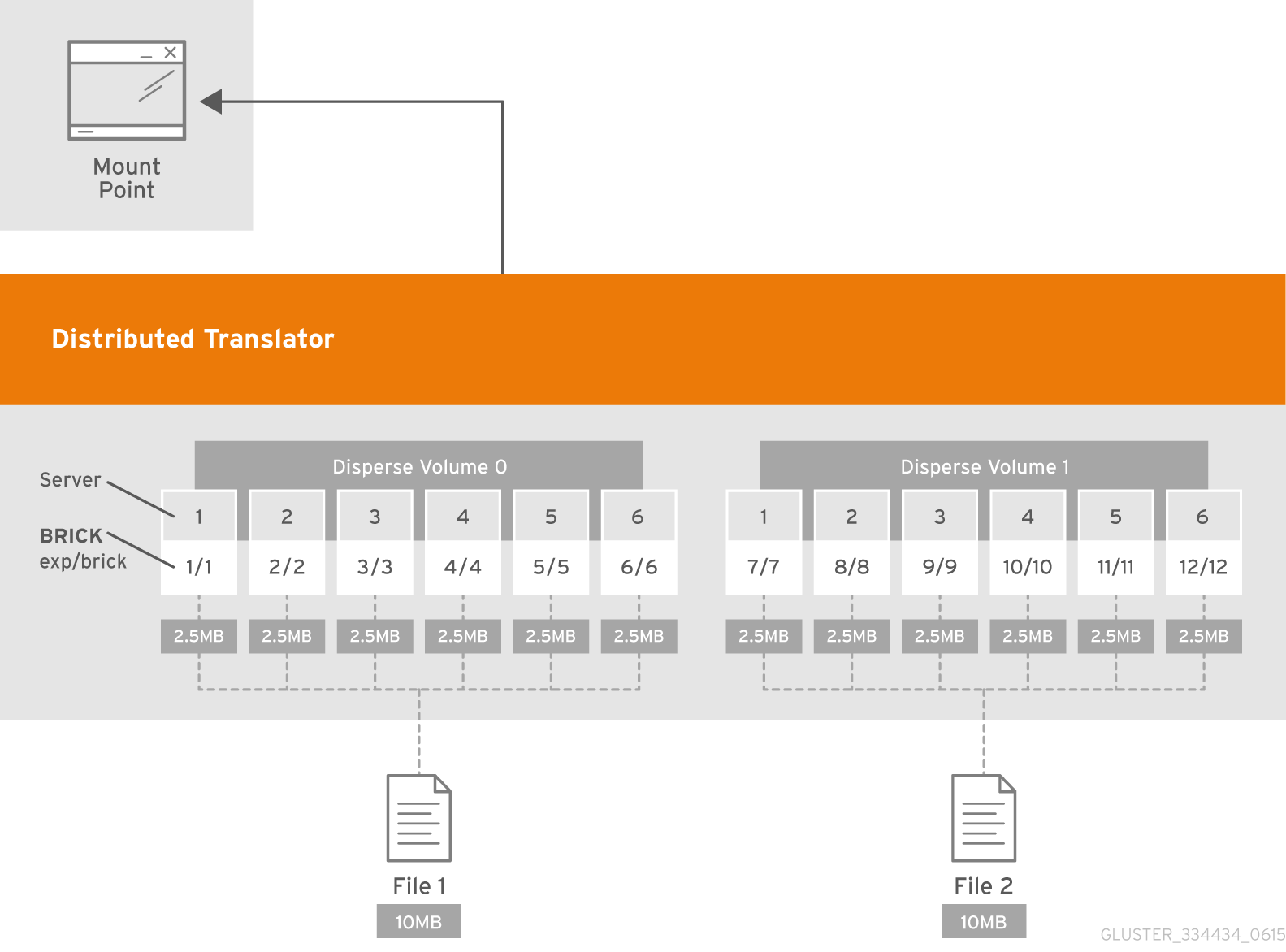

- Distributed Dispersed

- Distributes file's data across the dispersed sub-volume.Use this volume type where you need a configurable level of reliability with a minimum space waste.See Section 5.9, “Creating Distributed Dispersed Volumes” for additional information about this volume type.

5.1. Setting up Gluster Storage Volumes using gdeploy

- Setting-up the backend on several machines can be done from one's laptop/desktop. This saves time and scales up well when the number of nodes in the trusted storage pool increase.

- Flexibility in choosing the drives to configure. (sd, vd, ...).

- Flexibility in naming the logical volumes (LV) and volume groups (VG).

5.1.1. Getting Started

- Generate the passphrase-less SSH keys for the nodes which are going to be part of the trusted storage pool by running the following command:

ssh-keygen -t rsa -N ''

# ssh-keygen -t rsa -N ''Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Set up key-based SSH authentication access between the gdeploy controller and servers by running the following command:

ssh-copy-id -i root@server

# ssh-copy-id -i root@serverCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note

If you are using a Red Hat Gluster Storage node as the deployment node and not an external node, then the key-based SSH authentication must be set up for the Red Hat Gluster Storage node from where the installation is performed. - Enable the repository required to install Ansible by running the following command:For Red Hat Enterprise Linux 8

subscription-manager repos --enable=ansible-2-for-rhel-8-x86_64-rpms

# subscription-manager repos --enable=ansible-2-for-rhel-8-x86_64-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow For Red Hat Enterprise Linux 7subscription-manager repos --enable=rhel-7-server-ansible-2-rpms

# subscription-manager repos --enable=rhel-7-server-ansible-2-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Install

ansibleby executing the following command:yum install ansible

# yum install ansibleCopy to Clipboard Copied! Toggle word wrap Toggle overflow - You must also ensure the following:

- Devices should be raw and unused

- Default system locale must be set to

en_USFor information on system locale, refer to the Setting the System Locale of the Red Hat Enterprise Linux 7 System Administrator's Guide. - For multiple devices, use multiple volume groups, thinpool, and thinvol in the

gdeployconfiguration file

- Using a node in a trusted storage pool

- Using a machine outside the trusted storage pool

The gdeploy package is bundled as part of the initial installation of Red Hat Gluster Storage.

You must ensure that the Red Hat Gluster Storage is subscribed to the required channels. For more information see, Subscribing to the Red Hat Gluster Storage Server Channels in the Red Hat Gluster Storage 3.5 Installation Guide.

yum install gdeploy

# yum install gdeploygdeploy see, Installing Ansible to Support Gdeploy section in the Red Hat Gluster Storage 3.5 Installation Guide.

5.1.2. Setting up a Trusted Storage Pool

/usr/share/doc/gdeploy/examples/gluster.conf.sample

/usr/share/doc/gdeploy/examples/gluster.conf.sampleNote

/dev/sdb, /dev/sdc, /dev/sdd with the volume name as sample_volname will be created.

gdeploy -c txt.conf

# gdeploy -c txt.confNote

/usr/share/doc/gdeploy/examples/gluster.conf.sample. To invoke the new configuration file, run gdeploy -c /path_to_file/config.txt command.

only setup the backend see, Section 5.1.3, “Setting up the Backend ”

only create a volume see, Section 5.1.4, “Creating Volumes”

only mount clients see, Section 5.1.5, “Mounting Clients”

5.1.3. Setting up the Backend

/usr/share/doc/gdeploy/examples/gluster.conf.sample

/usr/share/doc/gdeploy/examples/gluster.conf.sample- Using the [backend-setup] module

- Creating Physical Volume (PV), Volume Group (VG), and Logical Volume (LV) individually

Note

xfsprogs package must be installed before setting up the backend bricks using gdeploy.

Important

5.1.3.1. Using the [backend-setup] Module

- Generic

- Specific

If the disk names are uniform across the machines then backend setup can be written as below. The backend is setup for all the hosts in the `hosts’ section.

If the disks names vary across the machines in the cluster then backend setup can be written for specific machines with specific disk names. gdeploy is quite flexible in allowing to do host specific setup in a single configuration file.

5.1.3.2. Creating Backend by Setting up PV, VG, and LV

5.1.4. Creating Volumes

/usr/share/doc/gdeploy/examples/gluster.conf.sample

/usr/share/doc/gdeploy/examples/gluster.conf.samplegdeploy -c txt.conf

# gdeploy -c txt.conf

Note

5.1.5. Mounting Clients

/usr/share/doc/gdeploy/examples/gluster.conf.sample

/usr/share/doc/gdeploy/examples/gluster.conf.sampleNote

fstype) is NFS, then mention it as nfs-version. The default version is 3.

gdeploy -c txt.conf

# gdeploy -c txt.conf5.1.6. Configuring a Volume

5.1.6.1. Adding and Removing a Brick

Modify the [volume] section in the configuration file to add a brick. For example:

[volume] action=add-brick volname=10.0.0.1:glustervol bricks=10.0.0.1:/rhgs/new_brick

[volume]

action=add-brick

volname=10.0.0.1:glustervol

bricks=10.0.0.1:/rhgs/new_brickgdeploy -c txt.conf

# gdeploy -c txt.confModify the [volume] section in the configuration file to remove a brick. For example:

[volume] action=remove-brick volname=10.0.0.1:glustervol bricks=10.0.0.2:/rhgs/brick state=commit

[volume]

action=remove-brick

volname=10.0.0.1:glustervol

bricks=10.0.0.2:/rhgs/brick

state=commitstate are stop, start, and force.

gdeploy -c txt.conf

# gdeploy -c txt.conf5.1.6.2. Rebalancing a Volume

[volume] action=rebalance volname=10.70.46.13:glustervol state=start

[volume]

action=rebalance

volname=10.70.46.13:glustervol

state=startstate are stop, and fix-layout.

gdeploy -c txt.conf

# gdeploy -c txt.conf5.1.6.3. Starting, Stopping, or Deleting a Volume

Modify the [volume] section in the configuration file to start a volume. For example:

[volume] action=start volname=10.0.0.1:glustervol

[volume]

action=start

volname=10.0.0.1:glustervolgdeploy -c txt.conf

# gdeploy -c txt.confModify the [volume] section in the configuration file to start a volume. For example:

[volume] action=stop volname=10.0.0.1:glustervol

[volume]

action=stop

volname=10.0.0.1:glustervolgdeploy -c txt.conf

# gdeploy -c txt.confModify the [volume] section in the configuration file to start a volume. For example:

[volume] action=delete volname=10.70.46.13:glustervol

[volume]

action=delete

volname=10.70.46.13:glustervolgdeploy -c txt.conf

# gdeploy -c txt.conf5.1.7. Configuration File

- [hosts]

- [devices]

- [disktype]

- [diskcount]

- [stripesize]

- [vgs]

- [pools]

- [lvs]

- [mountpoints]

- [peer]

- [clients]

- [volume]

- [backend-setup]

- [pv]

- [vg]

- [lv]

- [RH-subscription]

- [yum]

- [shell]

- [update-file]

- [service]

- [script]

- [firewalld]

- [geo-replication]

- hosts

This is a mandatory section which contains the IP address or hostname of the machines in the trusted storage pool. Each hostname or IP address should be listed in a separate line.

For example:[hosts] 10.0.0.1 10.0.0.2

[hosts] 10.0.0.1 10.0.0.2Copy to Clipboard Copied! Toggle word wrap Toggle overflow - devices

This is a generic section and is applicable to all the hosts listed in the [hosts] section. However, if sections of hosts such as the [hostname] or [IP-address] is present, then the data in the generic sections like [devices] is ignored. Host specific data take precedence. This is an optional section.

For example:[devices] /dev/sda /dev/sdb

[devices] /dev/sda /dev/sdbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note

When configuring the backend setup, the devices should be either listed in this section or in the host specific section. - disktype

This section specifies the disk configuration that is used while setting up the backend. gdeploy supports RAID 10, RAID 6, RAID 5, and JBOD configurations. This is an optional section and if the field is left empty, JBOD is taken as the default configuration. Valid values for this field are

raid10,raid6,raid5, andjbod.For example:[disktype] raid6

[disktype] raid6Copy to Clipboard Copied! Toggle word wrap Toggle overflow - diskcount

This section specifies the number of data disks in the setup. This is a mandatory field if a RAID disk type is specified under

[disktype]. If the [disktype] is JBOD the [diskcount] value is ignored. This parameter is host specific.For example:[diskcount] 10

[diskcount] 10Copy to Clipboard Copied! Toggle word wrap Toggle overflow - stripesize

This section specifies the stripe_unit size in KB.

Case 1: This field is not necessary if the [disktype] is JBOD, and any given value will be ignored.Case 2: This is a mandatory field if [disktype] is specified as RAID 5 or RAID 6.For [disktype] RAID 10, the default value is taken as 256KB. Red Hat does not recommend changing this value. If you specify any other value the following warning is displayed:"Warning: We recommend a stripe unit size of 256KB for RAID 10"

"Warning: We recommend a stripe unit size of 256KB for RAID 10"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note

Do not add any suffixes like K, KB, M, etc. This parameter is host specific and can be added in the hosts section.For example:[stripesize] 128

[stripesize] 128Copy to Clipboard Copied! Toggle word wrap Toggle overflow - vgs

This section is deprecated in gdeploy 2.0. Please see [backend-setup] for more details for gdeploy 2.0. This section specifies the volume group names for the devices listed in [devices]. The number of volume groups in the [vgs] section should match the one in [devices]. If the volume group names are missing, the volume groups will be named as GLUSTER_vg{1, 2, 3, ...} as default.

For example:[vgs] CUSTOM_vg1 CUSTOM_vg2

[vgs] CUSTOM_vg1 CUSTOM_vg2Copy to Clipboard Copied! Toggle word wrap Toggle overflow - pools

This section is deprecated in gdeploy 2.0. Please see [backend-setup] for more details for gdeploy 2.0. This section specifies the pool names for the volume groups specified in the [vgs] section. The number of pools listed in the [pools] section should match the number of volume groups in the [vgs] section. If the pool names are missing, the pools will be named as GLUSTER_pool{1, 2, 3, ...}.

For example:[pools] CUSTOM_pool1 CUSTOM_pool2

[pools] CUSTOM_pool1 CUSTOM_pool2Copy to Clipboard Copied! Toggle word wrap Toggle overflow - lvs

This section is deprecated in gdeploy 2.0. Please see [backend-setup] for more details for gdeploy 2.0. This section provides the logical volume names for the volume groups specified in [vgs]. The number of logical volumes listed in the [lvs] section should match the number of volume groups listed in [vgs]. If the logical volume names are missing, it is named as GLUSTER_lv{1, 2, 3, ...}.

For example:[lvs] CUSTOM_lv1 CUSTOM_lv2

[lvs] CUSTOM_lv1 CUSTOM_lv2Copy to Clipboard Copied! Toggle word wrap Toggle overflow - mountpoints

This section is deprecated in gdeploy 2.0. Please see [backend-setup] for more details for gdeploy 2.0. This section specifies the brick mount points for the logical volumes. The number of mount points should match the number of logical volumes specified in [lvs] If the mount points are missing, the mount points will be names as /gluster/brick{1, 2, 3…}.

For example:[mountpoints] /rhgs/brick1 /rhgs/brick2

[mountpoints] /rhgs/brick1 /rhgs/brick2Copy to Clipboard Copied! Toggle word wrap Toggle overflow - peer

This section specifies the configurations for the Trusted Storage Pool management (TSP). This section helps in making all the hosts specified in the [hosts] section to either probe each other to create the trusted storage pool or detach all of them from the trusted storage pool. The only option in this section is the option names 'action' which can have it's values to be either probe or detach.

For example:[peer] action=probe

[peer] action=probeCopy to Clipboard Copied! Toggle word wrap Toggle overflow - clients

This section specifies the client hosts and client_mount_points to mount the gluster storage volume created. The 'action' option is to be specified for the framework to determine the action that has to be performed. The options are 'mount' and 'unmount'. The Client hosts field is mandatory. If the mount points are not specified, default will be taken as /mnt/gluster for all the hosts.

The option fstype specifies how the gluster volume is to be mounted. Default is glusterfs (FUSE mount). The volume can also be mounted as NFS. Each client can have different types of volume mount, which has to be specified with a comma separated. The following fields are included:* action * hosts * fstype * client_mount_points

* action * hosts * fstype * client_mount_pointsCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - volume

The section specifies the configuration options for the volume. The following fields are included in this section:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - action

This option specifies what action must be performed in the volume. The choices can be [create, delete, add-brick, remove-brick].

create: This choice is used to create a volume.delete: If the delete choice is used, all the options other than 'volname' will be ignored.add-brick or remove-brick: If the add-brick or remove-brick is chosen, extra option bricks with a comma separated list of brick names(in the format <hostname>:<brick path> should be provided. In case of remove-brick, state option should also be provided specifying the state of the volume after brick removal. - volname

This option specifies the volume name. Default name is glustervol

Note

- In case of a volume operation, the 'hosts' section can be omitted, provided volname is in the format <hostname>:<volname>, where hostname is the hostname / IP of one of the nodes in the cluster

- Only single volume creation/deletion/configuration is supported.

- transport

This option specifies the transport type. Default is tcp. Options are tcp or rdma (Deprecated) or tcp,rdma.

- replica

This option will specify if the volume should be of type replica. options are yes and no. Default is no. If 'replica' is provided as yes, the 'replica_count' should be provided.

- disperse

This option specifies if the volume should be of type disperse. Options are yes and no. Default is no.

- disperse_count

This field is optional even if 'disperse' is yes. If not specified, the number of bricks specified in the command line is taken as the disperse_count value.

- redundancy_count

If this value is not specified, and if 'disperse' is yes, it's default value is computed so that it generates an optimal configuration.

- force

This is an optional field and can be used during volume creation to forcefully create the volume.

For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - backend-setup

Available in gdeploy 2.0. This section sets up the backend for using with GlusterFS volume. If more than one backend-setup has to be done, they can be done by numbering the section like [backend-setup1], [backend-setup2], ...

backend-setup section supports the following variables:- devices: This replaces the [pvs] section in gdeploy 1.x. devices variable lists the raw disks which should be used for backend setup. For example:

[backend-setup] devices=sda,sdb,sdc

[backend-setup] devices=sda,sdb,sdcCopy to Clipboard Copied! Toggle word wrap Toggle overflow This is a mandatory field. - dalign:The Logical Volume Manager can use a portion of the physical volume for storing its metadata while the rest is used as the data portion. Align the I/O at the Logical Volume Manager (LVM) layer using the dalign option while creating the physical volume. For example:

[backend-setup] devices=sdb,sdc,sdd,sde dalign=256k

[backend-setup] devices=sdb,sdc,sdd,sde dalign=256kCopy to Clipboard Copied! Toggle word wrap Toggle overflow For JBOD, use an alignment value of 256K. For hardware RAID, the alignment value should be obtained by multiplying the RAID stripe unit size with the number of data disks. If 12 disks are used in a RAID 6 configuration, the number of data disks is 10; on the other hand, if 12 disks are used in a RAID 10 configuration, the number of data disks is 6.The following example is appropriate for 12 disks in a RAID 6 configuration with a stripe unit size of 128 KiB:[backend-setup] devices=sdb,sdc,sdd,sde dalign=1280k

[backend-setup] devices=sdb,sdc,sdd,sde dalign=1280kCopy to Clipboard Copied! Toggle word wrap Toggle overflow The following example is appropriate for 12 disks in a RAID 10 configuration with a stripe unit size of 256 KiB:[backend-setup] devices=sdb,sdc,sdd,sde dalign=1536k

[backend-setup] devices=sdb,sdc,sdd,sde dalign=1536kCopy to Clipboard Copied! Toggle word wrap Toggle overflow To view the previously configured physical volume settings for the dalign option, run thepvs -o +pe_start devicecommand. For example:pvs -o +pe_start /dev/sdb PV VG Fmt Attr PSize PFree 1st PE /dev/sdb lvm2 a-- 9.09t 9.09t 1.25m

# pvs -o +pe_start /dev/sdb PV VG Fmt Attr PSize PFree 1st PE /dev/sdb lvm2 a-- 9.09t 9.09t 1.25mCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can also set the dalign option in the PV section. - vgs: This is an optional variable. This variable replaces the [vgs] section in gdeploy 1.x. vgs variable lists the names to be used while creating volume groups. The number of VG names should match the number of devices or should be left blank. gdeploy will generate names for the VGs. For example:

[backend-setup] devices=sda,sdb,sdc vgs=custom_vg1,custom_vg2,custom_vg3

[backend-setup] devices=sda,sdb,sdc vgs=custom_vg1,custom_vg2,custom_vg3Copy to Clipboard Copied! Toggle word wrap Toggle overflow A pattern can be provided for the vgs like custom_vg{1..3}, this will create three vgs.[backend-setup] devices=sda,sdb,sdc vgs=custom_vg{1..3}[backend-setup] devices=sda,sdb,sdc vgs=custom_vg{1..3}Copy to Clipboard Copied! Toggle word wrap Toggle overflow - pools: This is an optional variable. The variable replaces the [pools] section in gdeploy 1.x. pools lists the thin pool names for the volume.

[backend-setup] devices=sda,sdb,sdc vgs=custom_vg1,custom_vg2,custom_vg3 pools=custom_pool1,custom_pool2,custom_pool3

[backend-setup] devices=sda,sdb,sdc vgs=custom_vg1,custom_vg2,custom_vg3 pools=custom_pool1,custom_pool2,custom_pool3Copy to Clipboard Copied! Toggle word wrap Toggle overflow Similar to vg, pattern can be provided for thin pool names. For example custom_pool{1..3} - lvs: This is an optional variable. This variable replaces the [lvs] section in gdeploy 1.x. lvs lists the logical volume name for the volume.

[backend-setup] devices=sda,sdb,sdc vgs=custom_vg1,custom_vg2,custom_vg3 pools=custom_pool1,custom_pool2,custom_pool3 lvs=custom_lv1,custom_lv2,custom_lv3

[backend-setup] devices=sda,sdb,sdc vgs=custom_vg1,custom_vg2,custom_vg3 pools=custom_pool1,custom_pool2,custom_pool3 lvs=custom_lv1,custom_lv2,custom_lv3Copy to Clipboard Copied! Toggle word wrap Toggle overflow Patterns for LV can be provided similar to vg. For example custom_lv{1..3}. - mountpoints: This variable deprecates the [mountpoints] section in gdeploy 1.x. Mountpoints lists the mount points where the logical volumes should be mounted. Number of mount points should be equal to the number of logical volumes. For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - ssd - This variable is set if caching has to be added. For example, the backed setup with ssd for caching should be:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note

Specifying the name of the data LV is necessary while adding SSD. Make sure the datalv is created already. Otherwise ensure to create it in one of the earlier `backend-setup’ sections.

- PV

Available in gdeploy 2.0. If the user needs to have more control over setting up the backend, and does not want to use backend-setup section, then pv, vg, and lv modules are to be used. The pv module supports the following variables.

- action: Mandatory. Supports two values, 'create' and 'resize'Example: Creating physical volumes

[pv] action=create devices=vdb,vdc,vdd

[pv] action=create devices=vdb,vdc,vddCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example: Creating physical volumes on a specific host[pv:10.0.5.2] action=create devices=vdb,vdc,vdd

[pv:10.0.5.2] action=create devices=vdb,vdc,vddCopy to Clipboard Copied! Toggle word wrap Toggle overflow - devices: Mandatory. The list of devices to use for pv creation.

- expand: Used when

action=resize.Example: Expanding an already created pv[pv] action=resize devices=vdb expand=yes

[pv] action=resize devices=vdb expand=yesCopy to Clipboard Copied! Toggle word wrap Toggle overflow - shrink: Used when

action=resize.Example: Shrinking an already created pv[pv] action=resize devices=vdb shrink=100G

[pv] action=resize devices=vdb shrink=100GCopy to Clipboard Copied! Toggle word wrap Toggle overflow - dalign:The Logical Volume Manager can use a portion of the physical volume for storing its metadata while the rest is used as the data portion. Align the I/O at the Logical Volume Manager (LVM) layer using the dalign option while creating the physical volume. For example:

[pv] action=create devices=sdb,sdc,sdd,sde dalign=256k

[pv] action=create devices=sdb,sdc,sdd,sde dalign=256kCopy to Clipboard Copied! Toggle word wrap Toggle overflow For JBOD, use an alignment value of 256K. For hardware RAID, the alignment value should be obtained by multiplying the RAID stripe unit size with the number of data disks. If 12 disks are used in a RAID 6 configuration, the number of data disks is 10; on the other hand, if 12 disks are used in a RAID 10 configuration, the number of data disks is 6.The following example is appropriate for 12 disks in a RAID 6 configuration with a stripe unit size of 128 KiB:[pv] action=create devices=sdb,sdc,sdd,sde dalign=1280k

[pv] action=create devices=sdb,sdc,sdd,sde dalign=1280kCopy to Clipboard Copied! Toggle word wrap Toggle overflow The following example is appropriate for 12 disks in a RAID 10 configuration with a stripe unit size of 256 KiB:[pv] action=create devices=sdb,sdc,sdd,sde dalign=1536k

[pv] action=create devices=sdb,sdc,sdd,sde dalign=1536kCopy to Clipboard Copied! Toggle word wrap Toggle overflow To view the previously configured physical volume settings for the dalign option, run thepvs -o +pe_start devicecommand. For example:pvs -o +pe_start /dev/sdb PV VG Fmt Attr PSize PFree 1st PE /dev/sdb lvm2 a-- 9.09t 9.09t 1.25m

# pvs -o +pe_start /dev/sdb PV VG Fmt Attr PSize PFree 1st PE /dev/sdb lvm2 a-- 9.09t 9.09t 1.25mCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can also set the dalign option in the backend-setup section.

- VG

Available in gdeploy 2.0. This module is used to create and extend volume groups. The vg module supports the following variables.

- action - Action can be one of create or extend.

- pvname - PVs to use to create the volume. For more than one PV use comma separated values.

- vgname - The name of the vg. If no name is provided GLUSTER_vg will be used as default name.

- one-to-one - If set to yes, one-to-one mapping will be done between pv and vg.

If action is set to extend, the vg will be extended to include pv provided.Example1: Create a vg named images_vg with two PVs[vg] action=create vgname=images_vg pvname=sdb,sdc

[vg] action=create vgname=images_vg pvname=sdb,sdcCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example2: Create two vgs named rhgs_vg1 and rhgs_vg2 with two PVs[vg] action=create vgname=rhgs_vg pvname=sdb,sdc one-to-one=yes

[vg] action=create vgname=rhgs_vg pvname=sdb,sdc one-to-one=yesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example3: Extend an existing vg with the given disk.[vg] action=extend vgname=rhgs_images pvname=sdc

[vg] action=extend vgname=rhgs_images pvname=sdcCopy to Clipboard Copied! Toggle word wrap Toggle overflow - LV

Available in gdeploy 2.0. This module is used to create, setup-cache, and convert logical volumes. The lv module supports the following variables:

action - The action variable allows three values `create’, `setup-cache’, `convert’, and `change’. If the action is 'create', the following options are supported:- lvname: The name of the logical volume, this is an optional field. Default is GLUSTER_lv

- poolname - Name of the thinpool volume name, this is an optional field. Default is GLUSTER_pool

- lvtype - Type of the logical volume to be created, allowed values are `thin’ and `thick’. This is an optional field, default is thick.

- size - Size of the logical volume volume. Default is to take all available space on the vg.

- extent - Extent size, default is 100%FREE

- force - Force lv create, do not ask any questions. Allowed values `yes’, `no’. This is an optional field, default is yes.

- vgname - Name of the volume group to use.

- pvname - Name of the physical volume to use.

- chunksize - The size of the chunk unit used for snapshots, cache pools, and thin pools. By default this is specified in kilobytes. For RAID 5 and 6 volumes, gdeploy calculates the default chunksize by multiplying the stripe size and the disk count. For RAID 10, the default chunksize is 256 KB. See Section 19.2, “Brick Configuration” for details.

Warning

Red Hat recommends using at least the default chunksize. If the chunksize is too small and your volume runs out of space for metadata, the volume is unable to create data. This includes the data required to increase the size of the metadata pool or to migrate data away from a volume that has run out of metadata space. Red Hat recommends monitoring your logical volumes to ensure that they are expanded or more storage created before metadata volumes become completely full. - poolmetadatasize - Sets the size of pool's metadata logical volume. Allocate the maximum chunk size (16 GiB) if possible. If you allocate less than the maximum, allocate at least 0.5% of the pool size to ensure that you do not run out of metadata space.

Warning

If your metadata pool runs out of space, you cannot create data. This includes the data required to increase the size of the metadata pool or to migrate data away from a volume that has run out of metadata space. Monitor your metadata pool using thelvs -o+metadata_percentcommand and ensure that it does not run out of space. - virtualsize - Creates a thinly provisioned device or a sparse device of the given size

- mkfs - Creates a filesystem of the given type. Default is to use xfs.

- mkfs-opts - mkfs options.

- mount - Mount the logical volume.

If the action is setup-cache, the below options are supported:- ssd - Name of the ssd device. For example sda/vda/ … to setup cache.

- vgname - Name of the volume group.

- poolname - Name of the pool.

- cache_meta_lv - Due to requirements from dm-cache (the kernel driver), LVM further splits the cache pool LV into two devices - the cache data LV and cache metadata LV. Provide the cache_meta_lv name here.

- cache_meta_lvsize - Size of the cache meta lv.

- cache_lv - Name of the cache data lv.

- cache_lvsize - Size of the cache data.

- force - Force

If the action is convert, the below options are supported:- lvtype - type of the lv, available options are thin and thick

- force - Force the lvconvert, default is yes.

- vgname - Name of the volume group.

- poolmetadata - Specifies cache or thin pool metadata logical volume.

- cachemode - Allowed values writeback, writethrough. Default is writethrough.

- cachepool - This argument is necessary when converting a logical volume to a cache LV. Name of the cachepool.

- lvname - Name of the logical volume.

- chunksize - The size of the chunk unit used for snapshots, cache pools, and thin pools. By default this is specified in kilobytes. For RAID 5 and 6 volumes, gdeploy calculates the default chunksize by multiplying the stripe size and the disk count. For RAID 10, the default chunksize is 256 KB. See Section 19.2, “Brick Configuration” for details.

Warning

Red Hat recommends using at least the default chunksize. If the chunksize is too small and your volume runs out of space for metadata, the volume is unable to create data. Red Hat recommends monitoring your logical volumes to ensure that they are expanded or more storage created before metadata volumes become completely full. - poolmetadataspare - Controls creation and maintanence of pool metadata spare logical volume that will be used for automated pool recovery.

- thinpool - Specifies or converts logical volume into a thin pool's data volume. Volume’s name or path has to be given.

If the action is change, the below options are supported:- lvname - Name of the logical volume.

- vgname - Name of the volume group.

- zero - Set zeroing mode for thin pool.

Example 1: Create a thin LVCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example 2: Create a thick LVCopy to Clipboard Copied! Toggle word wrap Toggle overflow If there are more than one LVs, then the LVs can be created by numbering the LV sections, like [lv1], [lv2] … - RH-subscription

Available in gdeploy 2.0. This module is used to subscribe, unsubscribe, attach, enable repos etc. The RH-subscription module allows the following variables:

This module is used to subscribe, unsubscribe, attach, enable repos etc. The RH-subscription module allows the following variables:If the action is register, the following options are supported:- username/activationkey: Username or activationkey.

- password/activationkey: Password or activation key

- auto-attach: true/false

- pool: Name of the pool.

- repos: Repos to subscribe to.

- disable-repos: Repo names to disable. Leaving this option blank will disable all the repos.

- ignore_register_errors: If set to no, gdeploy will exit if system registration fails.

- If the action is attach-pool the following options are supported:pool - Pool name to be attached.ignore_attach_pool_errors - If set to no, gdeploy fails if attach-pool fails.

- If the action is enable-repos the following options are supported:repos - List of comma separated repos that are to be subscribed to.ignore_enable_errors - If set to no, gdeploy fails if enable-repos fail.

- If the action is disable-repos the following options are supported:repos - List of comma separated repos that are to be subscribed to.ignore_disable_errors - If set to no, gdeploy fails if disable-repos fail

- If the action is unregister the systems will be unregistered.ignore_unregister_errors - If set to no, gdeploy fails if unregistering fails.

Example 1: Subscribe to Red Hat Subscription network:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example 2: Disable all the repos:[RH-subscription2] action=disable-repos repos=*

[RH-subscription2] action=disable-repos repos=*Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example 3: Enable a few repos[RH-subscription3] action=enable-repos repos=rhel-7-server-rpms,rh-gluster-3-for-rhel-7-server-rpms,rhel-7-server-rhev-mgmt-agent-rpms ignore_enable_errors=no

[RH-subscription3] action=enable-repos repos=rhel-7-server-rpms,rh-gluster-3-for-rhel-7-server-rpms,rhel-7-server-rhev-mgmt-agent-rpms ignore_enable_errors=noCopy to Clipboard Copied! Toggle word wrap Toggle overflow - yum

Available in gdeploy 2.0. This module is used to install or remove rpm packages, with the yum module we can add repos as well during the install time.

The action variable allows two values `install’ and `remove’.If the action is install the following options are supported:- packages - Comma separated list of packages that are to be installed.

- repos - The repositories to be added.

- gpgcheck - yes/no values have to be provided.

- update - Whether yum update has to be initiated.

If the action is remove then only one option has to be provided:- remove - The comma separated list of packages to be removed.

For exampleCopy to Clipboard Copied! Toggle word wrap Toggle overflow Install a package on a particular host.[yum2:host1] action=install gpgcheck=no packages=rhevm-appliance

[yum2:host1] action=install gpgcheck=no packages=rhevm-applianceCopy to Clipboard Copied! Toggle word wrap Toggle overflow - shell

Available in gdeploy 2.0. This module allows user to run shell commands on the remote nodes.

Currently shell provides a single action variable with value execute. And a command variable with any valid shell command as value.The below command will execute vdsm-tool on all the nodes.[shell] action=execute command=vdsm-tool configure --force

[shell] action=execute command=vdsm-tool configure --forceCopy to Clipboard Copied! Toggle word wrap Toggle overflow - update-file

Available in gdeploy 2.0. update-file module allows users to copy a file, edit a line in a file, or add new lines to a file. action variable can be any of copy, edit, or add.

When the action variable is set to copy, the following variables are supported.- src - The source path of the file to be copied from.

- dest - The destination path on the remote machine to where the file is to be copied to.

When the action variable is set to edit, the following variables are supported.- dest - The destination file name which has to be edited.

- replace - A regular expression, which will match a line that will be replaced.

- line - Text that has to be replaced.

When the action variable is set to add, the following variables are supported.- dest - File on the remote machine to which a line has to be added.

- line - Line which has to be added to the file. Line will be added towards the end of the file.

Example 1: Copy a file to a remote machine.[update-file] action=copy src=/tmp/foo.cfg

[update-file] action=copy src=/tmp/foo.cfgCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example 2: Edit a line in the remote machine, in the below example lines that have allowed_hosts will be replaced with allowed_hosts=host.redhat.com[update-file] action=edit replace=allowed_hosts line=allowed_hosts=host.redhat.com

[update-file] action=edit replace=allowed_hosts line=allowed_hosts=host.redhat.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example 3: Add a line to the end of a fileFor Red Hat Enterprise Linux 7:[update-file] action=add dest=/etc/ntp.conf line=server clock.redhat.com iburst

[update-file] action=add dest=/etc/ntp.conf line=server clock.redhat.com iburstCopy to Clipboard Copied! Toggle word wrap Toggle overflow For Red Hat Enterprise Linux 8:[update-file] action=add dest=/etc/chrony.conf line=server 0.rhel.pool.ntp.org iburst

[update-file] action=add dest=/etc/chrony.conf line=server 0.rhel.pool.ntp.org iburstCopy to Clipboard Copied! Toggle word wrap Toggle overflow - service

Available in gdeploy 2.0. The service module allows user to start, stop, restart, reload, enable, or disable a service. The action variable specifies these values.

When action variable is set to any of start, stop, restart, reload, enable, disable the variable servicename specifies which service to start, stop etc.- service - Name of the service to start, stop etc.

For Red Hat Enterprise Linux 7:Example: enable and start ntp daemon.[service1] action=enable service=ntpd

[service1] action=enable service=ntpdCopy to Clipboard Copied! Toggle word wrap Toggle overflow [service2] action=restart service=ntpd

[service2] action=restart service=ntpdCopy to Clipboard Copied! Toggle word wrap Toggle overflow For Red Hat Enterprise Linux 8:Example: enable and start chrony daemon.[service1] action=enable service=chrony

[service1] action=enable service=chronyCopy to Clipboard Copied! Toggle word wrap Toggle overflow [service2] action=restart service=chrony

[service2] action=restart service=chronyCopy to Clipboard Copied! Toggle word wrap Toggle overflow - script

Available in gdeploy 2.0. script module enables user to execute a script/binary on the remote machine. action variable is set to execute. Allows user to specify two variables file and args.

- file - An executable on the local machine.

- args - Arguments to the above program.

Example: Execute script disable-multipath.sh on all the remote nodes listed in `hosts’ section.[script] action=execute file=/usr/share/ansible/gdeploy/scripts/disable-multipath.sh

[script] action=execute file=/usr/share/ansible/gdeploy/scripts/disable-multipath.shCopy to Clipboard Copied! Toggle word wrap Toggle overflow - firewalld

Available in gdeploy 2.0. firewalld module allows the user to manipulate firewall rules. action variable supports two values `add’ and `delete’. Both add and delete support the following variables:

- ports/services - The ports or services to add to firewall.

- permanent - Whether to make the entry permanent. Allowed values are true/false

- zone - Default zone is public

For example:[firewalld] action=add ports=111/tcp,2049/tcp,54321/tcp,5900/tcp,5900-6923/tcp,5666/tcp,16514/tcp services=glusterfs

[firewalld] action=add ports=111/tcp,2049/tcp,54321/tcp,5900/tcp,5900-6923/tcp,5666/tcp,16514/tcp services=glusterfsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - geo-replication

Available in gdeploy 2.0.2, geo-replication module allows the user to configure geo-replication, control and verify geo-replication sessions. The following are the supported variables:

action- The action to be performed for the geo-replication session.- create - To create a geo-replication session.

- start - To start a created geo-replication session.

- stop - To stop a started geo-replication session.

- pause - To pause a geo-replication session.

- resume - To resume a paused geo-replication session.

- delete - To delete a geo-replication session.

georepuser- Username to be used for the action being performedImportant

Ifgeorepuservariable is omitted, the user is assumed to be root user.mastervol- Master volume details in the following format:Master_HostName:Master_VolName

Master_HostName:Master_VolNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow slavevol- Slave volume details in the following format:Slave_HostName:Slave_VolName

Slave_HostName:Slave_VolNameCopy to Clipboard Copied! Toggle word wrap Toggle overflow slavenodes- Slave node IP addresses in the following format:Slave1_IPAddress,Slave2_IPAddress

Slave1_IPAddress,Slave2_IPAddressCopy to Clipboard Copied! Toggle word wrap Toggle overflow Important

Slave IP addresses must be comma (,) separated.force- Force the system to perform the action. Allowed values areyesorno.start- Start the action specified in the configuration file. Allowed values areyesorno. Default value isyes.

For example:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.1.8. Deploying NFS Ganesha using gdeploy

5.1.8.1. Prerequisites

You must subscribe to subscription manager and obtain the NFS Ganesha packages before continuing further.

[RH-subscription1] action=register username=<user>@redhat.com password=<password> pool=<pool-id>

[RH-subscription1]

action=register

username=<user>@redhat.com

password=<password>

pool=<pool-id>gdeploy -c txt.conf

# gdeploy -c txt.confTo enable the required repos, add the following details in the configuration file:

[RH-subscription2] action=enable-repos repos=rhel-7-server-rpms,rh-gluster-3-for-rhel-7-server-rpms,rh-gluster-3-nfs-for-rhel-7-server-rpms,rhel-ha-for-rhel-7-server-rpms,rhel-7-server-ansible-2-rpms

[RH-subscription2]

action=enable-repos

repos=rhel-7-server-rpms,rh-gluster-3-for-rhel-7-server-rpms,rh-gluster-3-nfs-for-rhel-7-server-rpms,rhel-ha-for-rhel-7-server-rpms,rhel-7-server-ansible-2-rpmsgdeploy -c txt.conf

# gdeploy -c txt.confTo enable the firewall ports, add the following details in the configuration file:

[firewalld] action=add ports=111/tcp,2049/tcp,54321/tcp,5900/tcp,5900-6923/tcp,5666/tcp,16514/tcp services=glusterfs,nlm,nfs,rpc-bind,high-availability,mountd,rquota

[firewalld]

action=add

ports=111/tcp,2049/tcp,54321/tcp,5900/tcp,5900-6923/tcp,5666/tcp,16514/tcp

services=glusterfs,nlm,nfs,rpc-bind,high-availability,mountd,rquotaNote

gdeploy -c txt.conf

# gdeploy -c txt.confTo install the required package, add the following details in the configuration file

gdeploy -c txt.conf

# gdeploy -c txt.conf5.1.8.2. Supported Actions

- Creating a Cluster

- Destroying a Cluster

- Adding a Node

- Deleting a Node

- Exporting a Volume

- Unexporting a Volume

- Refreshing NFS Ganesha Configuration

This action creates a fresh NFS-Ganesha setup on a given volume. For this action the nfs-ganesha in the configuration file section supports the following variables:

- ha-name: This is an optional variable. By default it is ganesha-ha-360.

- cluster-nodes: This is a required argument. This variable expects comma separated values of cluster node names, which is used to form the cluster.

- vip: This is a required argument. This variable expects comma separated list of ip addresses. These will be the virtual ip addresses.

- volname: This is an optional variable if the configuration contains the [volume] section

gdeploy -c txt.conf

# gdeploy -c txt.conf

The action, destroy-cluster cluster disables NFS Ganesha. It allows one variable, cluster-nodes.

gdeploy -c txt.conf

# gdeploy -c txt.confThe add-node action allows three variables:

nodes: Accepts a list of comma separated hostnames that have to be added to the clustervip: Accepts a list of comma separated ip addresses.cluster_nodes: Accepts a list of comma separated nodes of the NFS Ganesha cluster.

Note

gdeploy -c txt.conf

# gdeploy -c txt.conf

The delete-node action takes one variable, nodes, which specifies the node or nodes to delete from the NFS Ganesha cluster in a comma delimited list.

This action exports a volume. export-volume action supports one variable, volname.

gdeploy -c txt.conf

# gdeploy -c txt.conf

This action unexports a volume. unexport-volume action supports one variable, volname.

gdeploy -c txt.conf

# gdeploy -c txt.conf

This action will add/delete or add a config block to the configuration file and runs refresh-config on the cluster.

refresh-config supports the following variables:

- del-config-lines

- block-name

- volname

- ha-conf-dir

- update_config_lines

Note

refresh-config with client block has few limitations:

- Works for only one client

- User cannot delete a line from a config block

gdeploy -c txt.conf

# gdeploy -c txt.confgdeploy -c txt.conf

# gdeploy -c txt.confgdeploy -c txt.conf

# gdeploy -c txt.confgdeploy -c txt.conf

# gdeploy -c txt.conf5.1.9. Deploying Samba / CTDB using gdeploy

5.1.9.1. Prerequisites

You must subscribe to subscription manager and obtain the Samba packages before continuing further.

[RH-subscription1] action=register username=<user>@redhat.com password=<password> pool=<pool-id>

[RH-subscription1]

action=register

username=<user>@redhat.com

password=<password>

pool=<pool-id>gdeploy -c txt.conf

# gdeploy -c txt.confTo enable the required repos, add the following details in the configuration file:

[RH-subscription2] action=enable-repos repos=rhel-7-server-rpms,rh-gluster-3-for-rhel-7-server-rpms,rh-gluster-3-samba-for-rhel-7-server-rpms,rhel-7-server-ansible-2-rpms

[RH-subscription2]

action=enable-repos

repos=rhel-7-server-rpms,rh-gluster-3-for-rhel-7-server-rpms,rh-gluster-3-samba-for-rhel-7-server-rpms,rhel-7-server-ansible-2-rpms[RH-subscription2] action=enable-repos rh-gluster-3-for-rhel-8-x86_64-rpms,ansible-2-for-rhel-8-x86_64-rpms,rhel-8-for-x86_64-baseos-rpms,rhel-8-for-x86_64-appstream-rpms,rhel-8-for-x86_64-highavailability-rpms,rh-gluster-3-samba-for-rhel-8-x86_64-rpms

[RH-subscription2]

action=enable-repos

rh-gluster-3-for-rhel-8-x86_64-rpms,ansible-2-for-rhel-8-x86_64-rpms,rhel-8-for-x86_64-baseos-rpms,rhel-8-for-x86_64-appstream-rpms,rhel-8-for-x86_64-highavailability-rpms,rh-gluster-3-samba-for-rhel-8-x86_64-rpms

gdeploy -c txt.conf

# gdeploy -c txt.confTo enable the firewall ports, add the following details in the configuration file:

[firewalld] action=add ports=54321/tcp,5900/tcp,5900-6923/tcp,5666/tcp,4379/tcp services=glusterfs,samba,high-availability

[firewalld]

action=add

ports=54321/tcp,5900/tcp,5900-6923/tcp,5666/tcp,4379/tcp

services=glusterfs,samba,high-availabilitygdeploy -c txt.conf

# gdeploy -c txt.confTo install the required package, add the following details in the configuration file

gdeploy -c txt.conf

# gdeploy -c txt.conf5.1.9.2. Setting up Samba

- Enabling Samba on an existing volume

- Enabling Samba while creating a volume

If a Red Hat Gluster Storage volume is already present, then the user has to mention the action as smb-setup in the volume section. It is necessary to mention all the hosts that are in the cluster, as gdeploy updates the glusterd configuration files on each of the hosts.

Note

gdeploy -c txt.conf

# gdeploy -c txt.conf

If Samba has be set up while creating a volume, the a variable smb has to be set to yes in the configuration file.

gdeploy -c txt.conf

# gdeploy -c txt.confNote

smb_username and smb_mountpoint are necessary if samba has to be setup with the acls set correctly.

5.1.9.3. Setting up CTDB

ctdb_nodes parameter, as shown in the following example.

gdeploy -c txt.conf

# gdeploy -c txt.conf5.1.10. Enabling SSL on a Volume

5.1.10.1. Creating a Volume and Enabling SSL

gdeploy -c txt.conf

# gdeploy -c txt.conf5.1.10.2. Enabling SSL on an Existing Volume:

gdeploy -c txt.conf

# gdeploy -c txt.conf5.1.11. Gdeploy log files

/home/username/.gdeploy/logs/gdeploy.log instead of the /var/log directory.

GDEPLOY_LOGFILE environment variable. For example, to set the gdeploy log location to /var/log/gdeploy/gdeploy.log for this session, run the following command:

export GDEPLOY_LOGFILE=/var/log/gdeploy/gdeploy.log

$ export GDEPLOY_LOGFILE=/var/log/gdeploy/gdeploy.log/home/username/.bash_profile file for that user.

5.2. About Encrypted Disk

- For RHEL 6, refer to Disk Encryption Appendix of the Red Hat Enterprise Linux 6 Installation Guide.

Important

Red Hat Gluster Storage is not supported on Red Hat Enterprise Linux 6 (RHEL 6) from 3.5 Batch Update 1 onwards. See Version Details table in section Red Hat Gluster Storage Software Components and Versions of the Installation Guide - For RHEL 7, refer to Encryption of the Red Hat Enterprise Linux 7 Security Guide.

- Starting in RHEL 7.5, Red Hat has implemented an additional component that can be used to enable LUKS disks remotely during startup called as Network Bound Disk Encryption (NBDE). For more information on NBDE, refer to Configuring Automated Unlocking of Encrypted Volumes using Policy-Based Decryption of the Red Hat Enterprise Linux 7 Security Guide.

- For RHEL 8, refer to Encrypting Block Devices Using LUKS of the Red Hat Enterprise Linux 8 Security Guide.

5.3. Formatting and Mounting Bricks

5.3.1. Creating Bricks Manually

Important

- Red Hat supports formatting a Logical Volume using the XFS file system on the bricks.

- Red Hat supports heterogeneous subvolume sizes for distributed volumes (either pure distributed, distributed-replicated or distributed-dispersed). Red Hat does not support heterogeneous brick sizes for bricks of the same subvolume.For example, you can have a distributed-replicated 3x3 volume with 3 bricks of 10GiB, 3 bricks of 50GiB and 3 bricks of 100GiB as long as the 3 10GiB bricks belong to the same replicate and similarly the 3 50GiB and 100GiB bricks belong to the same replicate set. In this way you will have 1 subvolume of 10GiB, another of 50GiB and 100GiB. The distributed hash table balances the number of assigned files to each subvolume so that the subvolumes get filled proportionally to their size.

5.3.1.1. Creating a Thinly Provisioned Logical Volume

- Create a physical volume(PV) by using the

pvcreatecommand.pvcreate --dataalignment alignment_value device

# pvcreate --dataalignment alignment_value deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:pvcreate --dataalignment 1280K /dev/sdb

# pvcreate --dataalignment 1280K /dev/sdbCopy to Clipboard Copied! Toggle word wrap Toggle overflow Here,/dev/sdbis a storage device.Use the correctdataalignmentoption based on your device. For more information, see Section 19.2, “Brick Configuration”Note

The device name and the alignment value will vary based on the device you are using. - Create a Volume Group (VG) from the PV using the

vgcreatecommand:vgcreate --physicalextentsize alignment_value volgroup device

# vgcreate --physicalextentsize alignment_value volgroup deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:vgcreate --physicalextentsize 1280K rhs_vg /dev/sdb

# vgcreate --physicalextentsize 1280K rhs_vg /dev/sdbCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Create a thin-pool using the following commands:

lvcreate --thin volgroup/poolname --size pool_sz --chunksize chunk_sz --poolmetadatasize metadev_sz --zero n

# lvcreate --thin volgroup/poolname --size pool_sz --chunksize chunk_sz --poolmetadatasize metadev_sz --zero nCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:lvcreate --thin rhs_vg/rhs_pool --size 2T --chunksize 1280K --poolmetadatasize 16G --zero n

# lvcreate --thin rhs_vg/rhs_pool --size 2T --chunksize 1280K --poolmetadatasize 16G --zero nCopy to Clipboard Copied! Toggle word wrap Toggle overflow Ensure you read Chapter 19, Tuning for Performance to select appropriate values forchunksizeandpoolmetadatasize. - Create a thinly provisioned volume that uses the previously created pool by running the

lvcreatecommand with the--virtualsizeand--thinoptions:lvcreate --virtualsize size --thin volgroup/poolname --name volname

# lvcreate --virtualsize size --thin volgroup/poolname --name volnameCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:lvcreate --virtualsize 1G --thin rhs_vg/rhs_pool --name rhs_lv

# lvcreate --virtualsize 1G --thin rhs_vg/rhs_pool --name rhs_lvCopy to Clipboard Copied! Toggle word wrap Toggle overflow It is recommended that only one LV should be created in a thin pool. - Format bricks using the supported XFS configuration, mount the bricks, and verify the bricks are mounted correctly. To enhance the performance of Red Hat Gluster Storage, ensure you read Chapter 19, Tuning for Performance before formatting the bricks.

Important

Snapshots are not supported on bricks formatted with external log devices. Do not use-l logdev=deviceoption withmkfs.xfscommand for formatting the Red Hat Gluster Storage bricks.mkfs.xfs -f -i size=512 -n size=8192 -d su=128k,sw=10 device

# mkfs.xfs -f -i size=512 -n size=8192 -d su=128k,sw=10 deviceCopy to Clipboard Copied! Toggle word wrap Toggle overflow DEVICE is the created thin LV. The inode size is set to 512 bytes to accommodate for the extended attributes used by Red Hat Gluster Storage. - Run

# mkdir /mountpointto create a directory to link the brick to.mkdir /rhgs

# mkdir /rhgsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Add an entry in

/etc/fstab:/dev/volgroup/volname /mountpoint xfs rw,inode64,noatime,nouuid,x-systemd.device-timeout=10min 1 2

/dev/volgroup/volname /mountpoint xfs rw,inode64,noatime,nouuid,x-systemd.device-timeout=10min 1 2Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:/dev/rhs_vg/rhs_lv /rhgs xfs rw,inode64,noatime,nouuid,x-systemd.device-timeout=10min 1 2

/dev/rhs_vg/rhs_lv /rhgs xfs rw,inode64,noatime,nouuid,x-systemd.device-timeout=10min 1 2Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Run

mount /mountpointto mount the brick. - Run the

df -hcommand to verify the brick is successfully mounted:df -h /dev/rhs_vg/rhs_lv 16G 1.2G 15G 7% /rhgs

# df -h /dev/rhs_vg/rhs_lv 16G 1.2G 15G 7% /rhgsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If SElinux is enabled, then the SELinux labels that has to be set manually for the bricks created using the following commands:

semanage fcontext -a -t glusterd_brick_t /rhgs/brick1 restorecon -Rv /rhgs/brick1

# semanage fcontext -a -t glusterd_brick_t /rhgs/brick1 # restorecon -Rv /rhgs/brick1Copy to Clipboard Copied! Toggle word wrap Toggle overflow

5.3.2. Using Subdirectory as the Brick for Volume

/rhgs directory is the mounted file system and is used as the brick for volume creation. However, for some reason, if the mount point is unavailable, any write continues to happen in the /rhgs directory, but now this is under root file system.

/bricks. After the file system is available, create a directory called /rhgs/brick1 and use it for volume creation. Ensure that no more than one brick is created from a single mount. This approach has the following advantages:

- When the

/rhgsfile system is unavailable, there is no longer/rhgs/brick1directory available in the system. Hence, there will be no data loss by writing to a different location. - This does not require any additional file system for nesting.

- Create the

brick1subdirectory in the mounted file system.mkdir /rhgs/brick1

# mkdir /rhgs/brick1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Repeat the above steps on all nodes. - Create the Red Hat Gluster Storage volume using the subdirectories as bricks.

gluster volume create distdata01 ad-rhs-srv1:/rhgs/brick1 ad-rhs-srv2:/rhgs/brick2

# gluster volume create distdata01 ad-rhs-srv1:/rhgs/brick1 ad-rhs-srv2:/rhgs/brick2Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Start the Red Hat Gluster Storage volume.

gluster volume start distdata01

# gluster volume start distdata01Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Verify the status of the volume.

gluster volume status distdata01

# gluster volume status distdata01Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Note

df -h /dev/rhs_vg/rhs_lv1 16G 1.2G 15G 7% /rhgs1 /dev/rhs_vg/rhs_lv2 16G 1.2G 15G 7% /rhgs2

# df -h

/dev/rhs_vg/rhs_lv1 16G 1.2G 15G 7% /rhgs1

/dev/rhs_vg/rhs_lv2 16G 1.2G 15G 7% /rhgs2gluster volume create test-volume server1:/rhgs1/brick1 server2:/rhgs1/brick1 server1:/rhgs2/brick2 server2:/rhgs2/brick2

# gluster volume create test-volume server1:/rhgs1/brick1 server2:/rhgs1/brick1 server1:/rhgs2/brick2 server2:/rhgs2/brick25.3.3. Reusing a Brick from a Deleted Volume

# mkfs.xfs -f -i size=512 device to reformat the brick to supported requirements, and make it available for immediate reuse in a new volume.

Note

5.3.4. Cleaning An Unusable Brick

- Delete all previously existing data in the brick, including the

.glusterfssubdirectory. - Run

# setfattr -x trusted.glusterfs.volume-id brickand# setfattr -x trusted.gfid brickto remove the attributes from the root of the brick. - Run

# getfattr -d -m . brickto examine the attributes set on the volume. Take note of the attributes. - Run

# setfattr -x attribute brickto remove the attributes relating to the glusterFS file system.Thetrusted.glusterfs.dhtattribute for a distributed volume is one such example of attributes that need to be removed.

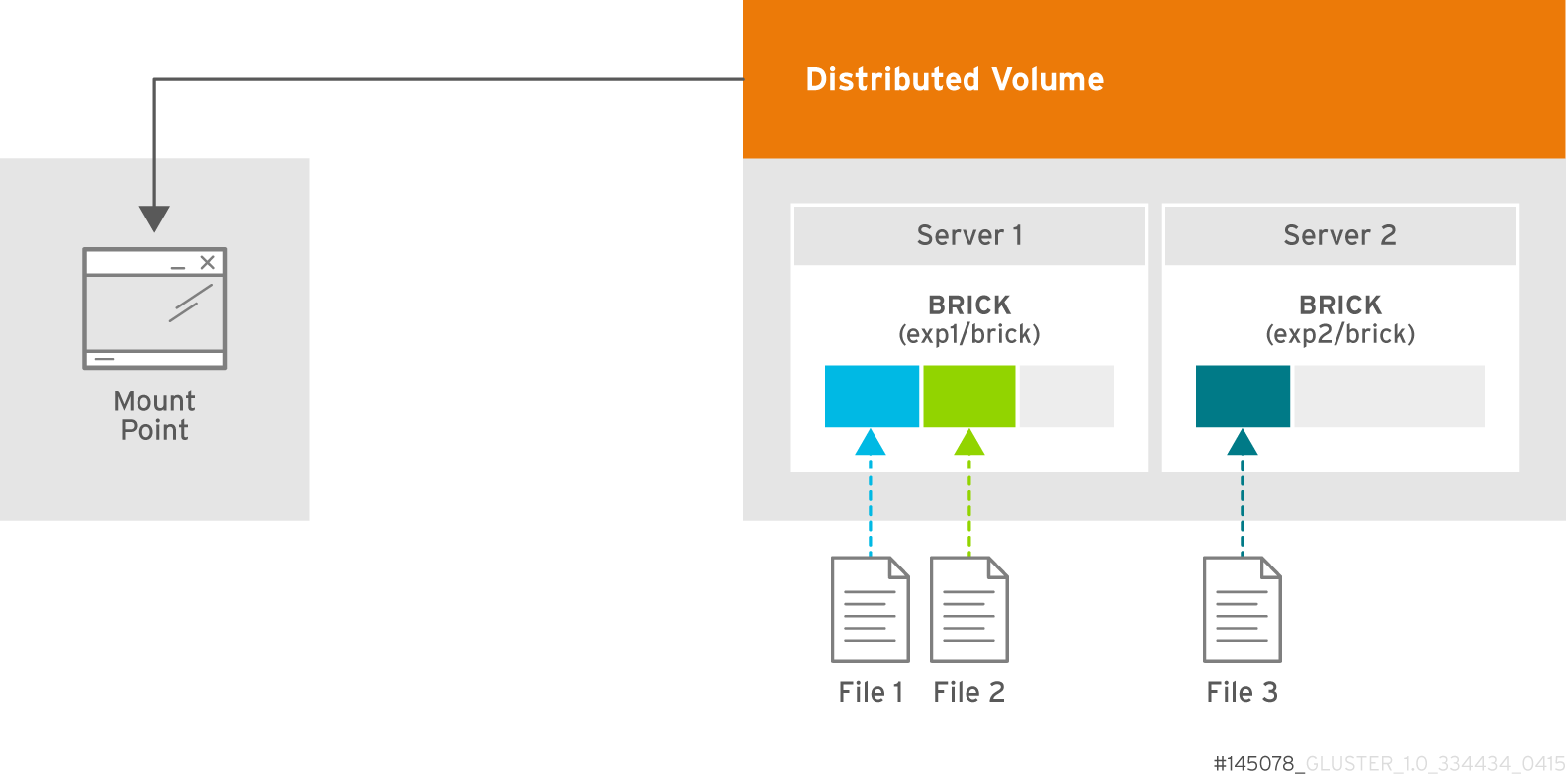

5.4. Creating Distributed Volumes

Figure 5.1. Illustration of a Distributed Volume

Warning

- No in-service upgrades - distributed only volumes need to be taken offline during upgrades.

- Temporary inconsistencies of directory entries and inodes during eventual node failures.

- I/O operations will block or fail due to node unavailability or eventual node failures.

- Permanent loss of data.

Create a Distributed Volume