Installation Guide

Installing Red Hat Gluster Storage 3.5

Abstract

Chapter 1. Planning Red Hat Gluster Storage Installation

1.1. About Red Hat Gluster Storage

Red Hat Gluster Storage Server for On-Premise enables enterprises to treat physical storage as a virtualized, scalable, and centrally managed pool of storage by using commodity server and storage hardware.

Red Hat Gluster Storage Server for Public Cloud packages GlusterFS as an Amazon Machine Image (AMI) for deploying scalable NAS in the AWS public cloud. This powerful storage server provides a highly available, scalable, virtualized, and centrally managed pool of storage for Amazon users.

A storage node can be a physical server, a virtual machine, or a public cloud machine image running one instance of Red Hat Gluster Storage.

1.2. Prerequisites

Important

1.2.1. Multi-site Cluster Latency

ping -c100 -q site_ip_address/site_hostname

# ping -c100 -q site_ip_address/site_hostnameNote

1.2.2. File System Requirements

Note

ext3 or ext4 to upgrade to a supported version of Red Hat Gluster Storage using the XFS back-end file system.

1.2.3. Logical Volume Manager

1.2.4. Network Time Configuration

1.2.4.1. Configuring time synchronization using Chrony

Note

clockdiff node-hostname

# clockdiff node-hostname1.2.4.2. Configuring time synchronization using Network Time Protocol

ntpd daemon to automatically synchronize the time during the boot process as follows:

- Edit the NTP configuration file

/etc/ntp.confusing a text editor such as vim or nano.nano /etc/ntp.conf

# nano /etc/ntp.confCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Add or edit the list of public NTP servers in the

ntp.conffile as follows:server 0.rhel.pool.ntp.org server 1.rhel.pool.ntp.org server 2.rhel.pool.ntp.org

server 0.rhel.pool.ntp.org server 1.rhel.pool.ntp.org server 2.rhel.pool.ntp.orgCopy to Clipboard Copied! Toggle word wrap Toggle overflow The Red Hat Enterprise Linux 6 version of this file already contains the required information. Edit the contents of this file if customization is required. For more information regarding supported Red Hat Enterprise Linux version for a particular Red Hat Gluster Storage release, see Section 1.7, “Red Hat Gluster Storage Support Matrix”. - Optionally, increase the initial synchronization speed by appending the

iburstdirective to each line:server 0.rhel.pool.ntp.org iburst server 1.rhel.pool.ntp.org iburst server 2.rhel.pool.ntp.org iburst

server 0.rhel.pool.ntp.org iburst server 1.rhel.pool.ntp.org iburst server 2.rhel.pool.ntp.org iburstCopy to Clipboard Copied! Toggle word wrap Toggle overflow - After the list of servers is complete, set the required permissions in the same file. Ensure that only

localhosthas unrestricted access:restrict default kod nomodify notrap nopeer noquery restrict -6 default kod nomodify notrap nopeer noquery restrict 127.0.0.1 restrict -6 ::1

restrict default kod nomodify notrap nopeer noquery restrict -6 default kod nomodify notrap nopeer noquery restrict 127.0.0.1 restrict -6 ::1Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Save all changes, exit the editor, and restart the NTP daemon:

service ntpd restart

# service ntpd restartCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Ensure that the

ntpddaemon starts at boot time:chkconfig ntpd on

# chkconfig ntpd onCopy to Clipboard Copied! Toggle word wrap Toggle overflow

ntpdate command for a one-time synchronization of NTP. For more information about this feature, see the Red Hat Enterprise Linux Deployment Guide.

1.3. Hardware Compatibility

1.4. Port Information

iptables command to open a port:

iptables -A INPUT -m state --state NEW -m tcp -p tcp --dport 5667 -j ACCEPT service iptables save

# iptables -A INPUT -m state --state NEW -m tcp -p tcp --dport 5667 -j ACCEPT

# service iptables savefirewall-cmd --zone=zone_name --add-service=glusterfs firewall-cmd --zone=zone_name --add-service=glusterfs --permanent

# firewall-cmd --zone=zone_name --add-service=glusterfs

# firewall-cmd --zone=zone_name --add-service=glusterfs --permanentfirewall-cmd --zone=zone_name --add-port=port/protocol firewall-cmd --zone=zone_name --add-port=port/protocol --permanent

# firewall-cmd --zone=zone_name --add-port=port/protocol

# firewall-cmd --zone=zone_name --add-port=port/protocol --permanentfirewall-cmd --zone=public --add-port=5667/tcp firewall-cmd --zone=public --add-port=5667/tcp --permanent

# firewall-cmd --zone=public --add-port=5667/tcp

# firewall-cmd --zone=public --add-port=5667/tcp --permanent| Connection source | TCP Ports | UDP Ports | Recommended for | Used for |

|---|---|---|---|---|

| Any authorized network entity with a valid SSH key | 22 | - | All configurations | Remote backup using geo-replication |

| Any authorized network entity; be cautious not to clash with other RPC services. | 111 | 111 | All configurations | RPC port mapper and RPC bind |

| Any authorized SMB/CIFS client | 139 and 445 | 137 and 138 | Sharing storage using SMB/CIFS | SMB/CIFS protocol |

| Any authorized NFS clients | 2049 | 2049 | Sharing storage using Gluster NFS (Deprecated) or NFS-Ganesha | Exports using NFS protocol |

| All servers in the Samba-CTDB cluster | 4379 | - | Sharing storage using SMB and Gluster NFS (Deprecated) | CTDB |

| Any authorized network entity | 24007 | - | All configurations | Management processes using glusterd |

| Any authorized network entity | 24009 | - | All configurations | Gluster events daemon |

| NFSv3 clients | 662 | 662 | Sharing storage using NFS-Ganesha and Gluster NFS (Deprecated) | statd |

| NFSv3 clients | 32803 | 32803 | Sharing storage using NFS-Ganesha and Gluster NFS (Deprecated) | NLM protocol |

| NFSv3 clients sending mount requests | - | 32769 | Sharing storage using Gluster NFS (Deprecated) | Gluster NFS MOUNT protocol |

| NFSv3 clients sending mount requests | 20048 | 20048 | Sharing storage using NFS-Ganesha | NFS-Ganesha MOUNT protocol |

| NFS clients | 875 | 875 | Sharing storage using NFS-Ganesha | NFS-Ganesha RQUOTA protocol (fetching quota information) |

| Servers in pacemaker/corosync cluster | 2224 | - | Sharing storage using NFS-Ganesha | pcsd |

| Servers in pacemaker/corosync cluster | 3121 | - | Sharing storage using NFS-Ganesha | pacemaker_remote |

| Servers in pacemaker/corosync cluster | - | 5404 and 5405 | Sharing storage using NFS-Ganesha | corosync |

| Servers in pacemaker/corosync cluster | 21064 | - | Sharing storage using NFS-Ganesha | dlm |

| Any authorized network entity | 49152 - 49664 | - | All configurations | Brick communication ports. The total number of ports required depends on the number of bricks on the node. One port is required for each brick on the machine. |

| Connection source | TCP Ports | UDP Ports | Recommended for | Used for |

|---|---|---|---|---|

| NFSv3 servers | 662 | 662 | Sharing storage using NFS-Ganesha and Gluster NFS (Deprecated) | statd |

| NFSv3 servers | 32803 | 32803 | Sharing storage using NFS-Ganesha and Gluster NFS (Deprecated) | NLM protocol |

1.5. Red Hat Gluster Storage Software Components and Versions

| RHGS version | glusterfs and glusterfs-fuse | RHGS op-version | SMB | NFS | gDeploy | Ansible |

|---|---|---|---|---|---|---|

| 3.4 | 3.12.2-18 | 31302 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0 | gdeploy-2.0.2-27 | - |

| 3.4 Batch 1 Update | 3.12.2-25 | 31303 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0 | gdeploy-2.0.2-30 | - |

| 3.4 Batch 2 Update | 3.12.2-32 | 31304 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0 | gdeploy-2.0.2-31 | gluster-ansible-infra-1.0.2-2 |

| 3.4 Batch 3 Update | 3.12.2-40 | 31305 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0 | gdeploy-2.0.2-31 | gluster-ansible-infra-1.0.2-2 |

| 3.4 Batch 4 Update | 3.12.2-47 | 31305 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0 | gdeploy-2.0.2-32 | gluster-ansible-infra-1.0.3-3 |

| 3.4.4 Async Update | 3.12.2-47.5 | 31306 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0 | gdeploy-2.0.2-32 | gluster-ansible-infra-1.0.3-3 |

| 3.5 (RHEL 6) | 6.0-22 | 70000 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | - | - | - |

| 3.5 (RHEL 7) | 6.0-21 | 70000 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0, NFSv4.1 | gdeploy-2.0.2-35 | gluster-ansible-infra-1.0.4-3 |

| 3.5 Batch Update 1 (RHEL 7) | 6.0-29 | 70000 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0, NFSv4.1 | gdeploy-2.0.2-35 | gluster-ansible-infra-1.0.4-5 |

| 3.5 Async Update (RHEL 7) | 6.0-30.1 | 70000 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0, NFSv4.1 | gdeploy-2.0.2-35 | gluster-ansible-infra-1.0.4-5 |

| 3.5 Batch 2 Update (RHEL 7) | 6.0-37 | 70000 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0, NFSv4.1 | gdeploy-2.0.2-35 | gluster-ansible-infra-1.0.4-5 |

| 3.5 Batch 2 Update (RHEL 8) | 6.0-37 | 70000 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0, NFSv4.1 | gdeploy-3.0.0-7 | gluster-ansible-infra-1.0.4-10 |

| 3.5.2 Async Update (RHEL 7) | 6.0-37.1 | 70000 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0, NFSv4.1 | gdeploy-2.0.2-35 | gluster-ansible-infra-1.0.4-5 |

| 3.5.2 Async Update (RHEL 8) | 6.0-37.1 | 70000 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0, NFSv4.1 | gdeploy-3.0.0-7 | gluster-ansible-infra-1.0.4-17 |

| 3.5 Batch 3 Update (RHEL 7) | 6.0-49.2 | 70000 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0, NFSv4.1 | gdeploy-2.0.2-36 | gluster-ansible-infra-1.0.4-5 |

| 3.5 Batch 3 Update (RHEL 8) | 6.0-49 | 70000 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0, NFSv4.1 | gdeploy-3.0.0-8 | gluster-ansible-infra-1.0.4-11 |

| 3.5 Batch 4 Update (RHEL 7) | 6.0-56 | 70100 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0, NFSv4.1 | gdeploy-2.0.2-36 | gluster-ansible-infra-1.0.4-5 |

| 3.5 Batch 4 Update (RHEL 8) | 6.0-56 | 70100 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0, NFSv4.1 | gdeploy-3.0.0-8 | gluster-ansible-infra-1.0.4-19 |

| 3.5.4 Async Update (RHEL 7) | 6.0-56.2 | 70100 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0, NFSv4.1 | gdeploy-2.0.2-36 | gluster-ansible-infra-1.0.4-19 |

| 3.5.4 Async Update (RHEL 8) | 6.0-56.2 | 70100 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0, NFSv4.1 | gdeploy-2.0.2-36 | gluster-ansible-infra-1.0.4-19 |

| 3.5 Batch 5 Update (RHEL 7) | 6.0-59 | 70200 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0, NFSv4.1 | gdeploy-2.0.2-36 | gluster-ansible-infra-1.0.4-19 |

| 3.5 Batch 5 Update (RHEL 8) | 6.0-59 | 70200 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0, NFSv4.1 | gdeploy-3.0.0-8 | gluster-ansible-infra-1.0.4-19 |

| 3.5 Batch 6 Update (RHEL 7) | 6.0-61 | 70200 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0, NFSv4.1 | gdeploy-2.0.2-36 | gluster-ansible-infra-1.0.4-19 |

| 3.5 Batch 6 Update (RHEL 8) | 6.0-61 | 70200 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0, NFSv4.1 | gdeploy-3.0.0-8 | gluster-ansible-infra-1.0.4-19 |

| 3.5 Batch 7 Update (RHEL 7) | 6.0-63 | 70200 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0, NFSv4.1 | gdeploy-2.0.2-36 | gluster-ansible-infra-1.0.4-19 |

| 3.5 Batch 7 Update (RHEL 8) | 6.0-63 | 70200 | SMB 1, 2.0, 2.1, 3.0, 3.1.1 | NFSv3, NFSv4.0, NFSv4.1 | gdeploy-3.0.0-11 | gluster-ansible-infra-1.0.4-21 |

Note

1.6. Feature Compatibility Support

Important

| Feature | Version |

|---|---|

| Arbiter bricks | 3.2 |

| Bitrot detection | 3.1 |

| Erasure coding | 3.1 |

| Google Compute Engine | 3.1.3 |

| Metadata caching | 3.2 |

| Microsoft Azure | 3.1.3 |

| NFS version 4 | 3.1 |

| SELinux | 3.1 |

| Sharding | 3.2.0 |

| Snapshots | 3.0 |

| Snapshots, cloning | 3.1.3 |

| Snapshots, user-serviceable | 3.0.3 |

| Tiering (Deprecated) | 3.1.2 |

| Volume Shadow Copy (VSS) | 3.1.3 |

| Volume Type | Sharding | Tiering (Deprecated) | Quota | Snapshots | Geo-Rep | Bitrot |

|---|---|---|---|---|---|---|

| Arbitrated-Replicated | Yes | No | Yes | Yes | Yes | Yes |

| Distributed | No | Yes | Yes | Yes | Yes | Yes |

| Distributed-Dispersed | No | Yes | Yes | Yes | Yes | Yes |

| Distributed-Replicated | Yes | Yes | Yes | Yes | Yes | Yes |

| Replicated | Yes | Yes | Yes | Yes | Yes | Yes |

| Sharded | N/A | No | No | No | Yes | No |

| Tiered (Deprecated) | No | N/A | Limited[a] | Limited[a] | Limited[a] | Limited[a] |

Note

| Feature | FUSE | Gluster-NFS | NFS-Ganesha | SMB |

|---|---|---|---|---|

| Arbiter | Yes | Yes | Yes | Yes |

| Bitrot detection | Yes | Yes | No | Yes |

| dm-cache | Yes | Yes | Yes | Yes |

| Encryption (TLS-SSL) | Yes | Yes | Yes | Yes |

| Erasure coding | Yes | Yes | Yes | Yes |

| Export subdirectory | Yes | Yes | Yes | N/A |

| Geo-replication | Yes | Yes | Yes | Yes |

| Quota (Deprecated)

Warning

Using QUOTA feature is considered to be deprecated in Red Hat Gluster Storage 3.5.3. Red Hat no longer recommends to use this feature and does not support it on new deployments and existing deployments that upgrade to Red Hat Gluster Storage 3.5.3.

| Yes | Yes | Yes | Yes |

| RDMA (Deprecated)

Warning

Using RDMA as a transport protocol is considered deprecated in Red Hat Gluster Storage 3.5. Red Hat no longer recommends the use of this feature, and does not support it on new deployments and existing deployments that upgrade to Red Hat Gluster Storage 3.5.3.

| Yes | No | No | No |

| Snapshots | Yes | Yes | Yes | Yes |

| Snapshot cloning | Yes | Yes | Yes | Yes |

| Snapshot mount | Yes | No | No | No |

| Tiering (Deprecated)

Warning

Using tiering feature is considered deprecated in Red Hat Gluster Storage 3.5. Red Hat no longer recommends the use of this feature, and does not support it on new deployments and existing deployments that upgrade to Red Hat Gluster Storage 3.5.3.

| Yes | Yes | N/A | N/A |

1.7. Red Hat Gluster Storage Support Matrix

| Red Hat Enterprise Linux version | Red Hat Gluster Storage version |

|---|---|

| 6.5 | 3.0 |

| 6.6 | 3.0.2, 3.0.3, 3.0.4 |

| 6.7 | 3.1, 3.1.1, 3.1.2 |

| 6.8 | 3.1.3 |

| 6.9 | 3.2 |

| 6.9 | 3.3 |

| 6.9 | 3.3.1 |

| 6.10 | 3.4, 3.5 |

| 7.1 | 3.1, 3.1.1 |

| 7.2 | 3.1.2 |

| 7.2 | 3.1.3 |

| 7.3 | 3.2 |

| 7.4 | 3.2 |

| 7.4 | 3.3 |

| 7.4 | 3.3.1 |

| 7.5 | 3.3.1, 3.4 |

| 7.6 | 3.3.1, 3.4 |

| 7.7 | 3.5, 3.5.1 |

| 7.8 | 3.5.1, 3.5.2 |

| 7.9 | 3.5.3, 3.5.4, 3.5.5, 3.5.6, 3.5.7 |

| 8.2 | 3.5.2, 3.5.3 |

| 8.3 | 3.5.3 |

| 8.4 | 3.5.4 |

| 8.5 | 3.5.5, 3.5.6 |

| 8.6 | 3.5.7 |

Chapter 2. Installing Red Hat Gluster Storage

Warning

Important

- Technology preview packages will also be installed with this installation of Red Hat Gluster Storage Server. For more information about the list of technology preview features, see chapter Technology Previews in the Red Hat Gluster Storage 3.5 Release Notes.

- When you clone a virtual machine that has Red Hat Gluster Storage Server installed, you need to remove the

/var/lib/glusterd/glusterd.infofile (if present) before you clone. If you do not remove this file, all cloned machines will have the same UUID. The file will be automatically recreated with a UUID on initial start-up of the glusterd daemon on the cloned virtual machines.

2.1. Obtaining Red Hat Gluster Storage

2.1.1. Obtaining Red Hat Gluster Storage Server for On-Premise

- Visit the Red Hat Customer Service Portal at https://access.redhat.com/login and enter your user name and password to log in.

- Click Downloads to visit the Software & Download Center.

- In the Red Hat Gluster Storage Server area, click to download the latest version of the software.

2.1.2. Obtaining Red Hat Gluster Storage Server for Public Cloud

2.2. Installing from an ISO Image

Important

2.2.1. Installing Red Hat Gluster Storage 3.5 on Red Hat Enterprise Linux

Important

- Download the ISO image file for Red Hat Gluster Storage Server as described in Section 2.1, “Obtaining Red Hat Gluster Storage”

- In the Welcome to Red Hat Gluster Storage 3.5 screen, select the language that will be used for the rest of the installation and click Continue. This selection will also become the default for the installed system, unless changed later.

Note

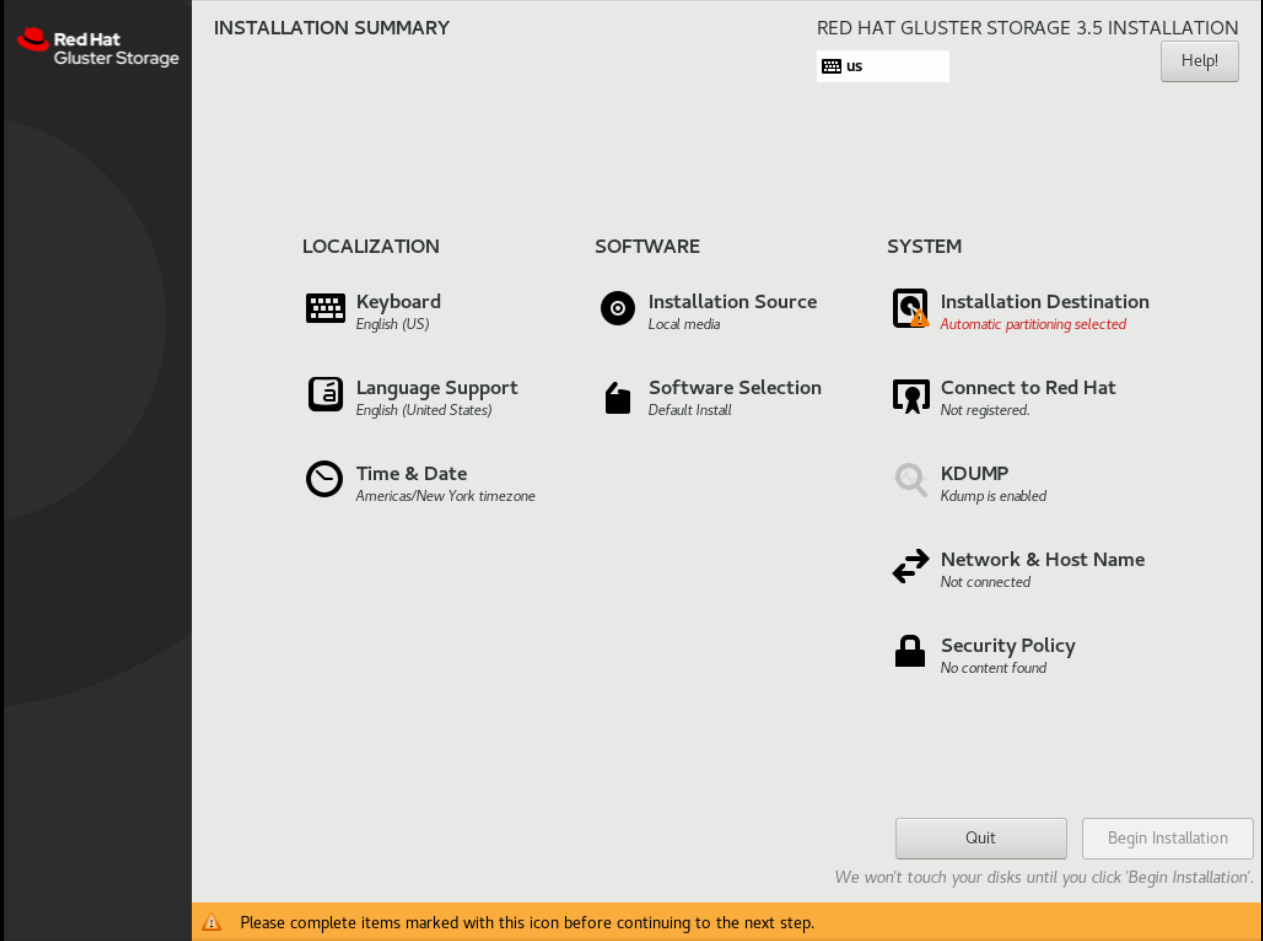

One language is pre-selected by default on top of the list. If network access is configured at this point (for example, if you booted from a network server instead of local media), the pre-selected language will be determined based on automatic location detection using the GeoIP module. - The Installation Summary screen is the central location for setting up an installation.

Figure 2.1. Installation Summary For Red Hat Gluster Storage 3.5 on Red Hat Enterprise Linux 8

Figure 2.2. Installation Summary for Red Hat Gluster Storage 3.5 on Red Hat Enterprise Linux 7

Instead of directing you through consecutive screens, the Red Hat Gluster Storage 3.5 installation program on Red Hat Enterprise Linux 7.7 and later allows you to configure the installation in the order you choose.Select a menu item to configure a section of the installation. When you have completed configuring a section, or if you would like to complete that section later, click the Done button located in the upper left corner of the screen.Only sections marked with a warning symbol are mandatory. A note at the bottom of the screen warns you that these sections must be completed before the installation can begin. The remaining sections are optional. Beneath each section's title, the current configuration is summarized. Using this you can determine whether you need to visit the section to configure it further.The following list provides a brief information of each of the menu item on the Installation Summary screen:- Date & TimeTo configure NTP, perform the following steps:

Important

Setting up NTP is mandatory for Gluster installation.- Click Date & Time and specify a time zone to maintain the accuracy of the system clock.

- Toggle the Network Time switch to ON.

Note

By default, the Network Time switch is enabled if you are connected to the network. - Click the configuration icon to add new NTP servers or select existing servers.

- Once you have made your addition or selection, click Done to return to the Installation Summary screen.

Note

NTP servers might be unavailable at the time of installation. In such a case, enabling them will not set the time automatically. When the servers are available, the date and time will be updated. - Language Support

To install support for additional locales and language dialects, select Language Support.

- Keyboard Configuration

To add multiple keyboard layouts to your system, select Keyboard.

- Installation Source

To specify a file or a location to install Red Hat Enterprise Linux from, select Installation Source. On this screen, you can choose between locally available installation media, such as a DVD or an ISO file, or a network location.

- Network & Hostname

To configure essential networking features for your system, select Network & Hostname.

Important

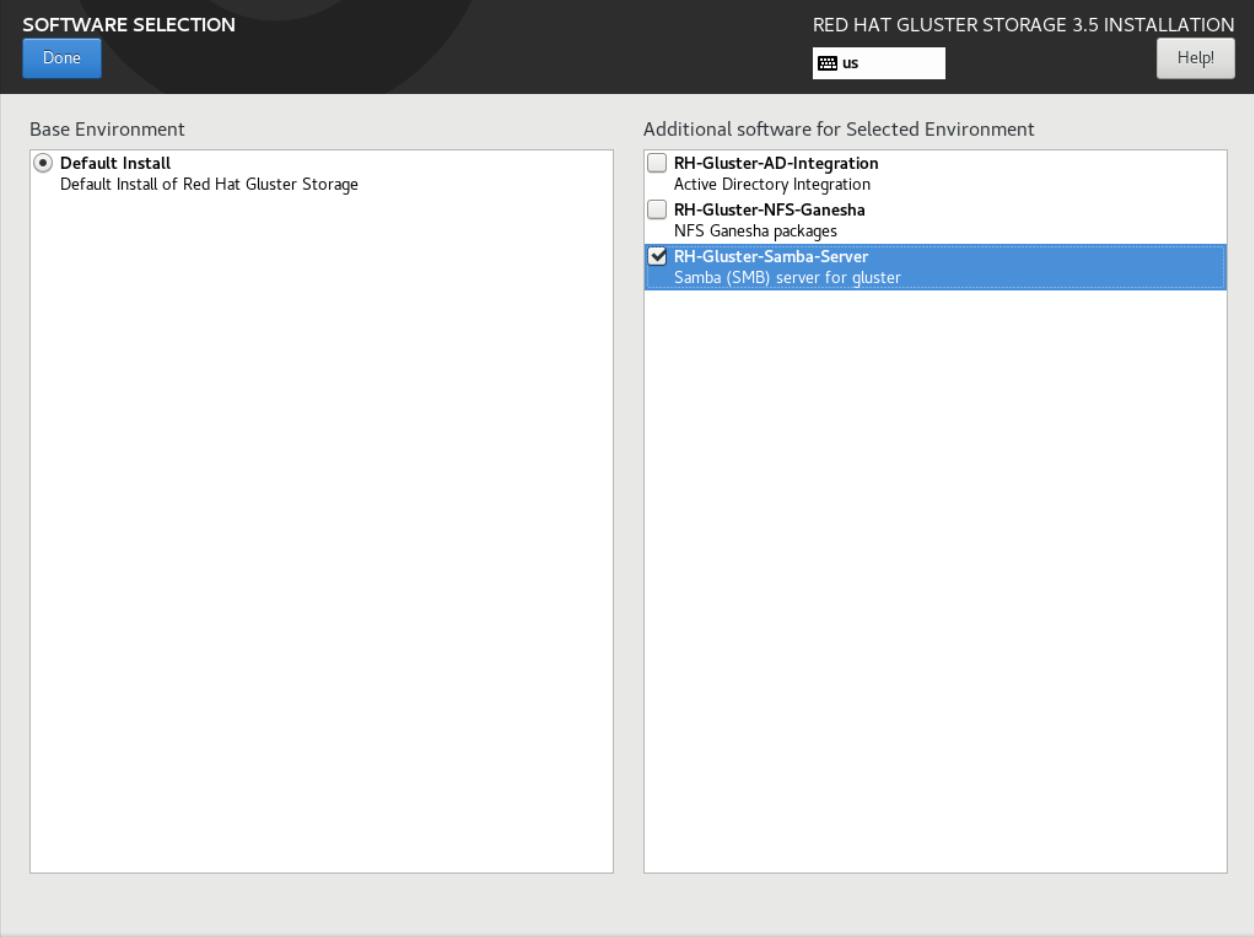

When the Red Hat Gluster Storage 3.5 on Red Hat Enterprise Linux 7.7 and later, installation finishes and the system boots for the first time, any network interfaces which you configured during the installation will be activated. However, the installation does not prompt you to configure network interfaces on some common installation paths - for example, when you install Red Hat Gluster Storage 3.5 on Red Hat Enterprise Linux 7.5 from a DVD to a local hard drive.When you install Red Hat Gluster Storage 3.5 on Red Hat Enterprise Linux 7.7 and later, from a local installation source to a local storage device, be sure to configure at least one network interface manually if you require network access when the system boots for the first time. You will also need to set the connection to connect automatically after boot when editing the configuration. - Software Selection

To specify which packages will be installed, select Software Selection. If you require the following optional Add-Ons, then select the required Add-Ons and click Done:

- RH-Gluster-AD-Integration

- RH-Gluster-NFS-Ganesha

- RH-Gluster-Samba-Server

- Installation Destination

To select the disks and partition the storage space on which you will install Red Hat Gluster Storage, select Installation Destination. For more information, see the Installation Destination section in the Red Hat Enterprise Linux 7 Installation Guide.

- Kdump

Kdump is a kernel crash dumping mechanism which, in the event of a system crash, captures information that can be invaluable in determining the cause of the crash. Use this option to select whether or not to use Kdump on the system

- After making the necessary configurations, click Begin Installation on the Installation Summary screen.

Warning

Up to this point in the installation process, no lasting changes have been made on your computer. When you click Begin Installation, the installation program will allocate space on your hard drive and start to transfer Red Hat Gluster Storage into this space. Depending on the partitioning option that you chose, this process might include erasing data that already exists on your computer.To revise any of the choices that you made up to this point, return to the relevant section of the Installation Summary screen. To cancel installation completely, click Quit or switch off your computer.If you have finished customizing the installation and are certain that you want to proceed, click Begin Installation.After you click Begin Installation, allow the installation process to complete. If the process is interrupted, for example, by you switching off or resetting the computer, or by a power outage, you will probably not be able to use your computer until you restart and complete the Red Hat Gluster Storage installation process - Once you click Begin Installation, the progress screen appears. Red Hat Gluster Storage reports the installation progress on the screen as it writes the selected packages to your system. Following is a brief description of the options on this screen:

- Root Password

The Root Password menu item is used to set the password for the root account. The root account is used to perform critical system management and administration tasks. The password can be configured either while the packages are being installed or afterwards, but you will not be able to complete the installation process until it has been configured.

- User Creation

Creating a user account is optional and can be done after installation, but it is recommended to do it on this screen. A user account is used for normal work and to access the system. Best practice suggests that you always access the system via a user account and not the root account.

- After the installation is completed, click Reboot to reboot your system and begin using Red Hat Gluster Storage.

2.3. Subscribing to the Red Hat Gluster Storage Server Channels

Note

Register the System with Subscription Manager

Run the following command and enter your Red Hat Network user name and password to register the system with Subscription Manager:subscription-manager register

# subscription-manager registerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Identify Available Entitlement Pools

Run the following commands to find entitlement pools containing the repositories required to install Red Hat Gluster Storage:subscription-manager list --available | grep -A8 "Red Hat Enterprise Linux Server" subscription-manager list --available | grep -A8 "Red Hat Storage"

# subscription-manager list --available | grep -A8 "Red Hat Enterprise Linux Server" # subscription-manager list --available | grep -A8 "Red Hat Storage"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Attach Entitlement Pools to the System

Use the pool identifiers located in the previous step to attach theRed Hat Enterprise Linux ServerandRed Hat Gluster Storageentitlements to the system. Run the following command to attach the entitlements:subscription-manager attach --pool=[POOLID]

# subscription-manager attach --pool=[POOLID]Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:subscription-manager attach --pool=8a85f9814999f69101499c05aa706e47

# subscription-manager attach --pool=8a85f9814999f69101499c05aa706e47Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enable the Required Channels for Red Hat Gluster Storage on Red Hat Enterprise Linux

For Red Hat Gluster Storage 3.5 on Red Hat Enterprise Linux 6.7 and later- Run the following commands to enable the repositories required to install Red Hat Gluster Storage:

subscription-manager repos --enable=rhel-6-server-rpms subscription-manager repos --enable=rhel-scalefs-for-rhel-6-server-rpms subscription-manager repos --enable=rhs-3-for-rhel-6-server-rpms

# subscription-manager repos --enable=rhel-6-server-rpms # subscription-manager repos --enable=rhel-scalefs-for-rhel-6-server-rpms # subscription-manager repos --enable=rhs-3-for-rhel-6-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If you require Samba, then enable the following repository:

subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-6-server-rpms

# subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-6-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - NFS-Ganesha is not supported on Red Hat Enterprise Linux 6 based installations.

For Red Hat Gluster Storage 3.5 on Red Hat Enterprise Linux 7.7 and later- Run the following commands to enable the repositories required to install Red Hat Gluster Storage

subscription-manager repos --enable=rhel-7-server-rpms subscription-manager repos --enable=rh-gluster-3-for-rhel-7-server-rpms

# subscription-manager repos --enable=rhel-7-server-rpms # subscription-manager repos --enable=rh-gluster-3-for-rhel-7-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If you require Samba, then enable the following repository:

subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-7-server-rpms

# subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-7-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - For Red Hat Gluster Storage 3.5, if NFS-Ganesha is required, then enable the following repository:

subscription-manager repos --enable=rh-gluster-3-nfs-for-rhel-7-server-rpms --enable=rhel-ha-for-rhel-7-server-rpms

# subscription-manager repos --enable=rh-gluster-3-nfs-for-rhel-7-server-rpms --enable=rhel-ha-for-rhel-7-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - For Red Hat Gluster Storage 3.5, if you require CTDB, then enable the following repository:

subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-7-server-rpms

# subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-7-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

For Red Hat Gluster Storage 3.5 on Red Hat Enterprise Linux 8.2 and later- Run the following commands to enable the repositories required to install Red Hat Gluster Storage

subscription-manager repos --enable=rhel-8-for-x86_64-baseos-rpms subscription-manager repos --enable=rhel-8-for-x86_64-appstream-rpms subscription-manager repos --enable=rh-gluster-3-for-rhel-8-x86_64-rpms

# subscription-manager repos --enable=rhel-8-for-x86_64-baseos-rpms # subscription-manager repos --enable=rhel-8-for-x86_64-appstream-rpms # subscription-manager repos --enable=rh-gluster-3-for-rhel-8-x86_64-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If you require Samba, then enable the following repository:

subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-8-x86_64-rpms

# subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-8-x86_64-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - For Red Hat Gluster Storage 3.5, if NFS-Ganesha is required, then enable the following repository:

subscription-manager repos --enable=rh-gluster-3-nfs-for-rhel-8-x86_64-rpms --enable=rhel-8-for-x86_64-highavailability-rpms

# subscription-manager repos --enable=rh-gluster-3-nfs-for-rhel-8-x86_64-rpms --enable=rhel-8-for-x86_64-highavailability-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - For Red Hat Gluster Storage 3.5, if you require CTDB, then enable the following repository:

subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-8-x86_64-rpms

# subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-8-x86_64-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verify if the Channels are Enabled

Run the following command to verify if the channels are enabled:yum repolist

# yum repolistCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Configure the Client System to Access Red Hat Satellite

Configure the client system to access Red Hat Satellite. Refer section Registering Clients with Red Hat Satellite Server in Red Hat Satellite 5.6 Client Configuration Guide.Register to the Red Hat Satellite Server

Run the following command to register the system to the Red Hat Satellite Server:rhn_register

# rhn_registerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Register to the Standard Base Channel

In the select operating system release page, selectAll available updatesand follow the prompts to register the system to the standard base channel for Red Hat Enterprise Linux 6 -rhel-6-server-rpms. The standard base channel for Red Hat Enterprise Linux 7 isrhel-7-server-rpms. The standard base channel for Red Hat Enterprise Linux 8 isrhel-8-for-x86_64-baseos-rpms.Subscribe to the Required Red Hat Gluster Storage Server Channels

For Red Hat Gluster Storage 3.5 on Red Hat Enterprise Linux 6.7 and later- Run the following command to subscribe the system to the required Red Hat Gluster Storage server channel:

rhn-channel --add \ --channel rhel-6-server-rpms \ --channel rhel-scalefs-for-rhel-6-server-rpms \ --channel rhs-3-for-rhel-6-server-rpms

# rhn-channel --add \ --channel rhel-6-server-rpms \ --channel rhel-scalefs-for-rhel-6-server-rpms \ --channel rhs-3-for-rhel-6-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If you require Samba, then execute the following command to enable the required channel:

rhn-channel --add --channel rh-gluster-3-samba-for-rhel-6-server-rpms

# rhn-channel --add --channel rh-gluster-3-samba-for-rhel-6-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - NFS-Ganesha is not supported on Red Hat Enterprise Linux 6 based installations.

For Red Hat Gluster Storage 3.5 on Red Hat Enterprise Linux 7.7 and later- Run the following command to subscribe the system to the required Red Hat Gluster Storage server channels for Red Hat Enterprise Linux 7:

rhn-channel --add \ --channel rhel-7-server-rpms \ --channel rh-gluster-3-for-rhel-7-server-rpms

# rhn-channel --add \ --channel rhel-7-server-rpms \ --channel rh-gluster-3-for-rhel-7-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If you require Samba, then execute the following command to enable the required channel:

rhn-channel --add --channel rh-gluster-3-samba-for-rhel-7-server-rpms

# rhn-channel --add --channel rh-gluster-3-samba-for-rhel-7-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - For Red Hat Gluster Storage 3.5, for NFS-Ganesha enable the following channel:

rhn-channel --add --channel rhel-x86_64-server-7-rh-gluster-3-nfs --channel rhel-x86_64-server-ha-7

# rhn-channel --add --channel rhel-x86_64-server-7-rh-gluster-3-nfs --channel rhel-x86_64-server-ha-7Copy to Clipboard Copied! Toggle word wrap Toggle overflow - For Red Hat Gluster Storage 3.5, if CTDB is required, then enable the following channel:

rhn-channel --add --channel rh-gluster-3-samba-for-rhel-7-server-rpms

# rhn-channel --add --channel rh-gluster-3-samba-for-rhel-7-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

For Red Hat Gluster Storage 3.5 on Red Hat Enterprise Linux 8.2 and later- Run the following command to subscribe the system to the required Red Hat Gluster Storage server channels for Red Hat Enterprise Linux 8:

rhn-channel --add \ --channel rh-gluster-3-for-rhel-8-x86_64-rpms# rhn-channel --add \ --channel rh-gluster-3-for-rhel-8-x86_64-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If you require Samba, then execute the following command to enable the required channel:

rhn-channel --add --channel rh-gluster-3-samba-for-rhel-8-x86_64-rpms

# rhn-channel --add --channel rh-gluster-3-samba-for-rhel-8-x86_64-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - For Red Hat Gluster Storage 3.5, for NFS-Ganesha enable the following channel:

rhn-channel --add --channel rh-gluster-3-nfs-for-rhel-8-x86_64-rpms --channel rhel-8-for-x86_64-highavailability-rpms

# rhn-channel --add --channel rh-gluster-3-nfs-for-rhel-8-x86_64-rpms --channel rhel-8-for-x86_64-highavailability-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - For Red Hat Gluster Storage 3.5, if CTDB is required, then enable the following channel:

rhn-channel --add --channel rh-gluster-3-samba-for-rhel-8-x86_64-rpms

# rhn-channel --add --channel rh-gluster-3-samba-for-rhel-8-x86_64-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verify if the System is Registered Successfully

Run the following command to verify if the system is registered successfully:rhn-channel --list rhel-x86_64-server-7 rhel-x86_64-server-7-rh-gluster-3

# rhn-channel --list rhel-x86_64-server-7 rhel-x86_64-server-7-rh-gluster-3Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4. Installing Red Hat Gluster Storage Server on Red Hat Enterprise Linux (Layered Install)

Important

Important

/var partition that is large enough (50GB - 100GB) for log files, geo-replication related miscellaneous files, and other files.

Perform a base install of Red Hat Enterprise Linux Server

Red Hat Gluster Storage is supported on Red Hat Enterprise Linux 7 (RHEL 7) and Red Hat Enterprise Linux 8 (RHEL 8). We highly recommend you to choose the latest RHEL versions.Important

Red Hat Gluster Storage is not supported on Red Hat Enterprise Linux 6 (RHEL 6) from 3.5 Batch Update 1 onwards. See, Table 1.3, “Version Details”Register the System with Subscription Manager

To register the system with subscription manager, refer Section 2.3, “Subscribing to the Red Hat Gluster Storage Server Channels”Kernel Version Requirement

Red Hat Gluster Storage requires the kernel-2.6.32-431.17.1.el6 version or higher to be used on the system. Verify the installed and running kernel versions by running the following command:rpm -q kernel kernel-2.6.32-431.el6.x86_64 kernel-2.6.32-431.17.1.el6.x86_64

# rpm -q kernel kernel-2.6.32-431.el6.x86_64 kernel-2.6.32-431.17.1.el6.x86_64Copy to Clipboard Copied! Toggle word wrap Toggle overflow uname -r 2.6.32-431.17.1.el6.x86_64

# uname -r 2.6.32-431.17.1.el6.x86_64Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update all packages

Ensure that all packages are up to date by running the following command.yum update

# yum updateCopy to Clipboard Copied! Toggle word wrap Toggle overflow Important

If any kernel packages are updated, reboot the system with the following command.shutdown -r now

# shutdown -r nowCopy to Clipboard Copied! Toggle word wrap Toggle overflow Install Red Hat Gluster Storage

Run the following command to install Red Hat Gluster Storage:yum install redhat-storage-server

# yum install redhat-storage-serverCopy to Clipboard Copied! Toggle word wrap Toggle overflow - To install Samba, see Chapter 3, Deploying Samba on Red Hat Gluster Storage

- To install NFS-Ganesha, see Chapter 4, Deploying NFS-Ganesha on Red Hat Gluster Storage

Reboot

Reboot the system.

2.5. Installing from a PXE Server

Network Boot or Boot Services. Once you properly configure PXE booting, the computer can boot the Red Hat Gluster Storage Server installation system without any other media.

- Ensure that the network cable is attached. The link indicator light on the network socket should be lit, even if the computer is not switched on.

- Switch on the computer.

- A menu screen appears. Press the number key that corresponds to the preferred option.

2.6. Installing from Red Hat Satellite Server

2.6.1. Using Red Hat Satellite Server 6.x

Note

- Create a new manifest file and upload the manifest in the Satellite 6 server.

- Search for the required Red Hat Gluster Storage repositories and enable them.

- Synchronize all repositories enabled for Red Hat Gluster Storage.

- Create a new content view and add all the required products.

- Publish the content view and create an activation key.

- Register the required clients

rpm -Uvh satellite-server-host-address/pub/katello-ca-consumer-latest.noarch.rpm subscription-manager register --org=”Organization_Name” --activationkey=”Activation_Key”

# rpm -Uvh satellite-server-host-address/pub/katello-ca-consumer-latest.noarch.rpm # subscription-manager register --org=”Organization_Name” --activationkey=”Activation_Key”Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Identify available entitlement pools

subscription-manager list --available

# subscription-manager list --availableCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Attach entitlement pools to the system

subscription-manager attach --pool=Pool_ID

# subscription-manager attach --pool=Pool_IDCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Subscribe to required channels

- Enable the RHEL and Gluster channelFor Red Hat Enterprise Linux 8

subscription-manager repos --enable=rhel-8-for-x86_64-baseos-rpms --enable=rhel-8-for-x86_64-appstream-rpms subscription-manager repos --enable=rh-gluster-3-for-rhel-8-x86_64-rpms

# subscription-manager repos --enable=rhel-8-for-x86_64-baseos-rpms --enable=rhel-8-for-x86_64-appstream-rpms # subscription-manager repos --enable=rh-gluster-3-for-rhel-8-x86_64-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow For Red Hat Enterprise Linux 7subscription-manager repos --enable=rhel-7-server-rpms --enable=rh-gluster-3-for-rhel-7-server-rpms

# subscription-manager repos --enable=rhel-7-server-rpms --enable=rh-gluster-3-for-rhel-7-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If you require Samba, enable its repositoryFor Red Hat Enterprise Linux 8

subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-8-x86_64-rpms

# subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-8-x86_64-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow For Red Hat Enterprise Linux 7subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-7-server-rpms

# subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-7-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If you require NFS-Ganesha, enable its repositoryFor Red Hat Enterprise Linux 8

subscription-manager repos --enable=rh-gluster-3-nfs-for-rhel-8-x86_64-rpms

# subscription-manager repos --enable=rh-gluster-3-nfs-for-rhel-8-x86_64-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow For Red Hat Enterprise Linux 7subscription-manager repos --enable=rh-gluster-3-nfs-for-rhel-7-server-rpms

# subscription-manager repos --enable=rh-gluster-3-nfs-for-rhel-7-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If you require HA, enable its repositoryFor Red Hat Enterprise Linux 8

subscription-manager repos --enable=rhel-8-for-x86_64-highavailability-rpms

# subscription-manager repos --enable=rhel-8-for-x86_64-highavailability-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow For Red Hat Enterprise Linux 7subscription-manager repos --enable=rhel-ha-for-rhel-7-server-rpms

# subscription-manager repos --enable=rhel-ha-for-rhel-7-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If you require gdeploy, enable the Ansible repositoryFor Red Hat Enterprise Linux 8

subscription-manager repos --enable=ansible-2-for-rhel-8-x86_64-rpms

# subscription-manager repos --enable=ansible-2-for-rhel-8-x86_64-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow For Red Hat Enterprise Linux 7subscription-manager repos --enable=rhel-7-server-ansible-2-rpms

# subscription-manager repos --enable=rhel-7-server-ansible-2-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- Install Red Hat Gluster Storage

yum install redhat-storage-server

# yum install redhat-storage-serverCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.6.2. Using Red Hat Satellite Server 5.x

For more information on how to create an activation key, see Activation Keys in the Reference Guide.

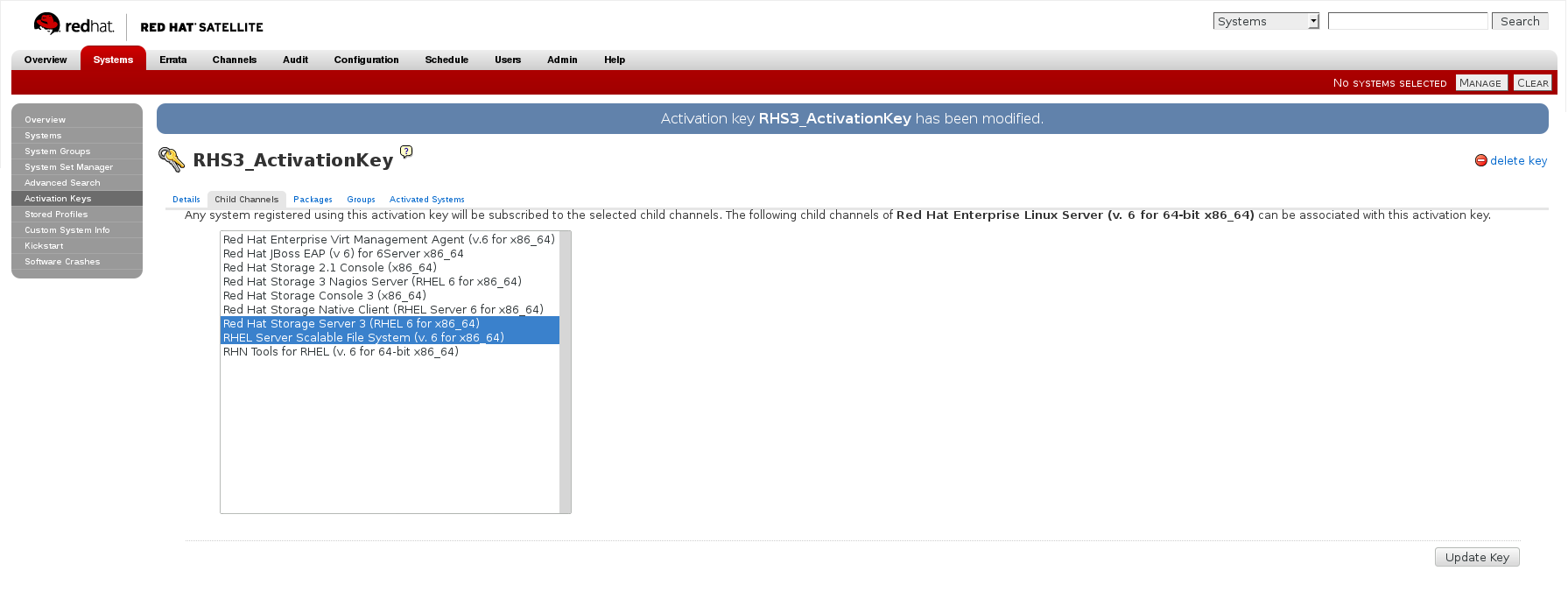

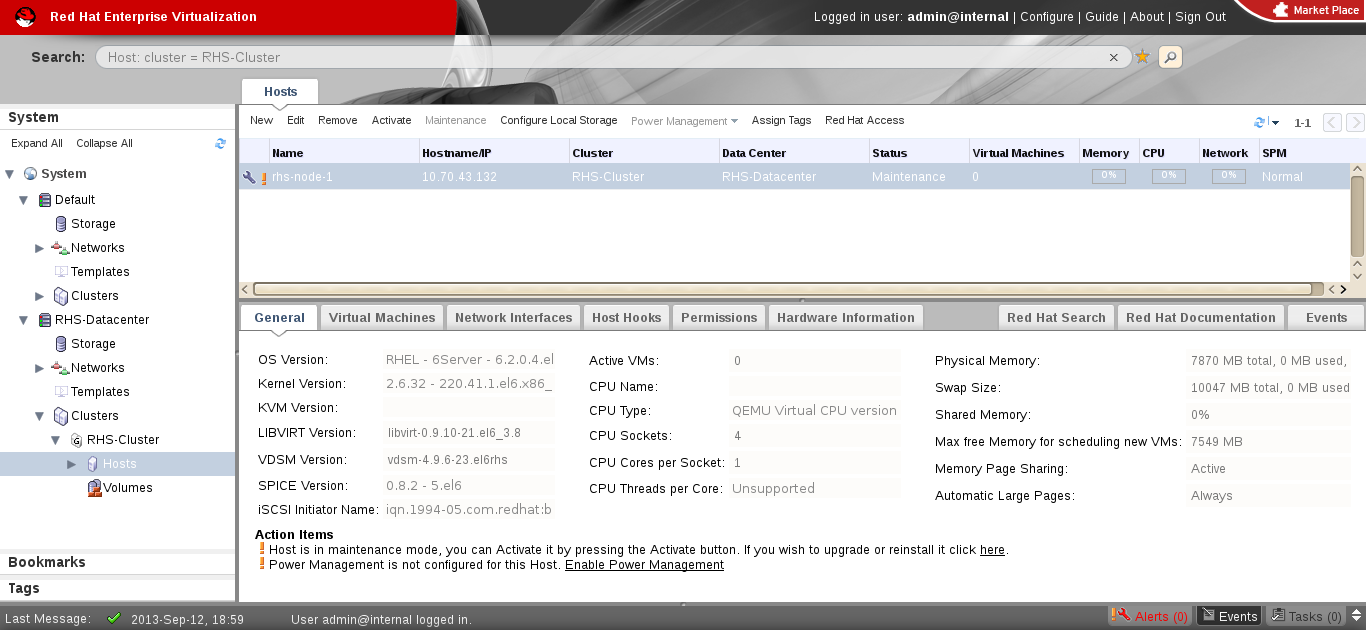

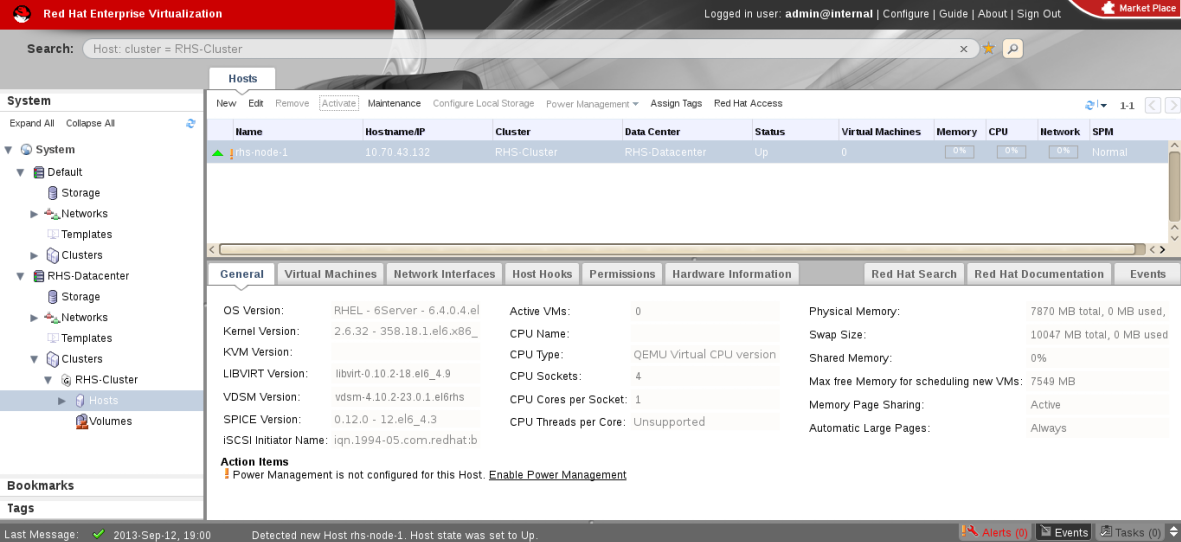

- In the Details tab of the Activation Keys screen, select

Red Hat Enterprise Linux Server (v.6 for 64-bit x86_64)from the Base Channels drop-down list.Figure 2.3. Base Channels

- In the Child Channels tab of the Activation Keys screen, select the following child channels:

RHEL Server Scalable File System (v. 6 for x86_64) Red Hat Gluster Storage Server 3 (RHEL 6 for x86_64)

RHEL Server Scalable File System (v. 6 for x86_64) Red Hat Gluster Storage Server 3 (RHEL 6 for x86_64)Copy to Clipboard Copied! Toggle word wrap Toggle overflow If you require the Samba package, then select the following child channel:Red Hat Gluster 3 Samba (RHEL 6 for x86_64)

Red Hat Gluster 3 Samba (RHEL 6 for x86_64)Copy to Clipboard Copied! Toggle word wrap Toggle overflow Figure 2.4. Child Channels

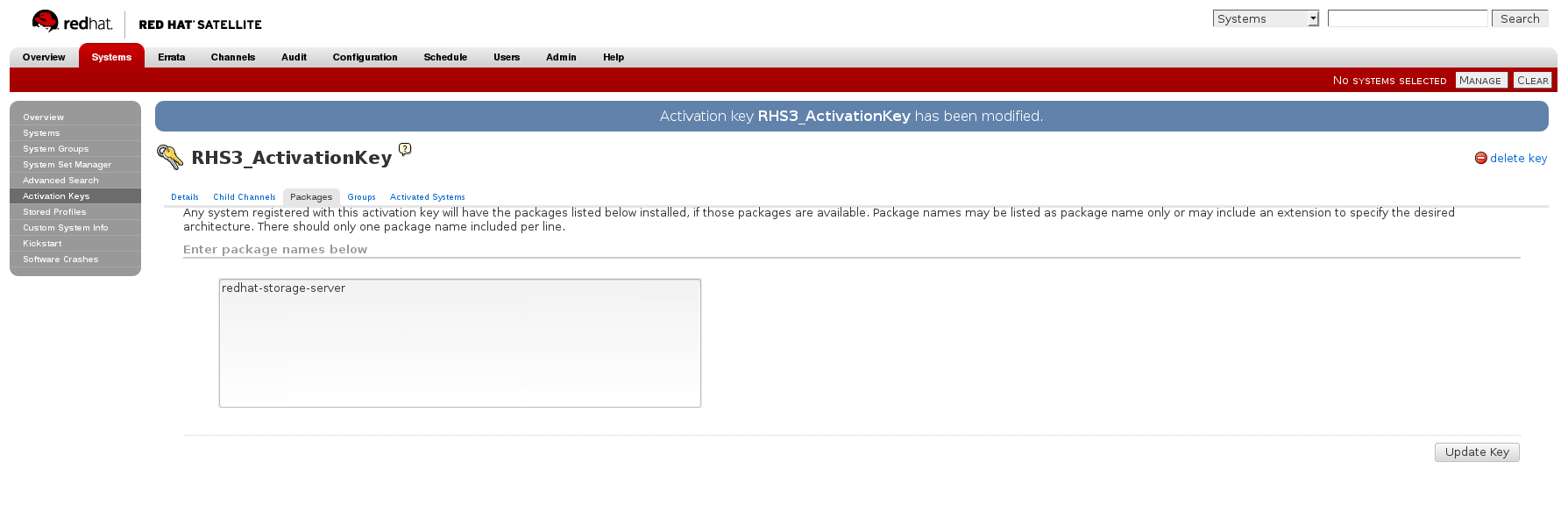

- In the Packages tab of the Activation Keys screen, enter the following package name:

redhat-storage-server

redhat-storage-serverCopy to Clipboard Copied! Toggle word wrap Toggle overflow Figure 2.5. Package

- If you require the Samba package, then enter the following package name:

samba

sambaCopy to Clipboard Copied! Toggle word wrap Toggle overflow

For more information on creating a kickstart profile, see Kickstart in the Reference Guide.

- When creating a kickstart profile, the following

Base ChannelandTreemust be selected.Base Channel: Red Hat Enterprise Linux Server (v.6 for 64-bit x86_64)Tree: ks-rhel-x86_64-server-6-6.5 - Do not associate any child channels with the kickstart profile.

- Associate the previously created activation key with the kickstart profile.

Important

- By default, the kickstart profile chooses

md5as the hash algorithm for user passwords.You must change this algorithm tosha512by providing the following settings in theauthfield of theKickstart Details,Advanced Optionspage of the kickstart profile:--enableshadow --passalgo=sha512

--enableshadow --passalgo=sha512Copy to Clipboard Copied! Toggle word wrap Toggle overflow - After creating the kickstart profile, you must change the root password in the Kickstart Details, Advanced Options page of the kickstart profile and add a root password based on the prepared sha512 hash algorithm.

For more information on installing Red Hat Gluster Storage Server using a kickstart profile, see Kickstart in the Reference Guide.

2.7. Managing the glusterd Service

glusterd service automatically starts on all the servers in the trusted storage pool. The service can be manually started and stopped by using the glusterd service commands. For more information on creating trusted storage pools, see the Red Hat Gluster Storage 3.5 Administration Guide.

glusterd also offers elastic volume management.

gluster CLI commands to decouple logical storage volumes from physical hardware. This allows the user to grow, shrink, and migrate storage volumes without any application downtime. As storage is added to the cluster, the volumes are distributed across the cluster. This distribution ensures that the cluster is always available despite changes to the underlying hardware.

2.7.1. Manually Starting and Stopping glusterd

glusterd service.

- Manually start

glusterdas follows:/etc/init.d/glusterd start

# /etc/init.d/glusterd startCopy to Clipboard Copied! Toggle word wrap Toggle overflow orservice glusterd start

# service glusterd startCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Manually stop

glusterdas follows:/etc/init.d/glusterd stop

# /etc/init.d/glusterd stopCopy to Clipboard Copied! Toggle word wrap Toggle overflow orservice glusterd stop

# service glusterd stopCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.8. Installing Ansible to Support Gdeploy

Note

- Execute the following command to enable the repository required to install Ansible:For Red Hat Enterprise Linux 8

subscription-manager repos --enable=ansible-2-for-rhel-8-x86_64-rpms

# subscription-manager repos --enable=ansible-2-for-rhel-8-x86_64-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow For Red Hat Enterprise Linux 7subscription-manager repos --enable=rhel-7-server-ansible-2-rpms

# subscription-manager repos --enable=rhel-7-server-ansible-2-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Install

ansibleby executing the following command:yum install ansible

# yum install ansibleCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.9. Installing Native Client

Note

- Red Hat Gluster Storage server supports the Native Client version which is the same as the server version and the preceding version of Native Client . For list of releases see: https://access.redhat.com/solutions/543123.

- From Red Hat Gluster Storage 3.5 batch update 7 onwards,

glusterfs-6.0-62and higher version of glusterFS Native Client is only available viarh-gluster-3-client-for-rhel-8-x86_64-rpmsfor Red Hat Gluster Storage based on Red Hat Enterprise Enterprise Linux (RHEL 8) andrh-gluster-3-client-for-rhel-7-server-rpmsfor Red Hat Gluster Storage based on RHEL 7.

Use the Command Line to Register and Subscribe a System to Red Hat Subscription Management

Prerequisites

- Know the user name and password of the Red Hat Subscription Manager account with Red Hat Gluster Storage entitlements.

- Run the

subscription-manager registercommand to list the available pools. Select the appropriate pool and enter your Red Hat Subscription Manager user name and password to register the system with Red Hat Subscription Manager.subscription-manager register

# subscription-manager registerCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Depending on your client, run one of the following commands to subscribe to the correct repositories.

- For Red Hat Enterprise Linux 8 clients:

subscription-manager repos --enable=rh-gluster-3-client-for-rhel-8-x86_64-rpms

# subscription-manager repos --enable=rh-gluster-3-client-for-rhel-8-x86_64-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - For Red Hat Enterprise Linux 7.x clients:

subscription-manager repos --enable=rhel-7-server-rpms --enable=rh-gluster-3-client-for-rhel-7-server-rpms

# subscription-manager repos --enable=rhel-7-server-rpms --enable=rh-gluster-3-client-for-rhel-7-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note

The following command can also be used, but Red Hat Gluster Storage may deprecate support for this repository in future releases.subscription-manager repos --enable=rhel-7-server-rh-common-rpms

# subscription-manager repos --enable=rhel-7-server-rh-common-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - For Red Hat Enterprise Linux 6.1 and later clients:

subscription-manager repos --enable=rhel-6-server-rpms --enable=rhel-6-server-rhs-client-1-rpms

# subscription-manager repos --enable=rhel-6-server-rpms --enable=rhel-6-server-rhs-client-1-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

For more information, see Section 3.1 Registering and attaching a system using the Command Line in Using and Configuring Red Hat Subscription Management. - Verify that the system is subscribed to the required repositories.

yum repolist

# yum repolistCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Use the Web Interface to Register and Subscribe a System

Prerequisites

- Know the user name and password of the Red Hat Subsrciption Management (RHSM) account with Red Hat Gluster Storage entitlements.

- Log on to Red Hat Subscription Management (https://access.redhat.com/management).

- Click the Systems link at the top of the screen.

- Click the name of the system to which the Red Hat Gluster Storage Native Client channel must be appended.

- Click in the Subscribed Channels section of the screen.

- Expand the node for Additional Services Channels for

Red Hat Enterprise Linux 8 for x86_64,Red Hat Enterprise Linux 7 for x86_64,Red Hat Enterprise Linux 6 for x86_64orRed Hat Enterprise Linux 5 for x86_64depending on the client platform. - Click the button to finalize the changes.When the page refreshes, select the Details tab to verify the system is subscribed to the appropriate channels.

Install Native Client Packages

Prerequisites

- Run the

yum installcommand to install the native client RPM packages.yum install glusterfs glusterfs-fuse

# yum install glusterfs glusterfs-fuseCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 3. Deploying Samba on Red Hat Gluster Storage

3.1. Prerequisites

- You must install Red Hat Gluster Storage Server on the target server.

Warning

- For layered installation of Red Hat Gluster Storage, ensure to have only the default Red Hat Enterprise Linux server installation, without the Samba or CTDB packages installed from Red Hat Enterprise Linux.

- The Samba version 3 is being deprecated from Red Hat Gluster Storage 3.0 Update 4. Further updates will not be provided for samba-3.x. It is recommended that you upgrade to Samba-4.x, which is provided in a separate channel or repository, for all updates including the security updates.

- CTDB version 2.5 is not supported from Red Hat Gluster Storage 3.1 Update 2. To use CTDB in Red Hat Gluster Storage 3.1.2 and later, you must upgrade the system to CTDB 4.x, which is provided in the Samba channel of Red Hat Gluster Storage.

- Downgrade of Samba from Samba 4.x to Samba 3.x is not supported.

- Ensure that Samba is upgraded on all the nodes simultaneously, as running different versions of Samba in the same cluster will lead to data corruption.

- Enable the channel where the Samba packages are available:For Red Hat Gluster Storage 3.5 on Red Hat Enterprise Linux 6.x

- If you have registered your machine using Red Hat Subscription Manager or Satellite server-6.x, enable the repository by running the following command:

subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-6-server-rpms

# subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-6-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

For Red Hat Gluster Storage 3.5 on Red Hat Enterprise Linux 7.7 and later- If you have registered your machine using Red Hat Subscription Manager or Satellite server-7.x, enable the repository by running the following command:

subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-7-server-rpms

# subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-7-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

For Red Hat Gluster Storage 3.5 on Red Hat Enterprise Linux 8.2 and later- If you have registered your machine using Red Hat Subscription Manager or Satellite server-8.x, enable the repository by running the following command:

subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-8-x86_64-rpms

# subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-8-x86_64-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

3.2. Installing Samba Using ISO

Figure 3.1. Customize Packages

3.3. Installing Samba Using yum

yum groupinstall RH-Gluster-Samba-Server

# yum groupinstall RH-Gluster-Samba-Serveryum groupinstall RH-Gluster-AD-Integration

# yum groupinstall RH-Gluster-AD-Integration

- To install the basic Samba packages, execute the following command:

yum install samba

# yum install sambaCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If you require the

smbclienton the server, then execute the following command:yum install samba-client

# yum install samba-clientCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If you require an Active directory setup, then execute the following commands:

yum install samba-winbind yum install samba-winbind-clients yum install samba-winbind-krb5-locator

# yum install samba-winbind # yum install samba-winbind-clients # yum install samba-winbind-krb5-locatorCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Verify if the following packages are installed.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Note

Chapter 4. Deploying NFS-Ganesha on Red Hat Gluster Storage

- Installing NFS-Ganesha using yum

- Installing NFS-Ganesha during an ISO Installation

Warning

4.1. Prerequisites

Enable the channel where the NFS-Ganesha packages are available:

- If you have registered your machine using Red Hat Subscription Manager, enable the repository by running the following command:

subscription-manager repos --enable=rh-gluster-3-nfs-for-rhel-8-x86_64-rpms

# subscription-manager repos --enable=rh-gluster-3-nfs-for-rhel-8-x86_64-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow To add the HA repository, execute the following command:subscription-manager repos --enable=rhel-8-for-x86_64-highavailability-rpms

# subscription-manager repos --enable=rhel-8-for-x86_64-highavailability-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If you have registered your machine using Satellite server, enable the channel by running the following command:

rhn-channel --add --channel rh-gluster-3-nfs-for-rhel-8-x86_64-rpms

# rhn-channel --add --channel rh-gluster-3-nfs-for-rhel-8-x86_64-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow To subscribe to the HA channel, execute the following command:rhn-channel --add --channel rhel-8-for-x86_64-highavailability-rpms

# rhn-channel --add --channel rhel-8-for-x86_64-highavailability-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Enable the channel where the NFS-Ganesha packages are available:

- If you have registered your machine using Red Hat Subscription Manager, enable the repository by running the following command:

subscription-manager repos --enable=rh-gluster-3-nfs-for-rhel-7-server-rpms

# subscription-manager repos --enable=rh-gluster-3-nfs-for-rhel-7-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow To add the HA repository, execute the following command:subscription-manager repos --enable=rhel-ha-for-rhel-7-server-rpms

# subscription-manager repos --enable=rhel-ha-for-rhel-7-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If you have registered your machine using Satellite server, enable the channel by running the following command:

rhn-channel --add --channel rhel-x86_64-server-7-rh-gluster-3-nfs

# rhn-channel --add --channel rhel-x86_64-server-7-rh-gluster-3-nfsCopy to Clipboard Copied! Toggle word wrap Toggle overflow To subscribe to the HA channel, execute the following command:rhn-channel --add --channel rhel-x86_64-server-ha-7

# rhn-channel --add --channel rhel-x86_64-server-ha-7Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.2. Installing NFS-Ganesha during an ISO Installation

- While installing Red Hat Storage using an ISO, in the Customizing the Software Selection screen, select RH-Gluster-NFS-Ganesha and click Next.

- Proceed with the remaining installation steps for installing Red Hat Gluster Storage. For more information on how to install Red Hat Storage using an ISO, see Installing from an ISO Image.

4.3. Installing NFS-Ganesha using yum

- The glusterfs-ganesha package can be installed using the following command:

yum install glusterfs-ganesha

# yum install glusterfs-ganeshaCopy to Clipboard Copied! Toggle word wrap Toggle overflow NFS-Ganesha is installed along with the above package. nfs-ganesha-gluster and HA packages are also installed.Important

Run the following command to install nfs-ganesha-selinux on Red Hat Enterprise Linux 7:yum install nfs-ganesha-selinux

# yum install nfs-ganesha-selinuxCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to install nfs-ganesha-selinux on Red Hat Enterprise Linux 8:dnf install glusterfs-ganesha

# dnf install glusterfs-ganeshaCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Note

Chapter 5. Upgrading to Red Hat Gluster Storage 3.5

Important

Upgrade support limitations

- While upgrading RHGS from versions lower than RHGS-3.5.4 to version RHGS-3.5.4 or higher, both servers and clients must upgrade to RHGS-3.5.4 or higher versions before bumping up the operating version of the cluster.

- Virtual Data Optimizer (VDO) volumes, which are supported in Red Hat Enterprise Linux 7.5, are not currently supported in Red Hat Gluster Storage. VDO is supported only when used as part of Red Hat Hyperconverged Infrastructure for Virtualization 2.0. See Understanding VDO for more information.

- Servers must be upgraded prior to upgrading clients.

- If you are upgrading from Red Hat Gluster Storage 3.1 Update 2 or earlier, you must upgrade servers and clients simultaneously.

- If you use NFS-Ganesha, your supported upgrade path to Red Hat Gluster Storage 3.5 depends on the version from which you are upgrading. If you are upgrading from version 3.3 or earlier, use Offline Upgrade to Red Hat Gluster Storage 3.3 and then perform an in-service upgrade from version 3.3 to 3.4. Later, perform the upgrading from version 3.4 to 3.5 using Section 5.2, “In-Service Software Upgrade from Red Hat Gluster Storage 3.4 to Red Hat Gluster Storage 3.5”. If you are upgrading from version 3.4 to 3.5, directly use Section 5.2, “In-Service Software Upgrade from Red Hat Gluster Storage 3.4 to Red Hat Gluster Storage 3.5”.

5.1. Offline Upgrade to Red Hat Gluster Storage 3.5

Warning

Important

5.1.1. Upgrading to Red Hat Gluster Storage 3.5 for Systems Subscribed to Red Hat Subscription Manager

Procedure 5.1. Before you upgrade

- Back up the following configuration directory and files in a location that is not on the operating system partition.

/var/lib/glusterd/etc/samba/etc/ctdb/etc/glusterfs/var/lib/samba/var/lib/ctdb/var/run/gluster/shared_storage/nfs-ganesha

Note

With the release of 3.5 Batch Update 3, the mount point of shared storage is changed from /var/run/gluster/ to /run/gluster/ .If you use NFS-Ganesha, back up the following files from all nodes:/run/gluster/shared_storage/nfs-ganesha/exports/export.*.conf/etc/ganesha/ganesha.conf/etc/ganesha/ganesha-ha.conf

If upgrading from Red Hat Gluster Storage 3.3 to 3.4 or subsequent releases, back up all xattr by executing the following command individually on the brick root(s) for all nodes:find ./ -type d ! -path "./.*" ! -path "./" | xargs getfattr -d -m. -e hex > /var/log/glusterfs/xattr_dump_brick_name

# find ./ -type d ! -path "./.*" ! -path "./" | xargs getfattr -d -m. -e hex > /var/log/glusterfs/xattr_dump_brick_nameCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Unmount gluster volumes from all clients. On a client, use the following command to unmount a volume from a mount point.

umount mount-point

# umount mount-pointCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If you use NFS-Ganesha, run the following on a gluster server to disable the nfs-ganesha service:

gluster nfs-ganesha disable

# gluster nfs-ganesha disableCopy to Clipboard Copied! Toggle word wrap Toggle overflow - On a gluster server, disable the shared volume.

gluster volume set all cluster.enable-shared-storage disable

# gluster volume set all cluster.enable-shared-storage disableCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Stop all volumes

for vol in `gluster volume list`; do gluster --mode=script volume stop $vol; sleep 2s; done

# for vol in `gluster volume list`; do gluster --mode=script volume stop $vol; sleep 2s; doneCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Verify that all volumes are stopped.

gluster volume info

# gluster volume infoCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Stop the

glusterdservices on all servers using the following command:service glusterd stop pkill glusterfs pkill glusterfsd

# service glusterd stop # pkill glusterfs # pkill glusterfsdCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Stop the pcsd service.

systemctl stop pcsd

# systemctl stop pcsdCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure 5.2. Upgrade using yum

Note

migrate-rhs-classic-to-rhsm --status

# migrate-rhs-classic-to-rhsm --statusmigrate-rhs-classic-to-rhsm --rhn-to-rhsm

# migrate-rhs-classic-to-rhsm --rhn-to-rhsmmigrate-rhs-classic-to-rhsm --status

# migrate-rhs-classic-to-rhsm --status- If you use Samba:

- For Red Hat Enterprise Linux 6.7 or higher, enable the following repository:

subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-6-server-rpms

# subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-6-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow For Red Hat Enterprise Linux 7, enable the following repository:subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-7-server-rpms

# subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-7-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow For Red Hat Enterprise Linux 8, enable the following repository:subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-8-x86_64-rpms

# subscription-manager repos --enable=rh-gluster-3-samba-for-rhel-8-x86_64-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Ensure that Samba is upgraded on all the nodes simultaneously, as running different versions of Samba in the same cluster will lead to data corruption.Stop the CTDB and SMB services.On Red Hat Enterprise Linux 7 and Red Hat Enterprise Linux 8:

systemctl stop ctdb

# systemctl stop ctdbCopy to Clipboard Copied! Toggle word wrap Toggle overflow On Red Hat Enterprise Linux 6:service ctdb stop

# service ctdb stopCopy to Clipboard Copied! Toggle word wrap Toggle overflow To verify that services are stopped, run:ps axf | grep -E '(ctdb|smb|winbind|nmb)[d]'

# ps axf | grep -E '(ctdb|smb|winbind|nmb)[d]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- Upgrade the server to Red Hat Gluster Storage 3.5.

yum update

# yum updateCopy to Clipboard Copied! Toggle word wrap Toggle overflow Wait for the update to complete.Important

Run the following command to install nfs-ganesha-selinux on Red Hat Enterprise Linux 7:yum install nfs-ganesha-selinux

# yum install nfs-ganesha-selinuxCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the following command to install nfs-ganesha-selinux on Red Hat Enterprise Linux 8:dnf install glusterfs-ganesha

# dnf install glusterfs-ganeshaCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If you use Samba/CTDB, update the following files to replace

META="all"withMETA="<ctdb_volume_name>", for example,META="ctdb":/var/lib/glusterd/hooks/1/start/post/S29CTDBsetup.sh- This script ensures the file system and its lock volume are mounted on all Red Hat Gluster Storage servers that use Samba, and ensures that CTDB starts at system boot./var/lib/glusterd/hooks/1/stop/pre/S29CTDB-teardown.sh- This script ensures that the file system and its lock volume are unmounted when the CTDB volume is stopped.Note

For RHEL based Red Hat Gluster Storage upgrading to 3.5 batch update 4 with Samba, the write-behind translator has to manually disabled for all existing samba volumes.gluster volume set <volname> performance.write-behind off

# gluster volume set <volname> performance.write-behind offCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- Reboot the server to ensure that kernel updates are applied.

- Ensure that glusterd and pcsd services are started.

systemctl start glusterd systemctl start pcsd

# systemctl start glusterd # systemctl start pcsdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Note

During upgrade of servers, the glustershd.log file throws some “Invalid argument” errors during every index crawl (10 mins by default) on the upgraded nodes. It is *EXPECTED* and can be *IGNORED* until the op-version bump up, after which these errors are not triggered. Sample error message:If you are in op-version '70000' or lower, do not bump up the op-version to '70100' or higher until all the servers and clients are upgraded to the newer version.[2021-05-25 17:58:38.007134] E [MSGID: 114031] [client-rpc-fops_v2.c:216:client4_0_mkdir_cbk] 0-spvol-client-40: remote operation failed. Path: (null) [Invalid argument]

[2021-05-25 17:58:38.007134] E [MSGID: 114031] [client-rpc-fops_v2.c:216:client4_0_mkdir_cbk] 0-spvol-client-40: remote operation failed. Path: (null) [Invalid argument]Copy to Clipboard Copied! Toggle word wrap Toggle overflow - When all nodes have been upgraded, run the following command to update the

op-versionof the cluster. This helps to prevent any compatibility issues within the cluster.gluster volume set all cluster.op-version 70200

# gluster volume set all cluster.op-version 70200Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note

70200is thecluster.op-versionvalue for Red Hat Gluster Storage 3.5. Ater upgrading the cluster-op version, enable the granular-entry-heal for the volume via the given command:The feature is now enabled by default post upgrade to Red Hat Gluster Storage 3.5, but this will come into affect only after bumping up the op-version. Refer to Section 1.5, “Red Hat Gluster Storage Software Components and Versions” for the correctgluster volume heal $VOLNAME granular-entry-heal enable

gluster volume heal $VOLNAME granular-entry-heal enableCopy to Clipboard Copied! Toggle word wrap Toggle overflow cluster.op-versionvalue for other versions.Important

If the op-version is bumped up to '70100' after upgrading the servers and before upgrading the clients, some internal metadata files under the root of the mount point named '.glusterfs-anonymous-inode-(gfid)' exposed to the older clients. The clients must not do any I/O or remove or touch contents in this directory. The clients must upgrade to 3.5.4 or higher version, then this directory becomes invisible to the clients. - If you want to migrate from Gluster NFS to NFS Ganesha as part of this upgrade, install the NFS-Ganesha packages as described in Chapter 4, Deploying NFS-Ganesha on Red Hat Gluster Storage, and configure the NFS Ganesha cluster using the information in the NFS Ganesha section of the Red Hat Gluster Storage 3.5 Administration Guide.

- Start all volumes.

for vol in `gluster volume list`; do gluster --mode=script volume start $vol; sleep 2s; done

# for vol in `gluster volume list`; do gluster --mode=script volume start $vol; sleep 2s; doneCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If you are using NFS-Ganesha:

- Copy the volume's export information from your backup copy of

ganesha.confto the new/etc/ganesha/ganesha.conffile.The export information in the backed up file is similar to the following:%include "/var/run/gluster/shared_storage/nfs-ganesha/exports/export.v1.conf" %include "/var/run/gluster/shared_storage/nfs-ganesha/exports/export.v2.conf" %include "/var/run/gluster/shared_storage/nfs-ganesha/exports/export.v3.conf"

%include "/var/run/gluster/shared_storage/nfs-ganesha/exports/export.v1.conf" %include "/var/run/gluster/shared_storage/nfs-ganesha/exports/export.v2.conf" %include "/var/run/gluster/shared_storage/nfs-ganesha/exports/export.v3.conf"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Note

With the release of 3.5 Batch Update 3, the mount point of shared storage is changed from /var/run/gluster/ to /run/gluster/ . - Copy the backup volume export files from the backup directory to

/etc/ganesha/exportsby running the following command from the backup directory:cp export.* /etc/ganesha/exports/

# cp export.* /etc/ganesha/exports/Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- Enable the shared volume.

gluster volume set all cluster.enable-shared-storage enable

# gluster volume set all cluster.enable-shared-storage enableCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Ensure that the shared storage volume is mounted on the server. If the volume is not mounted, run the following command:

mount -t glusterfs hostname:gluster_shared_storage /var/run/gluster/shared_storage

# mount -t glusterfs hostname:gluster_shared_storage /var/run/gluster/shared_storageCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Ensure that the

/var/run/gluster/shared_storage/nfs-ganeshadirectory is created.cd /var/run/gluster/shared_storage/ mkdir nfs-ganesha

# cd /var/run/gluster/shared_storage/ # mkdir nfs-ganeshaCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Enable firewall settings for new services and ports. See Getting Started in the Red Hat Gluster Storage 3.5 Administration Guide.

- If you use Samba/CTDB:

- Mount

/gluster/lockbefore starting CTDB by executing the following commands:mount <ctdb_volume_name> mount -t glusterfs server:/ctdb_volume_name /gluster/lock/

# mount <ctdb_volume_name> # mount -t glusterfs server:/ctdb_volume_name /gluster/lock/Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Verify that the lock volume mounted correctly by checking for

lockin the output of themountcommand on any Samba server.mount | grep 'lock' ... <hostname>:/<ctdb_volume_name>.tcp on /gluster/lock type fuse.glusterfs (rw,relatime,user_id=0,group_id=0,default_permissions,allow_other,max_read=131072)

# mount | grep 'lock' ... <hostname>:/<ctdb_volume_name>.tcp on /gluster/lock type fuse.glusterfs (rw,relatime,user_id=0,group_id=0,default_permissions,allow_other,max_read=131072)Copy to Clipboard Copied! Toggle word wrap Toggle overflow - If all servers that host volumes accessed via SMB have been updated, then start the CTDB and Samba services by executing the following commands.On Red Hat Enterprise Linux 7 and Red Hat Enterprise Linux 8:

systemctl start ctdb

# systemctl start ctdbCopy to Clipboard Copied! Toggle word wrap Toggle overflow On Red Hat Enterprise Linux 6:service ctdb start

# service ctdb startCopy to Clipboard Copied! Toggle word wrap Toggle overflow - To verify that the CTDB and SMB services have started, execute the following command:

ps axf | grep -E '(ctdb|smb|winbind|nmb)[d]'

ps axf | grep -E '(ctdb|smb|winbind|nmb)[d]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- If you use NFS-Ganesha:

- Copy the

ganesha.confandganesha-ha.conffiles, and the/etc/ganesha/exportsdirectory to the/var/run/gluster/shared_storage/nfs-ganeshadirectory.cd /etc/ganesha/ cp ganesha.conf ganesha-ha.conf /var/run/gluster/shared_storage/nfs-ganesha/ cp -r exports/ /var/run/gluster/shared_storage/nfs-ganesha/

# cd /etc/ganesha/ # cp ganesha.conf ganesha-ha.conf /var/run/gluster/shared_storage/nfs-ganesha/ # cp -r exports/ /var/run/gluster/shared_storage/nfs-ganesha/Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Update the path of any export entries in the

ganesha.conffile.sed -i 's/\/etc\/ganesha/\/var\/run\/gluster\/shared_storage\/nfs-ganesha/' /var/run/gluster/shared_storage/nfs-ganesha/ganesha.conf

# sed -i 's/\/etc\/ganesha/\/var\/run\/gluster\/shared_storage\/nfs-ganesha/' /var/run/gluster/shared_storage/nfs-ganesha/ganesha.confCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Run the following to clean up any existing cluster configuration:

/usr/libexec/ganesha/ganesha-ha.sh --cleanup /var/run/gluster/shared_storage/nfs-ganesha

/usr/libexec/ganesha/ganesha-ha.sh --cleanup /var/run/gluster/shared_storage/nfs-ganeshaCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If you have upgraded to Red Hat Enterprise Linux 7.4 or later, set the following SELinux Boolean:

setsebool -P ganesha_use_fusefs on

# setsebool -P ganesha_use_fusefs onCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Start the nfs-ganesha service and verify that all nodes are functional.

gluster nfs-ganesha enable

# gluster nfs-ganesha enableCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Enable NFS-Ganesha on all volumes.

gluster volume set volname ganesha.enable on

# gluster volume set volname ganesha.enable onCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.1.2. Upgrading to Red Hat Gluster Storage 3.5 for Systems Subscribed to Red Hat Network Satellite Server

Procedure 5.3. Before you upgrade

- Back up the following configuration directory and files in a location that is not on the operating system partition.

/var/lib/glusterd/etc/samba/etc/ctdb/etc/glusterfs/var/lib/samba/var/lib/ctdb/var/run/gluster/shared_storage/nfs-ganesha

Note

With the release of 3.5 Batch Update 3, the mount point of shared storage is changed from /var/run/gluster/ to /run/gluster/ .If you use NFS-Ganesha, back up the following files from all nodes:/run/gluster/shared_storage/nfs-ganesha/exports/export.*.conf/etc/ganesha/ganesha.conf/etc/ganesha/ganesha-ha.conf

If upgrading from Red Hat Gluster Storage 3.3 to 3.4 or subsequent releases, back up all xattr by executing the following command individually on the brick root(s) for all nodes:find ./ -type d ! -path "./.*" ! -path "./" | xargs getfattr -d -m. -e hex > /var/log/glusterfs/xattr_dump_brick_name

# find ./ -type d ! -path "./.*" ! -path "./" | xargs getfattr -d -m. -e hex > /var/log/glusterfs/xattr_dump_brick_nameCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Unmount gluster volumes from all clients. On a client, use the following command to unmount a volume from a mount point.

umount mount-point

# umount mount-pointCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If you use NFS-Ganesha, run the following on a gluster server to disable the nfs-ganesha service:

gluster nfs-ganesha disable

# gluster nfs-ganesha disableCopy to Clipboard Copied! Toggle word wrap Toggle overflow - On a gluster server, disable the shared volume.

gluster volume set all cluster.enable-shared-storage disable

# gluster volume set all cluster.enable-shared-storage disableCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Stop all volumes.

for vol in `gluster volume list`; do gluster --mode=script volume stop $vol; sleep 2s; done

# for vol in `gluster volume list`; do gluster --mode=script volume stop $vol; sleep 2s; doneCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Verify that all volumes are stopped.

gluster volume info

# gluster volume infoCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Stop the

glusterdservices on all servers using the following command:service glusterd stop pkill glusterfs pkill glusterfsd

# service glusterd stop # pkill glusterfs # pkill glusterfsdCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Stop the pcsd service.

systemctl stop pcsd

# systemctl stop pcsdCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Procedure 5.4. Upgrade using Satellite

- Create an Activation Key at the Red Hat Network Satellite Server, and associate it with the following channels. For more information, see Section 2.6, “Installing from Red Hat Satellite Server”

- For Red Hat Enterprise Linux 6.7 or higher:

Base Channel: Red Hat Enterprise Linux Server (v.6 for 64-bit x86_64) Child channels: RHEL Server Scalable File System (v. 6 for x86_64) Red Hat Gluster Storage Server 3 (RHEL 6 for x86_64)

Base Channel: Red Hat Enterprise Linux Server (v.6 for 64-bit x86_64) Child channels: RHEL Server Scalable File System (v. 6 for x86_64) Red Hat Gluster Storage Server 3 (RHEL 6 for x86_64)Copy to Clipboard Copied! Toggle word wrap Toggle overflow If you use Samba, add the following channel:Red Hat Gluster 3 Samba (RHEL 6 for x86_64)

Red Hat Gluster 3 Samba (RHEL 6 for x86_64)Copy to Clipboard Copied! Toggle word wrap Toggle overflow - For Red Hat Enterprise Linux 7:

Base Channel: Red Hat Enterprise Linux Server (v.7 for 64-bit x86_64) Child channels: RHEL Server Scalable File System (v. 7 for x86_64) Red Hat Gluster Storage Server 3 (RHEL 7 for x86_64)

Base Channel: Red Hat Enterprise Linux Server (v.7 for 64-bit x86_64) Child channels: RHEL Server Scalable File System (v. 7 for x86_64) Red Hat Gluster Storage Server 3 (RHEL 7 for x86_64)Copy to Clipboard Copied! Toggle word wrap Toggle overflow If you use Samba, add the following channel:Red Hat Gluster 3 Samba (RHEL 7 for x86_64)

Red Hat Gluster 3 Samba (RHEL 7 for x86_64)Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- Unregister your system from Red Hat Network Satellite by following these steps:

- Log in to the Red Hat Network Satellite server.