Data Virtualization Reference

TECHNOLOGY PREVIEW - Reference for Data Virtualization

Abstract

Chapter 1. Data Virtualization reference

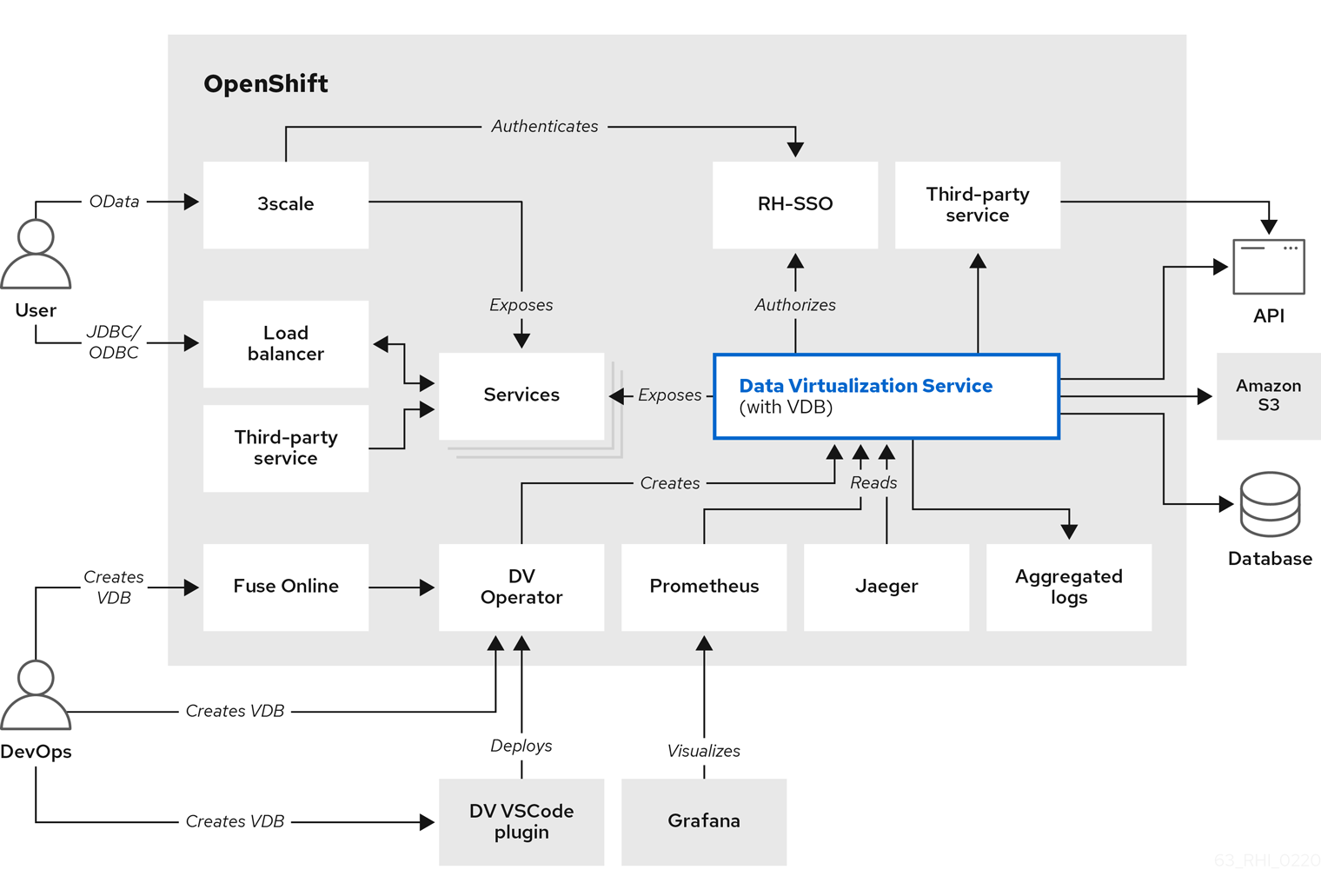

Data Virtualization offers a highly scalable and high performance solution to information integration. By allowing integrated and enriched data to be consumed relationally, as JSON, XML, and other formats over multiple protocols. Data Virtualization simplifies data access for developers and consuming applications.

Commercial development support, production support, and training for Data Virtualization is available through Red Hat. Data Virtualization is a professional open source project and a critical component of Red Hat data Integration.

Before one can delve into Data Virtualization it is very important to learn few basic constructs of Data Virtualization. For example, what is a virtual database? What is a model? and so forth. For more information, see the Teiid Basics.

If not otherwise specified, versions referenced in this document refer to Teiid project versions. Teiid or Data Virtualization running on various platforms will have both platform and product-specific versioning.

Data virtualization is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process. For more information about the support scope of Red Hat Technology Preview features, see https://access.redhat.com/support/offerings/techpreview/.

Chapter 2. Virtual databases

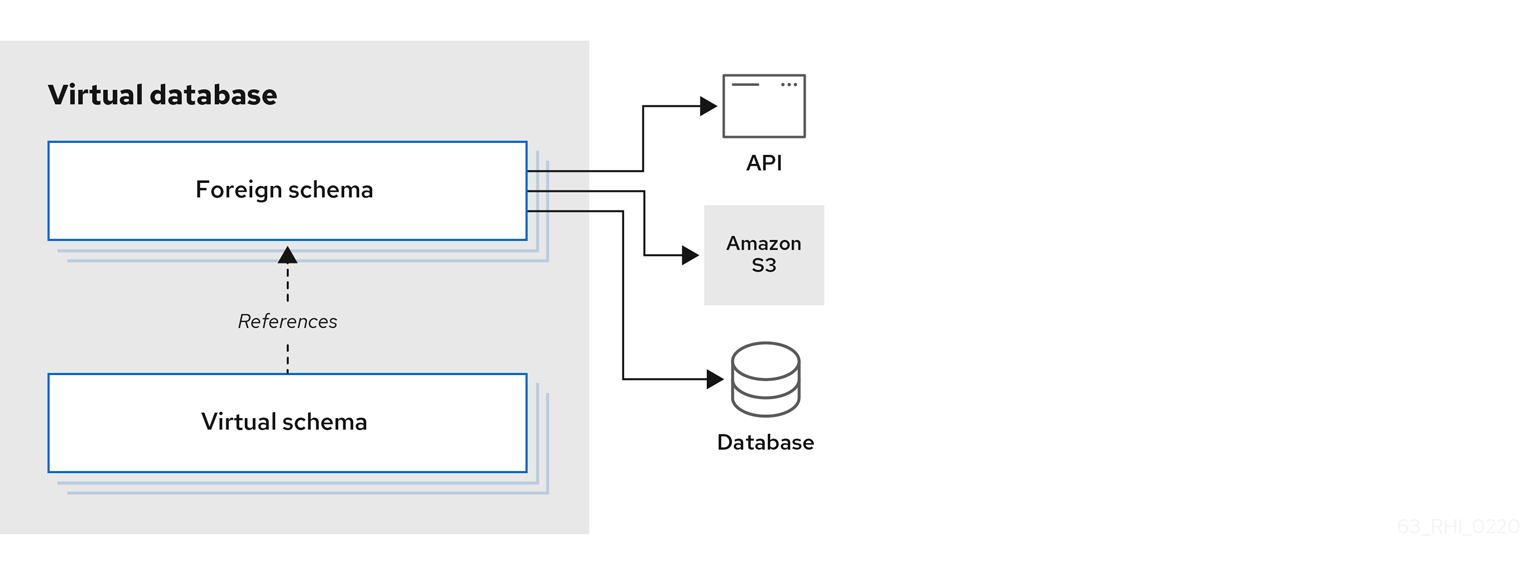

A virtual database (VDB) is a metadata container for components used to integrate data from multiple data sources, so that they can be accessed in an integrated manner through a single, uniform API.

A virtual database typically contains multiple schema components (also called as models), and each schema contains the metadata (tables, procedures, functions). There are two different types of schemas:

- Foreign schema

- Also called a source or physical schema, a foreign schema represents external or remote data sources, such as a relational database, such as Oracle, Db2, or MySQL; files, such as CSV or Microsoft Excel; or web services, such as SOAP or REST.

- Virtual schema

- A view layer, or logical schema layer that is defined using schema objects from foreign schemas. For example, when you create a view table that aggregates multiple foreign tables from different sources, the resulting view shields users from the complexities of the data sources that define the view.

One important thing to note is, a virtual database contains only metadata. Any use case involving Data Virtualization must have a virtual database model to begin with. So, it is important to learn how to design and develop a VDB.

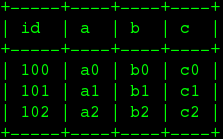

The following example of a virtual database model, defines a single foreign schema component that makes a connection to a PostgreSQL database.

The SQL DDL commands in the example implement the SQL/MED specification.

The following sections describe in greater detail how the statements in the preceding example are used to define a virtual database. Before that we need to learn about the different elements of the source schema component.

External data sources

As shown in preceding example, the "source schema" component of a virtual database is a collection of schema objects, tables, procedures and functions, that represent an external data source’s metadata locally. In the example, schema objects are not defined directly, but are imported from the server. Details of the connection to the external data source are provided through a resource-name, which is a named connection reference to a external data source.

For the purposes of Data Virtualization, connecting and issuing queries to fetch the metadata from these external data sources, Data Virtualization defines/provides two types of resources.

Translator

A translator, also known as a DATA WRAPPER, is a component that provides an abstraction layer between the Data Virtualization query engine and a physical data source. The translator knows how to convert query commands from Data Virtualization into source-specific commands and execute them. The translator also has the intelligence to convert data that the physical source returns into a form that the Data Virtualization query engine can process. For example, when working with a web service translator, the translator converts SQL procedures from the Data Virtualization layer into HTTP calls, and JSON responses are converted to tabular results.

Data Virtualization provides various translators as part of the system, or one can be developed by using the provided java libraries. For information about the available translators, see Translators.

2.1. Virtual database properties

DATABASE properties

- domain-ddl

- schema-ddl

-

query-timeout Sets the default query timeout in milliseconds for queries executed against this VDB.

0indicates that the server default query timeout should be used. Defaults to 0. Will have no effect if the server default query timeout is set to a lesser value. Note that clients can still set their own timeouts that will be managed on the client side. - connection.XXX For use by the ODBC transport and OData to set default connection/execution properties. For more information about related properties, see Driver Connection in the Client Developer’s Guide. Note these are set on the connection after it has been established.

CREATE DATABASE vdb OPTIONS ("connection.partialResultsMode" true);

CREATE DATABASE vdb OPTIONS ("connection.partialResultsMode" true);- authentication-type

Authentication type of configured security domain. Allowed values currently are (GSS, USERPASSWORD). The default is set on the transport (typically USERPASSWORD).

- password-pattern

Regular expression matched against the connecting user’s name that determines if USERPASSWORD authentication is used. password-pattern takes precedence over authentication-type. The default is authentication-type.

- gss-pattern

Regular expression matched against the connecting user’s name that determines if GSS authentication is used. gss-pattern takes precedence over password-pattern. The default is password-pattern.

- max-sessions-per-user (11.2+)

Maximum number of sessions allowed for each user, as identified by the user name, of this VDB. No setting or a negative number indicates no per user max, but the session service max will still apply. This is enforced at each Data Virtualization server member in a cluster, and not cluster wide. Derived sessions that are created for tasks under an existing session do not count against this maximum.

- model.visible

Used to override the visibility of imported vdb models, where model is the name of the imported model.

- include-pg-metadata

By default, PostgreSQL metadata is always added to VDB unless you set the property org.teiid.addPGMetadata to false. This property enables adding PG metadata per VDB. For more information, System Properties in the Administrator’s Guide. Please note that if you are using ODBC to access your VDB, the VDB must include PG metadata.

- lazy-invalidate

By default TTL expiration will be invalidating. For more information, see Internal Materialization in the Caching guide. Setting lazy-invalidate to true will make TTL refreshes non-invalidating.

- deployment-name

Effectively reserved. Will be set at deploy time by the server to the name of the server deployment.

Schema and model properties

- visible

Marks the schema as visible when the value is true (the default setting). When the visible flag is set to false, the schema’s metadata is hidden from any metadata requests. Setting the property to false does not prohibit you from issuing queries against this schema. For information about how to control access to data, see Data roles.

- multisource

Sets the schema to multi-source mode, where the data exists in partitions in multiple different sources. It is assumed that metadata of the schema is the same across all data sources.

- multisource.columnName

In a multi-source schema, an additional column that designates the partition is implicitly added to all tables to identify the source. This property defines the name of that column, the type will be always String.

- multisource.addColumn

This flag specifies to add an implicit partition column to all the tables in this schema. A true value adds the column. Default is false.

- allowed-languages

Specifies a comma-separated list of programming languages that can be used for any purpose in the VDB. Names are case-sensitive, and the list cannot include whitespace between entries. For example, <property name="allowed-languages" value="javascript"/>

-

allow-language Specifies that a role has permission to use a language that is listed in the

allowed-languagesproperty. For example, theallow-languageproperty in following excerpt specifies that users with the roleRoleAhave permission to use Javascript.

2.2. DDL metadata for schema objects

Tables and views exist in the same namespace in a schema. Indexes are not considered schema scoped objects, but are rather scoped to the table or view they are defined against. Procedures and functions are defined in separate namespaces, but a function that is defined by virtual procedure language exists as both a function and a procedure of the same name. Domain types are not schema-scoped; they are scoped to the entire VDB.

Data types

For information about data types, see simple data type in the BNF for SQL grammar.

Foreign tables

A FOREIGN table is table that is defined on source schema that represents a real relational table in source databases such as Oracle, Microsoft SQL Server, and so forth. For relational databases, Data Virtualization can automatically retrieve the database schema information upon the deployment of the VDB, if you want to auto import the existing schema. However, users can use the following FOREIGN table semantics, when they would like to explicitly define tables on PHYSICAL schema or represent non-relational data as relational in custom translators.

Example: Create foreign table (Created on PHYSICAL model)

For more information about creating foreign tables, see CREATE TABLE in BNF for SQL grammar.

Example: Create foreign table (Created on PHYSICAL model)

TABLE OPTIONS: (the following options are well known, any others properties defined will be considered as extension metadata)

| Property | Data type or allowed values | Description |

|---|---|---|

| UUID | string | Unique identifier for the view. |

| CARDINALITY | int | Costing information. Number of rows in the table. Used for planning purposes. |

| UPDATABLE | 'TRUE' | 'FALSE' |

| Defines whether or not the view is allowed to update. | ANNOTATION | string |

| Description of the view. | DETERMINISM | NONDETERMINISTIC, COMMAND_DETERMINISTIC, SESSION_DETERMINISTIC, USER_DETERMINISTIC, VDB_DETERMINISTIC, DETERMINISTIC |

COLUMN OPTIONS: (the following options are well known, any others properties defined will be considered as extension metadata).

| Property | Data type or allowed values | Description |

|---|---|---|

| UUID | string | A unique identifier for the column. |

| NAMEINSOURCE | string | If this is a column name on the FOREIGN table, this value represents name of the column in source database. If omitted, the column name is used when querying for data against the source. |

| CASE_SENSITIVE | 'TRUE'|'FALSE' |

|

| SELECTABLE | 'TRUE'|'FALSE' | TRUE when this column is available for selection from the user query. |

| UPDATABLE | 'TRUE'|'FALSE' | Defines if the column is updatable. Defaults to true if the view/table is updatable. |

| SIGNED | 'TRUE'|'FALSE' |

|

| CURRENCY | 'TRUE'|'FALSE' |

|

| FIXED_LENGTH | 'TRUE'|'FALSE' |

|

| SEARCHABLE | 'SEARCHABLE'|'UNSEARCHABLE'|'LIKE_ONLY'|'ALL_EXCEPT_LIKE' | Column searchability. Usually dictated by the data type. |

| MIN_VALUE |

| |

| MAX_VALUE |

| |

| CHAR_OCTET_LENGTH | integer |

|

| ANNOTATION | string |

|

| NATIVE_TYPE | string |

|

| RADIX | integer |

|

| NULL_VALUE_COUNT | long | Costing information. Number of NULLS in this column. |

| DISTINCT_VALUES | long | Costing information. Number of distinct values in this column. |

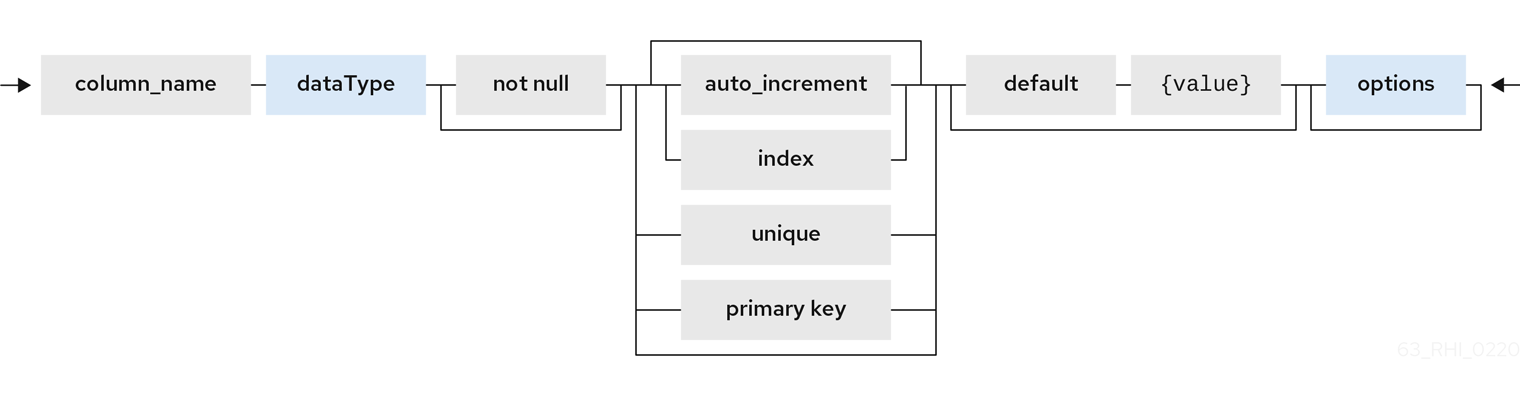

Columns may also be marked as NOT NULL, auto_increment, or with a DEFAULT value.

A column of type bigdecimal/decimal/numeric can be declared without a precision/scale, which defaults to an internal maximum for precision with half scale, or with a precision which will default to a scale of 0.

A column of type timestamp can be declared without a scale which will default to an internal maximum of 9 fractional seconds.

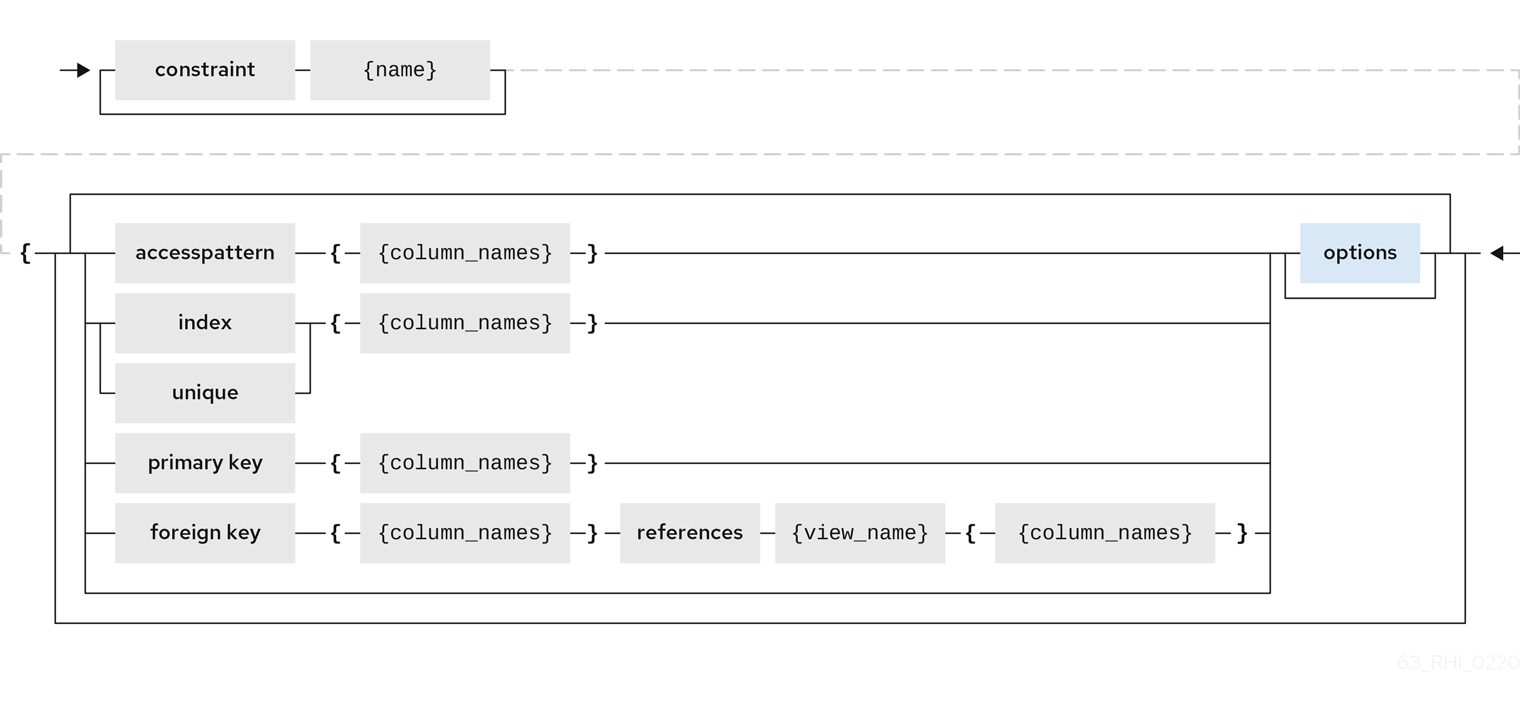

Table Constraints

Constraints can be defined on table/view to define indexes and relationships to other tables/views. This information is used by the Data Virtualization optimizer to plan queries, or use the indexes in materialization tables to optimize the access to the data.

CONSTRAINTS are same as one can define on RDBMS.

Example of CONSTRAINTs

ALTER TABLE

For the full SQL grammar for the ALTER TABLE statement, see ALTER TABLE in the BNF for SQL grammar.

Using the ALTER command, one can Add, Change, Delete columns, modify the values of any OPTIONS, and add constraints. The following examples show how to use the ALTER command to modify table objects.

Views

A view is a virtual table. A view contains rows and columns, like a real table. The columns in a view are columns from one or more real tables from the source or other view models. They can also be expressions made up multiple columns, or aggregated columns. When column definitions are not defined on the view table, they are derived from the projected columns of the view’s select transformation that is defined after the AS keyword.

You can add functions, JOIN statements and WHERE clauses to a view data as if the data were coming from one single table.

Access patterns are not currently meaningful to views, but are still allowed by the grammar. Other constraints on views are also not enforced, unless they are specified on an internal materialized view, in which case they will be automatically added to the materialization target table. However, non-access pattern View constraints are still useful for other purposes, such as to convey relationships for optimization and for discovery by clients.

BNF for CREATE VIEW

| Property | Data type or allowed values | Description |

|---|---|---|

| MATERIALIZED | 'TRUE'|'FALSE' | Defines if a table is materialized. |

| MATERIALIZED_TABLE | 'table.name' | If this view is being materialized to a external database, this defines the name of the table that is being materialized to. |

Example: Create view table (created on VIRTUAL schema)

Note that the columns are implicitly defined by the transformation query (SELECT statement). Columns can also defined inline, but if they are defined they can be only altered to modify their properties. You cannot ADD or DROP new columns.

ALTER TABLE

The BNF for ALTER VIEW, refer to ALTER TABLE

Using the ALTER COMMAND you can change the transformation query of the VIEW. You are NOT allowed to alter the column information. Transformation queries must be valid.

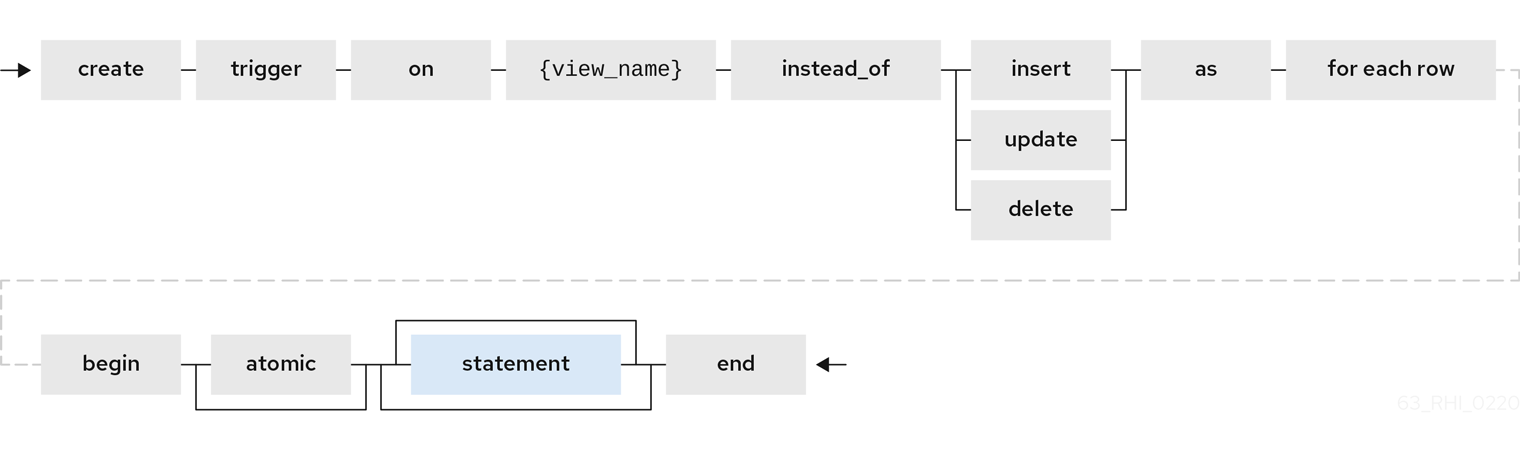

INSTEAD OF triggers on VIEW (Update VIEW)

A view comprising multiple base tables must use an INSTEAD OF trigger to insert records, apply updates, and implement deletes that reference data in the tables. Based on the select transformation’s complexity some times INSTEAD OF TRIGGERS are automatically provided for the user when UPDATABLE OPTION on the VIEW is set to TRUE. However, using the CREATE TRIGGER mechanism user can provide/override the default behavior.

Example: Define INSTEAD OF trigger on View for INSERT

For Update

Example: Define instead of trigger on View for UPDATE

While updating you have access to previous and new values of the columns. For more information about update procedures, see Update procedures.

AFTER triggers on source tables

A source table can have any number of uniquely named triggers registered to handle change events that are reported by a change data capture system.

Similar to view triggers AFTER insert provides access to new values via the NEW group, AFTER delete provides access to old values via the OLD group, and AFTER update provides access to both.

Example:Define AFTER trigger on Customer

CREATE TRIGGER ON Customer AFTER INSERT AS

FOR EACH ROW

BEGIN ATOMIC

INSERT INTO CustomerOrders (CustomerName, CustomerID) VALUES (NEW.Name, NEW.ID);

END

CREATE TRIGGER ON Customer AFTER INSERT AS

FOR EACH ROW

BEGIN ATOMIC

INSERT INTO CustomerOrders (CustomerName, CustomerID) VALUES (NEW.Name, NEW.ID);

ENDYou will typically define a handler for each operation - INSERT/UPDATE/DELTE.

For more detailed information about update procedures, see Update procedures

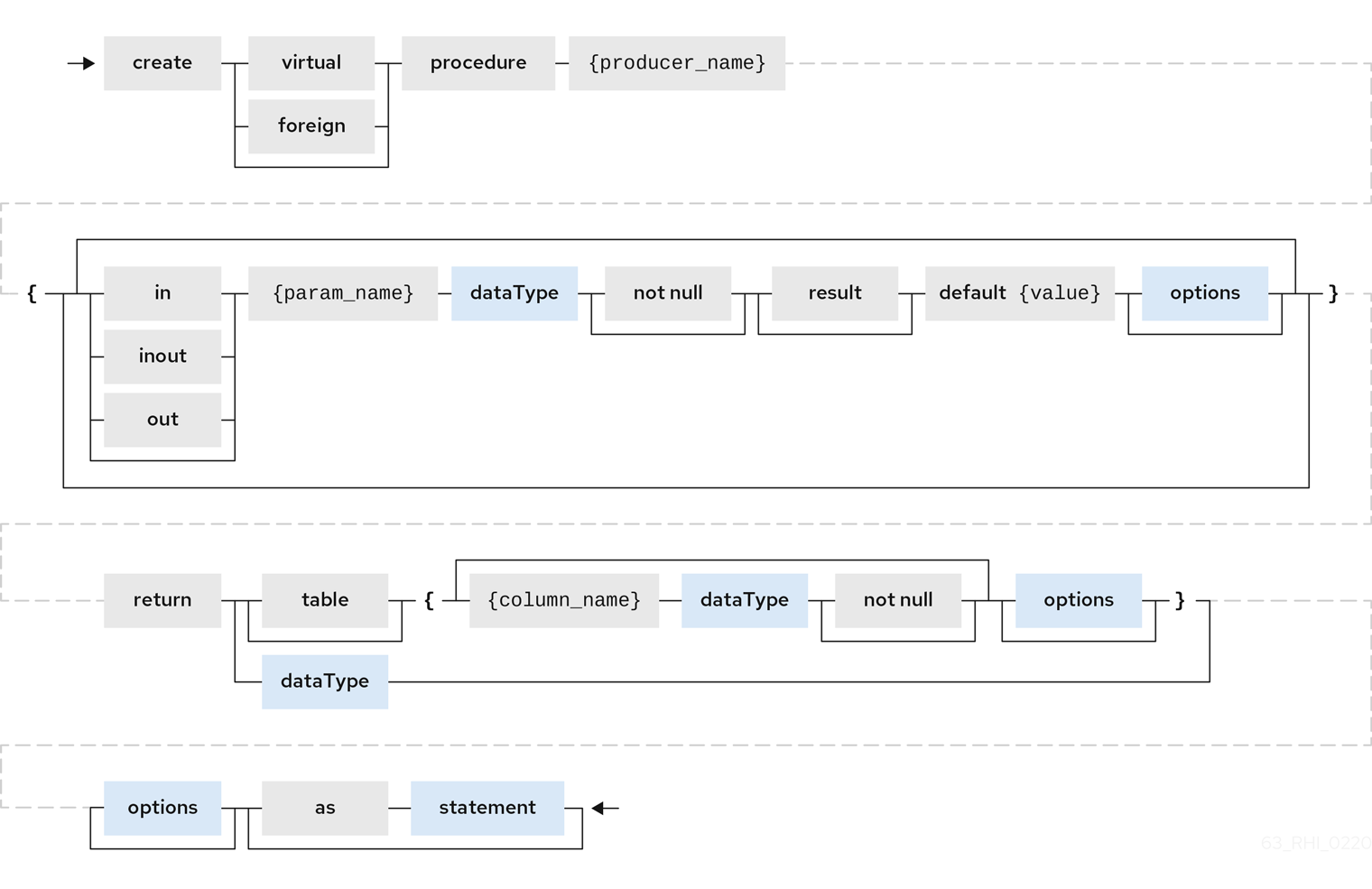

Create procedure/function

A user can define one of the following functions:

- Source Procedure ("CREATE FOREIGN PROCEDURE")

- A stored procedure in source.

- Source Function ("CREATE FOREIGN FUNCTION")

- A function that depends on capabilities in the data source, and for which Data Virtualization will pushdown to the source instead of evaluating in the Data Virtualization engine.

- Virtual Procedure ("CREATE VIRTUAL PROCEDURE")

- Similar to stored procedure, however this is defined using the Data Virtualization’s Procedure language and evaluated in the Data Virtualization’s engine.

- Function/UDF ("CREATE VIRTUAL FUNCTION")

- A user defined function, that can be defined using the Teiid procedure language, or than can have the implementation defined by a Java class. For more information about writing the Java code for a UDF, see Support for user-defined functions (non-pushdown) in the Translator Development Guide.

For more information about creating functions or procedures, see the BNF for SQL grammar.

Variable arguments

Instead of using just an IN parameter, the last non optional parameter can be declared VARIADIC to indicate that it can be repeated 0 or more times when the procedure is called.

Example: Vararg procedure

CREATE FOREIGN PROCEDURE proc (x integer, VARIADIC z integer)

RETURNS (x string);

CREATE FOREIGN PROCEDURE proc (x integer, VARIADIC z integer)

RETURNS (x string);FUNCTION OPTIONS:(the below are well known options, any others properties defined will be considered as extension metadata)

| Property | Data Type or Allowed Values | Description |

|---|---|---|

| UUID | string | unique Identifier |

| NAMEINSOURCE | If this is source function/procedure the name in the physical source, if different from the logical name given above | |

| ANNOTATION | string | Description of the function/procedure |

| CATEGORY | string | Function Category |

| DETERMINISM | NONDETERMINISTIC, COMMAND_DETERMINISTIC, SESSION_DETERMINISTIC, USER_DETERMINISTIC, VDB_DETERMINISTIC, DETERMINISTIC | Not used on virtual procedures |

| NULL-ON-NULL | 'TRUE'|'FALSE' | |

| JAVA_CLASS | string | Java Class that defines the method in case of UDF |

| JAVA_METHOD | string | The Java method name on the above defined java class for the UDF implementation |

| VARARGS | 'TRUE'|'FALSE' | Indicates that the last argument of the function can be repeated 0 to any number of times. default false. It is more proper to use a VARIADIC parameter. |

| AGGREGATE | 'TRUE'|'FALSE' | Indicates the function is a user defined aggregate function. Properties specific to aggregates are listed below. |

Note that NULL-ON-NULL, VARARGS, and all of the AGGREGATE properties are also valid relational extension metadata properties that can be used on source procedures marked as functions.

You can also create FOREIGN functions that are based on source-specific functions. For more information about creating foreign functions that use functions that are provided by the data source, see Source supported functions in the Translator development guide.

.AGGREGATE FUNCTION OPTIONS

| Property | Data type or allowed values | Description |

|---|---|---|

| ANALYTIC | 'TRUE'|'FALSE' |

Indicates the aggregate function must be windowed. The default value is |

| ALLOWS-ORDERBY | 'TRUE'|'FALSE' |

Indicates that the aggregate function can use an ORDER BY clause. The default value is |

| ALLOWS-DISTINCT | 'TRUE'|'FALSE' |

Indicates the aggregate function can use the |

| DECOMPOSABLE | 'TRUE'|'FALSE' |

Indicates the single argument aggregate function can be decomposed as agg(agg(x) ) over subsets of data. The default value is |

| USES-DISTINCT-ROWS | 'TRUE'|'FALSE' |

Indicates the aggregate function effectively uses distinct rows rather than all rows. The default value is |

Note that virtual functions defined using the Teiid procedure language cannot be aggregate functions.

Providing the JAR libraries - If you have defined a UDF (virtual) function without a Teiid procedure definition, then it must be accompanied by its implementation in Java. For information about how to configure the Java library as a dependency to the VDB, see Support for User-Defined Functions in the Translator development guide.

PROCEDURE OPTIONS:(the following options are well known, any others properties defined will be considered as extension metadata)

| Property | Data Type or Allowed Values | Description |

|---|---|---|

| UUID | string | Unique Identifier |

| NAMEINSOURCE | string | In the case of source |

| ANNOTATION | string | Description of the procedure |

| UPDATECOUNT | int | if this procedure updates the underlying sources, what is the update count, when update count is >1 the XA protocol for execution is enforced |

Example: Define virtual procedure

For more information about virtual procedures and virtual procedure language, see Virtual procedures, and Procedure language.

Example: Define virtual function

Procedure columns may also be marked as NOT NULL, or with a DEFAULT value. On a source procedure if you want the parameter to be defaultable in the source procedure and not supply a default value in Data Virtualization, then the parameter must use the extension property teiid_rel:default_handling set to omit.

There can only be a single RESULT parameter and it must be an out parameter. A RESULT parameter is the same as having a single non-table RETURNS type. If both are declared they are expected to match otherwise an exception is thrown. One is no more correct than the other. "RETURNS type" is shorter hand syntax especially for functions, while the parameter form is useful for additional metadata (explicit name, extension metadata, also defining a returns table, etc.).

A return parameter will be treated as the first parameter in for the procedure at runtime, regardless of where it appears in the argument list. This matches the expectation of Data Virtualization and JDBC calling semantics that expect assignments in the form "? = EXEC …".

.Relational extension OPTIONS:

| Property | Data Type or Allowed Values | Description |

|---|---|---|

| native-query | Parameterized String | Applies to both functions and procedures. The replacement for the function syntax rather than the standard prefix form with parentheses. For more information, see Parameterizable native queries in Translators. |

| non-prepared | boolean | Applies to JDBC procedures using the native-query option. If true a PreparedStatement will not be used to execute the native query. |

Example: Native query

CREATE FOREIGN FUNCTION func (x integer, y integer)

RETURNS integer OPTIONS ("teiid_rel:native-query" '$1 << $2');

CREATE FOREIGN FUNCTION func (x integer, y integer)

RETURNS integer OPTIONS ("teiid_rel:native-query" '$1 << $2');Example:Sequence native query

CREATE FOREIGN FUNCTION seq_nextval ()

RETURNS integer

OPTIONS ("teiid_rel:native-query" 'seq.nextval');

CREATE FOREIGN FUNCTION seq_nextval ()

RETURNS integer

OPTIONS ("teiid_rel:native-query" 'seq.nextval');Use source function representations to expose sequence functionality.

Extension metadata

When defining the extension metadata in the case of Custom Translators, the properties on tables/views/procedures/columns can be whatever you need. It is recommended that you use a consistent prefix that denotes what the properties relate to. Prefixes starting with teiid_ are reserved for use by Data Virtualization. Property keys are not case sensitive when accessed via the runtime APIs - but they are case sensitive when accessing SYS.PROPERTIES.

The usage of SET NAMESPACE for custom prefixes or namespaces is no longer allowed.

CREATE VIEW MyView (...)

OPTIONS ("my-translator:mycustom-prop" 'anyvalue')

CREATE VIEW MyView (...)

OPTIONS ("my-translator:mycustom-prop" 'anyvalue')| Prefix | Description |

|---|---|

| teiid_rel | Relational Extensions. Uses include function and native query metadata |

| teiid_sf | Salesforce Extensions. |

| teiid_mongo | MongoDB Extensions |

| teiid_odata | OData Extensions |

| teiid_accumulo | Accumulo Extensions |

| teiid_excel | Excel Extensions |

| teiid_ldap | LDAP Extensions |

| teiid_rest | REST Extensions |

| teiid_pi | PI Database Extensions |

2.3. DDL metadata for domains

Domains are simple type declarations that define a set of valid values for a given type name. They can be created at the database level only.

Create domain

CREATE DOMAIN <Domain name> [ AS ] <data type>

[ [NOT] NULL ]

CREATE DOMAIN <Domain name> [ AS ] <data type>

[ [NOT] NULL ]The domain name may any non-keyword identifier.

See the BNF for Data Types

Once a domain is defined it may be referenced as the data type for a column, parameter, etc.

Example: Virtual database DDL

When the system metadata is queried, the type for the column is shown as the domain name.

Limitations

Domain names might not be recognized in the following places where a data type is expected:

- create temp table

- execute immediate

- arraytable

- objecttable

- texttable

- xmltable

When you query a pg_attribute, the ODBC/pg metadata will show the name of the base type, rather than the domain name.

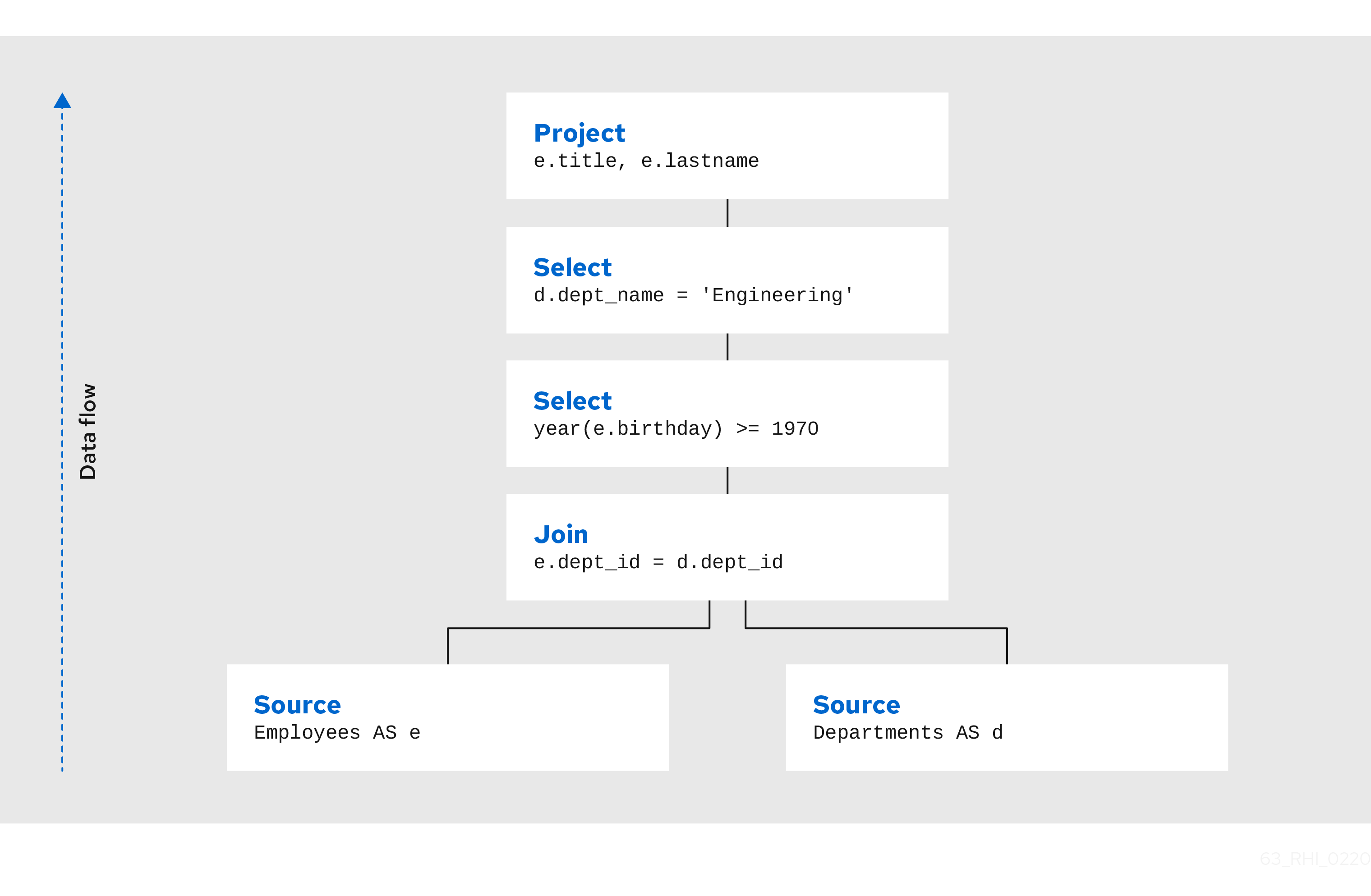

Chapter 3. SQL compatibility

Data Virtualization provides nearly all of the functionality of SQL-92 DML. SQL-99 and later features are constantly being added based upon community need. The following does not attempt to cover SQL exhaustively, but rather highlights how SQL is used within Data Virtualization. For details about the exact form of SQL that Data Virtualization accepts, see the BNF for SQL grammar.

3.1. Identifiers

SQL commands contain references to tables and columns. These references are in the form of identifiers, which uniquely identify the tables and columns in the context of the command. All queries are processed in the context of a virtual database, or VDB. Because information can be federated across multiple sources, tables and columns must be scoped in some manner to avoid conflicts. This scoping is provided by schemas, which contain the information for each data source or set of views.

Fully-qualified table and column names are of the following form, where the separate `parts' of the identifier are delimited by periods.

- TABLE: <schema_name>.<table_spec>

- COLUMN: <schema_name>.<table_spec>.<column_name>

Syntax rules

-

Identifiers can consist of alphanumeric characters, or the underscore (

_) character, and must begin with an alphabetic character. Any Unicode character may be used in an identifier. -

Identifiers in double quotes can have any contents. The double quote character can be used if is escaped with an additional double quote; for example,

"some "" id" - Because different data sources organize tables in different ways, with some prepending catalog, schema, or user information, Data Virtualization allows table specification to be a dot-delimited construct.

When a table specification contains a dot resolving will allow for the match of a partial name against any number of the end segments in the name. e.g. a table with the fully-qualified name vdbname."sourceschema.sourcetable" would match the partial name sourcetable.

-

Columns, column aliases, and schemas cannot contain a dot (

.) character. - Identifiers, even when quoted, are not case-sensitive in Data Virtualization.

Some examples of valid, fully-qualified table identifiers are:

- MySchema.Portfolios

- "MySchema.Portfolios"

- MySchema.MyCatalog.dbo.Authors

Some examples of valid fully-qualified column identifiers are:

- MySchema.Portfolios.portfolioID

- "MySchema.Portfolios"."portfolioID"

- MySchema.MyCatalog.dbo.Authors.lastName

Fully-qualified identifiers can always be used in SQL commands. Partially- or unqualified forms can also be used, as long as the resulting names are unambiguous in the context of the command. Different forms of qualification can be mixed in the same query.

If you use an alias containing a period (.) character, it is a known issue that the alias name will be treated the same as a qualified name and may conflict with fully qualified object names.

Reserved words

Reserved words in Data Virtualization include the standard SQL 2003 Foundation, SQL/MED, and SQL/XML reserved words, as well as Data Virtualization specific words such as BIGINTEGER, BIGDECIMAL, or MAKEDEP. For more information about reserved words, see the Reserved Keywords and Reserved Keywords For Future Use sections in BNF for SQL grammar.

3.2. Operator precedence

Data Virtualization parses and evaluates operators with higher precedence before those with lower precedence. Operators with equal precedence are left-associative (left-to-right). The following table lists operator precedence from high to low:

| Operator | Description |

|---|---|

|

| array element reference |

|

| positive/negative value expression |

|

| multiplication/division |

|

| addition/subtraction |

|

| concat |

| criteria | For information, see Criteria. |

3.3. Expressions

Identifiers, literals, and functions can be combined into expressions. Expressions can be used in a query with nearly any keyword, including SELECT, FROM (if specifying join criteria), WHERE, GROUP BY, HAVING, or ORDER BY.

You can use following types of expressions in Data Virtualization:

3.3.1. Column Identifiers

Column identifiers are used to specify the output columns in SELECT statements, the columns and their values for INSERT and UPDATE statements, and criteria used in WHERE and FROM clauses. They are also used in GROUP BY, HAVING, and ORDER BY clauses. The syntax for column identifiers was defined in the Identifiers section above.

3.3.2. Literals

Literal values represent fixed values. These can be any of the 'standard' data types. For information about data types, see Data types.

Syntax rules

- Integer values will be assigned an integral data type big enough to hold the value (integer, long, or biginteger).

- Floating point values will always be parsed as a double.

- The keyword 'null' is used to represent an absent or unknown value and is inherently untyped. In many cases, a null literal value will be assigned an implied type based on context. For example, in the function '5 + null', the null value will be assigned the type 'integer' to match the type of the value '5'. A null literal used in the SELECT clause of a query with no implied context will be assigned to type 'string'.

Some examples of simple literal values are:

'abc'

'abc'Example: Escaped single tick

'isn"t true'

'isn"t true'5

5Example: Scientific notation

-37.75e01

-37.75e01Example: exact numeric type BigDecimal

100.0

100.0true

truefalse

falseExample: Unicode character

'\u0027'

'\u0027'Example: Binary

X'0F0A'

X'0F0A'Date/Time literals can use either JDBC Escaped literal syntax:

Example: Date literal

{d'...'}

{d'...'}Example: Time literal

{t'...'}

{t'...'}Example: Timestamp literal

{ts'...'}

{ts'...'}Or the ANSI keyword syntax:

Example: Date literal

DATE '...'

DATE '...'Example: Time literal

TIME '...'

TIME '...'Example: Timestamp literal

TIMESTAMP '...'

TIMESTAMP '...'Either way, the string literal value portion of the expression is expected to follow the defined format - "yyyy-MM-dd" for date, "hh:mm:ss" for time, and "yyyy-MM-dd[ hh:mm:ss[.fff…]]" for timestamp.

Aggregate functions

Aggregate functions take sets of values from a group produced by an explicit or implicit GROUP BY and return a single scalar value computed from the group.

You can use the following aggregate functions in Data Virtualization:

- COUNT(*)

- Count the number of values (including nulls and duplicates) in a group. Returns an integer - an exception will be thrown if a larger count is computed.

- COUNT(x)

- Count the number of values (excluding nulls) in a group. Returns an integer - an exception will be thrown if a larger count is computed.

- COUNT_BIG(*)

- Count the number of values (including nulls and duplicates) in a group. Returns a long - an exception will be thrown if a larger count is computed.

- COUNT_BIG(x)

- Count the number of values (excluding nulls) in a group. Returns a long - an exception will be thrown if a larger count is computed.

- SUM(x)

- Sum of the values (excluding nulls) in a group.

- AVG(x)

- Average of the values (excluding nulls) in a group.

- MIN(x)

- Minimum value in a group (excluding null).

- MAX(x)

- Maximum value in a group (excluding null).

- ANY(x)/SOME(x)

- Returns TRUE if any value in the group is TRUE (excluding null).

- EVERY(x)

- Returns TRUE if every value in the group is TRUE (excluding null).

- VAR_POP(x)

- Biased variance (excluding null) logically equals(sum(x^2) - sum(x)^2/count(x))/count(x); returns a double; null if count = 0.

- VAR_SAMP(x)

- Sample variance (excluding null) logically equals(sum(x^2) - sum(x)^2/count(x))/(count(x) - 1); returns a double; null if count < 2.

- STDDEV_POP(x)

- Standard deviation (excluding null) logically equals SQRT(VAR_POP(x)).

- STDDEV_SAMP(x)

- Sample standard deviation (excluding null) logically equals SQRT(VAR_SAMP(x)).

- TEXTAGG(expression [as name], … [DELIMITER char] [QUOTE char | NO QUOTE] [HEADER] [ENCODING id] [ORDER BY …])

- CSV text aggregation of all expressions in each row of a group. When DELIMITER is not specified, by default comma(,) is used as delimiter. All non-null values will be quoted. Double quotes(") is the default quote character. Use QUOTE to specify a different value, or NO QUOTE for no value quoting. If HEADER is specified, the result contains the header row as the first line - the header line will be present even if there are no rows in a group. This aggregation returns a blob.

TEXTAGG(col1, col2 as name DELIMITER '|' HEADER ORDER BY col1)

TEXTAGG(col1, col2 as name DELIMITER '|' HEADER ORDER BY col1)- XMLAGG(xml_expr [ORDER BY …]) – XML concatenation of all XML expressions in a group (excluding null). The ORDER BY clause cannot reference alias names or use positional ordering.

- JSONARRAY_AGG(x [ORDER BY …]) – creates a JSON array result as a Clob including null value. The ORDER BY clause cannot reference alias names or use positional ordering. For more information, see JSONARRAY function.

Example: Integer value expression

jsonArray_Agg(col1 order by col1 nulls first)

jsonArray_Agg(col1 order by col1 nulls first)could return

[null,null,1,2,3]

[null,null,1,2,3]- STRING_AGG(x, delim) – creates a lob results from the concatenation of x using the delimiter delim. If either argument is null, no value is concatenated. Both arguments are expected to be character (string/clob) or binary (varbinary, blob), and the result will be CLOB or BLOB respectively. DISTINCT and ORDER BY are allowed in STRING_AGG.

Example: String aggregate expression

string_agg(col1, ',' ORDER BY col1 ASC)

string_agg(col1, ',' ORDER BY col1 ASC)could return

'a,b,c'

'a,b,c'-

LIST_AGG(x [, delim]) WITHIN GROUP (ORDER BY …) – a form of STRING_AGG that uses the same syntax as Oracle. Here

xcan be any type that can be converted to a string. Thedelimvalue, if specified, must be a literal, and theORDER BYvalue is required. This is only a parsing alias for an equivalentstring_aggexpression.

Example: List aggregate expression

listagg(col1, ',') WITHIN GROUP (ORDER BY col1 ASC)

listagg(col1, ',') WITHIN GROUP (ORDER BY col1 ASC)could return

'a,b,c'

'a,b,c'- ARRAY_AGG(x [ORDER BY …]) – Creates an array with a base type that matches the expression x. The ORDER BY clause cannot reference alias names or use positional ordering.

- agg([DISTINCT|ALL] arg … [ORDER BY …]) – A user defined aggregate function.

Syntax rules

- Some aggregate functions may contain a keyword 'DISTINCT' before the expression, indicating that duplicate expression values should be ignored. DISTINCT is not allowed in COUNT(*) and is not meaningful in MIN or MAX (result would be unchanged), so it can be used in COUNT, SUM, and AVG.

- Aggregate functions cannot be used in FROM, GROUP BY, or WHERE clauses without an intervening query expression.

- Aggregate functions cannot be nested within another aggregate function without an intervening query expression.

- Aggregate functions may be nested inside other functions.

- Any aggregate function may take an optional FILTER clause of the form

FILTER ( WHERE condition )

FILTER ( WHERE condition )The condition may be any boolean value expression that does not contain a subquery or a correlated variable. The filter will logically be evaluated for each row prior to the grouping operation. If false the aggregate function will not accumulate a value for the given row.

For more information on aggregates, see the sections on GROUP BY or HAVING.

3.3.3. Window functions

Data Virtualization provides ANSI SQL 2003 window functions. A window function allows an aggregate function to be applied to a subset of the result set, without the need for a GROUP BY clause. A window function is similar to an aggregate function, but requires the use of an OVER clause or window specification.

Usage:

In the preceding syntax, aggregate can refer to any aggregate function. Keywords exist for the following analytical functions ROW_NUMBER, RANK, DENSE_RANK, PERCENT_RANK, CUME_DIST. There are also the FIRST_VALUE, LAST_VALUE, LEAD, LAG, NTH_VALUE, and NTILE analytical functions. For more information, see Analytical functions definitions.

Syntax rules

- Window functions can only appear in the SELECT and ORDER BY clauses of a query expression.

- Window functions cannot be nested in one another.

- Partitioning and order by expressions cannot contain subqueries or outer references.

- An aggregate ORDER BY clause cannot be used when windowed.

- The window specification ORDER BY clause cannot reference alias names or use positional ordering.

- Windowed aggregates may not use DISTINCT if the window specification is ordered.

- Analytical value functions may not use DISTINCT and require the use of an ordering in the window specification.

- RANGE or ROWS requires the ORDER BY clause to be specified. The default frame if not specified is RANGE UNBOUNDED PRECEDING. If no end is specified the default is CURRENT ROW. No combination of start and end is allowed such that the end is before the start - for example UNBOUNDED FOLLOWING is not allow as a start nor is UNBOUNDED PRECEDING allowed as an end.

- RANGE cannot be used n PRECEDING or n FOLLOWING

Analytical function definitions

- Ranking functions

- RANK() – Assigns a number to each unique ordering value within each partition starting at 1, such that the next rank is equal to the count of prior rows.

- DENSE_RANK() – Assigns a number to each unique ordering value within each partition starting at 1, such that the next rank is sequential.

- PERCENT_RANK() – Computed as (RANK - 1) / ( RC - 1) where RC is the total row count of the partition.

CUME_DIST() – Computed as the PR / RC where PR is the rank of the row including peers and RC is the total row count of the partition.

By default all values are integers - an exception will be thrown if a larger value is needed. Use the system org.teiid.longRanks to have RANK, DENSE_RANK, and ROW_NUMBER return long values instead.

- Value functions

- FIRST_VALUE(val) – Return the first value in the window frame with the given ordering.

- LAST_VALUE(val) – Return the last observed value in the window frame with the given ordering.

- LEAD(val [, offset [, default]]) - Access the ordered value in the window that is offset rows ahead of the current row. If there is no such row, then the default value will be returned. If not specified the offset is 1 and the default is null.

- LAG(val [, offset [, default]]) - Access the ordered value in the window that is offset rows behind of the current row. If there is no such row, then the default value will be returned. If not specified the offset is 1 and the default is null.

- NTH_VALUE(val, n) - Returns the nth val in window frame. The index must be greater than 0. If no such value exists, then null is returned.

- Row value functions

-

ROW_NUMBER() – Sequentially assigns a number to each row in a partition starting at

1. -

NTILE(n) – Divides the partition into n tiles that differ in size by at most

1. Larger tiles will be created sequentially starting at the first.nmust be greater than0.

-

ROW_NUMBER() – Sequentially assigns a number to each row in a partition starting at

Processing

Window functions are logically processed just before creating the output from the SELECT clause. Window functions can use nested aggregates if a GROUP BY clause is present. There is no guaranteed effect on the output ordering from the presence of window functions. The SELECT statement must have an ORDER BY clause to have a predictable ordering.

An ORDER BY in the OVER clause follows the same rules pushdown and processing rules as a top level ORDER BY. In general this means you should specify NULLS FIRST/LAST as null handling may differ between engine and pushdown processing. Also see the system properties controlling sort behavior if you different default behavior.

Data Virtualization processes all window functions with the same window specification together. In general, a full pass over the row values coming into the SELECT clause is required for each unique window specification. For each window specification the values are grouped according to the PARTITION BY clause. If no PARTITION BY clause is specified, then the entire input is treated as a single partition.

The frame for the output value is determined based upon the definition of the analytical function or the ROWS/RANGE clause. The default frame is RANGE UNBOUNDED PRECEDING, which also implies the default end bound of CURRENT ROW. RANGE computes over a row and its peers together. ROWS computes over every row. Most analytical functions, such as ROW_NUMBER, have an implicit RANGE/ROWS - which is why a different one cannot be specified. For example, ROW_NUMBER() OVER (order)` can be expressed instead as count(*) OVER (order ROWS UNBOUNDED PRECEDING AND CURRENT ROW). Thus it assigns a different value to every row regardless of the number of peers.

Example: Windowed results

SELECT name, salary, max(salary) over (partition by name) as max_sal,

rank() over (order by salary) as rank, dense_rank() over (order by salary) as dense_rank,

row_number() over (order by salary) as row_num FROM employees

SELECT name, salary, max(salary) over (partition by name) as max_sal,

rank() over (order by salary) as rank, dense_rank() over (order by salary) as dense_rank,

row_number() over (order by salary) as row_num FROM employees| name | salary | max_sal | rank | dense_rank | row_num |

|---|---|---|---|---|---|

| John | 100000 | 100000 | 2 | 2 | 2 |

| Henry | 50000 | 50000 | 5 | 4 | 5 |

| John | 60000 | 100000 | 3 | 3 | 3 |

| Suzie | 60000 | 150000 | 3 | 3 | 4 |

| Suzie | 150000 | 150000 | 1 | 1 | 1 |

3.3.4. Case and searched case

In Data Virtualization, to include conditional logic in a scalar expression, you can use the following two forms of the CASE expression:

-

CASE <expr> ( WHEN <expr> THEN <expr>)+ [ELSE expr] END -

CASE ( WHEN <criteria> THEN <expr>)+ [ELSE expr] END

Each form allows for an output based on conditional logic. The first form starts with an initial expression and evaluates WHEN expressions until the values match, and outputs the THEN expression. If no WHEN is matched, the ELSE expression is output. If no WHEN is matched and no ELSE is specified, a null literal value is output. The second form (the searched case expression) searches the WHEN clauses, which specify an arbitrary criteria to evaluate. If any criteria evaluates to true, the THEN expression is evaluated and output. If no WHEN is true, the ELSE is evaluated or NULL is output if none exists.

Example case statements

SELECT CASE columnA WHEN '10' THEN 'ten' WHEN '20' THEN 'twenty' END AS myExample SELECT CASE WHEN columnA = '10' THEN 'ten' WHEN columnA = '20' THEN 'twenty' END AS myExample

SELECT CASE columnA WHEN '10' THEN 'ten' WHEN '20' THEN 'twenty' END AS myExample

SELECT CASE WHEN columnA = '10' THEN 'ten' WHEN columnA = '20' THEN 'twenty' END AS myExample3.3.5. Scalar subqueries

Subqueries can be used to produce a single scalar value in the SELECT, WHERE, or HAVING clauses only. A scalar subquery must have a single column in the SELECT clause and should return either 0 or 1 row. If no rows are returned, null will be returned as the scalar subquery value. For information about other types of subqueries, see Subqueries.

3.3.6. Parameter references

Parameters are specified using a ? symbol. You can use parameters only with PreparedStatement or CallableStatements in JDBC. Each parameter is linked to a value specified by 1-based index in the JDBC API.

3.3.7. Arrays

Array values may be constructed using parentheses around an expression list with an optional trailing comma, or with an explicit ARRAY constructor.

Example: Empty arrays

() (,) ARRAY[]

()

(,)

ARRAY[]Example: Single element array

(expr,) ARRAY[expr]

(expr,)

ARRAY[expr]A trailing comma is required for the parser to recognize a single element expression as an array with parentheses, rather than a simple nested expression.

Example: General array syntax

(expr, expr ... [,]) ARRAY[expr, ...]

(expr, expr ... [,])

ARRAY[expr, ...]If all of the elements in the array have the same type, the array will have a matching base type. If the element types differ the array base type will be object.

An array element reference takes the form of:

array_expr[index_expr]

array_expr[index_expr]

index_expr must resolve to an integer value. This syntax is effectively the same as the array_get system function and expects 1-based indexing.

3.4. Criteria

Criteria can be any of the following items:

- Predicates that evaluate to true or false.

- Logical criteria that combine criteria (AND, OR, NOT).

- A value expression of type Boolean.

Usage

criteria AND|OR criteria

criteria AND|OR criteriaNOT criteria

NOT criteria(criteria)

(criteria)expression (=|<>|!=|<|>|<=|>=) (expression|((ANY|ALL|SOME) subquery|(array_expression)))

expression (=|<>|!=|<|>|<=|>=) (expression|((ANY|ALL|SOME) subquery|(array_expression)))expression IS [NOT] DISTINCT FROM expression

expression IS [NOT] DISTINCT FROM expression

IS DISTINCT FROM considers null values to be equivalent and never produces an UNKNOWN value.

Because the optimizer is not tuned to handle IS DISTINCT FROM, if you use it in a join predicate that is not pushed down, the resulting plan does not perform as well a regular comparison.

expression [NOT] IS NULL

expression [NOT] IS NULLexpression [NOT] IN (expression [,expression]*)|subquery

expression [NOT] IN (expression [,expression]*)|subqueryexpression [NOT] LIKE pattern [ESCAPE char]

expression [NOT] LIKE pattern [ESCAPE char]

LIKE matches the string expression against the given string pattern. The pattern may contain % to match any number of characters, and _ to match any single character. The escape character can be used to escape the match characters % and _.

expression [NOT] SIMILAR TO pattern [ESCAPE char]

expression [NOT] SIMILAR TO pattern [ESCAPE char]

SIMILAR TO is a cross between LIKE and standard regular expression syntax. % and _ are still used, rather than .* and ., respectively.

Data Virtualization does not exhaustively validate SIMILAR TO pattern values. Instead, the pattern is converted to an equivalent regular expression. Do not rely on general regular expression features when using SIMILAR TO. If additional features are needed, use LIKE_REGEX. Avoid the use of non-literal patterns, because Data Virtualization has a limited ability to process SQL pushdown predicates.

expression [NOT] LIKE_REGEX pattern

expression [NOT] LIKE_REGEX pattern

You can use LIKE_REGEX with standard regular expression syntax for matching. This differs from SIMILAR TO and LIKE in that the escape character is no longer used. \ is already the standard escape mechanism in regular expressions, and %` and _ have no special meaning. The runtime engine uses the JRE implementation of regular expressions. For more information, see the java.util.regex.Pattern class.

Data Virtualization does not exhaustively validate LIKE_REGEX pattern values. It is possible to use JRE-only regular expression features that are not specified by the SQL specification. Additionally, not all sources can use the same regular expression flavor or extensions. In pushdown situations, be careful to ensure that the pattern that you use has the same meaning in Data Virtualization, and across all applicable sources.

EXISTS (subquery)

EXISTS (subquery)expression [NOT] BETWEEN minExpression AND maxExpression

expression [NOT] BETWEEN minExpression AND maxExpression

Data Virtualization converts BETWEEN into the equivalent form expression >= minExpression AND expression ⇐ maxExpression.

expression

expression

Where expression has type Boolean.

Syntax rules

- The precedence ordering from lowest to highest is comparison, NOT, AND, OR.

- Criteria nested by parenthesis will be logically evaluated prior to evaluating the parent criteria.

Some examples of valid criteria are:

-

(balance > 2500.0) -

100*(50 - x)/(25 - y) > z -

concat(areaCode,concat('-',phone)) LIKE '314%1'

Null values represent an unknown value. Comparison with a null value will evaluate to unknown, which can never be true even if not is used.

Criteria precedence

Data Virtualization parses and evaluates conditions with higher precedence before those with lower precedence. Conditions with equal precedence are left-associative. The following table lists condition precedence from high to low:

| Condition | Description |

|---|---|

| SQL operators | See Expressions |

| EXISTS, LIKE, SIMILAR TO, LIKE_REGEX, BETWEEN, IN, IS NULL, IS DISTINCT, <, ⇐, >, >=, =, <> | Comparison |

| NOT | Negation |

| AND | Conjunction |

| OR | Disjunction |

To prevent lookaheads, the parser does not accept all possible criteria sequences. For example, a = b is null is not accepted, because by the left-associative parsing we first recognize a =, then look for a common value expression. b is null is not a valid common value expression. Thus, nesting must be used, for example, (a = b) is null. For more information about parsing rules, see BNF for SQL grammar.

3.5. Scalar functions

Data Virtualization provides an extensive set of built-in scalar functions. For more information, see DML commands and Data types. In addition, Data Virtualization provides the capability for user-defined functions or UDFs. For information about adding UDFs, see User-defined functions in the Translator Development Guide. After you add UDFs, you can call them in the same way that you call other functions.

3.5.1. Numeric functions

Numeric functions return numeric values (integer, long, float, double, biginteger, bigdecimal). They generally take numeric values as inputs, though some take strings.

| Function | Definition | Datatype constraint |

|---|---|---|

| + - * / | Standard numeric operators | x in {integer, long, float, double, biginteger, bigdecimal}, return type is same as x [a] |

| ABS(x) | Absolute value of x | See standard numeric operators above |

| ACOS(x) | Arc cosine of x | x in {double, bigdecimal}, return type is double |

| ASIN(x) | Arc sine of x | x in {double, bigdecimal}, return type is double |

| ATAN(x) | Arc tangent of x | x in {double, bigdecimal}, return type is double |

| ATAN2(x,y) | Arc tangent of x and y | x, y in {double, bigdecimal}, return type is double |

| CEILING(x) | Ceiling of x | x in {double, float}, return type is double |

| COS(x) | Cosine of x | x in {double, bigdecimal}, return type is double |

| COT(x) | Cotangent of x | x in {double, bigdecimal}, return type is double |

| DEGREES(x) | Convert x degrees to radians | x in {double, bigdecimal}, return type is double |

| EXP(x) | e^x | x in {double, float}, return type is double |

| FLOOR(x) | Floor of x | x in {double, float}, return type is double |

| FORMATBIGDECIMAL(x, y) | Formats x using format y | x is bigdecimal, y is string, returns string |

| FORMATBIGINTEGER(x, y) | Formats x using format y | x is biginteger, y is string, returns string |

| FORMATDOUBLE(x, y) | Formats x using format y | x is double, y is string, returns string |

| FORMATFLOAT(x, y) | Formats x using format y | x is float, y is string, returns string |

| FORMATINTEGER(x, y) | Formats x using format y | x is integer, y is string, returns string |

| FORMATLONG(x, y) | Formats x using format y | x is long, y is string, returns string |

| LOG(x) | Natural log of x (base e) | x in {double, float}, return type is double |

| LOG10(x) | Log of x (base 10) | x in {double, float}, return type is double |

| MOD(x, y) | Modulus (remainder of x / y) | x in {integer, long, float, double, biginteger, bigdecimal}, return type is same as x |

| PARSEBIGDECIMAL(x, y) | Parses x using format y | x, y are strings, returns bigdecimal |

| PARSEBIGINTEGER(x, y) | Parses x using format y | x, y are strings, returns biginteger |

| PARSEDOUBLE(x, y) | Parses x using format y | x, y are strings, returns double |

| PARSEFLOAT(x, y) | Parses x using format y | x, y are strings, returns float |

| PARSEINTEGER(x, y) | Parses x using format y | x, y are strings, returns integer |

| PARSELONG(x, y) | Parses x using format y | x, y are strings, returns long |

| PI() | Value of Pi | return is double |

| POWER(x,y) | x to the y power | x in {double, bigdecimal, biginteger}, return is the same type as x |

| RADIANS(x) | Convert x radians to degrees | x in {double, bigdecimal}, return type is double |

| RAND() | Returns a random number, using generator established so far in the query or initializing with system clock if necessary. | Returns double. |

| RAND(x) | Returns a random number, using new generator seeded with x. This should typically be called in an initialization query. It will only effect the random values returned by the Data Virtualization RAND function and not the values from RAND functions evaluated by sources. | x is integer, returns double. |

| ROUND(x,y) | Round x to y places; negative values of y indicate places to the left of the decimal point | x in {integer, float, double, bigdecimal} y is integer, return is same type as x. |

| SIGN(x) | 1 if x > 0, 0 if x = 0, -1 if x < 0 | x in {integer, long, float, double, biginteger, bigdecimal}, return type is integer |

| SIN(x) | Sine value of x | x in {double, bigdecimal}, return type is double |

| SQRT(x) | Square root of x | x in {long, double, bigdecimal}, return type is double |

| TAN(x) | Tangent of x | x in {double, bigdecimal}, return type is double |

| BITAND(x, y) | Bitwise AND of x and y | x, y in {integer}, return type is integer |

| BITOR(x, y) | Bitwise OR of x and y | x, y in {integer}, return type is integer |

| BITXOR(x, y) | Bitwise XOR of x and y | x, y in {integer}, return type is integer |

| BITNOT(x) | Bitwise NOT of x | x in {integer}, return type is integer |

[a] The precision and scale of non-bigdecimal arithmetic function functions results matches that of Java. The results of bigdecimal operations match Java, except for division, which uses a preferred scale of max(16, dividend.scale + divisor.precision + 1), which then has trailing zeros removed by setting the scale to max(dividend.scale, normalized scale).

Parsing numeric datatypes from strings

Data Virtualization offers a set of functions you can use to parse numbers from strings. For each string, you need to provide the formatting of the string. These functions use the convention established by the java.text.DecimalFormat class to define the formats you can use with these functions. You can learn more about how this class defines numeric string formats by visiting the Sun Java Web site at the following URL for Sun Java.

For example, you could use these function calls, with the formatting string that adheres to the java.text.DecimalFormat convention, to parse strings and return the datatype you need:

| Input String | Function Call to Format String | Output Value | Output Datatype |

|---|---|---|---|

| '$25.30' | parseDouble(cost, '$,0.00;($,0.00)') | 25.3 | double |

| '25%' | parseFloat(percent, ',#0%') | 25 | float |

| '2,534.1' | parseFloat(total, ',0.;-,0.') | 2534.1 | float |

| '1.234E3' | parseLong(amt, '0.###E0') | 1234 | long |

| '1,234,567' | parseInteger(total, ',0;-,0') | 1234567 | integer |

Formatting numeric datatypes as strings

Data Virtualization offers a set of functions you can use to convert numeric datatypes into strings. For each string, you need to provide the formatting. These functions use the convention established within the java.text.DecimalFormat class to define the formats you can use with these functions. You can learn more about how this class defines numeric string formats by visiting the Sun Java Web site at the following URL for Sun Java .

For example, you could use these function calls, with the formatting string that adheres to the java.text.DecimalFormat convention, to format the numeric datatypes into strings:

| Input Value | Input Datatype | Function Call to Format String | Output String |

|---|---|---|---|

| 25.3 | double | formatDouble(cost, '$,0.00;($,0.00)') | '$25.30' |

| 25 | float | formatFloat(percent, ',#0%') | '25%' |

| 2534.1 | float | formatFloat(total, ',0.;-,0.') | '2,534.1' |

| 1234 | long | formatLong(amt, '0.###E0') | '1.234E3' |

| 1234567 | integer | formatInteger(total, ',0;-,0') | '1,234,567' |

3.5.2. String functions

String functions generally take strings as inputs and return strings as outputs.

Unless specified, all of the arguments and return types in the following table are strings and all indexes are 1-based. The 0 index is considered to be before the start of the string.

| Function | Definition | Datatype constraint |

|---|---|---|

| x || y | Concatenation operator | x,y in {string, clob}, return type is string or character large object (CLOB). |

| ASCII(x) |

Provide ASCII value of the left most character[1] in x. The empty string will as input will return | return type is integer |

| CHR(x) CHAR(x) |

Provide the character[1] for ASCII value | x in {integer} [1] For the engine’s implementations of the ASCII and CHR functions, characters are limited to UCS2 values only. For pushdown there is little consistency among sources for character values beyond character code 255. |

| CONCAT(x, y) | Concatenates x and y with ANSI semantics. If x and/or y is null, returns null. | x, y in {string} |

| CONCAT2(x, y) | Concatenates x and y with non-ANSI null semantics. If x and y is null, returns null. If only x or y is null, returns the other value. | x, y in {string} |

| ENDSWITH(x, y) | Checks if y ends with x. If x or y is null, returns null. | x, y in {string}, returns boolean |

| INITCAP(x) | Make first letter of each word in string x capital and all others lowercase. | x in {string} |

| INSERT(str1, start, length, str2) | Insert string2 into string1 | str1 in {string}, start in {integer}, length in {integer}, str2 in {string} |

| LCASE(x) | Lowercase of x | x in {string} |

| LEFT(x, y) | Get left y characters of x | x in {string}, y in {integer}, return string |

| LENGTH(x) CHAR_LENGTH(x) CHARACTER_LENGTH(x) | Length of x | return type is integer |

| LOCATE(x, y) POSITION(x IN y) | Find position of x in y starting at beginning of y. | x in {string}, y in {string}, return integer |

| LOCATE(x, y, z) | Find position of x in y starting at z. | x in {string}, y in {string}, z in {integer}, return integer |

| LPAD(x, y) | Pad input string x with spaces on the left to the length of y. | x in {string}, y in {integer}, return string |

| LPAD(x, y, z) | Pad input string x on the left to the length of y using character z. | x in {string}, y in {string}, z in {character}, return string |

| LTRIM(x) | Left trim x of blank chars. | x in {string}, return string |

| QUERYSTRING(path [, expr [AS name] …]) |

Returns a properly encoded query string appended to the given path. Null valued expressions are omitted, nd a null path is treated as ". Names are optional for column reference expressions. For example, | path, expr in {string}. name is an identifier. |

| REPEAT(str1,instances) | Repeat string1 a specified number of times | str1 in {string}, instances in {integer} return string. |

| RIGHT(x, y) | Get right y characters of x | x in {string}, y in {integer}, return string |

| RPAD(input string x, pad length y) | Pad input string x with spaces on the right to the length of y | x in {string}, y in {integer}, return string |

| RPAD(x, y, z) | Pad input string x on the right to the length of y using character z | x in {string}, y in {string}, z in {character}, return string |

| RTRIM(x) | Right trim x of blank chars | x is string, return string |

| SPACE(x) | Repeat the space character x number of times | x is integer, return string |

| SUBSTRING(x, y) SUBSTRING(x FROM y) | [b] Get substring from x, from position y to the end of x | y in {integer} |

| SUBSTRING(x, y, z) SUBSTRING(x FROM y FOR z) | [b] Get substring from x from position y with length z | y, z in {integer} |

| TRANSLATE(x, y, z) | Translate string x by replacing each character in y with the character in z at the same position. | x in {string} |

| TRIM([[LEADING|TRAILING|BOTH] [x] FROM] y) | Trim the leading, trailing, or both ends of a string y of character x. If LEADING/TRAILING/BOTH is not specified, BOTH is used. If no trim character x is specified, then the blank space ’ is used. | x in {character}, y in {string} |

| UCASE(x) | Uppercase of x | x in {string} |

| UNESCAPE(x) | Unescaped version of x. Possible escape sequences are \b - backspace, \t - tab, \n - line feed, \f - form feed, \r - carriage return. \uXXXX, where X is a hex value, can be used to specify any unicode character. \XXX, where X is an octal digit, can be used to specify an octal byte value. If any other character appears after an escape character, that character will appear in the output and the escape character will be ignored. | x in {string} |

[a] Non-ASCII range characters or integers used in these functions may produce different results or exceptions depending on where the function is evaluated (Data Virtualization vs. source). Data Virtualization’s uses Java default int to char and char to int conversions, which operates over UTF16 values.

[b] The substring function depending upon the source does not have consistent behavior with respect to negative from/length arguments nor out of bounds from/length arguments. The default Data Virtualization behavior is:

- Return a null value when the from value is out of bounds or the length is less than 0

- A zero from index is effective the same as 1.

- A negative from index is first counted from the end of the string.

Some sources, however, can return an empty string instead of null, and some sources are not compatible with negative indexing.

TO_CHARS

Return a CLOB from the binary large object (BLOB) with the given encoding.

TO_CHARS(x, encoding [, wellformed])

TO_CHARS(x, encoding [, wellformed])BASE64, HEX, UTF-8-BOM and the built-in Java Charset names are valid values for the encoding [b]. x is a BLOB, encoding is a string, wellformed is a boolean, and returns a CLOB. The two argument form defaults to wellformed=true. If wellformed is false, the conversion function will immediately validate the result such that an unmappable character or malformed input will raise an exception.

TO_BYTES

Return a BLOB from the CLOB with the given encoding.

TO_BYTES(x, encoding [, wellformed])

TO_BYTES(x, encoding [, wellformed])BASE64, HEX, UTF-8-BOM and the builtin Java Charset names are valid values for the encoding [b]. x in a CLOB, encoding is a string, wellformed is a boolean and returns a BLOB. The two argument form defaults to wellformed=true. If wellformed is false, the conversion function will immediately validate the result such that an unmappable character or malformed input will raise an exception. If wellformed is true, then unmappable characters will be replaced by the default replacement character for the character set. Binary formats, such as BASE64 and HEX, will be checked for correctness regardless of the wellformed parameter.

[b] For more information about Charset names, see the Charset docs.

REPLACE

Replace all occurrences of a given string with another.

REPLACE(x, y, z)

REPLACE(x, y, z)Replace all occurrences of y with z in x. x, y, z are strings and the return value is a string.

REGEXP_REPLACE

Replace one or all occurrences of a given pattern with another string.

REGEXP_REPLACE(str, pattern, sub [, flags])

REGEXP_REPLACE(str, pattern, sub [, flags])Replace one or more occurrences of pattern with sub in str. All arguments are strings and the return value is a string.

The pattern parameter is expected to be a valid Java regular expression

The flags argument can be any concatenation of any of the valid flags with the following meanings:

| Flag | Name | Meaning |

|---|---|---|

| g | Global | Replace all occurrences, not just the first. |

| m | Multi-line | Match over multiple lines. |

| i | Case insensitive | Match without case sensitivity. |

Usage:

The following will return "xxbye Wxx" using the global and case insensitive options.

Example regexp_replace

regexp_replace('Goodbye World', '[g-o].', 'x', 'gi')

regexp_replace('Goodbye World', '[g-o].', 'x', 'gi')3.5.3. Date and time functions

Date and time functions return or operate on dates, times, or timestamps.

Date and time functions use the convention established within the java.text.SimpleDateFormat class to define the formats you can use with these functions. You can learn more about how this class defines formats by visiting the Javadocs for SimpleDateFormat.

| Function | Definition | Datatype constraint |

|---|---|---|

| CURDATE() CURRENT_DATE[()] | Return current date - will return the same value for all invocations in the user command. | returns date. |

| CURTIME() | Return current time - will return the same value for all invocations in the user command. See also CURRENT_TIME. | returns time |

| NOW() | Return current timestamp (date and time with millisecond precision) - will return the same value for all invocations in the user command or procedure instruction. See also CURRENT_TIMESTAMP. | returns timestamp |

| CURRENT_TIME[(precision)] | Return current time - will return the same value for all invocations in the user command. The Data Virtualization time type does not track fractional seconds, so the precision argument is effectively ignored. Without a precision is the same as CURTIME(). | returns time |

| CURRENT_TIMESTAMP[(precision)] | Return current timestamp (date and time with millisecond precision) - will return the same value for all invocations with the same precision in the user command or procedure instruction. Without a precision is the same as NOW(). Since the current timestamp has only millisecond precision by default setting the precision to greater than 3 will have no effect. | returns timestamp |

| DAYNAME(x) | Return name of day in the default locale | x in {date, timestamp}, returns string |

| DAYOFMONTH(x) | Return day of month | x in {date, timestamp}, returns integer |

| DAYOFWEEK(x) | Return day of week (Sunday=1, Saturday=7) | x in {date, timestamp}, returns integer |

| DAYOFYEAR(x) | Return day number in year | x in {date, timestamp}, returns integer |

| EPOCH(x) | Return seconds since the unix epoch with microsecond precision | x in {date, timestamp}, returns double |

| EXTRACT(YEAR|MONTH|DAY |HOUR|MINUTE|SECOND|QUARTER|EPOCH FROM x) | Return the given field value from the date value x. Produces the same result as the associated YEAR, MONTH, DAYOFMONTH, HOUR, MINUTE, SECOND, QUARTER, EPOCH functions functions. The SQL specification also allows for TIMEZONE_HOUR and TIMEZONE_MINUTE as extraction targets. In Data Virtualization all date values are in the timezone of the server. | x in {date, time, timestamp}, epoch returns double, the others return integer |

| FORMATDATE(x, y) | Format date x using format y. | x is date, y is string, returns string |

| FORMATTIME(x, y) | Format time x using format y. | x is time, y is string, returns string |

| FORMATTIMESTAMP(x, y) | Format timestamp x using format y. | x is timestamp, y is string, returns string |

| FROM_MILLIS (millis) | Return the Timestamp value for the given milliseconds. | long UTC timestamp in milliseconds |

| FROM_UNIXTIME (unix_timestamp) | Return the Unix timestamp as a String value with the default format of yyyy/mm/dd hh:mm:ss. | long Unix timestamp (in seconds) |

| HOUR(x) | Return hour (in military 24-hour format). | x in {time, timestamp}, returns integer |

| MINUTE(x) | Return minute. | x in {time, timestamp}, returns integer |

| MODIFYTIMEZONE (timestamp, startTimeZone, endTimeZone) | Returns a timestamp based upon the incoming timestamp adjusted for the differential between the start and end time zones. If the server is in GMT-6, then modifytimezone({ts '2006-01-10 04:00:00.0'},'GMT-7', 'GMT-8') will return the timestamp {ts '2006-01-10 05:00:00.0'} as read in GMT-6. The value has been adjusted 1 hour ahead to compensate for the difference between GMT-7 and GMT-8. | startTimeZone and endTimeZone are strings, returns a timestamp |

| MODIFYTIMEZONE (timestamp, endTimeZone) | Return a timestamp in the same manner as modifytimezone(timestamp, startTimeZone, endTimeZone), but will assume that the startTimeZone is the same as the server process. | Timestamp is a timestamp; endTimeZone is a string, returns a timestamp |

| MONTH(x) | Return month. | x in {date, timestamp}, returns integer |

| MONTHNAME(x) | Return name of month in the default locale. | x in {date, timestamp}, returns string |

| PARSEDATE(x, y) | Parse date from x using format y. | x, y in {string}, returns date |

| PARSETIME(x, y) | Parse time from x using format y. | x, y in {string}, returns time |

| PARSETIMESTAMP(x,y) | Parse timestamp from x using format y. | x, y in {string}, returns timestamp |

| QUARTER(x) | Return quarter. | x in {date, timestamp}, returns integer |

| SECOND(x) | Return seconds. | x in {time, timestamp}, returns integer |

| TIMESTAMPCREATE(date, time) | Create a timestamp from a date and time. | date in {date}, time in {time}, returns timestamp |

| TO_MILLIS (timestamp) | Return the UTC timestamp in milliseconds. | timestamp value |

| UNIX_TIMESTAMP (unix_timestamp) | Return the long Unix timestamp (in seconds). | unix_timestamp String in the default format of yyyy/mm/dd hh:mm:ss |

| WEEK(x) | Return week in year 1-53. For customization information, see System Properties in the Administrator’s Guide. | x in {date, timestamp}, returns integer |

| YEAR(x) | Return four-digit year | x in {date, timestamp}, returns integer |

Timestampadd

Add a specified interval amount to the timestamp.

Syntax

TIMESTAMPADD(interval, count, timestamp)

TIMESTAMPADD(interval, count, timestamp)Arguments

| Name | Description |

|---|---|

| interval | A datetime interval unit, can be one of the following keywords:

|

| count | A long or integer count of units to add to the timestamp. Negative values will subtract that number of units. Long values are allowed for symmetry with TIMESTAMPDIFF - but the effective range is still limited to integer values. |

| timestamp | A datetime expression. |

Example

SELECT TIMESTAMPADD(SQL_TSI_MONTH, 12,'2016-10-10') SELECT TIMESTAMPADD(SQL_TSI_SECOND, 12,'2016-10-10 23:59:59')

SELECT TIMESTAMPADD(SQL_TSI_MONTH, 12,'2016-10-10')

SELECT TIMESTAMPADD(SQL_TSI_SECOND, 12,'2016-10-10 23:59:59')Timestampdiff

Calculates the number of date part intervals crossed between the two timestamps return a long value.

Syntax

TIMESTAMPDIFF(interval, startTime, endTime)

TIMESTAMPDIFF(interval, startTime, endTime)Arguments

| Name | Description |

|---|---|

| interval | A datetime interval unit, the same as keywords used by Timestampadd. |

| startTime | A datetime expression. |

| endTime | A datetime expression. |

Example

SELECT TIMESTAMPDIFF(SQL_TSI_MONTH,'2000-01-02','2016-10-10') SELECT TIMESTAMPDIFF(SQL_TSI_SECOND,'2000-01-02 00:00:00','2016-10-10 23:59:59') SELECT TIMESTAMPDIFF(SQL_TSI_FRAC_SECOND,'2000-01-02 00:00:00.0','2016-10-10 23:59:59.999999')

SELECT TIMESTAMPDIFF(SQL_TSI_MONTH,'2000-01-02','2016-10-10')

SELECT TIMESTAMPDIFF(SQL_TSI_SECOND,'2000-01-02 00:00:00','2016-10-10 23:59:59')

SELECT TIMESTAMPDIFF(SQL_TSI_FRAC_SECOND,'2000-01-02 00:00:00.0','2016-10-10 23:59:59.999999')If (endTime > startTime), a non-negative number will be returned. If (endTime < startTime), a non-positive number will be returned. The date part difference difference is counted regardless of how close the timestamps are. For example, '2000-01-02 00:00:00.0' is still considered 1 hour ahead of '2000-01-01 23:59:59.999999'.

Compatibility issues

- In SQL, Timestampdiff typically returns an integer. However the Data Virtualization implementation returns a long. You might receive an exception if you expect a value out of the integer range from a pushed down timestampdiff.

- The implementation of timestamp diff in Teiid 8.2 and earlier versions returned values based on the number of whole canonical interval approximations (365 days in a year, 91 days in a quarter, 30 days in a month, etc.) crossed. For example the difference in months between 2013-03-24 and 2013-04-01 was 0, but based upon the date parts crossed is 1. For information about backwards compatibility, see System Properties in the Adminstrator’s Guide.

Parsing date datatypes from strings

Data Virtualization does not implicitly convert strings that contain dates presented in different formats, such as '19970101' and '31/1/1996' to date-related datatypes. You can, however, use the parseDate, parseTime, and parseTimestamp functions, described in the next section, to explicitly convert strings with a different format to the appropriate datatype. These functions use the convention established within the java.text.SimpleDateFormat class to define the formats you can use with these functions. For more information about how this class defines date and time string formats, see Javadocs for SimpleDateFormat. Note that the format strings are specific to your Java default locale.

For example, you could use these function calls, with the formatting string that adheres to the java.text.SimpleDateFormat convention, to parse strings and return the datatype you need:

| String | Function call to parse string |

|---|---|

| '1997010' | parseDate(myDateString, 'yyyyMMdd') |

| '31/1/1996' | parseDate(myDateString, 'dd''/''MM''/''yyyy') |

| '22:08:56 CST' | parseTime (myTime, 'HH:mm:ss z') |

| '03.24.2003 at 06:14:32' | parseTimestamp(myTimestamp, 'MM.dd.yyyy''at''hh:mm:ss') |

Specifying time zones