Deploying Camel K integrations on OpenShift

TECHNOLOGY PREVIEW - Getting started with Red Hat Integration - Camel K

Abstract

Preface

Making open source more inclusive

Red Hat is committed to replacing problematic language in our code, documentation, and web properties. We are beginning with these four terms: master, slave, blacklist, and whitelist. Because of the enormity of this endeavor, these changes will be implemented gradually over several upcoming releases. For more details, see our CTO Chris Wright’s message.

Chapter 1. Introduction to Camel K

This chapter introduces the concepts, features, and cloud-native architecture provided by Red Hat Integration - Camel K:

Red Hat Integration - Camel K is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production.

These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process. For more information about the support scope of Red Hat Technology Preview features, see https://access.redhat.com/support/offerings/techpreview.

1.1. Camel K overview

Red Hat Integration - Camel K is a lightweight integration framework built from Apache Camel K that runs natively in the cloud on OpenShift. Camel K is specifically designed for serverless and microservice architectures. You can use Camel K to instantly run your integration code written in Camel Domain Specific Language (DSL) directly on OpenShift. Camel K is a subproject of the Apache Camel open source community: https://github.com/apache/camel-k.

Camel K is implemented in the Go programming language and uses the Kubernetes Operator SDK to automatically deploy integrations in the cloud. For example, this includes automatically creating services and routes on OpenShift. This provides much faster turnaround times when deploying and redeploying integrations in the cloud, such as a few seconds or less instead of minutes.

The Camel K runtime provides significant performance optimizations. The Quarkus cloud-native Java framework is enabled by default to provide faster start up times, and lower memory and CPU footprints. When running Camel K in developer mode, you can make live updates to your integration DSL and view results instantly in the cloud on OpenShift, without waiting for your integration to redeploy.

Using Camel K with OpenShift Serverless and Knative Serving, containers are created only as needed and are autoscaled under load up and down to zero. This reduces cost by removing the overhead of server provisioning and maintenance and enables you to focus on application development instead.

Using Camel K with OpenShift Serverless and Knative Eventing, you can manage how components in your system communicate in an event-driven architecture for serverless applications. This provides flexibility and creates efficiencies through decoupled relationships between event producers and consumers using a publish-subscribe or event-streaming model.

Additional resources

1.2. Camel K Technology Preview features

The Camel K Technology Preview includes the following main platforms and features:

1.2.1. Platform and component versions

- OpenShift Container Platform 4.6 or 4.7

- OpenShift Serverless 1.13

- Quarkus 1.7 Java runtime

- Apache Camel K 1.3

- Apache Camel 3.7

- Apache Camel Quarkus 1.5

- OpenJDK 11

1.2.2. Technology Preview features

- Knative Serving for autoscaling and scale-to-zero

- Knative Eventing for event-driven architectures

- Performance optimizations using Quarkus runtime by default

- Camel integrations written in Java, XML, or YAML DSL

- Development tooling with Visual Studio Code

- Monitoring of integrations using Prometheus in OpenShift

- Quickstart tutorials, including new Transformations and SaaS examples

- Kamelet catalog of connectors to external systems such as AWS, Jira, and Salesforce

The Technology Preview includes building Camel K integration images with OpenShift only. Installing Camel K with the Buildah or Kaniko image builder is not included in the Technology Preview and has community-only support.

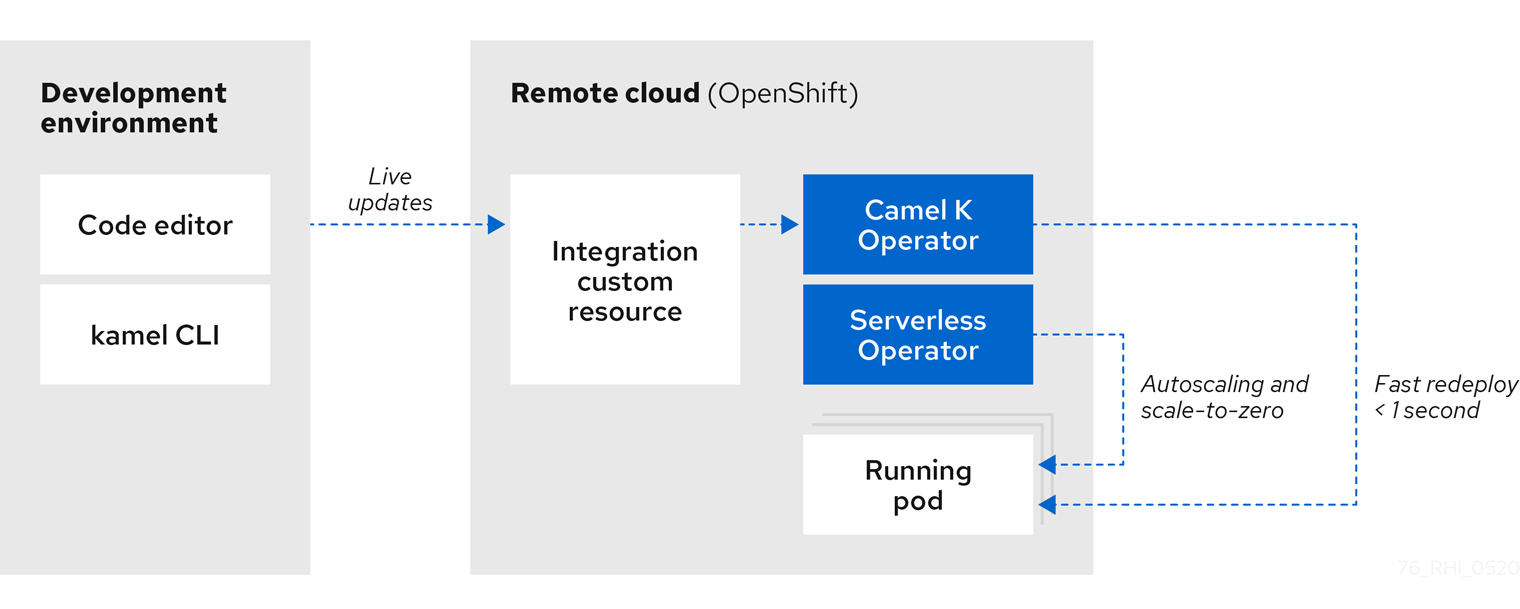

1.3. Camel K cloud-native architecture

The following diagram shows a simplified view of the Camel K cloud-native architecture:

Camel K automatically wraps the Camel integration in a Kubernetes custom resource and uploads it to the cloud. This architecture provides the following benefits:

- Cloud-native integration and developer experience on OpenShift for faster development cycles

- Automatic installation of Camel K and deployment of integrations using the Camel K Operator

- Live code updates using Camel K developer mode, without needing to redeploy

- Autoscaling up and down to zero with Knative using the OpenShift Serverless Operator

Performance optimizations and cost savings using the Quarkus Java runtime:

- Pre-compilation and pre-initialization of code at build time

- Fast start up, deploy, and redeploy times

- Low memory and CPU footprint

- Automatic dependency resolution of Camel integration code

- Configuring advanced features using Camel K traits on the command line and modeline

Additional resources

1.3.1. Kamelets

Kamelets hide the complexity of connecting to external systems behind a simple interface, which contains all the information needed to instantiate them, even for users who are not familiar with Camel.

Kamelets are implemented as custom resources that you can install on an OpenShift cluster and use in Camel K integrations. They contain high-level connectors in the form of route templates. Kamelets abstract the details of connecting to external systems. You can also combine Kamelets to create complex Camel integrations, just like using standard Camel components.

Additional resources

1.4. Camel K development tooling

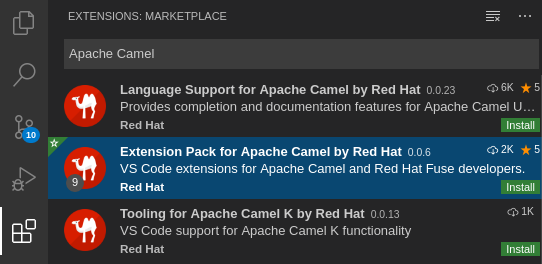

The Camel K Technology Preview provides development tooling extensions for Visual Studio (VS) Code, Red Hat CodeReady WorkSpaces, and Eclipse Che. The Camel-based tooling extensions include features such as automatic completion of Camel DSL code, Camel K modeline configuration, and Camel K traits. While Didact tutorial tooling extensions provide automatic execution of Camel K quick start tutorial commands.

The following VS Code development tooling extensions are available:

VS Code Extension Pack for Apache Camel by Red Hat

- Tooling for Apache Camel K extension

- Language Support for Apache Camel extension

- Additional extensions for OpenShift, Java, XML, and more

- Didact Tutorial Tools for VS Code extension

For details on how to set up these VS Code extensions for Camel K, see Section 3.1, “Setting up your Camel K development environment”.

Note: The VS Code Extension Pack for Apache Camel by Red Hat is a community offering.

Red Hat CodeReady Workspaces and Eclipse Che also provide these features using the vscode-camelk plug-in.

1.5. Camel K distributions

| Distribution | Description | Location |

|---|---|---|

| Operator image |

Container image for the Red Hat Integration - Camel K Operator: |

|

| Maven repository | Maven artifacts for Red Hat Integration - Camel K | |

| Source code | Source code for Red Hat Integration - Camel K | |

| Quickstarts | Quick start tutorials:

|

You must have a subscription for Red Hat Integration and be logged into the Red Hat Customer Portal to access the Red Hat Integration - Camel K distributions.

Chapter 2. Installing Camel K

This chapter explains how to install Red Hat Integration - Camel K and OpenShift Serverless on OpenShift, and how to install the required Camel K and OpenShift client tools in your development environment.

2.1. Installing Camel K from the OpenShift OperatorHub

You can install the Red Hat Integration - Camel K Operator on your OpenShift cluster from the OperatorHub. The OperatorHub is available from the OpenShift Container Platform web console and provides an interface for cluster administrators to discover and install Operators. For more details on the OperatorHub, see the OpenShift documentation.

Prerequisite

- You must have cluster administrator access to an OpenShift cluster

You do not need to create a pull secret when installing Camel K from the OpenShift OperatorHub. The Camel K Operator automatically reuses the OpenShift cluster-level authentication to pull the Camel K image from registry.redhat.io.

Procedure

- In the OpenShift Container Platform web console, log in using an account with cluster administrator privileges.

Create a new OpenShift project:

- In the left navigation menu, click Home > Project > Create Project.

-

Enter a project name, for example,

my-camel-k-project, and click Create.

- In the left navigation menu, click Operators > OperatorHub.

-

In the Filter by keyword text box, enter

Camel Kto find the Red Hat Integration - Camel K Operator. - Read the information about the Operator, and then click Install to display the Operator subscription page.

Select the following subscription settings:

- Update Channel > techpreview

- Installation Mode > A specific namespace on the cluster > my-camel-k-project

Approval Strategy > Automatic

NoteThe Installation mode > All namespaces on the cluster and Approval Strategy > Manual settings are also available if required by your environment.

- Click Install, and wait a few moments until the Camel K Operator is ready for use.

Additional resources

2.2. Installing OpenShift Serverless from the OperatorHub

You can install the OpenShift Serverless Operator on your OpenShift cluster from the OperatorHub. The OperatorHub is available from the OpenShift Container Platform web console and provides an interface for cluster administrators to discover and install Operators. For more details on the OperatorHub, see the OpenShift documentation.

The OpenShift Serverless Operator supports both Knative Serving and Knative Eventing features. For more details, see Getting started with OpenShift Serverless.

Prerequisite

- You must have cluster administrator access to an OpenShift cluster

Procedure

- In the OpenShift Container Platform web console, log in using an account with cluster administrator privileges.

- In the left navigation menu, click Operators > OperatorHub.

-

In the Filter by keyword text box, enter

Serverlessto find the OpenShift Serverless Operator. - Read the information about the Operator, and click Install to display the Operator subscription page.

Select the default subscription settings:

- Update Channel > Select the channel that matches your OpenShift version, for example, 4.6

- Installation Mode > All namespaces on the cluster

Approval Strategy > Automatic

NoteThe Approval Strategy > Manual setting is also available if required by your environment.

- Click Install, and wait a few moments until the Operator is ready for use.

Install the required Knative components using the steps in the OpenShift documentation:

Additional resources

- Installing OpenShift Serverless in the OpenShift documentation

2.3. Installing the Camel K and OpenShift command line tools

Camel K and OpenShift provide command line tools to deploy and manage your integrations in the cloud. This section explains how to install the following Command Line Interface (CLI) tools:

-

kamel- Camel K CLI -

oc- OpenShift Container Platform CLI -

kn- OpenShift Serverless CLI

These command line tools are all available on Linux, Windows, and Mac.

Prerequisites

You must have access to an OpenShift cluster on which the Camel K Operator and OpenShift Serverless Operator are installed:

Procedure

- In the OpenShift Container Platform web console, log in using an account with developer or administrator privileges.

-

Click the

help icon in the toolbar, and select Command Line Tools.

help icon in the toolbar, and select Command Line Tools.

-

Download and extract the

oc- OpenShift CLI archive if this tool is not already installed. For more details, see the OpenShift CLI documentation. -

Download and extract the

kn- OpenShift Serverless CLI archive if this tool is not already installed. For more details, see the OpenShift Serverless CLI documentation. -

Download and extract the

kamel- Camel K CLI archive to install. -

Add the

kamelbinary file to your system path. For example, on Linux, you can putkamelin/usr/bin. Log into your OpenShift cluster using the

occlient tool, for example:oc login --token=my-token --server=https://my-cluster.example.com:6443

oc login --token=my-token --server=https://my-cluster.example.com:6443Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the following command to verify the installation of the

kamelclient tool:kamel --help

kamel --helpCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

Chapter 3. Getting started with Camel K

This chapter explains how to set up your development environment and how to develop and deploy simple Camel K integrations written in Java, XML, and YAML. It also shows how to use the kamel command line to manage Camel K integrations at runtime. For example, this includes running, describing, logging, and deleting integrations,

- Section 3.1, “Setting up your Camel K development environment”

- Section 3.2, “Developing Camel K integrations in Java”

- Section 3.3, “Developing Camel K integrations in XML”

- Section 3.4, “Developing Camel K integrations in YAML”

- Section 3.5, “Running Camel K integrations”

- Section 3.6, “Running Camel K integrations in development mode”

- Section 3.7, “Running Camel K integrations using modeline”

3.1. Setting up your Camel K development environment

You must set up your environment with the recommended development tooling before you can automatically deploy the Camel K quick start tutorials. This section explains how to install the recommended Visual Studio (VS) Code IDE and the extensions that it provides for Camel K.

- VS Code is recommended for ease of use and the best developer experience of Camel K. This includes automatic completion of Camel DSL code and Camel K traits, and automatic execution of tutorial commands. However, you can manually enter your code and tutorial commands using your chosen IDE instead of VS Code.

- The VS Code Camel Extension Pack is a community offering.

Prerequisites

You must have access to an OpenShift cluster on which the Camel K Operator and OpenShift Serverless Operator are installed:

- Section 2.3, “Installing the Camel K and OpenShift command line tools”

Procedure

Install VS Code on your development platform. For example, on Red Hat Enterprise Linux:

Install the required key and repository:

sudo rpm --import https://packages.microsoft.com/keys/microsoft.asc sudo sh -c 'echo -e "[code]\nname=Visual Studio Code\nbaseurl=https://packages.microsoft.com/yumrepos/vscode\nenabled=1\ngpgcheck=1\ngpgkey=https://packages.microsoft.com/keys/microsoft.asc" > /etc/yum.repos.d/vscode.repo'

sudo rpm --import https://packages.microsoft.com/keys/microsoft.asc sudo sh -c 'echo -e "[code]\nname=Visual Studio Code\nbaseurl=https://packages.microsoft.com/yumrepos/vscode\nenabled=1\ngpgcheck=1\ngpgkey=https://packages.microsoft.com/keys/microsoft.asc" > /etc/yum.repos.d/vscode.repo'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Update the cache and install the VS Code package:

yum check-update sudo yum install code

yum check-update sudo yum install codeCopy to Clipboard Copied! Toggle word wrap Toggle overflow For details on installing on other platforms, see the VS Code installation documentation.

-

Enter the

codecommand to launch the VS Code editor. For more details, see the VS Code command line documentation. Install the VS Code Camel Extension Pack, which includes the extensions required for Camel K. For example, in VS Code:

- In the left navigation bar, click Extensions.

- In the search box, enter Apache Camel.

Select the Extension Pack for Apache Camel by Red Hat, and click Install.

For more details, see the instructions for the Extension Pack for Apache Camel by Red Hat.

3.2. Developing Camel K integrations in Java

This section shows how to develop a simple Camel K integration in Java DSL. Writing an integration in Java to be deployed using Camel K is the same as defining your routing rules in Camel. However, you do not need to build and package the integration as a JAR when using Camel K.

You can use any Camel component directly in your integration routes. Camel K automatically handles the dependency management and imports all the required libraries from the Camel catalog using code inspection.

Procedure

Enter the

kamel initcommand to generate a simple Java integration file. For example:kamel init HelloCamelK.java

kamel init HelloCamelK.javaCopy to Clipboard Copied! Toggle word wrap Toggle overflow Open the generated integration file in your IDE and edit as appropriate. For example, the

HelloCamelK.javaintegration automatically includes the Cameltimerandlogcomponents to help you get started:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Next steps

3.3. Developing Camel K integrations in XML

This section explains how to develop a simple Camel K integration in classic XML DSL. Writing an integration in XML to be deployed using Camel K is the same as defining your routing rules in Camel.

You can use any Camel component directly in your integration routes. Camel K automatically handles the dependency management and imports all the required libraries from the Camel catalog using code inspection.

Procedure

Enter the

kamel initcommand to generate a simple XML integration file. For example:kamel init hello-camel-k.xml

kamel init hello-camel-k.xmlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Open the generated integration file in your IDE and edit as appropriate. For example, the

hello-camel-k.xmlintegration automatically includes the Cameltimerandlogcomponents to help you get started:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Next steps

3.4. Developing Camel K integrations in YAML

This section explains how to develop a simple Camel K integration in YAML DSL. Writing an integration in YAML to be deployed using Camel K is the same as defining your routing rules in Camel.

You can use any Camel component directly in your integration routes. Camel K automatically handles the dependency management and imports all the required libraries from the Camel catalog using code inspection.

Procedure

Enter the

kamel initcommand to generate a simple XML integration file. For example:kamel init hello.camelk.yaml

kamel init hello.camelk.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Open the generated integration file in your IDE and edit as appropriate. For example, the

hello.camelk.yamlintegration automatically includes the Cameltimerandlogcomponents to help you get started:Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantIntegrations written in YAML must have a file name with the pattern

*.camelk.yamlor a first line of# camel-k: language=yaml.

Additional resources

3.5. Running Camel K integrations

You can run Camel K integrations in the cloud on your OpenShift cluster from the command line using the kamel run command.

Prerequisites

- Section 3.1, “Setting up your Camel K development environment”.

- You must already have a Camel integration written in Java, XML, or YAML DSL.

Procedure

Log into your OpenShift cluster using the

occlient tool, for example:oc login --token=my-token --server=https://my-cluster.example.com:6443`

oc login --token=my-token --server=https://my-cluster.example.com:6443`Copy to Clipboard Copied! Toggle word wrap Toggle overflow Open a project in which the Camel K operator is installed, for example:

oc project my-camel-k-project

oc project my-camel-k-projectCopy to Clipboard Copied! Toggle word wrap Toggle overflow Ensure that the Camel K Operator is running, for example:

oc get pod

oc get podCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns output similar to the following:

NAME READY STATUS RESTARTS AGE camel-k-operator-86b8d94b4-pk7d6 1/1 Running 0 6m28s

NAME READY STATUS RESTARTS AGE camel-k-operator-86b8d94b4-pk7d6 1/1 Running 0 6m28sCopy to Clipboard Copied! Toggle word wrap Toggle overflow Type the

kamel runcommand to run your integration in the cloud on OpenShift. For example:Java example

kamel run HelloCamelK.java

kamel run HelloCamelK.javaCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns:

integration "hello-camel-k" created

integration "hello-camel-k" createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow XML example

kamel run hello-camel-k.xml

kamel run hello-camel-k.xmlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns:

integration "hello-camel-k" created

integration "hello-camel-k" createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow YAML example

kamel run hello.camelk.yaml

kamel run hello.camelk.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns:

integration "hello" created

integration "hello" createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Type

kamel getto check the status of the integration.NAME PHASE KIT hello Building Kit kit-bq666mjej725sk8sn12g

NAME PHASE KIT hello Building Kit kit-bq666mjej725sk8sn12gCopy to Clipboard Copied! Toggle word wrap Toggle overflow When the integration runs for the first time, Camel K builds the integration kit for the container image, which downloads all the required Camel modules and adds them to the image classpath.

Type

kamel getagain to verify that the integration is running:NAME PHASE KIT hello Running kit-bq666mjej725sk8sn12g

NAME PHASE KIT hello Running kit-bq666mjej725sk8sn12gCopy to Clipboard Copied! Toggle word wrap Toggle overflow Type the

kamel logcommand to print the log tostdout.For example:

kamel log hello

kamel log helloCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns output similar to the following:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Press

Ctrl-Cto terminate logging in the terminal.

Additional resources

-

For more details on the

kamel runcommand, enterkamel run --help - For faster deployment turnaround times, see Section 3.6, “Running Camel K integrations in development mode”

- For details of development tools to run integrations, see VS Code Tooling for Apache Camel K by Red Hat

- See also Section 5.1, “Managing Camel K integrations”

3.6. Running Camel K integrations in development mode

You can run Camel K integrations in development mode on your OpenShift cluster from the command line. Using development mode, you can iterate quickly on integrations in development and get fast feedback on your code.

When you specify the kamel run command with the --dev option, this deploys the integration in the cloud immediately and shows the integration logs in the terminal. You can then change the code and see the changes automatically applied instantly to the remote integration Pod on OpenShift. The terminal automatically displays all redeployments of the remote integration in the cloud.

The artifacts generated by Camel K in development mode are identical to those that you run in production. The purpose of development mode is faster development.

Prerequisites

- Section 3.1, “Setting up your Camel K development environment”.

- You must already have a Camel integration written in Java, XML, or YAML DSL.

Procedure

Log into your OpenShift cluster using the

occlient tool, for example:oc login --token=my-token --server=https://my-cluster.example.com:6443

oc login --token=my-token --server=https://my-cluster.example.com:6443Copy to Clipboard Copied! Toggle word wrap Toggle overflow Ensure that the Camel K Operator is running, for example:

oc get pod

oc get podCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns:

NAME READY STATUS RESTARTS AGE camel-k-operator-86b8d94b4-pk7d6 1/1 Running 0 6m28s

NAME READY STATUS RESTARTS AGE camel-k-operator-86b8d94b4-pk7d6 1/1 Running 0 6m28sCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the

kamel runcommand with--devto run your integration in development mode on OpenShift in the cloud. The following shows a simple Java example:kamel run HelloCamelK.java --dev

kamel run HelloCamelK.java --devCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the content of your integration DSL file, save your changes, and see the changes displayed instantly in the terminal. For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Press

Ctrl-Cto terminate logging in the terminal.

Additional resources

-

For more details on the

kamel runcommand, enterkamel run --help - For details of development tools to run integrations, see VS Code Tooling for Apache Camel K by Red Hat

- Section 5.1, “Managing Camel K integrations”

- Section 7.6, “Configuring Camel K integration dependencies”

3.7. Running Camel K integrations using modeline

You can use the Camel K modeline to specify multiple configuration options in a Camel K integration source file, which are executed at runtime. This creates efficiencies by saving you the time of re-entering multiple command line options and helps to prevent input errors.

The following example shows a modeline entry from a Java integration file that configures traits for Prometheus monitoring and 3scale API Management, and includes a dependency on an external Maven library:

// camel-k: language=java trait=prometheus.enabled=true trait=3scale.enabled=true dependency=mvn:org.my/app:1.0

// camel-k: language=java trait=prometheus.enabled=true trait=3scale.enabled=true dependency=mvn:org.my/app:1.0Prerequisites

- Section 3.1, “Setting up your Camel K development environment”.

- You must already have a Camel integration written in Java, XML, or YAML DSL.

Procedure

Add a Camel K modeline entry to your integration file. For example:

Hello.java

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the following command to run the integration:

kamel run Hello.java

kamel run Hello.javaCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns:

Modeline options have been loaded from source files Full command: kamel run Hello.java --trait=prometheus.enabled=true --dependency mvn:org.my/application:1.0

Modeline options have been loaded from source files Full command: kamel run Hello.java --trait=prometheus.enabled=true --dependency mvn:org.my/application:1.0Copy to Clipboard Copied! Toggle word wrap Toggle overflow The

kamel runcommand outputs any modeline options specified in the integration.

Additional resources

- Section 9.2, “Camel K modeline options”

- For details of development tools to run modeline integrations, see Introducing IDE support for Apache Camel K Modeline.

Chapter 4. Camel K quick start developer tutorials

Red Hat Integration - Camel K provides quick start developer tutorials based on integration use cases available from https://github.com/openshift-integration. This chapter provides details on how to set up and deploy the following tutorials:

- Section 4.1, “Deploying a basic Camel K Java integration”

- Section 4.2, “Deploying a Camel K Serverless integration with Knative”

- Section 4.3, “Deploying a Camel K transformations integration”

- Section 4.4, “Deploying a Camel K Serverless event streaming integration”

- Section 4.5, “Deploying a Camel K Serverless API-based integration”

- Section 4.6, “Deploying a Camel K SaaS integration”

4.1. Deploying a basic Camel K Java integration

This tutorial demonstrates how to run a simple Java integration in the cloud on OpenShift, apply configuration and routing to an integration, and run an integration as a Kubernetes CronJob.

Prerequisites

- See the tutorial readme in GitHub: https://github.com/openshift-integration/camel-k-example-basic.

- You must have cluster administrator access to an OpenShift cluster to install Camel K. See Section 2.1, “Installing Camel K from the OpenShift OperatorHub”.

-

You must have the

kamelcommand installed. See Section 2.3, “Installing the Camel K and OpenShift command line tools”. - Visual Studio (VS) Code is optional but recommended for the best developer experience. See Section 3.1, “Setting up your Camel K development environment”.

Procedure

Clone the tutorial Git repository:

git clone git@github.com:openshift-integration/camel-k-example-basic.git

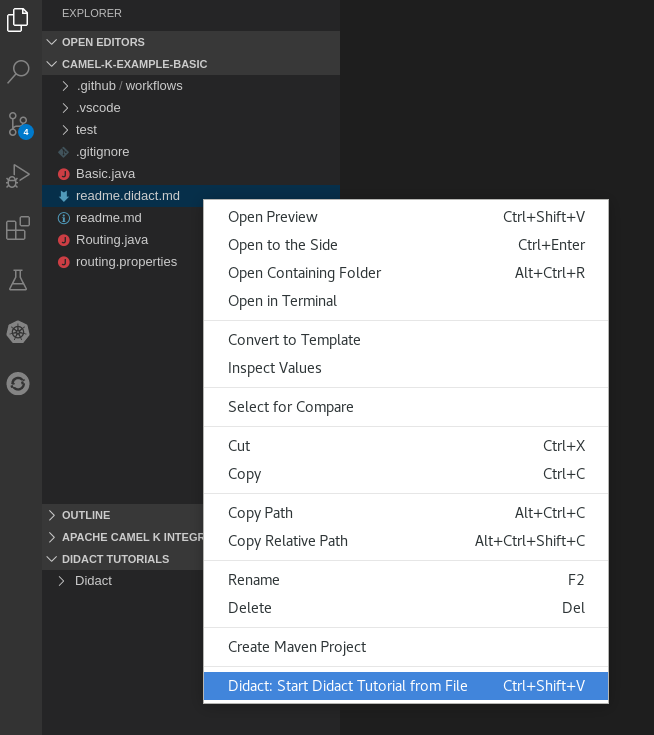

git clone git@github.com:openshift-integration/camel-k-example-basic.gitCopy to Clipboard Copied! Toggle word wrap Toggle overflow - In VS Code, select File → Open Folder → camel-k-example-basic.

In the VS Code navigation tree, right-click the

readme.didact.mdfile and select Didact: Start Didact Tutorial from File. For example:

This opens a new Didact tab in VS Code to display the tutorial instructions.

Follow the tutorial instructions and click the provided links to run the commands automatically.

Alternatively, if you do not have VS Code installed with the Didact extension, you can manually enter the commands from https://github.com/openshift-integration/camel-k-example-basic.

Additional resources

4.2. Deploying a Camel K Serverless integration with Knative

This tutorial demonstrates how to deploy Camel K integrations with OpenShift Serverless in an event-driven architecture. This tutorial uses a Knative Eventing broker to communicate using an event publish-subscribe pattern in a Bitcoin trading demonstration.

This tutorial also shows how to use Camel K integrations to connect to a Knative event mesh with multiple external systems. The Camel K integrations also use Knative Serving to automatically scale up and down to zero as needed.

Prerequisites

- See the tutorial readme in GitHub: https://github.com/openshift-integration/camel-k-example-knative.

You must have cluster administrator access to an OpenShift cluster to install Camel K and OpenShift Serverless:

-

You must have the

kamelcommand installed. See Section 2.3, “Installing the Camel K and OpenShift command line tools”. - Visual Studio (VS) Code is optional but recommended for the best developer experience. See Section 3.1, “Setting up your Camel K development environment”.

Procedure

Clone the tutorial Git repository:

git clone git@github.com:openshift-integration/camel-k-example-knative.git

git clone git@github.com:openshift-integration/camel-k-example-knative.gitCopy to Clipboard Copied! Toggle word wrap Toggle overflow - In VS Code, select File → Open Folder → camel-k-example-knative.

-

In the VS Code navigation tree, right-click the

readme.didact.mdfile and select Didact: Start Didact Tutorial from File. This opens a new Didact tab in VS Code to display the tutorial instructions. Follow the tutorial instructions and click the provided links to run the commands automatically.

Alternatively, if you do not have VS Code installed with the Didact extension, you can manually enter the commands from https://github.com/openshift-integration/camel-k-example-knative.

Additional resources

4.3. Deploying a Camel K transformations integration

This tutorial demonstrates how to run a Camel K Java integration on OpenShift that transforms data such as XML to JSON, and stores it in a database such as PostgreSQL.

The tutorial example uses a CSV file to query an XML API and uses the data collected to build a valid GeoJSON file, which is stored in a PostgreSQL database.

Prerequisites

- See the tutorial readme in GitHub: https://github.com/openshift-integration/camel-k-example-transformations.

- You must have cluster administrator access to an OpenShift cluster to install Camel K. See Section 2.1, “Installing Camel K from the OpenShift OperatorHub”.

-

You must have the

kamelcommand installed. See Section 2.3, “Installing the Camel K and OpenShift command line tools”. - You must follow the instructions in the tutorial readme to install the PostgreSQL Operator by Dev4Ddevs.com, which is required on your OpenShift cluster

- Visual Studio (VS) Code is optional but recommended for the best developer experience. See Section 3.1, “Setting up your Camel K development environment”.

Procedure

Clone the tutorial Git repository:

git clone git@github.com:openshift-integration/camel-k-example-transformations.git

git clone git@github.com:openshift-integration/camel-k-example-transformations.gitCopy to Clipboard Copied! Toggle word wrap Toggle overflow - In VS Code, select File → Open Folder → camel-k-example-transformations.

-

In the VS Code navigation tree, right-click the

readme.didact.mdfile and select Didact: Start Didact Tutorial from File. This opens a new Didact tab in VS Code to display the tutorial instructions. Follow the tutorial instructions and click the provided links to run the commands automatically.

Alternatively, if you do not have VS Code installed with the Didact extension, you can manually enter the commands from https://github.com/openshift-integration/camel-k-example-transformations.

Additional resources

4.4. Deploying a Camel K Serverless event streaming integration

This tutorial demonstrates using Camel K and OpenShift Serverless with Knative Eventing for an event-driven architecture.

The tutorial shows how to install Camel K and Serverless with Knative in an AMQ Streams cluster with an AMQ Broker cluster, and how to deploy an event streaming project to run a global hazard alert demonstration application.

Prerequisites

- See the tutorial readme in GitHub: https://github.com/openshift-integration/camel-k-example-event-streaming.

You must have cluster administrator access to an OpenShift cluster to install Camel K and OpenShift Serverless:

-

You must have the

kamelcommand installed. See Section 2.3, “Installing the Camel K and OpenShift command line tools”. You must follow the instructions in the tutorial readme to install the additional required Operators on your OpenShift cluster:

- AMQ Streams Operator

- AMQ Broker Operator

- Visual Studio (VS) Code is optional but recommended for the best developer experience. See Section 3.1, “Setting up your Camel K development environment”.

Procedure

Clone the tutorial Git repository:

git clone git@github.com:openshift-integration/camel-k-example-event-streaming.git

git clone git@github.com:openshift-integration/camel-k-example-event-streaming.gitCopy to Clipboard Copied! Toggle word wrap Toggle overflow - In VS Code, select File → Open Folder → camel-k-example-event-streaming.

-

In the VS Code navigation tree, right-click the

readme.didact.mdfile and select Didact: Start Didact Tutorial from File. This opens a new Didact tab in VS Code to display the tutorial instructions. Follow the tutorial instructions and click the provided links to run the commands automatically.

Alternatively, if you do not have VS Code installed with the Didact extension, you can manually enter the commands from https://github.com/openshift-integration/camel-k-example-event-streaming.

Additional resources

4.5. Deploying a Camel K Serverless API-based integration

This tutorial demonstrates using Camel K and OpenShift Serverless with Knative Serving for an API-based integration, and managing an API with 3scale API Management on OpenShift.

The tutorial shows how to configure Amazon S3-based storage, design an OpenAPI definition, and run an integration that calls the demonstration API endpoints.

Prerequisites

- See the tutorial readme in GitHub: https://github.com/openshift-integration/camel-k-example-api.

You must have cluster administrator access to an OpenShift cluster to install Camel K and OpenShift Serverless:

-

You must have the

kamelcommand installed. See Section 2.3, “Installing the Camel K and OpenShift command line tools”. - You can also install the optional Red Hat Integration - 3scale Operator on your OpenShift system to manage the API. See Deploying 3scale using the Operator.

- Visual Studio (VS) Code is optional but recommended for the best developer experience. See Section 3.1, “Setting up your Camel K development environment”.

Procedure

Clone the tutorial Git repository:

git clone git@github.com:openshift-integration/camel-k-example-api.git

git clone git@github.com:openshift-integration/camel-k-example-api.gitCopy to Clipboard Copied! Toggle word wrap Toggle overflow - In VS Code, select File → Open Folder → camel-k-example-api.

-

In the VS Code navigation tree, right-click the

readme.didact.mdfile and select Didact: Start Didact Tutorial from File. This opens a new Didact tab in VS Code to display the tutorial instructions. Follow the tutorial instructions and click the provided links to run the commands automatically.

Alternatively, if you do not have VS Code installed with the Didact extension, you can manually enter the commands from https://github.com/openshift-integration/camel-k-example-api.

Additional resources

4.6. Deploying a Camel K SaaS integration

This tutorial demonstrates how to run a Camel K Java integration on OpenShift that connects two widely-used Software as a Service (SaaS) providers.

The tutorial example shows how to integrate the Salesforce and ServiceNow SaaS providers using REST-based Camel components. In this simple example, each new Salesforce Case is copied to a corresponding ServiceNow Incident that includes the Salesforce Case Number.

Prerequisites

- See the tutorial readme in GitHub: https://github.com/openshift-integration/camel-k-example-saas.

- You must have cluster administrator access to an OpenShift cluster to install Camel K. See Section 2.1, “Installing Camel K from the OpenShift OperatorHub”.

-

You must have the

kamelcommand installed. See Section 2.3, “Installing the Camel K and OpenShift command line tools”. - You must have Salesforce login credentials and ServiceNow login credentials.

- Visual Studio (VS) Code is optional but recommended for the best developer experience. See Section 3.1, “Setting up your Camel K development environment”.

Procedure

Clone the tutorial Git repository:

git clone git@github.com:openshift-integration/camel-k-example-saas.git

git clone git@github.com:openshift-integration/camel-k-example-saas.gitCopy to Clipboard Copied! Toggle word wrap Toggle overflow - In VS Code, select File → Open Folder → camel-k-example-saas.

-

In the VS Code navigation tree, right-click the

readme.didact.mdfile and select Didact: Start Didact Tutorial from File. This opens a new Didact tab in VS Code to display the tutorial instructions. Follow the tutorial instructions and click the provided links to run the commands automatically.

Alternatively, if you do not have VS Code installed with the Didact extension, you can manually enter the commands from https://github.com/openshift-integration/camel-k-example-saas.

Additional resources

Chapter 5. Managing Camel K integrations

You can manage Red Hat Integration - Camel K integrations using the Camel K command line or using development tools. This chapter explains how to manage Camel K integrations on the command line and provides links to additional resources that explain how to use the VS Code development tools.

5.1. Managing Camel K integrations

Camel K provides different options for managing Camel K integrations on your OpenShift cluster on the command line. This section shows simple examples of using the following commands:

-

kamel get -

kamel describe -

kamel log -

kamel delete

Prerequisites

- Section 3.1, “Setting up your Camel K development environment”

- You must already have a Camel integration written in Java, XML, or YAML DSL

Procedure

Ensure that the Camel K Operator is running on your OpenShift cluster, for example:

oc get pod

oc get podCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns:

NAME READY STATUS RESTARTS AGE camel-k-operator-86b8d94b4-pk7d6 1/1 Running 0 6m28s

NAME READY STATUS RESTARTS AGE camel-k-operator-86b8d94b4-pk7d6 1/1 Running 0 6m28sCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the

kamel runcommand to run your integration in the cloud on OpenShift. For example:kamel run hello.camelk.yaml

kamel run hello.camelk.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns:

integration "hello" created

integration "hello" createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the status of the integration:

kamel get

kamel getCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns:

NAME PHASE KIT hello Building Kit kit-bqatqib5t4kse5vukt40

NAME PHASE KIT hello Building Kit kit-bqatqib5t4kse5vukt40Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the

kamel describecommand to print detailed information about the integration:kamel describe integration hello

kamel describe integration helloCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the

kamel logcommand to print the log tostdout:kamel log hello

kamel log helloCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns:

... 1] 2020-04-14 16:03:41.205 INFO [Camel (camel-k) thread #1 - timer://yaml] info - Exchange[ExchangePattern: InOnly, BodyType: String, Body: Hello Camel K from yaml] [1] 2020-04-14 16:03:42.205 INFO [Camel (camel-k) thread #1 - timer://yaml] info - Exchange[ExchangePattern: InOnly, BodyType: String, Body: Hello Camel K from yaml] [1] 2020-04-14 16:03:43.204 INFO [Camel (camel-k) thread #1 - timer://yaml] info - Exchange[ExchangePattern: InOnly, BodyType: String, Body: Hello Camel K from yaml] ...

... 1] 2020-04-14 16:03:41.205 INFO [Camel (camel-k) thread #1 - timer://yaml] info - Exchange[ExchangePattern: InOnly, BodyType: String, Body: Hello Camel K from yaml] [1] 2020-04-14 16:03:42.205 INFO [Camel (camel-k) thread #1 - timer://yaml] info - Exchange[ExchangePattern: InOnly, BodyType: String, Body: Hello Camel K from yaml] [1] 2020-04-14 16:03:43.204 INFO [Camel (camel-k) thread #1 - timer://yaml] info - Exchange[ExchangePattern: InOnly, BodyType: String, Body: Hello Camel K from yaml] ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Press

Ctrl-Cto terminate logging in the terminal. Enter the

kamel deleteto delete the integration deployed on OpenShift:kamel delete hello

kamel delete helloCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns:

Integration hello deleted

Integration hello deletedCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

- For more details on logging, see Section 5.2, “Managing Camel K integration logging levels”

- For faster deployment turnaround times, see Section 3.6, “Running Camel K integrations in development mode”

- For details of development tools to manage integrations, see VS Code Tooling for Apache Camel K by Red Hat

5.2. Managing Camel K integration logging levels

Camel K uses Apache Log4j 2 as the logging framework for integrations. You can configure the logging levels of various loggers on the command line at runtime by specifying the logging.level prefix as an integration property. For example:

--property logging.level.org.apache.camel=DEBUG

--property logging.level.org.apache.camel=DEBUGProcedure

Enter the

kamel runcommand and specify the logging level using the--propertyoption. For example:kamel run --property logging.level.org.apache.camel=DEBUG HelloCamelK.java --dev

kamel run --property logging.level.org.apache.camel=DEBUG HelloCamelK.java --devCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Press

Ctrl-Cto terminate logging in the terminal.

Additional resources

- For more details on the logging framework, see the Apache Log4j 2 documentation

- For details of development tools to view logging, see VS Code Tooling for Apache Camel K by Red Hat

Chapter 6. Monitoring Camel K integrations

Red Hat Integration - Camel K monitoring is based on the Prometheus monitoring system: https://prometheus.io/. This chapter explains how to use the available options for monitoring Red Hat Integration - Camel K integrations at runtime. You can use the Prometheus Operator that is already deployed as part of OpenShift Monitoring to monitor your own applications.

6.1. Enabling user workload monitoring in OpenShift

OpenShift 4.3 or higher includes an embedded Prometheus Operator already deployed as part of OpenShift Monitoring. This section explains how to enable monitoring of your own application services in OpenShift Monitoring. This option avoids the additional overhead of installing and managing a separate Prometheus instance.

Monitoring of Camel K integrations using a separate Prometheus Operator is not included in the Technology Preview.

Prerequisites

- You must have cluster administrator access to an OpenShift cluster on which the Camel K Operator is installed. See Section 2.1, “Installing Camel K from the OpenShift OperatorHub”.

Procedure

Enter the following command to check if the

cluster-monitoring-configConfigMap object exists in theopenshift-monitoring project:oc -n openshift-monitoring get configmap cluster-monitoring-config

oc -n openshift-monitoring get configmap cluster-monitoring-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create the

cluster-monitoring-configConfigMap if this does not already exist:oc -n openshift-monitoring create configmap cluster-monitoring-config

oc -n openshift-monitoring create configmap cluster-monitoring-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow Edit the

cluster-monitoring-configConfigMap:oc -n openshift-monitoring edit configmap cluster-monitoring-config

oc -n openshift-monitoring edit configmap cluster-monitoring-configCopy to Clipboard Copied! Toggle word wrap Toggle overflow Under

data:config.yaml:, setenableUserWorkloadtotrue:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

6.2. Configuring Camel K integration metrics

You can configure monitoring of Camel K integrations automatically using the Camel K Prometheus trait at runtime. This automates the configuration of dependencies and integration Pods to expose a metrics endpoint, which is then discovered and displayed by Prometheus. The Camel Quarkus MicroProfile Metrics extension automatically collects and exposes the default Camel K metrics in the OpenMetrics format.

Prerequisites

- You must have already enabled monitoring of your own services in OpenShift. See Section 6.1, “Enabling user workload monitoring in OpenShift”.

Procedure

Enter the following command to run your Camel K integration with the Prometheus trait enabled:

kamel run myIntegration.java -t prometheus.enabled=true

kamel run myIntegration.java -t prometheus.enabled=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Alternatively, you can enable the Prometheus trait globally once, by updating the integration platform as follows:

oc patch ip camel-k --type=merge -p

oc patch ip camel-k --type=merge -pCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns:

{"spec":{"traits":{"prometheus":{"configuration":{"enabled":"true"}}}}}{"spec":{"traits":{"prometheus":{"configuration":{"enabled":"true"}}}}}Copy to Clipboard Copied! Toggle word wrap Toggle overflow - View monitoring of Camel K integration metrics in Prometheus. For example, for embedded Prometheus, select Monitoring > Metrics in the OpenShift administrator or developer web console.

-

Enter the Camel K metric that you want to view. For example, in the Administrator console, under Insert Metric at Cursor, enter

application_camel_context_uptime_seconds, and click Run Queries. - Click Add Query to view additional metrics.

Additional resources

6.3. Adding custom Camel K integration metrics

You can add custom metrics to your Camel K integrations by using Camel MicroProfile Metrics component and annotations in your Java code. These custom metrics will then be automatically discovered and displayed by Prometheus.

This section shows examples of adding Camel MicroProfile Metrics annotations to Camel K integration and service implementation code.

Prerequisites

- You must have already enabled monitoring of your own services in OpenShift. See Section 6.1, “Enabling user workload monitoring in OpenShift”.

Procedure

Register the custom metrics in your Camel integration code using Camel MicroProfile Metrics component annotations. The following example shows a

Metrics.javaintegration:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Uses the Camel K modeline to automatically configure the Prometheus trait and Maven dependencies

- 2

error: Metric for the number of errors corresponding to the number of events that have not been processed- 3

generated: Metric for the number of events to be processed- 4

attempt: Metric for the number of calls made to the service bean to process incoming events- 5

redelivery: Metric for the number of retries made to process the event- 6

success: Metric for the number of events successfully processed

Add Camel MicroProfile Metrics annotations to any implementation files as needed. The following example shows the

servicebean called by the Camel K integration, which generates random failures:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Follow the steps in Section 6.2, “Configuring Camel K integration metrics” to run the integration and view the custom Camel K metrics in Prometheus.

In this case, the example already uses the Camel K modeline in

Metrics.javato automatically configure Prometheus and the required Maven dependencies forService.java.

Additional resources

Chapter 7. Configuring Camel K integrations

This chapter explains available options for configuring Red Hat Integration - Camel K integrations using properties:

- Section 7.1, “Configuring Camel K integrations using properties”

- Section 7.2, “Configuring Camel K integrations using property files”

- Section 7.3, “Configuring Camel K properties using an OpenShift ConfigMap”

- Section 7.4, “Configuring Camel K properties using an OpenShift Secret”

- Section 7.5, “Configuring Camel integration components”

- Section 7.6, “Configuring Camel K integration dependencies”

7.1. Configuring Camel K integrations using properties

You can configure properties for Camel K integrations on the command line at runtime. When you define a property in an integration using a property placeholder, for example, {{my.message}}, you can specify the property value on the command line, for example --property my.message=Hello. You can specify multiple properties in a single command.

Procedure

Develop a Camel integration that uses a property. The following simple route includes a

{{my.message}}property placeholder:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the

kamel runcommand using the--propertyoption to set the property value at runtime. For example:kamel run --property my.message="Hola Mundo" HelloCamelK.java --dev

kamel run --property my.message="Hola Mundo" HelloCamelK.java --devCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns output similar to the following:

... [1] 2020-04-13 15:39:59.213 INFO [main] ApplicationRuntime - Listener org.apache.camel.k.listener.RoutesDumper@6e0dec4a executed in phase Started [1] 2020-04-13 15:40:00.237 INFO [Camel (camel-k) thread #1 - timer://java] info - Exchange[ExchangePattern: InOnly, BodyType: String, Body: Hola Mundo from java] ...

... [1] 2020-04-13 15:39:59.213 INFO [main] ApplicationRuntime - Listener org.apache.camel.k.listener.RoutesDumper@6e0dec4a executed in phase Started [1] 2020-04-13 15:40:00.237 INFO [Camel (camel-k) thread #1 - timer://java] info - Exchange[ExchangePattern: InOnly, BodyType: String, Body: Hola Mundo from java] ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow

7.2. Configuring Camel K integrations using property files

You can configure multiple properties for Camel K integrations by specifying a property file on the command line at runtime. When you define properties in an integration using property placeholders, for example, {{my.items}}, you can specify the property values on the command line using a properties file, for example --property-file my-integration.properties.

Procedure

Define your integration properties file. The following shows a simple example from a

routing.propertiesfile:# List of items for random generation items=*radiator *engine *door window # Marker to identify priority items priority-marker=*

# List of items for random generation items=*radiator *engine *door window # Marker to identify priority items priority-marker=*Copy to Clipboard Copied! Toggle word wrap Toggle overflow Develop a Camel integration that uses properties defined in the properties file. The following example from the

Routing.javaintegration uses the{{items}}and{{priority-marker}}property placeholders:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the

kamel runcommand with the--property-fileoption. For example:kamel run Routing.java --property-file routing.properties --dev

kamel run Routing.java --property-file routing.properties --devCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns output similar to the following:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

7.3. Configuring Camel K properties using an OpenShift ConfigMap

You can configure multiple properties for Camel K integrations using an OpenShift ConfigMap. When you define properties in an integration using property placeholders, for example, {{my.message}}, you can specify the property values at runtime using a ConfigMap. You can also specify additional properties such as logging levels in the application.properties section of the ConfigMap.

Procedure

Develop a Camel integration that uses properties. The following simple route includes the

{{my.message}}property placeholder:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Define a ConfigMap that contains your configuration properties. For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow This example sets the value of the

my.messageproperty and sets the logging level for theorg.apache.camelpackage in theapplication.properties.Create the ConfigMap in the same OpenShift namespace as your integration:

oc apply -f my-configmap.yaml

oc apply -f my-configmap.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns:

configmap/my-configmap created

configmap/my-configmap createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the integration with the

--configmapoption to specify the configuration properties in the ConfigMap:kamel run --configmap=my-configmap HelloCamelK.java --dev

kamel run --configmap=my-configmap HelloCamelK.java --devCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns:

... [1] 2020-04-14 14:18:20.654 DEBUG [Camel (camel-k) thread #1 - timer://java] DefaultReactiveExecutor - Queuing reactive work: CamelInternalProcessor - UnitOfWork - afterProcess - DefaultErrorHandler[sendTo(log://info)] - ID-hello-camel-k-5df4bcd7dc-zq4vw-1586873876659-0-25 [1] 2020-04-14 14:18:20.654 DEBUG [Camel (camel-k) thread #1 - timer://java] SendProcessor - >>>> log://info Exchange[ID-hello-camel-k-5df4bcd7dc-zq4vw-1586873876659-0-25] [1] 2020-04-14 14:18:20.655 INFO [Camel (camel-k) thread #1 - timer://java] info - Exchange[ExchangePattern: InOnly, BodyType: String, Body: Bonjour le monde from java] ...

... [1] 2020-04-14 14:18:20.654 DEBUG [Camel (camel-k) thread #1 - timer://java] DefaultReactiveExecutor - Queuing reactive work: CamelInternalProcessor - UnitOfWork - afterProcess - DefaultErrorHandler[sendTo(log://info)] - ID-hello-camel-k-5df4bcd7dc-zq4vw-1586873876659-0-25 [1] 2020-04-14 14:18:20.654 DEBUG [Camel (camel-k) thread #1 - timer://java] SendProcessor - >>>> log://info Exchange[ID-hello-camel-k-5df4bcd7dc-zq4vw-1586873876659-0-25] [1] 2020-04-14 14:18:20.655 INFO [Camel (camel-k) thread #1 - timer://java] info - Exchange[ExchangePattern: InOnly, BodyType: String, Body: Bonjour le monde from java] ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

7.4. Configuring Camel K properties using an OpenShift Secret

You can configure multiple properties for Camel K integrations using an OpenShift Secret. When you define properties in an integration using property placeholders, for example, {{my.message}}, you can specify the property values at runtime using a Secret. You can also specify additional properties such as logging levels in the application.properties section of the Secret.

Configuring integration properties using a Secret is similar to configuring using a ConfigMap. The main difference is that you may need to base64-encode the content of the application.properties in the Secret.

Procedure

Develop a Camel integration that uses properties. The following simple route includes the

{{my.message}}property placeholder:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Define a Secret that contains your configuration properties. For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow This example sets the value of the

my.messageproperty toHello Worldand sets the logging level for theorg.apache.camelpackage toDEBUG. These settings are specified in base64-encoded format in theapplication.properties.Create the Secret in the same OpenShift namespace as your integration:

oc apply -f my-secret.yaml

oc apply -f my-secret.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns:

secret/my-secret created

secret/my-secret createdCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the integration with the

--secretoption to specify the configuration properties in the Secret:kamel run --secret=my-secret HelloCamelK.java --dev

kamel run --secret=my-secret HelloCamelK.java --devCopy to Clipboard Copied! Toggle word wrap Toggle overflow Returns:

[1] 2020-04-14 14:30:29.788 DEBUG [Camel (camel-k) thread #1 - timer://java] DefaultReactiveExecutor - Queuing reactive work: CamelInternalProcessor - UnitOfWork - afterProcess - DefaultErrorHandler[sendTo(log://info)] - ID-hello-camel-k-68f85d99b9-srd92-1586874486770-0-144 [1] 2020-04-14 14:30:29.789 DEBUG [Camel (camel-k) thread #1 - timer://java] SendProcessor - >>>> log://info Exchange[ID-hello-camel-k-68f85d99b9-srd92-1586874486770-0-144] [1] 2020-04-14 14:30:29.789 INFO [Camel (camel-k) thread #1 - timer://java] info - Exchange[ExchangePattern: InOnly, BodyType: String, Body: Hello World from java]

[1] 2020-04-14 14:30:29.788 DEBUG [Camel (camel-k) thread #1 - timer://java] DefaultReactiveExecutor - Queuing reactive work: CamelInternalProcessor - UnitOfWork - afterProcess - DefaultErrorHandler[sendTo(log://info)] - ID-hello-camel-k-68f85d99b9-srd92-1586874486770-0-144 [1] 2020-04-14 14:30:29.789 DEBUG [Camel (camel-k) thread #1 - timer://java] SendProcessor - >>>> log://info Exchange[ID-hello-camel-k-68f85d99b9-srd92-1586874486770-0-144] [1] 2020-04-14 14:30:29.789 INFO [Camel (camel-k) thread #1 - timer://java] info - Exchange[ExchangePattern: InOnly, BodyType: String, Body: Hello World from java]Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

7.5. Configuring Camel integration components

You can configure Camel components programmatically in your integration code or by using configuration properties on the command line at runtime. You can configure Camel components using the following syntax:

camel.component.${scheme}.${property}=${value}

camel.component.${scheme}.${property}=${value}

For example, to change the queue size of the Camel seda component for staged event-driven architecture, you can configure the following property on the command line:

camel.component.seda.queueSize=10

camel.component.seda.queueSize=10Procedure

Enter the

kamel runcommand and specify the Camel component configuration using the--propertyoption. For example:kamel run --property camel.component.seda.queueSize=10 examples/Integration.java

kamel run --property camel.component.seda.queueSize=10 examples/Integration.javaCopy to Clipboard Copied! Toggle word wrap Toggle overflow

7.6. Configuring Camel K integration dependencies

Camel K automatically resolves a wide range of dependencies that are required to run your integration code. However, you can explicitly add dependencies on the command line at runtime using the kamel run --dependency option.

The following example integration uses Camel K automatic dependency resolution:

...

from("imap://admin@myserver.com")

.to("seda:output")

...

...

from("imap://admin@myserver.com")

.to("seda:output")

...

Because this integration has an endpoint starting with the imap: prefix, Camel K can automatically add the camel-mail component to the list of required dependencies. The seda: endpoint belongs to camel-core, which is automatically added to all integrations, so Camel K does not add additional dependencies for this component.

Camel K automatic dependency resolution is transparent to the user at runtime. This is very useful in development mode because you can quickly add all the components that you need without exiting the development loop.

You can explicitly add a dependency using the kamel run --dependency or -d option. You might need to use this to specify dependencies that are not included in the Camel catalog. You can specify multiple dependencies on the command line.

Procedure

Enter the

kamel runcommand and specify dependencies using the-doption. For example:kamel run -d mvn:com.google.guava:guava:26.0-jre -d camel-mina2 Integration.java

kamel run -d mvn:com.google.guava:guava:26.0-jre -d camel-mina2 Integration.javaCopy to Clipboard Copied! Toggle word wrap Toggle overflow

You can disable automatic dependency resolution by disabling the dependencies trait: -trait dependencies.enabled=false. However, this is not recommended in most cases.

Chapter 8. Camel K trait configuration reference

This chapter provides reference information about advanced features and core capabilities that you can configure on the command line at runtime using traits. Camel K provides feature traits to configure specific features and technologies. Camel K provides platform traits to configure internal Camel K core capabilities.

The Red Hat Integration - Camel K Technology Preview includes the OpenShift and Knative profiles. The Kubernetes profile has community-only support.

This Technology Preview includes Java, XML, and YAML DSL for integrations. Other languages such as Groovy, JavaScript, and Kotlin have community-only support.

This chapter includes the following sections:

Camel K feature traits

- Section 8.2.1, “3scale Trait”

- Section 8.2.2, “Affinity Trait”

- Section 8.2.3, “Cron Trait”

- Section 8.2.4, “Gc Trait”

- Section 8.2.5, “Istio Trait”

- Section 8.2.6, “Jolokia Trait”

- Section 8.2.7, “Knative Trait”

- Section 8.2.8, “Knative Service Trait”

- Section 8.2.9, “Master Trait”

- Section 8.2.10, “Prometheus Trait”

- Section 8.2.11, “Quarkus Trait”

- Section 8.2.12, “Route Trait”

- Section 8.2.13, “Service Trait”

Camel K core platform traits

- Section 8.3.1, “Builder Trait”

- Section 8.3.3, “Camel Trait”

- Section 8.3.2, “Container Trait”

- Section 8.3.4, “Dependencies Trait”

- Section 8.3.5, “Deployer Trait”

- Section 8.3.6, “Deployment Trait”

- Section 8.3.7, “Environment Trait”

- Section 8.3.8, “Jvm Trait”

- Section 8.3.9, “Openapi Trait”

- Section 8.3.10, “Owner Trait”

- Section 8.3.11, “Platform Trait”

8.1. Camel K trait and profile configuration

This section explains the important Camel K concepts of traits and profiles, which are used to configure advanced Camel K features at runtime.

Camel K traits

Camel K traits are advanced features and core capabilities that you can configure on the command line to customize Camel K integrations. For example, this includes feature traits that configure interactions with technologies such as 3scale API Management, Quarkus, Knative, and Prometheus. Camel K also provides internal platform traits that configure important core platform capabilities such as Camel support, containers, dependency resolution, and JVM support.

Camel K profiles

Camel K profiles define the target cloud platforms on which Camel K integrations run. The Camel K Technology Preview supports the OpenShift and Knative profiles.

When you run an integration on OpenShift, Camel K uses the Knative profile when OpenShift Serverless is installed on the cluster. Camel K uses the OpenShift profile when OpenShift Serverless is not installed.

You can also specify the profile at runtime using the kamel run --profile option.

Camel K provides useful defaults for all traits, taking into account the target profile on which the integration runs. However, advanced users can configure Camel K traits for custom behavior. Some traits only apply to specific profiles such as OpenShift or Knative. For more details, see the available profiles in each trait description.

Camel K trait configuration

Each Camel trait has a unique ID that you can use to configure the trait on the command line. For example, the following command disables creating an OpenShift Service for an integration:

kamel run --trait service.enabled=false my-integration.yaml

kamel run --trait service.enabled=false my-integration.yaml

You can also use the -t option to specify traits.

Camel K trait properties

You can use the enabled property to enable or disable each trait. All traits have their own internal logic to determine if they need to be enabled when the user does not activate them explicitly.

Disabling a platform trait may compromise the platform functionality.

Some traits have an auto property, which you can use to enable or disable automatic configuration of the trait based on the environment. For example, this includes traits such as 3scale, Cron, and Knative. This automatic configuration can enable or disable the trait when the enabled property is not explicitly set, and can change the trait configuration.

Most traits have additional properties that you can configure on the command line. For more details, see the descriptions for each trait in the sections that follow.

8.2. Camel K feature traits

8.2.1. 3scale Trait

The 3scale trait can be used to automatically create annotations that allow 3scale to discover the generated service and make it available for API management.

The 3scale trait is disabled by default.

This trait is available in the following profiles: Kubernetes, Knative, OpenShift.

8.2.1.1. Configuration

Trait properties can be specified when running any integration with the CLI:

kamel run --trait 3scale.[key]=[value] --trait 3scale.[key2]=[value2] Integration.java

kamel run --trait 3scale.[key]=[value] --trait 3scale.[key2]=[value2] Integration.javaThe following configuration options are available:

| Property | Type | Description |

|---|---|---|

| 3scale.enabled | bool | Can be used to enable or disable a trait. All traits share this common property. |

| 3scale.auto | bool | Enables automatic configuration of the trait. |

| 3scale.scheme | string |

The scheme to use to contact the service (default |

| 3scale.path | string |

The path where the API is published (default |

| 3scale.port | int |

The port where the service is exposed (default |

| 3scale.description-path | string |

The path where the Open-API specification is published (default |

8.2.2. Affinity Trait

Allows constraining which nodes the integration pod(s) are eligible to be scheduled on, based on labels on the node, or with inter-pod affinity and anti-affinity, based on labels on pods that are already running on the nodes.

It’s disabled by default.

This trait is available in the following profiles: Kubernetes, Knative, OpenShift.

8.2.2.1. Configuration

Trait properties can be specified when running any integration with the CLI:

kamel run --trait affinity.[key]=[value] --trait affinity.[key2]=[value2] Integration.java

kamel run --trait affinity.[key]=[value] --trait affinity.[key2]=[value2] Integration.javaThe following configuration options are available:

| Property | Type | Description |

|---|---|---|

| affinity.enabled | bool | Can be used to enable or disable a trait. All traits share this common property. |

| affinity.pod-affinity | bool | Always co-locates multiple replicas of the integration in the same node (default false). |

| affinity.pod-anti-affinity | bool | Never co-locates multiple replicas of the integration in the same node (default false). |

| affinity.node-affinity-labels | []string | Defines a set of nodes the integration pod(s) are eligible to be scheduled on, based on labels on the node. |

| affinity.pod-affinity-labels | []string | Defines a set of pods (namely those matching the label selector, relative to the given namespace) that the integration pod(s) should be co-located with. |

| affinity.pod-anti-affinity-labels | []string | Defines a set of pods (namely those matching the label selector, relative to the given namespace) that the integration pod(s) should not be co-located with. |

8.2.2.2. Examples

To schedule the integration pod(s) on a specific node using the built-in node label

kubernetes.io/hostname:kamel run -t affinity.node-affinity-labels="kubernetes.io/hostname in(node-66-50.hosted.k8s.tld)" ...

$ kamel run -t affinity.node-affinity-labels="kubernetes.io/hostname in(node-66-50.hosted.k8s.tld)" ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow To schedule a single integration pod per node (using the

Existsoperator):kamel run -t affinity.pod-anti-affinity-labels="camel.apache.org/integration" ...

$ kamel run -t affinity.pod-anti-affinity-labels="camel.apache.org/integration" ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow To co-locate the integration pod(s) with other integration pod(s):

kamel run -t affinity.pod-affinity-labels="camel.apache.org/integration in(it1, it2)" ...

$ kamel run -t affinity.pod-affinity-labels="camel.apache.org/integration in(it1, it2)" ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow

The *-labels options follow the requirements from Label selectors. They can be multi-valuated, then the requirements list is ANDed, e.g., to schedule a single integration pod per node AND not co-located with the Camel K operator pod(s):

kamel run -t affinity.pod-anti-affinity-labels="camel.apache.org/integration" -t affinity.pod-anti-affinity-labels="camel.apache.org/component=operator" ...

$ kamel run -t affinity.pod-anti-affinity-labels="camel.apache.org/integration" -t affinity.pod-anti-affinity-labels="camel.apache.org/component=operator" ...More information can be found in the official Kubernetes documentation about Assigning Pods to Nodes.

8.2.3. Cron Trait

The Cron trait can be used to customize the behaviour of periodic timer/cron based integrations.

While normally an integration requires a pod to be always up and running, some periodic tasks, such as batch jobs, require to be activated at specific hours of the day or with a periodic delay of minutes. For such tasks, the cron trait can materialize the integration as a Kubernetes CronJob instead of a standard deployment, in order to save resources when the integration does not need to be executed.

Integrations that start from the following components are evaluated by the cron trait: timer, cron, quartz.

The rules for using a Kubernetes CronJob are the following: - timer: when periods can be written as cron expressions. E.g. timer:tick?period=60000. - cron, quartz: when the cron expression does not contain seconds (or the "seconds" part is set to 0). E.g. cron:tab?schedule=0/2$+*+*+*+? or quartz:trigger?cron=0+0/2+*+*+*+?.

This trait is available in the following profiles: Kubernetes, Knative, OpenShift.

8.2.3.1. Configuration

Trait properties can be specified when running any integration with the CLI:

kamel run --trait cron.[key]=[value] --trait cron.[key2]=[value2] Integration.java

kamel run --trait cron.[key]=[value] --trait cron.[key2]=[value2] Integration.javaThe following configuration options are available:

| Property | Type | Description |

|---|---|---|

| cron.enabled | bool | Can be used to enable or disable a trait. All traits share this common property. |

| cron.schedule | string | The CronJob schedule for the whole integration. If multiple routes are declared, they must have the same schedule for this mechanism to work correctly. |

| cron.components | string |

A comma separated list of the Camel components that need to be customized in order for them to work when the schedule is triggered externally by Kubernetes. A specific customizer is activated for each specified component. E.g. for the

Supported components are currently: |

| cron.fallback | bool |

Use the default Camel implementation of the |

| cron.concurrency-policy | string | Specifies how to treat concurrent executions of a Job. Valid values are: - "Allow": allows CronJobs to run concurrently; - "Forbid" (default): forbids concurrent runs, skipping next run if previous run hasn’t finished yet; - "Replace": cancels currently running job and replaces it with a new one |

| cron.auto | bool |

Automatically deploy the integration as CronJob when all routes are either starting from a periodic consumer (only

It’s required that all periodic consumers have the same period and it can be expressed as cron schedule (e.g. |

8.2.4. Gc Trait

The GC Trait garbage-collects all resources that are no longer necessary upon integration updates.

This trait is available in the following profiles: Kubernetes, Knative, OpenShift.

8.2.4.1. Configuration

Trait properties can be specified when running any integration with the CLI:

kamel run --trait gc.[key]=[value] --trait gc.[key2]=[value2] Integration.java

kamel run --trait gc.[key]=[value] --trait gc.[key2]=[value2] Integration.javaThe following configuration options are available:

| Property | Type | Description |

|---|---|---|

| gc.enabled | bool | Can be used to enable or disable a trait. All traits share this common property. |

| gc.discovery-cache | ./pkg/trait.discoveryCacheType |

Discovery client cache to be used, either |

8.2.5. Istio Trait

The Istio trait allows to configure properties related to the Istio service mesh, such as sidecar injection and outbound IP ranges.

This trait is available in the following profiles: Kubernetes, Knative, OpenShift.

8.2.5.1. Configuration

Trait properties can be specified when running any integration with the CLI:

kamel run --trait istio.[key]=[value] --trait istio.[key2]=[value2] Integration.java

kamel run --trait istio.[key]=[value] --trait istio.[key2]=[value2] Integration.javaThe following configuration options are available:

| Property | Type | Description |

|---|---|---|

| istio.enabled | bool | Can be used to enable or disable a trait. All traits share this common property. |

| istio.allow | string |

Configures a (comma-separated) list of CIDR subnets that should not be intercepted by the Istio proxy ( |

| istio.inject | bool |

Forces the value for labels |

8.2.6. Jolokia Trait

The Jolokia trait activates and configures the Jolokia Java agent.

See https://jolokia.org/reference/html/agents.html

This trait is available in the following profiles: Kubernetes, Knative, OpenShift.

8.2.6.1. Configuration

Trait properties can be specified when running any integration with the CLI:

kamel run --trait jolokia.[key]=[value] --trait jolokia.[key2]=[value2] Integration.java

kamel run --trait jolokia.[key]=[value] --trait jolokia.[key2]=[value2] Integration.javaThe following configuration options are available:

| Property | Type | Description |

|---|---|---|

| jolokia.enabled | bool | Can be used to enable or disable a trait. All traits share this common property. |

| jolokia.ca-cert | string |