Getting Started with Camel K

Develop and run your first Camel K application

Abstract

Preface

Making open source more inclusive

Red Hat is committed to replacing problematic language in our code, documentation, and web properties. We are beginning with these four terms: master, slave, blacklist, and whitelist. Because of the enormity of this endeavor, these changes will be implemented gradually over several upcoming releases. For more details, see our CTO Chris Wright’s message.

Chapter 1. Introduction to Camel K

This chapter introduces the concepts, features, and cloud-native architecture provided by Red Hat Integration - Camel K:

1.1. Camel K overview

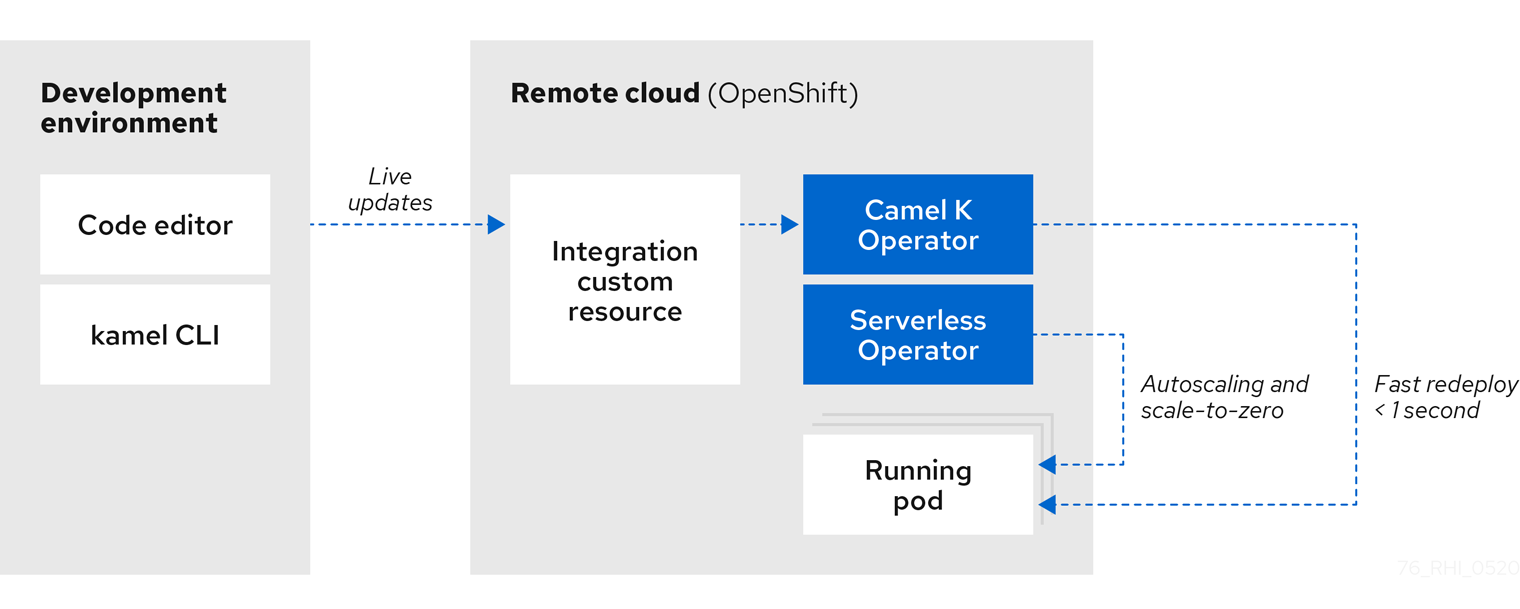

Red Hat Integration - Camel K is a lightweight integration framework built from Apache Camel K that runs natively in the cloud on OpenShift. Camel K is specifically designed for serverless and microservice architectures. You can use Camel K to instantly run your integration code written in Camel Domain Specific Language (DSL) directly on OpenShift. Camel K is a subproject of the Apache Camel open source community: https://github.com/apache/camel-k.

Camel K is implemented in the Go programming language and uses the Kubernetes Operator SDK to automatically deploy integrations in the cloud. For example, this includes automatically creating services and routes on OpenShift. This provides much faster turnaround times when deploying and redeploying integrations in the cloud, such as a few seconds or less instead of minutes.

The Camel K runtime provides significant performance optimizations. The Quarkus cloud-native Java framework is enabled by default to provide faster start up times, and lower memory and CPU footprints. When running Camel K in developer mode, you can make live updates to your integration DSL and view results instantly in the cloud on OpenShift, without waiting for your integration to redeploy.

Using Camel K with OpenShift Serverless and Knative Serving, containers are created only as needed and are autoscaled under load up and down to zero. This reduces cost by removing the overhead of server provisioning and maintenance and enables you to focus on application development instead.

Using Camel K with OpenShift Serverless and Knative Eventing, you can manage how components in your system communicate in an event-driven architecture for serverless applications. This provides flexibility and creates efficiencies through decoupled relationships between event producers and consumers using a publish-subscribe or event-streaming model.

Additional resources

1.2. Camel K features

The Camel K includes the following main platforms and features:

1.2.1. Platform and component versions

- OpenShift Container 4.6 or Platform 4.10

- OpenShift Serverless 1.18.0

- Quarkus 2.2 Java runtime

- Apache Camel K 1.6.6

- Apache Camel 3.11.1

- Apache Camel Quarkus 2.2

- OpenJDK 11

1.2.2. Camel K features

- Knative Serving for autoscaling and scale-to-zero

- Knative Eventing for event-driven architectures

- Performance optimizations using Quarkus runtime by default

- Camel integrations written in Java or YAML DSL

- Development tooling with Visual Studio Code

- Monitoring of integrations using Prometheus in OpenShift

- Quickstart tutorials

- Kamelet Catalog of connectors to external systems such as AWS, Jira, and Salesforce

The following diagram shows a simplified view of the Camel K cloud-native architecture:

Additional resources

1.2.3. Kamelets

Kamelets hide the complexity of connecting to external systems behind a simple interface, which contains all the information needed to instantiate them, even for users who are not familiar with Camel.

Kamelets are implemented as custom resources that you can install on an OpenShift cluster and use in Camel K integrations. Kamelets are route templates that use Camel components designed to connect to external systems without requiring deep understanding of the component. Kamelets abstract the details of connecting to external systems. You can also combine Kamelets to create complex Camel integrations, just like using standard Camel components.

Additional resources

1.3. Camel K development tooling

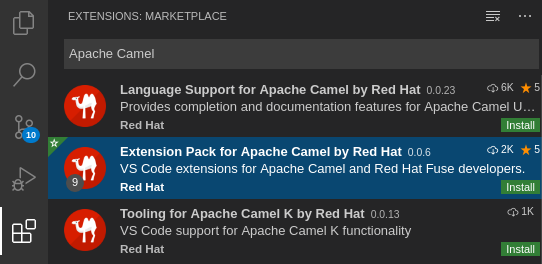

The Camel K provides development tooling extensions for Visual Studio (VS) Code, Red Hat CodeReady WorkSpaces, and Eclipse Che. The Camel-based tooling extensions include features such as automatic completion of Camel DSL code, Camel K modeline configuration, and Camel K traits. While Didact tutorial tooling extensions provide automatic execution of Camel K quick start tutorial commands.

The following VS Code development tooling extensions are available:

VS Code Extension Pack for Apache Camel by Red Hat

- Tooling for Apache Camel K extension

- Language Support for Apache Camel extension

- Additional extensions for OpenShift, Java and more

- Didact Tutorial Tools for VS Code extension

For details on how to set up these VS Code extensions for Camel K, see Setting up your Camel K development environment.

Note: The Camel K VS Code extensions are community features.

Red Hat CodeReady Workspaces and Eclipse Che also provide these features using the vscode-camelk plug-in.

1.4. Camel K distributions

| Distribution | Description | Location |

|---|---|---|

| Operator image |

Container image for the Red Hat Integration - Camel K Operator: |

|

| Maven repository | Maven artifacts for Red Hat Integration - Camel K Red Hat provides Maven repositories that host the content we ship with our products. These repositories are available to download from the software downloads page. For Red Hat Integration - Camel K the following repositories are required:

Installation of Red Hat Integration - Camel K in offline mode is not supported in this release. | |

| Source code | Source code for Red Hat Integration - Camel K | |

| Quickstarts | Quick start tutorials:

|

You must have a subscription for Red Hat Integration and be logged into the Red Hat Customer Portal to access the Red Hat Integration - Camel K distributions.

Chapter 2. Preparing your OpenShift cluster

This chapter explains how to install Red Hat Integration - Camel K and OpenShift Serverless on OpenShift, and how to install the required Camel K and OpenShift Serverless command-line client tools in your development environment.

2.1. Installing Camel K

You can install the Red Hat Integration - Camel K Operator on your OpenShift cluster from the OperatorHub. The OperatorHub is available from the OpenShift Container Platform web console and provides an interface for cluster administrators to discover and install Operators.

After you install the Camel K Operator, you can install the Camel K CLI tool for command line access to all Camel K features.

Prerequisites

You have access to an OpenShift 4.6 (or later) cluster with the correct access level, the ability to create projects and install operators, and the ability to install CLI tools on your local system.

NoteYou do not need to create a pull secret when installing Camel K from the OpenShift OperatorHub. The Camel K Operator automatically reuses the OpenShift cluster-level authentication to pull the Camel K image from

registry.redhat.io.-

You installed the OpenShift CLI tool (

oc) so that you can interact with the OpenShift cluster at the command line. For details on how to install the OpenShift CLI, see Installing the OpenShift CLI.

Procedure

- In the OpenShift Container Platform web console, log in by using an account with cluster administrator privileges.

Create a new OpenShift project:

- In the left navigation menu, click Home > Project > Create Project.

-

Enter a project name, for example,

my-camel-k-project, and then click Create.

- In the left navigation menu, click Operators > OperatorHub.

-

In the Filter by keyword text box, type

Camel Kand then click the Red Hat Integration - Camel K Operator card. - Read the information about the operator and then click Install. The Operator installation page opens.

Select the following subscription settings:

- Update Channel > latest

- Installation Mode > A specific namespace on the cluster > my-camel-k-project

Approval Strategy > Automatic

NoteThe Installation mode > All namespaces on the cluster and Approval Strategy > Manual settings are also available if required by your environment.

- Click Install, and then wait a few moments until the Camel K Operator is ready for use.

Download and install the Camel K CLI tool:

- From the Help menu (?) at the top of the OpenShift web console, select Command line tools.

- Scroll down to the kamel - Red Hat Integration - Camel K - Command Line Interface section.

- Click the link to download the binary for your local operating system (Linux, Mac, Windows).

- Unzip and install the CLI in your system path.

To verify that you can access the Kamel K CLI, open a command window and then type the following:

kamel --helpThis command shows information about Camel K CLI commands.

Next step

(optional) Specifying Camel K resource limits

2.1.1. Specifying Camel K resource limits

When you install Camel K, the OpenShift pod for Camel K does not have any limits set for CPU and memory (RAM) resources. If you want to define resource limits for Camel K, you must edit the Camel K subscription resource that was created during the installation process.

Prerequisite

- You have cluster administrator access to an OpenShift project in which the Camel K Operator is installed as described in Installing Camel K.

You know the resource limits that you want to apply to the Camel K subscription. For more information about resource limits, see the following documentation:

- Setting deployment resources in the OpenShift documentation.

- Managing Resources for Containers in the Kubernetes documentation.

Procedure

- Log in to the OpenShift Web console.

- Select Operators > Installed Operators > Operator Details > Subscription.

Select Actions > Edit Subscription.

The file for the subscription opens in the YAML editor.

Under the

specsection, add aconfig.resourcessection and provide values for memory and cpu as shown in the following example:spec: channel: default config: resources: limits: memory: 512Mi cpu: 500m requests: cpu: 200m memory: 128Mi- Save your changes.

OpenShift updates the subscription and applies the resource limits that you specified.

2.2. Installing OpenShift Serverless

You can install the OpenShift Serverless Operator on your OpenShift cluster from the OperatorHub. The OperatorHub is available from the OpenShift Container Platform web console and provides an interface for cluster administrators to discover and install Operators.

The OpenShift Serverless Operator supports both Knative Serving and Knative Eventing features. For more details, see installing OpenShift Serverless Operator.

Prerequisites

- You have cluster administrator access to an OpenShift project in which the Camel K Operator is installed.

-

You installed the OpenShift CLI tool (

oc) so that you can interact with the OpenShift cluster at the command line. For details on how to install the OpenShift CLI, see Installing the OpenShift CLI.

Procedure

- In the OpenShift Container Platform web console, log in by using an account with cluster administrator privileges.

- In the left navigation menu, click Operators > OperatorHub.

-

In the Filter by keyword text box, enter

Serverlessto find the OpenShift Serverless Operator. - Read the information about the Operator and then click Install to display the Operator subscription page.

Select the default subscription settings:

- Update Channel > Select the channel that matches your OpenShift version, for example, 4.10

- Installation Mode > All namespaces on the cluster

Approval Strategy > Automatic

NoteThe Approval Strategy > Manual setting is also available if required by your environment.

- Click Install, and wait a few moments until the Operator is ready for use.

Install the required Knative components using the steps in the OpenShift documentation:

(Optional) Download and install the OpenShift Serverless CLI tool:

- From the Help menu (?) at the top of the OpenShift web console, select Command line tools.

- Scroll down to the kn - OpenShift Serverless - Command Line Interface section.

- Click the link to download the binary for your local operating system (Linux, Mac, Windows)

- Unzip and install the CLI in your system path.

To verify that you can access the

knCLI, open a command window and then type the following:kn --helpThis command shows information about OpenShift Serverless CLI commands.

For more details, see the OpenShift Serverless CLI documentation.

Additional resources

- Installing OpenShift Serverless in the OpenShift documentation

2.3. Configuring Maven repository for Camel K

For Camel K operator, you can provide the Maven settings in a ConfigMap or a secret.

Procedure

To create a

ConfigMapfrom a file, run the following command.oc create configmap maven-settings --from-file=settings.xml

Created

ConfigMapcan be then referenced in theIntegrationPlatformresource, from thespec.build.maven.settingsfield.Example

apiVersion: camel.apache.org/v1 kind: IntegrationPlatform metadata: name: camel-k spec: build: maven: settings: configMapKeyRef: key: settings.xml name: maven-settingsOr you can edit the

IntegrationPlatformresource directly to reference the ConfigMap that contains the Maven settings using following command:oc edit ip camel-k

Configuring CA certificates for remote Maven repositories

You can provide the CA certificates, used by the Maven commands to connect to the remote Maven repositories, in a Secret.

Procedure

Create a Secret from file using following command:

oc create secret generic maven-ca-certs --from-file=ca.crt

Reference the created Secret in the

IntegrationPlatformresource, from thespec.build.maven.caSecretfield as shown below.apiVersion: camel.apache.org/v1 kind: IntegrationPlatform metadata: name: camel-k spec: build: maven: caSecret: key: tls.crt name: tls-secret

Chapter 3. Developing and running Camel K integrations

This chapter explains how to set up your development environment and how to develop and deploy simple Camel K integrations written in Java and YAML. It also shows how to use the kamel command line to manage Camel K integrations at runtime. For example, this includes running, describing, logging, and deleting integrations.

- Section 3.1, “Setting up your Camel K development environment”

- Section 3.2, “Developing Camel K integrations in Java”

- Section 3.3, “Developing Camel K integrations in YAML”

- Section 3.4, “Running Camel K integrations”

- Section 3.5, “Running Camel K integrations in development mode”

- Section 3.6, “Running Camel K integrations using modeline”

3.1. Setting up your Camel K development environment

You must set up your environment with the recommended development tooling before you can automatically deploy the Camel K quick start tutorials. This section explains how to install the recommended Visual Studio (VS) Code IDE and the extensions that it provides for Camel K.

- The Camel K VS Code extensions are community features.

- VS Code is recommended for ease of use and the best developer experience of Camel K. This includes automatic completion of Camel DSL code and Camel K traits, and automatic execution of tutorial commands. However, you can manually enter your code and tutorial commands using your chosen IDE instead of VS Code.

Prerequisites

You must have access to an OpenShift cluster on which the Camel K Operator and OpenShift Serverless Operator are installed:

Procedure

Install VS Code on your development platform. For example, on Red Hat Enterprise Linux:

Install the required key and repository:

$ sudo rpm --import https://packages.microsoft.com/keys/microsoft.asc $ sudo sh -c 'echo -e "[code]\nname=Visual Studio Code\nbaseurl=https://packages.microsoft.com/yumrepos/vscode\nenabled=1\ngpgcheck=1\ngpgkey=https://packages.microsoft.com/keys/microsoft.asc" > /etc/yum.repos.d/vscode.repo'

Update the cache and install the VS Code package:

$ yum check-update $ sudo yum install code

For details on installing on other platforms, see the VS Code installation documentation.

-

Enter the

codecommand to launch the VS Code editor. For more details, see the VS Code command line documentation. Install the VS Code Camel Extension Pack, which includes the extensions required for Camel K. For example, in VS Code:

- In the left navigation bar, click Extensions.

- In the search box, enter Apache Camel.

Select the Extension Pack for Apache Camel by Red Hat, and click Install.

For more details, see the instructions for the Extension Pack for Apache Camel by Red Hat.

Install the VS Code Didact extension, which you can use to automatically run quick start tutorial commands by clicking links in the tutorial. For example, in VS Code:

- In the left navigation bar, click Extensions.

- In the search box, enter Didact.

Select the extension, and click Install.

For more details, see the instructions for the Didact extension.

3.2. Developing Camel K integrations in Java

This section shows how to develop a simple Camel K integration in Java DSL. Writing an integration in Java to be deployed using Camel K is the same as defining your routing rules in Camel. However, you do not need to build and package the integration as a JAR when using Camel K.

You can use any Camel component directly in your integration routes. Camel K automatically handles the dependency management and imports all the required libraries from the Camel catalog using code inspection.

Prerequisites

Procedure

Enter the

kamel initcommand to generate a simple Java integration file. For example:$ kamel init HelloCamelK.java

Open the generated integration file in your IDE and edit as appropriate. For example, the

HelloCamelK.javaintegration automatically includes the Cameltimerandlogcomponents to help you get started:// camel-k: language=java import org.apache.camel.builder.RouteBuilder; public class HelloCamelK extends RouteBuilder { @Override public void configure() throws Exception { // Write your routes here, for example: from("timer:java?period=1s") .routeId("java") .setBody() .simple("Hello Camel K from ${routeId}") .to("log:info"); } }

Next steps

3.3. Developing Camel K integrations in YAML

This section explains how to develop a simple Camel K integration in YAML DSL. Writing an integration in YAML to be deployed using Camel K is the same as defining your routing rules in Camel.

You can use any Camel component directly in your integration routes. Camel K automatically handles the dependency management and imports all the required libraries from the Camel catalog using code inspection.

Prerequisites

Procedure

Enter the

kamel initcommand to generate a simple YAML integration file. For example:$ kamel init hello.camelk.yaml

Open the generated integration file in your IDE and edit as appropriate. For example, the

hello.camelk.yamlintegration automatically includes the Cameltimerandlogcomponents to help you get started:# Write your routes here, for example: - from: uri: "timer:yaml" parameters: period: "1s" steps: - set-body: constant: "Hello Camel K from yaml" - to: "log:info"

3.4. Running Camel K integrations

You can run Camel K integrations in the cloud on your OpenShift cluster from the command line using the kamel run command.

Prerequisites

- Setting up your Camel K development environment.

- You must already have a Camel integration written in Java or YAML DSL.

Procedure

Log into your OpenShift cluster using the

occlient tool, for example:$ oc login --token=my-token --server=https://my-cluster.example.com:6443

Ensure that the Camel K Operator is running, for example:

$ oc get pod NAME READY STATUS RESTARTS AGE camel-k-operator-86b8d94b4-pk7d6 1/1 Running 0 6m28s

Enter the

kamel runcommand to run your integration in the cloud on OpenShift. For example:Java example

$ kamel run HelloCamelK.java integration "hello-camel-k" created

YAML example

$ kamel run hello.camelk.yaml integration "hello" created

Enter the

kamel getcommand to check the status of the integration:$ kamel get NAME PHASE KIT hello Building Kit myproject/kit-bq666mjej725sk8sn12g

When the integration runs for the first time, Camel K builds the integration kit for the container image, which downloads all the required Camel modules and adds them to the image classpath.

Enter

kamel getagain to verify that the integration is running:$ kamel get NAME PHASE KIT hello Running myproject/kit-bq666mjej725sk8sn12g

Enter the

kamel logcommand to print the log tostdout:$ kamel log hello [1] 2021-08-11 17:58:40,573 INFO [org.apa.cam.k.Runtime] (main) Apache Camel K Runtime 1.7.1.fuse-800025-redhat-00001 [1] 2021-08-11 17:58:40,653 INFO [org.apa.cam.qua.cor.CamelBootstrapRecorder] (main) bootstrap runtime: org.apache.camel.quarkus.main.CamelMainRuntime [1] 2021-08-11 17:58:40,844 INFO [org.apa.cam.k.lis.SourcesConfigurer] (main) Loading routes from: SourceDefinition{name='camel-k-embedded-flow', language='yaml', location='file:/etc/camel/sources/camel-k-embedded-flow.yaml', } [1] 2021-08-11 17:58:41,216 INFO [org.apa.cam.imp.eng.AbstractCamelContext] (main) Routes startup summary (total:1 started:1) [1] 2021-08-11 17:58:41,217 INFO [org.apa.cam.imp.eng.AbstractCamelContext] (main) Started route1 (timer://yaml) [1] 2021-08-11 17:58:41,217 INFO [org.apa.cam.imp.eng.AbstractCamelContext] (main) Apache Camel 3.10.0.fuse-800010-redhat-00001 (camel-1) started in 136ms (build:0ms init:100ms start:36ms) [1] 2021-08-11 17:58:41,268 INFO [io.quarkus] (main) camel-k-integration 1.6.6 on JVM (powered by Quarkus 1.11.7.Final-redhat-00009) started in 2.064s. [1] 2021-08-11 17:58:41,269 INFO [io.quarkus] (main) Profile prod activated. [1] 2021-08-11 17:58:41,269 INFO [io.quarkus] (main) Installed features: [camel-bean, camel-core, camel-k-core, camel-k-runtime, camel-log, camel-support-common, camel-timer, camel-yaml-dsl, cdi] [1] 2021-08-11 17:58:42,423 INFO [info] (Camel (camel-1) thread #0 - timer://yaml) Exchange[ExchangePattern: InOnly, BodyType: String, Body: Hello Camel K from yaml] ...-

Press

Ctrl-Cto terminate logging in the terminal.

Additional resources

-

For more details on the

kamel runcommand, enterkamel run --help - For faster deployment turnaround times, see Running Camel K integrations in development mode

- For details of development tools to run integrations, see VS Code Tooling for Apache Camel K by Red Hat

- See also Managing Camel K integrations

3.5. Running Camel K integrations in development mode

You can run Camel K integrations in development mode on your OpenShift cluster from the command line. Using development mode, you can iterate quickly on integrations in development and get fast feedback on your code.

When you specify the kamel run command with the --dev option, this deploys the integration in the cloud immediately and shows the integration logs in the terminal. You can then change the code and see the changes automatically applied instantly to the remote integration Pod on OpenShift. The terminal automatically displays all redeployments of the remote integration in the cloud.

The artifacts generated by Camel K in development mode are identical to those that you run in production. The purpose of development mode is faster development.

Prerequisites

- Setting up your Camel K development environment.

- You must already have a Camel integration written in Java or YAML DSL.

Procedure

Log into your OpenShift cluster using the

occlient tool, for example:$ oc login --token=my-token --server=https://my-cluster.example.com:6443

Ensure that the Camel K Operator is running, for example:

$ oc get pod NAME READY STATUS RESTARTS AGE camel-k-operator-86b8d94b4-pk7d6 1/1 Running 0 6m28s

Enter the

kamel runcommand with--devto run your integration in development mode on OpenShift in the cloud. The following shows a simple Java example:$ kamel run HelloCamelK.java --dev Condition "IntegrationPlatformAvailable" is "True" for Integration hello-camel-k: test/camel-k Integration hello-camel-k in phase "Initialization" Integration hello-camel-k in phase "Building Kit" Condition "IntegrationKitAvailable" is "True" for Integration hello-camel-k: kit-c49sqn4apkb4qgn55ak0 Integration hello-camel-k in phase "Deploying" Progress: integration "hello-camel-k" in phase Initialization Progress: integration "hello-camel-k" in phase Building Kit Progress: integration "hello-camel-k" in phase Deploying Integration hello-camel-k in phase "Running" Condition "DeploymentAvailable" is "True" for Integration hello-camel-k: deployment name is hello-camel-k Progress: integration "hello-camel-k" in phase Running Condition "CronJobAvailable" is "False" for Integration hello-camel-k: different controller strategy used (deployment) Condition "KnativeServiceAvailable" is "False" for Integration hello-camel-k: different controller strategy used (deployment) Condition "Ready" is "False" for Integration hello-camel-k Condition "Ready" is "True" for Integration hello-camel-k [1] Monitoring pod hello-camel-k-7f85df47b8-js7cb ... ... [1] 2021-08-11 18:34:44,069 INFO [org.apa.cam.k.Runtime] (main) Apache Camel K Runtime 1.7.1.fuse-800025-redhat-00001 [1] 2021-08-11 18:34:44,167 INFO [org.apa.cam.qua.cor.CamelBootstrapRecorder] (main) bootstrap runtime: org.apache.camel.quarkus.main.CamelMainRuntime [1] 2021-08-11 18:34:44,362 INFO [org.apa.cam.k.lis.SourcesConfigurer] (main) Loading routes from: SourceDefinition{name='HelloCamelK', language='java', location='file:/etc/camel/sources/HelloCamelK.java', } [1] 2021-08-11 18:34:46,180 INFO [org.apa.cam.imp.eng.AbstractCamelContext] (main) Routes startup summary (total:1 started:1) [1] 2021-08-11 18:34:46,180 INFO [org.apa.cam.imp.eng.AbstractCamelContext] (main) Started java (timer://java) [1] 2021-08-11 18:34:46,180 INFO [org.apa.cam.imp.eng.AbstractCamelContext] (main) Apache Camel 3.10.0.fuse-800010-redhat-00001 (camel-1) started in 243ms (build:0ms init:213ms start:30ms) [1] 2021-08-11 18:34:46,190 INFO [io.quarkus] (main) camel-k-integration 1.6.6 on JVM (powered by Quarkus 1.11.7.Final-redhat-00009) started in 3.457s. [1] 2021-08-11 18:34:46,190 INFO [io.quarkus] (main) Profile prod activated. [1] 2021-08-11 18:34:46,191 INFO [io.quarkus] (main) Installed features: [camel-bean, camel-core, camel-java-joor-dsl, camel-k-core, camel-k-runtime, camel-log, camel-support-common, camel-timer, cdi] [1] 2021-08-11 18:34:47,200 INFO [info] (Camel (camel-1) thread #0 - timer://java) Exchange[ExchangePattern: InOnly, BodyType: String, Body: Hello Camel K from java] [1] 2021-08-11 18:34:48,180 INFO [info] (Camel (camel-1) thread #0 - timer://java) Exchange[ExchangePattern: InOnly, BodyType: String, Body: Hello Camel K from java] [1] 2021-08-11 18:34:49,180 INFO [info] (Camel (camel-1) thread #0 - timer://java) Exchange[ExchangePattern: InOnly, BodyType: String, Body: Hello Camel K from java] ...Edit the content of your integration DSL file, save your changes, and see the changes displayed instantly in the terminal. For example:

... integration "hello-camel-k" updated ... [2] 2021-08-11 18:40:54,173 INFO [org.apa.cam.k.Runtime] (main) Apache Camel K Runtime 1.7.1.fuse-800025-redhat-00001 [2] 2021-08-11 18:40:54,209 INFO [org.apa.cam.qua.cor.CamelBootstrapRecorder] (main) bootstrap runtime: org.apache.camel.quarkus.main.CamelMainRuntime [2] 2021-08-11 18:40:54,301 INFO [org.apa.cam.k.lis.SourcesConfigurer] (main) Loading routes from: SourceDefinition{name='HelloCamelK', language='java', location='file:/etc/camel/sources/HelloCamelK.java', } [2] 2021-08-11 18:40:55,796 INFO [org.apa.cam.imp.eng.AbstractCamelContext] (main) Routes startup summary (total:1 started:1) [2] 2021-08-11 18:40:55,796 INFO [org.apa.cam.imp.eng.AbstractCamelContext] (main) Started java (timer://java) [2] 2021-08-11 18:40:55,797 INFO [org.apa.cam.imp.eng.AbstractCamelContext] (main) Apache Camel 3.10.0.fuse-800010-redhat-00001 (camel-1) started in 174ms (build:0ms init:147ms start:27ms) [2] 2021-08-11 18:40:55,803 INFO [io.quarkus] (main) camel-k-integration 1.6.6 on JVM (powered by Quarkus 1.11.7.Final-redhat-00009) started in 3.025s. [2] 2021-08-11 18:40:55,808 INFO [io.quarkus] (main) Profile prod activated. [2] 2021-08-11 18:40:55,809 INFO [io.quarkus] (main) Installed features: [camel-bean, camel-core, camel-java-joor-dsl, camel-k-core, camel-k-runtime, camel-log, camel-support-common, camel-timer, cdi] [2] 2021-08-11 18:40:56,810 INFO [info] (Camel (camel-1) thread #0 - timer://java) Exchange[ExchangePattern: InOnly, BodyType: String, Body: Hello Camel K from java] [2] 2021-08-11 18:40:57,793 INFO [info] (Camel (camel-1) thread #0 - timer://java) Exchange[ExchangePattern: InOnly, BodyType: String, Body: Hello Camel K from java] ...-

Press

Ctrl-Cto terminate logging in the terminal.

Additional resources

-

For more details on the

kamel runcommand, enterkamel run --help - For details of development tools to run integrations, see VS Code Tooling for Apache Camel K by Red Hat

- Managing Camel K integrations

- Configuring Camel K integration dependencies

3.6. Running Camel K integrations using modeline

You can use the Camel K modeline to specify multiple configuration options in a Camel K integration source file, which are executed at runtime. This creates efficiencies by saving you the time of re-entering multiple command line options and helps to prevent input errors.

The following example shows a modeline entry from a Java integration file that enables 3scale and limits the integration container memory.

Prerequisites

- Setting up your Camel K development environment

- You must already have a Camel integration written in Java or YAML DSL.

Procedure

Add a Camel K modeline entry to your integration file. For example:

ThreeScaleRest.java

// camel-k: trait=3scale.enabled=true trait=container.limit-memory=256Mi 1 import org.apache.camel.builder.RouteBuilder; public class ThreeScaleRest extends RouteBuilder { @Override public void configure() throws Exception { rest().get("/") .route() .setBody().constant("Hello"); } }- 1

- Enables both the container and 3scale traits, to expose the route through 3scale and to limit the container memory.

Run the integration, for example:

kamel run ThreeScaleRest.java

The

kamel runcommand outputs any modeline options specified in the integration, for example:Modeline options have been loaded from source files Full command: kamel run ThreeScaleRest.java --trait=3scale.enabled=true --trait=container.limit-memory=256Mi

Additional resources

- Camel K modeline options

- For details of development tools to run modeline integrations, see Introducing IDE support for Apache Camel K Modeline.

Chapter 4. Upgrading Camel K

You can upgrade installed Camel K operator automatically, but it does not automatically upgrade the Camel K integrations. You must manually trigger the upgrade for the Camel K integrations. This chapter explains how to upgrade both Camel K operator and Camel K integrations.

4.1. Upgrading Camel K operator

The subscription of an installed Camel K operator specifies an update channel, for example, 1.6.0 channel, which is used to track and receive updates for the operator. To upgrade the operator to start tracking and receiving updates from a newer channel, you can change the update channel in the subscription. See Upgrading installed operators for more information about changing the update channel for installed operator.

- Installed Operators cannot change to a channel that is older than the current channel.

If the approval strategy in the subscription is set to Automatic, the upgrade process initiates as soon as a new Operator version is available in the selected channel. If the approval strategy is set to Manual, you must manually approve pending upgrades.

Prerequisites

- Camel K operator is installed using Operator Lifecycle Manager (OLM).

Procedure

- In the Administrator perspective of the OpenShift Container Platform web console, navigate to Operators → Installed Operators.

- Click the Camel K Operator.

- Click the Subscription tab.

- Click the name of the update channel under Channel.

-

Click the newer update channel that you want to change to. For example,

latest. Click Save. This will start the upgrade to the latest Camel K version.

For subscriptions with an Automatic approval strategy, the upgrade begins automatically. Navigate back to the Operators → Installed Operators page to monitor the progress of the upgrade. When complete, the status changes to Succeeded and Up to date.

For subscriptions with a Manual approval strategy, you can manually approve the upgrade from the Subscription tab.

4.2. Upgrading Camel K integrations

When you trigger the upgrade for Camel K operator, the operator prepares the integrations to be upgraded, but does not trigger an upgrade for each one, to avoid service interruptions. When upgrading the operator, integration custom resources are not automatically upgraded to the newer version, so for example, the operator may be at version 1.6.0, while integrations report the status.version field of the custom resource the previous version 1.4.1.

Prerequisites

Camel K operator is installed and upgraded using Operator Lifecycle Manager (OLM).

Procedure

- Open the terminal and run the following command to upgrade the Camel K intergations.

kamel rebuild myintegration

This will clear the status of the integration resource and the operator will start the deployment of the integration using the artifacts from upgraded version, for example, version 1.6.0.

4.3. Downgrading Camel K

You can downgrade to older version of Camel K operator by installing a previous version of the operator. This needs to be triggered manually using OC CLI. For more infromation about installing specific version of the operator using CLI see Installing a specific version of an Operator.

You must remove the existing Camel K operator and then install the specifc version of the operator as downgrading is not supported in OLM.

Once you install the older version of operator, use the kamel rebuild command to downgrade the integrations to the operator version. For example,

kamel rebuild myintegration

Chapter 5. Camel K quick start developer tutorials

Red Hat Integration - Camel K provides quick start developer tutorials based on integration use cases available from https://github.com/openshift-integration. This chapter provides details on how to set up and deploy the following tutorials:

- Section 5.1, “Deploying a basic Camel K Java integration”

- Section 5.2, “Deploying a Camel K Serverless integration with Knative”

- Section 5.3, “Deploying a Camel K transformations integration”

- Section 5.4, “Deploying a Camel K Serverless event streaming integration”

- Section 5.5, “Deploying a Camel K Serverless API-based integration”

- Section 5.6, “Deploying a Camel K SaaS integration”

- Section 5.7, “Deploying a Camel K JDBC integration”

- Section 5.8, “Deploying a Camel K JMS integration”

- Section 5.9, “Deploying a Camel K Kafka integration”

5.1. Deploying a basic Camel K Java integration

This tutorial demonstrates how to run a simple Java integration in the cloud on OpenShift, apply configuration and routing to an integration, and run an integration as a Kubernetes CronJob.

Prerequisites

- See the tutorial readme in GitHub: https://github.com/openshift-integration/camel-k-example-basic/tree/1.6.x.

-

You must have installed the Camel K operator and the

kamelCLI. See Installing Camel K. - Visual Studio (VS) Code is optional but recommended for the best developer experience. See link:Setting up your Camel K development environment.

Procedure

Clone the tutorial Git repository:

$ git clone git@github.com:openshift-integration/camel-k-example-basic.git

- In VS Code, select File → Open Folder → camel-k-example-basic.

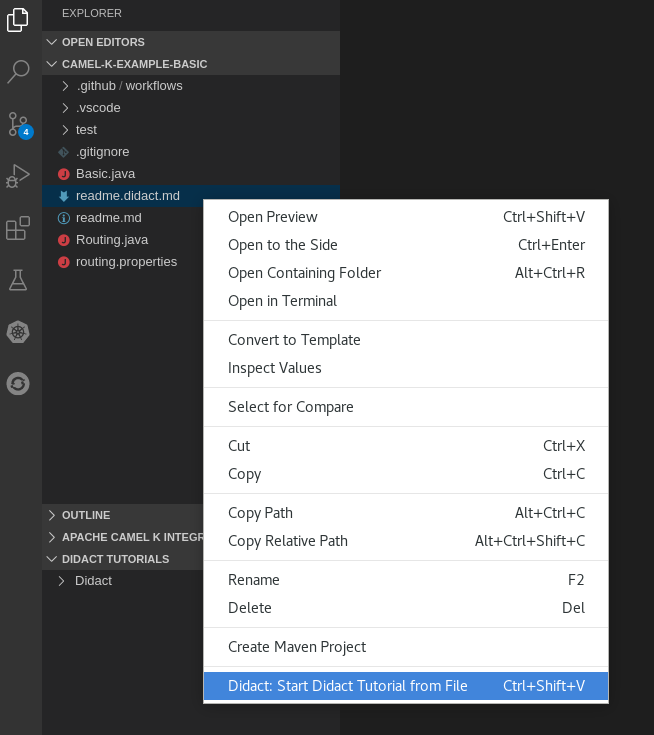

In the VS Code navigation tree, right-click the

readme.didact.mdfile and select Didact: Start Didact Tutorial from File. For example:

This opens a new Didact tab in VS Code to display the tutorial instructions.

Follow the tutorial instructions and click the provided links to run the commands automatically.

Alternatively, if you do not have VS Code installed with the Didact extension, you can manually enter the commands from https://github.com/openshift-integration/camel-k-example-basic.

Additional resources

5.2. Deploying a Camel K Serverless integration with Knative

This tutorial demonstrates how to deploy Camel K integrations with OpenShift Serverless in an event-driven architecture. This tutorial uses a Knative Eventing broker to communicate using an event publish-subscribe pattern in a Bitcoin trading demonstration.

This tutorial also shows how to use Camel K integrations to connect to a Knative event mesh with multiple external systems. The Camel K integrations also use Knative Serving to automatically scale up and down to zero as needed.

Prerequisites

- See the tutorial readme in GitHub: https://github.com/openshift-integration/camel-k-example-knative/tree/1.6.x.

You must have cluster administrator access to an OpenShift cluster to install Camel K and OpenShift Serverless:

- Visual Studio (VS) Code is optional but recommended for the best developer experience. See Setting up your Camel K development environment.

Procedure

Clone the tutorial Git repository:

$ git clone git@github.com:openshift-integration/camel-k-example-knative.git

- In VS Code, select File → Open Folder → camel-k-example-knative.

-

In the VS Code navigation tree, right-click the

readme.didact.mdfile and select Didact: Start Didact Tutorial from File. This opens a new Didact tab in VS Code to display the tutorial instructions. Follow the tutorial instructions and click the provided links to run the commands automatically.

Alternatively, if you do not have VS Code installed with the Didact extension, you can manually enter the commands from https://github.com/openshift-integration/camel-k-example-knative.

Additional resources

5.3. Deploying a Camel K transformations integration

This tutorial demonstrates how to run a Camel K Java integration on OpenShift that transforms data such as XML to JSON, and stores it in a database such as PostgreSQL.

The tutorial example uses a CSV file to query an XML API and uses the data collected to build a valid GeoJSON file, which is stored in a PostgreSQL database.

Prerequisites

- See the tutorial readme in GitHub: https://github.com/openshift-integration/camel-k-example-transformations/tree/1.6.x.

- You must have cluster administrator access to an OpenShift cluster to install Camel K. See Installing Camel K.

- You must follow the instructions in the tutorial readme to install the PostgreSQL Operator by Dev4Ddevs.com, which is required on your OpenShift cluster

- Visual Studio (VS) Code is optional but recommended for the best developer experience. See Setting up your Camel K development environment.

Procedure

Clone the tutorial Git repository:

$ git clone git@github.com:openshift-integration/camel-k-example-transformations.git

- In VS Code, select File → Open Folder → camel-k-example-transformations.

-

In the VS Code navigation tree, right-click the

readme.didact.mdfile and select Didact: Start Didact Tutorial from File. This opens a new Didact tab in VS Code to display the tutorial instructions. Follow the tutorial instructions and click the provided links to run the commands automatically.

Alternatively, if you do not have VS Code installed with the Didact extension, you can manually enter the commands from https://github.com/openshift-integration/camel-k-example-transformations.

Additional resources

5.4. Deploying a Camel K Serverless event streaming integration

This tutorial demonstrates using Camel K and OpenShift Serverless with Knative Eventing for an event-driven architecture.

The tutorial shows how to install Camel K and Serverless with Knative in an AMQ Streams cluster with an AMQ Broker cluster, and how to deploy an event streaming project to run a global hazard alert demonstration application.

Prerequisites

- See the tutorial readme in GitHub: https://github.com/openshift-integration/camel-k-example-event-streaming/tree/1.6.x.

You must have cluster administrator access to an OpenShift cluster to install Camel K and OpenShift Serverless:

You must follow the instructions in the tutorial readme to install the additional required Operators on your OpenShift cluster:

- AMQ Streams Operator

- AMQ Broker Operator

- Visual Studio (VS) Code is optional but recommended for the best developer experience. See Setting up your Camel K development environment.

Procedure

Clone the tutorial Git repository:

$ git clone git@github.com:openshift-integration/camel-k-example-event-streaming.git

- In VS Code, select File → Open Folder → camel-k-example-event-streaming.

-

In the VS Code navigation tree, right-click the

readme.didact.mdfile and select Didact: Start Didact Tutorial from File. This opens a new Didact tab in VS Code to display the tutorial instructions. Follow the tutorial instructions and click the provided links to run the commands automatically.

Alternatively, if you do not have VS Code installed with the Didact extension, you can manually enter the commands from https://github.com/openshift-integration/camel-k-example-event-streaming.

Additional resources

5.5. Deploying a Camel K Serverless API-based integration

This tutorial demonstrates using Camel K and OpenShift Serverless with Knative Serving for an API-based integration, and managing an API with 3scale API Management on OpenShift.

The tutorial shows how to configure Amazon S3-based storage, design an OpenAPI definition, and run an integration that calls the demonstration API endpoints.

Prerequisites

- See the tutorial readme in GitHub: https://github.com/openshift-integration/camel-k-example-api/tree/1.6.x.

You must have cluster administrator access to an OpenShift cluster to install Camel K and OpenShift Serverless:

- You can also install the optional Red Hat Integration - 3scale Operator on your OpenShift system to manage the API. See Deploying 3scale using the Operator.

- Visual Studio (VS) Code is optional but recommended for the best developer experience. See Setting up your Camel K development environment.

Procedure

Clone the tutorial Git repository:

$ git clone git@github.com:openshift-integration/camel-k-example-api.git

- In VS Code, select File → Open Folder → camel-k-example-api.

-

In the VS Code navigation tree, right-click the

readme.didact.mdfile and select Didact: Start Didact Tutorial from File. This opens a new Didact tab in VS Code to display the tutorial instructions. Follow the tutorial instructions and click the provided links to run the commands automatically.

Alternatively, if you do not have VS Code installed with the Didact extension, you can manually enter the commands from https://github.com/openshift-integration/camel-k-example-api.

Additional resources

5.6. Deploying a Camel K SaaS integration

This tutorial demonstrates how to run a Camel K Java integration on OpenShift that connects two widely-used Software as a Service (SaaS) providers.

The tutorial example shows how to integrate the Salesforce and ServiceNow SaaS providers using REST-based Camel components. In this simple example, each new Salesforce Case is copied to a corresponding ServiceNow Incident that includes the Salesforce Case Number.

Prerequisites

- See the tutorial readme in GitHub: https://github.com/openshift-integration/camel-k-example-saas/tree/1.6.x.

- You must have cluster administrator access to an OpenShift cluster to install Camel K. See Installing Camel K.

- You must have Salesforce login credentials and ServiceNow login credentials.

- Visual Studio (VS) Code is optional but recommended for the best developer experience. See Setting up your Camel K development environment.

Procedure

Clone the tutorial Git repository:

$ git clone git@github.com:openshift-integration/camel-k-example-saas.git

- In VS Code, select File → Open Folder → camel-k-example-saas.

-

In the VS Code navigation tree, right-click the

readme.didact.mdfile and select Didact: Start Didact Tutorial from File. This opens a new Didact tab in VS Code to display the tutorial instructions. Follow the tutorial instructions and click the provided links to run the commands automatically.

Alternatively, if you do not have VS Code installed with the Didact extension, you can manually enter the commands from https://github.com/openshift-integration/camel-k-example-saas.

Additional resources

5.7. Deploying a Camel K JDBC integration

This tutorial demonstrates how to get started with Camel K and an SQL database via JDBC drivers. This tutorial shows how to set up an integration producing data into a Postgres database (you can use any relational database of your choice) and also how to read data from the same database.

Prerequisites

- See the tutorial readme in GitHub: https://github.com/openshift-integration/camel-k-example-jdbc/tree/1.6.x.

You must have cluster administrator access to an OpenShift cluster to install Camel K.

- Visual Studio (VS) Code is optional but recommended for the best developer experience. See Setting up your Camel K development environment.

Procedure

Clone the tutorial Git repository:

$ git clone git@github.com:openshift-integration/camel-k-example-jdbc.git

- In VS Code, select File → Open Folder → camel-k-example-jdbc.

-

In the VS Code navigation tree, right-click the

readme.didact.mdfile and select Didact: Start Didact Tutorial from File. This opens a new Didact tab in VS Code to display the tutorial instructions. Follow the tutorial instructions and click the provided links to run the commands automatically.

Alternatively, if you do not have VS Code installed with the Didact extension, you can manually enter the commands from https://github.com/openshift-integration/camel-k-example-jdbc.

Additional resources

5.8. Deploying a Camel K JMS integration

This tutorial demonstrates how to use JMS to connect to a message broker in order to consume and produce messages. There are two examples:

- JMS Sink: this tutorial demonstrates how to produce a message to a JMS broker.

- JMS Source: this tutorial demonstrates how to consume a message from a JMS broker.

Prerequisites

- See the tutorial readme in GitHub: https://github.com/openshift-integration/camel-k-example-jms/tree/1.6.x.

You must have cluster administrator access to an OpenShift cluster to install Camel K.

- Visual Studio (VS) Code is optional but recommended for the best developer experience. See Setting up your Camel K development environment.

Procedure

Clone the tutorial Git repository:

$ git clone git@github.com:openshift-integration/camel-k-example-jms.git

- In VS Code, select File → Open Folder → camel-k-example-jms.

-

In the VS Code navigation tree, right-click the

readme.didact.mdfile and select Didact: Start Didact Tutorial from File. This opens a new Didact tab in VS Code to display the tutorial instructions. Follow the tutorial instructions and click the provided links to run the commands automatically.

Alternatively, if you do not have VS Code installed with the Didact extension, you can manually enter the commands from https://github.com/openshift-integration/camel-k-example-jms.

Additional resources

5.9. Deploying a Camel K Kafka integration

This tutorial demonstrates how to use Camel K with Apache Kafka. This tutorial demonstrates how to set up a Kafka Topic via Red Hat OpenShift Streams for Apache Kafka and to use it in conjunction with Camel K.

Prerequisites

- See the tutorial readme in GitHub: https://github.com/openshift-integration/camel-k-example-kafka/tree/1.6.x.

You must have cluster administrator access to an OpenShift cluster to install Camel K.

- Visual Studio (VS) Code is optional but recommended for the best developer experience. See Setting up your Camel K development environment.

Procedure

Clone the tutorial Git repository:

$ git clone git@github.com:openshift-integration/camel-k-example-kafka.git

- In VS Code, select File → Open Folder → camel-k-example-kafka.

-

In the VS Code navigation tree, right-click the

readme.didact.mdfile and select Didact: Start Didact Tutorial from File. This opens a new Didact tab in VS Code to display the tutorial instructions. Follow the tutorial instructions and click the provided links to run the commands automatically.

Alternatively, if you do not have VS Code installed with the Didact extension, you can manually enter the commands from https://github.com/openshift-integration/camel-k-example-kafka.