Debezium User Guide

For use with Debezium 1.7

Abstract

Preface

Debezium is a set of distributed services that capture row-level changes in your databases so that your applications can see and respond to those changes. Debezium records all row-level changes committed to each database table. Each application reads the transaction logs of interest to view all operations in the order in which they occurred.

This guide provides details about using the following Debezium topics:

- Chapter 1, High level overview of Debezium

- Chapter 2, Required custom resource upgrades

- Chapter 3, Debezium connector for Db2

- Chapter 4, Debezium connector for MongoDB

- Chapter 5, Debezium connector for MySQL

- Chapter 6, Debezium Connector for Oracle (Technology Preview)

- Chapter 7, Debezium connector for PostgreSQL

- Chapter 8, Debezium connector for SQL Server

- Chapter 9, Monitoring Debezium

- Chapter 10, Debezium logging

- Chapter 11, Configuring Debezium connectors for your application

- Chapter 12, Applying transformations to modify messages exchanged with Apache Kafka

Making open source more inclusive

Red Hat is committed to replacing problematic language in our code, documentation, and web properties. We are beginning with these four terms: master, slave, blacklist, and whitelist. Because of the enormity of this endeavor, these changes will be implemented gradually over several upcoming releases. For more details, see our CTO Chris Wright’s message.

Chapter 1. High level overview of Debezium

Debezium is a set of distributed services that capture changes in your databases. Your applications can consume and respond to those changes. Debezium captures each row-level change in each database table in a change event record and streams these records to Kafka topics. Applications read these streams, which provide the change event records in the same order in which they were generated.

More details are in the following sections:

1.1. Debezium Features

Debezium is a set of source connectors for Apache Kafka Connect. Each connector ingests changes from a different database by using that database’s features for change data capture (CDC). Unlike other approaches, such as polling or dual writes, log-based CDC as implemented by Debezium:

- Ensures that all data changes are captured.

- Produces change events with a very low delay while avoiding increased CPU usage required for frequent polling. For example, for MySQL or PostgreSQL, the delay is in the millisecond range.

- Requires no changes to your data model, such as a "Last Updated" column.

- Can capture deletes.

- Can capture old record state and additional metadata such as transaction ID and causing query, depending on the database’s capabilities and configuration.

Five Advantages of Log-Based Change Data Capture is a blog post that provides more details.

Debezium connectors capture data changes with a range of related capabilities and options:

- Snapshots: optionally, an initial snapshot of a database’s current state can be taken if a connector is started and not all logs still exist. Typically, this is the case when the database has been running for some time and has discarded trannsaction logs that are no longer needed for transaction recovery or replication. There are different modes for performing snapshots, including support for incremental snapshots, which can be triggered at connector runtime. For more details, see the documentation for the connector that you are using.

- Filters: you can configure the set of captured schemas, tables and columns with include/exclude list filters.

- Masking: the values from specific columns can be masked, for example, when they contain sensitive data.

- Monitoring: most connectors can be monitored by using JMX.

- Ready-to-use single message transformations (SMTs) for message routing, filtering, event flattening, and more. For more information about the SMTs that Debezium provides, see Applying transformations to modify messages exchanged with Apache Kafka.

The documentation for each connector provides details about the connectors features and configuration options.

1.2. Description of Debezium architecture

You deploy Debezium by means of Apache Kafka Connect. Kafka Connect is a framework and runtime for implementing and operating:

- Source connectors such as Debezium that send records into Kafka

- Sink connectors that propagate records from Kafka topics to other systems

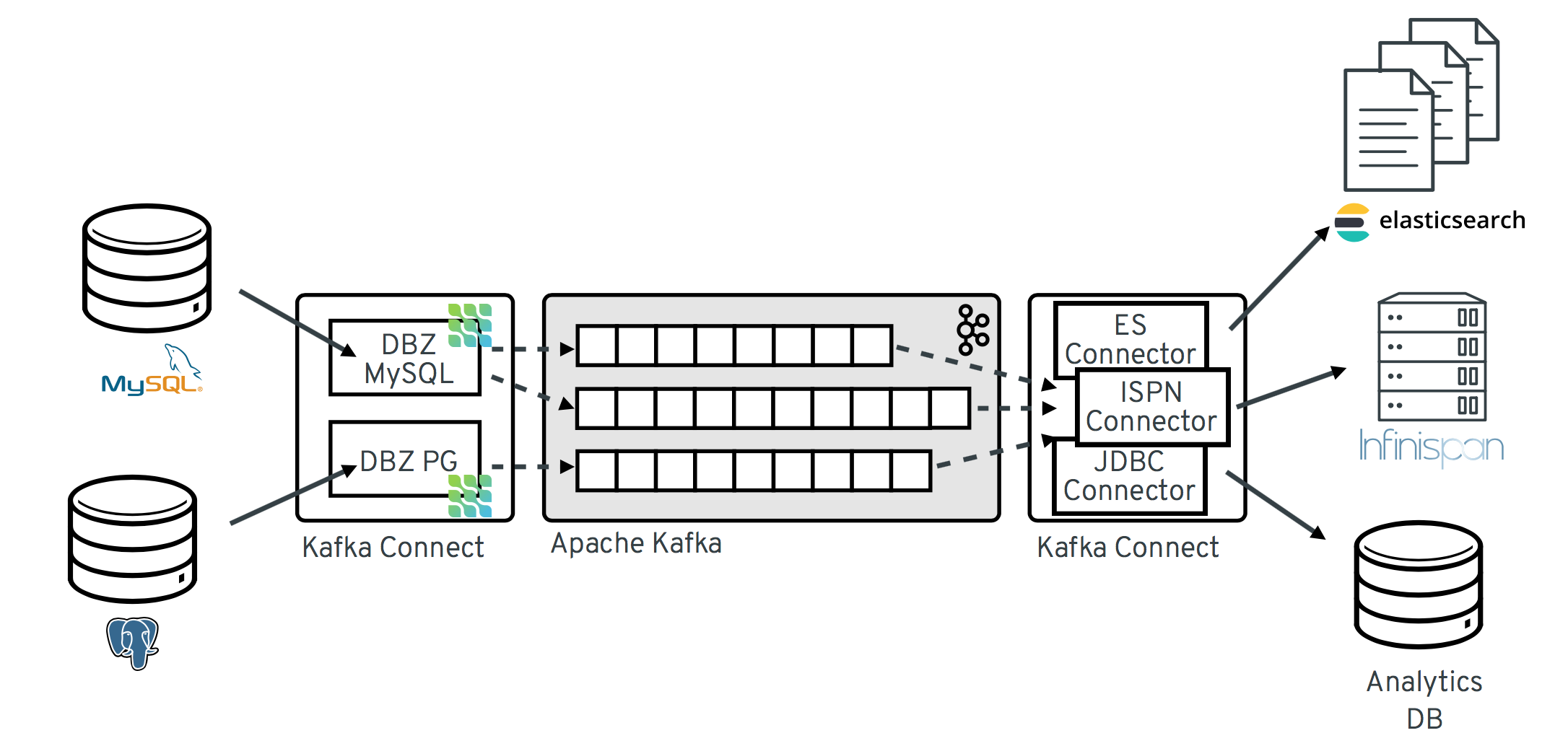

The following image shows the architecture of a change data capture pipeline based on Debezium:

As shown in the image, the Debezium connectors for MySQL and PostgresSQL are deployed to capture changes to these two types of databases. Each Debezium connector establishes a connection to its source database:

-

The MySQL connector uses a client library for accessing the

binlog. - The PostgreSQL connector reads from a logical replication stream.

Kafka Connect operates as a separate service besides the Kafka broker.

By default, changes from one database table are written to a Kafka topic whose name corresponds to the table name. If needed, you can adjust the destination topic name by configuring Debezium’s topic routing transformation. For example, you can:

- Route records to a topic whose name is different from the table’s name

- Stream change event records for multiple tables into a single topic

After change event records are in Apache Kafka, different connectors in the Kafka Connect eco-system can stream the records to other systems and databases such as Elasticsearch, data warehouses and analytics systems, or caches such as Infinispan. Depending on the chosen sink connector, you might need to configure Debezium’s new record state extraction transformation. This Kafka Connect SMT propagates the after structure from Debezium’s change event to the sink connector. This is in place of the verbose change event record that is propagated by default.

Chapter 2. Required custom resource upgrades

Debezium is a Kafka connector plugin that is deployed to an Apache Kafka cluster that runs on AMQ Streams on OpenShift. To prepare for OpenShift CRD v1, in the current version of AMQ Streams the required version of the custom resource definitions (CRD) API is now set to v1beta2. The v1beta2 version of the API replaces the previously supported v1beta1 and v1alpha1 API versions. Support for the v1alpha1 and v1beta1 API versions is now deprecated in AMQ Streams. Those earlier versions are now removed from most AMQ Streams custom resources, including the KafkaConnect and KafkaConnector resources that you use to configure Debezium connectors.

The CRDs that are based on the v1beta2 API version use the OpenAPI structural schema. Custom resources based on the superseded v1alpha1 or v1beta1 APIs do not support structural schemas, and are incompatible with the current version of AMQ Streams. Before you upgrade to AMQ Streams2.0, you must upgrade existing custom resources to use API version kafka.strimzi.io/v1beta2. You can upgrade custom resources any time after you upgrade to AMQ Streams 1.7. You must complete the upgrade to the v1beta2 API before you upgrade to AMQ Streams2.0 or newer.

To facilitate the upgrade of CRDs and custom resources, AMQ Streams provides an API conversion tool that automatically upgrades them to a format that is compatible with v1beta2. For more information about the tool and for the complete instructions about how to upgrade AMQ Streams, see Deploying and Upgrading AMQ Streams on OpenShift.

The requirement to update custom resources applies only to Debezium deployments that run on AMQ Streams on OpenShift. The requirement does not apply to Debezium on Red Hat Enterprise Linux

Chapter 3. Debezium connector for Db2

Debezium’s Db2 connector can capture row-level changes in the tables of a Db2 database. For information about the Db2 Database versions that are compatible with this connector, see the Debezium Supported Configurations page.

This connector is strongly inspired by the Debezium implementation of SQL Server, which uses a SQL-based polling model that puts tables into "capture mode". When a table is in capture mode, the Debezium Db2 connector generates and streams a change event for each row-level update to that table.

A table that is in capture mode has an associated change-data table, which Db2 creates. For each change to a table that is in capture mode, Db2 adds data about that change to the table’s associated change-data table. A change-data table contains an entry for each state of a row. It also has special entries for deletions. The Debezium Db2 connector reads change events from change-data tables and emits the events to Kafka topics.

The first time a Debezium Db2 connector connects to a Db2 database, the connector reads a consistent snapshot of the tables for which the connector is configured to capture changes. By default, this is all non-system tables. There are connector configuration properties that let you specify which tables to put into capture mode, or which tables to exclude from capture mode.

When the snapshot is complete the connector begins emitting change events for committed updates to tables that are in capture mode. By default, change events for a particular table go to a Kafka topic that has the same name as the table. Applications and services consume change events from these topics.

The connector requires the use of the abstract syntax notation (ASN) libraries, which are available as a standard part of Db2 for Linux. To use the ASN libraries, you must have a license for IBM InfoSphere Data Replication (IIDR). You do not have to install IIDR to use the ASN libraries.

Information and procedures for using a Debezium Db2 connector is organized as follows:

- Section 3.1, “Overview of Debezium Db2 connector”

- Section 3.2, “How Debezium Db2 connectors work”

- Section 3.3, “Descriptions of Debezium Db2 connector data change events”

- Section 3.4, “How Debezium Db2 connectors map data types”

- Section 3.5, “Setting up Db2 to run a Debezium connector”

- Section 3.6, “Deployment of Debezium Db2 connectors”

- Section 3.7, “Monitoring Debezium Db2 connector performance”

- Section 3.8, “Managing Debezium Db2 connectors”

- Section 3.9, “Updating schemas for Db2 tables in capture mode for Debezium connectors”

3.1. Overview of Debezium Db2 connector

The Debezium Db2 connector is based on the ASN Capture/Apply agents that enable SQL Replication in Db2. A capture agent:

- Generates change-data tables for tables that are in capture mode.

- Monitors tables in capture mode and stores change events for updates to those tables in their corresponding change-data tables.

The Debezium connector uses a SQL interface to query change-data tables for change events.

The database administrator must put the tables for which you want to capture changes into capture mode. For convenience and for automating testing, there are Debezium user-defined functions (UDFs) in C that you can compile and then use to do the following management tasks:

- Start, stop, and reinitialize the ASN agent

- Put tables into capture mode

- Create the replication (ASN) schemas and change-data tables

- Remove tables from capture mode

Alternatively, you can use Db2 control commands to accomplish these tasks.

After the tables of interest are in capture mode, the connector reads their corresponding change-data tables to obtain change events for table updates. The connector emits a change event for each row-level insert, update, and delete operation to a Kafka topic that has the same name as the changed table. This is default behavior that you can modify. Client applications read the Kafka topics that correspond to the database tables of interest and can react to each row-level change event.

Typically, the database administrator puts a table into capture mode in the middle of the life of a table. This means that the connector does not have the complete history of all changes that have been made to the table. Therefore, when the Db2 connector first connects to a particular Db2 database, it starts by performing a consistent snapshot of each table that is in capture mode. After the connector completes the snapshot, the connector streams change events from the point at which the snapshot was made. In this way, the connector starts with a consistent view of the tables that are in capture mode, and does not drop any changes that were made while it was performing the snapshot.

Debezium connectors are tolerant of failures. As the connector reads and produces change events, it records the log sequence number (LSN) of the change-data table entry. The LSN is the position of the change event in the database log. If the connector stops for any reason, including communication failures, network problems, or crashes, upon restarting it continues reading the change-data tables where it left off. This includes snapshots. That is, if the snapshot was not complete when the connector stopped, upon restart the connector begins a new snapshot.

3.2. How Debezium Db2 connectors work

To optimally configure and run a Debezium Db2 connector, it is helpful to understand how the connector performs snapshots, streams change events, determines Kafka topic names, and handles schema changes.

Details are in the following topics:

- Section 3.2.1, “How Debezium Db2 connectors perform database snapshots”

- Section 3.2.2, “How Debezium Db2 connectors read change-data tables”

- Section 3.2.3, “Default names of Kafka topics that receive Debezium Db2 change event records”

- Section 3.2.4, “About the Debezium Db2 connector schema change topic”

- Section 3.2.5, “Debezium Db2 connector-generated events that represent transaction boundaries”

3.2.1. How Debezium Db2 connectors perform database snapshots

Db2`s replication feature is not designed to store the complete history of database changes. Consequently, when a Debezium Db2 connector connects to a database for the first time, it takes a consistent snapshot of tables that are in capture mode and streams this state to Kafka. This establishes the baseline for table content.

By default, when a Db2 connector performs a snapshot, it does the following:

-

Determines which tables are in capture mode, and thus must be included in the snapshot. By default, all non-system tables are in capture mode. Connector configuration properties, such as

table.exclude.listandtable.include.listlet you specify which tables should be in capture mode. -

Obtains a lock on each of the tables in capture mode. This ensures that no schema changes can occur in those tables during the snapshot. The level of the lock is determined by the

snapshot.isolation.modeconnector configuration property. - Reads the highest (most recent) LSN position in the server’s transaction log.

- Captures the schema of all tables that are in capture mode. The connector persists this information in its internal database history topic.

- Optional, releases the locks obtained in step 2. Typically, these locks are held for only a short time.

At the LSN position read in step 3, the connector scans the capture mode tables as well as their schemas. During the scan, the connector:

- Confirms that the table was created before the start of the snapshot. If it was not, the snapshot skips that table. After the snapshot is complete, and the connector starts emitting change events, the connector produces change events for any tables that were created during the snapshot.

- Produces a read event for each row in each table that is in capture mode. All read events contain the same LSN position, which is the LSN position that was obtained in step 3.

- Emits each read event to the Kafka topic that has the same name as the table.

- Records the successful completion of the snapshot in the connector offsets.

3.2.1.1. Ad hoc snapshots

The use of ad hoc snapshots is a Technology Preview feature. Technology Preview features are not supported with Red Hat production service-level agreements (SLAs) and might not be functionally complete; therefore, Red Hat does not recommend implementing any Technology Preview features in production environments. This Technology Preview feature provides early access to upcoming product innovations, enabling you to test functionality and provide feedback during the development process. For more information about support scope, see Technology Preview Features Support Scope.

By default, a connector runs an initial snapshot operation only after it starts for the first time. Following this initial snapshot, under normal circumstances, the connector does not repeat the snapshot process. Any future change event data that the connector captures comes in through the streaming process only.

However, in some situations the data that the connector obtained during the initial snapshot might become stale, lost, or incomplete. To provide a mechanism for recapturing table data, Debezium includes an option to perform ad hoc snapshots. The following changes in a database might be cause for performing an ad hoc snapshot:

- The connector configuration is modified to capture a different set of tables.

- Kafka topics are deleted and must be rebuilt.

- Data corruption occurs due to a configuration error or some other problem.

You can re-run a snapshot for a table for which you previously captured a snapshot by initiating a so-called ad-hoc snapshot. Ad hoc snapshots require the use of signaling tables. You initiate an ad hoc snapshot by sending a signal request to the Debezium signaling table.

When you initiate an ad hoc snapshot of an existing table, the connector appends content to the topic that already exists for the table. If a previously existing topic was removed, Debezium can create a topic automatically if automatic topic creation is enabled.

Ad hoc snapshot signals specify the tables to include in the snapshot. The snapshot can capture the entire contents of the database, or capture only a subset of the tables in the database.

You specify the tables to capture by sending an execute-snapshot message to the signaling table. Set the type of the execute-snapshot signal to incremental, and provide the names of the tables to include in the snapshot, as described in the following table:

| Field | Default | Value |

|---|---|---|

|

|

|

Specifies the type of snapshot that you want to run. |

|

| N/A |

An array that contains the fully-qualified names of the table to be snapshotted. |

Triggering an ad hoc snapshot

You initiate an ad hoc snapshot by adding an entry with the execute-snapshot signal type to the signaling table. After the connector processes the message, it begins the snapshot operation. The snapshot process reads the first and last primary key values and uses those values as the start and end point for each table. Based on the number of entries in the table, and the configured chunk size, Debezium divides the table into chunks, and proceeds to snapshot each chunk, in succession, one at a time.

Currently, the execute-snapshot action type triggers incremental snapshots only. For more information, see Incremental snapshots.

3.2.1.2. Incremental snapshots

The use of incremental snapshots is a Technology Preview feature. Technology Preview features are not supported with Red Hat production service-level agreements (SLAs) and might not be functionally complete; therefore, Red Hat does not recommend implementing any Technology Preview features in production environments. This Technology Preview feature provides early access to upcoming product innovations, enabling you to test functionality and provide feedback during the development process. For more information about support scope, see Technology Preview Features Support Scope.

To provide flexibility in managing snapshots, Debezium includes a supplementary snapshot mechanism, known as incremental snapshotting. Incremental snapshots rely on the Debezium mechanism for sending signals to a Debezium connector.

In an incremental snapshot, instead of capturing the full state of a database all at once, as in an initial snapshot, Debezium captures each table in phases, in a series of configurable chunks. You can specify the tables that you want the snapshot to capture and the size of each chunk. The chunk size determines the number of rows that the snapshot collects during each fetch operation on the database. The default chunk size for incremental snapshots is 1 KB.

As an incremental snapshot proceeds, Debezium uses watermarks to track its progress, maintaining a record of each table row that it captures. This phased approach to capturing data provides the following advantages over the standard initial snapshot process:

- You can run incremental snapshots in parallel with streamed data capture, instead of postponing streaming until the snapshot completes. The connector continues to capture near real-time events from the change log throughout the snapshot process, and neither operation blocks the other.

- If the progress of an incremental snapshot is interrupted, you can resume it without losing any data. After the process resumes, the snapshot begins at the point where it stopped, rather than recapturing the table from the beginning.

-

You can run an incremental snapshot on demand at any time, and repeat the process as needed to adapt to database updates. For example, you might re-run a snapshot after you modify the connector configuration to add a table to its

table.include.listproperty.

Incremental snapshot process

When you run an incremental snapshot, Debezium sorts each table by primary key and then splits the table into chunks based on the configured chunk size. Working chunk by chunk, it then captures each table row in a chunk. For each row that it captures, the snapshot emits a READ event. That event represents the value of the row when the snapshot for the chunk began.

As a snapshot proceeds, it’s likely that other processes continue to access the database, potentially modifying table records. To reflect such changes, INSERT, UPDATE, or DELETE operations are committed to the transaction log as per usual. Similarly, the ongoing Debezium streaming process continues to detect these change events and emits corresponding change event records to Kafka.

How Debezium resolves collisions among records with the same primary key

In some cases, the UPDATE or DELETE events that the streaming process emits are received out of sequence. That is, the streaming process might emit an event that modifies a table row before the snapshot captures the chunk that contains the READ event for that row. When the snapshot eventually emits the corresponding READ event for the row, its value is already superseded. To ensure that incremental snapshot events that arrive out of sequence are processed in the correct logical order, Debezium employs a buffering scheme for resolving collisions. Only after collisions between the snapshot events and the streamed events are resolved does Debezium emit an event record to Kafka.

Snapshot window

To assist in resolving collisions between late-arriving READ events and streamed events that modify the same table row, Debezium employs a so-called snapshot window. The snapshot windows demarcates the interval during which an incremental snapshot captures data for a specified table chunk. Before the snapshot window for a chunk opens, Debezium follows its usual behavior and emits events from the transaction log directly downstream to the target Kafka topic. But from the moment that the snapshot for a particular chunk opens, until it closes, Debezium performs a de-duplication step to resolve collisions between events that have the same primary key..

For each data collection, the Debezium emits two types of events, and stores the records for them both in a single destination Kafka topic. The snapshot records that it captures directly from a table are emitted as READ operations. Meanwhile, as users continue to update records in the data collection, and the transaction log is updated to reflect each commit, Debezium emits UPDATE or DELETE operations for each change.

As the snapshot window opens, and Debezium begins processing a snapshot chunk, it delivers snapshot records to a memory buffer. During the snapshot windows, the primary keys of the READ events in the buffer are compared to the primary keys of the incoming streamed events. If no match is found, the streamed event record is sent directly to Kafka. If Debezium detects a match, it discards the buffered READ event, and writes the streamed record to the destination topic, because the streamed event logically supersede the static snapshot event. After the snapshot window for the chunk closes, the buffer contains only READ events for which no related transaction log events exist. Debezium emits these remaining READ events to the table’s Kafka topic.

The connector repeats the process for each snapshot chunk.

Triggering an incremental snapshot

Currently, the only way to initiate an incremental snapshot is to send an ad hoc snapshot signal to the signaling table on the source database. You submit signals to the table as SQL INSERT queries. After Debezium detects the change in the signaling table, it reads the signal, and runs the requested snapshot operation.

The query that you submit specifies the tables to include in the snapshot, and, optionally, specifies the kind of snapshot operation. Currently, the only valid option for snapshots operations is the default value, incremental.

To specify the tables to include in the snapshot, provide a data-collections array that lists the tables, for example,{"data-collections": ["public.MyFirstTable", "public.MySecondTable"]}

The data-collections array for an incremental snapshot signal has no default value. If the data-collections array is empty, Debezium detects that no action is required and does not perform a snapshot.

Prerequisites

- A signaling data collection exists on the source database and the connector is configured to capture it.

-

The signaling data collection is specified in the

signal.data.collectionproperty.

Procedure

Send a SQL query to add the ad hoc incremental snapshot request to the signaling table:

INSERT INTO _<signalTable>_ (id, type, data) VALUES (_'<id>'_, _'<snapshotType>'_, '{"data-collections": ["_<tableName>_","_<tableName>_"],"type":"_<snapshotType>_"}');For example,

INSERT INTO myschema.debezium_signal (id, type, data) VALUES('ad-hoc-1', 'execute-snapshot', '{"data-collections": ["schema1.table1", "schema2.table2"],"type":"incremental"}');The values of the

id,type, anddataparameters in the command correspond to the fields of the signaling table.The following table describes the these parameters:

Table 3.2. Descriptions of fields in a SQL command for sending an incremental snapshot signal to the signaling table Value Description myschema.debezium_signalSpecifies the fully-qualified name of the signaling table on the source database

ad-hoc-1The

idparameter specifies an arbitrary string that is assigned as theididentifier for the signal request.

Use this string to identify logging messages to entries in the signaling table. Debezium does not use this string. Rather, during the snapshot, Debezium generates its ownidstring as a watermarking signal.execute-snapshotSpecifies

typeparameter specifies the operation that the signal is intended to trigger.

data-collectionsA required component of the

datafield of a signal that specifies an array of table names to include in the snapshot.

The array lists tables by their fully-qualified names, using the same format as you use to specify the name of the connector’s signaling table in thesignal.data.collectionconfiguration property.incrementalAn optional

typecomponent of thedatafield of a signal that specifies the kind of snapshot operation to run.

Currently, the only valid option is the default value,incremental.

Specifying atypevalue in the SQL query that you submit to the signaling table is optional.

If you do not specify a value, the connector runs an incremental snapshot.

The following example, shows the JSON for an incremental snapshot event that is captured by a connector.

Example: Incremental snapshot event message

{

"before":null,

"after": {

"pk":"1",

"value":"New data"

},

"source": {

...

"snapshot":"incremental" 1

},

"op":"r", 2

"ts_ms":"1620393591654",

"transaction":null

}

| Item | Field name | Description |

|---|---|---|

| 1 |

|

Specifies the type of snapshot operation to run. |

| 2 |

|

Specifies the event type. |

The Debezium connector for Db2 does not support schema changes while an incremental snapshot is running.

3.2.2. How Debezium Db2 connectors read change-data tables

After a complete snapshot, when a Debezium Db2 connector starts for the first time, the connector identifies the change-data table for each source table that is in capture mode. The connector does the following for each change-data table:

- Reads change events that were created between the last stored, highest LSN and the current, highest LSN.

- Orders the change events according to the commit LSN and the change LSN for each event. This ensures that the connector emits the change events in the order in which the table changes occurred.

- Passes commit and change LSNs as offsets to Kafka Connect.

- Stores the highest LSN that the connector passed to Kafka Connect.

After a restart, the connector resumes emitting change events from the offset (commit and change LSNs) where it left off. While the connector is running and emitting change events, if you remove a table from capture mode or add a table to capture mode, the connector detects the change, and modifies its behavior accordingly.

3.2.3. Default names of Kafka topics that receive Debezium Db2 change event records

By default, the Db2 connector writes change events for all of the INSERT, UPDATE, and DELETE operations that occur in a table to a single Apache Kafka topic that is specific to that table. The connector uses the following convention to name change event topics:

databaseName.schemaName.tableName

The following list provides definitions for the components of the default name:

- databaseName

-

The logical name of the connector as specified by the

database.server.nameconnector configuration property. - schemaName

- The name of the schema in which the operation occurred.

- tableName

- The name of the table in which the operation occurred.

For example, consider a Db2 installation with the mydatabase database, which contains four tables: PRODUCTS, PRODUCTS_ON_HAND, CUSTOMERS, and ORDERS that are in the MYSCHEMA schema. The connector would emit events to these four Kafka topics:

-

mydatabase.MYSCHEMA.PRODUCTS -

mydatabase.MYSCHEMA.PRODUCTS_ON_HAND -

mydatabase.MYSCHEMA.CUSTOMERS -

mydatabase.MYSCHEMA.ORDERS

The connector applies similar naming conventions to label its internal database history topics, schema change topics, and transaction metadata topics.

If the default topic name do not meet your requirements, you can configure custom topic names. To configure custom topic names, you specify regular expressions in the logical topic routing SMT. For more information about using the logical topic routing SMT to customize topic naming, see Topic routing.

3.2.4. About the Debezium Db2 connector schema change topic

You can configure a Debezium Db2 connector to produce schema change events that describe schema changes that are applied to captured tables in the database.

Debezium emits a message to the schema change topic when:

- A new table goes into capture mode.

- A table is removed from capture mode.

- During a database schema update, there is a change in the schema for a table that is in capture mode.

The connector writes schema change events to a Kafka schema change topic that has the name <serverName> where <serverName> is the logical server name that is specified in the database.server.name connector configuration property. Messages that the connector sends to the schema change topic contain a payload that includes the following elements:

databaseName-

The name of the database to which the statements are applied. The value of

databaseNameserves as the message key. pos- The position in the binlog where the statements appear.

tableChanges-

A structured representation of the entire table schema after the schema change. The

tableChangesfield contains an array that includes entries for each column of the table. Because the structured representation presents data in JSON or Avro format, consumers can easily read messages without first processing them through a DDL parser.

For a table that is in capture mode, the connector not only stores the history of schema changes in the schema change topic, but also in an internal database history topic. The internal database history topic is for connector use only and it is not intended for direct use by consuming applications. Ensure that applications that require notifications about schema changes consume that information only from the schema change topic.

Never partition the database history topic. For the database history topic to function correctly, it must maintain a consistent, global order of the event records that the connector emits to it.

To ensure that the topic is not split among partitions, set the partition count for the topic by using one of the following methods:

-

If you create the database history topic manually, specify a partition count of

1. -

If you use the Apache Kafka broker to create the database history topic automatically, the topic is created, set the value of the Kafka

num.partitionsconfiguration option to1.

The format of messages that a connector emits to its schema change topic is in an incubating state and can change without notice.

Example: Message emitted to the Db2 connector schema change topic

The following example shows a message in the schema change topic. The message contains a logical representation of the table schema.

{

"schema": {

...

},

"payload": {

"source": {

"version": "1.7.2.Final",

"connector": "db2",

"name": "db2",

"ts_ms": 1588252618953,

"snapshot": "true",

"db": "testdb",

"schema": "DB2INST1",

"table": "CUSTOMERS",

"change_lsn": null,

"commit_lsn": "00000025:00000d98:00a2",

"event_serial_no": null

},

"databaseName": "TESTDB", 1

"schemaName": "DB2INST1",

"ddl": null, 2

"tableChanges": [ 3

{

"type": "CREATE", 4

"id": "\"DB2INST1\".\"CUSTOMERS\"", 5

"table": { 6

"defaultCharsetName": null,

"primaryKeyColumnNames": [ 7

"ID"

],

"columns": [ 8

{

"name": "ID",

"jdbcType": 4,

"nativeType": null,

"typeName": "int identity",

"typeExpression": "int identity",

"charsetName": null,

"length": 10,

"scale": 0,

"position": 1,

"optional": false,

"autoIncremented": false,

"generated": false

},

{

"name": "FIRST_NAME",

"jdbcType": 12,

"nativeType": null,

"typeName": "varchar",

"typeExpression": "varchar",

"charsetName": null,

"length": 255,

"scale": null,

"position": 2,

"optional": false,

"autoIncremented": false,

"generated": false

},

{

"name": "LAST_NAME",

"jdbcType": 12,

"nativeType": null,

"typeName": "varchar",

"typeExpression": "varchar",

"charsetName": null,

"length": 255,

"scale": null,

"position": 3,

"optional": false,

"autoIncremented": false,

"generated": false

},

{

"name": "EMAIL",

"jdbcType": 12,

"nativeType": null,

"typeName": "varchar",

"typeExpression": "varchar",

"charsetName": null,

"length": 255,

"scale": null,

"position": 4,

"optional": false,

"autoIncremented": false,

"generated": false

}

]

}

}

]

}

}| Item | Field name | Description |

|---|---|---|

| 1 |

| Identifies the database and the schema that contain the change. |

| 2 |

|

Always |

| 3 |

| An array of one or more items that contain the schema changes generated by a DDL command. |

| 4 |

| Describes the kind of change. The value is one of the following:

|

| 5 |

| Full identifier of the table that was created, altered, or dropped. |

| 6 |

| Represents table metadata after the applied change. |

| 7 |

| List of columns that compose the table’s primary key. |

| 8 |

| Metadata for each column in the changed table. |

In messages that the connector sends to the schema change topic, the message key is the name of the database that contains the schema change. In the following example, the payload field contains the key:

{

"schema": {

"type": "struct",

"fields": [

{

"type": "string",

"optional": false,

"field": "databaseName"

}

],

"optional": false,

"name": "io.debezium.connector.db2.SchemaChangeKey"

},

"payload": {

"databaseName": "TESTDB"

}

}3.2.5. Debezium Db2 connector-generated events that represent transaction boundaries

Debezium can generate events that represent transaction boundaries and that enrich change data event messages.

Debezium registers and receives metadata only for transactions that occur after you deploy the connector. Metadata for transactions that occur before you deploy the connector is not available.

Debezium generates transaction boundary events for the BEGIN and END delimiters in every transaction. Transaction boundary events contain the following fields:

status-

BEGINorEND. id- String representation of the unique transaction identifier.

event_count(forENDevents)- Total number of events emitted by the transaction.

data_collections(forENDevents)-

An array of pairs of

data_collectionandevent_countelements. that indicates the number of events that the connector emits for changes that originate from a data collection.

Example

{

"status": "BEGIN",

"id": "00000025:00000d08:0025",

"event_count": null,

"data_collections": null

}

{

"status": "END",

"id": "00000025:00000d08:0025",

"event_count": 2,

"data_collections": [

{

"data_collection": "testDB.dbo.tablea",

"event_count": 1

},

{

"data_collection": "testDB.dbo.tableb",

"event_count": 1

}

]

}

The connector emits transaction events to the <database.server.name>.transaction topic.

Data change event enrichment

When transaction metadata is enabled the connector enriches the change event Envelope with a new transaction field. This field provides information about every event in the form of a composite of fields:

id- String representation of unique transaction identifier.

total_order- The absolute position of the event among all events generated by the transaction.

data_collection_order- The per-data collection position of the event among all events that were emitted by the transaction.

Following is an example of a message:

{

"before": null,

"after": {

"pk": "2",

"aa": "1"

},

"source": {

...

},

"op": "c",

"ts_ms": "1580390884335",

"transaction": {

"id": "00000025:00000d08:0025",

"total_order": "1",

"data_collection_order": "1"

}

}3.3. Descriptions of Debezium Db2 connector data change events

The Debezium Db2 connector generates a data change event for each row-level INSERT, UPDATE, and DELETE operation. Each event contains a key and a value. The structure of the key and the value depends on the table that was changed.

Debezium and Kafka Connect are designed around continuous streams of event messages. However, the structure of these events may change over time, which can be difficult for consumers to handle. To address this, each event contains the schema for its content or, if you are using a schema registry, a schema ID that a consumer can use to obtain the schema from the registry. This makes each event self-contained.

The following skeleton JSON shows the basic four parts of a change event. However, how you configure the Kafka Connect converter that you choose to use in your application determines the representation of these four parts in change events. A schema field is in a change event only when you configure the converter to produce it. Likewise, the event key and event payload are in a change event only if you configure a converter to produce it. If you use the JSON converter and you configure it to produce all four basic change event parts, change events have this structure:

{

"schema": { 1

...

},

"payload": { 2

...

},

"schema": { 3

...

},

"payload": { 4

...

},

}| Item | Field name | Description |

|---|---|---|

| 1 |

|

The first |

| 2 |

|

The first |

| 3 |

|

The second |

| 4 |

|

The second |

By default, the connector streams change event records to topics with names that are the same as the event’s originating table. See topic names.

The Debezium Db2 connector ensures that all Kafka Connect schema names adhere to the Avro schema name format. This means that the logical server name must start with a Latin letter or an underscore, that is, a-z, A-Z, or _. Each remaining character in the logical server name and each character in the database and table names must be a Latin letter, a digit, or an underscore, that is, a-z, A-Z, 0-9, or \_. If there is an invalid character it is replaced with an underscore character.

This can lead to unexpected conflicts if the logical server name, a database name, or a table name contains invalid characters, and the only characters that distinguish names from one another are invalid and thus replaced with underscores.

Also, Db2 names for databases, schemas, and tables can be case sensitive. This means that the connector could emit event records for more than one table to the same Kafka topic.

Details are in the following topics:

3.3.1. About keys in Debezium db2 change events

A change event’s key contains the schema for the changed table’s key and the changed row’s actual key. Both the schema and its corresponding payload contain a field for each column in the changed table’s PRIMARY KEY (or unique constraint) at the time the connector created the event.

Consider the following customers table, which is followed by an example of a change event key for this table.

Example table

CREATE TABLE customers ( ID INTEGER IDENTITY(1001,1) NOT NULL PRIMARY KEY, FIRST_NAME VARCHAR(255) NOT NULL, LAST_NAME VARCHAR(255) NOT NULL, EMAIL VARCHAR(255) NOT NULL UNIQUE );

Example change event key

Every change event that captures a change to the customers table has the same event key schema. For as long as the customers table has the previous definition, every change event that captures a change to the customers table has the following key structure. In JSON, it looks like this:

{

"schema": { 1

"type": "struct",

"fields": [ 2

{

"type": "int32",

"optional": false,

"field": "ID"

}

],

"optional": false, 3

"name": "mydatabase.MYSCHEMA.CUSTOMERS.Key" 4

},

"payload": { 5

"ID": 1004

}

}| Item | Field name | Description |

|---|---|---|

| 1 |

|

The schema portion of the key specifies a Kafka Connect schema that describes what is in the key’s |

| 2 |

|

Specifies each field that is expected in the |

| 3 |

|

Indicates whether the event key must contain a value in its |

| 4 |

|

Name of the schema that defines the structure of the key’s payload. This schema describes the structure of the primary key for the table that was changed. Key schema names have the format connector-name.database-name.table-name.

|

| 5 |

|

Contains the key for the row for which this change event was generated. In this example, the key, contains a single |

3.3.2. About values in Debezium Db2 change events

The value in a change event is a bit more complicated than the key. Like the key, the value has a schema section and a payload section. The schema section contains the schema that describes the Envelope structure of the payload section, including its nested fields. Change events for operations that create, update or delete data all have a value payload with an envelope structure.

Consider the same sample table that was used to show an example of a change event key:

Example table

CREATE TABLE customers ( ID INTEGER IDENTITY(1001,1) NOT NULL PRIMARY KEY, FIRST_NAME VARCHAR(255) NOT NULL, LAST_NAME VARCHAR(255) NOT NULL, EMAIL VARCHAR(255) NOT NULL UNIQUE );

The event value portion of every change event for the customers table specifies the same schema. The event value’s payload varies according to the event type:

create events

The following example shows the value portion of a change event that the connector generates for an operation that creates data in the customers table:

{

"schema": { 1

"type": "struct",

"fields": [

{

"type": "struct",

"fields": [

{

"type": "int32",

"optional": false,

"field": "ID"

},

{

"type": "string",

"optional": false,

"field": "FIRST_NAME"

},

{

"type": "string",

"optional": false,

"field": "LAST_NAME"

},

{

"type": "string",

"optional": false,

"field": "EMAIL"

}

],

"optional": true,

"name": "mydatabase.MYSCHEMA.CUSTOMERS.Value", 2

"field": "before"

},

{

"type": "struct",

"fields": [

{

"type": "int32",

"optional": false,

"field": "ID"

},

{

"type": "string",

"optional": false,

"field": "FIRST_NAME"

},

{

"type": "string",

"optional": false,

"field": "LAST_NAME"

},

{

"type": "string",

"optional": false,

"field": "EMAIL"

}

],

"optional": true,

"name": "mydatabase.MYSCHEMA.CUSTOMERS.Value",

"field": "after"

},

{

"type": "struct",

"fields": [

{

"type": "string",

"optional": false,

"field": "version"

},

{

"type": "string",

"optional": false,

"field": "connector"

},

{

"type": "string",

"optional": false,

"field": "name"

},

{

"type": "int64",

"optional": false,

"field": "ts_ms"

},

{

"type": "boolean",

"optional": true,

"default": false,

"field": "snapshot"

},

{

"type": "string",

"optional": false,

"field": "db"

},

{

"type": "string",

"optional": false,

"field": "schema"

},

{

"type": "string",

"optional": false,

"field": "table"

},

{

"type": "string",

"optional": true,

"field": "change_lsn"

},

{

"type": "string",

"optional": true,

"field": "commit_lsn"

},

],

"optional": false,

"name": "io.debezium.connector.db2.Source", 3

"field": "source"

},

{

"type": "string",

"optional": false,

"field": "op"

},

{

"type": "int64",

"optional": true,

"field": "ts_ms"

}

],

"optional": false,

"name": "mydatabase.MYSCHEMA.CUSTOMERS.Envelope" 4

},

"payload": { 5

"before": null, 6

"after": { 7

"ID": 1005,

"FIRST_NAME": "john",

"LAST_NAME": "doe",

"EMAIL": "john.doe@example.org"

},

"source": { 8

"version": "1.7.2.Final",

"connector": "db2",

"name": "myconnector",

"ts_ms": 1559729468470,

"snapshot": false,

"db": "mydatabase",

"schema": "MYSCHEMA",

"table": "CUSTOMERS",

"change_lsn": "00000027:00000758:0003",

"commit_lsn": "00000027:00000758:0005",

},

"op": "c", 9

"ts_ms": 1559729471739 10

}

}| Item | Field name | Description |

|---|---|---|

| 1 |

| The value’s schema, which describes the structure of the value’s payload. A change event’s value schema is the same in every change event that the connector generates for a particular table. |

| 2 |

|

In the |

| 3 |

|

|

| 4 |

|

|

| 5 |

|

The value’s actual data. This is the information that the change event is providing. |

| 6 |

|

An optional field that specifies the state of the row before the event occurred. When the |

| 7 |

|

An optional field that specifies the state of the row after the event occurred. In this example, the |

| 8 |

|

Mandatory field that describes the source metadata for the event. The

|

| 9 |

|

Mandatory string that describes the type of operation that caused the connector to generate the event. In this example,

|

| 10 |

|

Optional field that displays the time at which the connector processed the event. The time is based on the system clock in the JVM running the Kafka Connect task. |

update events

The value of a change event for an update in the sample customers table has the same schema as a create event for that table. Likewise, the update event value’s payload has the same structure. However, the event value payload contains different values in an update event. Here is an example of a change event value in an event that the connector generates for an update in the customers table:

{

"schema": { ... },

"payload": {

"before": { 1

"ID": 1005,

"FIRST_NAME": "john",

"LAST_NAME": "doe",

"EMAIL": "john.doe@example.org"

},

"after": { 2

"ID": 1005,

"FIRST_NAME": "john",

"LAST_NAME": "doe",

"EMAIL": "noreply@example.org"

},

"source": { 3

"version": "1.7.2.Final",

"connector": "db2",

"name": "myconnector",

"ts_ms": 1559729995937,

"snapshot": false,

"db": "mydatabase",

"schema": "MYSCHEMA",

"table": "CUSTOMERS",

"change_lsn": "00000027:00000ac0:0002",

"commit_lsn": "00000027:00000ac0:0007",

},

"op": "u", 4

"ts_ms": 1559729998706 5

}

}| Item | Field name | Description |

|---|---|---|

| 1 |

|

An optional field that specifies the state of the row before the event occurred. In an update event value, the |

| 2 |

|

An optional field that specifies the state of the row after the event occurred. You can compare the |

| 3 |

|

Mandatory field that describes the source metadata for the event. The

|

| 4 |

|

Mandatory string that describes the type of operation. In an update event value, the |

| 5 |

|

Optional field that displays the time at which the connector processed the event. The time is based on the system clock in the JVM running the Kafka Connect task. |

Updating the columns for a row’s primary/unique key changes the value of the row’s key. When a key changes, Debezium outputs three events: a DELETE event and a tombstone event with the old key for the row, followed by an event with the new key for the row.

delete events

The value in a delete change event has the same schema portion as create and update events for the same table. The event value payload in a delete event for the sample customers table looks like this:

{

"schema": { ... },

},

"payload": {

"before": { 1

"ID": 1005,

"FIRST_NAME": "john",

"LAST_NAME": "doe",

"EMAIL": "noreply@example.org"

},

"after": null, 2

"source": { 3

"version": "1.7.2.Final",

"connector": "db2",

"name": "myconnector",

"ts_ms": 1559730445243,

"snapshot": false,

"db": "mydatabase",

"schema": "MYSCHEMA",

"table": "CUSTOMERS",

"change_lsn": "00000027:00000db0:0005",

"commit_lsn": "00000027:00000db0:0007"

},

"op": "d", 4

"ts_ms": 1559730450205 5

}

}| Item | Field name | Description |

|---|---|---|

| 1 |

|

Optional field that specifies the state of the row before the event occurred. In a delete event value, the |

| 2 |

|

Optional field that specifies the state of the row after the event occurred. In a delete event value, the |

| 3 |

|

Mandatory field that describes the source metadata for the event. In a delete event value, the

|

| 4 |

|

Mandatory string that describes the type of operation. The |

| 5 |

|

Optional field that displays the time at which the connector processed the event. The time is based on the system clock in the JVM running the Kafka Connect task. |

A delete change event record provides a consumer with the information it needs to process the removal of this row. The old values are included because some consumers might require them in order to properly handle the removal.

Db2 connector events are designed to work with Kafka log compaction. Log compaction enables removal of some older messages as long as at least the most recent message for every key is kept. This lets Kafka reclaim storage space while ensuring that the topic contains a complete data set and can be used for reloading key-based state.

When a row is deleted, the delete event value still works with log compaction, because Kafka can remove all earlier messages that have that same key. However, for Kafka to remove all messages that have that same key, the message value must be null. To make this possible, after Debezium’s Db2 connector emits a delete event, the connector emits a special tombstone event that has the same key but a null value.

3.4. How Debezium Db2 connectors map data types

Db2’s data types are described in Db2 SQL Data Types.

The Db2 connector represents changes to rows with events that are structured like the table in which the row exists. The event contains a field for each column value. How that value is represented in the event depends on the Db2 data type of the column. This section describes these mappings.

Details are in the following sections:

Basic types

The following table describes how the connector maps each of the Db2 data types to a literal type and a semantic type in event fields.

-

literal type describes how the value is represented using Kafka Connect schema types:

INT8,INT16,INT32,INT64,FLOAT32,FLOAT64,BOOLEAN,STRING,BYTES,ARRAY,MAP, andSTRUCT. - semantic type describes how the Kafka Connect schema captures the meaning of the field using the name of the Kafka Connect schema for the field.

| Db2 data type | Literal type (schema type) | Semantic type (schema name) and Notes |

|---|---|---|

|

|

| Only snapshots can be taken from tables with BOOLEAN type columns. Currently SQL Replication on Db2 does not support BOOLEAN, so Debezium can not perform CDC on those tables. Consider using a different type. |

|

|

| n/a |

|

|

| n/a |

|

|

| n/a |

|

|

| n/a |

|

|

| n/a |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| n/a |

|

|

| n/a |

|

|

| n/a |

|

|

| n/a |

|

|

| n/a |

|

|

|

|

|

|

|

|

|

|

| n/a |

|

|

| n/a |

|

|

| n/a |

|

|

|

|

If present, a column’s default value is propagated to the corresponding field’s Kafka Connect schema. Change events contain the field’s default value unless an explicit column value had been given. Consequently, there is rarely a need to obtain the default value from the schema.

Temporal types

Other than Db2’s DATETIMEOFFSET data type, which contains time zone information, how temporal types are mapped depends on the value of the time.precision.mode connector configuration property. The following sections describe these mappings:

time.precision.mode=adaptive

When the time.precision.mode configuration property is set to adaptive, the default, the connector determines the literal type and semantic type based on the column’s data type definition. This ensures that events exactly represent the values in the database.

| Db2 data type | Literal type (schema type) | Semantic type (schema name) and Notes |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

time.precision.mode=connect

When the time.precision.mode configuration property is set to connect, the connector uses Kafka Connect logical types. This may be useful when consumers can handle only the built-in Kafka Connect logical types and are unable to handle variable-precision time values. However, since Db2 supports tenth of a microsecond precision, the events generated by a connector with the connect time precision results in a loss of precision when the database column has a fractional second precision value that is greater than 3.

| Db2 data type | Literal type (schema type) | Semantic type (schema name) and Notes |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Timestamp types

The DATETIME, SMALLDATETIME and DATETIME2 types represent a timestamp without time zone information. Such columns are converted into an equivalent Kafka Connect value based on UTC. For example, the DATETIME2 value "2018-06-20 15:13:16.945104" is represented by an io.debezium.time.MicroTimestamp with the value "1529507596945104".

The timezone of the JVM running Kafka Connect and Debezium does not affect this conversion.

| Db2 data type | Literal type (schema type) | Semantic type (schema name) and Notes |

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

3.5. Setting up Db2 to run a Debezium connector

For Debezium to capture change events that are committed to Db2 tables, a Db2 database administrator with the necessary privileges must configure tables in the database for change data capture. After you begin to run Debezium you can adjust the configuration of the capture agent to optimize performance.

For details about setting up Db2 for use with the Debezium connector, see the following sections:

3.5.1. Configuring Db2 tables for change data capture

To put tables into capture mode, Debezium provides a set of user-defined functions (UDFs) for your convenience. The procedure here shows how to install and run these management UDFs. Alternatively, you can run Db2 control commands to put tables into capture mode. The administrator must then enable CDC for each table that you want Debezium to capture.

Prerequisites

-

You are logged in to Db2 as the

db2instluser. - On the Db2 host, the Debezium management UDFs are available in the $HOME/asncdctools/src directory. UDFs are available from the Debezium examples repository.

Procedure

Compile the Debezium management UDFs on the Db2 server host by using the

bldrtncommand provided with Db2:cd $HOME/asncdctools/src

./bldrtn asncdc

Start the database if it is not already running. Replace

DB_NAMEwith the name of the database that you want Debezium to connect to.db2 start db DB_NAME

Ensure that JDBC can read the Db2 metadata catalog:

cd $HOME/sqllib/bnd

db2 bind db2schema.bnd blocking all grant public sqlerror continue

Ensure that the database was recently backed-up. The ASN agents must have a recent starting point to read from. If you need to perform a backup, run the following commands, which prune the data so that only the most recent version is available. If you do not need to retain the older versions of the data, specify

dev/nullfor the backup location.Back up the database. Replace

DB_NAMEandBACK_UP_LOCATIONwith appropriate values:db2 backup db DB_NAME to BACK_UP_LOCATION

Restart the database:

db2 restart db DB_NAME

Connect to the database to install the Debezium management UDFs. It is assumed that you are logged in as the

db2instluser so the UDFs should be installed on thedb2inst1user.db2 connect to DB_NAME

Copy the Debezium management UDFs and set permissions for them:

cp $HOME/asncdctools/src/asncdc $HOME/sqllib/function

chmod 777 $HOME/sqllib/function

Enable the Debezium UDF that starts and stops the ASN capture agent:

db2 -tvmf $HOME/asncdctools/src/asncdc_UDF.sql

Create the ASN control tables:

$ db2 -tvmf $HOME/asncdctools/src/asncdctables.sql

Enable the Debezium UDF that adds tables to capture mode and removes tables from capture mode:

$ db2 -tvmf $HOME/asncdctools/src/asncdcaddremove.sql

After you set up the Db2 server, use the UDFs to control Db2 replication (ASN) with SQL commands. Some of the UDFs expect a return value in which case you use the SQL

VALUEstatement to invoke them. For other UDFs, use the SQLCALLstatement.Start the ASN agent:

VALUES ASNCDC.ASNCDCSERVICES('start','asncdc');The preceding statement returns one of the following results:

-

asncap is already running start --><COMMAND>In this case, enter the specified

<COMMAND>in the terminal window as shown in the following example:/database/config/db2inst1/sqllib/bin/asncap capture_schema=asncdc capture_server=SAMPLE &

-

Put tables into capture mode. Invoke the following statement for each table that you want to put into capture. Replace

MYSCHEMAwith the name of the schema that contains the table you want to put into capture mode. Likewise, replaceMYTABLEwith the name of the table to put into capture mode:CALL ASNCDC.ADDTABLE('MYSCHEMA', 'MYTABLE');Reinitialize the ASN service:

VALUES ASNCDC.ASNCDCSERVICES('reinit','asncdc');

Additional resources

3.5.2. Effect of Db2 capture agent configuration on server load and latency

When a database administrator enables change data capture for a source table, the capture agent begins to run. The agent reads new change event records from the transaction log and replicates the event records to a capture table. Between the time that a change is committed in the source table, and the time that the change appears in the corresponding change table, there is always a small latency interval. This latency interval represents a gap between when changes occur in the source table and when they become available for Debezium to stream to Apache Kafka.

Ideally, for applications that must respond quickly to changes in data, you want to maintain close synchronization between the source and capture tables. You might imagine that running the capture agent to continuously process change events as rapidly as possible might result in increased throughput and reduced latency — populating change tables with new event records as soon as possible after the events occur, in near real time. However, this is not necessarily the case. There is a performance penalty to pay in the pursuit of more immediate synchronization. Each time that the change agent queries the database for new event records, it increases the CPU load on the database host. The additional load on the server can have a negative effect on overall database performance, and potentially reduce transaction efficiency, especially during times of peak database use.

It’s important to monitor database metrics so that you know if the database reaches the point where the server can no longer support the capture agent’s level of activity. If you experience performance issues while running the capture agent, adjust capture agent settings to reduce CPU load.

3.5.3. Db2 capture agent configuration parameters

On Db2, the IBMSNAP_CAPPARMS table contains parameters that control the behavior of the capture agent. You can adjust the values for these parameters to balance the configuration of the capture process to reduce CPU load and still maintain acceptable levels of latency.

Specific guidance about how to configure Db2 capture agent parameters is beyond the scope of this documentation.

In the IBMSNAP_CAPPARMS table, the following parameters have the greatest effect on reducing CPU load:

COMMIT_INTERVAL- Specifies the number of seconds that the capture agent waits to commit data to the change data tables.

- A higher value reduces the load on the database host and increases latency.

-

The default value is

30.

SLEEP_INTERVAL- Specifies the number of seconds that the capture agent waits to start a new commit cycle after it reaches the end of the active transaction log.

- A higher value reduces the load on the server, and increases latency.

-

The default value is

5.

Additional resources

- For more information about capture agent parameters, see the Db2 documentation.

3.6. Deployment of Debezium Db2 connectors

You can use either of the following methods to deploy a Debezium Db2 connector:

The Debezium Db2 connector requires the Db2 JDBC driver to connect to Db2 databases. For information about how to obtain the driver, see Obtaining the Db2 JDBC driver.

Additional resources

3.6.1. Obtaining the Db2 JDBC driver

Due to licensing requirements, the Db2 JDBC driver file is not included in the Debezium Db2 connector archive. Regardless of the deployment method that you use, you must download the driver file to complete the deployment.

The following steps describe how to obtain the driver and use it your your environment.

Procedure

From a browser, navigate to the IBM Support site and download the JDBC driver that matches your version of Db2.

-

If you use a Dockerfile to build the connector, copy the downloaded file to the directory that contains the Debezium Db2 connector files, for example,

<kafka_home>/libsdirectory. If you use AMQ Streams to add the connector to your Kafka Connect image:

- Deploy the driver to a Maven repository or to another HTTP server that is available to your OpenShift cluster.

-

Add the artifact URL to the

KafkaConnectcustom resource.

-

If you use a Dockerfile to build the connector, copy the downloaded file to the directory that contains the Debezium Db2 connector files, for example,

After you apply the KafkaConnector resource to deploy the connector, the connector is configured to use the specified driver.

3.6.2. Db2 connector deployment using AMQ Streams

Beginning with Debezium 1.7, the preferred method for deploying a Debezium connector is to use AMQ Streams to build a Kafka Connect container image that includes the connector plug-in.

During the deployment process, you create and use the following custom resources (CRs):

-

A

KafkaConnectCR that defines your Kafka Connect instance and includes information about the connector artifacts needs to include in the image. -

A

KafkaConnectorCR that provides details that include information the connector uses to access the source database. After AMQ Streams starts the Kafka Connect pod, you start the connector by applying theKafkaConnectorCR.

In the build specification for the Kafka Connect image, you can specify the connectors that are available to deploy. For each connector plug-in, you can also specify other components that you want to make available for deployment. For example, you can add Service Registry artifacts, or the Debezium scripting component. When AMQ Streams builds the Kafka Connect image, it downloads the specified artifacts, and incorporates them into the image.

The spec.build.output parameter in the KafkaConnect CR specifies where to store the resulting Kafka Connect container image. Container images can be stored in a Docker registry, or in an OpenShift ImageStream. To store images in an ImageStream, you must create the ImageStream before you deploy Kafka Connect. ImageStreams are not created automatically.

If you use a KafkaConnect resource to create a cluster, afterwards you cannot use the Kafka Connect REST API to create or update connectors. You can still use the REST API to retrieve information.

Additional resources

- Configuring Kafka Connect in Using AMQ Streams on OpenShift.

- Creating a new container image automatically using AMQ Streams in Deploying and Upgrading AMQ Streams on OpenShift.

3.6.3. Using AMQ Streams to deploy a Debezium Db2 connector

With earlier versions of AMQ Streams, to deploy Debezium connectors on OpenShift, it was necessary to first build a Kafka Connect image for the connector. The current preferred method for deploying connectors on OpenShift is to use a build configuration in AMQ Streams to automatically build a Kafka Connect container image that includes the Debezium connector plug-ins that you want to use.

During the build process, the AMQ Streams Operator transforms input parameters in a KafkaConnect custom resource, including Debezium connector definitions, into a Kafka Connect container image. The build downloads the necessary artifacts from the Red Hat Maven repository or another configured HTTP server. The newly created container is pushed to the container registry that is specified in .spec.build.output, and is used to deploy a Kafka Connect pod. After AMQ Streams builds the Kafka Connect image, you create KafkaConnector custom resources to start the connectors that are included in the build.

Prerequisites

- You have access to an OpenShift cluster on which the cluster Operator is installed.

- The AMQ Streams Operator is running.

- An Apache Kafka cluster is deployed as documented in Deploying and Upgrading AMQ Streams on OpenShift.

- You have a Red Hat Integration license.

- Kafka Connect is deployed on AMQ Streams.

-

The OpenShift

ocCLI client is installed or you have access to the OpenShift Container Platform web console. Depending on how you intend to store the Kafka Connect build image, you need registry permissions or you must create an ImageStream resource:

- To store the build image in an image registry, such as Red Hat Quay.io or Docker Hub

- An account and permissions to create and manage images in the registry.

- To store the build image as a native OpenShift ImageStream

- An ImageStream resource is deployed to the cluster. You must explicitly create an ImageStream for the cluster. ImageStreams are not available by default.

Procedure

- Log in to the OpenShift cluster.

Create a Debezium

KafkaConnectcustom resource (CR) for the connector, or modify an existing one. For example, create aKafkaConnectCR that specifies themetadata.annotationsandspec.buildproperties, as shown in the following example. Save the file with a name such asdbz-connect.yaml.Example 3.1. A

dbz-connect.yamlfile that defines aKafkaConnectcustom resource that includes a Debezium connectorapiVersion: kafka.strimzi.io/v1beta2 kind: KafkaConnect metadata: name: debezium-kafka-connect-cluster annotations: strimzi.io/use-connector-resources: "true" 1 spec: version: 3.00 build: 2 output: 3 type: imagestream 4 image: debezium-streams-connect:latest plugins: 5 - name: debezium-connector-db2 artifacts: - type: zip 6 url: https://maven.repository.redhat.com/ga/io/debezium/debezium-connector-db2/1.7.2.Final-redhat-<build_number>/debezium-connector-db2-1.7.2.Final-redhat-<build_number>-plugin.zip 7 - type: zip url: https://maven.repository.redhat.com/ga/io/apicurio/apicurio-registry-distro-connect-converter/2.0-redhat-<build-number>/apicurio-registry-distro-connect-converter-2.0-redhat-<build-number>.zip - type: zip url: https://maven.repository.redhat.com/ga/io/debezium/debezium-scripting/1.7.2.Final/debezium-scripting-1.7.2.Final.zip bootstrapServers: debezium-kafka-cluster-kafka-bootstrap:9093Table 3.13. Descriptions of Kafka Connect configuration settings Item Description 1

Sets the

strimzi.io/use-connector-resourcesannotation to"true"to enable the Cluster Operator to useKafkaConnectorresources to configure connectors in this Kafka Connect cluster.2

The

spec.buildconfiguration specifies where to store the build image and lists the plug-ins to include in the image, along with the location of the plug-in artifacts.3

The

build.outputspecifies the registry in which the newly built image is stored.4

Specifies the name and image name for the image output. Valid values for

output.typearedockerto push into a container registry like Docker Hub or Quay, orimagestreamto push the image to an internal OpenShift ImageStream. To use an ImageStream, an ImageStream resource must be deployed to the cluster. For more information about specifying thebuild.outputin the KafkaConnect configuration, see the AMQ Streams Build schema reference documentation.5

The

pluginsconfiguration lists all of the connectors that you want to include in the Kafka Connect image. For each entry in the list, specify a plug-inname, and information for about the artifacts that are required to build the connector. Optionally, for each connector plug-in, you can include other components that you want to be available for use with the connector. For example, you can add Service Registry artifacts, or the Debezium scripting component.6

The value of

artifacts.typespecifies the file type of the artifact specified in theartifacts.url. Valid types arezip,tgz, orjar. Debezium connector archives are provided in.zipfile format. JDBC driver files are in.jarformat. Thetypevalue must match the type of the file that is referenced in theurlfield.7

The value of

artifacts.urlspecifies the address of an HTTP server, such as a Maven repository, that stores the file for the connector artifact. The OpenShift cluster must have access to the specified server.Apply the

KafkaConnectbuild specification to the OpenShift cluster by entering the following command:oc create -f dbz-connect.yaml

Based on the configuration specified in the custom resource, the Streams Operator prepares a Kafka Connect image to deploy.

After the build completes, the Operator pushes the image to the specified registry or ImageStream, and starts the Kafka Connect cluster. The connector artifacts that you listed in the configuration are available in the cluster.Create a

KafkaConnectorresource to define an instance of each connector that you want to deploy.

For example, create the followingKafkaConnectorCR, and save it asdb2-inventory-connector.yamlExample 3.2. A

db2-inventory-connector.yamlfile that defines theKafkaConnectorcustom resource for a Debezium connectorapiVersion: kafka.strimzi.io/v1beta2 kind: KafkaConnector metadata: labels: strimzi.io/cluster: debezium-kafka-connect-cluster name: inventory-connector-db2 1 spec: class: io.debezium.connector.db2.Db2ConnectorConnector 2 tasksMax: 1 3 config: 4 database.history.kafka.bootstrap.servers: 'debezium-kafka-cluster-kafka-bootstrap.debezium.svc.cluster.local:9092' database.history.kafka.topic: schema-changes.inventory database.hostname: db2.debezium-db2.svc.cluster.local 5 database.port: 3306 6 database.user: debezium 7 database.password: dbz 8 database.dbname: mydatabase 9 database.server.name: inventory_connector_db2 10 database.include.list: public.inventory 11Table 3.14. Descriptions of connector configuration settings Item Description 1

The name of the connector to register with the Kafka Connect cluster.

2

The name of the connector class.

3

The number of tasks that can operate concurrently.

4

The connector’s configuration.

5

The address of the host database instance.

6

The port number of the database instance.

7

The name of the user account through which Debezium connects to the database.

8

The password for the database user account.

9

The name of the database to capture changes from.

10

The logical name of the database instance or cluster.

The specified name must be formed only from alphanumeric characters or underscores.

Because the logical name is used as the prefix for any Kafka topics that receive change events from this connector, the name must be unique among the connectors in the cluster.

The namespace is also used in the names of related Kafka Connect schemas, and the namespaces of a corresponding Avro schema if you integrate the connector with the Avro connector.11

The list of tables from which the connector captures change events.

Create the connector resource by running the following command:

oc create -n <namespace> -f <kafkaConnector>.yaml

For example,

oc create -n debezium -f {context}-inventory-connector.yamlThe connector is registered to the Kafka Connect cluster and starts to run against the database that is specified by