Development Guide

For Use with Red Hat JBoss Enterprise Application Platform 7.0

Abstract

Chapter 1. Get Started Developing Applications

1.1. Introduction

1.1.1. About Red Hat JBoss Enterprise Application Platform 7

Red Hat JBoss Enterprise Application Platform 7 (JBoss EAP) is a middleware platform built on open standards and compliant with the Java Enterprise Edition 7 specification. It integrates WildFly Application Server 10 with messaging, high-availability clustering, and other technologies.

JBoss EAP includes a modular structure that allows service enabling only when required, improving startup speed.

The management console and management command-line interface (CLI) make editing XML configuration files unnecessary and add the ability to script and automate tasks.

JBoss EAP provides two operating modes for JBoss EAP instances: standalone server or managed domain. The standalone server operating mode represents running JBoss EAP as a single server instance. The managed domain operating mode allows for the management of multiple JBoss EAP instances from a single control point.

In addition, JBoss EAP includes APIs and development frameworks for quickly developing secure and scalable Java EE applications.

1.2. Become Familiar with Java Enterprise Edition 7

1.2.1. Overview of EE 7 Profiles

Java Enterprise Edition 7 (EE 7) includes support for multiple profiles, or subsets of APIs. The only two profiles that the EE 7 specification defines are the Full Profile and the Web Profile.

EE 7 Full Profile includes all APIs and specifications included in the EE 7 specification. EE 7 Web Profile includes a selected subset of APIs, which are designed to be useful to web developers.

JBoss EAP is a certified implementation of the Java Enterprise Edition 7 Full Profile and Web Profile specifications.

Java Enterprise Edition 7 Web Profile

The Web Profile is one of two profiles defined by the Java Enterprise Edition 7 specification, and is designed for web application development. The Web Profile supports the following APIs:

Java EE 7 Web Profile Requirements:

- Java Platform, Enterprise Edition 7

Java Web Technologies:

- Servlet 3.1 (JSR 340)

- JSP 2.3

- Expression Language (EL) 3.0

- JavaServer Faces (JSF) 2.2 (JSR 344)

Java Standard Tag Library (JSTL) for JSP 1.2

NoteA known security risk in JBoss EAP exists where the Java Standard Tag Library (JSTL) allows the processing of external entity references in untrusted XML documents which could access resources on the host system and, potentially, allow arbitrary code execution.

To avoid this, the JBoss EAP server has to be run with system property

org.apache.taglibs.standard.xml.accessExternalEntitycorrectly set, usually with an empty string as value. This can be done in two ways:Configuring the system properties and restarting the server.

org.apache.taglibs.standard.xml.accessExternalEntity

org.apache.taglibs.standard.xml.accessExternalEntityCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

Passing

-Dorg.apache.taglibs.standard.xml.accessExternalEntity=""as an argument to thestandalone.shordomain.shscripts.

- Debugging Support for Other Languages 1.0 (JSR 45)

Enterprise Application Technologies:

- Contexts and Dependency Injection (CDI) 1.1 (JSR 346)

- Dependency Injection for Java 1.0 (JSR 330)

- Enterprise JavaBeans 3.2 Lite (JSR 345)

- Java Persistence API 2.1 (JSR 338)

- Common Annotations for the Java Platform 1.1 (JSR 250)

- Java Transaction API (JTA) 1.2 (JSR 907)

- Bean Validation 1.1 (JSR 349)

The other profile defined by the Java EE 7 specification is the Full Profile, and includes several more APIs.

Java Enterprise Edition 7 Full Profile

The Java Enterprise Edition 7 (EE 7) specification defines a concept of profiles, and defines two of them as part of the specification. The Full Profile supports the following APIs, as well as those supported in the Java Enterprise Edition 7 Web Profile:

Included in the EE 7 Full Profile:

- Batch 1.0

- JSON-P 1.0

- Concurrency 1.0

- WebSocket 1.1

- JMS 2.0

- JPA 2.1

- JCA 1.7

- JAX-RS 2.0

- JAX-WS 2.2

- Servlet 3.1

- JSF 2.2

- JSP 2.3

- EL 3.0

- CDI 1.1

- CDI Extensions

- JTA 1.2

- Interceptors 1.2

- Common Annotations 1.1

- Managed Beans 1.0

- EJB 3.2

- Bean Validation 1.1

1.3. Setting Up the Development Environment

1.3.1. Download JBoss Developer Studio

JBoss Developer Studio can be downloaded from the Red Hat Customer Portal.

- Log in to the Red Hat Customer Portal.

- Click Downloads.

- In the Product Downloads list, click Red Hat JBoss Developer Studio.

Select the desired version in the Version drop-down menu.

NoteIt is recommended to use JBoss Developer Studio version 9.1 or later.

- Find the Red Hat JBoss Developer Studio 9.x.x Stand-alone Installer entry in the table and click Download.

- Save the JAR file to the desired directory.

1.3.2. Install JBoss Developer Studio

- Open a terminal and navigate to the directory containing the downloaded JAR file.

Run the following command to launch the GUI installation program:

java -jar jboss-devstudio-BUILD_VERSION-installer-standalone.jar

$ java -jar jboss-devstudio-BUILD_VERSION-installer-standalone.jarCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteAlternatively, you may be able to double-click the JAR file to launch the installation program.

- Click Next to start the installation process.

- Select I accept the terms of this license agreement and click Next.

Adjust the installation path and click Next.

NoteIf the installation path folder does not exist, a prompt will appear. Click OK to create the folder.

- Choose a JVM, or leave the default JVM selected, and click Next.

- Click Next when asked to select platforms and servers.

- Review the installation details, and click Next.

- Click Next when the installation process is complete.

- Configure the desktop shortcuts for JBoss Developer Studio, and click Next.

- Click Done.

1.3.3. Start JBoss Developer Studio

To start JBoss Developer Studio, you can double-click on the desktop shortcut created during the installation, or you can start it from a command line. Follow the below steps to start JBoss Developer Studio using the command line.

- Open a terminal and navigate to the JBoss Developer Studio installation directory.

Run the following command to start JBoss Developer Studio:

./jbdevstudio

$ ./jbdevstudioCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteFor Windows Server, use the

jbdevstudio.batfile.

1.3.4. Add the JBoss EAP Server to JBoss Developer Studio

These instructions assume that you have not yet added any JBoss EAP servers to JBoss Developer Studio. Use the following steps to add your JBoss EAP server using the Define New Server wizard.

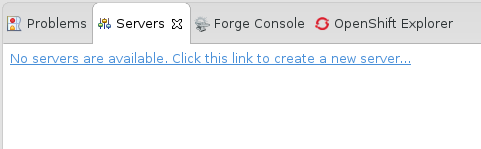

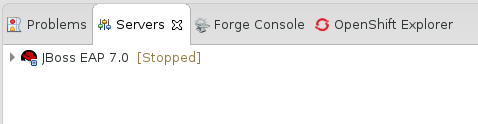

Open the Servers tab.

NoteIf the Servers tab is not shown, add it to the panel by selecting Window → Show View → Servers.

Click on the No servers are available. Click this link to create a new server link.

Figure 1.1. Add a New Server

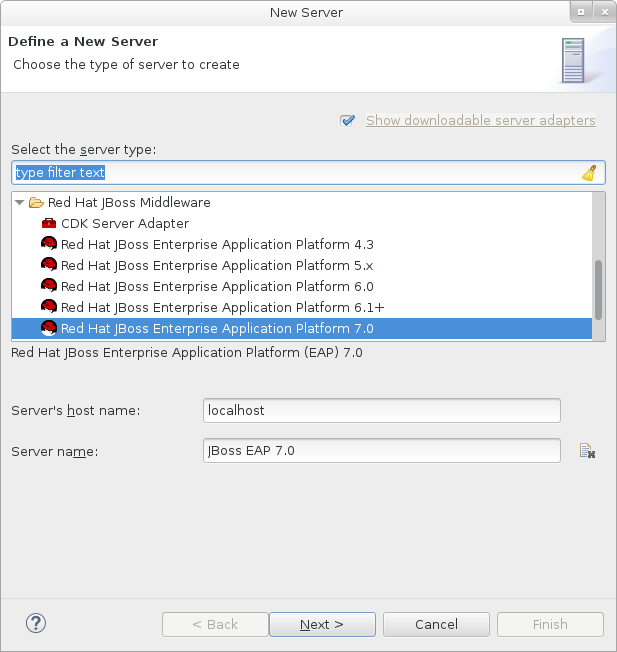

Expand Red Hat JBoss Middleware and choose JBoss Enterprise Application Platform 7.0. Enter a server name, for example,

JBoss EAP 7.0, then click Next.Figure 1.2. Define a New Server

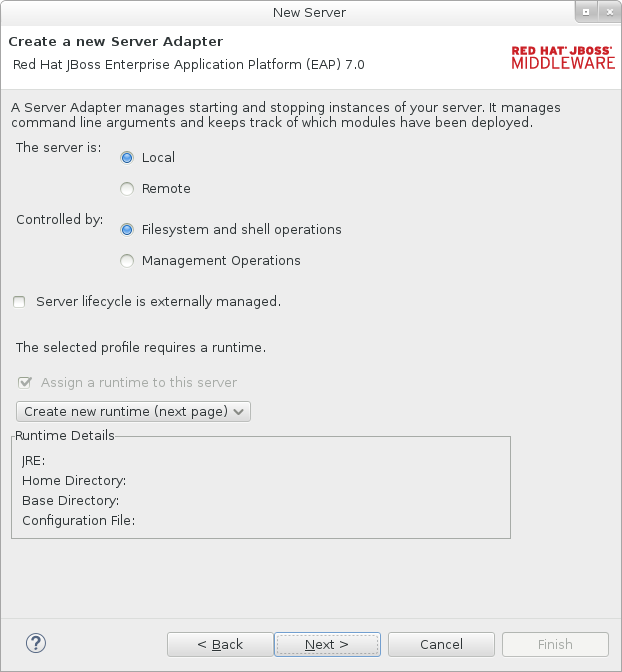

Create a server adapter to manage starting and stopping the server. Keep the defaults and click Next.

Figure 1.3. Create a New Server Adapter

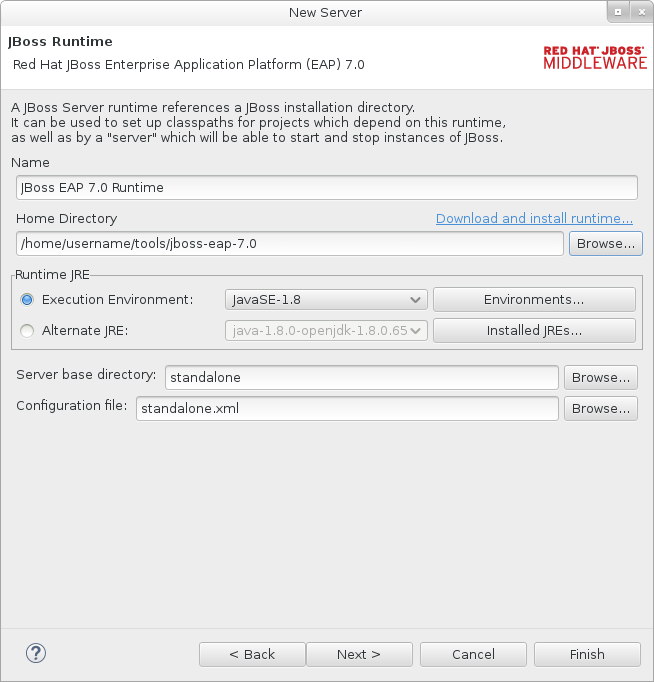

Enter a name, for example

JBoss EAP 7.0 Runtime. Click Browse next to Home Directory and navigate to your JBoss EAP installation directory. Then click Next.Figure 1.4. Add New Server Runtime Environment

NoteSome quickstarts require that you run the server with a different profile or additional arguments. For example, to deploy a quickstart that requires the full profile, you must define a new server and specify

standalone-full.xmlin the Configuration file field. Be sure to give the new server a descriptive name.Configure existing projects for the new server. Because you do not have any projects at this point, click Finish.

Figure 1.5. Modify Resources for the New Server

The JBoss EAP 7.0 server is now listed in the Servers tab.

Figure 1.6. Server List

1.4. Using the Quickstart Examples

1.4.1. About Maven

Apache Maven is a distributed build automation tool used in Java application development to create, manage, and build software projects. Maven uses standard configuration files called Project Object Model (POM) files to define projects and manage the build process. POMs describe the module and component dependencies, build order, and targets for the resulting project packaging and output using an XML file. This ensures that the project is built in a correct and uniform manner.

Maven achieves this by using a repository. A Maven repository stores Java libraries, plug-ins, and other build artifacts. The default public repository is the Maven 2 Central Repository, but repositories can be private and internal within a company with a goal to share common artifacts among development teams. Repositories are also available from third-parties. For more information, see the Apache Maven project and the Introduction to Repositories guide.

JBoss EAP includes a Maven repository that contains many of the requirements that Java EE developers typically use to build applications on JBoss EAP.

For more information, see Using Maven with JBoss EAP.

1.4.1.1. Using Maven with the Quickstarts

The artifacts and dependencies needed to build and deploy applications to JBoss EAP 7 are hosted on a public repository. Starting with the JBoss EAP 7 quickstarts, it is no longer necessary to configure your Maven settings.xml file to use these repositories when building the quickstarts. The Maven repositories are now configured in the quickstart project POM files. This method of configuration is provided to make it easier to get started with the quickstarts, however, is generally not recommended for production projects because it can slow down your build.

Red Hat JBoss Developer Studio includes Maven, so there is no need to download and install it separately. It is recommended to use JBoss Developer Studio version 9.1 or later.

If you plan to use the Maven command line to build and deploy your applications, then you must first download Maven from the Apache Maven project and install it using the instructions provided in the Maven documentation.

1.4.2. Download and Run the Quickstart Code Examples

1.4.2.1. Download the Quickstarts

JBoss EAP comes with a comprehensive set of quickstart code examples designed to help users begin writing applications using various Java EE 7 technologies. The quickstarts can be downloaded from the Red Hat Customer Portal.

- Log in to the Red Hat Customer Portal.

- Click Downloads.

- In the Product Downloads list, click Red Hat JBoss Enterprise Application Platform.

- Select the desired version in the Version drop-down menu.

- Find the Red Hat JBoss Enterprise Application Platform 7.0.0 Quickstarts entry in the table and click Download.

- Save the ZIP file to the desired directory.

- Extract the ZIP file.

1.4.2.2. Run the Quickstarts in JBoss Developer Studio

Once the quickstarts have been downloaded, they can be imported into JBoss Developer Studio and deployed to JBoss EAP.

Import a Quickstart into JBoss Developer Studio

Each quickstart ships with a POM file that contains its project and configuration information. Use this POM file to easily import the quickstart into JBoss Developer Studio.

If your quickstart project folder is located within the IDE workspace when you import it into JBoss Developer Studio, the IDE generates an invalid project name and WAR archive name. Be sure your quickstart project folder is located outside the IDE workspace before you begin.

- Start JBoss Developer Studio.

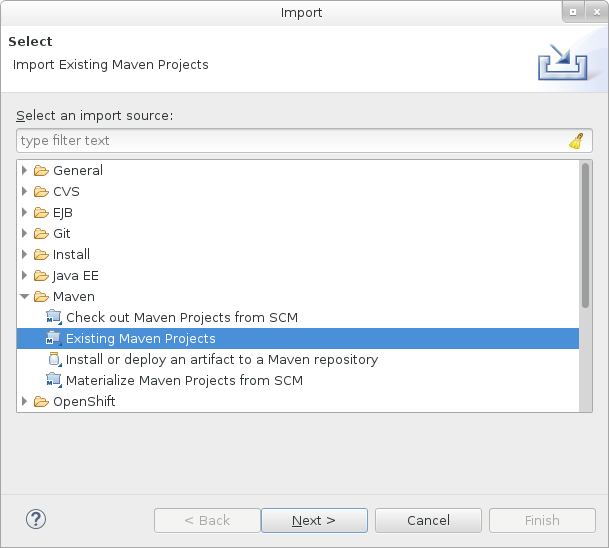

- Select File → Import.

Choose Maven → Existing Maven Projects, then click Next.

Figure 1.7. Import Existing Maven Projects

Browse to the desired quickstart’s directory (for example the

helloworldquickstart), and click OK. The Projects list box is populated with thepom.xmlfile of the selected quickstart project.Figure 1.8. Select Maven Projects

- Click Finish.

Run the helloworld Quickstart

Running the helloworld quickstart is a simple way to verify that the JBoss EAP server is configured and running correctly.

- If you have not yet defined a server, add the JBoss EAP server to JBoss Developer Studio.

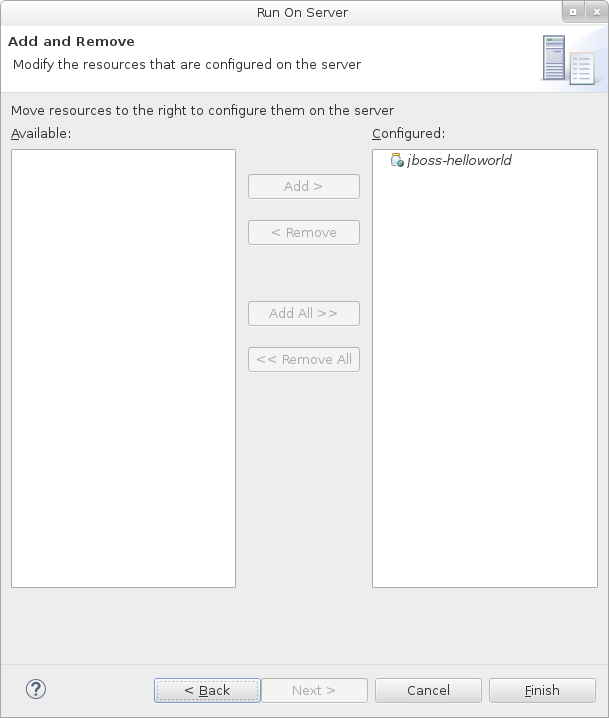

Right-click the jboss-helloworld project in the Project Explorer tab and select Run As → Run on Server.

Figure 1.9. Run As - Run on Server

Select JBoss EAP 7.0 from the server list and click Next.

Figure 1.10. Run on Server

The jboss-helloworld quickstart is already listed to be configured on the server. Click Finish to deploy the quickstart.

Figure 1.11. Modify Resources Configured on the Server

Verify the results.

-

In the Server tab, the

JBoss EAP 7.0server status changes toStarted. The Console tab shows messages detailing the JBoss EAP server start and the

helloworldquickstart deployment.WFLYUT0021: Registered web context: /jboss-helloworld WFLYSRV0010: Deployed "jboss-helloworld.war" (runtime-name : "jboss-helloworld.war")

WFLYUT0021: Registered web context: /jboss-helloworld WFLYSRV0010: Deployed "jboss-helloworld.war" (runtime-name : "jboss-helloworld.war")Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

The

helloworldapplication is available at http://localhost:8080/jboss-helloworld and displays the textHello World!.

-

In the Server tab, the

Run the bean-validation Quickstart

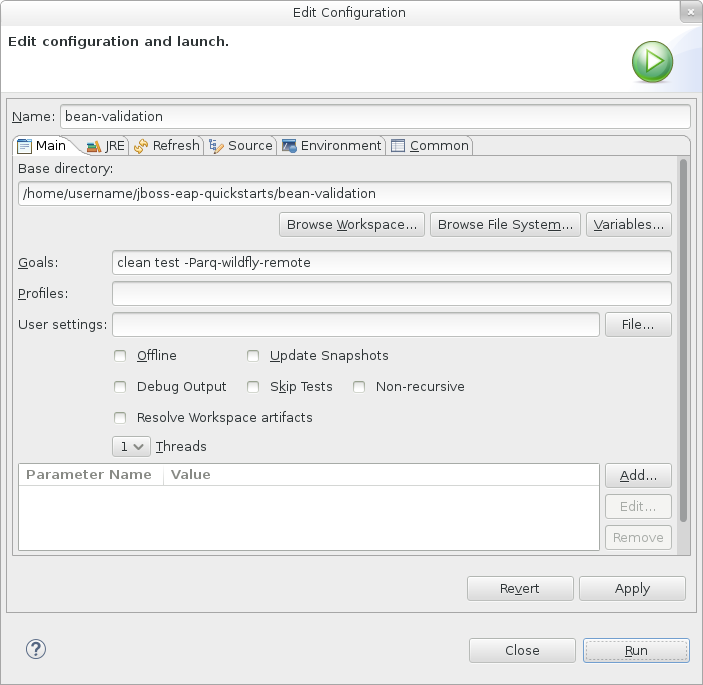

Some quickstarts, such as the bean-validation quickstart, do not provide a user interface layer and instead provide Arquillian tests to demonstrate functionality.

-

Import the

bean-validationquickstart into JBoss Developer Studio. - In the Servers tab, right-click on the server and choose Start to start the JBoss EAP server. If you do not see a Servers tab or have not yet defined a server, add the JBoss EAP server to Red Hat JBoss Developer Studio.

-

Right-click on the

jboss-bean-validationproject in the Project Explorer tab and select Run As → Maven Build. Enter the following in the Goals input field and then click Run.

clean test -Parq-wildfly-remote

clean test -Parq-wildfly-remoteCopy to Clipboard Copied! Toggle word wrap Toggle overflow Figure 1.12. Edit Configuration

Verify the results.

The Console tab shows the results of the

bean-validationArquillian tests:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

1.4.2.3. Run the Quickstarts from the Command Line

You can easily build and deploy the quickstarts from the command line using Maven. If you do not yet have Maven installed, see the Apache Maven project to download and install it.

A README.md file is provided at the root directory of the quickstarts that contains general information about system requirements, configuring Maven, adding users, and running the quickstarts.

Each quickstart also contains its own README.md file that provides the specific instructions and Maven commands to run that quickstart.

Run the helloworld Quickstart from the Command Line

-

Review the

README.mdfile in the root directory of the helloworld quickstart. Start the JBoss EAP server.

EAP_HOME/bin/standalone.sh

$ EAP_HOME/bin/standalone.shCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Navigate to the helloworld quickstart directory.

Build and deploy the quickstart using the Maven command provided in the quickstart’s

README.mdfile.mvn clean install wildfly:deploy

mvn clean install wildfly:deployCopy to Clipboard Copied! Toggle word wrap Toggle overflow -

The helloworld application is now available at http://localhost:8080/jboss-helloworld and displays the text

Hello World!.

1.4.3. Review the Quickstart Tutorials

1.4.3.1. Explore the helloworld Quickstart

The helloworld quickstart shows you how to deploy a simple servlet to JBoss EAP. The business logic is encapsulated in a service, which is provided as a Contexts and Dependency Injection (CDI) bean and injected into the Servlet. This quickstart is a starting point to be sure you have configured and started your server properly.

Detailed instructions to build and deploy this quickstart using the command line can be found in the README.html file at the root of the helloworld quickstart directory. This topic shows you how to use Red Hat JBoss Developer Studio to run the quickstart and assumes you have installed Red Hat JBoss Developer Studio, configured Maven, and imported and successfully run the helloworld quickstart.

Prerequisites

- Install Red Hat JBoss Developer Studio.

- Follow the instructions to run the quickstarts in JBoss Developer Studio.

-

Verify that the

helloworldquickstart was successfully deployed to JBoss EAP by opening a web browser and accessing the application at http://localhost:8080/jboss-helloworld

Examine the Directory Structure

The code for the helloworld quickstart can be found in the QUICKSTART_HOME/helloworld directory. The helloworld quickstart is comprised of a Servlet and a CDI bean. It also contains a beans.xml file in the application’s WEB-INF directory that has a version number of 1.1 and a bean-discovery-mode of all. This marker file identifies the WAR as a bean archive and tells JBoss EAP to look for beans in this application and to activate the CDI.

The src/main/webapp/ directory contains the files for the quickstart. All the configuration files for this example are located in the WEB-INF/ directory within src/main/webapp/, including the beans.xml file. The src/main/webapp/ directory also includes an index.html file, which uses a simple meta refresh to redirect the user’s browser to the Servlet, which is located at http://localhost:8080/jboss-helloworld/HelloWorld. The quickstart does not require a web.xml file.

Examine the Code

The package declaration and imports have been excluded from these listings. The complete listing is available in the quickstart source code.

Review the

HelloWorldServletcode.The

HelloWorldServlet.javafile is located in thesrc/main/java/org/jboss/as/quickstarts/helloworld/directory. This servlet sends the information to the browser.HelloWorldServlet Class Code Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Expand Table 1.1. HelloWorldServlet Details Line Note 43

All you need to do is add the

@WebServletannotation and provide a mapping to a URL used to access the servlet.46-48

Every web page needs correctly formed HTML. This quickstart uses static Strings to write the minimum header and footer output.

50-51

These lines inject the HelloService CDI bean which generates the actual message. As long as we don’t alter the API of HelloService, this approach allows us to alter the implementation of HelloService at a later date without changing the view layer.

58

This line calls into the service to generate the message "Hello World", and write it out to the HTTP request.

Review the

HelloServicecode.The

HelloService.javafile is located in thesrc/main/java/org/jboss/as/quickstarts/helloworld/directory. This service simply returns a message. No XML or annotation registration is required.HelloService Class Code Example

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

1.4.3.2. Explore the numberguess Quickstart

The numberguess quickstart shows you how to create and deploy a simple non-persistant application to JBoss EAP. Information is displayed using a JSF view and business logic is encapsulated in two CDI beans. In the numberguess quickstart, you have ten attempts to guess a number between 1 and 100. After each attempt, you’re told whether your guess was too high or too low.

The code for the numberguess quickstart can be found in the QUICKSTART_HOME/numberguess directory where QUICKSTART_HOME is the directory where you downloaded and unzipped the JBoss EAP quickstarts. The numberguess quickstart is comprised of a number of beans, configuration files, and Facelets (JSF) views, and is packaged as a WAR module.

Detailed instructions to build and deploy this quickstart using the command line can be found in the README.html file at the root of the numberguess quickstart directory. The following examples use Red Hat JBoss Developer Studio to run the quickstart.

Prerequisites

- Install Red Hat JBoss Developer Studio.

-

Follow the instructions to run the quickstarts in Red Hat JBoss Developer Studio, replacing

helloworldwith thenumberguessquickstart in the instructions. -

Verify the

numberguessquickstart was deployed successfully to JBoss EAP by opening a web browser and accessing the application at this URL: http://localhost:8080/jboss-numberguess

Examine the Configuration Files

All the configuration files for this example are located in the QUICKSTART_HOME/numberguess/src/main/webapp/WEB-INF/ directory of the quickstart.

Examine the

faces-config.xmlfile.This quickstart uses the JSF 2.2 version of

faces-config.xmlfilename. A standardized version of Facelets is the default view handler in JSF 2.2 so it requires no configuration. This file consists of only the root element and is simply a marker file to indicate JSF should be enabled in the application.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Examine the

beans.xmlfile.The

beans.xmlfile contains a version number of 1.1 and abean-discovery-modeofall. This file is a marker file that identifies the WAR as a bean archive and tells JBoss EAP to look for beans in this application and to activate the CDI.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

This quickstart does not need a web.xml file.

1.4.3.2.1. Examine the JSF Code

JSF uses the .xhtml file extension for source files, but delivers the rendered views with the .jsf extension. The home.xhtml file is located in the src/main/webapp/ directory.

JSF Source Code

The following line numbers correspond to those seen when viewing the file in JBoss Developer Studio.

| Line | Note |

|---|---|

| 36-40 | These are the messages which can be sent to the user: "Higher!" and "Lower!" |

| 45-48 | As the user guesses, the range of numbers they can guess gets smaller. This sentence changes to make sure they know the number range of a valid guess. |

| 55-58 | This input field is bound to a bean property using a value expression. |

| 58 | A validator binding is used to make sure the user does not accidentally input a number outside of the range in which they can guess. If the validator was not here, the user might use up a guess on an out of bounds number. |

| 59-61 | There must be a way for the user to send their guess to the server. Here we bind to an action method on the bean. |

1.4.3.2.2. Examine the Class Files

All of the numberguess quickstart source files can be found in the QUICKSTART_HOME/numberguess/src/main/java/org/jboss/as/quickstarts/numberguess/ directory. The package declaration and imports have been excluded from these listings. The complete listing is available in the quickstart source code.

Review the

Random.javaQualifier CodeA qualifier is used to remove ambiguity between two beans, both of which are eligible for injection based on their type. For more information on qualifiers, see Use a Qualifier to Resolve an Ambiguous Injection. The

@Randomqualifier is used for injecting a random number.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Review the

MaxNumber.javaQualifier CodeThe

@MaxNumberqualifieris used for injecting the maximum number allowed.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Review the

Generator.javaCodeThe

Generatorclass creates the random number via a producer method, exposing the maximum possible number via the same. This class is application-scoped, so you don’t get a different random each time.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Review the

Game.javaCodeThe session-scoped

Gameclass is the primary entry point of the application. It is responsible for setting up or resetting the game, capturing and validating the user’s guess, and providing feedback to the user with aFacesMessage. It uses the post-construct lifecycle method to initialize the game by retrieving a random number from the@Random Instance<Integer>bean.Notice the

@Namedannotation in the class. This annotation is only required when you want to make the bean accessible to a JSF view by using Expression Language (EL), in this case#{game}.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

1.5. Configure the Default Welcome Web Application

JBoss EAP includes a default Welcome application, which displays at the root context on port 8080 by default.

This default Welcome application can be replaced with your own web application. This can be configured in one of two ways:

You can also disable the welcome content.

Changing the welcome-content File Handler

Modify the existing welcome-content file handler’s path to point to the new deployment.

/subsystem=undertow/configuration=handler/file=welcome-content:write-attribute(name=path,value="/path/to/content")

/subsystem=undertow/configuration=handler/file=welcome-content:write-attribute(name=path,value="/path/to/content")Alternatively, you could create a different file handler to be used by the server’s root.

/subsystem=undertow/configuration=handler/file=NEW_FILE_HANDLER:add(path="/path/to/content") /subsystem=undertow/server=default-server/host=default-host/location=\/:write-attribute(name=handler,value=NEW_FILE_HANDLER)

/subsystem=undertow/configuration=handler/file=NEW_FILE_HANDLER:add(path="/path/to/content")

/subsystem=undertow/server=default-server/host=default-host/location=\/:write-attribute(name=handler,value=NEW_FILE_HANDLER)Reload the server for the changes to take effect.

reload

reloadChanging the default-web-module

Map a deployed web application to the server’s root.

/subsystem=undertow/server=default-server/host=default-host:write-attribute(name=default-web-module,value=hello.war)

/subsystem=undertow/server=default-server/host=default-host:write-attribute(name=default-web-module,value=hello.war)Reload the server for the changes to take effect.

reload

reloadDisabling the Default Welcome Web Application

Disable the welcome application by removing the location entry (/) for the default-host.

/subsystem=undertow/server=default-server/host=default-host/location=\/:remove

/subsystem=undertow/server=default-server/host=default-host/location=\/:removeReload the server for the changes to take effect.

reload

reloadChapter 2. Using Maven with JBoss EAP

2.1. Learn about Maven

2.1.1. About the Maven Repository

Apache Maven is a distributed build automation tool used in Java application development to create, manage, and build software projects. Maven uses standard configuration files called Project Object Model, or POM, files to define projects and manage the build process. POMs describe the module and component dependencies, build order, and targets for the resulting project packaging and output using an XML file. This ensures that the project is built in a correct and uniform manner.

Maven achieves this by using a repository. A Maven repository stores Java libraries, plug-ins, and other build artifacts. The default public repository is the Maven 2 Central Repository, but repositories can be private and internal within a company with a goal to share common artifacts among development teams. Repositories are also available from third-parties. JBoss EAP includes a Maven repository that contains many of the requirements that Java EE developers typically use to build applications on JBoss EAP. To configure your project to use this repository, see Configure the JBoss EAP Maven Repository.

For more information about Maven, see Welcome to Apache Maven.

For more information about Maven repositories, see Apache Maven Project - Introduction to Repositories.

2.1.2. About the Maven POM File

The Project Object Model, or POM, file is a configuration file used by Maven to build projects. It is an XML file that contains information about the project and how to build it, including the location of the source, test, and target directories, the project dependencies, plug-in repositories, and goals it can execute. It can also include additional details about the project including the version, description, developers, mailing list, license, and more. A pom.xml file requires some configuration options and will default all others.

The schema for the pom.xml file can be found at http://maven.apache.org/maven-v4_0_0.xsd.

For more information about POM files, see the Apache Maven Project POM Reference.

Minimum Requirements of a Maven POM File

The minimum requirements of a pom.xml file are as follows:

- project root

- modelVersion

- groupId - the id of the project’s group

- artifactId - the id of the artifact (project)

- version - the version of the artifact under the specified group

Example: Basic pom.xml File

A basic pom.xml file might look like this:

2.1.3. About the Maven Settings File

The Maven settings.xml file contains user-specific configuration information for Maven. It contains information that must not be distributed with the pom.xml file, such as developer identity, proxy information, local repository location, and other settings specific to a user.

There are two locations where the settings.xml can be found:

-

In the Maven installation: The settings file can be found in the

$M2_HOME/conf/directory. These settings are referred to asglobalsettings. The default Maven settings file is a template that can be copied and used as a starting point for the user settings file. -

In the user’s installation: The settings file can be found in the

${user.home}/.m2/directory. If both the Maven and usersettings.xmlfiles exist, the contents are merged. Where there are overlaps, the user’ssettings.xmlfile takes precedence.

Example: Maven Settings file

The schema for the settings.xml file can be found at http://maven.apache.org/xsd/settings-1.0.0.xsd.

2.1.4. About Maven Repository Managers

A repository manager is a tool that allows you to easily manage Maven repositories. Repository managers are useful in multiple ways:

- They provide the ability to configure proxies between your organization and remote Maven repositories. This provides a number of benefits, including faster and more efficient deployments and a better level of control over what is downloaded by Maven.

- They provide deployment destinations for your own generated artifacts, allowing collaboration between different development teams across an organization.

For more information about Maven repository managers, see Best Practice - Using a Repository Manager.

Commonly used Maven repository managers

- Sonatype Nexus

- See Sonatype Nexus documentation for more information about Nexus.

- Artifactory

- See JFrog Artifactory documentation for more information about Artifactory.

- Apache Archiva

- See Apache Archiva: The Build Artifact Repository Manager for more information about Apache Archiva.

In an Enterprise environment, where a repository manager is usually used, Maven should query all artifacts for all projects using this manager. Because Maven uses all declared repositories to find missing artifacts, if it can not find what it is looking for, it will try and look for it in the repository central (defined in the built-in parent POM). To override this central location, you can add a definition with central so that the default repository central is now your repository manager as well. This works well for established projects, but for clean or 'new' projects it causes a problem as it creates a cyclic dependency.

2.2. Install Maven and the JBoss EAP Maven Repository

2.2.1. Download and Install Maven

If you plan to use Maven command line to build and deploy your applications to JBoss EAP, you must download and install Maven. If you plan to use Red Hat JBoss Developer Studio to build and deploy your applications, you can skip this procedure as Maven is distributed with Red Hat JBoss Developer Studio.

- Go to Apache Maven Project - Download Maven and download the latest distribution for your operating system.

- See the Maven documentation for information on how to download and install Apache Maven for your operating system.

2.2.2. Install the JBoss EAP Maven Repository

There are three ways to install the JBoss EAP Maven repository.

- You can install the JBoss EAP Maven repository on your local file system. For detailed instructions, see Install the JBoss EAP Maven Repository Locally.

- You can install the JBoss EAP Maven repository on the Apache Web Server. For more information, see Install the JBoss EAP Maven Repository for Use with Apache httpd.

- You can install the JBoss EAP Maven repository using the Nexus Maven Repository Manager. For more information, see Repository Management Using Nexus Maven Repository Manager.

You can use the JBoss EAP Maven repository available online, or download and install it locally using any one of the three listed methods.

2.2.3. Install the JBoss EAP Maven Repository Locally

This example covers the steps to download the JBoss EAP Maven Repository to the local file system. This option is easy to configure and allows you to get up and running quickly on your local machine. It can help you become familiar with using Maven for development but is not recommended for team production environments.

Follow these steps to download and install the JBoss EAP Maven repository to the local file system.

- Open a web browser and access this URL: https://access.redhat.com/jbossnetwork/restricted/listSoftware.html?product=appplatform.

- Find Red Hat JBoss Enterprise Application Platform 7.0 Maven Repository in the list.

-

Click the Download button to download a

.zipfile containing the repository. Unzip the file on the local file system into a directory of your choosing.

This creates a new

jboss-eap-7.0.0.GA-maven-repository/directory, which contains the Maven repository in a subdirectory namedmaven-repository/.

If you want to continue to use an older local repository, you must configure it separately in the Maven settings.xml configuration file. Each local repository must be configured within its own <repository> tag.

When downloading a new Maven repository, remove the cached repository/ subdirectory located under the .m2/ directory before attempting to use it.

2.2.4. Install the JBoss EAP Maven Repository for Use with Apache httpd

This example will cover the steps to download the JBoss EAP Maven Repository for use with Apache httpd. This option is good for multi-user and cross-team development environments because any developer that can access the web server can also access the Maven repository.

You must first configure Apache httpd. See Apache HTTP Server Project documentation for instructions.

- Open a web browser and access this URL: https://access.redhat.com/jbossnetwork/restricted/listSoftware.html?product=appplatform.

- Find Red Hat JBoss Enterprise Application Platform 7.0 Maven Repository in the list.

-

Click the Download button to download a

.zipfile containing the repository. - Unzip the files in a directory that is web accessible on the Apache server.

Configure Apache to allow read access and directory browsing in the created directory.

This configuration allows a multi-user environment to access the Maven repository on Apache httpd.

2.3. Use the Maven Repository

2.3.1. Configure the JBoss EAP Maven Repository

- Overview

There are two approaches to direct Maven to use the JBoss EAP Maven Repository in your project:

Configure the JBoss EAP Maven Repository Using the Maven Settings

This is the recommended approach. Maven settings used with a repository manager or repository on a shared server provide better control and manageability of projects. Settings also provide the ability to use an alternative mirror to redirect all lookup requests for a specific repository to your repository manager without changing the project files. For more information about mirrors, see http://maven.apache.org/guides/mini/guide-mirror-settings.html.

This method of configuration applies across all Maven projects, as long as the project POM file does not contain repository configuration.

This section describes how to configure the Maven settings. You can configure the Maven install global settings or the user’s install settings.

Configure the Maven Settings File

Locate the Maven

settings.xmlfile for your operating system. It is usually located in the${user.home}/.m2/directory.-

For Linux or Mac, this is

~/.m2/ -

For Windows, this is

\Documents and Settings\.m2\or\Users\.m2\

-

For Linux or Mac, this is

-

If you do not find a

settings.xmlfile, copy thesettings.xmlfile from the${user.home}/.m2/conf/directory into the${user.home}/.m2/directory. Copy the following XML into the

<profiles>element of thesettings.xmlfile. Determine the URL of the JBoss EAP repository and replace JBOSS_EAP_REPOSITORY_URL with it.Copy to Clipboard Copied! Toggle word wrap Toggle overflow The following is an example configuration that accesses the online JBoss EAP Maven repository.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy the following XML into the

<activeProfiles>element of thesettings.xmlfile.<activeProfile>jboss-enterprise-maven-repository</activeProfile>

<activeProfile>jboss-enterprise-maven-repository</activeProfile>Copy to Clipboard Copied! Toggle word wrap Toggle overflow If you modify the

settings.xmlfile while Red Hat JBoss Developer Studio is running, you must refresh the user settings.-

From the menu, choose

Window → Preferences. -

In the

Preferenceswindow, expandMavenand chooseUser Settings. Click the

Update Settingsbutton to refresh the Maven user settings in Red Hat JBoss Developer Studio.The Update Maven User Settings screen shot

-

From the menu, choose

If your Maven repository contains outdated artifacts, you may encounter one of the following Maven error messages when you build or deploy your project:

- Missing artifact ARTIFACT_NAME

- [ERROR] Failed to execute goal on project PROJECT_NAME; Could not resolve dependencies for PROJECT_NAME

To resolve the issue, delete the cached version of your local repository to force a download of the latest Maven artifacts. The cached repository is located here: ${user.home}/.m2/repository/

Configure the JBoss EAP Maven Repository Using the Project POM

You should avoid this method of configuration as it overrides the global and user Maven settings for the configured project.

You must plan carefully if you decide to configure repositories using project POM file. Transitively included POMs are an issue with this type of configuration since Maven has to query the external repositories for missing artifacts and this slows the build process. It can also cause you to lose control over where your artifacts are coming from.

The URL of the repository will depend on where the repository is located: on the file system, or web server. For information on how to install the repository, see: Install the JBoss EAP Maven Repository. The following are examples for each of the installation options:

- File System

- file:///path/to/repo/jboss-eap-maven-repository

- Apache Web Server

- http://intranet.acme.com/jboss-eap-maven-repository/

- Nexus Repository Manager

- https://intranet.acme.com/nexus/content/repositories/jboss-eap-maven-repository

Configuring the Project’s POM File

-

Open your project’s

pom.xmlfile in a text editor. -

Add the following repository configuration. If there is already a

<repositories>configuration in the file, then add the<repository>element to it. Be sure to change the<url>to the actual repository location.

-

Add the following plug-in repository configuration. If there is already a

<pluginRepositories>configuration in the file, then add the<pluginRepository>element to it.

Determine the URL of the JBoss EAP Repository

The repository URL depends on where the repository is located. You can configure Maven to use any of the following repository locations.

- To use the online JBoss EAP Maven repository, specify the following URL: https://maven.repository.redhat.com/ga/

- To use a JBoss EAP Maven repository installed on the local file system, you must download the repository and then use the local file path for the URL. For example: file:///path/to/repo/jboss-eap-7.0-maven-repository/maven-repository/

- If you install the repository on an Apache Web Server, the repository URL will be similar to the following: http://intranet.acme.com/jboss-eap-7.0-maven-repository/maven-repository/

- If you install the JBoss EAP Maven repository using the Nexus Repository Manager, the URL will look something like the following: https://intranet.acme.com/nexus/content/repositories/jboss-eap-7.0-maven-repository/maven-repository/

Remote repositories are accessed using common protocols such as http:// for a repository on an HTTP server or file:// for a repository on a file server.

2.3.2. Configure Maven for Use with Red Hat JBoss Developer Studio

The artifacts and dependencies needed to build and deploy applications to Red Hat JBoss Enterprise Application Platform are hosted on a public repository. You must direct Maven to use this repository when you build your applications. This topic covers the steps to configure Maven if you plan to build and deploy applications using Red Hat JBoss Developer Studio.

Maven is distributed with Red Hat JBoss Developer Studio, so it is not necessary to install it separately. However, you must configure Maven for use by the Java EE Web Project wizard for deployments to JBoss EAP. The procedure below demonstrates how to configure Maven for use with JBoss EAP by editing the Maven configuration file from within Red Hat JBoss Developer Studio.

Configure Maven in Red Hat JBoss Developer Studio

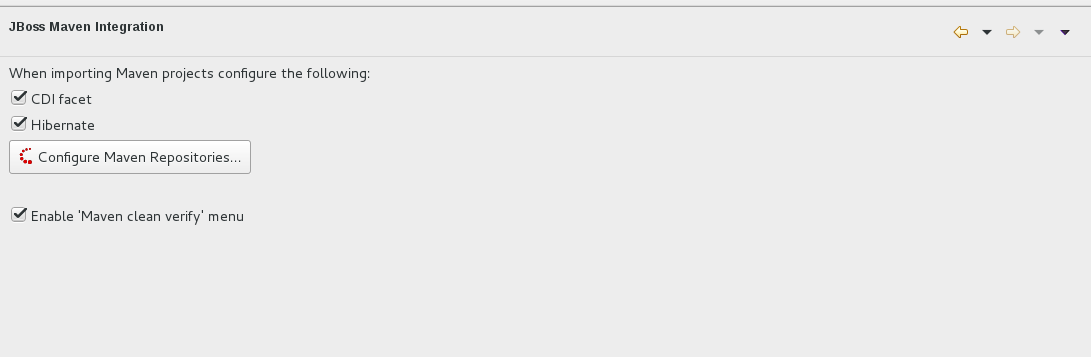

Click

Window → Preferences, expand JBoss Tools and select JBoss Maven Integration.JBoss Maven Integration Pane in the Preferences Window

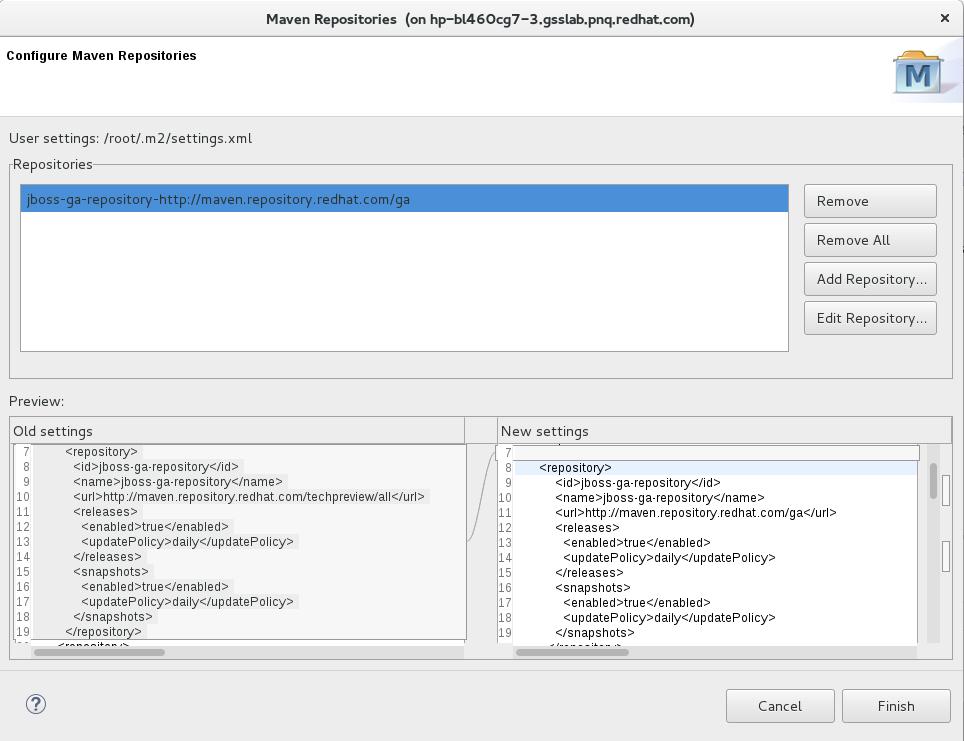

- Click Configure Maven Repositories.

Click Add Repository to configure the JBoss Enterprise Maven repository. Complete the

Add Maven Repositorydialog as follows:-

Set the Profile ID, Repository ID, and Repository Name values to

jboss-ga-repository. -

Set the Repository URL value to

http://maven.repository.redhat.com/ga. - Click the Active by default checkbox to enable the Maven repository.

Click OK.

Add Maven Repository

-

Set the Profile ID, Repository ID, and Repository Name values to

Review the repositories and click Finish.

Review Maven Repositories

-

You are prompted with the message "Are you sure you want to update the file

MAVEN_HOME/settings.xml?". Click Yes to update the settings. Click OK to close the dialog.

The JBoss EAP Maven repository is now configured for use with Red Hat JBoss Developer Studio.

2.3.3. Manage Project Dependencies

This topic describes the usage of Bill of Materials (BOM) POMs for Red Hat JBoss Enterprise Application Platform.

A BOM is a Maven pom.xml (POM) file that specifies the versions of all runtime dependencies for a given module. Version dependencies are listed in the dependency management section of the file.

A project uses a BOM by adding its groupId:artifactId:version (GAV) to the dependency management section of the project pom.xml file and specifying the <scope>import</scope> and <type>pom</type> element values.

In many cases, dependencies in project POM files use the provided scope. This is because these classes are provided by the application server at runtime and it is not necessary to package them with the user application.

Supported Maven Artifacts

As part of the product build process, all runtime components of JBoss EAP are built from source in a controlled environment. This helps to ensure that the binary artifacts do not contain any malicious code, and that they can be supported for the life of the product. These artifacts can be easily identified by the -redhat version qualifier, for example 1.0.0-redhat-1.

Adding a supported artifact to the build configuration pom.xml file ensures that the build is using the correct binary artifact for local building and testing. Note that an artifact with a -redhat version is not necessarily part of the supported public API, and may change in future revisions. For information about the public supported API, see the JavaDoc documentation included in the release.

For example, to use the supported version of Hibernate, add something similar to the following to your build configuration.

Notice that the above example includes a value for the <version/> field. However, it is recommended to use Maven dependency management for configuring dependency versions.

Dependency Management

Maven includes a mechanism for managing the versions of direct and transitive dependencies throughout the build. For general information about using dependency management, see the Apache Maven Project: Introduction to the Dependency Mechanism.

Using one or more supported Red Hat dependencies directly in your build does not guarantee that all transitive dependencies of the build will be fully supported Red Hat artifacts. It is common for Maven builds to use a mix of artifact sources from the Maven central repository and other Maven repositories.

There is a dependency management BOM included in the JBoss EAP Maven repository, which specifies all the supported JBoss EAP binary artifacts. This BOM can be used in a build to ensure that Maven will prioritize supported JBoss EAP dependencies for all direct and transitive dependencies in the build. In other words, transitive dependencies will be managed to the correct supported dependency version where applicable. The version of this BOM matches the version of the JBoss EAP release.

In JBoss EAP 7 the name of this BOM was changed from eap6-supported-artifacts to eap-runtime-artifacts. The purpose of this change is to make it more clear that the artifacts in this POM are part of the JBoss EAP runtime, but are not necessarily part of the supported public API. Some of the jars contain internal API and functionality which may change between releases.

JBoss EAP Java EE Specs BOM

The jboss-javaee-7.0 BOM contains the Java EE Specification API JARs used by JBoss EAP.

To use this BOM in a project, add a dependency for the GAV that contains the version of the JSP and Servlet API JARs needed to build and deploy the application.

The following example uses the 1.0.3.Final-redhat-1 version of the jboss-javaee-7.0 BOM.

JBoss EAP BOMs and Quickstarts

The quickstarts provide the primary use case examples for the Maven repository. The following table lists the Maven BOMs used by the quickstarts.

| BOM Artifact ID | Use Case |

|---|---|

| jboss-eap-javaee7 | Supported JBoss EAP JavaEE 7 APIs plus additional JBoss EAP API jars |

| jboss-eap-javaee7-with-spring3 | jboss-eap-javaee7 plus recommended Spring 3 versions |

| jboss-eap-javaee7-with-spring4 | jboss-eap-javaee7 plus recommended Spring 4 versions |

| jjboss-eap-javaee7-with-tools | jboss-eap-javaee7 plus development tools such as Arquillian |

These BOMs from JBoss EAP 6 have been consolidated into fewer BOMs to make usage simpler for most use cases. The Hibernate, logging, transactions, messaging, and other public API jars are now included in jboss-javaee7-eap instead of a requiring a separate BOM for each case.

The following example uses the 7.0.0.GA version of the jboss-eap-javaee7 BOM.

JBoss EAP Client BOMs

The client BOMs do not create a dependency management section or define dependencies. Instead, they are an aggregate of other BOMs and are used to package the set of dependencies necessary for a remote client use case.

The wildfly-ejb-client-bom and wildfly-jms-client-bom BOMs are managed by the jboss-eap-javaee7 BOM, so there is no need to manage the versions in your project dependencies.

The following is an example of how to add the wildfly-ejb-client-bom and wildfly-jms-client-bom client BOM dependencies to your project.

For more information about Maven Dependencies and BOM POM files, see Apache Maven Project - Introduction to the Dependency Mechanism.

Chapter 3. Class Loading and Modules

3.1. Introduction

3.1.1. Overview of Class Loading and Modules

JBoss EAP uses a modular class loading system for controlling the class paths of deployed applications. This system provides more flexibility and control than the traditional system of hierarchical class loaders. Developers have fine-grained control of the classes available to their applications, and can configure a deployment to ignore classes provided by the application server in favor of their own.

The modular class loader separates all Java classes into logical groups called modules. Each module can define dependencies on other modules in order to have the classes from that module added to its own class path. Because each deployed JAR and WAR file is treated as a module, developers can control the contents of their application’s class path by adding module configuration to their application.

3.1.2. Modules

A module is a logical grouping of classes used for class loading and dependency management. JBoss EAP identifies two different types of modules: static and dynamic. The main difference between the two is how they are packaged.

Static Modules

Static modules are defined in the EAP_HOME/modules/ directory of the application server. Each module exists as a subdirectory, for example EAP_HOME/modules/com/mysql/. Each module directory then contains a slot subdirectory, which defaults to main and contains the module.xml configuration file and any required JAR files. All the application server-provided APIs are provided as static modules, including the Java EE APIs as well as other APIs.

Example MySQL JDBC Driver module.xml File

The module name (com.mysql) must match the directory structure for the module, excluding the slot name (main).

Creating custom static modules can be useful if many applications are deployed on the same server that use the same third-party libraries. Instead of bundling those libraries with each application, a module containing these libraries can be created and installed by an administrator. The applications can then declare an explicit dependency on the custom static modules.

The modules provided in JBoss EAP distributions are located in the system directory within the EAP_HOME/modules directory. This keeps them separate from any modules provided by third parties. Any Red Hat provided products that layer on top of JBoss EAP also install their modules within the system directory.

Users must ensure that custom modules are installed into the EAP_HOME/modules directory, using one directory per module. This ensures that custom versions of modules that already exist in the system directory are loaded instead of the shipped versions. In this way, user-provided modules will take precedence over system modules.

If you use the JBOSS_MODULEPATH environment variable to change the locations in which JBoss EAP searches for modules, then the product will look for a system subdirectory structure within one of the locations specified. A system structure must exist somewhere in the locations specified with JBOSS_MODULEPATH.

Dynamic Modules

Dynamic modules are created and loaded by the application server for each JAR or WAR deployment (or subdeployment in an EAR). The name of a dynamic module is derived from the name of the deployed archive. Because deployments are loaded as modules, they can configure dependencies and be used as dependencies by other deployments.

Modules are only loaded when required. This usually only occurs when an application is deployed that has explicit or implicit dependencies.

3.1.3. Module Dependencies

A module dependency is a declaration that one module requires the classes of one or more other modules in order to function. When JBoss EAP loads a module, the modular class loader parses the dependencies of that module and adds the classes from each dependency to its class path. If a specified dependency cannot be found, the module will fail to load.

See the Modules section for complete details about modules and the modular class loading system.

Deployed applications (a JAR or WAR, for example) are loaded as dynamic modules and make use of dependencies to access the APIs provided by JBoss EAP.

There are two types of dependencies: explicit and implicit.

- Explicit Dependencies

-

Explicit dependencies are declared by the developer in a configuration file. A static module can declare dependencies in its

module.xmlfile. A dynamic module can declare dependencies in the deployment’sMANIFEST.MForjboss-deployment-structure.xmldeployment descriptor. - Implicit Dependencies

Implicit dependencies are added automatically by JBoss EAP when certain conditions or meta-data are found in a deployment. The Java EE 7 APIs supplied with JBoss EAP are examples of modules that are added by detection of implicit dependencies in deployments.

Deployments can also be configured to exclude specific implicit dependencies by using the

jboss-deployment-structure.xmldeployment descriptor file. This can be useful when an application bundles a specific version of a library that JBoss EAP will attempt to add as an implicit dependency.

See the Add an Explicit Module Dependency to a Deployment section for details on using the jboss-deployment-structure.xml deployment descriptor.

Optional Dependencies

Explicit dependencies can be specified as optional. Failure to load an optional dependency will not cause a module to fail to load. However, if the dependency becomes available later it will not be added to the module’s class path. Dependencies must be available when the module is loaded.

Export a Dependency

A module’s class path contains only its own classes and that of its immediate dependencies. A module is not able to access the classes of the dependencies of one of its dependencies. However, a module can specify that an explicit dependency is exported. An exported dependency is provided to any module that depends on the module that exports it.

For example, Module A depends on Module B, and Module B depends on Module C. Module A can access the classes of Module B, and Module B can access the classes of Module C. Module A cannot access the classes of Module C unless:

- Module A declares an explicit dependency on Module C, or

- Module B exports its dependency on Module C.

Global Modules

A global module is a module that JBoss EAP provides as a dependency to every application. Any module can be made global by adding it to JBoss EAP’s list of global modules. It does not require changes to the module.

See the Define Global Modules section of the JBoss EAP Configuration Guide for details.

3.1.3.1. Display Module Dependencies Using the Management CLI

You can use the following management operation to view information about a particular module and its dependencies:

/core-service=module-loading:module-info(name=$MODULE_NAME)

/core-service=module-loading:module-info(name=$MODULE_NAME)Example of module-info output

3.1.4. Class Loading in Deployments

For the purposes of class loading, JBoss EAP treats all deployments as modules. These are called dynamic modules. Class loading behavior varies according to the deployment type.

- WAR Deployment

-

A WAR deployment is considered to be a single module. Classes in the

WEB-INF/libdirectory are treated the same as classes in theWEB-INF/classesdirectory. All classes packaged in the WAR will be loaded with the same class loader. - EAR Deployment

EAR deployments are made up of more than one module, and are defined by the following rules:

-

The

lib/directory of the EAR is a single module called the parent module. - Each WAR deployment within the EAR is a single module.

- Each EJB JAR deployment within the EAR is a single module.

-

The

Subdeployment modules (the WAR and JAR deployments within the EAR) have an automatic dependency on the parent module. However, they do not have automatic dependencies on each other. This is called subdeployment isolation, and can be disabled per deployment, or for the entire application server.

Explicit dependencies between subdeployment modules can be added by the same means as any other module.

3.1.5. Class Loading Precedence

The JBoss EAP modular class loader uses a precedence system to prevent class loading conflicts.

During deployment, a complete list of packages and classes is created for each deployment and each of its dependencies. The list is ordered according to the class loading precedence rules. When loading classes at runtime, the class loader searches this list, and loads the first match. This prevents multiple copies of the same classes and packages within the deployments class path from conflicting with each other.

The class loader loads classes in the following order, from highest to lowest:

Implicit dependencies: These dependencies are automatically added by JBoss EAP, such as the JAVA EE APIs. These dependencies have the highest class loader precedence because they contain common functionality and APIs that are supplied by JBoss EAP.

Refer to Implicit Module Dependencies for complete details about each implicit dependency.

Explicit dependencies: These dependencies are manually added to the application configuration using the application’s

MANIFEST.MFfile or the new optional JBoss deployment descriptorjboss-deployment-structure.xmlfile.Refer to Add an Explicit Module Dependency to a Deployment to learn how to add explicit dependencies.

-

Local resources: These are class files packaged up inside the deployment itself, e.g. from the

WEB-INF/classesorWEB-INF/libdirectories of a WAR file. -

Inter-deployment dependencies: These are dependencies on other deployments in a EAR deployment. This can include classes in the

libdirectory of the EAR or classes defined in other EJB jars.

3.1.6. Dynamic Module Naming Conventions

JBoss EAP loads all deployments as modules, which are named according to the following conventions.

Deployments of WAR and JAR files are named using the following format:

deployment.DEPLOYMENT_NAME

deployment.DEPLOYMENT_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow For example,

inventory.warandstore.jarwill have the module names ofdeployment.inventory.waranddeployment.store.jarrespectively.Subdeployments within an Enterprise Archive (EAR) are named using the following format:

deployment.EAR_NAME.SUBDEPLOYMENT_NAME

deployment.EAR_NAME.SUBDEPLOYMENT_NAMECopy to Clipboard Copied! Toggle word wrap Toggle overflow For example, the subdeployment of

reports.warwithin the enterprise archiveaccounts.earwill have the module name ofdeployment.accounts.ear.reports.war.

3.1.7. jboss-deployment-structure.xml

jboss-deployment-structure.xml is an optional deployment descriptor for JBoss EAP. This deployment descriptor provides control over class loading in the deployment.

The XML schema for this deployment descriptor is in /docs/schema/jboss-deployment-structure-1_2.xsd

3.2. Add an Explicit Module Dependency to a Deployment

Explicit module dependencies can be added to applications to add the classes of those modules to the class path of the application at deployment.

JBoss EAP automatically adds some dependencies to deployments. See Implicit Module Dependencies for details.

Prerequisites

- A working software project that you want to add a module dependency to.

- You must know the name of the module being added as a dependency. See Included Modules for the list of static modules included with JBoss EAP. If the module is another deployment then see Dynamic Module Naming to determine the module name.

Dependencies can be configured using two methods:

-

Adding entries to the

MANIFEST.MFfile of the deployment. -

Adding entries to the

jboss-deployment-structure.xmldeployment descriptor.

Add a Dependency Configuration to MANIFEST.MF

Maven projects can be configured to create the required dependency entries in the MANIFEST.MF file.

-

If the project does not have one, create a file called

MANIFEST.MF. For a web application (WAR) add this file to theMETA-INFdirectory. For an EJB archive (JAR) add it to theMETA-INFdirectory. Add a dependencies entry to the

MANIFEST.MFfile with a comma-separated list of dependency module names:Dependencies: org.javassist, org.apache.velocity, org.antlr

Dependencies: org.javassist, org.apache.velocity, org.antlrCopy to Clipboard Copied! Toggle word wrap Toggle overflow To make a dependency optional, append

optionalto the module name in the dependency entry:Dependencies: org.javassist optional, org.apache.velocity

Dependencies: org.javassist optional, org.apache.velocityCopy to Clipboard Copied! Toggle word wrap Toggle overflow A dependency can be exported by appending

exportto the module name in the dependency entry:Dependencies: org.javassist, org.apache.velocity export

Dependencies: org.javassist, org.apache.velocity exportCopy to Clipboard Copied! Toggle word wrap Toggle overflow The

annotationsflag is needed when the module dependency contains annotations that need to be processed during annotation scanning, such as when declaring EJB interceptors. Without this, an EJB interceptor declared in a module cannot be used in a deployment. There are other situations involving annotation scanning when this is needed too.Dependencies: org.javassist, test.module annotations

Dependencies: org.javassist, test.module annotationsCopy to Clipboard Copied! Toggle word wrap Toggle overflow By default items in the

META-INFof a dependency are not accessible. Theservicesdependency makes items fromMETA-INF/servicesaccessible so thatservicesin the modules can be loaded.Dependencies: org.javassist, org.hibernate services

Dependencies: org.javassist, org.hibernate servicesCopy to Clipboard Copied! Toggle word wrap Toggle overflow To scan a

beans.xmlfile and make its resulting beans available to the application, themeta-infdependency can be used.Dependencies: org.javassist, test.module meta-inf

Dependencies: org.javassist, test.module meta-infCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Add a Dependency Configuration to the jboss-deployment-structure.xml

If the application does not have one, create a new file called

jboss-deployment-structure.xmland add it to the project. This file is an XML file with the root element of<jboss-deployment-structure>.<jboss-deployment-structure> </jboss-deployment-structure>

<jboss-deployment-structure> </jboss-deployment-structure>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For a web application (WAR) add this file to the

WEB-INFdirectory. For an EJB archive (JAR) add it to theMETA-INFdirectory.-

Create a

<deployment>element within the document root and a<dependencies>element within that. Within the

<dependencies>node, add a module element for each module dependency. Set thenameattribute to the name of the module.<module name="org.javassist" />

<module name="org.javassist" />Copy to Clipboard Copied! Toggle word wrap Toggle overflow A dependency can be made optional by adding the

optionalattribute to the module entry with the value oftrue. The default value for this attribute isfalse.<module name="org.javassist" optional="true" />

<module name="org.javassist" optional="true" />Copy to Clipboard Copied! Toggle word wrap Toggle overflow A dependency can be exported by adding the

exportattribute to the module entry with the value oftrue. The default value for this attribute isfalse.<module name="org.javassist" export="true" />

<module name="org.javassist" export="true" />Copy to Clipboard Copied! Toggle word wrap Toggle overflow When the module dependency contains annotations that need to be processed during annotation scanning, the

annotationsflag is used.<module name="test.module" annotations="true" />

<module name="test.module" annotations="true" />Copy to Clipboard Copied! Toggle word wrap Toggle overflow The

Servicesdependency specifies whether and howservicesfound in this dependency are used. The default isnone. Specifying a value ofimportfor this attribute is equivalent to adding a filter at the end of the import filter list which includes theMETA-INF/servicespath from the dependency module. Setting a value ofexportfor this attribute is equivalent to the same action on the export filter list.<module name="org.hibernate" services="import" />

<module name="org.hibernate" services="import" />Copy to Clipboard Copied! Toggle word wrap Toggle overflow The

META-INFdependency specifies whether and howMETA-INFentries in this dependency are used. The default isnone. Specifying a value ofimportfor this attribute is equivalent to adding a filter at the end of the import filter list which includes theMETA-INF/**path from the dependency module. Setting a value ofexportfor this attribute is equivalent to the same action on the export filter list.<module name="test.module" meta-inf="import" />

<module name="test.module" meta-inf="import" />Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Example: jboss-deployment-structure.xml with Two Dependencies

JBoss EAP adds the classes from the specified modules to the class path of the application when it is deployed.

Creating a Jandex Index

The annotations flag requires that the module contain a Jandex index. In JBoss EAP 7.0, this is generated automatically. However, to add the index manually, perhaps for backwards compatibility, create a new "index JAR" to add to the module. Use the Jandex JAR to build the index, and then insert it into a new JAR file.

Creating a Jandex index::

Create the index:

java -jar modules/system/layers/base/org/jboss/jandex/main/jandex-jandex-2.0.0.Final-redhat-1.jar $JAR_FILE

java -jar modules/system/layers/base/org/jboss/jandex/main/jandex-jandex-2.0.0.Final-redhat-1.jar $JAR_FILECopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a temporary working space:

mkdir /tmp/META-INF

mkdir /tmp/META-INFCopy to Clipboard Copied! Toggle word wrap Toggle overflow Move the index file to the working directory

mv $JAR_FILE.ifx /tmp/META-INF/jandex.idx

mv $JAR_FILE.ifx /tmp/META-INF/jandex.idxCopy to Clipboard Copied! Toggle word wrap Toggle overflow Option 1: Include the index in a new JAR file

jar cf index.jar -C /tmp META-INF/jandex.idx

jar cf index.jar -C /tmp META-INF/jandex.idxCopy to Clipboard Copied! Toggle word wrap Toggle overflow Then place the JAR in the module directory and edit

module.xmlto add it to the resource roots.Option 2: Add the index to an existing JAR

java -jar /modules/org/jboss/jandex/main/jandex-1.0.3.Final-redhat-1.jar -m $JAR_FILE

java -jar /modules/org/jboss/jandex/main/jandex-1.0.3.Final-redhat-1.jar -m $JAR_FILECopy to Clipboard Copied! Toggle word wrap Toggle overflow

Tell the module import to utilize the annotation index, so that annotation scanning can find the annotations.

Option 1: If you are adding a module dependency using MANIFEST.MF, add

annotationsafter the module name. For example change:Dependencies: test.module, other.module

Dependencies: test.module, other.moduleCopy to Clipboard Copied! Toggle word wrap Toggle overflow to

Dependencies: test.module annotations, other.module

Dependencies: test.module annotations, other.moduleCopy to Clipboard Copied! Toggle word wrap Toggle overflow Option 2: If you are adding a module dependency using

jboss-deployment-structure.xmladdannotations="true"on the module dependency.NoteAn annotation index is required when an application wants to use annotated Java EE components defined in classes within the static module. In JBoss EAP 7.0, annotation indexes for static modules are automatically generated, so you do not need to create them. However, you must tell the module import to use the annotations by adding the dependencies to either the

MANIFEST.MFor thejboss-deployment-structure.xmlfile.

3.3. Generate MANIFEST.MF entries using Maven

Maven projects using the Maven JAR, EJB, or WAR packaging plug-ins can generate a MANIFEST.MF file with a Dependencies entry. This does not automatically generate the list of dependencies, but only creates the MANIFEST.MF file with the details specified in the pom.xml.

Before generating the MANIFEST.MF entries using Maven, you will require:

-

A working Maven project, which is using one of the JAR, EJB, or WAR plug-ins (

maven-jar-plugin,maven-ejb-plugin, ormaven-war-plugin). - You must know the name of the project’s module dependencies. Refer to Included Modules for the list of static modules included with JBoss EAP. If the module is another deployment, then refer to Dynamic Module Naming to determine the module name.

Generate a MANIFEST.MF File Containing Module Dependencies

Add the following configuration to the packaging plug-in configuration in the project’s

pom.xmlfile.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add the list of module dependencies to the

<Dependencies>element. Use the same format that is used when adding the dependencies to theMANIFEST.MFfile:<Dependencies>org.javassist, org.apache.velocity</Dependencies>

<Dependencies>org.javassist, org.apache.velocity</Dependencies>Copy to Clipboard Copied! Toggle word wrap Toggle overflow The

optionalandexportattributes can also be used here:<Dependencies>org.javassist optional, org.apache.velocity export</Dependencies>

<Dependencies>org.javassist optional, org.apache.velocity export</Dependencies>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Build the project using the Maven assembly goal:

mvn assembly:single

[Localhost ]$ mvn assembly:singleCopy to Clipboard Copied! Toggle word wrap Toggle overflow When the project is built using the assembly goal, the final archive contains a

MANIFEST.MFfile with the specified module dependencies.Example: Configured Module Dependencies in

pom.xmlNoteThe example here shows the WAR plug-in but it also works with the JAR and EJB plug-ins (maven-jar-plugin and maven-ejb-plugin).

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4. Prevent a Module Being Implicitly Loaded

You can configure a deployable application to prevent implicit dependencies from being loaded. This can be useful when an application includes a different version of a library or framework than the one that will be provided by the application server as an implicit dependency.

Prerequisites

- A working software project that you want to exclude an implicit dependency from.

- You must know the name of the module to exclude. Refer to Implicit Module Dependencies for a list of implicit dependencies and their conditions.

Add dependency exclusion configuration to jboss-deployment-structure.xml

If the application does not have one, create a new file called

jboss-deployment-structure.xmland add it to the project. This is an XML file with the root element of<jboss-deployment-structure>.<jboss-deployment-structure> </jboss-deployment-structure>

<jboss-deployment-structure> </jboss-deployment-structure>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For a web application (WAR) add this file to the

WEB-INFdirectory. For an EJB archive (JAR) add it to theMETA-INFdirectory.Create a

<deployment>element within the document root and an<exclusions>element within that.<deployment> <exclusions> </exclusions> </deployment>

<deployment> <exclusions> </exclusions> </deployment>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Within the exclusions element, add a

<module>element for each module to be excluded. Set thenameattribute to the name of the module.<module name="org.javassist" />

<module name="org.javassist" />Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example: Excluding Two Modules

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.5. Exclude a Subsystem from a Deployment

Excluding a subsystem provides the same effect as removing the subsystem, but it applies only to a single deployment. You can exclude a subsystem from a deployment by editing the jboss-deployment-structure.xml configuration file.

Exclude a Subsystem

-

Edit the

jboss-deployment-structure.xmlfile. Add the following XML inside the

<deployment>tags:<exclude-subsystems> <subsystem name="SUBSYSTEM_NAME" /> </exclude-subsystems>

<exclude-subsystems> <subsystem name="SUBSYSTEM_NAME" /> </exclude-subsystems>Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Save the

jboss-deployment-structure.xmlfile.

The subsystem’s deployment unit processors will no longer run on the deployment.

Example: jboss-deployment-structure.xml File

3.6. Use the Class Loader Programmatically in a Deployment

3.6.1. Programmatically Load Classes and Resources in a Deployment

You can programmatically find or load classes and resources in your application code. The method you choose will depend on a number of factors. This topic describes the methods available and provides guidelines for when to use them.

Load a Class Using the Class.forName() Method

You can use the Class.forName() method to programmatically load and initialize classes. This method has two signatures:

- Class.forName(String className): This signature takes only one parameter, the name of the class you need to load. With this method signature, the class is loaded by the class loader of the current class and initializes the newly loaded class by default.

- Class.forName(String className, boolean initialize, ClassLoader loader): This signature expects three parameters: the class name, a boolean value that specifies whether to initialize the class, and the ClassLoader that should load the class.

The three argument signature is the recommended way to programmatically load a class. This signature allows you to control whether you want the target class to be initialized upon load. It is also more efficient to obtain and provide the class loader because the JVM does not need to examine the call stack to determine which class loader to use. Assuming the class containing the code is named CurrentClass, you can obtain the class’s class loader using CurrentClass.class.getClassLoader() method.

The following example provides the class loader to load and initialize the TargetClass class:

Class<?> targetClass = Class.forName("com.myorg.util.TargetClass", true, CurrentClass.class.getClassLoader());

Class<?> targetClass = Class.forName("com.myorg.util.TargetClass", true, CurrentClass.class.getClassLoader());Find All Resources with a Given Name

If you know the name and path of a resource, the best way to load it directly is to use the standard Java development kit Class or ClassLoader API.

Load a Single Resource: To load a single resource located in the same directory as your class or another class in your deployment, you can use the

Class.getResourceAsStream()method.InputStream inputStream = CurrentClass.class.getResourceAsStream("targetResourceName");InputStream inputStream = CurrentClass.class.getResourceAsStream("targetResourceName");Copy to Clipboard Copied! Toggle word wrap Toggle overflow Load All Instances of a Single Resource: To load all instances of a single resource that are visible to your deployment’s class loader, use the

Class.getClassLoader().getResources(String resourceName)method, whereresourceNameis the fully qualified path of the resource. This method returns an Enumeration of allURLobjects for resources accessible by the class loader with the given name. You can then iterate through the array of URLs to open each stream using theopenStream()method.The following example loads all instances of a resource and iterates through the results.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Because the URL instances are loaded from local storage, it is not necessary to use the openConnection() or other related methods. Streams are much simpler to use and minimize the complexity of the code.

Load a Class File From the Class Loader: If a class has already been loaded, you can load the class file that corresponds to that class using the following syntax:

InputStream inputStream = CurrentClass.class.getResourceAsStream(TargetClass.class.getSimpleName() + ".class");

InputStream inputStream = CurrentClass.class.getResourceAsStream(TargetClass.class.getSimpleName() + ".class");Copy to Clipboard Copied! Toggle word wrap Toggle overflow If the class is not yet loaded, you must use the class loader and translate the path: