OpenShift Container Storage is now OpenShift Data Foundation starting with version 4.9.

Deployment Guide

Deploying Red Hat Openshift Container Storage 3.11

Abstract

Making open source more inclusive

Red Hat is committed to replacing problematic language in our code, documentation, and web properties. We are beginning with these four terms: master, slave, blacklist, and whitelist. Because of the enormity of this endeavor, these changes will be implemented gradually over several upcoming releases. For more details, see our CTO Chris Wright’s message.

Part I. Planning

Chapter 1. Identify your Workloads

This chapter provides a list of workloads that are supported with Red Hat Openshift Container Storage.

Persistent volumes backed by block storage is the recommended method for the following workloads:

- Jenkins

- ElasticSearch

- Prometheus

If using file storage for transactional workloads, turn off the performance translators as described in Chapter 11, Setting up Custom Volume Options.

Chapter 2. Identify your Use Case

This chapter provides a brief introduction of the two use cases available in Containerized Red Hat Gluster Storage.

Red Hat Openshift Container Storage does not support a simultaneous deployment of converged and independent mode with ansible workflow. Therefore, you must deploy either converged mode or independent mode: you cannot mix both modes during deployment.

Red Hat only supports Heketi inside OpenShift Container Platform in OCS.

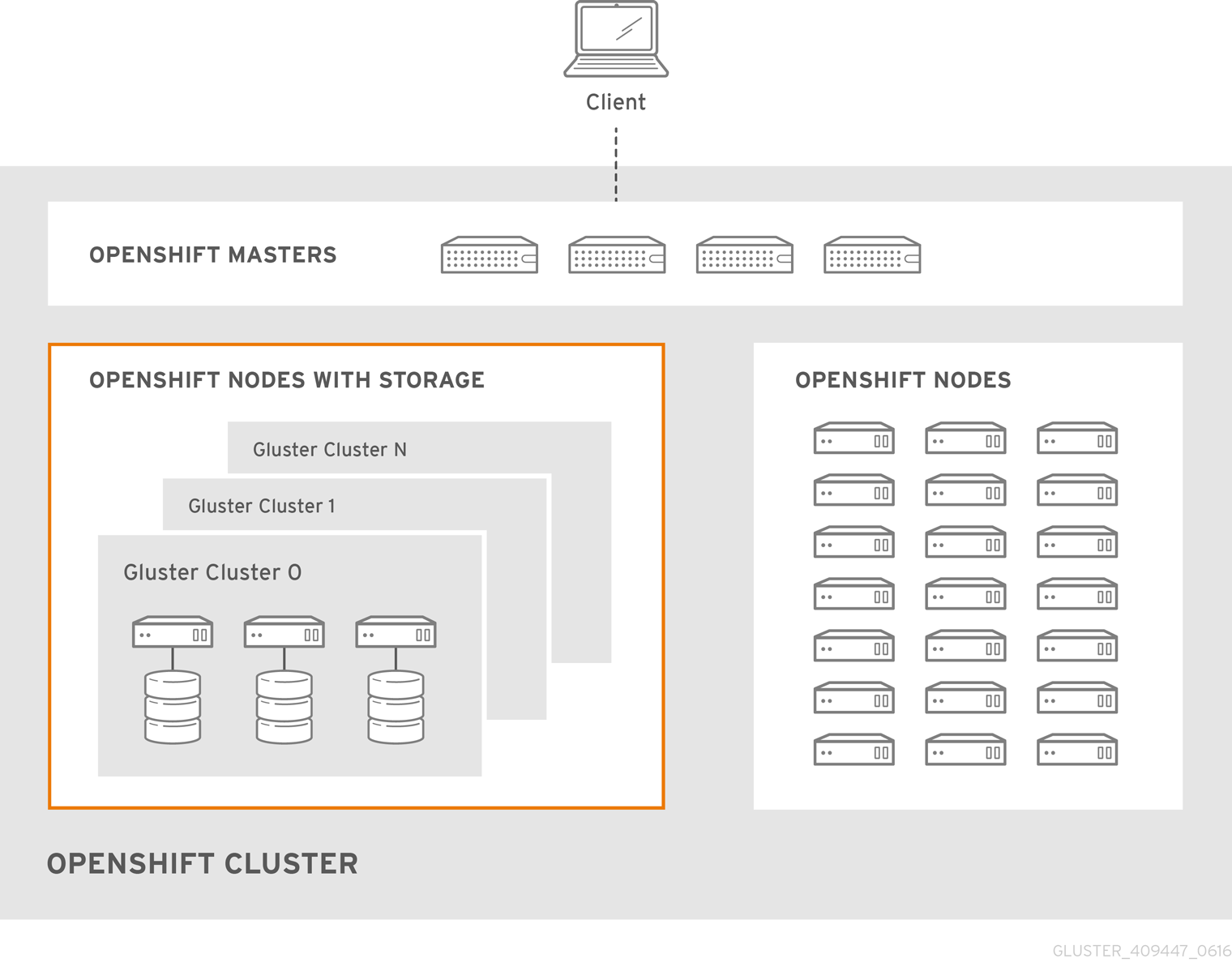

2.1. Converged Mode

Converged mode was earlier called as Container-Native Storage.

This deployment delivers a hyper-converged solution, where the storage containers that host Red Hat Gluster Storage co-reside with the compute containers and serve out storage from the hosts that have local or direct attached storage to the compute containers. This solution integrates Red Hat Gluster Storage deployment and management with OpenShift services. As a result, persistent storage is delivered within an OpenShift pod that provides both compute and file storage.

Converged Mode for OpenShift Container Platform is built around three key technologies:

- OpenShift provides the platform as a service (PaaS) infrastructure based on Kubernetes container management. Basic OpenShift architecture is built around multiple master systems where each system contains a set of nodes.

- Red Hat Gluster Storage provides the containerized distributed storage based on Red Hat Gluster Storage 3.5 container. Each Red Hat Gluster Storage volume is composed of a collection of bricks, where each brick is the combination of a node and an export directory.

- Heketi provides the Red Hat Gluster Storage volume life-cycle management. It creates the Red Hat Gluster Storage volumes dynamically and supports multiple Red Hat Gluster Storage clusters.

The following list provides the administrators a solution workflow. The administrators can:

- Create multiple persistent volumes (PV) and register these volumes with OpenShift.

- Developers then submit a persistent volume claim (PVC).

- A PV is identified and selected from a pool of available PVs and bound to the PVC.

- The OpenShift pod then uses the PV for persistent storage.

Figure 2.1. Architecture - Converged Mode for OpenShift Container Platform

Red Hat Openshift Container Storage does not support a simultaneous deployment of converged and independent mode with ansible workflow. Therefore, you must deploy either converged mode or independent mode: you cannot mix both modes during deployment.

2.2. Independent mode

Independent mode was earlier called Container-Ready Storage.

When Red Hat Gluster Storage is deployed as stand-alone storage, providing storage to containers, that is called independent mode. In this mode, the life cycle of the storage platform is maintained independently from the life cycle of the container platform.

When Red Hat Gluster Storage is deployed on top an OpenShift cluster, it is called converged mode.

Independent mode provides dynamically provisioned storage, statically provisioned storage, RWO support, and RWX support. Further, it provides full support for OpenShift Container Platform infrastructure services like logging, metrics, and registry services.

For users of persistent storage, the deployment modes are completely transparent. Administrators, however, will see a variation in how they set the system up, manage, and scale. In independent mode, storage is managed like Red Hat Gluster Storage.

Following are some of the key drivers of choosing independent mode of deployment:

- OpenShift Container Platform administrators might not want to manage storage. Independent mode separates storage management from container management.

- Leverage legacy storage (SAN, Arrays, Old filers): Storage arrays from traditional storage vendors often have either limited or no support for OpenShift. Independent mode allows you to leverage existing legacy storage for OpenShift Containers.

- Cost effective: In environments where costs related to new infrastructure is a challenge, you can repurpose existing storage arrays to back OpenShift under independent mode. Independent mode is perfect for situations where you can run Red Hat Gluster Storage inside a VM and serve out LUNs or disks from these storage arrays to OpenShift offering all the features that the OpenShift storage subsystem has to offer including dynamic provisioning. This is a very useful solution in those environments with potential infrastructure additions.

Independent mode may have Heketi, and other provisioners (components of independent mode) deployed on top of OpenShift Cluster nodes. Red Hat only supports Heketi inside OpenShift Container Platform in OCS. Heketi is a service endpoint for automated Red Hat Gluster Storage volume provisioning, where requests for allocation of Red Hat Gluster Storage volumes to back OpenShift PVs land from kubernetes. Heketi manages allocation and de-allocation of Red Hat Gluster Storage volumes dynamically.

Red Hat Openshift Container Storage does not support a simultaneous deployment of converged and independent mode with ansible workflow. Therefore, you must deploy either converged mode or independent mode: you cannot mix both modes during deployment.

In independent mode, Heketi must have complete control of the Gluster cluster.

Chapter 3. Verify Prerequisites

This chapter provides the prerequisites that have to be verified before for the two different use cases available in Containerized Red Hat Gluster Storage before deployment.

Support for Red Hat Enterprise Linux Atomic Host is deprecated as of Red Hat OpenShift Container Storage 3.11.5. Red Hat no longer recommends the use of Red Hat Enterprise Linux Atomic Host and does not support its use in new deployments. Existing deployments that upgrade to Red Hat OpenShift Container Storage 3.11.5 remain supported.

3.1. Converged mode

3.1.1. Supported Versions

For supported versions of OpenShift Container Platform with Red Hat Gluster Storage Server and Container-Native Storage, please see https://access.redhat.com/articles/3403951.

3.1.2. Environment Requirements

The requirements for Red Hat Enterprise Linux Atomic Host, Red Hat OpenShift Container Platform, Red Hat Enterprise Linux, and Red Hat Gluster Storage are described in this section. A Red Hat Gluster Storage Container Native with OpenShift Container Platform environment consists of Red Hat OpenShift Container Platform installed on either Red Hat Enterprise Linux Atomic Host or Red Hat Enterprise Linux.

3.1.2.1. Installing Red Hat Openshift Container Storage with OpenShift Container Platform on Red Hat Enterprise Linux 7

This section describes the procedures to install Red Hat Gluster Storage Container Native with OpenShift Container Platform on Red Hat Enterprise Linux 7 based OpenShift Container Platform 3.11.

3.1.2.1.1. Setting up the Openshift Master as the Client

You can use the OpenShift Master as a client to execute the oc commands across the cluster when installing OpenShift. Generally, this is setup as a non-scheduled node in the cluster. This is the default configuration when using the OpenShift installer. You can also choose to install their client on their local machine to access the cluster remotely. For more information, see https://access.redhat.com/documentation/en-us/openshift_container_platform/3.11/html/cli_reference/cli-reference-get-started-cli#installing-the-cli.

Install heketi-client package

Execute the following commands to install heketi-client package.

subscription-manager repos --enable=rh-gluster-3-client-for-rhel-7-server-rpms

# subscription-manager repos --enable=rh-gluster-3-client-for-rhel-7-server-rpmsyum install heketi-client

# yum install heketi-client3.1.2.2. OpenShift Container Platform Options

- Container logs by default are not configured to be rotated or limited to a max size by docker. A log file can grow so large that it fills up the disk space if the container runs for long enough and generate enough logs.

To set log limits for containers on a host

--log-optcan be configured withmax-sizeandmax-fileso that a containers logs are rolled over when they reach a max limit and only a certain number of files are saved before being discarded.cat /etc/sysconfig/docker OPTIONS='--insecure-registry=172.30.0.0/16 --selinux-enabled --log-opt max-size=50m --log-opt max-file=5'

# cat /etc/sysconfig/docker OPTIONS='--insecure-registry=172.30.0.0/16 --selinux-enabled --log-opt max-size=50m --log-opt max-file=5'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

If the above options are not executed , once the logs get large the pod can be evicted.

3.1.3. Red Hat OpenShift Container Platform and Red Hat Openshift Container Storage Requirements

The following list provides the Red Hat OpenShift Container Platform and Red Hat Openshift Container Storage requirements:

All OpenShift nodes on Red Hat Enterprise Linux systems must have

glusterfs-clientRPMs (glusterfs, glusterfs-client-xlators, glusterfs-libs, glusterfs-fuse) installed. You can verify if the RPMs are installed by running the following command:yum list glusterfs glusterfs-client-xlators glusterfs-libs glusterfs-fuse

# yum list glusterfs glusterfs-client-xlators glusterfs-libs glusterfs-fuseCopy to Clipboard Copied! Toggle word wrap Toggle overflow

The client RPMs must have the same version as the gluster-rhgs-server. The gluster-rhgs-server version is based on the selected OCS version.

For more information on installing native client packages, see https://access.redhat.com/documentation/en-us/red_hat_gluster_storage/3.5/html-single/administration_guide/index#Installing_Native_Client

3.1.4. Deployment and Scaling Guidelines

To prevent potential deployment or scaling issues, review the following guidelines before deploying converged mode with OpenShift Container Platform.

Ensure that the Trusted Storage Pool is appropriately sized and you have room for dynamic scaling on demand. This action ensures that you do not scale beyond the following maximum limits:

Sizing guidelines on converged mode

Persistent volumes backed by the file interface: For typical operations, size for 500-800 persistent volumes backed by files per four-node converged mode cluster. The maximum limit of supported persistent volumes backed by the file interface is 2000 persistent volumes per four-node cluster in a converged mode deployment. Considering that micro-services can dynamically scale as per demand, it is recommended that the initial sizing keep sufficient headroom for the scaling. If additional scaling is needed, add a new four-node converged mode cluster to support additional persistent volumes

The default limit for file-based persistent volumes per trusted storage pool is set to 1000 and the maximum supported limit is 2000. For more information on steps needed to be performed to go beyond 1000+ default limit and max till 2000, see How to have more PV’s beyond default limit in OCS?

- Persistent volumes backed by block-based storage: Size for a maximum of 300 persistent volumes per four-node converged mode cluster.

- Persistent volumes backed by file and block: Size for 300-500 persistent volumes (backed by files) and 100-200 persistent volumes (backed by block). 1000 gluster volumes including file PVs and block-hosting volumes.

- Minimum Red Hat Openshift Container Storage cluster size (4): It is recommended to have a minimum of 4 nodes in the Red Hat Openshift Container Storage cluster to adequately meet high-availability requirements. Although 3 nodes are required to create a persistent volume claim, the failure of one node in a 3 node cluster prevents the persistent volume claim from being created. The fourth node provides high-availability and allows the persistent volume claim to be created even if a node fails.

Minimum requirements: Each physical or virtual node that hosts a converged mode peer requires the following:

- a minimum of 8 GB RAM and 30 MB per persistent volume

- the same disk type

- the heketidb utilises 2 GB distributed replica volume

- a minimum of 2 physical core pair

2 physical core pair translates to 4 vCPU for non hyper-threaded systems and 8 vCPU for hyper-threaded systems.

Deployment guidelines on converged mode

- In converged mode, you can install the Red Hat Openshift Container Storage nodes, Heketi, and all provisioner pods on OpenShift Container Platform Infrastructure nodes or OpenShift Container Platform Application nodes.

-

Red Hat Gluster Storage Container Native with OpenShift Container Platform supports up to 14 snapshots per volume by default (

snap-max-hard-limit =14in Heketi Template). The required kernel version is kernel-3.10.0-862.14.4.el7.x86_64 or higher. Verify the installed and running kernel versions by running the following command:

rpm -q kernel kernel-3.10.0-862.14.4.el7.x86_64

# rpm -q kernel kernel-3.10.0-862.14.4.el7.x86_64Copy to Clipboard Copied! Toggle word wrap Toggle overflow uname -r 3.10.0-862.14.4.el7.x86_64

# uname -r 3.10.0-862.14.4.el7.x86_64Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.2. Independent mode

3.2.1. Supported Versions

For supported versions of OpenShift Container Platform with Red Hat Gluster Storage Server and Container-Native Storage, please see https://access.redhat.com/articles/3403951.

3.2.2. Environment Requirements

The requirements for Red Hat Enterprise Linux Atomic Host, Red Hat OpenShift Container Platform, Red Hat Enterprise Linux, and Red Hat Gluster Storage are described in this section. A Red Hat Gluster Storage Container Native with OpenShift Container Platform environment consists of Red Hat OpenShift Container Platform installed on either Red Hat Enterprise Linux Atomic Host or Red Hat Enterprise Linux.

3.2.2.1. Installing Red Hat Openshift Container Storage with OpenShift Container Platform on Red Hat Enterprise Linux 7

This section describes the procedures to install Red Hat Gluster Storage Container Native with OpenShift Container Platform on Red Hat Enterprise Linux 7 based OpenShift Container Platform 3.11.

3.2.2.1.1. Setting up the Openshift Master as the Client

You can use the OpenShift Master as a client to execute the oc commands across the cluster when installing OpenShift. Generally, this is setup as a non-scheduled node in the cluster. This is the default configuration when using the OpenShift installer. You can also choose to install their client on their local machine to access the cluster remotely. For more information, see https://access.redhat.com/documentation/en-us/openshift_container_platform/3.11/html/cli_reference/cli-reference-get-started-cli#installing-the-cli.

Install heketi-client package

Execute the following commands to install heketi-client package.

subscription-manager repos --enable=rh-gluster-3-client-for-rhel-7-server-rpms

# subscription-manager repos --enable=rh-gluster-3-client-for-rhel-7-server-rpmsyum install heketi-client

# yum install heketi-client3.2.2.2. OpenShift Container Platform Options

- Container logs by default are not configured to be rotated or limited to a max size by docker. A log file can grow so large that it fills up the disk space if the container runs for long enough and generate enough logs.

To set log limits for containers on a host

--log-optcan be configured withmax-sizeandmax-fileso that a containers logs are rolled over when they reach a max limit and only a certain number of files are saved before being discarded.cat /etc/sysconfig/docker OPTIONS='--insecure-registry=172.30.0.0/16 --selinux-enabled --log-opt max-size=50m --log-opt max-file=5'

# cat /etc/sysconfig/docker OPTIONS='--insecure-registry=172.30.0.0/16 --selinux-enabled --log-opt max-size=50m --log-opt max-file=5'Copy to Clipboard Copied! Toggle word wrap Toggle overflow

If the above options are not executed , once the logs get large the pod can be evicted.

3.2.3. Red Hat OpenShift Container Platform and Red Hat Openshift Container Storage Requirements

The following list provides the Red Hat OpenShift Container Platform requirements:

All OpenShift nodes on Red Hat Enterprise Linux systems must have glusterfs-client RPMs (glusterfs, glusterfs-client-xlators, glusterfs-libs, glusterfs-fuse) installed. You can verify if the RPMs are installed by running the following command:

yum list glusterfs glusterfs-client-xlators glusterfs-libs glusterfs-fuse

# yum list glusterfs glusterfs-client-xlators glusterfs-libs glusterfs-fuseCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Ensure that the latest version of glusterfs-client RPMs are installed. The client RPMs must have the same version as the gluster-rhgs-server version. The gluster-rhgs-server version is based on the selected OCS version.

For more information on installing native client packages, see https://access.redhat.com/documentation/en-us/red_hat_gluster_storage/3.5/html-single/administration_guide/index#Installing_Native_Client

3.2.4. Red Hat Gluster Storage Requirements

The following list provides the details regarding the Red Hat Gluster Storage requirements:

- Installation of Heketi packages must have valid subscriptions to Red Hat Gluster Storage Server repositories.

- Red Hat Gluster Storage installations must adhere to the requirements outlined in the Red Hat Gluster Storage Installation Guide.

- The versions of Red Hat Enterprise OpenShift and Red Hat Gluster Storage integrated must be compatible, according to the information in Section 3.1.1, “Supported Versions” section.

- A fully qualified domain name must be set for Red Hat Gluster Storage server node. Ensure that the correct DNS records exist and that the fully qualified domain name is resolvable via both forward and reverse DNS lookup.

To access GlusterFS volumes, the mount.glusterfs command must be available on all schedulable nodes. For RPM-based systems, the glusterfs-fuse package must be installed:

yum install glusterfs-fuse

# yum install glusterfs-fuseCopy to Clipboard Copied! Toggle word wrap Toggle overflow This package comes installed on every RHEL system. However, it is recommended to update to the latest available version from Red Hat Gluster Storage. To do this, the following RPM repository must be enabled:

subscription-manager repos --enable=rh-gluster-3-client-for-rhel-7-server-rpms

# subscription-manager repos --enable=rh-gluster-3-client-for-rhel-7-server-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow If glusterfs-fuse is already installed on the nodes, ensure that the latest version is installed:

yum update glusterfs-fuse

# yum update glusterfs-fuseCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Restrictions for using Snapshot

After a snapshot is created, it must be accessed through the user-serviceable snapshots feature only. This can be used to copy the old versions of files into the required location.

Reverting the volume to a snapshot state is not supported and should never be done as it might damage the consistency of the data.

- On a volume with snapshots, volume changing operations, such as volume expansion, must not be performed.

3.2.5. Deployment and Scaling Guidelines

To prevent potential deployment or scaling issues, review the following guidelines before deploying independent mode with OpenShift Container Platform.

Ensure that the Trusted Storage Pool is appropriately sized and you have room for dynamic scaling on demand. This action ensures that you do not scale beyond the following maximum limits:

Sizing guidelines on Independent mode

-

Persistent volumes backed by the file interface: For typical operations, size for 500-800 persistent volumes backed by files per four-node independent mode cluster. The maximum limit of supported persistent volumes backed by the file interface is 2000 persistent volumes per four-node cluster in an independent mode deployment. Considering that micro-services can dynamically scale as per demand, it is recommended that the initial sizing keep sufficient headroom for the scaling. If additional scaling is needed, add a new four-node independent mode cluster to support additional persistent volumes.

The default limit for file-based persistent volumes per trusted storage pool is set to 1000 and the maximum supported limit is 2000. For more information on steps needed to be performed to go beyond 1000+ default limit and max till 2000, see How to have more PV’s beyond default limit in OCS?

- Persistent volumes backed by block-based storage: Size for a maximum of 300 persistent volumes per four-node independent mode cluster.

- Persistent volumes backed by file and block: Size for 300-500 persistent volumes (backed by files) and 100-200 persistent volumes (backed by block). 1000 gluster volumes including file PVs and block-hosting volumes.

- Volume types: 3-way distributed-replicated volumes and arbitrated volumes are the only supported volume types.

- Minimum Red Hat Openshift Container Storage cluster size (4): It is recommended to have a minimum of 4 nodes in the Red Hat Openshift Container Storage cluster to adequately meet high-availability requirements. Although 3 nodes are required to create a persistent volume claim, the failure of one node in a 3 node cluster prevents the persistent volume claim from being created. The fourth node provides high-availability and allows the persistent volume claim to be created even if a node fails.

Minimum requirements: Each physical or virtual node that hosts a Red Hat Gluster Storage independent mode peer requires the following:

- a minimum of 8 GB RAM and 30 MB per persistent volume.

- the same disk type.

- the heketidb utilises 2 GB distributed replica volume.

- a minimum of 2 physical core pair

2 physical core pair translates to 4vCPU for non hyper-threaded systems and 8 vCPU for hyper-threaded systems.

Deployment guidelines on independent mode

- In independent mode, you can install Heketi and all provisioners pods on OpenShift Container Platform Infrastructure nodes or on OpenShift Container Platform Application nodes

- Red Hat Gluster Storage Container Native with OpenShift Container Platform supports up to 14 snapshots per volume by default (snap-max-hard-limit =14 in Heketi Template).

The required kernel version is kernel-3.10.0-862.14.4.el7.x86_64 version or higher. Verify the installed and running kernel versions by running the following command:

rpm -q kernel kernel-3.10.0-862.14.4.el7.x86_64

# rpm -q kernel kernel-3.10.0-862.14.4.el7.x86_64Copy to Clipboard Copied! Toggle word wrap Toggle overflow uname -r 3.10.0-862.14.4.el7.x86_64

# uname -r 3.10.0-862.14.4.el7.x86_64Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Part II. Deploy

Chapter 4. Deploying Containerized Storage in Converged Mode

Before following the deployment workflow for your preferred solution, make sure to review Section 4.1, “Specify Advanced Installer Variables” to understand ansible variable and playbook recommendations and requirements.

To set up storage to containers on top of an OpenShift Cluster, select the workflow that meets your objectives.

| Deployment workflow | Registry | Metrics | Logging | Applications |

|---|---|---|---|---|

| Section 4.2, “Deploying Red Hat Openshift Container Storage in Converged Mode” | ✔ | |||

| Section 4.3, “Deploying Red Hat Openshift Container Storage in Converged Mode with Registry” | ✔ | |||

| ✔ | ✔ | |||

| ✔ | ✔ | ✔ | ✔ |

- Red Hat Openshift Container Storage does not support a simultaneous deployment of converged and independent mode with ansible workflow. Therefore, you must deploy either converged mode or independent mode: you cannot mix both modes during deployment.

- s3 is deployed manually and not through Ansible installer. For more information on manual deployment, see https://access.redhat.com/documentation/en-us/red_hat_openshift_container_storage/3.11/html-single/operations_guide/#S3_Object_Store

New registry name registry.redhat.io is used throughout in this Guide.

However, if you have not migrated to the new registry yet then replace all occurrences of registry.redhat.io with registry.access.redhat.com where ever applicable.

4.1. Specify Advanced Installer Variables

The cluster installation process as documented in https://access.redhat.com/documentation/en-us/openshift_container_platform/3.11/html-single/installing_clusters/#install-planning, can be used to install one or both the GlusterFS node groups:

-

glusterfs: A general storage cluster for use by user applications. -

glusterfs-registry: A dedicated storage cluster for use by infrastructureapplications such as an integrated OpenShift Container Registry.

It is recommended to deploy both groups to avoid potential impacts on performance in I/O and volume creation. Both of these are defined in the inventory hosts file.

The definition of the clusters is done by including the relevant names in the`[OSEv3:children]` group, creating similarly named groups, and then populating the groups with the node information. The clusters can then be configured through a variety of variables in the [OSEv3:vars] group. glusterfs variables begin with openshift_storage_glusterfs_ and glusterfs-registry variables begin with openshift_storage_glusterfs_registry_. A few other variables, such as openshift_hosted_registry_storage_kind, interact with the GlusterFS clusters.

It is recommended to specify image names and version tags for all containerized components.This is to prevent components such as the Red Hat Gluster Storage pods from upgrading after an outage, which might lead to a cluster of widely disparate software versions. The relevant variables are as follows:

-

openshift_storage_glusterfs_image -

openshift_storage_glusterfs_block_image -

openshift_storage_glusterfs_heketi_image

The following are the recommended values for this release of Red Hat Openshift Container Storage

-

openshift_storage_glusterfs_image=registry.redhat.io/rhgs3/rhgs-server-rhel7:v3.11.8 -

openshift_storage_glusterfs_block_image=registry.redhat.io/rhgs3/rhgs-gluster-block-prov-rhel7:v3.11.8 -

openshift_storage_glusterfs_heketi_image=registry.redhat.io/rhgs3/rhgs-volmanager-rhel7:v3.11.8 -

openshift_storage_glusterfs_s3_server_image=registry.redhat.io/rhgs3/rhgs-s3-server-rhel7:v3.11.8

For a complete list of variables, see https://github.com/openshift/openshift-ansible/tree/release-3.11/roles/openshift_storage_glusterfs on GitHub.

Once the variables are configured, there are several playbooks available depending on the circumstances of the installation:

The main playbook for cluster installations can be used to deploy the GlusterFS clusters in tandem with an initial installation of OpenShift Container Platform.

- This includes deploying an integrated OpenShift Container Registry that uses GlusterFS storage.

-

/usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.ymlcan be used to deploy the clusters onto an existing OpenShift Container Platform installation. /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/registry.ymlcan be used to deploy the clusters onto an existing OpenShift Container Platform installation. In addition, this will deploy an integrated OpenShift Container Registry, which uses GlusterFS storage.Important- There must not be a pre-existing registry in the OpenShift Container Platform cluster.

playbooks/openshift-glusterfs/uninstall.ymlcan be used to remove existingclusters matching the configuration in the inventory hosts file. This is usefulfor cleaning up the Red Hat Openshift Container Storage environment in the case of a faileddeployment due to configuration errors.NoteThe GlusterFS playbooks are not guaranteed to be idempotent. Running the playbooks more than once for a given installation is currently not supported without deleting the entire GlusterFS installation (including disk data) and starting over.

4.2. Deploying Red Hat Openshift Container Storage in Converged Mode

In your inventory file, include the following variables in the

[OSEv3:vars]section, adjusting them as needed for your configuration:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Noteopenshift_storage_glusterfs_block_host_vol_sizetakes an integer, which is the size of the volume in Gi.In your inventory file, add

glusterfsin the[OSEv3:children]section to enable the[glusterfs]group:[OSEv3:children] masters etcd nodes glusterfs

[OSEv3:children] masters etcd nodes glusterfsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add a

[glusterfs]section with entries for each storage node that will host the GlusterFS storage. For each node, setglusterfs_devicesto a list of raw block devices that will be completely managed as part of a GlusterFS cluster.There must be at least one device listed. Each device must be bare, with no partitions or LVM PVs. Specifying the variable takes the form:<hostname_or_ip> glusterfs_zone=<zone_number> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'

<hostname_or_ip> glusterfs_zone=<zone_number> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

[glusterfs] node103.example.com glusterfs_zone=1 glusterfs_devices='["/dev/sdd"]' node104.example.com glusterfs_zone=2 glusterfs_devices='["/dev/sdd"]' node105.example.com glusterfs_zone=3 glusterfs_devices='["/dev/sdd"]'

[glusterfs] node103.example.com glusterfs_zone=1 glusterfs_devices='["/dev/sdd"]' node104.example.com glusterfs_zone=2 glusterfs_devices='["/dev/sdd"]' node105.example.com glusterfs_zone=3 glusterfs_devices='["/dev/sdd"]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add the hosts listed under

[glusterfs]to the[nodes]group:[nodes] ... node103.example.com openshift_node_group_name="node-config-infra" node104.example.com openshift_node_group_name="node-config-infra" node105.example.com openshift_node_group_name="node-config-infra"

[nodes] ... node103.example.com openshift_node_group_name="node-config-infra" node104.example.com openshift_node_group_name="node-config-infra" node105.example.com openshift_node_group_name="node-config-infra"Copy to Clipboard Copied! Toggle word wrap Toggle overflow The preceding steps detail options that need to be added to a larger, complete inventory file. To use the complete inventory file to deploy {gluster} provide the file path as an option to the following playbooks:

For an initial OpenShift Container Platform installation:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.yml

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow For a standalone installation onto an existing OpenShift Container Platform cluster:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.yml

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- To verify the deployment see, Section 4.8, “Verify your Deployment”.

4.3. Deploying Red Hat Openshift Container Storage in Converged Mode with Registry

In your inventory file, include the following variables in the [OSEv3:vars] section, adjusting them as needed for your configuration:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow In your inventory file, set the following variable under

[OSEv3:vars]:[OSEv3:vars] ... openshift_hosted_registry_storage_kind=glusterfs openshift_hosted_registry_storage_volume_size=5Gi openshift_hosted_registry_selector='node-role.kubernetes.io/infra=true'

[OSEv3:vars] ... openshift_hosted_registry_storage_kind=glusterfs openshift_hosted_registry_storage_volume_size=5Gi openshift_hosted_registry_selector='node-role.kubernetes.io/infra=true'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add

glusterfs_registryin the[OSEv3:children]section to enable the`[glusterfs_registry]` group:[OSEv3:children] masters etcd nodes glusterfs_registry

[OSEv3:children] masters etcd nodes glusterfs_registryCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add a

[glusterfs_registry]section with entries for each storage node that will host the GlusterFS storage. For each node, setglusterfs_devicesto a list of raw block devices that will be completely managed as part of a GlusterFS cluster. There must be at least one device listed. Each device must be bare, with no partitions or LVM PVs. Specifying the variable takes the form:<hostname_or_ip> glusterfs_zone=<zone_number> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'

<hostname_or_ip> glusterfs_zone=<zone_number> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

[glusterfs_registry] node106.example.com glusterfs_zone=1 glusterfs_devices='["/dev/sdd"]' node107.example.com glusterfs_zone=2 glusterfs_devices='["/dev/sdd"]' node108.example.com glusterfs_zone=3 glusterfs_devices='["/dev/sdd"]'

[glusterfs_registry] node106.example.com glusterfs_zone=1 glusterfs_devices='["/dev/sdd"]' node107.example.com glusterfs_zone=2 glusterfs_devices='["/dev/sdd"]' node108.example.com glusterfs_zone=3 glusterfs_devices='["/dev/sdd"]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add the hosts listed under

[glusterfs_registry]to the[nodes]group:[nodes] ... node106.example.com openshift_node_group_name="node-config-compute" node107.example.com openshift_node_group_name="node-config-compute" node108.example.com openshift_node_group_name="node-config-compute"

[nodes] ... node106.example.com openshift_node_group_name="node-config-compute" node107.example.com openshift_node_group_name="node-config-compute" node108.example.com openshift_node_group_name="node-config-compute"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

The preceding steps detail options that need to be added to a larger, complete inventory file. To use the complete inventory file to deploy {gluster} provide the file path as an option to the following playbooks:

For an initial OpenShift Container Platform installation:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.yml

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow For a standalone installation onto an existing OpenShift Container Platform cluster:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.yml

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- To verify the deployment see, Section 4.8, “Verify your Deployment”.

4.4. Deploying Red Hat Openshift Container Storage in Converged Mode with Logging and Metrics

In your inventory file, set the following variables under

[OSEv3:vars]:Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteFor more details about all the variables, see https://github.com/openshift/openshift-ansible/tree/release-3.11/roles/openshift_storage_glusterfs.

Add

glusterfs_registryin the[OSEv3:children]`section to enable the `[glusterfs_registry]group:[OSEv3:children] masters etcd nodes glusterfs_registry

[OSEv3:children] masters etcd nodes glusterfs_registryCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add a

[glusterfs_registry]section with entries for each storage node thatwill host the GlusterFS storage. For each node, setglusterfs_devicesto alist of raw block devices that will be completely managed as part of aGlusterFS cluster. There must be at least one device listed. Each device mustbe bare, with no partitions or LVM PVs. Specifying the variable takes the form:<hostname_or_ip> glusterfs_zone=<zone_number> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'

<hostname_or_ip> glusterfs_zone=<zone_number> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

[glusterfs_registry] node106.example.com glusterfs_zone=1 glusterfs_devices='["/dev/sdd"]' node107.example.com glusterfs_zone=2 glusterfs_devices='["/dev/sdd"]' node108.example.com glusterfs_zone=3 glusterfs_devices='["/dev/sdd"]'

[glusterfs_registry] node106.example.com glusterfs_zone=1 glusterfs_devices='["/dev/sdd"]' node107.example.com glusterfs_zone=2 glusterfs_devices='["/dev/sdd"]' node108.example.com glusterfs_zone=3 glusterfs_devices='["/dev/sdd"]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Add the hosts listed under

[glusterfs_registry]to the[nodes]group:

[nodes] ... node106.example.com openshift_node_group_name="node-config-compute" node107.example.com openshift_node_group_name="node-config-compute" node108.example.com openshift_node_group_name="node-config-compute"

[nodes]

...

node106.example.com openshift_node_group_name="node-config-compute"

node107.example.com openshift_node_group_name="node-config-compute"

node108.example.com openshift_node_group_name="node-config-compute"The preceding steps detail options that need to be added to a larger, complete inventory file. To use the complete inventory file to deploy {gluster} provide the file path as an option to the following playbooks:

For an initial OpenShift Container Platform installation:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.yml

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow For a standalone installation onto an existing OpenShift Container Platform cluster:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.yml ansible-playbook -i <path_to_the_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-logging/config.yml ansible-playbook -i <path_to_the_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-metrics/config.yml

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.yml ansible-playbook -i <path_to_the_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-logging/config.yml ansible-playbook -i <path_to_the_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-metrics/config.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- To verify the deployment see, Section 4.8, “Verify your Deployment”.

4.5. Deploying Red Hat Openshift Container Storage in Converged mode for Applications with Registry, Logging, and Metrics

In your inventory file, set the following variables under

[OSEv3:vars]:Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteEnsure to set

openshift_storage_glusterfs_block_deploy=falsein this deployment scenario.Add

glusterfsandglusterfs_registryin the[OSEv3:children]section toenable the[glusterfs]and[glusterfs_registry]groups:[OSEv3:children] ... glusterfs glusterfs_registry

[OSEv3:children] ... glusterfs glusterfs_registryCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add

[glusterfs]and[glusterfs_registry]sections with entries for eachstorage node that will host the GlusterFS storage. For each node, set`glusterfs_devices` to a list of raw block devices that will be completelymanaged as part of a GlusterFS cluster. There must be at least one devicelisted. Each device must be bare, with no partitions or LVM PVs. Specifying thevariable takes the form:<hostname_or_ip> glusterfs_zone=<zone_number> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'

<hostname_or_ip> glusterfs_zone=<zone_number> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add the hosts listed under

[glusterfs]and[glusterfs_registry]to the`[nodes]` group:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The preceding steps detail options that need to be added to a larger, complete inventory file. To use the complete inventory file to deploy {gluster} provide the file path as an option to the following playbooks:

For an initial OpenShift Container Platform installation:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.yml

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow For a standalone installation onto an existing OpenShift Container Platform cluster:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.yml ansible-playbook -i <path_to_the_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-logging/config.yml ansible-playbook -i <path_to_the_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-metrics/config.yml

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.yml ansible-playbook -i <path_to_the_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-logging/config.yml ansible-playbook -i <path_to_the_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-metrics/config.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

- To verify the deployment see, Section 4.8, “Verify your Deployment”.

4.6. Single OCS cluster installation

It is possible to support both general-application storage and infrastructure storage in a single OCS cluster. To do this, the inventory file options will change slightly for logging and metrics. This is because when there is only one cluster, the gluster-block StorageClass would be glusterfs-storage-block.

The registry PV will be created on this single cluster if the second cluster,[glusterfs_registry] does not exist. For high availability, it is very important to have four nodes for this cluster. Special attention should be given to choosing the size for openshift_storage_glusterfs_block_host_vol_size.

This is the hosting volume for gluster-block devices that will be created for logging and metrics. Make sure that the size can accommodate all these block volumes and have sufficient storage if another hosting volume must be created.

openshift_storage_glusterfs_block_host_vol_size takes an integer, which is the size of the volume in Gi.

4.7. Configure Heketi to Place Bricks Across Zones

Heketi uses node zones as a hint for brick placement. To force Heketi to strictly place replica bricks in different zones, "strict zone checking" feature of Heketi has to be enabled. When this feature is enabled, a volume is created successfully only if each brick set is spread across sufficiently many zones.

Ensure that the OCS nodes are labeled with the correct zones before configuring StorageClass to use heketi’s strict zoning.

You can configure this feature by adding the "volumeoptions" field with the desired setting in the parameters section of the StorageClass. For example:

volumeoptions: "user.heketi.zone-checking strict"

volumeoptions: "user.heketi.zone-checking strict"OR

volumeoptions: "user.heketi.zone-checking none"

volumeoptions: "user.heketi.zone-checking none"The settings are as follows:

- strict

- Requires at least 3 nodes to be present in different zones (assuming replica 3).

- none

- Previous (and current default) behavior

A sample StorageClass file with "strict zone checking" feature configured is shown below:

Existing storage class specifications are not editable. You can create a new storage class with the required volume options for all future applications. However, if you need to change the settings of an existing storage class then the existing storage class must first be deleted and then a new storage class with the same name as the previous class has to be re-created.

Execute the following commands to delete and re-create the glusterfs-storage storage class with the new settings:

Export the storage class object to a yaml file:

oc get sc glusterfs-storage --export=true -o yaml > glusterfs-storage.yaml

# oc get sc glusterfs-storage --export=true -o yaml > glusterfs-storage.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Use your preferred editor to add the new parameters.

Delete and re-create the storage class object:

oc delete sc glusterfs-storage oc create -f glusterfs-storage.yaml

# oc delete sc glusterfs-storage # oc create -f glusterfs-storage.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

4.8. Verify your Deployment

Execute the following steps to verify the deployment.

Installation Verification for converged mode

Examine the installation for the app-storage namespace by running the following commands This can be done from an OCP master node or the ansible deploy host that has the OC CLI installed.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Examine the installation for the infra-storage namespace by running the following commands This can be done from an OCP master node or the ansible deploy host that has the OC CLI installed.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Check the existence of the registry PVC backed by OCP infrastructure Red Hat Openshift Container Storage. This volume was statically provisioned by openshift-ansible deployment.

oc get pvc -n default NAME STATUS VOLUME CAPACITY ACCESSMODES STORAGECLASS AGE registry-claim Bound pvc-7ca4c8de-10ca-11e8-84d3-069df2c4f284 25Gi RWX 1h

oc get pvc -n default NAME STATUS VOLUME CAPACITY ACCESSMODES STORAGECLASS AGE registry-claim Bound pvc-7ca4c8de-10ca-11e8-84d3-069df2c4f284 25Gi RWX 1hCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check the registry DeploymentConfig to verify it’s using this glusterfs volume.

oc describe dc/docker-registry -n default | grep -A3 Volumes Volumes: registry-storage: Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace) ClaimName: registry-claimoc describe dc/docker-registry -n default | grep -A3 Volumes Volumes: registry-storage: Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace) ClaimName: registry-claimCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Storage Provisioning Verification for Converged Mode

The Storage Class resources can be used to create new PV claims for verification of the RHOCS deployment. Validate PV provisioning using the following OCP Storage Class created during the RHOCS deployment:

- Use the glusterfs-storage-block OCP Storage Class resource to create new PV claims if you deployed RHOCS using Section 4.2, “Deploying Red Hat Openshift Container Storage in Converged Mode”.

Use the glusterfs-registry-block OCP Storage Class resource to create new PV claims if you deployed RHOCS using one of the following workflows:

- Section 4.3, “Deploying Red Hat Openshift Container Storage in Converged Mode with Registry”

- Section 4.4, “Deploying Red Hat Openshift Container Storage in Converged Mode with Logging and Metrics”

- Section 4.5, “Deploying Red Hat Openshift Container Storage in Converged mode for Applications with Registry, Logging, and Metrics”

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Copy to Clipboard Copied! Toggle word wrap Toggle overflow oc create -f pvc-file.yaml oc create -f pvc-block.yaml

# oc create -f pvc-file.yaml # oc create -f pvc-block.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Validate that the two PVCs and respective PVs are created correctly:

oc get pvc

# oc get pvcCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Using the heketi-client for Verification

The heketi-client package needs to be installed on the ansible deploy host or on a OCP master. Once it is installed two new files should be created to easily export the required environment variables to run the heketi-client commands (or heketi-cli). The content of each file as well as useful heketi-cli commands are detailed here.

Create a new file (e.g. "heketi-exports-app") with the following contents:

export HEKETI_POD=$(oc get pods -l glusterfs=heketi-storage-pod -n app-storage -o jsonpath="{.items[0].metadata.name}") export HEKETI_CLI_SERVER=http://$(oc get route/heketi-storage -n app-storage -o jsonpath='{.spec.host}') export HEKETI_CLI_KEY=$(oc get pod/$HEKETI_POD -n app-storage -o jsonpath='{.spec.containers[0].env[?(@.name=="HEKETI_ADMIN_KEY")].value}') export HEKETI_ADMIN_KEY_SECRET=$(echo -n ${HEKETI_CLI_KEY} | base64) export HEKETI_CLI_USER=adminexport HEKETI_POD=$(oc get pods -l glusterfs=heketi-storage-pod -n app-storage -o jsonpath="{.items[0].metadata.name}") export HEKETI_CLI_SERVER=http://$(oc get route/heketi-storage -n app-storage -o jsonpath='{.spec.host}') export HEKETI_CLI_KEY=$(oc get pod/$HEKETI_POD -n app-storage -o jsonpath='{.spec.containers[0].env[?(@.name=="HEKETI_ADMIN_KEY")].value}') export HEKETI_ADMIN_KEY_SECRET=$(echo -n ${HEKETI_CLI_KEY} | base64) export HEKETI_CLI_USER=adminCopy to Clipboard Copied! Toggle word wrap Toggle overflow Source the file to create the HEKETI app-storage environment variables:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new file (e.g. "heketi-exports-infra") with the following contents:

export HEKETI_POD=$(oc get pods -l glusterfs=heketi-registry-pod -n infra-storage -o jsonpath="{.items[0].metadata.name}") export HEKETI_CLI_SERVER=http://$(oc get route/heketi-registry -n infra-storage -o jsonpath='{.spec.host}') export HEKETI_CLI_USER=admin export HEKETI_CLI_KEY=$(oc get pod/$HEKETI_POD -n infra-storage -o jsonpath='{.spec.containers[0].env[?(@.name=="HEKETI_ADMIN_KEY")].value}') export HEKETI_ADMIN_KEY_SECRET=$(echo -n ${HEKETI_CLI_KEY} | base64)export HEKETI_POD=$(oc get pods -l glusterfs=heketi-registry-pod -n infra-storage -o jsonpath="{.items[0].metadata.name}") export HEKETI_CLI_SERVER=http://$(oc get route/heketi-registry -n infra-storage -o jsonpath='{.spec.host}') export HEKETI_CLI_USER=admin export HEKETI_CLI_KEY=$(oc get pod/$HEKETI_POD -n infra-storage -o jsonpath='{.spec.containers[0].env[?(@.name=="HEKETI_ADMIN_KEY")].value}') export HEKETI_ADMIN_KEY_SECRET=$(echo -n ${HEKETI_CLI_KEY} | base64)Copy to Clipboard Copied! Toggle word wrap Toggle overflow Source the file to create the HEKETI infra-storage environment variables:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

4.9. Creating an Arbiter Volume (optional)

Arbiter volumes support all persistent volume types with similar consistency and less disk space requirements. An arbitrated replicated volume, or arbiter volume, acts like a three-way replicated volume where every third brick is a special type of brick called an arbiter. Arbiter bricks do not store file data; they only store file names, structure, and metadata. The arbiter uses client quorum to compare this metadata with the metadata of the other nodes to ensure consistency in the volume and prevent split-brain conditions.

Advantages of arbitrated replicated volumes:

- Similar consistency: When an arbiter is configured, arbitration logic uses client-side quorum in auto mode to prevent file operations that would lead to split-brain conditions.

- Less disk space required: Because an arbiter brick only stores file names and metadata, an arbiter brick can be much smaller than the other bricks in the volume.

For more information about Arbitrated Replicated Volumes, see https://access.redhat.com/documentation/en-us/red_hat_gluster_storage/3.5/html-single/administration_guide/index#Creating_Arbitrated_Replicated_Volumes

Before creating the arbiter volume, make sure heketi-client packages are installed.

subscription-manager repos --enable=rh-gluster-3-for-rhel-7-server-rpms

# subscription-manager repos --enable=rh-gluster-3-for-rhel-7-server-rpmsyum install heketi-client

# yum install heketi-clientIf you want to upgrade your already existing Heketi server, then see, https://access.redhat.com/documentation/en-us/red_hat_openshift_container_storage/3.11/html-single/deployment_guide/index#upgrade_heketi_rhgs

Arbiter volumes may not be appropriate for small file or unpredictable file-size workloads as it could fill up the arbiter bricks faster than the data bricks. If you want to use an arbiter volume, we recommend you to choose a conservative average file size based on the size of the data brick and number of files so that the arbiter brick can accommodate your workload.

4.9.1. Creating an Arbiter Volume

Arbiter volume can be created using the Heketi CLI or by updating the storageclass file.

4.9.1.1. Creating an Arbiter Volume using Heketi CLI

To create an Arbiter volume using the Heketi CLI one must request a replica 3 volume as well as provide the Heketi-specific volume option “user.heketi.arbiter true” that will instruct the system to create the Arbiter variant of replica 3.

For example:

heketi-cli volume create --size=4 --gluster-volume-options='user.heketi.arbiter true'

# heketi-cli volume create --size=4 --gluster-volume-options='user.heketi.arbiter true'4.9.1.2. Creating an Arbiter Volume using the Storageclass file

To create an arbiter volume using the storageclass file ensure to include the following two parameters in the storageclass file:

- user.heketi.arbiter true

- (Optional) user.heketi.average-file-size 1024

Following is a sample storageclass file:

4.9.2. Creating Block Hosting Volume as an Arbiter Volume

There are no changes to the storageclass file.

To create a block hosting volume as an arbiter volume, execute the following:

Edit the configuration file under Glusterfs section in Heketi deployment configuration by adding the following environment variable and value:

HEKETI_BLOCK_HOSTING_VOLUME_OPTIONS: group gluster-block,user.heketi.arbiter true

HEKETI_BLOCK_HOSTING_VOLUME_OPTIONS: group gluster-block,user.heketi.arbiter trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create block volume using Heketi CLI.

heketi-cli blockvolume create --size=100

# heketi-cli blockvolume create --size=100Copy to Clipboard Copied! Toggle word wrap Toggle overflow Ensure that block hosting volume is arbiter volume.

gluster v info

# gluster v infoCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteFor information about managing arbiter volumes see, Chapter 10, Managing Arbitrated Replicated Volumes

Chapter 5. Deploying Container Storage in Independent Mode

Before following the deployment workflow for your preferred solution, make sure to complete ] and review xref:chap-Documentation-Red_Hat_Gluster_Storage_Container_Native_with_OpenShift_Platform-Specify_variables_CRS[ to understand ansible variable and playbook recommendations and requirements.To set up storage to containers as a stand-alone Red Hat Gluster Storage cluster, select the workflow that meets your objectives.

| Deployment workflow | Registry | Metrics | Logging | Applications |

|---|---|---|---|---|

| Section 5.3, “Deploying Red Hat Openshift Container Storage in Independent Mode” | ✔ | |||

| ✔ | ✔ | ✔ | ✔ |

- Red Hat Openshift Container Storage does not support a simultaneous deployment of converged and independent mode with ansible workflow. Therefore, you must deploy either converged mode or independent mode: you cannot mix both modes during deployment.

- s3 is deployed manually and not through Ansible installer. For more information on manual deployment, see https://access.redhat.com/documentation/en-us/red_hat_openshift_container_storage/3.11/html-single/operations_guide/#S3_Object_Store

New registry name registry.redhat.io is used throughout in this Guide.

However, if you have not migrated to the new registry yet then replace all occurrences of registry.redhat.io with registry.access.redhat.com where ever applicable.

5.1. Setting up a RHGS Cluster

In an independent mode set-up a dedicated Red Hat Gluster Storage cluster is available external to the OpenShift Container Platform. The storage is provisioned from the Red Hat Gluster Storage cluster.

5.1.1. Installing Red Hat Gluster Storage Server on Red Hat Enterprise Linux (Layered Install)

Layered install involves installing Red Hat Gluster Storage over Red Hat Enterprise Linux.

It is recommended to create a separate /var partition that is large enough (50GB - 100GB) for log files, geo-replication related miscellaneous files, and other files.

Perform a base install of Red Hat Enterprise Linux 7 Server

Independent mode is supported only on Red Hat Enterprise Linux 7.

Register the System with Subscription Manager

Run the following command and enter your Red Hat Network username and password to register the system with the Red Hat Network:

subscription-manager register

# subscription-manager registerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Identify Available Entitlement Pools

Run the following commands to find entitlement pools containing the repositories required to install Red Hat Gluster Storage:

subscription-manager list --available

# subscription-manager list --availableCopy to Clipboard Copied! Toggle word wrap Toggle overflow Attach Entitlement Pools to the System

Use the pool identifiers located in the previous step to attach the

Red Hat Enterprise Linux ServerandRed Hat Gluster Storageentitlements to the system. Run the following command to attach the entitlements:subscription-manager attach --pool=[POOLID]

# subscription-manager attach --pool=[POOLID]Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

subscription-manager attach --pool=8a85f9814999f69101499c05aa706e47

# subscription-manager attach --pool=8a85f9814999f69101499c05aa706e47Copy to Clipboard Copied! Toggle word wrap Toggle overflow Enable the Required Channels

Run the following commands to enable the repositories required to install Red Hat Gluster Storage 3.5 on Red Hat Enterprise Linux 7.7

subscription-manager repos --enable=rhel-7-server-rpms subscription-manager repos --enable=rh-gluster-3-for-rhel-7-server-rpms subscription-manager repos --enable=rhel-7-server-extras-rpms

# subscription-manager repos --enable=rhel-7-server-rpms # subscription-manager repos --enable=rh-gluster-3-for-rhel-7-server-rpms # subscription-manager repos --enable=rhel-7-server-extras-rpmsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Verify if the Channels are Enabled

Run the following command to verify if the channels are enabled:

yum repolist

# yum repolistCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Update all packages

Ensure that all packages are up to date by running the following command.

+

yum update

# yum updateKernel Version Requirement

Independent mode requires the kernel-3.10.0-862.14.4.el7.x86_64 version or higher to be used on the system. Verify the installed and running kernel versions by running the following command:

rpm -q kernel kernel-3.10.0-862.14.4.el7.x86_64

# rpm -q kernel kernel-3.10.0-862.14.4.el7.x86_64Copy to Clipboard Copied! Toggle word wrap Toggle overflow uname -r 3.10.0-862.14.4.el7.x86_64

# uname -r 3.10.0-862.14.4.el7.x86_64Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantIf any kernel packages are updated, reboot the system with the following command.

+

shutdown -r now

# shutdown -r nowCopy to Clipboard Copied! Toggle word wrap Toggle overflow Install Red Hat Gluster Storage

Run the following command to install Red Hat Gluster Storage:

yum install redhat-storage-server

# yum install redhat-storage-serverCopy to Clipboard Copied! Toggle word wrap Toggle overflow To enable gluster-block execute the following command:

yum install gluster-block

# yum install gluster-blockCopy to Clipboard Copied! Toggle word wrap Toggle overflow Reboot

Reboot the system.

5.1.2. Configuring Port Access

This section provides information about the ports that must be open for the independent mode.

Red Hat Gluster Storage Server uses the listed ports. You must ensure that the firewall settings do not prevent access to these ports.

Execute the following commands to open the required ports for both runtime and permanent configurations on all Red Hat Gluster Storage nodes:

firewall-cmd --zone=zone_name --add-port=24010/tcp --add-port=3260/tcp --add-port=111/tcp --add-port=22/tcp --add-port=24007/tcp --add-port=49152-49664/tcp firewall-cmd --zone=zone_name --add-port=24010/tcp --add-port=3260/tcp --add-port=111/tcp --add-port=22/tcp --add-port=24007/tcp --add-port=49152-49664/tcp --permanent

# firewall-cmd --zone=zone_name --add-port=24010/tcp --add-port=3260/tcp --add-port=111/tcp --add-port=22/tcp --add-port=24007/tcp --add-port=49152-49664/tcp

# firewall-cmd --zone=zone_name --add-port=24010/tcp --add-port=3260/tcp --add-port=111/tcp --add-port=22/tcp --add-port=24007/tcp --add-port=49152-49664/tcp --permanent- Port 24010 and 3260 are for gluster-blockd and iSCSI targets respectively.

- The port range starting at 49664 defines the range of ports that can be used by GlusterFS for communication to its volume bricks. In the above example the total number of bricks allowed is 512. Configure the port range based on the maximum number of bricks that could be hosted on each node.

-

If the option

client.bind-insecureis set, Gluster native clients (including the gfapi clients ) use the first available port which starts at port 1023 or 49152.

5.1.3. Enabling Kernel Modules

Execute the following commands to enable kernel modules:

You must ensure that the dm_thin_pool and target_core_user modules are loaded in the Red Hat Gluster Storage nodes.

modprobe target_core_user

# modprobe target_core_userCopy to Clipboard Copied! Toggle word wrap Toggle overflow modprobe dm_thin_pool

# modprobe dm_thin_poolCopy to Clipboard Copied! Toggle word wrap Toggle overflow Execute the following command to verify if the modules are loaded:

lsmod | grep dm_thin_pool

# lsmod | grep dm_thin_poolCopy to Clipboard Copied! Toggle word wrap Toggle overflow lsmod | grep target_core_user

# lsmod | grep target_core_userCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteTo ensure these operations are persisted across reboots, create the following files and update each file with the content as mentioned:

cat /etc/modules-load.d/dm_thin_pool.conf dm_thin_pool

# cat /etc/modules-load.d/dm_thin_pool.conf dm_thin_poolCopy to Clipboard Copied! Toggle word wrap Toggle overflow cat /etc/modules-load.d/target_core_user.conf target_core_user

# cat /etc/modules-load.d/target_core_user.conf target_core_userCopy to Clipboard Copied! Toggle word wrap Toggle overflow You must ensure that the dm_multipath module is loaded on all OpenShift Container Platform nodes.

modprobe dm_multipath

# modprobe dm_multipathCopy to Clipboard Copied! Toggle word wrap Toggle overflow Execute the following command to verify if the modules are loaded:

lsmod | grep dm_multipath

# lsmod | grep dm_multipathCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteTo ensure these operations are persisted across reboots, create the following file and update it with the content as mentioned:

cat /etc/modules-load.d/dm_multipath.conf dm_multipath

# cat /etc/modules-load.d/dm_multipath.conf dm_multipathCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.1.4. Starting and Enabling Services

Execute the following commands to start glusterd and gluster-blockd:

systemctl start sshd

# systemctl start sshdsystemctl enable sshd

# systemctl enable sshdsystemctl start glusterd

# systemctl start glusterdsystemctl enable glusterd

# systemctl enable glusterdsystemctl start gluster-blockd

# systemctl start gluster-blockdsystemctl enable gluster-blockd

# systemctl enable gluster-blockd5.1.5. Creating 2 TB (or more) Block Volume

To create 2 TB or more (a maximum of 2.5 TB) of block volume in independent mode, the GB_CLI_TIMEOUT parameter has to be configured as follows:

-

Edit the /etc/sysconfig/gluster-blockd configuration file. Uncomment the

GB_CLI_TIMEOUTparameter and update the parameter value as900.

5.2. Specify Advanced Installer Variables

The cluster installation process as documented in https://access.redhat.com/documentation/en-us/openshift_container_platform/3.11/html-single/installing_clusters/#install-planning, can be used to install one or both the GlusterFS node groups:

-

glusterfs: A general storage cluster for use by user applications. -

glusterfs-registry: A dedicated storage cluster for use by infrastructureapplications such as an integrated OpenShift Container Registry.

It is recommended to deploy both groups to avoid potential impacts onperformance in I/O and volume creation. Both of these are defined in theinventory hosts file.

The definition of the clusters is done by including the relevant names in the`[OSEv3:children]` group, creating similarly named groups, and then populating the groups with the node information. The clusters can then be configured through a variety of variables in the [OSEv3:vars] group. glusterfs variables begin with openshift_storage_glusterfs_ and glusterfs-registry variables begin with openshift_storage_glusterfs_registry_. A few other variables, such as openshift_hosted_registry_storage_kind, interact with the GlusterFS clusters.

It is recommended to specify version tags for all containerized components.This is primarily to prevent components from upgrading after an outage, which might lead to a cluster of widely disparate software versions. The relevant variables are:

-

openshift_storage_glusterfs_image -

openshift_storage_glusterfs_block_image -

openshift_storage_glusterfs_heketi_image

The image variables for gluster-block is necessary only if the corresponding deployment variables (the variables ending in _block_deploy) is true.

The recommended values for this release of Red Hat Openshift Container Storage are as follows:

-

openshift_storage_glusterfs_image=registry.redhat.io/rhgs3/rhgs-server-rhel7:v3.11.8 -

openshift_storage_glusterfs_block_image=registry.redhat.io/rhgs3/rhgs-gluster-block-prov-rhel7:v3.11.8 -

openshift_storage_glusterfs_heketi_image=registry.redhat.io/rhgs3/rhgs-volmanager-rhel7:v3.11.8 -

openshift_storage_glusterfs_s3_server_image=registry.redhat.io/rhgs3/rhgs-s3-server-rhel7:v3.11.8

For a complete list of variables, see https://github.com/openshift/openshift-ansible/tree/release-3.11/roles/openshift_storage_glusterfs on GitHub.

Once the variables are configured, there are several playbooks available depending on the circumstances of the installation:

- The main playbook for cluster installations can be used to deploy theGlusterFS clusters in tandem with an initial installation of OpenShift Container Platform.

- This includes deploying an integrated OpenShift Container Registry thatuses GlusterFS storage.

-

/usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.ymlcan be used to deploy the clusters onto an existing OpenShift Container Platform installation. /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/registry.ymlcan be used to deploy the clusters onto an existing OpenShift Container Platform installation. In addition, this deploys an integrated OpenShift Container Registry, which uses GlusterFS storage.ImportantThe OpenShift Container Platform cluster must not contain a pre-existing registry.

NoteThe GlusterFS playbooks are not guaranteed to be idempotent. Running the playbooks more than once for a given installation is not supported without deleting the entire GlusterFS installation (including disk data) and starting over.

5.3. Deploying Red Hat Openshift Container Storage in Independent Mode

In your inventory file, add

glusterfsin the[OSEv3:children]section to enable the[glusterfs]group:[OSEv3:children] masters etcd nodes glusterfs

[OSEv3:children] masters etcd nodes glusterfsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Include the following variables in the

[OSEv3:vars]section, adjusting them as needed for your configuration:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Noteopenshift_storage_glusterfs_block_host_vol_sizetakes an integer, which is the size of the volume in Gi.Add a

[glusterfs]section with entries for each storage node that will hostthe GlusterFS storage. For each node, setglusterfs_devicesto a list of rawblock devices that will be completely managed as part of a GlusterFS cluster.There must be at least one device listed. Each device must be bare, with nopartitions or LVM PVs. Also, setglusterfs_ipto the IP address of the node.Specifying the variable takes the form:<hostname_or_ip> glusterfs_zone=<zone_number> glusterfs_ip=<ip_address> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'

<hostname_or_ip> glusterfs_zone=<zone_number> glusterfs_ip=<ip_address> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

[glusterfs] gluster1.example.com glusterfs_zone=1 glusterfs_ip=192.168.10.11 glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]' gluster2.example.com glusterfs_zone=2 glusterfs_ip=192.168.10.12 glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]' gluster3.example.com glusterfs_zone=3 glusterfs_ip=192.168.10.13 glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]'

[glusterfs] gluster1.example.com glusterfs_zone=1 glusterfs_ip=192.168.10.11 glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]' gluster2.example.com glusterfs_zone=2 glusterfs_ip=192.168.10.12 glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]' gluster3.example.com glusterfs_zone=3 glusterfs_ip=192.168.10.13 glusterfs_devices='[ "/dev/xvdc", "/dev/xvdd" ]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow The preceding steps detail options that need to be added to a larger, complete inventory file. To use the complete inventory file to deploy {gluster} provide the file path as an option to the following playbooks:

For an initial OpenShift Container Platform installation:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.yml

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow For a standalone installation onto an existing OpenShift Container Platform cluster:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.yml

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/openshift-glusterfs/config.ymlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Brick multiplexing is a feature that allows adding multiple bricks into one process. This reduces resource consumption and allows us to run more bricks than before with the same memory consumption. Execute the following commands on one of the Red Hat Gluster Storage nodes on each cluster to enable brick-multiplexing:

Execute the following command to enable brick multiplexing:

gluster vol set all cluster.brick-multiplex on

# gluster vol set all cluster.brick-multiplex onCopy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

gluster vol set all cluster.brick-multiplex on Brick-multiplexing is supported only for container workloads(Independent or Converged mode). Also it is advised to make sure that either all volumes are in stopped state or no bricks are running before this option is modified.Do you still want to continue? (y/n) y volume set: success

# gluster vol set all cluster.brick-multiplex on Brick-multiplexing is supported only for container workloads(Independent or Converged mode). Also it is advised to make sure that either all volumes are in stopped state or no bricks are running before this option is modified.Do you still want to continue? (y/n) y volume set: successCopy to Clipboard Copied! Toggle word wrap Toggle overflow Restart the heketidb volumes:

gluster vol stop heketidbstorage Stopping volume will make its data inaccessible. Do you want to continue? (y/n) y volume stop: heketidbstorage: success

# gluster vol stop heketidbstorage Stopping volume will make its data inaccessible. Do you want to continue? (y/n) y volume stop: heketidbstorage: successCopy to Clipboard Copied! Toggle word wrap Toggle overflow gluster vol start heketidbstorage volume start: heketidbstorage: success

# gluster vol start heketidbstorage volume start: heketidbstorage: successCopy to Clipboard Copied! Toggle word wrap Toggle overflow

5.4. Deploying Red Hat Openshift Container Storage in Independent mode for Applications with Registry, Logging, and Metrics

In your inventory file, set the following variables under

[OSEv3:vars]:Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteEnsure to set

openshift_storage_glusterfs_block_deploy=falsein this deployment scenario.Add

glusterfsandglusterfs_registryin the[OSEv3:children]section to enable the[glusterfs]and[glusterfs_registry]groups:[OSEv3:children] ... glusterfs glusterfs_registry

[OSEv3:children] ... glusterfs glusterfs_registryCopy to Clipboard Copied! Toggle word wrap Toggle overflow Add

[glusterfs]and[glusterfs_registry]sections with entries for eachstorage node that will host the GlusterFS storage. For each node, set`glusterfs_devices` to a list of raw block devices that will be completelymanaged as part of a GlusterFS cluster. There must be at least one devicelisted. Each device must be bare, with no partitions or LVM PVs. Specifying thevariable takes the form:<hostname_or_ip> glusterfs_zone=<zone_number> glusterfs_ip=<ip_address> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'

<hostname_or_ip> glusterfs_zone=<zone_number> glusterfs_ip=<ip_address> glusterfs_devices='[ "</path/to/device1/>", "</path/to/device2>", ... ]'Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow The preceding steps detail options that need to be added to a larger, complete inventory file. To use the complete inventory file to deploy {gluster} provide the file path as an option to the following playbooks:

For an initial OpenShift Container Platform installation:

ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/prerequisites.yml ansible-playbook -i <path_to_inventory_file> /usr/share/ansible/openshift-ansible/playbooks/deploy_cluster.yml