OpenShift Container Storage is now OpenShift Data Foundation starting with version 4.9.

Scaling storage

Instructions for scaling operations in OpenShift Data Foundation

Abstract

Making open source more inclusive

Red Hat is committed to replacing problematic language in our code, documentation, and web properties. We are beginning with these four terms: master, slave, blacklist, and whitelist. Because of the enormity of this endeavor, these changes will be implemented gradually over several upcoming releases. For more details, see our CTO Chris Wright’s message.

Providing feedback on Red Hat documentation

We appreciate your input on our documentation. Do let us know how we can make it better. To give feedback:

For simple comments on specific passages:

- Make sure you are viewing the documentation in the Multi-page HTML format. In addition, ensure you see the Feedback button in the upper right corner of the document.

- Use your mouse cursor to highlight the part of text that you want to comment on.

- Click the Add Feedback pop-up that appears below the highlighted text.

- Follow the displayed instructions.

For submitting more complex feedback, create a Bugzilla ticket:

- Go to the Bugzilla website.

- In the Component section, choose documentation.

- Fill in the Description field with your suggestion for improvement. Include a link to the relevant part(s) of documentation.

- Click Submit Bug.

Preface

To scale the storage capacity of OpenShift Data Foundation in internal mode, you can do either of the following:

- Scale up storage nodes - Add storage capacity to the existing Red Hat OpenShift Data Foundation worker nodes

- Scale out storage nodes - Add new worker nodes containing storage capacity

For scaling your storage in external mode, see Red Hat Ceph Storage documentation.

Chapter 1. Requirements for scaling storage nodes

Before you proceed to scale the storage nodes, refer to the following sections to understand the node requirements for your specific Red Hat OpenShift Data Foundation instance:

- Platform requirements

Storage device requirements

Always ensure that you have plenty of storage capacity.

If storage ever fills completely, it is not possible to add capacity or delete or migrate content away from the storage to free up space completely. Full storage is very difficult to recover.

Capacity alerts are issued when cluster storage capacity reaches 75% (near-full) and 85% (full) of total capacity. Always address capacity warnings promptly, and review your storage regularly to ensure that you do not run out of storage space.

If you do run out of storage space completely, contact Red Hat Customer Support.

1.1. Supported Deployments for Red Hat OpenShift Data Foundation

User-provisioned infrastructure:

- Amazon Web Services (AWS)

- VMware

- Bare metal

- IBM Power

- IBM Z or LinuxONE

Installer-provisioned infrastructure:

- Amazon Web Services (AWS)

- Microsoft Azure

- Red Hat Virtualization

- VMware

Chapter 2. Scaling up storage capacity

Depending on the type of your deployment, you can choose one of the following procedures to scale up storage capacity.

- For AWS, VMware, Red Hat Virtualization or Azure infrastructures using dynamic or automated provisioning of storage devices, see Section 2.2, “Scaling up storage by adding capacity to your OpenShift Data Foundation nodes”.

- For bare metal, VMware or Red Hat Virtualization infrastructures using local storage devices, see Section 2.3, “Scaling up storage by adding capacity to your OpenShift Data Foundation nodes using local storage devices”.

- For IBM Z or LinuxONE infrastructures using local storage devices, see Section 2.4, “Scaling up storage by adding capacity to your OpenShift Data Foundation nodes on IBM Z or LinuxONE infrastructure”.

- For IBM Power using local storage devices, see Section 2.5, “Scaling up storage by adding capacity to your OpenShift Data Foundation nodes on IBM Power infrastructure using local storage devices”.

If you want to scale using a storage class other than the one provisioned during deployment, you must also define an additional storage class before you scale. See Creating a storage class for details.

OpenShift Data Foundation does not support heterogeneous OSD sizes.

2.1. Creating a Storage Class

You can define a new storage class to dynamically provision storage from an existing provider.

Prerequisites

- Administrator access to OpenShift web console.

Procedure

- Log in to OpenShift Web Console.

- Click Storage → StorageClasses.

Click Create Storage Class.

- Enter the storage class Name and Description.

- Select the required Reclaim Policy and Provisioner.

- Click Create to create the Storage Class.

Verification steps

- Click Storage → StorageClasses and verify that you can see the new storage class.

2.2. Scaling up storage by adding capacity to your OpenShift Data Foundation nodes

You can add storage capacity and performance to your configured Red Hat OpenShift Data Foundation worker nodes on the following infrastructures:

- AWS

- VMware vSphere

- Red Hat Virtualization

- Microsoft Azure

Prerequisites

- A running OpenShift Data Foundation Platform.

- Administrative privileges on the OpenShift Web Console.

- To scale using a storage class other than the one provisioned during deployment, first define an additional storage class. See Creating a storage class for details.

Procedure

- Log in to the OpenShift Web Console.

- Click Operators → Installed Operators.

- Click OpenShift Data Foundation Operator.

Click the Storage Systems tab.

- Click the Action Menu (⋮) on the far right of the storage system name to extend the options menu.

- Select Add Capacity from the options menu.

Select the Storage Class.

Set the storage class to

gp2on AWS,thinon VMware,ovirt-csi-scon Red Hat Virtualization ormanaged_premiumon Microsoft Azure if you are using the default storage class generated during deployment. If you have created other storage classes, select whichever is appropriate.ImportantUsing storage classes other than the default for your provider is a Technology Preview feature.

Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information, see Technology Preview Features Support Scope.

The Raw Capacity field shows the size set during storage class creation. The total amount of storage consumed is three times this amount, because OpenShift Data Foundation uses a replica count of 3.

- Click Add.

-

To check the status, navigate to Storage → OpenShift Data Foundation and verify that

Storage Systemin the Status card has a green tick.

Verification steps

Verify the Raw Capacity card.

- In the OpenShift Web Console, click Storage → OpenShift Data Foundation.

- In the Status card of the Overview tab, click Storage System and then click the storage system link from the pop up that appears.

In the Block and File tab, check the Raw Capacity card.

Note that the capacity increases based on your selections.

NoteThe raw capacity does not take replication into account and shows the full capacity.

Verify that the new OSDs and their corresponding new Persistent Volume Claims (PVCs) are created.

To view the state of the newly created OSDs:

- Click Workloads → Pods from the OpenShift Web Console.

Select

openshift-storagefrom the Project drop-down list.NoteIf the Show default projects option is disabled, use the toggle button to list all the default projects.

To view the state of the PVCs:

- Click Storage → Persistent Volume Claims from the OpenShift Web Console.

Select

openshift-storagefrom the Project drop-down list.NoteIf the Show default projects option is disabled, use the toggle button to list all the default projects.

Optional: If cluster-wide encryption is enabled on the cluster, verify that the new OSD devices are encrypted.

Identify the nodes where the new OSD pods are running.

oc get -o=custom-columns=NODE:.spec.nodeName pod/<OSD-pod-name>

$ oc get -o=custom-columns=NODE:.spec.nodeName pod/<OSD-pod-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow <OSD-pod-name>Is the name of the OSD pod.

For example:

oc get -o=custom-columns=NODE:.spec.nodeName pod/rook-ceph-osd-0-544db49d7f-qrgqm

oc get -o=custom-columns=NODE:.spec.nodeName pod/rook-ceph-osd-0-544db49d7f-qrgqmCopy to Clipboard Copied! Toggle word wrap Toggle overflow

For each of the nodes identified in the previous step, do the following:

Create a debug pod and open a chroot environment for the selected hosts.

oc debug node/<node-name>

$ oc debug node/<node-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow <node-name>Is the name of the node.

chroot /host

$ chroot /hostCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Check for the

cryptkeyword beside theocs-devicesetnames.lsblk

$ lsblkCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Cluster reduction is supported only with the Red Hat Support Team’s assistance..

2.3. Scaling up storage by adding capacity to your OpenShift Data Foundation nodes using local storage devices

You can add storage capacity (additional storage devices) to your configured local storage based OpenShift Data Foundation worker nodes on the following infrastructures:

- Bare metal

- VMware

- Red Hat Virtualization

Prerequisites

- You must be logged into the OpenShift Container Platform cluster.

You must have installed the local storage operator. Use any of the following procedures applicable to your infrastructure:

-

If you upgraded to OpenShift Data Foundation version 4.9 from a previous version, and have not already created the

LocalVolumeDiscoveryandLocalVolumeSetobjects, follow the procedure described in Post-update configuration changes for clusters backed by local storage. - You must have three OpenShift Container Platform worker nodes with the same storage type and size attached to each node (for example, 2TB NVMe drive) as the original OpenShift Data Foundation StorageCluster was created with.

Procedure

To add capacity, you can either use a storage class that you provisioned during the deployment or any other storage class that matches the filter.

- In the OpenShift Web Console, click Operators → Installed Operators.

- Click OpenShift Data Foundation Operator.

Click Storage Systems tab.

- Click the Action menu (⋮) next to the visible list to extend the options menu.

- Select Add Capacity from the options menu.

- Select the Storage Class for which you added disks or the new storage class depending on your requirement. Available Capacity displayed is based on the local disks available in storage class.

- Click Add.

- To check the status, navigate to Storage → OpenShift Data Foundation and verify that Storage System in the Status card has a green tick.

Verification steps

Verify the Raw Capacity card.

- In the OpenShift Web Console, click Storage → OpenShift Data Foundation.

- In the Status card of the Overview tab, click Storage System and then click the storage system link from the pop up that appears.

In the Block and File tab, check the Raw Capacity card.

Note that the capacity increases based on your selections.

NoteThe raw capacity does not take replication into account and shows the full capacity.

Verify that the new OSDs and their corresponding new Persistent Volume Claims (PVCs) are created.

To view the state of the newly created OSDs:

- Click Workloads → Pods from the OpenShift Web Console.

Select

openshift-storagefrom the Project drop-down list.NoteIf the Show default projects option is disabled, use the toggle button to list all the default projects.

To view the state of the PVCs:

- Click Storage → Persistent Volume Claims from the OpenShift Web Console.

Select

openshift-storagefrom the Project drop-down list.NoteIf the Show default projects option is disabled, use the toggle button to list all the default projects.

Optional: If cluster-wide encryption is enabled on the cluster, verify that the new OSD devices are encrypted.

Identify the node(s) where the new OSD pod(s) are running.

oc get -o=custom-columns=NODE:.spec.nodeName pod/<OSD-pod-name>

$ oc get -o=custom-columns=NODE:.spec.nodeName pod/<OSD-pod-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow <OSD-pod-name>Is the name of the OSD pod.

For example:

oc get -o=custom-columns=NODE:.spec.nodeName pod/rook-ceph-osd-0-544db49d7f-qrgqm

oc get -o=custom-columns=NODE:.spec.nodeName pod/rook-ceph-osd-0-544db49d7f-qrgqmCopy to Clipboard Copied! Toggle word wrap Toggle overflow

For each of the nodes identified in the previous step, do the following:

Create a debug pod and open a chroot environment for the selected host(s).

oc debug node/<node-name>

$ oc debug node/<node-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow <node-name>Is the name of the node.

chroot /host

$ chroot /hostCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Check for the

cryptkeyword beside theocs-devicesetnames.lsblk

$ lsblkCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Cluster reduction is supported only with the Red Hat Support Team’s assistance.

2.4. Scaling up storage by adding capacity to your OpenShift Data Foundation nodes on IBM Z or LinuxONE infrastructure

You can add storage capacity and performance to your configured Red Hat OpenShift Data Foundation worker nodes.

Prerequisites

- A running OpenShift Data Foundation Platform.

- Administrative privileges on the OpenShift Web Console.

- To scale using a storage class other than the one provisioned during deployment, first define an additional storage class. See Creating a storage class for details.

Procedure

Add additional hardware resources with zFCP disks.

List all the disks.

lszdev

$ lszdevCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow A SCSI disk is represented as a

zfcp-lunwith the structure<device-id>:<wwpn>:<lun-id>in the ID section. The first disk is used for the operating system. The device id for the new disk can be the same.Append a new SCSI disk.

chzdev -e 0.0.8204:0x400506630b1b50a4:0x3001301a00000000

$ chzdev -e 0.0.8204:0x400506630b1b50a4:0x3001301a00000000Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe device ID for the new disk must be the same as the disk to be replaced. The new disk is identified with its WWPN and LUN ID.

List all the FCP devices to verify the new disk is configured.

lszdev zfcp-lun TYPE ID ON PERS NAMES zfcp-lun 0.0.8204:0x102107630b1b5060:0x4001402900000000 yes no sda sg0 zfcp-lun 0.0.8204:0x500507630b1b50a4:0x4001302a00000000 yes yes sdb sg1 zfcp-lun 0.0.8204:0x400506630b1b50a4:0x3001301a00000000 yes yes sdc sg2

$ lszdev zfcp-lun TYPE ID ON PERS NAMES zfcp-lun 0.0.8204:0x102107630b1b5060:0x4001402900000000 yes no sda sg0 zfcp-lun 0.0.8204:0x500507630b1b50a4:0x4001302a00000000 yes yes sdb sg1 zfcp-lun 0.0.8204:0x400506630b1b50a4:0x3001301a00000000 yes yes sdc sg2Copy to Clipboard Copied! Toggle word wrap Toggle overflow

- Navigate to the OpenShift Web Console.

- Click Operators on the left navigation bar.

- Select Installed Operators.

- In the window, click OpenShift Data Foundation Operator.

In the top navigation bar, scroll right and click Storage Systems tab.

- Click the Action menu (⋮) next to the visible list to extend the options menu.

Select Add Capacity from the options menu.

The Raw Capacity field shows the size set during storage class creation. The total amount of storage consumed is three times this amount, because OpenShift Data Foundation uses a replica count of 3.

- Click Add.

- To check the status, navigate to Storage → OpenShift Data Foundation and verify that Storage System in the Status card has a green tick.

Verification steps

Verify the Raw Capacity card.

- In the OpenShift Web Console, click Storage → OpenShift Data Foundation.

- In the Status card of the Overview tab, click Storage System and then click the storage system link from the pop up that appears.

In the Block and File tab, check the Raw Capacity card.

Note that the capacity increases based on your selections.

NoteThe raw capacity does not take replication into account and shows the full capacity.

Verify that the new OSDs and their corresponding new Persistent Volume Claims (PVCs) are created.

To view the state of the newly created OSDs:

- Click Workloads → Pods from the OpenShift Web Console.

Select

openshift-storagefrom the Project drop-down list.NoteIf the Show default projects option is disabled, use the toggle button to list all the default projects.

To view the state of the PVCs:

- Click Storage → Persistent Volume Claims from the OpenShift Web Console.

Select

openshift-storagefrom the Project drop-down list.NoteIf the Show default projects option is disabled, use the toggle button to list all the default projects.

Optional: If cluster-wide encryption is enabled on the cluster, verify that the new OSD devices are encrypted.

Identify the node(s) where the new OSD pod(s) are running.

oc get -o=custom-columns=NODE:.spec.nodeName pod/<OSD-pod-name>

$ oc get -o=custom-columns=NODE:.spec.nodeName pod/<OSD-pod-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow <OSD-pod-name>Is the name of the OSD pod.

For example:

oc get -o=custom-columns=NODE:.spec.nodeName pod/rook-ceph-osd-0-544db49d7f-qrgqm

oc get -o=custom-columns=NODE:.spec.nodeName pod/rook-ceph-osd-0-544db49d7f-qrgqmCopy to Clipboard Copied! Toggle word wrap Toggle overflow

For each of the nodes identified in the previous step, do the following:

Create a debug pod and open a chroot environment for the selected host(s).

oc debug node/<node-name>

$ oc debug node/<node-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow <node-name>Is the name of the node.

chroot /host

$ chroot /hostCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Check for the

cryptkeyword beside theocs-devicesetnames.lsblk

$ lsblkCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Cluster reduction is supported only with the Red Hat Support Team’s assistance..

You can add storage capacity (additional storage devices) to your configured local storage based OpenShift Data Foundation worker nodes on IBM Power infrastructures.

Prerequisites

- You must be logged into OpenShift Container Platform cluster.

You must have installed the local storage operator. Use the following procedure:

- You must have three OpenShift Container Platform worker nodes with the same storage type and size attached to each node (for example, 0.5TB SSD) as the original OpenShift Data Foundation StorageCluster was created with.

Procedure

To add storage capacity to OpenShift Container Platform nodes with OpenShift Data Foundation installed, you need to

Find the available devices that you want to add, that is, a minimum of one device per worker node. You can follow the procedure for finding available storage devices in the respective deployment guide.

NoteMake sure you perform this process for all the existing nodes (minimum of 3) for which you want to add storage.

Add the additional disks to the

LocalVolumecustom resource (CR).oc edit -n openshift-local-storage localvolume localblock

$ oc edit -n openshift-local-storage localvolume localblockCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Make sure to save the changes after editing the CR.

Example output:

localvolume.local.storage.openshift.io/localblock edited

localvolume.local.storage.openshift.io/localblock editedCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can see in this CR that new devices are added.

-

sdx

-

Display the newly created Persistent Volumes (PVs) with the

storageclassname used in thelocalVolumeCR.oc get pv | grep localblock | grep Available

$ oc get pv | grep localblock | grep AvailableCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

local-pv-a04ffd8 500Gi RWO Delete Available localblock 24s local-pv-a0ca996b 500Gi RWO Delete Available localblock 23s local-pv-c171754a 500Gi RWO Delete Available localblock 23s

local-pv-a04ffd8 500Gi RWO Delete Available localblock 24s local-pv-a0ca996b 500Gi RWO Delete Available localblock 23s local-pv-c171754a 500Gi RWO Delete Available localblock 23sCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Navigate to the OpenShift Web Console.

- Click Operators on the left navigation bar.

- Select Installed Operators.

- In the window, click OpenShift Data Foundation Operator.

In the top navigation bar, scroll right and click Storage System tab.

- Click the Action menu (⋮) next to the visible list to extend the options menu.

Select Add Capacity from the options menu.

From this dialog box, set the Storage Class name to the name used in the

localVolumeCR. Available Capacity displayed is based on the local disks available in storage class.- Click Add.

- To check the status, navigate to Storage → OpenShift Data Foundation and verify that Storage System in the Status card has a green tick.

Verification steps

Verify the available Capacity.

- In the OpenShift Web Console, click Storage → OpenShift Data Foundation.

-

Click the Storage Systems tab and then click on

ocs-storagecluster-storagesystem. Navigate to Overview → Block and File tab, then check the Raw Capacity card.

Note that the capacity increases based on your selections.

NoteThe raw capacity does not take replication into account and shows the full capacity.

Verify that the new OSDs and their corresponding new Persistent Volume Claims (PVCs) are created.

To view the state of the newly created OSDs:

- Click Workloads → Pods from the OpenShift Web Console.

Select

openshift-storagefrom the Project drop-down list.NoteIf the Show default projects option is disabled, use the toggle button to list all the default projects.

To view the state of the PVCs:

- Click Storage → Persistent Volume Claims from the OpenShift Web Console.

Select

openshift-storagefrom the Project drop-down list.NoteIf the Show default projects option is disabled, use the toggle button to list all the default projects.

Optional: If cluster-wide encryption is enabled on the cluster, verify that the new OSD devices are encrypted.

Identify the node(s) where the new OSD pod(s) are running.

oc get -o=custom-columns=NODE:.spec.nodeName pod/<OSD-pod-name>

$ oc get -o=custom-columns=NODE:.spec.nodeName pod/<OSD-pod-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow <OSD-pod-name>Is the name of the OSD pod.

For example:

oc get -o=custom-columns=NODE:.spec.nodeName pod/rook-ceph-osd-0-544db49d7f-qrgqm

oc get -o=custom-columns=NODE:.spec.nodeName pod/rook-ceph-osd-0-544db49d7f-qrgqmCopy to Clipboard Copied! Toggle word wrap Toggle overflow

For each of the nodes identified in the previous step, do the following:

Create a debug pod and open a chroot environment for the selected host(s).

oc debug node/<node-name>

$ oc debug node/<node-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow <node-name>Is the name of the node.

chroot /host

$ chroot /hostCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Check for the

cryptkeyword beside theocs-devicesetnames.lsblk

$ lsblkCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Cluster reduction is supported only with the Red Hat Support Team’s assistance.

Chapter 3. Scaling out storage capacity

To scale out storage capacity, you need to perform the following steps:

- Add a new node.

- Verify that the new node is added successfully.

- Scale up the storage capacity.

OpenShift Data Foundation does not support heterogeneous OSD sizes.

3.1. Adding a node

You can add nodes to increase the storage capacity when existing worker nodes are already running at their maximum supported OSDs, which is increment of 3 OSDs of the capacity selected during initial configuration.

Depending on the type of your deployment, you can choose one of the following procedures to add a storage node:

- For AWS or Azure or Red Hat Virtualization installer-provisioned infrastructures, see Adding a node on an installer-provisioned infrastructure.

- For AWS or VMware user-provisioned infrastructure, see Adding a node on an user-provisioned infrastructure.

- For bare metal, IBM Z or LinuxONE, VMware, or Red Hat Virtualization infrastructures, see Adding a node using a local storage device.

- For IBM Power, see Adding a node using a local storage device on IBM Power.

3.1.1. Adding a node on an installer-provisioned infrastructure

You can add a node on the following installer provisioned infrastructures:

- AWS

- Azure

- Red Hat Virtualization

- VMware

Prerequisites

- You must be logged into OpenShift Container Platform cluster.

Procedure

- Navigate to Compute → Machine Sets.

On the machine set where you want to add nodes, select Edit Machine Count.

- Add the amount of nodes, and click Save.

- Click Compute → Nodes and confirm if the new node is in Ready state.

Apply the OpenShift Data Foundation label to the new node.

- For the new node, click Action menu (⋮) → Edit Labels.

- Add cluster.ocs.openshift.io/openshift-storage, and click Save.

It is recommended to add 3 nodes, one each in different zones. You must add 3 nodes and perform this procedure for all of them.

Verification steps

- To verify that the new node is added, see Verifying the addition of a new node.

3.1.2. Adding a node on an user-provisioned infrastructure

You can add a node on an AWS or VMware user-provisioned infrastructure.

Prerequisites

- You must be logged into OpenShift Container Platform cluster.

Procedure

Depending on whether you are adding a node on an AWS or a VMware user-provisioned infrastructure, perform the following steps:

For AWS:

- Create a new AWS machine instance with the required infrastructure. See Platform requirements.

- Create a new OpenShift Container Platform node using the new AWS machine instance.

For VMware:

- Create a new virtual machine (VM) on vSphere with the required infrastructure. See Platform requirements.

- Create a new OpenShift Container Platform worker node using the new VM.

Check for certificate signing requests (CSRs) related to OpenShift Data Foundation that are in

Pendingstate.oc get csr

$ oc get csrCopy to Clipboard Copied! Toggle word wrap Toggle overflow Approve all the required OpenShift Data Foundation CSRs for the new node.

oc adm certificate approve <Certificate_Name>

$ oc adm certificate approve <Certificate_Name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow <Certificate_Name>- Is the name of the CSR.

- Click Compute → Nodes, confirm if the new node is in Ready state.

Apply the OpenShift Data Foundation label to the new node using any one of the following:

- From User interface

- For the new node, click Action Menu (⋮) → Edit Labels.

-

Add

cluster.ocs.openshift.io/openshift-storage, and click Save.

- From Command line interface

Apply the OpenShift Data Foundation label to the new node.

oc label node <new_node_name> cluster.ocs.openshift.io/openshift-storage=""

$ oc label node <new_node_name> cluster.ocs.openshift.io/openshift-storage=""Copy to Clipboard Copied! Toggle word wrap Toggle overflow <new_node_name>- Is the name of the new node.

It is recommended to add 3 nodes, one each in different zones. You must add 3 nodes and perform this procedure for all of them.

Verification steps

- To verify that the new node is added, see Verifying the addition of a new node.

3.1.3. Adding a node using a local storage device

You can add a node on the following:

- Bare metal

- IBM Z or LinuxONE

- VMware

- Red Hat Virtualization

Prerequisites

- You must be logged into the OpenShift Container Platform cluster.

- You must have three OpenShift Container Platform worker nodes with the same storage type and size attached to each node (for example, 2TB SSD or 2TB NVMe drive) as the original OpenShift Data Foundation StorageCluster was created with.

-

If you upgraded to OpenShift Data Foundation version 4.9 from a previous version, and have not already created the

LocalVolumeDiscoveryandLocalVolumeSetobjects, follow the procedure described in Post-update configuration changes for clusters backed by local storage.

Procedure

Depending on the type of infrastructure, perform the following steps:

For VMware:

- Create a new virtual machine (VM) on vSphere with the required infrastructure. See Platform requirements.

- Create a new OpenShift Container Platform worker node using the new VM.

For Red Hat Virtualization:

- Create a new VM on Red Hat Virtualization with the required infrastructure. See Platform requirements.

- Create a new OpenShift Container Platform worker node using the new VM.

For bare metal:

- Get a new bare metal machine with the required infrastructure. See Platform requirements.

- Create a new OpenShift Container Platform node using the new bare metal machine.

For IBM Z or LinuxONE:

- Get a new IBM Z or LinuxONE machine with the required infrastructure. See Platform requirements.

- Create a new OpenShift Container Platform node using the new IBM Z or LinuxONE machine.

Check for certificate signing requests (CSRs) related to OpenShift Data Foundation that are in

Pendingstate.oc get csr

$ oc get csrCopy to Clipboard Copied! Toggle word wrap Toggle overflow Approve all the required OpenShift Data Foundation CSRs for the new node.

oc adm certificate approve <Certificate_Name>

$ oc adm certificate approve <Certificate_Name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow <Certificate_Name>- Is the name of the CSR.

- Click Compute → Nodes, confirm if the new node is in Ready state.

Apply the OpenShift Data Foundation label to the new node using any one of the following:

- From User interface

- For the new node, click Action Menu (⋮) → Edit Labels.

-

Add

cluster.ocs.openshift.io/openshift-storage, and click Save.

- From Command line interface

Apply the OpenShift Data Foundation label to the new node.

oc label node <new_node_name> cluster.ocs.openshift.io/openshift-storage=""

$ oc label node <new_node_name> cluster.ocs.openshift.io/openshift-storage=""Copy to Clipboard Copied! Toggle word wrap Toggle overflow <new_node_name>- Is the name of the new node.

Click Operators → Installed Operators from the OpenShift Web Console.

From the Project drop-down list, make sure to select the project where the Local Storage Operator is installed.

- Click Local Storage.

Click the Local Volume Discovery tab.

-

Beside the

LocalVolumeDiscovery, click Action menu (⋮) → Edit Local Volume Discovery. -

In the YAML, add the hostname of the new node in the

valuesfield under the node selector. - Click Save.

-

Beside the

Click the Local Volume Sets tab.

-

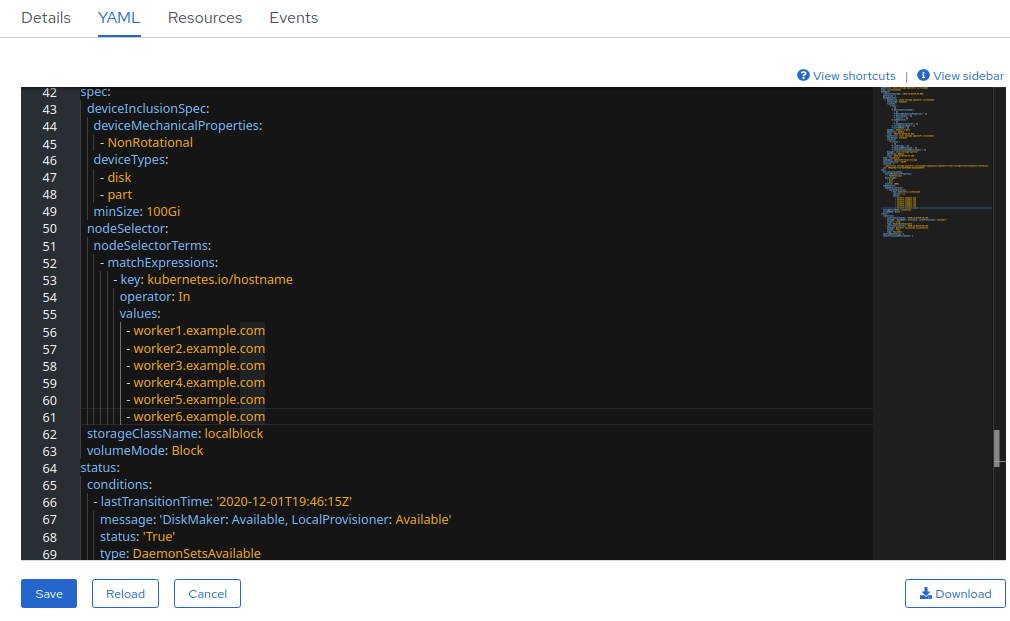

Beside the

LocalVolumeSet, click Action menu (⋮) → Edit Local Volume Set. In the YAML, add the hostname of the new node in the

valuesfield under thenode selector.Figure 3.1. YAML showing the addition of new hostnames

- Click Save.

-

Beside the

It is recommended to add 3 nodes, one each in different zones. You must add 3 nodes and perform this procedure for all of them.

Verification steps

- To verify that the new node is added, see Verifying the addition of a new node.

3.1.4. Adding a node using a local storage device on IBM Power

Prerequisites

- You must be logged into the OpenShift Container Platform cluster.

- You must have three OpenShift Container Platform worker nodes with the same storage type and size attached to each node (for example, 2TB SSD drive) as the original OpenShift Data Foundation StorageCluster was created with.

-

If you have upgraded from a previous version of OpenShift Data Foundation and have not already created a

LocalVolumeDiscoveryobject, follow the procedure described in Post-update configuration changes for clusters backed by local storage.

Procedure

- Get a new IBM Power machine with the required infrastructure. See Platform requirements.

Create a new OpenShift Container Platform node using the new IBM Power machine.

Check for certificate signing requests (CSRs) related to OpenShift Data Foundation that are in

Pendingstate.oc get csr

$ oc get csrCopy to Clipboard Copied! Toggle word wrap Toggle overflow Approve all the required OpenShift Data Foundation CSRs for the new node.

oc adm certificate approve <Certificate_Name>

$ oc adm certificate approve <Certificate_Name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow <Certificate_Name>- Is the name of the CSR.

- Click Compute → Nodes, confirm if the new node is in Ready state.

Apply the OpenShift Data Foundation label to the new node using any one of the following:

- From User interface

- For the new node, click Action Menu (⋮) → Edit Labels.

-

Add

cluster.ocs.openshift.io/openshift-storageand click Save.

- From Command line interface

Apply the OpenShift Data Foundation label to the new node.

oc label node <new_node_name> cluster.ocs.openshift.io/openshift-storage=""

$ oc label node <new_node_name> cluster.ocs.openshift.io/openshift-storage=""Copy to Clipboard Copied! Toggle word wrap Toggle overflow

<new_node_name>- Is the name of the new node.

Click Operators → Installed Operators from the OpenShift Web Console.

From the Project drop-down list, make sure to select the project where the Local Storage Operator is installed.

- Click Local Storage.

Click the Local Volume Discovery tab.

-

Click the Action menu (⋮) next to

LocalVolumeDiscovery→ Edit Local Volume Discovery. -

In the YAML, add the hostname of the new node in the

valuesfield under the node selector. - Click Save.

-

Click the Action menu (⋮) next to

Click the Local Volume tab.

-

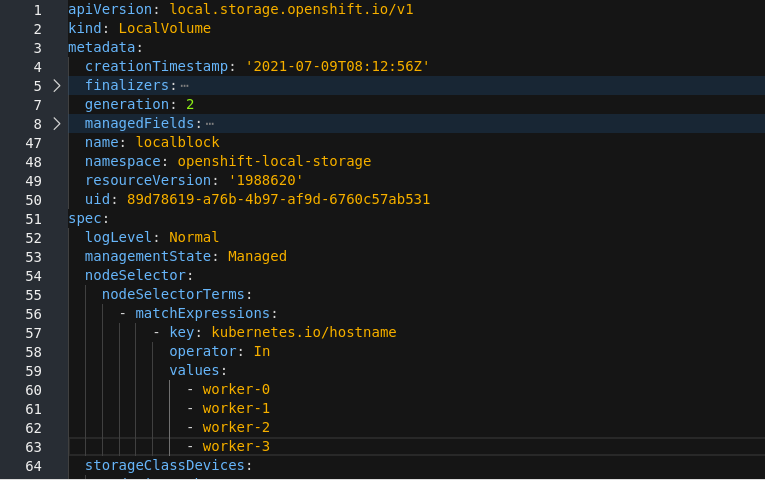

Beside the

LocalVolume, click Action menu (⋮) → Edit Local Volume. In the YAML, add the hostname of the new node in the

valuesfield under thenode selector.Figure 3.2. YAML showing the addition of new hostnames

- Click Save.

-

Beside the

It is recommended to add 3 nodes, one each in different zones. You must add 3 nodes and perform this procedure for all of them.

Verification steps

- To verify that the new node is added, see Verifying the addition of a new node.

3.1.5. Verifying the addition of a new node

Execute the following command and verify that the new node is present in the output:

oc get nodes --show-labels | grep cluster.ocs.openshift.io/openshift-storage= |cut -d' ' -f1

$ oc get nodes --show-labels | grep cluster.ocs.openshift.io/openshift-storage= |cut -d' ' -f1Copy to Clipboard Copied! Toggle word wrap Toggle overflow Click Workloads → Pods, confirm that at least the following pods on the new node are in Running state:

-

csi-cephfsplugin-* -

csi-rbdplugin-*

-

3.2. Adding capacity to a newly added node

To add capacity to a newly added node, either use the Add Capacity option to expand the storage cluster with 3 OSDs or use the new flexible scaling feature that allows you to expand the storage cluster by any number of OSDs if it is enabled.

3.2.1. Add capacity with 3 OSDs using the Add Capacity option

To add capacity by 3 OSDs for dynamic and local storage using the Add Capacity option in the user interface, see Scaling up storage by adding capacity. The Add Capacity option is available for storage clusters with or without the flexible scaling feature enabled.

3.2.2. Add capacity using YAML

With flexible scaling enabled, you can add capacity by 1 or more OSDs at a time using the YAML instead of the default set of 3 OSDs. However, you need to make sure that you add disks in a way that the cluster remains balanced.

Flexible scaling is supported only for the internal-attached mode of storage cluster creation.

To enable flexible scaling, create a cluster with 3 nodes, and fewer than 3 availability zones. The OpenShift Web Console detects the 3 nodes spread across fewer than 3 availability zones and enables flexible scaling.

You can not enable or disable the flexible scaling feature after creating the storage cluster.

3.2.2.1. Verifying if flexible scaling is enabled

Procedure

In the Web Console, click Home → Search.

-

Enter

StorageClusterin the search field. -

Click

ocs-storagecluster. In the YAML tab, search for the keys

flexibleScalinginspecsection andfailureDomaininstatussection.If

flexible scalingis true andfailureDomainis set to host, flexible scaling feature is enabled.spec: flexibleScaling: true […] status: failureDomain: host

spec: flexibleScaling: true […] status: failureDomain: hostCopy to Clipboard Copied! Toggle word wrap Toggle overflow

-

Enter

3.2.2.2. Adding capacity using the YAML in multiples of 1 OSD

To add OSDs to your storage cluster flexibly through the YAML, perform the following steps:

Prerequisites

- Administrator access to the OpenShift Container Platform web console.

- A storage cluster with flexible scaling enabled.

- Additional disks available for adding capacity.

Procedure

In the OpenShift Web Console, click Home → Search.

-

Search for

ocs-storageclusterin the search field and click onocs-storageclusterfrom the search result. - Click the Action menu (⋮) next to the storage cluster you want to scale up.

- Click Edit Storage Cluster. You are redirected to the YAML.

-

Search for

-

In the YAML, search for the key

count. This count parameter scales up the capacity. Increase the count by the number of OSDs you want to add to your cluster.

ImportantEnsure the

countparameter in the YAML is incremented depending on the number of available disks and also make sure that you add disks in a way that the cluster remains balanced.- Click Save.

You might need to wait a couple of minutes for the storage cluster to reach the Ready state.

Verification steps

Verify the Raw Capacity card.

- In the OpenShift Web Console, click Storage → OpenShift Data Foundation.

- In the Status card of the Overview tab, click Storage System and then click the storage system link from the pop up that appears.

In the Block and File tab, check the Raw Capacity card.

Note that the capacity increases based on your selections.

NoteThe raw capacity does not take replication into account and shows the full capacity.

Verify that the new OSDs and their corresponding new Persistent Volume Claims (PVCs) are created.

To view the state of the newly created OSDs:

- Click Workloads → Pods from the OpenShift Web Console.

Select

openshift-storagefrom the Project drop-down list.NoteIf the Show default projects option is disabled, use the toggle button to list all the default projects.

To view the state of the PVCs:

- Click Storage → Persistent Volume Claims from the OpenShift Web Console.

Select

openshift-storagefrom the Project drop-down list.NoteIf the Show default projects option is disabled, use the toggle button to list all the default projects.

Optional: If cluster-wide encryption is enabled on the cluster, verify that the new OSD devices are encrypted.

Identify the node(s) where the new OSD pod(s) are running.

oc get -o=custom-columns=NODE:.spec.nodeName pod/<OSD-pod-name>

$ oc get -o=custom-columns=NODE:.spec.nodeName pod/<OSD-pod-name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow <OSD-pod-name>Is the name of th OSD pod.

For example:

oc get -o=custom-columns=NODE:.spec.nodeName pod/rook-ceph-osd-0-544db49d7f-qrgqm

oc get -o=custom-columns=NODE:.spec.nodeName pod/rook-ceph-osd-0-544db49d7f-qrgqmCopy to Clipboard Copied! Toggle word wrap Toggle overflow

For each of the nodes identified in the previous step, do the following:

Create a debug pod and open a chroot environment for the selected host(s).

oc debug node/<node name>

$ oc debug node/<node name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow <node name>Is the name of the node.

chroot /host

$ chroot /hostCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Check for the

cryptkeyword beside theocs-devicesetname(s).lsblk

$ lsblkCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Cluster reduction is supported only with the Red Hat Support Team’s assistance.