This documentation is for a release that is no longer maintained

See documentation for the latest supported version.User guide

Using Red Hat OpenShift Dev Spaces 3.17

Abstract

Chapter 1. Getting started with Dev Spaces

If your organization is already running a OpenShift Dev Spaces instance, you can get started as a new user by learning how to start a new workspace, manage your workspaces, and authenticate yourself to a Git server from a workspace:

- Section 1.1, “Starting a workspace from a Git repository URL”

- Section 1.1.1, “Optional parameters for the URLs for starting a new workspace”

- Section 1.2, “Starting a workspace from a raw devfile URL”

- Section 1.3, “Basic actions you can perform on a workspace”

- Section 1.4, “Authenticating to a Git server from a workspace”

- Section 1.5, “Using the fuse-overlayfs storage driver for Podman and Buildah”

1.1. Starting a workspace from a Git repository URL

With OpenShift Dev Spaces, you can use a URL in your browser to start a new workspace that contains a clone of a Git repository. This way, you can clone a Git repository that is hosted on GitHub, GitLab, Bitbucket or Microsoft Azure DevOps server instances.

You can also use the Git Repository URL field on the Create Workspace page of your OpenShift Dev Spaces dashboard to enter the URL of a Git repository to start a new workspace.

- If you use an SSH URL to start a new workspace, you must propagate the SSH key. See Configuring DevWorkspaces to use SSH keys for Git operations for more information.

-

If the SSH URL points to a private repository, you must apply an access token to be able to fetch the

devfile.yamlcontent. You can do this either by accepting an SCM authentication page or following a Personal Access Token procedure.

Configure personal access token to access private repositories. See Section 6.1.2, “Using a Git-provider access token”.

Prerequisites

- Your organization has a running instance of OpenShift Dev Spaces.

-

You know the FQDN URL of your organization’s OpenShift Dev Spaces instance:

https://<openshift_dev_spaces_fqdn>. - Optional: You have authentication to the Git server configured.

Your Git repository maintainer keeps the

devfile.yamlor.devfile.yamlfile in the root directory of the Git repository. (For alternative file names and file paths, see Section 1.1.1, “Optional parameters for the URLs for starting a new workspace”.)TipYou can also start a new workspace by supplying the URL of a Git repository that contains no devfile. Doing so results in a workspace with Universal Developer Image and with Microsoft Visual Studio Code - Open Source as the workspace IDE.

Procedure

To start a new workspace with a clone of a Git repository:

- Optional: Visit your OpenShift Dev Spaces dashboard pages to authenticate to your organization’s instance of OpenShift Dev Spaces.

Visit the URL to start a new workspace using the basic syntax:

https://<openshift_dev_spaces_fqdn>#<git_repository_url>

https://<openshift_dev_spaces_fqdn>#<git_repository_url>Copy to Clipboard Copied! Toggle word wrap Toggle overflow TipYou can extend this URL with optional parameters:

https://<openshift_dev_spaces_fqdn>#<git_repository_url>?<optional_parameters>

https://<openshift_dev_spaces_fqdn>#<git_repository_url>?<optional_parameters>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow TipYou can use Git+SSH URLs to start a new workspace. See Configuring DevWorkspaces to use SSH keys for Git operations

Example 1.1. A URL for starting a new workspace

-

https://<openshift_dev_spaces_fqdn>#https://github.com/che-samples/cpp-hello-world -

https://<openshift_dev_spaces_fqdn>#git@github.com:che-samples/cpp-hello-world.git

Example 1.2. The URL syntax for starting a new workspace with a clone of a GitHub instance repository

-

https://<openshift_dev_spaces_fqdn>#https://<github_host>/<user_or_org>/<repository>starts a new workspace with a clone of the default branch. -

https://<openshift_dev_spaces_fqdn>#https://<github_host>/<user_or_org>/<repository>/tree/<branch_name>starts a new workspace with a clone of the specified branch. -

https://<openshift_dev_spaces_fqdn>#https://<github_host>/<user_or_org>/<repository>/pull/<pull_request_id>starts a new workspace with a clone of the branch of the pull request. -

https://<openshift_dev_spaces_fqdn>#git@<github_host>:<user_or_org>/<repository>.gitstarts a new workspace from Git+SSH URL.

Example 1.3. The URL syntax for starting a new workspace with a clone of a GitLab instance repository

-

https://<openshift_dev_spaces_fqdn>#https://<gitlab_host>/<user_or_org>/<repository>starts a new workspace with a clone of the default branch. -

https://<openshift_dev_spaces_fqdn>#https://<gitlab_host>/<user_or_org>/<repository>/-/tree/<branch_name>starts a new workspace with a clone of the specified branch. -

https://<openshift_dev_spaces_fqdn>#git@<gitlab_host>:<user_or_org>/<repository>.gitstarts a new workspace from Git+SSH URL.

Example 1.4. The URL syntax for starting a new workspace with a clone of a BitBucket Server repository

-

https://<openshift_dev_spaces_fqdn>#https://<bb_host>/scm/<project-key>/<repository>.gitstarts a new workspace with a clone of the default branch. -

https://<openshift_dev_spaces_fqdn>#https://<bb_host>/users/<user_slug>/repos/<repository>/starts a new workspace with a clone of the default branch, if a repository was created under the user profile. -

https://<openshift_dev_spaces_fqdn>#https://<bb_host>/users/<user-slug>/repos/<repository>/browse?at=refs%2Fheads%2F<branch-name>starts a new workspace with a clone of the specified branch. -

https://<openshift_dev_spaces_fqdn>#git@<bb_host>:<user_slug>/<repository>.gitstarts a new workspace from Git+SSH URL.

Example 1.5. The URL syntax for starting a new workspace with a clone of a Microsoft Azure DevOps Git repository

-

https://<openshift_dev_spaces_fqdn>#https://<organization>@dev.azure.com/<organization>/<project>/_git/<repository>starts a new workspace with a clone of the default branch. -

https://<openshift_dev_spaces_fqdn>#https://<organization>@dev.azure.com/<organization>/<project>/_git/<repository>?version=GB<branch>starts a new workspace with a clone of the specific branch. -

https://<openshift_dev_spaces_fqdn>#git@ssh.dev.azure.com:v3/<organization>/<project>/<repository>starts a new workspace from Git+SSH URL.

After you enter the URL to start a new workspace in a browser tab, the workspace starting page appears.

When the new workspace is ready, the workspace IDE loads in the browser tab.

A clone of the Git repository is present in the filesystem of the new workspace.

The workspace has a unique URL:

https://<openshift_dev_spaces_fqdn>/<user_name>/<unique_url>.-

Additional resources

1.1.1. Optional parameters for the URLs for starting a new workspace

When you start a new workspace, OpenShift Dev Spaces configures the workspace according to the instructions in the devfile. When you use a URL to start a new workspace, you can append optional parameters to the URL that further configure the workspace. You can use these parameters to specify a workspace IDE, start duplicate workspaces, and specify a devfile file name or path.

- Section 1.1.1.1, “URL parameter concatenation”

- Section 1.1.1.2, “URL parameter for the IDE”

- Section 1.1.1.3, “URL parameter for the IDE image”

- Section 1.1.1.4, “URL parameter for starting duplicate workspaces”

- Section 1.1.1.5, “URL parameter for the devfile file name”

- Section 1.1.1.6, “URL parameter for the devfile file path”

- Section 1.1.1.7, “URL parameter for the workspace storage”

- Section 1.1.1.8, “URL parameter for additional remotes”

- Section 1.1.1.9, “URL parameter for a container image”

1.1.1.1. URL parameter concatenation

The URL for starting a new workspace supports concatenation of multiple optional URL parameters by using & with the following URL syntax:

https://<openshift_dev_spaces_fqdn>#<git_repository_url>?<url_parameter_1>&<url_parameter_2>&<url_parameter_3>

Example 1.6. A URL for starting a new workspace with the URL of a Git repository and optional URL parameters

The complete URL for the browser:

https://<openshift_dev_spaces_fqdn>#https://github.com/che-samples/cpp-hello-world?new&che-editor=che-incubator/intellij-community/latest&devfilePath=tests/testdevfile.yaml

Explanation of the parts of the URL:

https://<openshift_dev_spaces_fqdn> #https://github.com/che-samples/cpp-hello-world ?new&che-editor=che-incubator/intellij-community/latest&devfilePath=tests/testdevfile.yaml

https://<openshift_dev_spaces_fqdn>

#https://github.com/che-samples/cpp-hello-world

?new&che-editor=che-incubator/intellij-community/latest&devfilePath=tests/testdevfile.yaml 1.1.1.2. URL parameter for the IDE

You can use the che-editor= URL parameter to specify a supported IDE when starting a workspace.

Use the che-editor= parameter when you cannot add or edit a /.che/che-editor.yaml file in the source-code Git repository to be cloned for workspaces.

The che-editor= parameter overrides the /.che/che-editor.yaml file.

This parameter accepts two types of values:

che-editor=<editor_key>https://<openshift_dev_spaces_fqdn>#<git_repository_url>?che-editor=<editor_key>

https://<openshift_dev_spaces_fqdn>#<git_repository_url>?che-editor=<editor_key>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Expand Table 1.1. The URL parameter <editor_key> values for supported IDEs IDE <editor_key>valueNote che-incubator/che-code/latestThis is the default IDE that loads in a new workspace when the URL parameter or

che-editor.yamlis not used.che-editor=<url_to_a_file>https://<openshift_dev_spaces_fqdn>#<git_repository_url>?che-editor=<url_to_a_file>

https://<openshift_dev_spaces_fqdn>#<git_repository_url>?che-editor=<url_to_a_file>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- URL to a file with devfile content.

Tip- The URL must point to the raw file content.

-

To use this parameter with a

che-editor.yamlfile, copy the file with another name or path, and remove the line withinlinefrom the file.

- The che-editors.yaml file features the devfiles of all supported IDEs.

1.1.1.3. URL parameter for the IDE image

You can use the editor-image parameter to set the custom IDE image for the workspace.

-

If the Git repository contains

/.che/che-editor.yamlfile, the custom editor will be overridden with the new IDE image. -

If there is no

/.che/che-editor.yamlfile in the Git repository, the default editor will be overridden with the new IDE image. -

If you want to override the supported IDE and change the target editor image, you can use both parameters together:

che-editorandeditor-imageURL parameters.

The URL parameter to override the IDE image is editor-image=:

https://<openshift_dev_spaces_fqdn>#<git_repository_url>?editor-image=<container_registry/image_name:image_tag>

https://<openshift_dev_spaces_fqdn>#<git_repository_url>?editor-image=<container_registry/image_name:image_tag>Example:

https://<openshift_dev_spaces_fqdn>#https://github.com/eclipse-che/che-docs?editor-image=quay.io/che-incubator/che-code:next

or

https://<openshift_dev_spaces_fqdn>#https://github.com/eclipse-che/che-docs?che-editor=che-incubator/che-code/latest&editor-image=quay.io/che-incubator/che-code:next

1.1.1.4. URL parameter for starting duplicate workspaces

Visiting a URL for starting a new workspace results in a new workspace according to the devfile and with a clone of the linked Git repository.

In some situations, you might need to have multiple workspaces that are duplicates in terms of the devfile and the linked Git repository. You can do this by visiting the same URL for starting a new workspace with a URL parameter.

The URL parameter for starting a duplicate workspace is new:

https://<openshift_dev_spaces_fqdn>#<git_repository_url>?new

https://<openshift_dev_spaces_fqdn>#<git_repository_url>?new

If you currently have a workspace that you started using a URL, then visiting the URL again without the new URL parameter results in an error message.

1.1.1.5. URL parameter for the devfile file name

When you visit a URL for starting a new workspace, OpenShift Dev Spaces searches the linked Git repository for a devfile with the file name .devfile.yaml or devfile.yaml. The devfile in the linked Git repository must follow this file-naming convention.

In some situations, you might need to specify a different, unconventional file name for the devfile.

The URL parameter for specifying an unconventional file name of the devfile is df=<filename>.yaml:

https://<openshift_dev_spaces_fqdn>#<git_repository_url>?df=<filename>.yaml

https://<openshift_dev_spaces_fqdn>#<git_repository_url>?df=<filename>.yaml - 1

<filename>.yamlis an unconventional file name of the devfile in the linked Git repository.

The df=<filename>.yaml parameter also has a long version: devfilePath=<filename>.yaml.

1.1.1.6. URL parameter for the devfile file path

When you visit a URL for starting a new workspace, OpenShift Dev Spaces searches the root directory of the linked Git repository for a devfile with the file name .devfile.yaml or devfile.yaml. The file path of the devfile in the linked Git repository must follow this path convention.

In some situations, you might need to specify a different, unconventional file path for the devfile in the linked Git repository.

The URL parameter for specifying an unconventional file path of the devfile is devfilePath=<relative_file_path>:

https://<openshift_dev_spaces_fqdn>#<git_repository_url>?devfilePath=<relative_file_path>

https://<openshift_dev_spaces_fqdn>#<git_repository_url>?devfilePath=<relative_file_path> - 1

<relative_file_path>is an unconventional file path of the devfile in the linked Git repository.

1.1.1.7. URL parameter for the workspace storage

If the URL for starting a new workspace does not contain a URL parameter specifying the storage type, the new workspace is created in ephemeral or persistent storage, whichever is defined as the default storage type in the CheCluster Custom Resource.

The URL parameter for specifying a storage type for a workspace is storageType=<storage_type>:

https://<openshift_dev_spaces_fqdn>#<git_repository_url>?storageType=<storage_type>

https://<openshift_dev_spaces_fqdn>#<git_repository_url>?storageType=<storage_type> - 1

- Possible

<storage_type>values:-

ephemeral -

per-user(persistent) -

per-workspace(persistent)

-

With the ephemeral or per-workspace storage type, you can run multiple workspaces concurrently, which is not possible with the default per-user storage type.

Additional resources

1.1.1.8. URL parameter for additional remotes

When you visit a URL for starting a new workspace, OpenShift Dev Spaces configures the origin remote to be the Git repository that you specified with # after the FQDN URL of your organization’s OpenShift Dev Spaces instance.

The URL parameter for cloning and configuring additional remotes for the workspace is remotes=:

https://<openshift_dev_spaces_fqdn>#<git_repository_url>?remotes={{<name_1>,<url_1>},{<name_2>,<url_2>},{<name_3>,<url_3>},...}

https://<openshift_dev_spaces_fqdn>#<git_repository_url>?remotes={{<name_1>,<url_1>},{<name_2>,<url_2>},{<name_3>,<url_3>},...}-

If you do not enter the name

originfor any of the additional remotes, the remote from <git_repository_url> will be cloned and namedoriginby default, and its expected branch will be checked out automatically. -

If you enter the name

originfor one of the additional remotes, its default branch will be checked out automatically, but the remote from <git_repository_url> will NOT be cloned for the workspace.

1.1.1.9. URL parameter for a container image

You can use the image parameter to use a custom reference to a container image in the following scenarios:

- The Git repository contains no devfile, and you want to start a new workspace with the custom image.

-

The Git repository contains a devfile, and you want to override the first container image listed in the

componentssection of the devfile.

The URL parameter for the path to the container image is image=:

https://<openshift_dev_spaces_fqdn>#<git_repository_url>?image=<container_image_url>

https://<openshift_dev_spaces_fqdn>#<git_repository_url>?image=<container_image_url>Example

https://<openshift_dev_spaces_fqdn>#https://github.com/eclipse-che/che-docs?image=quay.io/devfile/universal-developer-image:ubi8-latest

1.2. Starting a workspace from a raw devfile URL

With OpenShift Dev Spaces, you can open a devfile URL in your browser to start a new workspace.

You can use the Git Repo URL field on the Create Workspace page of your OpenShift Dev Spaces dashboard to enter the URL of a devfile to start a new workspace.

To initiate a clone of the Git repository in the filesystem of a new workspace, the devfile must contain project info.

Prerequisites

- Your organization has a running instance of OpenShift Dev Spaces.

-

You know the FQDN URL of your organization’s OpenShift Dev Spaces instance:

https://<openshift_dev_spaces_fqdn>.

Procedure

To start a new workspace from a devfile URL:

- Optional: Visit your OpenShift Dev Spaces dashboard pages to authenticate to your organization’s instance of OpenShift Dev Spaces.

Visit the URL to start a new workspace from a public repository using the basic syntax:

https://<openshift_dev_spaces_fqdn>#<devfile_url>

https://<openshift_dev_spaces_fqdn>#<devfile_url>Copy to Clipboard Copied! Toggle word wrap Toggle overflow You can pass your personal access token to the URL to access a devfile from private repositories:

https://<openshift_dev_spaces_fqdn>#https://<token>@<host>/<path_to_devfile>

https://<openshift_dev_spaces_fqdn>#https://<token>@<host>/<path_to_devfile>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Your personal access token that you generated on the Git provider’s website.

This works for GitHub, GitLab, Bitbucket, Microsoft Azure, and other providers that support Personal Access Token.

ImportantAutomated Git credential injection does not work in this case. To configure the Git credentials, use the configure personal access token guide.

TipYou can extend this URL with optional parameters:

https://<openshift_dev_spaces_fqdn>#<devfile_url>?<optional_parameters>

https://<openshift_dev_spaces_fqdn>#<devfile_url>?<optional_parameters>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example 1.7. A URL for starting a new workspace from a public repository

https://<openshift_dev_spaces_fqdn>#https://raw.githubusercontent.com/che-samples/cpp-hello-world/main/devfile.yamlExample 1.8. A URL for starting a new workspace from a private repository

https://<openshift_dev_spaces_fqdn>#https://<token>@raw.githubusercontent.com/che-samples/cpp-hello-world/main/devfile.yamlVerification

After you enter the URL to start a new workspace in a browser tab, the workspace starting page appears. When the new workspace is ready, the workspace IDE loads in the browser tab.

The workspace has a unique URL:

https://<openshift_dev_spaces_fqdn>/<user_name>/<unique_url>.

Additional resources

1.3. Basic actions you can perform on a workspace

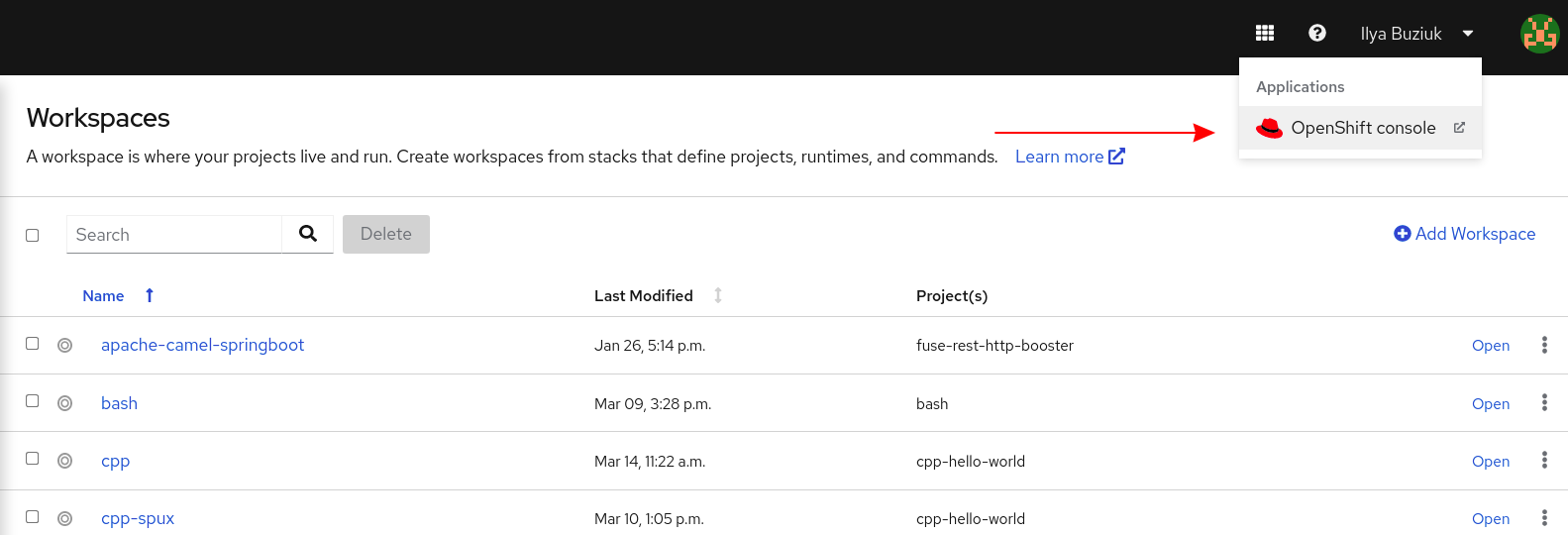

You manage your workspaces and verify their current states in the Workspaces page (https://<openshift_dev_spaces_fqdn>/dashboard/#/workspaces) of your OpenShift Dev Spaces dashboard.

After you start a new workspace, you can perform the following actions on it in the Workspaces page:

| Action | GUI steps in the Workspaces page |

|---|---|

| Reopen a running workspace | Click Open. |

| Restart a running workspace | Go to ⋮ > Restart Workspace. |

| Stop a running workspace | Go to ⋮ > Stop Workspace. |

| Start a stopped workspace | Click Open. |

| Delete a workspace | Go to ⋮ > Delete Workspace. |

1.4. Authenticating to a Git server from a workspace

In a workspace, you can run Git commands that require user authentication like cloning a remote private Git repository or pushing to a remote public or private Git repository.

User authentication to a Git server from a workspace is configured by the administrator or, in some cases, by the individual user:

- Your administrator sets up an OAuth application on GitHub, GitLab, Bitbucket, or Microsoft Azure Repos for your organization’s Red Hat OpenShift Dev Spaces instance.

- As a workaround, some users create and apply their own Kubernetes Secrets for their personal Git-provider access tokens or configure SSH keys for Git operations.

Additional resources

1.5. Using the fuse-overlayfs storage driver for Podman and Buildah

By default, newly created workspaces that do not specify a devfile will use the Universal Developer Image (UDI). The UDI contains common development tools and dependencies commonly used by developers.

Podman and Buildah are included in the UDI, allowing developers to build and push container images from their workspace.

By default, Podman and Buildah in the UDI are configured to use the vfs storage driver. For more efficient image management, use the fuse-overlayfs storage driver which supports copy-on-write in rootless environments.

You must meet the following requirements to use fuse-overlayfs in a workspace:

-

For OpenShift versions older than 4.15, the administrator has enabled

/dev/fuseaccess on the cluster by following https://access.redhat.com/documentation/en-us/red_hat_openshift_dev_spaces/3.17/html-single/administration_guide/index#administration-guide:configuring-fuse. -

The workspace has the necessary annotations for using the

/dev/fusedevice. See Section 1.5.1, “Accessing /dev/fuse”. -

The

storage.conffile in the workspace container has been configured to use fuse-overlayfs. See Section 1.5.2, “Enabling fuse-overlayfs with a ConfigMap”.

Additional resources

1.5.1. Accessing /dev/fuse

You must have access to /dev/fuse to use fuse-overlayfs. This section describes how to make /dev/fuse accessible to workspace containers.

Prerequisites

-

For OpenShift versions older than 4.15, the administrator has enabled access to

/dev/fuseby following https://access.redhat.com/documentation/en-us/red_hat_openshift_dev_spaces/3.17/html-single/administration_guide/index#administration-guide:configuring-fuse. - Determine a workspace to use fuse-overlayfs with.

Procedure

Use the

pod-overridesattribute to add the required annotations defined in https://access.redhat.com/documentation/en-us/red_hat_openshift_dev_spaces/3.17/html-single/administration_guide/index#administration-guide:configuring-fuse to the workspace. Thepod-overridesattribute allows merging certain fields in the workspace pod’sspec.For OpenShift versions older than 4.15:

oc patch devworkspace <DevWorkspace_name> \ --patch '{"spec":{"template":{"attributes":{"pod-overrides":{"metadata":{"annotations":{"io.kubernetes.cri-o.Devices":"/dev/fuse","io.openshift.podman-fuse":""}}}}}}}' \ --type=merge$ oc patch devworkspace <DevWorkspace_name> \ --patch '{"spec":{"template":{"attributes":{"pod-overrides":{"metadata":{"annotations":{"io.kubernetes.cri-o.Devices":"/dev/fuse","io.openshift.podman-fuse":""}}}}}}}' \ --type=mergeCopy to Clipboard Copied! Toggle word wrap Toggle overflow For OpenShift version 4.15 and later:

oc patch devworkspace <DevWorkspace_name> \ --patch '{"spec":{"template":{"attributes":{"pod-overrides":{"metadata":{"annotations":{"io.kubernetes.cri-o.Devices":"/dev/fuse"}}}}}}}' \ --type=merge$ oc patch devworkspace <DevWorkspace_name> \ --patch '{"spec":{"template":{"attributes":{"pod-overrides":{"metadata":{"annotations":{"io.kubernetes.cri-o.Devices":"/dev/fuse"}}}}}}}' \ --type=mergeCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification steps

Start the workspace and verify that

/dev/fuseis available in the workspace container.stat /dev/fuse

$ stat /dev/fuseCopy to Clipboard Copied! Toggle word wrap Toggle overflow

After completing this procedure, follow the steps in Section 1.5.2, “Enabling fuse-overlayfs with a ConfigMap” to use fuse-overlayfs for Podman.

1.5.2. Enabling fuse-overlayfs with a ConfigMap

You can define the storage driver for Podman and Buildah in the ~/.config/containers/storage.conf file. Here are the default contents of the /home/user/.config/containers/storage.conf file in the UDI container:

storage.conf

[storage] driver = "vfs"

[storage]

driver = "vfs"

To use fuse-overlayfs, storage.conf can be set to the following:

storage.conf

[storage] driver = "overlay" [storage.options.overlay] mount_program="/usr/bin/fuse-overlayfs"

[storage]

driver = "overlay"

[storage.options.overlay]

mount_program="/usr/bin/fuse-overlayfs" - 1

- The absolute path to the

fuse-overlayfsbinary. The/usr/bin/fuse-overlayfspath is the default for the UDI.

You can do this manually after starting a workspace. Another option is to build a new image based on the UDI with changes to storage.conf and use the new image for workspaces.

Otherwise, you can update the /home/user/.config/containers/storage.conf for all workspaces in your project by creating a ConfigMap that mounts the updated file. See Section 6.2, “Mounting ConfigMaps”.

Prerequisites

-

For OpenShift versions older than 4.15, the administrator has enabled access to

/dev/fuseby following https://access.redhat.com/documentation/en-us/red_hat_openshift_dev_spaces/3.17/html-single/administration_guide/index#administration-guide:configuring-fuse. - A workspace with the required annotations are set by following Section 1.5.1, “Accessing /dev/fuse”

Since ConfigMaps mounted by following this guide mounts the ConfigMap’s data to all workspaces, following this procedure will set the storage driver to fuse-overlayfs for all workspaces. Ensure that your workspaces contain the required annotations to use fuse-overlayfs by following Section 1.5.1, “Accessing /dev/fuse”.

Procedure

Apply a ConfigMap that mounts a

/home/user/.config/containers/storage.conffile in your project.Copy to Clipboard Copied! Toggle word wrap Toggle overflow WarningCreating this ConfigMap will cause all of your running workspaces to restart.

Verification steps

Start the workspace containing the required annotations and verify that the storage driver is

overlay.podman info | grep overlay

$ podman info | grep overlayCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow NoteThe following error might occur for existing workspaces:

ERRO[0000] User-selected graph driver "overlay" overwritten by graph driver "vfs" from database - delete libpod local files ("/home/user/.local/share/containers/storage") to resolve. May prevent use of images created by other toolsERRO[0000] User-selected graph driver "overlay" overwritten by graph driver "vfs" from database - delete libpod local files ("/home/user/.local/share/containers/storage") to resolve. May prevent use of images created by other toolsCopy to Clipboard Copied! Toggle word wrap Toggle overflow In this case, delete the libpod local files as mentioned in the error message.

1.6. Running containers with kubedock

Kubedock is a minimal container engine implementation that gives you a Podman-/docker-like experience inside a OpenShift Dev Spaces workspace. Kubedock is especially useful when dealing with ad-hoc, ephemeral, and testing containers, such as in the use cases listed below:

- Executing application tests which rely on Testcontainers framework.

- Using Quarkus Dev Services.

- Running a container stored in remote container registry, for local development purposes

The image you want to use with kubedock must be compliant with Openshift Container Platform guidelines. Otherwise, running the image with kubedock will result in a failure even if the same image runs locally without issues.

Enabling kubedock

After enabling the kubedock environment variable, kubedock will run the following podman commands:

-

podman run -

podman ps -

podman exec -

podman cp -

podman logs -

podman inspect -

podman kill -

podman rm -

podman wait -

podman stop -

podman start

Other commands such as podman build are started by the local Podman.

Using podman commands with kubedock has following limitations

-

The

podman build -t <image> . && podman run <image>command will fail. Usepodman build -t <image> . && podman push <image> && podman run <image>instead. -

The

podman generate kubecommand is not supported. -

--envoption causes thepodman runcommand to fail.

Prerequisites

- An image compliant with Openshift Container Platform guidelines.

Process

Add

KUBEDOCK_ENABLED=trueenvironment variable to the devfile.(OPTIONAL) Use the

KUBEDOCK_PARAMvariable to specify additional kubedock parameters. The list of variables is available here. Alternatively, you can use the following command to view the available options:kubedock server --help

# kubedock server --helpCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Example

You must configure the Podman or docker API to point to kubedock setting CONTAINER_HOST=tcp://127.0.0.1:2475 or DOCKER_HOST=tcp://127.0.0.1:2475 when running containers.

At the same time, you must configure Podman to point to local Podman when building the container.

Chapter 2. Using Dev Spaces in team workflow

Learn about the benefits of using OpenShift Dev Spaces in your organization in the following articles:

2.1. Badge for first-time contributors

To enable a first-time contributor to start a workspace with a project, add a badge with a link to your OpenShift Dev Spaces instance.

Figure 2.1. Factory badge

Procedure

Substitute your OpenShift Dev Spaces URL (

https://<openshift_dev_spaces_fqdn>) and repository URL (<your_repository_url>), and add the link to your repository in the projectREADME.mdfile.[](https://<openshift_dev_spaces_fqdn>/#https://<your_repository_url>)

[](https://<openshift_dev_spaces_fqdn>/#https://<your_repository_url>)Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

The

README.mdfile in your Git provider web interface displays the factory badge. Click the badge to open a workspace with your project in your OpenShift Dev Spaces instance.

2.2. Reviewing pull and merge requests

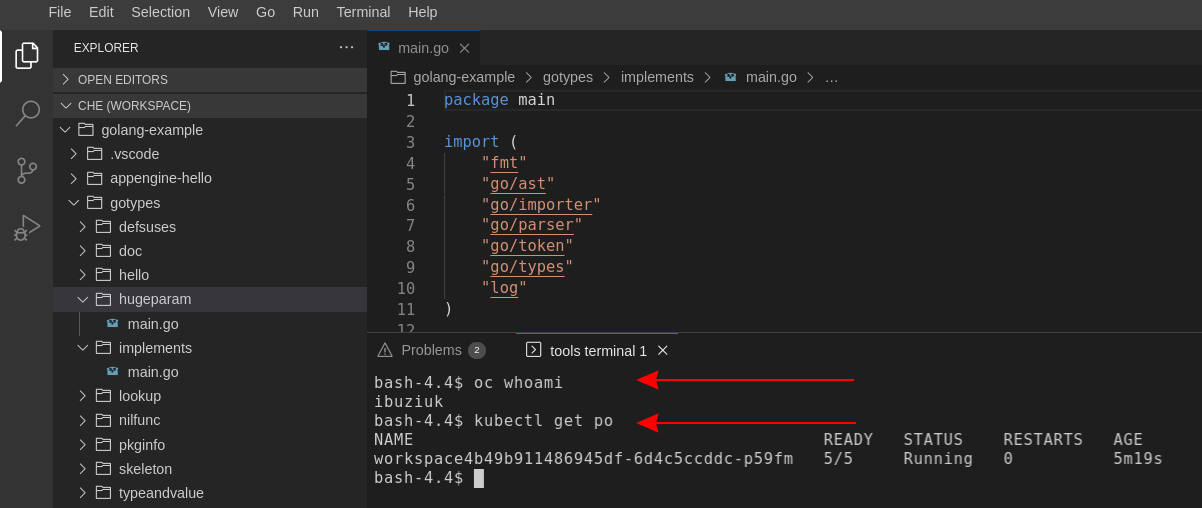

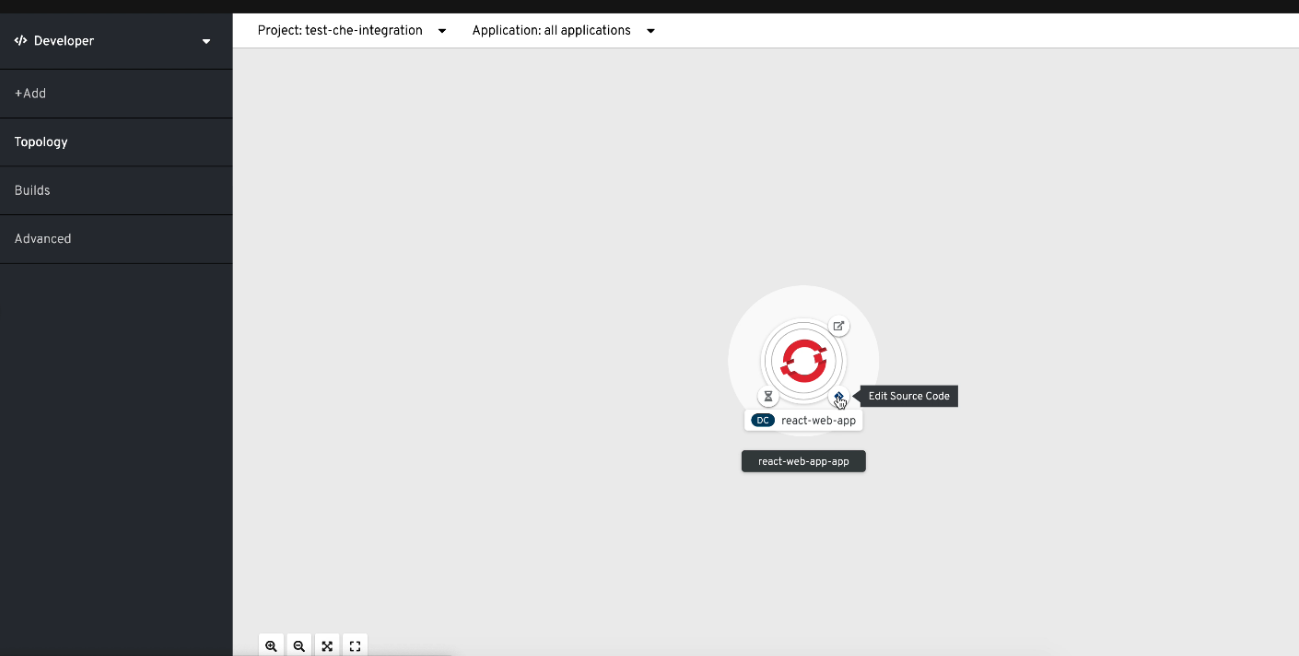

Red Hat OpenShift Dev Spaces workspace contains all tools you need to review pull and merge requests from start to finish. By clicking a OpenShift Dev Spaces link, you get access to Red Hat OpenShift Dev Spaces-supported web IDE with a ready-to-use workspace where you can run a linter, unit tests, the build and more.

Prerequisites

- You have access to the repository hosted by your Git provider.

- You have access to a OpenShift Dev Spaces instance.

Procedure

- Open the feature branch to review in OpenShift Dev Spaces. A clone of the branch opens in a workspace with tools for debugging and testing.

- Check the pull or merge request changes.

Run your desired debugging and testing tools:

- Run a linter.

- Run unit tests.

- Run the build.

- Run the application to check for problems.

- Navigate to UI of your Git provider to leave comment and pull or merge your assigned request.

Verification

- (optional) Open a second workspace using the main branch of the repository to reproduce a problem.

2.3. Try in Web IDE GitHub action

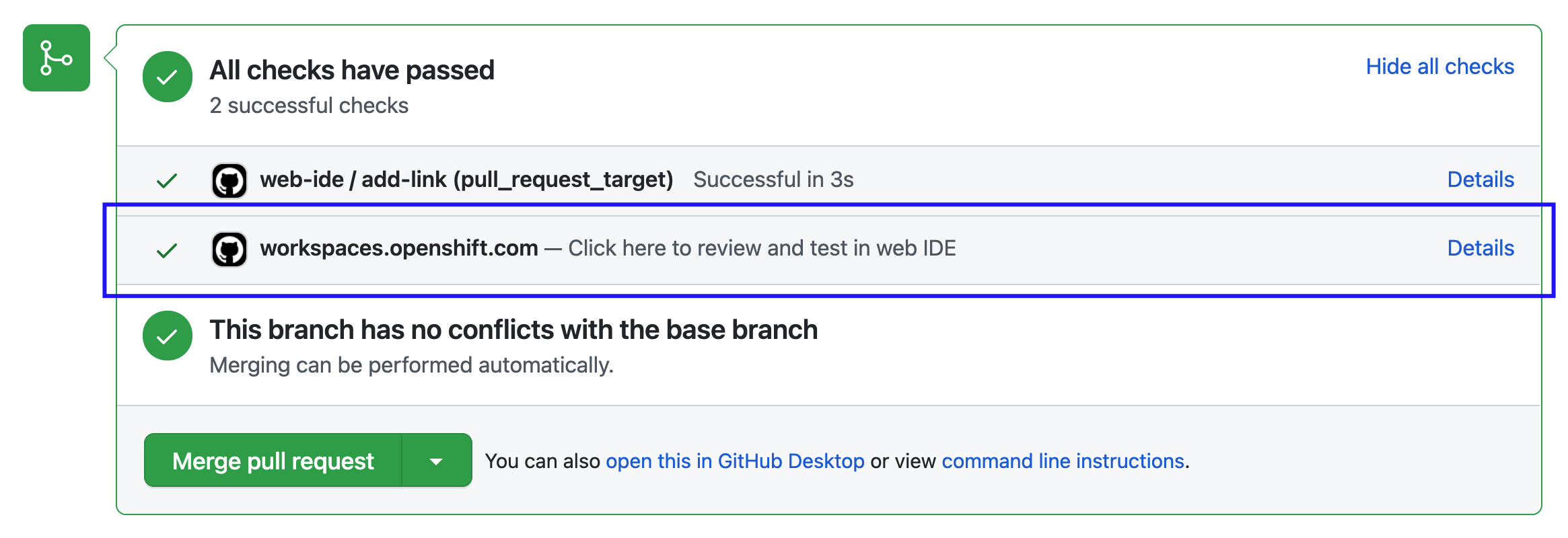

The Try in Web IDE GitHub action can be added to a GitHub repository workflow to help reviewers quickly test pull requests on Eclipse Che hosted by Red Hat. The action achieves this by listening to pull request events and providing a factory URL by creating a comment, a status check, or both. This factory URL creates a new workspace from the pull request branch on Eclipse Che hosted by Red Hat.

The Che documentation repository (https://github.com/eclipse/che-docs) is a real-life example where the Try in Web IDE GitHub action helps reviewers quickly test pull requests. Experience the workflow by navigating to a recent pull request and opening a factory URL.

Figure 2.2. Pull request comment created by the Try in Web IDE GitHub action. Clicking the badge opens a new workspace for reviewers to test the pull request.

Figure 2.3. Pull request status check created by the Try in Web IDE GitHub action. Clicking the "Details" link opens a new workspace for reviewers to test the pull request.

2.3.1. Adding the action to a GitHub repository workflow

This section describes how to integrate the Try in Web IDE GitHub action to a GitHub repository workflow.

Prerequisites

- A GitHub repository

- A devfile in the root of the GitHub repository.

Procedure

-

In the GitHub repository, create a

.github/workflowsdirectory if it does not exist already. Create an

example.ymlfile in the.github/workflowsdirectory with the following content:Example 2.1. example.yml

Copy to Clipboard Copied! Toggle word wrap Toggle overflow This code snippet creates a workflow named

Try in Web IDE example, with a job that runs thev1version of theredhat-actions/try-in-web-idecommunity action. The workflow is triggered on thepull_request_targetevent, on theopenedactivity type.Optionally configure the activity types from the

on.pull_request_target.typesfield to customize when workflow trigger. Activity types such asreopenedandsynchronizecan be useful.Example 2.2. Triggering the workflow on both

openedandsynchronizeactivity typeson: pull_request_target: types: [opened, synchronize]on: pull_request_target: types: [opened, synchronize]Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Optionally configure the

add_commentandadd_statusGitHub action inputs withinexample.yml. These inputs are sent to the Try in Web IDE GitHub action to customize whether comments and status checks are to be made.

2.3.2. Providing a devfile

Providing a devfile in the root directory of the repository is recommended to define the development environment of the workspace created by the factory URL. In this way, the workspace contains everything users need to review pull requests, such as plugins, development commands, and other environment setup.

The Che documentation repository devfile is an example of a well-defined and effective devfile.

Chapter 3. Customizing workspace components

To customize workspace components:

- Choose a Git repository for your workspace.

- Use a devfile.

- Configure an IDE.

- Add OpenShift Dev Spaces specific attributes in addition to the generic devfile specification.

Chapter 4. Introduction to devfile in Dev Spaces

Devfiles are yaml text files used for development environment customization. Use them to configure a devfile to suit your specific needs and share the customized devfile across multiple workspaces to ensure identical user experience and build, run, and deploy behaviours across your team.

Red Hat OpenShift Dev Spaces is expected to work with most of the popular images defined in the components section of devfile. For production purposes, it is recommended to use one of the Universal Base Images as a base image for defining the Cloud Development Environment.

Some images can not be used as-is for defining Cloud Development Environment since Visual Studio Code - Open Source ("Code - OSS") can not be started in the containers with missing openssl and libbrotli. Missing libraries should be explicitly installed on the Dockerfile level e.g. RUN yum install compat-openssl11 libbrotli

Devfile and Universal Developer Image

You do not need a devfile to start a workspace. If you do not include a devfile in your project repository, Red Hat OpenShift Dev Spaces automatically loads a default devfile with a Universal Developer Image (UDI).

Devfile Registry

Devfile Registry contains ready-to-use community-supported devfiles for different languages and technologies. Devfiles included in the registry should be treated as samples rather than templates.

Chapter 5. IDEs in workspaces

5.1. Supported IDEs

The default IDE in a new workspace is Microsoft Visual Studio Code - Open Source. Alternatively, you can choose another supported IDE:

| IDE | id | Note |

|---|---|---|

|

|

This is the default IDE that loads in a new workspace when the URL parameter or |

5.2. Repository-level IDE configuration in OpenShift Dev Spaces

You can store IDE configuration files directly in the remote Git repository that contains your project source code. This way, one common IDE configuration is applied to all new workspaces that feature a clone of that repository. Such IDE configuration files might include the following:

-

The

/.che/che-editor.yamlfile that stores a definition of the chosen IDE. -

IDE-specific configuration files that one would typically store locally for a desktop IDE. For example, the

/.vscode/extensions.jsonfile.

5.3. Microsoft Visual Studio Code - Open Source

The OpenShift Dev Spaces build of Microsoft Visual Studio Code - Open Source is the default IDE of a new workspace.

You can automate installation of Microsoft Visual Studio Code extensions from the Open VSX registry at workspace startup. See Automating installation of Microsoft Visual Studio Code extensions at workspace startup.

-

Use Tasks to find and run the commands specified in

devfile.yaml. Use Dev Spaces commands by clicking Dev Spaces in the Status Bar or finding them through the Command Palette:

- Dev Spaces: Open Dashboard

- Dev Spaces: Open OpenShift Console

- Dev Spaces: Stop Workspace

- Dev Spaces: Restart Workspace

- Dev Spaces: Restart Workspace from Local Devfile

- Dev Spaces: Open Documentation

Configure IDE preferences on a per-workspace basis by invoking the Command Palette and selecting Preferences: Open Workspace Settings.

You might see your organization’s branding in this IDE if your organization customized it through a branded build.

5.3.1. Automating installation of Microsoft Visual Studio Code extensions at workspace startup

To have the Microsoft Visual Studio Code - Open Source IDE automatically install chosen extensions, you can add an extensions.json file to the remote Git repository that contains your project source code and that will be cloned into workspaces.

Prerequisites

The public OpenVSX registry at open-vsx.org is selected and accessible over the internet. See Selecting an Open VSX registry instance.

TipTo install recommended extensions in a restricted environment, consider the following options instead:

Procedure

Get the publisher and extension names of each chosen extension:

- Find the extension on the Open VSX registry website and copy the URL of the extension’s listing page.

Extract the <publisher> and <extension> names from the copied URL:

https://www.open-vsx.org/extension/<publisher>/<extension>

https://www.open-vsx.org/extension/<publisher>/<extension>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

-

Create a

.vscode/extensions.jsonfile in the remote Git repository. Add the <publisher> and <extension> names to the

extensions.jsonfile as follows:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

-

Start a new workspace by using the URL of the remote Git repository that contains the created

extensions.jsonfile. - In the IDE of the workspace, press Ctrl+Shift+X or go to Extensions to find each of the extensions listed in the file.

- The extension has the label This extension is enabled globally.

5.4. Defining a common IDE

While the Section 1.1.1.2, “URL parameter for the IDE” enables you to start a workspace with your personal choice of the supported IDE, you might find it more convenient to define the same IDE for all workspaces for the same source code Git repository. To do so, use the che-editor.yaml file. This file supports even a detailed IDE configuration.

If you intend to start most or all of your organization’s workspaces with the same IDE other than Microsoft Visual Studio Code - Open Source, an alternative is for the administrator of your organization’s OpenShift Dev Spaces instance to specify another supported IDE as the default IDE at the OpenShift Dev Spaces instance level. This can be done with .spec.devEnvironments.defaultEditor in the CheCluster Custom Resource.

5.4.1. Setting up che-editor.yaml

By using the che-editor.yaml file, you can set a common default IDE for your team and provide new contributors with the most suitable IDE for your project source code. You can also use the che-editor.yaml file when you need to set a different IDE default for a particular source code Git repository rather than the default IDE of your organization’s OpenShift Dev Spaces instance.

Procedure

-

In the remote Git repository of your project source code, create a

/.che/che-editor.yamlfile with lines that specify the relevant parameter.

Verification

- Start a new workspace with a clone of the Git repository.

- Verify that the specified IDE loads in the browser tab of the started workspace.

5.4.2. Parameters for che-editor.yaml

The simplest way to select an IDE in the che-editor.yaml is to specify the id of an IDE from the table of supported IDEs:

| IDE | id | Note |

|---|---|---|

|

|

This is the default IDE that loads in a new workspace when the URL parameter or |

Example 5.1. id selects an IDE from the plugin registry

id: che-incubator/che-idea/latest

id: che-incubator/che-idea/latest

As alternatives to providing the id parameter, the che-editor.yaml file supports a reference to the URL of another che-editor.yaml file or an inline definition for an IDE outside of a plugin registry:

Example 5.2. reference points to a remote che-editor.yaml file

reference: https://<hostname_and_path_to_a_remote_file>/che-editor.yaml

reference: https://<hostname_and_path_to_a_remote_file>/che-editor.yamlExample 5.3. inline specifies a complete definition for a customized IDE without a plugin registry

For more complex scenarios, the che-editor.yaml file supports the registryUrl and override parameters:

Example 5.4. registryUrl points to a custom plugin registry rather than to the default OpenShift Dev Spaces plugin registry

id: <editor_id> registryUrl: <url_of_custom_plugin_registry>

id: <editor_id>

registryUrl: <url_of_custom_plugin_registry>- 1

- The

idof the IDE in the custom plugin registry.

Example 5.5. override of the default value of one or more defined properties of the IDE

- 1

id:,registryUrl:, orreference:.

Chapter 6. Using credentials and configurations in workspaces

You can use your credentials and configurations in your workspaces.

To do so, mount your credentials and configurations to the Dev Workspace containers in the OpenShift cluster of your organization’s OpenShift Dev Spaces instance:

- Mount your credentials and sensitive configurations as Kubernetes Secrets.

- Mount your non-sensitive configurations as Kubernetes ConfigMaps.

If you need to allow the Dev Workspace Pods in the cluster to access container registries that require authentication, create an image pull Secret for the Dev Workspace Pods.

The mounting process uses the standard Kubernetes mounting mechanism and requires applying additional labels and annotations to your existing resources. Resources are mounted when starting a new workspace or restarting an existing one.

You can create permanent mount points for various components:

-

Maven configuration, such as the user-specific

settings.xmlfile - SSH key pairs

- Git-provider access tokens

- Git configuration

- AWS authorization tokens

- Configuration files

- Persistent storage

Additional resources

6.1. Mounting Secrets

To mount confidential data into your workspaces, use Kubernetes Secrets.

Using Kubernetes Secrets, you can mount usernames, passwords, SSH key pairs, authentication tokens (for example, for AWS), and sensitive configurations.

Mount Kubernetes Secrets to the Dev Workspace containers in the OpenShift cluster of your organization’s OpenShift Dev Spaces instance.

Prerequisites

-

An active

ocsession with administrative permissions to the destination OpenShift cluster. See Getting started with the CLI. -

In your user project, you created a new Secret or determined an existing Secret to mount to all

Dev Workspacecontainers.

Procedure

Add the labels, which are required for mounting the Secret, to the Secret.

oc label secret <Secret_name> \ controller.devfile.io/mount-to-devworkspace=true \ controller.devfile.io/watch-secret=true$ oc label secret <Secret_name> \ controller.devfile.io/mount-to-devworkspace=true \ controller.devfile.io/watch-secret=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Use the annotations to configure how the Secret is mounted.

Expand Table 6.1. Optional annotations Annotation Description controller.devfile.io/mount-path:Specifies the mount path.

Defaults to

/etc/secret/<Secret_name>.controller.devfile.io/mount-as:Specifies how the resource should be mounted:

file,subpath, orenv.Defaults to

file.mount-as: filemounts the keys and values as files within the mount path.mount-as: subpathmounts the keys and values within the mount path using subpath volume mounts.mount-as: envmounts the keys and values as environment variables in allDev Workspacecontainers.

Example 6.1. Mounting a Secret as a file

When you start a workspace, the /home/user/.m2/settings.xml file will be available in the Dev Workspace containers.

With Maven, you can set a custom path for the settings.xml file. For example:

mvn --settings /home/user/.m2/settings.xml clean install

$ mvn --settings /home/user/.m2/settings.xml clean install6.1.1. Creating image pull Secrets

To allow the Dev Workspace Pods in the OpenShift cluster of your organization’s OpenShift Dev Spaces instance to access container registries that require authentication, create an image pull Secret.

You can create image pull Secrets by using oc or a .dockercfg file or a config.json file.

6.1.1.1. Creating an image pull Secret with oc

Prerequisites

-

An active

ocsession with administrative permissions to the destination OpenShift cluster. See Getting started with the CLI.

Procedure

In your user project, create an image pull Secret with your private container registry details and credentials:

oc create secret docker-registry <Secret_name> \ --docker-server=<registry_server> \ --docker-username=<username> \ --docker-password=<password> \ --docker-email=<email_address>$ oc create secret docker-registry <Secret_name> \ --docker-server=<registry_server> \ --docker-username=<username> \ --docker-password=<password> \ --docker-email=<email_address>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add the following label to the image pull Secret:

oc label secret <Secret_name> controller.devfile.io/devworkspace_pullsecret=true controller.devfile.io/watch-secret=true

$ oc label secret <Secret_name> controller.devfile.io/devworkspace_pullsecret=true controller.devfile.io/watch-secret=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.1.1.2. Creating an image pull Secret from a .dockercfg file

If you already store the credentials for the private container registry in a .dockercfg file, you can use that file to create an image pull Secret.

Prerequisites

-

An active

ocsession with administrative permissions to the destination OpenShift cluster. See Getting started with the CLI. -

base64command line tools are installed in the operating system you are using.

Procedure

Encode the

.dockercfgfile to Base64:cat .dockercfg | base64 | tr -d '\n'

$ cat .dockercfg | base64 | tr -d '\n'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new OpenShift Secret in your user project:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the Secret:

oc apply -f - <<EOF <Secret_prepared_in_the_previous_step> EOF

$ oc apply -f - <<EOF <Secret_prepared_in_the_previous_step> EOFCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.1.1.3. Creating an image pull Secret from a config.json file

If you already store the credentials for the private container registry in a $HOME/.docker/config.json file, you can use that file to create an image pull Secret.

Prerequisites

-

An active

ocsession with administrative permissions to the destination OpenShift cluster. See Getting started with the CLI. -

base64command line tools are installed in the operating system you are using.

Procedure

Encode the

$HOME/.docker/config.jsonfile to Base64.cat config.json | base64 | tr -d '\n'

$ cat config.json | base64 | tr -d '\n'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new OpenShift Secret in your user project:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the Secret:

oc apply -f - <<EOF <Secret_prepared_in_the_previous_step> EOF

$ oc apply -f - <<EOF <Secret_prepared_in_the_previous_step> EOFCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.1.2. Using a Git-provider access token

OAuth for GitHub, GitLab, Bitbucket, or Microsoft Azure Repos needs to be configured by the administrator of your organization’s OpenShift Dev Spaces instance. If your administrator could not configure it for OpenShift Dev Spaces users, the workaround is for you to use a personal access token. You can configure personal access tokens on the User Preferences page of your OpenShift Dev Spaces dashboard: https://<openshift_dev_spaces_fqdn>/dashboard/#/user-preferences?tab=personal-access-tokens, or apply it manually as a Kubernetes Secret in the namespace.

Mounting your access token as a Secret enables the OpenShift Dev Spaces Server to access the remote repository that is cloned during workspace creation, including access to the repository’s /.che and /.vscode folders.

Apply the Secret in your user project of the OpenShift cluster of your organization’s OpenShift Dev Spaces instance.

After applying the Secret, you can create workspaces with clones of private Git repositories that are hosted on GitHub, GitLab, Bitbucket Server, or Microsoft Azure Repos.

You can create and apply multiple access-token Secrets per Git provider. You must apply each of those Secrets in your user project.

Prerequisites

You have logged in to the cluster.

TipOn OpenShift, you can use the

occommand-line tool to log in to the cluster:$ oc login https://<openshift_dev_spaces_fqdn> --username=<my_user>

Procedure

Generate your access token on your Git provider’s website.

ImportantPersonal access tokens are sensitive information and should be kept confidential. Treat them like passwords. If you are having trouble with authentication, ensure you are using the correct token and have the appropriate permissions for cloning repositories:

- Open a terminal locally on your computer

-

Use the

gitcommand to clone the repository using your personal access token. The format of thegitcommand vary based on the Git Provider. As an example, GitHub personal access token verification can be done using the following command:

git clone https://<PAT>@github.com/username/repo.git

git clone https://<PAT>@github.com/username/repo.gitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Replace

<PAT>with your personal access token, andusername/repowith the appropriate repository path. If the token is valid and has the necessary permissions, the cloning process should be successful. Otherwise, this is an indicator of incorrect personal access token, insufficient permissions, or other issues.ImportantFor GitHub Enterprise Cloud, verify that the token is authorized for use within the organization.

-

Go to

https://<openshift_dev_spaces_fqdn>/api/user/idin the web browser to get your OpenShift Dev Spaces user ID. Prepare a new OpenShift Secret.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Visit

https://<openshift_dev_spaces_fqdn>/api/kubernetes/namespaceto get your OpenShift Dev Spaces user namespace asname. Switch to your OpenShift Dev Spaces user namespace in the cluster.

TipOn OpenShift:

The

occommand-line tool can return the namespace you are currently on in the cluster, which you can use to check your current namespace:$ oc projectYou can switch to your OpenShift Dev Spaces user namespace on a command line if needed:

$ oc project <your_user_namespace>

Apply the Secret.

TipOn OpenShift, you can use the

occommand-line tool:oc apply -f - <<EOF <Secret_prepared_in_step_5> EOF

$ oc apply -f - <<EOF <Secret_prepared_in_step_5> EOFCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

- Start a new workspace by using the URL of a remote Git repository that the Git provider hosts.

- Make some changes and push to the remote Git repository from the workspace.

Additional resources

6.2. Mounting ConfigMaps

To mount non-confidential configuration data into your workspaces, use Kubernetes ConfigMaps.

Using Kubernetes ConfigMaps, you can mount non-sensitive data such as configuration values for an application.

Mount Kubernetes ConfigMaps to the Dev Workspace containers in the OpenShift cluster of your organization’s OpenShift Dev Spaces instance.

Prerequisites

-

An active

ocsession with administrative permissions to the destination OpenShift cluster. See Getting started with the CLI. -

In your user project, you created a new ConfigMap or determined an existing ConfigMap to mount to all

Dev Workspacecontainers.

Procedure

Add the labels, which are required for mounting the ConfigMap, to the ConfigMap.

oc label configmap <ConfigMap_name> \ controller.devfile.io/mount-to-devworkspace=true \ controller.devfile.io/watch-configmap=true$ oc label configmap <ConfigMap_name> \ controller.devfile.io/mount-to-devworkspace=true \ controller.devfile.io/watch-configmap=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Use the annotations to configure how the ConfigMap is mounted.

Expand Table 6.2. Optional annotations Annotation Description controller.devfile.io/mount-path:Specifies the mount path.

Defaults to

/etc/config/<ConfigMap_name>.controller.devfile.io/mount-as:Specifies how the resource should be mounted:

file,subpath, orenv.Defaults to

file.mount-as:filemounts the keys and values as files within the mount path.mount-as:subpathmounts the keys and values within the mount path using subpath volume mounts.mount-as:envmounts the keys and values as environment variables in allDev Workspacecontainers.

Example 6.2. Mounting a ConfigMap as environment variables

When you start a workspace, the <env_var_1> and <env_var_2> environment variables will be available in the Dev Workspace containers.

6.2.1. Mounting Git configuration

The user.name and user.email fields will be set automatically to the gitconfig content from a git provider, connected to OpenShift Dev Spaces by a Git-provider access token or a token generated via OAuth, if username and email are set on the provider’s user profile page.

Follow the instructions below to mount a Git config file in a workspace.

Prerequisites

- You have logged in to the cluster.

Procedure

Prepare a new OpenShift ConfigMap.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the ConfigMap.

oc apply -f - <<EOF <ConfigMap_prepared_in_step_1> EOF

$ oc apply -f - <<EOF <ConfigMap_prepared_in_step_1> EOFCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

- Start a new workspace by using the URL of a remote Git repository that the Git provider hosts.

-

Once the workspace is started, open a new terminal in the

toolscontainer and rungit config --get-regexp user.*. Your Git user name and email should appear in the output.

6.3. Enabling artifact repositories in a restricted environment

By configuring technology stacks, you can work with artifacts from in-house repositories using self-signed certificates:

6.3.1. Maven

You can enable a Maven artifact repository in Maven workspaces that run in a restricted environment.

Prerequisites

- You are not running any Maven workspace.

-

You know your user namespace, which is

<username>-devspaceswhere<username>is your OpenShift Dev Spaces username.

Procedure

In the

<username>-devspacesnamespace, apply the Secret for the TLS certificate:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Base64 encoding with disabled line wrapping.

In the

<username>-devspacesnamespace, apply the ConfigMap to create thesettings.xmlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Optional: When using JBoss EAP-based devfiles, apply a second

settings-xmlConfigMap in the<username>-devspacesnamespace, and with the same content, a different name, and the/home/jboss/.m2mount path. In the

<username>-devspacesnamespace, apply the ConfigMap for the TrustStore initialization script:Java 8

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Java 11

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Start a Maven workspace.

-

Open a new terminal in the

toolscontainer. -

Run

~/init-truststore.sh.

6.3.2. Gradle

You can enable a Gradle artifact repository in Gradle workspaces that run in a restricted environment.

Prerequisites

- You are not running any Gradle workspace.

Procedure

Apply the Secret for the TLS certificate:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Base64 encoding with disabled line wrapping.

Apply the ConfigMap for the TrustStore initialization script:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the ConfigMap for the Gradle init script:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Start a Gradle workspace.

-

Open a new terminal in the

toolscontainer. -

Run

~/init-truststore.sh.

6.3.3. npm

You can enable an npm artifact repository in npm workspaces that run in a restricted environment.

Prerequisites

- You are not running any npm workspace.

Applying a ConfigMap that sets environment variables might cause a workspace boot loop.

If you encounter this behavior, remove the ConfigMap and edit the devfile directly.

Procedure

Apply the Secret for the TLS certificate:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Base64 encoding with disabled line wrapping.

Apply the ConfigMap to set the following environment variables in the

toolscontainer:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.3.1. Disabling self-signed certificate validation

Run the command below to disable SSL/TLS, bypassing the validation of your self-signed certificates. Note that this is a potential security risk. For a better solution, configure a self-signed certificate you trust with NODE_EXTRA_CA_CERTS.

Procedure

Run the following command in the terminal:

npm config set strict-ssl false

npm config set strict-ssl falseCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.3.2. Configuring NODE_EXTRA_CA_CERTS to use a certificate

Use the command below to set NODE_EXTRA_CA_CERTS to point to where you have your SSL/TLS certificate.

Procedure

Run the following command in the terminal:

`export NODE_EXTRA_CA_CERTS=/public-certs/nexus.cer` `npm install`

`export NODE_EXTRA_CA_CERTS=/public-certs/nexus.cer`1 `npm install`Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

/public-certs/nexus.ceris the path to self-signed SSL/TLS certificate of Nexus artifactory.

6.3.4. Python

You can enable a Python artifact repository in Python workspaces that run in a restricted environment.

Prerequisites

- You are not running any Python workspace.

Applying a ConfigMap that sets environment variables might cause a workspace boot loop.

If you encounter this behavior, remove the ConfigMap and edit the devfile directly.

Procedure

Apply the Secret for the TLS certificate:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Base64 encoding with disabled line wrapping.

Apply the ConfigMap to set the following environment variables in the

toolscontainer:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.5. Go

You can enable a Go artifact repository in Go workspaces that run in a restricted environment.

Prerequisites

- You are not running any Go workspace.

Applying a ConfigMap that sets environment variables might cause a workspace boot loop.

If you encounter this behavior, remove the ConfigMap and edit the devfile directly.

Procedure

Apply the Secret for the TLS certificate:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Base64 encoding with disabled line wrapping.

Apply the ConfigMap to set the following environment variables in the

toolscontainer:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.6. NuGet

You can enable a NuGet artifact repository in NuGet workspaces that run in a restricted environment.

Prerequisites

- You are not running any NuGet workspace.

Applying a ConfigMap that sets environment variables might cause a workspace boot loop.

If you encounter this behavior, remove the ConfigMap and edit the devfile directly.

Procedure

Apply the Secret for the TLS certificate:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Base64 encoding with disabled line wrapping.

Apply the ConfigMap to set the environment variable for the path of the TLS certificate file in the

toolscontainer:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the ConfigMap to create the

nuget.configfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Chapter 7. Requesting persistent storage for workspaces

OpenShift Dev Spaces workspaces and workspace data are ephemeral and are lost when the workspace stops.

To preserve the workspace state in persistent storage while the workspace is stopped, request a Kubernetes PersistentVolume (PV) for the Dev Workspace containers in the OpenShift cluster of your organization’s OpenShift Dev Spaces instance.

You can request a PV by using the devfile or a Kubernetes PersistentVolumeClaim (PVC).

An example of a PV is the /projects/ directory of a workspace, which is mounted by default for non-ephemeral workspaces.

Persistent Volumes come at a cost: attaching a persistent volume slows workspace startup.

Starting another, concurrently running workspace with a ReadWriteOnce PV might fail.

Additional resources

7.1. Requesting persistent storage in a devfile

When a workspace requires its own persistent storage, request a PersistentVolume (PV) in the devfile, and OpenShift Dev Spaces will automatically manage the necessary PersistentVolumeClaims.

Prerequisites

- You have not started the workspace.

Procedure

Add a

volumecomponent in the devfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add a

volumeMountfor the relevantcontainerin the devfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Example 7.1. A devfile that provisions a PV for a workspace to a container

When a workspace is started with the following devfile, the cache PV is provisioned to the golang container in the ./cache container path:

7.2. Requesting persistent storage in a PVC

You can opt to apply a PersistentVolumeClaim (PVC) to request a PersistentVolume (PV) for your workspaces in the following cases:

- Not all developers of the project need the PV.

- The PV lifecycle goes beyond the lifecycle of a single workspace.

- The data included in the PV are shared across workspaces.

You can apply a PVC to the Dev Workspace containers even if the workspace is ephemeral and its devfile contains the controller.devfile.io/storage-type: ephemeral attribute.

Prerequisites

- You have not started the workspace.

-

An active

ocsession with administrative permissions to the destination OpenShift cluster. See Getting started with the CLI. -

A PVC is created in your user project to mount to all

Dev Workspacecontainers.

Procedure

Add the

controller.devfile.io/mount-to-devworkspace: truelabel to the PVC.oc label persistentvolumeclaim <PVC_name> \ controller.devfile.io/mount-to-devworkspace=true

$ oc label persistentvolumeclaim <PVC_name> \ controller.devfile.io/mount-to-devworkspace=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Use the annotations to configure how the PVC is mounted:

Expand Table 7.1. Optional annotations Annotation Description controller.devfile.io/mount-path:The mount path for the PVC.

Defaults to

/tmp/<PVC_name>.controller.devfile.io/read-only:Set to

'true'or'false'to specify whether the PVC is to be mounted as read-only.Defaults to

'false', resulting in the PVC mounted as read/write.

Example 7.2. Mounting a read-only PVC

Chapter 8. Integrating with OpenShift

8.1. Managing workspaces with OpenShift APIs

On your organization’s OpenShift cluster, OpenShift Dev Spaces workspaces are represented as DevWorkspace custom resources of the same name. As a result, if there is a workspace named my-workspace in the OpenShift Dev Spaces dashboard, there is a corresponding DevWorkspace custom resource named my-workspace in the user’s project on the cluster.

Because each DevWorkspace custom resource on the cluster represents a OpenShift Dev Spaces workspace, you can manage OpenShift Dev Spaces workspaces by using OpenShift APIs with clients such as the command-line oc.

Each DevWorkspace custom resource contains details derived from the devfile of the Git repository cloned for the workspace. For example, a devfile might provide devfile commands and workspace container configurations.

8.1.1. Listing all workspaces

As a user, you can list your workspaces by using the command line.

Prerequisites

-

An active

ocsession with permissions togettheDevWorkspaceresources in your project on the cluster. See Getting started with the CLI. You know the relevant OpenShift Dev Spaces user namespace on the cluster.

TipYou can visit

https://<openshift_dev_spaces_fqdn>/api/kubernetes/namespaceto get your OpenShift Dev Spaces user namespace asname.You are in the OpenShift Dev Spaces user namespace on the cluster.

TipOn OpenShift, you can use the command-line

octool to display your current namespace or switch to a namespace.

Procedure

To list your workspaces, enter the following on a command line:

oc get devworkspaces

$ oc get devworkspacesCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example 8.1. Output

NAMESPACE NAME DEVWORKSPACE ID PHASE INFO user1-dev spring-petclinic workspace6d99e9ffb9784491 Running https://url-to-workspace.com user1-dev golang-example workspacedf64e4a492cd4701 Stopped Stopped user1-dev python-hello-world workspace69c26884bbc141f2 Failed Container tooling has state CrashLoopBackOff

NAMESPACE NAME DEVWORKSPACE ID PHASE INFO user1-dev spring-petclinic workspace6d99e9ffb9784491 Running https://url-to-workspace.com user1-dev golang-example workspacedf64e4a492cd4701 Stopped Stopped user1-dev python-hello-world workspace69c26884bbc141f2 Failed Container tooling has state CrashLoopBackOffCopy to Clipboard Copied! Toggle word wrap Toggle overflow

You can view PHASE changes live by adding the --watch flag to this command.

Users with administrative permissions on the cluster can list all workspaces from all OpenShift Dev Spaces users by including the --all-namespaces flag.

8.1.2. Creating workspaces

If your use case does not permit use of the OpenShift Dev Spaces dashboard, you can create workspaces with OpenShift APIs by applying custom resources to the cluster.

Creating workspaces through the OpenShift Dev Spaces dashboard provides better user experience and configuration benefits compared to using the command line:

- As a user, you are automatically logged in to the cluster.

- OpenShift clients work automatically.

-

OpenShift Dev Spaces and its components automatically convert the target Git repository’s devfile into the

DevWorkspaceandDevWorkspaceTemplatecustom resources on the cluster. -

Access to the workspace is secured by default with the

routingClass: chein theDevWorkspaceof the workspace. -

Recognition of the

DevWorkspaceOperatorConfigconfiguration is managed by OpenShift Dev Spaces. Recognition of configurations in

spec.devEnvironmentsspecified in theCheClustercustom resource including:-

Persistent storage strategy is specified with

devEnvironments.storage. -

Default IDE is specified with

devEnvironments.defaultEditor. -

Default plugins are specified with

devEnvironments.defaultPlugins. -

Container build configuration is specified with

devEnvironments.containerBuildConfiguration.

-

Persistent storage strategy is specified with

Prerequisites

-

An active

ocsession with permissions to createDevWorkspaceresources in your project on the cluster. See Getting started with the CLI. You know the relevant OpenShift Dev Spaces user namespace on the cluster.

TipYou can visit

https://<openshift_dev_spaces_fqdn>/api/kubernetes/namespaceto get your OpenShift Dev Spaces user namespace asname.You are in the OpenShift Dev Spaces user namespace on the cluster.

TipOn OpenShift, you can use the command-line

octool to display your current namespace or switch to a namespace.NoteOpenShift Dev Spaces administrators who intend to create workspaces for other users must create the

DevWorkspacecustom resource in a user namespace that is provisioned by OpenShift Dev Spaces or by the administrator. See https://access.redhat.com/documentation/en-us/red_hat_openshift_dev_spaces/3.17/html-single/administration_guide/index#administration-guide:configuring-namespace-provisioning.

Procedure

To prepare the

DevWorkspacecustom resource, copy the contents of the target Git repository’s devfile.Example 8.2. Copied devfile contents with

schemaVersion: 2.2.0components: - name: tooling-container container: image: quay.io/devfile/universal-developer-image:ubi8-latestcomponents: - name: tooling-container container: image: quay.io/devfile/universal-developer-image:ubi8-latestCopy to Clipboard Copied! Toggle word wrap Toggle overflow TipFor more details, see the devfile v2 documentation.

Create a

DevWorkspacecustom resource, pasting the devfile contents from the previous step under thespec.templatefield.Example 8.3. A

DevWorkspacecustom resourceCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Name of the

DevWorkspacecustom resource. This will be the name of the new workspace. - 2

- User namespace, which is the target project for the new workspace.

- 3

- Determines whether the workspace must be started when the

DevWorkspacecustom resource is created. - 4

- URL reference to the Microsoft Visual Studio Code - Open Source IDE devfile.

- 5

- Details about the Git repository to clone into the workspace when it starts.

- 6

- List of components such as workspace containers and volume components.

-

Apply the

DevWorkspacecustom resource to the cluster.

Verification

Verify that the workspace is starting by checking the PHASE status of the

DevWorkspace.oc get devworkspaces -n <user_project> --watch

$ oc get devworkspaces -n <user_project> --watchCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example 8.4. Output

NAMESPACE NAME DEVWORKSPACE ID PHASE INFO user1-dev my-devworkspace workspacedf64e4a492cd4701 Starting Waiting for workspace deployment

NAMESPACE NAME DEVWORKSPACE ID PHASE INFO user1-dev my-devworkspace workspacedf64e4a492cd4701 Starting Waiting for workspace deploymentCopy to Clipboard Copied! Toggle word wrap Toggle overflow When the workspace has successfully started, its PHASE status changes to Running in the output of the

oc get devworkspacescommand.Example 8.5. Output

NAMESPACE NAME DEVWORKSPACE ID PHASE INFO user1-dev my-devworkspace workspacedf64e4a492cd4701 Running https://url-to-workspace.com

NAMESPACE NAME DEVWORKSPACE ID PHASE INFO user1-dev my-devworkspace workspacedf64e4a492cd4701 Running https://url-to-workspace.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow You can then open the workspace by using one of these options:

-

Visit the URL provided in the INFO section of the output of the

oc get devworkspacescommand. - Open the workspace from the OpenShift Dev Spaces dashboard.

-

Visit the URL provided in the INFO section of the output of the

8.1.3. Stopping workspaces

You can stop a workspace by setting the spec.started field in the Devworkspace custom resource to false.

Prerequisites

-

An active

ocsession on the cluster. See Getting started with the CLI. You know the workspace name.

TipYou can find the relevant workspace name in the output of

$ oc get devworkspaces.You know the relevant OpenShift Dev Spaces user namespace on the cluster.

TipYou can visit

https://<openshift_dev_spaces_fqdn>/api/kubernetes/namespaceto get your OpenShift Dev Spaces user namespace asname.You are in the OpenShift Dev Spaces user namespace on the cluster.

TipOn OpenShift, you can use the command-line

octool to display your current namespace or switch to a namespace.

Procedure

Run the following command to stop a workspace:

oc patch devworkspace <workspace_name> \ -p '{"spec":{"started":false}}' \ --type=merge -n <user_namespace> && \ oc wait --for=jsonpath='{.status.phase}'=Stopped \ dw/<workspace_name> -n <user_namespace>$ oc patch devworkspace <workspace_name> \ -p '{"spec":{"started":false}}' \ --type=merge -n <user_namespace> && \ oc wait --for=jsonpath='{.status.phase}'=Stopped \ dw/<workspace_name> -n <user_namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

8.1.4. Starting stopped workspaces

You can start a stopped workspace by setting the spec.started field in the Devworkspace custom resource to true.

Prerequisites

-

An active

ocsession on the cluster. See Getting started with the CLI. You know the workspace name.

TipYou can find the relevant workspace name in the output of

$ oc get devworkspaces.You know the relevant OpenShift Dev Spaces user namespace on the cluster.

TipYou can visit

https://<openshift_dev_spaces_fqdn>/api/kubernetes/namespaceto get your OpenShift Dev Spaces user namespace asname.You are in the OpenShift Dev Spaces user namespace on the cluster.

TipOn OpenShift, you can use the command-line

octool to display your current namespace or switch to a namespace.

Procedure

Run the following command to start a stopped workspace:

oc patch devworkspace <workspace_name> \ -p '{"spec":{"started":true}}' \ --type=merge -n <user_namespace> && \ oc wait --for=jsonpath='{.status.phase}'=Running \ dw/<workspace_name> -n <user_namespace>$ oc patch devworkspace <workspace_name> \ -p '{"spec":{"started":true}}' \ --type=merge -n <user_namespace> && \ oc wait --for=jsonpath='{.status.phase}'=Running \ dw/<workspace_name> -n <user_namespace>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

8.1.5. Removing workspaces

You can remove a workspace by simply deleting the DevWorkspace custom resource.

Deleting the DevWorkspace custom resource will also delete other workspace resources if they were created by OpenShift Dev Spaces: for example, the referenced DevWorkspaceTemplate and per-workspace PersistentVolumeClaims.

Remove workspaces by using the OpenShift Dev Spaces dashboard whenever possible.

Prerequisites

-

An active

ocsession on the cluster. See Getting started with the CLI. You know the workspace name.

TipYou can find the relevant workspace name in the output of

$ oc get devworkspaces.You know the relevant OpenShift Dev Spaces user namespace on the cluster.

TipYou can visit

https://<openshift_dev_spaces_fqdn>/api/kubernetes/namespaceto get your OpenShift Dev Spaces user namespace asname.You are in the OpenShift Dev Spaces user namespace on the cluster.

TipOn OpenShift, you can use the command-line

octool to display your current namespace or switch to a namespace.

Procedure

Run the following command to remove a workspace:

oc delete devworkspace <workspace_name> -n <user_namespace>