This documentation is for a release that is no longer maintained

See documentation for the latest supported version.User guide

Using Red Hat OpenShift Dev Spaces 3.2

Abstract

Chapter 1. Adopting OpenShift Dev Spaces

To get started with adopting OpenShift Dev Spaces for your organization, you can read the following:

1.1. Developer workspaces

Red Hat OpenShift Dev Spaces provides developer workspaces with everything you need to code, build, test, run, and debug applications:

- Project source code

- Web-based integrated development environment (IDE)

- Tool dependencies needed by developers to work on a project

- Application runtime: a replica of the environment where the application runs in production

Pods manage each component of a OpenShift Dev Spaces workspace. Therefore, everything running in a OpenShift Dev Spaces workspace is running inside containers. This makes a OpenShift Dev Spaces workspace highly portable.

The embedded browser-based IDE is the point of access for everything running in a OpenShift Dev Spaces workspace. This makes a OpenShift Dev Spaces workspace easy to share.

1.2. Using a badge with a link to enable a first-time contributor to start a workspace

To enable a first-time contributor to start a workspace with a project, add a badge with a link to your OpenShift Dev Spaces instance.

Figure 1.1. Factory badge

Procedure

Substitute your OpenShift Dev Spaces URL (

https://devspaces-<openshift_deployment_name>.<domain_name>) and repository URL (<your-repository-url>), and add the link to your repository in the projectREADME.mdfile.[](https://devspaces-<openshift_deployment_name>.<domain_name>/#https://<your-repository-url>)

[](https://devspaces-<openshift_deployment_name>.<domain_name>/#https://<your-repository-url>)Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

The

README.mdfile in your Git provider web interface displays the factory badge. Click the badge to open a workspace with your project in your OpenShift Dev Spaces instance.

1.3. Benefits of reviewing pull and merge requests in Red Hat OpenShift Dev Spaces

Red Hat OpenShift Dev Spaces workspace contains all tools you need to review pull and merge requests from start to finish. By clicking a OpenShift Dev Spaces link, you get access to Red Hat OpenShift Dev Spaces-supported web IDE with a ready-to-use workspace where you can run a linter, unit tests, the build and more.

Prerequisites

- You have access to the repository hosted by your Git provider.

- You use a Red Hat OpenShift Dev Spaces-supported browser: Google Chrome or Mozilla Firefox.

- You have access to a OpenShift Dev Spaces instance.

Procedure

- Open the feature branch to review in OpenShift Dev Spaces. A clone of the branch opens in a workspace with tools for debugging and testing.

- Check the pull or merge request changes.

Run your desired debugging and testing tools:

- Run a linter.

- Run unit tests.

- Run the build.

- Run the application to check for problems.

- Navigate to UI of your Git provider to leave comment and pull or merge your assigned request.

Verification

- (optional) Open a second workspace using the main branch of the repository to reproduce a problem.

1.4. Supported languages

| Language | Builders, runtimes, and databases | Maturity |

|---|---|---|

| Apache Camel K |

| GA |

| Java |

| GA |

| Node.js |

| GA |

| Python |

| GA |

| C/C++ |

| Technology preview |

| C# |

| Technology preview |

| Go |

| Technology preview |

| PHP |

| Technology preview |

Chapter 2. User onboarding

If your organization is already running a OpenShift Dev Spaces instance, you can get started as a new user by learning how to start a new workspace, manage your workspaces, and authenticate yourself to a Git server from a workspace:

2.1. Starting a new workspace with a clone of a Git repository

Working with OpenShift Dev Spaces in your browser involves multiple URLs:

- The URL of your organization’s OpenShift Dev Spaces instance, used as part of all the following URLs

- The URL of the Workspaces page of your OpenShift Dev Spaces dashboard with the workspace control panel

- The URLs for starting a new workspace

- The URLs of your workspaces in use

With OpenShift Dev Spaces, you can visit a URL in your browser to start a new workspace that contains a clone of a Git repository. This way, you can clone a Git repository that is hosted on GitHub, a GitLab instance, or a Bitbucket server.

You can also use the Git Repo URL * field on the Create Workspace page of your OpenShift Dev Spaces dashboard to enter the URL of a Git repository to start a new workspace.

Prerequisites

- Your organization has a running instance of OpenShift Dev Spaces.

-

You know the FQDN URL of your organization’s OpenShift Dev Spaces instance:

https://devspaces-<openshift_deployment_name>.<domain_name>. - Optional: You have authentication to the Git server configured.

Your Git repository maintainer keeps the

devfile.yamlor.devfile.yamlfile in the root directory of the Git repository. (For alternative file names and file paths, see Section 2.2, “Optional parameters for the URLs for starting a new workspace”.)TipYou can also start a new workspace by supplying the URL of a Git repository that contains no devfile. Doing so results in a workspace with the Che-Theia IDE and the Universal Developer Image.

Procedure

To start a new workspace with a clone of a Git repository:

- Optional: Visit your OpenShift Dev Spaces dashboard pages to authenticate to your organization’s instance of OpenShift Dev Spaces.

Visit the URL to start a new workspace using the basic syntax:

https://devspaces-<openshift_deployment_name>.<domain_name>#<git_repository_url>

https://devspaces-<openshift_deployment_name>.<domain_name>#<git_repository_url>Copy to Clipboard Copied! Toggle word wrap Toggle overflow TipYou can extend this URL with optional parameters:

https://devspaces-<openshift_deployment_name>.<domain_name>#<git_repository_url>?<optional_parameters>

https://devspaces-<openshift_deployment_name>.<domain_name>#<git_repository_url>?<optional_parameters>1 Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example 2.1. A URL for starting a new workspace

https://devspaces-<openshift_deployment_name>.<domain_name>#https://github.com/che-samples/cpp-hello-worldExample 2.2. The URL syntax for starting a new workspace with a clone of a GitHub-hosted repository

With GitHub and GitLab, you can even use the URL of a specific branch of the repository to be cloned:

-

https://devspaces-<openshift_deployment_name>.<domain_name>#https://github.com/<user_or_org>/<repository>starts a new workspace with a clone of the default branch. -

https://devspaces-<openshift_deployment_name>.<domain_name>#https://github.com/<user_or_org>/<repository>/tree/<branch_name>starts a new workspace with a clone of the specified branch. -

https://devspaces-<openshift_deployment_name>.<domain_name>#https://github.com/<user_or_org>/<repository>/pull/<pull_request_id>starts a new workspace with a clone of the branch of the pull request.

After you enter the URL to start a new workspace in a browser tab, it renders the workspace-starting page.

When the new workspace is ready, the workspace IDE loads in the browser tab.

A clone of the Git repository is present in the filesystem of the new workspace.

The workspace has a unique URL:

https://devspaces-<openshift_deployment_name>.<domain_name>#workspace<unique_url>.-

Although this is not possible in the address bar, you can add a URL for starting a new workspace as a bookmark by using the browser bookmark manager:

- In Mozilla Firefox, go to ☰ > Bookmarks > Manage bookmarks Ctrl+Shift+O > Bookmarks Toolbar > Organize > Add bookmark.

- In Google Chrome, go to ⋮ > Bookmarks > Bookmark manager > Bookmarks bar > ⋮ > Add new bookmark.

2.2. Optional parameters for the URLs for starting a new workspace

When you start a new workspace, OpenShift Dev Spaces configures the workspace according to the instructions in the devfile. When you use a URL to start a new workspace, you can append optional parameters to the URL that further configure the workspace. You can use these parameters to specify a workspace IDE, start duplicate workspaces, and specify a devfile file name or path.

- Section 2.2.1, “URL parameter concatenation”

- Section 2.2.2, “URL parameter for the workspace IDE”

- Section 2.2.3, “URL parameter for starting duplicate workspaces”

- Section 2.2.4, “URL parameter for the devfile file name”

- Section 2.2.5, “URL parameter for the devfile file path”

- Section 2.2.6, “URL parameter for the workspace storage”

2.2.1. URL parameter concatenation

The URL for starting a new workspace supports concatenation of multiple optional URL parameters by using & with the following URL syntax:

https://devspaces-<openshift_deployment_name>.<domain_name>#<git_repository_url>?<url_parameter_1>&<url_parameter_2>&<url_parameter_3>

Example 2.3. A URL for starting a new workspace with the URL of a Git repository and optional URL parameters

The complete URL for the browser:

https://devspaces-<openshift_deployment_name>.<domain_name>#https://github.com/che-samples/cpp-hello-world?new&che-editor=che-incubator/intellij-community/latest&devfilePath=tests/testdevfile.yaml

Explanation of the parts of the URL:

https://devspaces-<openshift_deployment_name>.<domain_name> #https://github.com/che-samples/cpp-hello-world ?new&che-editor=che-incubator/intellij-community/latest&devfilePath=tests/testdevfile.yaml

https://devspaces-<openshift_deployment_name>.<domain_name>

#https://github.com/che-samples/cpp-hello-world

?new&che-editor=che-incubator/intellij-community/latest&devfilePath=tests/testdevfile.yaml 2.2.2. URL parameter for the workspace IDE

If the URL for starting a new workspace doesn’t contain a URL parameter specifying the integrated development environment (IDE), the workspace loads with the default IDE: Che Theia.

The URL parameter for specifying another supported IDE is che-editor=<editor_key>:

https://devspaces-<openshift_deployment_name>.<domain_name>#<git_repository_url>?che-editor=<editor_key>

https://devspaces-<openshift_deployment_name>.<domain_name>#<git_repository_url>?che-editor=<editor_key>| IDE | <editor_key> value | Note |

|---|---|---|

|

|

Default editor when the URL parameter or | |

|

| ||

|

|

2.2.3. URL parameter for starting duplicate workspaces

Visiting a URL for starting a new workspace results in a new workspace according to the devfile and with a clone of the linked Git repository.

In some situations, you may need to have multiple workspaces that are duplicates in terms of the devfile and the linked Git repository. You can do this by visiting the same URL for starting a new workspace with a URL parameter.

The URL parameter for starting a duplicate workspace is new:

https://devspaces-<openshift_deployment_name>.<domain_name>#<git_repository_url>?new

https://devspaces-<openshift_deployment_name>.<domain_name>#<git_repository_url>?new

If you currently have a workspace that you started using a URL, then visiting the URL again without the new URL parameter results in an error message.

2.2.4. URL parameter for the devfile file name

When you visit a URL for starting a new workspace, OpenShift Dev Spaces searches the linked Git repository for a devfile with the file name .devfile.yaml or devfile.yaml. The devfile in the linked Git repository must follow this file-naming convention.

In some situations, you may need to specify a different, unconventional file name for the devfile.

The URL parameter for specifying an unconventional file name of the devfile is df=<filename>.yaml:

https://devspaces-<openshift_deployment_name>.<domain_name>#<git_repository_url>?df=<filename>.yaml

https://devspaces-<openshift_deployment_name>.<domain_name>#<git_repository_url>?df=<filename>.yaml - 1

<filename>.yamlis an unconventional file name of the devfile in the linked Git repository.

The df=<filename>.yaml parameter also has a long version: devfilePath=<filename>.yaml.

2.2.5. URL parameter for the devfile file path

When you visit a URL for starting a new workspace, OpenShift Dev Spaces searches the root directory of the linked Git repository for a devfile with the file name .devfile.yaml or devfile.yaml. The file path of the devfile in the linked Git repository must follow this path convention.

In some situations, you may need to specify a different, unconventional file path for the devfile in the linked Git repository.

The URL parameter for specifying an unconventional file path of the devfile is devfilePath=<relative_file_path>:

https://devspaces-<openshift_deployment_name>.<domain_name>#<git_repository_url>?devfilePath=<relative_file_path>

https://devspaces-<openshift_deployment_name>.<domain_name>#<git_repository_url>?devfilePath=<relative_file_path> - 1

<relative_file_path>is an unconventional file path of the devfile in the linked Git repository.

2.2.6. URL parameter for the workspace storage

If the URL for starting a new workspace does not contain a URL parameter specifying the storage type, the new workspace is created in ephemeral or persistent storage, whichever is defined as the default storage type in the CheCluster Custom Resource.

The URL parameter for specifying a storage type for a workspace is storageType=<storage_type>:

https://devspaces-<openshift_deployment_name>.<domain_name>#<git_repository_url>?storageType=<storage_type>

https://devspaces-<openshift_deployment_name>.<domain_name>#<git_repository_url>?storageType=<storage_type> - 1

- Possible

<storage_type>values:-

ephemeral -

per-user(persistent) -

per-workspace(persistent)

-

Additional resources

2.3. Basic actions you can perform on a workspace

You manage your workspaces and verify their current states in the Workspaces page (https://devspaces-<openshift_deployment_name>.<domain_name>/dashboard/#/workspaces) of your OpenShift Dev Spaces dashboard.

After you start a new workspace, you can perform the following actions on it in the Workspaces page:

| Action | GUI steps in the Workspaces page |

|---|---|

| Reopen a running workspace | Click Open. |

| Restart a running workspace | Go to ⋮ > Restart Workspace. |

| Stop a running workspace | Go to ⋮ > Stop Workspace. |

| Start a stopped workspace | Click Open. |

| Delete a workspace | Go to ⋮ > Delete Workspace. |

2.4. Authenticating to a Git server from a workspace

In a workspace, you can run Git commands that require user authentication like cloning a remote private Git repository or pushing to a remote public or private Git repository.

User authentication to a Git server from a workspace can be configured by the administrator or user:

- Your administrator sets up an OAuth application on GitHub, GitLab, or Bitbucket for your organization’s Red Hat OpenShift Dev Spaces instance.

- As a user, you create and apply your own Kubernetes Secrets for your Git credentials store and access token.

Chapter 3. Customizing workspace components

To customize workspace components:

- Choose a Git repository for your workspace.

- Use a devfile

- Select and customize your in-browser IDE.

- Add OpenShift Dev Spaces specific attributes in addition to the generic devfile specification.

Chapter 4. Introduction to devfile in Red Hat OpenShift Dev Spaces

Devfiles are yaml text files used for development environment customization. Use them to configure a devfile to suit your specific needs and share the customized devfile across multiple workspaces to ensure identical user experience and build, run, and deploy behaviours across your team.

Devfile and Universal Developer Image

You do not need a devfile to start a workspace. If you do not include a devfile in your project repository, Red Hat OpenShift Dev Spaces automatically loads a default devfile with a Universal Developer Image (UDI).

OpenShift Dev Spaces devfile registry

OpenShift Dev Spaces devfile registry contains ready-to-use devfiles for different languages and technologies.

Devfiles included in the registry are specific to Red Hat OpenShift Dev Spaces and should be treated as samples rather than templates. They might require updates to work with other versions of the components featured in the samples.

Additional resources

Chapter 5. Selecting a workspace IDE

The default in-browser IDE in a new workspace is Che Theia.

You can select another supported in-browser IDE by either method:

-

When you start a new workspace by visiting a URL, you can choose an IDE for that workspace by adding the

che-editorparameter to the URL. See Section 5.1, “Selecting an in-browser IDE for one new workspace”. -

You can specify an IDE in the

.che/che-editor.yamlfile of the Git repository for all new workspaces that will feature a clone of that repository. See Section 5.2, “Selecting an in-browser IDE for all workspaces that clone the same Git repository”.

| IDE | id | Note |

|---|---|---|

|

|

Default editor when the URL parameter or | |

|

| ||

|

|

5.1. Selecting an in-browser IDE for one new workspace

You can select your preferred in-browser IDE when using a URL for starting a new workspace. This way, each developer using OpenShift Dev Spaces can start a workspace with a clone of the same project repository and the personal choice of the in-browser IDE.

Procedure

- Include the URL parameter for the workspace IDE in the URL for starting a new workspace.

- Visit the URL in the browser.

Verification

- Verify that the selected in-browser IDE loads in the browser tab of the started workspace.

5.2. Selecting an in-browser IDE for all workspaces that clone the same Git repository

5.2.1. Setting up che-editor.yaml

To define the same in-browser IDE for all workspaces that will clone the same remote Git repository of your project, you can use the che-editor.yaml file.

This way, you can set a common default editor for your team and provide new contributors with the most suitable editor for your project. You can also use the che-editor.yaml file when you need to set a different IDE default for a particular project repository rather than the default IDE of your organization’s OpenShift Dev Spaces instance.

Procedure

-

In the remote Git repository of your project, create a

/.che/che-editor.yamlfile with lines that specify the relevant parameter, as described in the next section.

Verification

- Visit the URL for starting a new workspace.

- Verify that the selected in-browser IDE loads in the browser tab of the started workspace.

5.2.2. Parameters for che-editor.yaml

The simplest way to select an IDE in the che-editor.yaml is to specify the id of an IDE that is available in the table of supported in-browser IDEs in Chapter 5, Selecting a workspace IDE:

Example 5.1. id selects an IDE from the plug-in registry

id: che-incubator/che-code/insiders

id: che-incubator/che-code/insiders

As alternatives to providing the id parameter, the che-editor.yaml file supports a reference to the URL of another che-editor.yaml file or an inline definition for an IDE outside of a plug-in registry:

Example 5.2. reference points to a remote che-editor.yaml file

reference: https://<hostname_and_path_to_a_remote_file>/che-editor.yaml

reference: https://<hostname_and_path_to_a_remote_file>/che-editor.yamlExample 5.3. inline specifies a complete definition for a customized IDE without a plug-in registry

For more complex scenarios, the che-editor.yaml file supports the registryUrl and override parameters:

Example 5.4. registryUrl points to a custom plug-in registry rather than to the default OpenShift Dev Spaces plug-in registry

id: <editor_id> registryUrl: <url_of_custom_plug-in_registry>

id: <editor_id>

registryUrl: <url_of_custom_plug-in_registry>- 1

- The

idof the IDE in the custom plug-in registry.

Example 5.5. override of the default value of one or more defined properties of the IDE

- 1

id:,registryUrl:, orreference:.

Chapter 6. Using credentials and configurations in workspaces

You can use your credentials and configurations in your workspaces.

To do so, mount your credentials and configurations to the DevWorkspace containers in the OpenShift cluster of your organization’s OpenShift Dev Spaces instance:

- Mount your credentials and sensitive configurations as Kubernetes Secrets.

- Mount your non-sensitve configurations as Kubernetes ConfigMaps.

If you need to allow the DevWorkspace Pods in the cluster to access container registries that require authentication, create an image pull Secret for the DevWorkspace Pods.

The mounting process uses the standard Kubernetes mounting mechanism and requires applying additional labels and annotations to your existing resources. Resources are mounted when starting a new workspace or restarting an existing one.

You can create permanent mount points for various components:

-

Maven configuration, such as the user-specific

settings.xmlfile - SSH key pairs

- AWS authorization tokens

- Configuration files

- Persistent storage

- Git credentials

Additional resources

6.1. Using Git credentials

As an alternative to the OAuth for GitHub, GitLab, or Bitbucket that is configured by the administrator of your organization’s OpenShift Dev Spaces instance, you can apply your Git credentials, a credentials store and access token, as Kubernetes Secrets.

6.1.1. Using a Git credentials store

If the administrator of your organization’s OpenShift Dev Spaces instance has not configured OAuth for GitHub, GitLab, or Bitbucket, you can apply your Git credentials store as a Kubernetes Secret.

Mounting your Git credentials store as a Secret results in the DevWorkspace Operator applying your Git credentials to the .gitconfig file in the workspace container.

Apply the Kubernetes Secret in your user project of the OpenShift cluster of your organization’s OpenShift Dev Spaces instance.

When you apply the Secret, a Git configuration file with the path to the mounted Git credentials store is automatically configured and mounted to the DevWorkspace containers in the cluster at /etc/gitconfig. This makes your Git credentials store available in your workspaces.

Prerequisites

-

An active

ocsession, with administrative permissions, to the OpenShift cluster. See Getting started with the CLI. -

The

base64command line tools are installed in the operating system you are using.

Procedure

In your home directory, locate and open your

.git-credentialsfile if you already have it. Alternatively, if you do not have this file, save a new.git-credentialsfile, using the Git credentials storage format. Each credential is stored on its own line in the file:https://<username>:<token>@<git_server_hostname>

https://<username>:<token>@<git_server_hostname>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example 6.1. A line in a

.git-credentialsfilehttps://trailblazer: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@github.com

https://trailblazer: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@github.comCopy to Clipboard Copied! Toggle word wrap Toggle overflow Select credentials from your

.git-credentialsfile for the Secret. Encode the selected credentials to Base64 for the next step.TipTo encode all lines in the file:

$ cat .git-credentials | base64 | tr -d '\n'To encode a selected line:

$ echo -n '<copied_and_pasted_line_from_.git-credentials>' | base64

Create a new OpenShift Secret in your user project.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The

controller.devfile.io/git-credentiallabel marks the Secret as containing Git credentials. - 2

- A custom absolute path in the

DevWorkspacecontainers. The Secret is mounted as thecredentialsfile at this path. The default path is/. - 3

- The selected content from

.git-credentialsthat you encoded to Base64 in the previous step.

TipYou can create and apply multiple Git credentials Secrets in your user project. All of them will be copied into one Secret that will be mounted to the

DevWorkspacecontainers. For example, if you set the mount path to/etc/secret, then the one Secret with all of your Git credentials will be mounted at/etc/secret/credentials. You must set all Git credentials Secrets in your user project to the same mount path. You can set the mount path to an arbitrary path because the mount path will be automatically set in the Git configuration file configured at/etc/gitconfig.Apply the Secret.

oc apply -f - <<EOF <Secret_prepared_in_the_previous_step> EOF

$ oc apply -f - <<EOF <Secret_prepared_in_the_previous_step> EOFCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.1.2. Using a Git provider access token

If the administrator of your organization’s OpenShift Dev Spaces instance has not configured OAuth for GitHub, GitLab, or Bitbucket, you can apply your personal access token as a Kubernetes Secret.

Mounting your access token as a Secret enables the OpenShift Dev Spaces Server to access the remote repository that is cloned during workspace creation, including access to the repository’s /.che and /.vscode folders.

Apply the Kubernetes Secret in your user project of the OpenShift cluster of your organization’s OpenShift Dev Spaces instance.

After you have applied the Secret, you can create new workspaces from a private GitHub, GitLab, or Bitbucket-server repository.

In your user project, you can create and apply multiple access-token Secrets per a Git provider.

Prerequisites

-

An active

ocsession, with administrative permissions, to the OpenShift cluster. See Getting started with the CLI. -

The

base64command line tools are installed in the operating system you are using.

Procedure

Copy your access token and encode it to Base64.

echo -n '<your_access_token>' | base64

$ echo -n '<your_access_token>' | base64Copy to Clipboard Copied! Toggle word wrap Toggle overflow Prepare a new OpenShift Secret in your user project.

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Your Che user ID. You can retrieve

<che-endpoint>/api/userto get the Che user data. - 2

- The Git provider name:

githuborgitlaborbitbucket-server. - 3

- The Git provider URL.

- 4

- Your Git provider user ID, follow the API documentation to retrieve the user object:

-

GitHub: Get a user. See the

idvalue in the response. -

GitLab: List users: For normal users, use the

usernamefilter:/users?username=:username. See theidvalue in the response. -

Bitbucket Server: Get users. See the

idvalue in the response.

-

GitHub: Get a user. See the

Apply the Secret.

oc apply -f - <<EOF <Secret_prepared_in_the_previous_step> EOF

$ oc apply -f - <<EOF <Secret_prepared_in_the_previous_step> EOFCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2. Enabling artifact repositories in a restricted environment

By configuring technology stacks, you can work with artifacts from in-house repositories using self-signed certificates:

6.2.1. Enabling Maven artifact repositories

You can enable a Maven artifact repository in Maven workspaces that run in a restricted environment.

Prerequisites

- You are not running any Maven workspace.

-

You know your user namespace, which is

<username>-devspaceswhere<username>is your OpenShift Dev Spaces username.

Procedure

In the

<username>-devspacesnamespace, apply the Secret for the TLS certificate:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Base64 encoding with disabled line wrapping.

In the

<username>-devspacesnamespace, apply the ConfigMap to create thesettings.xmlfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Optional: When using EAP-based devfiles, apply a second

settings-xmlConfigMap in the<username>-devspacesnamespace, and with the same content, a different name, and the/home/jboss/.m2mount path. In the

<username>-devspacesnamespace, apply the ConfigMap for the TrustStore initialization script:Java 8

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Java 11

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Start a Maven workspace.

-

Open a new terminal in the

toolscontainer. -

Run

~/init-truststore.sh.

6.2.2. Enabling Gradle artifact repositories

You can enable a Gradle artifact repository in Gradle workspaces that run in a restricted environment.

Prerequisites

- You are not running any Gradle workspace.

Procedure

Apply the Secret for the TLS certificate:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Base64 encoding with disabled line wrapping.

Apply the ConfigMap for the TrustStore initialization script:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the ConfigMap for the Gradle init script:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Start a Gradle workspace.

-

Open a new terminal in the

toolscontainer. -

Run

~/init-truststore.sh.

6.2.3. Enabling npm artifact repositories

You can enable an npm artifact repository in npm workspaces that run in a restricted environment.

Prerequisites

- You are not running any npm workspace.

Applying a ConfigMap that sets environment variables might cause a workspace boot loop.

If you encounter this behavior, remove the ConfigMap and edit the devfile directly.

Procedure

Apply the Secret for the TLS certificate:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Base64 encoding with disabled line wrapping.

Apply the ConfigMap to set the following environment variables in the

toolscontainer:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2.4. Enabling Python artifact repositories

You can enable a Python artifact repository in Python workspaces that run in a restricted environment.

Prerequisites

- You are not running any Python workspace.

Applying a ConfigMap that sets environment variables might cause a workspace boot loop.

If you encounter this behavior, remove the ConfigMap and edit the devfile directly.

Procedure

Apply the Secret for the TLS certificate:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Base64 encoding with disabled line wrapping.

Apply the ConfigMap to set the following environment variables in the

toolscontainer:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2.5. Enabling Go artifact repositories

You can enable a Go artifact repository in Go workspaces that run in a restricted environment.

Prerequisites

- You are not running any Go workspace.

Applying a ConfigMap that sets environment variables might cause a workspace boot loop.

If you encounter this behavior, remove the ConfigMap and edit the devfile directly.

Procedure

Apply the Secret for the TLS certificate:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Base64 encoding with disabled line wrapping.

Apply the ConfigMap to set the following environment variables in the

toolscontainer:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.2.6. Enabling NuGet artifact repositories

You can enable a NuGet artifact repository in NuGet workspaces that run in a restricted environment.

Prerequisites

- You are not running any NuGet workspace.

Applying a ConfigMap that sets environment variables might cause a workspace boot loop.

If you encounter this behavior, remove the ConfigMap and edit the devfile directly.

Procedure

Apply the Secret for the TLS certificate:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Base64 encoding with disabled line wrapping.

Apply the ConfigMap to set the environment variable for the path of the TLS certificate file in the

toolscontainer:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the ConfigMap to create the

nuget.configfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3. Creating image pull Secrets

To allow the DevWorkspace Pods in the OpenShift cluster of your organization’s OpenShift Dev Spaces instance to access container registries that require authentication, create an image pull Secret.

You can create image pull Secrets by using oc or a .dockercfg file or a config.json file.

6.3.1. Creating an image pull Secret with oc

Prerequisites

-

An active

ocsession with administrative permissions to the destination OpenShift cluster. See Getting started with the CLI.

Procedure

In your user project, create an image pull Secret with your private container registry details and credentials:

oc create secret docker-registry <Secret_name> \ --docker-server=<registry_server> \ --docker-username=<username> \ --docker-password=<password> \ --docker-email=<email_address>$ oc create secret docker-registry <Secret_name> \ --docker-server=<registry_server> \ --docker-username=<username> \ --docker-password=<password> \ --docker-email=<email_address>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add the following label to the image pull Secret:

oc label secret <Secret_name> controller.devfile.io/devworkspace_pullsecret=true controller.devfile.io/watch-secret=true

$ oc label secret <Secret_name> controller.devfile.io/devworkspace_pullsecret=true controller.devfile.io/watch-secret=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.2. Creating an image pull Secret from a .dockercfg file

If you already store the credentials for the private container registry in a .dockercfg file, you can use that file to create an image pull Secret.

Prerequisites

-

An active

ocsession with administrative permissions to the destination OpenShift cluster. See Getting started with the CLI. -

base64command line tools are installed in the operating system you are using.

Procedure

Encode the

.dockercfgfile to Base64:cat .dockercfg | base64 | tr -d '\n'

$ cat .dockercfg | base64 | tr -d '\n'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new OpenShift Secret in your user project:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the Secret:

oc apply -f - <<EOF <Secret_prepared_in_the_previous_step> EOF

$ oc apply -f - <<EOF <Secret_prepared_in_the_previous_step> EOFCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.3.3. Creating an image pull Secret from a config.json file

If you already store the credentials for the private container registry in a $HOME/.docker/config.json file, you can use that file to create an image pull Secret.

Prerequisites

-

An active

ocsession with administrative permissions to the destination OpenShift cluster. See Getting started with the CLI. -

base64command line tools are installed in the operating system you are using.

Procedure

Encode the

$HOME/.docker/config.jsonfile to Base64.cat config.json | base64 | tr -d '\n'

$ cat config.json | base64 | tr -d '\n'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a new OpenShift Secret in your user project:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the Secret:

oc apply -f - <<EOF <Secret_prepared_in_the_previous_step> EOF

$ oc apply -f - <<EOF <Secret_prepared_in_the_previous_step> EOFCopy to Clipboard Copied! Toggle word wrap Toggle overflow

6.4. Mounting Secrets

To mount confidential data into your workspaces, use Kubernetes Secrets.

Using Kubernetes Secrets, you can mount usernames, passwords, SSH key pairs, authentication tokens (for example, for AWS), and sensitive configurations.

Mount Kubernetes Secrets to the DevWorkspace containers in the OpenShift cluster of your organization’s OpenShift Dev Spaces instance.

Prerequisites

-

An active

ocsession with administrative permissions to the destination OpenShift cluster. See Getting started with the CLI. -

In your user project, you created a new Secret or determined an existing Secret to mount to all

DevWorkspacecontainers.

Procedure

Add the labels, which are required for mounting the Secret, to the Secret.

oc label secret <Secret_name> \ controller.devfile.io/mount-to-devworkspace=true \ controller.devfile.io/watch-secret=true$ oc label secret <Secret_name> \ controller.devfile.io/mount-to-devworkspace=true \ controller.devfile.io/watch-secret=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Use the annotations to configure how the Secret is mounted.

Expand Table 6.1. Optional annotations Annotation Description controller.devfile.io/mount-path:Specifies the mount path.

Defaults to

/etc/secret/<Secret_name>.controller.devfile.io/mount-as:Specifies how the resource should be mounted:

file,subpath, orenv.Defaults to

file.mount-as: filemounts the keys and values as files within the mount path.mount-as: subpathmounts the keys and values within the mount path using subpath volume mounts.mount-as: envmounts the keys and values as environment variables in allDevWorkspacecontainers.

Example 6.2. Mounting a Secret as a file

When you start a workspace, the /home/user/.m2/settings.xml file will be available in the DevWorkspace containers.

With Maven, you can set a custom path for the settings.xml file. For example:

mvn --settings /home/user/.m2/settings.xml clean install

$ mvn --settings /home/user/.m2/settings.xml clean install6.5. Mounting ConfigMaps

To mount non-confidential configuration data into your workspaces, use Kubernetes ConfigMaps.

Using Kubernetes ConfigMaps, you can mount non-sensitive data such as configuration values for an application.

Mount Kubernetes ConfigMaps to the DevWorkspace containers in the OpenShift cluster of your organization’s OpenShift Dev Spaces instance.

Prerequisites

-

An active

ocsession with administrative permissions to the destination OpenShift cluster. See Getting started with the CLI. -

In your user project, you created a new ConfigMap or determined an existing ConfigMap to mount to all

DevWorkspacecontainers.

Procedure

Add the labels, which are required for mounting the ConfigMap, to the ConfigMap.

oc label configmap <ConfigMap_name> \ controller.devfile.io/mount-to-devworkspace=true \ controller.devfile.io/watch-configmap=true$ oc label configmap <ConfigMap_name> \ controller.devfile.io/mount-to-devworkspace=true \ controller.devfile.io/watch-configmap=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Use the annotations to configure how the ConfigMap is mounted.

Expand Table 6.2. Optional annotations Annotation Description controller.devfile.io/mount-path:Specifies the mount path.

Defaults to

/etc/config/<ConfigMap_name>.controller.devfile.io/mount-as:Specifies how the resource should be mounted:

file,subpath, orenv.Defaults to

file.mount-as:filemounts the keys and values as files within the mount path.mount-as:subpathmounts the keys and values within the mount path using subpath volume mounts.mount-as:envmounts the keys and values as environment variables in allDevWorkspacecontainers.

Example 6.3. Mounting a ConfigMap as environment variables

When you start a workspace, the <env_var_1> and <env_var_2> environment variables will be available in the DevWorkspace containers.

Chapter 7. Requesting persistent storage for workspaces

OpenShift Dev Spaces workspaces and workspace data are ephemeral and are lost when the workspace stops.

To preserve the workspace state in persistent storage while the workspace is stopped, request a Kubernetes PersistentVolume (PV) for the DevWorkspace containers in the OpenShift cluster of your organization’s OpenShift Dev Spaces instance.

You can request a PV by using the devfile or a Kubernetes PersistentVolumeClaim (PVC).

An example of a PV is the /projects/ directory of a workspace, which is mounted by default for non-ephemeral workspaces.

Persistent Volumes come at a cost: attaching a persistent volume slows workspace startup.

Starting another, concurrently running workspace with a ReadWriteOnce PV may fail.

Additional resources

7.1. Requesting persistent storage in a devfile

When a workspace requires its own persistent storage, request a PersistentVolume (PV) in the devfile, and OpenShift Dev Spaces will automatically manage the necessary PersistentVolumeClaims.

Prerequisites

- You have not started the workspace.

Procedure

Add a

volumecomponent in the devfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add a

volumeMountfor the relevantcontainerin the devfile:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Example 7.1. A devfile that provisions a PV for a workspace to a container

When a workspace is started with the following devfile, the cache PV is provisioned to the golang container in the ./cache container path:

7.2. Requesting persistent storage in a PVC

You may opt to apply a PersistentVolumeClaim (PVC) to request a PersistentVolume (PV) for your workspaces in the following cases:

- Not all developers of the project need the PV.

- The PV lifecycle goes beyond the lifecycle of a single workspace.

- The data included in the PV are shared across workspaces.

You can apply a PVC to the DevWorkspace containers even if the workspace is ephemeral and its devfile contains the controller.devfile.io/storage-type: ephemeral attribute.

Prerequisites

- You have not started the workspace.

-

An active

ocsession with administrative permissions to the destination OpenShift cluster. See Getting started with the CLI. -

A PVC is created in your user project to mount to all

DevWorkspacecontainers.

Procedure

Add the

controller.devfile.io/mount-to-devworkspace: truelabel to the PVC.oc label persistentvolumeclaim <PVC_name> \ controller.devfile.io/mount-to-devworkspace=true

$ oc label persistentvolumeclaim <PVC_name> \ controller.devfile.io/mount-to-devworkspace=trueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Optional: Use the annotations to configure how the PVC is mounted:

Expand Table 7.1. Optional annotations Annotation Description controller.devfile.io/mount-path:The mount path for the PVC.

Defaults to

/tmp/<PVC_name>.controller.devfile.io/read-only:Set to

'true'or'false'to specify whether the PVC is to be mounted as read-only.Defaults to

'false', resulting in the PVC mounted as read-write.

Chapter 8. Integrating with OpenShift

8.1. Automatic OpenShift token injection

This section describes how to use the OpenShift user token that is automatically injected into workspace containers which allows running OpenShift Dev Spaces CLI commands against OpenShift cluster.

Procedure

- Open the OpenShift Dev Spaces dashboard and start a workspace.

- Once the workspace is started, open a terminal in the container that contains the OpenShift Dev Spaces CLI.

Execute OpenShift Dev Spaces CLI commands which allow you to run commands against OpenShift cluster. CLI can be used for deploying applications, inspecting and managing cluster resources, and viewing logs. OpenShift user token will be used during the execution of the commands.

The automatic token injection currently works only on the OpenShift infrastructure.

Chapter 9. Troubleshooting OpenShift Dev Spaces

This section provides troubleshooting procedures for the most frequent issues a user can come in conflict with.

Additional resources

9.1. Viewing OpenShift Dev Spaces workspaces logs

This section describes how to view OpenShift Dev Spaces workspaces logs.

9.1.1. Viewing logs from language servers and debug adapters

9.1.1.1. Checking important logs

This section describes how to check important logs.

Procedure

- In the OpenShift web console, click Applications → Pods to see a list of all the active workspaces.

- Click on the name of the running Pod where the workspace is running. The Pod screen contains the list of all containers with additional information.

Choose a container and click the container name.

NoteThe most important logs are the

theia-idecontainer and the plug-ins container logs.- On the container screen, navigate to the Logs section.

9.1.1.2. Detecting memory problems

This section describes how to detect memory problems related to a plug-in running out of memory. The following are the two most common problems related to a plug-in running out of memory:

- The plug-in container runs out of memory

-

This can happen during plug-in initialization when the container does not have enough RAM to execute the entrypoint of the image. The user can detect this in the logs of the plug-in container. In this case, the logs contain

OOMKilled, which implies that the processes in the container requested more memory than is available in the container. - A process inside the container runs out of memory without the container noticing this

For example, the Java language server (Eclipse JDT Language Server, started by the

vscode-javaextension) throws an OutOfMemoryException. This can happen any time after the container is initialized, for example, when a plug-in starts a language server or when a process runs out of memory because of the size of the project it has to handle.To detect this problem, check the logs of the primary process running in the container. For example, to check the log file of Eclipse JDT Language Server for details, see the relevant plug-in-specific sections.

9.1.1.3. Logging the client-server traffic for debug adapters

This section describes how to log the exchange between Che-Theia and a debug adapter into the Output view.

Prerequisites

- A debug session must be started for the Debug adapters option to appear in the list.

Procedure

- Click File → Settings and then open Preferences.

- Expand the Debug section in the Preferences view.

Set the trace preference value to

true(default isfalse).All the communication events are logged.

- To watch these events, click View → Output and select Debug adapters from the drop-down list at the upper right corner of the Output view.

9.1.1.4. Viewing logs for Python

This section describes how to view logs for the Python language server.

Procedure

Navigate to the Output view and select Python in the drop-down list.

9.1.1.5. Viewing logs for Go

This section describes how to view logs for the Go language server.

9.1.1.5.1. Finding the Go path

This section describes how to find where the GOPATH variable points to.

Procedure

Execute the

Go: Current GOPATHcommand.

9.1.1.5.2. Viewing the Debug Console log for Go

This section describes how to view the log output from the Go debugger.

Procedure

Set the

showLogattribute totruein the debug configuration.Copy to Clipboard Copied! Toggle word wrap Toggle overflow To enable debugging output for a component, add the package to the comma-separated list value of the

logOutputattribute:Copy to Clipboard Copied! Toggle word wrap Toggle overflow The debug console prints the additional information in the debug console.

9.1.1.5.3. Viewing the Go logs output in the Output panel

This section describes how to view the Go logs output in the Output panel.

Procedure

- Navigate to the Output view.

Select Go in the drop-down list.

9.1.1.6. Viewing logs for the NodeDebug NodeDebug2 adapter

No specific diagnostics exist other than the general ones.

9.1.1.7. Viewing logs for Typescript

9.1.1.7.1. Enabling the label switched protocol (LSP) tracing

Procedure

-

To enable the tracing of messages sent to the Typescript (TS) server, in the Preferences view, set the

typescript.tsserver.traceattribute toverbose. Use this to diagnose the TS server issues. -

To enable logging of the TS server to a file, set the

typescript.tsserver.logattribute toverbose. Use this log to diagnose the TS server issues. The log contains the file paths.

9.1.1.7.2. Viewing the Typescript language server log

This section describes how to view the Typescript language server log.

Procedure

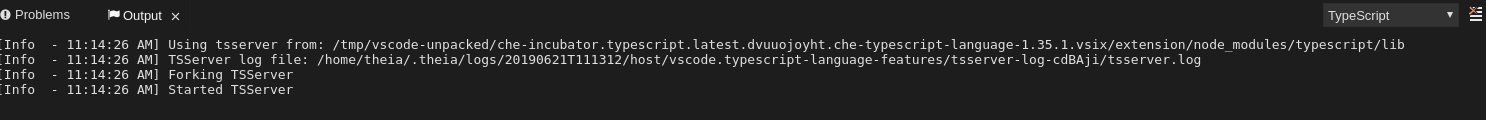

To get the path to the log file, see the Typescript Output console:

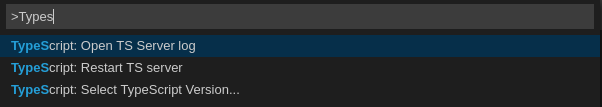

To open log file, use the Open TS Server log command.

9.1.1.7.3. Viewing the Typescript logs output in the Output panel

This section describes how to view the Typescript logs output in the Output panel.

Procedure

- Navigate to the Output view

Select TypeScript in the drop-down list.

9.1.1.8. Viewing logs for Java

Other than the general diagnostics, there are Language Support for Java (Eclipse JDT Language Server) plug-in actions that the user can perform.

9.1.1.8.1. Verifying the state of the Eclipse JDT Language Server

Procedure

Check if the container that is running the Eclipse JDT Language Server plug-in is running the Eclipse JDT Language Server main process.

-

Open a terminal in the container that is running the Eclipse JDT Language Server plug-in (an example name for the container:

vscode-javaxxx). Inside the terminal, run the

ps aux | grep jdtcommand to check if the Eclipse JDT Language Server process is running in the container. If the process is running, the output is:usr/lib/jvm/default-jvm/bin/java --add-modules=ALL-SYSTEM --add-opens java.base/java.util

usr/lib/jvm/default-jvm/bin/java --add-modules=ALL-SYSTEM --add-opens java.base/java.utilCopy to Clipboard Copied! Toggle word wrap Toggle overflow This message also shows the Visual Studio Code Java extension used. If it is not running, the language server has not been started inside the container.

- Check all logs described in Section 9.1, “Viewing OpenShift Dev Spaces workspaces logs”.

9.1.1.8.2. Verifying the Eclipse JDT Language Server features

Procedure

If the Eclipse JDT Language Server process is running, check if the language server features are working:

- Open a Java file and use the hover or autocomplete functionality. In case of an erroneous file, the user sees Java in the Outline view or in the Problems view.

9.1.1.8.3. Viewing the Java language server log

Procedure

The Eclipse JDT Language Server has its own workspace where it logs errors, information about executed commands, and events.

- To open this log file, open a terminal in the container that is running the Eclipse JDT Language Server plug-in. You can also view the log file by running the Java: Open Java Language Server log file command.

-

Run

cat <PATH_TO_LOG_FILE>wherePATH_TO_LOG_FILEis/home/theia/.theia/workspace-storage/<workspace_name>/redhat.java/jdt_ws/.metadata/.log.

9.1.1.8.4. Logging the Java language server protocol (LSP) messages

Procedure

To log the LSP messages to the Visual Studio Code Output view, enable tracing by setting the java.trace.server attribute to verbose.

Additional resources

For troubleshooting instructions, see the Visual Studio Code Java GitHub repository.

9.1.1.9. Viewing logs for Intelephense

9.1.1.9.1. Logging the Intelephense client-server communication

Procedure

To configure the PHP Intelephense language support to log the client-server communication in the Output view:

- Click File → Settings.

- Open the Preferences view.

-

Expand the Intelephense section and set the

trace.server.verbosepreference value toverboseto see all the communication events (the default value isoff).

9.1.1.9.2. Viewing Intelephense events in the Output panel

This procedure describes how to view Intelephense events in the Output panel.

Procedure

- Click View → Output

- Select Intelephense in the drop-down list for the Output view.

9.1.1.10. Viewing logs for PHP-Debug

This procedure describes how to configure the PHP Debug plug-in to log the PHP Debug plug-in diagnostic messages into the Debug Console view. Configure this before the start of the debug session.

Procedure

-

In the

launch.jsonfile, add the"log": trueattribute to thephpconfiguration. - Start the debug session.

- The diagnostic messages are printed into the Debug Console view along with the application output.

9.1.1.11. Viewing logs for XML

Other than the general diagnostics, there are XML plug-in specific actions that the user can perform.

9.1.1.11.1. Verifying the state of the XML language server

Procedure

-

Open a terminal in the container named

vscode-xml-<xxx>. Run

ps aux | grep javato verify that the XML language server has started. If the process is running, the output is:java ***/org.eclipse.ls4xml-uber.jar`

java ***/org.eclipse.ls4xml-uber.jar`Copy to Clipboard Copied! Toggle word wrap Toggle overflow If is not, see the Section 9.1, “Viewing OpenShift Dev Spaces workspaces logs” chapter.

9.1.1.11.2. Checking XML language server feature flags

Procedure

Check if the features are enabled. The XML plug-in provides multiple settings that can enable and disable features:

-

xml.format.enabled: Enable the formatter -

xml.validation.enabled: Enable the validation -

xml.documentSymbols.enabled: Enable the document symbols

-

-

To diagnose whether the XML language server is working, create a simple XML element, such as

<hello></hello>, and confirm that it appears in the Outline panel on the right. -

If the document symbols do not show, ensure that the

xml.documentSymbols.enabledattribute is set totrue. If it istrue, and there are no symbols, the language server may not be hooked to the editor. If there are document symbols, then the language server is connected to the editor. -

Ensure that the features that the user needs, are set to

truein the settings (they are set totrueby default). If any of the features are not working, or not working as expected, file an issue against the Language Server.

9.1.1.11.3. Enabling XML Language Server Protocol (LSP) tracing

Procedure

To log LSP messages to the Visual Studio Code Output view, enable tracing by setting the xml.trace.server attribute to verbose.

9.1.1.11.4. Viewing the XML language server log

Procedure

The log from the language server can be found in the plug-in sidecar at /home/theia/.theia/workspace-storage/<workspace_name>/redhat.vscode-xml/lsp4xml.log.

9.1.1.12. Viewing logs for YAML

This section describes the YAML plug-in specific actions that the user can perform, in addition to the general diagnostics ones.

9.1.1.12.1. Verifying the state of the YAML language server

This section describes how to verify the state of the YAML language server.

Procedure

Check if the container running the YAML plug-in is running the YAML language server.

-

In the editor, open a terminal in the container that is running the YAML plug-in (an example name of the container:

vscode-yaml-<xxx>). -

In the terminal, run the

ps aux | grep nodecommand. This command searches all the node processes running in the current container. Verify that a command

node **/server.jsis running.

The node **/server.js running in the container indicates that the language server is running. If it is not running, the language server has not started inside the container. In this case, see Section 9.1, “Viewing OpenShift Dev Spaces workspaces logs”.

9.1.1.12.2. Checking the YAML language server feature flags

Procedure

To check the feature flags:

Check if the features are enabled. The YAML plug-in provides multiple settings that can enable and disable features, such as:

-

yaml.format.enable: Enables the formatter -

yaml.validate: Enables validation -

yaml.hover: Enables the hover function -

yaml.completion: Enables the completion function

-

-

To check if the plug-in is working, type the simplest YAML, such as

hello: world, and then open the Outline panel on the right side of the editor. - Verify if there are any document symbols. If yes, the language server is connected to the editor.

-

If any feature is not working, verify that the settings listed above are set to

true(they are set totrueby default). If a feature is not working, file an issue against the Language Server.

9.1.1.12.3. Enabling YAML Language Server Protocol (LSP) tracing

Procedure

To log LSP messages to the Visual Studio Code Output view, enable tracing by setting the yaml.trace.server attribute to verbose.

9.1.1.13. Viewing logs for .NET with OmniSharp-Theia plug-in

9.1.1.13.1. OmniSharp-Theia plug-in

OpenShift Dev Spaces uses the OmniSharp-Theia plug-in as a remote plug-in. It is located at github.com/redhat-developer/omnisharp-theia-plugin. In case of an issue, report it, or contribute your fix in the repository.

This plug-in registers omnisharp-roslyn as a language server and provides project dependencies and language syntax for C# applications.

The language server runs on .NET SDK 2.2.105.

9.1.1.13.2. Verifying the state of the OmniSharp-Theia plug-in language server

Procedure

To check if the container running the OmniSharp-Theia plug-in is running OmniSharp, execute the ps aux | grep OmniSharp.exe command. If the process is running, the following is an example output:

/tmp/theia-unpacked/redhat-developer.che-omnisharp-plugin.0.0.1.zcpaqpczwb.omnisharp_theia_plugin.theia/server/bin/mono /tmp/theia-unpacked/redhat-developer.che-omnisharp-plugin.0.0.1.zcpaqpczwb.omnisharp_theia_plugin.theia/server/omnisharp/OmniSharp.exe

/tmp/theia-unpacked/redhat-developer.che-omnisharp-plugin.0.0.1.zcpaqpczwb.omnisharp_theia_plugin.theia/server/bin/mono

/tmp/theia-unpacked/redhat-developer.che-omnisharp-plugin.0.0.1.zcpaqpczwb.omnisharp_theia_plugin.theia/server/omnisharp/OmniSharp.exeIf the output is different, the language server has not started inside the container. Check the logs described in Section 9.1, “Viewing OpenShift Dev Spaces workspaces logs”.

9.1.1.13.3. Checking OmniSharp Che-Theia plug-in language server features

Procedure

-

If the OmniSharp.exe process is running, check if the language server features are working by opening a

.csfile and trying the hover or completion features, or opening the Problems or Outline view.

9.1.1.13.4. Viewing OmniSharp-Theia plug-in logs in the Output panel

Procedure

If OmniSharp.exe is running, it logs all information in the Output panel. To view the logs, open the Output view and select C# from the drop-down list.

9.1.1.14. Viewing logs for .NET with NetcoredebugOutput plug-in

9.1.1.14.1. NetcoredebugOutput plug-in

The NetcoredebugOutput plug-in provides the netcoredbg tool. This tool implements the Visual Studio Code Debug Adapter protocol and allows users to debug .NET applications under the .NET Core runtime.

The container where the NetcoredebugOutput plug-in is running contains .NET SDK v.2.2.105.

9.1.1.14.2. Verifying the state of the NetcoredebugOutput plug-in

Procedure

Search for a

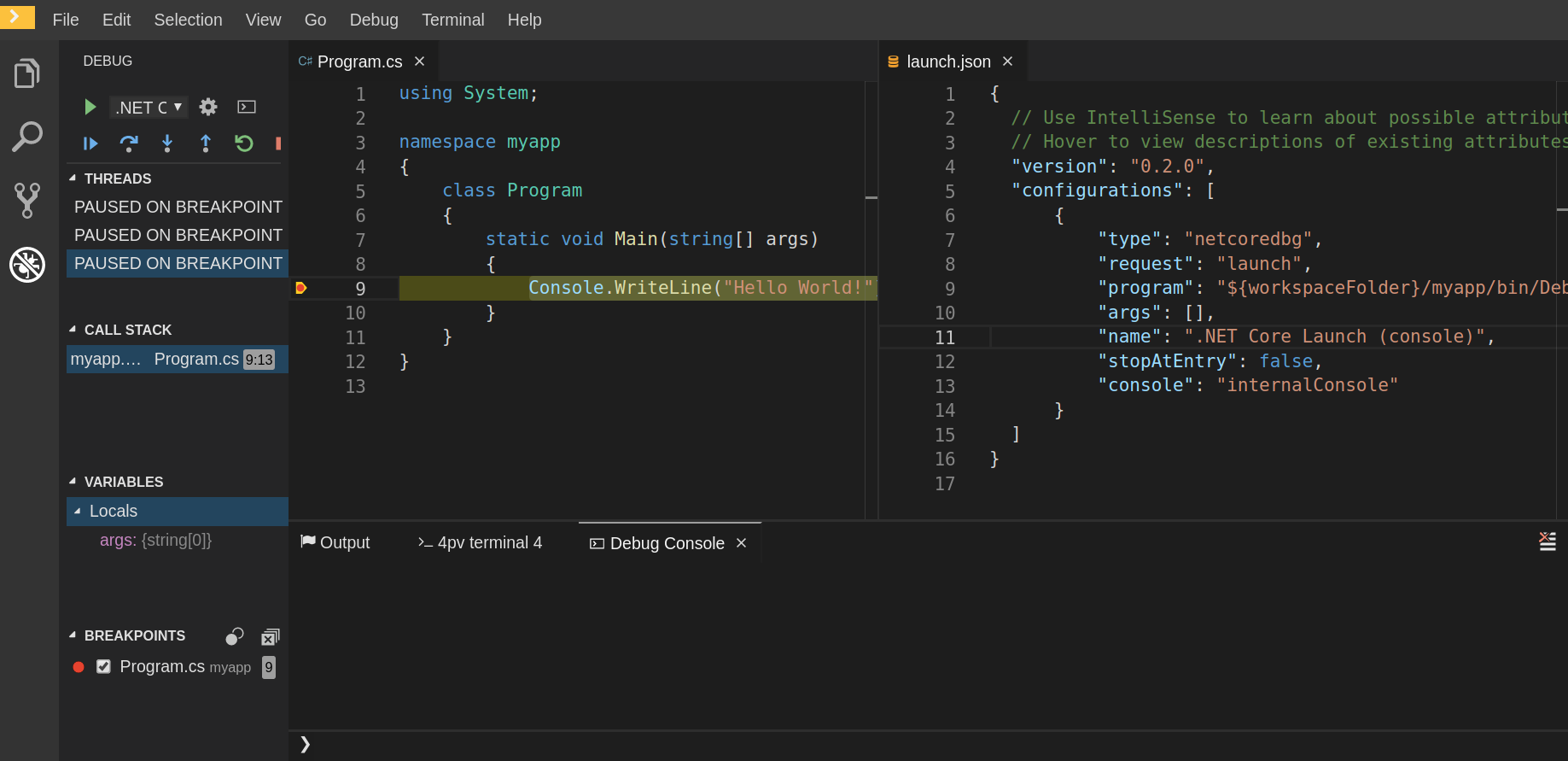

netcoredbgdebug configuration in thelaunch.jsonfile.Example 9.1. Sample debug configuration

Copy to Clipboard Copied! Toggle word wrap Toggle overflow -

Test the autocompletion feature within the braces of the

configurationsection of thelaunch.jsonfile. If you can findnetcoredbg, the Che-Theia plug-in is correctly initialized. If not, see Section 9.1, “Viewing OpenShift Dev Spaces workspaces logs”.

9.1.1.14.3. Viewing NetcoredebugOutput plug-in logs in the Output panel

This section describes how to view NetcoredebugOutput plug-in logs in the Output panel.

Procedure

Open the Debug console.

9.1.1.15. Viewing logs for Camel

9.1.1.15.1. Verifying the state of the Camel language server

Procedure

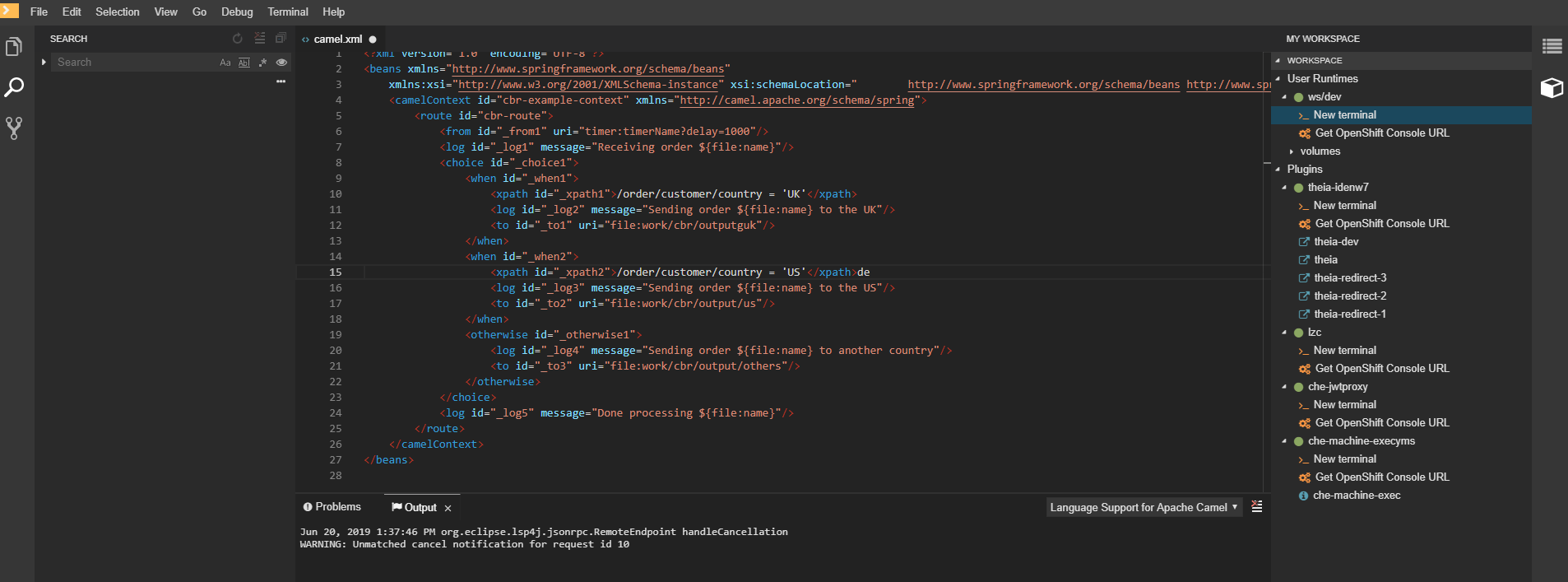

The user can inspect the log output of the sidecar container using the Camel language tools that are stored in the vscode-apache-camel<xxx> Camel container.

To verify the state of the language server:

-

Open a terminal inside the

vscode-apache-camel<xxx>container. Run the

ps aux | grep javacommand. The following is an example language server process:java -jar /tmp/vscode-unpacked/camel-tooling.vscode-apache-camel.latest.euqhbmepxd.camel-tooling.vscode-apache-camel-0.0.14.vsix/extension/jars/language-server.jar

java -jar /tmp/vscode-unpacked/camel-tooling.vscode-apache-camel.latest.euqhbmepxd.camel-tooling.vscode-apache-camel-0.0.14.vsix/extension/jars/language-server.jarCopy to Clipboard Copied! Toggle word wrap Toggle overflow - If you cannot find it, see Section 9.1, “Viewing OpenShift Dev Spaces workspaces logs”.

9.1.1.15.2. Viewing Camel logs in the Output panel

The Camel language server is a SpringBoot application that writes its log to the $\{java.io.tmpdir}/log-camel-lsp.out file. Typically, $\{java.io.tmpdir} points to the /tmp directory, so the filename is /tmp/log-camel-lsp.out.

Procedure

The Camel language server logs are printed in the Output channel named Language Support for Apache Camel.

The output channel is created only at the first created log entry on the client side. It may be absent when everything is going well.

9.1.2. Viewing Che-Theia IDE logs

This section describes how to view Che-Theia IDE logs.

9.1.2.1. Viewing Che-Theia editor logs using the OpenShift CLI

Observing Che-Theia editor logs helps to get a better understanding and insight over the plug-ins loaded by the editor. This section describes how to access the Che-Theia editor logs using the OpenShift CLI (command-line interface).

Prerequisites

- OpenShift Dev Spaces is deployed in an OpenShift cluster.

- A workspace is created.

- User is located in a OpenShift Dev Spaces installation project.

Procedure

Obtain the list of the available Pods:

oc get pods

$ oc get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example

oc get pods NAME READY STATUS RESTARTS AGE devspaces-9-xz6g8 1/1 Running 1 15h workspace0zqb2ew3py4srthh.go-cli-549cdcf69-9n4w2 4/4 Running 0 1h

$ oc get pods NAME READY STATUS RESTARTS AGE devspaces-9-xz6g8 1/1 Running 1 15h workspace0zqb2ew3py4srthh.go-cli-549cdcf69-9n4w2 4/4 Running 0 1hCopy to Clipboard Copied! Toggle word wrap Toggle overflow Obtain the list of the available containers in the particular Pod:

oc get pods <name-of-pod> --output jsonpath='\{.spec.containers[*].name}'$ oc get pods <name-of-pod> --output jsonpath='\{.spec.containers[*].name}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example:

oc get pods workspace0zqb2ew3py4srthh.go-cli-549cdcf69-9n4w2 -o jsonpath='\{.spec.containers[*].name}' go-cli che-machine-exechr7 theia-idexzb vscode-gox3r$ oc get pods workspace0zqb2ew3py4srthh.go-cli-549cdcf69-9n4w2 -o jsonpath='\{.spec.containers[*].name}' > go-cli che-machine-exechr7 theia-idexzb vscode-gox3rCopy to Clipboard Copied! Toggle word wrap Toggle overflow Get logs from the

theia/idecontainer:oc logs --follow <name-of-pod> --container <name-of-container>

$ oc logs --follow <name-of-pod> --container <name-of-container>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

9.2. Investigating failures at a workspace start using the Verbose mode

Verbose mode allows users to reach an enlarged log output, investigating failures at a workspace start.

In addition to usual log entries, the Verbose mode also lists the container logs of each workspace.

9.2.1. Restarting a OpenShift Dev Spaces workspace in Verbose mode after start failure

This section describes how to restart a OpenShift Dev Spaces workspace in the Verbose mode after a failure during the workspace start. Dashboard proposes the restart of a workspace in the Verbose mode once the workspace fails at its start.

Prerequisites

- A running instance of OpenShift Dev Spaces.

- An existing workspace that fails to start.

Procedure

- Using Dashboard, try to start a workspace.

- When it fails to start, click on the displayed Open in Verbose mode link.

- Check the Logs tab to find a reason for the workspace failure.

9.2.2. Starting a OpenShift Dev Spaces workspace in Verbose mode

This section describes how to start the Red Hat OpenShift Dev Spaces workspace in Verbose mode.

Prerequisites

- A running instance of Red Hat OpenShift Dev Spaces.

- An existing workspace defined on this instance of OpenShift Dev Spaces.

Procedure

- Open the Workspaces tab.

- On the left side of a row dedicated to the workspace, access the drop-down menu displayed as three horizontal dots and select the Open in Verbose mode option. Alternatively, this option is also available in the workspace details, under the Actions drop-down menu.

- Check the Logs tab to find a reason for the workspace failure.

9.3. Troubleshooting slow workspaces

Sometimes, workspaces can take a long time to start. Tuning can reduce this start time. Depending on the options, administrators or users can do the tuning.

This section includes several tuning options for starting workspaces faster or improving workspace runtime performance.

9.3.1. Improving workspace start time

- Caching images with Image Puller

Role: Administrator

When starting a workspace, OpenShift pulls the images from the registry. A workspace can include many containers meaning that OpenShift pulls Pod’s images (one per container). Depending on the size of the image and the bandwidth, it can take a long time.

Image Puller is a tool that can cache images on each of OpenShift nodes. As such, pre-pulling images can improve start times. See https://access.redhat.com/documentation/en-us/red_hat_openshift_dev_spaces/3.2/html-single/administration_guide/index#caching-images-for-faster-workspace-start.

- Choosing better storage type

Role: Administrator and user

Every workspace has a shared volume attached. This volume stores the project files, so that when restarting a workspace, changes are still available. Depending on the storage, attach time can take up to a few minutes, and I/O can be slow.

- Installing offline

Role: Administrator

Components of OpenShift Dev Spaces are OCI images. Set up Red Hat OpenShift Dev Spaces in offline mode (air-gap scenario) to reduce any extra download at runtime because everything needs to be available from the beginning. See https://access.redhat.com/documentation/en-us/red_hat_openshift_dev_spaces/3.2/html-single/administration_guide/index#installing-devspaces-in-a-restricted-environment-on-openshift.

- Optimizing workspace plug-ins

Role: User

When selecting various plug-ins, each plug-in can bring its own sidecar container, which is an OCI image. OpenShift pulls the images of these sidecar containers.

Reduce the number of plug-ins, or disable them to see if start time is faster. See also https://access.redhat.com/documentation/en-us/red_hat_openshift_dev_spaces/3.2/html-single/administration_guide/index#caching-images-for-faster-workspace-start.

- Reducing the number of public endpoints

Role: Administrator

For each endpoint, OpenShift is creating OpenShift Route objects. Depending on the underlying configuration, this creation can be slow.

To avoid this problem, reduce the exposure. For example, to automatically detect a new port listening inside containers and redirect traffic for the processes using a local IP address (

127.0.0.1), the Che-Theia IDE plug-in has three optional routes.By reducing the number of endpoints and checking endpoints of all plug-ins, workspace start can be faster.

- CDN configuration

The IDE editor uses a CDN (Content Delivery Network) to serve content. Check that the content uses a CDN to the client (or a local route for offline setup).

To check that, open Developer Tools in the browser and check for

vendorsin the Network tab.vendors.<random-id>.jsoreditor.main.*should come from CDN URLs.

9.3.2. Improving workspace runtime performance

- Providing enough CPU resources

Plug-ins consume CPU resources. For example, when a plug-in provides IntelliSense features, adding more CPU resources may lead to better performance.

Ensure the CPU settings in the devfile definition,

devfile.yaml, are correct:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Providing enough memory

Plug-ins consume CPU and memory resources. For example, when a plug-in provides IntelliSense features, collecting data can consume all the memory allocated to the container.

Providing more memory to the plug-in can increase performance. Ensure about the correctness of memory settings:

-

in the plug-in definition -

meta.yamlfile in the devfile definition -

devfile.yamlfileCopy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specifies the memory limit for the plug-in.

In the devfile definition (

devfile.yaml):Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Specifies the memory limit for this plug-in.

-

in the plug-in definition -

9.4. Troubleshooting network problems

This section describes how to prevent or resolve issues related to network policies. OpenShift Dev Spaces requires the availability of the WebSocket Secure (WSS) connections. Secure WebSocket connections improve confidentiality and also reliability because they reduce the risk of interference by bad proxies.

Prerequisites

- The WebSocket Secure (WSS) connections on port 443 must be available on the network. Firewall and proxy may need additional configuration.

Use a supported web browser:

- Google Chrome

- Mozilla Firefox

Procedure

- Verify the browser supports the WebSocket protocol. See: Searching a websocket test.

- Verify firewalls settings: WebSocket Secure (WSS) connections on port 443 must be available.

- Verify proxy servers settings: The proxy transmits and intercepts WebSocket Secure (WSS) connections on port 443.

Chapter 10. Adding a Visual Studio Code extension to a workspace

Previously, with the devfiles v1 format, you used the devfile to specify IDE-specific plug-ins and Visual Studio Code extensions. Now, with devfiles v2, you use a specific meta-folder rather than the devfile to specify the plug-ins and extensions.

10.1. OpenShift Dev Spaces plug-in registries overview

Every OpenShift Dev Spaces instance has a registry of default plug-ins and extensions. The Che-Theia IDE gets information about these plug-ins and extensions from the registry and installs them.

Check this OpenShift Dev Spaces registry project for an overview of the default plug-ins, extensions, and source code. An online instance that refreshes after every commit to the main branch, is located here. You can use a different plug-in or extension registry for Che-Theia if you don’t work in air-gapped environment: only the default registry is available there.

The plug-in and extension overview for Che-Code Visual Studio Code editor is located in the OpenVSX instance. Air gap is not yet supported for this editor.

10.2. Adding an extension to .vscode/extensions.json

The easiest way to add a Visual Studio Code extension to a workspace is to add it to the .vscode/extensions.json file. The main advantage of this method is that it works with all supported OpenShift Dev Spaces IDEs.

If you use the Che-Theia IDE, the extension is installed and configured automatically. If you use a different supported IDE with the Che-Code Visual Studio Code fork, the IDE displays a pop-up with a recommendation to install the extension.

Prerequisites

-

You have the

.vscode/extensions.jsonfile in the root of the GitHub repository.

Procedure

Add the extension ID to the

.vscode/extensions.jsonfile. Use a.sign to separate the publisher and extension. The following example uses the IDs of Red Hat Visual Studio Code Java extension:{ "recommendations": [ "redhat.java" ] }{ "recommendations": [ "redhat.java" ] }Copy to Clipboard Copied! Toggle word wrap Toggle overflow

If the specified set of extension IDs isn’t available in the OpenShift Dev Spaces registry, the workspace starts without the extension.

10.3. Adding plug-in parameters to .che/che-theia-plugins.yaml

You can add extra parameters to a plug-in by modifying the .che/che-theia-plugins.yaml file. These modifications include:

- Defining the plug-ins for workspace installation.

- Changing the default memory limit.

- Overriding default preferences.

10.3.1. Defining the plug-ins for workspace installation

Define the plug-ins to be installed in the workspace.

Prerequisites

-

You have the

.che/che-theia-plugins.yamlfile in the root of the GitHub repository.

Procedure

Add the ID of the plug-in to the

.che/che-theia-plugins.yamlfile. Use the/sign to separate the publisher and plug-in name. The following example uses the IDs of Red Hat Visual Studio Code Java extension:- id: redhat/java/latest

- id: redhat/java/latestCopy to Clipboard Copied! Toggle word wrap Toggle overflow

10.3.2. Changing the default memory limit

Override container settings such as the memory limit.

Prerequisites

-

You have the

.che/che-theia-plugins.yamlfile in the root of the GitHub repository.

Procedure

-

Add an

overridesection to the.che/che-theia-plugins.yamlfile under theidof the plug-in. Specify the memory limit for the extension. In the following example, OpenShift Dev Spaces automatically installs the Red Hat Visual Studio Code Java extension in the OpenShift Dev Spaces workspace and increases the memory of the workspace by two gigabytes:

- id: redhat/java/latest override: sidecar: memoryLimit: 2Gi- id: redhat/java/latest override: sidecar: memoryLimit: 2GiCopy to Clipboard Copied! Toggle word wrap Toggle overflow

10.3.3. Overriding default preferences

Override the default preferences of the Visual Studio Code extension for the workspace.

Prerequisites

-

You have the

.che/che-theia-plugins.yamlfile in the root of the GitHub repository.

Procedure

-

Add an

overridesection to the.che/che-theia-plugins.yamlfile under theidof the extension. Specify the preferences in the

Preferencessection. In the following example, OpenShift Dev Spaces automatically installs Red Hat Visual Studio Code Java extension in the OpenShift Dev Spaces workspace and sets thejava.server.launchModepreference toLightWeight:- id: redhat/java/latest override: preferences: java.server.launchMode: LightWeight- id: redhat/java/latest override: preferences: java.server.launchMode: LightWeightCopy to Clipboard Copied! Toggle word wrap Toggle overflow

You can also define the preferences in the .vscode/settings.json file, either by changing the preferences in the UI of your IDE or by adding them to the .vscode/settings.json file:

{

"my.preferences": "my-value"

}

{

"my.preferences": "my-value"

}10.4. Defining Visual Studio Code extension attributes in the devfile

If it’s not possible to add extra files in the GitHub repository, you can define some of the plug-in or extension attributes by inlining them in the devfile. You can use this procedure with both .vscode/extensions.json and .che/che-theia-plugins.yaml file contents.

10.4.1. Inlining .vscode/extensions.json file

Use .vscode/extensions.json file contents to inline the extension attributes in the devfile.

Procedure

-

Add an

attributessection to yourdevfile.yamlfile. -

Add

.vscode/extensions.jsonto theatributessection. Add a|sign after the colon separator. Paste the contents of the

.vscode/extensions.jsonfile after the|sign. The following example uses Red Hat Visual Studio Code Java extension attributes:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

10.4.2. Inlining .che/che-theia-plugins.yaml file

Use .che/che-theia-plugins.yaml file contents to inline the plug-in attributes in the devfile.

Procedure

-

Add an

attributessection to yourdevfile.yamlfile. -

Add

.vscode/extensions.jsonto theatributessection. Add a|sign after the colon separator. Paste the content of the

.che/che-theia-plugins.yamlfile after the|sign. The following example uses Red Hat Visual Studio Code Java extension attributes:Copy to Clipboard Copied! Toggle word wrap Toggle overflow