Serving

Getting started with Knative Serving and configuring services

Abstract

Chapter 1. Getting started with Knative Serving

1.1. Serverless applications

Serverless applications are created and deployed as Kubernetes services, defined by a route and a configuration, and contained in a YAML file. To deploy a serverless application using OpenShift Serverless, you must create a Knative Service object.

Example Knative Service object YAML file

apiVersion: serving.knative.dev/v1 kind: Service metadata: name: showcase 1 namespace: default 2 spec: template: spec: containers: - image: quay.io/openshift-knative/showcase 3 env: - name: GREET 4 value: Ciao

You can create a serverless application by using one of the following methods:

Create a Knative service from the OpenShift Container Platform web console.

For OpenShift Container Platform, see Creating applications using the Developer perspective for more information.

-

Create a Knative service by using the Knative (

kn) CLI. -

Create and apply a Knative

Serviceobject as a YAML file, by using theocCLI.

1.1.1. Creating serverless applications by using the Knative CLI

Using the Knative (kn) CLI to create serverless applications provides a more streamlined and intuitive user interface over modifying YAML files directly. You can use the kn service create command to create a basic serverless application.

Prerequisites

- OpenShift Serverless Operator and Knative Serving are installed on your cluster.

-

You have installed the Knative (

kn) CLI. - You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

Procedure

Create a Knative service:

$ kn service create <service-name> --image <image> --tag <tag-value>

Where:

-

--imageis the URI of the image for the application. --tagis an optional flag that can be used to add a tag to the initial revision that is created with the service.Example command

$ kn service create showcase \ --image quay.io/openshift-knative/showcaseExample output

Creating service 'showcase' in namespace 'default': 0.271s The Route is still working to reflect the latest desired specification. 0.580s Configuration "showcase" is waiting for a Revision to become ready. 3.857s ... 3.861s Ingress has not yet been reconciled. 4.270s Ready to serve. Service 'showcase' created with latest revision 'showcase-00001' and URL: http://showcase-default.apps-crc.testing

-

1.1.2. Creating serverless applications using YAML

Creating Knative resources by using YAML files uses a declarative API, which enables you to describe applications declaratively and in a reproducible manner. To create a serverless application by using YAML, you must create a YAML file that defines a Knative Service object, then apply it by using oc apply.

After the service is created and the application is deployed, Knative creates an immutable revision for this version of the application. Knative also performs network programming to create a route, ingress, service, and load balancer for your application and automatically scales your pods up and down based on traffic.

Prerequisites

- OpenShift Serverless Operator and Knative Serving are installed on your cluster.

- You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

-

Install the OpenShift CLI (

oc).

Procedure

Create a YAML file containing the following sample code:

apiVersion: serving.knative.dev/v1 kind: Service metadata: name: showcase namespace: default spec: template: spec: containers: - image: quay.io/openshift-knative/showcase env: - name: GREET value: BonjourNavigate to the directory where the YAML file is contained, and deploy the application by applying the YAML file:

$ oc apply -f <filename>

If you do not want to switch to the Developer perspective in the OpenShift Container Platform web console or use the Knative (kn) CLI or YAML files, you can create Knative components by using the Administator perspective of the OpenShift Container Platform web console.

1.1.3. Creating serverless applications using the Administrator perspective

Serverless applications are created and deployed as Kubernetes services, defined by a route and a configuration, and contained in a YAML file. To deploy a serverless application using OpenShift Serverless, you must create a Knative Service object.

Example Knative Service object YAML file

apiVersion: serving.knative.dev/v1 kind: Service metadata: name: showcase 1 namespace: default 2 spec: template: spec: containers: - image: quay.io/openshift-knative/showcase 3 env: - name: GREET 4 value: Ciao

After the service is created and the application is deployed, Knative creates an immutable revision for this version of the application. Knative also performs network programming to create a route, ingress, service, and load balancer for your application and automatically scales your pods up and down based on traffic.

Prerequisites

To create serverless applications using the Administrator perspective, ensure that you have completed the following steps.

- The OpenShift Serverless Operator and Knative Serving are installed.

- You have logged in to the web console and are in the Administrator perspective.

Procedure

- Navigate to the Serverless → Serving page.

- In the Create list, select Service.

- Manually enter YAML or JSON definitions, or by dragging and dropping a file into the editor.

- Click Create.

1.1.4. Creating a service using offline mode

You can execute kn service commands in offline mode, so that no changes happen on the cluster, and instead the service descriptor file is created on your local machine. After the descriptor file is created, you can modify the file before propagating changes to the cluster.

The offline mode of the Knative CLI is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

Prerequisites

- OpenShift Serverless Operator and Knative Serving are installed on your cluster.

-

You have installed the Knative (

kn) CLI.

Procedure

In offline mode, create a local Knative service descriptor file:

$ kn service create showcase \ --image quay.io/openshift-knative/showcase \ --target ./ \ --namespace testExample output

Service 'showcase' created in namespace 'test'.

The

--target ./flag enables offline mode and specifies./as the directory for storing the new directory tree.If you do not specify an existing directory, but use a filename, such as

--target my-service.yaml, then no directory tree is created. Instead, only the service descriptor filemy-service.yamlis created in the current directory.The filename can have the

.yaml,.yml, or.jsonextension. Choosing.jsoncreates the service descriptor file in the JSON format.The

--namespace testoption places the new service in thetestnamespace.If you do not use

--namespace, and you are logged in to an OpenShift Container Platform cluster, the descriptor file is created in the current namespace. Otherwise, the descriptor file is created in thedefaultnamespace.

Examine the created directory structure:

$ tree ./

Example output

./ └── test └── ksvc └── showcase.yaml 2 directories, 1 file-

The current

./directory specified with--targetcontains the newtest/directory that is named after the specified namespace. -

The

test/directory contains theksvcdirectory, named after the resource type. -

The

ksvcdirectory contains the descriptor fileshowcase.yaml, named according to the specified service name.

-

The current

Examine the generated service descriptor file:

$ cat test/ksvc/showcase.yaml

Example output

apiVersion: serving.knative.dev/v1 kind: Service metadata: creationTimestamp: null name: showcase namespace: test spec: template: metadata: annotations: client.knative.dev/user-image: quay.io/openshift-knative/showcase creationTimestamp: null spec: containers: - image: quay.io/openshift-knative/showcase name: "" resources: {} status: {}List information about the new service:

$ kn service describe showcase --target ./ --namespace test

Example output

Name: showcase Namespace: test Age: URL: Revisions: Conditions: OK TYPE AGE REASON

The

--target ./option specifies the root directory for the directory structure containing namespace subdirectories.Alternatively, you can directly specify a YAML or JSON filename with the

--targetoption. The accepted file extensions are.yaml,.yml, and.json.The

--namespaceoption specifies the namespace, which communicates toknthe subdirectory that contains the necessary service descriptor file.If you do not use

--namespace, and you are logged in to an OpenShift Container Platform cluster,knsearches for the service in the subdirectory that is named after the current namespace. Otherwise,knsearches in thedefault/subdirectory.

Use the service descriptor file to create the service on the cluster:

$ kn service create -f test/ksvc/showcase.yaml

Example output

Creating service 'showcase' in namespace 'test': 0.058s The Route is still working to reflect the latest desired specification. 0.098s ... 0.168s Configuration "showcase" is waiting for a Revision to become ready. 23.377s ... 23.419s Ingress has not yet been reconciled. 23.534s Waiting for load balancer to be ready 23.723s Ready to serve. Service 'showcase' created to latest revision 'showcase-00001' is available at URL: http://showcase-test.apps.example.com

1.1.5. Additional resources

1.2. Verifying your serverless application deployment

To verify that your serverless application has been deployed successfully, you must get the application URL created by Knative, and then send a request to that URL and observe the output. OpenShift Serverless supports the use of both HTTP and HTTPS URLs, however the output from oc get ksvc always prints URLs using the http:// format.

1.2.1. Verifying your serverless application deployment

To verify that your serverless application has been deployed successfully, you must get the application URL created by Knative, and then send a request to that URL and observe the output. OpenShift Serverless supports the use of both HTTP and HTTPS URLs, however the output from oc get ksvc always prints URLs using the http:// format.

Prerequisites

- OpenShift Serverless Operator and Knative Serving are installed on your cluster.

-

You have installed the

ocCLI. - You have created a Knative service.

Prerequisites

-

Install the OpenShift CLI (

oc).

Procedure

Find the application URL:

$ oc get ksvc <service_name>

Example output

NAME URL LATESTCREATED LATESTREADY READY REASON showcase http://showcase-default.example.com showcase-00001 showcase-00001 True

Make a request to your cluster and observe the output.

Example HTTP request (using HTTPie tool)

$ http showcase-default.example.com

Example HTTPS request

$ https showcase-default.example.com

Example output

HTTP/1.1 200 OK Content-Type: application/json Server: Quarkus/2.13.7.Final-redhat-00003 Java/17.0.7 X-Config: {"sink":"http://localhost:31111","greet":"Ciao","delay":0} X-Version: v0.7.0-4-g23d460f content-length: 49 { "artifact": "knative-showcase", "greeting": "Ciao" }Optional. If you don’t have the HTTPie tool installed on your system, you can likely use curl tool instead:

Example HTTPS request

$ curl http://showcase-default.example.com

Example output

{"artifact":"knative-showcase","greeting":"Ciao"}Optional. If you receive an error relating to a self-signed certificate in the certificate chain, you can add the

--verify=noflag to the HTTPie command to ignore the error:$ https --verify=no showcase-default.example.com

Example output

HTTP/1.1 200 OK Content-Type: application/json Server: Quarkus/2.13.7.Final-redhat-00003 Java/17.0.7 X-Config: {"sink":"http://localhost:31111","greet":"Ciao","delay":0} X-Version: v0.7.0-4-g23d460f content-length: 49 { "artifact": "knative-showcase", "greeting": "Ciao" }ImportantSelf-signed certificates must not be used in a production deployment. This method is only for testing purposes.

Optional. If your OpenShift Container Platform cluster is configured with a certificate that is signed by a certificate authority (CA) but not yet globally configured for your system, you can specify this with the

curlcommand. The path to the certificate can be passed to the curl command by using the--cacertflag:$ curl https://showcase-default.example.com --cacert <file>

Example output

{"artifact":"knative-showcase","greeting":"Ciao"}

Chapter 2. Autoscaling

2.1. Autoscaling

Knative Serving provides automatic scaling, or autoscaling, for applications to match incoming demand. For example, if an application is receiving no traffic, and scale-to-zero is enabled, Knative Serving scales the application down to zero replicas. If scale-to-zero is disabled, the application is scaled down to the minimum number of replicas configured for applications on the cluster. Replicas can also be scaled up to meet demand if traffic to the application increases.

Autoscaling settings for Knative services can be global settings that are configured by cluster administrators (or dedicated administrators for Red Hat OpenShift Service on AWS and OpenShift Dedicated), or per-revision settings that are configured for individual services.

You can modify per-revision settings for your services by using the OpenShift Container Platform web console, by modifying the YAML file for your service, or by using the Knative (kn) CLI.

Any limits or targets that you set for a service are measured against a single instance of your application. For example, setting the target annotation to 50 configures the autoscaler to scale the application so that each revision handles 50 requests at a time.

2.2. Scale bounds

Scale bounds determine the minimum and maximum numbers of replicas that can serve an application at any given time. You can set scale bounds for an application to help prevent cold starts or control computing costs.

2.2.1. Minimum scale bounds

The minimum number of replicas that can serve an application is determined by the min-scale annotation. If scale to zero is not enabled, the min-scale value defaults to 1.

The min-scale value defaults to 0 replicas if the following conditions are met:

-

The

min-scaleannotation is not set - Scaling to zero is enabled

-

The class

KPAis used

Example service spec with min-scale annotation

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: showcase

namespace: default

spec:

template:

metadata:

annotations:

autoscaling.knative.dev/min-scale: "0"

...

2.2.1.1. Setting the min-scale annotation by using the Knative CLI

Using the Knative (kn) CLI to set the min-scale annotation provides a more streamlined and intuitive user interface over modifying YAML files directly. You can use the kn service command with the --scale-min flag to create or modify the min-scale value for a service.

Prerequisites

- Knative Serving is installed on the cluster.

-

You have installed the Knative (

kn) CLI.

Procedure

Set the minimum number of replicas for the service by using the

--scale-minflag:$ kn service create <service_name> --image <image_uri> --scale-min <integer>

Example command

$ kn service create showcase --image quay.io/openshift-knative/showcase --scale-min 2

2.2.2. Maximum scale bounds

The maximum number of replicas that can serve an application is determined by the max-scale annotation. If the max-scale annotation is not set, there is no upper limit for the number of replicas created.

Example service spec with max-scale annotation

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: showcase

namespace: default

spec:

template:

metadata:

annotations:

autoscaling.knative.dev/max-scale: "10"

...

2.2.2.1. Setting the max-scale annotation by using the Knative CLI

Using the Knative (kn) CLI to set the max-scale annotation provides a more streamlined and intuitive user interface over modifying YAML files directly. You can use the kn service command with the --scale-max flag to create or modify the max-scale value for a service.

Prerequisites

- Knative Serving is installed on the cluster.

-

You have installed the Knative (

kn) CLI.

Procedure

Set the maximum number of replicas for the service by using the

--scale-maxflag:$ kn service create <service_name> --image <image_uri> --scale-max <integer>

Example command

$ kn service create showcase --image quay.io/openshift-knative/showcase --scale-max 10

2.3. Concurrency

Concurrency determines the number of simultaneous requests that can be processed by each replica of an application at any given time. Concurrency can be configured as a soft limit or a hard limit:

- A soft limit is a targeted requests limit, rather than a strictly enforced bound. For example, if there is a sudden burst of traffic, the soft limit target can be exceeded.

A hard limit is a strictly enforced upper bound requests limit. If concurrency reaches the hard limit, surplus requests are buffered and must wait until there is enough free capacity to execute the requests.

ImportantUsing a hard limit configuration is only recommended if there is a clear use case for it with your application. Having a low, hard limit specified may have a negative impact on the throughput and latency of an application, and might cause cold starts.

Adding a soft target and a hard limit means that the autoscaler targets the soft target number of concurrent requests, but imposes a hard limit of the hard limit value for the maximum number of requests.

If the hard limit value is less than the soft limit value, the soft limit value is tuned down, because there is no need to target more requests than the number that can actually be handled.

2.3.1. Configuring a soft concurrency target

A soft limit is a targeted requests limit, rather than a strictly enforced bound. For example, if there is a sudden burst of traffic, the soft limit target can be exceeded. You can specify a soft concurrency target for your Knative service by setting the autoscaling.knative.dev/target annotation in the spec, or by using the kn service command with the correct flags.

Procedure

Optional: Set the

autoscaling.knative.dev/targetannotation for your Knative service in the spec of theServicecustom resource:Example service spec

apiVersion: serving.knative.dev/v1 kind: Service metadata: name: showcase namespace: default spec: template: metadata: annotations: autoscaling.knative.dev/target: "200"Optional: Use the

kn servicecommand to specify the--concurrency-targetflag:$ kn service create <service_name> --image <image_uri> --concurrency-target <integer>

Example command to create a service with a concurrency target of 50 requests

$ kn service create showcase --image quay.io/openshift-knative/showcase --concurrency-target 50

2.3.2. Configuring a hard concurrency limit

A hard concurrency limit is a strictly enforced upper bound requests limit. If concurrency reaches the hard limit, surplus requests are buffered and must wait until there is enough free capacity to execute the requests. You can specify a hard concurrency limit for your Knative service by modifying the containerConcurrency spec, or by using the kn service command with the correct flags.

Procedure

Optional: Set the

containerConcurrencyspec for your Knative service in the spec of theServicecustom resource:Example service spec

apiVersion: serving.knative.dev/v1 kind: Service metadata: name: showcase namespace: default spec: template: spec: containerConcurrency: 50The default value is

0, which means that there is no limit on the number of simultaneous requests that are permitted to flow into one replica of the service at a time.A value greater than

0specifies the exact number of requests that are permitted to flow into one replica of the service at a time. This example would enable a hard concurrency limit of 50 requests.Optional: Use the

kn servicecommand to specify the--concurrency-limitflag:$ kn service create <service_name> --image <image_uri> --concurrency-limit <integer>

Example command to create a service with a concurrency limit of 50 requests

$ kn service create showcase --image quay.io/openshift-knative/showcase --concurrency-limit 50

2.3.3. Concurrency target utilization

This value specifies the percentage of the concurrency limit that is actually targeted by the autoscaler. This is also known as specifying the hotness at which a replica runs, which enables the autoscaler to scale up before the defined hard limit is reached.

For example, if the containerConcurrency value is set to 10, and the target-utilization-percentage value is set to 70 percent, the autoscaler creates a new replica when the average number of concurrent requests across all existing replicas reaches 7. Requests numbered 7 to 10 are still sent to the existing replicas, but additional replicas are started in anticipation of being required after the containerConcurrency value is reached.

Example service configured using the target-utilization-percentage annotation

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: showcase

namespace: default

spec:

template:

metadata:

annotations:

autoscaling.knative.dev/target-utilization-percentage: "70"

...

2.4. Scale-to-zero

Knative Serving provides automatic scaling, or autoscaling, for applications to match incoming demand.

2.4.1. Enabling scale-to-zero

You can use the enable-scale-to-zero spec to enable or disable scale-to-zero globally for applications on the cluster.

Prerequisites

- You have installed OpenShift Serverless Operator and Knative Serving on your cluster.

- You have cluster administrator permissions on OpenShift Container Platform, or you have cluster or dedicated administrator permissions on Red Hat OpenShift Service on AWS or OpenShift Dedicated.

- You are using the default Knative Pod Autoscaler. The scale to zero feature is not available if you are using the Kubernetes Horizontal Pod Autoscaler.

Procedure

Modify the

enable-scale-to-zerospec in theKnativeServingcustom resource (CR):Example KnativeServing CR

apiVersion: operator.knative.dev/v1beta1 kind: KnativeServing metadata: name: knative-serving spec: config: autoscaler: enable-scale-to-zero: "false" 1- 1

- The

enable-scale-to-zerospec can be either"true"or"false". If set to true, scale-to-zero is enabled. If set to false, applications are scaled down to the configured minimum scale bound. The default value is"true".

2.4.2. Configuring the scale-to-zero grace period

Knative Serving provides automatic scaling down to zero pods for applications. You can use the scale-to-zero-grace-period spec to define an upper bound time limit that Knative waits for scale-to-zero machinery to be in place before the last replica of an application is removed.

Prerequisites

- You have installed OpenShift Serverless Operator and Knative Serving on your cluster.

- You have cluster administrator permissions on OpenShift Container Platform, or you have cluster or dedicated administrator permissions on Red Hat OpenShift Service on AWS or OpenShift Dedicated.

- You are using the default Knative Pod Autoscaler. The scale-to-zero feature is not available if you are using the Kubernetes Horizontal Pod Autoscaler.

Procedure

Modify the

scale-to-zero-grace-periodspec in theKnativeServingcustom resource (CR):Example KnativeServing CR

apiVersion: operator.knative.dev/v1beta1 kind: KnativeServing metadata: name: knative-serving spec: config: autoscaler: scale-to-zero-grace-period: "30s" 1- 1

- The grace period time in seconds. The default value is 30 seconds.

Chapter 3. Configuring Serverless applications

3.1. Multi-container support for Serving

You can deploy a multi-container pod by using a single Knative service. This method is useful for separating application responsibilities into smaller, specialized parts.

3.1.1. Configuring a multi-container service

Multi-container support is enabled by default. You can create a multi-container pod by specifiying multiple containers in the service.

Procedure

Modify your service to include additional containers. Only one container can handle requests, so specify

portsfor exactly one container. Here is an example configuration with two containers:Multiple containers configuration

apiVersion: serving.knative.dev/v1 kind: Service ... spec: template: spec: containers: - name: first-container 1 image: gcr.io/knative-samples/helloworld-go ports: - containerPort: 8080 2 - name: second-container 3 image: gcr.io/knative-samples/helloworld-java

3.2. EmptyDir volumes

emptyDir volumes are empty volumes that are created when a pod is created, and are used to provide temporary working disk space. emptyDir volumes are deleted when the pod they were created for is deleted.

3.2.1. Configuring the EmptyDir extension

The kubernetes.podspec-volumes-emptydir extension controls whether emptyDir volumes can be used with Knative Serving. To enable using emptyDir volumes, you must modify the KnativeServing custom resource (CR) to include the following YAML:

Example KnativeServing CR

apiVersion: operator.knative.dev/v1beta1

kind: KnativeServing

metadata:

name: knative-serving

spec:

config:

features:

kubernetes.podspec-volumes-emptydir: enabled

...

3.3. Persistent Volume Claims for Serving

Some serverless applications require permanent data storage. By configuring different volume types, you can provide data storage for Knative services. Serving supports mounting of the volume types such as secret, configMap, projected, and emptyDir.

You can configure persistent volume claims (PVCs) for your Knative services. The Persistent volume types are implemented as plugins. To determine if there are any persistent volume types available, you can check the available or installed storage classes in your cluster. Persistent volumes are supported, but require a feature flag to be enabled.

The mounting of large volumes can lead to a considerable delay in the start time of the application.

3.3.1. Enabling PVC support

Procedure

To enable Knative Serving to use PVCs and write to them, modify the

KnativeServingcustom resource (CR) to include the following YAML:Enabling PVCs with write access

... spec: config: features: "kubernetes.podspec-persistent-volume-claim": enabled "kubernetes.podspec-persistent-volume-write": enabled ...-

The

kubernetes.podspec-persistent-volume-claimextension controls whether persistent volumes (PVs) can be used with Knative Serving. -

The

kubernetes.podspec-persistent-volume-writeextension controls whether PVs are available to Knative Serving with the write access.

-

The

To claim a PV, modify your service to include the PV configuration. For example, you might have a persistent volume claim with the following configuration:

NoteUse the storage class that supports the access mode you are requesting. For example, you can use the

ocs-storagecluster-cephfsstorage class for theReadWriteManyaccess mode.The

ocs-storagecluster-cephfsstorage class is supported and comes from Red Hat OpenShift Data Foundation.PersistentVolumeClaim configuration

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: example-pv-claim namespace: my-ns spec: accessModes: - ReadWriteMany storageClassName: ocs-storagecluster-cephfs resources: requests: storage: 1GiIn this case, to claim a PV with write access, modify your service as follows:

Knative service PVC configuration

apiVersion: serving.knative.dev/v1 kind: Service metadata: namespace: my-ns ... spec: template: spec: containers: ... volumeMounts: 1 - mountPath: /data name: mydata readOnly: false volumes: - name: mydata persistentVolumeClaim: 2 claimName: example-pv-claim readOnly: false 3NoteTo successfully use persistent storage in Knative services, you need additional configuration, such as the user permissions for the Knative container user.

3.3.2. Additional resources for OpenShift Container Platform

3.4. Init containers

Init containers are specialized containers that are run before application containers in a pod. They are generally used to implement initialization logic for an application, which may include running setup scripts or downloading required configurations. You can enable the use of init containers for Knative services by modifying the KnativeServing custom resource (CR).

Init containers may cause longer application start-up times and should be used with caution for serverless applications, which are expected to scale up and down frequently.

3.4.1. Enabling init containers

Prerequisites

- You have installed OpenShift Serverless Operator and Knative Serving on your cluster.

- You have cluster administrator permissions on OpenShift Container Platform, or you have cluster or dedicated administrator permissions on Red Hat OpenShift Service on AWS or OpenShift Dedicated.

Procedure

Enable the use of init containers by adding the

kubernetes.podspec-init-containersflag to theKnativeServingCR:Example KnativeServing CR

apiVersion: operator.knative.dev/v1beta1 kind: KnativeServing metadata: name: knative-serving spec: config: features: kubernetes.podspec-init-containers: enabled ...

3.5. Resolving image tags to digests

If the Knative Serving controller has access to the container registry, Knative Serving resolves image tags to a digest when you create a revision of a service. This is known as tag-to-digest resolution, and helps to provide consistency for deployments.

3.5.1. Tag-to-digest resolution

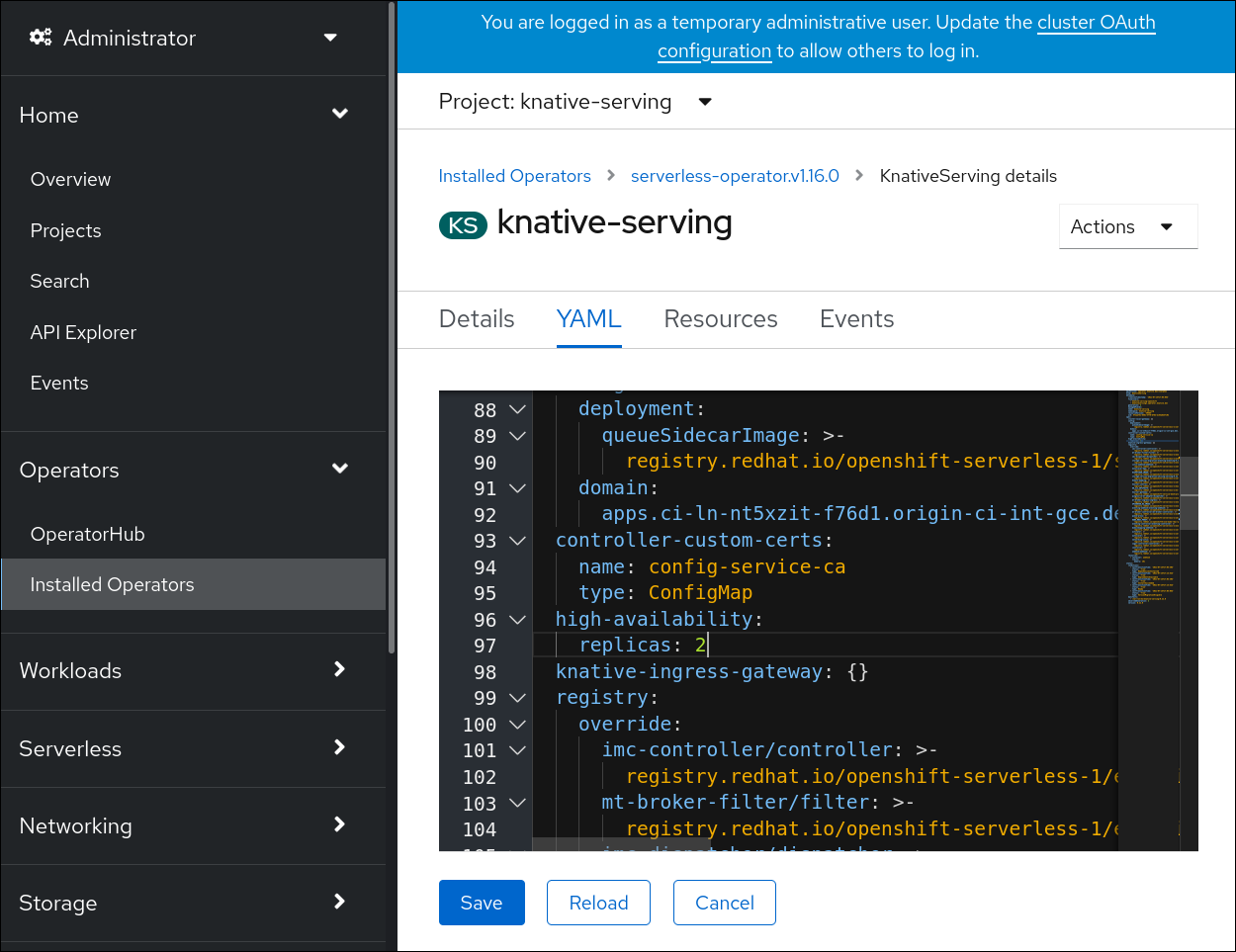

To give the controller access to the container registry on OpenShift Container Platform, you must create a secret and then configure controller custom certificates. You can configure controller custom certificates by modifying the controller-custom-certs spec in the KnativeServing custom resource (CR). The secret must reside in the same namespace as the KnativeServing CR.

If a secret is not included in the KnativeServing CR, this setting defaults to using public key infrastructure (PKI). When using PKI, the cluster-wide certificates are automatically injected into the Knative Serving controller by using the config-service-sa config map. The OpenShift Serverless Operator populates the config-service-sa config map with cluster-wide certificates and mounts the config map as a volume to the controller.

3.5.1.1. Configuring tag-to-digest resolution by using a secret

If the controller-custom-certs spec uses the Secret type, the secret is mounted as a secret volume. Knative components consume the secret directly, assuming that the secret has the required certificates.

Prerequisites

- You have cluster administrator permissions on OpenShift Container Platform, or you have cluster or dedicated administrator permissions on Red Hat OpenShift Service on AWS or OpenShift Dedicated.

- You have installed the OpenShift Serverless Operator and Knative Serving on your cluster.

Procedure

Create a secret:

Example command

$ oc -n knative-serving create secret generic custom-secret --from-file=<secret_name>.crt=<path_to_certificate>

Configure the

controller-custom-certsspec in theKnativeServingcustom resource (CR) to use theSecrettype:Example KnativeServing CR

apiVersion: operator.knative.dev/v1beta1 kind: KnativeServing metadata: name: knative-serving namespace: knative-serving spec: controller-custom-certs: name: custom-secret type: Secret

3.6. Configuring TLS authentication

You can use Transport Layer Security (TLS) to encrypt Knative traffic and for authentication.

TLS is the only supported method of traffic encryption for Knative Kafka. Red Hat recommends using both SASL and TLS together for Knative broker for Apache Kafka resources.

If you want to enable internal TLS with a Red Hat OpenShift Service Mesh integration, you must enable Service Mesh with mTLS instead of the internal encryption explained in the following procedure.

For OpenShift Container Platform and Red Hat OpenShift Service on AWS, see the documentation for Enabling Knative Serving metrics when using Service Mesh with mTLS.

3.6.1. Enabling TLS authentication for internal traffic

OpenShift Serverless supports TLS edge termination by default, so that HTTPS traffic from end users is encrypted. However, internal traffic behind the OpenShift route is forwarded to applications by using plain data. By enabling TLS for internal traffic, the traffic sent between components is encrypted, which makes this traffic more secure.

If you want to enable internal TLS with a Red Hat OpenShift Service Mesh integration, you must enable Service Mesh with mTLS instead of the internal encryption explained in the following procedure.

Internal TLS encryption support is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

Prerequisites

- You have installed the OpenShift Serverless Operator and Knative Serving.

-

You have installed the OpenShift (

oc) CLI.

Procedure

Create or update your

KnativeServingresource and make sure that it includes theinternal-encryption: "true"field in the spec:... spec: config: network: internal-encryption: "true" ...Restart the activator pods in the

knative-servingnamespace to load the certificates:$ oc delete pod -n knative-serving --selector app=activator

3.7. Configuring Kourier

Kourier is a lightweight Kubernetes-native Ingress for Knative Serving. Kourier acts as a gateway for Knative, routing HTTP traffic to Knative services.

3.7.1. Customizing kourier-bootstrap for Kourier getaways

The Envoy proxy component in Kourier handles inbound and outbound HTTP traffic for the Knative services. By default, Kourier contains an Envoy bootstrap configuration in the kourier-bootstrap configuration map in the knative-serving-ingress namespace. You can change this configuration.

Prerequisites

- You have installed the OpenShift Serverless Operator and Knative Serving.

- You have cluster administrator permissions on OpenShift Container Platform, or you have cluster or dedicated administrator permissions on Red Hat OpenShift Service on AWS or OpenShift Dedicated.

Procedure

Specify a custom bootstrapping configuration map by changing the

spec.ingress.kourier.bootstrap-configmapfield in theKnativeServingcustom resource (CR):Example KnativeServing CR

apiVersion: operator.knative.dev/v1beta1 kind: KnativeServing metadata: name: knative-serving namespace: knative-serving spec: config: network: ingress-class: kourier.ingress.networking.knative.dev ingress: kourier: bootstrap-configmap: my-configmap enabled: true # ...

3.8. Restrictive network policies

3.8.1. Clusters with restrictive network policies

If you are using a cluster that multiple users have access to, your cluster might use network policies to control which pods, services, and namespaces can communicate with each other over the network. If your cluster uses restrictive network policies, it is possible that Knative system pods are not able to access your Knative application. For example, if your namespace has the following network policy, which denies all requests, Knative system pods cannot access your Knative application:

Example NetworkPolicy object that denies all requests to the namespace

kind: NetworkPolicy apiVersion: networking.k8s.io/v1 metadata: name: deny-by-default namespace: example-namespace spec: podSelector: ingress: []

3.8.2. Enabling communication with Knative applications on a cluster with restrictive network policies

To allow access to your applications from Knative system pods, you must add a label to each of the Knative system namespaces, and then create a NetworkPolicy object in your application namespace that allows access to the namespace for other namespaces that have this label.

A network policy that denies requests to non-Knative services on your cluster still prevents access to these services. However, by allowing access from Knative system namespaces to your Knative application, you are allowing access to your Knative application from all namespaces in the cluster.

If you do not want to allow access to your Knative application from all namespaces on the cluster, you might want to use JSON Web Token authentication for Knative services instead. JSON Web Token authentication for Knative services requires Service Mesh.

Prerequisites

-

Install the OpenShift CLI (

oc). - OpenShift Serverless Operator and Knative Serving are installed on your cluster.

Procedure

Add the

knative.openshift.io/system-namespace=truelabel to each Knative system namespace that requires access to your application:Label the

knative-servingnamespace:$ oc label namespace knative-serving knative.openshift.io/system-namespace=true

Label the

knative-serving-ingressnamespace:$ oc label namespace knative-serving-ingress knative.openshift.io/system-namespace=true

Label the

knative-eventingnamespace:$ oc label namespace knative-eventing knative.openshift.io/system-namespace=true

Label the

knative-kafkanamespace:$ oc label namespace knative-kafka knative.openshift.io/system-namespace=true

Create a

NetworkPolicyobject in your application namespace to allow access from namespaces with theknative.openshift.io/system-namespacelabel:Example

NetworkPolicyobjectapiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: <network_policy_name> 1 namespace: <namespace> 2 spec: ingress: - from: - namespaceSelector: matchLabels: knative.openshift.io/system-namespace: "true" podSelector: {} policyTypes: - Ingress

Chapter 4. Debugging Serverless applications

You can use a variety of methods to troubleshoot a Serverless application.

4.1. Checking terminal output

You can check your deploy command output to see whether deployment succeeded or not. If your deployment process was terminated, you should see an error message in the output that describes the reason why the deployment failed. This kind of failure is most likely due to either a misconfigured manifest or an invalid command.

Procedure

Open the command output on the client where you deploy and manage your application. The following example is an error that you might see after a failed

oc applycommand:Error from server (InternalError): error when applying patch: {"metadata":{"annotations":{"kubectl.kubernetes.io/last-applied-configuration":"{\"apiVersion\":\"serving.knative.dev/v1\",\"kind\":\"Route\",\"metadata\":{\"annotations\":{},\"name\":\"route-example\",\"namespace\":\"default\"},\"spec\":{\"traffic\":[{\"configurationName\":\"configuration-example\",\"percent\":50}]}}\n"}},"spec":{"traffic":[{"configurationName":"configuration-example","percent":50}]}} to: &{0xc421d98240 0xc421e77490 default route-example STDIN 0xc421db0488 264682 false} for: "STDIN": Internal error occurred: admission webhook "webhook.knative.dev" denied the request: mutation failed: The route must have traffic percent sum equal to 100. ERROR: Non-zero return code '1' from command: Process exited with status 1This output indicates that you must configure the route traffic percent to be equal to 100.

4.2. Checking pod status

You might need to check the status of your Pod object to identify the issue with your Serverless application.

Procedure

List all pods for your deployment by running the following command:

$ oc get pods

Example output

NAME READY STATUS RESTARTS AGE configuration-example-00001-deployment-659747ff99-9bvr4 2/2 Running 0 3h configuration-example-00002-deployment-5f475b7849-gxcht 1/2 CrashLoopBackOff 2 36s

In the output, you can see all pods with selected data about their status.

View the detailed information on the status of a pod by running the following command:

Example output

$ oc get pod <pod_name> --output yaml

In the output, the

conditionsandcontainerStatusesfields might be particularly useful for debugging.

4.3. Checking revision status

You might need to check the status of your revision to identify the issue with your Serverless application.

Procedure

If you configure your route with a

Configurationobject, get the name of theRevisionobject created for your deployment by running the following command:$ oc get configuration <configuration_name> --output jsonpath="{.status.latestCreatedRevisionName}"You can find the configuration name in the

Route.yamlfile, which specifies routing settings by defining an OpenShiftRouteresource.If you configure your route with revision directly, look up the revision name in the

Route.yamlfile.Query for the status of the revision by running the following command:

$ oc get revision <revision-name> --output yaml

A ready revision should have the

reason: ServiceReady,status: "True", andtype: Readyconditions in its status. If these conditions are present, you might want to check pod status or Istio routing. Otherwise, the resource status contains the error message.

4.3.1. Additional resources

4.4. Checking Ingress status

You might need to check the status of your Ingress to identify the issue with your Serverless application.

Procedure

Check the IP address of your Ingress by running the following command:

$ oc get svc -n istio-system istio-ingressgateway

The

istio-ingressgatewayservice is theLoadBalancerservice used by Knative.If there is no external IP address, run the following command:

$ oc describe svc istio-ingressgateway -n istio-system

This command prints the reason why IP addresses were not provisioned. Most likely, it is due to a quota issue.

4.5. Checking route status

In some cases, the Route object has issues. You can check its status by using the OpenShift CLI (oc).

Procedure

View the status of the

Routeobject with which you deployed your application by running the following command:$ oc get route <route_name> --output yaml

Substitute

<route_name>with the name of yourRouteobject.The

conditionsobject in thestatusobject states the reason in case of a failure.

4.6. Checking Ingress and Istio routing

Sometimes, when Istio is used as an Ingress layer, the Ingress and Istio routing have issues. You can see the details on them by using the OpenShift CLI (oc).

Procedure

List all Ingress resources and their corresponding labels by running the following command:

$ oc get ingresses.networking.internal.knative.dev -o=custom-columns='NAME:.metadata.name,LABELS:.metadata.labels'

Example output

NAME LABELS helloworld-go map[serving.knative.dev/route:helloworld-go serving.knative.dev/routeNamespace:default serving.knative.dev/service:helloworld-go]

In this output, labels

serving.knative.dev/routeandserving.knative.dev/routeNamespaceindicate theRoutewhere the Ingress resource resides. YourRouteand Ingress should be listed.If your Ingress does not exist, the route controller assumes that the

Revisionobjects targeted by yourRouteorServiceobject are not ready. Proceed with other debugging procedures to diagnoseRevisionreadiness status.If your Ingress is listed, examine the

ClusterIngressobject created for your route by running the following command:$ oc get ingresses.networking.internal.knative.dev <ingress_name> --output yaml

In the status section of the output, if the condition with

type=Readyhas the status ofTrue, then Ingress is working correctly. Otherwise, the output contains error messages.If Ingress has the status of

Ready, then there is a correspondingVirtualServiceobject. Verify the configuration of theVirtualServiceobject by running the following command:$ oc get virtualservice -l networking.internal.knative.dev/ingress=<ingress_name> -n <ingress_namespace> --output yaml

The network configuration in the

VirtualServiceobject must match that of theIngressandRouteobjects. Because theVirtualServiceobject does not expose aStatusfield, you might need to wait for its settings to propagate.

4.6.1. Additional resources

Chapter 5. Kourier and Istio ingresses

OpenShift Serverless supports the following two ingress solutions:

- Kourier

- Istio using Red Hat OpenShift Service Mesh

The default is Kourier.

5.1. Kourier and Istio ingress solutions

5.1.1. Kourier

Kourier is the default ingress solution for OpenShift Serverless. It has the following properties:

- It is based on envoy proxy.

- It is simple and lightweight.

- It provides the basic routing functionality that Serverless needs to provide its set of features.

- It supports basic observability and metrics.

- It supports basic TLS termination of Knative Service routing.

- It provides only limited configuration and extension options.

5.1.2. Istio using OpenShift Service Mesh

Using Istio as the ingress solution for OpenShift Serverless enables an additional feature set that is based on what Red Hat OpenShift Service Mesh offers:

- Native mTLS between all connections

- Serverless components are part of a service mesh

- Additional observability and metrics

- Authorization and authentication support

- Custom rules and configuration, as supported by Red Hat OpenShift Service Mesh

However, the additional features come with a higher overhead and resource consumption. For details, see the Red Hat OpenShift Service Mesh documentation.

See the "Integrating Service Mesh with OpenShift Serverless" section of Serverless documentation for Istio requirements and installation instructions.

5.1.3. Traffic configuration and routing

Regardless of whether you use Kourier or Istio, the traffic for a Knative Service is configured in the knative-serving namespace by the net-kourier-controller or the net-istio-controller respectively.

The controller reads the KnativeService and its child custom resources to configure the ingress solution. Both ingress solutions provide an ingress gateway pod that becomes part of the traffic path. Both ingress solutions are based on Envoy. By default, Serverless has two routes for each KnativeService object:

-

A cluster-external route that is forwarded by the OpenShift router, for example

myapp-namespace.example.com. -

A cluster-local route containing the cluster domain, for example

myapp.namespace.svc.cluster.local. This domain can and should be used to call Knative services from Knative or other user workloads.

The ingress gateway can forward requests either in the serve mode or the proxy mode:

- In the serve mode, requests go directly to the Queue-Proxy sidecar container of the Knative service.

-

In the proxy mode, requests first go through the Activator component in the

knative-servingnamespace.

The choice of mode depends on the configuration of Knative, the Knative service, and the current traffic. For example, if a Knative Service is scaled to zero, requests are sent to the Activator component, which acts as a buffer until a new Knative service pod is started.

Chapter 6. Traffic splitting

6.1. Traffic splitting overview

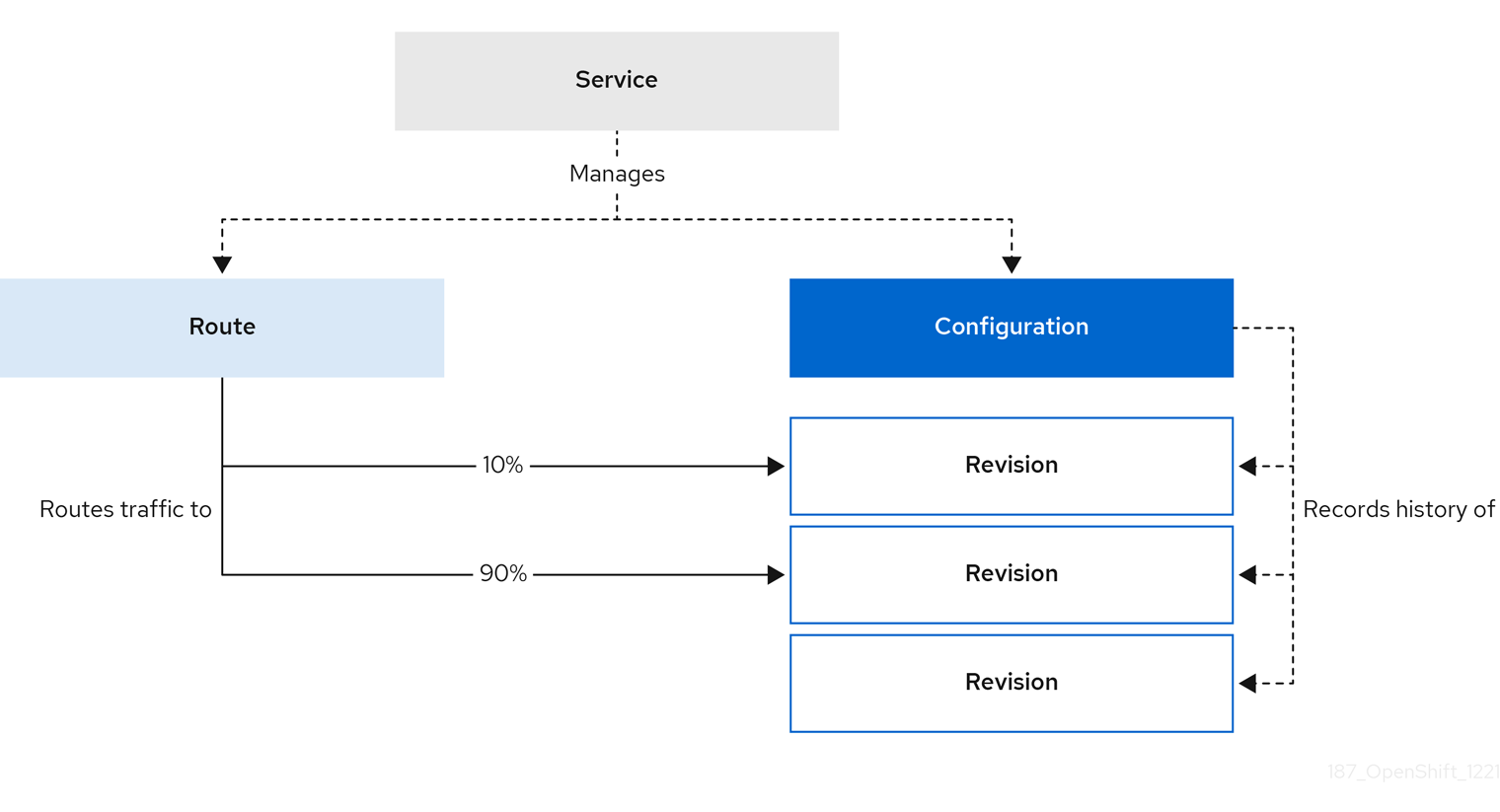

In a Knative application, traffic can be managed by creating a traffic split. A traffic split is configured as part of a route, which is managed by a Knative service.

Configuring a route allows requests to be sent to different revisions of a service. This routing is determined by the traffic spec of the Service object.

A traffic spec declaration consists of one or more revisions, each responsible for handling a portion of the overall traffic. The percentages of traffic routed to each revision must add up to 100%, which is ensured by a Knative validation.

The revisions specified in a traffic spec can either be a fixed, named revision, or can point to the “latest” revision, which tracks the head of the list of all revisions for the service. The "latest" revision is a type of floating reference that updates if a new revision is created. Each revision can have a tag attached that creates an additional access URL for that revision.

The traffic spec can be modified by:

-

Editing the YAML of a

Serviceobject directly. -

Using the Knative (

kn) CLI--trafficflag. - Using the OpenShift Container Platform web console.

When you create a Knative service, it does not have any default traffic spec settings.

6.2. Traffic spec examples

The following example shows a traffic spec where 100% of traffic is routed to the latest revision of the service. Under status, you can see the name of the latest revision that latestRevision resolves to:

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: example-service

namespace: default

spec:

...

traffic:

- latestRevision: true

percent: 100

status:

...

traffic:

- percent: 100

revisionName: example-service

The following example shows a traffic spec where 100% of traffic is routed to the revision tagged as current, and the name of that revision is specified as example-service. The revision tagged as latest is kept available, even though no traffic is routed to it:

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: example-service

namespace: default

spec:

...

traffic:

- tag: current

revisionName: example-service

percent: 100

- tag: latest

latestRevision: true

percent: 0

The following example shows how the list of revisions in the traffic spec can be extended so that traffic is split between multiple revisions. This example sends 50% of traffic to the revision tagged as current, and 50% of traffic to the revision tagged as candidate. The revision tagged as latest is kept available, even though no traffic is routed to it:

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: example-service

namespace: default

spec:

...

traffic:

- tag: current

revisionName: example-service-1

percent: 50

- tag: candidate

revisionName: example-service-2

percent: 50

- tag: latest

latestRevision: true

percent: 06.3. Traffic splitting using the Knative CLI

Using the Knative (kn) CLI to create traffic splits provides a more streamlined and intuitive user interface over modifying YAML files directly. You can use the kn service update command to split traffic between revisions of a service.

6.3.1. Creating a traffic split by using the Knative CLI

Prerequisites

- The OpenShift Serverless Operator and Knative Serving are installed on your cluster.

-

You have installed the Knative (

kn) CLI. - You have created a Knative service.

Procedure

Specify the revision of your service and what percentage of traffic you want to route to it by using the

--traffictag with a standardkn service updatecommand:Example command

$ kn service update <service_name> --traffic <revision>=<percentage>

Where:

-

<service_name>is the name of the Knative service that you are configuring traffic routing for. -

<revision>is the revision that you want to configure to receive a percentage of traffic. You can either specify the name of the revision, or a tag that you assigned to the revision by using the--tagflag. -

<percentage>is the percentage of traffic that you want to send to the specified revision.

-

Optional: The

--trafficflag can be specified multiple times in one command. For example, if you have a revision tagged as@latestand a revision namedstable, you can specify the percentage of traffic that you want to split to each revision as follows:Example command

$ kn service update showcase --traffic @latest=20,stable=80

If you have multiple revisions and do not specify the percentage of traffic that should be split to the last revision, the

--trafficflag can calculate this automatically. For example, if you have a third revision namedexample, and you use the following command:Example command

$ kn service update showcase --traffic @latest=10,stable=60

The remaining 30% of traffic is split to the

examplerevision, even though it was not specified.

6.4. CLI flags for traffic splitting

The Knative (kn) CLI supports traffic operations on the traffic block of a service as part of the kn service update command.

6.4.1. Knative CLI traffic splitting flags

The following table displays a summary of traffic splitting flags, value formats, and the operation the flag performs. The Repetition column denotes whether repeating the particular value of flag is allowed in a kn service update command.

| Flag | Value(s) | Operation | Repetition |

|---|---|---|---|

|

|

|

Gives | Yes |

|

|

|

Gives | Yes |

|

|

|

Gives | No |

|

|

|

Gives | Yes |

|

|

|

Gives | No |

|

|

|

Removes | Yes |

6.4.1.1. Multiple flags and order precedence

All traffic-related flags can be specified using a single kn service update command. kn defines the precedence of these flags. The order of the flags specified when using the command is not taken into account.

The precedence of the flags as they are evaluated by kn are:

-

--untag: All the referenced revisions with this flag are removed from the traffic block. -

--tag: Revisions are tagged as specified in the traffic block. -

--traffic: The referenced revisions are assigned a portion of the traffic split.

You can add tags to revisions and then split traffic according to the tags you have set.

6.4.1.2. Custom URLs for revisions

Assigning a --tag flag to a service by using the kn service update command creates a custom URL for the revision that is created when you update the service. The custom URL follows the pattern https://<tag>-<service_name>-<namespace>.<domain> or http://<tag>-<service_name>-<namespace>.<domain>.

The --tag and --untag flags use the following syntax:

- Require one value.

- Denote a unique tag in the traffic block of the service.

- Can be specified multiple times in one command.

6.4.1.2.1. Example: Assign a tag to a revision

The following example assigns the tag latest to a revision named example-revision:

$ kn service update <service_name> --tag @latest=example-tag

6.4.1.2.2. Example: Remove a tag from a revision

You can remove a tag to remove the custom URL, by using the --untag flag.

If a revision has its tags removed, and it is assigned 0% of the traffic, the revision is removed from the traffic block entirely.

The following command removes all tags from the revision named example-revision:

$ kn service update <service_name> --untag example-tag

6.5. Splitting traffic between revisions

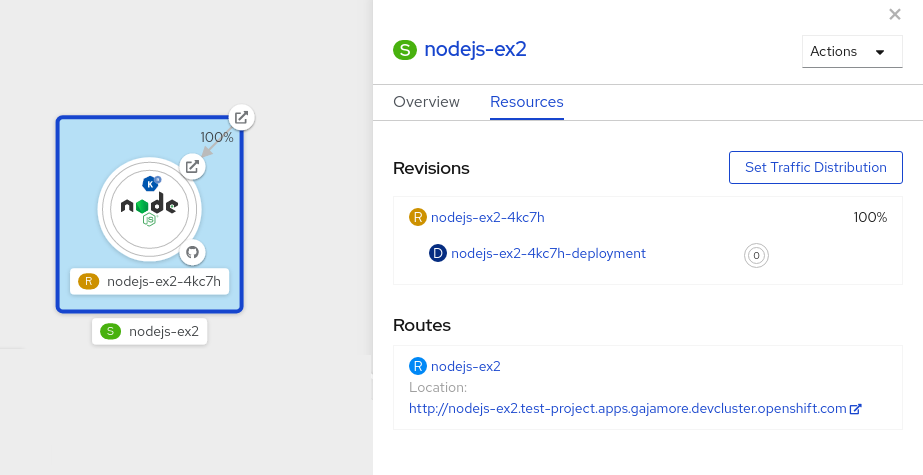

After you create a serverless application, the application is displayed in the Topology view of the Developer perspective in the OpenShift Container Platform web console. The application revision is represented by the node, and the Knative service is indicated by a quadrilateral around the node.

Any new change in the code or the service configuration creates a new revision, which is a snapshot of the code at a given time. For a service, you can manage the traffic between the revisions of the service by splitting and routing it to the different revisions as required.

6.5.1. Managing traffic between revisions by using the OpenShift Container Platform web console

Prerequisites

- The OpenShift Serverless Operator and Knative Serving are installed on your cluster.

- You have logged in to the OpenShift Container Platform web console.

Procedure

To split traffic between multiple revisions of an application in the Topology view:

- Click the Knative service to see its overview in the side panel.

Click the Resources tab, to see a list of Revisions and Routes for the service.

Figure 6.1. Serverless application

- Click the service, indicated by the S icon at the top of the side panel, to see an overview of the service details.

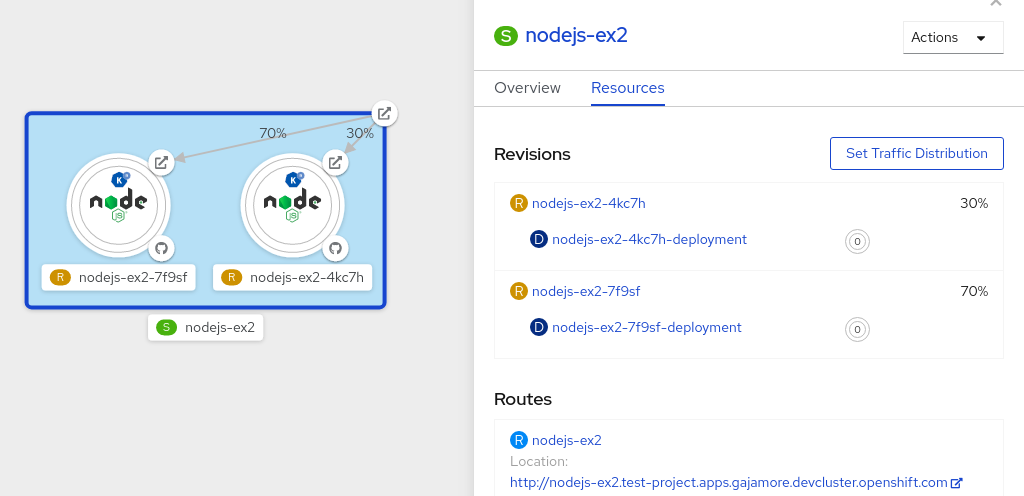

-

Click the YAML tab and modify the service configuration in the YAML editor, and click Save. For example, change the

timeoutsecondsfrom 300 to 301 . This change in the configuration triggers a new revision. In the Topology view, the latest revision is displayed and the Resources tab for the service now displays the two revisions. In the Resources tab, click to see the traffic distribution dialog box:

- Add the split traffic percentage portion for the two revisions in the Splits field.

- Add tags to create custom URLs for the two revisions.

Click Save to see two nodes representing the two revisions in the Topology view.

Figure 6.2. Serverless application revisions

6.6. Rerouting traffic using blue-green strategy

You can safely reroute traffic from a production version of an app to a new version, by using a blue-green deployment strategy.

6.6.1. Routing and managing traffic by using a blue-green deployment strategy

Prerequisites

- The OpenShift Serverless Operator and Knative Serving are installed on the cluster.

-

Install the OpenShift CLI (

oc).

Procedure

- Create and deploy an app as a Knative service.

Find the name of the first revision that was created when you deployed the service, by viewing the output from the following command:

$ oc get ksvc <service_name> -o=jsonpath='{.status.latestCreatedRevisionName}'Example command

$ oc get ksvc showcase -o=jsonpath='{.status.latestCreatedRevisionName}'Example output

$ showcase-00001

Add the following YAML to the service

specto send inbound traffic to the revision:... spec: traffic: - revisionName: <first_revision_name> percent: 100 # All traffic goes to this revision ...Verify that you can view your app at the URL output you get from running the following command:

$ oc get ksvc <service_name>

-

Deploy a second revision of your app by modifying at least one field in the

templatespec of the service and redeploying it. For example, you can modify theimageof the service, or anenvenvironment variable. You can redeploy the service by applying the service YAML file, or by using thekn service updatecommand if you have installed the Knative (kn) CLI. Find the name of the second, latest revision that was created when you redeployed the service, by running the command:

$ oc get ksvc <service_name> -o=jsonpath='{.status.latestCreatedRevisionName}'At this point, both the first and second revisions of the service are deployed and running.

Update your existing service to create a new, test endpoint for the second revision, while still sending all other traffic to the first revision:

Example of updated service spec with test endpoint

... spec: traffic: - revisionName: <first_revision_name> percent: 100 # All traffic is still being routed to the first revision - revisionName: <second_revision_name> percent: 0 # No traffic is routed to the second revision tag: v2 # A named route ...After you redeploy this service by reapplying the YAML resource, the second revision of the app is now staged. No traffic is routed to the second revision at the main URL, and Knative creates a new service named

v2for testing the newly deployed revision.Get the URL of the new service for the second revision, by running the following command:

$ oc get ksvc <service_name> --output jsonpath="{.status.traffic[*].url}"You can use this URL to validate that the new version of the app is behaving as expected before you route any traffic to it.

Update your existing service again, so that 50% of traffic is sent to the first revision, and 50% is sent to the second revision:

Example of updated service spec splitting traffic 50/50 between revisions

... spec: traffic: - revisionName: <first_revision_name> percent: 50 - revisionName: <second_revision_name> percent: 50 tag: v2 ...When you are ready to route all traffic to the new version of the app, update the service again to send 100% of traffic to the second revision:

Example of updated service spec sending all traffic to the second revision

... spec: traffic: - revisionName: <first_revision_name> percent: 0 - revisionName: <second_revision_name> percent: 100 tag: v2 ...TipYou can remove the first revision instead of setting it to 0% of traffic if you do not plan to roll back the revision. Non-routeable revision objects are then garbage-collected.

- Visit the URL of the first revision to verify that no more traffic is being sent to the old version of the app.

Chapter 7. External and Ingress routing

7.1. Routing overview

Knative leverages OpenShift Container Platform TLS termination to provide routing for Knative services. When a Knative service is created, an OpenShift Container Platform route is automatically created for the service. This route is managed by the OpenShift Serverless Operator. The OpenShift Container Platform route exposes the Knative service through the same domain as the OpenShift Container Platform cluster.

You can disable Operator control of OpenShift Container Platform routing so that you can configure a Knative route to directly use your TLS certificates instead.

Knative routes can also be used alongside the OpenShift Container Platform route to provide additional fine-grained routing capabilities, such as traffic splitting.

7.1.1. Additional resources for OpenShift Container Platform

7.2. Customizing labels and annotations

OpenShift Container Platform routes support the use of custom labels and annotations, which you can configure by modifying the metadata spec of a Knative service. Custom labels and annotations are propagated from the service to the Knative route, then to the Knative ingress, and finally to the OpenShift Container Platform route.

7.2.1. Customizing labels and annotations for OpenShift Container Platform routes

Prerequisites

- You must have the OpenShift Serverless Operator and Knative Serving installed on your OpenShift Container Platform cluster.

-

Install the OpenShift CLI (

oc).

Procedure

Create a Knative service that contains the label or annotation that you want to propagate to the OpenShift Container Platform route:

To create a service by using YAML:

Example service created by using YAML

apiVersion: serving.knative.dev/v1 kind: Service metadata: name: <service_name> labels: <label_name>: <label_value> annotations: <annotation_name>: <annotation_value> ...To create a service by using the Knative (

kn) CLI, enter:Example service created by using a

kncommand$ kn service create <service_name> \ --image=<image> \ --annotation <annotation_name>=<annotation_value> \ --label <label_value>=<label_value>

Verify that the OpenShift Container Platform route has been created with the annotation or label that you added by inspecting the output from the following command:

Example command for verification

$ oc get routes.route.openshift.io \ -l serving.knative.openshift.io/ingressName=<service_name> \ 1 -l serving.knative.openshift.io/ingressNamespace=<service_namespace> \ 2 -n knative-serving-ingress -o yaml \ | grep -e "<label_name>: \"<label_value>\"" -e "<annotation_name>: <annotation_value>" 3

7.3. Configuring routes for Knative services

If you want to configure a Knative service to use your TLS certificate on OpenShift Container Platform, you must disable the automatic creation of a route for the service by the OpenShift Serverless Operator and instead manually create a route for the service.

When you complete the following procedure, the default OpenShift Container Platform route in the knative-serving-ingress namespace is not created. However, the Knative route for the application is still created in this namespace.

7.3.1. Configuring OpenShift Container Platform routes for Knative services

Prerequisites

- The OpenShift Serverless Operator and Knative Serving component must be installed on your OpenShift Container Platform cluster.

-

Install the OpenShift CLI (

oc).

Procedure

Create a Knative service that includes the

serving.knative.openshift.io/disableRoute=trueannotation:ImportantThe

serving.knative.openshift.io/disableRoute=trueannotation instructs OpenShift Serverless to not automatically create a route for you. However, the service still shows a URL and reaches a status ofReady. This URL does not work externally until you create your own route with the same hostname as the hostname in the URL.Create a Knative

Serviceresource:Example resource

apiVersion: serving.knative.dev/v1 kind: Service metadata: name: <service_name> annotations: serving.knative.openshift.io/disableRoute: "true" spec: template: spec: containers: - image: <image> ...Apply the

Serviceresource:$ oc apply -f <filename>

Optional. Create a Knative service by using the

kn service createcommand:Example

kncommand$ kn service create <service_name> \ --image=gcr.io/knative-samples/helloworld-go \ --annotation serving.knative.openshift.io/disableRoute=true

Verify that no OpenShift Container Platform route has been created for the service:

Example command

$ $ oc get routes.route.openshift.io \ -l serving.knative.openshift.io/ingressName=$KSERVICE_NAME \ -l serving.knative.openshift.io/ingressNamespace=$KSERVICE_NAMESPACE \ -n knative-serving-ingress

You will see the following output:

No resources found in knative-serving-ingress namespace.

Create a

Routeresource in theknative-serving-ingressnamespace:apiVersion: route.openshift.io/v1 kind: Route metadata: annotations: haproxy.router.openshift.io/timeout: 600s 1 name: <route_name> 2 namespace: knative-serving-ingress 3 spec: host: <service_host> 4 port: targetPort: http2 to: kind: Service name: kourier weight: 100 tls: insecureEdgeTerminationPolicy: Allow termination: edge 5 key: |- -----BEGIN PRIVATE KEY----- [...] -----END PRIVATE KEY----- certificate: |- -----BEGIN CERTIFICATE----- [...] -----END CERTIFICATE----- caCertificate: |- -----BEGIN CERTIFICATE----- [...] -----END CERTIFICATE---- wildcardPolicy: None- 1

- The timeout value for the OpenShift Container Platform route. You must set the same value as the

max-revision-timeout-secondssetting (600sby default). - 2

- The name of the OpenShift Container Platform route.

- 3

- The namespace for the OpenShift Container Platform route. This must be

knative-serving-ingress. - 4

- The hostname for external access. You can set this to

<service_name>-<service_namespace>.<domain>. - 5

- The certificates you want to use. Currently, only

edgetermination is supported.

Apply the

Routeresource:$ oc apply -f <filename>

7.4. Global HTTPS redirection

HTTPS redirection provides redirection for incoming HTTP requests. These redirected HTTP requests are encrypted. You can enable HTTPS redirection for all services on the cluster by configuring the httpProtocol spec for the KnativeServing custom resource (CR).

7.4.1. HTTPS redirection global settings

Example KnativeServing CR that enables HTTPS redirection

apiVersion: operator.knative.dev/v1beta1

kind: KnativeServing

metadata:

name: knative-serving

spec:

config:

network:

httpProtocol: "redirected"

...

7.5. URL scheme for external routes

The URL scheme of external routes defaults to HTTPS for enhanced security. This scheme is determined by the default-external-scheme key in the KnativeServing custom resource (CR) spec.

7.5.1. Setting the URL scheme for external routes

Default spec

...

spec:

config:

network:

default-external-scheme: "https"

...

You can override the default spec to use HTTP by modifying the default-external-scheme key:

HTTP override spec

...

spec:

config:

network:

default-external-scheme: "http"

...

7.6. HTTPS redirection per service

You can enable or disable HTTPS redirection for a service by configuring the networking.knative.dev/http-option annotation.

7.6.1. Redirecting HTTPS for a service

The following example shows how you can use this annotation in a Knative Service YAML object:

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: example

namespace: default

annotations:

networking.knative.dev/http-protocol: "redirected"

spec:

...7.7. Cluster local availability

By default, Knative services are published to a public IP address. Being published to a public IP address means that Knative services are public applications, and have a publicly accessible URL.

Publicly accessible URLs are accessible from outside of the cluster. However, developers may need to build back-end services that are only be accessible from inside the cluster, known as private services. Developers can label individual services in the cluster with the networking.knative.dev/visibility=cluster-local label to make them private.

For OpenShift Serverless 1.15.0 and newer versions, the serving.knative.dev/visibility label is no longer available. You must update existing services to use the networking.knative.dev/visibility label instead.

7.7.1. Setting cluster availability to cluster local

Prerequisites

- The OpenShift Serverless Operator and Knative Serving are installed on the cluster.

- You have created a Knative service.

Procedure

Set the visibility for your service by adding the

networking.knative.dev/visibility=cluster-locallabel:$ oc label ksvc <service_name> networking.knative.dev/visibility=cluster-local

Verification

Check that the URL for your service is now in the format

http://<service_name>.<namespace>.svc.cluster.local, by entering the following command and reviewing the output:$ oc get ksvc

Example output

NAME URL LATESTCREATED LATESTREADY READY REASON hello http://hello.default.svc.cluster.local hello-tx2g7 hello-tx2g7 True

7.7.2. Enabling TLS authentication for cluster local services

For cluster local services, the Kourier local gateway kourier-internal is used. If you want to use TLS traffic against the Kourier local gateway, you must configure your own server certificates in the local gateway.

Prerequisites

- You have installed the OpenShift Serverless Operator and Knative Serving.

- You have administrator permissions.

-

You have installed the OpenShift (

oc) CLI.

Procedure

Deploy server certificates in the

knative-serving-ingressnamespace:$ export san="knative"

NoteSubject Alternative Name (SAN) validation is required so that these certificates can serve the request to

<app_name>.<namespace>.svc.cluster.local.Generate a root key and certificate:

$ openssl req -x509 -sha256 -nodes -days 365 -newkey rsa:2048 \ -subj '/O=Example/CN=Example' \ -keyout ca.key \ -out ca.crtGenerate a server key that uses SAN validation:

$ openssl req -out tls.csr -newkey rsa:2048 -nodes -keyout tls.key \ -subj "/CN=Example/O=Example" \ -addext "subjectAltName = DNS:$san"

Create server certificates:

$ openssl x509 -req -extfile <(printf "subjectAltName=DNS:$san") \ -days 365 -in tls.csr \ -CA ca.crt -CAkey ca.key -CAcreateserial -out tls.crt

Configure a secret for the Kourier local gateway:

Deploy a secret in

knative-serving-ingressnamespace from the certificates created by the previous steps:$ oc create -n knative-serving-ingress secret tls server-certs \ --key=tls.key \ --cert=tls.crt --dry-run=client -o yaml | oc apply -f -Update the

KnativeServingcustom resource (CR) spec to use the secret that was created by the Kourier gateway:Example KnativeServing CR

... spec: config: kourier: cluster-cert-secret: server-certs ...

The Kourier controller sets the certificate without restarting the service, so that you do not need to restart the pod.

You can access the Kourier internal service with TLS through port 443 by mounting and using the ca.crt from the client.

7.8. Kourier Gateway service type

The Kourier Gateway is exposed by default as the ClusterIP service type. This service type is determined by the service-type ingress spec in the KnativeServing custom resource (CR).

Default spec

...

spec:

ingress:

kourier:

service-type: ClusterIP

...

7.8.1. Setting the Kourier Gateway service type

You can override the default service type to use a load balancer service type instead by modifying the service-type spec:

LoadBalancer override spec

...

spec:

ingress:

kourier:

service-type: LoadBalancer

...

7.9. Using HTTP2 and gRPC

OpenShift Serverless supports only insecure or edge-terminated routes. Insecure or edge-terminated routes do not support HTTP2 on OpenShift Container Platform. These routes also do not support gRPC because gRPC is transported by HTTP2. If you use these protocols in your application, you must call the application using the ingress gateway directly. To do this you must find the ingress gateway’s public address and the application’s specific host.

7.9.1. Interacting with a serverless application using HTTP2 and gRPC

This method applies to OpenShift Container Platform 4.10 and later. For older versions, see the following section.

Prerequisites

- Install OpenShift Serverless Operator and Knative Serving on your cluster.

-

Install the OpenShift CLI (

oc). - Create a Knative service.

- Upgrade OpenShift Container Platform 4.10 or later.

- Enable HTTP/2 on OpenShift Ingress controller.

Procedure

Add the

serverless.openshift.io/default-enable-http2=trueannotation to theKnativeServingCustom Resource:$ oc annotate knativeserving <your_knative_CR> -n knative-serving serverless.openshift.io/default-enable-http2=true

After the annotation is added, you can verify that the

appProtocolvalue of the Kourier service ish2c:$ oc get svc -n knative-serving-ingress kourier -o jsonpath="{.spec.ports[0].appProtocol}"Example output

h2c

Now you can use the gRPC framework over the HTTP/2 protocol for external traffic, for example:

import "google.golang.org/grpc" grpc.Dial( YOUR_URL, 1 grpc.WithTransportCredentials(insecure.NewCredentials())), 2 )

Additional resources

7.10. Using Serving with OpenShift ingress sharding

You can use Knative Serving with OpenShift ingress sharding to split ingress traffic based on domains. This allows you to manage and route network traffic to different parts of a cluster more efficiently.

Even with OpenShift ingress sharding in place, OpenShift Serverless traffic is still routed through a single Knative Ingress Gateway and the activator component in the knative-serving project.

For more information about isolating the network traffic, see Using Service Mesh to isolate network traffic with OpenShift Serverless.

Prerequisites

- You have installed the OpenShift Serverless Operator and Knative Serving.

- You have cluster administrator permissions on OpenShift Container Platform, or you have cluster or dedicated administrator permissions on Red Hat OpenShift Service on AWS or OpenShift Dedicated.

7.10.1. Configuring OpenShift ingress shards

Before configuring Knative Serving, you must configure OpenShift ingress shards.

Procedure

Use a label selector in the

IngressControllerCR to configure OpenShift Serverless to match specific ingress shards with different domains:Example

IngressControllerCRapiVersion: operator.openshift.io/v1 kind: IngressController metadata: name: ingress-dev 1 namespace: openshift-ingress-operator spec: routeSelector: matchLabels: router: dev 2 domain: "dev.serverless.cluster.example.com" 3 # ... --- apiVersion: operator.openshift.io/v1 kind: IngressController metadata: name: ingress-prod 4 namespace: openshift-ingress-operator spec: routeSelector: matchLabels: router: prod 5 domain: "prod.serverless.cluster.example.com" 6 # ...

7.10.2. Configuring custom domains in the KnativeServing CR