Eventing

Using event-driven architectures with OpenShift Serverless

Abstract

Chapter 1. Knative Eventing

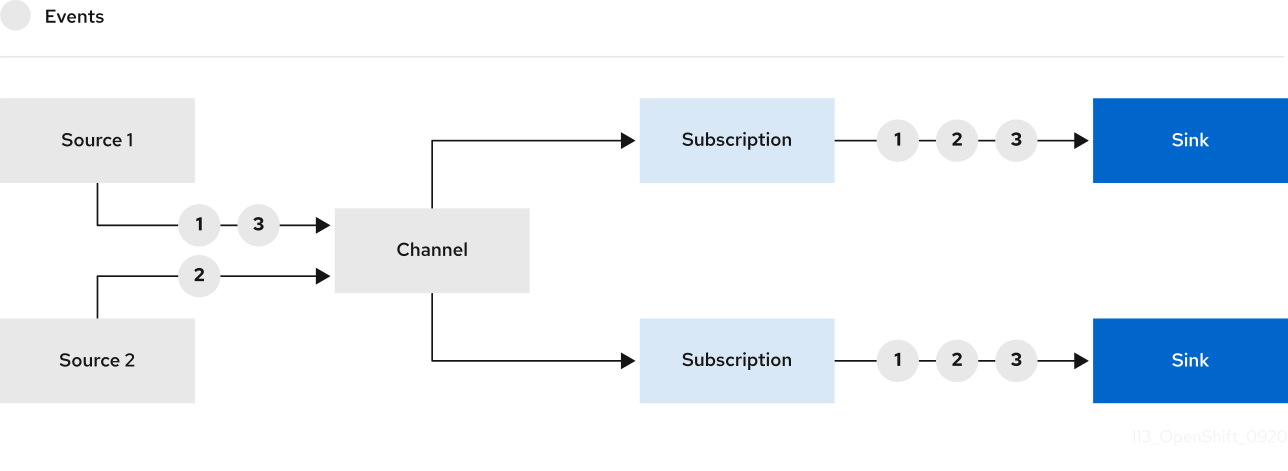

Knative Eventing on OpenShift Container Platform enables developers to use an event-driven architecture with serverless applications. An event-driven architecture is based on the concept of decoupled relationships between event producers and event consumers.

Event producers create events, and event sinks, or consumers, receive events. Knative Eventing uses standard HTTP POST requests to send and receive events between event producers and sinks. These events conform to the CloudEvents specifications, which enables creating, parsing, sending, and receiving events in any programming language.

1.1. Knative Eventing use cases:

Knative Eventing supports the following use cases:

- Publish an event without creating a consumer

- You can send events to a broker as an HTTP POST, and use binding to decouple the destination configuration from your application that produces events.

- Consume an event without creating a publisher

- You can use a trigger to consume events from a broker based on event attributes. The application receives events as an HTTP POST.

To enable delivery to multiple types of sinks, Knative Eventing defines the following generic interfaces that can be implemented by multiple Kubernetes resources:

- Addressable resources

-

Able to receive and acknowledge an event delivered over HTTP to an address defined in the

status.address.urlfield of the event. The KubernetesServiceresource also satisfies the addressable interface. - Callable resources

-

Able to receive an event delivered over HTTP and transform it, returning

0or1new events in the HTTP response payload. These returned events may be further processed in the same way that events from an external event source are processed.

Chapter 2. Event sources

2.1. Event sources

A Knative event source can be any Kubernetes object that generates or imports cloud events, and relays those events to another endpoint, known as a sink. Sourcing events is critical to developing a distributed system that reacts to events.

You can create and manage Knative event sources in the OpenShift Container Platform web console, the Knative (kn) CLI, or by applying YAML files.

Currently, OpenShift Serverless supports the following event source types:

- API server source

- Brings Kubernetes API server events into Knative. The API server source sends a new event each time a Kubernetes resource is created, updated or deleted.

- Ping source

- Produces events with a fixed payload on a specified cron schedule.

- Kafka event source

- Connects an Apache Kafka cluster to a sink as an event source.

You can also create a custom event source.

2.1.1. Creating an event source

A Knative event source can be any Kubernetes object that generates or imports cloud events, and relays those events to another endpoint, known as a sink.

Prerequisites

- The OpenShift Serverless Operator and Knative Eventing are installed on your OpenShift Container Platform cluster.

- You have logged in to the web console.

-

You have

cluster-adminprivileges on OpenShift Container Platform, or you have cluster or dedicated administrator permissions on Red Hat OpenShift Service on AWS or OpenShift Dedicated.

Procedure

- In the OpenShift Container Platform web console, navigate to Serverless → Eventing.

- In the Create list, select Event Source. You will be directed to the Event Sources page.

- Select the event source type that you want to create.

2.2. Creating an API server source

The API server source is an event source that can be used to connect an event sink, such as a Knative service, to the Kubernetes API server. The API server source watches for Kubernetes events and forwards them to the Knative Eventing broker.

2.2.1. Creating an API server source by using the web console

After Knative Eventing is installed on your cluster, you can create an API server source by using the web console. Using the OpenShift Container Platform web console provides a streamlined and intuitive user interface to create an event source.

Prerequisites

- You have logged in to the OpenShift Container Platform web console.

- The OpenShift Serverless Operator and Knative Eventing are installed on the cluster.

- You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

-

You have installed the OpenShift CLI (

oc).

If you want to re-use an existing service account, you can modify your existing ServiceAccount resource to include the required permissions instead of creating a new resource.

Create a service account, role, and role binding for the event source as a YAML file:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the YAML file:

oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Navigate to +Add → Event Source. The Event Sources page is displayed.

- Optional: If you have multiple providers for your event sources, select the required provider from the Providers list to filter the available event sources from the provider.

- Select ApiServerSource and then click Create Event Source. The Create Event Source page is displayed.

Configure the ApiServerSource settings by using the Form view or YAML view:

NoteYou can switch between the Form view and YAML view. The data is persisted when switching between the views.

-

Enter

v1as the APIVERSION andEventas the KIND. - Select the Service Account Name for the service account that you created.

In the Target section, select your event sink. This can be either a Resource or a URI:

- Select Resource to use a channel, broker, or service as an event sink for the event source.

- Select URI to specify a Uniform Resource Identifier (URI) where the events are routed to.

-

Enter

- Click Create.

Verification

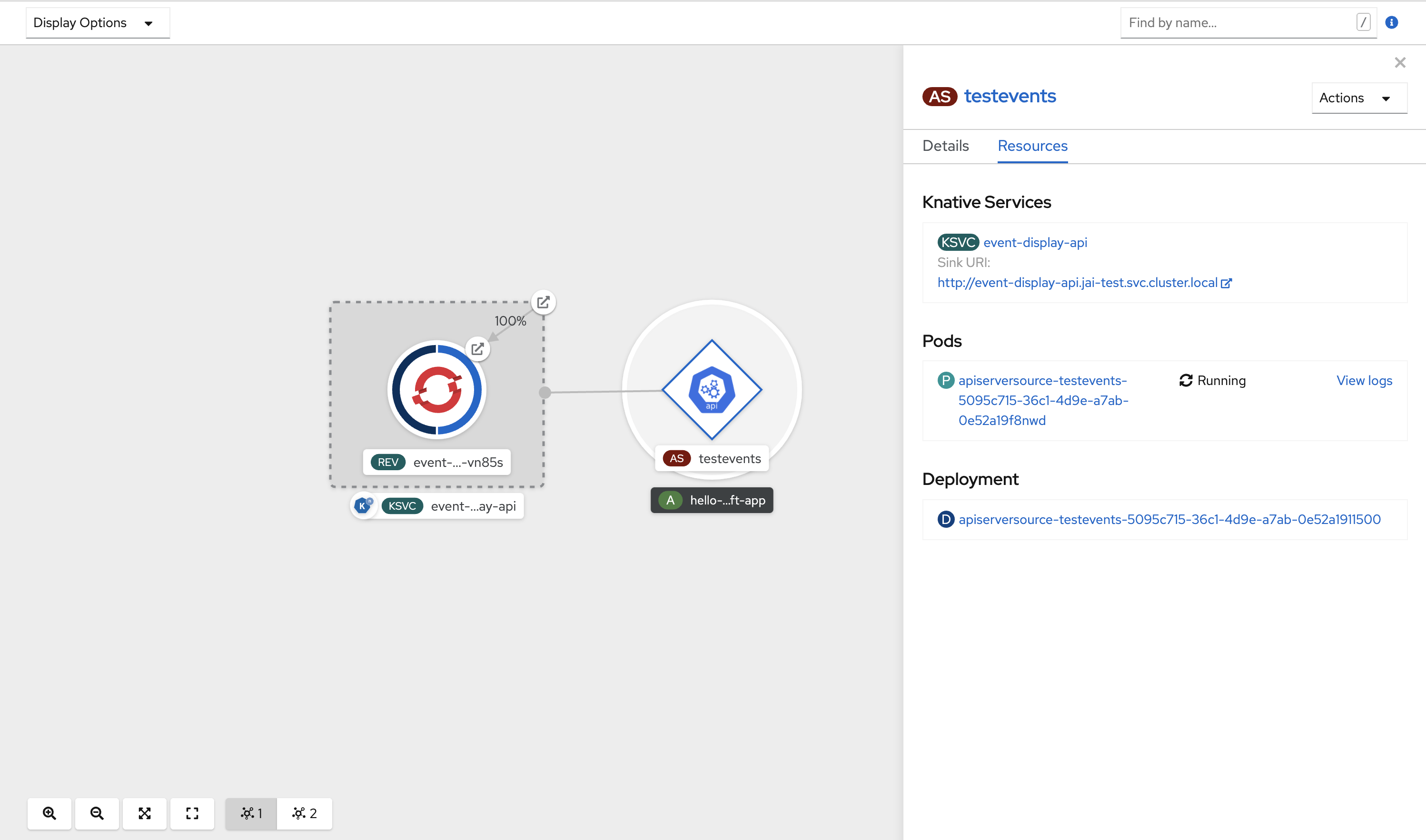

After you have created the API server source, check that it is connected to the event sink by viewing it in the Topology view.

If a URI sink is used, you can modify the URI by right-clicking on URI sink → Edit URI.

Deleting the API server source

- Navigate to the Topology view.

- Right-click the API server source and select Delete ApiServerSource.

2.2.2. Creating an API server source by using the Knative CLI

You can use the kn source apiserver create command to create an API server source by using the kn CLI. Using the kn CLI to create an API server source provides a more streamlined and intuitive user interface than modifying YAML files directly.

Prerequisites

- The OpenShift Serverless Operator and Knative Eventing are installed on the cluster.

- You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

-

You have installed the OpenShift CLI (

oc). -

You have installed the Knative (

kn) CLI.

If you want to re-use an existing service account, you can modify your existing ServiceAccount resource to include the required permissions instead of creating a new resource.

Create a service account, role, and role binding for the event source as a YAML file:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the YAML file:

oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create an API server source that has an event sink. In the following example, the sink is a broker:

kn source apiserver create <event_source_name> --sink broker:<broker_name> --resource "event:v1" --service-account <service_account_name> --mode Resource

$ kn source apiserver create <event_source_name> --sink broker:<broker_name> --resource "event:v1" --service-account <service_account_name> --mode ResourceCopy to Clipboard Copied! Toggle word wrap Toggle overflow To check that the API server source is set up correctly, create a Knative service that dumps incoming messages to its log:

kn service create event-display --image quay.io/openshift-knative/showcase

$ kn service create event-display --image quay.io/openshift-knative/showcaseCopy to Clipboard Copied! Toggle word wrap Toggle overflow If you used a broker as an event sink, create a trigger to filter events from the

defaultbroker to the service:kn trigger create <trigger_name> --sink ksvc:event-display

$ kn trigger create <trigger_name> --sink ksvc:event-displayCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create events by launching a pod in the default namespace:

oc create deployment event-origin --image quay.io/openshift-knative/showcase

$ oc create deployment event-origin --image quay.io/openshift-knative/showcaseCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check that the controller is mapped correctly by inspecting the output generated by the following command:

kn source apiserver describe <source_name>

$ kn source apiserver describe <source_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

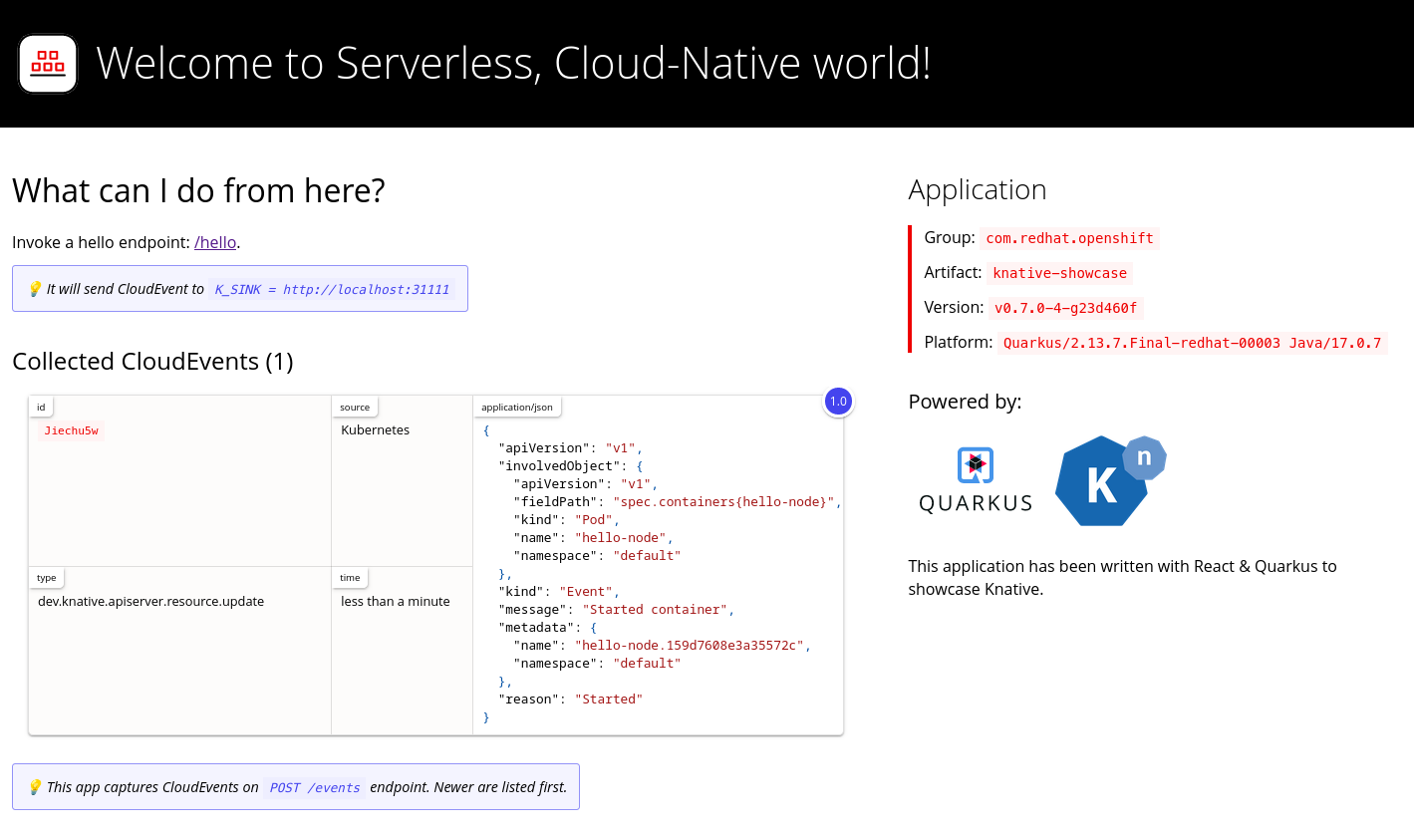

To verify that the Kubernetes events were sent to Knative, look at the event-display logs or use web browser to see the events.

To view the events in a web browser, open the link returned by the following command:

kn service describe event-display -o url

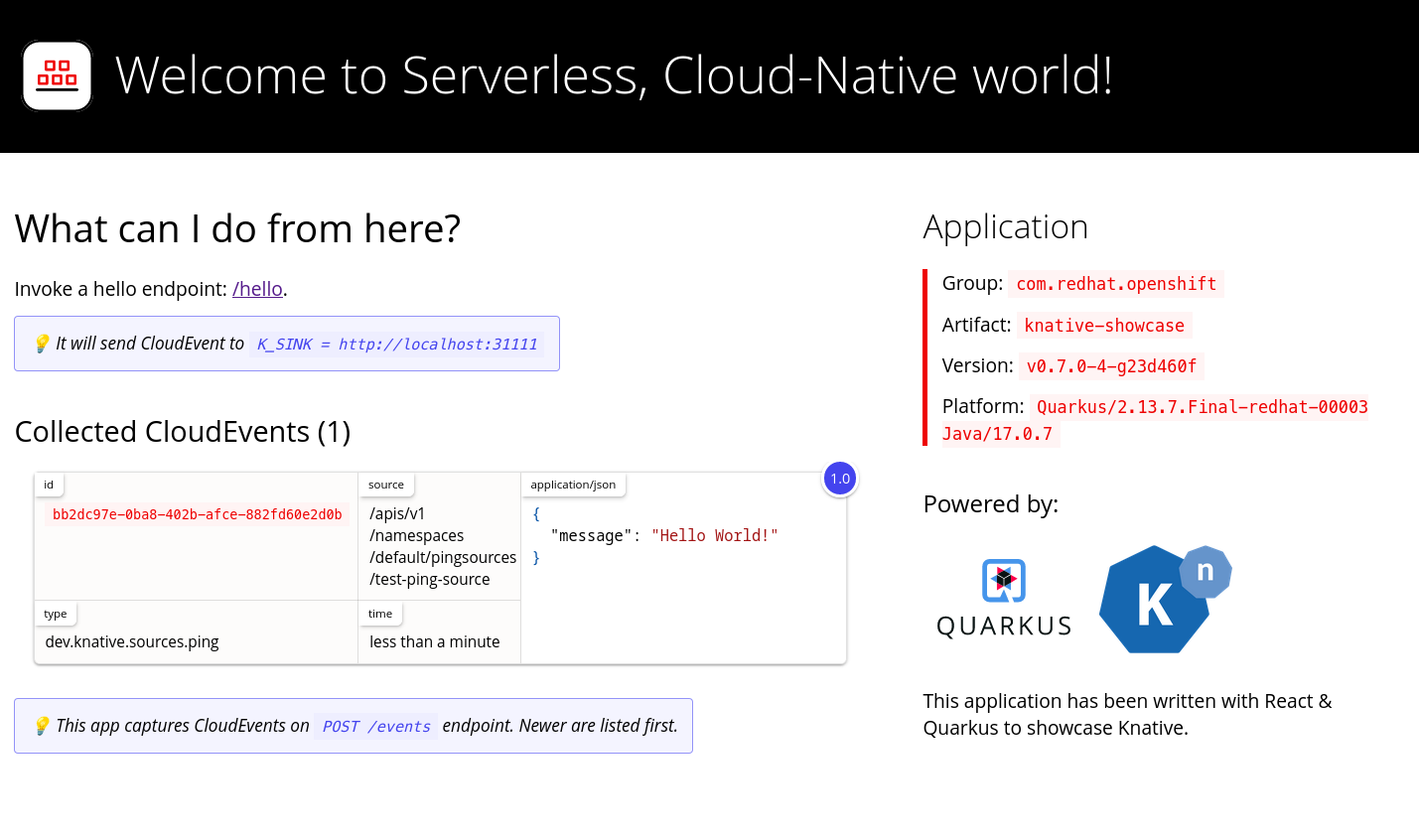

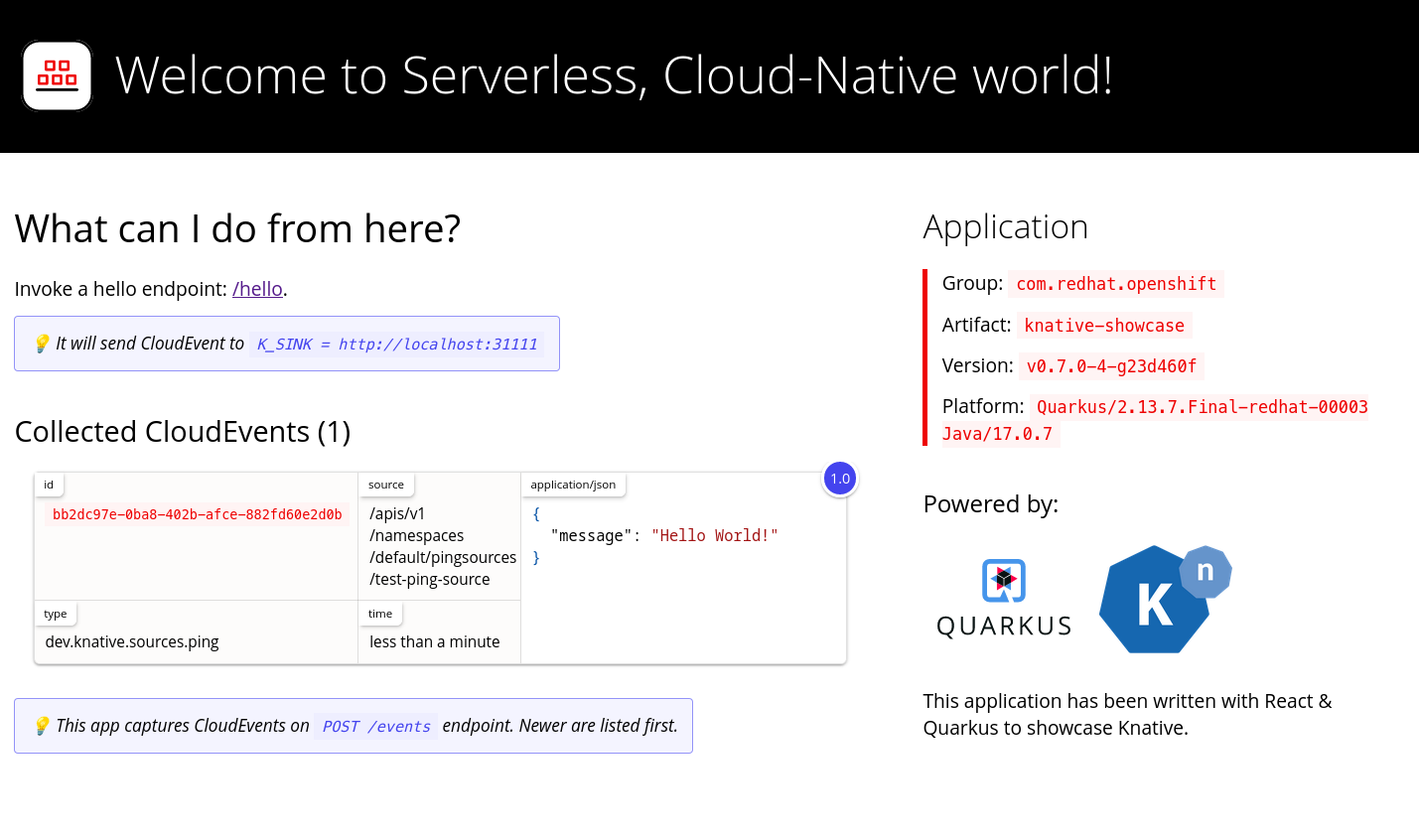

$ kn service describe event-display -o urlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Figure 2.1. Example browser page

Alternatively, to see the logs in the terminal, view the event-display logs for the pods by entering the following command:

oc logs $(oc get pod -o name | grep event-display) -c user-container

$ oc logs $(oc get pod -o name | grep event-display) -c user-containerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Deleting the API server source

Delete the trigger:

kn trigger delete <trigger_name>

$ kn trigger delete <trigger_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the event source:

kn source apiserver delete <source_name>

$ kn source apiserver delete <source_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the service account, cluster role, and cluster binding:

oc delete -f authentication.yaml

$ oc delete -f authentication.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.2.2.1. Knative CLI sink flag

When you create an event source by using the Knative (kn) CLI, you can specify a sink where events are sent to from that resource by using the --sink flag. The sink can be any addressable or callable resource that can receive incoming events from other resources.

The following example creates a sink binding that uses a service, http://event-display.svc.cluster.local, as the sink:

Example command using the sink flag

kn source binding create bind-heartbeat \ --namespace sinkbinding-example \ --subject "Job:batch/v1:app=heartbeat-cron" \ --sink http://event-display.svc.cluster.local \ --ce-override "sink=bound"

$ kn source binding create bind-heartbeat \

--namespace sinkbinding-example \

--subject "Job:batch/v1:app=heartbeat-cron" \

--sink http://event-display.svc.cluster.local \

--ce-override "sink=bound"- 1

svcinhttp://event-display.svc.cluster.localdetermines that the sink is a Knative service. Other default sink prefixes includechannel, andbroker.

2.2.3. Creating an API server source by using YAML files

Creating Knative resources by using YAML files uses a declarative API, which enables you to describe event sources declaratively and in a reproducible manner. To create an API server source by using YAML, you must create a YAML file that defines an ApiServerSource object, then apply it by using the oc apply command.

Prerequisites

- The OpenShift Serverless Operator and Knative Eventing are installed on the cluster.

- You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

-

You have created the

defaultbroker in the same namespace as the one defined in the API server source YAML file. -

Install the OpenShift CLI (

oc).

If you want to re-use an existing service account, you can modify your existing ServiceAccount resource to include the required permissions instead of creating a new resource.

Create a service account, role, and role binding for the event source as a YAML file:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the YAML file:

oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create an API server source as a YAML file:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the

ApiServerSourceYAML file:oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow To check that the API server source is set up correctly, create a Knative service as a YAML file that dumps incoming messages to its log:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the

ServiceYAML file:oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a

Triggerobject as a YAML file that filters events from thedefaultbroker to the service created in the previous step:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the

TriggerYAML file:oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create events by launching a pod in the default namespace:

oc create deployment event-origin --image=quay.io/openshift-knative/showcase

$ oc create deployment event-origin --image=quay.io/openshift-knative/showcaseCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check that the controller is mapped correctly, by entering the following command and inspecting the output:

oc get apiserversource.sources.knative.dev testevents -o yaml

$ oc get apiserversource.sources.knative.dev testevents -o yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

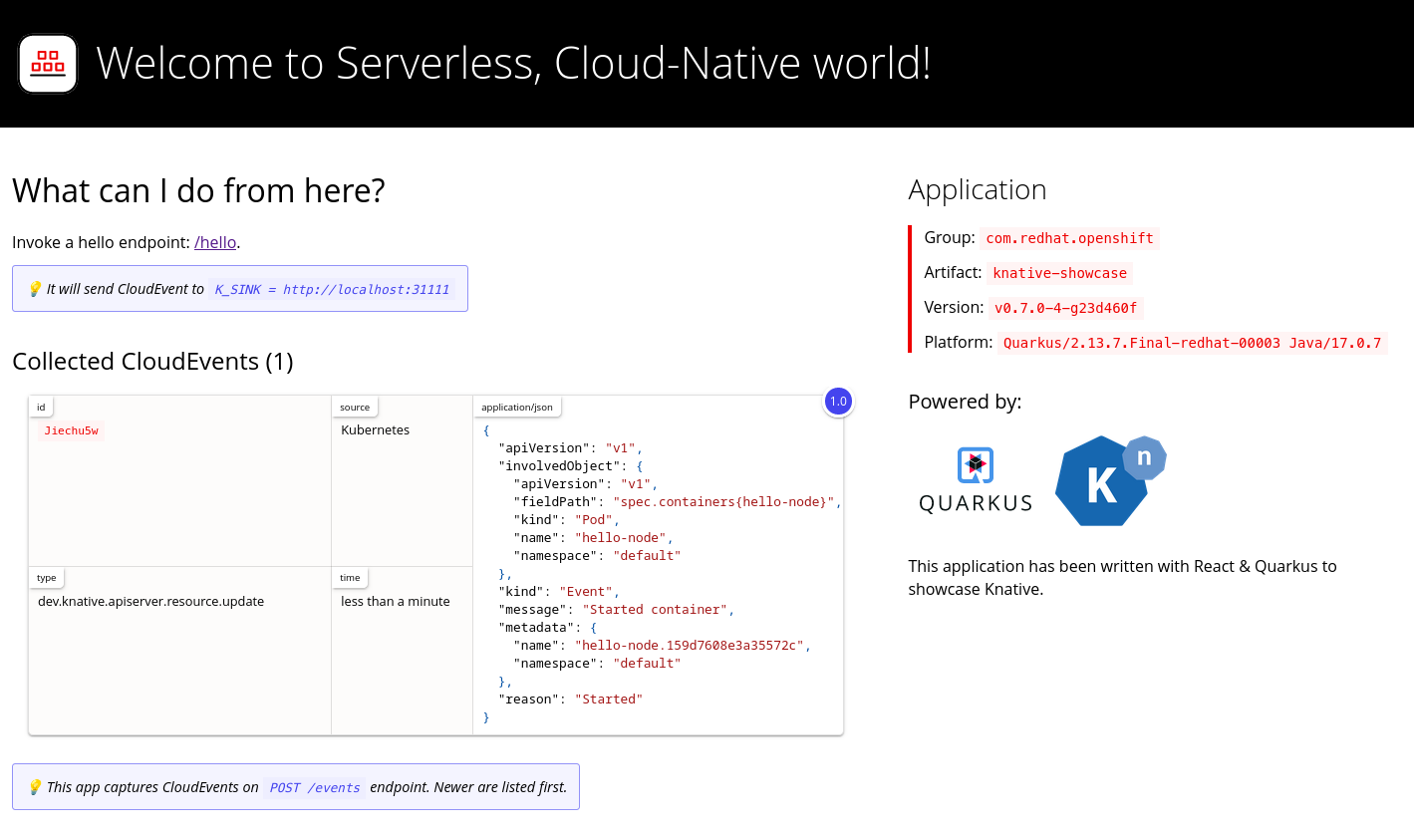

To verify that the Kubernetes events were sent to Knative, you can look at the event-display logs or use web browser to see the events.

To view the events in a web browser, open the link returned by the following command:

oc get ksvc event-display -o jsonpath='{.status.url}'$ oc get ksvc event-display -o jsonpath='{.status.url}'Copy to Clipboard Copied! Toggle word wrap Toggle overflow Figure 2.2. Example browser page

To see the logs in the terminal, view the event-display logs for the pods by entering the following command:

oc logs $(oc get pod -o name | grep event-display) -c user-container

$ oc logs $(oc get pod -o name | grep event-display) -c user-containerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Deleting the API server source

Delete the trigger:

oc delete -f trigger.yaml

$ oc delete -f trigger.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the event source:

oc delete -f k8s-events.yaml

$ oc delete -f k8s-events.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Delete the service account, cluster role, and cluster binding:

oc delete -f authentication.yaml

$ oc delete -f authentication.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.3. Creating a ping source

A ping source is an event source that can be used to periodically send ping events with a constant payload to an event consumer. A ping source can be used to schedule sending events, similar to a timer.

2.3.1. Creating a ping source by using the web console

After Knative Eventing is installed on your cluster, you can create a ping source by using the web console. Using the OpenShift Container Platform web console provides a streamlined and intuitive user interface to create an event source.

Prerequisites

- You have logged in to the OpenShift Container Platform web console.

- The OpenShift Serverless Operator, Knative Serving and Knative Eventing are installed on the cluster.

- You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

Procedure

To verify that the ping source is working, create a simple Knative service that dumps incoming messages to the logs of the service.

- Navigate to +Add → YAML.

Copy the example YAML:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Click Create.

Create a ping source in the same namespace as the service created in the previous step, or any other sink that you want to send events to.

- Navigate to +Add → Event Source. The Event Sources page is displayed.

- Optional: If you have multiple providers for your event sources, select the required provider from the Providers list to filter the available event sources from the provider.

Select Ping Source and then click Create Event Source. The Create Event Source page is displayed.

NoteYou can configure the PingSource settings by using the Form view or YAML view and can switch between the views. The data is persisted when switching between the views.

-

Enter a value for Schedule. In this example, the value is

*/2 * * * *, which creates a PingSource that sends a message every two minutes. - Optional: You can enter a value for Data, which is the message payload.

In the Target section, select your event sink. This can be either a Resource or a URI:

-

Select Resource to use a channel, broker, or service as an event sink for the event source. In this example, the

event-displayservice created in the previous step is used as the target Resource. - Select URI to specify a Uniform Resource Identifier (URI) where the events are routed to.

-

Select Resource to use a channel, broker, or service as an event sink for the event source. In this example, the

- Click Create.

Verification

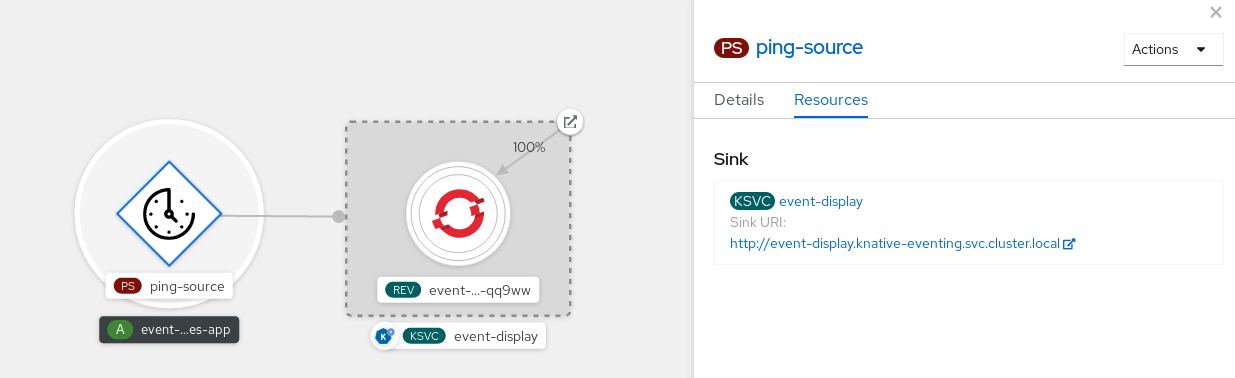

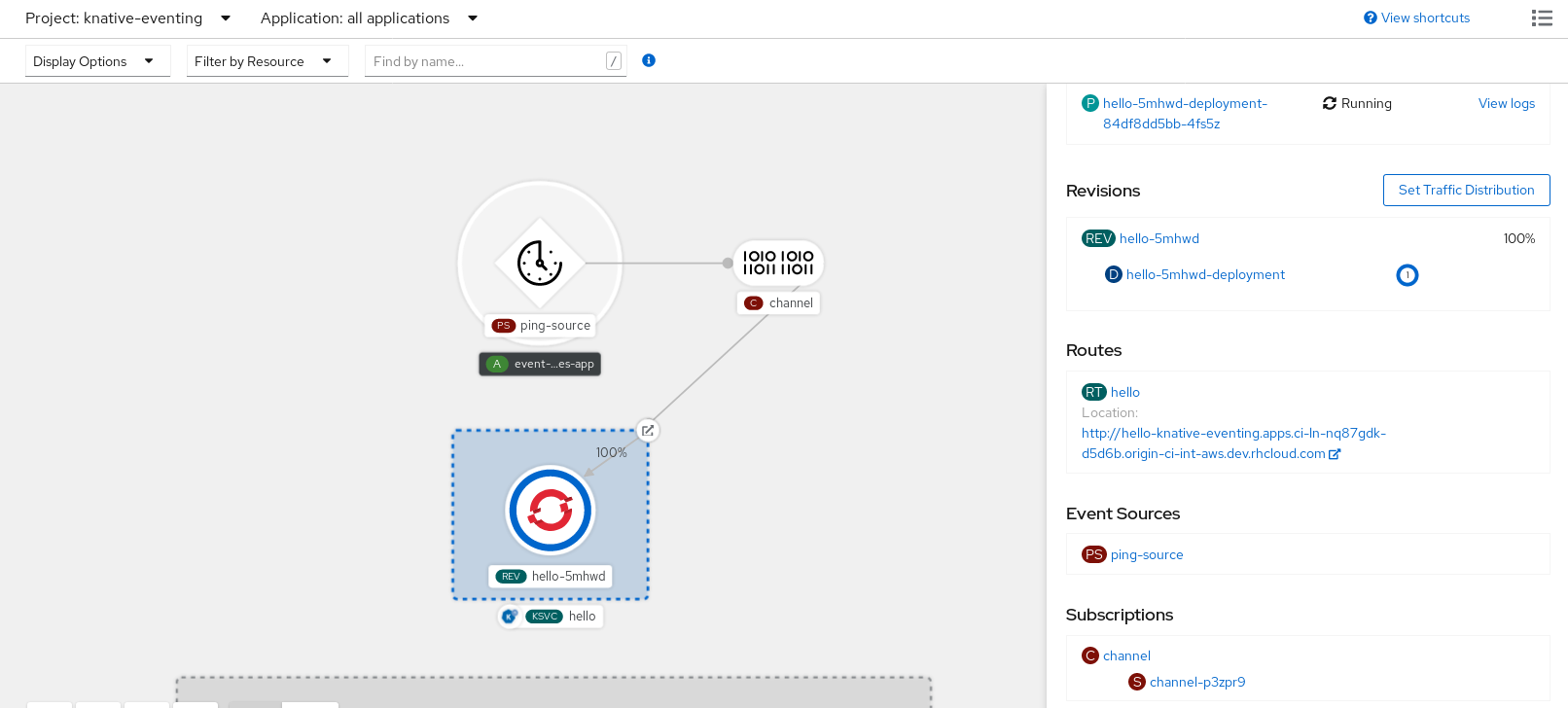

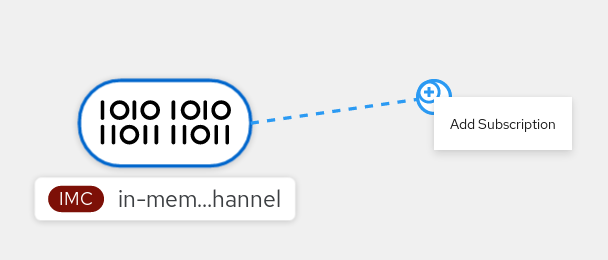

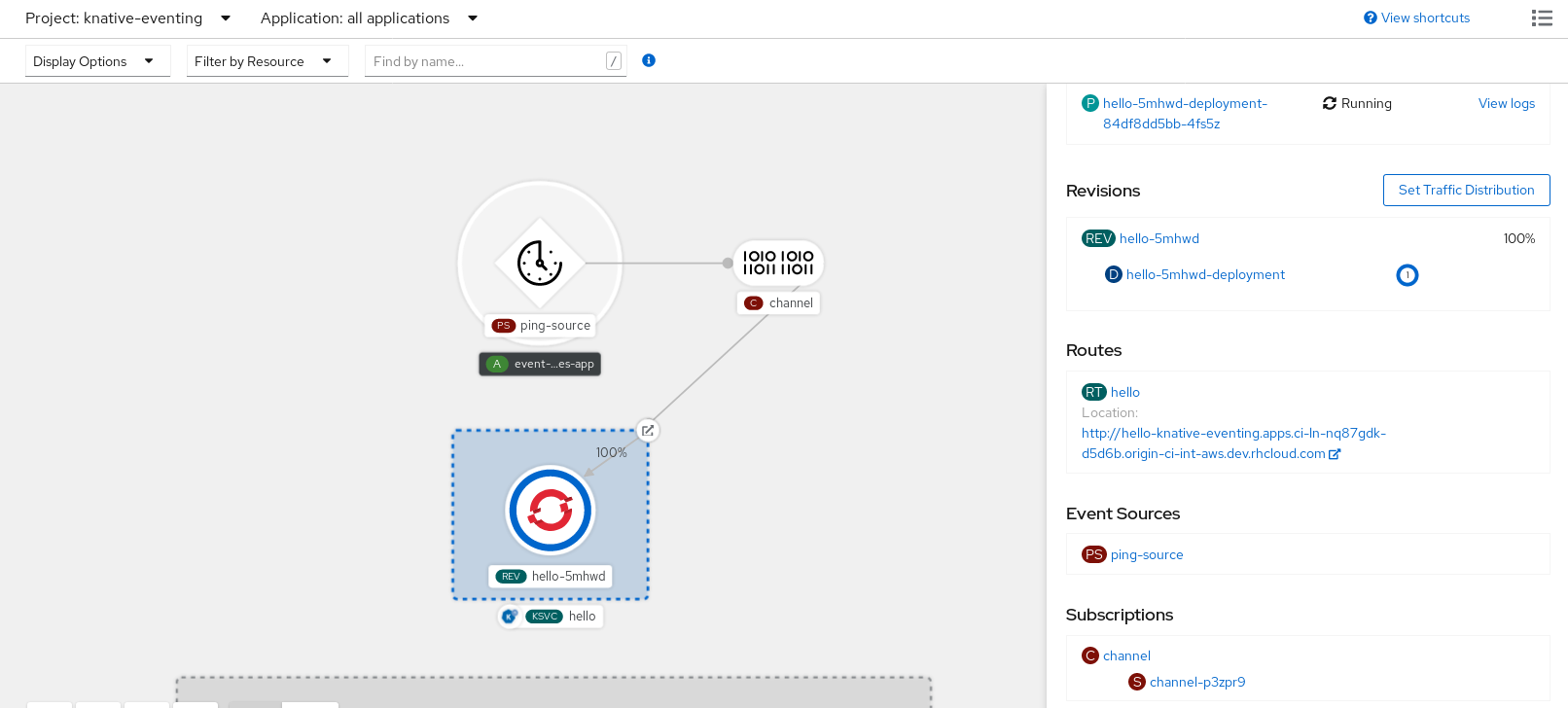

You can verify that the ping source was created and is connected to the sink by viewing the Topology page.

- Navigate to Topology.

View the ping source and sink.

View the event-display service in the web browser. You should see the ping source events in the web UI.

Deleting the ping source

- Navigate to the Topology view.

- Right-click the API server source and select Delete Ping Source.

2.3.2. Creating a ping source by using the Knative CLI

You can use the kn source ping create command to create a ping source by using the Knative (kn) CLI. Using the Knative CLI to create event sources provides a more streamlined and intuitive user interface than modifying YAML files directly.

Prerequisites

- The OpenShift Serverless Operator, Knative Serving and Knative Eventing are installed on the cluster.

-

You have installed the Knative (

kn) CLI. - You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

-

Optional: If you want to use the verification steps for this procedure, install the OpenShift CLI (

oc).

Procedure

To verify that the ping source is working, create a simple Knative service that dumps incoming messages to the service logs:

kn service create event-display \ --image quay.io/openshift-knative/showcase$ kn service create event-display \ --image quay.io/openshift-knative/showcaseCopy to Clipboard Copied! Toggle word wrap Toggle overflow For each set of ping events that you want to request, create a ping source in the same namespace as the event consumer:

kn source ping create test-ping-source \ --schedule "*/2 * * * *" \ --data '{"message": "Hello world!"}' \ --sink ksvc:event-display$ kn source ping create test-ping-source \ --schedule "*/2 * * * *" \ --data '{"message": "Hello world!"}' \ --sink ksvc:event-displayCopy to Clipboard Copied! Toggle word wrap Toggle overflow Check that the controller is mapped correctly by entering the following command and inspecting the output:

kn source ping describe test-ping-source

$ kn source ping describe test-ping-sourceCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

You can verify that the Kubernetes events were sent to the Knative event sink by looking at the logs of the sink pod.

By default, Knative services terminate their pods if no traffic is received within a 60 second period. The example shown in this guide creates a ping source that sends a message every 2 minutes, so each message should be observed in a newly created pod.

Watch for new pods created:

watch oc get pods

$ watch oc get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Cancel watching the pods using Ctrl+C, then look at the logs of the created pod:

oc logs $(oc get pod -o name | grep event-display) -c user-container

$ oc logs $(oc get pod -o name | grep event-display) -c user-containerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Deleting the ping source

Delete the ping source:

kn delete pingsources.sources.knative.dev <ping_source_name>

$ kn delete pingsources.sources.knative.dev <ping_source_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.3.2.1. Knative CLI sink flag

When you create an event source by using the Knative (kn) CLI, you can specify a sink where events are sent to from that resource by using the --sink flag. The sink can be any addressable or callable resource that can receive incoming events from other resources.

The following example creates a sink binding that uses a service, http://event-display.svc.cluster.local, as the sink:

Example command using the sink flag

kn source binding create bind-heartbeat \ --namespace sinkbinding-example \ --subject "Job:batch/v1:app=heartbeat-cron" \ --sink http://event-display.svc.cluster.local \ --ce-override "sink=bound"

$ kn source binding create bind-heartbeat \

--namespace sinkbinding-example \

--subject "Job:batch/v1:app=heartbeat-cron" \

--sink http://event-display.svc.cluster.local \

--ce-override "sink=bound"- 1

svcinhttp://event-display.svc.cluster.localdetermines that the sink is a Knative service. Other default sink prefixes includechannel, andbroker.

2.3.3. Creating a ping source by using YAML

Creating Knative resources by using YAML files uses a declarative API, which enables you to describe event sources declaratively and in a reproducible manner. To create a serverless ping source by using YAML, you must create a YAML file that defines a PingSource object, then apply it by using oc apply.

Example PingSource object

- 1

- The schedule of the event specified using CRON expression.

- 2

- The event message body expressed as a JSON encoded data string.

- 3

- These are the details of the event consumer. In this example, we are using a Knative service named

event-display.

Prerequisites

- The OpenShift Serverless Operator, Knative Serving and Knative Eventing are installed on the cluster.

-

Install the OpenShift CLI (

oc). - You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

Procedure

To verify that the ping source is working, create a simple Knative service that dumps incoming messages to the service’s logs.

Create a service YAML file:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the service:

oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

For each set of ping events that you want to request, create a ping source in the same namespace as the event consumer.

Create a YAML file for the ping source:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the ping source:

oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Check that the controller is mapped correctly by entering the following command:

oc get pingsource.sources.knative.dev <ping_source_name> -oyaml

$ oc get pingsource.sources.knative.dev <ping_source_name> -oyamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

You can verify that the Kubernetes events were sent to the Knative event sink by looking at the sink pod’s logs.

By default, Knative services terminate their pods if no traffic is received within a 60 second period. The example shown in this guide creates a PingSource that sends a message every 2 minutes, so each message should be observed in a newly created pod.

Watch for new pods created:

watch oc get pods

$ watch oc get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Cancel watching the pods using Ctrl+C, then look at the logs of the created pod:

oc logs $(oc get pod -o name | grep event-display) -c user-container

$ oc logs $(oc get pod -o name | grep event-display) -c user-containerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Deleting the ping source

Delete the ping source:

oc delete -f <filename>

$ oc delete -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example command

oc delete -f ping-source.yaml

$ oc delete -f ping-source.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4. Source for Apache Kafka

You can create an Apache Kafka source that reads events from an Apache Kafka cluster and passes these events to a sink. You can create a Kafka source by using the OpenShift Container Platform web console, the Knative (kn) CLI, or by creating a KafkaSource object directly as a YAML file and using the OpenShift CLI (oc) to apply it.

See the documentation for Installing Knative broker for Apache Kafka.

2.4.1. Creating an Apache Kafka event source by using the web console

After the Knative broker implementation for Apache Kafka is installed on your cluster, you can create an Apache Kafka source by using the web console. Using the OpenShift Container Platform web console provides a streamlined and intuitive user interface to create a Kafka source.

Prerequisites

-

The OpenShift Serverless Operator, Knative Eventing, and the

KnativeKafkacustom resource are installed on your cluster. - You have logged in to the web console.

- You have access to a Red Hat AMQ Streams (Kafka) cluster that produces the Kafka messages you want to import.

- You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

Procedure

- Navigate to the +Add page and select Event Source.

- In the Event Sources page, select Kafka Source in the Type section.

Configure the Kafka Source settings:

- Add a comma-separated list of Bootstrap Servers.

- Add a comma-separated list of Topics.

- Add a Consumer Group.

- Select the Service Account Name for the service account that you created.

In the Target section, select your event sink. This can be either a Resource or a URI:

- Select Resource to use a channel, broker, or service as an event sink for the event source.

- Select URI to specify a Uniform Resource Identifier (URI) where the events are routed to.

- Enter a Name for the Kafka event source.

- Click Create.

Verification

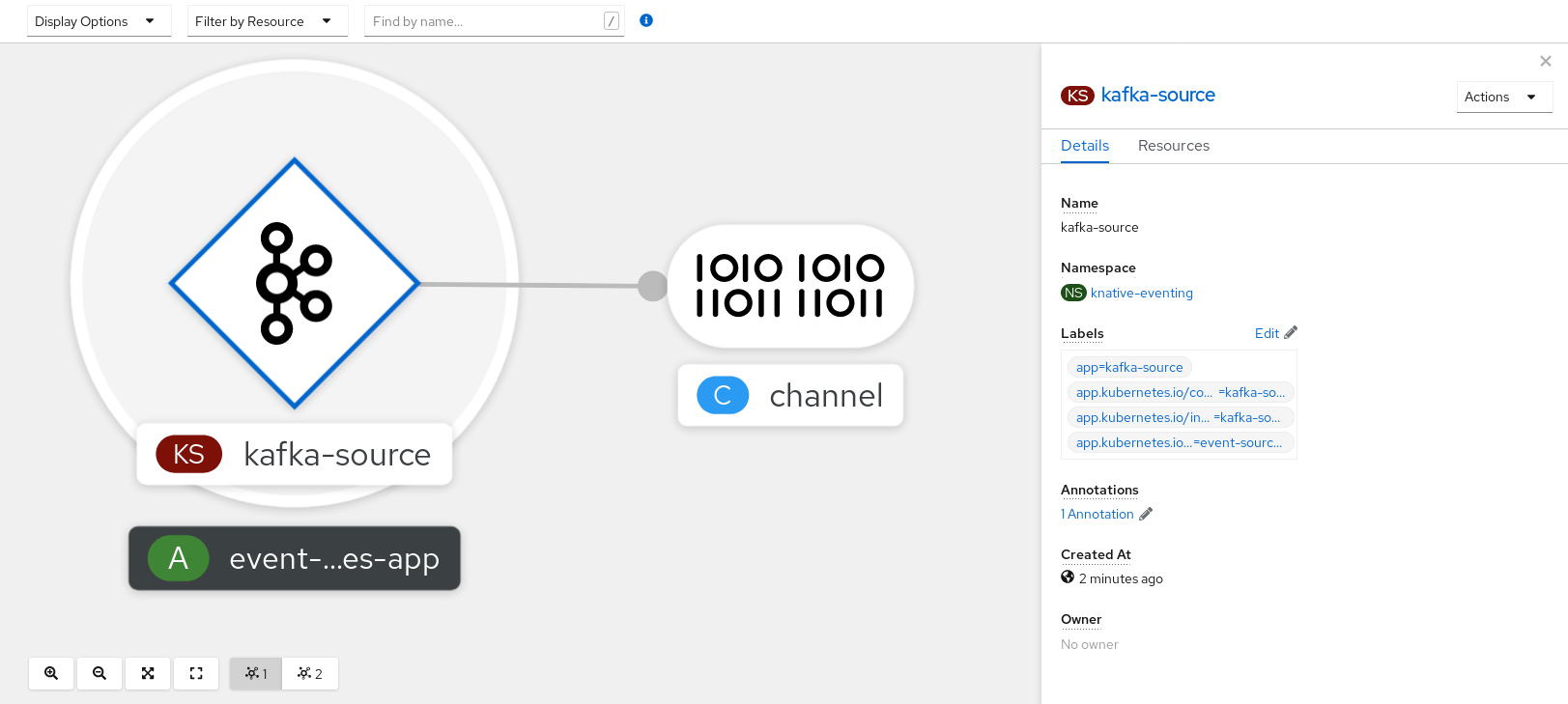

You can verify that the Kafka event source was created and is connected to the sink by viewing the Topology page.

- Navigate to Topology.

View the Kafka event source and sink.

2.4.2. Creating an Apache Kafka event source by using the Knative CLI

You can use the kn source kafka create command to create a Kafka source by using the Knative (kn) CLI. Using the Knative CLI to create event sources provides a more streamlined and intuitive user interface than modifying YAML files directly.

Prerequisites

-

The OpenShift Serverless Operator, Knative Eventing, Knative Serving, and the

KnativeKafkacustom resource (CR) are installed on your cluster. - You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

- You have access to a Red Hat AMQ Streams (Kafka) cluster that produces the Kafka messages you want to import.

-

You have installed the Knative (

kn) CLI. -

Optional: You have installed the OpenShift CLI (

oc) if you want to use the verification steps in this procedure.

Procedure

To verify that the Kafka event source is working, create a Knative service that dumps incoming events into the service logs:

kn service create event-display \ --image quay.io/openshift-knative/showcase$ kn service create event-display \ --image quay.io/openshift-knative/showcaseCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a

KafkaSourceCR:kn source kafka create <kafka_source_name> \ --servers <cluster_kafka_bootstrap>.kafka.svc:9092 \ --topics <topic_name> --consumergroup my-consumer-group \ --sink event-display$ kn source kafka create <kafka_source_name> \ --servers <cluster_kafka_bootstrap>.kafka.svc:9092 \ --topics <topic_name> --consumergroup my-consumer-group \ --sink event-displayCopy to Clipboard Copied! Toggle word wrap Toggle overflow NoteReplace the placeholder values in this command with values for your source name, bootstrap servers, and topics.

The

--servers,--topics, and--consumergroupoptions specify the connection parameters to the Kafka cluster. The--consumergroupoption is optional.Optional: View details about the

KafkaSourceCR you created:kn source kafka describe <kafka_source_name>

$ kn source kafka describe <kafka_source_name>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification steps

Trigger the Kafka instance to send a message to the topic:

oc -n kafka run kafka-producer \ -ti --image=quay.io/strimzi/kafka:latest-kafka-2.7.0 --rm=true \ --restart=Never -- bin/kafka-console-producer.sh \ --broker-list <cluster_kafka_bootstrap>:9092 --topic my-topic$ oc -n kafka run kafka-producer \ -ti --image=quay.io/strimzi/kafka:latest-kafka-2.7.0 --rm=true \ --restart=Never -- bin/kafka-console-producer.sh \ --broker-list <cluster_kafka_bootstrap>:9092 --topic my-topicCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the message in the prompt. This command assumes that:

-

The Kafka cluster is installed in the

kafkanamespace. -

The

KafkaSourceobject has been configured to use themy-topictopic.

-

The Kafka cluster is installed in the

Verify that the message arrived by viewing the logs:

oc logs $(oc get pod -o name | grep event-display) -c user-container

$ oc logs $(oc get pod -o name | grep event-display) -c user-containerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4.2.1. Knative CLI sink flag

When you create an event source by using the Knative (kn) CLI, you can specify a sink where events are sent to from that resource by using the --sink flag. The sink can be any addressable or callable resource that can receive incoming events from other resources.

The following example creates a sink binding that uses a service, http://event-display.svc.cluster.local, as the sink:

Example command using the sink flag

kn source binding create bind-heartbeat \ --namespace sinkbinding-example \ --subject "Job:batch/v1:app=heartbeat-cron" \ --sink http://event-display.svc.cluster.local \ --ce-override "sink=bound"

$ kn source binding create bind-heartbeat \

--namespace sinkbinding-example \

--subject "Job:batch/v1:app=heartbeat-cron" \

--sink http://event-display.svc.cluster.local \

--ce-override "sink=bound"- 1

svcinhttp://event-display.svc.cluster.localdetermines that the sink is a Knative service. Other default sink prefixes includechannel, andbroker.

2.4.3. Creating an Apache Kafka event source by using YAML

Creating Knative resources by using YAML files uses a declarative API, which enables you to describe applications declaratively and in a reproducible manner. To create a Kafka source by using YAML, you must create a YAML file that defines a KafkaSource object, then apply it by using the oc apply command.

Prerequisites

-

The OpenShift Serverless Operator, Knative Eventing, and the

KnativeKafkacustom resource are installed on your cluster. - You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

- You have access to a Red Hat AMQ Streams (Kafka) cluster that produces the Kafka messages you want to import.

-

Install the OpenShift CLI (

oc).

Procedure

Create a

KafkaSourceobject as a YAML file:Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantOnly the

v1beta1version of the API forKafkaSourceobjects on OpenShift Serverless is supported. Do not use thev1alpha1version of this API, as this version is now deprecated.Example

KafkaSourceobjectCopy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the

KafkaSourceYAML file:oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

Verify that the Kafka event source was created by entering the following command:

oc get pods

$ oc get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

NAME READY STATUS RESTARTS AGE kafkasource-kafka-source-5ca0248f-... 1/1 Running 0 13m

NAME READY STATUS RESTARTS AGE kafkasource-kafka-source-5ca0248f-... 1/1 Running 0 13mCopy to Clipboard Copied! Toggle word wrap Toggle overflow

2.4.4. Configuring SASL authentication for Apache Kafka sources

Simple Authentication and Security Layer (SASL) is used by Apache Kafka for authentication. If you use SASL authentication on your cluster, users must provide credentials to Knative for communicating with the Kafka cluster; otherwise events cannot be produced or consumed.

Prerequisites

- You have cluster or dedicated administrator permissions on OpenShift Container Platform.

-

The OpenShift Serverless Operator, Knative Eventing, and the

KnativeKafkaCR are installed on your OpenShift Container Platform cluster. - You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

- You have a username and password for a Kafka cluster.

-

You have chosen the SASL mechanism to use, for example,

PLAIN,SCRAM-SHA-256, orSCRAM-SHA-512. -

If TLS is enabled, you also need the

ca.crtcertificate file for the Kafka cluster. -

You have installed the OpenShift (

oc) CLI.

Procedure

Create the certificate files as secrets in your chosen namespace:

oc create secret -n <namespace> generic <kafka_auth_secret> \ --from-file=ca.crt=caroot.pem \ --from-literal=password="SecretPassword" \ --from-literal=saslType="SCRAM-SHA-512" \ --from-literal=user="my-sasl-user"

$ oc create secret -n <namespace> generic <kafka_auth_secret> \ --from-file=ca.crt=caroot.pem \ --from-literal=password="SecretPassword" \ --from-literal=saslType="SCRAM-SHA-512" \1 --from-literal=user="my-sasl-user"Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The SASL type can be

PLAIN,SCRAM-SHA-256, orSCRAM-SHA-512.

Create or modify your Kafka source so that it contains the following

specconfiguration:Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The

caCertspec is not required if you are using a public cloud Kafka service.

2.4.5. Configuring KEDA autoscaling for KafkaSource

You can configure Knative Eventing sources for Apache Kafka (KafkaSource) to be autoscaled using the Custom Metrics Autoscaler Operator, which is based on the Kubernetes Event Driven Autoscaler (KEDA).

Configuring KEDA autoscaling for KafkaSource is a Technology Preview feature only. Technology Preview features are not supported with Red Hat production service level agreements (SLAs) and might not be functionally complete. Red Hat does not recommend using them in production. These features provide early access to upcoming product features, enabling customers to test functionality and provide feedback during the development process.

For more information about the support scope of Red Hat Technology Preview features, see Technology Preview Features Support Scope.

Prerequisites

-

The OpenShift Serverless Operator, Knative Eventing, and the

KnativeKafkacustom resource are installed on your cluster.

Procedure

In the

KnativeKafkacustom resource, enable KEDA scaling:Example YAML

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the

KnativeKafkaYAML file:oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5. Custom event sources

If you need to ingress events from an event producer that is not included in Knative, or from a producer that emits events which are not in the CloudEvent format, you can do this by creating a custom event source. You can create a custom event source by using one of the following methods:

-

Use a

PodSpecableobject as an event source, by creating a sink binding. - Use a container as an event source, by creating a container source.

2.5.1. Sink binding

The SinkBinding object supports decoupling event production from delivery addressing. Sink binding is used to connect event producers to an event consumer, or sink. An event producer is a Kubernetes resource that embeds a PodSpec template and produces events. A sink is an addressable Kubernetes object that can receive events.

The SinkBinding object injects environment variables into the PodTemplateSpec of the sink, which means that the application code does not need to interact directly with the Kubernetes API to locate the event destination. These environment variables are as follows:

K_SINK- The URL of the resolved sink.

K_CE_OVERRIDES- A JSON object that specifies overrides to the outbound event.

The SinkBinding object currently does not support custom revision names for services.

2.5.1.1. Creating a sink binding by using YAML

Creating Knative resources by using YAML files uses a declarative API, which enables you to describe event sources declaratively and in a reproducible manner. To create a sink binding by using YAML, you must create a YAML file that defines an SinkBinding object, then apply it by using the oc apply command.

Prerequisites

- The OpenShift Serverless Operator, Knative Serving and Knative Eventing are installed on the cluster.

-

Install the OpenShift CLI (

oc). - You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

Procedure

To check that sink binding is set up correctly, create a Knative event display service, or event sink, that dumps incoming messages to its log.

Create a service YAML file:

Example service YAML file

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the service:

oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Create a sink binding instance that directs events to the service.

Create a sink binding YAML file:

Example service YAML file

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- In this example, any Job with the label

app: heartbeat-cronwill be bound to the event sink.

Create the sink binding:

oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Create a

CronJobobject.Create a cron job YAML file:

Example cron job YAML file

Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantTo use sink binding, you must manually add a

bindings.knative.dev/include=truelabel to your Knative resources.For example, to add this label to a

CronJobresource, add the following lines to theJobresource YAML definition:jobTemplate: metadata: labels: app: heartbeat-cron bindings.knative.dev/include: "true"jobTemplate: metadata: labels: app: heartbeat-cron bindings.knative.dev/include: "true"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the cron job:

oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Check that the controller is mapped correctly by entering the following command and inspecting the output:

oc get sinkbindings.sources.knative.dev bind-heartbeat -oyaml

$ oc get sinkbindings.sources.knative.dev bind-heartbeat -oyamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

You can verify that the Kubernetes events were sent to the Knative event sink by looking at the message dumper function logs.

Enter the command:

oc get pods

$ oc get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the command:

oc logs $(oc get pod -o name | grep event-display) -c user-container

$ oc logs $(oc get pod -o name | grep event-display) -c user-containerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5.1.2. Creating a sink binding by using the Knative CLI

You can use the kn source binding create command to create a sink binding by using the Knative (kn) CLI. Using the Knative CLI to create event sources provides a more streamlined and intuitive user interface than modifying YAML files directly.

Prerequisites

- The OpenShift Serverless Operator, Knative Serving and Knative Eventing are installed on the cluster.

- You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

-

Install the Knative (

kn) CLI. -

Install the OpenShift CLI (

oc).

The following procedure requires you to create YAML files.

If you change the names of the YAML files from those used in the examples, you must ensure that you also update the corresponding CLI commands.

Procedure

To check that sink binding is set up correctly, create a Knative event display service, or event sink, that dumps incoming messages to its log:

kn service create event-display --image quay.io/openshift-knative/showcase

$ kn service create event-display --image quay.io/openshift-knative/showcaseCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a sink binding instance that directs events to the service:

kn source binding create bind-heartbeat --subject Job:batch/v1:app=heartbeat-cron --sink ksvc:event-display

$ kn source binding create bind-heartbeat --subject Job:batch/v1:app=heartbeat-cron --sink ksvc:event-displayCopy to Clipboard Copied! Toggle word wrap Toggle overflow Create a

CronJobobject.Create a cron job YAML file:

Example cron job YAML file

Copy to Clipboard Copied! Toggle word wrap Toggle overflow ImportantTo use sink binding, you must manually add a

bindings.knative.dev/include=truelabel to your Knative CRs.For example, to add this label to a

CronJobCR, add the following lines to theJobCR YAML definition:jobTemplate: metadata: labels: app: heartbeat-cron bindings.knative.dev/include: "true"jobTemplate: metadata: labels: app: heartbeat-cron bindings.knative.dev/include: "true"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create the cron job:

oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Check that the controller is mapped correctly by entering the following command and inspecting the output:

kn source binding describe bind-heartbeat

$ kn source binding describe bind-heartbeatCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

You can verify that the Kubernetes events were sent to the Knative event sink by looking at the message dumper function logs.

View the message dumper function logs by entering the following commands:

oc get pods

$ oc get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow oc logs $(oc get pod -o name | grep event-display) -c user-container

$ oc logs $(oc get pod -o name | grep event-display) -c user-containerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

2.5.1.2.1. Knative CLI sink flag

When you create an event source by using the Knative (kn) CLI, you can specify a sink where events are sent to from that resource by using the --sink flag. The sink can be any addressable or callable resource that can receive incoming events from other resources.

The following example creates a sink binding that uses a service, http://event-display.svc.cluster.local, as the sink:

Example command using the sink flag

kn source binding create bind-heartbeat \ --namespace sinkbinding-example \ --subject "Job:batch/v1:app=heartbeat-cron" \ --sink http://event-display.svc.cluster.local \ --ce-override "sink=bound"

$ kn source binding create bind-heartbeat \

--namespace sinkbinding-example \

--subject "Job:batch/v1:app=heartbeat-cron" \

--sink http://event-display.svc.cluster.local \

--ce-override "sink=bound"- 1

svcinhttp://event-display.svc.cluster.localdetermines that the sink is a Knative service. Other default sink prefixes includechannel, andbroker.

2.5.1.3. Creating a sink binding by using the web console

After Knative Eventing is installed on your cluster, you can create a sink binding by using the web console. Using the OpenShift Container Platform web console provides a streamlined and intuitive user interface to create an event source.

Prerequisites

- You have logged in to the OpenShift Container Platform web console.

- The OpenShift Serverless Operator, Knative Serving, and Knative Eventing are installed on your OpenShift Container Platform cluster.

- You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

Procedure

Create a Knative service to use as a sink:

- Navigate to +Add → YAML.

Copy the example YAML:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - Click Create.

Create a

CronJobresource that is used as an event source and sends an event every minute.- Navigate to +Add → YAML.

Copy the example YAML:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- Ensure that you include the

bindings.knative.dev/include: truelabel. The default namespace selection behavior of OpenShift Serverless uses inclusion mode.

- Click Create.

Create a sink binding in the same namespace as the service created in the previous step, or any other sink that you want to send events to.

- Navigate to +Add → Event Source. The Event Sources page is displayed.

- Optional: If you have multiple providers for your event sources, select the required provider from the Providers list to filter the available event sources from the provider.

Select Sink Binding and then click Create Event Source. The Create Event Source page is displayed.

NoteYou can configure the Sink Binding settings by using the Form view or YAML view and can switch between the views. The data is persisted when switching between the views.

-

In the apiVersion field enter

batch/v1. In the Kind field enter

Job.NoteThe

CronJobkind is not supported directly by OpenShift Serverless sink binding, so the Kind field must target theJobobjects created by the cron job, rather than the cron job object itself.In the Target section, select your event sink. This can be either a Resource or a URI:

-

Select Resource to use a channel, broker, or service as an event sink for the event source. In this example, the

event-displayservice created in the previous step is used as the target Resource. - Select URI to specify a Uniform Resource Identifier (URI) where the events are routed to.

-

Select Resource to use a channel, broker, or service as an event sink for the event source. In this example, the

In the Match labels section:

-

Enter

appin the Name field. Enter

heartbeat-cronin the Value field.NoteThe label selector is required when using cron jobs with sink binding, rather than the resource name. This is because jobs created by a cron job do not have a predictable name, and contain a randomly generated string in their name. For example,

hearthbeat-cron-1cc23f.

-

Enter

- Click Create.

Verification

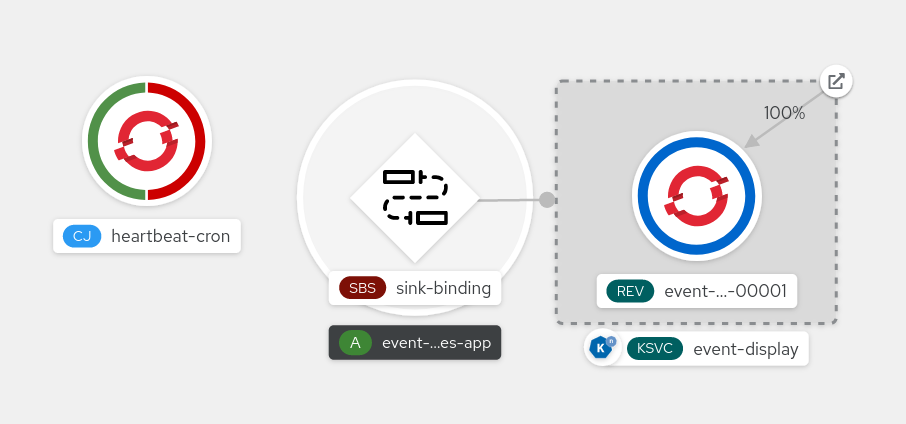

You can verify that the sink binding, sink, and cron job have been created and are working correctly by viewing the Topology page and pod logs.

- Navigate to Topology.

View the sink binding, sink, and heartbeats cron job.

- Observe that successful jobs are being registered by the cron job once the sink binding is added. This means that the sink binding is successfully reconfiguring the jobs created by the cron job.

Browse the

event-displayservice to see events produced by the heartbeats cron job.

2.5.1.4. Sink binding reference

You can use a PodSpecable object as an event source by creating a sink binding. You can configure multiple parameters when creating a SinkBinding object.

SinkBinding objects support the following parameters:

| Field | Description | Required or optional |

|---|---|---|

|

|

Specifies the API version, for example | Required |

|

|

Identifies this resource object as a | Required |

|

|

Specifies metadata that uniquely identifies the | Required |

|

|

Specifies the configuration information for this | Required |

|

| A reference to an object that resolves to a URI to use as the sink. | Required |

|

| References the resources for which the runtime contract is augmented by binding implementations. | Required |

|

| Defines overrides to control the output format and modifications to the event sent to the sink. | Optional |

2.5.1.4.1. Subject parameter

The Subject parameter references the resources for which the runtime contract is augmented by binding implementations. You can configure multiple fields for a Subject definition.

The Subject definition supports the following fields:

| Field | Description | Required or optional |

|---|---|---|

|

| API version of the referent. | Required |

|

| Kind of the referent. | Required |

|

| Namespace of the referent. If omitted, this defaults to the namespace of the object. | Optional |

|

| Name of the referent. |

Do not use if you configure |

|

| Selector of the referents. |

Do not use if you configure |

|

| A list of label selector requirements. |

Only use one of either |

|

| The label key that the selector applies to. |

Required if using |

|

|

Represents a key’s relationship to a set of values. Valid operators are |

Required if using |

|

|

An array of string values. If the |

Required if using |

|

|

A map of key-value pairs. Each key-value pair in the |

Only use one of either |

Subject parameter examples

Given the following YAML, the Deployment object named mysubject in the default namespace is selected:

Given the following YAML, any Job object with the label working=example in the default namespace is selected:

Given the following YAML, any Pod object with the label working=example or working=sample in the default namespace is selected:

2.5.1.4.2. CloudEvent overrides

A ceOverrides definition provides overrides that control the CloudEvent’s output format and modifications sent to the sink. You can configure multiple fields for the ceOverrides definition.

A ceOverrides definition supports the following fields:

| Field | Description | Required or optional |

|---|---|---|

|

|

Specifies which attributes are added or overridden on the outbound event. Each | Optional |

Only valid CloudEvent attribute names are allowed as extensions. You cannot set the spec defined attributes from the extensions override configuration. For example, you can not modify the type attribute.

CloudEvent Overrides example

This sets the K_CE_OVERRIDES environment variable on the subject:

Example output

{ "extensions": { "extra": "this is an extra attribute", "additional": "42" } }

{ "extensions": { "extra": "this is an extra attribute", "additional": "42" } }2.5.1.4.3. The include label

To use a sink binding, you need to do assign the bindings.knative.dev/include: "true" label to either the resource or the namespace that the resource is included in. If the resource definition does not include the label, a cluster administrator can attach it to the namespace by running:

oc label namespace <namespace> bindings.knative.dev/include=true

$ oc label namespace <namespace> bindings.knative.dev/include=true2.5.1.5. Integrating Service Mesh with a sink binding

Prerequisites

- You have integrated Service Mesh with OpenShift Serverless.

Procedure

Create a

Servicein a namespace that is a member of theServiceMeshMemberRoll.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the

Serviceresource.oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a

SinkBindingresource.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the

SinkBindingresource.oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a

CronJob:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the

CronJobresource.oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

To verify that the events were sent to the Knative event sink, look at the message dumper function logs.

Enter the following command:

oc get pods

$ oc get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the following command:

oc logs $(oc get pod -o name | grep event-display) -c user-container

$ oc logs $(oc get pod -o name | grep event-display) -c user-containerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

2.5.2. Container source

Container sources create a container image that generates events and sends events to a sink. You can use a container source to create a custom event source, by creating a container image and a ContainerSource object that uses your image URI.

2.5.2.1. Guidelines for creating a container image

Two environment variables are injected by the container source controller: K_SINK and K_CE_OVERRIDES. These variables are resolved from the sink and ceOverrides spec, respectively. Events are sent to the sink URI specified in the K_SINK environment variable. The message must be sent as a POST using the CloudEvent HTTP format.

Example container images

The following is an example of a heartbeats container image:

The following is an example of a container source that references the previous heartbeats container image:

2.5.2.2. Creating and managing container sources by using the Knative CLI

You can use the kn source container commands to create and manage container sources by using the Knative (kn) CLI. Using the Knative CLI to create event sources provides a more streamlined and intuitive user interface than modifying YAML files directly.

Create a container source

kn source container create <container_source_name> --image <image_uri> --sink <sink>

$ kn source container create <container_source_name> --image <image_uri> --sink <sink>Delete a container source

kn source container delete <container_source_name>

$ kn source container delete <container_source_name>Describe a container source

kn source container describe <container_source_name>

$ kn source container describe <container_source_name>List existing container sources

kn source container list

$ kn source container listList existing container sources in YAML format

kn source container list -o yaml

$ kn source container list -o yamlUpdate a container source

This command updates the image URI for an existing container source:

kn source container update <container_source_name> --image <image_uri>

$ kn source container update <container_source_name> --image <image_uri>2.5.2.3. Creating a container source by using the web console

After Knative Eventing is installed on your cluster, you can create a container source by using the web console. Using the OpenShift Container Platform web console provides a streamlined and intuitive user interface to create an event source.

Prerequisites

- You have logged in to the OpenShift Container Platform web console.

- The OpenShift Serverless Operator, Knative Serving, and Knative Eventing are installed on your OpenShift Container Platform cluster.

- You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

Procedure

- Navigate to +Add → Event Source. The Event Sources page is displayed.

- Select Container Source and then click Create Event Source. The Create Event Source page is displayed.

Configure the Container Source settings by using the Form view or YAML view:

NoteYou can switch between the Form view and YAML view. The data is persisted when switching between the views.

- In the Image field, enter the URI of the image that you want to run in the container created by the container source.

- In the Name field, enter the name of the image.

- Optional: In the Arguments field, enter any arguments to be passed to the container.

- Optional: In the Environment variables field, add any environment variables to set in the container.

In the Target section, select your event sink. This can be either a Resource or a URI:

- Select Resource to use a channel, broker, or service as an event sink for the event source.

- Select URI to specify a Uniform Resource Identifier (URI) where the events are routed to.

- After you have finished configuring the container source, click Create.

2.5.2.4. Container source reference

You can use a container as an event source, by creating a ContainerSource object. You can configure multiple parameters when creating a ContainerSource object.

ContainerSource objects support the following fields:

| Field | Description | Required or optional |

|---|---|---|

|

|

Specifies the API version, for example | Required |

|

|

Identifies this resource object as a | Required |

|

|

Specifies metadata that uniquely identifies the | Required |

|

|

Specifies the configuration information for this | Required |

|

| A reference to an object that resolves to a URI to use as the sink. | Required |

|

|

A | Required |

|

| Defines overrides to control the output format and modifications to the event sent to the sink. | Optional |

Template parameter example

2.5.2.4.1. CloudEvent overrides

A ceOverrides definition provides overrides that control the CloudEvent’s output format and modifications sent to the sink. You can configure multiple fields for the ceOverrides definition.

A ceOverrides definition supports the following fields:

| Field | Description | Required or optional |

|---|---|---|

|

|

Specifies which attributes are added or overridden on the outbound event. Each | Optional |

Only valid CloudEvent attribute names are allowed as extensions. You cannot set the spec defined attributes from the extensions override configuration. For example, you can not modify the type attribute.

CloudEvent Overrides example

This sets the K_CE_OVERRIDES environment variable on the subject:

Example output

{ "extensions": { "extra": "this is an extra attribute", "additional": "42" } }

{ "extensions": { "extra": "this is an extra attribute", "additional": "42" } }2.5.2.5. Integrating Service Mesh with ContainerSource

Prerequisites

- You have integrated Service Mesh with OpenShift Serverless.

Procedure

Create a

Servicein a namespace that is a member of theServiceMeshMemberRoll.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the

Serviceresource.oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a

ContainerSourceobject in a namespace that is a member of theServiceMeshMemberRolland sink set to theevent-display.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the

ContainerSourceresource.oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Verification

To verify that the events were sent to the Knative event sink, look at the message dumper function logs.

Enter the following command:

oc get pods

$ oc get podsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Enter the following command:

oc logs $(oc get pod -o name | grep event-display) -c user-container

$ oc logs $(oc get pod -o name | grep event-display) -c user-containerCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

Additional resources

2.6. Connecting an event source to an event sink

When you create an event source by using the OpenShift Container Platform web console, you can specify a target event sink that events are sent to from that source. The event sink can be any addressable or callable resource that can receive incoming events from other resources.

2.6.1. Connect an event source to an event sink

Prerequisites

- The OpenShift Serverless Operator, Knative Serving, and Knative Eventing are installed on your OpenShift Container Platform cluster.

- You have logged in to the web console.

- You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

- You have created an event sink, such as a Knative service, channel or broker.

Procedure

- Create an event source of any type, by navigating to +Add → Event Source and selecting the event source type that you want to create.

In the Target section of the Create Event Source form view, select your event sink. This can be either a Resource or a URI:

- Select Resource to use a channel, broker, or service as an event sink for the event source.

- Select URI to specify a Uniform Resource Identifier (URI) where the events are routed to.

- Click Create.

Verification

You can verify that the event source was created and is connected to the sink by viewing the Topology page.

- Navigate to Topology.

- View the event source and click the connected event sink to see the sink details in the right panel.

Chapter 3. Event sinks

3.1. Event sinks

When you create an event source, you can specify an event sink where events are sent to from the source. An event sink is an addressable or a callable resource that can receive incoming events from other resources. Knative services, channels, and brokers are all examples of event sinks. There is also a specific Apache Kafka sink type available.

Addressable objects receive and acknowledge an event delivered over HTTP to an address defined in their status.address.url field. As a special case, the core Kubernetes Service object also fulfills the addressable interface.

Callable objects are able to receive an event delivered over HTTP and transform the event, returning 0 or 1 new events in the HTTP response. These returned events may be further processed in the same way that events from an external event source are processed.

3.1.1. Knative CLI sink flag

When you create an event source by using the Knative (kn) CLI, you can specify a sink where events are sent to from that resource by using the --sink flag. The sink can be any addressable or callable resource that can receive incoming events from other resources.

The following example creates a sink binding that uses a service, http://event-display.svc.cluster.local, as the sink:

Example command using the sink flag

kn source binding create bind-heartbeat \ --namespace sinkbinding-example \ --subject "Job:batch/v1:app=heartbeat-cron" \ --sink http://event-display.svc.cluster.local \ --ce-override "sink=bound"

$ kn source binding create bind-heartbeat \

--namespace sinkbinding-example \

--subject "Job:batch/v1:app=heartbeat-cron" \

--sink http://event-display.svc.cluster.local \

--ce-override "sink=bound"- 1

svcinhttp://event-display.svc.cluster.localdetermines that the sink is a Knative service. Other default sink prefixes includechannel, andbroker.

You can configure which CRs can be used with the --sink flag for Knative (kn) CLI commands by Customizing kn.

3.2. Creating event sinks

When you create an event source, you can specify an event sink where events are sent to from the source. An event sink is an addressable or a callable resource that can receive incoming events from other resources. Knative services, channels, and brokers are all examples of event sinks. There is also a specific Apache Kafka sink type available.

For information about creating resources that can be used as event sinks, see the following documentation:

3.3. Sink for Apache Kafka

Apache Kafka sinks are a type of event sink that are available if a cluster administrator has enabled Apache Kafka on your cluster. You can send events directly from an event source to a Kafka topic by using a Kafka sink.

3.3.1. Creating an Apache Kafka sink by using YAML

You can create a Kafka sink that sends events to a Kafka topic. By default, a Kafka sink uses the binary content mode, which is more efficient than the structured mode. To create a Kafka sink by using YAML, you must create a YAML file that defines a KafkaSink object, then apply it by using the oc apply command.

Prerequisites

-

The OpenShift Serverless Operator, Knative Eventing, and the

KnativeKafkacustom resource (CR) are installed on your cluster. - You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

- You have access to a Red Hat AMQ Streams (Kafka) cluster that produces the Kafka messages you want to import.

-

Install the OpenShift CLI (

oc).

Procedure

Create a

KafkaSinkobject definition as a YAML file:Kafka sink YAML

Copy to Clipboard Copied! Toggle word wrap Toggle overflow To create the Kafka sink, apply the

KafkaSinkYAML file:oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Configure an event source so that the sink is specified in its spec:

Example of a Kafka sink connected to an API server source

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

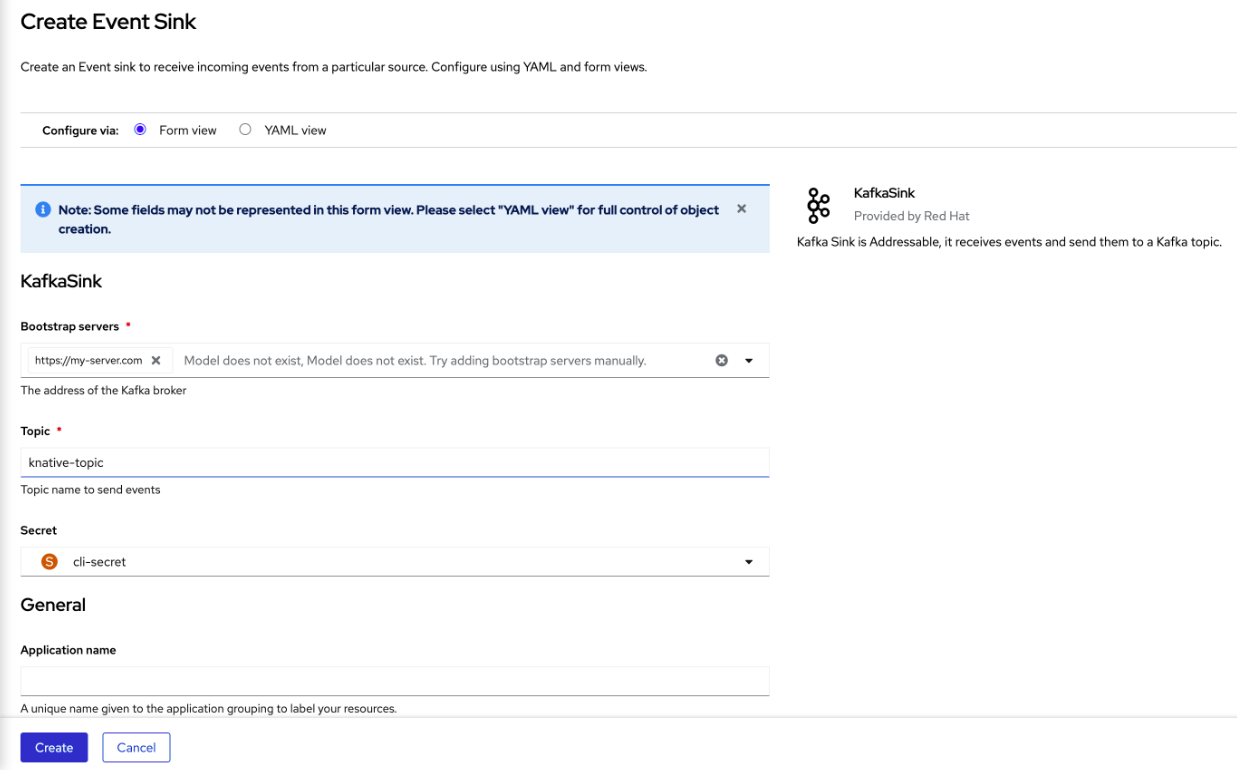

3.3.2. Creating an event sink for Apache Kafka by using the OpenShift Container Platform web console

You can create a Kafka sink that sends events to a Kafka topic in the OpenShift Container Platform web console. By default, a Kafka sink uses the binary content mode, which is more efficient than the structured mode.

As a developer, you can create an event sink to receive events from a particular source and send them to a Kafka topic.

Prerequisites

- You have installed the OpenShift Serverless Operator, with Knative Serving, Knative Eventing, and Knative broker for Apache Kafka APIs, from the OperatorHub.

- You have created a Kafka topic in your Kafka environment.

Procedure

- Navigate to the +Add view.

- Click Event Sink in the Eventing catalog.

-

Search for

KafkaSinkin the catalog items and click it. - Click Create Event Sink.

In the form view, type the URL of the bootstrap server, which is a combination of host name and port.

- Type the name of the topic to send event data.

- Type the name of the event sink.

- Click Create.

Verification

- Navigate to the Topology view.

- Click the created event sink to view its details in the right panel.

3.3.3. Configuring security for Apache Kafka sinks

Transport Layer Security (TLS) is used by Apache Kafka clients and servers to encrypt traffic between Knative and Kafka, as well as for authentication. TLS is the only supported method of traffic encryption for the Knative broker implementation for Apache Kafka.

Simple Authentication and Security Layer (SASL) is used by Apache Kafka for authentication. If you use SASL authentication on your cluster, users must provide credentials to Knative for communicating with the Kafka cluster; otherwise events cannot be produced or consumed.

Prerequisites

-

The OpenShift Serverless Operator, Knative Eventing, and the

KnativeKafkacustom resources (CRs) are installed on your OpenShift Container Platform cluster. -

Kafka sink is enabled in the

KnativeKafkaCR. - You have created a project or have access to a project with the appropriate roles and permissions to create applications and other workloads in OpenShift Container Platform.

-

You have a Kafka cluster CA certificate stored as a

.pemfile. -

You have a Kafka cluster client certificate and a key stored as

.pemfiles. -

You have installed the OpenShift (

oc) CLI. -

You have chosen the SASL mechanism to use, for example,

PLAIN,SCRAM-SHA-256, orSCRAM-SHA-512.

Procedure

Create the certificate files as a secret in the same namespace as your

KafkaSinkobject:ImportantCertificates and keys must be in PEM format.

For authentication using SASL without encryption:

oc create secret -n <namespace> generic <secret_name> \ --from-literal=protocol=SASL_PLAINTEXT \ --from-literal=sasl.mechanism=<sasl_mechanism> \ --from-literal=user=<username> \ --from-literal=password=<password>

$ oc create secret -n <namespace> generic <secret_name> \ --from-literal=protocol=SASL_PLAINTEXT \ --from-literal=sasl.mechanism=<sasl_mechanism> \ --from-literal=user=<username> \ --from-literal=password=<password>Copy to Clipboard Copied! Toggle word wrap Toggle overflow For authentication using SASL and encryption using TLS:

Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The

ca.crtcan be omitted to use the system’s root CA set if you are using a public cloud managed Kafka service.

For authentication and encryption using TLS:

oc create secret -n <namespace> generic <secret_name> \ --from-literal=protocol=SSL \ --from-file=ca.crt=<my_caroot.pem_file_path> \ --from-file=user.crt=<my_cert.pem_file_path> \ --from-file=user.key=<my_key.pem_file_path>

$ oc create secret -n <namespace> generic <secret_name> \ --from-literal=protocol=SSL \ --from-file=ca.crt=<my_caroot.pem_file_path> \1 --from-file=user.crt=<my_cert.pem_file_path> \ --from-file=user.key=<my_key.pem_file_path>Copy to Clipboard Copied! Toggle word wrap Toggle overflow - 1

- The

ca.crtcan be omitted to use the system’s root CA set if you are using a public cloud managed Kafka service.

Create or modify a

KafkaSinkobject and add a reference to your secret in theauthspec:Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the

KafkaSinkobject:oc apply -f <filename>

$ oc apply -f <filename>Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4. JobSink

Event processing usually completes within a short time frame, such as a few minutes. This ensures that the HTTP connection remains open and the service does not scale down prematurely.

Maintaining long-running connections increases the risk of failure, potentially leading to processing restarts and repeated request retries.

You can use JobSink to support long-running asynchronous jobs and tasks using the full Kubernetes batch/v1 Job resource and features and Kubernetes job queuing systems such as Kueue.

3.4.1. Using JobSink

When an event is sent to a JobSink, Eventing creates a Job and mounts the received event as JSON file at /etc/jobsink-event/event.

Procedure

Create a

JobSinkobject definition as a YAML file:JobSink YAML

Copy to Clipboard Copied! Toggle word wrap Toggle overflow Apply the

JobSinkYAML file:oc apply -f <job-sink-file.yaml>

$ oc apply -f <job-sink-file.yaml>Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify

JobSinkis ready:oc get jobsinks.sinks.knative.dev

$ oc get jobsinks.sinks.knative.devCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

NAME URL AGE READY REASON job-sink-logger http://job-sink.knative-eventing.svc.cluster.local/default/job-sink-logger 5s True

NAME URL AGE READY REASON job-sink-logger http://job-sink.knative-eventing.svc.cluster.local/default/job-sink-logger 5s TrueCopy to Clipboard Copied! Toggle word wrap Toggle overflow Trigger a

JobSink.JobSinkcan be triggered by any event source or trigger.Copy to Clipboard Copied! Toggle word wrap Toggle overflow Verify a

Jobis created:oc logs job-sink-loggerszoi6-dqbtq

$ oc logs job-sink-loggerszoi6-dqbtqCopy to Clipboard Copied! Toggle word wrap Toggle overflow Example output:

{"specversion":"1.0","id":"123","source":"my/curl/command","type":"my.demo.event","datacontenttype":"application/json","data":{"details":"JobSinkDemo"}}{"specversion":"1.0","id":"123","source":"my/curl/command","type":"my.demo.event","datacontenttype":"application/json","data":{"details":"JobSinkDemo"}}Copy to Clipboard Copied! Toggle word wrap Toggle overflow

JobSink creates a Job for each unique event it receives.

An event is uniquely identified by the combination of its source and id attributes.

If an event with the same attributes is received while a Job for that event already exists, another Job will not be created.

3.4.2. Reading the Job event file

Procedure

Read the

eventfile and deserialize it by using any CloudEvents JSON deserializer. The following example demonstrates how to read and process an event using CloudEvents Go SDK:Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.3. Setting custom event file mount path

You can set a custom event file mount path in your JobSink definition.

Procedure

Inside your container definition, include the

volumeMountsconfiguration and set as required.Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.4. Cleaning up finished jobs

You can clean up finished jobs by setting a ttlSecondsAfterFinished value in your JobSink definition. For example, setting the value to 600 removes completed jobs 600 seconds (10 minutes) after they finish.

Procedure

In your definition, set the value of

ttlSecondsAfterFinishedto the required amount.Example of ttlSecondsAfterFinished set to 600

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

3.4.5. Simulating FailJob action

Procedure

Trigger a

FailJobaction by including a bug simulating command in your JobSink definition.Example of JobSink failure

Copy to Clipboard Copied! Toggle word wrap Toggle overflow

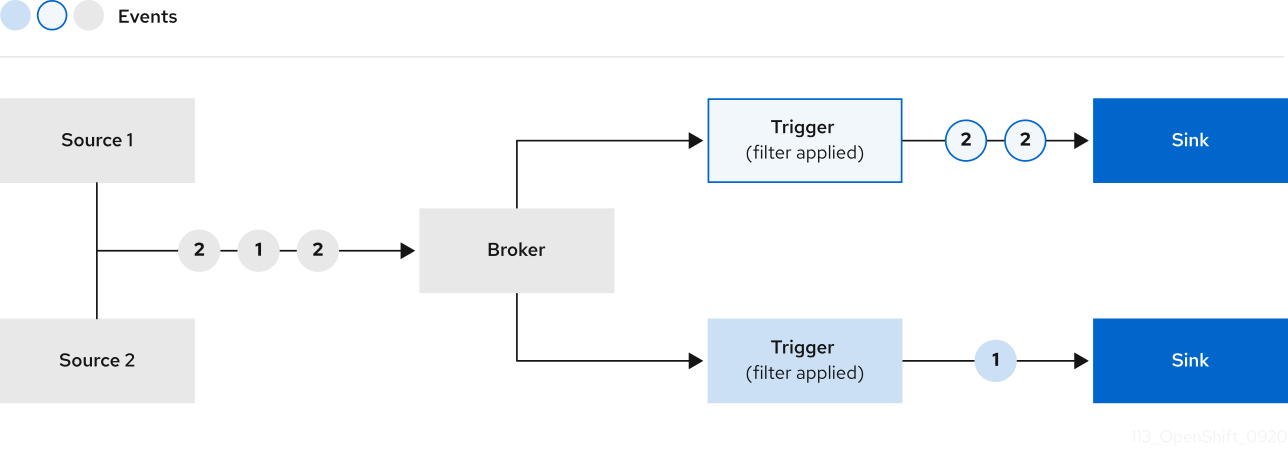

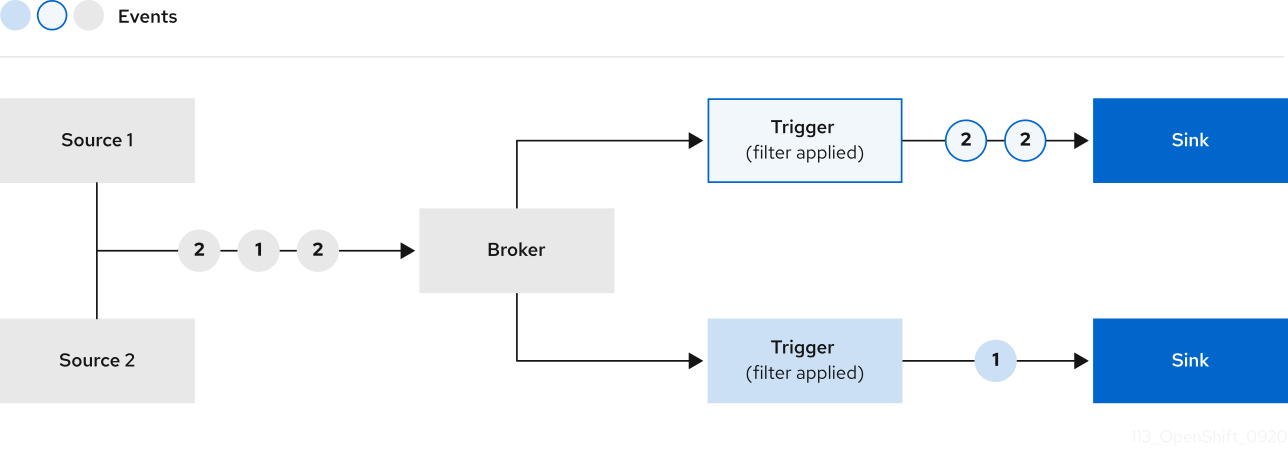

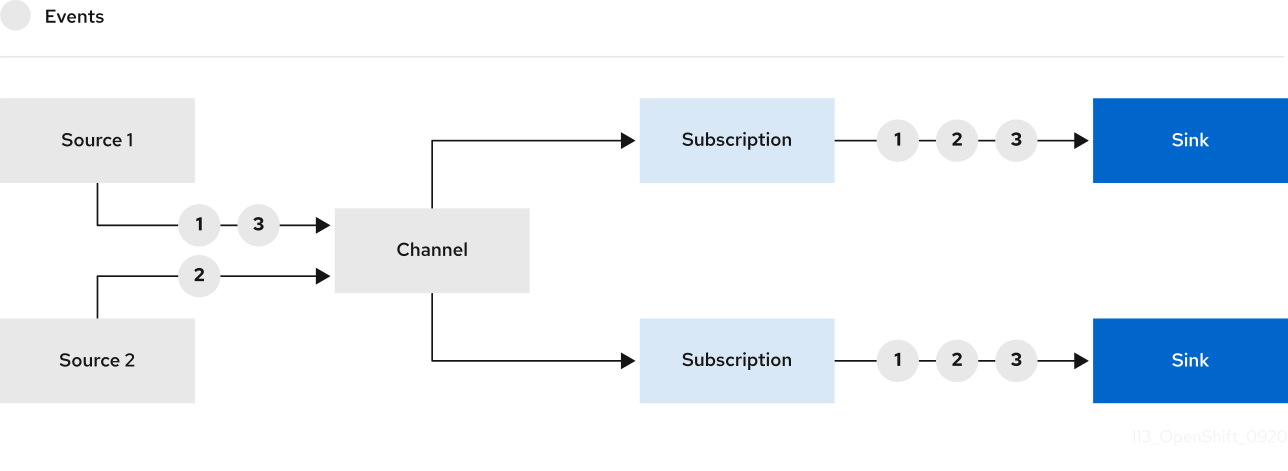

Chapter 4. Brokers

4.1. Brokers