Advanced Overcloud Customization

Methods for configuring advanced features using Red Hat OpenStack Platform director

Abstract

Chapter 1. Introduction

The Red Hat OpenStack Platform director provides a set of tools to provision and create a fully featured OpenStack environment, also known as the Overcloud. The Director Installation and Usage Guide covers the preparation and configuration of the Overcloud. However, a proper production-level Overcloud might require additional configuration, including:

- Basic network configuration to integrate the Overcloud into your existing network infrastructure.

- Network traffic isolation on separate VLANs for certain OpenStack network traffic types.

- SSL configuration to secure communication on public endpoints

- Storage options such as NFS, iSCSI, Red Hat Ceph Storage, and multiple third-party storage devices.

- Registration of nodes to the Red Hat Content Delivery Network or your internal Red Hat Satellite 5 or 6 server.

- Various system level options.

- Various OpenStack service options.

This guide provides instructions for augmenting your Overcloud through the director. At this point, the director has registered the nodes and configured the necessary services for Overcloud creation. Now you can customize your Overcloud using the methods in this guide.

The examples in this guide are optional steps for configuring the Overcloud. These steps are only required to provide the Overcloud with additional functionality. Use only the steps that apply to the needs of your environment.

Chapter 2. Understanding Heat Templates

The custom configurations in this guide use Heat templates and environment files to define certain aspects of the Overcloud. This chapter provides a basic introduction to Heat templates so that you can understand the structure and format of these templates in the context of the Red Hat OpenStack Platform director.

2.1. Heat Templates

The director uses Heat Orchestration Templates (HOT) as a template format for its Overcloud deployment plan. Templates in HOT format are mostly expressed in YAML format. The purpose of a template is to define and create a stack, which is a collection of resources that heat creates, and the configuration of the resources. Resources are objects in OpenStack and can include compute resources, network configuration, security groups, scaling rules, and custom resources.

The structure of a Heat template has three main sections:

- Parameters

-

These are settings passed to heat, which provides a way to customize a stack, and any default values for parameters without passed values. These are defined in the

parameterssection of a template. - Resources

-

These are the specific objects to create and configure as part of a stack. OpenStack contains a set of core resources that span across all components. These are defined in the

resourcessection of a template. - Output

-

These are values passed from heat after the stack’s creation. You can access these values either through the heat API or client tools. These are defined in the

outputsection of a template.

Here is an example of a basic heat template:

This template uses the resource type type: OS::Nova::Server to create an instance called my_instance with a particular flavor, image, and key. The stack can return the value of instance_name, which is called My Cirros Instance.

When Heat processes a template it creates a stack for the template and a set of child stacks for resource templates. This creates a hierarchy of stacks that descend from the main stack you define with your template. You can view the stack hierarchy using this following command:

openstack stack list --nested

$ openstack stack list --nested2.2. Environment Files

An environment file is a special type of template that provides customization for your Heat templates. This includes three key parts:

- Resource Registry

-

This section defines custom resource names, linked to other heat templates. This essentially provides a method to create custom resources that do not exist within the core resource collection. These are defined in the

resource_registrysection of an environment file. - Parameters

-

These are common settings you apply to the top-level template’s parameters. For example, if you have a template that deploys nested stacks, such as resource registry mappings, the parameters only apply to the top-level template and not templates for the nested resources. Parameters are defined in the

parameterssection of an environment file. - Parameter Defaults

-

These parameters modify the default values for parameters in all templates. For example, if you have a Heat template that deploys nested stacks, such as resource registry mappings,the parameter defaults apply to all templates. In other words, the top-level template and those defining all nested resources. The parameter defaults are defined in the

parameter_defaultssection of an environment file.

It is recommended to use parameter_defaults instead of parameters When creating custom environment files for your Overcloud. This is so the parameters apply to all stack templates for the Overcloud.

An example of a basic environment file:

For example, this environment file (my_env.yaml) might be included when creating a stack from a certain Heat template (my_template.yaml). The my_env.yaml files creates a new resource type called OS::Nova::Server::MyServer. The myserver.yaml file is a Heat template file that provides an implementation for this resource type that overrides any built-in ones. You can include the OS::Nova::Server::MyServer resource in your my_template.yaml file.

The MyIP applies a parameter only to the main Heat template that deploys along with this environment file. In this example, it only applies to the parameters in my_template.yaml.

The NetworkName applies to both the main Heat template (in this example, my_template.yaml) and the templates associated with resources included the main template, such as the OS::Nova::Server::MyServer resource and its myserver.yaml template in this example.

2.3. Core Overcloud Heat Templates

The director contains a core heat template collection for the Overcloud. This collection is stored in /usr/share/openstack-tripleo-heat-templates.

There are many heat templates and environment files in this collection. However, the main files and directories to note in this template collection are:

overcloud.j2.yaml- This is the main template file used to create the Overcloud environment. This file uses Jinja2 syntax to iterate over certain sections in the template to create custom roles. The Jinja2 formatting is rendered into YAML during the overcloud deployment process.

overcloud-resource-registry-puppet.j2.yaml- This is the main environment file used to create the Overcloud environment. It provides a set of configurations for Puppet modules stored on the Overcloud image. After the director writes the Overcloud image to each node, Heat starts the Puppet configuration for each node using the resources registered in this environment file. This file uses Jinja2 syntax to iterate over certain sections in the template to create custom roles. The Jinja2 formatting is rendered into YAML during the overcloud deployment process.

roles_data.yaml- A file that defines the roles in an overcloud and maps services to each role.

capabilities-map.yaml-

A mapping of environment files for an overcloud plan. Use this file to describe and enable environment files through the director’s web UI. Custom environment files detected in an overcloud plan but not listed in the

capabilities-map.yamlare listed in the Other subtab of 2 Specify Deployment Configuration > Overall Settings on the web UI. environments-

Contains additional Heat environment files that you can use with your Overcloud creation. These environment files enable extra functions for your resulting OpenStack environment. For example, the directory contains an environment file for enabling Cinder NetApp backend storage (

cinder-netapp-config.yaml). network- A set of Heat templates to help create isolated networks and ports.

puppet-

Templates mostly driven by configuration with puppet. The aforementioned

overcloud-resource-registry-puppet.j2.yamlenvironment file uses the files in this directory to drive the application of the Puppet configuration on each node. puppet/services- A directory containing heat templates for all services in the composable service architecture.

extraconfig-

Templates used to enable extra functionality. For example, the

extraconfig/pre_deploy/rhel-registrationdirector provides the ability to register your nodes' Red Hat Enterprise Linux operating systems to the Red Hat Content Delivery network or your own Red Hat Satellite server. firstboot-

Provides example

first_bootscripts that the director uses when initially creating the nodes.

2.4. Including Environment Files in Overcloud Creation

The deployment command (openstack overcloud deploy) uses the -e option to include an environment file to customize your Overcloud. You can include as many environment files as necessary. However, the order of the environment files is important as the parameters and resources defined in subsequent environment files take precedence. For example, you might have two environment files:

environment-file-1.yaml

environment-file-2.yaml

resource_registry: OS::TripleO::NodeExtraConfigPost: /home/stack/templates/template-2.yaml parameter_defaults: TimeZone: 'Hongkong'

resource_registry:

OS::TripleO::NodeExtraConfigPost: /home/stack/templates/template-2.yaml

parameter_defaults:

TimeZone: 'Hongkong'Then deploy with both environment files included:

openstack overcloud deploy --templates -e environment-file-1.yaml -e environment-file-2.yaml

$ openstack overcloud deploy --templates -e environment-file-1.yaml -e environment-file-2.yaml

In this example, both environment files contain a common resource type (OS::TripleO::NodeExtraConfigPost) and a common parameter (TimeZone). The openstack overcloud deploy command runs through the following process:

-

Loads the default configuration from the core Heat template collection as per the

--templateoption. -

Applies the configuration from

environment-file-1.yaml, which overrides any common settings from the default configuration. -

Applies the configuration from

environment-file-2.yaml, which overrides any common settings from the default configuration andenvironment-file-1.yaml.

This results in the following changes to the default configuration of the Overcloud:

-

OS::TripleO::NodeExtraConfigPostresource is set to/home/stack/templates/template-2.yamlas perenvironment-file-2.yaml. -

TimeZoneparameter is set toHongkongas perenvironment-file-2.yaml. -

RabbitFDLimitparameter is set to65536as perenvironment-file-1.yaml.environment-file-2.yamldoes not change this value.

This provides a method for defining custom configuration to the your Overcloud without values from multiple environment files conflicting.

2.5. Using Customized Core Heat Templates

When creating the overcloud, the director uses a core set of Heat templates located in /usr/share/openstack-tripleo-heat-templates. If you want to customize this core template collection, use a Git workflow to track changes and merge updates. Use the following git processes to help manage your custom template collection:

Initializing a Custom Template Collection

Use the following procedure to create an initial Git repository containing the Heat template collection:

Copy the templates' directory to the

stackusers directory. This example copies it to the~/templatesdirectory:cd ~/templates cp -r /usr/share/openstack-tripleo-heat-templates .

$ cd ~/templates $ cp -r /usr/share/openstack-tripleo-heat-templates .Copy to Clipboard Copied! Toggle word wrap Toggle overflow Change to the custom template directory and initialize a Git repository:

cd openstack-tripleo-heat-templates git init .

$ cd openstack-tripleo-heat-templates $ git init .Copy to Clipboard Copied! Toggle word wrap Toggle overflow Stage all templates for the initial commit:

git add *

$ git add *Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create an initial commit:

git commit -m "Initial creation of custom core heat templates"

$ git commit -m "Initial creation of custom core heat templates"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

This creates an initial master branch containing the latest core template collection. Use this branch as a basis for your custom branch and merge new template versions to this branch.

Creating a Custom Branch and Committing Changes

Use a custom branch to store your changes to the core template collection. Use the following procedure to create a my-customizations branch and add customizations to it:

Create the

my-customizationsbranch and switch to it:git checkout -b my-customizations

$ git checkout -b my-customizationsCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Edit the files in the custom branch.

Stage the changes in git:

git add [edited files]

$ git add [edited files]Copy to Clipboard Copied! Toggle word wrap Toggle overflow Commit the changes to the custom branch:

git commit -m "[Commit message for custom changes]"

$ git commit -m "[Commit message for custom changes]"Copy to Clipboard Copied! Toggle word wrap Toggle overflow

This adds your changes as commits to the my-customizations branch. When the master branch updates, you can rebase my-customizations off master, which causes git to add these commits on to the updated template collection. This helps with tracking you customizations and replaying them on future template updates.

Updating the Custom Template Collection:

Sometimes when updating the undercloud, the openstack-tripleo-heat-templates package might also update. Use the following procedure to update your custom template collection:

Save the

openstack-tripleo-heat-templatespackage version as an environment variable:export PACKAGE=$(rpm -qv openstack-tripleo-heat-templates)

$ export PACKAGE=$(rpm -qv openstack-tripleo-heat-templates)Copy to Clipboard Copied! Toggle word wrap Toggle overflow Change to your template collection directory and create a new branch for the updated templates:

cd ~/templates/openstack-tripleo-heat-templates git checkout -b $PACKAGE

$ cd ~/templates/openstack-tripleo-heat-templates $ git checkout -b $PACKAGECopy to Clipboard Copied! Toggle word wrap Toggle overflow Remove all files in the branch and replace them with the new versions:

git rm -rf * cp -r /usr/share/openstack-tripleo-heat-templates/* .

$ git rm -rf * $ cp -r /usr/share/openstack-tripleo-heat-templates/* .Copy to Clipboard Copied! Toggle word wrap Toggle overflow Add all templates for the initial commit:

git add *

$ git add *Copy to Clipboard Copied! Toggle word wrap Toggle overflow Create a commit for the package update:

git commit -m "Updates for $PACKAGE"

$ git commit -m "Updates for $PACKAGE"Copy to Clipboard Copied! Toggle word wrap Toggle overflow Merge the branch into master. If using a Git management system, such as GitLab, use the management workflow. If using git locally, merge by switching to the

masterbranch and run thegit mergecommand:git checkout master git merge $PACKAGE

$ git checkout master $ git merge $PACKAGECopy to Clipboard Copied! Toggle word wrap Toggle overflow

The master branch now contains the latest version of the core template collection. You can now rebase the my-customization branch from this updated collection.

Rebasing the Custom Branch

Use the following procedure to update the my-customization branch,:

Change to the

my-customizationsbranch:git checkout my-customizations

$ git checkout my-customizationsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Rebase the branch off

master:git rebase master

$ git rebase masterCopy to Clipboard Copied! Toggle word wrap Toggle overflow

This updates the my-customizations branch and replays the custom commits made to this branch.

If git reports any conflicts during the rebase, use this procedure:

Check which files contain the conflicts:

git status

$ git statusCopy to Clipboard Copied! Toggle word wrap Toggle overflow - Resolve the conflicts of the template files identified.

Add the resolved files

git add [resolved files] git commit

$ git add [resolved files] $ git commitCopy to Clipboard Copied! Toggle word wrap Toggle overflow Continue the rebase:

git rebase --continue

$ git rebase --continueCopy to Clipboard Copied! Toggle word wrap Toggle overflow

Deploying Custom Templates

Use the following procedure to deploy the custom template collection:

Make sure you have switched to the

my-customizationbranch:git checkout my-customizations

git checkout my-customizationsCopy to Clipboard Copied! Toggle word wrap Toggle overflow Run the

openstack overcloud deploycommand with the--templatesoption to specify your local template directory:openstack overcloud deploy --templates /home/stack/templates/openstack-tripleo-heat-templates [OTHER OPTIONS]

$ openstack overcloud deploy --templates /home/stack/templates/openstack-tripleo-heat-templates [OTHER OPTIONS]Copy to Clipboard Copied! Toggle word wrap Toggle overflow

The director uses the default template directory (/usr/share/openstack-tripleo-heat-templates) if you specify the --templates option without a directory.

Red Hat recommends using the methods from the following sections instead of modifying the heat template collection:

- Section 4.2, “Pre-Configuration: Customizing Specific Overcloud Roles”

- Section 4.3, “Pre-Configuration: Customizing All Overcloud Roles”

- Section 4.4, “Post-Configuration: Customizing All Overcloud Roles”

- Section 4.5, “Puppet: Customizing Hieradata for Roles”

- Section 4.7, “Puppet: Applying Custom Manifests”

Chapter 3. Parameters

Each Heat template in the director’s template collection contains a parameters section. This section defines all parameters specific to a particular overcloud service. This includes the following:

-

overcloud.j2.yaml- Default base parameters -

roles_data.yaml- Default parameters for composable roles -

puppet/services/*.yaml- Default parameters for specific services

You can modify the values for these parameters using the following method:

- Create an environment file for your custom parameters.

-

Include your custom parameters in the

parameter_defaultssection of the environment file. -

Include the environment file with the

openstack overcloud deploycommand.

The next few sections contain examples to demonstrate how to configure specific parameters for services in the puppet/services directory.

3.1. Example 1: Configuring the Timezone

The Heat template for setting the timezone (puppet/services/time/timezone.yaml) contains a TimeZone parameter. If you leave the TimeZone parameter blank, the overcloud sets the time to UTC as a default. The director recognizes the standard timezone names defined in the timezone database /usr/share/zoneinfo/. For example, if you wanted to set your time zone to Japan, you would examine the contents of /usr/share/zoneinfo to locate a suitable entry:

ls /usr/share/zoneinfo/ Africa Asia Canada Cuba EST GB GMT-0 HST iso3166.tab Kwajalein MST NZ-CHAT posix right Turkey UTC Zulu America Atlantic CET EET EST5EDT GB-Eire GMT+0 Iceland Israel Libya MST7MDT Pacific posixrules ROC UCT WET Antarctica Australia Chile Egypt Etc GMT Greenwich Indian Jamaica MET Navajo Poland PRC ROK Universal W-SU Arctic Brazil CST6CDT Eire Europe GMT0 Hongkong Iran Japan Mexico NZ Portugal PST8PDT Singapore US zone.tab

$ ls /usr/share/zoneinfo/

Africa Asia Canada Cuba EST GB GMT-0 HST iso3166.tab Kwajalein MST NZ-CHAT posix right Turkey UTC Zulu

America Atlantic CET EET EST5EDT GB-Eire GMT+0 Iceland Israel Libya MST7MDT Pacific posixrules ROC UCT WET

Antarctica Australia Chile Egypt Etc GMT Greenwich Indian Jamaica MET Navajo Poland PRC ROK Universal W-SU

Arctic Brazil CST6CDT Eire Europe GMT0 Hongkong Iran Japan Mexico NZ Portugal PST8PDT Singapore US zone.tab

The output listed above includes time zone files, and directories containing additional time zone files. For example, Japan is an individual time zone file in this result, but Africa is a directory containing additional time zone files:

ls /usr/share/zoneinfo/Africa/ Abidjan Algiers Bamako Bissau Bujumbura Ceuta Dar_es_Salaam El_Aaiun Harare Kampala Kinshasa Lome Lusaka Maseru Monrovia Niamey Porto-Novo Tripoli Accra Asmara Bangui Blantyre Cairo Conakry Djibouti Freetown Johannesburg Khartoum Lagos Luanda Malabo Mbabane Nairobi Nouakchott Sao_Tome Tunis Addis_Ababa Asmera Banjul Brazzaville Casablanca Dakar Douala Gaborone Juba Kigali Libreville Lubumbashi Maputo Mogadishu Ndjamena Ouagadougou Timbuktu Windhoek

$ ls /usr/share/zoneinfo/Africa/

Abidjan Algiers Bamako Bissau Bujumbura Ceuta Dar_es_Salaam El_Aaiun Harare Kampala Kinshasa Lome Lusaka Maseru Monrovia Niamey Porto-Novo Tripoli

Accra Asmara Bangui Blantyre Cairo Conakry Djibouti Freetown Johannesburg Khartoum Lagos Luanda Malabo Mbabane Nairobi Nouakchott Sao_Tome Tunis

Addis_Ababa Asmera Banjul Brazzaville Casablanca Dakar Douala Gaborone Juba Kigali Libreville Lubumbashi Maputo Mogadishu Ndjamena Ouagadougou Timbuktu Windhoek

Add the entry in an environment file to set your timezone to Japan:

parameter_defaults: TimeZone: 'Japan'

parameter_defaults:

TimeZone: 'Japan'3.2. Example 2: Disabling Layer 3 High Availability (L3HA)

The Heat template for the OpenStack Networking (neutron) API (puppet/services/neutron-api.yaml) contains a parameter to enable and disable Layer 3 High Availability (L3HA). The default for the parameter is false. However, you can enable it using the following in an environment file:

parameter_defaults: NeutronL3HA: true

parameter_defaults:

NeutronL3HA: true3.3. Example 3: Configuring the Telemetry Dispatcher

The OpenStack Telemetry (ceilometer) service includes a component for a time series data storage (gnocchi). The puppet/services/ceilometer-base.yaml Heat Template allows you to switch between gnocchi and the standard database. You accomplish this with the CeilometerMeterDispatcher parameter, which you set to either:

-

gnocchi- Use the new time series database for Ceilometer dispatcher. This is the default option. -

database- Use the standard database for the Ceilometer dispatcher.

To switch to a standard database, add the following to an environment file:

parameter_defaults: CeilometerMeterDispatcher: database

parameter_defaults:

CeilometerMeterDispatcher: database3.4. Example 4: Configuring RabbitMQ File Descriptor Limit

For certain configurations, you might need to increase the file descriptor limit for the RabbitMQ server. The puppet/services/rabbitmq.yaml Heat template allows you to set the RabbitFDLimit parameter to the limit you require. Add the following to an environment file.

parameter_defaults: RabbitFDLimit: 65536

parameter_defaults:

RabbitFDLimit: 655363.5. Example 5: Enabling and Disabling Parameters

In some case, you might need to initially set a parameters during a deployment, then disable the parameter for a future deployment operation, such as updates or scaling operations. For example, to include a custom RPM during the overcloud creation, you would include the following:

parameter_defaults: DeployArtifactURLs: ["http://www.example.com/myfile.rpm"]

parameter_defaults:

DeployArtifactURLs: ["http://www.example.com/myfile.rpm"]If you need to disable this parameter from a future deployment, it is not enough to remove the parameter. Instead, you set the parameter to an empty value:

parameter_defaults: DeployArtifactURLs: []

parameter_defaults:

DeployArtifactURLs: []This ensures the parameter is no longer set for subsequent deployments operations.

3.6. Identifying Parameters to Modify

Red Hat OpenStack Platform director provides many parameters for configuration. In some cases, you might experience difficulty identifying a certain option to configure and the corresponding director parameter. If there is an option you want to configure through the director, use the following workflow to identify and map the option to a specific overcloud parameter:

- Identify the option you aim to configure. Make a note of the service that uses the option.

Check the corresponding Puppet module for this option. The Puppet modules for Red Hat OpenStack Platform are located under

/etc/puppet/moduleson the director node. Each module corresponds to a particular service. For example, thekeystonemodule corresponds to the OpenStack Identity (keystone).- If the Puppet module contains a variable that controls the chosen option, move to the next step.

- If the Puppet module does not contain a variable that controls the chosen option, then no hieradata exists for this option. If possible, you can set the option manually after the overcloud completes deployment.

Check the director’s core Heat template collection for the Puppet variable in the form of hieradata. The templates in

puppet/services/*usually correspond to the Puppet modules of the same services. For example, thepuppet/services/keystone.yamltemplate provides hieradata to thekeystonemodule.- If the Heat template sets hieradata for the Puppet variable, the template should also disclose the director-based parameter to modify.

- If the Heat template does not set hieradata for the Puppet variable, use the configuration hooks to pass the hieradata using an environment file. See Section 4.5, “Puppet: Customizing Hieradata for Roles” for more information on customizing hieradata.

Workflow Example

You might aim to change the notification format for OpenStack Identity (keystone). Using the workflow, you would:

-

Identify the OpenStack parameter to configure (

notification_format). Search the

keystonePuppet module for thenotification_formatsetting. For example:grep notification_format /etc/puppet/modules/keystone/manifests/*

$ grep notification_format /etc/puppet/modules/keystone/manifests/*Copy to Clipboard Copied! Toggle word wrap Toggle overflow In this case, the

keystonemodule manages this option using thekeystone::notification_formatvariable.Search the

keystoneservice template for this variable. For example:grep "keystone::notification_format" /usr/share/openstack-tripleo-heat-templates/puppet/services/keystone.yaml

$ grep "keystone::notification_format" /usr/share/openstack-tripleo-heat-templates/puppet/services/keystone.yamlCopy to Clipboard Copied! Toggle word wrap Toggle overflow The output shows the director using the

KeystoneNotificationFormatparameter to set thekeystone::notification_formathieradata.

The following table shows the eventual mapping:

| Director Parameter | Puppet Hieradata | OpenStack Identity (keystone) option |

|---|---|---|

|

|

|

|

This means setting the KeystoneNotificationFormat in an overcloud’s environment file would set the notification_format option in the keystone.conf file during the overcloud’s configuration.

Chapter 4. Configuration Hooks

The configuration hooks provide a method to inject your own configuration functions into the Overcloud deployment process. This includes hooks for injecting custom configuration before and after the main Overcloud services configuration and hook for modifying and including Puppet-based configuration.

4.1. First Boot: Customizing First Boot Configuration

The director provides a mechanism to perform configuration on all nodes upon the initial creation of the Overcloud. The director achieves this through cloud-init, which you can call using the OS::TripleO::NodeUserData resource type.

In this example, you will update the nameserver with a custom IP address on all nodes. You must first create a basic heat template (/home/stack/templates/nameserver.yaml) that runs a script to append each node’s resolv.conf with a specific nameserver. You can use the OS::TripleO::MultipartMime resource type to send the configuration script.

Next, create an environment file (/home/stack/templates/firstboot.yaml) that registers your heat template as the OS::TripleO::NodeUserData resource type.

resource_registry: OS::TripleO::NodeUserData: /home/stack/templates/nameserver.yaml

resource_registry:

OS::TripleO::NodeUserData: /home/stack/templates/nameserver.yamlTo add the first boot configuration, add the environment file to the stack along with your other environment files when first creating the Overcloud. For example:

openstack overcloud deploy --templates \

...

-e /home/stack/templates/firstboot.yaml \

...

$ openstack overcloud deploy --templates \

...

-e /home/stack/templates/firstboot.yaml \

...

The -e applies the environment file to the Overcloud stack.

This adds the configuration to all nodes when they are first created and boot for the first time. Subsequent inclusions of these templates, such as updating the Overcloud stack, does not run these scripts.

You can only register the OS::TripleO::NodeUserData to one heat template. Subsequent usage overrides the heat template to use.

4.2. Pre-Configuration: Customizing Specific Overcloud Roles

Previous versions of this document used the OS::TripleO::Tasks::*PreConfig resources to provide pre-configuration hooks on a per role basis. The director’s Heat template collection requires dedicated use of these hooks, which means you should not use them for custom use. Instead, use the OS::TripleO::*ExtraConfigPre hooks outlined below.

The Overcloud uses Puppet for the core configuration of OpenStack components. The director provides a set of hooks to provide custom configuration for specific node roles after the first boot completes and before the core configuration begins. These hooks include:

- OS::TripleO::ControllerExtraConfigPre

- Additional configuration applied to Controller nodes before the core Puppet configuration.

- OS::TripleO::ComputeExtraConfigPre

- Additional configuration applied to Compute nodes before the core Puppet configuration.

- OS::TripleO::CephStorageExtraConfigPre

- Additional configuration applied to Ceph Storage nodes before the core Puppet configuration.

- OS::TripleO::ObjectStorageExtraConfigPre

- Additional configuration applied to Object Storage nodes before the core Puppet configuration.

- OS::TripleO::BlockStorageExtraConfigPre

- Additional configuration applied to Block Storage nodes before the core Puppet configuration.

- OS::TripleO::[ROLE]ExtraConfigPre

-

Additional configuration applied to custom nodes before the core Puppet configuration. Replace

[ROLE]with the composable role name.

In this example, you first create a basic heat template (/home/stack/templates/nameserver.yaml) that runs a script to write to a node’s resolv.conf with a variable nameserver.

In this example, the resources section contains the following:

- CustomExtraConfigPre

-

This defines a software configuration. In this example, we define a Bash

scriptand Heat replaces_NAMESERVER_IP_with the value stored in thenameserver_ipparameter. - CustomExtraDeploymentPre

This executes a software configuration, which is the software configuration from the

CustomExtraConfigPreresource. Note the following:-

The

configparameter makes a reference to theCustomExtraConfigPreresource so Heat knows what configuration to apply. -

The

serverparameter retrieves a map of the Overcloud nodes. This parameter is provided by the parent template and is mandatory in templates for this hook. -

The

actionsparameter defines when to apply the configuration. In this case, we only apply the configuration when the Overcloud is created. Possible actions includeCREATE,UPDATE,DELETE,SUSPEND, andRESUME. -

input_valuescontains a parameter calleddeploy_identifier, which stores theDeployIdentifierfrom the parent template. This parameter provides a timestamp to the resource for each deployment update. This ensures the resource reapplies on subsequent overcloud updates.

-

The

Next, create an environment file (/home/stack/templates/pre_config.yaml) that registers your heat template to the role-based resource type. For example, to apply only to Controller nodes, use the ControllerExtraConfigPre hook:

resource_registry: OS::TripleO::ControllerExtraConfigPre: /home/stack/templates/nameserver.yaml parameter_defaults: nameserver_ip: 192.168.1.1

resource_registry:

OS::TripleO::ControllerExtraConfigPre: /home/stack/templates/nameserver.yaml

parameter_defaults:

nameserver_ip: 192.168.1.1To apply the configuration, add the environment file to the stack along with your other environment files when creating or updating the Overcloud. For example:

openstack overcloud deploy --templates \

...

-e /home/stack/templates/pre_config.yaml \

...

$ openstack overcloud deploy --templates \

...

-e /home/stack/templates/pre_config.yaml \

...This applies the configuration to all Controller nodes before the core configuration begins on either the initial Overcloud creation or subsequent updates.

You can only register each resource to only one Heat template per hook. Subsequent usage overrides the Heat template to use.

4.3. Pre-Configuration: Customizing All Overcloud Roles

The Overcloud uses Puppet for the core configuration of OpenStack components. The director provides a hook to configure all node types after the first boot completes and before the core configuration begins:

- OS::TripleO::NodeExtraConfig

- Additional configuration applied to all nodes roles before the core Puppet configuration.

In this example, you first create a basic heat template (/home/stack/templates/nameserver.yaml) that runs a script to append each node’s resolv.conf with a variable nameserver.

In this example, the resources section contains the following:

- CustomExtraConfigPre

-

This defines a software configuration. In this example, we define a Bash

scriptand Heat replaces_NAMESERVER_IP_with the value stored in thenameserver_ipparameter. - CustomExtraDeploymentPre

This executes a software configuration, which is the software configuration from the

CustomExtraConfigPreresource. Note the following:-

The

configparameter makes a reference to theCustomExtraConfigPreresource so Heat knows what configuration to apply. -

The

serverparameter retrieves a map of the Overcloud nodes. This parameter is provided by the parent template and is mandatory in templates for this hook. -

The

actionsparameter defines when to apply the configuration. In this case, we only apply the configuration when the Overcloud is created. Possible actions includeCREATE,UPDATE,DELETE,SUSPEND, andRESUME. -

The

input_valuesparameter contains a sub-parameter calleddeploy_identifier, which stores theDeployIdentifierfrom the parent template. This parameter provides a timestamp to the resource for each deployment update. This ensures the resource reapplies on subsequent overcloud updates.

-

The

Next, create an environment file (/home/stack/templates/pre_config.yaml) that registers your heat template as the OS::TripleO::NodeExtraConfig resource type.

resource_registry: OS::TripleO::NodeExtraConfig: /home/stack/templates/nameserver.yaml parameter_defaults: nameserver_ip: 192.168.1.1

resource_registry:

OS::TripleO::NodeExtraConfig: /home/stack/templates/nameserver.yaml

parameter_defaults:

nameserver_ip: 192.168.1.1To apply the configuration, add the environment file to the stack along with your other environment files when creating or updating the Overcloud. For example:

openstack overcloud deploy --templates \

...

-e /home/stack/templates/pre_config.yaml \

...

$ openstack overcloud deploy --templates \

...

-e /home/stack/templates/pre_config.yaml \

...This applies the configuration to all nodes before the core configuration begins on either the initial Overcloud creation or subsequent updates.

You can only register the OS::TripleO::NodeExtraConfig to only one Heat template. Subsequent usage overrides the Heat template to use.

4.4. Post-Configuration: Customizing All Overcloud Roles

Previous versions of this document used the OS::TripleO::Tasks::*PostConfig resources to provide post-configuration hooks on a per role basis. The director’s Heat template collection requires dedicated use of these hooks, which means you should not use them for custom use. Instead, use the OS::TripleO::NodeExtraConfigPost hook outlined below.

A situation might occur where you have completed the creation of your Overcloud but want to add additional configuration to all roles, either on initial creation or on a subsequent update of the Overcloud. In this case, you use the following post-configuration hook:

- OS::TripleO::NodeExtraConfigPost

- Additional configuration applied to all nodes roles after the core Puppet configuration.

In this example, you first create a basic heat template (/home/stack/templates/nameserver.yaml) that runs a script to append each node’s resolv.conf with a variable nameserver.

In this example, the resources section contains the following:

- CustomExtraConfig

-

This defines a software configuration. In this example, we define a Bash

scriptand Heat replaces_NAMESERVER_IP_with the value stored in thenameserver_ipparameter. - CustomExtraDeployments

This executes a software configuration, which is the software configuration from the

CustomExtraConfigresource. Note the following:-

The

configparameter makes a reference to theCustomExtraConfigresource so Heat knows what configuration to apply. -

The

serversparameter retrieves a map of the Overcloud nodes. This parameter is provided by the parent template and is mandatory in templates for this hook. -

The

actionsparameter defines when to apply the configuration. In this case, we only apply the configuration when the Overcloud is created. Possible actions includeCREATE,UPDATE,DELETE,SUSPEND, andRESUME. -

input_valuescontains a parameter calleddeploy_identifier, which stores theDeployIdentifierfrom the parent template. This parameter provides a timestamp to the resource for each deployment update. This ensures the resource reapplies on subsequent overcloud updates.

-

The

Next, create an environment file (/home/stack/templates/post_config.yaml) that registers your heat template as the OS::TripleO::NodeExtraConfigPost: resource type.

resource_registry: OS::TripleO::NodeExtraConfigPost: /home/stack/templates/nameserver.yaml parameter_defaults: nameserver_ip: 192.168.1.1

resource_registry:

OS::TripleO::NodeExtraConfigPost: /home/stack/templates/nameserver.yaml

parameter_defaults:

nameserver_ip: 192.168.1.1To apply the configuration, add the environment file to the stack along with your other environment files when creating or updating the Overcloud. For example:

openstack overcloud deploy --templates \

...

-e /home/stack/templates/post_config.yaml \

...

$ openstack overcloud deploy --templates \

...

-e /home/stack/templates/post_config.yaml \

...This applies the configuration to all nodes after the core configuration completes on either initial Overcloud creation or subsequent updates.

You can only register the OS::TripleO::NodeExtraConfigPost to only one Heat template. Subsequent usage overrides the Heat template to use.

4.5. Puppet: Customizing Hieradata for Roles

The Heat template collection contains a set of parameters to pass extra configuration to certain node types. These parameters save the configuration as hieradata for the node’s Puppet configuration. These parameters are:

- ControllerExtraConfig

- Configuration to add to all Controller nodes.

- NovaComputeExtraConfig

- Configuration to add to all Compute nodes.

- BlockStorageExtraConfig

- Configuration to add to all Block Storage nodes.

- ObjectStorageExtraConfig

- Configuration to add to all Object Storage nodes

- CephStorageExtraConfig

- Configuration to add to all Ceph Storage nodes

- [ROLE]ExtraConfig

-

Configuration to add to a composable role. Replace

[ROLE]with the composable role name. - ExtraConfig

- Configuration to add to all nodes.

To add extra configuration to the post-deployment configuration process, create an environment file that contains these parameters in the parameter_defaults section. For example, to increase the reserved memory for Compute hosts to 1024 MB and set the VNC keymap to Japanese:

parameter_defaults:

NovaComputeExtraConfig:

nova::compute::reserved_host_memory: 1024

nova::compute::vnc_keymap: ja

parameter_defaults:

NovaComputeExtraConfig:

nova::compute::reserved_host_memory: 1024

nova::compute::vnc_keymap: ja

Include this environment file when running openstack overcloud deploy.

You can only define each parameter once. Subsequent usage overrides previous values.

4.6. Puppet: Customizing Hieradata for Individual Nodes

You can set Puppet hieradata for individual nodes using the Heat template collection. To accomplish this, you need to acquire the system UUID saved as part of the introspection data for a node:

openstack baremetal introspection data save 9dcc87ae-4c6d-4ede-81a5-9b20d7dc4a14 | jq .extra.system.product.uuid

$ openstack baremetal introspection data save 9dcc87ae-4c6d-4ede-81a5-9b20d7dc4a14 | jq .extra.system.product.uuidThis outputs a system UUID. For example:

"F5055C6C-477F-47FB-AFE5-95C6928C407F"

"F5055C6C-477F-47FB-AFE5-95C6928C407F"

Use this system UUID in an environment file that defines node-specific hieradata and registers the per_node.yaml template to a pre-configuration hook. For example:

resource_registry:

OS::TripleO::ComputeExtraConfigPre: /usr/share/openstack-tripleo-heat-templates/puppet/extraconfig/pre_deploy/per_node.yaml

parameter_defaults:

NodeDataLookup: '{"F5055C6C-477F-47FB-AFE5-95C6928C407F": {"nova::compute::vcpu_pin_set": [ "2", "3" ]}}'

resource_registry:

OS::TripleO::ComputeExtraConfigPre: /usr/share/openstack-tripleo-heat-templates/puppet/extraconfig/pre_deploy/per_node.yaml

parameter_defaults:

NodeDataLookup: '{"F5055C6C-477F-47FB-AFE5-95C6928C407F": {"nova::compute::vcpu_pin_set": [ "2", "3" ]}}'

Include this environment file when running openstack overcloud deploy.

The per_node.yaml template generates a set of heiradata files on nodes that correspond to each system UUID and contains the hieradata you defined. If a UUID is not defined, the resulting hieradata file is empty. In the previous example, the per_node.yaml template runs on all Compute nodes (as per the OS::TripleO::ComputeExtraConfigPre hook), but only the Compute node with system UUID F5055C6C-477F-47FB-AFE5-95C6928C407F receives hieradata.

This provides a method of tailoring each node to specific requirements.

4.7. Puppet: Applying Custom Manifests

In certain circumstances, you might need to install and configure some additional components to your Overcloud nodes. You can achieve this with a custom Puppet manifest that applies to nodes on after the main configuration completes. As a basic example, you might intend to install motd to each node. The process for accomplishing is to first create a Heat template (/home/stack/templates/custom_puppet_config.yaml) that launches Puppet configuration.

This includes the /home/stack/templates/motd.pp within the template and passes it to nodes for configuration. The motd.pp file itself contains the Puppet classes to install and configure motd.

Next, create an environment file (/home/stack/templates/puppet_post_config.yaml) that registers your heat template as the OS::TripleO::NodeExtraConfigPost: resource type.

resource_registry: OS::TripleO::NodeExtraConfigPost: /home/stack/templates/custom_puppet_config.yaml

resource_registry:

OS::TripleO::NodeExtraConfigPost: /home/stack/templates/custom_puppet_config.yamlAnd finally include this environment file along with your other environment files when creating or updating the Overcloud stack:

openstack overcloud deploy --templates \

...

-e /home/stack/templates/puppet_post_config.yaml \

...

$ openstack overcloud deploy --templates \

...

-e /home/stack/templates/puppet_post_config.yaml \

...

This applies the configuration from motd.pp to all nodes in the Overcloud.

Chapter 5. Overcloud Registration

The Overcloud provides a method to register nodes to either the Red Hat Content Delivery Network, a Red Hat Satellite 5 server, or a Red Hat Satellite 6 server.

5.1. Registering the Overcloud with an Environment File

Copy the registration files from the Heat template collection:

cp -r /usr/share/openstack-tripleo-heat-templates/extraconfig/pre_deploy/rhel-registration ~/templates/.

$ cp -r /usr/share/openstack-tripleo-heat-templates/extraconfig/pre_deploy/rhel-registration ~/templates/.

Edit the ~/templates/rhel-registration/environment-rhel-registration.yaml and modify the following values to suit your registration method and details.

- rhel_reg_method

-

Choose the registration method. Either

portal,satellite, ordisable. - rhel_reg_type

-

The type of unit to register. Leave blank to register as a

system - rhel_reg_auto_attach

-

Automatically attach compatible subscriptions to this system. Set to

trueto enable. To disable this feature, remove this parameter from your environment file. - rhel_reg_service_level

- The service level to use for auto attachment.

- rhel_reg_release

- Use this parameter to set a release version for auto attachment. Leave blank to use the default from Red Hat Subscription Manager.

- rhel_reg_pool_id

-

The subscription pool ID to use. Use this if not auto-attaching subscriptions. To locate this ID, run

sudo subscription-manager list --available --all --matches="*OpenStack*"from the undercloud node, and use the resultingPool IDvalue. - rhel_reg_sat_url

-

The base URL of the Satellite server to register Overcloud nodes. Use the Satellite’s HTTP URL and not the HTTPS URL for this parameter. For example, use http://satellite.example.com and not https://satellite.example.com. The Overcloud creation process uses this URL to determine whether the server is a Red Hat Satellite 5 or Red Hat Satellite 6 server. If a Red Hat Satellite 6 server, the Overcloud obtains the

katello-ca-consumer-latest.noarch.rpmfile, registers withsubscription-manager, and installskatello-agent. If a Red Hat Satellite 5 server, the Overcloud obtains theRHN-ORG-TRUSTED-SSL-CERTfile and registers withrhnreg_ks. - rhel_reg_server_url

- The hostname of the subscription service to use. The default is for Customer Portal Subscription Management, subscription.rhn.redhat.com. If this option is not used, the system is registered with Customer Portal Subscription Management. The subscription server URL uses the form of https://hostname:port/prefix.

- rhel_reg_base_url

- Gives the hostname of the content delivery server to use to receive updates. The default is https://cdn.redhat.com. Since Satellite 6 hosts its own content, the URL must be used for systems registered with Satellite 6. The base URL for content uses the form of https://hostname:port/prefix.

- rhel_reg_org

-

The organization to use for registration. To locate this ID, run

sudo subscription-manager orgsfrom the undercloud node. Enter your Red Hat credentials when prompted, and use the resultingKeyvalue. - rhel_reg_environment

- The environment to use within the chosen organization.

- rhel_reg_repos

- A comma-separated list of repositories to enable.

- rhel_reg_activation_key

- The activation key to use for registration.

- rhel_reg_user; rhel_reg_password

- The username and password for registration. If possible, use activation keys for registration.

- rhel_reg_machine_name

- The machine name. Leave this as blank to use the hostname of the node.

- rhel_reg_force

-

Set to

trueto force your registration options. For example, when re-registering nodes. - rhel_reg_sat_repo

-

The repository containing Red Hat Satellite 6’s management tools, such as

katello-agent. Check the correct repository name corresponds to your Red Hat Satellite version and check that the repository is synchronized on the Satellite server. For example,rhel-7-server-satellite-tools-6.2-rpmscorresponds to Red Hat Satellite 6.2.

The deployment command (openstack overcloud deploy) uses the -e option to add environment files. Add both ~/templates/rhel-registration/environment-rhel-registration.yaml and ~/templates/rhel-registration/rhel-registration-resource-registry.yaml. For example:

openstack overcloud deploy --templates [...] -e /home/stack/templates/rhel-registration/environment-rhel-registration.yaml -e /home/stack/templates/rhel-registration/rhel-registration-resource-registry.yaml

$ openstack overcloud deploy --templates [...] -e /home/stack/templates/rhel-registration/environment-rhel-registration.yaml -e /home/stack/templates/rhel-registration/rhel-registration-resource-registry.yaml

Registration is set as the OS::TripleO::NodeExtraConfig Heat resource. This means you can only use this resource for registration. See Section 4.2, “Pre-Configuration: Customizing Specific Overcloud Roles” for more information.

5.2. Example 1: Registering to the Customer Portal

The following registers the overcloud nodes to the Red Hat Customer Portal using the my-openstack activation key and subscribes to pool 1a85f9223e3d5e43013e3d6e8ff506fd.

5.3. Example 2: Registering to a Red Hat Satellite 6 Server

The following registers the overcloud nodes to a Red Hat Satellite 6 Server at sat6.example.com and uses the my-openstack activation key to subscribe to pool 1a85f9223e3d5e43013e3d6e8ff506fd. In this situation, the activation key also provides the repositories to enable.

5.4. Example 3: Registering to a Red Hat Satellite 5 Server

The following registers the overcloud nodes to a Red Hat Satellite 5 Server at sat5.example.com, uses the my-openstack activation key, and automatically attaches subscriptions. In this situation, the activation key also provides the repositories to enable.

Chapter 6. Composable Services and Custom Roles

The Overcloud usually consists of nodes in predefined roles such as Controller nodes, Compute nodes, and different storage node types. Each of these default roles contains a set of services defined in the core Heat template collection on the director node. However, the architecture of the core Heat templates provides a method to:

- Create custom roles

- Add and remove services from each role

This chapter explores the architecture of custom roles, composable services, and methods for using them.

Guidelines and Limitations

Note the following guidelines and limitations for the composable node architecture:

-

You can assign any

systemdmanaged service to a supported standalone custom role. - You cannot split Pacemaker-managed services. This is because the Pacemaker manages the same set of services on each node within the Overcloud cluster. Splitting Pacemaker-managed services can cause cluster deployment errors. These services should remain on the Controller role.

- You cannot change to custom roles and composable services during the upgrade process from Red Hat OpenStack Platform 9 to 10. The upgrade scripts can only accommodate the default Overcloud roles.

- You can create additional custom roles after the initial deployment and deploy them to scale existing services.

- You cannot modify the list of services for any role after deploying an Overcloud. Modifying the service lists after Overcloud deployment can cause deployment errors and leave orphaned services on nodes.

Supported Custom Role Architecture

Custom roles and composable services are new features in Red Hat OpenStack Platform 10 and only a limited number of composable service combinations have been tested and verified at this early stage. Red Hat supports the following architectures when using custom roles and composable services:

- Architecture 1 - Monolithic Controller

- All controller services are contained within one Controller role. This is the default. See Section 6.8, “Service Architecture: Monolithic Controller” for more details.

- Architecture 2 - Split Controller

The controller services are split into two roles:

- Controller PCMK - Core Pacemaker-managed services such as database and load balancing

- Controller Systemd - 'systemd`-managed OpenStack Platform services

See Section 6.9, “Service Architecture: Split Controller” for more details.

- Architecture 3 - Standalone roles

- Use Architecture 1 or Architecture 2, except split the OpenStack Platform services into custom roles. See Section 6.10, “Service Architecture: Standalone Roles” for more details.

6.1. Examining Custom Role Architecture

The Overcloud creation process defines its roles using a template that contains role data. The default template is located at /usr/share/openstack-tripleo-heat-templates/roles_data.yaml and defines all the default role types: Controller, Compute, BlockStorage, ObjectStorage, and CephStorage.

If creating a custom roles_data.yaml file, the Controller role must always be the first role defined. This role is treated as the primary role.

Each role contains the following parameters:

- name

-

(Mandatory) The name of the role, which is a plain text name with no spaces or special characters. Check that the chosen name does not cause conflicts with other resources. For example, use

Networkeras a name instead ofNetwork. For recommendations on role names, see Section 6.9, “Service Architecture: Split Controller” for examples. - CountDefault

- (Optional) Defines the default number of nodes to deploy for this role.

- HostnameFormatDefault

(Optional) Defines the default hostname format for the role. The default naming convention uses the following format:

[STACK NAME]-[ROLE NAME]-[NODE ID]

[STACK NAME]-[ROLE NAME]-[NODE ID]Copy to Clipboard Copied! Toggle word wrap Toggle overflow For example, the default Controller nodes are named:

overcloud-controller-0 overcloud-controller-1 overcloud-controller-2 ...

overcloud-controller-0 overcloud-controller-1 overcloud-controller-2 ...Copy to Clipboard Copied! Toggle word wrap Toggle overflow - ServicesDefault

- (Optional) Defines the default list of services to include on the node. See Section 6.2, “Examining Composable Service Architecture” for more information.

These options provide a means to create new roles and also define which services to include.

The openstack overcloud deploy command integrates the parameters from roles_data.yaml file into the overcloud.j2.yaml Heat template. At certain points, the overcloud.j2.yaml Heat template iterates over the list of roles from roles_data.yaml and creates parameters and resources specific to each respective role.

For example, the resource definition for each role in the overcloud.j2.yaml Heat template appears as the following snippet:

This snippet shows how the Jinja2-based template incorporates the {{role.name}} variable to define the name of each role as a OS::Heat::ResourceGroup resource. This in turn uses each name parameter from roles_data.yaml to name each respective OS::Heat::ResourceGroup resource.

6.2. Examining Composable Service Architecture

The core Heat template collection contains a collection of composable service templates in the puppet/services subdirectory. You can view these services with the following command:

ls /usr/share/openstack-tripleo-heat-templates/puppet/services

$ ls /usr/share/openstack-tripleo-heat-templates/puppet/services

Each service template contains a description that identifies its purpose. For example, the keystone.yaml service template contains the following description:

description: > OpenStack Identity (`keystone`) service configured with Puppet

description: >

OpenStack Identity (`keystone`) service configured with Puppet

These service templates are registered as resources specific to a Red Hat OpenStack Platform deployment. This means you can call each resource using a unique Heat resource namespace defined in the overcloud-resource-registry-puppet.j2.yaml file. All services use the OS::TripleO::Services namespace for their resource type. For example, the keystone.yaml service template is registered to the OS::TripleO::Services::Keystone resource type:

grep "OS::TripleO::Services::Keystone" /usr/share/openstack-tripleo-heat-templates/overcloud-resource-registry-puppet.j2.yaml OS::TripleO::Services::Keystone: puppet/services/keystone.yaml

grep "OS::TripleO::Services::Keystone" /usr/share/openstack-tripleo-heat-templates/overcloud-resource-registry-puppet.j2.yaml

OS::TripleO::Services::Keystone: puppet/services/keystone.yaml

The overcloud.j2.yaml Heat template includes a section of Jinja2-based code to define a service list for each custom role in the roles_data.yaml file:

For the default roles, this creates the following service list parameters: ControllerServices, ComputeServices, BlockStorageServices, ObjectStorageServices, and CephStorageServices.

You define the default services for each custom role in the roles_data.yaml file. For example, the default Controller role contains the following content:

These services are then defined as the default list for the ControllerServices parameter.

You can also use an environment file to override the default list for the service parameters. For example, you can define ControllerServices as a parameter_default in an environment file to override the services list from the roles_data.yaml file.

6.3. Enabling Disabled Services

Some services are disabled by default. These services are registered as null operations (OS::Heat::None) in the overcloud-resource-registry-puppet.j2.yaml file. For example, the Block Storage backup service (cinder-backup) is disabled:

OS::TripleO::Services::CinderBackup: OS::Heat::None

OS::TripleO::Services::CinderBackup: OS::Heat::None

To enable this service, include an environment file that links the resource to its respective Heat templates in the puppet/services directory. Some services have predefined environment files in the environments directory. For example, the Block Storage backup service uses the environments/cinder-backup.yaml file, which contains the following:

resource_registry: OS::TripleO::Services::CinderBackup: ../puppet/services/pacemaker/cinder-backup.yaml ...

resource_registry:

OS::TripleO::Services::CinderBackup: ../puppet/services/pacemaker/cinder-backup.yaml

...

This overrides the default null operation resource and enables the service. Include this environment file when running the openstack overcloud deploy command.

openstack overcloud deploy --templates -e /usr/share/openstack-tripleo-heat-templates/environments/cinder-backup.yaml

$ openstack overcloud deploy --templates -e /usr/share/openstack-tripleo-heat-templates/environments/cinder-backup.yaml

For another example of how to enable disabled services, see the Installation section of the OpenStack Data Processing guide. This section contains instructions on how to enable the OpenStack Data Processing service (sahara) on the overcloud.

6.4. Adding and Removing Services from Roles

The basic method of adding or removing services involves creating a copy of the default service list for a node role and then adding or removing services. For example, you might aim to remove OpenStack Orchestration (heat) from the Controller nodes. In this situation, create a custom copy of the default roles_data.yaml file:

cp /usr/share/openstack-tripleo-heat-templates/roles_data.yaml ~/templates/roles_data-no_heat.yaml

$ cp /usr/share/openstack-tripleo-heat-templates/roles_data.yaml ~/templates/roles_data-no_heat.yaml

Edit the roles_data file and modify the service list for the Controller’s ServicesDefault parameter. Scroll to the OpenStack Orchestration services and remove them:

Include this new roles_data file when running the openstack overcloud deploy command. For example:

openstack overcloud deploy --templates -r ~/templates/roles_data-no_heat.yaml

$ openstack overcloud deploy --templates -r ~/templates/roles_data-no_heat.yamlThis deploys an Overcloud without OpenStack Orchestration services installed on the Controller nodes.

You can also disable services in the roles_data file using a custom environment file. Redirect the services to disable to the OS::Heat::None resource. For example:

resource_registry: OS::TripleO::Services::HeatApi: OS::Heat::None OS::TripleO::Services::HeatApiCfn: OS::Heat::None OS::TripleO::Services::HeatApiCloudwatch: OS::Heat::None OS::TripleO::Services::HeatEngine: OS::Heat::None

resource_registry:

OS::TripleO::Services::HeatApi: OS::Heat::None

OS::TripleO::Services::HeatApiCfn: OS::Heat::None

OS::TripleO::Services::HeatApiCloudwatch: OS::Heat::None

OS::TripleO::Services::HeatEngine: OS::Heat::None6.5. Creating a New Role

In this example, the aim is to create a new Networker role to host OpenStack Networking (neutron) agents only. In this situation, you create a custom roles_data files that includes the new role information.

Create a custom copy of the default roles_data.yaml file:

cp /usr/share/openstack-tripleo-heat-templates/roles_data.yaml ~/templates/roles_data-network_node.yaml

$ cp /usr/share/openstack-tripleo-heat-templates/roles_data.yaml ~/templates/roles_data-network_node.yaml

Edit the new roles_data file and create a new Networker role containing base and core OpenStack Networking services. For example:

It is also a good idea to set the CountDefault to 1 so that a default Overcloud always includes the Networking node.

If scaling the services in an existing overcloud, keep the existing services on the Controller role. If creating a new overcloud and you only want the OpenStack Networking agents to remain on the standalone role, remove the OpenStack Networking agents from the Controller role definition:

You might need to define a new flavor for this role so that you can tag specific nodes. For this example, use the following commands to create a networker flavor:

openstack flavor create --id auto --ram 6144 --disk 40 --vcpus 4 networker openstack flavor set --property "cpu_arch"="x86_64" --property "capabilities:boot_option"="local" --property "capabilities:profile"="networker" networker

$ openstack flavor create --id auto --ram 6144 --disk 40 --vcpus 4 networker

$ openstack flavor set --property "cpu_arch"="x86_64" --property "capabilities:boot_option"="local" --property "capabilities:profile"="networker" networkerTag nodes into the new flavor using the following command:

openstack baremetal node set --property capabilities='profile:networker,boot_option:local' 58c3d07e-24f2-48a7-bbb6-6843f0e8ee13

$ openstack baremetal node set --property capabilities='profile:networker,boot_option:local' 58c3d07e-24f2-48a7-bbb6-6843f0e8ee13Define the Networker node count and flavor using the following environment file snippet:

parameter_defaults: OvercloudNetworkerFlavor: networker NetworkerCount: 1

parameter_defaults:

OvercloudNetworkerFlavor: networker

NetworkerCount: 1

Include the new roles_data file and environment file when running the openstack overcloud deploy command. For example:

openstack overcloud deploy --templates -r ~/templates/roles_data-network_node.yaml -e ~/templates/node-count-flavor.yaml

$ openstack overcloud deploy --templates -r ~/templates/roles_data-network_node.yaml -e ~/templates/node-count-flavor.yamlWhen the deployment completes, this creates a three-node Overcloud consisting of one Controller node, one Compute node, and one Networker node. To view the Overcloud’s list of nodes, run the following command:

nova list

$ nova list6.6. Creating a Generic Node with No Services

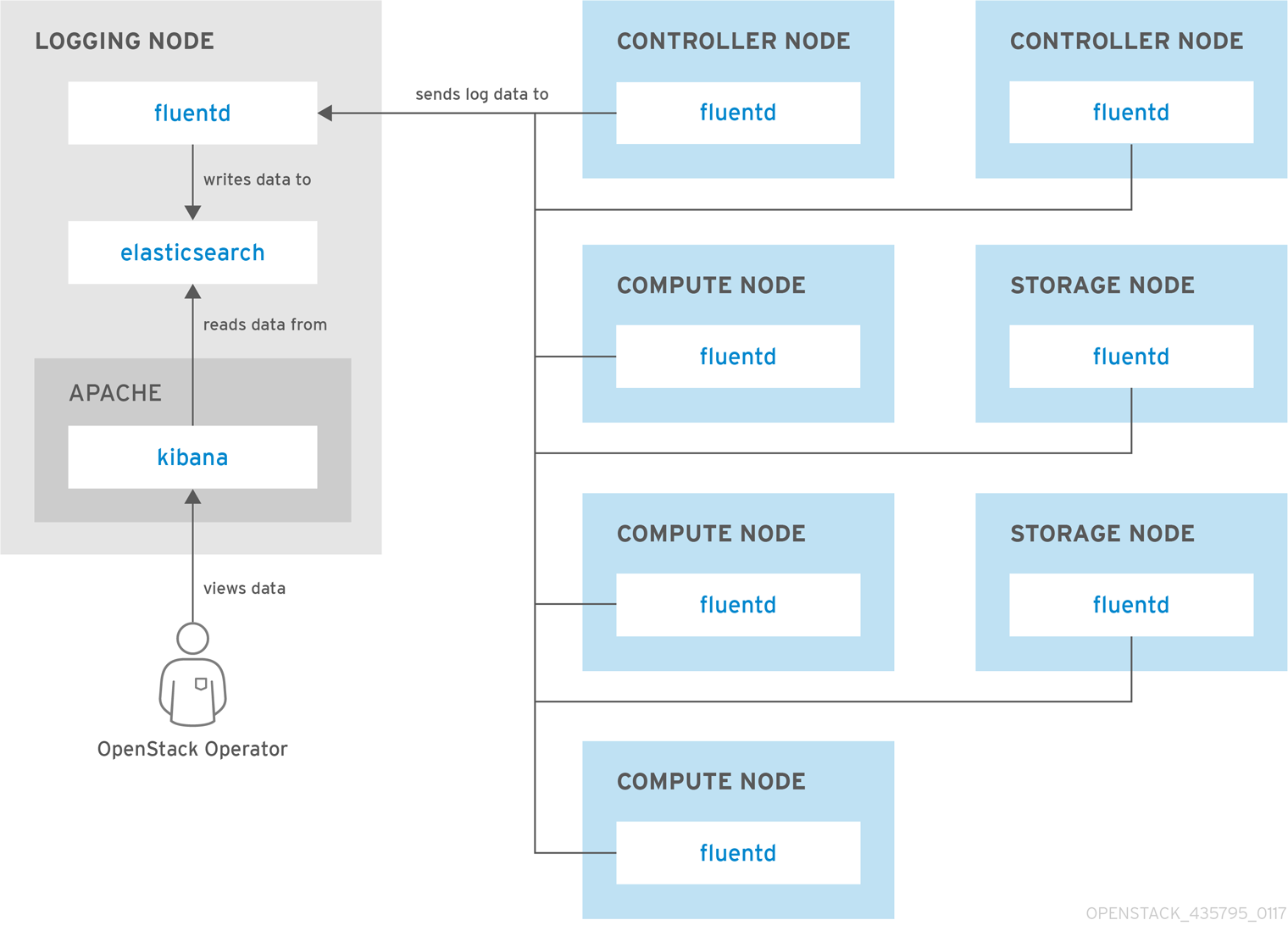

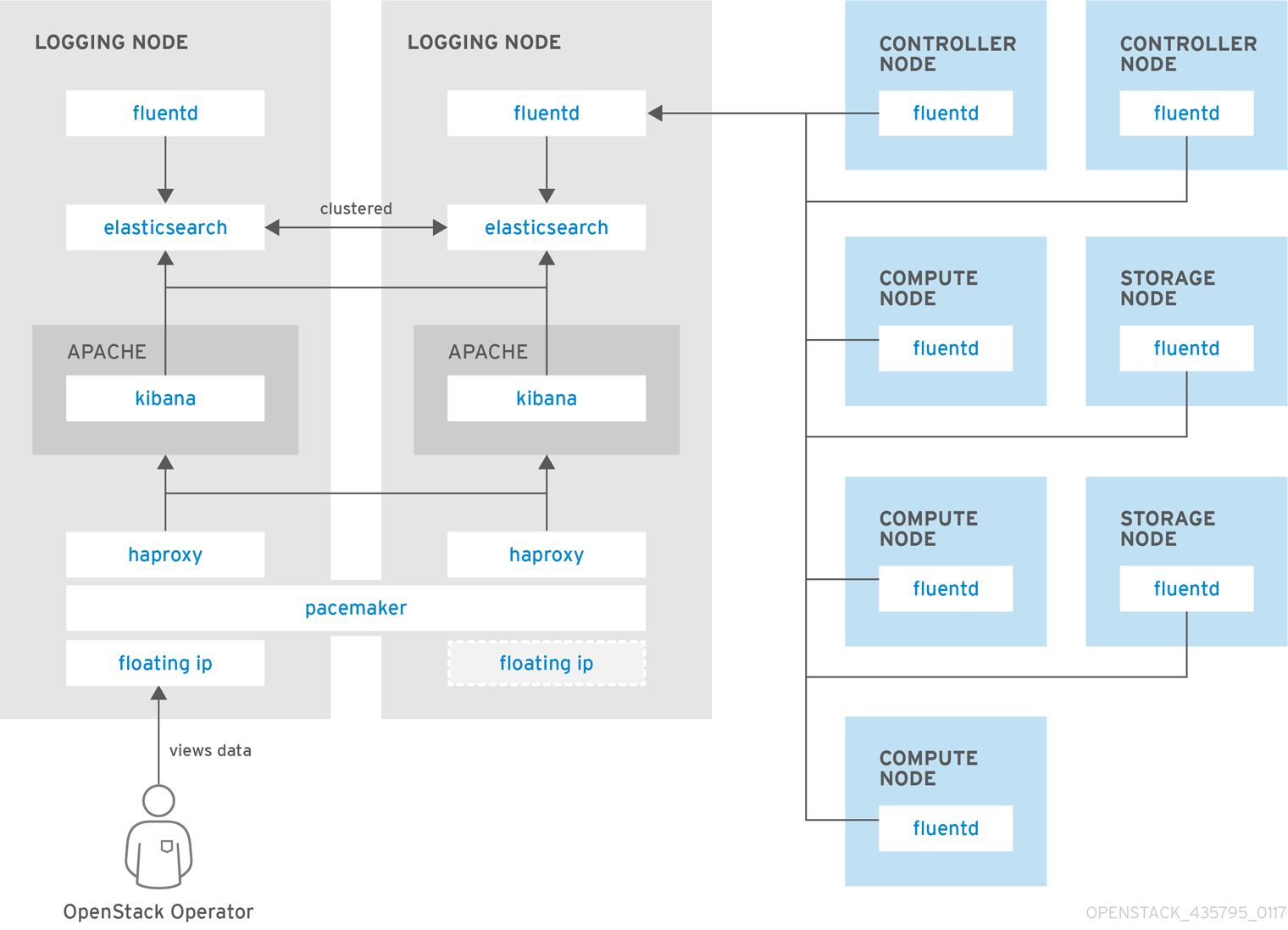

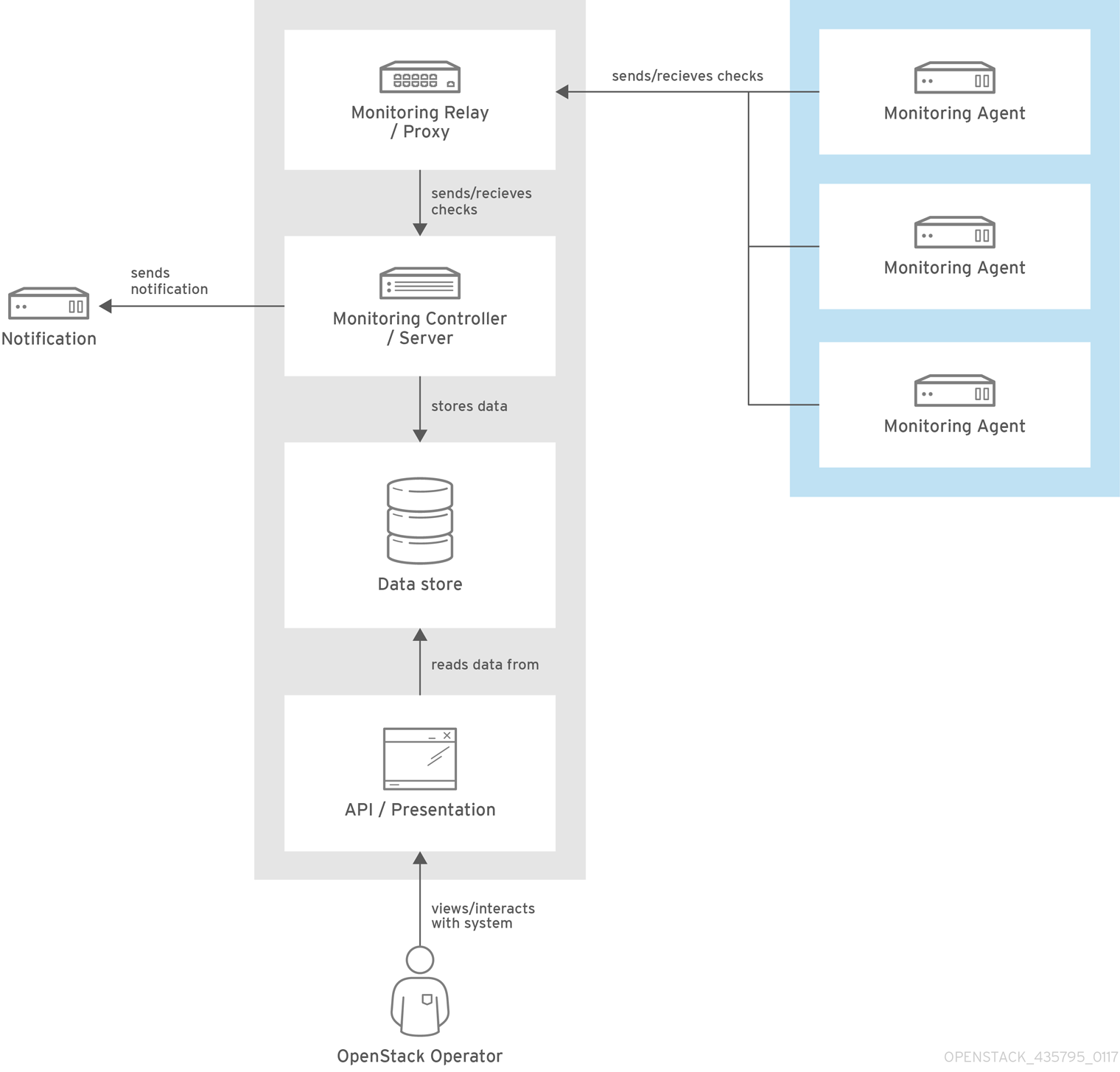

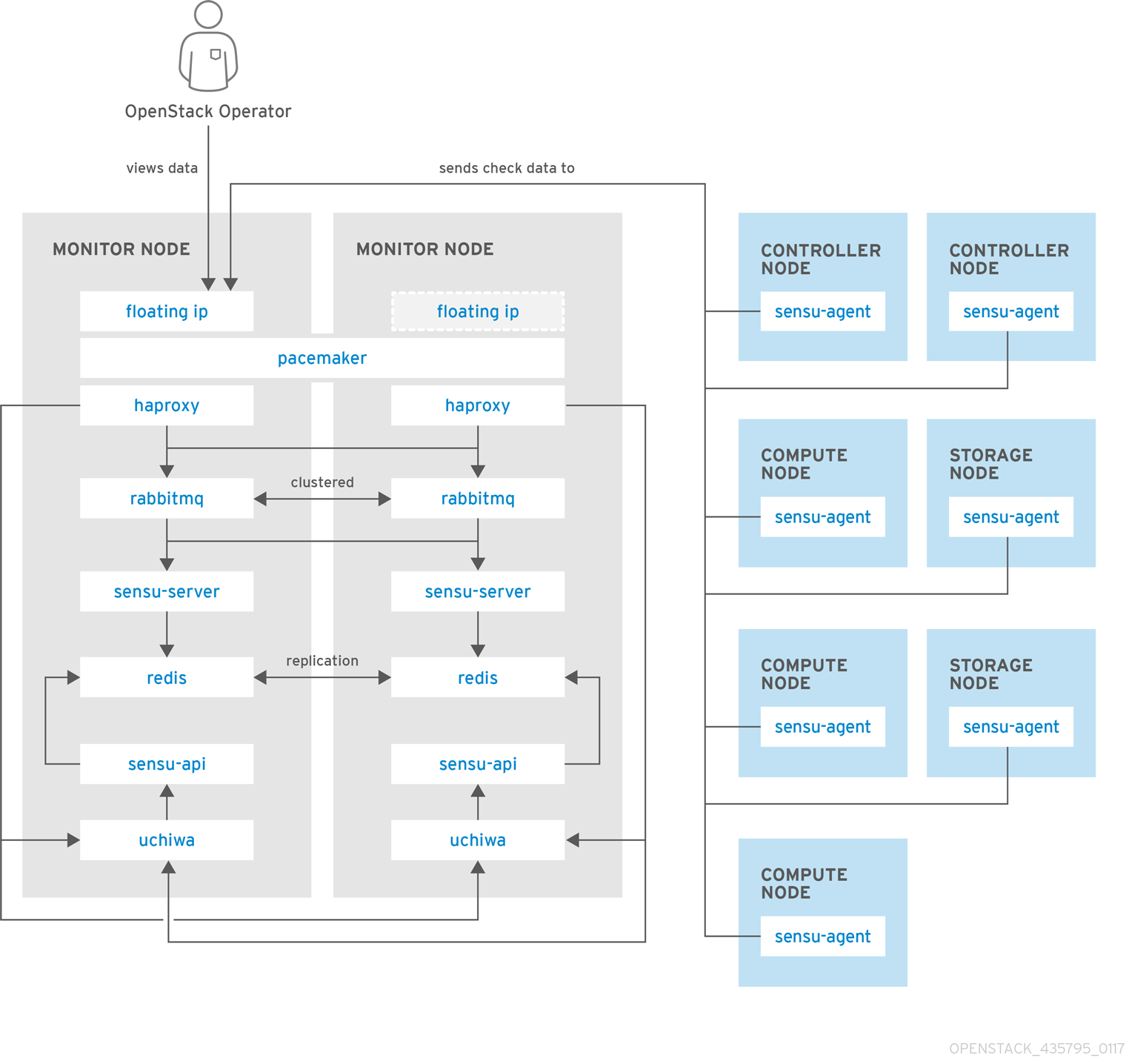

Red Hat OpenStack Platform provides the ability to create generic Red Hat Enterprise Linux 7 nodes without any OpenStack services configured. This is useful in situations where you need to host software outside of the core Red Hat OpenStack Platform environment. For example, OpenStack Platform provides integration with monitoring tools such as Kibana and Sensu (see Chapter 12, Monitoring Tools Configuration). While Red Hat does not provide support for the monitoring tools themselves, the director can create a generic Red Hat Enterprise Linux 7 node to host these tools.

The generic node still uses the base overcloud-full image rather than a base Red Hat Enterprise Linux 7 image. This means the node has some Red Hat OpenStack Platform software installed but not enabled or configured.

Creating a generic node requires a new role without a ServicesDefault list:

- name: Generic

- name: Generic

Include the role in your custom roles_data file (roles_data_with_generic.yaml). Make sure to keep the existing Controller and Compute roles.

You can also include an environment file (generic-node-params.yaml) to specify how many generic Red Hat Enterprise Linux 7 nodes you require and the flavor when selecting nodes to provision. For example:

parameter_defaults: OvercloudGenericFlavor: baremetal GenericCount: 1

parameter_defaults:

OvercloudGenericFlavor: baremetal

GenericCount: 1

Include both the roles file and the environment file when running the openstack overcloud deploy command. For example:

openstack overcloud deploy --templates -r ~/templates/roles_data_with_generic.yaml -e ~/templates/generic-node-params.yaml

$ openstack overcloud deploy --templates -r ~/templates/roles_data_with_generic.yaml -e ~/templates/generic-node-params.yamlThis deploys a three-node environment with one Controller node, one Compute node, and one generic Red Hat Enterprise Linux 7 node.

6.7. Creating Hyper-Converged Compute and Ceph Services

Hyper-Converged Compute and Ceph Services are a Technology Preview feature. Technology Preview features are not fully supported under Red Hat Subscription Service Level Agreements (SLAs), may not be functionally complete, and are not intended for production use. However, these features provide early access to upcoming product innovations, enabling customers to test functionality and provide feedback during the development process. For more information on the support scope for features marked as technology previews, see https://access.redhat.com/support/offerings/techpreview/.

Ceph OSD services normally run on their own Ceph Storage nodes. However, the composable services provides a method to configure the Ceph OSD services on Compute nodes instead.

For example, the default service list for each role includes the following:

Compute nodes:

Ceph Storage nodes:

The Ceph Storage role contain services common to the Compute role, which means you can ignore them. One service remains: OS::TripleO::Services::CephOSD.

Create a custom version of the default roles_data file:

cp /usr/share/openstack-tripleo-heat-templates/roles_data.yaml ~/templates/roles_data-ceph_osd_on_compute.yaml

$ cp /usr/share/openstack-tripleo-heat-templates/roles_data.yaml ~/templates/roles_data-ceph_osd_on_compute.yaml

Edit the file to add OS::TripleO::Services::CephOSD to the Compute’s service list:

You can also safely remove the OS::TripleO::Services::CephExternal service from the Compute service list because the Overcloud does not integrate with an external Ceph Storage cluster.

Include this role file when running the openstack overcloud deploy command. For example:

openstack overcloud deploy --templates -r ~/templates/roles_data-ceph_osd_on_compute.yaml -e ~/template/storage-environment.yaml

$ openstack overcloud deploy --templates -r ~/templates/roles_data-ceph_osd_on_compute.yaml -e ~/template/storage-environment.yaml

Note that this command also includes a custom environment file for storage (storage-environment.yaml), which contains parameters specific to the Ceph Storage.

After the Overcloud deployment, verify the Ceph OSD installation on a Compute node. Log into a Compute node and run the following:

ps ax | grep ceph 17437 ? Ss 0:00 /bin/bash -c ulimit -n 32768; /usr/bin/ceph-osd -i 0 --pid-file /var/run/ceph/osd.0.pid -c /etc/ceph/ceph.conf --cluster ceph -f 17438 ? Sl 0:00 /usr/bin/ceph-osd -i 0 --pid-file /var/run/ceph/osd.0.pid -c /etc/ceph/ceph.conf --cluster ceph -f

[root@overcloud-novacompute-0 ~]# ps ax | grep ceph

17437 ? Ss 0:00 /bin/bash -c ulimit -n 32768; /usr/bin/ceph-osd -i 0 --pid-file /var/run/ceph/osd.0.pid -c /etc/ceph/ceph.conf --cluster ceph -f

17438 ? Sl 0:00 /usr/bin/ceph-osd -i 0 --pid-file /var/run/ceph/osd.0.pid -c /etc/ceph/ceph.conf --cluster ceph -f6.8. Service Architecture: Monolithic Controller

The default architecture for composable services uses a monolithic Controller that contains the core Red Hat OpenStack Platform Services. These default services are defined in the roles file included with the director’s Heat template collection (/usr/share/openstack-tripleo-heat-templates/roles_data.yaml).

Some services are disabled by default. See Section 6.3, “Enabling Disabled Services” for information on how to enable these services.

6.9. Service Architecture: Split Controller

You can split the services on the Controller nodes into two separate roles:

- Controller PCMK - Contains only the core services that Pacemaker manages including database and load balancing

- Controller systemd - Contains all OpenStack services

The remaining default roles (Compute, Ceph Storage, Object Storage, Block Storage) remain unaffected.

Use the following tables as a guide to creating a split controller architecture.

Some services are disabled by default. See Section 6.3, “Enabling Disabled Services” for information on how to enable these services.

Controller PCMK

The following services are the minimum services required for the Controller PCMK role.

Controller systemd

The following table represents the services available on the Controller systemd role:

6.10. Service Architecture: Standalone Roles

The following tables list the supported custom role collection you can create and scale with the composable service architecture in Red Hat OpenStack Platform. Group these collections together as individual roles and use them to isolate and split services in combination with the previous architectures:

Some services are disabled by default. See Section 6.3, “Enabling Disabled Services” for information on how to enable these services.

Note that all roles use a set of common services, which include:

-

OS::TripleO::Services::CACerts -

OS::TripleO::Services::FluentdClient -

OS::TripleO::Services::Kernel -

OS::TripleO::Services::Ntp -

OS::TripleO::Services::SensuClient -

OS::TripleO::Services::Sshd -

OS::TripleO::Services::Snmp -

OS::TripleO::Services::Timezone -

OS::TripleO::Services::TripleoFirewall -

OS::TripleO::Services::TripleoPackages -

OS::TripleO::Services::VipHosts

Once you have chosen the roles to include in your overcloud, remove the associated services (except for the common services) from the main Controller roles. For example, if creating a standalone Keystone role, remove the OS::TripleO::Services::Apache and OS::TripleO::Services::Keystone services from the Controller nodes. The only exceptions are the services with limited custom role support (see Table 6.1, “Custom Roles Support”).

Click on a role in the following table to view the services associated with it.

| Role | Support Status |

|---|---|

| Supported | |

| Supported | |

| Limited. If spliting, this service needs to be part of a Controller systemd role. | |

| Supported | |

| Supported | |

| Supported | |

| Supported | |

| Supported | |

| Limited. If spliting, this service needs to be part of a Controller systemd role. | |

| Supported | |

| Limited. If spliting, this service needs to be part of a Controller systemd role. | |

| Supported | |

| Supported | |

| Supported | |

| Supported | |

| Technical Preview | |

| Limited. If spliting, this service needs to be part of a Controller systemd role. | |

| Supported | |

| Supported | |

| Supported |

Ceph Storage Monitor

The following services configure Ceph Storage Monitor.

Ceph Storage OSD

The following services configure Ceph Storage OSDs.

Ceph Storage RadosGW

The following services configure Ceph Storage RadosGW. If separating these services, they need to be part of a Controller systemd role.

Cinder API

The following services configure the OpenStack Block Storage API.

Controller PCMK

The following services are the minimum services required for the Controller PCMK role.

Glance

The following services configure the OpenStack Image service.

Heat

The following services configure the OpenStack Orchestration service.

Horizon

The following services configure the OpenStack Dashboard.

Ironic

The following services configure the OpenStack Bare Metal Provisioning service. If separating these services, they need to be part of a Controller systemd role.

Keystone

The following services configure the OpenStack Identity service. When performing minor updates, make sure to update this role before updating other services.

Manila

The following services configure the OpenStack Shared File Systems service. If separating these services, they need to be part of a Controller systemd role.

Networker

The following services configure the OpenStack Networking agents.

Neutron API

The following services configure the OpenStack Networking API.

Nova

The following services configure the OpenStack Compute services.

Nova Compute

The following services configure an OpenStack Compute node.

OpenDaylight

The following services configure OpenDayLight. These services are technical preview for Red Hat OpenStack Platform 10.

Sahara

The following services configure the OpenStack Clustering service. If separating these services, they need to be part of a Controller systemd role.

Swift API

The following services configure the OpenStack Object Storage API.

Swift Storage

The following services configure the OpenStack Object Storage service.

Telemetry

The following services configure the OpenStack Telemetry services.

6.11. Composable Service Reference

The following table contains a list of all available composable services in Red Hat OpenStack Platform.

Some services are disabled by default. See Section 6.3, “Enabling Disabled Services” for information on how to enable these services.

| Service | Description |

|---|---|

|

|

OpenStack Telemetry Alarming ( |

|

|

OpenStack Telemetry Alarming ( |

|

|

OpenStack Telemetry Alarming ( |

|

|

OpenStack Telemetry Alarming ( |

|

| Apache service configured with Puppet. Note this is typically included automatically with other services which run through Apache. |

|

| HAProxy service configured with Puppet |

|

|

OpenStack Telemetry ( |

|

|

OpenStack Telemetry ( |

|

|

OpenStack Telemetry ( |

|

|

OpenStack Telemetry ( |

|

|

OpenStack Telemetry ( |

|

| (Disabled by default) Ceph Client service |

|

| (Disabled by default) Ceph External service |

|

| (Disabled by default) Ceph Monitor service |

|

| (Disabled by default) Ceph OSD service |

|

|

OpenStack Block Storage ( |

|

|

(Disabled by default) OpenStack Block Storage ( |

|

|

OpenStack Block Storage ( |

|

|

OpenStack Block Storage ( |

|

|

OpenStack Telemetry ( |

|

|

OpenStack Networking ( |

|

|

(Disabled by default) OpenStack Networking ( |

|

|

(Disabled by default) OpenStack Networking ( |

|

|

OpenStack Networking ( |

|

| (Disabled by default) Fluentd client configured with Puppet |

|

|

OpenStack Image ( |

|

|

OpenStack Image ( |

|

|

OpenStack Telemetry Metrics ( |

|

|

OpenStack Telemetry Metrics ( |

|

|

OpenStack Telemetry Metrics ( |

|

| HAProxy service (Pacemaker-managed) configured with Puppet |

|

|

OpenStack Orchestration ( |

|

|

OpenStack Orchestration ( |

|

|

OpenStack Orchestration ( |

|

|

OpenStack Orchestration ( |

|

|

OpenStack Dashboard ( |

|

|

(Disabled by default) OpenStack Bare Metal Provisioning ( |

|

|

(Disabled by default) OpenStack Bare Metal Provisioning ( |

|